Dell HPC Compute Cluster An overview for administrators

- Slides: 120

Dell HPC Compute Cluster An overview for administrators and researchers © 2015 Alces Software Ltd 1

HPC Cluster Overview An overview for administrators and researchers © 2015 Alces Software Ltd 2

Beowulf Architecture • Loosely-coupled Linux Supercomputer • Efficient for a number of use-cases – Embarrassingly parallel / single-threaded jobs – SMP / multi-threaded, single-node jobs – MPI / parallel multi-node jobs • Very cost effective HPC solution – Commodity X 86_64 server hardware and storage – Linux based operating system – Specialist high-performance interconnect and software 3

Beowulf Architecture • Scalable architecture – Dedicated management and storage nodes – High-performance, low-latency Omnipath interconnect – Multiple compute nodes for different jobs • Standard memory jobs (4 GB/core) • High memory compute nodes (16 GB/core) – Multiple storage tiers for user data • 1 TB local scratch disk on every node • 680 TB archive storage 4

User facilities • Modern 64 -bit Linux operating system – Compatible with a wide range of software • Pre-installed with tuned HPC applications – Compiled for the latest CPU architecture • Comprehensive software development environment – C, C++, Fortran, Java, Ruby, Python, Perl, R – Modules environment management 5

User facilities • High throughput job-scheduler for increased utilisation – Resource request limits to protect running jobs – Fair-share for cluster load-balancing – Resource reservation with job backfilling • Multiple user-interfaces supporting all abilities – Command-line access – Graphical desktop access 6

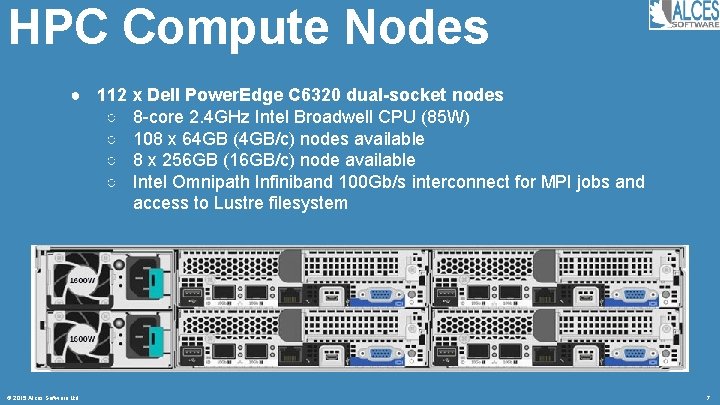

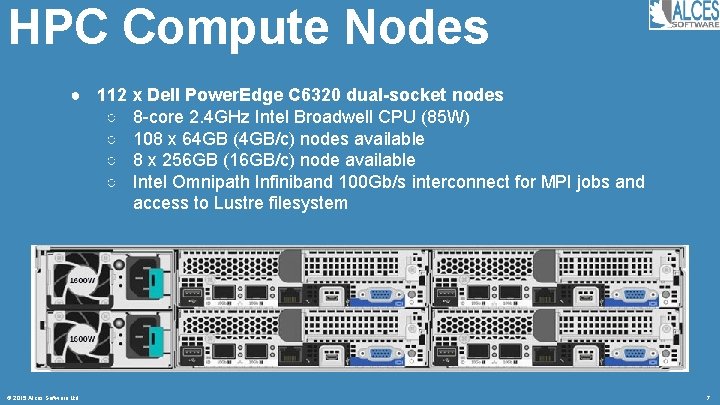

HPC Compute Nodes ● 112 x Dell Power. Edge C 6320 dual-socket nodes ○ 8 -core 2. 4 GHz Intel Broadwell CPU (85 W) ○ 108 x 64 GB (4 GB/c) nodes available ○ 8 x 256 GB (16 GB/c) node available ○ Intel Omnipath Infiniband 100 Gb/s interconnect for MPI jobs and access to Lustre filesystem © 2015 Alces Software Ltd 7

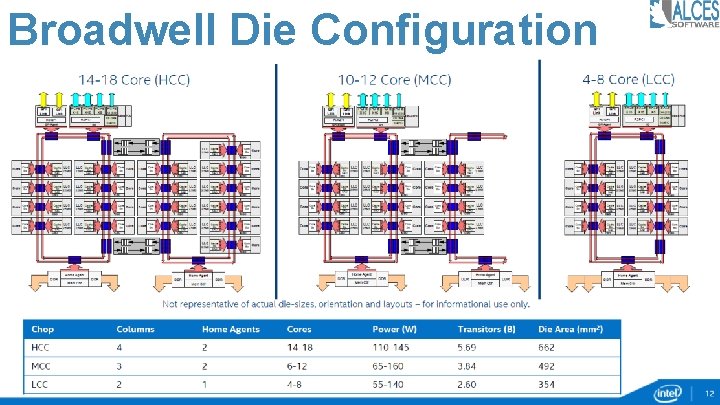

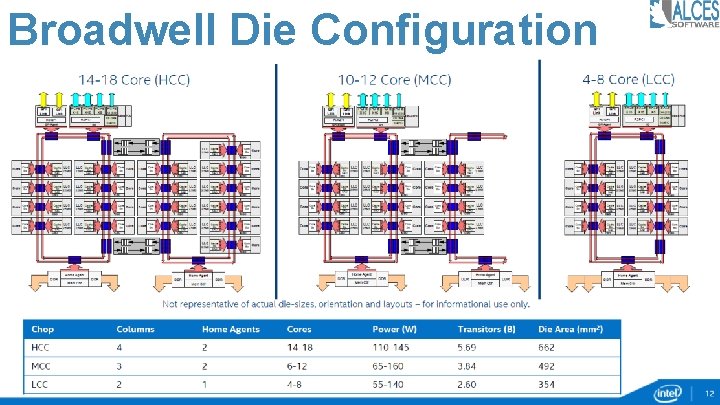

Broadwell Die Configuration © 2015 Alces Software Ltd 8

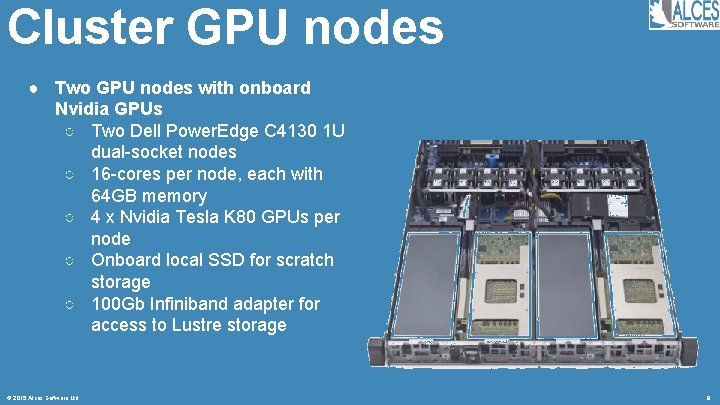

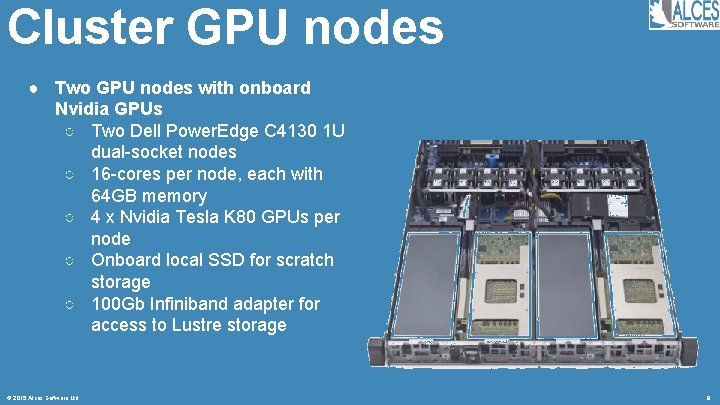

Cluster GPU nodes ● Two GPU nodes with onboard Nvidia GPUs ○ Two Dell Power. Edge C 4130 1 U dual-socket nodes ○ 16 -cores per node, each with 64 GB memory ○ 4 x Nvidia Tesla K 80 GPUs per node ○ Onboard local SSD for scratch storage ○ 100 Gb Infiniband adapter for access to Lustre storage © 2015 Alces Software Ltd 9

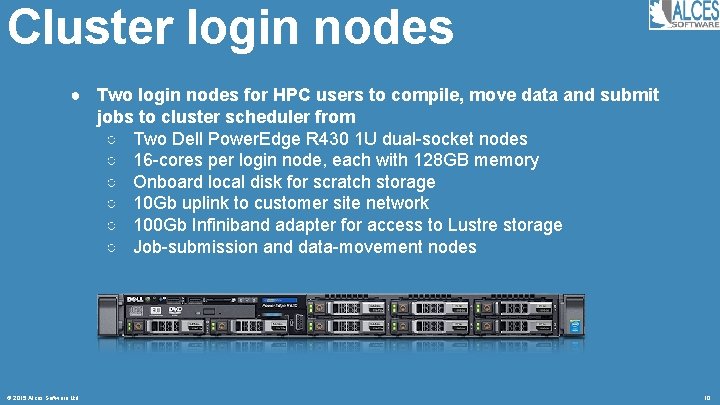

Cluster login nodes ● Two login nodes for HPC users to compile, move data and submit jobs to cluster scheduler from ○ Two Dell Power. Edge R 430 1 U dual-socket nodes ○ 16 -cores per login node, each with 128 GB memory ○ Onboard local disk for scratch storage ○ 10 Gb uplink to customer site network ○ 100 Gb Infiniband adapter for access to Lustre storage ○ Job-submission and data-movement nodes © 2015 Alces Software Ltd 10

Lustre filesystem ● 680 TB Lustre filesystem using Dell Power. Vault arrays with highavailability OSS pairs ○ Four Dell Power. Vault 4 U disk arrays each with 60 x 4 TB disks ○ RAID 6 protected data area, with RAID 10 metadata disks ○ Four Dell Power. Edge servers configured as Lustre MDS and OSS high-availability pairs ● Capable of 4. 7 GB/s sustained sequential write performance © 2015 Alces Software Ltd 11

Omnipath Infiniband fabric ● ● Five 48 -port 100 Gb Infiniband edge switches with 32 hosts each Four 48 -port 100 Gb Infiniband core switches 1, 600 Gbps bandwidth between each edge and the core One single connection provides: ● ● © 2015 Alces Software Ltd 1. 19 us latency (measured) for small messages 11, 700 MB/sec throughput (measured) for large messages 1, 214 MB/sec storage throughput for single-threaded application 4, 715 MB/sec storage throughput for multi-threaded, single-node application 12

Dell Ethernet Fabric ● Each rack of 48 nodes includes: ● ● ● Dell Power. Connect N 2048 1 U 48 -port Ethernet switches Dual-redundant LACP configured 10 Gb bonds to Ethernet core Dell Power. Connect 2848 management switches ● Ethernet fabric used for: ● ● ● © 2015 Alces Software Ltd Operating system software deployment Interactive traffic (SSH, qrsh) Data movement to the “/users” filesystem Monitoring traffic (ganglia, nagios) Management traffic (via separate out-of-band interface) 13

HPC Software ● HPC cluster managed by a series of virtual software appliances ● ● Software deployment and configuration management System performance and availability monitoring Software package repository and update manager User authentication and name services ● HPC content deployed on bare-metal and in service containers ● ● ● © 2015 Alces Software Ltd HPC login nodes (bare-metal) HPC compute nodes (bare-metal) HPC headnodes (virtual containers) 14

Physical environment © 2015 Alces Software Ltd 15

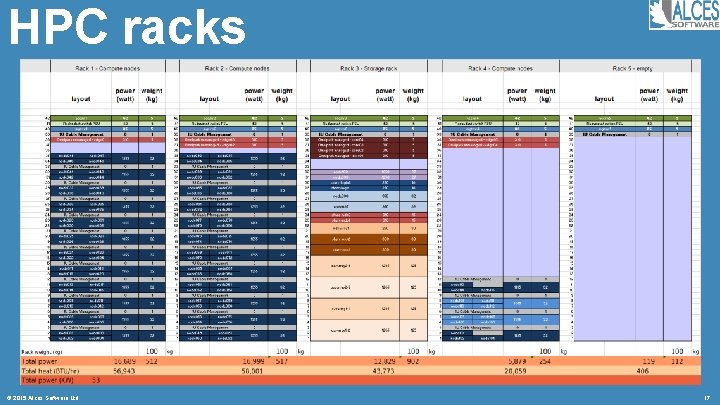

Rack enclosures ● ● ● © 2015 Alces Software Ltd 5 x Dell 42 U rack enclosures 17 KW maximum power output per rack HPC cluster nodes in racks 1, 2 Core systems in rack 3 Space for expansion 16

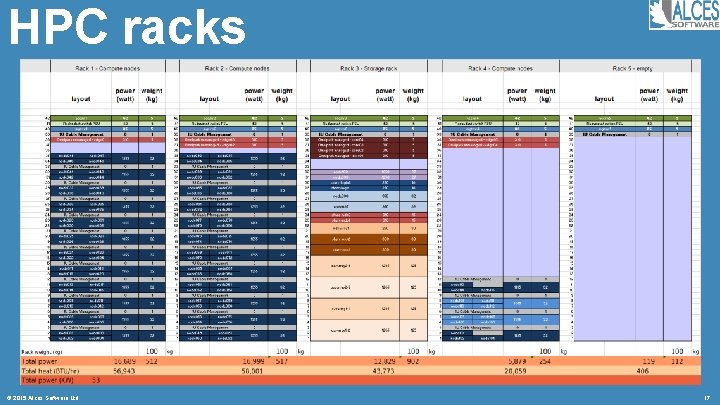

HPC racks © 2015 Alces Software Ltd 17

Lustre Filesystem An overview for administrators and researchers © 2015 Alces Software Ltd 18

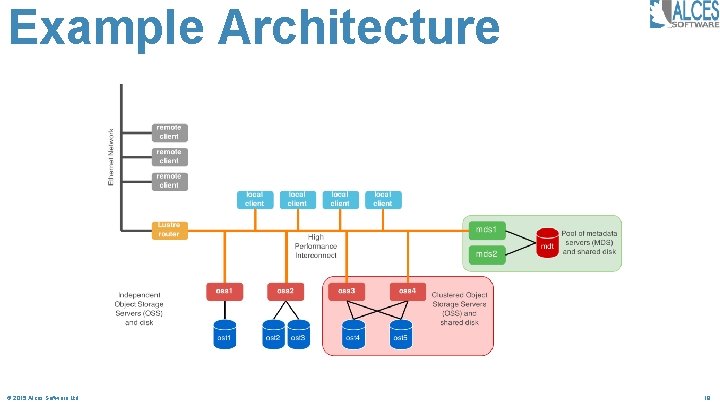

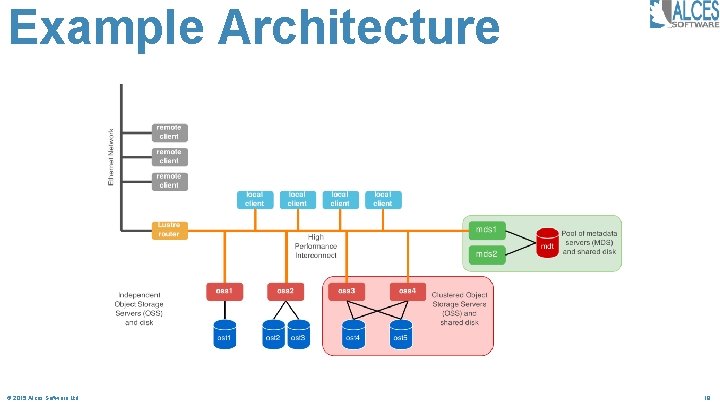

Example Architecture © 2015 Alces Software Ltd 19

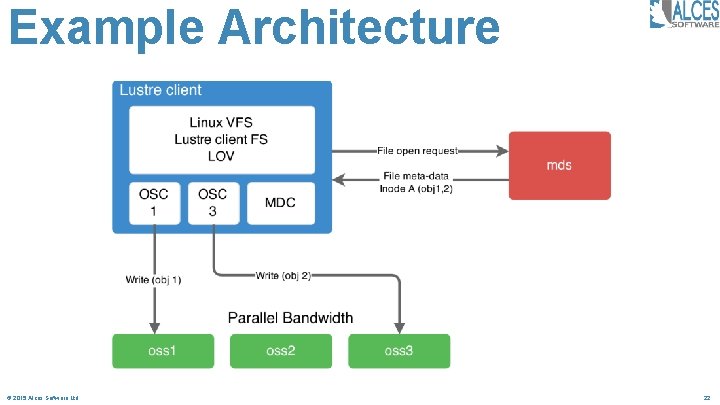

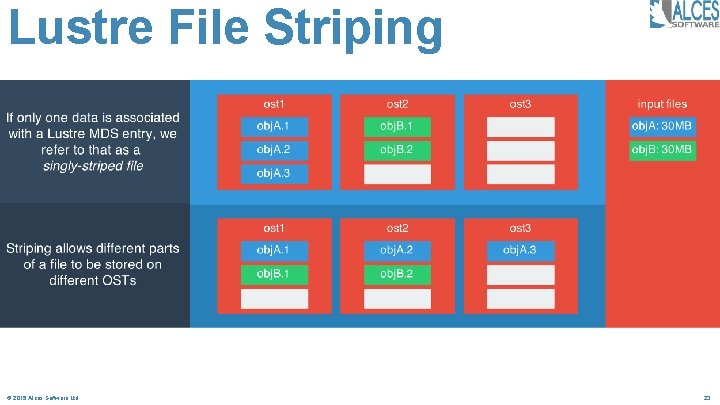

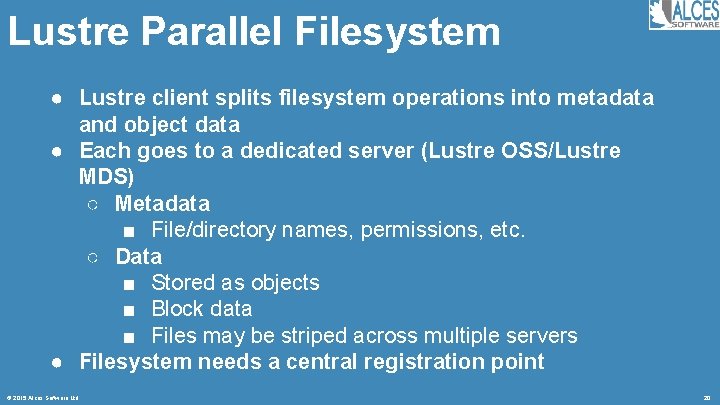

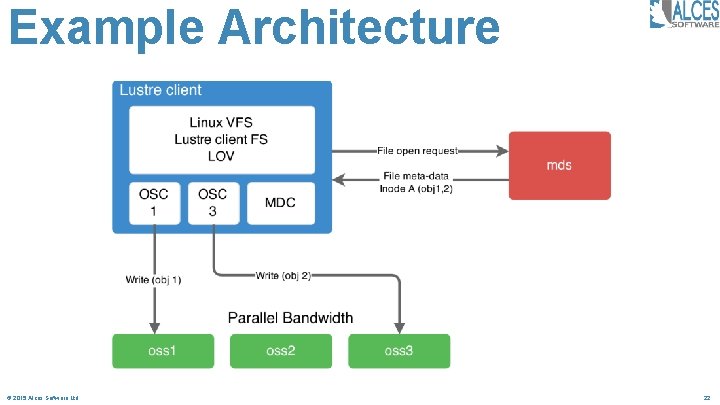

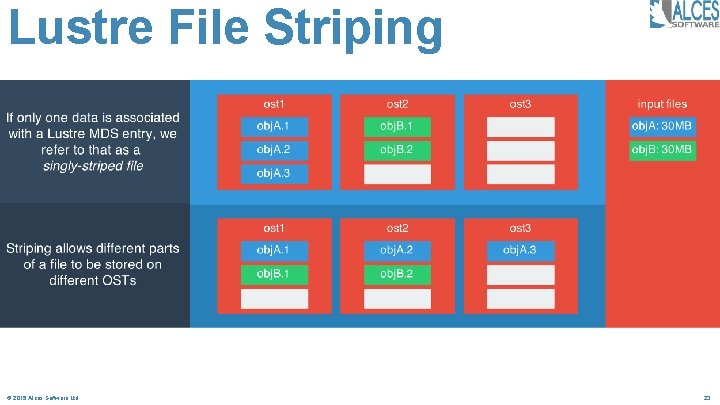

Lustre Parallel Filesystem ● Lustre client splits filesystem operations into metadata and object data ● Each goes to a dedicated server (Lustre OSS/Lustre MDS) ○ Metadata ■ File/directory names, permissions, etc. ○ Data ■ Stored as objects ■ Block data ■ Files may be striped across multiple servers ● Filesystem needs a central registration point © 2015 Alces Software Ltd 20

Lustre Servers ● Metadata Server (MDS) ○ Responsible for storing file information ○ Manages names and directories ● Object Storage Server (OSS) ○ Provide file I/O service ● Management Server (MGS) ○ Holds cluster configuration and exports mount-point to clients ○ New Lustre servers register with management server to join filesystem ○ Lustre clients contact management server to mount filesystem ○ Requires its own storage device (or can share MDT) ○ Can serve multiple filesystems (MDTs) ○ Servers can register to only one management server © 2015 Alces Software Ltd 21

Example Architecture © 2015 Alces Software Ltd 22

Lustre File Striping © 2015 Alces Software Ltd 23

Allocation of Resources ● Lustre filesystem provides several mechanisms to control how data is stored ● Directory-level policies ○ Controls which OST data is stored on ○ Controls striping policy for new files ● Storage pools ○ Arbitrary groups of OSTs ● Quotas ○ Similar to standard Linux quotas today ● ACLs ○ Ability to store ACL details with user files © 2015 Alces Software Ltd 24

Effortless HPC on demand

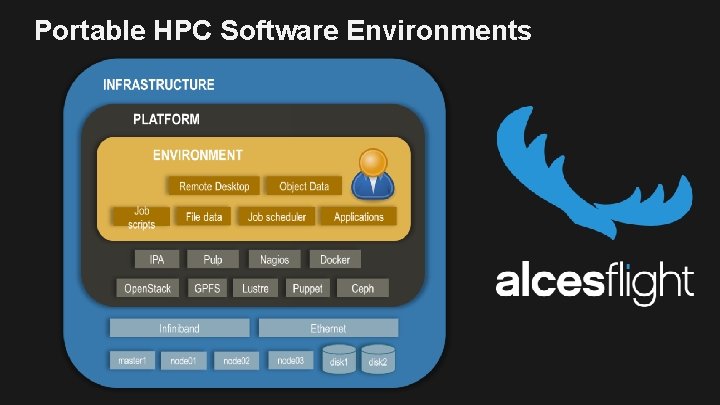

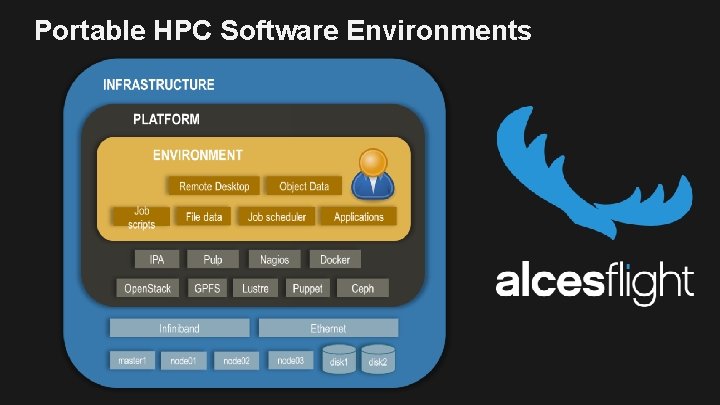

Portable HPC Software Environments

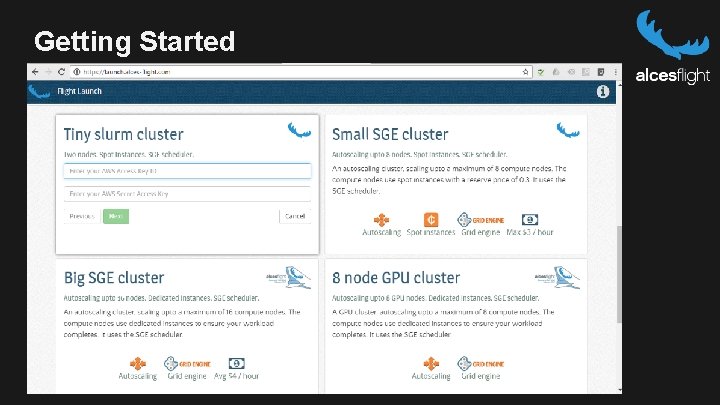

Alces Flight – versions available • • Run your software the way you want it Use both on-premise and remote resources Self-service HPC environments Two versions of Flight available • Alces Flight Solo – community and professional edition • Alces Flight Enterprise • • • Persistent, shared HPC environment Hybrid HPC Multi-site usage

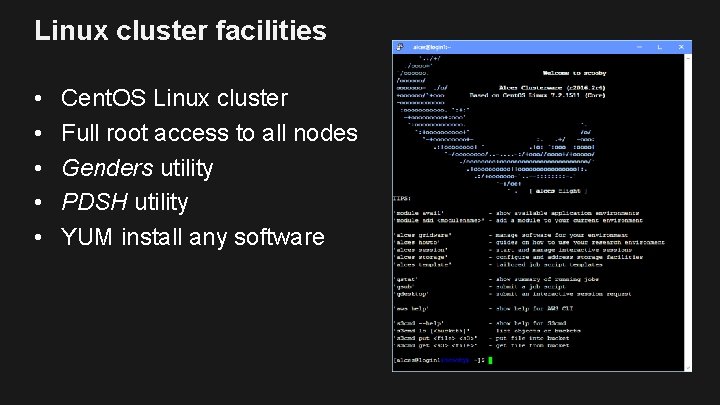

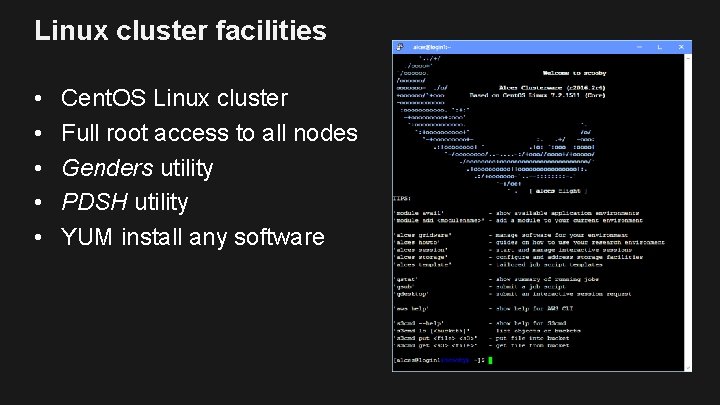

Linux cluster facilities • • • Cent. OS Linux cluster Full root access to all nodes Genders utility PDSH utility YUM install any software

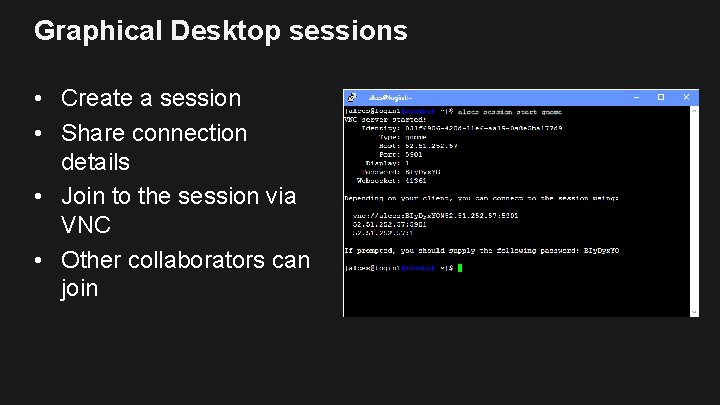

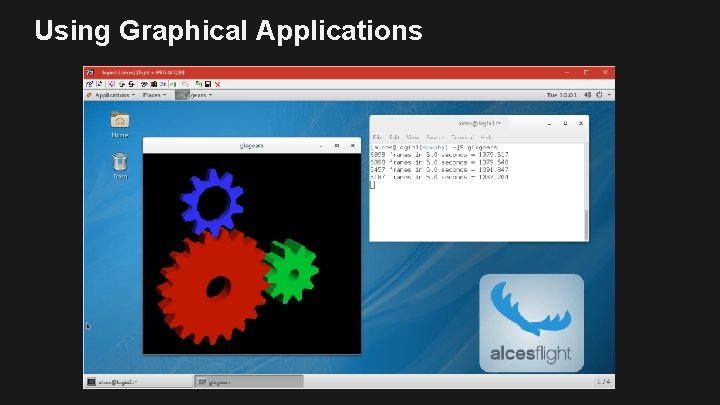

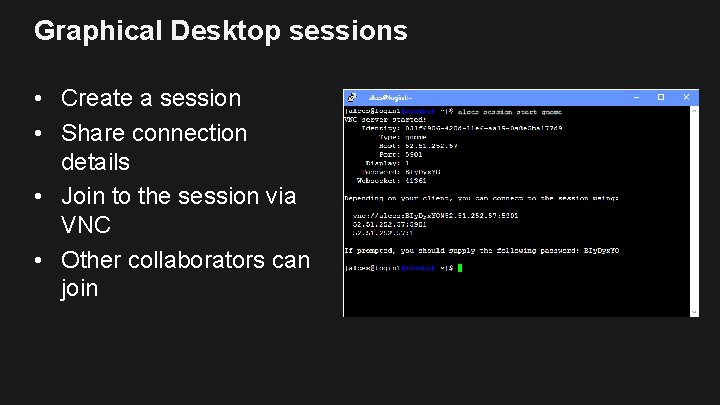

Graphical Desktop sessions • Create a session • Share connection details • Join to the session via VNC • Other collaborators can join

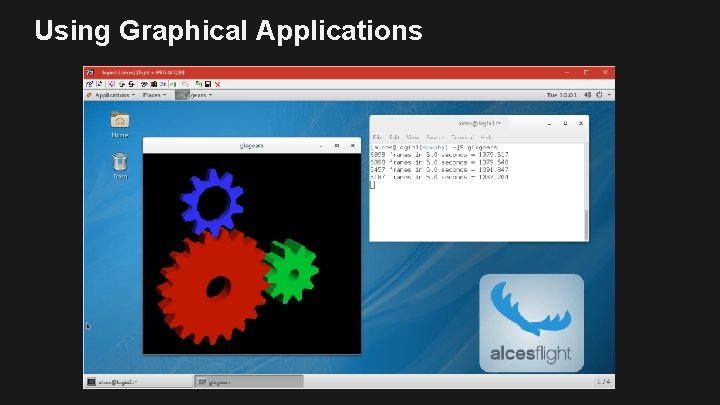

Using Graphical Applications

Alces Gridware Application library • Over 1250 application, library and MPI versions • Pre-optimized and stored in S 3 • Option to compile and optimize on-demand • • Includes modules environment management Gridware project keeps pace with latest versions Support for commercial and licensed applications http: //gridware. alces-flight. com

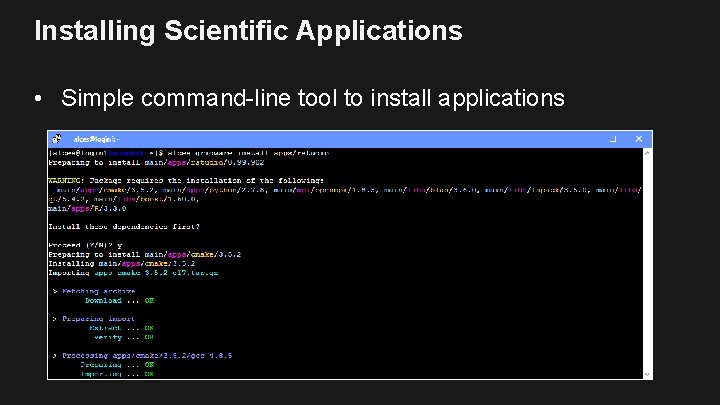

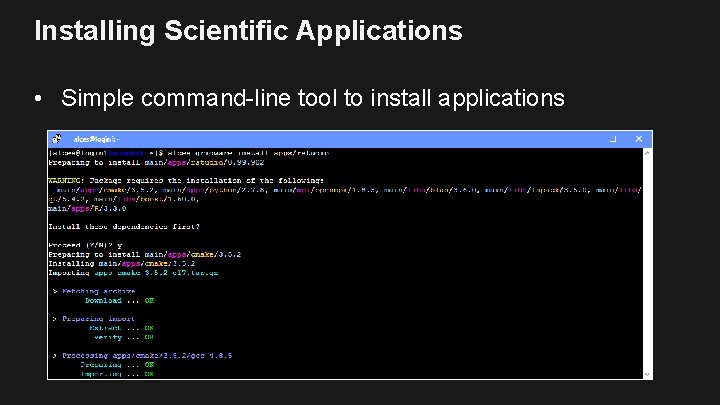

Installing Scientific Applications • Simple command-line tool to install applications

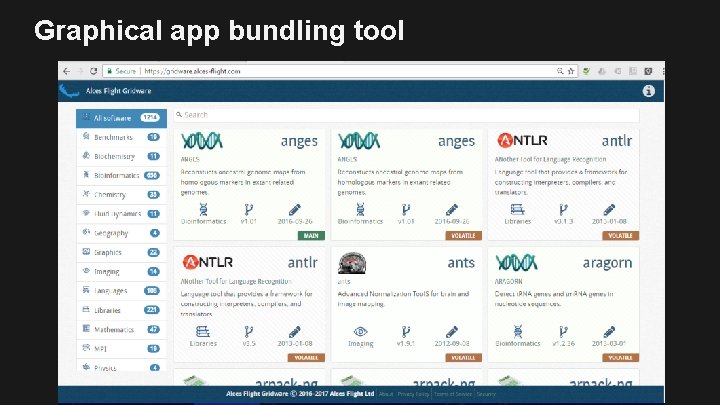

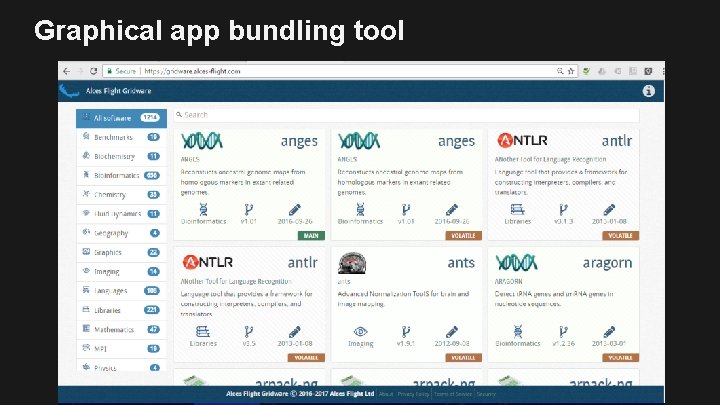

Graphical app bundling tool

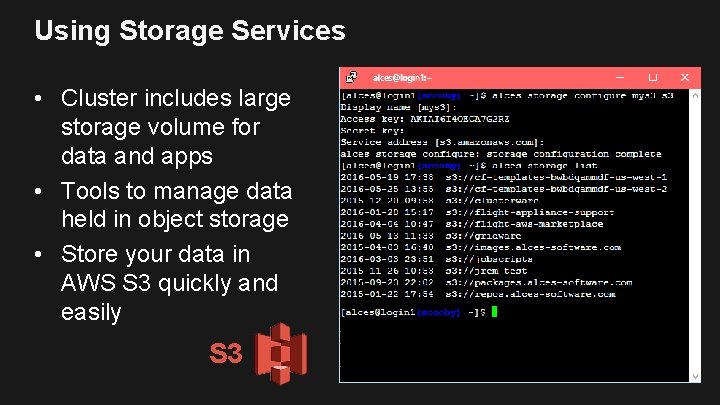

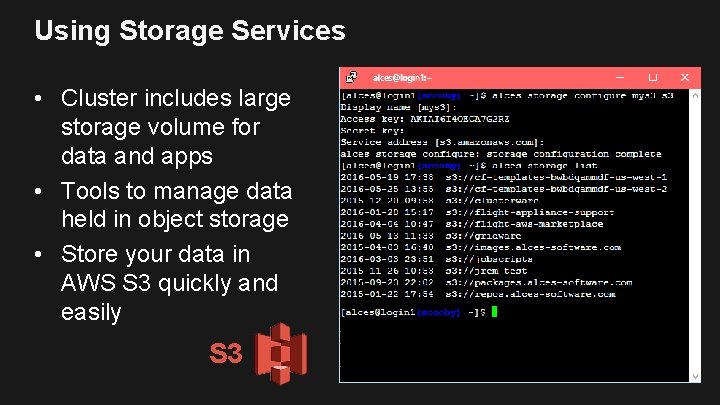

Using Storage Services • Cluster includes large storage volume for data and apps • Tools to manage data held in object storage • Store your data in AWS S 3 quickly and easily S 3

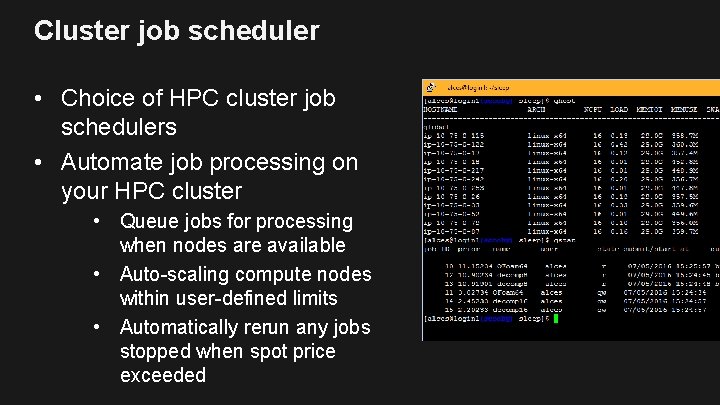

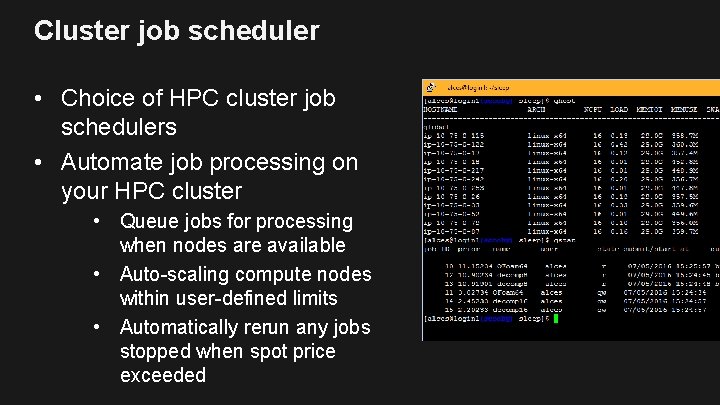

Cluster job scheduler • Choice of HPC cluster job schedulers • Automate job processing on your HPC cluster • Queue jobs for processing when nodes are available • Auto-scaling compute nodes within user-defined limits • Automatically rerun any jobs stopped when spot price exceeded

Alces Flight – advanced topics • • Pre-installing applications on your cluster Using alternative job-schedulers Synchronizing your home-directory between clusters Optimizing auto-scaling by choosing instances Setting user quotas for usage Running Docker containers Choosing data-volumes • • • Size, performance, scalability, encryption Parallel and distributed filesystems Managing and archiving data

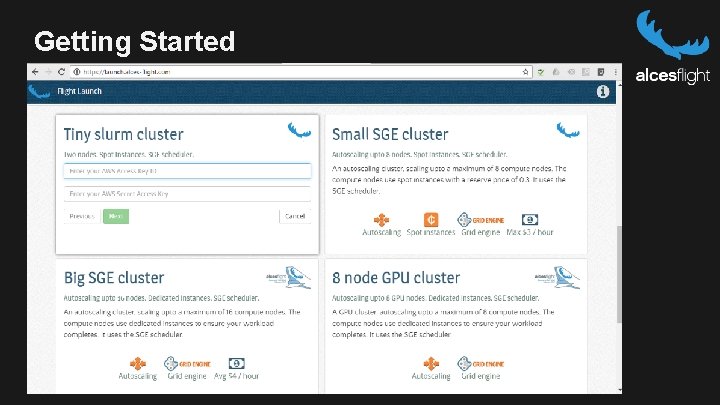

Getting Started

Questions? © 2015 Alces Software Ltd 38

Backup slides © 2015 Alces Software Ltd 39

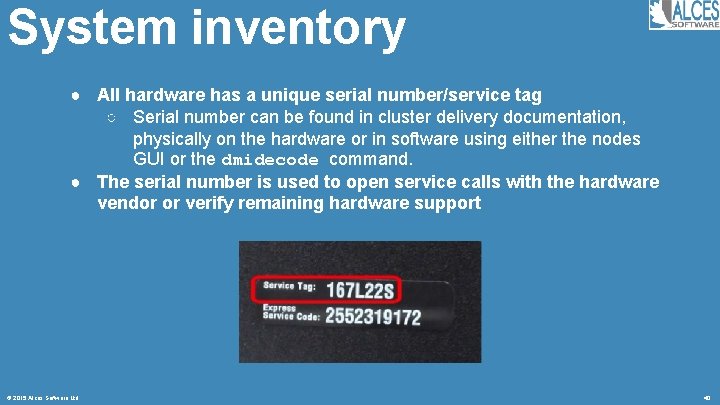

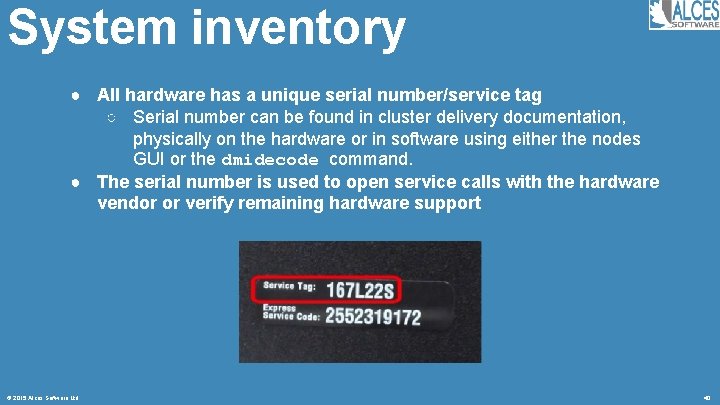

System inventory ● All hardware has a unique serial number/service tag ○ Serial number can be found in cluster delivery documentation, physically on the hardware or in software using either the nodes GUI or the dmidecode command. ● The serial number is used to open service calls with the hardware vendor or verify remaining hardware support © 2015 Alces Software Ltd 40

Regular maintenance ● Review queue scheduler system ○ Look for stuck jobs, errors on queues ○ Check for over or under-loaded nodes ○ Review queue waiting times ○ Performance capacity planning for compute resources ● Filesystem maintenance ○ Check for full filesystems ○ Look for replicated data to be rationalised ○ Work with the users exceeding their soft quotas ○ Balance data between filesystems ○ Check user data backups and test restores © 2015 Alces Software Ltd 41

Regular maintenance ● Physical maintenance ○ Review temperature and airflow in data-centre ○ Keep systems clean and free from debris ● Service maintenance ○ Prioritise user requests and support requests ○ Hold regular user group meetings ○ Maintain user lists; disable inactive users ○ Review system performance, throughput and availability ○ Attend regular service review meetings with vendor © 2015 Alces Software Ltd 42

User Management Free. IPA An overview for administrators and researchers © 2015 Alces Software Ltd 43

User management ● Users and site administrators have IPA accounts ○ Multiple access methods to the cloud/HPC sides of the cluster via the same account ■ SSH access to Open. Stack/HPC environments ■ Web GUI access for Open. Stack environment ■ API access to Open. Stack environment ■ Graphical X-forwarding support (via SSH) ■ SCP access for transferring files © 2015 Alces Software Ltd 44

User management ● Admin functions available via CLI and GUI interfaces ● CLI ○ Available from any node in the cluster assuming valid site administrator kerberos ticket ● GUI ○ Available if connected to the admin VPN ■ https: //directory. rosalind. compute. estate © 2015 Alces Software Ltd 45

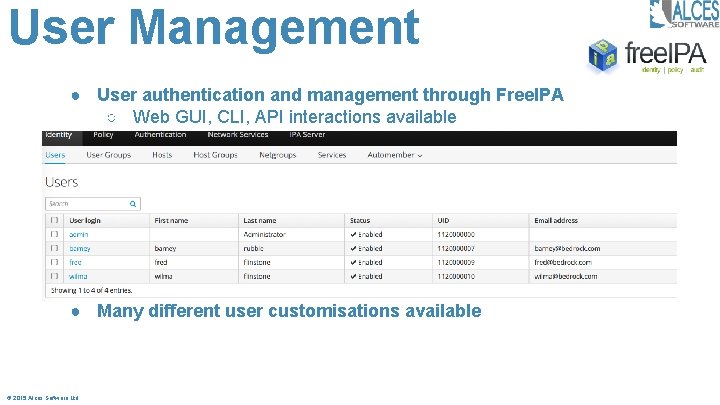

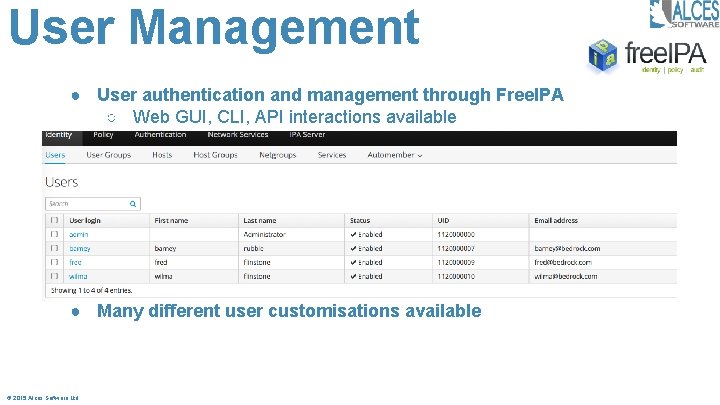

User Management ● User authentication and management through Free. IPA ○ Web GUI, CLI, API interactions available ● Many different user customisations available © 2015 Alces Software Ltd 46

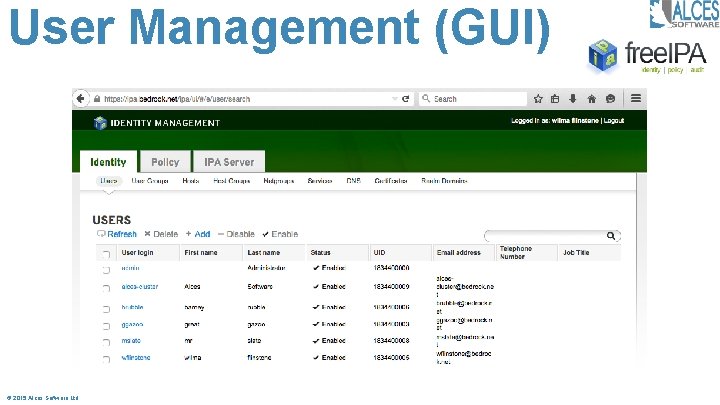

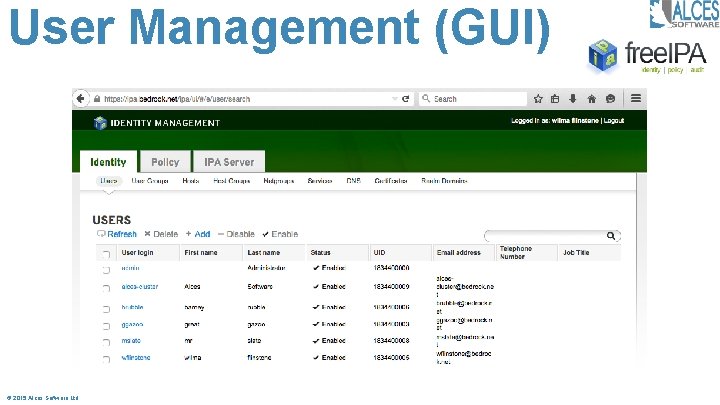

User Management (GUI) © 2015 Alces Software Ltd 47

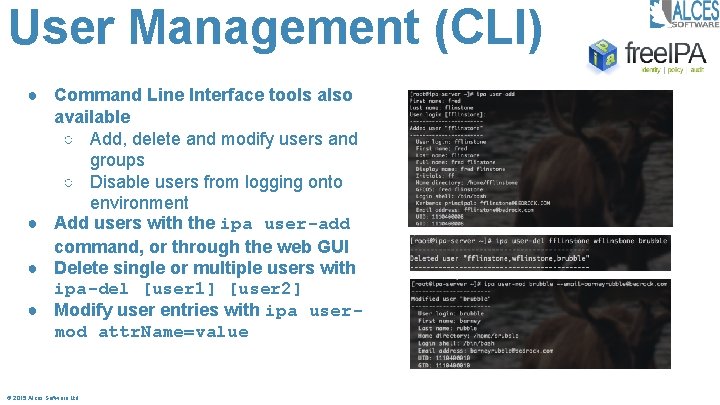

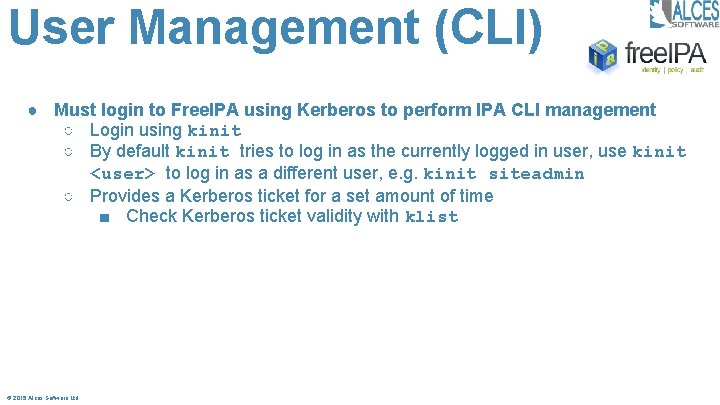

User Management (CLI) ● Must login to Free. IPA using Kerberos to perform IPA CLI management ○ Login using kinit ○ By default kinit tries to log in as the currently logged in user, use kinit <user> to log in as a different user, e. g. kinit siteadmin ○ Provides a Kerberos ticket for a set amount of time ■ Check Kerberos ticket validity with klist © 2015 Alces Software Ltd 48

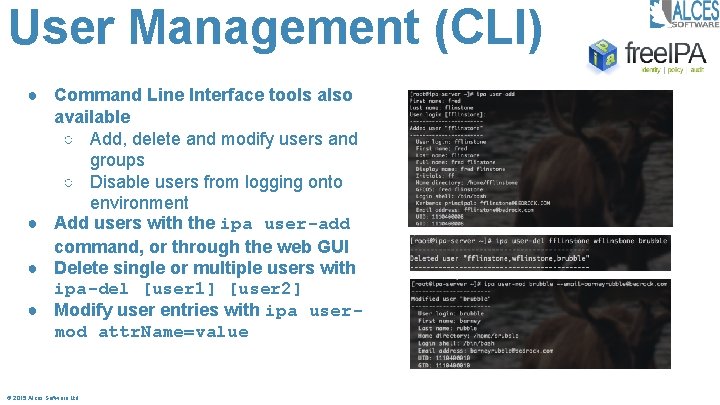

User Management (CLI) ● Command Line Interface tools also available ○ Add, delete and modify users and groups ○ Disable users from logging onto environment ● Add users with the ipa user-add command, or through the web GUI ● Delete single or multiple users with ipa-del [user 1] [user 2] ● Modify user entries with ipa usermod attr. Name=value © 2015 Alces Software Ltd 49

Using Open. Stack An overview for administrators and researchers © 2015 Alces Software Ltd 50

Horizon Dashboard © 2015 Alces Software Ltd 51

Using Ceph An overview for administrators and researchers © 2015 Alces Software Ltd 52

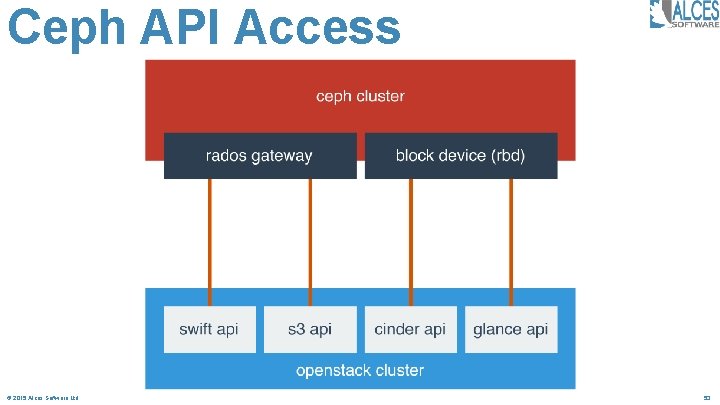

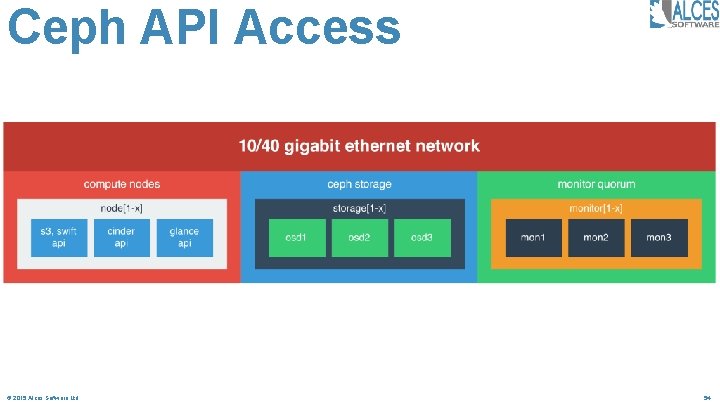

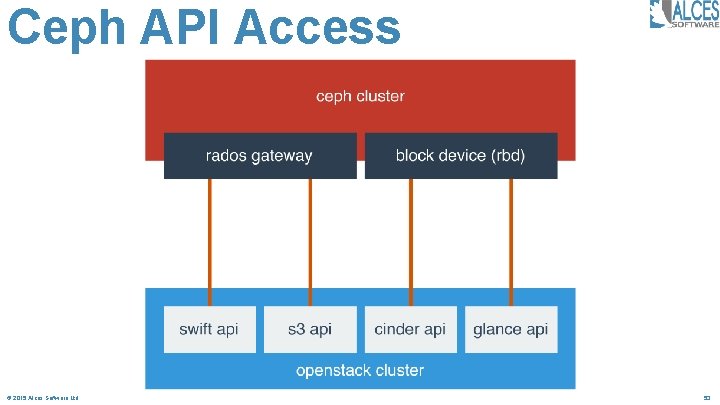

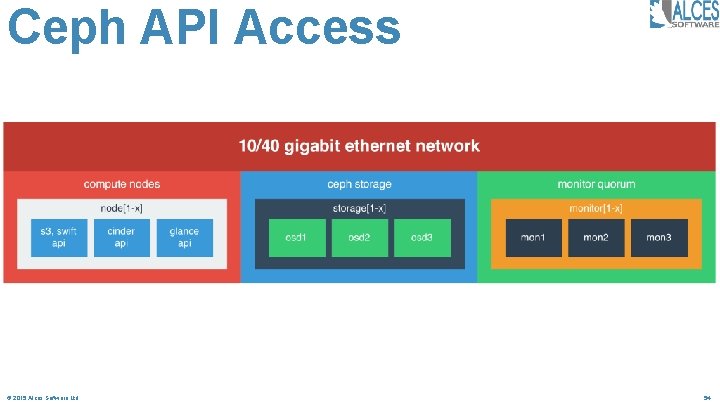

Ceph API Access © 2015 Alces Software Ltd 53

Ceph API Access © 2015 Alces Software Ltd 54

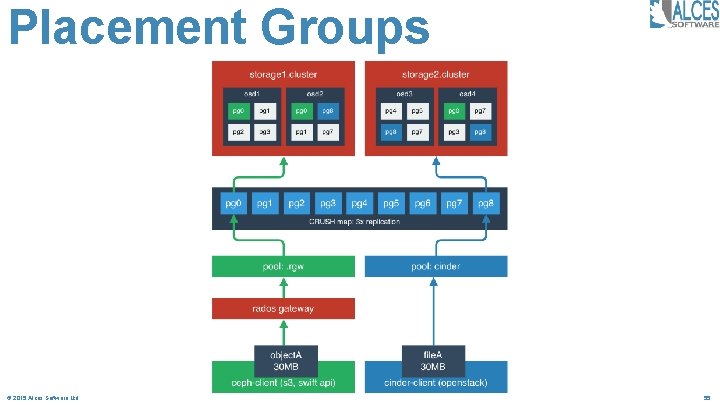

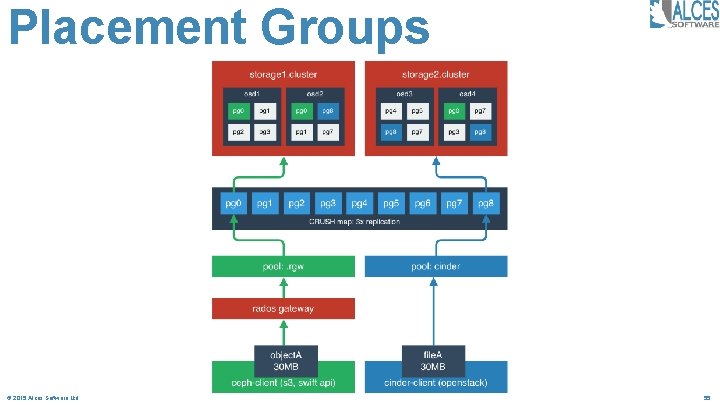

Placement Groups © 2015 Alces Software Ltd 55

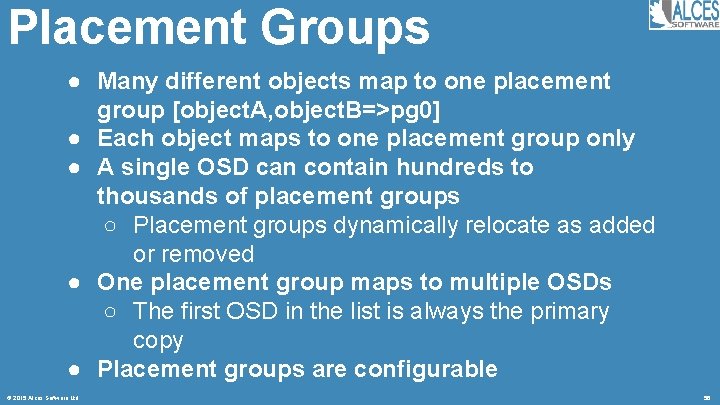

Placement Groups ● Many different objects map to one placement group [object. A, object. B=>pg 0] ● Each object maps to one placement group only ● A single OSD can contain hundreds to thousands of placement groups ○ Placement groups dynamically relocate as added or removed ● One placement group maps to multiple OSDs ○ The first OSD in the list is always the primary copy ● Placement groups are configurable © 2015 Alces Software Ltd 56

Placement Groups Demo ● Demo 1: ○ PUT our new 30 MB data file object 1 into. rgw Ceph pool ● Demo 2: ○ Save our new 30 MB file. A from newly created Nova instance © 2015 Alces Software Ltd 57

Erasure Coding ● Erasure code individual or multiple pools ● Highly configurable to provide more or less redundancy across OSDs, racks or even data centres ● Move large unused input data to cold/cheap storage ● m = additional chunks, defines the number of OSDs that can be simultaneously lost before complete failure ● k = split the object into n chunks © 2015 Alces Software Ltd 58

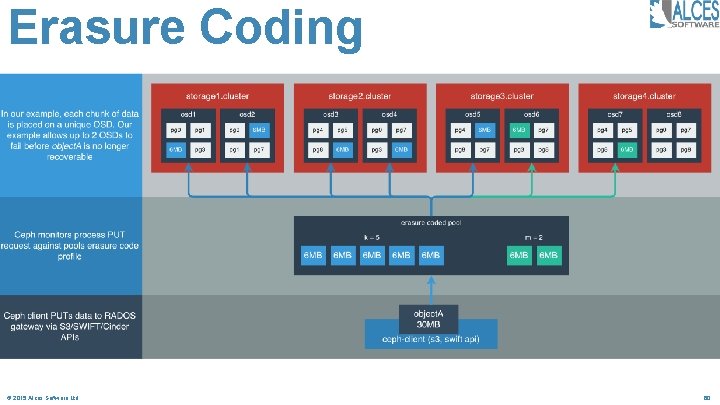

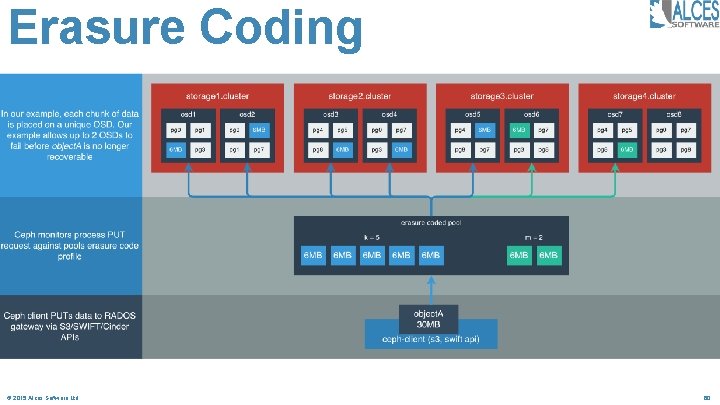

Erasure Coding Example ● Take new 30 MB file object. A ● PUT into my pool with erasure coding method k=5 m=2 ○ This splits the 30 MB file into 5 6 MB chunks (k=5) spread across multiple OSDs, and creates 2 parity chunks (m=2) ■ The 2 parity chunks allow for 2 simultaneous OSD losses to occur before complete loss of data ● Take 2 OSDs offline ● GET object. A back from pool and verify size © 2015 Alces Software Ltd 59

Erasure Coding © 2015 Alces Software Ltd 60

Accessing the cluster An overview for administrators and researchers © 2015 Alces Software Ltd 61

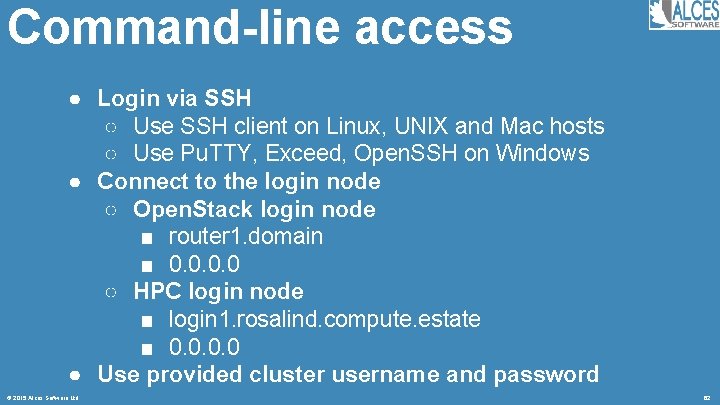

Command-line access ● Login via SSH ○ Use SSH client on Linux, UNIX and Mac hosts ○ Use Pu. TTY, Exceed, Open. SSH on Windows ● Connect to the login node ○ Open. Stack login node ■ router 1. domain ■ 0. 0 ○ HPC login node ■ login 1. rosalind. compute. estate ■ 0. 0 ● Use provided cluster username and password © 2015 Alces Software Ltd 62

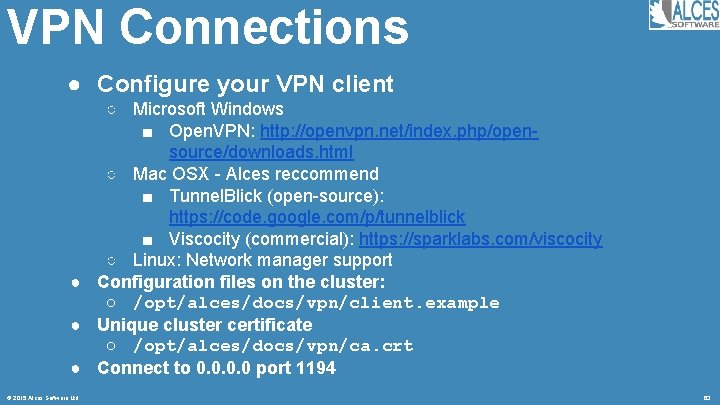

VPN Connections ● Configure your VPN client ○ Microsoft Windows ■ Open. VPN: http: //openvpn. net/index. php/opensource/downloads. html ○ Mac OSX - Alces reccommend ■ Tunnel. Blick (open-source): https: //code. google. com/p/tunnelblick ■ Viscocity (commercial): https: //sparklabs. com/viscocity ○ Linux: Network manager support ● Configuration files on the cluster: ○ /opt/alces/docs/vpn/client. example ● Unique cluster certificate ○ /opt/alces/docs/vpn/ca. crt ● Connect to 0. 0 port 1194 © 2015 Alces Software Ltd 63

Linux Modules An overview for administrators and researchers © 2015 Alces Software Ltd 64

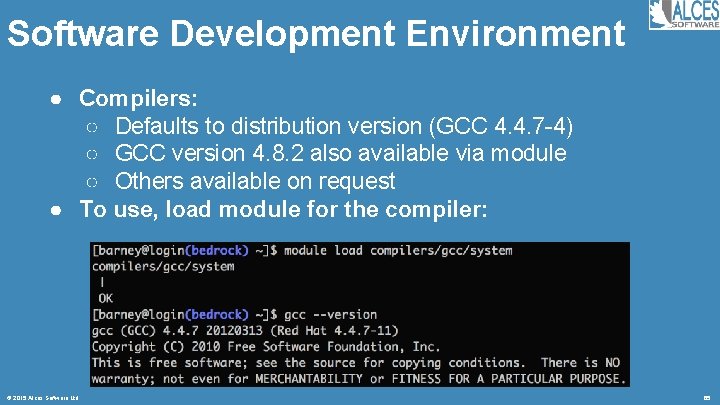

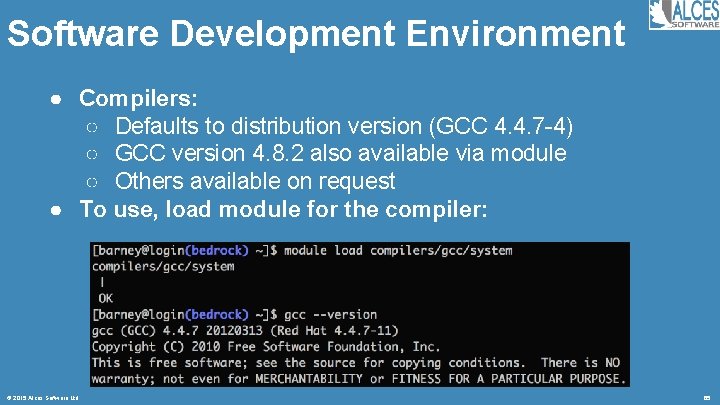

Software Development Environment ● Compilers: ○ Defaults to distribution version (GCC 4. 4. 7 -4) ○ GCC version 4. 8. 2 also available via module ○ Others available on request ● To use, load module for the compiler: © 2015 Alces Software Ltd 65

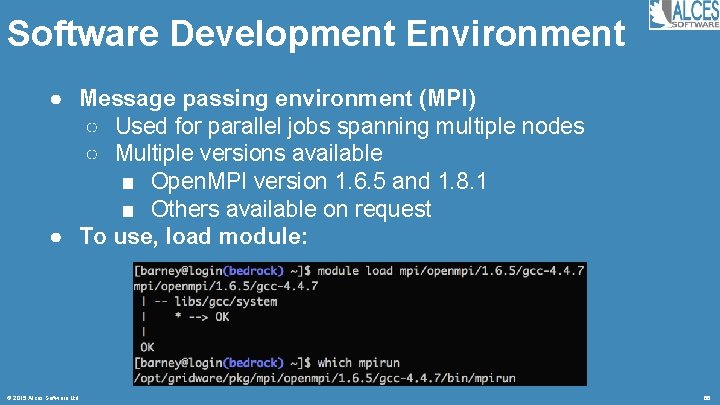

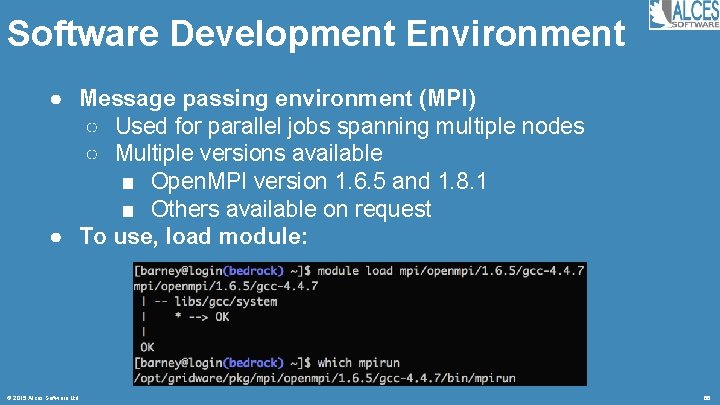

Software Development Environment ● Message passing environment (MPI) ○ Used for parallel jobs spanning multiple nodes ○ Multiple versions available ■ Open. MPI version 1. 6. 5 and 1. 8. 1 ■ Others available on request ● To use, load module: © 2015 Alces Software Ltd 66

Software Development Environment ● modules environment management ○ The primary method for users to access software ○ Enables central, shared software library ○ Provides separation between software packages ■ Support multiple incompatible versions ■ Automatic dependency analysis and module loading ○ Available for all users to ■ Use centralised application repository ■ Build their own applications and modules in home dirs ■ Ignore modules and setup user account manually © 2015 Alces Software Ltd 67

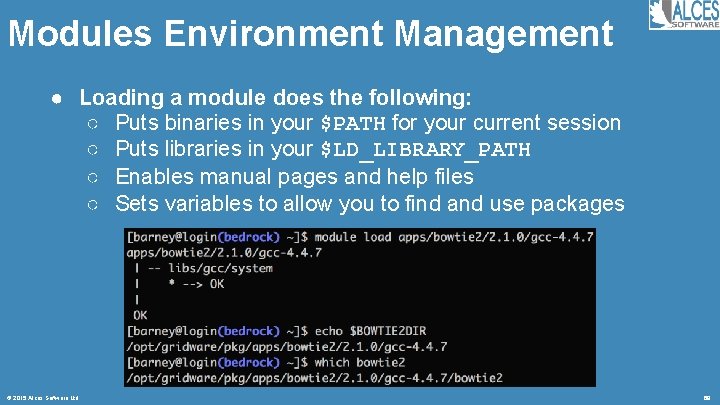

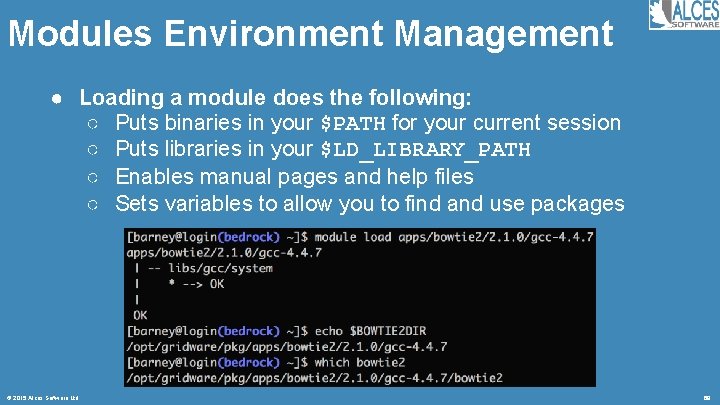

Modules Environment Management ● Loading a module does the following: ○ Puts binaries in your $PATH for your current session ○ Puts libraries in your $LD_LIBRARY_PATH ○ Enables manual pages and help files ○ Sets variables to allow you to find and use packages © 2015 Alces Software Ltd 68

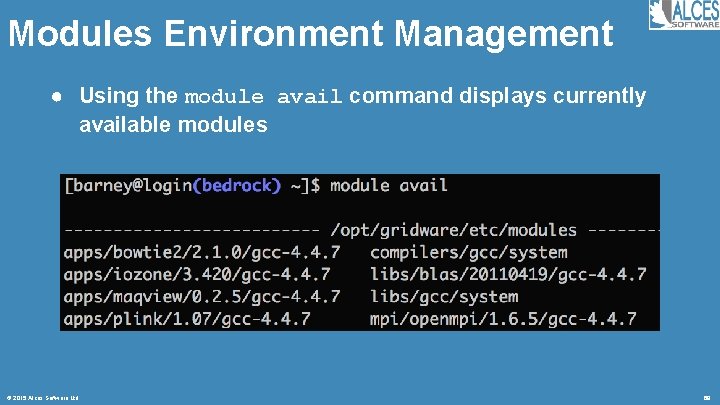

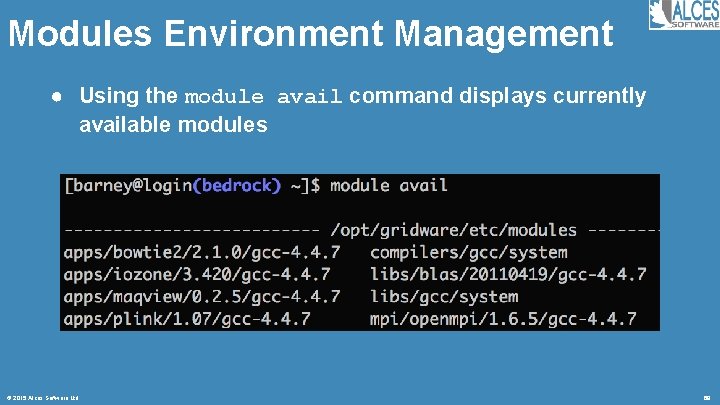

Modules Environment Management ● Using the module avail command displays currently available modules © 2015 Alces Software Ltd 69

Modules Environment Management ● Other modules commands available ○ module unload <module> ■ Removes the module from the current environment ○ module list ■ Shows the currently loaded modules ○ module display <module> ○ module whatis <module> ■ Shows information about the software ○ module keyword <search term> ■ Searches for the supplied term in the available modules whatis entries © 2015 Alces Software Ltd 70

Modules Environment Management ● By default, modules are loaded for your session ● Loading modules automatically (all sessions): ○ module initadd <module> ■ Loads the named module every time a user logs in ○ module initlist ■ Shows which modules are automatically loaded at login ○ module initrm <module> ■ Stops a module being loaded on login ○ module use <new module dir> ■ Allows users to supply their own modules branches © 2015 Alces Software Ltd 71

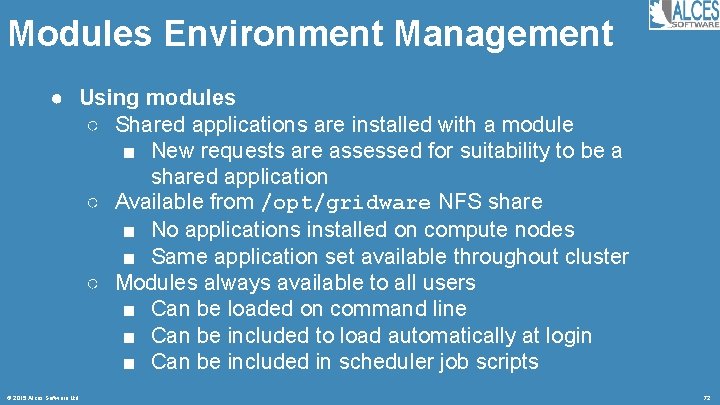

Modules Environment Management ● Using modules ○ Shared applications are installed with a module ■ New requests are assessed for suitability to be a shared application ○ Available from /opt/gridware NFS share ■ No applications installed on compute nodes ■ Same application set available throughout cluster ○ Modules always available to all users ■ Can be loaded on command line ■ Can be included to load automatically at login ■ Can be included in scheduler job scripts © 2015 Alces Software Ltd 72

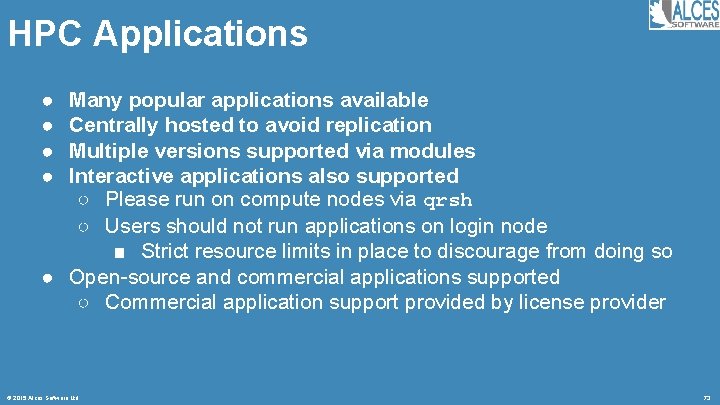

HPC Applications ● ● Many popular applications available Centrally hosted to avoid replication Multiple versions supported via modules Interactive applications also supported ○ Please run on compute nodes via qrsh ○ Users should not run applications on login node ■ Strict resource limits in place to discourage from doing so ● Open-source and commercial applications supported ○ Commercial application support provided by license provider © 2015 Alces Software Ltd 73

Requesting New Applications ● Contact your site admin for information ● Application requests will be reviewed ○ Most open-source applications can be incorporated ○ Commercial applications require appropriate licenses ■ Site license may be required ■ License server available on cluster service nodes ● Users can install their own packages in home dirs ● Many software tools are available in Linux © 2015 Alces Software Ltd 74

HPC Job Scheduler An overview for administrators and researchers © 2015 Alces Software Ltd 75

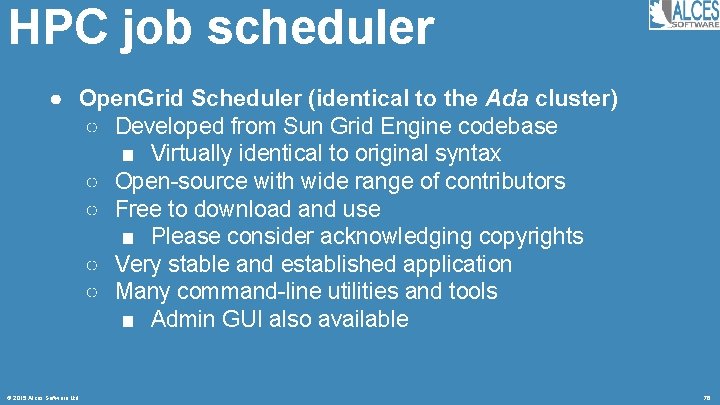

HPC job scheduler ● Open. Grid Scheduler (identical to the Ada cluster) ○ Developed from Sun Grid Engine codebase ■ Virtually identical to original syntax ○ Open-source with wide range of contributors ○ Free to download and use ■ Please consider acknowledging copyrights ○ Very stable and established application ○ Many command-line utilities and tools ■ Admin GUI also available © 2015 Alces Software Ltd 76

HPC job scheduler ● Why do we a need a job scheduler? ○ Need to allocate compute resources to users ○ Need to prevent user applications from overloading compute nodes ○ Want to queue work to run overnight and out of hours ○ Want to ensure that users each get a fair share of the available resources © 2015 Alces Software Ltd 77

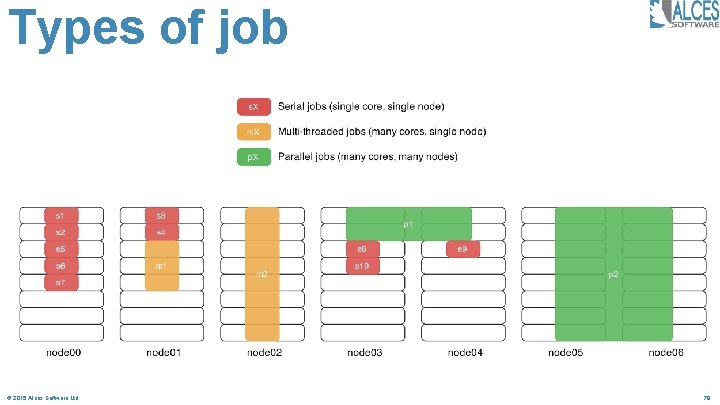

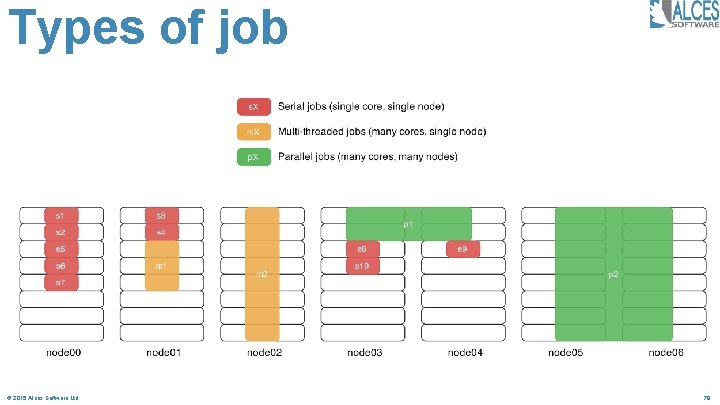

Types of job © 2015 Alces Software Ltd 78

Types of job ● Serial jobs ○ Use a single CPU core ○ May be submitted as a task array (many single jobs) ● Multi-threaded jobs ○ Use two or more CPU cores on the same node ○ User must request the number of cores required ● Parallel jobs ○ Use two or more CPU cores on one or more nodes ○ User must request the number of cores required ○ User may optionally request a number of nodes to use © 2015 Alces Software Ltd 79

Class of job ● Interactive jobs ○ Available from the web portal ○ Started with the “qrsh” or “qdesktop” commands ■ Will start immediately if resources available ■ Will exit immediately if there are no resources available ○ May be serial, multi-threaded or parallel type ○ Users must request a maximum runtime ○ Users should specify resources required to run ○ Input data is the shell session or application started ○ Output data is shown on screen © 2015 Alces Software Ltd 80

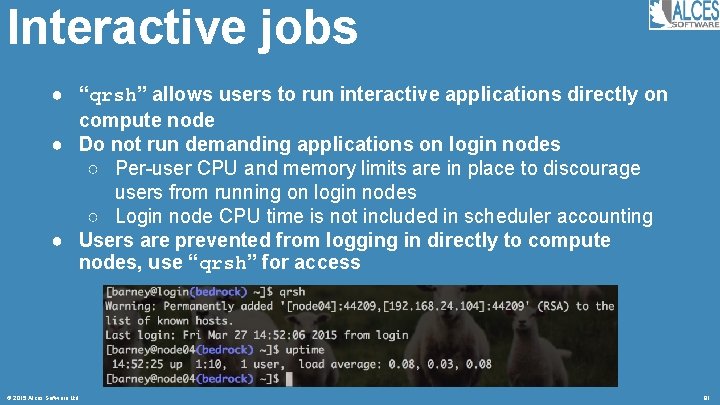

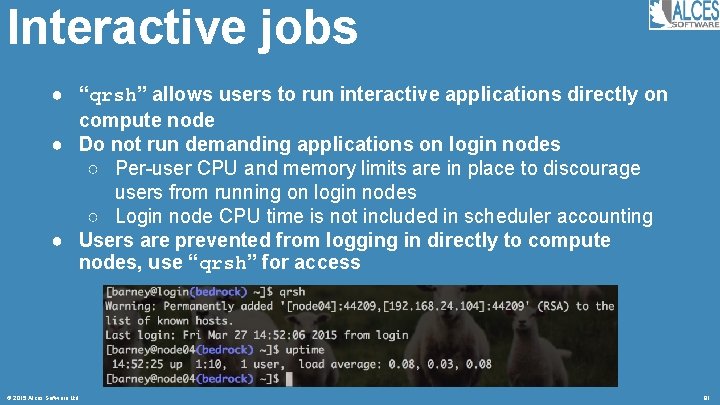

Interactive jobs ● “qrsh” allows users to run interactive applications directly on compute node ● Do not run demanding applications on login nodes ○ Per-user CPU and memory limits are in place to discourage users from running on login nodes ○ Login node CPU time is not included in scheduler accounting ● Users are prevented from logging in directly to compute nodes, use “qrsh” for access © 2015 Alces Software Ltd 81

Interactive Linux desktop ● Connect to the cluster VPN ● Connect using your favourite VNC client (after using qdesktop or via Alces Portal © 2015 Alces Software Ltd 82

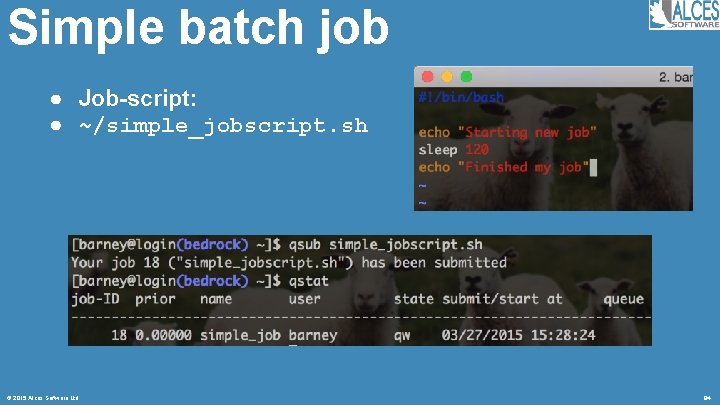

Non-interactive jobs ● Jobs are submitted via a job-script ○ Contains the commands to run your job ○ Can contain instructions for the job-scheduler ● Submission of a simple job-script ○ Echo commands to stdout ○ Single-core batch job ○ Runs with default resource requests ○ Assigned a unique one-time only job number (e. g. 2573) ○ Output sent to home directory by default © 2015 Alces Software Ltd 83

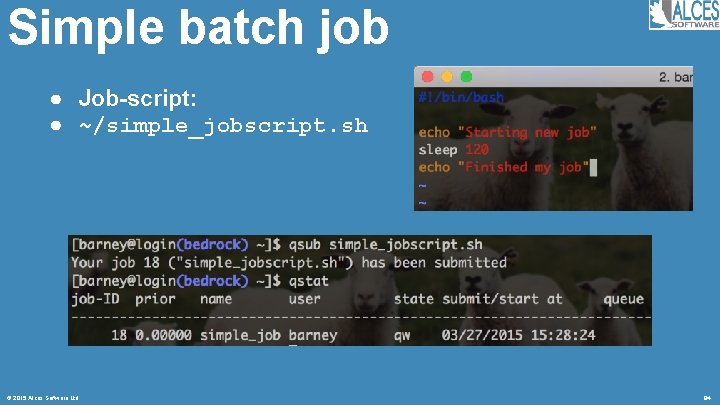

Simple batch job ● Job-script: ● ~/simple_jobscript. sh © 2015 Alces Software Ltd 84

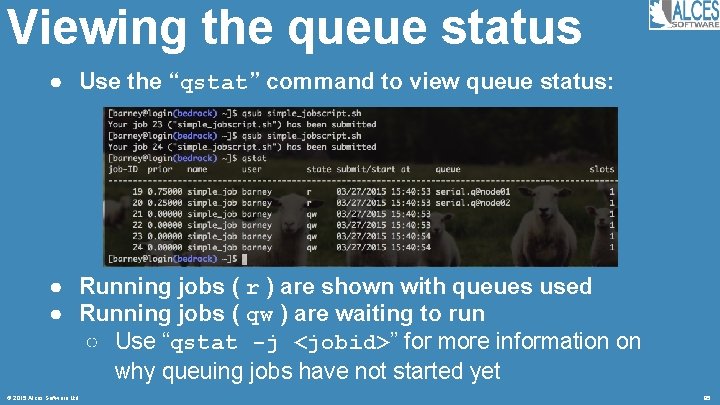

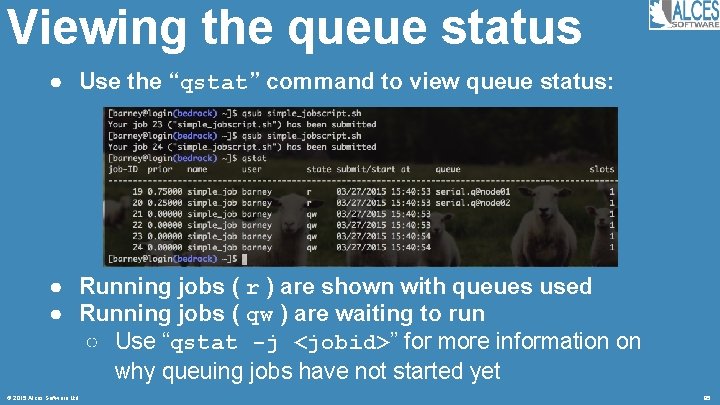

Viewing the queue status ● Use the “qstat” command to view queue status: ● Running jobs ( r ) are shown with queues used ● Running jobs ( qw ) are waiting to run ○ Use “qstat -j <jobid>” for more information on why queuing jobs have not started yet © 2015 Alces Software Ltd 85

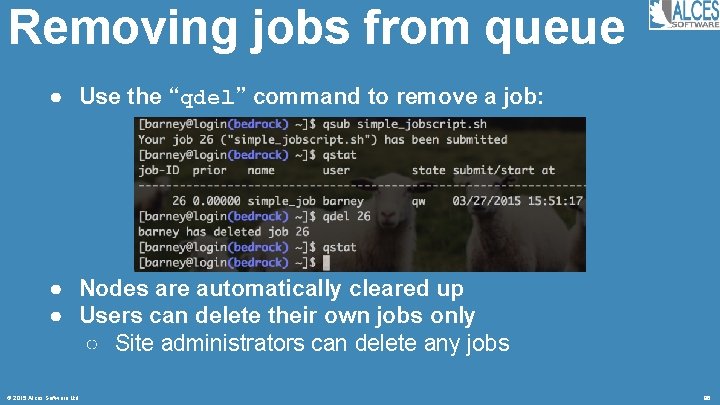

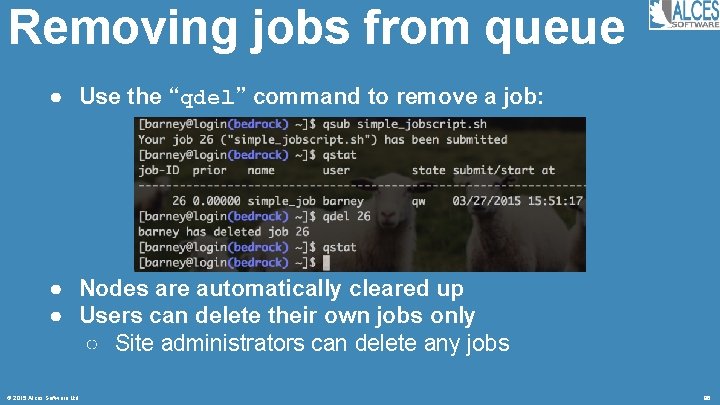

Removing jobs from queue ● Use the “qdel” command to remove a job: ● Nodes are automatically cleared up ● Users can delete their own jobs only ○ Site administrators can delete any jobs © 2015 Alces Software Ltd 86

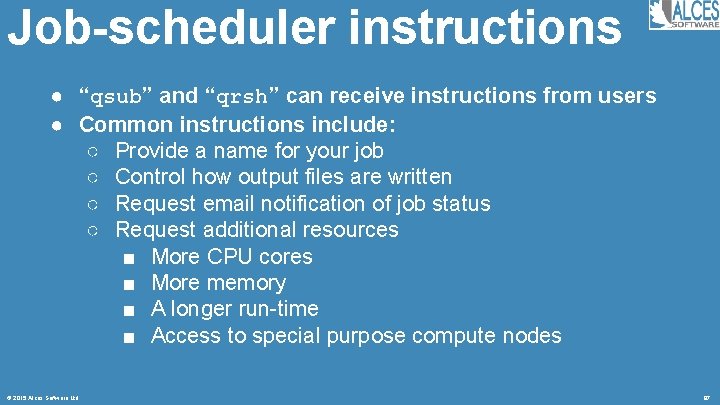

Job-scheduler instructions ● “qsub” and “qrsh” can receive instructions from users ● Common instructions include: ○ Provide a name for your job ○ Control how output files are written ○ Request email notification of job status ○ Request additional resources ■ More CPU cores ■ More memory ■ A longer run-time ■ Access to special purpose compute nodes © 2015 Alces Software Ltd 87

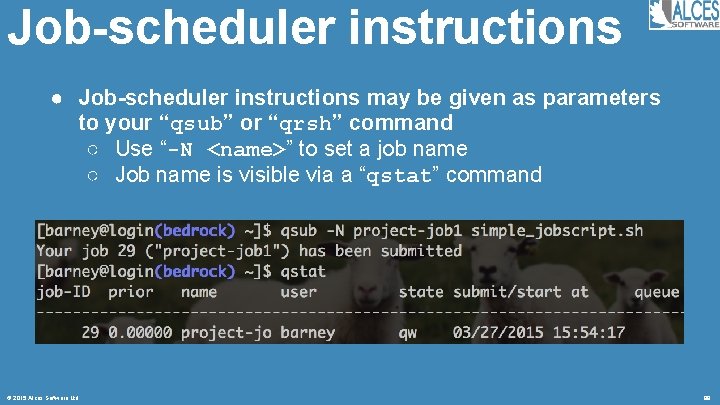

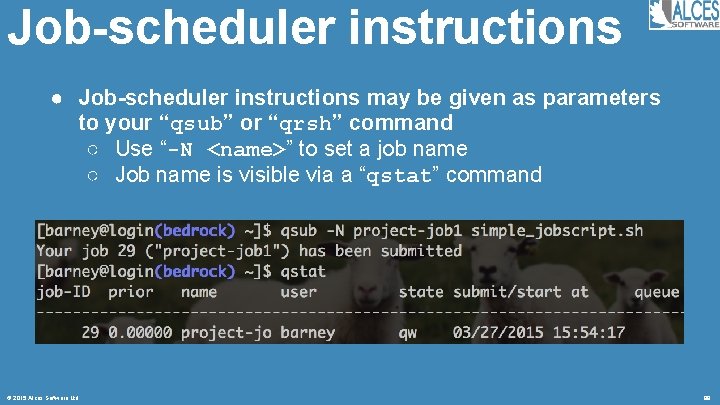

Job-scheduler instructions ● Job-scheduler instructions may be given as parameters to your “qsub” or “qrsh” command ○ Use “-N <name>” to set a job name ○ Job name is visible via a “qstat” command © 2015 Alces Software Ltd 88

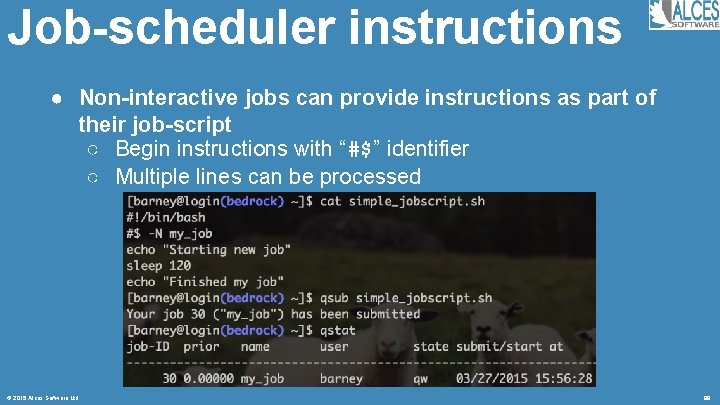

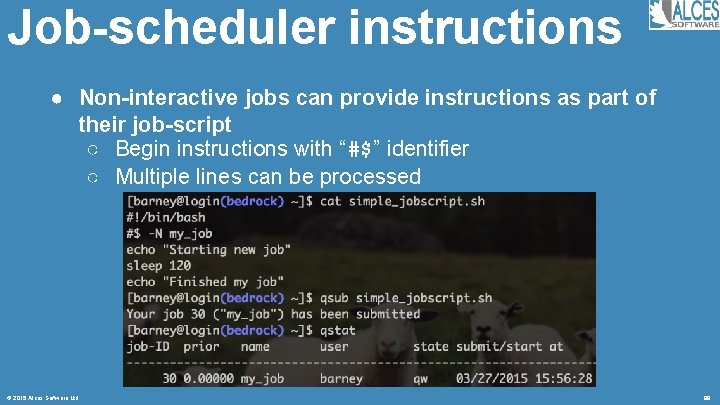

Job-scheduler instructions ● Non-interactive jobs can provide instructions as part of their job-script ○ Begin instructions with “#$” identifier ○ Multiple lines can be processed © 2015 Alces Software Ltd 89

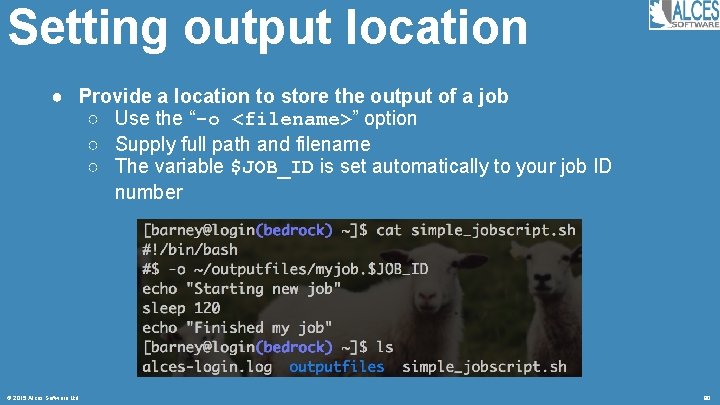

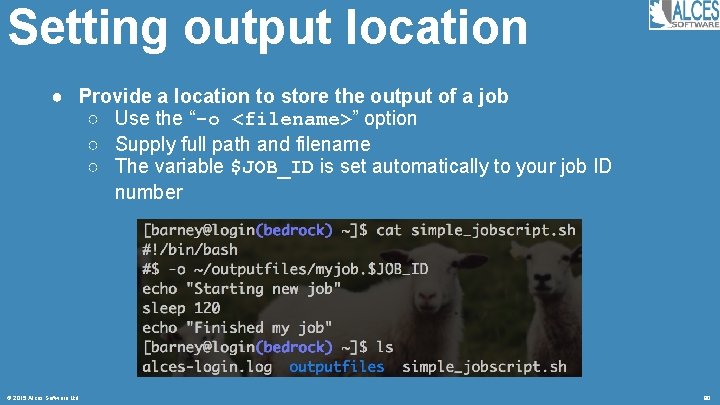

Setting output location ● Provide a location to store the output of a job ○ Use the “-o <filename>” option ○ Supply full path and filename ○ The variable $JOB_ID is set automatically to your job ID number © 2015 Alces Software Ltd 90

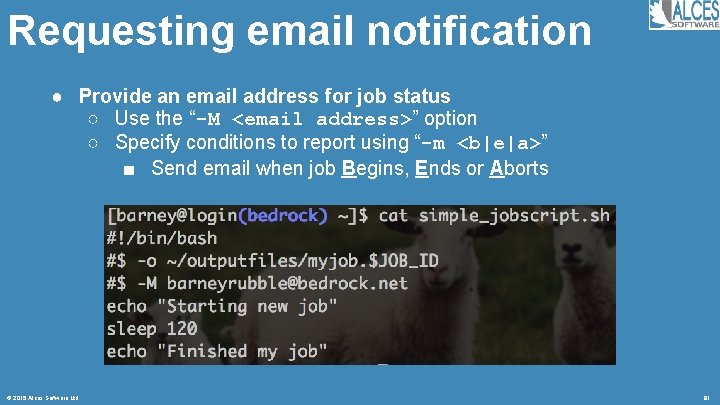

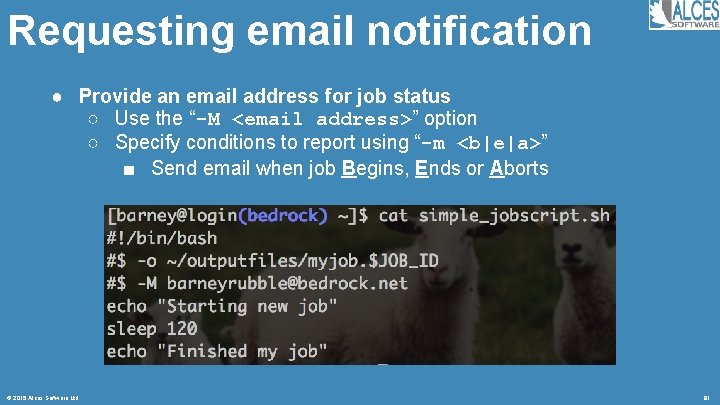

Requesting email notification ● Provide an email address for job status ○ Use the “-M <email address>” option ○ Specify conditions to report using “-m <b|e|a>” ■ Send email when job Begins, Ends or Aborts © 2015 Alces Software Ltd 91

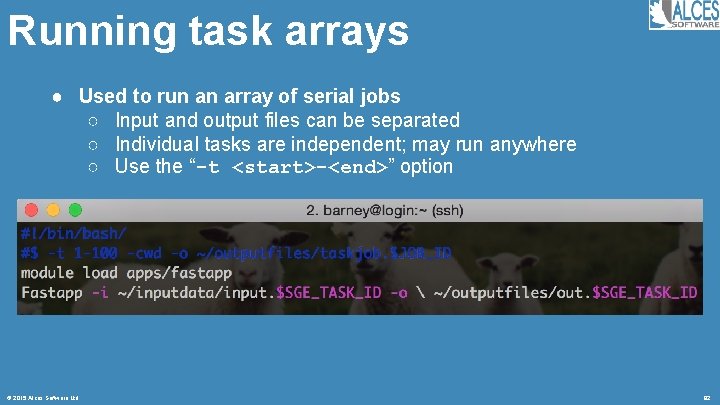

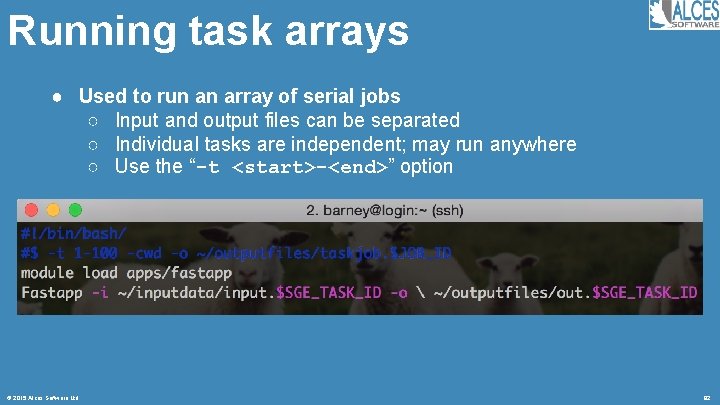

Running task arrays ● Used to run an array of serial jobs ○ Input and output files can be separated ○ Individual tasks are independent; may run anywhere ○ Use the “-t <start>-<end>” option © 2015 Alces Software Ltd 92

Requesting additional resources ● If no additional resources are requested, job are automatically assigned default resources ○ One CPU core ○ Up to 8 GB RAM ○ Maximum of 72 -hour runtime (wall-clock) ● These limits are automatically enforced ● Jobs must request different limits if required © 2015 Alces Software Ltd 93

Requesting more CPU cores ● Multi-threaded and parallel jobs require users to request the number of CPU cores needed ● The scheduler uses a Parallel Environment (PE) ○ Must be requested by name ○ Number of slots (CPU cores) must be requested ○ Enables scheduler to prepare nodes for the job ● Three PE available on cluster ○ smp / smp-verbose ○ mpislots / mpislots-verbose ○ mpinodes / mpinodes-verbose © 2015 Alces Software Ltd 94

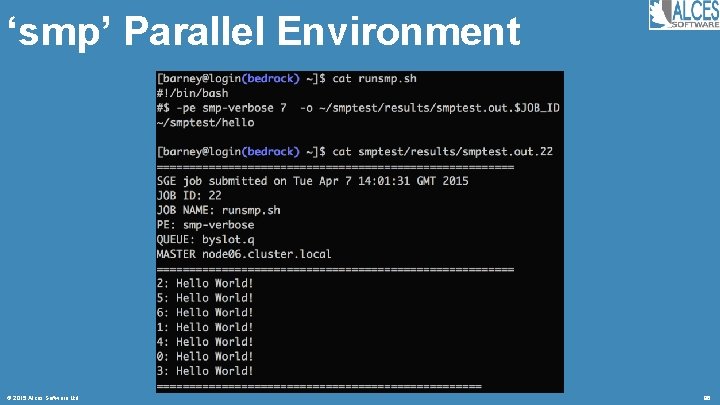

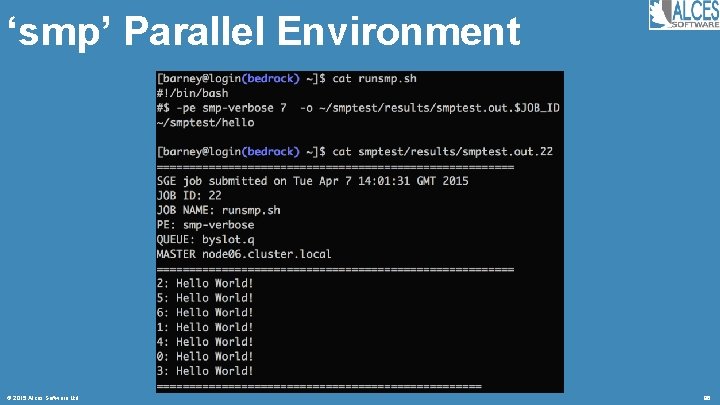

‘smp’ Parallel Environment ● Designed for smp / multi-threaded jobs ○ Job must be contained within a single node ○ Requested with number of slots (CPU-cores) ○ Verbose variant shows setup information in job output ○ Memory limit is requested per slot ● Requested with “-pe smp-verbose <slots>” © 2015 Alces Software Ltd 95

‘smp’ Parallel Environment © 2015 Alces Software Ltd 96

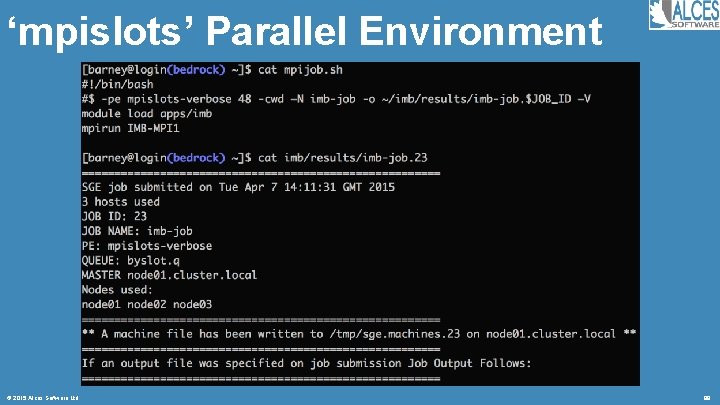

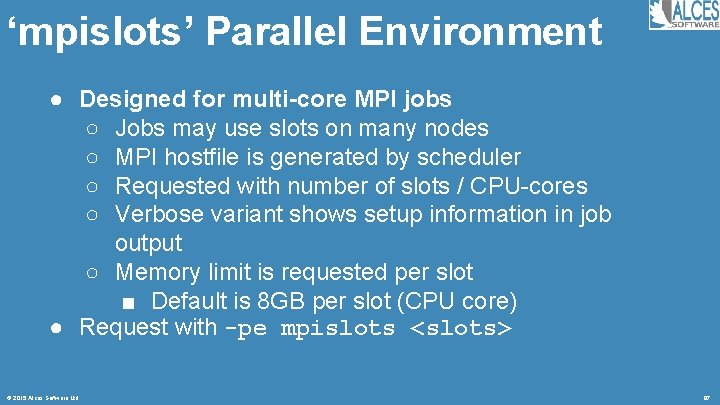

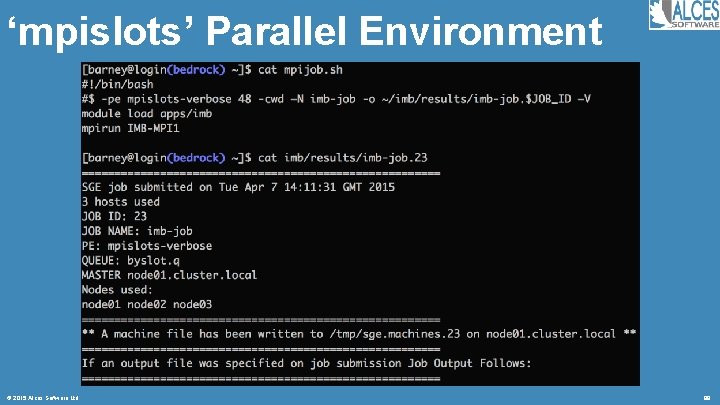

‘mpislots’ Parallel Environment ● Designed for multi-core MPI jobs ○ Jobs may use slots on many nodes ○ MPI hostfile is generated by scheduler ○ Requested with number of slots / CPU-cores ○ Verbose variant shows setup information in job output ○ Memory limit is requested per slot ■ Default is 8 GB per slot (CPU core) ● Request with -pe mpislots <slots> © 2015 Alces Software Ltd 97

‘mpislots’ Parallel Environment © 2015 Alces Software Ltd 98

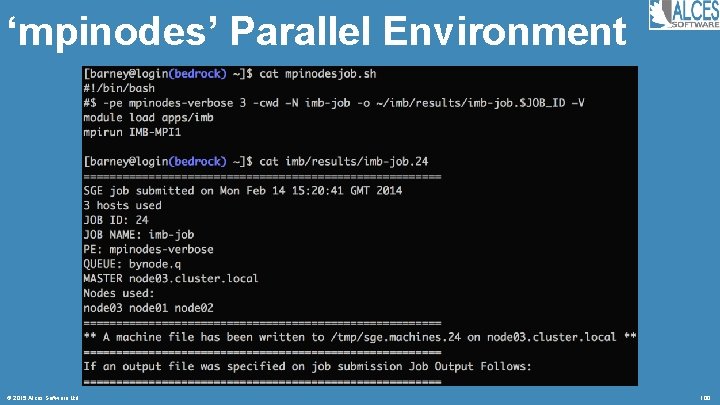

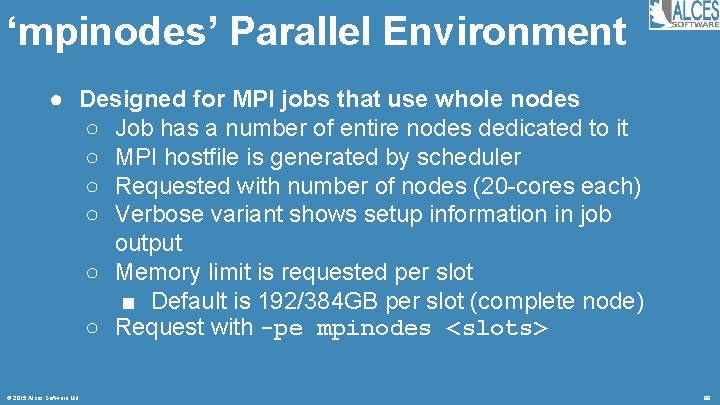

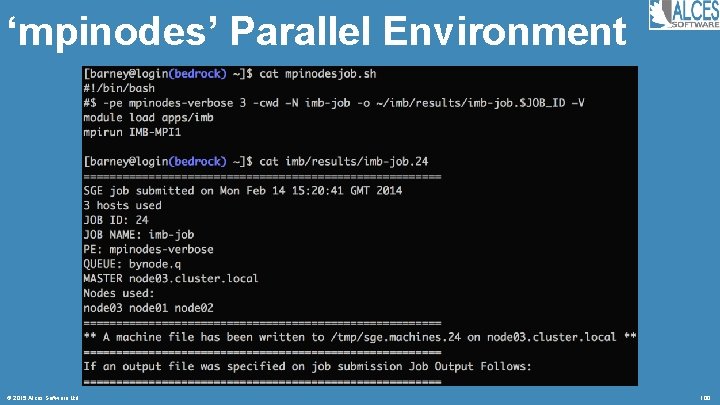

‘mpinodes’ Parallel Environment ● Designed for MPI jobs that use whole nodes ○ Job has a number of entire nodes dedicated to it ○ MPI hostfile is generated by scheduler ○ Requested with number of nodes (20 -cores each) ○ Verbose variant shows setup information in job output ○ Memory limit is requested per slot ■ Default is 192/384 GB per slot (complete node) ○ Request with -pe mpinodes <slots> © 2015 Alces Software Ltd 99

‘mpinodes’ Parallel Environment © 2015 Alces Software Ltd 100

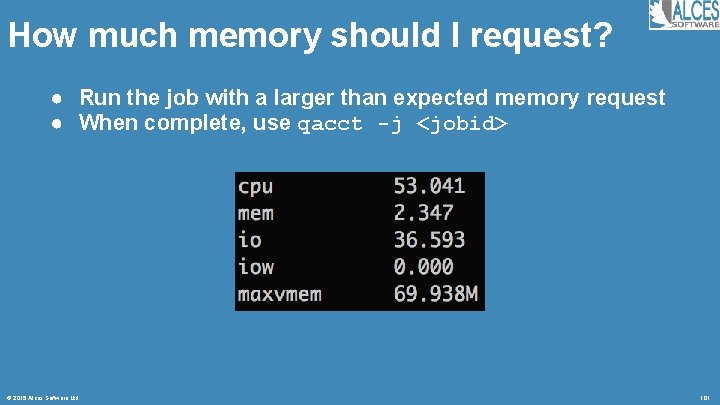

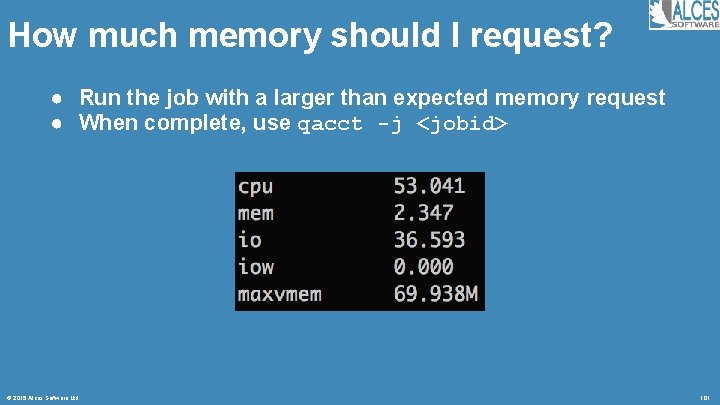

How much memory should I request? ● Run the job with a larger than expected memory request ● When complete, use qacct -j <jobid> © 2015 Alces Software Ltd 101

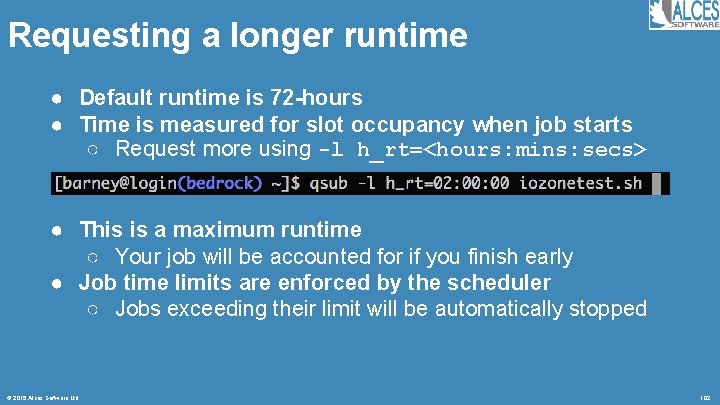

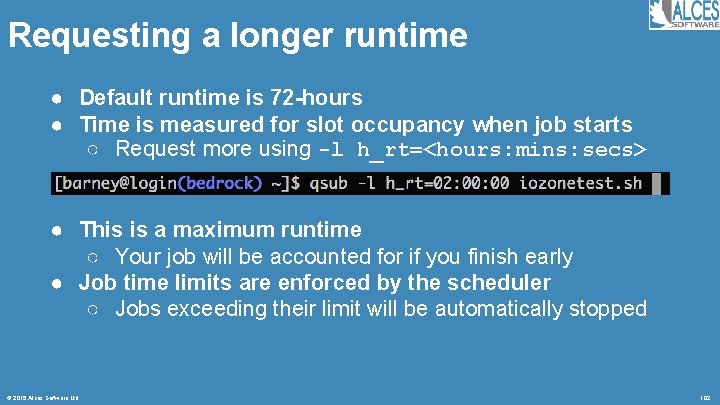

Requesting a longer runtime ● Default runtime is 72 -hours ● Time is measured for slot occupancy when job starts ○ Request more using -l h_rt=<hours: mins: secs> ● This is a maximum runtime ○ Your job will be accounted for if you finish early ● Job time limits are enforced by the scheduler ○ Jobs exceeding their limit will be automatically stopped © 2015 Alces Software Ltd 102

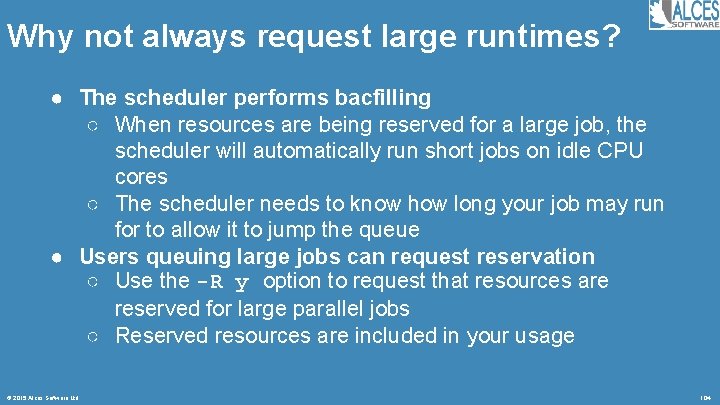

Why not always request large runtimes? ● Default runtime is 72 -hours ● Time is measured for slot occupancy when job starts ○ Request more using -l h_rt=<hours: mins: secs> ● This is a maximum runtime ○ Your job will be accounted for if you finish early ● Job time limits are enforced by the scheduler ○ Jobs exceeding their limit will be automatically stopped © 2015 Alces Software Ltd 103

Why not always request large runtimes? ● The scheduler performs bacfilling ○ When resources are being reserved for a large job, the scheduler will automatically run short jobs on idle CPU cores ○ The scheduler needs to know how long your job may run for to allow it to jump the queue ● Users queuing large jobs can request reservation ○ Use the -R y option to request that resources are reserved for large parallel jobs ○ Reserved resources are included in your usage © 2015 Alces Software Ltd 104

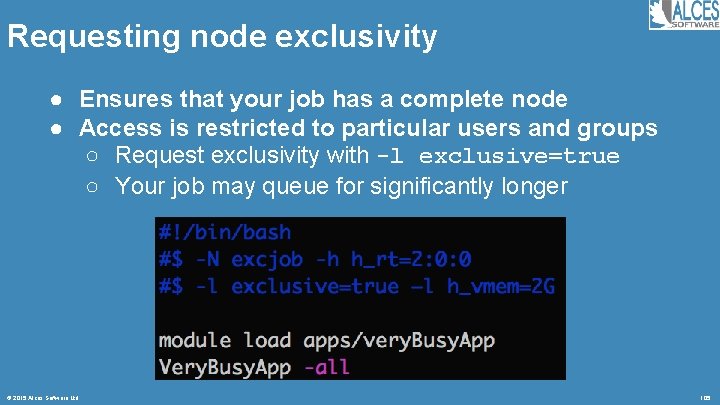

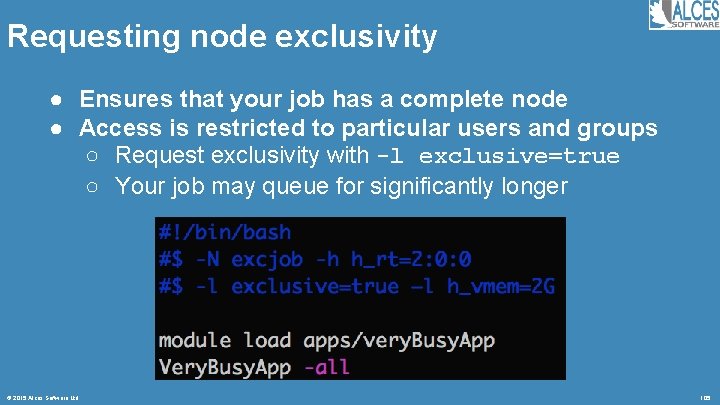

Requesting node exclusivity ● Ensures that your job has a complete node ● Access is restricted to particular users and groups ○ Request exclusivity with -l exclusive=true ○ Your job may queue for significantly longer © 2015 Alces Software Ltd 105

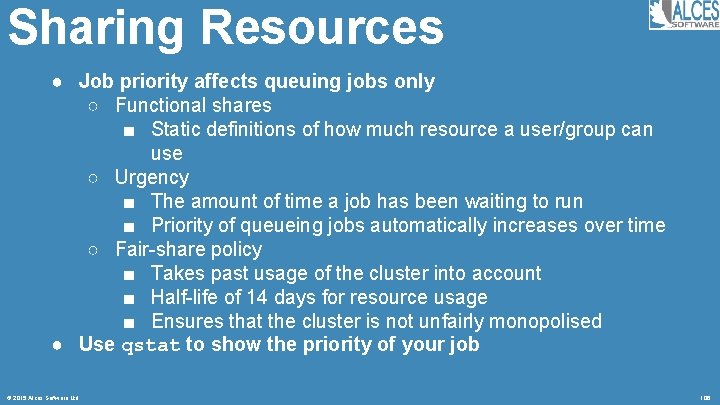

Sharing Resources ● Job priority affects queuing jobs only ○ Functional shares ■ Static definitions of how much resource a user/group can use ○ Urgency ■ The amount of time a job has been waiting to run ■ Priority of queueing jobs automatically increases over time ○ Fair-share policy ■ Takes past usage of the cluster into account ■ Half-life of 14 days for resource usage ■ Ensures that the cluster is not unfairly monopolised ● Use qstat to show the priority of your job © 2015 Alces Software Ltd 106

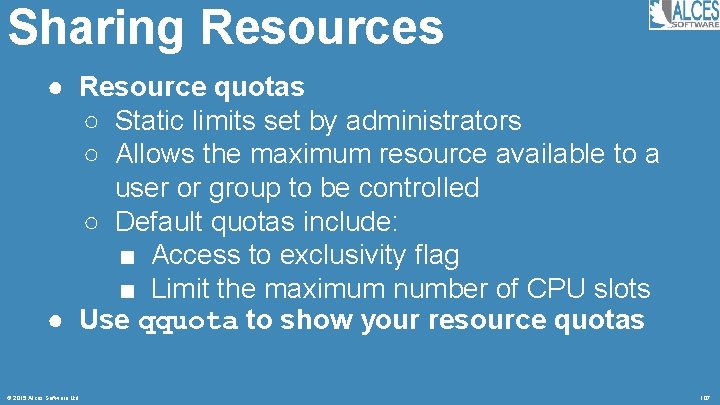

Sharing Resources ● Resource quotas ○ Static limits set by administrators ○ Allows the maximum resource available to a user or group to be controlled ○ Default quotas include: ■ Access to exclusivity flag ■ Limit the maximum number of CPU slots ● Use qquota to show your resource quotas © 2015 Alces Software Ltd 107

Queue Administration ● Administrator can perform queue management ○ Delete jobs (qdel) ○ Enable and disable queues (qmod) ■ Disabled queues finish running jobs but do not start more © 2015 Alces Software Ltd 108

Execution Host Status ● Administrator can perform queue management ○ Delete jobs (qdel) ○ Enable and disable queues (qmod) ■ Disabled queues finish running jobs but do not start more © 2015 Alces Software Ltd 109

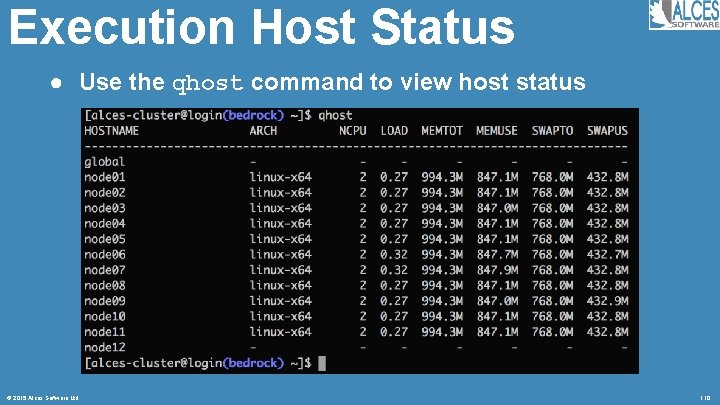

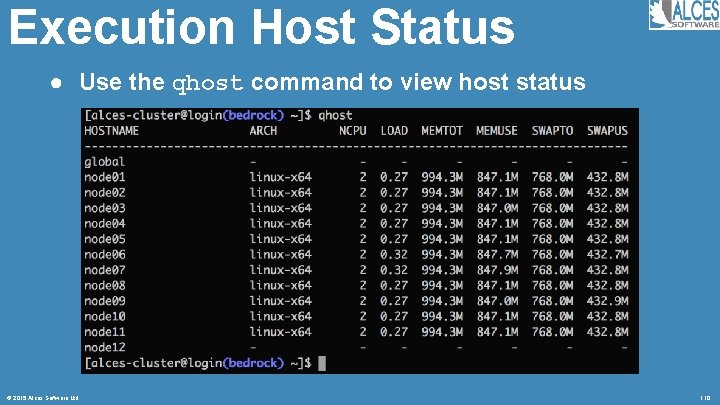

Execution Host Status ● Use the qhost command to view host status © 2015 Alces Software Ltd 110

Requesting Support An overview for administrators and researchers © 2015 Alces Software Ltd 111

Support services ● Site administrator ○ On-site point of contact for all users ■ Service delivery lead for site ○ Organises, prioritises and referees user requests ■ Determines usage policy, time sharing, etc. ○ Responsible for data management ■ Ability to organise data files stored on cluster ■ Can set user quotas, change file ownership and groups ○ Requests vendor service via support tickets ■ Changes to service must be authorized by site admin ■ Delegated responsibility during absence to another admin © 2015 Alces Software Ltd 112

Remote managed service ● System maintenance tasks ○ “It used to work and now it doesn’t” ○ General system maintenance ■ Generally non-intrusive to user workloads ■ System monitoring, reporting and tuning ■ Redundant hardware to enhance availability ○ Scheduled preventative maintenance ■ Generally requires a pre-arranged outage of HPC service ■ Typically 1 day of scheduled maintenance every calendar quarter ○ Emergency maintenance (priority 1 requests) ■ Restoration of service after serious failure © 2015 Alces Software Ltd 113

Remote managed service ● Usage assistance ○ “Something isn’t working the way I expected” ○ Primary contact for users is the site administrator ○ Further assistance provided by remote support service ○ Example user queries include: ■ My HPC job runs slower than it did last week ■ How do I write a job script for the HPC scheduler and my application? ■ Performance of my job is not scaling as expected ■ Where should I store my files? ■ Why is my HPC job/Open. Stack VM still waiting in queue? © 2015 Alces Software Ltd 114

Remote managed service ● Enhancement requests ○ “I need help to do a new thing” ○ Changes to the existing environment ○ May require schedule maintenance period ○ Example enhancements may include: ■ Alternative HPC scheduler configuration ■ New storage system pool or resource ■ New or upgraded system software ■ New or upgraded application software ■ New Open. Stack Glance images with pre-loaded application(s) © 2015 Alces Software Ltd 115

Requesting assistance ● Support request email sent by site administrator ○ Must contain a description of the problem ○ Username or group experiencing the problem ○ Example job output or log files that show the issue ○ Method for replicating the problem ○ Request priority and business justification ● support@alces-software. com © 2015 Alces Software Ltd 116

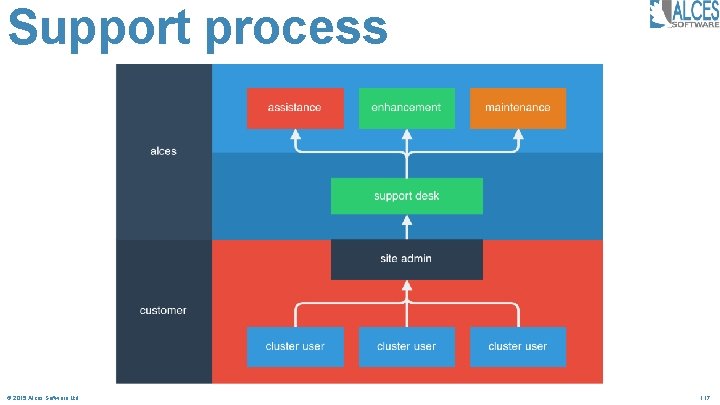

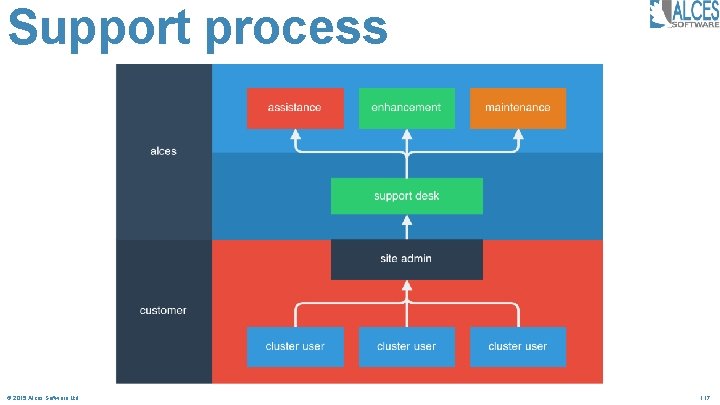

Support process © 2015 Alces Software Ltd 117

Visiting site ● Site engineer visits ○ Hot-swap components typically ship direct to site ○ Dell/Alces engineers may need to visit site for fixes ○ 4 -hour response for critical components ○ Next-business-day response for compute nodes ○ All hardware replacements scheduled by Alces during managedservice period ■ Dell/Alces responsible for all site visits and hardware replacements ○ May be scheduled by customer in an emergency © 2015 Alces Software Ltd 118

Service review meetings ● Regular account meeting ○ Review maintenance requests for cluster ■ Plan preventative maintenance sessions ■ Root-cause analysis for unscheduled outages ○ Review assistance requests from users ■ Identify common requests and feedback to documentation ■ Determine future training requirements ○ Review enhancement requests ■ Consider cluster utilisation and update policies as required ■ Prioritise outstanding requests for new software packages ■ Rationalise installed software base ● Quarterly visit with site administrators to review service during managed-service period © 2015 Alces Software Ltd 119

Site Admin Tasks ● ● ● ● © 2015 Alces Software Ltd Point of contact for HPC users User data manipulation Storage quota management Final authorization for service changes Arbitration for resource conflicts Point of contact for regular service reviews Request reporting to managed service support 120