Introduction to Tufts High Performance Compute HPC Cluster

![Slurm Options • • --mem=[in MB] --tasks-per-node=[#] --depend=[state: job_id] --nodelist=[nodes] --constraint=[nodes] --exclude=[nodes] --pty --x Slurm Options • • --mem=[in MB] --tasks-per-node=[#] --depend=[state: job_id] --nodelist=[nodes] --constraint=[nodes] --exclude=[nodes] --pty --x](https://slidetodoc.com/presentation_image_h/e1175cff3e7c11dc7f9d27fe23a6919e/image-30.jpg)

- Slides: 41

Introduction to Tufts High Performance Compute (HPC) Cluster & Slurm Delilah Maloney Research Technology Tufts Technology Services Delilah. Maloney@tufts. edu tts-research@tufts. edu

What is a HPC Cluster? • A computer cluster is a set of loosely or tightly connected computers that work together so that, in many respects, they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. [Wikipedia]

What is a HPC Cluster? • A computer cluster is a set of loosely or tightly connected computers that work together so that, in many respects, they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. [Wikipedia] • Computers Ø CPUs -> (Up to 72 cores per node) Ø Memory -> (High memory nodes available up to 1 TB) Ø Storage -> (Home Dir and Project Storage) Ø Accelerators -> (GPUs) • Interconnect/Network • Operating System and Software

What is a HPC Cluster? • A computer cluster is a set of loosely or tightly connected computers that work together so that, in many respects, they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. [Wikipedia] • Linux Operating System Ø Since Nov. 2017, ALL of top 500 supercomputers use Linux based operating systems

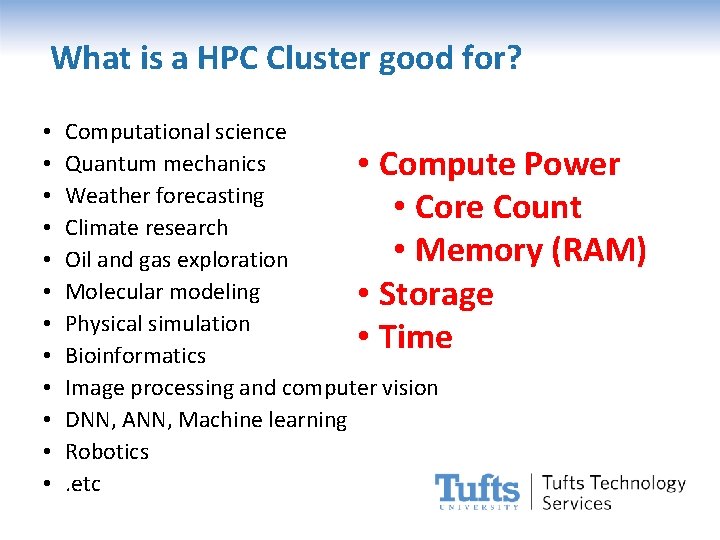

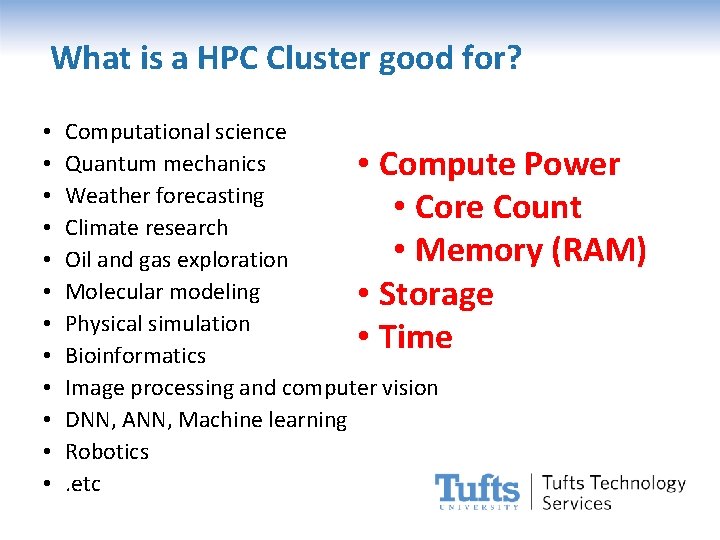

What is a HPC Cluster good for? • • • Computational science Quantum mechanics • Compute Power Weather forecasting • Core Count Climate research • Memory (RAM) Oil and gas exploration Molecular modeling • Storage Physical simulation • Time Bioinformatics Image processing and computer vision DNN, ANN, Machine learning Robotics. etc

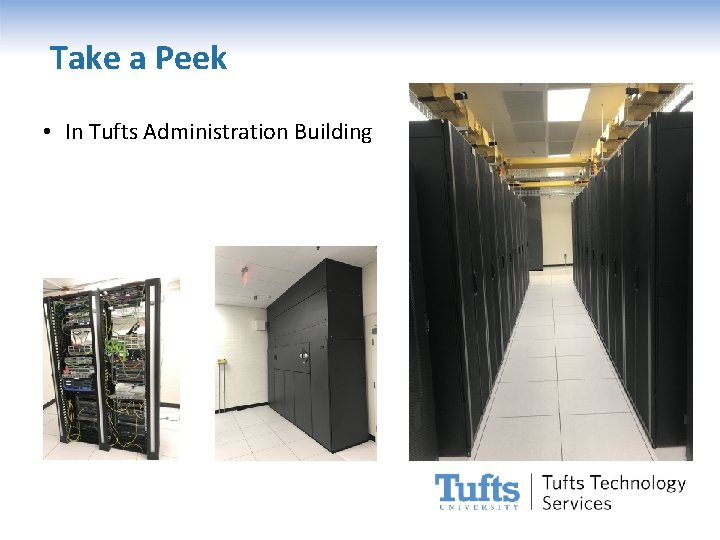

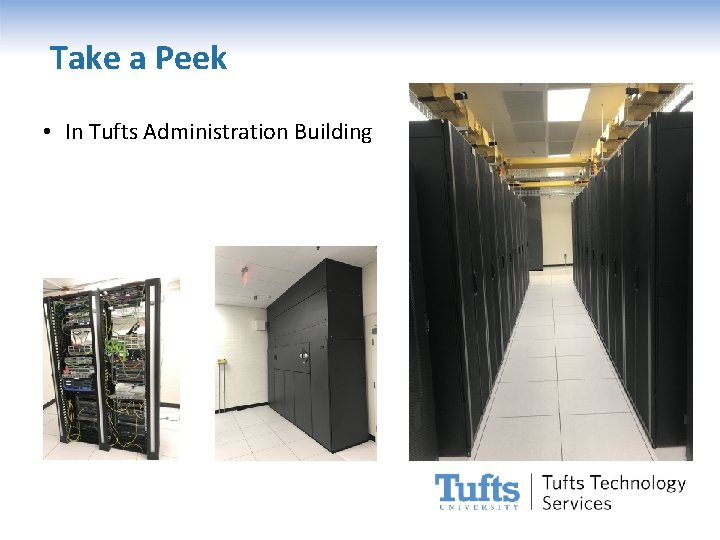

Take a Peek • In Tufts Administration Building

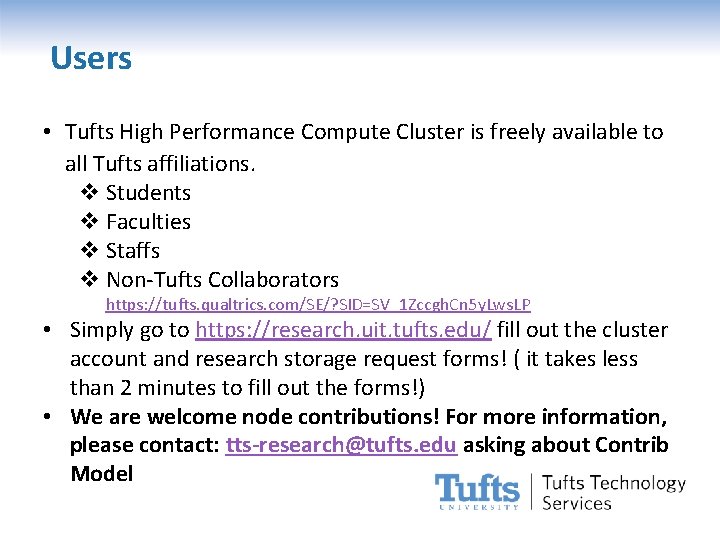

Users • Tufts High Performance Compute Cluster is freely available to all Tufts affiliations. v Students v Faculties v Staffs v Non-Tufts Collaborators https: //tufts. qualtrics. com/SE/? SID=SV_1 Zccgh. Cn 5 y. Lws. LP • Simply go to https: //research. uit. tufts. edu/ fill out the cluster account and research storage request forms! ( it takes less than 2 minutes to fill out the forms!) • We are welcome node contributions! For more information, please contact: tts-research@tufts. edu asking about Contrib Model

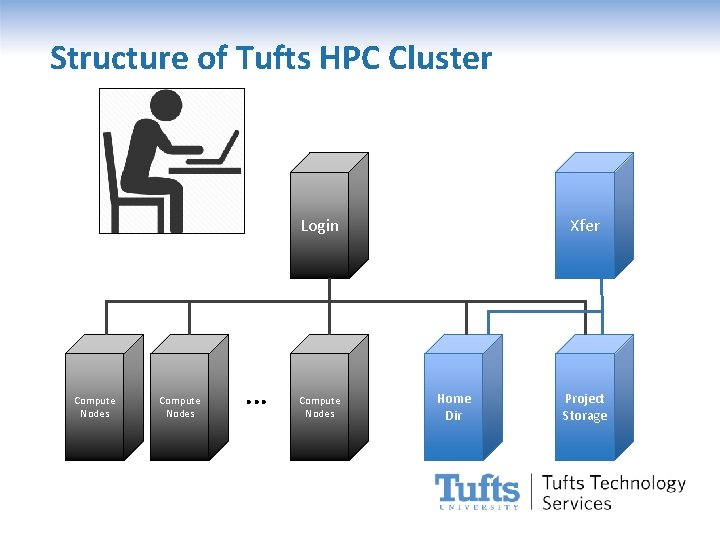

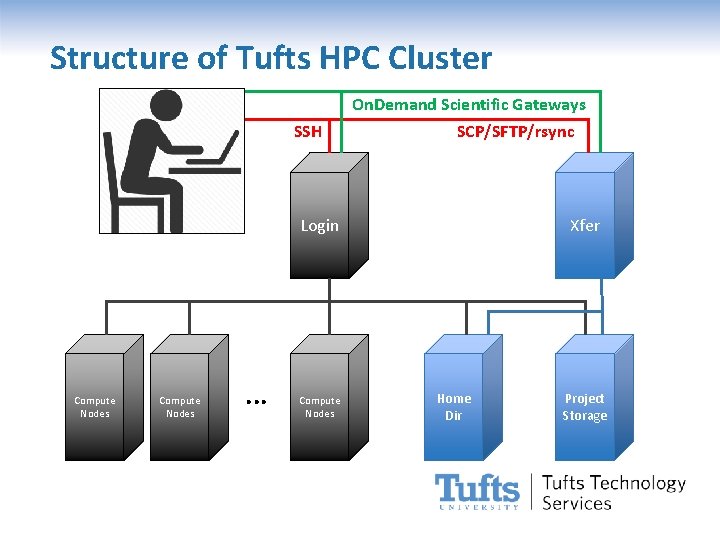

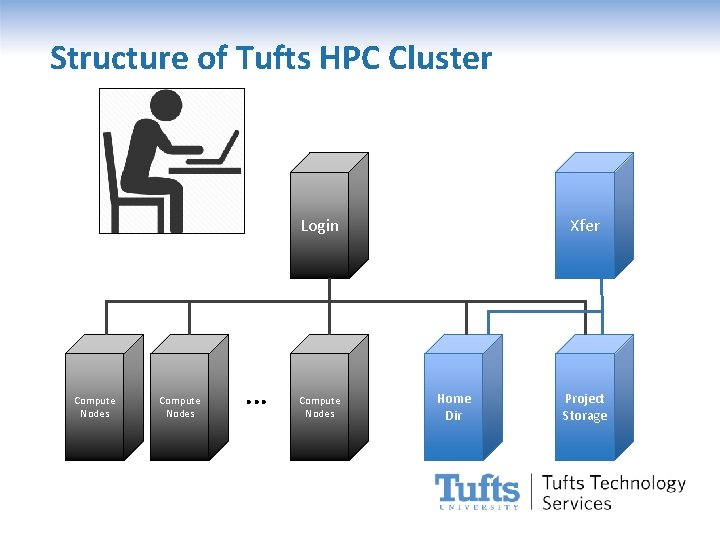

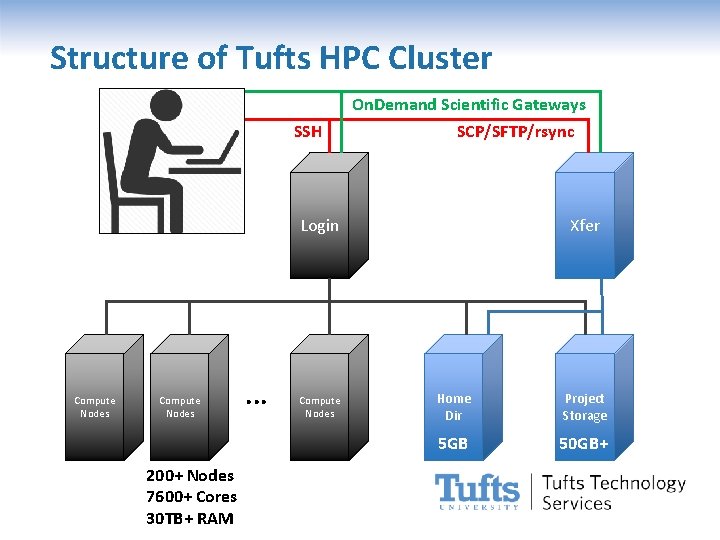

Structure of Tufts HPC Cluster Login Compute Nodes . . . Compute Nodes Xfer Home Dir Project Storage

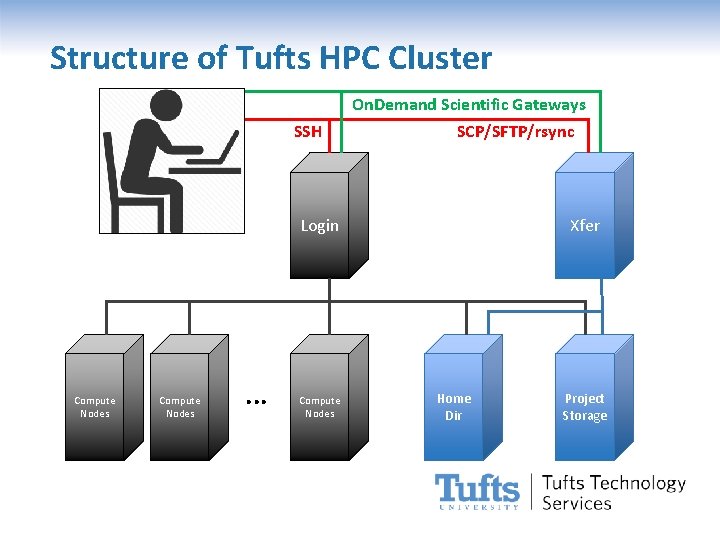

Structure of Tufts HPC Cluster On. Demand Scientific Gateways SSH SCP/SFTP/rsync Login Compute Nodes . . . Compute Nodes Xfer Home Dir Project Storage

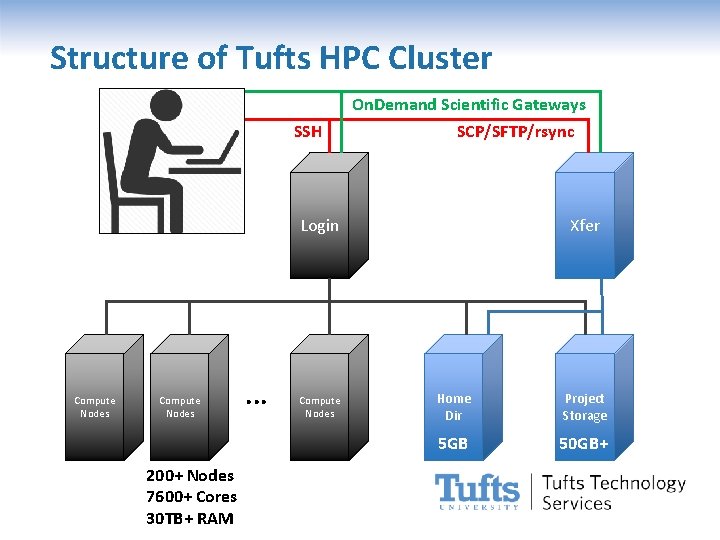

Structure of Tufts HPC Cluster On. Demand Scientific Gateways SSH SCP/SFTP/rsync Login Compute Nodes 200+ Nodes 7600+ Cores 30 TB+ RAM . . . Compute Nodes Xfer Home Dir Project Storage 5 GB 50 GB+

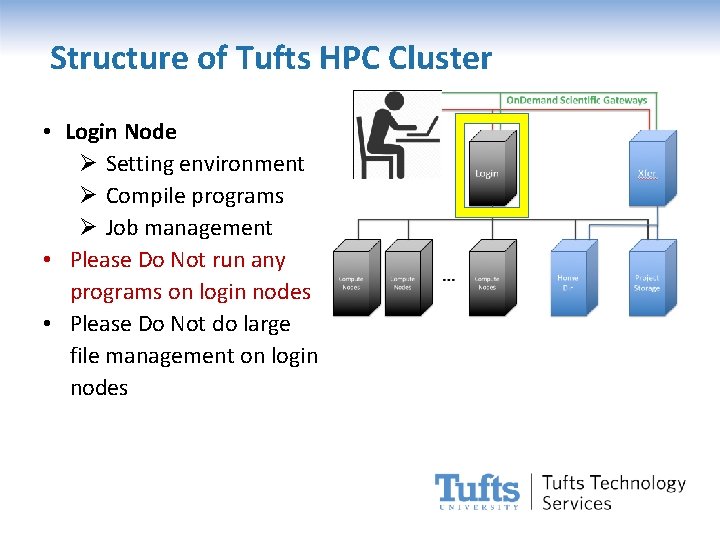

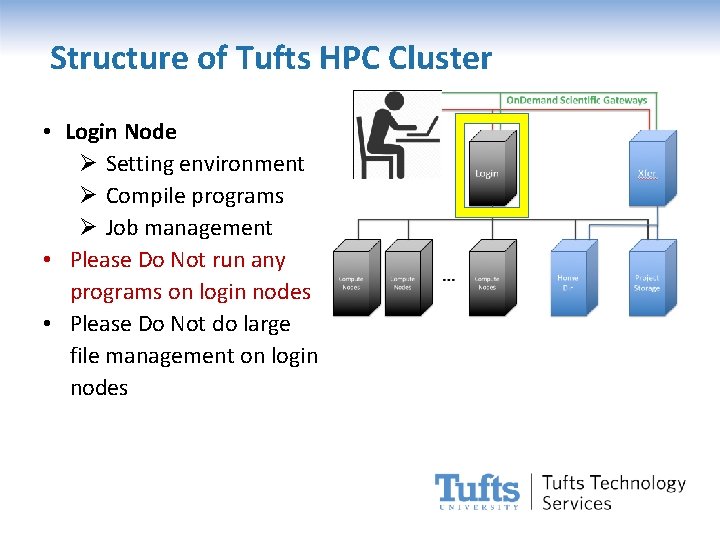

Structure of Tufts HPC Cluster • Login Node Ø Setting environment Ø Compile programs Ø Job management • Please Do Not run any programs on login nodes • Please Do Not do large file management on login nodes

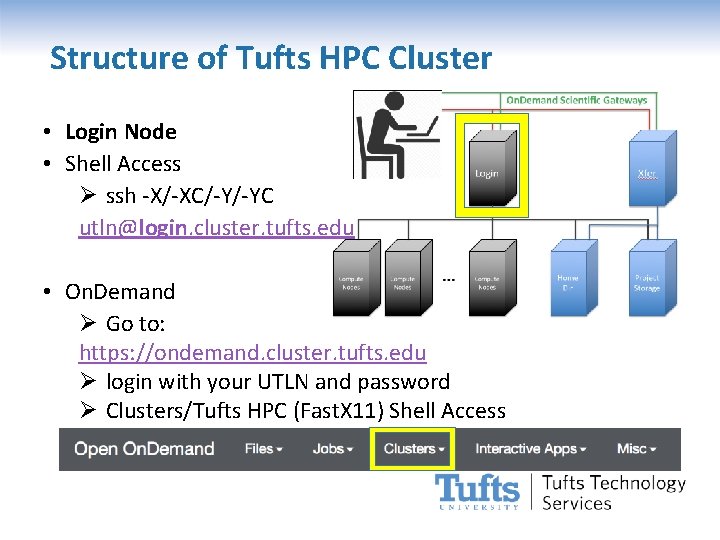

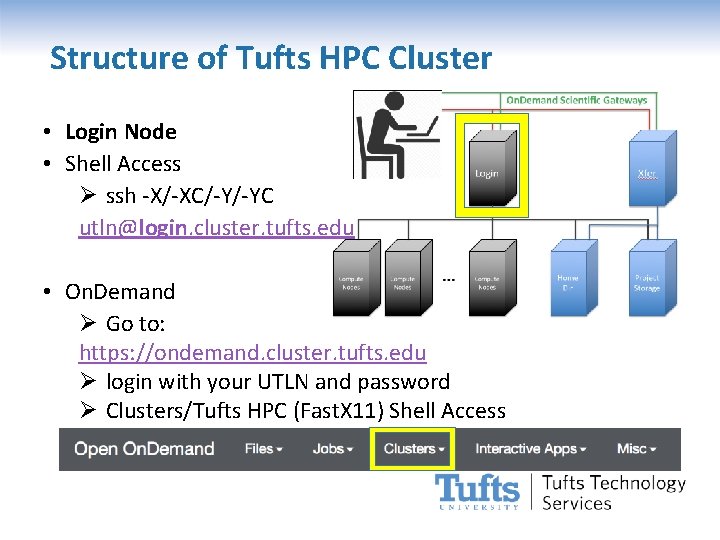

Structure of Tufts HPC Cluster • Login Node • Shell Access Ø ssh -X/-XC/-Y/-YC utln@login. cluster. tufts. edu • On. Demand Ø Go to: https: //ondemand. cluster. tufts. edu Ø login with your UTLN and password Ø Clusters/Tufts HPC (Fast. X 11) Shell Access

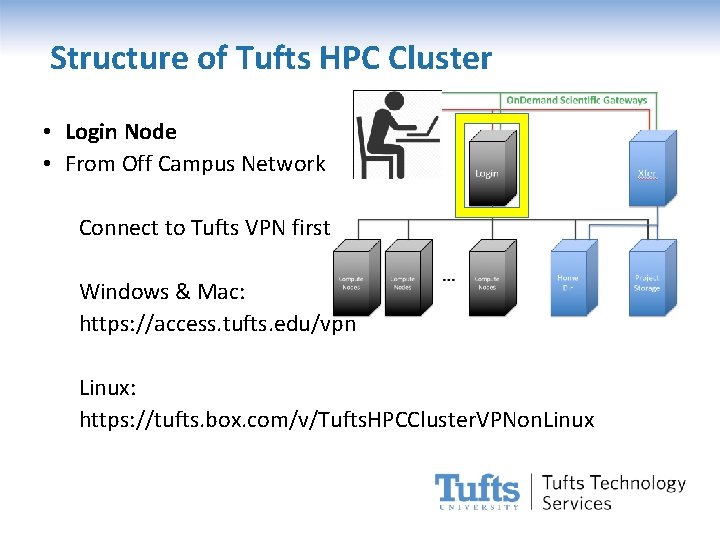

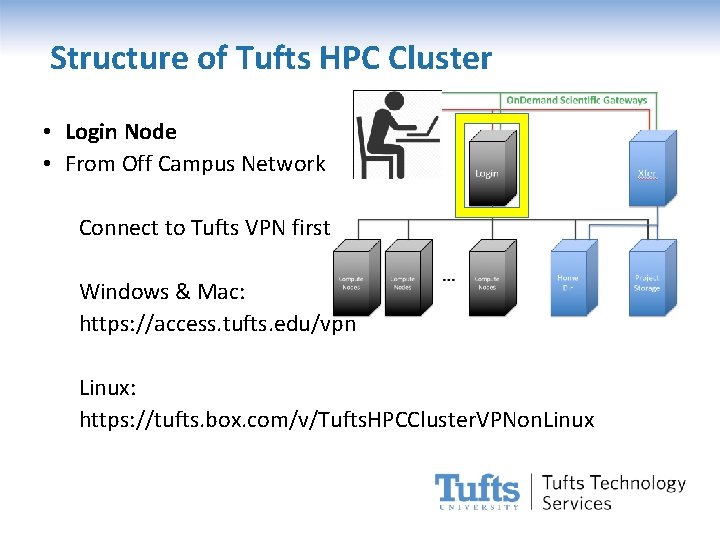

Structure of Tufts HPC Cluster • Login Node • From Off Campus Network Connect to Tufts VPN first Windows & Mac: https: //access. tufts. edu/vpn Linux: https: //tufts. box. com/v/Tufts. HPCCluster. VPNon. Linux

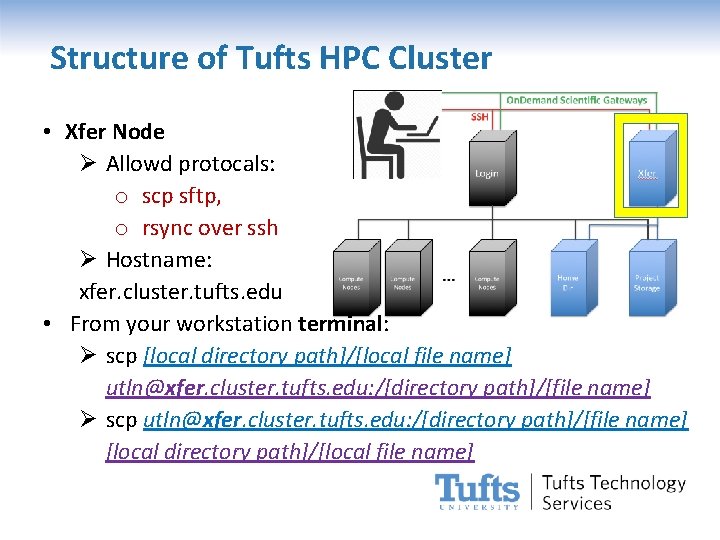

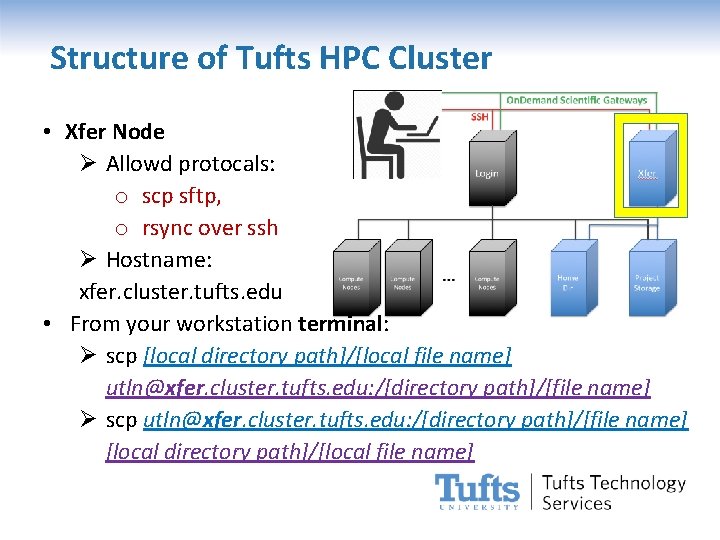

Structure of Tufts HPC Cluster • Xfer Node Ø Allowd protocals: o scp sftp, o rsync over ssh Ø Hostname: xfer. cluster. tufts. edu • From your workstation terminal: Ø scp [local directory path]/[local file name] utln@xfer. cluster. tufts. edu: /[directory path]/[file name] Ø scp utln@xfer. cluster. tufts. edu: /[directory path]/[file name] [local directory path]/[local file name]

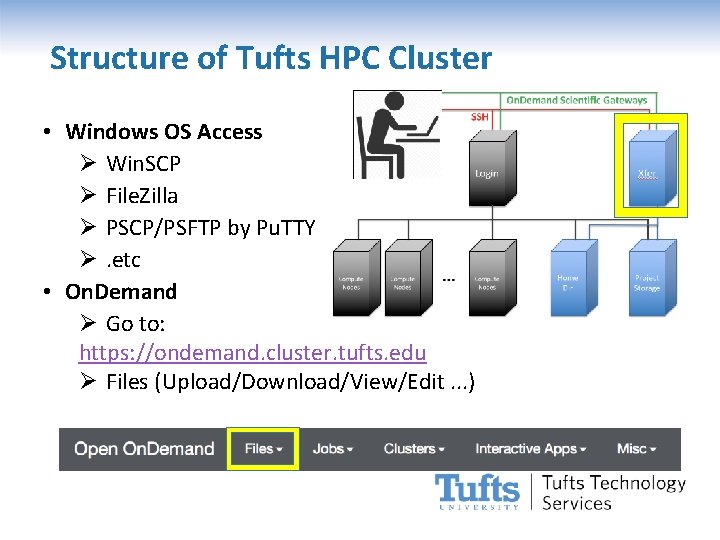

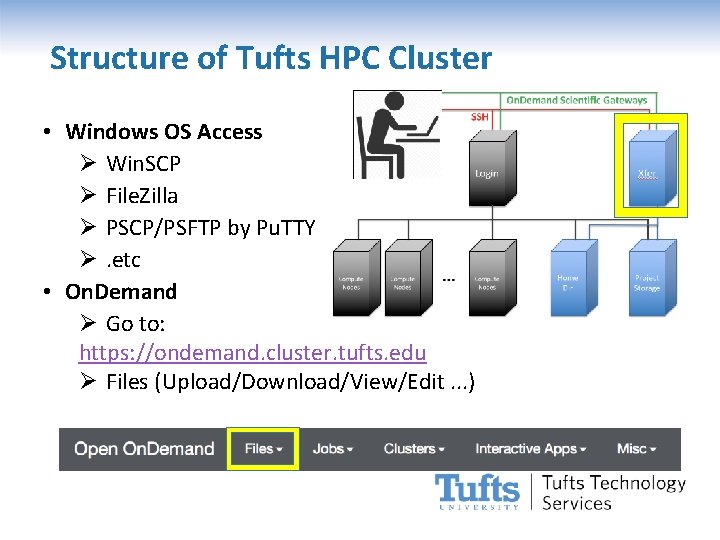

Structure of Tufts HPC Cluster • Windows OS Access Ø Win. SCP Ø File. Zilla Ø PSCP/PSFTP by Pu. TTY Ø. etc • On. Demand Ø Go to: https: //ondemand. cluster. tufts. edu Ø Files (Upload/Download/View/Edit. . . )

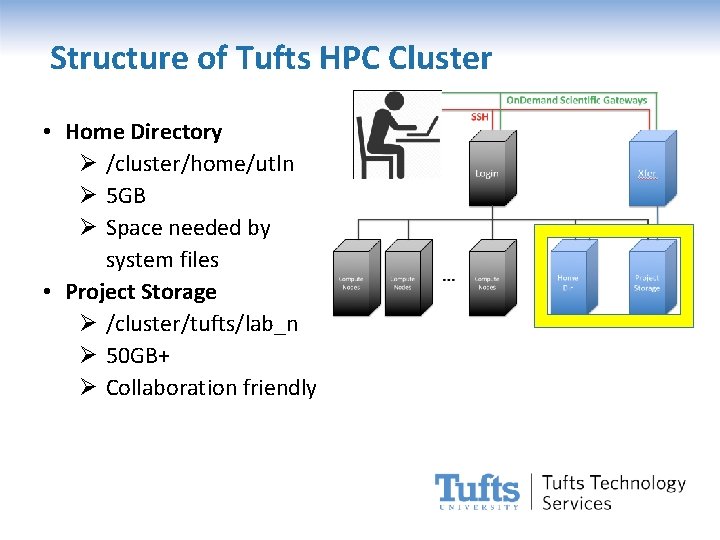

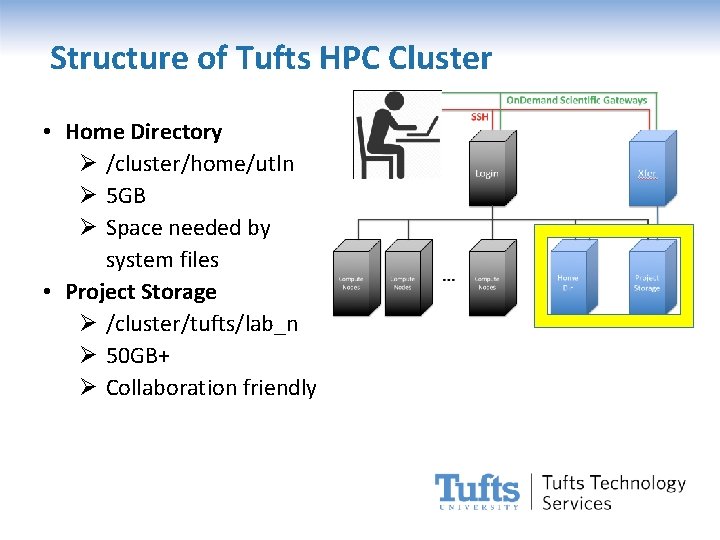

Structure of Tufts HPC Cluster • Home Directory Ø /cluster/home/utln Ø 5 GB Ø Space needed by system files • Project Storage Ø /cluster/tufts/lab_n Ø 50 GB+ Ø Collaboration friendly

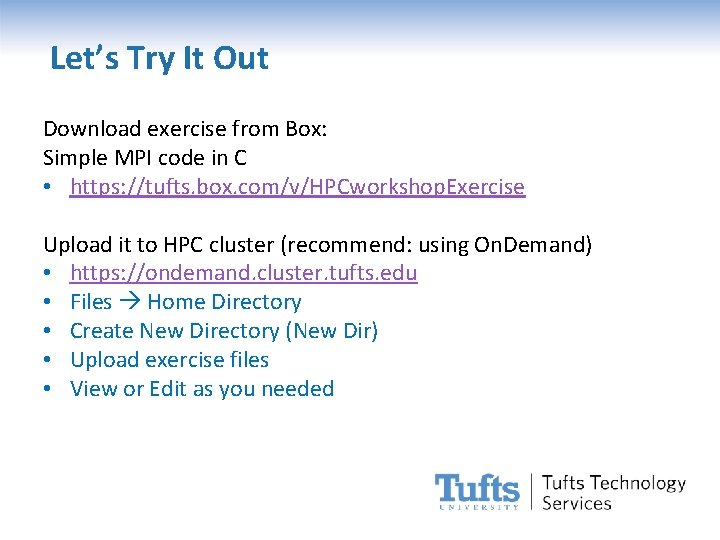

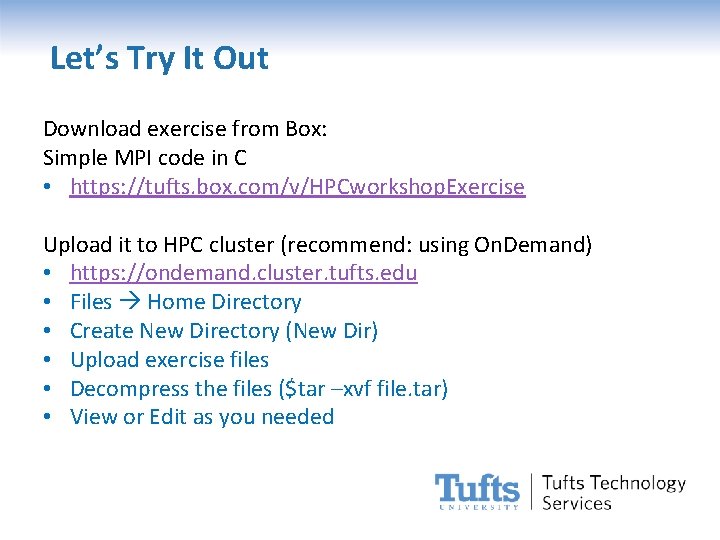

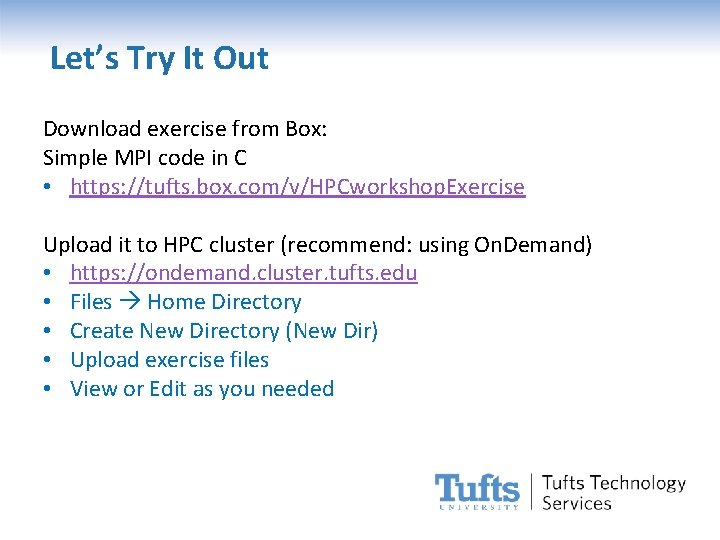

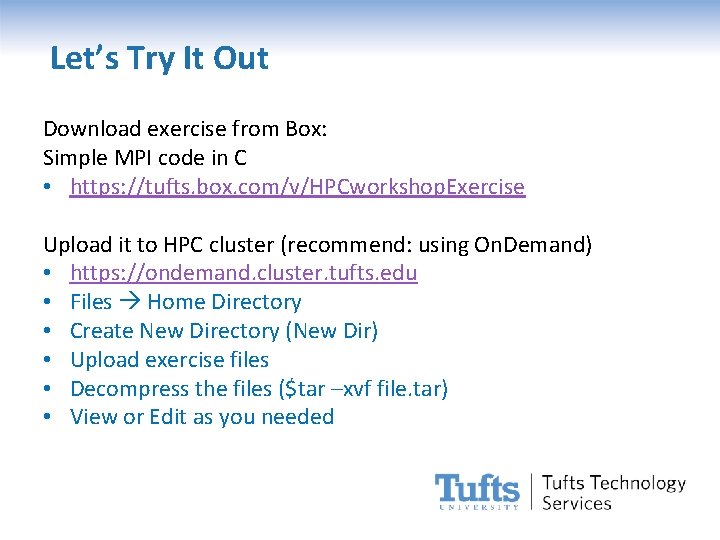

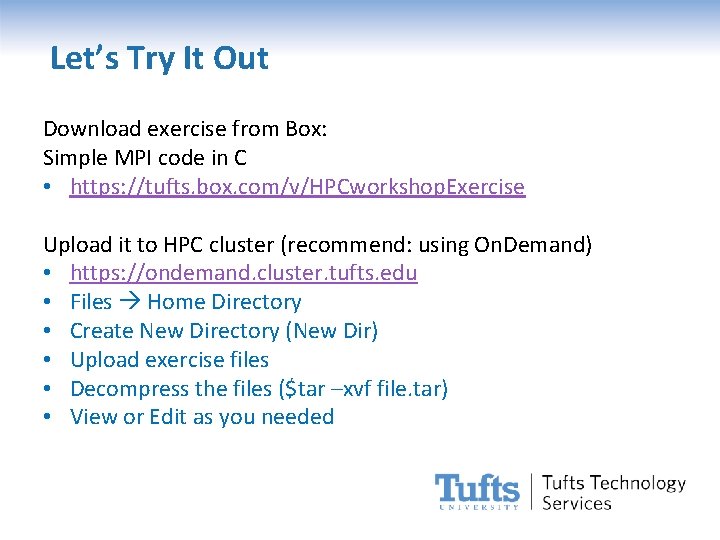

Let’s Try It Out Download exercise from Box: Simple MPI code in C • https: //tufts. box. com/v/HPCworkshop. Exercise Upload it to HPC cluster (recommend: using On. Demand) • https: //ondemand. cluster. tufts. edu • Files Home Directory • Create New Directory (New Dir) • Upload exercise files • View or Edit as you needed

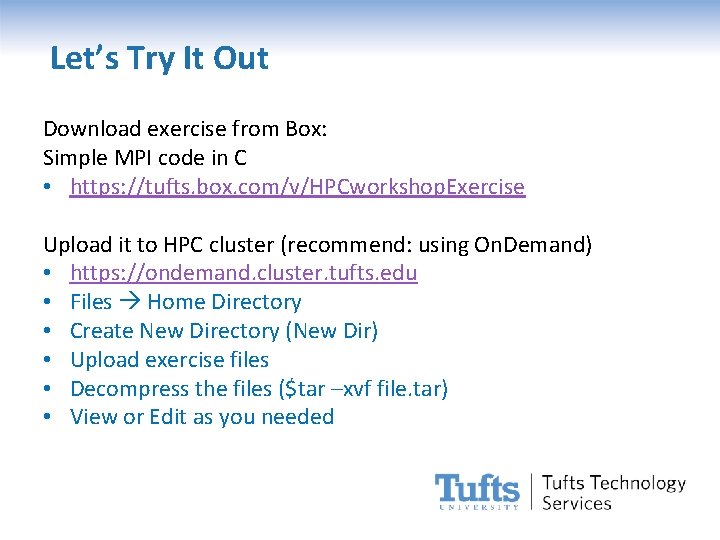

Let’s Try It Out Download exercise from Box: Simple MPI code in C • https: //tufts. box. com/v/HPCworkshop. Exercise Upload it to HPC cluster (recommend: using On. Demand) • https: //ondemand. cluster. tufts. edu • Files Home Directory • Create New Directory (New Dir) • Upload exercise files • Decompress the files ($tar –xvf file. tar) • View or Edit as you needed

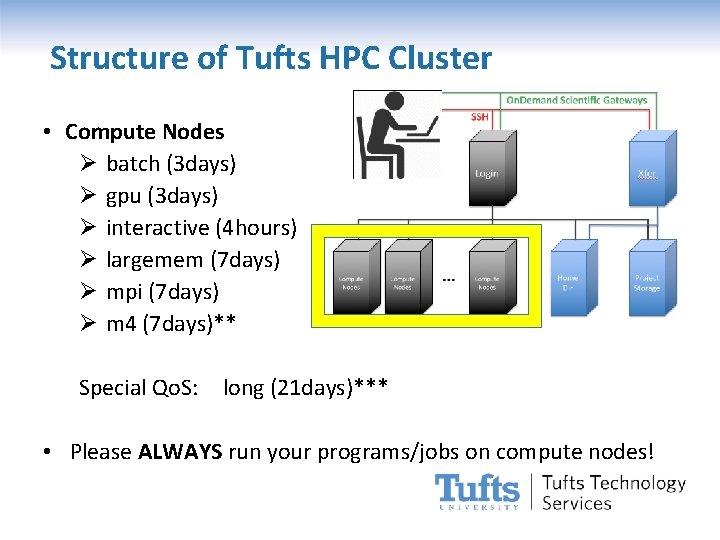

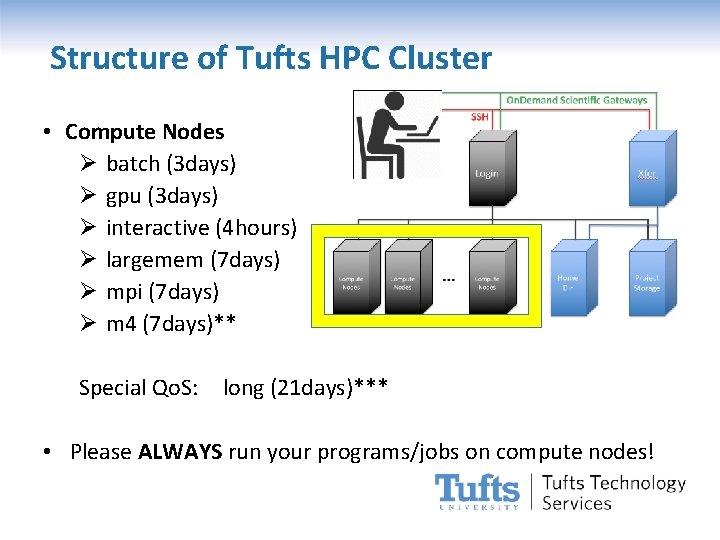

Structure of Tufts HPC Cluster • Compute Nodes Ø batch (3 days) Ø gpu (3 days) Ø interactive (4 hours) Ø largemem (7 days) Ø mpi (7 days) Ø m 4 (7 days)** Special Qo. S: long (21 days)*** • Please ALWAYS run your programs/jobs on compute nodes!

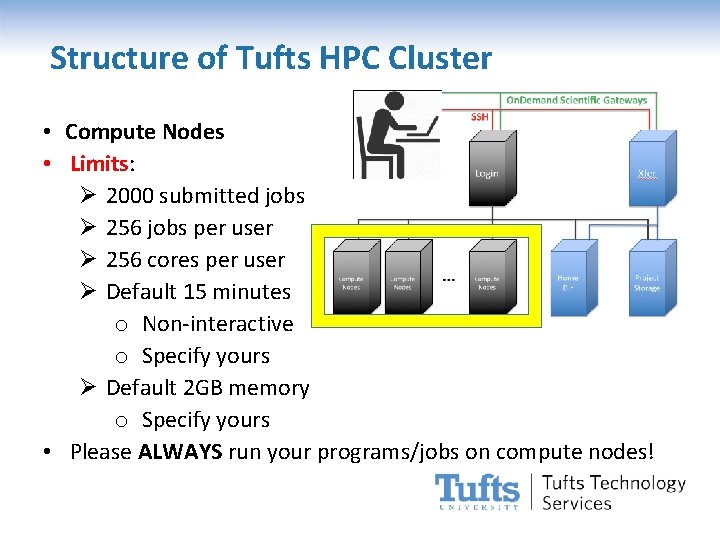

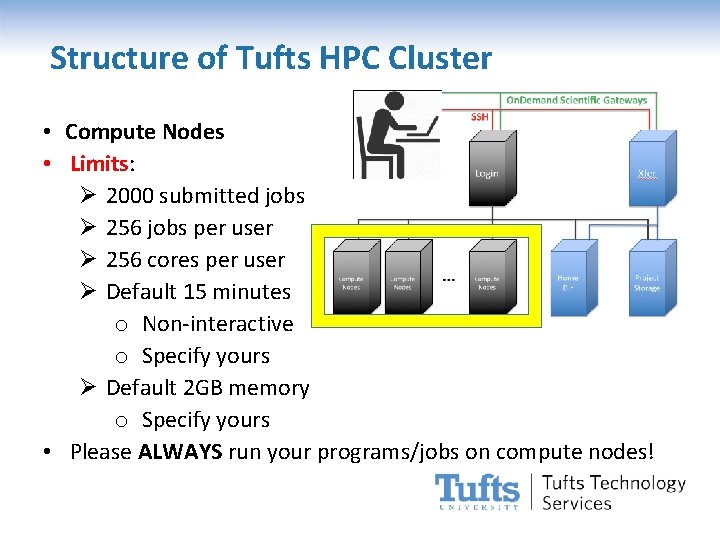

Structure of Tufts HPC Cluster • Compute Nodes • Limits: Ø 2000 submitted jobs Ø 256 jobs per user Ø 256 cores per user Ø Default 15 minutes o Non-interactive o Specify yours Ø Default 2 GB memory o Specify yours • Please ALWAYS run your programs/jobs on compute nodes!

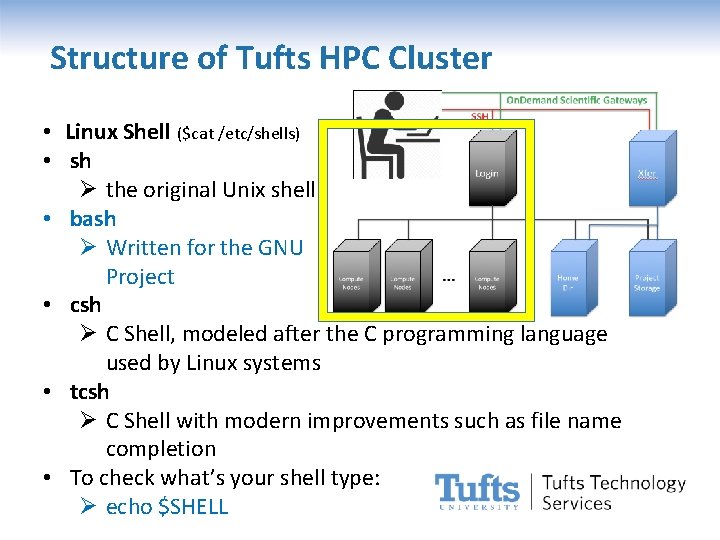

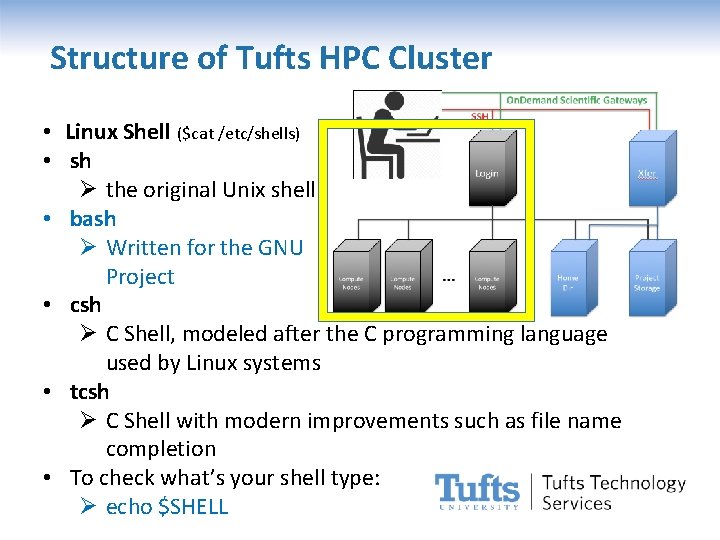

Structure of Tufts HPC Cluster • Linux Shell ($cat /etc/shells) • sh Ø the original Unix shell • bash Ø Written for the GNU Project • csh Ø C Shell, modeled after the C programming language used by Linux systems • tcsh Ø C Shell with modern improvements such as file name completion • To check what’s your shell type: Ø echo $SHELL

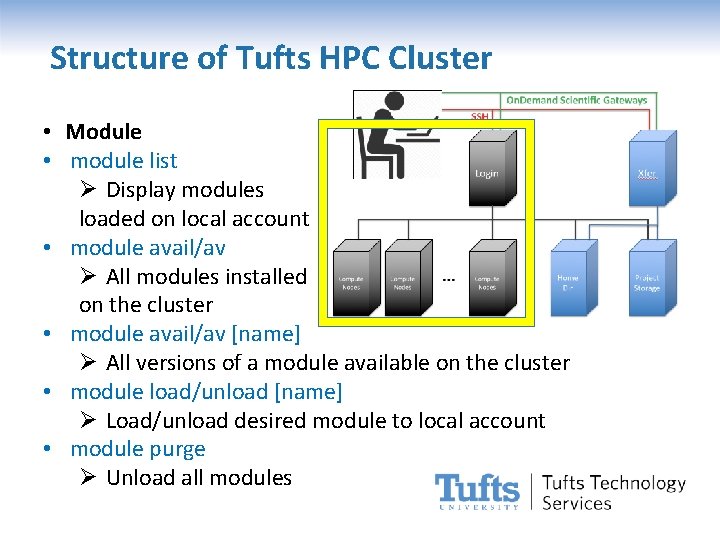

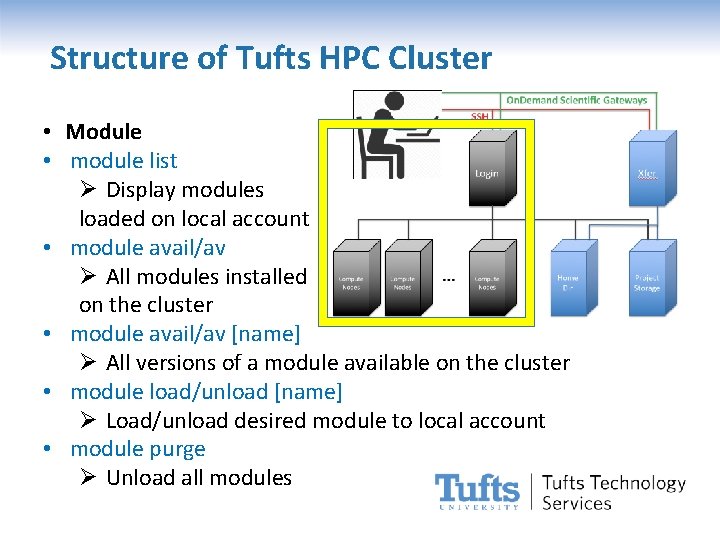

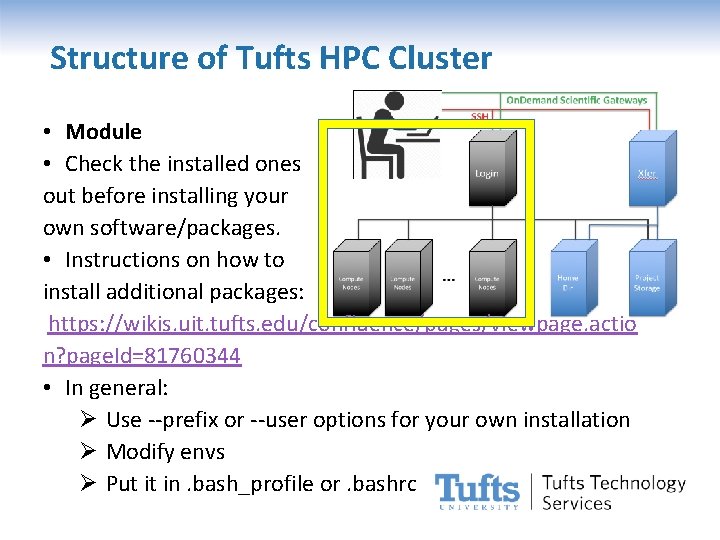

Structure of Tufts HPC Cluster • Module • module list Ø Display modules loaded on local account • module avail/av Ø All modules installed on the cluster • module avail/av [name] Ø All versions of a module available on the cluster • module load/unload [name] Ø Load/unload desired module to local account • module purge Ø Unload all modules

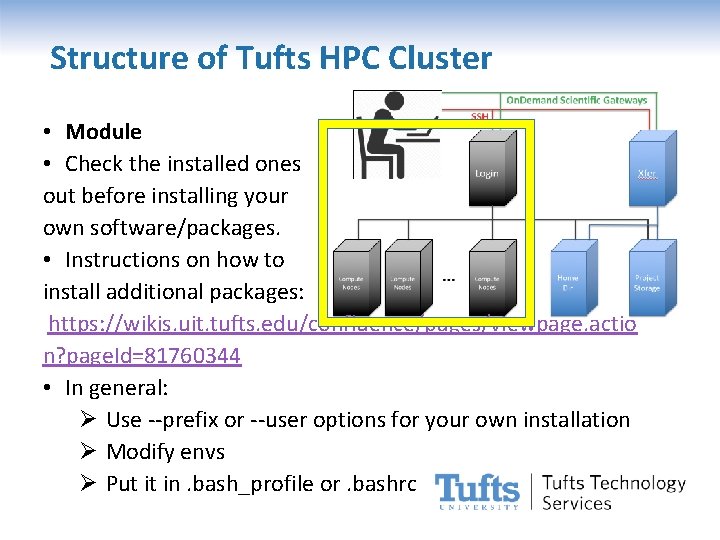

Structure of Tufts HPC Cluster • Module • Check the installed ones out before installing your own software/packages. • Instructions on how to install additional packages: https: //wikis. uit. tufts. edu/confluence/pages/viewpage. actio n? page. Id=81760344 • In general: Ø Use --prefix or --user options for your own installation Ø Modify envs Ø Put it in. bash_profile or. bashrc

What is a HPC cluster? • A computer cluster is a set of loosely or tightly connected computers that work together so that, in many respects, they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. [Wikipedia]

What is a HPC cluster? • A computer cluster is a set of loosely or tightly connected computers that work together so that, in many respects, they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. [Wikipedia]

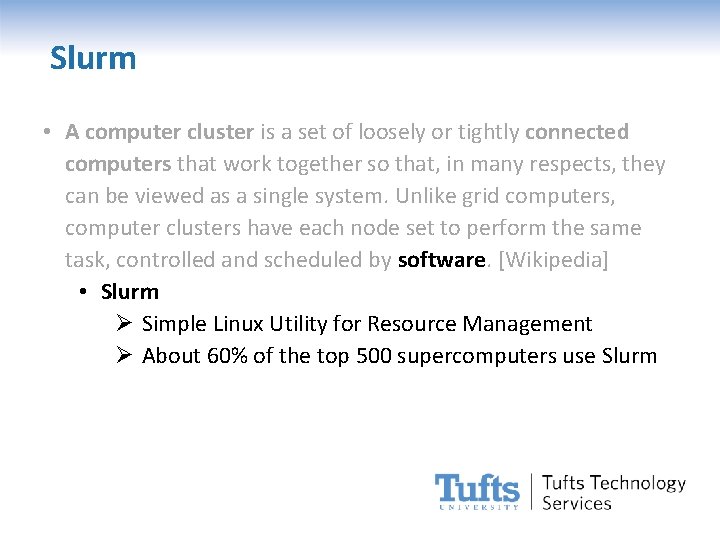

Slurm • A computer cluster is a set of loosely or tightly connected computers that work together so that, in many respects, they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. [Wikipedia] • Slurm Ø Simple Linux Utility for Resource Management Ø About 60% of the top 500 supercomputers use Slurm

Slurm • A computer cluster is a set of loosely or tightly connected computers that work together so that, in many respects, they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. [Wikipedia] • Slurm Ø An open source, fault-tolerant, and highly scalable cluster management and job scheduling system for large and small Linux clusters. Ø Allocates exclusive and/or non-exclusive access to resources to users for some duration of time so they can perform work. Ø Provides a framework for starting, executing, and monitoring work on the set of allocated nodes. Ø Arbitrates contention for resources by managing a queue of pending work.

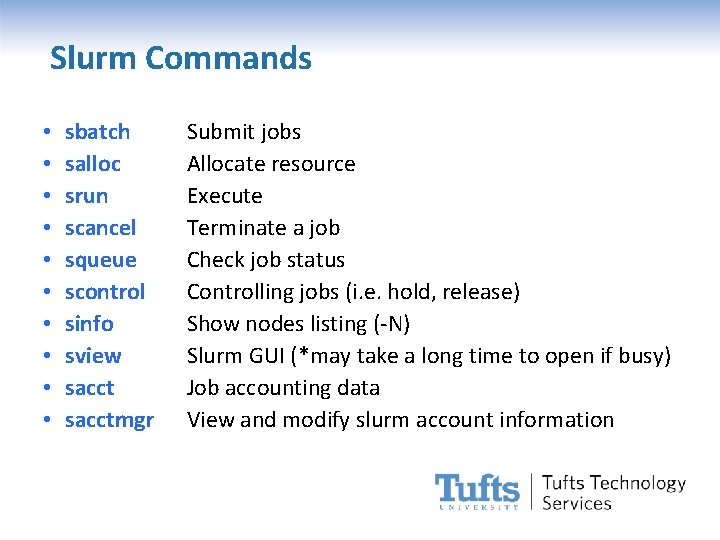

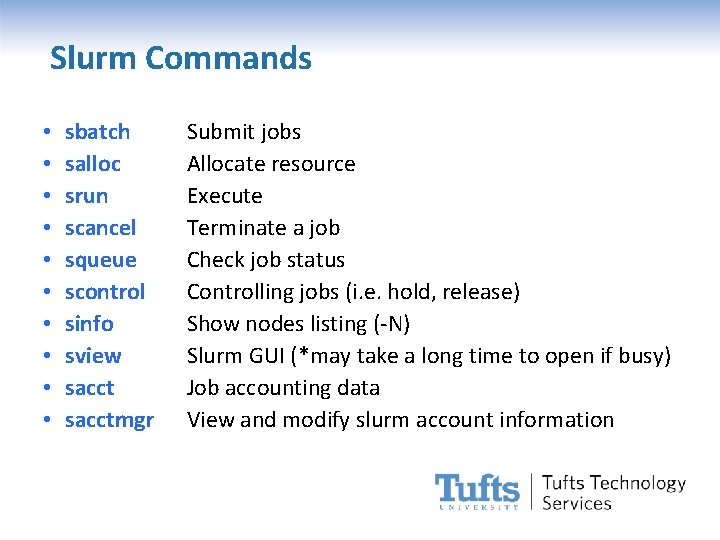

Slurm Commands • • • sbatch salloc srun scancel squeue scontrol sinfo sview sacctmgr Submit jobs Allocate resource Execute Terminate a job Check job status Controlling jobs (i. e. hold, release) Show nodes listing (-N) Slurm GUI (*may take a long time to open if busy) Job accounting data View and modify slurm account information

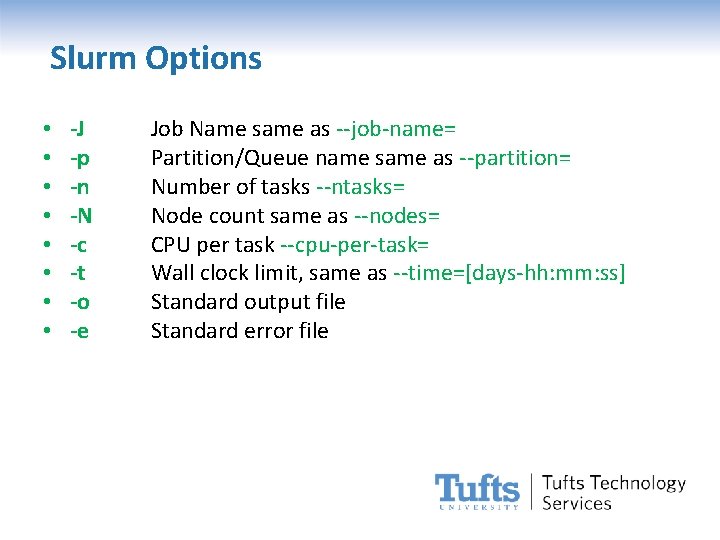

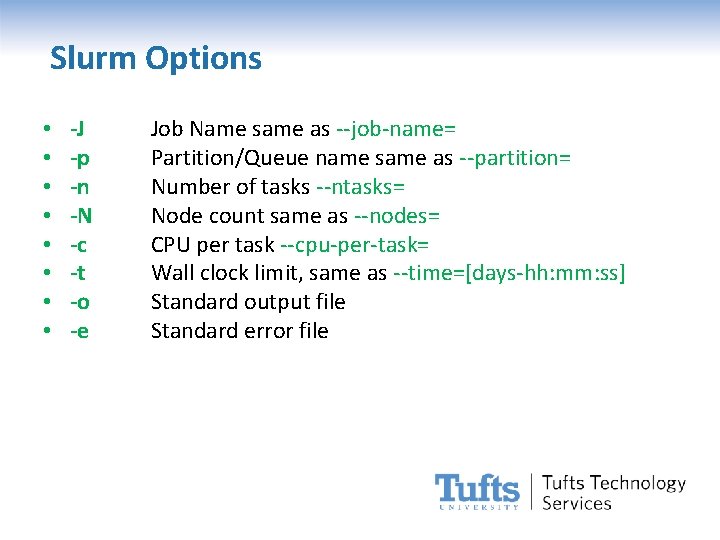

Slurm Options • • -J -p -n -N -c -t -o -e Job Name same as --job-name= Partition/Queue name same as --partition= Number of tasks --ntasks= Node count same as --nodes= CPU per task --cpu-per-task= Wall clock limit, same as --time=[days-hh: mm: ss] Standard output file Standard error file

![Slurm Options memin MB taskspernode dependstate jobid nodelistnodes constraintnodes excludenodes pty x Slurm Options • • --mem=[in MB] --tasks-per-node=[#] --depend=[state: job_id] --nodelist=[nodes] --constraint=[nodes] --exclude=[nodes] --pty --x](https://slidetodoc.com/presentation_image_h/e1175cff3e7c11dc7f9d27fe23a6919e/image-30.jpg)

Slurm Options • • --mem=[in MB] --tasks-per-node=[#] --depend=[state: job_id] --nodelist=[nodes] --constraint=[nodes] --exclude=[nodes] --pty --x 11=first Memory size Tasks per node Job dependency Select nodes Constraint node selection Exclude nodes pseudo terminal Interactive session with GUI

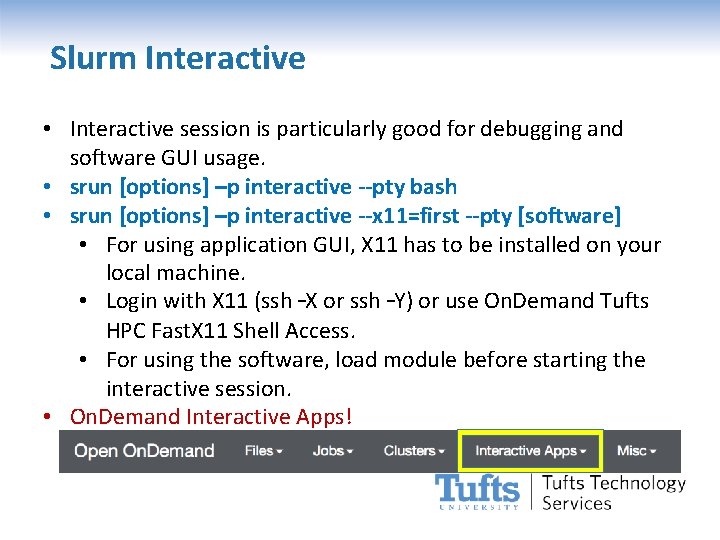

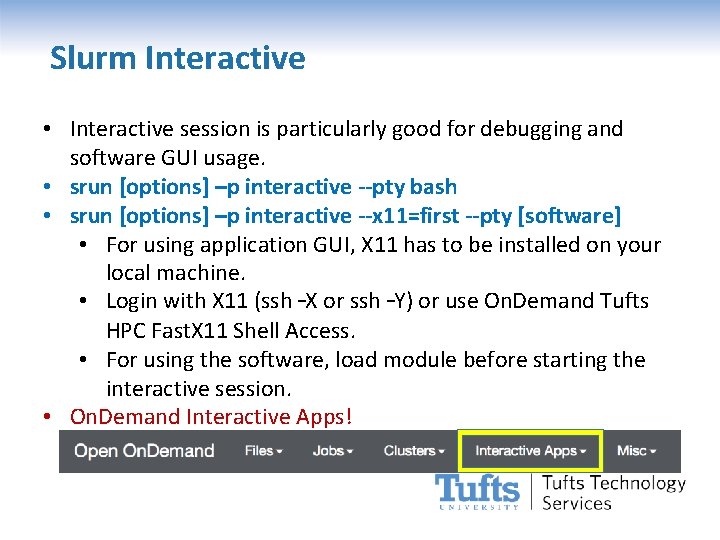

Slurm Interactive • Interactive session is particularly good for debugging and software GUI usage. • srun [options] –p interactive --pty bash • srun [options] –p interactive --x 11=first --pty [software] • For using application GUI, X 11 has to be installed on your local machine. • Login with X 11 (ssh –X or ssh –Y) or use On. Demand Tufts HPC Fast. X 11 Shell Access. • For using the software, load module before starting the interactive session. • On. Demand Interactive Apps!

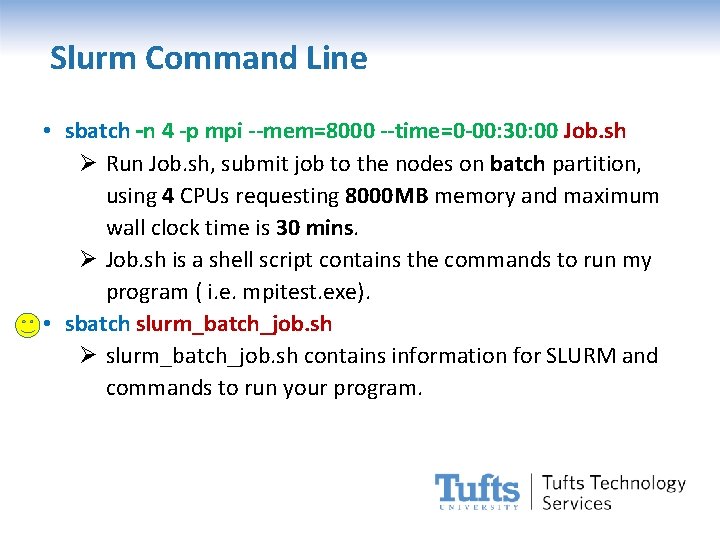

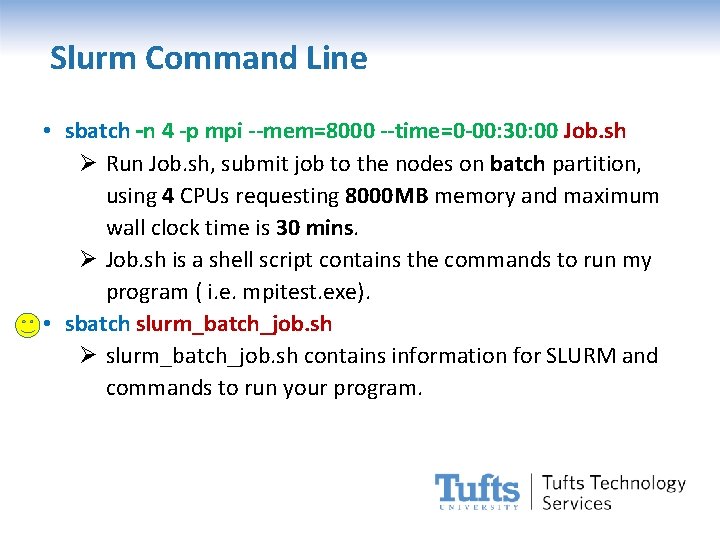

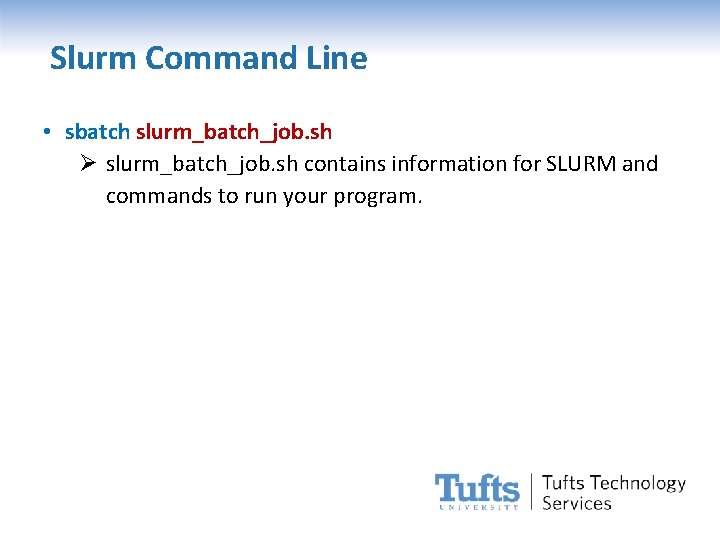

Slurm Command Line • sbatch –n 4 -p mpi --mem=8000 --time=0 -00: 30: 00 Job. sh Ø Run Job. sh, submit job to the nodes on batch partition, using 4 CPUs requesting 8000 MB memory and maximum wall clock time is 30 mins. Ø Job. sh is a shell script contains the commands to run my program ( i. e. mpitest. exe). • sbatch slurm_batch_job. sh Ø slurm_batch_job. sh contains information for SLURM and commands to run your program.

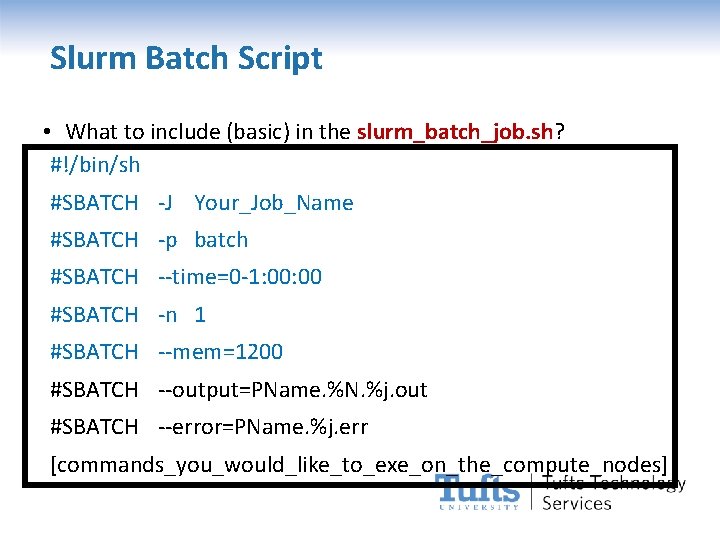

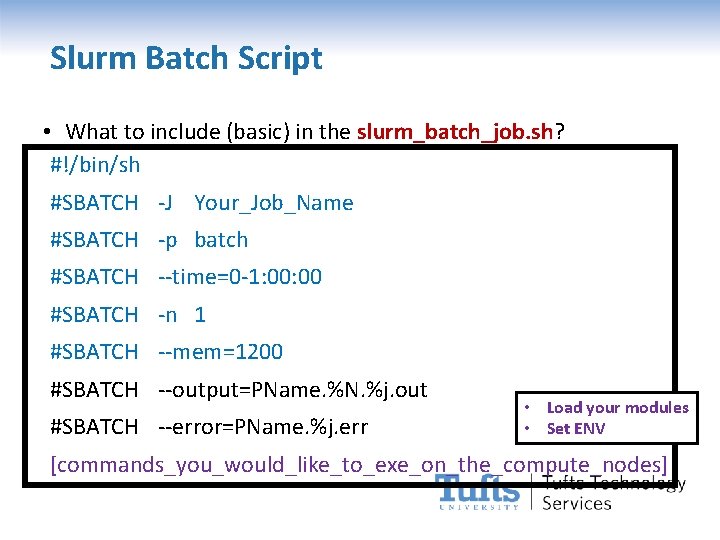

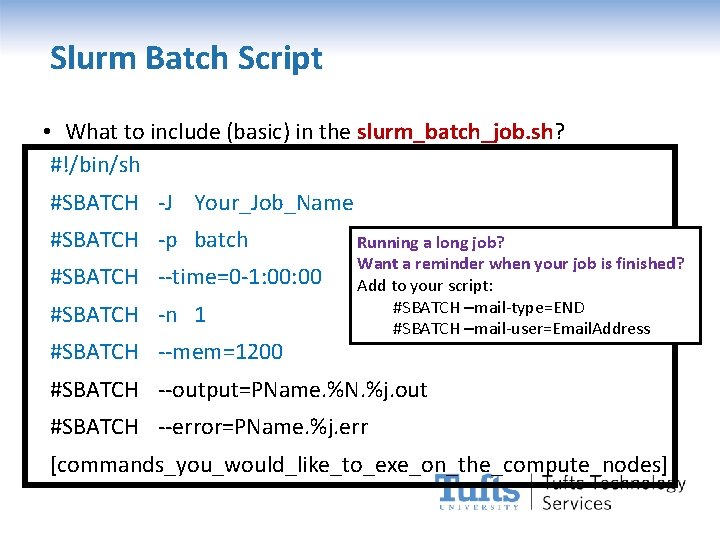

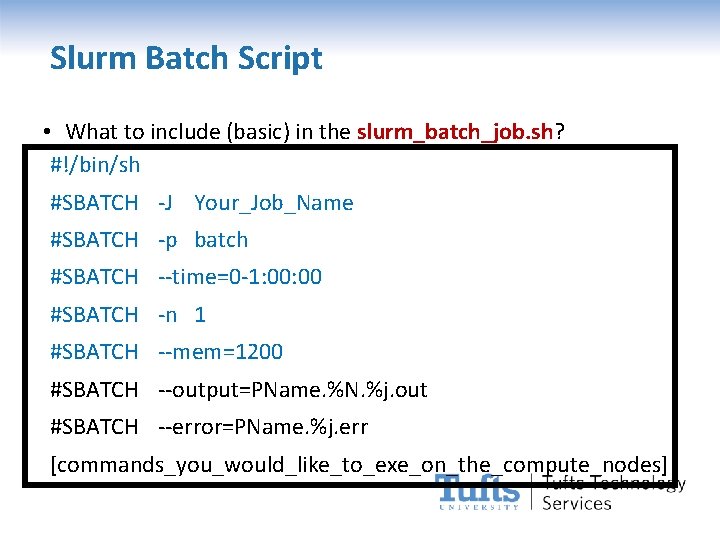

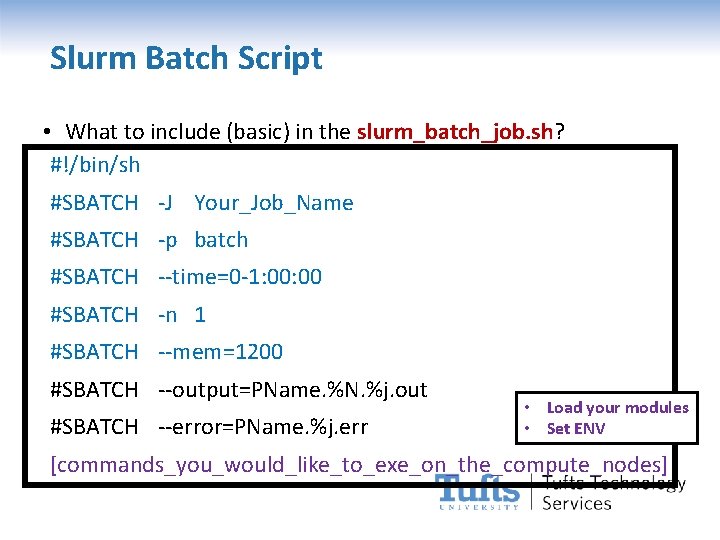

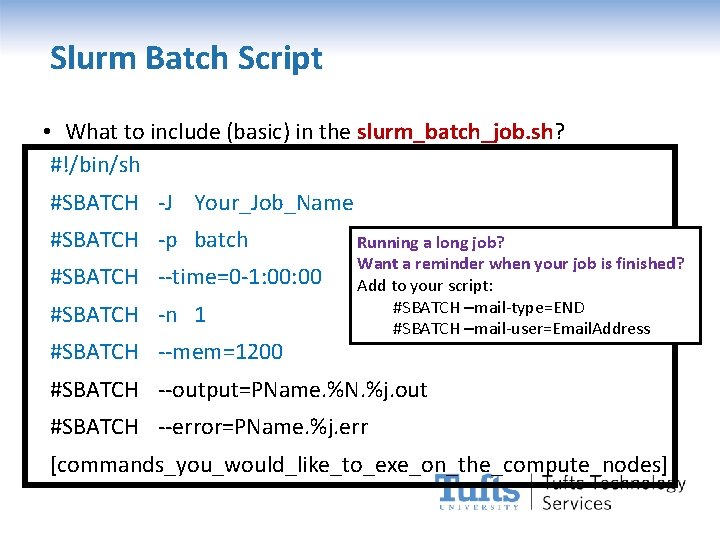

Slurm Batch Script • What to include (basic) in the slurm_batch_job. sh? #!/bin/sh #SBATCH -J Your_Job_Name #SBATCH -p batch #SBATCH --time=0 -1: 00 #SBATCH -n 1 #SBATCH --mem=1200 #SBATCH --output=PName. %N. %j. out #SBATCH --error=PName. %j. err [commands_you_would_like_to_exe_on_the_compute_nodes]

Slurm Batch Script • What to include (basic) in the slurm_batch_job. sh? #!/bin/sh #SBATCH -J Your_Job_Name #SBATCH -p batch #SBATCH --time=0 -1: 00 #SBATCH -n 1 #SBATCH --mem=1200 #SBATCH --output=PName. %N. %j. out #SBATCH --error=PName. %j. err • Load your modules • Set ENV [commands_you_would_like_to_exe_on_the_compute_nodes]

Slurm Batch Script • What to include (basic) in the slurm_batch_job. sh? #!/bin/sh #SBATCH -J Your_Job_Name #SBATCH -p batch #SBATCH --time=0 -1: 00 #SBATCH -n 1 #SBATCH --mem=1200 Running a long job? Want a reminder when your job is finished? Add to your script: #SBATCH –mail-type=END #SBATCH –mail-user=Email. Address #SBATCH --output=PName. %N. %j. out #SBATCH --error=PName. %j. err [commands_you_would_like_to_exe_on_the_compute_nodes]

Slurm Command Line • sbatch slurm_batch_job. sh Ø slurm_batch_job. sh contains information for SLURM and commands to run your program.

Let’s Try It Out Download exercise from Box: Simple MPI code in C • https: //tufts. box. com/v/HPCworkshop. Exercise Upload it to HPC cluster (recommend: using On. Demand) • https: //ondemand. cluster. tufts. edu • Files Home Directory • Create New Directory (New Dir) • Upload exercise files • Decompress the files ($tar –xvf file. tar) • View or Edit as you needed

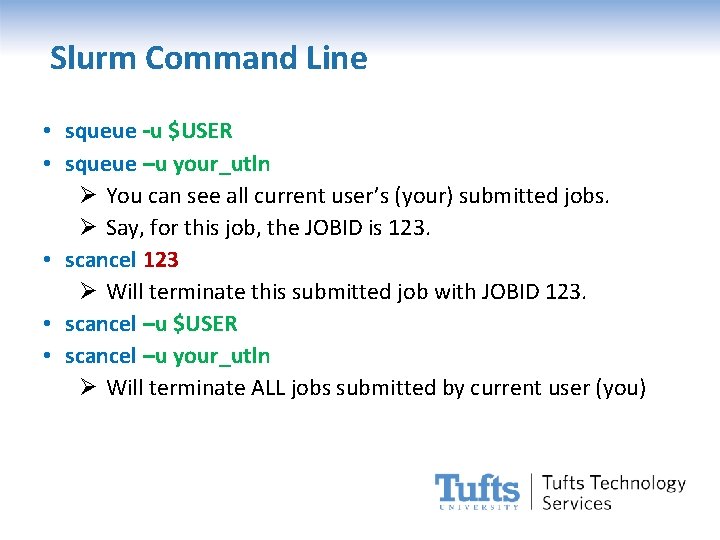

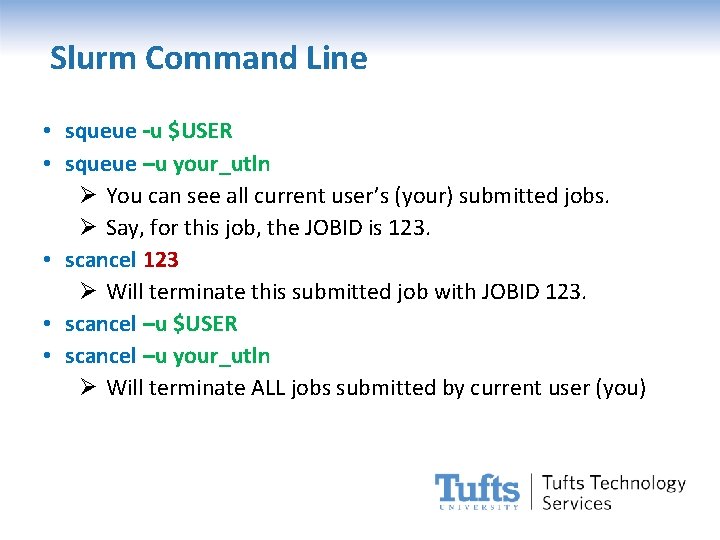

Slurm Command Line • squeue –u $USER • squeue –u your_utln Ø You can see all current user’s (your) submitted jobs. Ø Say, for this job, the JOBID is 123. • scancel 123 Ø Will terminate this submitted job with JOBID 123. • scancel –u $USER • scancel –u your_utln Ø Will terminate ALL jobs submitted by current user (you)

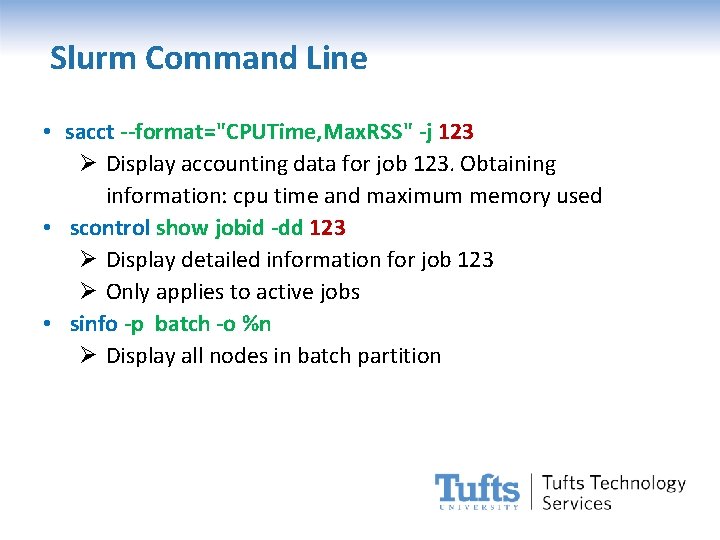

Slurm Command Line • sacct --format="CPUTime, Max. RSS" -j 123 Ø Display accounting data for job 123. Obtaining information: cpu time and maximum memory used • scontrol show jobid -dd 123 Ø Display detailed information for job 123 Ø Only applies to active jobs • sinfo -p batch -o %n Ø Display all nodes in batch partition

More Slurm Information • Wiki: Ø go. tufts. edu/cluster or Ø https: //wikis. uit. tufts. edu/confluence/display/Tufts. UITRes earch. Computing/Home Ø go to High Performance Compute Cluster/Slurm • Slurm Documentation: Ø https: //slurm. schedmd. com/ • . . . and Google

Need More Help? Contact RT-TTS If you have any questions regarding to Tufts HPC cluster access or usage, Please feel free to contact us at: tts-research@tufts. edu