Welcome to HPC Please log in with Hawk

- Slides: 36

Welcome to HPC � Please log in with Hawk. ID (IOWA domain) and password to the type of machine with which you are most comfortable (Mac or PC) � To switch between the Dell and the Apple computer, press scroll lock twice 11/28/2020 1

Intro to HPC with the Helium Cluster Ben Rogers ITS-Research Services July 25, 2014 11/28/2020 2

What is your field? � Chemistry � Engineering � Genetics � Hydrology � Imaging � Physics � Statistics � Others? 11/28/2020 3

Poll � Has anyone not used a Unix or Linux command line before? � Mac OS X Terminal counts 11/28/2020 4

Overview � What is a Compute Cluster? � High Performance Computing � High Throughput Computing � The Helium & Neon Clusters � Mapping Your Problem to a Cluster 11/28/2020 5

What is a Compute Cluster? � Large number of computers � Software that allows them to work together � A tool for solving large computational problems that require more memory or cpu than is available on a single system http: //www. flickr. com/photos/fullaperture/5435786866/sizes/l/in/photostream/ 11/28/2020 6

High Performance Computing � Using multiple computers in a coordinated way to solve a single problem � Provides the ability to: ◦ Use 10 s-1000 s of cores to solve a single problem ◦ Allows access to 10 s-1000 s of GB of Ram � Likely to require substantial code modification to use a library such as MPI � Common Examples: ◦ Computational Fluid Dynamics ◦ Molecular Dynamics 11/28/2020 7

High Throughput Computing � Using multiple computers in a coordinated way to solve many individual problems � Provides the ability to: ◦ Analyze many data sets simultaneously ◦ Efficiently perform a parameter sweep � Requires minimal code modifications � Common Examples: ◦ Image Analysis ◦ Genomics 11/28/2020 8

The Helium Cluster � Collaborative Cluster � Cent. OS 5 (Linux) � ~400 compute nodes � ~3800 processor cores � 24 -144 GB of Ram/node � 300 TB+ Storage � 40 Gb/s Infiniband Network 9

Helium Storage � Home Account Storage ◦ NFS ◦ 80 TB Total ◦ 1 TB per User � Shared Scratch Storage ◦ NFS: 150 TB - /nfsscratch ◦ Fh. GFS: 140 TB - /scratch ◦ Deleted after 30 days � Local Scratch Storage ◦ 600 GB+/Compute Node � No Backups! 11/28/2020 10

The Neon Cluster � Upgrades & Outage Coming Soon! � Collaborative Cluster � Cent. OS 6 (Linux) � ~175 compute nodes � ~2600 processor cores � 64 -512 GB of Ram/node � 40 Gb/s Infiniband � Xeon Phi & Nvidia K 20 Accelerators 11

Neon Storage � Home Account Storage ◦ NFS ◦ 50 TB Total ◦ 1 TB per User � Shared Scratch Storage ◦ NFS: 85 TB - /nfsscratch ◦ Deleted after 30 days � Local Scratch Storage ◦ 2 TB/Compute Node � No Backups! 11/28/2020 12

Storage Continued � Home & Scratch Storage Not Shared Between Clusters � Paid Data ◦ ◦ Storage Service - $60/TB/Year/Copy of May be shared between clusters Available outside cluster Backups available Found at /Shared/$LAB & /Dedicated/$LAB 13

Transferring Data to Helium � Globus Online/Grid. FTP �https: //wiki. uiowa. edu/display/hpcdocs/Globus+Onlin e � sftp � IPSwitch WS_FTP on Windows �https: //helpdesk. its. uiowa. edu/software/download/wsftp/ � Fetch on Mac �https: //helpdesk. its. uiowa. edu/software/download/fetch/ 11/28/2020 14

So It Just Runs Faster, Right? � Not quite! � Just running on Helium or Neon won’t necessarily make your program faster. http: //basementgeographer. blogspot. com/2012/03/international-racing-colours. html 11/28/2020 15

Mapping Your Problem to a Cluster � Is a cluster a good fit? ◦ If your problem �Is not tractable on your desktop system �Requires more memory than your desktop has available �Requires rapid turnaround of results that you can’t achieve with a desktop system �Would benefit from having jobs scheduled �Don’t want to tie up your desktop computer � Your problem may be a good candidate for a cluster! 11/28/2020 16

Mapping Your Problem to a Cluster � Next Questions � Next Steps ◦ Does your job run on Linux? ◦ Can your job run in batch mode? ◦ Is your job HPC or HTC? ◦ ◦ Develop Strategy for Running Jobs Install Software Develop Job Submission Scripts Run Your Job 11/28/2020 17

The Challenge: Analyze 1000 MRIs � Run Freesurfer on 1000 MRIs ◦ Takes 20 Hours per MRI ◦ Requires 2 GB of Memory/analysis � Desktop Analysis Time ◦ 20 Hours x 1000 MRIs = 20, 000 Hours � 2. 3 Years! ◦ But I have a Quad Core Desktop with 8 GB �That’s still over six months! http: //surfer. nmr. mgh. harvard. edu/ 11/28/2020 18

Analyze 1000 MRIs: Using the Helium Cluster � Good fit for cluster? – Yes � Type of problem – HTC � Software – Runs on Linux in batch mode Time to Analyze � On Helium – As little as 20 hours ◦ Time dependent on cores available, likely complete within a week. ◦ Possible to run all analyses simultaneously � 1000 processor cores – Total on Helium > 3800 � 2000 GB of memory – Total on Helium > 9000 GB 11/28/2020 19

Analyze 1000 MRIs: Using the Helium Cluster � What’s the catch? ◦ Time and effort needed to understand how to run your analysis on Helium ◦ Shared Resource �Job wait time �Job eviction 11/28/2020 20

Hands On Activities � Any questions before we proceed? � Anyone need a break? 11/28/2020 21

Logging In � Getting ◦ ◦ ◦ logged in to Helium Need to use your Hawk. ID (Iowa domain) password Windows will use Secure. CRT Mac will use Secure. CRT or ssh from Terminal Make sure you do not check “save password” You will be prompted to accept a key. �Say yes to accepting the key 11/28/2020 22

I’m logged in; now what? � I’m connected; how do I start working? ◦ Helium is a batch, queued system ◦ Jobs must be submitted to a queue, wait their turn; then they are processed �When running in a batch system you do not interact with your program in real time. �Have to specify options in your job script 11/28/2020 23

Queues � Investor queues ◦ Investor owned, guaranteed access ◦ Access restricted to specified users � UI queue ◦ Treated like an investor queue but everyone has access ◦ Limited to 25 RUNNING jobs per user on Helium � all. q ◦ No job limits ◦ Subject to eviction 11/28/2020 24

Slots � Slots are equivalent to a share of a machines resources (1 slot on a 16 core machine = 1/16 th of the system resources) ◦ Processor Cores ◦ Memory � The number of slots you specify can determine how soon your job will be able to run � When using mpi we recommend using full machines (eg. Request a number of slots evenly divisible by the number of slots on a machine. ) 11/28/2020 25

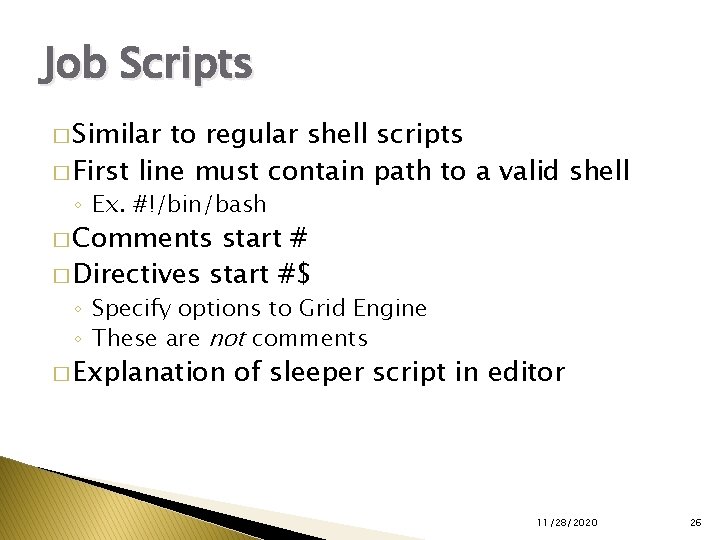

Job Scripts � Similar to regular shell scripts � First line must contain path to a valid shell ◦ Ex. #!/bin/bash � Comments start # � Directives start #$ ◦ Specify options to Grid Engine ◦ These are not comments � Explanation of sleeper script in editor 11/28/2020 26

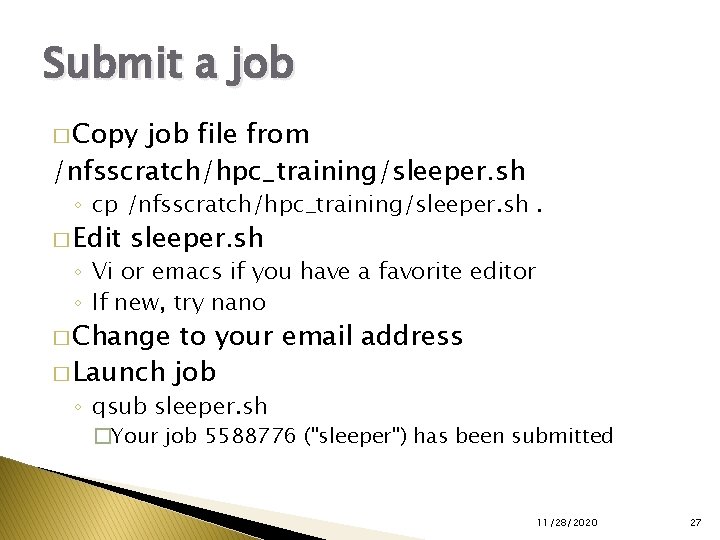

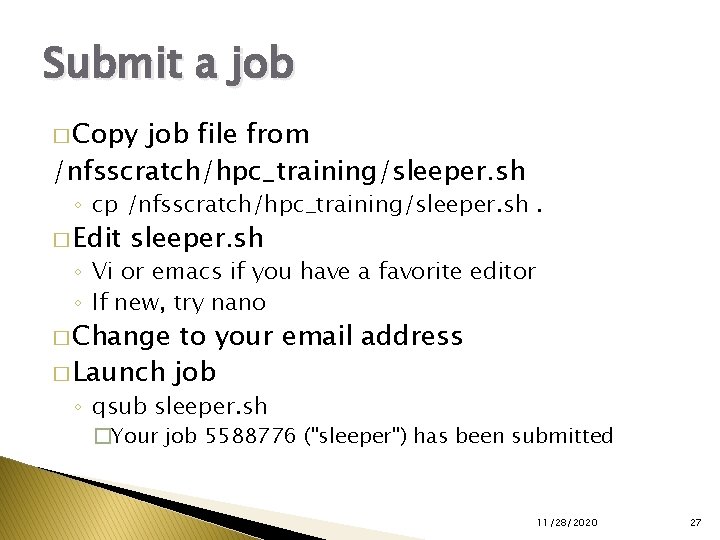

Submit a job � Copy job file from /nfsscratch/hpc_training/sleeper. sh ◦ cp /nfsscratch/hpc_training/sleeper. sh. � Edit sleeper. sh ◦ Vi or emacs if you have a favorite editor ◦ If new, try nano � Change to your email address � Launch job ◦ qsub sleeper. sh �Your job 5588776 ("sleeper") has been submitted 11/28/2020 27

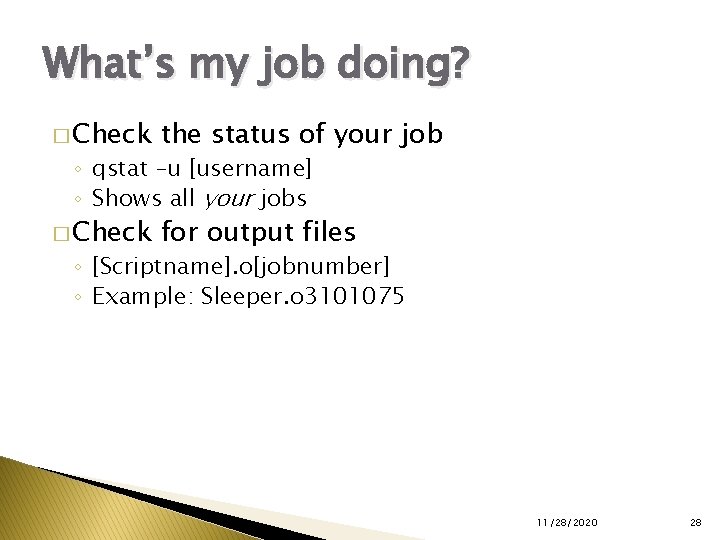

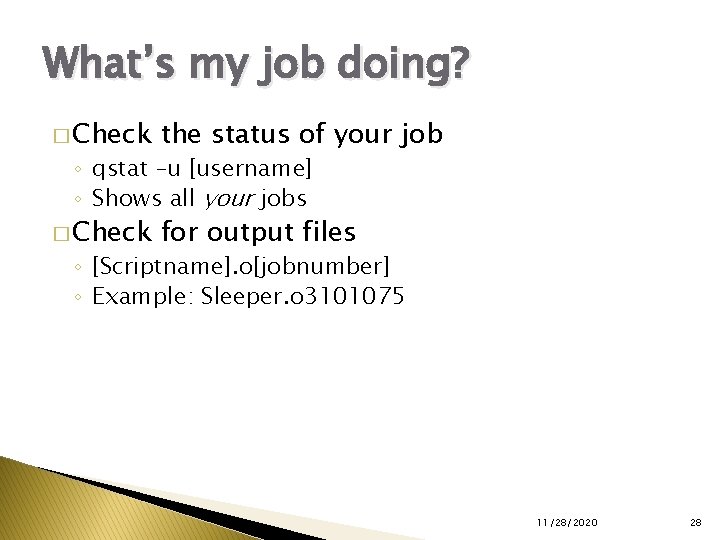

What’s my job doing? � Check the status of your job � Check for output files ◦ qstat –u [username] ◦ Shows all your jobs ◦ [Scriptname]. o[jobnumber] ◦ Example: Sleeper. o 3101075 11/28/2020 28

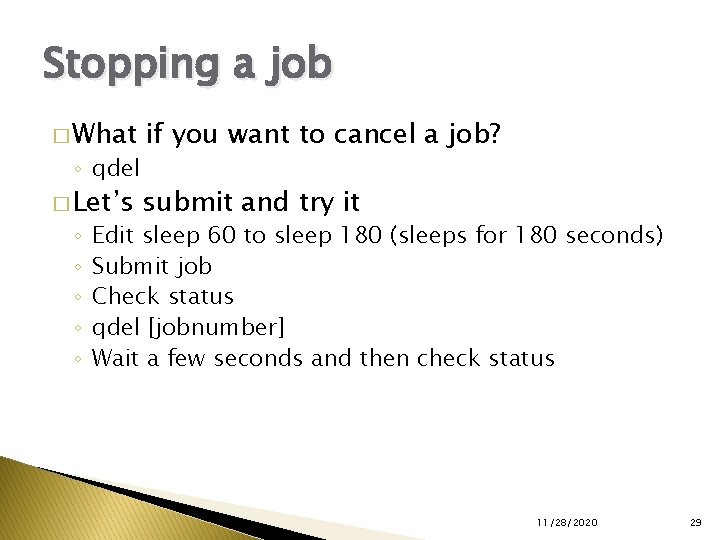

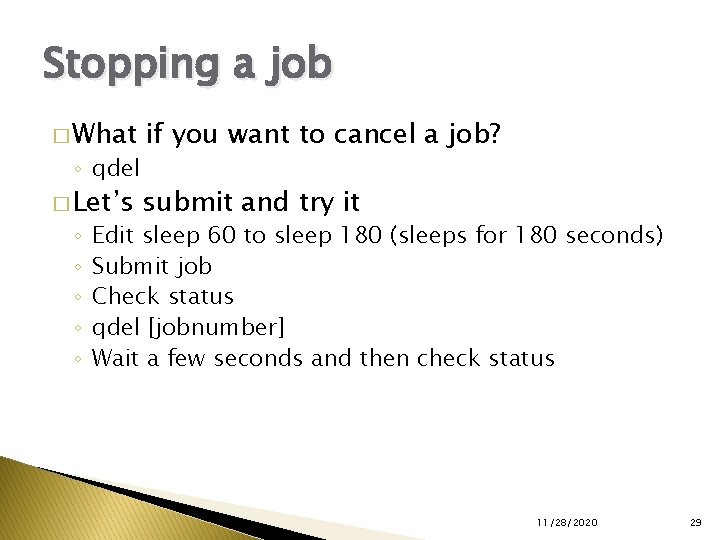

Stopping a job � What if you want to cancel a job? � Let’s submit and try it ◦ qdel ◦ ◦ ◦ Edit sleep 60 to sleep 180 (sleeps for 180 seconds) Submit job Check status qdel [jobnumber] Wait a few seconds and then check status 11/28/2020 29

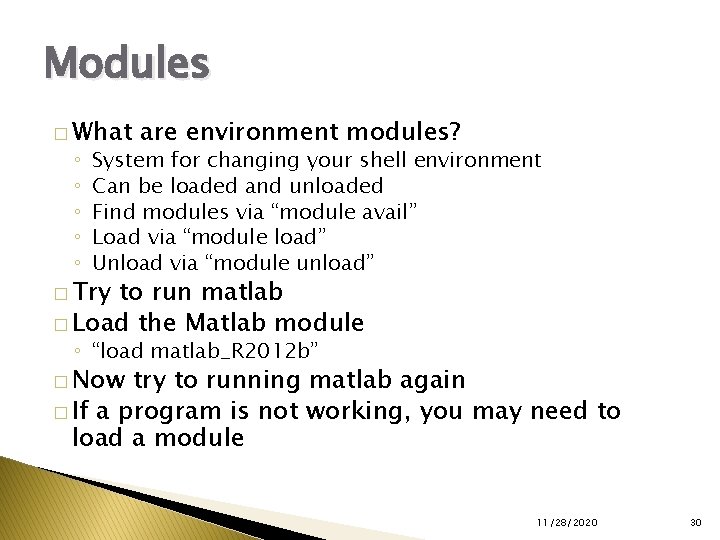

Modules � What ◦ ◦ ◦ are environment modules? System for changing your shell environment Can be loaded and unloaded Find modules via “module avail” Load via “module load” Unload via “module unload” � Try to run matlab � Load the Matlab module ◦ “load matlab_R 2012 b” � Now try to running matlab again � If a program is not working, you may need to load a module 11/28/2020 30

I/O Streams & Redirection � I/O ◦ ◦ streams Standard input (stdin) Standard output (stdout) (1) Standard error (stderr) (2) Both stdout & stderr (&) �Only some shells (bash) support this ◦ Redirect using > for output and < for input 2> redirects stderr and &> redirects both � What’s an example of how you’ve used them? ◦ Redirection happens when your job runs on the cluster 11/28/2020 31

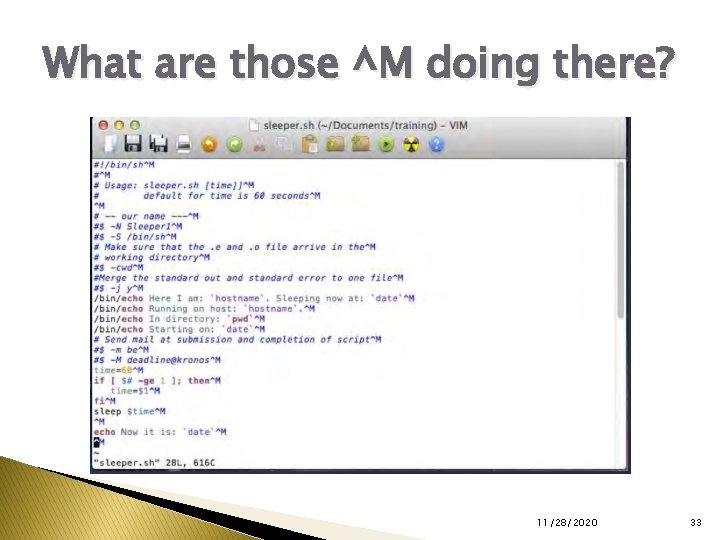

Common issue � Newline characters � “I’m getting weird errors and I don’t see anything wrong with my file. ” 11/28/2020 32

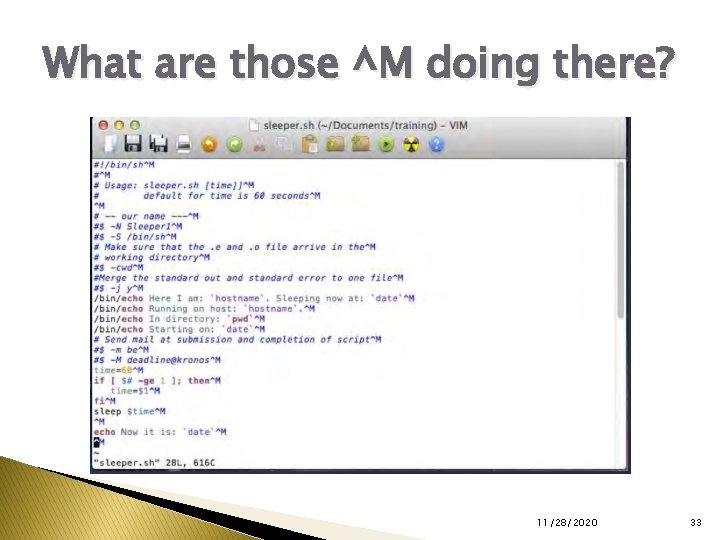

What are those ^M doing there? 11/28/2020 33

Options for Windows Users � Use a windows editor that has a UNIX line ending setting such as notepad++ (free software) � Edit your text files exclusively on Helium � Use dos 2 unix on Helium to convert the files 11/28/2020 34

Additional Help � Individual Consulting ◦ By appointment � Contact Us: ◦ HPC-Sysadmins@iowa. uiowa. edu � For additional details visit ◦ http: //www. hpc. uiowa. edu 11/28/2020 35

Questions? � hpc-sysadmins@iowa. uiowa. edu � ben-rogers@uiowa. edu � Suggestions? � Additional Information �http: //www. hpc. uiowa. edu �http: //its. uiowa. edu/hpc �https: //wiki. uiowa. edu/display/hpcdocs/Helium+Clust er+Overview+and+Quick+Start+Guide �https: //wiki. uiowa. edu/display/hpcdocs/Neon+Overvi ew+and+Quick+Start+Guide 11/28/2020 36