Hola Hadoop 0 Peligro Please please please please

![5. Pu. TTy: Create folder • hdfs dfs -ls /uhadoop • hdfs -mkdir /uhadoop/[username] 5. Pu. TTy: Create folder • hdfs dfs -ls /uhadoop • hdfs -mkdir /uhadoop/[username]](https://slidetodoc.com/presentation_image_h2/d94fb6f60cb339e039bf338692940a31/image-12.jpg)

![9. Win. SCP: Copy. jar to Master Server • Create dir: /data/2014/uhadoop/[username]/ • Copy 9. Win. SCP: Copy. jar to Master Server • Create dir: /data/2014/uhadoop/[username]/ • Copy](https://slidetodoc.com/presentation_image_h2/d94fb6f60cb339e039bf338692940a31/image-19.jpg)

![10. Pu. TTy: Run Job All one command! • hadoop jar /data/2014/uhadoop/[username]/mdplab 4. jar 10. Pu. TTy: Run Job All one command! • hadoop jar /data/2014/uhadoop/[username]/mdplab 4. jar](https://slidetodoc.com/presentation_image_h2/d94fb6f60cb339e039bf338692940a31/image-20.jpg)

![11. Pu. TTY: Look at output • hdfs -ls /uhadoop/[username]/wc/ All one command! • 11. Pu. TTY: Look at output • hdfs -ls /uhadoop/[username]/wc/ All one command! •](https://slidetodoc.com/presentation_image_h2/d94fb6f60cb339e039bf338692940a31/image-21.jpg)

- Slides: 23

Hola Hadoop

0. Peligro! Please please please please please please please please please please please please please please please please please please please please please please please please please

0. Peligro! … please please please please please please please please please please please please please please please please please please please please please please please please

Peligro! … please

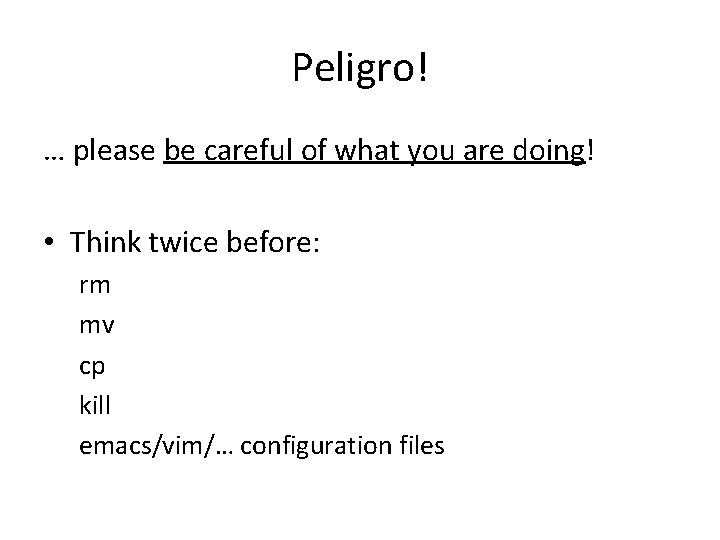

Peligro! … please be careful of what you are doing! • Think twice before: rm mv cp kill emacs/vim/… configuration files

Peligro! … please.

• cluster. dcc. uchile. cl

1. Download tools • http: //aidanhogan. com/teaching/cc 5212 -12015/tools/ • Unzip them somewhere you can find them

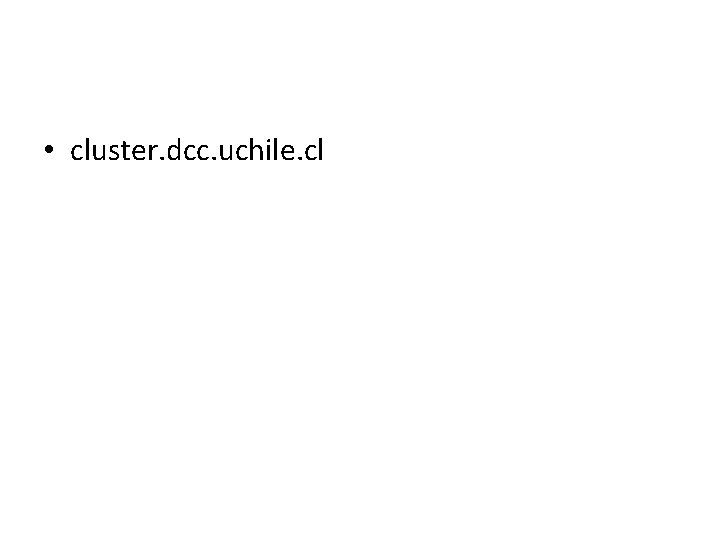

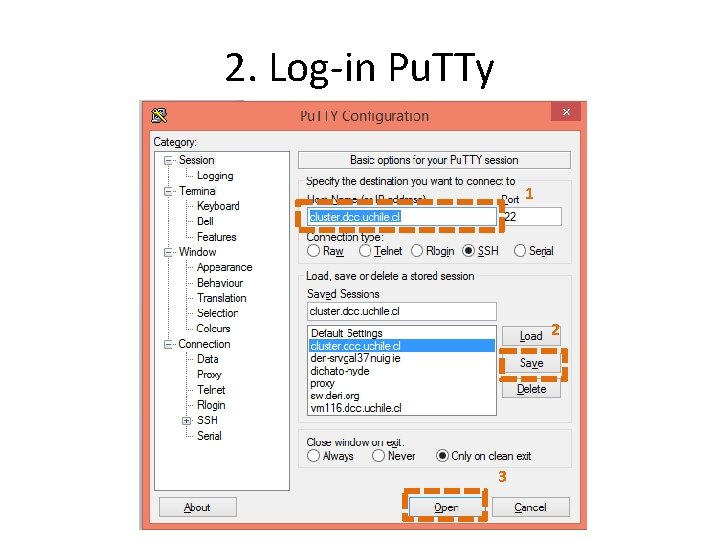

2. Log-in Pu. TTy 1 2 3

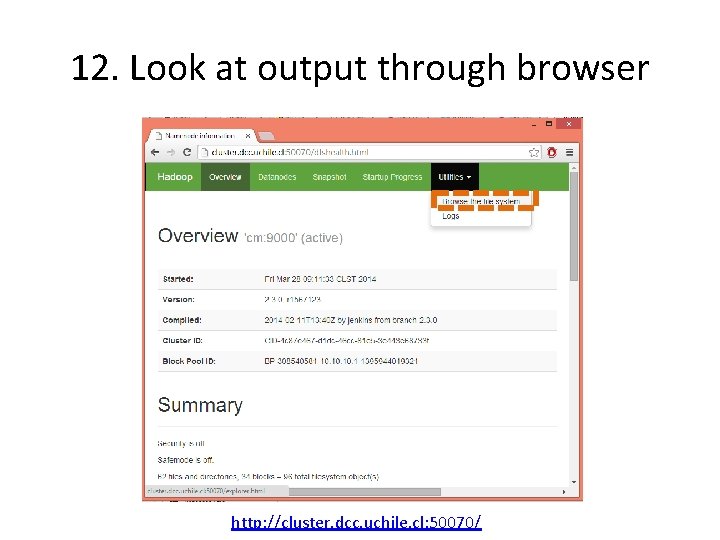

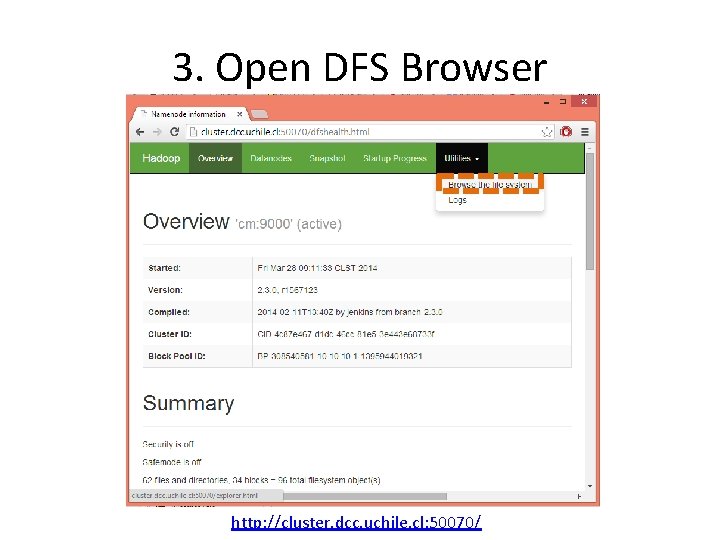

3. Open DFS Browser http: //cluster. dcc. uchile. cl: 50070/

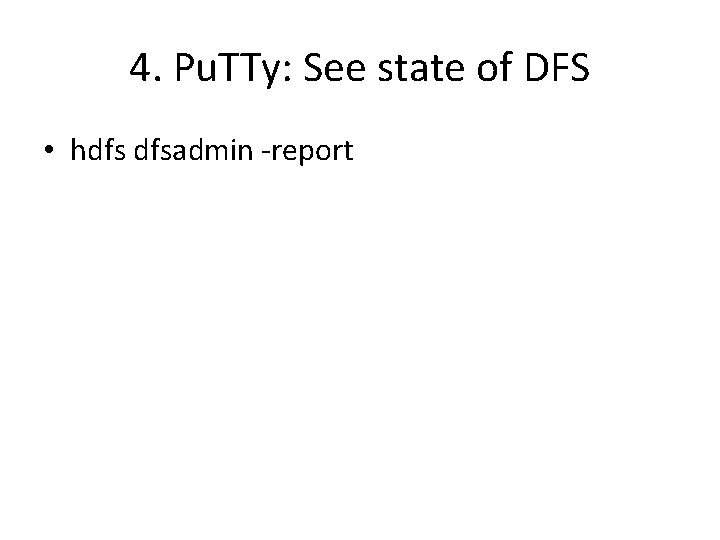

4. Pu. TTy: See state of DFS • hdfs dfsadmin -report

![5 Pu TTy Create folder hdfs dfs ls uhadoop hdfs mkdir uhadoopusername 5. Pu. TTy: Create folder • hdfs dfs -ls /uhadoop • hdfs -mkdir /uhadoop/[username]](https://slidetodoc.com/presentation_image_h2/d94fb6f60cb339e039bf338692940a31/image-12.jpg)

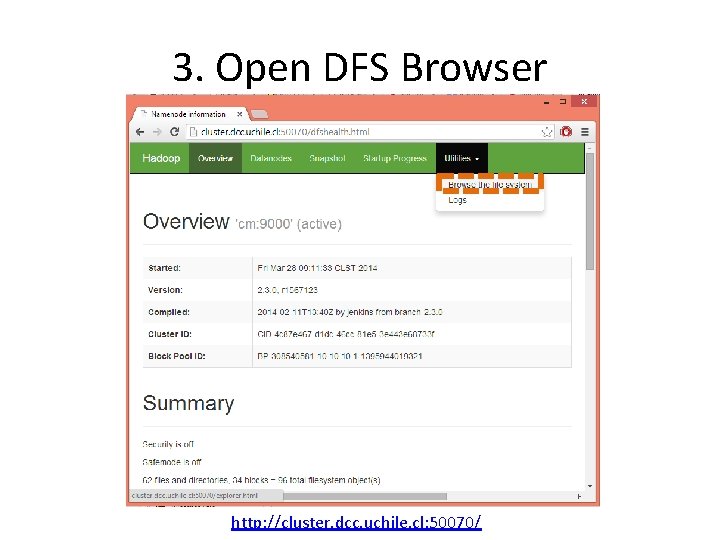

5. Pu. TTy: Create folder • hdfs dfs -ls /uhadoop • hdfs -mkdir /uhadoop/[username] – [username] = first letter first name, last name (e. g. , “ahogan”)

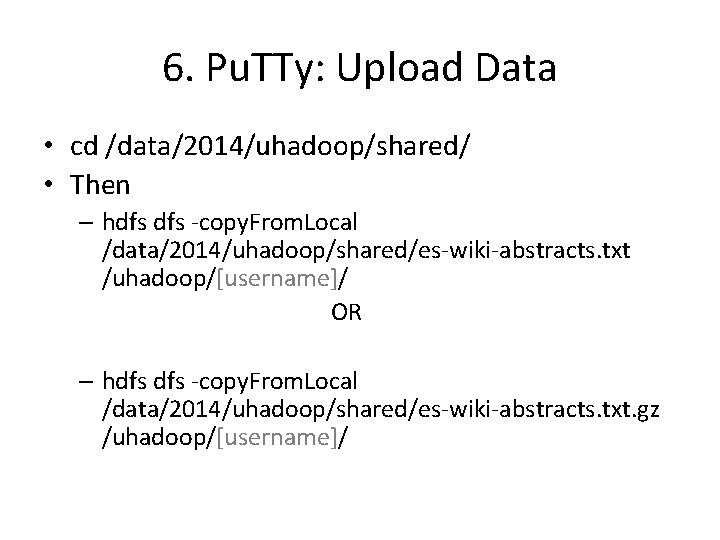

6. Pu. TTy: Upload Data • cd /data/2014/uhadoop/shared/ • Then – hdfs -copy. From. Local /data/2014/uhadoop/shared/es-wiki-abstracts. txt /uhadoop/[username]/ OR – hdfs -copy. From. Local /data/2014/uhadoop/shared/es-wiki-abstracts. txt. gz /uhadoop/[username]/

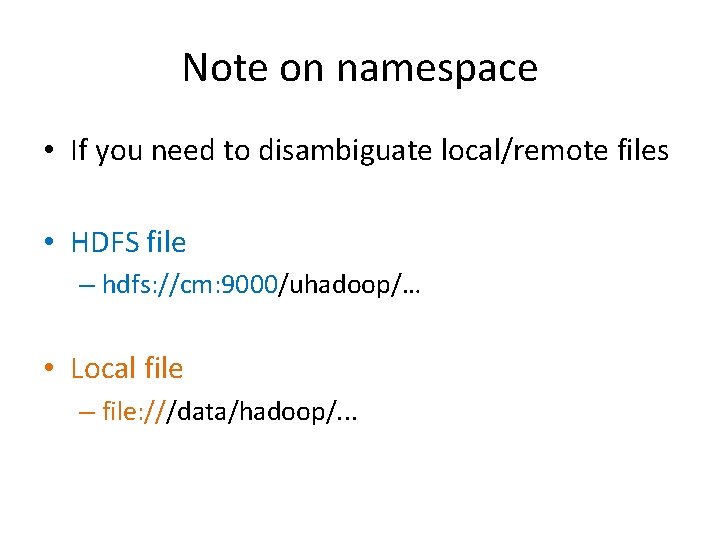

Note on namespace • If you need to disambiguate local/remote files • HDFS file – hdfs: //cm: 9000/uhadoop/… • Local file – file: ///data/hadoop/. . .

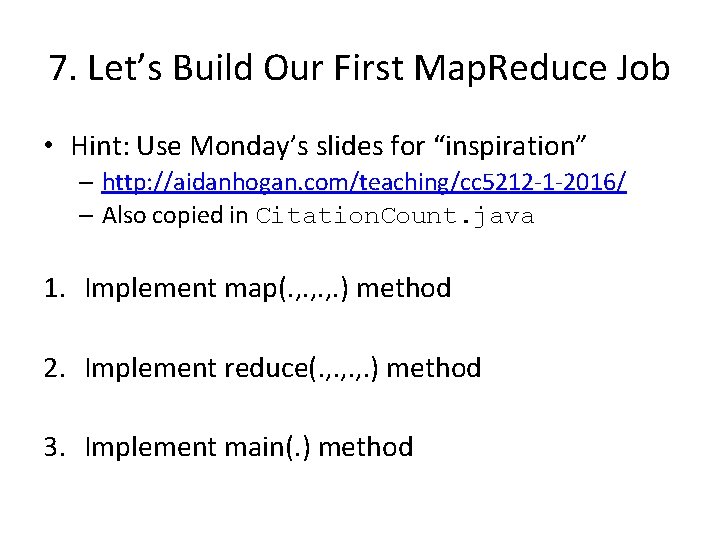

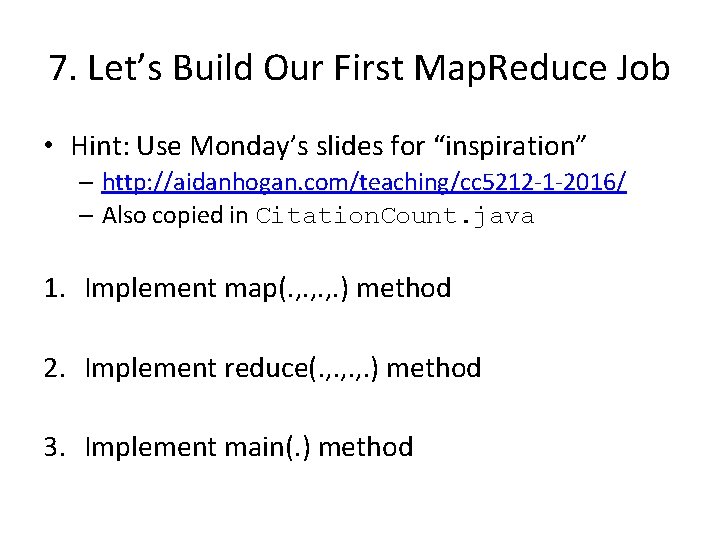

7. Let’s Build Our First Map. Reduce Job • Hint: Use Monday’s slides for “inspiration” – http: //aidanhogan. com/teaching/cc 5212 -1 -2016/ – Also copied in Citation. Count. java 1. Implement map(. , . , . ) method 2. Implement reduce(. , . , . ) method 3. Implement main(. ) method

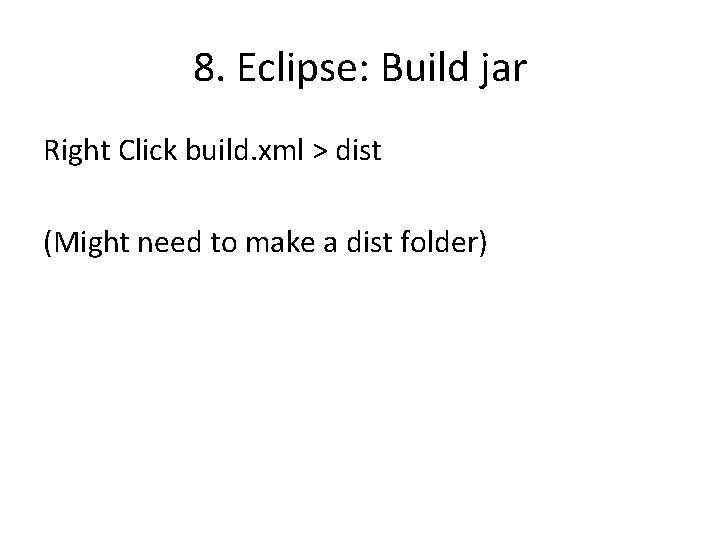

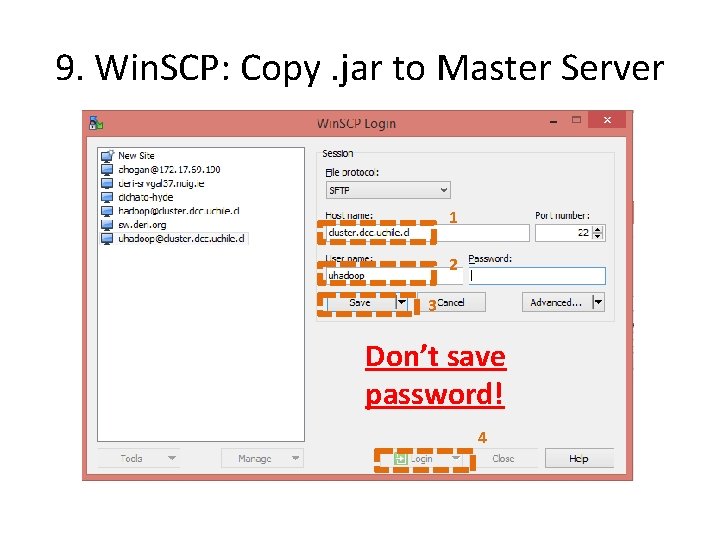

8. Eclipse: Build jar Right Click build. xml > dist (Might need to make a dist folder)

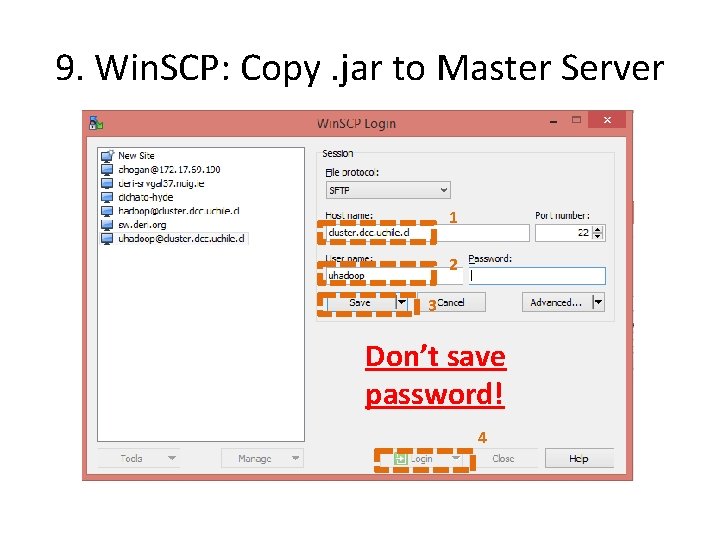

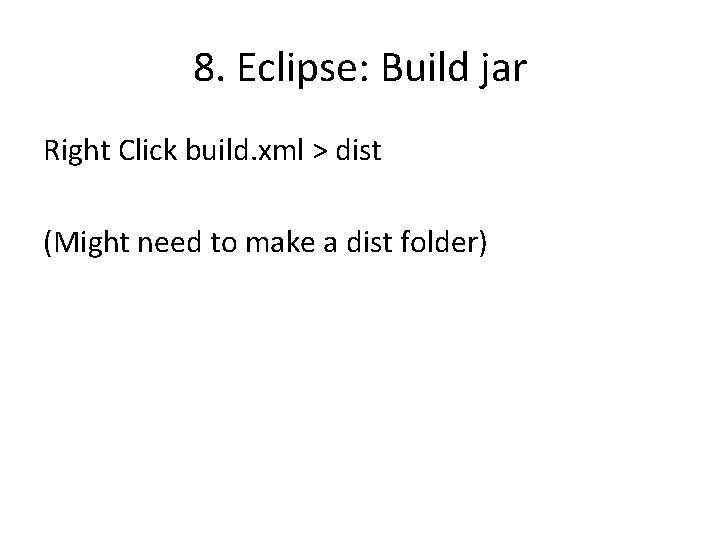

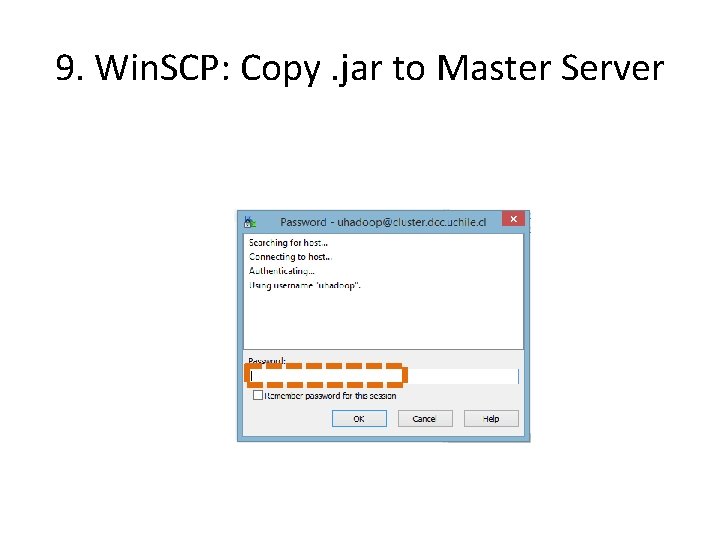

9. Win. SCP: Copy. jar to Master Server 1 2 3 Don’t save password! 4

9. Win. SCP: Copy. jar to Master Server

![9 Win SCP Copy jar to Master Server Create dir data2014uhadoopusername Copy 9. Win. SCP: Copy. jar to Master Server • Create dir: /data/2014/uhadoop/[username]/ • Copy](https://slidetodoc.com/presentation_image_h2/d94fb6f60cb339e039bf338692940a31/image-19.jpg)

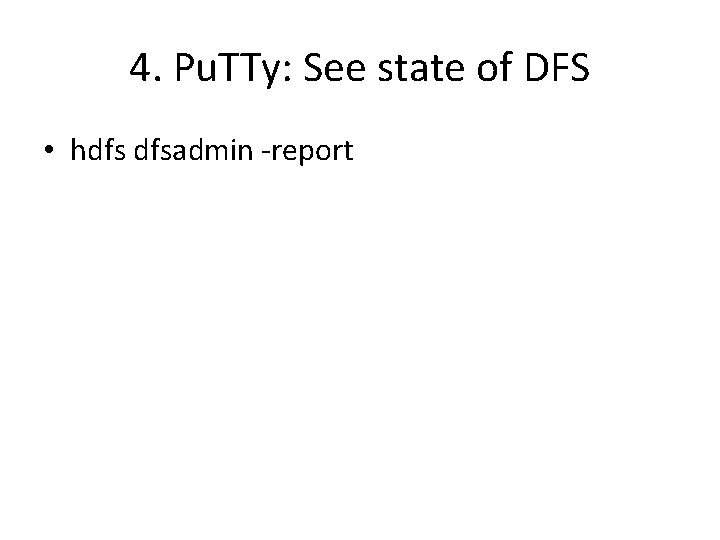

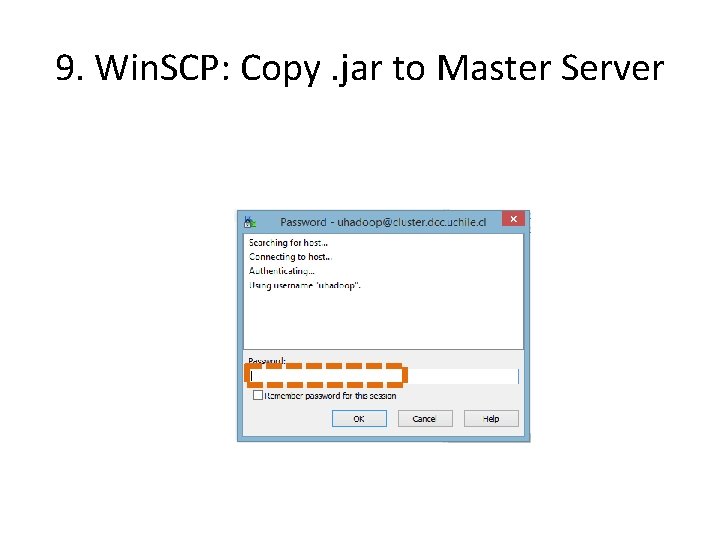

9. Win. SCP: Copy. jar to Master Server • Create dir: /data/2014/uhadoop/[username]/ • Copy your mdp-lab 4. jar into it

![10 Pu TTy Run Job All one command hadoop jar data2014uhadoopusernamemdplab 4 jar 10. Pu. TTy: Run Job All one command! • hadoop jar /data/2014/uhadoop/[username]/mdplab 4. jar](https://slidetodoc.com/presentation_image_h2/d94fb6f60cb339e039bf338692940a31/image-20.jpg)

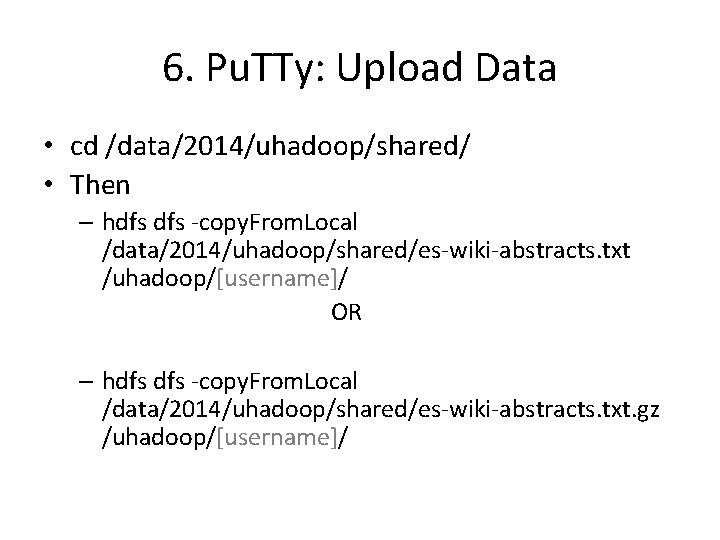

10. Pu. TTy: Run Job All one command! • hadoop jar /data/2014/uhadoop/[username]/mdplab 4. jar Word. Count /uhadoop/[username]/eswiki-abstracts. txt[. gz] /uhadoop/[username]/wc/

![11 Pu TTY Look at output hdfs ls uhadoopusernamewc All one command 11. Pu. TTY: Look at output • hdfs -ls /uhadoop/[username]/wc/ All one command! •](https://slidetodoc.com/presentation_image_h2/d94fb6f60cb339e039bf338692940a31/image-21.jpg)

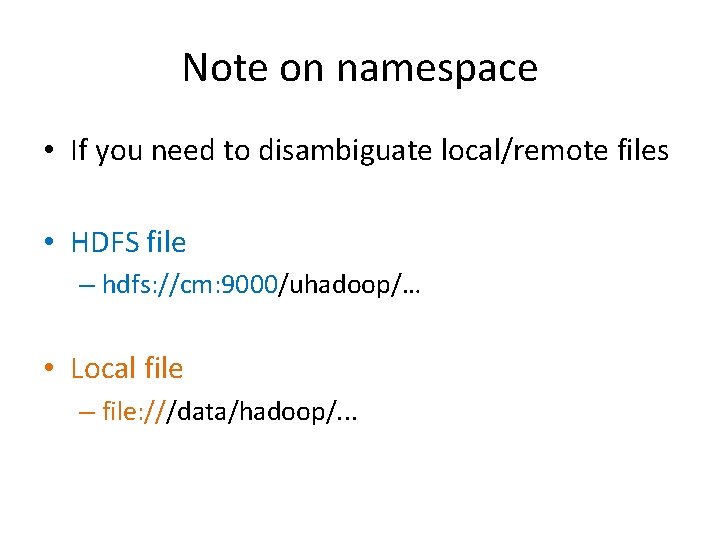

11. Pu. TTY: Look at output • hdfs -ls /uhadoop/[username]/wc/ All one command! • hdfs -cat /uhadoop/[username]/wc/part-r 00000 | more • hdfs -cat /uhadoop/[username]/wc/part-r 00000 | grep -P "^det" | more Look for “de” … 4916432 occurrences in local run

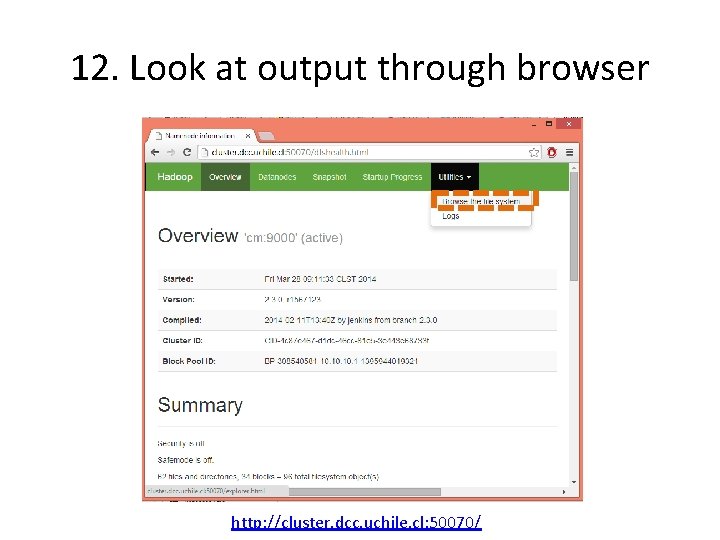

12. Look at output through browser http: //cluster. dcc. uchile. cl: 50070/