Linux Clusters for High Performance Computing Jim Phillips

- Slides: 61

Linux Clusters for High. Performance Computing Jim Phillips and Tim Skirvin Theoretical and Computational Biophysics Beckman Institute Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 1

HPC vs High-Availability There are two major types of Linux clusters: – High-Performance Computing • Multiple computers running a single job for increased performance – High-Availability • Multiple computers running the same job for increased reliability – We will be talking about the former! Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 2

Why Clusters? Cheap alternative to “big iron” Local development platform for “big iron” code Built to task (buy only what you need) Built from COTS components Runs COTS software (Linux/MPI) Lower yearly maintenance costs Single failure does not take down entire facility Re-deploy as desktops or “throw away” Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 3

Why Not Clusters? Non-parallelizable or tightly coupled application Cost of porting large existing codebase too high No source code for application No local expertise (don’t know Unix) No vendor hand holding Massive I/O or memory requirements Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 4

Know Your Users Who are you building the cluster for? – Yourself and two grad students? – Yourself and twenty grad students? – Your entire department or university? Are they clueless, competitive, or malicious? How will you to allocate resources among them? Will they expect an existing infrastructure? How well will they tolerate system downtimes? Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 5

Your Users’ Goals Do you want increased throughput? – Large number of queued serial jobs. – Standard applications, no changes needed. Or decreased turnaround time? – Small number of highly parallel jobs. – Parallelized applications, changes required. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 6

Your Application The best benchmark for making decisions is your application running your dataset. Designing a cluster is about trade-offs. – Your application determines your choices. – No supercomputer runs everything well either. Never buy hardware until the application is parallelized, ported, tested, and debugged. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 7

Your Application: Parallel Performance How much memory per node? How would it scale on an ideal machine? How is scaling affected by: – Latency (time needed for small messages)? – Bandwidth (time per byte for large messages)? – Multiprocessor nodes? How fast do you need to run? Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 8

Budget Figure out how much money you have to spend. Don’t spend money on problems you won’t have. – Design the system to just run your application. Never solve problems you can’t afford to have. – Fast network on 20 nodes or slower on 100? Don’t buy the hardware until… – The application is ported, tested, and debugged. – The science is ready to run. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 9

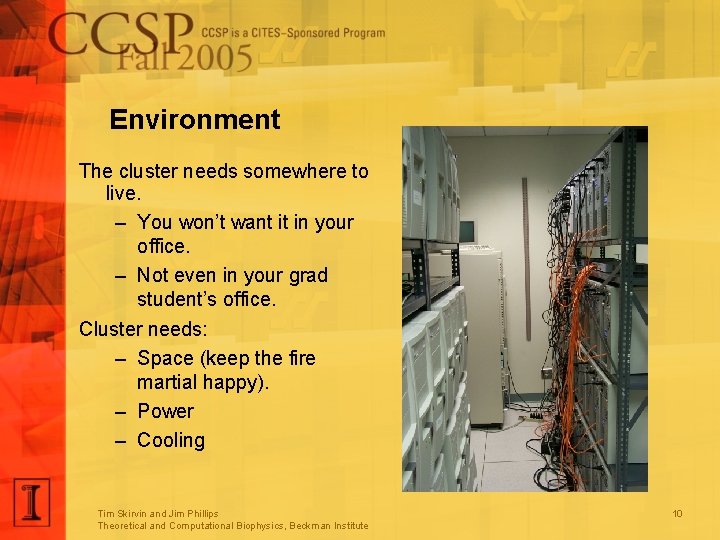

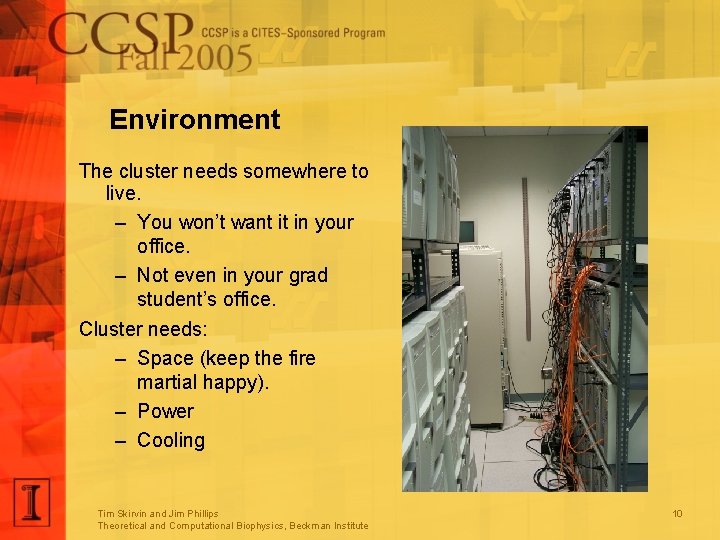

Environment The cluster needs somewhere to live. – You won’t want it in your office. – Not even in your grad student’s office. Cluster needs: – Space (keep the fire martial happy). – Power – Cooling Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 10

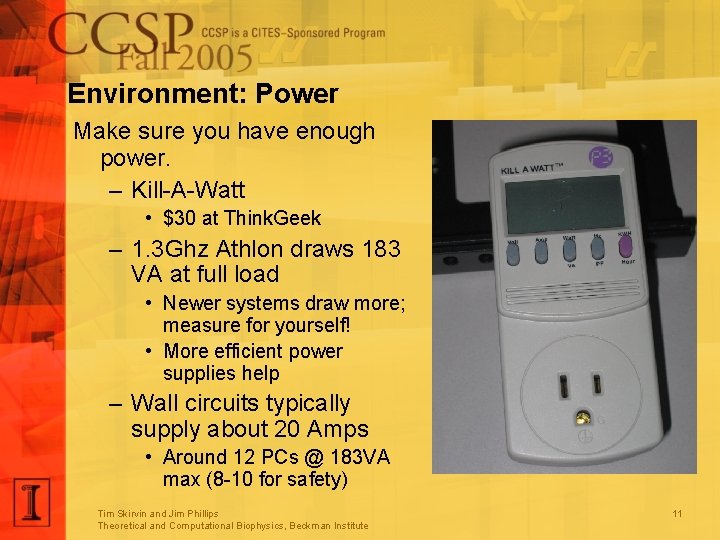

Environment: Power Make sure you have enough power. – Kill-A-Watt • $30 at Think. Geek – 1. 3 Ghz Athlon draws 183 VA at full load • Newer systems draw more; measure for yourself! • More efficient power supplies help – Wall circuits typically supply about 20 Amps • Around 12 PCs @ 183 VA max (8 -10 for safety) Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 11

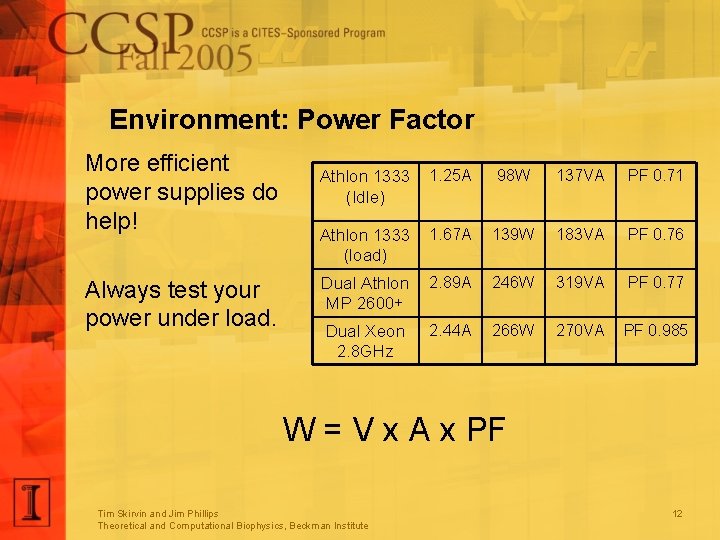

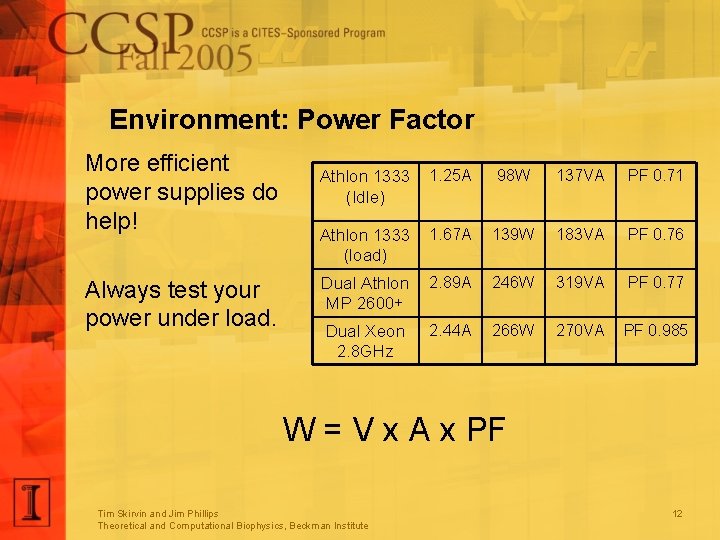

Environment: Power Factor More efficient power supplies do help! Always test your power under load. Athlon 1333 (Idle) 1. 25 A 98 W 137 VA PF 0. 71 Athlon 1333 (load) 1. 67 A 139 W 183 VA PF 0. 76 Dual Athlon MP 2600+ 2. 89 A 246 W 319 VA PF 0. 77 Dual Xeon 2. 8 GHz 2. 44 A 266 W 270 VA PF 0. 985 W = V x A x PF Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 12

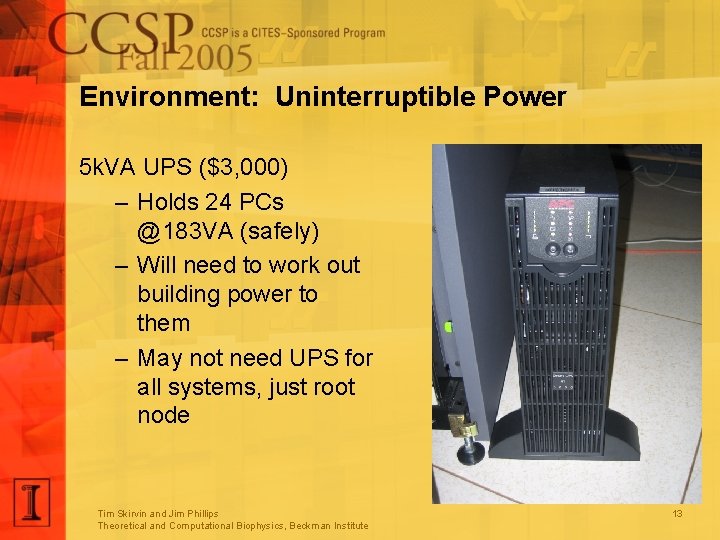

Environment: Uninterruptible Power 5 k. VA UPS ($3, 000) – Holds 24 PCs @183 VA (safely) – Will need to work out building power to them – May not need UPS for all systems, just root node Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 13

Environment: Cooling Building AC will only get you so far Make sure you have enough cooling. – One PC @183 VA puts out ~600 BTU of heat. – 1 ton of AC = 12, 000 BTUs = ~3500 Watts – Can run ~20 CPUs per ton of AC Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 14

Hardware Many important decisions to make Keep application performance, users, environment, local expertise, and budget in mind An exercise in systems integration, making many separate components work well as a unit A reliable but slightly slower cluster is better than a fast but non-functioning cluster Always benchmark a demo system first! Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 15

Hardware: Networking Two main options: – Gigabit Ethernet – cheap ($100 -200/node), universally supported and tested, cheap commodity switches up to 48 ports. • 24 -port switches seem the best bang-for-buck – Special interconnects: • Myrinet – very expensive ($thousands per node), very low latency, logarithmic cost model for very large clusters. • Infiniband – similar, less common, not as well supported. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 16

Hardware: Other Components Filtered Power (Isobar, Data Shield, etc) Network Cables: buy good ones, you’ll save debugging time later If a cable is at all questionable, throw it away! Power Cables Monitor Video/Keyboard Cables Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 17

User Rules of Thumb 1 -4 users: – Yes, you still want a queueing system. – Plan ahead to avoid idle time and conflicts. 5 -20 users: – Put one person in charge of running things. – Work out a fair-share or reservation system. > 20 users: – User documentation and examples are essential. – Decide who makes resource allocation decisions. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 18

Application Rules of Thumb 1 -2 programs: – Don’t pay for anything you won’t use. – Benchmark, benchmark! • Be sure to use your typical data. • Try different compilers and compiler options. > 2 programs: – Select the most standard OS environment. – Benchmark those that will run the most. • Consider a specialized cluster for dominant apps only. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 19

Parallelization Rules of Thumb Throughput is easy…app runs as is. Turnaround is not: – Parallel speedup is limited by: • Time spent in non-parallel code. • Time spent waiting for data from the network. – Improve serial performance first: • Profile to find most time-consuming functions. • Try new algorithms, libraries, hand tuning. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 20

Some Details Matter More What limiting factor do you hit first? – Budget? – Space, power, and cooling? – Network speed? – Memory speed? – Processor speed? – Expertise? Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 21

Limited by Budget Don’t waste money solving problems you can’t afford to have right now: – Regular PCs on shelves (rolling carts) – Gigabit networking and multiple jobs Benchmark performance per dollar. – The last dollar you spend should be on whatever improves your performance. Ask for equipment funds in proposals! Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 22

Limited by Space Benchmark performance per rack Consider all combinations of: – Rackmount nodes • More expensive but no performance loss – Dual-processor nodes • Less memory bandwidth per processor – Dual-core processors • Less memory bandwidth per core Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 23

Limited by Power/Cooling Benchmark performance per Watt Consider: – Opteron or Power. PC rather than Xeon – Dual-processor nodes – Dual-core processors Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 24

Limited by Network Speed Benchmark your code at NCSA. – 10, 000 CPU-hours is easy to get. – Try running one process per node. • If that works, buy single-processor nodes. – Try Myrinet. • If that works, can you run at NCSA? – Can you run more, smaller jobs? Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 25

Limited by Serial Performance Is it memory performance? Try: – Single-core Opterons – Single-processor nodes – Larger cache CPUs – Lower clock speed CPUs Is it really the processor itself? Try: – Higher clock speed CPUs – Dual-core CPUs Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 26

Limited by Expertise There is no substitute for a local expert. Qualifications: – Comfortable with the Unix command line. – Comfortable with Linux administration. – Cluster experience if you can get it. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 27

System Software “Linux” is just a starting point. – Operating system, – Libraries - message passing, numerical – Compilers – Queuing Systems Performance Stability System security Existing infrastructure considerations Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 28

Scyld Beowulf / Clustermatic Single front-end master node: – Fully operational normal Linux installation. – Bproc patches incorporate slave nodes. Severely restricted slave nodes: – Minimum installation, downloaded at boot. – No daemons, users, logins, scripts, etc. – No access to NFS servers except for master. – Highly secure slave nodes as a result Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 29

Oscar/ROCKS Each node is a full Linux install – Offers access to a file system. – Software tools help manage these large numbers of machines. – Still more complicated than only maintaining one “master” node. – Better suited for running multiple jobs on a single cluster, vs one job on the whole cluster. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 30

System Software: Compilers No point in buying fast hardware just to run poor performing executables Good compilers might provide 50 -150% performance improvement May be cheaper to buy a $2, 500 compiler license than to buy more compute nodes Benchmark real application with compiler, get an eval compiler license if necessary Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 31

System Software: Message Passing Libraries Usually dictated by application code Choose something that will work well with hardware, OS, and application User-space message passing? MPI: industry standard, many implementations by many vendors, as well as several free implementations Others: Charm++, BIP, Fast Messages Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 32

System Software: Numerical Libraries Can provide a huge performance boost over “Numerical Recipes” or in-house routines Typically hand-optimized for each platform When applications spend a large fraction of runtime in library code, it pays to buy a license for a highly tuned library Examples: BLAS, FFTW, Interval libraries Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 33

System Software: Batch Queueing Clusters, although cheaper than “big iron” are still expensive, so should be efficiently utilized The use of a batch queueing system can keep a cluster running jobs 24/7 Things to consider: – Allocation of sub-clusters? – 1 -CPU jobs on SMP nodes? Examples: Sun Grid Engine, PBS, Load Leveler Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 34

System Software: Operating System Any annoying management or reliability issues get hugely multiplied in a cluster environment. Plan for security from the outset Clusters have special needs; use something appropriate for the application and hardware Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 35

System Software: Install It Yourself Don’t use the vendor’s pre-loaded OS. – They would love to sell you 100 licenses. – What happens when you have to reinstall? – Do you like talking to tech support? – Are those flashy graphics really useful? – How many security holes are there? Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 36

Security Tips Restrict physical access to the cluster, if possible. – Make sure you’re involved in all tours, to make sure nobody touches anything. If you’re on campus, put your clusters into the Fully Closed network group – Might cause some limitations if you’re trying to submit from off-site – Will cause problems with GLOBUS – The built-in firewall is your friend! Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 37

Purchasing Tips: Before You Begin Get your budget Work out the space, power, and cooling capacities of the room. Start talking to vendors early – But don’t commit! Don’t fall in love with any one vendor until you’ve looked at them all. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 38

Purchasing Tips: Design Notes Make sure to order some spare nodes – Serial nodes and hot-swap spares – Keep them running to make sure they work. If possible, install HDs only in head node – State law and UIUC policy requires all hard drives to be wiped before disposal – It doesn’t matter if the drive never stored anything! – Each drive will take 8 -10 hours to wipe. • Save yourself a world of pain in a few years… • …or just give your machines to some other campus group, and make them worry about it. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 39

Purchasing Tips: Get Local Service If a node dies, do you want to ship it? Two choices: – Local business (Champaign Computer) – Major vendor (Sun) Ask others about responsiveness. Design your cluster so that you can still run jobs if a couple of nodes are down. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 40

Purchasing Tips: Dealing with Purchasing You will want to put the cluster order on a Purchase Order (PO) – Do not pay for the cluster until it entirely works. Prepare a ten-point letter – Necessary for all purchases >$25 k. – Examples are available with your business office (or bug us for our examples). – These aren’t difficult to write, but will probably be necessary. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 41

Purchasing Tips: The Bid Process Any purchase >$28 k must go up for bid – Exception: sole-source vendors – Number grows every year – Adds a month or so to the purchase time – If you can keep the numbers below the magic $28 k, do it! • The bid limit may be leverage for vendors to drop their prices just below the limit; plan accordingly. You will get lots of junk bids – Be very specific about your requirements to keep them away! Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 42

Purchasing Tips: Working the Bid Process Use sole-source vendors where possible. – This is a major reason why we buy from Sun. – Check with your purchasing people. – This won’t help you get around the month time loss, as the item still has to be posted. Purchase your clusters in small chunks – Only works if you’re looking at a relatively small cluster. – Again, you may be able to use this as leverage with your vendor to lower their prices. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 43

Purchasing Tips: Receiving Your Equipment Let Receiving know that the machines are coming. – It will take up a lot of space on the loading dock. – Working with them to save space will earn you good will (and faster turnaround). – Take your machines out of Receiving’s space as soon as reasonably possible. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 44

Purchasing Tips: Consolidated Inventory Try to convince your Inventory workers to tag each cluster, and not each machine – It’s really going to be running as a cluster anyway (right? ). – This will make life easier on you. • Repairs are easier when you don’t have to worry about inventory stickers – This will make life easier for them. • 3 items to track instead of 72 Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 45

Purchasing Tips: Assembly Get extra help for assembly – It’s reasonably fun work • …as long as the assembly line goes fast. – Demand pizza. Test the assembly instructions before you begin – Nothing is more annoying than having to realign all of the rails after they’re all screwed in. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 46

Purchasing Tips: Testing and Benchmarking Test the cluster before you put it into production! – Sample jobs + cpuburn – Look at power consumption – Test for dead nodes Remember: vendors make mistakes! – Even their demo applications may not work; check for yourself. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 47

Case Studies The best way to illustrate cluster design is to look at how somebody else has done it. – The TCB Group has designed four separate Linux clusters in the last six years Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 48

2001 Case Study Users: – Many researchers with MD simulations – Need to supplement time on supercomputers Application: – Not memory-bound, runs well on IA 32 – Scales to 32 CPUs with 100 Mbps Ethernet – Scales to 100+ CPUs with Myrinet Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 49

2001 Case Study 2 Budget: – Initially $20 K, eventually grew to $100 K Environment: – Full machine room, slowly clear out space – Under-utilized 12 k. VA UPS, staff electrician – 3 ton chilled water air conditioner (Liebert) Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 50

2001 Case Study 3 Hardware: – Fastest AMD Athon CPUs available (1333 MHz). – Fast CL 2 SDRAM, but not DDR. – Switched 100 Mbps Ethernet, Intel EEPro cards. – Small 40 GB hard drives and CD-ROMs. System Software: – Scyld clusters of 32 machines, 1 job/cluster. – Existing DQS, NIS, NFS, etc. infrastructure. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 51

2003 Case Study What changed since 2001: – 50% increase in processor speed – 50% increase in NAMD serial performance – Improved stability of SMP Linux kernel – Inexpensive gigabit cards and 24 -port switches – Nearly full machine room and power supply – Popularity of compact form factor cases – Emphasis on interactive MD of small systems Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 52

2003 Case Study 2 Budget: – Initially $65 K, eventually grew to ~$100 K Environment: – Same general machine room environment – Additional machine room space is available in server room • Just switched to using rack-mount equipment – Still using the old clusters; don’t want to get rid of them entirely • Need to be more space-conscious Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 53

2003 Case Sudy 3 Option #1: – Single processor, small form factor nodes. – Hyperthreaded Pentium 4 processors. – 32 bit 33 MHz gigabit network cards. – 24 port gigabit switch (24 -processor clusters). Problems: – No ECC memory. – Limited network performance. – Too small for next-generation video cards. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 54

2003 Case Study 4 Final decision: – Dual Athlon MP 2600+ in normal cases. • No hard drives or CD-ROMs. • 64 bit 66 MHz gigabit network cards. – 24 port gigabit switch (48 -proc clusters). – Clustermatic OS, boot slaves off of floppy. • Floppies have proven very unreliable, especially when left in the drives. Benefits: – Server class hardware w/ ECC memory. – Maximum processor count for large simulations. – Maximum network bandwidth for small simulations. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 55

2003 Case Study 5 Athlon clusters from 2001 recycled: – 36 nodes outfitted as desktops • Added video cards, hard drives, extra RAM • Cost: ~$300/machine • Now dead or in 16 -node Condor test cluster – 32 nodes donated to another group – Remaining nodes move to server room • 16 -node Clustermatic cluster (used by guests) • 12 spares and build/test boxes for developers Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 56

2004 Case Study What changed since 2003: – Technologically, not much! – Space is more of an issue. – A new machine room has been built for us. – Vendors are desperate to sell systems at any price. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 57

2004 Case Study 2 Budget: – Initially ~$130 K, eventually grew to ~$180 K Environment: – New machine room will store the new clusters. – Two five-ton Liebert air conditioners have been installed. – There is minimal floor space, enough for four racks of equipment. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 58

2004 Case Study 3 Final decision: – 72 x Sun V 60 x rack-mount servers. • • Dual 3. 06 GHz Intel processors – only slightly faster 2 GB RAM, Dual 36 GB HDs, DVD-ROM included in deal Network-bootable gigabit ethernet built in Significantly more stable than any old cluster machine – 3 x 24 port gigabit switch (3 x 48 -processor clusters) – 6 x serial nodes (identical to above, also serve as spares) – Sun Rack 900 -38 • 26 systems per rack, plus switch and UPS for head nodes – Clustermatic 4 on Red. Hat 9 Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 59

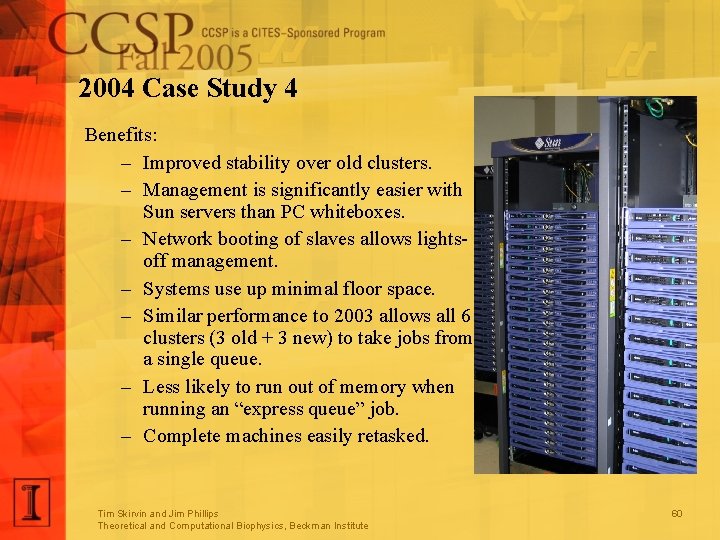

2004 Case Study 4 Benefits: – Improved stability over old clusters. – Management is significantly easier with Sun servers than PC whiteboxes. – Network booting of slaves allows lightsoff management. – Systems use up minimal floor space. – Similar performance to 2003 allows all 6 clusters (3 old + 3 new) to take jobs from a single queue. – Less likely to run out of memory when running an “express queue” job. – Complete machines easily retasked. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 60

For More Information… http: //www. ks. uiuc. edu/Development/Computers/Cluster/ http: //www. ks. uiuc. edu/Training/Workshop/Clusters/ We will be setting up a Clusters mailing list some time in the next week or two We will also be setting up a Clusters User Group shortly, but that will take some more effort. Tim Skirvin and Jim Phillips Theoretical and Computational Biophysics, Beckman Institute 61