Hops The Hops Hadoop Stack Jim Dowling Assoc

![Access Control in Hadoop: Apache Sentry [Mujumdar’ 15] How do you ensure the consistency Access Control in Hadoop: Apache Sentry [Mujumdar’ 15] How do you ensure the consistency](https://slidetodoc.com/presentation_image_h/200c57b7ff7885382b56058c13fc4564/image-73.jpg)

- Slides: 104

Hops The Hops Hadoop Stack Jim Dowling Assoc Prof @ KTH Stockholm CEO @ Logical Clocks AB CERN Talk, April 16 th 2018

Evolution of Hadoop Huge Body (Data. Nodes) Tiny Brain (Name. Node, Resource. Mgr) ? 2009 2021 -03 -03 2018 2/31

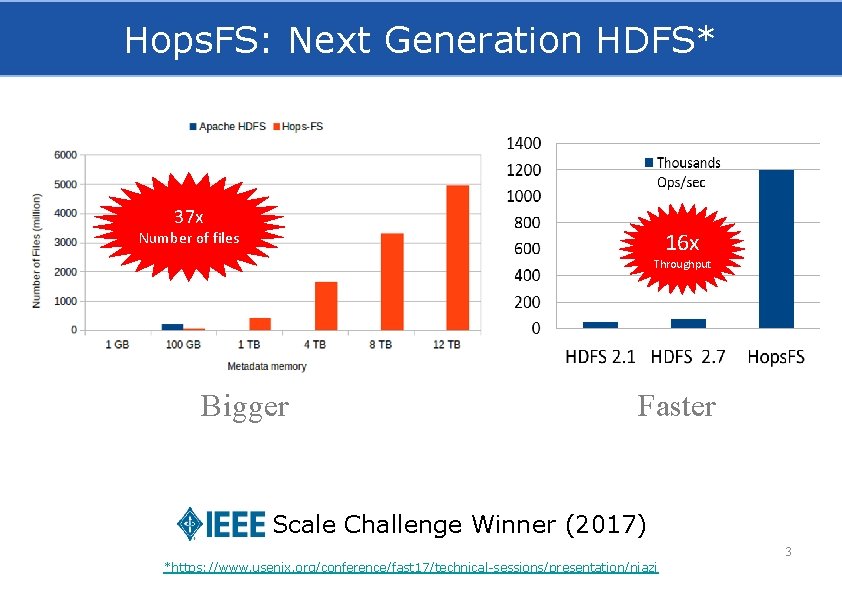

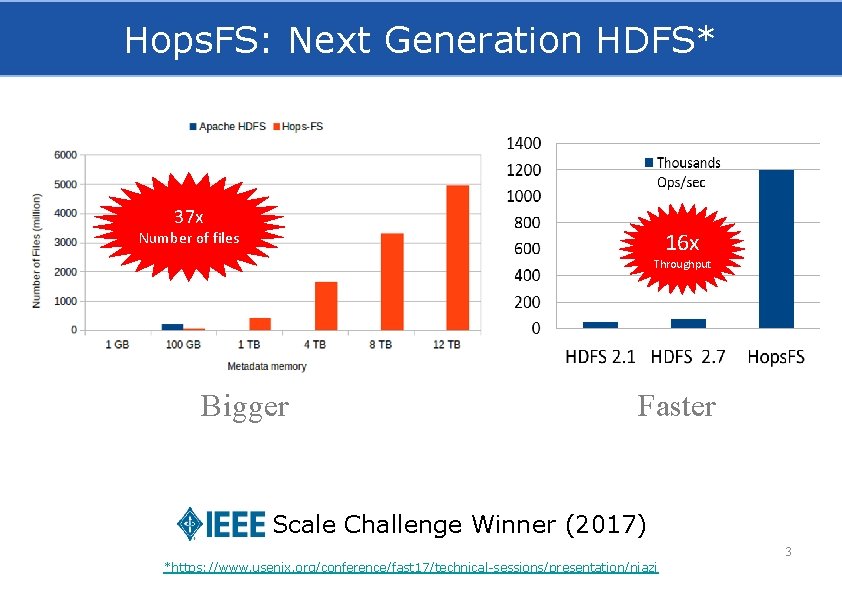

Hops. FS: Next Generation HDFS* 37 x 16 x Number of files Throughput Bigger Faster Scale Challenge Winner (2017) 3 *https: //www. usenix. org/conference/fast 17/technical-sessions/presentation/niazi

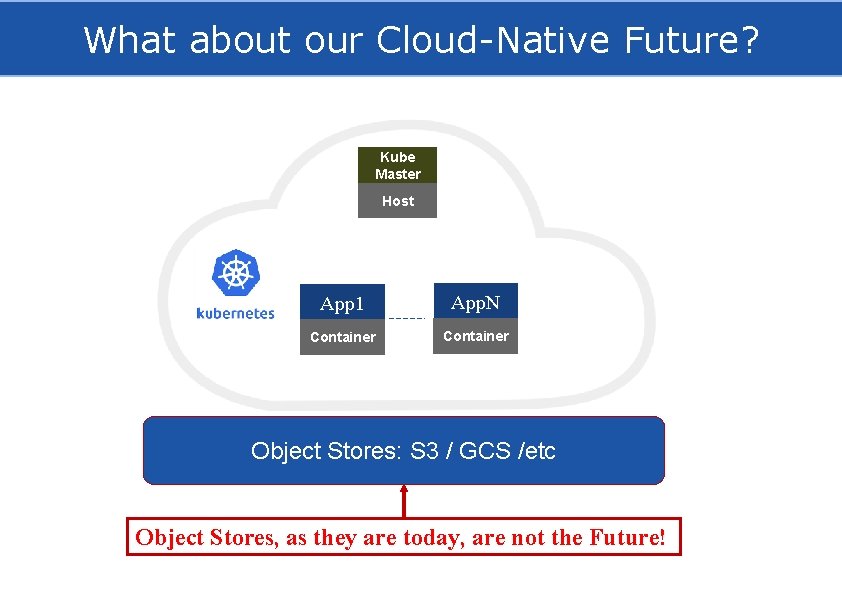

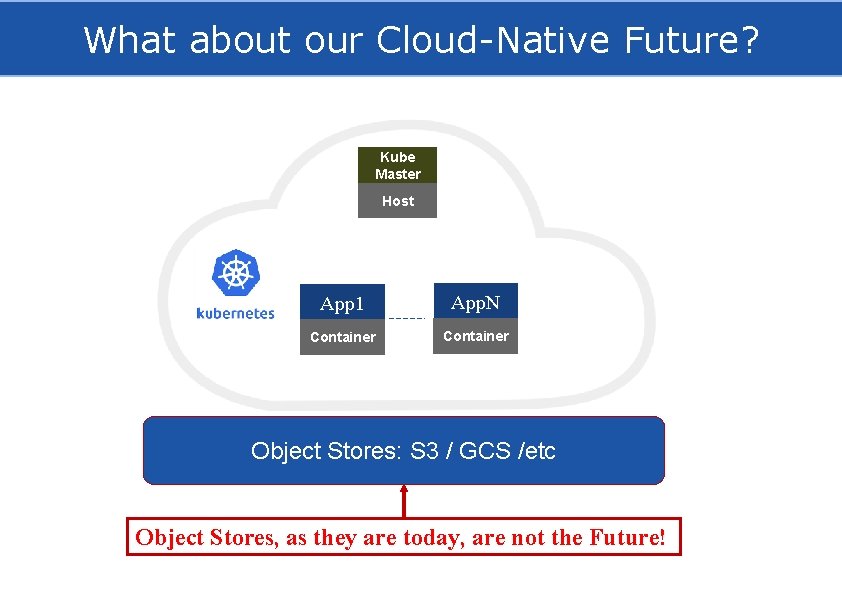

What about our Cloud-Native Future? Kube Master Host App 1 App. N Container Object Stores: S 3 / GCS /etc Object Stores, as they are today, are not the Future!

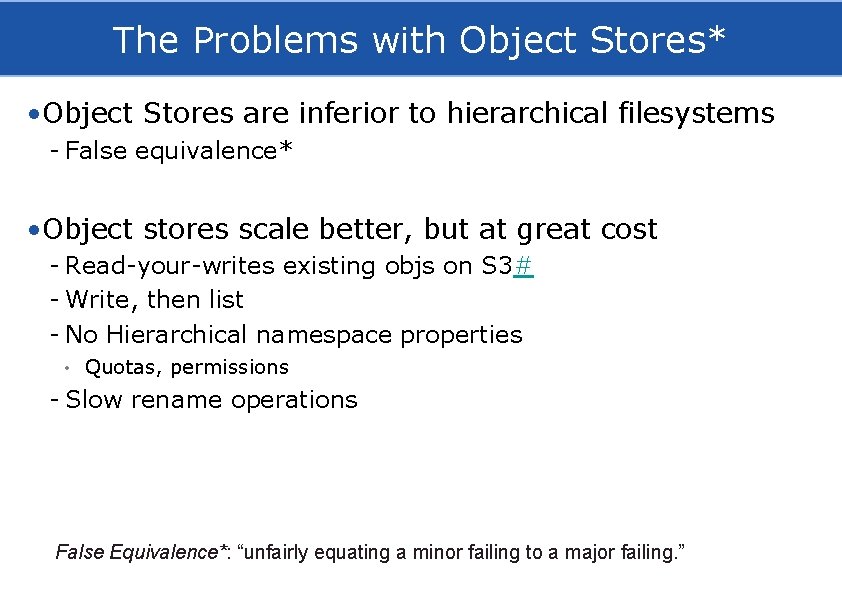

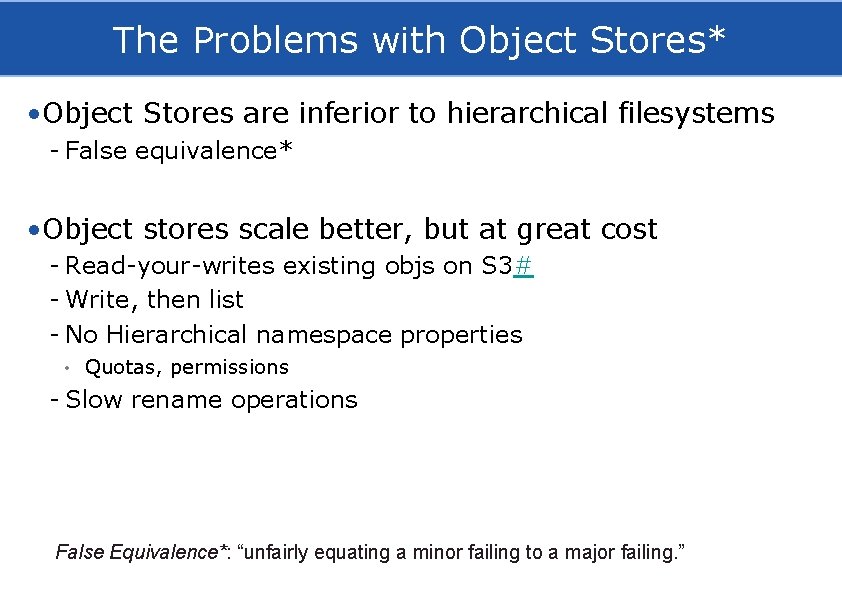

The Problems with Object Stores* • Object Stores are inferior to hierarchical filesystems - False equivalence* • Object stores scale better, but at great cost - Read-your-writes existing objs on S 3# - Write, then list - No Hierarchical namespace properties • Quotas, permissions - Slow rename operations False Equivalence*: “unfairly equating a minor failing to a major failing. ”

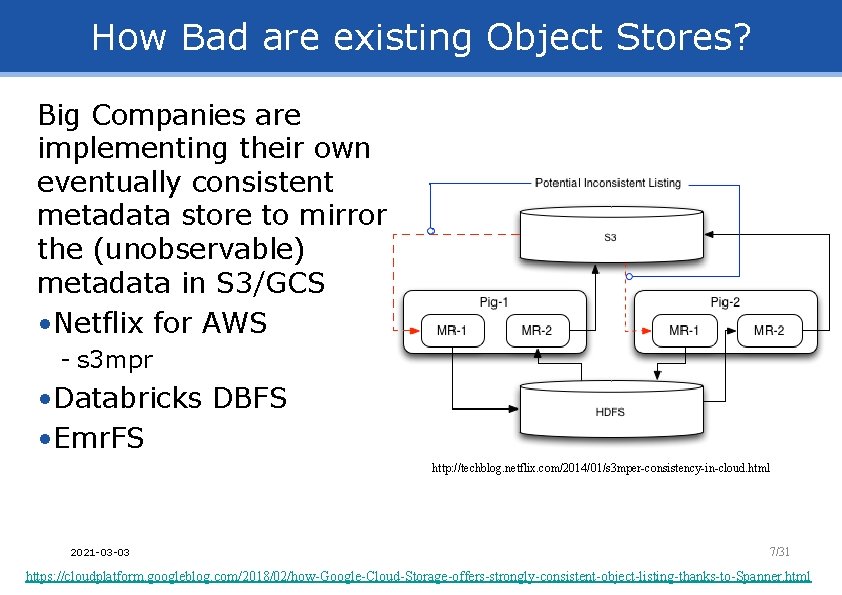

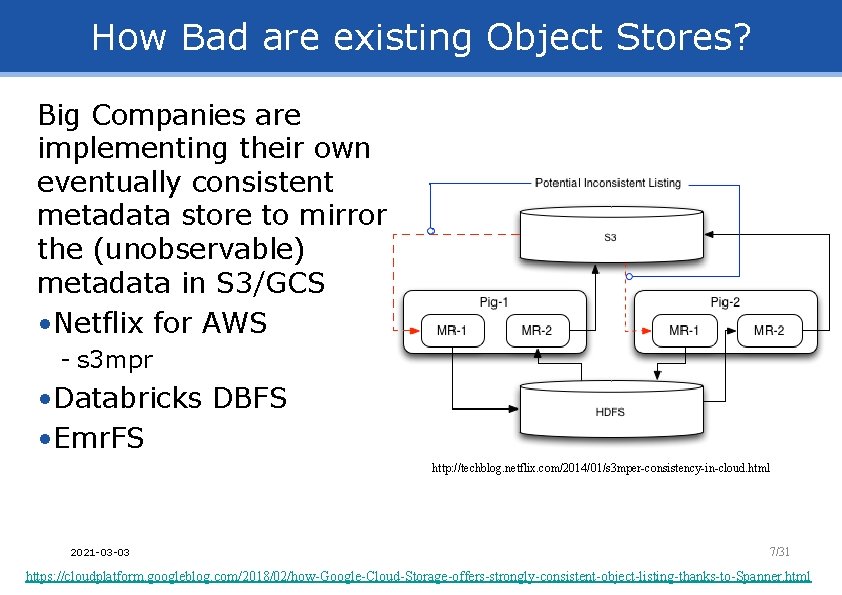

How Bad are existing Object Stores? Big Companies are implementing their own eventually consistent metadata store to mirror the (unobservable) metadata in S 3/GCS • Netflix for AWS - s 3 mpr • Databricks DBFS • Emr. FS http: //techblog. netflix. com/2014/01/s 3 mper-consistency-in-cloud. html 2021 -03 -03 7/31 https: //cloudplatform. googleblog. com/2018/02/how-Google-Cloud-Storage-offers-strongly-consistent-object-listing-thanks-to-Spanner. html

HDFS: A POSIX-Style Distributed FS 2021 -03 -03 8/48

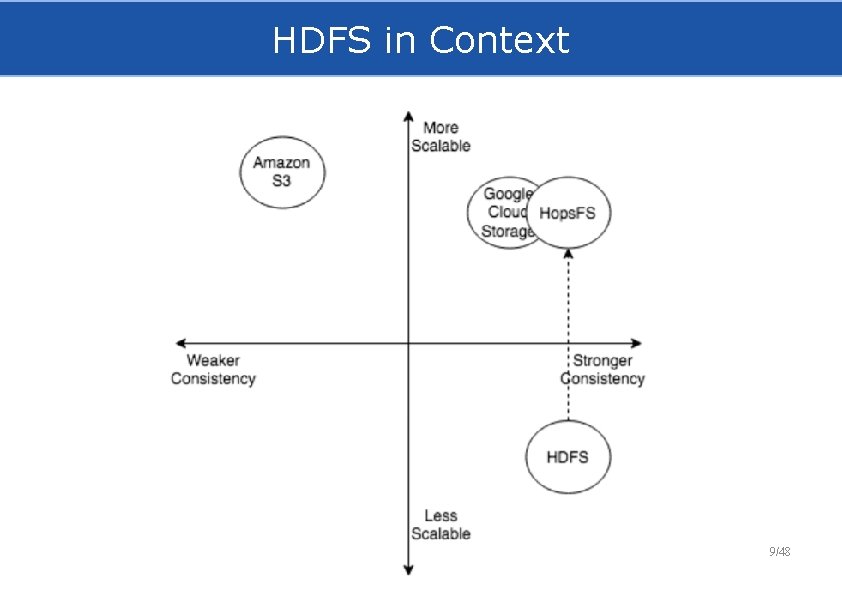

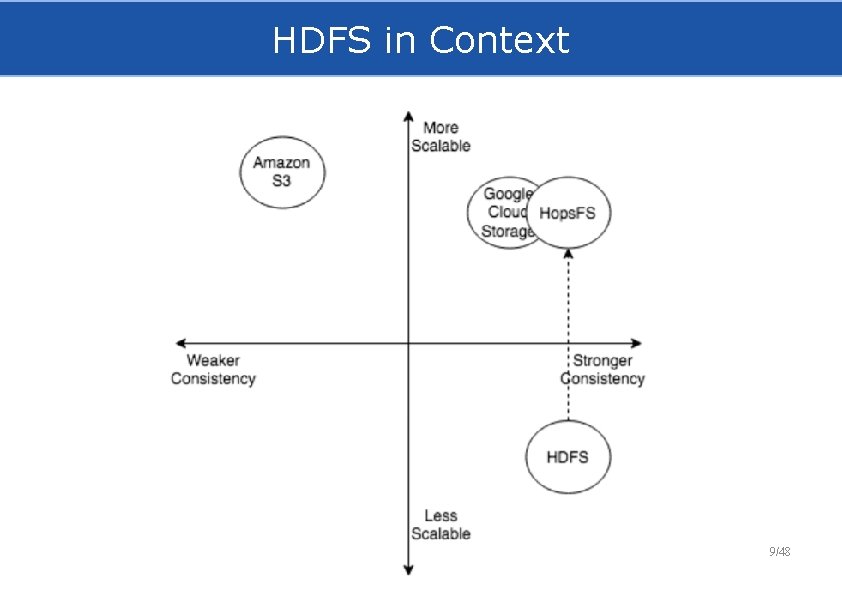

HDFS in Context 9/48

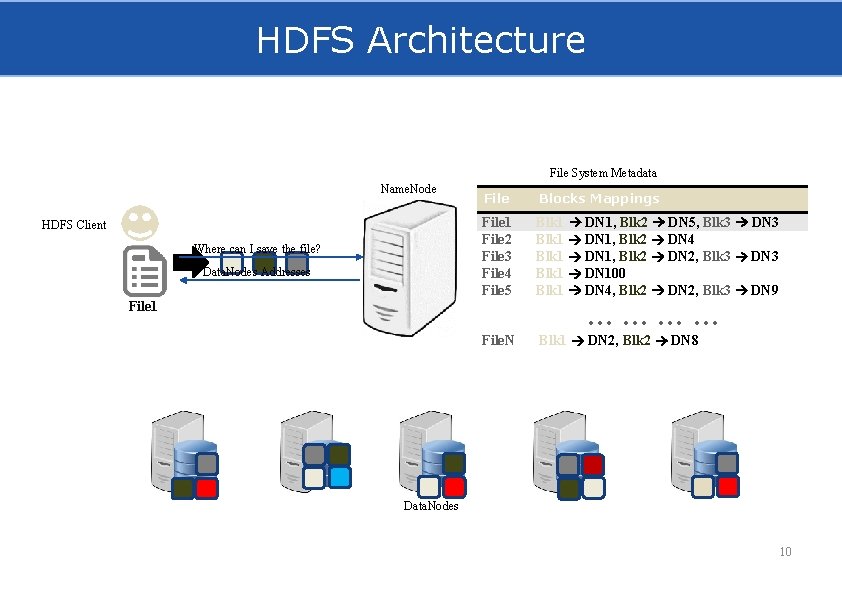

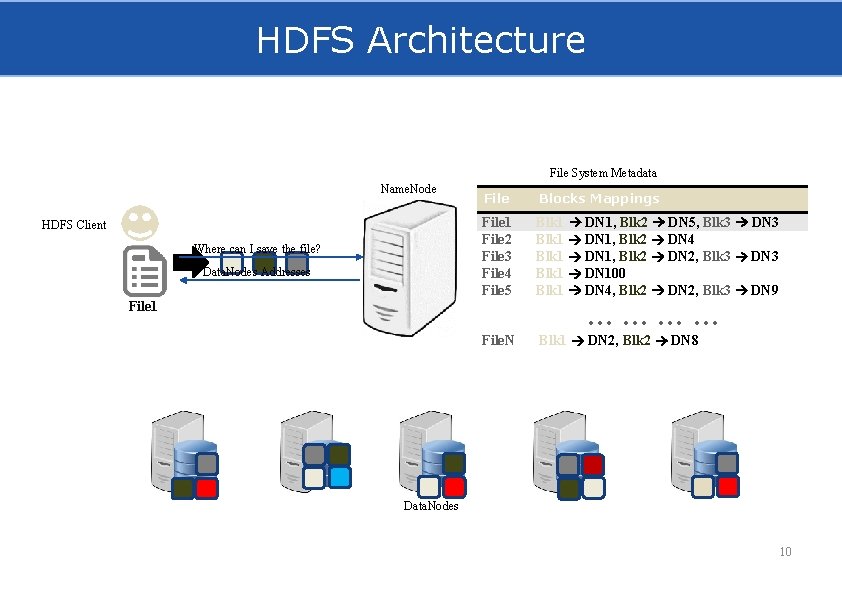

HDFS Architecture File System Metadata Name. Node HDFS Client Where can I save the file? Data. Nodes Addresses File Blocks Mappings File 1 File 2 File 3 File 4 File 5 Blk 1 DN 1, Blk 2 DN 5, Blk 3 DN 3 Blk 1 DN 1, Blk 2 DN 4 Blk 1 DN 1, Blk 2 DN 2, Blk 3 DN 3 Blk 1 DN 100 Blk 1 DN 4, Blk 2 DN 2, Blk 3 DN 9 File 1 ………… File. N Blk 1 DN 2, Blk 2 DN 8 Data. Nodes 10

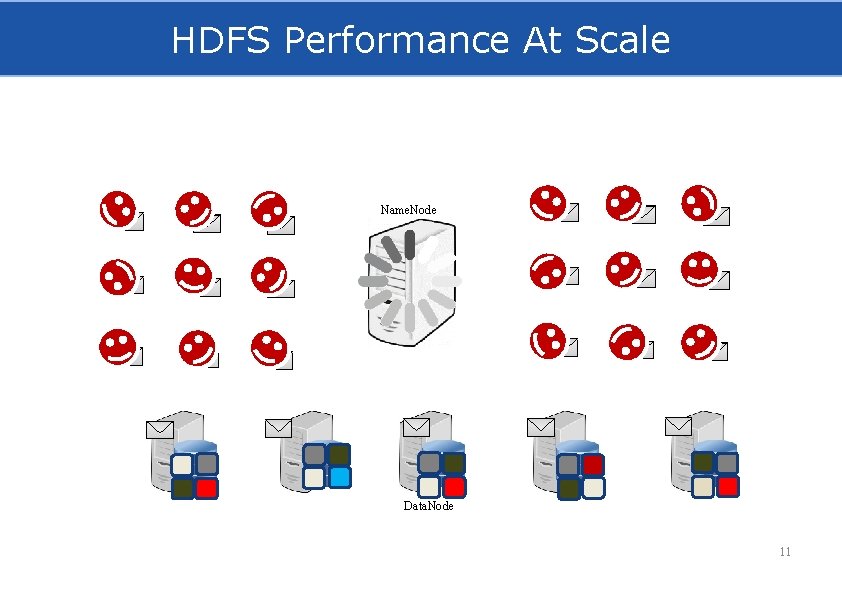

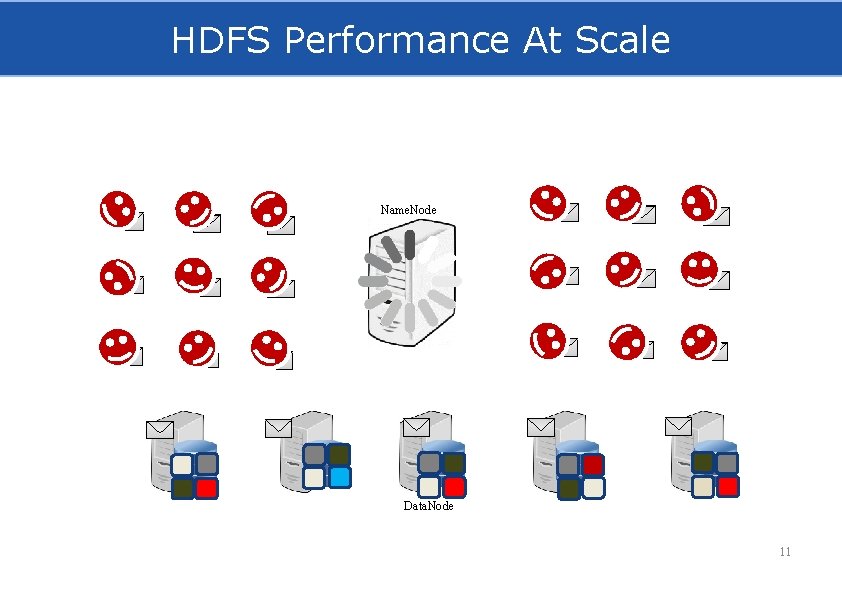

HDFS Performance At Scale Name. Node Data. Node 11

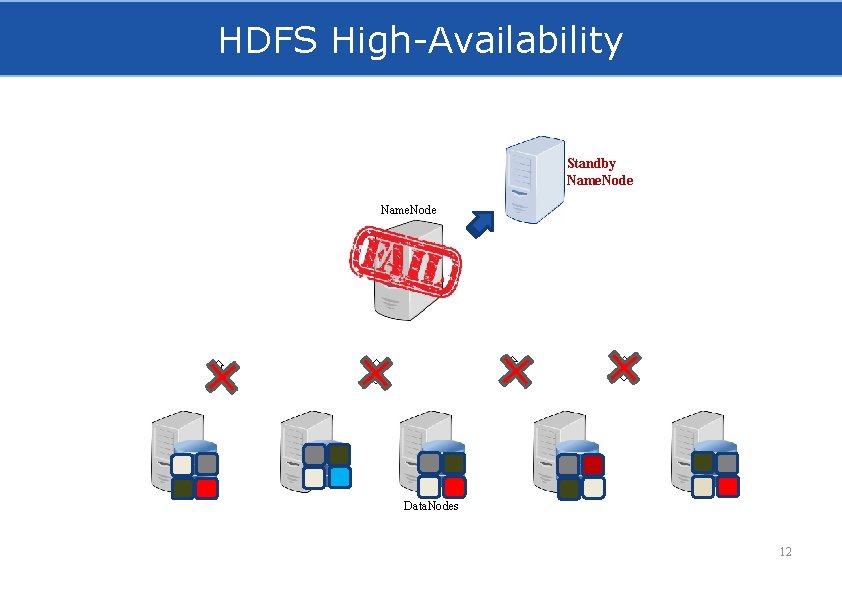

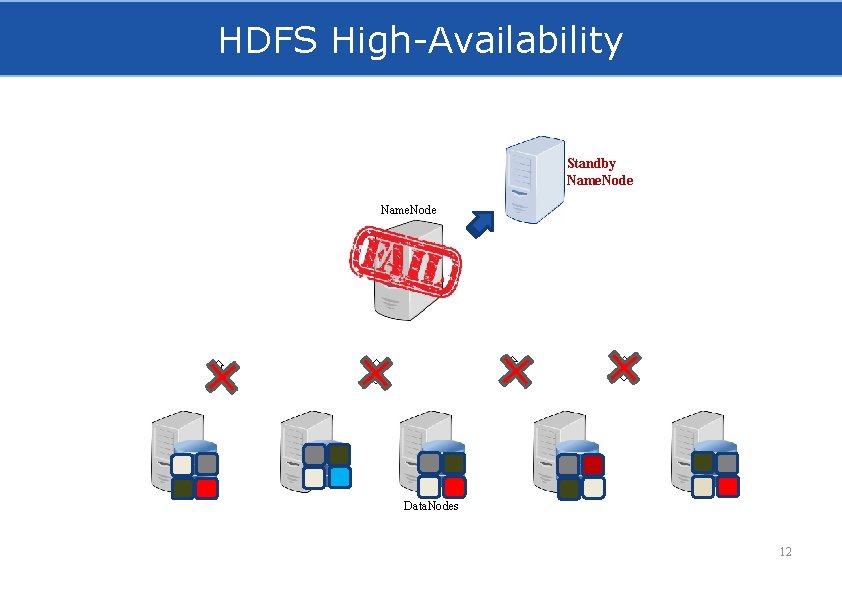

HDFS High-Availability Standby Name. Node Data. Nodes 12

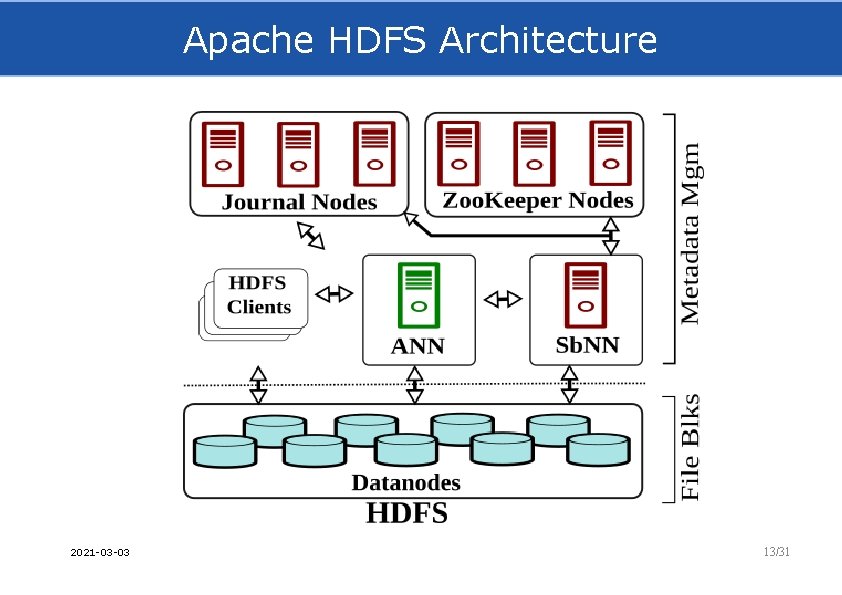

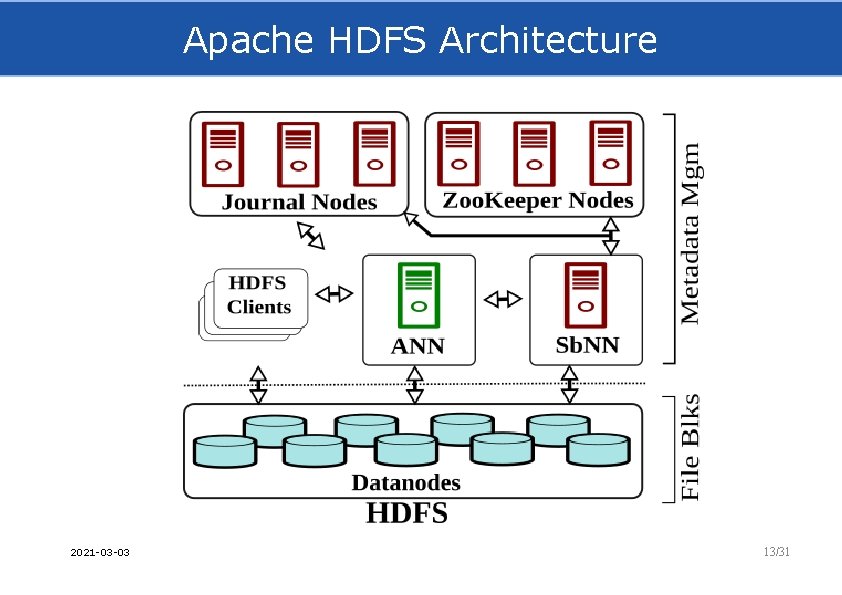

Apache HDFS Architecture 2021 -03 -03 13/31

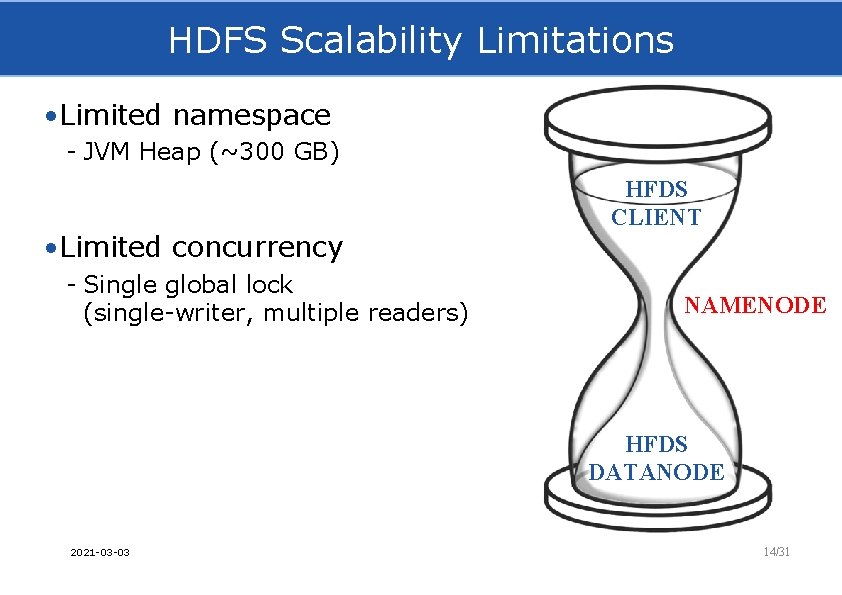

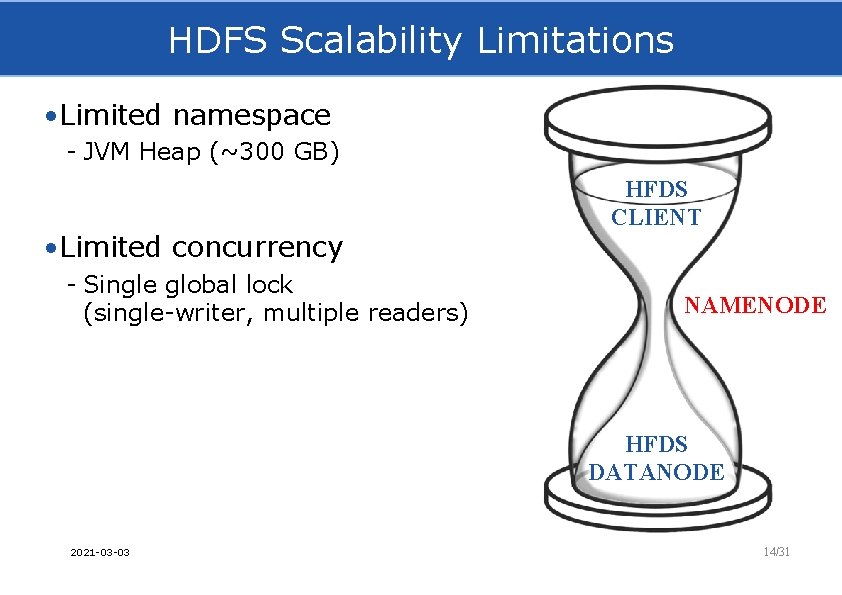

HDFS Scalability Limitations • Limited namespace - JVM Heap (~300 GB) • Limited concurrency - Single global lock (single-writer, multiple readers) HFDS CLIENT NAMENODE HFDS DATANODE 2021 -03 -03 14/31

Name. Node in the Tragedy of the Commons Single-Host Name. Node Tragedy of the Commons 2021 -03 -03 15/48

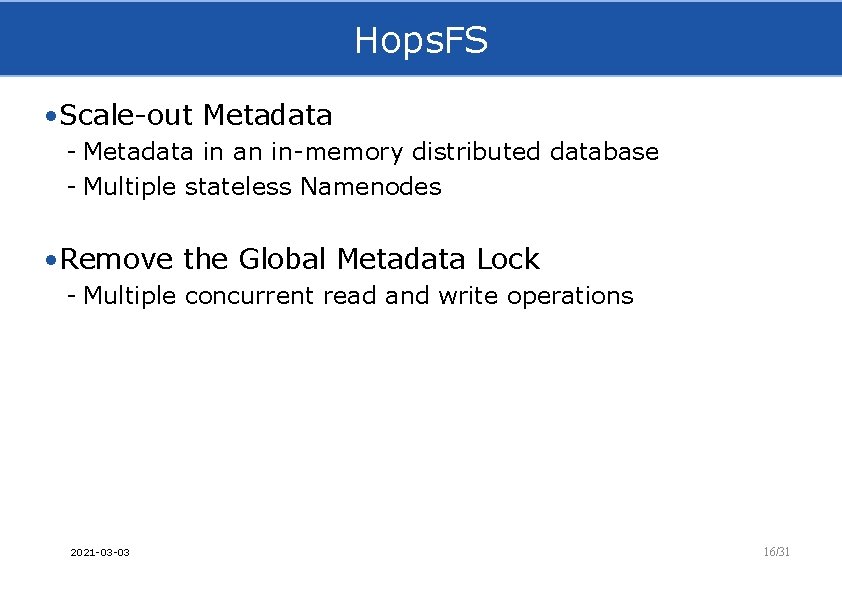

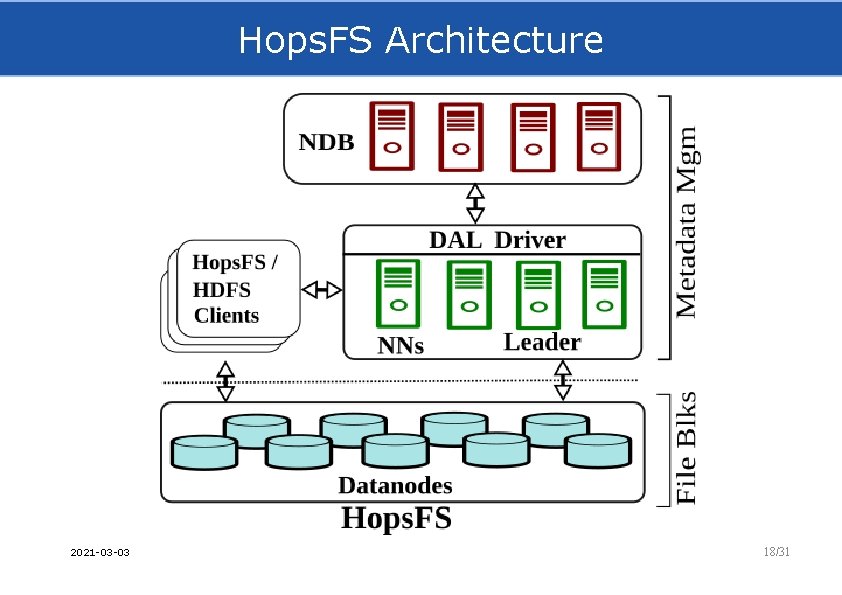

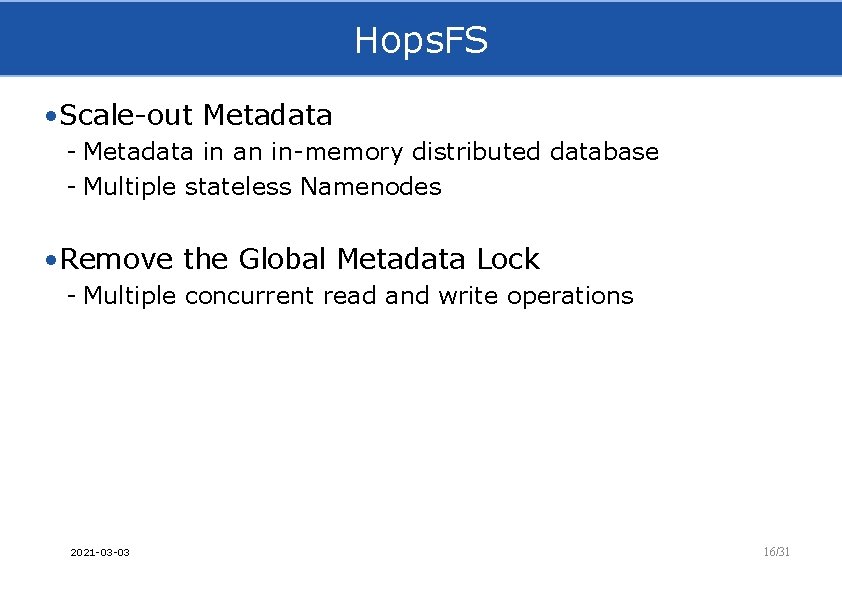

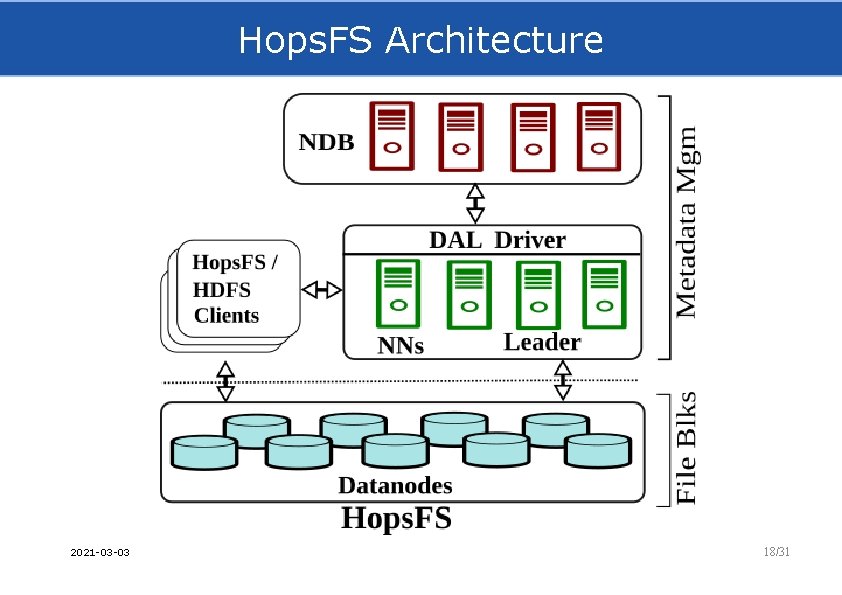

Hops. FS • Scale-out Metadata - Metadata in an in-memory distributed database - Multiple stateless Namenodes • Remove the Global Metadata Lock - Multiple concurrent read and write operations 2021 -03 -03 16/31

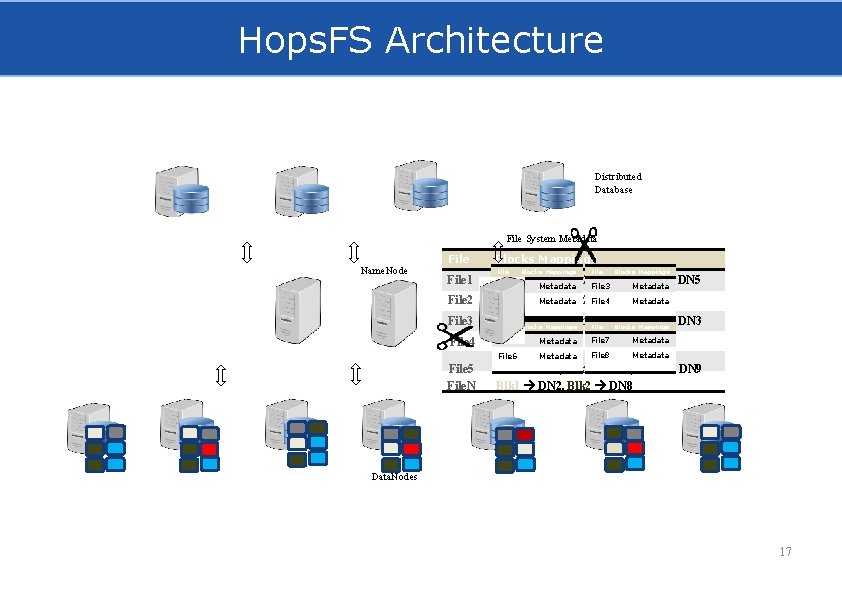

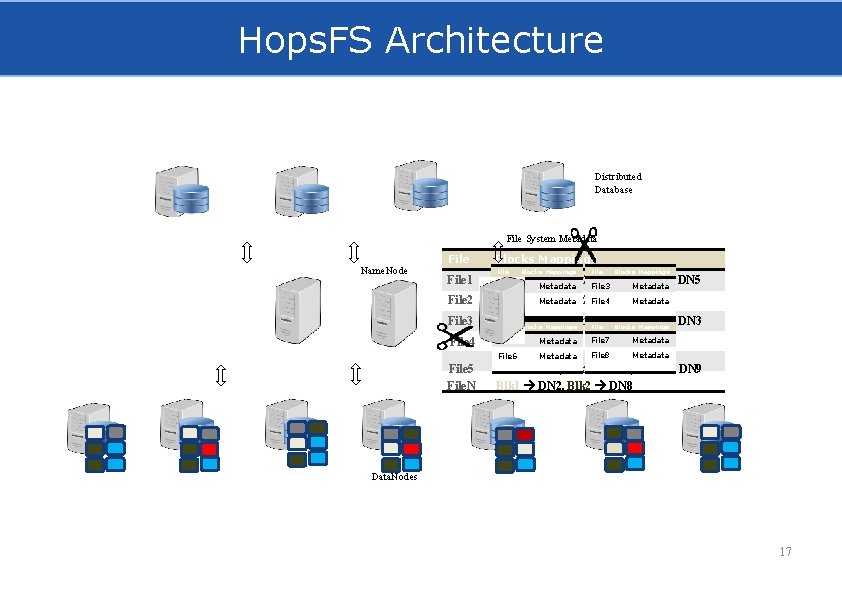

Hops. FS Architecture Distributed Database File System Metadata Name. Node File Blocks Mappings File 1 Blk 1 DN 1, Blk 2 File 3 DN 4, Metadata Blk 3 DN 5 File 1 Metadata File Blocks Mappings File 2 Blk 1 Blk 2 File 4 DN 4 Metadata File 2 DN 1, Metadata File 3 Blk 1 DN 1, Blk 2 File DN 2, Blk 3 DN 3 Blocks Mappings File 4 File 5 DN 100 Metadata Blk 1 File 7 Metadata File 6 File 8 Metadata File 5 File. N Metadata Blk 1 DN 4, Blk 2 DN 2, Blk 3 DN 9 Blk 1 DN 2, Blk 2 DN 8 Data. Nodes 17

Hops. FS Architecture 2021 -03 -03 18/31

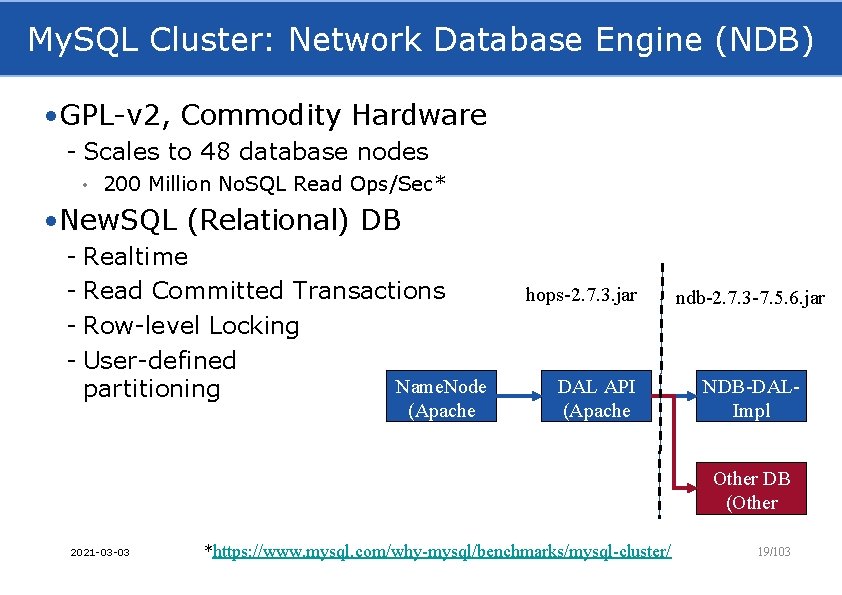

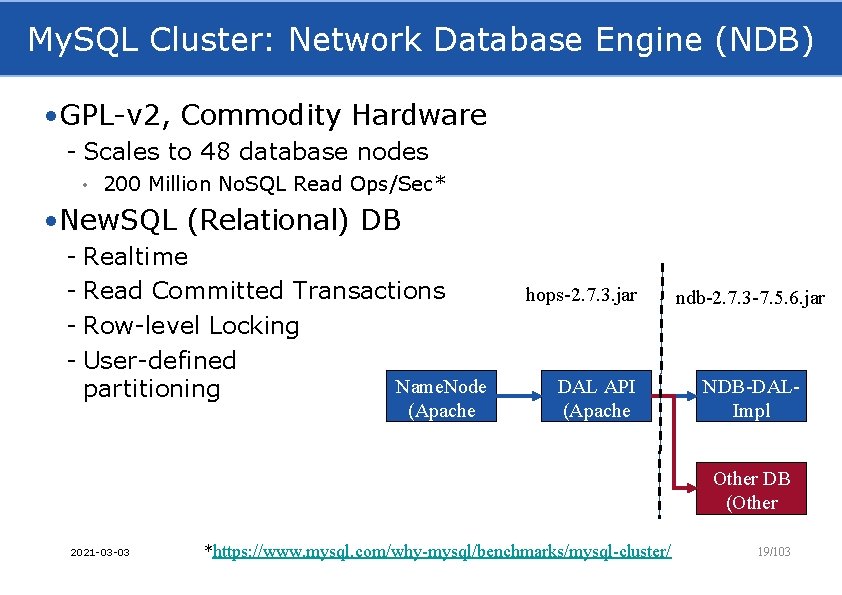

My. SQL Cluster: Network Database Engine (NDB) • GPL-v 2, Commodity Hardware - Scales to 48 database nodes • 200 Million No. SQL Read Ops/Sec* • New. SQL (Relational) DB - Realtime - Read Committed Transactions - Row-level Locking - User-defined Name. Node partitioning (Apache v 2) hops-2. 7. 3. jar ndb-2. 7. 3 -7. 5. 6. jar DAL API (Apache v 2) NDB-DALImpl (GPL v 2) Other DB (Other License) 2021 -03 -03 *https: //www. mysql. com/why-mysql/benchmarks/mysql-cluster/ 19/103

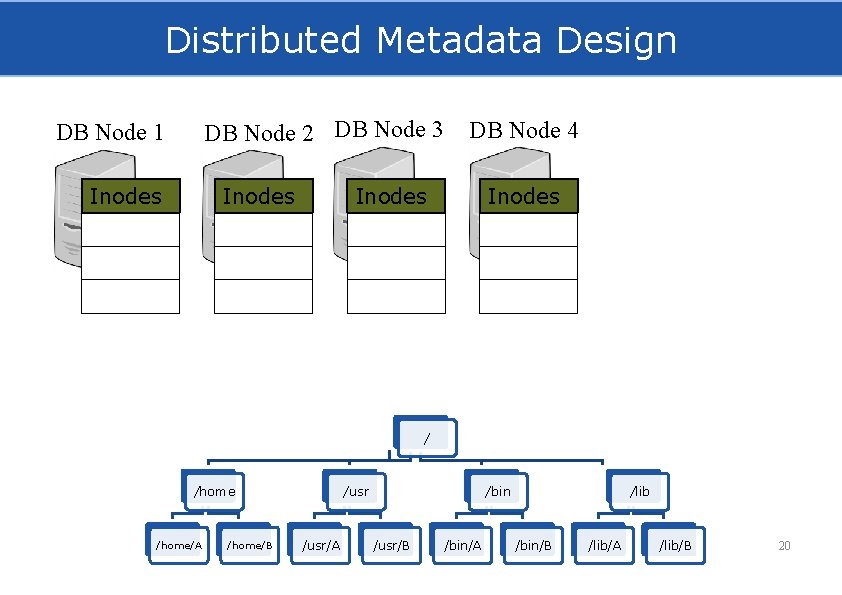

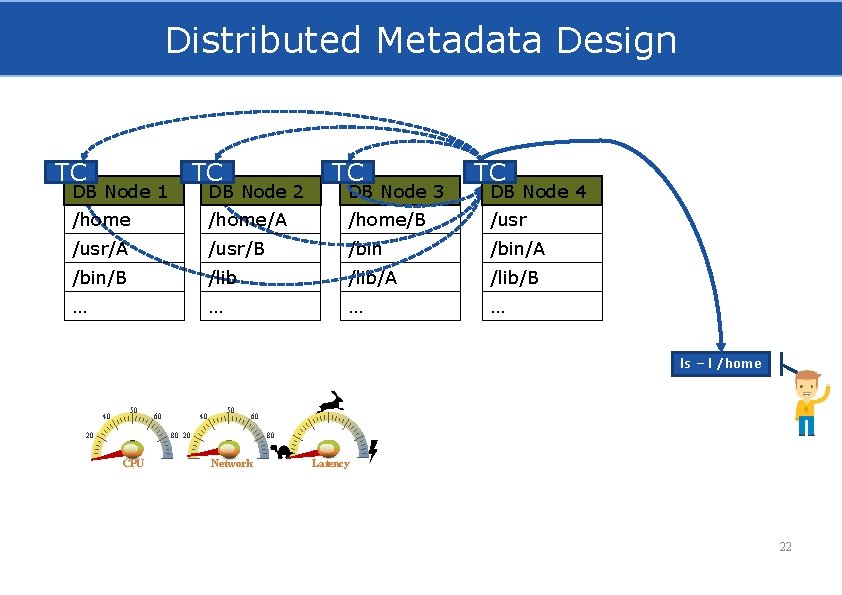

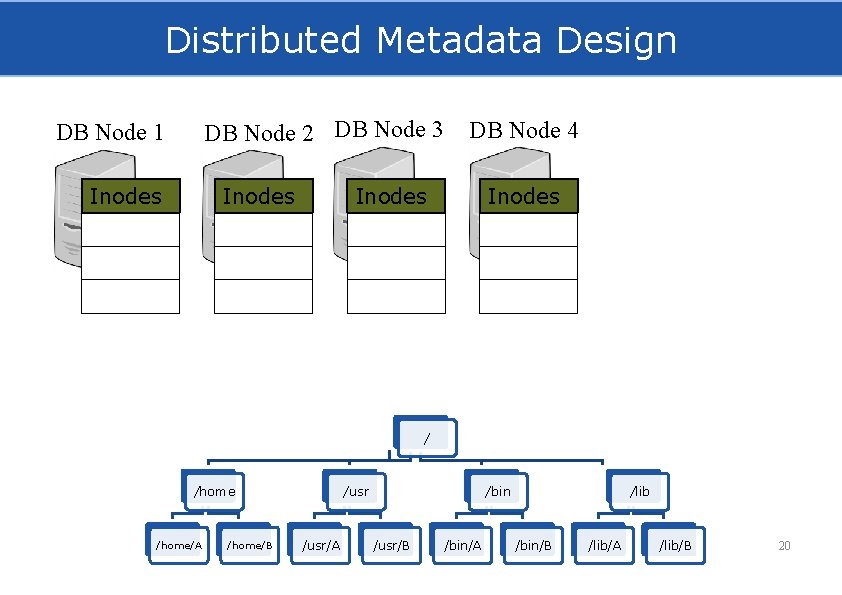

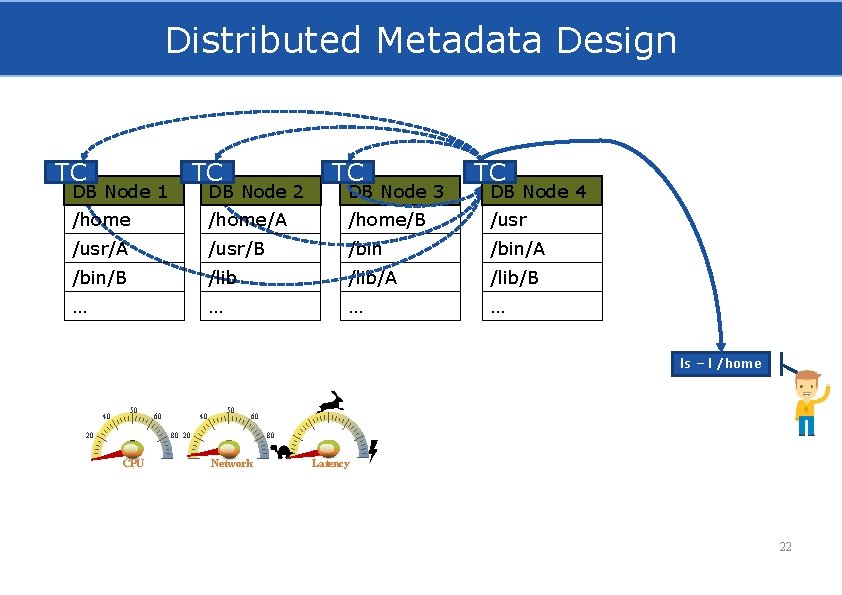

Distributed Metadata Design DB Node 2 DB Node 3 DB Node 1 Inodes DB Node 4 Inodes / /home/A /home/B /usr/A /bin /usr/B /bin/A /lib /bin/B /lib/A /lib/B 20

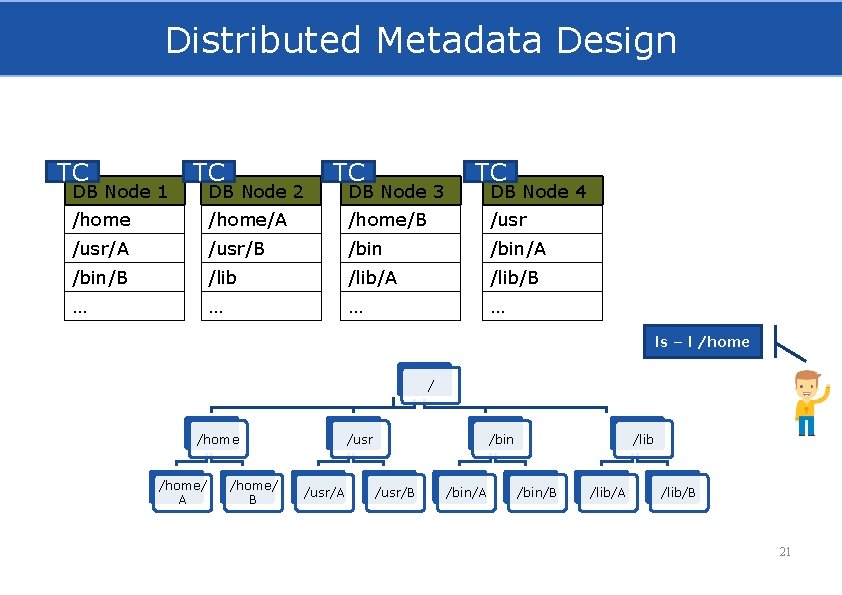

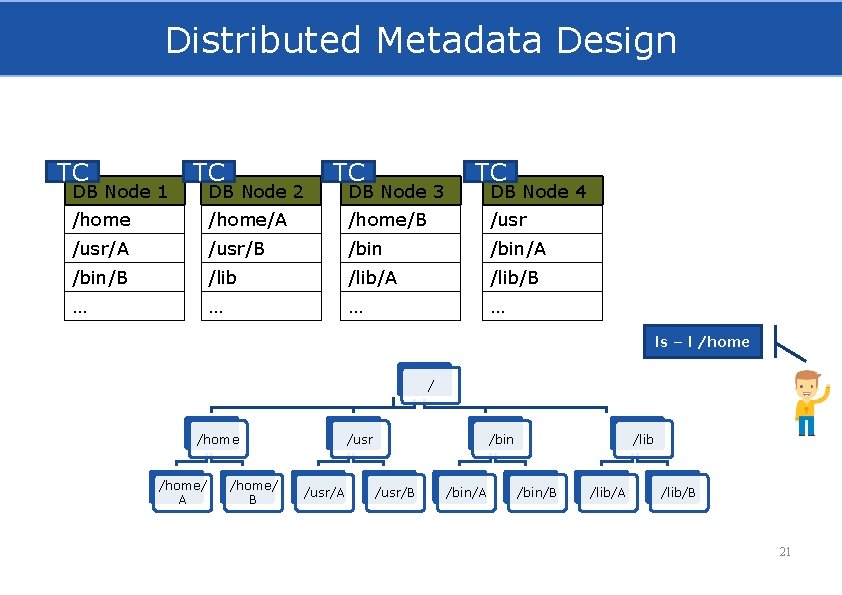

Distributed Metadata Design TC DB Node 1 TC DB Node 2 TC DB Node 3 TC DB Node 4 /home/A /home/B /usr/A /usr/B /bin/A /bin/B /lib/A /lib/B … … ls – l /home/ A /home/ B /usr/A /bin /usr/B /bin/A /lib /bin/B /lib/A /lib/B 21

Distributed Metadata Design TC TC DB Node 1 DB Node 2 TC DB Node 3 TC DB Node 4 /home/A /home/B /usr/A /usr/B /bin/A /bin/B /lib/A /lib/B … … ls––l /home ls l /home 40 50 20 60 40 50 60 80 20 CPU 80 Network Latency 22

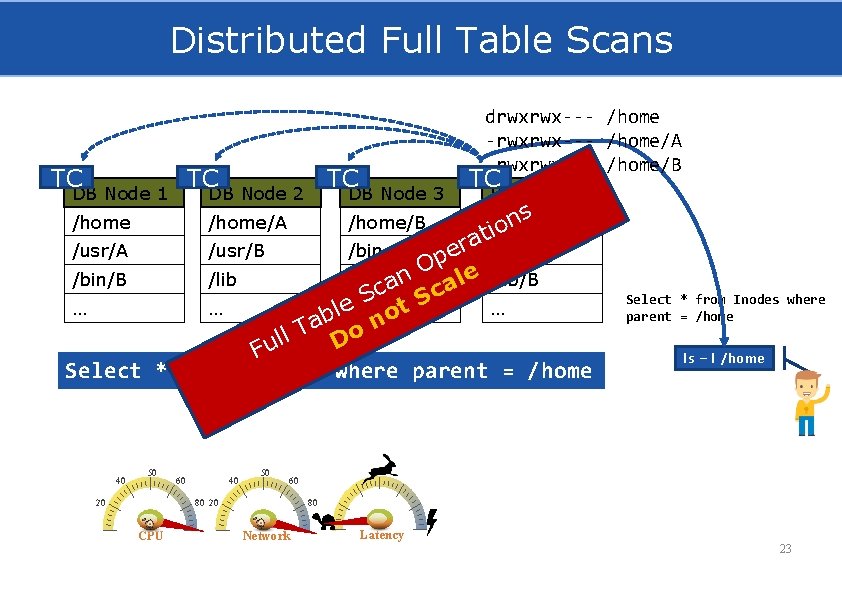

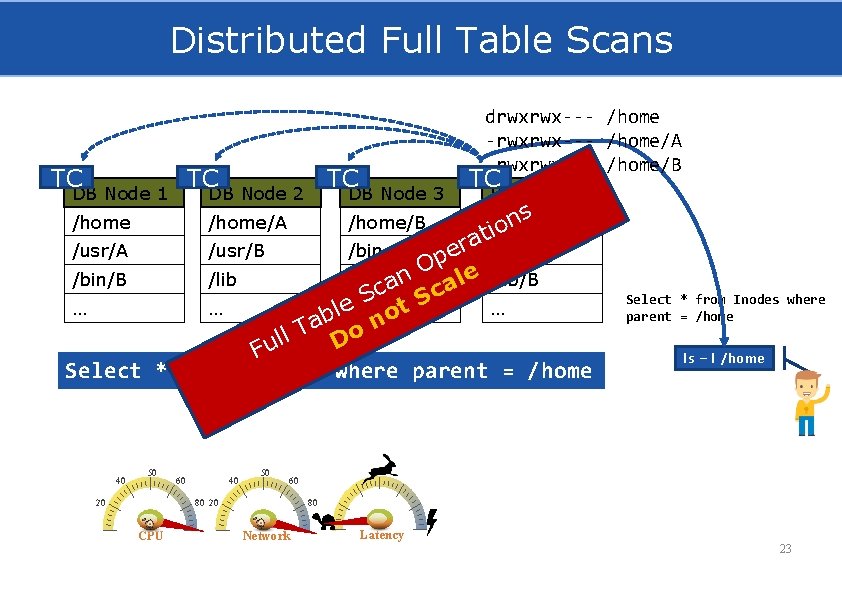

Distributed Full Table Scans TC TC DB Node 1 TC DB Node 2 /home drwxrwx--- /home -rwxrwx--- /home/A -rwxrwx--- /home/B DB Node 3 /home/A /home/B TC DB Node 4 s n /usr o i t a r /usr/A /usr/B /bin e /bin/A p O le n /bin/B /lib/A a ca /lib/B c S t. S e … … l o b a on T l l D Fu Select * from Inodes where parent = /home 40 50 60 40 50 CPU ls – l /home 60 80 20 20 Select * from Inodes where parent = /home 80 Network Latency 23

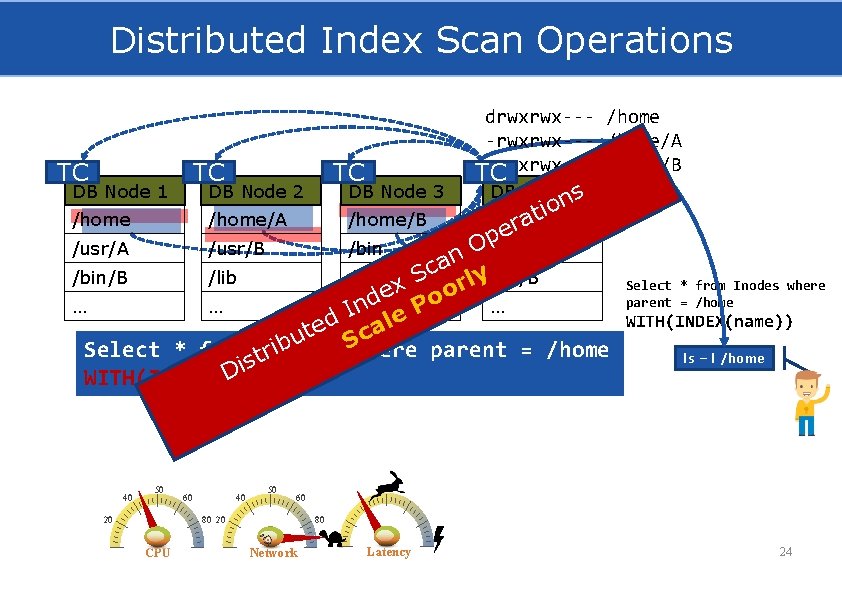

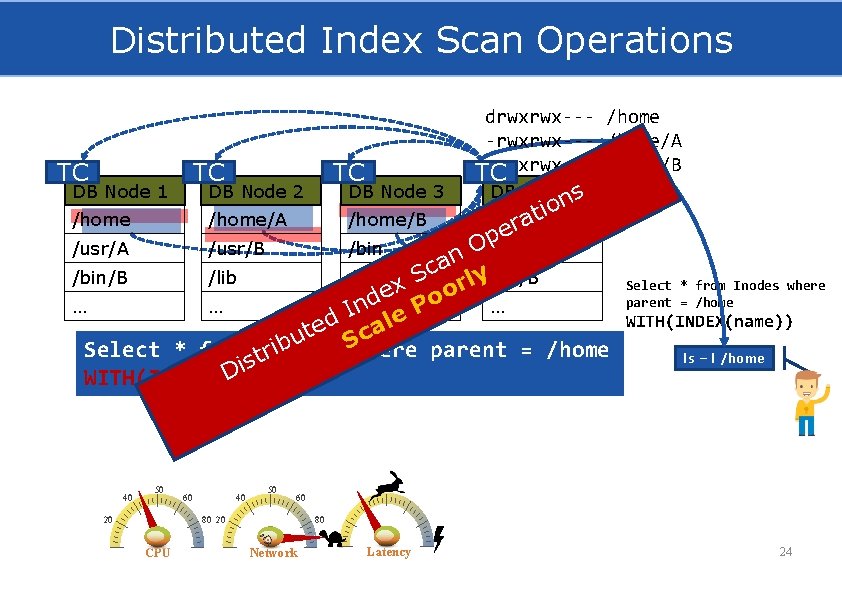

Distributed Index Scan Operations TC TC DB Node 1 drwxrwx--- /home -rwxrwx--- /home/A -rwxrwx--- /home/B TC DB Node 2 DB Node 3 TC DB Node 4 s on i t /home/A /home/B /usr ra e p O /usr/A /usr/B /bin/A n a c S /bin/B /lib/A ly/lib/B r x o e d o … … n P I e d al e t c u S b i Select * from t. Inodes where parent = /home r s Di WITH(INDEX(name)) 40 50 20 60 40 50 WITH(INDEX(name)) ls – l /home 60 80 20 CPU Select * from Inodes where parent = /home 80 Network Latency 24

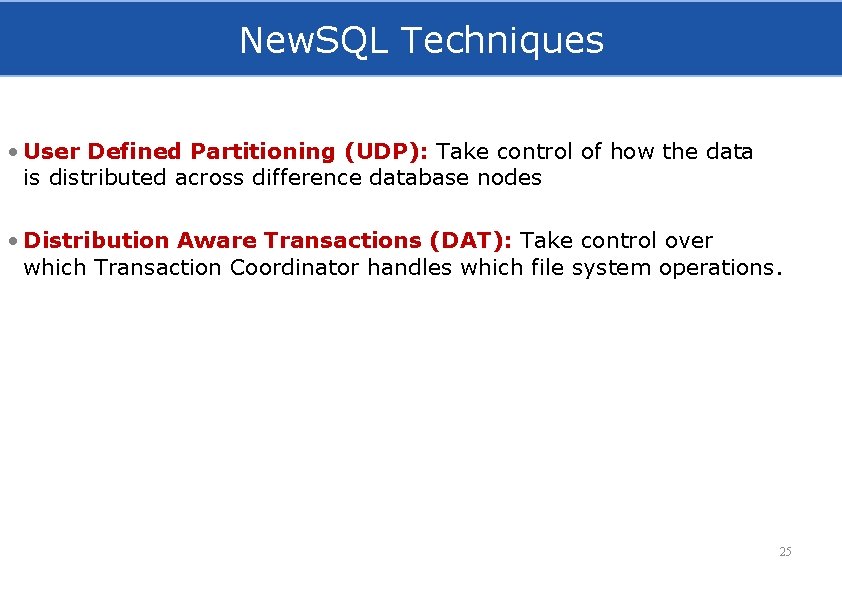

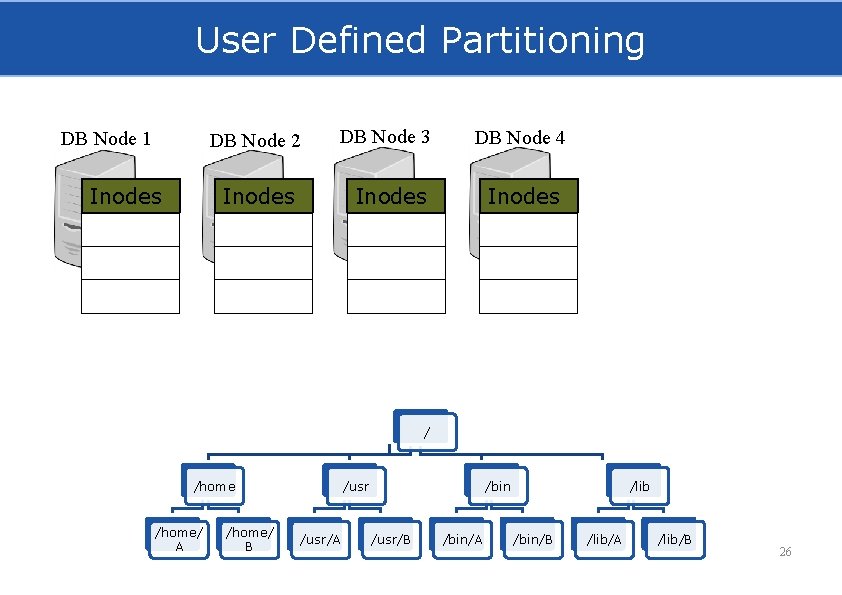

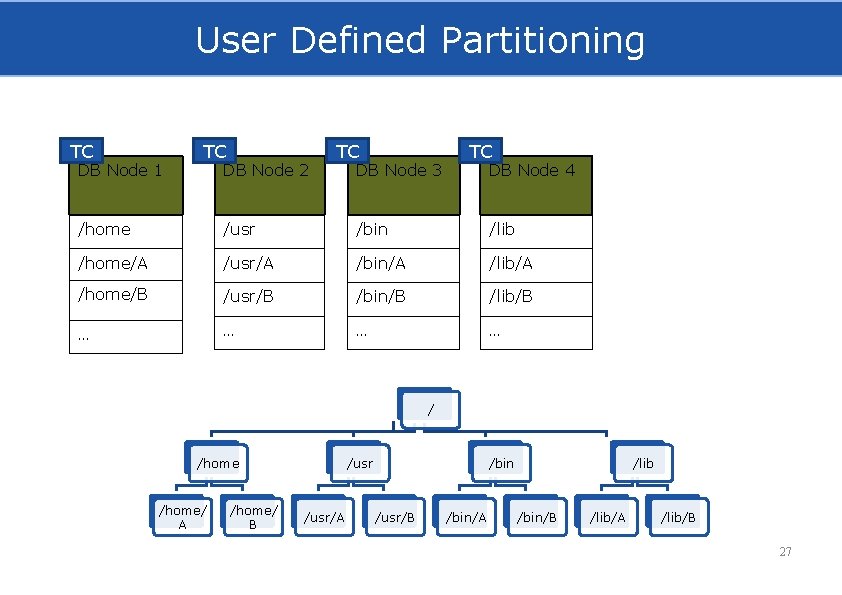

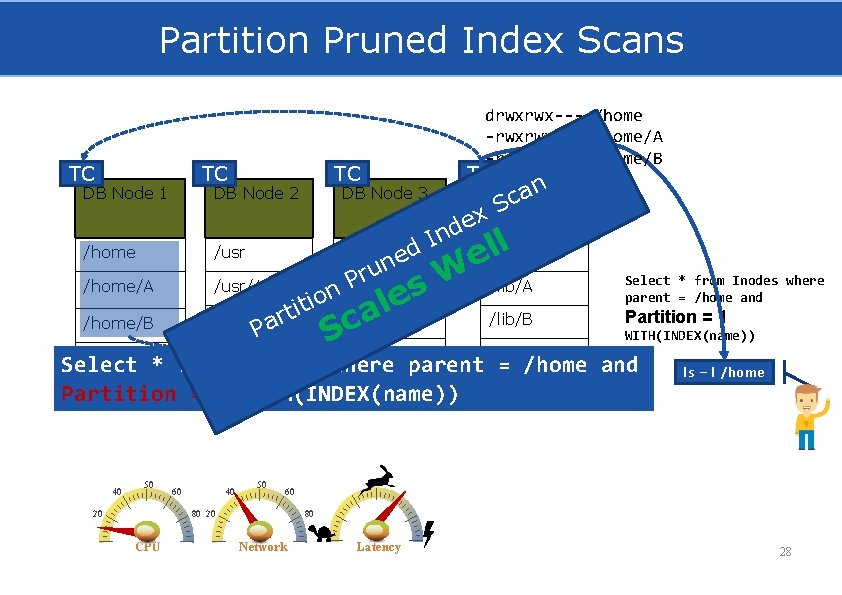

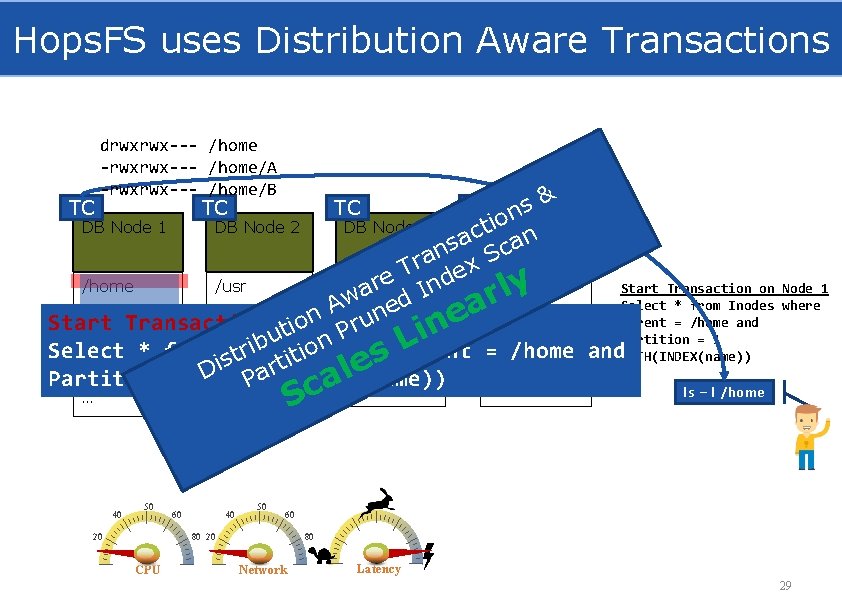

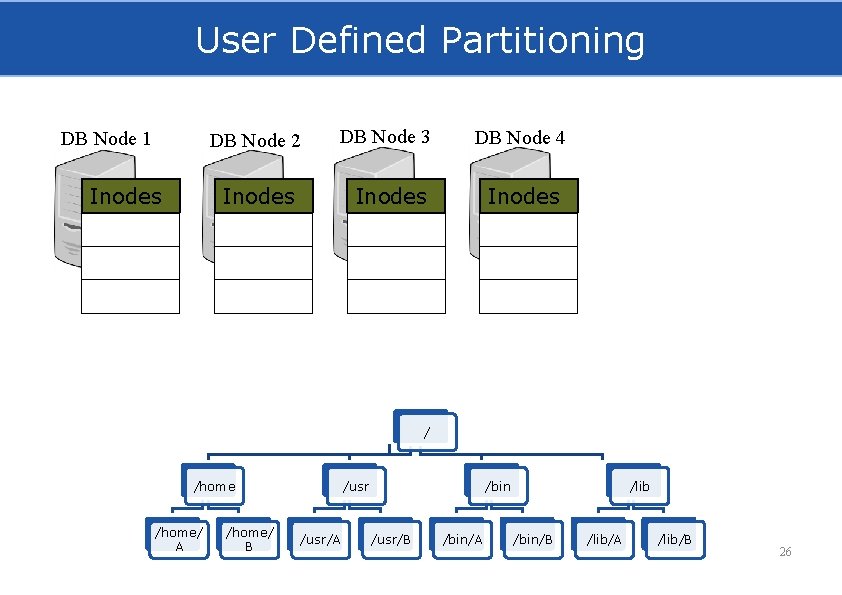

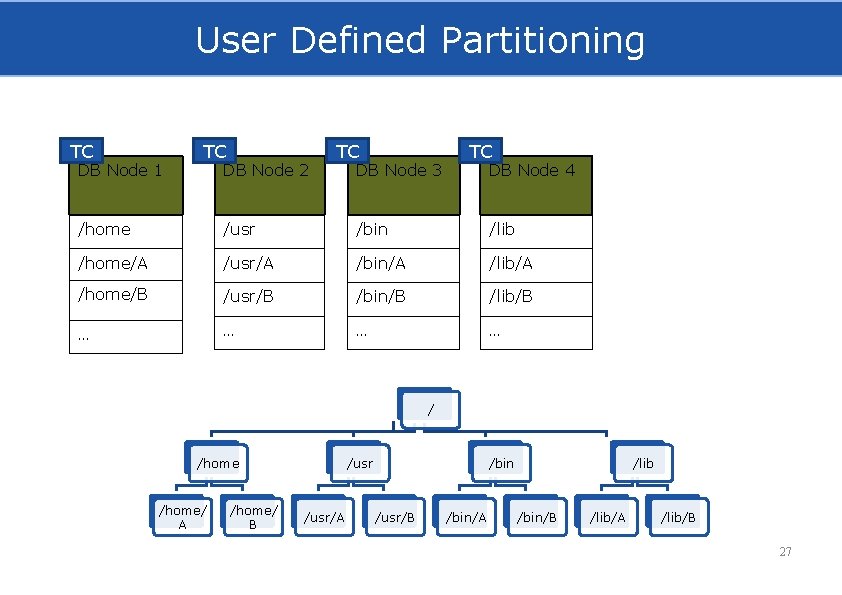

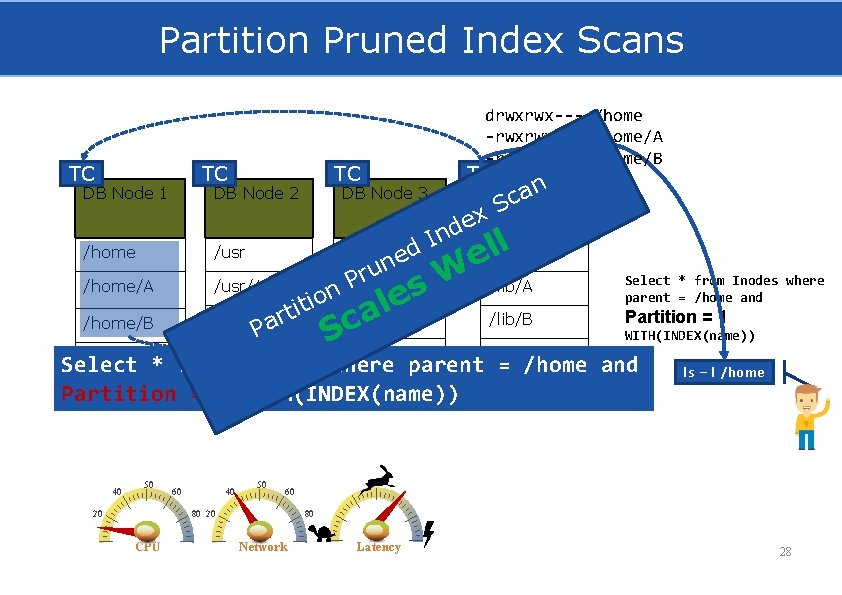

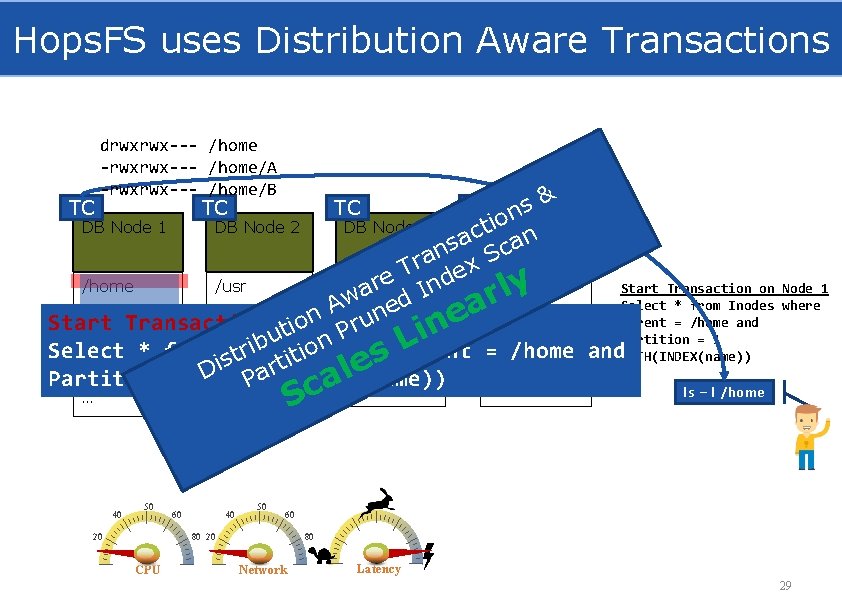

New. SQL Techniques • User Defined Partitioning (UDP): Take control of how the data is distributed across difference database nodes • Distribution Aware Transactions (DAT): Take control over which Transaction Coordinator handles which file system operations. 25

User Defined Partitioning DB Node 1 DB Node 2 Inodes DB Node 3 Inodes DB Node 4 Inodes / /home/ A /home/ B /usr/A /bin /usr/B /bin/A /lib /bin/B /lib/A /lib/B 26

User Defined Partitioning TC DB Node 1 TC DB Node 2 TC DB Node 3 TC DB Node 4 /home /usr /bin /lib /home/A /usr/A /bin/A /lib/A /home/B /usr/B /bin/B /lib/B … … / /home/ A /home/ B /usr/A /bin /usr/B /bin/A /lib /bin/B /lib/A /lib/B 27

Partition Pruned Index Scans TC TC DB Node 1 TC DB Node 2 /home /usr /home/A /usr/A DB Node 3 drwxrwx--- /home -rwxrwx--- /home/A -rwxrwx--- /home/B TC DBc. Node an 4 S x e nd I l /bin ed /lib l e n u r P s W /lib/A n/bin/A Select * from Inodes where parent = /home and o e i t l i t Partition = 1 a /usr/B ar /bin/B /lib/B /home/B c P WITH(INDEX(name)) S … … Select * from Inodes where parent = /home and ls – l /home Partition = 1 WITH(INDEX(name)) 40 50 20 60 40 50 60 80 20 CPU 80 Network Latency 28

Hops. FS uses Distribution Aware Transactions TC drwxrwx--- /home -rwxrwx--- /home/A -rwxrwx--- /home/B TC TC TC ns io & 4 ct. DB a. Node n a s c n S a r T dex e r /home /usr /bin /lib y Start Transaction on n a l I r w d Select * from Inodes A e a n /home/A /usr/A on /bin/A /lib/A e parent = /home and u Start Transaction 1 on. Node r i n t P i Partition = 1 u n L b i o Select * from Inodes where parent = /home and r /usr/B /bin/B /lib/B i WITH(INDEX(name)) /home/B t s it s t i e r l Partition = D 1…WITH(INDEX(name)) Pa a … … ls – l /home c DB Node 1 DB Node 2 DB Node 3 Node 1 where S … 40 50 60 80 20 20 CPU 80 Network Latency 29

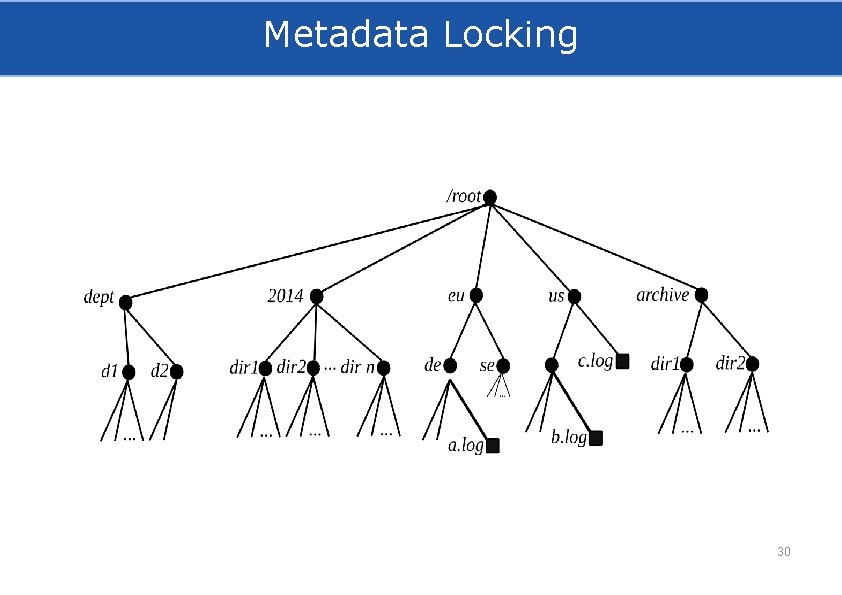

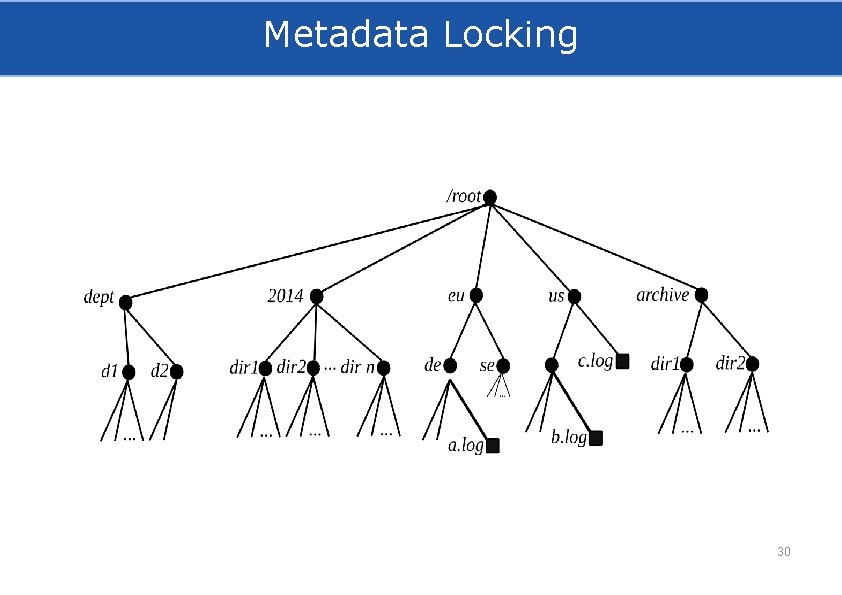

Metadata Locking 30

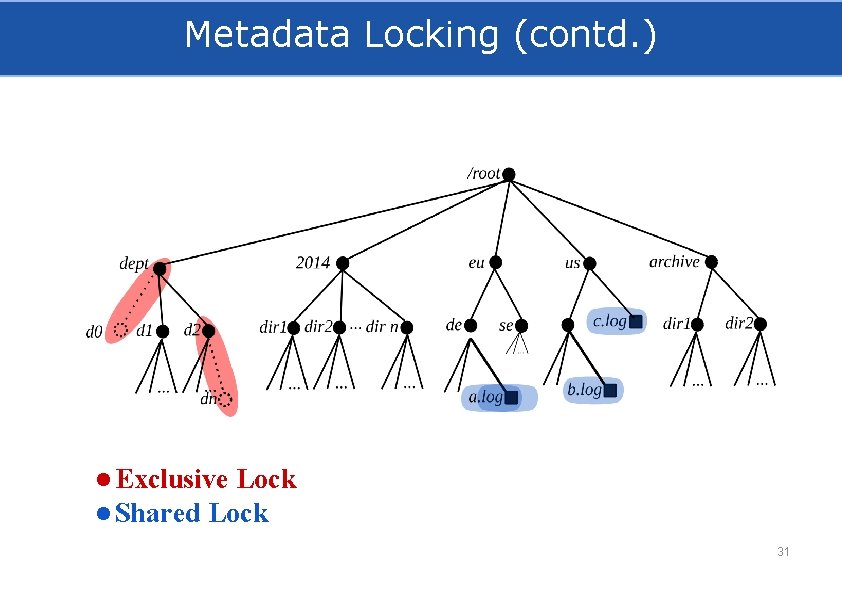

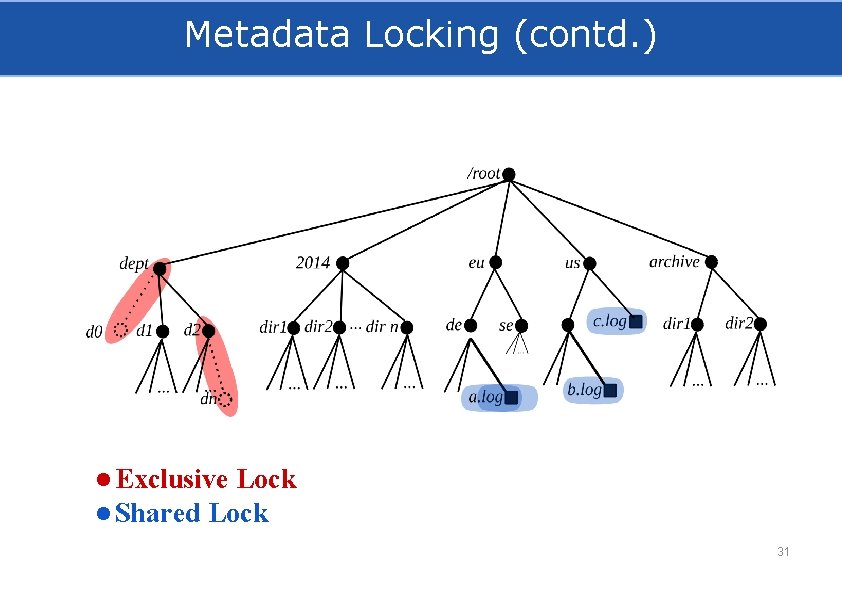

Metadata Locking (contd. ) ● Exclusive Lock ● Shared Lock 31

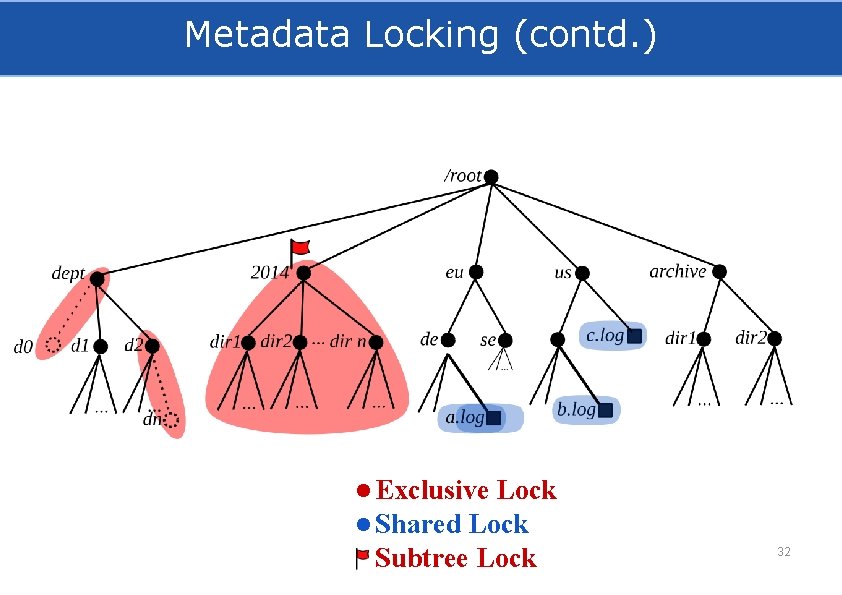

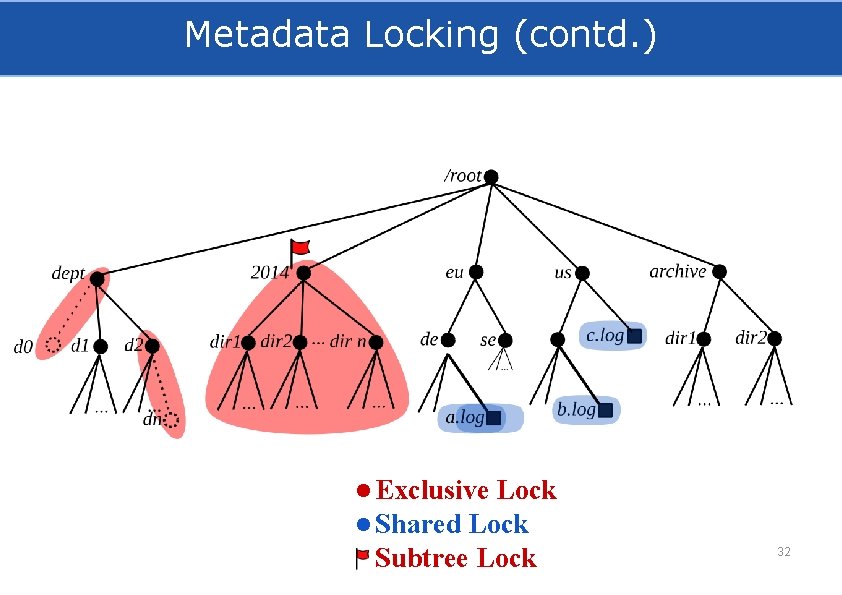

Metadata Locking (contd. ) ● Exclusive Lock ● Shared Lock Subtree Lock 32

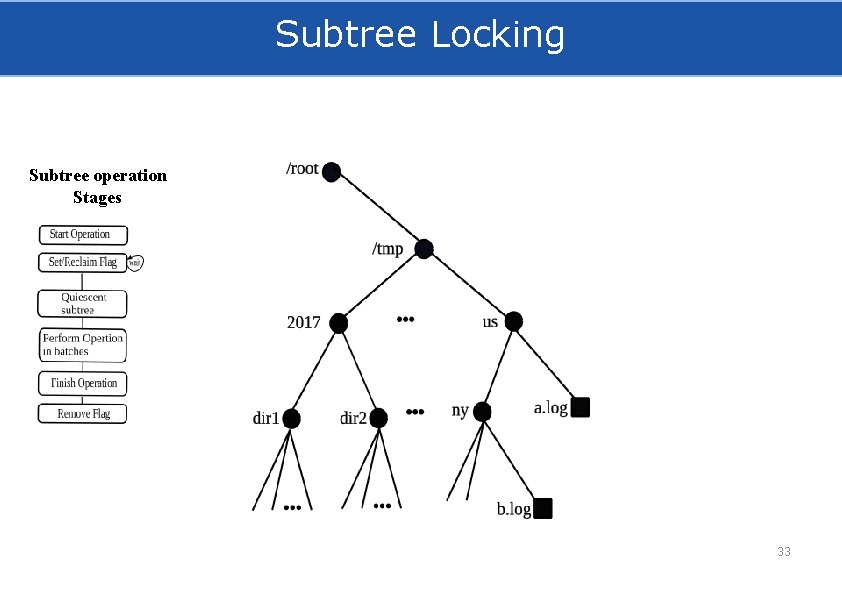

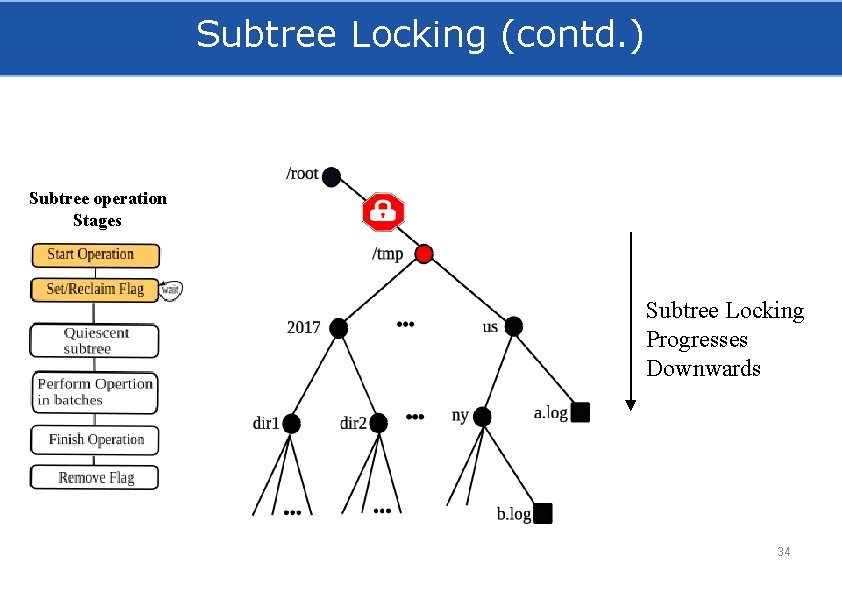

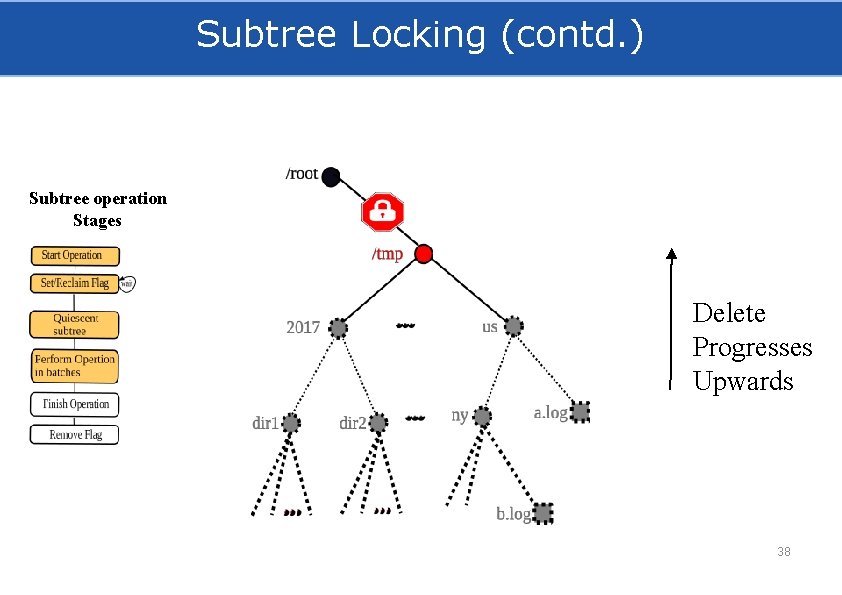

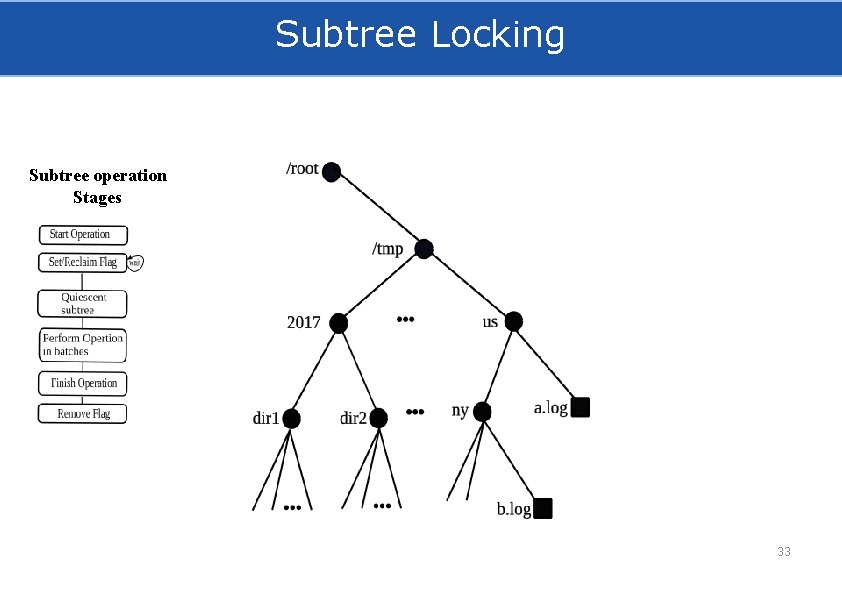

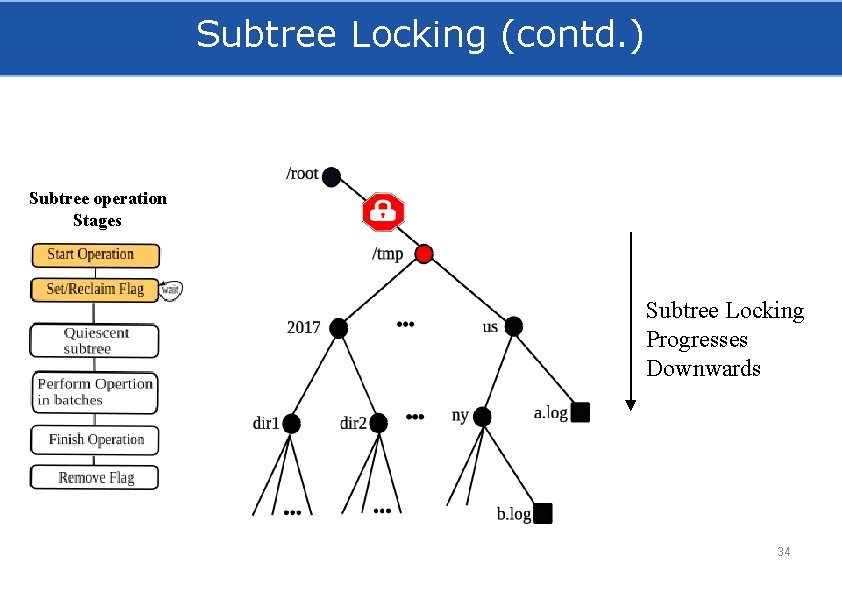

Subtree Locking Subtree operation Stages 33

Subtree Locking (contd. ) Subtree operation Stages Subtree Locking Progresses Downwards 34

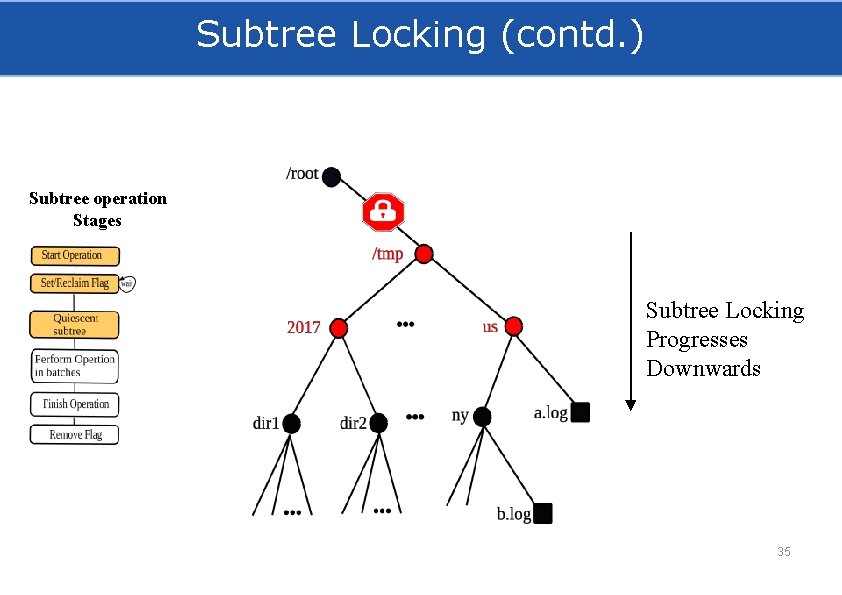

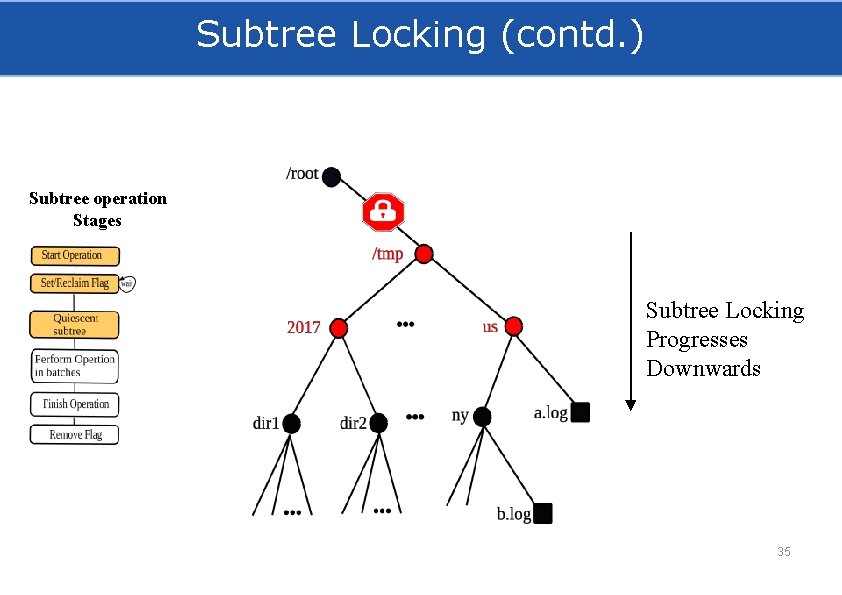

Subtree Locking (contd. ) Subtree operation Stages Subtree Locking Progresses Downwards 35

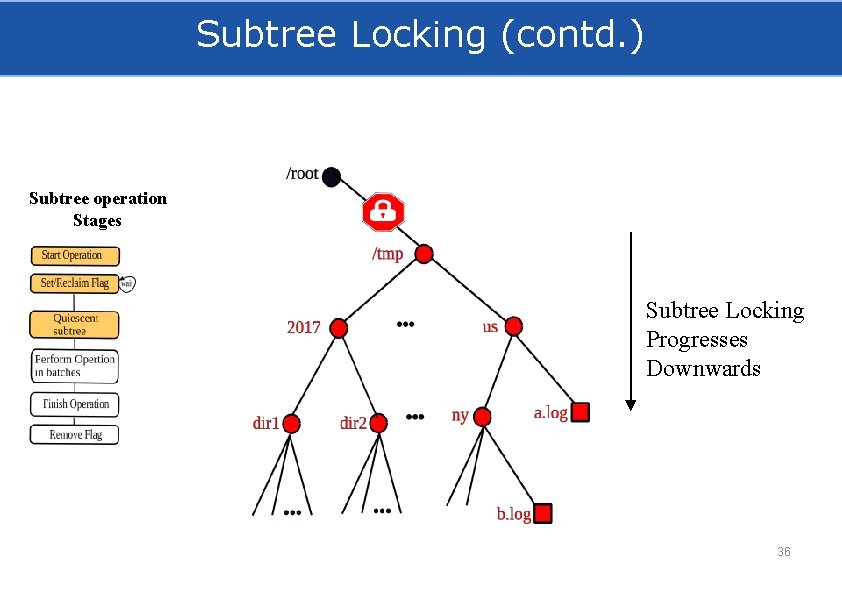

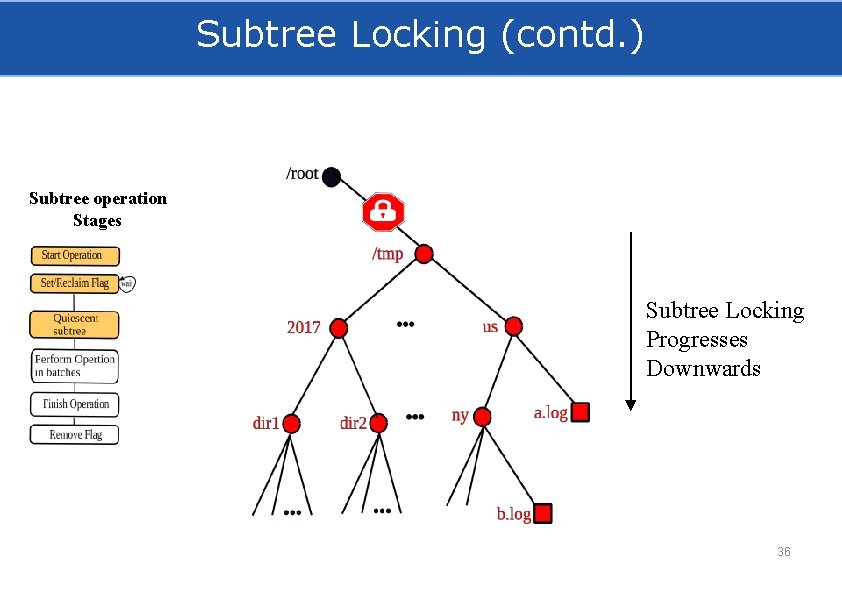

Subtree Locking (contd. ) Subtree operation Stages Subtree Locking Progresses Downwards 36

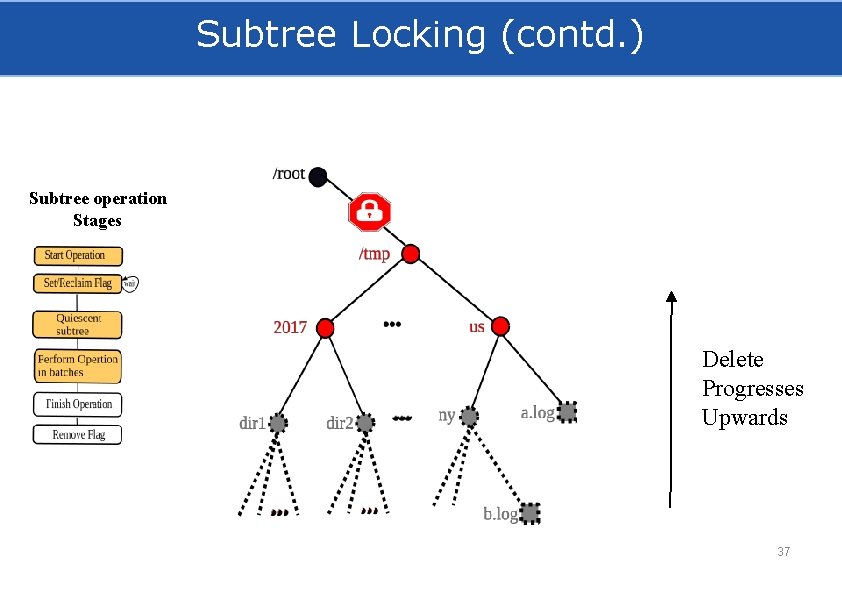

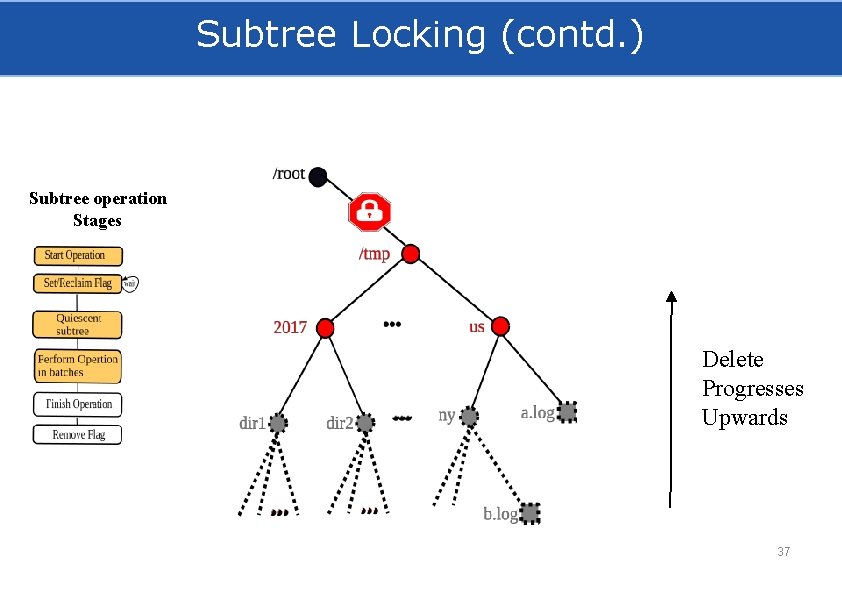

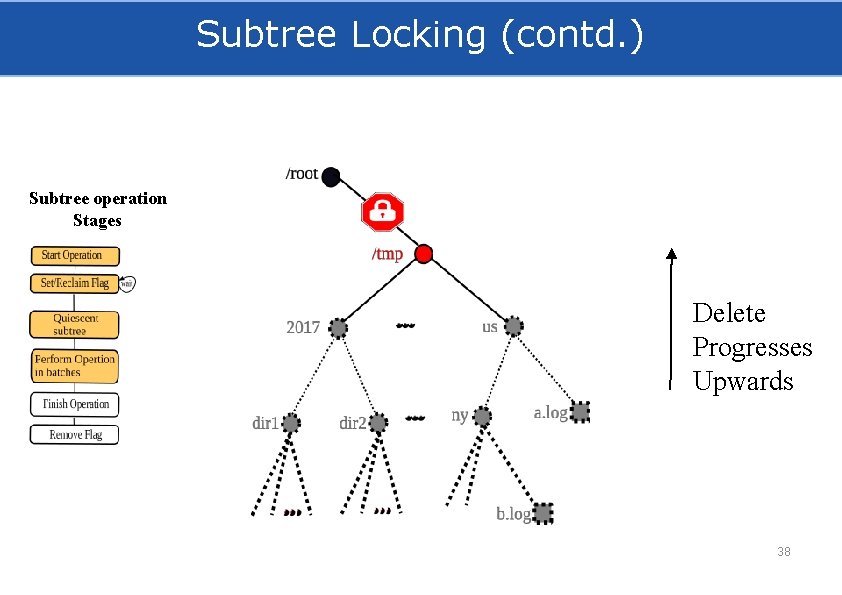

Subtree Locking (contd. ) Subtree operation Stages Delete Progresses Upwards 37

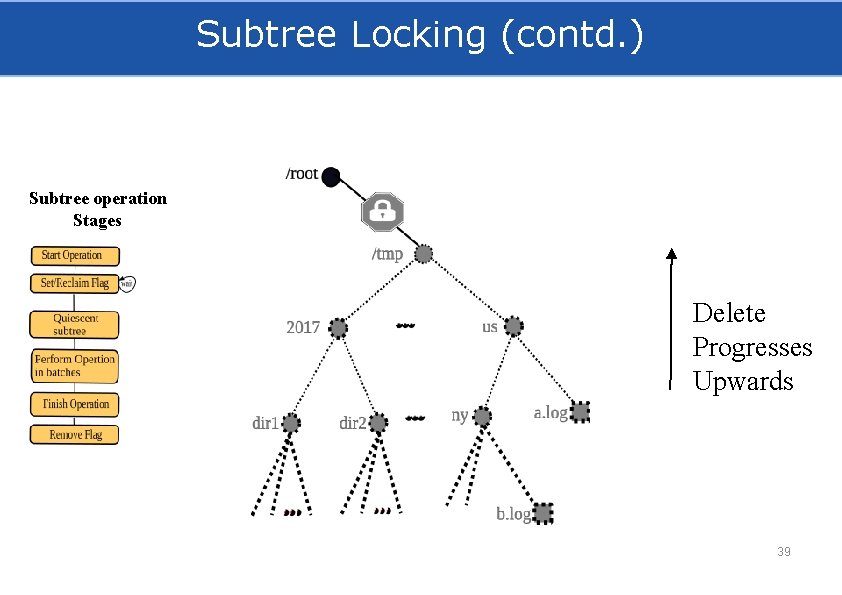

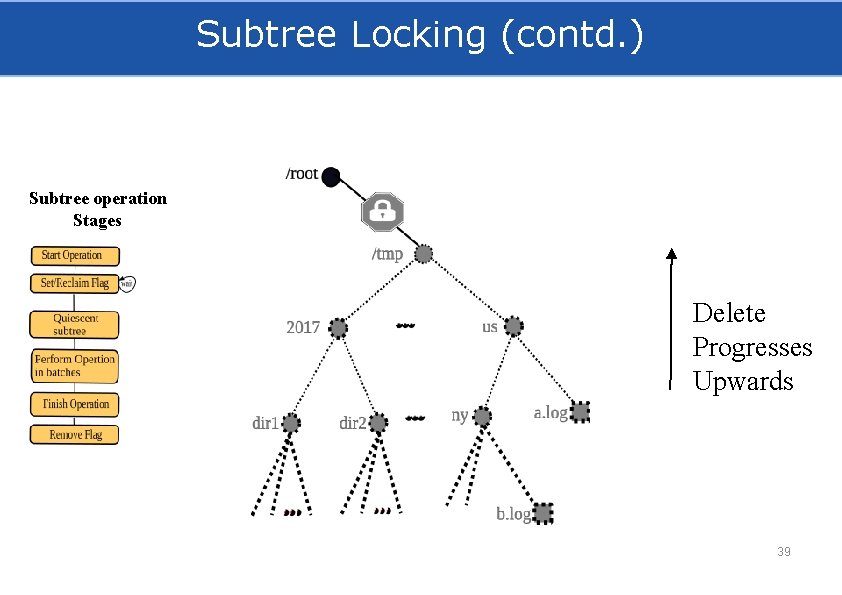

Subtree Locking (contd. ) Subtree operation Stages Delete Progresses Upwards 38

Subtree Locking (contd. ) Subtree operation Stages Delete Progresses Upwards 39

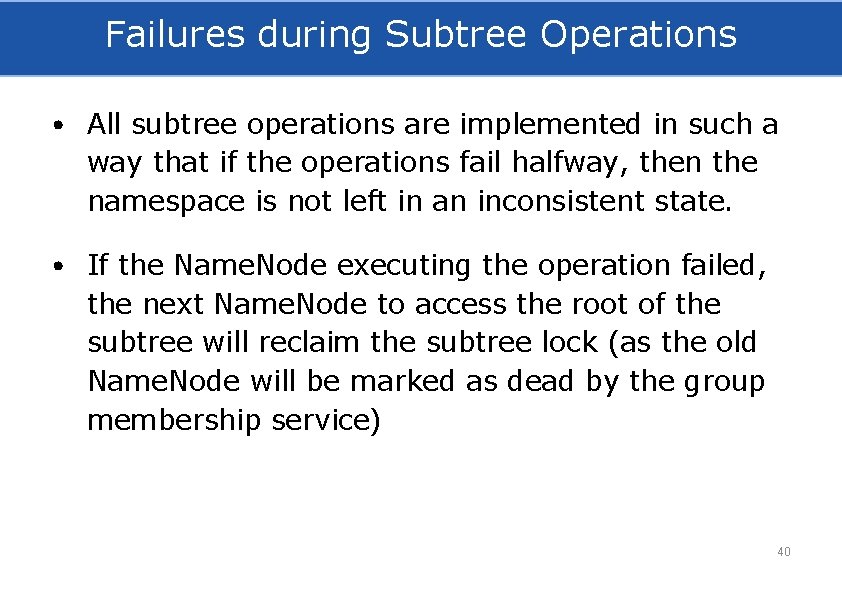

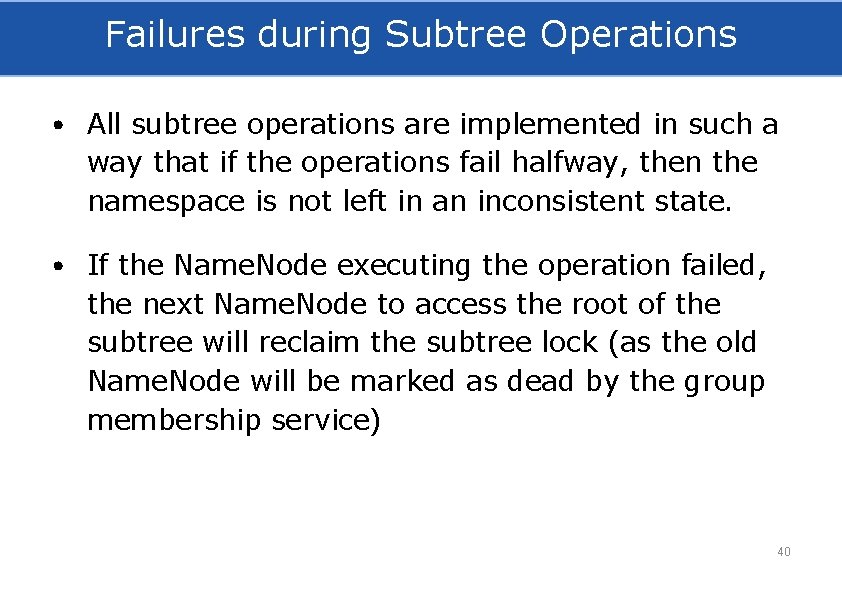

Failures during Subtree Operations • All subtree operations are implemented in such a way that if the operations fail halfway, then the namespace is not left in an inconsistent state. • If the Name. Node executing the operation failed, the next Name. Node to access the root of the subtree will reclaim the subtree lock (as the old Name. Node will be marked as dead by the group membership service) 40

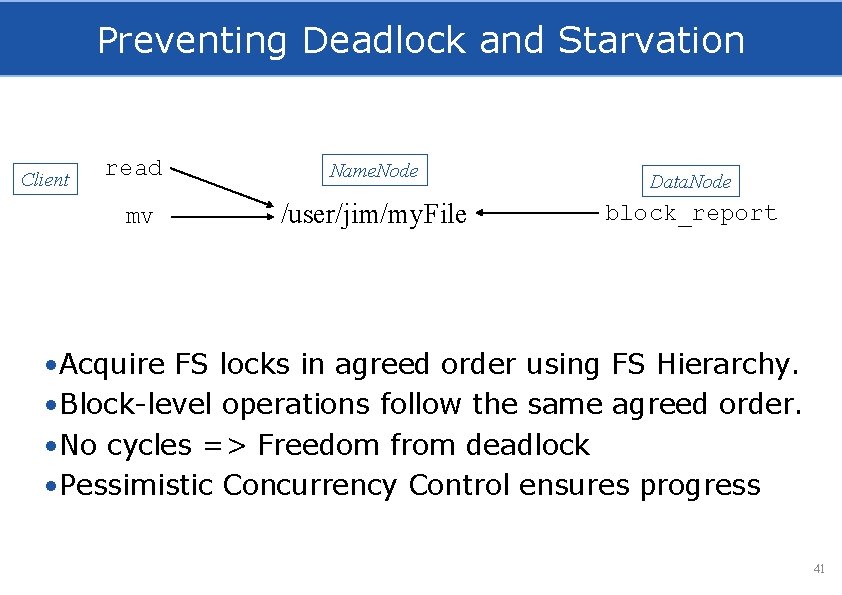

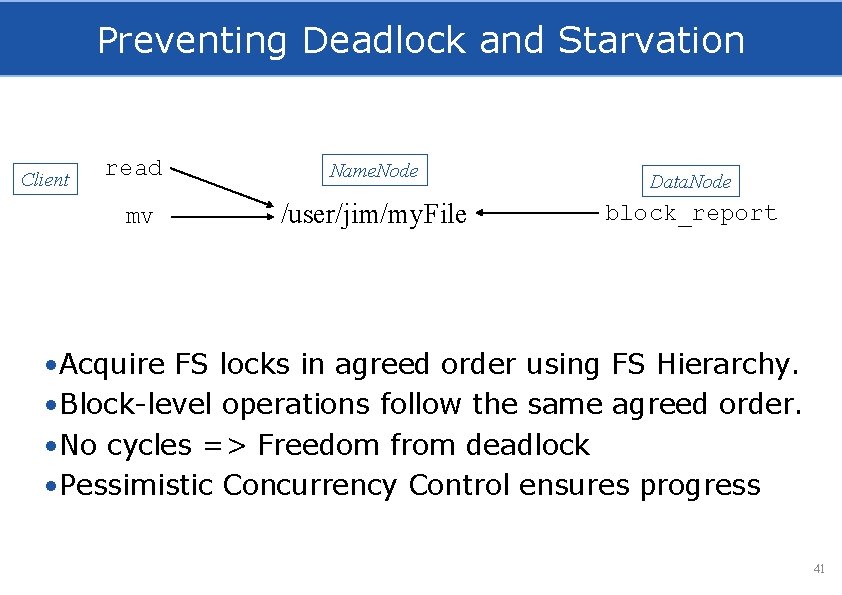

Preventing Deadlock and Starvation Client read mv Name. Node /user/jim/my. File Data. Node block_report • Acquire FS locks in agreed order using FS Hierarchy. • Block-level operations follow the same agreed order. • No cycles => Freedom from deadlock • Pessimistic Concurrency Control ensures progress 41

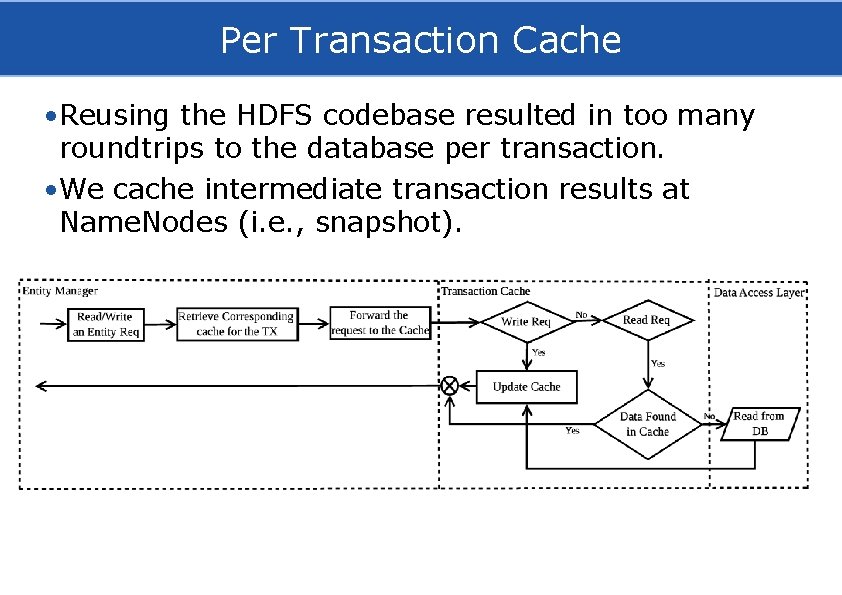

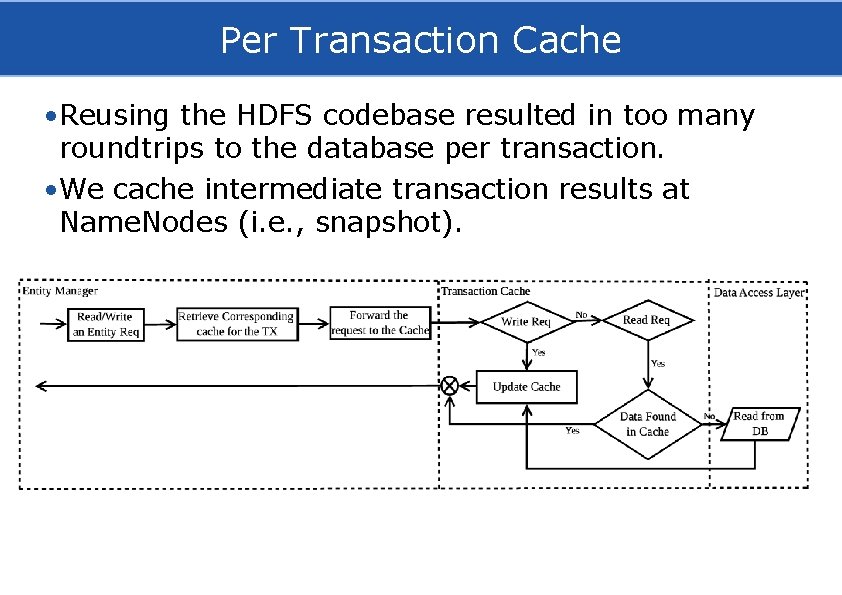

Per Transaction Cache • Reusing the HDFS codebase resulted in too many roundtrips to the database per transaction. • We cache intermediate transaction results at Name. Nodes (i. e. , snapshot).

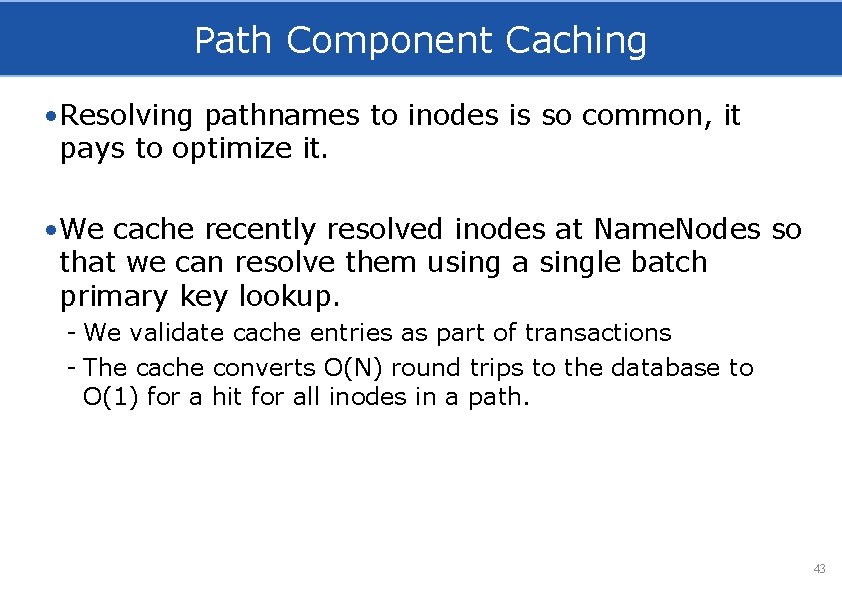

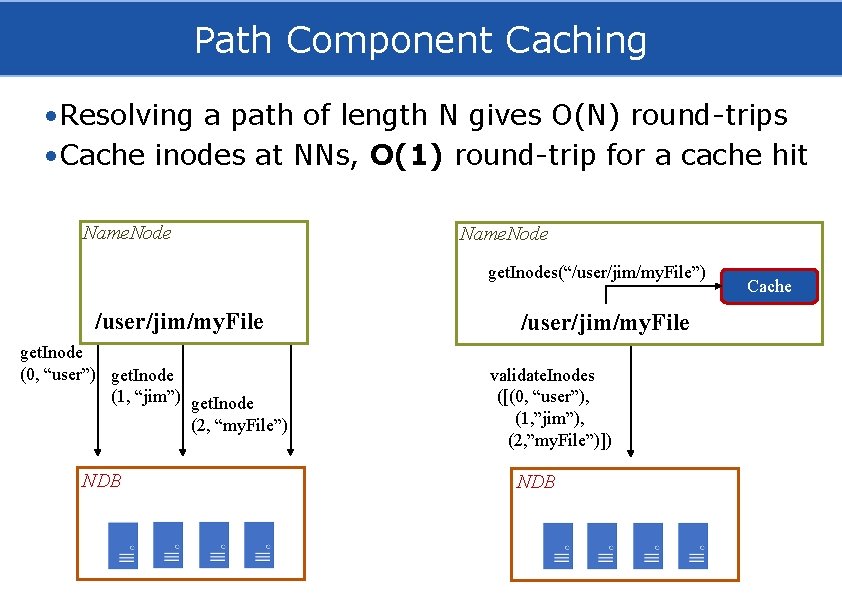

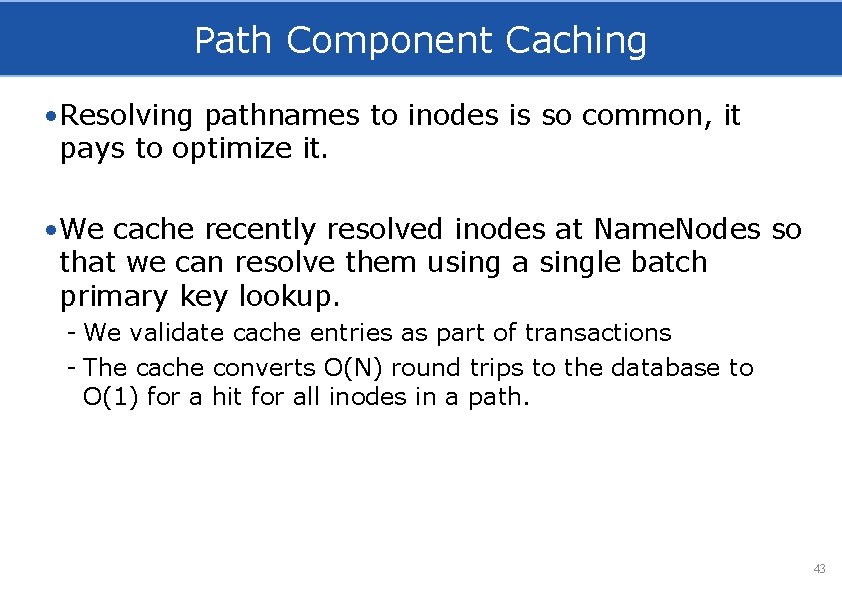

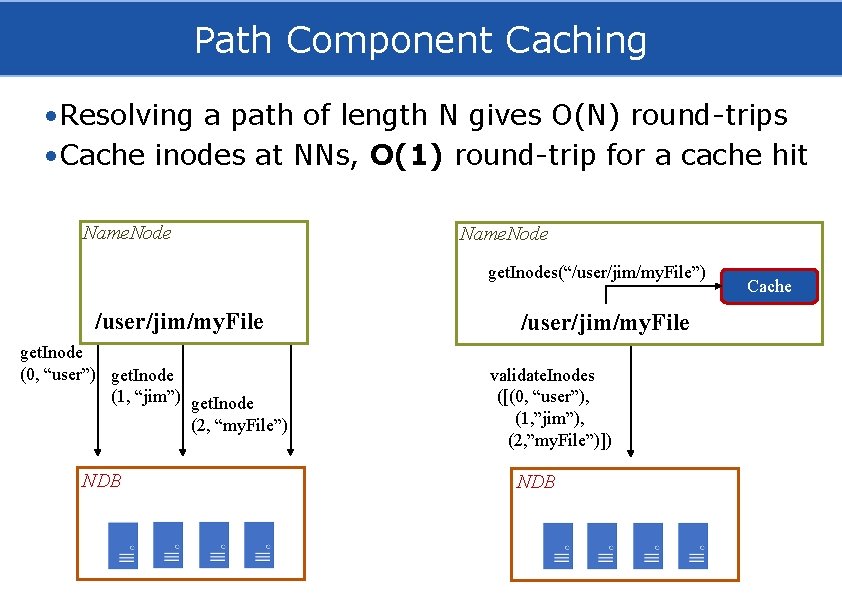

Path Component Caching • Resolving pathnames to inodes is so common, it pays to optimize it. • We cache recently resolved inodes at Name. Nodes so that we can resolve them using a single batch primary key lookup. - We validate cache entries as part of transactions - The cache converts O(N) round trips to the database to O(1) for a hit for all inodes in a path. 43

Path Component Caching • Resolving a path of length N gives O(N) round-trips • Cache inodes at NNs, O(1) round-trip for a cache hit Name. Node get. Inodes(“/user/jim/my. File”) /user/jim/my. File get. Inode (0, “user”) get. Inode (1, “jim”) get. Inode (2, “my. File”) NDB /user/jim/my. File validate. Inodes ([(0, “user”), (1, ”jim”), (2, ”my. File”)]) NDB Cache

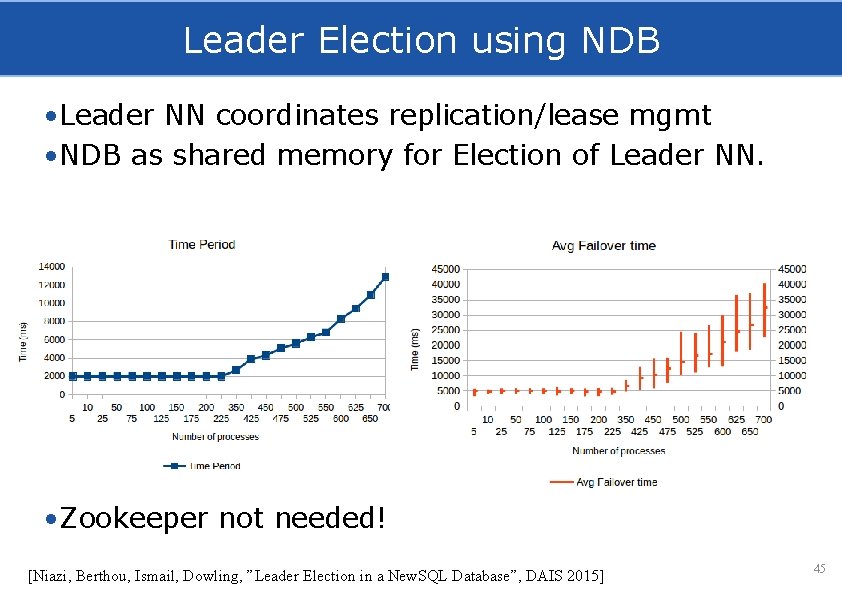

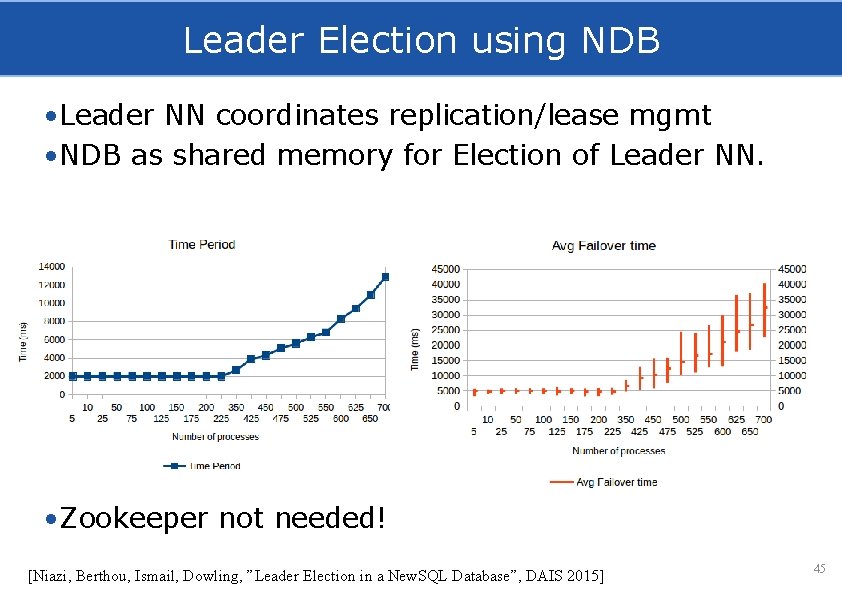

Leader Election using NDB • Leader NN coordinates replication/lease mgmt • NDB as shared memory for Election of Leader NN. • Zookeeper not needed! [Niazi, Berthou, Ismail, Dowling, ”Leader Election in a New. SQL Database”, DAIS 2015] 45

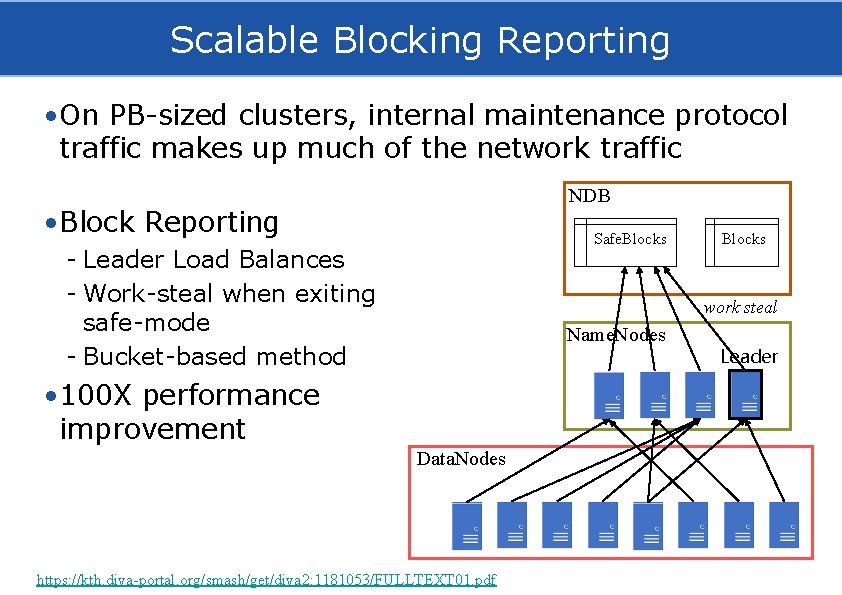

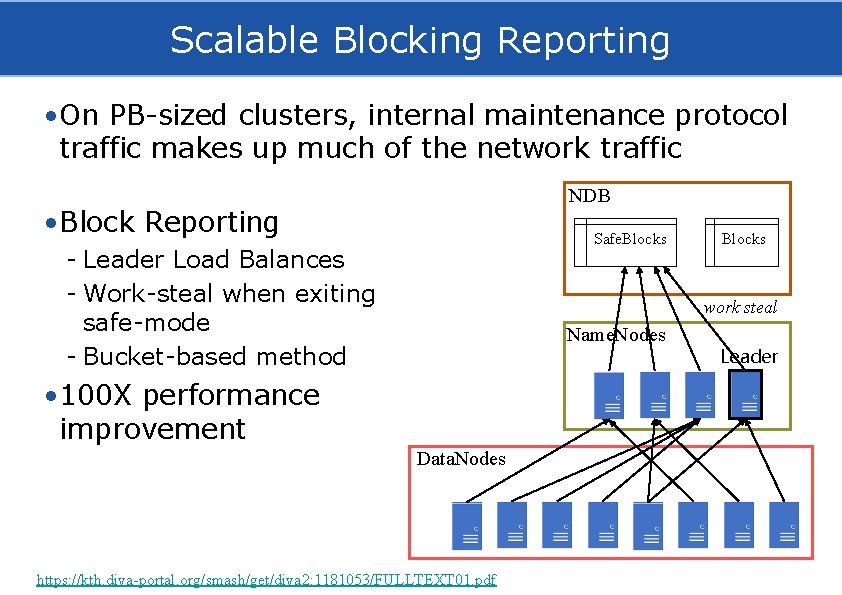

Scalable Blocking Reporting • On PB-sized clusters, internal maintenance protocol traffic makes up much of the network traffic NDB • Block Reporting Safe. Blocks - Leader Load Balances - Work-steal when exiting safe-mode - Bucket-based method Blocks work steal Name. Nodes • 100 X performance improvement Data. Nodes https: //kth. diva-portal. org/smash/get/diva 2: 1181053/FULLTEXT 01. pdf Leader

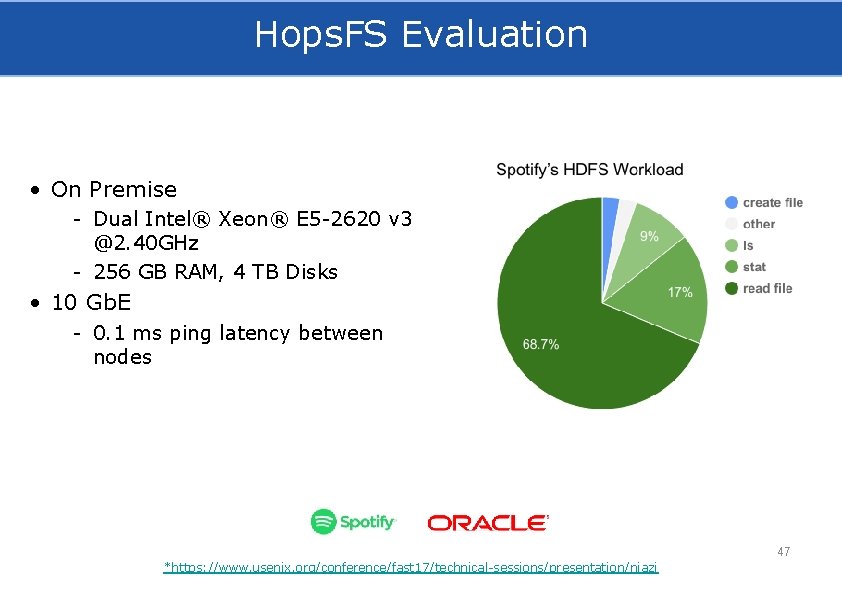

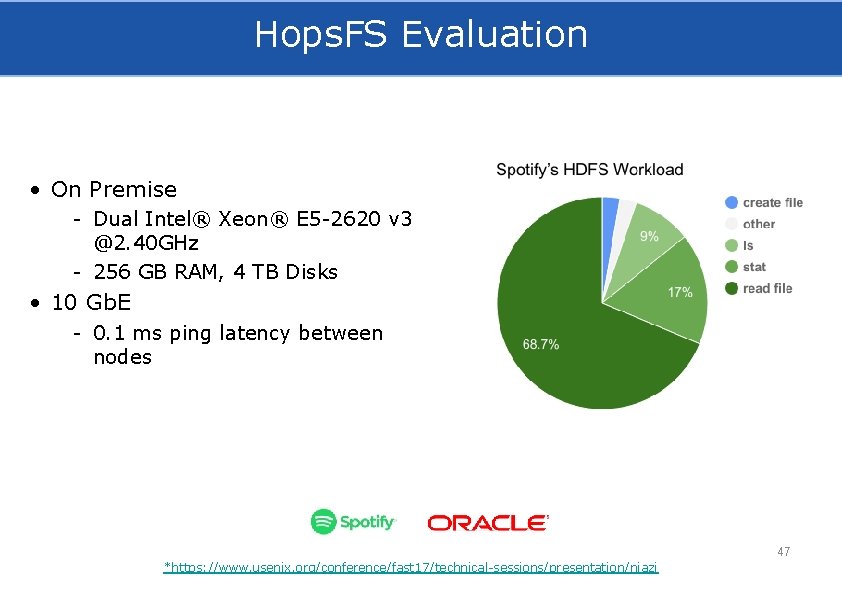

Hops. FS Evaluation • On Premise - Dual Intel® Xeon® E 5 -2620 v 3 @2. 40 GHz - 256 GB RAM, 4 TB Disks • 10 Gb. E - 0. 1 ms ping latency between nodes 47 *https: //www. usenix. org/conference/fast 17/technical-sessions/presentation/niazi

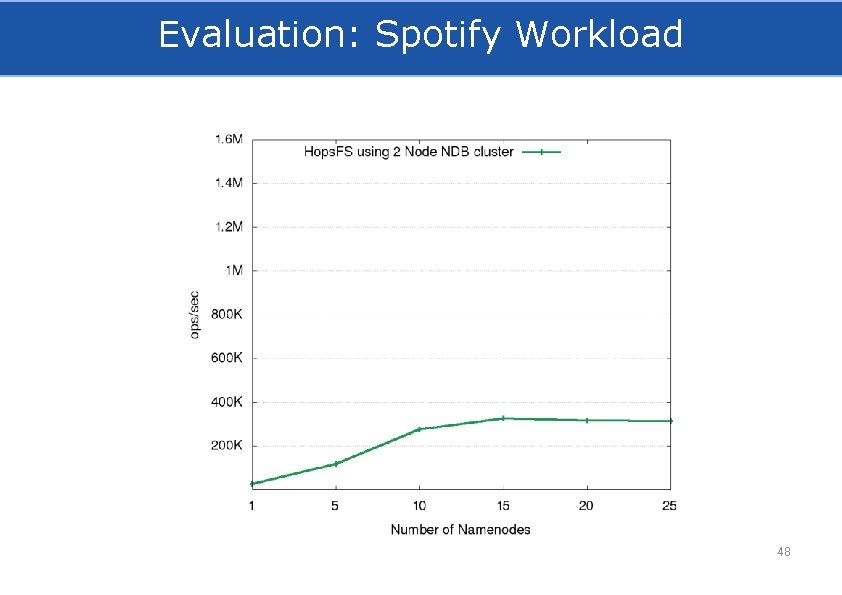

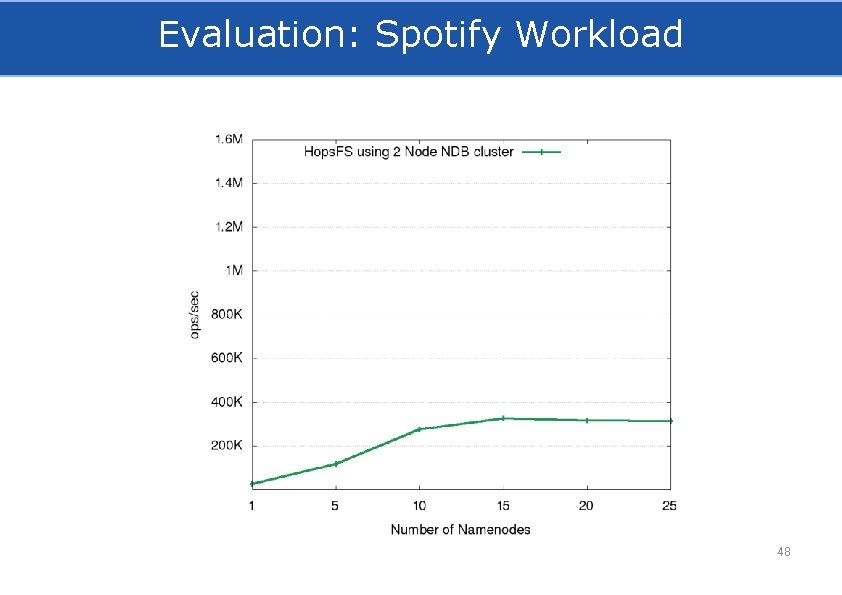

Evaluation: Spotify Workload 48

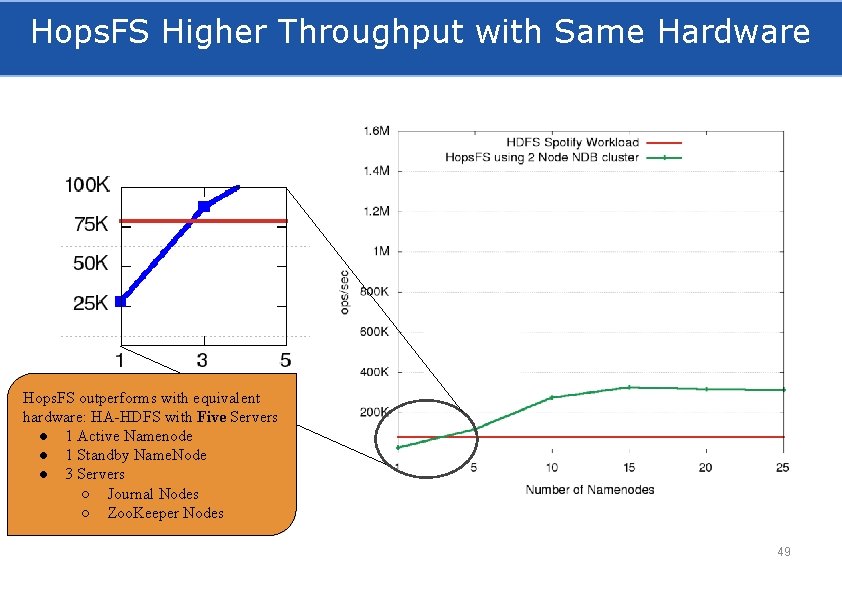

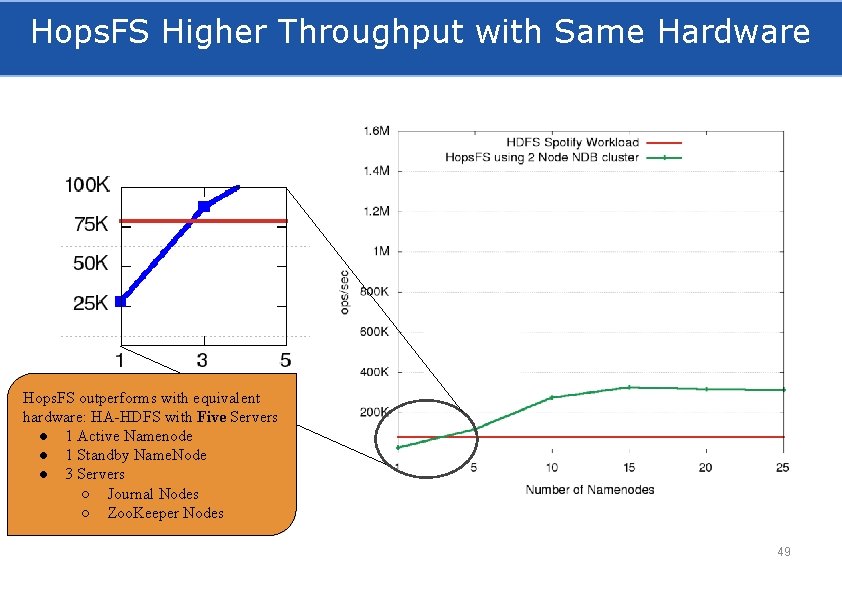

Hops. FS Higher Throughput with Same Hardware Hops. FS outperforms with equivalent hardware: HA-HDFS with Five Servers ● 1 Active Namenode ● 1 Standby Name. Node ● 3 Servers ○ Journal Nodes ○ Zoo. Keeper Nodes 49

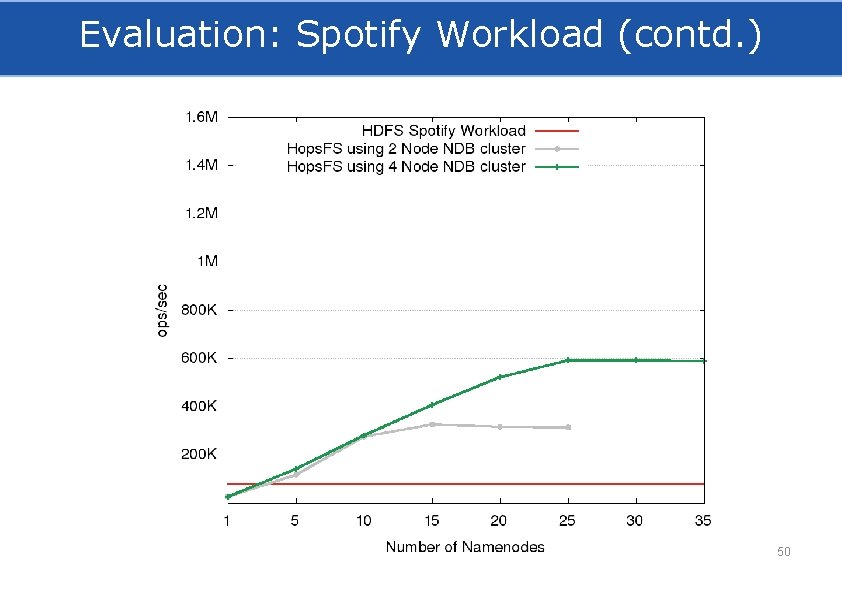

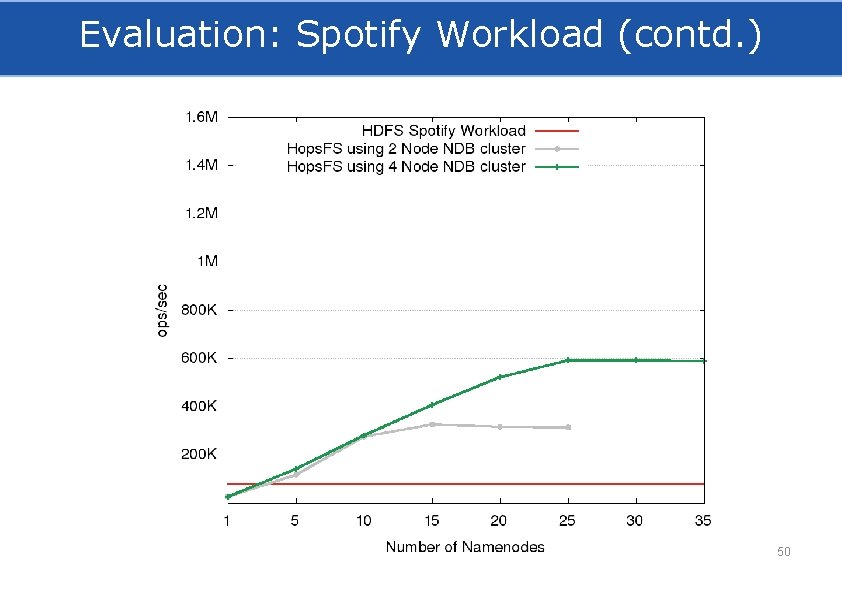

Evaluation: Spotify Workload (contd. ) 50

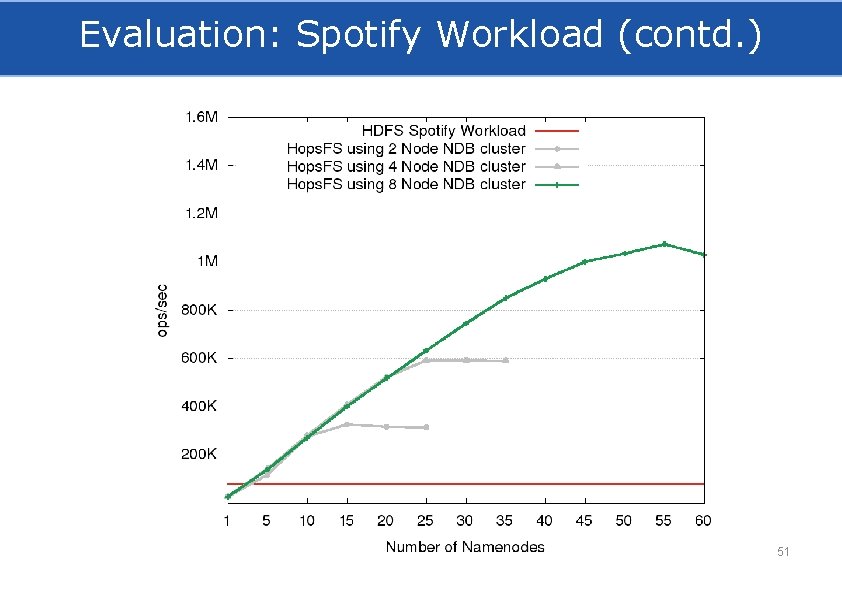

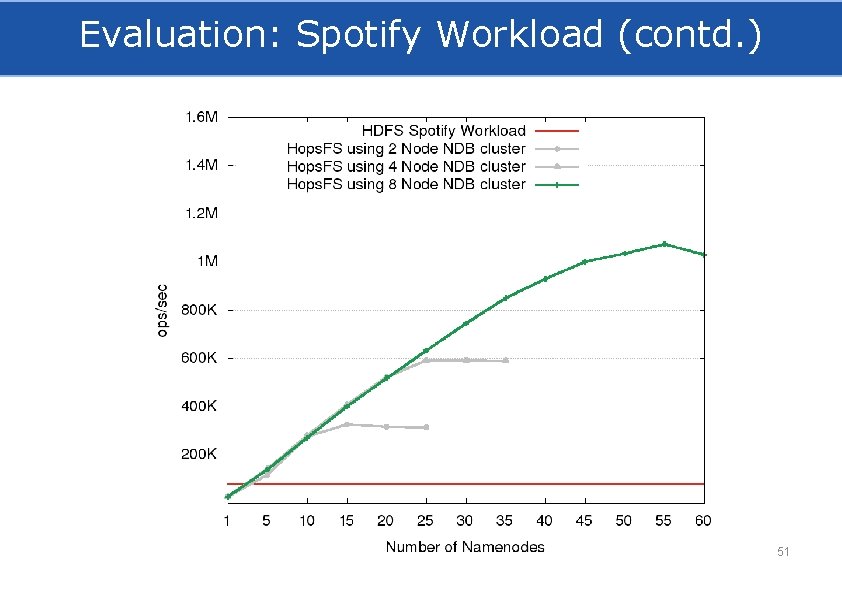

Evaluation: Spotify Workload (contd. ) 51

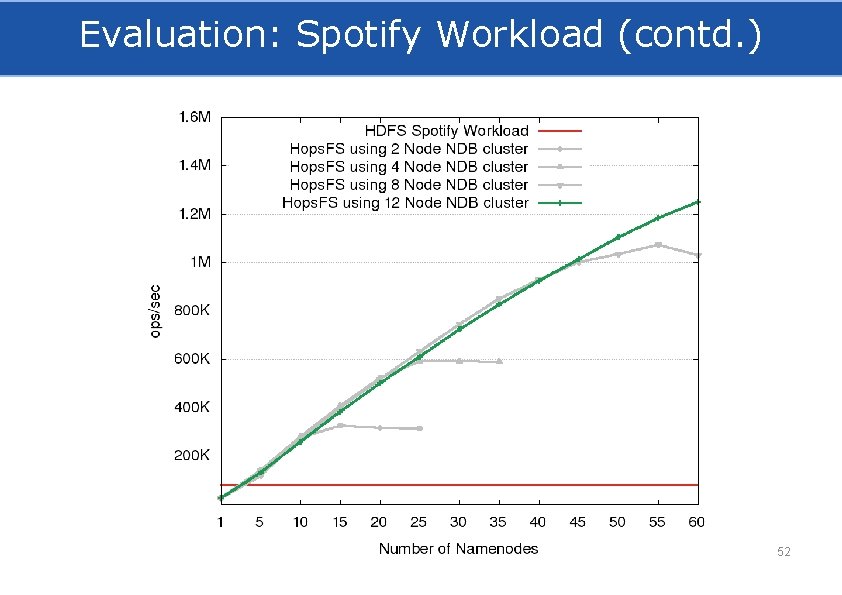

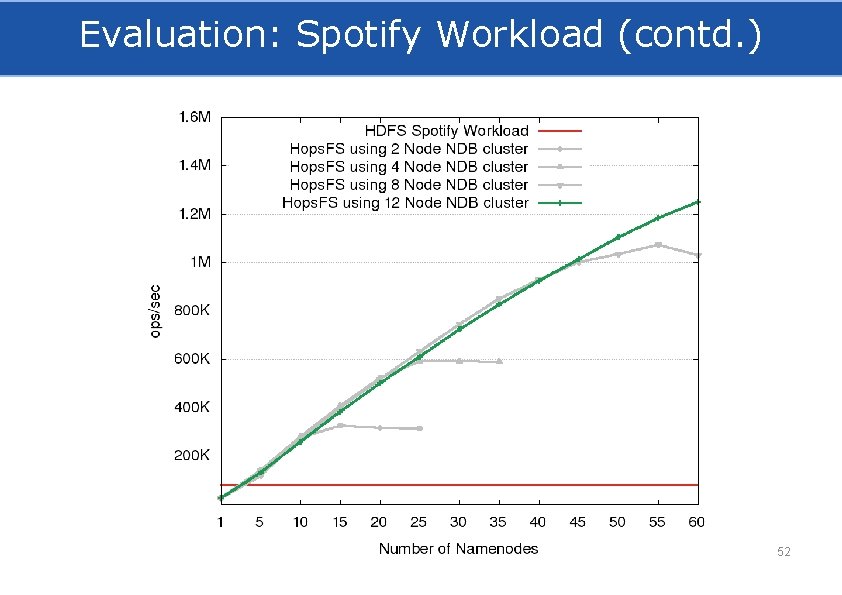

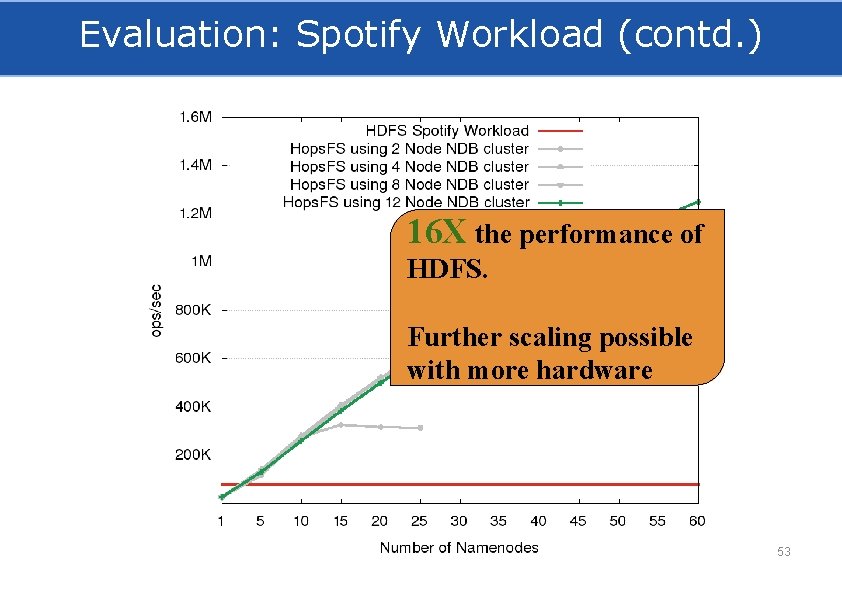

Evaluation: Spotify Workload (contd. ) 52

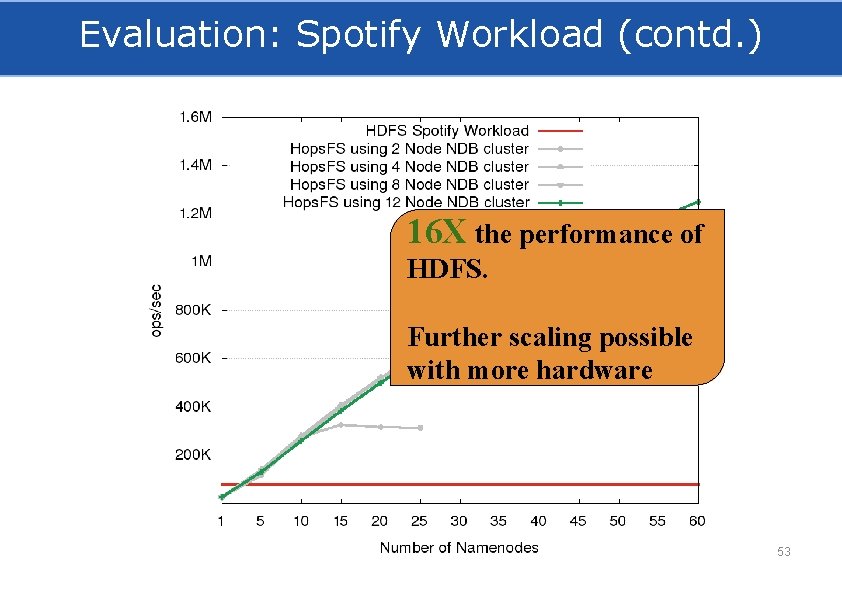

Evaluation: Spotify Workload (contd. ) 16 X the performance of HDFS. Further scaling possible with more hardware 53

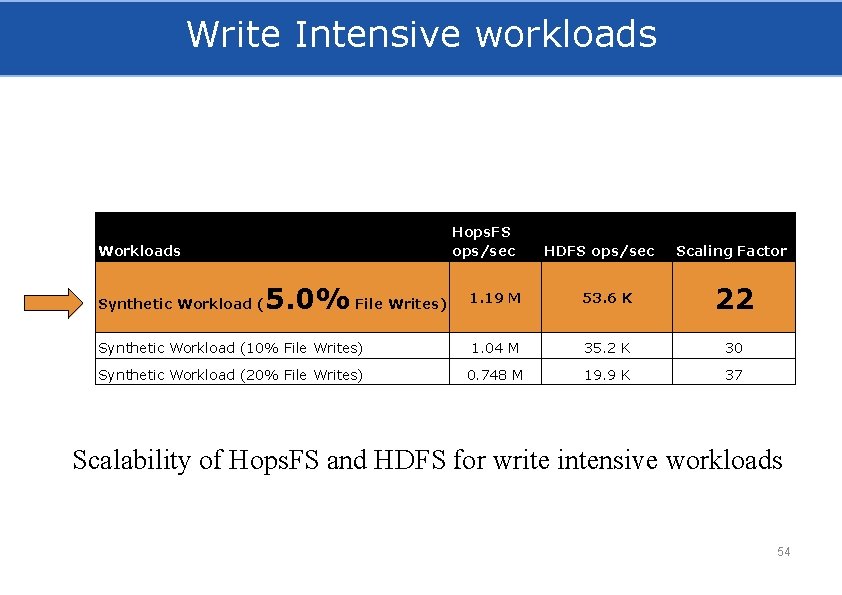

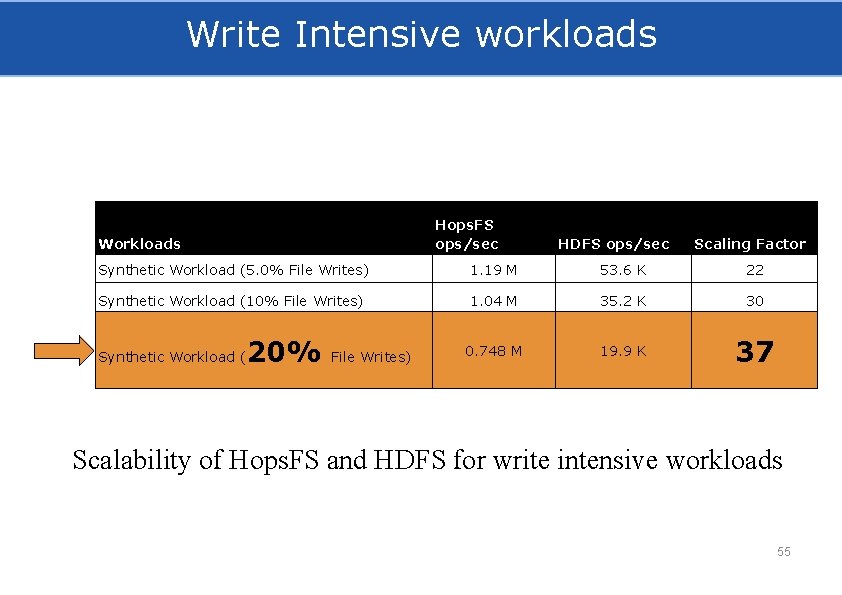

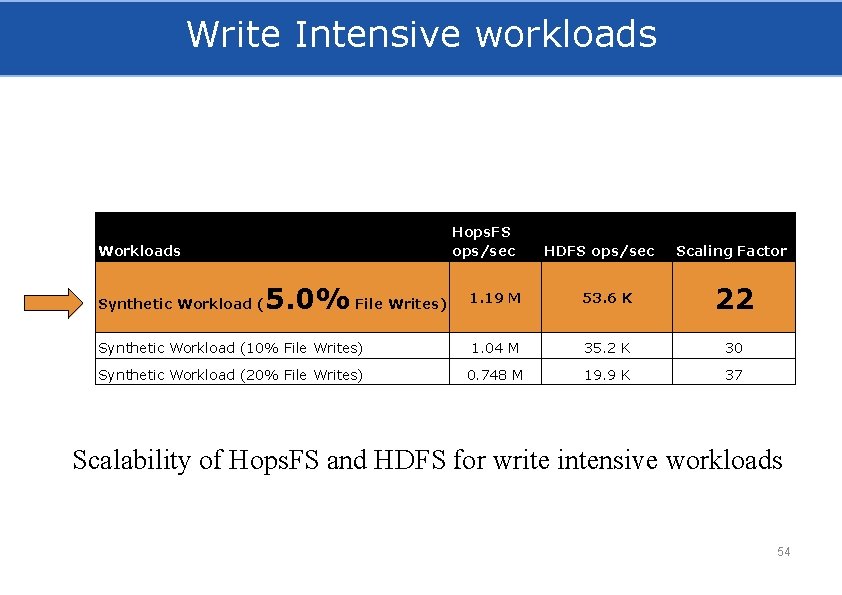

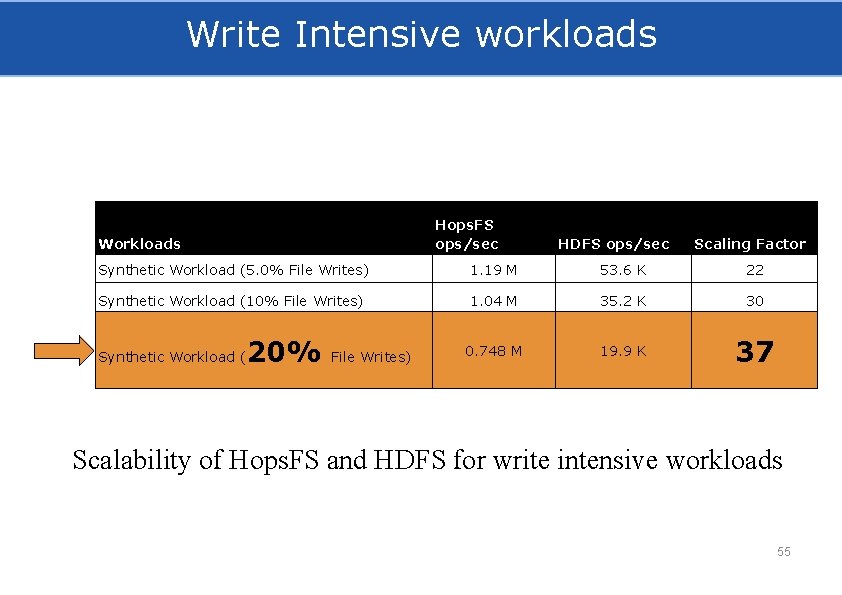

Write Intensive workloads Hops. FS ops/sec Workloads Scaling Factor 1. 19 M 53. 6 K 22 Synthetic Workload (10% File Writes) 1. 04 M 35. 2 K 30 Synthetic Workload (20% File Writes) 0. 748 M 19. 9 K 37 Synthetic Workload ( 5. 0% File Writes) HDFS ops/sec Scalability of Hops. FS and HDFS for write intensive workloads 54

Write Intensive workloads Hops. FS ops/sec Workloads HDFS ops/sec Scaling Factor Synthetic Workload (5. 0% File Writes) 1. 19 M 53. 6 K 22 Synthetic Workload (10% File Writes) 1. 04 M 35. 2 K 30 0. 748 M 19. 9 K 37 Synthetic Workload ( 20% File Writes) Scalability of Hops. FS and HDFS for write intensive workloads 55

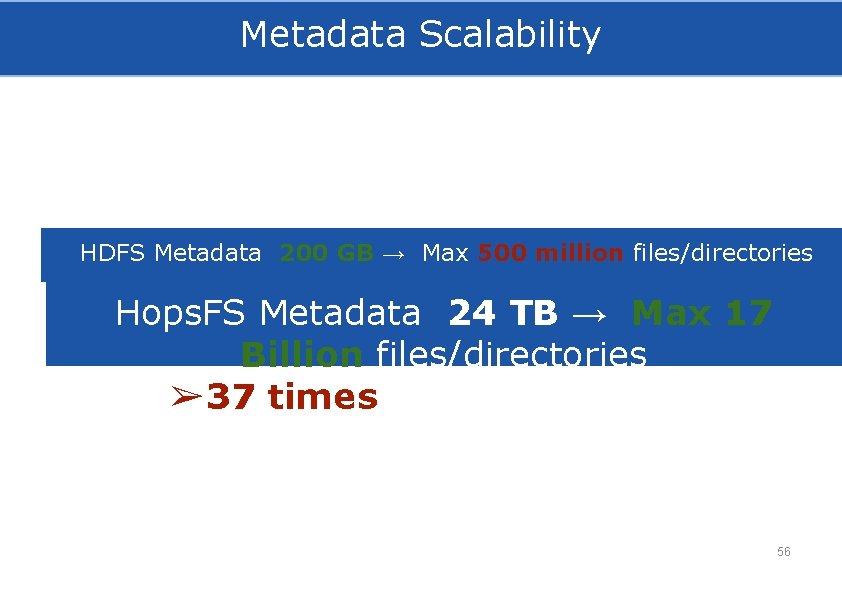

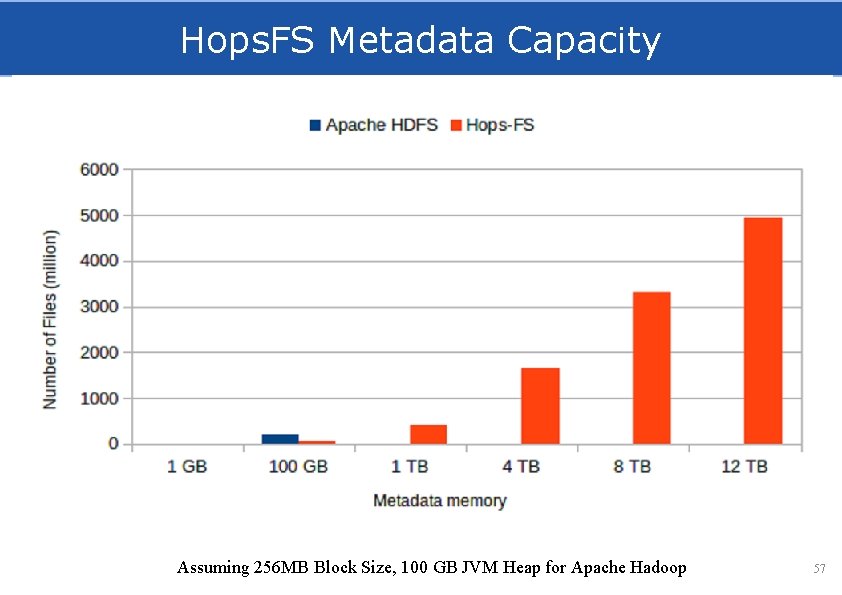

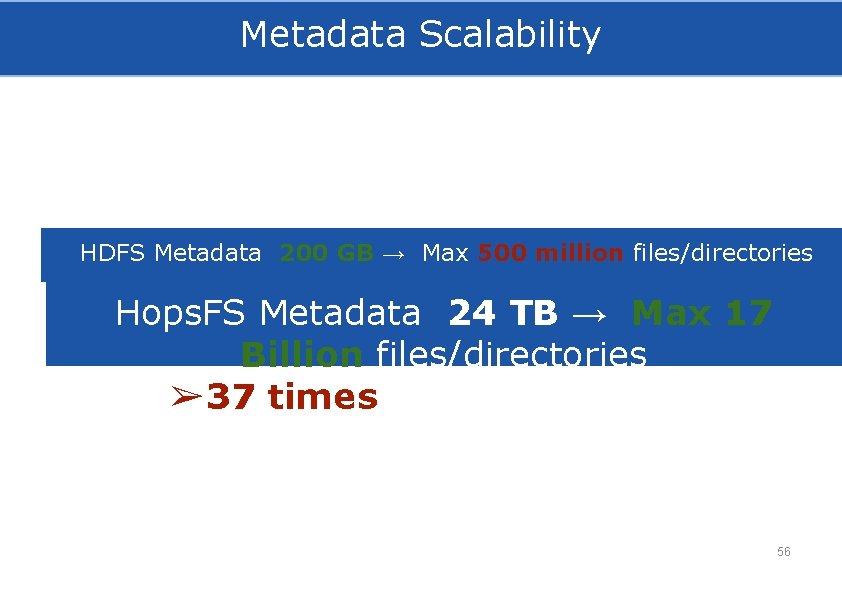

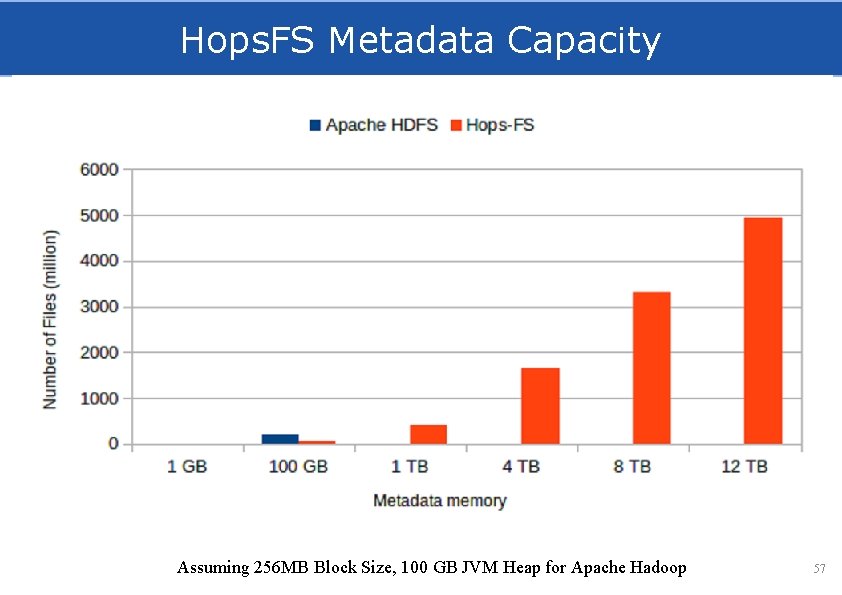

Metadata Scalability HDFS Metadata 200 GB → Max 500 million files/directories Hops. FS Metadata 24 TB → Max 17 Billion files/directories ➢ 37 times more files than HDFS 56

Hops. FS Metadata Capacity Assuming 256 MB Block Size, 100 GB JVM Heap for Apache Hadoop 57

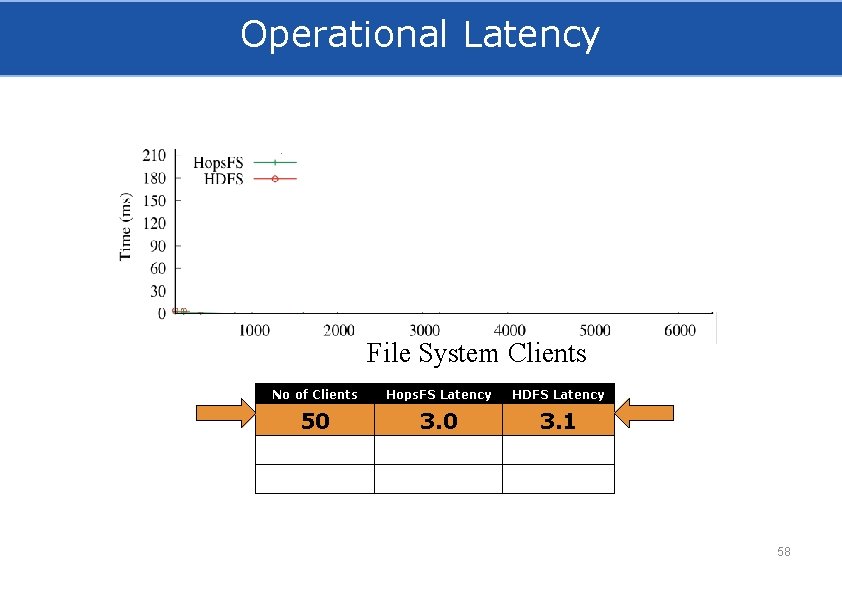

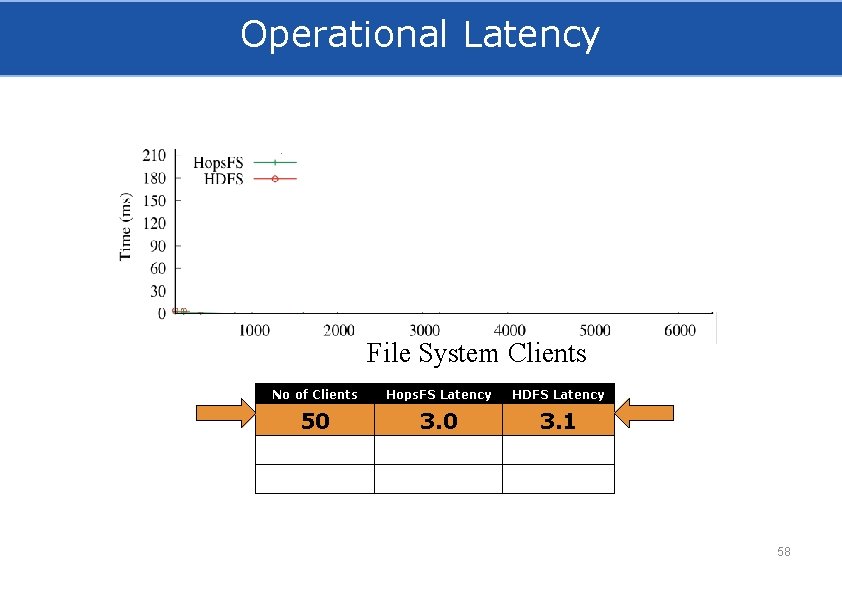

Operational Latency File System Clients No of Clients Hops. FS Latency HDFS Latency 50 3. 1 58

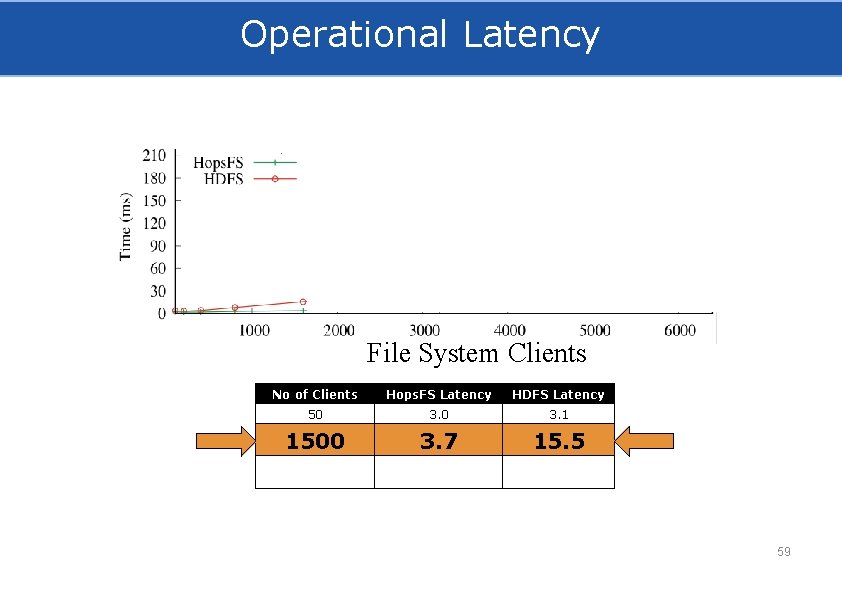

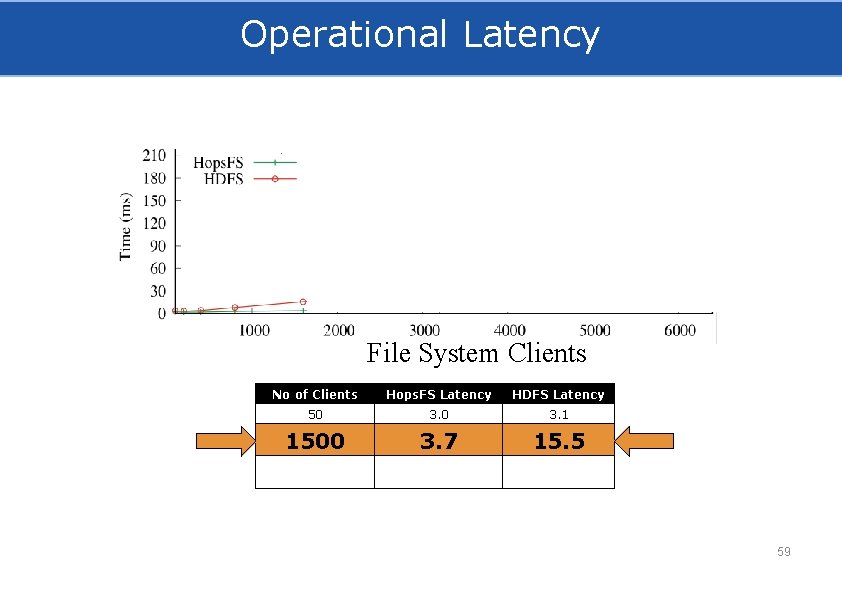

Operational Latency File System Clients No of Clients Hops. FS Latency HDFS Latency 50 3. 1 1500 3. 7 15. 5 59

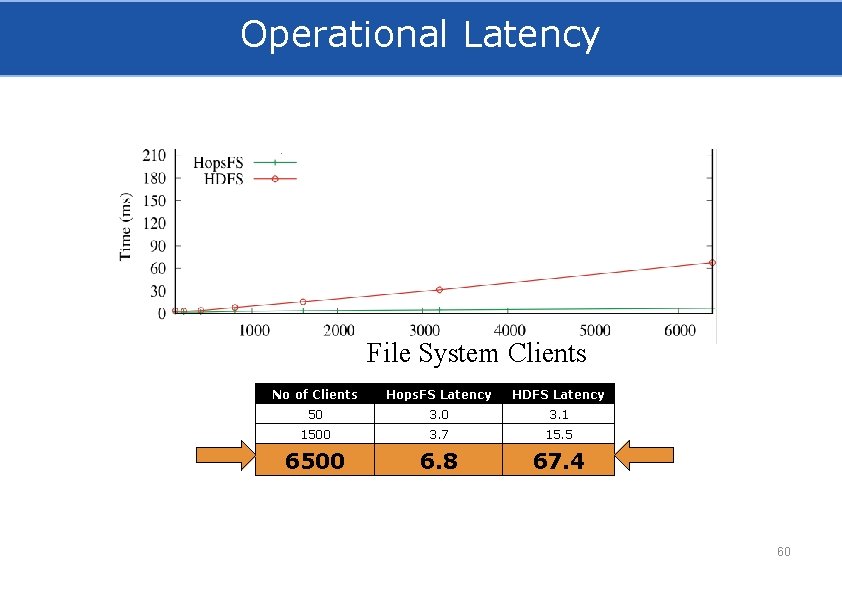

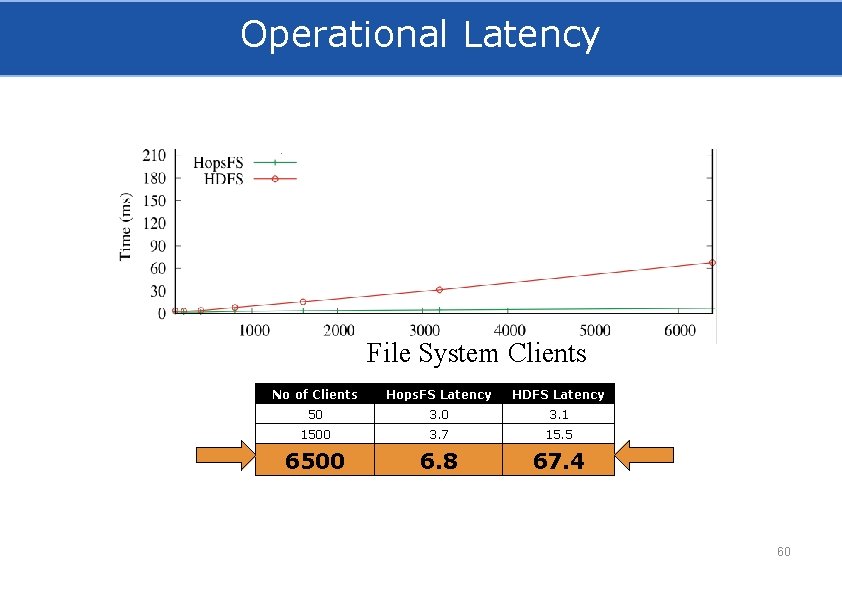

Operational Latency File System Clients No of Clients Hops. FS Latency HDFS Latency 50 3. 1 1500 3. 7 15. 5 6500 6. 8 67. 4 60

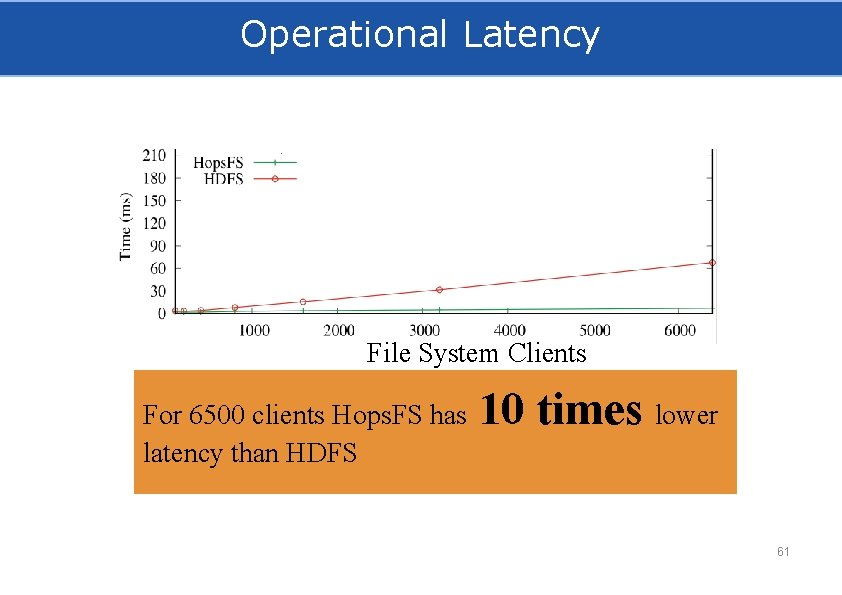

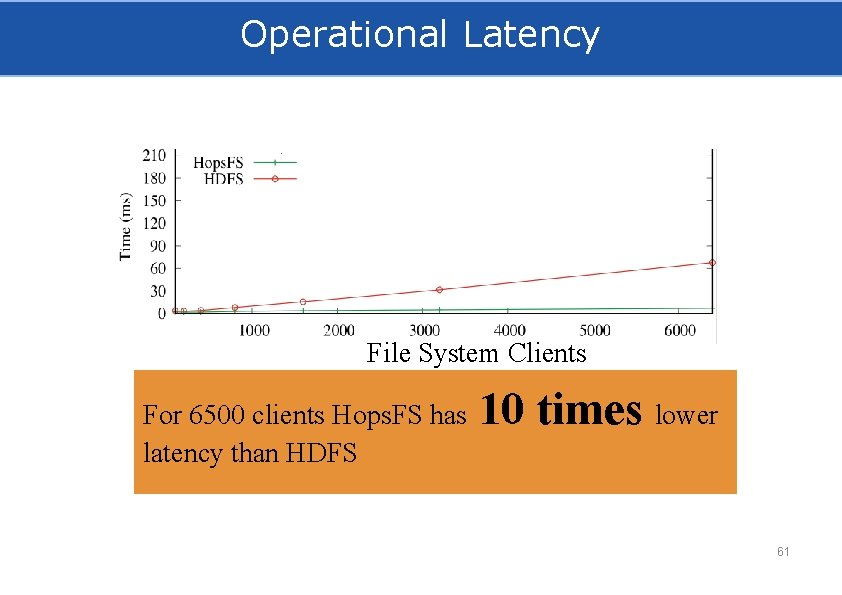

Operational Latency File System Clients No of Clients 10 times lower Hops. FS Latency 50 3. 0 For 6500 clients Hops. FS has 1500 3. 7 latency than HDFS 6500 6. 8 HDFS Latency 3. 1 15. 5 67. 4 61

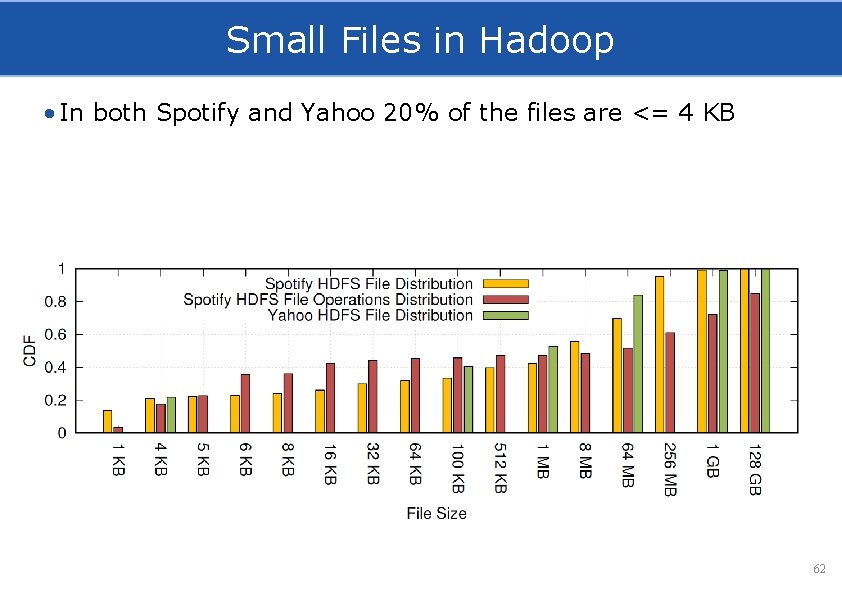

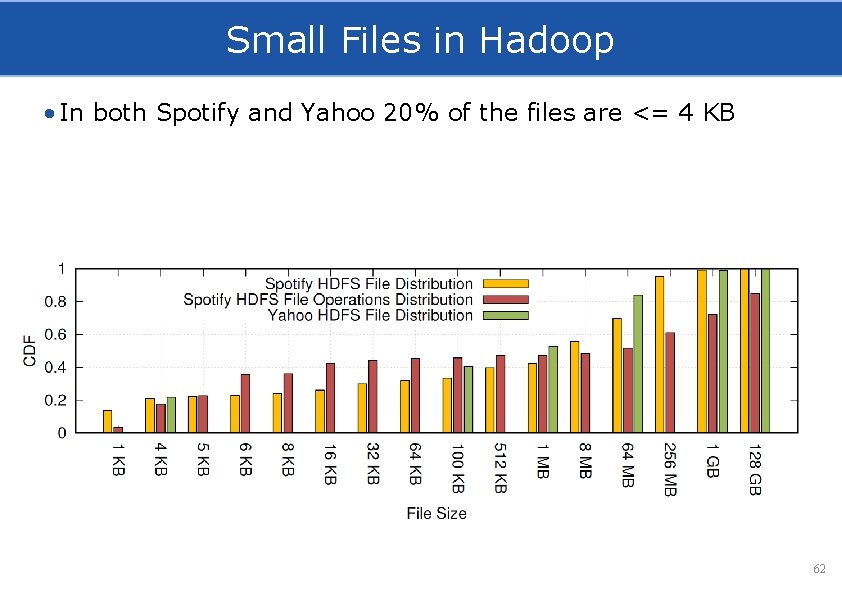

Small Files in Hadoop • In both Spotify and Yahoo 20% of the files are <= 4 KB 62

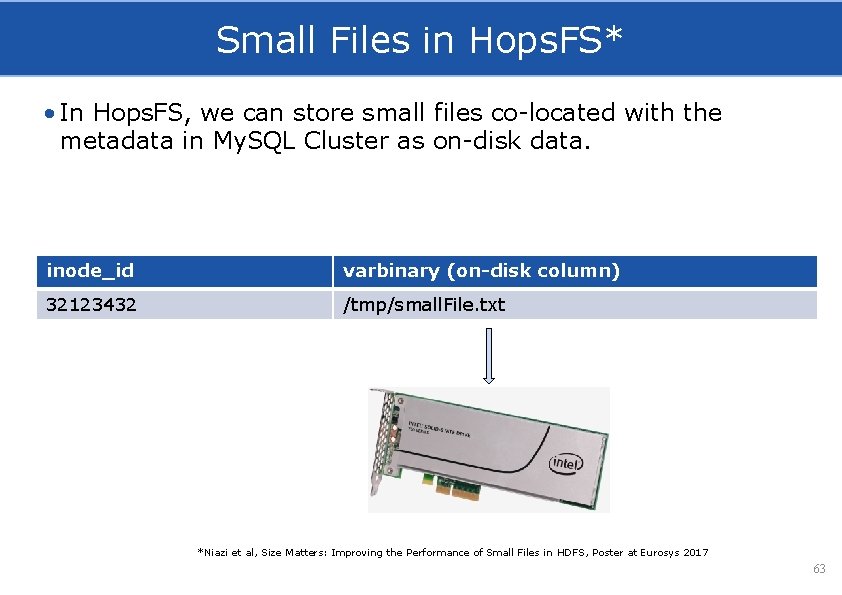

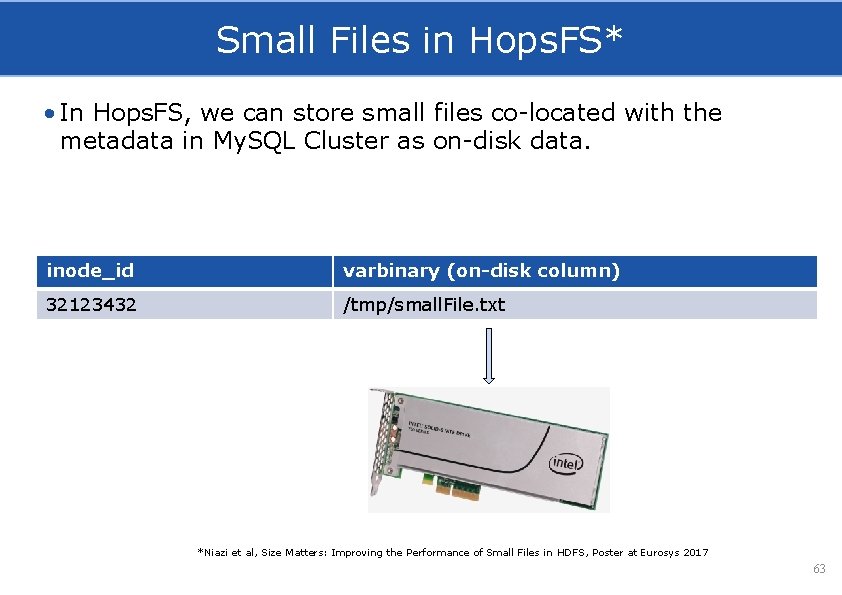

Small Files in Hops. FS* • In Hops. FS, we can store small files co-located with the metadata in My. SQL Cluster as on-disk data. inode_id varbinary (on-disk column) 32123432 /tmp/small. File. txt *Niazi et al, Size Matters: Improving the Performance of Small Files in HDFS, Poster at Eurosys 2017 63

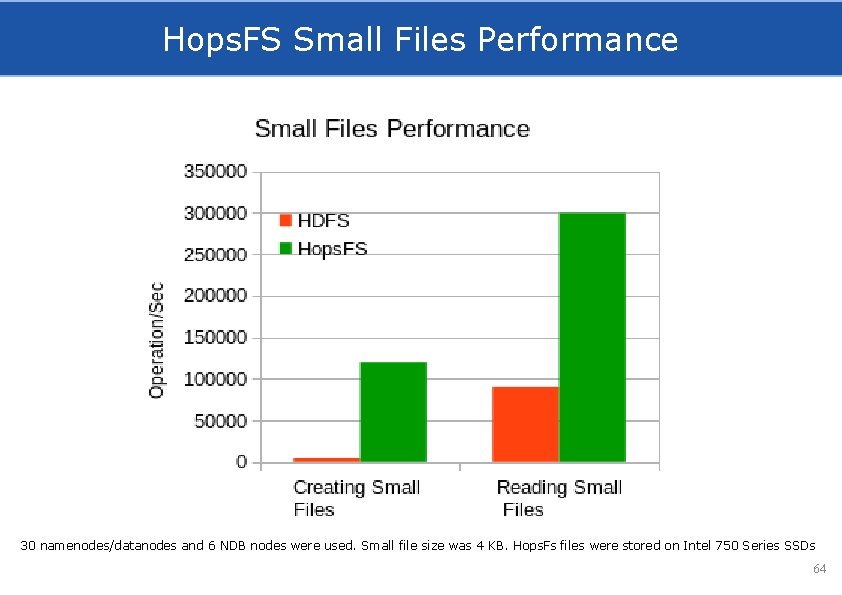

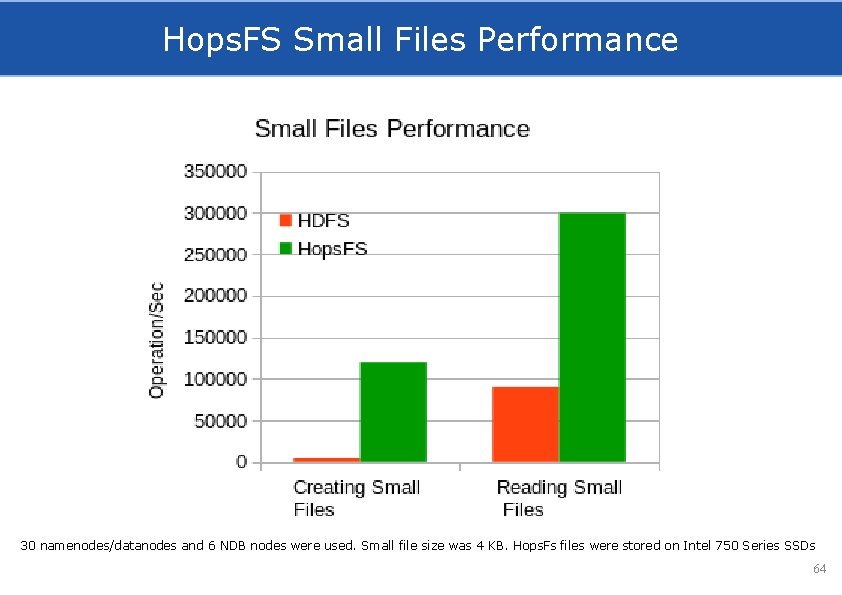

Hops. FS Small Files Performance 30 namenodes/datanodes and 6 NDB nodes were used. Small file size was 4 KB. Hops. Fs files were stored on Intel 750 Series SSDs 64

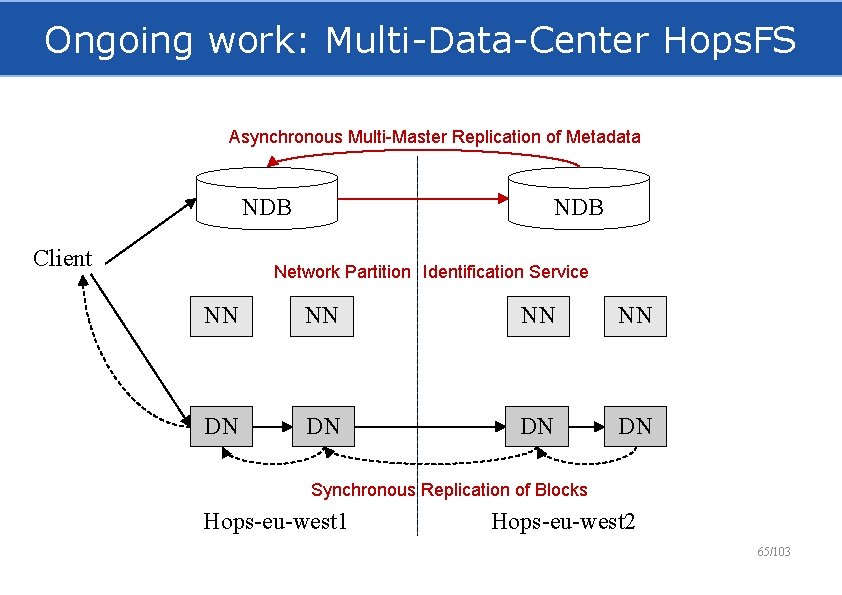

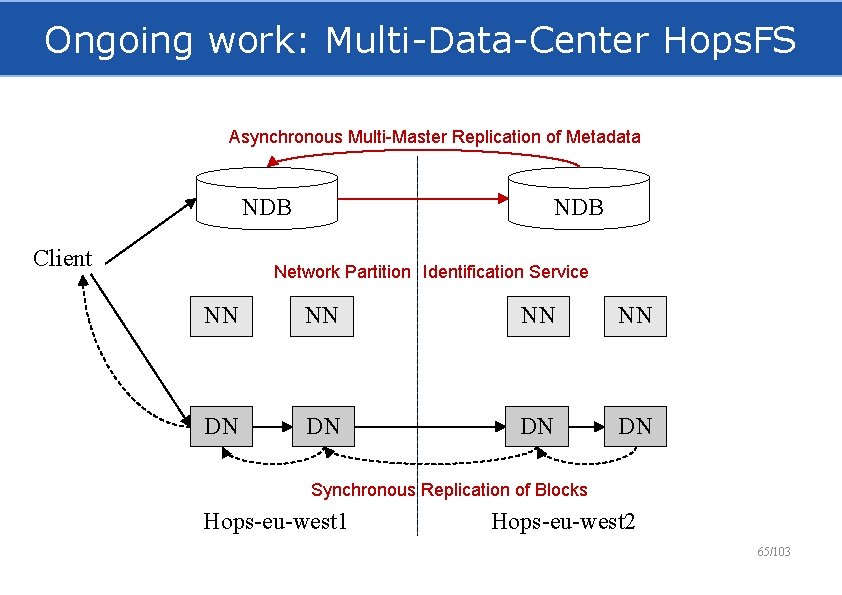

Ongoing work: Multi-Data-Center Hops. FS Asynchronous Multi-Master Replication of Metadata NDB Client NDB Network Partition Identification Service NN NN DN DN Synchronous Replication of Blocks Hops-eu-west 1 Hops-eu-west 2 65/103

What do I do with all this Metadata? 66

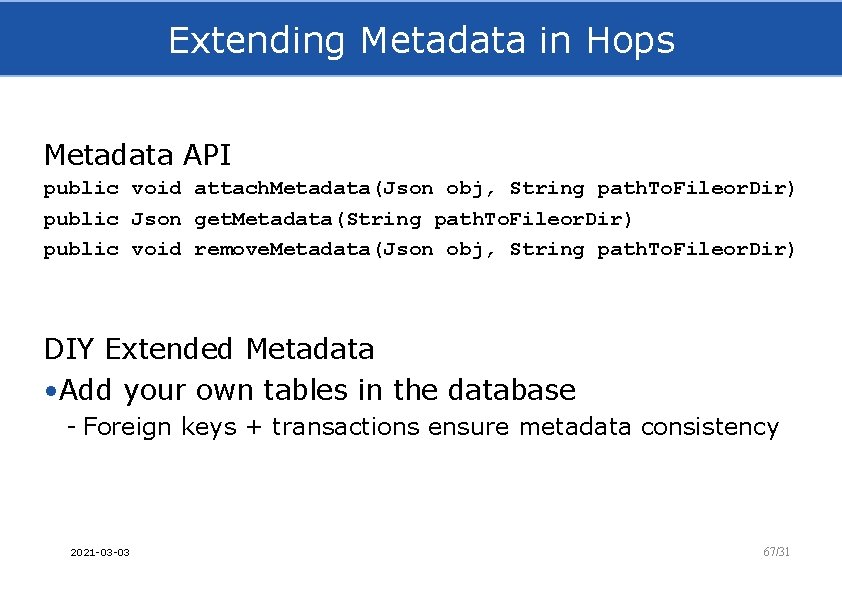

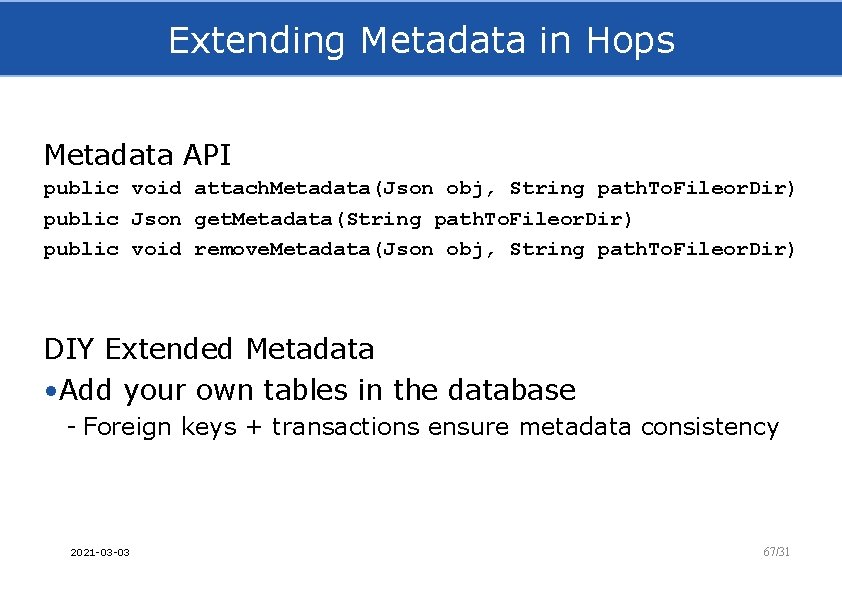

Extending Metadata in Hops Metadata API public void attach. Metadata(Json obj, String path. To. Fileor. Dir) public Json get. Metadata(String path. To. Fileor. Dir) public void remove. Metadata(Json obj, String path. To. Fileor. Dir) DIY Extended Metadata • Add your own tables in the database - Foreign keys + transactions ensure metadata consistency 2021 -03 -03 67/31

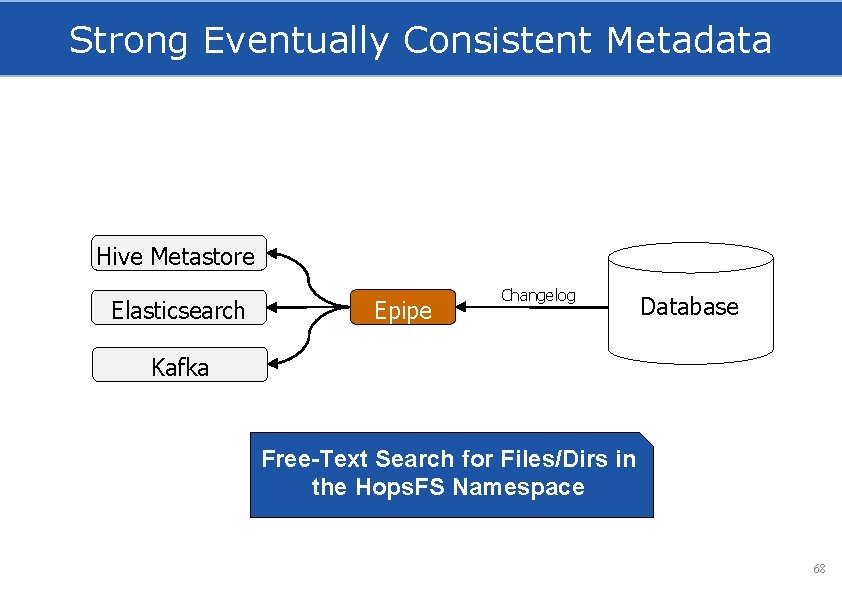

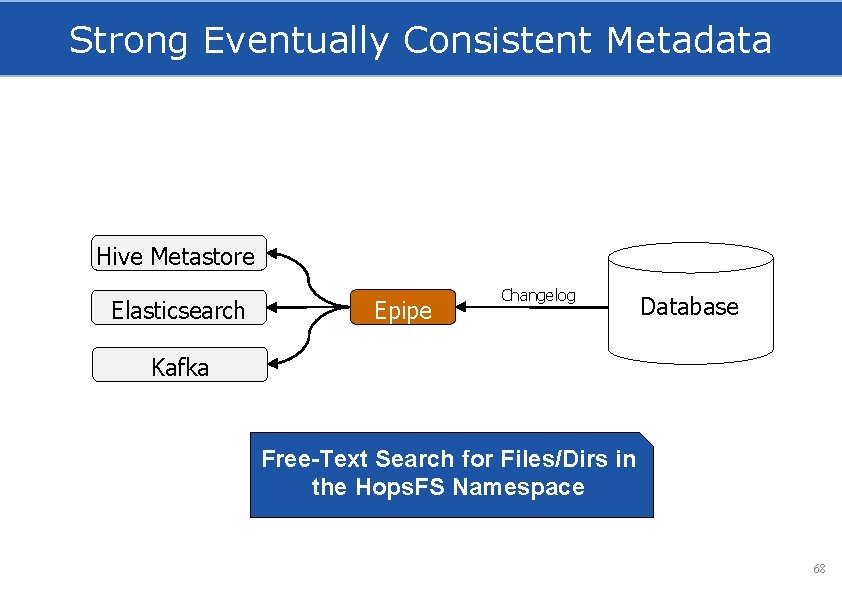

Strong Eventually Consistent Metadata Hive Metastore Elasticsearch Epipe Changelog Database Kafka Free-Text Search for Files/Dirs in the Hops. FS Namespace 68

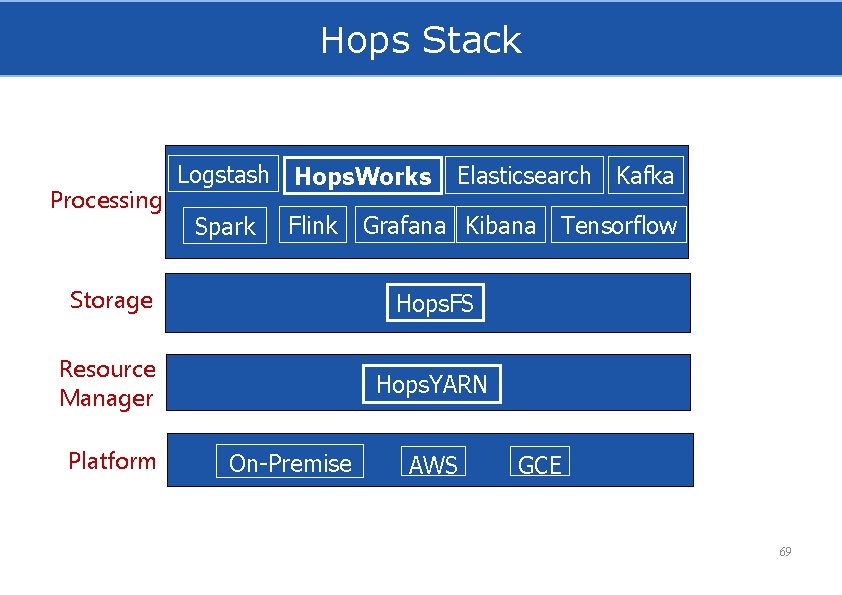

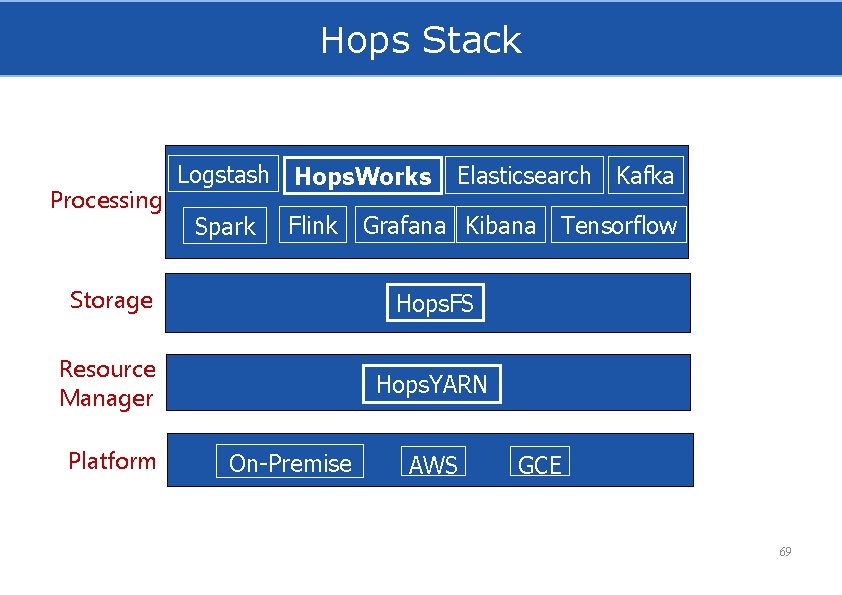

Hops Stack Processing Logstash Hops. Works Spark Flink Elasticsearch Kafka Grafana Kibana Storage Hops. FS Resource Manager Hops. YARN Platform On-Premise AWS Tensorflow GCE 69

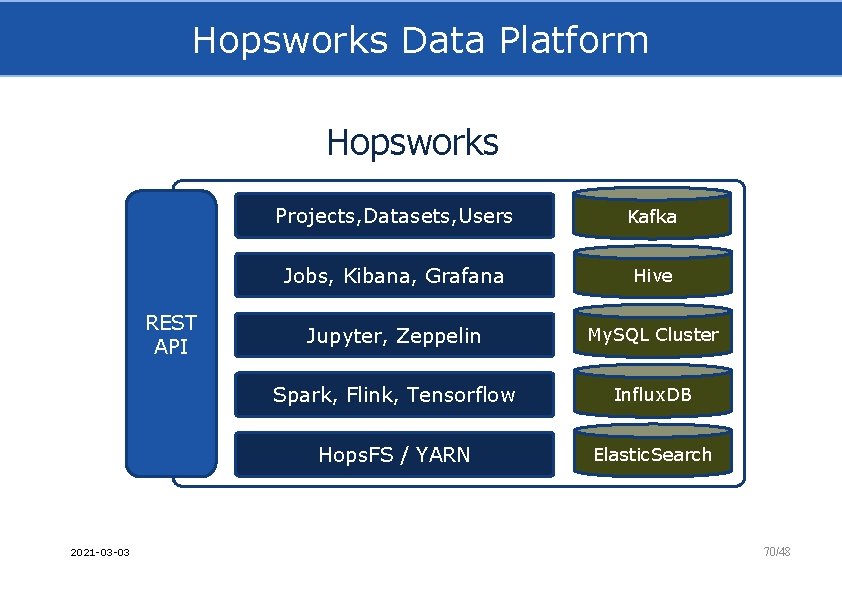

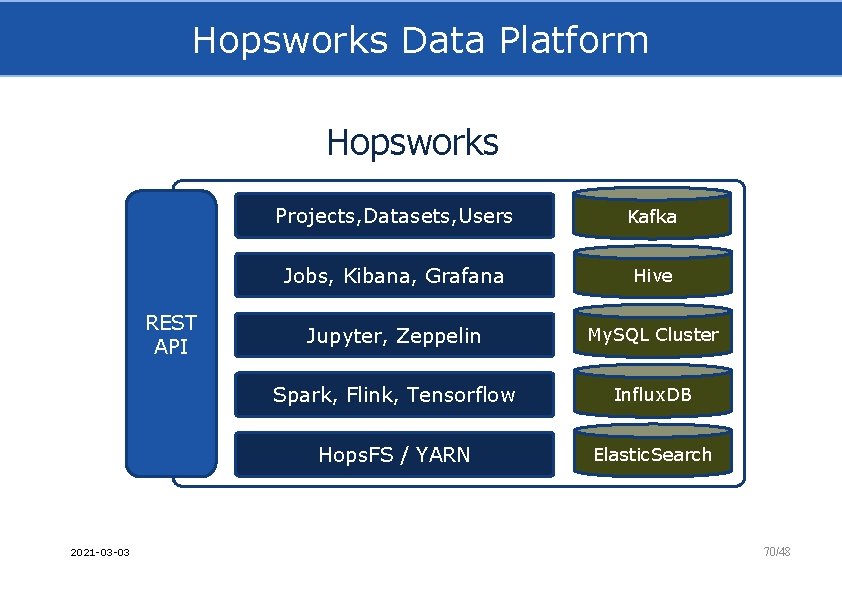

Hopsworks Data Platform Hopsworks REST API 2021 -03 -03 Projects, Datasets, Users Kafka Jobs, Kibana, Grafana Hive Jupyter, Zeppelin My. SQL Cluster Spark, Flink, Tensorflow Influx. DB Hops. FS / YARN Elastic. Search 70/48

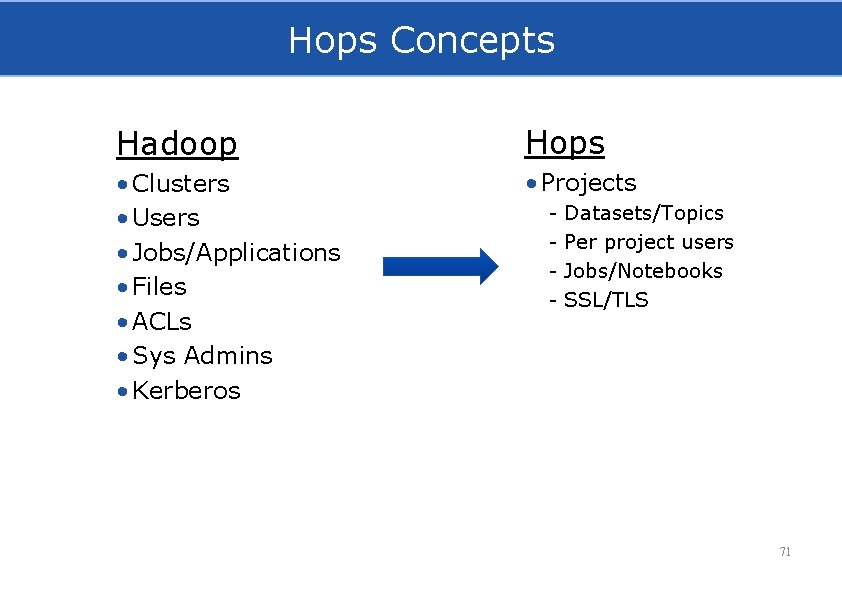

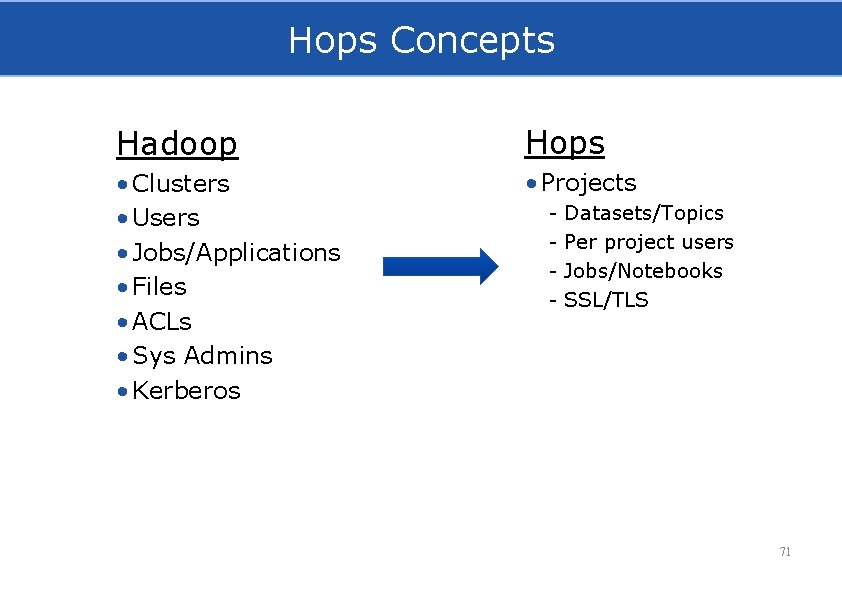

Hops Concepts Hadoop Hops • Clusters • Users • Jobs/Applications • Files • ACLs • Sys Admins • Kerberos • Projects - Datasets/Topics Per project users Jobs/Notebooks SSL/TLS 71

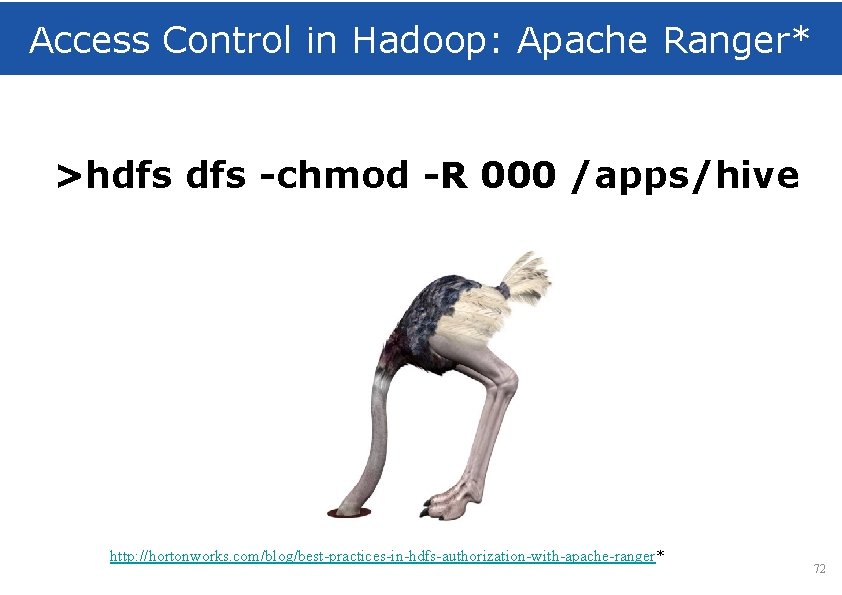

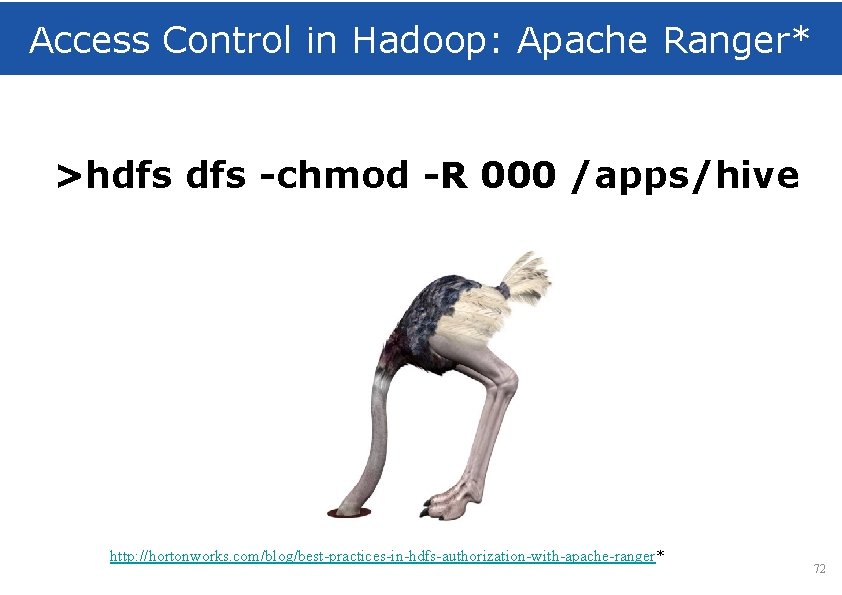

Access Control in Hadoop: Apache Ranger* >hdfs -chmod -R 000 /apps/hive http: //hortonworks. com/blog/best-practices-in-hdfs-authorization-with-apache-ranger* 72

![Access Control in Hadoop Apache Sentry Mujumdar 15 How do you ensure the consistency Access Control in Hadoop: Apache Sentry [Mujumdar’ 15] How do you ensure the consistency](https://slidetodoc.com/presentation_image_h/200c57b7ff7885382b56058c13fc4564/image-73.jpg)

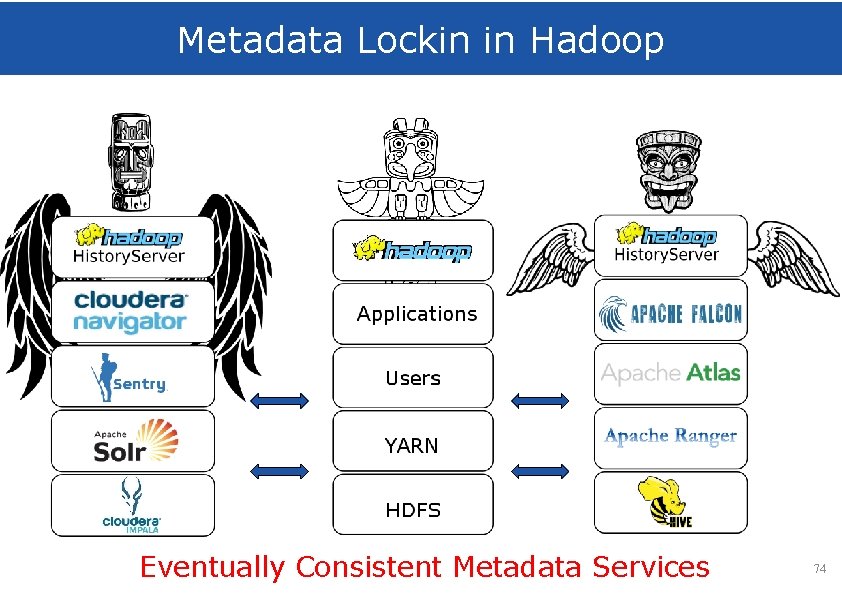

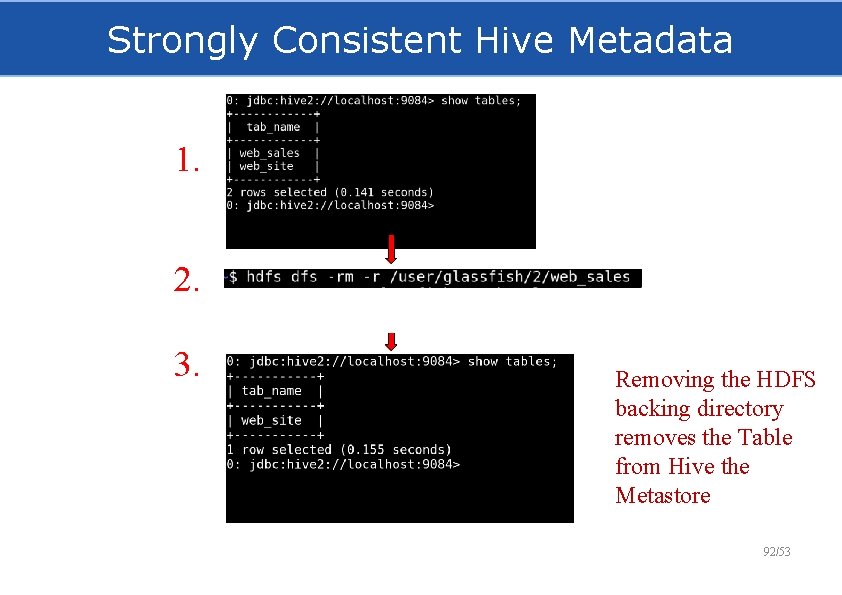

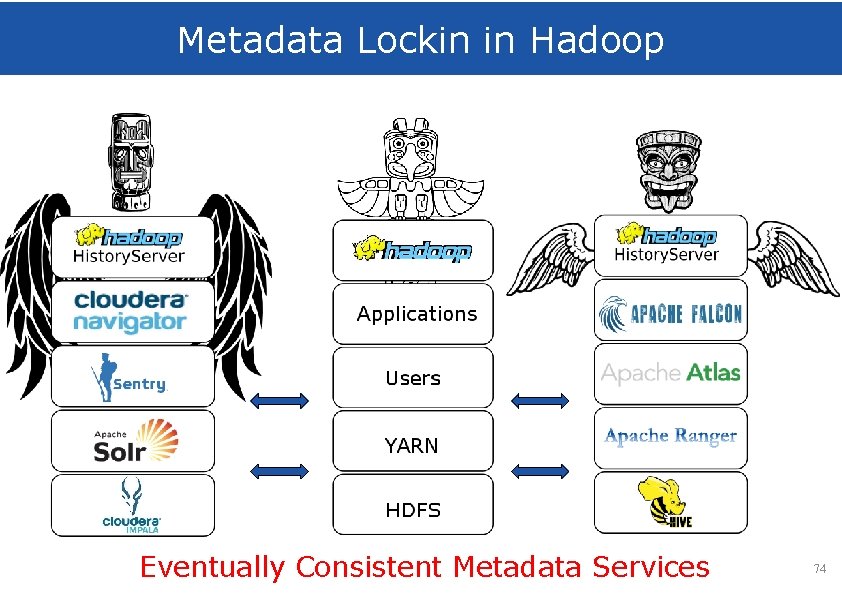

Access Control in Hadoop: Apache Sentry [Mujumdar’ 15] How do you ensure the consistency of the policies and the data? 73

Metadata Lockin in Hadoop Eventually Consistent Metadata Services 74

SSL/TLS, not Kerberos 75

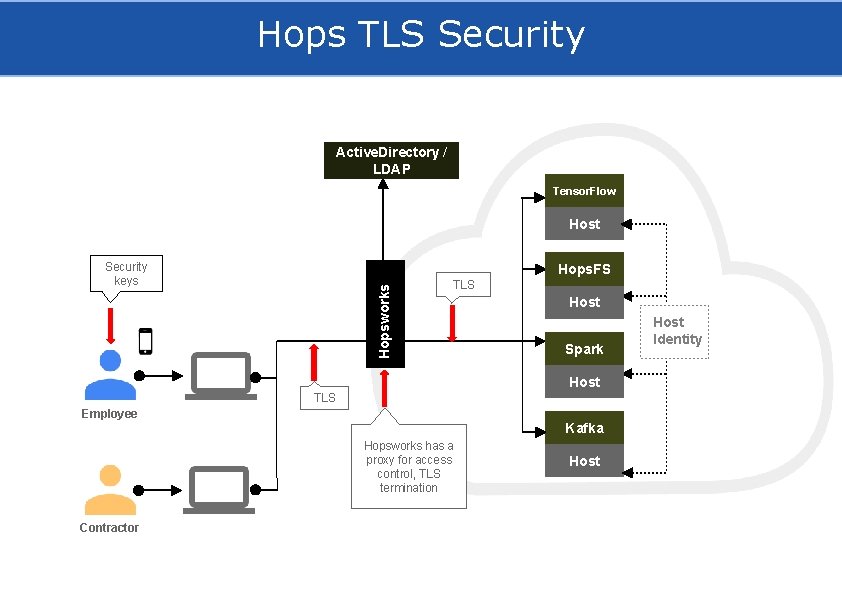

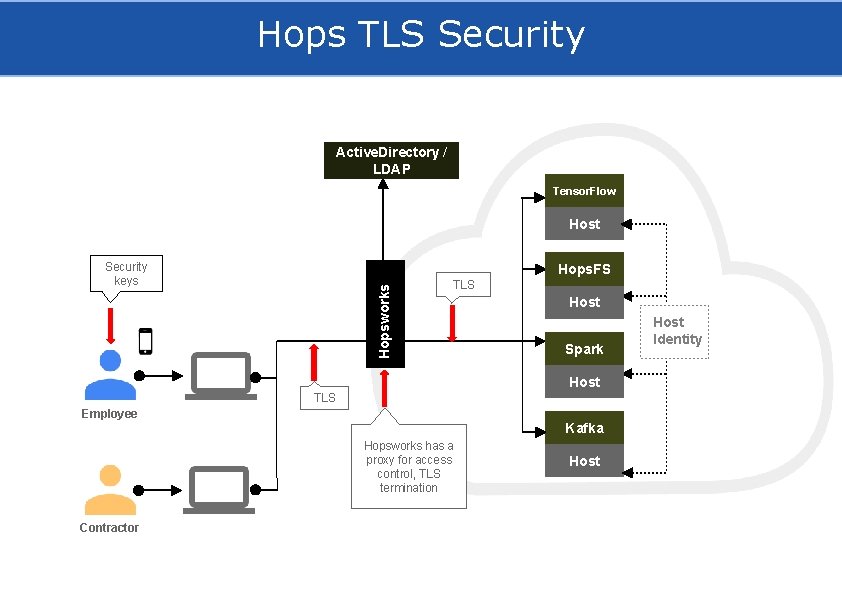

Hops TLS Security Active. Directory / LDAP Tensor. Flow Host Security keys Hopsworks Hops. FS TLS Host Spark Host TLS Employee Kafka Hopsworks has a proxy for access control, TLS termination Contractor Host Identity

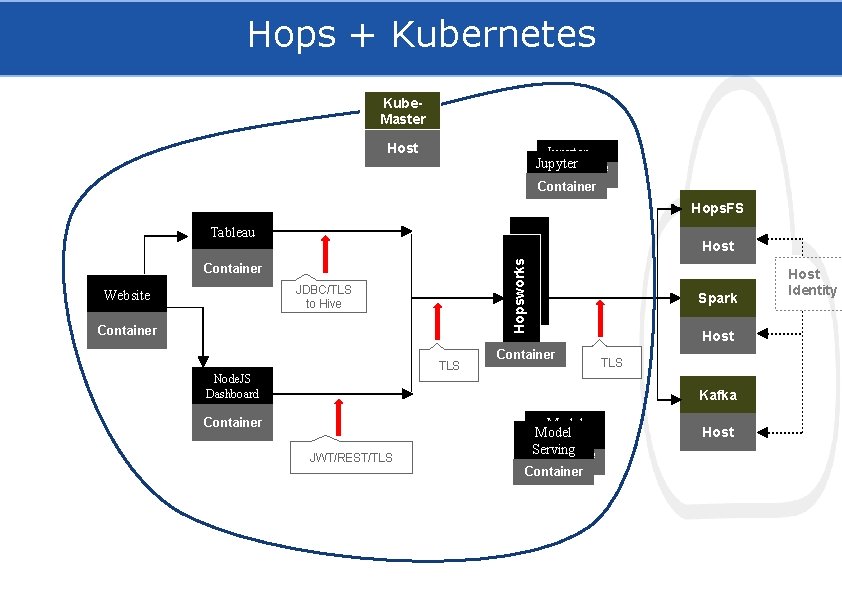

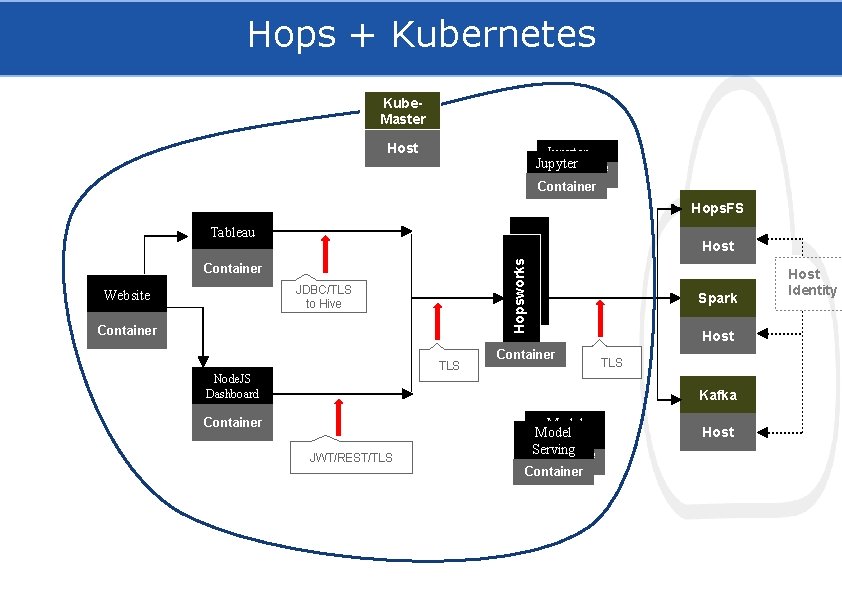

Hops + Kubernetes Kube. Master Host Jupyter Container Hops. FS Tableau Hopsworks Container JDBC/TLS to Hive Website Host Container TLS Container Node. JS Dashboard Spark Host TLS Kafka Container JWT/REST/TLS Model Serving Containe r Container Host Identity

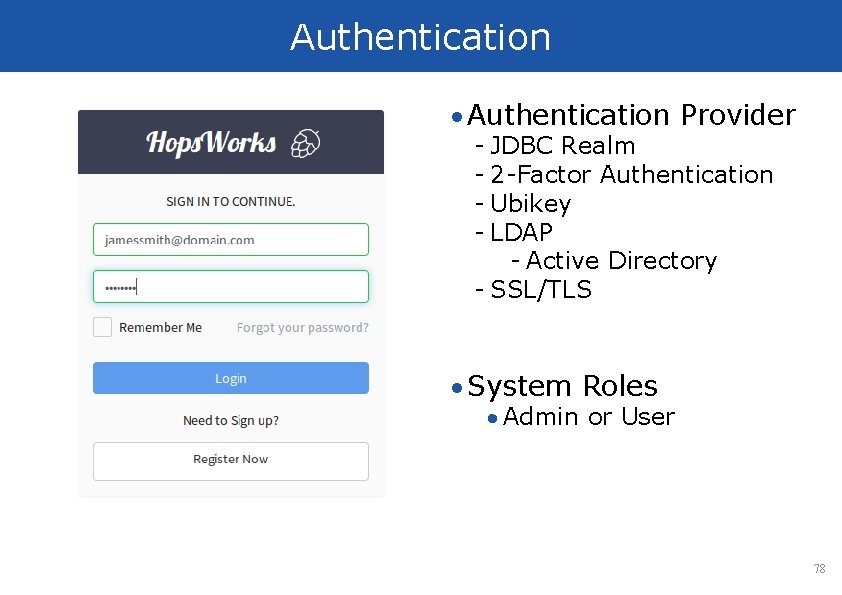

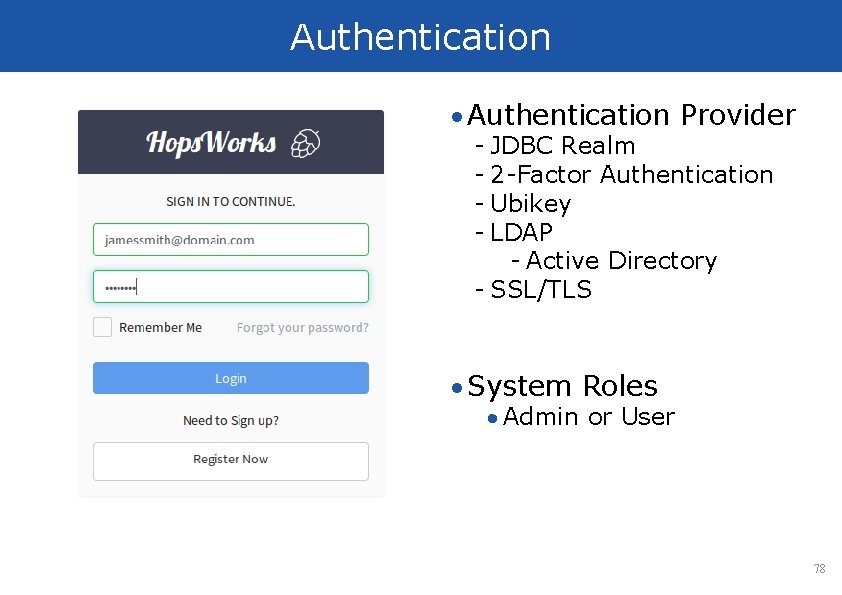

Authentication Provider - JDBC Realm - 2 -Factor Authentication - Ubikey - LDAP - Active Directory - SSL/TLS System Roles Admin or User 78

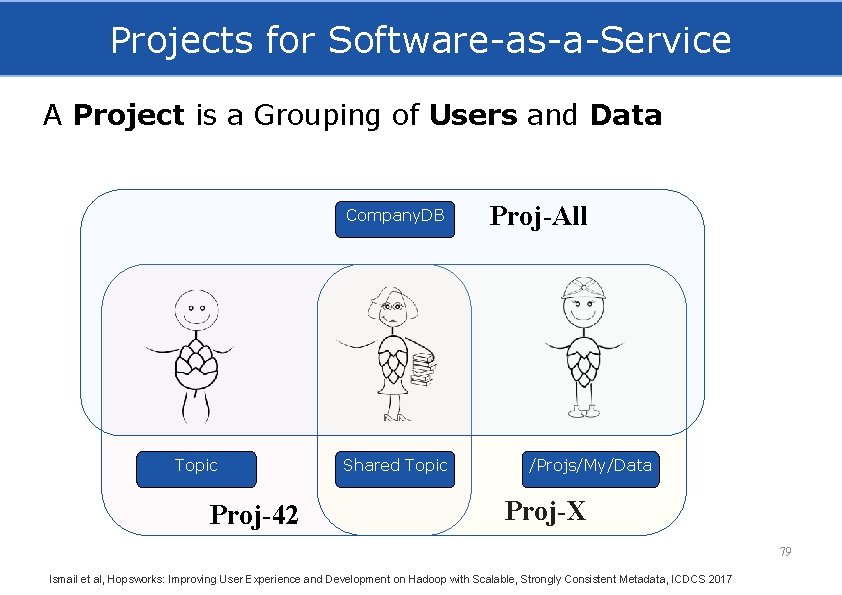

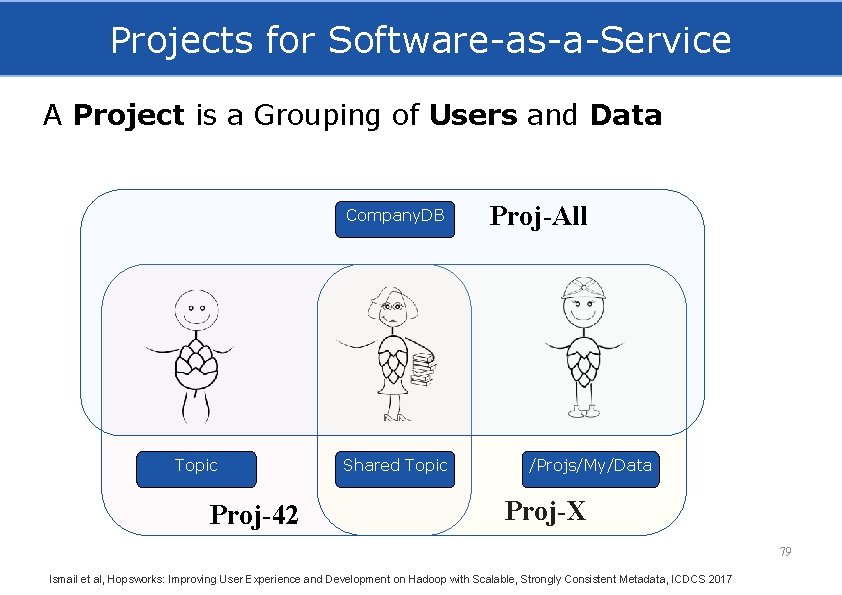

Projects for Software-as-a-Service A Project is a Grouping of Users and Data Company. DB Topic Proj-42 Shared Topic Proj-All /Projs/My/Data Proj-X 79 Ismail et al, Hopsworks: Improving User Experience and Development on Hadoop with Scalable, Strongly Consistent Metadata, ICDCS 2017

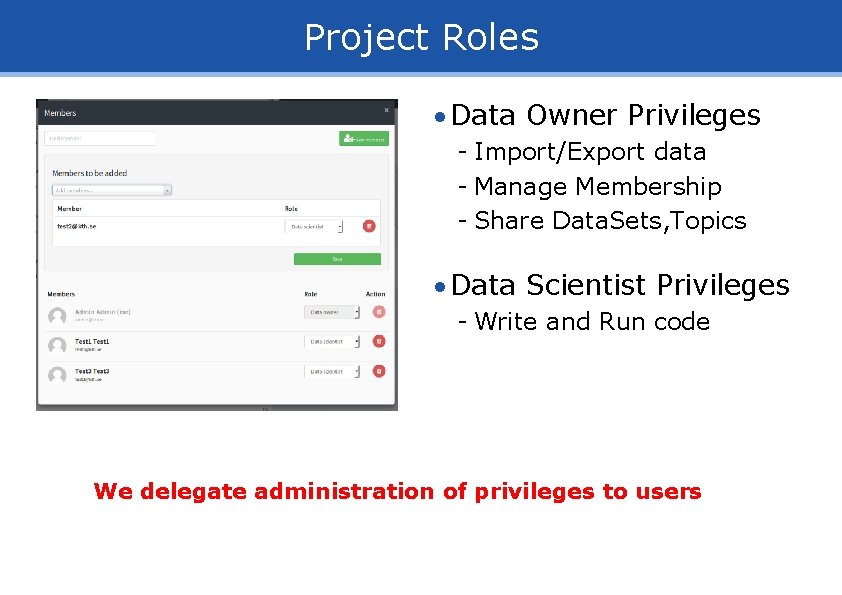

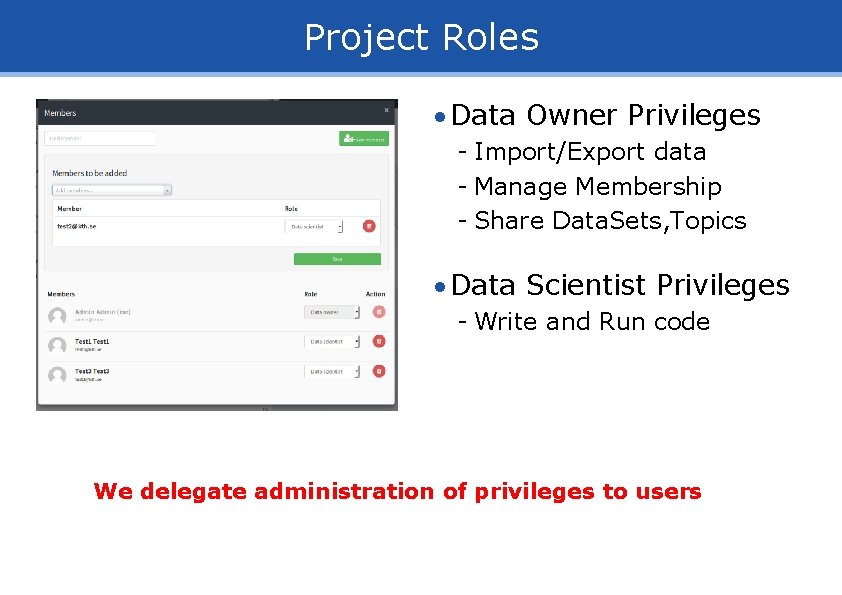

Project Roles Data Owner Privileges - Import/Export data - Manage Membership - Share Data. Sets, Topics Data Scientist Privileges - Write and Run code We delegate administration of privileges to users

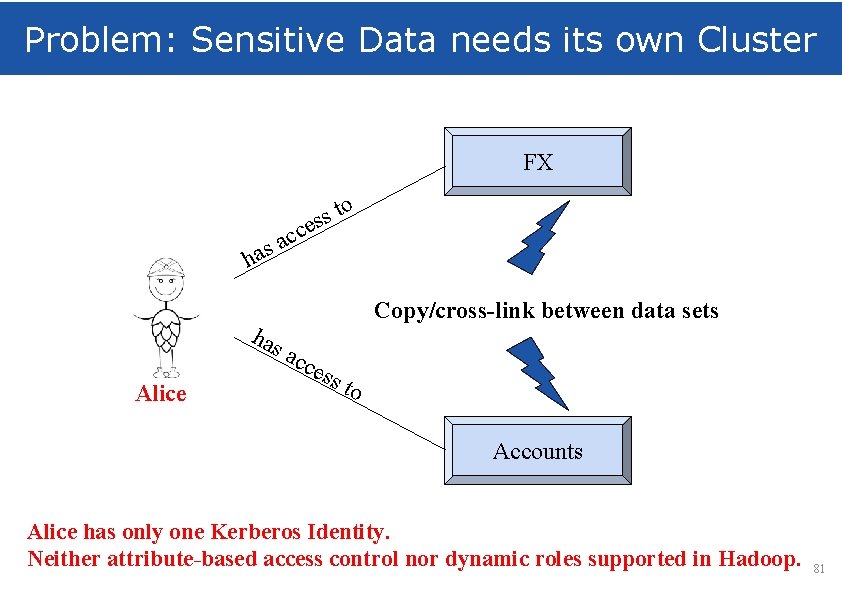

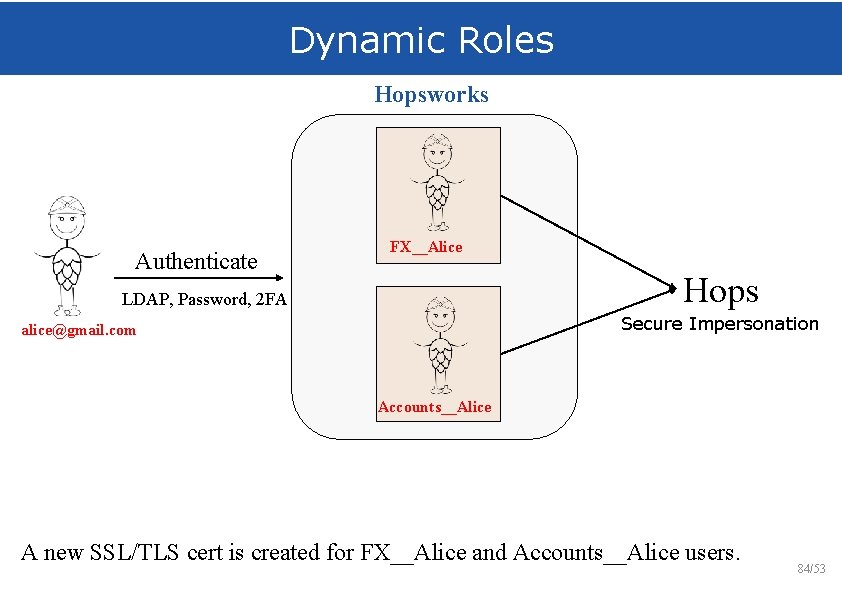

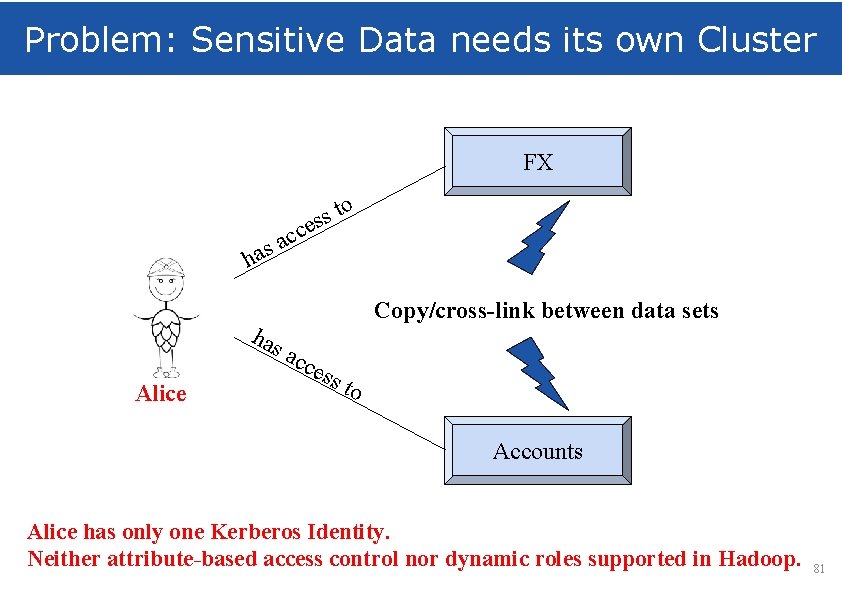

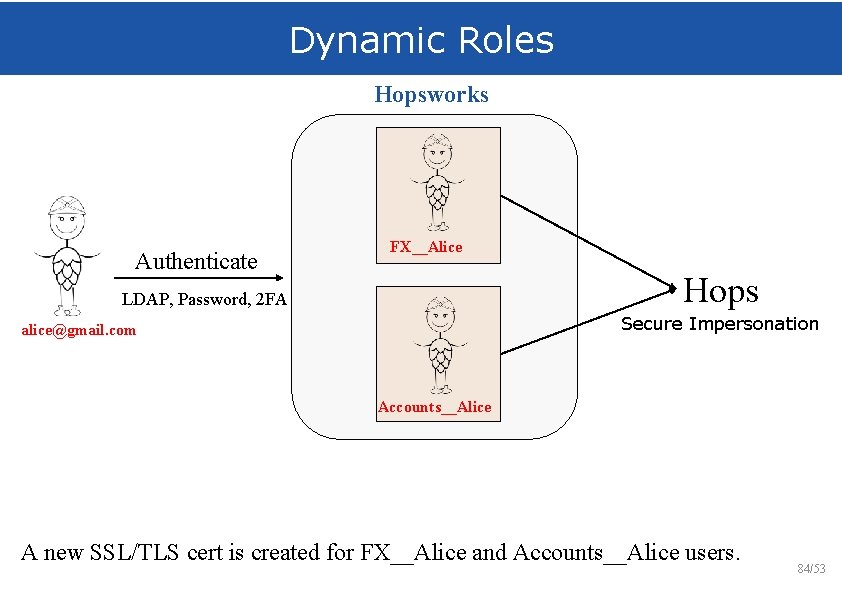

Problem: Sensitive Data needs its own Cluster FX o t s s e c ac s ha Alice has ac ces s to Copy/cross-link between data sets Accounts Alice has only one Kerberos Identity. Neither attribute-based access control nor dynamic roles supported in Hadoop. 81

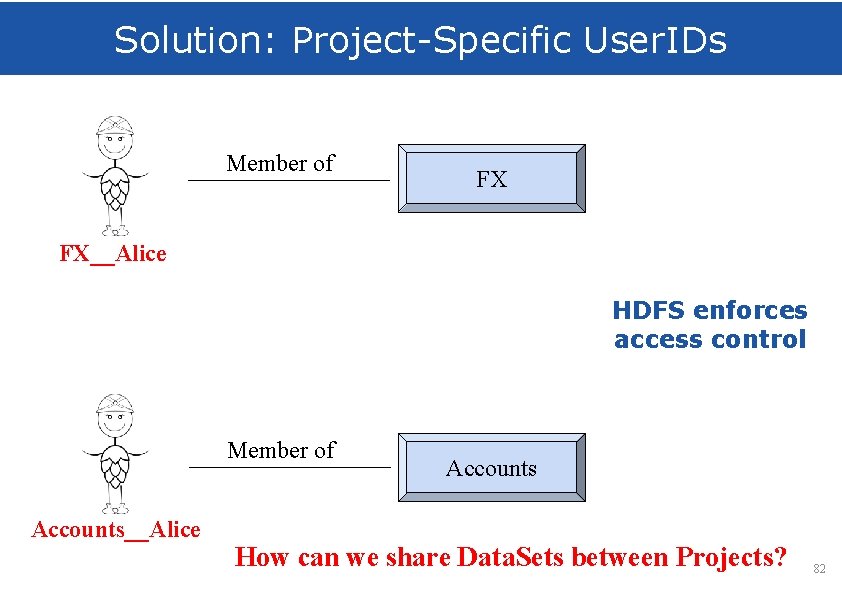

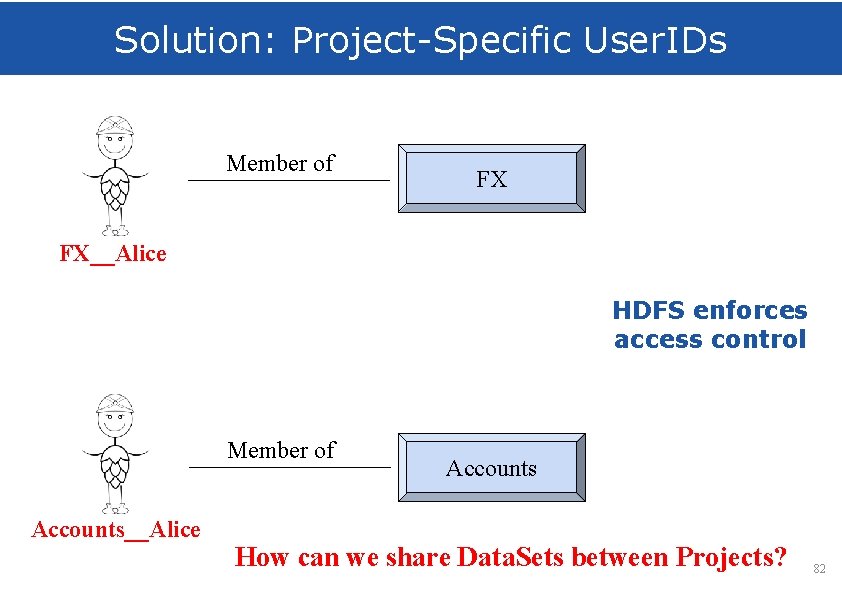

Solution: Project-Specific User. IDs Member of FX FX__Alice HDFS enforces access control Member of Accounts__Alice Accounts How can we share Data. Sets between Projects? 82

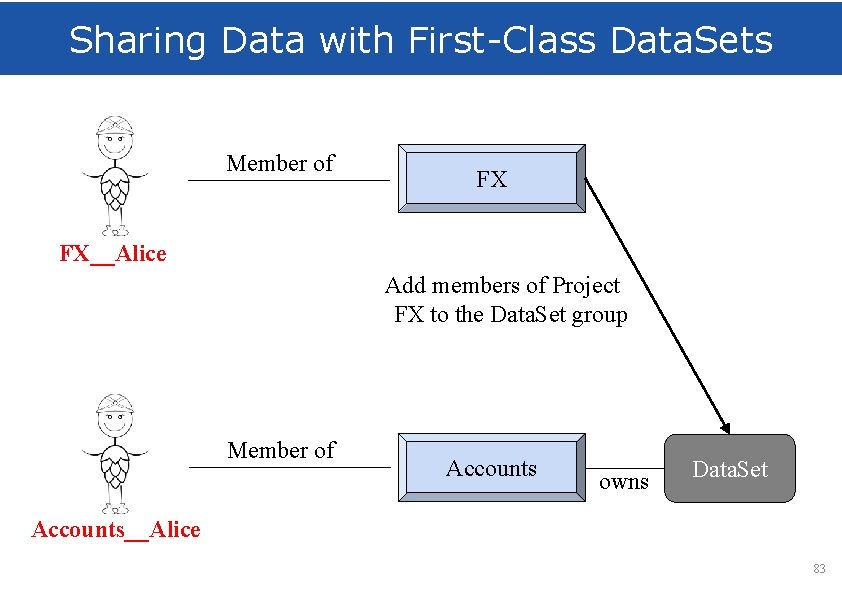

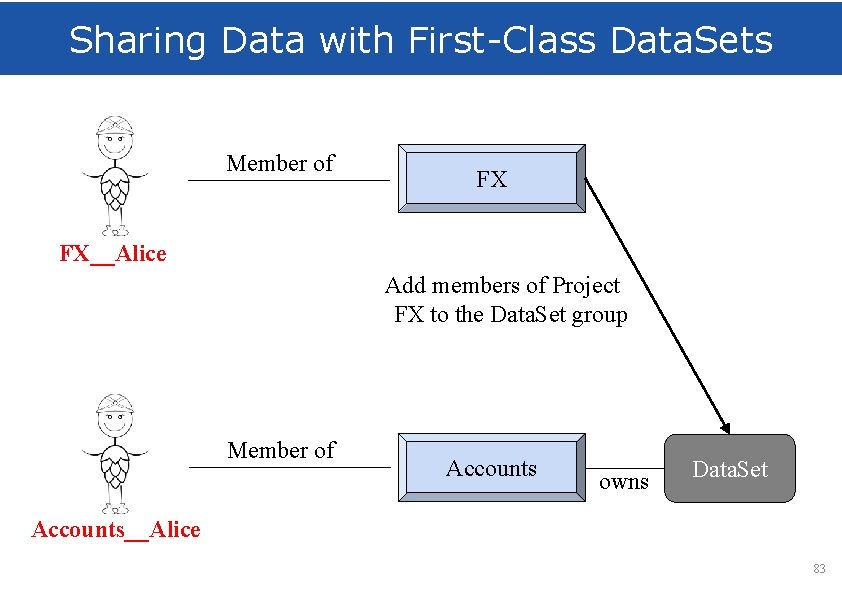

Sharing Data with First-Class Data. Sets Member of FX FX__Alice Add members of Project FX to the Data. Set group Member of Accounts owns Data. Set Accounts__Alice 83

Dynamic Roles Hopsworks Authenticate FX__Alice Hops LDAP, Password, 2 FA Secure Impersonation alice@gmail. com Accounts__Alice A new SSL/TLS cert is created for FX__Alice and Accounts__Alice users. 84/53

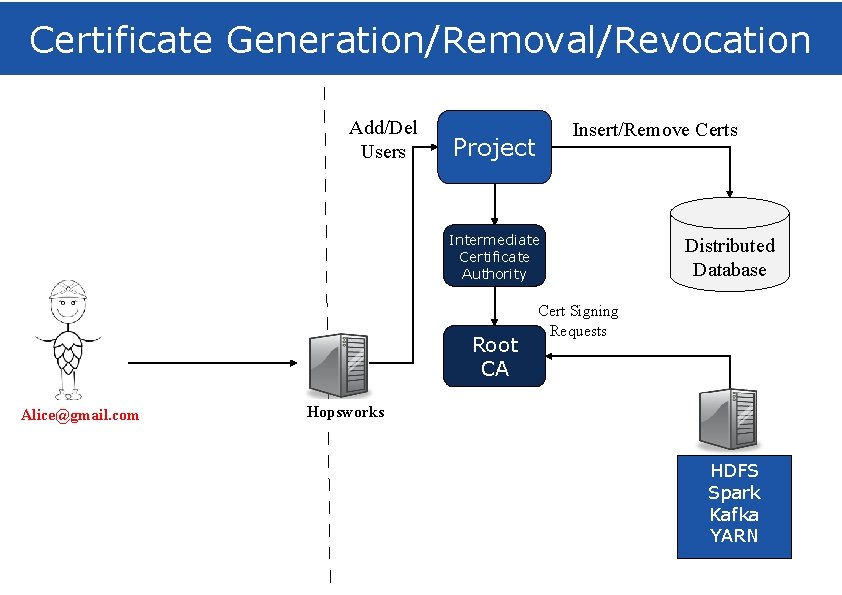

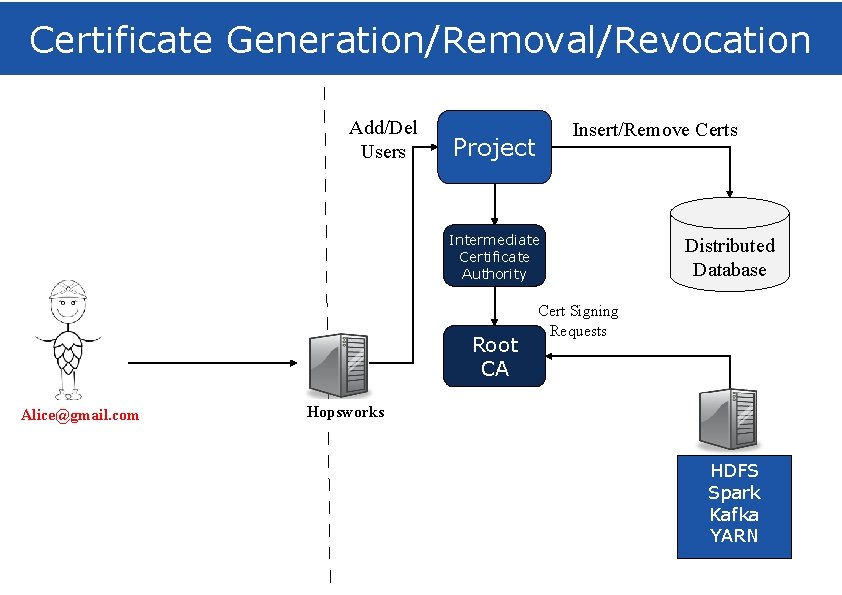

Certificate Generation/Removal/Revocation Add/Del Users Insert/Remove Certs Project Intermediate Certificate Authority Root CA Alice@gmail. com Distributed Database Cert Signing Requests Hopsworks HDFS Spark Kafka YARN

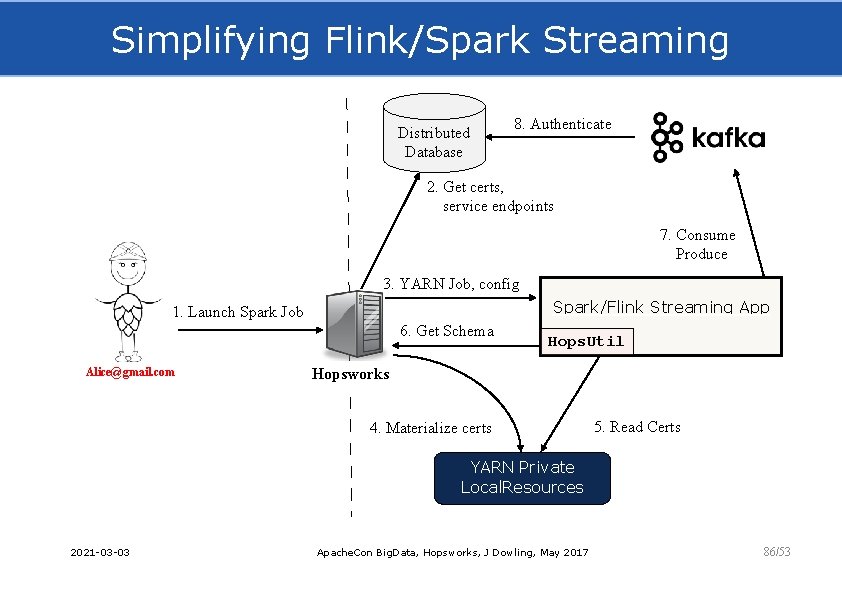

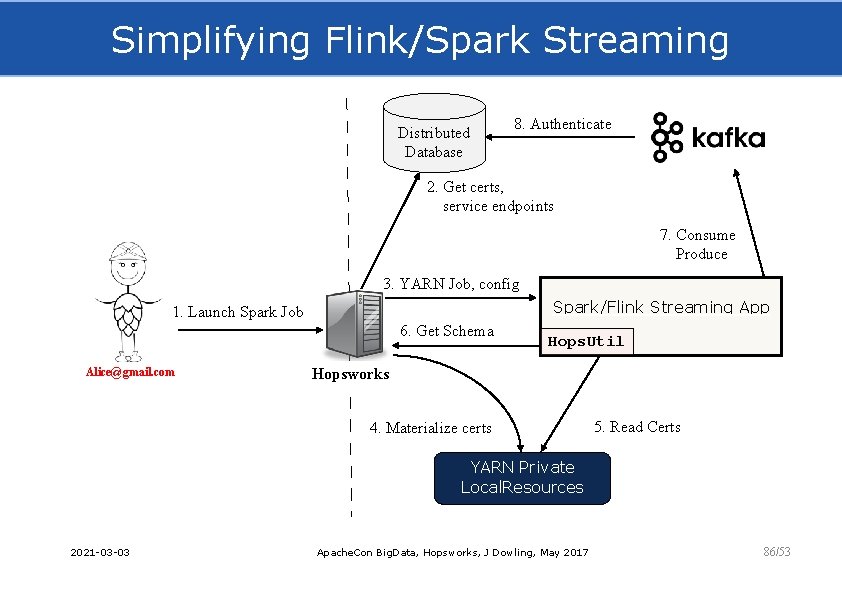

Simplifying Flink/Spark Streaming Distributed Database 8. Authenticate 2. Get certs, service endpoints 7. Consume Produce 3. YARN Job, config Spark/Flink Streaming App 1. Launch Spark Job 6. Get Schema Alice@gmail. com Hops. Util Hopsworks 4. Materialize certs 5. Read Certs YARN Private Local. Resources 2021 -03 -03 Apache. Con Big. Data, Hopsworks, J Dowling, May 2017 86/53

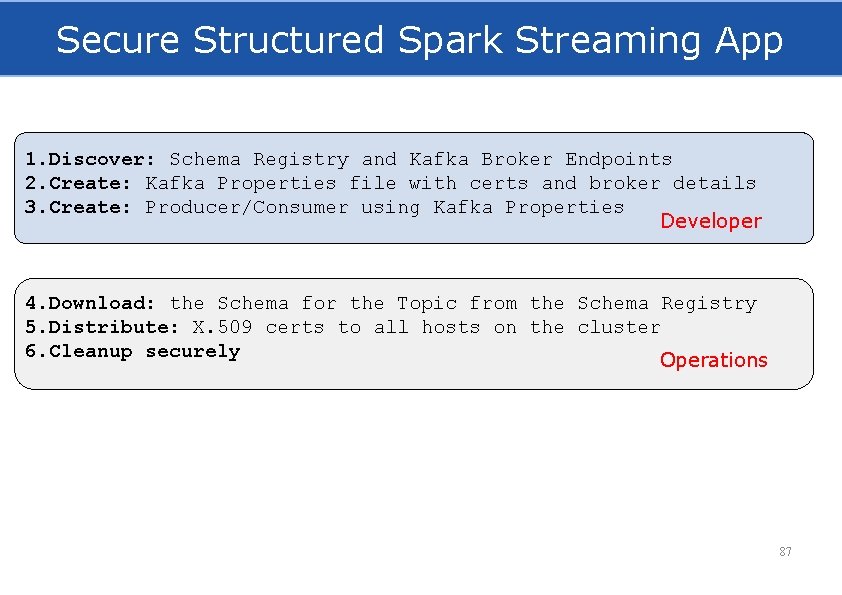

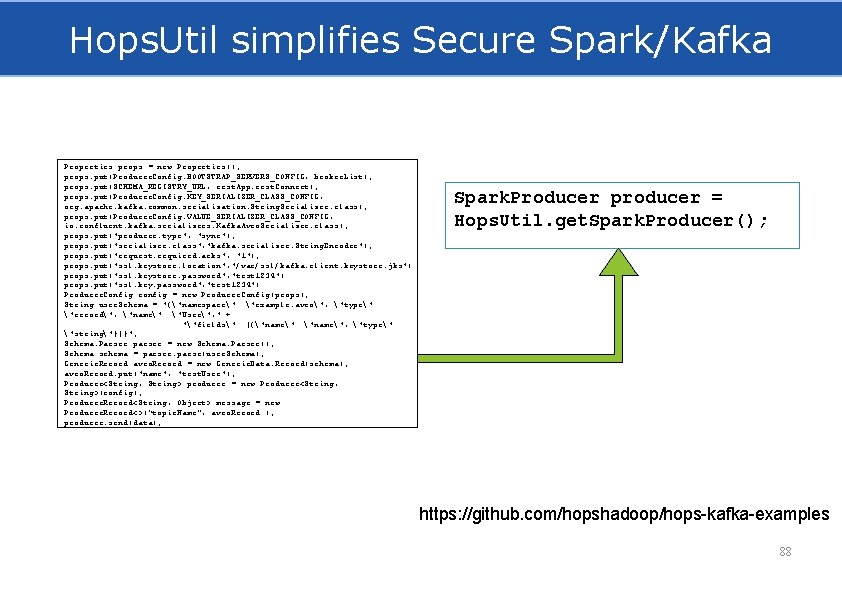

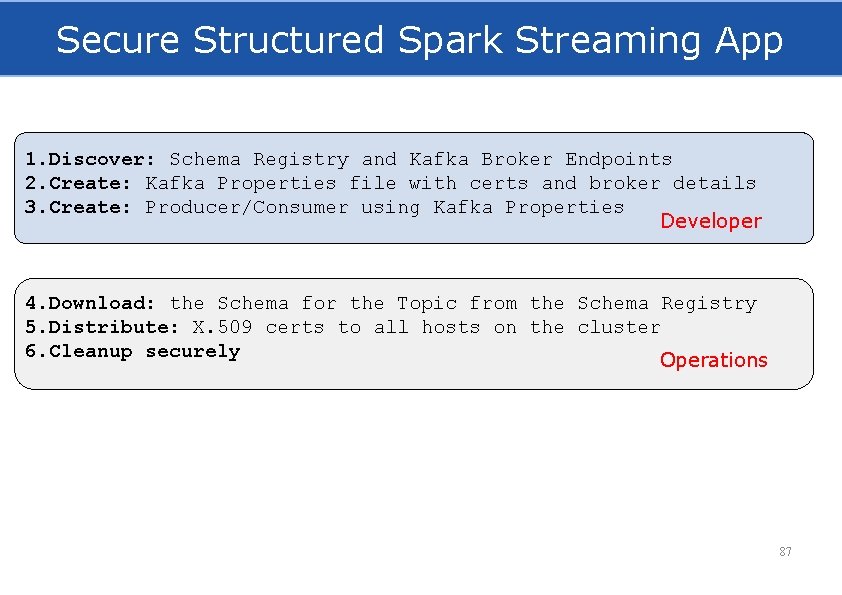

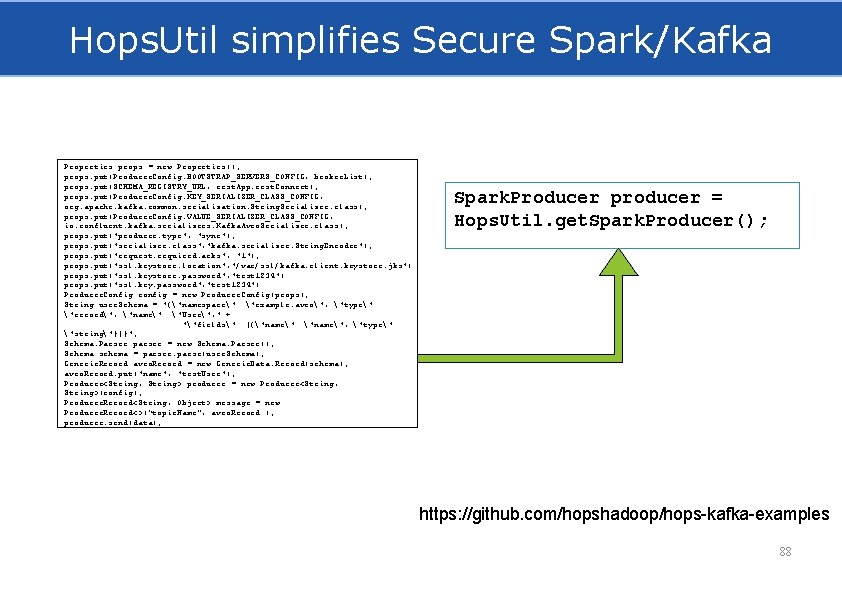

Secure Structured Spark Streaming App 1. Discover: Schema Registry and Kafka Broker Endpoints 2. Create: Kafka Properties file with certs and broker details 3. Create: Producer/Consumer using Kafka Properties Developer 4. Download: the Schema for the Topic from the Schema Registry 5. Distribute: X. 509 certs to all hosts on the cluster 6. Cleanup securely Operations 87

Hops. Util simplifies Secure Spark/Kafka Properties props = new Properties(); props. put(Producer. Config. BOOTSTRAP_SERVERS_CONFIG, broker. List); props. put(SCHEMA_REGISTRY_URL, rest. App. rest. Connect); props. put(Producer. Config. KEY_SERIALIZER_CLASS_CONFIG, org. apache. kafka. common. serialization. String. Serializer. class); props. put(Producer. Config. VALUE_SERIALIZER_CLASS_CONFIG, io. confluent. kafka. serializers. Kafka. Avro. Serializer. class); props. put("producer. type", "sync"); props. put("serializer. class", "kafka. serializer. String. Encoder"); props. put("request. required. acks", "1"); props. put("ssl. keystore. location", "/var/ssl/kafka. client. keystore. jks") props. put("ssl. keystore. password", "test 1234") props. put("ssl. key. password", "test 1234") Producer. Config config = new Producer. Config(props); String user. Schema = "{"namespace": "example. avro", "type": "record", "name": "User", " + ""fields": [{"name": "name", "type": "string"}]}"; Schema. Parser parser = new Schema. Parser(); Schema schema = parser. parse(user. Schema); Generic. Record avro. Record = new Generic. Data. Record(schema); avro. Record. put("name", "test. User"); Producer<String, String> producer = new Producer<String, String>(config); Producer. Record<String, Object> message = new Producer. Record<>(“topic. Name”, avro. Record ); producer. send(data); Spark. Producer producer = Hops. Util. get. Spark. Producer(); https: //github. com/hopshadoop/hops-kafka-examples 88

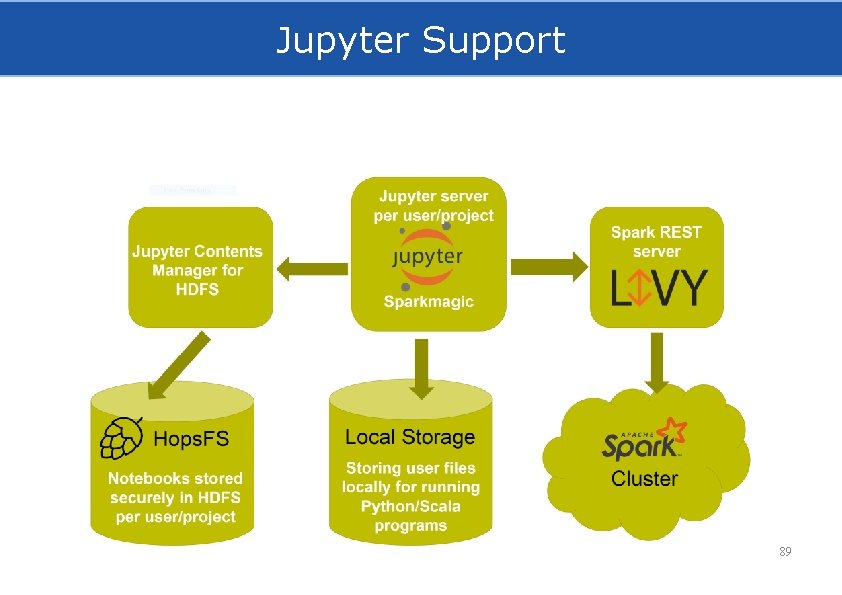

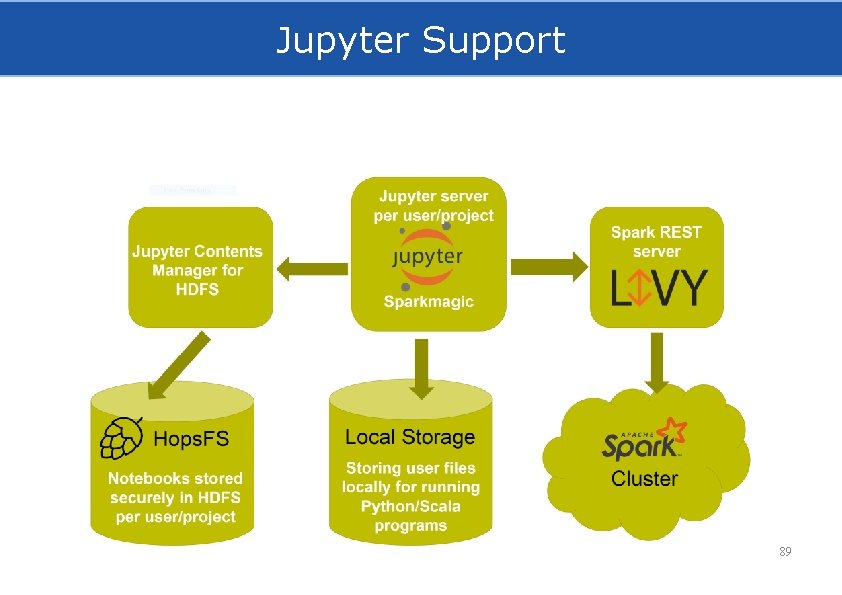

Jupyter Support 89

Hive Metastore in Hops. FS Hive Meta. Store Hops. FS 90/53

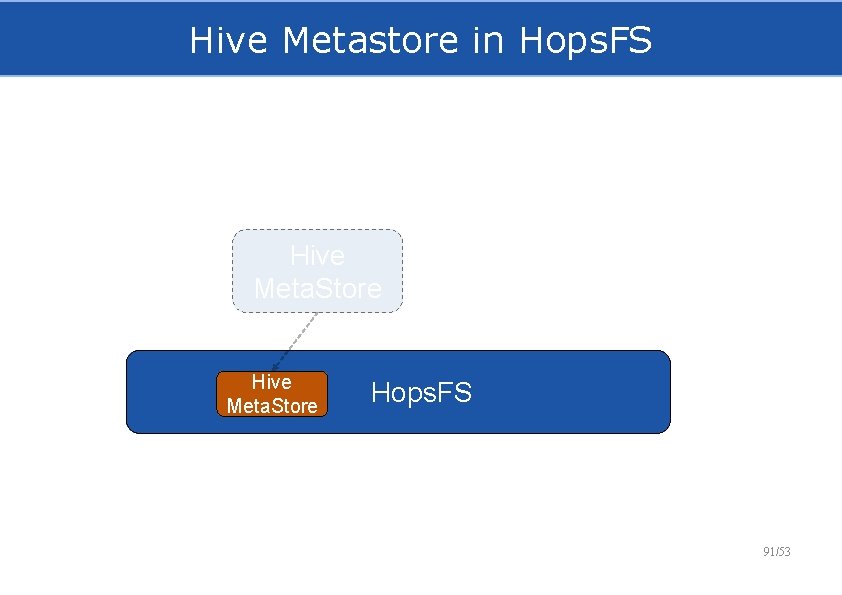

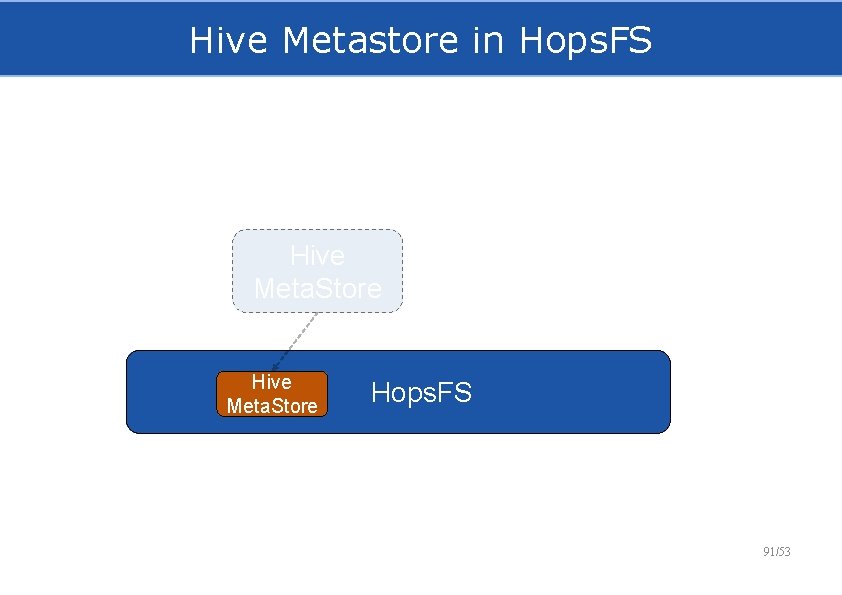

Hive Metastore in Hops. FS Hive Meta. Store Hops. FS 91/53

Strongly Consistent Hive Metadata 1. 2. 3. Removing the HDFS backing directory removes the Table from Hive the Metastore 92/53

Extra Features 5/30/2012 www. hops. io 93

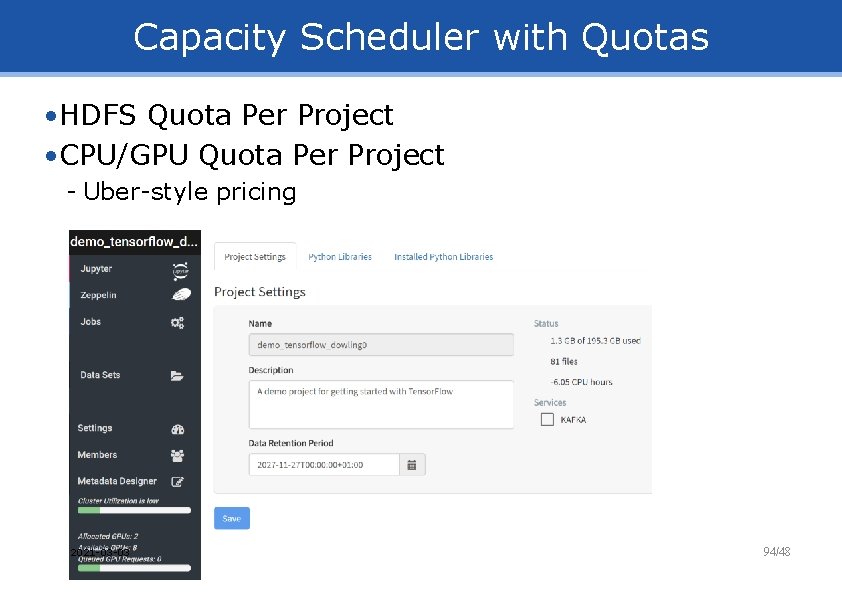

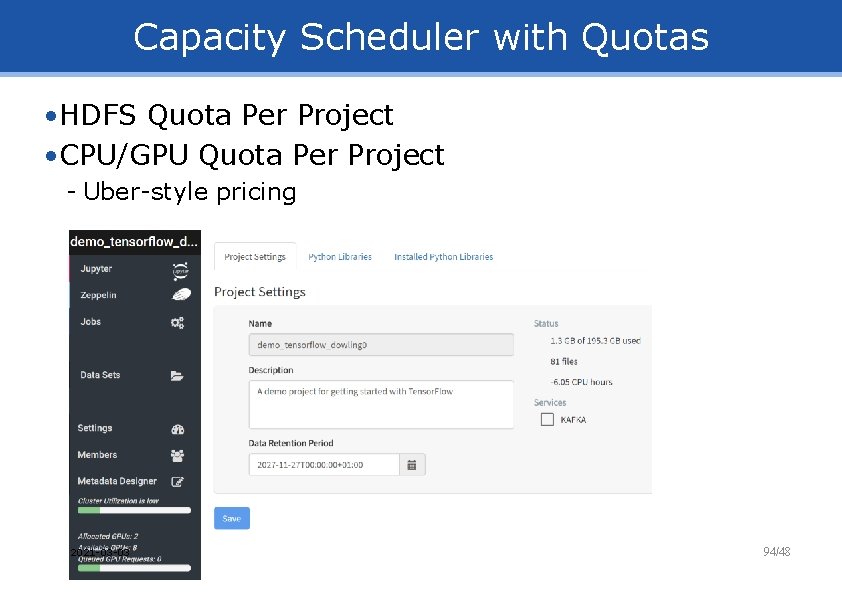

Capacity Scheduler with Quotas • HDFS Quota Per Project • CPU/GPU Quota Per Project - Uber-style pricing 2021 -03 -03 94/48

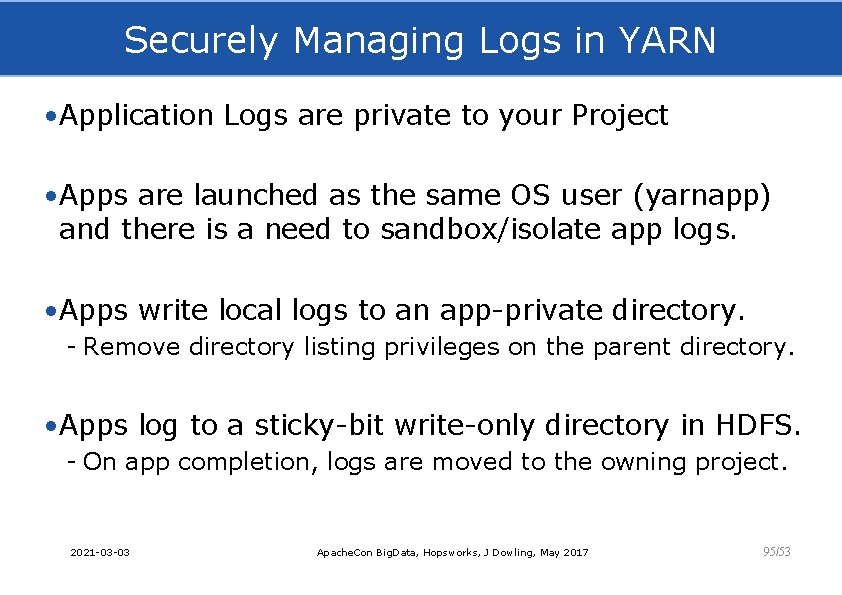

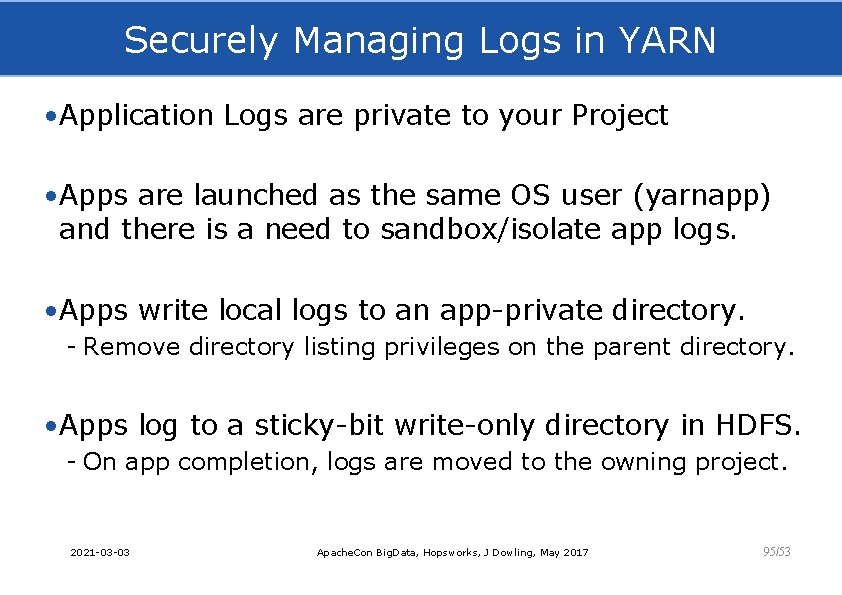

Securely Managing Logs in YARN • Application Logs are private to your Project • Apps are launched as the same OS user (yarnapp) and there is a need to sandbox/isolate app logs. • Apps write local logs to an app-private directory. - Remove directory listing privileges on the parent directory. • Apps log to a sticky-bit write-only directory in HDFS. - On app completion, logs are moved to the owning project. 2021 -03 -03 Apache. Con Big. Data, Hopsworks, J Dowling, May 2017 95/53

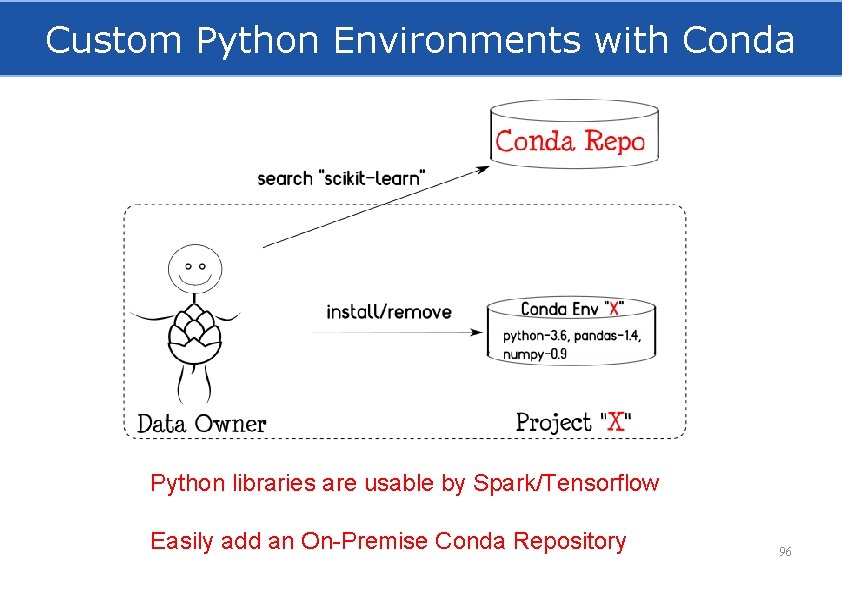

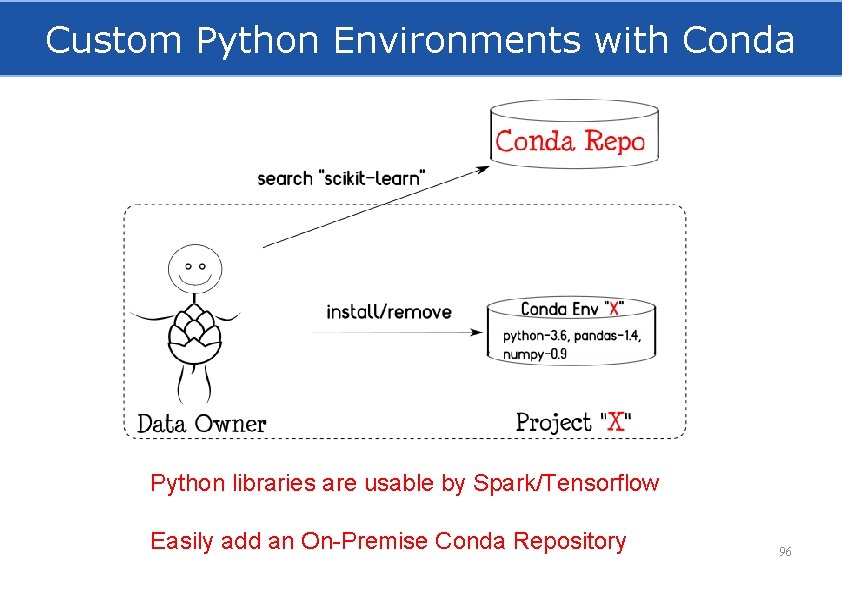

Custom Python Environments with Conda Python libraries are usable by Spark/Tensorflow Easily add an On-Premise Conda Repository 96

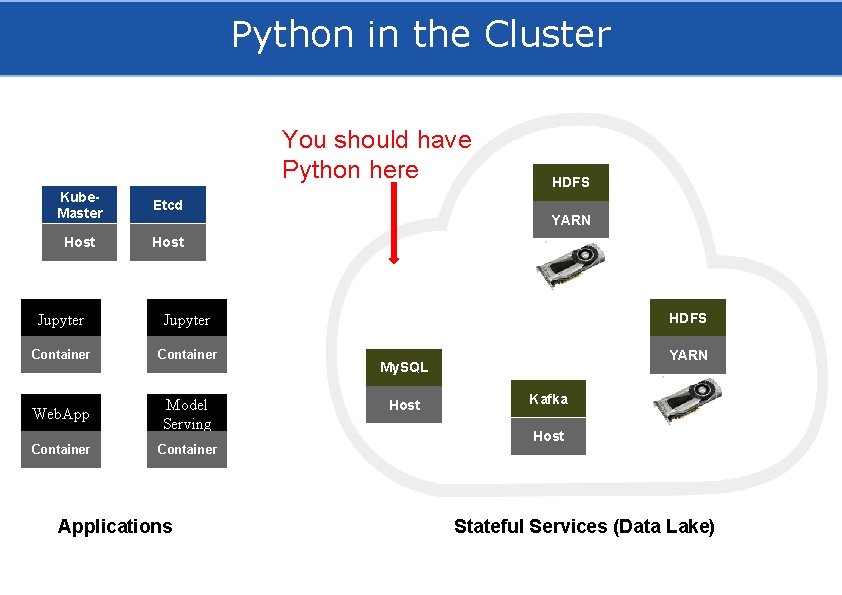

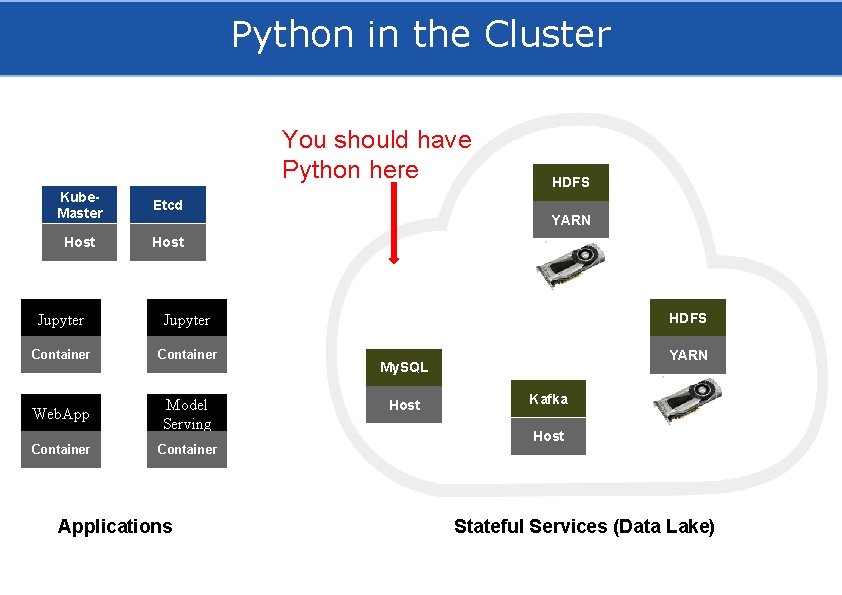

Python in the Cluster You should have Python here Kube. Master Etcd Host Jupyter Container Web. App Model Serving Container Applications HDFS YARN My. SQL Host Kafka Host Stateful Services (Data Lake)

Admin Services 2021 -03 -03 98/48

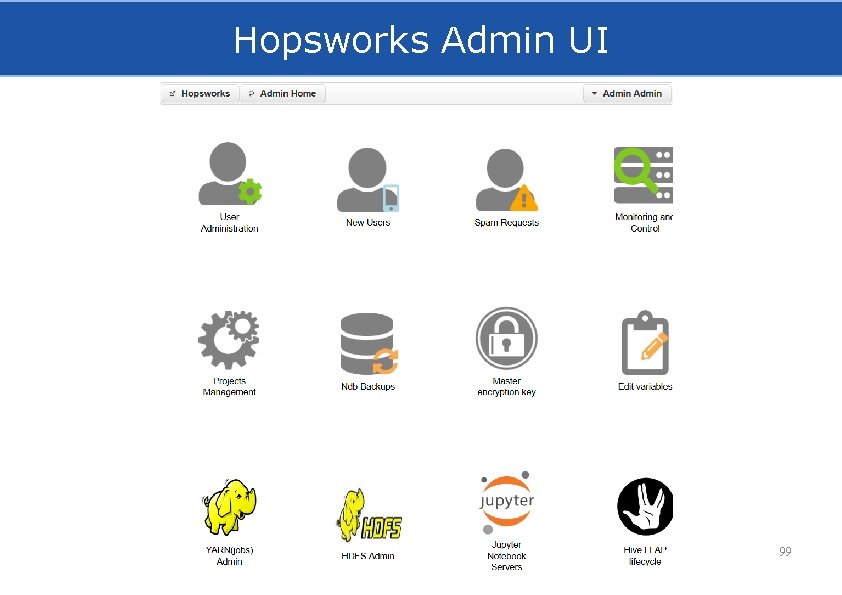

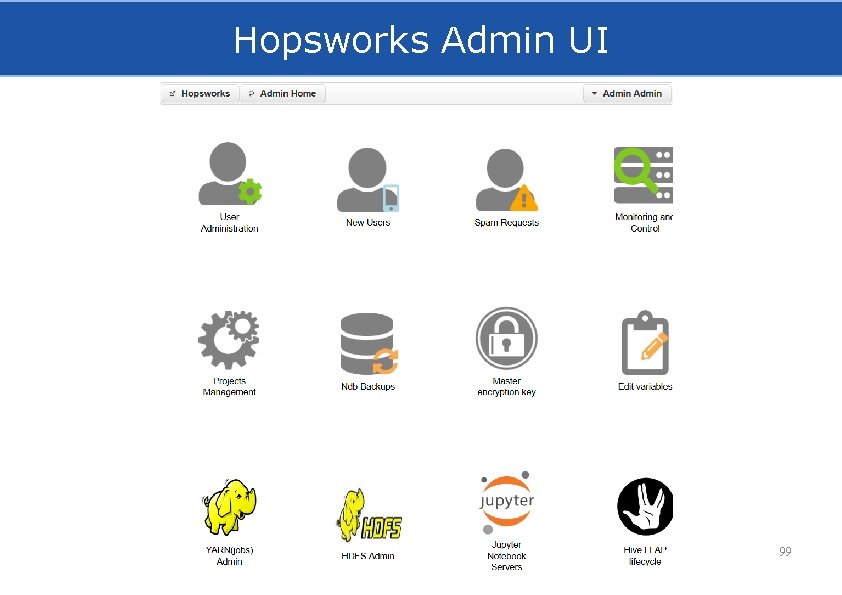

Hopsworks Admin UI 99

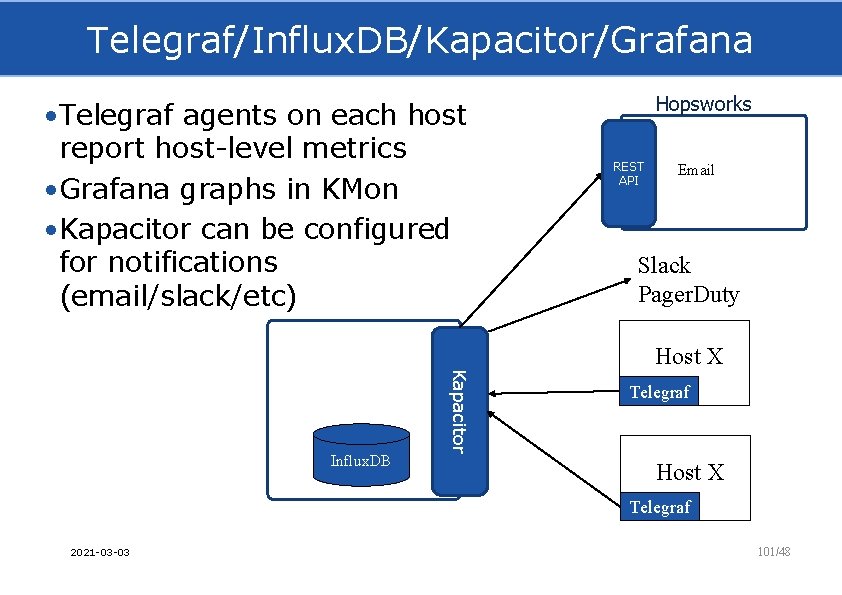

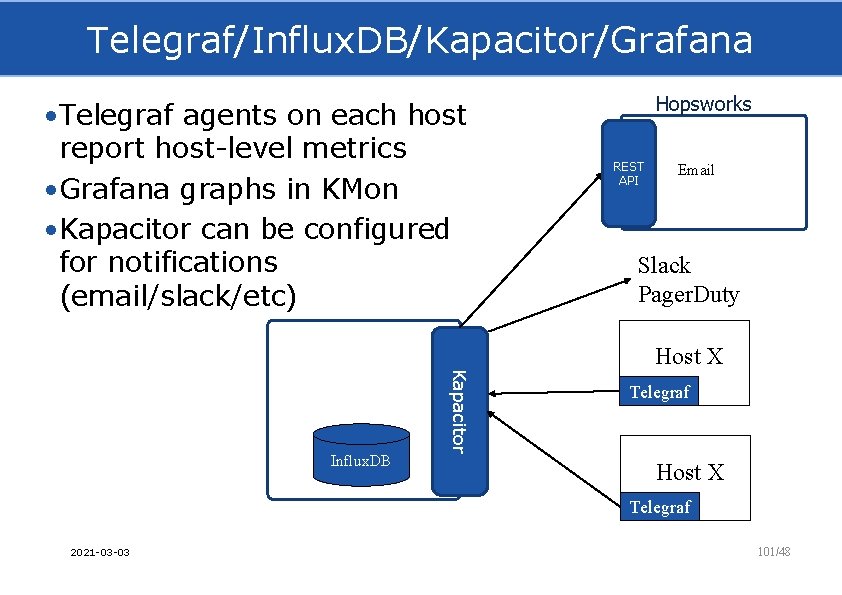

KMon • Python agent runs on all hosts - Heartbeats Hopsworks with service availability Info - Restart Services with REST/Https (password protected) - Conda library installs/removals Service A - SSL/TLS Host X Hopsworks kagent Service Z REST API My. SQL Cluster Host X kagent 2021 -03 -03 Service A Service Z 100/48

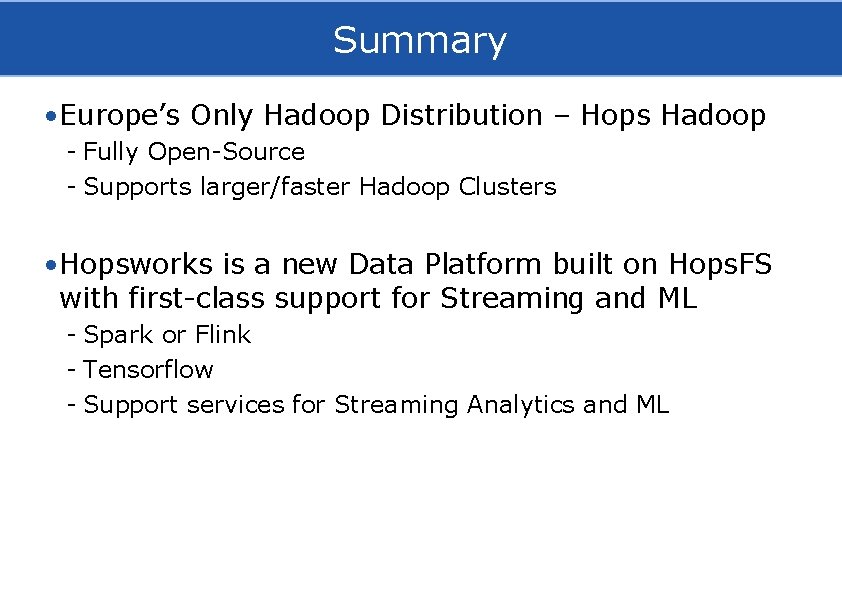

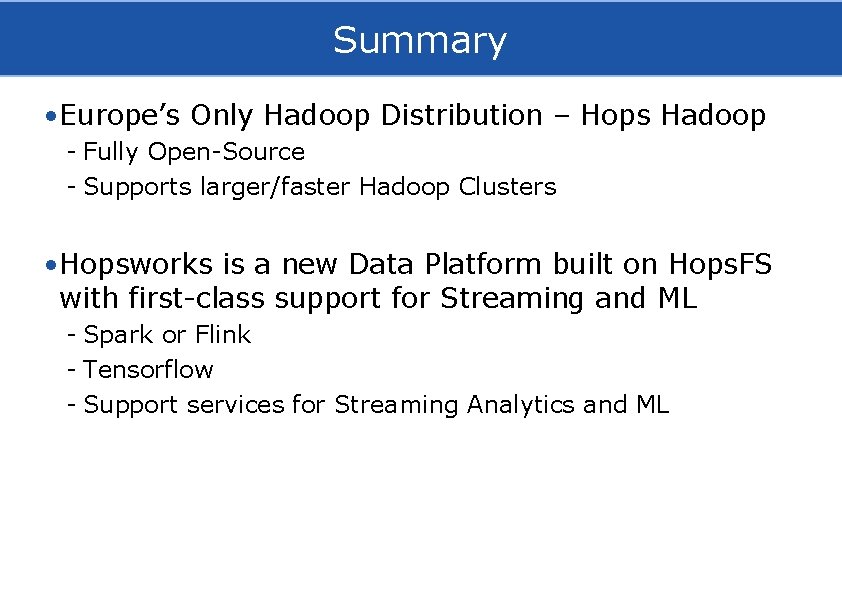

Telegraf/Influx. DB/Kapacitor/Grafana • Telegraf agents on each host report host-level metrics • Grafana graphs in KMon • Kapacitor can be configured for notifications (email/slack/etc) Kapacitor Influx. DB Hopsworks REST API Email Slack Pager. Duty Host X Telegraf 2021 -03 -03 101/48

Summary • Europe’s Only Hadoop Distribution – Hops Hadoop - Fully Open-Source - Supports larger/faster Hadoop Clusters • Hopsworks is a new Data Platform built on Hops. FS with first-class support for Streaming and ML - Spark or Flink - Tensorflow - Support services for Streaming Analytics and ML

The Team Active: Jim Dowling, Seif Haridi, Tor Björn Minde, Gautier Berthou, Salman Niazi, Mahmoud Ismail, Theofilos Kakantousis, Ermias Gebremeskel, Antonios Kouzoupis, Alex Ormenisan, Fabio Buso, Robin Andersson, August Bonds, Filotas Siskos, Mahmoud Hamed. www. hops. io Alumni: Vasileios Giannokostas, Johan Svedlund Nordström, Rizvi Hasan, Paul Mälzer, Bram Leenders, Juan Roca, Misganu Dessalegn, K “Sri” Srijeyanthan, Jude D’Souza, Alberto Lorente, Andre Moré, Ali Gholami, Davis Jaunzems, Stig Viaene, Hooman Peiro, Evangelos Savvidis, Steffen Grohsschmiedt, Qi Qi, Gayana Chandrasekara, Nikolaos Stanogias, Daniel Bali, Ioannis Kerkinos, Peter Buechler, Pushparaj Motamari, Hamid Afzali, Wasif Malik, Lalith Suresh, Mariano Valles, Ying Lieu, Fanti Machmount Al Samisti, Braulio Grana, Adam Alpire, Zahin Azher Rashid, Aruna. Kumari Yedurupaka, Tobias Johansson , Roberto Bampi. @hopshadoop

Hops Thank You. Follow us: @hopshadoop Star us: Follow us: http: //github. com/hopshadoop/hopsworks @hopshadoop Join us: Star us: http: //www. hops. io http: //github. com/hopshadoop/hopsworks Join us: http: //www. hops. io