OMOP CDM on Hadoop Reference Architecture Target audience

- Slides: 9

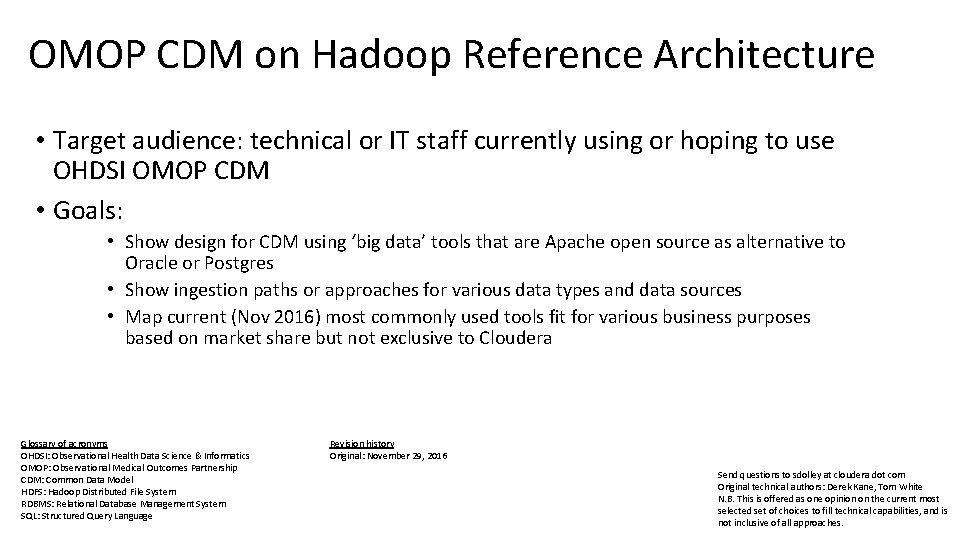

OMOP CDM on Hadoop Reference Architecture • Target audience: technical or IT staff currently using or hoping to use OHDSI OMOP CDM • Goals: • Show design for CDM using ‘big data’ tools that are Apache open source as alternative to Oracle or Postgres • Show ingestion paths or approaches for various data types and data sources • Map current (Nov 2016) most commonly used tools fit for various business purposes based on market share but not exclusive to Cloudera Glossary of acronyms OHDSI: Observational Health Data Science & Informatics OMOP: Observational Medical Outcomes Partnership CDM: Common Data Model HDFS: Hadoop Distributed File System RDBMS: Relational Database Management System SQL: Structured Query Language Revision history Original: November 29, 2016 Send questions to sdolley at cloudera dot com Original technical authors: Derek Kane, Tom White N. B. This is offered as one opinion on the current most selected set of choices to fill technical capabilities, and is not inclusive of all approaches.

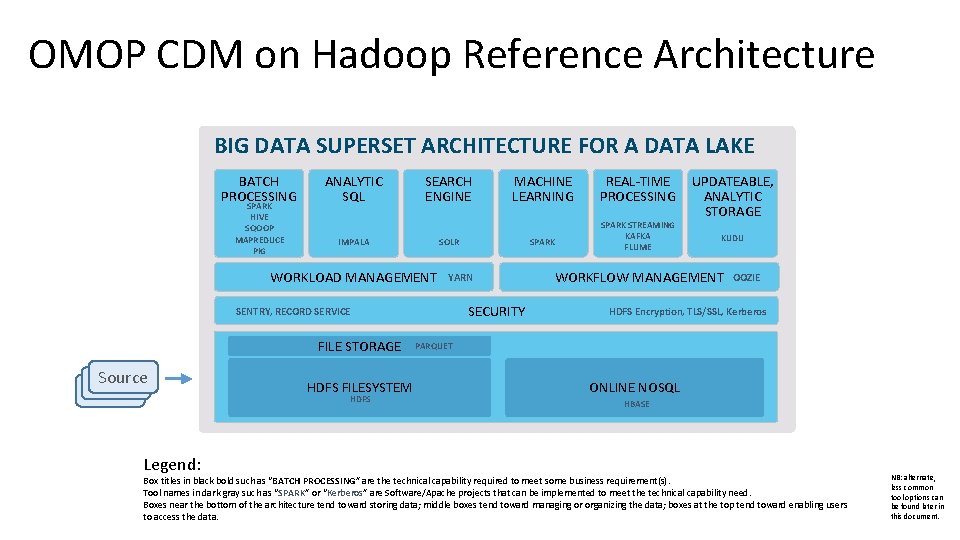

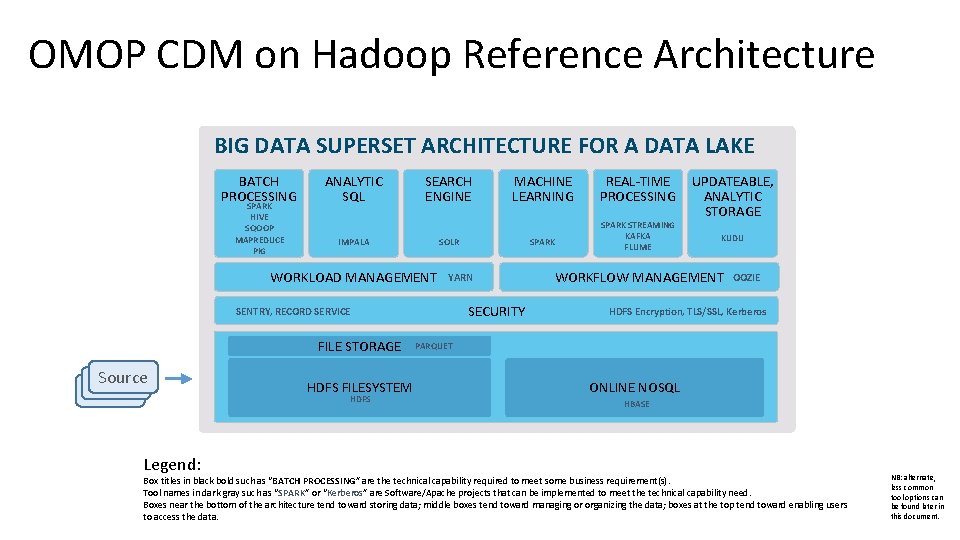

OMOP CDM on Hadoop Reference Architecture BIG DATA SUPERSET ARCHITECTURE FOR A DATA LAKE BATCH PROCESSING SPARK HIVE SQOOP MAPREDUCE PIG ANALYTIC SQL SEARCH ENGINE IMPALA SOLR WORKLOAD MANAGEMENT Source Legend: HDFS FILESYSTEM HDFS SPARK YARN SECURITY SENTRY, RECORD SERVICE FILE STORAGE MACHINE LEARNING REAL-TIME PROCESSING SPARK STREAMING KAFKA FLUME UPDATEABLE, ANALYTIC STORAGE KUDU WORKFLOW MANAGEMENT OOZIE HDFS Encryption, TLS/SSL, Kerberos PARQUET ONLINE NOSQL HBASE Box titles in black bold such as “BATCH PROCESSING” are the technical capability required to meet some business requirement(s). Tool names in dark gray such as “SPARK” or “Kerberos” are Software/Apache projects that can be implemented to meet the technical capability need. Boxes near the bottom of the architecture tend toward storing data; middle boxes tend toward managing or organizing the data; boxes at the top tend toward enabling users to access the data. NB: alternate, less common tool options can be found later in this document.

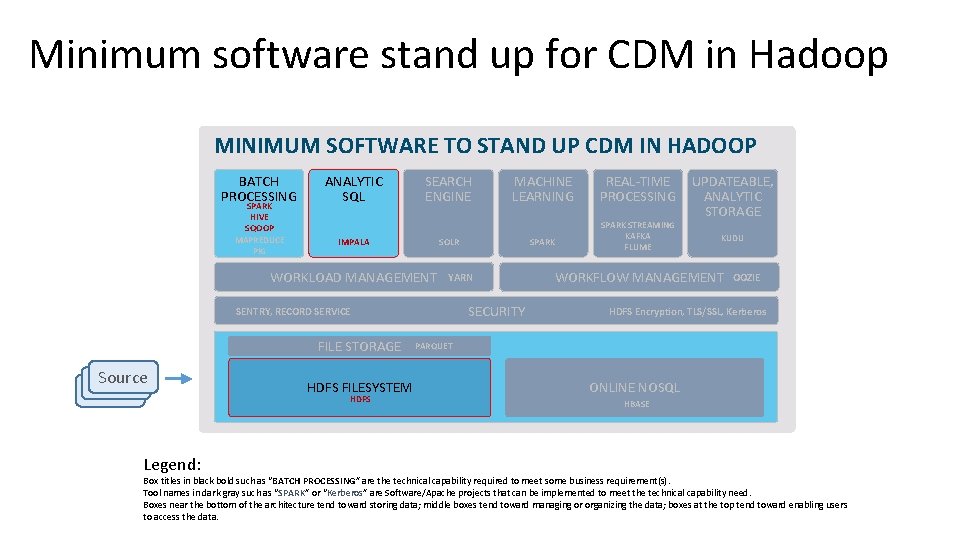

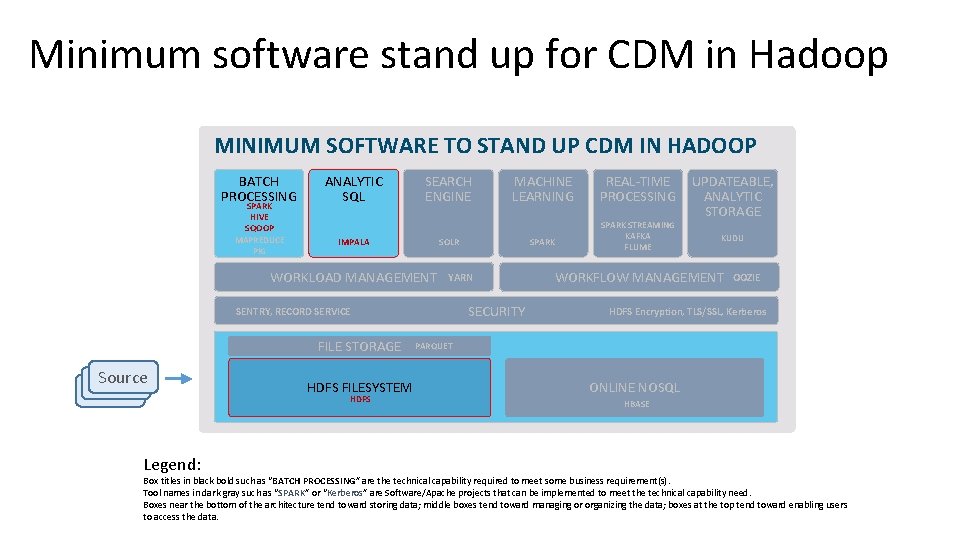

Minimum software stand up for CDM in Hadoop MINIMUM SOFTWARE TO STAND UP CDM IN HADOOP BATCH PROCESSING SPARK HIVE SQOOP MAPREDUCE PIG ANALYTIC SQL SEARCH ENGINE IMPALA SOLR WORKLOAD MANAGEMENT Source Legend: HDFS FILESYSTEM HDFS SPARK YARN SECURITY SENTRY, RECORD SERVICE FILE STORAGE MACHINE LEARNING REAL-TIME PROCESSING SPARK STREAMING KAFKA FLUME UPDATEABLE, ANALYTIC STORAGE KUDU WORKFLOW MANAGEMENT OOZIE HDFS Encryption, TLS/SSL, Kerberos PARQUET ONLINE NOSQL HBASE Box titles in black bold such as “BATCH PROCESSING” are the technical capability required to meet some business requirement(s). Tool names in dark gray such as “SPARK” or “Kerberos” are Software/Apache projects that can be implemented to meet the technical capability need. Boxes near the bottom of the architecture tend toward storing data; middle boxes tend toward managing or organizing the data; boxes at the top tend toward enabling users to access the data.

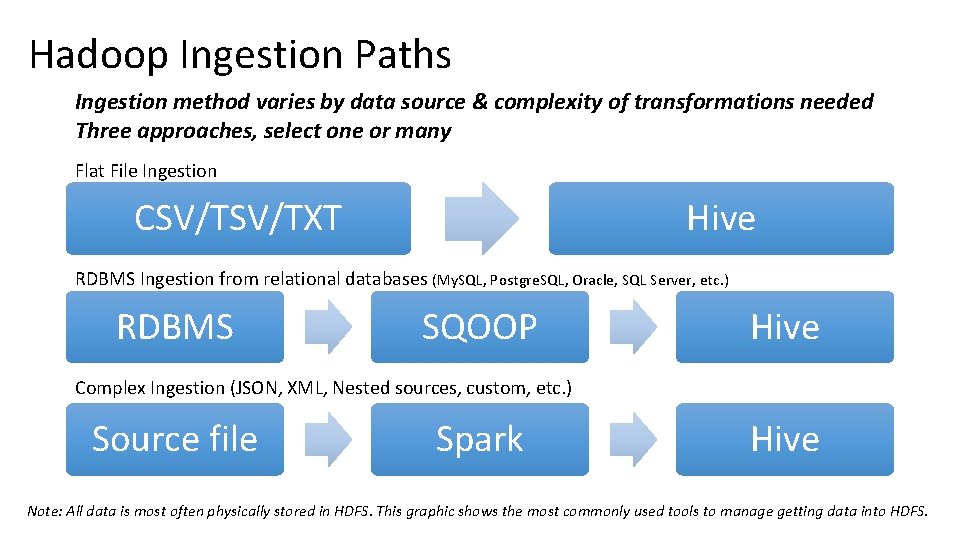

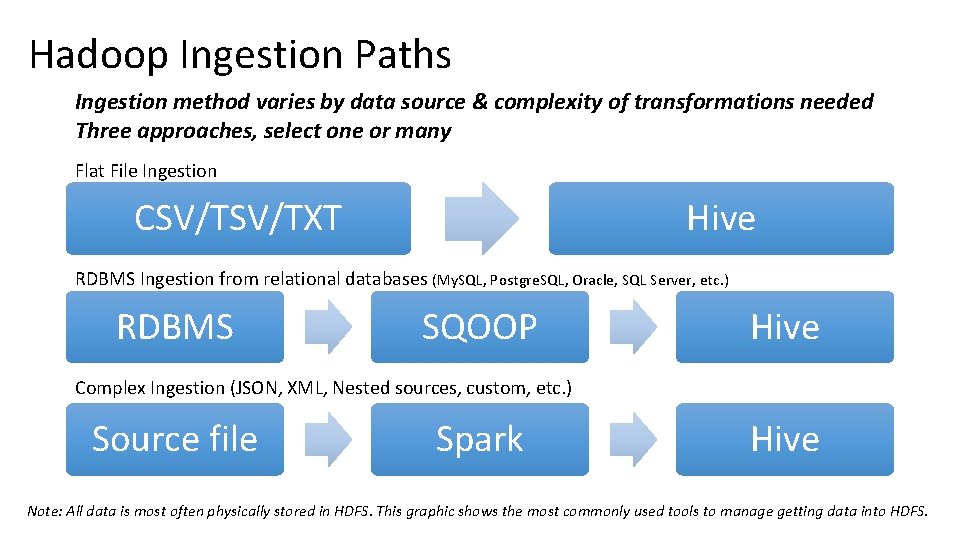

Hadoop Ingestion Paths Ingestion method varies by data source & complexity of transformations needed Three approaches, select one or many Flat File Ingestion CSV/TXT Hive RDBMS Ingestion from relational databases (My. SQL, Postgre. SQL, Oracle, SQL Server, etc. ) RDBMS SQOOP Hive Complex Ingestion (JSON, XML, Nested sources, custom, etc. ) Source file Spark Hive Note: All data is most often physically stored in HDFS. This graphic shows the most commonly used tools to manage getting data into HDFS.

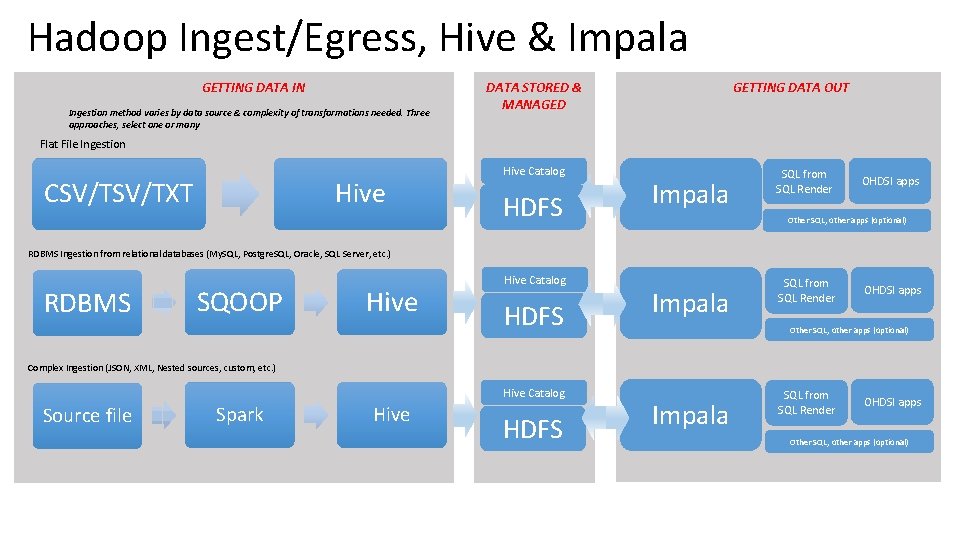

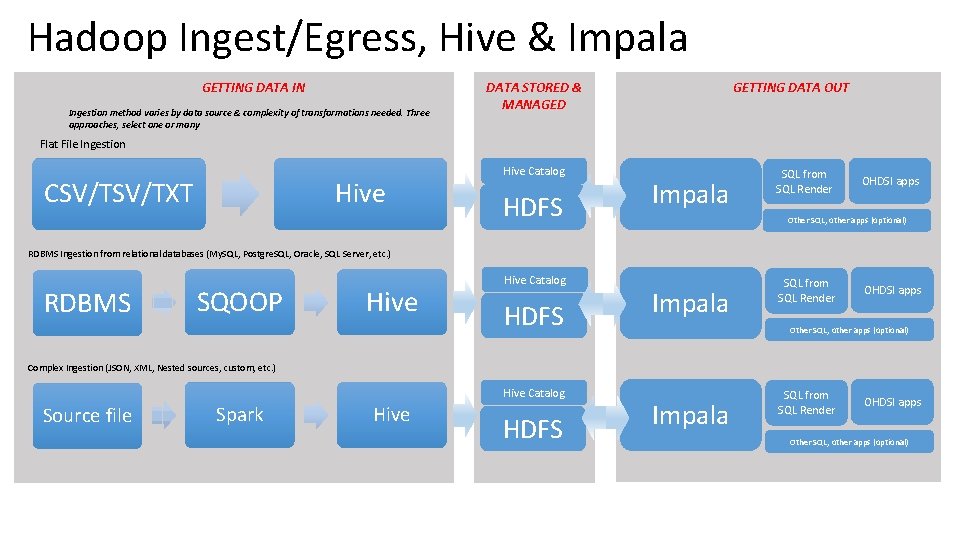

Hadoop Ingest/Egress, Hive & Impala GETTING DATA IN Ingestion method varies by data source & complexity of transformations needed. Three approaches, select one or many GETTING DATA OUT DATA STORED & MANAGED Flat File Ingestion CSV/TXT Hive Catalog HDFS Impala SQL from SQL Render OHDSI apps Other SQL, other apps (optional) RDBMS Ingestion from relational databases (My. SQL, Postgre. SQL, Oracle, SQL Server, etc. ) RDBMS SQOOP Hive Catalog HDFS Impala SQL from SQL Render OHDSI apps Other SQL, other apps (optional) Complex Ingestion (JSON, XML, Nested sources, custom, etc. ) Hive Catalog Source file Spark Hive HDFS Impala SQL from SQL Render OHDSI apps Other SQL, other apps (optional)

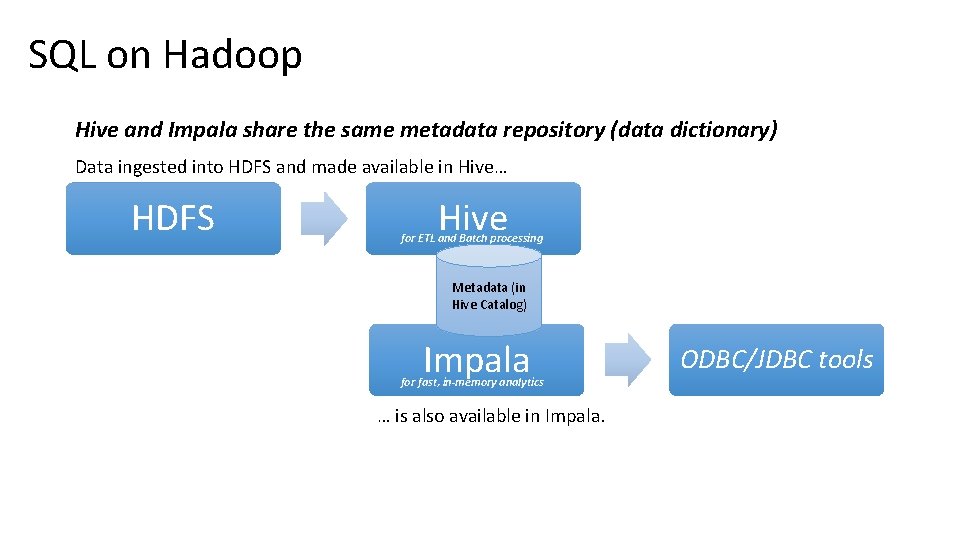

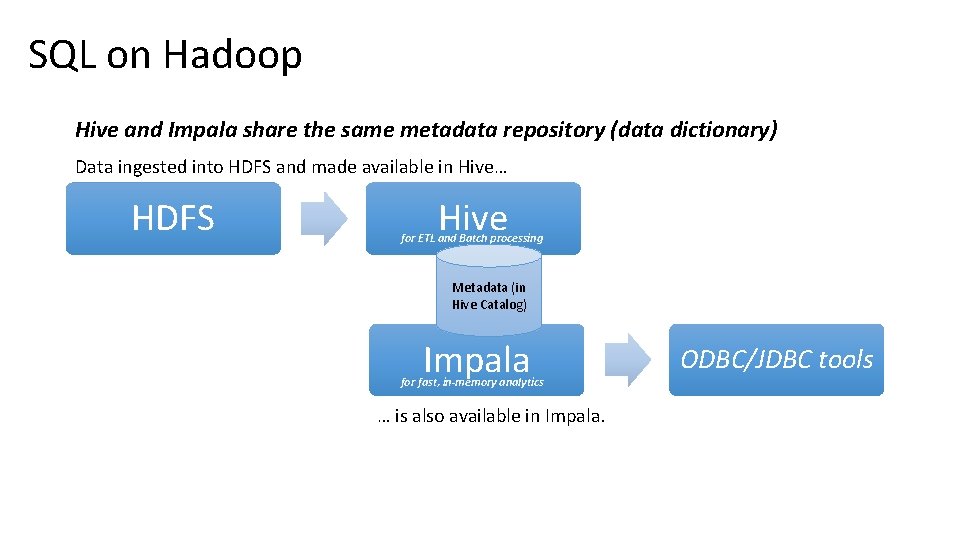

SQL on Hadoop Hive and Impala share the same metadata repository (data dictionary) Data ingested into HDFS and made available in Hive… HDFS Hive for ETL and Batch processing Metadata (in Hive Catalog) Impala for fast, in-memory analytics … is also available in Impala. ODBC/JDBC tools

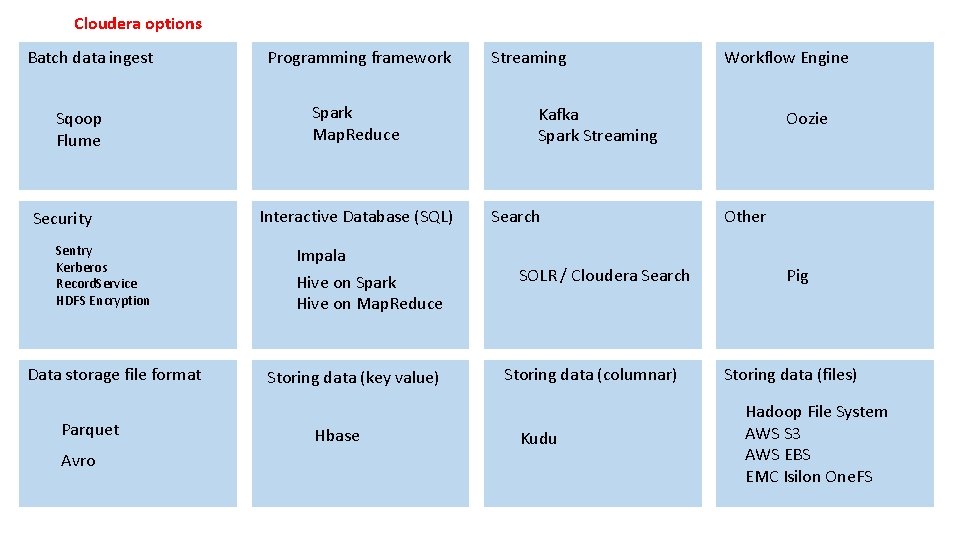

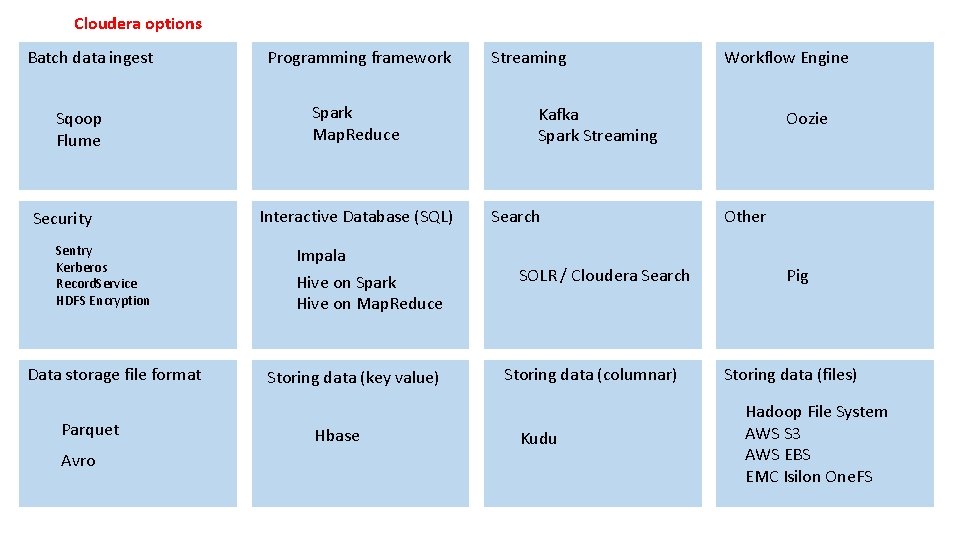

Cloudera options Batch data ingest Sqoop Flume Security Sentry Kerberos Record. Service HDFS Encryption Data storage file format Parquet Avro Programming framework Spark Map. Reduce Interactive Database (SQL) Impala Hive on Spark Hive on Map. Reduce Storing data (key value) Hbase Streaming Workflow Engine Kafka Spark Streaming Search SOLR / Cloudera Search Storing data (columnar) Kudu Oozie Other Pig Storing data (files) Hadoop File System AWS S 3 AWS EBS EMC Isilon One. FS

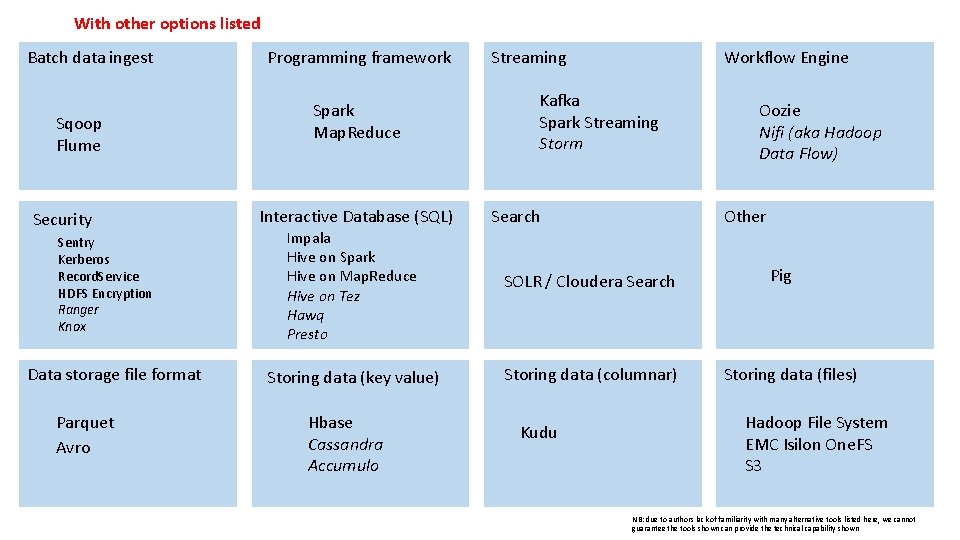

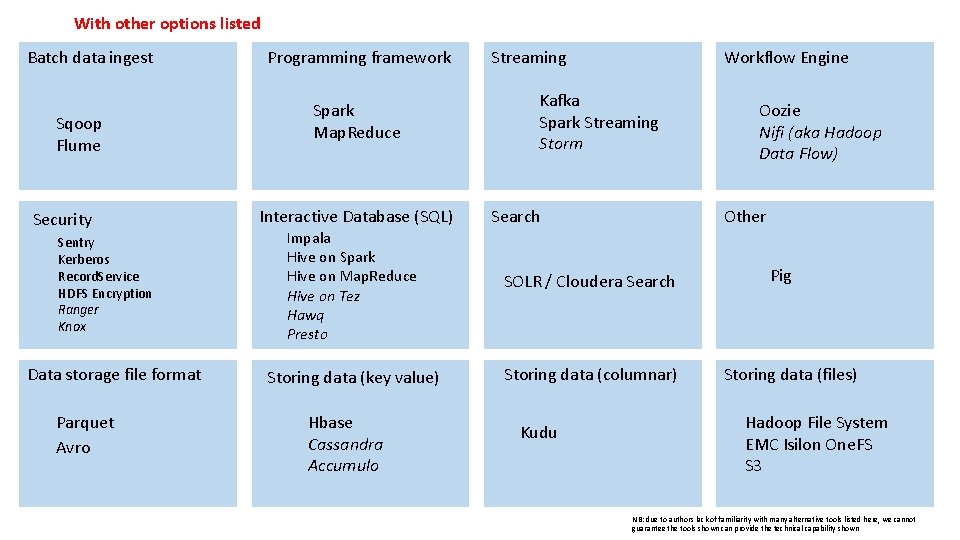

With other options listed Batch data ingest Sqoop Flume Security Sentry Kerberos Record. Service HDFS Encryption Ranger Knox Data storage file format Parquet Avro Programming framework Spark Map. Reduce Interactive Database (SQL) Streaming Workflow Engine Kafka Spark Streaming Storm Search Other Impala Hive on Spark Hive on Map. Reduce Hive on Tez Hawq Presto SOLR / Cloudera Search Storing data (key value) Storing data (columnar) Hbase Cassandra Accumulo Kudu Oozie Nifi (aka Hadoop Data Flow) Pig Storing data (files) Hadoop File System EMC Isilon One. FS S 3 NB: due to authors lack of familiarity with many alternative tools listed here, we cannot guarantee the tools shown can provide the technical capability shown

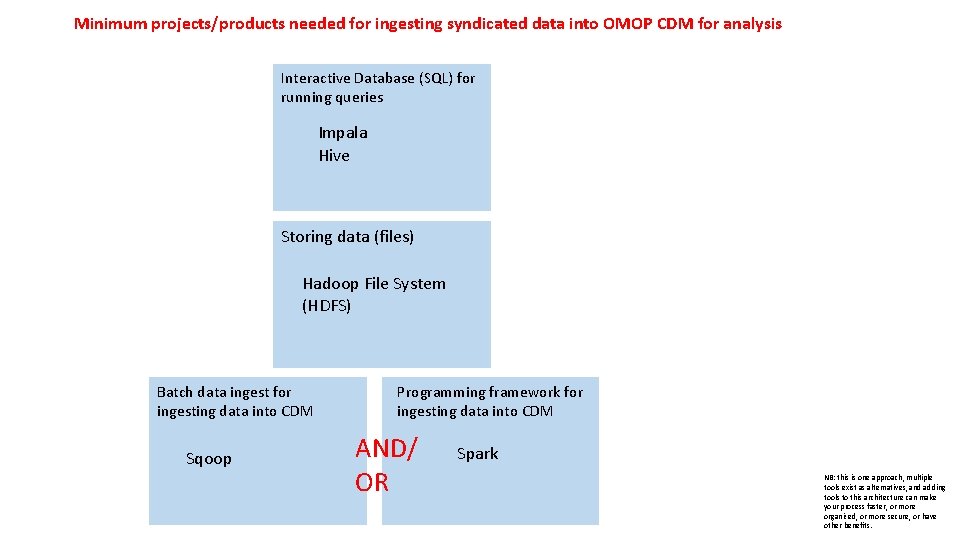

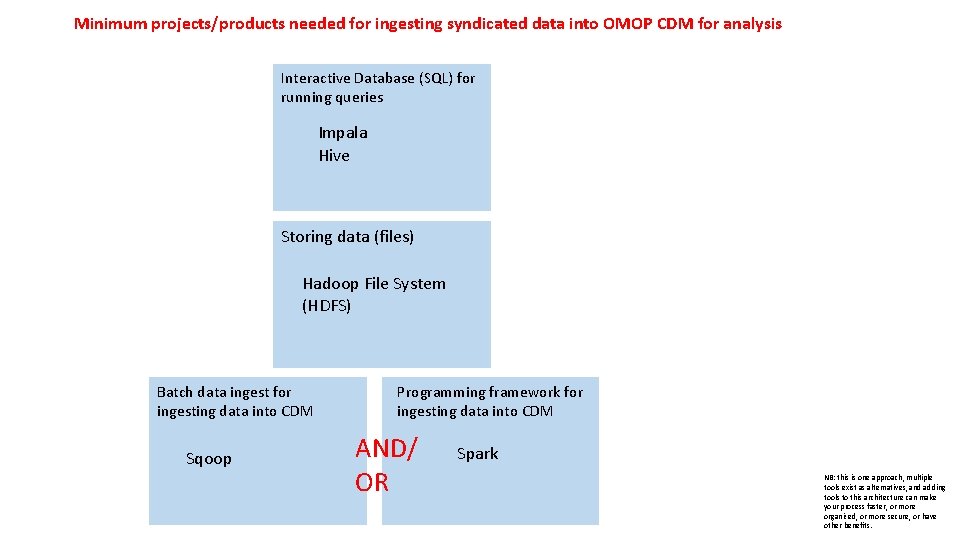

Minimum projects/products needed for ingesting syndicated data into OMOP CDM for analysis Interactive Database (SQL) for running queries Impala Hive Storing data (files) Hadoop File System (HDFS) Batch data ingest for ingesting data into CDM Sqoop Programming framework for ingesting data into CDM AND/ OR Spark NB: this is one approach, multiple tools exist as alternatives, and adding tools to this architecture can make your process faster, or more organized, or more secure, or have other benefits.