Running Hadoop Hadoop Platforms Platforms Unix and on

![public class Max. Temperature { public static void main(String[] args) throws IOException { if public class Max. Temperature { public static void main(String[] args) throws IOException { if](https://slidetodoc.com/presentation_image/38532828f690d095019aaf0aa2ff1811/image-24.jpg)

![public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); String[] public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); String[]](https://slidetodoc.com/presentation_image/38532828f690d095019aaf0aa2ff1811/image-25.jpg)

![public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); String[] public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); String[]](https://slidetodoc.com/presentation_image/38532828f690d095019aaf0aa2ff1811/image-33.jpg)

![public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); String[] public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); String[]](https://slidetodoc.com/presentation_image/38532828f690d095019aaf0aa2ff1811/image-51.jpg)

- Slides: 51

Running Hadoop

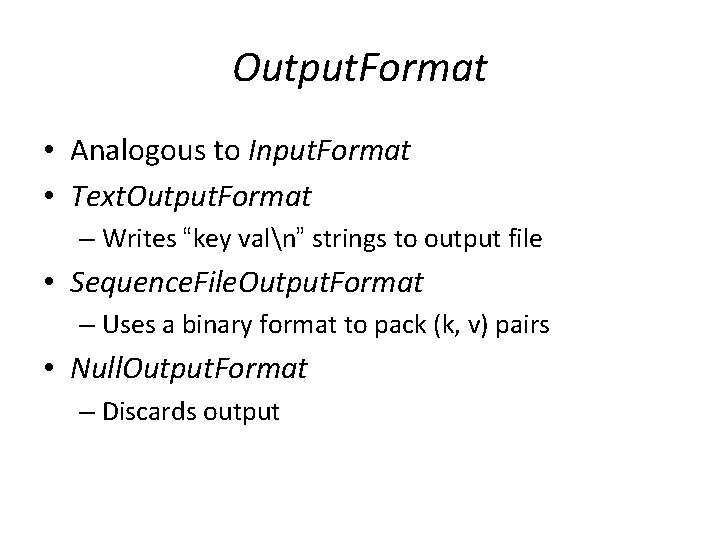

Hadoop Platforms • Platforms: Unix and on Windows. – Linux: the only supported production platform. – Other variants of Unix, like Mac OS X: run Hadoop for development. – Windows + Cygwin: development platform (openssh) • Java 6 – Java 1. 6. x (aka 6. 0. x aka 6) is recommended for running Hadoop.

Hadoop Installation • Download a stable version of Hadoop: – http: //hadoop. apache. org/core/releases. html • Untar the hadoop file: – tar xvfz hadoop-0. 2. tar. gz • JAVA_HOME at hadoop/conf/hadoop-env. sh: – Mac OS: /System/Library/Frameworks/Java. VM. framework/Versions /1. 6. 0/Home (/Library/Java/Home) – Linux: which java • Environment Variables: – export PATH=$PATH: $HADOOP_HOME/bin

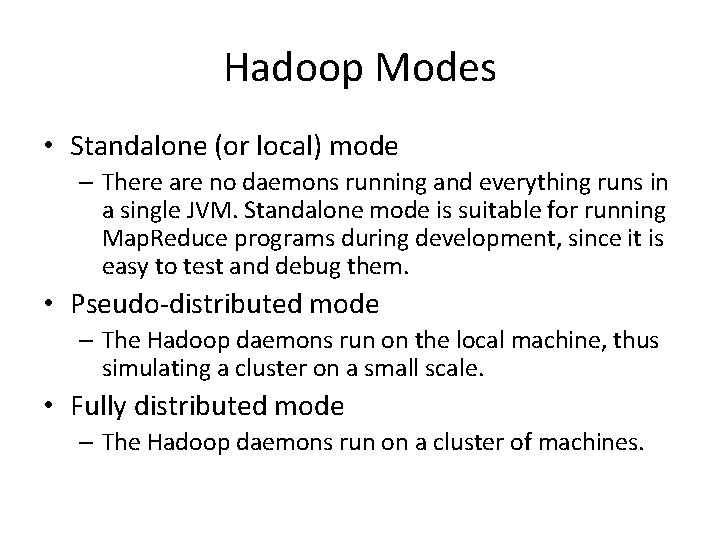

Hadoop Modes • Standalone (or local) mode – There are no daemons running and everything runs in a single JVM. Standalone mode is suitable for running Map. Reduce programs during development, since it is easy to test and debug them. • Pseudo-distributed mode – The Hadoop daemons run on the local machine, thus simulating a cluster on a small scale. • Fully distributed mode – The Hadoop daemons run on a cluster of machines.

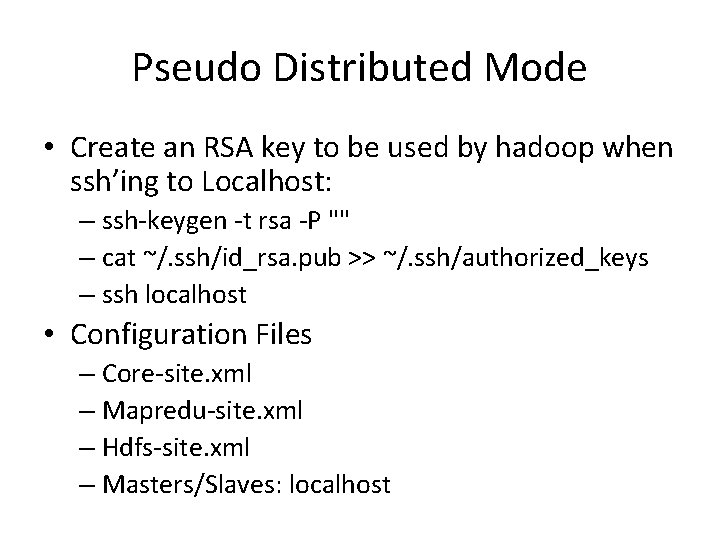

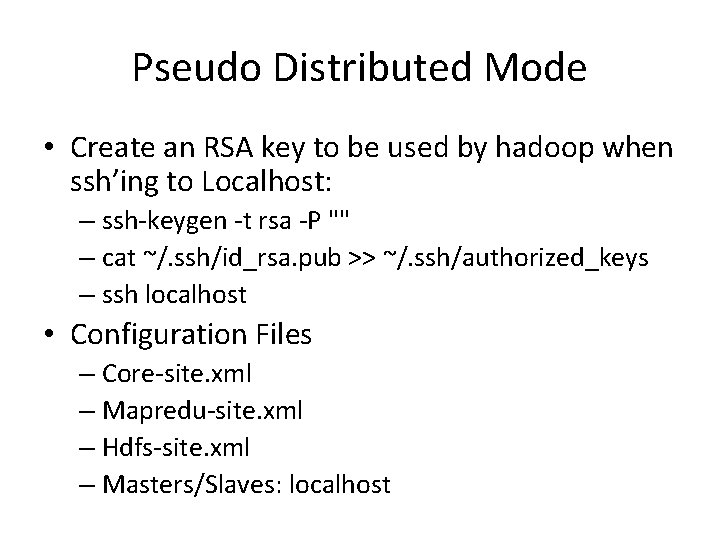

Pseudo Distributed Mode • Create an RSA key to be used by hadoop when ssh’ing to Localhost: – ssh-keygen -t rsa -P "" – cat ~/. ssh/id_rsa. pub >> ~/. ssh/authorized_keys – ssh localhost • Configuration Files – Core-site. xml – Mapredu-site. xml – Hdfs-site. xml – Masters/Slaves: localhost

<? xml version="1. 0"? > <!-- core-site. xml --> <configuration> <property> <name>fs. default. name</name> <value>hdfs: //localhost/</value> </property> </configuration> <? xml version="1. 0"? > <!-- hdfs-site. xml --> <configuration> <property> <name>dfs. replication</name> <value>1</value> </property> </configuration> <? xml version="1. 0"? > <!-- mapred-site. xml --> <configuration> <property> <name>mapred. job. tracker</name> <value>localhost: 8021</value> </property> </configuration>

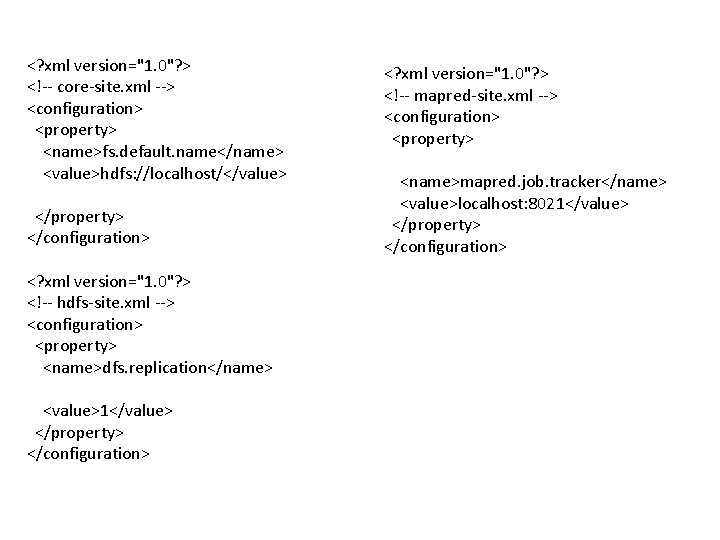

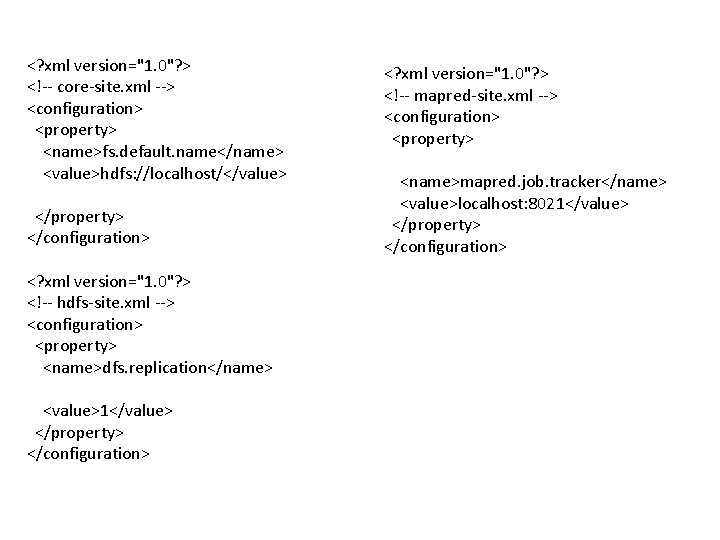

Start Hadoop • • hadoop namenode –format bin/star-all. sh (start-dfs. sh/start-mapred. sh) jps bin/stop-all. sh • Web-based UI – http: //localhost: 50070 (Namenode report) – http: //localhost: 50030 (Jobtracker)

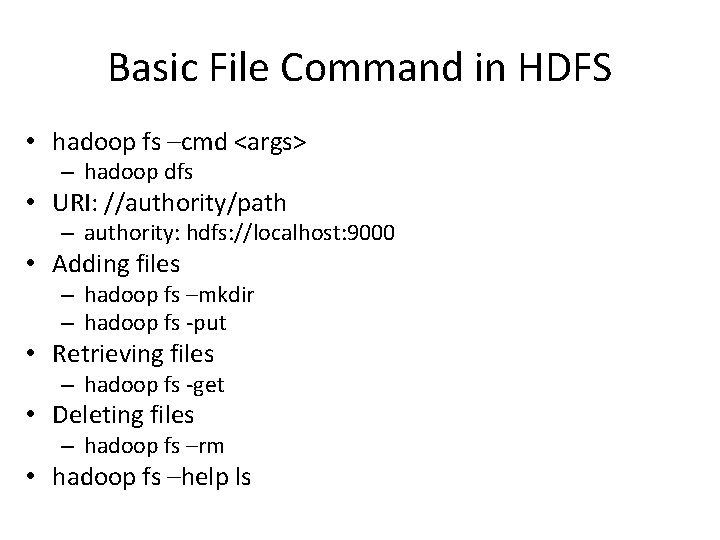

Basic File Command in HDFS • hadoop fs –cmd <args> – hadoop dfs • URI: //authority/path – authority: hdfs: //localhost: 9000 • Adding files – hadoop fs –mkdir – hadoop fs -put • Retrieving files – hadoop fs -get • Deleting files – hadoop fs –rm • hadoop fs –help ls

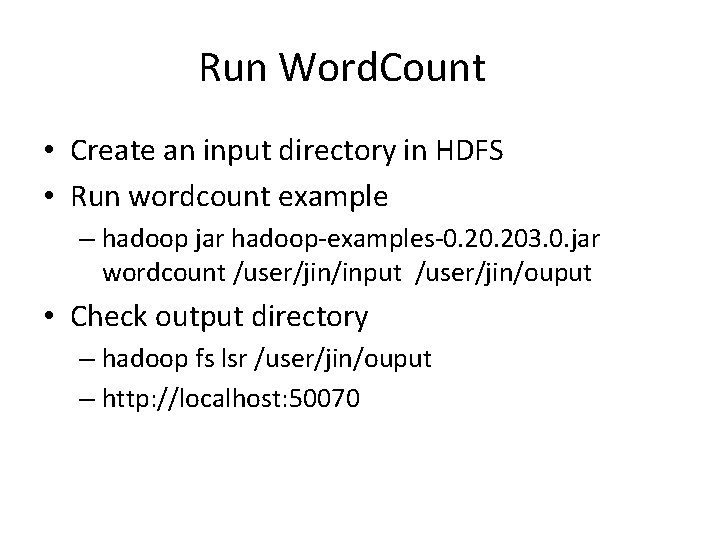

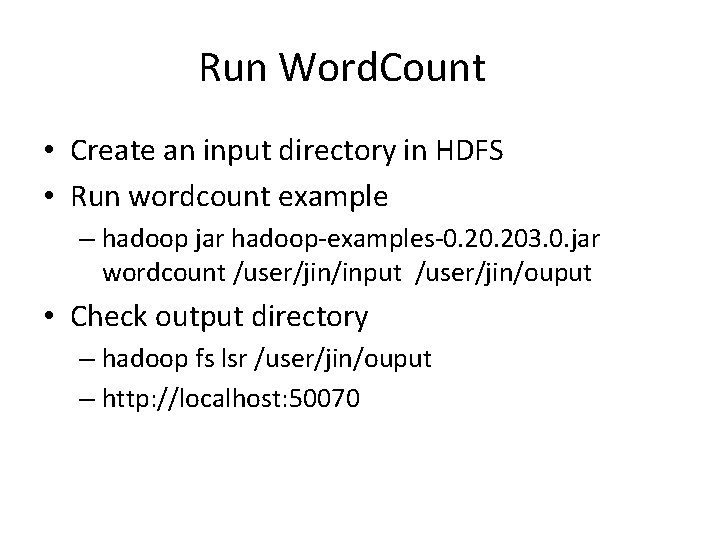

Run Word. Count • Create an input directory in HDFS • Run wordcount example – hadoop jar hadoop-examples-0. 203. 0. jar wordcount /user/jin/input /user/jin/ouput • Check output directory – hadoop fs lsr /user/jin/ouput – http: //localhost: 50070

References • http: //hadoop. apache. org/common/docs/r 0. 2/quickstart. html • http: //oreilly. com/otherprogramming/excerpts/hadoop-tdg/installingapache-hadoop. html • http: //www. michaelnoll. com/tutorials/running-hadoop-on-ubuntu -linux-single-node-cluster/ • http: //snap. stanford. edu/class/cs 2462011/hw_files/hadoop_install. pdf

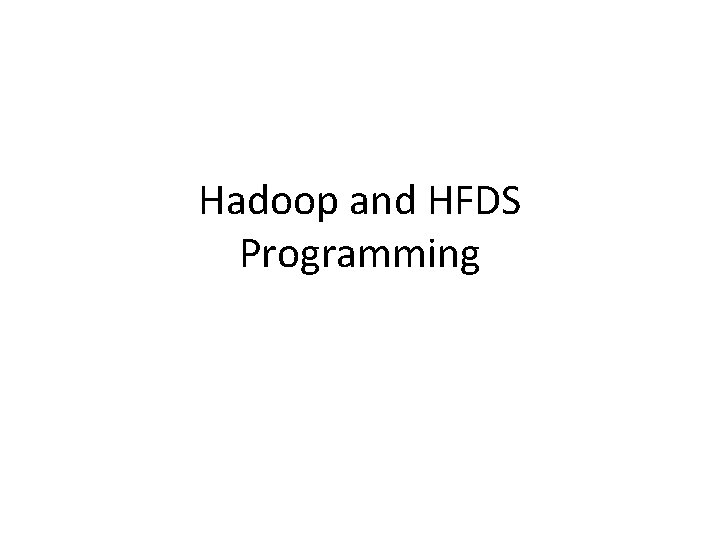

Hadoop and HFDS Programming

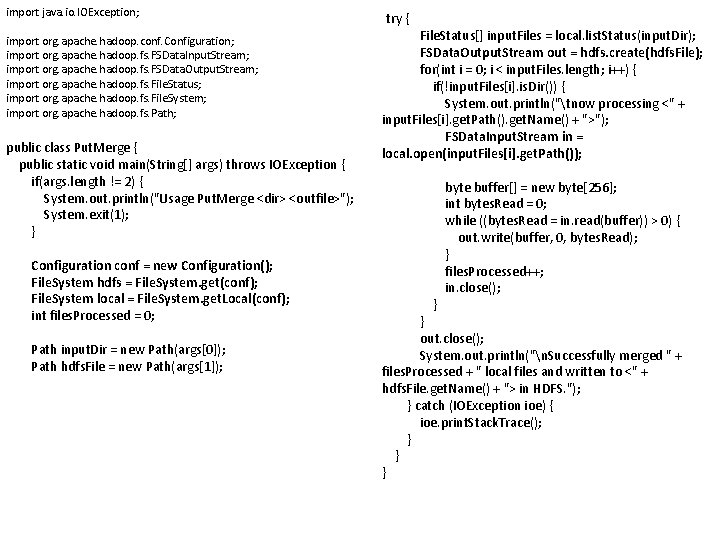

import java. io. IOException; import org. apache. hadoop. conf. Configuration; import org. apache. hadoop. fs. FSData. Input. Stream; import org. apache. hadoop. fs. FSData. Output. Stream; import org. apache. hadoop. fs. File. Status; import org. apache. hadoop. fs. File. System; import org. apache. hadoop. fs. Path; public class Put. Merge { public static void main(String[] args) throws IOException { if(args. length != 2) { System. out. println("Usage Put. Merge <dir> <outfile>"); System. exit(1); } Configuration conf = new Configuration(); File. System hdfs = File. System. get(conf); File. System local = File. System. get. Local(conf); int files. Processed = 0; Path input. Dir = new Path(args[0]); Path hdfs. File = new Path(args[1]); try { File. Status[] input. Files = local. list. Status(input. Dir); FSData. Output. Stream out = hdfs. create(hdfs. File); for(int i = 0; i < input. Files. length; i++) { if(!input. Files[i]. is. Dir()) { System. out. println("tnow processing <" + input. Files[i]. get. Path(). get. Name() + ">"); FSData. Input. Stream in = local. open(input. Files[i]. get. Path()); } byte buffer[] = new byte[256]; int bytes. Read = 0; while ((bytes. Read = in. read(buffer)) > 0) { out. write(buffer, 0, bytes. Read); } files. Processed++; in. close(); } out. close(); System. out. println("n. Successfully merged " + files. Processed + " local files and written to <" + hdfs. File. get. Name() + "> in HDFS. "); } catch (IOException ioe) { ioe. print. Stack. Trace(); } } }

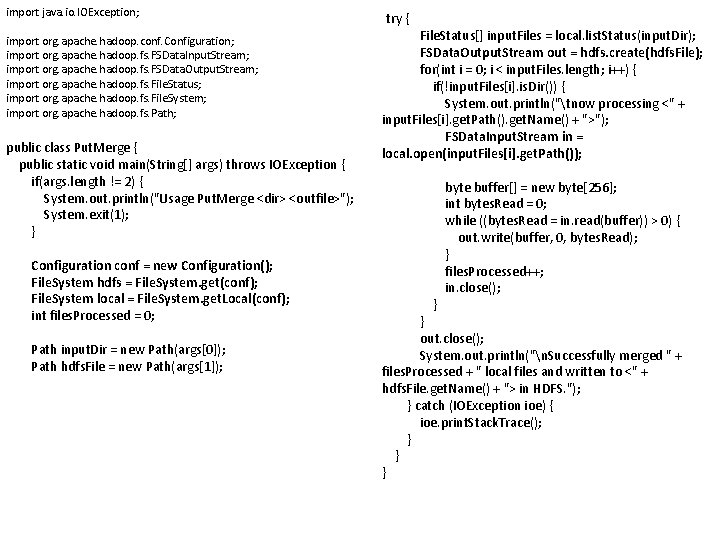

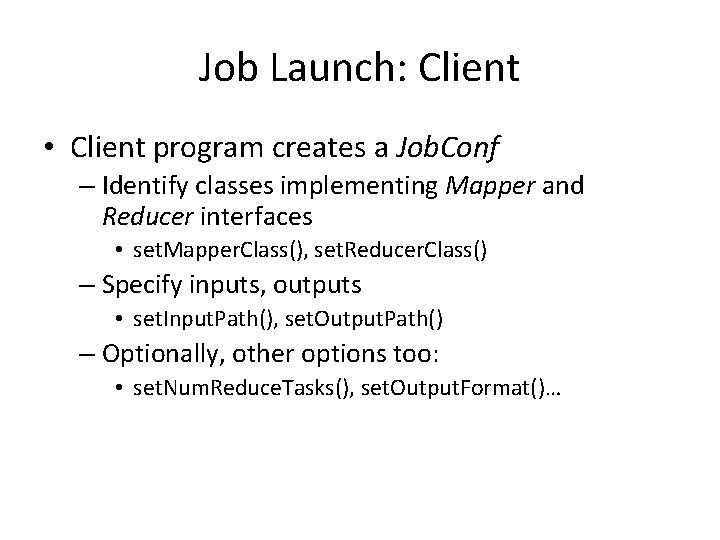

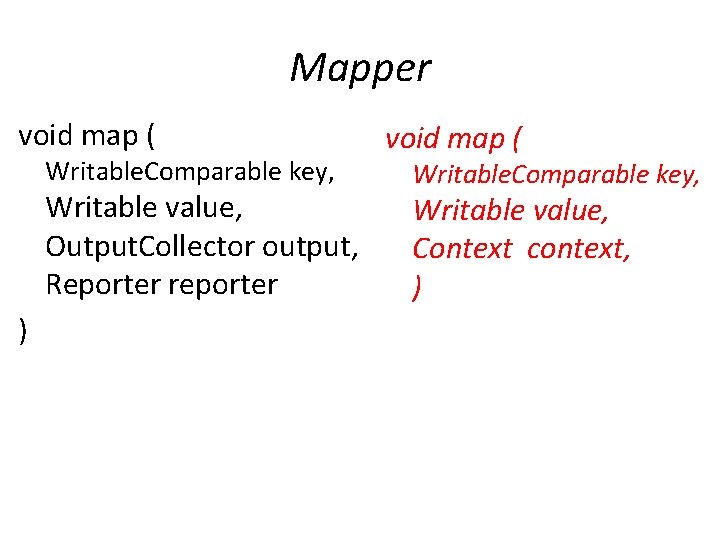

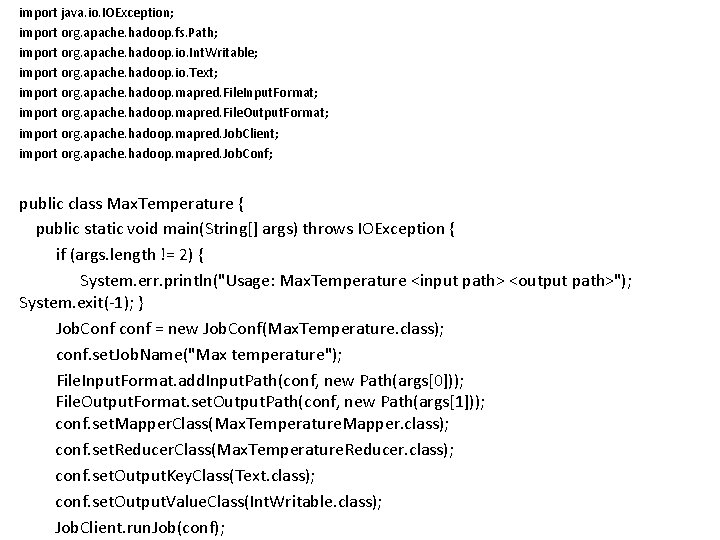

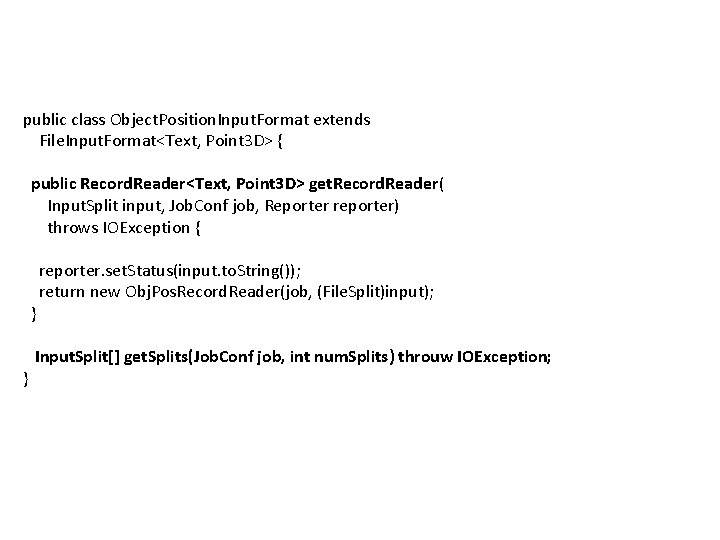

import java. io. IOException; import org. apache. hadoop. fs. Path; import org. apache. hadoop. io. Int. Writable; import org. apache. hadoop. io. Text; import org. apache. hadoop. mapred. File. Input. Format; import org. apache. hadoop. mapred. File. Output. Format; import org. apache. hadoop. mapred. Job. Client; import org. apache. hadoop. mapred. Job. Conf; public class Max. Temperature { public static void main(String[] args) throws IOException { if (args. length != 2) { System. err. println("Usage: Max. Temperature <input path> <output path>"); System. exit(-1); } Job. Conf conf = new Job. Conf(Max. Temperature. class); conf. set. Job. Name("Max temperature"); File. Input. Format. add. Input. Path(conf, new Path(args[0])); File. Output. Format. set. Output. Path(conf, new Path(args[1])); conf. set. Mapper. Class(Max. Temperature. Mapper. class); conf. set. Reducer. Class(Max. Temperature. Reducer. class); conf. set. Output. Key. Class(Text. class); conf. set. Output. Value. Class(Int. Writable. class); Job. Client. run. Job(conf);

Job. Client. run. Job(conf) • The client, which submits the Map. Reduce job. • The jobtracker, which coordinates the job run. The jobtracker is a Java application whose main class is Job. Tracker. • The tasktrackers, which run the tasks that the job has been split into. Tasktrackers are Java applications whose main class is Task. Tracker. • The distributed filesystem, which is used for sharing job files between the other entities.

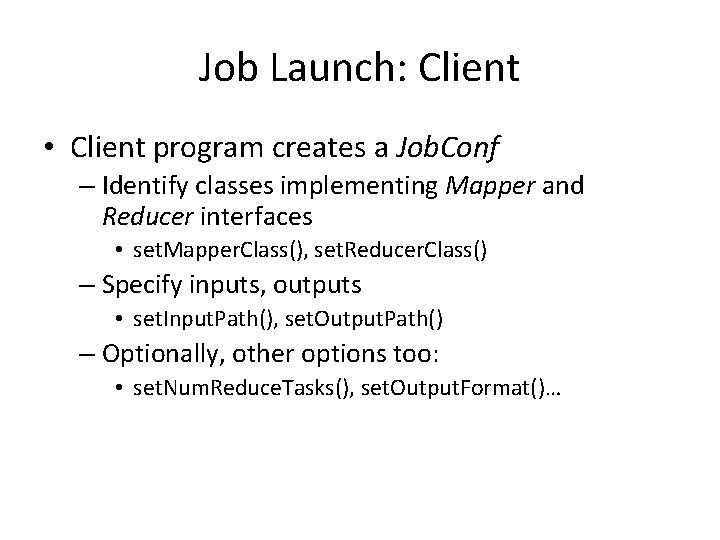

Job Launch: Client • Client program creates a Job. Conf – Identify classes implementing Mapper and Reducer interfaces • set. Mapper. Class(), set. Reducer. Class() – Specify inputs, outputs • set. Input. Path(), set. Output. Path() – Optionally, other options too: • set. Num. Reduce. Tasks(), set. Output. Format()…

Job Launch: Job. Client • Pass Job. Conf to – Job. Client. run. Job() // blocks – Job. Client. submit. Job() // does not block • Job. Client: – Determines proper division of input into Input. Splits – Sends job data to master Job. Tracker server

Job Launch: Job. Tracker • Job. Tracker: – Inserts jar and Job. Conf (serialized to XML) in shared location – Posts a Job. In. Progress to its run queue

Job Launch: Task. Tracker • Task. Trackers running on slave nodes periodically query Job. Tracker for work • Retrieve job-specific jar and config • Launch task in separate instance of Java – main() is provided by Hadoop

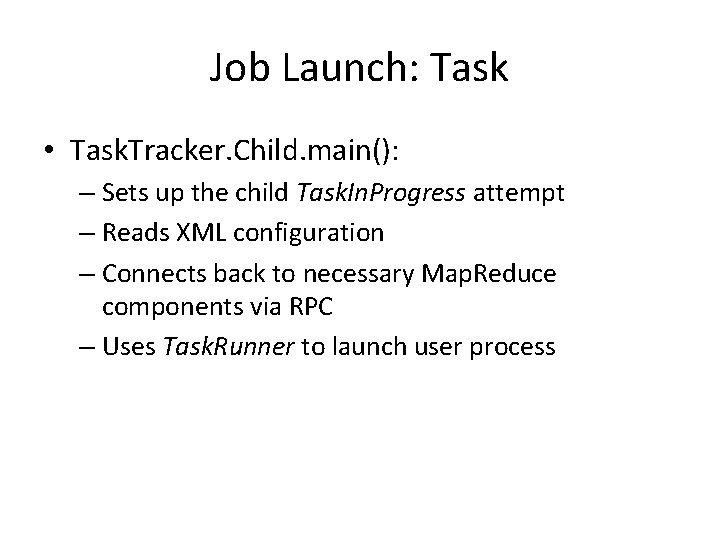

Job Launch: Task • Task. Tracker. Child. main(): – Sets up the child Task. In. Progress attempt – Reads XML configuration – Connects back to necessary Map. Reduce components via RPC – Uses Task. Runner to launch user process

Job Launch: Task. Runner • Task. Runner, Map. Runner work in a daisy-chain to launch Mapper – Task knows ahead of time which Input. Splits it should be mapping – Calls Mapper once for each record retrieved from the Input. Split • Running the Reducer is much the same

![public class Max Temperature public static void mainString args throws IOException if public class Max. Temperature { public static void main(String[] args) throws IOException { if](https://slidetodoc.com/presentation_image/38532828f690d095019aaf0aa2ff1811/image-24.jpg)

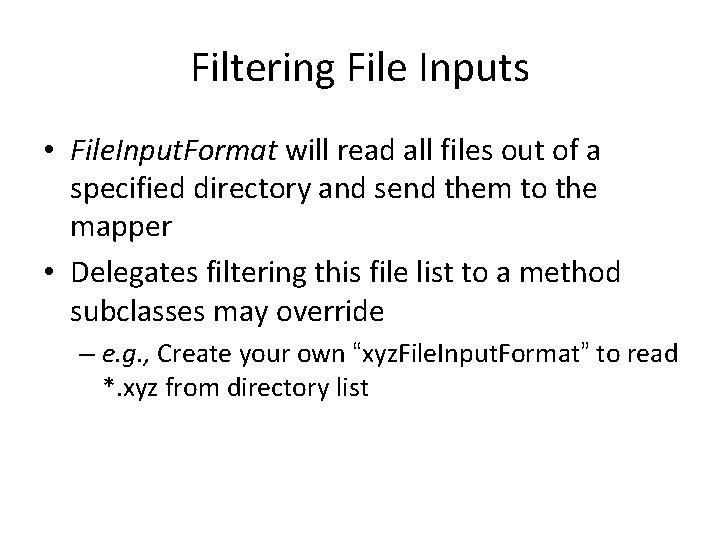

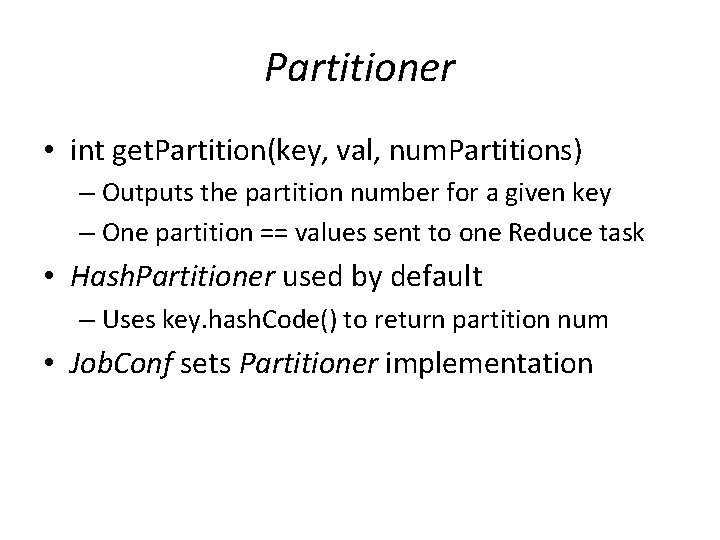

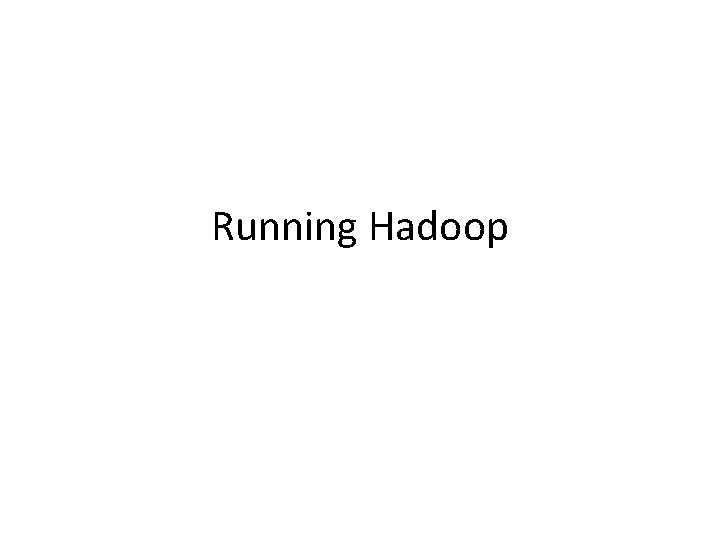

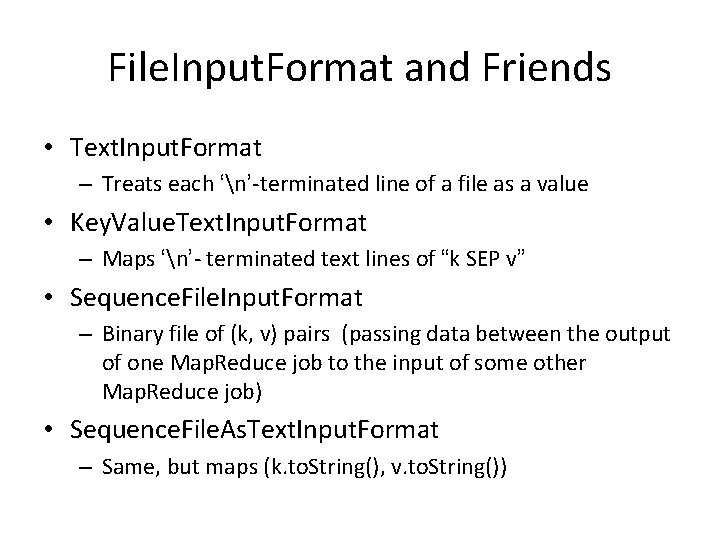

public class Max. Temperature { public static void main(String[] args) throws IOException { if (args. length != 2) { System. err. println("Usage: Max. Temperature <input path> <output path>"); System. exit(-1); } Job. Conf conf = new Job. Conf(Max. Temperature. class); conf. set. Job. Name("Max temperature"); File. Input. Format. add. Input. Path(conf, new Path(args[0])); File. Output. Format. set. Output. Path(conf, new Path(args[1])); conf. set. Mapper. Class(Max. Temperature. Mapper. class); conf. set. Reducer. Class(Max. Temperature. Reducer. class); conf. set. Output. Key. Class(Text. class); conf. set. Output. Value. Class(Int. Writable. class); Job. Client. run. Job(conf); }}

![public static void mainString args throws Exception Configuration conf new Configuration String public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); String[]](https://slidetodoc.com/presentation_image/38532828f690d095019aaf0aa2ff1811/image-25.jpg)

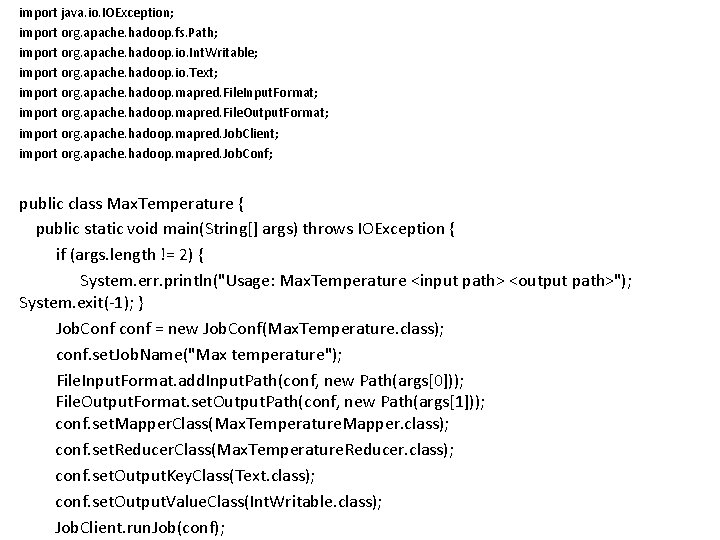

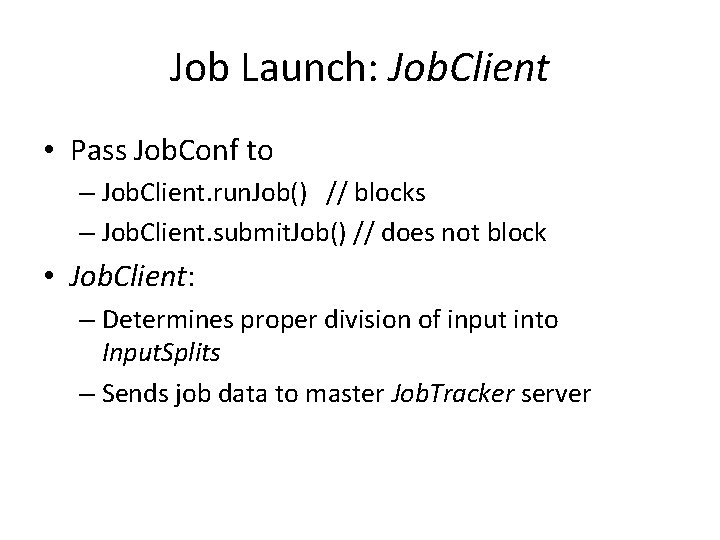

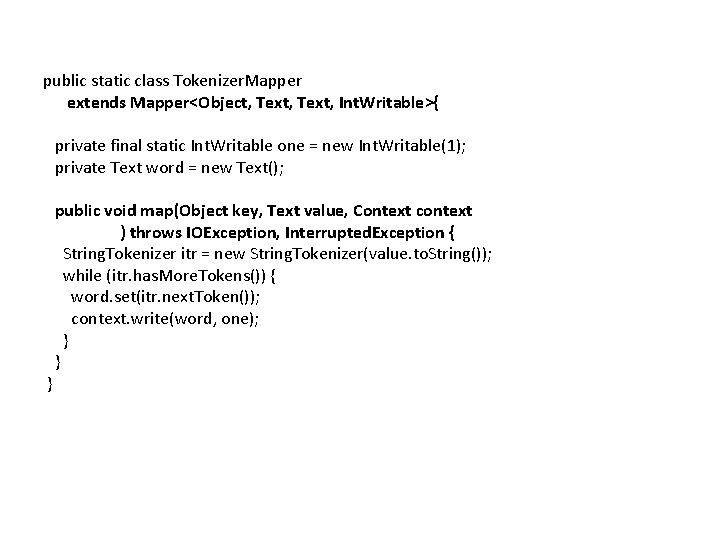

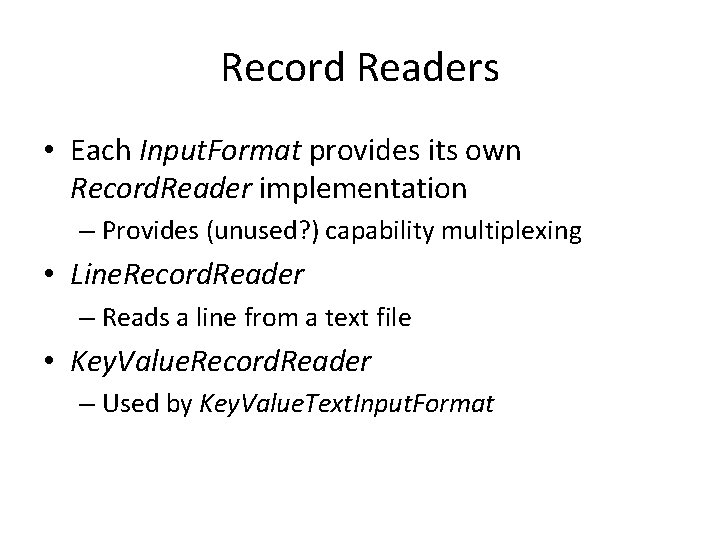

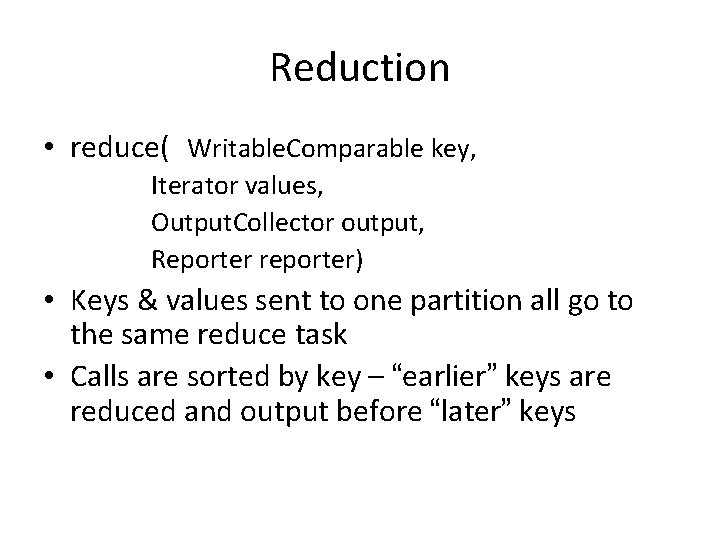

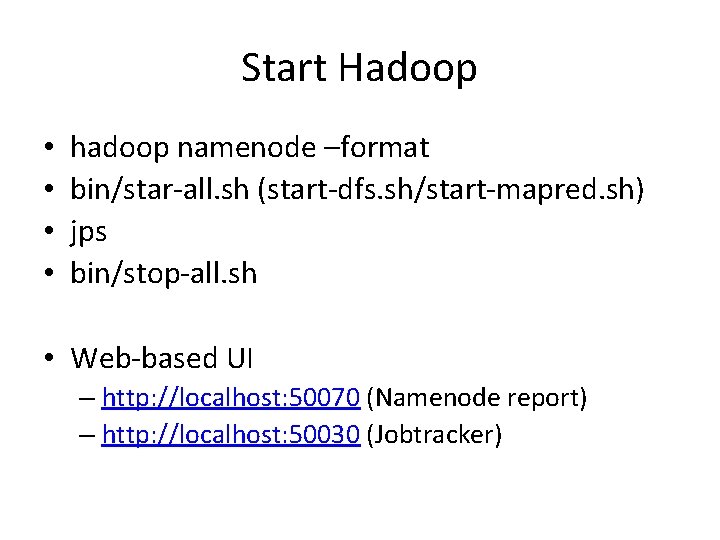

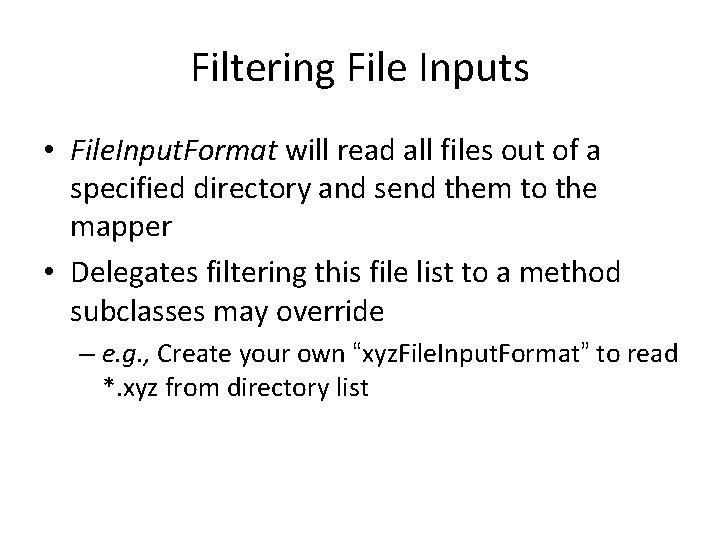

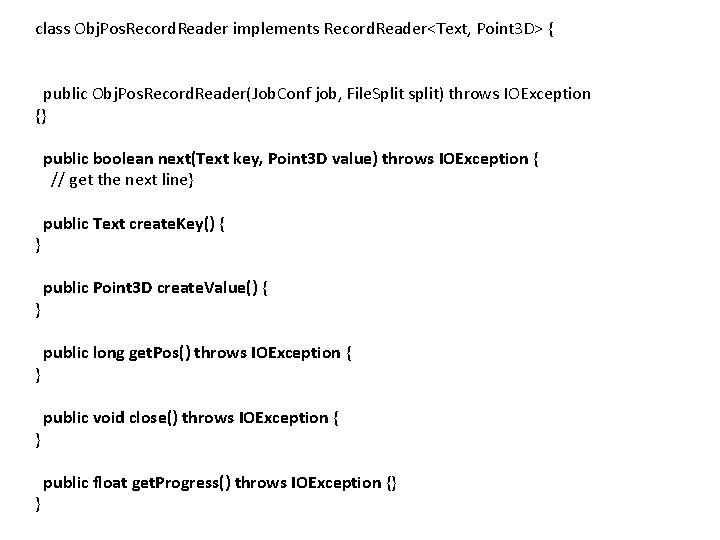

public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); String[] other. Args = new Generic. Options. Parser(conf, args). get. Remaining. Args(); if (other. Args. length != 2) { System. err. println("Usage: wordcount <in> <out>"); System. exit(2); } Job job = new Job(conf, "word count"); job. set. Jar. By. Class(Word. Count. class); job. set. Mapper. Class(Tokenizer. Mapper. class); job. set. Combiner. Class(Int. Sum. Reducer. class); job. set. Reducer. Class(Int. Sum. Reducer. class); job. set. Output. Key. Class(Text. class); job. set. Output. Value. Class(Int. Writable. class); File. Input. Format. add. Input. Path(job, new Path(other. Args[0])); File. Output. Format. set. Output. Path(job, new Path(other. Args[1])); } System. exit(job. wait. For. Completion(true) ? 0 : 1);

Creating the Mapper • Your instance of Mapper should extend Map. Reduce. Base • One instance of your Mapper is initialized by the Map. Task. Runner for a Task. In. Progress – Exists in separate process from all other instances of Mapper – no data sharing!

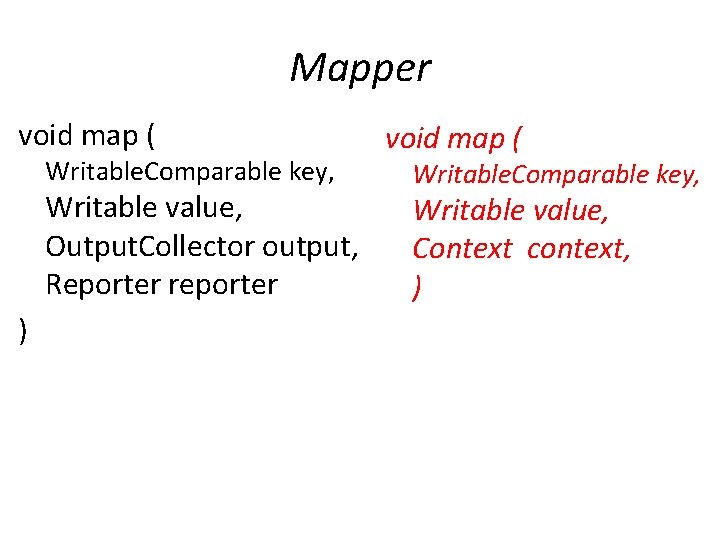

Mapper void map ( Writable. Comparable key, Writable value, Output. Collector output, Reporter reporter ) void map ( Writable. Comparable key, Writable value, Context context, )

public static class Tokenizer. Mapper extends Mapper<Object, Text, Int. Writable>{ private final static Int. Writable one = new Int. Writable(1); private Text word = new Text(); } public void map(Object key, Text value, Context context ) throws IOException, Interrupted. Exception { String. Tokenizer itr = new String. Tokenizer(value. to. String()); while (itr. has. More. Tokens()) { word. set(itr. next. Token()); context. write(word, one); } }

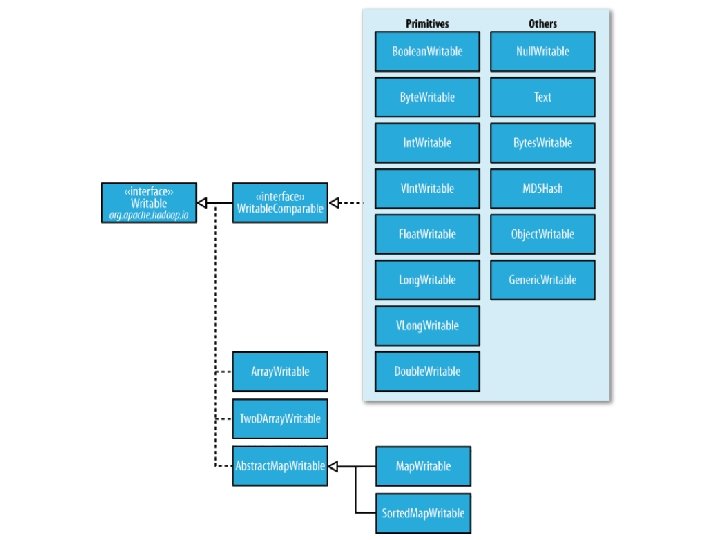

What is Writable? • Hadoop defines its own “box” classes for strings (Text), integers (Int. Writable), etc. • All values are instances of Writable • All keys are instances of Writable. Comparable

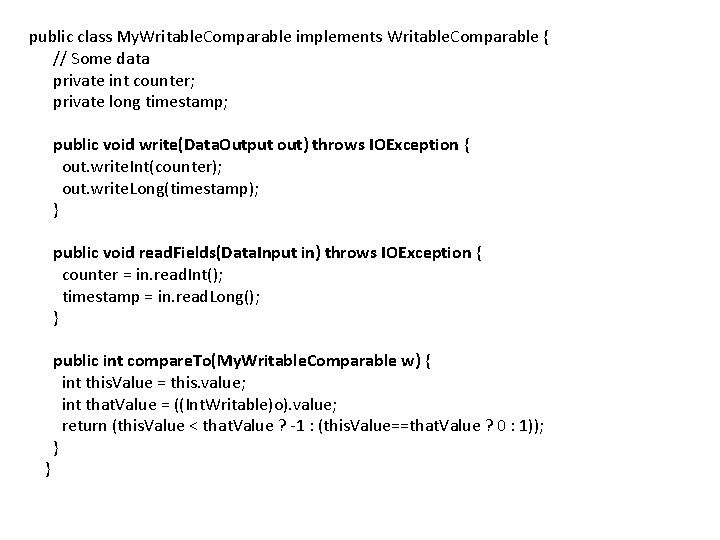

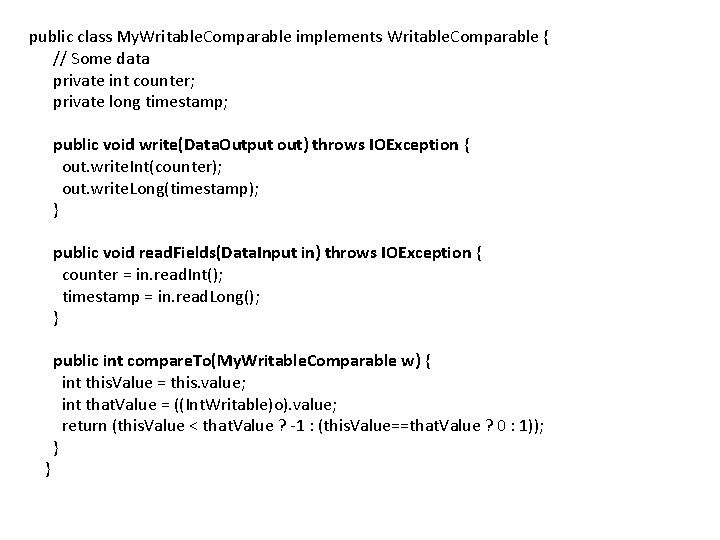

public class My. Writable. Comparable implements Writable. Comparable { // Some data private int counter; private long timestamp; public void write(Data. Output out) throws IOException { out. write. Int(counter); out. write. Long(timestamp); } public void read. Fields(Data. Input in) throws IOException { counter = in. read. Int(); timestamp = in. read. Long(); } } public int compare. To(My. Writable. Comparable w) { int this. Value = this. value; int that. Value = ((Int. Writable)o). value; return (this. Value < that. Value ? -1 : (this. Value==that. Value ? 0 : 1)); }

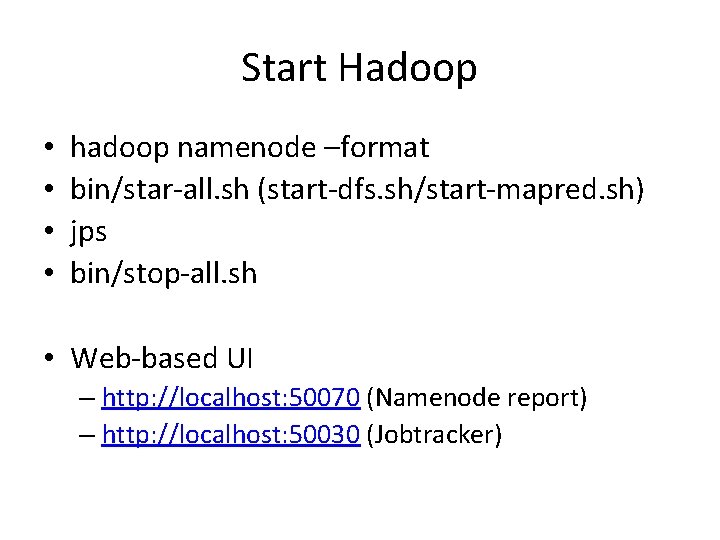

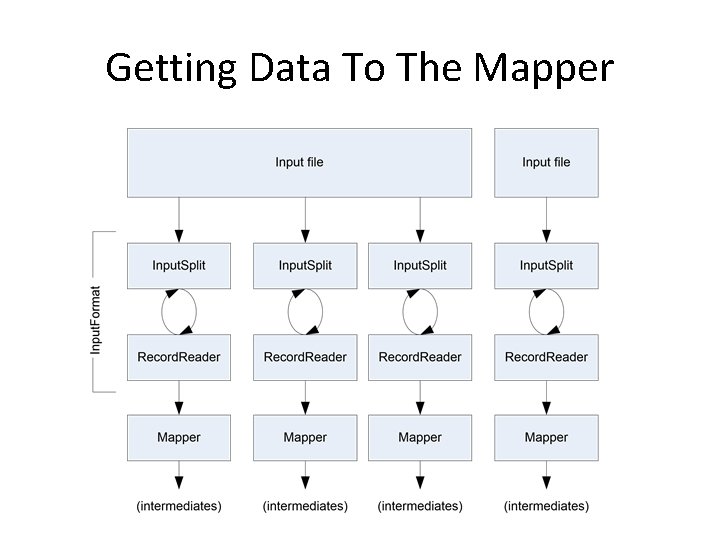

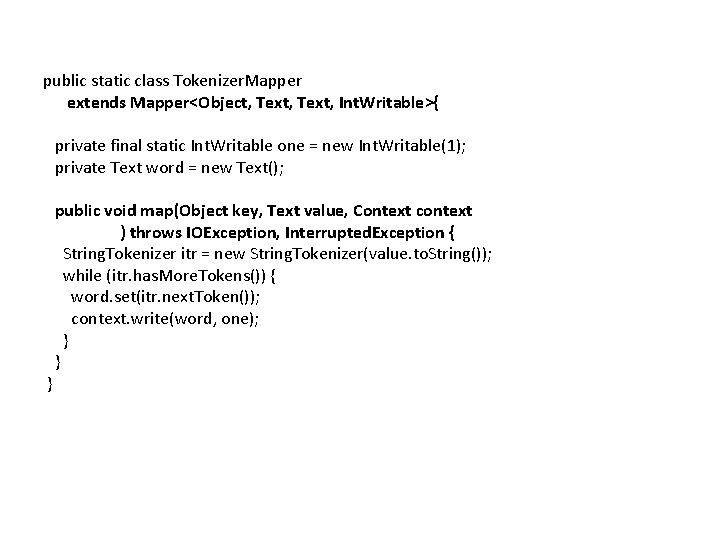

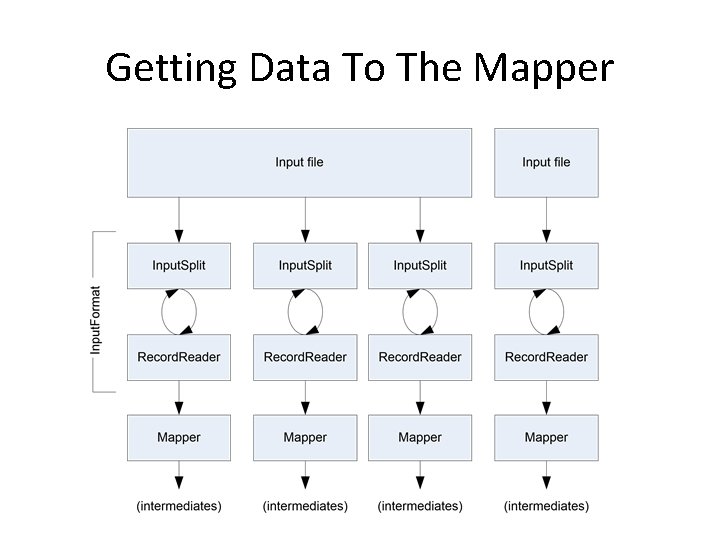

Getting Data To The Mapper

![public static void mainString args throws Exception Configuration conf new Configuration String public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); String[]](https://slidetodoc.com/presentation_image/38532828f690d095019aaf0aa2ff1811/image-33.jpg)

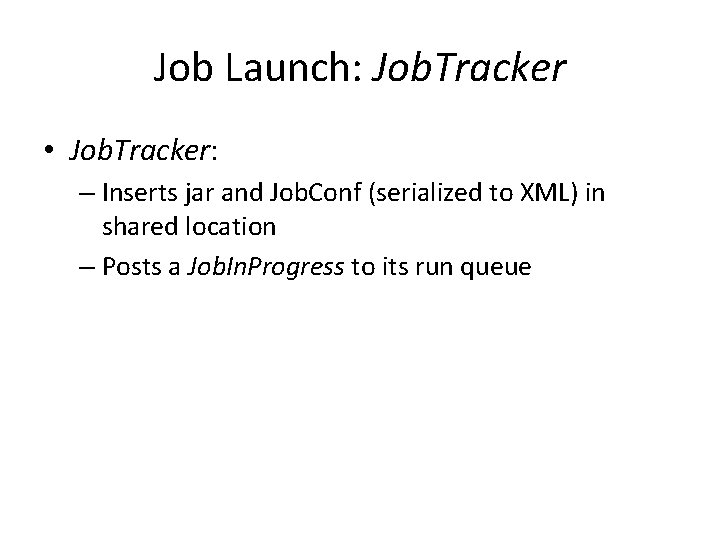

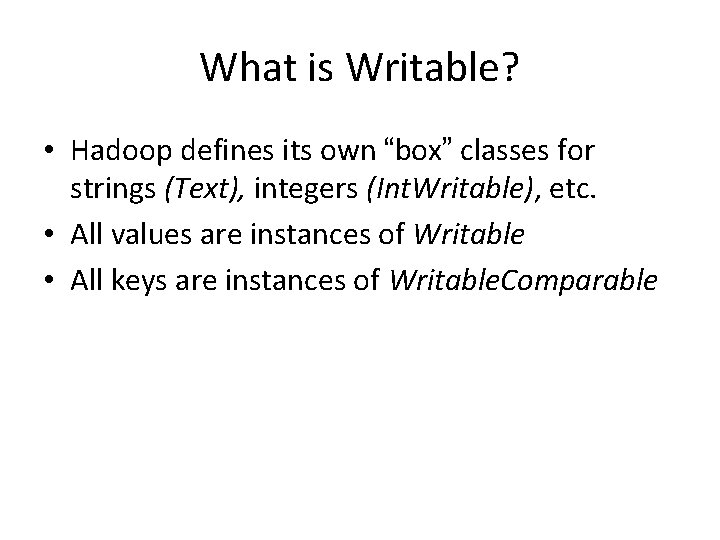

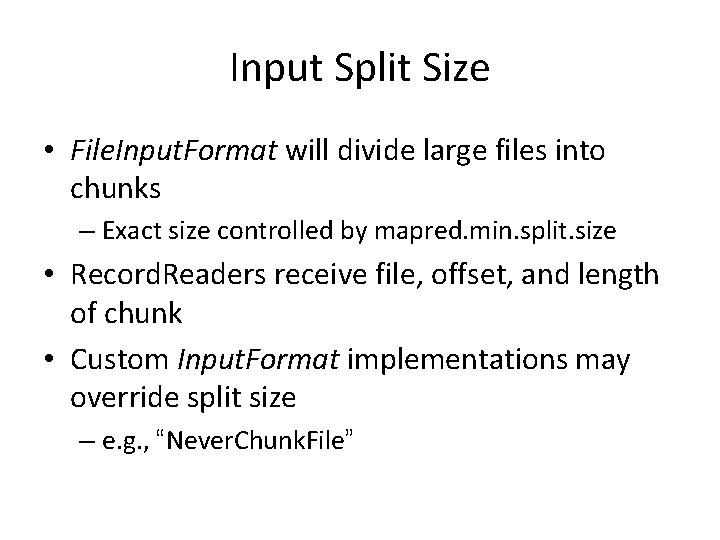

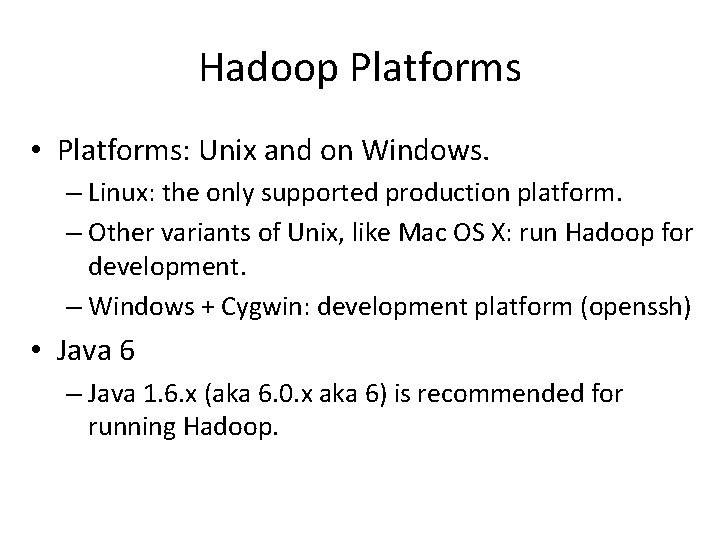

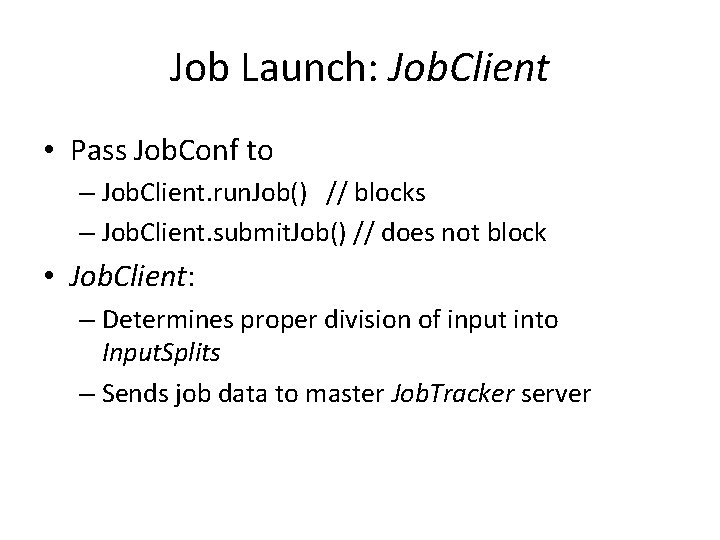

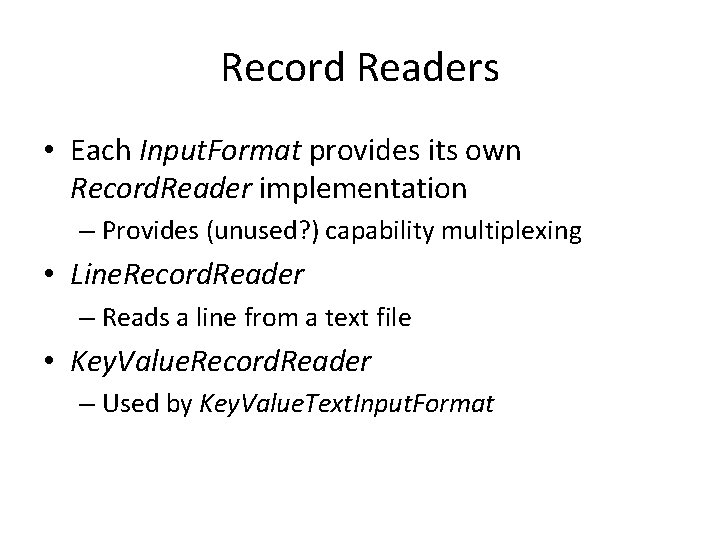

public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); String[] other. Args = new Generic. Options. Parser(conf, args). get. Remaining. Args(); if (other. Args. length != 2) { System. err. println("Usage: wordcount <in> <out>"); System. exit(2); } Job job = new Job(conf, "word count"); job. set. Jar. By. Class(Word. Count. class); job. set. Mapper. Class(Tokenizer. Mapper. class); job. set. Combiner. Class(Int. Sum. Reducer. class); job. set. Reducer. Class(Int. Sum. Reducer. class); job. set. Output. Key. Class(Text. class); job. set. Output. Value. Class(Int. Writable. class); File. Input. Format. add. Input. Path(job, new Path(other. Args[0])); File. Output. Format. set. Output. Path(job, new Path(other. Args[1])); } System. exit(job. wait. For. Completion(true) ? 0 : 1);

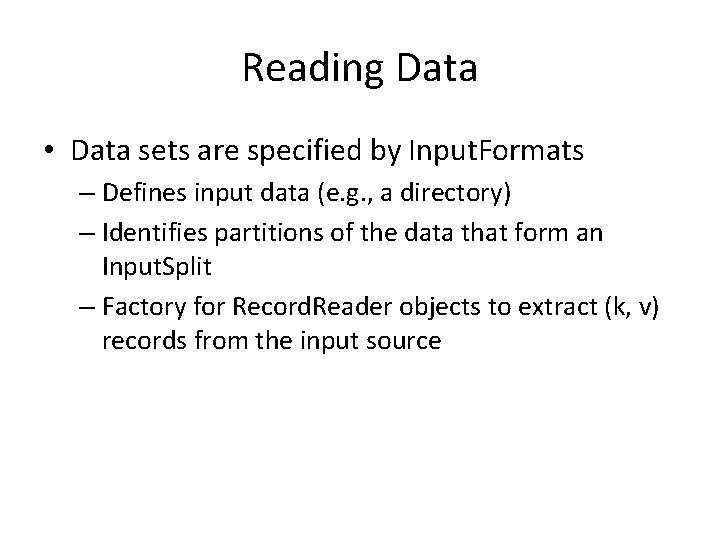

Reading Data • Data sets are specified by Input. Formats – Defines input data (e. g. , a directory) – Identifies partitions of the data that form an Input. Split – Factory for Record. Reader objects to extract (k, v) records from the input source

File. Input. Format and Friends • Text. Input. Format – Treats each ‘n’-terminated line of a file as a value • Key. Value. Text. Input. Format – Maps ‘n’- terminated text lines of “k SEP v” • Sequence. File. Input. Format – Binary file of (k, v) pairs (passing data between the output of one Map. Reduce job to the input of some other Map. Reduce job) • Sequence. File. As. Text. Input. Format – Same, but maps (k. to. String(), v. to. String())

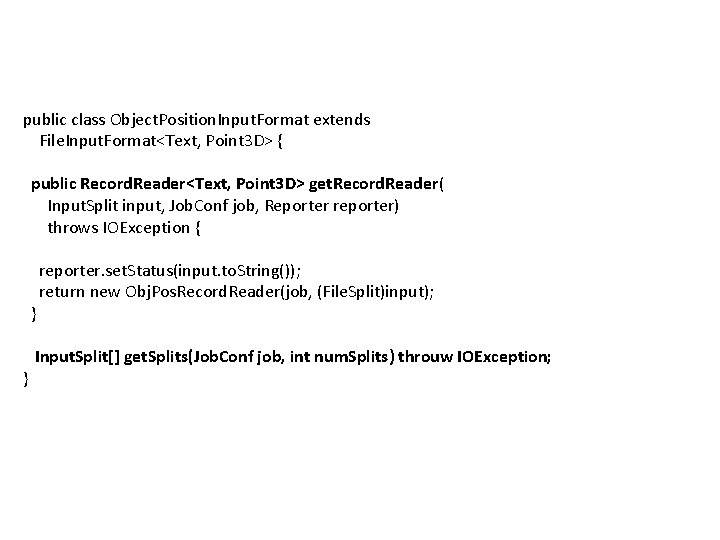

Filtering File Inputs • File. Input. Format will read all files out of a specified directory and send them to the mapper • Delegates filtering this file list to a method subclasses may override – e. g. , Create your own “xyz. File. Input. Format” to read *. xyz from directory list

Record Readers • Each Input. Format provides its own Record. Reader implementation – Provides (unused? ) capability multiplexing • Line. Record. Reader – Reads a line from a text file • Key. Value. Record. Reader – Used by Key. Value. Text. Input. Format

Input Split Size • File. Input. Format will divide large files into chunks – Exact size controlled by mapred. min. split. size • Record. Readers receive file, offset, and length of chunk • Custom Input. Format implementations may override split size – e. g. , “Never. Chunk. File”

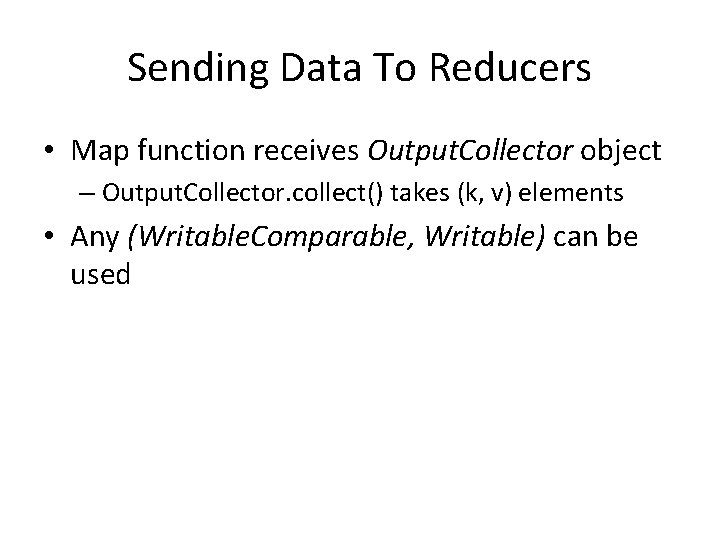

public class Object. Position. Input. Format extends File. Input. Format<Text, Point 3 D> { public Record. Reader<Text, Point 3 D> get. Record. Reader( Input. Split input, Job. Conf job, Reporter reporter) throws IOException { } } reporter. set. Status(input. to. String()); return new Obj. Pos. Record. Reader(job, (File. Split)input); Input. Split[] get. Splits(Job. Conf job, int num. Splits) throuw IOException;

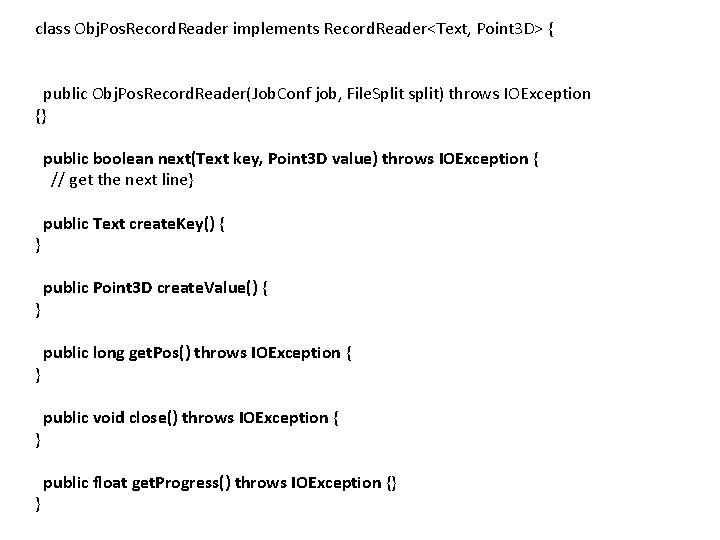

class Obj. Pos. Record. Reader implements Record. Reader<Text, Point 3 D> { public Obj. Pos. Record. Reader(Job. Conf job, File. Split split) throws IOException {} public boolean next(Text key, Point 3 D value) throws IOException { // get the next line} } } public Text create. Key() { public Point 3 D create. Value() { public long get. Pos() throws IOException { public void close() throws IOException { public float get. Progress() throws IOException {}

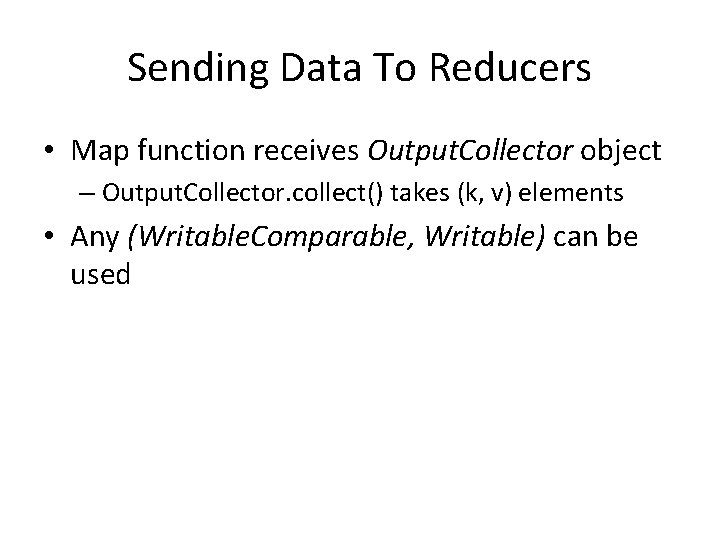

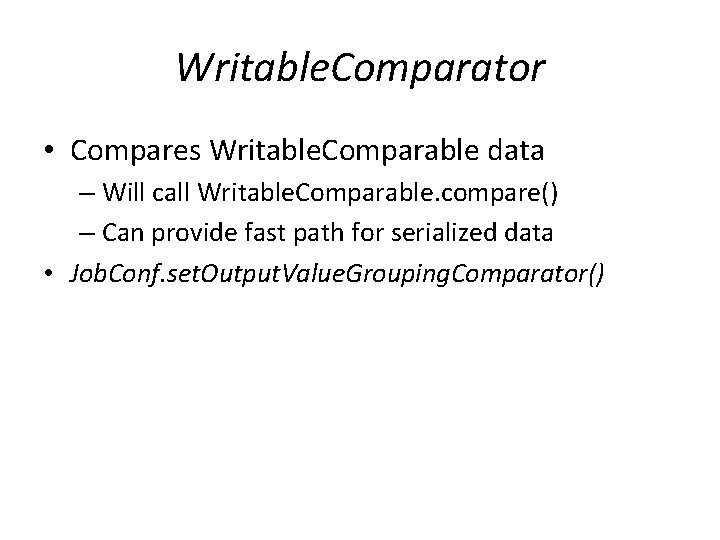

Sending Data To Reducers • Map function receives Output. Collector object – Output. Collector. collect() takes (k, v) elements • Any (Writable. Comparable, Writable) can be used

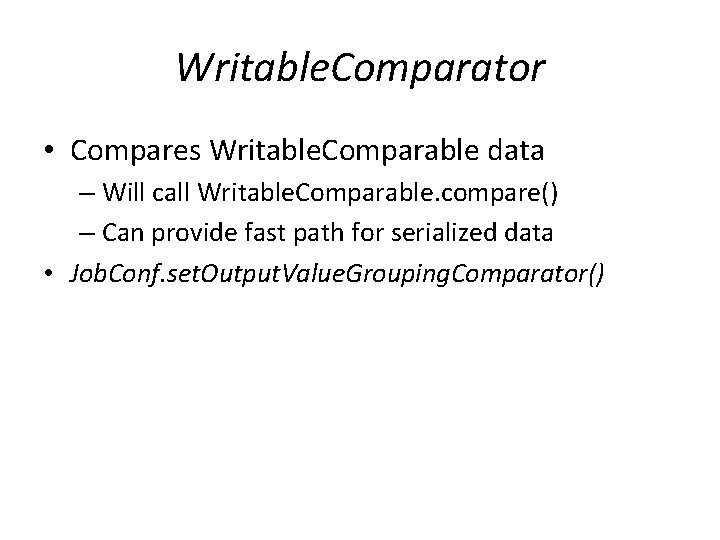

Writable. Comparator • Compares Writable. Comparable data – Will call Writable. Comparable. compare() – Can provide fast path for serialized data • Job. Conf. set. Output. Value. Grouping. Comparator()

Sending Data To The Client • Reporter object sent to Mapper allows simple asynchronous feedback – incr. Counter(Enum key, long amount) – set. Status(String msg) • Allows self-identification of input – Input. Split get. Input. Split()

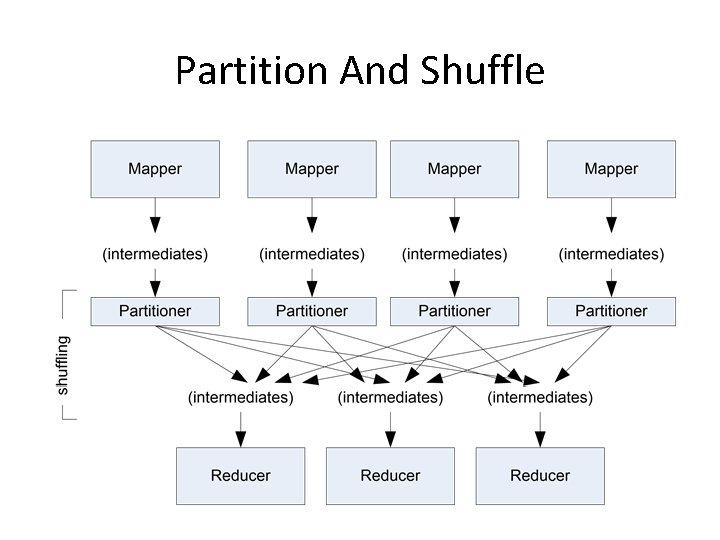

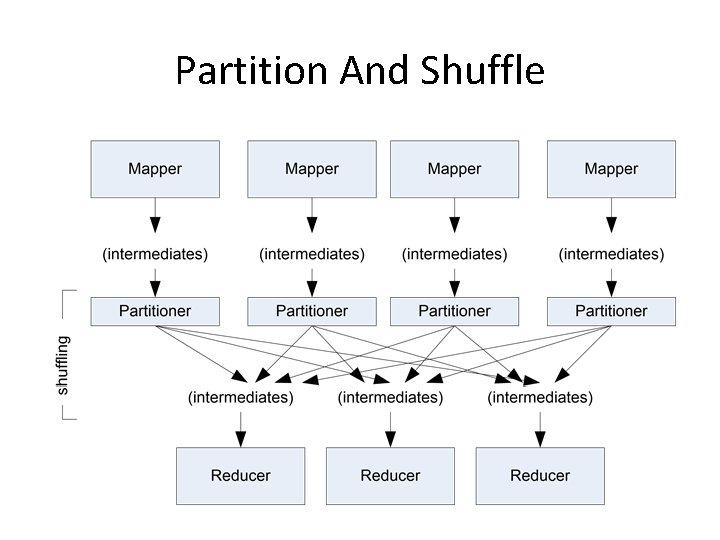

Partition And Shuffle

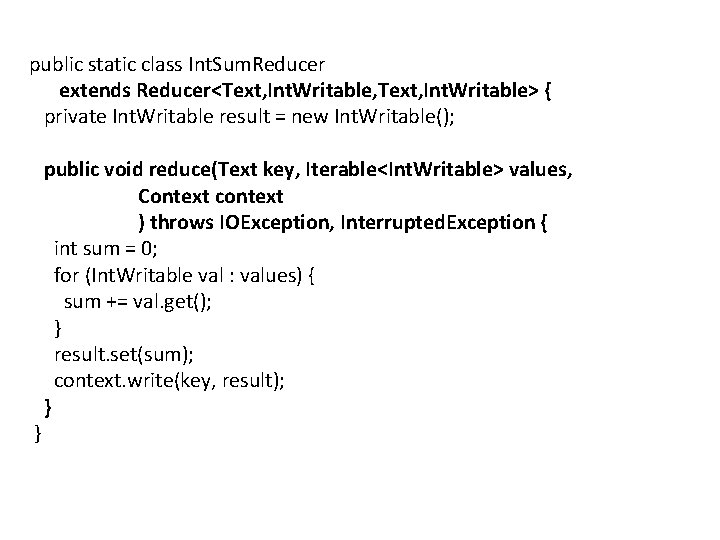

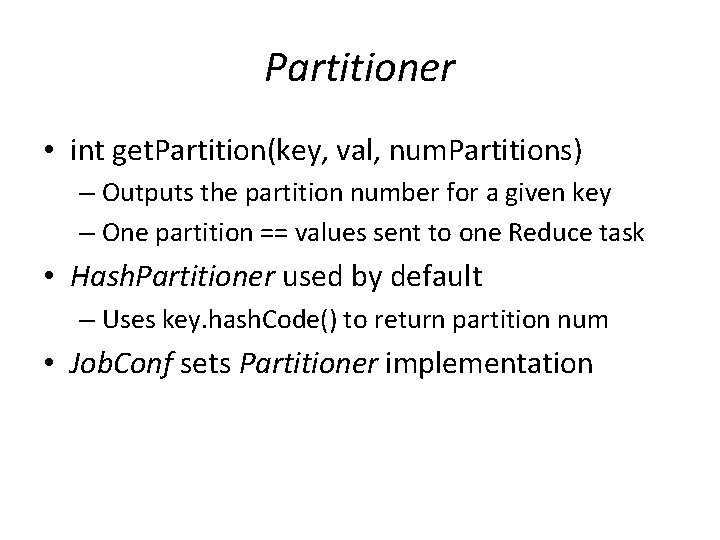

Partitioner • int get. Partition(key, val, num. Partitions) – Outputs the partition number for a given key – One partition == values sent to one Reduce task • Hash. Partitioner used by default – Uses key. hash. Code() to return partition num • Job. Conf sets Partitioner implementation

public class My. Partitioner implements Partitioner<Int. Writable, Text> { @Override public int get. Partition(Int. Writable key, Text value, int num. Partitions) { /* Pretty ugly hard coded partitioning function. Don't do that in practice, it is just for the sake of understanding. */ int nb. Occurences = key. get(); } } if( nb. Occurences < 3 ) return 0; else return 1; @Override public void configure(Job. Conf arg 0) { } conf. set. Partitioner. Class(My. Partitioner. class);

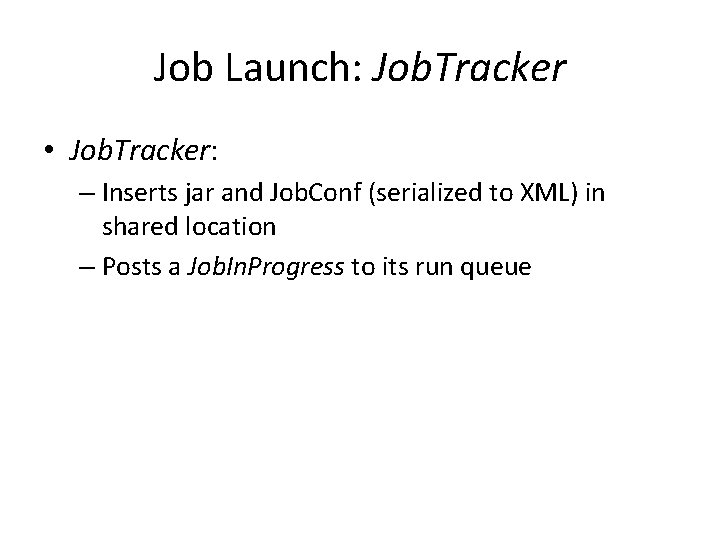

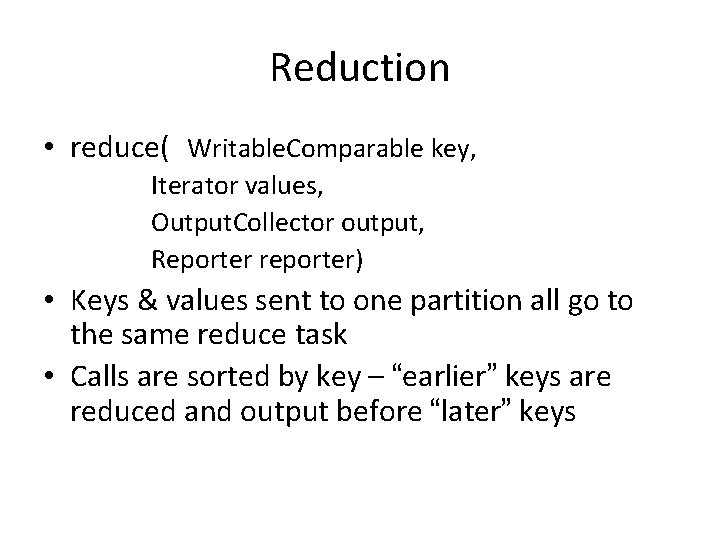

Reduction • reduce( Writable. Comparable key, Iterator values, Output. Collector output, Reporter reporter) • Keys & values sent to one partition all go to the same reduce task • Calls are sorted by key – “earlier” keys are reduced and output before “later” keys

public static class Int. Sum. Reducer extends Reducer<Text, Int. Writable, Text, Int. Writable> { private Int. Writable result = new Int. Writable(); } public void reduce(Text key, Iterable<Int. Writable> values, Context context ) throws IOException, Interrupted. Exception { int sum = 0; for (Int. Writable val : values) { sum += val. get(); } result. set(sum); context. write(key, result); }

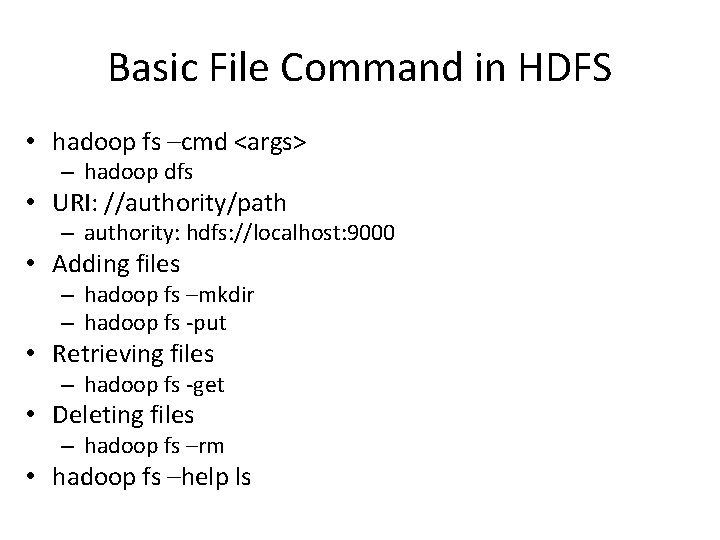

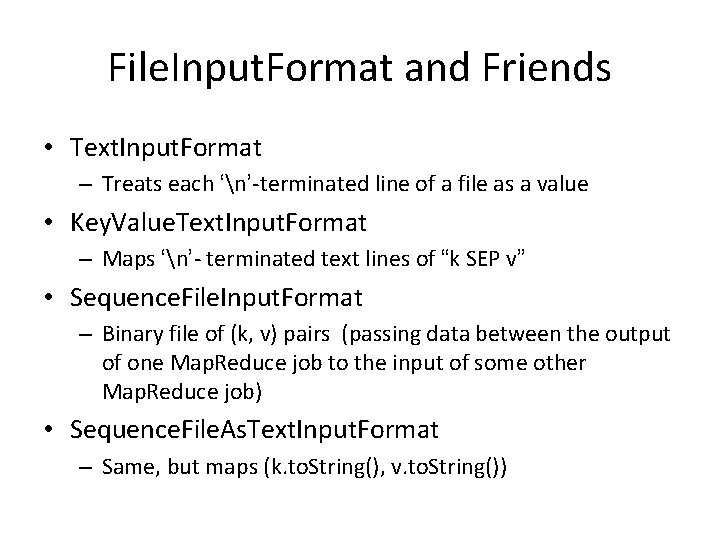

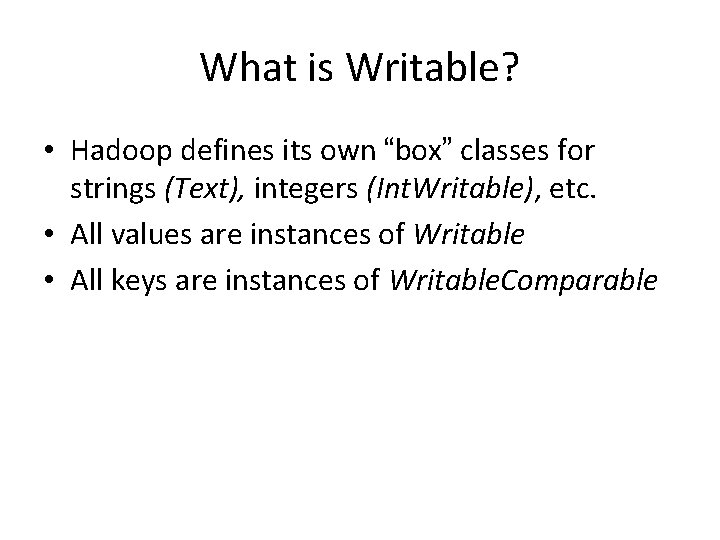

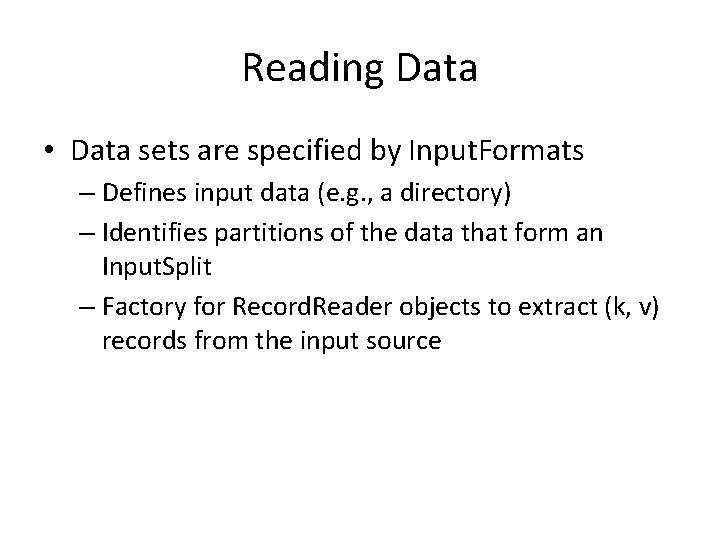

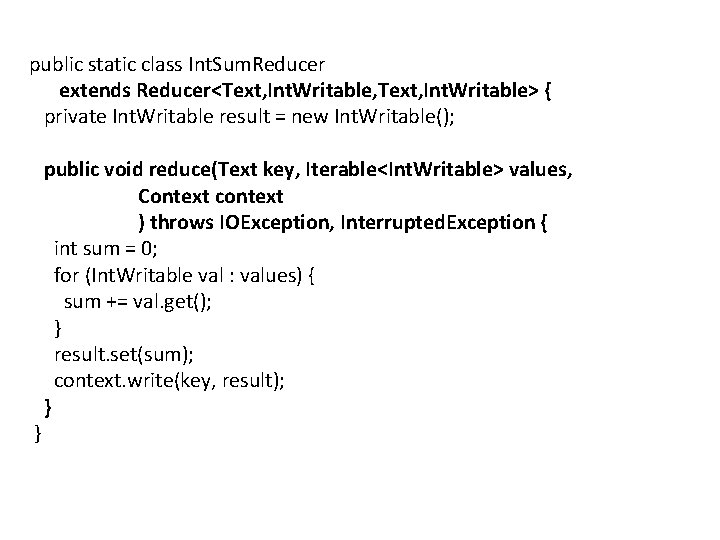

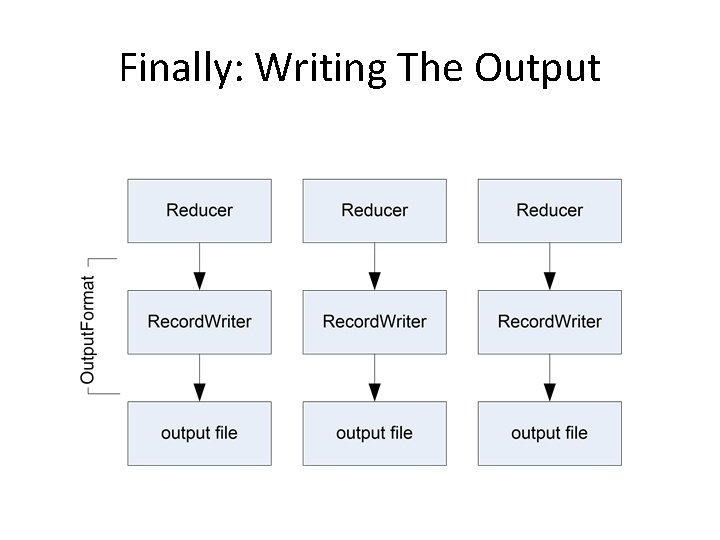

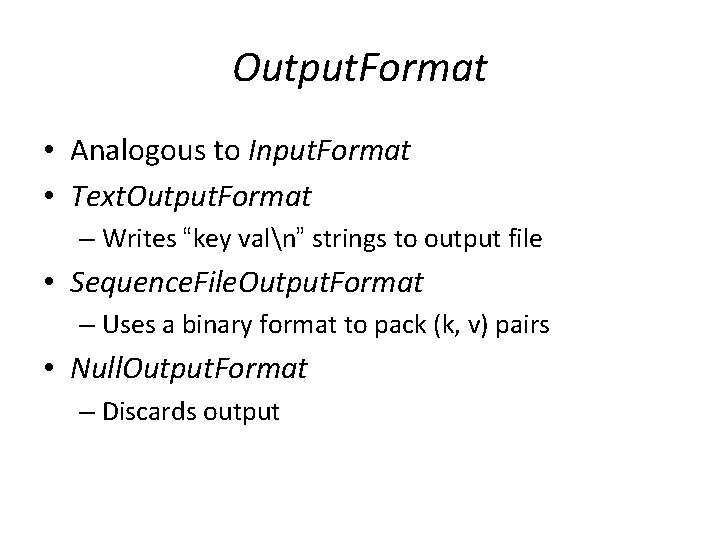

Finally: Writing The Output

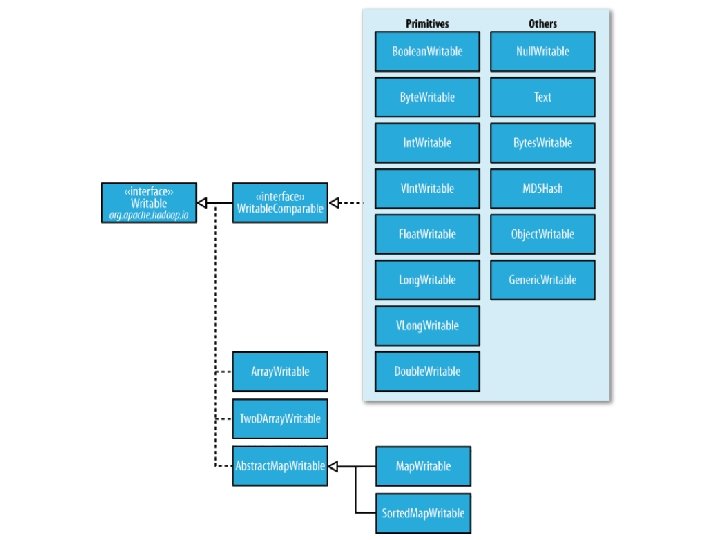

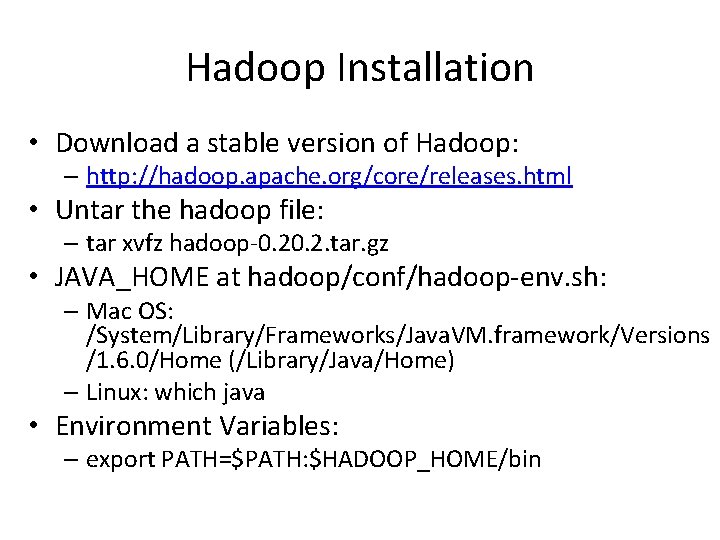

Output. Format • Analogous to Input. Format • Text. Output. Format – Writes “key valn” strings to output file • Sequence. File. Output. Format – Uses a binary format to pack (k, v) pairs • Null. Output. Format – Discards output

![public static void mainString args throws Exception Configuration conf new Configuration String public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); String[]](https://slidetodoc.com/presentation_image/38532828f690d095019aaf0aa2ff1811/image-51.jpg)

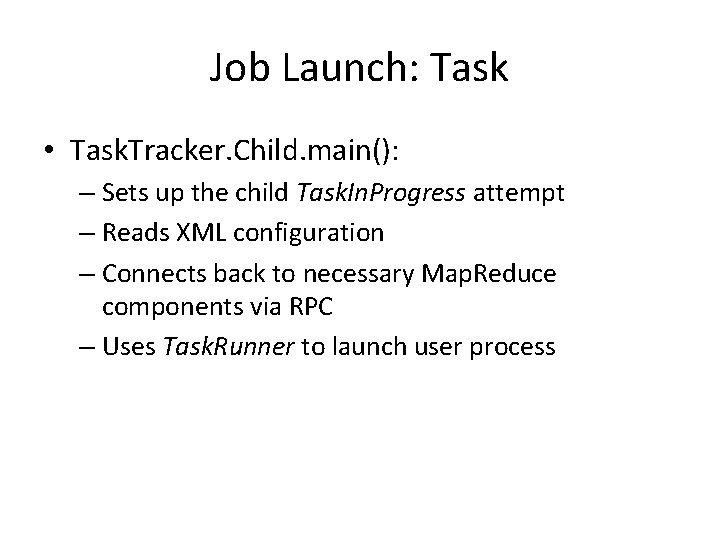

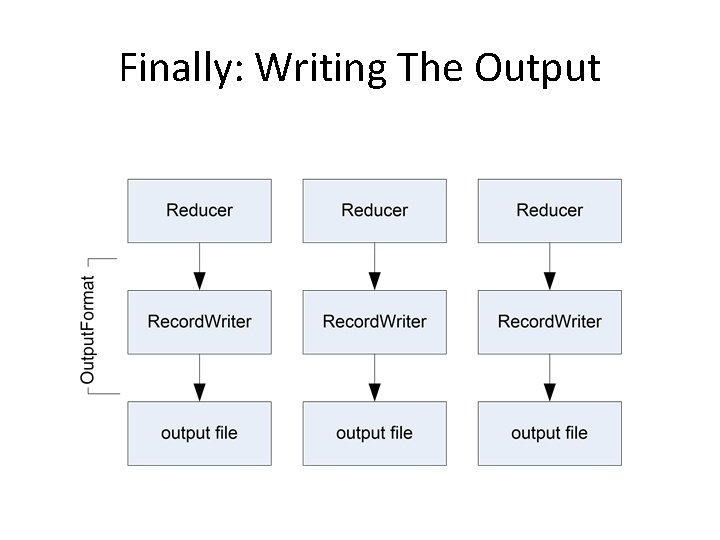

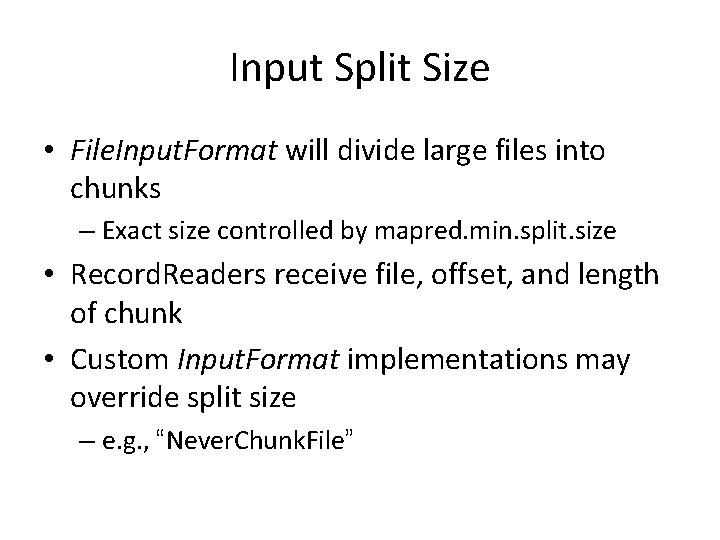

public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); String[] other. Args = new Generic. Options. Parser(conf, args). get. Remaining. Args(); if (other. Args. length != 2) { System. err. println("Usage: wordcount <in> <out>"); System. exit(2); } Job job = new Job(conf, "word count"); job. set. Jar. By. Class(Word. Count. class); job. set. Mapper. Class(Tokenizer. Mapper. class); job. set. Combiner. Class(Int. Sum. Reducer. class); job. set. Reducer. Class(Int. Sum. Reducer. class); job. set. Output. Key. Class(Text. class); job. set. Output. Value. Class(Int. Writable. class); File. Input. Format. add. Input. Path(job, new Path(other. Args[0])); File. Output. Format. set. Output. Path(job, new Path(other. Args[1])); } System. exit(job. wait. For. Completion(true) ? 0 : 1);

Once upon a time,there

Once upon a time,there Running running running

Running running running File based data structures in hadoop

File based data structures in hadoop Security strategies in windows platforms and applications

Security strategies in windows platforms and applications Security strategies in windows platforms and applications

Security strategies in windows platforms and applications Online platforms, tools and application

Online platforms, tools and application Security strategies in linux platforms and applications

Security strategies in linux platforms and applications Folksonomy allows user to categorize and locate information

Folksonomy allows user to categorize and locate information Security strategies in windows platforms and applications

Security strategies in windows platforms and applications Security strategies in linux platforms and applications

Security strategies in linux platforms and applications Is unix and linux same

Is unix and linux same Difference between linux and unix

Difference between linux and unix Bamuengine

Bamuengine Stevens unix

Stevens unix Unix and linux difference

Unix and linux difference What is the sequence of installations on rhipe

What is the sequence of installations on rhipe Scale up and scale out in hadoop

Scale up and scale out in hadoop Hadoop partitioner example

Hadoop partitioner example What is direct changeover

What is direct changeover Running water and groundwater

Running water and groundwater Pros and cons of running start

Pros and cons of running start Advantages and disadvantages of document analysis

Advantages and disadvantages of document analysis Chapter 6 running water and groundwater

Chapter 6 running water and groundwater Aberglas

Aberglas Running back ball security drills and technique

Running back ball security drills and technique Fountas and pinnell benchmark assessment forms

Fountas and pinnell benchmark assessment forms Steps involved in developing and running a local applet

Steps involved in developing and running a local applet Nellie hangs motionless by one hand from a clothesline

Nellie hangs motionless by one hand from a clothesline A computer that serves one user at a time

A computer that serves one user at a time Foot biomechanics during walking and running

Foot biomechanics during walking and running Laughing and a running hey hey

Laughing and a running hey hey Iot design methodology examples

Iot design methodology examples Design methodology of iot

Design methodology of iot Remote sensing platforms

Remote sensing platforms 7 platforms nmc

7 platforms nmc Computer hardware platforms in it infrastructure

Computer hardware platforms in it infrastructure It infrastructure objectives

It infrastructure objectives Computer hardware platforms in it infrastructure

Computer hardware platforms in it infrastructure Digitally convergent media platforms definition

Digitally convergent media platforms definition Aerial photography platforms

Aerial photography platforms Emerging technology chapter 5

Emerging technology chapter 5 Iot design methodology

Iot design methodology Social media platforms

Social media platforms Bsd berkeley

Bsd berkeley Oregon trail platforms

Oregon trail platforms Bwtree

Bwtree Mobile computing platforms

Mobile computing platforms Parallel programming platforms

Parallel programming platforms Shibu lijack

Shibu lijack Dl platforms

Dl platforms Mobile sensor platforms

Mobile sensor platforms Forrester cmp

Forrester cmp