Learning for Semantic Parsing with Kernels under Various

![KRISP: Kernel-based Robust Interpretation for Semantic Parsing [Kate & Mooney, 2006] • Learns semantic KRISP: Kernel-based Robust Interpretation for Semantic Parsing [Kate & Mooney, 2006] • Learns semantic](https://slidetodoc.com/presentation_image_h/317fe5df4b18ec7aeee60a358e0132f4/image-9.jpg)

![KRISP’s Training Algorithm contd. Most probable correct derivation: (ANSWER answer(RIVER), [1. . 9]) (RIVER KRISP’s Training Algorithm contd. Most probable correct derivation: (ANSWER answer(RIVER), [1. . 9]) (RIVER](https://slidetodoc.com/presentation_image_h/317fe5df4b18ec7aeee60a358e0132f4/image-32.jpg)

![Experimental Corpora • CLang [Kate, Wong & Mooney, 2005] – 300 randomly selected pieces Experimental Corpora • CLang [Kate, Wong & Mooney, 2005] – 300 randomly selected pieces](https://slidetodoc.com/presentation_image_h/317fe5df4b18ec7aeee60a358e0132f4/image-39.jpg)

![Experimental Methodology contd. • Compared Systems: – CHILL [Tang & Mooney, 2001]: Inductive Logic Experimental Methodology contd. • Compared Systems: – CHILL [Tang & Mooney, 2001]: Inductive Logic](https://slidetodoc.com/presentation_image_h/317fe5df4b18ec7aeee60a358e0132f4/image-41.jpg)

![SEMISUP-KRISP: Semi-Supervised Semantic Parser Learner [Kate & Mooney, 2007 a] • First learns a SEMISUP-KRISP: Semi-Supervised Semantic Parser Learner [Kate & Mooney, 2007 a] • First learns a](https://slidetodoc.com/presentation_image_h/317fe5df4b18ec7aeee60a358e0132f4/image-56.jpg)

![Results 25% saving GEOBASE: Hand-built semantic parser [Borland International, 1988] 65 Results 25% saving GEOBASE: Hand-built semantic parser [Borland International, 1988] 65](https://slidetodoc.com/presentation_image_h/317fe5df4b18ec7aeee60a358e0132f4/image-65.jpg)

- Slides: 120

Learning for Semantic Parsing with Kernels under Various Forms of Supervision Rohit J. Kate Ph. D. Final Defense Supervisor: Raymond J. Mooney Machine Learning Group Department of Computer Sciences University of Texas at Austin

Semantic Parsing • Semantic Parsing: Transforming natural language (NL) sentences into computer executable complete meaning representations (MRs) for domain-specific applications • Requires deeper semantic analysis than other semantic tasks like semantic role labeling, word sense disambiguation, information extraction • Example application domains – CLang: Robocup Coach Language – Geoquery: A Database Query Application 2

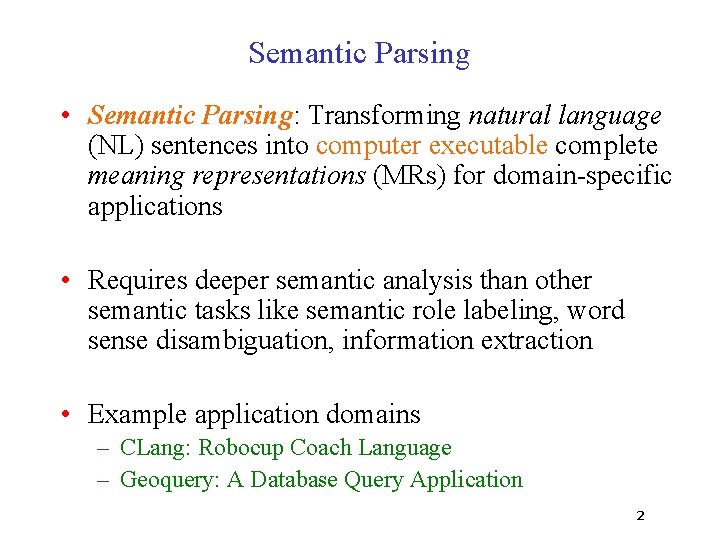

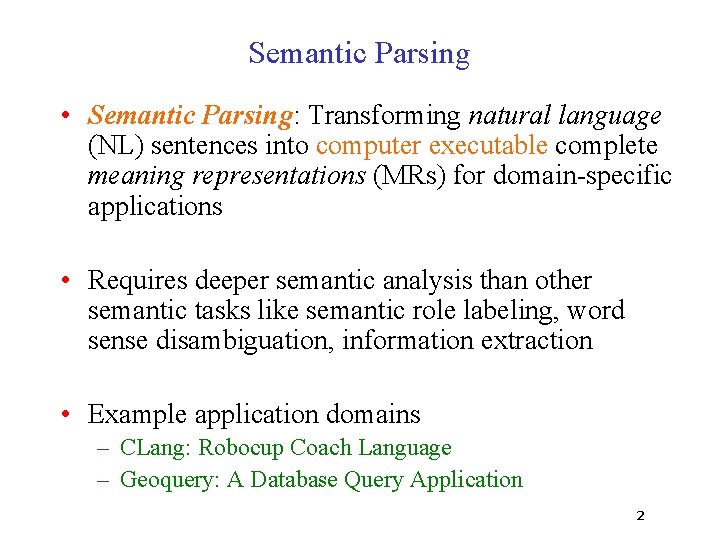

CLang: Robo. Cup Coach Language • In Robo. Cup Coach competition teams compete to coach simulated players [http: //www. robocup. org] • The coaching instructions are given in a formal language called CLang [Chen et al. 2003] If the ball is in our goal area then player 1 should intercept it. Simulated soccer field Semantic Parsing (bpos (goal-area our) (do our {1} intercept)) CLang 3

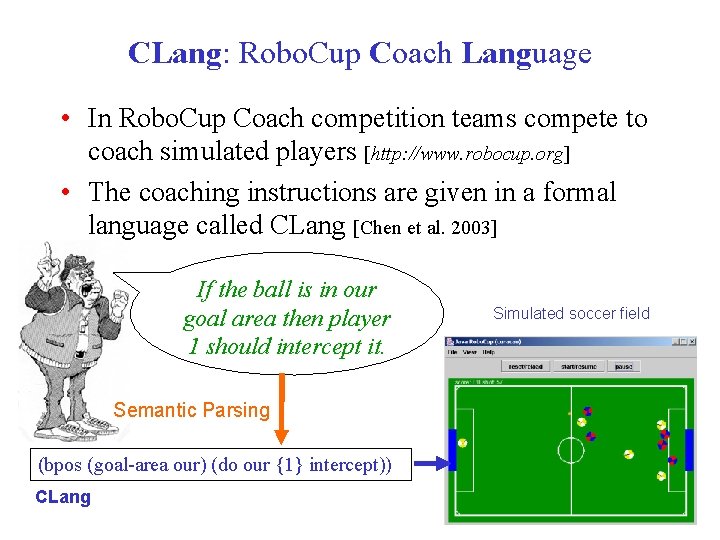

Geoquery: A Database Query Application • Query application for U. S. geography database containing about 800 facts [Zelle & Mooney, 1996] Which rivers run through the states bordering Texas? Arkansas, Canadian, Cimarron, Gila, Mississippi, Rio Grande … Answer Semantic Parsing answer(traverse(next_to(stateid(‘texas’)))) Query 4

Engineering Motivation for Semantic Parsing • Most computational language-learning research analyzes open-domain text but the analysis is shallow • Realistic semantic parsing currently entails domain dependence • Applications of domain-dependent semantic parsing – – Natural language interfaces to computing systems Communication with robots in natural language Personalized software assistants Question-answering systems • Machine Learning makes developing semantic parsers for specific applications more tractable 5

Cognitive Science Motivation for Semantic Parsing • Most natural-language learning methods require supervised training data that is not available to a child – No POS-tagged or treebank data • Assuming a child can infer the likely meaning of an utterance from context, NL-MR pairs are more cognitively plausible training data 6

Thesis Contributions • A new framework for learning for semantic parsing based on kernel-based string classification – Requires no feature engineering – Does not use any hard-matching rules or any grammar rules for natural language which makes it robust • First semi-supervised learning system for semantic parsing • Considers learning for semantic parsing under cognitively motivated weaker and more general form of ambiguous supervision • Introduces transformations for meaning representation grammars to make them conform better with natural language semantics 7

Outline • KRISP: A Semantic Parsing Learning System • Utilizing Weaker Forms of Supervision – Semi-supervision – Ambiguous supervision • Transforming meaning representation grammar • Directions for Future Work • Conclusions 8

![KRISP Kernelbased Robust Interpretation for Semantic Parsing Kate Mooney 2006 Learns semantic KRISP: Kernel-based Robust Interpretation for Semantic Parsing [Kate & Mooney, 2006] • Learns semantic](https://slidetodoc.com/presentation_image_h/317fe5df4b18ec7aeee60a358e0132f4/image-9.jpg)

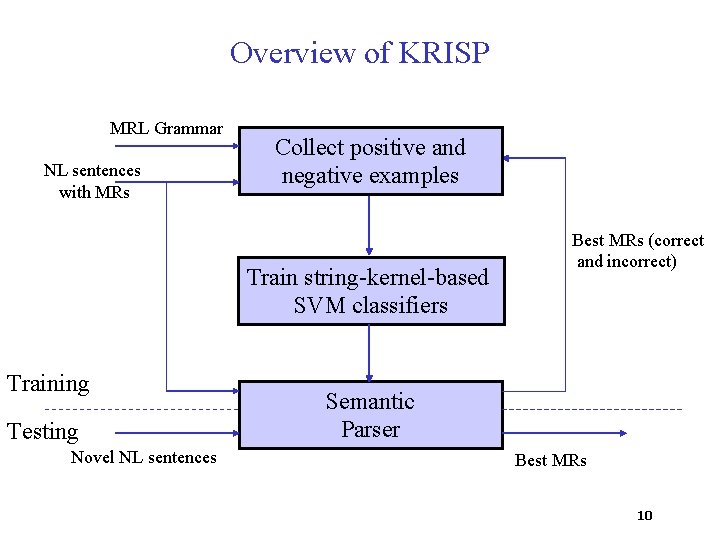

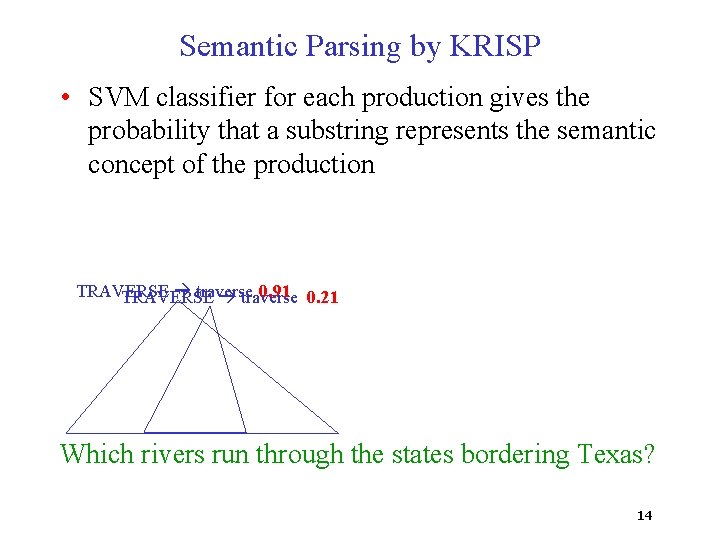

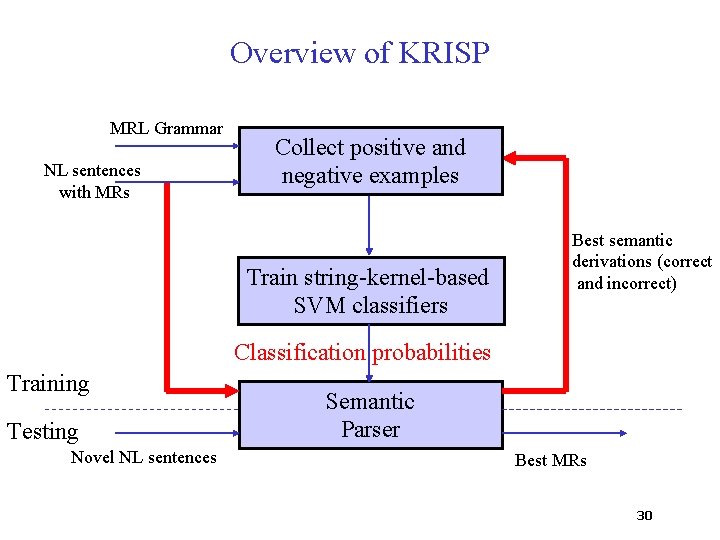

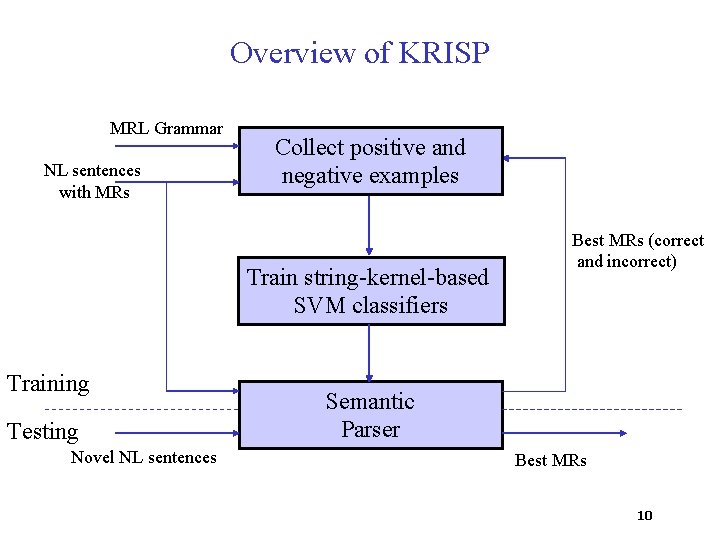

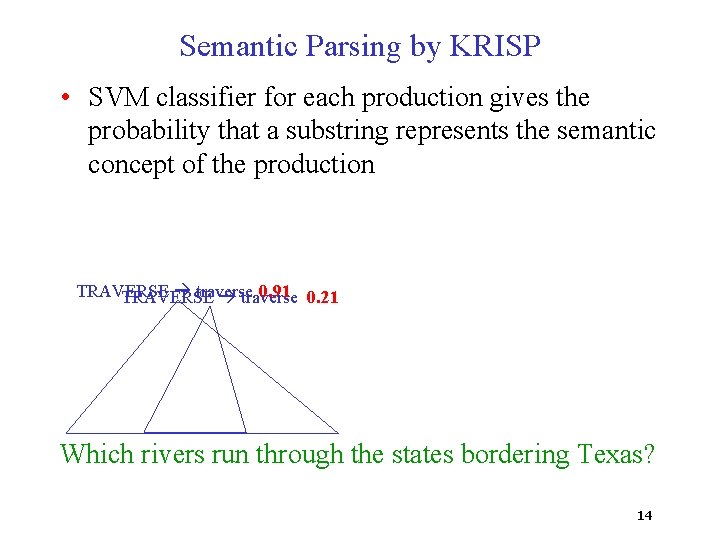

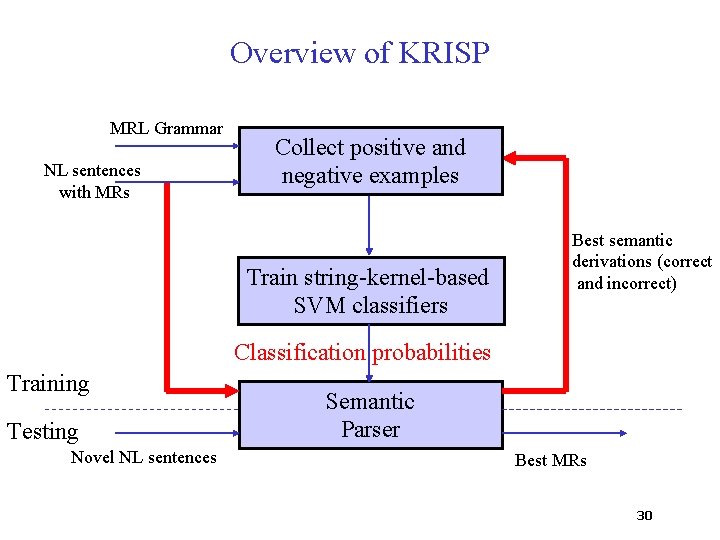

KRISP: Kernel-based Robust Interpretation for Semantic Parsing [Kate & Mooney, 2006] • Learns semantic parser from NL sentences paired with their respective MRs given meaning representation language (MRL) grammar • Productions of MRL are treated like semantic concepts • SVM classifier with string subsequence kernel is trained for each production to identify if an NL substring represents the semantic concept • These classifiers are used to compositionally build MRs of the sentences 9

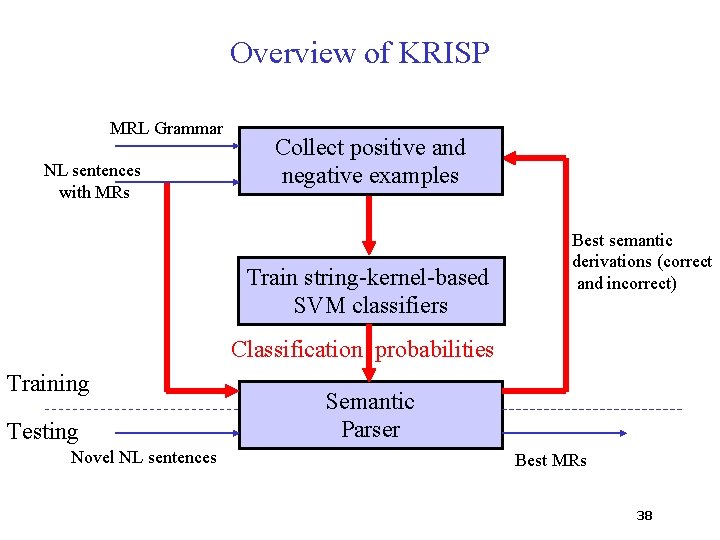

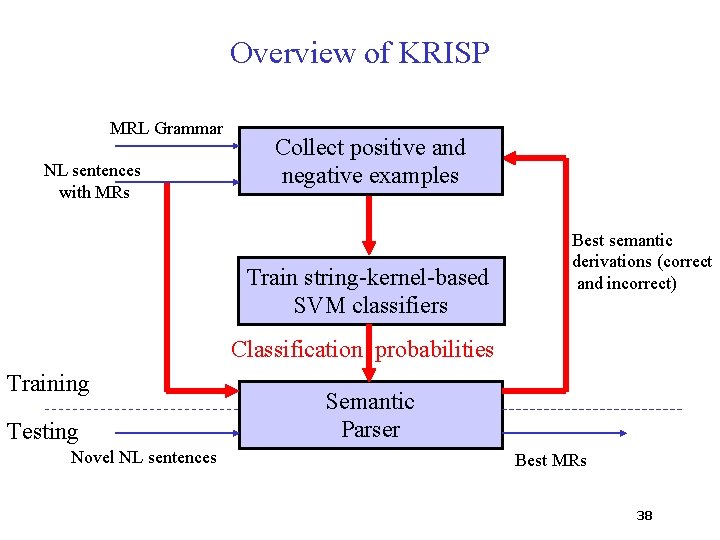

Overview of KRISP MRL Grammar NL sentences with MRs Collect positive and negative examples Train string-kernel-based SVM classifiers Training Testing Novel NL sentences Best MRs (correct and incorrect) Semantic Parser Best MRs 10

Overview of KRISP MRL Grammar NL sentences with MRs Collect positive and negative examples Train string-kernel-based SVM classifiers Training Testing Novel NL sentences Best MRs (correct and incorrect) Semantic Parser Best MRs 11

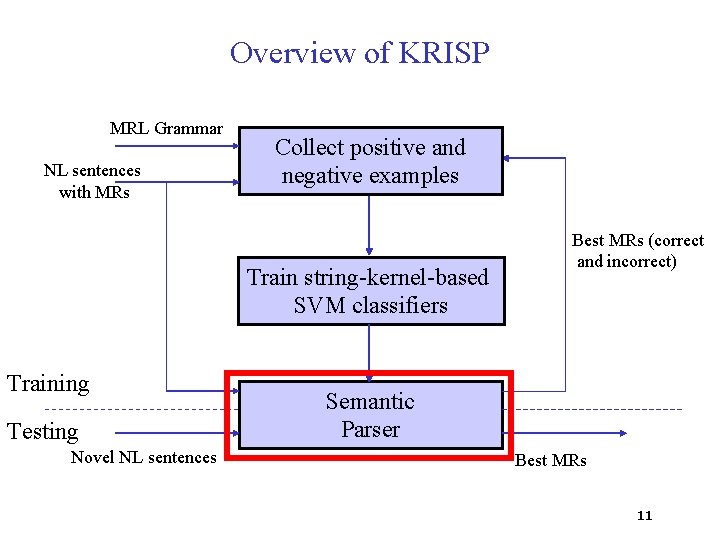

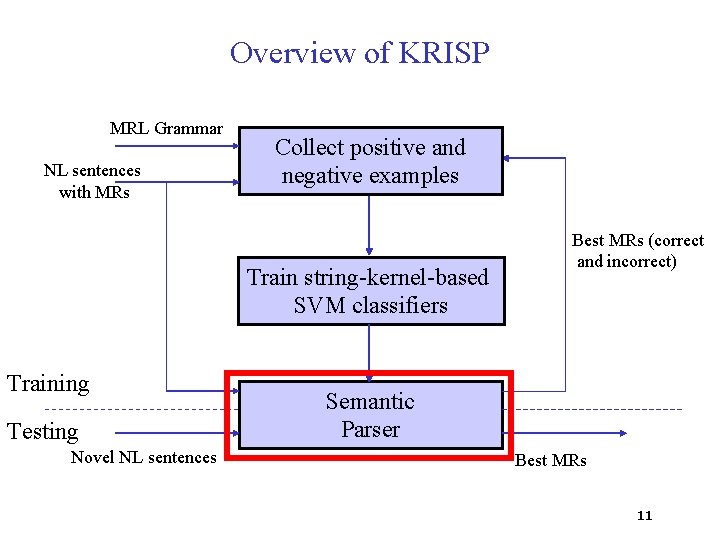

Meaning Representation Language MR: answer(traverse(next_to(stateid(‘texas’)))) Parse tree of MR: ANSWER answer(RIVER) ANSWER RIVER answer RIVER TRAVERSE(STATE) TRAVERSE traverse STATE NEXT_TO(STATE) NEXT_TO STATE NEXT_TOnext_to STATE STATEID stateid Productions: ANSWER answer(RIVER) STATE NEXT_TO(STATE) NEXT_TO next_to ‘texas’ STATEID RIVER TRAVERSE(STATE) TRAVERSE traverse STATEID ‘texas’ 12

Semantic Parsing by KRISP • SVM classifier for each production gives the probability that a substring represents the semantic concept of the production NEXT_TO next_to. NEXT_TO 0. 02 next_to NEXT_TO 0. 01 next_to 0. 95 Which rivers run through the states bordering Texas? 13

Semantic Parsing by KRISP • SVM classifier for each production gives the probability that a substring represents the semantic concept of the production TRAVERSE traverse 0. 91 0. 21 TRAVERSE traverse Which rivers run through the states bordering Texas? 14

Semantic Parsing by KRISP • Semantic parsing is done by finding the most probable derivation of the sentence [Kate & Mooney 2006] ANSWER answer(RIVER) 0. 89 RIVER TRAVERSE(STATE) 0. 92 TRAVERSE traverse 0. 91 STATE NEXT_TO(STATE) 0. 81 NEXT_TO next_to 0. 95 STATEID 0. 98 STATEID ‘texas’ 0. 99 Which rivers run through the states bordering Texas? Probability of the derivation is the product of the probabilities 15 at the nodes.

Overview of KRISP MRL Grammar NL sentences with MRs Collect positive and negative examples Train string-kernel-based SVM classifiers Best semantic derivations (correct and incorrect) Classification probabilities Training Testing Novel NL sentences Semantic Parser Best MRs 16

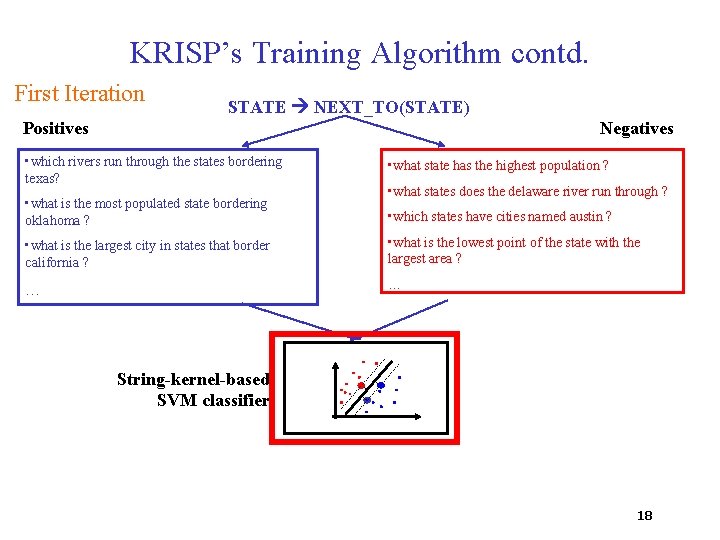

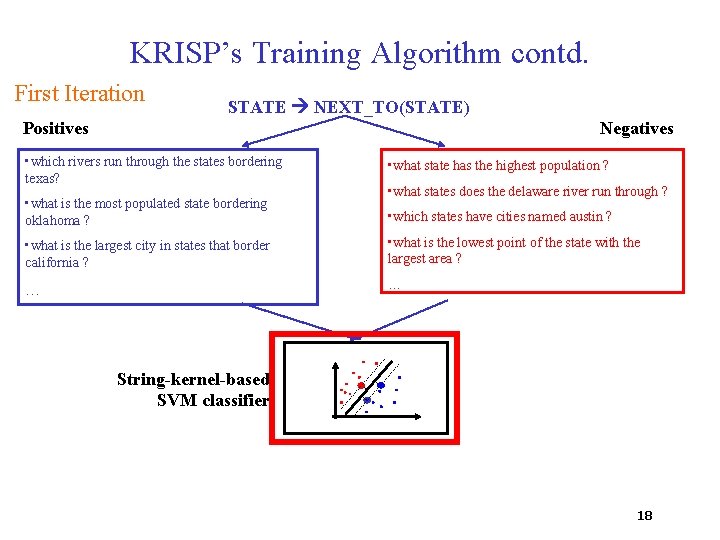

KRISP’s Training Algorithm • Takes NL sentences paired with their respective MRs as input • Obtains MR parses • Induces the semantic parser and refines it in iterations • In the first iteration, for every production: – Call those sentences positives whose MR parses use that production – Call the remaining sentences negatives 17

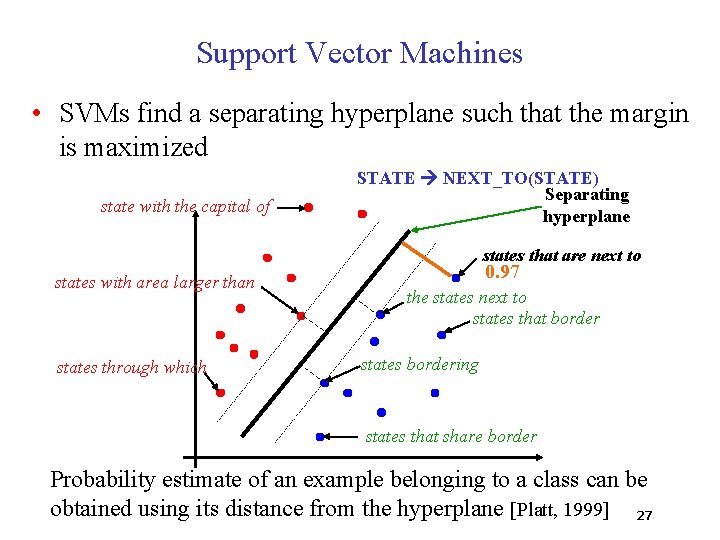

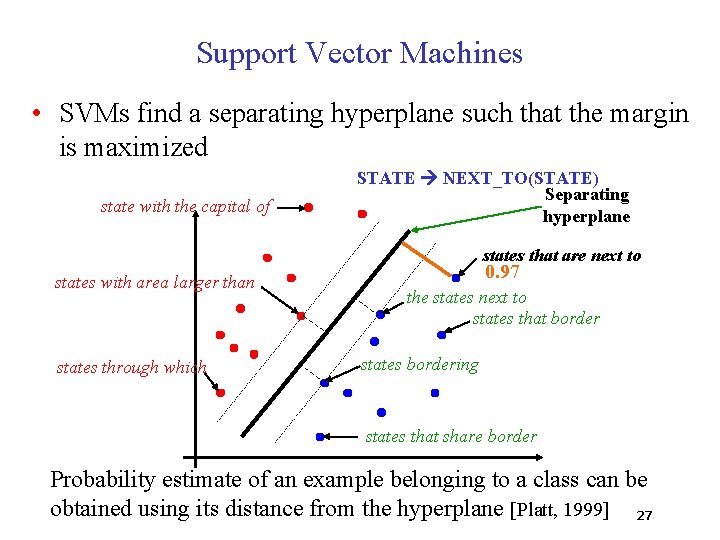

KRISP’s Training Algorithm contd. First Iteration STATE NEXT_TO(STATE) Positives Negatives • which rivers run through the states bordering texas? • what state has the highest population ? • what is the most populated state bordering oklahoma ? • which states have cities named austin ? • what states does the delaware river run through ? • what is the largest city in states that border california ? • what is the lowest point of the state with the largest area ? … … String-kernel-based SVM classifier 18

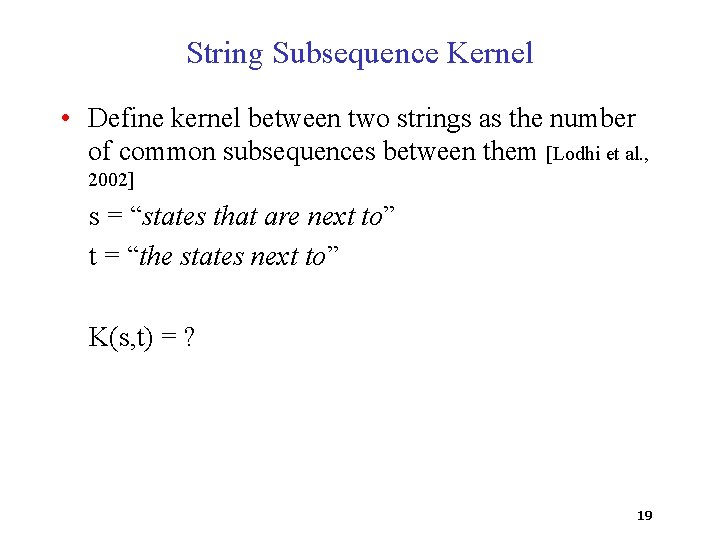

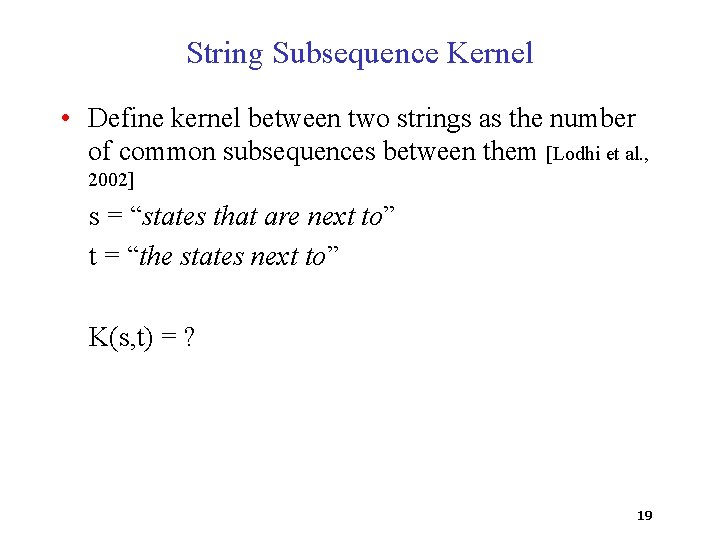

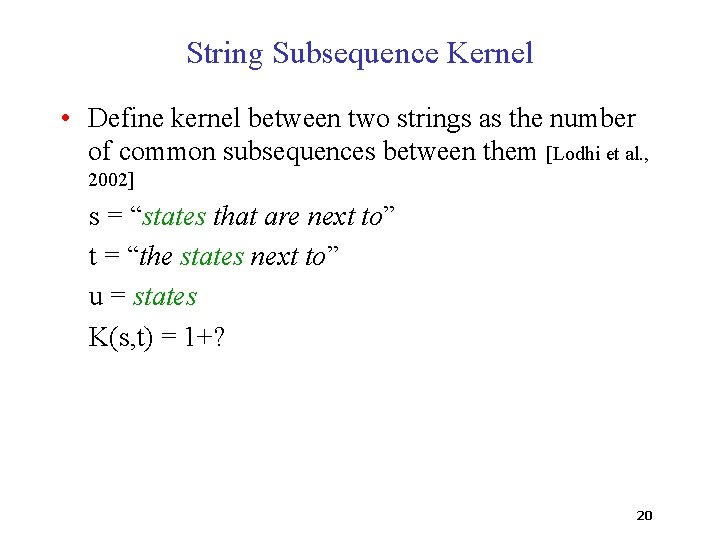

String Subsequence Kernel • Define kernel between two strings as the number of common subsequences between them [Lodhi et al. , 2002] s = “states that are next to” t = “the states next to” K(s, t) = ? 19

String Subsequence Kernel • Define kernel between two strings as the number of common subsequences between them [Lodhi et al. , 2002] s = “states that are next to” t = “the states next to” u = states K(s, t) = 1+? 20

String Subsequence Kernel • Define kernel between two strings as the number of common subsequences between them [Lodhi et al. , 2002] s = “states that are next to” t = “the states next to” u = next K(s, t) = 2+? 21

String Subsequence Kernel • Define kernel between two strings as the number of common subsequences between them [Lodhi et al. , 2002] s = “states that are next to” t = “the states next to” u = to K(s, t) = 3+? 22

String Subsequence Kernel • Define kernel between two strings as the number of common subsequences between them [Lodhi et al. , 2002] s = “states that are next to” t = “the states next to” u = states next K(s, t) = 4+? 23

String Subsequence Kernel • Define kernel between two strings as the number of common subsequences between them [Lodhi et al. , 2002] s = “states that are next to” t = “the states next to” K(s, t) = 7 24

String Subsequence Kernel contd. • The kernel is normalized to remove any bias due to different string lengths • Lodhi et al. [2002] give O(n|s||t|) algorithm for computing string subsequence kernel • Used for Text Categorization [Lodhi et al, 2002] and Information Extraction [Bunescu & Mooney, 2005] 25

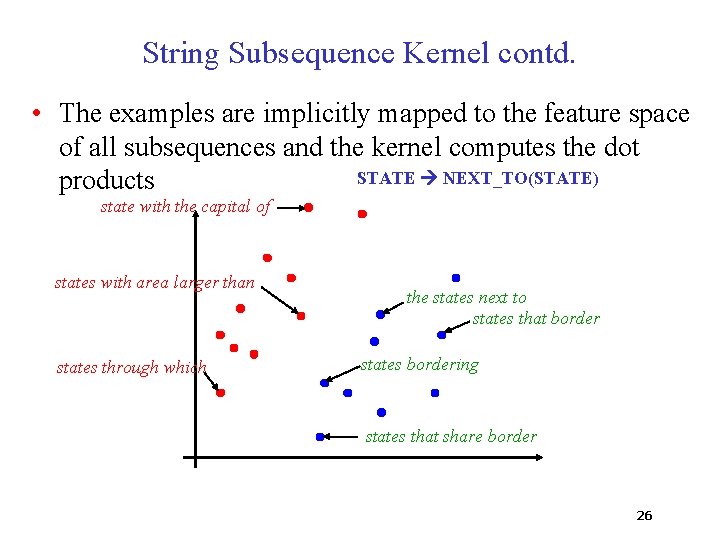

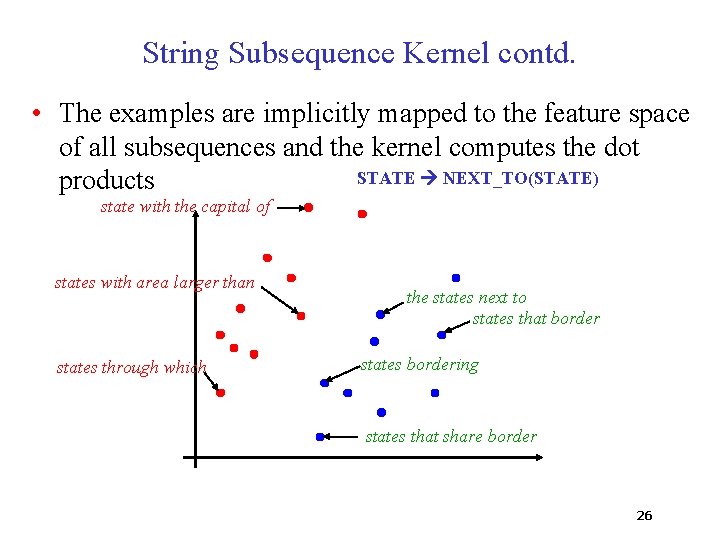

String Subsequence Kernel contd. • The examples are implicitly mapped to the feature space of all subsequences and the kernel computes the dot STATE NEXT_TO(STATE) products state with the capital of states with area larger than states through which the states next to states that border states bordering states that share border 26

Support Vector Machines • SVMs find a separating hyperplane such that the margin is maximized state with the capital of STATE NEXT_TO(STATE) Separating hyperplane states that are next to states with area larger than states through which 0. 97 the states next to states that border states bordering states that share border Probability estimate of an example belonging to a class can be obtained using its distance from the hyperplane [Platt, 1999] 27

KRISP’s Training Algorithm contd. First Iteration STATE NEXT_TO(STATE) Positives Negatives • which rivers run through the states bordering texas? • what state has the highest population ? • what is the most populated state bordering oklahoma ? • which states have cities named austin ? • what states does the delaware river run through ? • what is the largest city in states that border california ? • what is the lowest point of the state with the largest area ? … … String-kernel-based SVM classifier Clasification probabilities 28

Overview of KRISP MRL Grammar NL sentences with MRs Collect positive and negative examples Train string-kernel-based SVM classifiers Best semantic derivations (correct and incorrect) Classification probabilities Training Testing Novel NL sentences Semantic Parser Best MRs 29

Overview of KRISP MRL Grammar NL sentences with MRs Collect positive and negative examples Train string-kernel-based SVM classifiers Best semantic derivations (correct and incorrect) Classification probabilities Training Testing Novel NL sentences Semantic Parser Best MRs 30

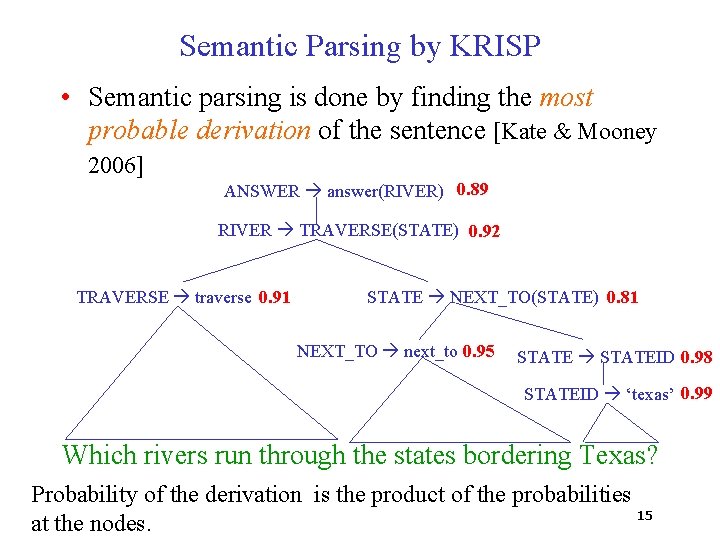

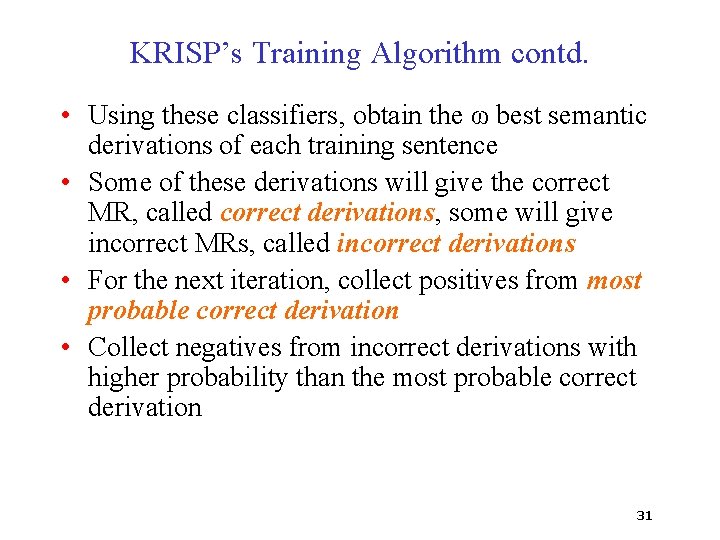

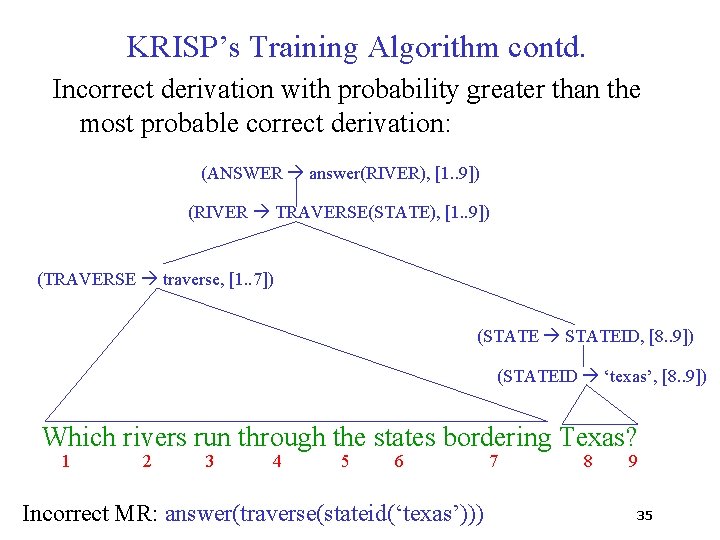

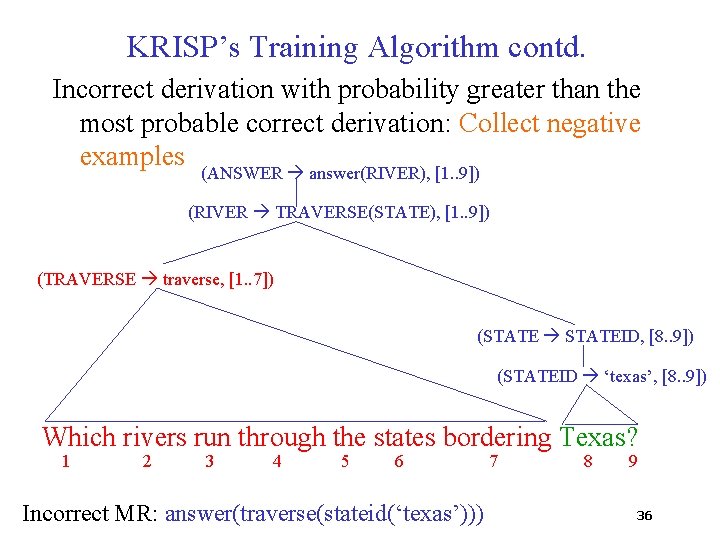

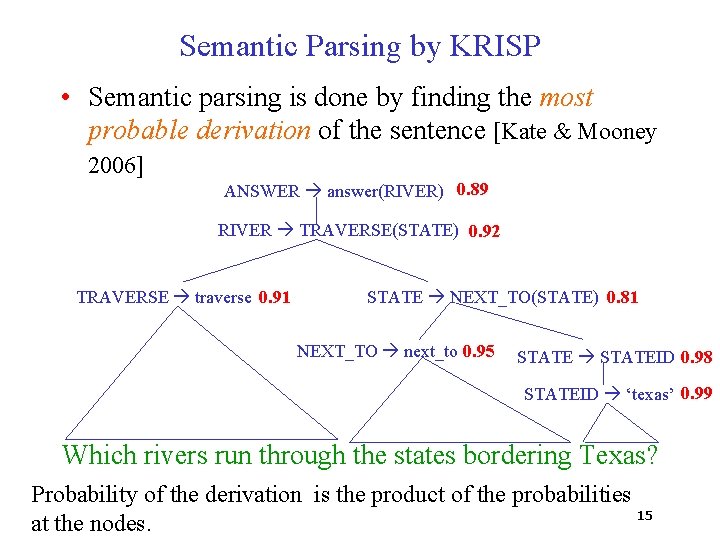

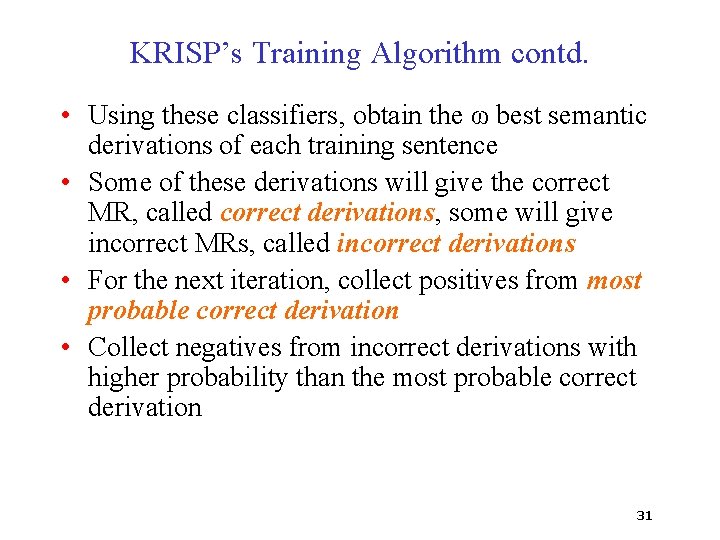

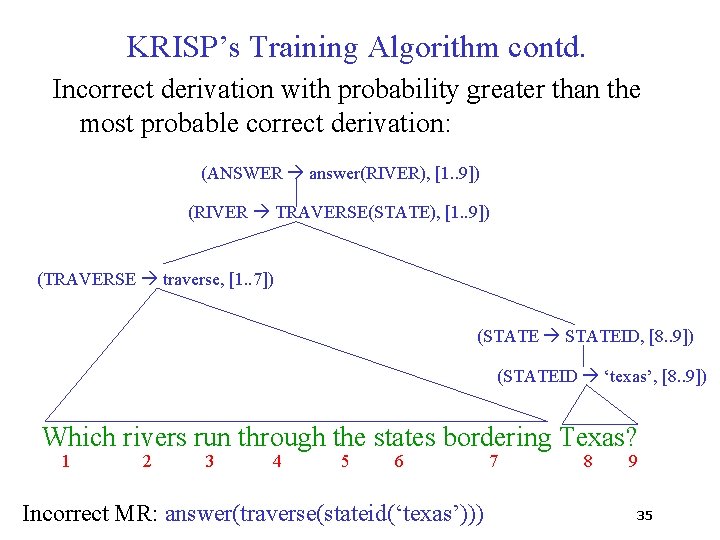

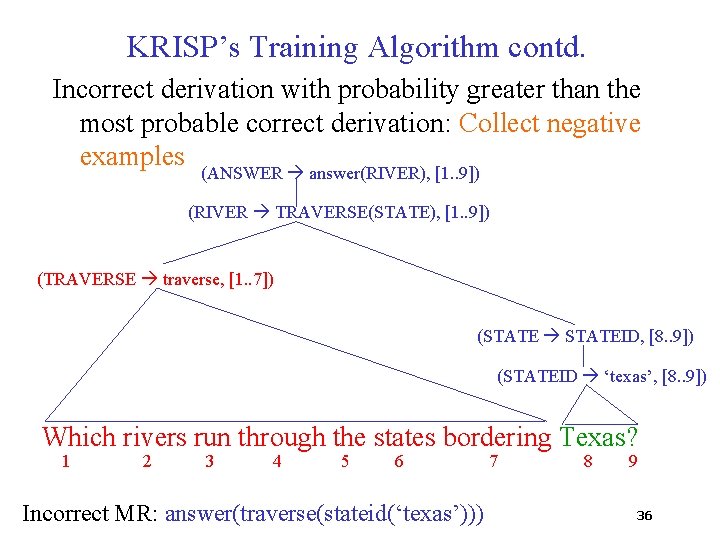

KRISP’s Training Algorithm contd. • Using these classifiers, obtain the ω best semantic derivations of each training sentence • Some of these derivations will give the correct MR, called correct derivations, some will give incorrect MRs, called incorrect derivations • For the next iteration, collect positives from most probable correct derivation • Collect negatives from incorrect derivations with higher probability than the most probable correct derivation 31

![KRISPs Training Algorithm contd Most probable correct derivation ANSWER answerRIVER 1 9 RIVER KRISP’s Training Algorithm contd. Most probable correct derivation: (ANSWER answer(RIVER), [1. . 9]) (RIVER](https://slidetodoc.com/presentation_image_h/317fe5df4b18ec7aeee60a358e0132f4/image-32.jpg)

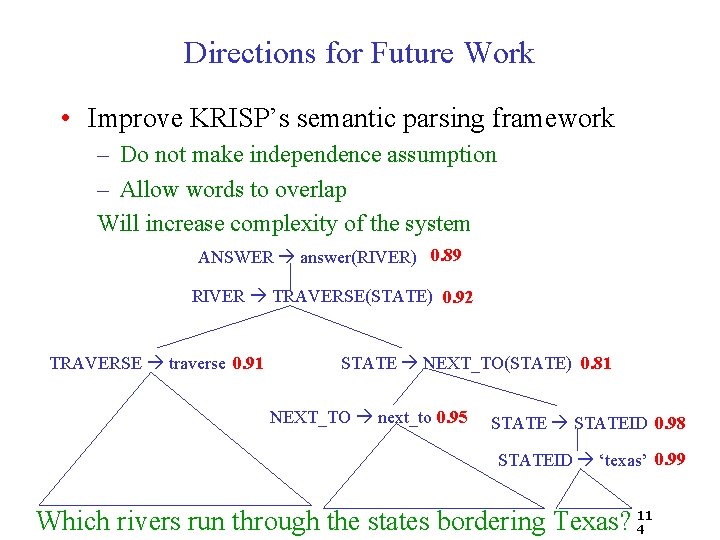

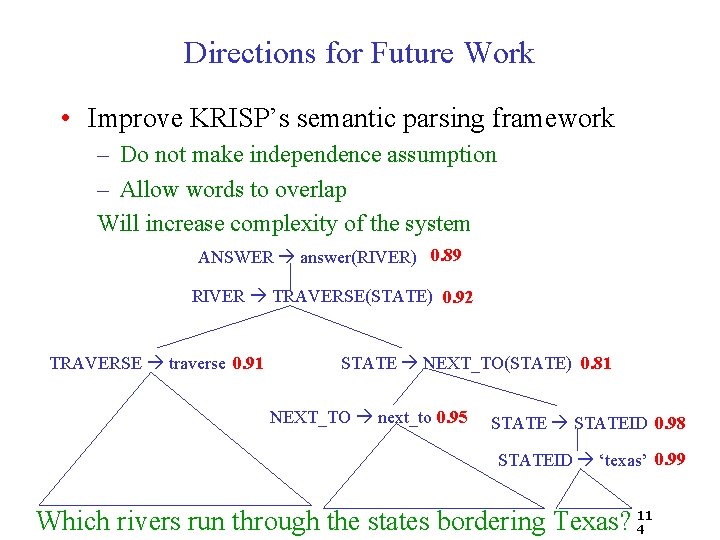

KRISP’s Training Algorithm contd. Most probable correct derivation: (ANSWER answer(RIVER), [1. . 9]) (RIVER TRAVERSE(STATE), [1. . 9]) (TRAVERSE traverse, [1. . 4]) (STATE NEXT_TO(STATE), [5. . 9]) (NEXT_TO next_to, [5. . 7]) (STATE STATEID, [8. . 9]) (STATEID ‘texas’, [8. . 9]) Which rivers run through the states bordering Texas? 1 2 3 4 5 6 7 8 9 32

KRISP’s Training Algorithm contd. Most probable correct derivation: Collect positive examples (ANSWER answer(RIVER), [1. . 9]) (RIVER TRAVERSE(STATE), [1. . 9]) (TRAVERSE traverse, [1. . 4]) (STATE NEXT_TO(STATE), [5. . 9]) (NEXT_TO next_to, [5. . 7]) (STATE STATEID, [8. . 9]) (STATEID ‘texas’, [8. . 9]) Which rivers run through the states bordering Texas? 1 2 3 4 5 6 7 8 9 33

KRISP’s Training Algorithm contd. Most probable correct derivation: Collect positive examples (ANSWER answer(RIVER), [1. . 9]) (RIVER TRAVERSE(STATE), [1. . 9]) (TRAVERSE traverse, [1. . 4]) (STATE NEXT_TO(STATE), [5. . 9]) (NEXT_TO next_to, [5. . 7]) (STATE STATEID, [8. . 9]) (STATEID ‘texas’, [8. . 9]) Which rivers run through the states bordering Texas? 1 2 3 4 5 6 7 8 9 34

KRISP’s Training Algorithm contd. Incorrect derivation with probability greater than the most probable correct derivation: (ANSWER answer(RIVER), [1. . 9]) (RIVER TRAVERSE(STATE), [1. . 9]) (TRAVERSE traverse, [1. . 7]) (STATE STATEID, [8. . 9]) (STATEID ‘texas’, [8. . 9]) Which rivers run through the states bordering Texas? 1 2 3 4 5 6 Incorrect MR: answer(traverse(stateid(‘texas’))) 7 8 9 35

KRISP’s Training Algorithm contd. Incorrect derivation with probability greater than the most probable correct derivation: Collect negative examples (ANSWER answer(RIVER), [1. . 9]) (RIVER TRAVERSE(STATE), [1. . 9]) (TRAVERSE traverse, [1. . 7]) (STATE STATEID, [8. . 9]) (STATEID ‘texas’, [8. . 9]) Which rivers run through the states bordering Texas? 1 2 3 4 5 6 Incorrect MR: answer(traverse(stateid(‘texas’))) 7 8 9 36

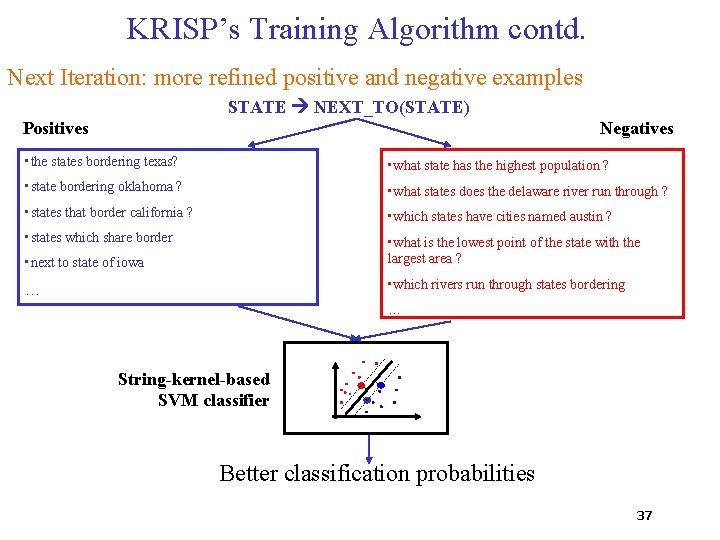

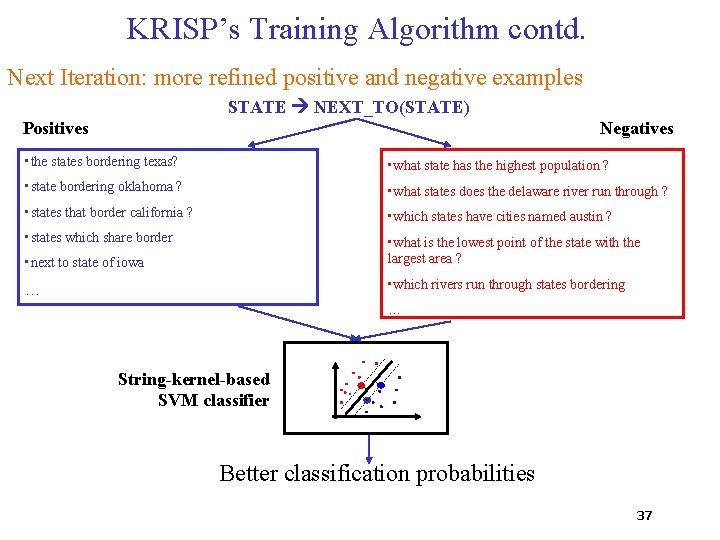

KRISP’s Training Algorithm contd. Next Iteration: more refined positive and negative examples STATE NEXT_TO(STATE) Positives Negatives • the states bordering texas? • what state has the highest population ? • state bordering oklahoma ? • what states does the delaware river run through ? • states that border california ? • which states have cities named austin ? • states which share border • next to state of iowa • what is the lowest point of the state with the largest area ? … • which rivers run through states bordering … String-kernel-based SVM classifier Better classification probabilities 37

Overview of KRISP MRL Grammar NL sentences with MRs Collect positive and negative examples Train string-kernel-based SVM classifiers Best semantic derivations (correct and incorrect) Classification probabilities Training Testing Novel NL sentences Semantic Parser Best MRs 38

![Experimental Corpora CLang Kate Wong Mooney 2005 300 randomly selected pieces Experimental Corpora • CLang [Kate, Wong & Mooney, 2005] – 300 randomly selected pieces](https://slidetodoc.com/presentation_image_h/317fe5df4b18ec7aeee60a358e0132f4/image-39.jpg)

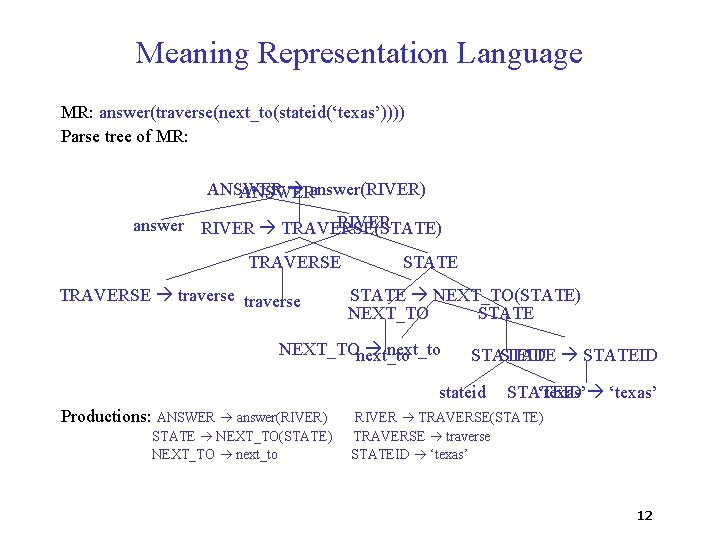

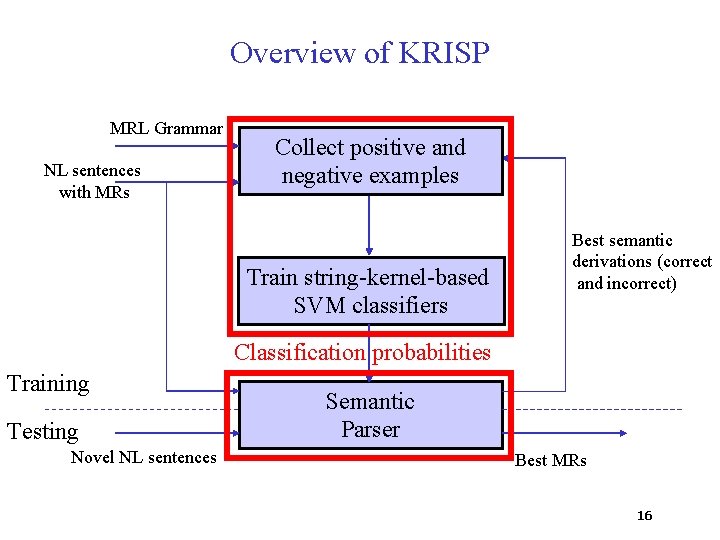

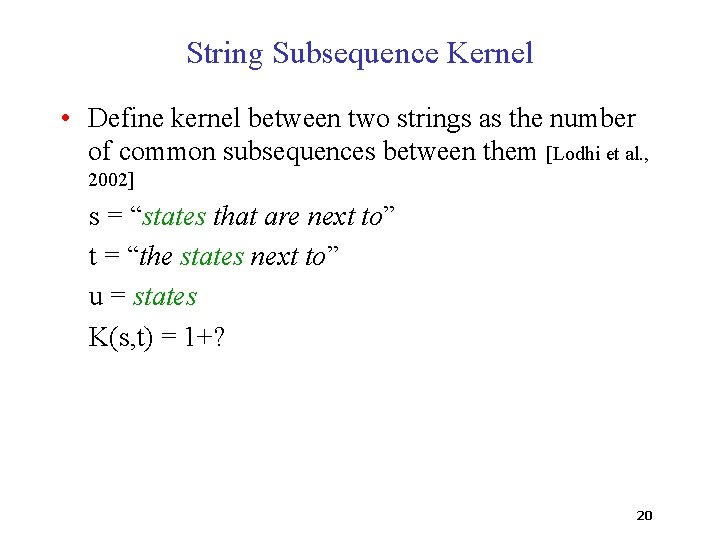

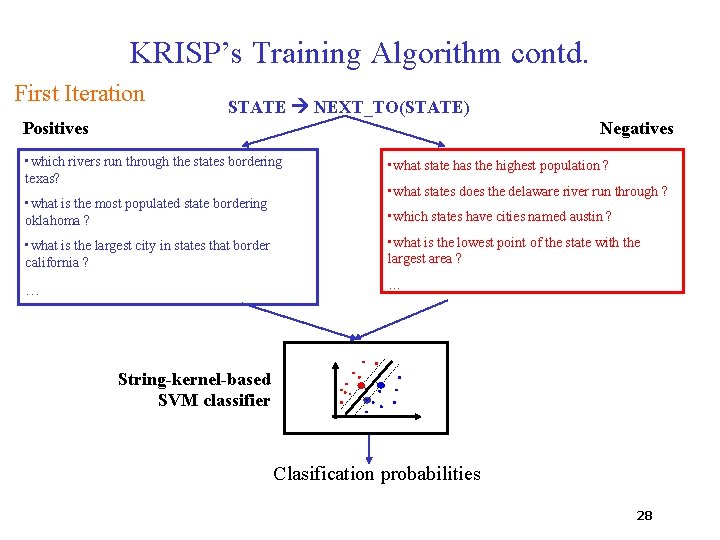

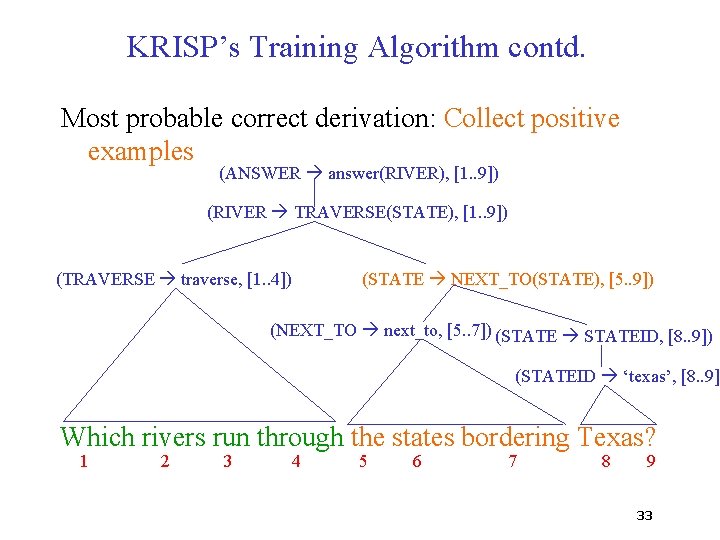

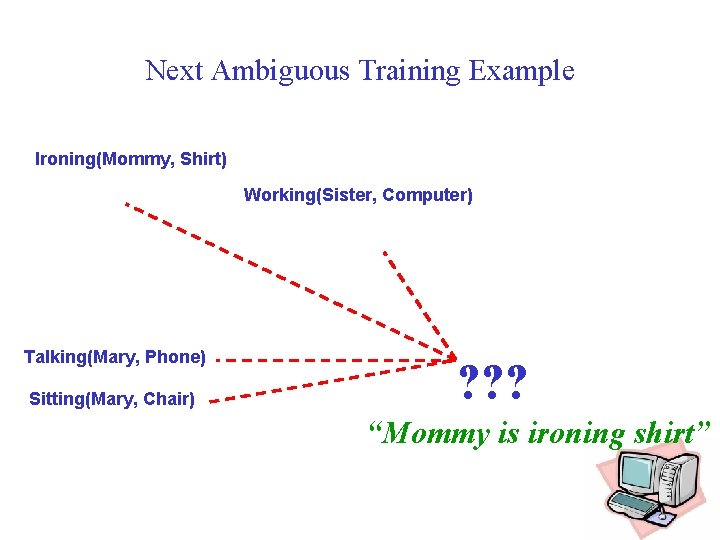

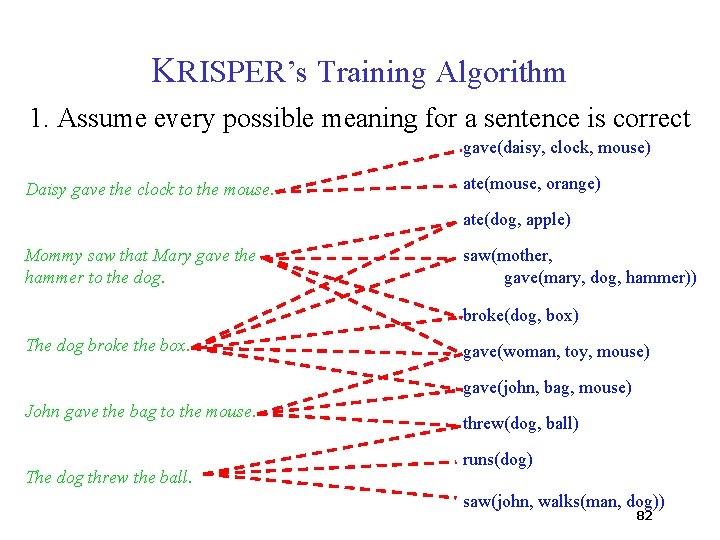

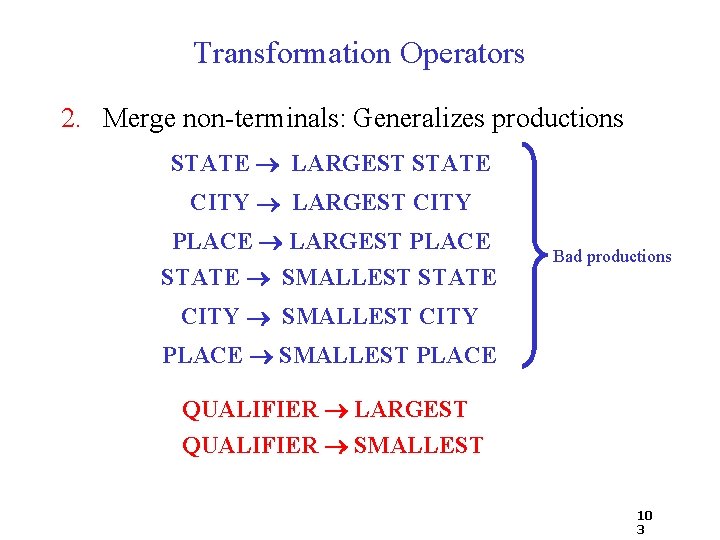

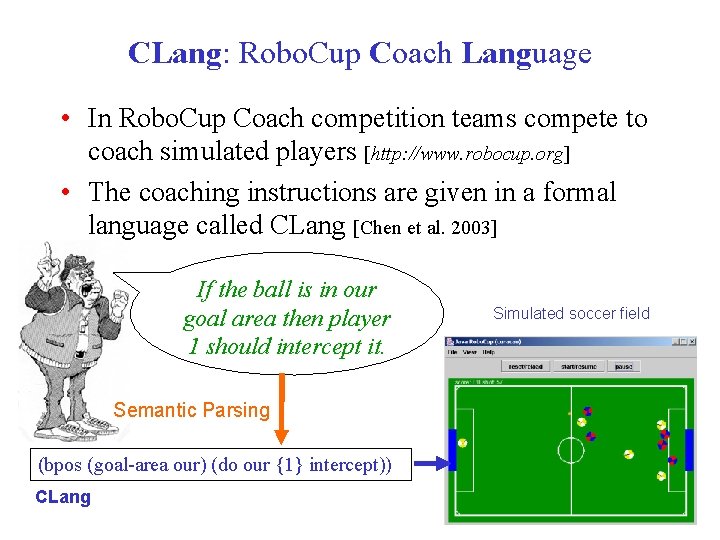

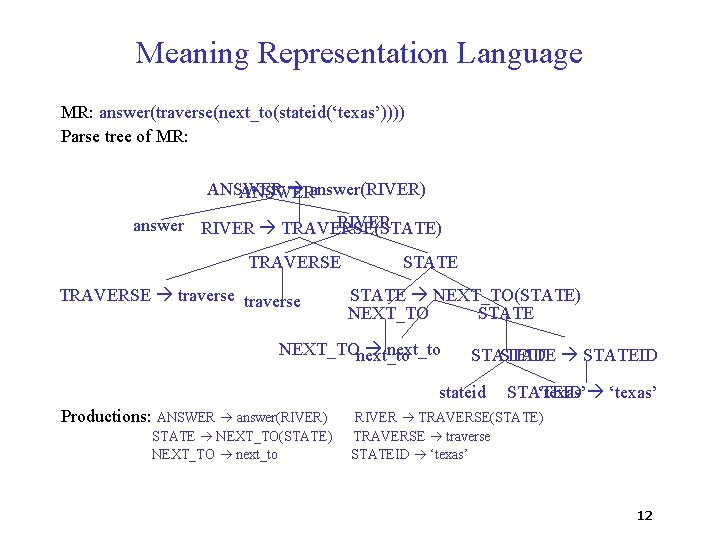

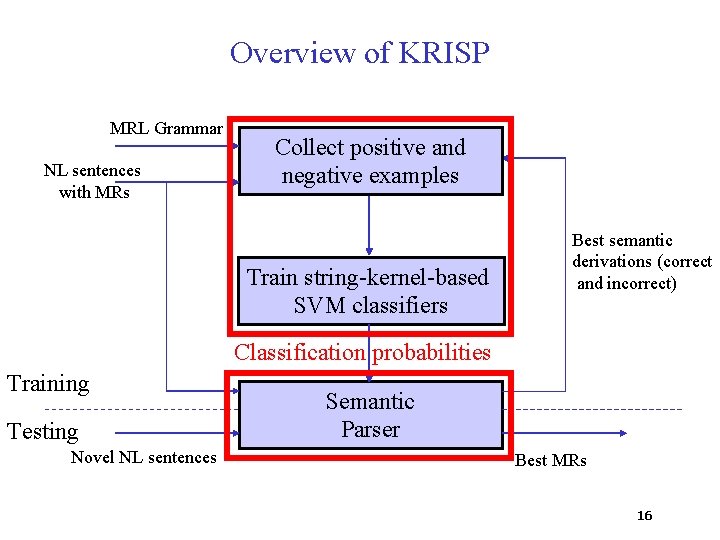

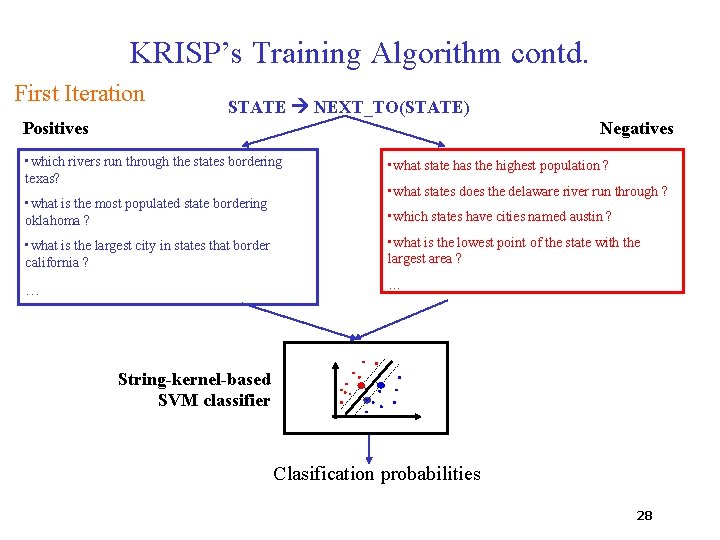

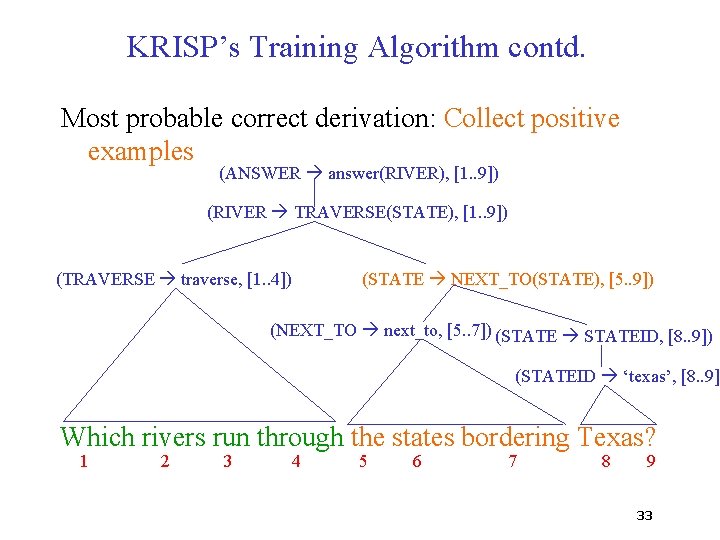

Experimental Corpora • CLang [Kate, Wong & Mooney, 2005] – 300 randomly selected pieces of coaching advice from the log files of the 2003 Robo. Cup Coach Competition – 22. 52 words on average in NL sentences – 13. 42 tokens on average in MRs • Geoquery [Tang & Mooney, 2001] – 880 queries for the given U. S. geography database – 7. 48 words on average in NL sentences – 6. 47 tokens on average in MRs 39

Experimental Methodology • Evaluated using standard 10 -fold cross validation • Correctness – CLang: output exactly matches the correct representation – Geoquery: the resulting query retrieves the same answer as the correct representation • Metrics 40

![Experimental Methodology contd Compared Systems CHILL Tang Mooney 2001 Inductive Logic Experimental Methodology contd. • Compared Systems: – CHILL [Tang & Mooney, 2001]: Inductive Logic](https://slidetodoc.com/presentation_image_h/317fe5df4b18ec7aeee60a358e0132f4/image-41.jpg)

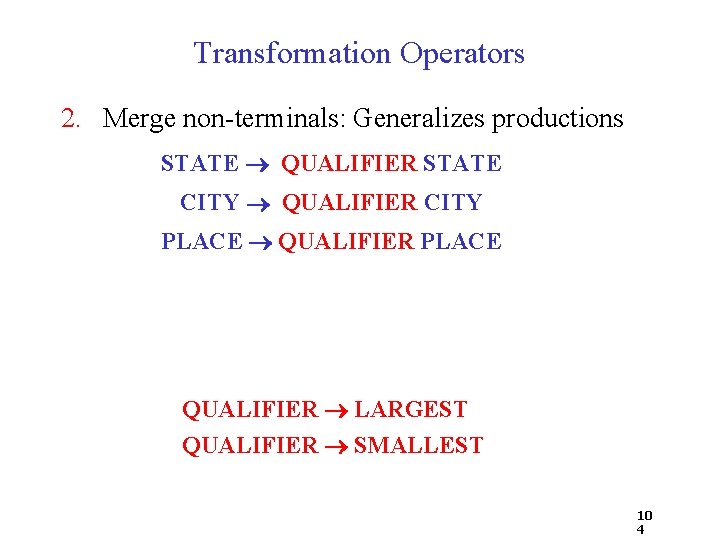

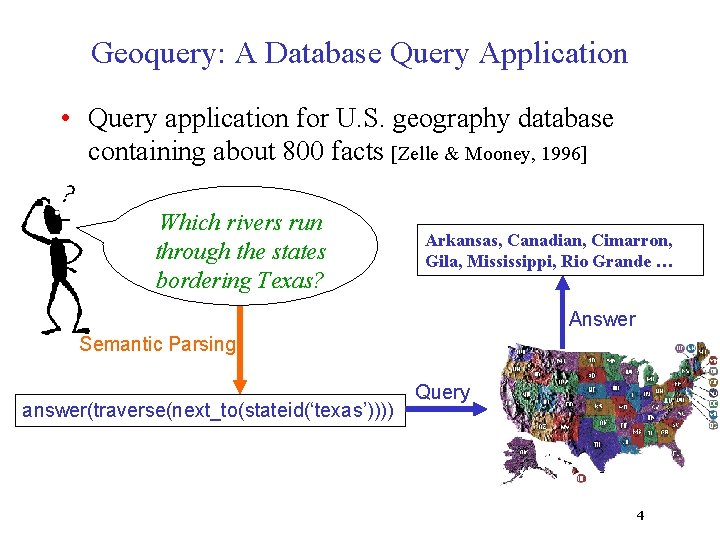

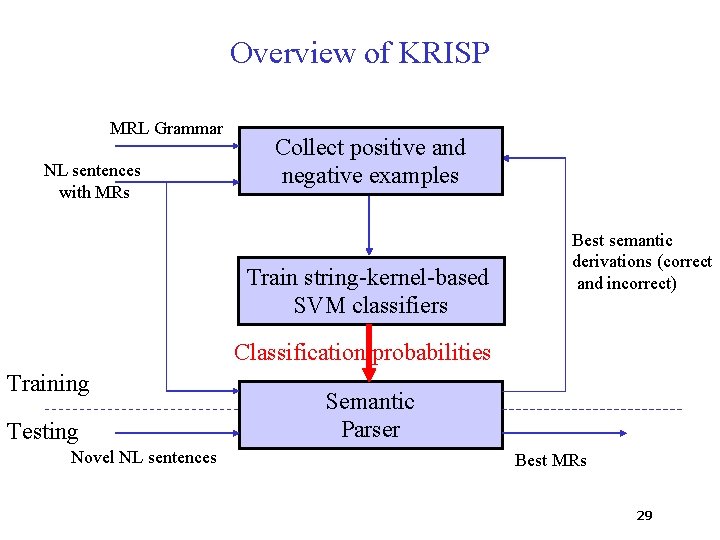

Experimental Methodology contd. • Compared Systems: – CHILL [Tang & Mooney, 2001]: Inductive Logic Programming based semantic parser – SCISSOR [Ge & Mooney, 2005]: learns an integrated syntactic-semantic parser, needs extra annotations – WASP [Wong & Mooney, 2006]: uses statistical machine translation techniques – Zettlemoyer & Collins (2007): Combinatory Categorial Grammar (CCG) based semantic parser • Different Experimental Setup (600 training, 280 testing examples) • Requires an initial hand-built lexicon 41

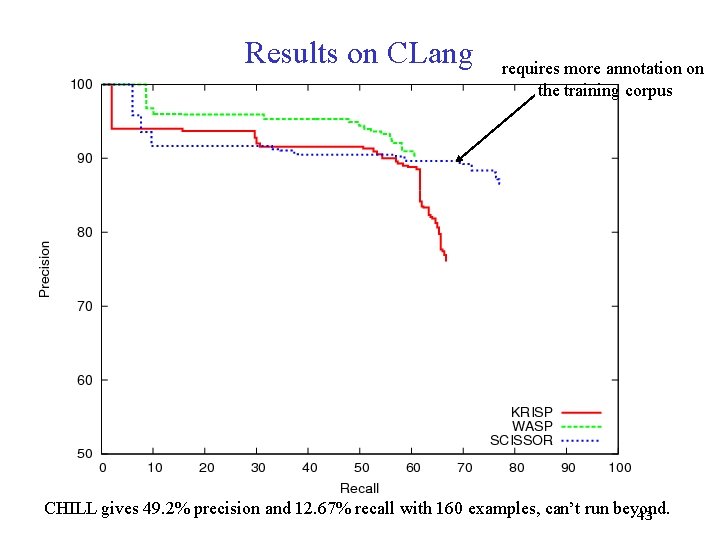

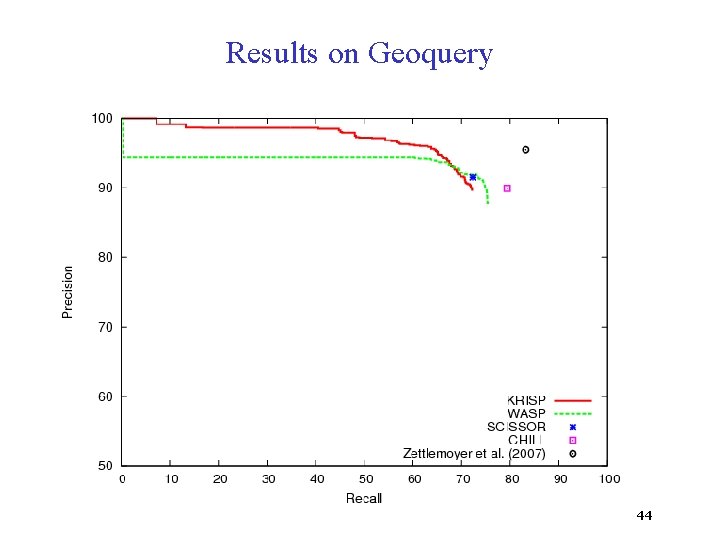

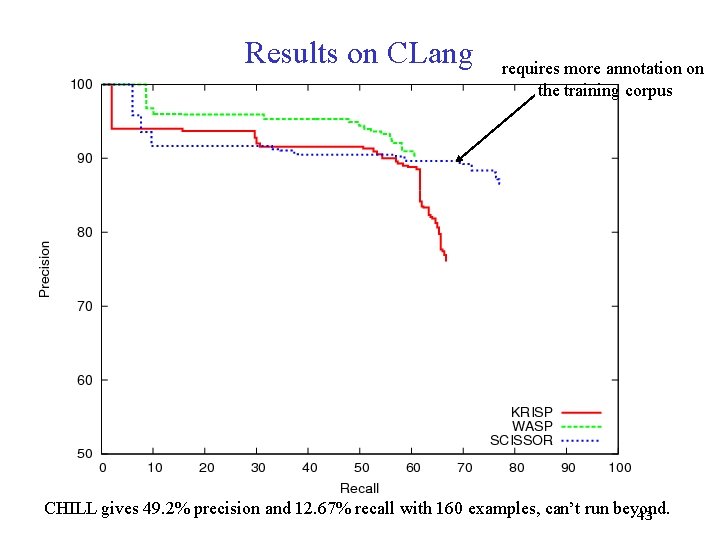

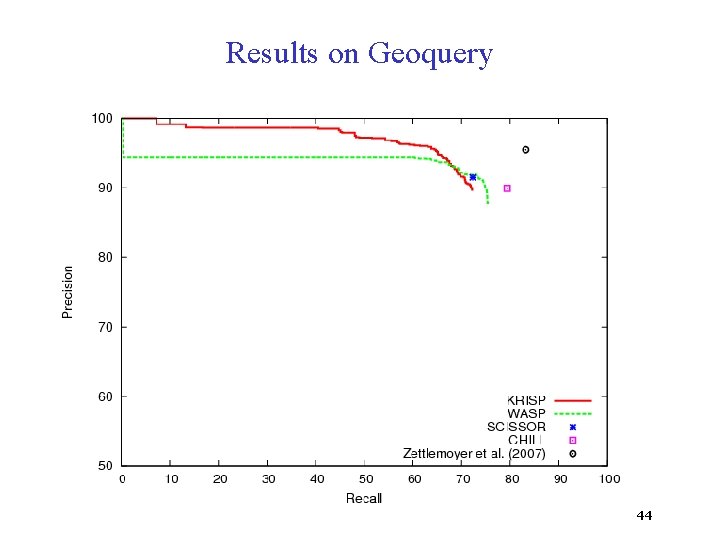

Experimental Methodology contd. • KRISP gives probabilities for its semantic derivation which are taken as confidences of the MRs • We plot precision-recall curves by first sorting the best MR for each sentence by confidences and then finding precision for every recall value • WASP and SCISSOR also output confidences so we show their precision-recall curves • Results of other systems shown as points on precision-recall graphs 42

Results on CLang requires more annotation on the training corpus CHILL gives 49. 2% precision and 12. 67% recall with 160 examples, can’t run beyond. 43

Results on Geoquery 44

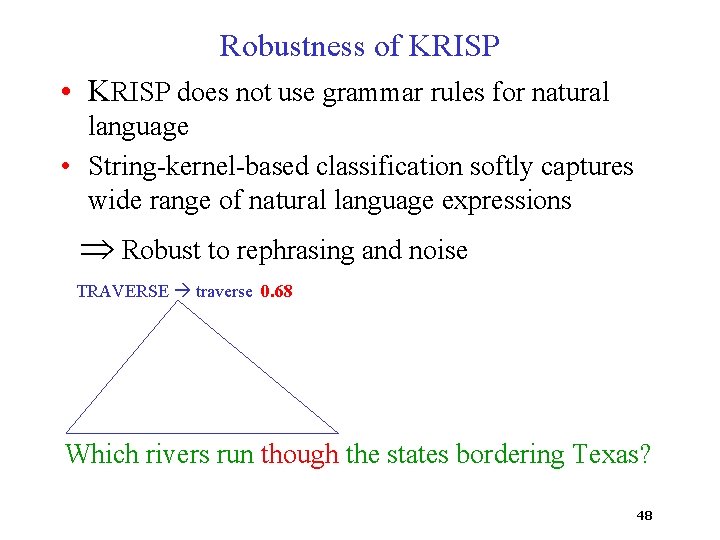

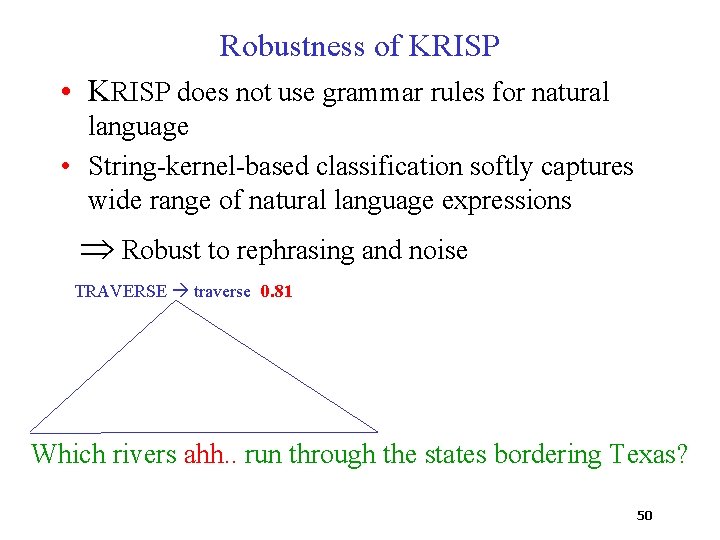

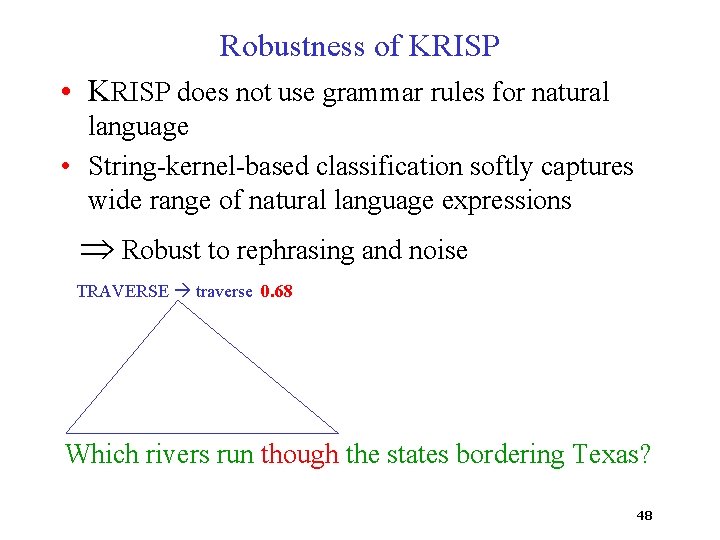

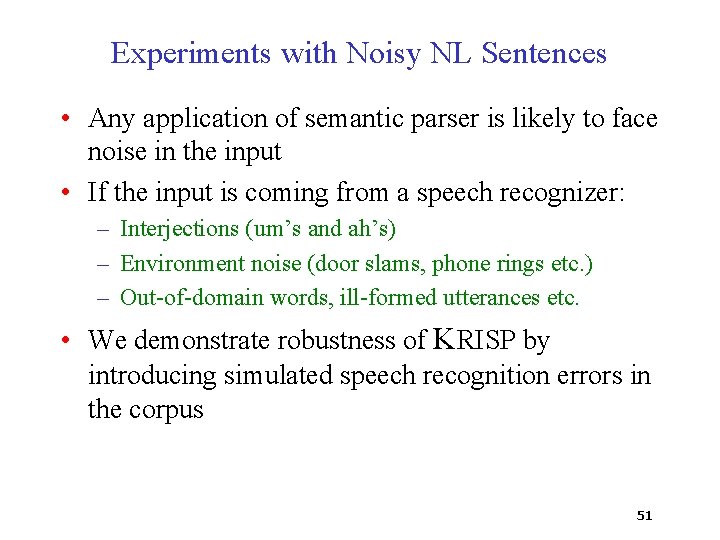

Robustness of KRISP • KRISP does not use grammar rules for natural language • String-kernel-based classification softly captures wide range of natural language expressions Robust to rephrasing and noise 45

Robustness of KRISP • KRISP does not use grammar rules for natural language • String-kernel-based classification softly captures wide range of natural language expressions Robust to rephrasing and noise TRAVERSE traverse 0. 95 Which rivers run through the states bordering Texas? 46

Robustness of KRISP • KRISP does not use grammar rules for natural language • String-kernel-based classification softly captures wide range of natural language expressions Robust to rephrasing and noise TRAVERSE traverse 0. 78 Which are the rivers that run through the states bordering Texas? 47

Robustness of KRISP • KRISP does not use grammar rules for natural language • String-kernel-based classification softly captures wide range of natural language expressions Robust to rephrasing and noise TRAVERSE traverse 0. 68 Which rivers run though the states bordering Texas? 48

Robustness of KRISP • KRISP does not use grammar rules for natural language • String-kernel-based classification softly captures wide range of natural language expressions Robust to rephrasing and noise TRAVERSE traverse 0. 65 Which rivers through the states bordering Texas? 49

Robustness of KRISP • KRISP does not use grammar rules for natural language • String-kernel-based classification softly captures wide range of natural language expressions Robust to rephrasing and noise TRAVERSE traverse 0. 81 Which rivers ahh. . run through the states bordering Texas? 50

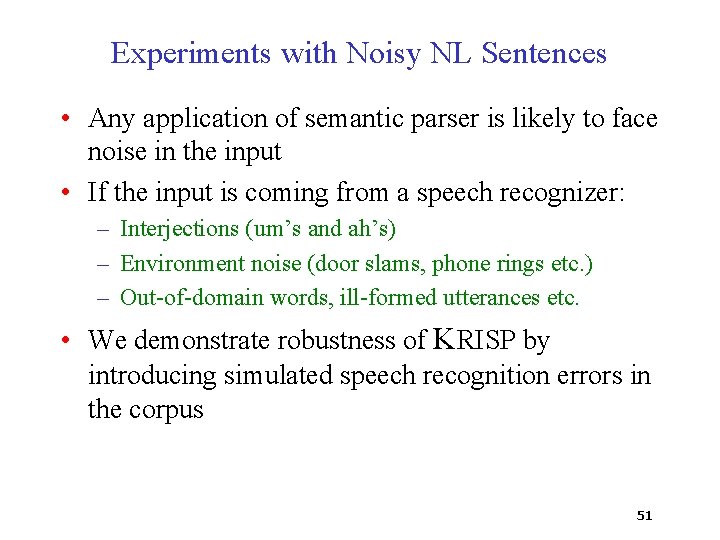

Experiments with Noisy NL Sentences • Any application of semantic parser is likely to face noise in the input • If the input is coming from a speech recognizer: – Interjections (um’s and ah’s) – Environment noise (door slams, phone rings etc. ) – Out-of-domain words, ill-formed utterances etc. • We demonstrate robustness of KRISP by introducing simulated speech recognition errors in the corpus 51

Experiments with Noisy NL Sentences contd. • Noise was introduced in the NL sentences by: – Adding extra words chosen according to their frequencies in the BNC – Dropping words randomly – Substituting words with�phonetically close high frequency words • Four levels of noise was created by increasing the probabilities of the above • Results shown when only test sentences are corrupted, qualitatively similar results when both test and train sentences are corrupted • We show best F-measures (harmonic mean of precision and recall) 52

Results on Noisy CLang Corpus 53

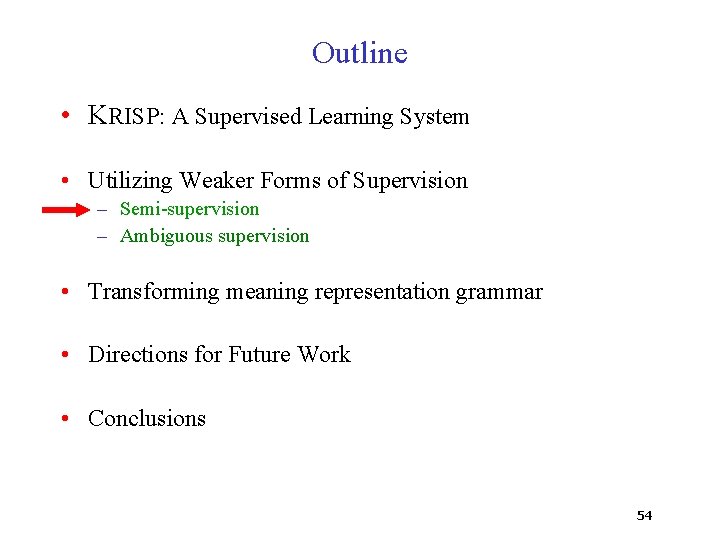

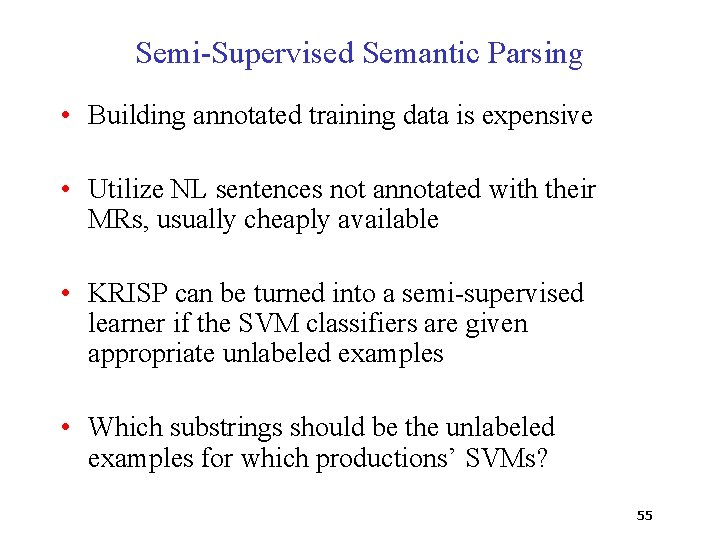

Outline • KRISP: A Supervised Learning System • Utilizing Weaker Forms of Supervision – Semi-supervision – Ambiguous supervision • Transforming meaning representation grammar • Directions for Future Work • Conclusions 54

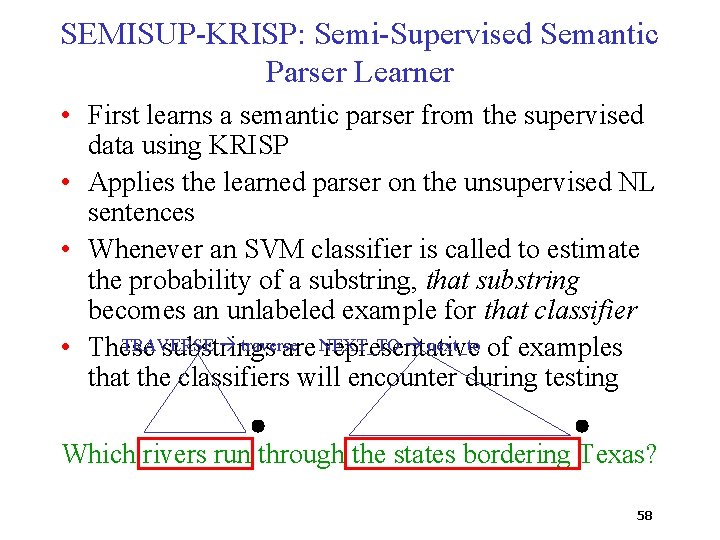

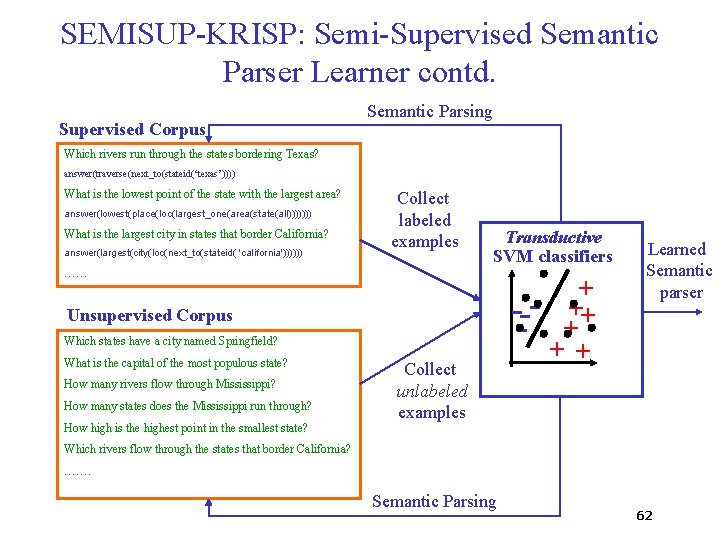

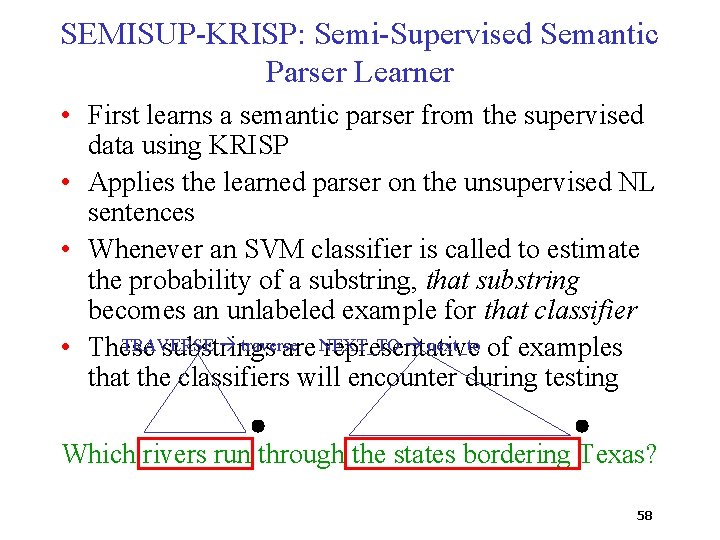

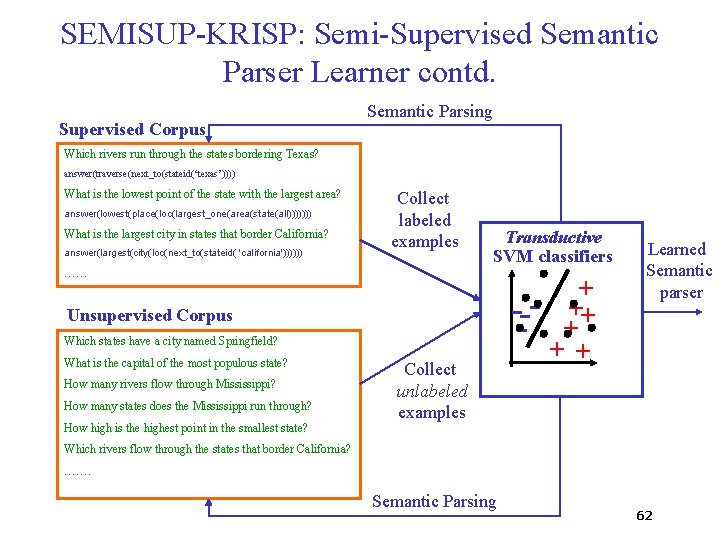

Semi-Supervised Semantic Parsing • Building annotated training data is expensive • Utilize NL sentences not annotated with their MRs, usually cheaply available • KRISP can be turned into a semi-supervised learner if the SVM classifiers are given appropriate unlabeled examples • Which substrings should be the unlabeled examples for which productions’ SVMs? 55

![SEMISUPKRISP SemiSupervised Semantic Parser Learner Kate Mooney 2007 a First learns a SEMISUP-KRISP: Semi-Supervised Semantic Parser Learner [Kate & Mooney, 2007 a] • First learns a](https://slidetodoc.com/presentation_image_h/317fe5df4b18ec7aeee60a358e0132f4/image-56.jpg)

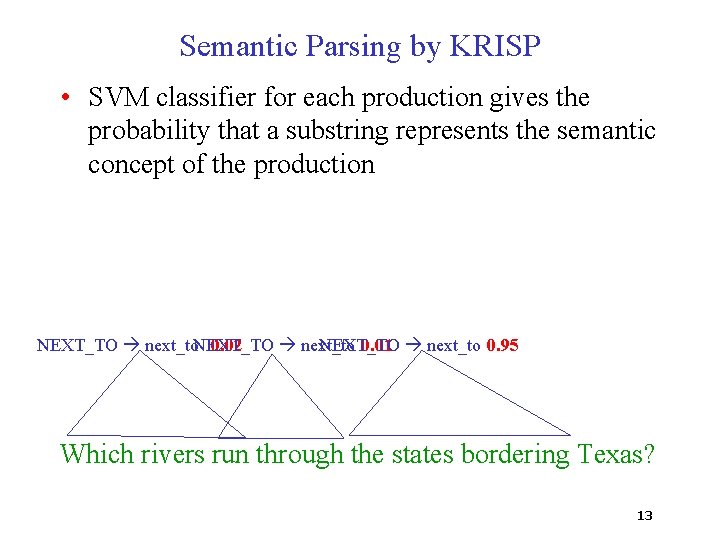

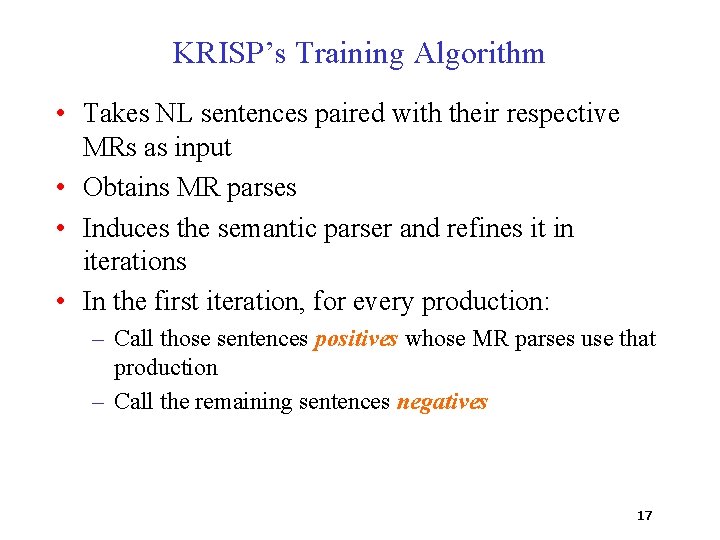

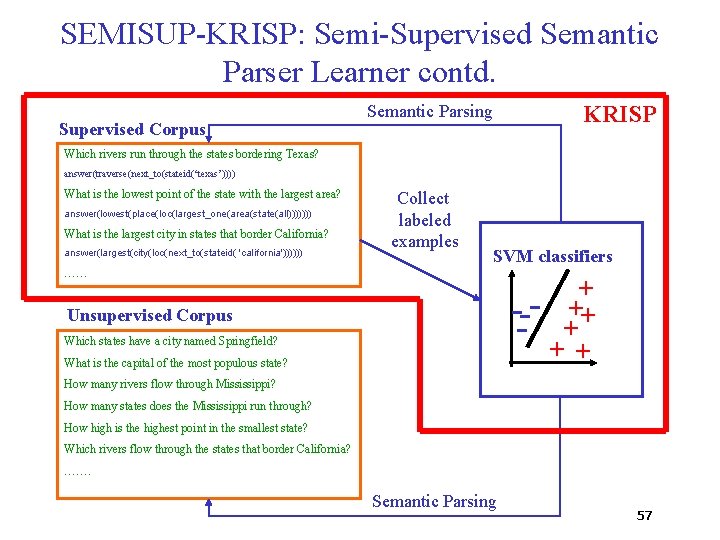

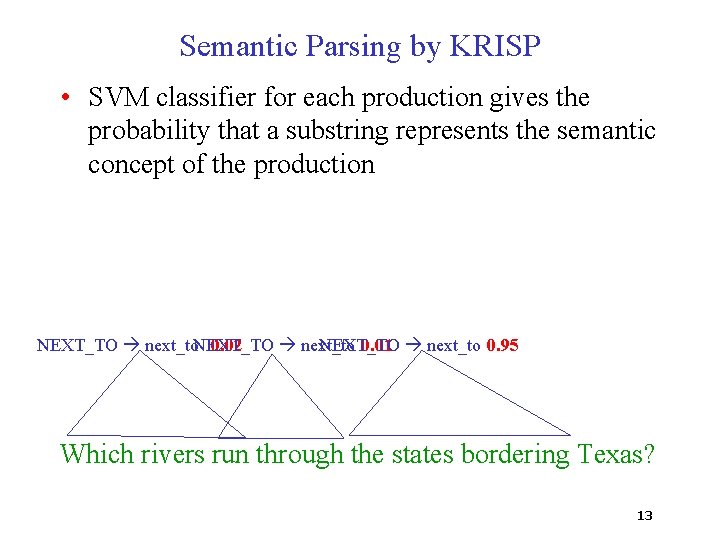

SEMISUP-KRISP: Semi-Supervised Semantic Parser Learner [Kate & Mooney, 2007 a] • First learns a semantic parser from the supervised data using KRISP 56

SEMISUP-KRISP: Semi-Supervised Semantic Parser Learner contd. Supervised Corpus KRISP Semantic Parsing Which rivers run through the states bordering Texas? answer(traverse(next_to(stateid(‘texas’)))) What is the lowest point of the state with the largest area? answer(lowest(place(loc(largest_one(area(state(all))))))) What is the largest city in states that border California? answer(largest(city(loc(next_to(stateid( 'california')))))) Collect labeled examples SVM classifiers …… --- Unsupervised Corpus Which states have a city named Springfield? What is the capital of the most populous state? + ++ + How many rivers flow through Mississippi? How many states does the Mississippi run through? How high is the highest point in the smallest state? Which rivers flow through the states that border California? ……. Semantic Parsing 57

SEMISUP-KRISP: Semi-Supervised Semantic Parser Learner • First learns a semantic parser from the supervised data using KRISP • Applies the learned parser on the unsupervised NL sentences • Whenever an SVM classifier is called to estimate the probability of a substring, that substring becomes an unlabeled example for that classifier TRAVERSE traverse next_to of examples • These substrings are NEXT_TO representative that the classifiers will encounter during testing Which rivers run through the states bordering Texas? 58

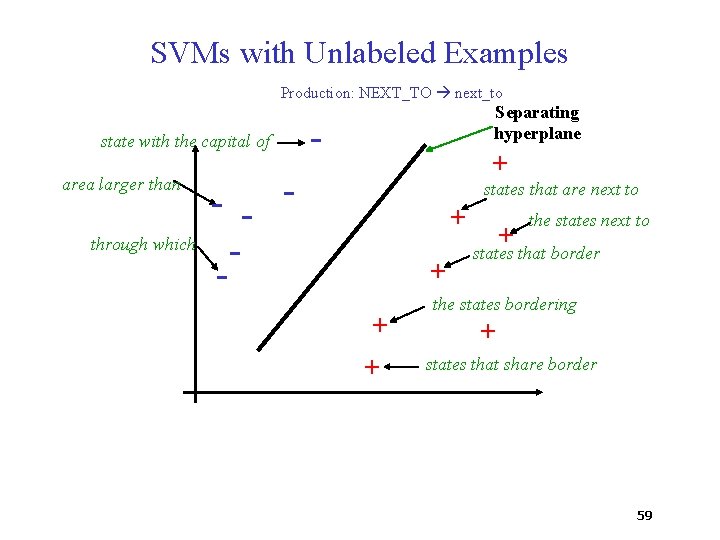

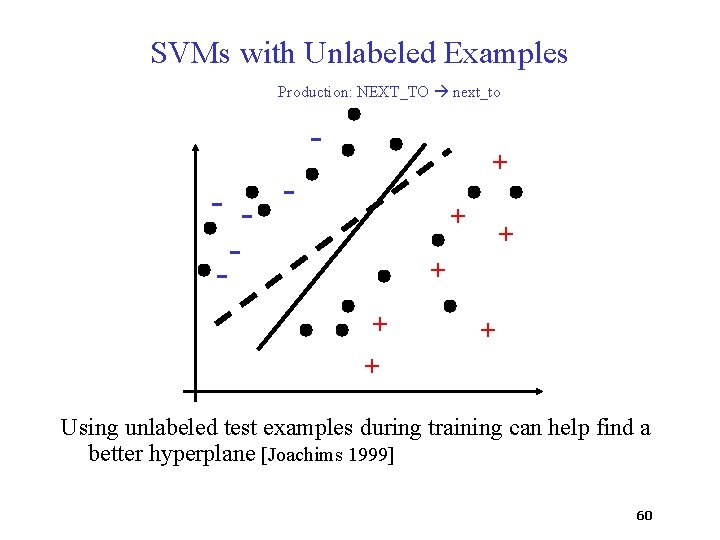

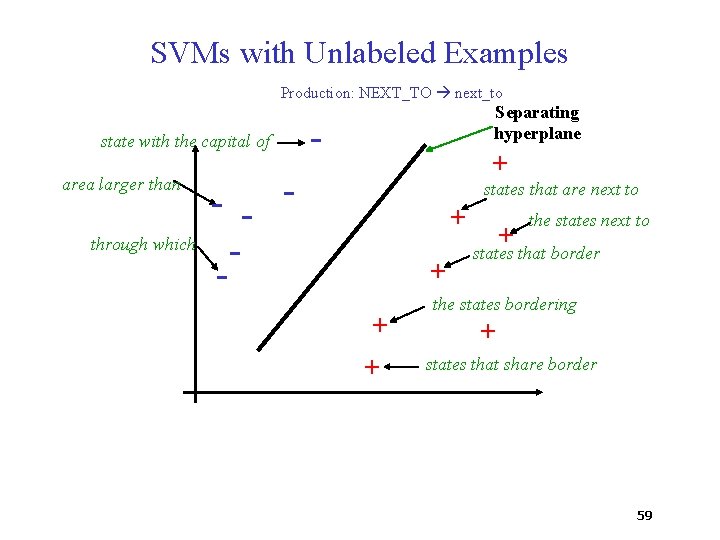

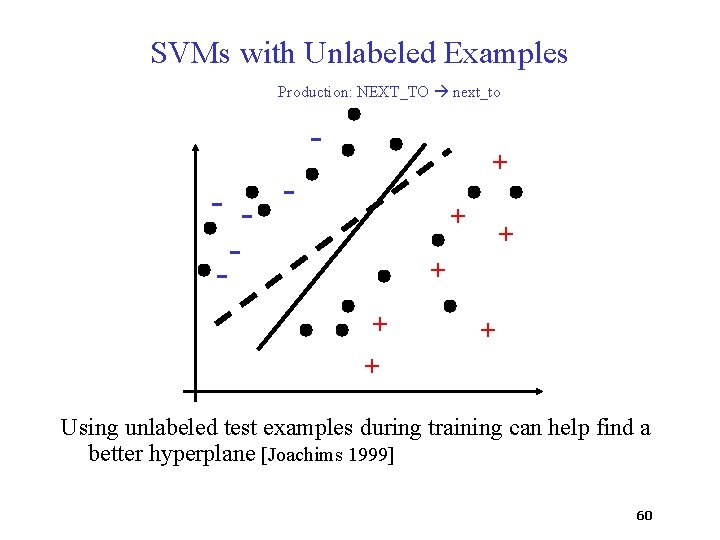

SVMs with Unlabeled Examples Production: NEXT_TO next_to - state with the capital of area larger than through which - - Separating hyperplane + - states that are next to + + + the states next to states that border the states bordering + states that share border 59

SVMs with Unlabeled Examples Production: NEXT_TO next_to - + - - - + + + Using unlabeled test examples during training can help find a better hyperplane [Joachims 1999] 60

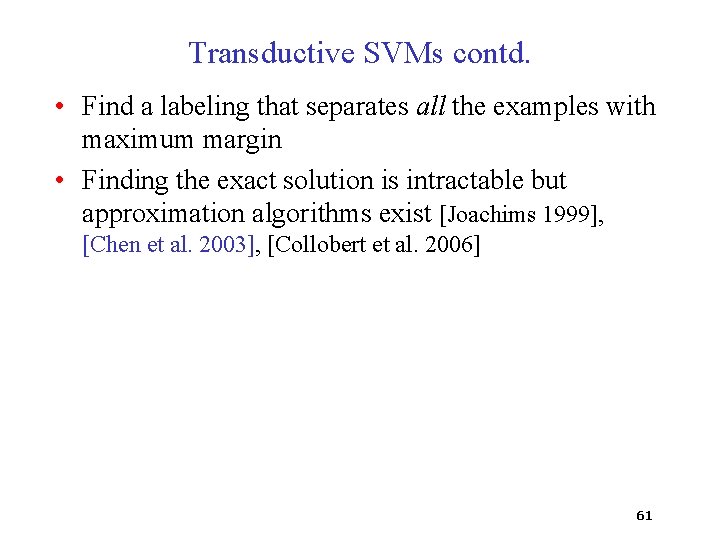

Transductive SVMs contd. • Find a labeling that separates all the examples with maximum margin • Finding the exact solution is intractable but approximation algorithms exist [Joachims 1999], [Chen et al. 2003], [Collobert et al. 2006] 61

SEMISUP-KRISP: Semi-Supervised Semantic Parser Learner contd. Supervised Corpus Semantic Parsing Which rivers run through the states bordering Texas? answer(traverse(next_to(stateid(‘texas’)))) What is the lowest point of the state with the largest area? answer(lowest(place(loc(largest_one(area(state(all))))))) What is the largest city in states that border California? answer(largest(city(loc(next_to(stateid( 'california')))))) Collect labeled examples Transductive SVM classifiers …… --- Unsupervised Corpus Which states have a city named Springfield? What is the capital of the most populous state? How many rivers flow through Mississippi? How many states does the Mississippi run through? How high is the highest point in the smallest state? Collect unlabeled examples + ++ + Learned Semantic parser Which rivers flow through the states that border California? ……. Semantic Parsing 62

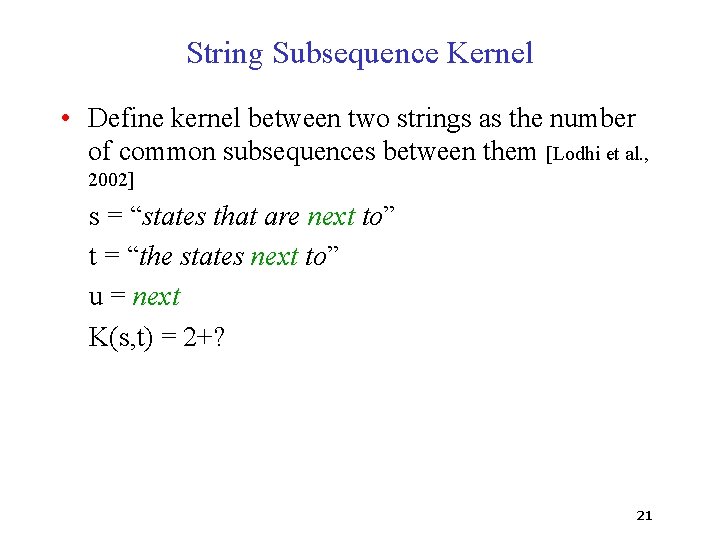

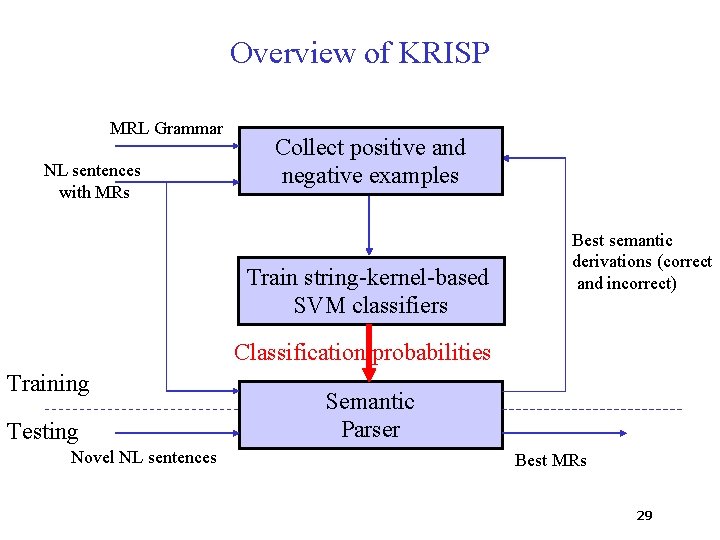

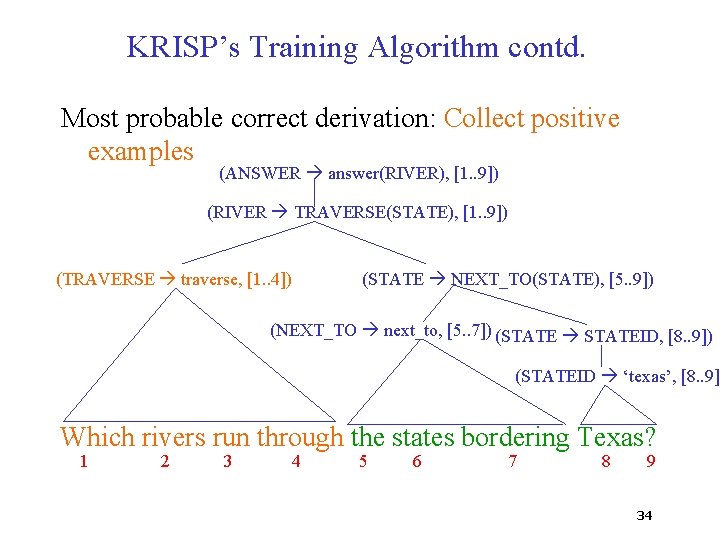

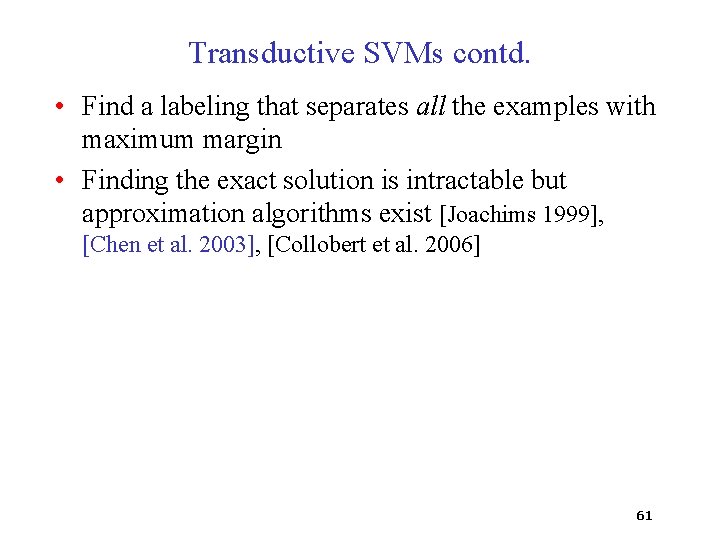

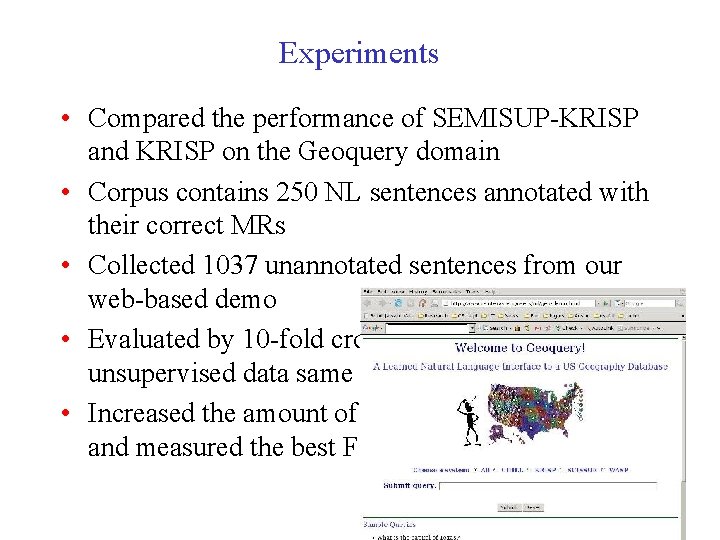

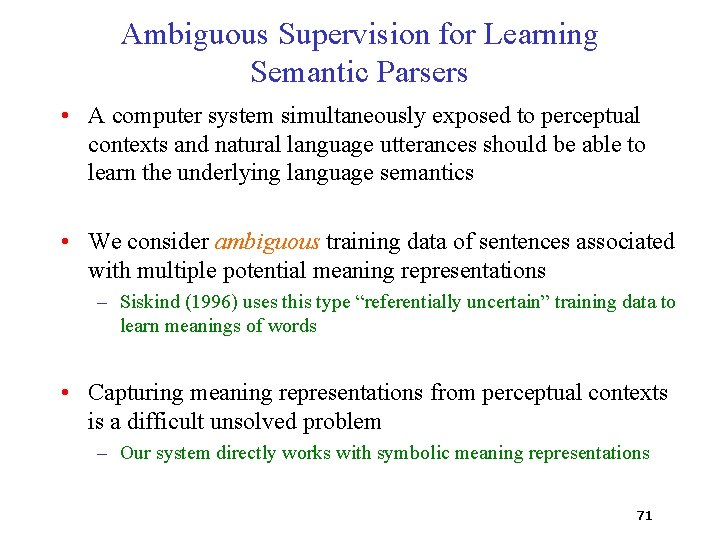

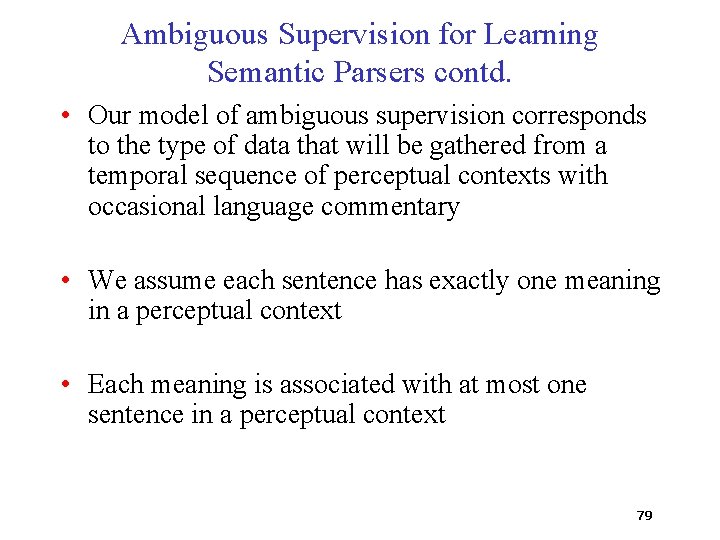

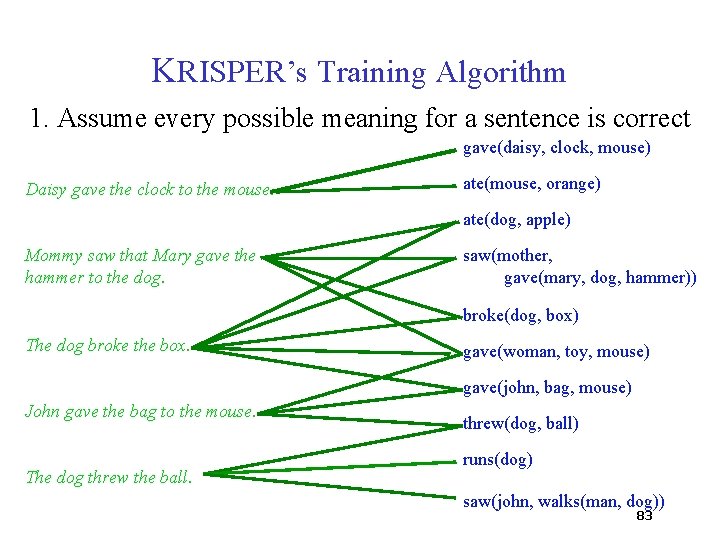

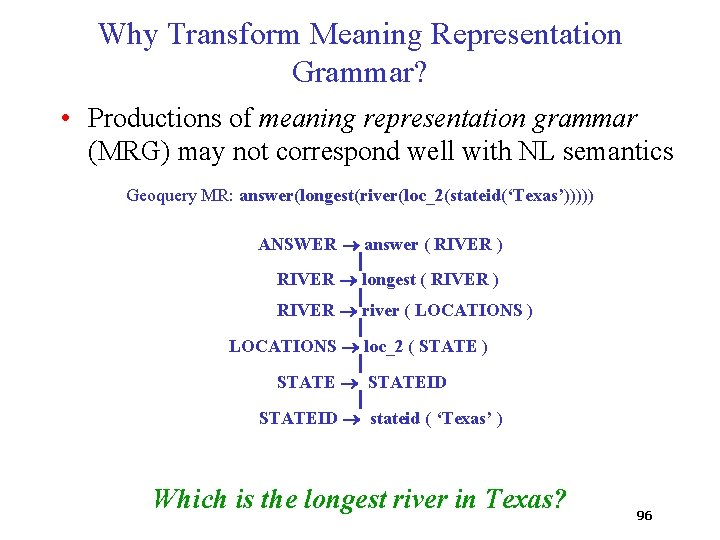

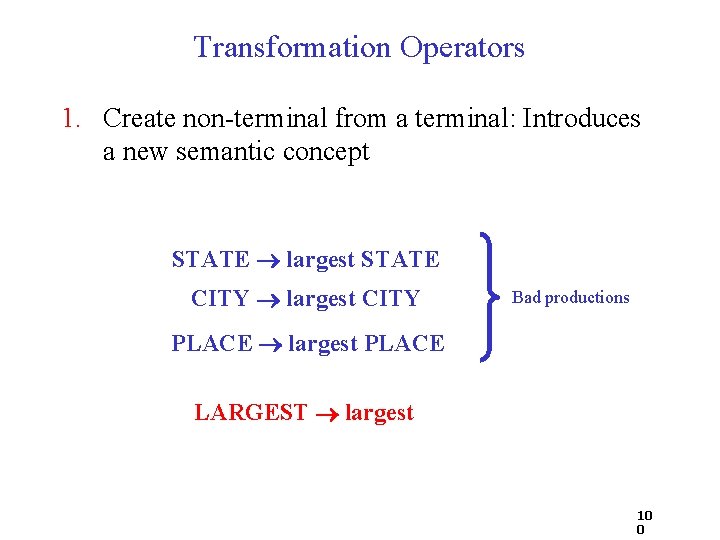

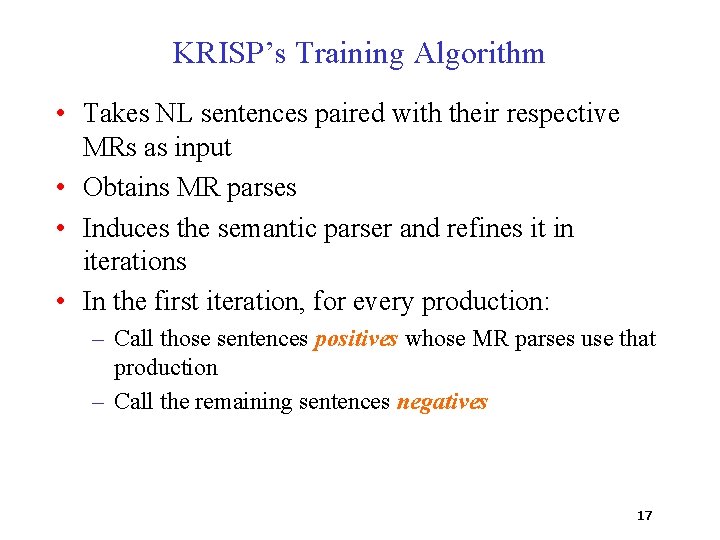

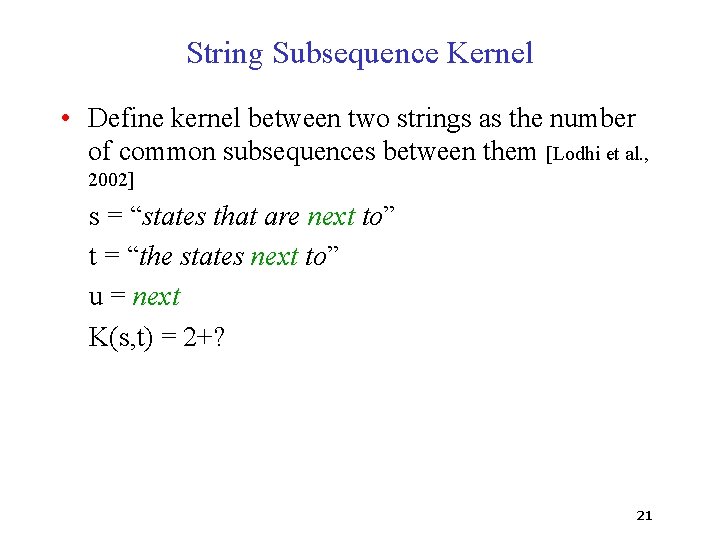

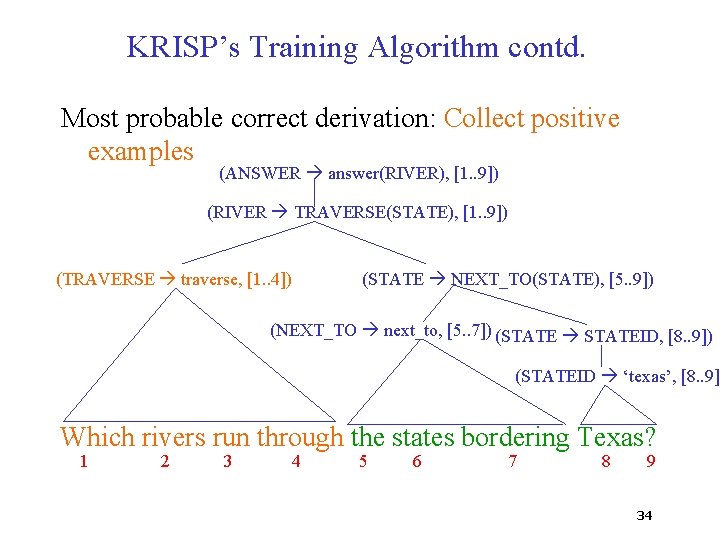

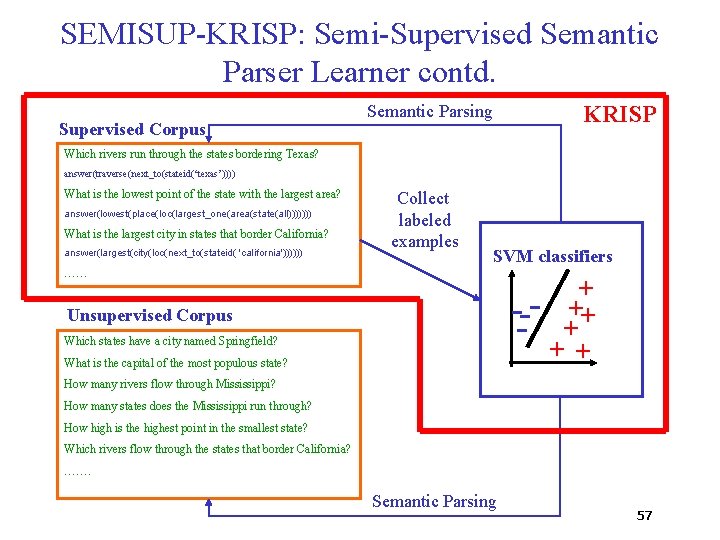

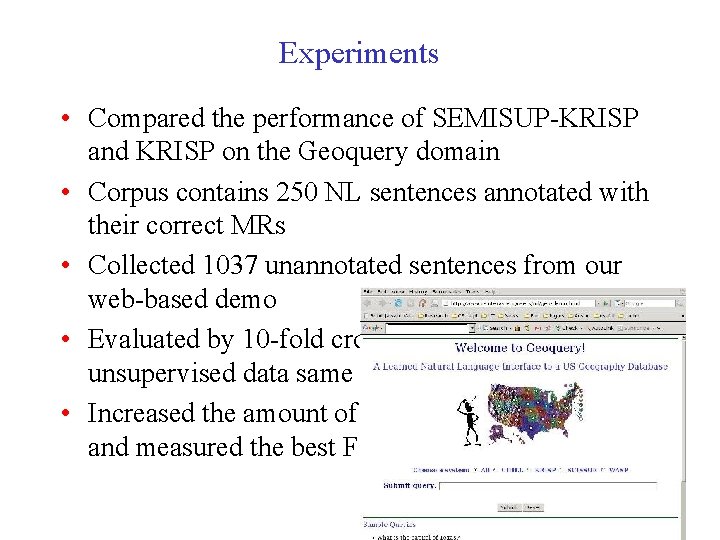

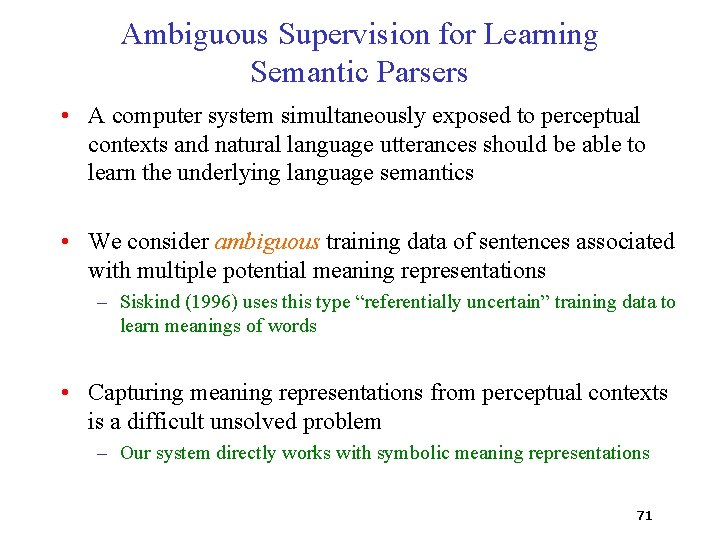

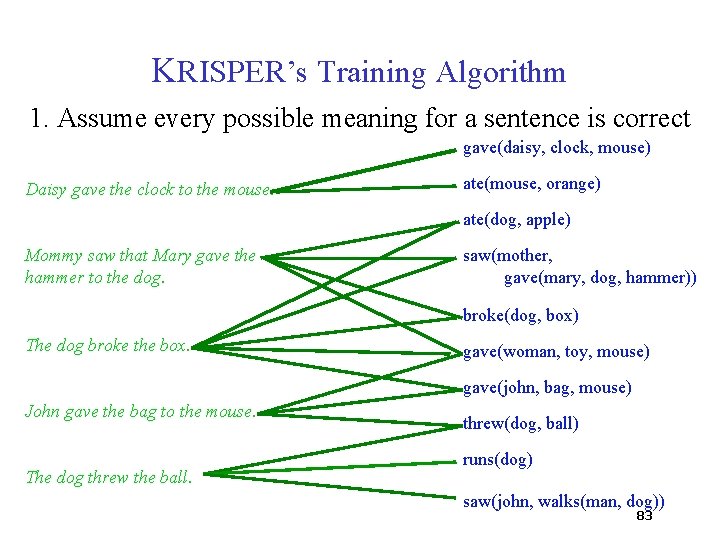

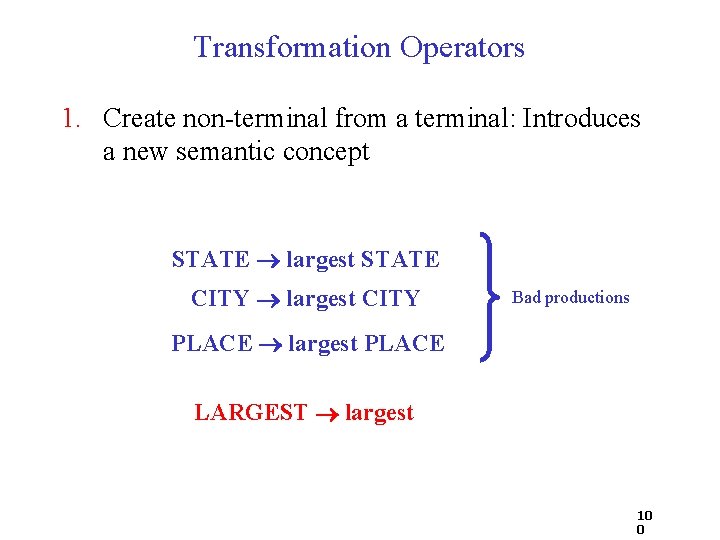

Experiments • Compared the performance of SEMISUP-KRISP and KRISP on the Geoquery domain • Corpus contains 250 NL sentences annotated with their correct MRs • Collected 1037 unannotated sentences from our web-based demo • Evaluated by 10 -fold cross validation keeping the unsupervised data same in each fold • Increased the amount of supervised training data and measured the best F-measure 63

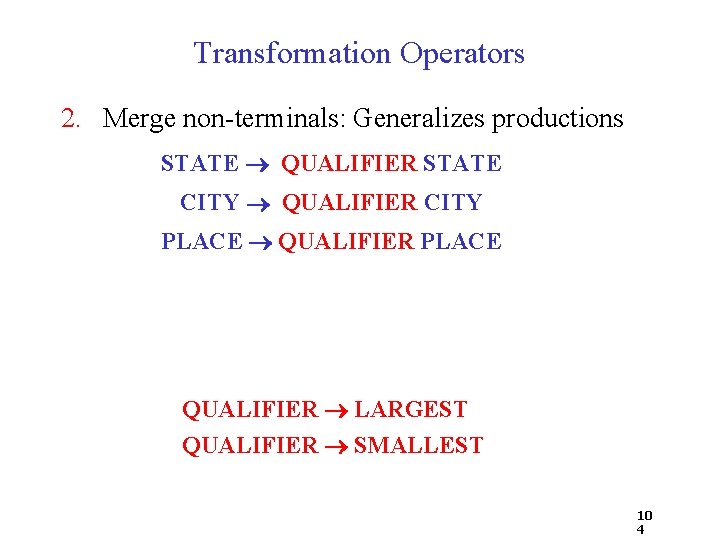

Results 64

![Results 25 saving GEOBASE Handbuilt semantic parser Borland International 1988 65 Results 25% saving GEOBASE: Hand-built semantic parser [Borland International, 1988] 65](https://slidetodoc.com/presentation_image_h/317fe5df4b18ec7aeee60a358e0132f4/image-65.jpg)

Results 25% saving GEOBASE: Hand-built semantic parser [Borland International, 1988] 65

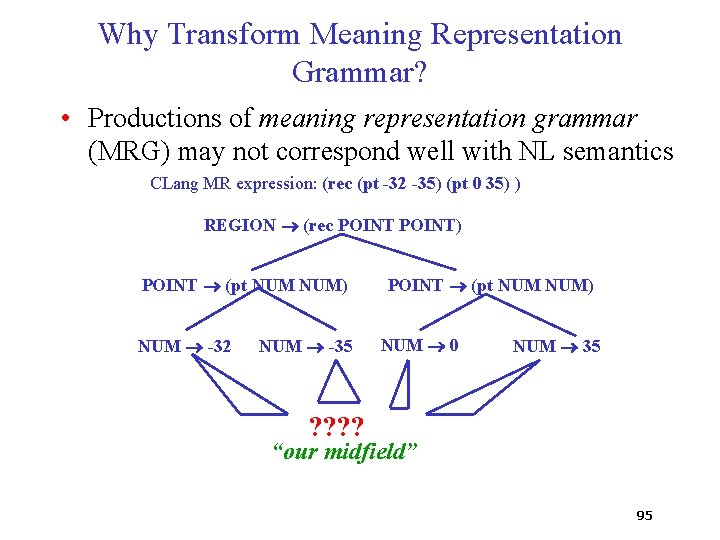

Outline • KRISP: A Supervised Learning System • Utilizing Weaker Forms of Supervision – Semi-supervision – Ambiguous supervision • Transforming meaning representation grammar • Directions for Future Work • Conclusions 66

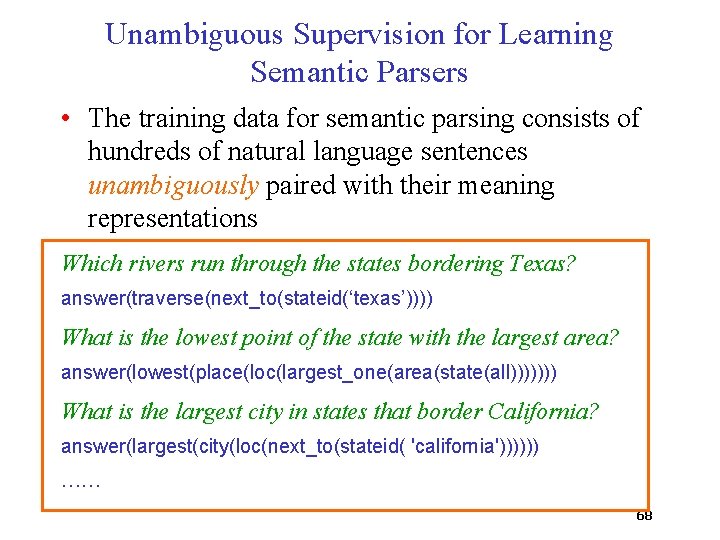

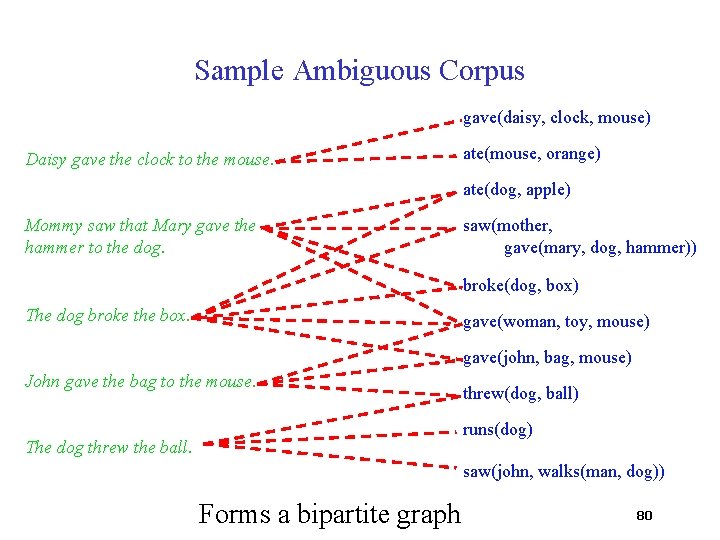

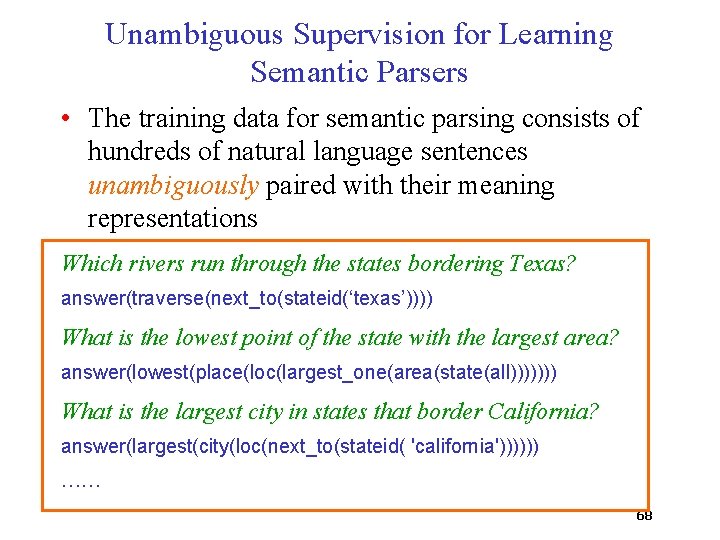

Unambiguous Supervision for Learning Semantic Parsers • The training data for semantic parsing consists of hundreds of natural language sentences unambiguously paired with their meaning representations 67

Unambiguous Supervision for Learning Semantic Parsers • The training data for semantic parsing consists of hundreds of natural language sentences unambiguously paired with their meaning representations Which rivers run through the states bordering Texas? answer(traverse(next_to(stateid(‘texas’)))) What is the lowest point of the state with the largest area? answer(lowest(place(loc(largest_one(area(state(all))))))) What is the largest city in states that border California? answer(largest(city(loc(next_to(stateid( 'california')))))) …… 68

Shortcomings of Unambiguous Supervision • It requires considerable human effort to annotate each sentence with its correct meaning representation • Does not model the type of supervision children receive when they are learning a language – Children are not taught meanings of individual sentences – They learn to identify the correct meaning of a sentence from several meanings possible in their perceptual context 69

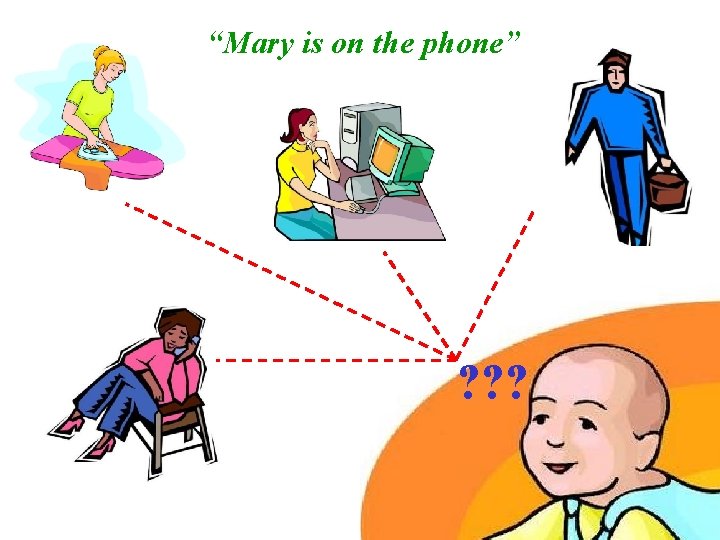

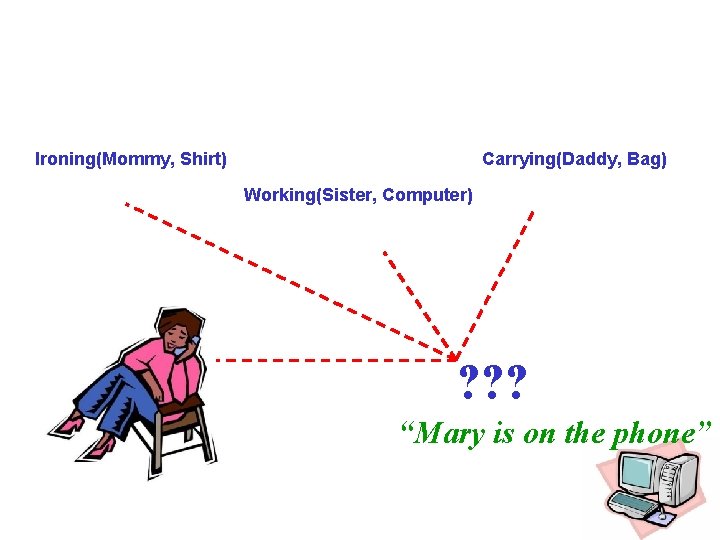

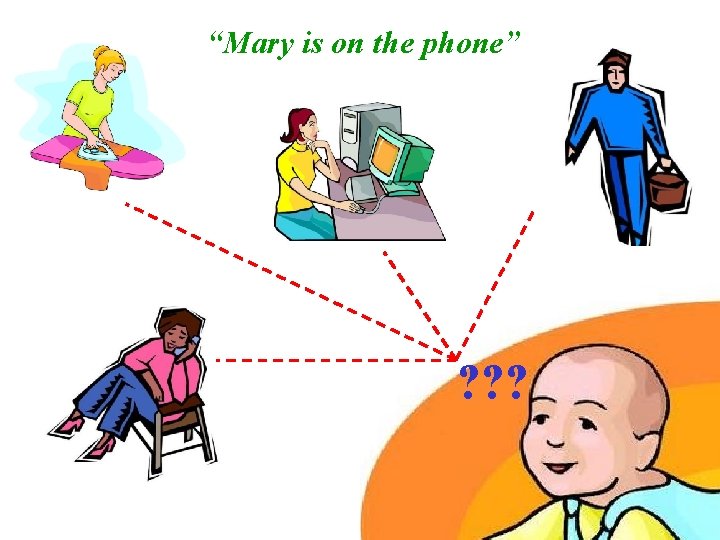

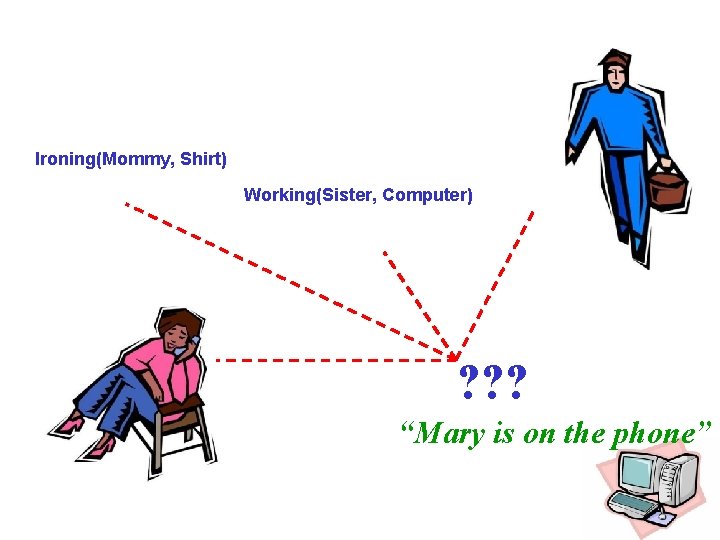

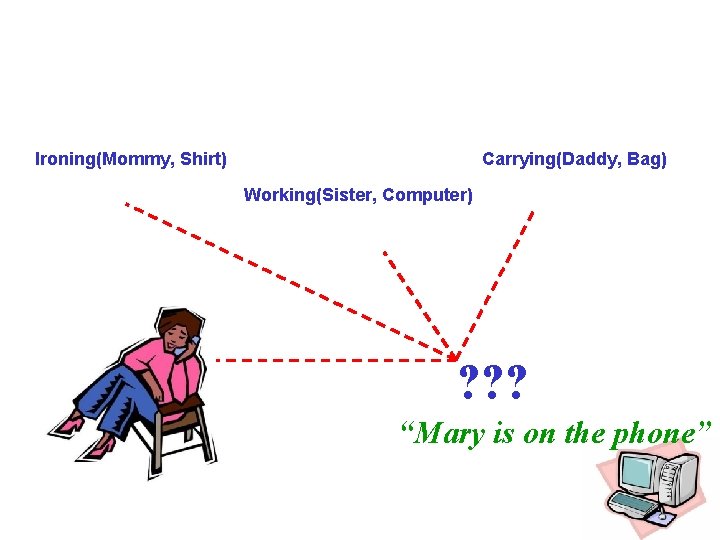

“Mary is on the phone” ? ? ? 70

Ambiguous Supervision for Learning Semantic Parsers • A computer system simultaneously exposed to perceptual contexts and natural language utterances should be able to learn the underlying language semantics • We consider ambiguous training data of sentences associated with multiple potential meaning representations – Siskind (1996) uses this type “referentially uncertain” training data to learn meanings of words • Capturing meaning representations from perceptual contexts is a difficult unsolved problem – Our system directly works with symbolic meaning representations 71

“Mary is on the phone” ? ? ? 72

? ? ? “Mary is on the phone” 73

Ironing(Mommy, Shirt) ? ? ? “Mary is on the phone” 74

Ironing(Mommy, Shirt) Working(Sister, Computer) ? ? ? “Mary is on the phone” 75

Ironing(Mommy, Shirt) Carrying(Daddy, Bag) Working(Sister, Computer) ? ? ? “Mary is on the phone” 76

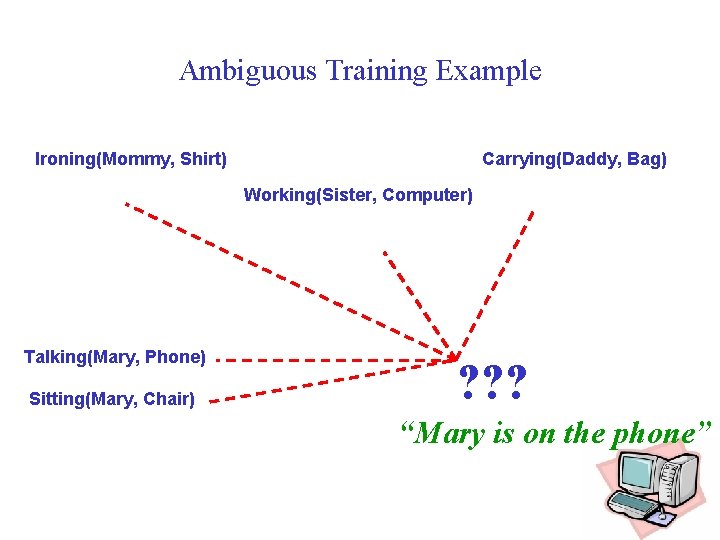

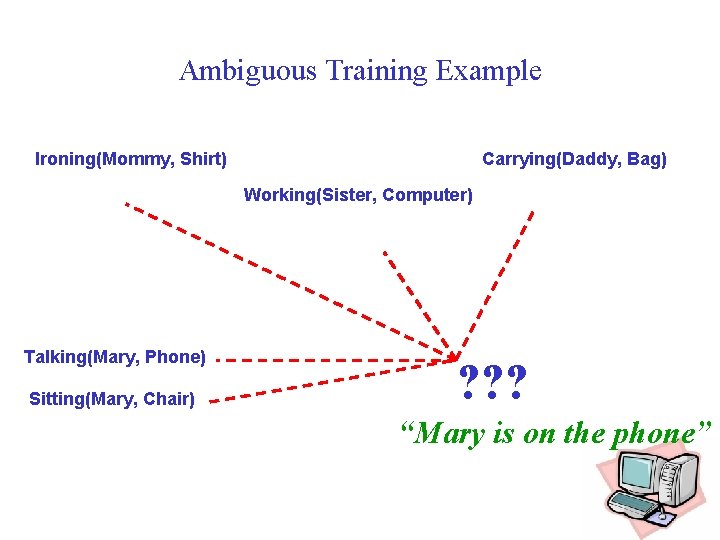

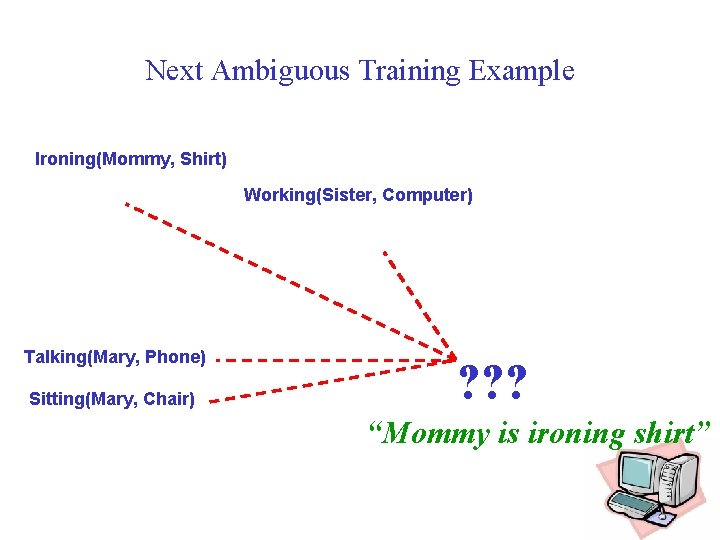

Ambiguous Training Example Ironing(Mommy, Shirt) Carrying(Daddy, Bag) Working(Sister, Computer) Talking(Mary, Phone) Sitting(Mary, Chair) ? ? ? “Mary is on the phone” 77

Next Ambiguous Training Example Ironing(Mommy, Shirt) Working(Sister, Computer) Talking(Mary, Phone) Sitting(Mary, Chair) ? ? ? “Mommy is ironing shirt” 78

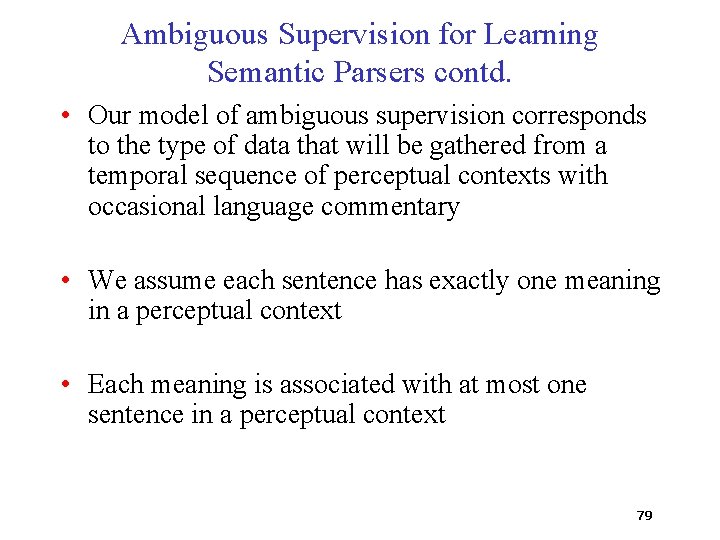

Ambiguous Supervision for Learning Semantic Parsers contd. • Our model of ambiguous supervision corresponds to the type of data that will be gathered from a temporal sequence of perceptual contexts with occasional language commentary • We assume each sentence has exactly one meaning in a perceptual context • Each meaning is associated with at most one sentence in a perceptual context 79

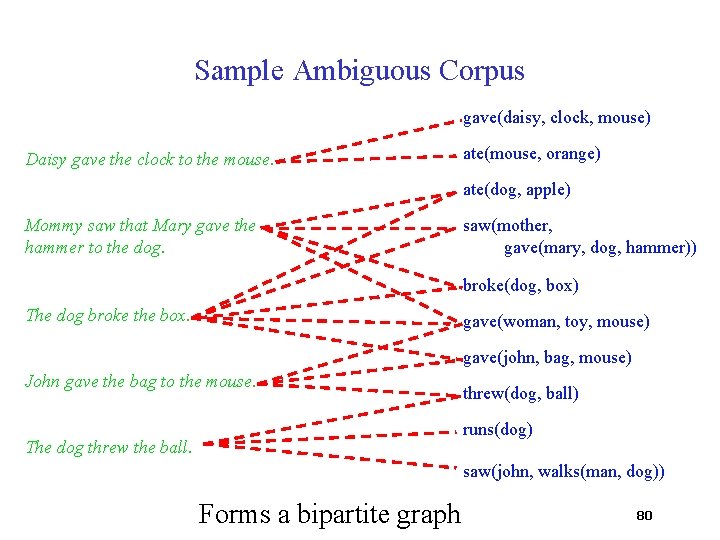

Sample Ambiguous Corpus gave(daisy, clock, mouse) Daisy gave the clock to the mouse. ate(mouse, orange) ate(dog, apple) Mommy saw that Mary gave the hammer to the dog. saw(mother, gave(mary, dog, hammer)) broke(dog, box) The dog broke the box. gave(woman, toy, mouse) gave(john, bag, mouse) John gave the bag to the mouse. threw(dog, ball) runs(dog) The dog threw the ball. saw(john, walks(man, dog)) Forms a bipartite graph 80

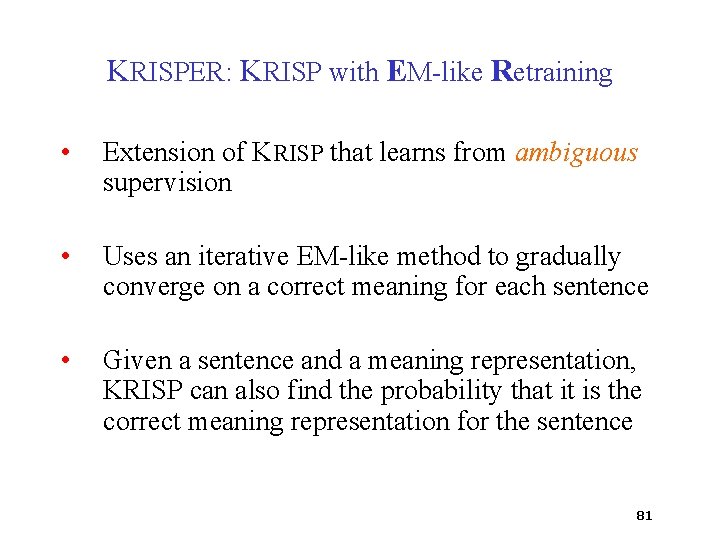

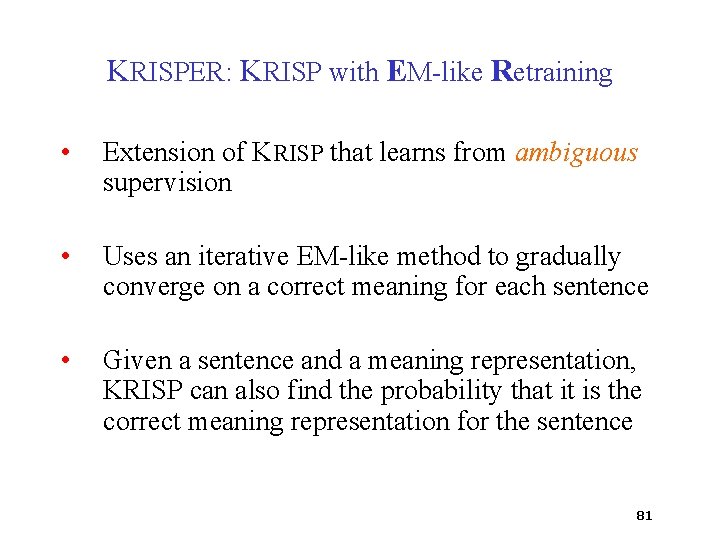

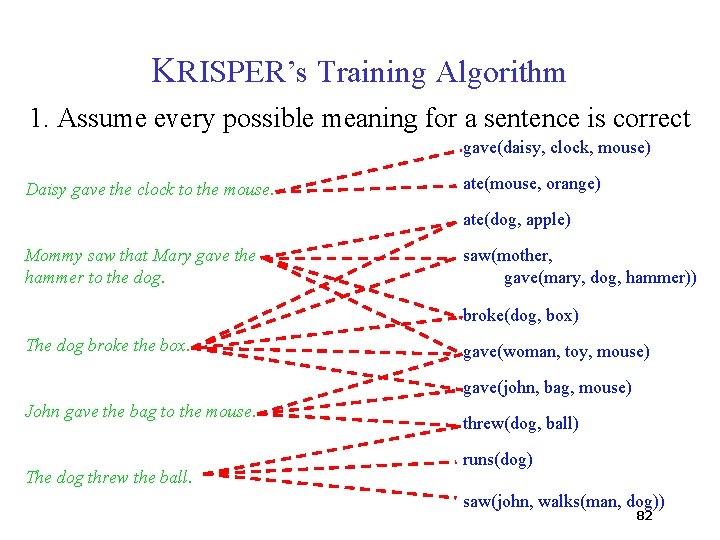

KRISPER: KRISP with EM-like Retraining • Extension of KRISP that learns from ambiguous supervision • Uses an iterative EM-like method to gradually converge on a correct meaning for each sentence • Given a sentence and a meaning representation, KRISP can also find the probability that it is the correct meaning representation for the sentence 81

KRISPER’s Training Algorithm 1. Assume every possible meaning for a sentence is correct gave(daisy, clock, mouse) Daisy gave the clock to the mouse. ate(mouse, orange) ate(dog, apple) Mommy saw that Mary gave the hammer to the dog. saw(mother, gave(mary, dog, hammer)) broke(dog, box) The dog broke the box. gave(woman, toy, mouse) gave(john, bag, mouse) John gave the bag to the mouse. The dog threw the ball. threw(dog, ball) runs(dog) saw(john, walks(man, dog)) 82

KRISPER’s Training Algorithm 1. Assume every possible meaning for a sentence is correct gave(daisy, clock, mouse) Daisy gave the clock to the mouse. ate(mouse, orange) ate(dog, apple) Mommy saw that Mary gave the hammer to the dog. saw(mother, gave(mary, dog, hammer)) broke(dog, box) The dog broke the box. gave(woman, toy, mouse) gave(john, bag, mouse) John gave the bag to the mouse. The dog threw the ball. threw(dog, ball) runs(dog) saw(john, walks(man, dog)) 83

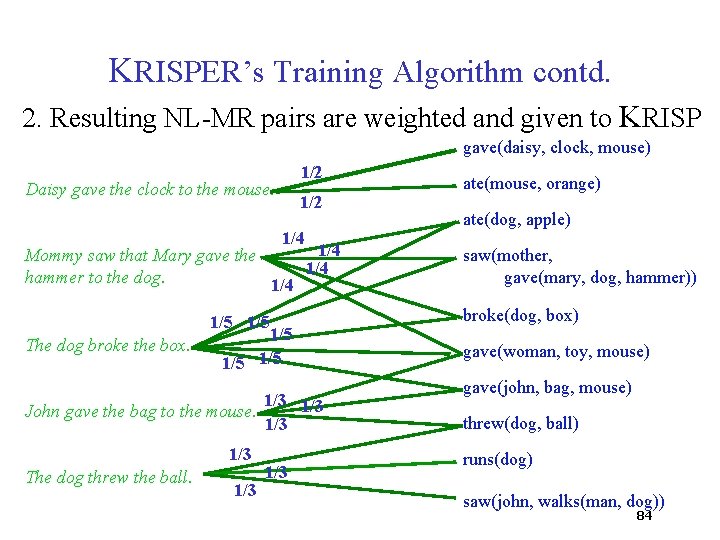

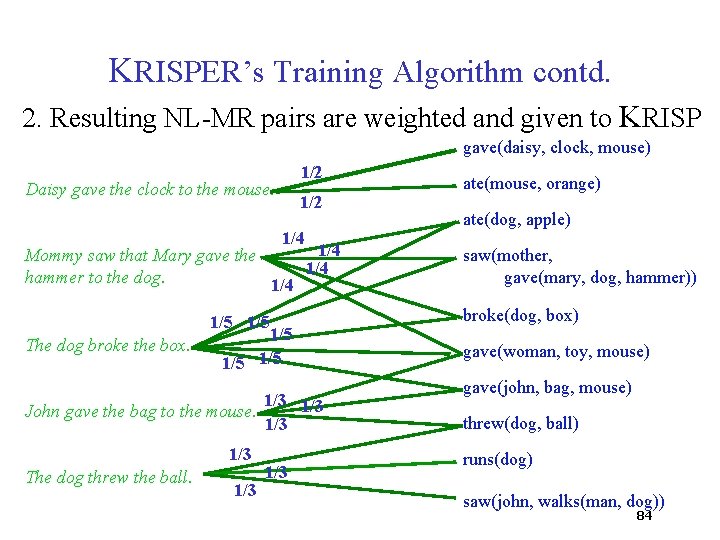

KRISPER’s Training Algorithm contd. 2. Resulting NL-MR pairs are weighted and given to KRISP gave(daisy, clock, mouse) 1/2 Daisy gave the clock to the mouse. 1/2 1/4 Mommy saw that Mary gave the 1/4 hammer to the dog. 1/4 The dog broke the box. 1/5 1/5 1/5 1/3 John gave the bag to the mouse. 1/3 The dog threw the ball. 1/3 ate(mouse, orange) ate(dog, apple) saw(mother, gave(mary, dog, hammer)) broke(dog, box) gave(woman, toy, mouse) gave(john, bag, mouse) threw(dog, ball) runs(dog) saw(john, walks(man, dog)) 84

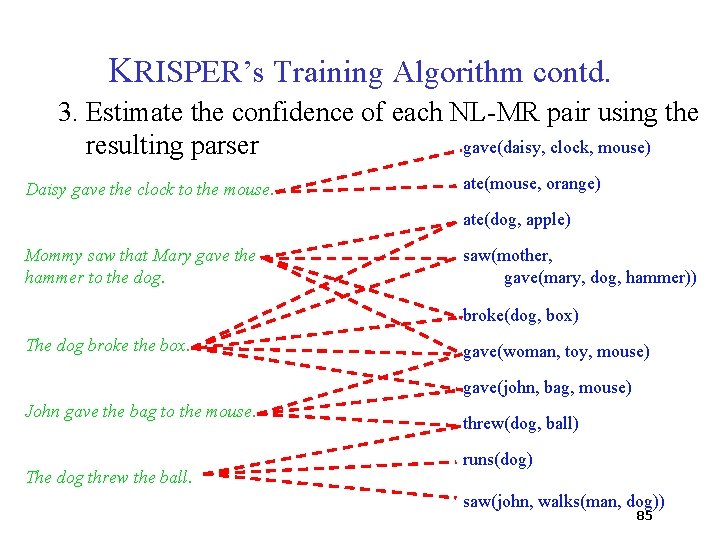

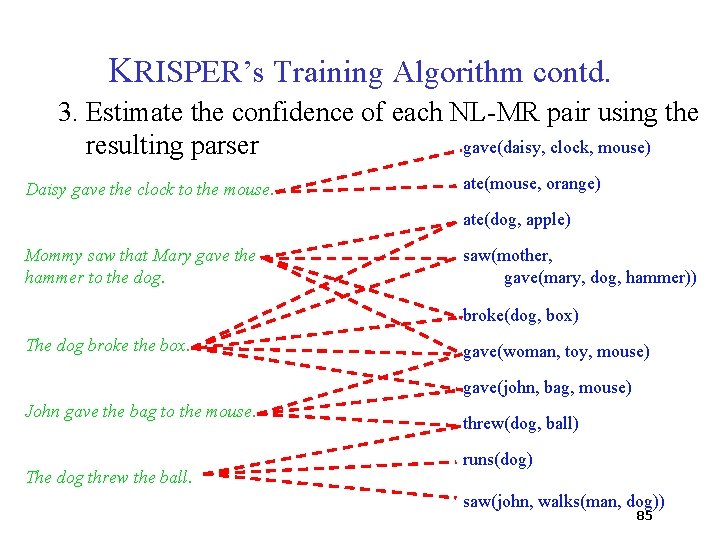

KRISPER’s Training Algorithm contd. 3. Estimate the confidence of each NL-MR pair using the gave(daisy, clock, mouse) resulting parser Daisy gave the clock to the mouse. ate(mouse, orange) ate(dog, apple) Mommy saw that Mary gave the hammer to the dog. saw(mother, gave(mary, dog, hammer)) broke(dog, box) The dog broke the box. gave(woman, toy, mouse) gave(john, bag, mouse) John gave the bag to the mouse. The dog threw the ball. threw(dog, ball) runs(dog) saw(john, walks(man, dog)) 85

KRISPER’s Training Algorithm contd. 3. Estimate the confidence of each NL-MR pair using the gave(daisy, clock, mouse) resulting parser 0. 92 Daisy gave the clock to the mouse. 0. 11 0. 32 ate(mouse, orange) ate(dog, apple) 0. 88 Mommy saw that Mary gave the 0. 22 hammer to the dog. 0. 24 saw(mother, gave(mary, dog, hammer)) 0. 71 0. 18 0. 85 The dog broke the box. 0. 14 0. 95 broke(dog, box) 0. 24 0. 89 John gave the bag to the mouse. 0. 33 0. 97 The dog threw the ball. 0. 34 0. 81 gave(woman, toy, mouse) gave(john, bag, mouse) threw(dog, ball) runs(dog) saw(john, walks(man, dog)) 86

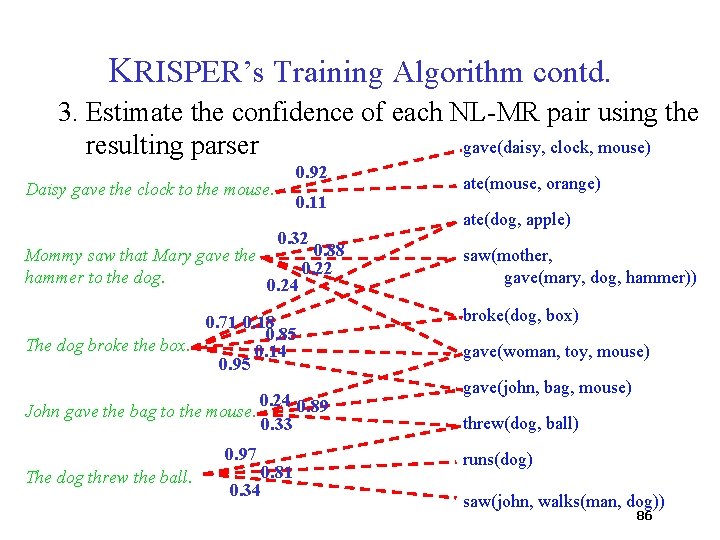

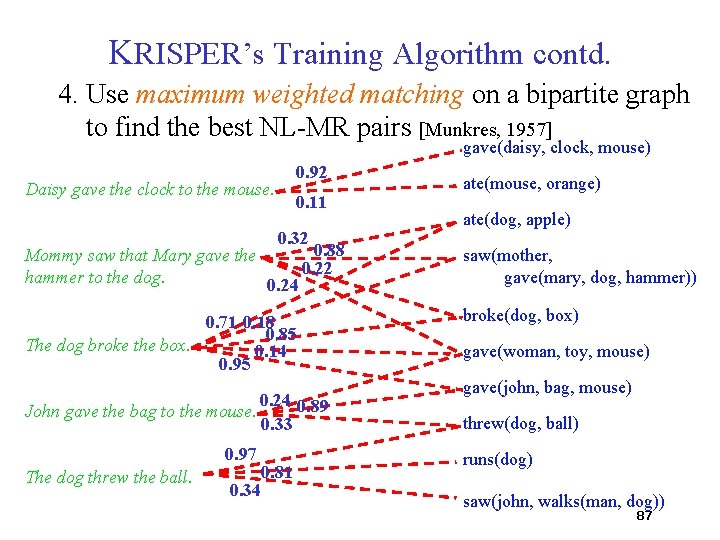

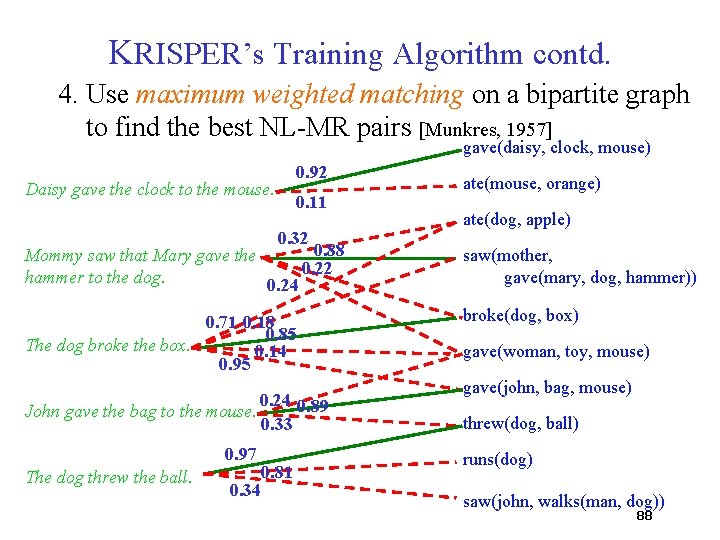

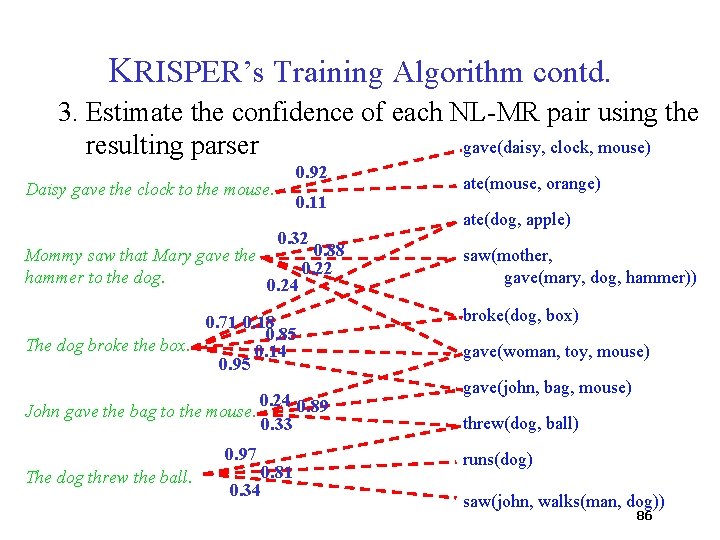

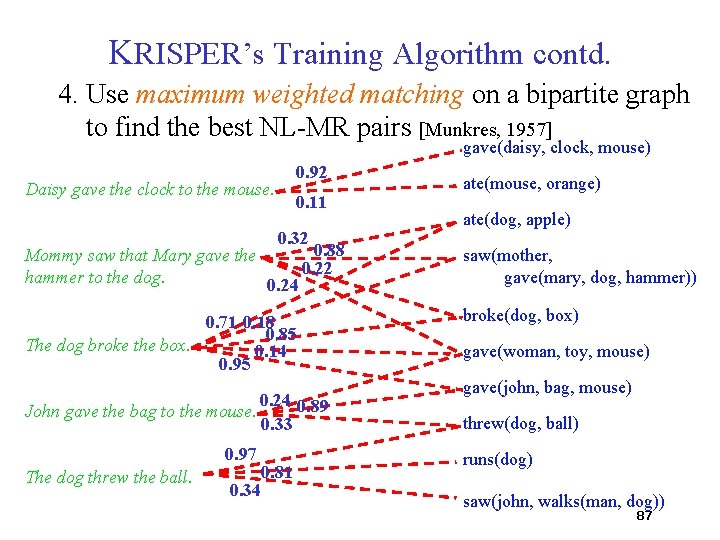

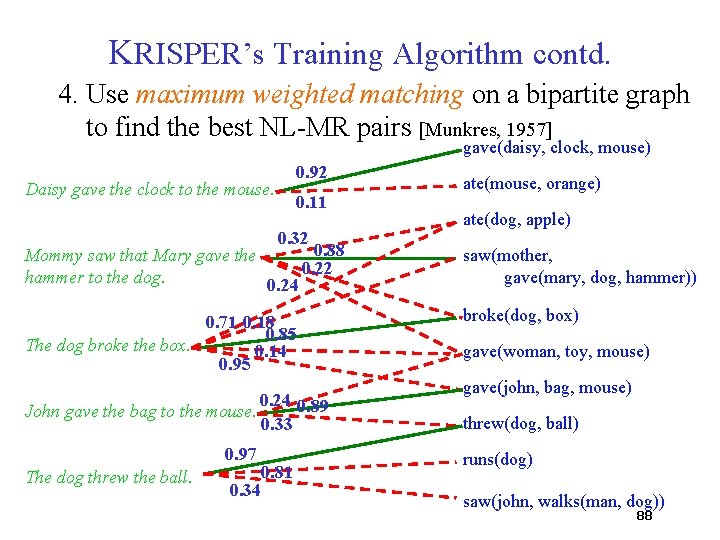

KRISPER’s Training Algorithm contd. 4. Use maximum weighted matching on a bipartite graph to find the best NL-MR pairs [Munkres, 1957] gave(daisy, clock, mouse) 0. 92 Daisy gave the clock to the mouse. 0. 11 0. 32 ate(mouse, orange) ate(dog, apple) 0. 88 Mommy saw that Mary gave the 0. 22 hammer to the dog. 0. 24 saw(mother, gave(mary, dog, hammer)) 0. 71 0. 18 0. 85 The dog broke the box. 0. 14 0. 95 broke(dog, box) 0. 24 0. 89 John gave the bag to the mouse. 0. 33 0. 97 The dog threw the ball. 0. 34 0. 81 gave(woman, toy, mouse) gave(john, bag, mouse) threw(dog, ball) runs(dog) saw(john, walks(man, dog)) 87

KRISPER’s Training Algorithm contd. 4. Use maximum weighted matching on a bipartite graph to find the best NL-MR pairs [Munkres, 1957] gave(daisy, clock, mouse) 0. 92 Daisy gave the clock to the mouse. 0. 11 0. 32 ate(mouse, orange) ate(dog, apple) 0. 88 Mommy saw that Mary gave the 0. 22 hammer to the dog. 0. 24 saw(mother, gave(mary, dog, hammer)) 0. 71 0. 18 0. 85 The dog broke the box. 0. 14 0. 95 broke(dog, box) 0. 24 0. 89 John gave the bag to the mouse. 0. 33 0. 97 The dog threw the ball. 0. 34 0. 81 gave(woman, toy, mouse) gave(john, bag, mouse) threw(dog, ball) runs(dog) saw(john, walks(man, dog)) 88

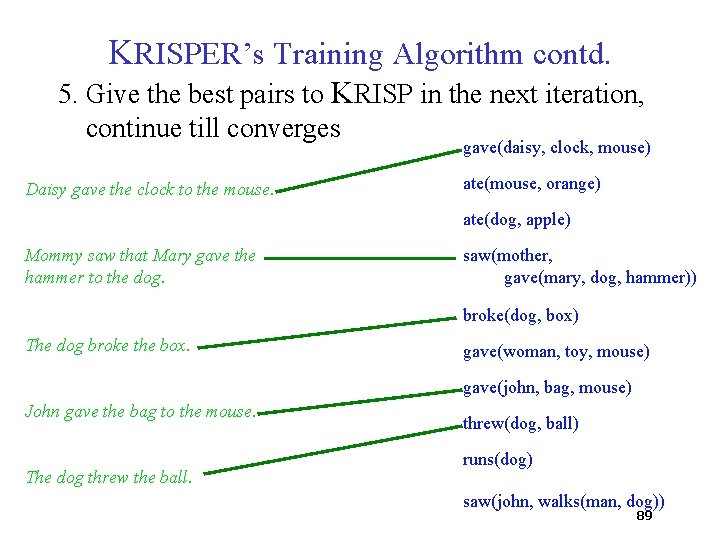

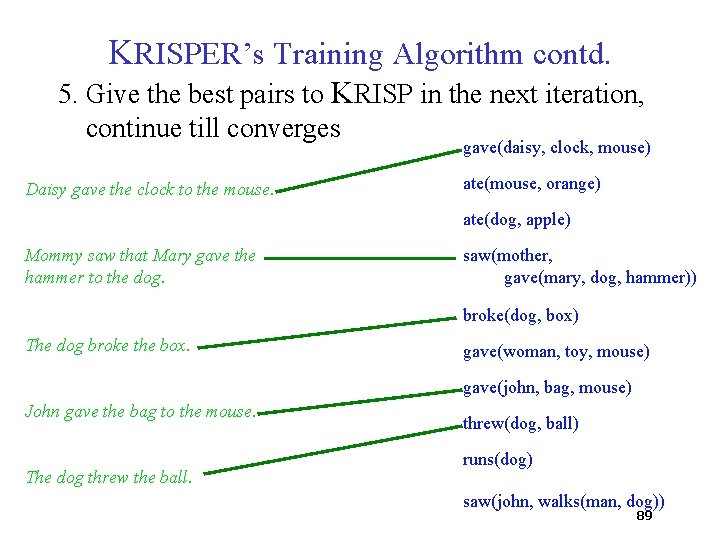

KRISPER’s Training Algorithm contd. 5. Give the best pairs to KRISP in the next iteration, continue till converges gave(daisy, clock, mouse) Daisy gave the clock to the mouse. ate(mouse, orange) ate(dog, apple) Mommy saw that Mary gave the hammer to the dog. saw(mother, gave(mary, dog, hammer)) broke(dog, box) The dog broke the box. gave(woman, toy, mouse) gave(john, bag, mouse) John gave the bag to the mouse. The dog threw the ball. threw(dog, ball) runs(dog) saw(john, walks(man, dog)) 89

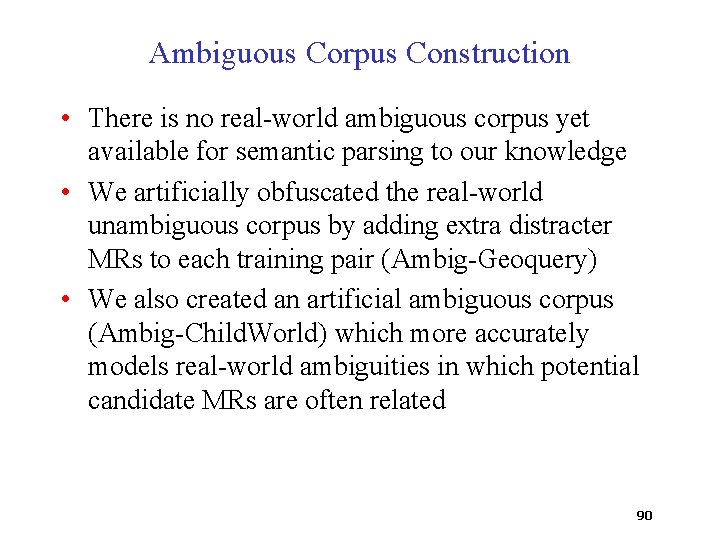

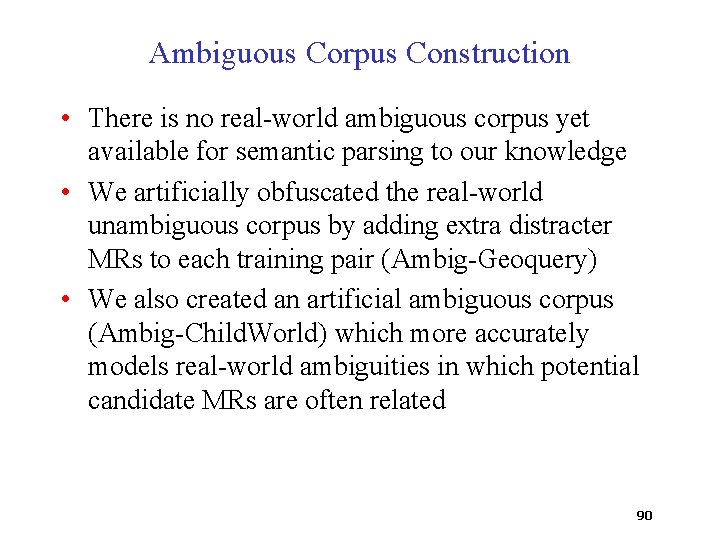

Ambiguous Corpus Construction • There is no real-world ambiguous corpus yet available for semantic parsing to our knowledge • We artificially obfuscated the real-world unambiguous corpus by adding extra distracter MRs to each training pair (Ambig-Geoquery) • We also created an artificial ambiguous corpus (Ambig-Child. World) which more accurately models real-world ambiguities in which potential candidate MRs are often related 90

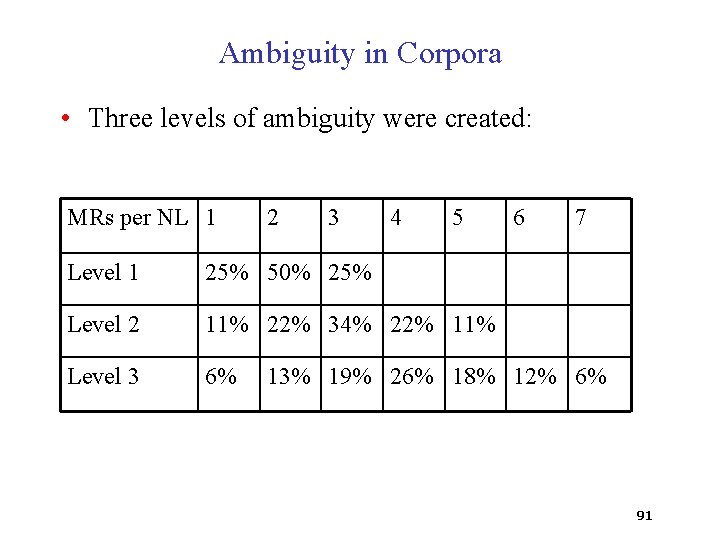

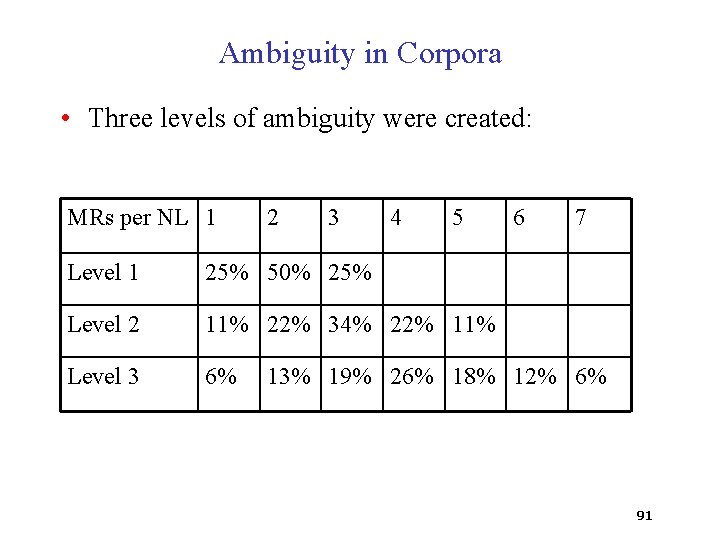

Ambiguity in Corpora • Three levels of ambiguity were created: MRs per NL 1 2 3 4 5 Level 1 25% 50% 25% Level 2 11% 22% 34% 22% 11% Level 3 6% 6 7 13% 19% 26% 18% 12% 6% 91

Results on Ambig-Geoquery Corpus 92

Results on Ambig-Child. World Corpus 93

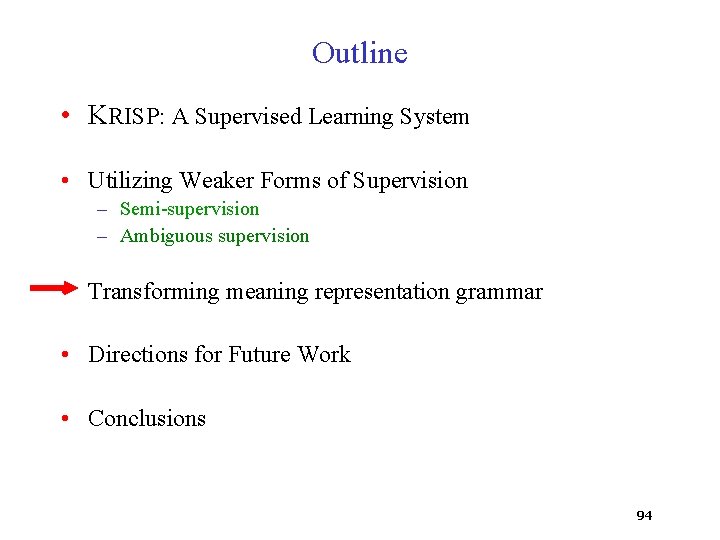

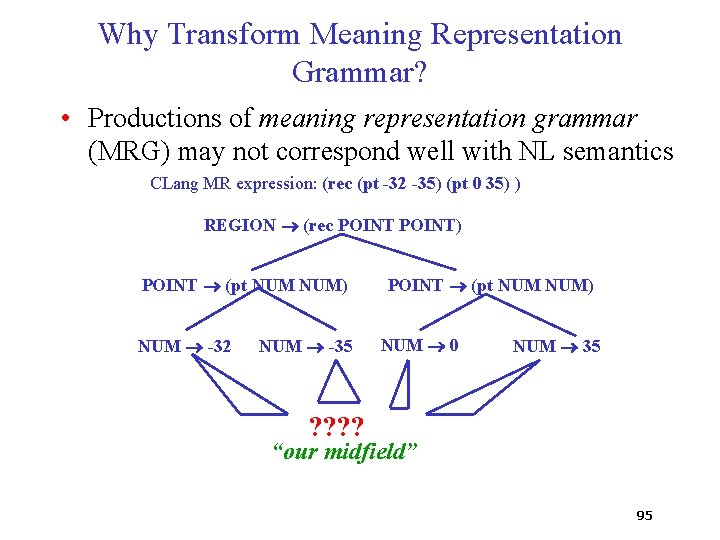

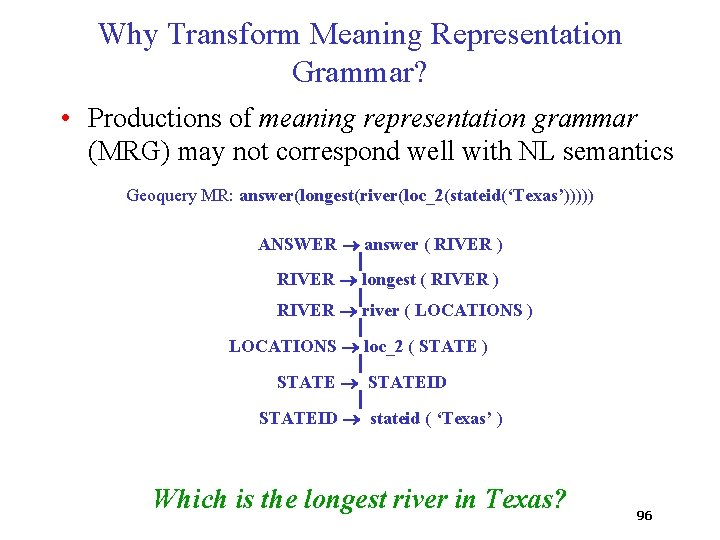

Outline • KRISP: A Supervised Learning System • Utilizing Weaker Forms of Supervision – Semi-supervision – Ambiguous supervision • Transforming meaning representation grammar • Directions for Future Work • Conclusions 94

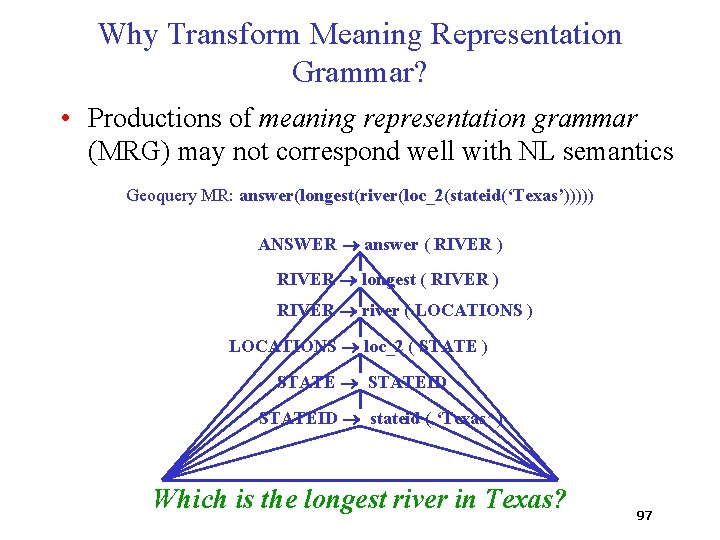

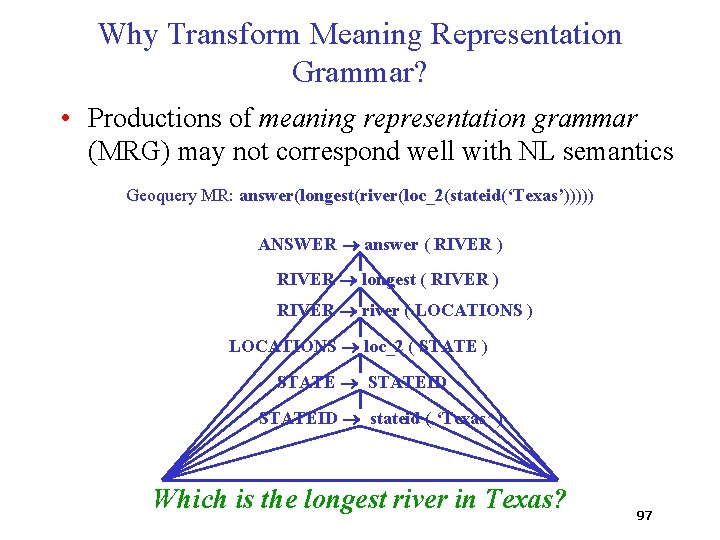

Why Transform Meaning Representation Grammar? • Productions of meaning representation grammar (MRG) may not correspond well with NL semantics CLang MR expression: (rec (pt -32 -35) (pt 0 35) ) REGION (rec POINT) POINT (pt NUM) NUM -32 NUM -35 POINT (pt NUM) NUM 0 NUM 35 ? ? “our midfield” 95

Why Transform Meaning Representation Grammar? • Productions of meaning representation grammar (MRG) may not correspond well with NL semantics Geoquery MR: answer(longest(river(loc_2(stateid(‘Texas’))))) ANSWER answer ( RIVER ) RIVER longest ( RIVER ) RIVER river ( LOCATIONS ) LOCATIONS loc_2 ( STATE ) STATEID stateid ( ‘Texas’ ) Which is the longest river in Texas? 96

Why Transform Meaning Representation Grammar? • Productions of meaning representation grammar (MRG) may not correspond well with NL semantics Geoquery MR: answer(longest(river(loc_2(stateid(‘Texas’))))) ANSWER answer ( RIVER ) RIVER longest ( RIVER ) RIVER river ( LOCATIONS ) LOCATIONS loc_2 ( STATE ) STATEID stateid ( ‘Texas’ ) Which is the longest river in Texas? 97

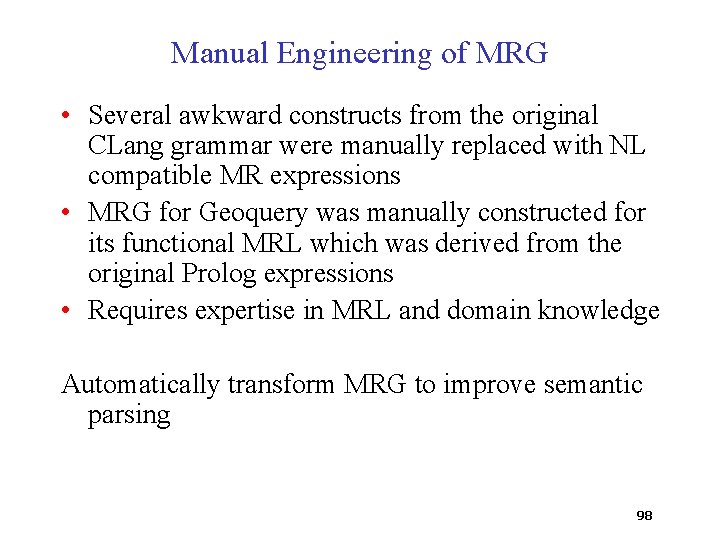

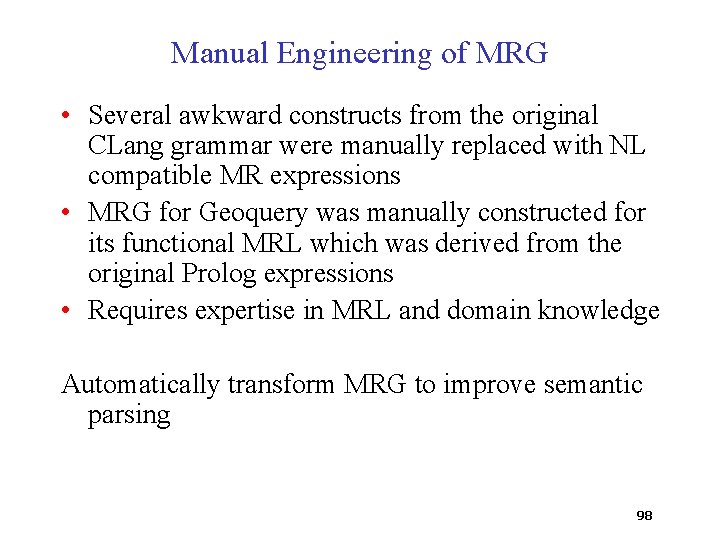

Manual Engineering of MRG • Several awkward constructs from the original CLang grammar were manually replaced with NL compatible MR expressions • MRG for Geoquery was manually constructed for its functional MRL which was derived from the original Prolog expressions • Requires expertise in MRL and domain knowledge Automatically transform MRG to improve semantic parsing 98

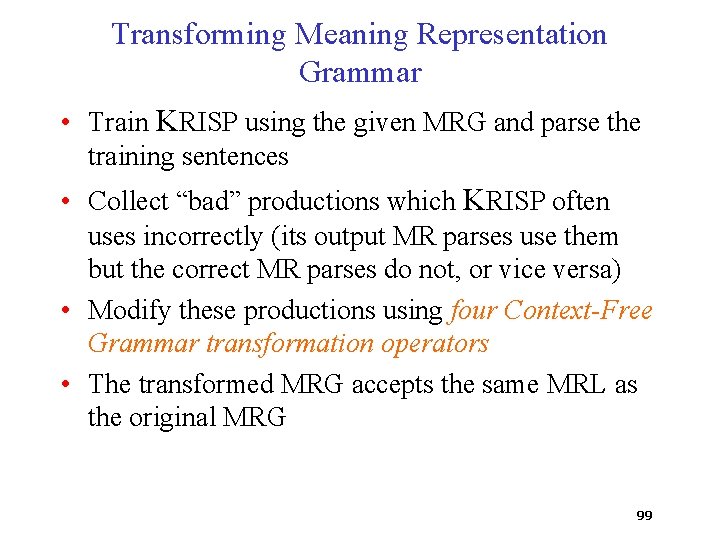

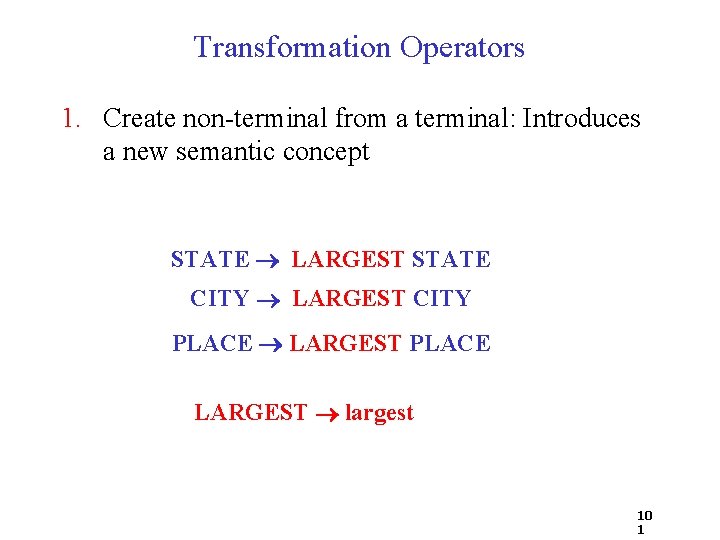

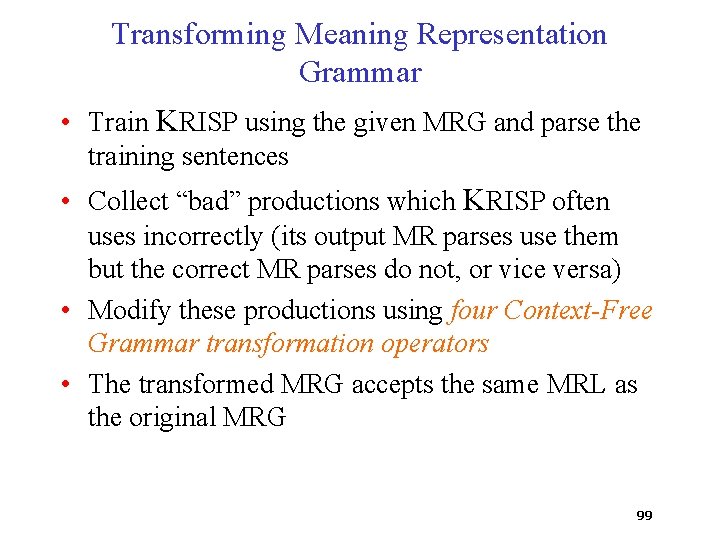

Transforming Meaning Representation Grammar • Train KRISP using the given MRG and parse the training sentences • Collect “bad” productions which KRISP often uses incorrectly (its output MR parses use them but the correct MR parses do not, or vice versa) • Modify these productions using four Context-Free Grammar transformation operators • The transformed MRG accepts the same MRL as the original MRG 99

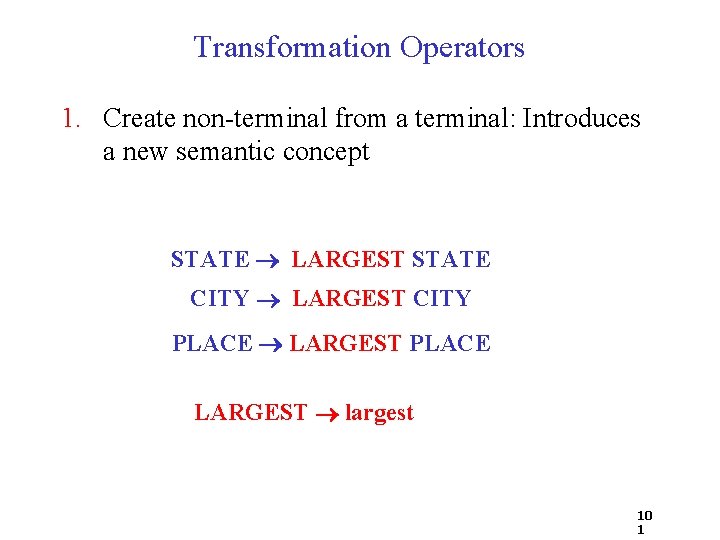

Transformation Operators 1. Create non-terminal from a terminal: Introduces a new semantic concept STATE largest STATE CITY largest CITY Bad productions PLACE largest PLACE LARGEST largest 10 0

Transformation Operators 1. Create non-terminal from a terminal: Introduces a new semantic concept STATE LARGEST STATE CITY LARGEST CITY PLACE LARGEST PLACE LARGEST largest 10 1

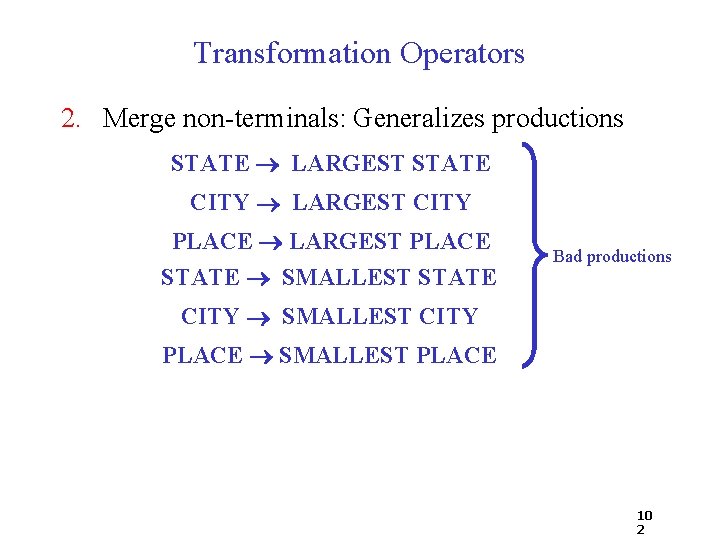

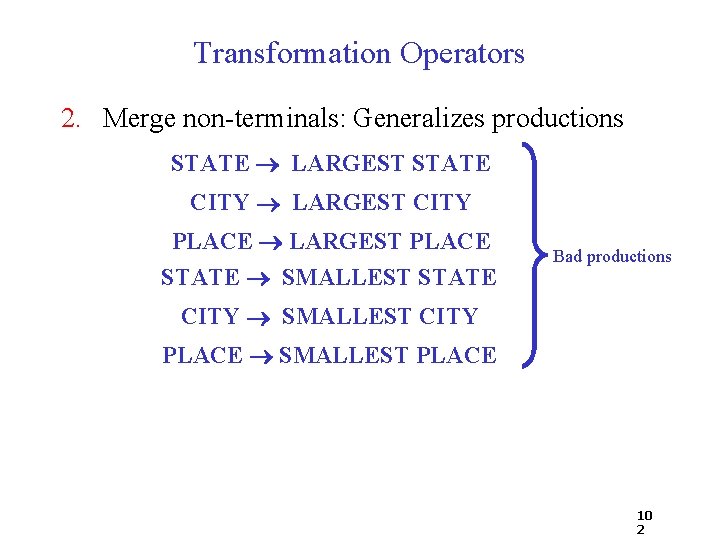

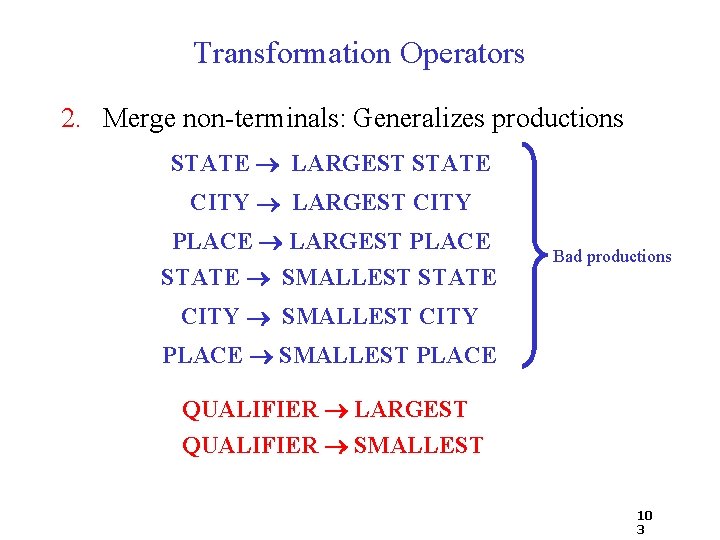

Transformation Operators 2. Merge non-terminals: Generalizes productions STATE LARGEST STATE CITY LARGEST CITY PLACE LARGEST PLACE STATE SMALLEST STATE Bad productions CITY SMALLEST CITY PLACE SMALLEST PLACE 10 2

Transformation Operators 2. Merge non-terminals: Generalizes productions STATE LARGEST STATE CITY LARGEST CITY PLACE LARGEST PLACE STATE SMALLEST STATE Bad productions CITY SMALLEST CITY PLACE SMALLEST PLACE QUALIFIER LARGEST QUALIFIER SMALLEST 10 3

Transformation Operators 2. Merge non-terminals: Generalizes productions STATE QUALIFIER STATE CITY QUALIFIER CITY PLACE QUALIFIER PLACE QUALIFIER LARGEST QUALIFIER SMALLEST 10 4

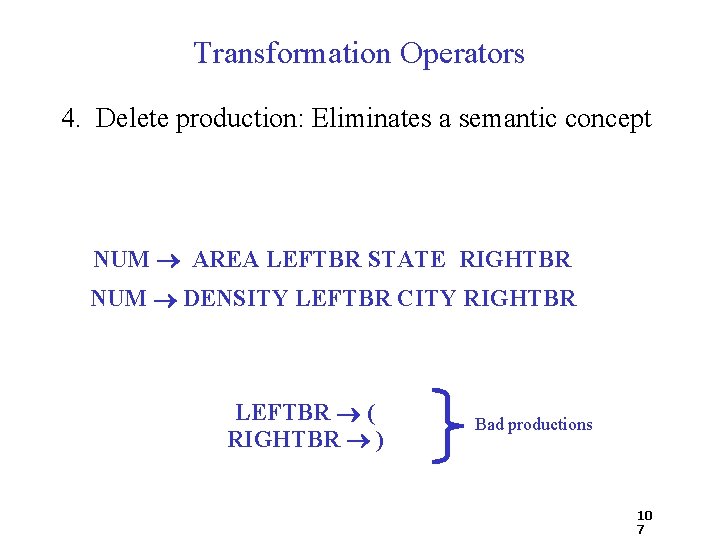

Transformation Operators 3. Combine non-terminals: Combines the concepts CITY SMALLEST MAJOR CITY LAKE SMALLEST MAJOR LAKE Bad productions SMALLEST_MAJOR SMALLEST MAJOR 10 5

Transformation Operators 3. Combine non-terminals: Combines the concepts CITY SMALLEST_MAJOR CITY LAKE SMALLEST_MAJOR LAKE SMALLEST_MAJOR SMALLEST MAJOR 10 6

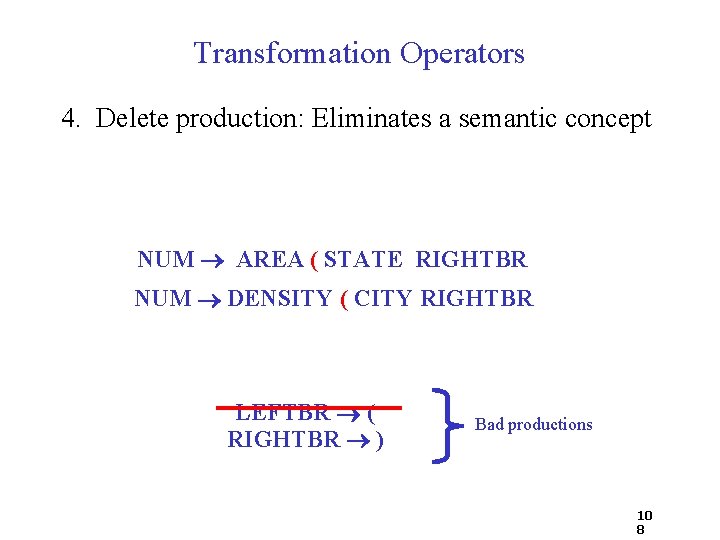

Transformation Operators 4. Delete production: Eliminates a semantic concept NUM AREA LEFTBR STATE RIGHTBR NUM DENSITY LEFTBR CITY RIGHTBR LEFTBR ( RIGHTBR ) Bad productions 10 7

Transformation Operators 4. Delete production: Eliminates a semantic concept NUM AREA ( STATE RIGHTBR NUM DENSITY ( CITY RIGHTBR LEFTBR ( RIGHTBR ) Bad productions 10 8

Transformation Operators 4. Delete production: Eliminates a semantic concept NUM AREA ( STATE ) NUM DENSITY ( CITY ) LEFTBR ( RIGHTBR ) Bad productions 10 9

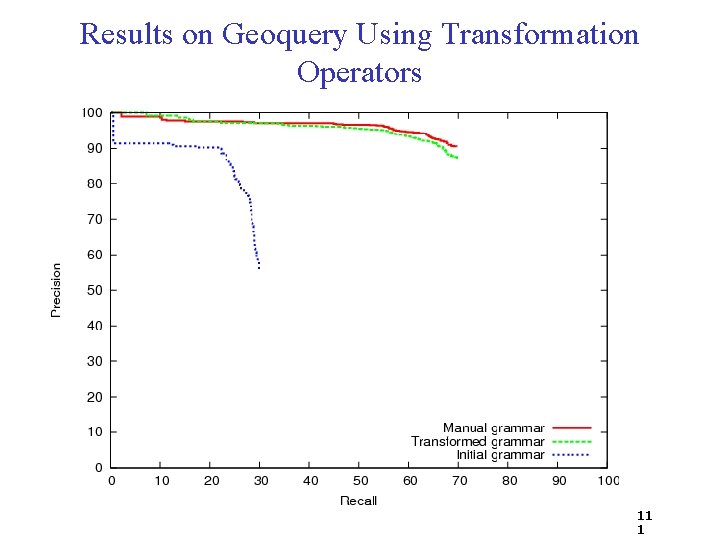

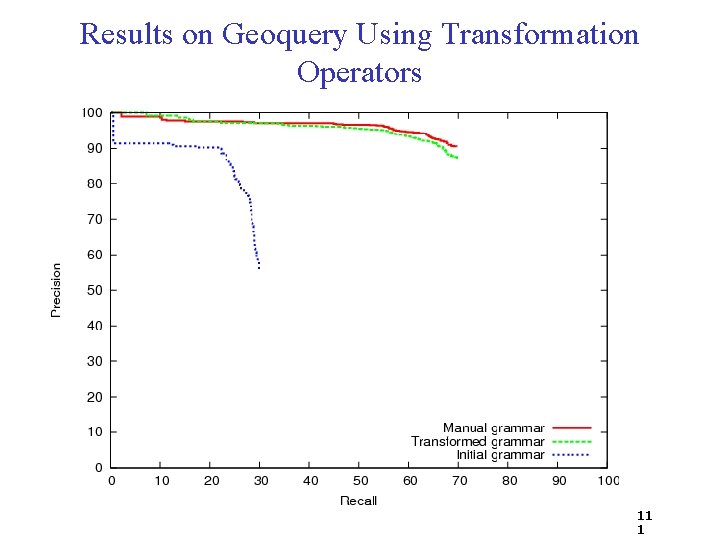

MRG Transformation Algorithm • A heuristic search is used to find a good MRG among all possible MRGs • All possible instances of each type of operator are applied, then the training examples are re-parsed and the semantic parser is re-trained • Two iterations were sufficient for convergence of performance 11 0

Results on Geoquery Using Transformation Operators 11 1

Rest of the Dissertation • Utilizing More Supervision – Utilize syntactic parses using tree-kernel – Utilize Semantically Augmented Parse Trees [Ge & Mooney, 2005] Not much improvement in performance • Meaning representation macros to transform MRG • Ensembles of semantic parsers – Simple majority ensemble of KRISP, WASP and SCISSOR achieves the best overall performance 11 2

Outline • KRISP: A Supervised Learning System • Utilizing Weaker Forms of Supervision – Semi-supervision – Ambiguous supervision • Transforming meaning representation grammar • Directions for Future Work • Conclusions 11 3

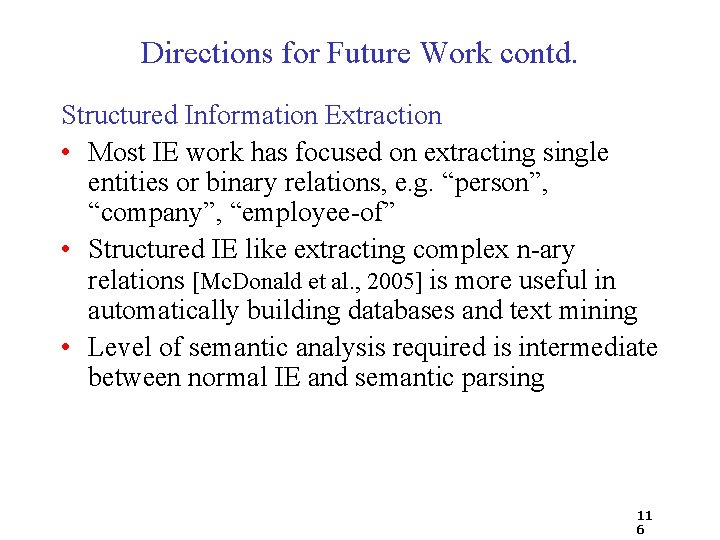

Directions for Future Work • Improve KRISP’s semantic parsing framework – Do not make independence assumption – Allow words to overlap Will increase complexity of the system ANSWER answer(RIVER) 0. 89 RIVER TRAVERSE(STATE) 0. 92 TRAVERSE traverse 0. 91 STATE NEXT_TO(STATE) 0. 81 NEXT_TO next_to 0. 95 STATEID 0. 98 STATEID ‘texas’ 0. 99 Which rivers run through the states bordering Texas? 11 4

Directions for Future Work • Improve KRISP’s semantic parsing framework – Do not make independence assumption – Allow words to overlap Will increase complexity of the system • Better kernels: – Dependency tree kernels – Use word categories or domain-specific word ontology – Noise resistant kernel • Learn from perceptual contexts – Combine with a vision-based system to map real-world perceptual contexts into symbolic MRs 11 5

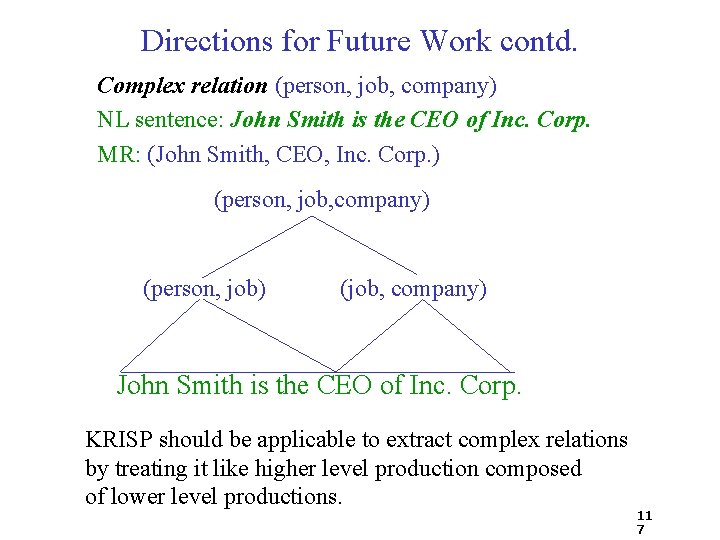

Directions for Future Work contd. Structured Information Extraction • Most IE work has focused on extracting single entities or binary relations, e. g. “person”, “company”, “employee-of” • Structured IE like extracting complex n-ary relations [Mc. Donald et al. , 2005] is more useful in automatically building databases and text mining • Level of semantic analysis required is intermediate between normal IE and semantic parsing 11 6

Directions for Future Work contd. Complex relation (person, job, company) NL sentence: John Smith is the CEO of Inc. Corp. MR: (John Smith, CEO, Inc. Corp. ) (person, job, company) (person, job) (job, company) John Smith is the CEO of Inc. Corp. KRISP should be applicable to extract complex relations by treating it like higher level production composed of lower level productions. 11 7

Directions for Future Work contd. Broaden the applicability of semantic parsers to open-domain • Difficult to construct one MRL for open-domain • But a suitable MRL may be constructed by narrowing down the meaning of open-domain natural language based on the actions expected from the computer • Will need help from open-domain techniques of word-sense disambiguation, anaphora resolution etc. 11 8

Conclusions • A new string-kernel-based approach for learning semantic parsers, more robust to noisy input • Extension for semi-supervised semantic parsing to utilize unannotated training data • Learns from more general and weaker form of ambiguous supervision • Transforms meaning representation grammar to improve semantic parsing • In future, scope and applicability of semantic parsing can be broadened 11 9

Thank You! Questions? ? 12 0