On Kernels Margins and Lowdimensional Mappings or Kernels

- Slides: 17

On Kernels, Margins, and Lowdimensional Mappings or Kernels versus features Nina Balcan CMU Avrim Blum CMU Santosh Vempala MIT

Generic problem w Given a set of images: , want to learn a linear separator to distinguish men from women. w Problem: pixel representation no good. Old style advice: w Pick a better set of features! w But seems ad-hoc. Not scientific. New style advice: w Use a Kernel! K( , ) = ( )¢ ( ). is implicit, high-dimensional mapping. w Sounds more scientific. Many algorithms can be “kernelized”. Use “magic” of implicit high-dim’l space. Don’t pay for it if exists a large margin separator.

Generic problem Old style advice: w Pick a better set of features! w But seems ad-hoc. Not scientific. New style advice: w Use a Kernel! K( , ) = ( )¢ ( ). is implicit, high-dimensional mapping. w Sounds more scientific. Many algorithms can be “kernelized”. Use “magic” of implicit high-dim’l space. Don’t pay for it if exists a large margin separator. w E. g. , K(x, y) = (x ¢ y + 1)m. : (n-diml space) ! (nm-diml space).

Main point of this work: Can view new method as way of conducting old method. w Given a kernel [as a black-box program K(x, y)] and access to typical inputs [samples from D], w Claim: Can run K and reverse-engineer an explicit (small) set of features, such that if K is good [9 large-margin separator in -space for D, c], then this is a good feature set [9 almost-as-good separator]. “You give me a kernel, I give you a set of features”

Main point of this work: Can view new method as way of conducting old method. w Given a kernel [as a black-box program K(x, y)] and access to typical inputs [samples from D], w Claim: Can run K and reverse-engineer an explicit (small) set of features, such that if K is good [9 large-margin separator in -space for D, c], then this is a good feature set [9 almost-as-good separator]. E. g. , sample z 1, . . . , zd from D. Given x, define xi = K(x, zi). Implications: w Practical: alternative to kernelizing the algorithm. w. Conceptual: View kernel as (principled) way of doing feature generation. View as similarity function, rather than “magic power of implicit high dimensional space”.

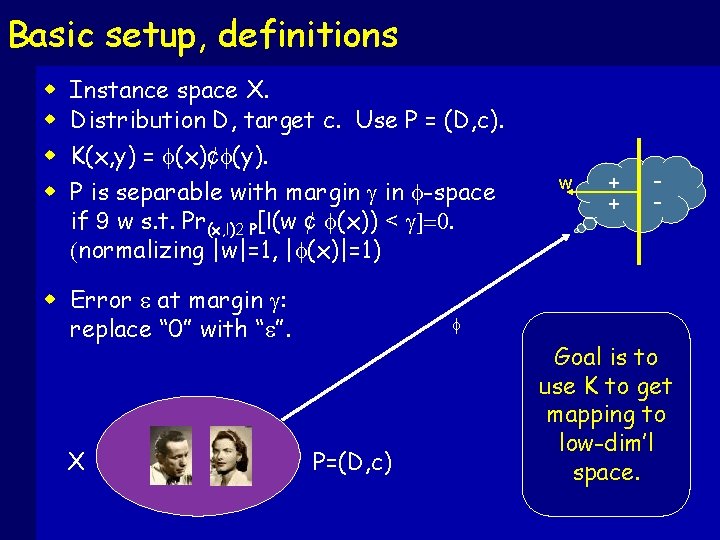

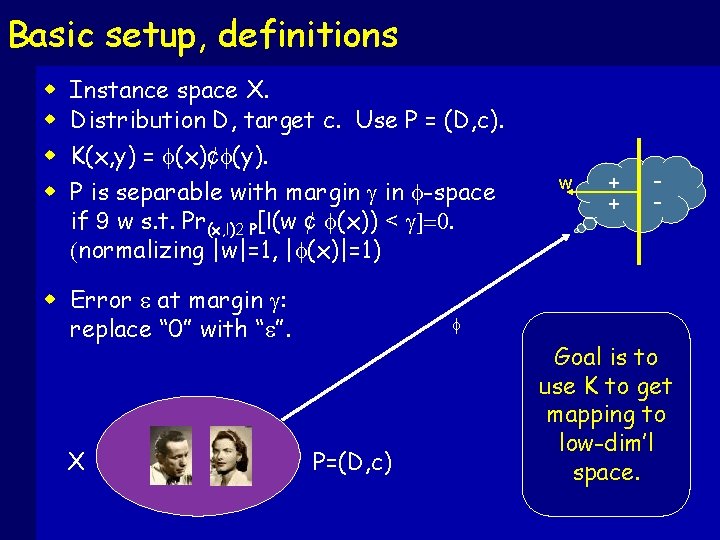

Basic setup, definitions w w Instance space X. Distribution D, target c. Use P = (D, c). K(x, y) = (x)¢ (y). P is separable with margin g in -space if 9 w s. t. Pr(x, l)2 P[l(w ¢ (x)) < g]=0. (normalizing |w|=1, | (x)|=1) w Error e at margin g: replace “ 0” with “e”. X w + + - P=(D, c) Goal is to use K to get mapping to low-dim’l space.

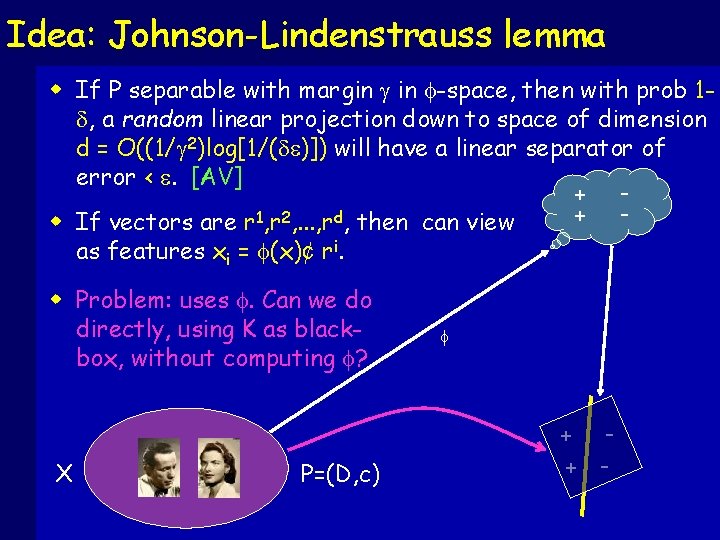

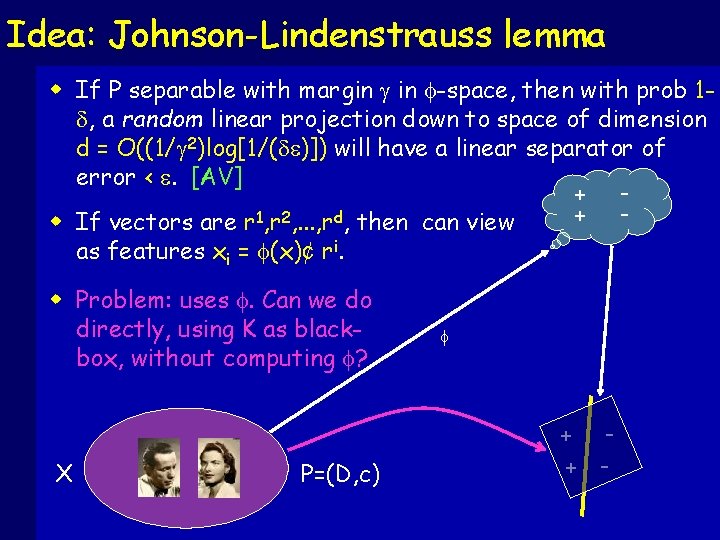

Idea: Johnson-Lindenstrauss lemma w If P separable with margin g in -space, then with prob 1 d, a random linear projection down to space of dimension d = O((1/g 2)log[1/(de)]) will have a linear separator of error < e. [AV] + + w If vectors are r 1, r 2, . . . , rd, then can view as features xi = (x)¢ ri. w Problem: uses . Can we do directly, using K as blackbox, without computing ? + X P=(D, c) - + -

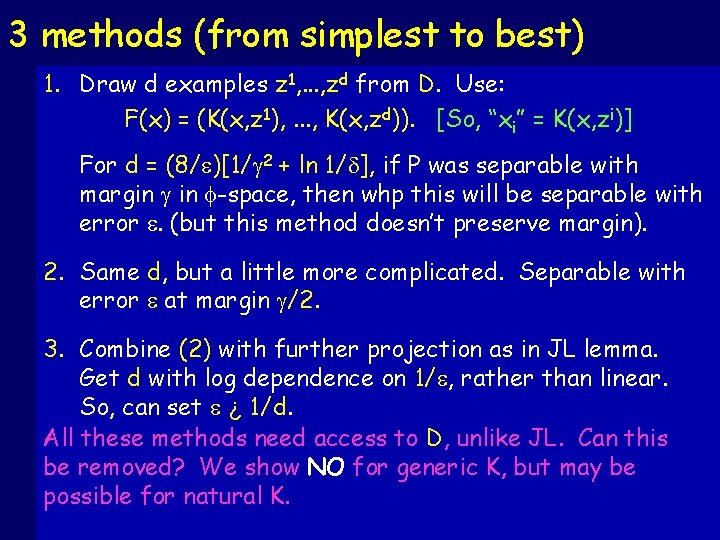

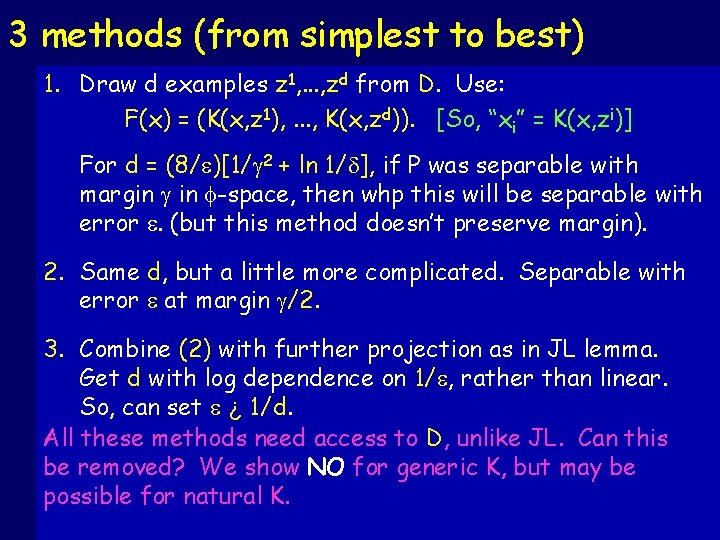

3 methods (from simplest to best) 1. Draw d examples z 1, . . . , zd from D. Use: F(x) = (K(x, z 1), . . . , K(x, zd)). [So, “xi” = K(x, zi)] For d = (8/e)[1/g 2 + ln 1/d], if P was separable with margin g in -space, then whp this will be separable with error e. (but this method doesn’t preserve margin). 2. Same d, but a little more complicated. Separable with error e at margin g/2. 3. Combine (2) with further projection as in JL lemma. Get d with log dependence on 1/e, rather than linear. So, can set e ¿ 1/d. All these methods need access to D, unlike JL. Can this be removed? We show NO for generic K, but may be possible for natural K.

Actually, the argument is pretty easy. . . (though we did try a lot of things first that didn’t work. . . )

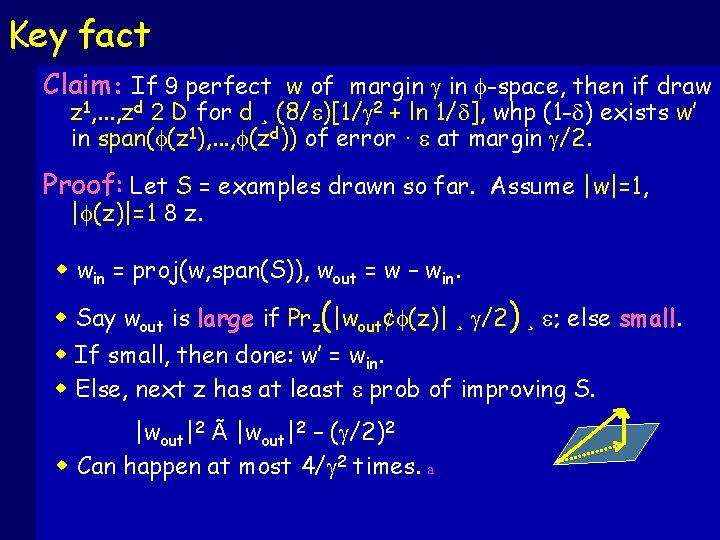

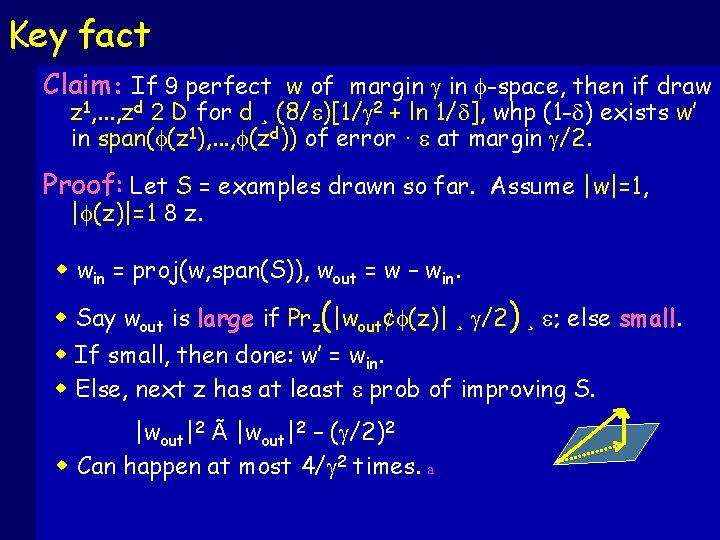

Key fact Claim: If 9 perfect w of margin g in -space, then if draw z 1, . . . , zd 2 D for d ¸ (8/e)[1/g 2 + ln 1/d], whp (1 -d) exists w’ in span( (z 1), . . . , (zd)) of error · e at margin g/2. Proof: Let S = examples drawn so far. Assume |w|=1, | (z)|=1 8 z. w win = proj(w, span(S)), wout = w – win. w Say wout is large if Prz(|wout¢ (z)| ¸ g/2) ¸ e; else small. w If small, then done: w’ = win. w Else, next z has at least e prob of improving S. |wout|2 Ã |wout|2 – (g/2)2 w Can happen at most 4/g 2 times. a

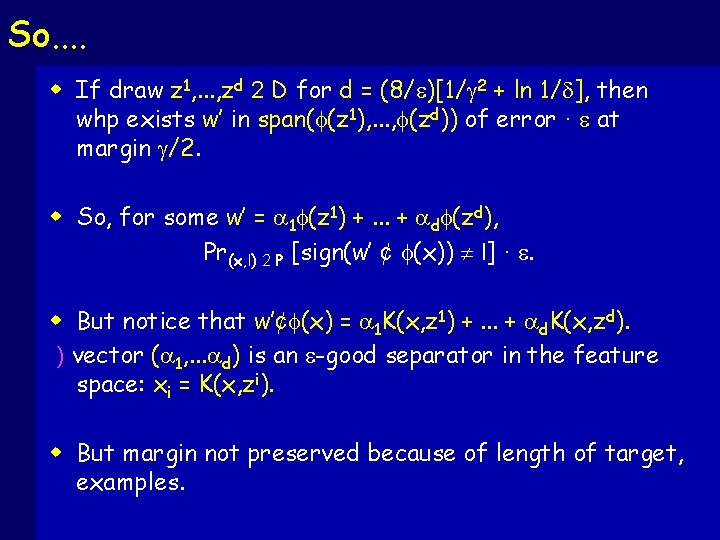

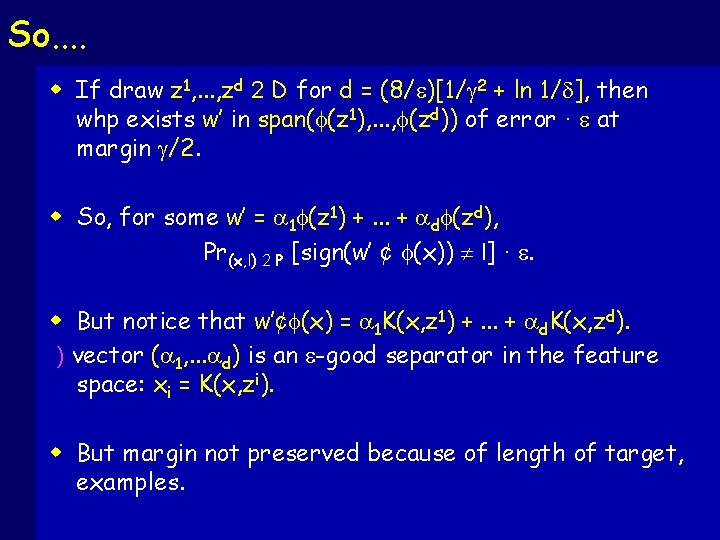

So. . w If draw z 1, . . . , zd 2 D for d = (8/e)[1/g 2 + ln 1/d], then whp exists w’ in span( (z 1), . . . , (zd)) of error · e at margin g/2. w So, for some w’ = a 1 (z 1) +. . . + ad (zd), Pr(x, l) 2 P [sign(w’ ¢ (x)) ¹ l] · e. w But notice that w’¢ (x) = a 1 K(x, z 1) +. . . + ad. K(x, zd). ) vector (a 1, . . . ad) is an e-good separator in the feature space: xi = K(x, zi). w But margin not preserved because of length of target, examples.

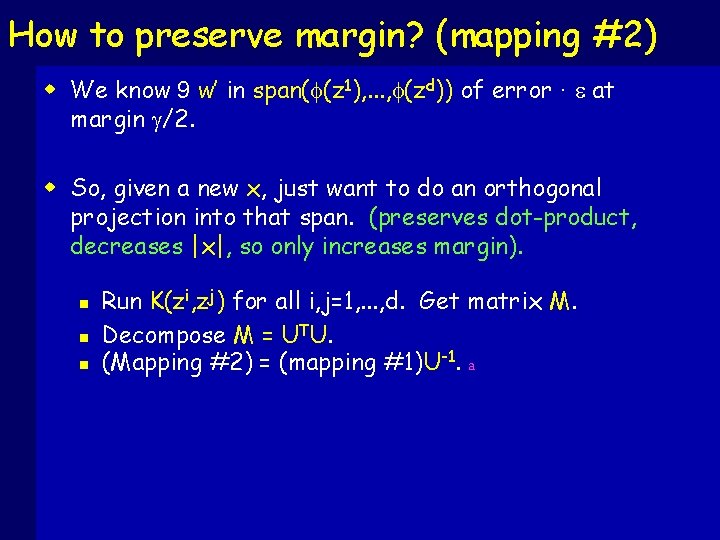

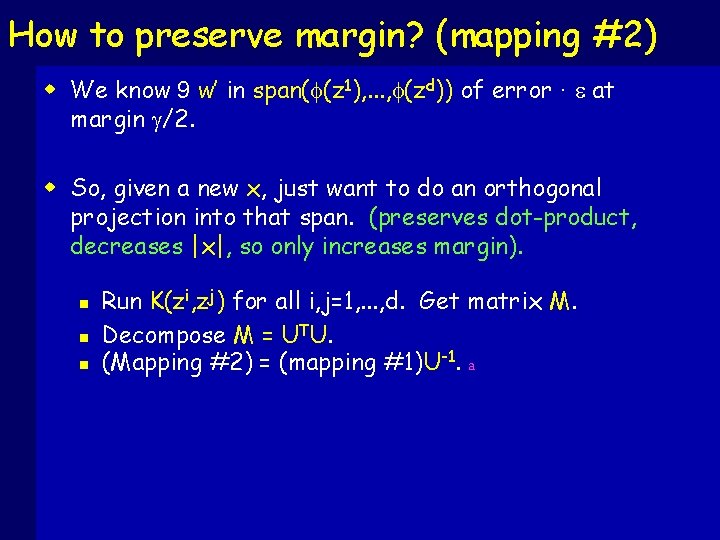

How to preserve margin? (mapping #2) w We know 9 w’ in span( (z 1), . . . , (zd)) of error · e at margin g/2. w So, given a new x, just want to do an orthogonal projection into that span. (preserves dot-product, decreases |x|, so only increases margin). n n n Run K(zi, zj) for all i, j=1, . . . , d. Get matrix M. Decompose M = UTU. (Mapping #2) = (mapping #1)U-1. a

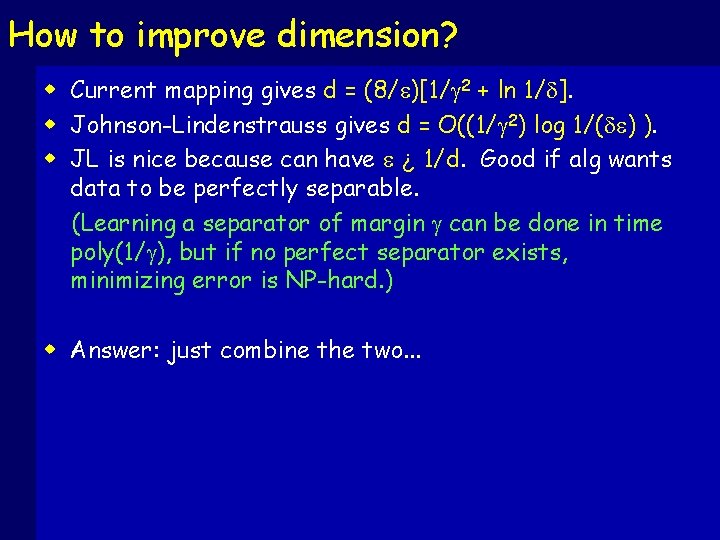

How to improve dimension? w Current mapping gives d = (8/e)[1/g 2 + ln 1/d]. w Johnson-Lindenstrauss gives d = O((1/g 2) log 1/(de) ). w JL is nice because can have e ¿ 1/d. Good if alg wants data to be perfectly separable. (Learning a separator of margin g can be done in time poly(1/g), but if no perfect separator exists, minimizing error is NP-hard. ) w Answer: just combine the two. . .

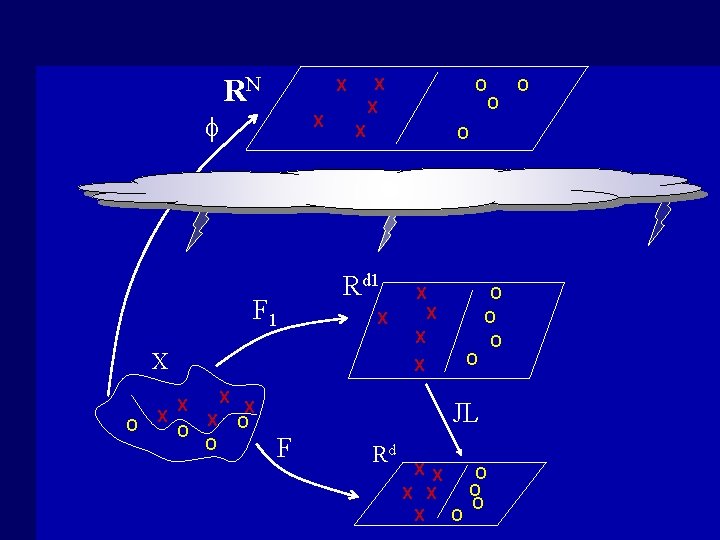

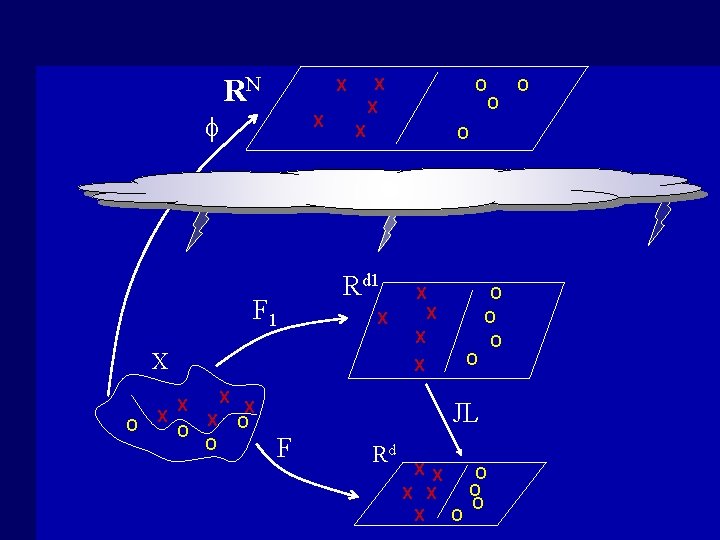

RN X X F 1 X O Rd 1 X X O O X X X O O O JL X O F Rd X X O O

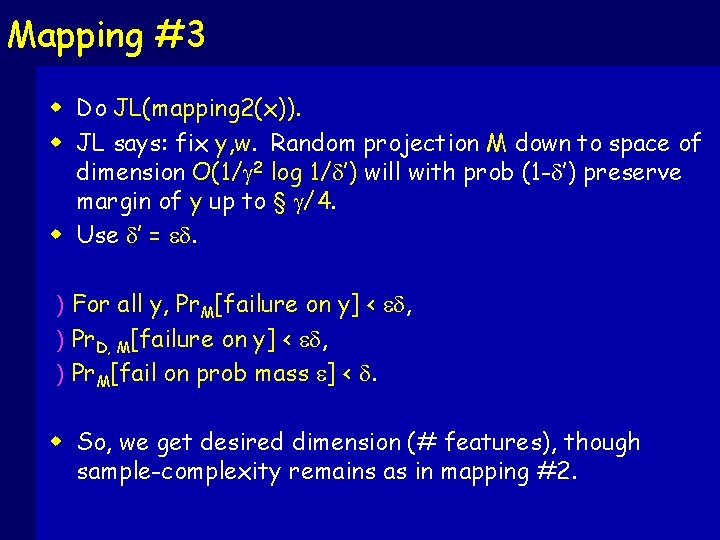

Mapping #3 w Do JL(mapping 2(x)). w JL says: fix y, w. Random projection M down to space of dimension O(1/g 2 log 1/d’) will with prob (1 -d’) preserve margin of y up to § g/4. w Use d’ = ed. ) For all y, Pr. M[failure on y] < ed, ) Pr. D, M[failure on y] < ed, ) Pr. M[fail on prob mass e] < d. w So, we get desired dimension (# features), though sample-complexity remains as in mapping #2.

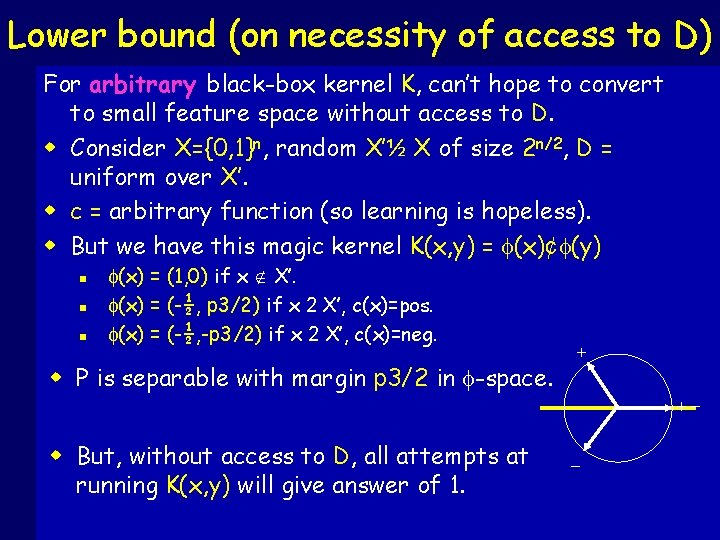

Lower bound (on necessity of access to D) For arbitrary black-box kernel K, can’t hope to convert to small feature space without access to D. w Consider X={0, 1}n, random X’½ X of size 2 n/2, D = uniform over X’. w c = arbitrary function (so learning is hopeless). w But we have this magic kernel K(x, y) = (x)¢ (y) n n n (x) = (1, 0) if x Ï X’. (x) = (-½, p 3/2) if x 2 X’, c(x)=pos. (x) = (-½, -p 3/2) if x 2 X’, c(x)=neg. w P is separable with margin p 3/2 in -space. w But, without access to D, all attempts at running K(x, y) will give answer of 1. + +-

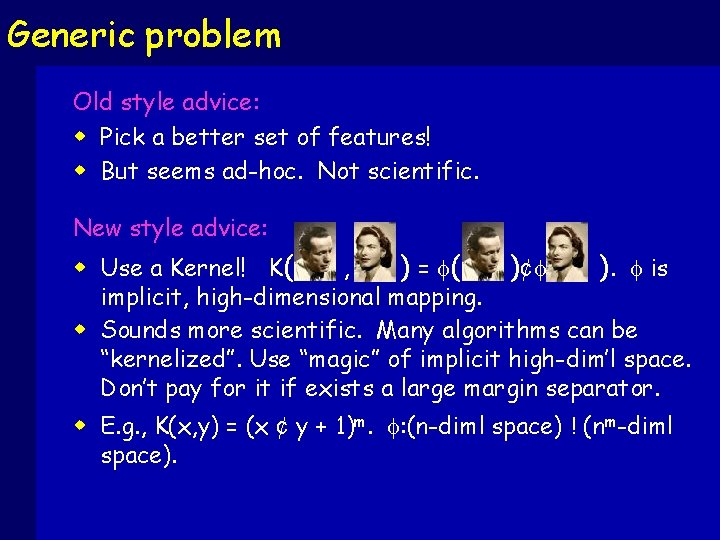

Open Problems w For specific, natural kernels, like, K(x, y) = (1 + x ¢ y)m, Is there an efficient (probability distribution over) mappings that is good for any P = (c, D) for which the kernel is good? w I. e. , an efficient analog to JL for these kernels. w Or, at least can these mappings be constructed using less sample-complexity (fewer accesses to D)?