Learning for Semantic Parsing Using Statistical Syntactic Parsing

![Questions l Q 1. Can Syn. Sem produce accurate semantic interpretations? [yes] l Q Questions l Q 1. Can Syn. Sem produce accurate semantic interpretations? [yes] l Q](https://slidetodoc.com/presentation_image_h/a9c978ee0ae1501cc9ab9e8c86581159/image-75.jpg)

![Questions l Q 1. Can Syn. Sem produce accurate semantic interpretations? [yes] l Q Questions l Q 1. Can Syn. Sem produce accurate semantic interpretations? [yes] l Q](https://slidetodoc.com/presentation_image_h/a9c978ee0ae1501cc9ab9e8c86581159/image-77.jpg)

![Questions l Q 1. Can Syn. Sem produce accurate semantic interpretations? [yes] l Q Questions l Q 1. Can Syn. Sem produce accurate semantic interpretations? [yes] l Q](https://slidetodoc.com/presentation_image_h/a9c978ee0ae1501cc9ab9e8c86581159/image-79.jpg)

![Questions l Q 1. Can Syn. Sem produce accurate semantic interpretations? [yes] l Q Questions l Q 1. Can Syn. Sem produce accurate semantic interpretations? [yes] l Q](https://slidetodoc.com/presentation_image_h/a9c978ee0ae1501cc9ab9e8c86581159/image-81.jpg)

- Slides: 90

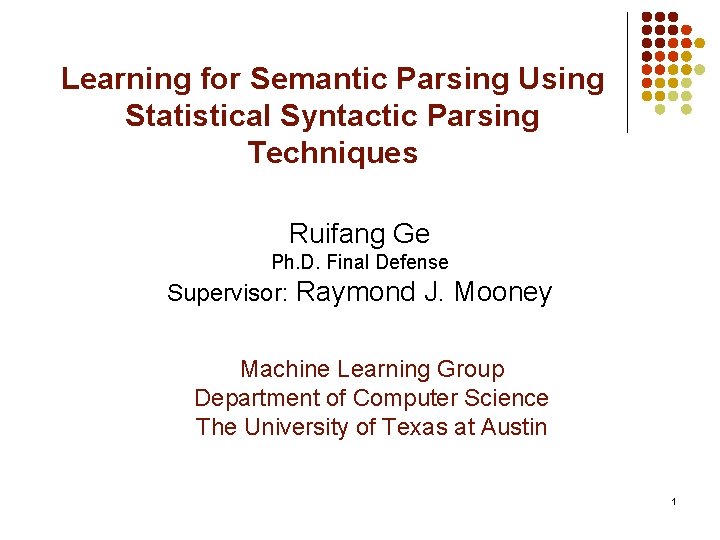

Learning for Semantic Parsing Using Statistical Syntactic Parsing Techniques Ruifang Ge Ph. D. Final Defense Supervisor: Raymond J. Mooney Machine Learning Group Department of Computer Science The University of Texas at Austin 1

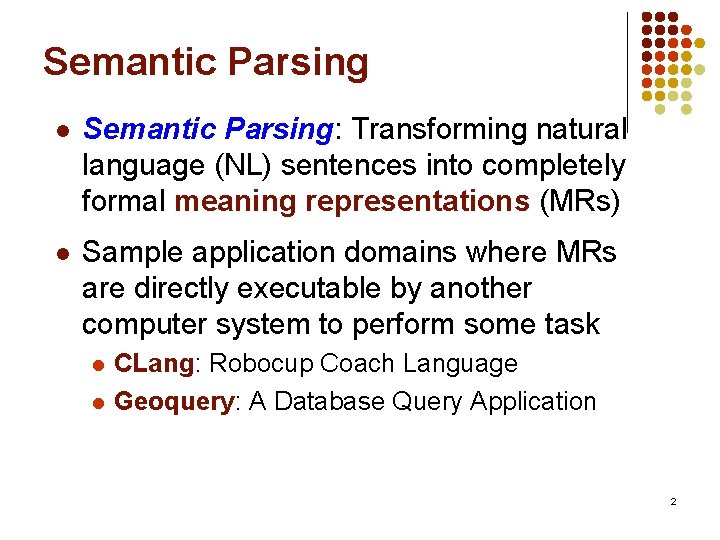

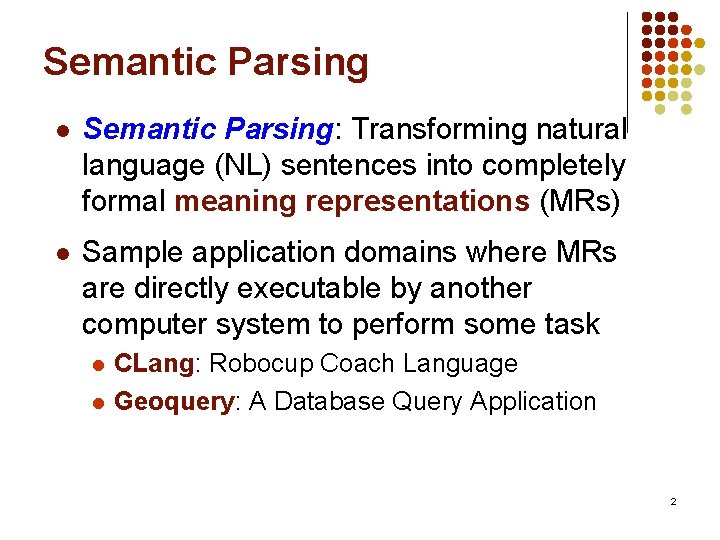

Semantic Parsing l Semantic Parsing: Transforming natural language (NL) sentences into completely formal meaning representations (MRs) l Sample application domains where MRs are directly executable by another computer system to perform some task l l CLang: Robocup Coach Language Geoquery: A Database Query Application 2

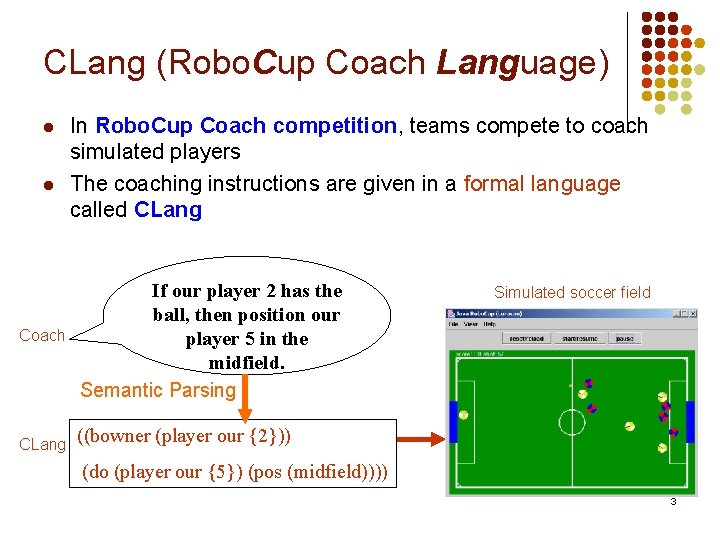

CLang (Robo. Cup Coach Language) l l Coach CLang In Robo. Cup Coach competition, teams compete to coach simulated players The coaching instructions are given in a formal language called CLang If our player 2 has the ball, then position our player 5 in the midfield. Semantic Parsing Simulated soccer field ((bowner (player our {2})) (do (player our {5}) (pos (midfield)))) 3

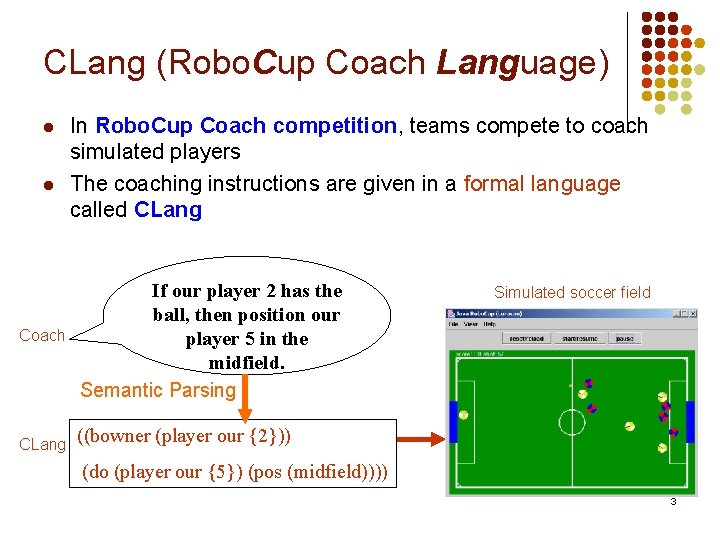

Geo. Query: A Database Query Application l Query application for U. S. geography database [Zelle & Mooney, 1996] User What are the rivers in Texas? Semantic Parsing Query answer(x 1, (river(x 1), loc(x 1, x 2), equal(x 2, stateid(texas)))) Angelina, Blanco, … Data. Base 4

Motivation for Semantic Parsing l l Theoretically, it answers the question of how people interpret language Practical applications l l Question answering Natural language interface Knowledge acquisition Reasoning 5

Motivating Example If our player 2 has the ball, our player 4 should stay in our half ((bowner (player our {2})) (do our {4} (pos (half our)))) Semantic parsing is a compositional process. Sentence structures are needed for building meaning representations. bowner: ball owner pos: position 6

Syntax-Based Approaches l Meaning composition follows the tree structure of a syntactic parse l Composing the meaning of a constituent from the meanings of its sub-constituents in a syntactic parse l Hand-built approaches (Woods, 1970, Warren and Pereira, 1982) l Learned approaches Conceptually simple sentences l Miller et al. (1996): l Zettlemoyer & Collins (2005)): hand-built Combinatory Categorial Grammar (CCG) template rules 7

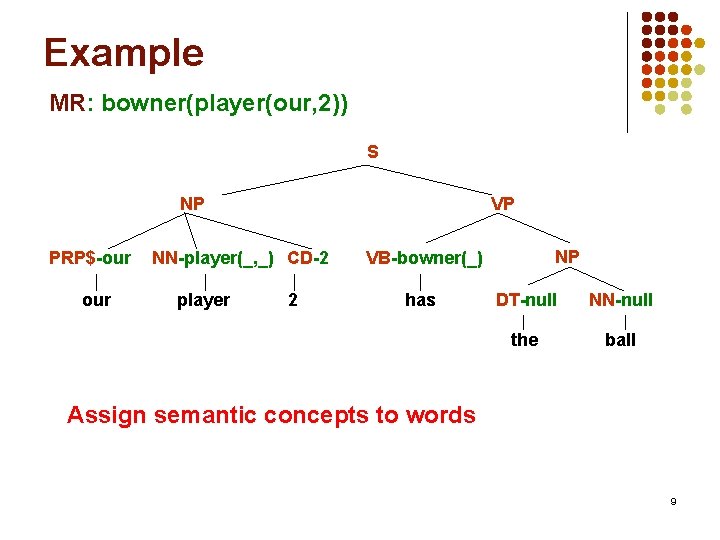

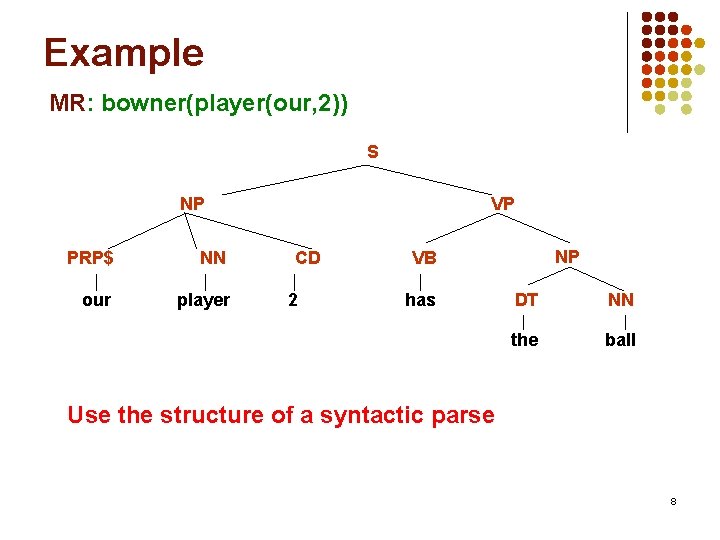

Example MR: bowner(player(our, 2)) S NP PRP$ NN our player VP CD 2 NP VB has DT NN the ball Use the structure of a syntactic parse 8

Example MR: bowner(player(our, 2)) S NP PRP$-our VP NN-player(_, _) CD-2 player 2 NP VB-bowner(_) has DT-null NN-null the ball Assign semantic concepts to words 9

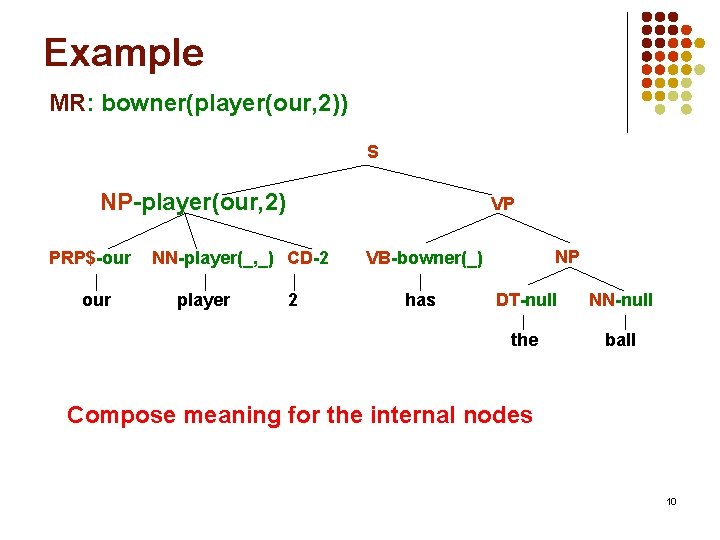

Example MR: bowner(player(our, 2)) S NP-player(our, 2) PRP$-our VP NN-player(_, _) CD-2 player 2 NP VB-bowner(_) has DT-null NN-null the ball Compose meaning for the internal nodes 10

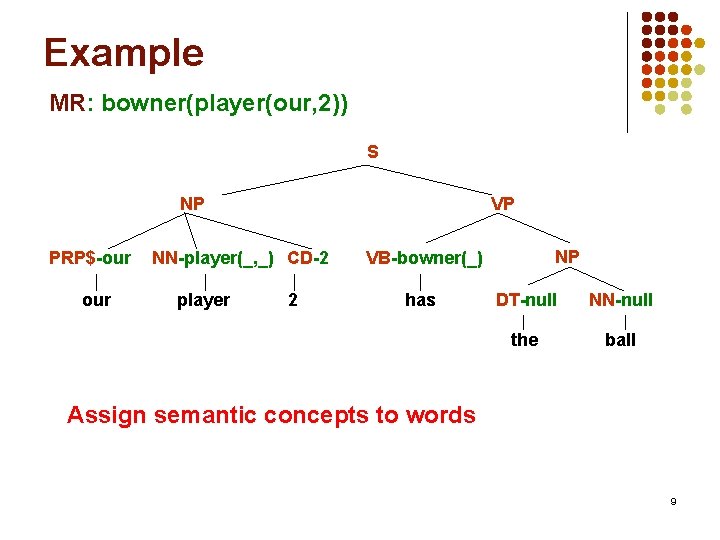

Example MR: bowner(player(our, 2)) S VP-bowner(_) NP-player(our, 2) PRP$-our NN-player(_, _) CD-2 player 2 VB-bowner(_) has NP-null DT-null NN-null the ball Compose meaning for the internal nodes 11

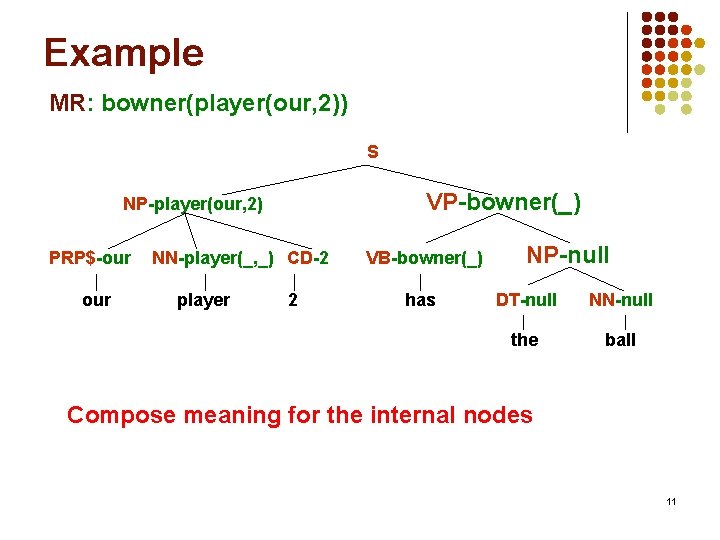

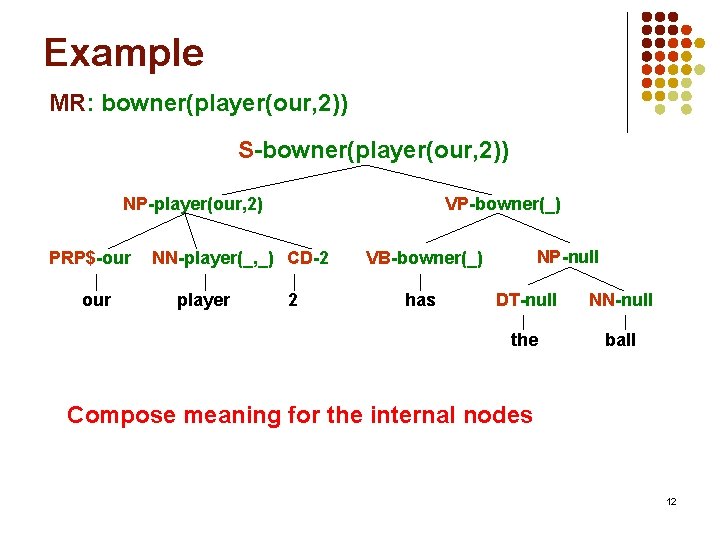

Example MR: bowner(player(our, 2)) S-bowner(player(our, 2)) NP-player(our, 2) PRP$-our VP-bowner(_) NN-player(_, _) CD-2 player 2 NP-null VB-bowner(_) has DT-null NN-null the ball Compose meaning for the internal nodes 12

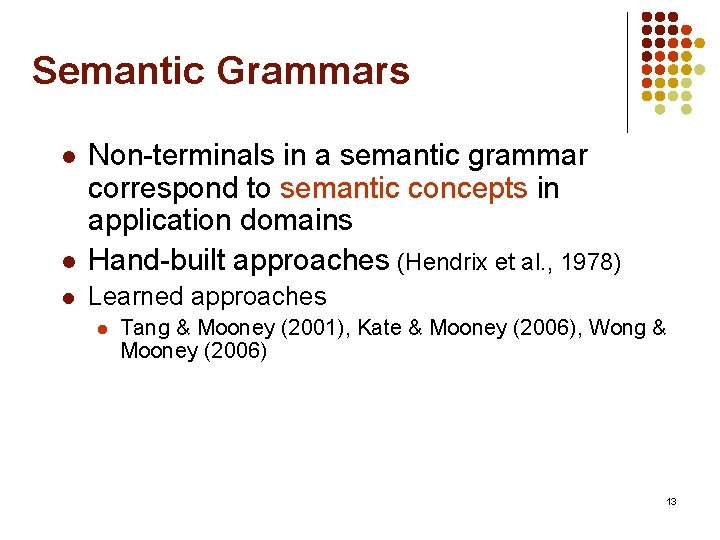

Semantic Grammars l Non-terminals in a semantic grammar correspond to semantic concepts in application domains Hand-built approaches (Hendrix et al. , 1978) l Learned approaches l l Tang & Mooney (2001), Kate & Mooney (2006), Wong & Mooney (2006) 13

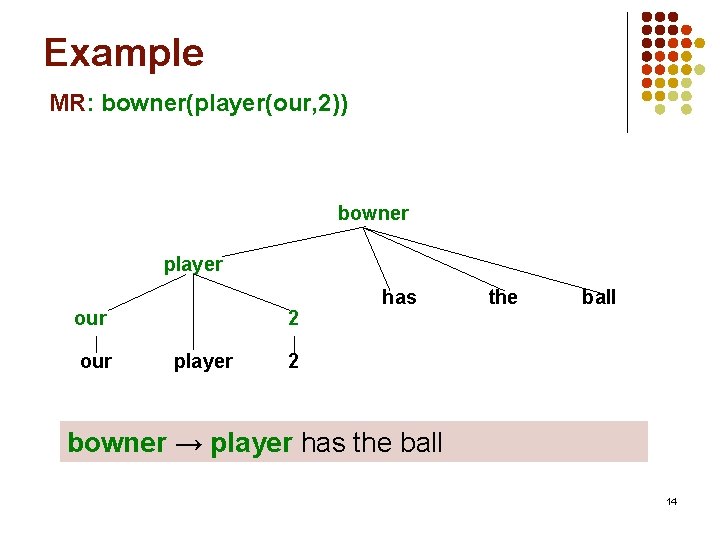

Example MR: bowner(player(our, 2)) bowner player our 2 player has the ball 2 bowner → player has the ball 14

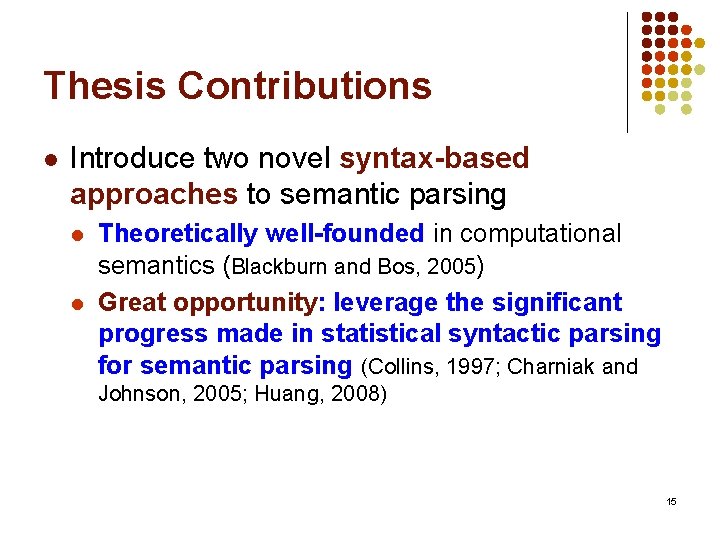

Thesis Contributions l Introduce two novel syntax-based approaches to semantic parsing l l Theoretically well-founded in computational semantics (Blackburn and Bos, 2005) Great opportunity: leverage the significant progress made in statistical syntactic parsing for semantic parsing (Collins, 1997; Charniak and Johnson, 2005; Huang, 2008) 15

Thesis Contributions l l l SCISSOR: a novel integrated syntacticsemantic parser SYNSEM: exploits an existing syntactic parser to produce disambiguated parse trees that drive the compositional meaning composition Investigate when the knowledge of syntax can help 16

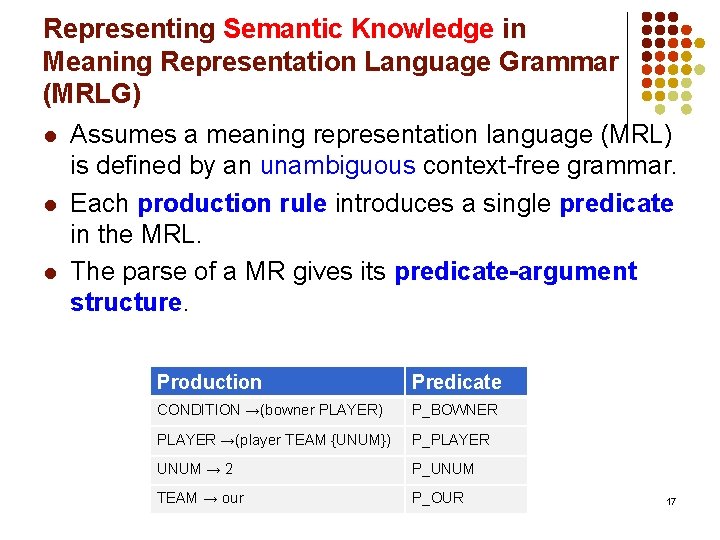

Representing Semantic Knowledge in Meaning Representation Language Grammar (MRLG) l Assumes a meaning representation language (MRL) is defined by an unambiguous context-free grammar. l Each production rule introduces a single predicate in the MRL. l The parse of a MR gives its predicate-argument structure. Production Predicate CONDITION →(bowner PLAYER) P_BOWNER PLAYER →(player TEAM {UNUM}) P_PLAYER UNUM → 2 P_UNUM TEAM → our P_OUR 17

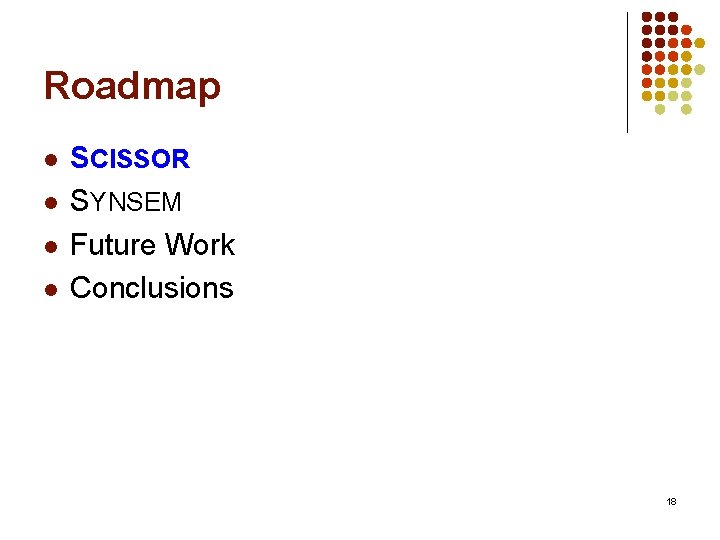

Roadmap l l SCISSOR SYNSEM Future Work Conclusions 18

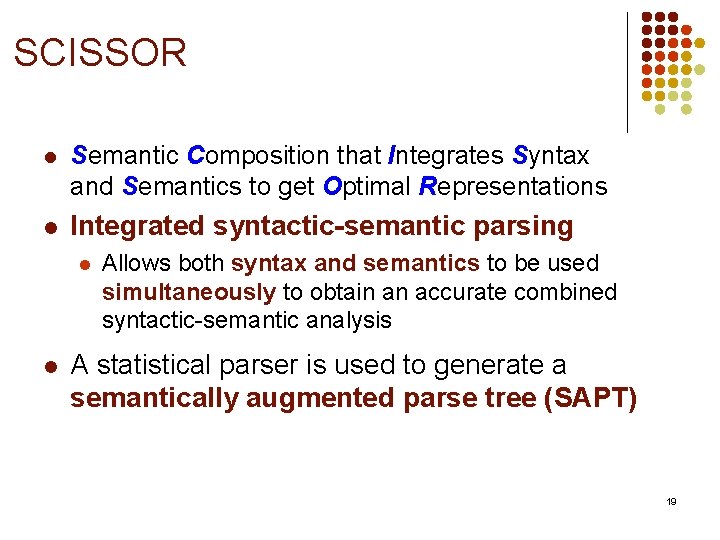

SCISSOR l Semantic Composition that Integrates Syntax and Semantics to get Optimal Representations l Integrated syntactic-semantic parsing l l Allows both syntax and semantics to be used simultaneously to obtain an accurate combined syntactic-semantic analysis A statistical parser is used to generate a semantically augmented parse tree (SAPT) 19

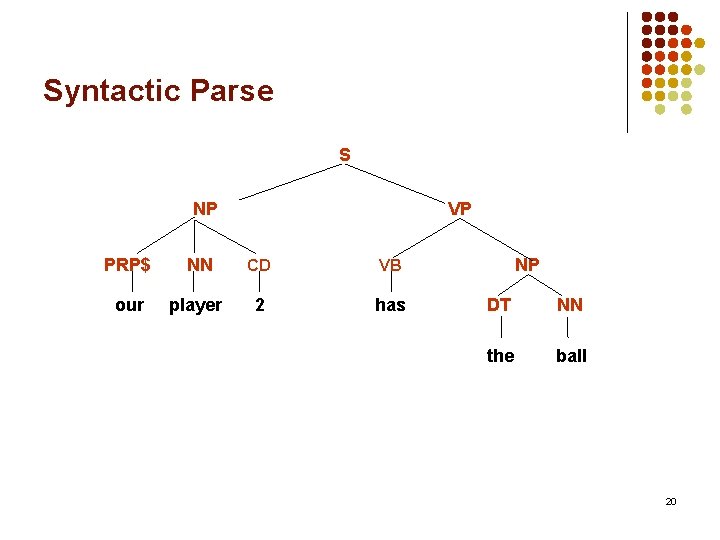

Syntactic Parse S NP VP PRP$ NN CD VB our player 2 has NP DT NN the ball 20

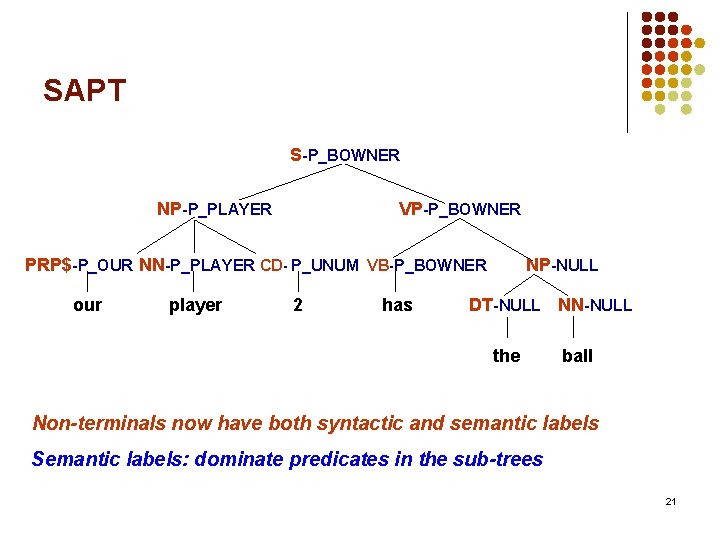

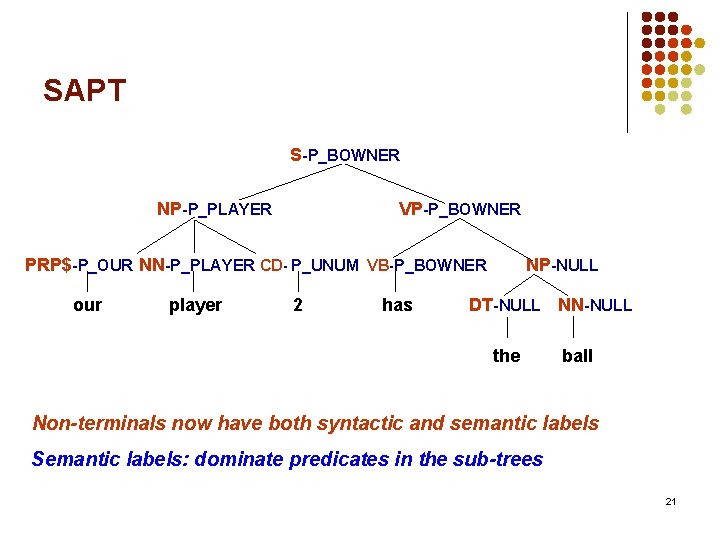

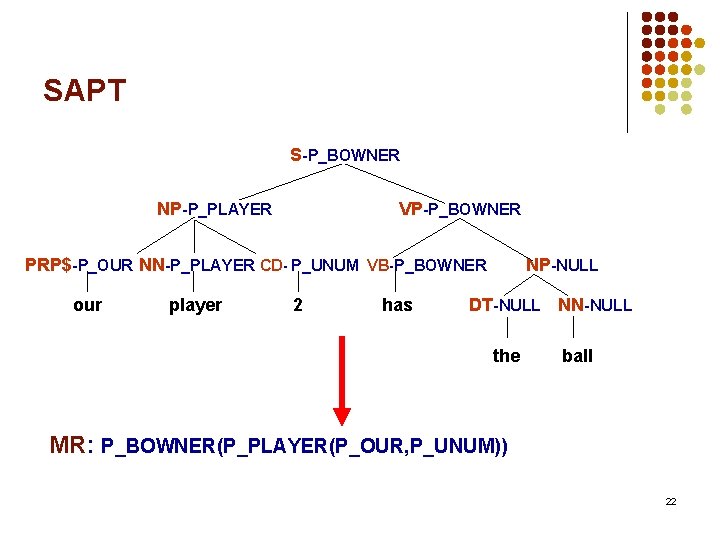

SAPT S-P_BOWNER NP-P_PLAYER VP-P_BOWNER PRP$-P_OUR NN-P_PLAYER CD- P_UNUM VB-P_BOWNER our player 2 has NP-NULL DT-NULL NN-NULL the ball Non-terminals now have both syntactic and semantic labels Semantic labels: dominate predicates in the sub-trees 21

SAPT S-P_BOWNER NP-P_PLAYER VP-P_BOWNER PRP$-P_OUR NN-P_PLAYER CD- P_UNUM VB-P_BOWNER our player 2 has NP-NULL DT-NULL NN-NULL the ball MR: P_BOWNER(P_PLAYER(P_OUR, P_UNUM)) 22

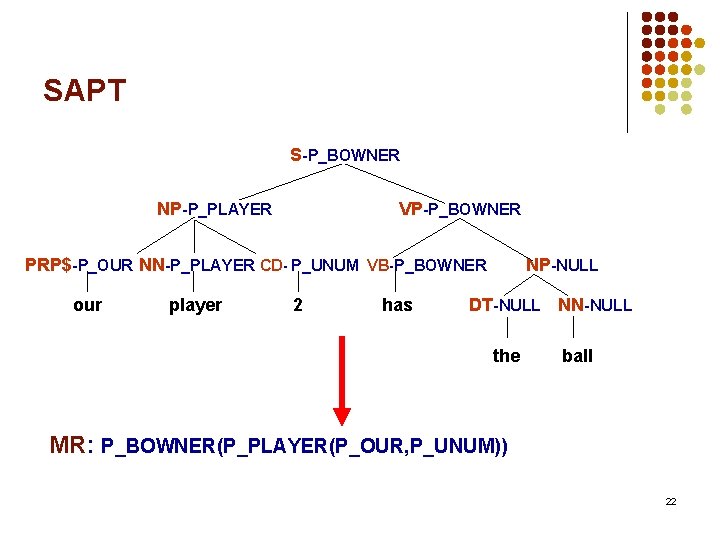

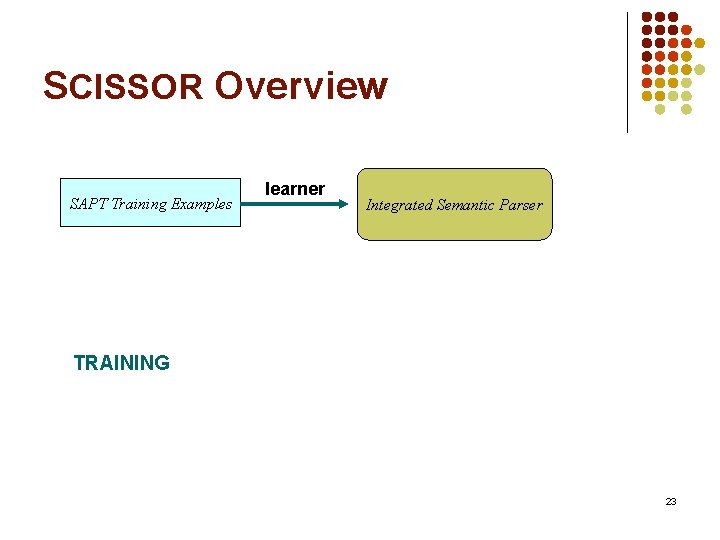

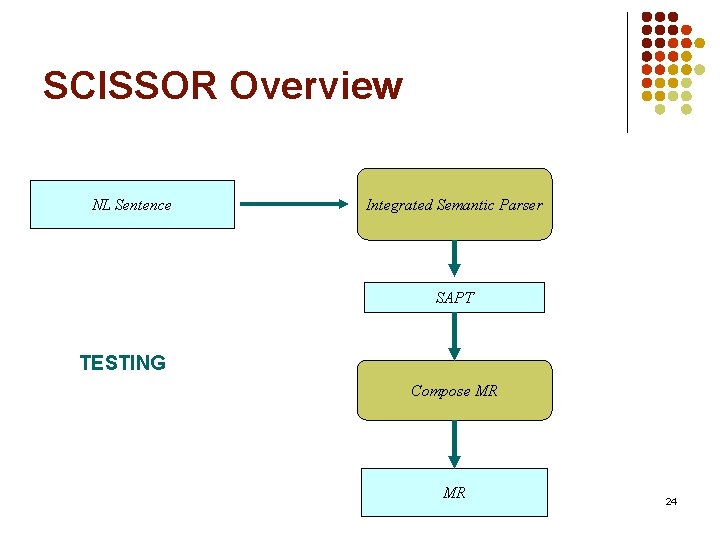

SCISSOR Overview SAPT Training Examples learner Integrated Semantic Parser TRAINING 23

SCISSOR Overview NL Sentence Integrated Semantic Parser SAPT TESTING Compose MR MR 24

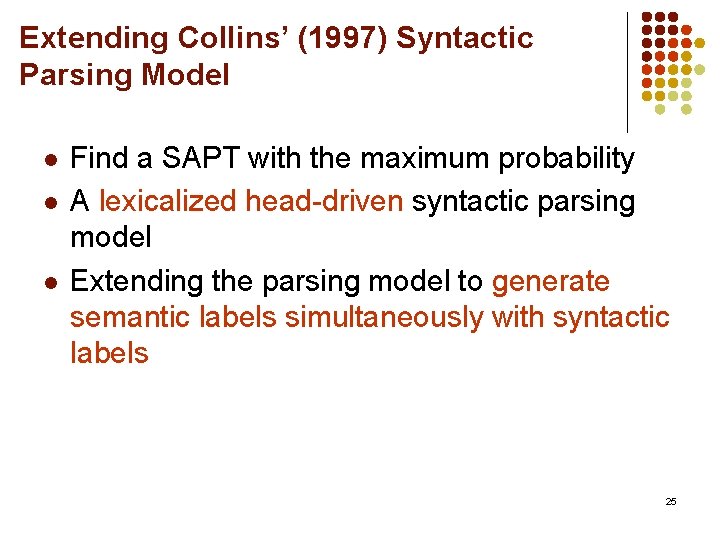

Extending Collins’ (1997) Syntactic Parsing Model l Find a SAPT with the maximum probability A lexicalized head-driven syntactic parsing model Extending the parsing model to generate semantic labels simultaneously with syntactic labels 25

Why Extending Collins’ (1997) Syntactic Parsing Model l Suitable for incorporating semantic knowledge l l l Head dependency: predicate-argument relation Syntactic subcategorization: a set of arguments that a predicate appears with Bikel (2004) implementation: easily extendable 26

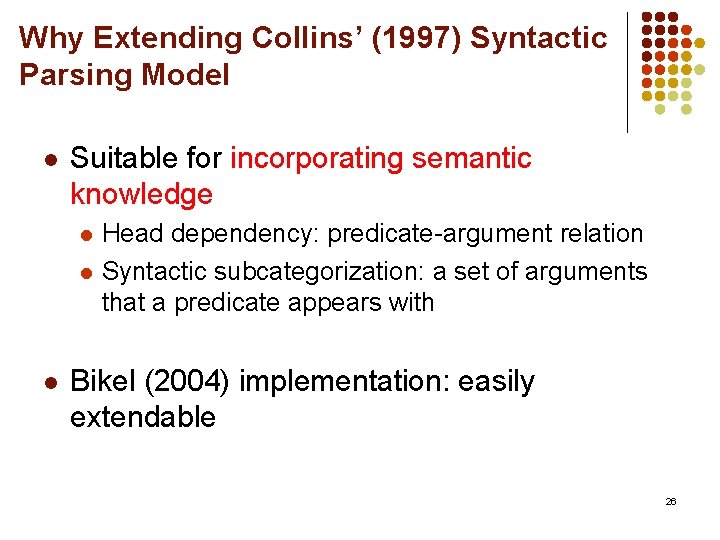

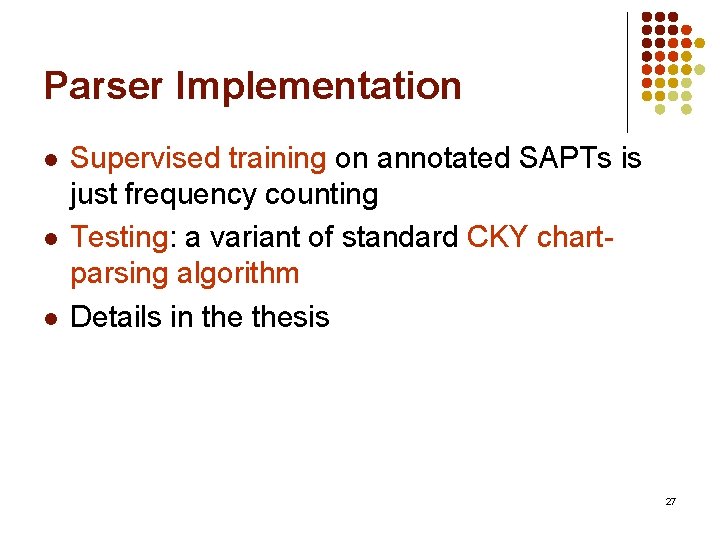

Parser Implementation l l l Supervised training on annotated SAPTs is just frequency counting Testing: a variant of standard CKY chartparsing algorithm Details in thesis 27

Smoothing l l l Each label in SAPT is the combination of a syntactic label and a semantic label Increases data sparsity Break the parameters down Ph(H | P, w) = Ph(Hsyn, Hsem | P, w) = Ph(Hsyn | P, w) × Ph(Hsem | P, w, Hsyn) 28

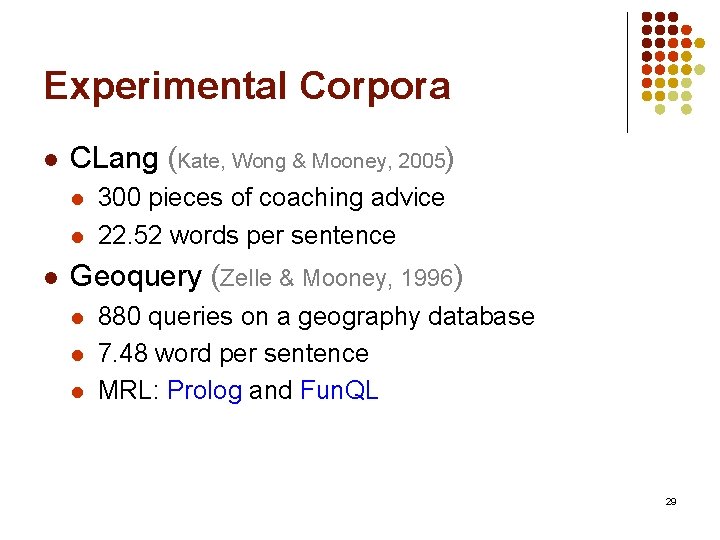

Experimental Corpora l CLang (Kate, Wong & Mooney, 2005) l l l 300 pieces of coaching advice 22. 52 words per sentence Geoquery (Zelle & Mooney, 1996) l l l 880 queries on a geography database 7. 48 word per sentence MRL: Prolog and Fun. QL 29

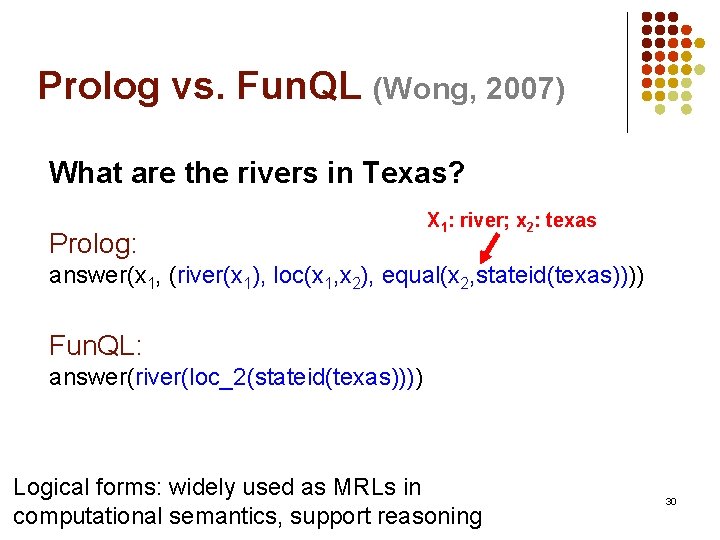

Prolog vs. Fun. QL (Wong, 2007) What are the rivers in Texas? Prolog: X 1: river; x 2: texas answer(x 1, (river(x 1), loc(x 1, x 2), equal(x 2, stateid(texas)))) Fun. QL: answer(river(loc_2(stateid(texas)))) Logical forms: widely used as MRLs in computational semantics, support reasoning 30

Prolog vs. Fun. QL (Wong, 2007) What are the rivers in Texas? Flexible order Prolog: answer(x 1, (river(x 1), loc(x 1, x 2), equal(x 2, stateid(texas)))) Fun. QL: answer(river(loc_2(stateid(texas)))) Strict order Better generalization on Prolog 31

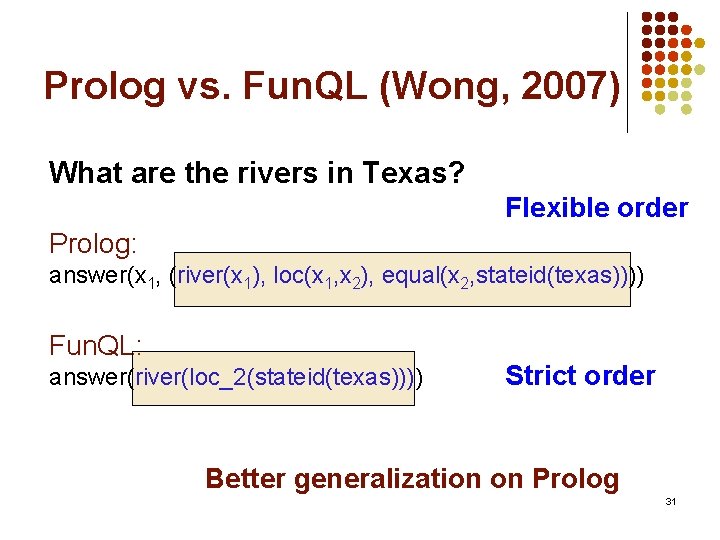

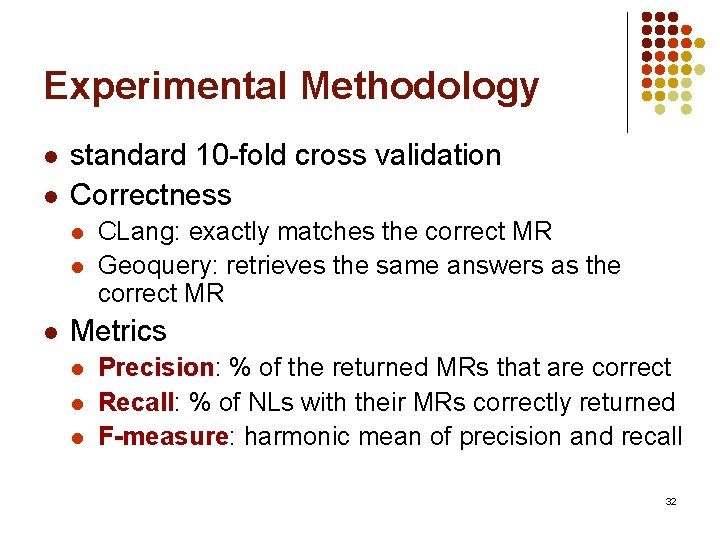

Experimental Methodology l l standard 10 -fold cross validation Correctness l l l CLang: exactly matches the correct MR Geoquery: retrieves the same answers as the correct MR Metrics l l l Precision: % of the returned MRs that are correct Recall: % of NLs with their MRs correctly returned F-measure: harmonic mean of precision and recall 32

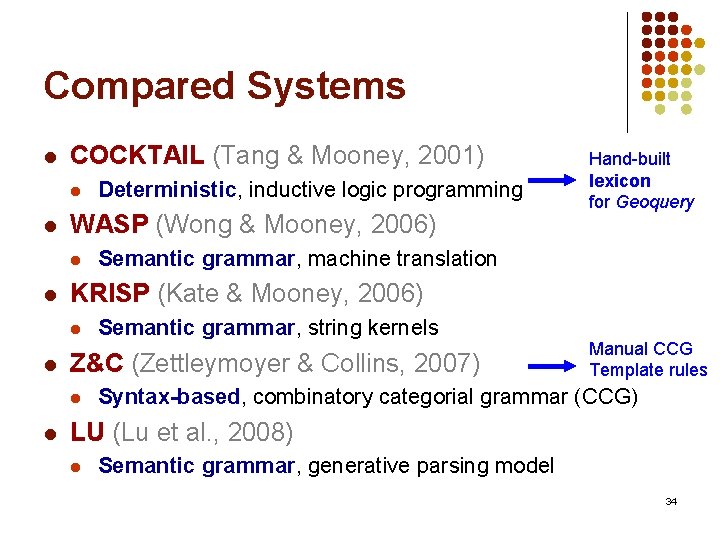

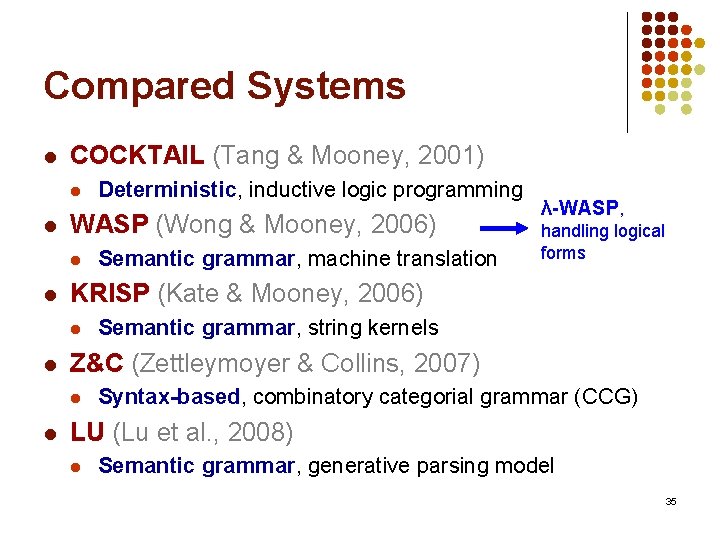

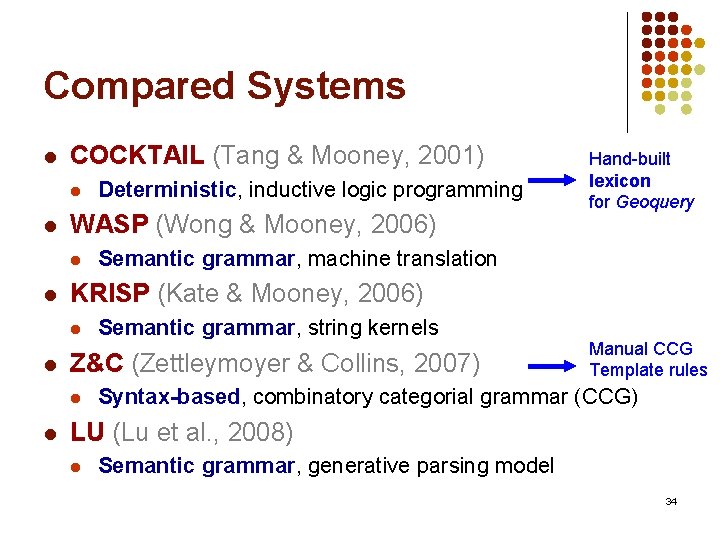

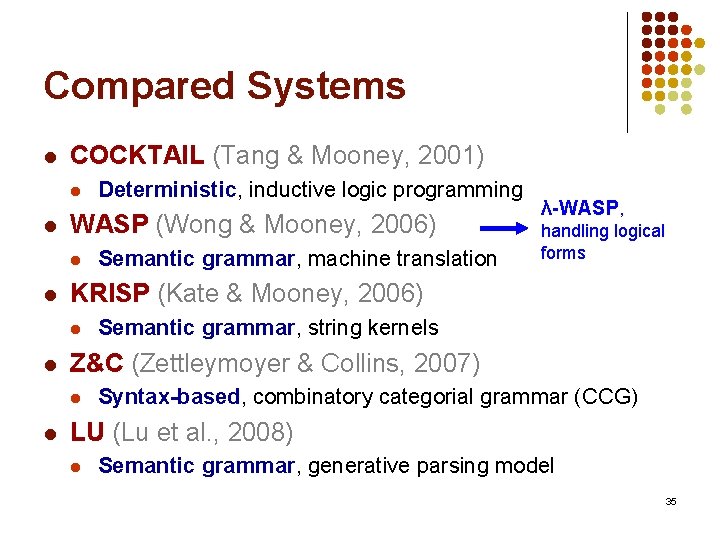

Compared Systems l COCKTAIL (Tang & Mooney, 2001) l l WASP (Wong & Mooney, 2006) l l Semantic grammar, string kernels Z&C (Zettleymoyer & Collins, 2007) l l Semantic grammar, machine translation KRISP (Kate & Mooney, 2006) l l Deterministic, inductive logic programming Syntax-based, combinatory categorial grammar (CCG) LU (Lu et al. , 2008) l Semantic grammar, generative parsing model 33

Compared Systems l COCKTAIL (Tang & Mooney, 2001) l l WASP (Wong & Mooney, 2006) l l Semantic grammar, string kernels Z&C (Zettleymoyer & Collins, 2007) l l Semantic grammar, machine translation KRISP (Kate & Mooney, 2006) l l Deterministic, inductive logic programming Hand-built lexicon for Geoquery Manual CCG Template rules Syntax-based, combinatory categorial grammar (CCG) LU (Lu et al. , 2008) l Semantic grammar, generative parsing model 34

Compared Systems l COCKTAIL (Tang & Mooney, 2001) l l WASP (Wong & Mooney, 2006) l l handling logical forms Semantic grammar, string kernels Z&C (Zettleymoyer & Collins, 2007) l l Semantic grammar, machine translation λ-WASP, KRISP (Kate & Mooney, 2006) l l Deterministic, inductive logic programming Syntax-based, combinatory categorial grammar (CCG) LU (Lu et al. , 2008) l Semantic grammar, generative parsing model 35

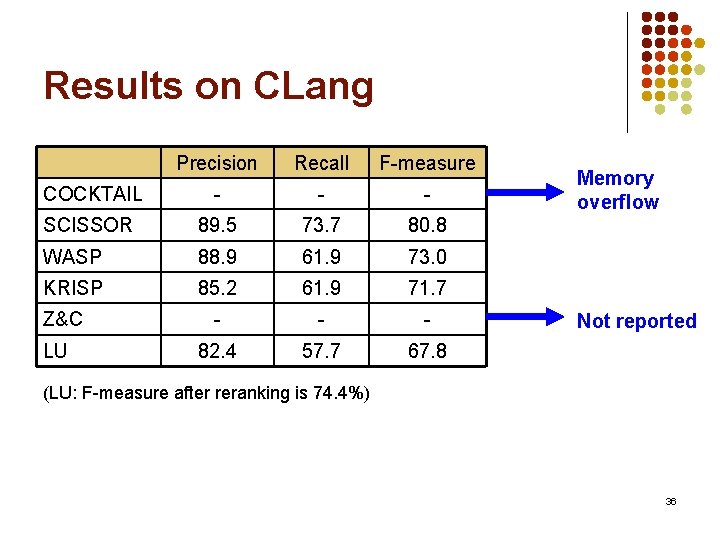

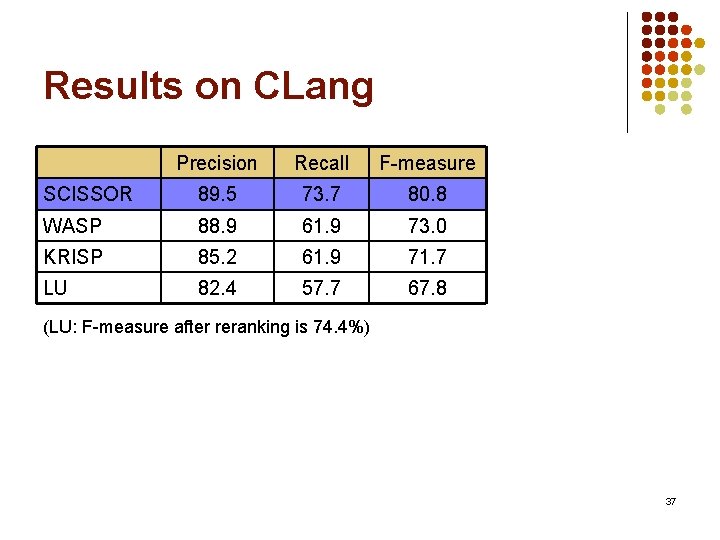

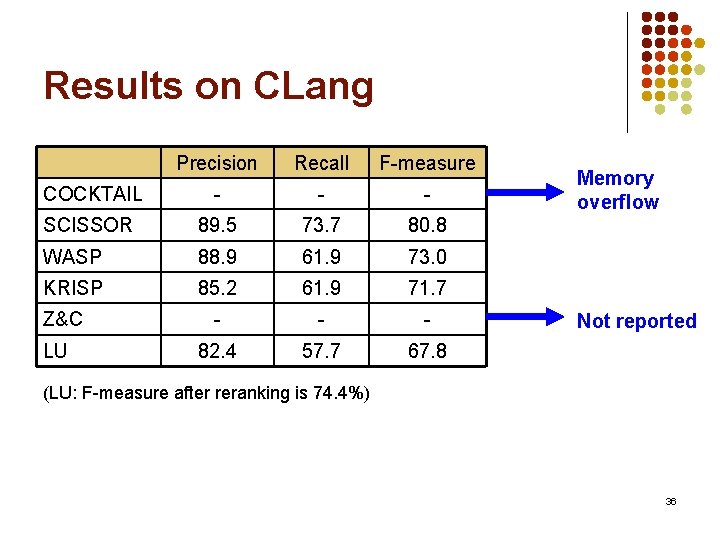

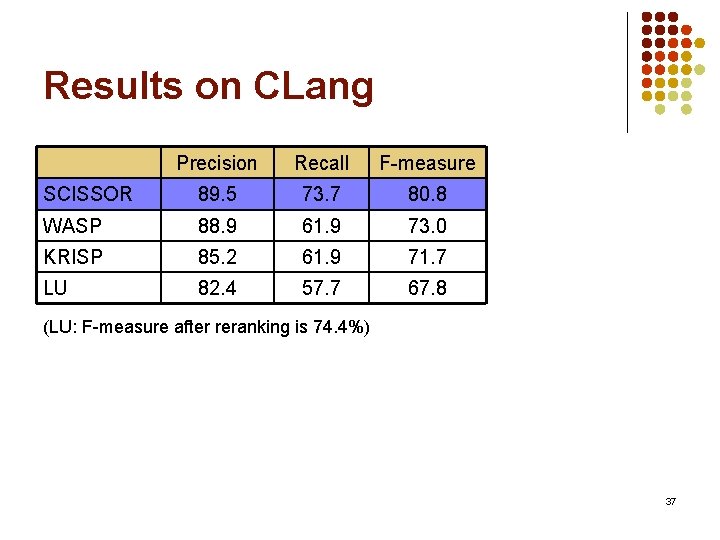

Results on CLang Precision Recall F-measure COCKTAIL - - - SCISSOR 89. 5 73. 7 80. 8 WASP 88. 9 61. 9 73. 0 KRISP 85. 2 61. 9 71. 7 - - - 82. 4 57. 7 67. 8 Z&C LU Memory overflow Not reported (LU: F-measure after reranking is 74. 4%) 36

Results on CLang Precision Recall F-measure SCISSOR 89. 5 73. 7 80. 8 WASP 88. 9 61. 9 73. 0 KRISP 85. 2 61. 9 71. 7 LU 82. 4 57. 7 67. 8 (LU: F-measure after reranking is 74. 4%) 37

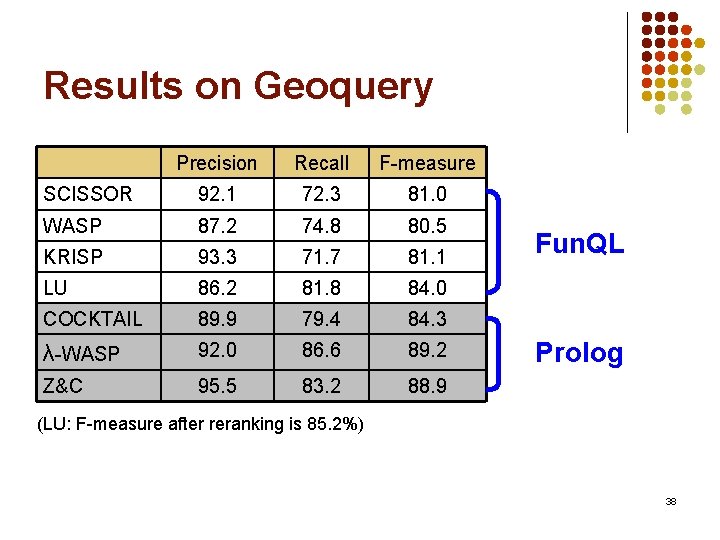

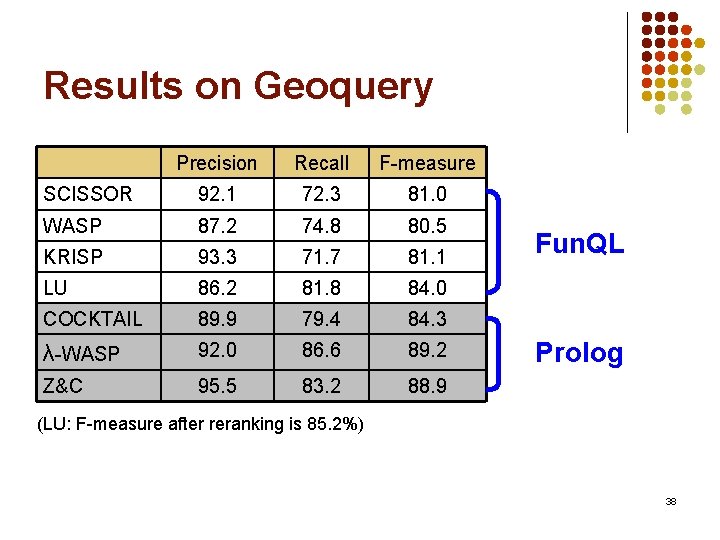

Results on Geoquery Precision Recall F-measure SCISSOR 92. 1 72. 3 81. 0 WASP 87. 2 74. 8 80. 5 KRISP 93. 3 71. 7 81. 1 LU 86. 2 81. 8 84. 0 COCKTAIL 89. 9 79. 4 84. 3 λ-WASP 92. 0 86. 6 89. 2 Z&C 95. 5 83. 2 88. 9 Fun. QL Prolog (LU: F-measure after reranking is 85. 2%) 38

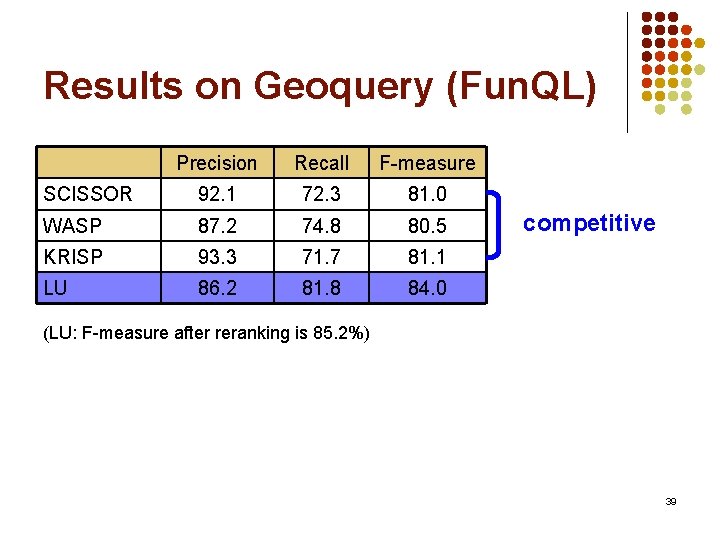

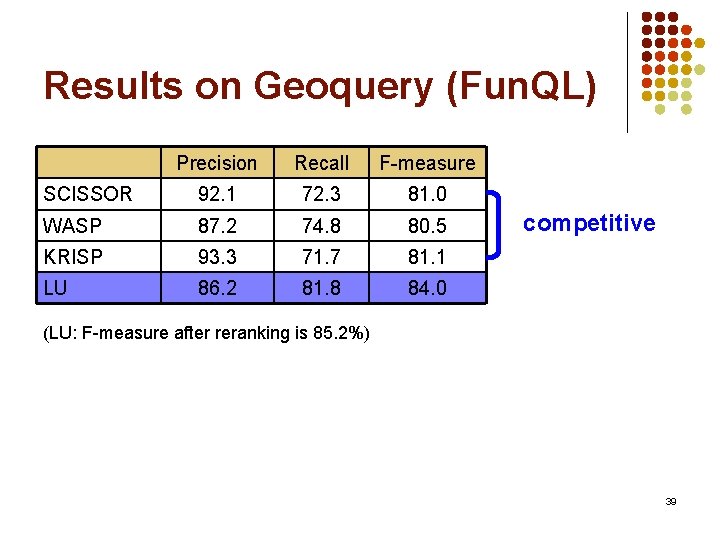

Results on Geoquery (Fun. QL) Precision Recall F-measure SCISSOR 92. 1 72. 3 81. 0 WASP 87. 2 74. 8 80. 5 KRISP 93. 3 71. 7 81. 1 LU 86. 2 81. 8 84. 0 competitive (LU: F-measure after reranking is 85. 2%) 39

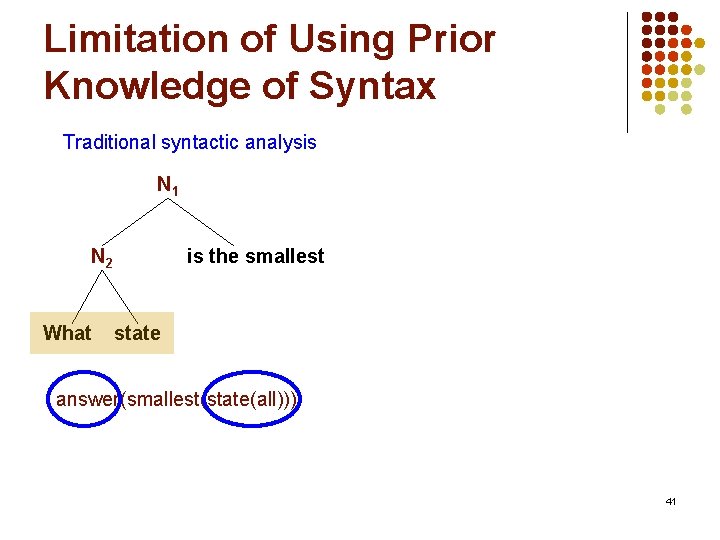

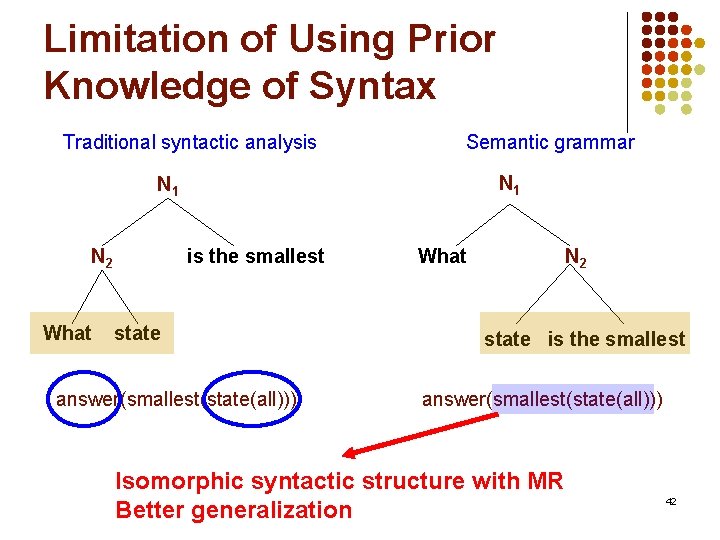

Why Knowledge of Syntax does not Help l l Geoquery: 7. 48 word per sentence Short sentence l l Sentence structure can be feasibly learned from NLs paired with MRs Gain from knowledge of syntax vs. flexibility loss 40

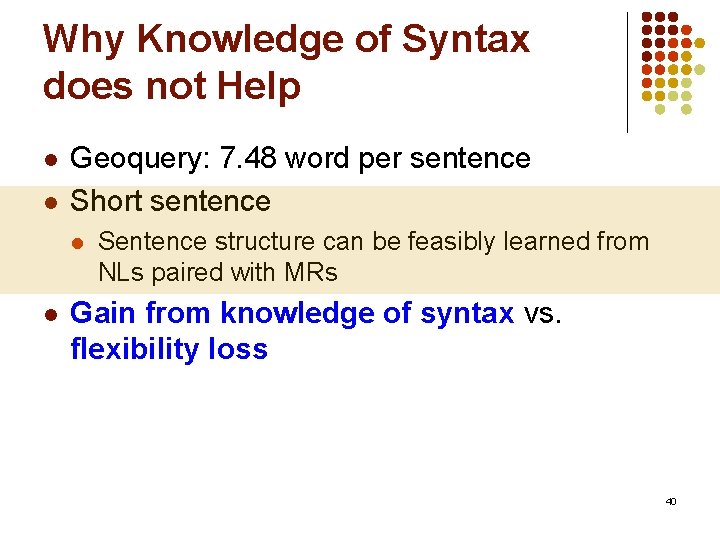

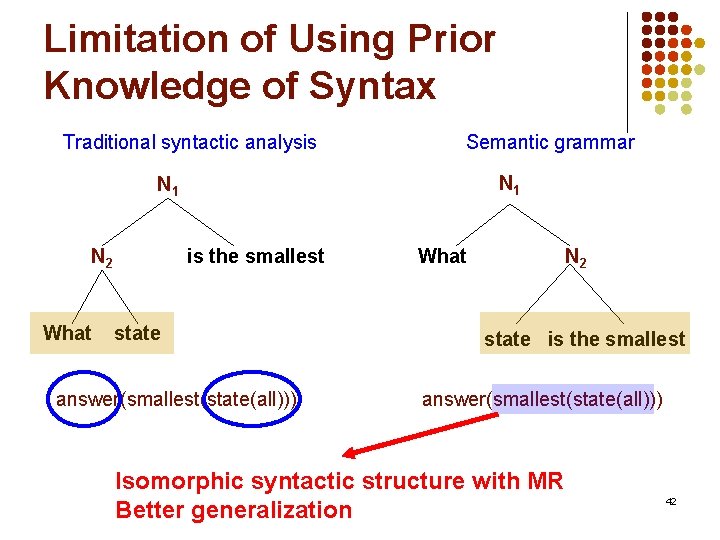

Limitation of Using Prior Knowledge of Syntax Traditional syntactic analysis N 1 N 2 What is the smallest state answer(smallest(state(all))) 41

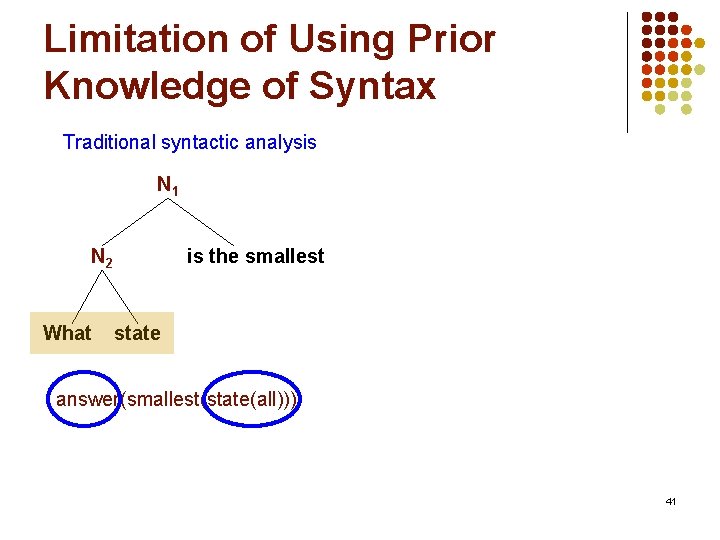

Limitation of Using Prior Knowledge of Syntax Traditional syntactic analysis Semantic grammar N 1 N 2 What is the smallest state answer(smallest(state(all))) What N 2 state is the smallest answer(smallest(state(all))) Isomorphic syntactic structure with MR Better generalization 42

Why Prior Knowledge of Syntax does not Help l l Geoquery: 7. 48 word per sentence Short sentence l l l Sentence structure can be feasibly learned from NLs paired with MRs Gain from knowledge of syntax vs. flexibility loss LU vs. WASP and KRISP l Decomposed model for semantic grammar 43

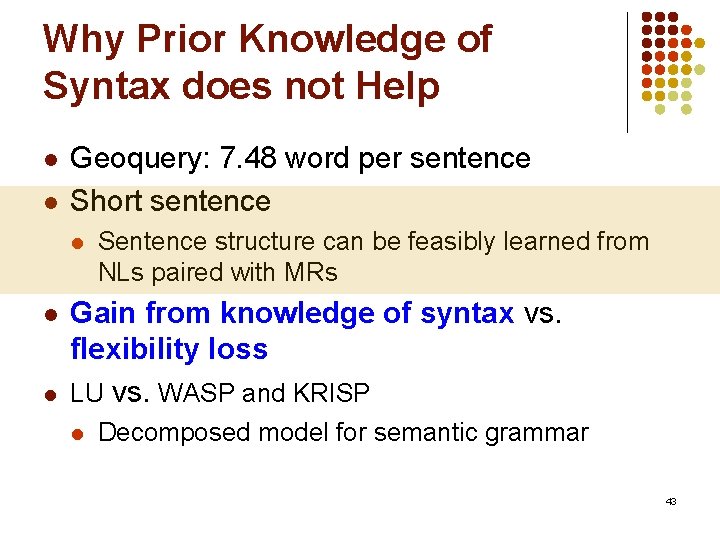

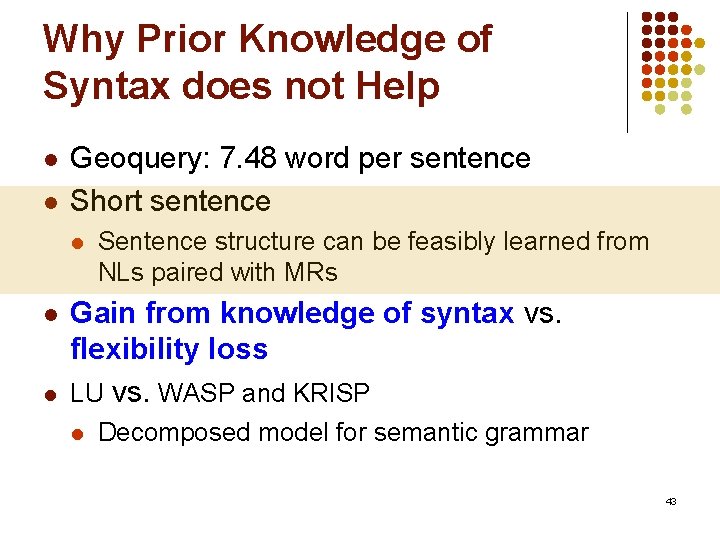

Detailed Clang Results on Sentence Length 0 -10 (7%) 11 -20 (33%) 21 -30 (46%) 31 -40 (13%) 44

SCISSOR Summary l l l Integrated syntactic-semantic parsing approach Learns accurate semantic interpretations by utilizing the SAPT annotations knowledge of syntax improves performance on long sentences 45

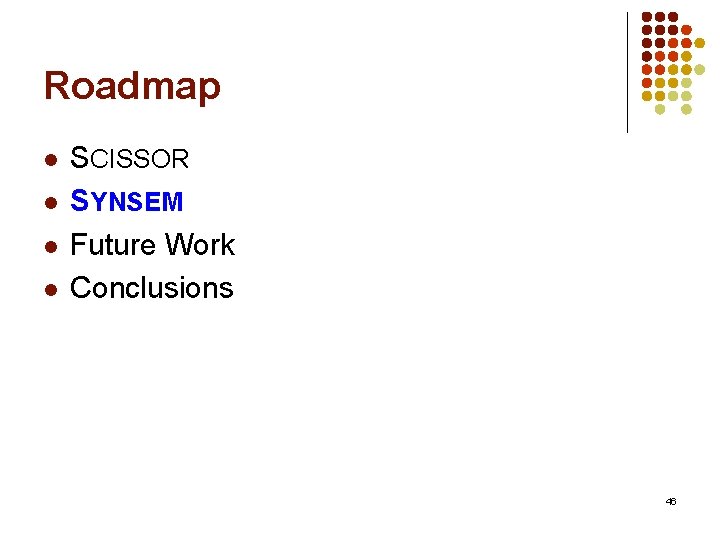

Roadmap l l SCISSOR SYNSEM Future Work Conclusions 46

SYNSEM Motivation l l l SCISSOR requires extra SAPT annotation for training Must learn both syntax and semantics from same limited training corpus High performance syntactic parsers are available that are trained on existing large corpora (Collins, 1997; Charniak & Johnson, 2005) 47

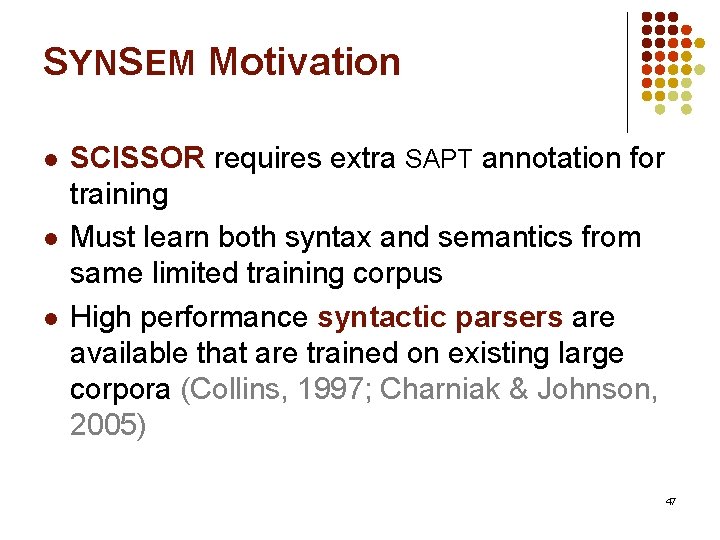

SCISSOR Requires SAPT Annotation S-P_BOWNER NP-P_PLAYER VP-P_BOWNER PRP$-P_OUR NN-P_PLAYER CD- P_UNUM VB-P_BOWNER our player 2 has NP-NULL DT-NULL NN-NULL the ball Time consuming. Automate it! 48

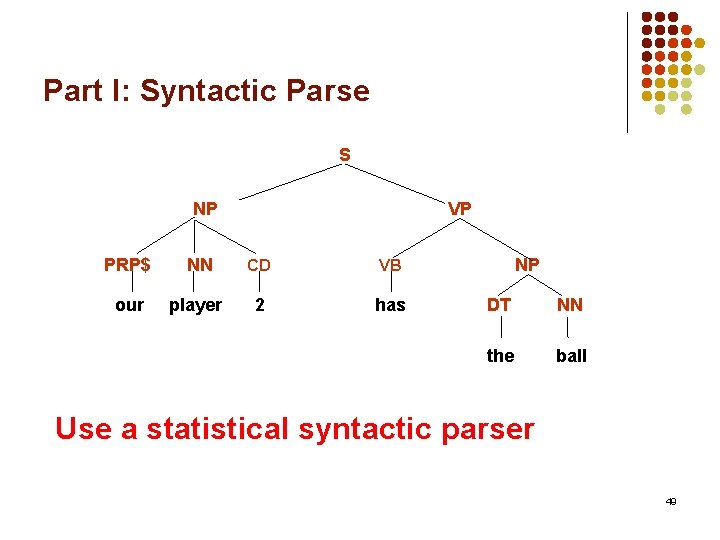

Part I: Syntactic Parse S NP VP PRP$ NN CD VB our player 2 has NP DT NN the ball Use a statistical syntactic parser 49

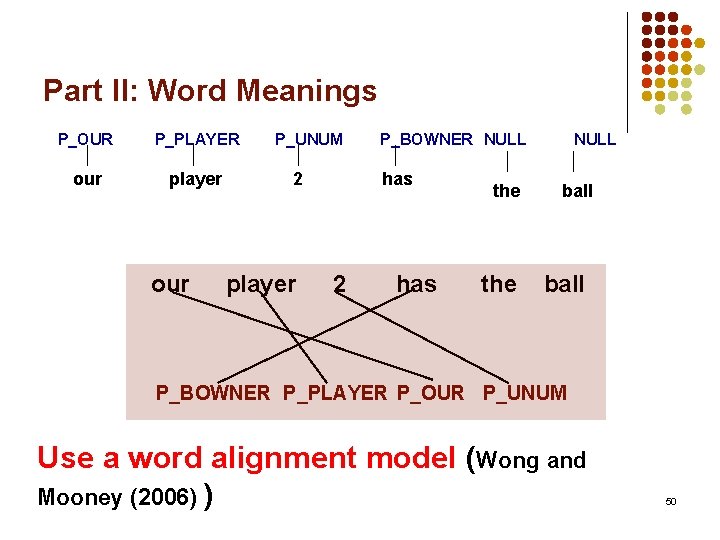

Part II: Word Meanings P_OUR P_PLAYER our player our P_UNUM 2 player P_BOWNER NULL has 2 has the NULL ball P_BOWNER P_PLAYER P_OUR P_UNUM Use a word alignment model (Wong and Mooney (2006) ) 50

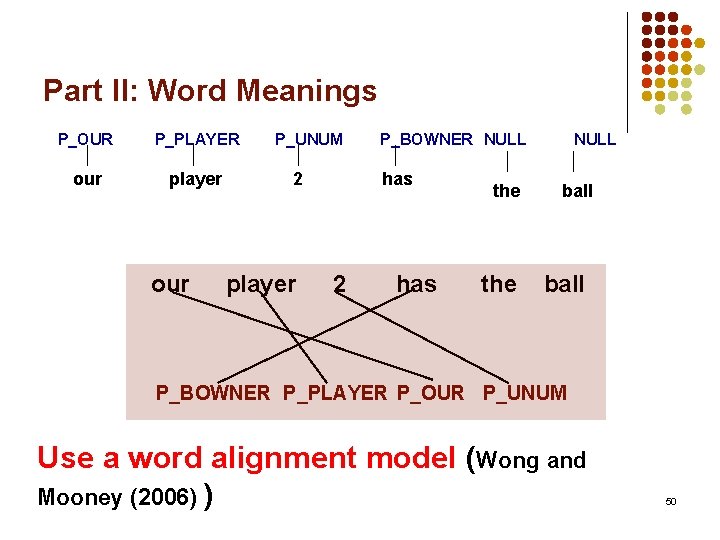

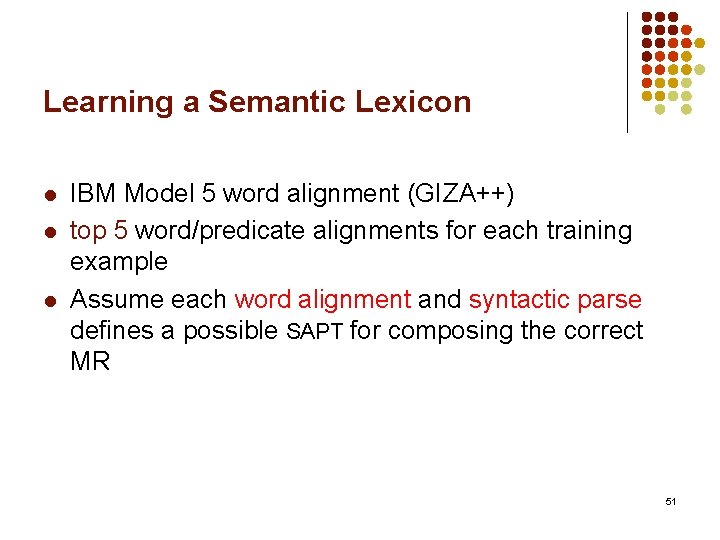

Learning a Semantic Lexicon l l l IBM Model 5 word alignment (GIZA++) top 5 word/predicate alignments for each training example Assume each word alignment and syntactic parse defines a possible SAPT for composing the correct MR 51

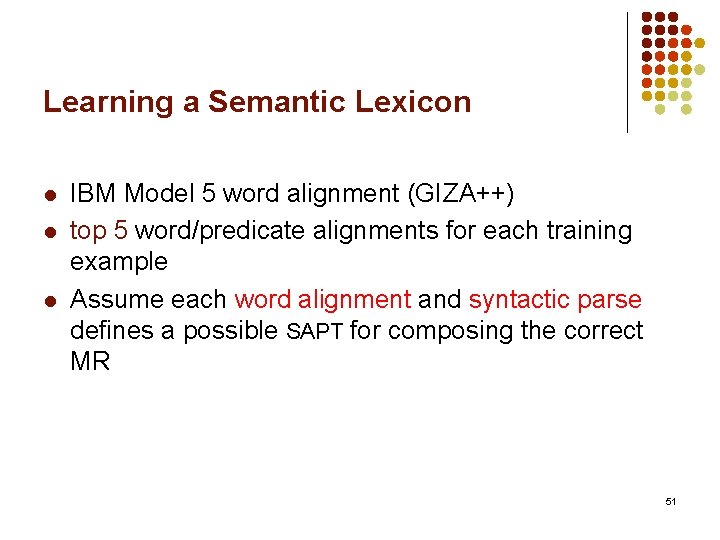

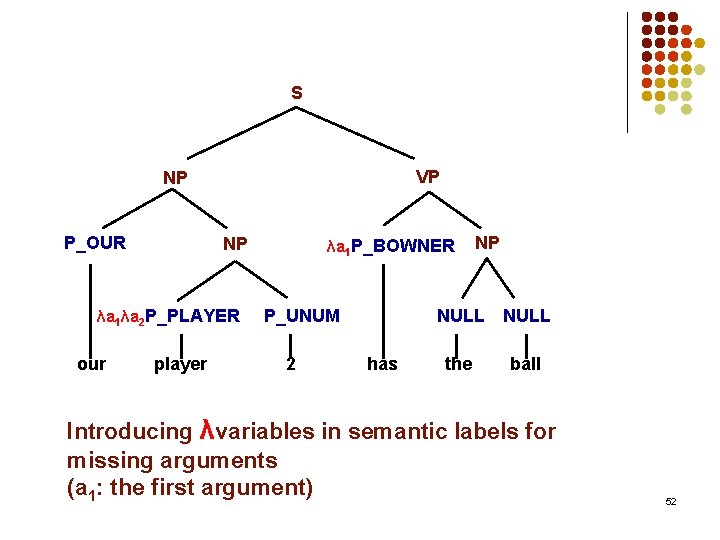

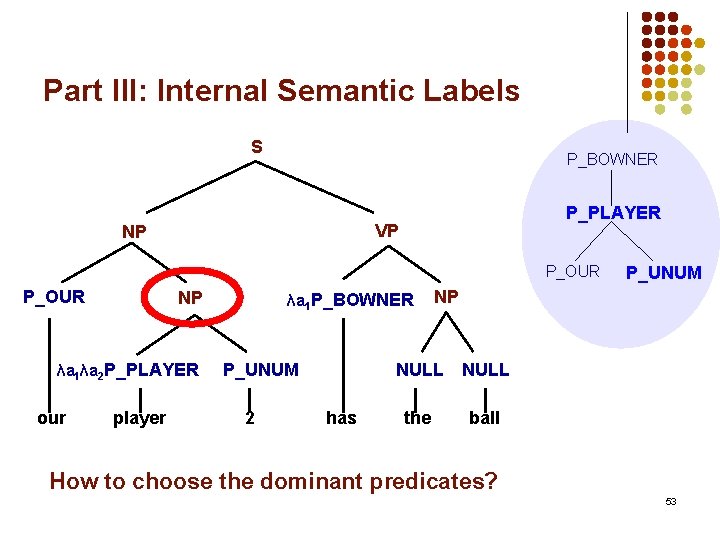

S VP NP P_OUR NP λa 1λa 2 P_PLAYER our player λa 1 P_BOWNER P_UNUM 2 has NP NULL the ball Introducing λvariables in semantic labels for missing arguments (a 1: the first argument) 52

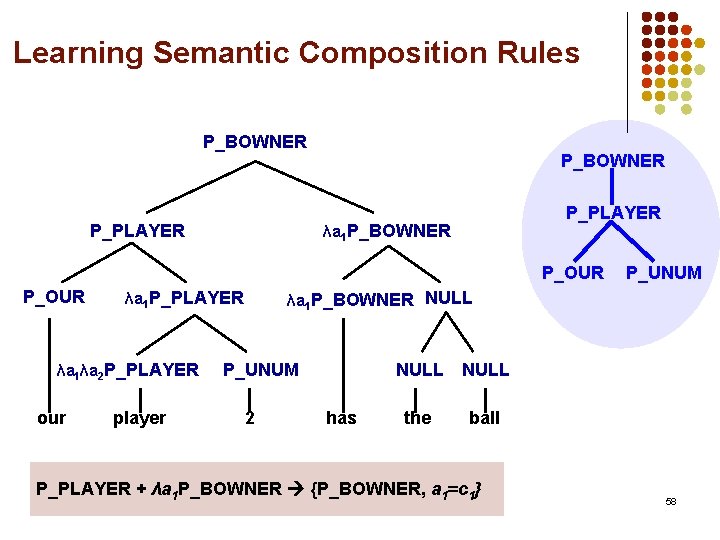

Part III: Internal Semantic Labels S P_BOWNER P_PLAYER VP NP P_OUR NP λa 1λa 2 P_PLAYER our player λa 1 P_BOWNER P_UNUM 2 has P_UNUM NP NULL the ball How to choose the dominant predicates? 53

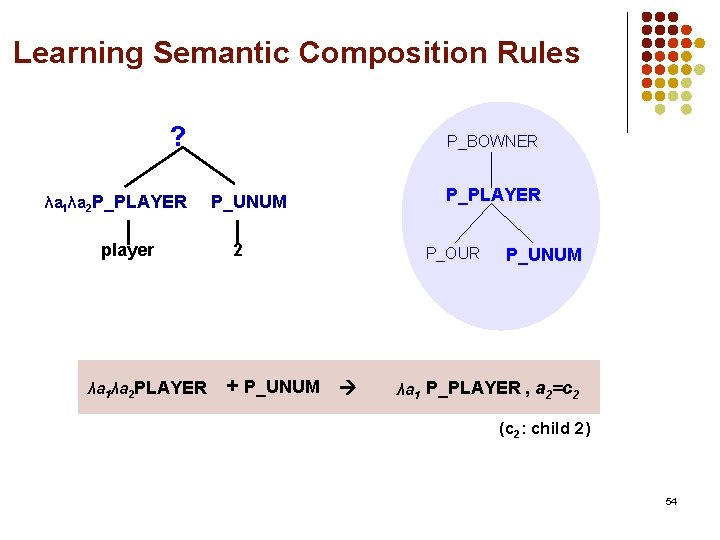

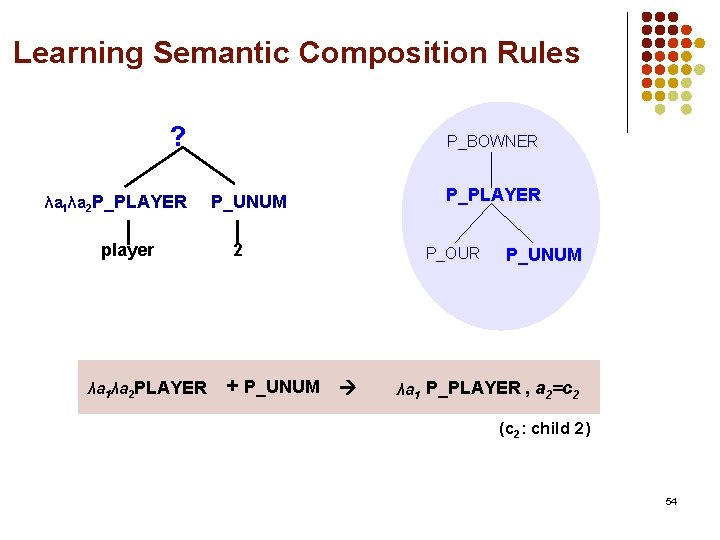

Learning Semantic Composition Rules ? λa 1λa 2 P_PLAYER player λa 1λa 2 PLAYER P_BOWNER P_UNUM 2 + P_UNUM P_PLAYER P_OUR P_UNUM λa 1 P_PLAYER , a 2=c 2 (c 2: child 2) 54

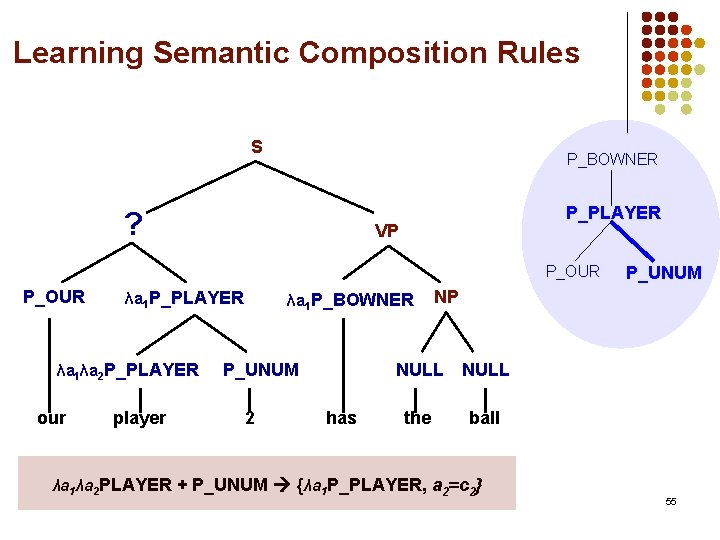

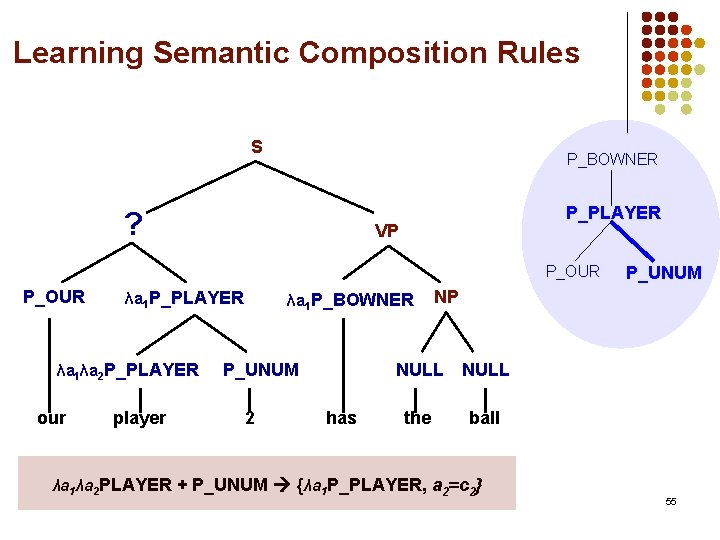

Learning Semantic Composition Rules S P_BOWNER ? P_PLAYER VP P_OUR λa 1 P_PLAYER λa 1λa 2 P_PLAYER our player λa 1 P_BOWNER P_UNUM 2 has P_UNUM NP NULL the ball λa 1λa 2 PLAYER + P_UNUM {λa 1 P_PLAYER, a 2=c 2} 55

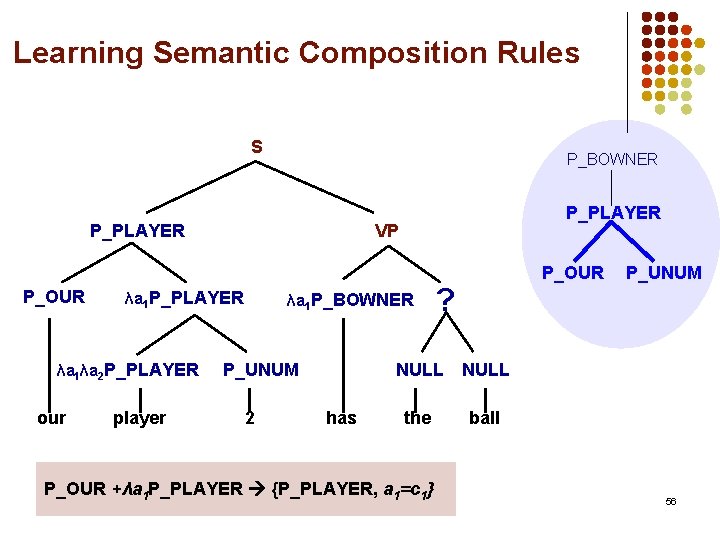

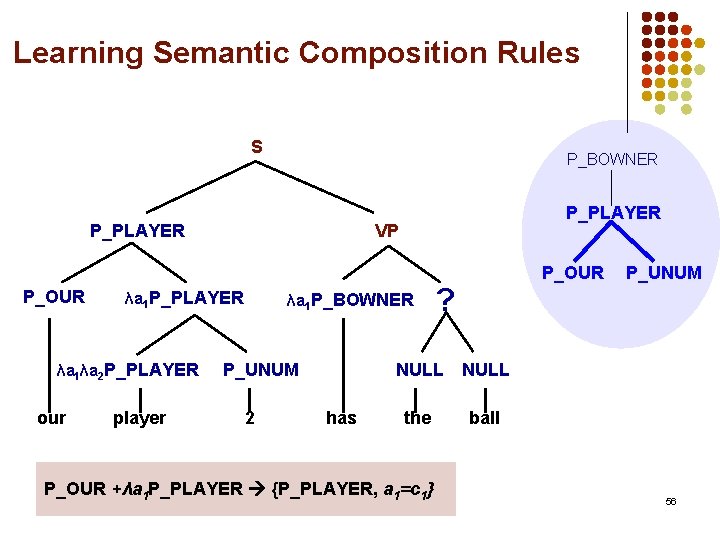

Learning Semantic Composition Rules S P_BOWNER P_PLAYER P_OUR our VP λa 1 P_PLAYER λa 1λa 2 P_PLAYER player P_PLAYER λa 1 P_BOWNER P_UNUM 2 has P_OUR ? NULL the ball P_OUR +λa 1 P_PLAYER {P_PLAYER, a 1=c 1} P_UNUM 56

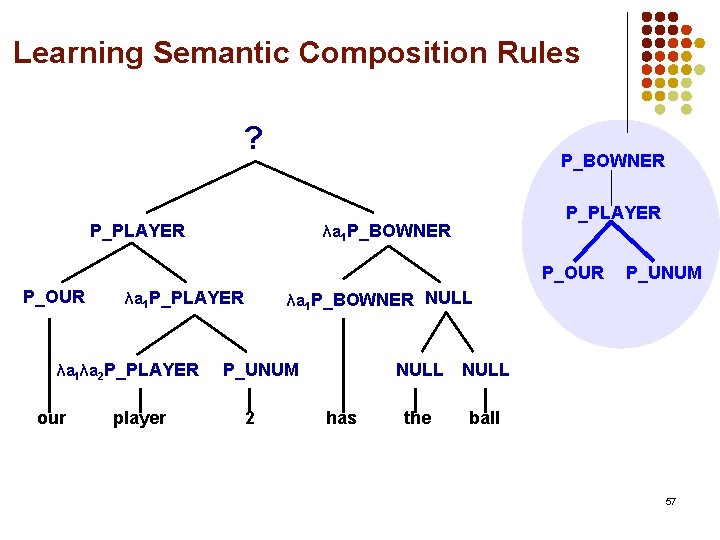

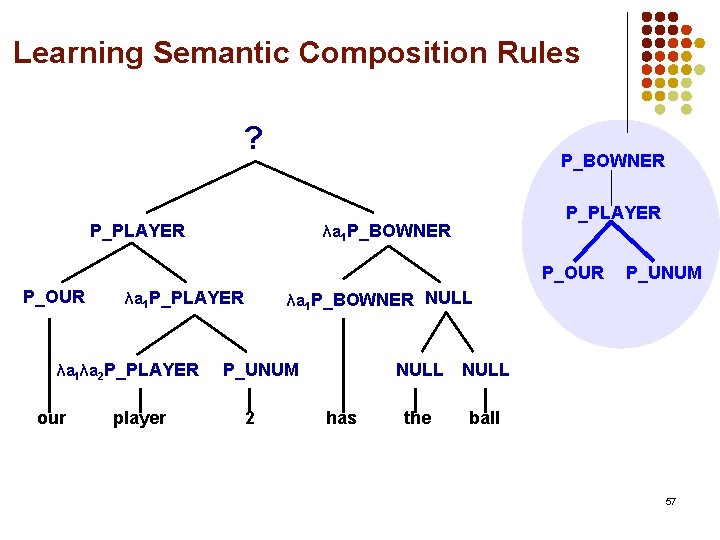

Learning Semantic Composition Rules ? P_BOWNER P_PLAYER λa 1 P_BOWNER P_OUR λa 1 P_PLAYER λa 1λa 2 P_PLAYER our player P_UNUM λa 1 P_BOWNER NULL P_UNUM 2 has NULL the ball 57

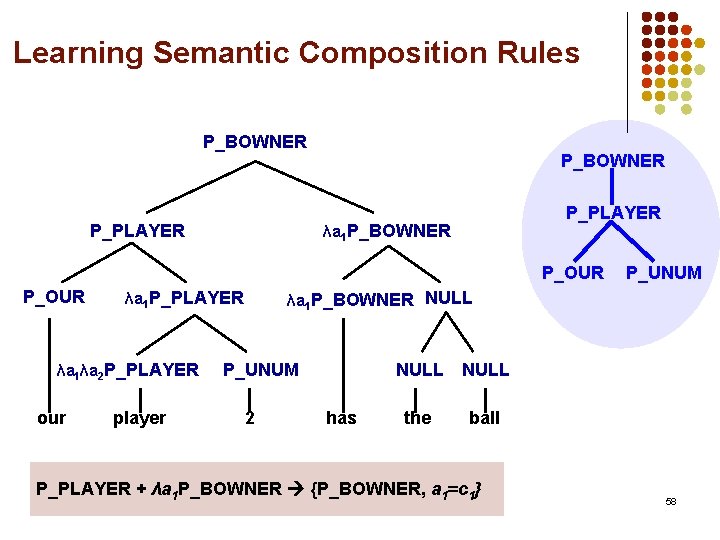

Learning Semantic Composition Rules P_BOWNER P_PLAYER λa 1 P_BOWNER P_OUR λa 1 P_PLAYER λa 1λa 2 P_PLAYER our player P_UNUM λa 1 P_BOWNER NULL P_UNUM 2 has NULL the ball P_PLAYER + λa 1 P_BOWNER {P_BOWNER, a 1=c 1} 58

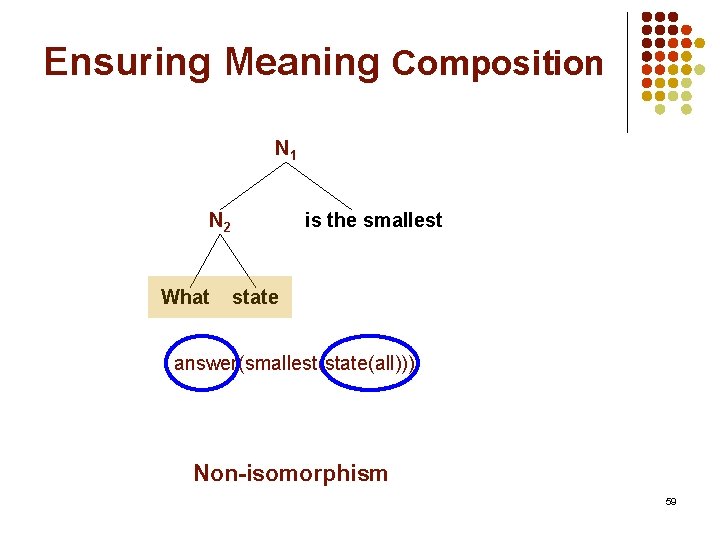

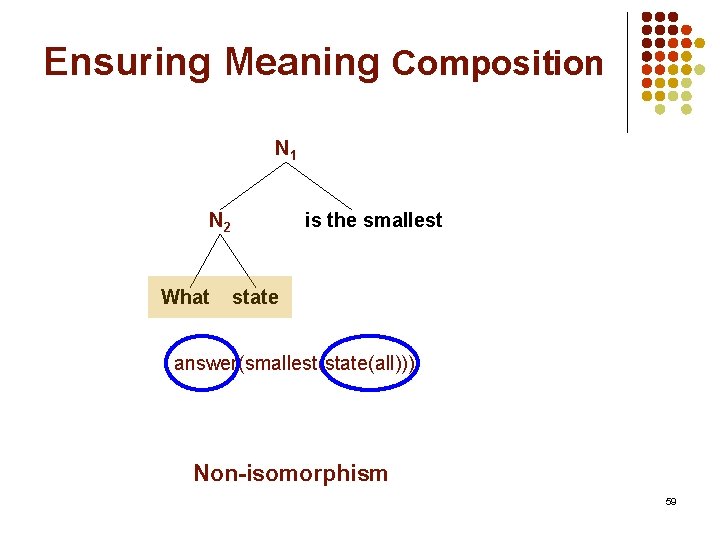

Ensuring Meaning Composition N 1 N 2 What is the smallest state answer(smallest(state(all))) Non-isomorphism 59

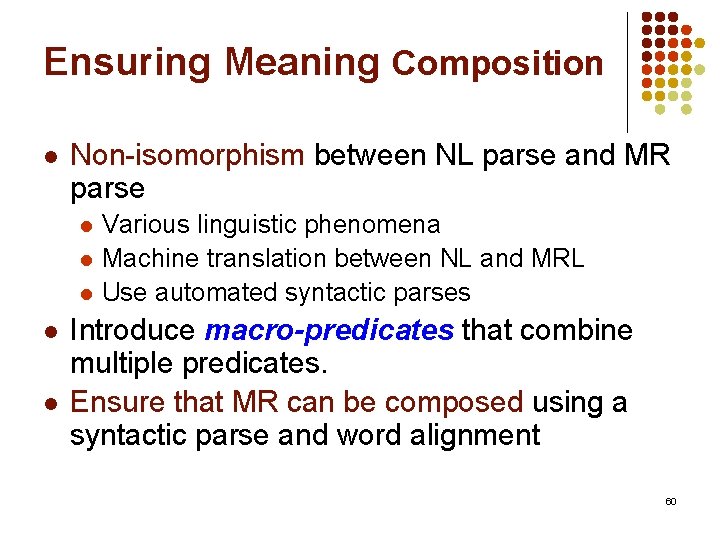

Ensuring Meaning Composition l Non-isomorphism between NL parse and MR parse l l l Various linguistic phenomena Machine translation between NL and MRL Use automated syntactic parses Introduce macro-predicates that combine multiple predicates. Ensure that MR can be composed using a syntactic parse and word alignment 60

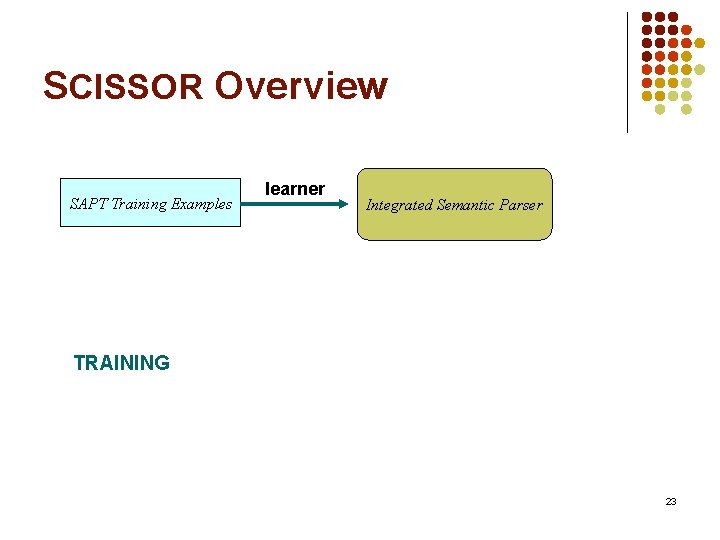

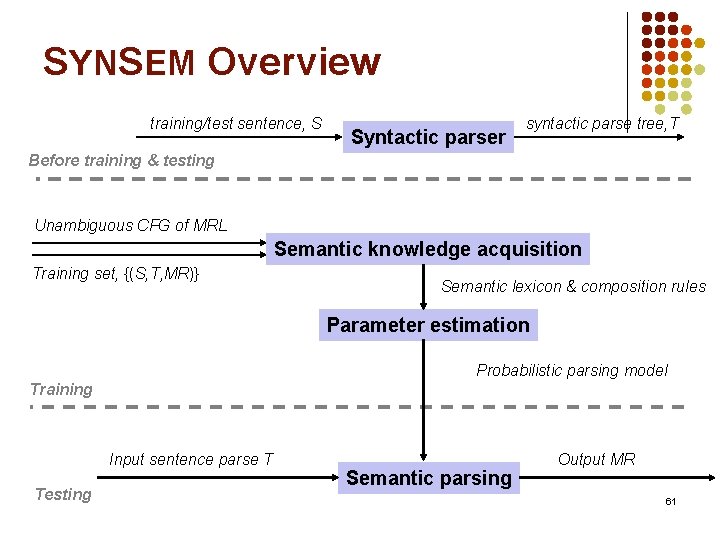

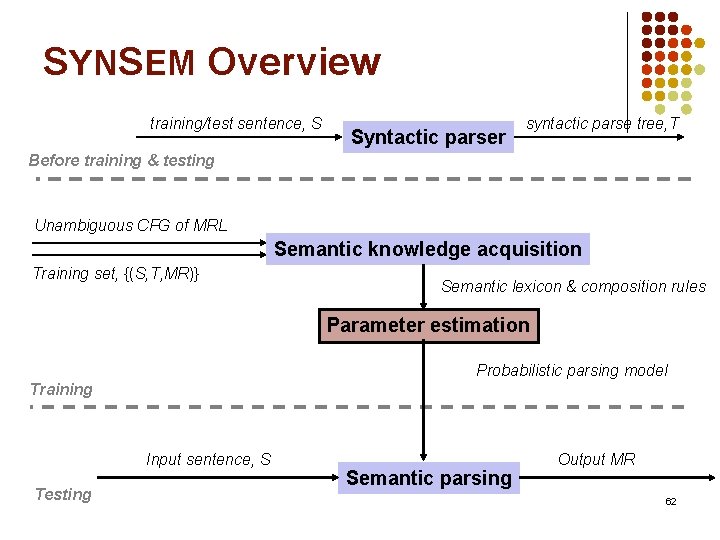

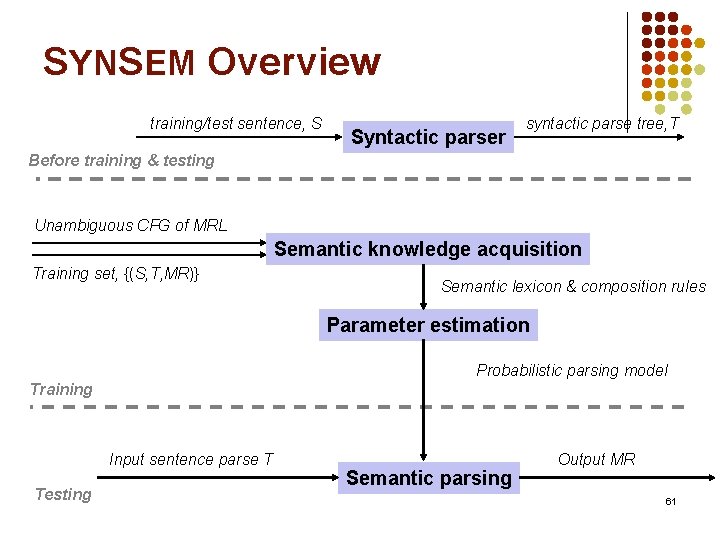

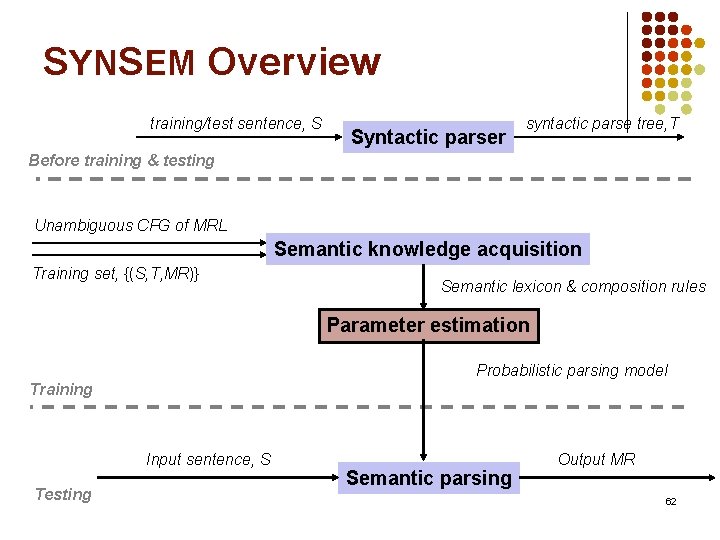

SYNSEM Overview training/test sentence, S Syntactic parser syntactic parse tree, T Before training & testing Unambiguous CFG of MRL Semantic knowledge acquisition Training set, {(S, T, MR)} Semantic lexicon & composition rules Parameter estimation Probabilistic parsing model Training Input sentence parse T Testing Semantic parsing Output MR 61

SYNSEM Overview training/test sentence, S Syntactic parser syntactic parse tree, T Before training & testing Unambiguous CFG of MRL Semantic knowledge acquisition Training set, {(S, T, MR)} Semantic lexicon & composition rules Parameter estimation Probabilistic parsing model Training Input sentence, S Testing Semantic parsing Output MR 62

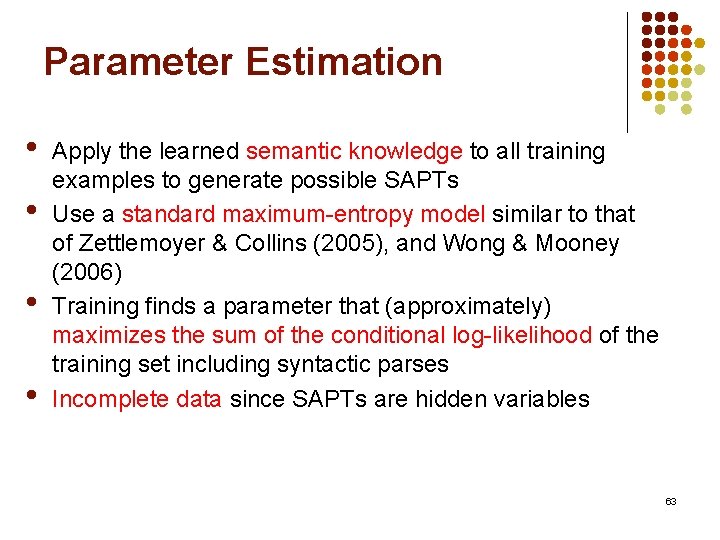

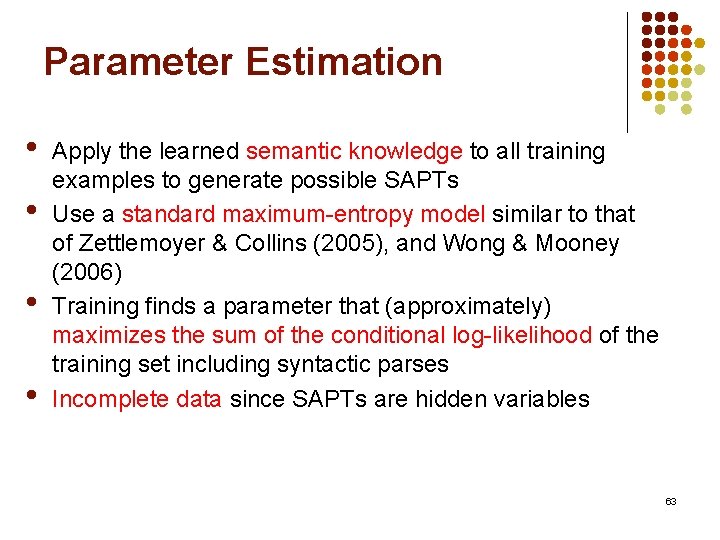

Parameter Estimation • • Apply the learned semantic knowledge to all training examples to generate possible SAPTs Use a standard maximum-entropy model similar to that of Zettlemoyer & Collins (2005), and Wong & Mooney (2006) Training finds a parameter that (approximately) maximizes the sum of the conditional log-likelihood of the training set including syntactic parses Incomplete data since SAPTs are hidden variables 63

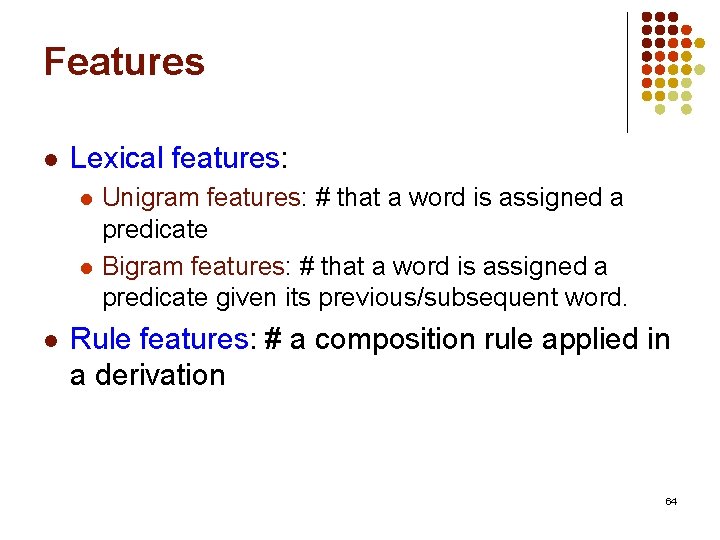

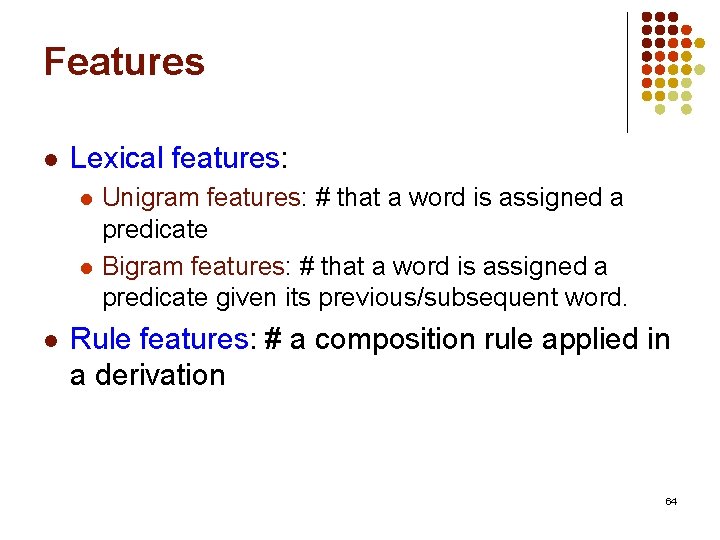

Features l Lexical features: l l l Unigram features: # that a word is assigned a predicate Bigram features: # that a word is assigned a predicate given its previous/subsequent word. Rule features: # a composition rule applied in a derivation 64

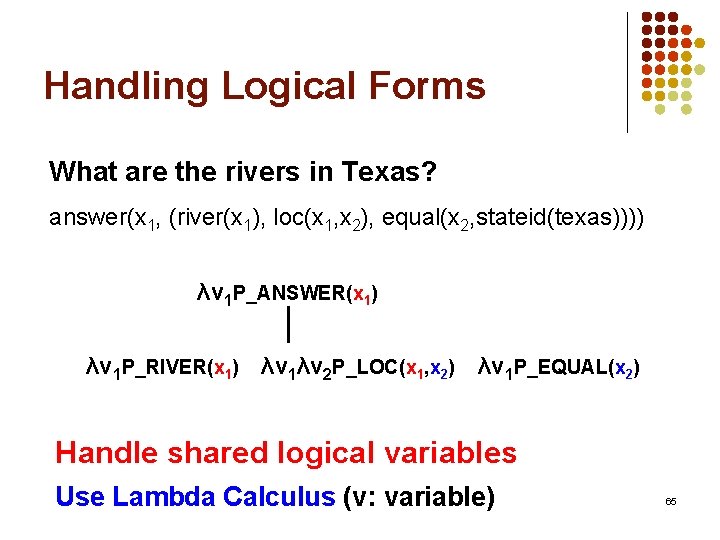

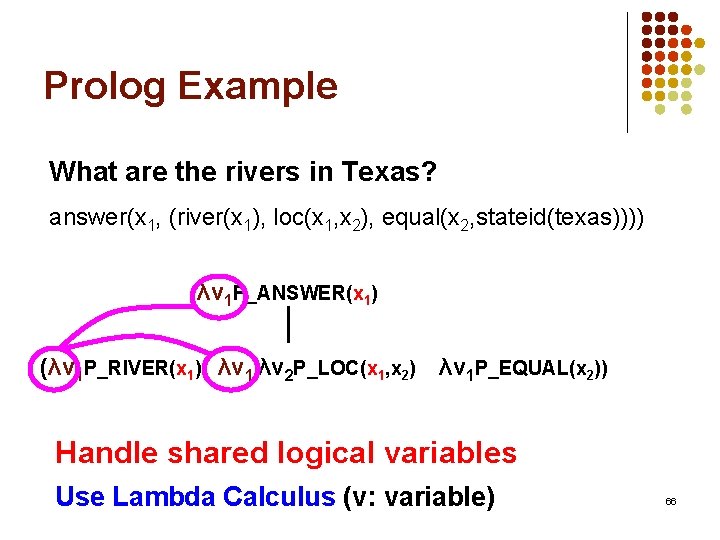

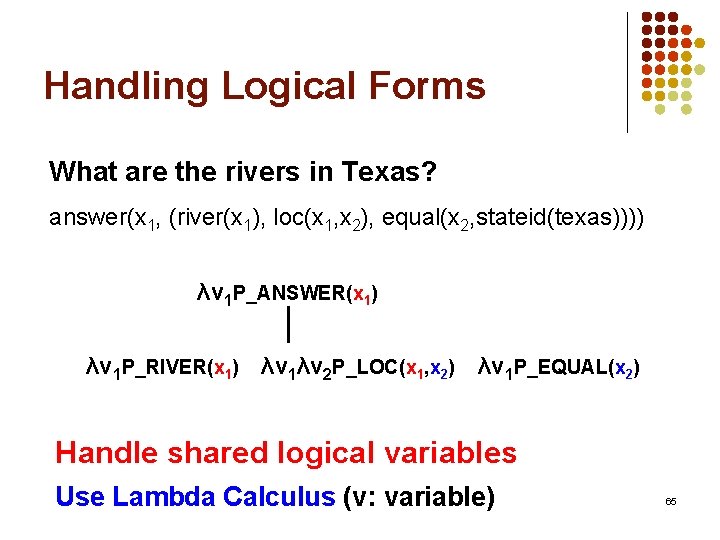

Handling Logical Forms What are the rivers in Texas? answer(x 1, (river(x 1), loc(x 1, x 2), equal(x 2, stateid(texas)))) λv 1 P_ANSWER(x 1) λv 1 P_RIVER(x 1) λv 1λv 2 P_LOC(x 1, x 2) λv 1 P_EQUAL(x 2) Handle shared logical variables Use Lambda Calculus (v: variable) 65

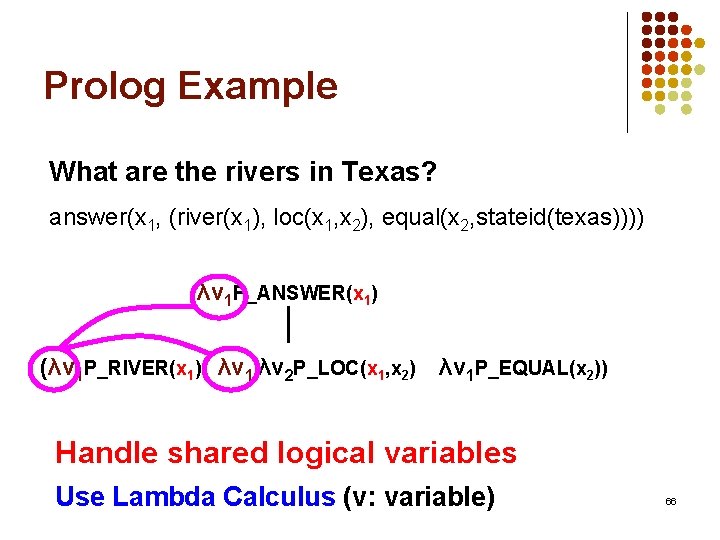

Prolog Example What are the rivers in Texas? answer(x 1, (river(x 1), loc(x 1, x 2), equal(x 2, stateid(texas)))) λv 1 P_ANSWER(x 1) (λv 1 P_RIVER(x 1) λv 1 λv 2 P_LOC(x 1, x 2) λv 1 P_EQUAL(x 2)) Handle shared logical variables Use Lambda Calculus (v: variable) 66

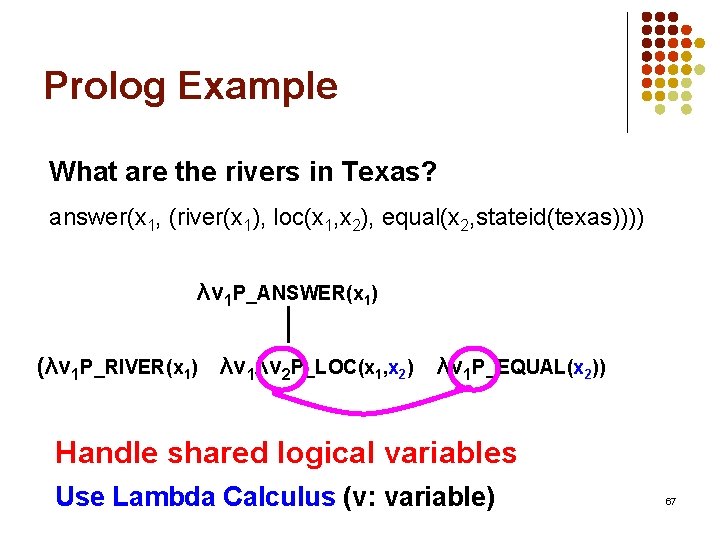

Prolog Example What are the rivers in Texas? answer(x 1, (river(x 1), loc(x 1, x 2), equal(x 2, stateid(texas)))) λv 1 P_ANSWER(x 1) (λv 1 P_RIVER(x 1) λv 1λv 2 P_LOC(x 1, x 2) λv 1 P_EQUAL(x 2)) Handle shared logical variables Use Lambda Calculus (v: variable) 67

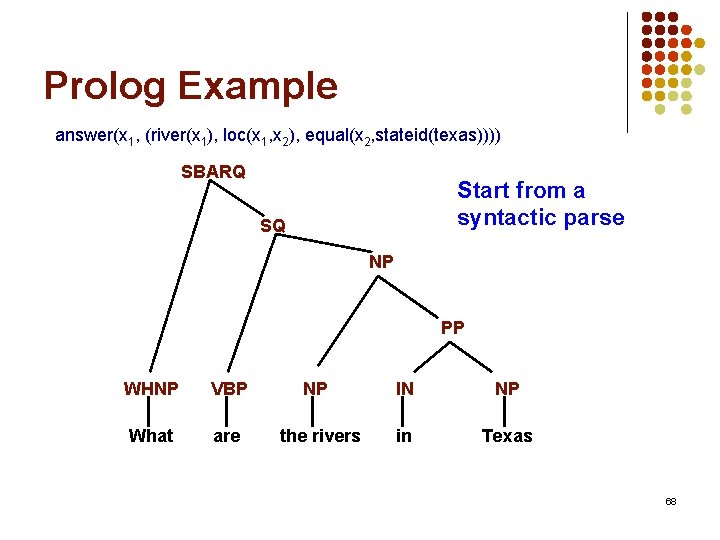

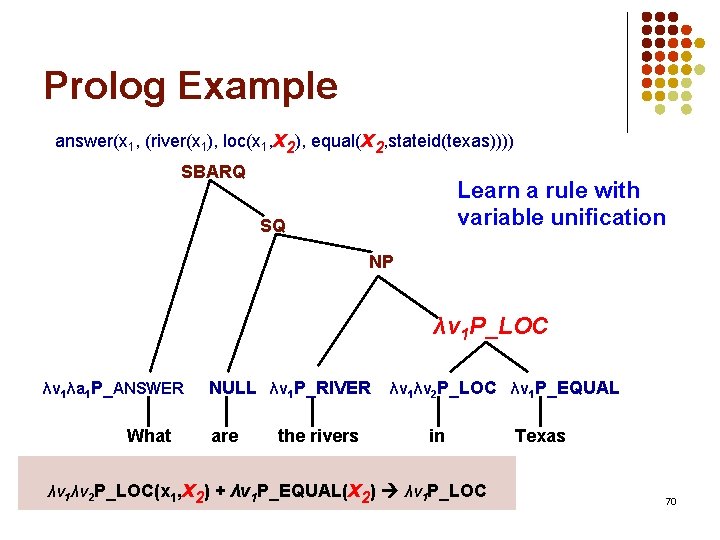

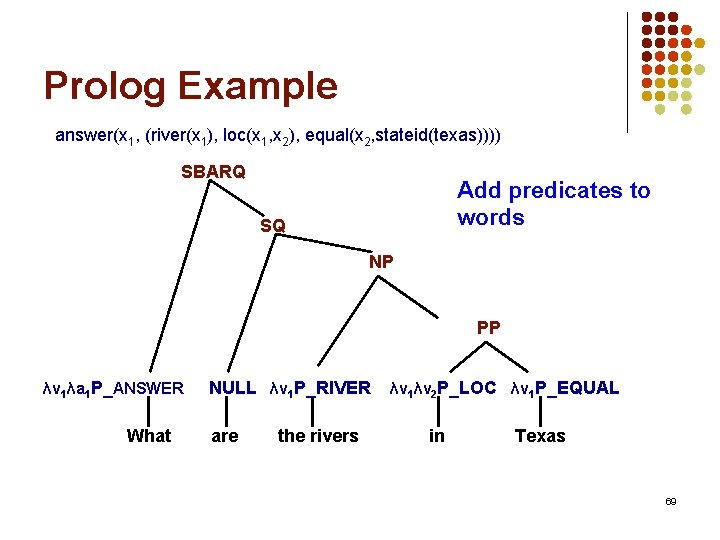

Prolog Example answer(x 1, (river(x 1), loc(x 1, x 2), equal(x 2, stateid(texas)))) SBARQ Start from a syntactic parse SQ NP PP WHNP VBP NP IN NP What are the rivers in Texas 68

Prolog Example answer(x 1, (river(x 1), loc(x 1, x 2), equal(x 2, stateid(texas)))) SBARQ Add predicates to words SQ NP PP λv 1λa 1 P_ANSWER What NULL λv 1 P_RIVER are the rivers λv 1λv 2 P_LOC λv 1 P_EQUAL in Texas 69

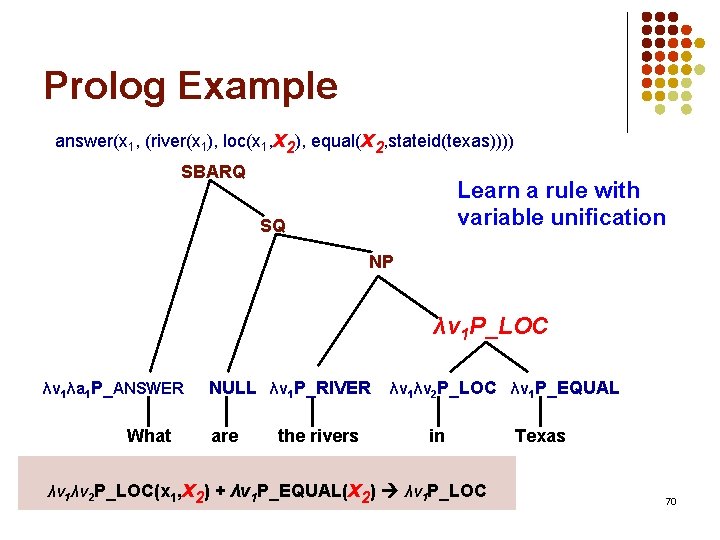

Prolog Example answer(x 1, (river(x 1), loc(x 1, x 2), equal(x 2, stateid(texas)))) SBARQ Learn a rule with variable unification SQ NP λv 1 P_LOC λv 1λa 1 P_ANSWER What NULL λv 1 P_RIVER are the rivers λv 1λv 2 P_LOC λv 1 P_EQUAL in λv 1λv 2 P_LOC(x 1, x 2) + λv 1 P_EQUAL(x 2) λv 1 P_LOC Texas 70

Experimental Results l l CLang Geoquery (Prolog) 71

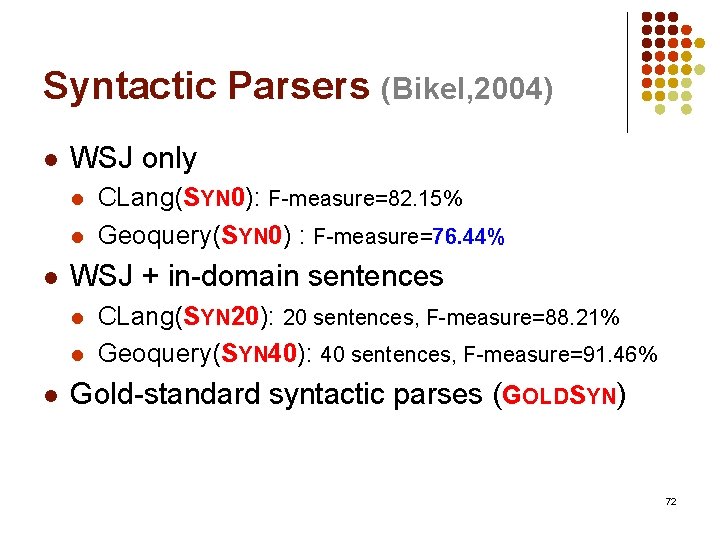

Syntactic Parsers (Bikel, 2004) l WSJ only l l l WSJ + in-domain sentences l l l CLang(SYN 0): F-measure=82. 15% Geoquery(SYN 0) : F-measure=76. 44% CLang(SYN 20): 20 sentences, F-measure=88. 21% Geoquery(SYN 40): 40 sentences, F-measure=91. 46% Gold-standard syntactic parses (GOLDSYN) 72

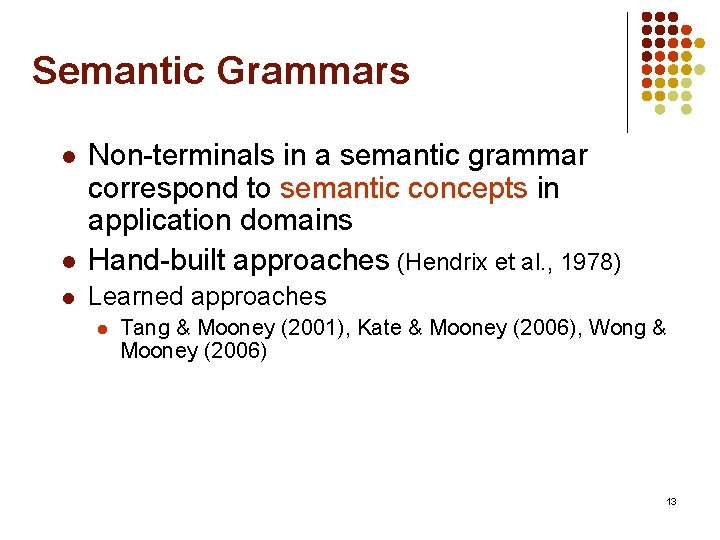

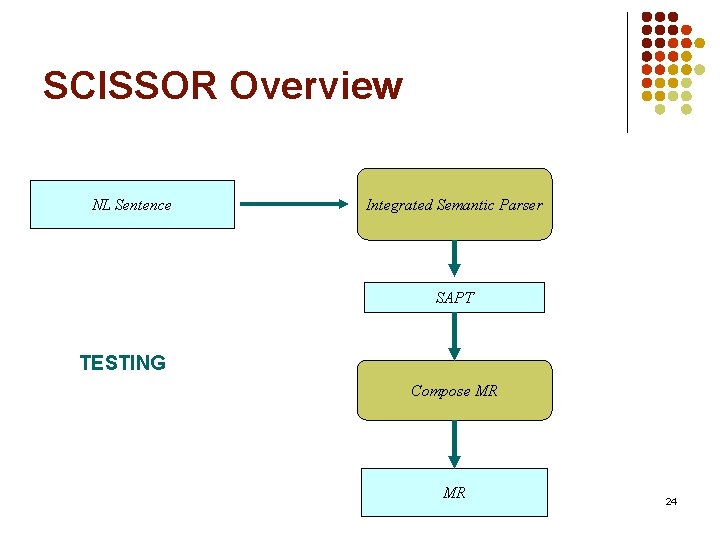

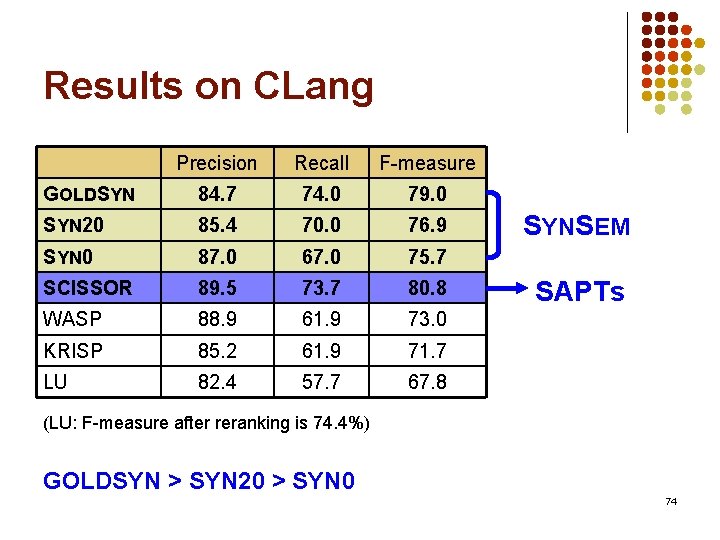

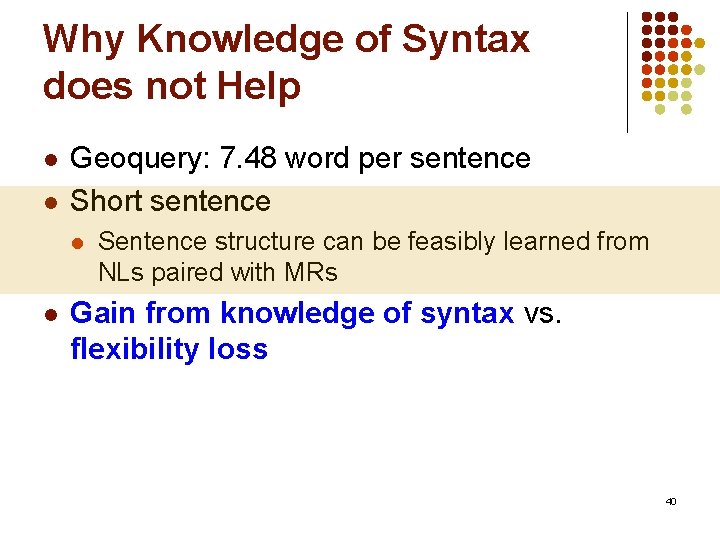

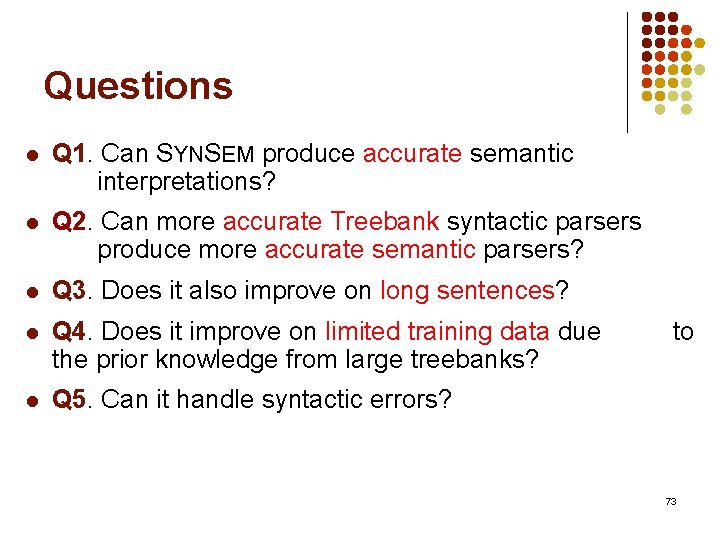

Questions l Q 1. Can SYNSEM produce accurate semantic interpretations? l Q 2. Can more accurate Treebank syntactic parsers produce more accurate semantic parsers? l Q 3. Does it also improve on long sentences? l Q 4. Does it improve on limited training data due the prior knowledge from large treebanks? l Q 5. Can it handle syntactic errors? to 73

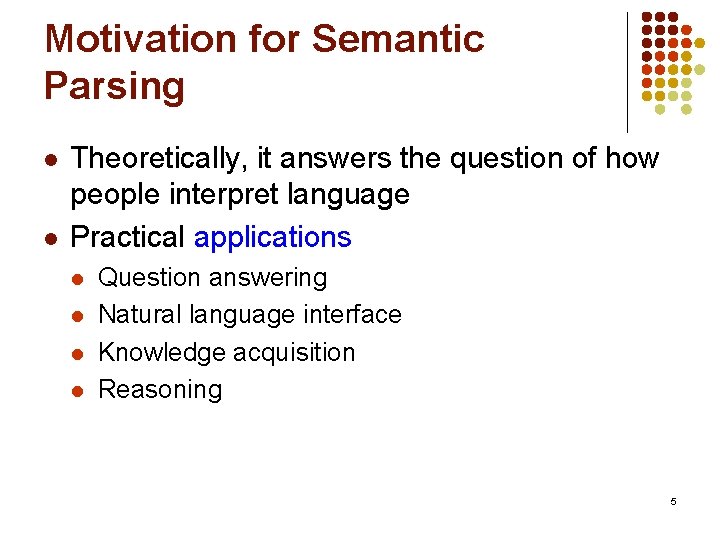

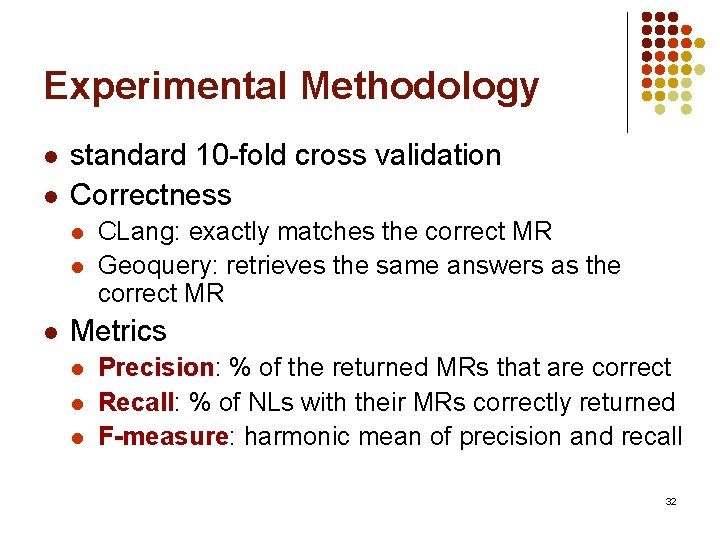

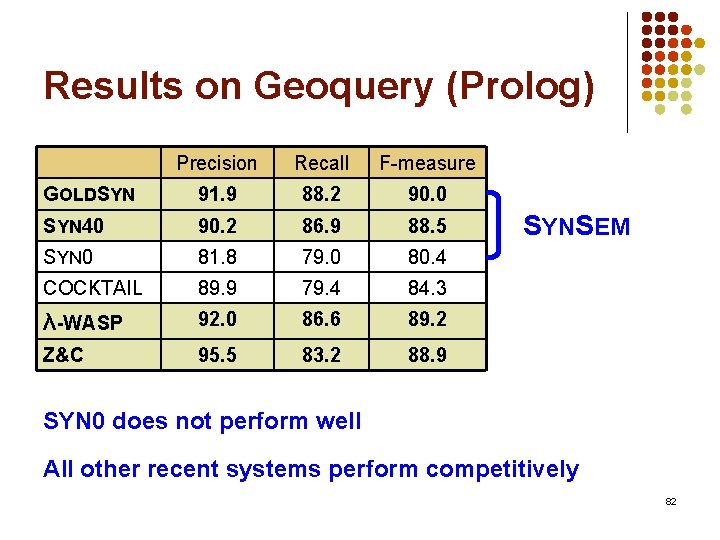

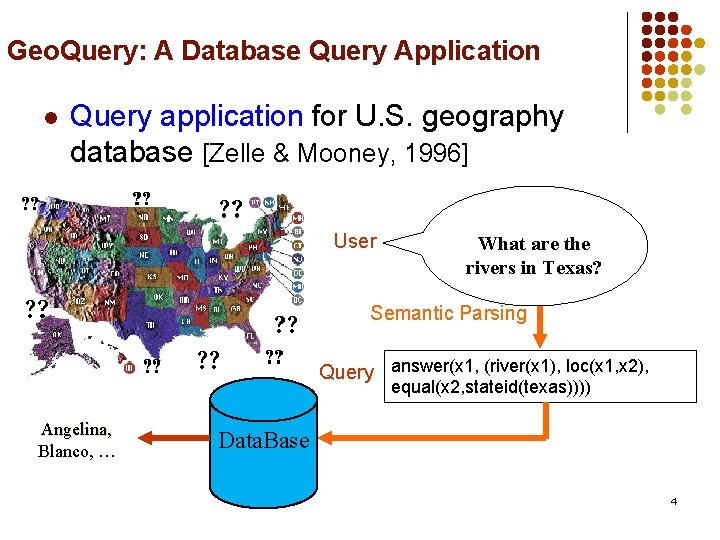

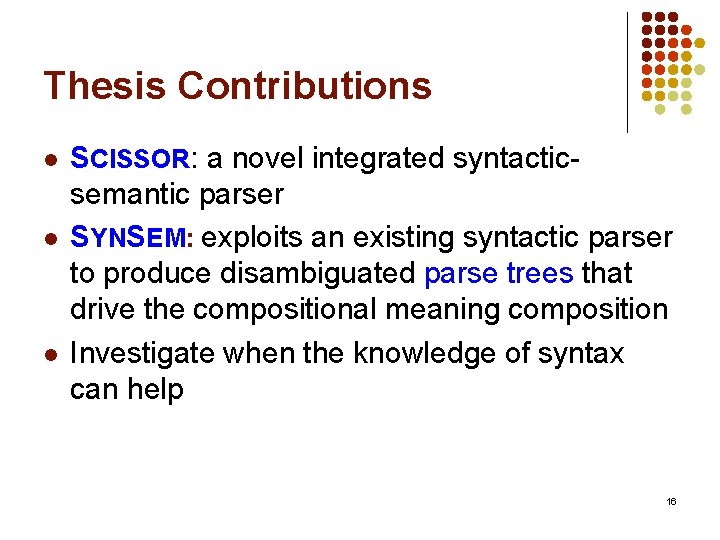

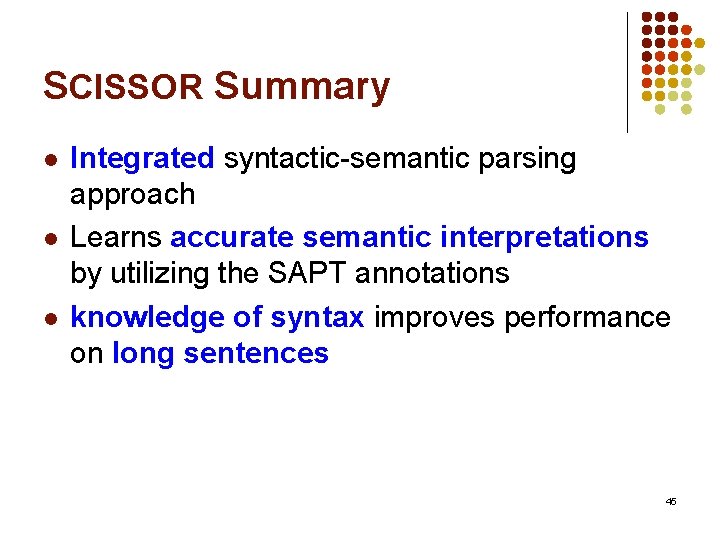

Results on CLang Precision Recall F-measure GOLDSYN 84. 7 74. 0 79. 0 SYN 20 85. 4 70. 0 76. 9 SYN 0 87. 0 67. 0 75. 7 SCISSOR 89. 5 73. 7 80. 8 WASP 88. 9 61. 9 73. 0 KRISP 85. 2 61. 9 71. 7 LU 82. 4 57. 7 67. 8 SYNSEM SAPTs (LU: F-measure after reranking is 74. 4%) GOLDSYN > SYN 20 > SYN 0 74

![Questions l Q 1 Can Syn Sem produce accurate semantic interpretations yes l Q Questions l Q 1. Can Syn. Sem produce accurate semantic interpretations? [yes] l Q](https://slidetodoc.com/presentation_image_h/a9c978ee0ae1501cc9ab9e8c86581159/image-75.jpg)

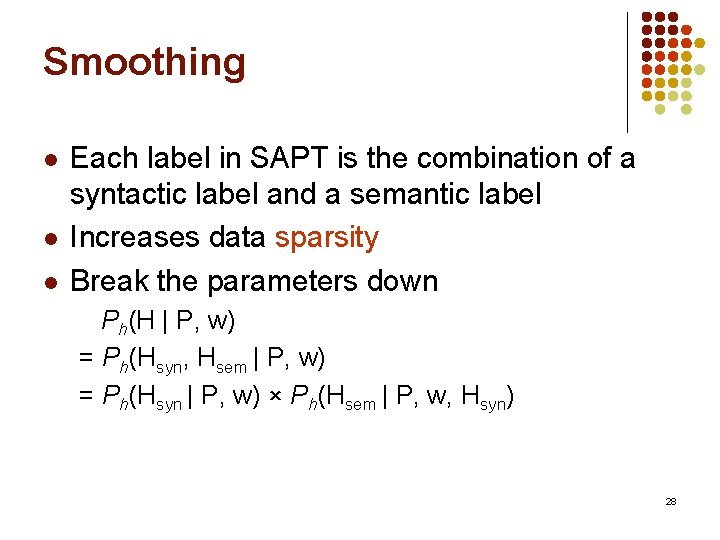

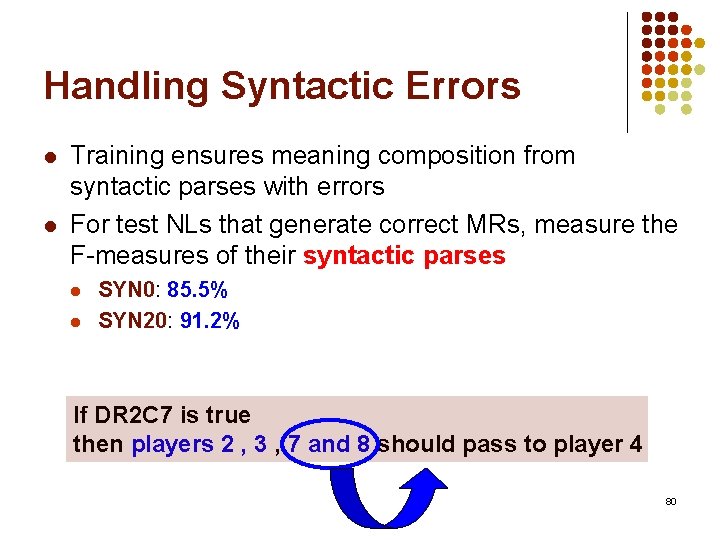

Questions l Q 1. Can Syn. Sem produce accurate semantic interpretations? [yes] l Q 2. Can more accurate Treebank syntactic parsers produce more accurate semantic parsers? [yes] l Q 3. Does it also improve on long sentences? 75

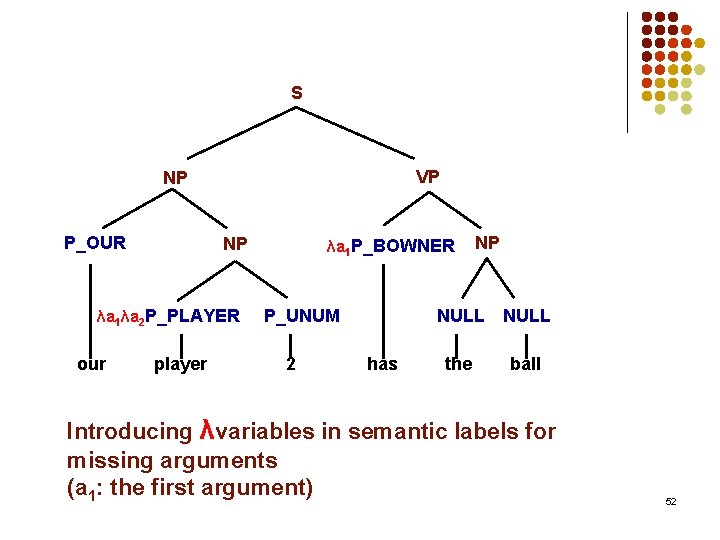

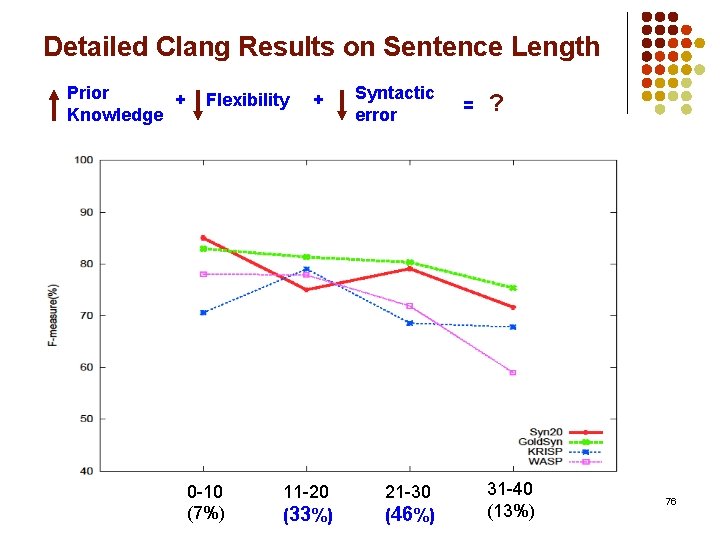

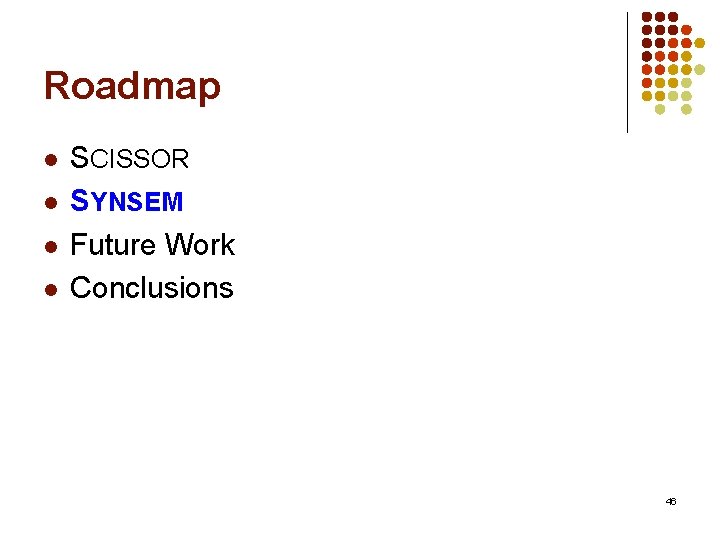

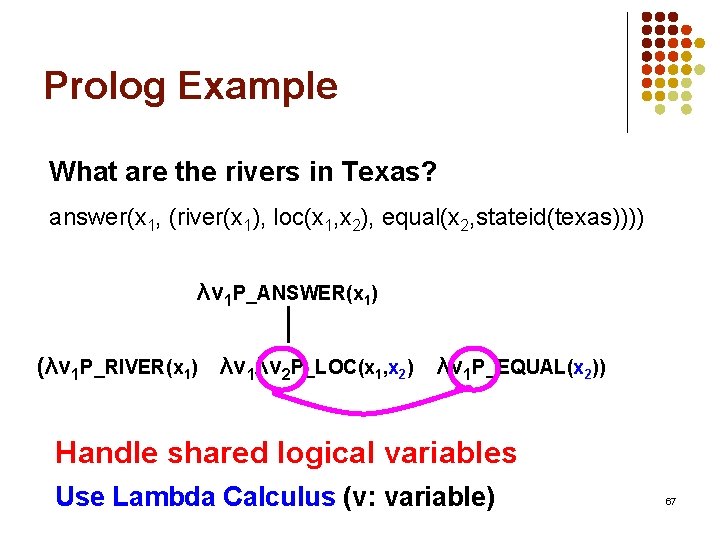

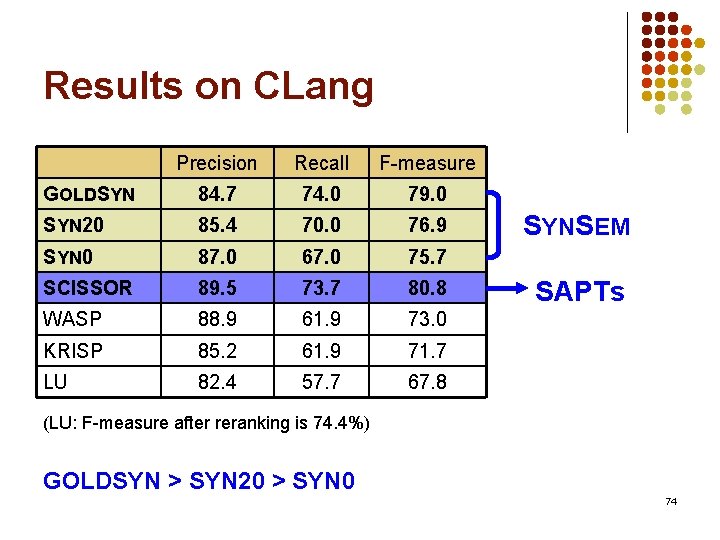

Detailed Clang Results on Sentence Length Prior + Knowledge Flexibility 0 -10 (7%) + 11 -20 (33%) Syntactic error 21 -30 (46%) = ? 31 -40 (13%) 76

![Questions l Q 1 Can Syn Sem produce accurate semantic interpretations yes l Q Questions l Q 1. Can Syn. Sem produce accurate semantic interpretations? [yes] l Q](https://slidetodoc.com/presentation_image_h/a9c978ee0ae1501cc9ab9e8c86581159/image-77.jpg)

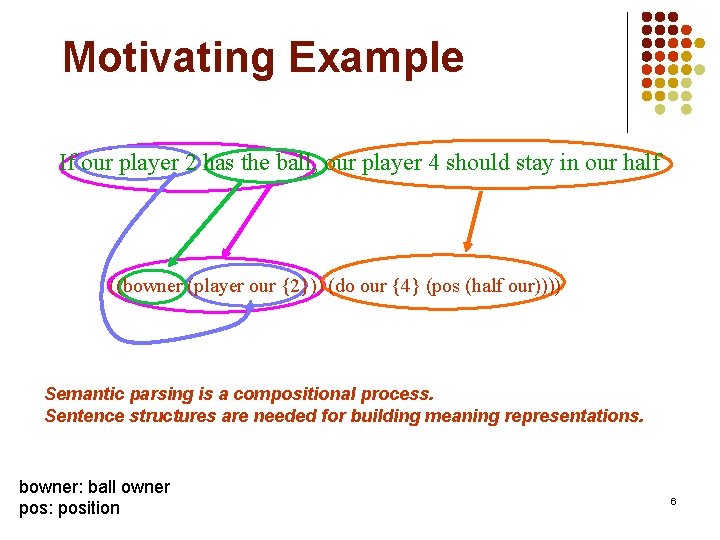

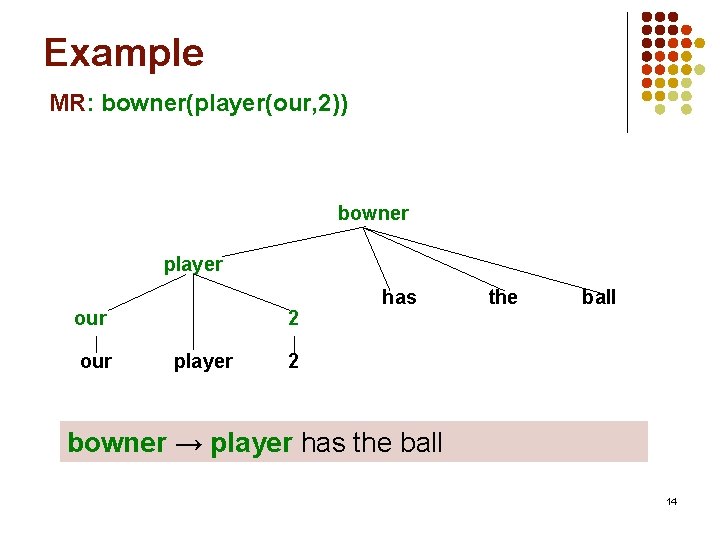

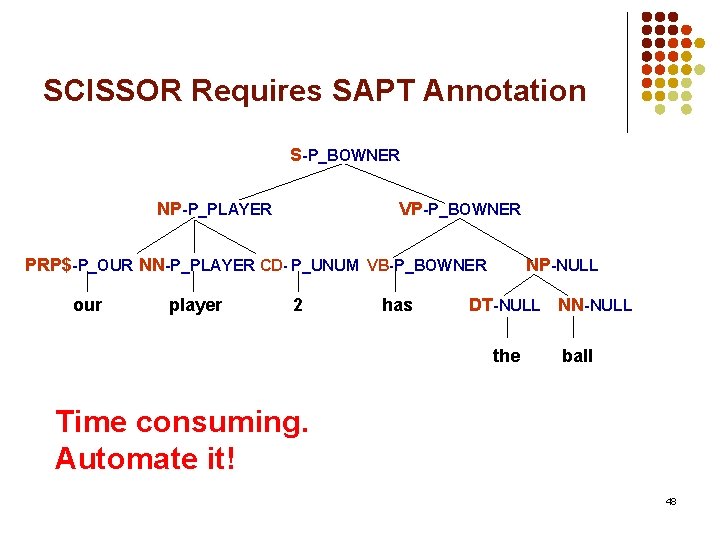

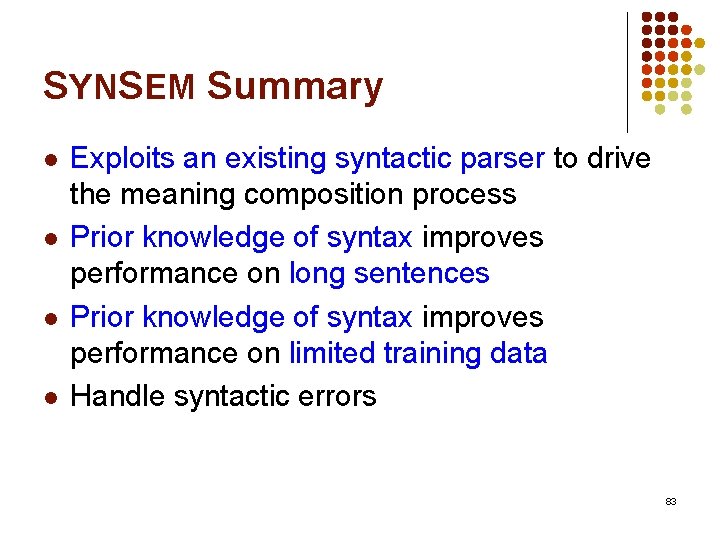

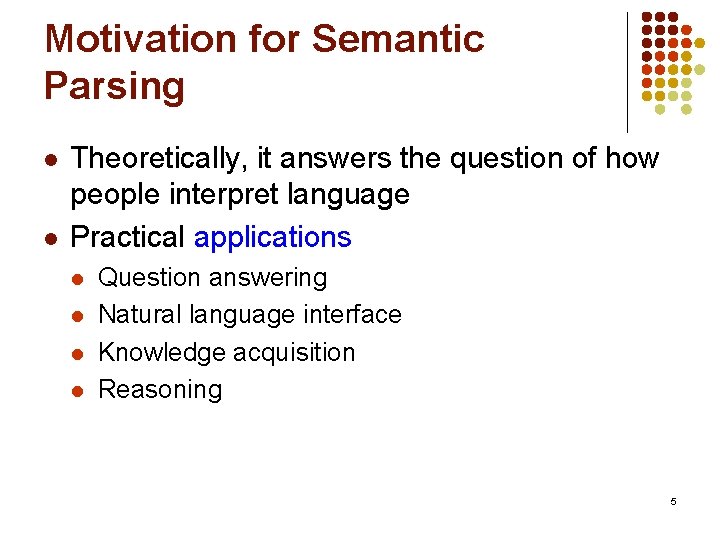

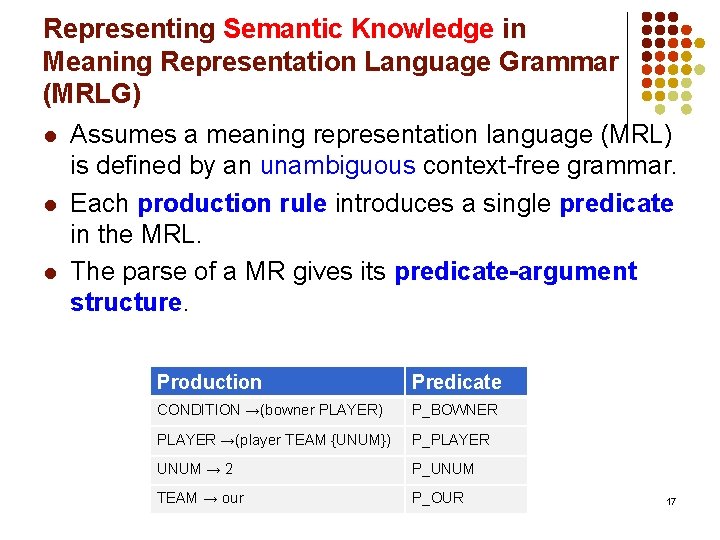

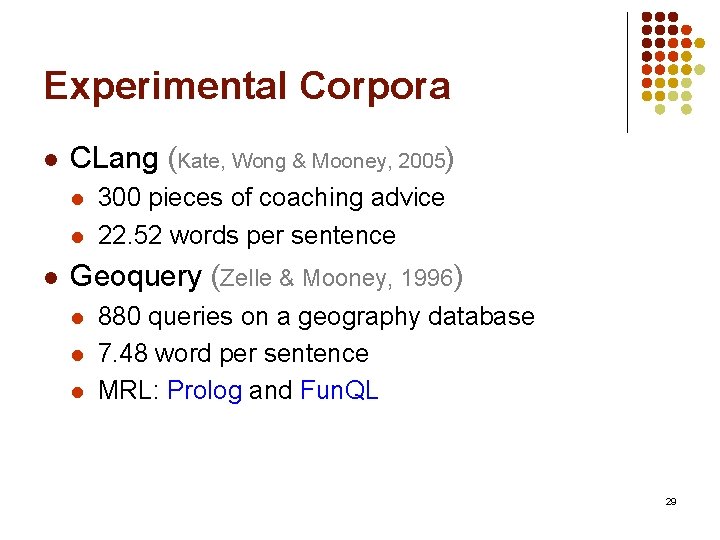

Questions l Q 1. Can Syn. Sem produce accurate semantic interpretations? [yes] l Q 2. Can more accurate Treebank syntactic parsers produce more accurate semantic parsers? [yes] l Q 3. Does it also improve on long sentences? [yes] l Q 4. Does it improve on limited training data due the prior knowledge from large treebanks? to 77

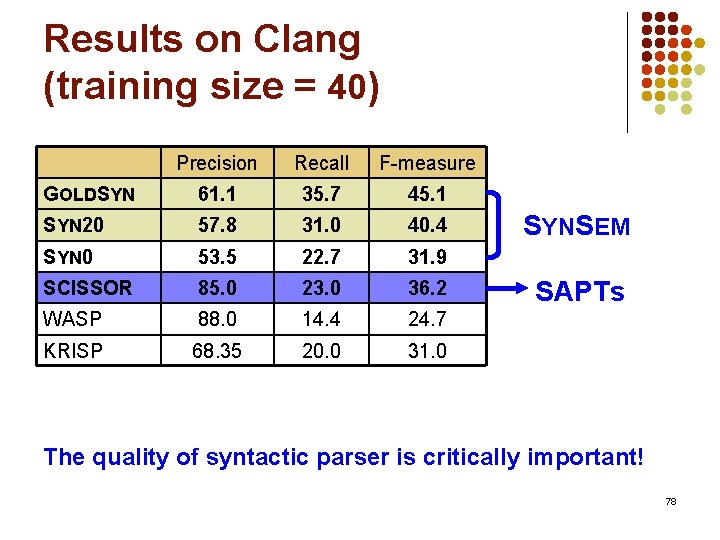

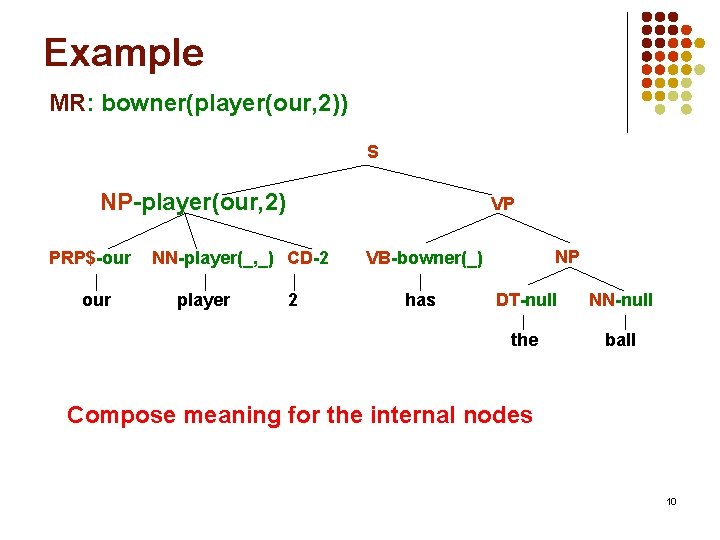

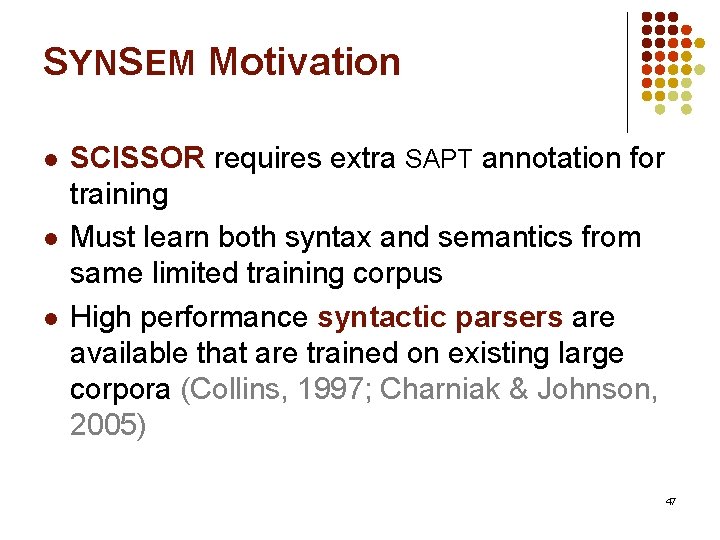

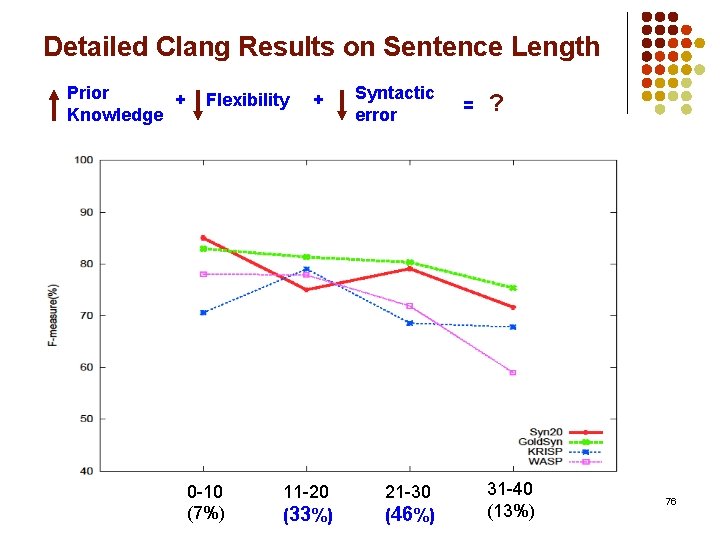

Results on Clang (training size = 40) Precision Recall F-measure GOLDSYN 61. 1 35. 7 45. 1 SYN 20 57. 8 31. 0 40. 4 SYN 0 53. 5 22. 7 31. 9 SCISSOR 85. 0 23. 0 36. 2 WASP 88. 0 14. 4 24. 7 KRISP 68. 35 20. 0 31. 0 SYNSEM SAPTs The quality of syntactic parser is critically important! 78

![Questions l Q 1 Can Syn Sem produce accurate semantic interpretations yes l Q Questions l Q 1. Can Syn. Sem produce accurate semantic interpretations? [yes] l Q](https://slidetodoc.com/presentation_image_h/a9c978ee0ae1501cc9ab9e8c86581159/image-79.jpg)

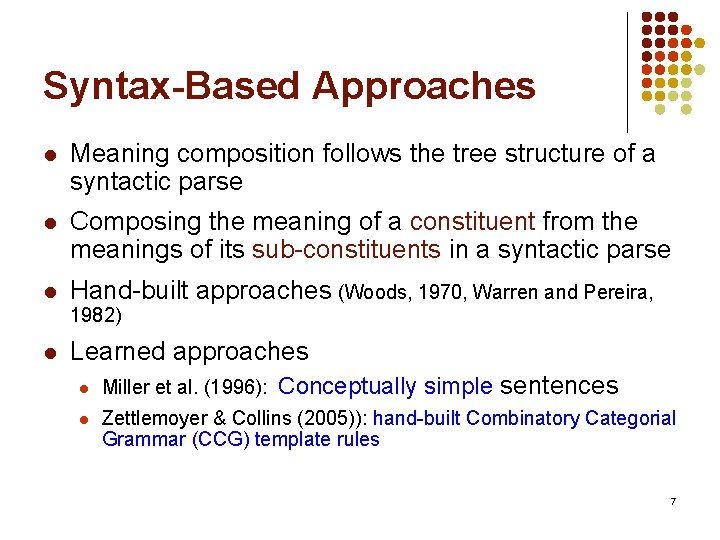

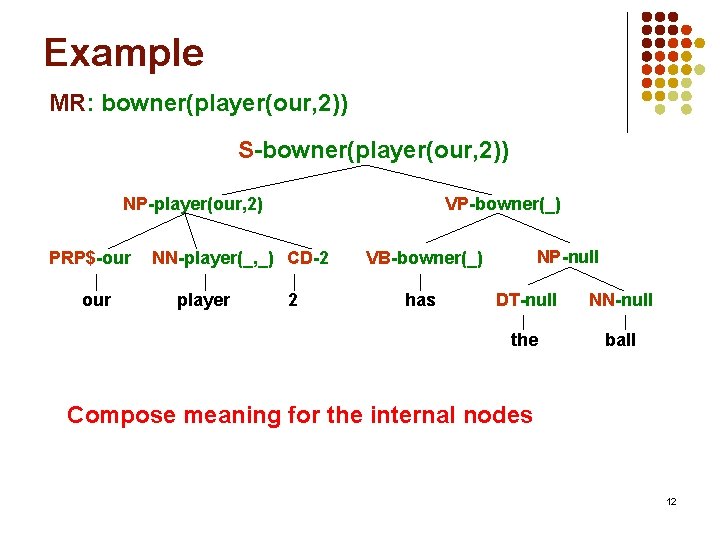

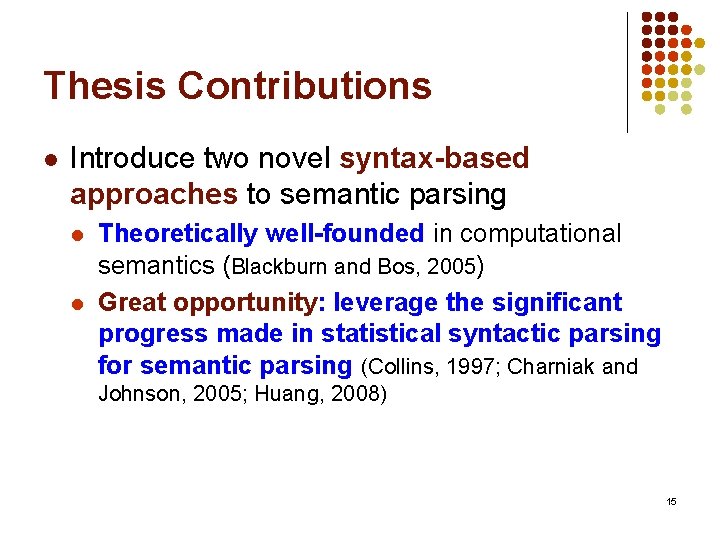

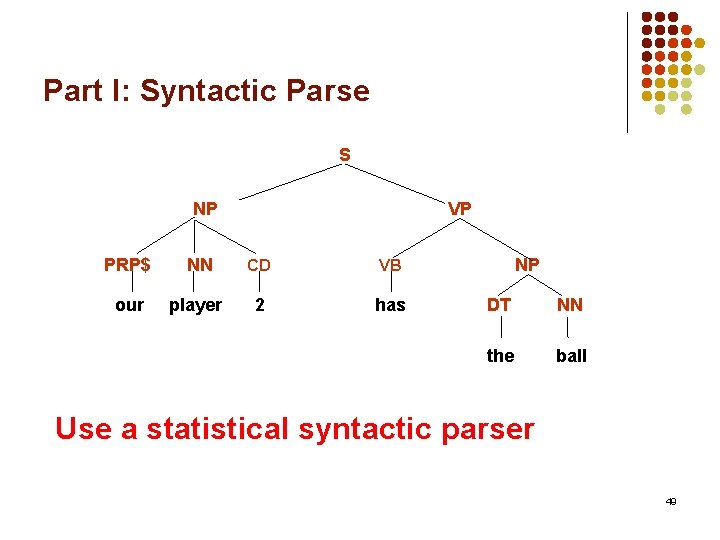

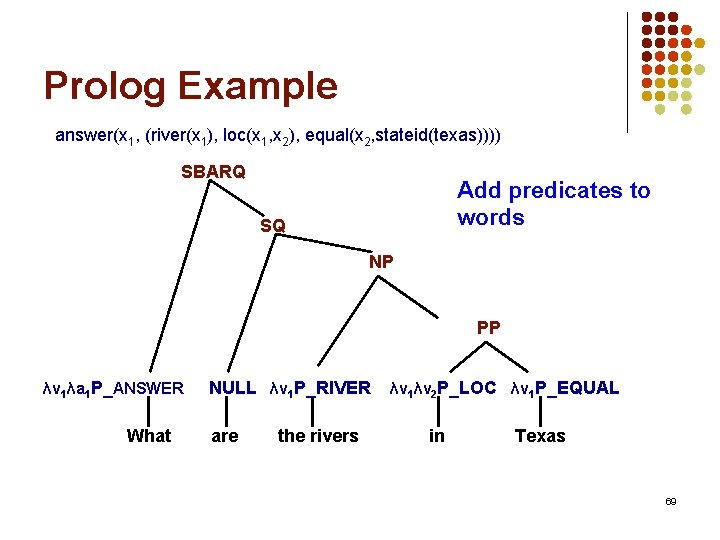

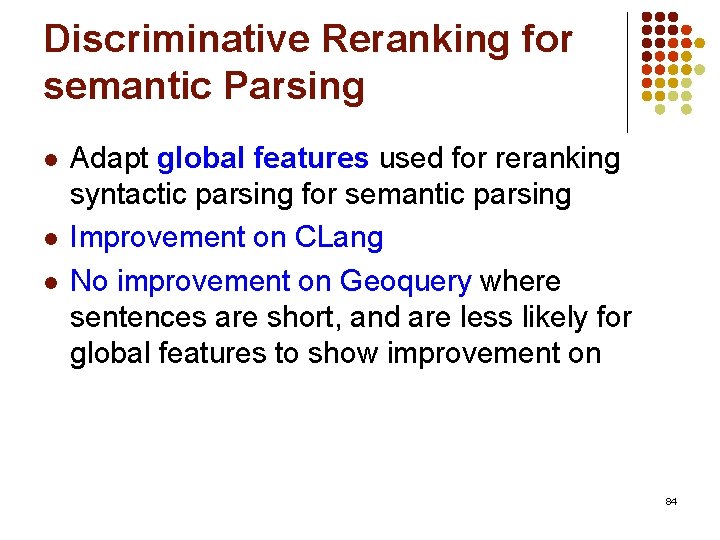

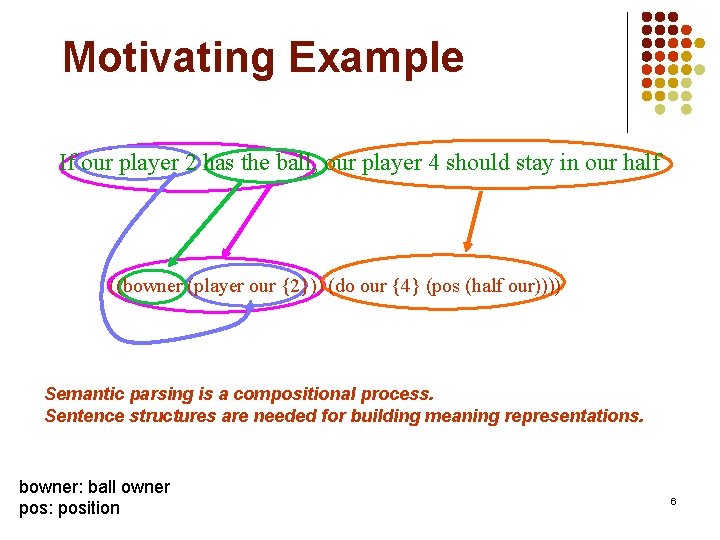

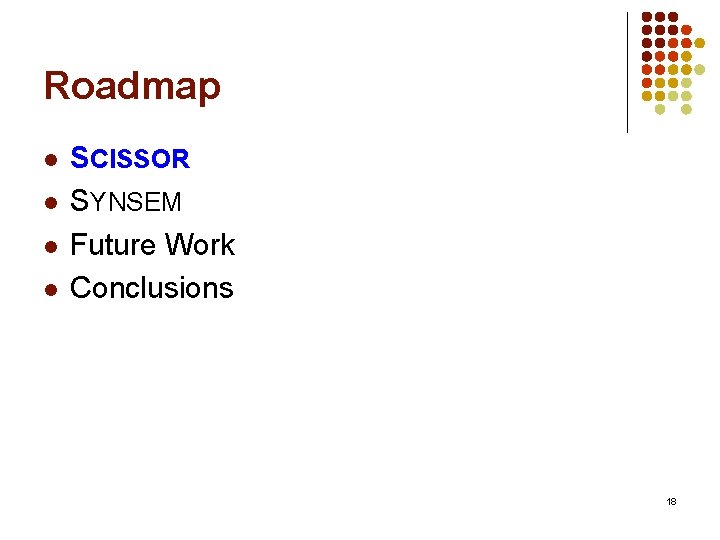

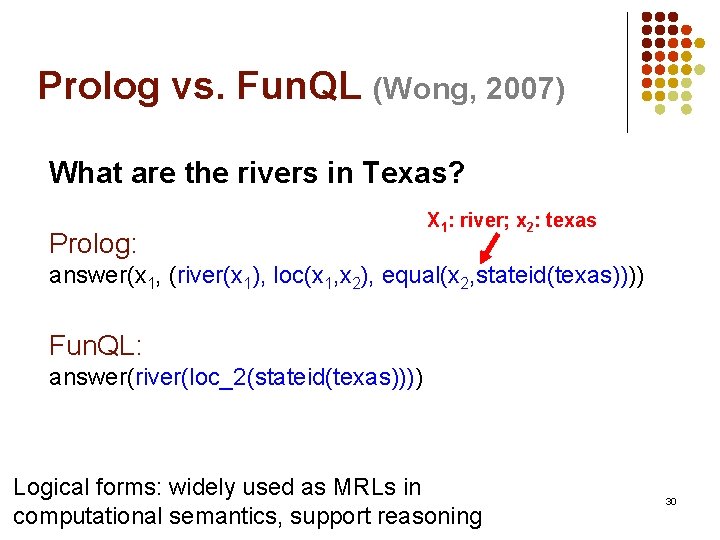

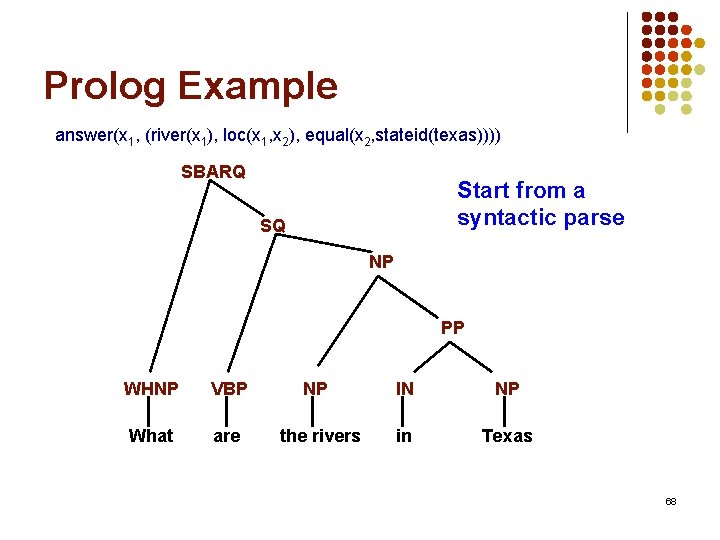

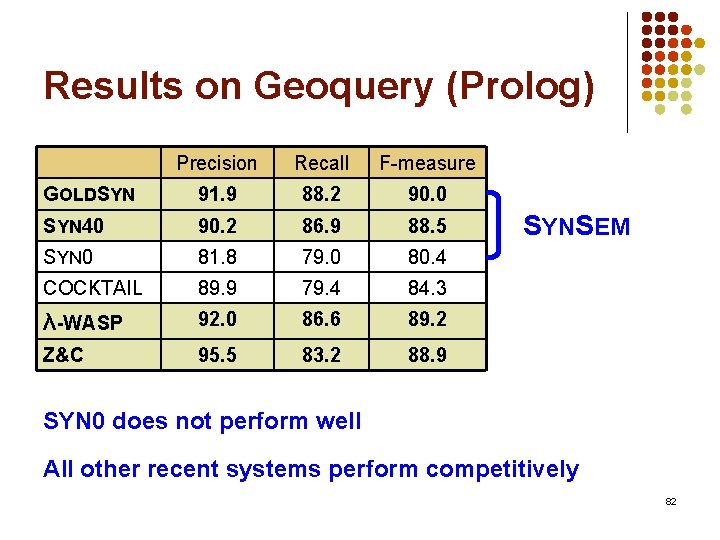

Questions l Q 1. Can Syn. Sem produce accurate semantic interpretations? [yes] l Q 2. Can more accurate Treebank syntactic parsers produce more accurate semantic parsers? [yes] l Q 3. Does it also improve on long sentences? [yes] l Q 4. Does it improve on limited training data due the prior knowledge from large treebanks? [yes] l Q 5. Can it handle syntactic errors? to 79

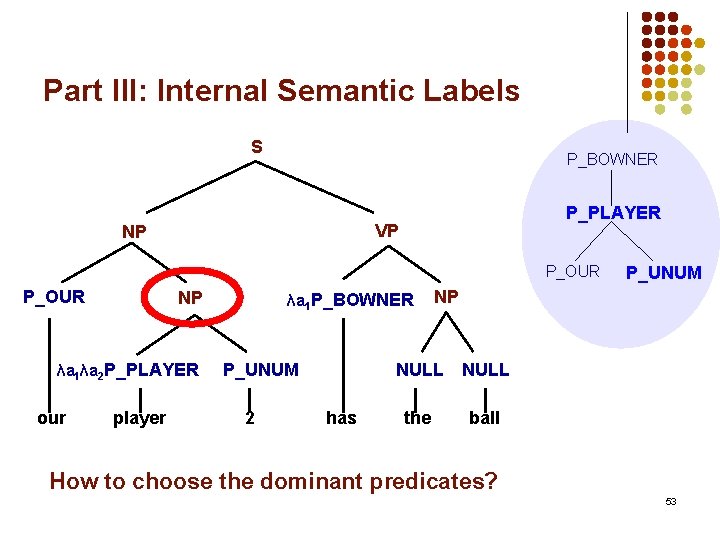

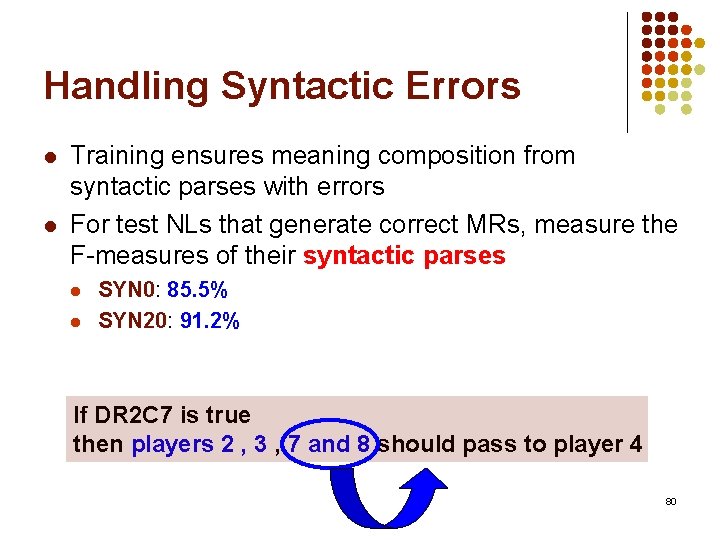

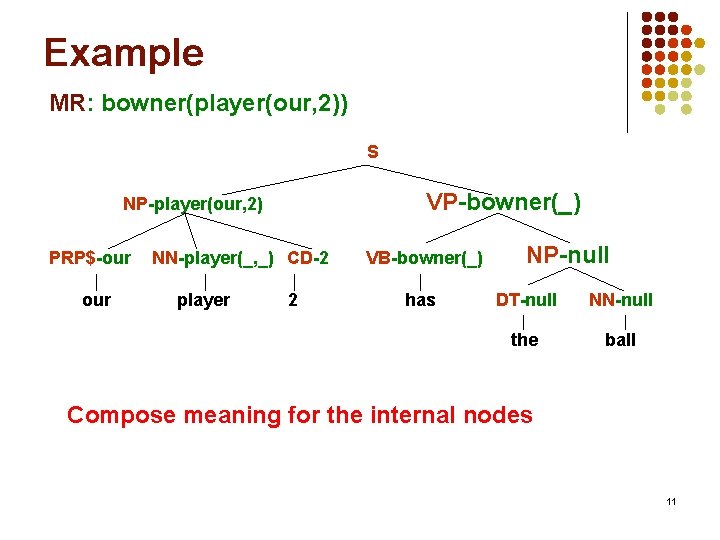

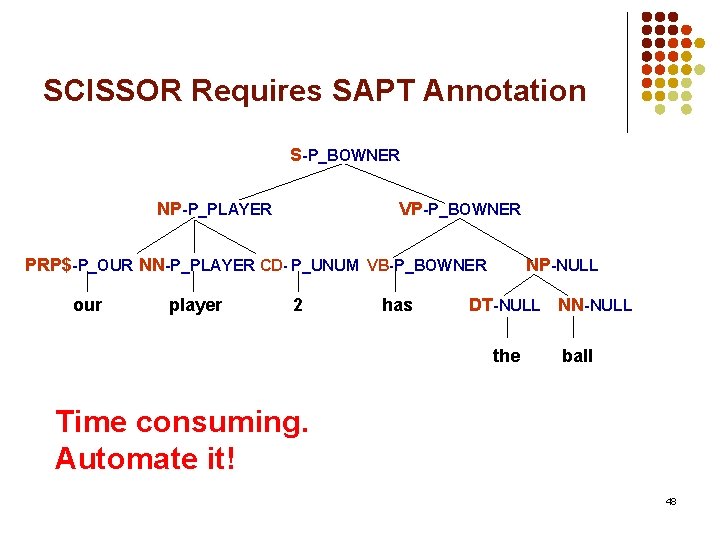

Handling Syntactic Errors l l Training ensures meaning composition from syntactic parses with errors For test NLs that generate correct MRs, measure the F-measures of their syntactic parses l l SYN 0: 85. 5% SYN 20: 91. 2% If DR 2 C 7 is true then players 2 , 3 , 7 and 8 should pass to player 4 80

![Questions l Q 1 Can Syn Sem produce accurate semantic interpretations yes l Q Questions l Q 1. Can Syn. Sem produce accurate semantic interpretations? [yes] l Q](https://slidetodoc.com/presentation_image_h/a9c978ee0ae1501cc9ab9e8c86581159/image-81.jpg)

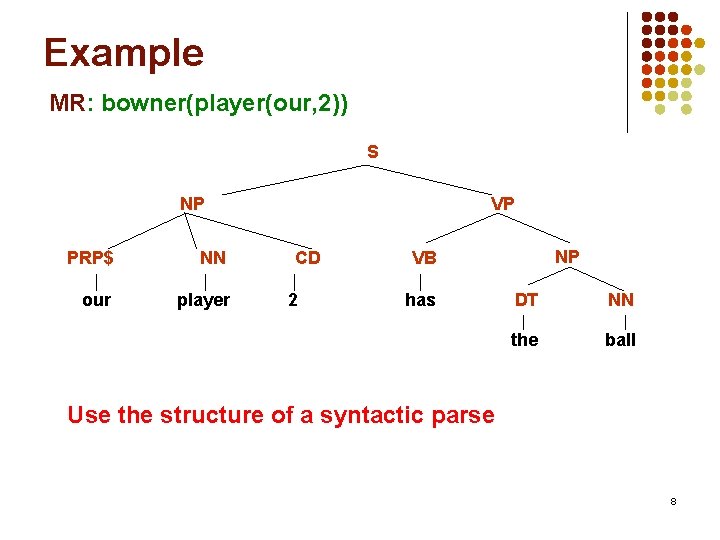

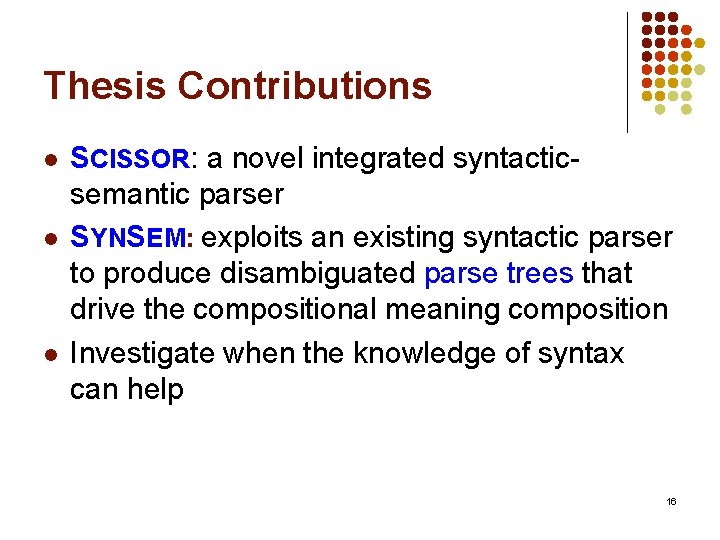

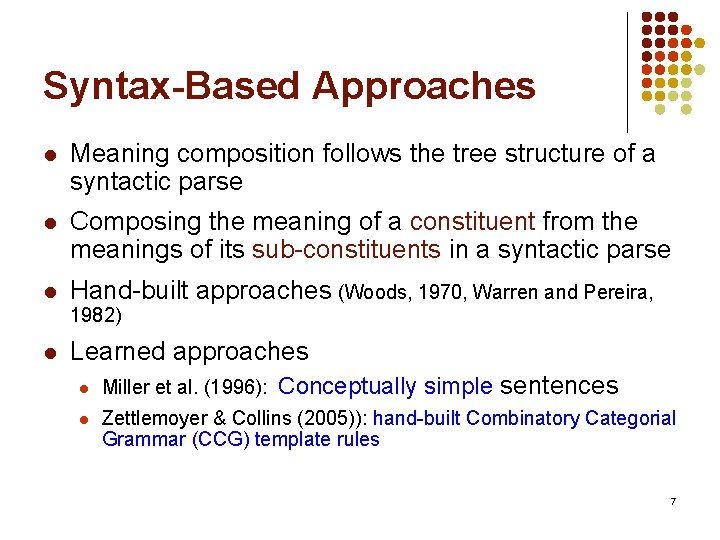

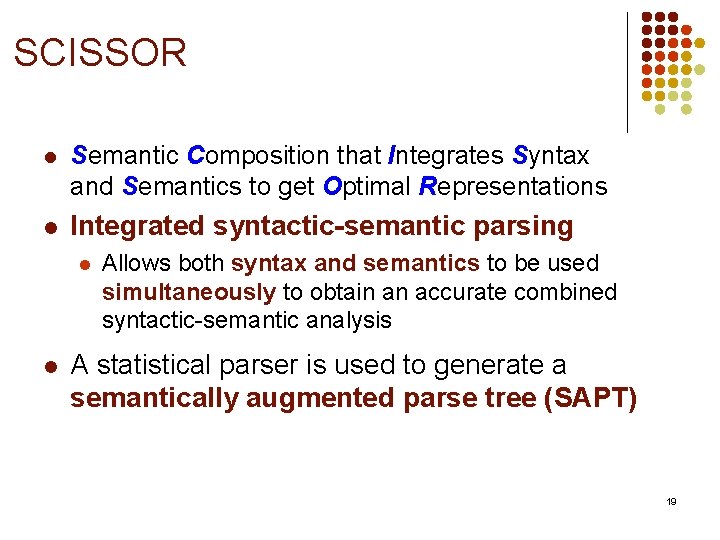

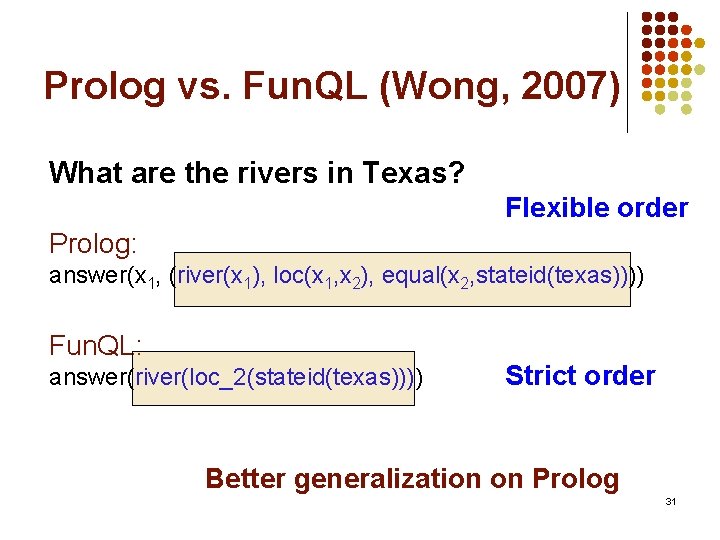

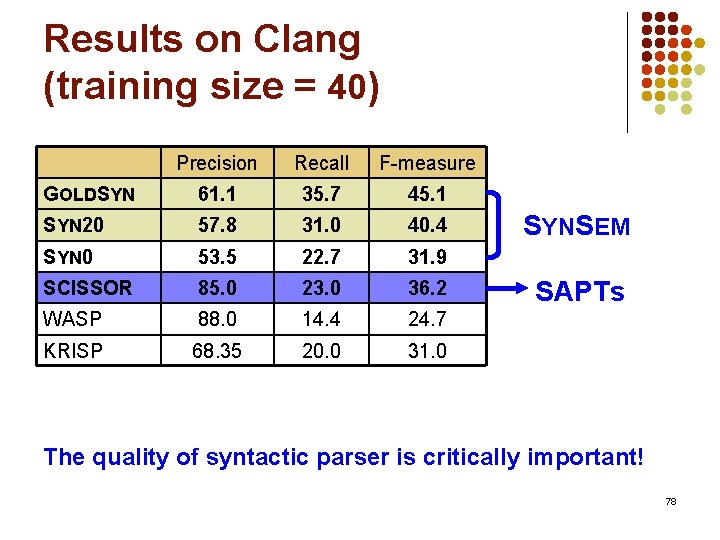

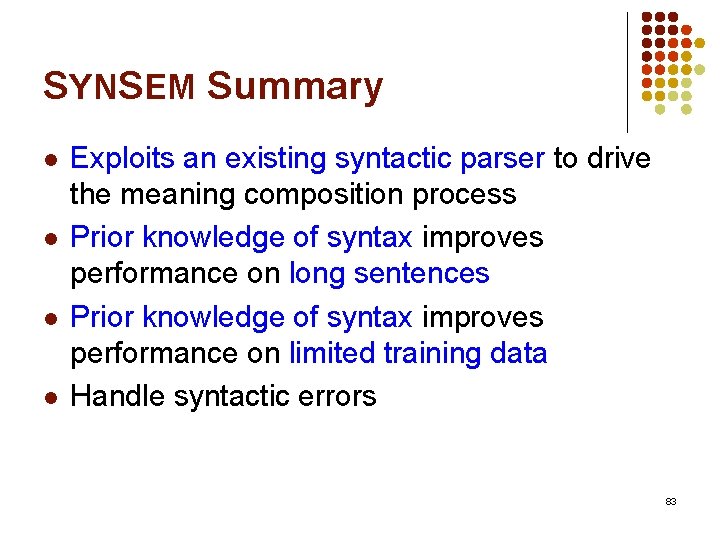

Questions l Q 1. Can Syn. Sem produce accurate semantic interpretations? [yes] l Q 2. Can more accurate Treebank syntactic parsers produce more accurate semantic parsers? [yes] l Q 3. Does it also improve on long sentences? [yes] l Q 4. Does it improve on limited training data due the prior knowledge of large treebanks? [yes] l Q 5. Is it robust to syntactic errors? [yes] to 81

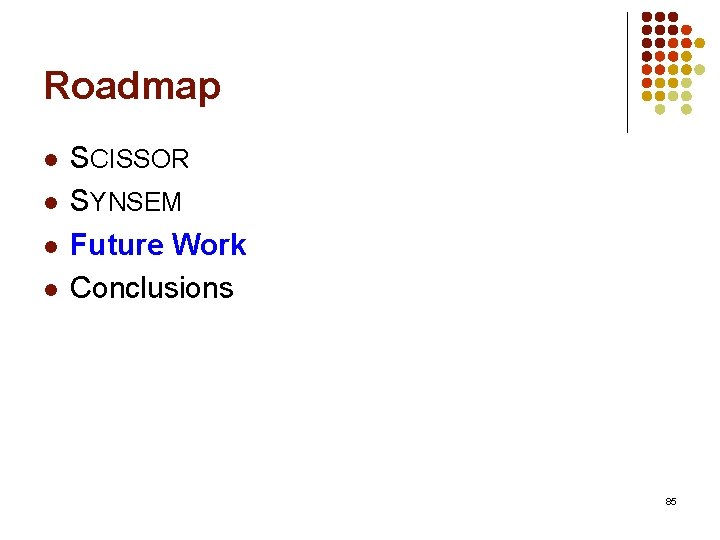

Results on Geoquery (Prolog) Precision Recall F-measure GOLDSYN 91. 9 88. 2 90. 0 SYN 40 90. 2 86. 9 88. 5 SYN 0 81. 8 79. 0 80. 4 COCKTAIL 89. 9 79. 4 84. 3 λ-WASP 92. 0 86. 6 89. 2 Z&C 95. 5 83. 2 88. 9 SYNSEM SYN 0 does not perform well All other recent systems perform competitively 82

SYNSEM Summary l l Exploits an existing syntactic parser to drive the meaning composition process Prior knowledge of syntax improves performance on long sentences Prior knowledge of syntax improves performance on limited training data Handle syntactic errors 83

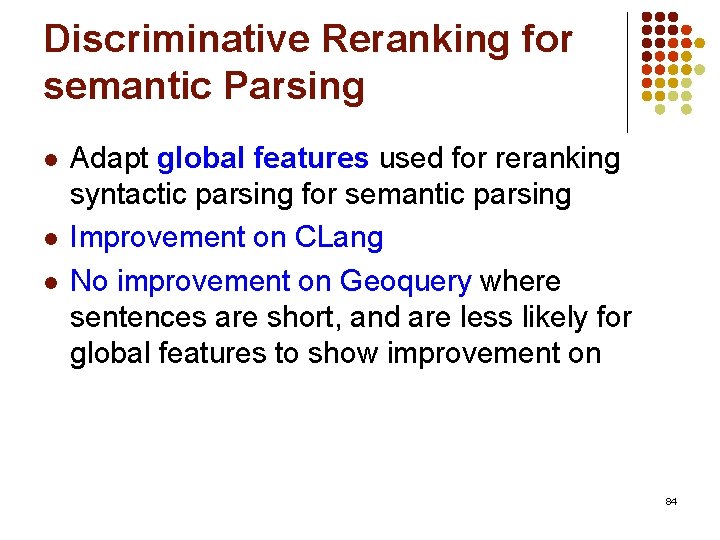

Discriminative Reranking for semantic Parsing l l l Adapt global features used for reranking syntactic parsing for semantic parsing Improvement on CLang No improvement on Geoquery where sentences are short, and are less likely for global features to show improvement on 84

Roadmap l l SCISSOR SYNSEM Future Work Conclusions 85

Future Work l Improve SCISSOR l l Discriminative SCISSOR (Finkel, et al. , 2008) Handling logical forms SCISSOR without extra annotation (Klein and Manning, 2002, 2004) Improve SYNSEM l Utilizing syntactic parsers with improved accuracy and in other syntactic formalism 86

Future Work l Utilizing wide-coverage semantic representations (Curran et al. , 2007) l l Better generalizations for syntactic variations Utilizing semantic role labeling (Gildea and Palmer, 2002) l Provides a layer of correlated semantic information 87

Roadmap l l SCISSOR SYNSEM Future Work Conclusions 88

Conclusions l l l SCISSOR: a novel integrated syntactic-semantic parser. SYNSEM: exploits an existing syntactic parser to produce disambiguated parse trees that drive the compositional meaning composition. Both produce accurate semantic interpretations. Using the knowledge of syntax improves performance on long sentences. SYNSEM also improves performance on limited training data. 89

Thank you! l Questions? 90