Cache Coherence Techniques for Multicore Processors Dissertation Defense

![Outline Introduction and Motivation Virtual Hierarchies [ISCA 2007, IEEE Micro Top Pick 2008] • Outline Introduction and Motivation Virtual Hierarchies [ISCA 2007, IEEE Micro Top Pick 2008] •](https://slidetodoc.com/presentation_image/867574b9cdbfdfa1e8be497c46696867/image-9.jpg)

![Outline Introduction and Motivation Virtual Hierarchies Ring-based Coherence [MICRO 2006] • Skip, 5 -minute Outline Introduction and Motivation Virtual Hierarchies Ring-based Coherence [MICRO 2006] • Skip, 5 -minute](https://slidetodoc.com/presentation_image/867574b9cdbfdfa1e8be497c46696867/image-63.jpg)

- Slides: 91

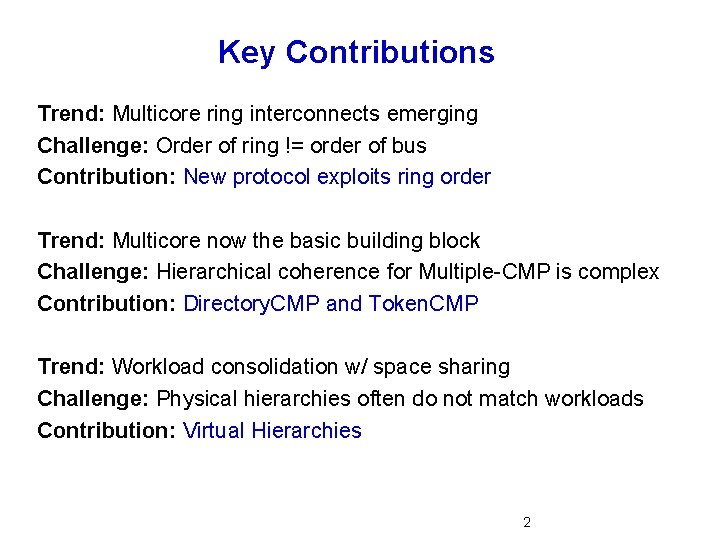

Cache Coherence Techniques for Multicore Processors Dissertation Defense Mike Marty 12/19/2007 1

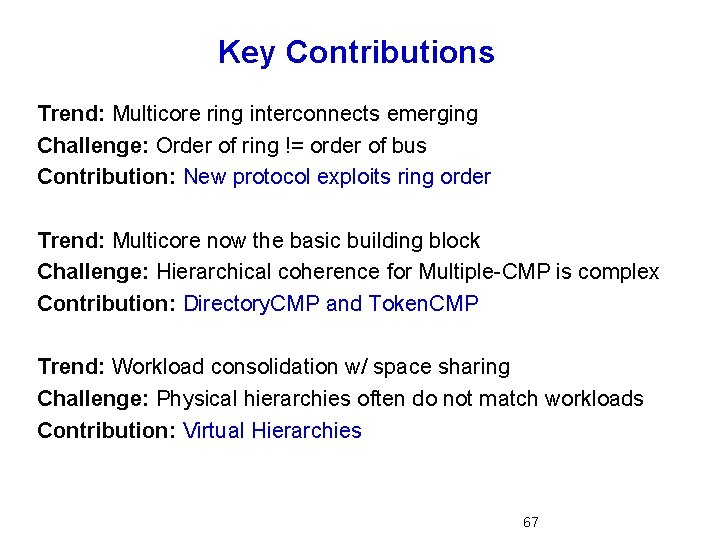

Key Contributions Trend: Multicore ring interconnects emerging Challenge: Order of ring != order of bus Contribution: New protocol exploits ring order Trend: Multicore now the basic building block Challenge: Hierarchical coherence for Multiple-CMP is complex Contribution: Directory. CMP and Token. CMP Trend: Workload consolidation w/ space sharing Challenge: Physical hierarchies often do not match workloads Contribution: Virtual Hierarchies 2

Outline Introduction and Motivation • Multicore Trends Virtual Hierarchies • Focus of presentation Multiple-CMP Coherence Ring-based Coherence Conclusion 3

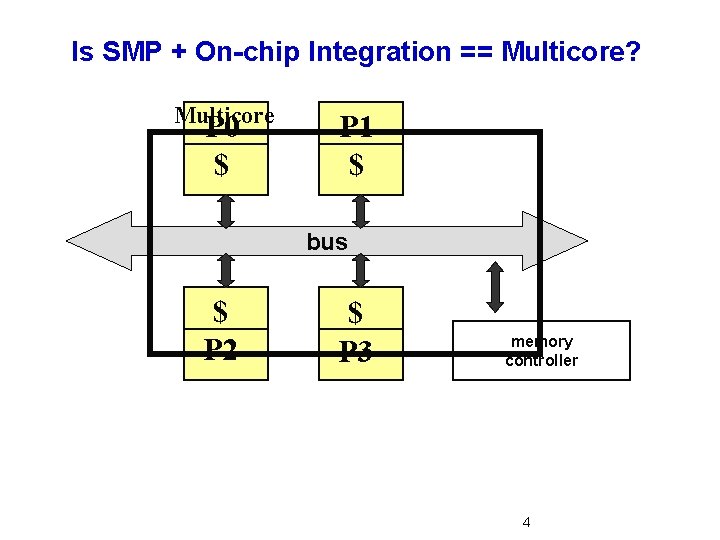

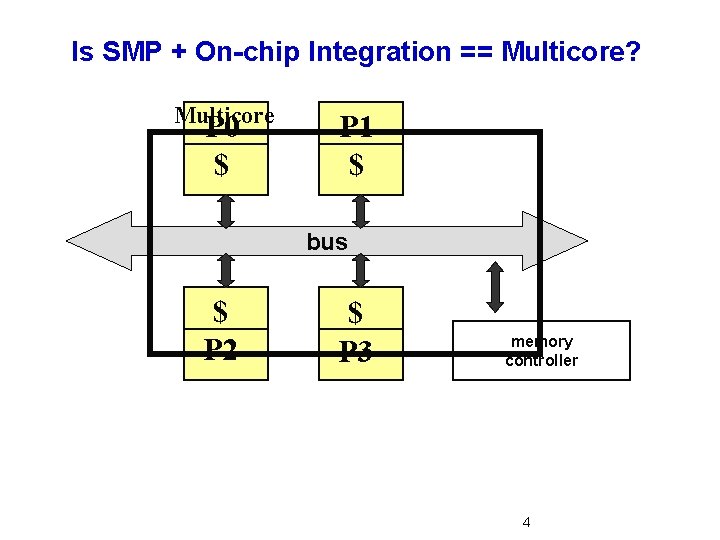

Is SMP + On-chip Integration == Multicore? Multicore P 0 $ P 1 $ bus $ P 2 $ P 3 memory controller 4

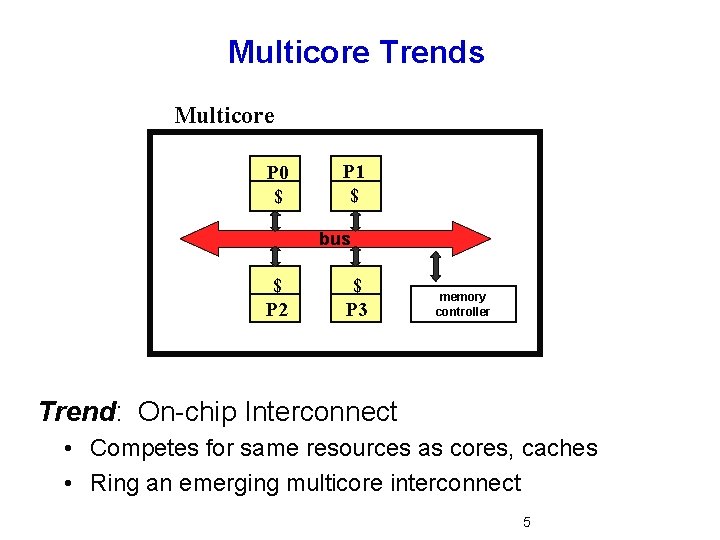

Multicore Trends Multicore P 0 $ P 1 $ bus $ P 2 $ P 3 memory controller Trend: On-chip Interconnect • Competes for same resources as cores, caches • Ring an emerging multicore interconnect 5

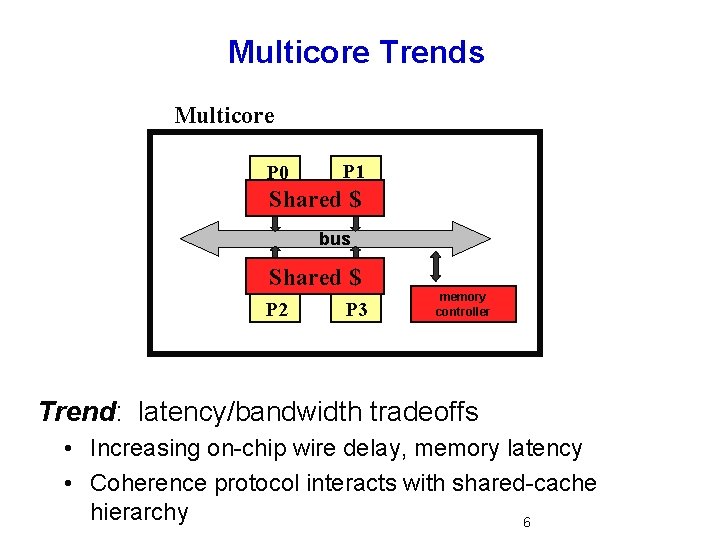

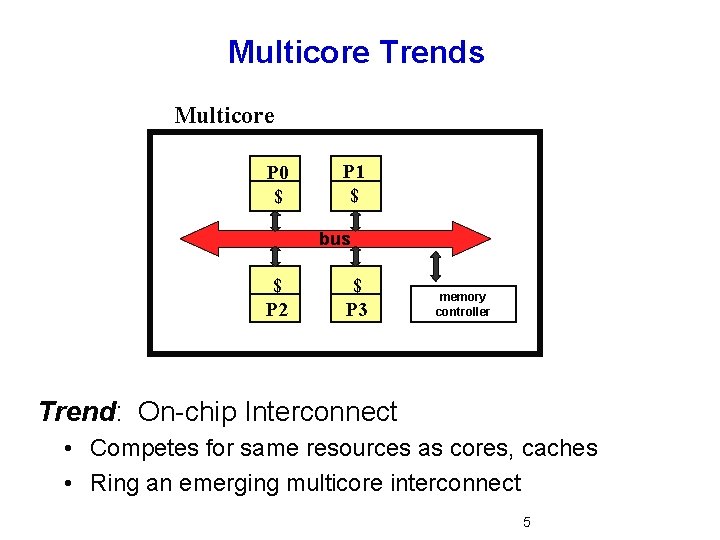

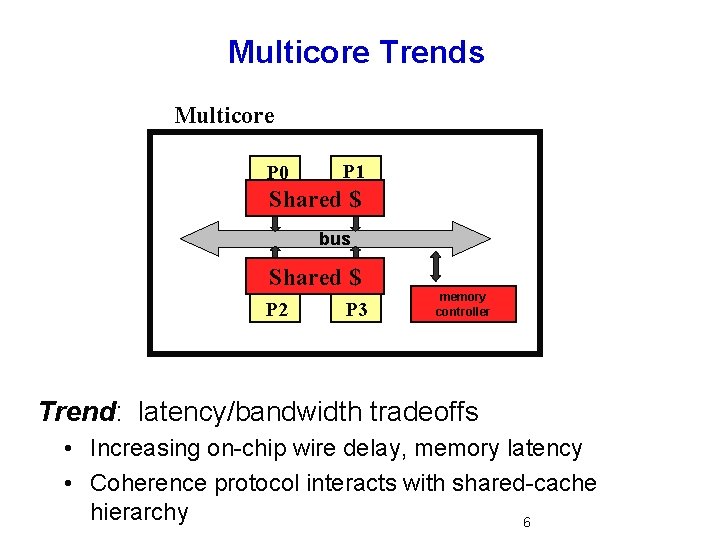

Multicore Trends Multicore P 1 P 0 $ Shared $$ bus Shared $$ $ P 2 P 3 memory controller Trend: latency/bandwidth tradeoffs • Increasing on-chip wire delay, memory latency • Coherence protocol interacts with shared-cache hierarchy 6

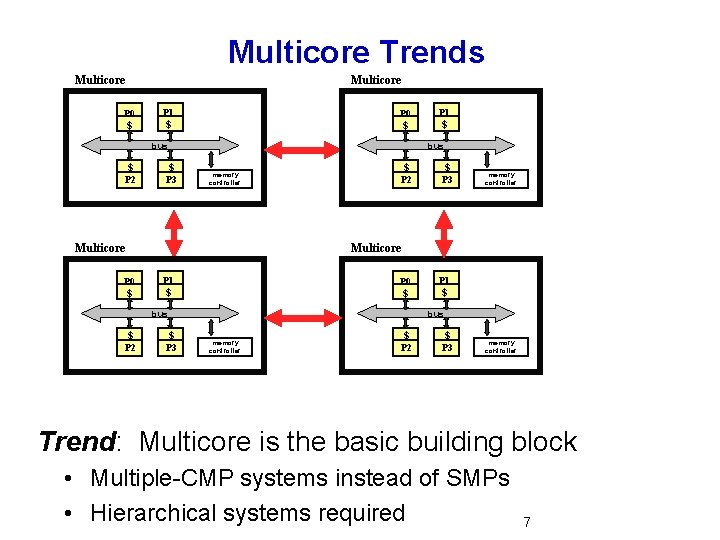

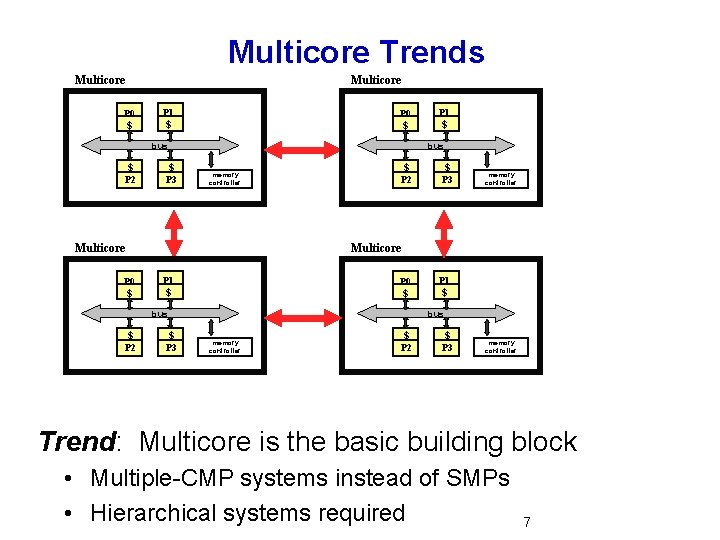

Multicore Trends Multicore P 0 $ Multicore P 1 $ P 0 $ bus $ P 2 $ P 3 bus memory controller Multicore P 0 $ $ P 2 $ P 3 memory controller Multicore P 1 $ P 0 $ bus $ P 2 P 1 $ $ P 3 P 1 $ bus memory controller $ P 2 $ P 3 memory controller Trend: Multicore is the basic building block • Multiple-CMP systems instead of SMPs • Hierarchical systems required 7

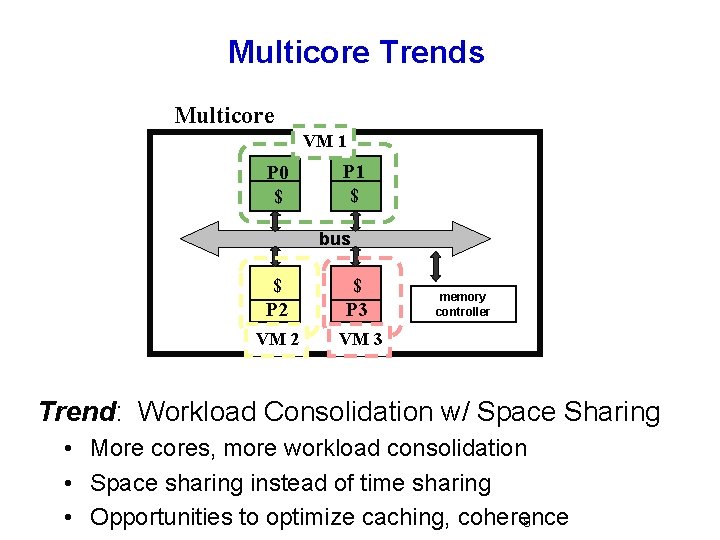

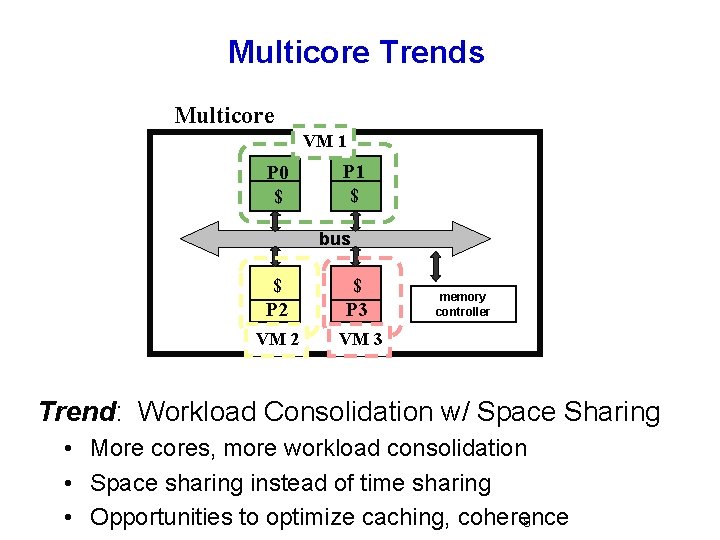

Multicore Trends Multicore VM 1 P 0 $ P 1 $ bus $ P 2 $ P 3 VM 2 VM 3 memory controller Trend: Workload Consolidation w/ Space Sharing • More cores, more workload consolidation • Space sharing instead of time sharing • Opportunities to optimize caching, coherence 8

![Outline Introduction and Motivation Virtual Hierarchies ISCA 2007 IEEE Micro Top Pick 2008 Outline Introduction and Motivation Virtual Hierarchies [ISCA 2007, IEEE Micro Top Pick 2008] •](https://slidetodoc.com/presentation_image/867574b9cdbfdfa1e8be497c46696867/image-9.jpg)

Outline Introduction and Motivation Virtual Hierarchies [ISCA 2007, IEEE Micro Top Pick 2008] • Focus of presentation Multiple-CMP Coherence Ring-based Coherence Conclusion 9

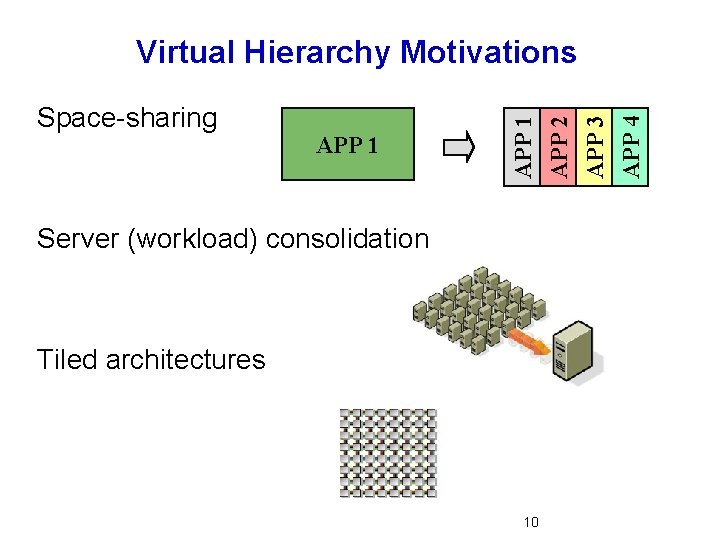

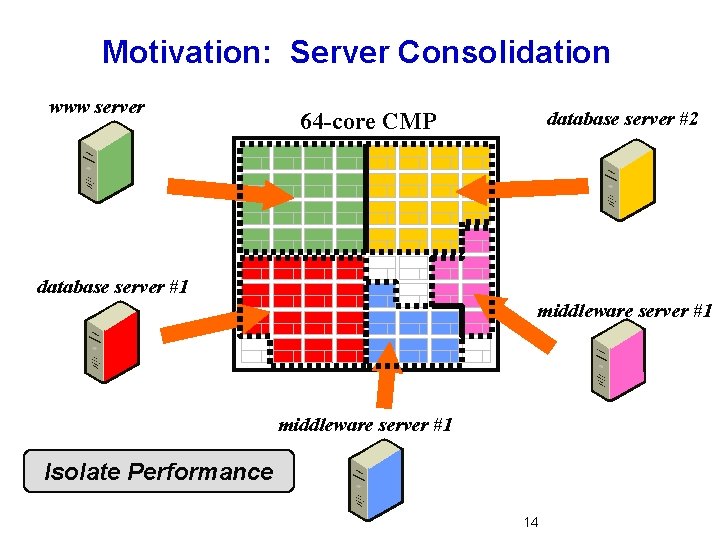

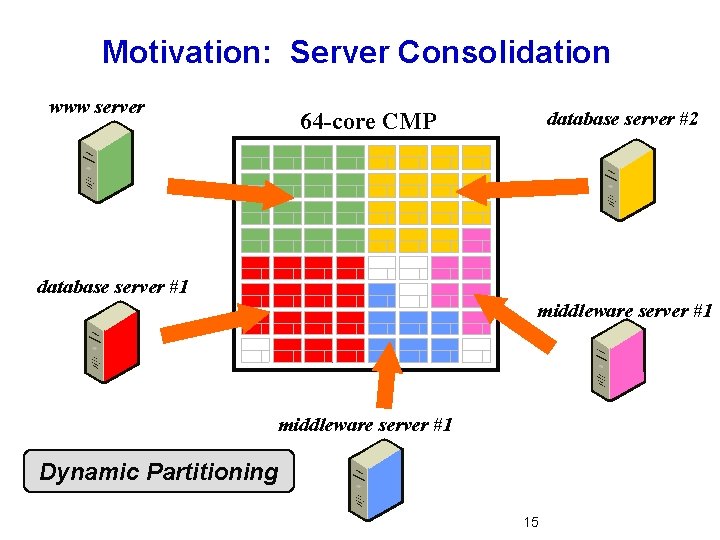

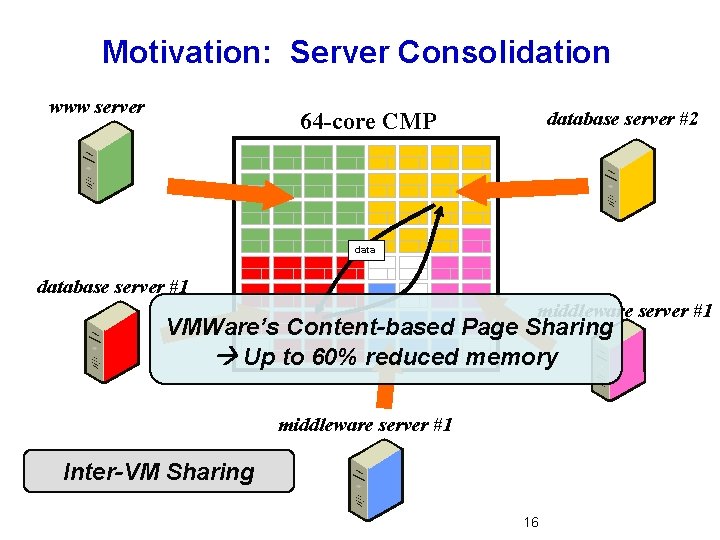

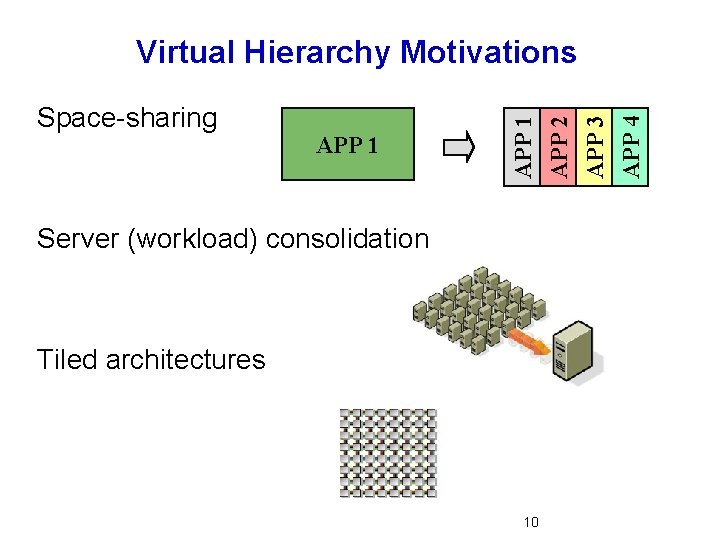

Space-sharing APP 1 APP 2 APP 3 APP 4 Virtual Hierarchy Motivations Server (workload) consolidation Tiled architectures 10

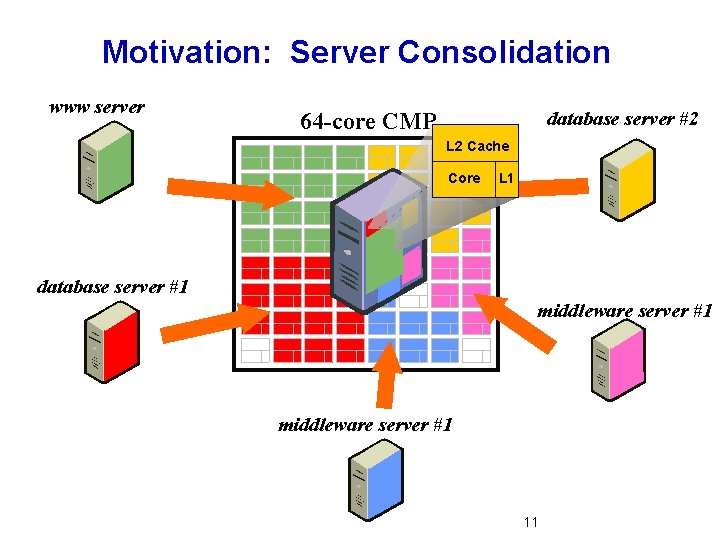

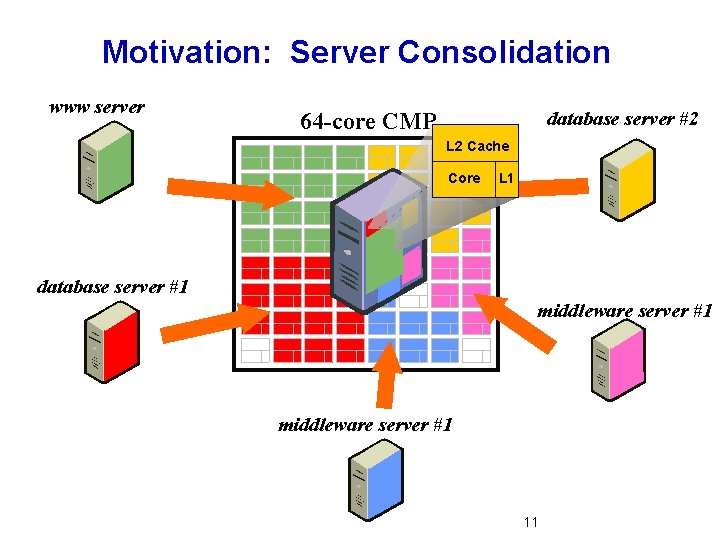

Motivation: Server Consolidation www server database server #2 64 -core CMP L 2 Cache Core L 1 database server #1 middleware server #1 11

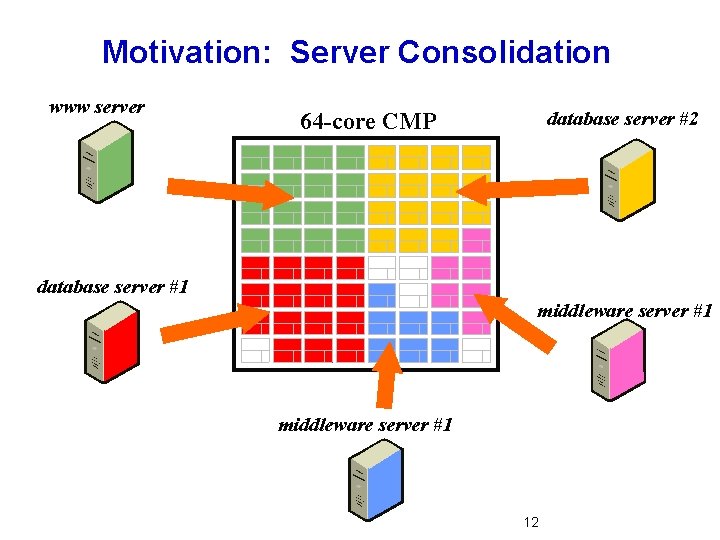

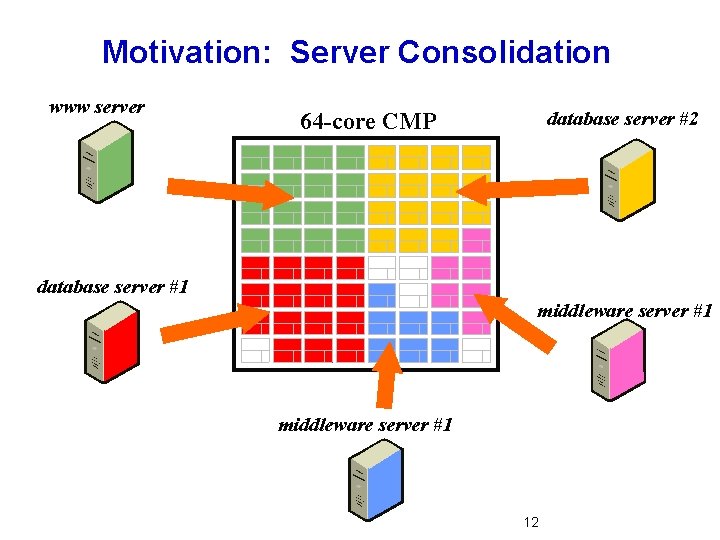

Motivation: Server Consolidation www server database server #2 64 -core CMP database server #1 middleware server #1 12

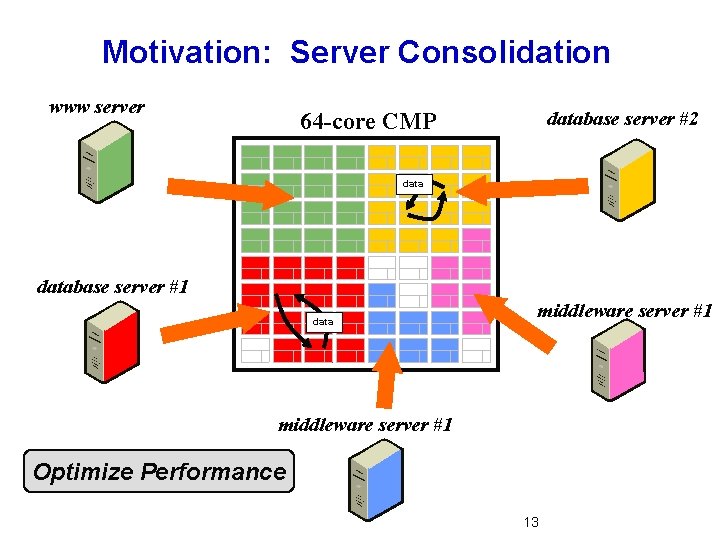

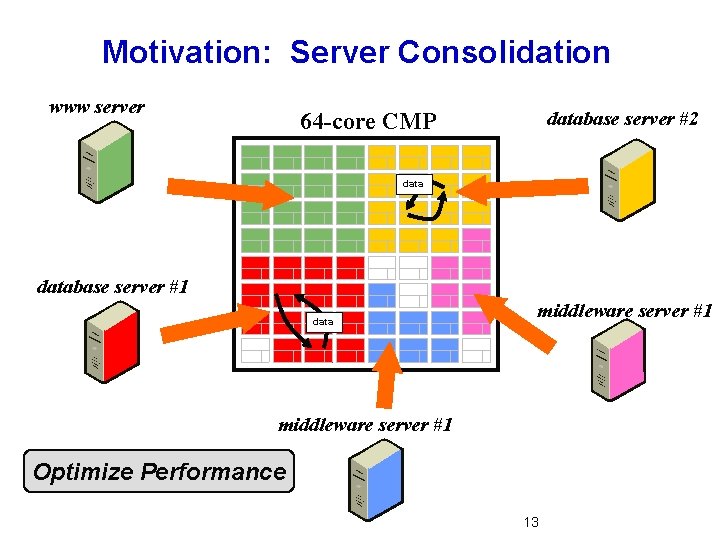

Motivation: Server Consolidation www server database server #2 64 -core CMP database server #1 data middleware server #1 Optimize Performance 13

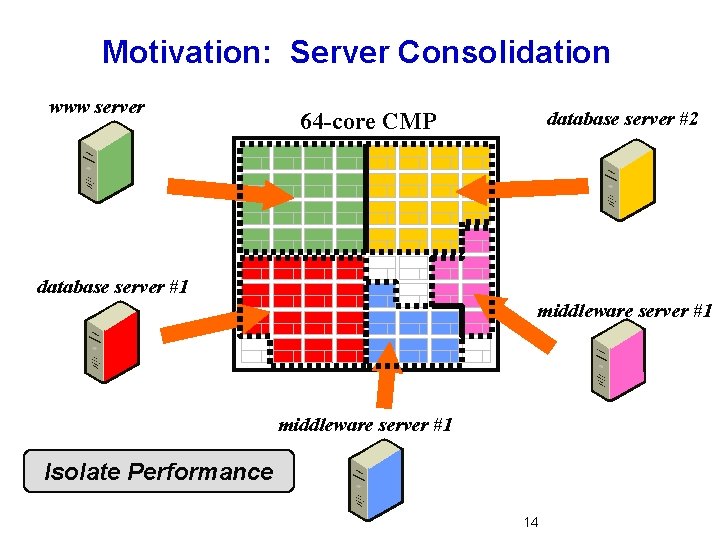

Motivation: Server Consolidation www server database server #2 64 -core CMP database server #1 middleware server #1 Isolate Performance 14

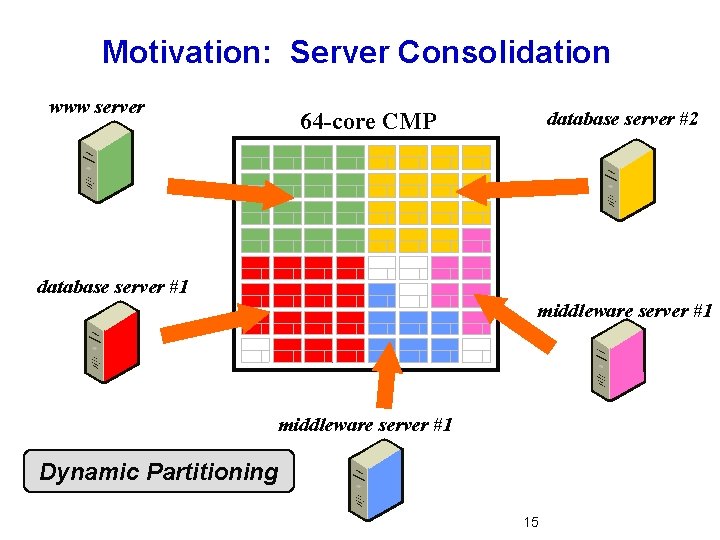

Motivation: Server Consolidation www server database server #2 64 -core CMP database server #1 middleware server #1 Dynamic Partitioning 15

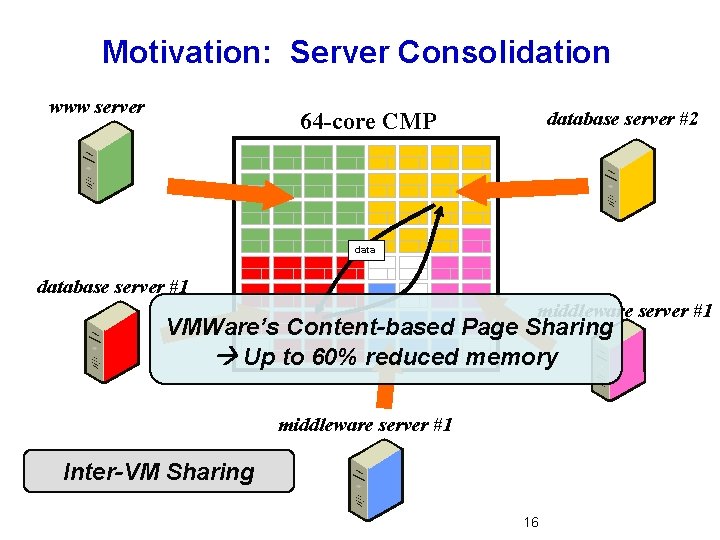

Motivation: Server Consolidation www server database server #2 64 -core CMP database server #1 middleware server #1 VMWare’s Content-based Page Sharing Up to 60% reduced memory middleware server #1 Inter-VM Sharing 16

Outline Introduction and Motivation Virtual Hierarchies • • • Expanded Motivation Non-hierarchical approaches Proposed Virtual Hierarchies Evaluation Related Work Ring-based and Multiple-CMP Coherence Conclusion 17

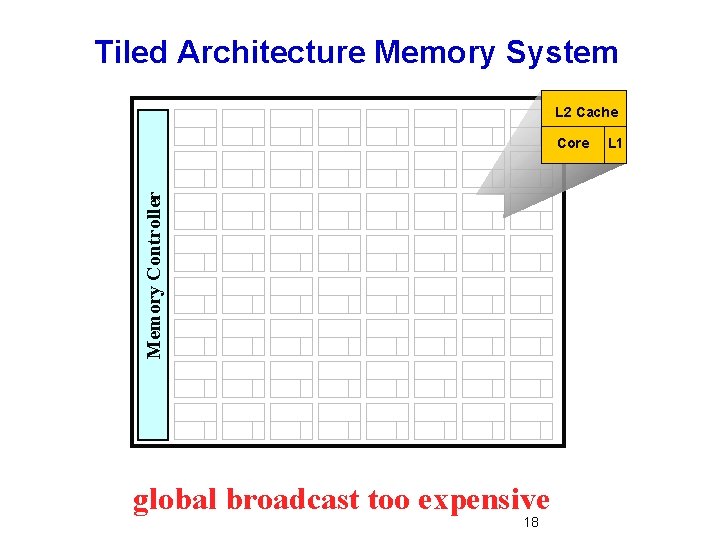

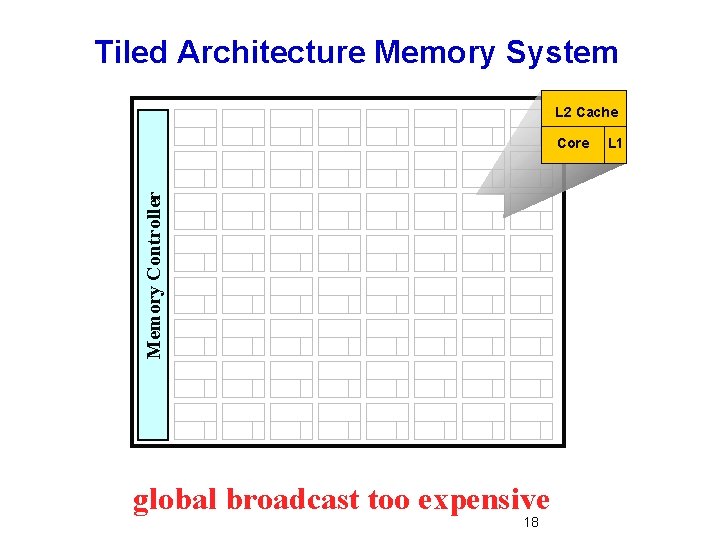

Tiled Architecture Memory System L 2 Cache Memory Controller Core global broadcast too expensive 18 L 1

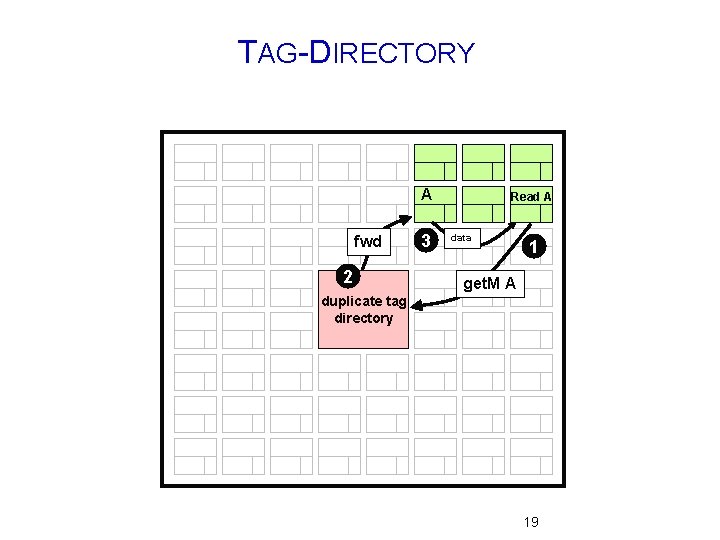

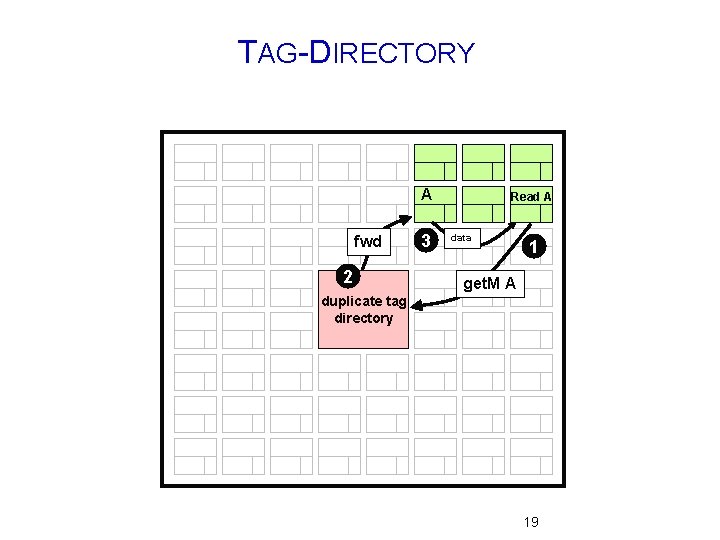

TAG-DIRECTORY A fwd 2 3 Read A data 1 get. M A duplicate tag directory 19

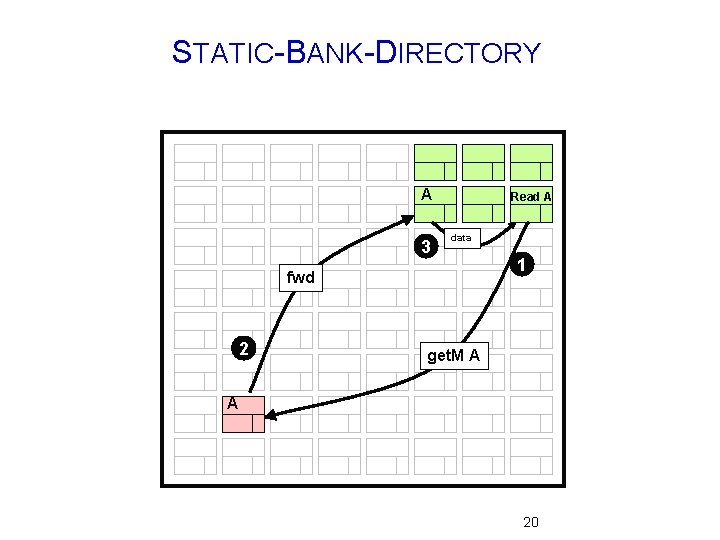

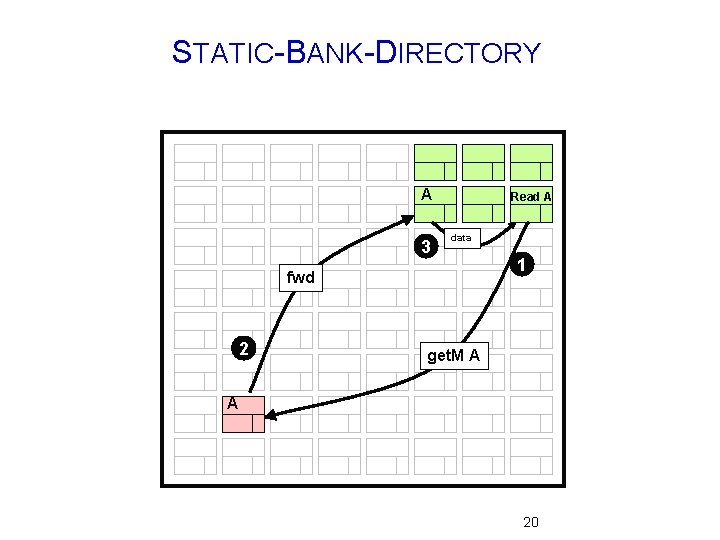

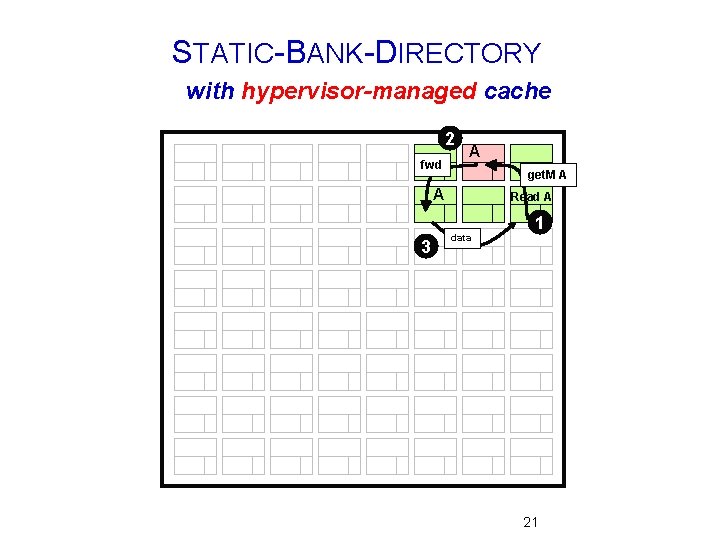

STATIC-BANK-DIRECTORY A 3 Read A data fwd 2 1 get. M A A 20

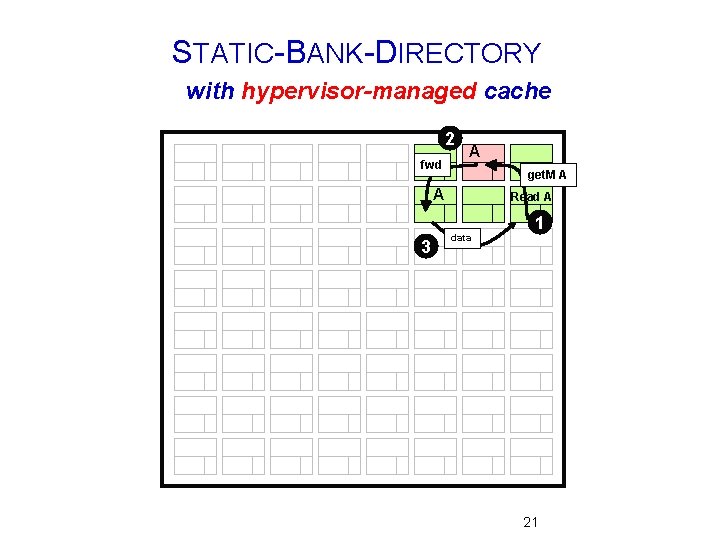

STATIC-BANK-DIRECTORY with hypervisor-managed cache 2 fwd A get. M A A 3 Read A data 1 21

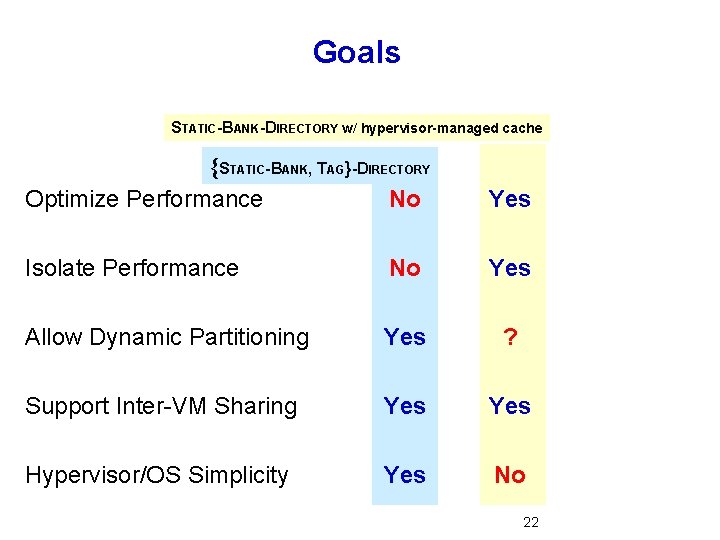

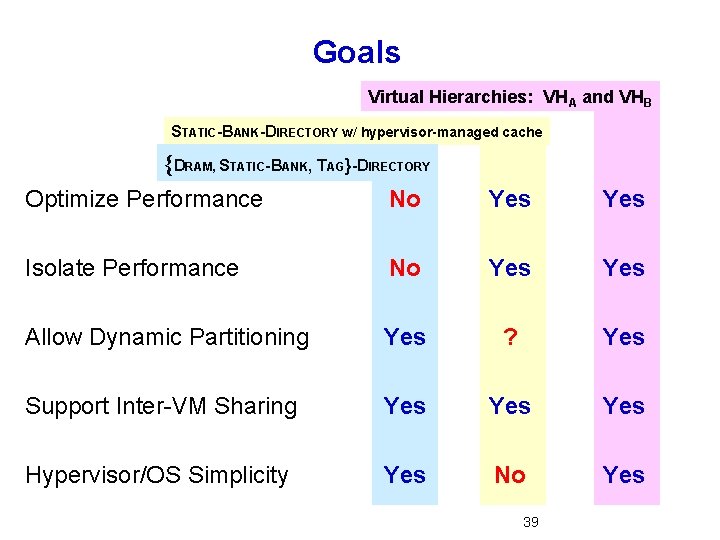

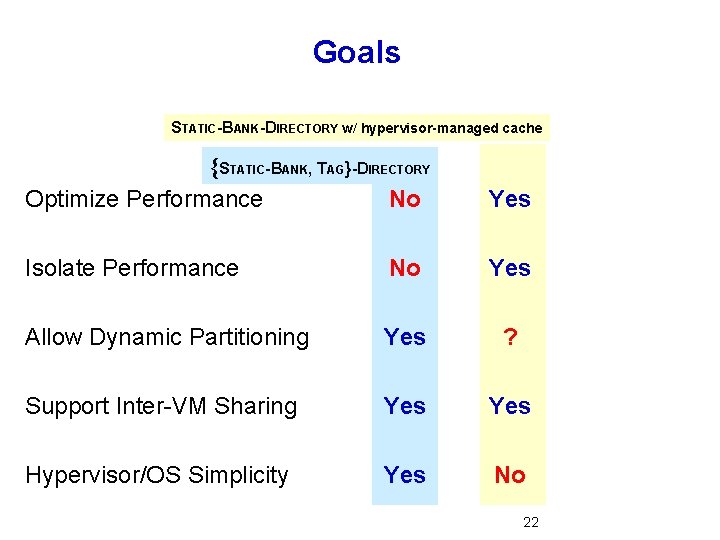

Goals STATIC-BANK-DIRECTORY w/ hypervisor-managed cache {STATIC-BANK, TAG}-DIRECTORY Optimize Performance No Yes Isolate Performance No Yes Allow Dynamic Partitioning Yes ? Support Inter-VM Sharing Yes Hypervisor/OS Simplicity Yes No 22

Outline Introduction and Motivation Virtual Hierarchies • • • Expanded Motivation Non-hierarchical approaches Proposed Virtual Hierarchies Evaluation Related Work Ring-based and Multiple-CMP Coherence Conclusion 23

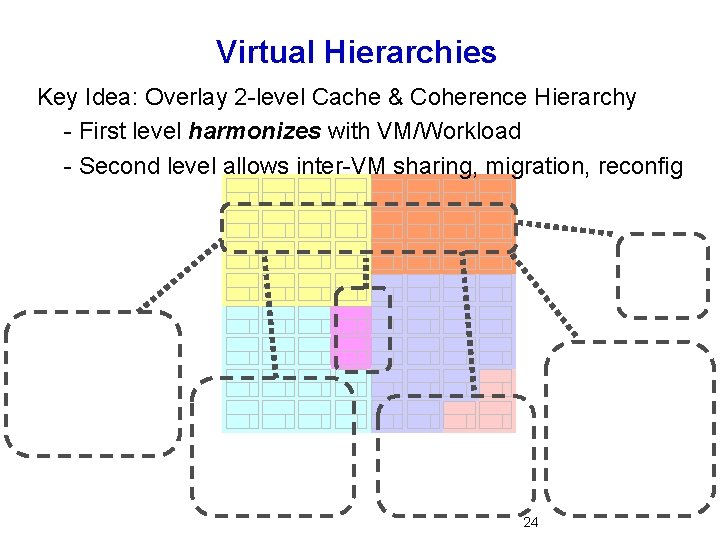

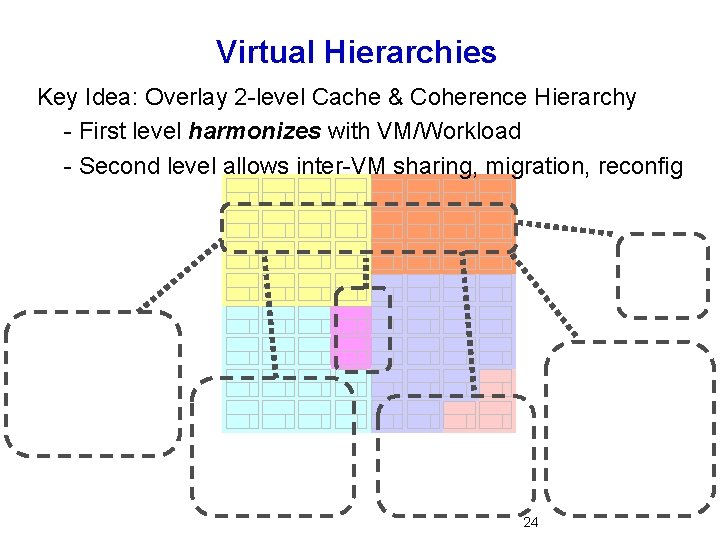

Virtual Hierarchies Key Idea: Overlay 2 -level Cache & Coherence Hierarchy - First level harmonizes with VM/Workload - Second level allows inter-VM sharing, migration, reconfig 24

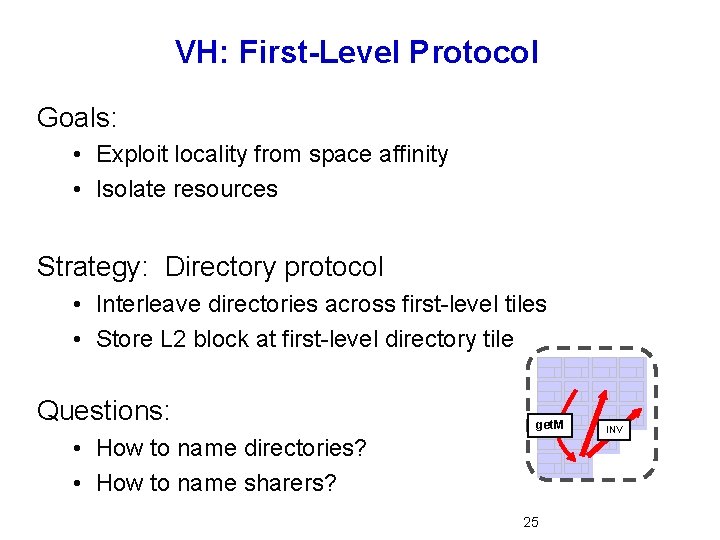

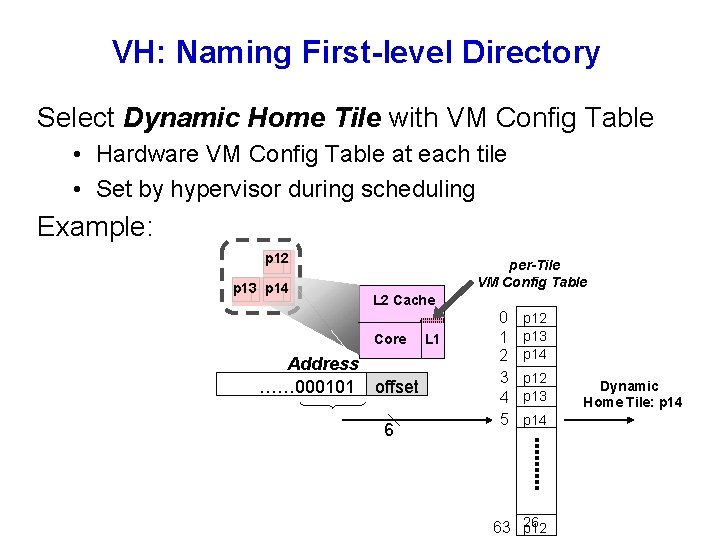

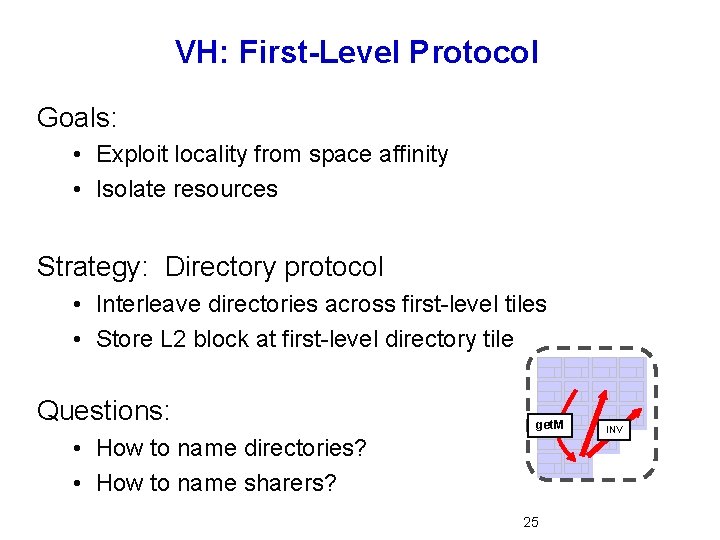

VH: First-Level Protocol Goals: • Exploit locality from space affinity • Isolate resources Strategy: Directory protocol • Interleave directories across first-level tiles • Store L 2 block at first-level directory tile Questions: get. M • How to name directories? • How to name sharers? 25 INV

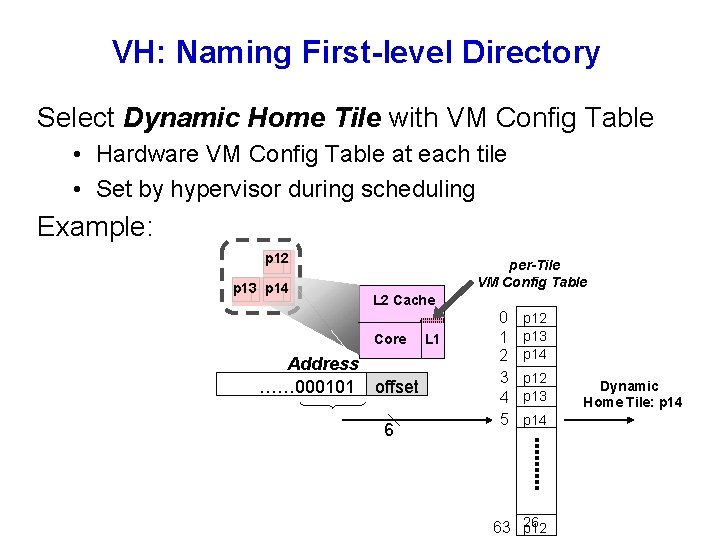

VH: Naming First-level Directory Select Dynamic Home Tile with VM Config Table • Hardware VM Config Table at each tile • Set by hypervisor during scheduling Example: p 12 p 13 p 14 per-Tile VM Config Table L 2 Cache Core Address …… 000101 offset 6 L 1 0 1 2 3 4 5 p 12 p 13 p 14 63 26 p 12 Dynamic Home Tile: p 14

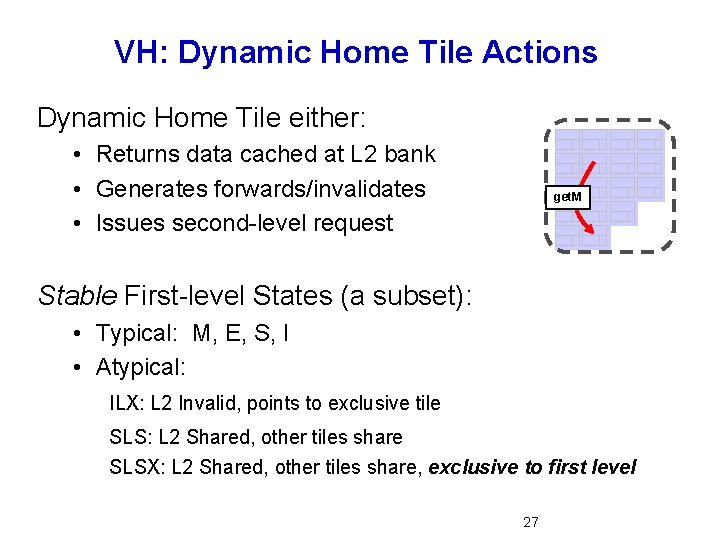

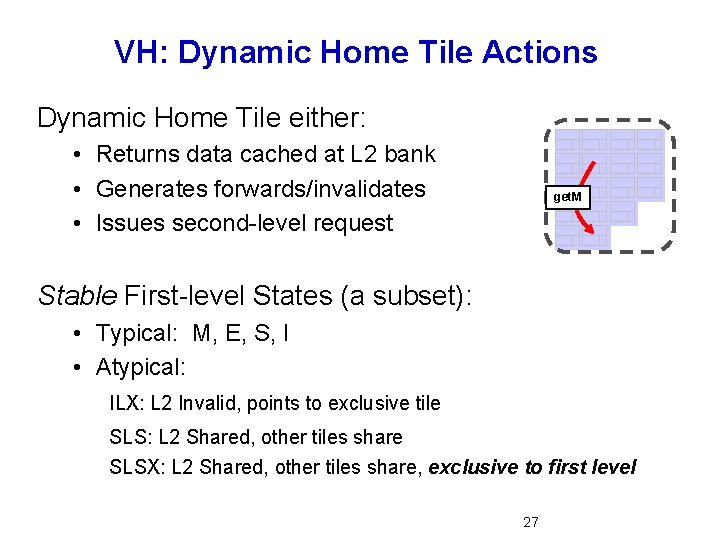

VH: Dynamic Home Tile Actions Dynamic Home Tile either: • Returns data cached at L 2 bank • Generates forwards/invalidates • Issues second-level request get. M Stable First-level States (a subset): • Typical: M, E, S, I • Atypical: ILX: L 2 Invalid, points to exclusive tile SLS: L 2 Shared, other tiles share SLSX: L 2 Shared, other tiles share, exclusive to first level 27

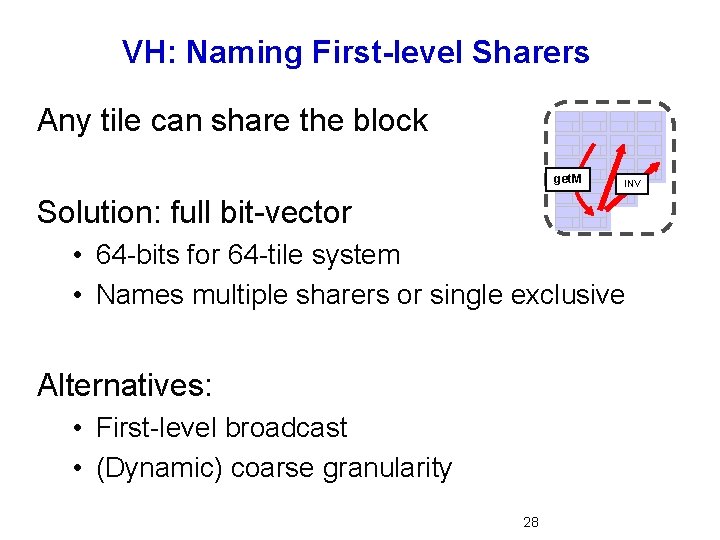

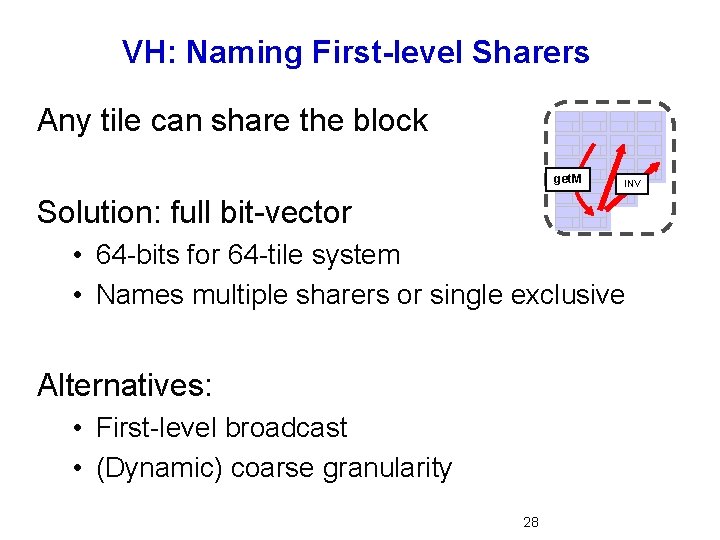

VH: Naming First-level Sharers Any tile can share the block get. M INV Solution: full bit-vector • 64 -bits for 64 -tile system • Names multiple sharers or single exclusive Alternatives: • First-level broadcast • (Dynamic) coarse granularity 28

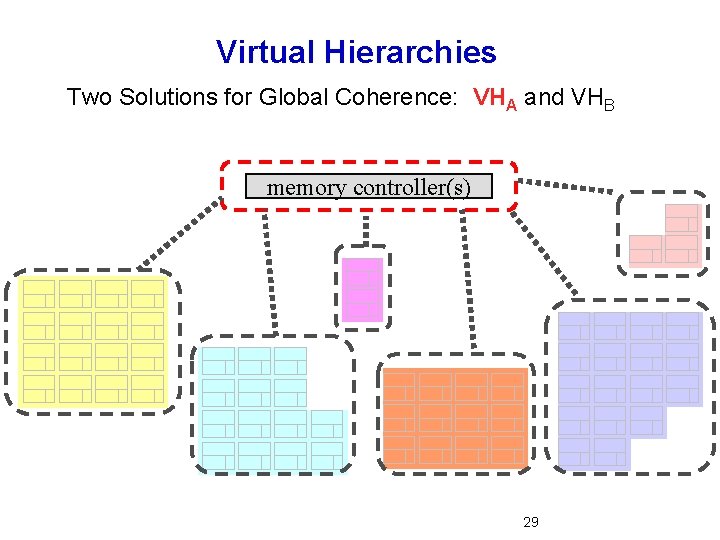

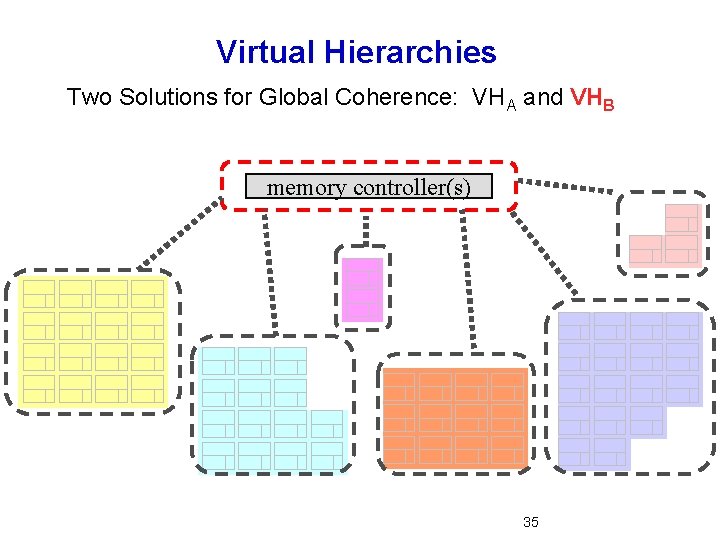

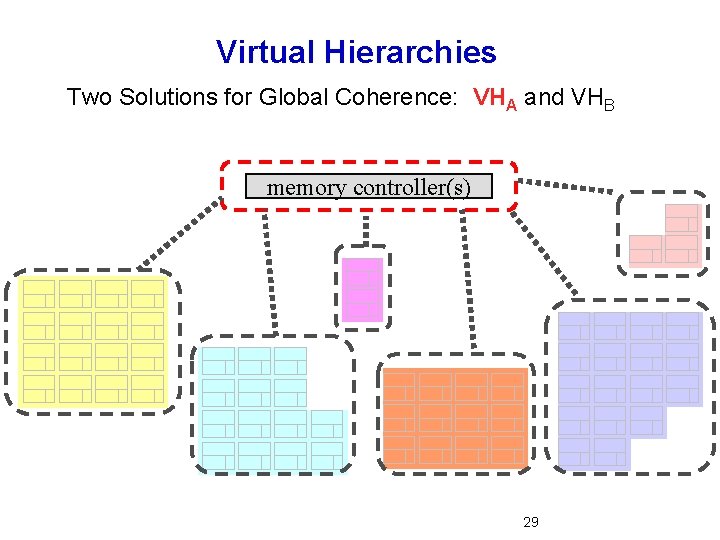

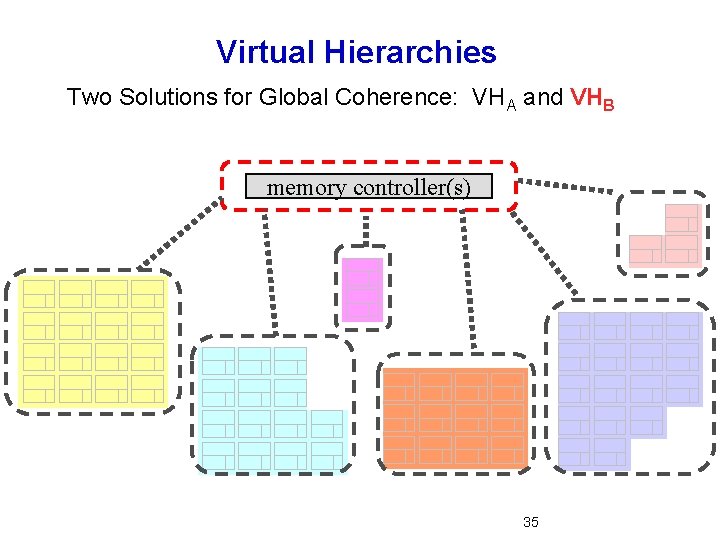

Virtual Hierarchies Two Solutions for Global Coherence: VHA and VHB memory controller(s) 29

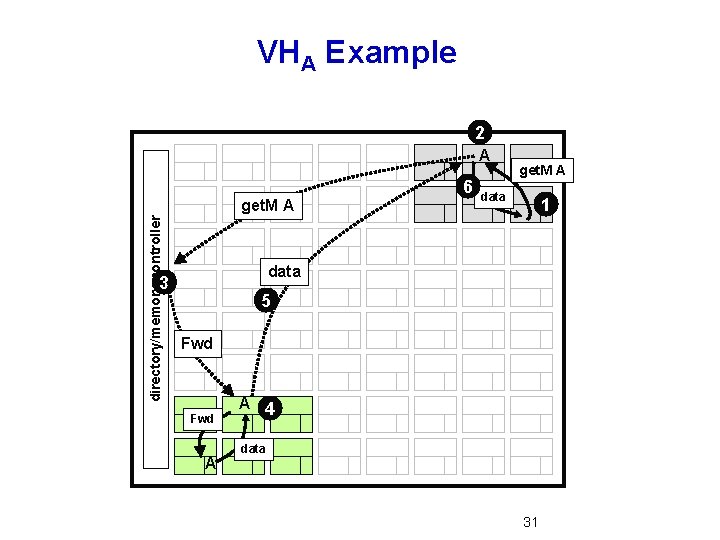

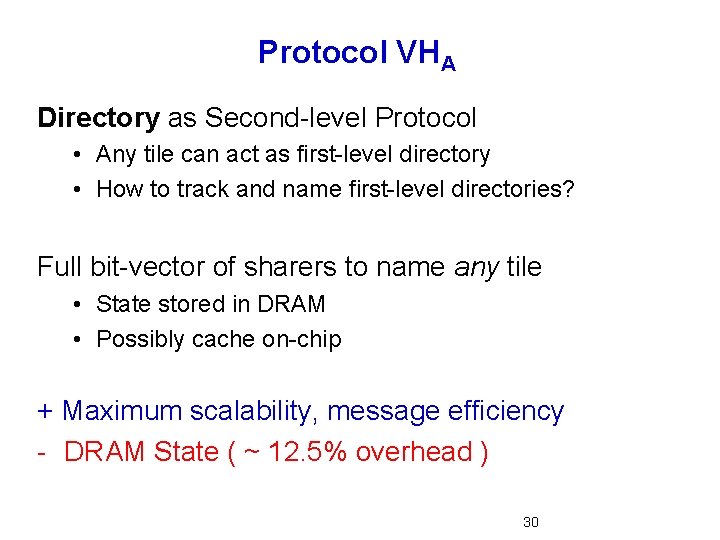

Protocol VHA Directory as Second-level Protocol • Any tile can act as first-level directory • How to track and name first-level directories? Full bit-vector of sharers to name any tile • State stored in DRAM • Possibly cache on-chip + Maximum scalability, message efficiency - DRAM State ( ~ 12. 5% overhead ) 30

VHA Example 2 A 6 directory/memory controller get. M A data 1 data 3 5 Fwd A A 4 data 31

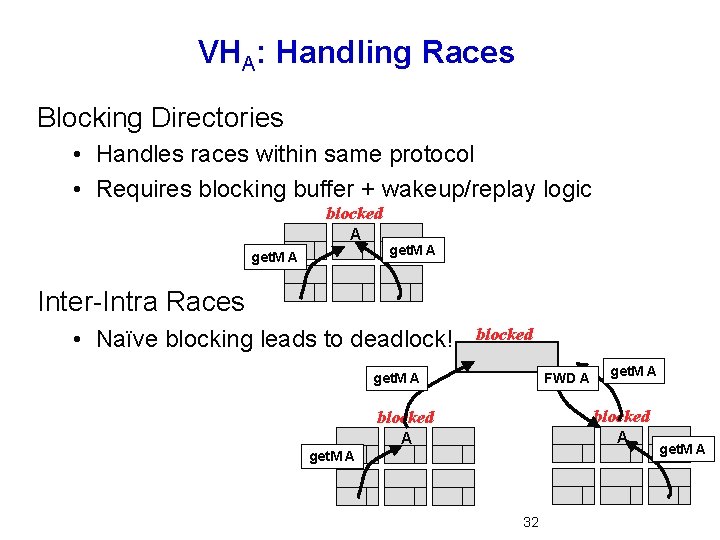

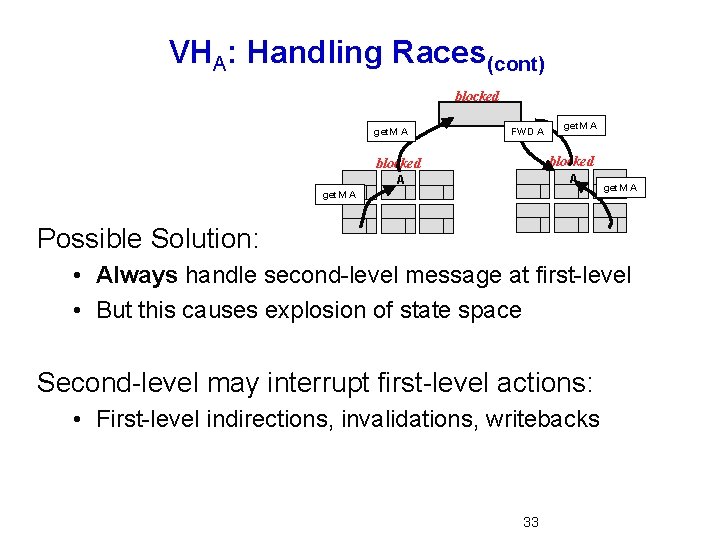

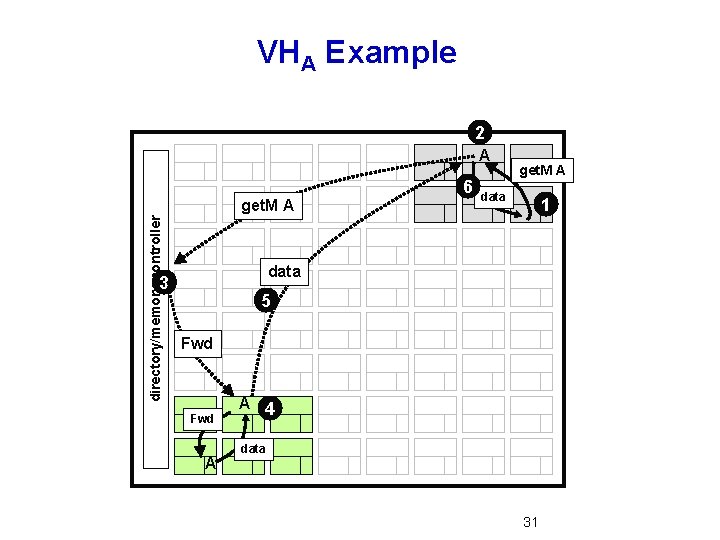

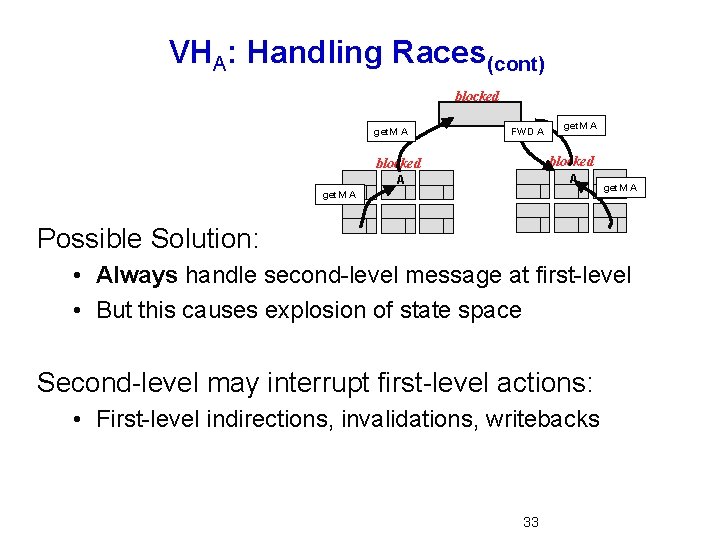

VHA: Handling Races Blocking Directories • Handles races within same protocol • Requires blocking buffer + wakeup/replay logic blocked A get. M A Inter-Intra Races • Naïve blocking leads to deadlock! blocked get. M A FWD A get. M A blocked A A get. M A 32 get. M A

VHA: Handling Races(cont) blocked get. M A FWD A get. M A blocked A A get. M A Possible Solution: • Always handle second-level message at first-level • But this causes explosion of state space Second-level may interrupt first-level actions: • First-level indirections, invalidations, writebacks 33

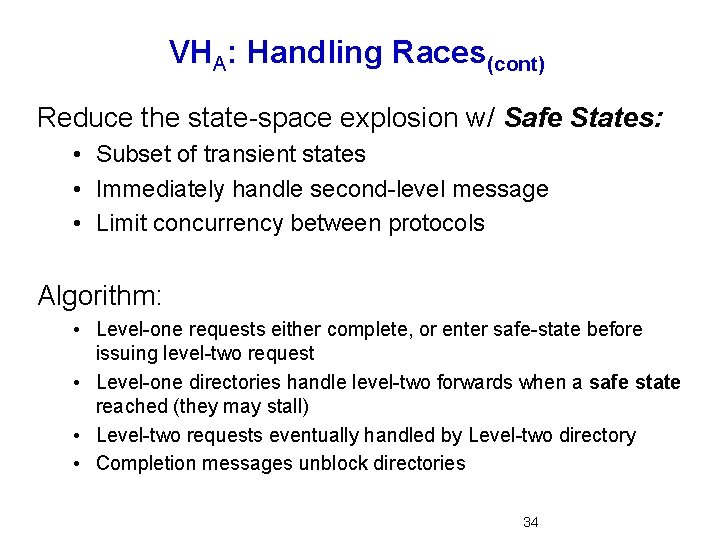

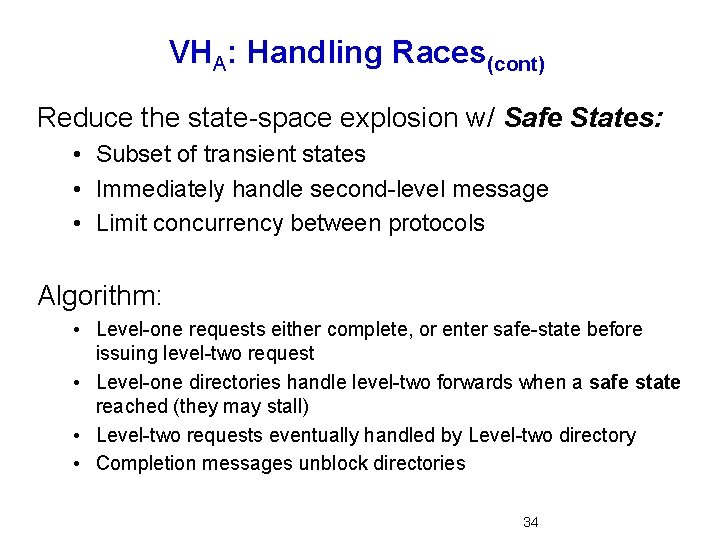

VHA: Handling Races(cont) Reduce the state-space explosion w/ Safe States: • Subset of transient states • Immediately handle second-level message • Limit concurrency between protocols Algorithm: • Level-one requests either complete, or enter safe-state before issuing level-two request • Level-one directories handle level-two forwards when a safe state reached (they may stall) • Level-two requests eventually handled by Level-two directory • Completion messages unblock directories 34

Virtual Hierarchies Two Solutions for Global Coherence: VHA and VHB memory controller(s) 35

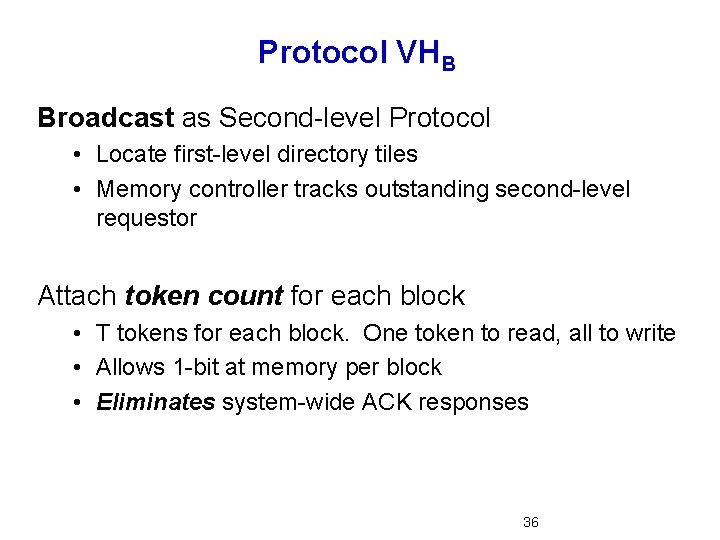

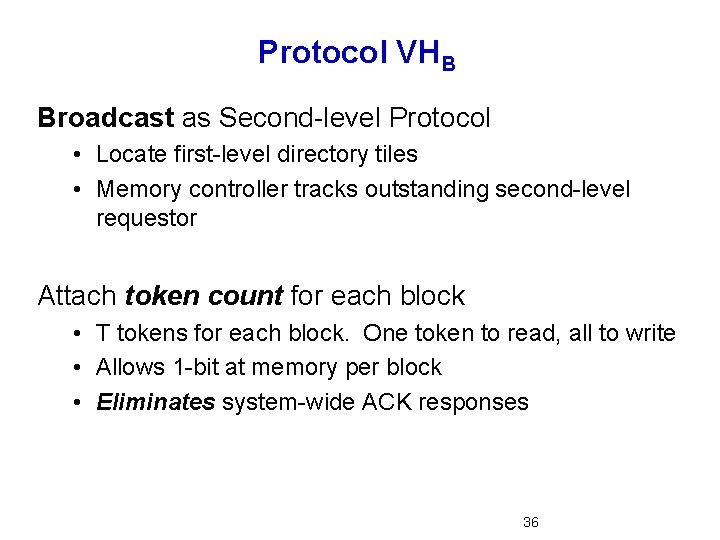

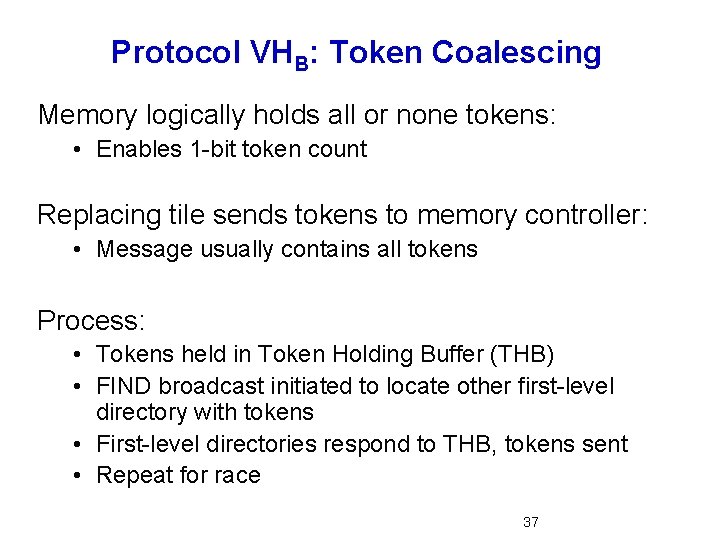

Protocol VHB Broadcast as Second-level Protocol • Locate first-level directory tiles • Memory controller tracks outstanding second-level requestor Attach token count for each block • T tokens for each block. One token to read, all to write • Allows 1 -bit at memory per block • Eliminates system-wide ACK responses 36

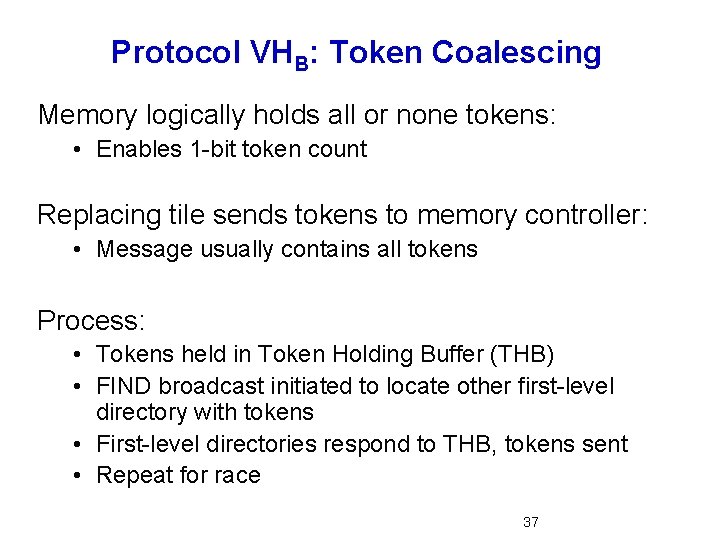

Protocol VHB: Token Coalescing Memory logically holds all or none tokens: • Enables 1 -bit token count Replacing tile sends tokens to memory controller: • Message usually contains all tokens Process: • Tokens held in Token Holding Buffer (THB) • FIND broadcast initiated to locate other first-level directory with tokens • First-level directories respond to THB, tokens sent • Repeat for race 37

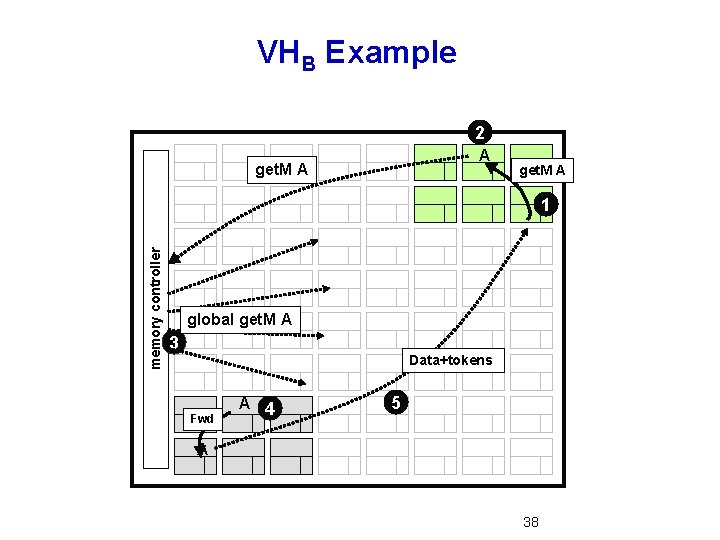

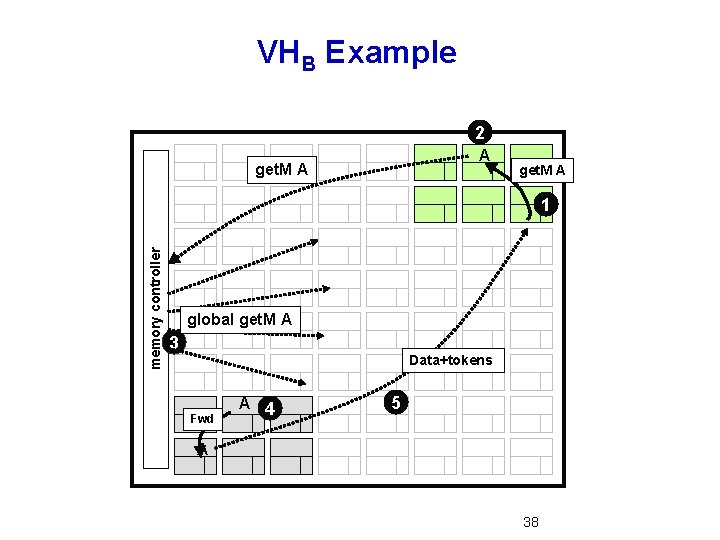

VHB Example 2 A get. M A memory controller 1 global get. M A 3 Data+tokens Fwd A 4 5 A 38

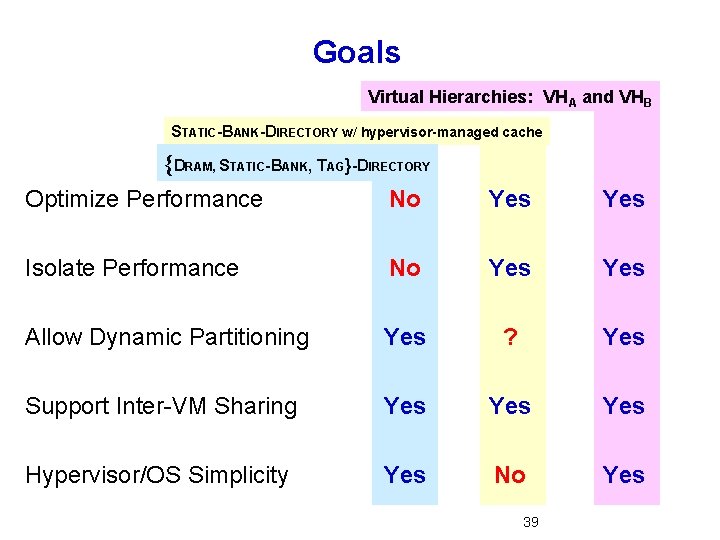

Goals Virtual Hierarchies: VHA and VHB STATIC-BANK-DIRECTORY w/ hypervisor-managed cache {DRAM, STATIC-BANK, TAG}-DIRECTORY Optimize Performance No Yes Isolate Performance No Yes Allow Dynamic Partitioning Yes ? Yes Support Inter-VM Sharing Yes Yes Hypervisor/OS Simplicity Yes No Yes 39

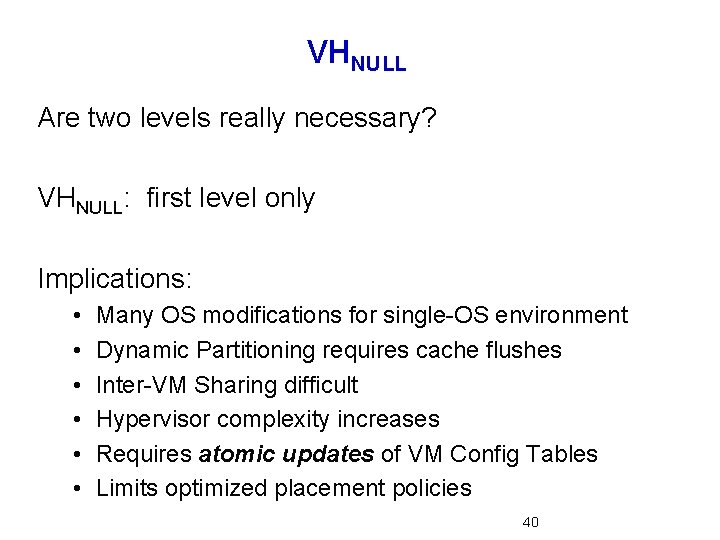

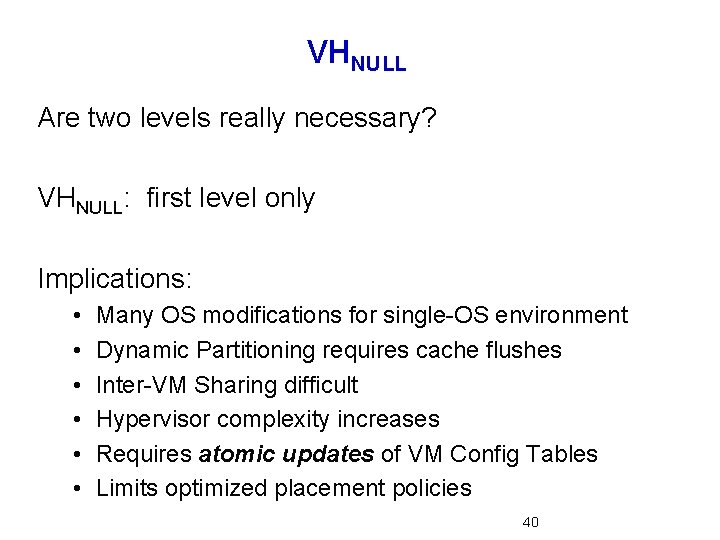

VHNULL Are two levels really necessary? VHNULL: first level only Implications: • • • Many OS modifications for single-OS environment Dynamic Partitioning requires cache flushes Inter-VM Sharing difficult Hypervisor complexity increases Requires atomic updates of VM Config Tables Limits optimized placement policies 40

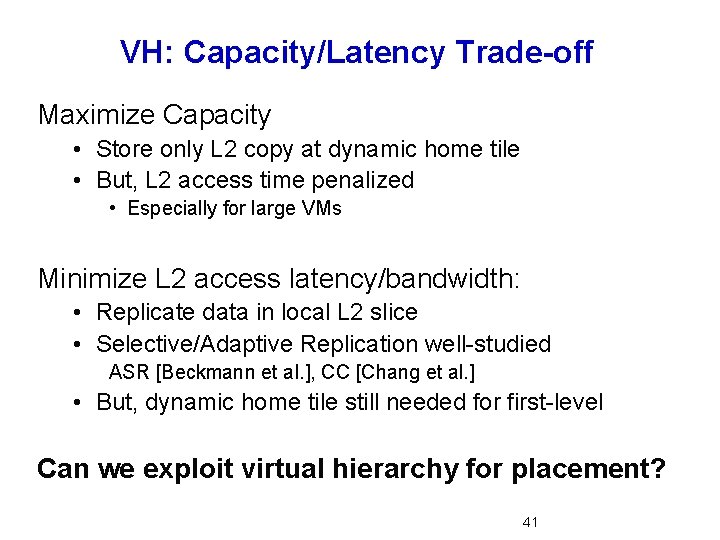

VH: Capacity/Latency Trade-off Maximize Capacity • Store only L 2 copy at dynamic home tile • But, L 2 access time penalized • Especially for large VMs Minimize L 2 access latency/bandwidth: • Replicate data in local L 2 slice • Selective/Adaptive Replication well-studied ASR [Beckmann et al. ], CC [Chang et al. ] • But, dynamic home tile still needed for first-level Can we exploit virtual hierarchy for placement? 41

VH: Data Placement Optimization Policy Data from memory placed in tile’s local L 2 bank • Tag not allocated at dynamic home tile Use second-level coherence on first sharing miss • Then allocate tag at dynamic home tile for future sharing misses Benefits: • Private data allocates in tile’s local L 2 bank • Overhead of replicating data reduced • Fast, first-level sharing for widely shared data 42

Outline Introduction and Motivation Virtual Hierarchies • • • Expanded Motivation Non-hierarchical approaches Proposed Virtual Hierarchies Evaluation Related Work Ring-based and Multiple-CMP Coherence Conclusion 43

VH Evaluation Methods Wisconsin GEMS Target System: 64 -core tiled CMP • In-order SPARC cores • 1 MB, 16 -way L 2 cache per tile, 10 -cycle access • 2 D mesh interconnect, 16 -byte links, 5 -cycle link latency • Eight on-chip memory controllers, 275 -cycle DRAM latency 44

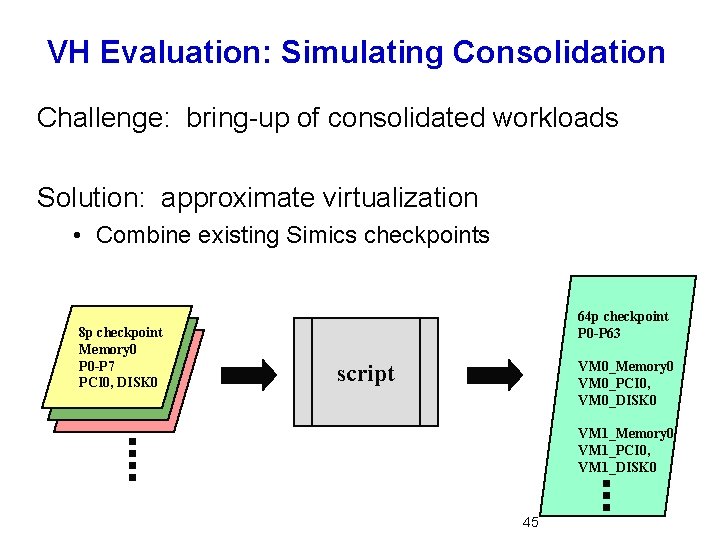

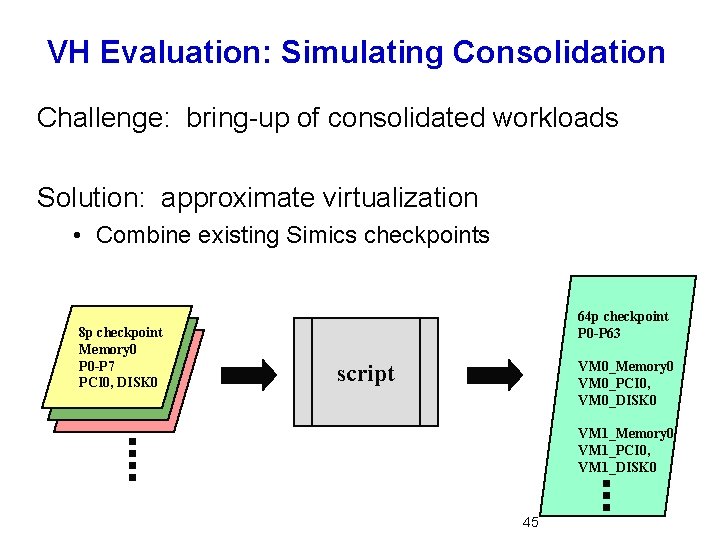

VH Evaluation: Simulating Consolidation Challenge: bring-up of consolidated workloads Solution: approximate virtualization • Combine existing Simics checkpoints 8 p checkpoint Memory 0 P 0 -P 7 PCI 0, DISK 0 64 p checkpoint P 0 -P 63 VM 0_Memory 0 VM 0_PCI 0, VM 0_DISK 0 script VM 1_Memory 0 VM 1_PCI 0, VM 1_DISK 0 45

VH Evaluation: Simulating Consolidation At simulation-time, Ruby handles mapping: • Converts <Processor ID, 32 -bit Address> to <36 -bit address> • Schedules VMs to adjacent cores by sending Simics requests to appropriate L 1 controllers • Memory controllers evenly interleaved Bottom-line: • Static scheduling • No hypervisor execution simulated • No content-based page sharing 46

VH Evaluation: Workloads OLTP, Spec. JBB, Apache, Zeus • Separate instance of Solaris for each VM Homogenous Consolidation • Simulate same-size workload N times • Unit of work identical across all workloads • (each workload staggered by 1, 000+ ins) Heterogeneous Consolidation • Simulate different-size, different workloads • Cycles-per-Transaction for each workload 47

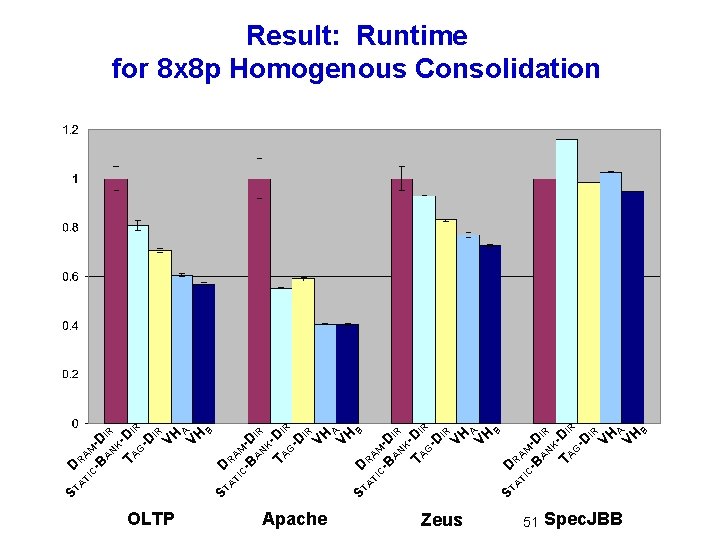

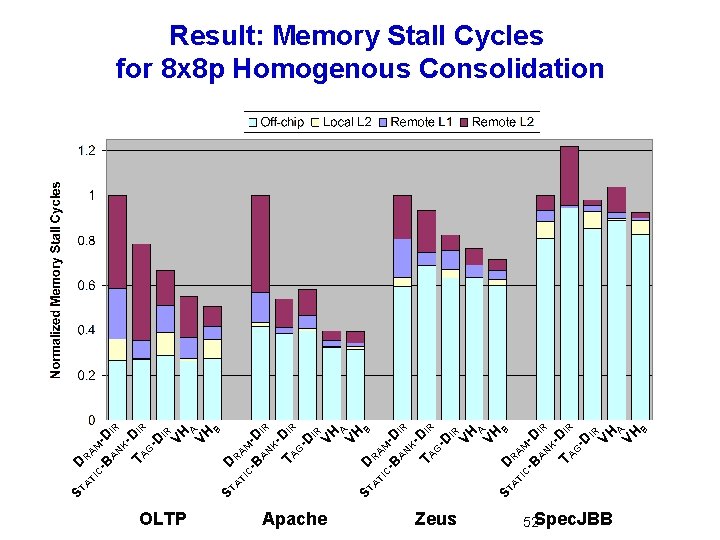

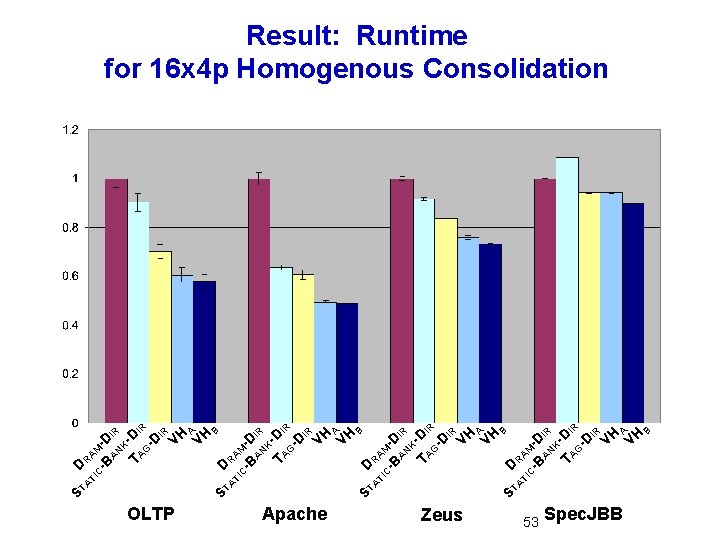

VH Evaluation: Baseline Protocols DRAM-DIRECTORY: • 1 MB directory cache per controller • Each tile nominally private, but replication limited TAG-DIRECTORY: • 3 -cycle central tag directory (1024 ways). Nonpipelined • Replication limited STATIC-BANK-DIRECTORY • Home tiles interleave by frame address • Home tile stores only L 2 copy 48

VH Evaluation: VHA and VHB Protocols VHA • Based on Directory. CMP implementation • Dynamic Home Tile stores only L 2 copy VHB with optimizations • Private data placement optimization policy (shared data stored at home tile, private data is not) • Can violate inclusiveness (evict L 2 tag w/ sharers) • Memory data returned directly to requestor 49

Micro-benchmark: Sharing Latency 50

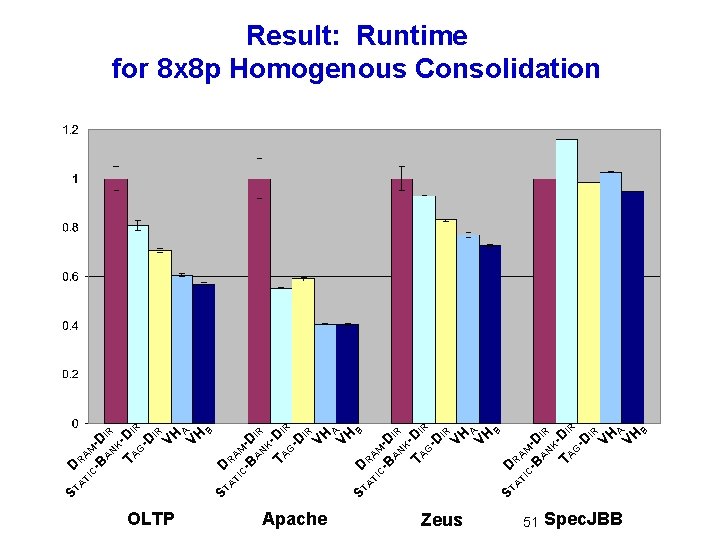

OLTP Apache Zeus A R K A N A IR B A 51 IR Spec. JBB B VH A TA -DIR G -D IR VH K A N M -B -D R VH A TA -DIR G -D IR VH D C TI A ST IR VH M -B -D D C TI A ST K A N TA -DIR G -D IR VH D C TI A ST A R M -B -D D C TI A ST Result: Runtime for 8 x 8 p Homogenous Consolidation

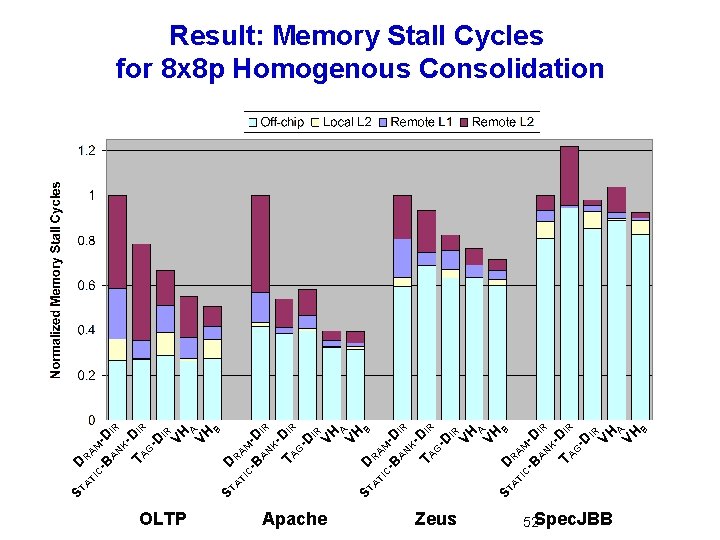

OLTP Apache C TI A ST -D IR B Zeus -D IR B 52 Spec. JBB B VH A TA DIR G -D IR VH K A N M A R -B D Result: Memory Stall Cycles for 8 x 8 p Homogenous Consolidation

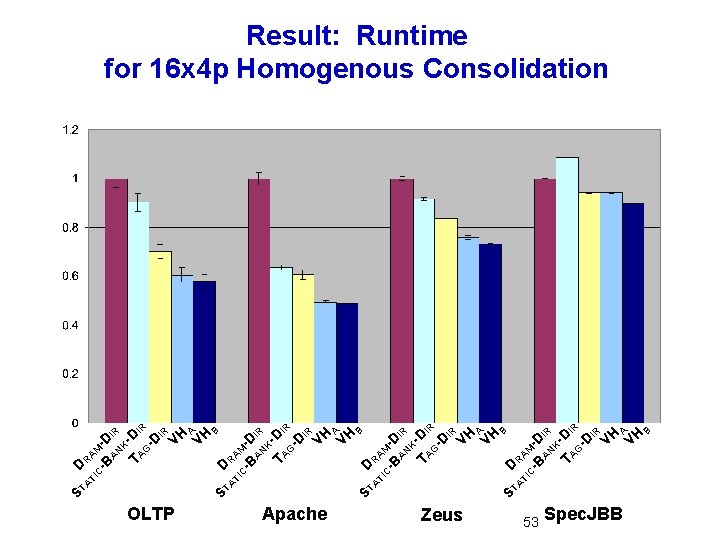

OLTP Apache Zeus A R K A N A IR B A IR A 53 IR Spec. JBB B VH A TA -DIR G -D IR VH K A N M -B -D R B VH A TA -DIR G -D IR VH D C TI A ST IR VH M -B -D D C TI A ST K A N TA -DIR G -D IR VH D C TI A ST A R M -B -D D C TI A ST Result: Runtime for 16 x 4 p Homogenous Consolidation

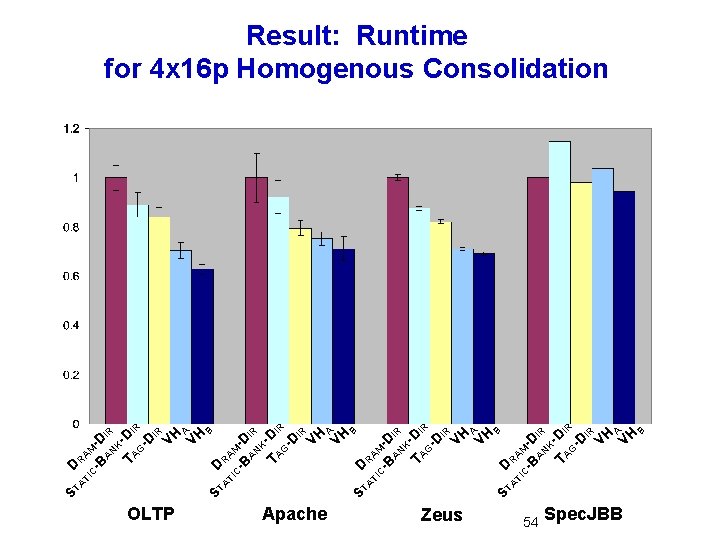

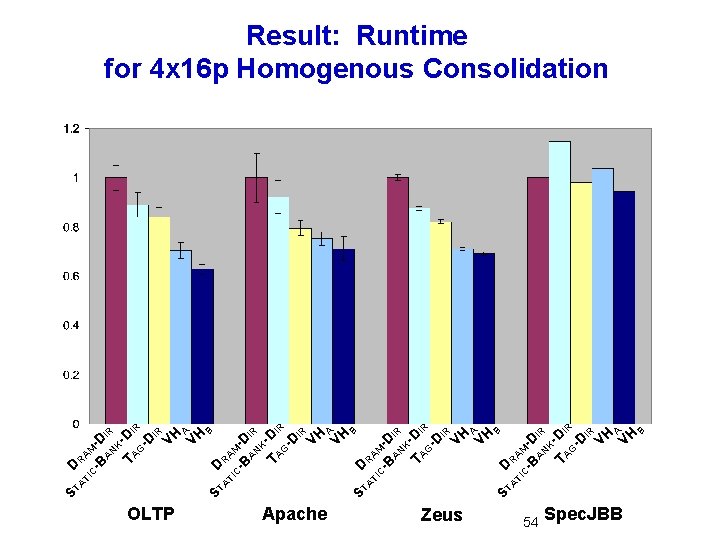

OLTP Apache Zeus A R K A N A IR B A IR A 54 IR Spec. JBB B VH A TA -DIR G -D IR VH K A N M -B -D R B VH A TA -DIR G -D IR VH D C TI A ST IR VH M -B -D D C TI A ST K A N TA -DIR G -D IR VH D C TI A ST A R M -B -D D C TI A ST Result: Runtime for 4 x 16 p Homogenous Consolidation

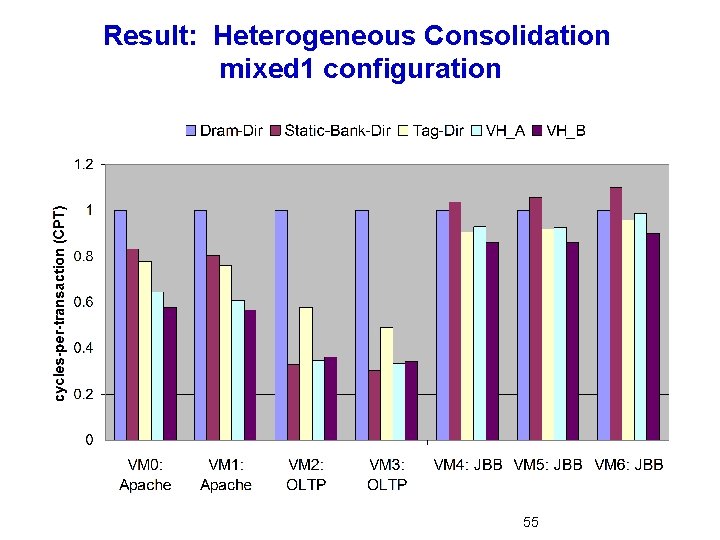

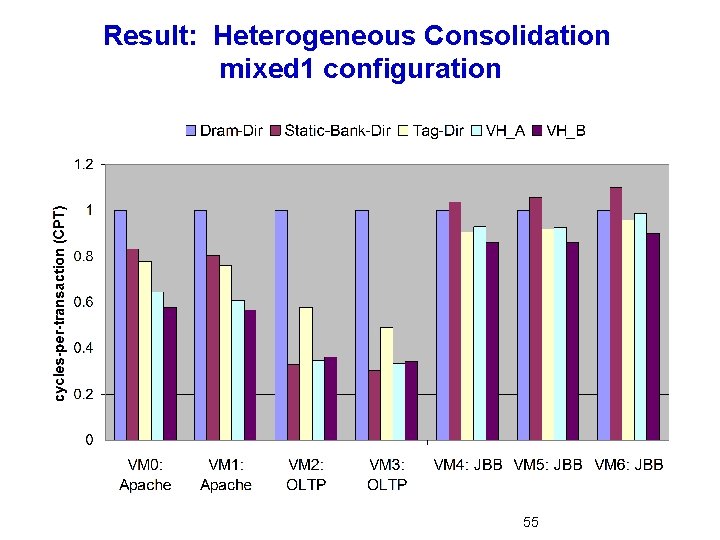

Result: Heterogeneous Consolidation mixed 1 configuration 55

Result: Heterogeneous Consolidation mixed 2 configuration 56

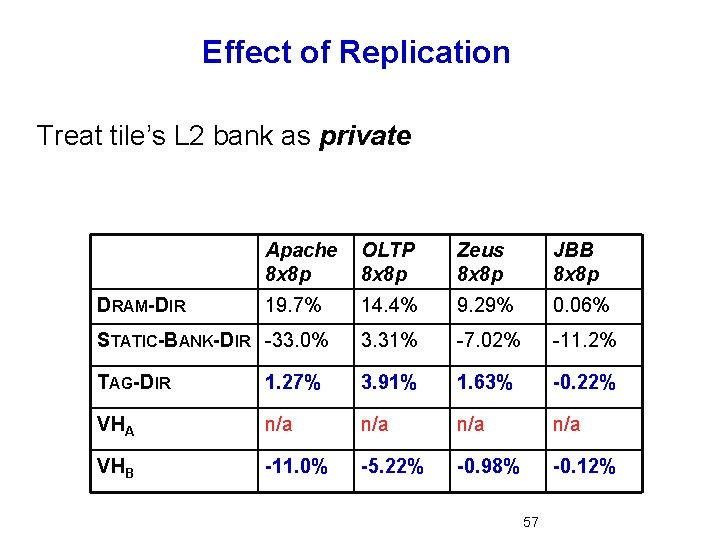

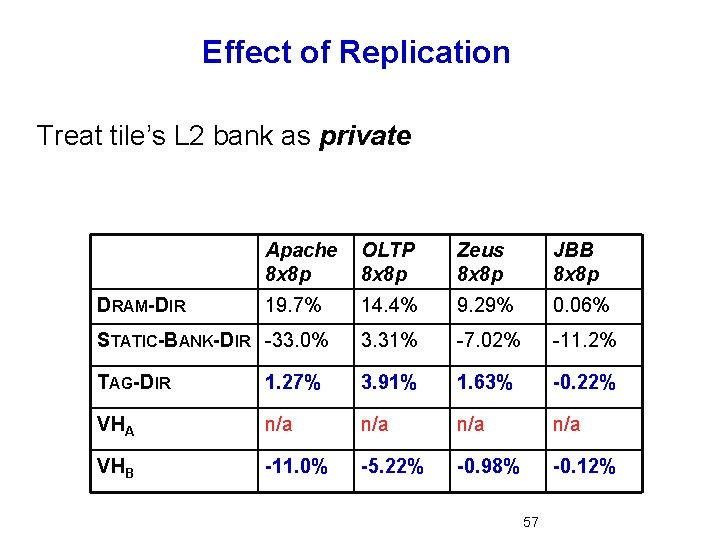

Effect of Replication Treat tile’s L 2 bank as private Apache 8 x 8 p OLTP 8 x 8 p Zeus 8 x 8 p JBB 8 x 8 p 19. 7% 14. 4% 9. 29% 0. 06% STATIC-BANK-DIR -33. 0% 3. 31% -7. 02% -11. 2% TAG-DIR 1. 27% 3. 91% 1. 63% -0. 22% VHA n/a n/a VHB -11. 0% -5. 22% -0. 98% -0. 12% DRAM-DIR 57

Outline Introduction and Motivation Virtual Hierarchies • • • Expanded Motivation Non-hierarchical approaches Proposed Virtual Hierarchies Evaluation Related Work Ring-based and Multiple-CMP Coherence Conclusion 58

Virtual Hierarchies: Related Work Commercial systems usually support partitioning Sun (Starfire and others) • Physical partitioning • No coherence between partitions IBM’s LPAR • Logical partitions, time-slicing of processors • Global coherence, but doesn’t optimize space-sharing 59

Virtual Hierarchies: Related Work Systems Approaches to Space Affinity: • Cellular Disco, Managing L 2 via OS [Cho et al. ] Shared L 2 Cache Partitioning • Way-based, replacement-based • Molecular Caches ( ~ VHnull ) Cache Organization and Replication • D-NUCA, Nu. Rapid, Cooperative Caching, ASR Quality-of-Service • Virtual Private Caches [Nesbit et al. ] • More 60

Virtual Hierarchies: Related Work Coherence protocol implementations • Token coherence w/ multicast • Multicast snooping Two-level directory • Compaq Piranha • Pruning caches [Scott et al. ] 61

Summary: Virtual Hierarchies Contribution: Virtual Hierarchy Idea • Alternative to physical hard-wired hierarchies • Optimize for space sharing and workload consolidation Contribution: VHA and VHB implementations • Two-level virtual hierarchy implementations Published in ISCA 2007 and 2008 Top Picks 62

![Outline Introduction and Motivation Virtual Hierarchies Ringbased Coherence MICRO 2006 Skip 5 minute Outline Introduction and Motivation Virtual Hierarchies Ring-based Coherence [MICRO 2006] • Skip, 5 -minute](https://slidetodoc.com/presentation_image/867574b9cdbfdfa1e8be497c46696867/image-63.jpg)

Outline Introduction and Motivation Virtual Hierarchies Ring-based Coherence [MICRO 2006] • Skip, 5 -minute versions, or 15 -minute versions? Multiple-CMP Coherence [HPCA 2005] • Skip, 5 -minute versions, or 15 -minute versions? Conclusion 63

Contribution: Ring-based Coherence Problem: Order of Bus != Order of Ring • Cannot apply bus-based snooping protocols Existing Solutions • Use unbounded retries to handle contention • Use a performance-costly ordering point Contribution: RING-ORDER • Exploits round-robin order of ring • Fast and stable performance Appears in MICRO 2006 64

Contribution: Multiple-CMP Coherence Hierarchy now the default, increases complexity • Most prior hierarchical protocols use bus-based nodes Contribution: Directory. CMP • Two-level directory protocol Contribution: Token. CMP • Extend token coherence to Multiple-CMPs • Flat for correctness, hierarchical for performance Appears in HPCA 2005 65

Other Research and Contributions Wisconsin GEMS • ISCA ’ 05 tutorial, CMP development, release, support Amdahl’s Law in the Multicore Era • Mark D. Hill and Michael R. Marty, to appear IEEE Computer ASR: Adaptive Selective Replication for CMP Caches • Beckmann et al. , MICRO 2006 Log. TM-SE: Decoupling Hardware Transactional Memory from Caches, • Yen et al. , HPCA 2007 66

Key Contributions Trend: Multicore ring interconnects emerging Challenge: Order of ring != order of bus Contribution: New protocol exploits ring order Trend: Multicore now the basic building block Challenge: Hierarchical coherence for Multiple-CMP is complex Contribution: Directory. CMP and Token. CMP Trend: Workload consolidation w/ space sharing Challenge: Physical hierarchies often do not match workloads Contribution: Virtual Hierarchies 67

Backup Slides 68

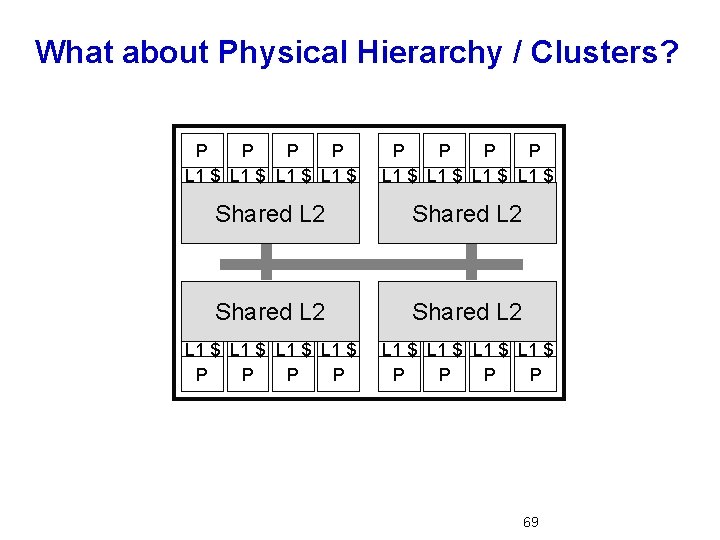

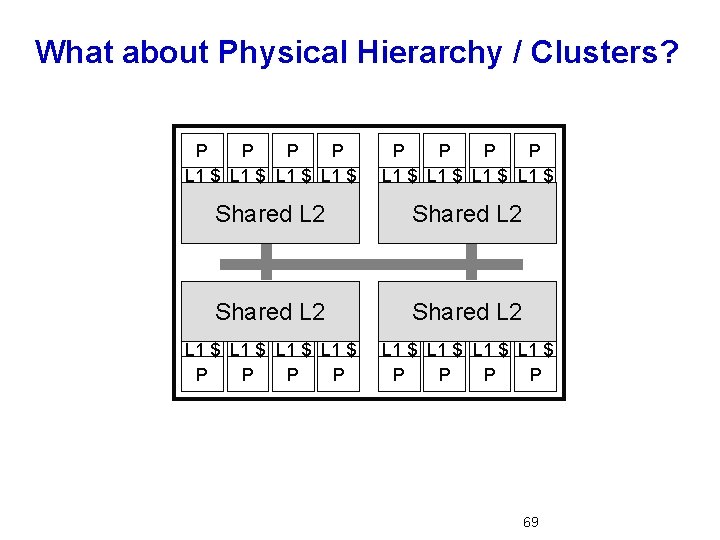

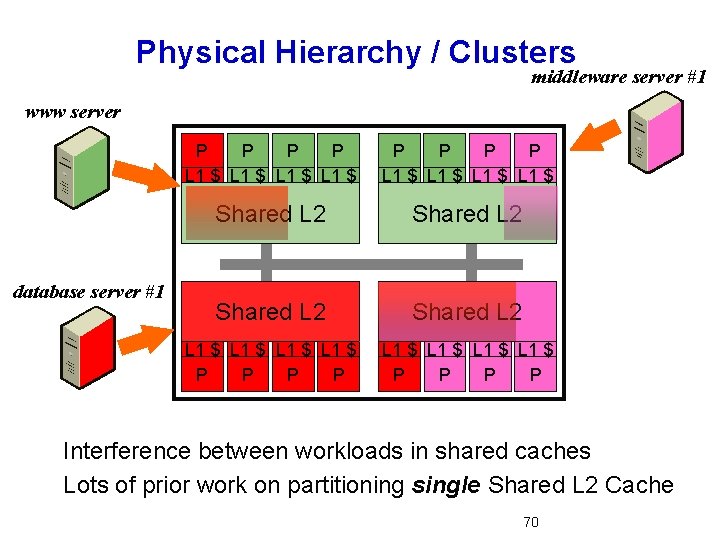

What about Physical Hierarchy / Clusters? P P P P L 1 $ L 1 $ Shared L 2 L 1 $ L 1 $ P P P P 69

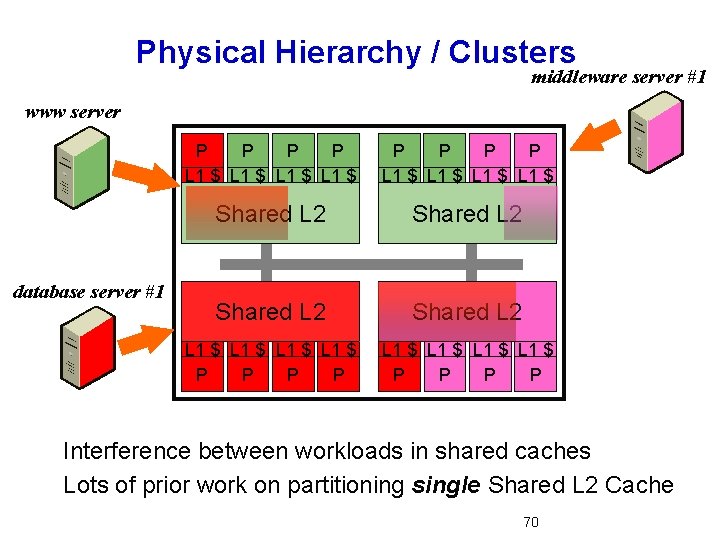

Physical Hierarchy / Clusters middleware server #1 www server database server #1 P P P P L 1 $ L 1 $ Shared L 2 L 1 $ L 1 $ P P P P Interference between workloads in shared caches Lots of prior work on partitioning single Shared L 2 Cache 70

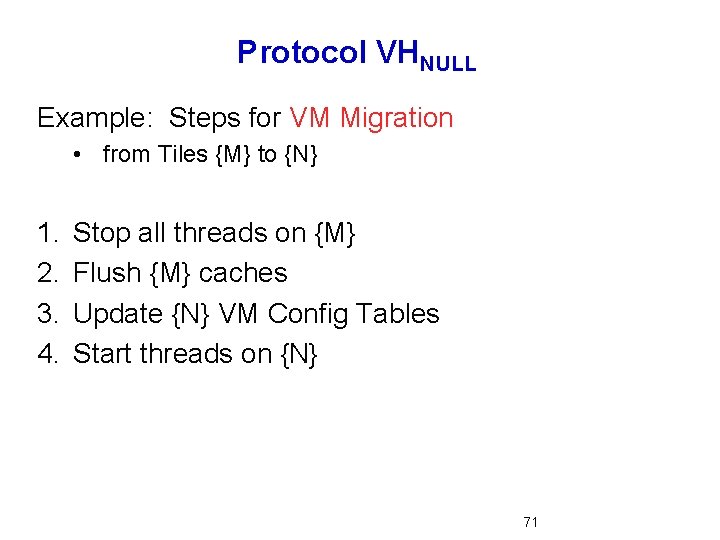

Protocol VHNULL Example: Steps for VM Migration • from Tiles {M} to {N} 1. 2. 3. 4. Stop all threads on {M} Flush {M} caches Update {N} VM Config Tables Start threads on {N} 71

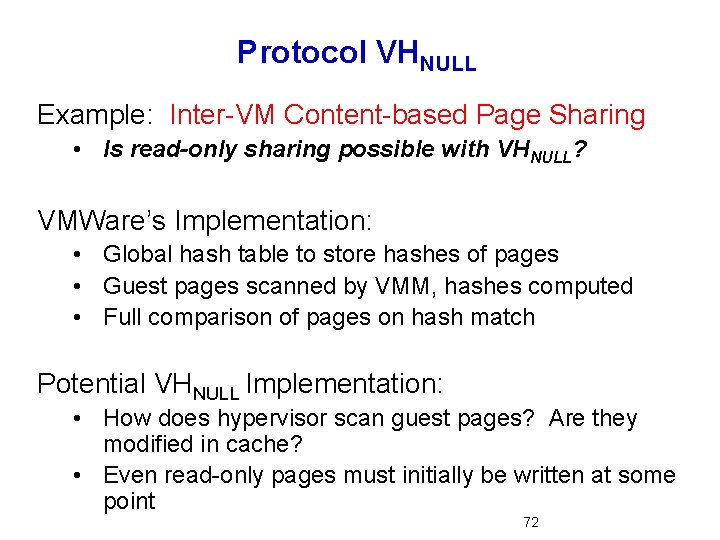

Protocol VHNULL Example: Inter-VM Content-based Page Sharing • Is read-only sharing possible with VHNULL? VMWare’s Implementation: • Global hash table to store hashes of pages • Guest pages scanned by VMM, hashes computed • Full comparison of pages on hash match Potential VHNULL Implementation: • How does hypervisor scan guest pages? Are they modified in cache? • Even read-only pages must initially be written at some point 72

5 -minute Ring Coherence 73

Ring Interconnect • Why? àShort, fast point-to-point links àFewer (data) ports àLess complex than packet-switched àSimple, distributed arbitration àExploitable ordering for coherence 74

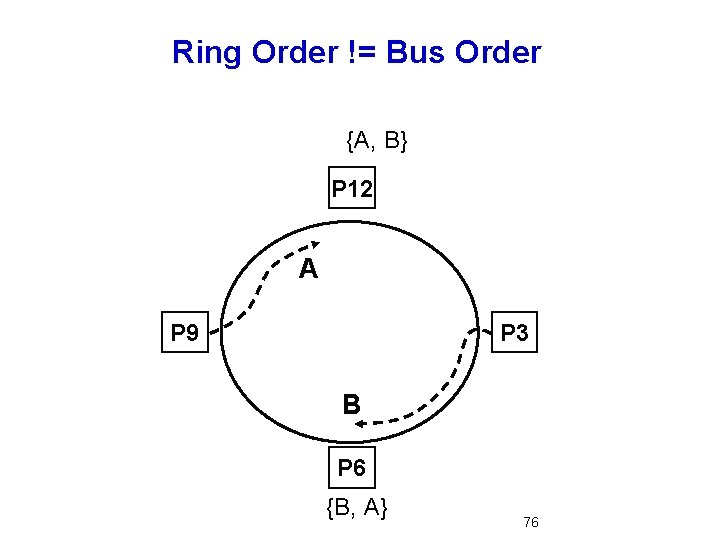

Cache Coherence for a Ring • Ring is broadcast and offers ordering • Apply existing bus-based snooping protocols? • NO! • Order properties of ring are different 75

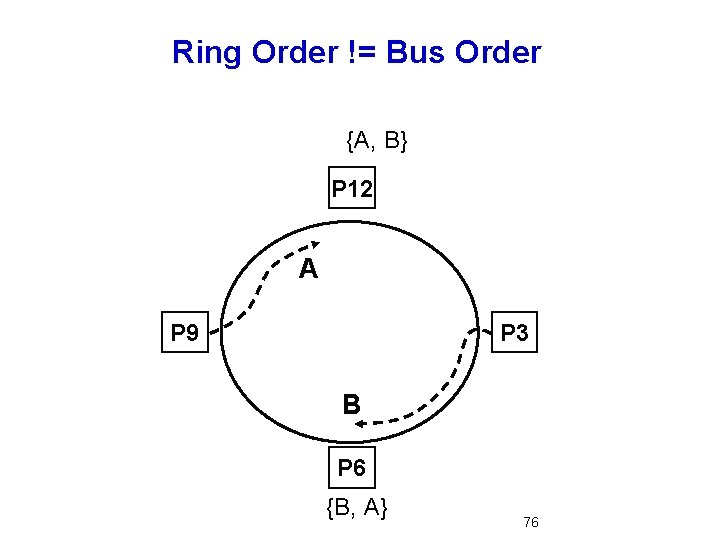

Ring Order != Bus Order {A, B} P 12 A P 9 P 3 B P 6 {B, A} 76

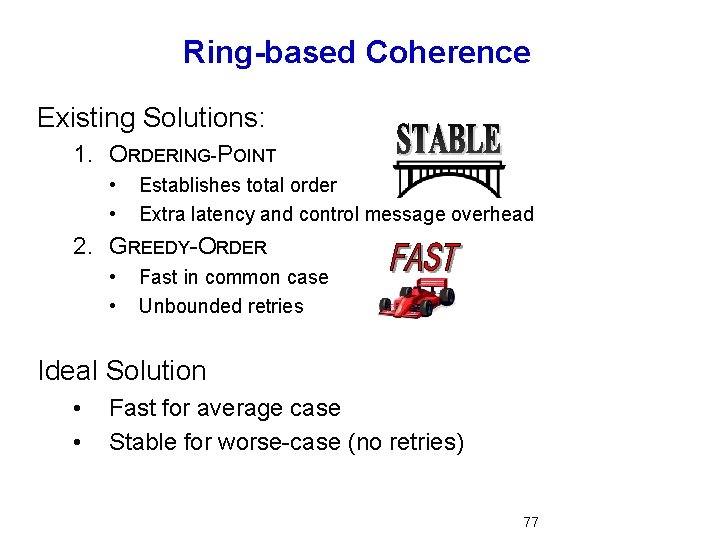

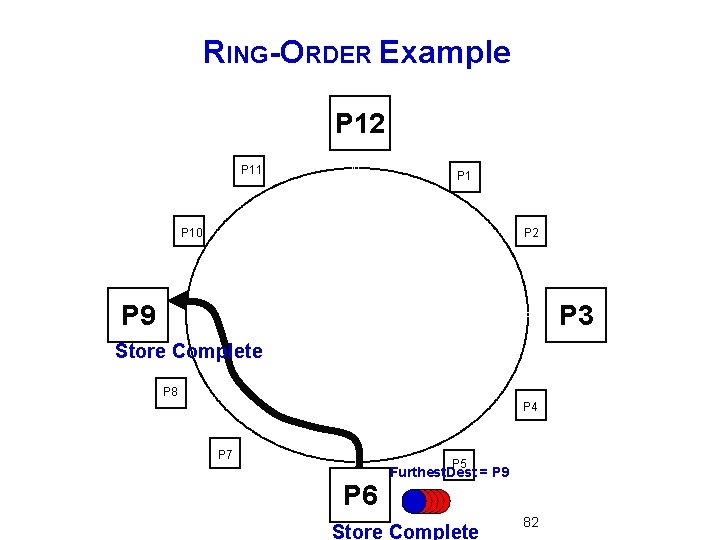

Ring-based Coherence Existing Solutions: 1. ORDERING-POINT • • Establishes total order Extra latency and control message overhead 2. GREEDY-ORDER • • Fast in common case Unbounded retries Ideal Solution • • Fast for average case Stable for worse-case (no retries) 77

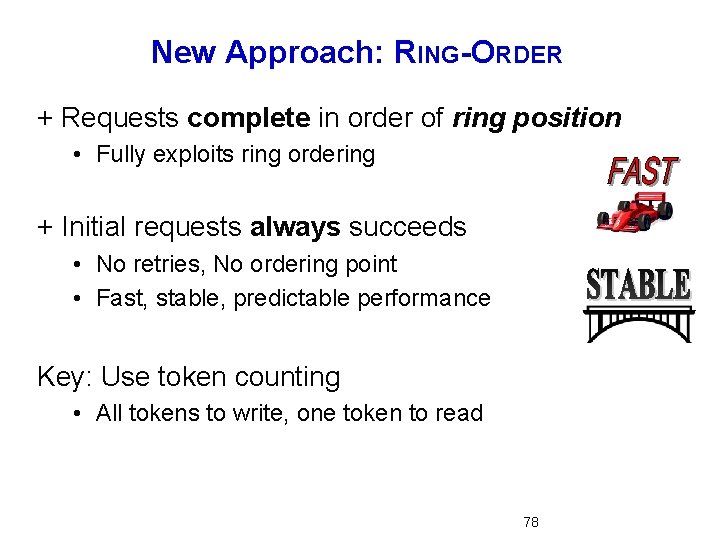

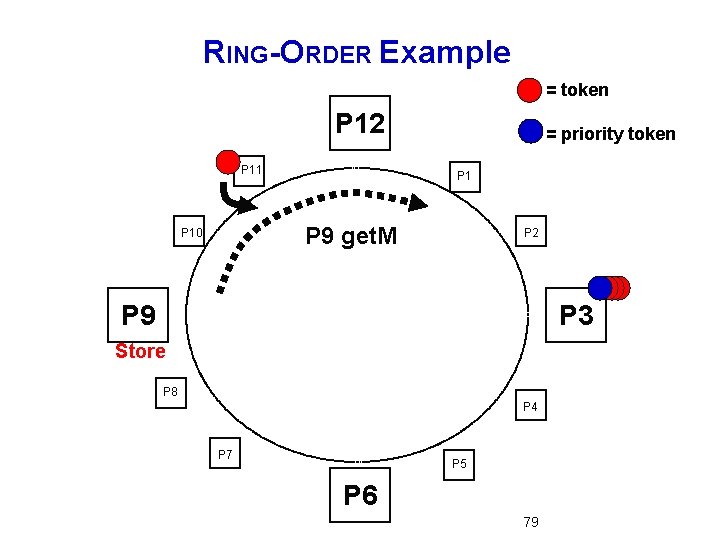

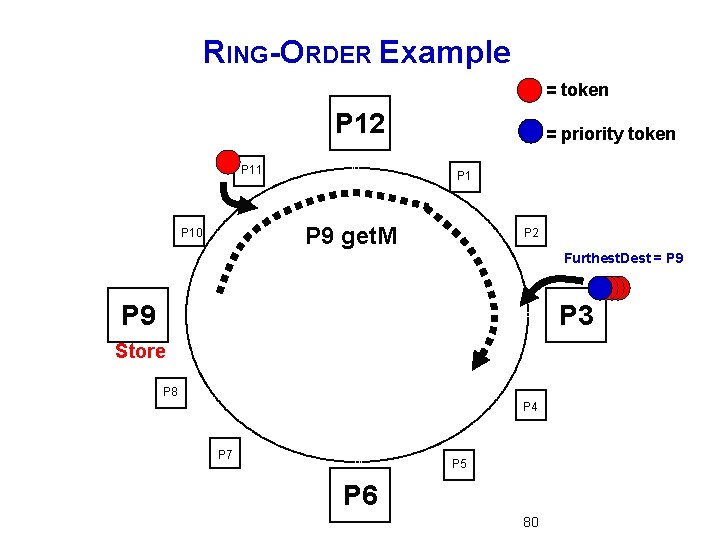

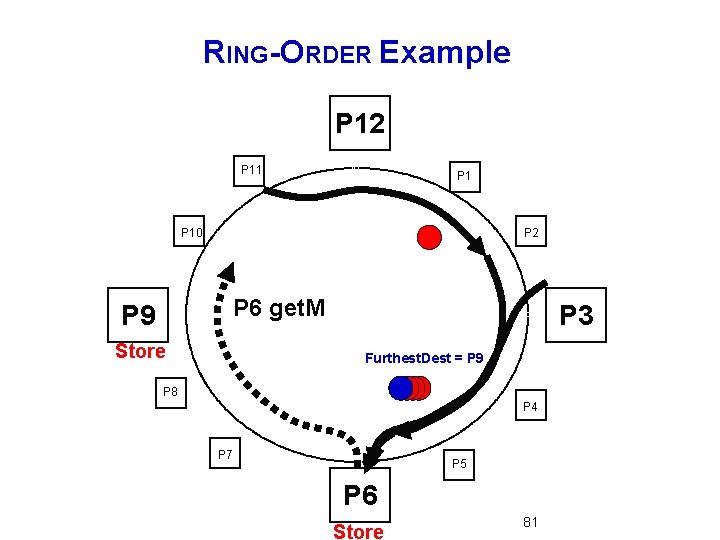

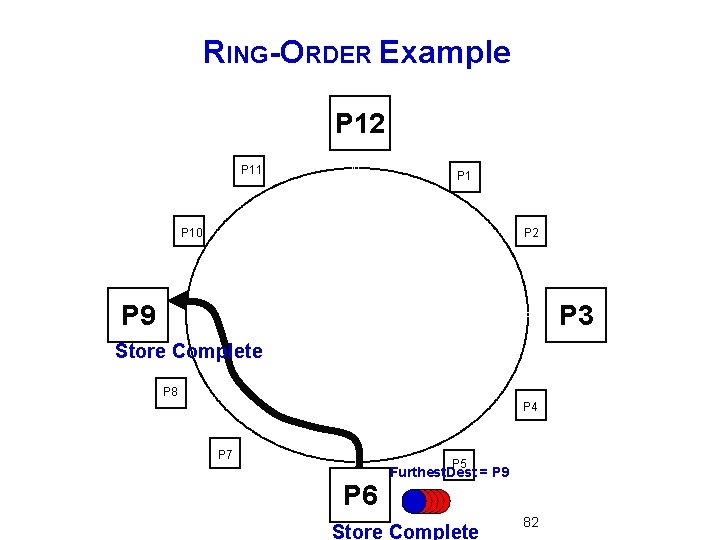

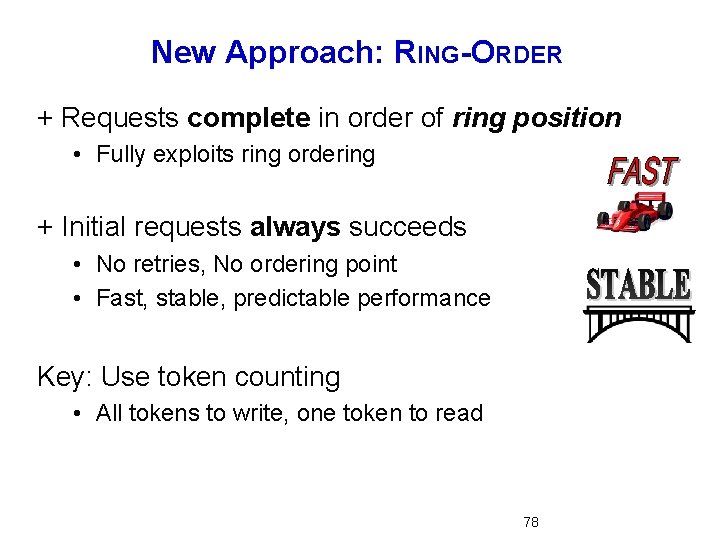

New Approach: RING-ORDER + Requests complete in order of ring position • Fully exploits ring ordering + Initial requests always succeeds • No retries, No ordering point • Fast, stable, predictable performance Key: Use token counting • All tokens to write, one token to read 78

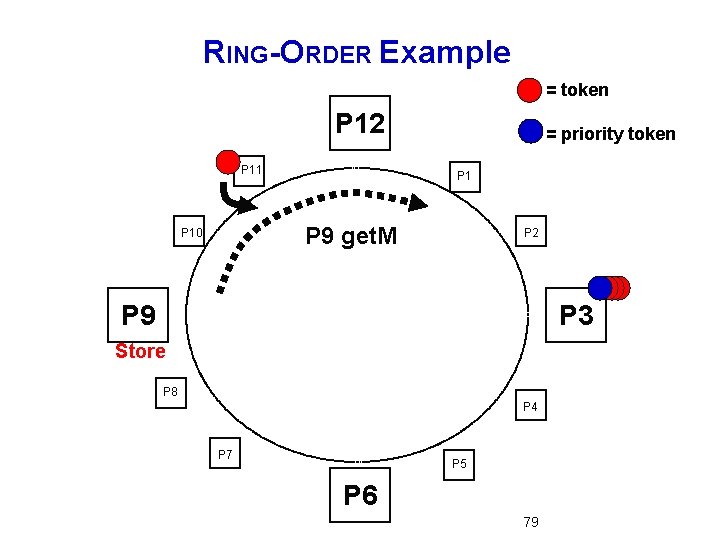

RING-ORDER Example = token P 12 P 11 = priority token P 1 P 9 get. M P 10 P 2 P 9 P 3 Store P 8 P 4 P 7 P 5 P 6 79

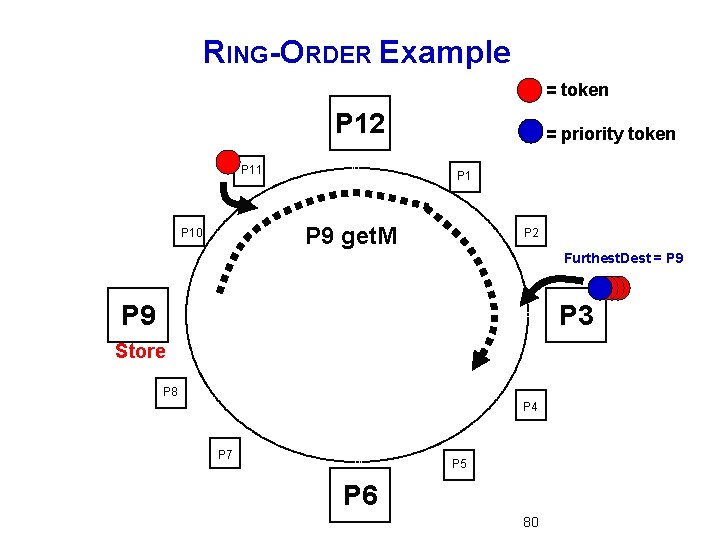

RING-ORDER Example = token P 12 P 11 = priority token P 1 P 9 get. M P 10 P 2 Furthest. Dest = P 9 P 3 Store P 8 P 4 P 7 P 5 P 6 80

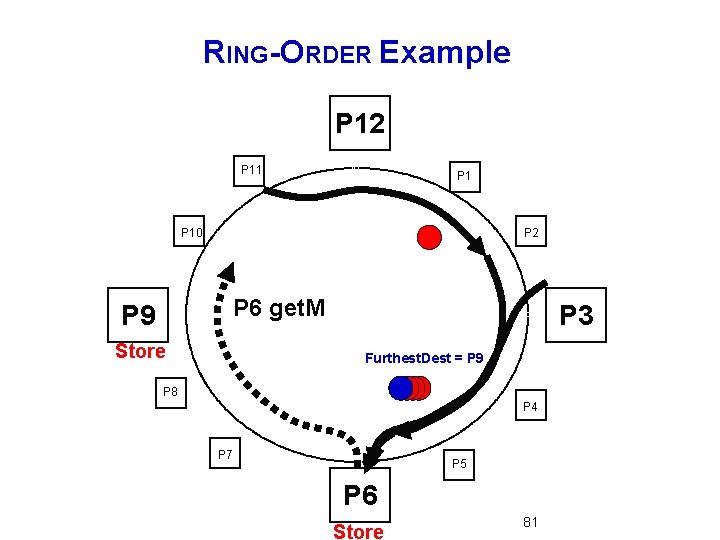

RING-ORDER Example P 12 P 11 P 10 P 2 P 6 get. M P 9 Store P 3 Furthest. Dest = P 9 P 8 P 4 P 7 P 5 P 6 Store 81

RING-ORDER Example P 12 P 11 P 10 P 2 P 9 P 3 Store Complete P 8 P 4 P 7 P 5 P 6 Furthest. Dest = P 9 Store Complete 82

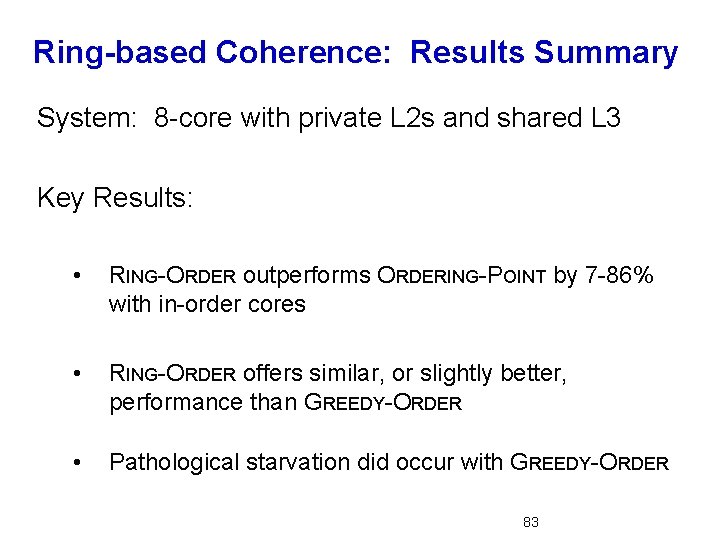

Ring-based Coherence: Results Summary System: 8 -core with private L 2 s and shared L 3 Key Results: • RING-ORDER outperforms ORDERING-POINT by 7 -86% with in-order cores • RING-ORDER offers similar, or slightly better, performance than GREEDY-ORDER • Pathological starvation did occur with GREEDY-ORDER 83

5 -minute Multiple-CMP Coherence 84

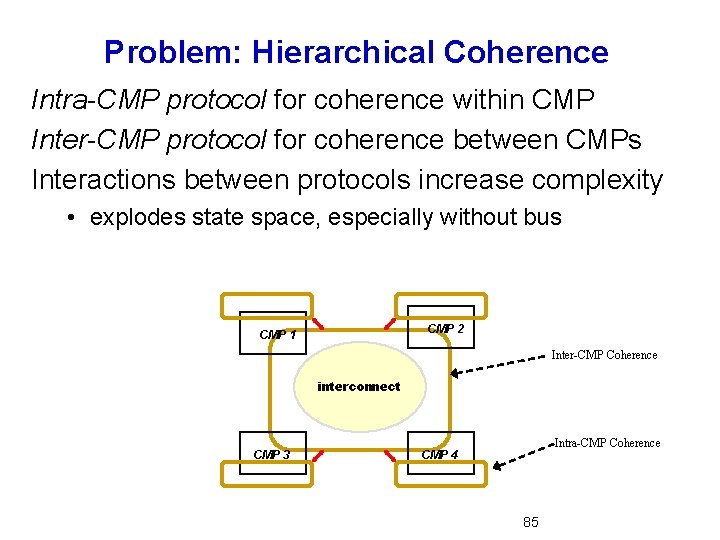

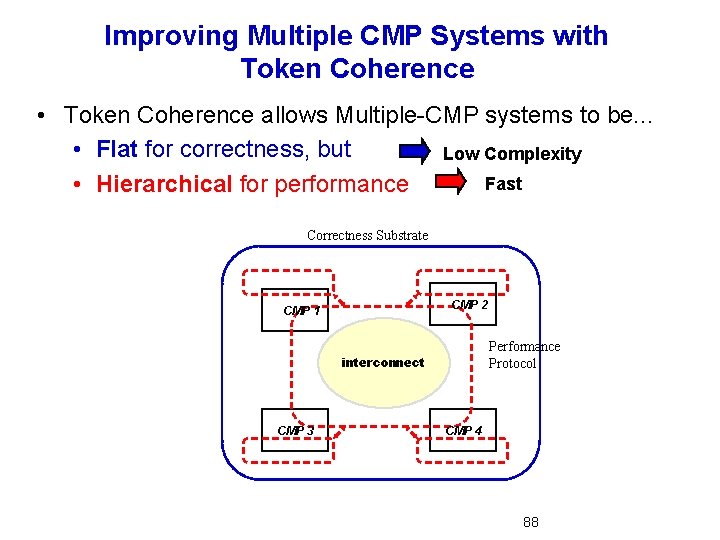

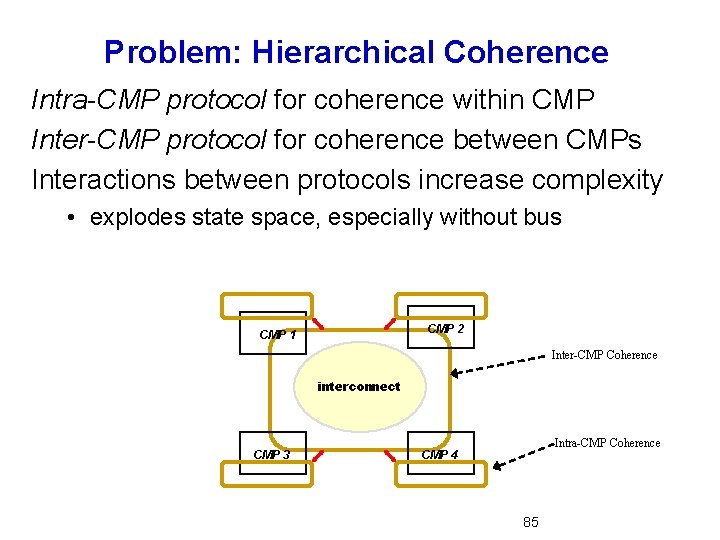

Problem: Hierarchical Coherence Intra-CMP protocol for coherence within CMP Inter-CMP protocol for coherence between CMPs Interactions between protocols increase complexity • explodes state space, especially without bus CMP 2 CMP 1 Inter-CMP Coherence interconnect CMP 3 Intra-CMP Coherence CMP 4 85

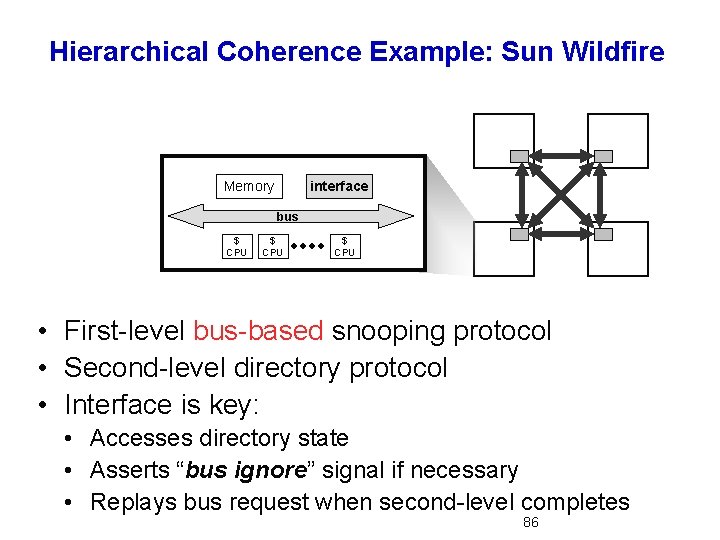

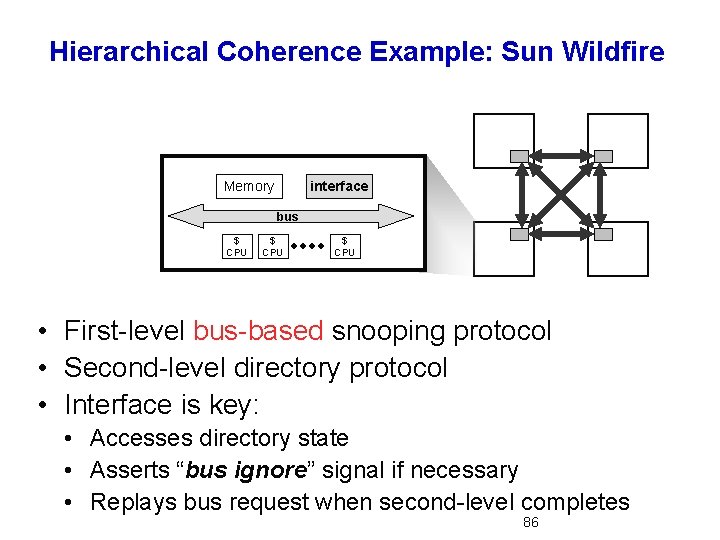

Hierarchical Coherence Example: Sun Wildfire Memory interface bus $ CPU • First-level bus-based snooping protocol • Second-level directory protocol • Interface is key: • Accesses directory state • Asserts “bus ignore” signal if necessary • Replays bus request when second-level completes 86

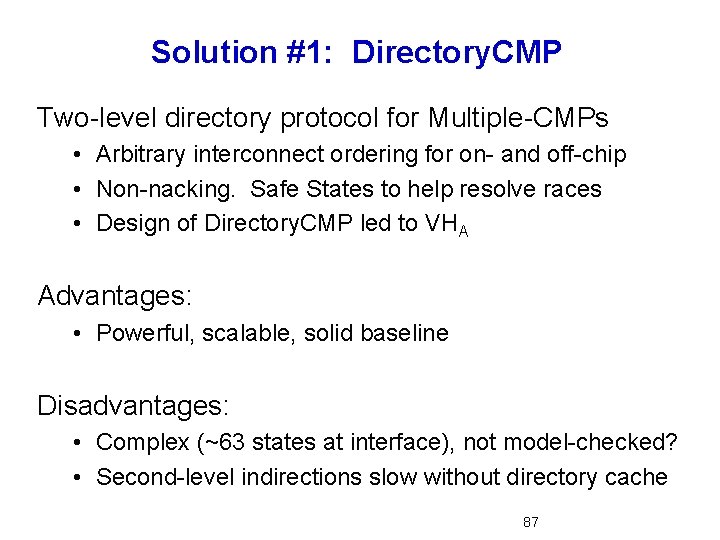

Solution #1: Directory. CMP Two-level directory protocol for Multiple-CMPs • Arbitrary interconnect ordering for on- and off-chip • Non-nacking. Safe States to help resolve races • Design of Directory. CMP led to VHA Advantages: • Powerful, scalable, solid baseline Disadvantages: • Complex (~63 states at interface), not model-checked? • Second-level indirections slow without directory cache 87

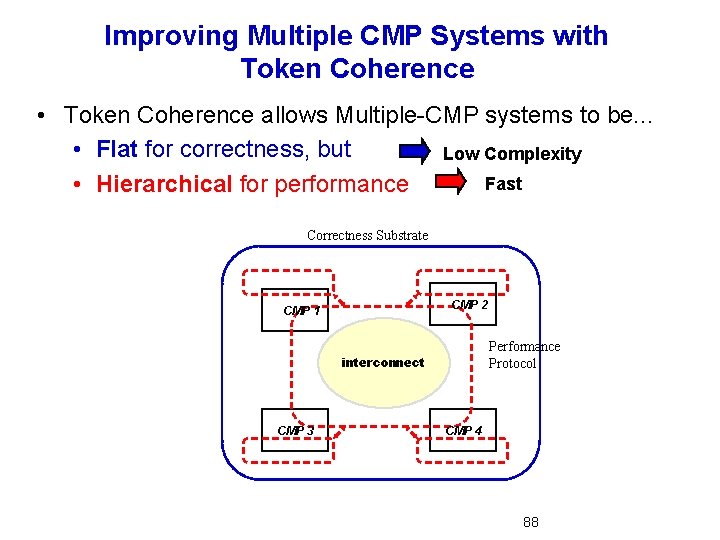

Improving Multiple CMP Systems with Token Coherence • Token Coherence allows Multiple-CMP systems to be. . . • Flat for correctness, but Low Complexity Fast • Hierarchical for performance Correctness Substrate CMP 2 CMP 1 Performance Protocol interconnect CMP 3 CMP 4 88

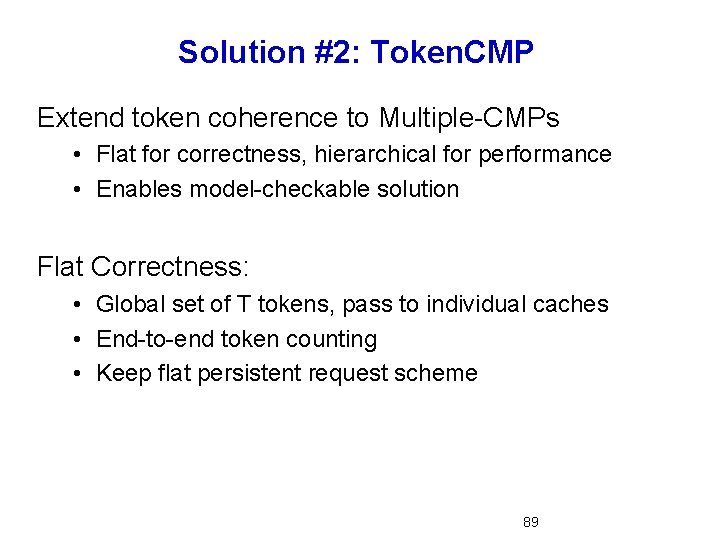

Solution #2: Token. CMP Extend token coherence to Multiple-CMPs • Flat for correctness, hierarchical for performance • Enables model-checkable solution Flat Correctness: • Global set of T tokens, pass to individual caches • End-to-end token counting • Keep flat persistent request scheme 89

Token. CMP Performance Policies Token. CMPA: • • Two-level broadcast L 2 broadcasts off-chip on miss Local cache responds if it has extra tokens Responses from off-chip carry extra tokens Token. CMPB: • On-chip broadcast on L 2 miss only (local indirection) Token. CMPC: Extra states for further filtering Token. CMPA-PRED: persistent request prediction 90

M-CMP Coherence: Summary of Results System: Four, 4 -core CMP Notable Results: • Token. CMP 2 -32% faster than Directory. CMP w/ inorder cores • Token. CMPA, Token. CMPB, Token. CMPC all perform similarly • Persistent request prediction greatly helps Zeus • Token. CMP gains diminished with out-of-order cores 91