ECE 526 Network Processing Systems Design Network Processor

- Slides: 21

ECE 526 – Network Processing Systems Design Network Processor Architecture and Scalability Chapter 13, 14: D. E. Comer

NP Architectures • Last class: ─ Key requirement of network processor: flexibility and scalability ─ Optimized instruction set and parallel processing using multiprocessors • This class: ─ Internal organization of NP: • Computation, storage and communication • Operating support • Content addressable memory (CAM) ─ NP scaling issues Ning Weng ECE 526 2

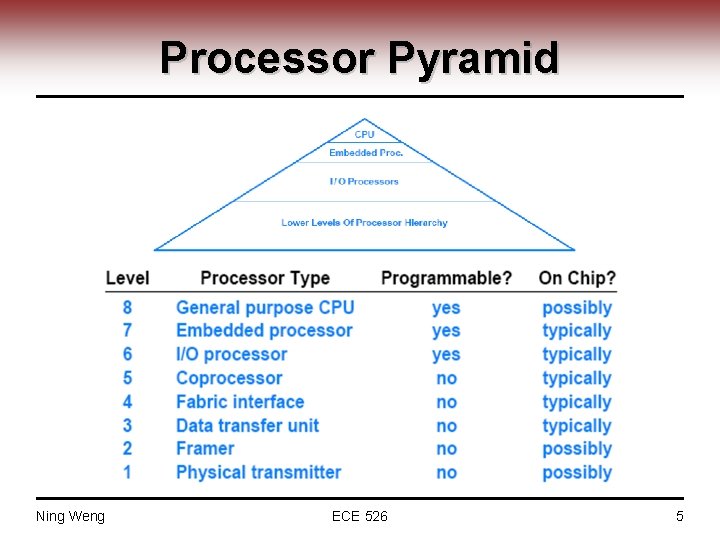

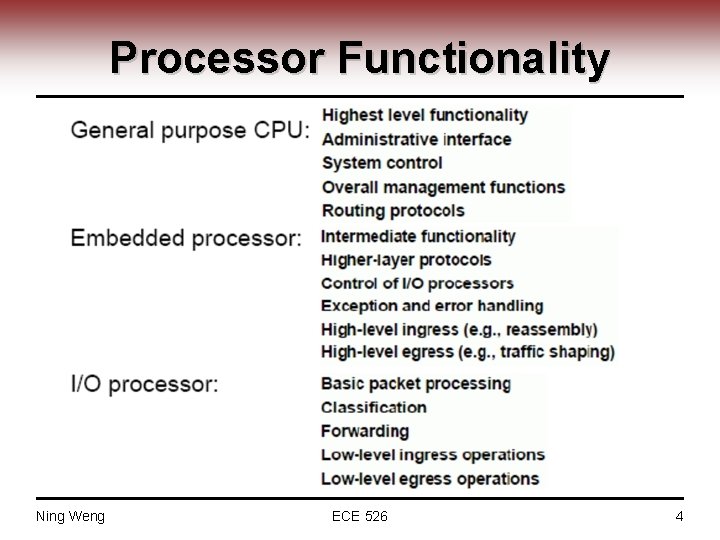

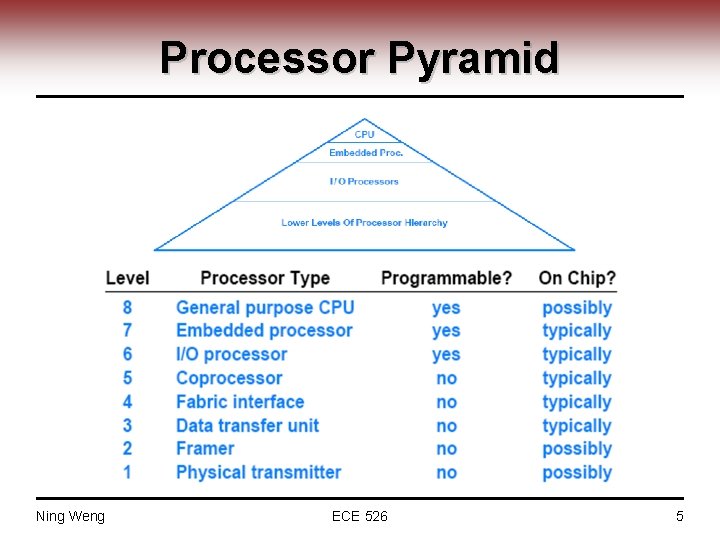

NP Architectures • NP architecture characteristics ─ Computation • Processor hierarchy • Special-purpose functional units ─ Storage • Memory hierarchy • Content addressable memory (CAM) ─ Communication • Internal buses • External interfaces ─ Operation support • Concurrent/parallel execution support • Programming models • Dispatch mechanisms Ning Weng ECE 526 3

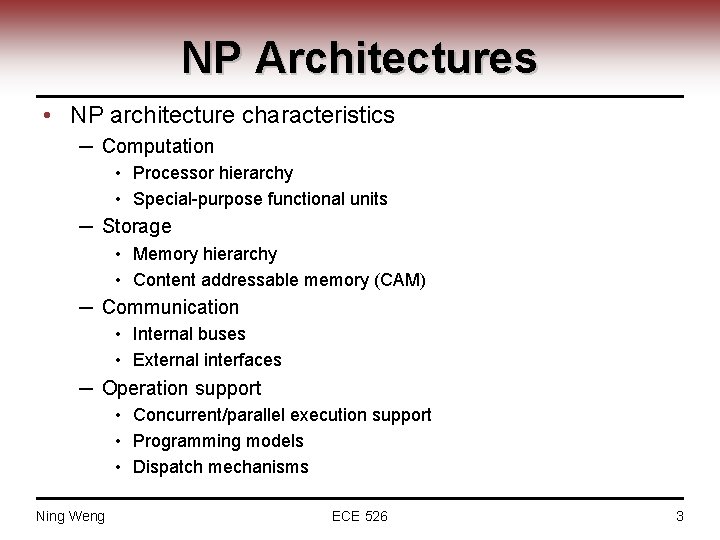

Processor Functionality Ning Weng ECE 526 4

Processor Pyramid Ning Weng ECE 526 5

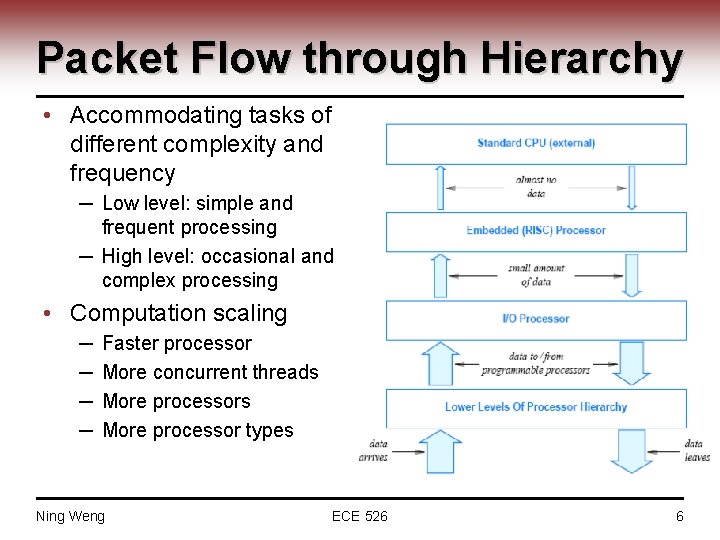

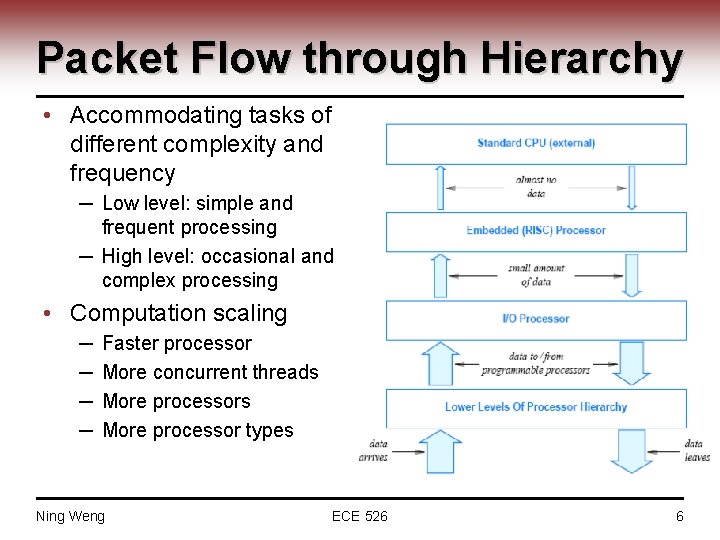

Packet Flow through Hierarchy • Accommodating tasks of different complexity and frequency ─ Low level: simple and frequent processing ─ High level: occasional and complex processing • Computation scaling ─ ─ Faster processor More concurrent threads More processor types Ning Weng ECE 526 6

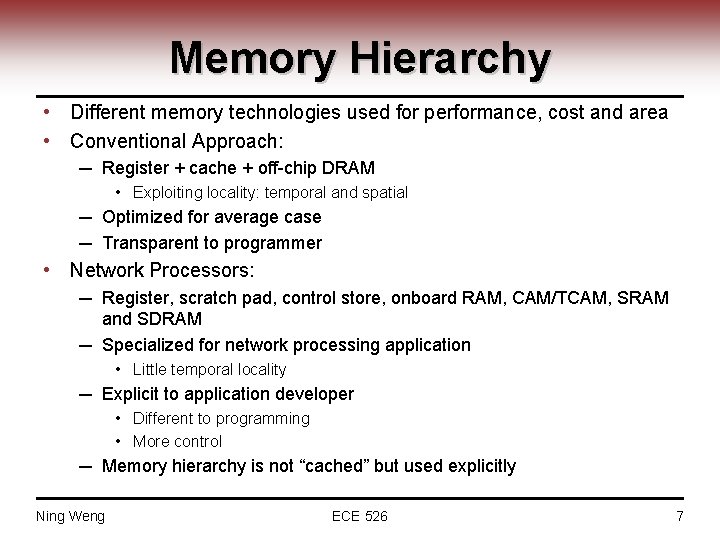

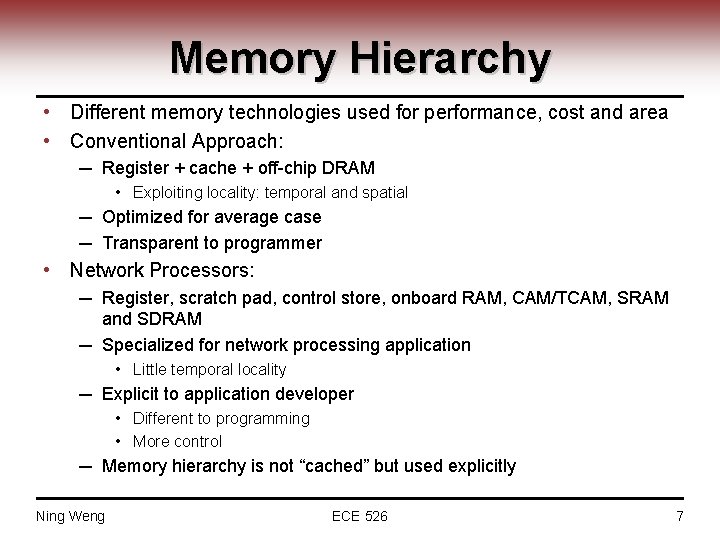

Memory Hierarchy • Different memory technologies used for performance, cost and area • Conventional Approach: ─ Register + cache + off-chip DRAM • Exploiting locality: temporal and spatial ─ Optimized for average case ─ Transparent to programmer • Network Processors: ─ Register, scratch pad, control store, onboard RAM, CAM/TCAM, SRAM and SDRAM ─ Specialized for network processing application • Little temporal locality ─ Explicit to application developer • Different to programming • More control ─ Memory hierarchy is not “cached” but used explicitly Ning Weng ECE 526 7

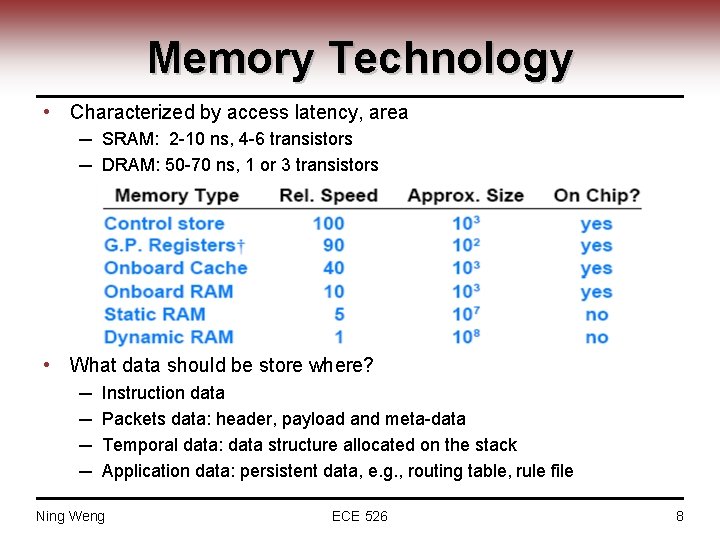

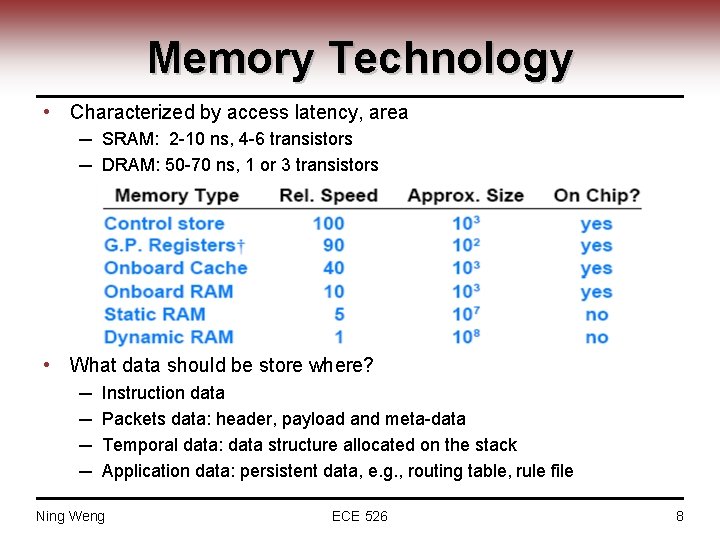

Memory Technology • Characterized by access latency, area ─ SRAM: 2 -10 ns, 4 -6 transistors ─ DRAM: 50 -70 ns, 1 or 3 transistors • What data should be store where? ─ ─ Instruction data Packets data: header, payload and meta-data Temporal data: data structure allocated on the stack Application data: persistent data, e. g. , routing table, rule file Ning Weng ECE 526 8

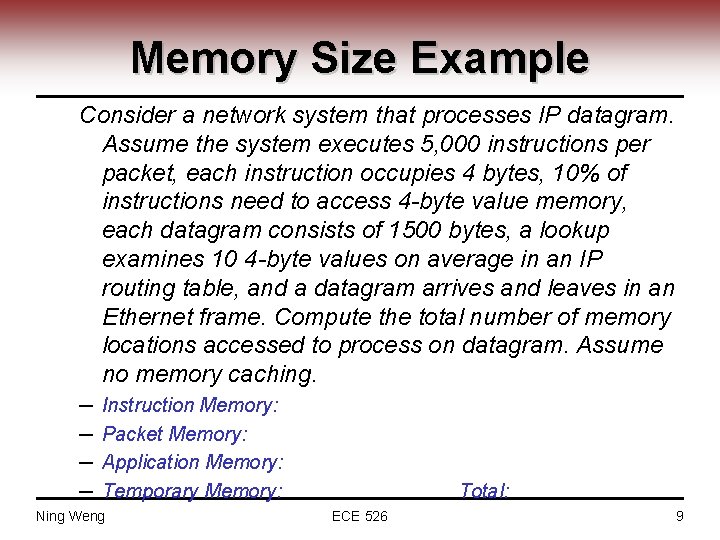

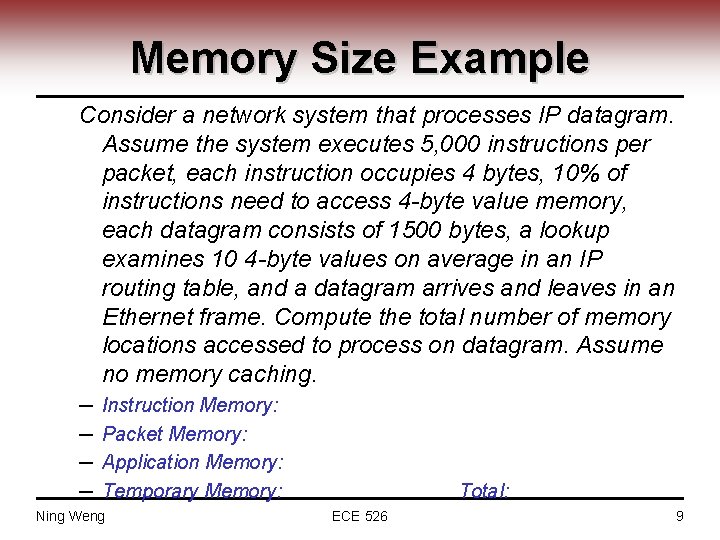

Memory Size Example Consider a network system that processes IP datagram. Assume the system executes 5, 000 instructions per packet, each instruction occupies 4 bytes, 10% of instructions need to access 4 -byte value memory, each datagram consists of 1500 bytes, a lookup examines 10 4 -byte values on average in an IP routing table, and a datagram arrives and leaves in an Ethernet frame. Compute the total number of memory locations accessed to process on datagram. Assume no memory caching. ─ ─ Instruction Memory: Packet Memory: Application Memory: Temporary Memory: Ning Weng Total: ECE 526 9

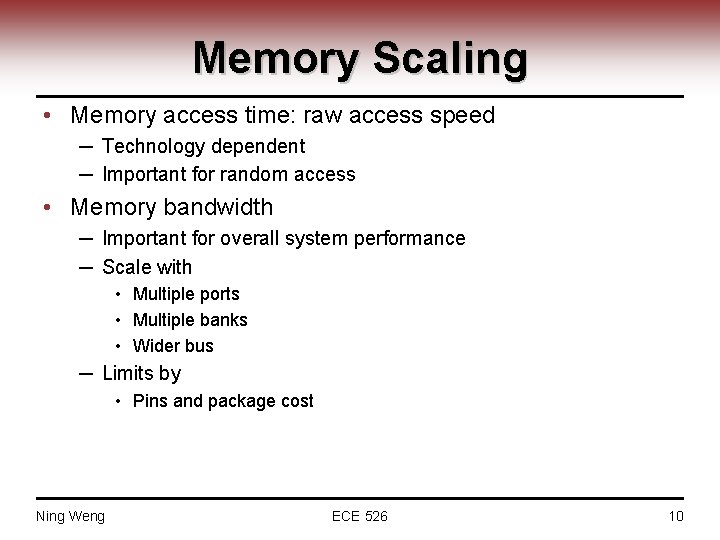

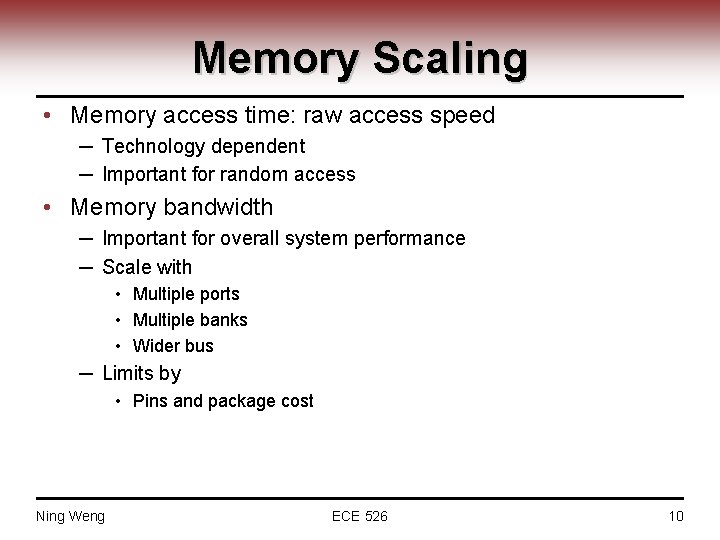

Memory Scaling • Memory access time: raw access speed ─ Technology dependent ─ Important for random access • Memory bandwidth ─ Important for overall system performance ─ Scale with • Multiple ports • Multiple banks • Wider bus ─ Limits by • Pins and package cost Ning Weng ECE 526 10

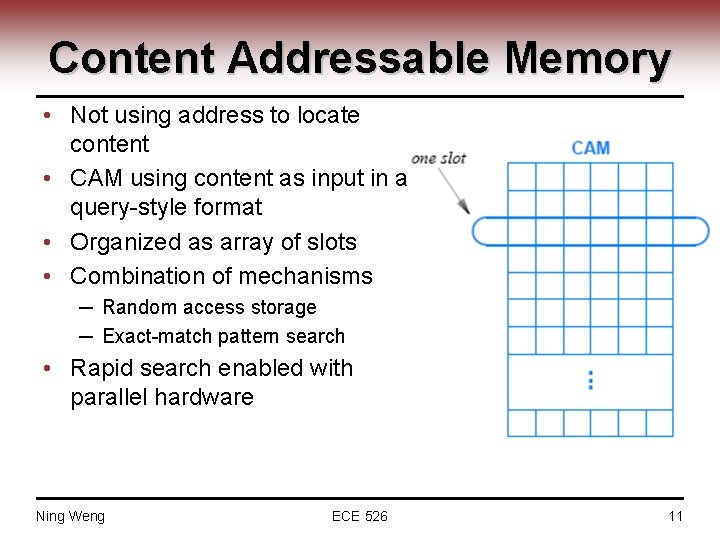

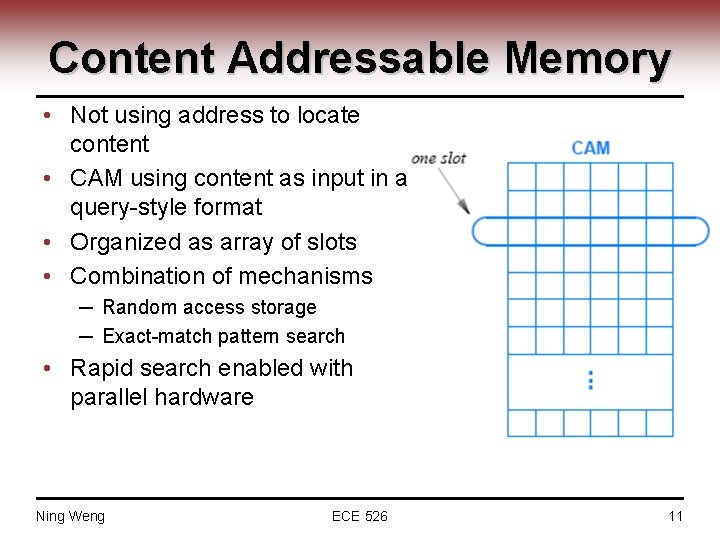

Content Addressable Memory • Not using address to locate content • CAM using content as input in a query-style format • Organized as array of slots • Combination of mechanisms ─ Random access storage ─ Exact-match pattern search • Rapid search enabled with parallel hardware Ning Weng ECE 526 11

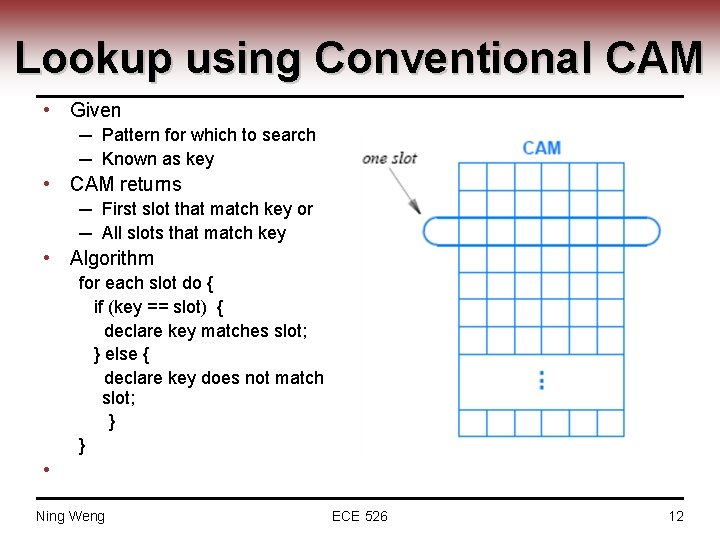

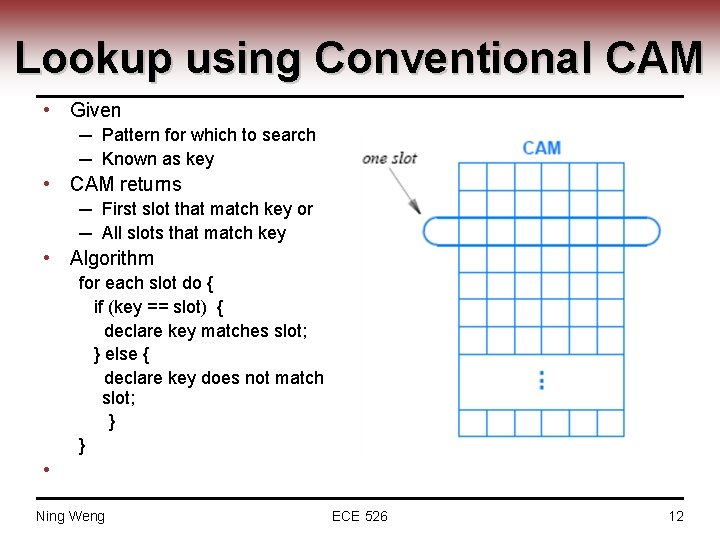

Lookup using Conventional CAM • Given ─ Pattern for which to search ─ Known as key • CAM returns ─ First slot that match key or ─ All slots that match key • Algorithm for each slot do { if (key == slot) { declare key matches slot; } else { declare key does not match slot; } } • Ning Weng ECE 526 12

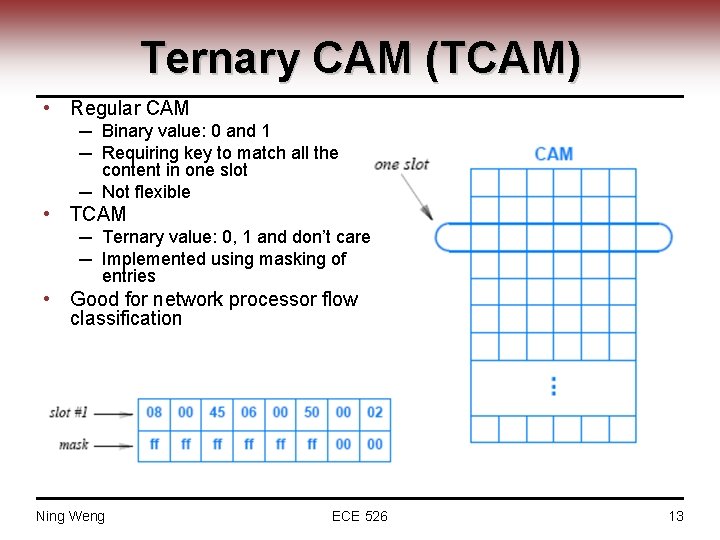

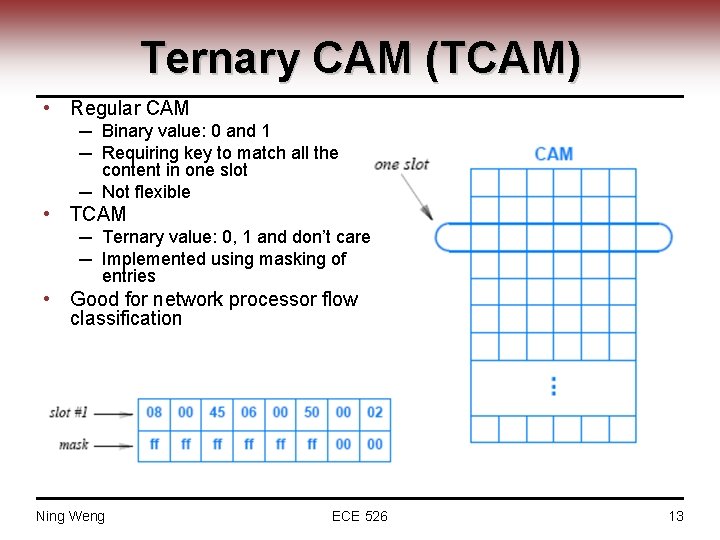

Ternary CAM (TCAM) • Regular CAM ─ Binary value: 0 and 1 ─ Requiring key to match all the content in one slot ─ Not flexible • TCAM ─ Ternary value: 0, 1 and don’t care ─ Implemented using masking of entries • Good for network processor flow classification Ning Weng ECE 526 13

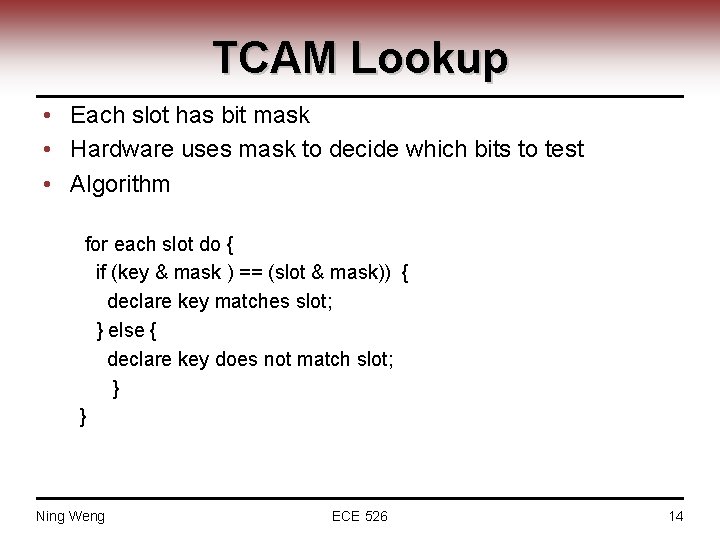

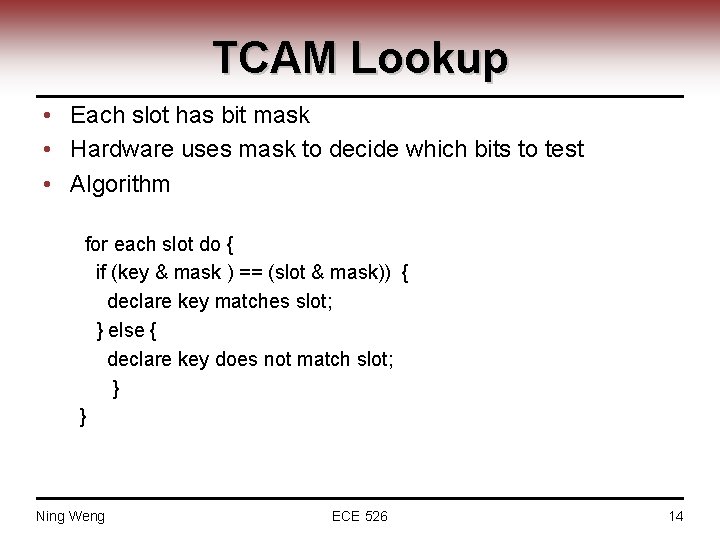

TCAM Lookup • Each slot has bit mask • Hardware uses mask to decide which bits to test • Algorithm for each slot do { if (key & mask ) == (slot & mask)) { declare key matches slot; } else { declare key does not match slot; } } Ning Weng ECE 526 14

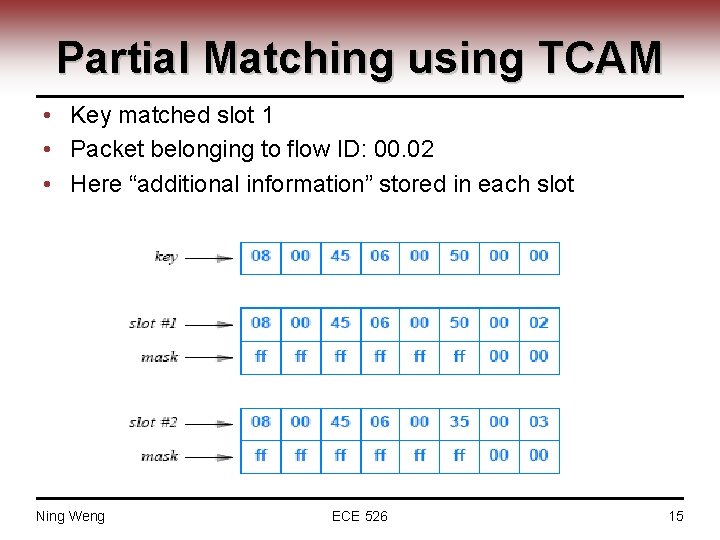

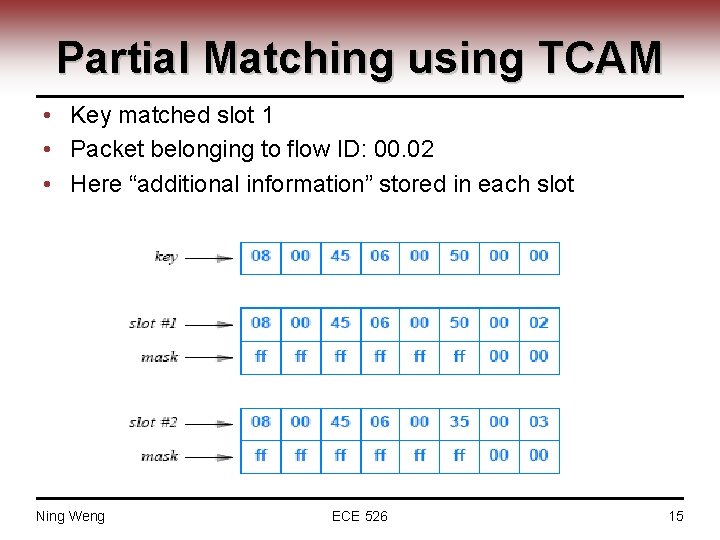

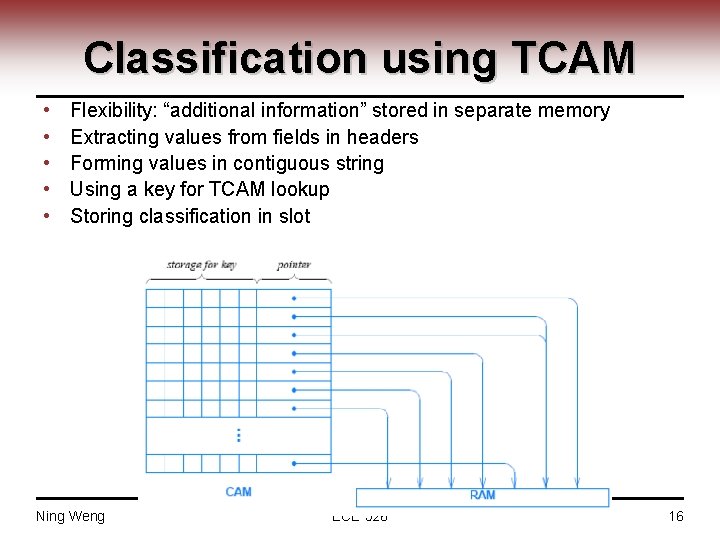

Partial Matching using TCAM • Key matched slot 1 • Packet belonging to flow ID: 00. 02 • Here “additional information” stored in each slot Ning Weng ECE 526 15

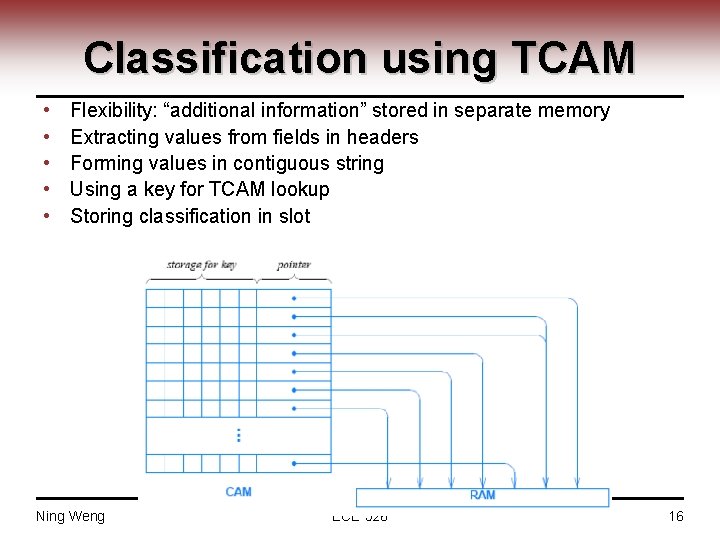

Classification using TCAM • • • Flexibility: “additional information” stored in separate memory Extracting values from fields in headers Forming values in contiguous string Using a key for TCAM lookup Storing classification in slot Ning Weng ECE 526 16

Communication • Internal interfaces: channels between processing elements, memories ─ ─ Internal bus Hardware FIFO: sequential access Transfer register: random access Onboard shared memory: shared random access • External interfaces ─ ─ Memory interfaces: accesses to larger off-chip memory Direct I/O interfaces: e. g. , access to link interfaces Bus interfaces: accesses to other devices, e. g. , control CPU Switching fabric interface • Access to switching fabric • Several standards (e. g. , CSIX by NP Forum) Ning Weng ECE 526 17

Communication Cost Example • Consider a second generation network system that forwards IP datagram. If the system has 16 interfaces that each connect to an OC-192 line (data rate is 10 Gbps). These 16 interfaces are interconnected with a shared communication channel. The packet size is in the range of 40 bytes to 1500 bytes. What aggregate bandwidth is needed on the communication channel for the two design scenarios: ─ Every bit of a packet transfers through the shared communication channels. ─ Only a 4 -byte packet memory address transfers through the shared communication channels. Ning Weng ECE 526 18

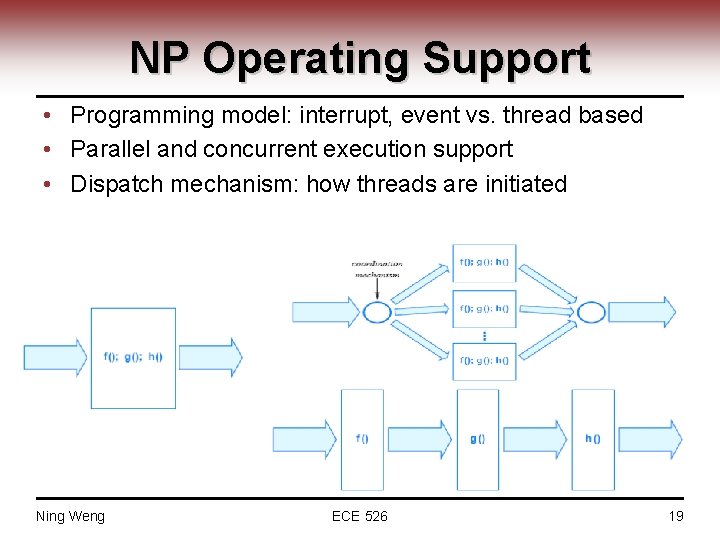

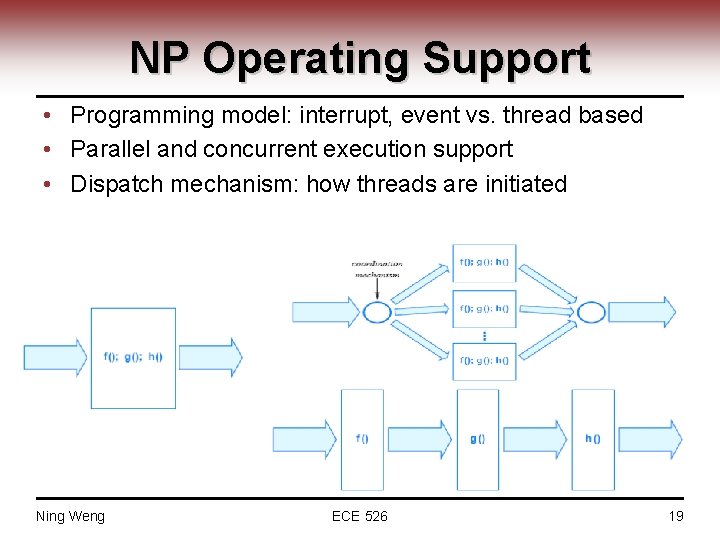

NP Operating Support • Programming model: interrupt, event vs. thread based • Parallel and concurrent execution support • Dispatch mechanism: how threads are initiated Ning Weng ECE 526 19

Summary • NP scaling by ─ ─ Heterogeneous multiprocessors structured hierarchically Mixed memory technologies explicitly available to programmer Different communication mechanisms Operating support important to achieve high system performance • NP scaling limited by ─ Physical space: chip area (less than 400 mm 2) ─ Pin limits and packaging technology ─ Power consumption and heat dissipation Ning Weng ECE 526 20

For Next Class and Reminder • • Read Comer: chapter 15 and 16 Homework solution on-line by Friday Midterm: 10/6 Project ─ topic finalized 10/5 (group leader email me) ─ proposal presentation 10/22 Ning Weng ECE 526 21