Programming Massively Parallel Processors Chapter 2 GPU Computing

- Slides: 12

Programming Massively Parallel Processors Chapter 2: GPU Computing History © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 1

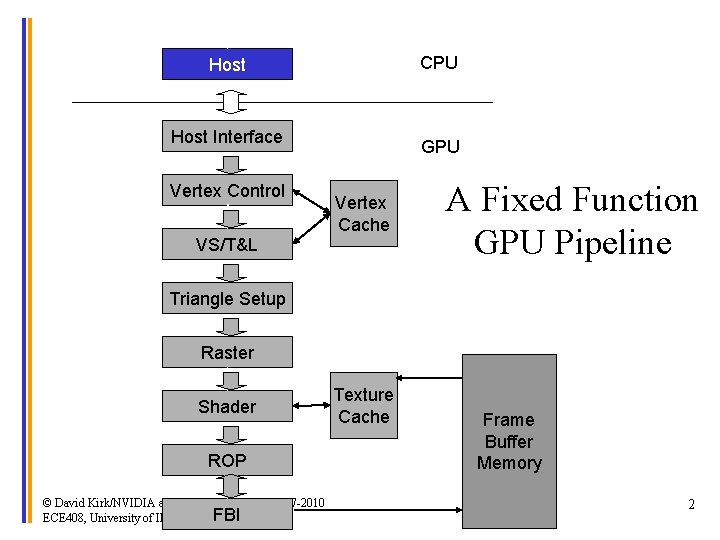

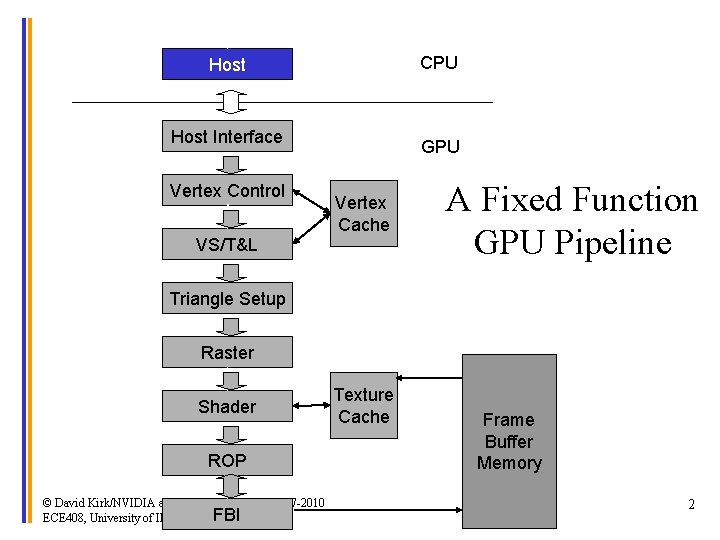

CPU Host Interface Vertex Control VS/T&L GPU Vertex Cache A Fixed Function GPU Pipeline Triangle Setup Raster Shader ROP © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 FBI ECE 408, University of Illinois, Urbana-Champaign Texture Cache Frame Buffer Memory 2

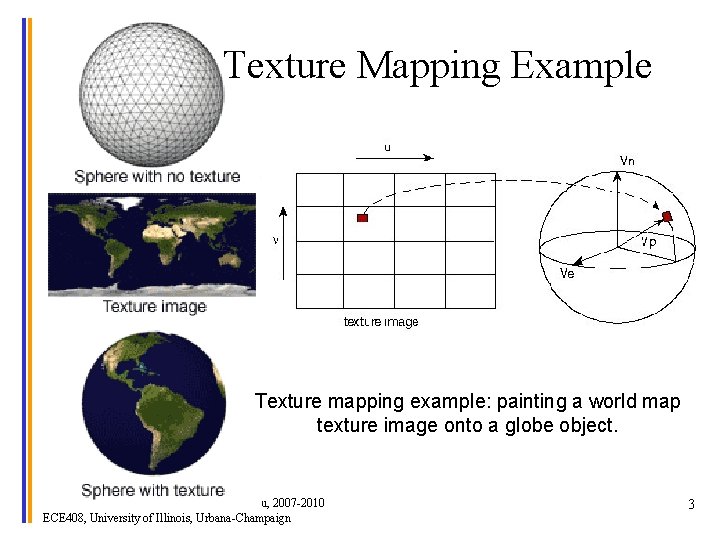

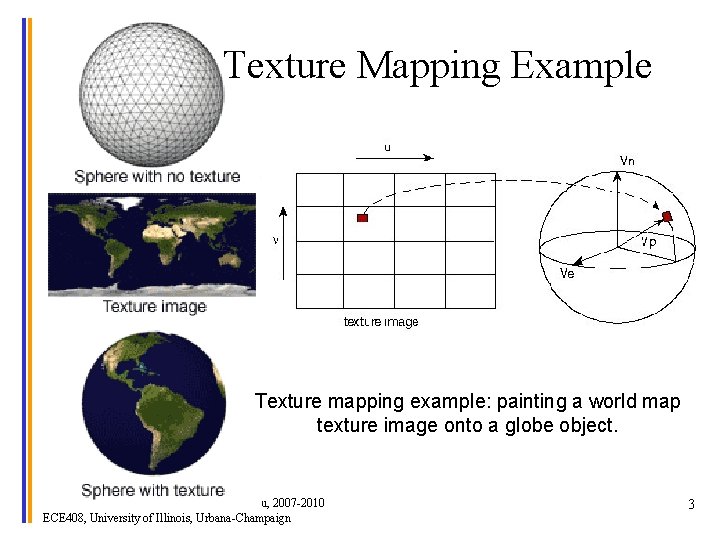

Texture Mapping Example Texture mapping example: painting a world map texture image onto a globe object. © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 3

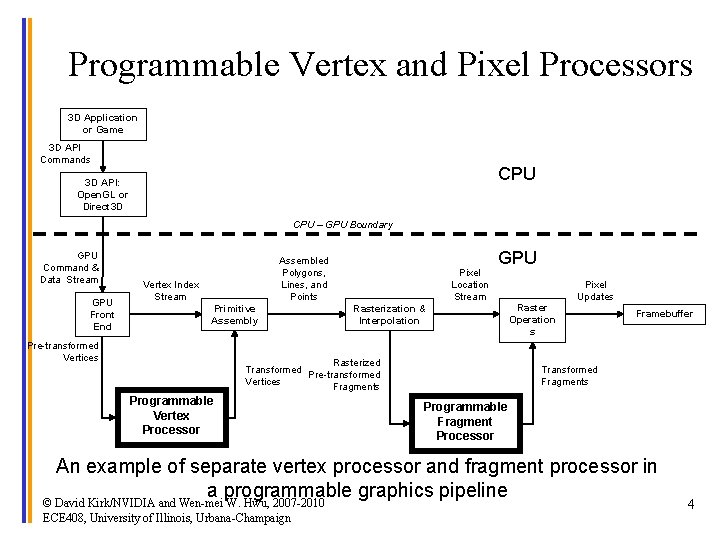

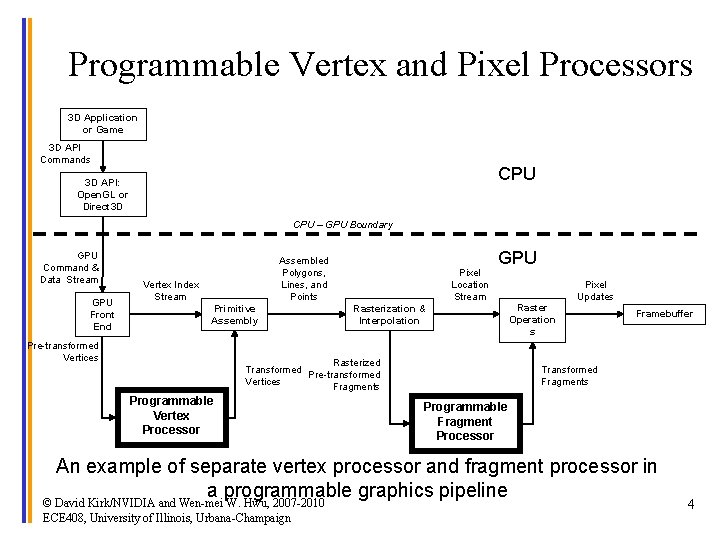

Programmable Vertex and Pixel Processors 3 D Application or Game 3 D API Commands CPU 3 D API: Open. GL or Direct 3 D CPU – GPU Boundary GPU Command & Data Stream GPU Front End Assembled Polygons, Lines, and Points Vertex Index Stream Primitive Assembly Pre-transformed Vertices Pixel Location Stream GPU Rasterization & Interpolation Rasterized Transformed Pre-transformed Vertices Fragments Programmable Vertex Processor Pixel Updates Raster Operation s Framebuffer Transformed Fragments Programmable Fragment Processor An example of separate vertex processor and fragment processor in a programmable graphics pipeline © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 4

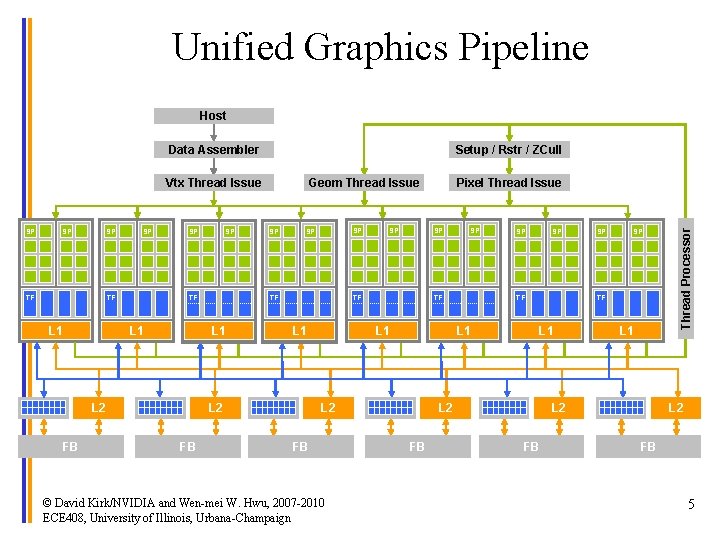

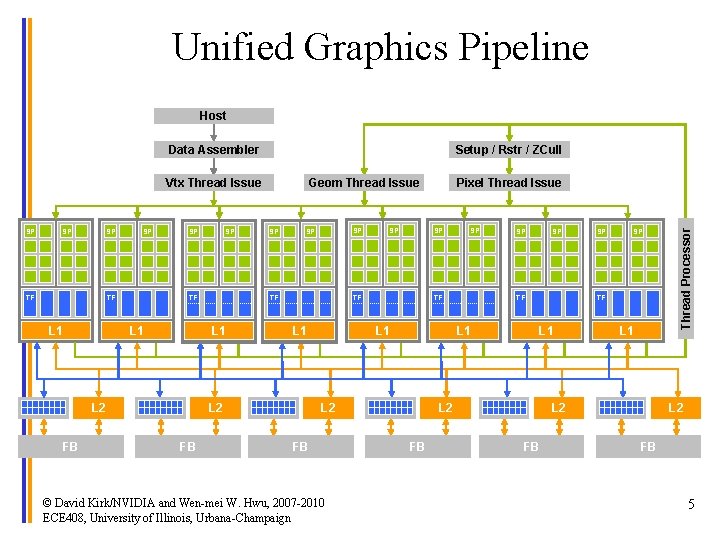

Unified Graphics Pipeline Host Data Assembler Setup / Rstr / ZCull SP SP SP TF L 1 SP SP Pixel Thread Issue SP TF © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign SP SP TF L 1 L 2 FB SP SP TF L 1 L 2 FB SP Geom Thread Issue SP TF L 1 L 2 FB SP L 1 L 2 FB Thread Processor Vtx Thread Issue L 2 FB 5

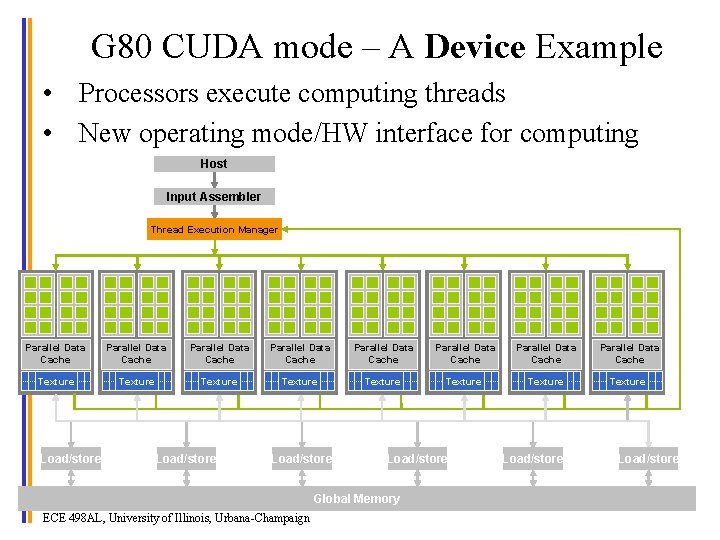

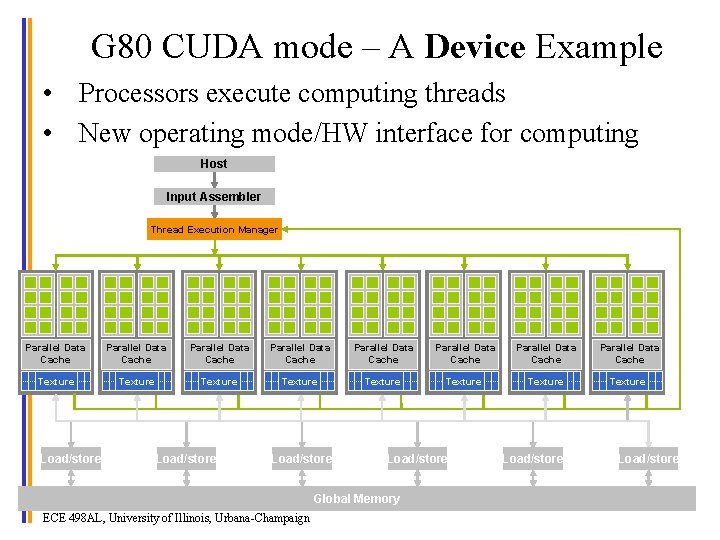

G 80 CUDA mode – A Device Example • Processors execute computing threads • New operating mode/HW interface for computing Host Input Assembler Thread Execution Manager Parallel Data Cache Parallel Data Cache Texture Texture Texture Load/store Global Memory © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 498 AL, University of Illinois, Urbana-Champaign Load/store 6

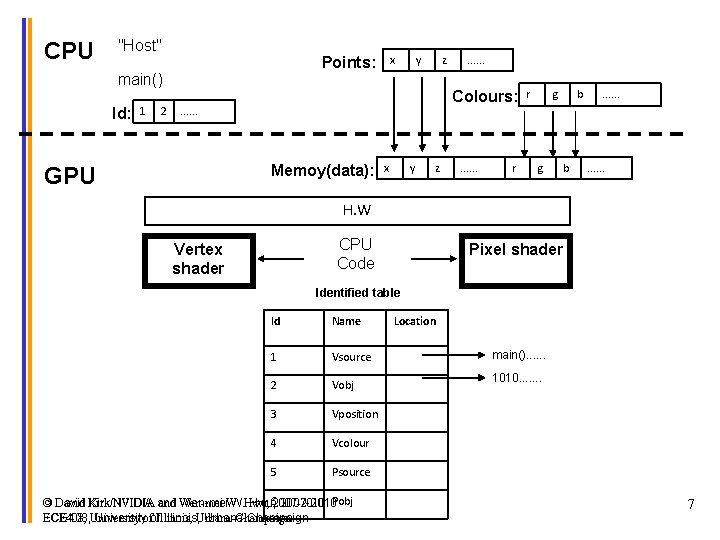

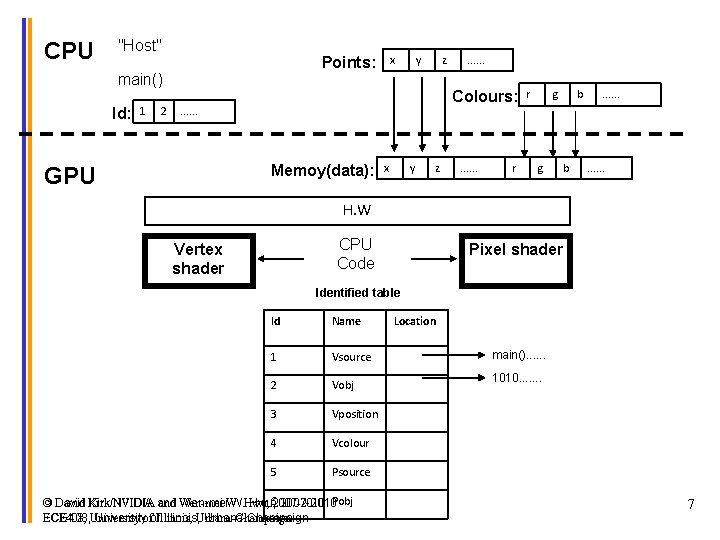

CPU "Host" main() Id: 1 2 x Points: y z Colours: . . . Memoy(data): GPU . . . x y z . . . r r g g b b . . . H. W CPU Code Vertex shader Pixel shader Identified table Id Name 1 Vsource 2 Vobj 3 Vposition 4 Vcolour 5 Psource and Wen-mei © David Kirk/NVIDIA and Wen-mei. W. W. Hwu, 62007 -2010 Pobj ECE 408, Universityofof. Illinois, Urbana-Champaign Location main(). . . 1010. . . . 7

CUDA General Purpose Computation using GPU © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 498 AL, University of Illinois, Urbana-Champaign 8

What is (Historical) GPGPU ? • General Purpose computation using GPU and graphics API in applications other than 3 D graphics – GPU accelerates critical path of application • Data parallel algorithms leverage GPU attributes – Large data arrays, streaming throughput – Fine-grain SIMD parallelism – Low-latency floating point (FP) computation • Applications – see //GPGPU. org – Game effects (FX) physics, image processing – Physical modeling, computational engineering, matrix algebra, convolution, correlation, sorting © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 498 AL, University of Illinois, Urbana-Champaign 9

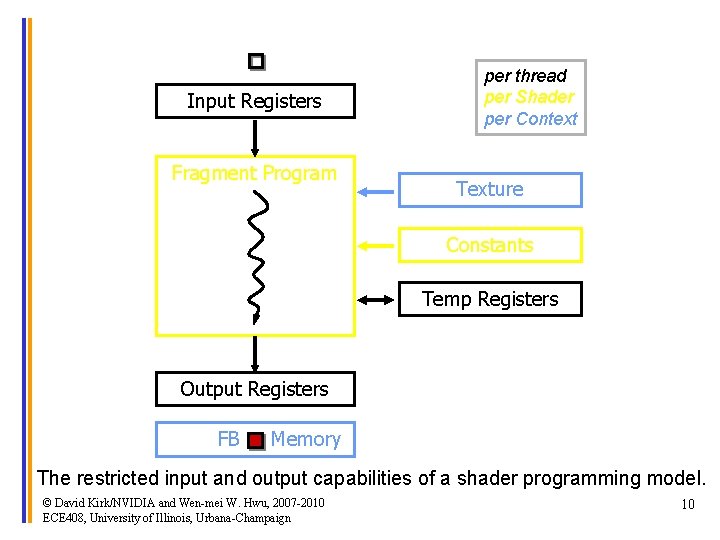

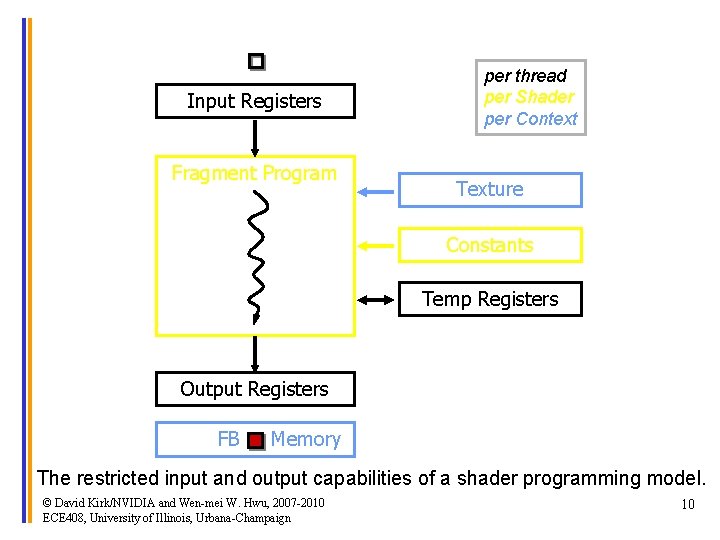

Input Registers Fragment Program per thread per Shader per Context Texture Constants Temp Registers Output Registers FB Memory The restricted input and output capabilities of a shader programming model. © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 10

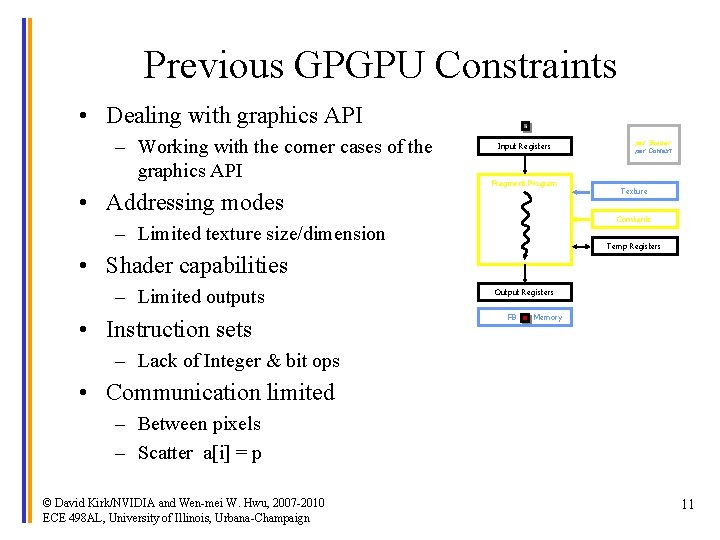

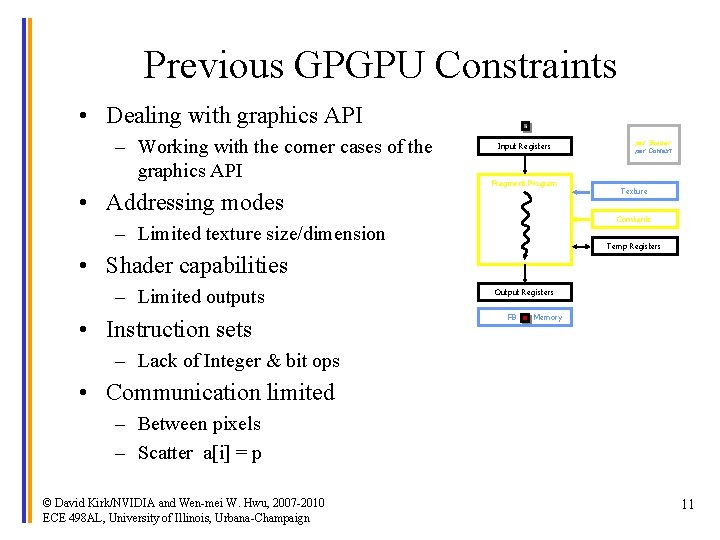

Previous GPGPU Constraints • Dealing with graphics API – Working with the corner cases of the graphics API Input Registers Fragment Program • Addressing modes per thread per Shader per Context Texture Constants – Limited texture size/dimension Temp Registers • Shader capabilities – Limited outputs • Instruction sets Output Registers FB Memory – Lack of Integer & bit ops • Communication limited – Between pixels – Scatter a[i] = p © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 498 AL, University of Illinois, Urbana-Champaign 11

CUDA • “Compute Unified Device Architecture” • General purpose programming model – User kicks off batches of threads on the GPU – GPU = dedicated super-threaded, massively data parallel co-processor • Targeted software stack – Compute oriented drivers, language, and tools • Driver for loading computation programs into GPU – – – Standalone Driver - Optimized for computation Interface designed for compute – graphics-free API Data sharing with Open. GL buffer objects Guaranteed maximum download & readback speeds Explicit GPU memory management © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 498 AL, University of Illinois, Urbana-Champaign 12