Packet Scheduling for Deep Packet Inspection on MultiCore

- Slides: 14

Packet Scheduling for Deep Packet Inspection on Multi-Core Architectures Author: Terry Nelms, Mustaque Ahamad Publisher: ANCS 2010 Presenter: Li-Hsien, Hsu Data: 4/11/2012

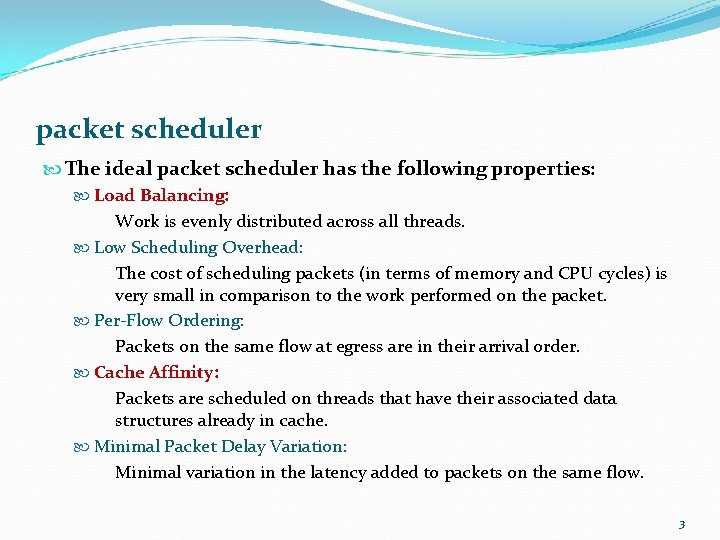

packet scheduler The ideal packet scheduler has the following properties: Load Balancing: Work is evenly distributed across all threads. Low Scheduling Overhead: The cost of scheduling packets (in terms of memory and CPU cycles) is very small in comparison to the work performed on the packet. Per-Flow Ordering: Packets on the same flow at egress are in their arrival order. Cache Affinity: Packets are scheduled on threads that have their associated data structures already in cache. Minimal Packet Delay Variation: Minimal variation in the latency added to packets on the same flow. 2

packet scheduler The ideal packet scheduler has the following properties: Load Balancing: Work is evenly distributed across all threads. Low Scheduling Overhead: The cost of scheduling packets (in terms of memory and CPU cycles) is very small in comparison to the work performed on the packet. Per-Flow Ordering: Packets on the same flow at egress are in their arrival order. Cache Affinity: Packets are scheduled on threads that have their associated data structures already in cache. Minimal Packet Delay Variation: Minimal variation in the latency added to packets on the same flow. 3

DPI Packet schedulers Direct Hash (DH)(for comparison purpose) Packet Handoff (PH)(maximize load balancing) Last Flow Bundle (LFB)(maximize cache affinity) 4

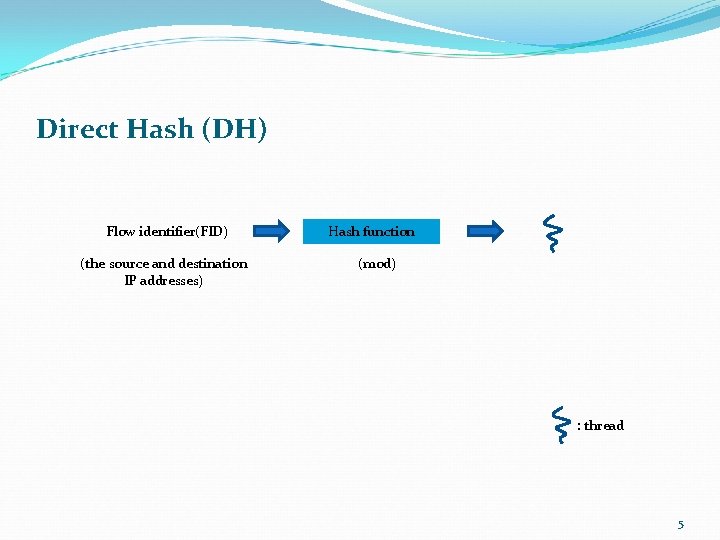

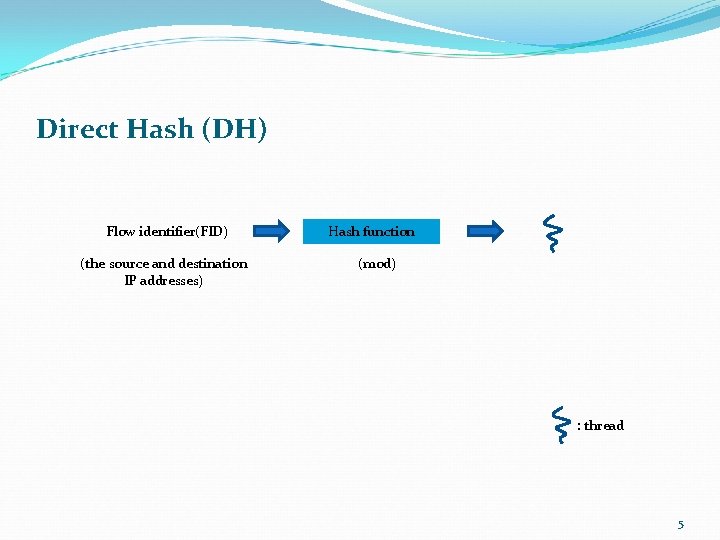

Direct Hash (DH) Flow identifier(FID) (the source and destination IP addresses) Hash function (mod) : thread 5

Direct Hash (DH) The advantages of DH is: Cache Affinity: Packets on a flow are always processed by the same thread. The disadvantages of DH is: Load Imbalance There is no control over how packets are distributed. 6

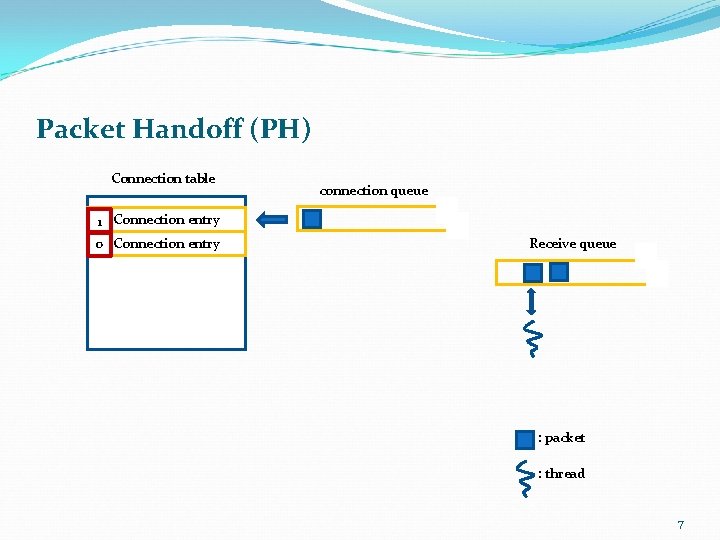

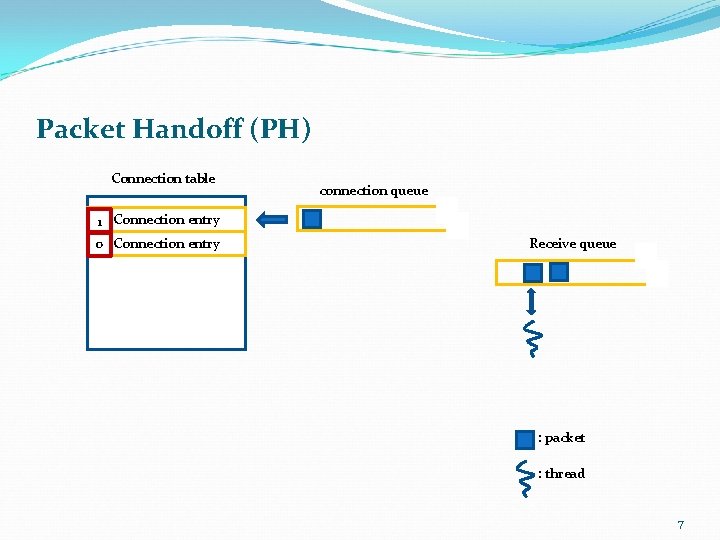

Packet Handoff (PH) Connection table connection queue 1 Connection entry 0 Connection entry Receive queue : packet : thread 7

Packet Handoff (PH) The advantages of PH is: Load Balancing: Threads pull packets from a queue (i. e. from the RQ) when they are not busy. If n threads are processing packets and there at least n packets in the receive queue that map to different connection entries, no threads will be idle. The disadvantages of PH is: Cache Affinity: There is no mapping of packets that are part of the same flow to the same thread. 8

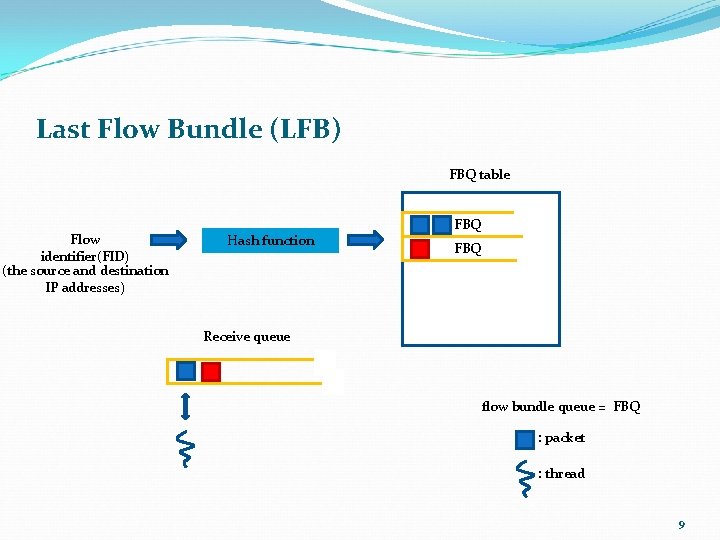

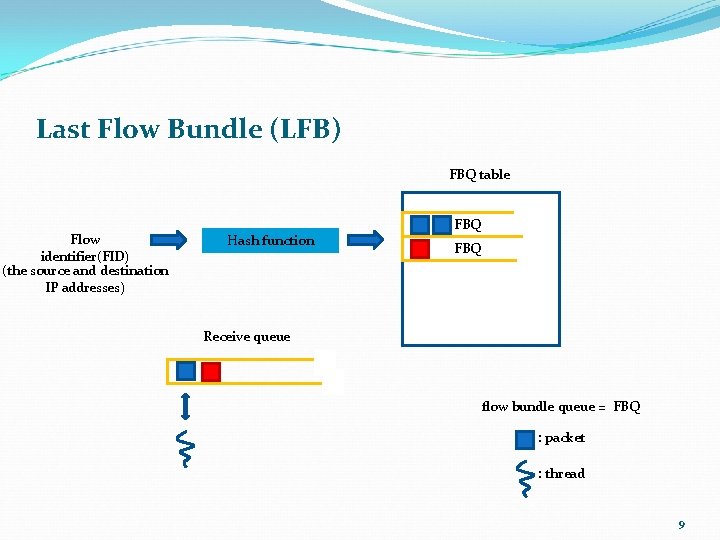

Last Flow Bundle (LFB) FBQ table Flow identifier(FID) (the source and destination IP addresses) Hash function FBQ Receive queue flow bundle queue = FBQ : packet : thread 9

Last Flow Bundle (LFB) The advantages of LFB are: Cache Affinity: Packets that map to the same FBQ and are in the system at the same time are processed by a single thread. The disadvantages of LFB are: Increased Packet Delay Variation: Packets on the same flow are intentionally processed together for improved efficiency. However, this causes packets in a packet train to jump ahead of older packets in the queue resulting in higher latency for those packets. 10

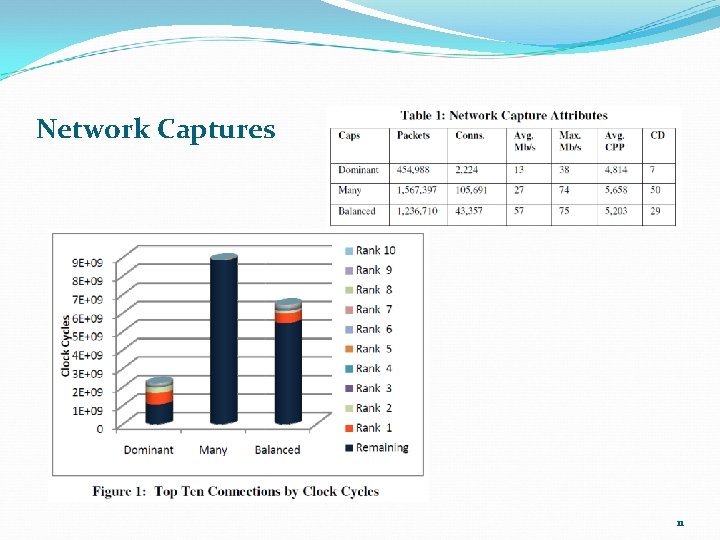

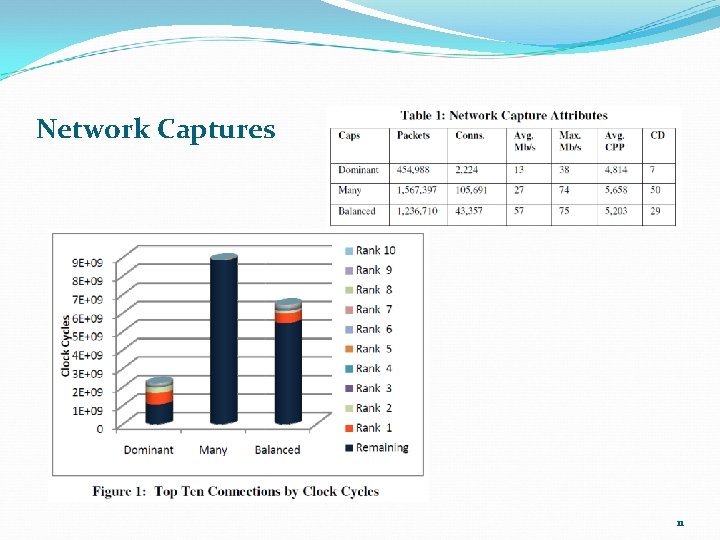

Network Captures 11

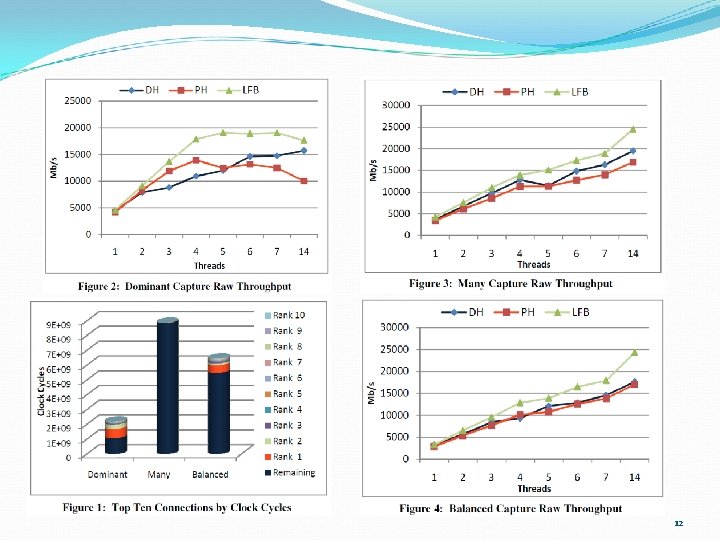

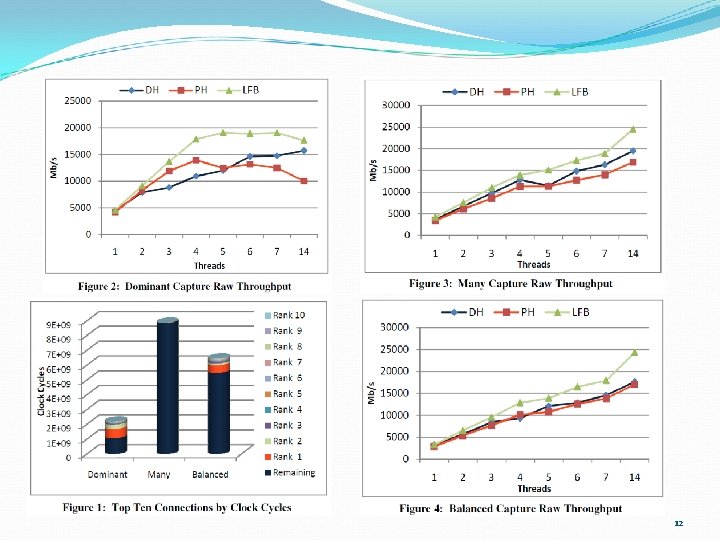

12

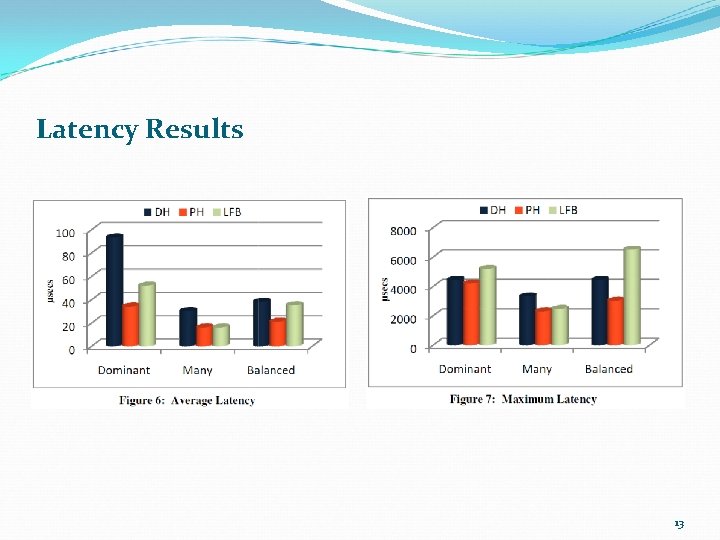

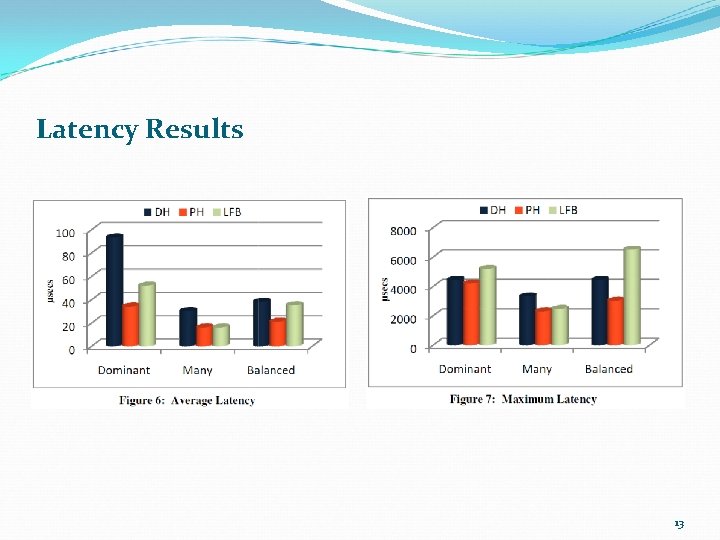

Latency Results 13

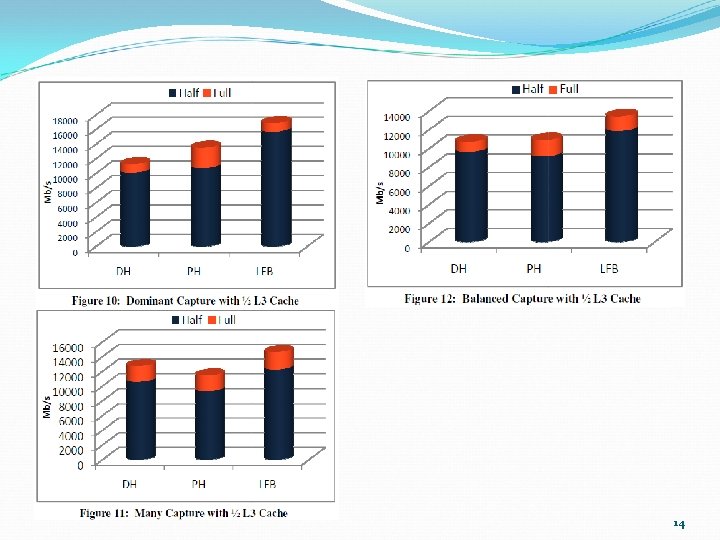

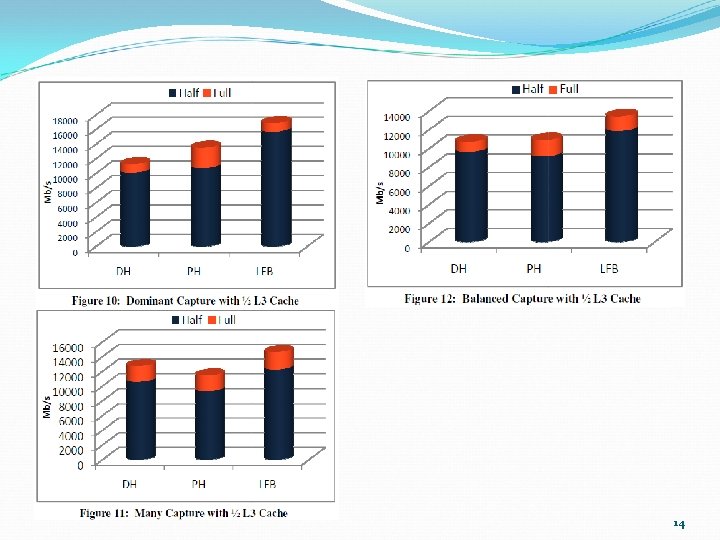

14