Multiprocessor cache coherence Caching terms and definitions cache

- Slides: 20

Multiprocessor cache coherence

Caching: terms and definitions • cache line, line size, cache size • degree of associativity – direct-mapped, set and fully associative • • • placement, replacement, location (tags) hits and misses clean vs. dirty entries write-through vs. write-back multi-level inclusion

Cache line, line and cache size • caches hold multi-byte lines • bytes are from sequential locations in memory (called a memory block) • number of bytes in a line usually a multiple of bus width • lines are identified by “tags” • cache size = # lines * # bytes per line – tags are not included in cache size

Degree of associativity • how many “ways” or places can we store a block from memory in the cache? – direct-mapped => 1 “way” or place to store a given block – set-associative => multiple sets, or “ways” – fully associative => a block from memory can be stored in any line in the cache

Placement • when we bring a block in from memory, where do we put it? – use address of the first byte of the block – break into offset, index and tag – remove log 2(# bytes in block) low-order bits for offset – use middle log 2(# lines per way) bits to select line -> called “index” – remaining high-order bits are the tag

Replacement • placement selects the same line number in each way • if one way has an empty line at that location, use it • if all ways have valid lines at that location, one will need to be victimized • use LRU, clock, random, ….

Locating a block: Tags • how do we know whether a given block is in cache? • calculate the index and tag from the address • check the tags for that index in each way • separate memory for tag array • circuitry for tag comparisons

Hit vs. miss • hit == block is in the cache • read hit / miss: process wants to read one or more bytes in the block • write hit / miss similarly • we want high hit rates

Clean vs. dirty • has the value in the cache been modified since it was placed in the cache? • one bit per line • similarly, one bit for valid / not valid

Write-through vs. write-back • write-through: on a write, update the cache and also write to memory – more traffic to memory – no need to stall on replacement • write-back: hold writes in cache, mark the line dirty – less memory traffic – coalesces multiple writes to the line

Multi-level inclusion (MLI) • L 1 (the child) holds a subset of L 2 (the parent) • L 2 holds a subset of main memory • affects servicing of misses & invalidations – see 6. 3. 1, pp. 232 -233 in text • constrains the organizations we can build if we want MLI - can switch to MLE, though • ensures there will be an allocated line in the parent with the same contents as the child (if clean) or which can receive a dirty line from the child when it is replaced

Uniprocessor MLI • assuming writeback caches • Ap, Bp are parent’s associativity and line size, respectively; Ac and Bc for the child • we are constrained to: Ap >= (Bp / Bc) Ac • associativity must at least cover the ratio of the line sizes

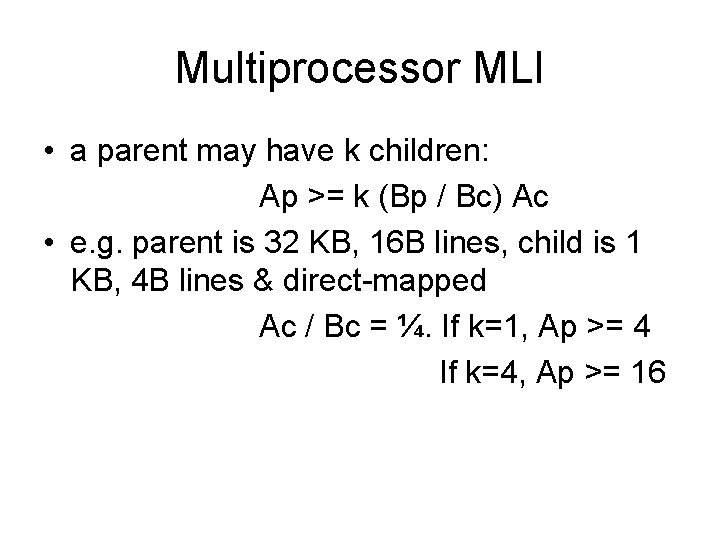

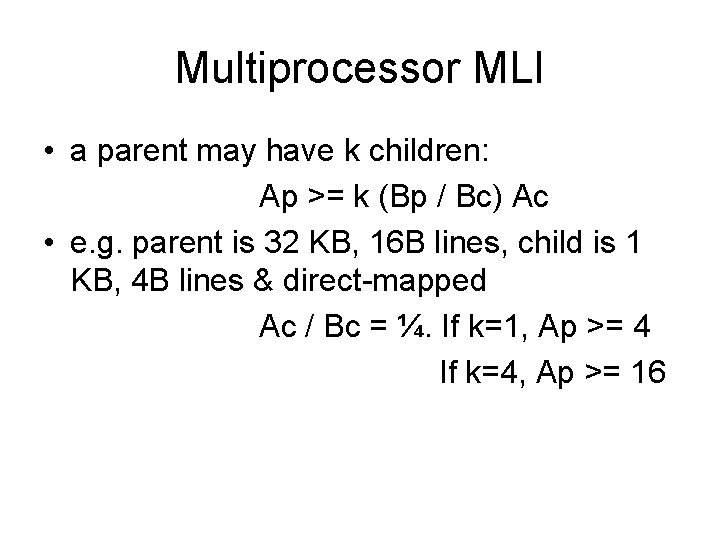

Multiprocessor MLI • a parent may have k children: Ap >= k (Bp / Bc) Ac • e. g. parent is 32 KB, 16 B lines, child is 1 KB, 4 B lines & direct-mapped Ac / Bc = ¼. If k=1, Ap >= 4 If k=4, Ap >= 16

Coherence • MLI makes it easy to keep an L 1 / L 2 pair consistent, or “coherent” • what about multiple caches in a multiprocessor or multi-core system?

Shared memory machines

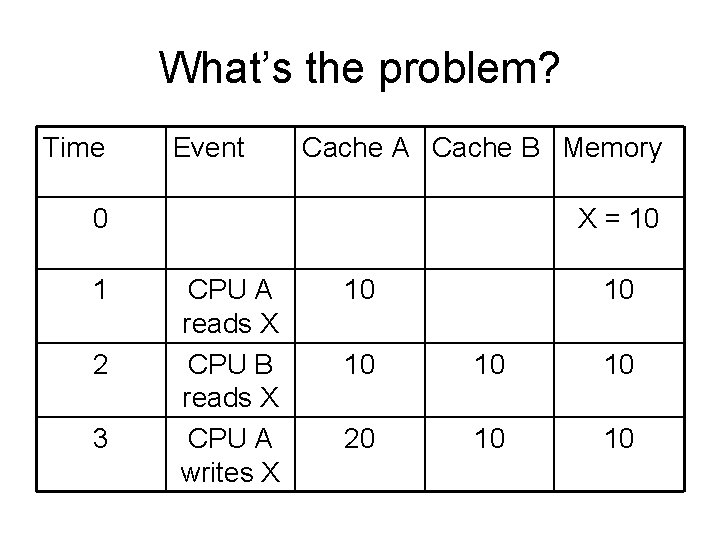

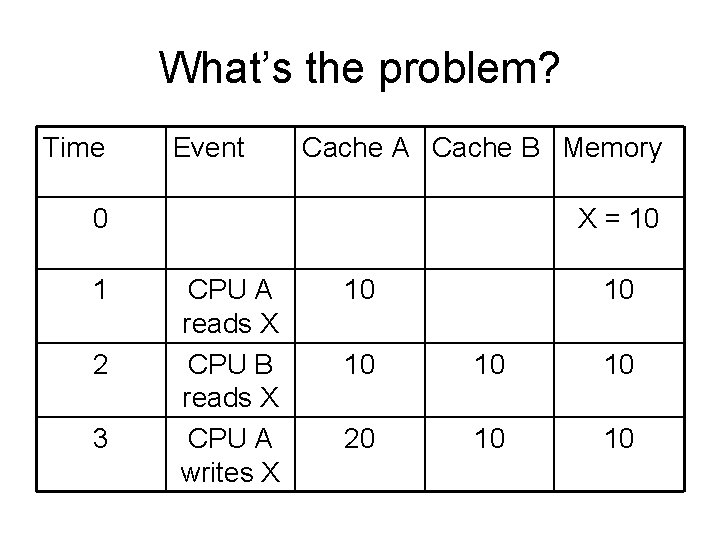

What’s the problem? Time Event Cache A Cache B Memory 0 1 2 3 X = 10 CPU A reads X CPU B reads X CPU A writes X 10 10 10 20 10 10

Coherence (formally) • determines the value returned by a read • coherent memory system: – If P writes to X then reads X, with no writes to X by other processors, should return value written by P – If P 1 writes to X and then P 2 reads from X, if read/write “sufficiently” separated in time, should return value written P 1 – Writes to the same location are serialized; two writes to the same location by any two processors are seen in the same order by all processors

Consistency • determines when a written value will be available to be read – “sufficiently separated” in the previous slide • various consistency models are possible – later

Think / group / share • how can we ensure coherence in a sharedmemory multiprocessor?

Reading assignment • section 7. 3 on pages 281 to 290 in Baer – covers synchronization • example 1, pages 269 to 270