Programming Massively Parallel Processors Lecture Slides for Chapter

![Linear Addressing • Given: __shared__ float shared[256]; float foo = shared[base. Index + s Linear Addressing • Given: __shared__ float shared[256]; float foo = shared[base. Index + s](https://slidetodoc.com/presentation_image_h/8e56a5006351544151ae9136a94d4576/image-39.jpg)

![A simplementation • Assume we have already loaded array into __shared__ float partial. Sum[] A simplementation • Assume we have already loaded array into __shared__ float partial. Sum[]](https://slidetodoc.com/presentation_image_h/8e56a5006351544151ae9136a94d4576/image-44.jpg)

- Slides: 57

Programming Massively Parallel Processors Lecture Slides for Chapter 6: Performance Considerations © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 1

Threading Hardware © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 2

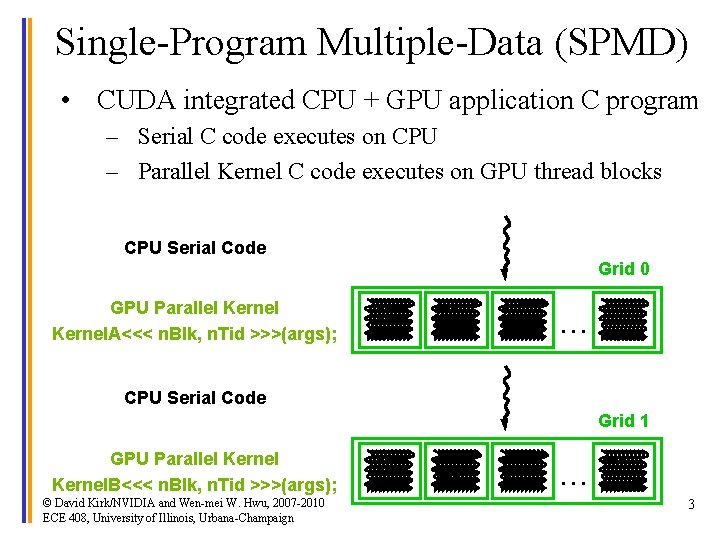

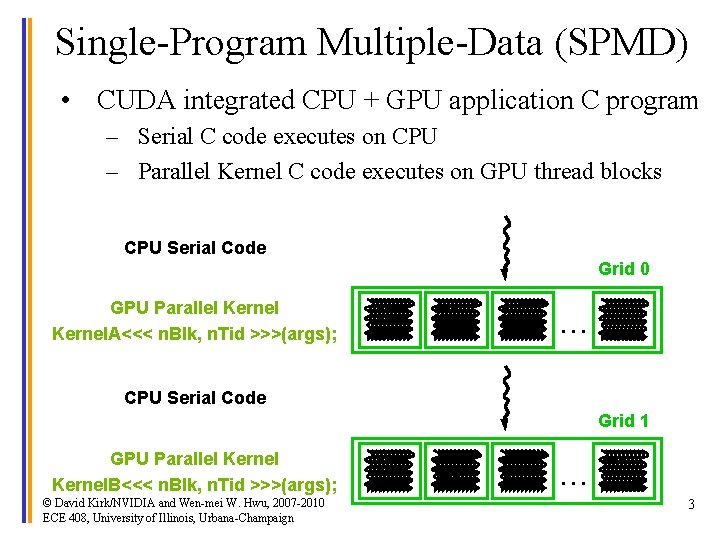

Single-Program Multiple-Data (SPMD) • CUDA integrated CPU + GPU application C program – Serial C code executes on CPU – Parallel Kernel C code executes on GPU thread blocks CPU Serial Code Grid 0 GPU Parallel Kernel. A<<< n. Blk, n. Tid >>>(args); . . . CPU Serial Code Grid 1 GPU Parallel Kernel. B<<< n. Blk, n. Tid >>>(args); © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign . . . 3

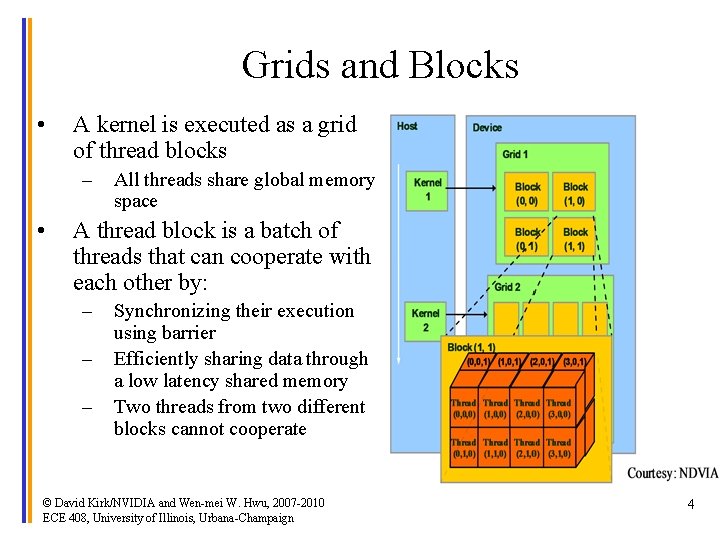

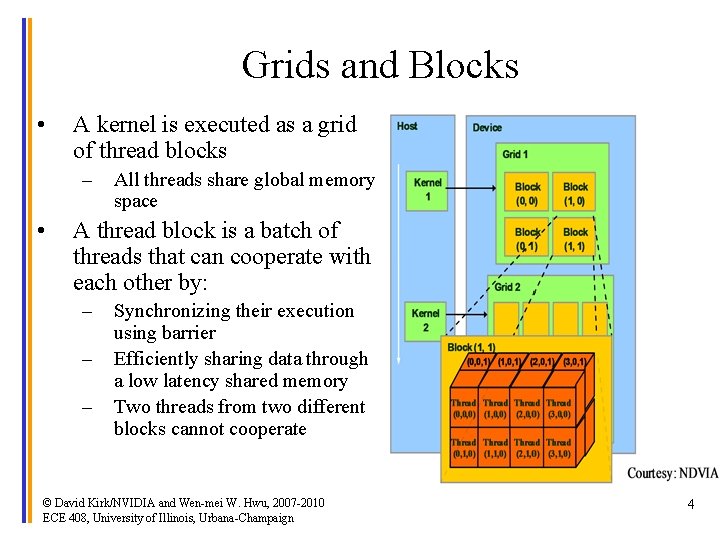

Grids and Blocks • A kernel is executed as a grid of thread blocks – • All threads share global memory space A thread block is a batch of threads that can cooperate with each other by: – – – Synchronizing their execution using barrier Efficiently sharing data through a low latency shared memory Two threads from two different blocks cannot cooperate © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 4

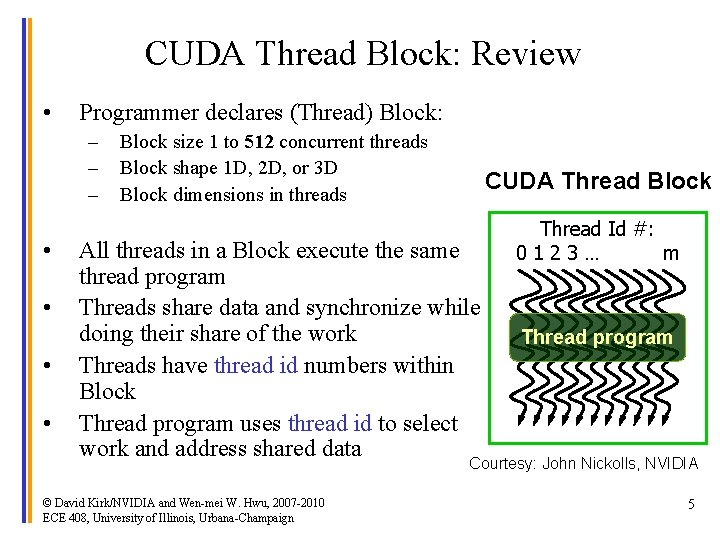

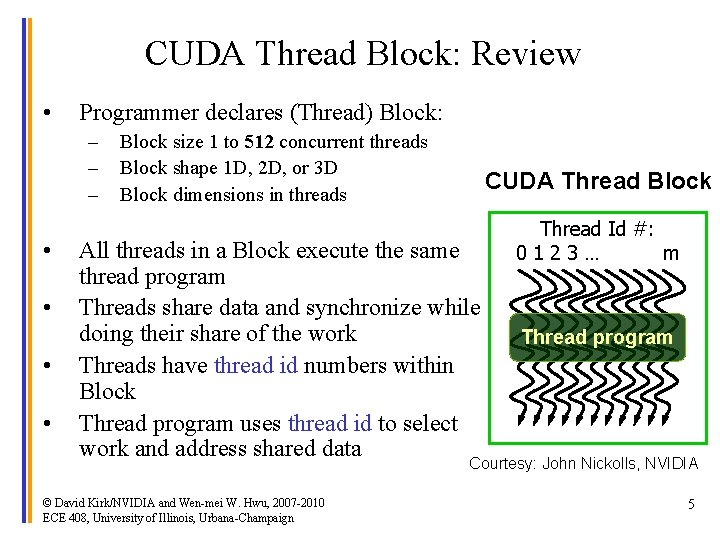

CUDA Thread Block: Review • Programmer declares (Thread) Block: – – – • • Block size 1 to 512 concurrent threads Block shape 1 D, 2 D, or 3 D Block dimensions in threads CUDA Thread Block All threads in a Block execute the same thread program Threads share data and synchronize while doing their share of the work Threads have thread id numbers within Block Thread program uses thread id to select work and address shared data Thread Id #: 0123… m Thread program Courtesy: John Nickolls, NVIDIA © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 5

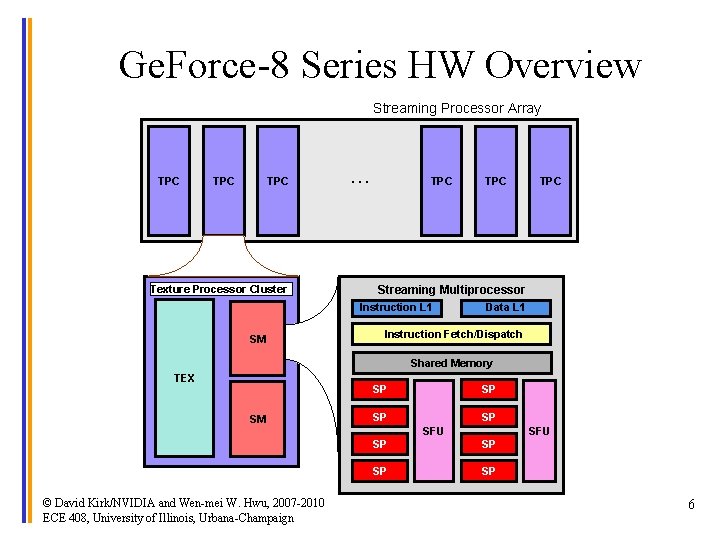

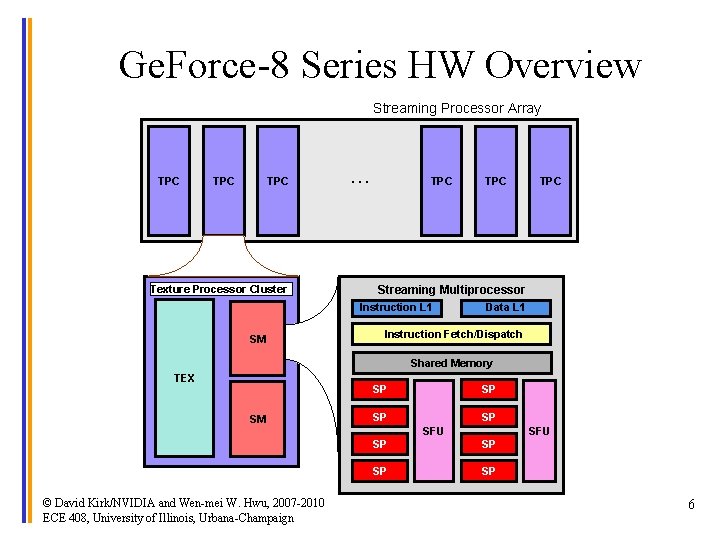

Ge. Force-8 Series HW Overview Streaming Processor Array TPC TPC Texture Processor Cluster … TPC Streaming Multiprocessor Instruction L 1 SM TPC Data L 1 Instruction Fetch/Dispatch Shared Memory TEX SP SM SP SP SP SFU © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign SFU SP SP 6

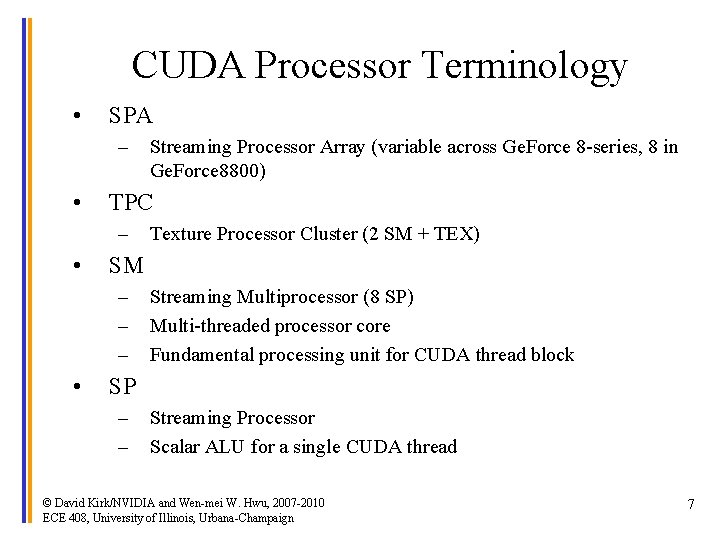

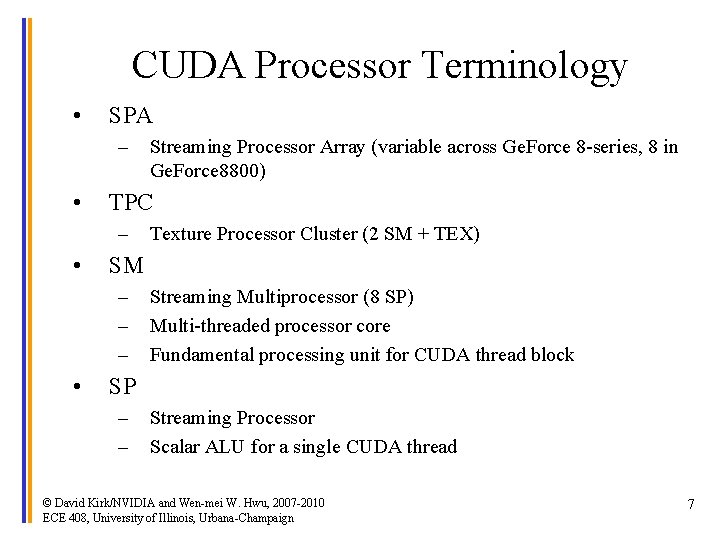

CUDA Processor Terminology • SPA – • TPC – • Texture Processor Cluster (2 SM + TEX) SM – – – • Streaming Processor Array (variable across Ge. Force 8 -series, 8 in Ge. Force 8800) Streaming Multiprocessor (8 SP) Multi-threaded processor core Fundamental processing unit for CUDA thread block SP – – Streaming Processor Scalar ALU for a single CUDA thread © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 7

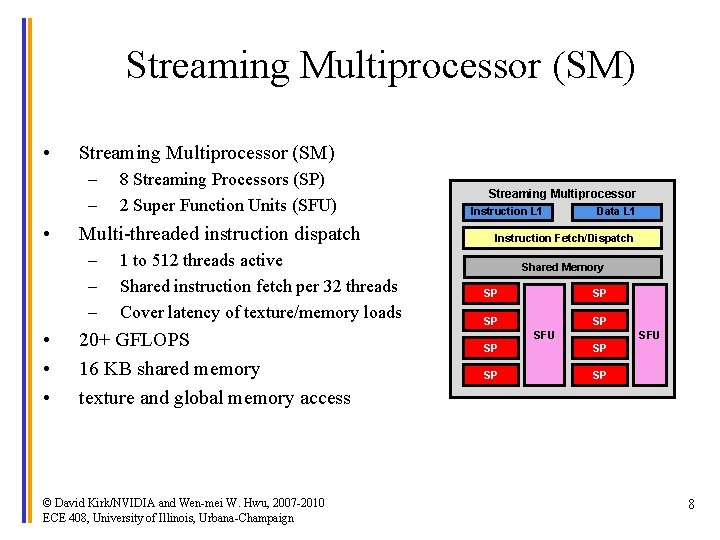

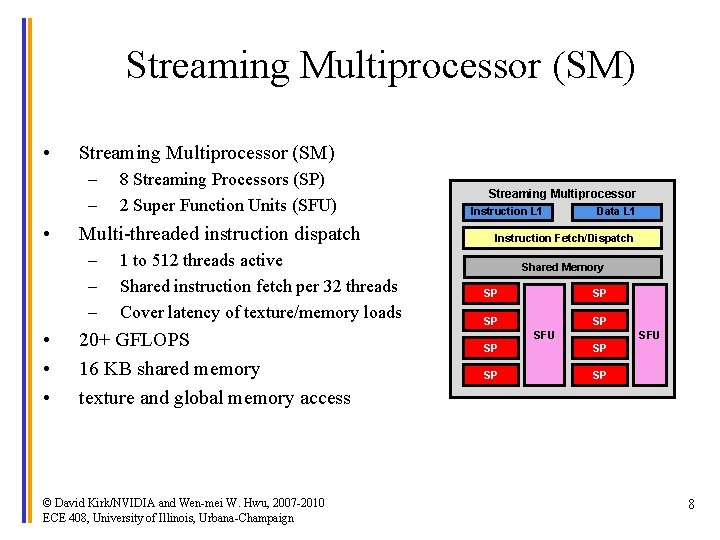

Streaming Multiprocessor (SM) • Streaming Multiprocessor (SM) – – • Multi-threaded instruction dispatch – – – • • • 8 Streaming Processors (SP) 2 Super Function Units (SFU) 1 to 512 threads active Shared instruction fetch per 32 threads Cover latency of texture/memory loads 20+ GFLOPS 16 KB shared memory texture and global memory access © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign Streaming Multiprocessor Instruction L 1 Data L 1 Instruction Fetch/Dispatch Shared Memory SP SP SFU SP SP 8

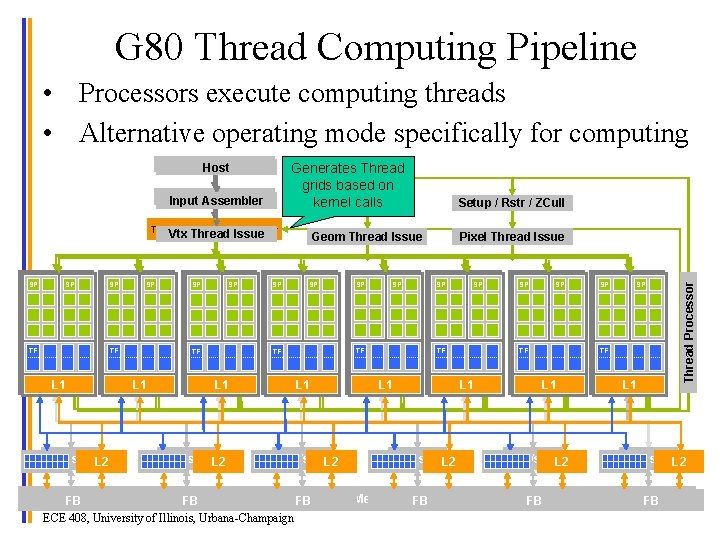

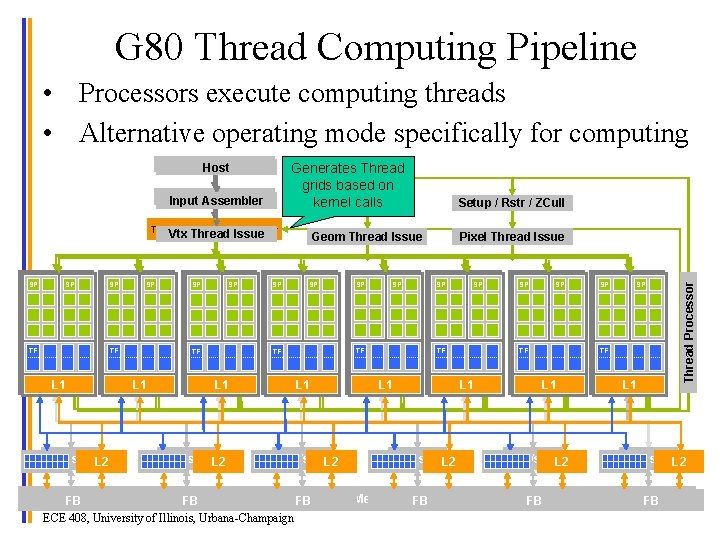

G 80 Thread Computing Pipeline • Processors execute computing threads • Alternative operating mode specifically for computing Generates Thread grids based on kernel calls Input Assembler Thread Execution Manager Vtx Thread Issue SP SP SP Setup / Rstr / ZCull Geom Thread Issue SP SP Pixel Thread Issue SP SP SP Parallel Data TF Cache Parallel Data TF Cache Texture L 1 Texture L 1 Texture L 1 Load/store L 2 FB Kirk/NVIDIA and Wen-mei FB FB Global Memory © David W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign FB Thread Processor Host Load/store L 2 FB FB 9

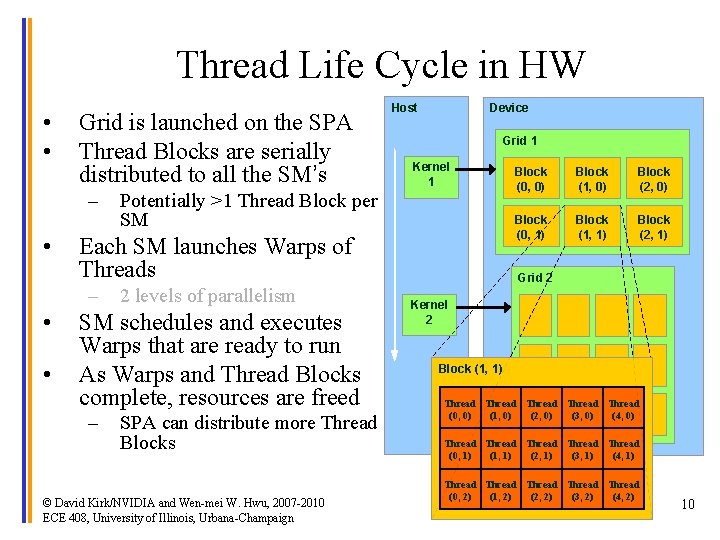

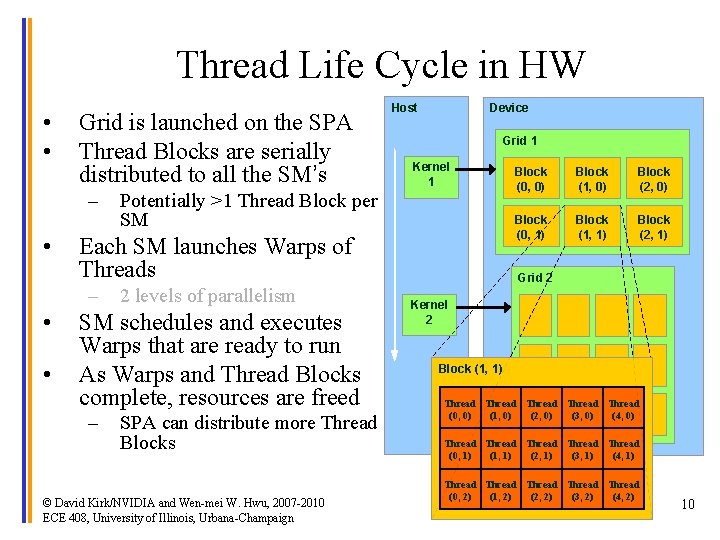

Thread Life Cycle in HW • • Grid is launched on the SPA Thread Blocks are serially distributed to all the SM’s – • • Device Grid 1 Kernel 1 Potentially >1 Thread Block per SM Each SM launches Warps of Threads – • Host 2 levels of parallelism SM schedules and executes Warps that are ready to run As Warps and Thread Blocks complete, resources are freed – SPA can distribute more Thread Blocks © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign Block (0, 0) Block (1, 0) Block (2, 0) Block (0, 1) Block (1, 1) Block (2, 1) Grid 2 Kernel 2 Block (1, 1) Thread Thread (0, 0) (1, 0) (2, 0) (3, 0) (4, 0) Thread Thread (0, 1) (1, 1) (2, 1) (3, 1) (4, 1) Thread Thread (0, 2) (1, 2) (2, 2) (3, 2) (4, 2) 10

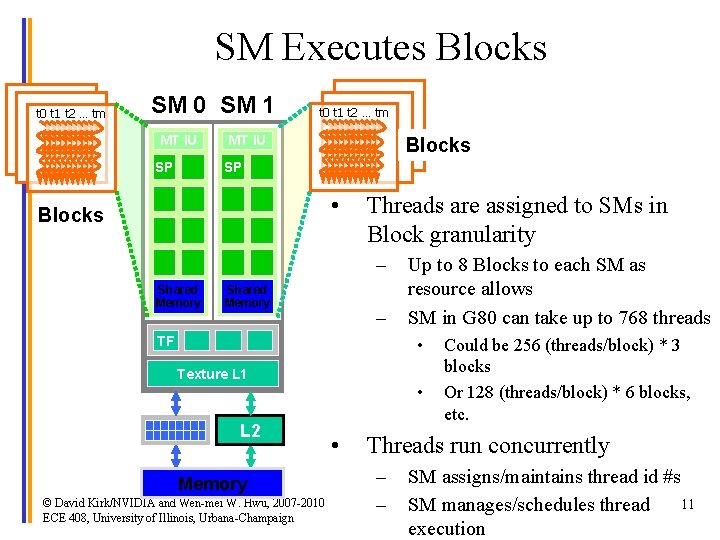

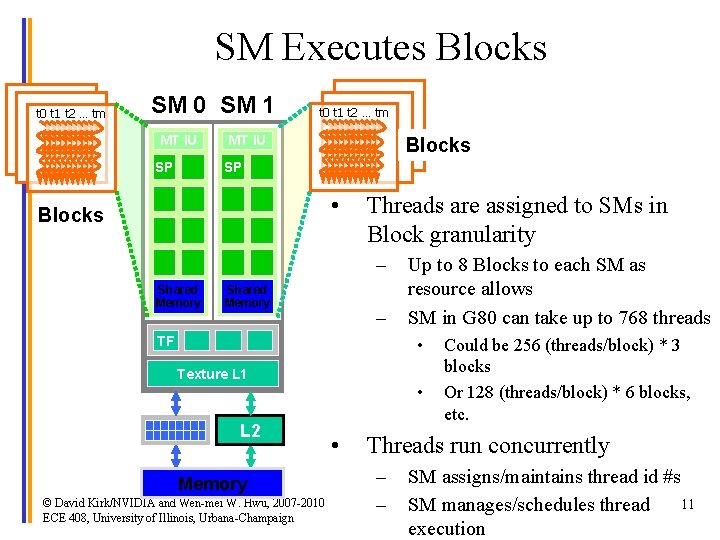

SM Executes Blocks t 0 t 1 t 2 … tm SM 0 SM 1 MT IU SP t 0 t 1 t 2 … tm MT IU Blocks SP • Blocks Threads are assigned to SMs in Block granularity – Shared Memory – TF Up to 8 Blocks to each SM as resource allows SM in G 80 can take up to 768 threads • Texture L 1 L 2 Memory © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign • • Could be 256 (threads/block) * 3 blocks Or 128 (threads/block) * 6 blocks, etc. Threads run concurrently – – SM assigns/maintains thread id #s 11 SM manages/schedules thread execution

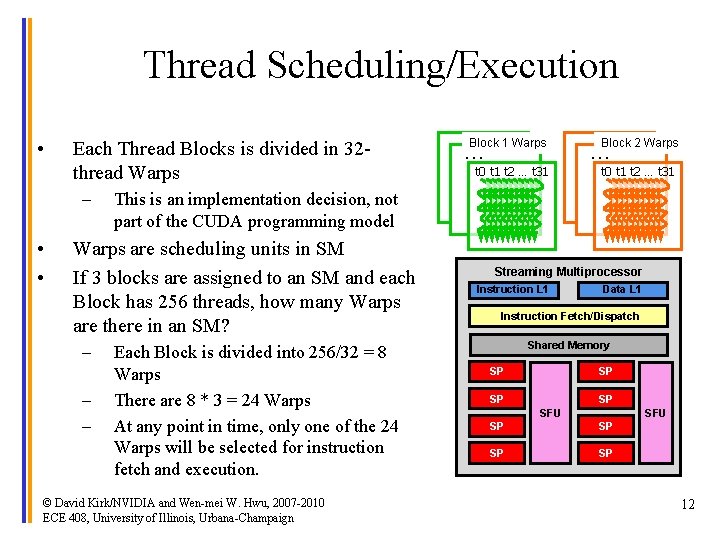

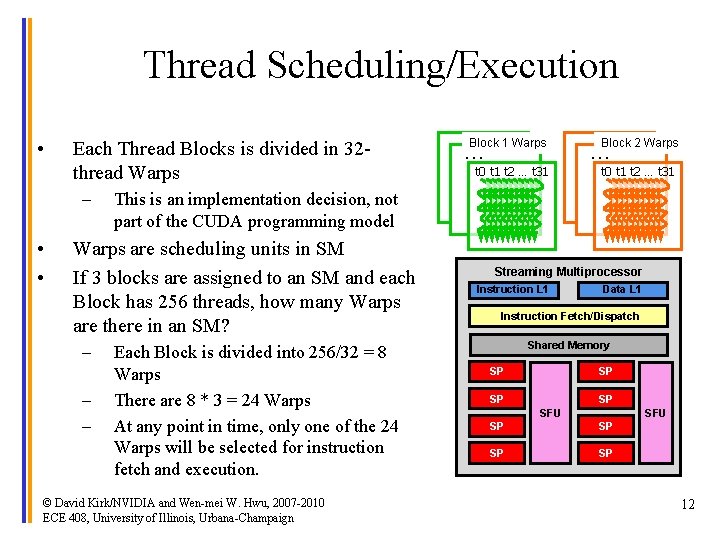

Thread Scheduling/Execution • Each Thread Blocks is divided in 32 thread Warps – • • This is an implementation decision, not part of the CUDA programming model Warps are scheduling units in SM If 3 blocks are assigned to an SM and each Block has 256 threads, how many Warps are there in an SM? – – – Each Block is divided into 256/32 = 8 Warps There are 8 * 3 = 24 Warps At any point in time, only one of the 24 Warps will be selected for instruction fetch and execution. © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign Block 1 Warps … t 0 t 1 t 2 … t 31 … …Block 2 Warps t 0 t 1 t 2 … t 31 … Streaming Multiprocessor Instruction L 1 Data L 1 Instruction Fetch/Dispatch Shared Memory SP SP SFU SP SP 12

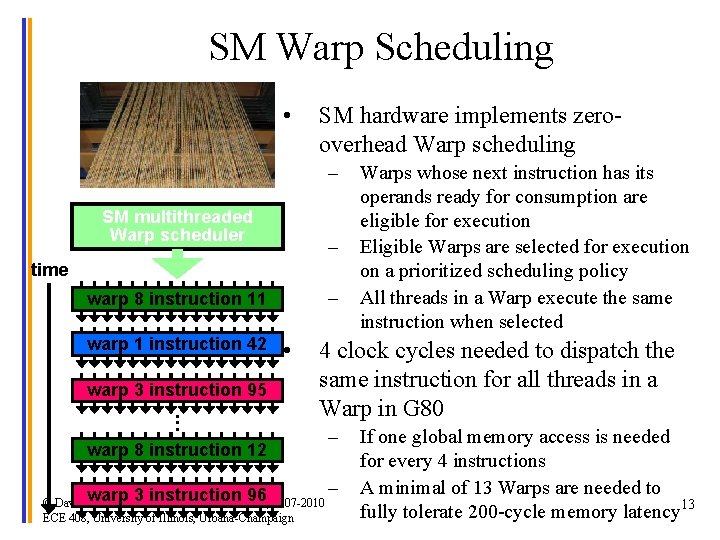

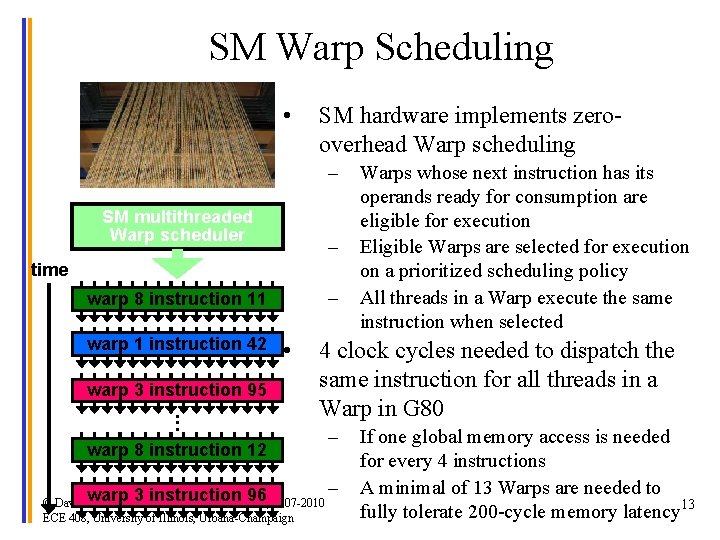

SM Warp Scheduling • SM hardware implements zerooverhead Warp scheduling – SM multithreaded Warp scheduler – time – warp 8 instruction 11 warp 1 instruction 42 warp 3 instruction 95. . . warp 8 instruction 12 warp 3 instruction 96 • Warps whose next instruction has its operands ready for consumption are eligible for execution Eligible Warps are selected for execution on a prioritized scheduling policy All threads in a Warp execute the same instruction when selected 4 clock cycles needed to dispatch the same instruction for all threads in a Warp in G 80 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign – – If one global memory access is needed for every 4 instructions A minimal of 13 Warps are needed to fully tolerate 200 -cycle memory latency 13

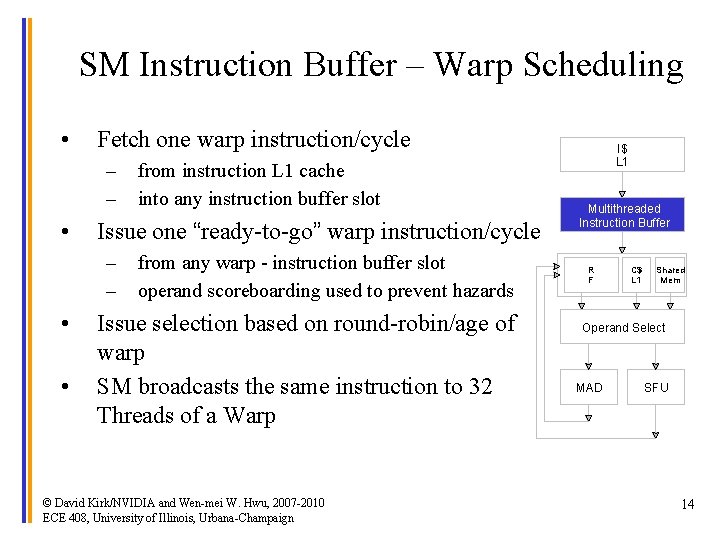

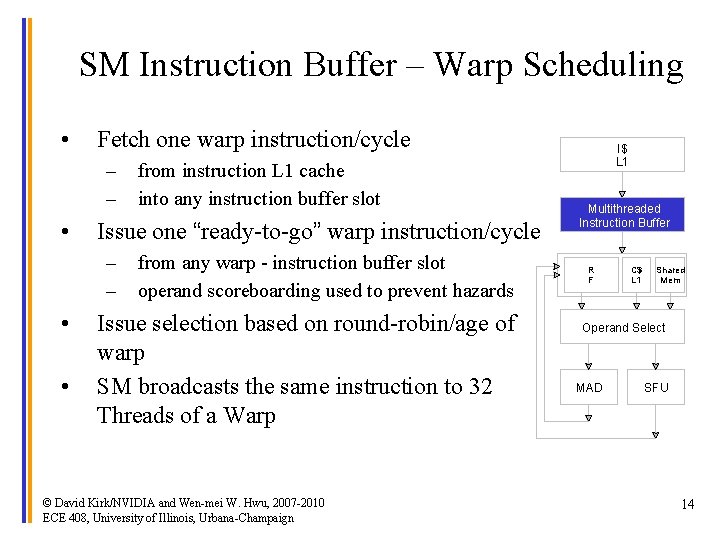

SM Instruction Buffer – Warp Scheduling • Fetch one warp instruction/cycle – – • Issue one “ready-to-go” warp instruction/cycle – – • • from instruction L 1 cache into any instruction buffer slot from any warp - instruction buffer slot operand scoreboarding used to prevent hazards Issue selection based on round-robin/age of warp SM broadcasts the same instruction to 32 Threads of a Warp © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign I$ L 1 Multithreaded Instruction Buffer R F C$ L 1 Shared Mem Operand Select MAD SFU 14

Scoreboarding • All register operands of all instructions in the Instruction Buffer are scoreboarded – – – • Instruction becomes ready after the needed values are deposited prevents hazards cleared instructions are eligible for issue Decoupled Memory/Processor pipelines – – any thread can continue to issue instructions until scoreboarding prevents issue allows Memory/Processor ops to proceed in shadow of other waiting Memory/Processor ops © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 15

Granularity Considerations • For Matrix Multiplication, should I use 4 X 4, 8 X 8, 16 X 16 or 32 X 32 tiles? – For 4 X 4, we have 16 threads per block, Since each SM can take up to 768 threads, the thread capacity allows 48 blocks. However, each SM can only take up to 8 blocks, thus there will be only 128 threads in each SM! • There are 8 warps but each warp is only half full. – For 8 X 8, we have 64 threads per Block. Since each SM can take up to 768 threads, it could take up to 12 Blocks. However, each SM can only take up to 8 Blocks, only 512 threads will go into each SM! • There are 16 warps available for scheduling in each SM • Each warp spans four slices in the y dimension – For 16 X 16, we have 256 threads per Block. Since each SM can take up to 768 threads, it can take up to 3 Blocks and achieve full capacity unless other resource considerations overrule. • There are 24 warps available for scheduling in each SM • Each warp spans two slices in the y dimension – For 32 X 32, we have 1024 threads per Block. Not even one can fit into an SM! © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 16

Memory Hardware © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 17

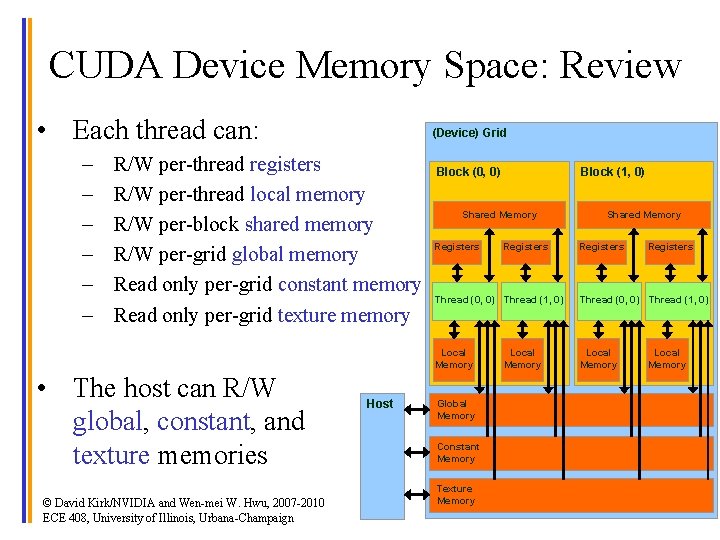

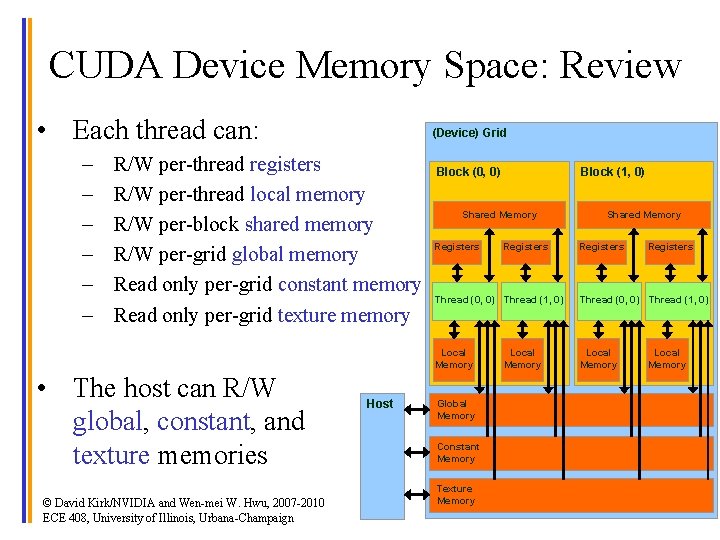

CUDA Device Memory Space: Review • Each thread can: – – – (Device) Grid R/W per-thread registers R/W per-thread local memory R/W per-block shared memory R/W per-grid global memory Read only per-grid constant memory Read only per-grid texture memory • The host can R/W global, constant, and texture memories © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign Host Block (0, 0) Block (1, 0) Shared Memory Registers Thread (0, 0) Thread (1, 0) Local Memory Global Memory Constant Memory Texture Memory 18

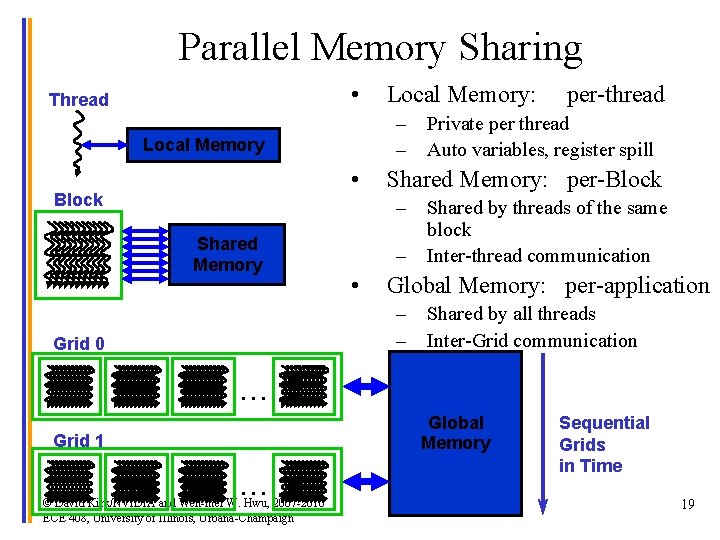

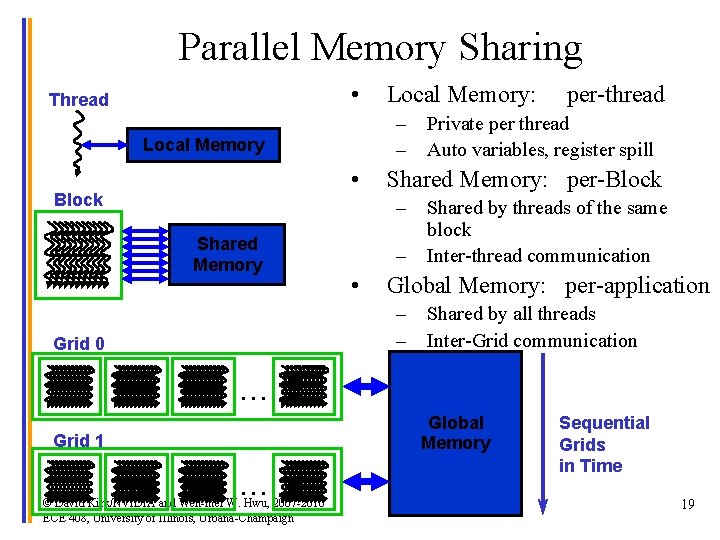

Parallel Memory Sharing • Thread – – Local Memory • Block Local Memory: – • Shared by threads of the same block Inter-thread communication Global Memory: per-application – – Grid 0 Private per thread Auto variables, register spill Shared Memory: per-Block – Shared Memory per-thread Shared by all threads Inter-Grid communication . . . Global Memory Grid 1. . . © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign Sequential Grids in Time 19

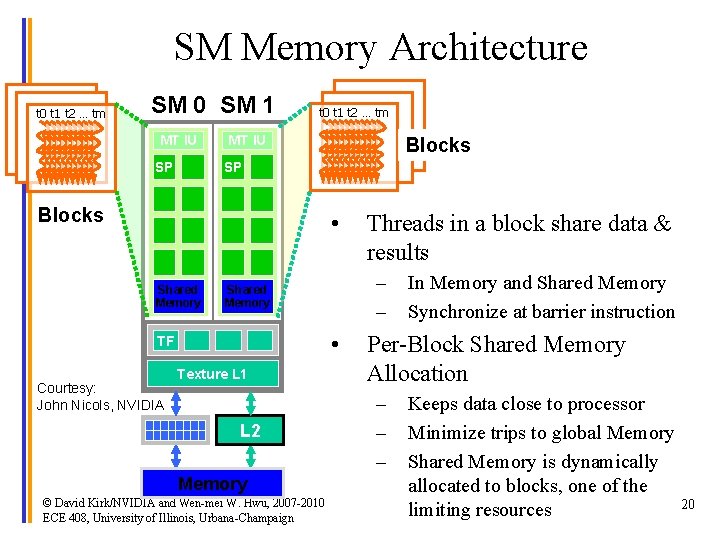

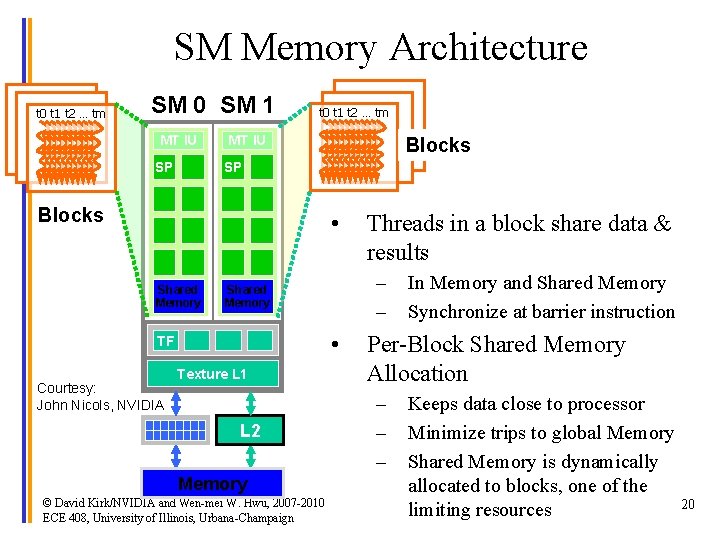

SM Memory Architecture t 0 t 1 t 2 … tm SM 0 SM 1 MT IU SP t 0 t 1 t 2 … tm MT IU Blocks SP Blocks • Shared Memory Courtesy: John Nicols, NVIDIA – – Shared Memory • TF Texture L 1 L 2 Memory © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign Threads in a block share data & results In Memory and Shared Memory Synchronize at barrier instruction Per-Block Shared Memory Allocation – – – Keeps data close to processor Minimize trips to global Memory Shared Memory is dynamically allocated to blocks, one of the 20 limiting resources

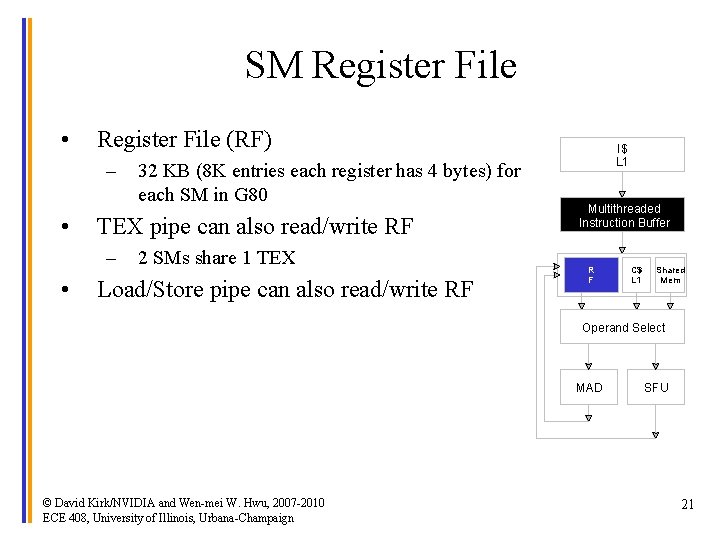

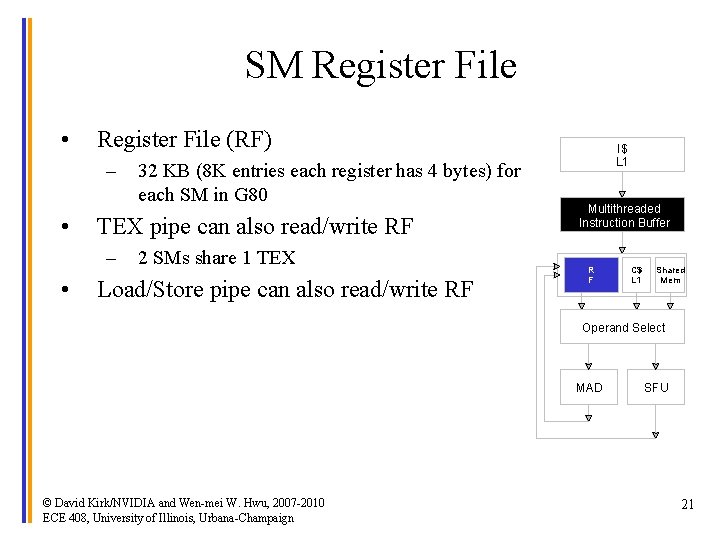

SM Register File • Register File (RF) – • TEX pipe can also read/write RF – • 32 KB (8 K entries each register has 4 bytes) for each SM in G 80 2 SMs share 1 TEX Load/Store pipe can also read/write RF I$ L 1 Multithreaded Instruction Buffer R F C$ L 1 Shared Mem Operand Select MAD © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign SFU 21

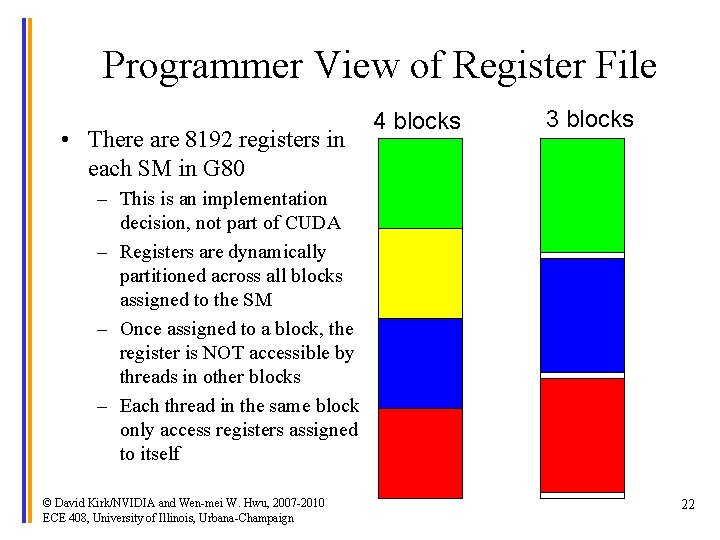

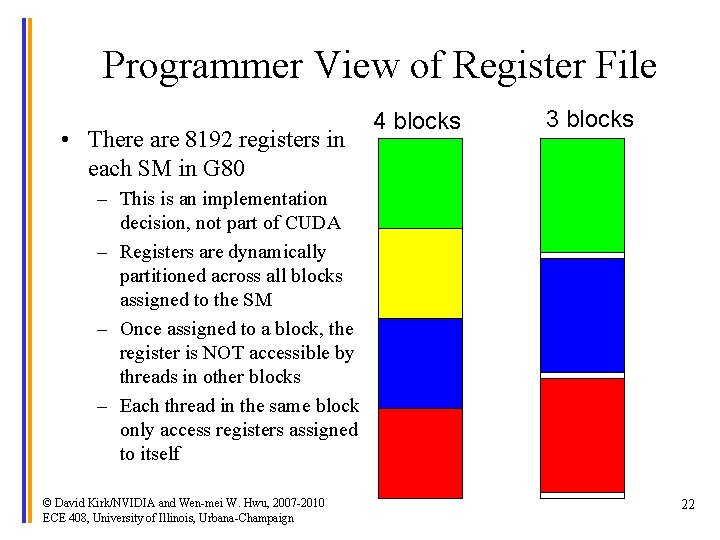

Programmer View of Register File • There are 8192 registers in each SM in G 80 4 blocks 3 blocks – This is an implementation decision, not part of CUDA – Registers are dynamically partitioned across all blocks assigned to the SM – Once assigned to a block, the register is NOT accessible by threads in other blocks – Each thread in the same block only access registers assigned to itself © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 22

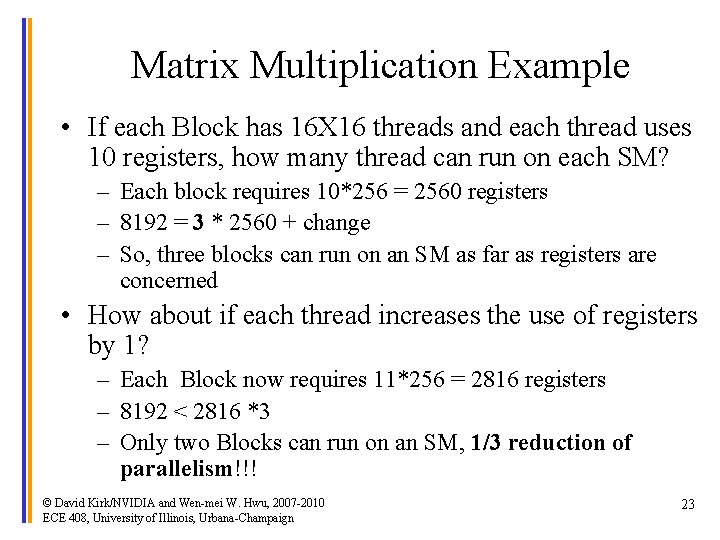

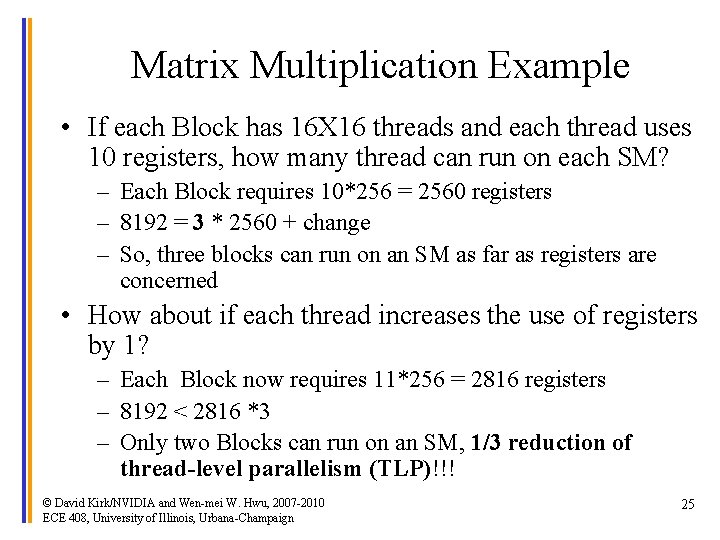

Matrix Multiplication Example • If each Block has 16 X 16 threads and each thread uses 10 registers, how many thread can run on each SM? – Each block requires 10*256 = 2560 registers – 8192 = 3 * 2560 + change – So, three blocks can run on an SM as far as registers are concerned • How about if each thread increases the use of registers by 1? – Each Block now requires 11*256 = 2816 registers – 8192 < 2816 *3 – Only two Blocks can run on an SM, 1/3 reduction of parallelism!!! © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 23

More on Dynamic Partitioning • Dynamic partitioning gives more flexibility to compilers/programmers – One can run a smaller number of threads that require many registers each or a large number of threads that require few registers each • This allows for finer grain threading than traditional CPU threading models. – The compiler can tradeoff between instruction-level parallelism and thread level parallelism © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 24

Matrix Multiplication Example • If each Block has 16 X 16 threads and each thread uses 10 registers, how many thread can run on each SM? – Each Block requires 10*256 = 2560 registers – 8192 = 3 * 2560 + change – So, three blocks can run on an SM as far as registers are concerned • How about if each thread increases the use of registers by 1? – Each Block now requires 11*256 = 2816 registers – 8192 < 2816 *3 – Only two Blocks can run on an SM, 1/3 reduction of thread-level parallelism (TLP)!!! © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 25

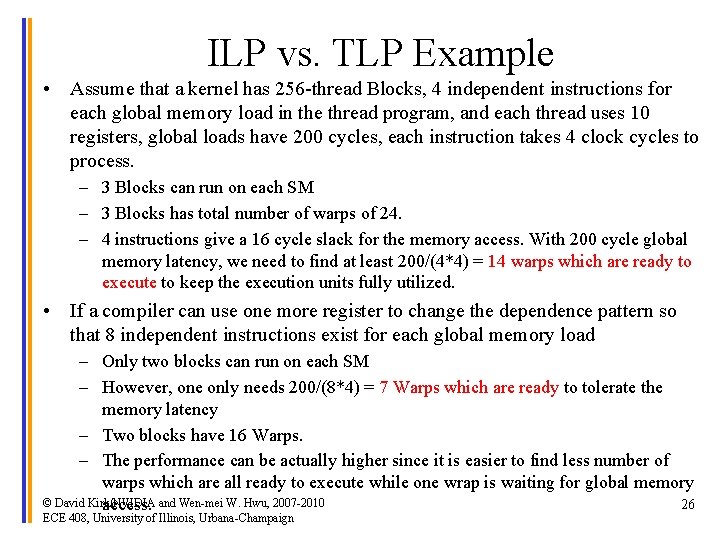

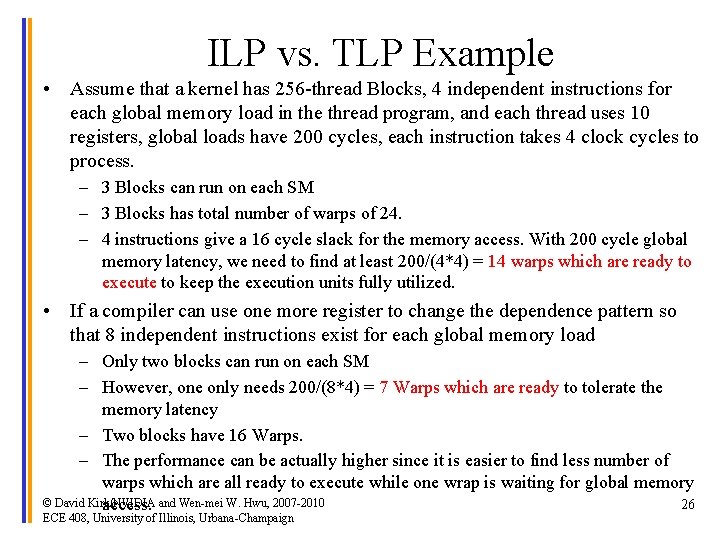

ILP vs. TLP Example • Assume that a kernel has 256 -thread Blocks, 4 independent instructions for each global memory load in the thread program, and each thread uses 10 registers, global loads have 200 cycles, each instruction takes 4 clock cycles to process. – 3 Blocks can run on each SM – 3 Blocks has total number of warps of 24. – 4 instructions give a 16 cycle slack for the memory access. With 200 cycle global memory latency, we need to find at least 200/(4*4) = 14 warps which are ready to execute to keep the execution units fully utilized. • If a compiler can use one more register to change the dependence pattern so that 8 independent instructions exist for each global memory load – Only two blocks can run on each SM – However, one only needs 200/(8*4) = 7 Warps which are ready to tolerate the memory latency – Two blocks have 16 Warps. – The performance can be actually higher since it is easier to find less number of warps which are all ready to execute while one wrap is waiting for global memory © David Kirk/NVIDIA 26 access. and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign

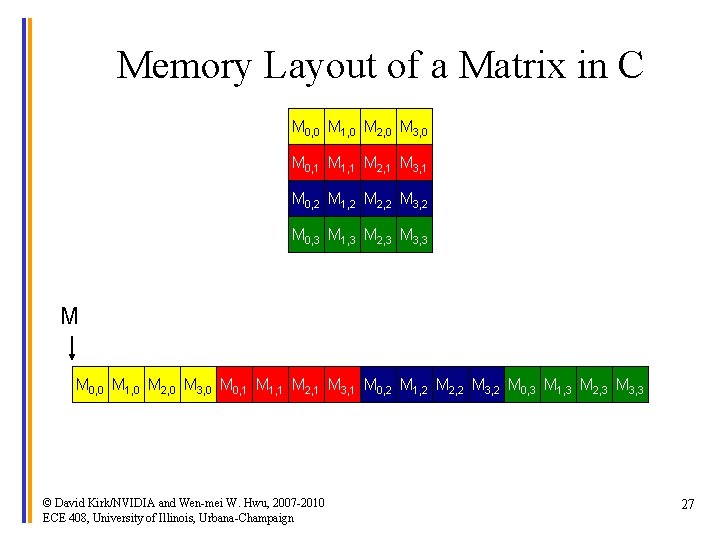

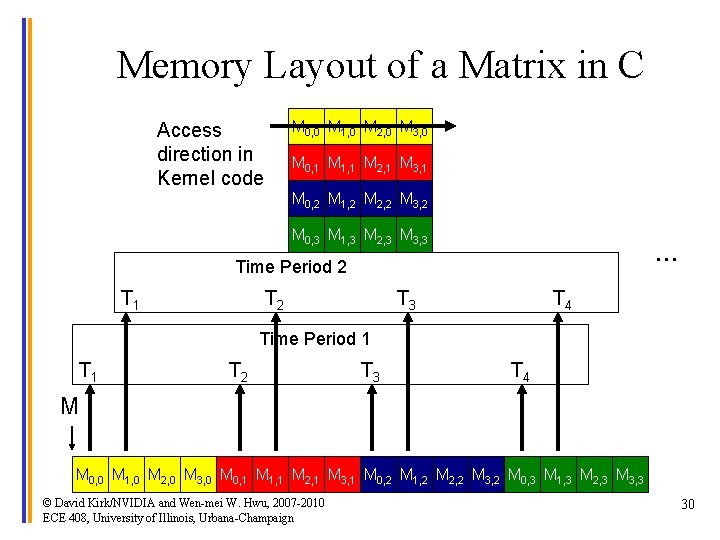

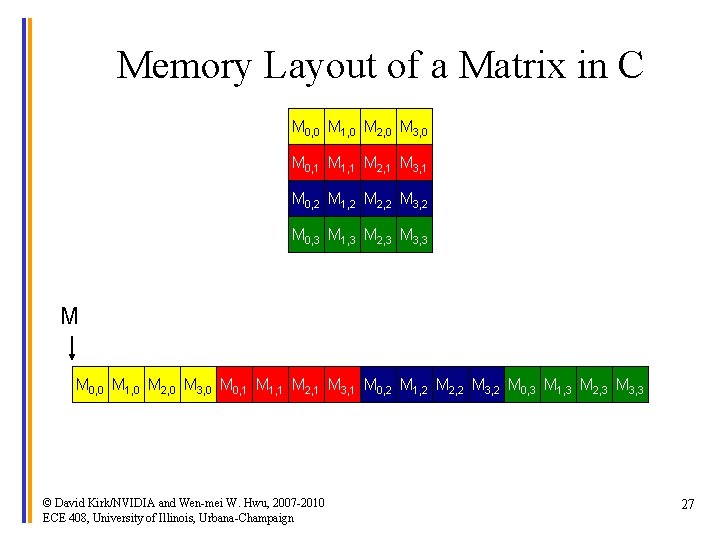

Memory Layout of a Matrix in C M 0, 0 M 1, 0 M 2, 0 M 3, 0 M 0, 1 M 1, 1 M 2, 1 M 3, 1 M 0, 2 M 1, 2 M 2, 2 M 3, 2 M 0, 3 M 1, 3 M 2, 3 M 3, 3 M M 0, 0 M 1, 0 M 2, 0 M 3, 0 M 0, 1 M 1, 1 M 2, 1 M 3, 1 M 0, 2 M 1, 2 M 2, 2 M 3, 2 M 0, 3 M 1, 3 M 2, 3 M 3, 3 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 27

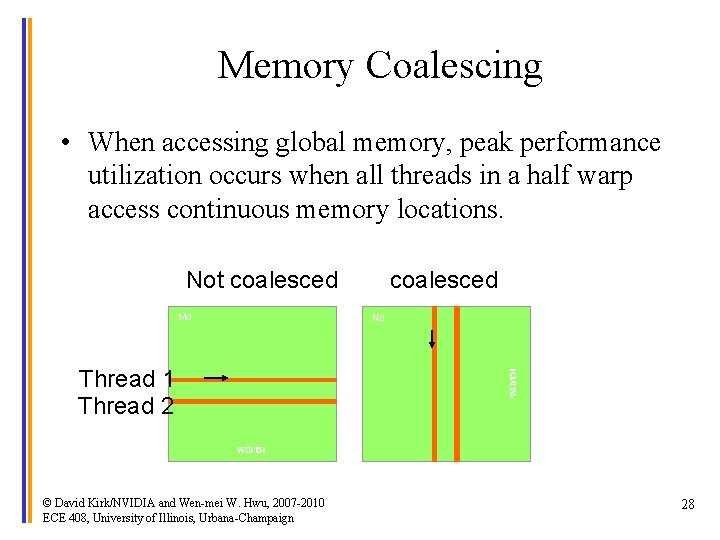

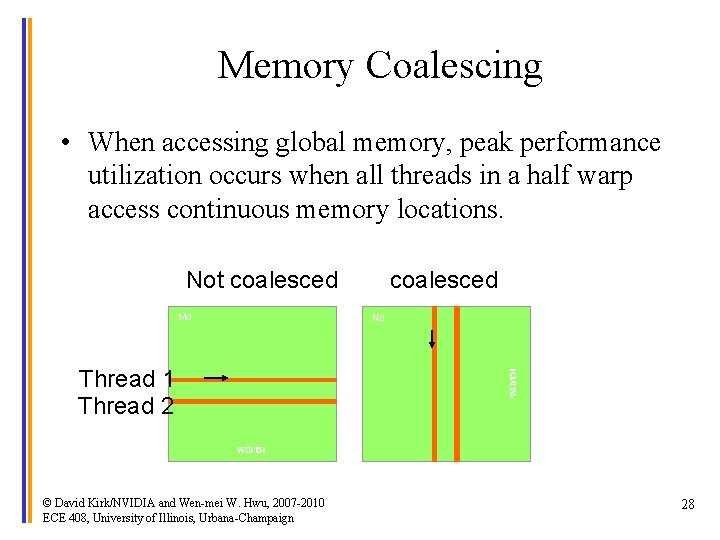

Memory Coalescing • When accessing global memory, peak performance utilization occurs when all threads in a half warp access continuous memory locations. Not coalesced Md coalesced Nd WIDTH Thread 1 Thread 2 WIDTH © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 28

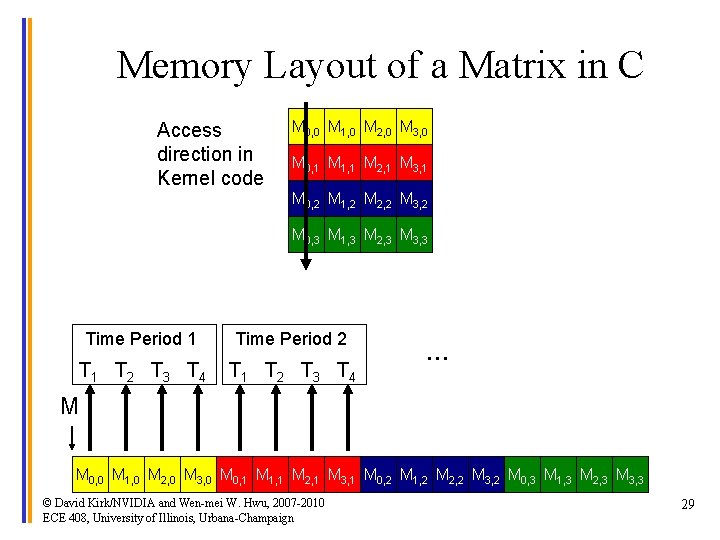

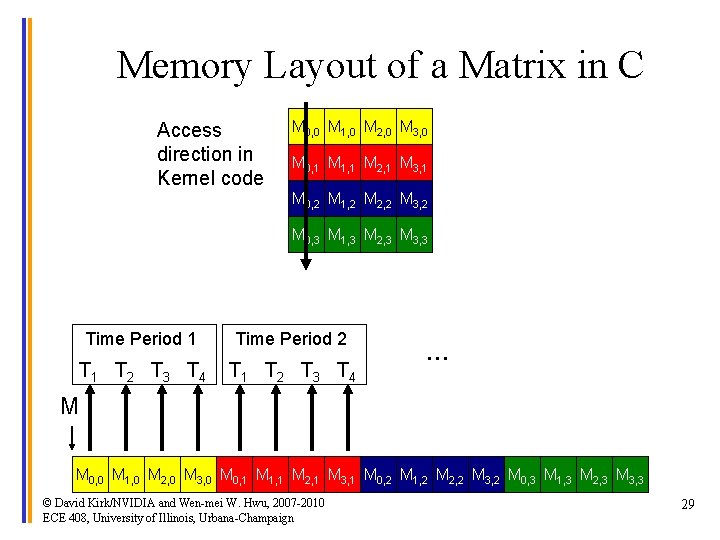

Memory Layout of a Matrix in C Access direction in Kernel code M 0, 0 M 1, 0 M 2, 0 M 3, 0 M 0, 1 M 1, 1 M 2, 1 M 3, 1 M 0, 2 M 1, 2 M 2, 2 M 3, 2 M 0, 3 M 1, 3 M 2, 3 M 3, 3 Time Period 1 Time Period 2 T 1 T 2 T 3 T 4 … M M 0, 0 M 1, 0 M 2, 0 M 3, 0 M 0, 1 M 1, 1 M 2, 1 M 3, 1 M 0, 2 M 1, 2 M 2, 2 M 3, 2 M 0, 3 M 1, 3 M 2, 3 M 3, 3 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 29

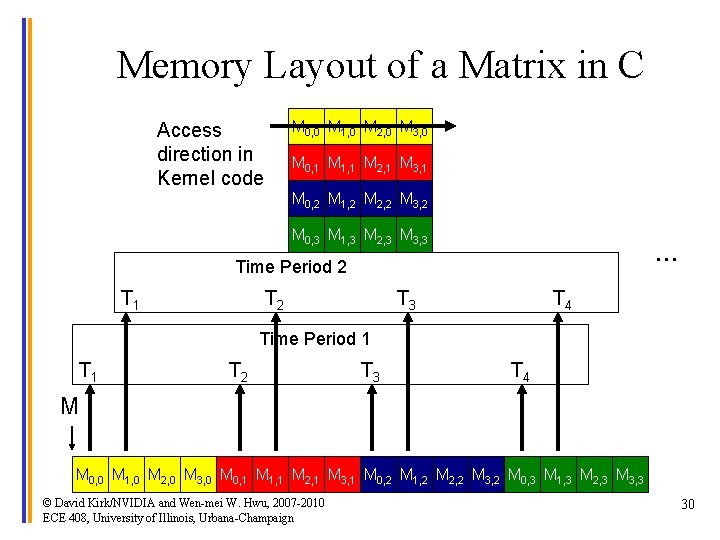

Memory Layout of a Matrix in C M 0, 0 M 1, 0 M 2, 0 M 3, 0 Access direction in Kernel code M 0, 1 M 1, 1 M 2, 1 M 3, 1 M 0, 2 M 1, 2 M 2, 2 M 3, 2 M 0, 3 M 1, 3 M 2, 3 M 3, 3 … Time Period 2 T 1 T 2 T 3 T 4 Time Period 1 T 2 T 3 T 4 M M 0, 0 M 1, 0 M 2, 0 M 3, 0 M 0, 1 M 1, 1 M 2, 1 M 3, 1 M 0, 2 M 1, 2 M 2, 2 M 3, 2 M 0, 3 M 1, 3 M 2, 3 M 3, 3 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 30

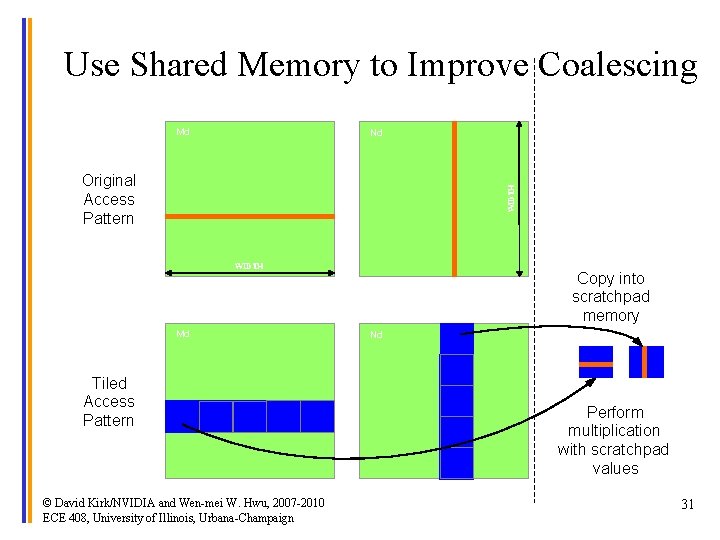

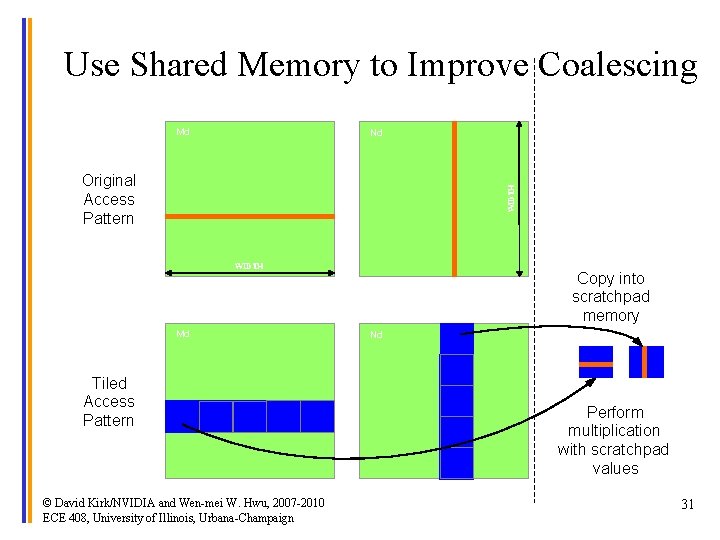

Use Shared Memory to Improve Coalescing Md Nd WIDTH Original Access Pattern WIDTH Md Tiled Access Pattern © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign Copy into scratchpad memory Nd Perform multiplication with scratchpad values 31

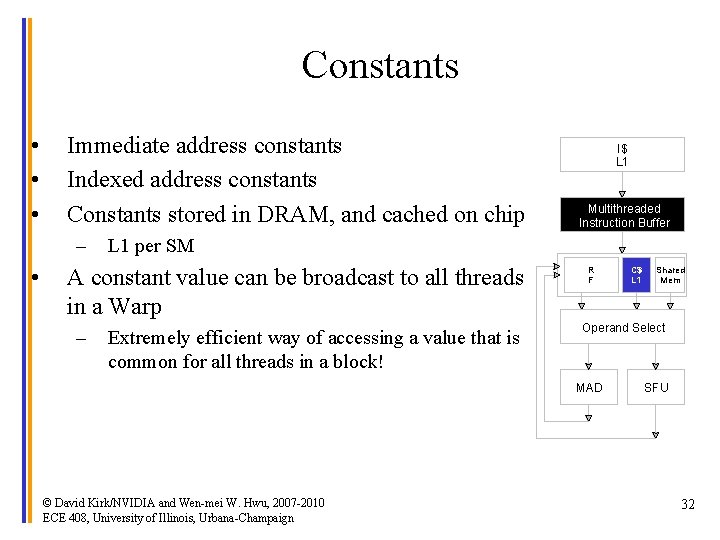

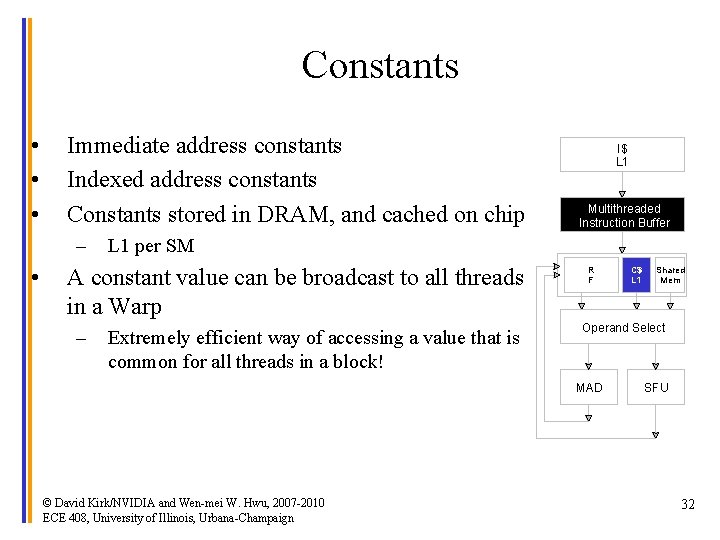

Constants • • • Immediate address constants Indexed address constants Constants stored in DRAM, and cached on chip – • Multithreaded Instruction Buffer L 1 per SM A constant value can be broadcast to all threads in a Warp – I$ L 1 Extremely efficient way of accessing a value that is common for all threads in a block! R F Shared Mem Operand Select MAD © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign C$ L 1 SFU 32

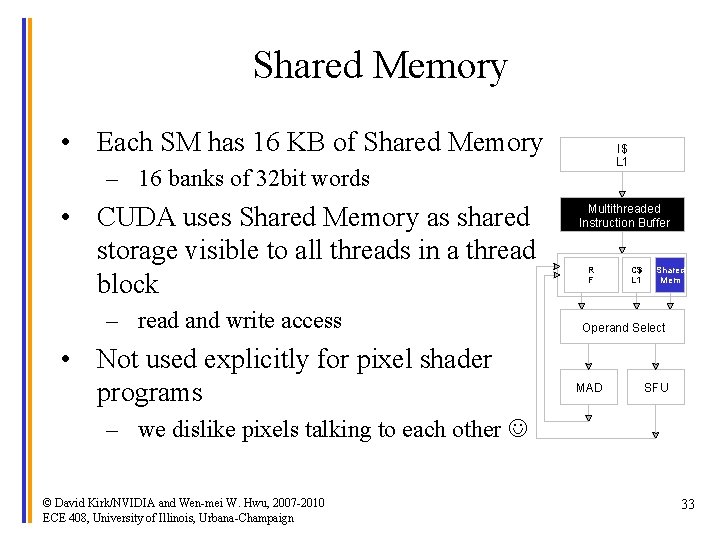

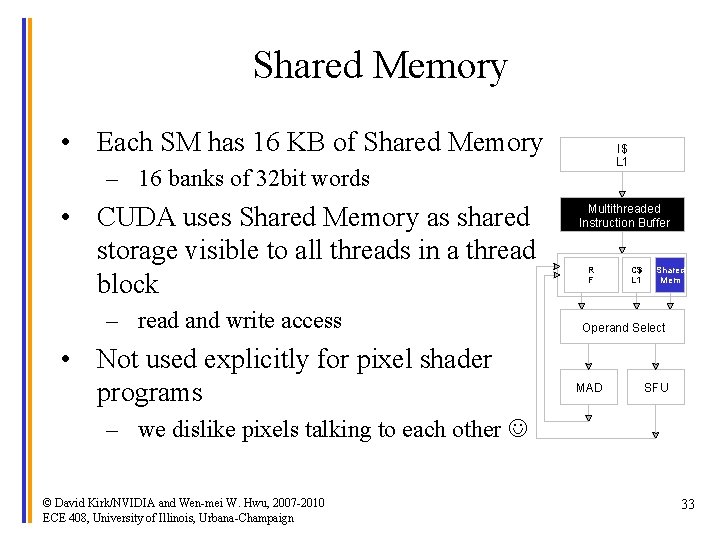

Shared Memory • Each SM has 16 KB of Shared Memory I$ L 1 – 16 banks of 32 bit words • CUDA uses Shared Memory as shared storage visible to all threads in a thread block – read and write access • Not used explicitly for pixel shader programs Multithreaded Instruction Buffer R F C$ L 1 Shared Mem Operand Select MAD SFU – we dislike pixels talking to each other © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 33

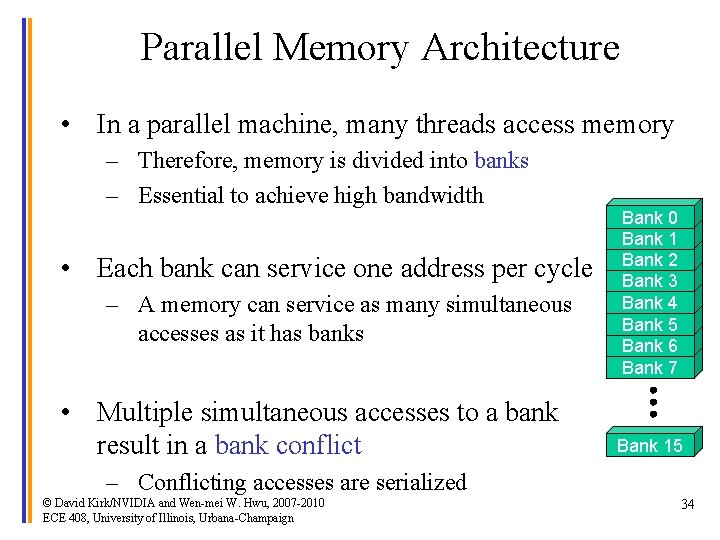

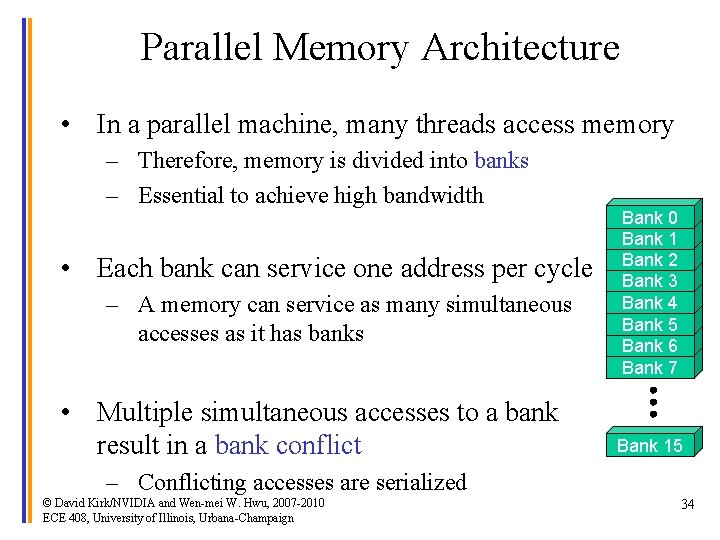

Parallel Memory Architecture • In a parallel machine, many threads access memory – Therefore, memory is divided into banks – Essential to achieve high bandwidth • Each bank can service one address per cycle – A memory can service as many simultaneous accesses as it has banks • Multiple simultaneous accesses to a bank result in a bank conflict Bank 0 Bank 1 Bank 2 Bank 3 Bank 4 Bank 5 Bank 6 Bank 7 Bank 15 – Conflicting accesses are serialized © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 34

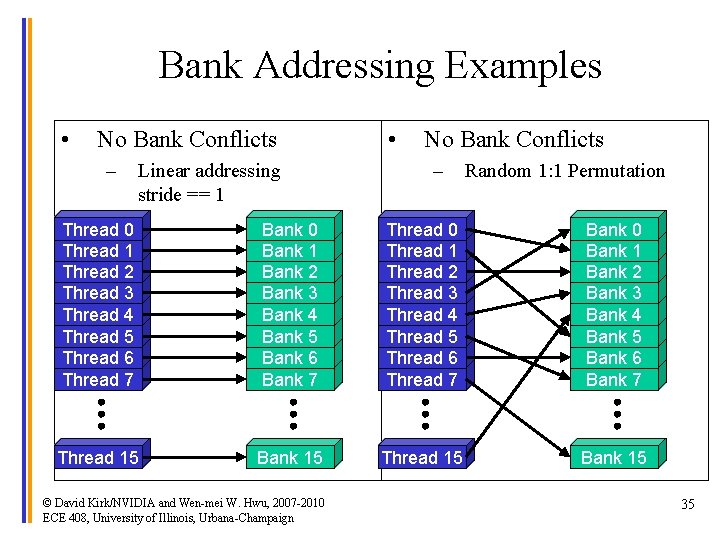

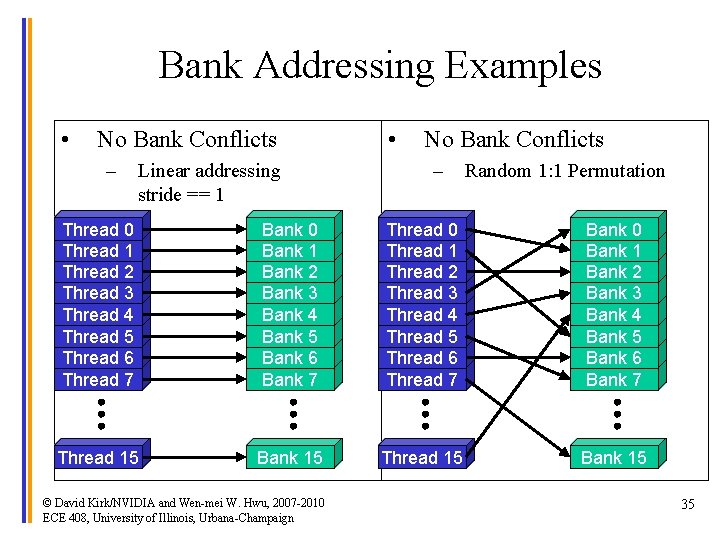

Bank Addressing Examples • No Bank Conflicts – Linear addressing stride == 1 • No Bank Conflicts – Random 1: 1 Permutation Thread 0 Thread 1 Thread 2 Thread 3 Thread 4 Thread 5 Thread 6 Thread 7 Bank 0 Bank 1 Bank 2 Bank 3 Bank 4 Bank 5 Bank 6 Bank 7 Thread 15 Bank 15 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 35

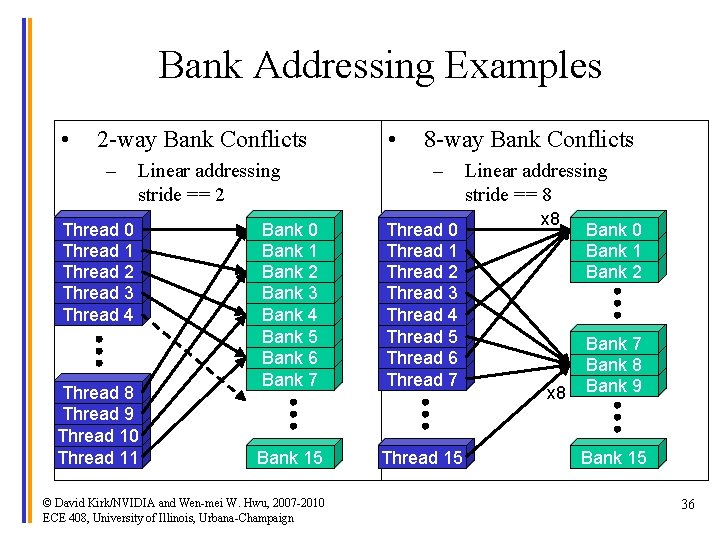

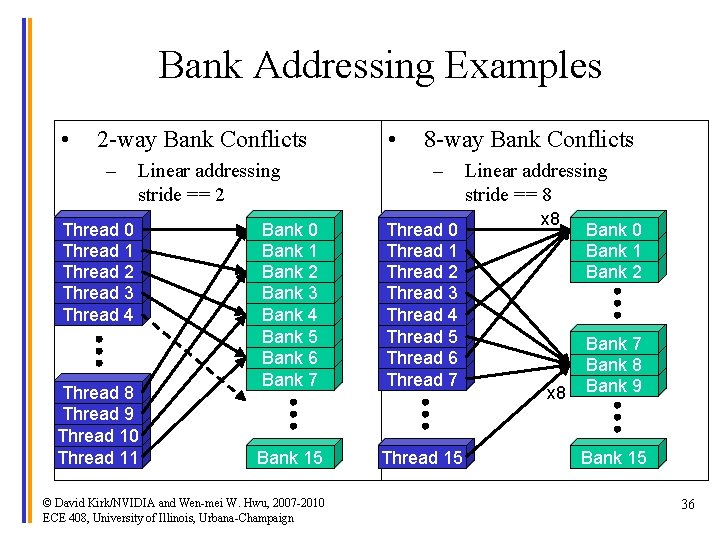

Bank Addressing Examples • 2 -way Bank Conflicts – Linear addressing stride == 2 Thread 0 Thread 1 Thread 2 Thread 3 Thread 4 Thread 8 Thread 9 Thread 10 Thread 11 • 8 -way Bank Conflicts – Bank 0 Bank 1 Bank 2 Bank 3 Bank 4 Bank 5 Bank 6 Bank 7 Thread 0 Thread 1 Thread 2 Thread 3 Thread 4 Thread 5 Thread 6 Thread 7 Bank 15 Thread 15 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign Linear addressing stride == 8 x 8 Bank 0 Bank 1 Bank 2 Bank 7 Bank 8 Bank 9 Bank 15 36

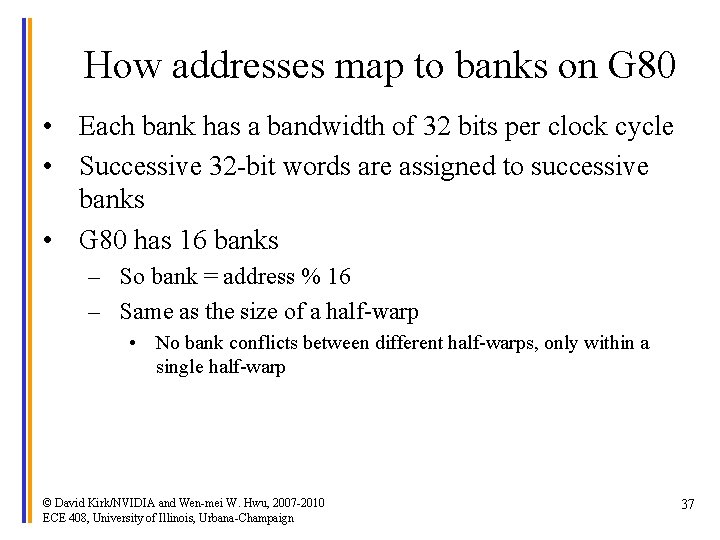

How addresses map to banks on G 80 • Each bank has a bandwidth of 32 bits per clock cycle • Successive 32 -bit words are assigned to successive banks • G 80 has 16 banks – So bank = address % 16 – Same as the size of a half-warp • No bank conflicts between different half-warps, only within a single half-warp © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 37

Shared memory bank conflicts • Shared memory is as fast as registers if there are no bank conflicts • The fast case: – – • If all threads of a half-warp access different banks, there is no bank conflict If all threads of a half-warp access the identical address, there is no bank conflict (broadcast) The slow case: – – – Bank Conflict: multiple threads in the same half-warp access the same bank Must serialize the accesses Cost = max # of simultaneous accesses to a single bank © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 38

![Linear Addressing Given shared float shared256 float foo sharedbase Index s Linear Addressing • Given: __shared__ float shared[256]; float foo = shared[base. Index + s](https://slidetodoc.com/presentation_image_h/8e56a5006351544151ae9136a94d4576/image-39.jpg)

Linear Addressing • Given: __shared__ float shared[256]; float foo = shared[base. Index + s * thread. Idx. x]; s=1 Thread 0 Thread 1 Bank 0 Bank 1 Thread 2 Thread 3 Bank 2 Bank 3 Thread 4 Bank 4 Thread 5 Thread 6 Bank 5 Bank 6 Thread 7 Bank 7 Thread 15 Bank 15 s=3 • This is only bank-conflict-free if s shares no common factors with the number of banks – 16 on G 80, so s must be odd © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign Thread 0 Thread 1 Bank 0 Bank 1 Thread 2 Thread 3 Bank 2 Bank 3 Thread 4 Bank 4 Thread 5 Thread 6 Bank 5 Bank 6 Thread 7 Bank 7 Thread 15 Bank 1539

Control Flow © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 40

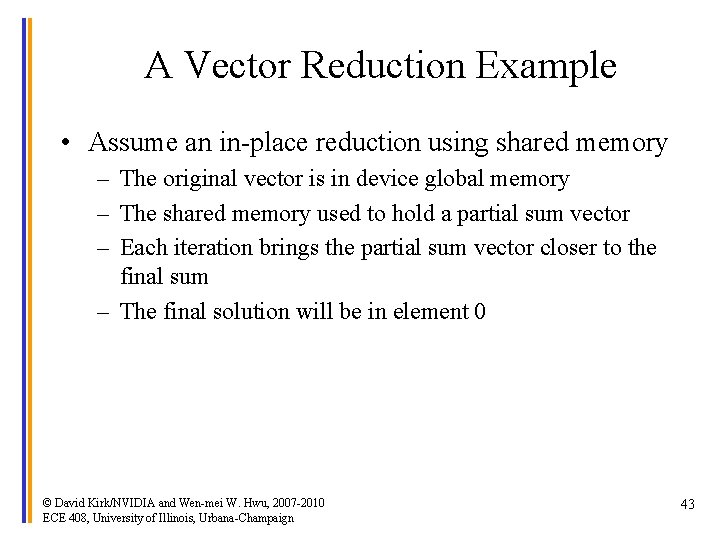

Control Flow Instructions • Main performance concern with branching is divergence – – Threads within a single warp take different paths Different execution paths are serialized in G 80 • • The control paths taken by the threads in a warp are traversed one at a time until there is no more. A common case: avoid divergence when branch condition is a function of thread ID – Example with divergence: • If (thread. Idx. x > 2) { } • This creates two different control paths for threads in a block • Branch granularity < warp size; threads 0, 1 and 2 follow different path than the rest of the threads in the first warp – Example without divergence: • If (thread. Idx. x / WARP_SIZE > 2) { } • Also creates two different control paths for threads in a block • Branch granularity is a whole multiple of warp size; all threads in any given warp follow the same path © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 41

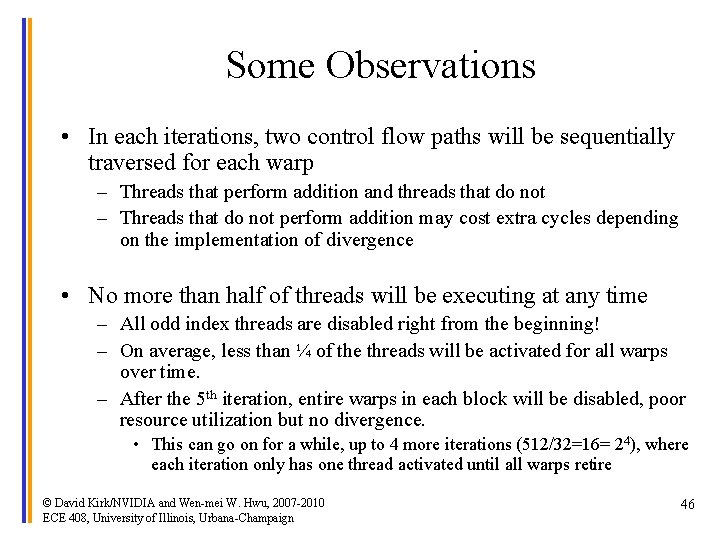

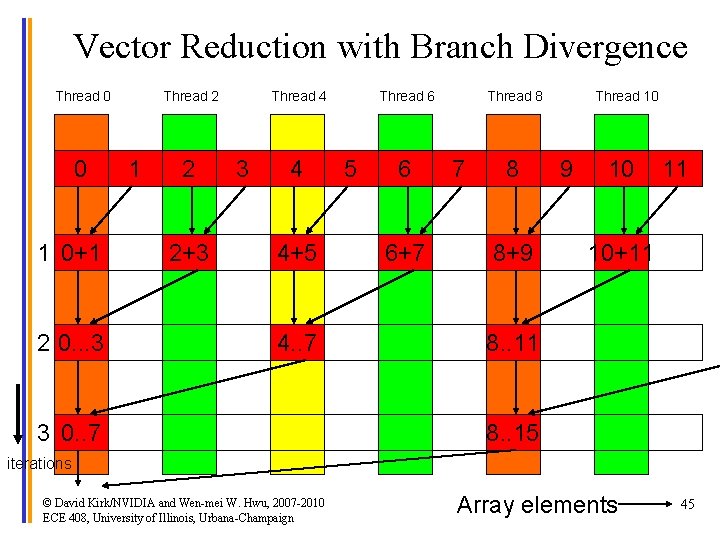

Parallel Reduction • Given an array of values, “reduce” them to a single value in parallel • Examples – sum reduction: sum of all values in the array – Max reduction: maximum of all values in the array • Typically parallel implementation: – Recursively halve # threads, add two values per thread – Takes log(n) steps for n elements, requires n/2 threads © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 42

A Vector Reduction Example • Assume an in-place reduction using shared memory – The original vector is in device global memory – The shared memory used to hold a partial sum vector – Each iteration brings the partial sum vector closer to the final sum – The final solution will be in element 0 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 43

![A simplementation Assume we have already loaded array into shared float partial Sum A simplementation • Assume we have already loaded array into __shared__ float partial. Sum[]](https://slidetodoc.com/presentation_image_h/8e56a5006351544151ae9136a94d4576/image-44.jpg)

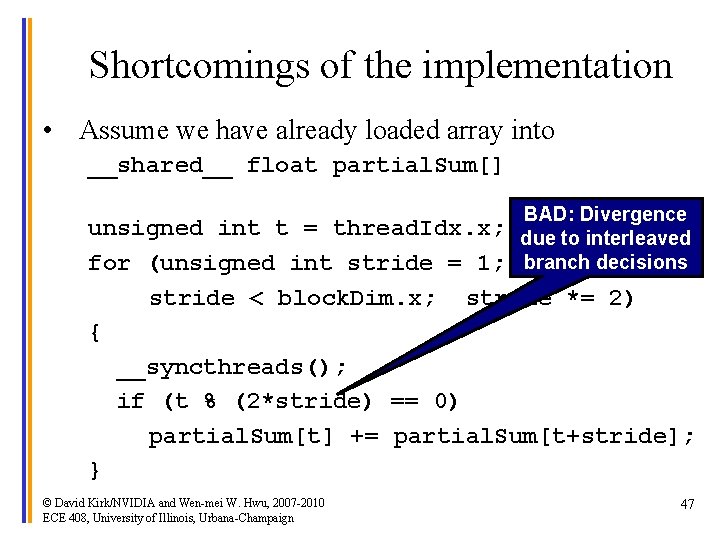

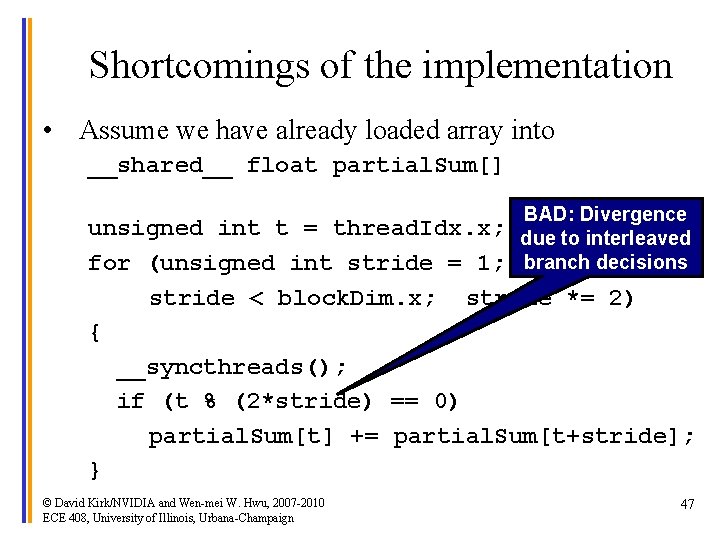

A simplementation • Assume we have already loaded array into __shared__ float partial. Sum[] unsigned int t = thread. Idx. x; for (unsigned int stride = 1; stride < block. Dim. x; stride *= 2) { __syncthreads(); if (t % (2*stride) == 0) partial. Sum[t] += partial. Sum[t+stride]; } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 44

Vector Reduction with Branch Divergence Thread 0 0 1 0+1 2 0. . . 3 Thread 2 1 2 2+3 Thread 4 3 4 4+5 4. . 7 3 0. . 7 Thread 6 5 6 6+7 Thread 8 7 8 8+9 Thread 10 9 10 11 10+11 8. . 15 iterations © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign Array elements 45

Some Observations • In each iterations, two control flow paths will be sequentially traversed for each warp – Threads that perform addition and threads that do not – Threads that do not perform addition may cost extra cycles depending on the implementation of divergence • No more than half of threads will be executing at any time – All odd index threads are disabled right from the beginning! – On average, less than ¼ of the threads will be activated for all warps over time. – After the 5 th iteration, entire warps in each block will be disabled, poor resource utilization but no divergence. • This can go on for a while, up to 4 more iterations (512/32=16= 24), where each iteration only has one thread activated until all warps retire © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 46

Shortcomings of the implementation • Assume we have already loaded array into __shared__ float partial. Sum[] BAD: Divergence unsigned int t = thread. Idx. x; due to interleaved for (unsigned int stride = 1; branch decisions stride < block. Dim. x; stride *= 2) { __syncthreads(); if (t % (2*stride) == 0) partial. Sum[t] += partial. Sum[t+stride]; } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 47

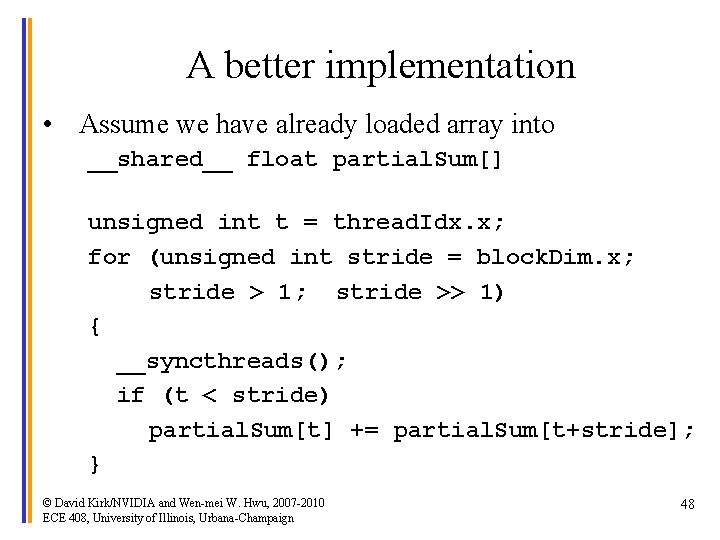

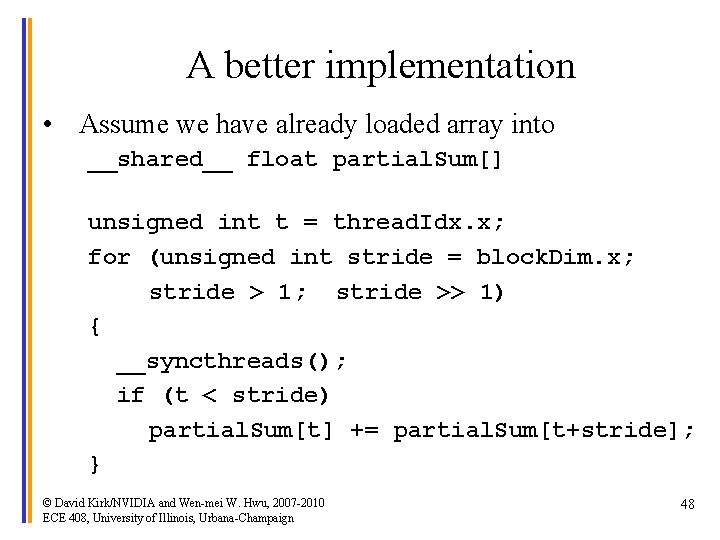

A better implementation • Assume we have already loaded array into __shared__ float partial. Sum[] unsigned int t = thread. Idx. x; for (unsigned int stride = block. Dim. x; stride > 1; stride >> 1) { __syncthreads(); if (t < stride) partial. Sum[t] += partial. Sum[t+stride]; } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 48

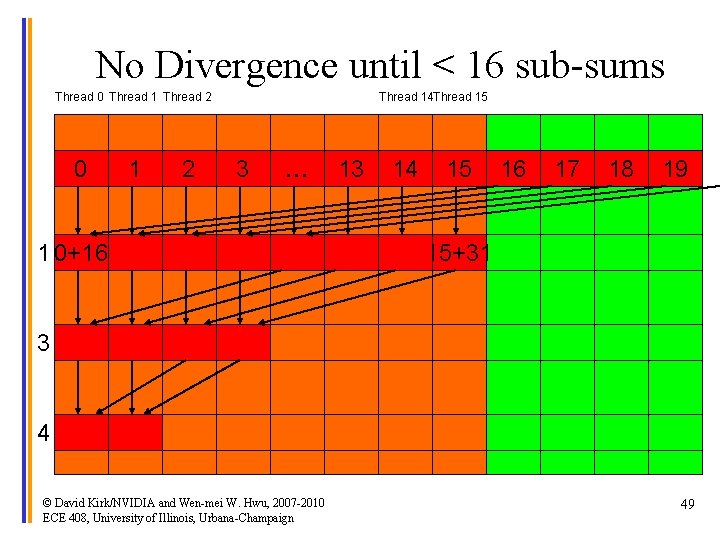

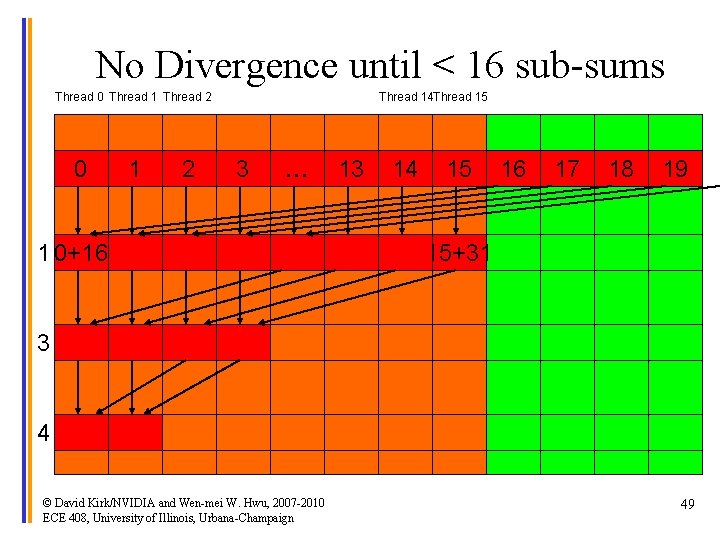

No Divergence until < 16 sub-sums Thread 0 Thread 1 Thread 2 0 1 2 Thread 14 Thread 15 3 … 1 0+16 13 14 15 16 17 18 19 15+31 3 4 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 49

Registers, ILP and Instruction Mix © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 50

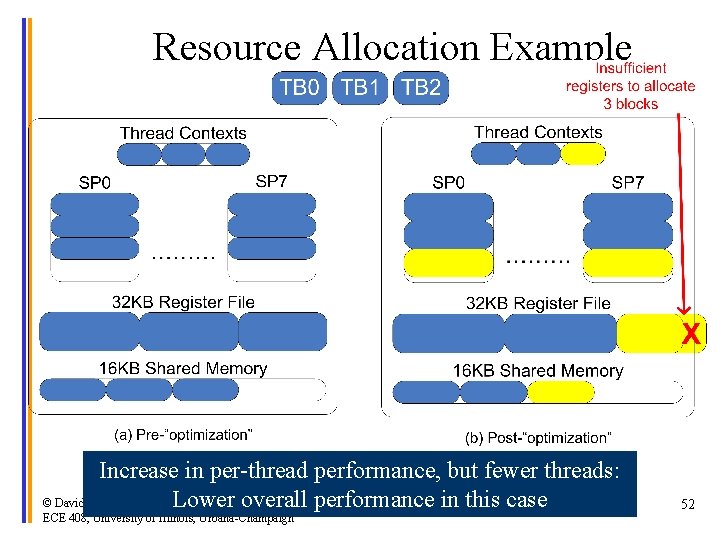

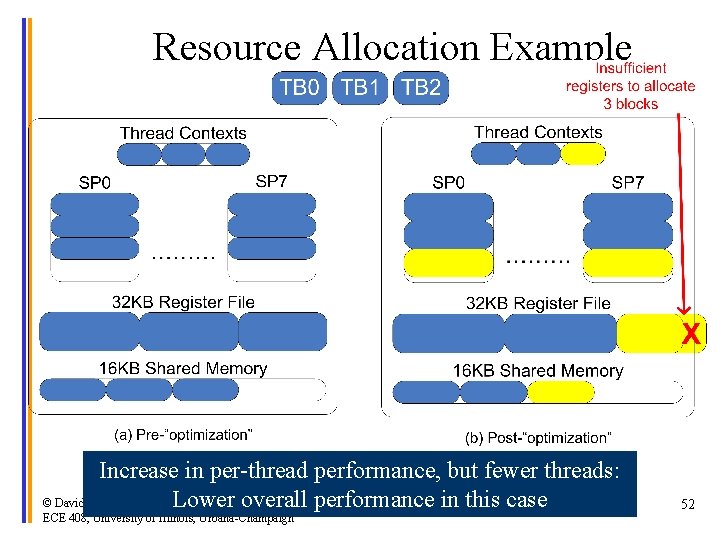

More on Dynamic Partitioning • Dynamic partitioning of SM resources gives more flexibility to compilers/programmers – One can run a smaller number of threads that require many registers each or a large number of threads that require few registers each • This allows for finer grain threading than traditional CPU threading models. – The compiler can tradeoff between instruction-level parallelism and thread level parallelism © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 51

Resource Allocation Example Increase in per-thread performance, but fewer threads: © David Kirk/NVIDIA and. Lower Wen-mei W. overall Hwu, 2007 -2010 performance in this case ECE 408, University of Illinois, Urbana-Champaign 52

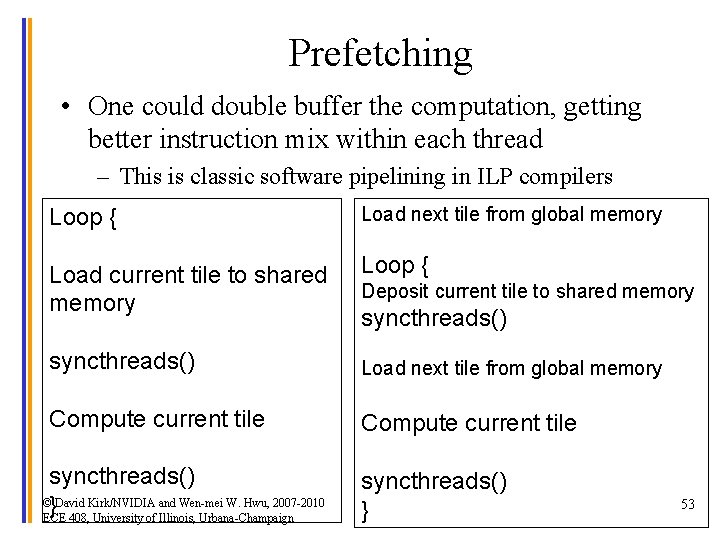

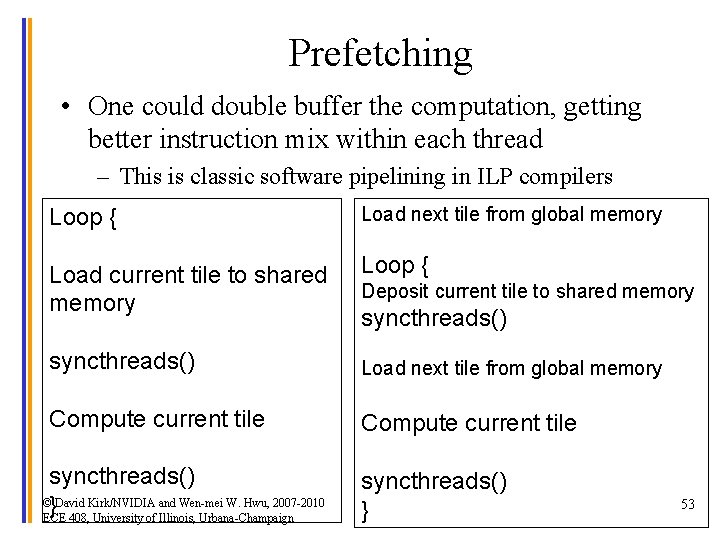

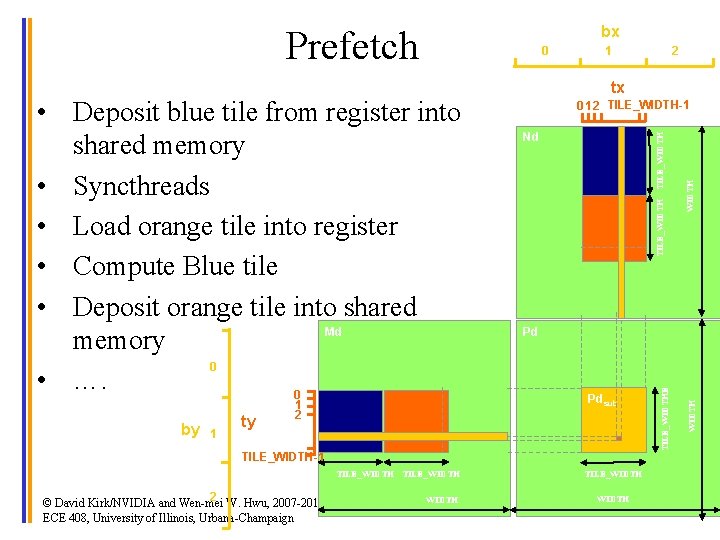

Prefetching • One could double buffer the computation, getting better instruction mix within each thread – This is classic software pipelining in ILP compilers Loop { Load next tile from global memory Load current tile to shared memory Loop { syncthreads() Load next tile from global memory Compute current tile syncthreads() ©}David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign syncthreads() } Deposit current tile to shared memory syncthreads() 53

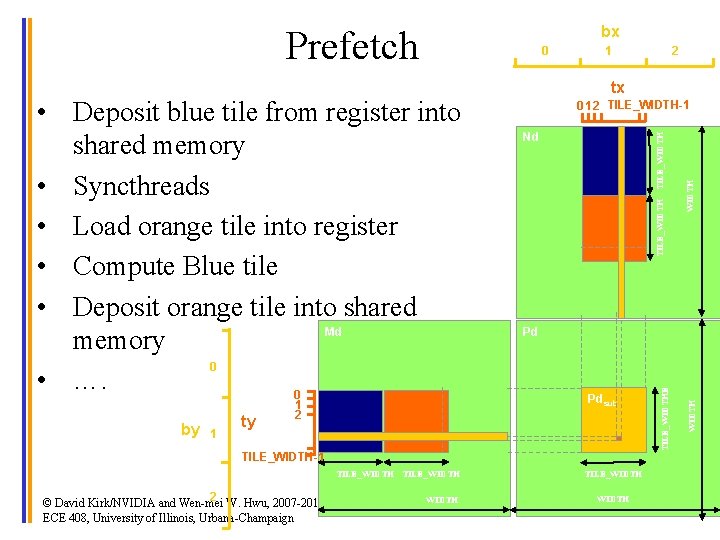

bx Prefetch 0 1 ty tx TILE_WIDTH Nd Pd Pdsub TILE_WIDTH-1 TILE_WIDTH 2 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign WIDTH 0 1 2 TILE_WIDTH-1 WIDTH by 1 2 2 TILE_WIDTHE • Deposit blue tile from register into shared memory • Syncthreads • Load orange tile into register • Compute Blue tile • Deposit orange tile into shared Md memory 0 • …. 0 1 TILE_WIDTH 54

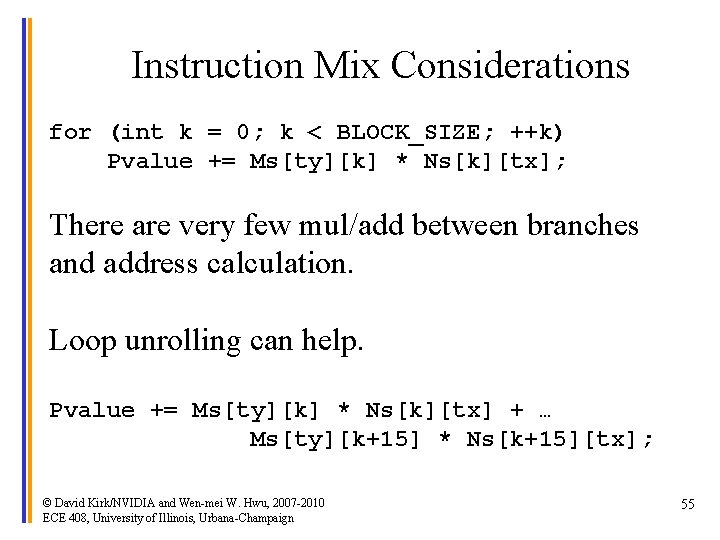

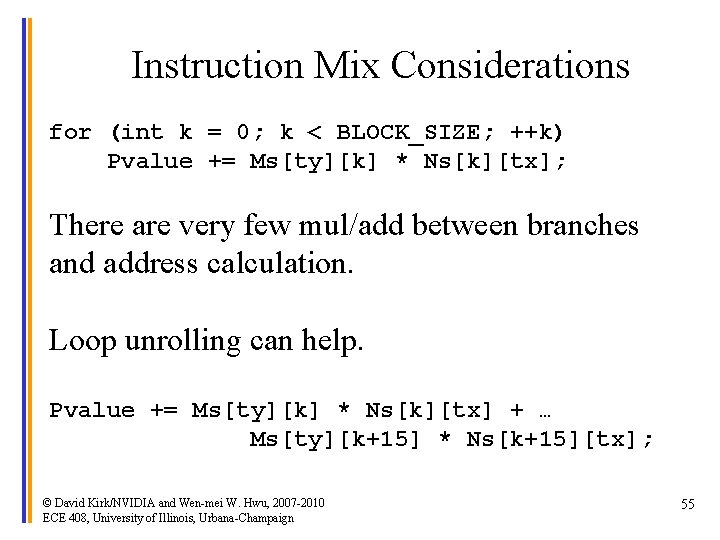

Instruction Mix Considerations for (int k = 0; k < BLOCK_SIZE; ++k) Pvalue += Ms[ty][k] * Ns[k][tx]; There are very few mul/add between branches and address calculation. Loop unrolling can help. Pvalue += Ms[ty][k] * Ns[k][tx] + … Ms[ty][k+15] * Ns[k+15][tx]; © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 55

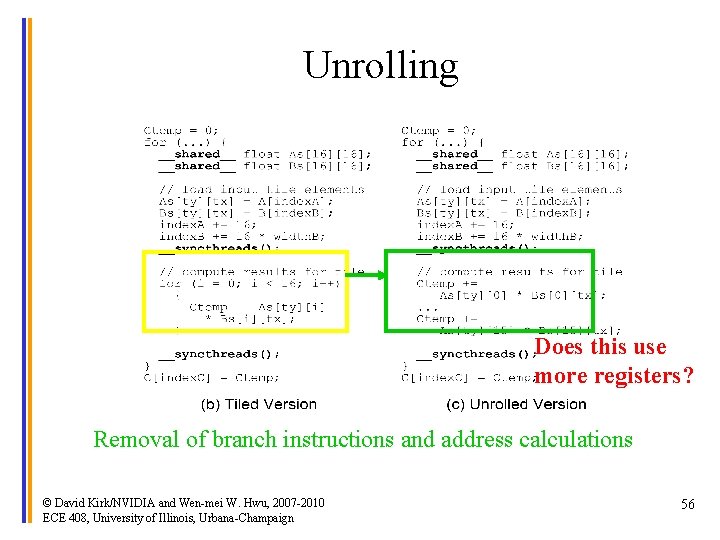

Unrolling Does this use more registers? Removal of branch instructions and address calculations © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 56

Major G 80 Performance Detractors • Long-latency operations – Avoid stalls by executing other threads • Stalls and bubbles in the pipeline – Barrier synchronization – Branch divergence • Shared resource saturation – Global memory bandwidth – Local memory capacity © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 57