Human Error Chapter 4 Text Human Error The

- Slides: 57

Human Error Chapter 4, Text

Human Error • The man who makes no mistakes does not usually make anything. • William Conor Magee

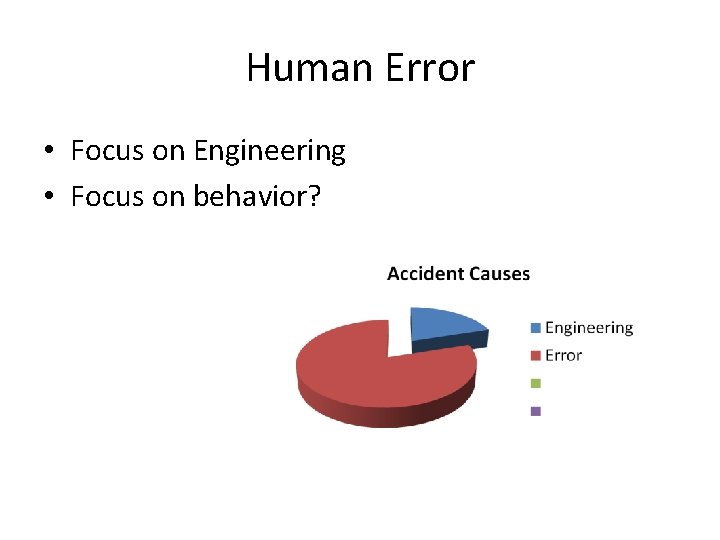

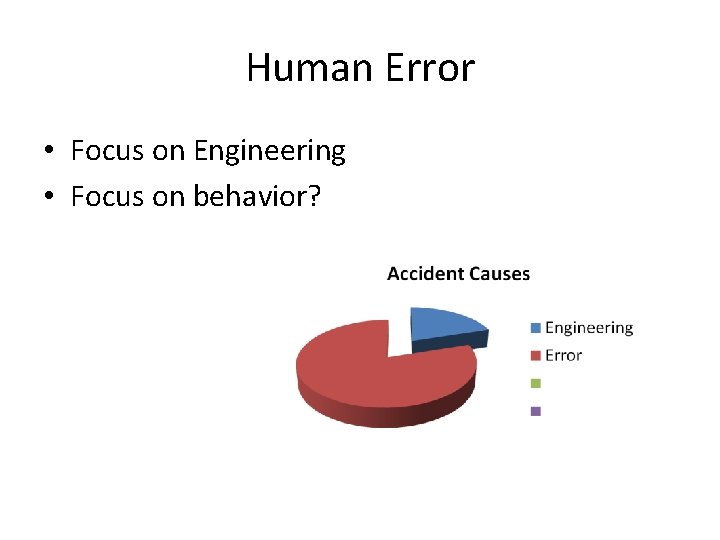

Human Error • Focus on Engineering • Focus on behavior?

Human Error • Human Error - Human Reliability –Same?

Human Error • Cognitive factors: – Attention – Control Mechanisms

Human Errors • To Err is Human • Why?

Human Errors • Errors Acting on Errors Learning

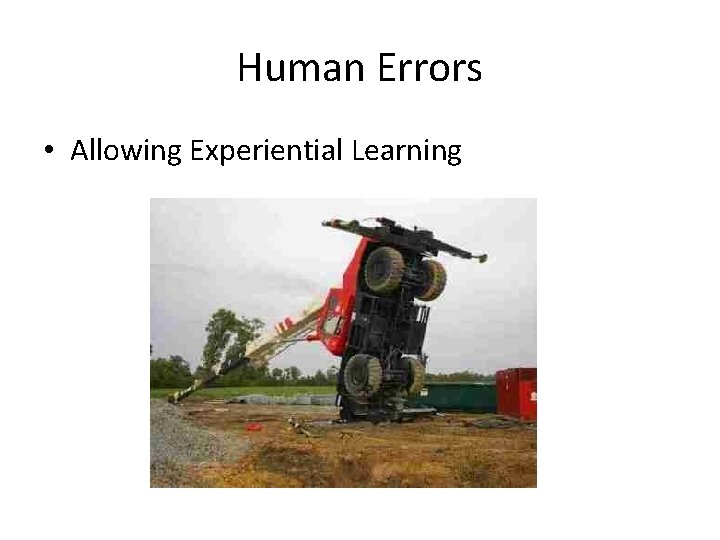

Human Errors • Allowing Experiential Learning

Human Errors • Simulators

Human Errors • Time to Learn by Experiences • Cost to Learn by Experiences • Schedule

Human Error • Simulators for High Risk Technologies

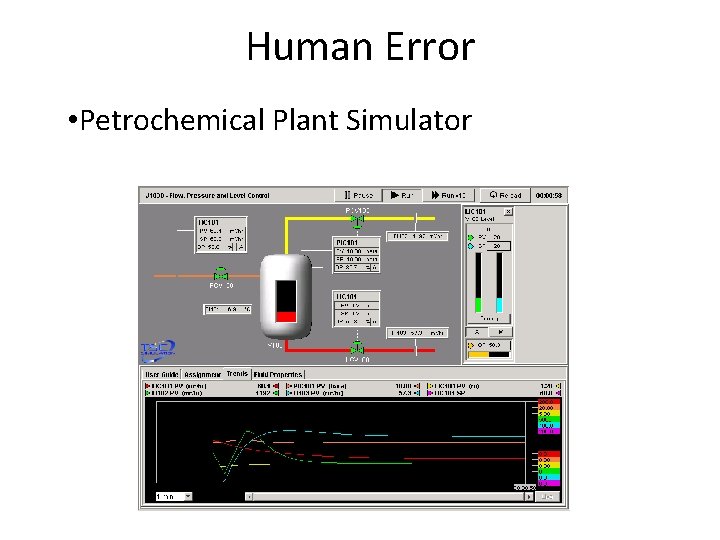

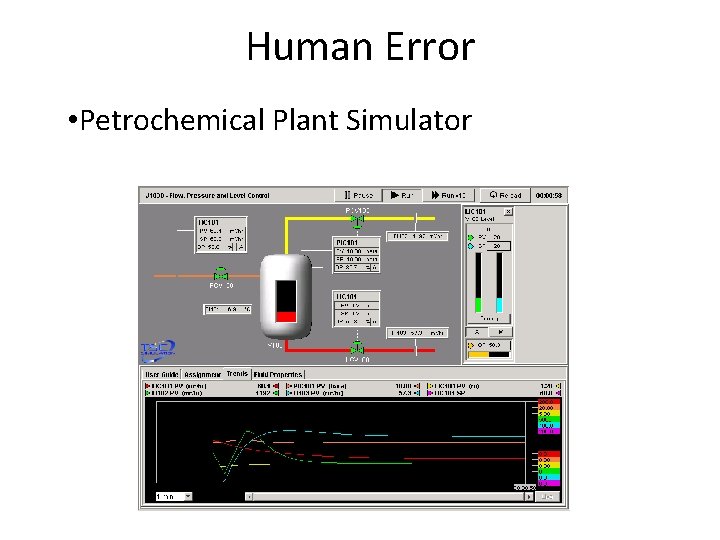

Human Error • Petrochemical Plant Simulator

Human Errors • Good at detecting some errors • Proceduralize all to eliminate errors (Right? ) – (Which culture is this? )

Human Errors • Teams at Odds:

Human Errors

Human Errors • Humans in Complex Systems – Needed?

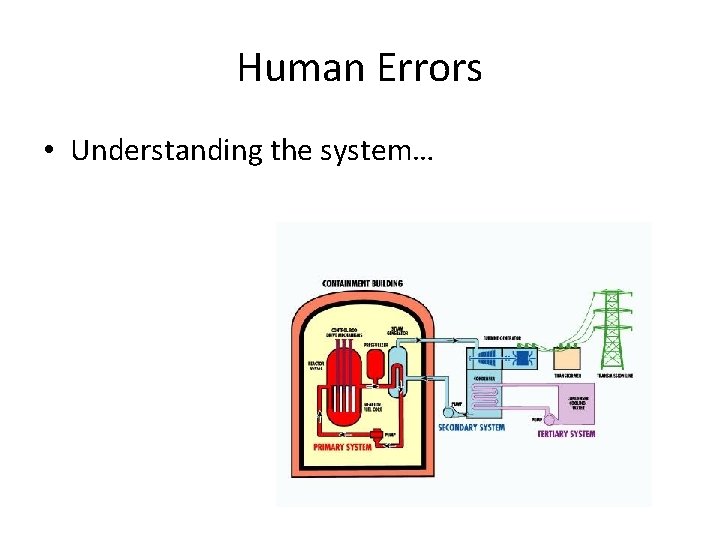

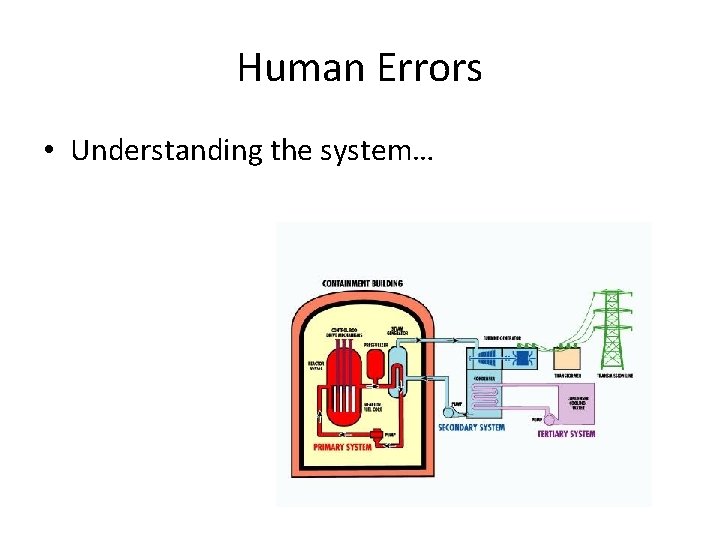

Human Errors • Understanding the system…

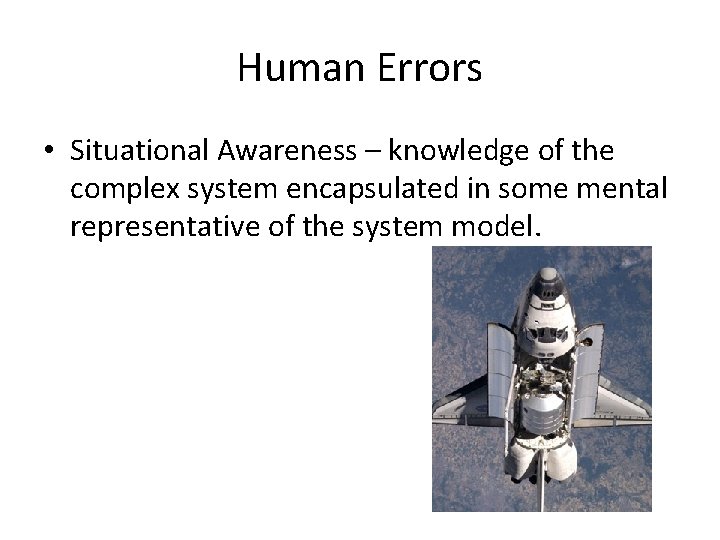

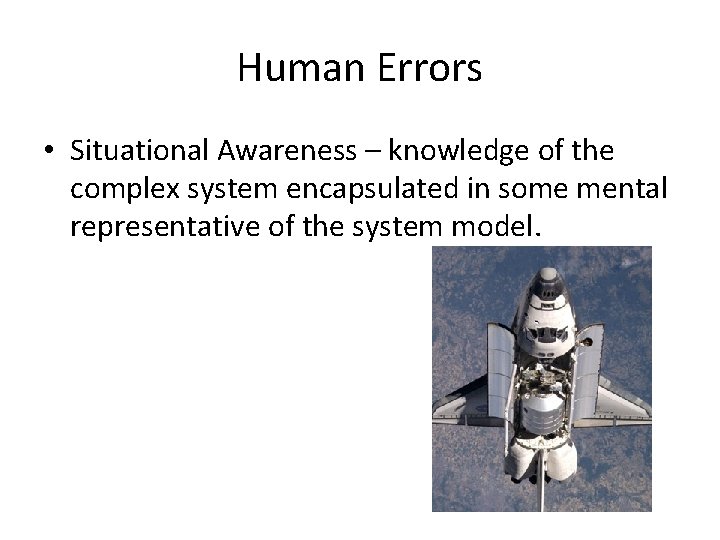

Human Errors • Situational Awareness – knowledge of the complex system encapsulated in some mental representative of the system model.

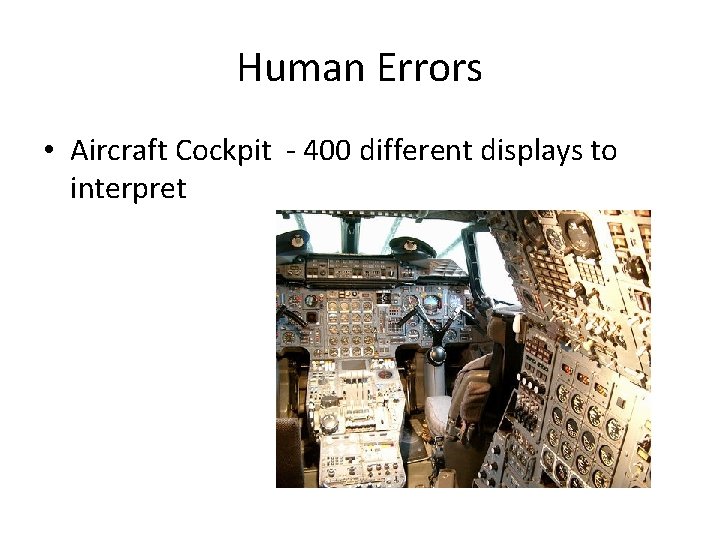

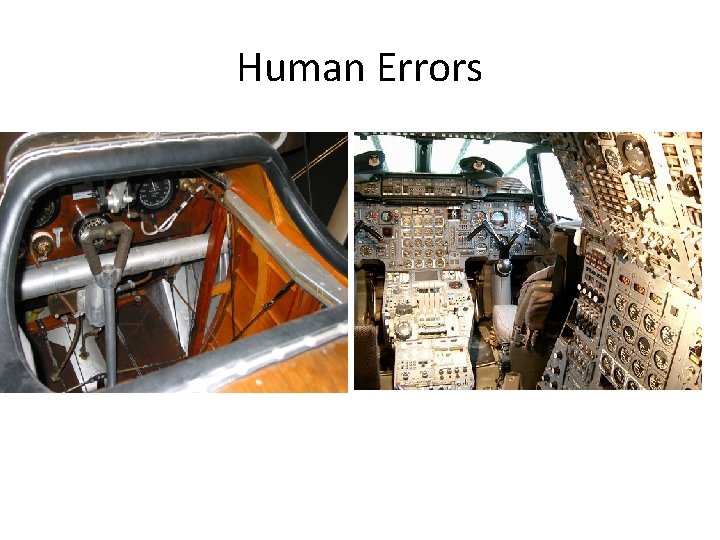

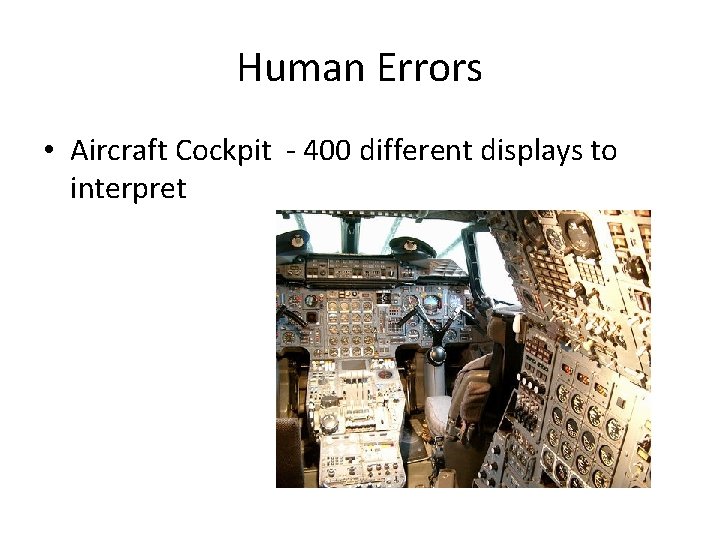

Human Errors • Aircraft Cockpit - 400 different displays to interpret

Human Errors

Human Errors • • Identify Errors Assess Risks Take Steps (If needed) Monitor control measures

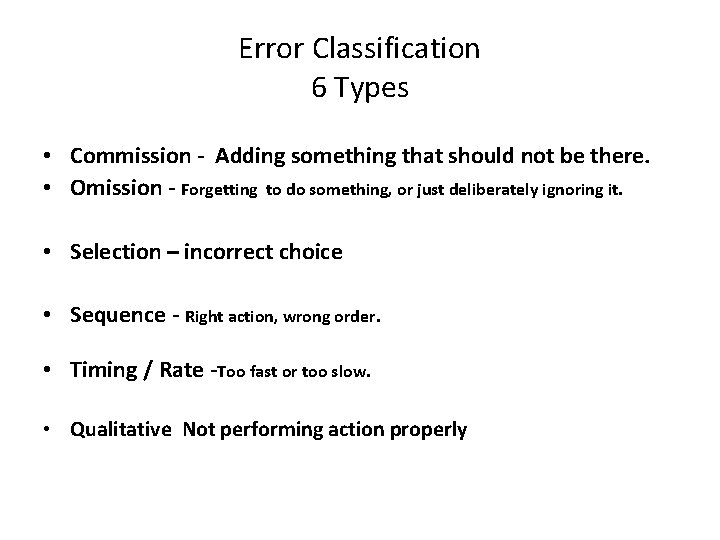

Error Classification 6 Types • Commission - Adding something that should not be there. • Omission - Forgetting to do something, or just deliberately ignoring it. • Selection – incorrect choice • Sequence - Right action, wrong order. • Timing / Rate -Too fast or too slow. • Qualitative Not performing action properly

Errors • • Slips – skill based. Lapses – skill based, unconscious mental Mistakes – rule or knowledge based Violations – deliberate deviations from standard practice

Skill Based Errors • • Repetitions – action more than required Wrong Objects – action on wrong object Intrusions – unintended actions Omissions – actions left out Page 116, Text 4. 3.

Mistakes • Rule Based Mistakes – Actions by hierarchy of rules – General to specific: • If , Then

FAA Analyses - Controllers • Most common- Forgetting a Step • Second – Miscommunication • Lowest - Supervision

Knowledge Based Mistakes • • New Task Relates task to other tasks Decisions based on Learning? Improvisation

Kegworth Airline Accident

Herald Free Enterprise Ship

King Cross Underground Station

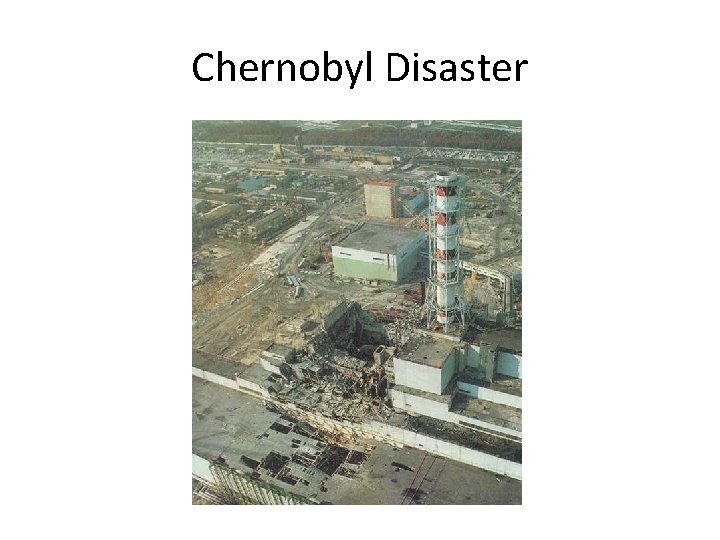

Chernobyl Disaster

Break

Error Reduction • • • Institute System for recording near misses Objectively analyze all incidents Involve personnel at all levels Joint problem solving Change should be controlled and monitored

Management of Human Errors Create human factors database Reassess operator’s performance regularily Study operator’s habits routinely Introduce computer based displays of information • Be aware of Group Think - • •

Group Think • Groupthink is a type of thought exhibited by group members who try to minimize conflict and reach consensus without critically testing, analyzing, and evaluating ideas.

Group Think Loud Mouth Wins?

General Failures • • • Hardware defects Design failures Poor Maintenance Procedures Error – enforcing conditions Poor Housekeeping System goals incompatible with safety Communication failures Inadequate training Inadequate defenses

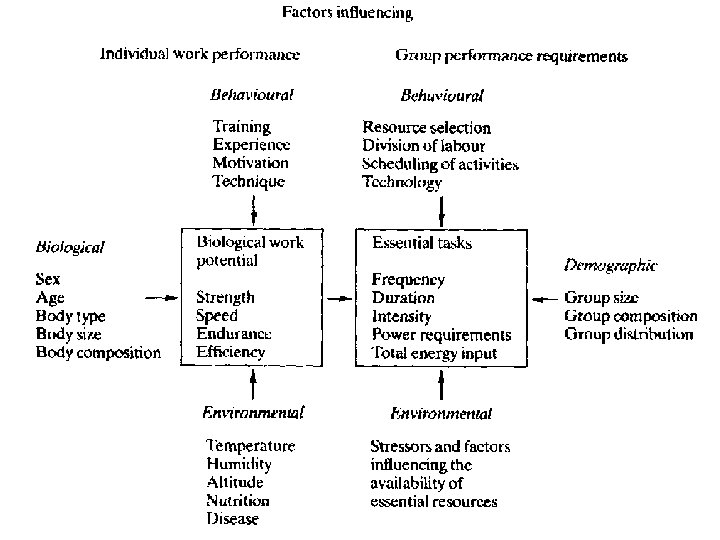

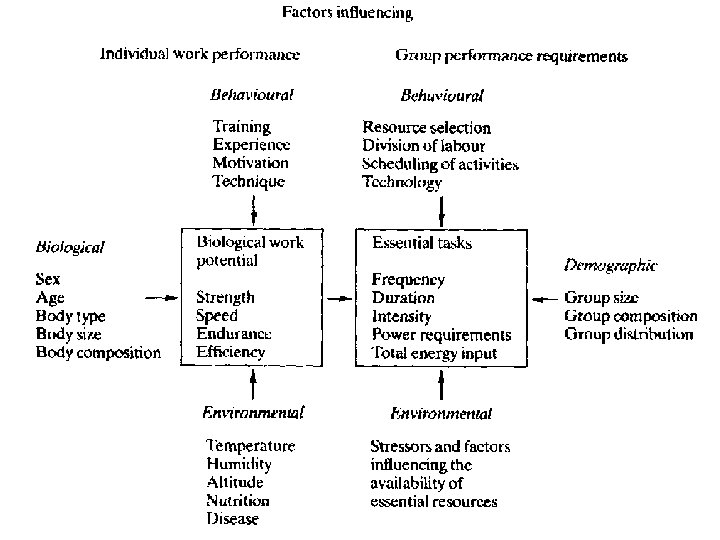

Human Factors • Human dimensions • Working Environment • Effects of systems of work on humans

Human Factors • Ergonomics • Design Equipment and working to match humans • Enhance effectiveness • Design work systems to humans • Reduce fatigue, stress…

Human & Machine Performance • • • Speed Consistency Complex activity Memory Reasoning Computation Input Overload Dexterity Text 4. 11, page 132

Allocation of Function • • • • Humans better at: Sensing very low stimuli Detecting against high noise Recognizing complex patterns Remembering (Storing) large amounts of info Retrieving relevant information Drawing on experience Selecting alternative modes of operations Reasoning Applying principles to varied problems Making estimates Concentrating on important activities Adapting physical responses Text 4. 12, page 133

• Machines are better at: • • Simuli outside human range Applying deductive reasoning Monitoring for prescribed events Storing encoded information Retrieving encoded Processing repetitive events Counting physical quantities Text 4. 12, page 133

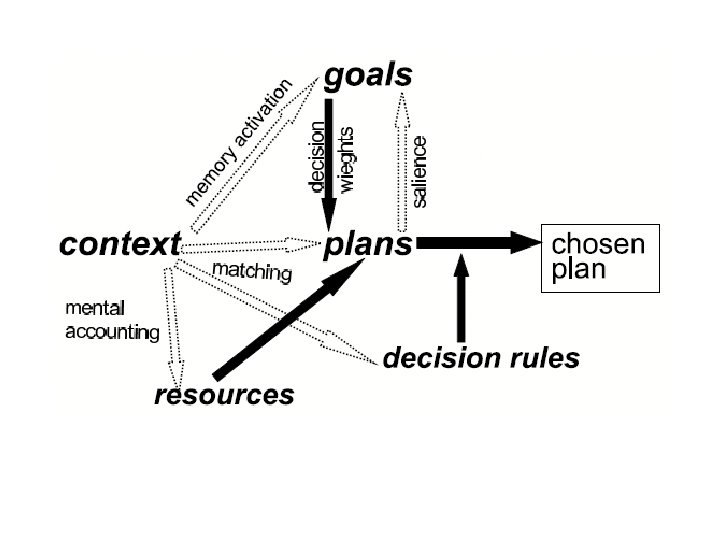

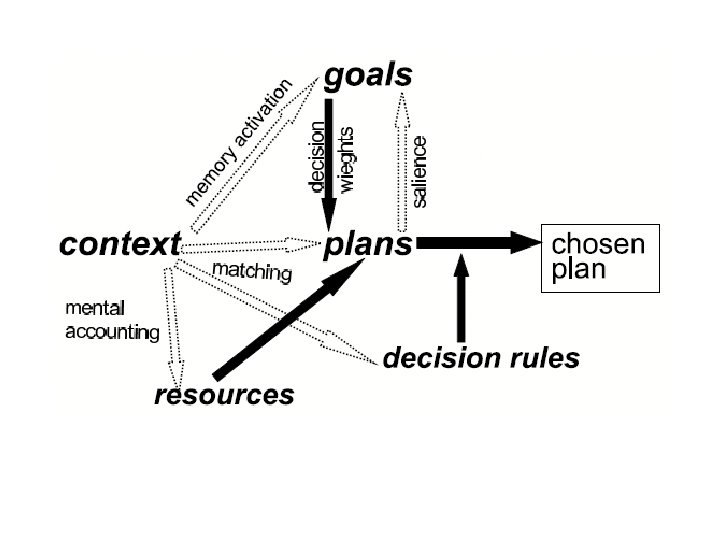

Machine – Human Interface • • • Displays/Warnings Human receptors Memory Effectors Human Behavior Figure 4. 3, page 136

Human Limitations • • • Sense Organs limits Interpretation Posture Layout Comfort Fatigue Text 4. 14, page 137

Analog vrs Digital Displays • Precise Readings not needed • Best for warnings • Setting and Tracking Text 4. 15, page 139

Qualitative Displays • Attract immediate attention • Represent States • Provide model of system

Control Design • • Feedback – to the human Resistance – guard against inadvertent motion Size- force required to move Weight – relative to operator Texture- slip, grip and glare Coding – shape or size Location – foot pedals on cars Text 4. 17, page 140

Decision making Biases • • • Great weight – Primacy Less information from sources More information – More accurate? Seek more information – absorbed? All information reliable? Focus on critical attributes Text 4. 18, page 141

Predictive Human Error Analysis • • • Objectives Represent Human-machine systems Determine what can go wrong Assess consequences Generate strategies Section 4. 7. 3, page 146

FAA Tracer Lite. • Excel Spreadsheet

Performance Influence Factors (PIFs) • • • Corporate Factors - Management Process Factors - technology Machine Interface – controls, displays Environmental Factors – work patterns Equipment Factors – PPE, Tools Individual factors – experience, knowledge Text, page 147

Reminders for Reducing Maintenance and other Errors • • • Conspicuousness Contiguity Context Check Comprehensiveness Confirmation Text 4. 23, page 149

Conclusions • Human error is natural • Control the worst parts of human errors