HighPerformance Computing Jos Nelson Amaral Department of Computing

![Vector Processing (2) for( i=0; i<=N; i++ ) A[i] = B[i] * C[i]; Mult Vector Processing (2) for( i=0; i<=N; i++ ) A[i] = B[i] * C[i]; Mult](https://slidetodoc.com/presentation_image_h2/172abff91a1edbf30307044ff6a1ef14/image-37.jpg)

![Top 500 (November 10, 2001) Rmax = Maximal LINPACK Performance Achieved [GFLOPS] Rpeak = Top 500 (November 10, 2001) Rmax = Maximal LINPACK Performance Achieved [GFLOPS] Rpeak =](https://slidetodoc.com/presentation_image_h2/172abff91a1edbf30307044ff6a1ef14/image-55.jpg)

![Loop Fusion (Another Example) (2) A[1] = B[1] + 1 (1) for i=2 to Loop Fusion (Another Example) (2) A[1] = B[1] + 1 (1) for i=2 to](https://slidetodoc.com/presentation_image_h2/172abff91a1edbf30307044ff6a1ef14/image-73.jpg)

- Slides: 128

High-Performance Computing José Nelson Amaral Department of Computing Science University of Alberta amaral@cs. ualberta. ca MACI - University of Alberta April 2001 1

Why High Performance Computing? z. Many important problems cannot be solved yet even with the fastest machines available. zfaster computers enable the formulation of more interesting questions zwhen a problem is solved, researchers find bigger problems to tackle! MACI - University of Alberta - April 2001 2

Grand Challenges zweather forecasting zeconomic modeling zcomputer-aided design zdrug design zexploring the origins of the universe zsearching for extra-terrestrial life zcomputer vision MACI - University of Alberta - April 2001 3

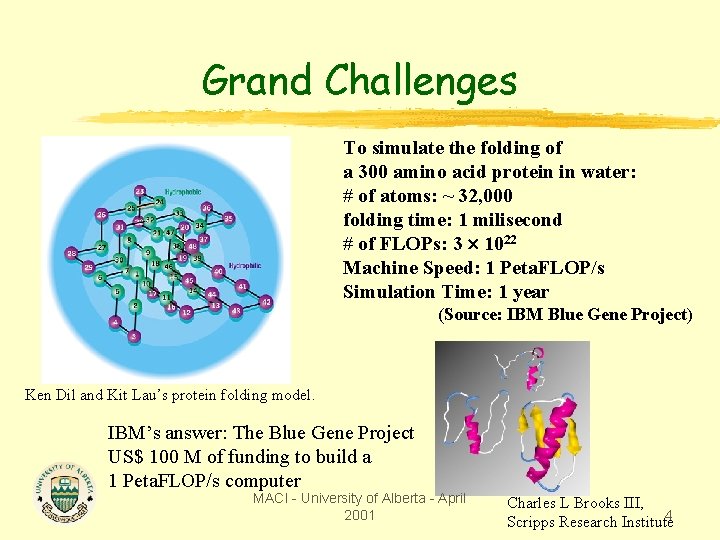

Grand Challenges To simulate the folding of a 300 amino acid protein in water: # of atoms: ~ 32, 000 folding time: 1 milisecond # of FLOPs: 3 1022 Machine Speed: 1 Peta. FLOP/s Simulation Time: 1 year (Source: IBM Blue Gene Project) Ken Dil and Kit Lau’s protein folding model. IBM’s answer: The Blue Gene Project US$ 100 M of funding to build a 1 Peta. FLOP/s computer MACI - University of Alberta - April 2001 Charles L Brooks III, 4 Scripps Research Institute

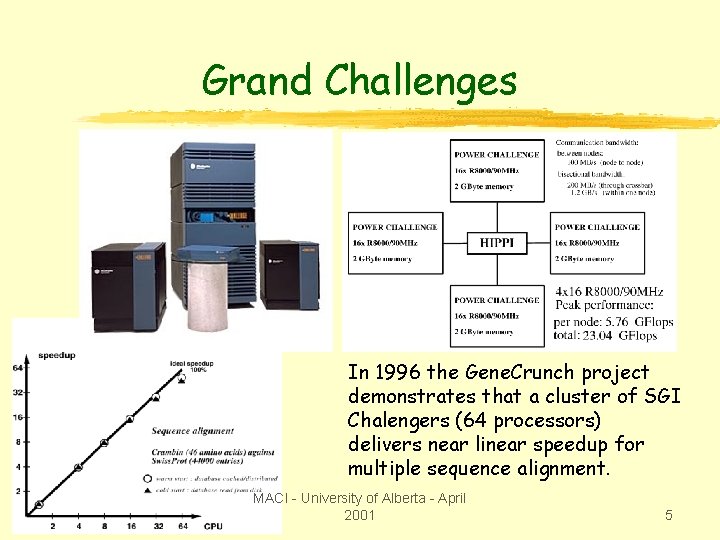

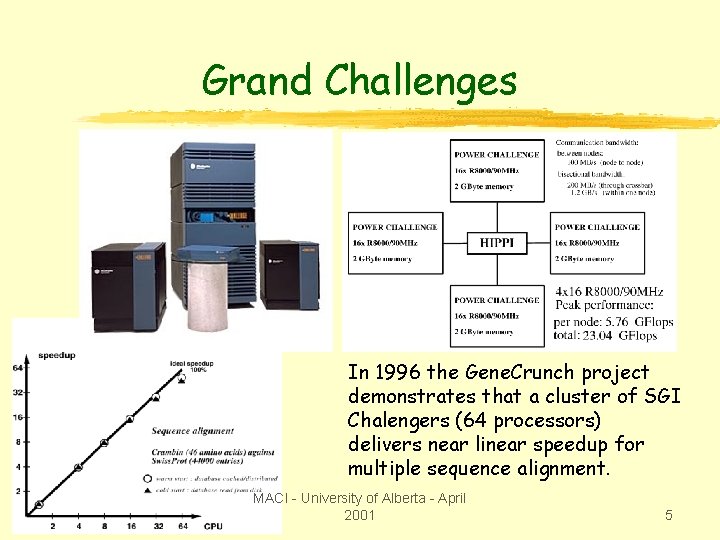

Grand Challenges In 1996 the Gene. Crunch project demonstrates that a cluster of SGI Chalengers (64 processors) delivers near linear speedup for multiple sequence alignment. MACI - University of Alberta - April 2001 5

Commercial Applications In October 2000, SGI and ESI (France) revealed a crash simulator to be used in the future BMW Series 5. Sustained performance: 12 GFLOPS Processors: 96 400 MHz MIPS Machine: SGI Origin 3000 series. MACI - University of Alberta - April 2001 6

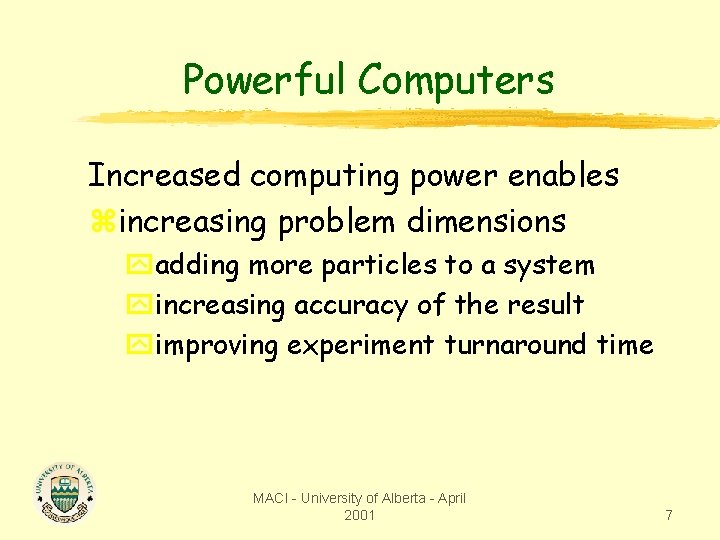

Powerful Computers Increased computing power enables zincreasing problem dimensions yadding more particles to a system yincreasing accuracy of the result yimproving experiment turnaround time MACI - University of Alberta - April 2001 7

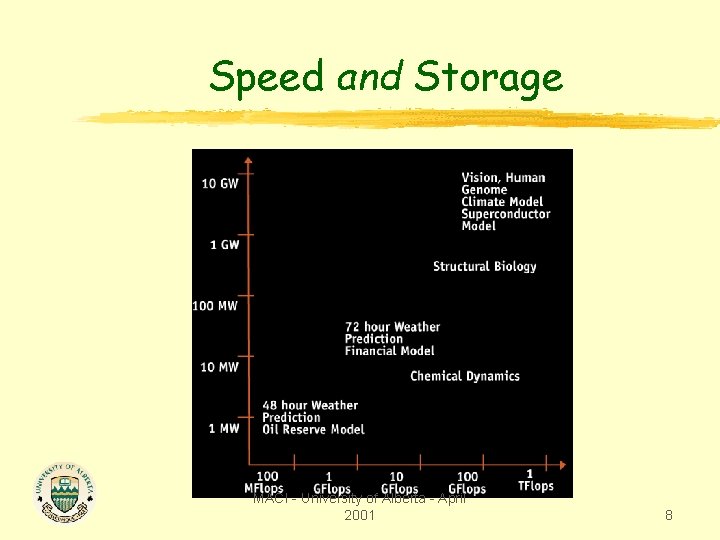

Speed and Storage MACI - University of Alberta - April 2001 8

Solution? Instead of using a single processor … use multiple processors zcombine their efforts to solve a problem zbenefit from the aggregate of their processing speed, memory, cache and disk storage MACI - University of Alberta - April 2001 9

This Talk y. Motivation y. Parallel Machine Organizations y. Cluster Computing y. Programming Models y. Cache Coherence and Memory Consistency y. The Top 500: Who is the fastest? y. Processor Architecture: What is new? y. The Role of Compilers y. Speedup and Scalability y. Final Remarks MACI - University of Alberta - April 2001 10

This Talk y. Motivation y. Parallel Machine Organizations y. Cluster Computing y. Programming Models y. Cache Coherence and Memory Consistency y. The Top 500: Who is the fastest? y. Processor Architecture: What is new? y. The Role of Compilers y. Speedup and Scalability y. Final Remarks MACI - University of Alberta - April 2001 11

2000 1998 1990 1994 1988 MACI - University of Alberta - April 2001 1980 12

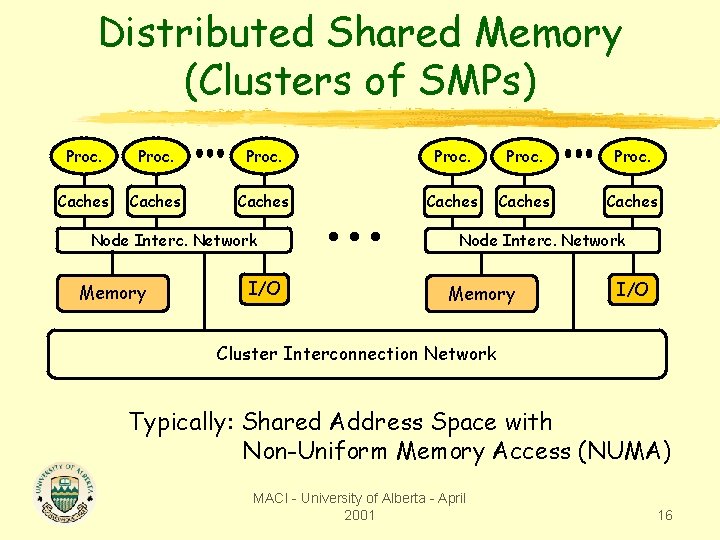

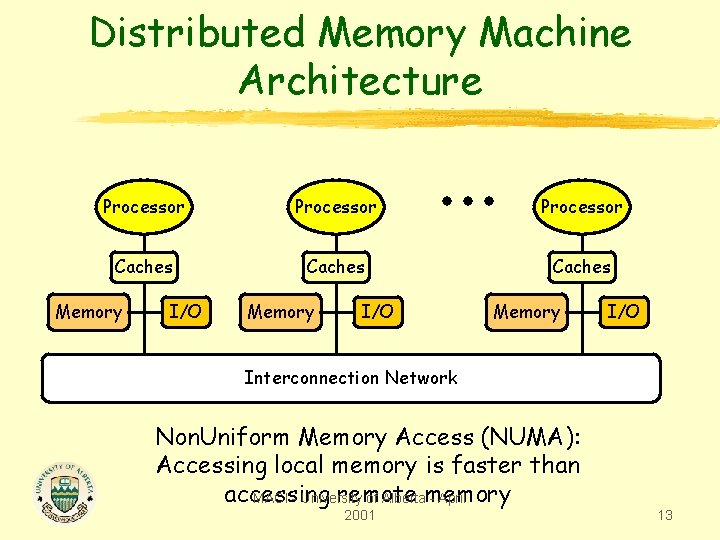

Distributed Memory Machine Architecture Processor Caches Memory I/O Interconnection Network Non. Uniform Memory Access (NUMA): Accessing local memory is faster than accessing remote MACI - University of Albertamemory - April 2001 13

Centralized Shared Memory Multiprocessor Processor Caches Interconnection Network Main Memory I/O System MACI - University of Alberta - April 2001 14

Centralized Shared Memory Multiprocessor Processor Caches Interconnection Network Mem. I/O crtl Uniform Memory Address (UMA) “Dance Hall Approach” MACI - University of Alberta - April 2001 15

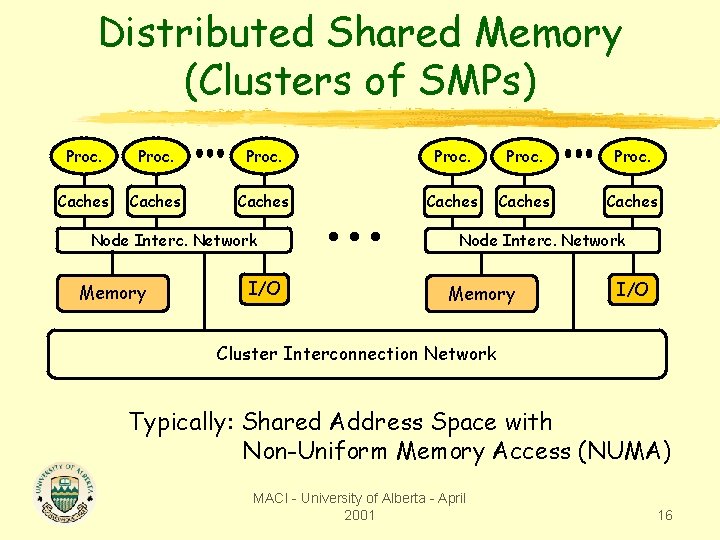

Distributed Shared Memory (Clusters of SMPs) Proc. Caches Caches Node Interc. Network Memory I/O Cluster Interconnection Network Typically: Shared Address Space with Non-Uniform Memory Access (NUMA) MACI - University of Alberta - April 2001 16

This Talk y. Motivation y. Parallel Machine Organizations y. Cluster Computing y. Programming Models y. Cache Coherence and Memory Consistency y. The Top 500: Who is the fastest? y. Processor Architecture: What is new? y. The Role of Compilers y. Speedup and Scalability y. Final Remarks MACI - University of Alberta - April 2001 17

What’s Next? What is Next in High-Performance Computing? (Gordon Bell and Jim Gray, Comm. of ACM, Feb 2002) Thesis: 1. Clusters are becoming ubiquous, and even traditional data centers are migrating to clusters; 2. Grid communities are beginning to provide significant advantages for addressing parallel problems and sharing vast number of files. “Dark Side of Clusters: Clusters perform poorly on applications that require large. MACI shared memory. ” - University of Alberta - April 2001 18

Beowulf Project started at NASA in 1993 with the goal of: “Implementing a 1 GFLOPs workstation costing less than US$50, 000 using commercial off-the-shelf (COTS) hardware and software. ” In 1994 a US$ 40, 000 cluster, with 16 Intel 486 s reached the goal. In 1997 a Beowulf cluster won the Gordon Bell performance/price Prize. In June 2001, 28 Beowulfs were in the Top 500 fastest computers in the world. MACI - University of Alberta - April 2001 19

“The Dark Side of Clusters” What is Next in High-Performance Computing? (Gordon Bell and Jim Gray, Comm. of ACM, Feb 2002) “Clusters perform poorly on applications that require large shared memory. ” PAP = Peak Advertised Performance RAP = Real Application Performance Shared memory computers deliver RAP of 30 -50% of the PAP, while clusters deliver 5 -15% of the PAP. MACI - University of Alberta - April 2001 20

Non-Shared Address Space l l l Clusters require an explicit message passing programming model: MPI is the most widely used parallel programming model today PVM used in some engineering departments. MACI - University of Alberta - April 2001 21

Large and Expensive Clusters ASCI White 8, 192 Power. PC processors 6 TB of memory 160 TB of disk space 12. 3 Teraops (peak) 28 tractor trailers to transport (July 2000) Suplier: IBM Client: USA Department of Energy Main Application: Simulated Testing of Nuclear Weapons Stockpile MACI - University of Alberta - April 2001 22

This Talk y. Motivation y. Parallel Machine Organizations y. Cluster Computing y. Programming Models y. Cache Coherence and Memory Consistency y. The Top 500: Who is the fastest? y. Processor Architecture: What is new? y. The Role of Compilers y. Speedup and Scalability y. Final Remarks MACI - University of Alberta - April 2001 23

Programming Model Requirements z. What data can be named by the threads? z. What operations can be performed on the named data? z. What ordering exists among these operations? MACI - University of Alberta - April 2001 24

Programming Model Requirements z. Naming: x. Global Physical Address Space x. Independent Local Physical Address Spaces z. Ordering: x. Mutual Exclusion x. Events x. Communication X Synchronization MACI - University of Alberta - April 2001 25

Parallel Framework z. Layers: y. Programming Model: x. Multiprogramming: lots of jobs, no communication x. Shared address space: communicate via memory x. Message passing: send and receive messages MACI - University of Alberta - April 2001 26

Message Passing Model y. Communicate through explicit I/O operations x. Essentially NUMA but integrated at I/O devices vs. memory system y. Send specifies local buffer + receiving process on remote computer y. Receive specifies sending process on remote computer + local buffer to place data x. Synch: when send completes, when buffer free, when request accepted, receive wait for send y. Send+receive => memory-memory copy, where each supplies local address, AND does pair-wise synchronization! MACI - University of Alberta - April 2001 27

Shared Address Model Summary z Each processor can name every physical location in the machine z Each process can name all data it shares with other processes z Data transfer via load and store z Data size: byte, word, . . . or cache blocks z Uses virtual memory to map virtual to local or remote physical z Memory hierarchy model applies: communication moves data to local processor cache (as load moves data from memory to cache) MACI - University of Alberta - April 2001 28

Shared Address/Memory Multiprocessor Model z Communicate via Load and Store y Oldest and most popular model z process: a virtual address space and ~ 1 thread of control: y Multiple processes can overlap (share), but all threads share the process address space z Writes to shared address space by one thread are visible to reads by other threads MACI - University of Alberta - April 2001 29

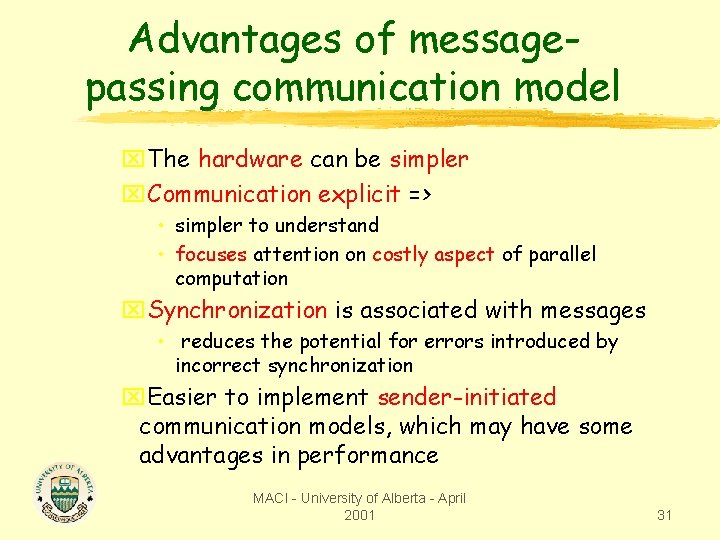

Advantages of sharedmemory communication model x. Compatibility with SMP hardware x. Ease of programming • for complex communication patterns; or • for dynamic communication patterns; x. Uses familiar SMP model • attention only on performance critical accesses x. Lower communication overhead, • better use of BW for small items • memory mapping implements protection in hardware x. HW-controlled caching • reduces remote comm. by caching of all data, both shared and private. MACI - University of Alberta - April 2001 30

Advantages of messagepassing communication model x. The hardware can be simpler x. Communication explicit => • simpler to understand • focuses attention on costly aspect of parallel computation x. Synchronization is associated with messages • reduces the potential for errors introduced by incorrect synchronization x. Easier to implement sender-initiated communication models, which may have some advantages in performance MACI - University of Alberta - April 2001 31

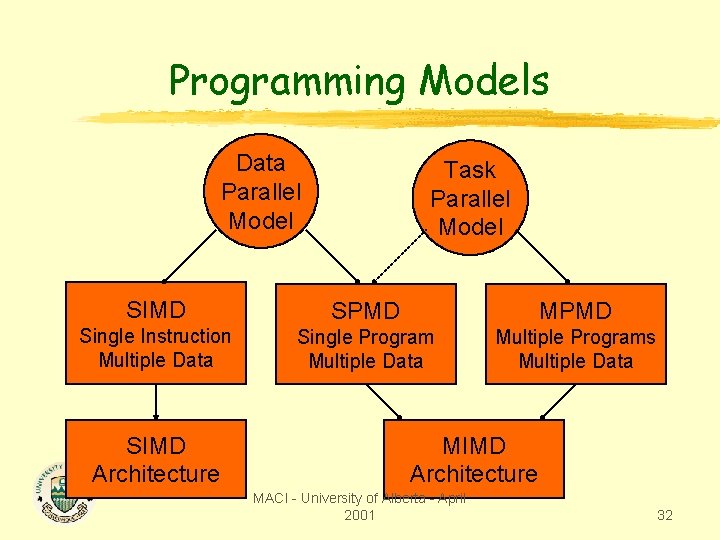

Programming Models Data Parallel Model Task Parallel Model SIMD SPMD MPMD Single Instruction Multiple Data Single Program Multiple Data Multiple Programs Multiple Data SIMD Architecture MACI - University of Alberta - April 2001 32

Open. MP (1) z. Open. MP gives programmers a “simple” and portable interface for developing shared-memory parallel programs z. Open. MP supports C/C++ and Fortran on “all” architectures, including Unix platforms and Windows NT platforms zmay become the industry standard MACI - University of Alberta - April 2001 33

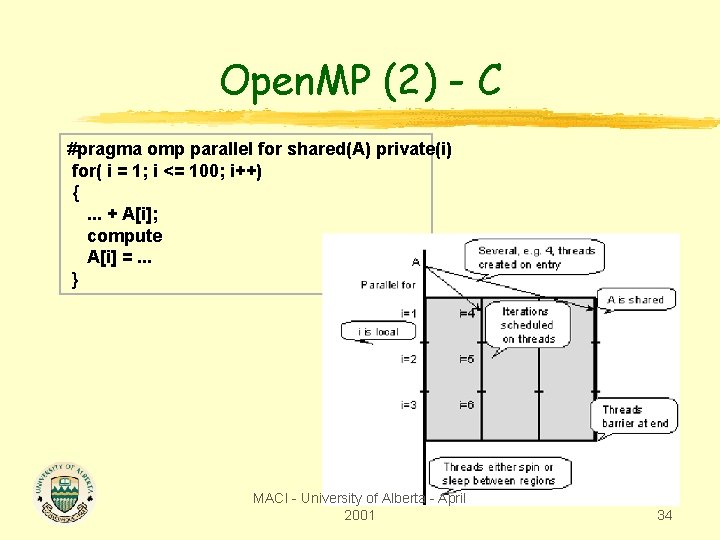

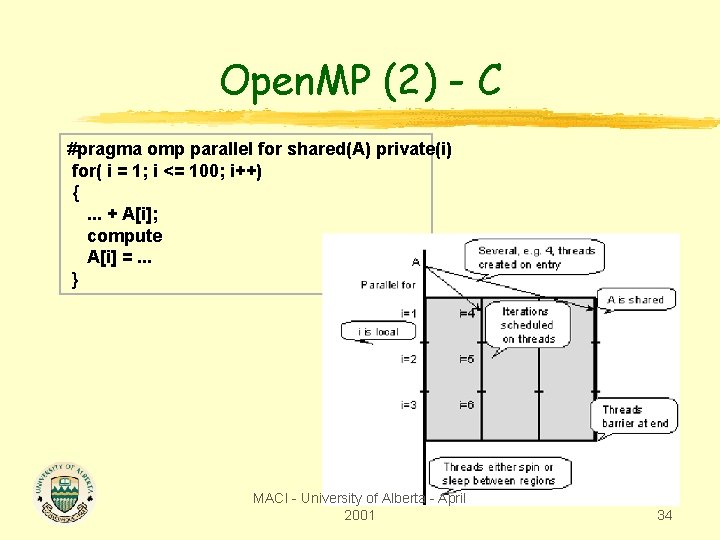

Open. MP (2) - C #pragma omp parallel for shared(A) private(i) for( i = 1; i <= 100; i++) {. . . + A[i]; compute A[i] =. . . } MACI - University of Alberta - April 2001 34

Open. MP (3) - Fortran c$omp paralleldo schedule(static) c$omp&shared(omega, error, uold, u) c$omp&private(i, j, resid) c$omp&reduction(+: error) do j = 2, m-1 do i = 2, n-1 resid = calcerror(uold, I, j) u(i, j) = uold(i, j) - omega * resid error = error + resid*resid end do enddo c$omp end paralleldo MACI - University of Alberta - April 2001 35

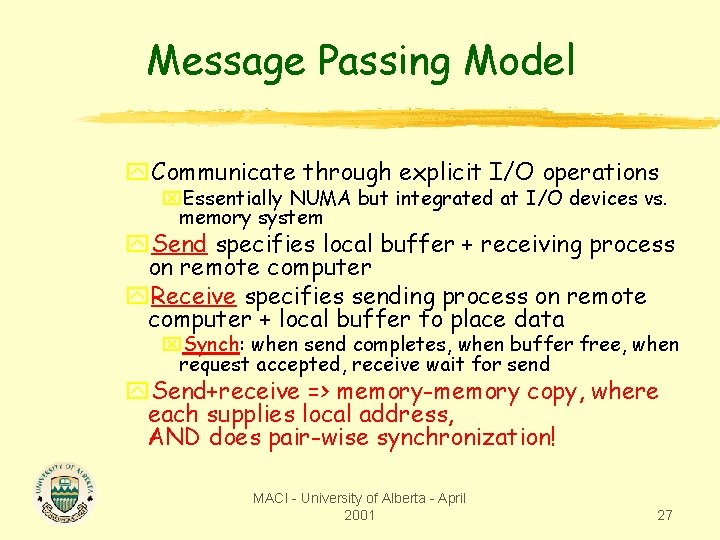

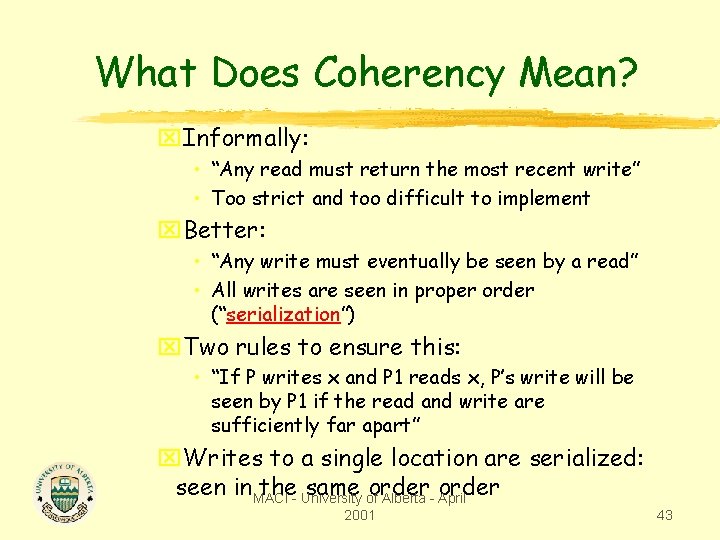

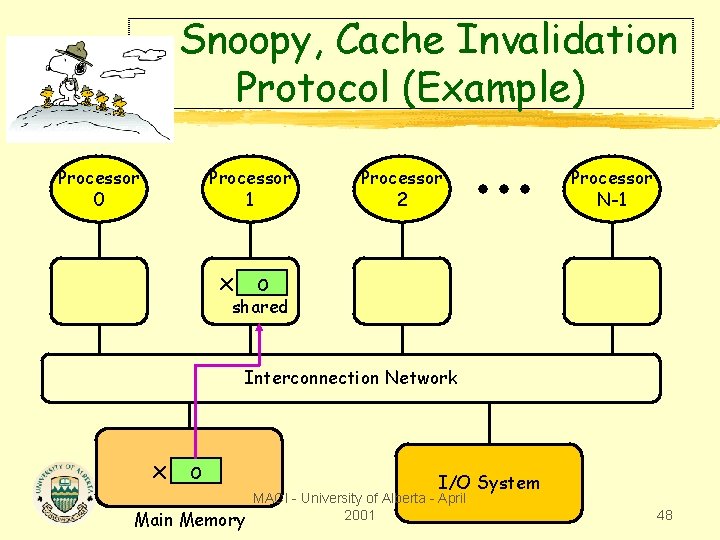

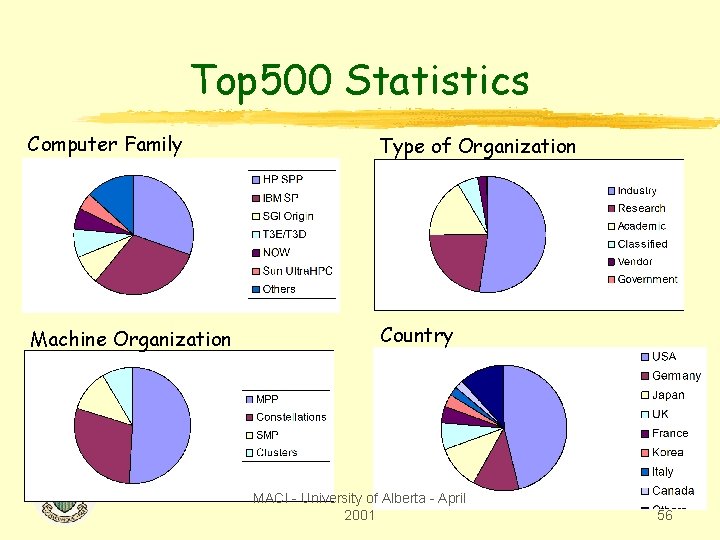

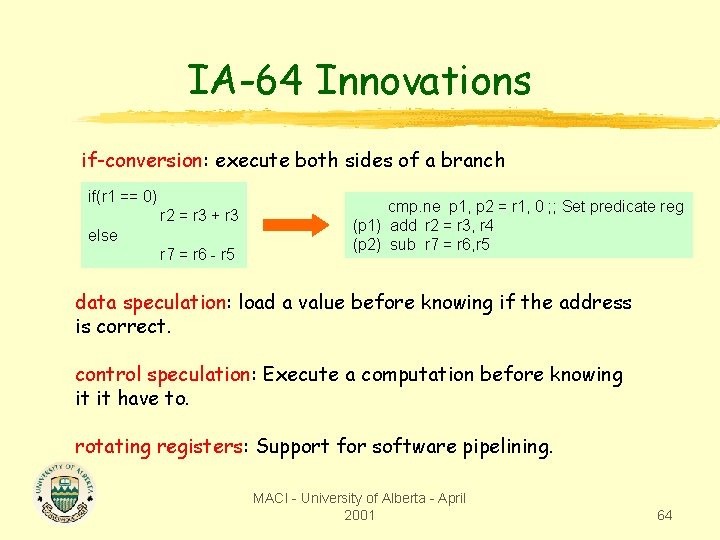

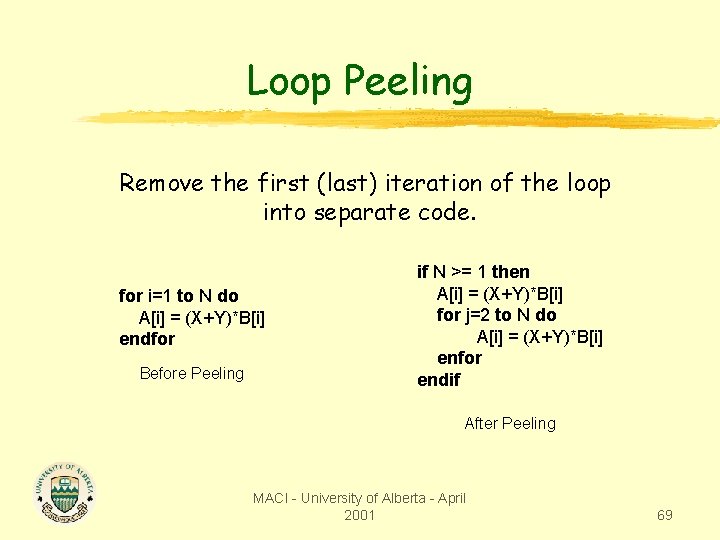

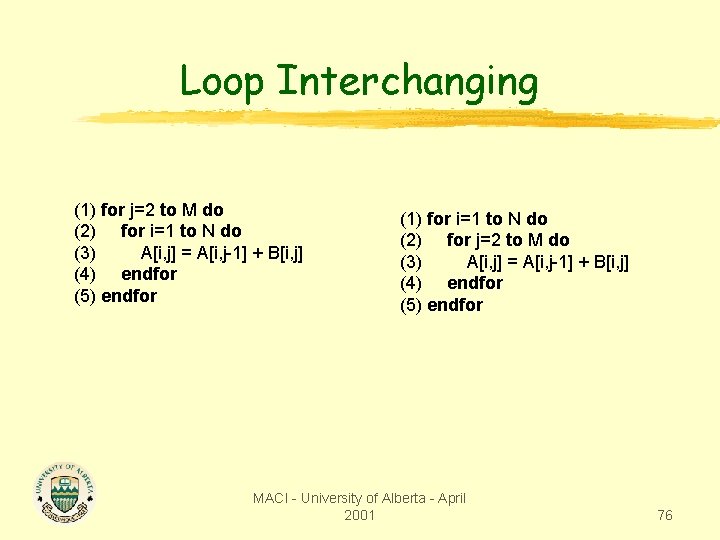

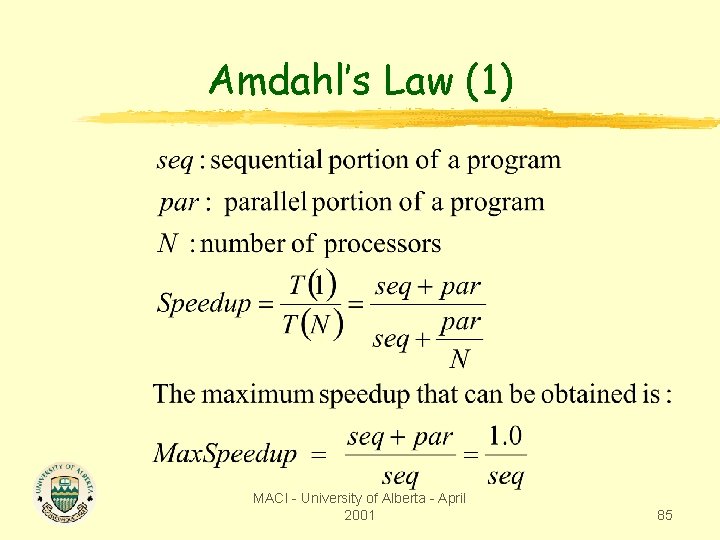

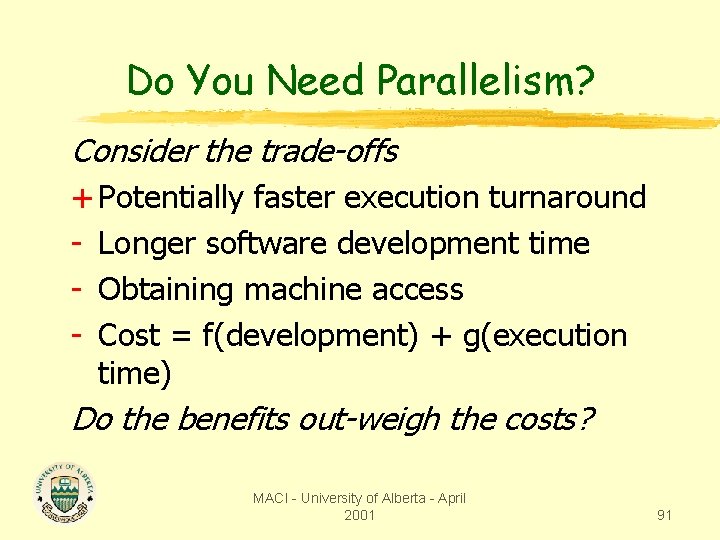

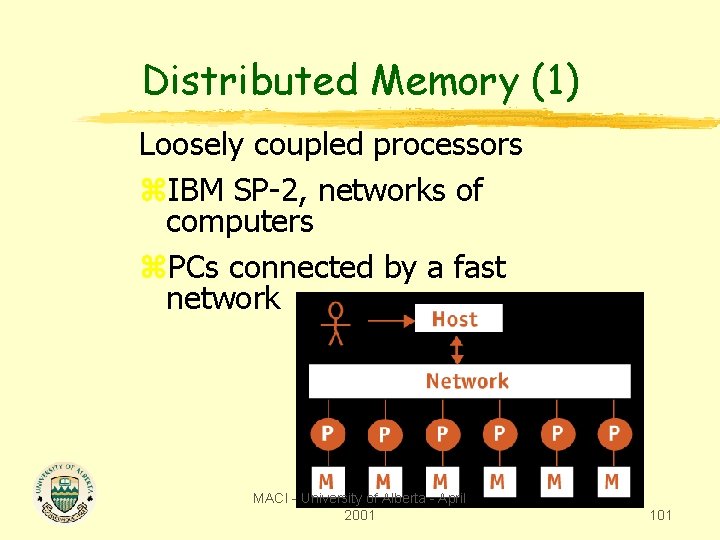

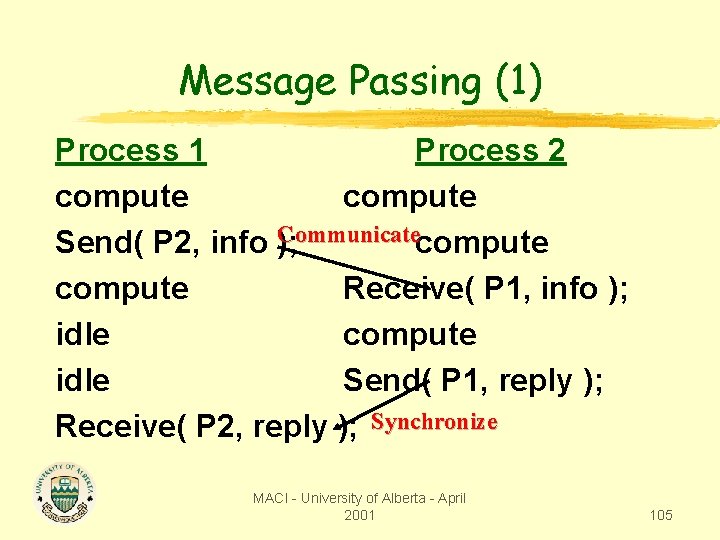

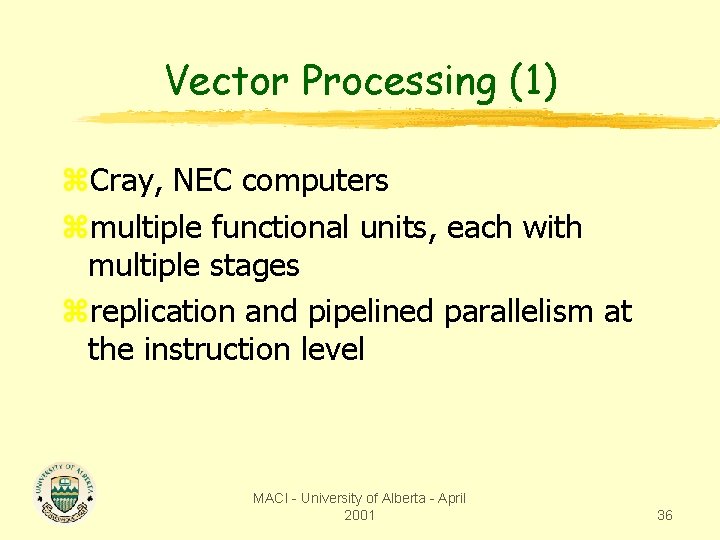

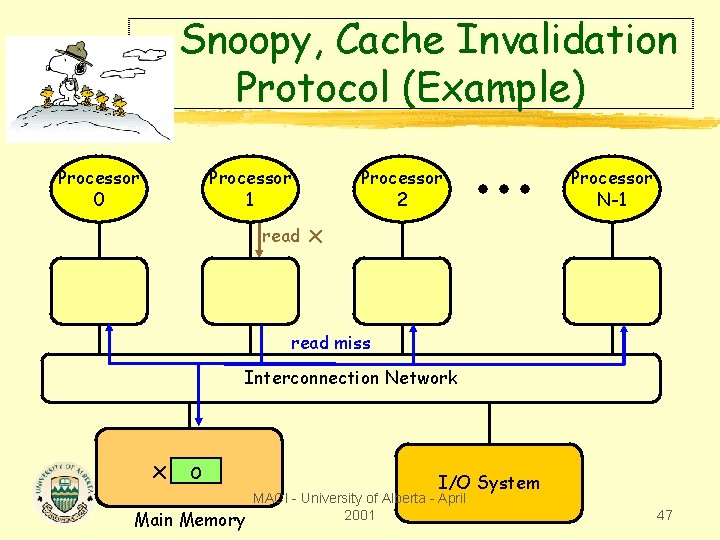

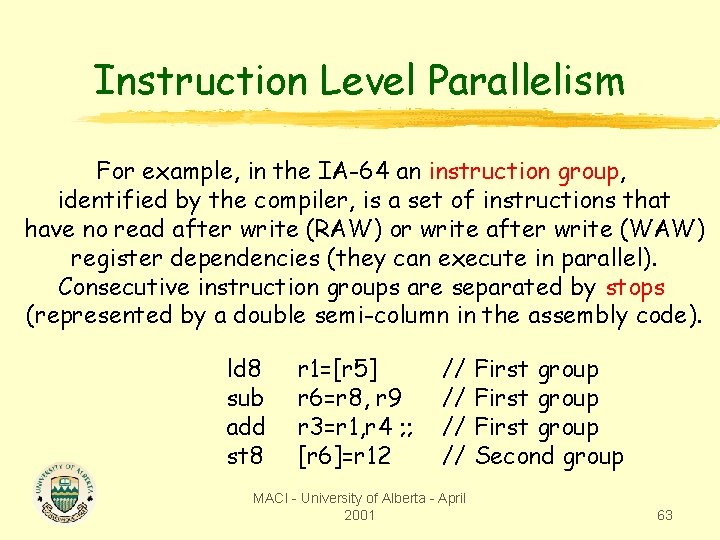

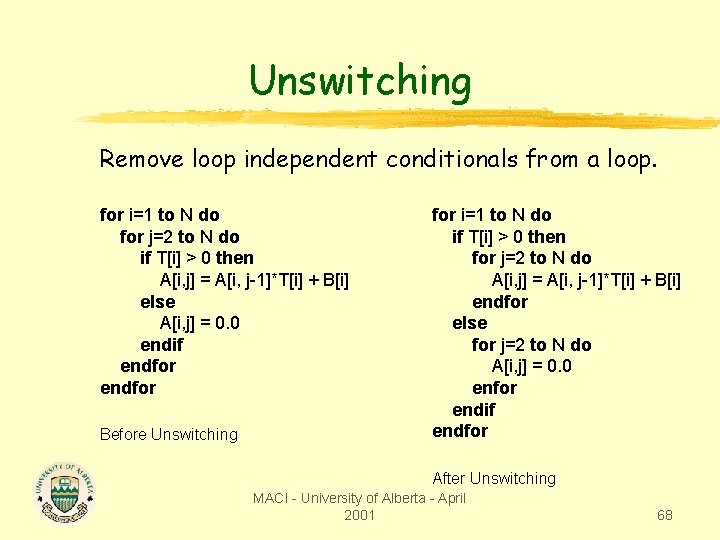

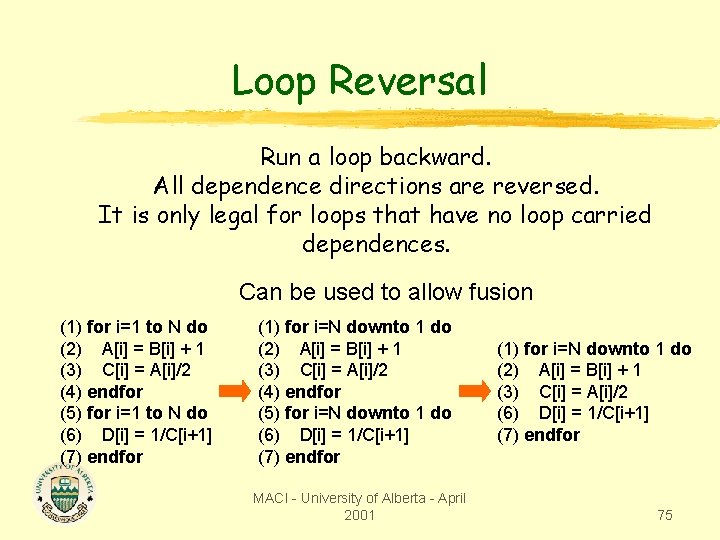

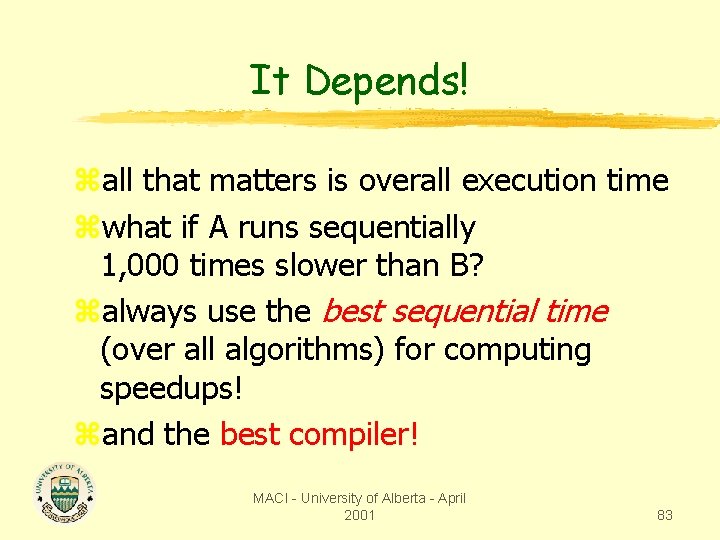

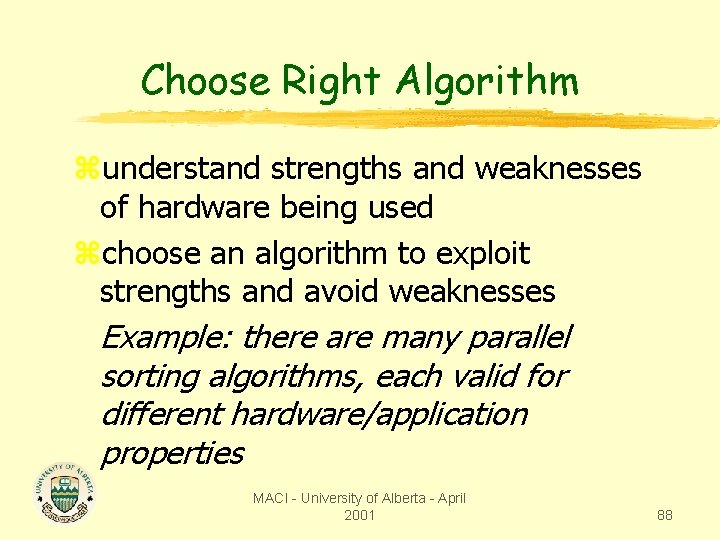

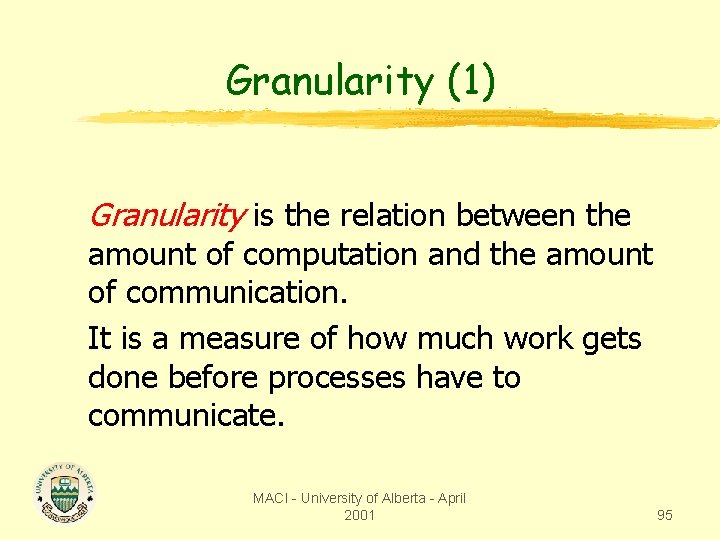

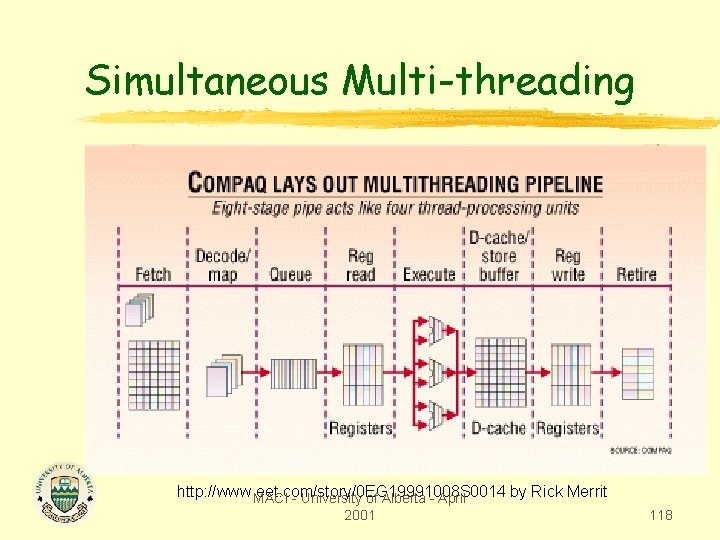

Vector Processing (1) z. Cray, NEC computers zmultiple functional units, each with multiple stages zreplication and pipelined parallelism at the instruction level MACI - University of Alberta - April 2001 36

![Vector Processing 2 for i0 iN i Ai Bi Ci Mult Vector Processing (2) for( i=0; i<=N; i++ ) A[i] = B[i] * C[i]; Mult](https://slidetodoc.com/presentation_image_h2/172abff91a1edbf30307044ff6a1ef14/image-37.jpg)

Vector Processing (2) for( i=0; i<=N; i++ ) A[i] = B[i] * C[i]; Mult 1 Mult 2 Mult 3 Mult 1 Mult 2 Mult 3 Mult 1 Mult 2 Mult 3 MACI - University of Alberta - April 2001 37

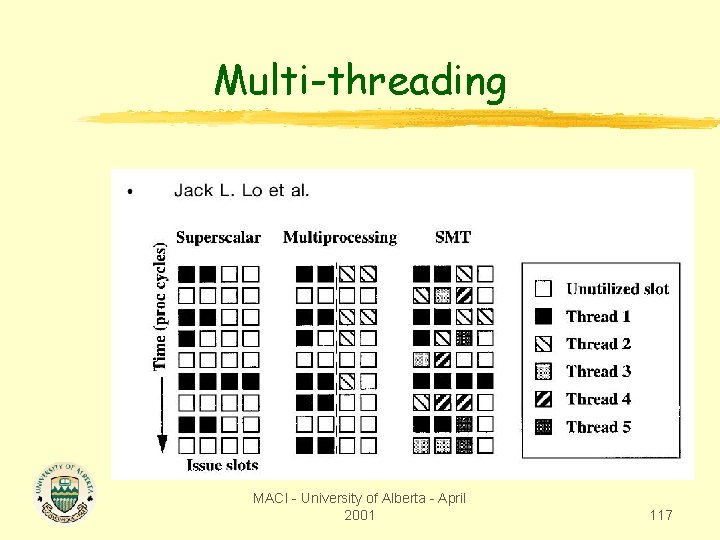

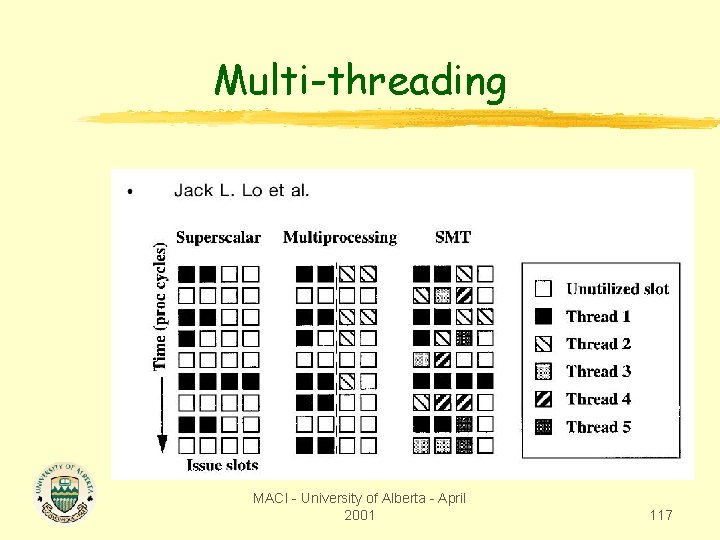

Multi-threading OS-level multi-threading: P-threads Programming Language-level multi-threading: Java Fine Grain Multi-threading: Threaded-C, Cilk, TAM Hardware Supported Multi-threading: Tera Instruction Level Multi-threading: Simultaneous Multi-threading (Compaq-Intel) MACI - University of Alberta - April 2001 38

Other Issues: Debugging parallel programs can be frustrating z non-deterministic execution z probe effect z difficult to “stop” a parallel program z multiple core files z difficult to visualize parallel activity z tools are barely adequate MACI - University of Alberta - April 2001 39

Other Issues: Performance Tuning z. Use available performance tuning tools (perfex, Speedshop on SGI) to know where the program spends time. z. Re-tune code for performance when hardware changes. MACI - University of Alberta - April 2001 40

Other Issues: Fault Tolerance Consider a job running on 40 processors for a week, then there is a power outage, losing all the work. Long-running jobs must be able to save a program’s state and then be able to restart from that state. This is called check-pointing. MACI - University of Alberta - April 2001 41

This Talk y. Motivation y. Parallel Machine Organizations y. Cluster Computing y. Programming Models y. Cache Coherence and Memory Consistency y. The Top 500: Who is the fastest? y. Processor Architecture: What is new? y. The Role of Compilers y. Speedup and Scalability y. Final Remarks MACI - University of Alberta - April 2001 42

What Does Coherency Mean? x. Informally: • “Any read must return the most recent write” • Too strict and too difficult to implement x. Better: • “Any write must eventually be seen by a read” • All writes are seen in proper order (“serialization”) x. Two rules to ensure this: • “If P writes x and P 1 reads x, P’s write will be seen by P 1 if the read and write are sufficiently far apart” x. Writes to a single location are serialized: seen in MACI the- University same oforder Alberta - April 2001 43

Potential HW Coherency Solutions x. Snooping Solution (Snoopy Bus): • • • Send all requests for data to all processors Each processor snoops to see if it have a copy Requires broadcast Works well with bus (natural broadcast medium) Prefered scheme for small scale machines x. Directory-Based Schemes: • Keep track of what is being shared in 1 centralized place x. Distributed memory => distributed directory • Sends point-to-point requests • Scales better than Snooping MACI - University of Alberta - April 2001 44

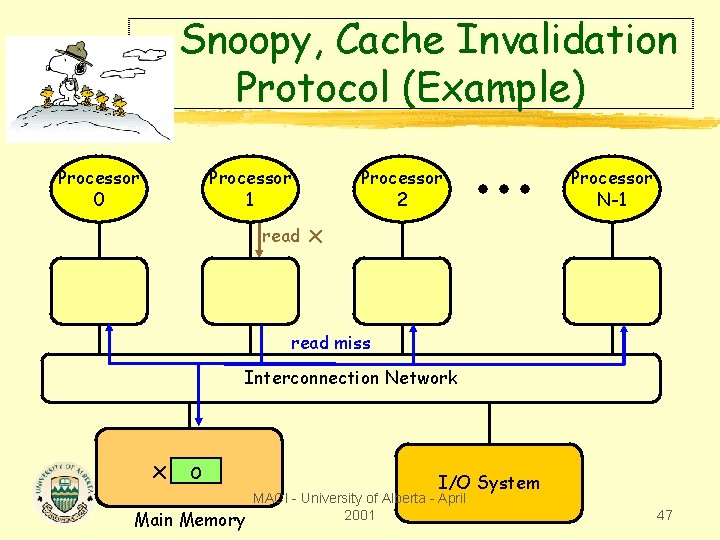

Basic Snoopy Protocols y. Write Invalidate Protocol: x. Multiple readers, single writer x. Write to shared data: an invalidate is sent to all caches which snoop and invalidate any copies x. Read Miss: • Write-through: memory is always up-to-date • Write-back: snoop in caches to find most recent copy y. Write Broadcast Protocol: x. Write to shared data: broadcast on bus, processors snoop, and update any copies x. Read miss: memory is always up-to-date y. Write serialization: bus serializes requests! x. Bus is single point of arbitration MACI - University of Alberta - April 2001 45

Basic Snoopy Protocols z. Write Invalidate versus Broadcast: y. Invalidate requires one transaction per write-run y. Invalidate uses spatial locality: one transaction per block y. Broadcast has lower latency between write and read MACI - University of Alberta - April 2001 46

Snoopy, Cache Invalidation Protocol (Example) Processor 0 Processor 1 read Processor 2 Processor N-1 x read miss Interconnection Network x o Main Memory I/O System MACI - University of Alberta - April 2001 47

Snoopy, Cache Invalidation Protocol (Example) Processor 0 Processor 1 Processor 2 Processor N-1 x o shared Interconnection Network x o Main Memory I/O System MACI - University of Alberta - April 2001 48

Snoopy, Cache Invalidation Protocol (Example) Processor 0 Processor 1 Processor 2 read Processor N-1 x x o shared read miss Interconnection Network x o Main Memory I/O System MACI - University of Alberta - April 2001 49

Snoopy, Cache Invalidation Protocol (Example) Processor 0 Processor 1 x o shared Processor 2 Processor N-1 x o shared Interconnection Network x o Main Memory I/O System MACI - University of Alberta - April 2001 50

Snoopy, Cache Invalidation Protocol (Example) Processor 0 Processor 1 write x o shared Processor 2 Processor N-1 x x o shared invalidate Interconnection Network x o Main Memory I/O System MACI - University of Alberta - April 2001 51

Snoopy, Cache Invalidation Protocol (Example) Processor 0 Processor 1 Processor 2 Processor N-1 x 1 exclusive Interconnection Network x o Main Memory I/O System MACI - University of Alberta - April 2001 52

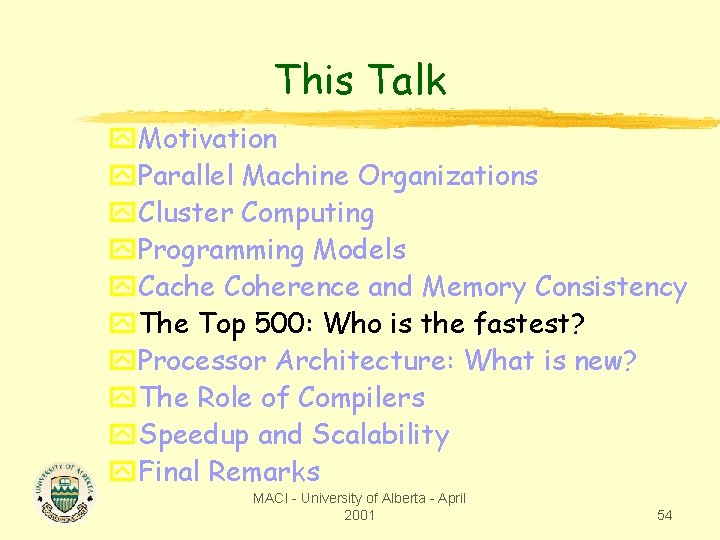

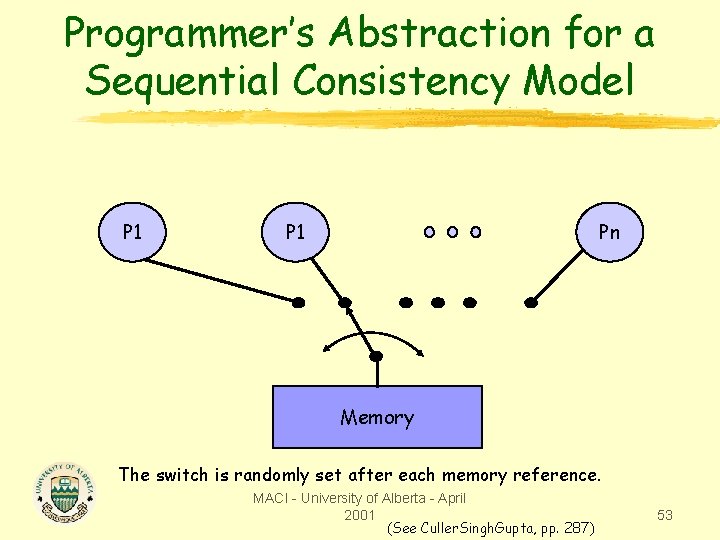

Programmer’s Abstraction for a Sequential Consistency Model P 1 Pn Memory The switch is randomly set after each memory reference. MACI - University of Alberta - April 2001 (See Culler. Singh. Gupta, pp. 287) 53

This Talk y. Motivation y. Parallel Machine Organizations y. Cluster Computing y. Programming Models y. Cache Coherence and Memory Consistency y. The Top 500: Who is the fastest? y. Processor Architecture: What is new? y. The Role of Compilers y. Speedup and Scalability y. Final Remarks MACI - University of Alberta - April 2001 54

![Top 500 November 10 2001 Rmax Maximal LINPACK Performance Achieved GFLOPS Rpeak Top 500 (November 10, 2001) Rmax = Maximal LINPACK Performance Achieved [GFLOPS] Rpeak =](https://slidetodoc.com/presentation_image_h2/172abff91a1edbf30307044ff6a1ef14/image-55.jpg)

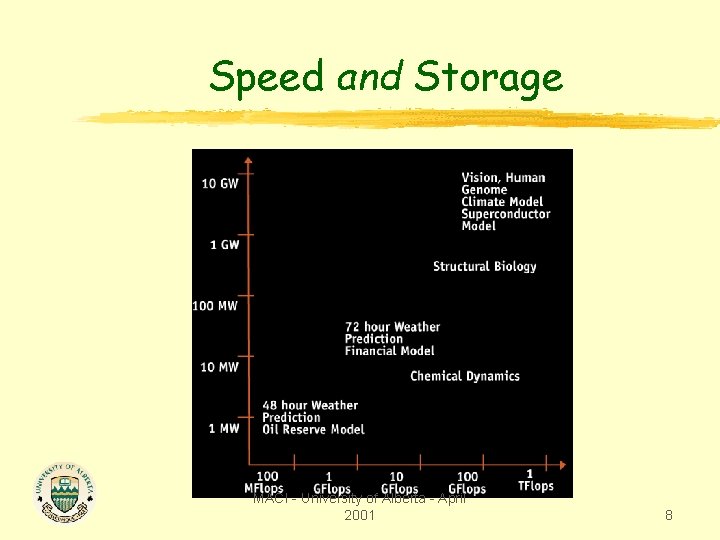

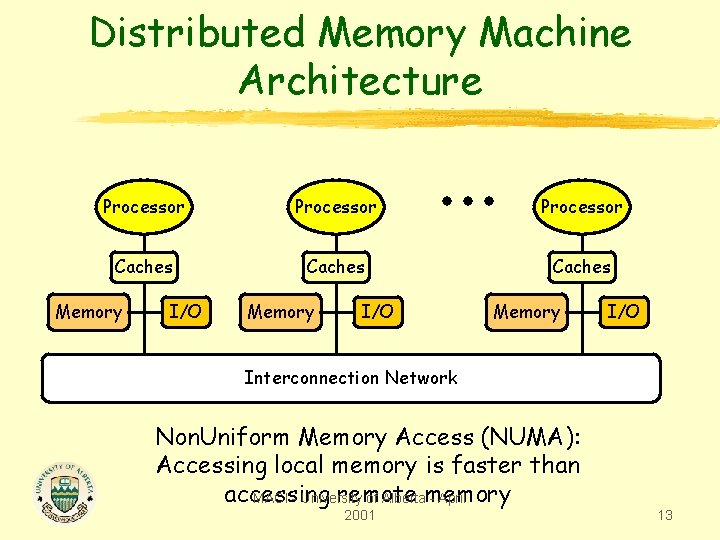

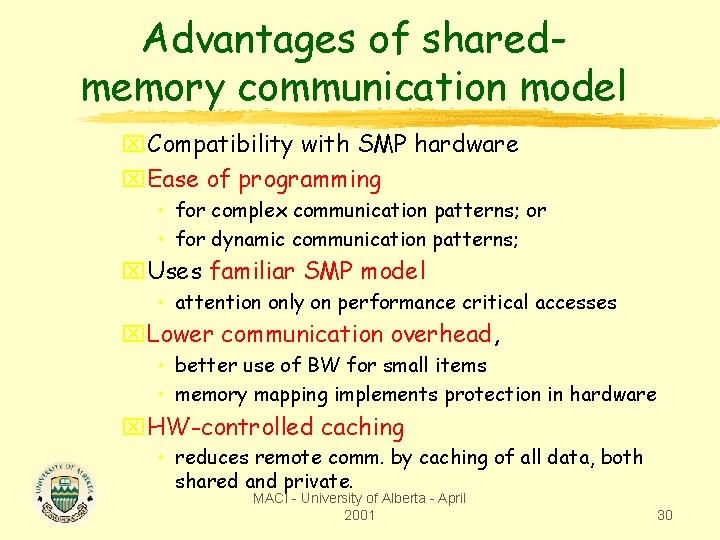

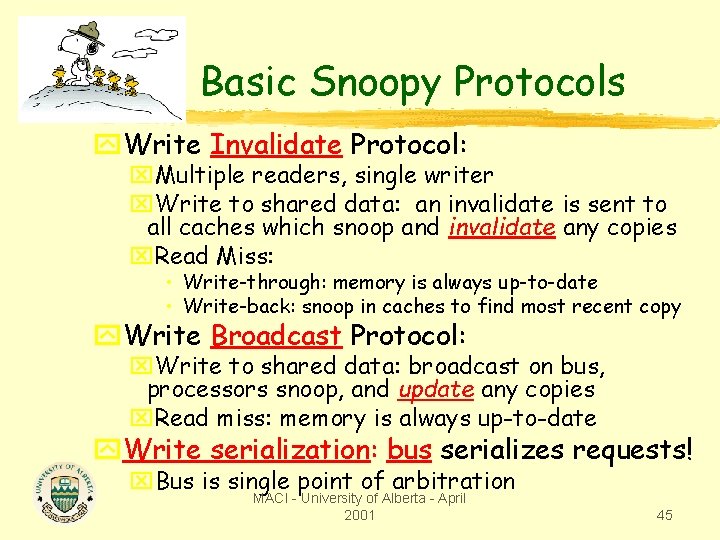

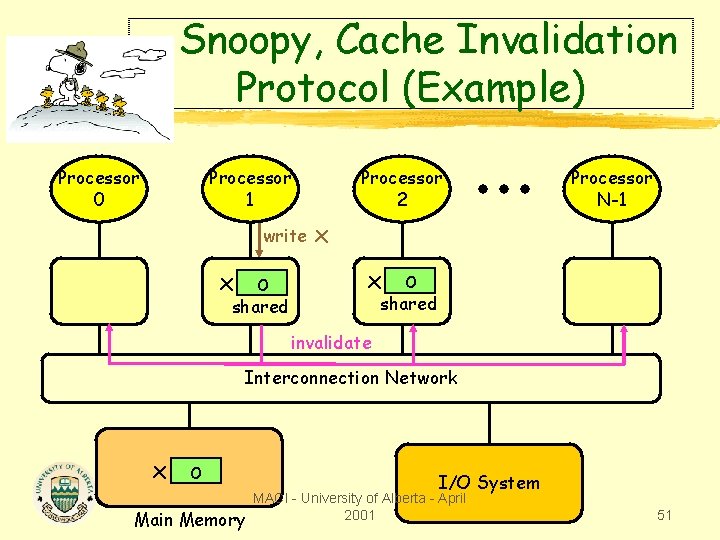

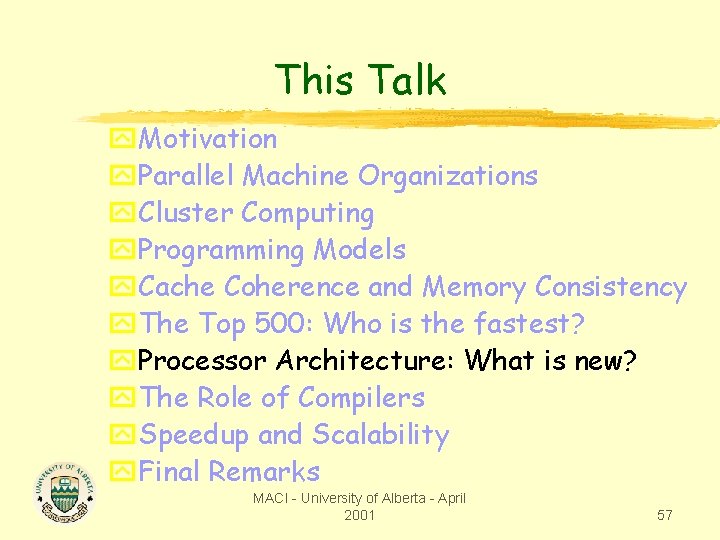

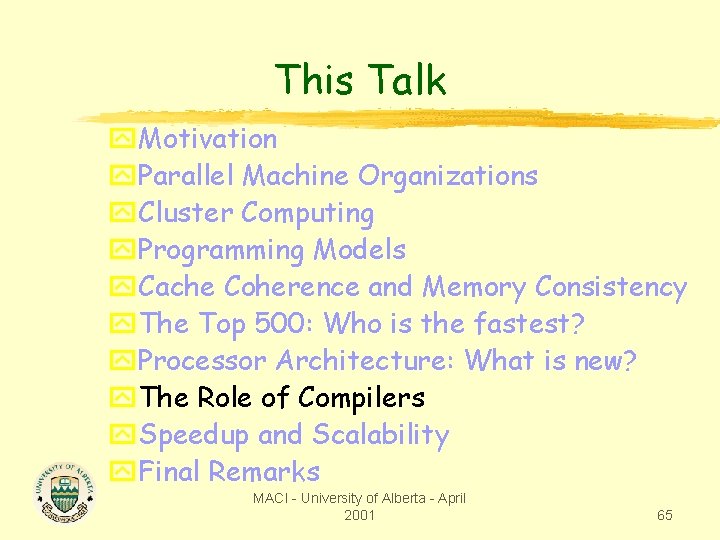

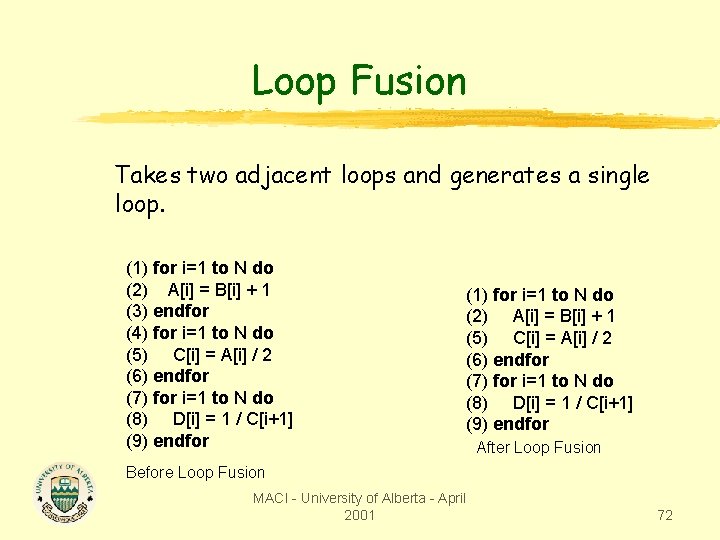

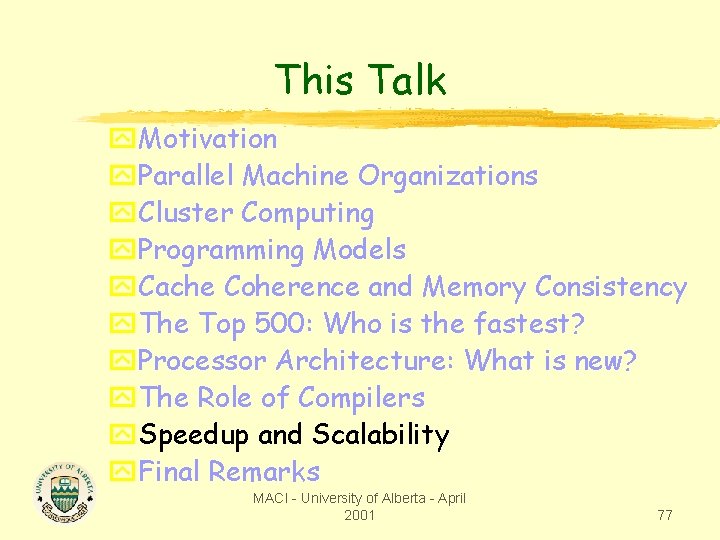

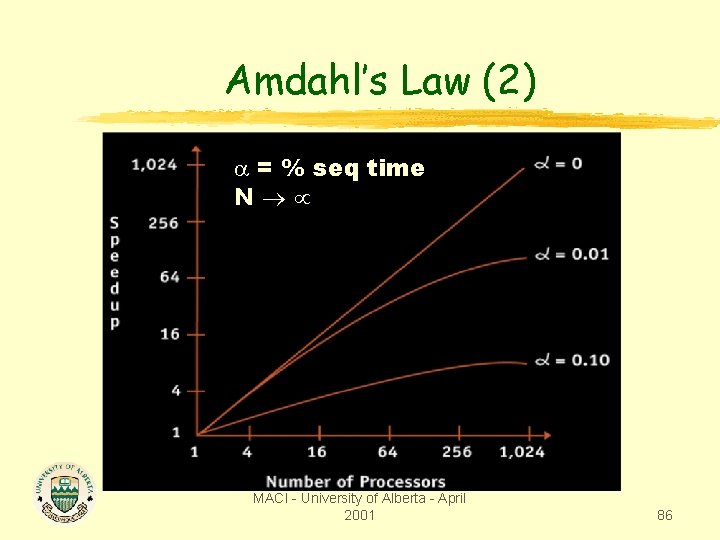

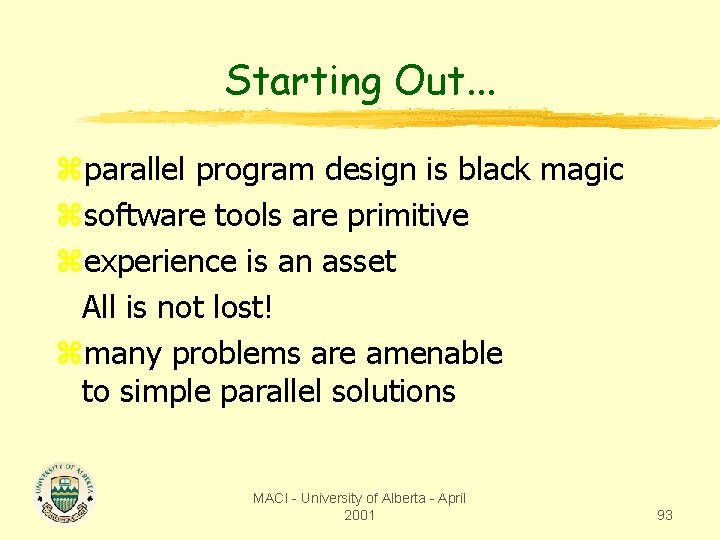

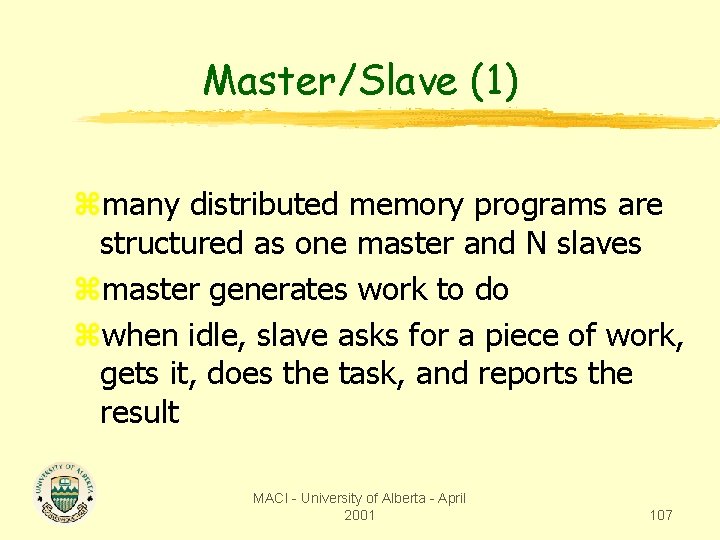

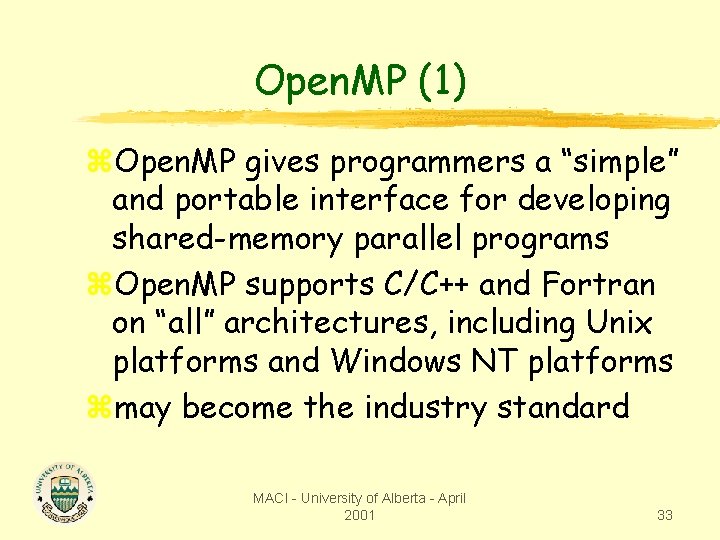

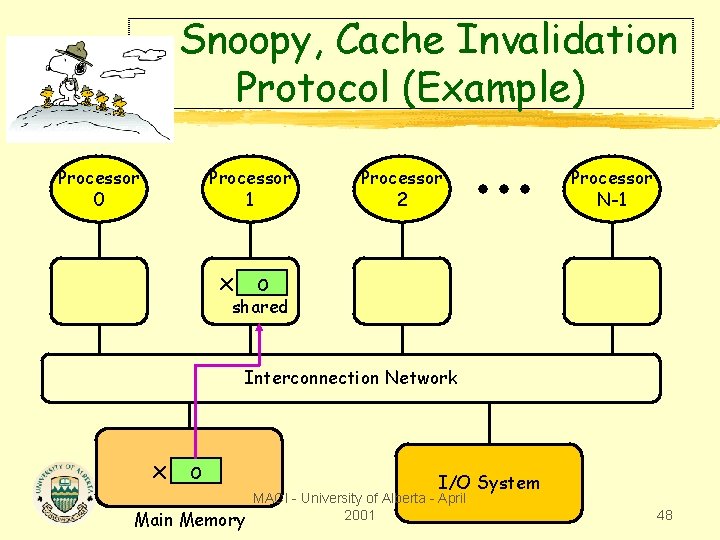

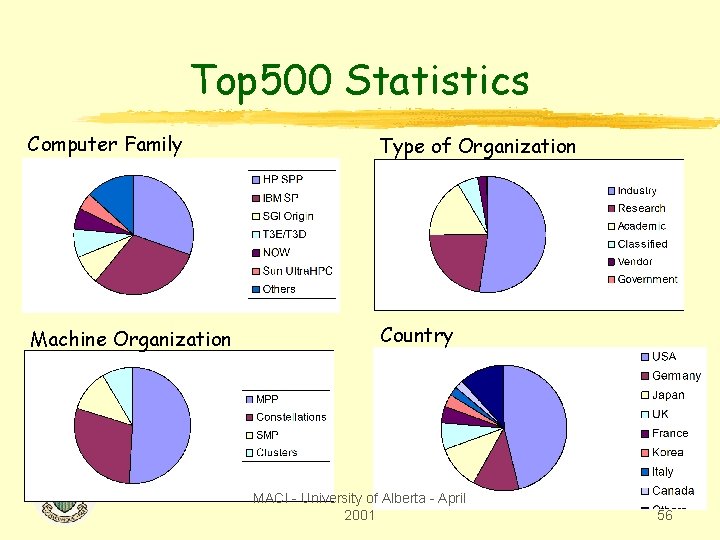

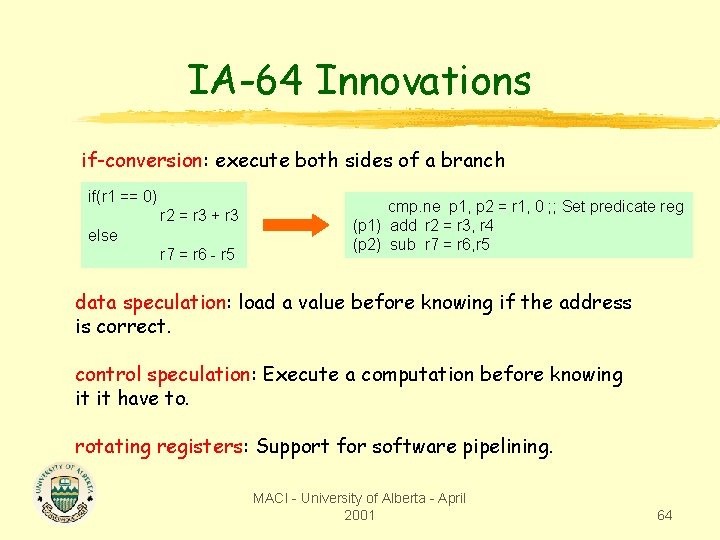

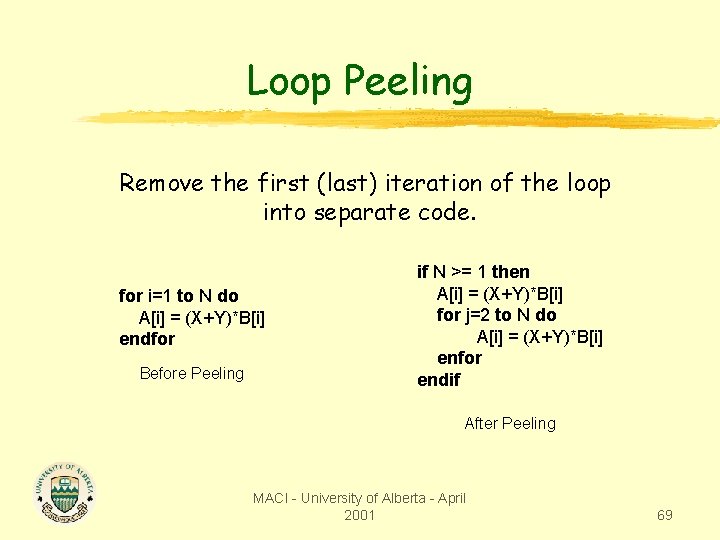

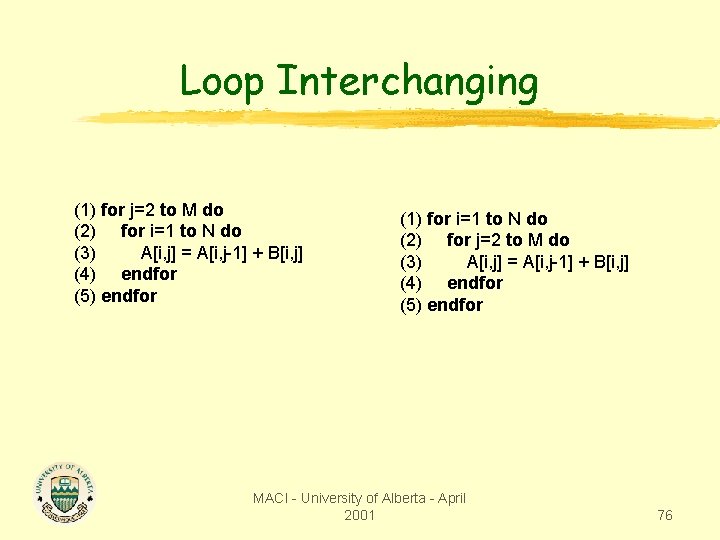

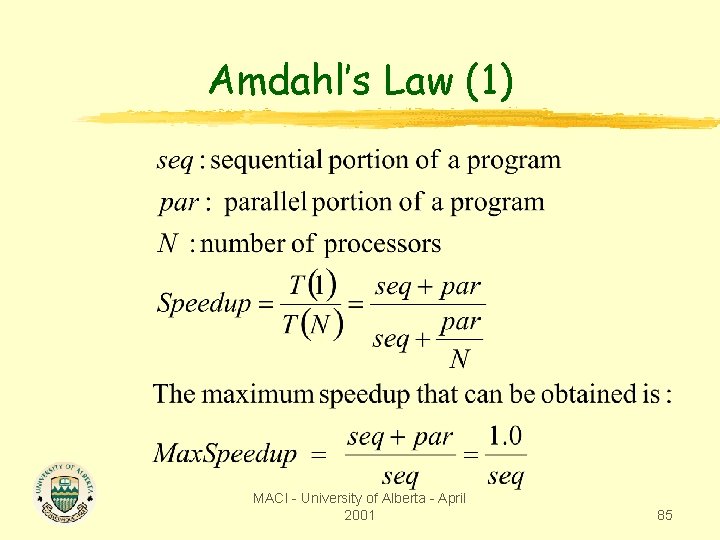

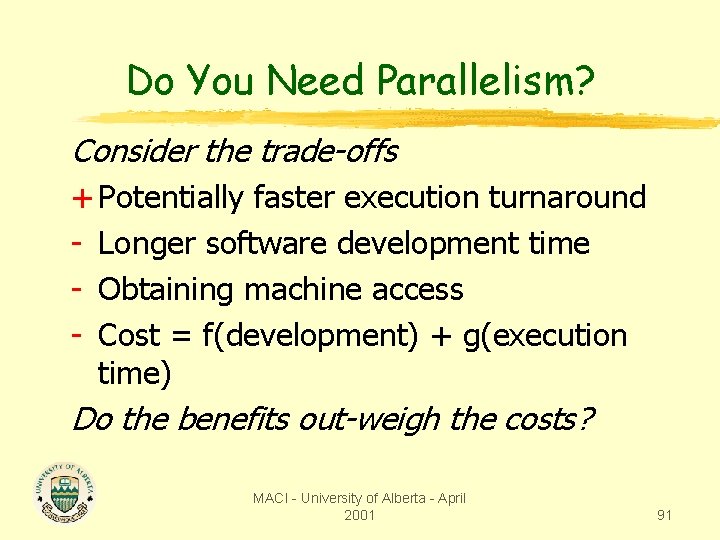

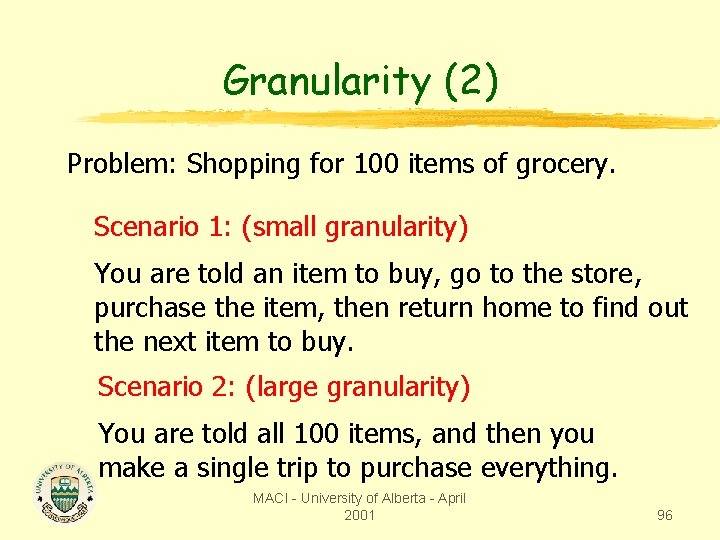

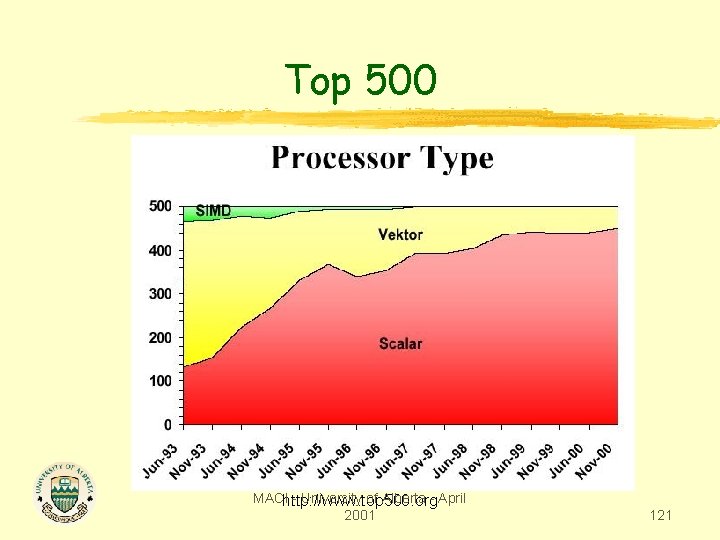

Top 500 (November 10, 2001) Rmax = Maximal LINPACK Performance Achieved [GFLOPS] Rpeak = Theoretical peak performance [GFLOPS] University Alberta Canada appears in MACI ranks-123, 144, of 183, 255, - April 266, 280, 308, 311, 315, 414, 419. 2001 55

Top 500 Statistics Computer Family Type of Organization Machine Organization Country MACI - University of Alberta - April 2001 56

This Talk y. Motivation y. Parallel Machine Organizations y. Cluster Computing y. Programming Models y. Cache Coherence and Memory Consistency y. The Top 500: Who is the fastest? y. Processor Architecture: What is new? y. The Role of Compilers y. Speedup and Scalability y. Final Remarks MACI - University of Alberta - April 2001 57

Intel Architecture 64 Itanium, the first one, is out…. … but we are still waiting for Mckinley. . . Will we get Yamhill (Intel’s Plan B) instead? MACI - University of Alberta - April 2001 58

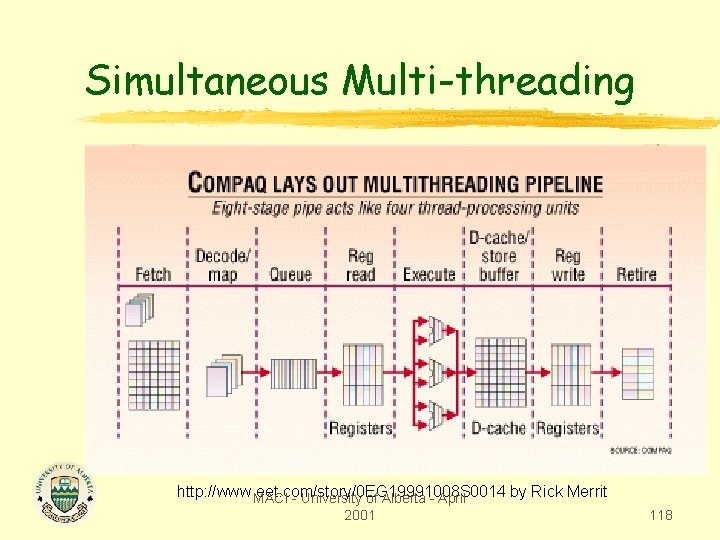

Alpha is gone. . . So is Compaq! EV 8 is scrapped. Compaq designers split between AMD and Intel converts to the Symultaneous Multithreading religion, but renames it: Hyperthreading!! MACI - University of Alberta - April 2001 59

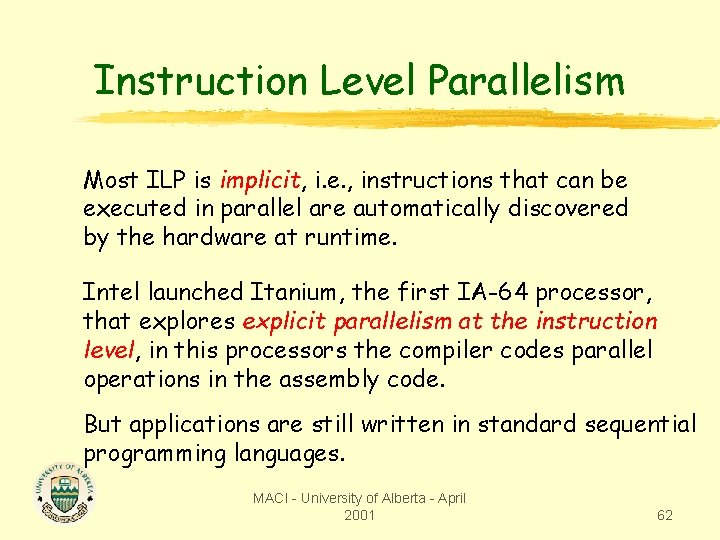

IBM’s POWER 4 is the 2001/2002 winner Best Floating Point and integer performance available in the market. Highest memory bandwidth in the industry. Well integrated cache coherence mechanism. MACI - University of Alberta - April 2001 60

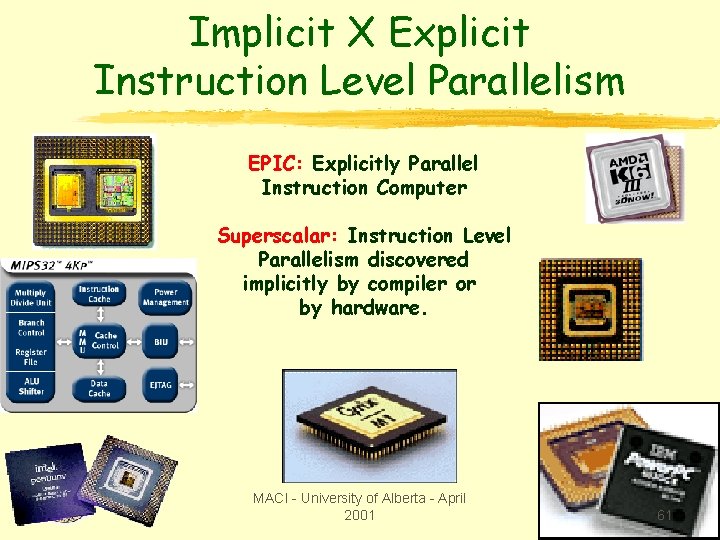

Implicit X Explicit Instruction Level Parallelism EPIC: Explicitly Parallel Instruction Computer Superscalar: Instruction Level Parallelism discovered implicitly by compiler or by hardware. MACI - University of Alberta - April 2001 61

Instruction Level Parallelism Most ILP is implicit, i. e. , instructions that can be executed in parallel are automatically discovered by the hardware at runtime. Intel launched Itanium, the first IA-64 processor, that explores explicit parallelism at the instruction level, in this processors the compiler codes parallel operations in the assembly code. But applications are still written in standard sequential programming languages. MACI - University of Alberta - April 2001 62

Instruction Level Parallelism For example, in the IA-64 an instruction group, identified by the compiler, is a set of instructions that have no read after write (RAW) or write after write (WAW) register dependencies (they can execute in parallel). Consecutive instruction groups are separated by stops (represented by a double semi-column in the assembly code). ld 8 sub add st 8 r 1=[r 5] r 6=r 8, r 9 r 3=r 1, r 4 ; ; [r 6]=r 12 // First group // Second group MACI - University of Alberta - April 2001 63

IA-64 Innovations if-conversion: execute both sides of a branch if(r 1 == 0) r 2 = r 3 + r 3 else r 7 = r 6 - r 5 cmp. ne p 1, p 2 = r 1, 0 ; ; Set predicate reg (p 1) add r 2 = r 3, r 4 (p 2) sub r 7 = r 6, r 5 data speculation: load a value before knowing if the address is correct. control speculation: Execute a computation before knowing it it have to. rotating registers: Support for software pipelining. MACI - University of Alberta - April 2001 64

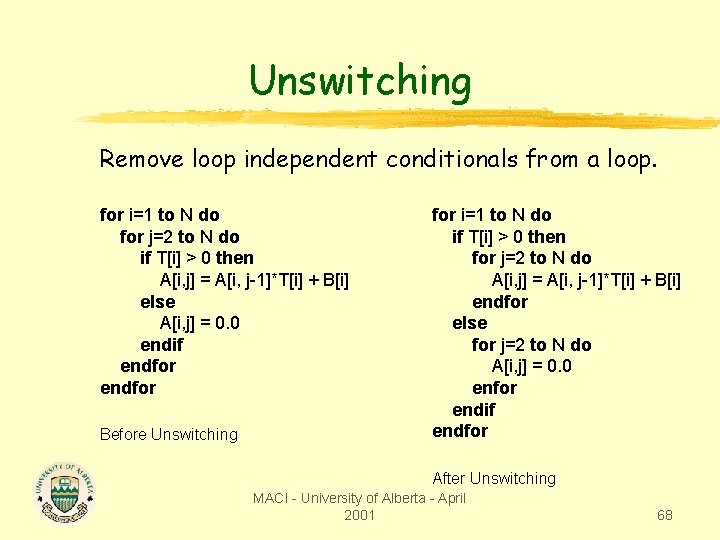

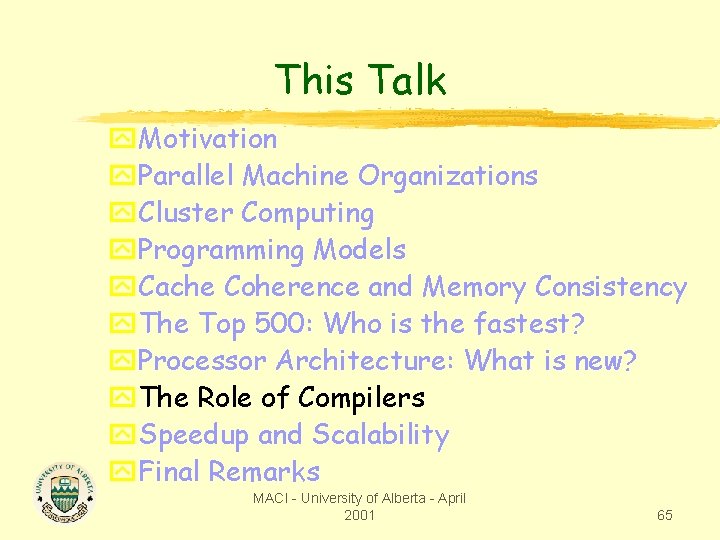

This Talk y. Motivation y. Parallel Machine Organizations y. Cluster Computing y. Programming Models y. Cache Coherence and Memory Consistency y. The Top 500: Who is the fastest? y. Processor Architecture: What is new? y. The Role of Compilers y. Speedup and Scalability y. Final Remarks MACI - University of Alberta - April 2001 65

Below Above the line for(n=0 ; …) for(f=0 ; …) for(t=0 ; …) for(x=0 ; …) for(y=0 ; …) for(z=0 ; …) { ……. . } MACI - University of Alberta - April 2001 Application Level Parallelism Automatic Parallelism 66

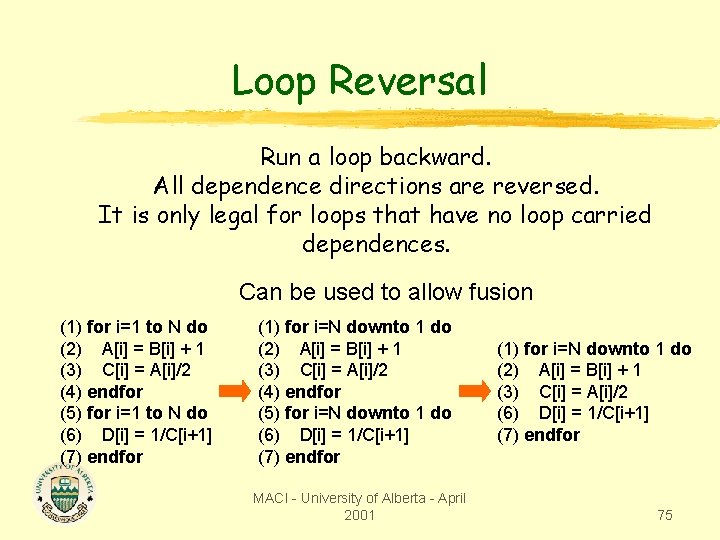

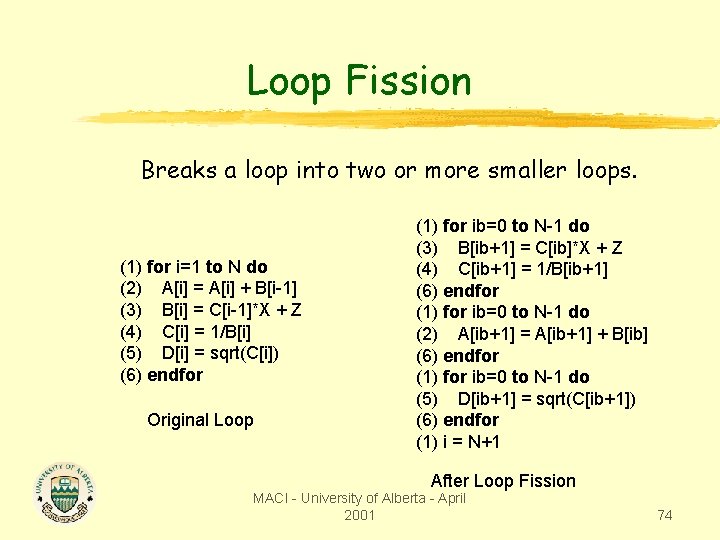

Some Common Loop Optimizations Unswitching Loop Peeling Loop Alignment Index Set Splitting Scalar Expansion Loop Fusion Loop Fission Loop Reversal Loop Interchange MACI - University of Alberta - April 2001 67

Unswitching Remove loop independent conditionals from a loop. for i=1 to N do for j=2 to N do if T[i] > 0 then A[i, j] = A[i, j-1]*T[i] + B[i] else A[i, j] = 0. 0 endif endfor Before Unswitching for i=1 to N do if T[i] > 0 then for j=2 to N do A[i, j] = A[i, j-1]*T[i] + B[i] endfor else for j=2 to N do A[i, j] = 0. 0 enfor endif endfor After Unswitching MACI - University of Alberta - April 2001 68

Loop Peeling Remove the first (last) iteration of the loop into separate code. for i=1 to N do A[i] = (X+Y)*B[i] endfor Before Peeling if N >= 1 then A[i] = (X+Y)*B[i] for j=2 to N do A[i] = (X+Y)*B[i] enfor endif After Peeling MACI - University of Alberta - April 2001 69

Index Set Splitting Divides the index set into two portions. for i=1 to 100 do A[i] = B[i] + C[i] if i > 10 then D[i] = A[i] + A[i-10] endif endfor Before Set Splitting for i=1 to 10 do A[i] = B[i] + C[i] endfor i=11 to 100 do A[i] = B[i] + C[i] D[i] = A[i] + A[i-10] endfor After Set Splitting MACI - University of Alberta - April 2001 70

Scalar Expansion Breaks anti-dependence relations by expanding, or promoting a scalar into an array. for i=1 to N do T = A[i] + B[i] C[i] = T + 1/T endfor Before Scalar Expansion if N >= 1 then allocate Tx(1: N) for i=1 to N do Tx[i] = A[i] + B[i] C[i] = Tx[i] + 1/Tx[i] endfor T = Tx[N] endif After Scalar Expansion MACI - University of Alberta - April 2001 71

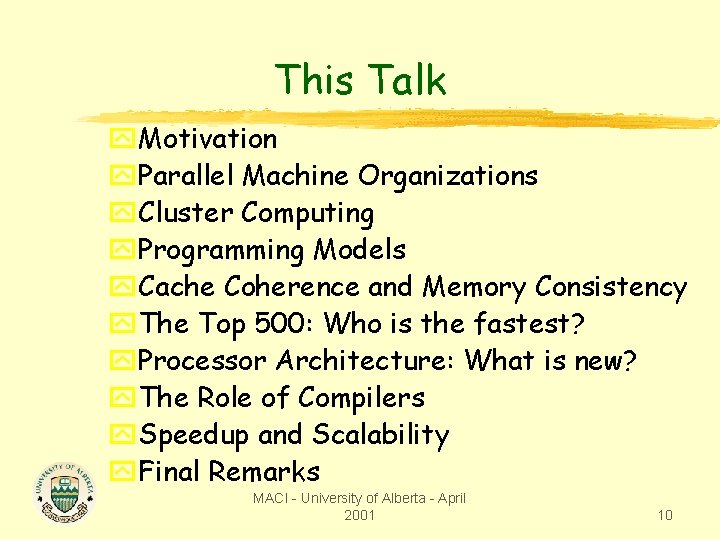

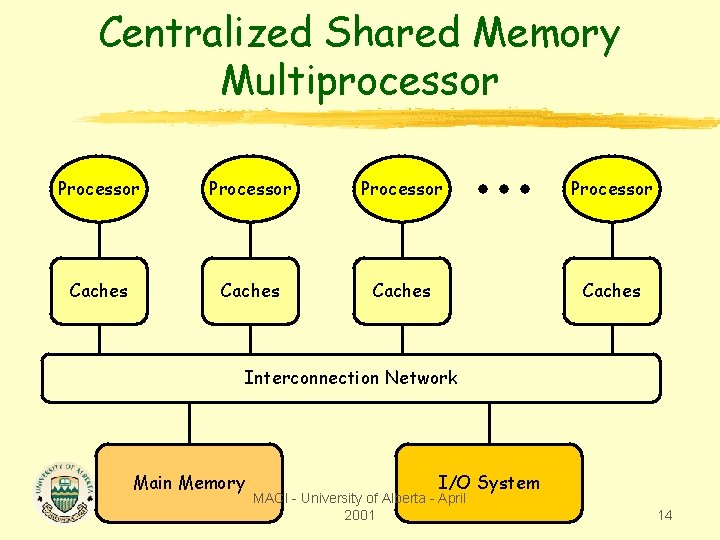

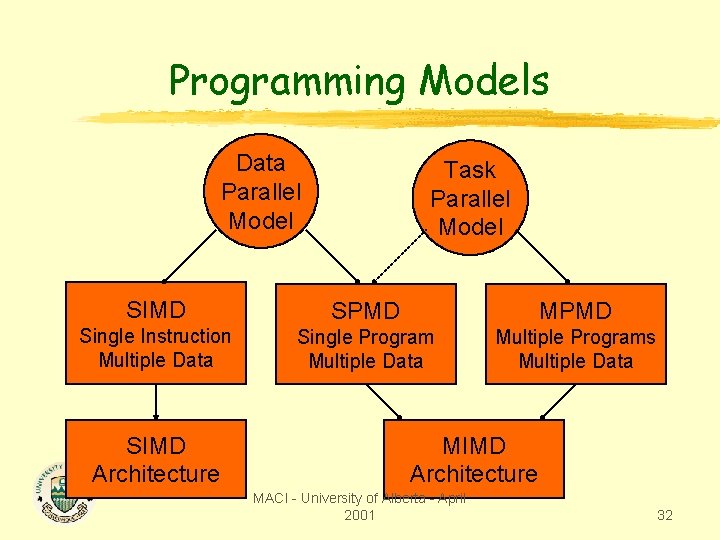

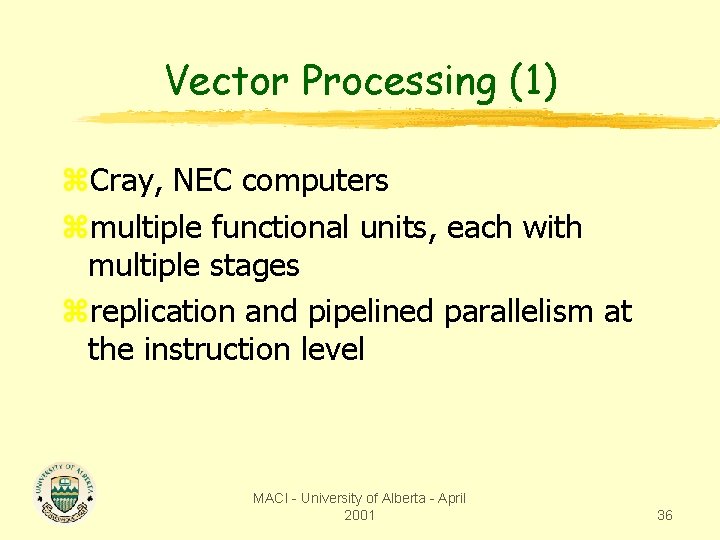

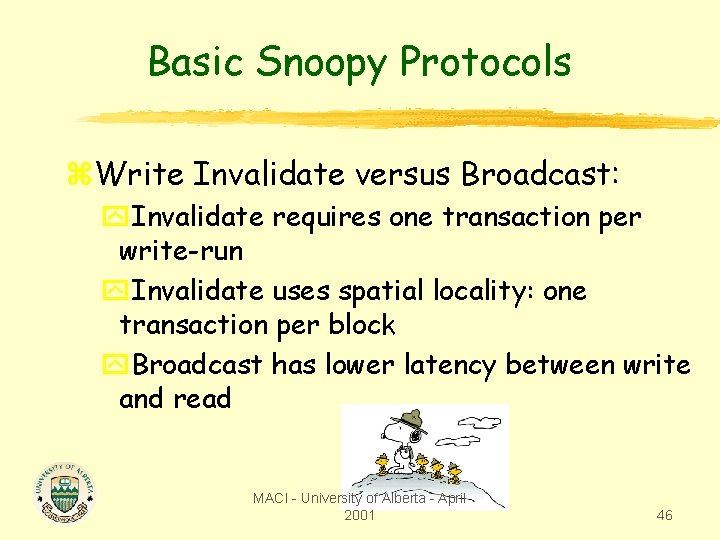

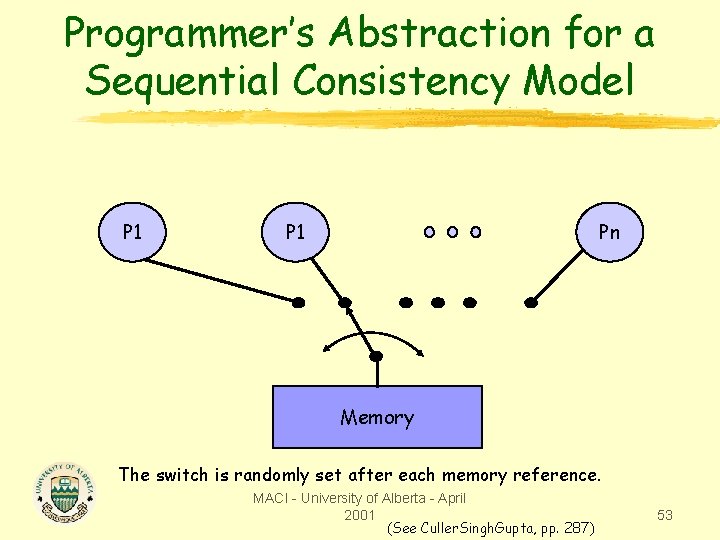

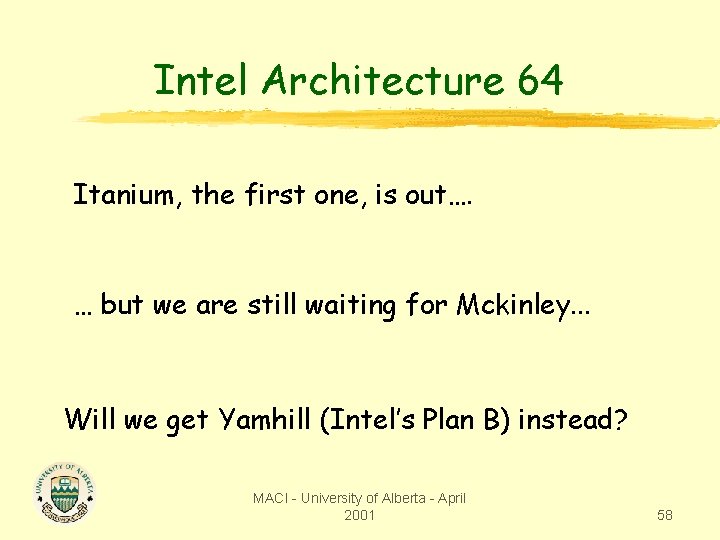

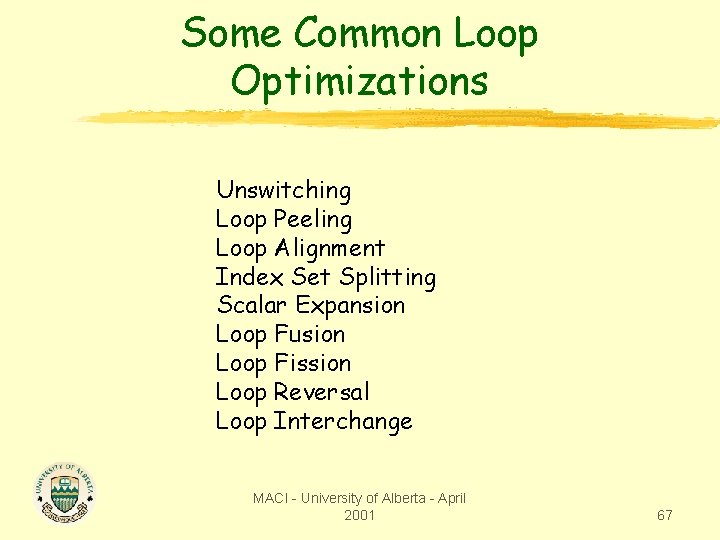

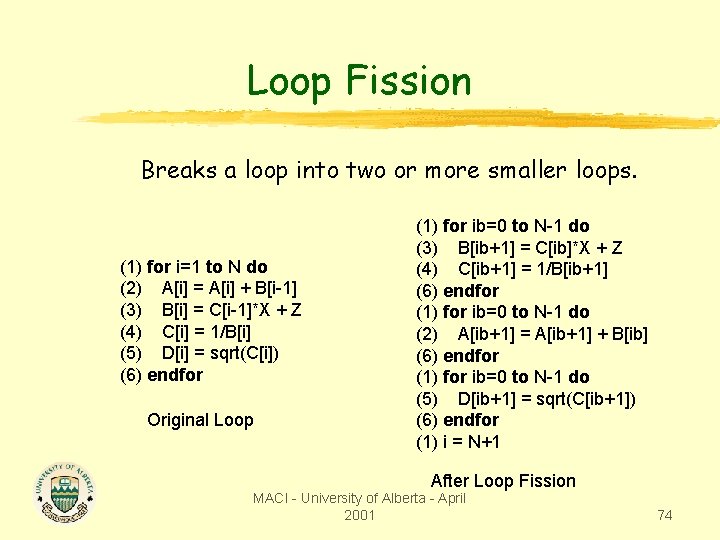

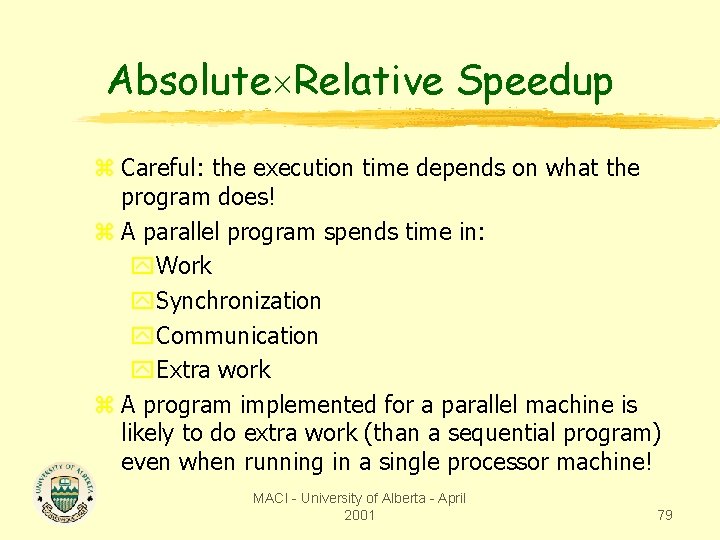

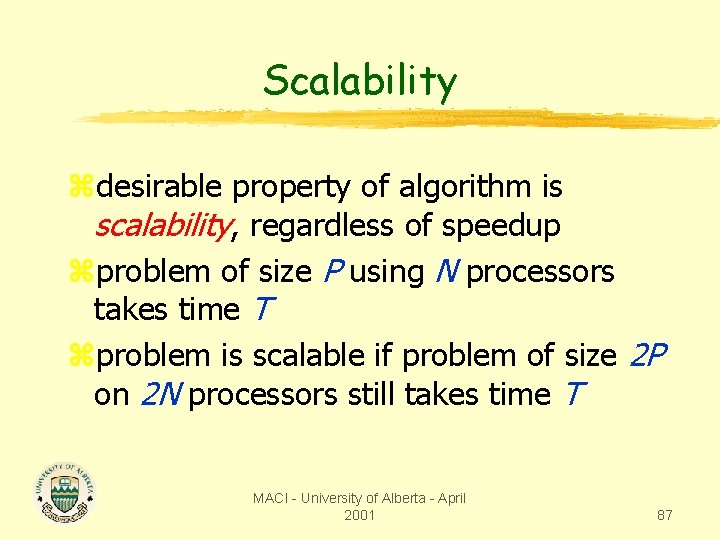

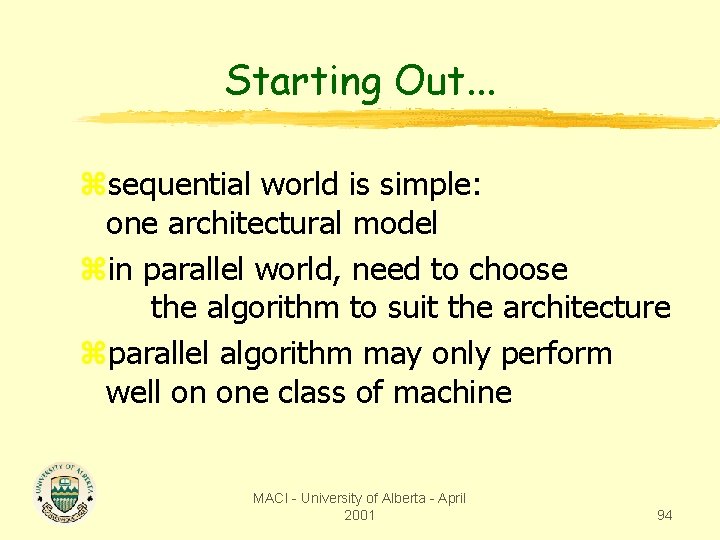

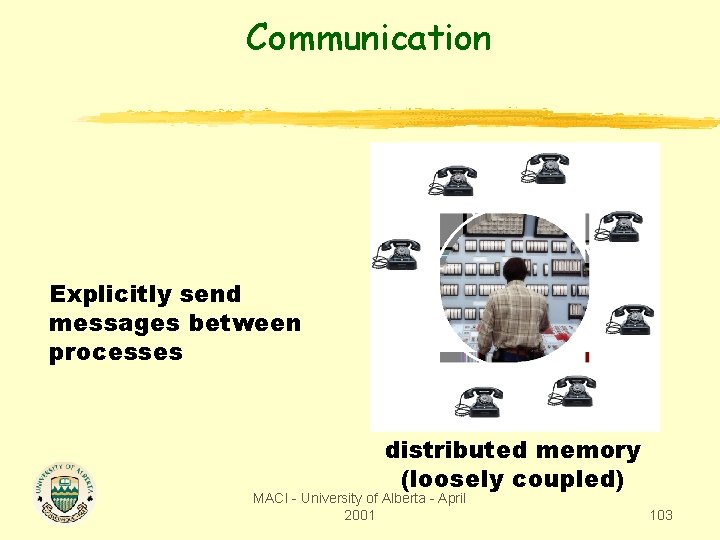

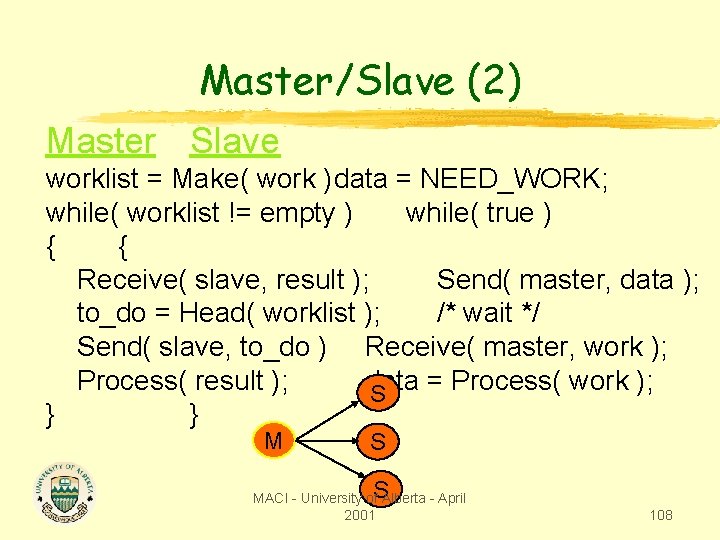

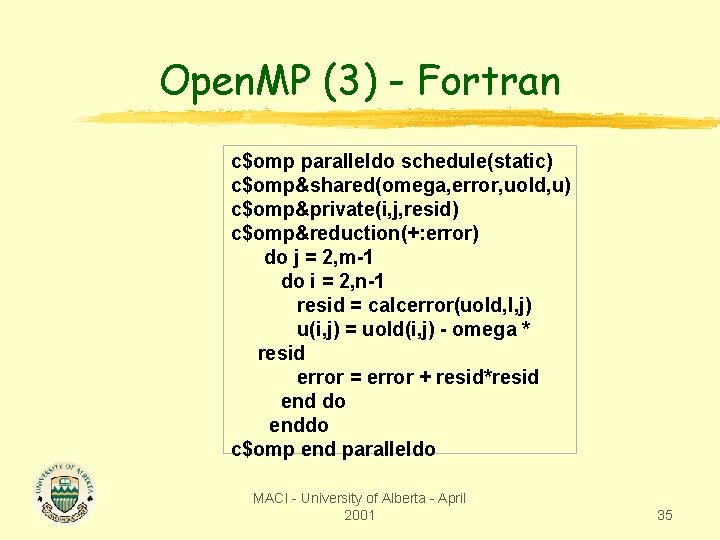

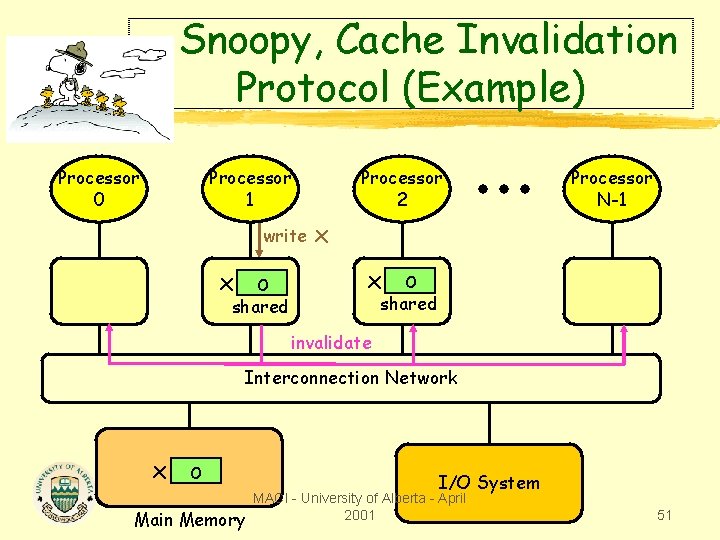

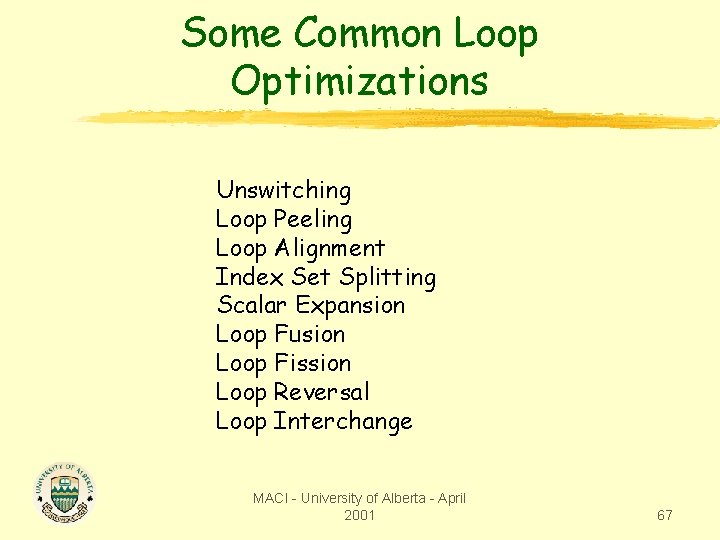

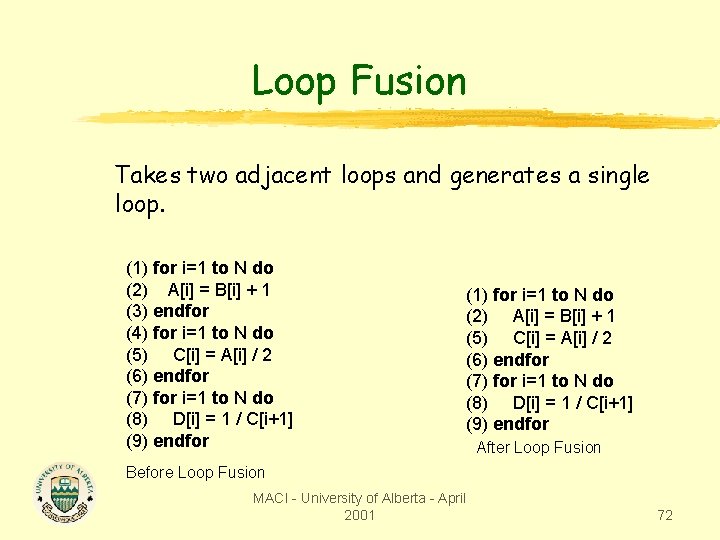

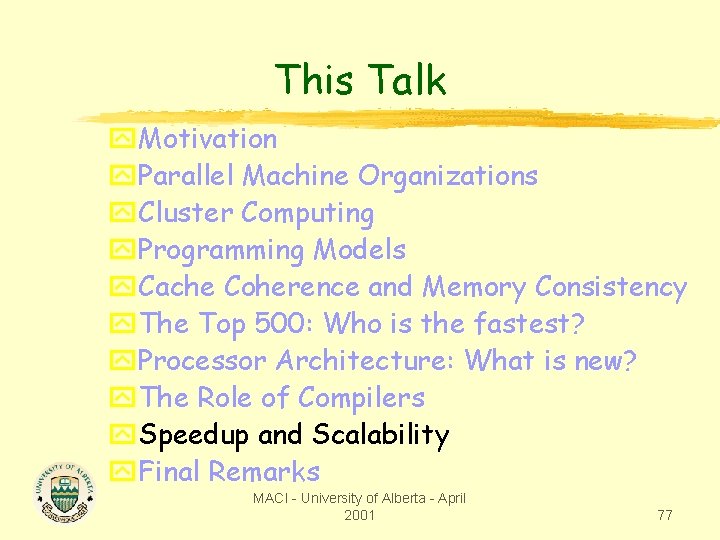

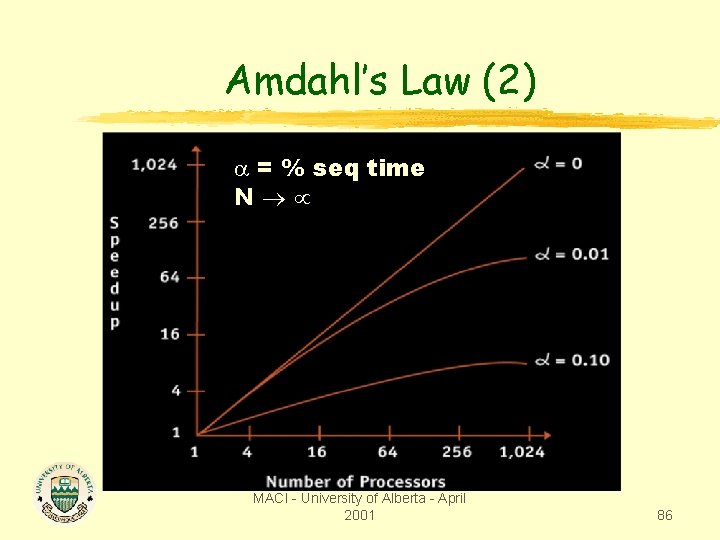

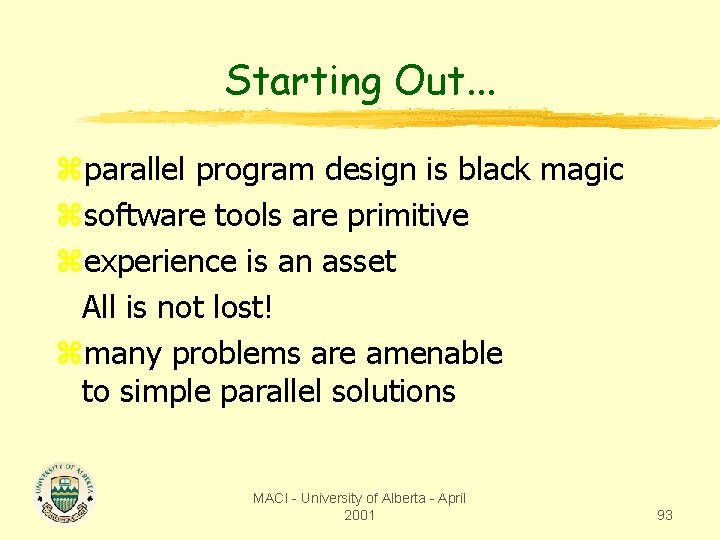

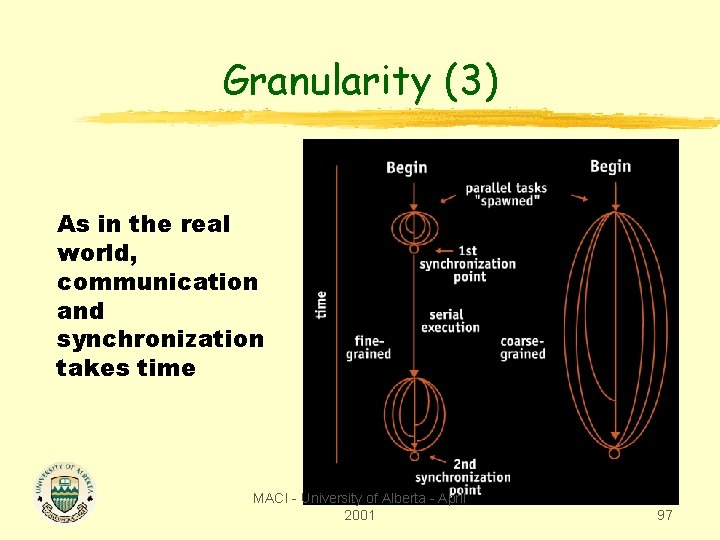

Loop Fusion Takes two adjacent loops and generates a single loop. (1) for i=1 to N do (2) A[i] = B[i] + 1 (3) endfor (4) for i=1 to N do (5) C[i] = A[i] / 2 (6) endfor (7) for i=1 to N do (8) D[i] = 1 / C[i+1] (9) endfor (1) for i=1 to N do (2) A[i] = B[i] + 1 (5) C[i] = A[i] / 2 (6) endfor (7) for i=1 to N do (8) D[i] = 1 / C[i+1] (9) endfor After Loop Fusion Before Loop Fusion MACI - University of Alberta - April 2001 72

![Loop Fusion Another Example 2 A1 B1 1 1 for i2 to Loop Fusion (Another Example) (2) A[1] = B[1] + 1 (1) for i=2 to](https://slidetodoc.com/presentation_image_h2/172abff91a1edbf30307044ff6a1ef14/image-73.jpg)

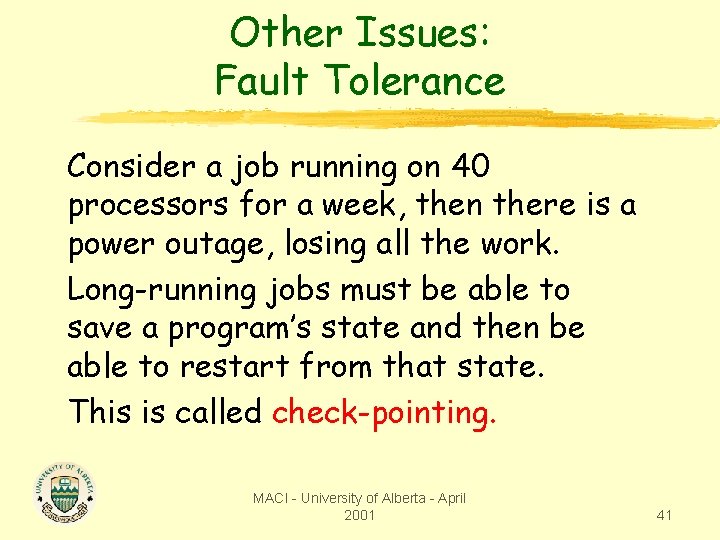

Loop Fusion (Another Example) (2) A[1] = B[1] + 1 (1) for i=2 to 99 do (2) A[i] = B[i] + 1 (3) endfor (4) for i=1 to 98 do (5) C[i] = A[i+1] * 2 (6) endfor (1) for i=1 to 99 do (2) A[i] = B[i] + 1 (3) endfor (4) for i=1 to 98 do (5) C[i] = A[i+1] * 2 (6) endfor (1) i = 1 (2) A[i] = B[i] + 1 for ib=0 to 97 do (1) i = ib+2 (2) A[i] = B[i] + 1 (4) i = ib+1 (5) C[i] = A[i+1] * 2 MACI - University of Alberta April (6) -endfor 2001 73

Loop Fission Breaks a loop into two or more smaller loops. (1) for i=1 to N do (2) A[i] = A[i] + B[i-1] (3) B[i] = C[i-1]*X + Z (4) C[i] = 1/B[i] (5) D[i] = sqrt(C[i]) (6) endfor Original Loop (1) for ib=0 to N-1 do (3) B[ib+1] = C[ib]*X + Z (4) C[ib+1] = 1/B[ib+1] (6) endfor (1) for ib=0 to N-1 do (2) A[ib+1] = A[ib+1] + B[ib] (6) endfor (1) for ib=0 to N-1 do (5) D[ib+1] = sqrt(C[ib+1]) (6) endfor (1) i = N+1 After Loop Fission MACI - University of Alberta - April 2001 74

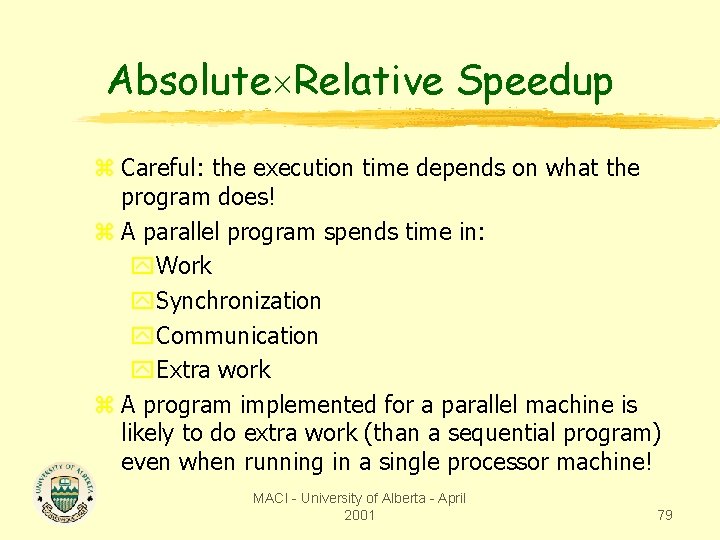

Loop Reversal Run a loop backward. All dependence directions are reversed. It is only legal for loops that have no loop carried dependences. Can be used to allow fusion (1) for i=1 to N do (2) A[i] = B[i] + 1 (3) C[i] = A[i]/2 (4) endfor (5) for i=1 to N do (6) D[i] = 1/C[i+1] (7) endfor (1) for i=N downto 1 do (2) A[i] = B[i] + 1 (3) C[i] = A[i]/2 (4) endfor (5) for i=N downto 1 do (6) D[i] = 1/C[i+1] (7) endfor MACI - University of Alberta - April 2001 (1) for i=N downto 1 do (2) A[i] = B[i] + 1 (3) C[i] = A[i]/2 (6) D[i] = 1/C[i+1] (7) endfor 75

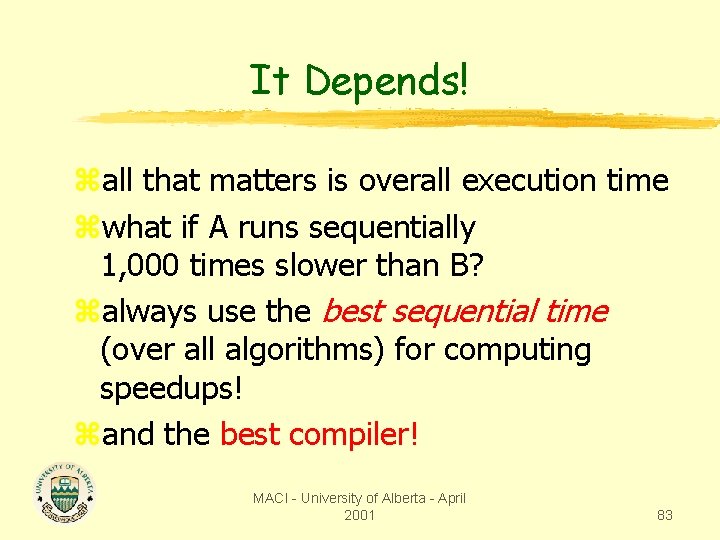

Loop Interchanging (1) for j=2 to M do (2) for i=1 to N do (3) A[i, j] = A[i, j-1] + B[i, j] (4) endfor (5) endfor (1) for i=1 to N do (2) for j=2 to M do (3) A[i, j] = A[i, j-1] + B[i, j] (4) endfor (5) endfor MACI - University of Alberta - April 2001 76

This Talk y. Motivation y. Parallel Machine Organizations y. Cluster Computing y. Programming Models y. Cache Coherence and Memory Consistency y. The Top 500: Who is the fastest? y. Processor Architecture: What is new? y. The Role of Compilers y. Speedup and Scalability y. Final Remarks MACI - University of Alberta - April 2001 77

Speedup z goal is to use N processors to make a program run N times faster z speedup is the factor by which the program’s speed improves MACI - University of Alberta - April 2001 78

Absolute Relative Speedup z Careful: the execution time depends on what the program does! z A parallel program spends time in: y. Work y. Synchronization y. Communication y. Extra work z A program implemented for a parallel machine is likely to do extra work (than a sequential program) even when running in a single processor machine! MACI - University of Alberta - April 2001 79

Absolute Relative Speedup z When talking about execution time, ask what algorithm is implemented! MACI - University of Alberta - April 2001 80

Speedup MACI - University of Alberta - April 2001 81

Which is Better? zprograms A & B solve the same problem using different algorithms zboth are run on a 100 -processor computer zprogram A gets a 90 -fold speedup zprogram B gets a 10 -fold speedup Which one would you prefer to use? MACI - University of Alberta - April 2001 82

It Depends! zall that matters is overall execution time zwhat if A runs sequentially 1, 000 times slower than B? zalways use the best sequential time (over all algorithms) for computing speedups! zand the best compiler! MACI - University of Alberta - April 2001 83

Superlinear Speedups zsometimes N processors can achieve a speedup > N zusually the result of improving an inferior sequential algorithm zcan legitimately occur because of cache and memory effects MACI - University of Alberta - April 2001 84

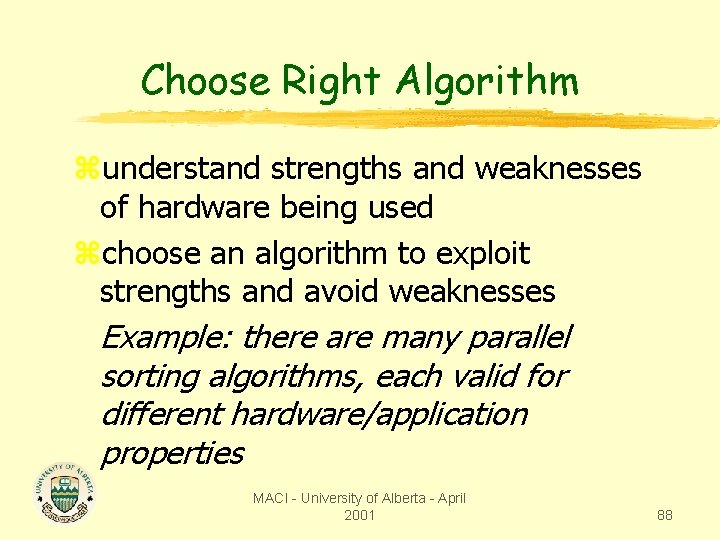

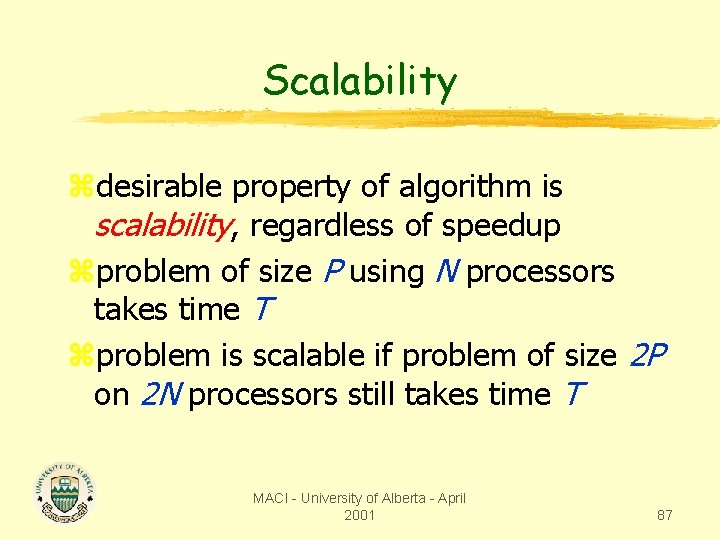

Amdahl’s Law (1) MACI - University of Alberta - April 2001 85

Amdahl’s Law (2) = % seq time N MACI - University of Alberta - April 2001 86

Scalability zdesirable property of algorithm is scalability, regardless of speedup zproblem of size P using N processors takes time T zproblem is scalable if problem of size 2 P on 2 N processors still takes time T MACI - University of Alberta - April 2001 87

Choose Right Algorithm zunderstand strengths and weaknesses of hardware being used zchoose an algorithm to exploit strengths and avoid weaknesses Example: there are many parallel sorting algorithms, each valid for different hardware/application properties MACI - University of Alberta - April 2001 88

This Talk y. Motivation y. Parallel Machine Organizations y. Cluster Computing y. Programming Models y. Cache Coherence and Memory Consistency y. The Top 500: Who is the fastest? y. Processor Architecture: What is new? y. The Role of Compilers y. Speedup and Scalability y. Final Remarks MACI - University of Alberta - April 2001 89

The Reality Is. . . Parallel programming is hard! zsoftware – more issues to be addressed beyond sequential case zhardware – need to understand machine architecture The Reward Is. . . zhigh performance – the motivation in the first place! MACI - University of Alberta - April 2001 90

Do You Need Parallelism? Consider the trade-offs + Potentially faster execution turnaround - Longer software development time - Obtaining machine access - Cost = f(development) + g(execution time) Do the benefits out-weigh the costs? MACI - University of Alberta - April 2001 91

Resistance to Parallelism zsoftware inertia – cost of converting existing software zhardware inertia – waiting for faster machines zlack of education zlimited access to resources MACI - University of Alberta - April 2001 92

Starting Out. . . zparallel program design is black magic zsoftware tools are primitive zexperience is an asset All is not lost! zmany problems are amenable to simple parallel solutions MACI - University of Alberta - April 2001 93

Starting Out. . . zsequential world is simple: one architectural model zin parallel world, need to choose the algorithm to suit the architecture zparallel algorithm may only perform well on one class of machine MACI - University of Alberta - April 2001 94

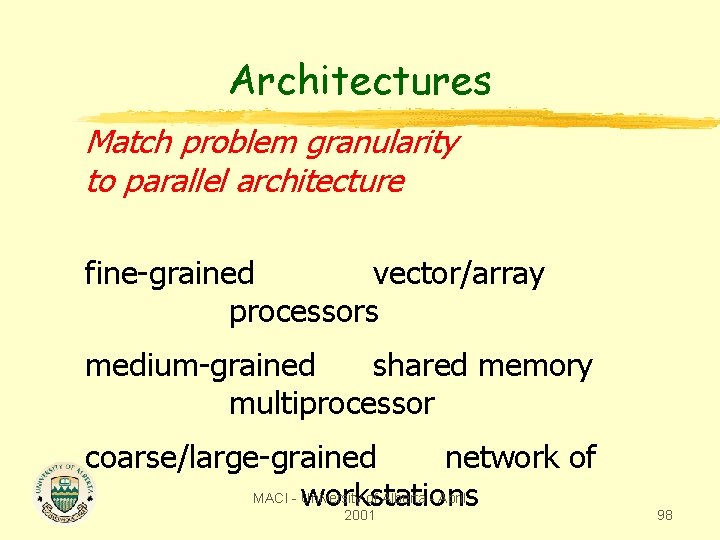

Granularity (1) Granularity is the relation between the amount of computation and the amount of communication. It is a measure of how much work gets done before processes have to communicate. MACI - University of Alberta - April 2001 95

Granularity (2) Problem: Shopping for 100 items of grocery. Scenario 1: (small granularity) You are told an item to buy, go to the store, purchase the item, then return home to find out the next item to buy. Scenario 2: (large granularity) You are told all 100 items, and then you make a single trip to purchase everything. MACI - University of Alberta - April 2001 96

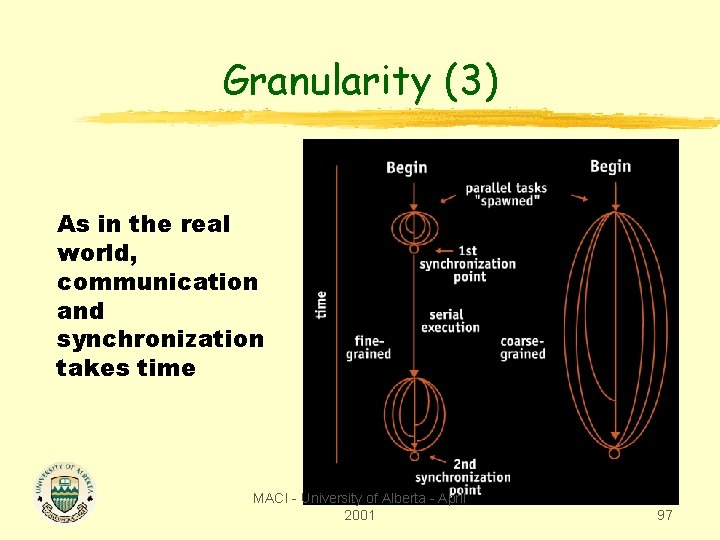

Granularity (3) As in the real world, communication and synchronization takes time MACI - University of Alberta - April 2001 97

Architectures Match problem granularity to parallel architecture fine-grained vector/array processors medium-grained shared memory multiprocessor coarse/large-grained network of MACI - University of Alberta - April workstations 2001 98

Program Design 1) Identify hardware platforms available 2) Identify parallelism in the application 3) Choose right type of algorithmic parallelism to match the architecture 4) Implement algorithm, being wary of performance and correctness issues MACI - University of Alberta - April 2001 99

Vector Processing (3) zthis class of machines is very effective at striding through arrays zsome parallelism can be automatically detected by compiler zthere is “right” way and “wrong” way to code loops to allow parallelism MACI - University of Alberta - April 2001 100

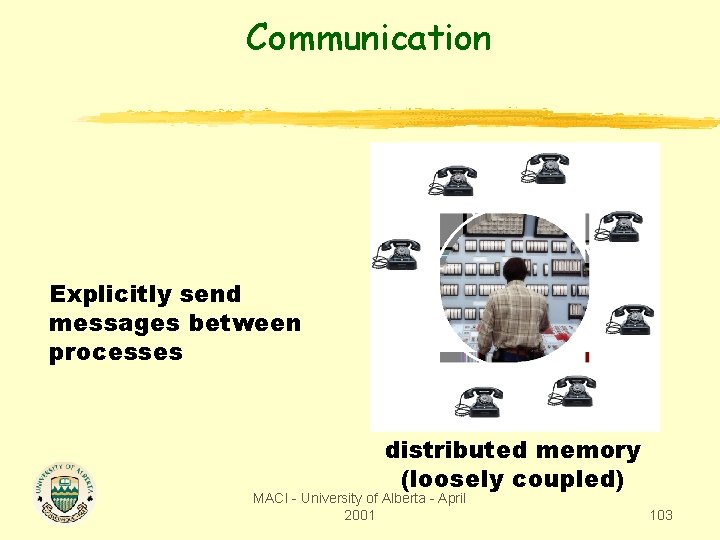

Distributed Memory (1) Loosely coupled processors z. IBM SP-2, networks of computers z. PCs connected by a fast network MACI - University of Alberta - April 2001 101

Distributed Memory (2) zcommunication between processes by sending messages Overhead of parallelism includes zcost of preparing a message zcost of sending a message zcost of waiting for a message MACI - University of Alberta - April 2001 102

Communication Explicitly send messages between processes distributed memory (loosely coupled) MACI - University of Alberta - April 2001 103

Synchronization MACI - University of Alberta - April 2001 104

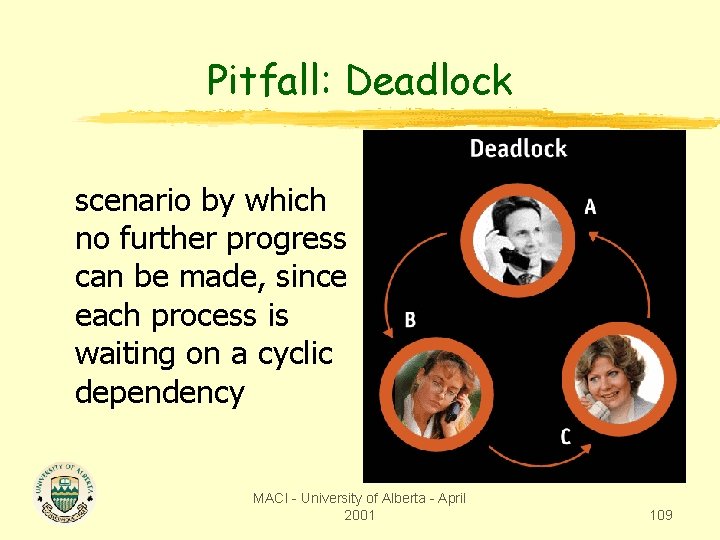

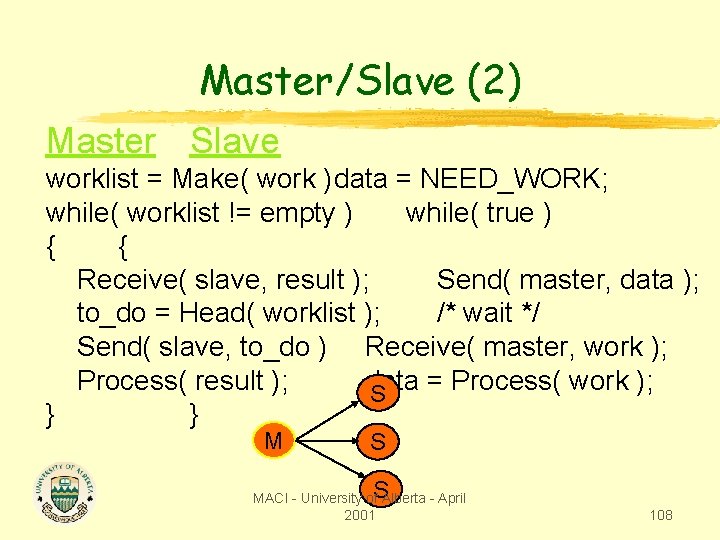

Message Passing (1) Process 1 Process 2 compute Communicatecompute Send( P 2, info ); compute Receive( P 1, info ); idle compute idle Send( P 1, reply ); Receive( P 2, reply ); Synchronize MACI - University of Alberta - April 2001 105

Message Passing (2) Two popular message passing libraries: z. PVM (Parallel Virtual Machine) z. MPI (Message Passing Interface) MPI will likely be the industry standard Both are easy to use, but verbose MACI - University of Alberta - April 2001 106

Master/Slave (1) zmany distributed memory programs are structured as one master and N slaves zmaster generates work to do zwhen idle, slave asks for a piece of work, gets it, does the task, and reports the result MACI - University of Alberta - April 2001 107

Master/Slave (2) Master Slave worklist = Make( work )data = NEED_WORK; while( worklist != empty ) while( true ) { { Receive( slave, result ); Send( master, data ); to_do = Head( worklist ); /* wait */ Send( slave, to_do ) Receive( master, work ); Process( result ); data = Process( work ); S } } M S S MACI - University of Alberta - April 2001 108

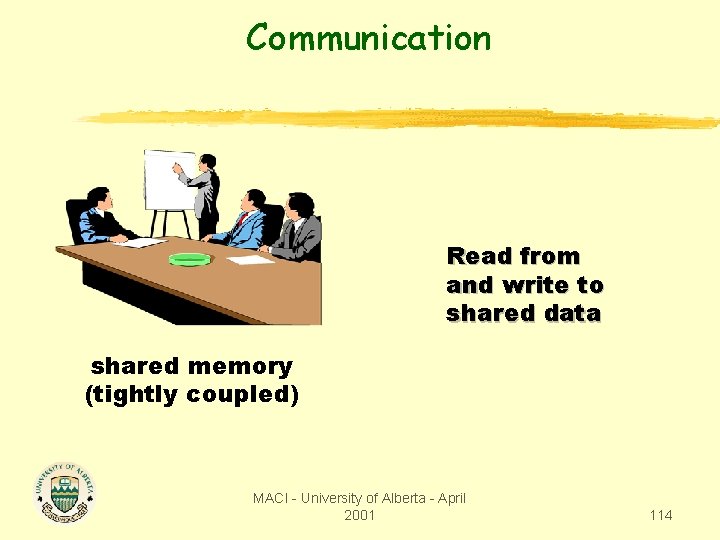

Pitfall: Deadlock scenario by which no further progress can be made, since each process is waiting on a cyclic dependency MACI - University of Alberta - April 2001 109

Pitfall: Deadlock A real-world problem! MACI - University of Alberta - April 2001 110

Pitfall: Load Balancing zneed to assign a roughly equal portion of work to each processor zload imbalances can result in poor speedups MACI - University of Alberta - April 2001 111

Shared Memory (1) Tightly coupled multiprocessors z. SGI Origin 2400, SUN E 10000 z. Classified by memory access times y. Same (SMP - symmetric multiprocessor) y. Different (NUMA - non-uniform memory access) P P Memory MACI - University of Alberta - April 2001 112

Shared Memory (2) zcommunicate between processes through reading/writing shared variables (instead of sending and receiving messages) Overhead of parallelism includes: zcontention for shared resource zprotecting integrity of shared resources MACI - University of Alberta - April 2001 113

Communication Read from and write to shared data shared memory (tightly coupled) MACI - University of Alberta - April 2001 114

Synchronization May have to prevent simultaneous access! Process 1 Process 2 B = Bank. Balance; B = B + 100; B = B + 150; Bank. Balance = B; Print Statement; What is the value of Bank. Balance? MACI - University of Alberta - April 2001 115

Pitfall: Shared Data Access Need to restrict access to data! Avoid race conditions! Process 1 Process 2 Lock( access ); B = Bank. Balance; B = B + 100; B = B + 150; Bank. Balance = B; Unlock( access ); MACI - University of Alberta - April 2001 116

Multi-threading MACI - University of Alberta - April 2001 117

Simultaneous Multi-threading http: //www. eet. com/story/0 EG 19991008 S 0014 by Rick Merrit MACI - University of Alberta - April 2001 118

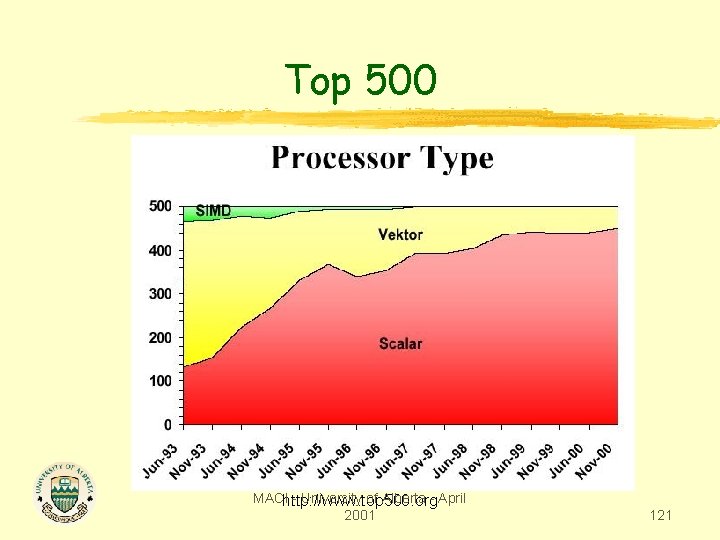

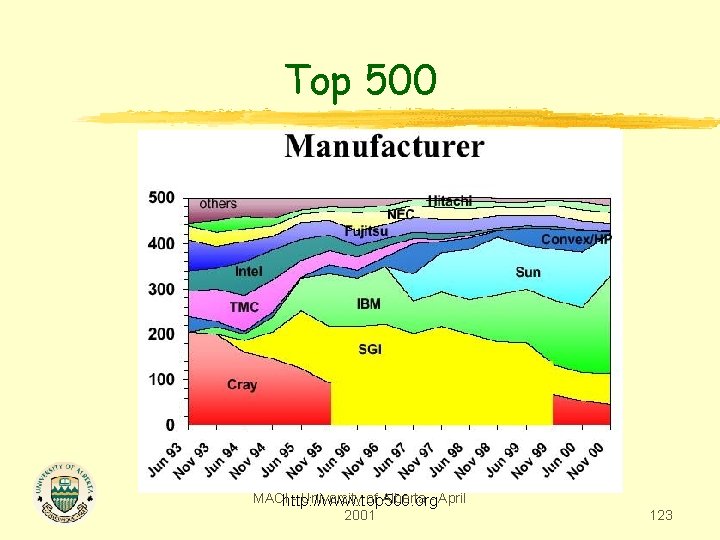

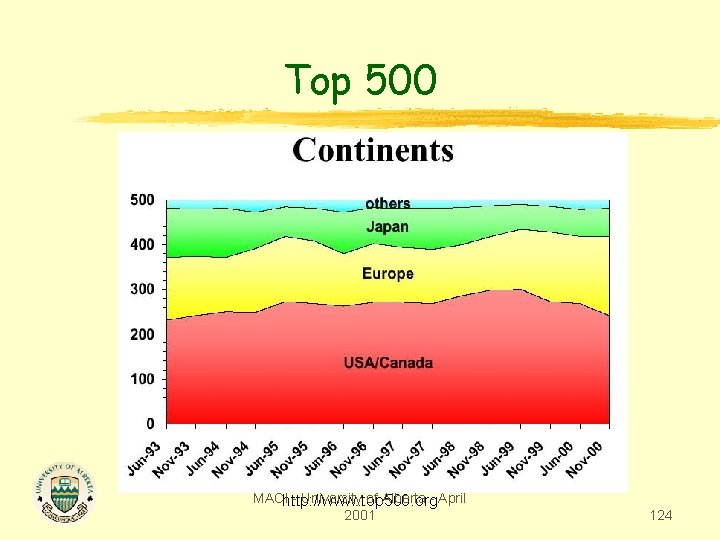

Top 500 MACIhttp: //www. top 500. org - University of Alberta - April 2001 119

Top 500 MACIhttp: //www. top 500. org - University of Alberta - April 2001 120

Top 500 MACIhttp: //www. top 500. org - University of Alberta - April 2001 121

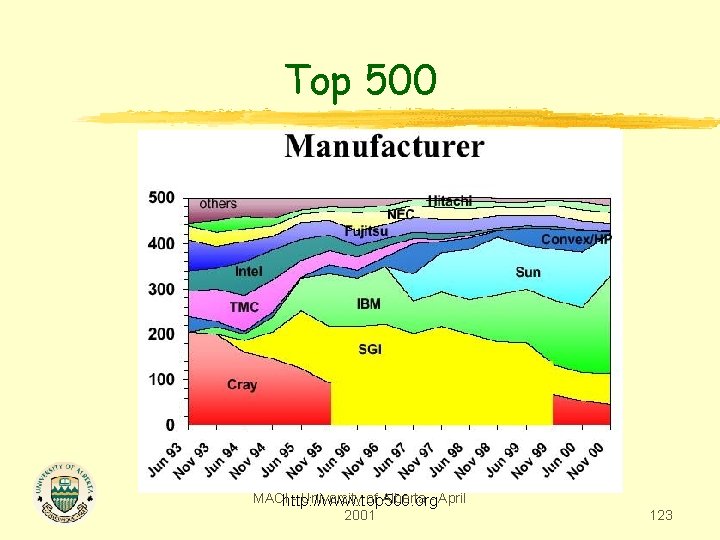

Top 500 MACIhttp: //www. top 500. org - University of Alberta - April 2001 122

Top 500 MACIhttp: //www. top 500. org - University of Alberta - April 2001 123

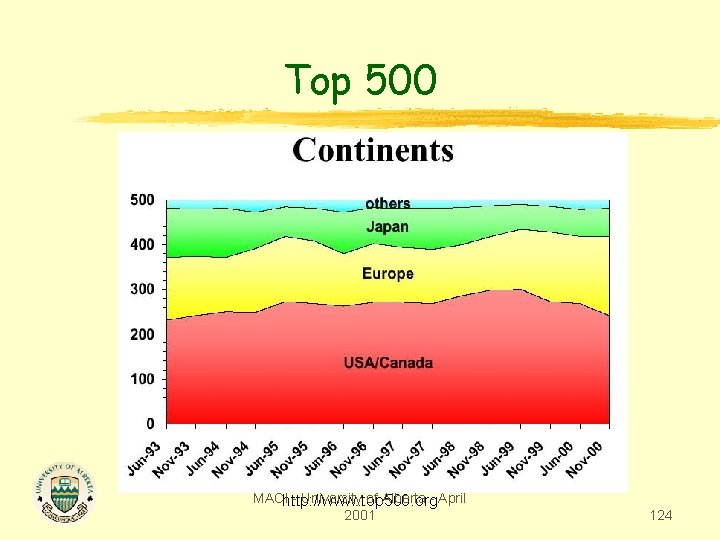

Top 500 MACIhttp: //www. top 500. org - University of Alberta - April 2001 124

Top 500 MACIhttp: //www. top 500. org - University of Alberta - April 2001 125

Conclusions z some problems require extensive computational resources z parallelism allows you to decrease experiment turnaround time z the tools are adequate but still have a long way to go z “simple” parallelism gets some performance but maximum performance requires effort. z Performance commensurate with effort! MACI - University of Alberta - April 2001 126

Reminders z understand your computational needs z understand the hardware and software resources available to you z match parallelism to the architecture z maximize utilization, don’t waste cycles! z granularity, granularity z develop, test and debug small data sets before trying large ones z be wary of the many pitfalls MACI - University of Alberta - April 2001 127

We Want You! Get parallel! Become part of MACI For help with parallel programming, contact Research. Support@ualberta. ca MACI - University of Alberta - April 2001 128