LCG The LCG Service Challenges Ramping up the

- Slides: 74

LCG The LCG Service Challenges: Ramping up the LCG Service Jamie Shiers, CERN-IT-GD-SC April 2005

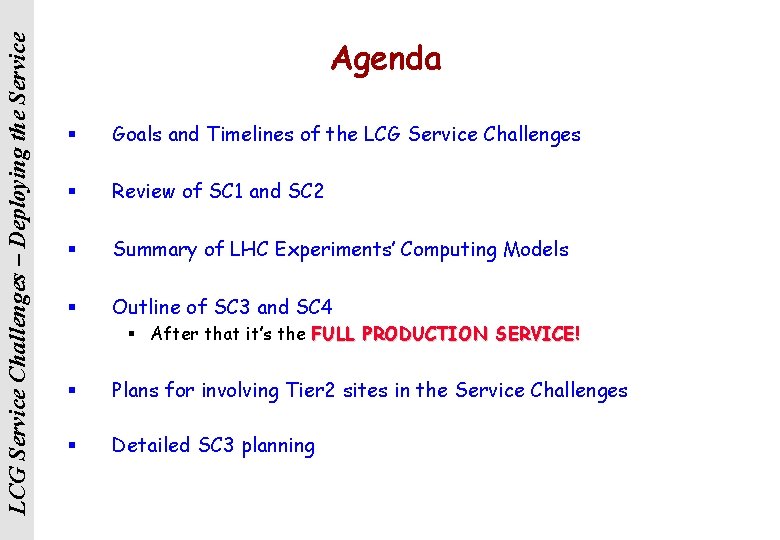

LCG Service Challenges – Deploying the Service Agenda § Goals and Timelines of the LCG Service Challenges § Review of SC 1 and SC 2 § Summary of LHC Experiments’ Computing Models § Outline of SC 3 and SC 4 § After that it’s the FULL PRODUCTION SERVICE! § Plans for involving Tier 2 sites in the Service Challenges § Detailed SC 3 planning

LCG Service Challenges – Deploying the Service Acknowledgements / Disclaimer § These slides are the work of many people § LCG Storage Management Workshop, Service Challenge Meetings etc § Plus relevant presentations I found with Google! § The work behind the slides is that of many more… § All those involved in the Service Challenges at the many sites… § I will use “LCG” in the most generic sense… § Because that’s what I know / understand… (? )

LCG Service Challenges – Deploying the Service Partial Contribution List § § § James Casey: SC 1/2 review Jean-Philippe Baud: DPM and LFC Sophie Lemaitre: LFC deployment Peter Kunszt: Fi. Re. Man Michael Ernst: d. Cache g. Lite FTS: Gavin Mc. Cance § § ALICE: Latchezar Betev ATLAS: Miguel Branco CMS: Lassi Tuura LHCb: atsareg@in 2 p 3. fr

LCG Service Challenges – Deploying the Service LCG Service Challenges - Overview § LHC will enter production (physics) in April 2007 § LCG ‘solution’ is a world-wide Grid § But… § LCG must be ready at full production capacity, functionality and reliability in less than 2 years from now § Will generate an enormous volume of data § Will require huge amount of processing power § Many components understood, deployed, tested. . § Unprecedented scale § Humungous challenge of getting large numbers of institutes and individuals, all with existing, sometimes conflicting commitments, to work together § Issues include h/w acquisition, personnel hiring and training, vendor rollout schedules etc. Ø Should not limit ability of physicist to exploit performance of detectors nor LHC’s physics potential § Whilst being stable, reliable and easy to use

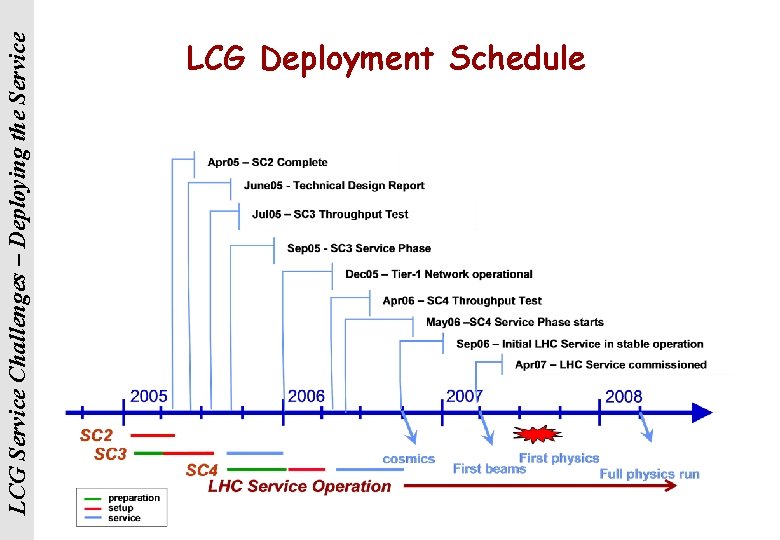

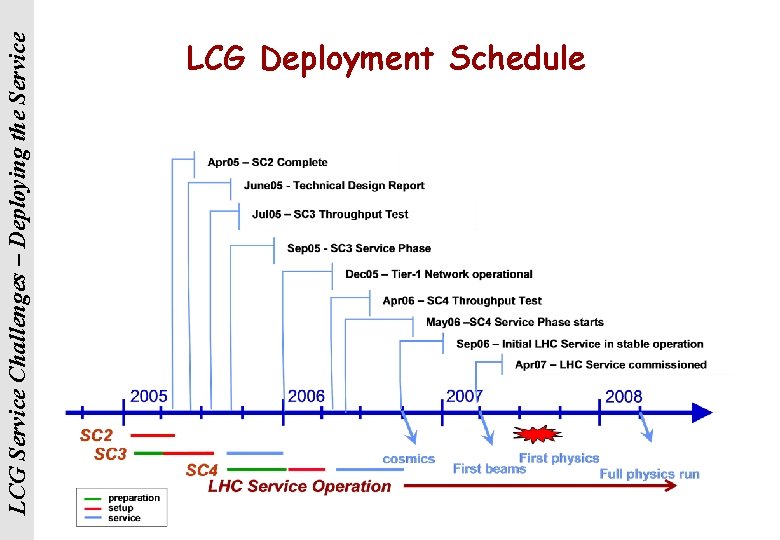

LCG Service Challenges – Deploying the Service LCG Deployment Schedule

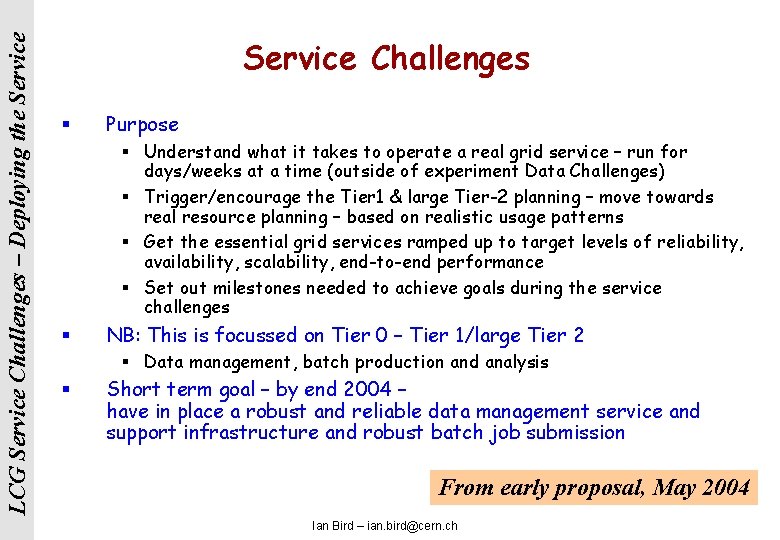

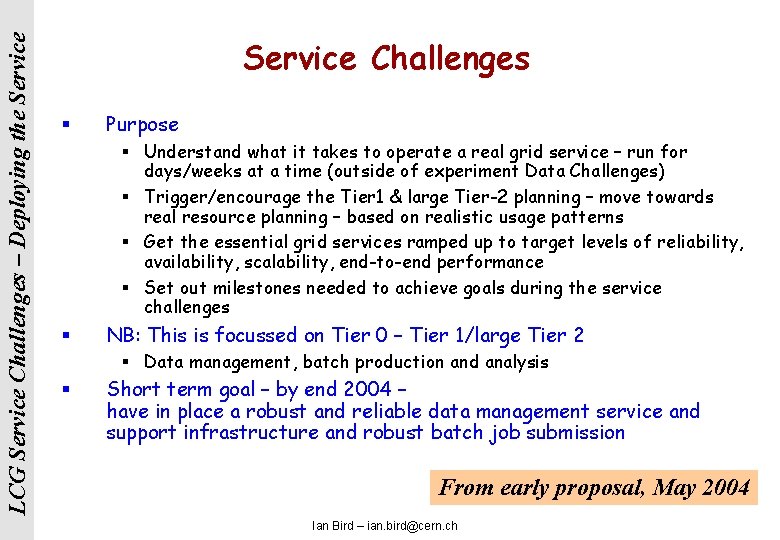

LCG Service Challenges – Deploying the Service Challenges § Purpose § Understand what it takes to operate a real grid service – run for days/weeks at a time (outside of experiment Data Challenges) § Trigger/encourage the Tier 1 & large Tier-2 planning – move towards real resource planning – based on realistic usage patterns § Get the essential grid services ramped up to target levels of reliability, availability, scalability, end-to-end performance § Set out milestones needed to achieve goals during the service challenges § NB: This is focussed on Tier 0 – Tier 1/large Tier 2 § Data management, batch production and analysis § Short term goal – by end 2004 – have in place a robust and reliable data management service and support infrastructure and robust batch job submission From early proposal, May 2004 Ian Bird – ian. bird@cern. ch

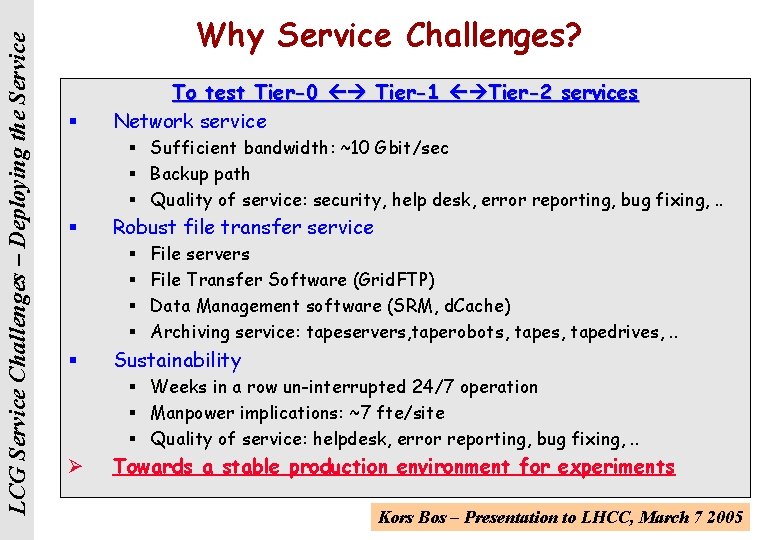

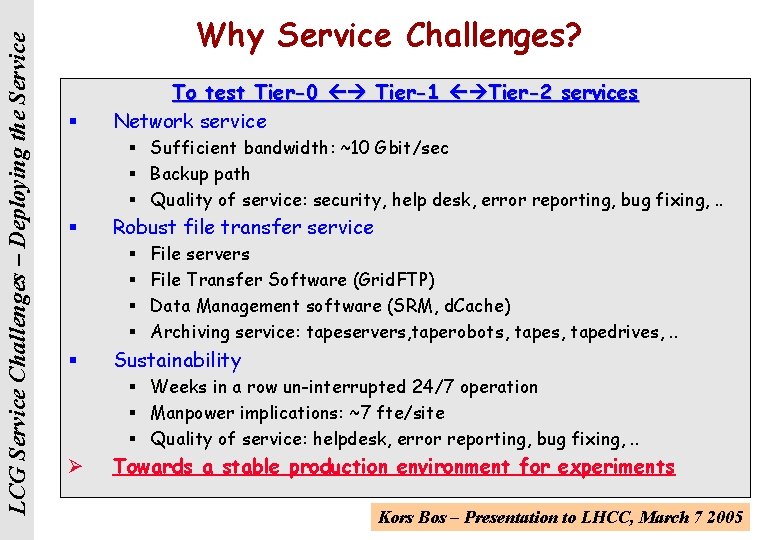

LCG Service Challenges – Deploying the Service Why Service Challenges? § To test Tier-0 Tier-1 Tier-2 services Network service § Sufficient bandwidth: ~10 Gbit/sec § Backup path § Quality of service: security, help desk, error reporting, bug fixing, . . § Robust file transfer service § § § File servers File Transfer Software (Grid. FTP) Data Management software (SRM, d. Cache) Archiving service: tapeservers, taperobots, tapedrives, . . Sustainability § Weeks in a row un-interrupted 24/7 operation § Manpower implications: ~7 fte/site § Quality of service: helpdesk, error reporting, bug fixing, . . Ø Towards a stable production environment for experiments Kors Bos – Presentation to LHCC, March 7 2005

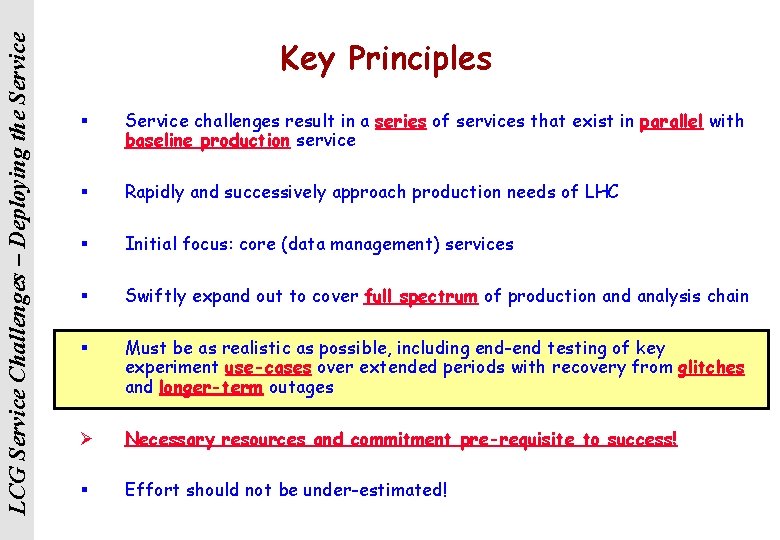

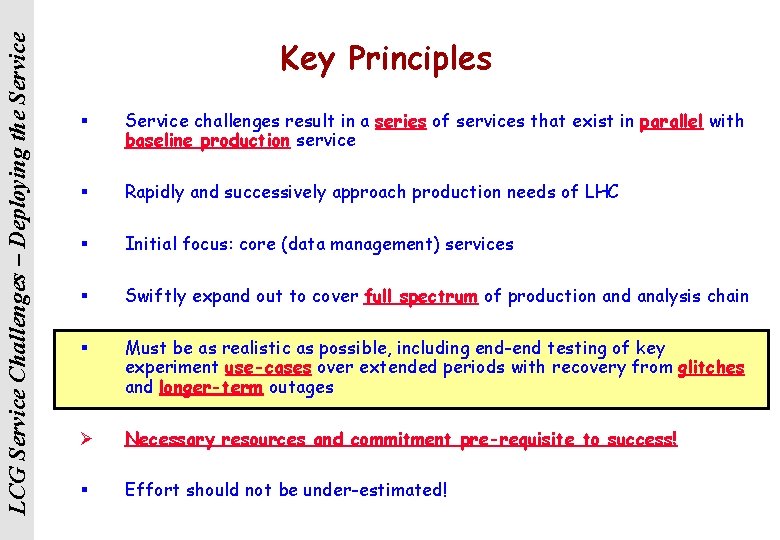

LCG Service Challenges – Deploying the Service Key Principles § Service challenges result in a series of services that exist in parallel with baseline production service § Rapidly and successively approach production needs of LHC § Initial focus: core (data management) services § Swiftly expand out to cover full spectrum of production and analysis chain § Must be as realistic as possible, including end-end testing of key experiment use-cases over extended periods with recovery from glitches and longer-term outages Ø Necessary resources and commitment pre-requisite to success! § Effort should not be under-estimated!

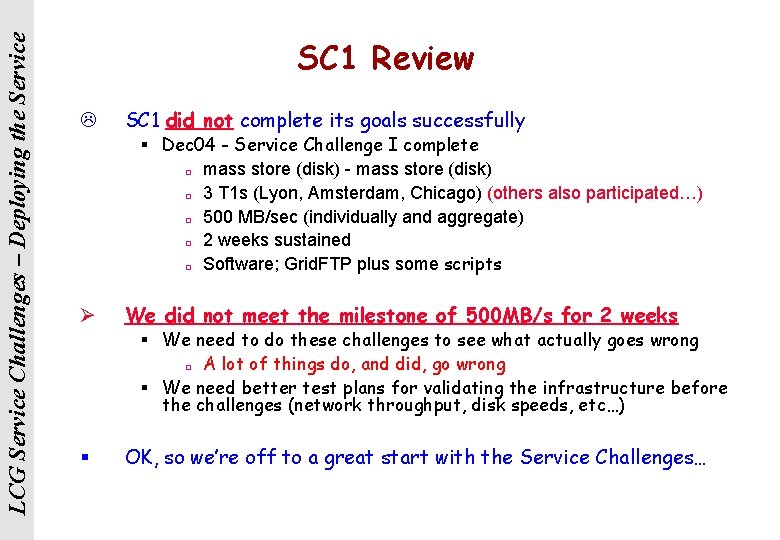

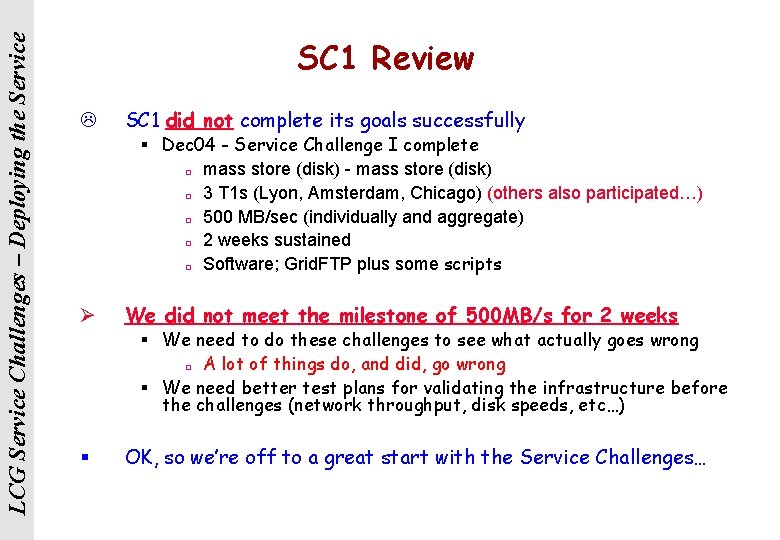

LCG Service Challenges – Deploying the Service SC 1 Review L SC 1 did not complete its goals successfully § Dec 04 - Service Challenge I complete ¨ mass store (disk) - mass store (disk) ¨ 3 T 1 s (Lyon, Amsterdam, Chicago) (others also participated…) ¨ 500 MB/sec (individually and aggregate) ¨ 2 weeks sustained ¨ Software; Grid. FTP plus some scripts Ø We did not meet the milestone of 500 MB/s for 2 weeks § We need to do these challenges to see what actually goes wrong ¨ A lot of things do, and did, go wrong § We need better test plans for validating the infrastructure before the challenges (network throughput, disk speeds, etc…) § OK, so we’re off to a great start with the Service Challenges…

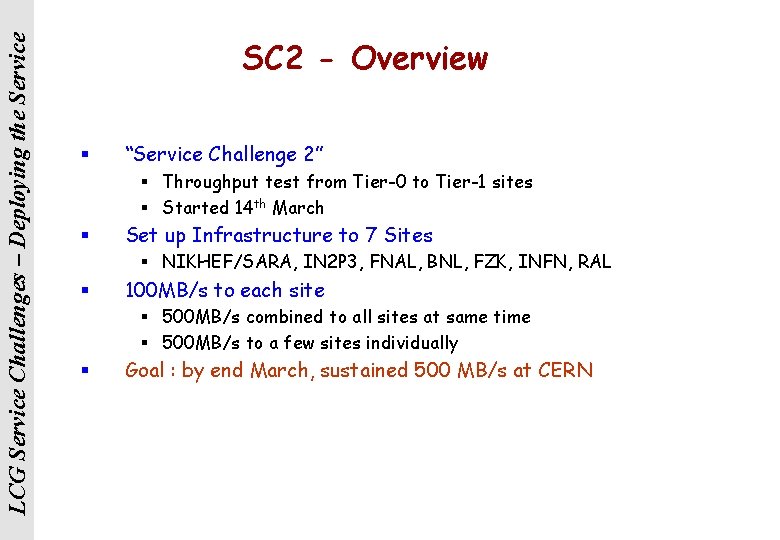

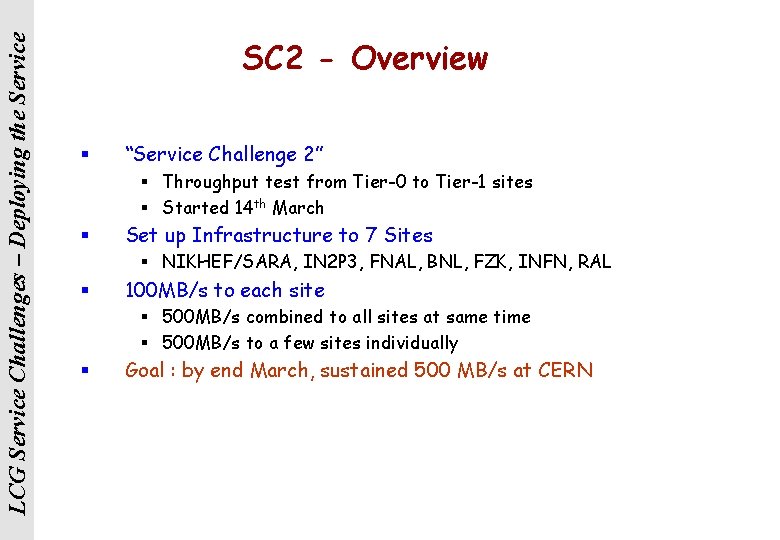

LCG Service Challenges – Deploying the Service SC 2 - Overview § “Service Challenge 2” § Throughput test from Tier-0 to Tier-1 sites § Started 14 th March § Set up Infrastructure to 7 Sites § NIKHEF/SARA, IN 2 P 3, FNAL, BNL, FZK, INFN, RAL § 100 MB/s to each site § 500 MB/s combined to all sites at same time § 500 MB/s to a few sites individually § Goal : by end March, sustained 500 MB/s at CERN

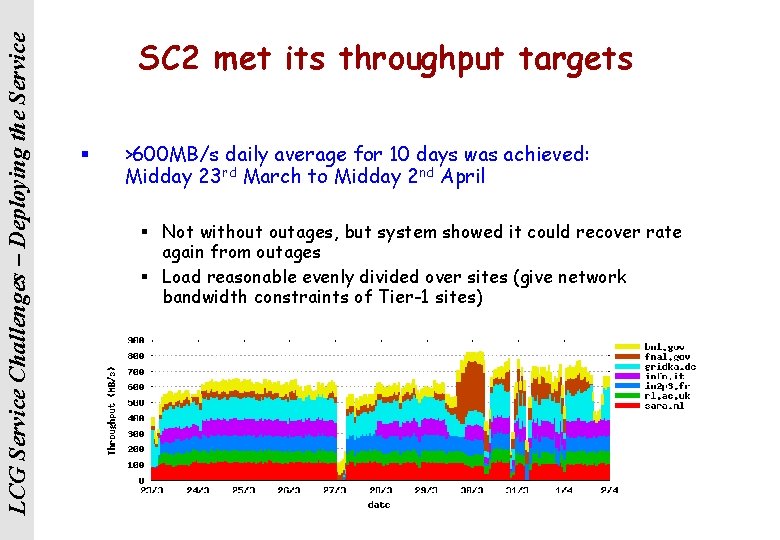

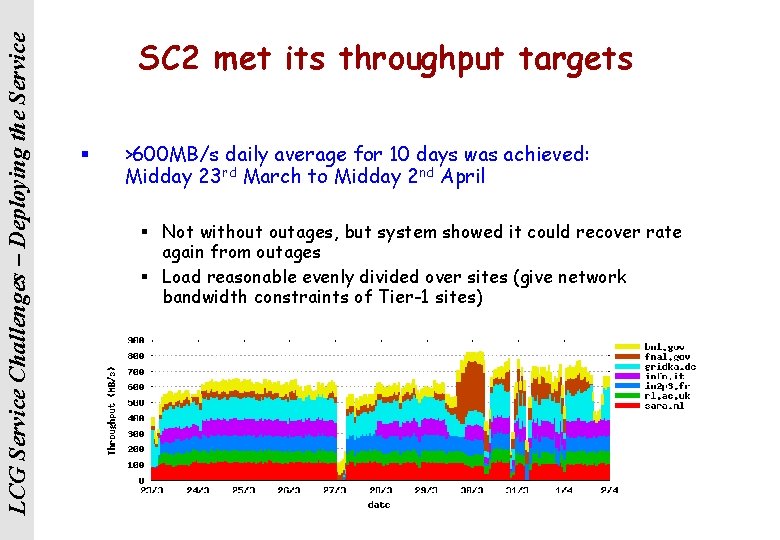

LCG Service Challenges – Deploying the Service SC 2 met its throughput targets § >600 MB/s daily average for 10 days was achieved: Midday 23 rd March to Midday 2 nd April § Not without outages, but system showed it could recover rate again from outages § Load reasonable evenly divided over sites (give network bandwidth constraints of Tier-1 sites)

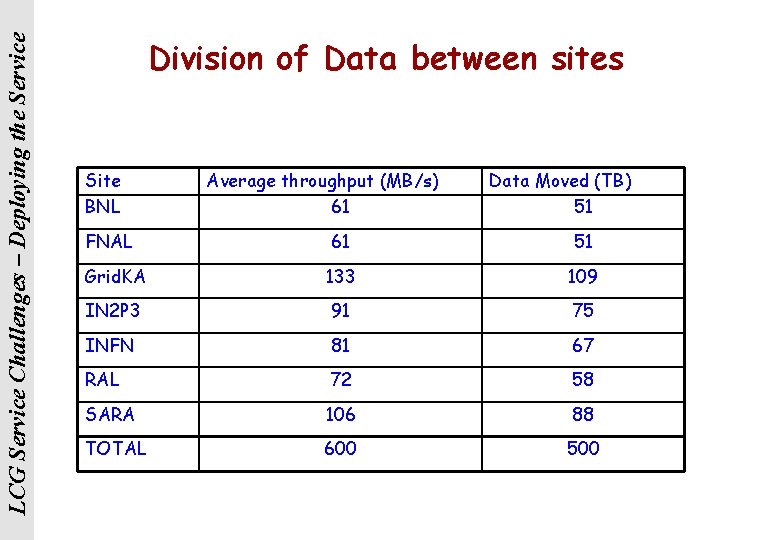

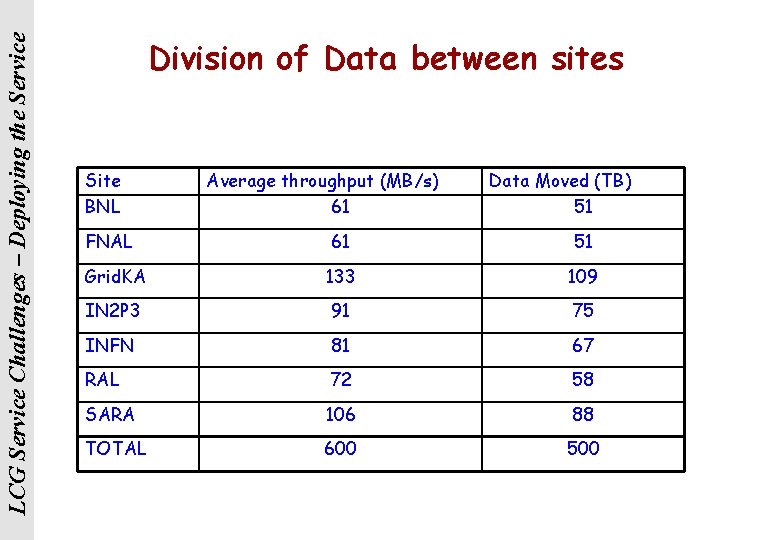

LCG Service Challenges – Deploying the Service Division of Data between sites Site BNL FNAL Average throughput (MB/s) 61 Data Moved (TB) 51 61 51 Grid. KA 133 109 IN 2 P 3 91 75 INFN 81 67 RAL 72 58 SARA 106 88 TOTAL 600 500

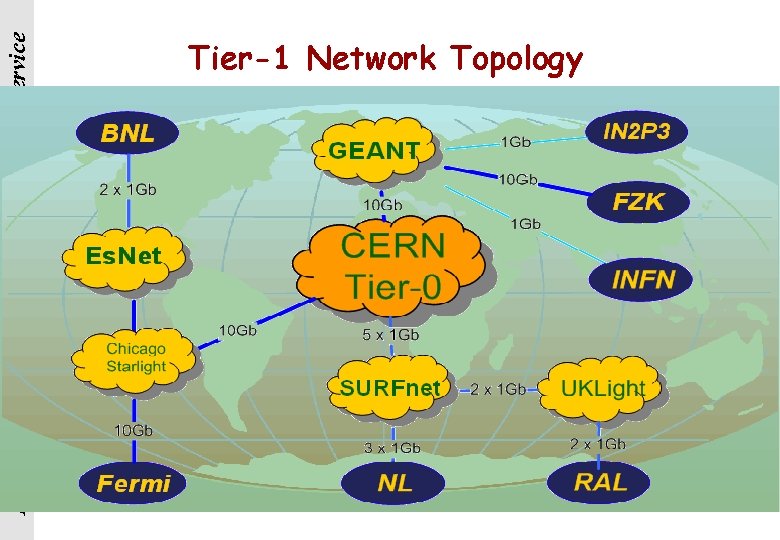

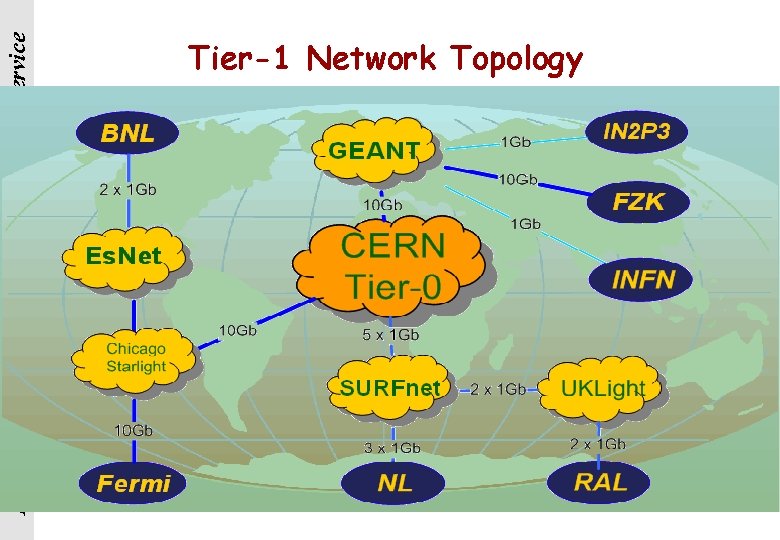

LCG Service Challenges – Deploying the Service Tier-1 Network Topology

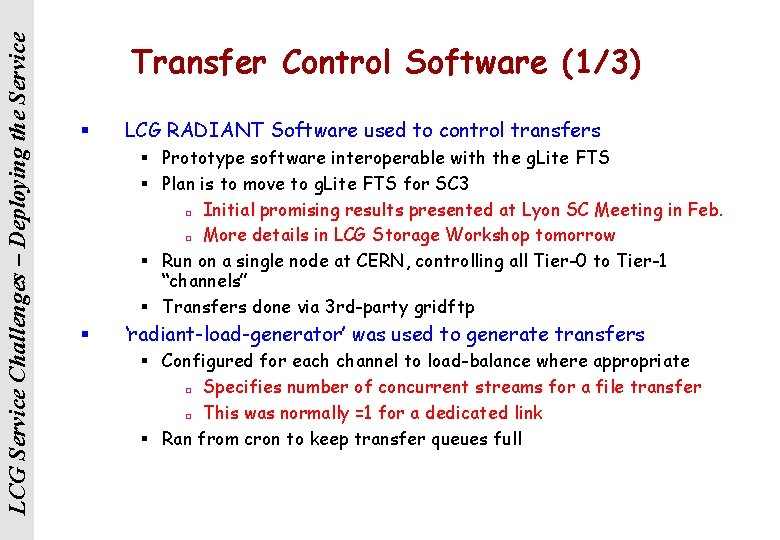

LCG Service Challenges – Deploying the Service Transfer Control Software (1/3) § LCG RADIANT Software used to control transfers § Prototype software interoperable with the g. Lite FTS § Plan is to move to g. Lite FTS for SC 3 ¨ Initial promising results presented at Lyon SC Meeting in Feb. ¨ More details in LCG Storage Workshop tomorrow § Run on a single node at CERN, controlling all Tier-0 to Tier-1 “channels” § Transfers done via 3 rd-party gridftp § ‘radiant-load-generator’ was used to generate transfers § Configured for each channel to load-balance where appropriate ¨ Specifies number of concurrent streams for a file transfer ¨ This was normally =1 for a dedicated link § Ran from cron to keep transfer queues full

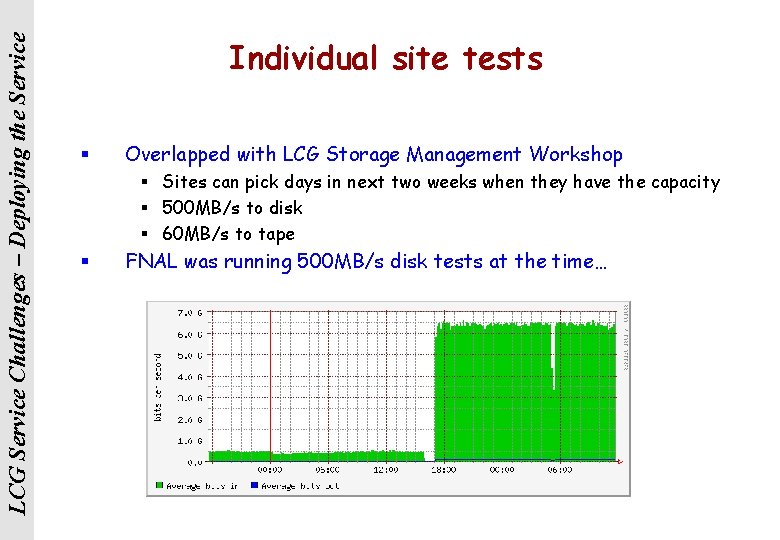

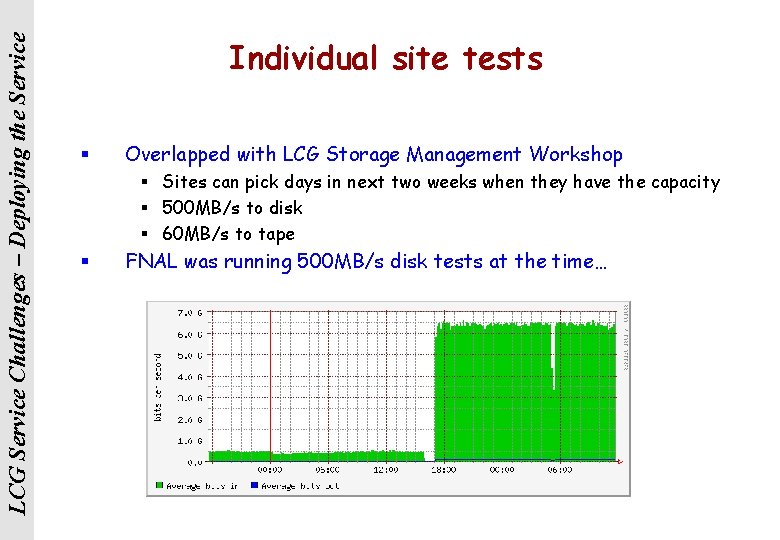

LCG Service Challenges – Deploying the Service Individual site tests § Overlapped with LCG Storage Management Workshop § Sites can pick days in next two weeks when they have the capacity § 500 MB/s to disk § 60 MB/s to tape § FNAL was running 500 MB/s disk tests at the time…

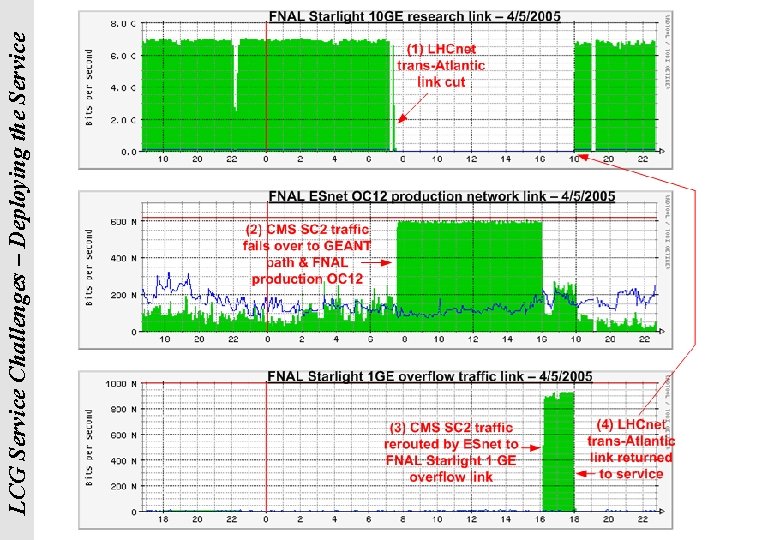

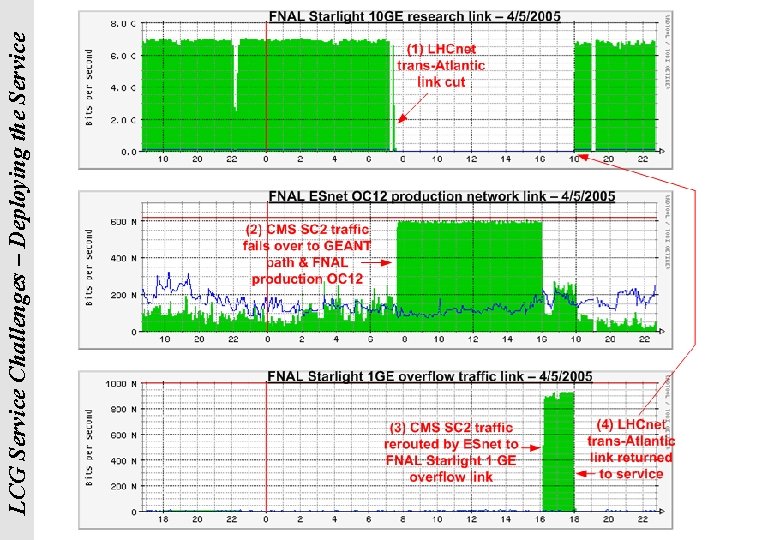

LCG Service Challenges – Deploying the Service FNAL Failover it shows § that when the starlight link was cut, our transfers fell back to our ESNET connection, § when our network folks rerouted it to our 1 GE link § when starlight came back.

LCG Service Challenges – Deploying the Service SC 2 Summary § SC 2 met it’s throughput goals – and with more sites than originally planned! § A big improvement from SC 1 L But we still don’t have something we can call a service § Monitoring is better § We see outages when they happen, and we understood why they happen ¨ First step towards operations guides § Some advances in infrastructure and software will happen before SC 3 § g. Lite transfer software § SRM service more widely deployed § We have to understand how to incorporate these elements

LCG Service Challenges – Deploying the Service SC 1/2 - Conclusions § Setting up the infrastructure and achieving reliable transfers, even at much lower data rates than needed for LHC, is complex and requires a lot of technical work + coordination § Even within one site – people are working very hard & are stressed. Stressed people do not work at their best. Far from clear how this scales to SC 3/SC 4, let alone to LHC production phase § Compound this with the multi-site / multi-partner issue, together with time zones etc and you have a large “non-technical” component to an already tough problem (example of technical problem follows…) § But… the end point is fixed (time + functionality) § We should be careful not to over-complicate the problem or potential solutions § And not forget there is still a humungous amount to do… § (much more than we’ve done…)

LCG Service Challenges – Deploying the Service Computing Model Summary - Goals § Present key features of LHC experiments’ Computing Models in a consistent manner § High-light the commonality § Emphasize the key differences § Define these ‘parameters’ in a central place (LCG web) § Update with change-log as required § Use these parameters as input to requirements for Service Challenges § To enable partners (T 0/T 1 sites, experiments, network providers) to have a clear understanding of what is required of them Ø Define precise terms and ‘factors’

LHC Computing Models Summary of Key Characteristics of LHC Computing Models

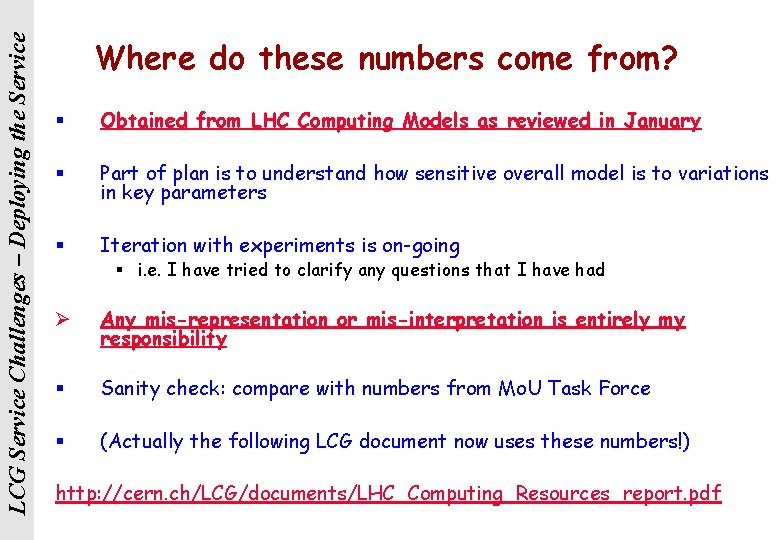

LCG Service Challenges – Deploying the Service Where do these numbers come from? § Obtained from LHC Computing Models as reviewed in January § Part of plan is to understand how sensitive overall model is to variations in key parameters § Iteration with experiments is on-going § i. e. I have tried to clarify any questions that I have had Ø Any mis-representation or mis-interpretation is entirely my responsibility § Sanity check: compare with numbers from Mo. U Task Force § (Actually the following LCG document now uses these numbers!) http: //cern. ch/LCG/documents/LHC_Computing_Resources_report. pdf

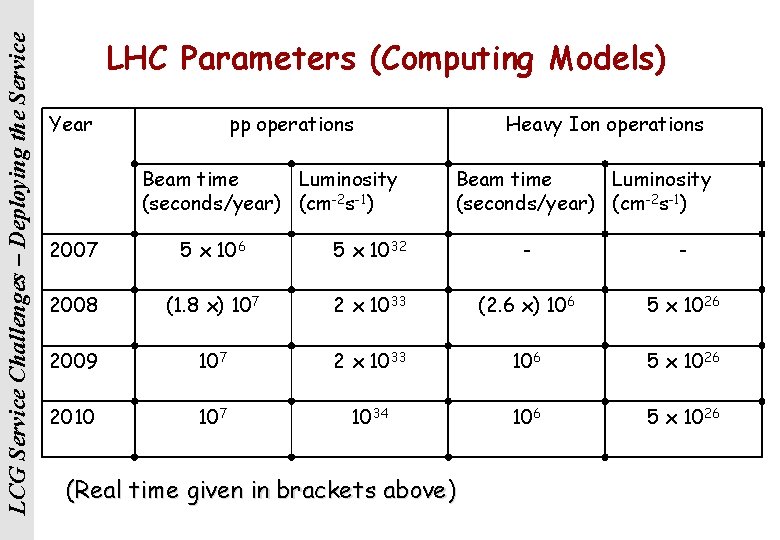

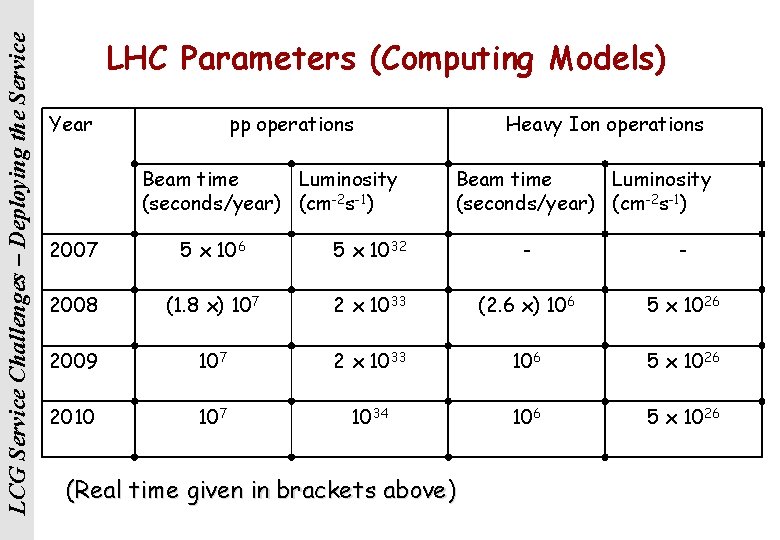

LCG Service Challenges – Deploying the Service LHC Parameters (Computing Models) Year pp operations Beam time Luminosity (seconds/year) (cm-2 s-1) Heavy Ion operations Beam time Luminosity (seconds/year) (cm-2 s-1) 2007 5 x 106 5 x 1032 - - 2008 (1. 8 x) 107 2 x 1033 (2. 6 x) 106 5 x 1026 2009 107 2 x 1033 106 5 x 1026 2010 107 1034 106 5 x 1026 (Real time given in brackets above)

LCG Service Challenges – Deploying the Service LHC Schedule – “Chamonix” workshop § First collisions: two months after first turn on in August 2007 § 32 weeks of operation, 16 weeks of shutdown, 4 weeks commissioning = 140 days physics / year (5 lunar months)

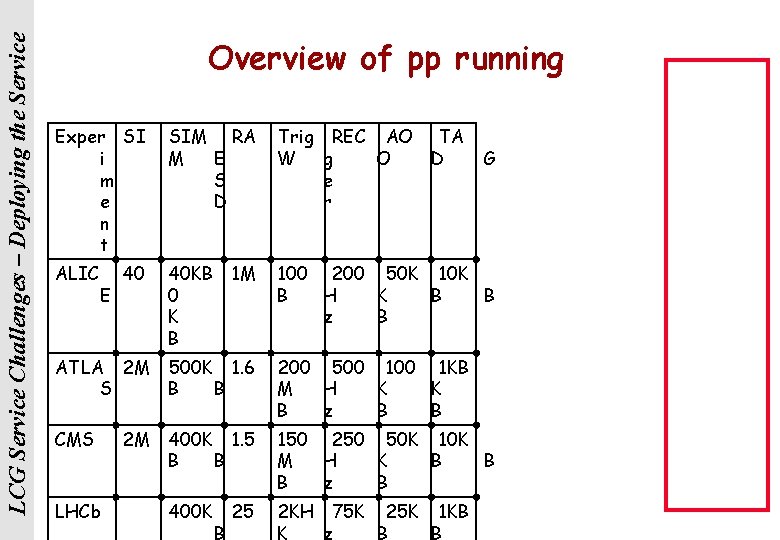

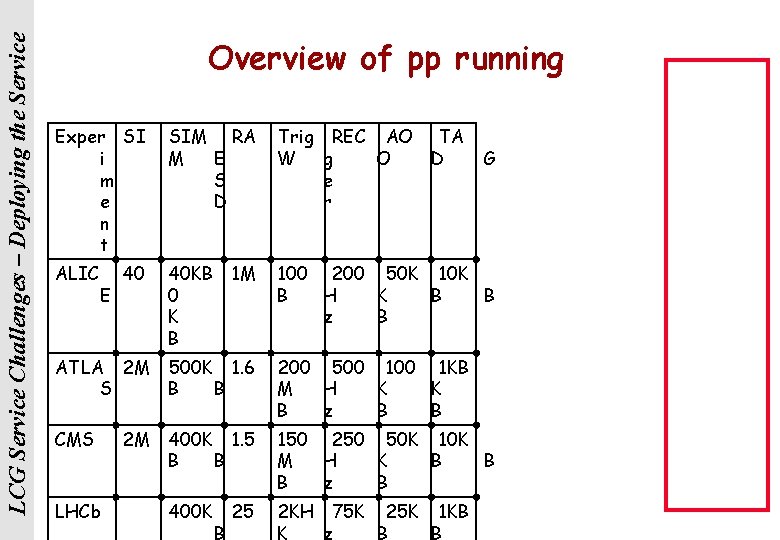

LCG Service Challenges – Deploying the Service Overview of pp running Exper SI i m e n t SIM RA M E S D Trig REC AO TA W g O D e r ALIC 40 KB 0 K B 100 200 50 K 10 K B H K B B z B E 40 1 M G ATLA 2 M S 500 K 1. 6 B B 200 500 1 KB M H K K B z B B CMS 400 K 1. 5 B B 150 250 50 K 10 K M H K B B B z B 400 K 2 KH 75 K 25 K 1 KB K z B B LHCb 2 M B 25

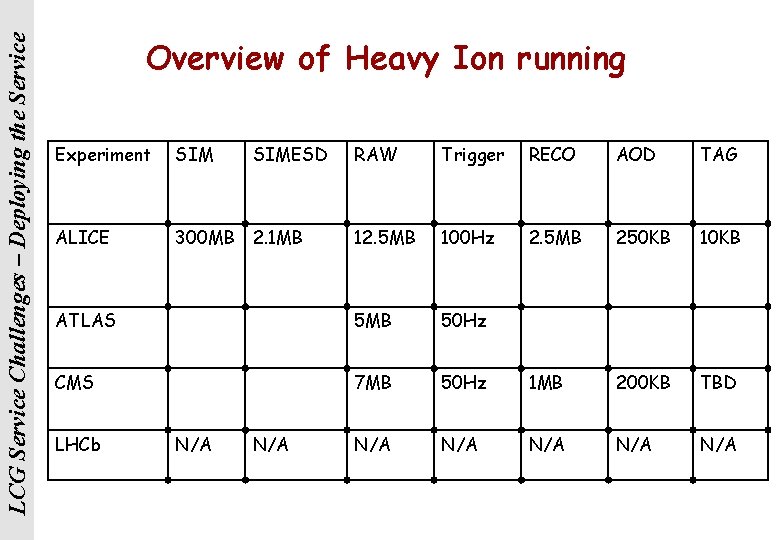

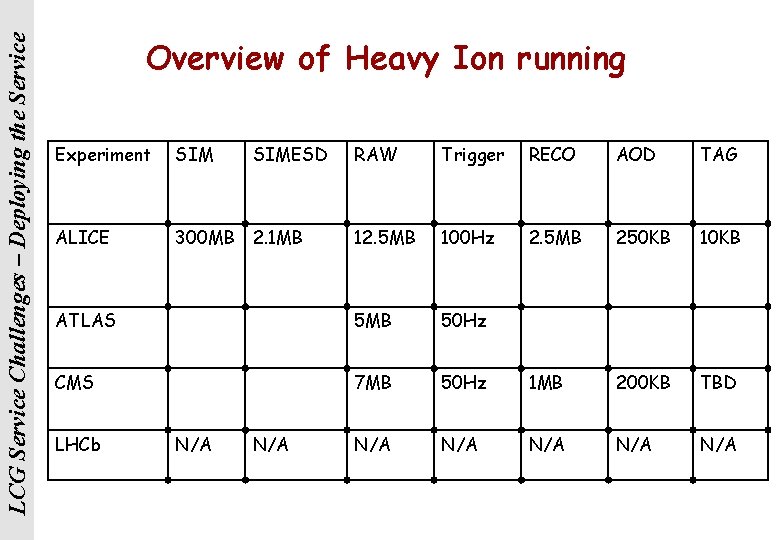

LCG Service Challenges – Deploying the Service Overview of Heavy Ion running Experiment SIM RAW Trigger RECO AOD TAG ALICE 300 MB 2. 1 MB 12. 5 MB 100 Hz 2. 5 MB 250 KB 10 KB ATLAS 5 MB 50 Hz CMS 7 MB 50 Hz 1 MB 200 KB TBD N/A N/A N/A LHCb N/A SIMESD N/A

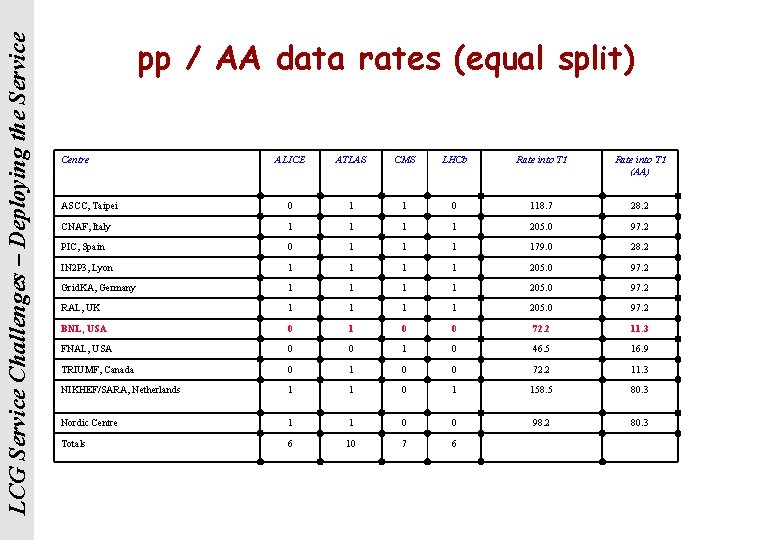

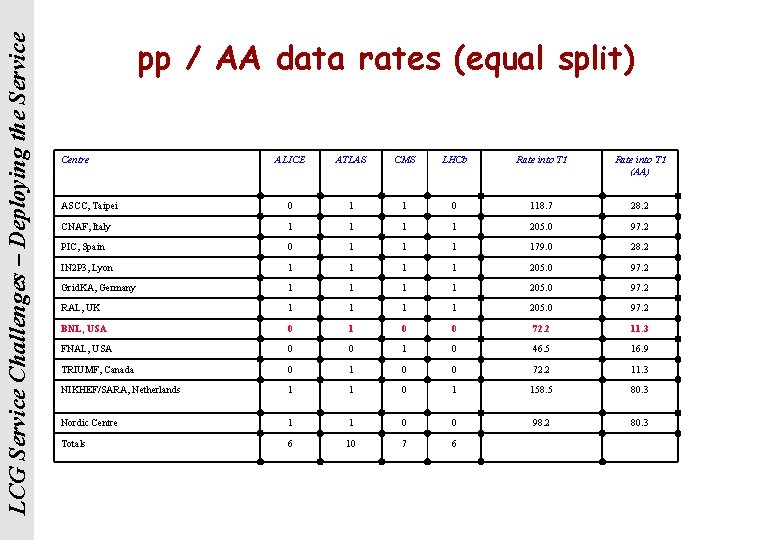

LCG Service Challenges – Deploying the Service pp / AA data rates (equal split) Centre ALICE ATLAS CMS LHCb Rate into T 1 (AA) ASCC, Taipei 0 1 1 0 118. 7 28. 2 CNAF, Italy 1 1 205. 0 97. 2 PIC, Spain 0 1 179. 0 28. 2 IN 2 P 3, Lyon 1 1 205. 0 97. 2 Grid. KA, Germany 1 1 205. 0 97. 2 RAL, UK 1 1 205. 0 97. 2 BNL, USA 0 1 0 0 72. 2 11. 3 FNAL, USA 0 0 1 0 46. 5 16. 9 TRIUMF, Canada 0 1 0 0 72. 2 11. 3 NIKHEF/SARA, Netherlands 1 1 0 1 158. 5 80. 3 Nordic Centre 1 1 0 0 98. 2 80. 3 Totals 6 10 7 6

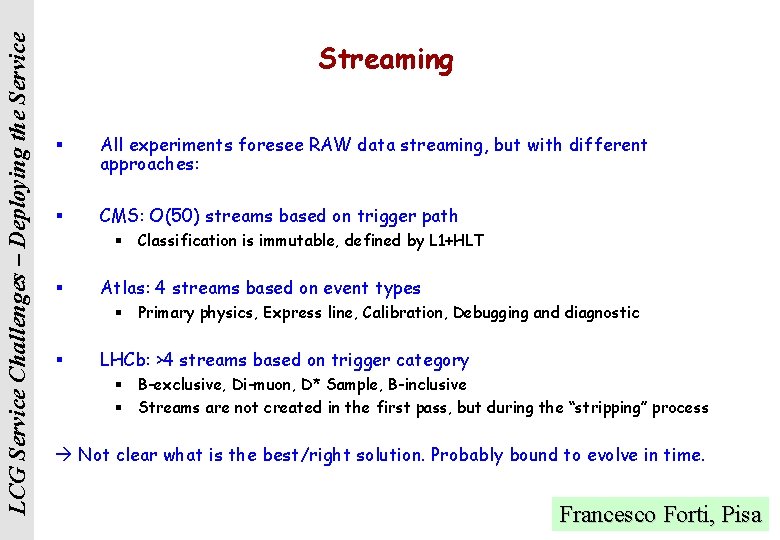

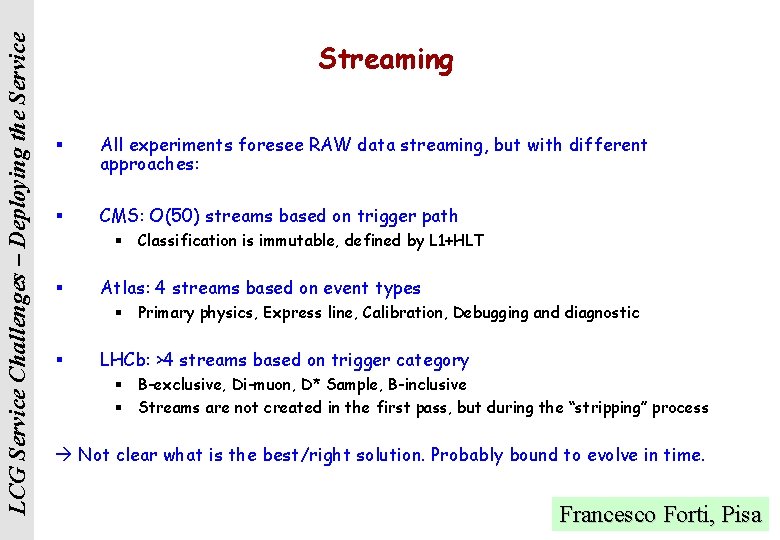

LCG Service Challenges – Deploying the Service Streaming § All experiments foresee RAW data streaming, but with different approaches: § CMS: O(50) streams based on trigger path § Classification is immutable, defined by L 1+HLT § Atlas: 4 streams based on event types § Primary physics, Express line, Calibration, Debugging and diagnostic § LHCb: >4 streams based on trigger category § B-exclusive, Di-muon, D* Sample, B-inclusive § Streams are not created in the first pass, but during the “stripping” process Not clear what is the best/right solution. Probably bound to evolve in time. Francesco Forti, Pisa

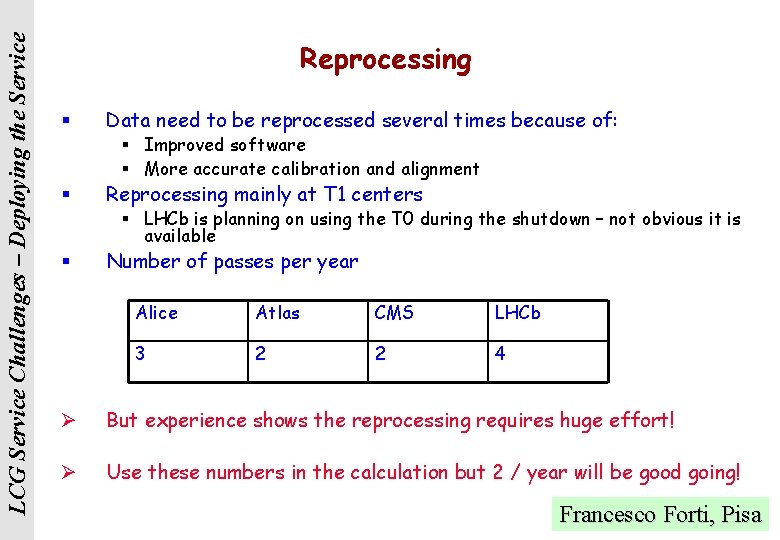

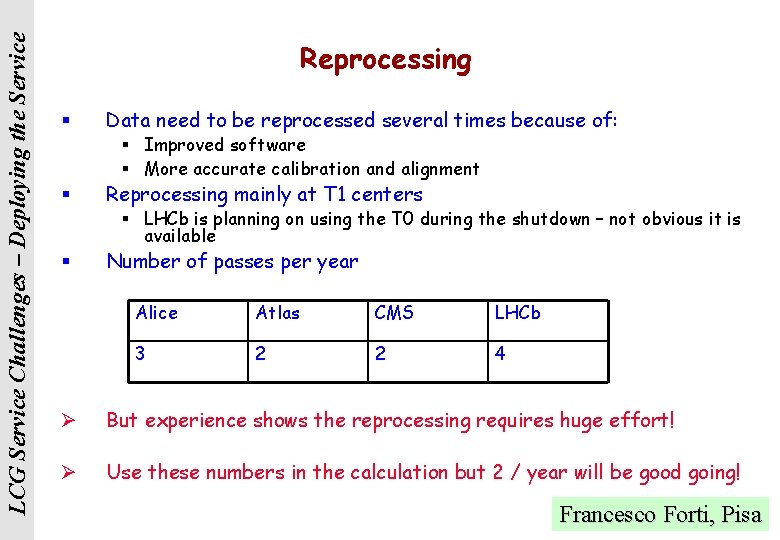

LCG Service Challenges – Deploying the Service Reprocessing § Data need to be reprocessed several times because of: § Improved software § More accurate calibration and alignment § Reprocessing mainly at T 1 centers § LHCb is planning on using the T 0 during the shutdown – not obvious it is available § Number of passes per year Alice Atlas CMS LHCb 3 2 2 4 Ø But experience shows the reprocessing requires huge effort! Ø Use these numbers in the calculation but 2 / year will be good going! Francesco Forti, Pisa

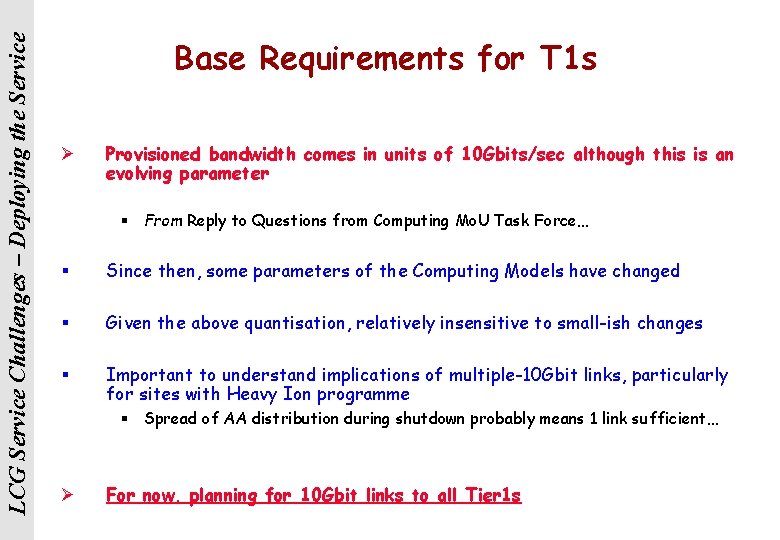

LCG Service Challenges – Deploying the Service Base Requirements for T 1 s Ø Provisioned bandwidth comes in units of 10 Gbits/sec although this is an evolving parameter § From Reply to Questions from Computing Mo. U Task Force… § Since then, some parameters of the Computing Models have changed § Given the above quantisation, relatively insensitive to small-ish changes § Important to understand implications of multiple-10 Gbit links, particularly for sites with Heavy Ion programme § Spread of AA distribution during shutdown probably means 1 link sufficient… Ø For now, planning for 10 Gbit links to all Tier 1 s

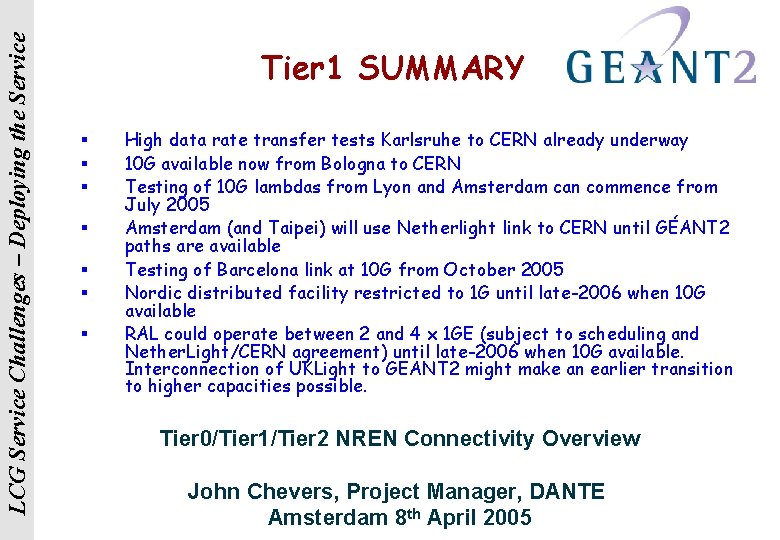

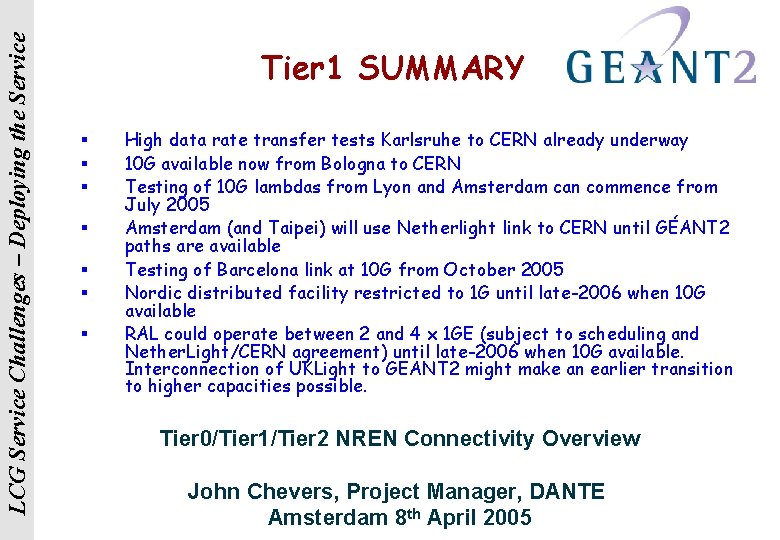

LCG Service Challenges – Deploying the Service Tier 1 SUMMARY § § § § High data rate transfer tests Karlsruhe to CERN already underway 10 G available now from Bologna to CERN Testing of 10 G lambdas from Lyon and Amsterdam can commence from July 2005 Amsterdam (and Taipei) will use Netherlight link to CERN until GÉANT 2 paths are available Testing of Barcelona link at 10 G from October 2005 Nordic distributed facility restricted to 1 G until late-2006 when 10 G available RAL could operate between 2 and 4 x 1 GE (subject to scheduling and Nether. Light/CERN agreement) until late-2006 when 10 G available. Interconnection of UKLight to GEANT 2 might make an earlier transition to higher capacities possible. Tier 0/Tier 1/Tier 2 NREN Connectivity Overview John Chevers, Project Manager, DANTE Amsterdam 8 th April 2005

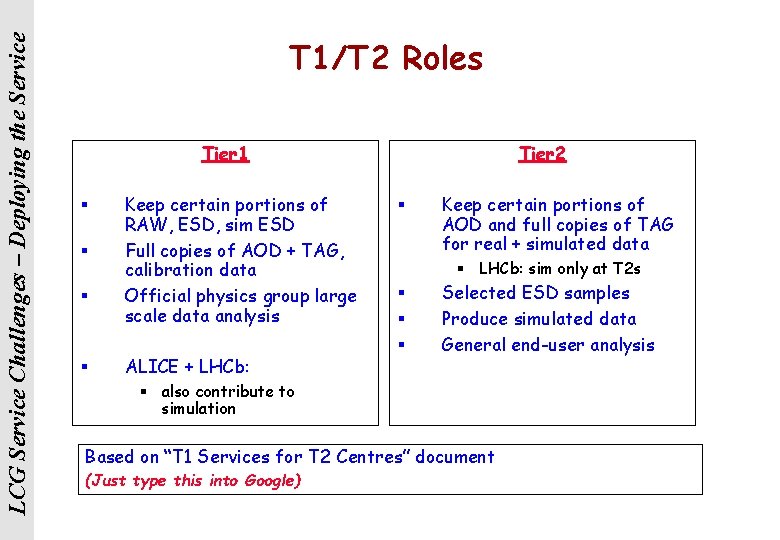

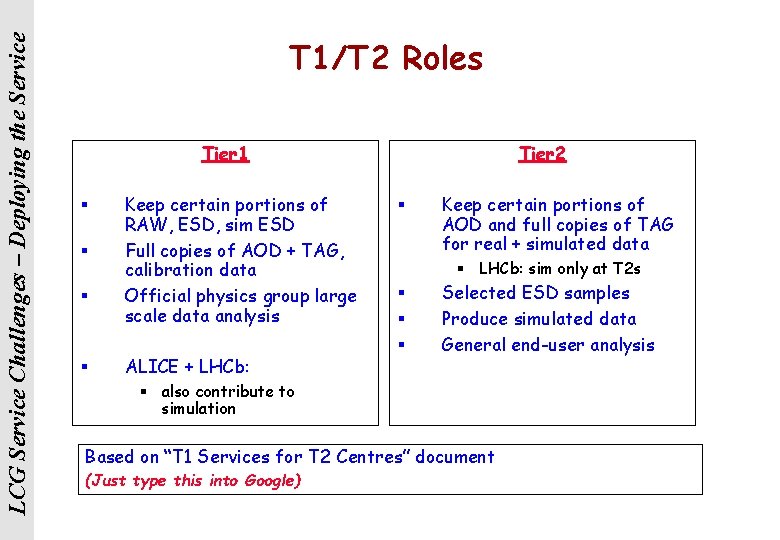

LCG Service Challenges – Deploying the Service T 1/T 2 Roles Tier 2 Tier 1 § § Keep certain portions of RAW, ESD, sim ESD Full copies of AOD + TAG, calibration data Official physics group large scale data analysis ALICE + LHCb: § Keep certain portions of AOD and full copies of TAG for real + simulated data § LHCb: sim only at T 2 s § § § Selected ESD samples Produce simulated data General end-user analysis § also contribute to simulation Based on “T 1 Services for T 2 Centres” document (Just type this into Google)

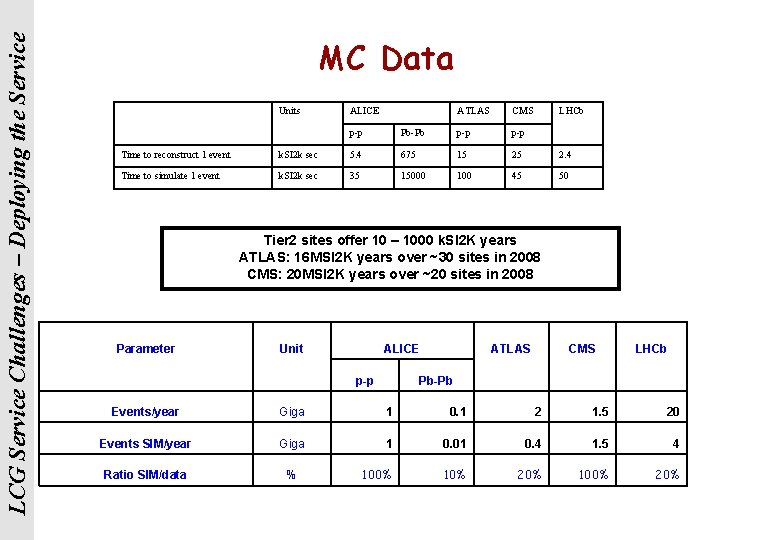

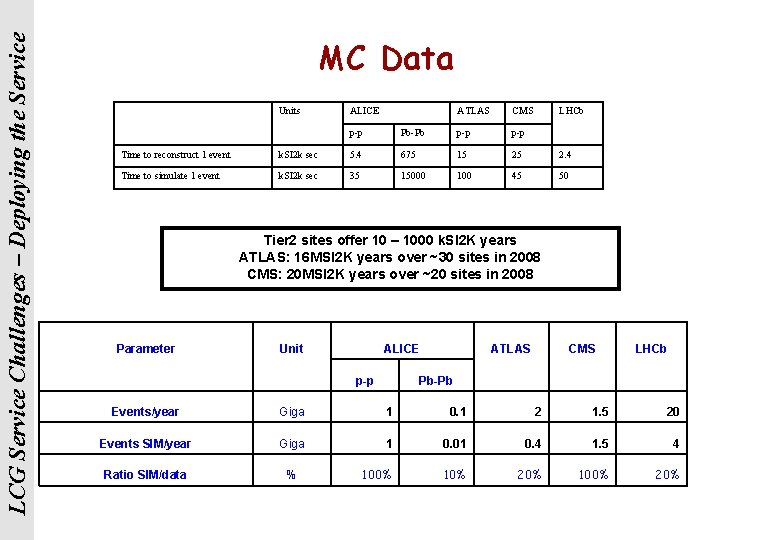

LCG Service Challenges – Deploying the Service MC Data Units ALICE ATLAS CMS p-p Pb-Pb p-p LHCb Time to reconstruct 1 event k. SI 2 k sec 5. 4 675 15 25 2. 4 Time to simulate 1 event k. SI 2 k sec 35 15000 100 45 50 Tier 2 sites offer 10 – 1000 k. SI 2 K years ATLAS: 16 MSI 2 K years over ~30 sites in 2008 CMS: 20 MSI 2 K years over ~20 sites in 2008 Parameter Unit ALICE Events/year Giga 1 0. 1 2 1. 5 20 Events SIM/year Giga 1 0. 01 0. 4 1. 5 4 Ratio SIM/data % 100% 10% 20% 100% 20% p-p Pb-Pb ATLAS CMS LHCb

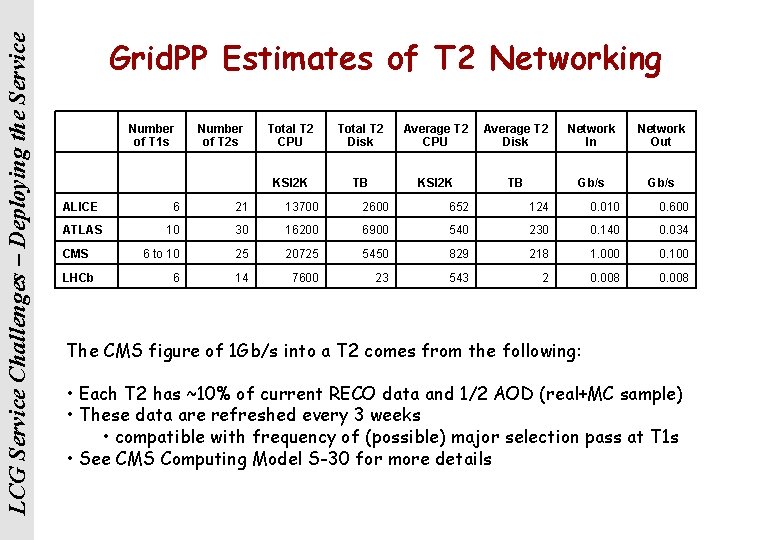

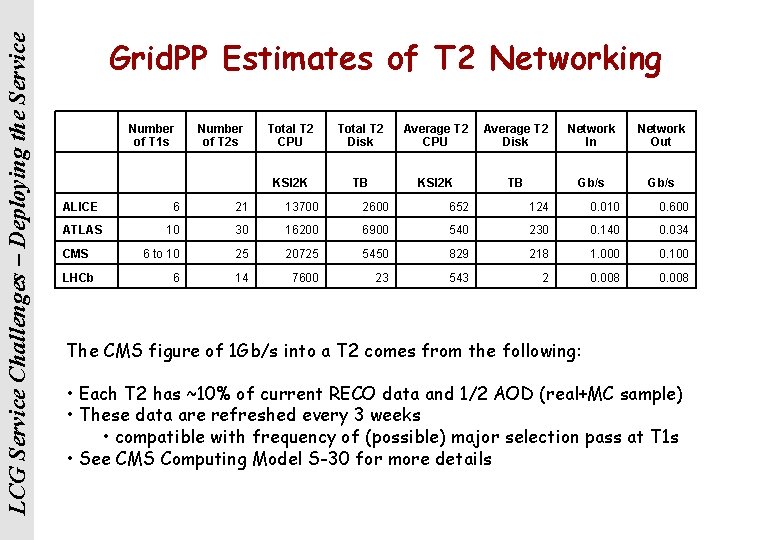

LCG Service Challenges – Deploying the Service Grid. PP Estimates of T 2 Networking Number of T 1 s Number of T 2 s Total T 2 CPU Total T 2 Disk Average T 2 CPU Average T 2 Disk Network In Network Out KSI 2 K TB Gb/s ALICE 6 21 13700 2600 652 124 0. 010 0. 600 ATLAS 10 30 16200 6900 540 230 0. 140 0. 034 6 to 10 25 20725 5450 829 218 1. 000 0. 100 6 14 7600 23 543 2 0. 008 CMS LHCb The CMS figure of 1 Gb/s into a T 2 comes from the following: • Each T 2 has ~10% of current RECO data and 1/2 AOD (real+MC sample) • These data are refreshed every 3 weeks • compatible with frequency of (possible) major selection pass at T 1 s • See CMS Computing Model S-30 for more details

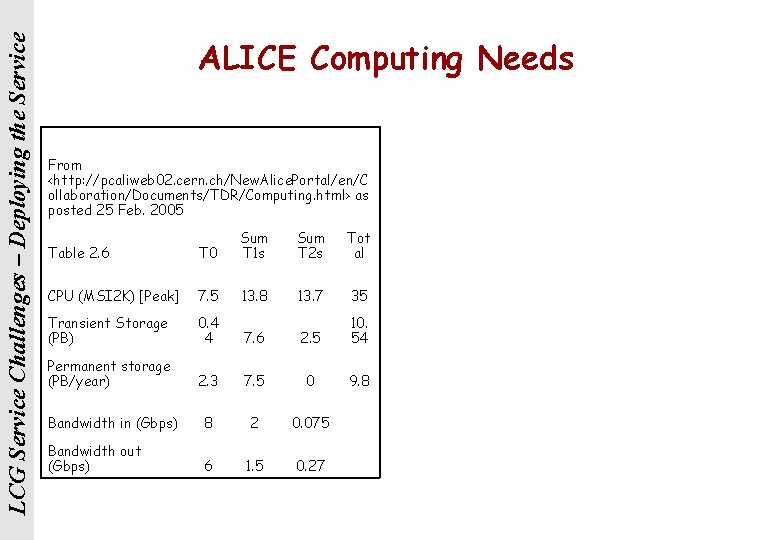

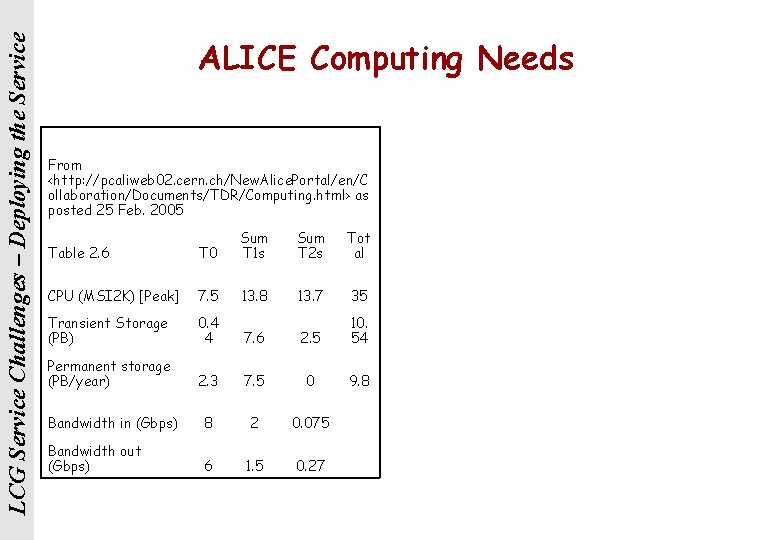

LCG Service Challenges – Deploying the Service ALICE Computing Needs From <http: //pcaliweb 02. cern. ch/New. Alice. Portal/en/C ollaboration/Documents/TDR/Computing. html> as posted 25 Feb. 2005 Table 2. 6 T 0 Sum T 1 s Sum T 2 s Tot al CPU (MSI 2 K) [Peak] 7. 5 13. 8 13. 7 35 Transient Storage (PB) 0. 4 4 7. 6 2. 5 10. 54 Permanent storage (PB/year) 2. 3 7. 5 0 9. 8 Bandwidth in (Gbps) 8 2 0. 075 Bandwidth out (Gbps) 6 1. 5 0. 27

Service Challenge 3 Goals and Timeline for Service Challenge 3

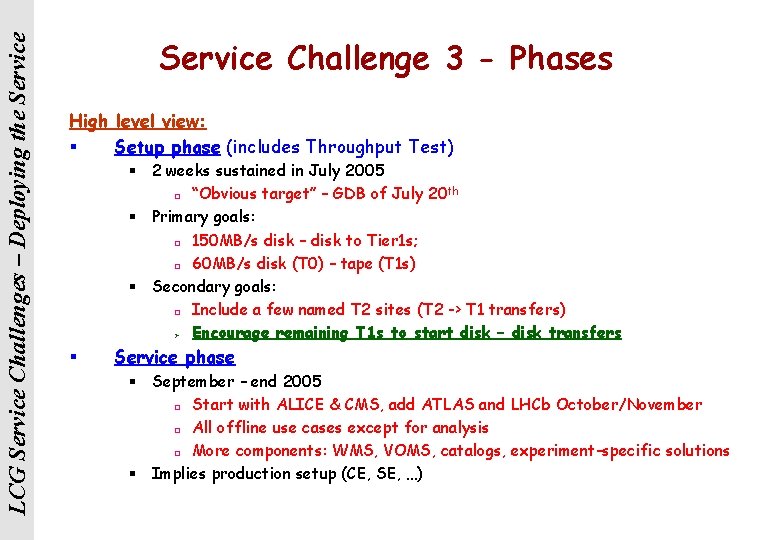

LCG Service Challenges – Deploying the Service Challenge 3 - Phases High level view: § Setup phase (includes Throughput Test) § 2 weeks sustained in July 2005 ¨ “Obvious target” – GDB of July 20 th § Primary goals: ¨ 150 MB/s disk – disk to Tier 1 s; ¨ 60 MB/s disk (T 0) – tape (T 1 s) § Secondary goals: ¨ Include a few named T 2 sites (T 2 -> T 1 transfers) Ø Encourage remaining T 1 s to start disk – disk transfers § Service phase § September – end 2005 ¨ Start with ALICE & CMS, add ATLAS and LHCb October/November ¨ All offline use cases except for analysis ¨ More components: WMS, VOMS, catalogs, experiment-specific solutions § Implies production setup (CE, SE, …)

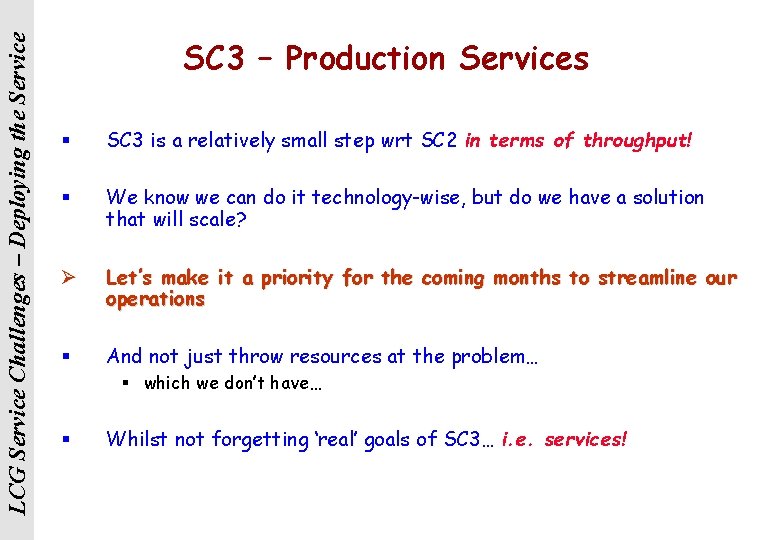

LCG Service Challenges – Deploying the Service SC 3 – Production Services § SC 3 is a relatively small step wrt SC 2 in terms of throughput! § We know we can do it technology-wise, but do we have a solution that will scale? Ø Let’s make it a priority for the coming months to streamline our operations § And not just throw resources at the problem… § which we don’t have… § Whilst not forgetting ‘real’ goals of SC 3… i. e. services!

LCG Service Challenges – Deploying the Service SC 3 Preparation Workshop § This (proposed) workshop will focus on very detailed technical planning for the whole SC 3 exercise. § It is intended to be as interactive as possible, i. e. not presentations to an audience largely in a different (wireless) world. § There will be sessions devoted to specific experiment issues, Tier 1 issues, Tier 2 issues as well as the general service infrastructure. § Planning for SC 3 has already started and will continue prior to the workshop. § This is an opportunity to get together to iron out concerns and issues that cannot easily be solved by e-mail, phone conferences and/or other meetings prior to the workshop.

LCG Service Challenges – Deploying the Service SC 3 Preparation W/S Agenda § 4 x 1/2 days devoted to experiments § in B 160 1 -009, phone conferencing possible § 1 day focussing on T 1/T 2 issues together with output of above § In 513 1 -024, VRVS available § Dates are 13 – 15 June (Monday – Wednesday) § Even though conference room booked tentatively in February, little flexibility in dates even then!

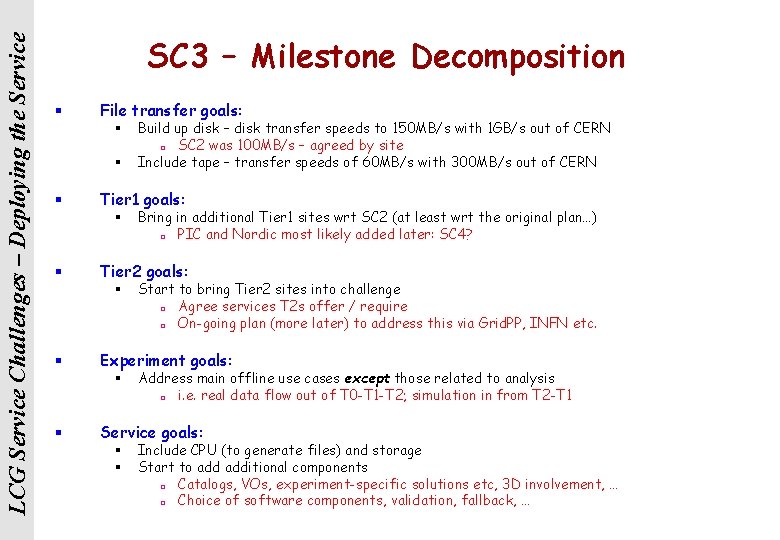

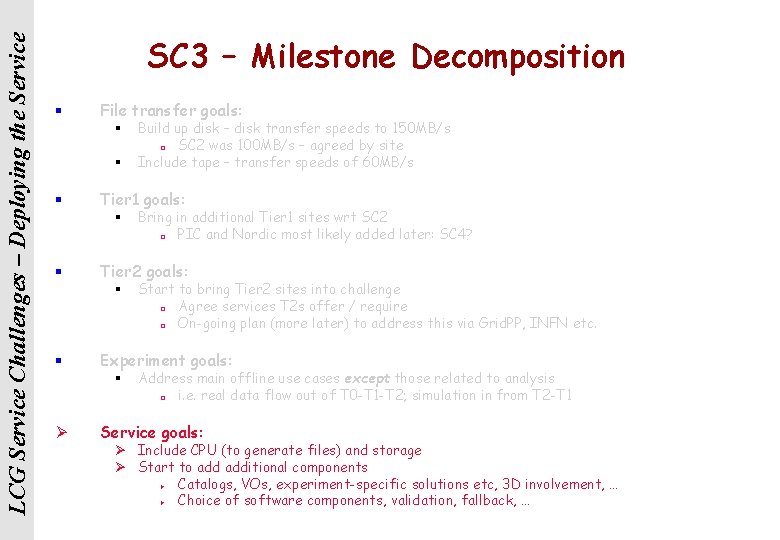

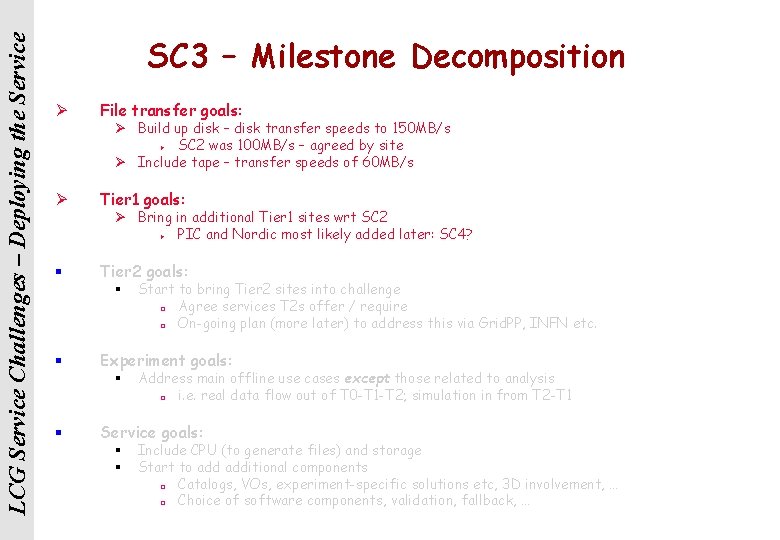

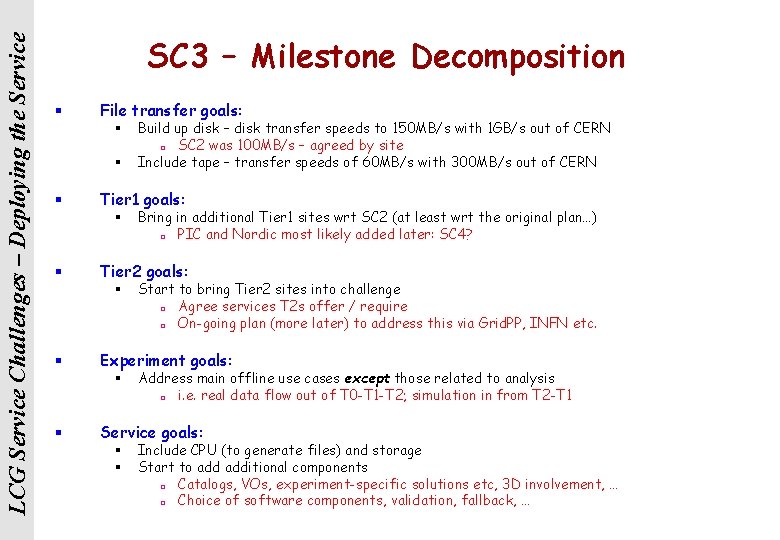

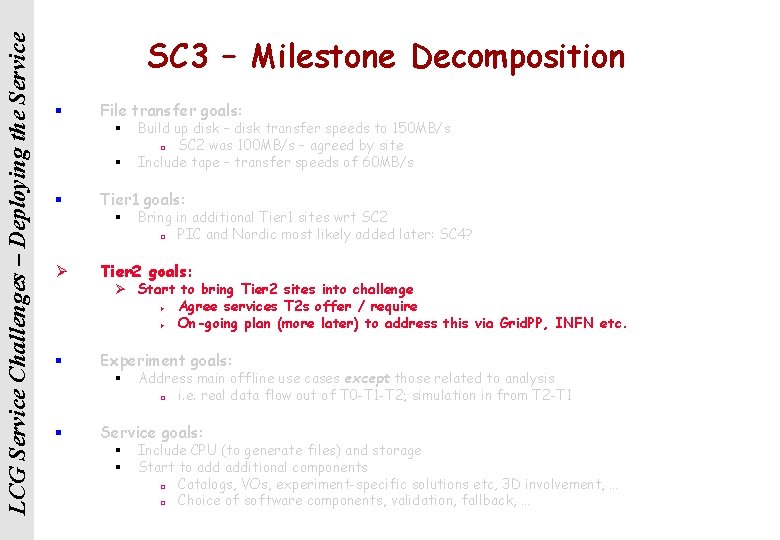

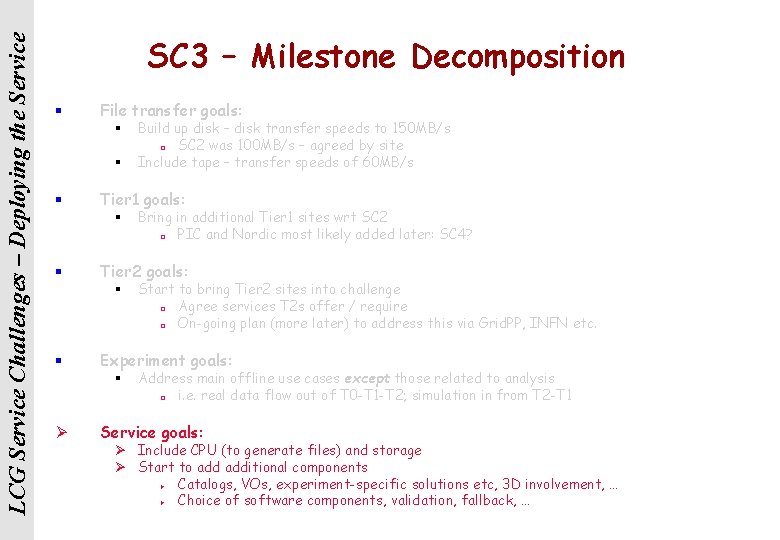

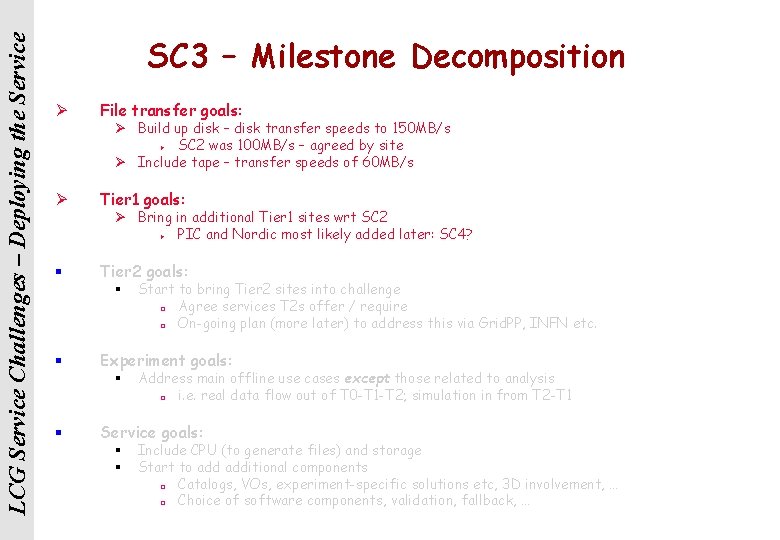

LCG Service Challenges – Deploying the Service SC 3 – Milestone Decomposition § File transfer goals: § § § Tier 1 goals: § § Start to bring Tier 2 sites into challenge ¨ Agree services T 2 s offer / require ¨ On-going plan (more later) to address this via Grid. PP, INFN etc. Experiment goals: § § Bring in additional Tier 1 sites wrt SC 2 (at least wrt the original plan…) ¨ PIC and Nordic most likely added later: SC 4? Tier 2 goals: § § Build up disk – disk transfer speeds to 150 MB/s with 1 GB/s out of CERN ¨ SC 2 was 100 MB/s – agreed by site Include tape – transfer speeds of 60 MB/s with 300 MB/s out of CERN Address main offline use cases except those related to analysis ¨ i. e. real data flow out of T 0 -T 1 -T 2; simulation in from T 2 -T 1 Service goals: § § Include CPU (to generate files) and storage Start to additional components ¨ Catalogs, VOs, experiment-specific solutions etc, 3 D involvement, … ¨ Choice of software components, validation, fallback, …

LCG Service Challenges – Deploying the Service SC 3 – Experiment Goals § Meetings on-going to discuss goals of SC 3 and experiment involvement § Focus on: First demonstrate robust infrastructure; Add ‘simulated’ experiment-specific usage patterns; Add experiment-specific components; Run experiments offline frameworks but don’t preserve data; ¨ Exercise primary Use Cases except analysis (SC 4) § Service phase: data is preserved… § § § Has significant implications on resources beyond file transfer services Ø In effect, experiments’ usage of SC during service phase = data challenge § Must be exceedingly clear on goals / responsibilities during each phase! § Storage; CPU; Network… Both at CERN and participating sites (T 1/T 2) § May have different partners for experiment-specific tests (e. g. not all T 1 s)

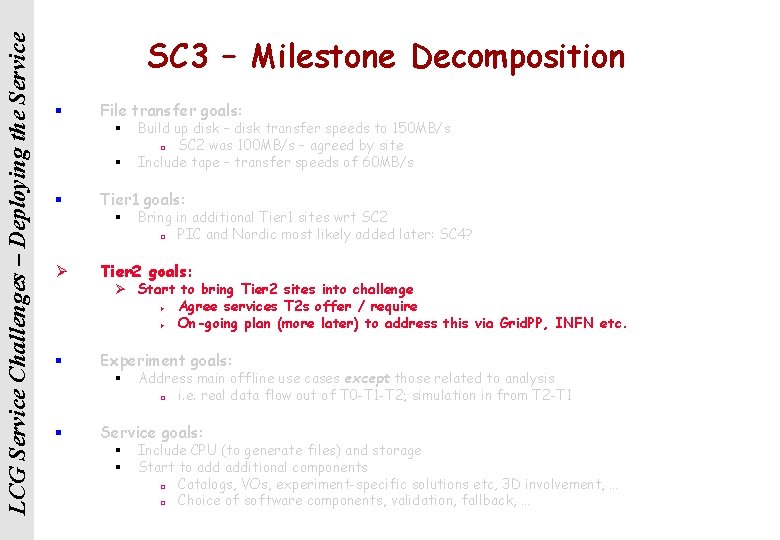

LCG Service Challenges – Deploying the Service SC 3 – Milestone Decomposition § File transfer goals: § § § Build up disk – disk transfer speeds to 150 MB/s ¨ SC 2 was 100 MB/s – agreed by site Include tape – transfer speeds of 60 MB/s Tier 1 goals: § Bring in additional Tier 1 sites wrt SC 2 ¨ PIC and Nordic most likely added later: SC 4? Ø Tier 2 goals: § Experiment goals: Ø Start to bring Tier 2 sites into challenge Ø Agree services T 2 s offer / require Ø On-going plan (more later) to address this via Grid. PP, INFN etc. § § Address main offline use cases except those related to analysis ¨ i. e. real data flow out of T 0 -T 1 -T 2; simulation in from T 2 -T 1 Service goals: § § Include CPU (to generate files) and storage Start to additional components ¨ Catalogs, VOs, experiment-specific solutions etc, 3 D involvement, … ¨ Choice of software components, validation, fallback, …

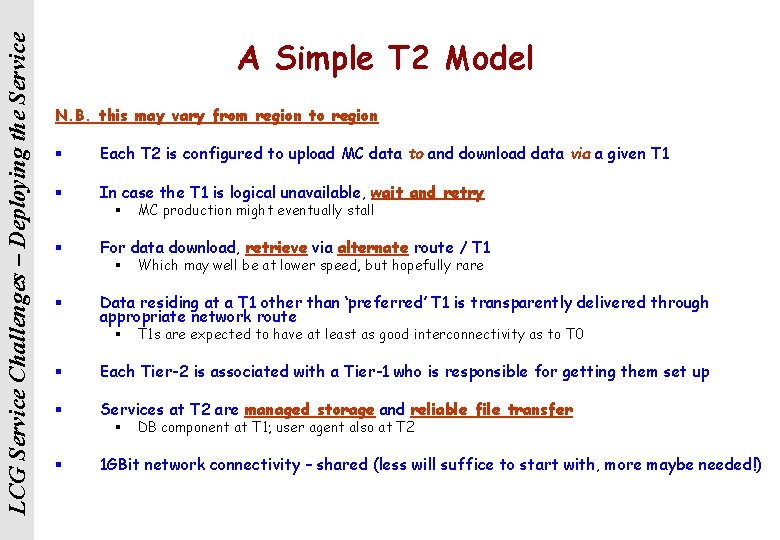

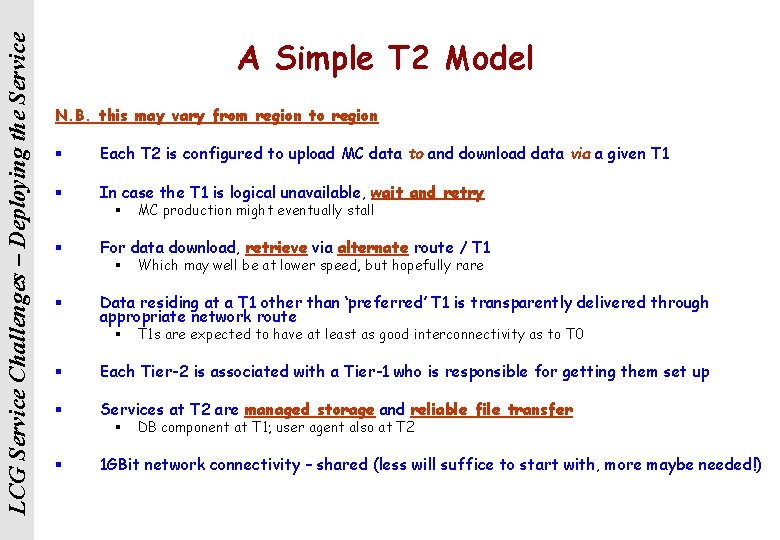

LCG Service Challenges – Deploying the Service A Simple T 2 Model N. B. this may vary from region to region § Each T 2 is configured to upload MC data to and download data via a given T 1 § In case the T 1 is logical unavailable, wait and retry § § For data download, retrieve via alternate route / T 1 § § MC production might eventually stall Which may well be at lower speed, but hopefully rare Data residing at a T 1 other than ‘preferred’ T 1 is transparently delivered through appropriate network route § T 1 s are expected to have at least as good interconnectivity as to T 0 § Each Tier-2 is associated with a Tier-1 who is responsible for getting them set up § Services at T 2 are managed storage and reliable file transfer § § DB component at T 1; user agent also at T 2 1 GBit network connectivity – shared (less will suffice to start with, more maybe needed!)

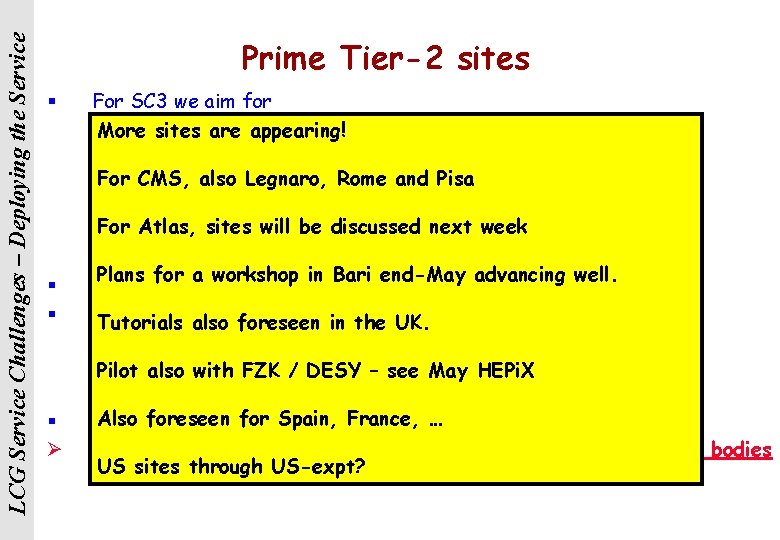

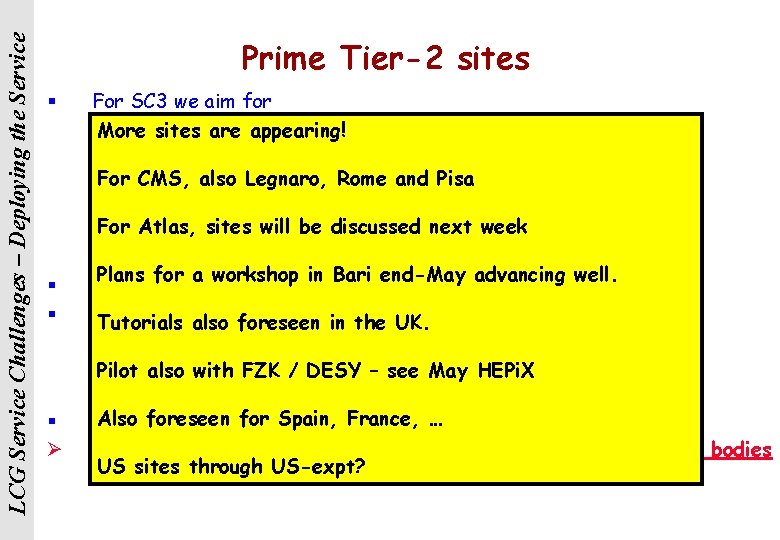

LCG Service Challenges – Deploying the Service Prime Tier-2 sites § For SC 3 we aim for Tier 1 Experiment §Site DESY FZK (CMS + ATLAS) More sites are appearing! Bari, Italy CNAF, Italy CMS §Turin, Lancaster CNAF, RAL (ATLAS) Alice Italy For CMS, also Legnaro, and CMS Pisa Germany FZK, Germany §DESY, London RAL (CMS) Rome ATLAS, UK UK ATLAS §Lancaster, Scotgrid RAL, RAL (LHCb) London, UK RAL, UK CMS For Atlas, sites will be discussed next week §Scot. Grid, Torino CNAF UK RAL, UK(ALICE) LHCb / FNAL (CMS) ATLAS / CMS §US Tier 2 s US sites BNL FNAL § § Plans for a workshop in Bari end-May advancing well. Responsibility between T 1 and T 2 (+ experiments) CERN’s rolealso limited Tutorials foreseen in the UK. § Develop a manual “how to connect as a T 2” § Provide relevant installation Pilot also with FZKs/w / +DESY – seeguides May HEPi. X § Assist in workshops, training etc. § Ø Also foreseen forparties: Spain, Prague, France, Warsaw, … Other interested Moscow, . . Also attacking larger scale problem through national / regional bodies US sites through US-expt? § Grid. PP, INFN, HEPi. X, US-ATLAS, US-CMS

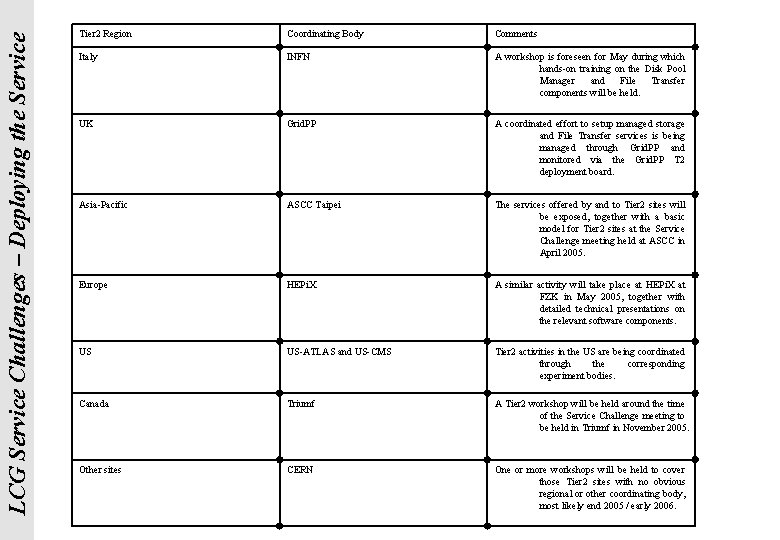

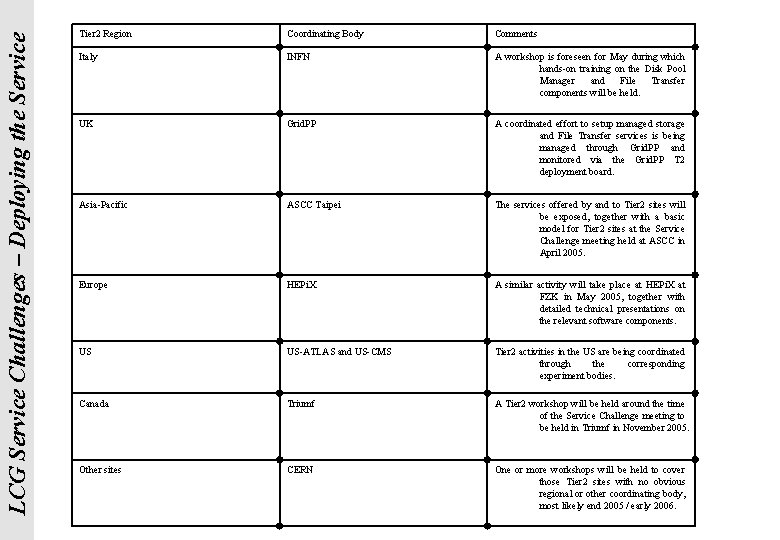

LCG Service Challenges – Deploying the Service Tier 2 Region Coordinating Body Comments Italy INFN A workshop is foreseen for May during which hands-on training on the Disk Pool Manager and File Transfer components will be held. UK Grid. PP A coordinated effort to setup managed storage and File Transfer services is being managed through Grid. PP and monitored via the Grid. PP T 2 deployment board. Asia-Pacific ASCC Taipei The services offered by and to Tier 2 sites will be exposed, together with a basic model for Tier 2 sites at the Service Challenge meeting held at ASCC in April 2005. Europe HEPi. X A similar activity will take place at HEPi. X at FZK in May 2005, together with detailed technical presentations on the relevant software components. US US-ATLAS and US-CMS Tier 2 activities in the US are being coordinated through the corresponding experiment bodies. Canada Triumf A Tier 2 workshop will be held around the time of the Service Challenge meeting to be held in Triumf in November 2005. Other sites CERN One or more workshops will be held to cover those Tier 2 sites with no obvious regional or other coordinating body, most likely end 2005 / early 2006.

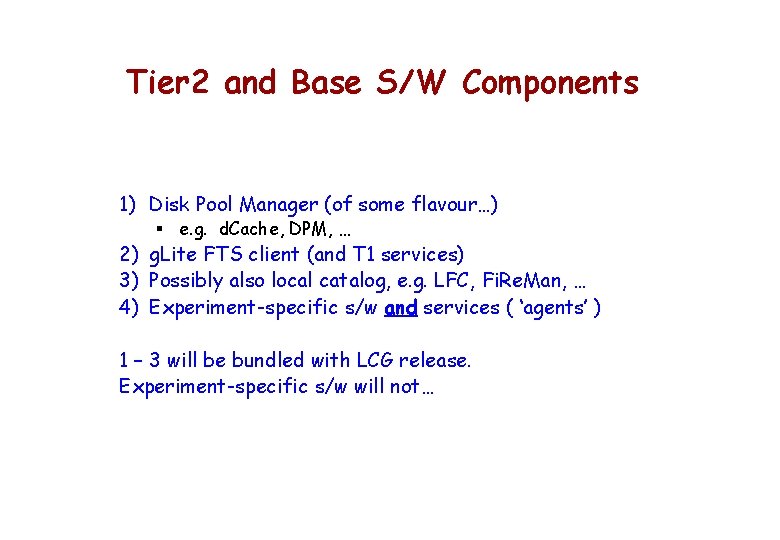

Tier 2 and Base S/W Components 1) Disk Pool Manager (of some flavour…) § e. g. d. Cache, DPM, … 2) g. Lite FTS client (and T 1 services) 3) Possibly also local catalog, e. g. LFC, Fi. Re. Man, … 4) Experiment-specific s/w and services ( ‘agents’ ) 1 – 3 will be bundled with LCG release. Experiment-specific s/w will not…

Tier 2 Software Components Overview of d. Cache, DPM, g. Lite FTS + LFC and Fi. Re. Man

File Transfer Software SC 3 Gavin Mc. Cance – JRA 1 Data Management Cluster LCG Storage Management Workshop April 6, 2005, CERN

LCG Service Challenges – Deploying the Service Reliable File Transfer Requirements § LCG created a set of requirements based on the Robust Data Transfer Service Challenge § LCG and g. Lite teams translated this into a detailed architecture and design document for the software and the service § A prototype (radiant) was created to test out the architecture and was used in SC 1 and SC 2 ¨ Architecture and design have worked well for SC 2 § g. Lite FTS (“File Transfer Service”) is an instantiation of the same architecture and design, and is the candidate for use in SC 3 ¨ Current version of FTS and SC 2 radiant software interoperable

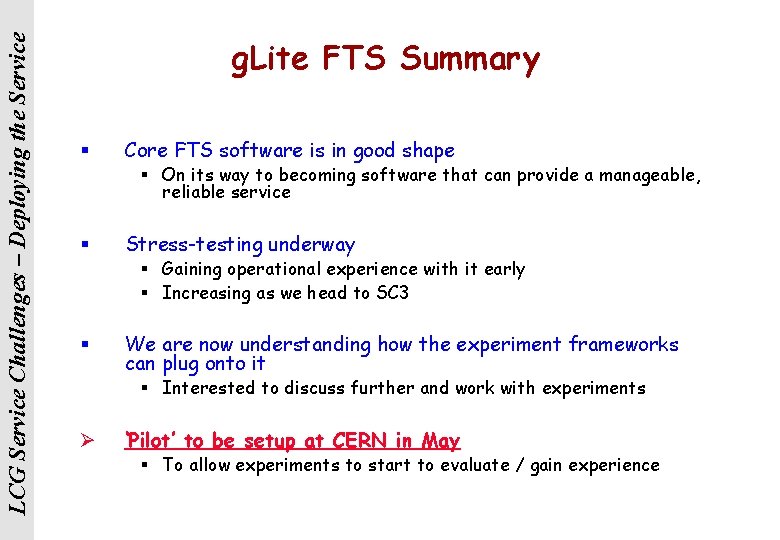

LCG Service Challenges – Deploying the Service g. Lite FTS Summary § Core FTS software is in good shape § On its way to becoming software that can provide a manageable, reliable service § Stress-testing underway § Gaining operational experience with it early § Increasing as we head to SC 3 § We are now understanding how the experiment frameworks can plug onto it § Interested to discuss further and work with experiments Ø ‘Pilot’ to be setup at CERN in May § To allow experiments to start to evaluate / gain experience

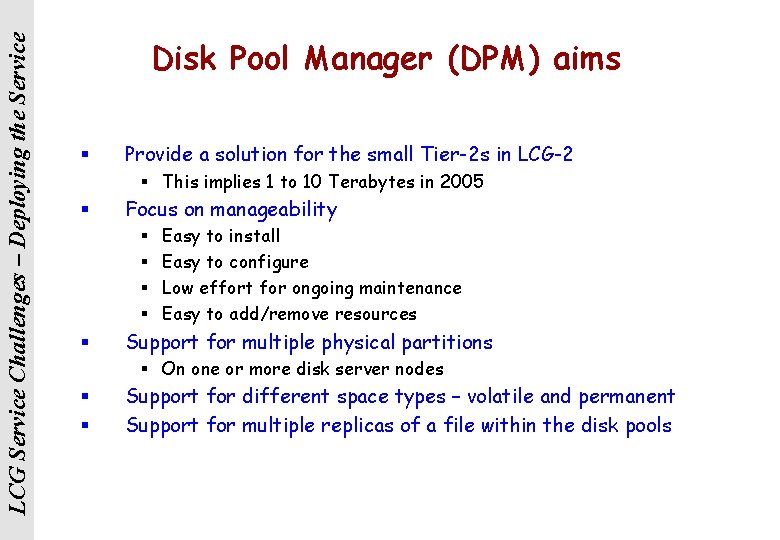

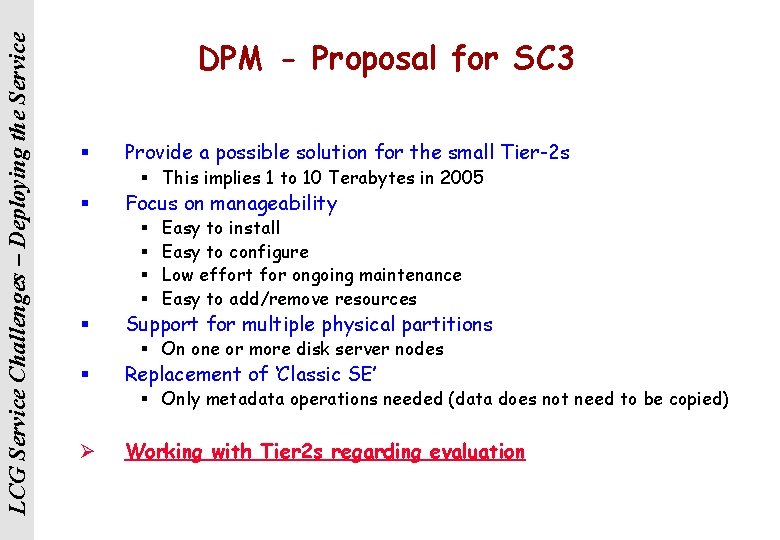

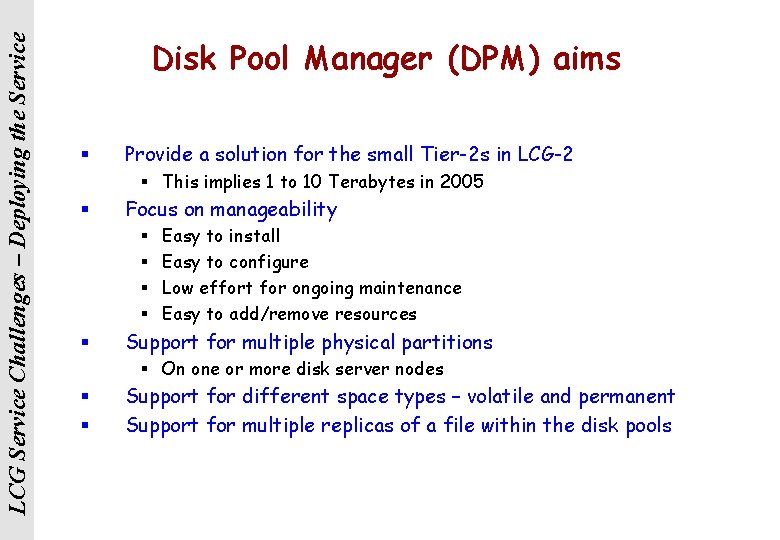

LCG Service Challenges – Deploying the Service Disk Pool Manager (DPM) aims § Provide a solution for the small Tier-2 s in LCG-2 § This implies 1 to 10 Terabytes in 2005 § Focus on manageability § § § Easy to install Easy to configure Low effort for ongoing maintenance Easy to add/remove resources Support for multiple physical partitions § On one or more disk server nodes § § Support for different space types – volatile and permanent Support for multiple replicas of a file within the disk pools

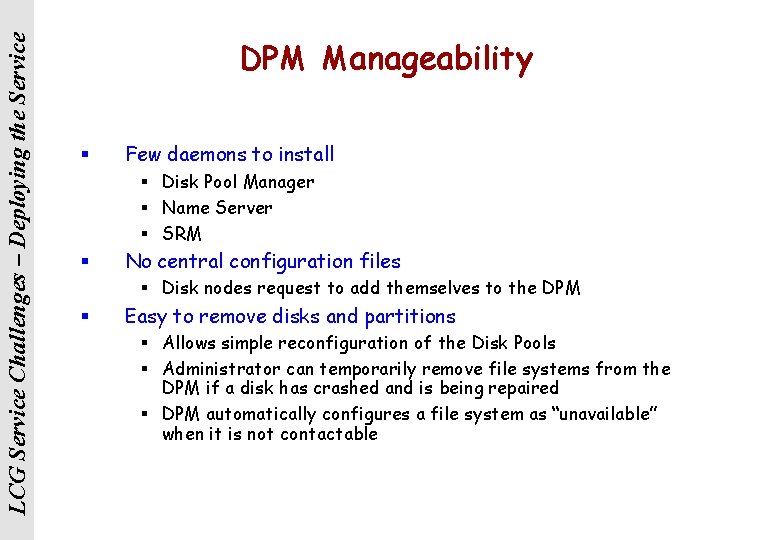

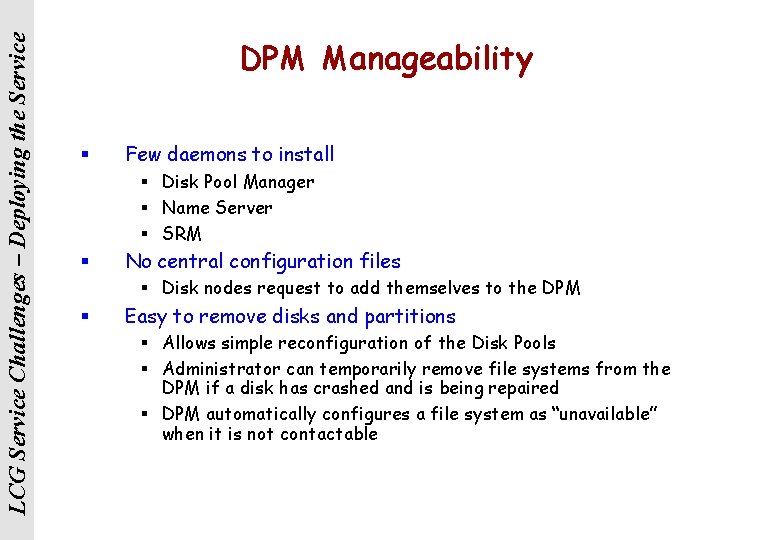

LCG Service Challenges – Deploying the Service DPM Manageability § Few daemons to install § Disk Pool Manager § Name Server § SRM § No central configuration files § Disk nodes request to add themselves to the DPM § Easy to remove disks and partitions § Allows simple reconfiguration of the Disk Pools § Administrator can temporarily remove file systems from the DPM if a disk has crashed and is being repaired § DPM automatically configures a file system as “unavailable” when it is not contactable

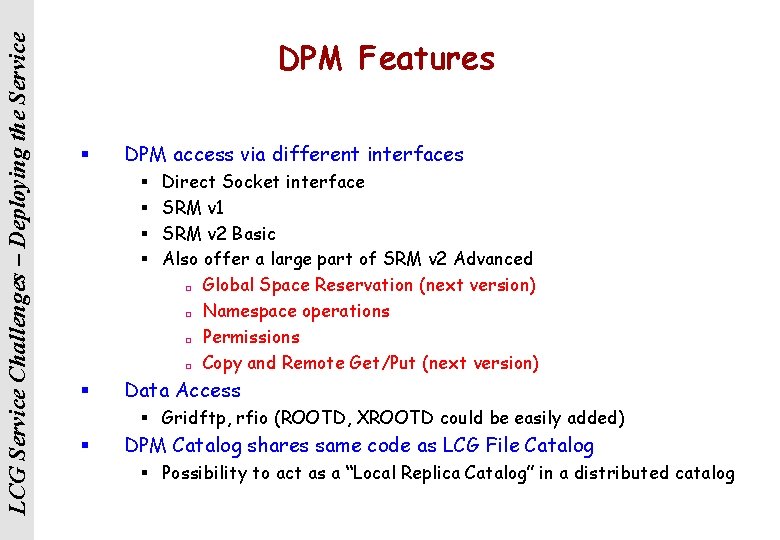

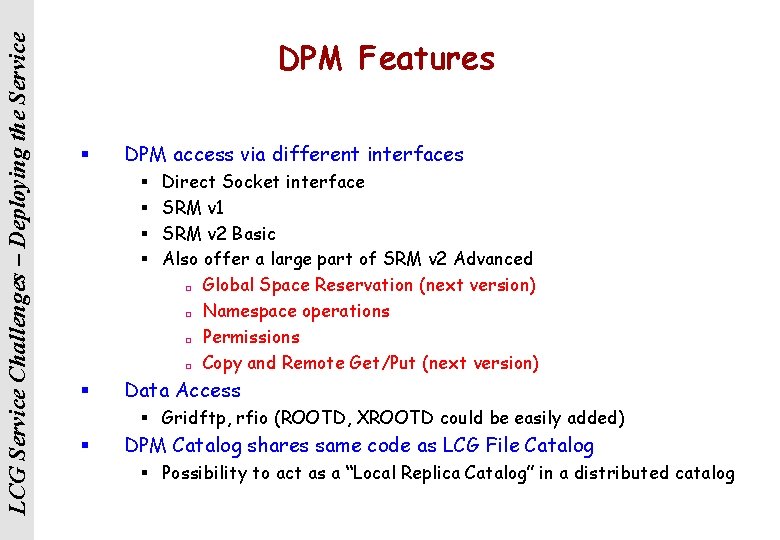

LCG Service Challenges – Deploying the Service DPM Features § DPM access via different interfaces § § § Direct Socket interface SRM v 1 SRM v 2 Basic Also offer a large part of SRM v 2 Advanced ¨ Global Space Reservation (next version) ¨ Namespace operations ¨ Permissions ¨ Copy and Remote Get/Put (next version) Data Access § Gridftp, rfio (ROOTD, XROOTD could be easily added) § DPM Catalog shares same code as LCG File Catalog § Possibility to act as a “Local Replica Catalog” in a distributed catalog

LCG Service Challenges – Deploying the Service DPM Status § § DPNS, DPM, SRM v 1 and SRM v 2 (without Copy nor global space reservation) have been tested for 4 months The secure version has been tested for 6 weeks Gsi. FTP has been modified to interface to the DPM RFIO interface is in final stage of development

LCG Service Challenges – Deploying the Service DPM - Proposal for SC 3 § Provide a possible solution for the small Tier-2 s § This implies 1 to 10 Terabytes in 2005 § Focus on manageability § § § Easy to install Easy to configure Low effort for ongoing maintenance Easy to add/remove resources Support for multiple physical partitions § On one or more disk server nodes § Replacement of ‘Classic SE’ § Only metadata operations needed (data does not need to be copied) Ø Working with Tier 2 s regarding evaluation

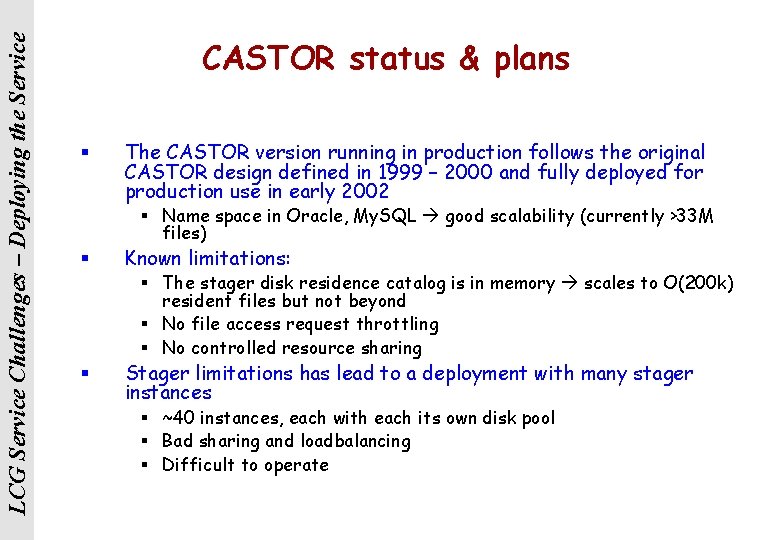

LCG Service Challenges – Deploying the Service CASTOR status & plans § The CASTOR version running in production follows the original CASTOR design defined in 1999 – 2000 and fully deployed for production use in early 2002 § Name space in Oracle, My. SQL good scalability (currently >33 M files) § Known limitations: § The stager disk residence catalog is in memory scales to O(200 k) resident files but not beyond § No file access request throttling § No controlled resource sharing § Stager limitations has lead to a deployment with many stager instances § ~40 instances, each with each its own disk pool § Bad sharing and loadbalancing § Difficult to operate

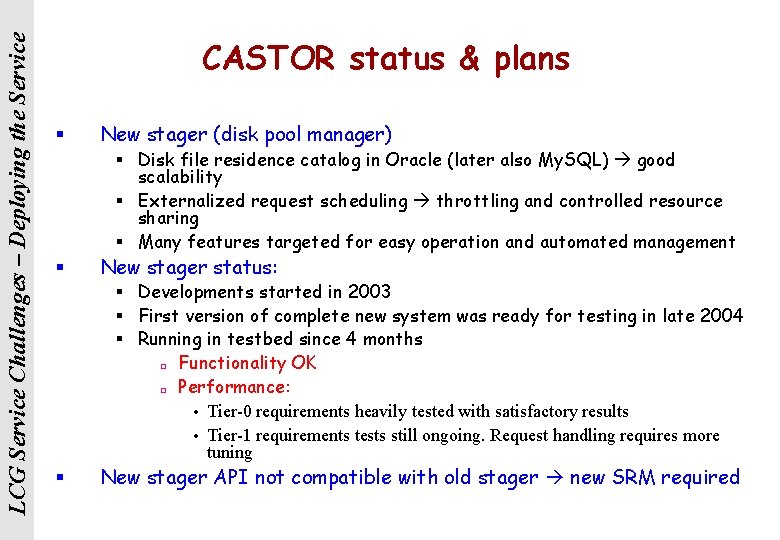

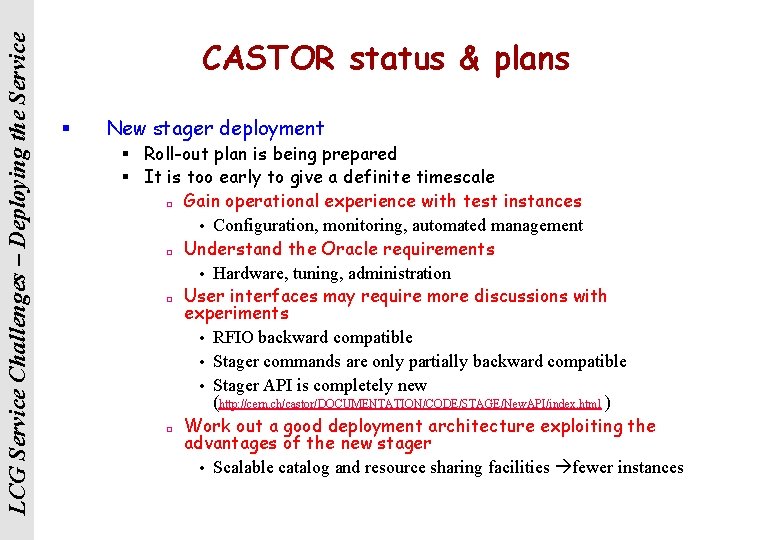

LCG Service Challenges – Deploying the Service CASTOR status & plans § New stager (disk pool manager) § Disk file residence catalog in Oracle (later also My. SQL) good scalability § Externalized request scheduling throttling and controlled resource sharing § Many features targeted for easy operation and automated management § New stager status: § Developments started in 2003 § First version of complete new system was ready for testing in late 2004 § Running in testbed since 4 months ¨ Functionality OK ¨ Performance: Tier-0 requirements heavily tested with satisfactory results Tier-1 requirements tests still ongoing. Request handling requires more tuning § New stager API not compatible with old stager new SRM required

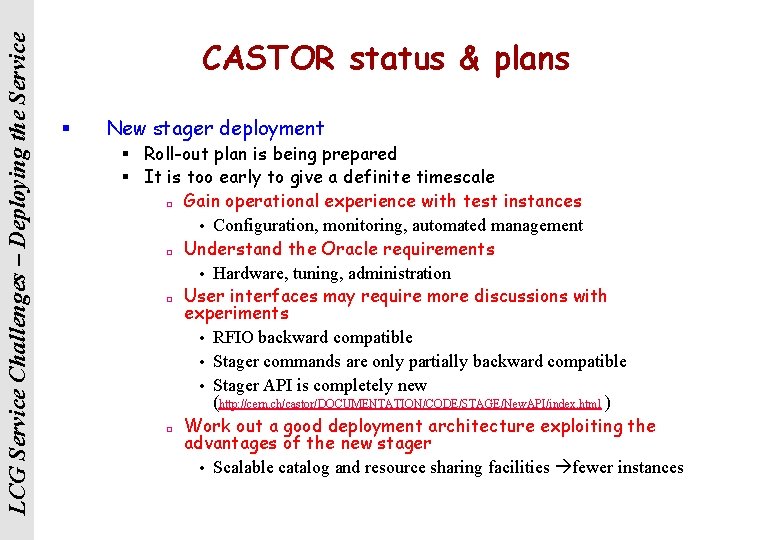

LCG Service Challenges – Deploying the Service CASTOR status & plans § New stager deployment § Roll-out plan is being prepared § It is too early to give a definite timescale ¨ Gain operational experience with test instances Configuration, monitoring, automated management ¨ Understand the Oracle requirements Hardware, tuning, administration ¨ User interfaces may require more discussions with experiments RFIO backward compatible Stager commands are only partially backward compatible Stager API is completely new (http: //cern. ch/castor/DOCUMENTATION/CODE/STAGE/New. API/index. html ) ¨ Work out a good deployment architecture exploiting the advantages of the new stager Scalable catalog and resource sharing facilities fewer instances

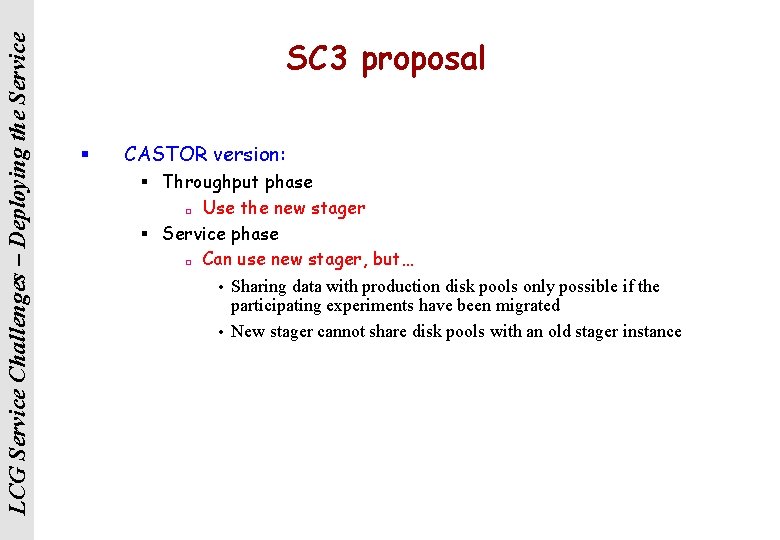

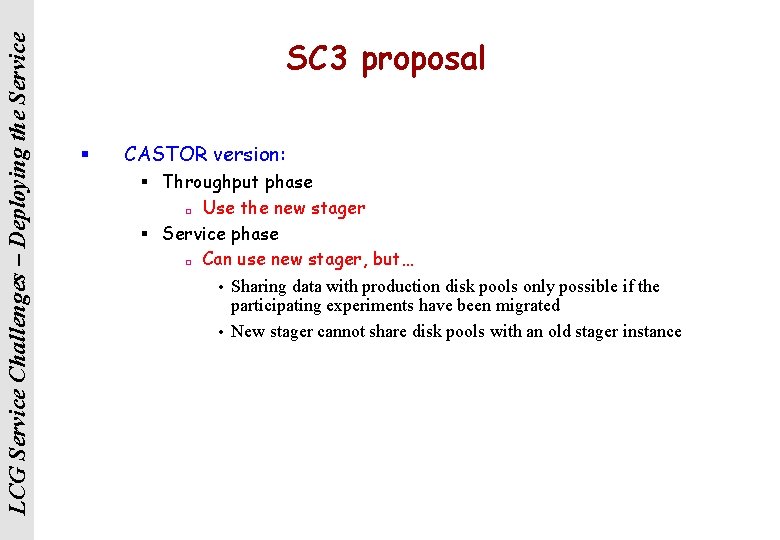

LCG Service Challenges – Deploying the Service SC 3 proposal § CASTOR version: § Throughput phase ¨ Use the new stager § Service phase ¨ Can use new stager, but… Sharing data with production disk pools only possible if the participating experiments have been migrated New stager cannot share disk pools with an old stager instance

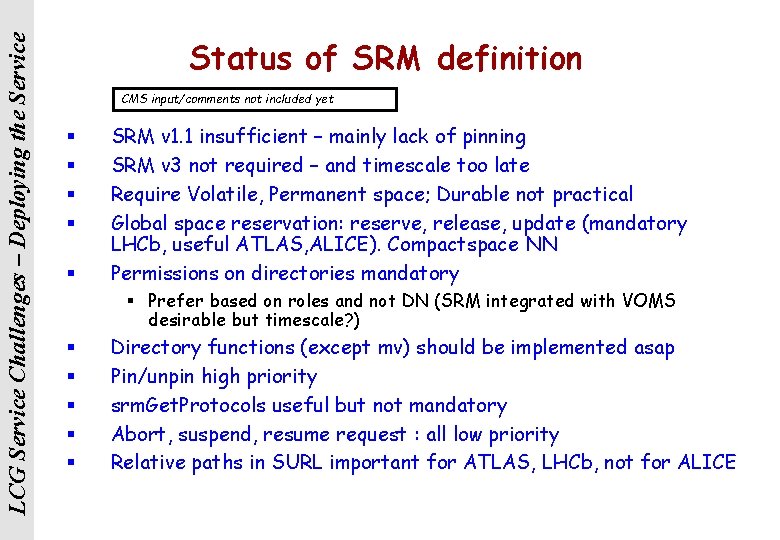

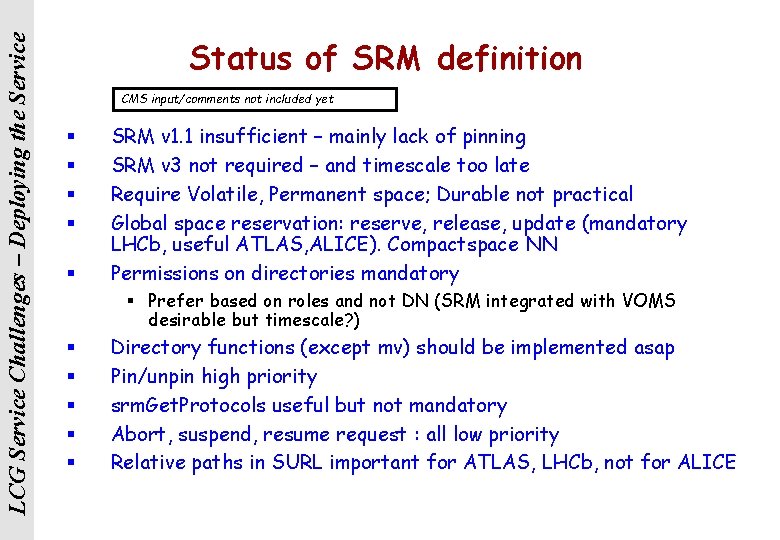

LCG Service Challenges – Deploying the Service Status of SRM definition CMS input/comments not included yet § § § SRM v 1. 1 insufficient – mainly lack of pinning SRM v 3 not required – and timescale too late Require Volatile, Permanent space; Durable not practical Global space reservation: reserve, release, update (mandatory LHCb, useful ATLAS, ALICE). Compactspace NN Permissions on directories mandatory § Prefer based on roles and not DN (SRM integrated with VOMS desirable but timescale? ) § § § Directory functions (except mv) should be implemented asap Pin/unpin high priority srm. Get. Protocols useful but not mandatory Abort, suspend, resume request : all low priority Relative paths in SURL important for ATLAS, LHCb, not for ALICE

LCG Service Challenges – Deploying the Service SC 3 – Milestone Decomposition § File transfer goals: § § § Tier 1 goals: § § Start to bring Tier 2 sites into challenge ¨ Agree services T 2 s offer / require ¨ On-going plan (more later) to address this via Grid. PP, INFN etc. Experiment goals: § Ø Bring in additional Tier 1 sites wrt SC 2 ¨ PIC and Nordic most likely added later: SC 4? Tier 2 goals: § § Build up disk – disk transfer speeds to 150 MB/s ¨ SC 2 was 100 MB/s – agreed by site Include tape – transfer speeds of 60 MB/s Address main offline use cases except those related to analysis ¨ i. e. real data flow out of T 0 -T 1 -T 2; simulation in from T 2 -T 1 Service goals: Ø Include CPU (to generate files) and storage Ø Start to additional components Ø Catalogs, VOs, experiment-specific solutions etc, 3 D involvement, … Ø Choice of software components, validation, fallback, …

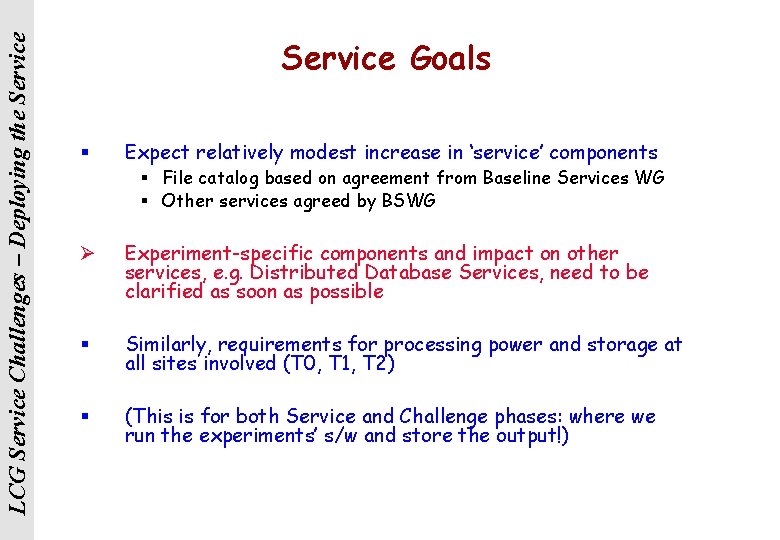

LCG Service Challenges – Deploying the Service Goals § Expect relatively modest increase in ‘service’ components § File catalog based on agreement from Baseline Services WG § Other services agreed by BSWG Ø Experiment-specific components and impact on other services, e. g. Distributed Database Services, need to be clarified as soon as possible § Similarly, requirements for processing power and storage at all sites involved (T 0, T 1, T 2) § (This is for both Service and Challenge phases: where we run the experiments’ s/w and store the output!)

LCG Service Challenges – Deploying the Service SC 3 – Milestone Decomposition Ø File transfer goals: Ø Tier 1 goals: § Tier 2 goals: Ø Build up disk – disk transfer speeds to 150 MB/s Ø SC 2 was 100 MB/s – agreed by site Ø Include tape – transfer speeds of 60 MB/s Ø Bring in additional Tier 1 sites wrt SC 2 Ø PIC and Nordic most likely added later: SC 4? § § Experiment goals: § § Start to bring Tier 2 sites into challenge ¨ Agree services T 2 s offer / require ¨ On-going plan (more later) to address this via Grid. PP, INFN etc. Address main offline use cases except those related to analysis ¨ i. e. real data flow out of T 0 -T 1 -T 2; simulation in from T 2 -T 1 Service goals: § § Include CPU (to generate files) and storage Start to additional components ¨ Catalogs, VOs, experiment-specific solutions etc, 3 D involvement, … ¨ Choice of software components, validation, fallback, …

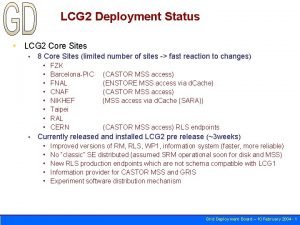

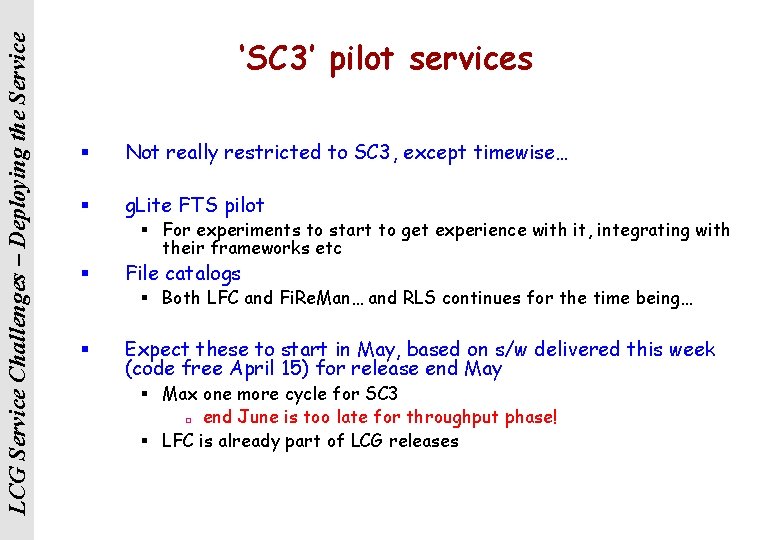

LCG Service Challenges – Deploying the Service ‘SC 3’ pilot services § Not really restricted to SC 3, except timewise… § g. Lite FTS pilot § For experiments to start to get experience with it, integrating with their frameworks etc § File catalogs § Both LFC and Fi. Re. Man… and RLS continues for the time being… § Expect these to start in May, based on s/w delivered this week (code free April 15) for release end May § Max one more cycle for SC 3 ¨ end June is too late for throughput phase! § LFC is already part of LCG releases

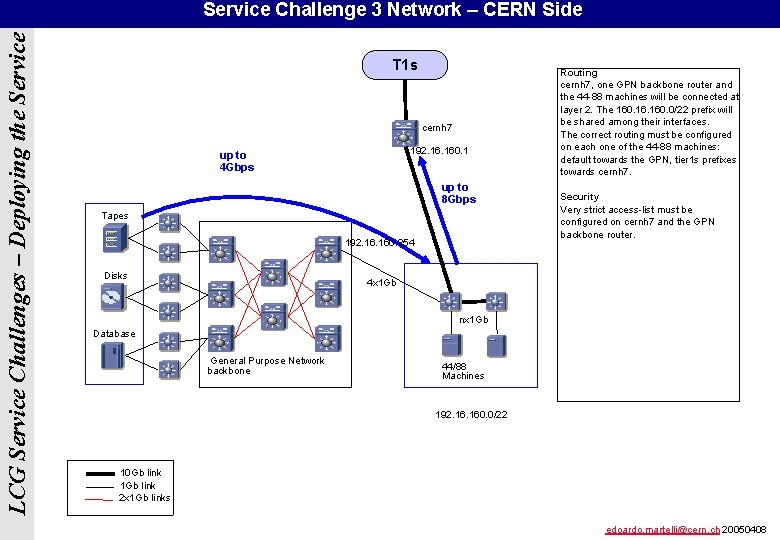

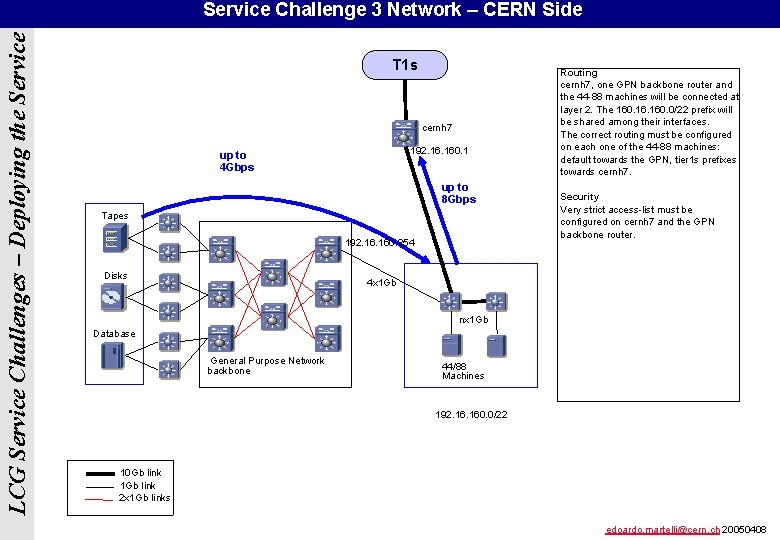

LCG Service Challenges – Deploying the Service Challenge 3 Network – CERN Side T 1 s cernh 7 192. 160. 1 up to 4 Gbps up to 8 Gbps Tapes 192. 160. 254 Disks Routing cernh 7, one GPN backbone router and the 44 -88 machines will be connected at layer 2. The 160. 0/22 prefix will be shared among their interfaces. The correct routing must be configured on each one of the 44 -88 machines: default towards the GPN, tier 1 s prefixes towards cernh 7. Security Very strict access-list must be configured on cernh 7 and the GPN backbone router. 4 x 1 Gb nx 1 Gb Database General Purpose Network backbone 44/88 Machines 192. 160. 0/22 10 Gb link 1 Gb link 2 x 1 Gb links edoardo. martelli@cern. ch 20050408

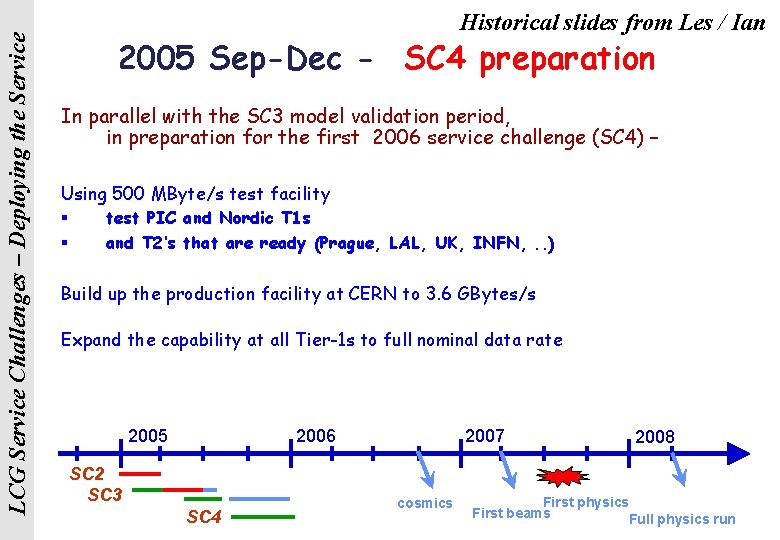

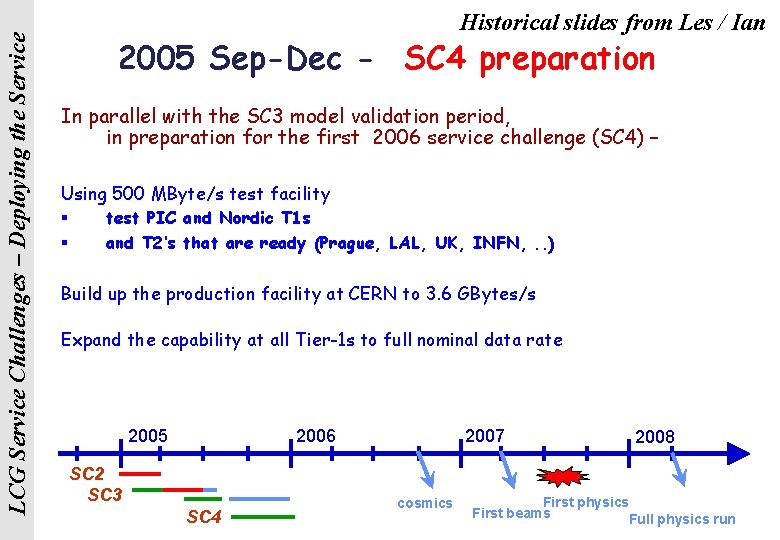

LCG Service Challenges – Deploying the Service Historical slides from Les / Ian 2005 Sep-Dec - SC 4 preparation In parallel with the SC 3 model validation period, in preparation for the first 2006 service challenge (SC 4) – Using 500 MByte/s test facility § test PIC and Nordic T 1 s § and T 2’s that are ready (Prague, LAL, UK, INFN, . . ) Build up the production facility at CERN to 3. 6 GBytes/s Expand the capability at all Tier-1 s to full nominal data rate 2005 2006 SC 2 SC 3 SC 4 2007 cosmics 2008 First physics First beams Full physics run

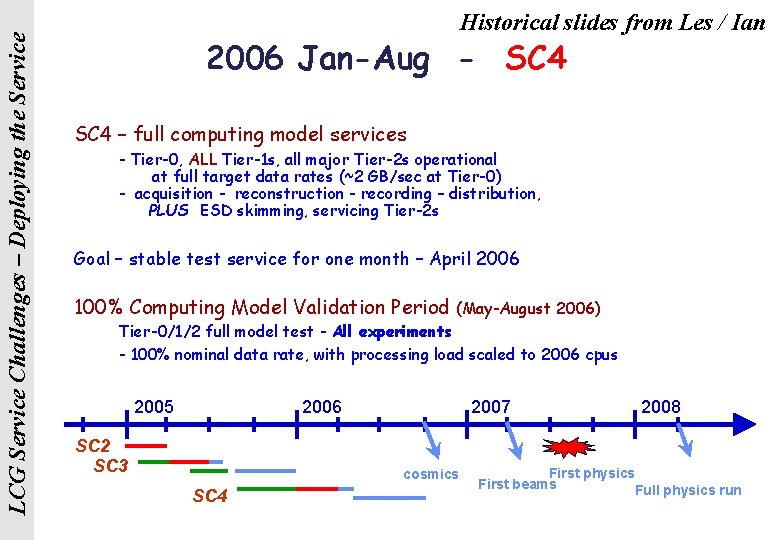

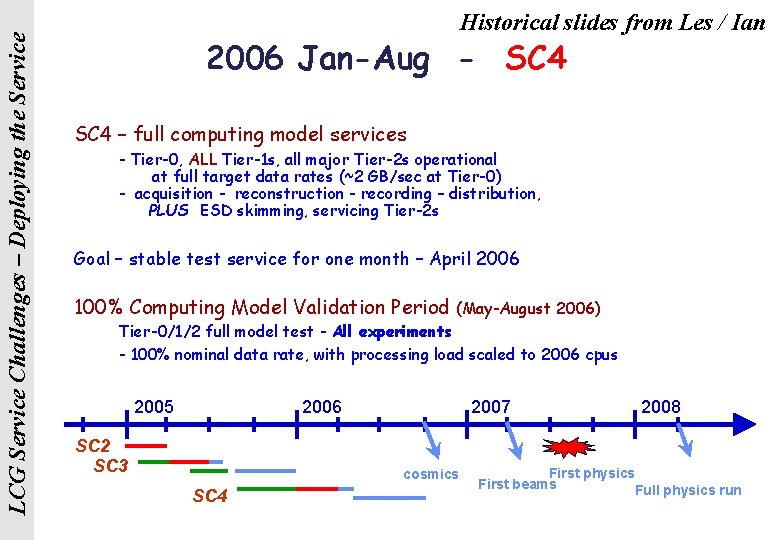

LCG Service Challenges – Deploying the Service Historical slides from Les / Ian 2006 Jan-Aug - SC 4 – full computing model services - Tier-0, ALL Tier-1 s, all major Tier-2 s operational at full target data rates (~2 GB/sec at Tier-0) - acquisition - reconstruction - recording – distribution, PLUS ESD skimming, servicing Tier-2 s Goal – stable test service for one month – April 2006 100% Computing Model Validation Period (May-August 2006) Tier-0/1/2 full model test - All experiments - 100% nominal data rate, with processing load scaled to 2006 cpus 2005 2006 SC 2 SC 3 2007 cosmics SC 4 2008 First physics First beams Full physics run

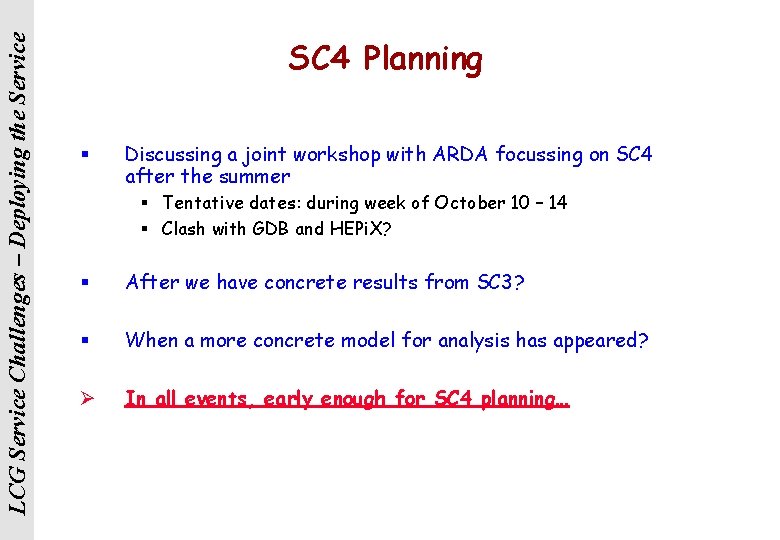

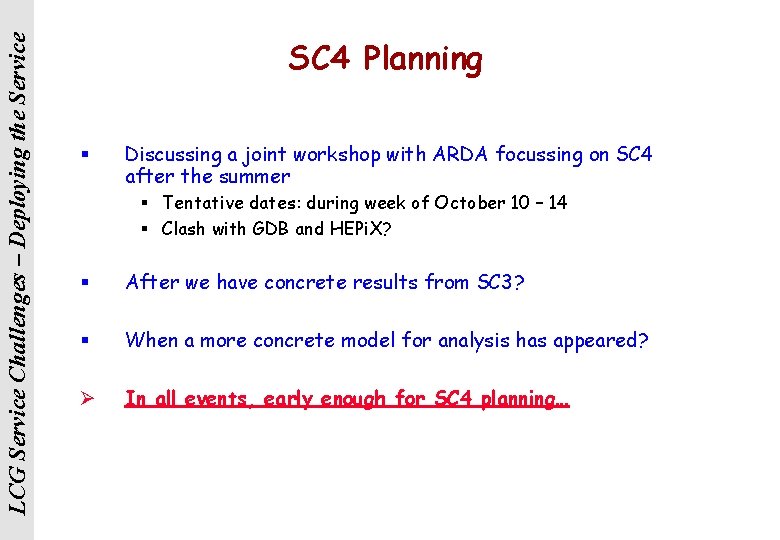

LCG Service Challenges – Deploying the Service SC 4 Planning § Discussing a joint workshop with ARDA focussing on SC 4 after the summer § Tentative dates: during week of October 10 – 14 § Clash with GDB and HEPi. X? § After we have concrete results from SC 3? § When a more concrete model for analysis has appeared? Ø In all events, early enough for SC 4 planning…

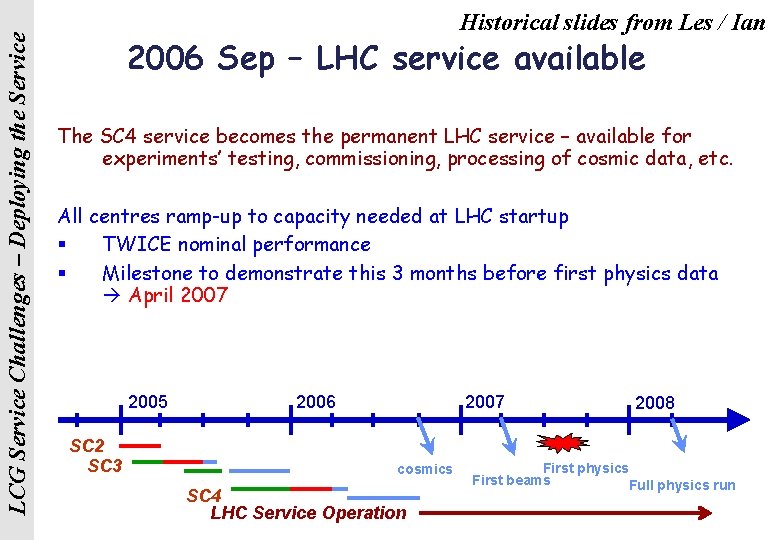

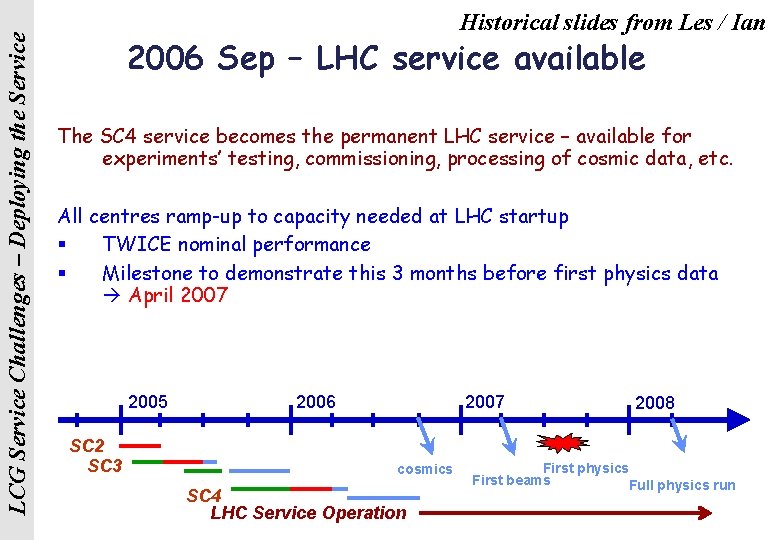

LCG Service Challenges – Deploying the Service Historical slides from Les / Ian 2006 Sep – LHC service available The SC 4 service becomes the permanent LHC service – available for experiments’ testing, commissioning, processing of cosmic data, etc. All centres ramp-up to capacity needed at LHC startup § TWICE nominal performance § Milestone to demonstrate this 3 months before first physics data April 2007 2005 SC 2 SC 3 2006 2007 cosmics SC 4 LHC Service Operation 2008 First physics First beams Full physics run

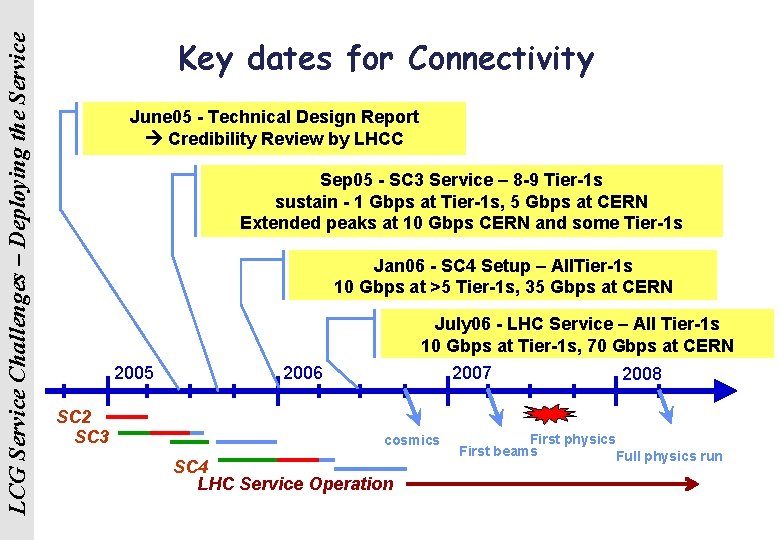

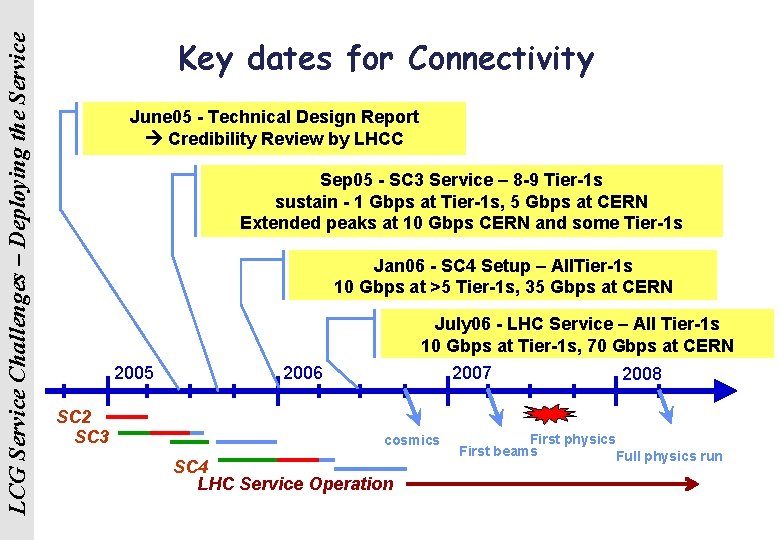

LCG Service Challenges – Deploying the Service Key dates for Connectivity June 05 - Technical Design Report Credibility Review by LHCC Sep 05 - SC 3 Service – 8 -9 Tier-1 s sustain - 1 Gbps at Tier-1 s, 5 Gbps at CERN Extended peaks at 10 Gbps CERN and some Tier-1 s Jan 06 - SC 4 Setup – All. Tier-1 s 10 Gbps at >5 Tier-1 s, 35 Gbps at CERN July 06 - LHC Service – All Tier-1 s 10 Gbps at Tier-1 s, 70 Gbps at CERN 2005 SC 2 SC 3 2006 2007 cosmics SC 4 LHC Service Operation 2008 First physics First beams Full physics run

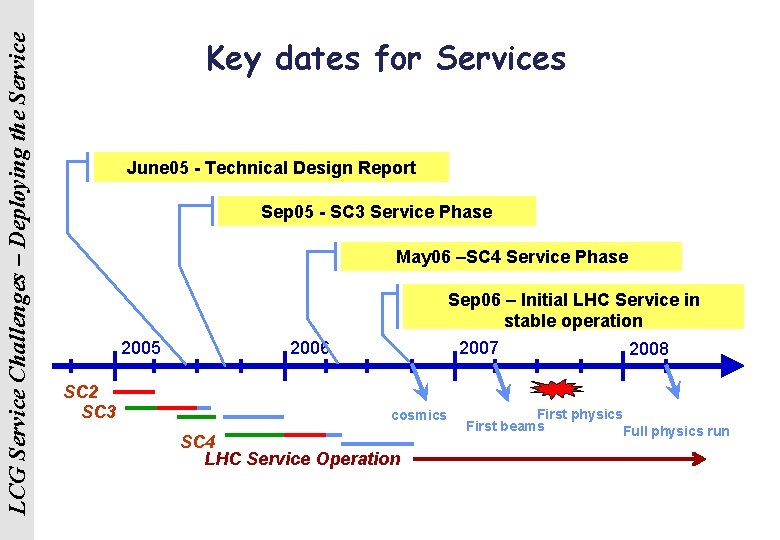

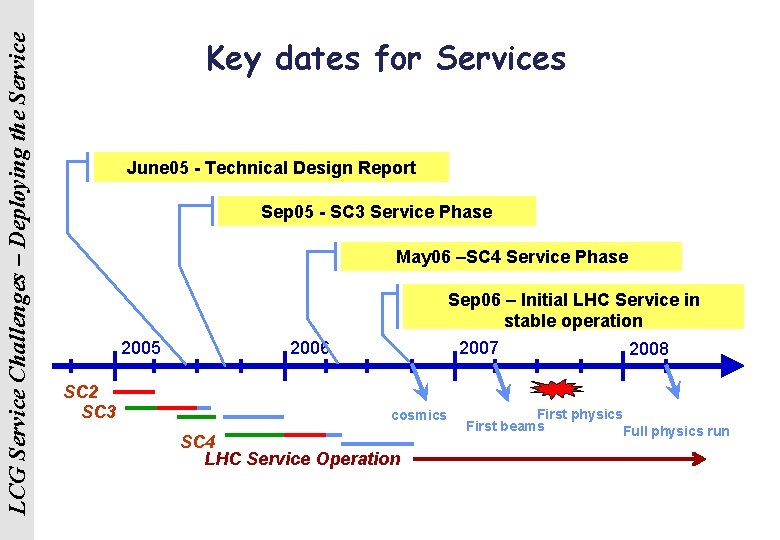

LCG Service Challenges – Deploying the Service Key dates for Services June 05 - Technical Design Report Sep 05 - SC 3 Service Phase May 06 –SC 4 Service Phase 2005 SC 2 SC 3 Sep 06 – Initial LHC Service in stable operation 2007 2008 2006 cosmics SC 4 LHC Service Operation First physics First beams Full physics run

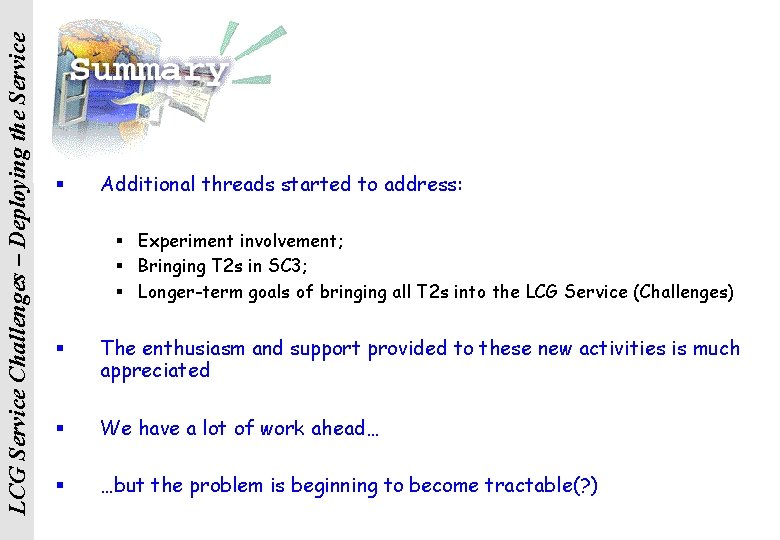

LCG Service Challenges – Deploying the Service § Additional threads started to address: § Experiment involvement; § Bringing T 2 s in SC 3; § Longer-term goals of bringing all T 2 s into the LCG Service (Challenges) § The enthusiasm and support provided to these new activities is much appreciated § We have a lot of work ahead… § …but the problem is beginning to become tractable(? )

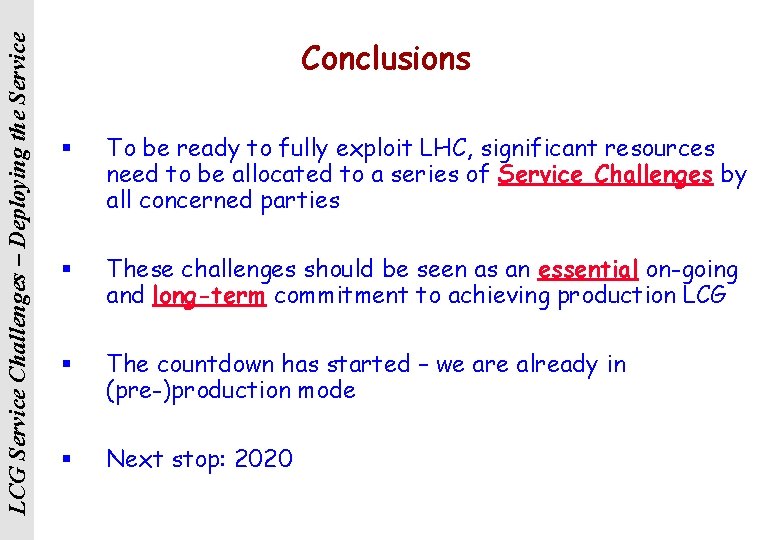

LCG Service Challenges – Deploying the Service Conclusions § To be ready to fully exploit LHC, significant resources need to be allocated to a series of Service Challenges by all concerned parties § These challenges should be seen as an essential on-going and long-term commitment to achieving production LCG § The countdown has started – we are already in (pre-)production mode § Next stop: 2020

Ramping project invictus

Ramping project invictus Cngrid

Cngrid Lcg

Lcg Lcg database

Lcg database Lcg projects

Lcg projects Lcg webcast

Lcg webcast Lcg random

Lcg random Lcg test

Lcg test Lcg

Lcg Challenges of service innovation and design

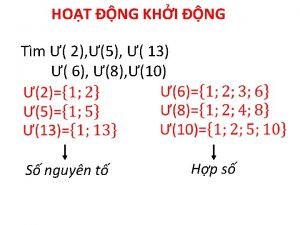

Challenges of service innovation and design Số nguyên tố là số gì

Số nguyên tố là số gì Thiếu nhi thế giới liên hoan

Thiếu nhi thế giới liên hoan Tỉ lệ cơ thể trẻ em

Tỉ lệ cơ thể trẻ em Tia chieu sa te

Tia chieu sa te Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Thế nào là hệ số cao nhất

Thế nào là hệ số cao nhất Hệ hô hấp

Hệ hô hấp Tư thế ngồi viết

Tư thế ngồi viết Cái miệng nó xinh thế chỉ nói điều hay thôi

Cái miệng nó xinh thế chỉ nói điều hay thôi Hình ảnh bộ gõ cơ thể búng tay

Hình ảnh bộ gõ cơ thể búng tay đặc điểm cơ thể của người tối cổ

đặc điểm cơ thể của người tối cổ Cách giải mật thư tọa độ

Cách giải mật thư tọa độ Chụp phim tư thế worms-breton

Chụp phim tư thế worms-breton Tư thế ngồi viết

Tư thế ngồi viết ưu thế lai là gì

ưu thế lai là gì Chó sói

Chó sói Thẻ vin

Thẻ vin Thể thơ truyền thống

Thể thơ truyền thống Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Từ ngữ thể hiện lòng nhân hậu

Từ ngữ thể hiện lòng nhân hậu Diễn thế sinh thái là

Diễn thế sinh thái là Thứ tự các dấu thăng giáng ở hóa biểu

Thứ tự các dấu thăng giáng ở hóa biểu Làm thế nào để 102-1=99

Làm thế nào để 102-1=99 Chúa yêu trần thế alleluia

Chúa yêu trần thế alleluia Lời thề hippocrates

Lời thề hippocrates Hổ sinh sản vào mùa nào

Hổ sinh sản vào mùa nào đại từ thay thế

đại từ thay thế Quá trình desamine hóa có thể tạo ra

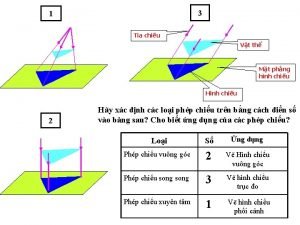

Quá trình desamine hóa có thể tạo ra Vẽ hình chiếu vuông góc của vật thể sau

Vẽ hình chiếu vuông góc của vật thể sau Công của trọng lực

Công của trọng lực Thế nào là mạng điện lắp đặt kiểu nổi

Thế nào là mạng điện lắp đặt kiểu nổi Các loại đột biến cấu trúc nhiễm sắc thể

Các loại đột biến cấu trúc nhiễm sắc thể Bổ thể

Bổ thể Vẽ hình chiếu đứng bằng cạnh của vật thể

Vẽ hình chiếu đứng bằng cạnh của vật thể Phản ứng thế ankan

Phản ứng thế ankan Môn thể thao bắt đầu bằng chữ đua

Môn thể thao bắt đầu bằng chữ đua Khi nào hổ mẹ dạy hổ con săn mồi

Khi nào hổ mẹ dạy hổ con săn mồi điện thế nghỉ

điện thế nghỉ Một số thể thơ truyền thống

Một số thể thơ truyền thống Thế nào là sự mỏi cơ

Thế nào là sự mỏi cơ Trời xanh đây là của chúng ta thể thơ

Trời xanh đây là của chúng ta thể thơ Service provider and service consumer

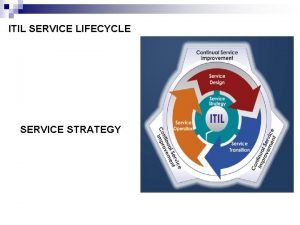

Service provider and service consumer Itil service lifecycle stages

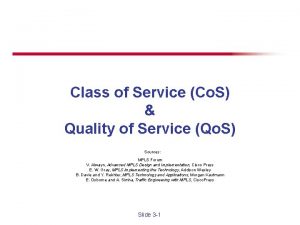

Itil service lifecycle stages Class of service vs quality of service

Class of service vs quality of service Service desk improvement initiatives

Service desk improvement initiatives Itil 7 step improvement process

Itil 7 step improvement process New service development

New service development Adp service portal

Adp service portal Adequate service examples

Adequate service examples Service owner vs service manager

Service owner vs service manager Itil service lifecycle model

Itil service lifecycle model Challenges for more able pupils

Challenges for more able pupils Challenges of parenting

Challenges of parenting Mathematical challenges for more able pupils

Mathematical challenges for more able pupils The challenges faced

The challenges faced Taha hussein challenges

Taha hussein challenges Challenges facing global managers

Challenges facing global managers Adapting to challenges of the micro environment

Adapting to challenges of the micro environment Security and ethical challenges

Security and ethical challenges Linda r greene

Linda r greene Structuring organizations for today's challenges

Structuring organizations for today's challenges Unexpected challenges 1995

Unexpected challenges 1995 Challenges of international business in emerging markets

Challenges of international business in emerging markets Challenges faced by company secretary

Challenges faced by company secretary