UCDavis ecs 251 Fall 2007 Operating System Models

![UCDavis, ecs 251 Fall 2007 Anti-Spam sfelixwu@gmail. com l subject: [ecs 251_f 2007]… l UCDavis, ecs 251 Fall 2007 Anti-Spam sfelixwu@gmail. com l subject: [ecs 251_f 2007]… l](https://slidetodoc.com/presentation_image_h2/1b05f6adf9852b209564be5395ac405a/image-21.jpg)

![UCDavis, ecs 251 Fall 2007 struct dirent { ino_t d_ino; char d_name[NAME_MAX+1]; }; struct UCDavis, ecs 251 Fall 2007 struct dirent { ino_t d_ino; char d_name[NAME_MAX+1]; }; struct](https://slidetodoc.com/presentation_image_h2/1b05f6adf9852b209564be5395ac405a/image-54.jpg)

- Slides: 193

UCDavis, ecs 251 Fall 2007: Operating System Models #1: File Systems Dr. S. Felix Wu Computer Science Department University of California, Davis http: //www. cs. ucdavis. edu/~wu/ sfelixwu@gmail. com 09/27/2007 File Systems 1

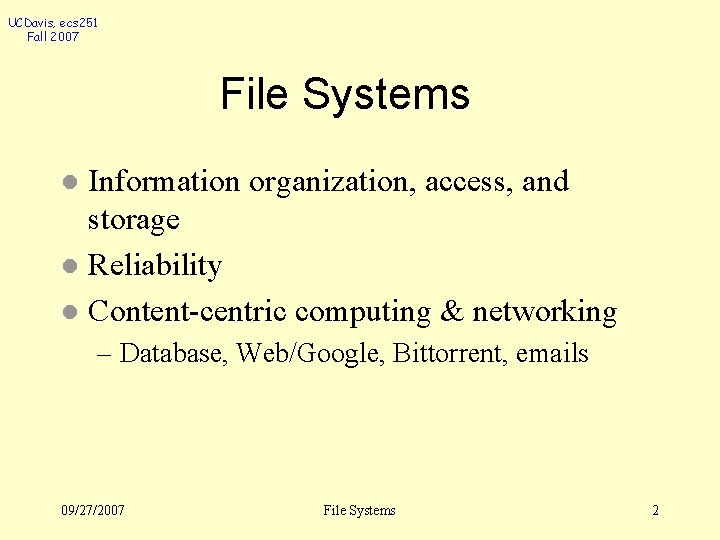

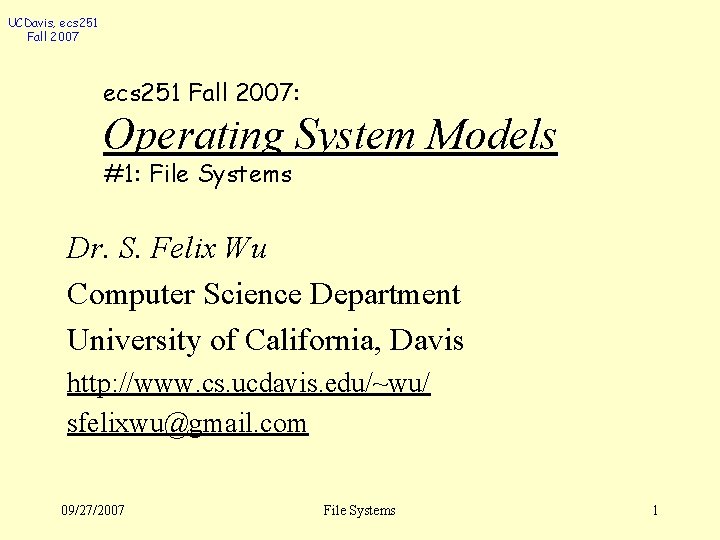

UCDavis, ecs 251 Fall 2007 File Systems Information organization, access, and storage l Reliability l Content-centric computing & networking l – Database, Web/Google, Bittorrent, emails 09/27/2007 File Systems 2

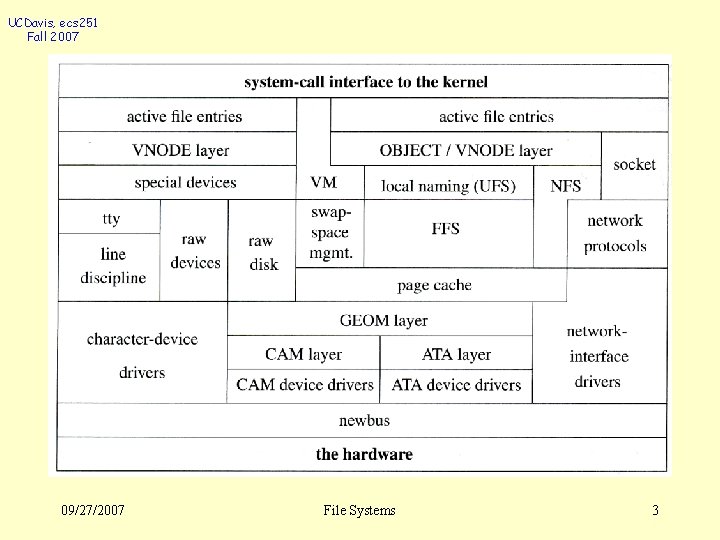

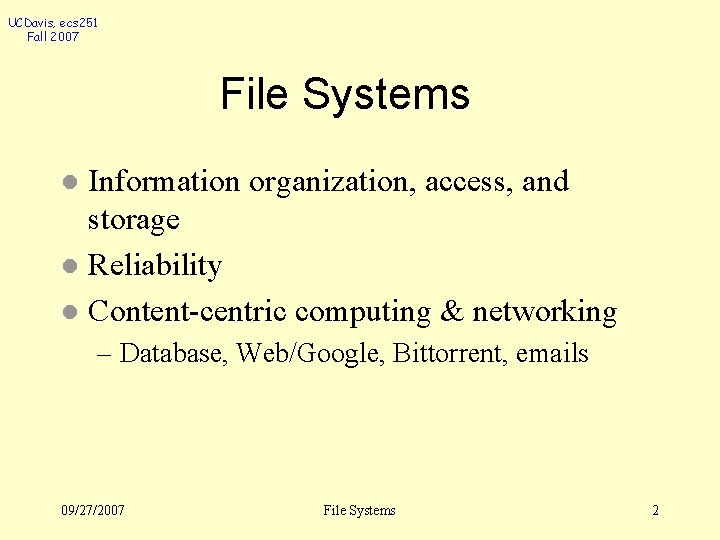

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 3

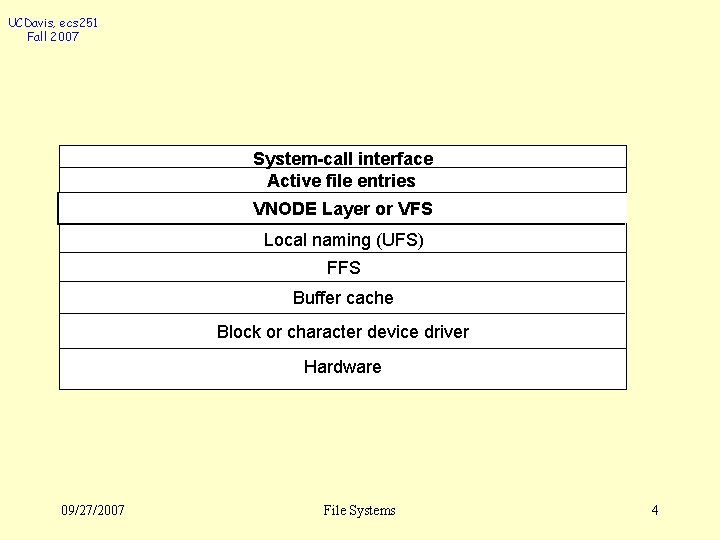

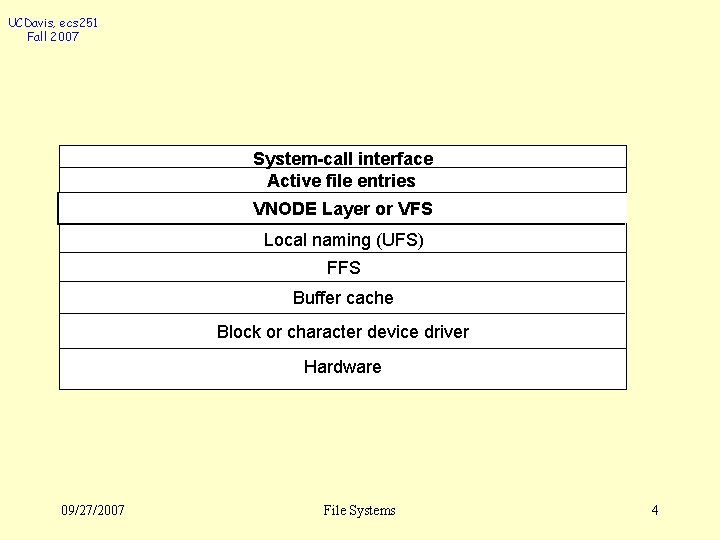

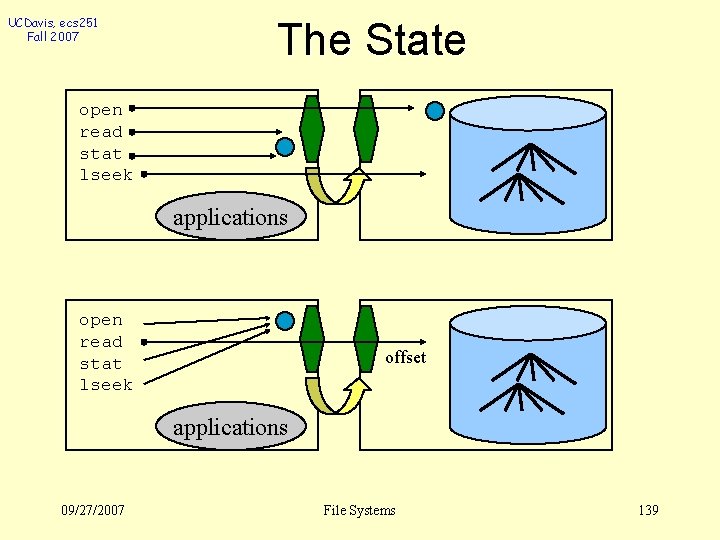

UCDavis, ecs 251 Fall 2007 System-call interface Active file entries VNODE Layer or VFS Local naming (UFS) FFS Buffer cache Block or character device driver Hardware 09/27/2007 File Systems 4

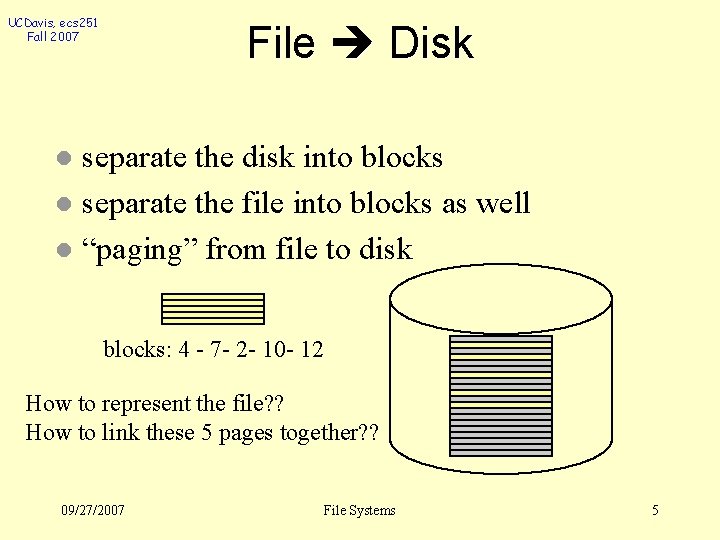

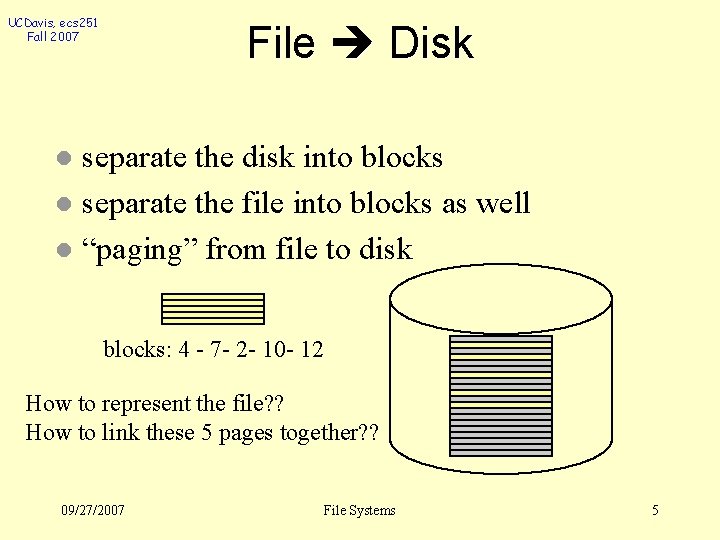

UCDavis, ecs 251 Fall 2007 File Disk separate the disk into blocks l separate the file into blocks as well l “paging” from file to disk l blocks: 4 - 7 - 2 - 10 - 12 How to represent the file? ? How to link these 5 pages together? ? 09/27/2007 File Systems 5

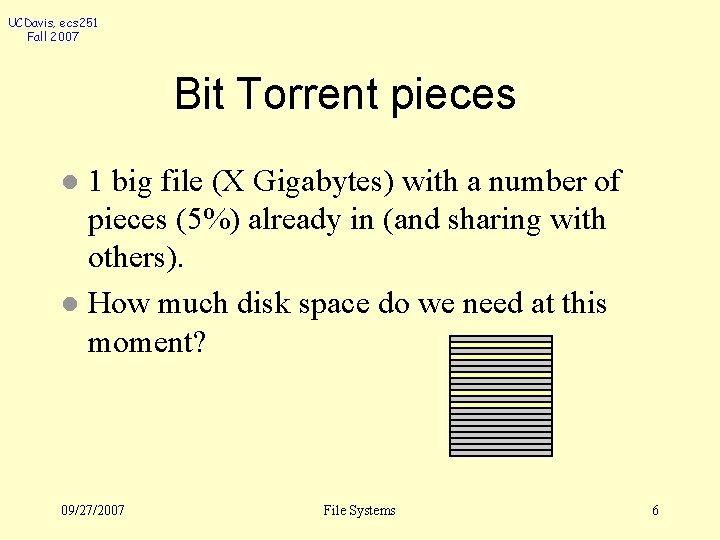

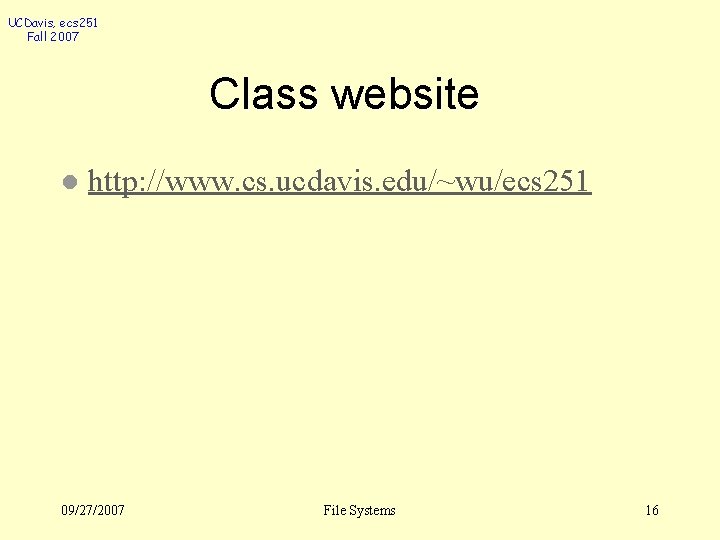

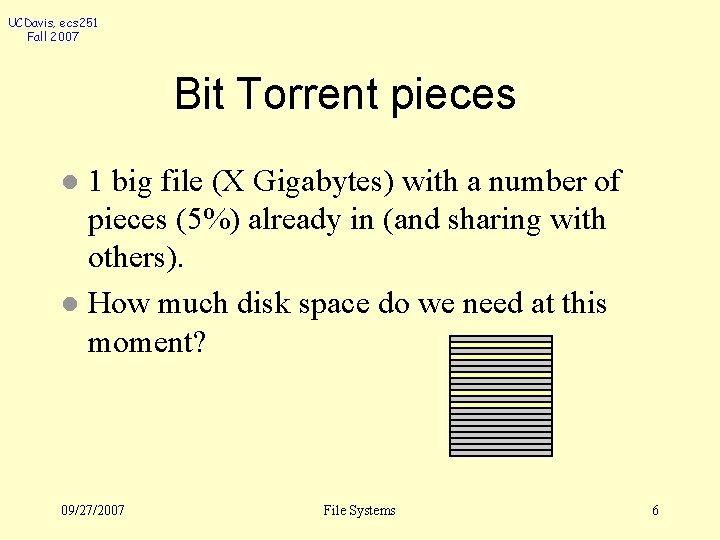

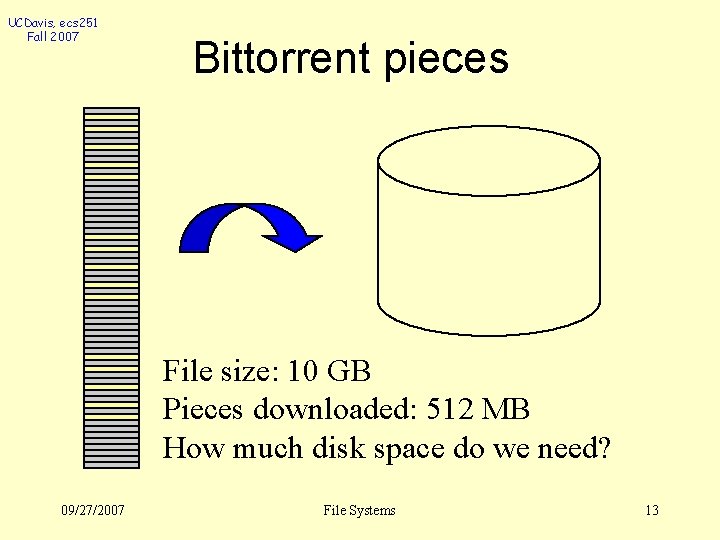

UCDavis, ecs 251 Fall 2007 Bit Torrent pieces 1 big file (X Gigabytes) with a number of pieces (5%) already in (and sharing with others). l How much disk space do we need at this moment? l 09/27/2007 File Systems 6

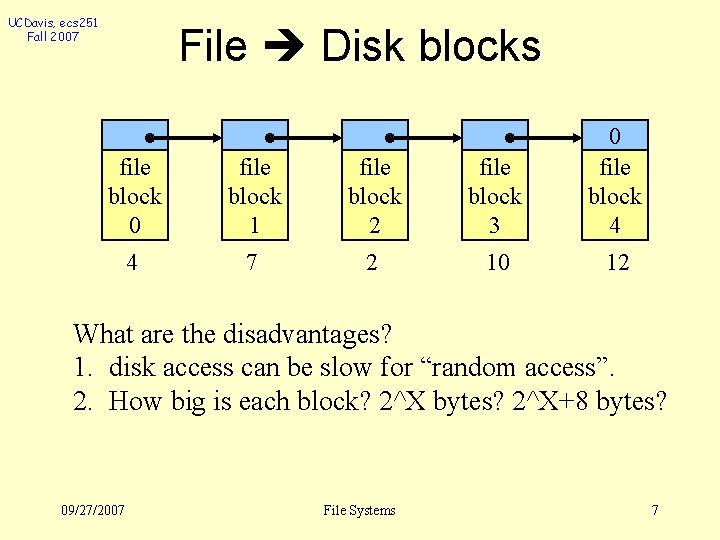

UCDavis, ecs 251 Fall 2007 File Disk blocks file block 0 file block 1 file block 2 file block 3 0 file block 4 4 7 2 10 12 What are the disadvantages? 1. disk access can be slow for “random access”. 2. How big is each block? 2^X bytes? 2^X+8 bytes? 09/27/2007 File Systems 7

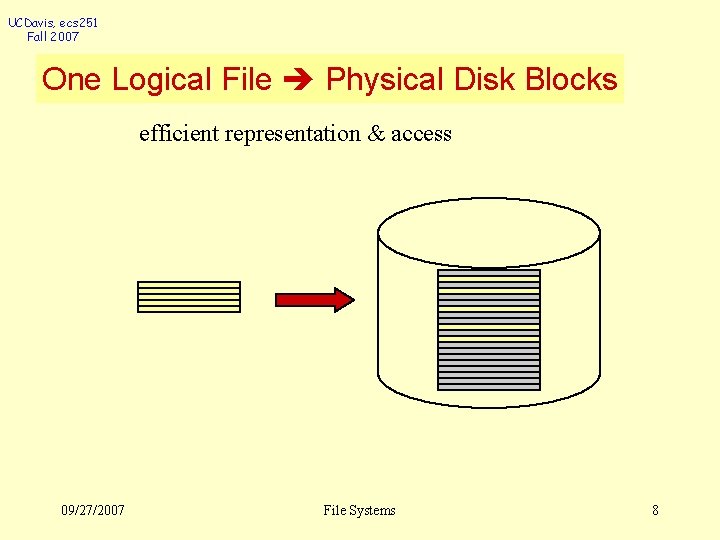

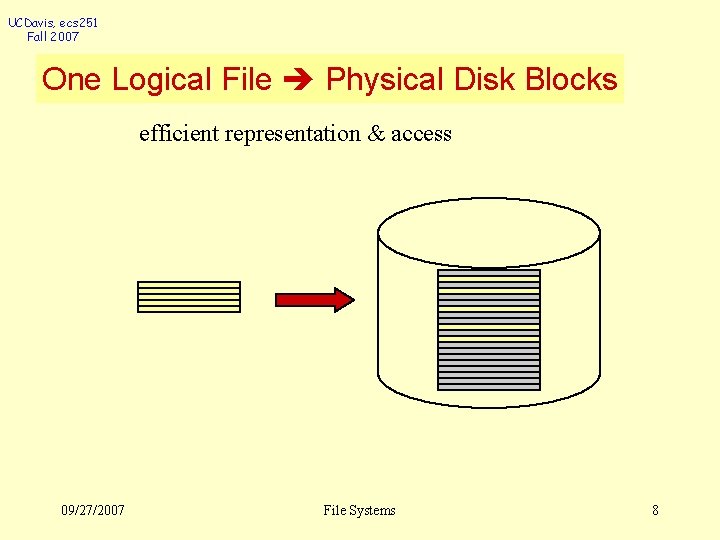

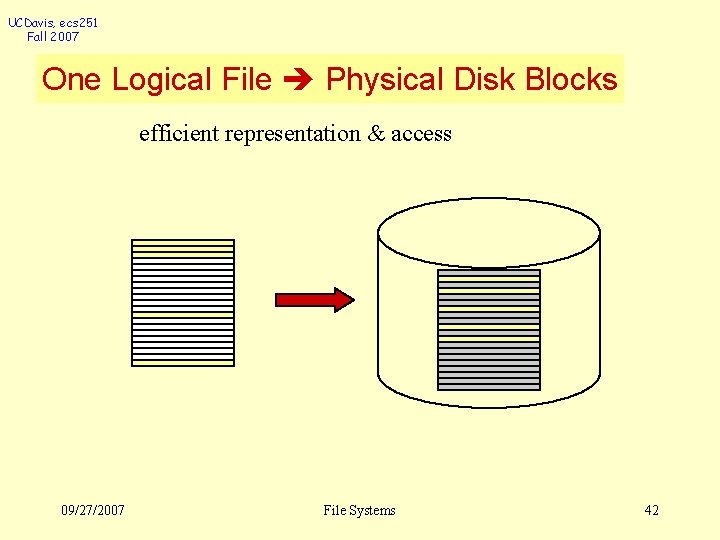

UCDavis, ecs 251 Fall 2007 One Logical File Physical Disk Blocks efficient representation & access 09/27/2007 File Systems 8

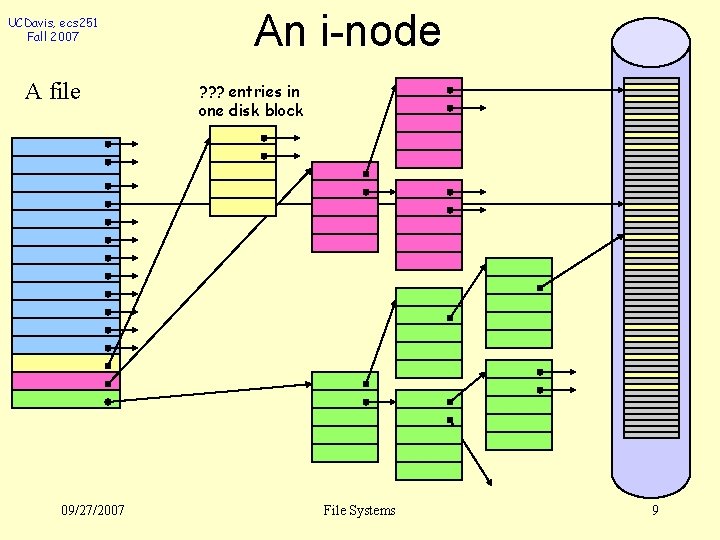

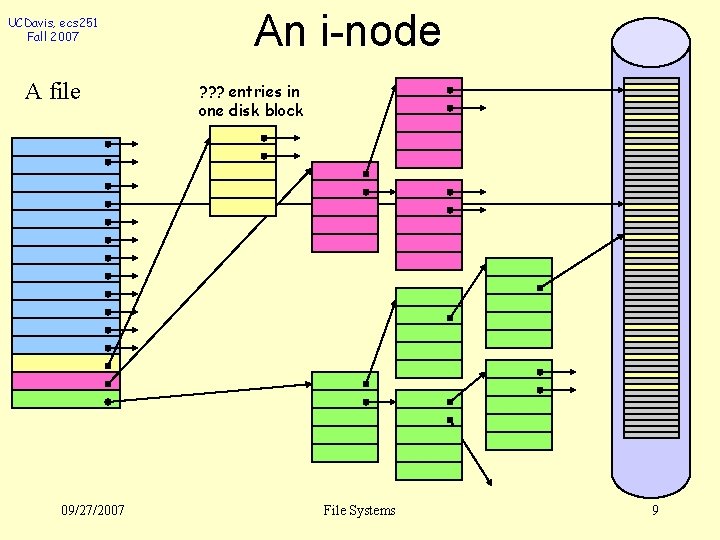

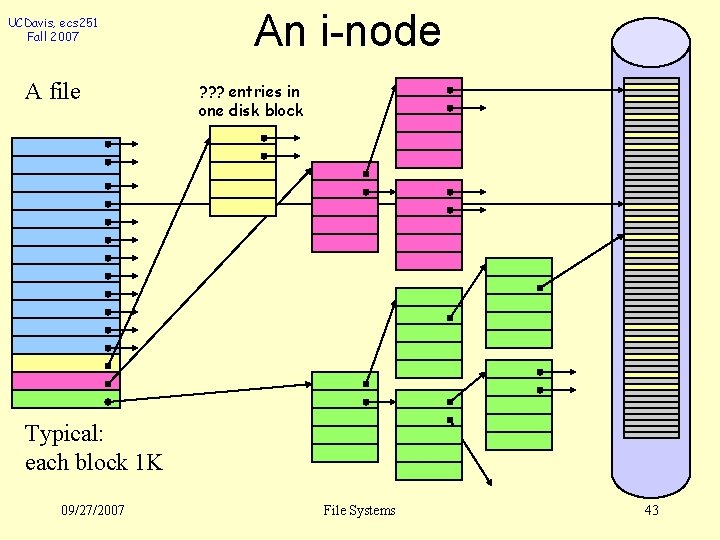

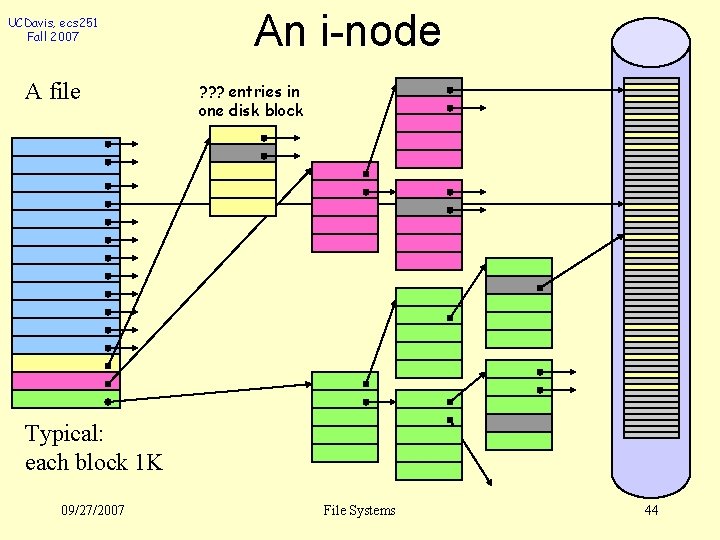

UCDavis, ecs 251 Fall 2007 A file 09/27/2007 An i-node ? ? ? entries in one disk block File Systems 9

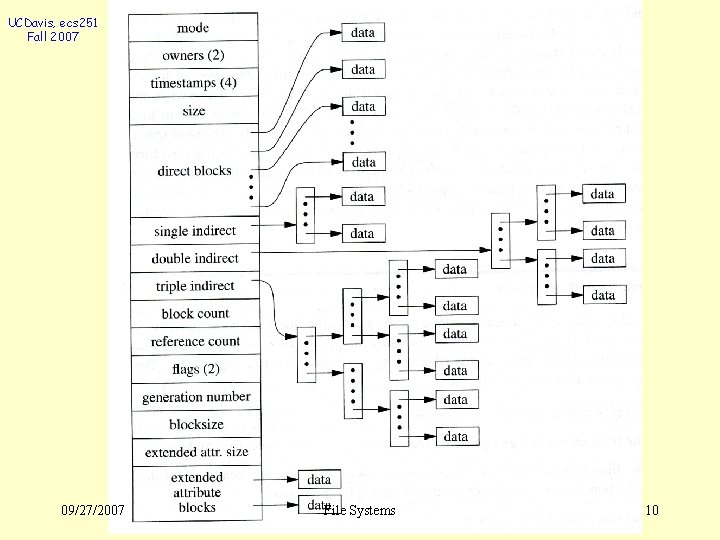

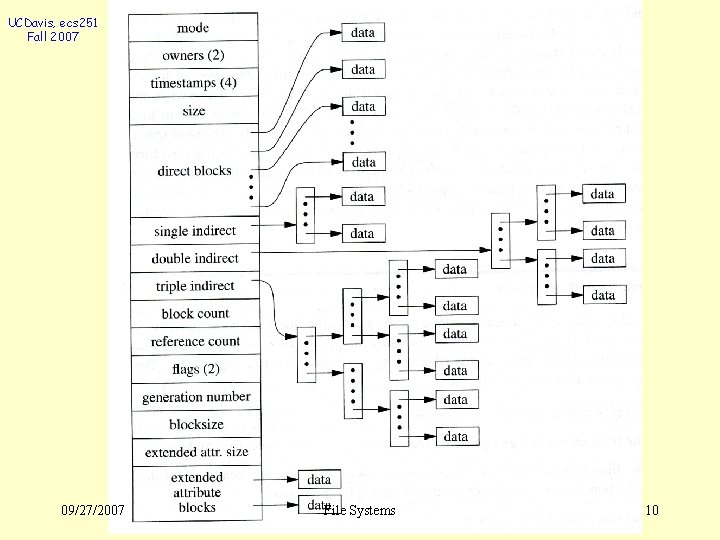

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 10

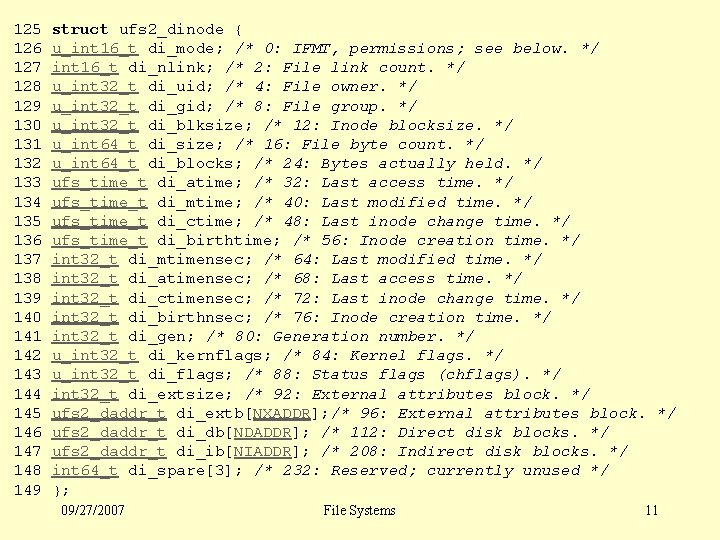

UCDavis, ecs 251 125 struct Fall 2007 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 ufs 2_dinode { u_int 16_t di_mode; /* 0: IFMT, permissions; see below. */ int 16_t di_nlink; /* 2: File link count. */ u_int 32_t di_uid; /* 4: File owner. */ u_int 32_t di_gid; /* 8: File group. */ u_int 32_t di_blksize; /* 12: Inode blocksize. */ u_int 64_t di_size; /* 16: File byte count. */ u_int 64_t di_blocks; /* 24: Bytes actually held. */ ufs_time_t di_atime; /* 32: Last access time. */ ufs_time_t di_mtime; /* 40: Last modified time. */ ufs_time_t di_ctime; /* 48: Last inode change time. */ ufs_time_t di_birthtime; /* 56: Inode creation time. */ int 32_t di_mtimensec; /* 64: Last modified time. */ int 32_t di_atimensec; /* 68: Last access time. */ int 32_t di_ctimensec; /* 72: Last inode change time. */ int 32_t di_birthnsec; /* 76: Inode creation time. */ int 32_t di_gen; /* 80: Generation number. */ u_int 32_t di_kernflags; /* 84: Kernel flags. */ u_int 32_t di_flags; /* 88: Status flags (chflags). */ int 32_t di_extsize; /* 92: External attributes block. */ ufs 2_daddr_t di_extb[NXADDR]; /* 96: External attributes block. */ ufs 2_daddr_t di_db[NDADDR]; /* 112: Direct disk blocks. */ ufs 2_daddr_t di_ib[NIADDR]; /* 208: Indirect disk blocks. */ int 64_t di_spare[3]; /* 232: Reserved; currently unused */ }; 09/27/2007 File Systems 11

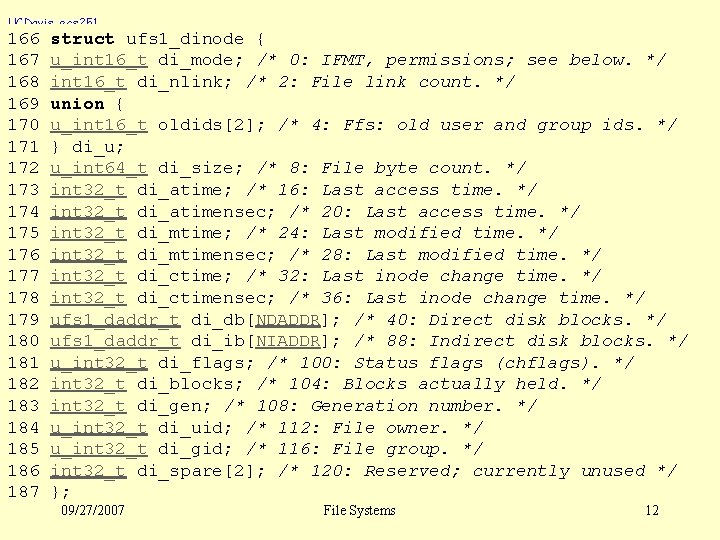

UCDavis, ecs 251 Fall 2007 166 struct 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 ufs 1_dinode { u_int 16_t di_mode; /* 0: IFMT, permissions; see below. */ int 16_t di_nlink; /* 2: File link count. */ union { u_int 16_t oldids[2]; /* 4: Ffs: old user and group ids. */ } di_u; u_int 64_t di_size; /* 8: File byte count. */ int 32_t di_atime; /* 16: Last access time. */ int 32_t di_atimensec; /* 20: Last access time. */ int 32_t di_mtime; /* 24: Last modified time. */ int 32_t di_mtimensec; /* 28: Last modified time. */ int 32_t di_ctime; /* 32: Last inode change time. */ int 32_t di_ctimensec; /* 36: Last inode change time. */ ufs 1_daddr_t di_db[NDADDR]; /* 40: Direct disk blocks. */ ufs 1_daddr_t di_ib[NIADDR]; /* 88: Indirect disk blocks. */ u_int 32_t di_flags; /* 100: Status flags (chflags). */ int 32_t di_blocks; /* 104: Blocks actually held. */ int 32_t di_gen; /* 108: Generation number. */ u_int 32_t di_uid; /* 112: File owner. */ u_int 32_t di_gid; /* 116: File group. */ int 32_t di_spare[2]; /* 120: Reserved; currently unused */ }; 09/27/2007 File Systems 12

UCDavis, ecs 251 Fall 2007 Bittorrent pieces File size: 10 GB Pieces downloaded: 512 MB How much disk space do we need? 09/27/2007 File Systems 13

UCDavis, ecs 251 Fall 2007 Welcome to ecs 251 09/27/2007 File Systems 14

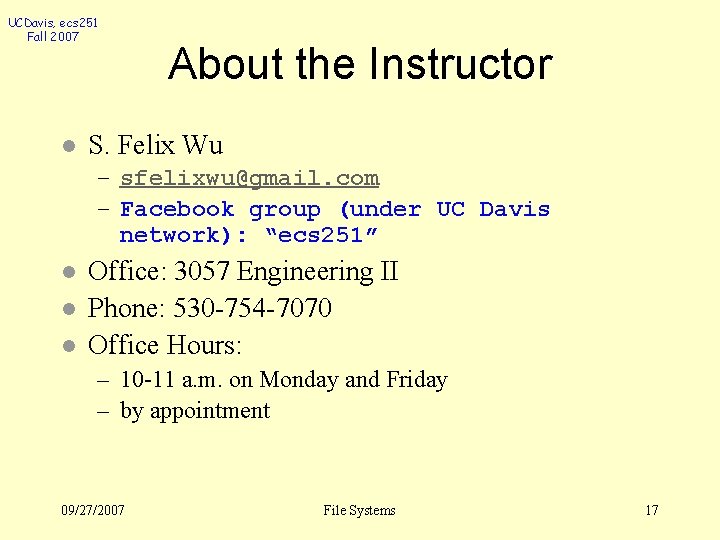

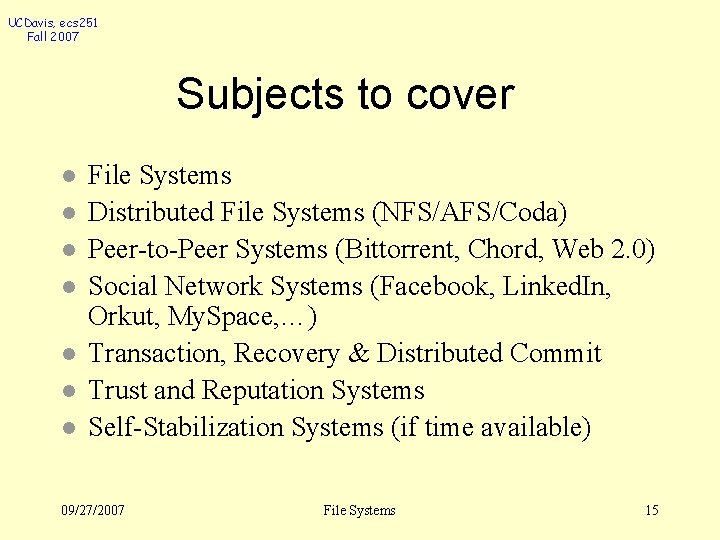

UCDavis, ecs 251 Fall 2007 Subjects to cover l l l l File Systems Distributed File Systems (NFS/AFS/Coda) Peer-to-Peer Systems (Bittorrent, Chord, Web 2. 0) Social Network Systems (Facebook, Linked. In, Orkut, My. Space, …) Transaction, Recovery & Distributed Commit Trust and Reputation Systems Self-Stabilization Systems (if time available) 09/27/2007 File Systems 15

UCDavis, ecs 251 Fall 2007 Class website l http: //www. cs. ucdavis. edu/~wu/ecs 251 09/27/2007 File Systems 16

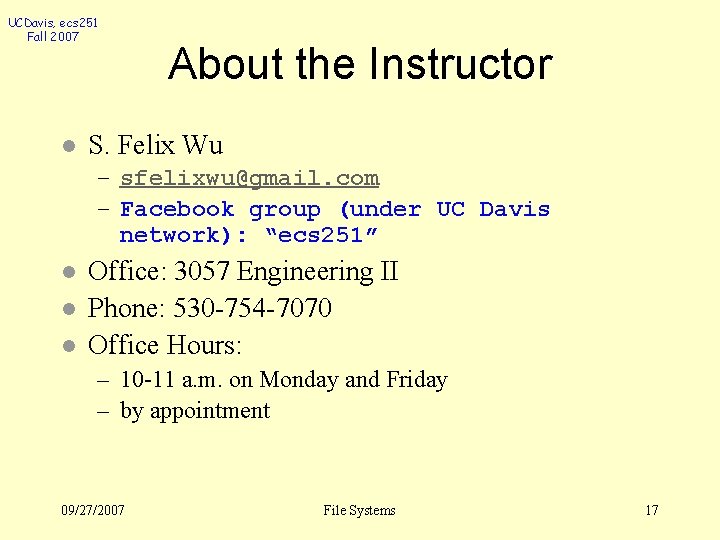

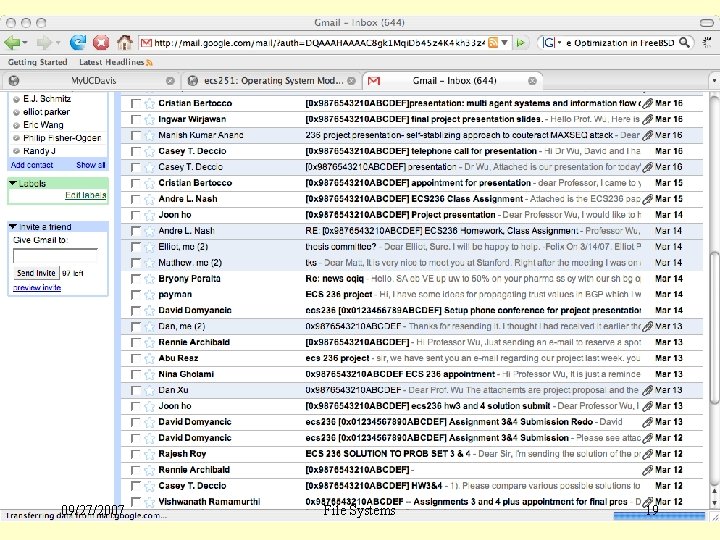

UCDavis, ecs 251 Fall 2007 l About the Instructor S. Felix Wu – sfelixwu@gmail. com – Facebook group (under UC Davis network): “ecs 251” l l l Office: 3057 Engineering II Phone: 530 -754 -7070 Office Hours: – 10 -11 a. m. on Monday and Friday – by appointment 09/27/2007 File Systems 17

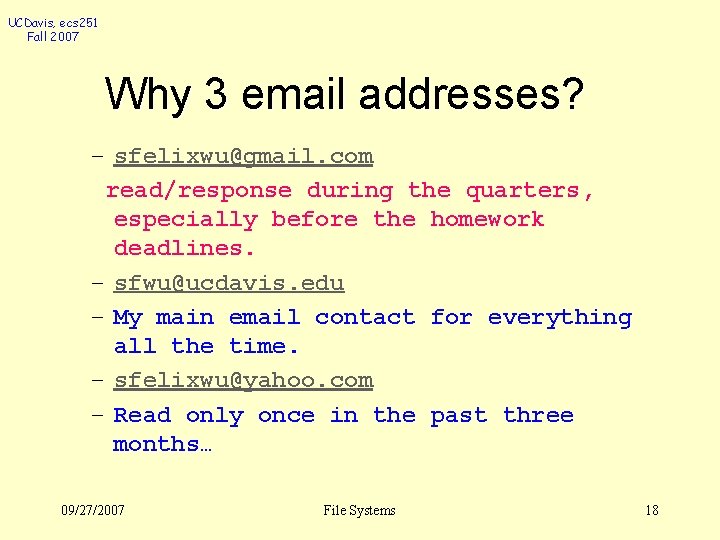

UCDavis, ecs 251 Fall 2007 Why 3 email addresses? – sfelixwu@gmail. com read/response during the quarters, especially before the homework deadlines. – sfwu@ucdavis. edu – My main email contact for everything all the time. – sfelixwu@yahoo. com – Read only once in the past three months… 09/27/2007 File Systems 18

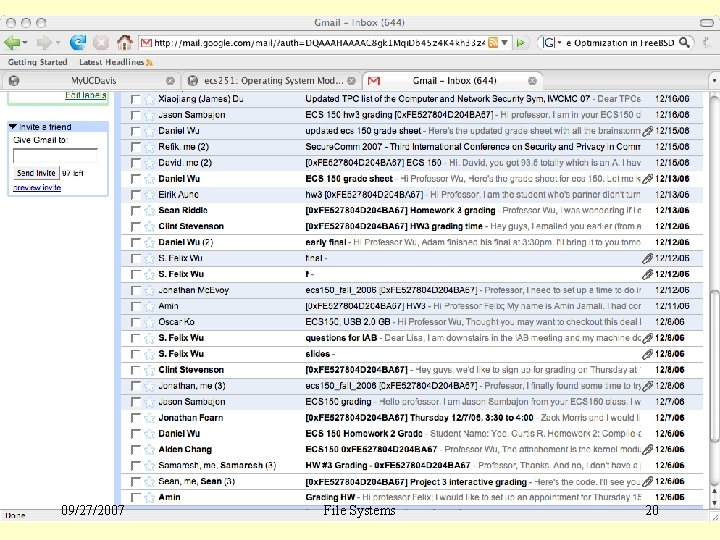

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 19

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 20

![UCDavis ecs 251 Fall 2007 AntiSpam sfelixwugmail com l subject ecs 251f 2007 l UCDavis, ecs 251 Fall 2007 Anti-Spam sfelixwu@gmail. com l subject: [ecs 251_f 2007]… l](https://slidetodoc.com/presentation_image_h2/1b05f6adf9852b209564be5395ac405a/image-21.jpg)

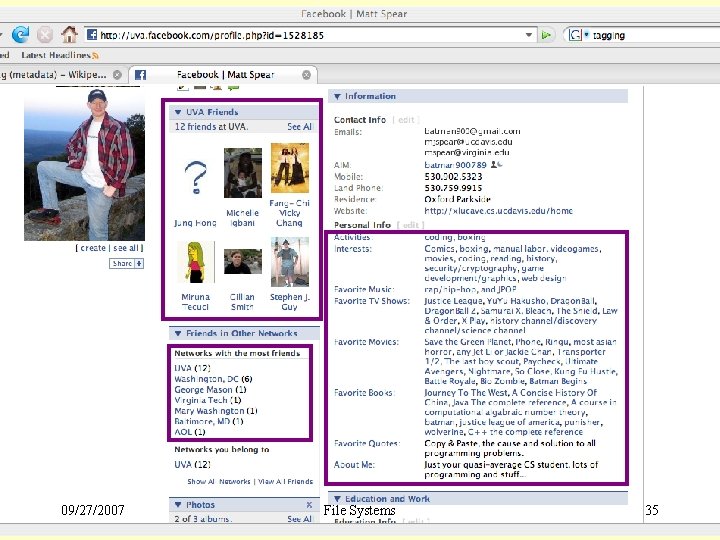

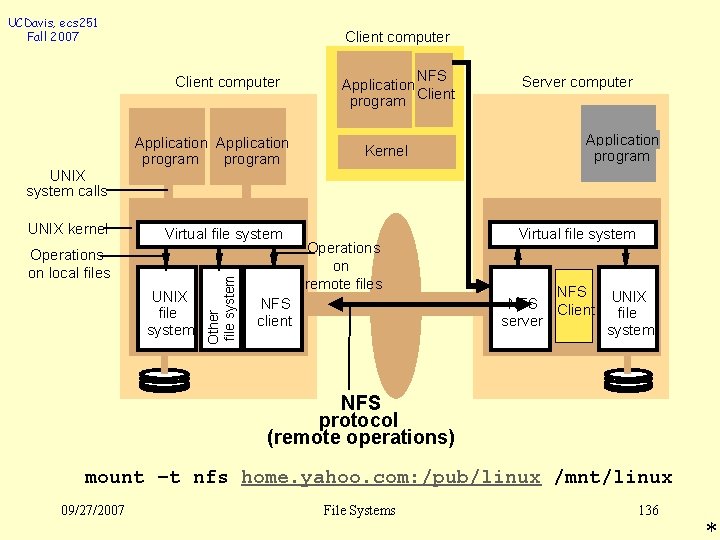

UCDavis, ecs 251 Fall 2007 Anti-Spam sfelixwu@gmail. com l subject: [ecs 251_f 2007]… l l ecs 251_f 2007_0927 is the cyber social link between the instructor and the students in ecs 251, Fall 2007. 09/27/2007 File Systems 21

UCDavis, ecs 251 Fall 2007 Textbook l A set of papers on these subjects 09/27/2007 File Systems 22

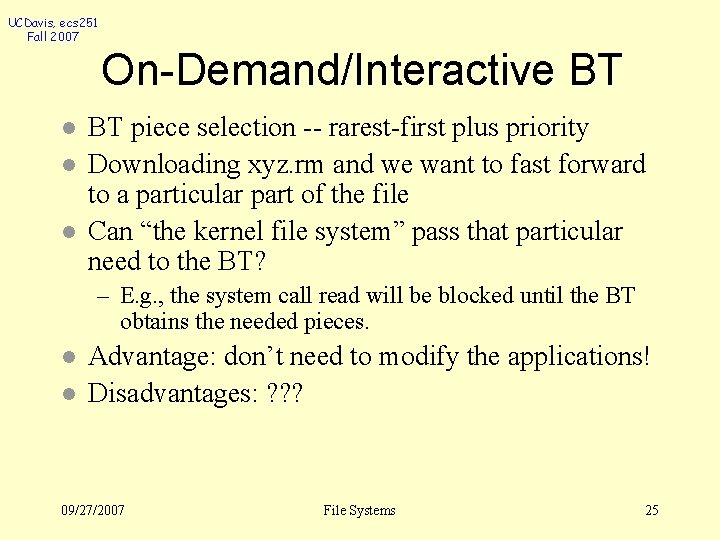

UCDavis, ecs 251 Fall 2007 Requirements Midterm: 30% (11/01/2007) l Final Project: 70% (1~2 persons) l – Pre-proposal (10%, 10/18/2007) – Proposal/Design/Prototype (30%, 11/20/2007) – Final Demo & Report (30%, 12/11/2007) 09/27/2007 File Systems 23

UCDavis, ecs 251 Fall 2007 l Projects (10/02/2007) On-demand/interactive Bittorrent – Kernel, BT client, & applications l l Transaction-oriented File System P 2 P-X over Internet – X = Facebook, Google, e. Bay, ud-DFS, Data Center l “Internet-less information system” – MANET-based systems l Social network information crawling 09/27/2007 File Systems 24

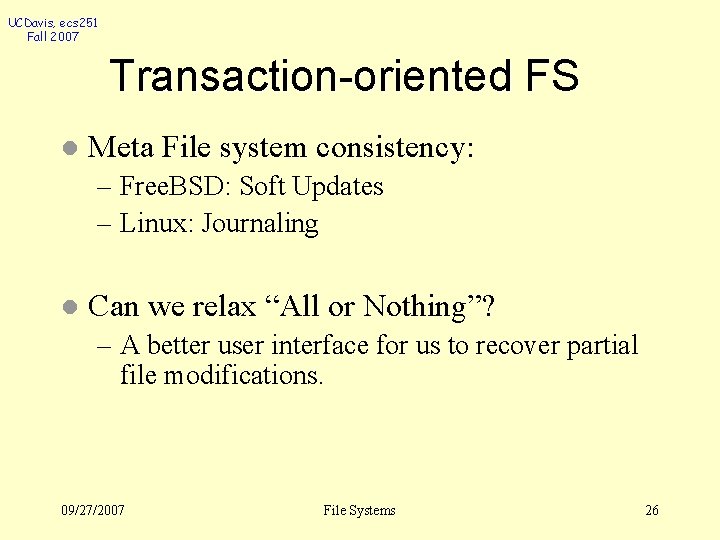

UCDavis, ecs 251 Fall 2007 On-Demand/Interactive BT l l l BT piece selection -- rarest-first plus priority Downloading xyz. rm and we want to fast forward to a particular part of the file Can “the kernel file system” pass that particular need to the BT? – E. g. , the system call read will be blocked until the BT obtains the needed pieces. l l Advantage: don’t need to modify the applications! Disadvantages: ? ? ? 09/27/2007 File Systems 25

UCDavis, ecs 251 Fall 2007 Transaction-oriented FS l Meta File system consistency: – Free. BSD: Soft Updates – Linux: Journaling l Can we relax “All or Nothing”? – A better user interface for us to recover partial file modifications. 09/27/2007 File Systems 26

UCDavis, ecs 251 Fall 2007 P 2 P over X Facebook l Google l e. Bay l Mega. Upload l l Problems with centralized service providers? ? ? 09/27/2007 File Systems 27

UCDavis, ecs 251 Fall 2007 Dace. Book Davis version of Face. Book l Peer-to-Peer social networking l Community gateways to other social networks such as Facebook l l Socially here, information here 09/27/2007 File Systems 28

UCDavis, ecs 251 Fall 2007 Internet-less Information Sharing Ad Hoc Collaboration for Content Sharing in the Infrastructure-less Heterogeneous Network 09/27/2007 File Systems 29

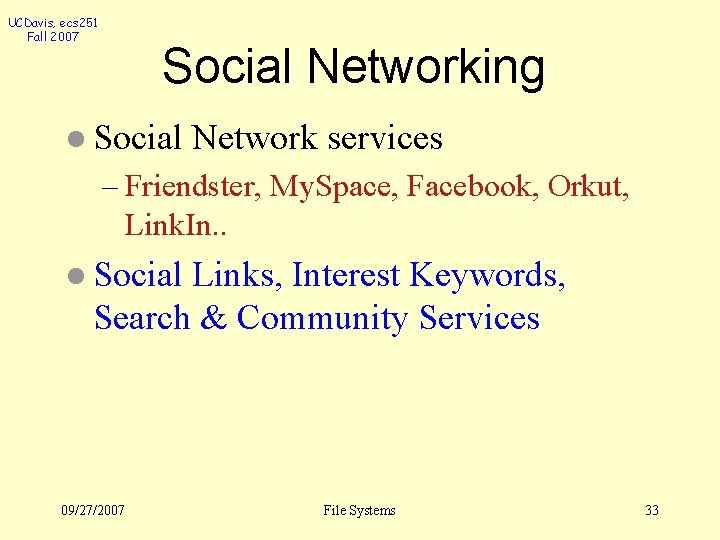

UCDavis, ecs 251 Fall 2007 Infrastructure-Less “No IP address, DNS, or Web servers” l Purely P 2 P from Layers 3 to 7 l 09/27/2007 File Systems 30

UCDavis, ecs 251 Fall 2007 Social Network Crawling 09/27/2007 File Systems 31

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 32

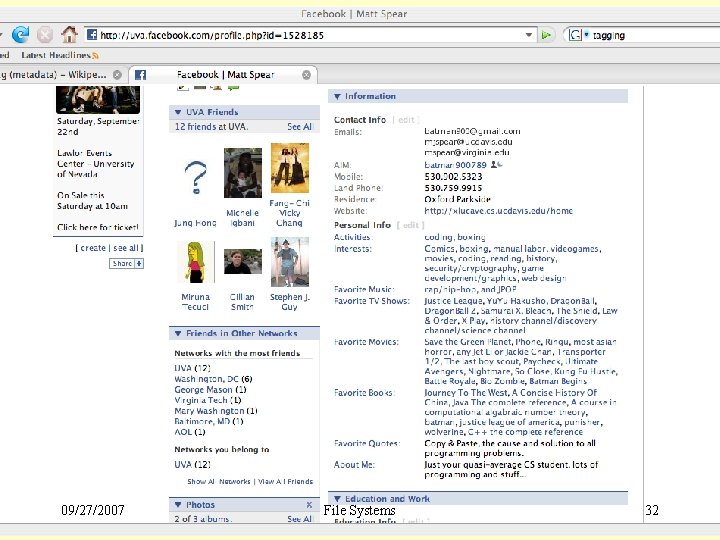

UCDavis, ecs 251 Fall 2007 Social Networking l Social Network services – Friendster, My. Space, Facebook, Orkut, Link. In. . l Social Links, Interest Keywords, Search & Community Services 09/27/2007 File Systems 33

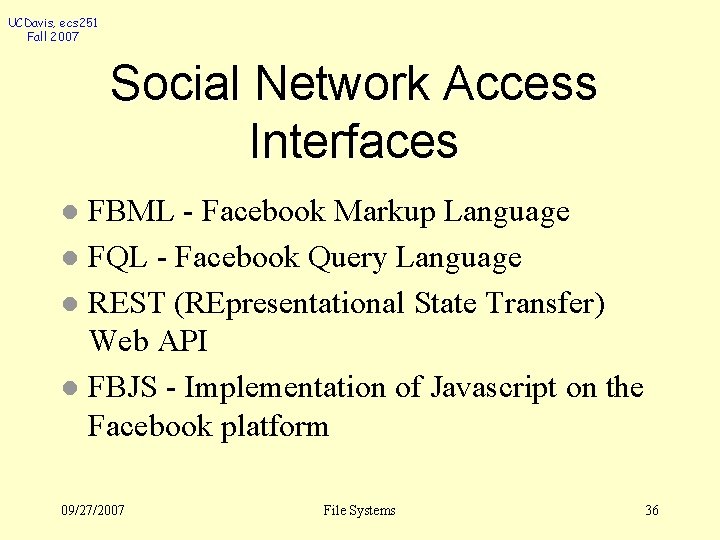

UCDavis, ecs 251 Fall 2007 Social Networks: # of Users http: //en. wikipedia. org/wiki/List_of_social_networking_sites l l l l Face. Book Friendster Hi 5 Link. In My. Space Orkut Yahoo! 360 o … among many others. 09/27/2007 File Systems ~34 M ~47 M ~50 M ~12 M ~200 M ~47 M ~4 M 34

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 35

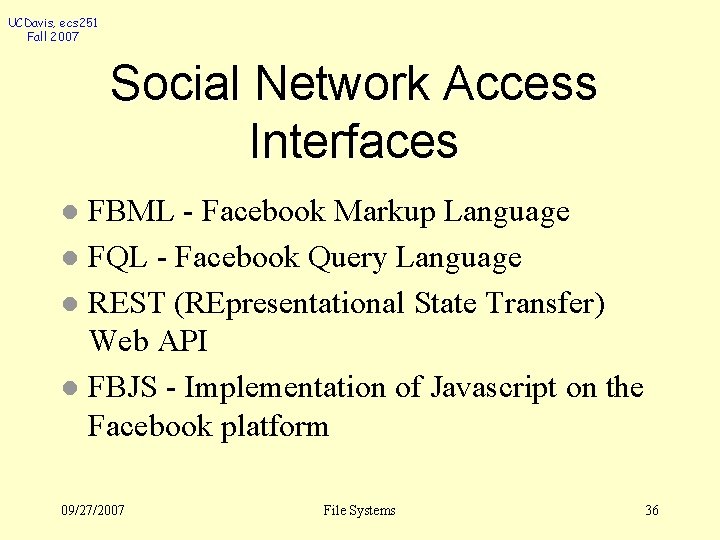

UCDavis, ecs 251 Fall 2007 Social Network Access Interfaces FBML - Facebook Markup Language l FQL - Facebook Query Language l REST (REpresentational State Transfer) Web API l FBJS - Implementation of Javascript on the Facebook platform l 09/27/2007 File Systems 36

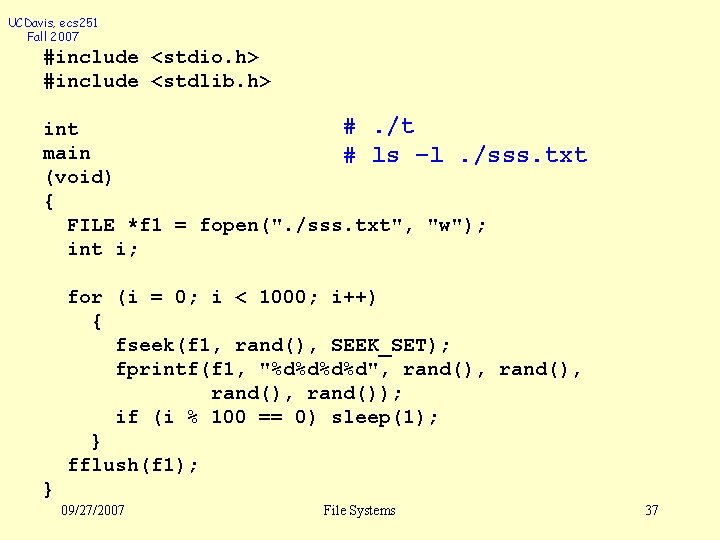

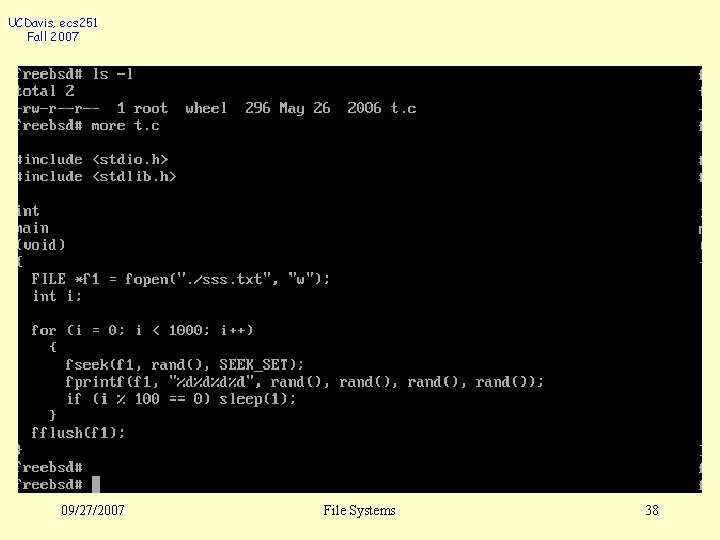

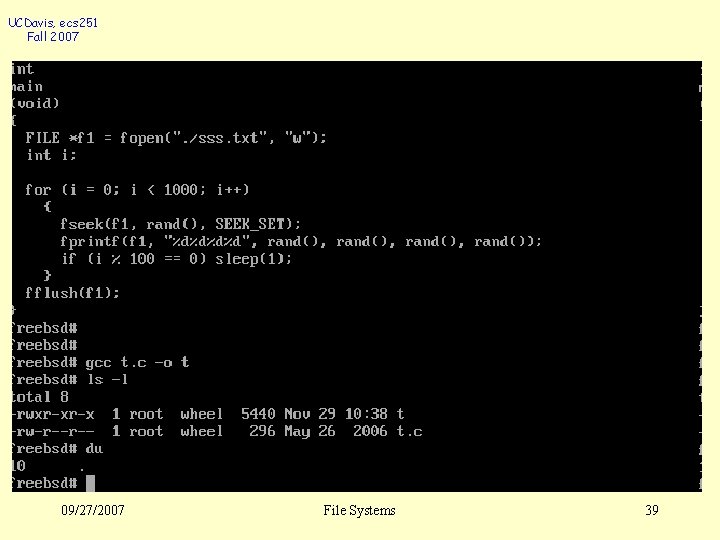

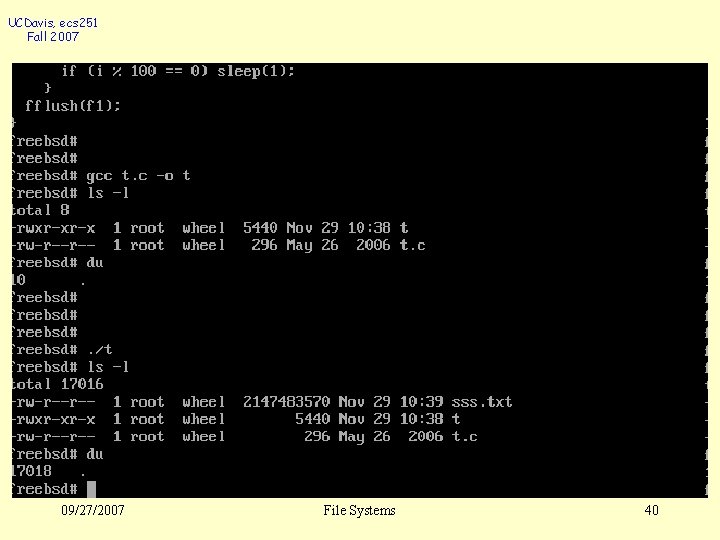

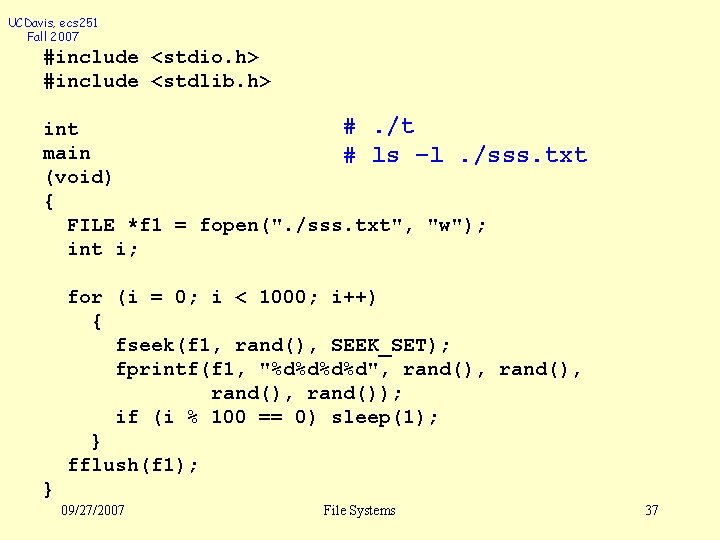

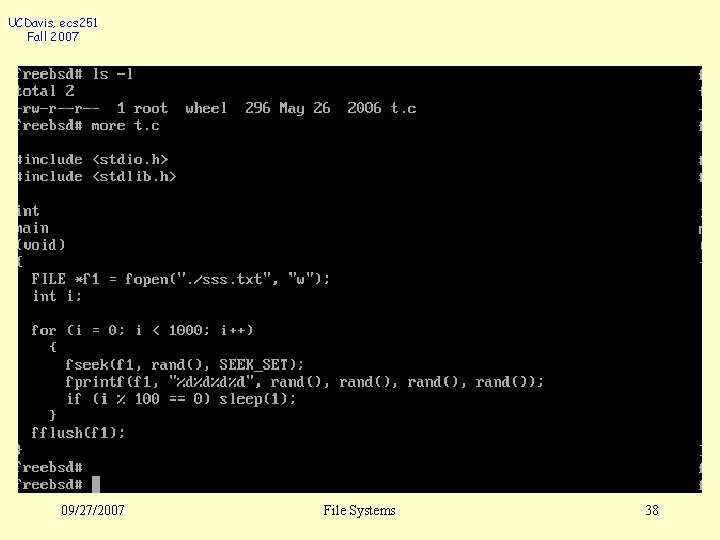

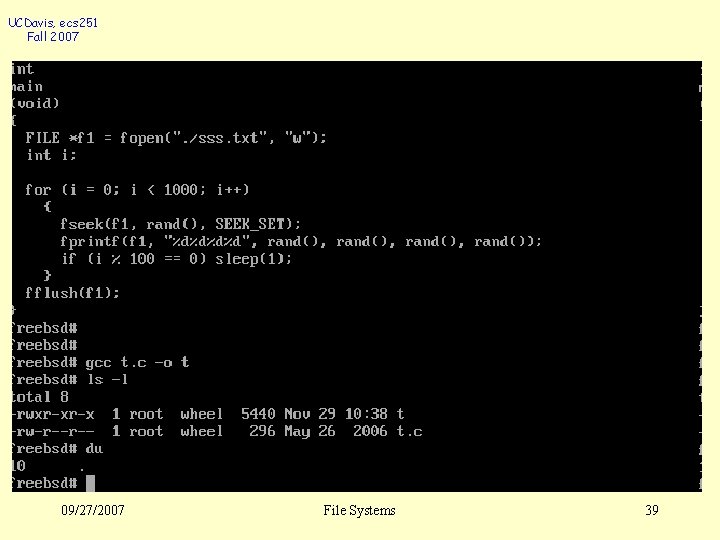

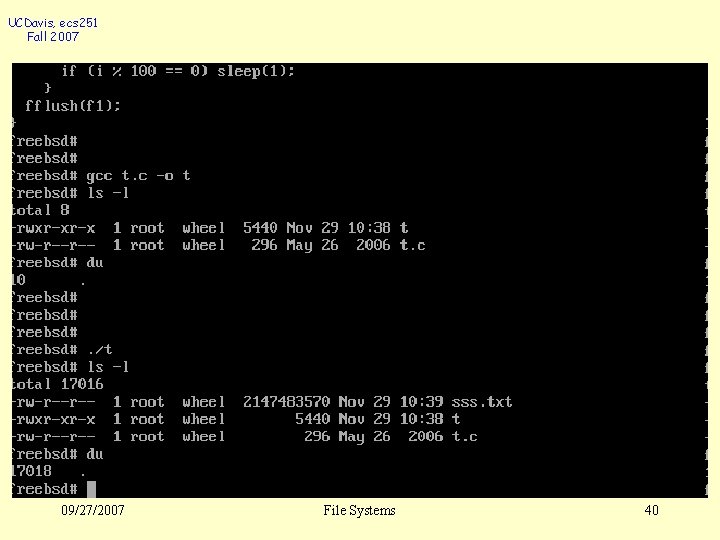

UCDavis, ecs 251 Fall 2007 #include <stdio. h> #include <stdlib. h> #. /t int main # ls –l. /sss. txt (void) { FILE *f 1 = fopen(". /sss. txt", "w"); int i; for (i = 0; i < 1000; i++) { fseek(f 1, rand(), SEEK_SET); fprintf(f 1, "%d%d", rand(), rand()); if (i % 100 == 0) sleep(1); } fflush(f 1); } 09/27/2007 File Systems 37

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 38

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 39

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 40

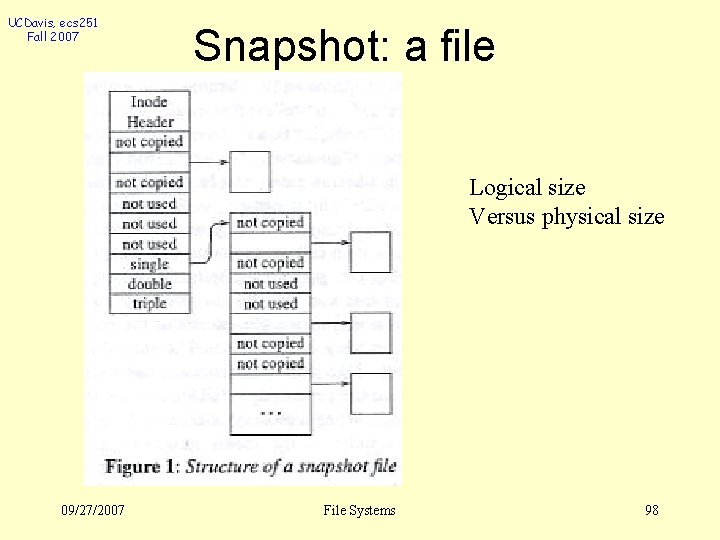

UCDavis, ecs 251 Fall 2007 di_size vs. di_blocks Logical l Physical l fstat l du l 09/27/2007 File Systems 41

UCDavis, ecs 251 Fall 2007 One Logical File Physical Disk Blocks efficient representation & access 09/27/2007 File Systems 42

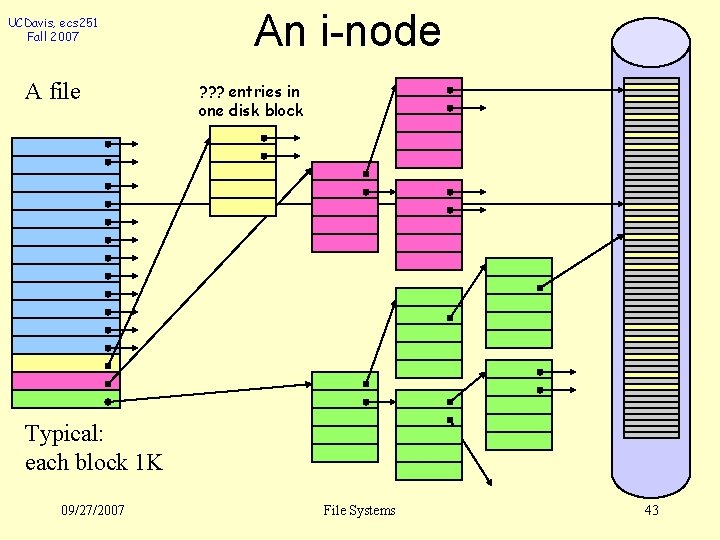

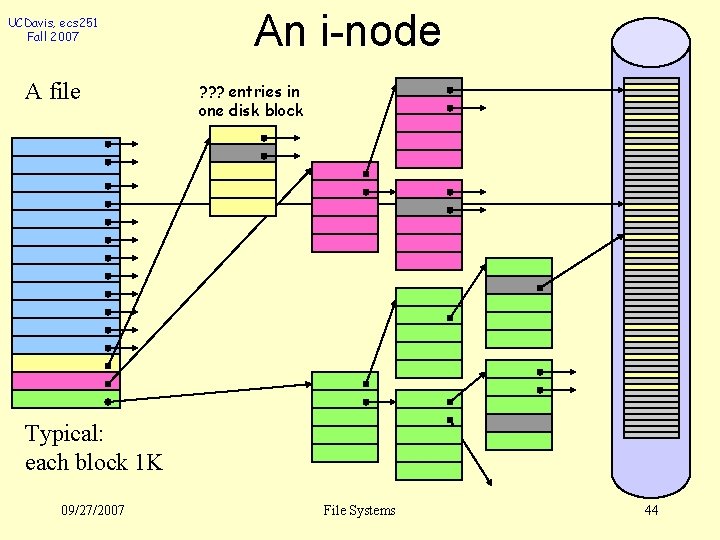

UCDavis, ecs 251 Fall 2007 A file An i-node ? ? ? entries in one disk block Typical: each block 1 K 09/27/2007 File Systems 43

UCDavis, ecs 251 Fall 2007 A file An i-node ? ? ? entries in one disk block Typical: each block 1 K 09/27/2007 File Systems 44

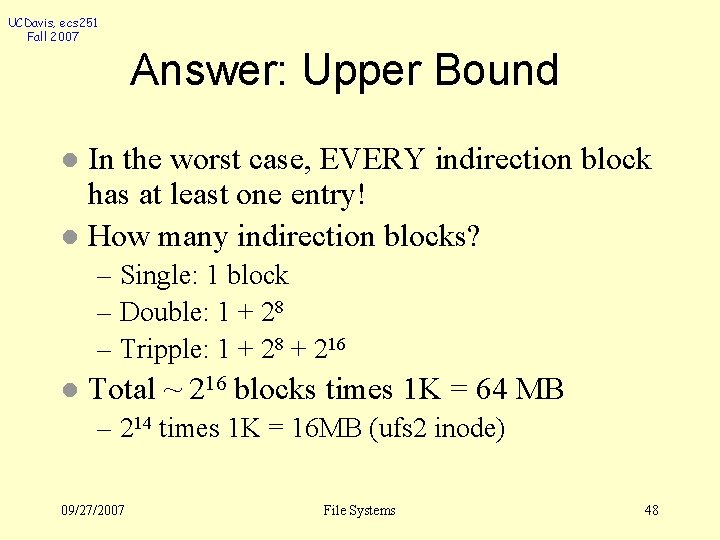

UCDavis, ecs 251 Fall 2007 l l i-node How many disk blocks can a FS have? How many levels of i-node indirection will be necessary to store a file of 2 G bytes? (I. e. , 0, 1, 2 or 3) What is the largest possible file size in i-node? What is the size of the i-node itself for a file of 10 GB with only 512 MB downloaded? 09/27/2007 File Systems 45

UCDavis, ecs 251 Fall 2007 l Answer How many disk blocks can a FS have? – 264 or 232: Pointer (to blocks) size is 8/4 bytes. l How many levels of i-node indirection will be necessary to store a file of 2 G (231) bytes? (I. e. , 0, 1, 2 or 3) – 12*210 + 28 *2 10 + 28 *28 *2 10 >? 231 l What is the largest possible file size in i-node? – 12*210 + 28 *2 10 + 28 *28 *2 10 – 264 – 1 – 232 * 210 You need to consider three issues and find the minimum! 09/27/2007 File Systems 46

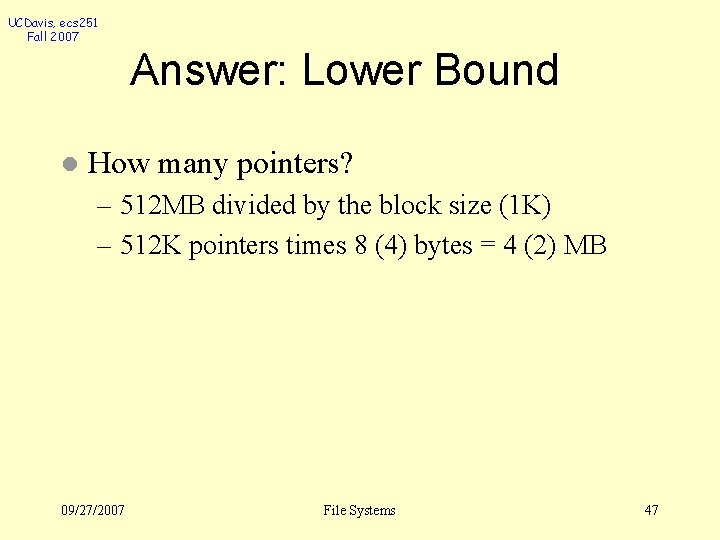

UCDavis, ecs 251 Fall 2007 Answer: Lower Bound l How many pointers? – 512 MB divided by the block size (1 K) – 512 K pointers times 8 (4) bytes = 4 (2) MB 09/27/2007 File Systems 47

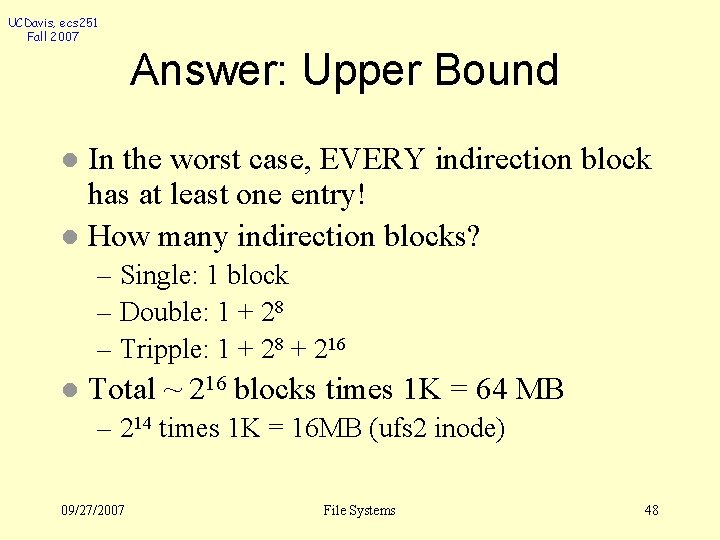

UCDavis, ecs 251 Fall 2007 Answer: Upper Bound In the worst case, EVERY indirection block has at least one entry! l How many indirection blocks? l – Single: 1 block – Double: 1 + 28 – Tripple: 1 + 28 + 216 l Total ~ 216 blocks times 1 K = 64 MB – 214 times 1 K = 16 MB (ufs 2 inode) 09/27/2007 File Systems 48

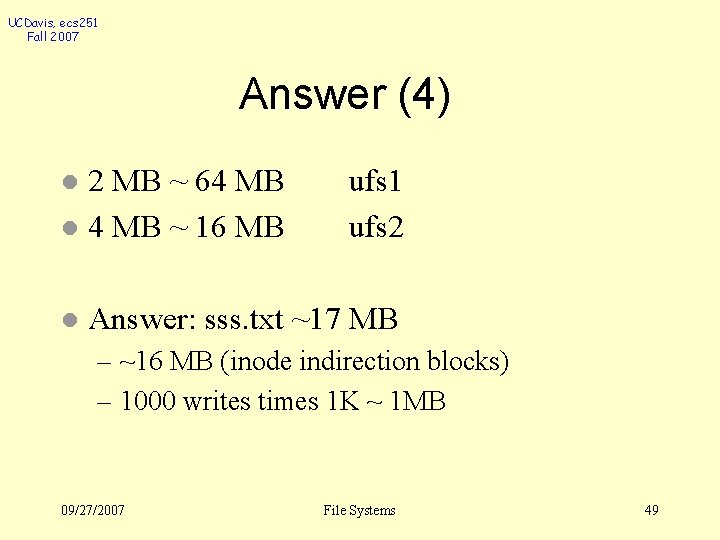

UCDavis, ecs 251 Fall 2007 Answer (4) l 2 MB ~ 64 MB l 4 MB ~ 16 MB ufs 1 ufs 2 l Answer: sss. txt ~17 MB – ~16 MB (inode indirection blocks) – 1000 writes times 1 K ~ 1 MB 09/27/2007 File Systems 49

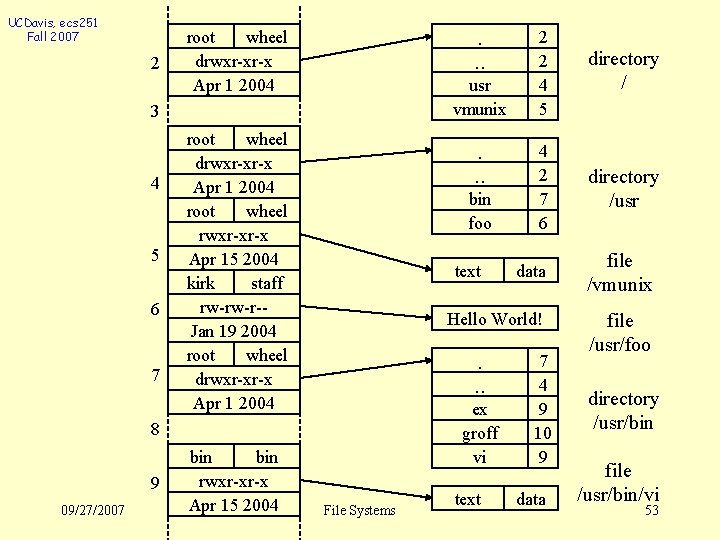

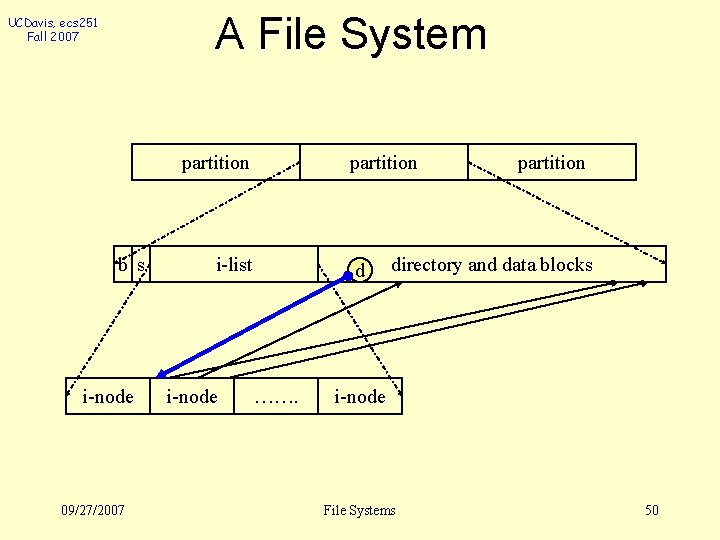

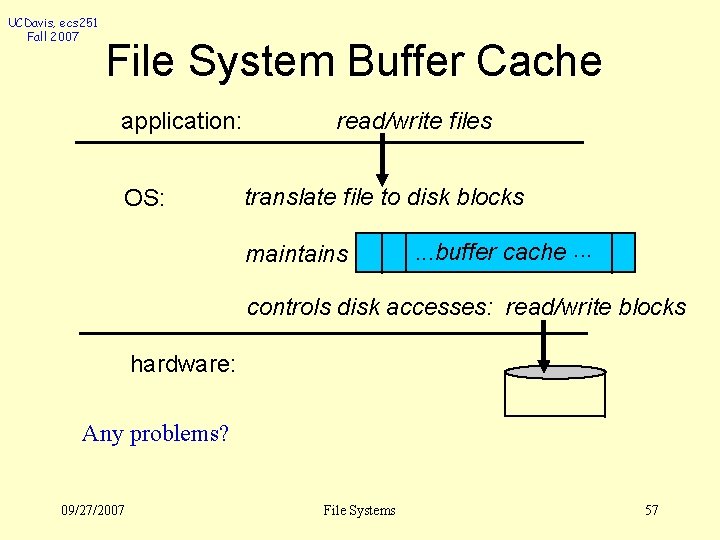

A File System UCDavis, ecs 251 Fall 2007 partition b s i-node 09/27/2007 partition i-list i-node d ……. partition directory and data blocks i-node File Systems 50

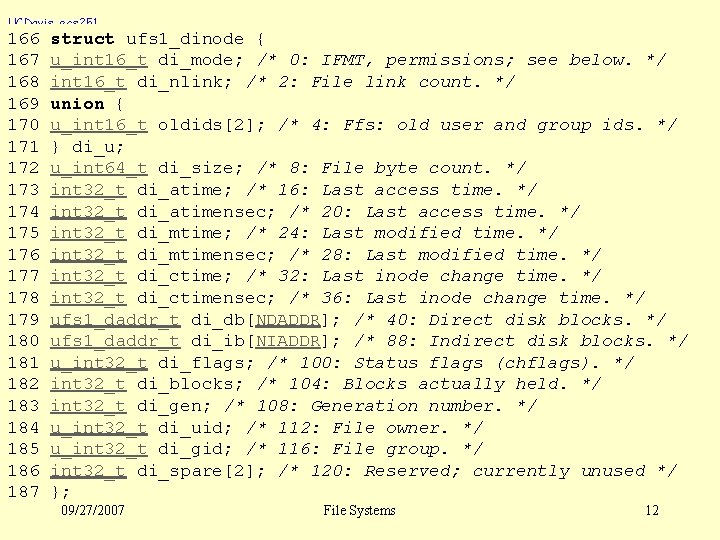

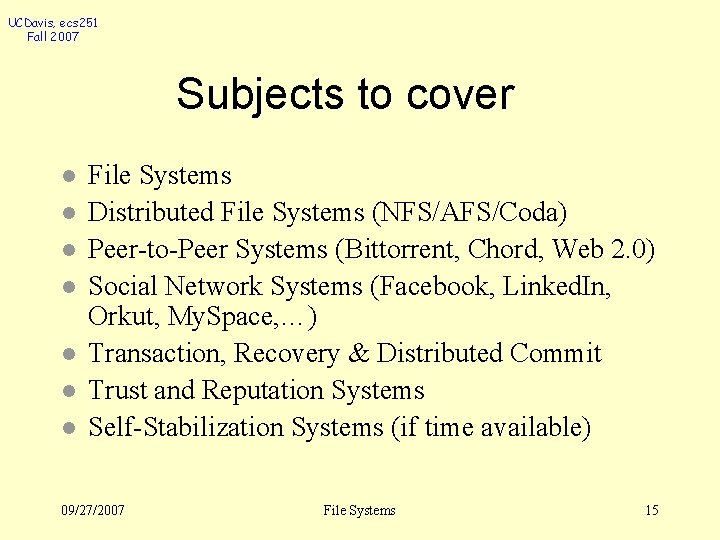

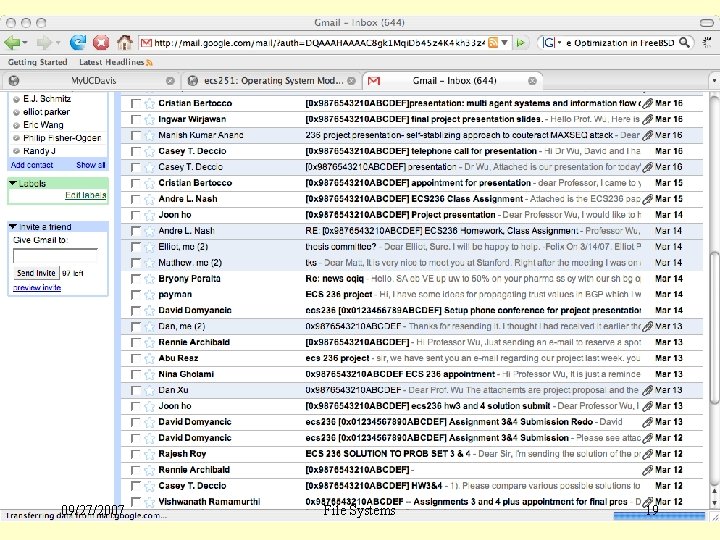

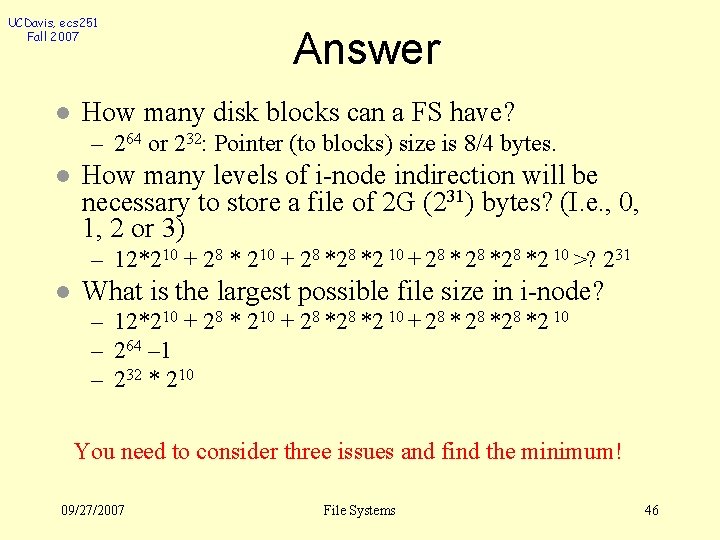

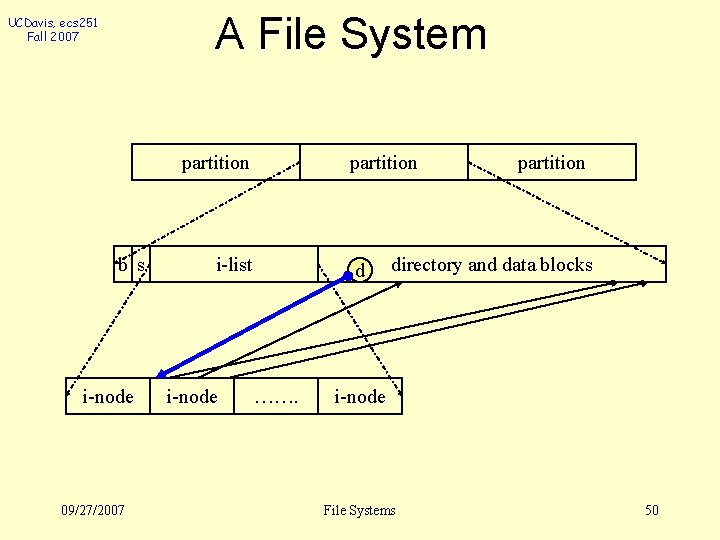

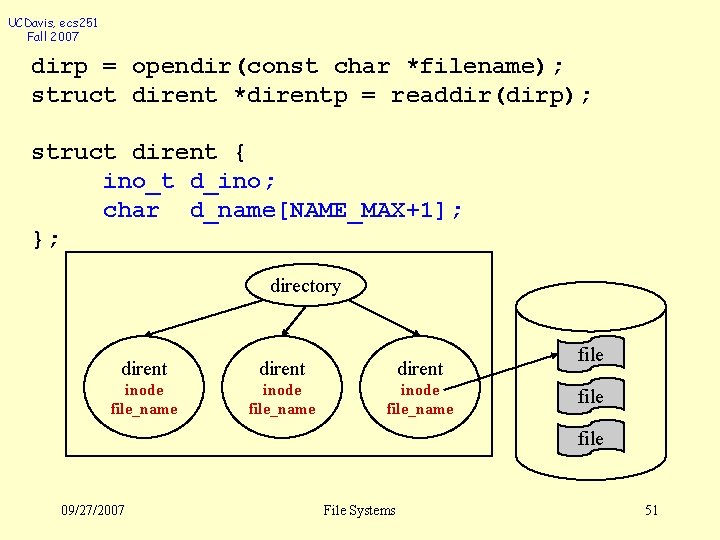

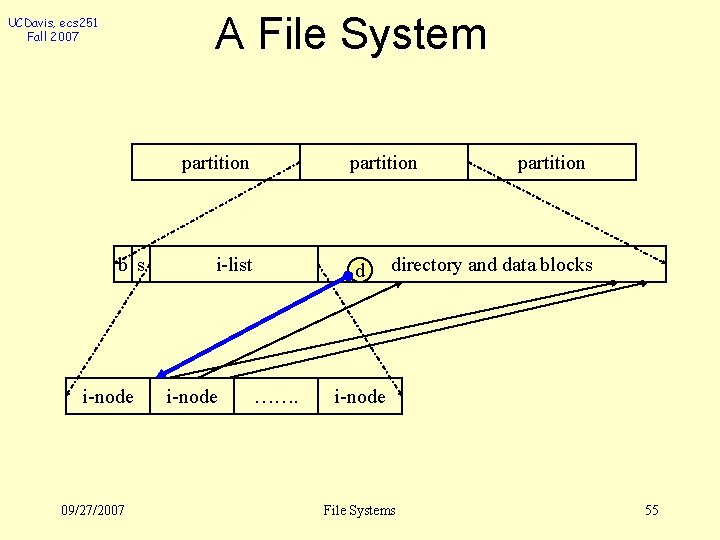

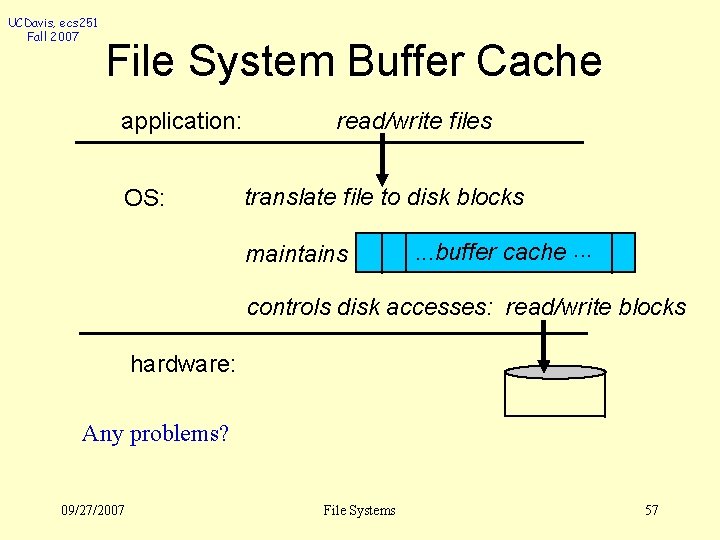

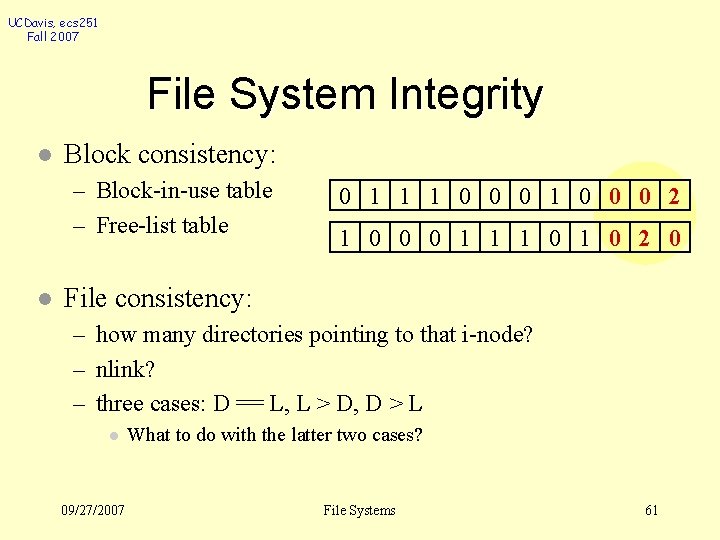

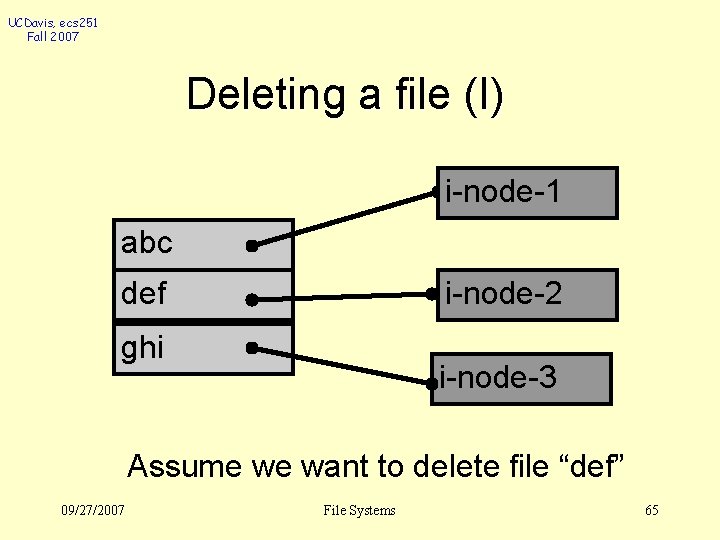

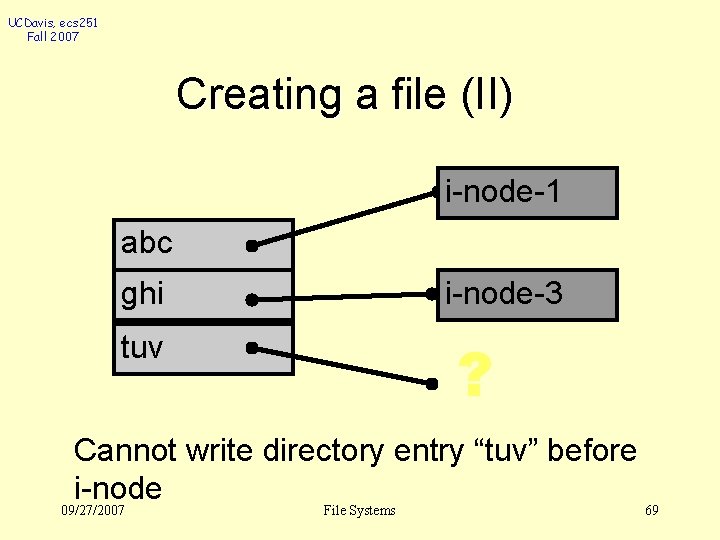

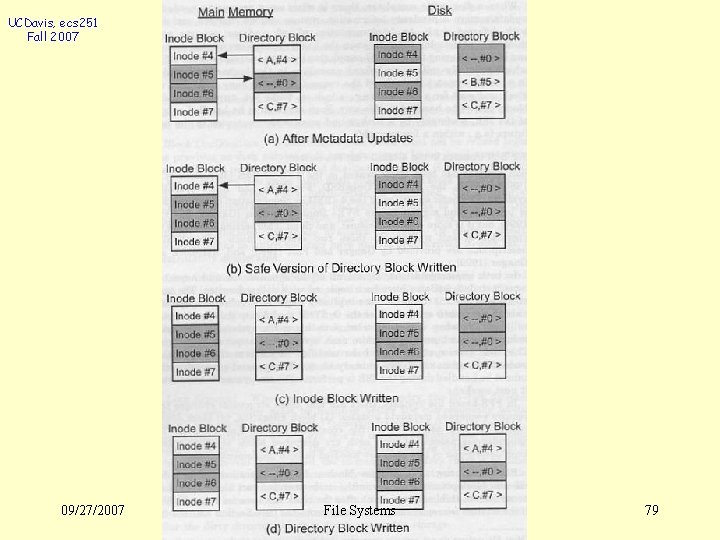

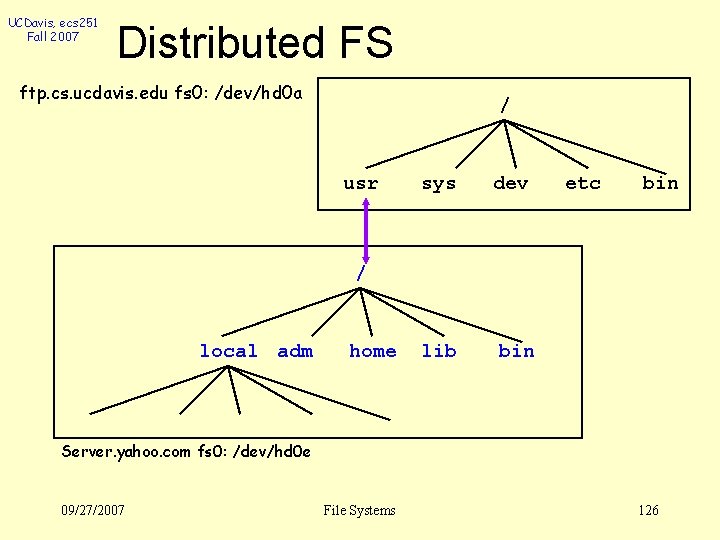

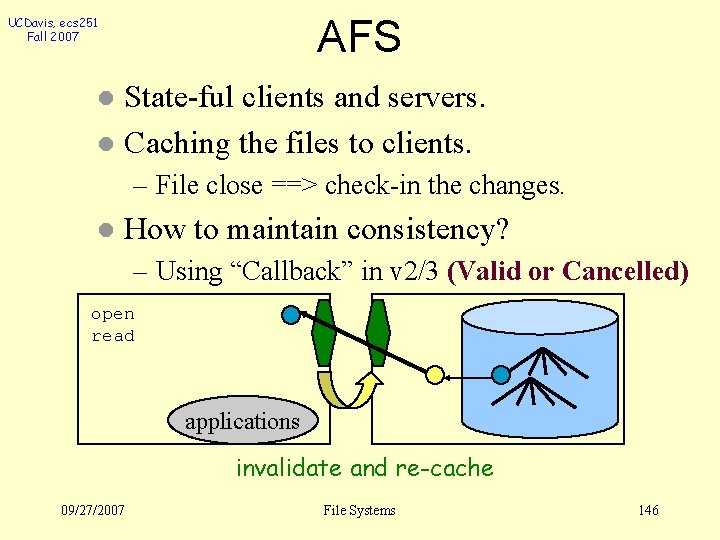

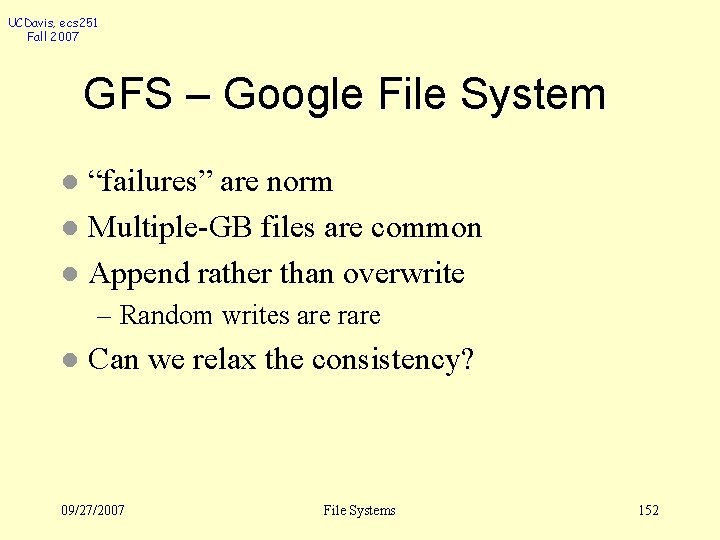

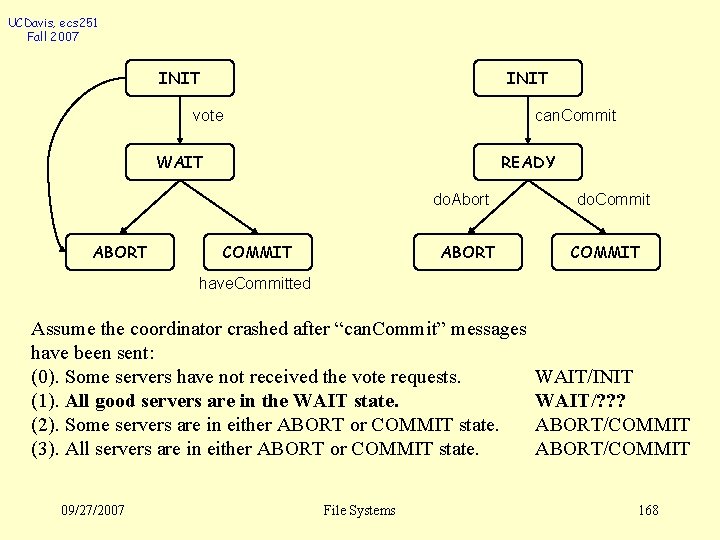

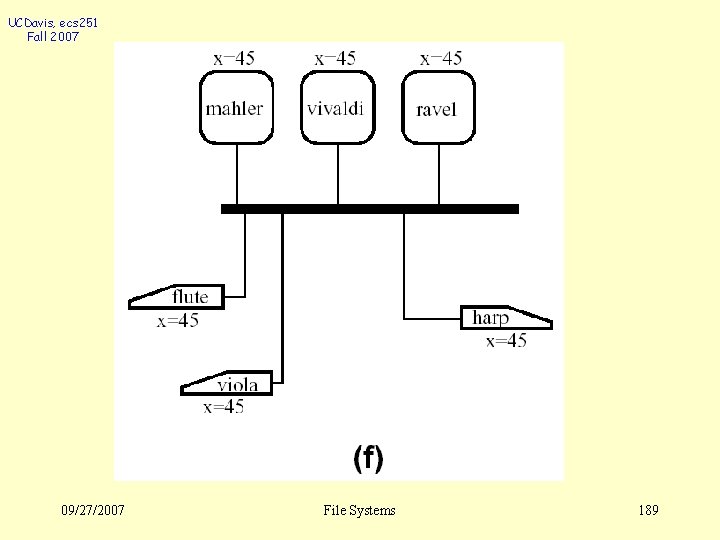

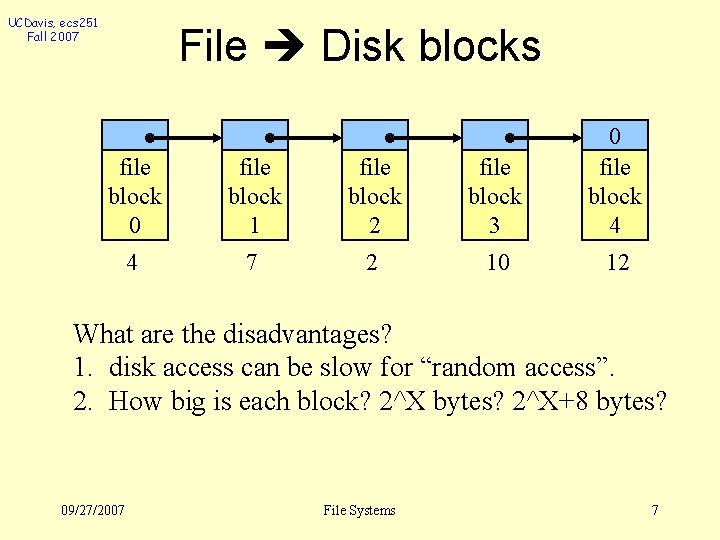

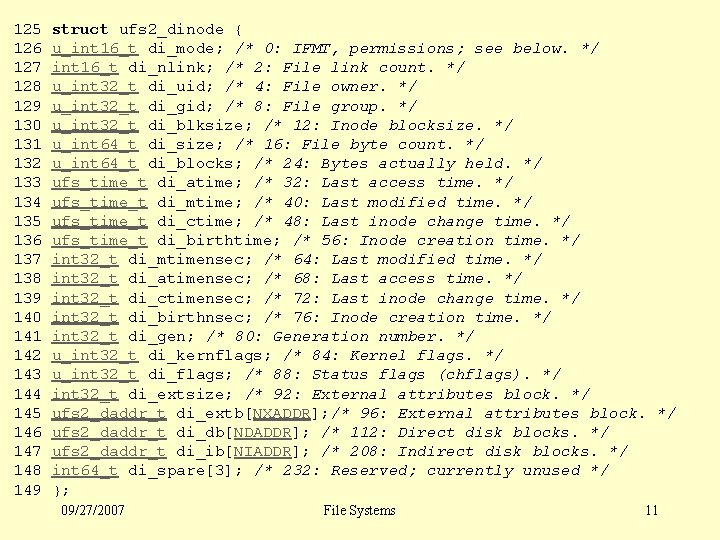

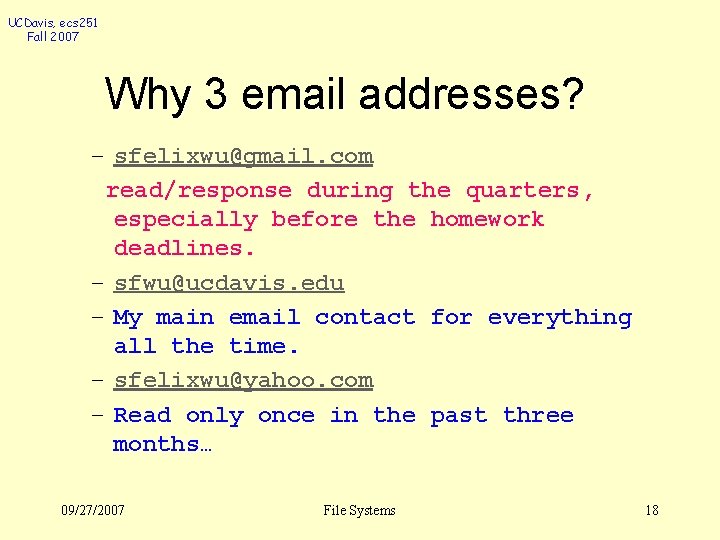

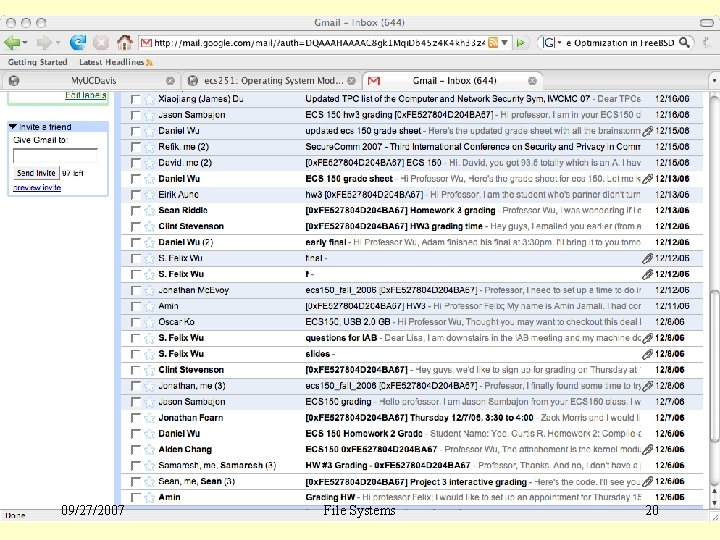

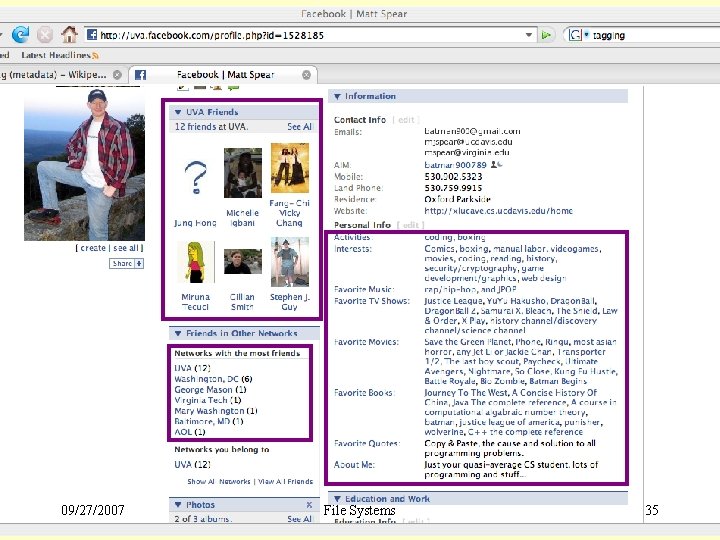

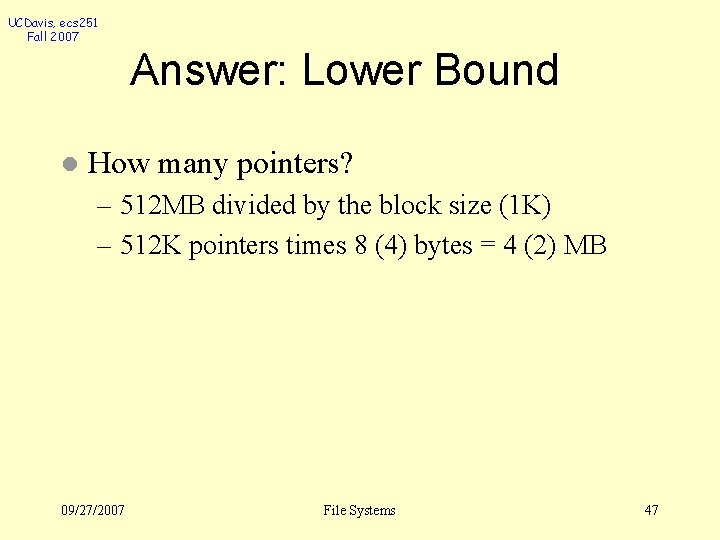

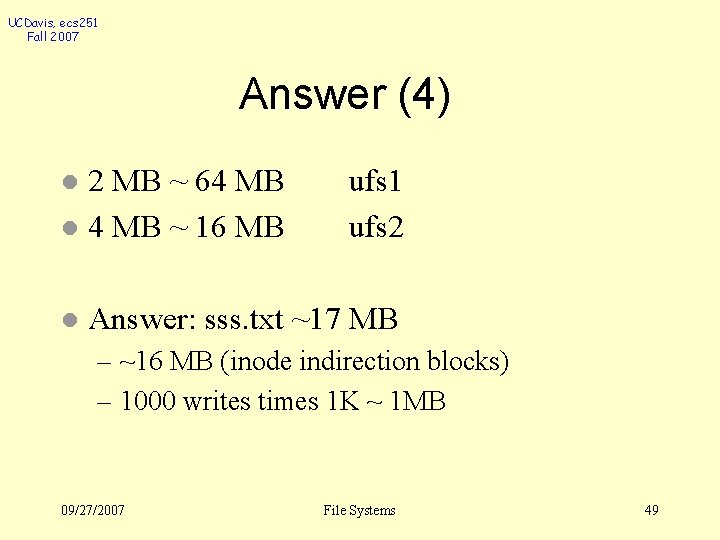

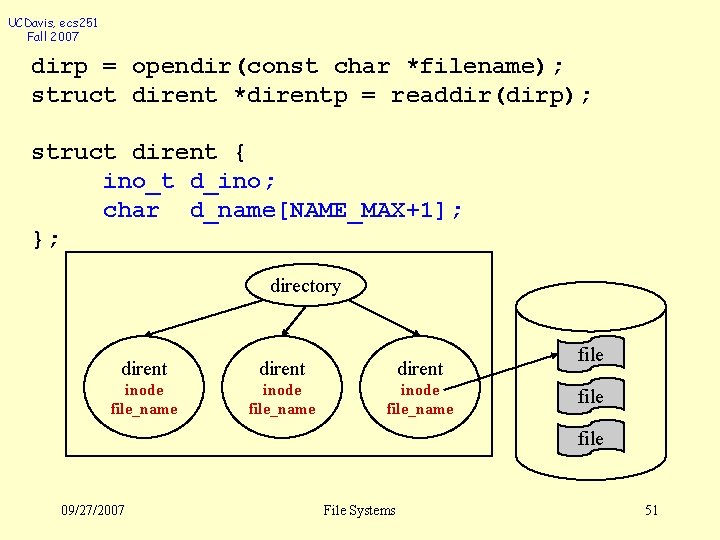

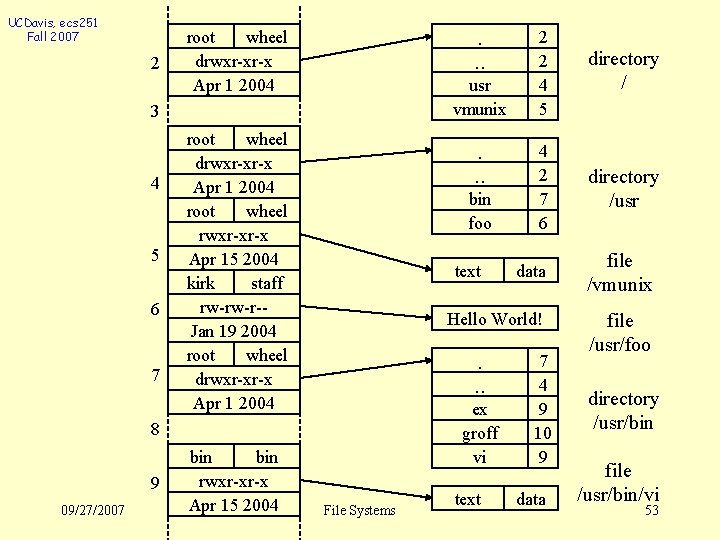

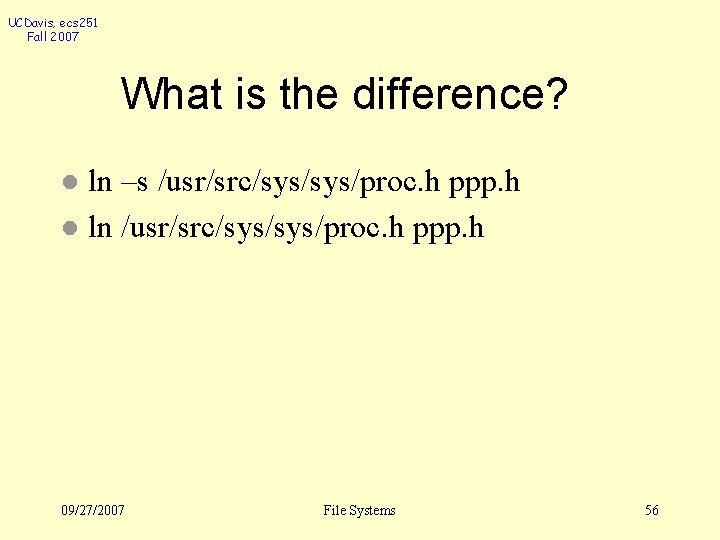

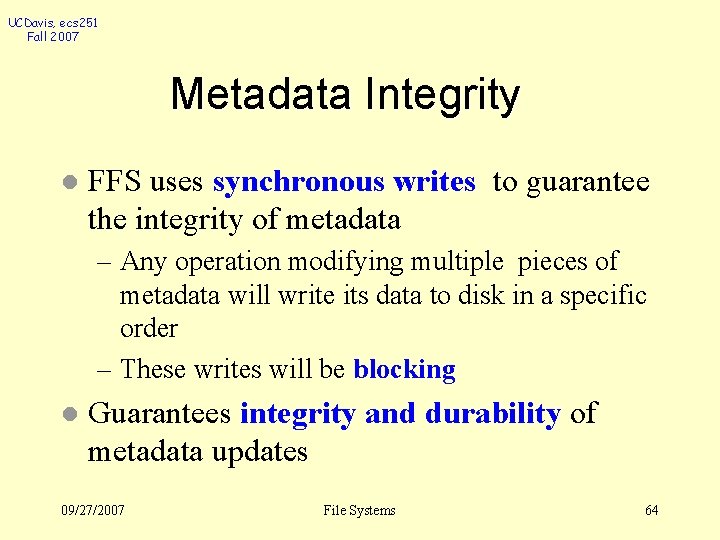

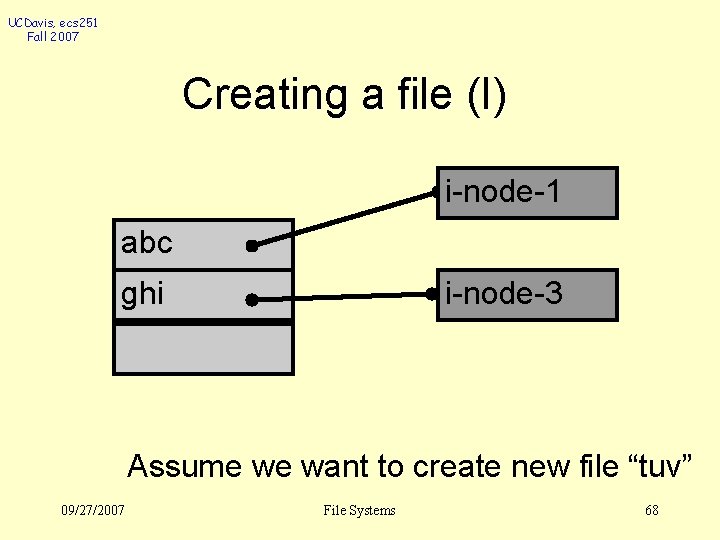

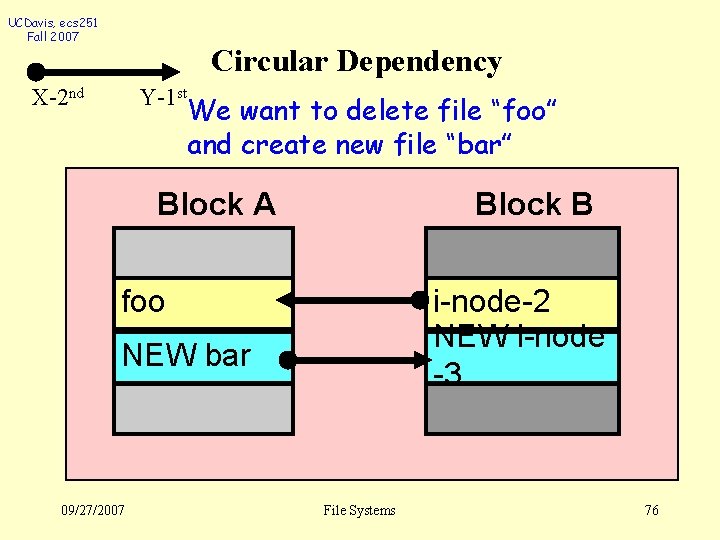

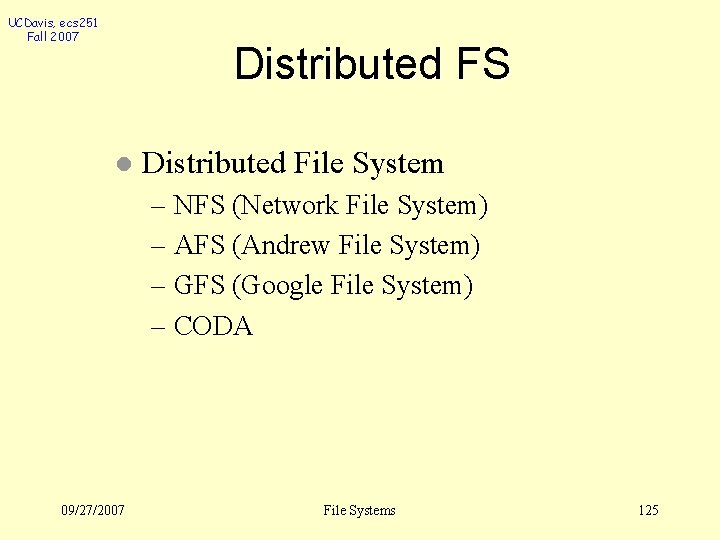

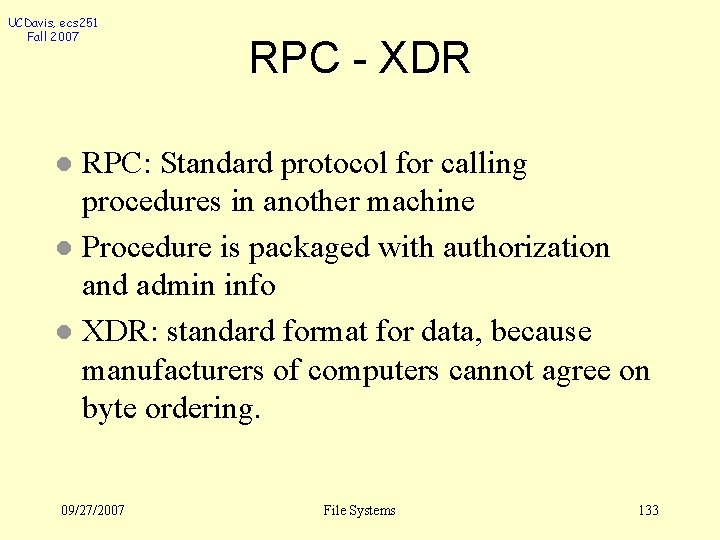

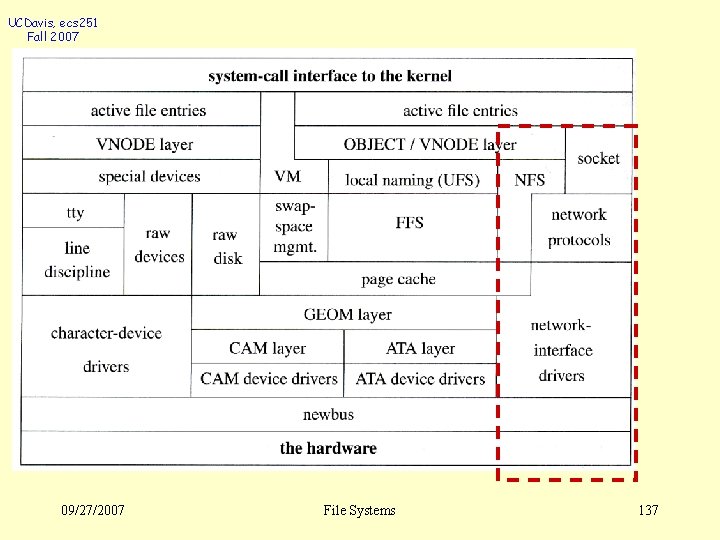

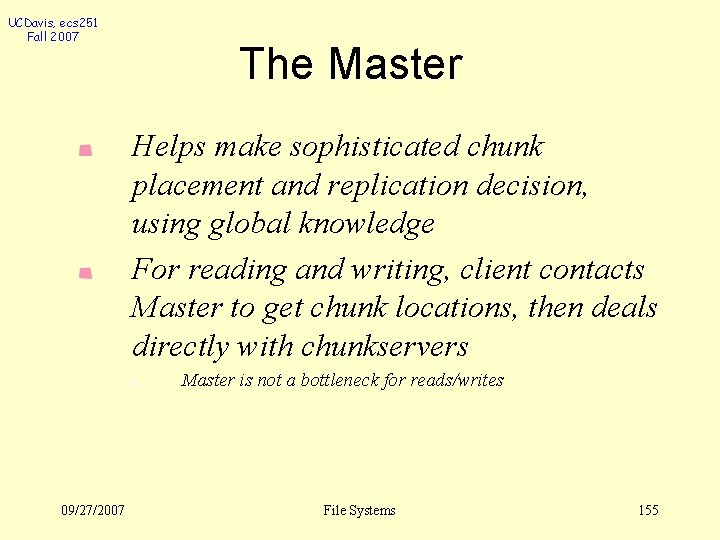

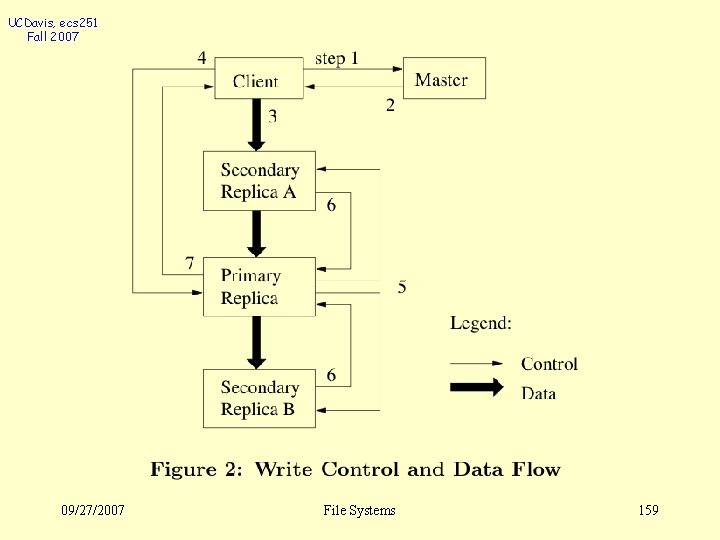

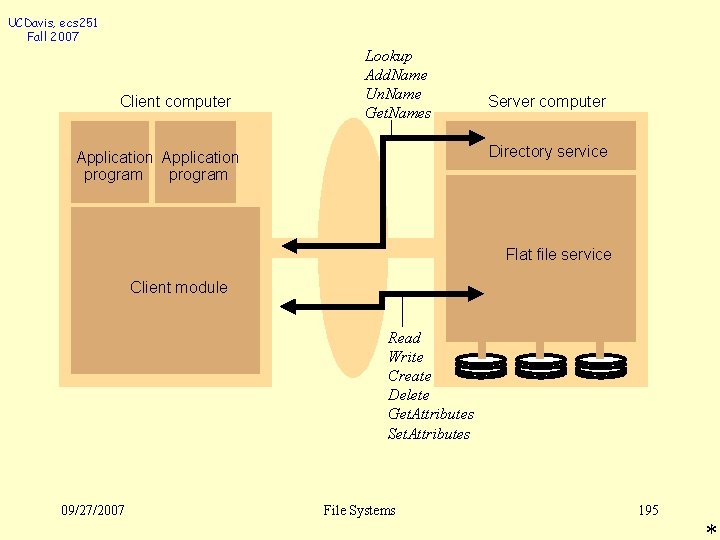

UCDavis, ecs 251 Fall 2007 dirp = opendir(const char *filename); struct dirent *direntp = readdir(dirp); struct dirent { ino_t d_ino; char d_name[NAME_MAX+1]; }; directory dirent inode file_name file 09/27/2007 File Systems 51

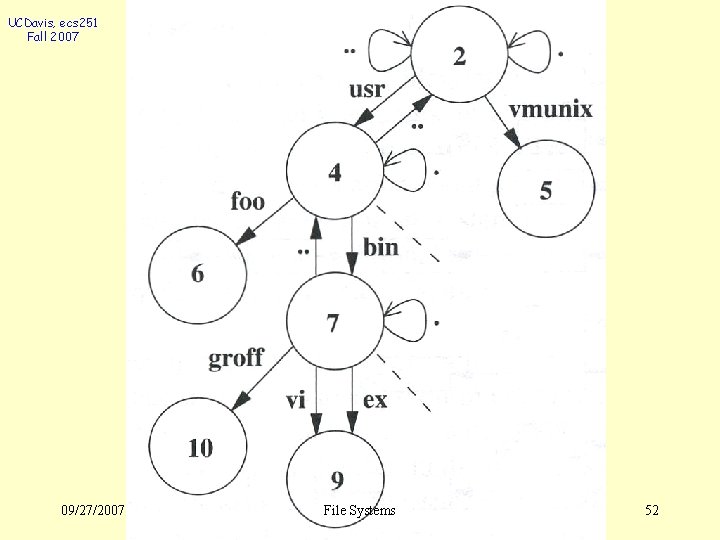

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 52

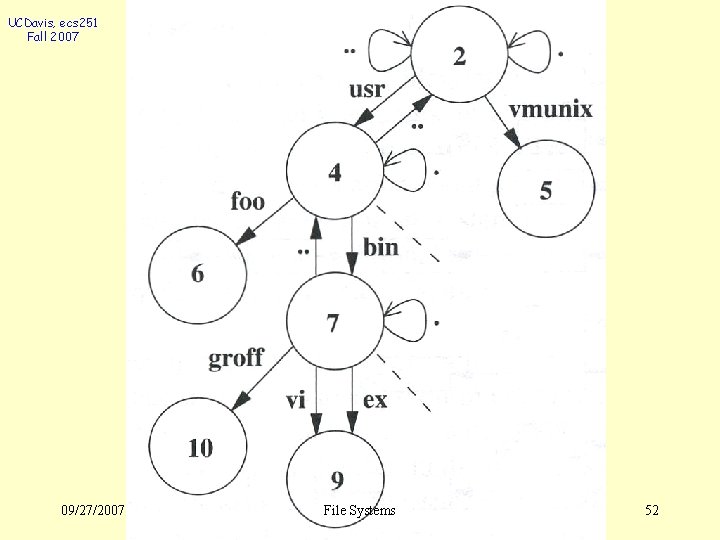

UCDavis, ecs 251 Fall 2007 2 . . . root wheel drwxr-xr-x Apr 1 2004 usr vmunix 3 4 5 6 7 root wheel drwxr-xr-x Apr 1 2004 root wheel rwxr-xr-x Apr 15 2004 kirk staff rw-rw-r-Jan 19 2004 root wheel drwxr-xr-x Apr 1 2004 . . . bin foo text 9 09/27/2007 directory / 4 2 7 6 directory /usr data Hello World! . . . ex groff vi 8 bin rwxr-xr-x Apr 15 2004 2 2 4 5 File Systems text 7 4 9 10 9 data file /vmunix file /usr/foo directory /usr/bin file /usr/bin/vi 53

![UCDavis ecs 251 Fall 2007 struct dirent inot dino char dnameNAMEMAX1 struct UCDavis, ecs 251 Fall 2007 struct dirent { ino_t d_ino; char d_name[NAME_MAX+1]; }; struct](https://slidetodoc.com/presentation_image_h2/1b05f6adf9852b209564be5395ac405a/image-54.jpg)

UCDavis, ecs 251 Fall 2007 struct dirent { ino_t d_ino; char d_name[NAME_MAX+1]; }; struct stat {… short …}; nlinks; directory dirent inode file_name file 09/27/2007 File Systems 54

A File System UCDavis, ecs 251 Fall 2007 partition b s i-node 09/27/2007 partition i-list i-node d ……. partition directory and data blocks i-node File Systems 55

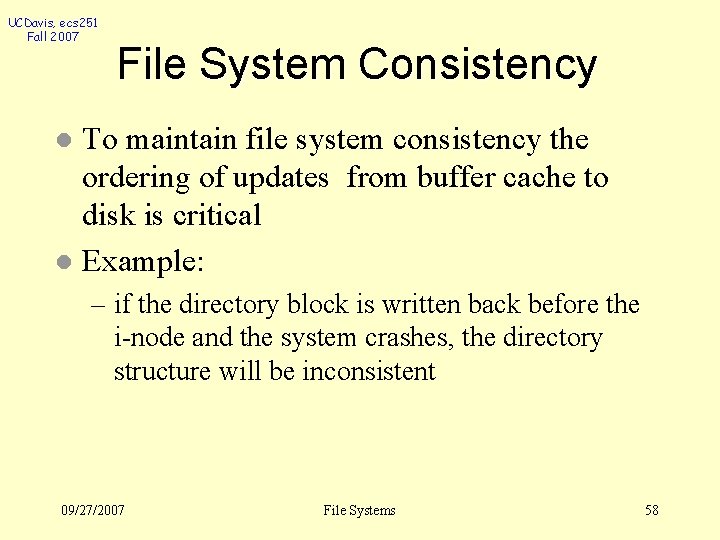

UCDavis, ecs 251 Fall 2007 What is the difference? ln –s /usr/src/sys/proc. h ppp. h l ln /usr/src/sys/proc. h ppp. h l 09/27/2007 File Systems 56

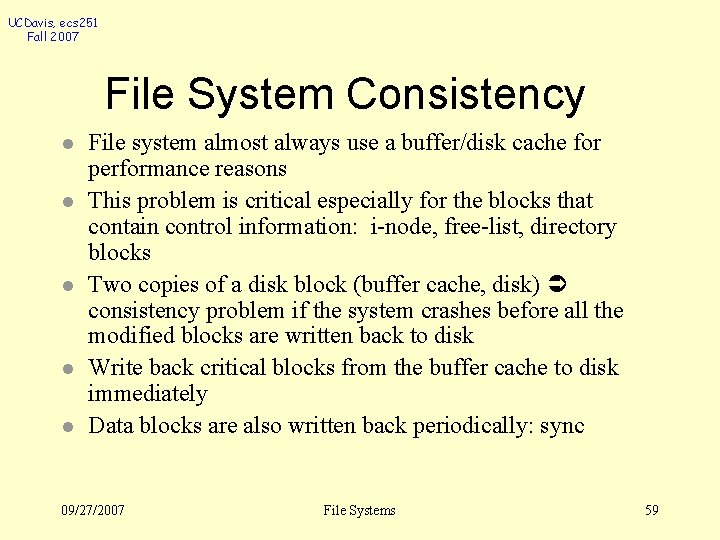

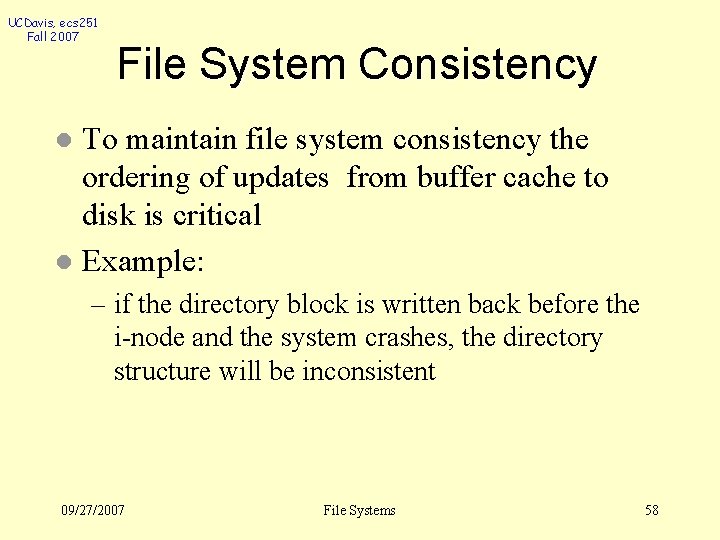

UCDavis, ecs 251 Fall 2007 File System Buffer Cache application: OS: read/write files translate file to disk blocks maintains . . . buffer cache. . . controls disk accesses: read/write blocks hardware: Any problems? 09/27/2007 File Systems 57

UCDavis, ecs 251 Fall 2007 File System Consistency To maintain file system consistency the ordering of updates from buffer cache to disk is critical l Example: l – if the directory block is written back before the i-node and the system crashes, the directory structure will be inconsistent 09/27/2007 File Systems 58

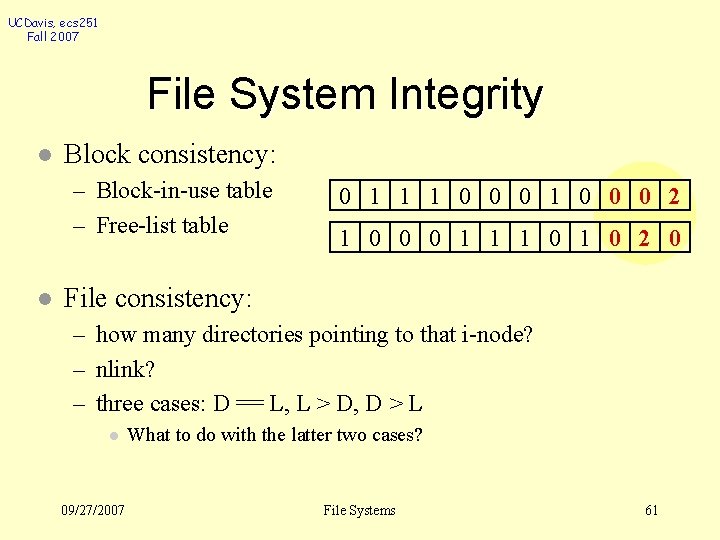

UCDavis, ecs 251 Fall 2007 File System Consistency l l l File system almost always use a buffer/disk cache for performance reasons This problem is critical especially for the blocks that contain control information: i-node, free-list, directory blocks Two copies of a disk block (buffer cache, disk) consistency problem if the system crashes before all the modified blocks are written back to disk Write back critical blocks from the buffer cache to disk immediately Data blocks are also written back periodically: sync 09/27/2007 File Systems 59

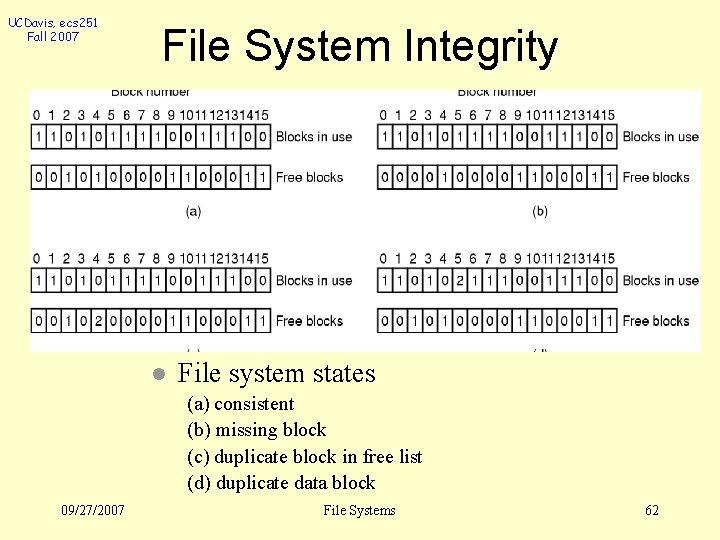

UCDavis, ecs 251 Fall 2007 Two Strategies l Prevention – Use un-buffered I/O when writing i-nodes or pointer blocks – Use buffered I/O for other writes and force sync every 30 seconds l Detect and Fix – Detect the inconsistency – Fix them according to the “rules” – Fsck (File System Checker) 09/27/2007 File Systems 60

UCDavis, ecs 251 Fall 2007 File System Integrity l Block consistency: – Block-in-use table – Free-list table l 0 1 1 1 0 0 0 2 1 0 0 0 1 1 1 0 2 0 File consistency: – how many directories pointing to that i-node? – nlink? – three cases: D == L, L > D, D > L l 09/27/2007 What to do with the latter two cases? File Systems 61

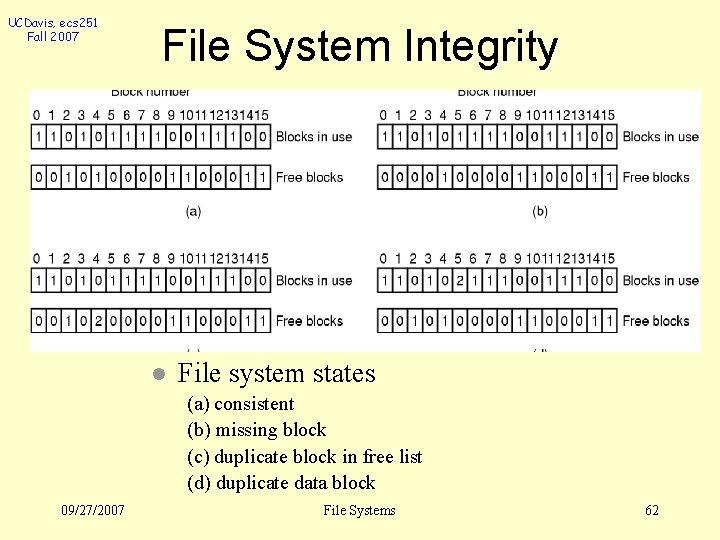

UCDavis, ecs 251 Fall 2007 File System Integrity l File system states (a) consistent (b) missing block (c) duplicate block in free list (d) duplicate data block 09/27/2007 File Systems 62

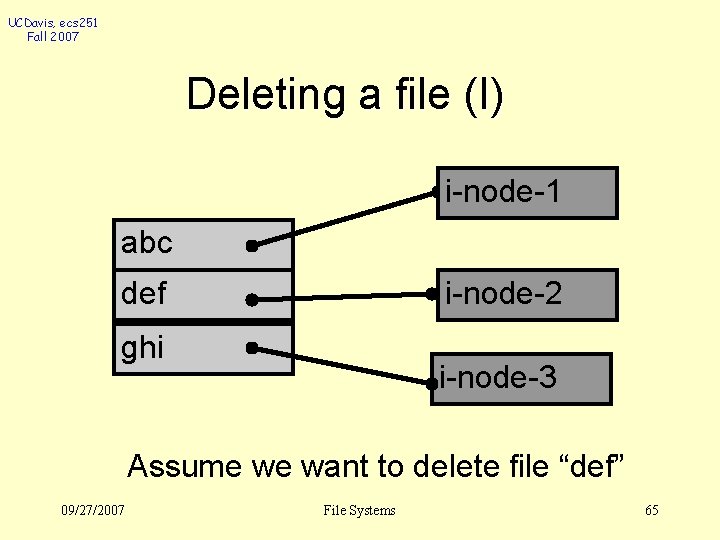

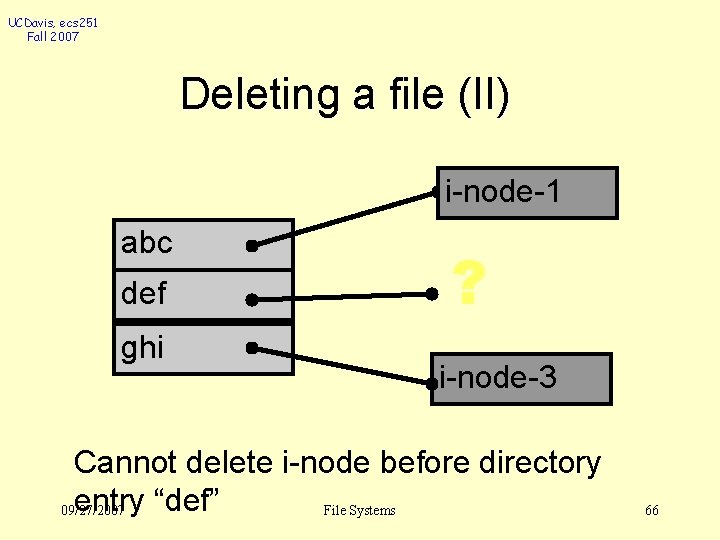

UCDavis, ecs 251 Fall 2007 Metadata Operations l Metadata operations modify the structure of the file system – Creating, deleting, or renaming files, directories, or special files – Directory & I-node l Data must be written to disk in such a way that the file system can be recovered to a consistent state after a system crash 09/27/2007 File Systems 63

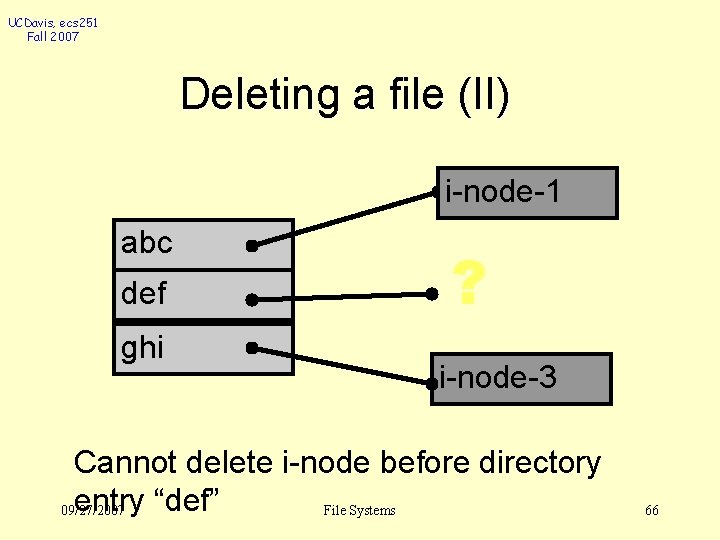

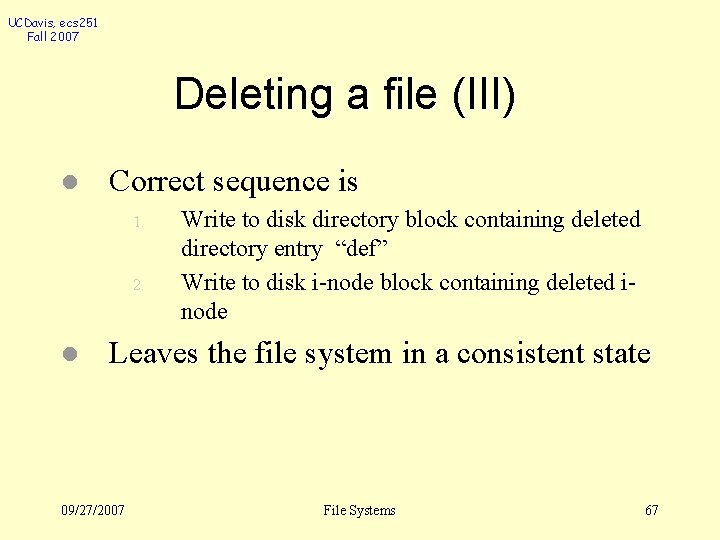

UCDavis, ecs 251 Fall 2007 Metadata Integrity l FFS uses synchronous writes to guarantee the integrity of metadata – Any operation modifying multiple pieces of metadata will write its data to disk in a specific order – These writes will be blocking l Guarantees integrity and durability of metadata updates 09/27/2007 File Systems 64

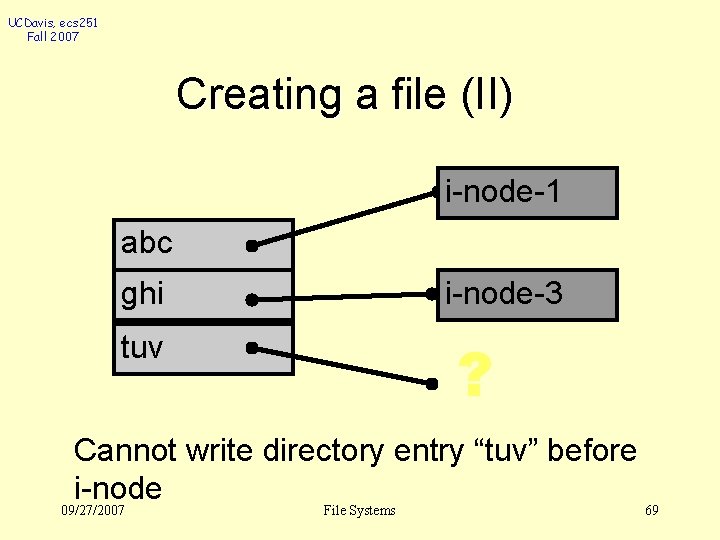

UCDavis, ecs 251 Fall 2007 Deleting a file (I) i-node-1 abc def i-node-2 ghi i-node-3 Assume we want to delete file “def” 09/27/2007 File Systems 65

UCDavis, ecs 251 Fall 2007 Deleting a file (II) i-node-1 abc def ghi ? i-node-3 Cannot delete i-node before directory entry “def” 09/27/2007 File Systems 66

UCDavis, ecs 251 Fall 2007 Deleting a file (III) l Correct sequence is 1. 2. l Write to disk directory block containing deleted directory entry “def” Write to disk i-node block containing deleted inode Leaves the file system in a consistent state 09/27/2007 File Systems 67

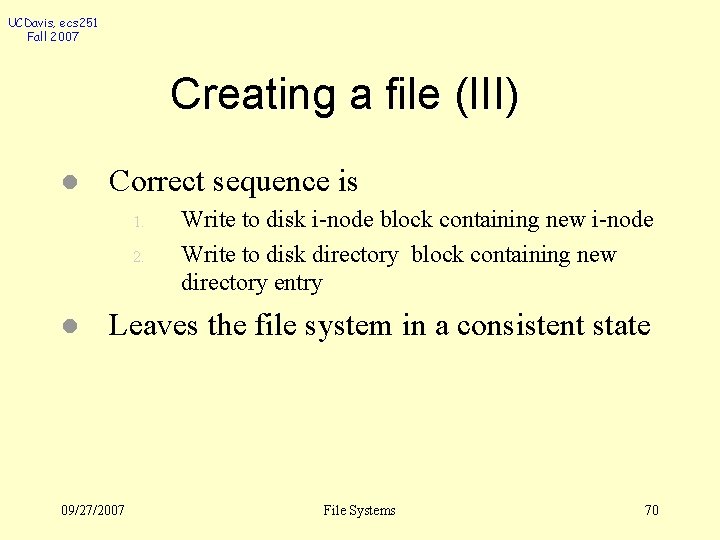

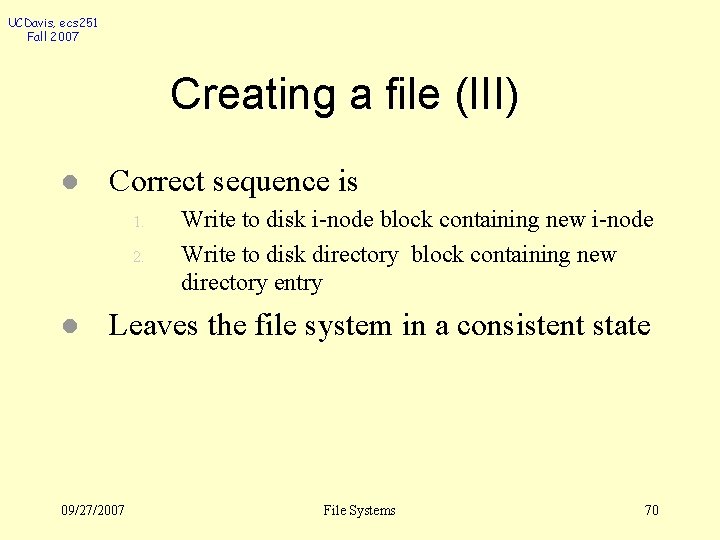

UCDavis, ecs 251 Fall 2007 Creating a file (I) i-node-1 abc ghi i-node-3 Assume we want to create new file “tuv” 09/27/2007 File Systems 68

UCDavis, ecs 251 Fall 2007 Creating a file (II) i-node-1 abc ghi i-node-3 tuv ? Cannot write directory entry “tuv” before i-node 09/27/2007 File Systems 69

UCDavis, ecs 251 Fall 2007 Creating a file (III) l Correct sequence is 1. 2. l Write to disk i-node block containing new i-node Write to disk directory block containing new directory entry Leaves the file system in a consistent state 09/27/2007 File Systems 70

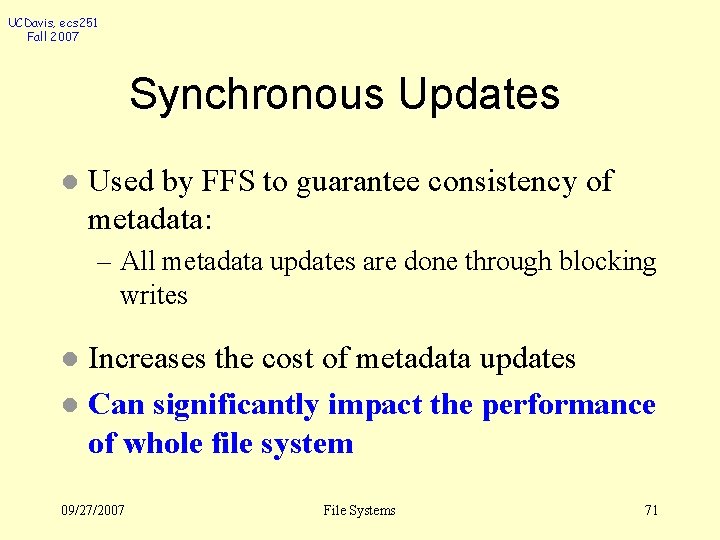

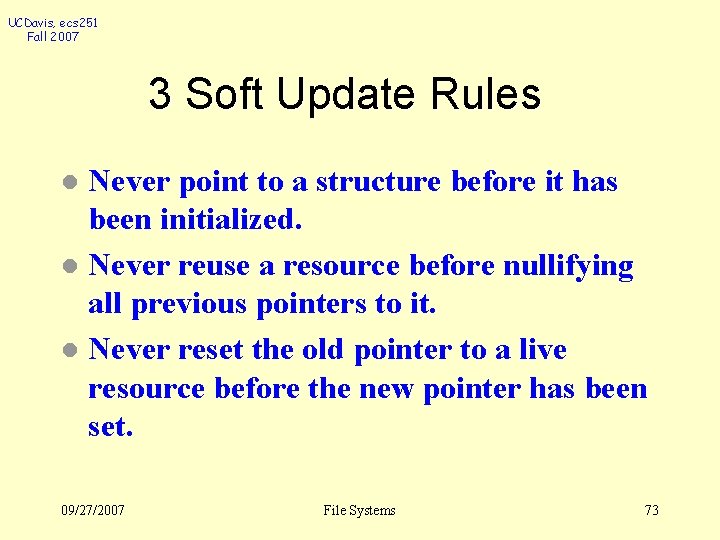

UCDavis, ecs 251 Fall 2007 Synchronous Updates l Used by FFS to guarantee consistency of metadata: – All metadata updates are done through blocking writes Increases the cost of metadata updates l Can significantly impact the performance of whole file system l 09/27/2007 File Systems 71

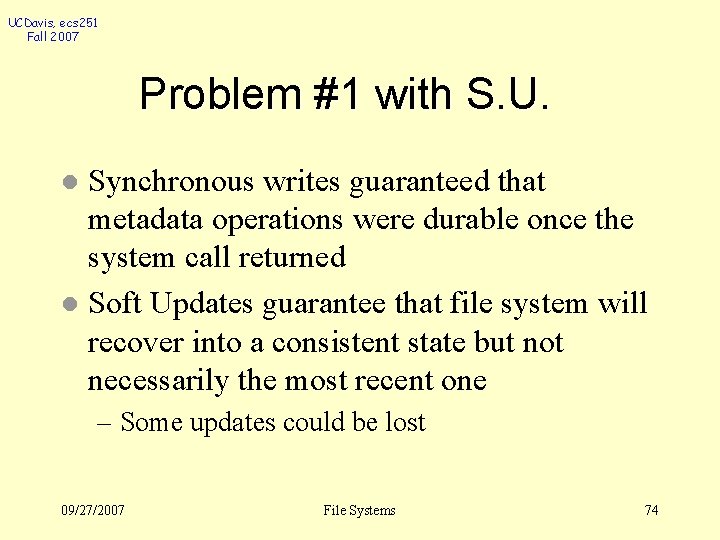

UCDavis, ecs 251 Fall 2007 SOFT UPDATES Use delayed writes (write back) l Maintain dependency information about cached pieces of metadata: l This i-node must be updated before/after this directory entry l Guarantee that metadata blocks are written to disk in the required order 09/27/2007 File Systems 72

UCDavis, ecs 251 Fall 2007 3 Soft Update Rules Never point to a structure before it has been initialized. l Never reuse a resource before nullifying all previous pointers to it. l Never reset the old pointer to a live resource before the new pointer has been set. l 09/27/2007 File Systems 73

UCDavis, ecs 251 Fall 2007 Problem #1 with S. U. Synchronous writes guaranteed that metadata operations were durable once the system call returned l Soft Updates guarantee that file system will recover into a consistent state but not necessarily the most recent one l – Some updates could be lost 09/27/2007 File Systems 74

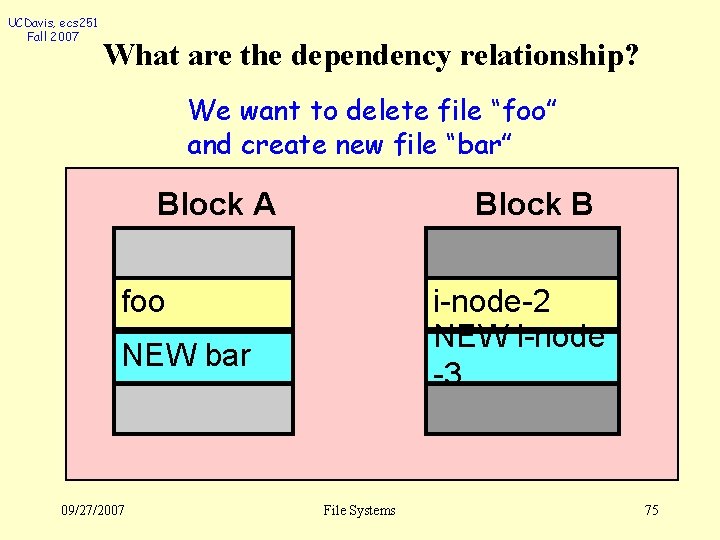

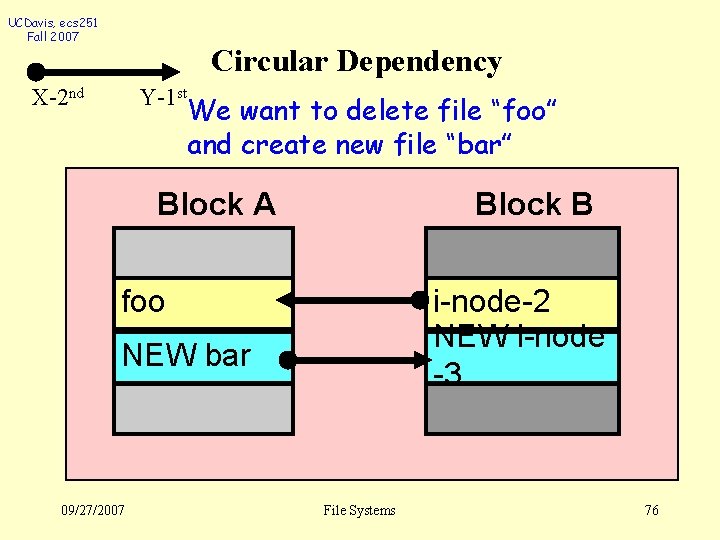

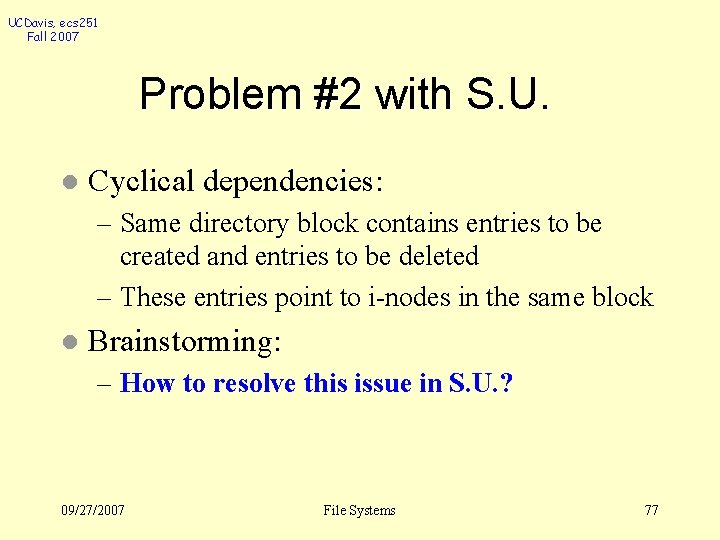

UCDavis, ecs 251 Fall 2007 What are the dependency relationship? We want to delete file “foo” and create new file “bar” Block A Block B foo i-node-2 NEW i-node -3 NEW bar 09/27/2007 File Systems 75

UCDavis, ecs 251 Fall 2007 Circular Dependency X-2 nd Y-1 st We want to delete file “foo” and create new file “bar” Block A Block B foo i-node-2 NEW i-node -3 NEW bar 09/27/2007 File Systems 76

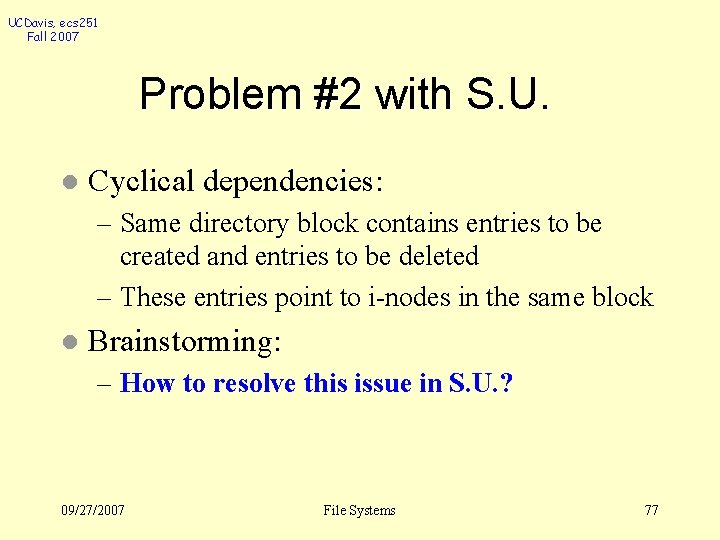

UCDavis, ecs 251 Fall 2007 Problem #2 with S. U. l Cyclical dependencies: – Same directory block contains entries to be created and entries to be deleted – These entries point to i-nodes in the same block l Brainstorming: – How to resolve this issue in S. U. ? 09/27/2007 File Systems 77

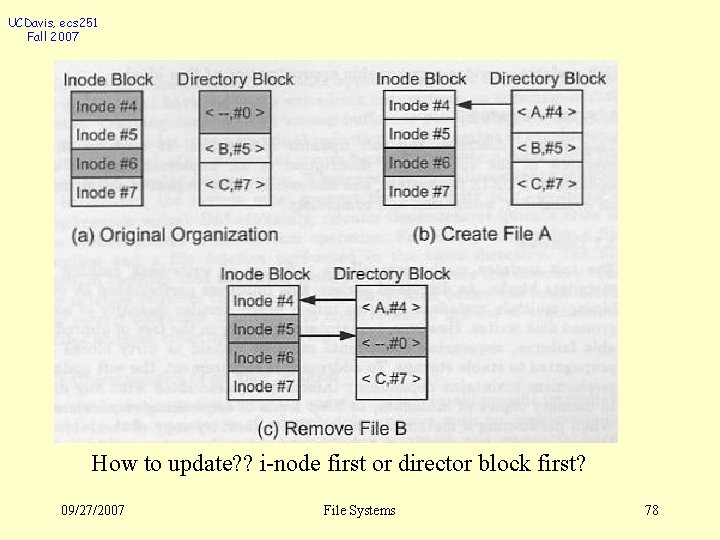

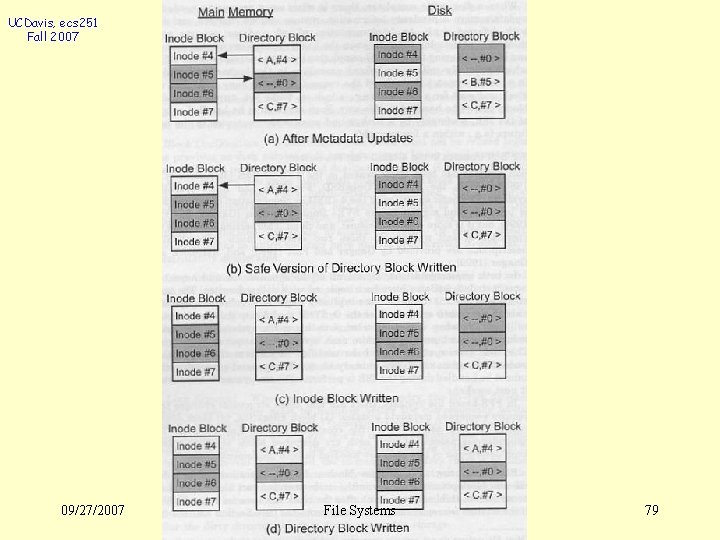

UCDavis, ecs 251 Fall 2007 How to update? ? i-node first or director block first? 09/27/2007 File Systems 78

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 79

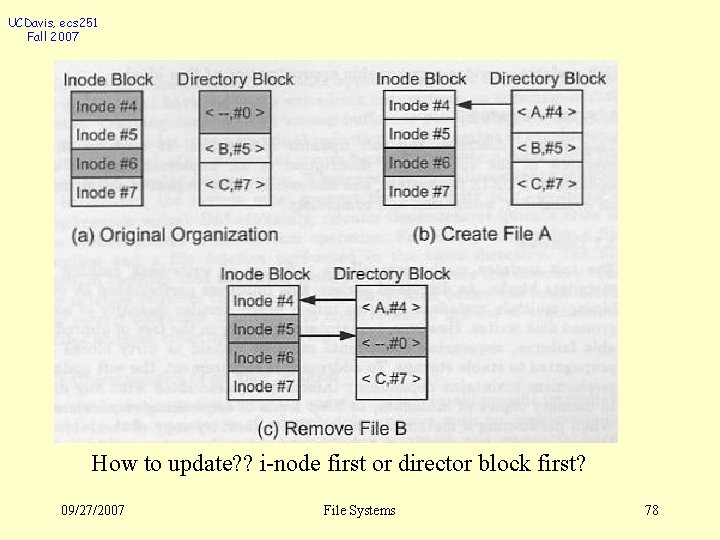

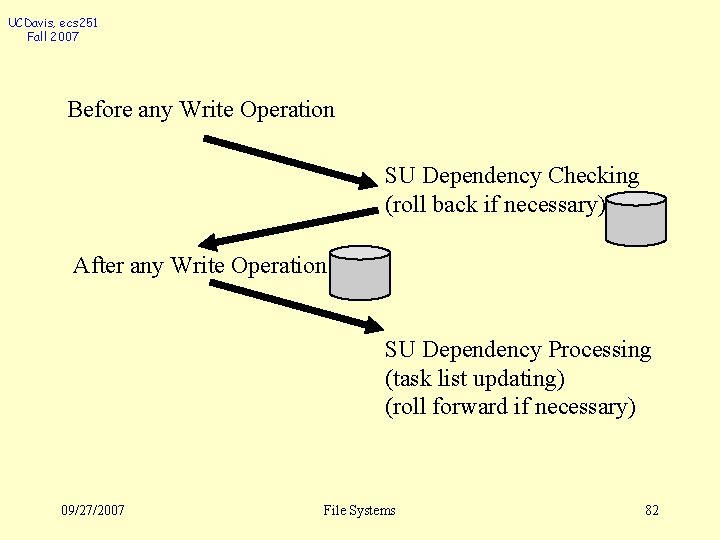

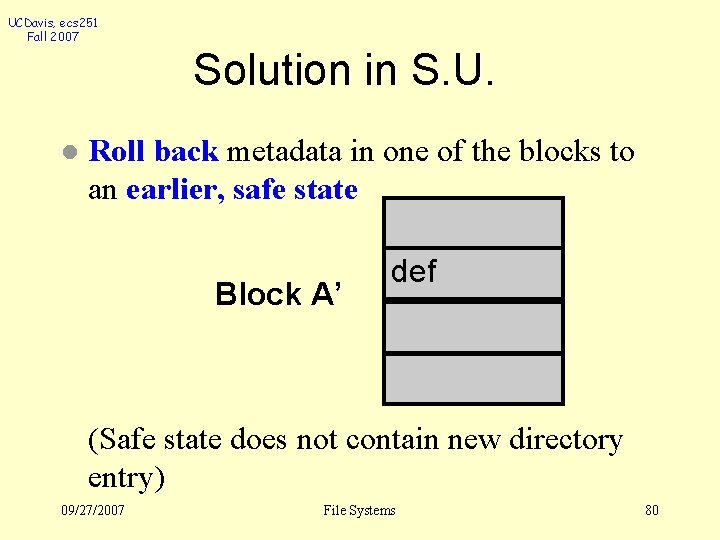

UCDavis, ecs 251 Fall 2007 Solution in S. U. l Roll back metadata in one of the blocks to an earlier, safe state Block A’ def (Safe state does not contain new directory entry) 09/27/2007 File Systems 80

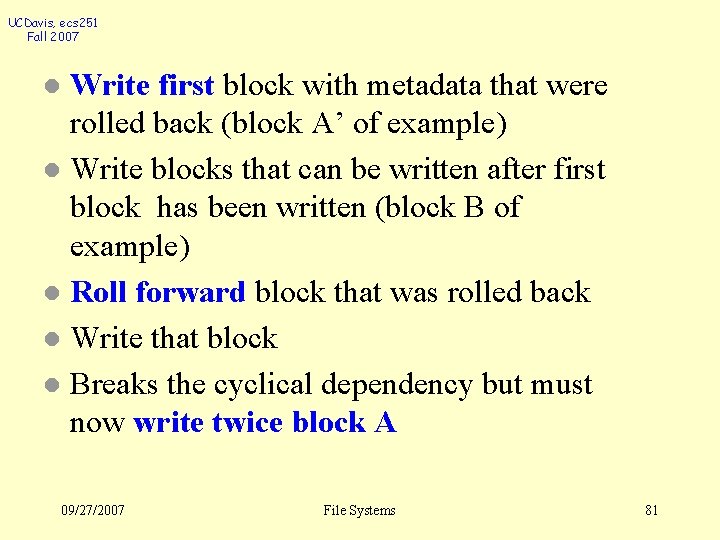

UCDavis, ecs 251 Fall 2007 Write first block with metadata that were rolled back (block A’ of example) l Write blocks that can be written after first block has been written (block B of example) l Roll forward block that was rolled back l Write that block l Breaks the cyclical dependency but must now write twice block A l 09/27/2007 File Systems 81

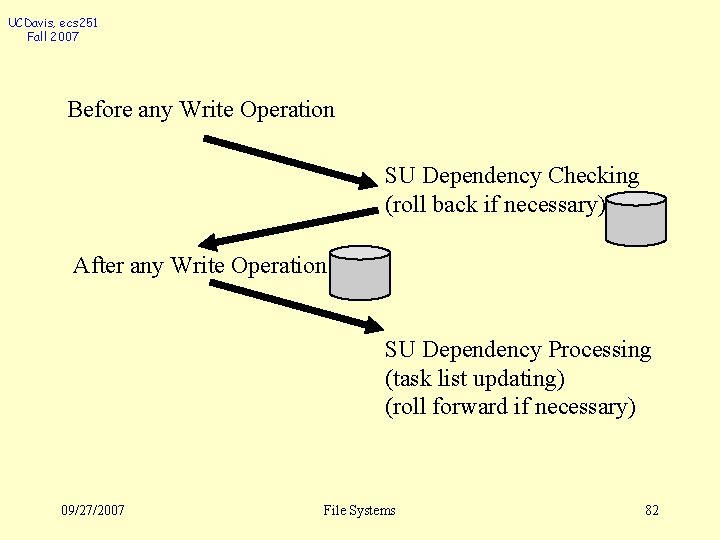

UCDavis, ecs 251 Fall 2007 Before any Write Operation SU Dependency Checking (roll back if necessary) After any Write Operation SU Dependency Processing (task list updating) (roll forward if necessary) 09/27/2007 File Systems 82

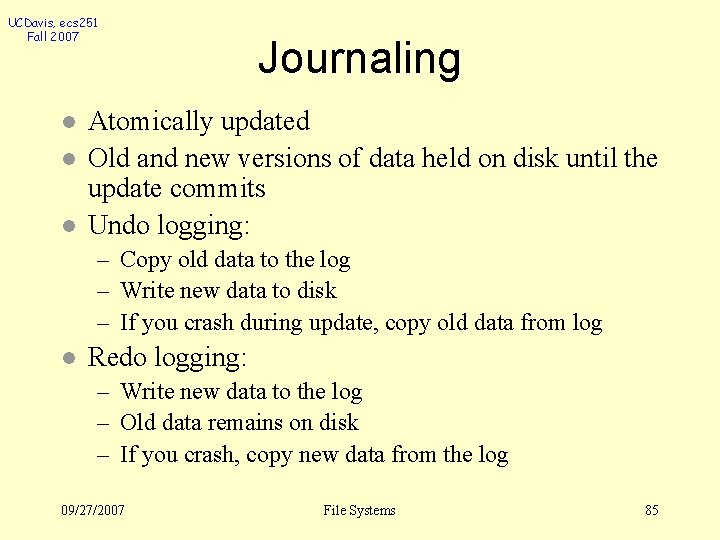

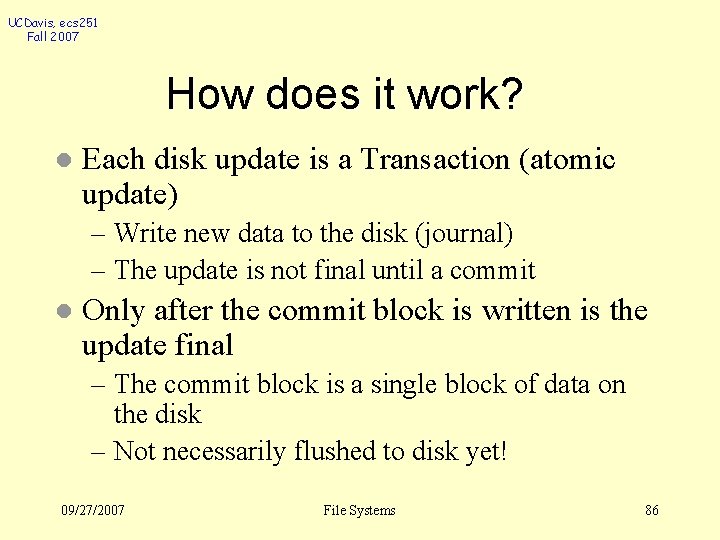

UCDavis, ecs 251 Fall 2007 l two most popular approaches for improving the performance of metadata operations and recovery: – Journaling – Soft Updates Journaling systems record metadata operations on an auxiliary log l Soft Updates uses ordered writes l 09/27/2007 File Systems 83

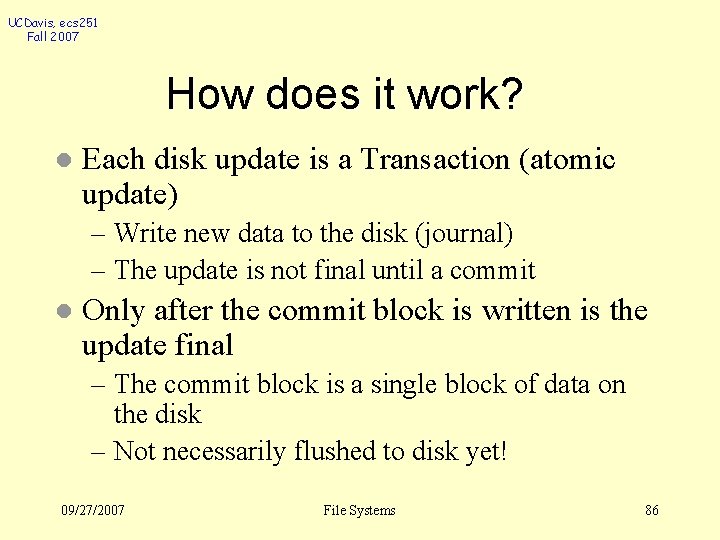

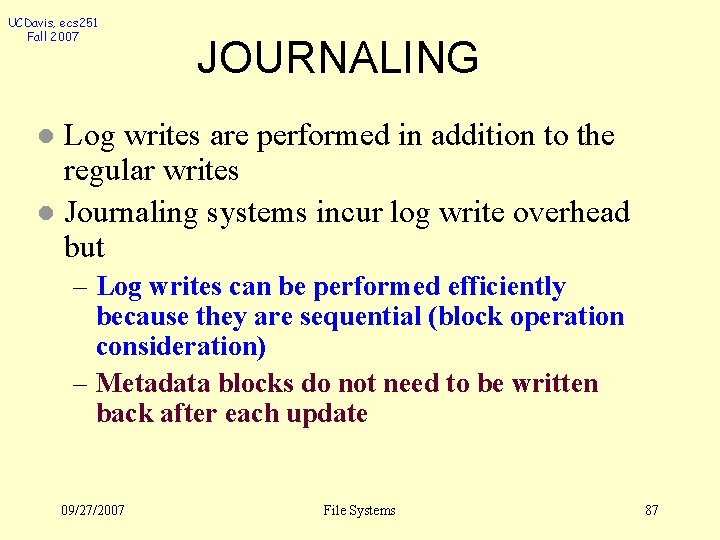

UCDavis, ecs 251 Fall 2007 JOURNALING Journaling systems maintain an auxiliary log that records all meta-data operations l Write-ahead logging ensures that the log is written to disk before any blocks containing data modified by the corresponding operations. l – After a crash, can replay the log to bring the file system to a consistent state 09/27/2007 File Systems 84

UCDavis, ecs 251 Fall 2007 l l l Journaling Atomically updated Old and new versions of data held on disk until the update commits Undo logging: – Copy old data to the log – Write new data to disk – If you crash during update, copy old data from log l Redo logging: – Write new data to the log – Old data remains on disk – If you crash, copy new data from the log 09/27/2007 File Systems 85

UCDavis, ecs 251 Fall 2007 How does it work? l Each disk update is a Transaction (atomic update) – Write new data to the disk (journal) – The update is not final until a commit l Only after the commit block is written is the update final – The commit block is a single block of data on the disk – Not necessarily flushed to disk yet! 09/27/2007 File Systems 86

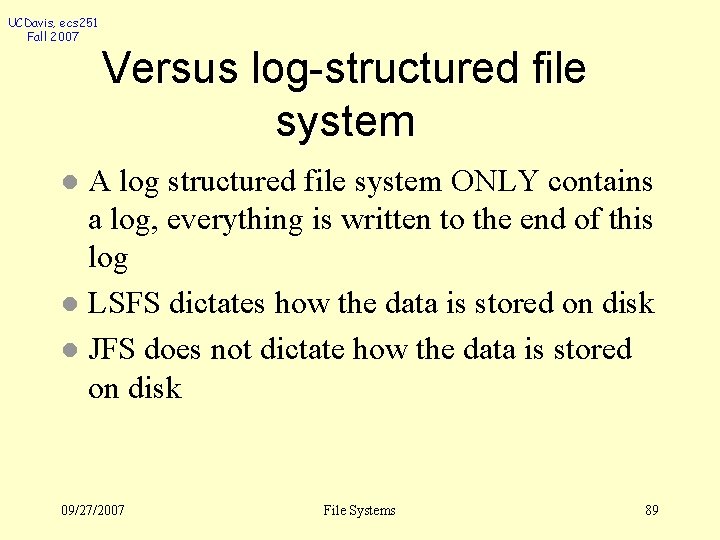

UCDavis, ecs 251 Fall 2007 JOURNALING Log writes are performed in addition to the regular writes l Journaling systems incur log write overhead but l – Log writes can be performed efficiently because they are sequential (block operation consideration) – Metadata blocks do not need to be written back after each update 09/27/2007 File Systems 87

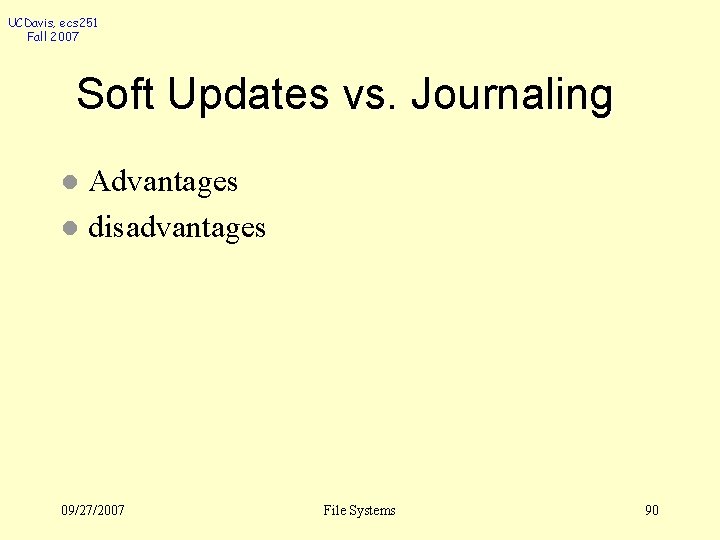

UCDavis, ecs 251 Fall 2007 l JOURNALING Journaling systems can provide – same durability semantics as FFS if log is forced to disk after each meta-data operation – the laxer semantics of Soft Updates if log writes are buffered until entire buffers are full 09/27/2007 File Systems 88

UCDavis, ecs 251 Fall 2007 Versus log-structured file system A log structured file system ONLY contains a log, everything is written to the end of this log l LSFS dictates how the data is stored on disk l JFS does not dictate how the data is stored on disk l 09/27/2007 File Systems 89

UCDavis, ecs 251 Fall 2007 Soft Updates vs. Journaling Advantages l disadvantages l 09/27/2007 File Systems 90

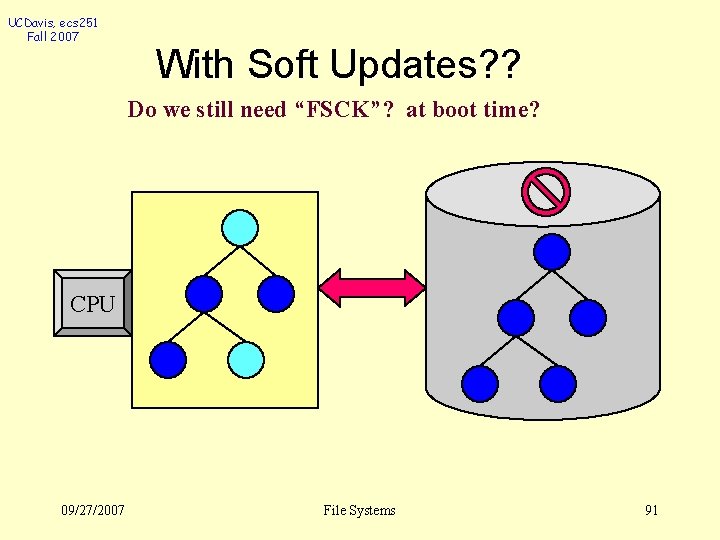

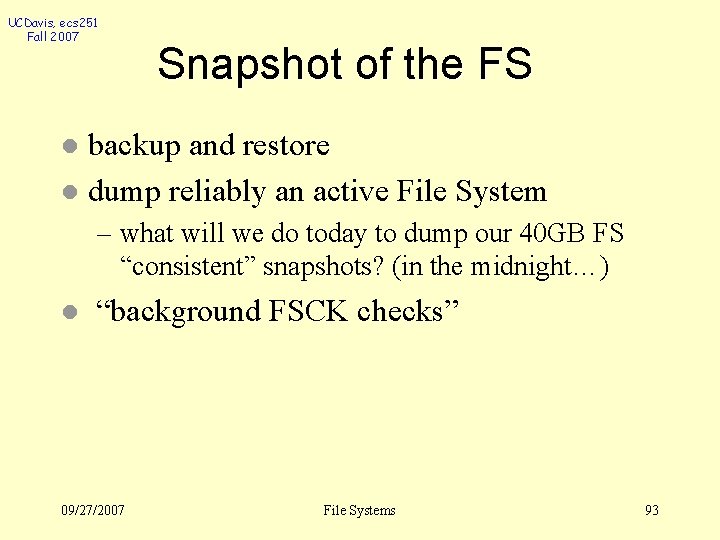

UCDavis, ecs 251 Fall 2007 With Soft Updates? ? Do we still need “FSCK”? at boot time? CPU 09/27/2007 File Systems 91

UCDavis, ecs 251 Fall 2007 Recover the Missing Resources l In the background, in an active FS… – We don’t want to wait for the lengthy FSCK process to complete… l A related issue: – the virus scanning process – what happens if we get a new virus signature? 09/27/2007 File Systems 92

UCDavis, ecs 251 Fall 2007 Snapshot of the FS backup and restore l dump reliably an active File System l – what will we do today to dump our 40 GB FS “consistent” snapshots? (in the midnight…) l “background FSCK checks” 09/27/2007 File Systems 93

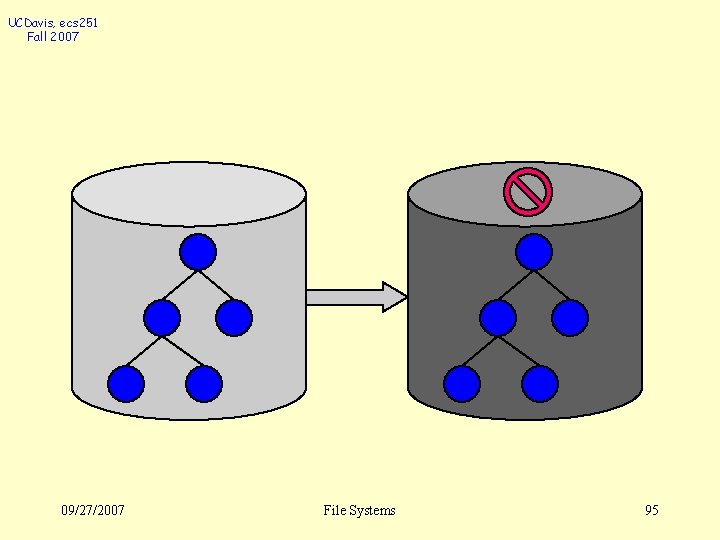

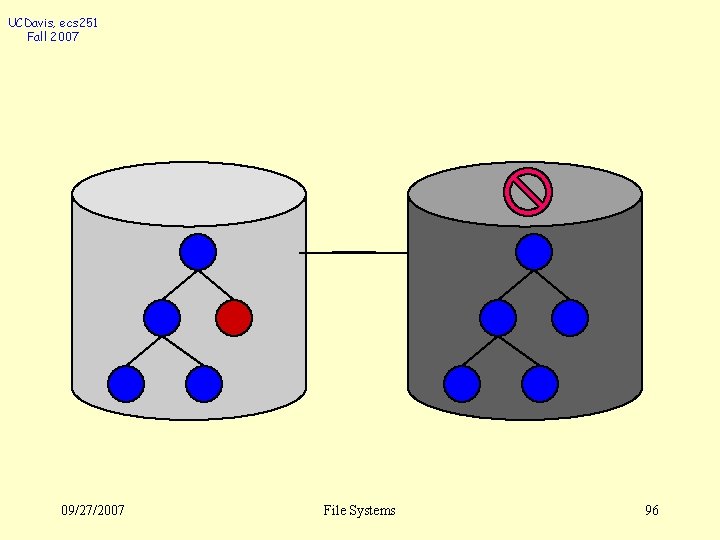

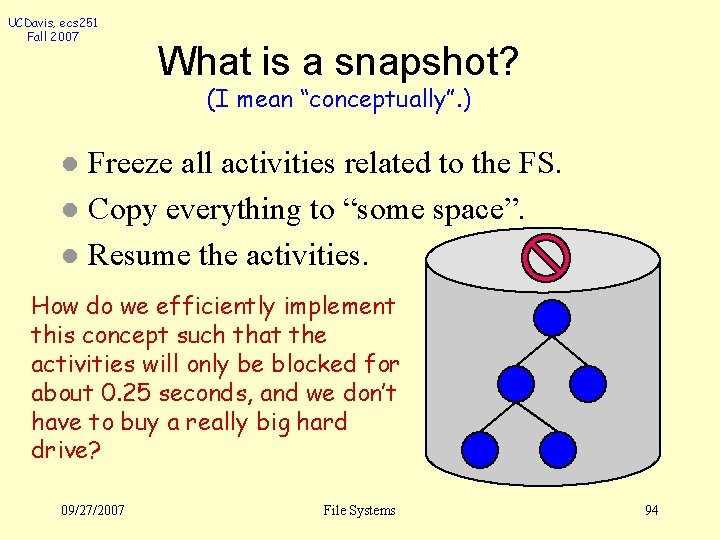

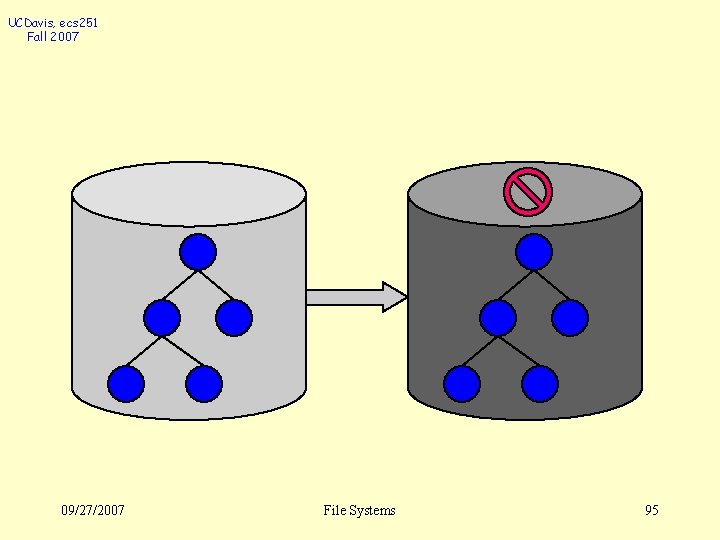

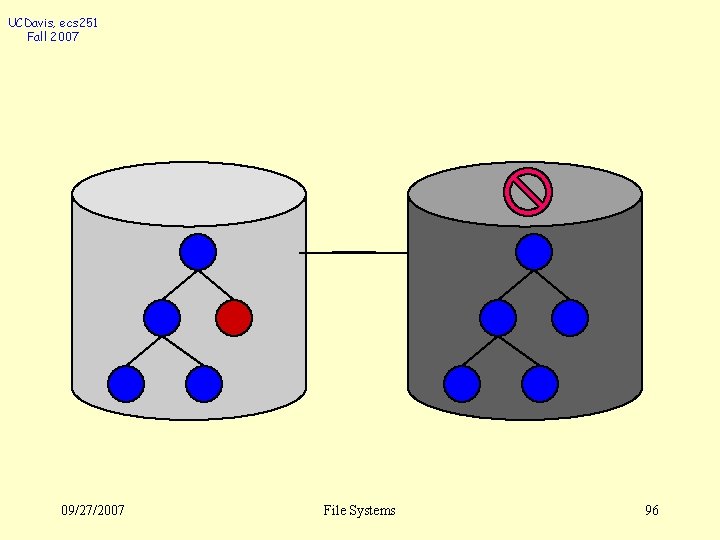

UCDavis, ecs 251 Fall 2007 What is a snapshot? (I mean “conceptually”. ) Freeze all activities related to the FS. l Copy everything to “some space”. l Resume the activities. l How do we efficiently implement this concept such that the activities will only be blocked for about 0. 25 seconds, and we don’t have to buy a really big hard drive? 09/27/2007 File Systems 94

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 95

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 96

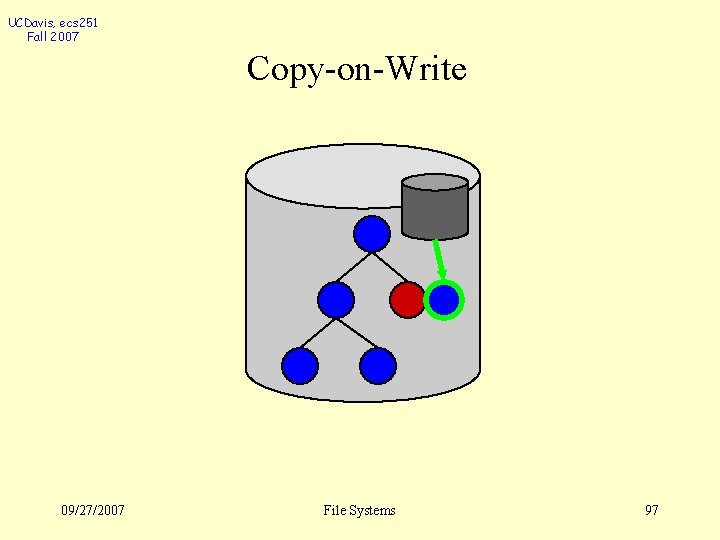

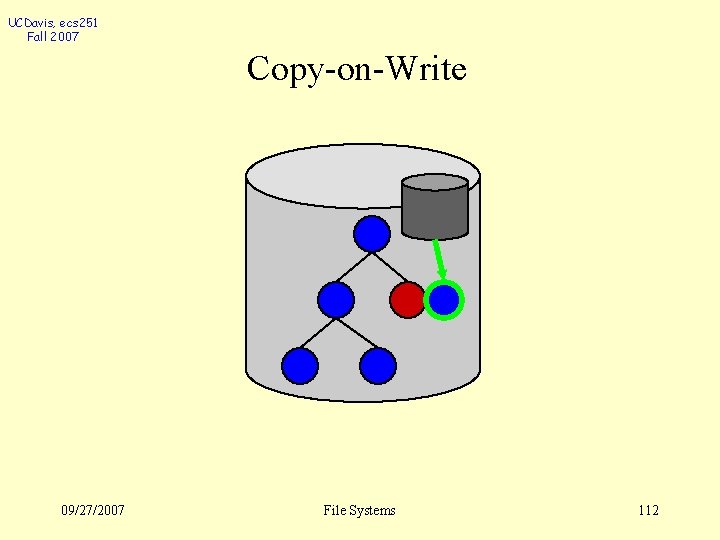

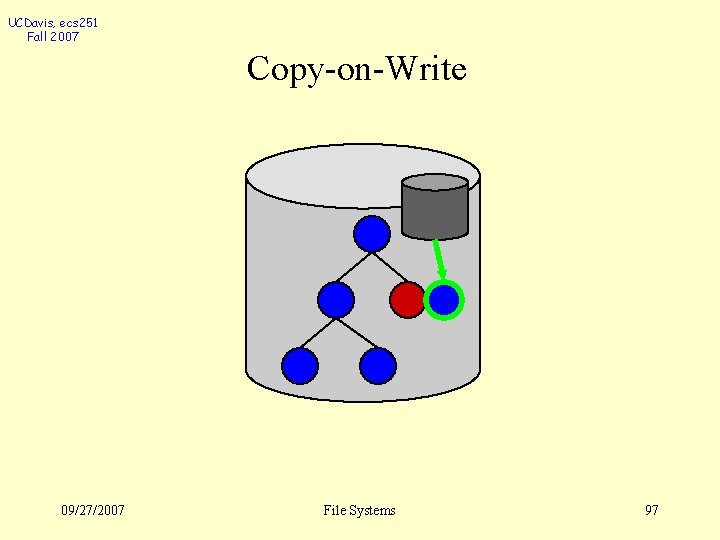

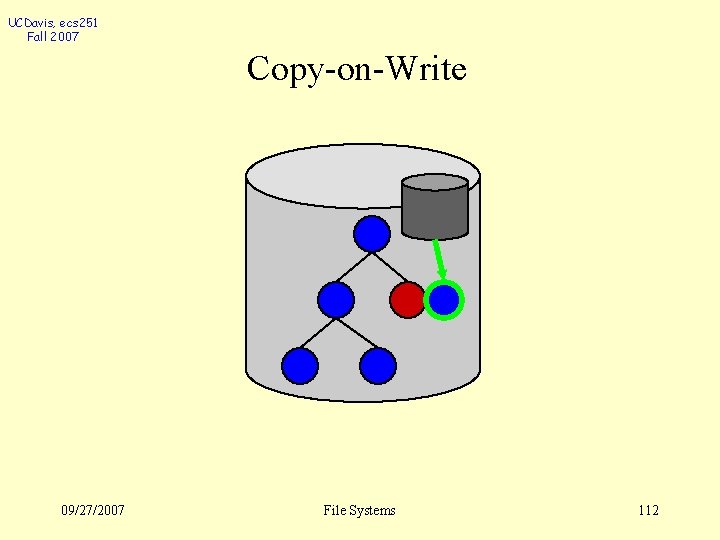

UCDavis, ecs 251 Fall 2007 Copy-on-Write 09/27/2007 File Systems 97

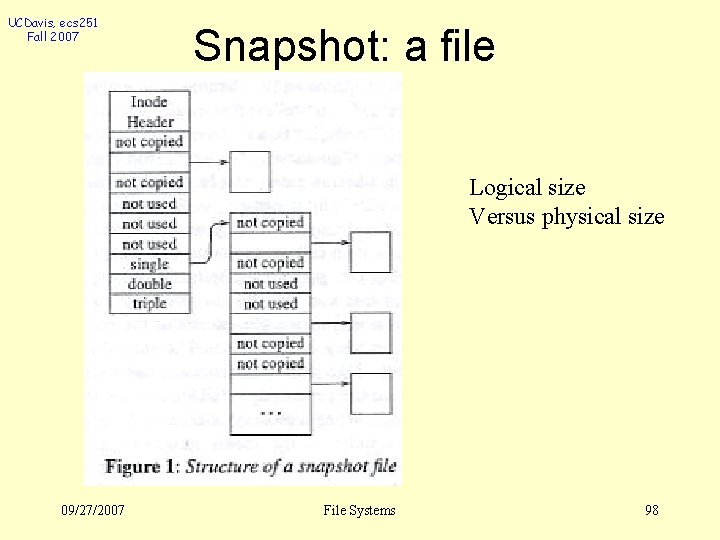

UCDavis, ecs 251 Fall 2007 Snapshot: a file Logical size Versus physical size 09/27/2007 File Systems 98

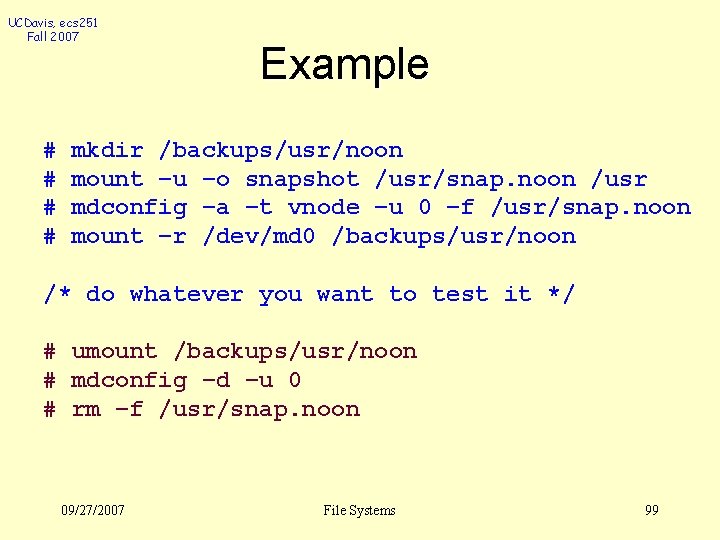

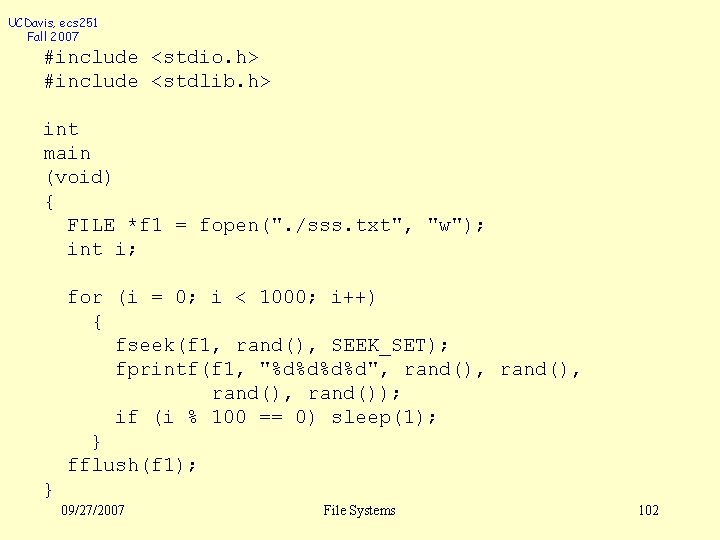

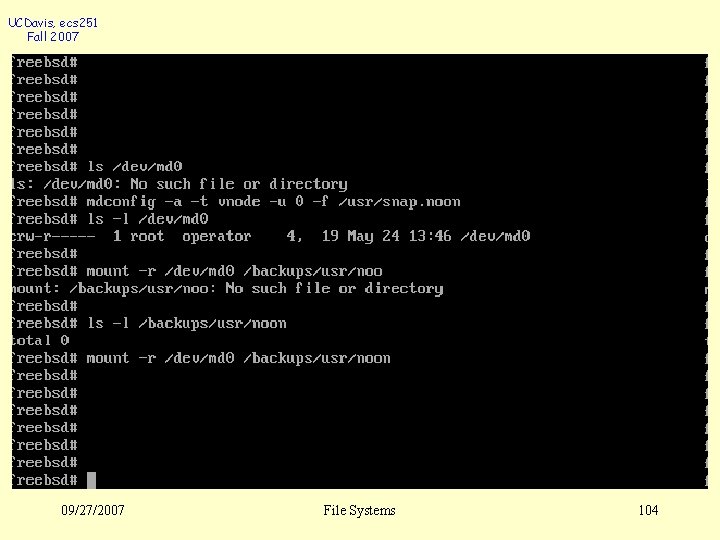

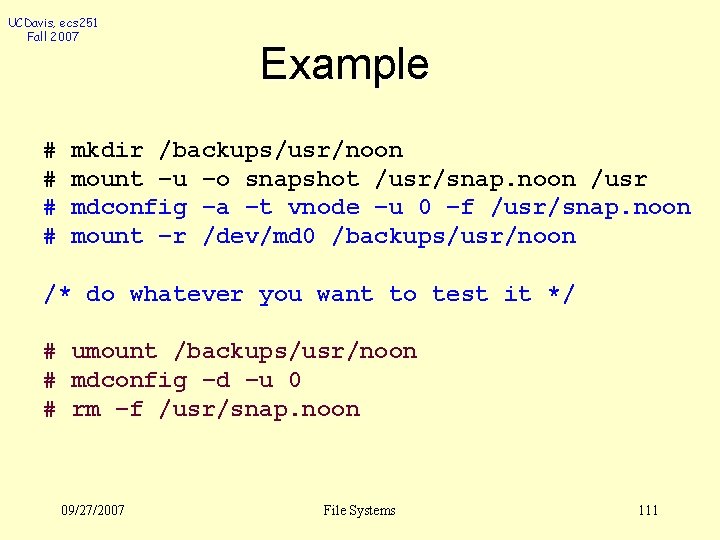

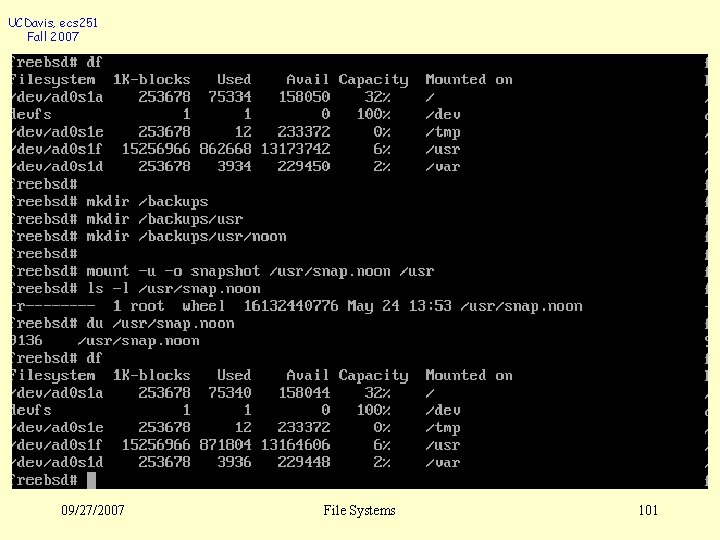

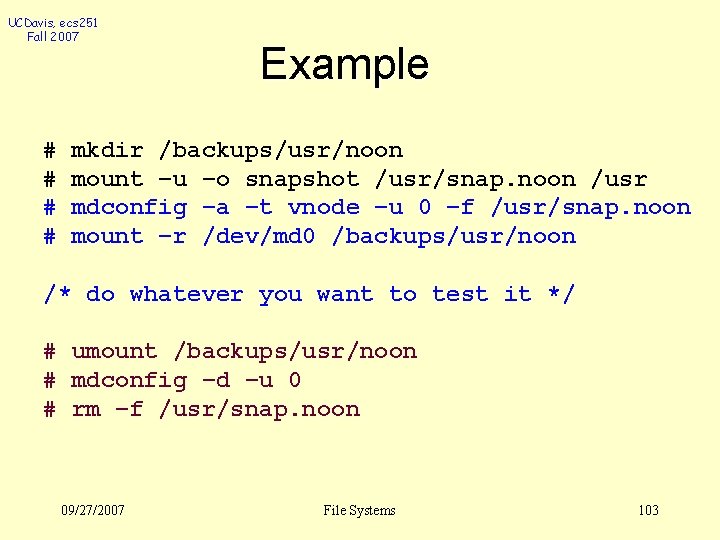

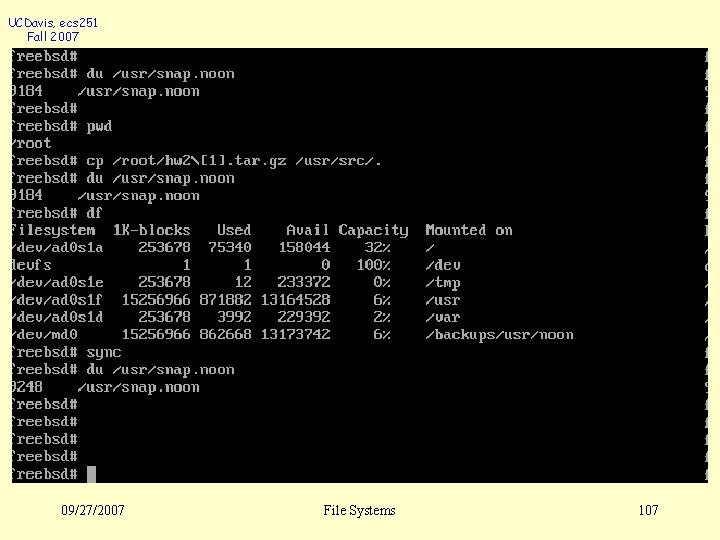

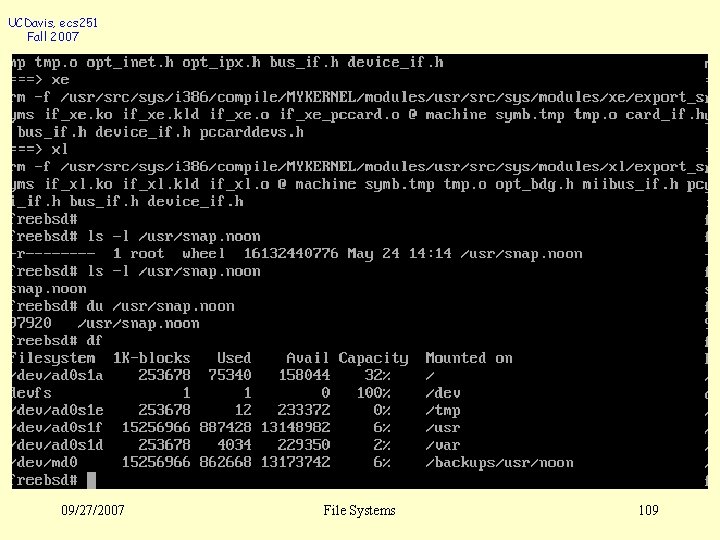

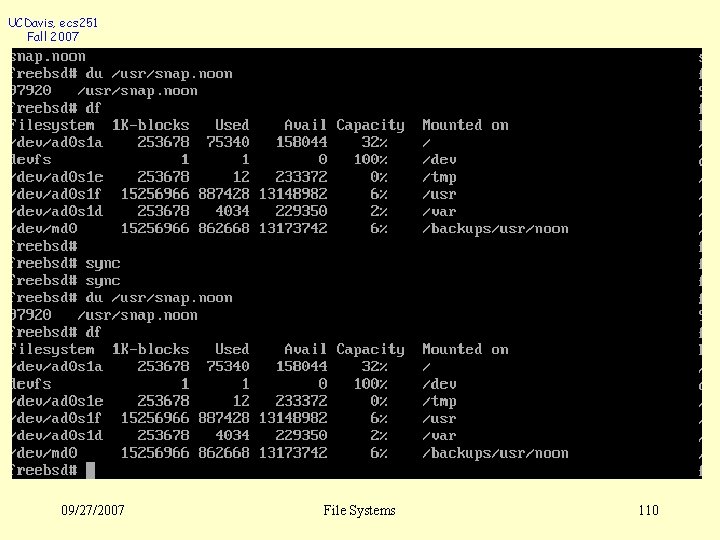

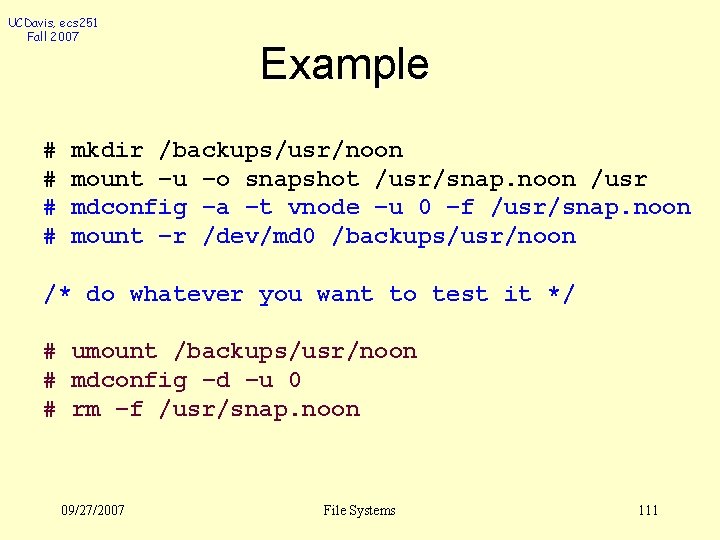

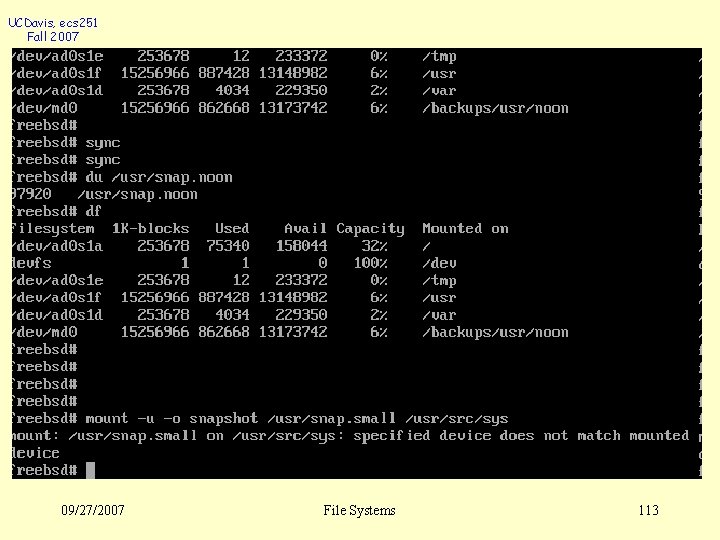

UCDavis, ecs 251 Fall 2007 # # Example mkdir /backups/usr/noon mount –u –o snapshot /usr/snap. noon /usr mdconfig –a –t vnode –u 0 –f /usr/snap. noon mount –r /dev/md 0 /backups/usr/noon /* do whatever you want to test it */ # umount /backups/usr/noon # mdconfig –d –u 0 # rm –f /usr/snap. noon 09/27/2007 File Systems 99

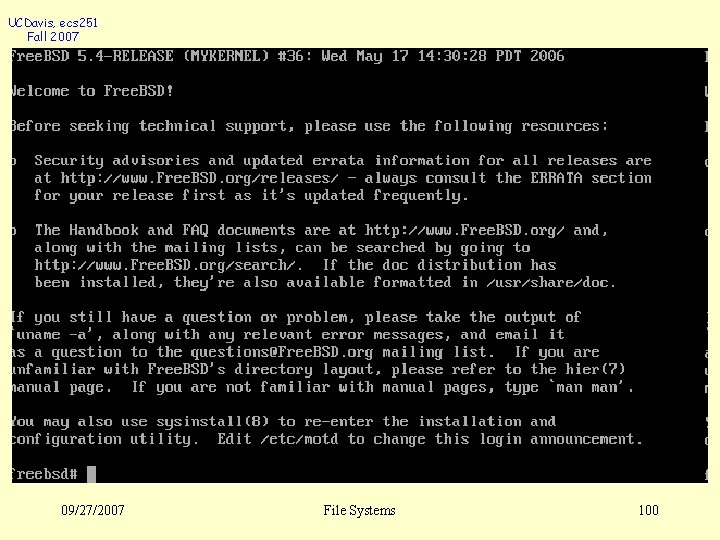

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 100

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 101

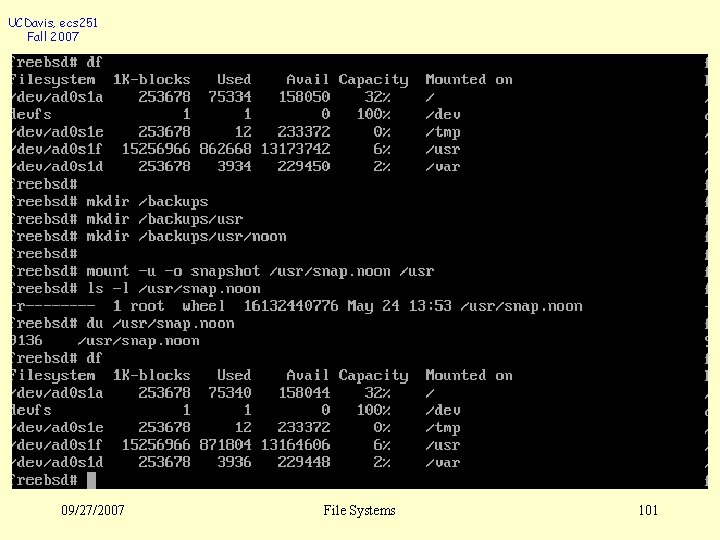

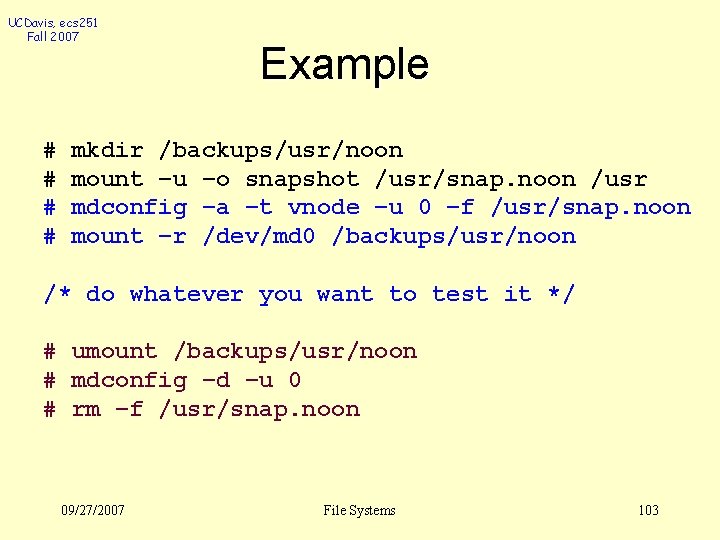

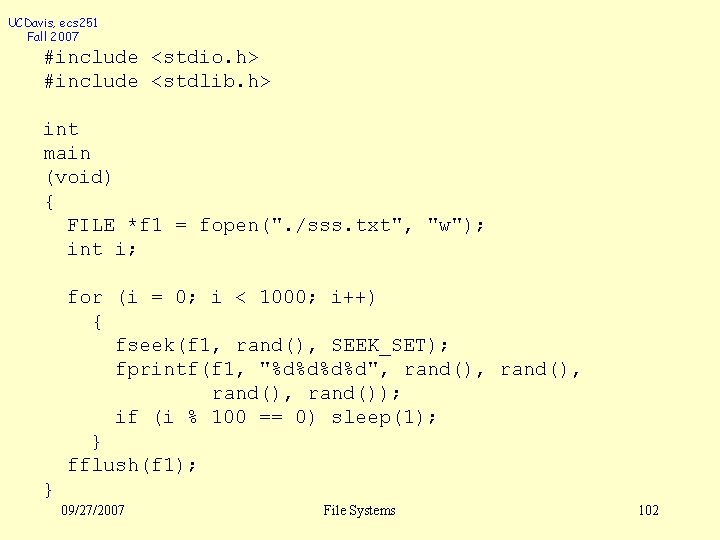

UCDavis, ecs 251 Fall 2007 #include <stdio. h> #include <stdlib. h> int main (void) { FILE *f 1 = fopen(". /sss. txt", "w"); int i; for (i = 0; i < 1000; i++) { fseek(f 1, rand(), SEEK_SET); fprintf(f 1, "%d%d", rand(), rand()); if (i % 100 == 0) sleep(1); } fflush(f 1); } 09/27/2007 File Systems 102

UCDavis, ecs 251 Fall 2007 # # Example mkdir /backups/usr/noon mount –u –o snapshot /usr/snap. noon /usr mdconfig –a –t vnode –u 0 –f /usr/snap. noon mount –r /dev/md 0 /backups/usr/noon /* do whatever you want to test it */ # umount /backups/usr/noon # mdconfig –d –u 0 # rm –f /usr/snap. noon 09/27/2007 File Systems 103

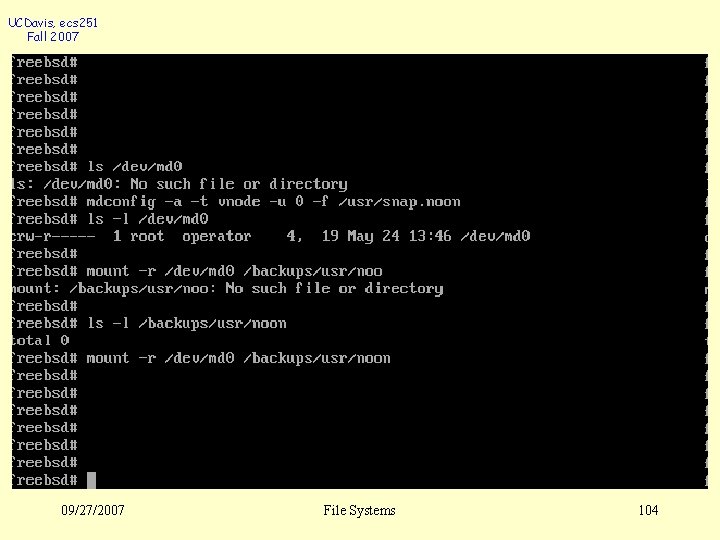

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 104

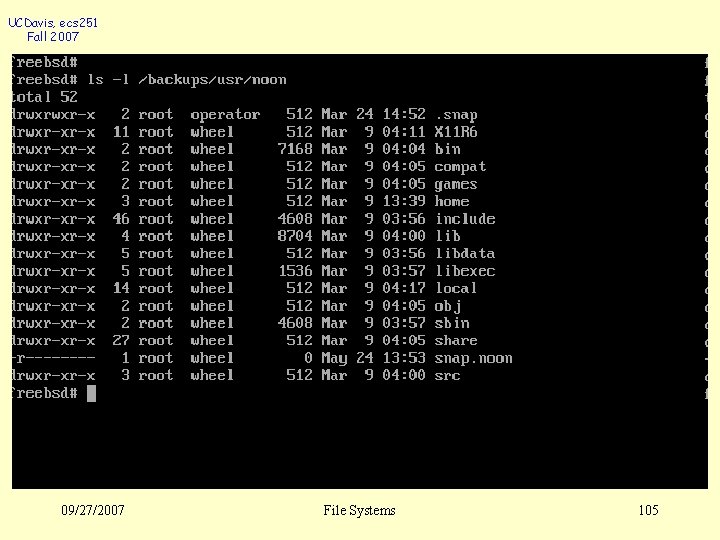

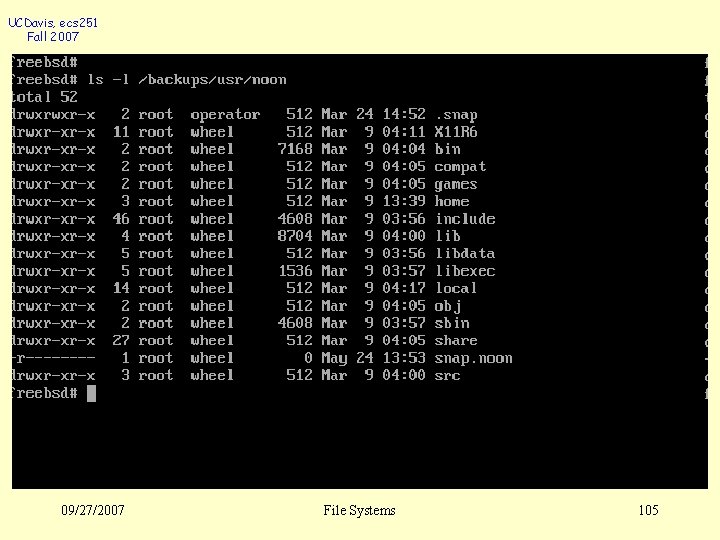

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 105

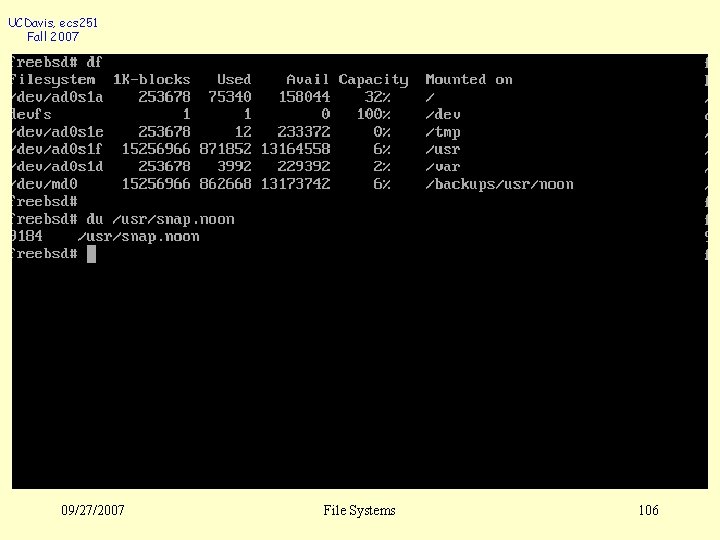

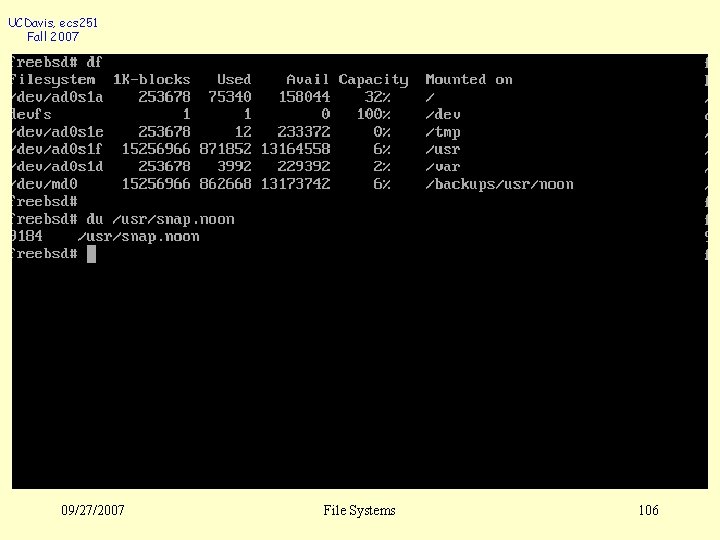

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 106

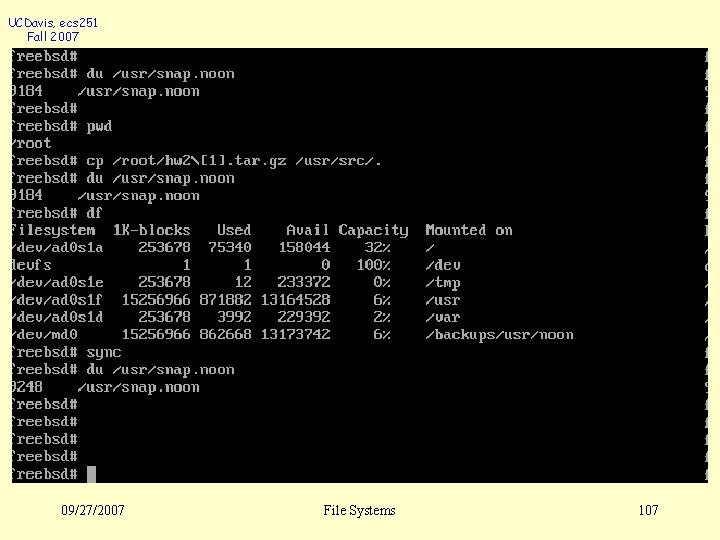

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 107

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 108

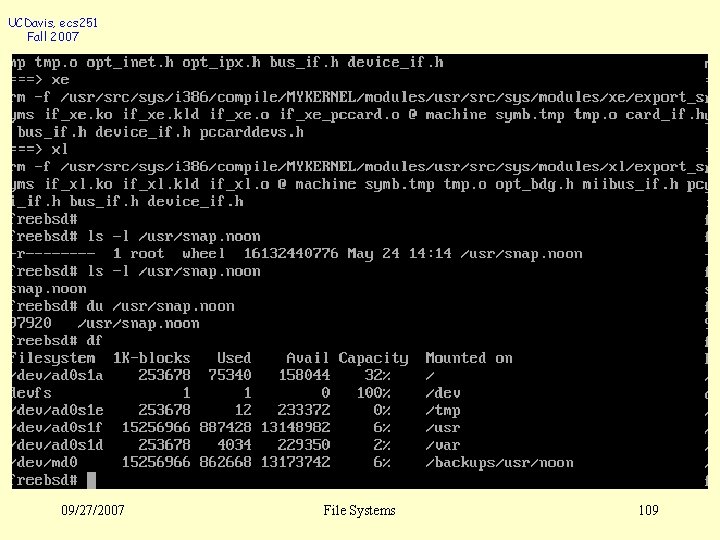

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 109

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 110

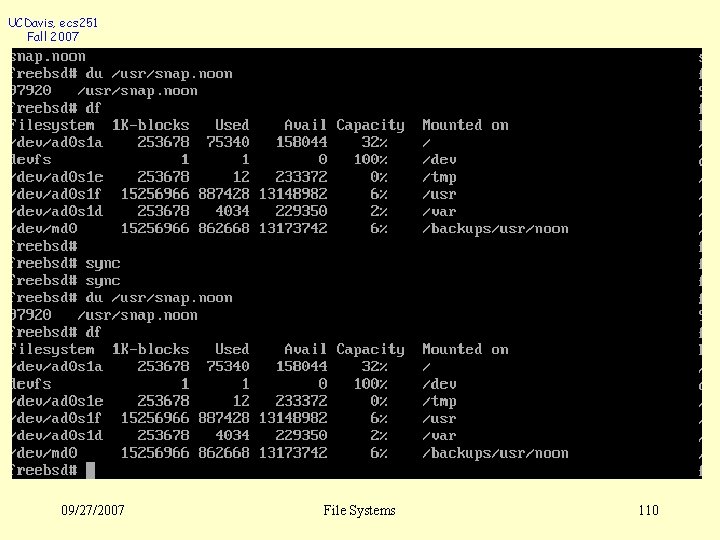

UCDavis, ecs 251 Fall 2007 # # Example mkdir /backups/usr/noon mount –u –o snapshot /usr/snap. noon /usr mdconfig –a –t vnode –u 0 –f /usr/snap. noon mount –r /dev/md 0 /backups/usr/noon /* do whatever you want to test it */ # umount /backups/usr/noon # mdconfig –d –u 0 # rm –f /usr/snap. noon 09/27/2007 File Systems 111

UCDavis, ecs 251 Fall 2007 Copy-on-Write 09/27/2007 File Systems 112

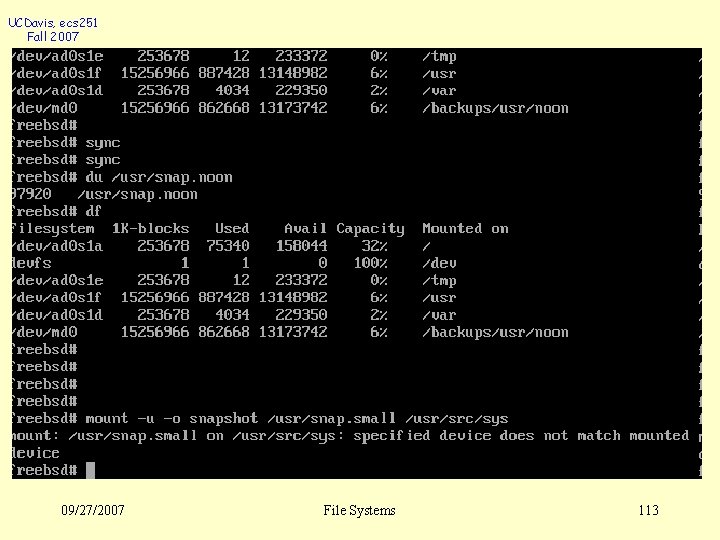

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 113

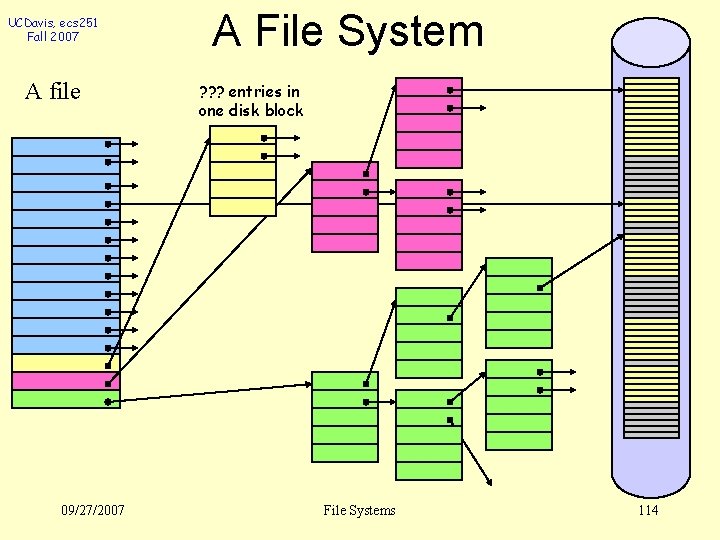

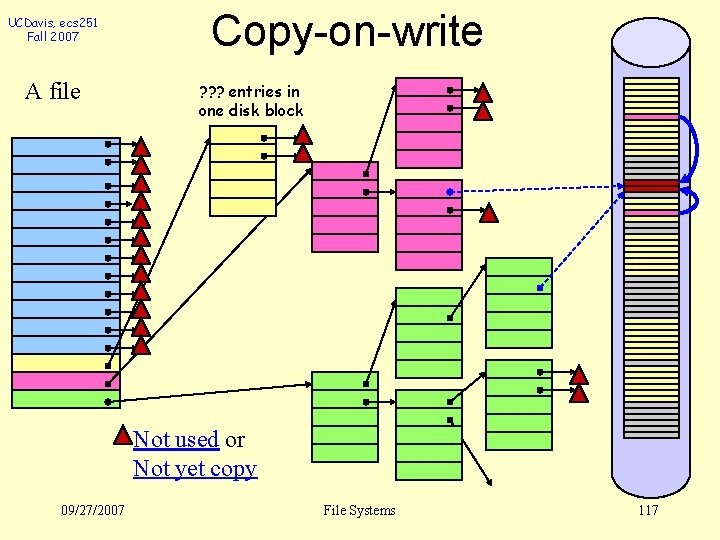

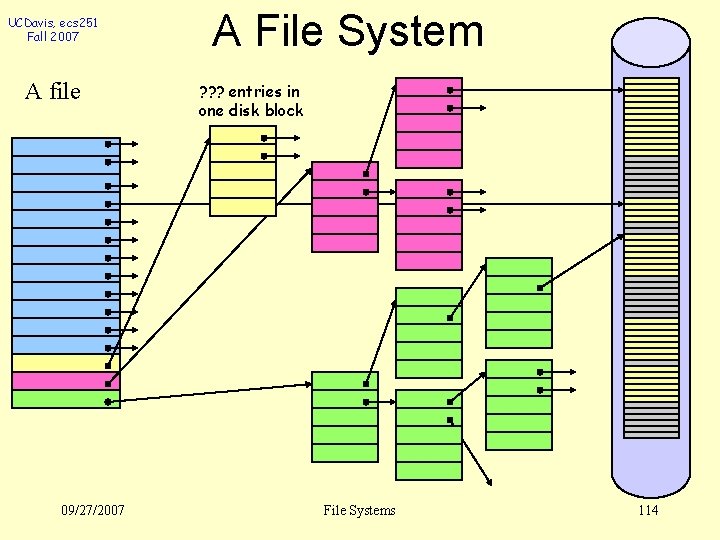

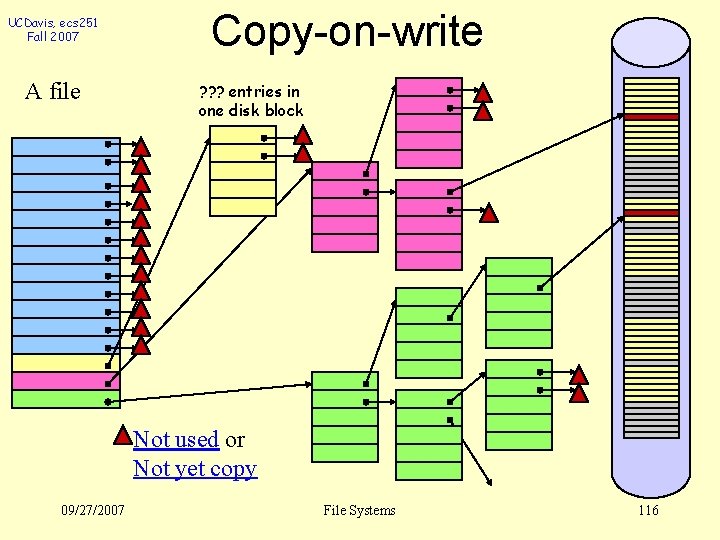

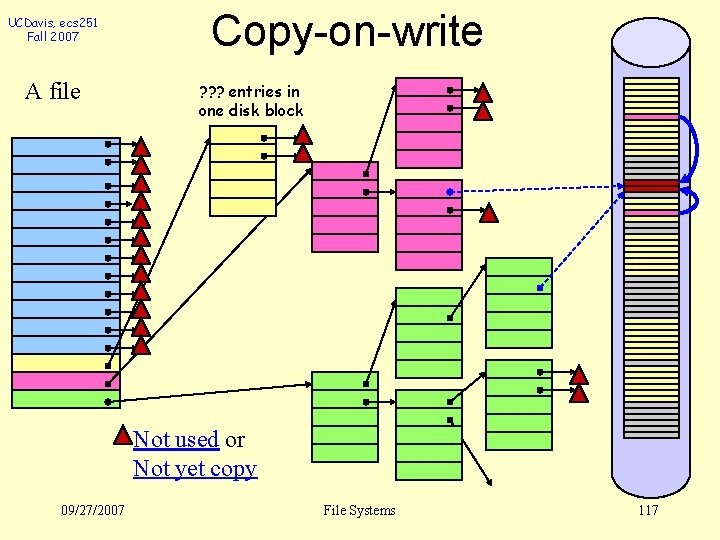

UCDavis, ecs 251 Fall 2007 A file 09/27/2007 A File System ? ? ? entries in one disk block File Systems 114

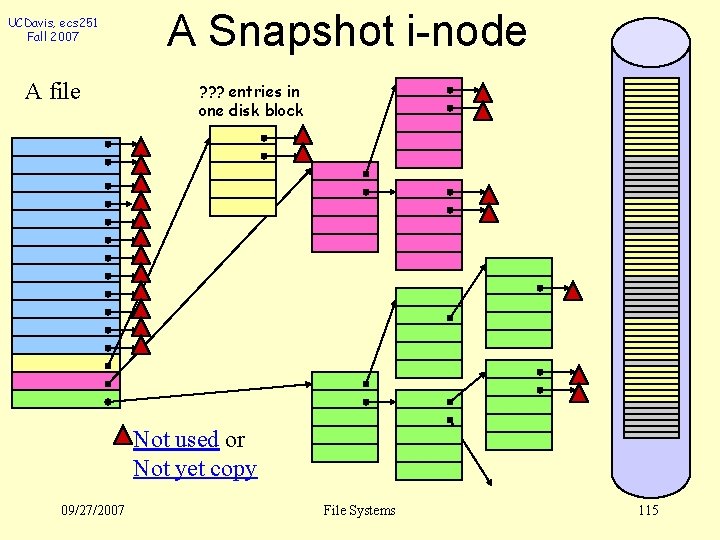

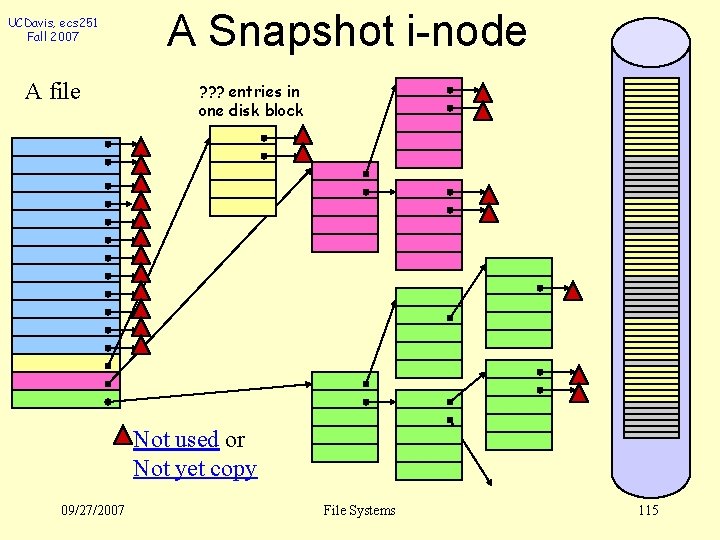

UCDavis, ecs 251 Fall 2007 A file A Snapshot i-node ? ? ? entries in one disk block Not used or Not yet copy 09/27/2007 File Systems 115

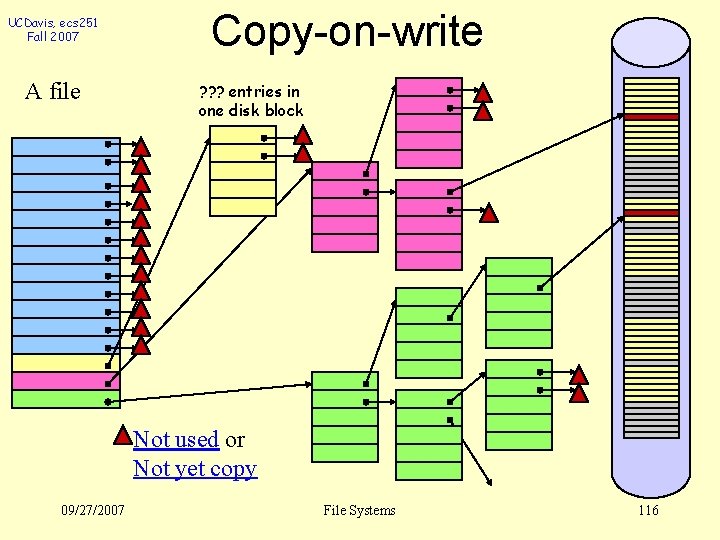

UCDavis, ecs 251 Fall 2007 A file Copy-on-write ? ? ? entries in one disk block Not used or Not yet copy 09/27/2007 File Systems 116

UCDavis, ecs 251 Fall 2007 A file Copy-on-write ? ? ? entries in one disk block Not used or Not yet copy 09/27/2007 File Systems 117

UCDavis, ecs 251 Fall 2007 Multiple Snapshots about 20 snapshots l Interactions/sharing among snapshots l 09/27/2007 File Systems 118

UCDavis, ecs 251 Fall 2007 Snapshot of the FS backup and restore l dump reliably an active File System l – what will we do today to dump our 40 GB FS “consistent” snapshots? (in the midnight…) l “background FSCK checks” 09/27/2007 File Systems 119

UCDavis, ecs 251 Fall 2007 Transaction-based FS Performance versus consistency l “Atomic Writes” on Multiple Blocks l – See the paper titled “Atomic Writes for Data Integrity and Consistency in Shared Storage Devices for Clusters” by Okun and Barak, FGCS, vol. 20, pages 539 -547, 2004. – Modify SCSI handling 09/27/2007 File Systems 120

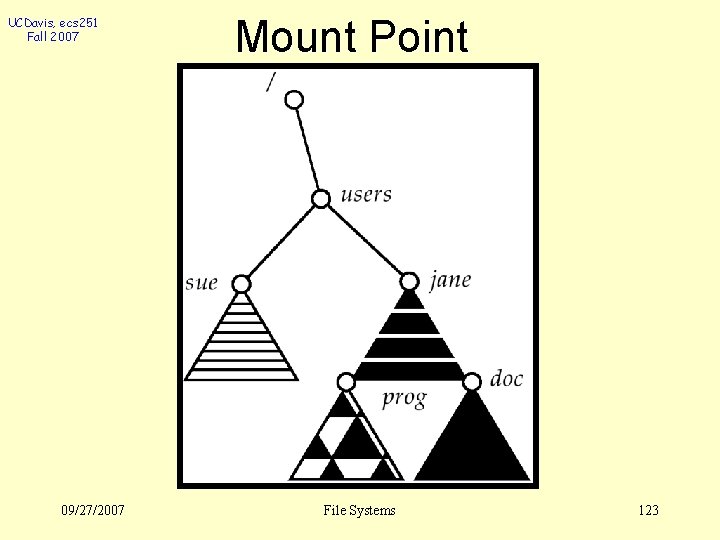

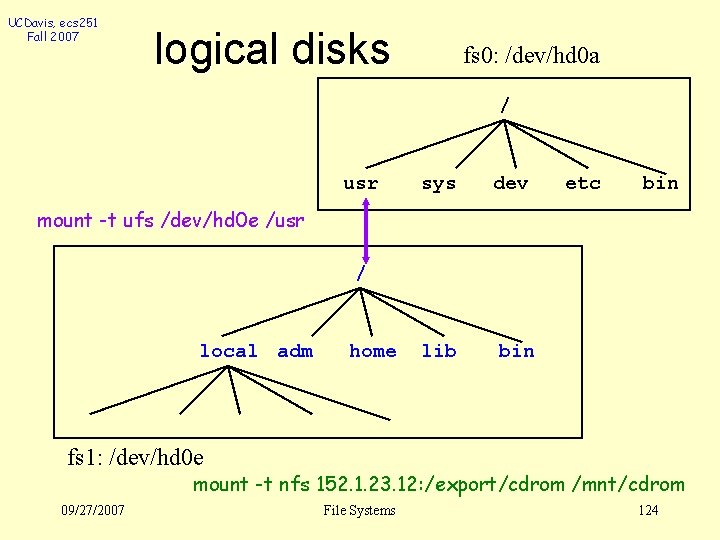

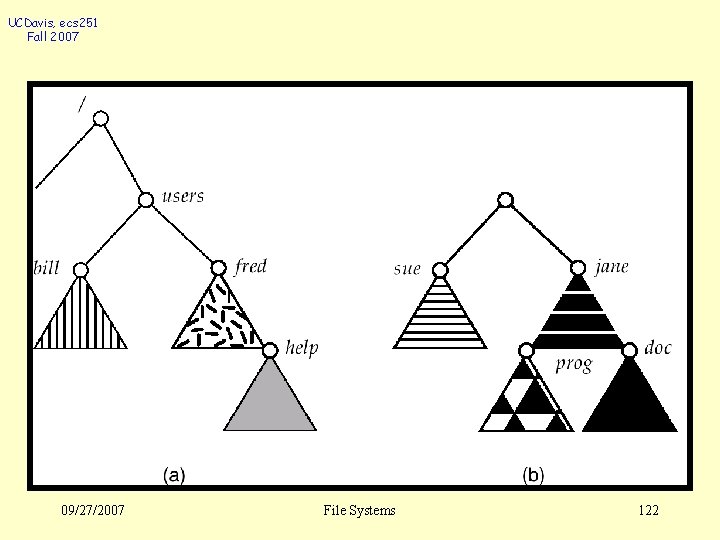

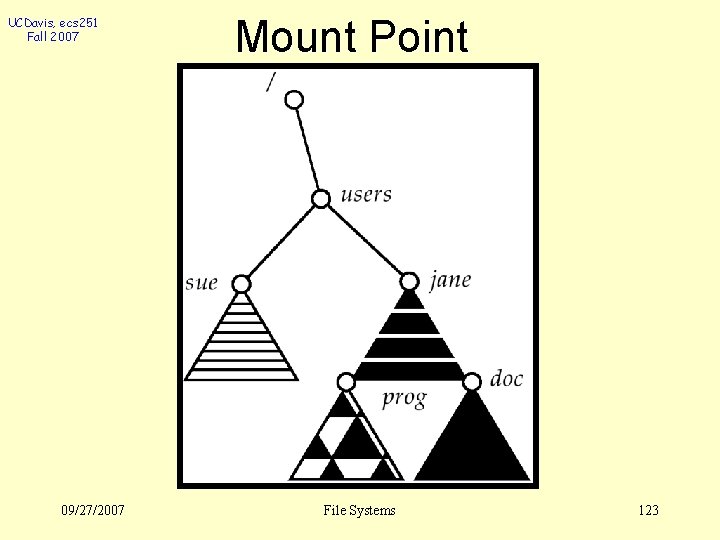

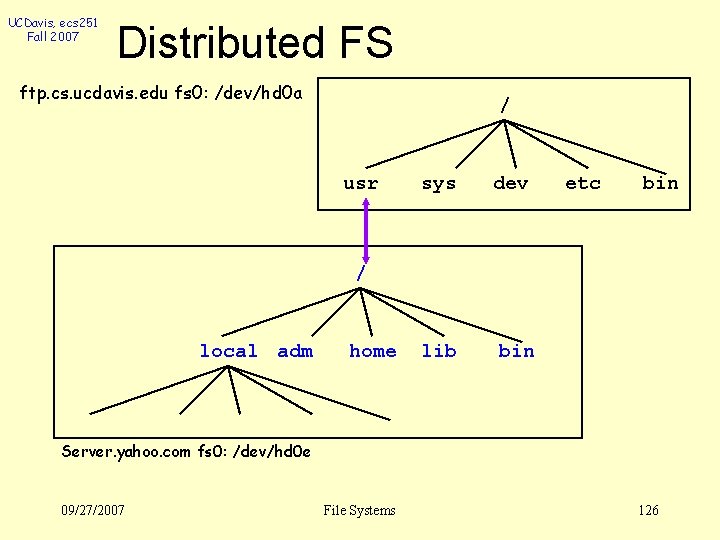

UCDavis, ecs 251 Fall 2007 File System Mounting A file system must be mounted before it can be accessed. l A unmounted file system is mounted at a mount point. l 09/27/2007 File Systems 121

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 122

UCDavis, ecs 251 Fall 2007 09/27/2007 Mount Point File Systems 123

UCDavis, ecs 251 Fall 2007 logical disks fs 0: /dev/hd 0 a / usr sys dev lib bin etc bin mount -t ufs /dev/hd 0 e /usr / local adm home fs 1: /dev/hd 0 e mount -t nfs 152. 1. 23. 12: /export/cdrom /mnt/cdrom 09/27/2007 File Systems 124

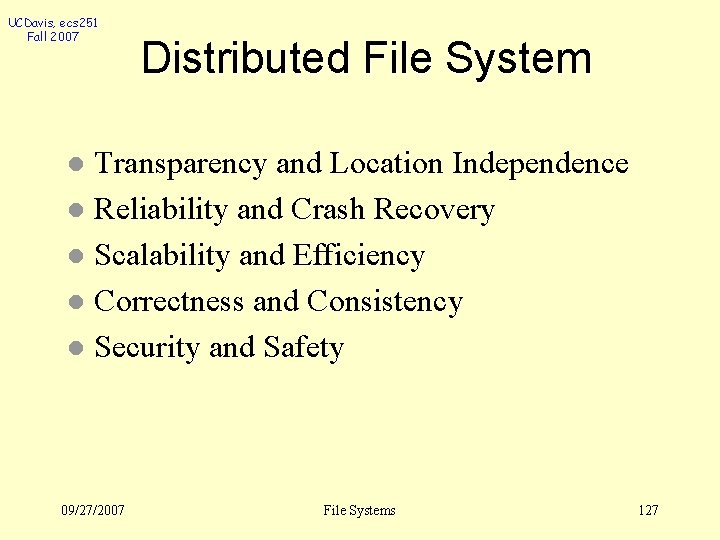

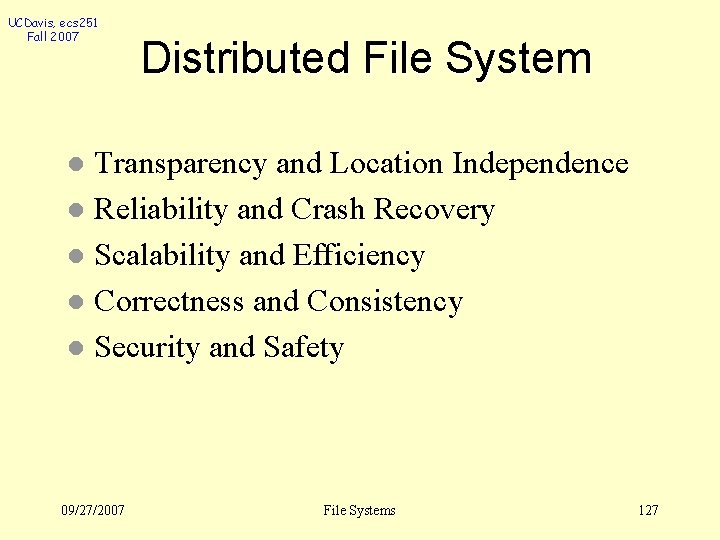

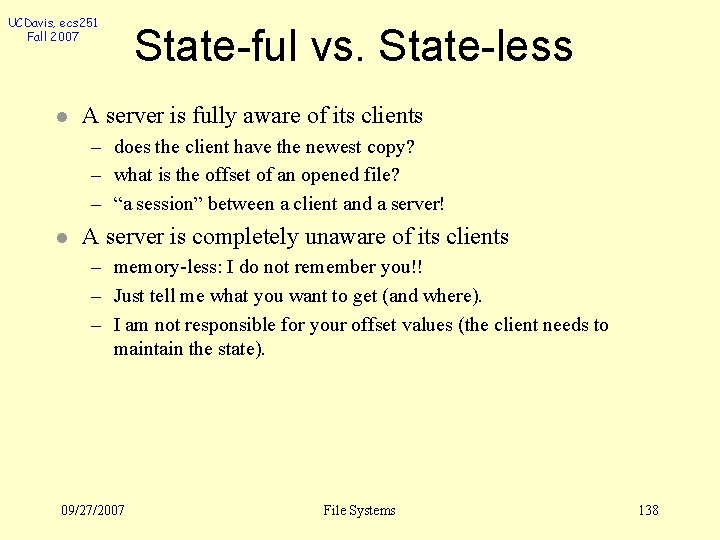

UCDavis, ecs 251 Fall 2007 Distributed FS l Distributed File System – NFS (Network File System) – AFS (Andrew File System) – GFS (Google File System) – CODA 09/27/2007 File Systems 125

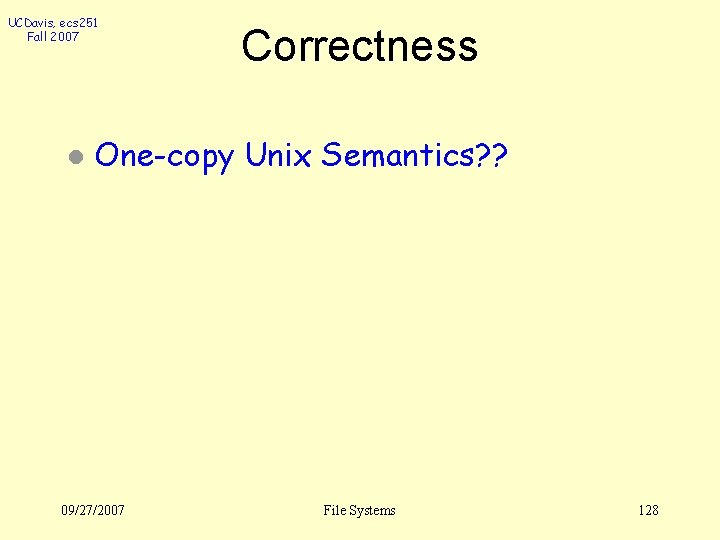

UCDavis, ecs 251 Fall 2007 Distributed FS ftp. cs. ucdavis. edu fs 0: /dev/hd 0 a / usr sys dev lib bin etc bin / local adm home Server. yahoo. com fs 0: /dev/hd 0 e 09/27/2007 File Systems 126

UCDavis, ecs 251 Fall 2007 Distributed File System Transparency and Location Independence l Reliability and Crash Recovery l Scalability and Efficiency l Correctness and Consistency l Security and Safety l 09/27/2007 File Systems 127

UCDavis, ecs 251 Fall 2007 l Correctness One-copy Unix Semantics? ? 09/27/2007 File Systems 128

UCDavis, ecs 251 Fall 2007 l Correctness One-copy Unix Semantics – every modification to every byte of a file has to be immediately and permanently visible to every client. 09/27/2007 File Systems 129

UCDavis, ecs 251 Fall 2007 l Correctness One-copy Unix Semantics – every modification to every byte of a file has to be immediately and permanently visible to every client. – Conceptually FS sequent access Make sense in a local file system l Single processor versus shared memory l l Is this necessary? 09/27/2007 File Systems 130

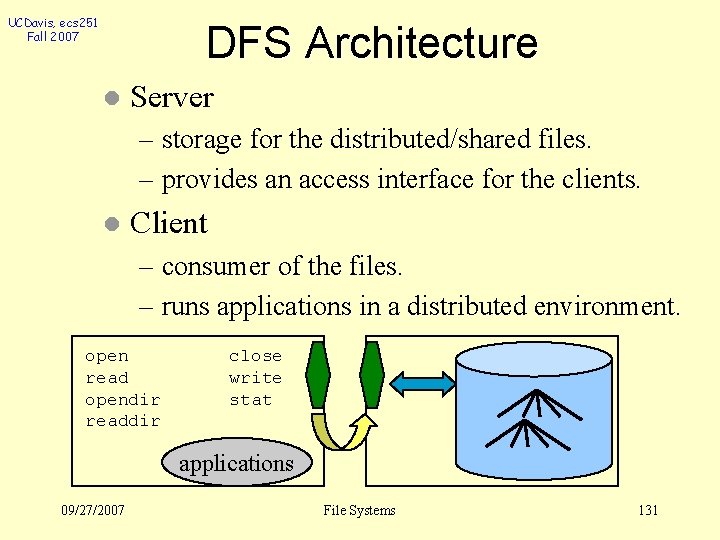

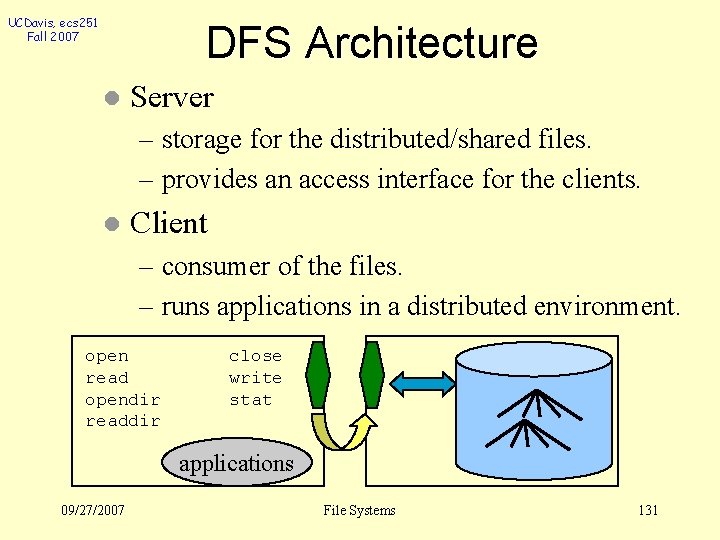

UCDavis, ecs 251 Fall 2007 DFS Architecture l Server – storage for the distributed/shared files. – provides an access interface for the clients. l Client – consumer of the files. – runs applications in a distributed environment. open read opendir readdir close write stat applications 09/27/2007 File Systems 131

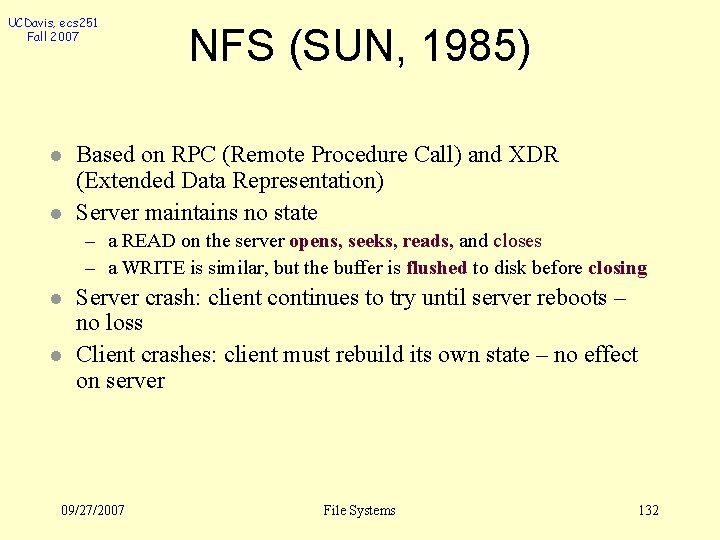

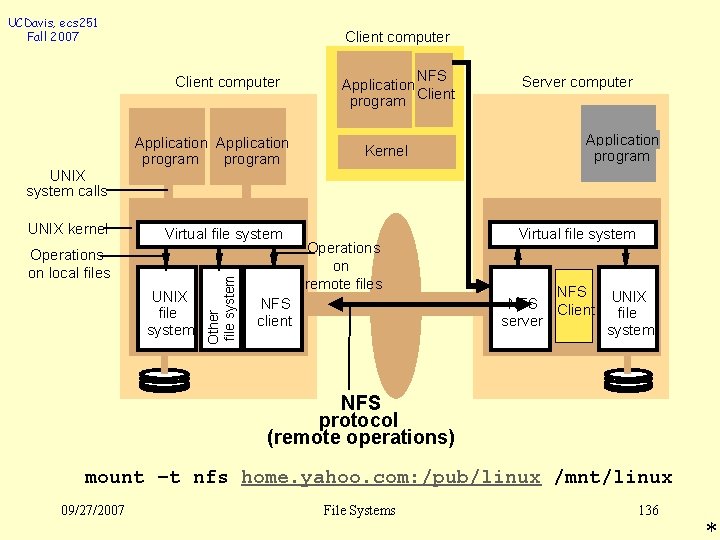

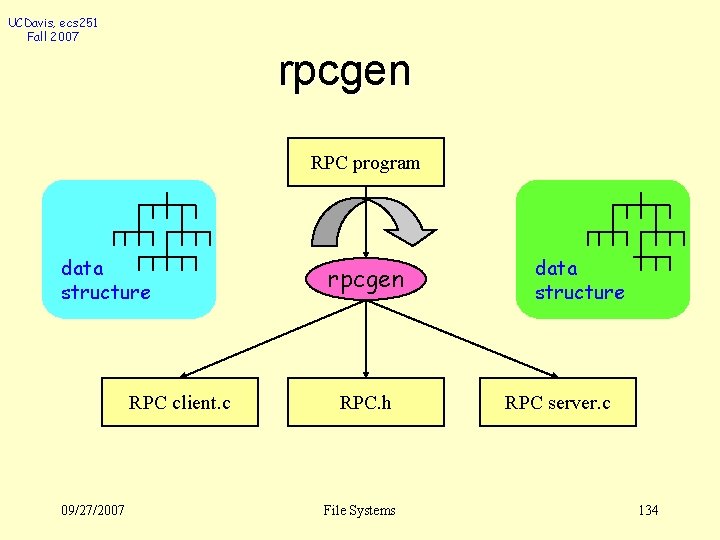

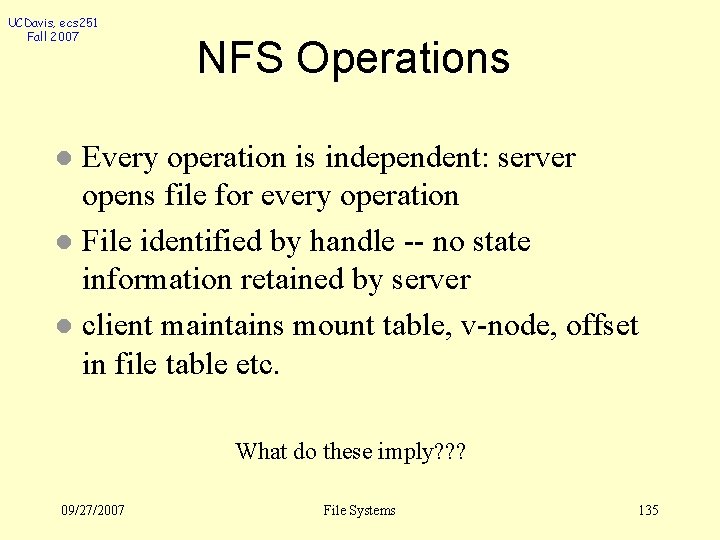

UCDavis, ecs 251 Fall 2007 l l NFS (SUN, 1985) Based on RPC (Remote Procedure Call) and XDR (Extended Data Representation) Server maintains no state – a READ on the server opens, seeks, reads, and closes – a WRITE is similar, but the buffer is flushed to disk before closing l l Server crash: client continues to try until server reboots – no loss Client crashes: client must rebuild its own state – no effect on server 09/27/2007 File Systems 132

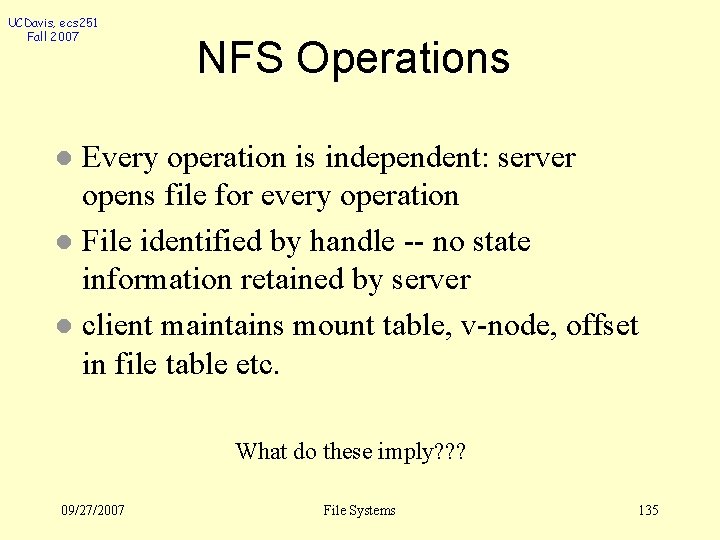

UCDavis, ecs 251 Fall 2007 RPC - XDR RPC: Standard protocol for calling procedures in another machine l Procedure is packaged with authorization and admin info l XDR: standard format for data, because manufacturers of computers cannot agree on byte ordering. l 09/27/2007 File Systems 133

UCDavis, ecs 251 Fall 2007 rpcgen RPC program data structure RPC client. c 09/27/2007 rpcgen RPC. h File Systems data structure RPC server. c 134

UCDavis, ecs 251 Fall 2007 NFS Operations Every operation is independent: server opens file for every operation l File identified by handle -- no state information retained by server l client maintains mount table, v-node, offset in file table etc. l What do these imply? ? ? 09/27/2007 File Systems 135

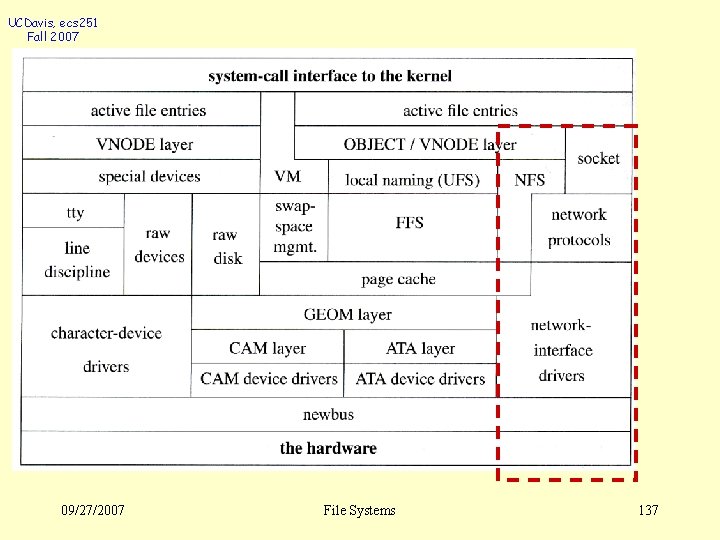

UCDavis, ecs 251 Fall 2007 Client computer Application program NFS Application program Client Kernel Server computer Application program UNIX system calls Virtual file system Operations on local files UNIX file system Other file system UNIX kernel Operations on remote files NFS client Virtual file system NFS Client server UNIX file system NFS protocol (remote operations) mount –t nfs home. yahoo. com: /pub/linux /mnt/linux 09/27/2007 File Systems 136 *

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 137

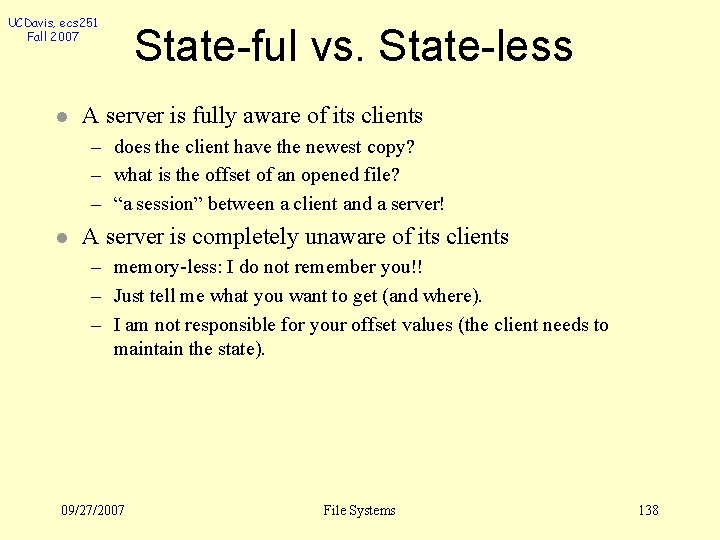

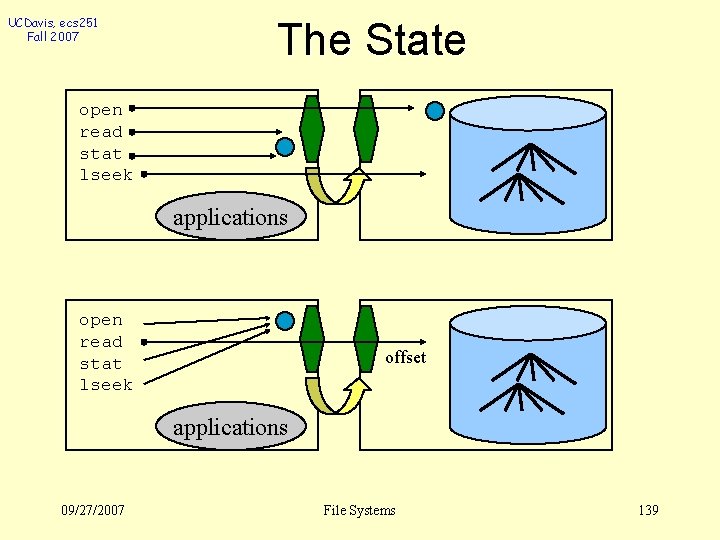

UCDavis, ecs 251 Fall 2007 l State-ful vs. State-less A server is fully aware of its clients – does the client have the newest copy? – what is the offset of an opened file? – “a session” between a client and a server! l A server is completely unaware of its clients – memory-less: I do not remember you!! – Just tell me what you want to get (and where). – I am not responsible for your offset values (the client needs to maintain the state). 09/27/2007 File Systems 138

UCDavis, ecs 251 Fall 2007 The State open read stat lseek applications open read stat lseek offset applications 09/27/2007 File Systems 139

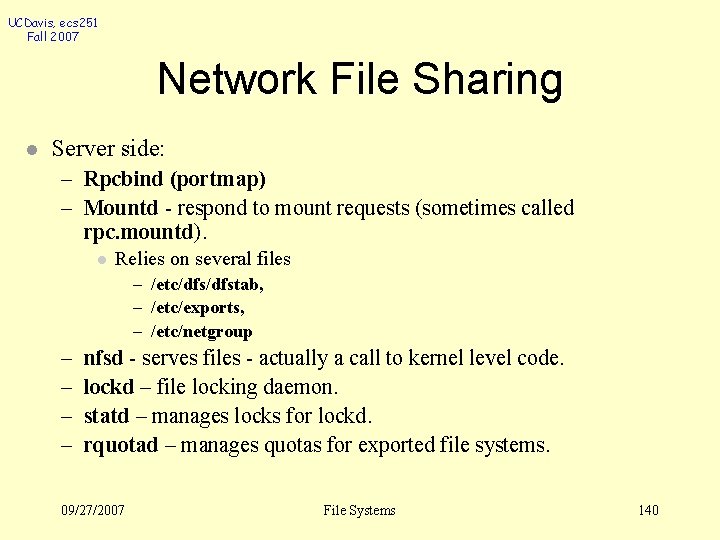

UCDavis, ecs 251 Fall 2007 Network File Sharing l Server side: – Rpcbind (portmap) – Mountd - respond to mount requests (sometimes called rpc. mountd). l Relies on several files – /etc/dfstab, – /etc/exports, – /etc/netgroup – – nfsd - serves files - actually a call to kernel level code. lockd – file locking daemon. statd – manages locks for lockd. rquotad – manages quotas for exported file systems. 09/27/2007 File Systems 140

UCDavis, ecs 251 Fall 2007 Network File Sharing l Client Side – biod - client side caching daemon – mount must understand the hostname: directory convention. – Filesystem entries in /etc/[v]fstab tell the client what filesystems to mount. 09/27/2007 File Systems 141

UCDavis, ecs 251 Fall 2007 l Unix file semantics NFS: – open a file with read-write mode – later, the server’s copy becomes read-only mode – now, the application tries to write it!! 09/27/2007 File Systems 142

UCDavis, ecs 251 Fall 2007 l Problems with NFS Performance not scaleable: – maybe it is OK for a local office. – will be horrible with large scale systems. 09/27/2007 File Systems 143

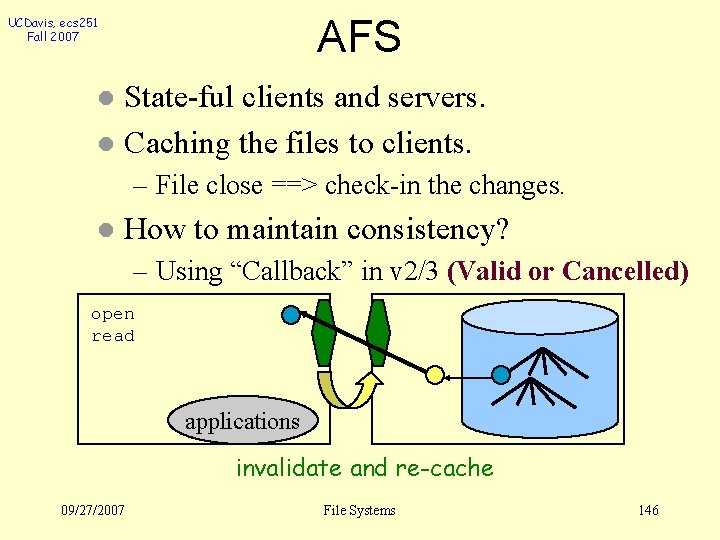

UCDavis, ecs 251 Fall 2007 l Similar to UNIX file caching for local files: – pages (blocks) from disk are held in a main memory buffer cache until the space is required for newer pages. Read-ahead and delayed-write optimisations. – For local files, writes are deferred to next sync event (30 second intervals) – Works well in local context, where files are always accessed through the local cache, but in the remote case it doesn't offer necessary synchronization guarantees to clients. l NFS v 3 servers offers two strategies for updating the disk: – write-through - altered pages are written to disk as soon as they are received at the server. When a write() RPC returns, the NFS client knows that the page is on the disk. – delayed commit - pages are held only in the cache until a commit() call is received for the relevant file. This is the default mode used by NFS v 3 clients. A commit() is issued by the client whenever a file is closed. 09/27/2007 File Systems 144 *

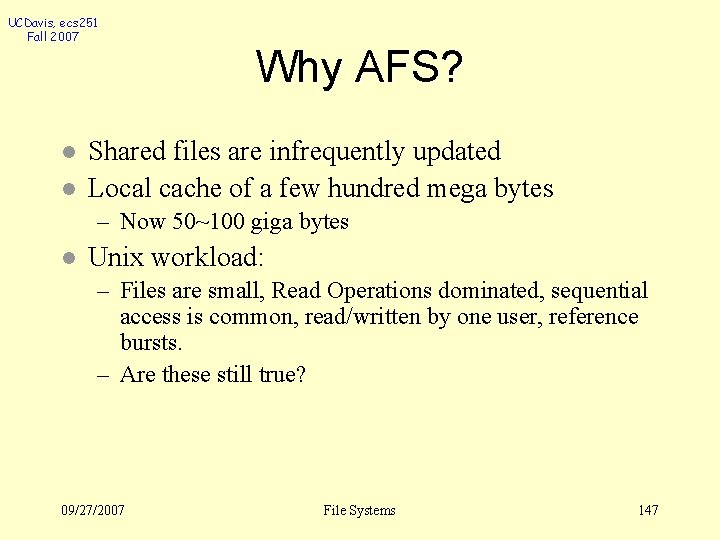

UCDavis, ecs 251 Fall 2007 l Server caching does nothing to reduce RPC traffic between client and server – further optimisation is essential to reduce server load in large networks – NFS client module caches the results of read, write, getattr, lookup and readdir operations – synchronization of file contents (one-copy semantics) is not guaranteed when two or more clients are sharing the same file. l Timestamp-based validity check – reduces inconsistency, but doesn't eliminate it – validity condition for cache entries at the client: (T - Tc < t) v (Tmclient = Tmserver) – t is configurable (per file) but is typically set to 3 seconds for files and 30 secs. for directories – it remains difficult to write distributed applications that share files with NFS 09/27/2007 File Systems t freshness guarantee Tc time when cache entry was last validated Tm time when block was last updated at server T current time 145 *

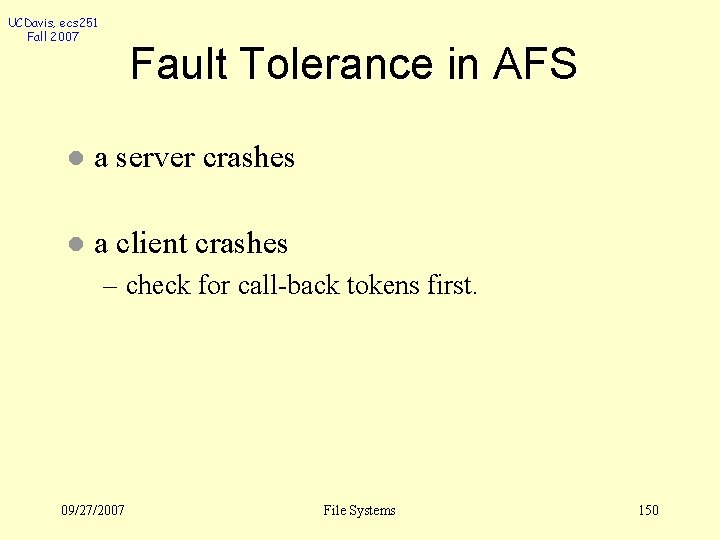

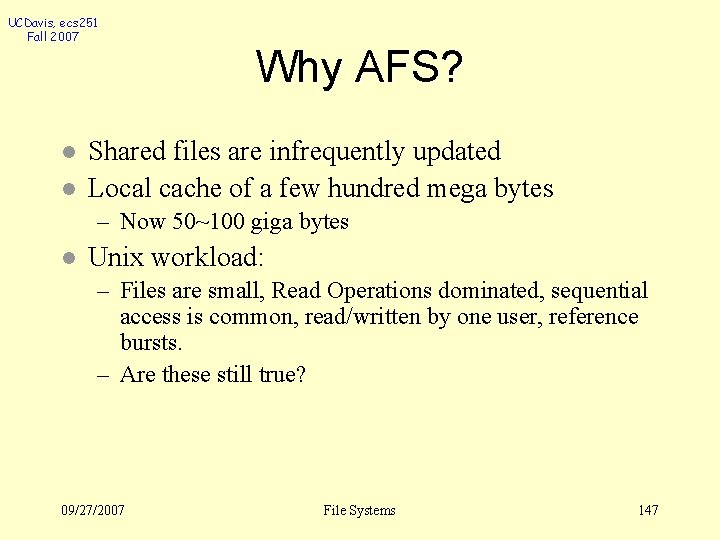

AFS UCDavis, ecs 251 Fall 2007 State-ful clients and servers. l Caching the files to clients. l – File close ==> check-in the changes. l How to maintain consistency? – Using “Callback” in v 2/3 (Valid or Cancelled) open read applications invalidate and re-cache 09/27/2007 File Systems 146

UCDavis, ecs 251 Fall 2007 l l Why AFS? Shared files are infrequently updated Local cache of a few hundred mega bytes – Now 50~100 giga bytes l Unix workload: – Files are small, Read Operations dominated, sequential access is common, read/written by one user, reference bursts. – Are these still true? 09/27/2007 File Systems 147

UCDavis, ecs 251 Fall 2007 Fault Tolerance in AFS l a server crashes l a client crashes – check for call-back tokens first. 09/27/2007 File Systems 150

UCDavis, ecs 251 Fall 2007 Problems with AFS Availability l what happens if call-back itself is lost? ? l 09/27/2007 File Systems 151

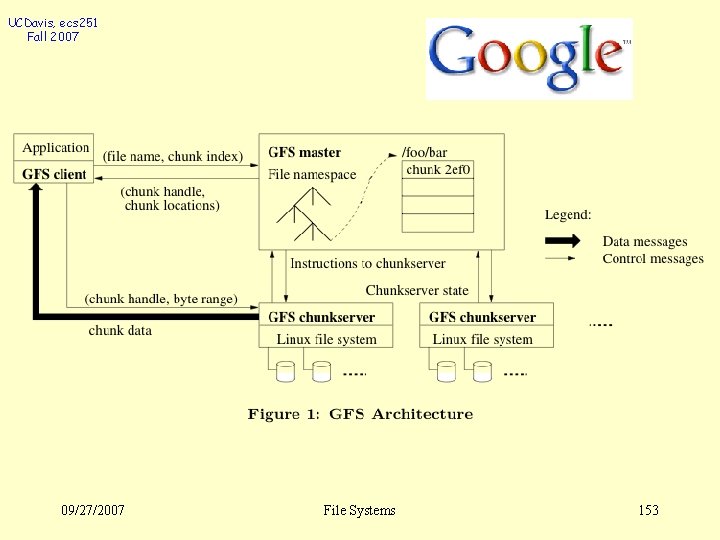

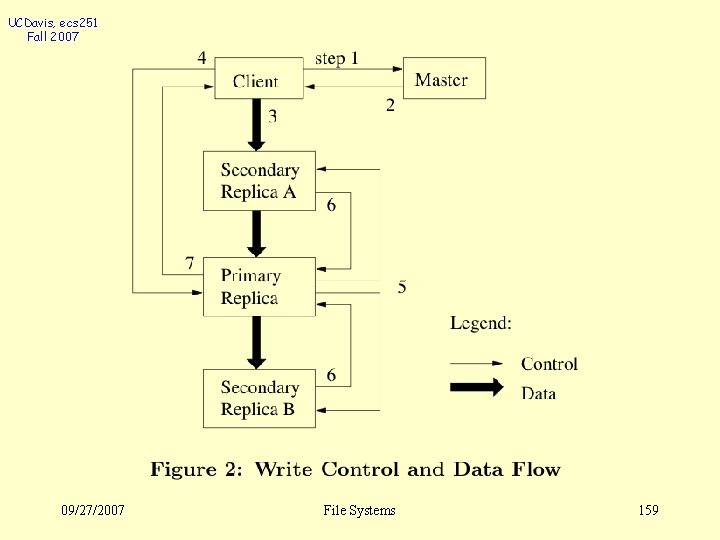

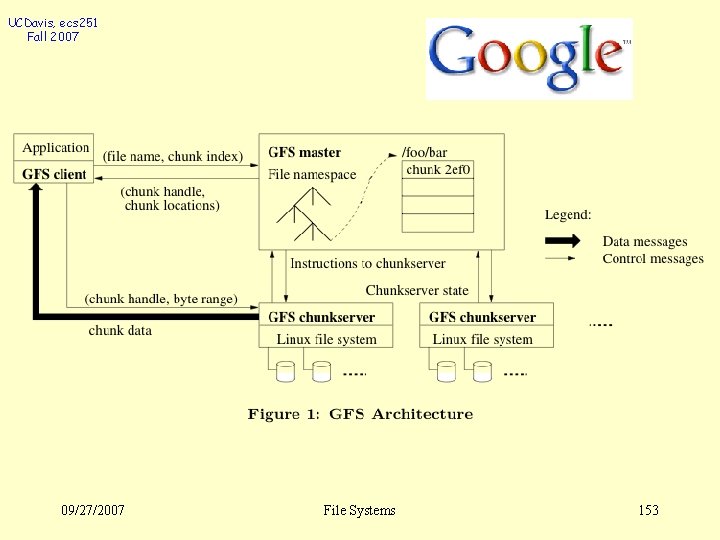

UCDavis, ecs 251 Fall 2007 GFS – Google File System “failures” are norm l Multiple-GB files are common l Append rather than overwrite l – Random writes are rare l Can we relax the consistency? 09/27/2007 File Systems 152

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 153

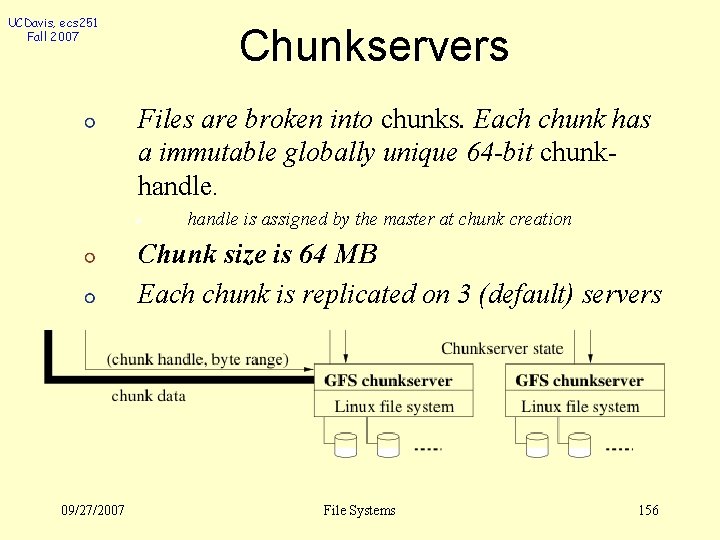

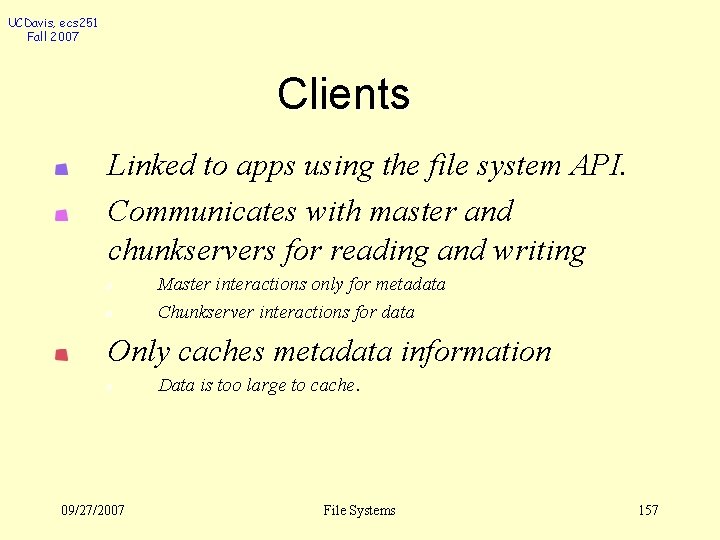

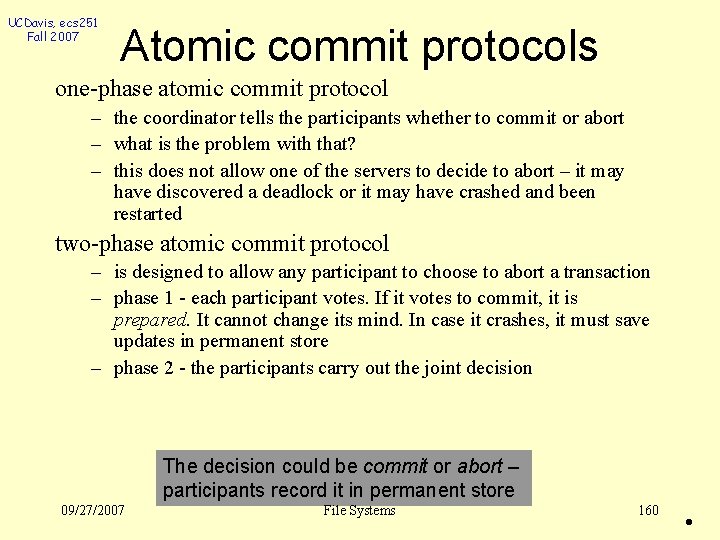

UCDavis, ecs 251 Fall 2007 The Master Maintains all file system metadata. names space, access control info, file to chunk mappings, chunk (including replicas) location, etc. Periodically communicates with chunkservers in Heart. Beat messages to give instructions and check state 09/27/2007 File Systems 154

UCDavis, ecs 251 Fall 2007 The Master Helps make sophisticated chunk placement and replication decision, using global knowledge For reading and writing, client contacts Master to get chunk locations, then deals directly with chunkservers Master is not a bottleneck for reads/writes 09/27/2007 File Systems 155

UCDavis, ecs 251 Fall 2007 Chunkservers Files are broken into chunks. Each chunk has a immutable globally unique 64 -bit chunkhandle is assigned by the master at chunk creation Chunk size is 64 MB Each chunk is replicated on 3 (default) servers 09/27/2007 File Systems 156

UCDavis, ecs 251 Fall 2007 Clients Linked to apps using the file system API. Communicates with master and chunkservers for reading and writing Master interactions only for metadata Chunkserver interactions for data Only caches metadata information Data is too large to cache. 09/27/2007 File Systems 157

UCDavis, ecs 251 Fall 2007 Chunk Locations Master does not keep a persistent record of locations of chunks and replicas. Polls chunkservers at startup, and when new chunkservers join/leave for this. Stays up to date by controlling placement of new chunks and through Heart. Beat messages (when monitoring chunkservers) 09/27/2007 File Systems 158

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 159

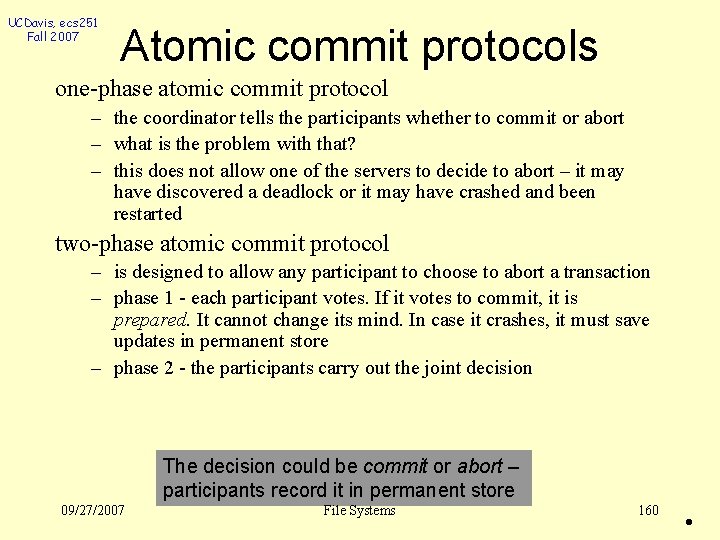

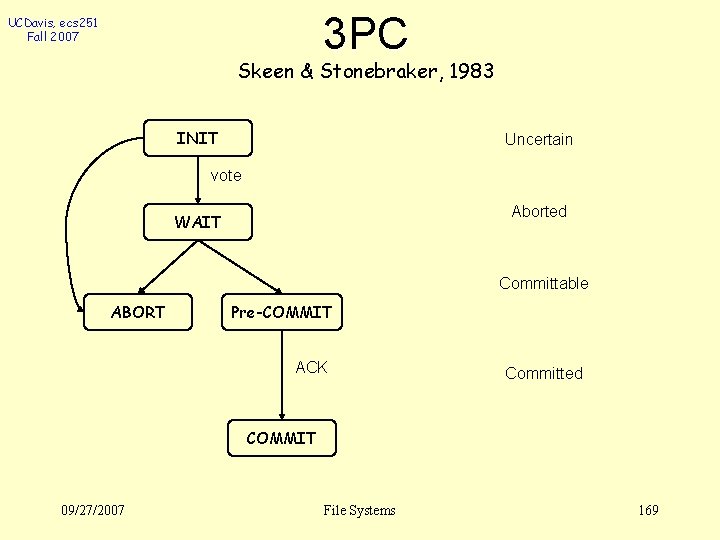

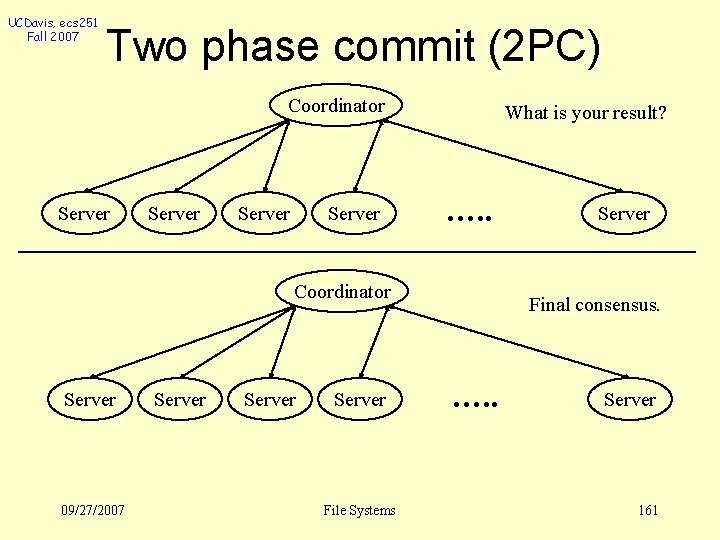

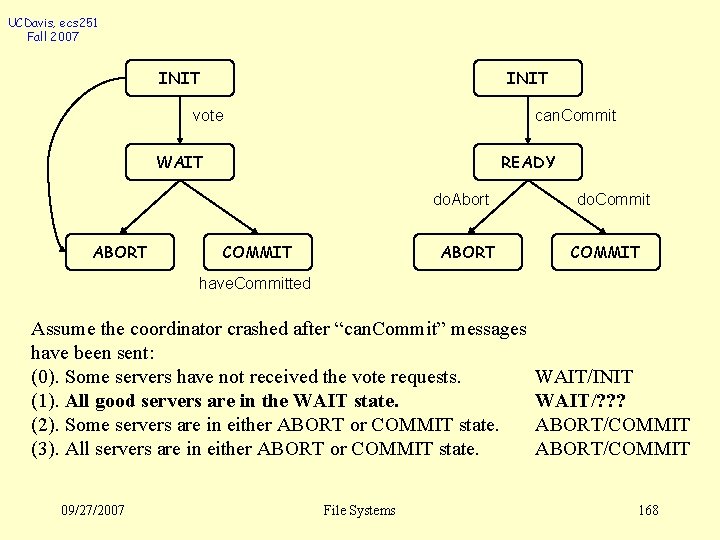

UCDavis, ecs 251 Fall 2007 Atomic commit protocols one-phase atomic commit protocol – the coordinator tells the participants whether to commit or abort – what is the problem with that? – this does not allow one of the servers to decide to abort – it may have discovered a deadlock or it may have crashed and been restarted two-phase atomic commit protocol – is designed to allow any participant to choose to abort a transaction – phase 1 - each participant votes. If it votes to commit, it is prepared. It cannot change its mind. In case it crashes, it must save updates in permanent store – phase 2 - the participants carry out the joint decision The decision could be commit or abort – participants record it in permanent store 09/27/2007 File Systems 160 •

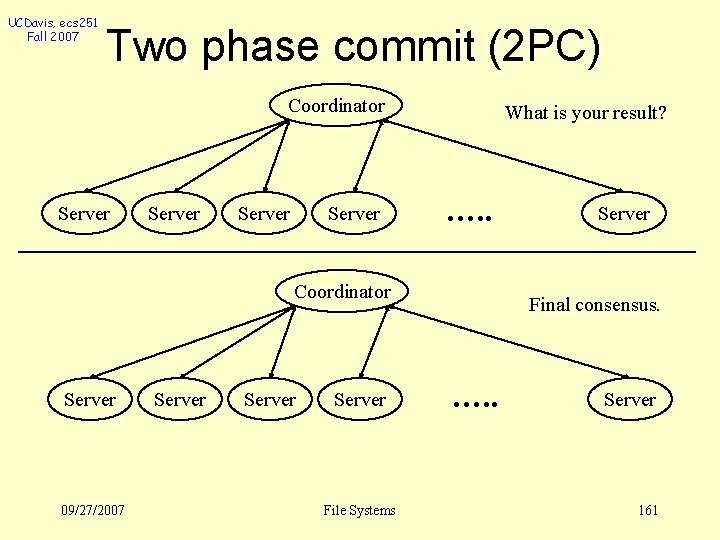

UCDavis, ecs 251 Fall 2007 Two phase commit (2 PC) Coordinator Server What is your result? …. . Coordinator Server 09/27/2007 Server File Systems Server Final consensus. …. . Server 161

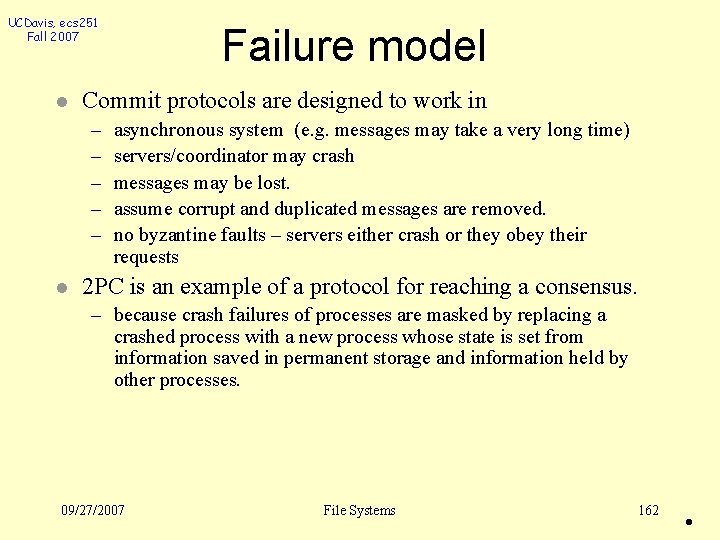

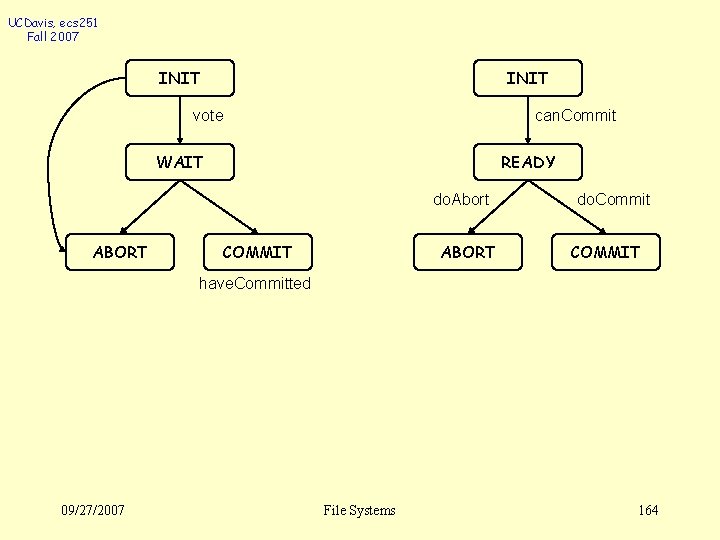

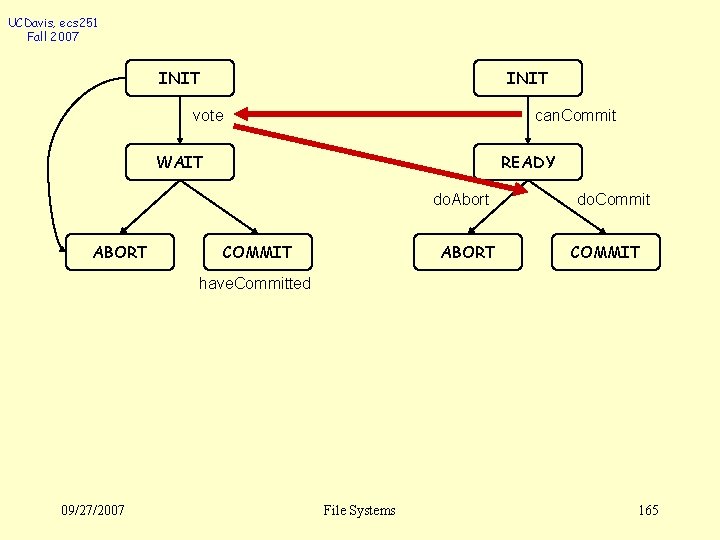

UCDavis, ecs 251 Fall 2007 l Commit protocols are designed to work in – – – l Failure model asynchronous system (e. g. messages may take a very long time) servers/coordinator may crash messages may be lost. assume corrupt and duplicated messages are removed. no byzantine faults – servers either crash or they obey their requests 2 PC is an example of a protocol for reaching a consensus. – because crash failures of processes are masked by replacing a crashed process with a new process whose state is set from information saved in permanent storage and information held by other processes. 09/27/2007 File Systems 162 •

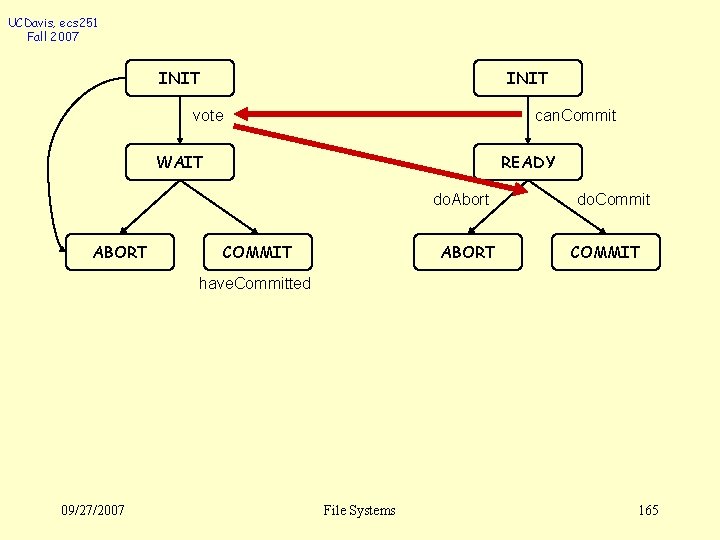

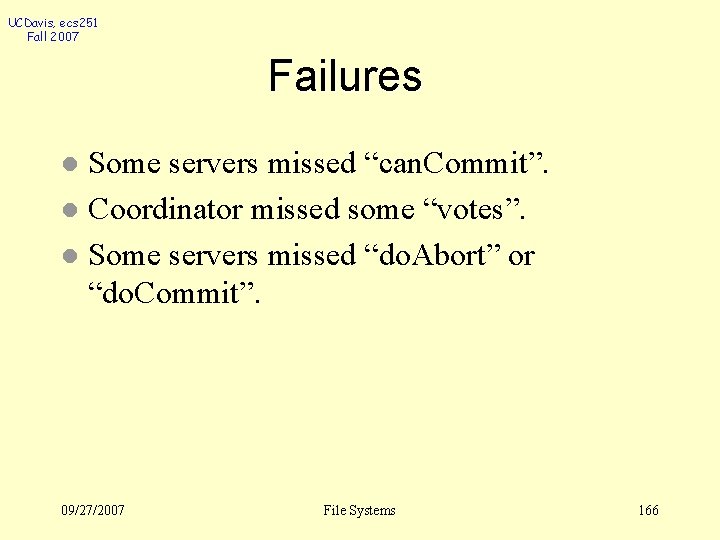

UCDavis, ecs 251 Fall 2007 l 2 PC – voting phase: coordinator asks all servers if they can commit l if yes, server records updates in permanent storage and then votes – completion phase: coordinator tells all servers to commit or abort 09/27/2007 File Systems 163 •

UCDavis, ecs 251 Fall 2007 INIT vote can. Commit WAIT READY do. Abort ABORT COMMIT ABORT do. Commit COMMIT have. Committed 09/27/2007 File Systems 164

UCDavis, ecs 251 Fall 2007 INIT vote can. Commit WAIT READY do. Abort ABORT COMMIT ABORT do. Commit COMMIT have. Committed 09/27/2007 File Systems 165

UCDavis, ecs 251 Fall 2007 Failures Some servers missed “can. Commit”. l Coordinator missed some “votes”. l Some servers missed “do. Abort” or “do. Commit”. l 09/27/2007 File Systems 166

UCDavis, ecs 251 Fall 2007 Failures/Crashes Some servers crashed b/a “can. Commit”. l Coordinator crashed b/a receiving some “votes”. l Some servers crashes b/a receiving “do. Abort” or “do. Commit”. l 09/27/2007 File Systems 167

UCDavis, ecs 251 Fall 2007 INIT vote can. Commit WAIT READY do. Abort ABORT COMMIT ABORT do. Commit COMMIT have. Committed Assume the coordinator crashed after “can. Commit” messages have been sent: (0). Some servers have not received the vote requests. (1). All good servers are in the WAIT state. (2). Some servers are in either ABORT or COMMIT state. (3). All servers are in either ABORT or COMMIT state. 09/27/2007 File Systems WAIT/INIT WAIT/? ? ? ABORT/COMMIT 168

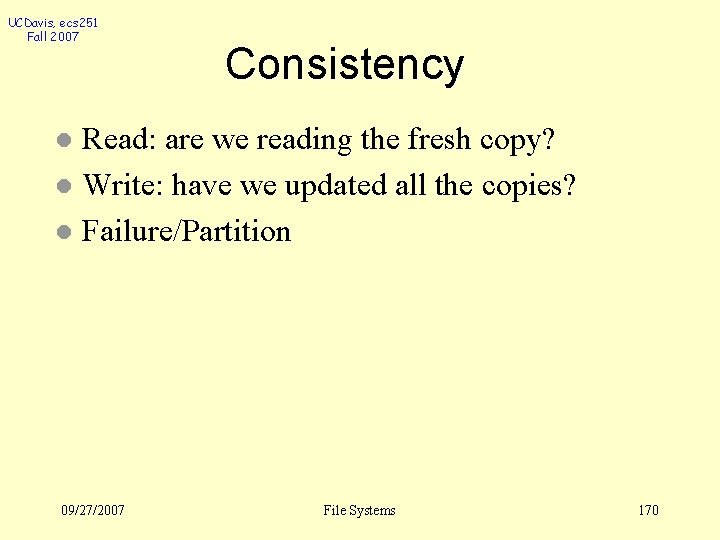

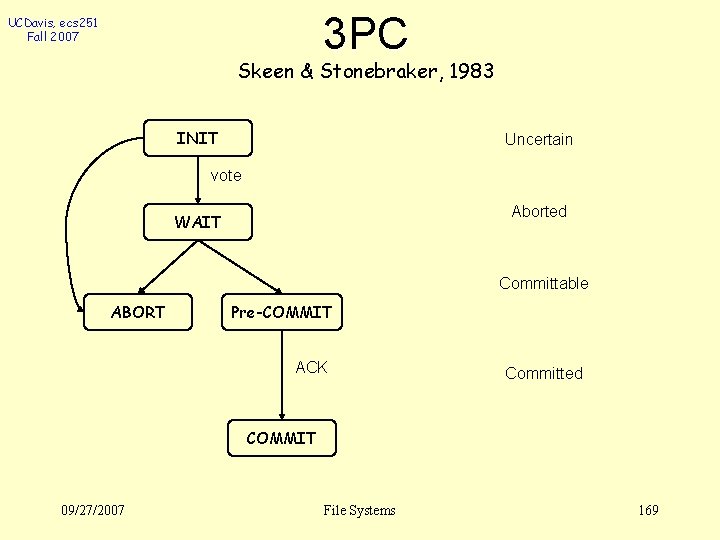

3 PC UCDavis, ecs 251 Fall 2007 Skeen & Stonebraker, 1983 INIT Uncertain vote Aborted WAIT Committable ABORT Pre-COMMIT ACK Committed COMMIT 09/27/2007 File Systems 169

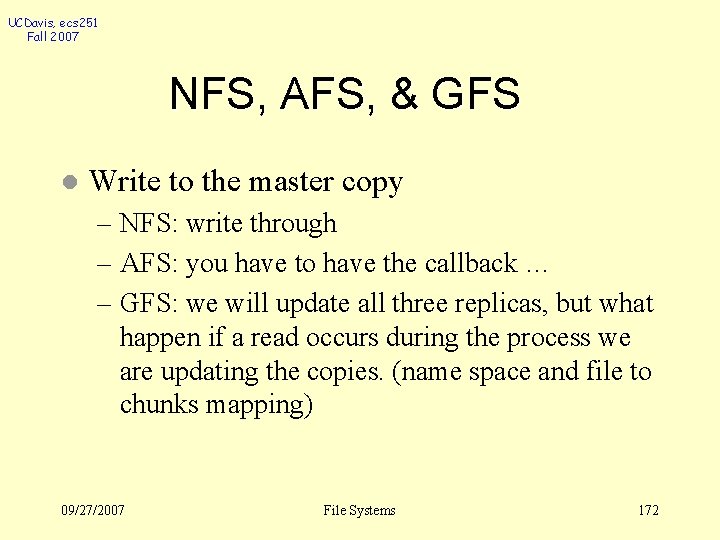

UCDavis, ecs 251 Fall 2007 Consistency Read: are we reading the fresh copy? l Write: have we updated all the copies? l Failure/Partition l 09/27/2007 File Systems 170

UCDavis, ecs 251 Fall 2007 NFS, AFS, & GFS l Read the newest copy – NFS: as long as we don’t cache… – AFS: the read callback might be broken… – GFS: we can check the master… 09/27/2007 File Systems 171

UCDavis, ecs 251 Fall 2007 NFS, AFS, & GFS l Write to the master copy – NFS: write through – AFS: you have to have the callback … – GFS: we will update all three replicas, but what happen if a read occurs during the process we are updating the copies. (name space and file to chunks mapping) 09/27/2007 File Systems 172

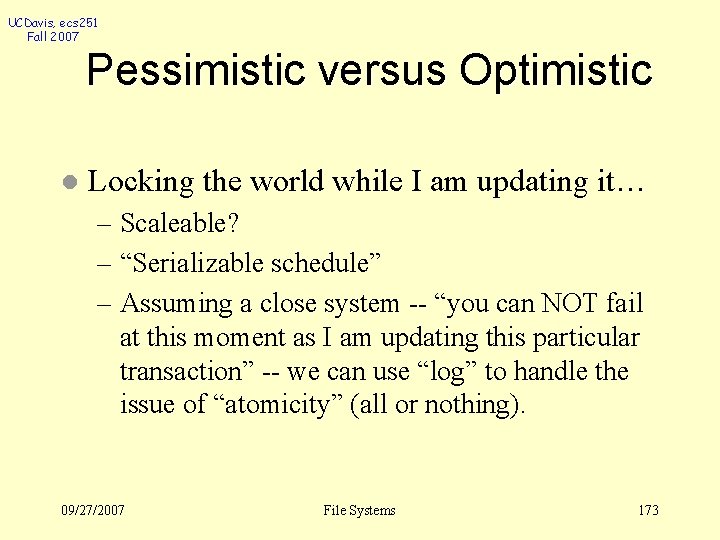

UCDavis, ecs 251 Fall 2007 Pessimistic versus Optimistic l Locking the world while I am updating it… – Scaleable? – “Serializable schedule” – Assuming a close system -- “you can NOT fail at this moment as I am updating this particular transaction” -- we can use “log” to handle the issue of “atomicity” (all or nothing). 09/27/2007 File Systems 173

UCDavis, ecs 251 Fall 2007 Soft Update l Create X (t 1) and Delete Y (t 2) l T 1, T 2, T 1 l l We will enforce it when resolve the problem of circular dependency! 09/27/2007 File Systems 174

UCDavis, ecs 251 Fall 2007 SU & Background FSCK Soft Update guarantees that the File System will always in a “consistent” state at all time. l Essentially, Soft Update prevents any chances of inconsistency! l 09/27/2007 File Systems 175

UCDavis, ecs 251 Fall 2007 An Alternative Approach “Optimistic” l The chance for certain bad things to occur is very small (depending on what you are doing). l And, it is very expensive to pessimistically prevent the probability. l “Let it happen, and we try to detect and recover from that…” l 09/27/2007 File Systems 176

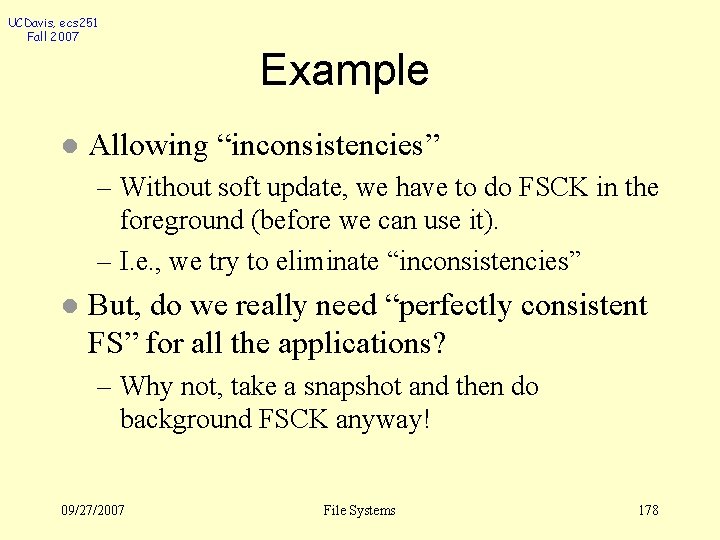

UCDavis, ecs 251 Fall 2007 The Optimistic Approach Regular Execution with “recording” l Conflict Detection based on the “recorded” l Conflict resolution l 09/27/2007 File Systems 177

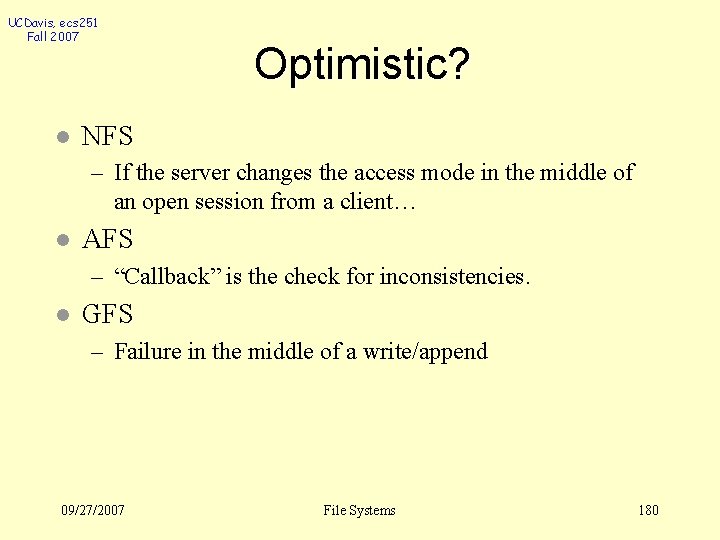

UCDavis, ecs 251 Fall 2007 Example l Allowing “inconsistencies” – Without soft update, we have to do FSCK in the foreground (before we can use it). – I. e. , we try to eliminate “inconsistencies” l But, do we really need “perfectly consistent FS” for all the applications? – Why not, take a snapshot and then do background FSCK anyway! 09/27/2007 File Systems 178

UCDavis, ecs 251 Fall 2007 Optimistic? NFS l AFS l GFS l 09/27/2007 File Systems 179

UCDavis, ecs 251 Fall 2007 l Optimistic? NFS – If the server changes the access mode in the middle of an open session from a client… l AFS – “Callback” is the check for inconsistencies. l GFS – Failure in the middle of a write/append 09/27/2007 File Systems 180

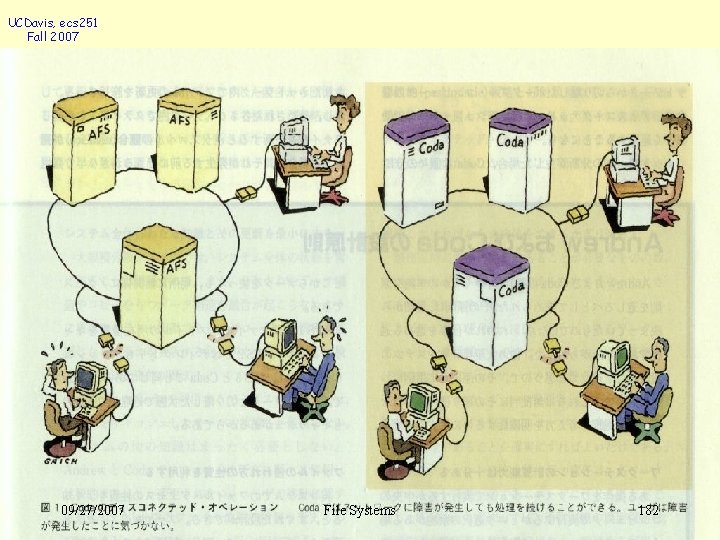

UCDavis, ecs 251 Fall 2007 l CODA Server Replication: – if one server goes down, I can get another. l Disconnected Operation: – if all go down, I will use my own cache. 09/27/2007 File Systems 181

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 182

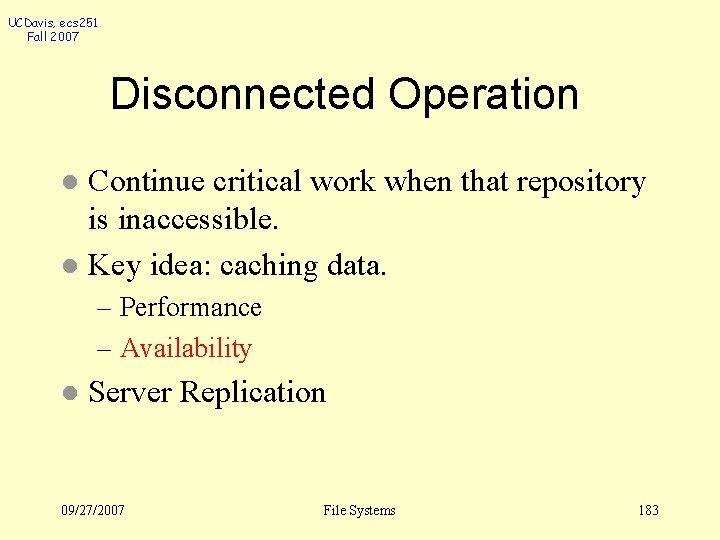

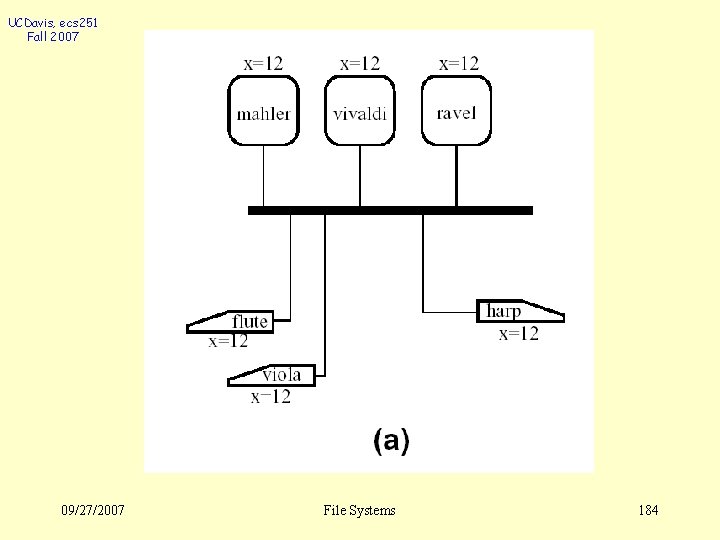

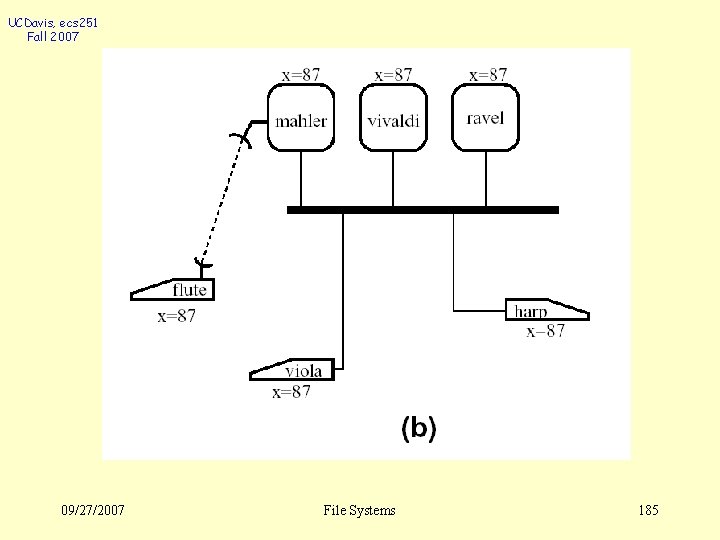

UCDavis, ecs 251 Fall 2007 Disconnected Operation Continue critical work when that repository is inaccessible. l Key idea: caching data. l – Performance – Availability l Server Replication 09/27/2007 File Systems 183

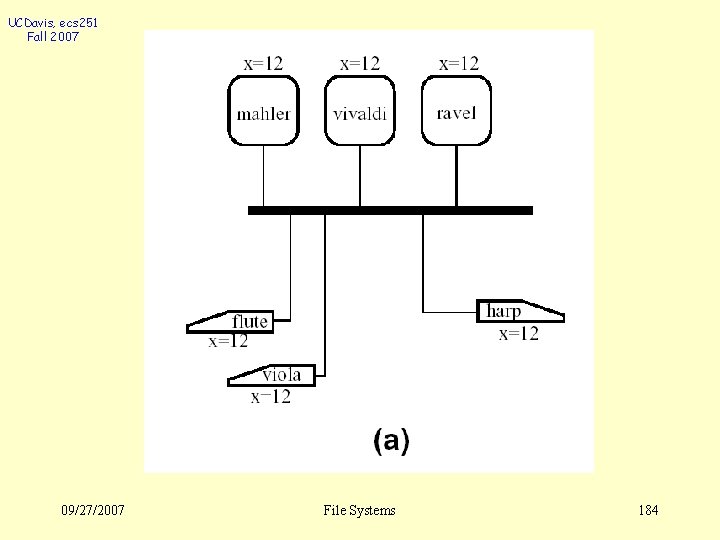

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 184

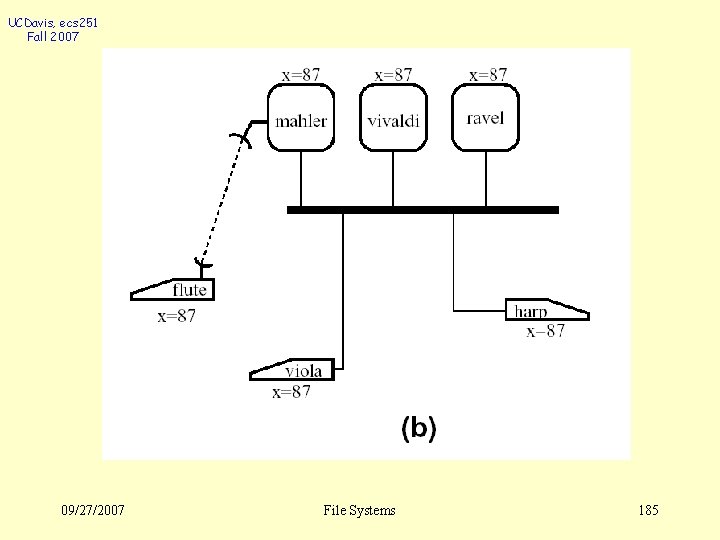

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 185

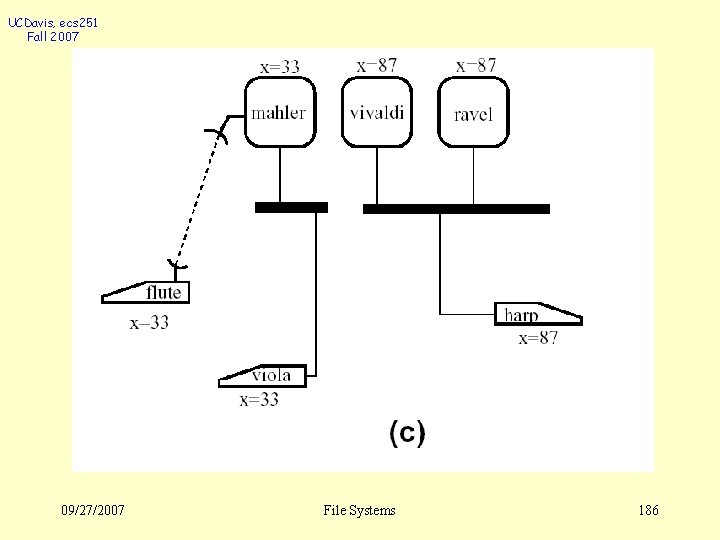

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 186

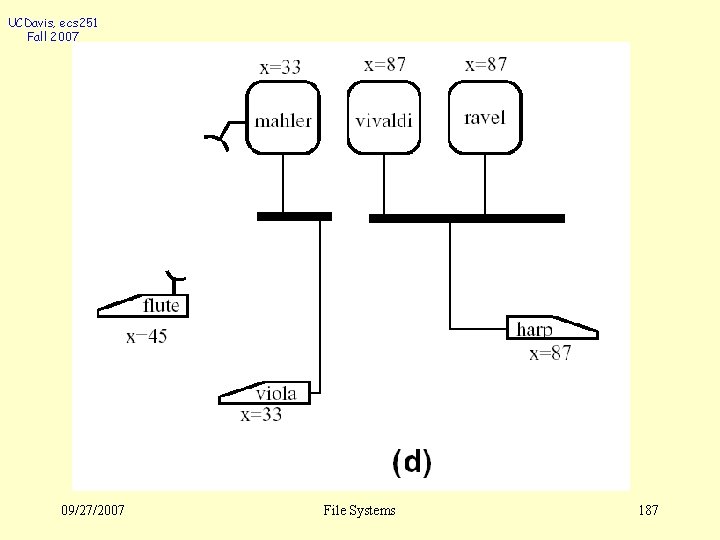

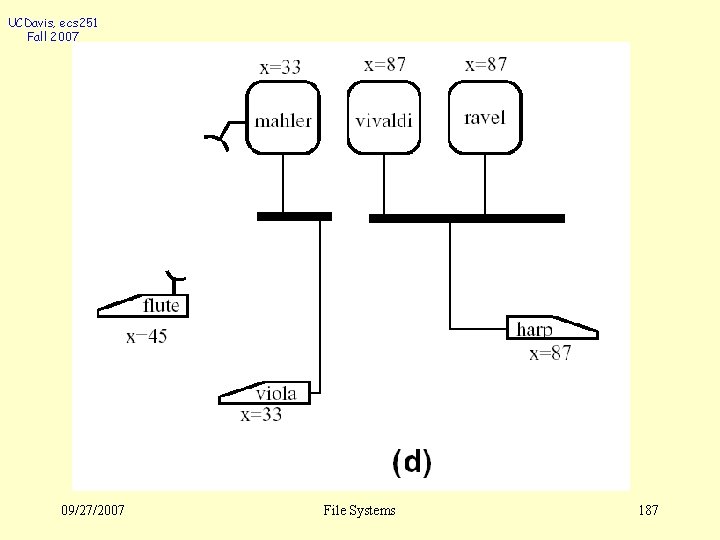

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 187

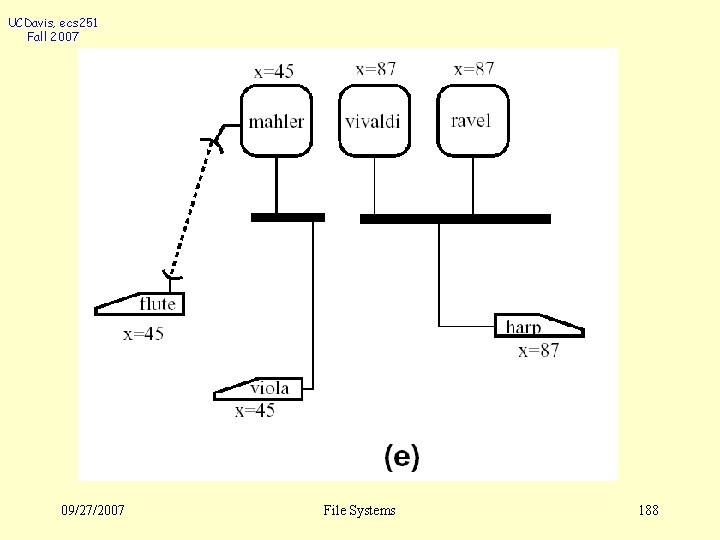

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 188

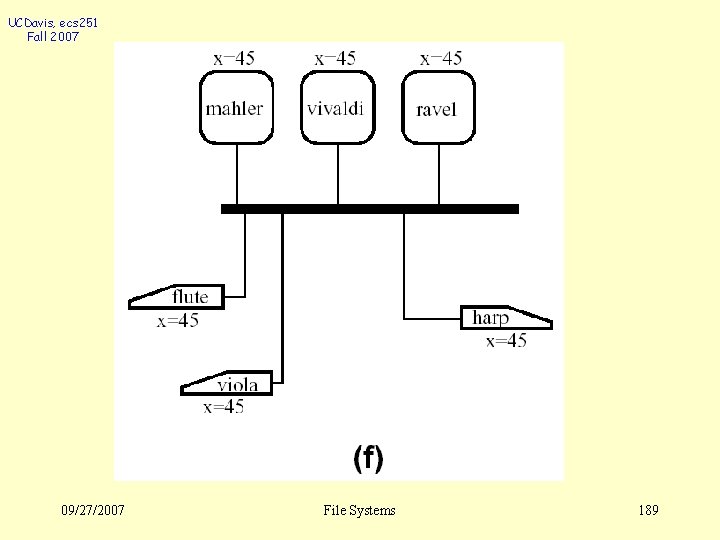

UCDavis, ecs 251 Fall 2007 09/27/2007 File Systems 189

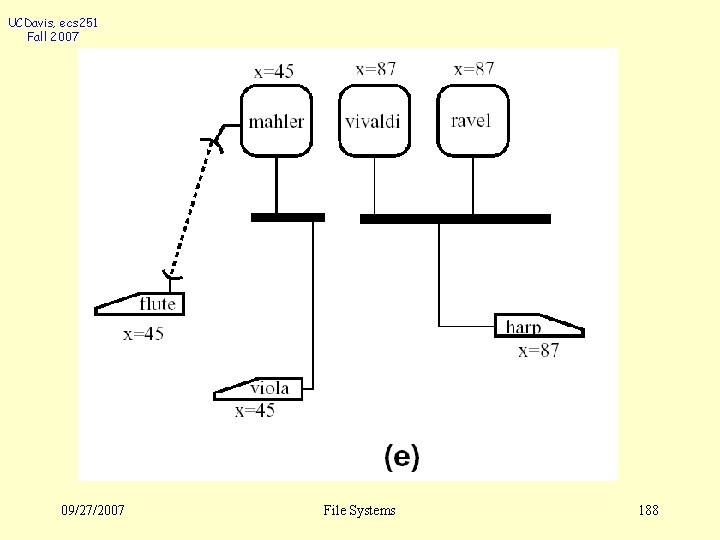

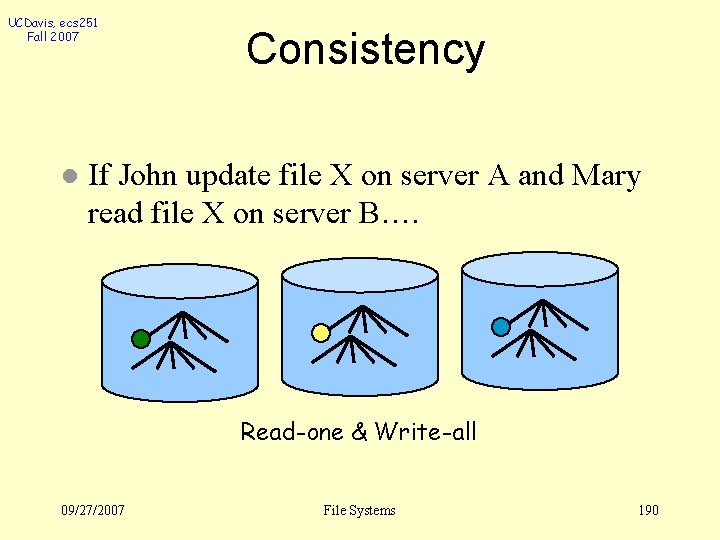

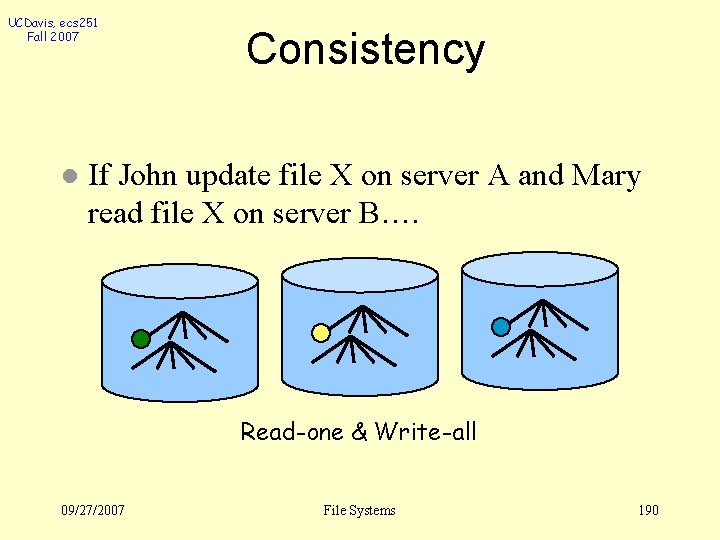

UCDavis, ecs 251 Fall 2007 l Consistency If John update file X on server A and Mary read file X on server B…. Read-one & Write-all 09/27/2007 File Systems 190

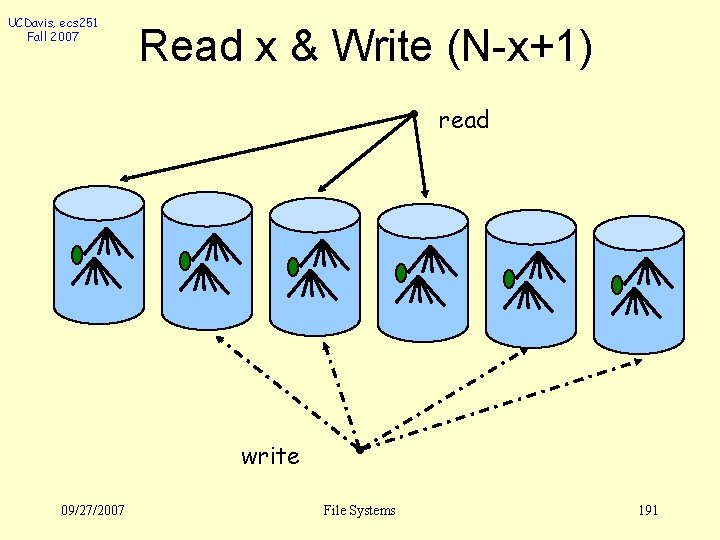

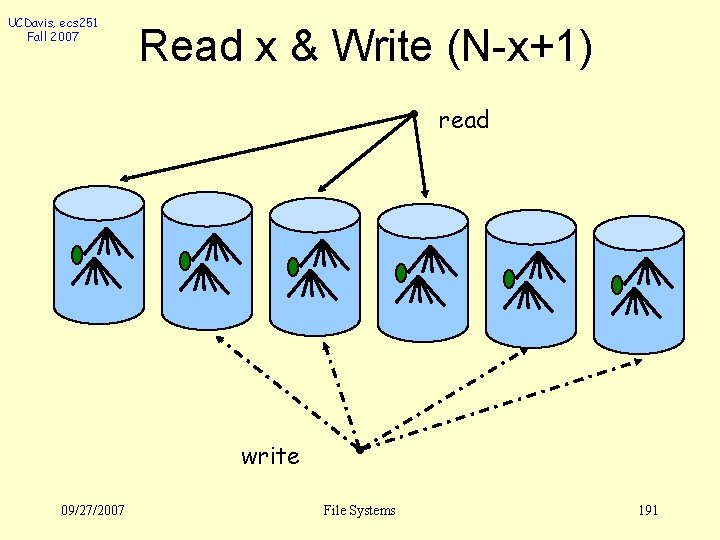

UCDavis, ecs 251 Fall 2007 Read x & Write (N-x+1) read write 09/27/2007 File Systems 191

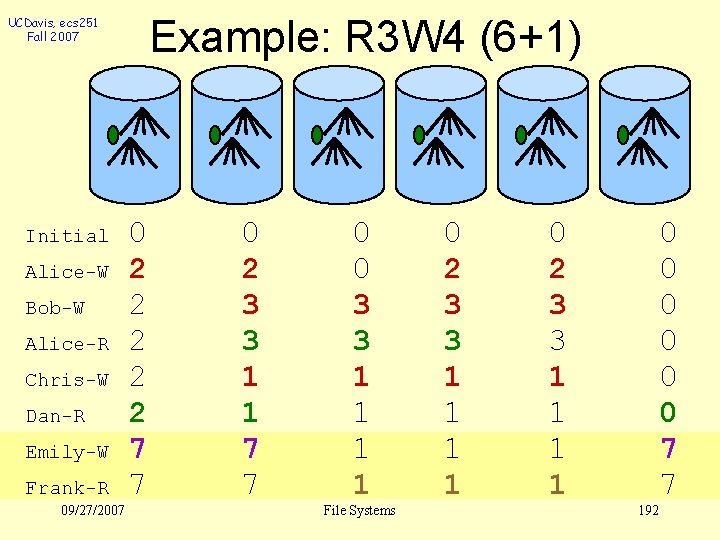

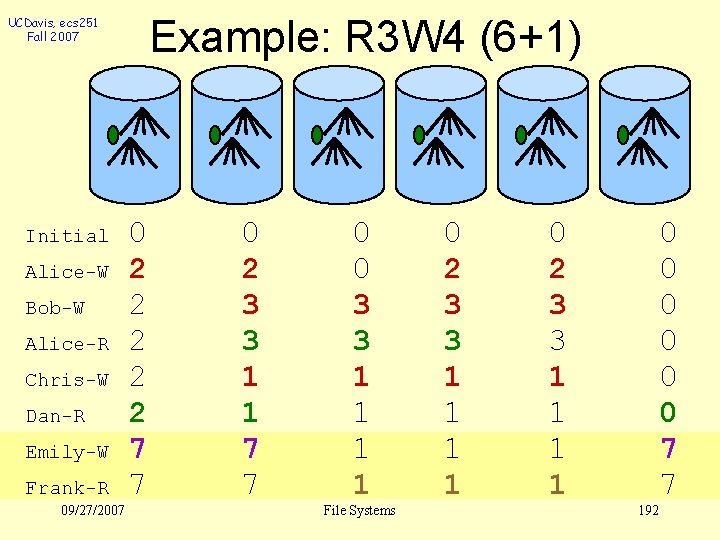

Example: R 3 W 4 (6+1) UCDavis, ecs 251 Fall 2007 Initial Alice-W Bob-W Alice-R Chris-W Dan-R Emily-W Frank-R 09/27/2007 0 2 2 2 7 7 0 2 3 3 1 1 7 7 0 0 3 3 1 1 File Systems 0 2 3 3 1 1 1 1 192 0 0 0 7 7

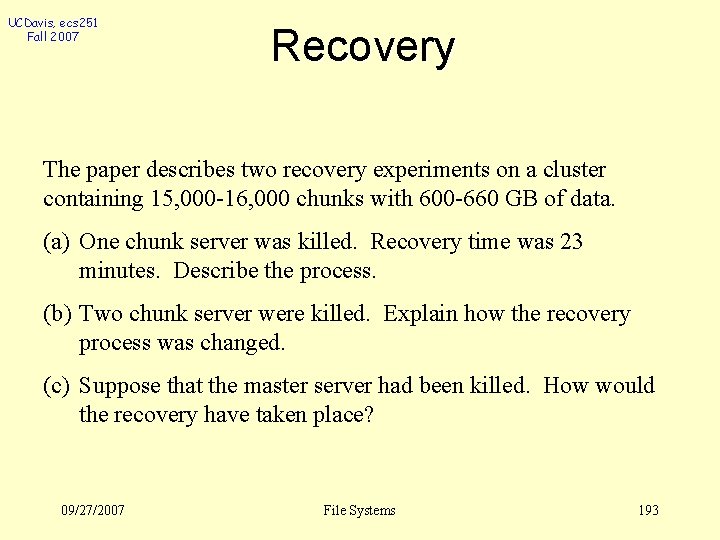

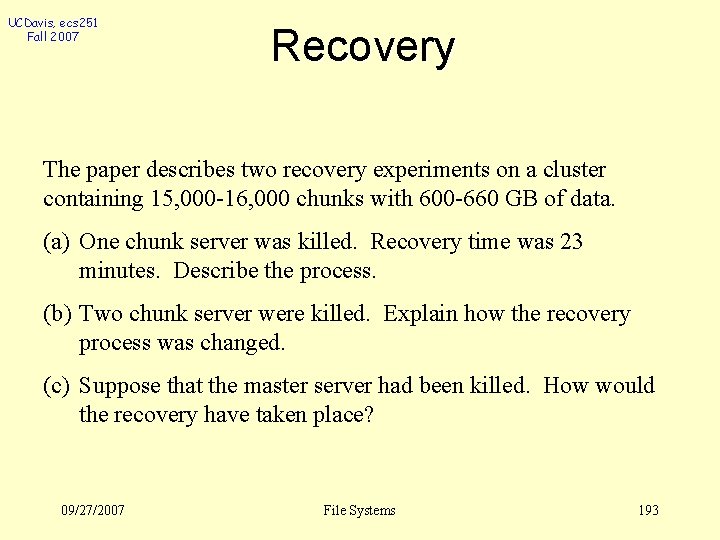

UCDavis, ecs 251 Fall 2007 Recovery The paper describes two recovery experiments on a cluster containing 15, 000 -16, 000 chunks with 600 -660 GB of data. (a) One chunk server was killed. Recovery time was 23 minutes. Describe the process. (b) Two chunk server were killed. Explain how the recovery process was changed. (c) Suppose that the master server had been killed. How would the recovery have taken place? 09/27/2007 File Systems 193

UCDavis, ecs 251 Fall 2007 Consistency Model (a) (b) (c) (d) What is a consistency model? How does the consistency model used by the Google File System differ from the model that might be used by a general purpose file system? How does the file system guarantee correctness of the namespace? Explain what happens, from a consistency viewpoint, when an application attempts to append to a file. 09/27/2007 File Systems 194

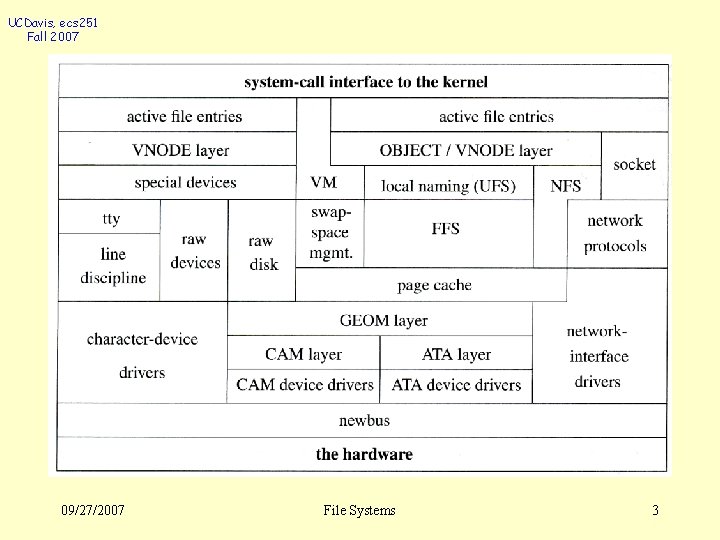

UCDavis, ecs 251 Fall 2007 Client computer Lookup Add. Name Un. Name Get. Names Server computer Directory service Application program Flat file service Client module Read Write Create Delete Get. Attributes Set. Attributes 09/27/2007 File Systems 195