Operating Systems Processes and Threads Process Process a

- Slides: 49

Operating Systems Processes and Threads

Process • Process - a program in execution; process execution must progress in sequential fashion • A process includes: – program counter – stack – data section 2

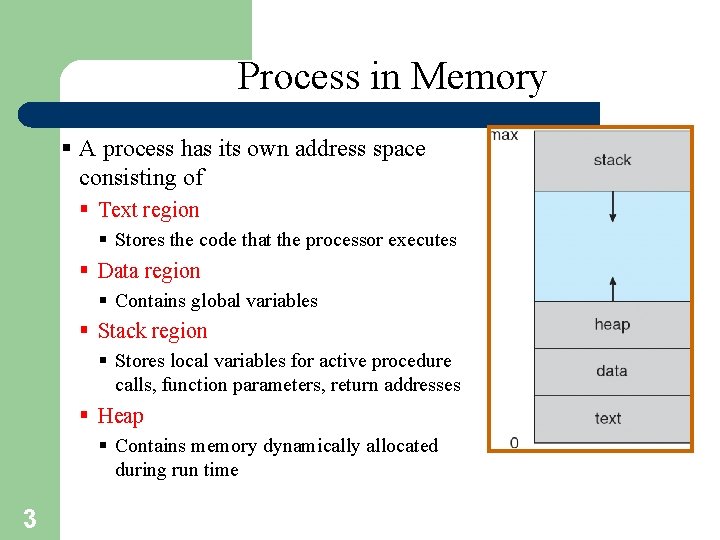

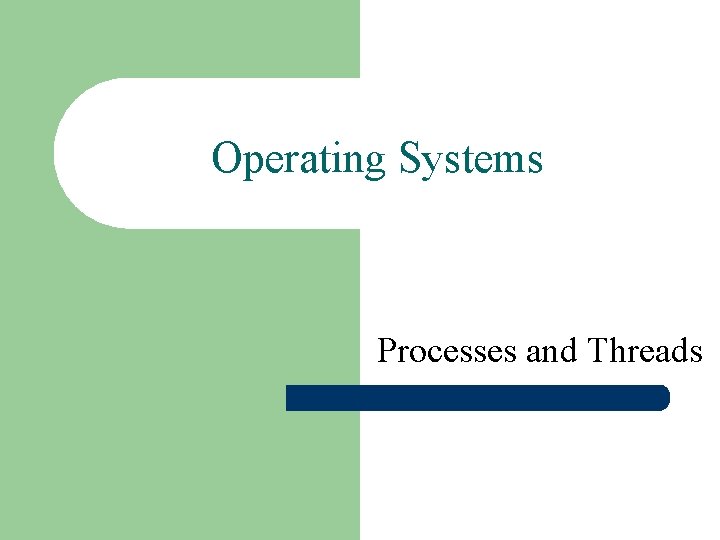

Process in Memory § A process has its own address space consisting of § Text region § Stores the code that the processor executes § Data region § Contains global variables § Stack region § Stores local variables for active procedure calls, function parameters, return addresses § Heap § Contains memory dynamically allocated during run time 3

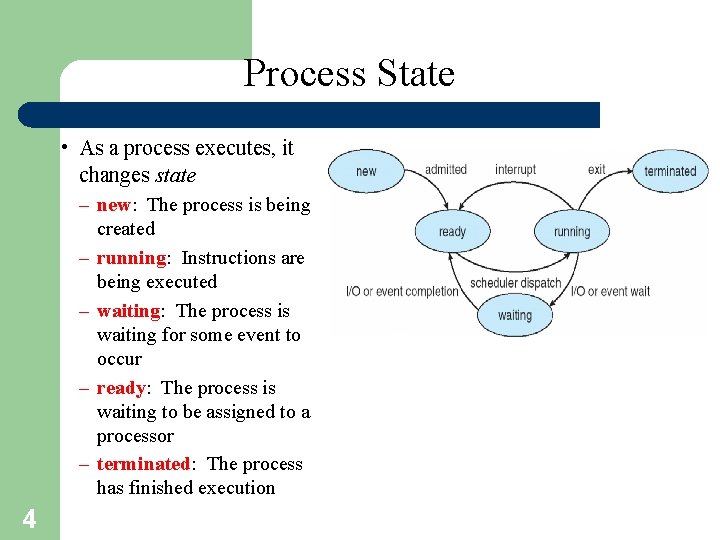

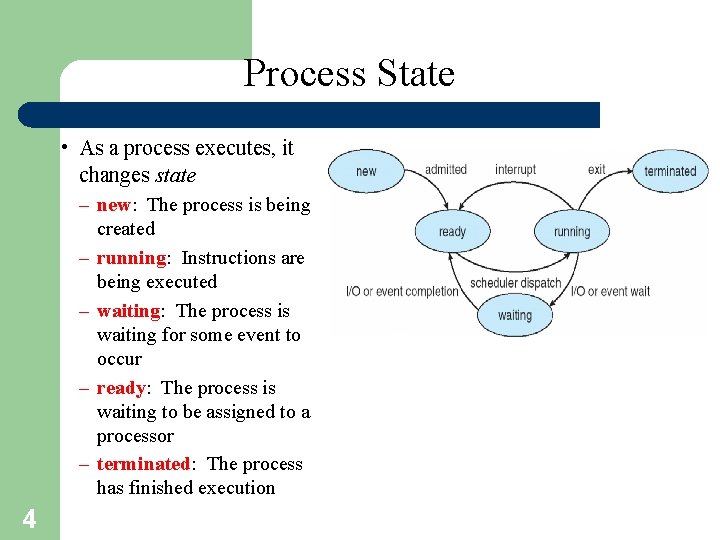

Process State • As a process executes, it changes state – new: The process is being created – running: Instructions are being executed – waiting: The process is waiting for some event to occur – ready: The process is waiting to be assigned to a processor – terminated: The process has finished execution 4

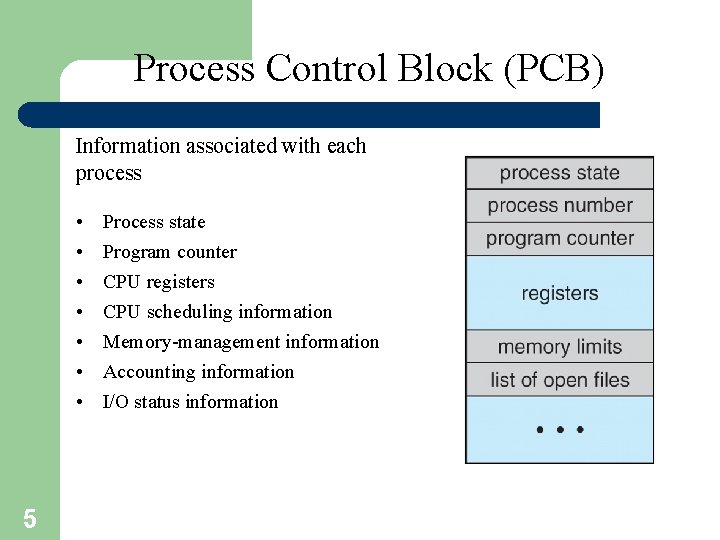

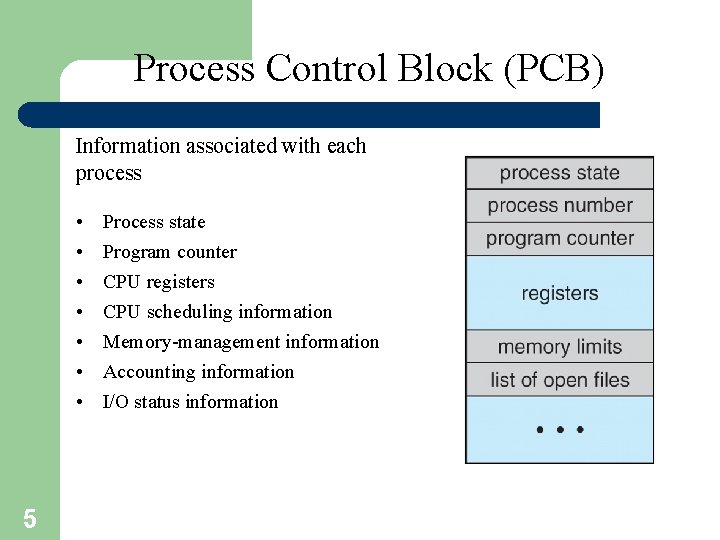

Process Control Block (PCB) Information associated with each process • • 5 Process state Program counter CPU registers CPU scheduling information Memory-management information Accounting information I/O status information

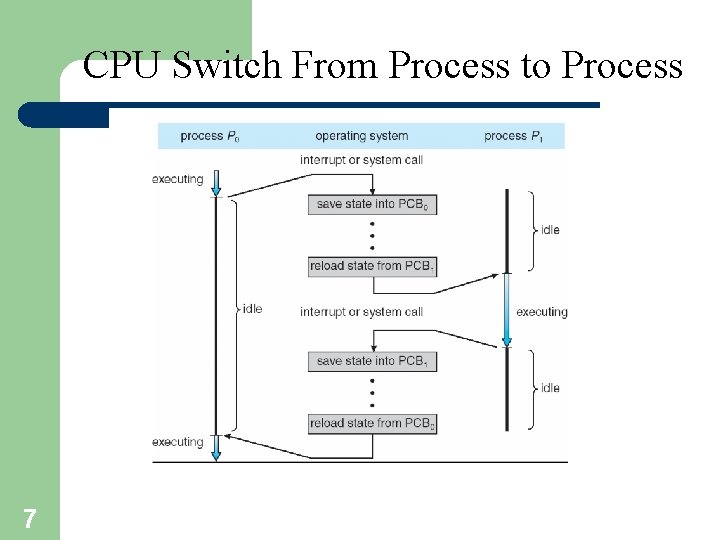

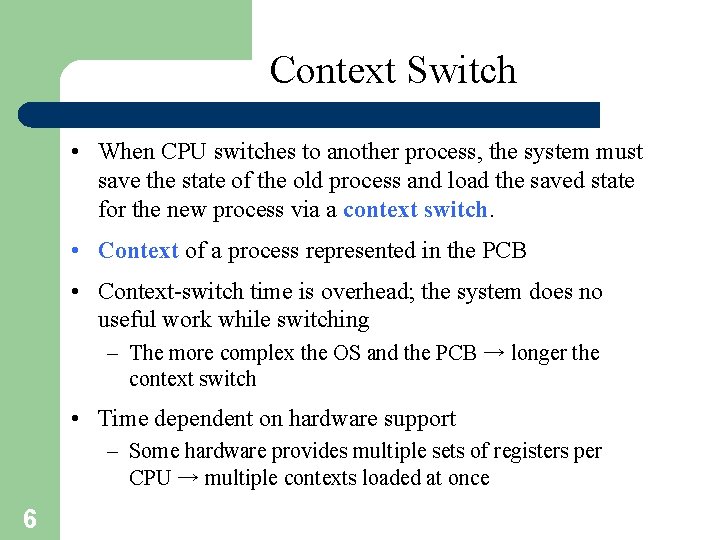

Context Switch • When CPU switches to another process, the system must save the state of the old process and load the saved state for the new process via a context switch. • Context of a process represented in the PCB • Context-switch time is overhead; the system does no useful work while switching – The more complex the OS and the PCB → longer the context switch • Time dependent on hardware support – Some hardware provides multiple sets of registers per CPU → multiple contexts loaded at once 6

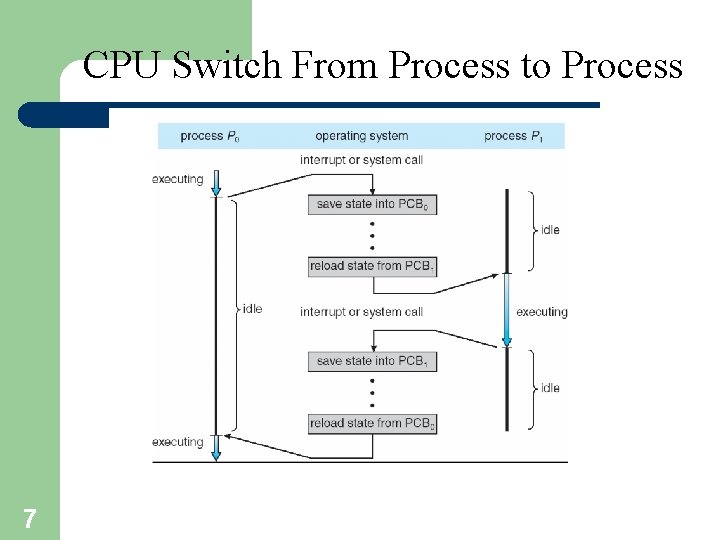

CPU Switch From Process to Process 7

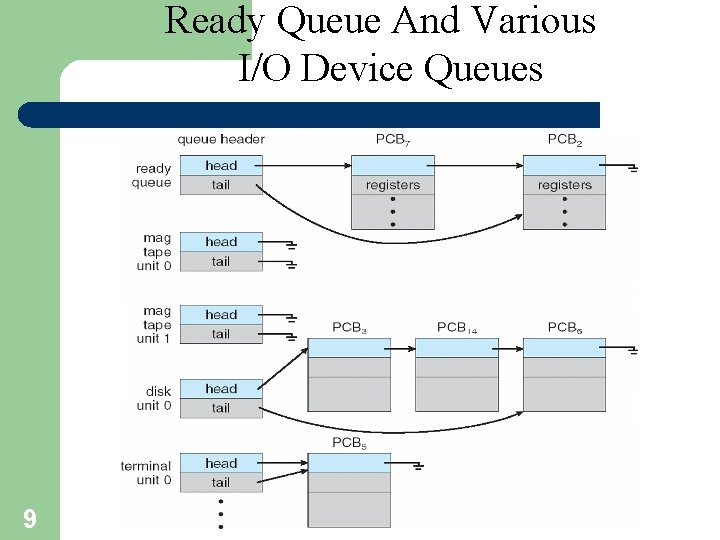

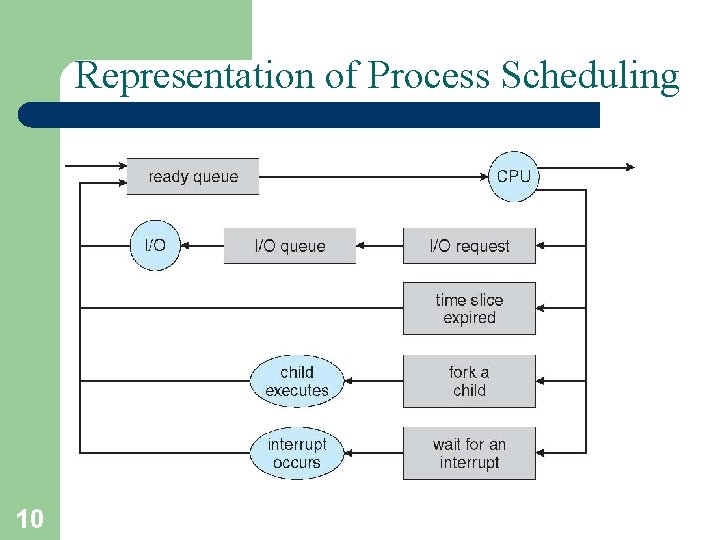

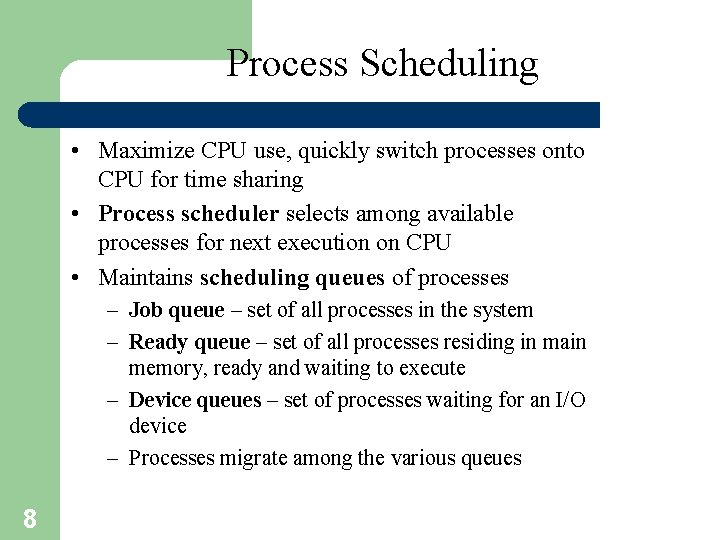

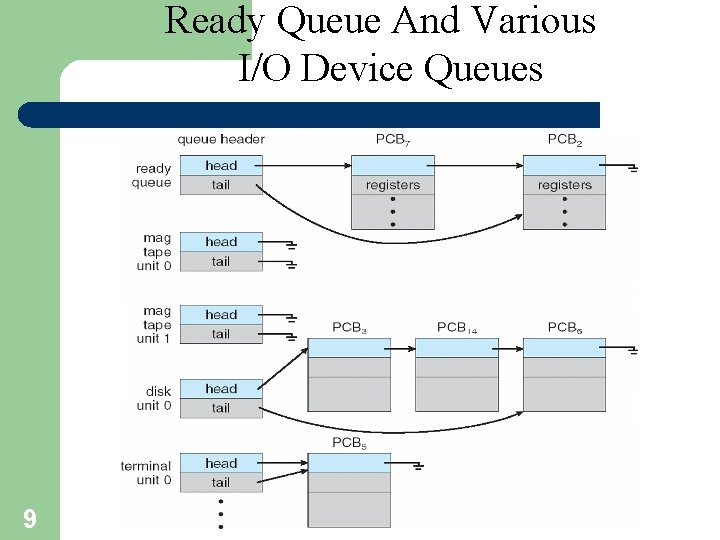

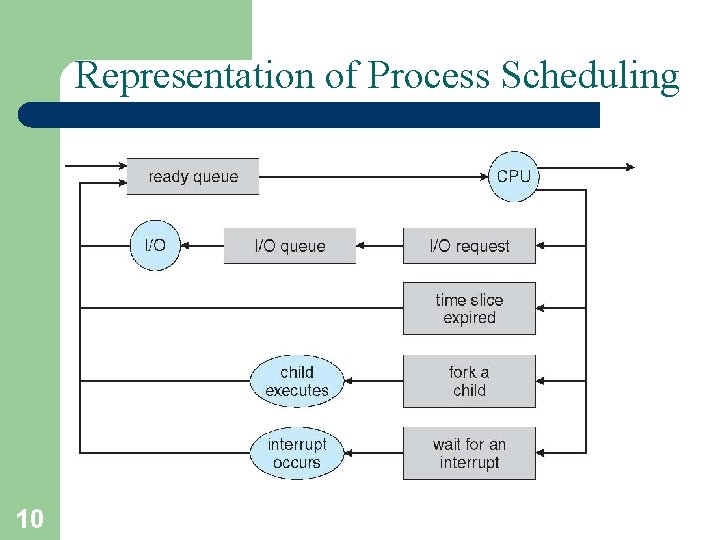

Process Scheduling • Maximize CPU use, quickly switch processes onto CPU for time sharing • Process scheduler selects among available processes for next execution on CPU • Maintains scheduling queues of processes – Job queue – set of all processes in the system – Ready queue – set of all processes residing in main memory, ready and waiting to execute – Device queues – set of processes waiting for an I/O device – Processes migrate among the various queues 8

Ready Queue And Various I/O Device Queues 9

Representation of Process Scheduling 10

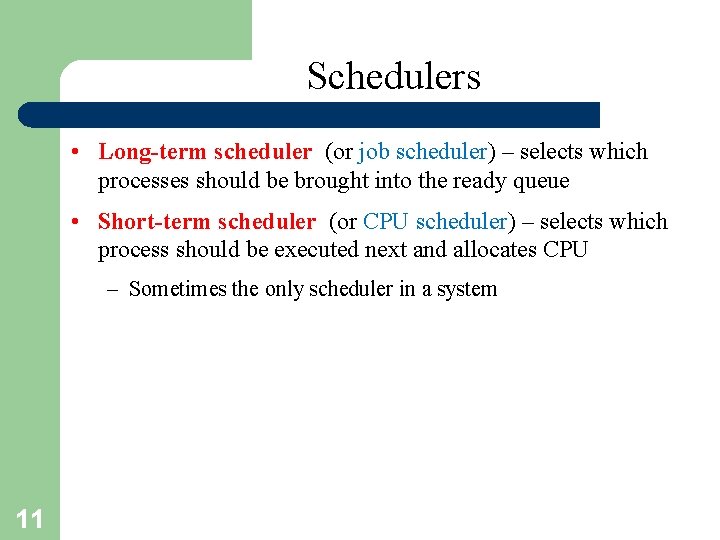

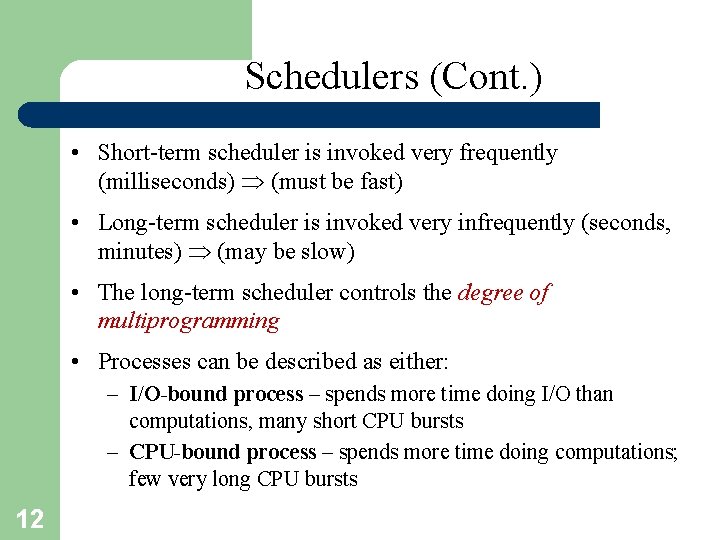

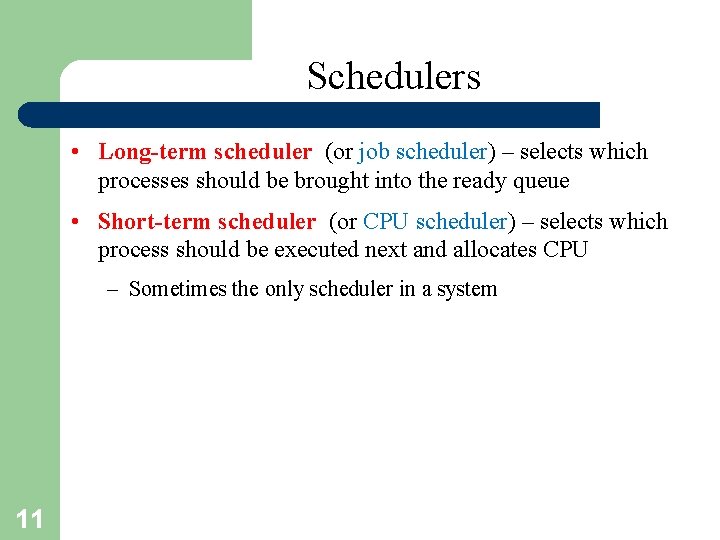

Schedulers • Long-term scheduler (or job scheduler) – selects which processes should be brought into the ready queue • Short-term scheduler (or CPU scheduler) – selects which process should be executed next and allocates CPU – Sometimes the only scheduler in a system 11

Schedulers (Cont. ) • Short-term scheduler is invoked very frequently (milliseconds) (must be fast) • Long-term scheduler is invoked very infrequently (seconds, minutes) (may be slow) • The long-term scheduler controls the degree of multiprogramming • Processes can be described as either: – I/O-bound process – spends more time doing I/O than computations, many short CPU bursts – CPU-bound process – spends more time doing computations; few very long CPU bursts 12

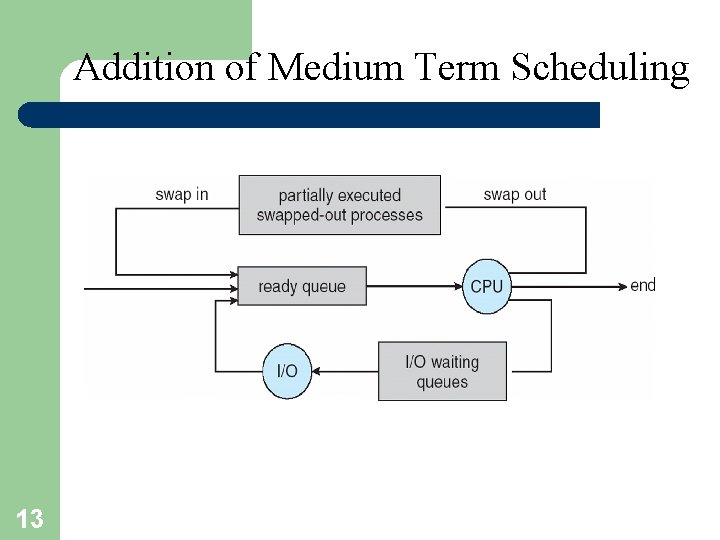

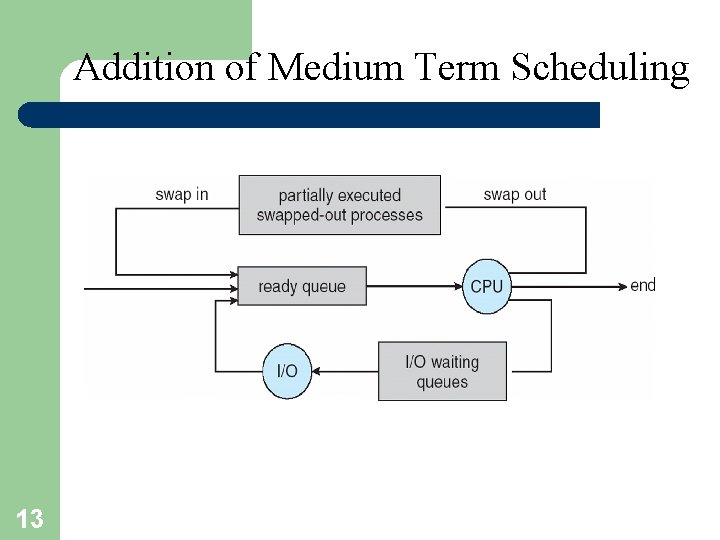

Addition of Medium Term Scheduling 13

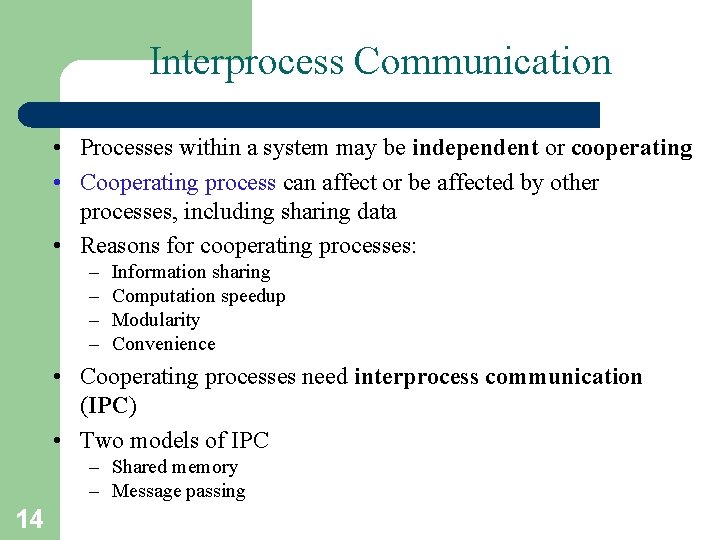

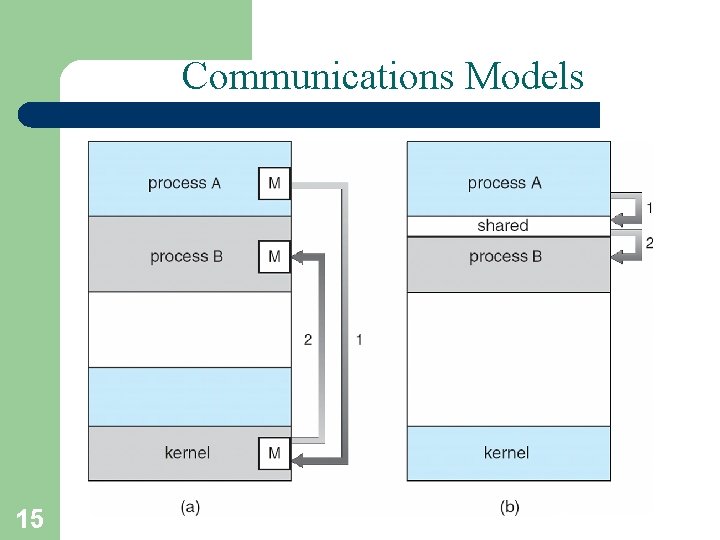

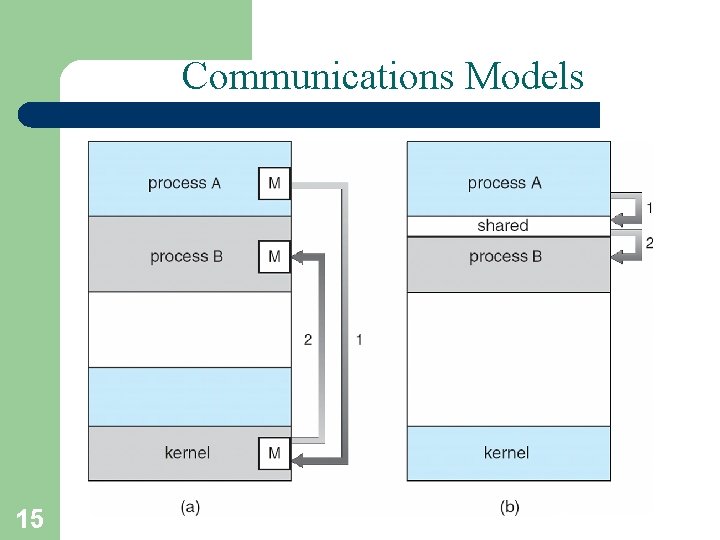

Interprocess Communication • Processes within a system may be independent or cooperating • Cooperating process can affect or be affected by other processes, including sharing data • Reasons for cooperating processes: – – Information sharing Computation speedup Modularity Convenience • Cooperating processes need interprocess communication (IPC) • Two models of IPC – Shared memory – Message passing 14

Communications Models 15

Cooperating Processes • Independent process cannot affect or be affected by the execution of another process • Cooperating process can affect or be affected by the execution of another process • Advantages of process cooperation – – 16 Information sharing Computation speed-up Modularity Convenience

Producer-Consumer Problem • Paradigm for cooperating processes, producer process produces information that is consumed by a consumer process – unbounded-buffer places no practical limit on the size of the buffer – bounded-buffer assumes that there is a fixed buffer size 17

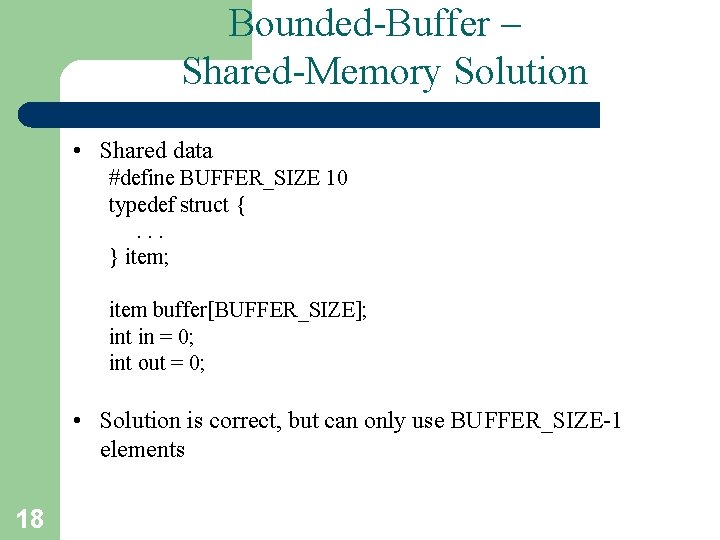

Bounded-Buffer – Shared-Memory Solution • Shared data #define BUFFER_SIZE 10 typedef struct {. . . } item; item buffer[BUFFER_SIZE]; int in = 0; int out = 0; • Solution is correct, but can only use BUFFER_SIZE-1 elements 18

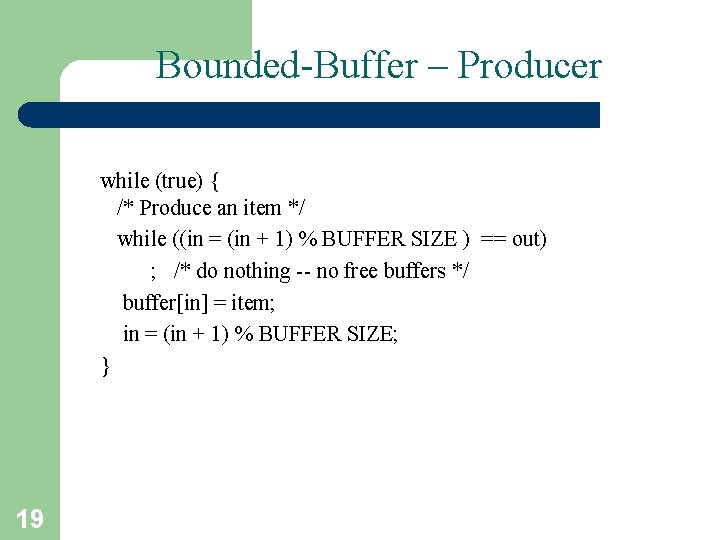

Bounded-Buffer – Producer while (true) { /* Produce an item */ while ((in = (in + 1) % BUFFER SIZE ) == out) ; /* do nothing -- no free buffers */ buffer[in] = item; in = (in + 1) % BUFFER SIZE; } 19

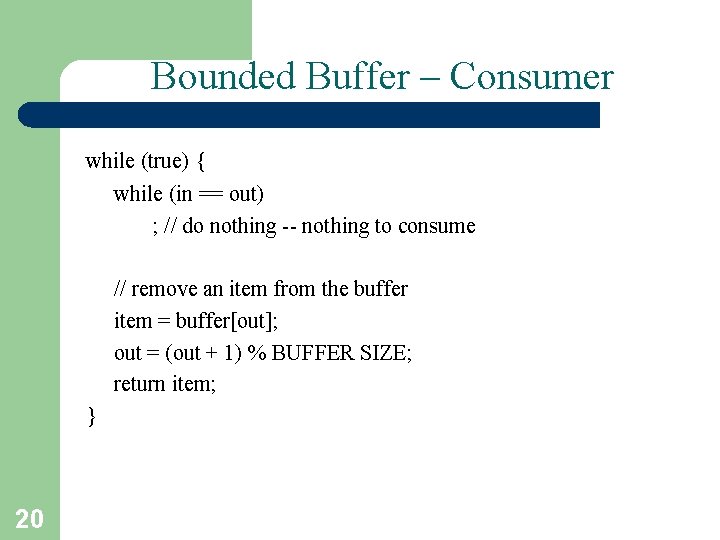

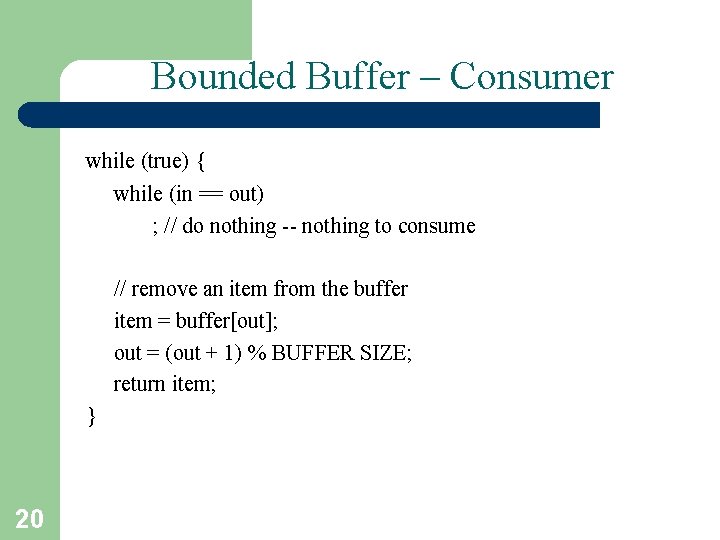

Bounded Buffer – Consumer while (true) { while (in == out) ; // do nothing -- nothing to consume // remove an item from the buffer item = buffer[out]; out = (out + 1) % BUFFER SIZE; return item; } 20

Interprocess Communication – Message Passing • Mechanism for processes to communicate and to synchronize their actions • Message system – processes communicate with each other without resorting to shared variables • IPC facility provides two operations: – send(message) – message size fixed or variable – receive(message) • If P and Q wish to communicate, they need to: – establish a communication link between them – exchange messages via send/receive • Implementation of communication link 21 – physical (e. g. , shared memory, hardware bus) – logical (e. g. , logical properties)

Threads • A thread is a flow of execution through the process code, with its own – program counter, – system registers and – stack. • A thread is also called a light weight process. • Threads provide a way to improve application performance through parallelism • Threads represent a software approach to improving performance of operating system by reducing the overhead thread is equivalent to a classical process. 22

Threads (Contd…) • Each thread belongs to exactly one process • No thread can exist outside a process • Each thread represents a separate flow of control • Threads have been successfully used in implementing network servers and web server • They also provide a suitable foundation for parallel execution of applications on shared memory multiprocessors. 23

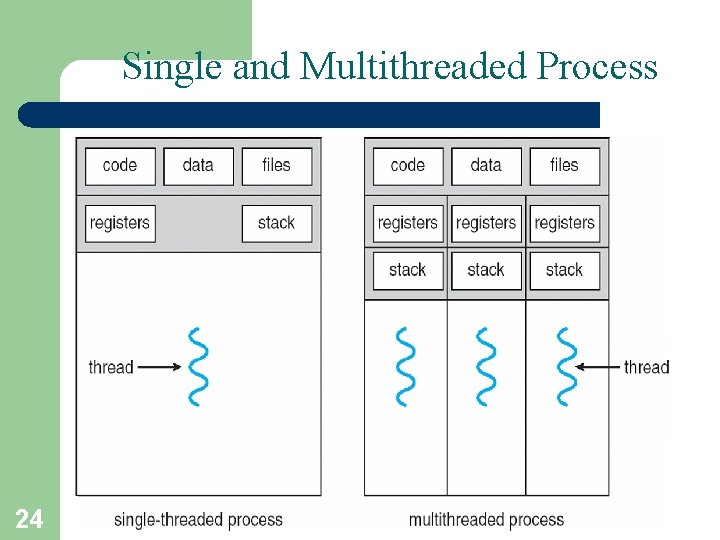

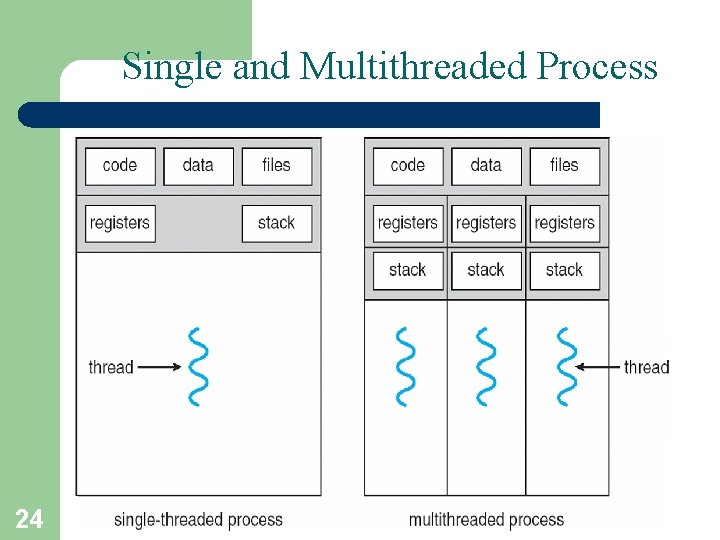

Single and Multithreaded Process 24

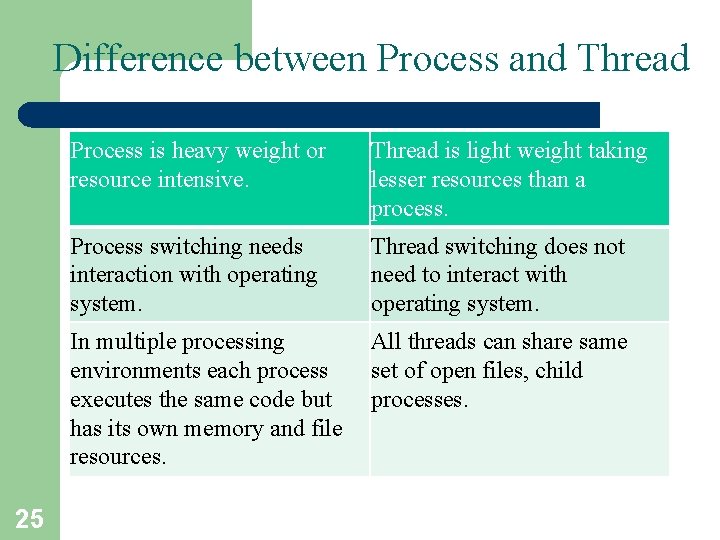

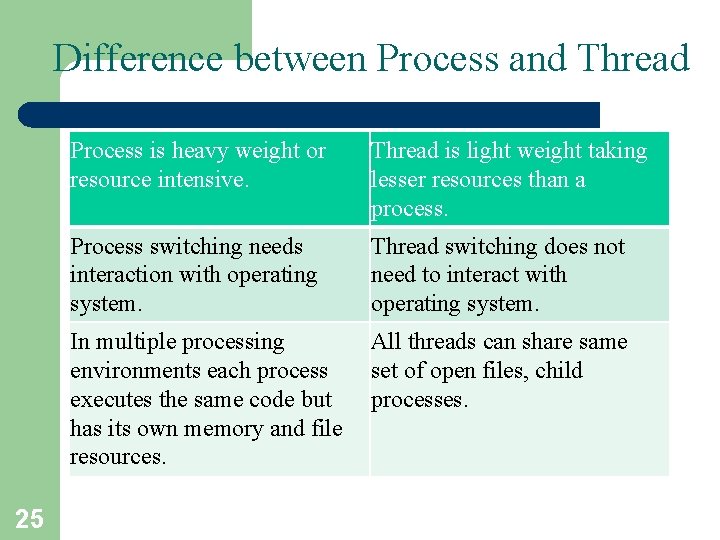

Difference between Process and Thread Process is heavy weight or resource intensive. Thread is light weight taking lesser resources than a process. Process switching needs interaction with operating system. Thread switching does not need to interact with operating system. In multiple processing All threads can share same environments each process set of open files, child executes the same code but processes. has its own memory and file resources. 25

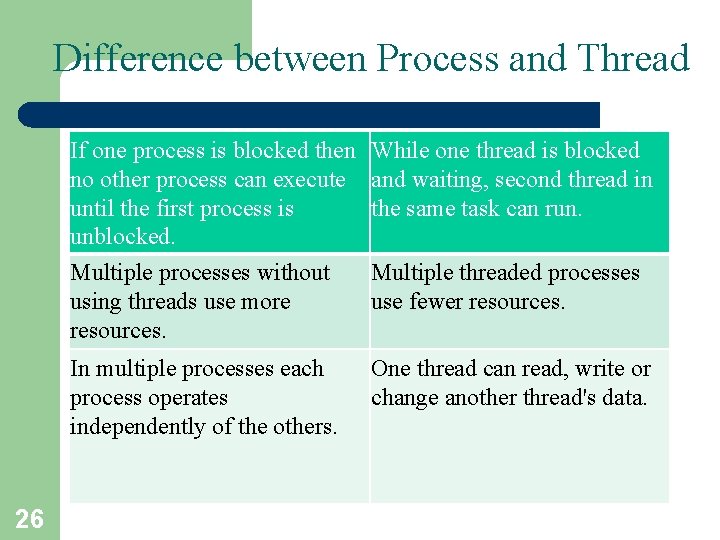

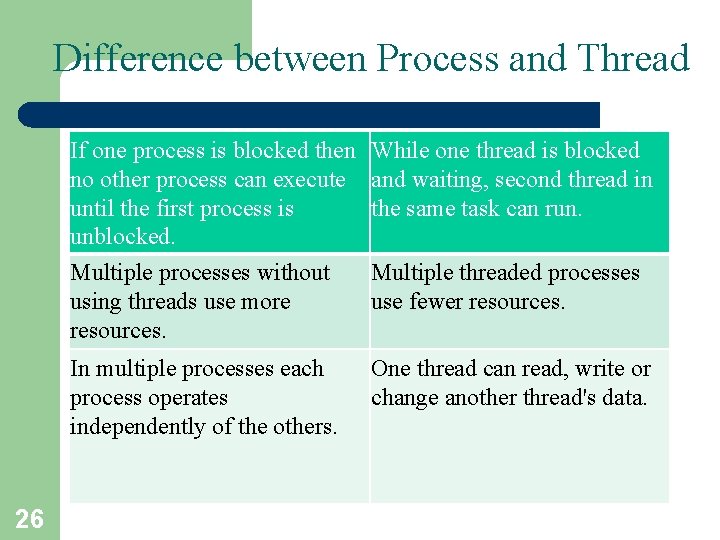

Difference between Process and Thread 26 If one process is blocked then no other process can execute until the first process is unblocked. Multiple processes without using threads use more resources. While one thread is blocked and waiting, second thread in the same task can run. In multiple processes each process operates independently of the others. One thread can read, write or change another thread's data. Multiple threaded processes use fewer resources.

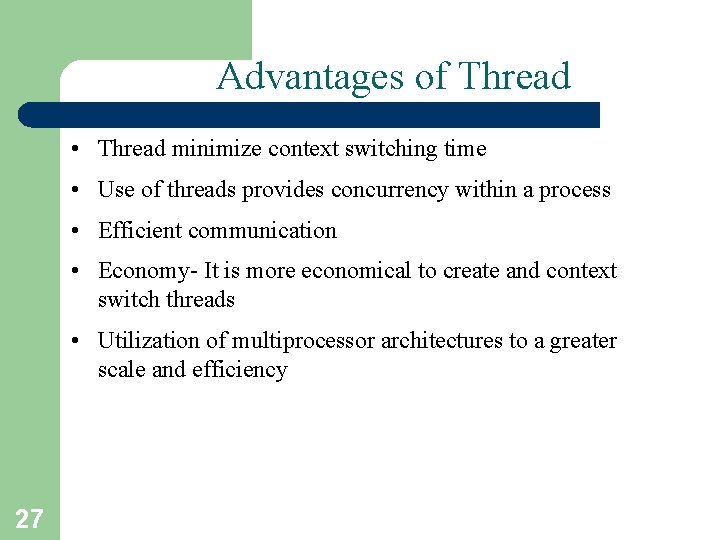

Advantages of Thread • Thread minimize context switching time • Use of threads provides concurrency within a process • Efficient communication • Economy- It is more economical to create and context switch threads • Utilization of multiprocessor architectures to a greater scale and efficiency 27

Types of Thread • Threads are implemented in following two ways • User Level Threads -- User managed threads • Kernel Level Threads -- Operating System managed threads acting on kernel, an operating system core. 28

User Level Threads • In this case, application manages thread management kernel is not aware of the existence of threads. • The thread library contains code for creating and destroying threads, for passing message and data between threads, for scheduling thread execution and for saving and restoring thread contexts. • The application begins with a single thread and begins running in that thread. 29

User Level Threads • Advantages – Thread switching does not require Kernel mode privileges. – User level thread can run on any operating system. – Scheduling can be application specific in the user level thread. – User level threads are fast to create and manage. • Disadvantages – In a typical operating system, most system calls are blocking. – Multithreaded application cannot take advantage of multiprocessing. 30

Kernel Level Threads • In this case, thread management done by the Kernel. There is no thread management code in the application area. Kernel threads are supported directly by the operating system. Any application can be programmed to be multithreaded. All of the threads within an application are supported within a single process. • The Kernel maintains context information for the process as a whole and for individuals threads within the process. Scheduling by the Kernel is done on a thread basis. The Kernel performs thread creation, scheduling and management in Kernel space. Kernel threads are generally slower to create and manage than the user threads. 31

Kernel Level Threads • Advantages – Kernel can simultaneously schedule multiple threads from the same process on multiple processes. – If one thread in a process is blocked, the Kernel can schedule another thread of the same process. – Kernel routines themselves can multithreaded. • Disadvantages – Kernel threads are generally slower to create and manage than the user threads. – Transfer of control from one thread to another within same process requires a mode switch to the Kernel. 32

Multithreading Models • Some operating system provide a combined user level thread and Kernel level thread facility. Solaris is a good example of this combined approach. • In a combined system, multiple threads within the same application can run in parallel on multiple processors and a blocking system call need not block the entire process. • Multithreading models are three types • Many to many relationship. • Many to one relationship. • One to one relationship. 33

Multithreading Models • Many-to-One: map many user-level threads to one kernel thread • One-to-One: map each user-level thread to a kernel thread • Many-to-Many: multiplexes many user-level threads to a smaller or equal number of kernel theads 34

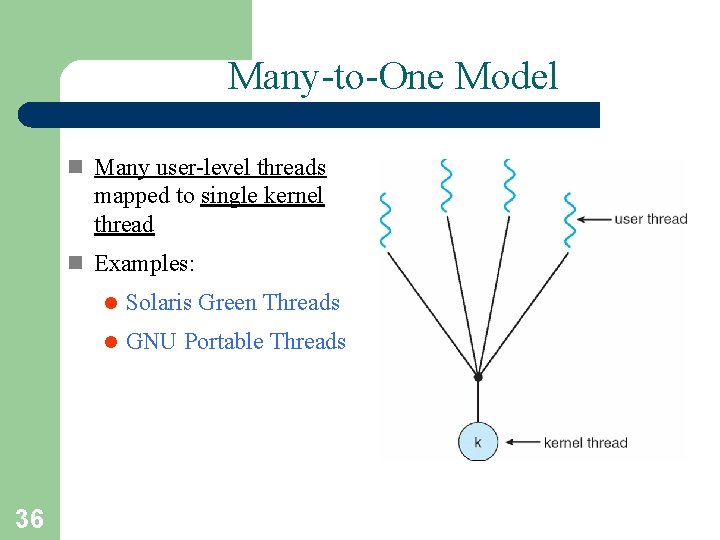

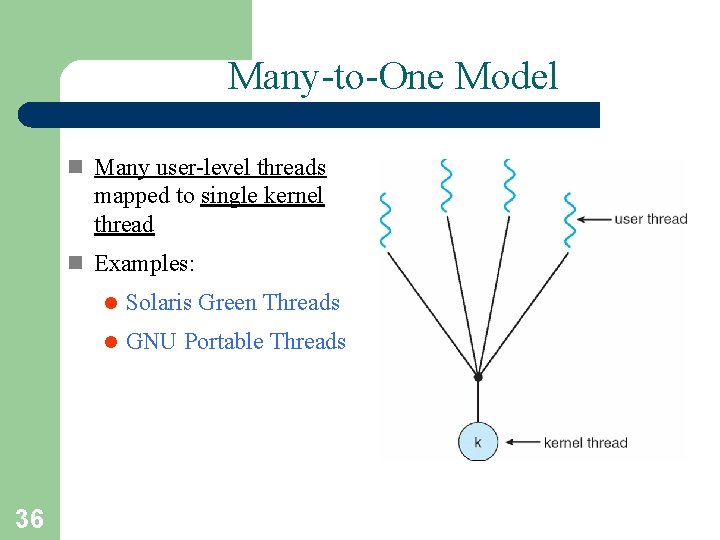

Many-to-One Model • Many to one model maps many user level threads to one Kernel level thread • Thread management is done in user space • When thread makes a blocking system call, the entire process will be blocked • Only one thread can access the Kernel at a time, so multiple threads are unable to run in parallel on multiprocessors. • If the user level thread libraries are implemented in the operating system in such a way that system does not support them then Kernel threads use the many to one relationship modes. 35

Many-to-One Model n Many user-level threads mapped to single kernel thread n Examples: 36 l Solaris Green Threads l GNU Portable Threads

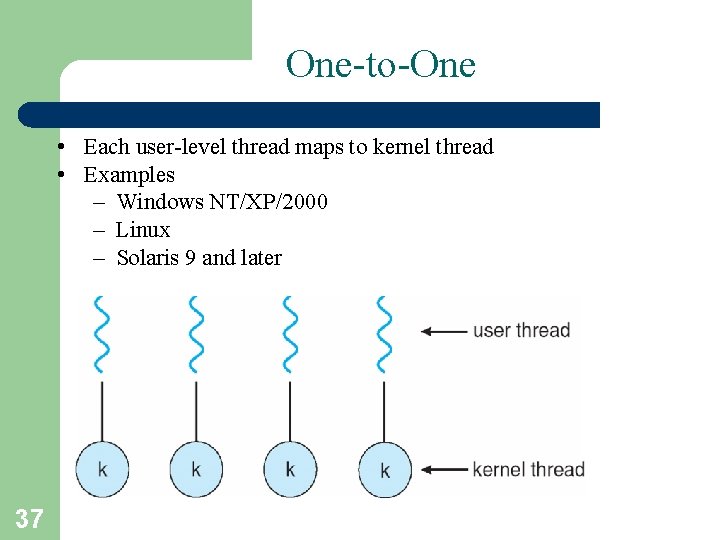

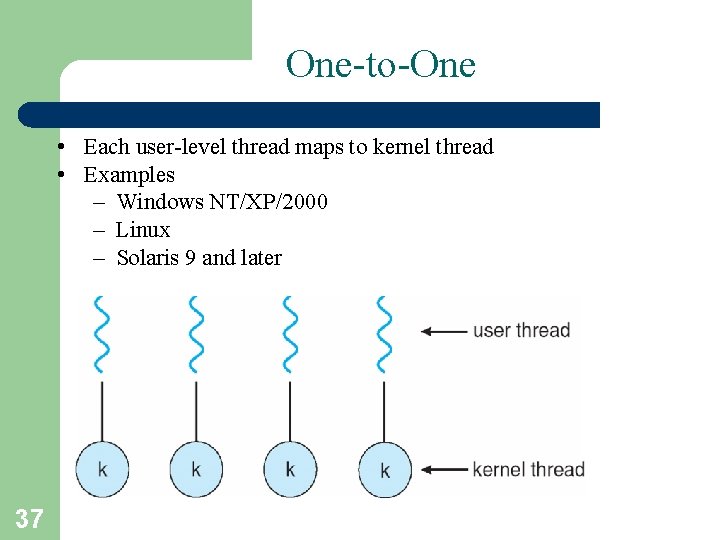

One-to-One • Each user-level thread maps to kernel thread • Examples – Windows NT/XP/2000 – Linux – Solaris 9 and later 37

One-to-One • There is one to one relationship of user level thread to the kernel level thread • This model provides more concurrency than the many to one model • It also another thread to run when a thread makes a blocking system call • It support multiple thread to execute in parallel on microprocessors • Disadvantage of this model is that creating user thread requires the corresponding Kernel thread • OS/2, windows NT and windows 2000 use one to one relationship model. 38

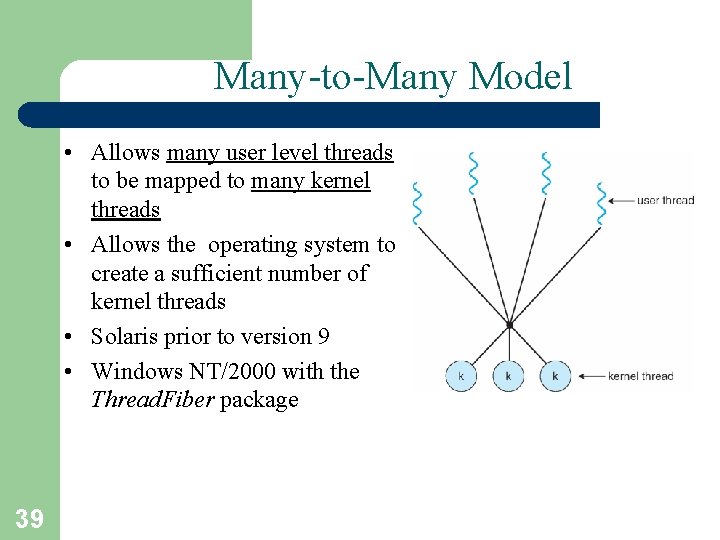

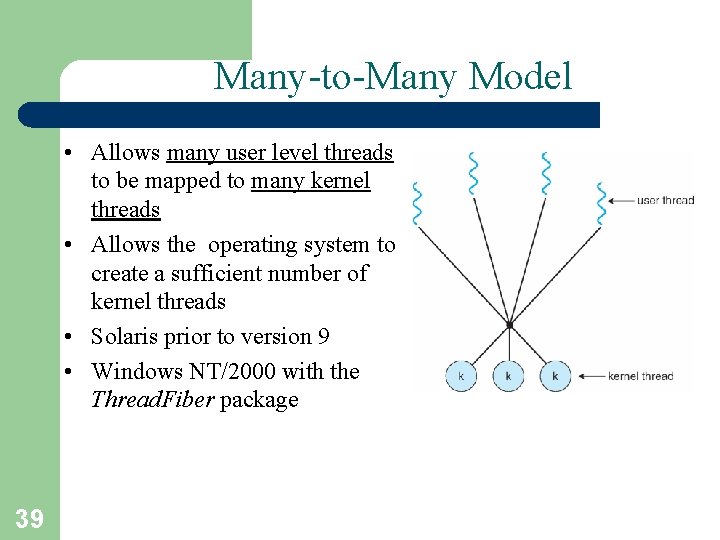

Many-to-Many Model • Allows many user level threads to be mapped to many kernel threads • Allows the operating system to create a sufficient number of kernel threads • Solaris prior to version 9 • Windows NT/2000 with the Thread. Fiber package 39

Many-to-Many Model • In this model, many user level threads multiplexes to the Kernel thread of smaller or equal numbers • The number of Kernel threads may be specific to either a particular application or a particular machine. • In this model, developers can create as many user threads as necessary and the corresponding Kernel threads can run in parallels on a multiprocessor. 40

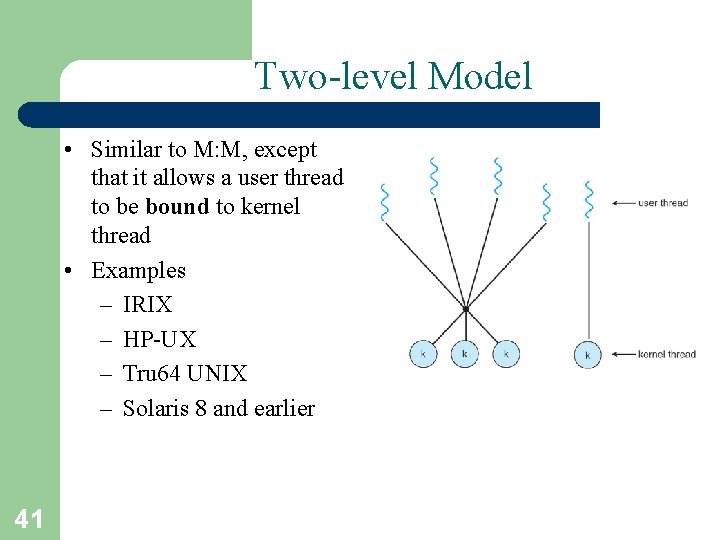

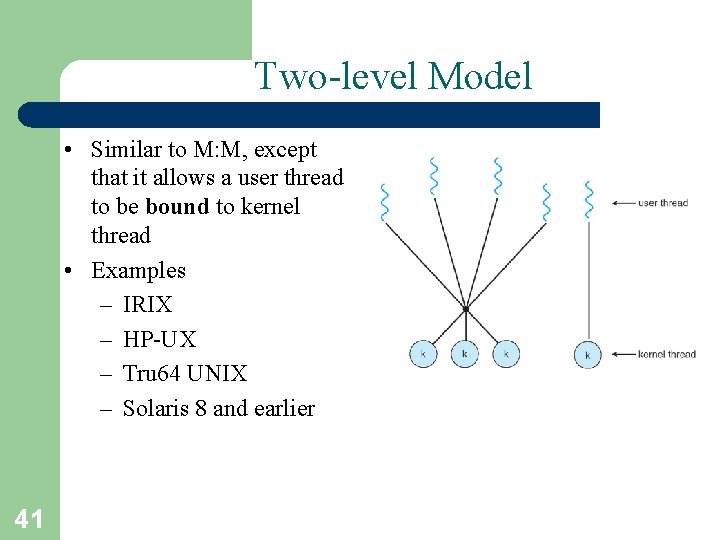

Two-level Model • Similar to M: M, except that it allows a user thread to be bound to kernel thread • Examples – IRIX – HP-UX – Tru 64 UNIX – Solaris 8 and earlier 41

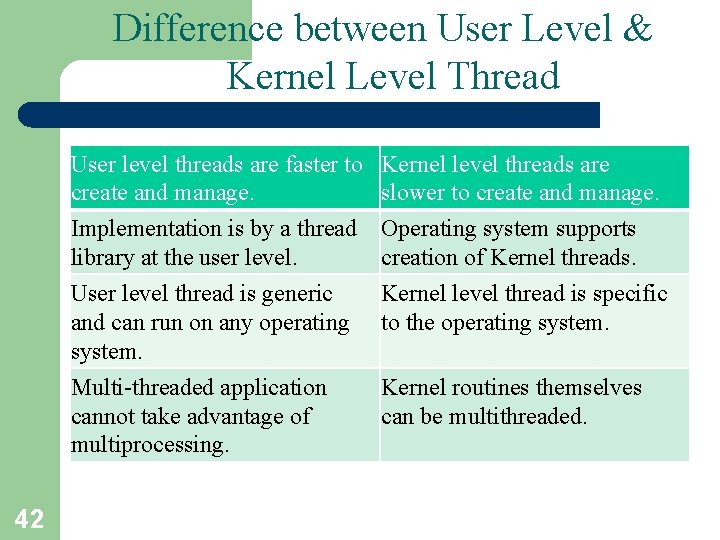

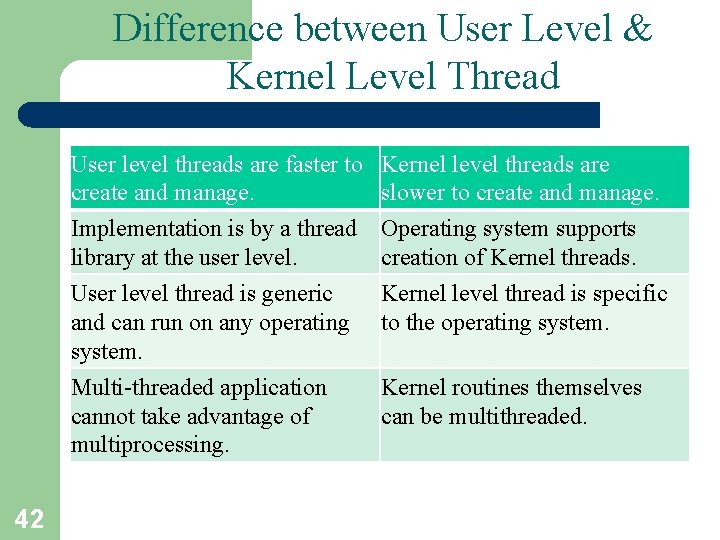

Difference between User Level & Kernel Level Thread 42 User level threads are faster to create and manage. Implementation is by a thread library at the user level. User level thread is generic and can run on any operating system. Kernel level threads are slower to create and manage. Operating system supports creation of Kernel threads. Kernel level thread is specific to the operating system. Multi-threaded application cannot take advantage of multiprocessing. Kernel routines themselves can be multithreaded.

User-Level Threads • Thread management done by user-level threads library • Examples – POSIX Pthreads – Mach C-threads – Solaris threads – Java threads 43

User-Level Threads • • 44 Thread library entirely executed in user mode Cheap to manage threads – Create: setup a stack – Destroy: free up memory Context switch requires few instructions – Just save CPU registers – Done based on program logic A blocking system call blocks all peer threads

Kernel-Level Threads 45 • • Kernel is aware of and schedules threads A blocking system call, will not block all peer threads • • • Expensive to manage threads Expensive context switch Kernel Intervention

Kernel Threads • Supported by the Kernel • Examples: newer versions of – Windows – UNIX – Linux 46

Linux Threads • Linux refers to them as tasks rather than threads. • Thread creation is done through clone() system call. • Unlike fork(), clone() allows a child task to share the address space of the parent task (process) 47

Pthreads • A POSIX standard (IEEE 1003. 1 c) API for thread creation and synchronization. • API specifies behavior of the thread library, implementation is up to development of the library. • POSIX Pthreads - may be provided as either a user or kernel library, as an extension to the POSIX standard. • Common in UNIX operating systems. 48

LWP Advantages 49 • Cheap user-level thread management • A blocking system call will not suspend the whole process • LWPs are transparent to the application • LWPs can be easily mapped to different CPUs