UCDavis ecs 150 Fall 2007 Operating System ecs

- Slides: 136

UCDavis, ecs 150 Fall 2007 : Operating System ecs 150 Fall 2007 #4: Memory Management (chapter 5) Dr. S. Felix Wu Computer Science Department University of California, Davis http: //www. cs. ucdavis. edu/~wu/ sfelixwu@gmail. com 10/25/2007 ecs 150, Fall 2007 1

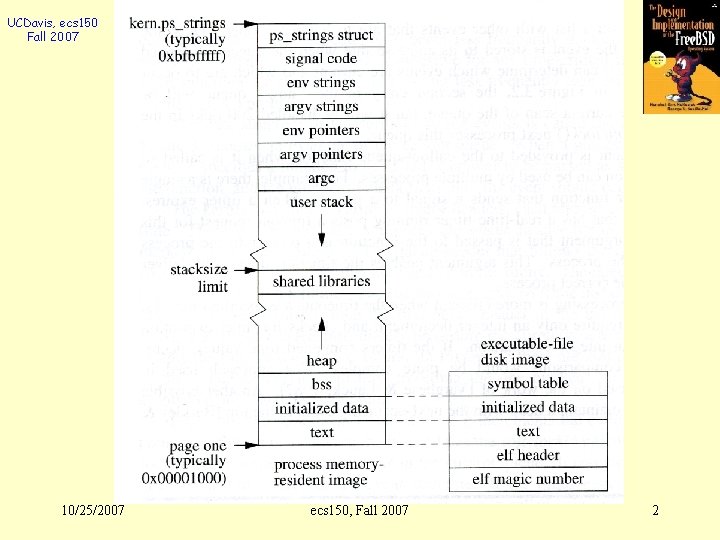

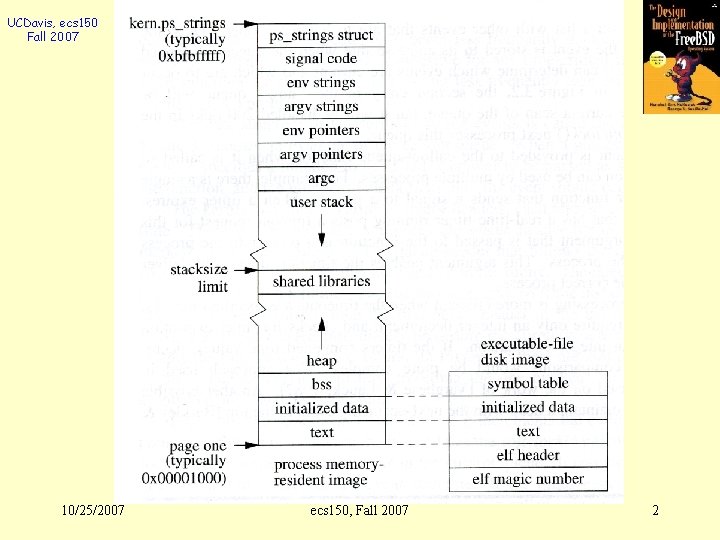

UCDavis, ecs 150 Fall 2007 10/25/2007 ecs 150, Fall 2007 2

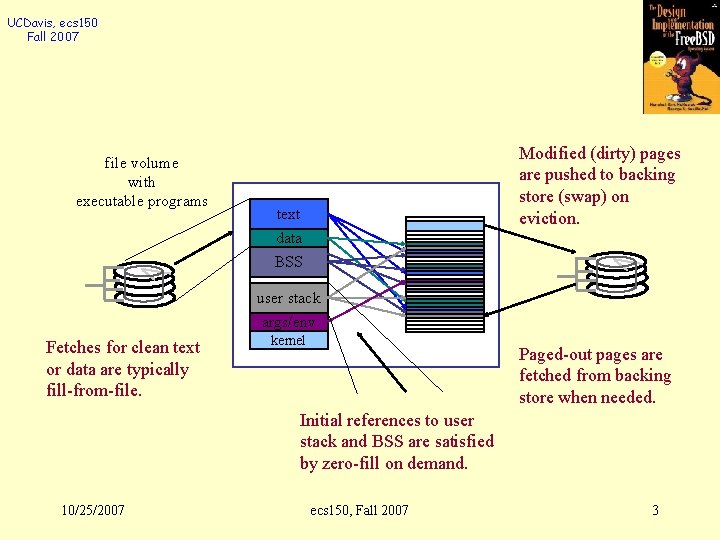

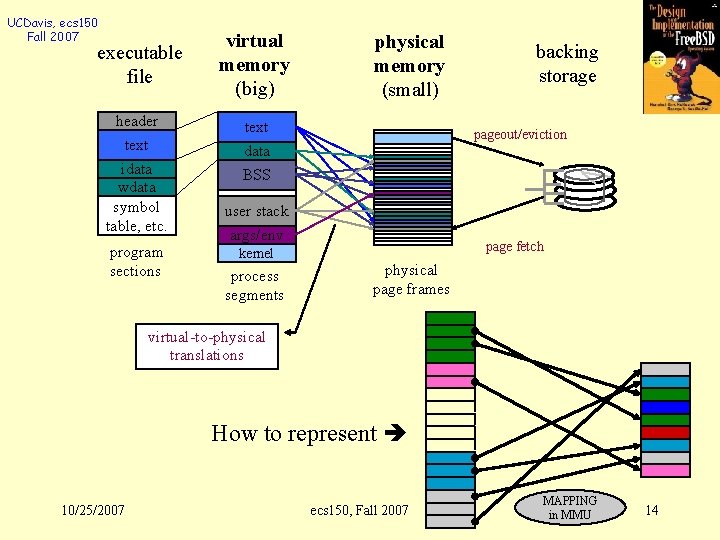

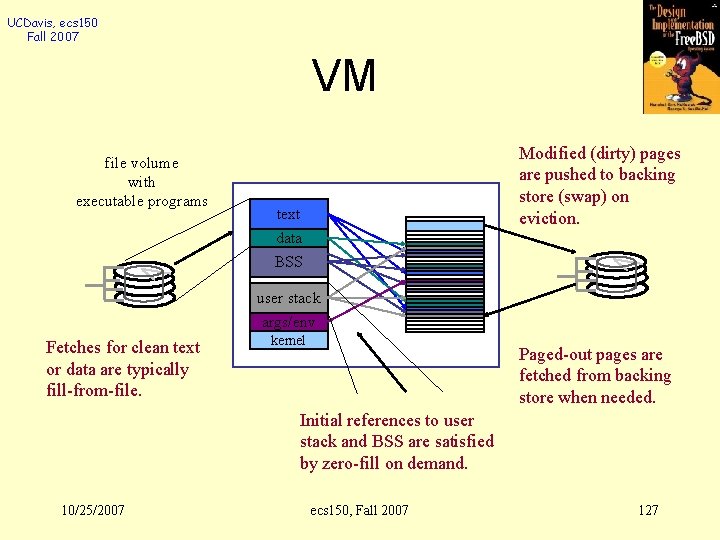

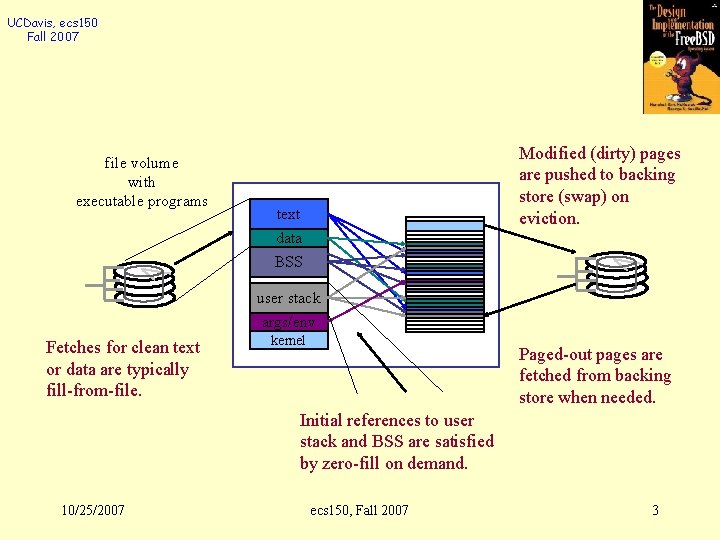

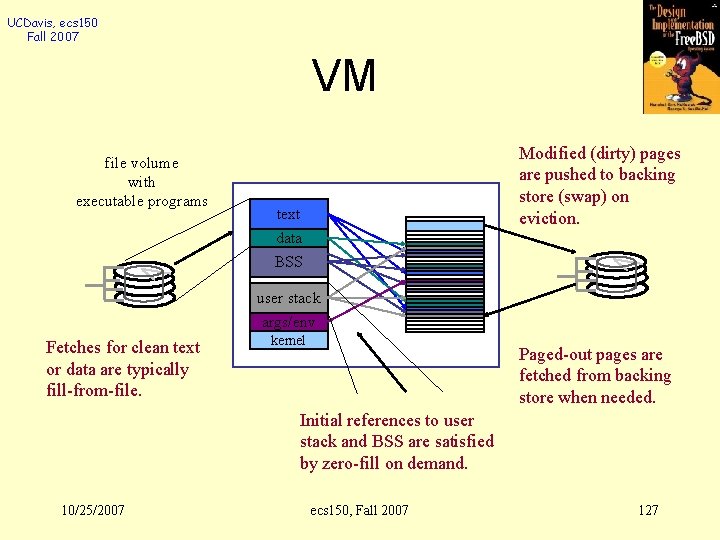

UCDavis, ecs 150 Fall 2007 file volume with executable programs Modified (dirty) pages are pushed to backing store (swap) on eviction. text data BSS user stack args/env Fetches for clean text or data are typically fill-from-file. kernel Paged-out pages are fetched from backing store when needed. Initial references to user stack and BSS are satisfied by zero-fill on demand. 10/25/2007 ecs 150, Fall 2007 3

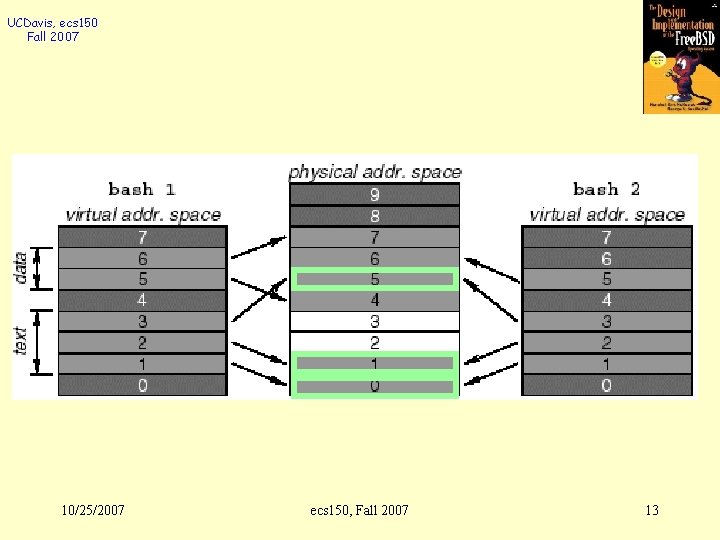

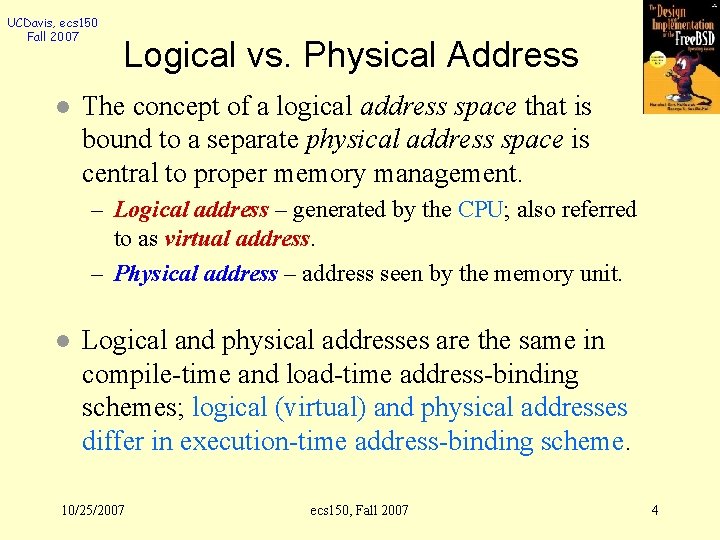

UCDavis, ecs 150 Fall 2007 l Logical vs. Physical Address The concept of a logical address space that is bound to a separate physical address space is central to proper memory management. – Logical address – generated by the CPU; also referred to as virtual address. – Physical address – address seen by the memory unit. l Logical and physical addresses are the same in compile-time and load-time address-binding schemes; logical (virtual) and physical addresses differ in execution-time address-binding scheme. 10/25/2007 ecs 150, Fall 2007 4

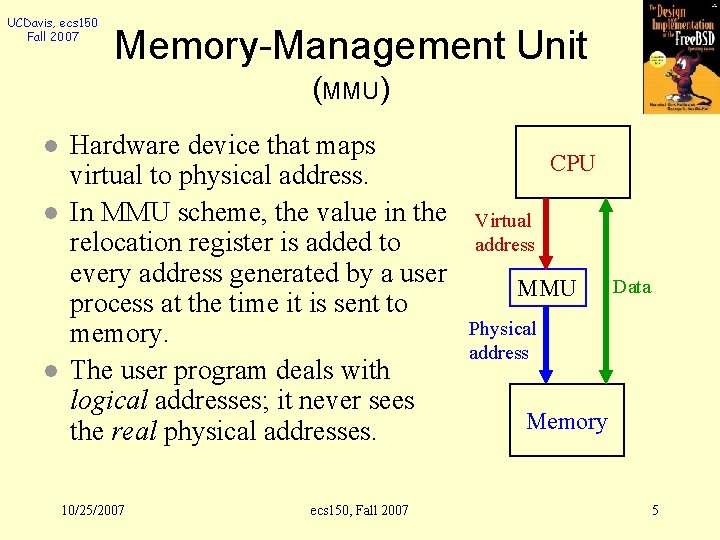

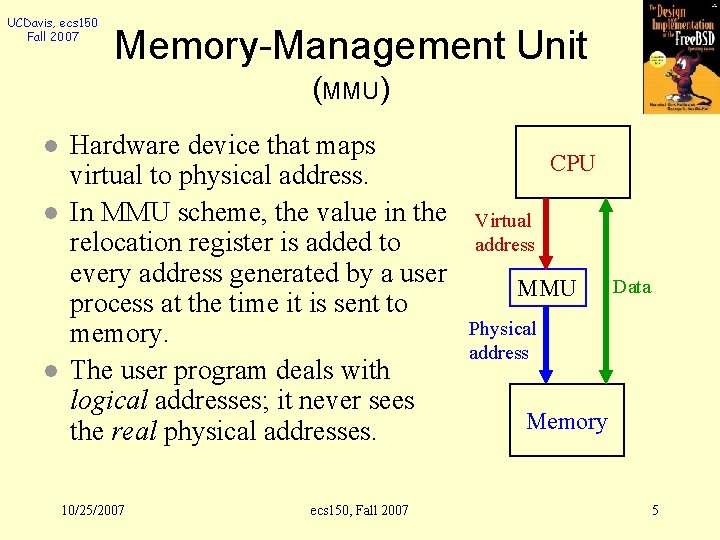

UCDavis, ecs 150 Fall 2007 Memory-Management Unit (MMU) l l l Hardware device that maps virtual to physical address. In MMU scheme, the value in the relocation register is added to every address generated by a user process at the time it is sent to memory. The user program deals with logical addresses; it never sees the real physical addresses. 10/25/2007 ecs 150, Fall 2007 CPU Virtual address MMU Data Physical address Memory 5

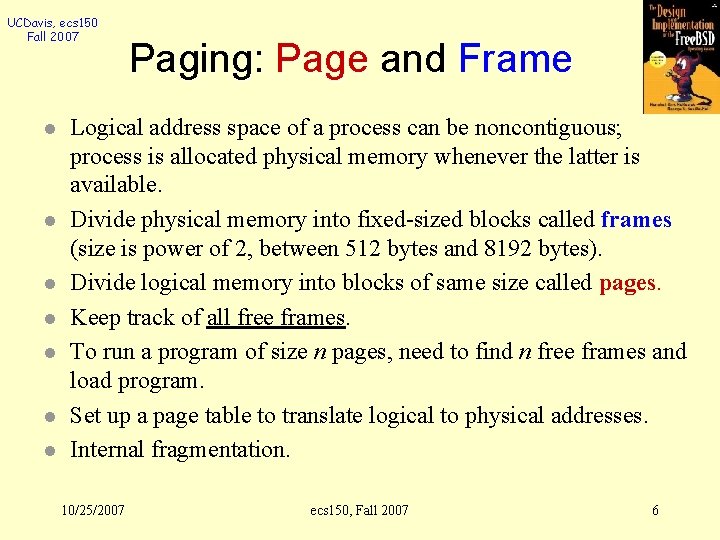

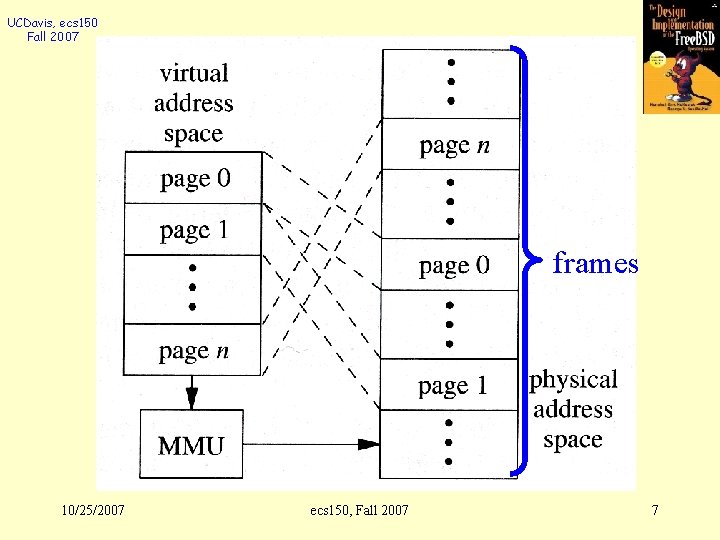

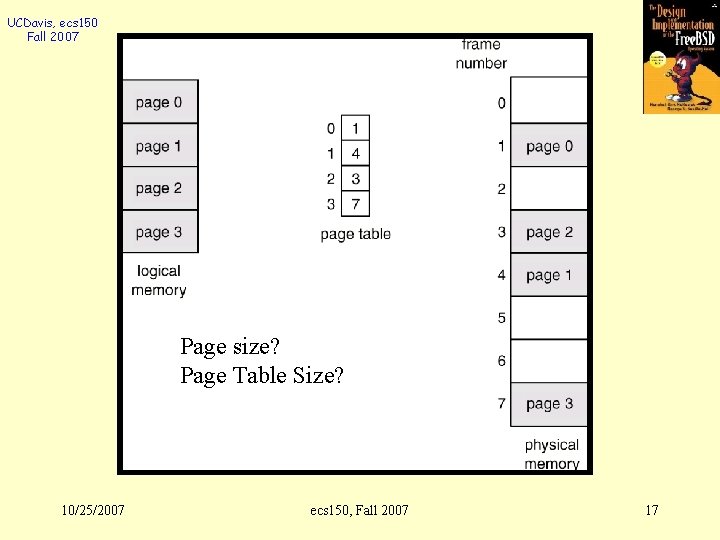

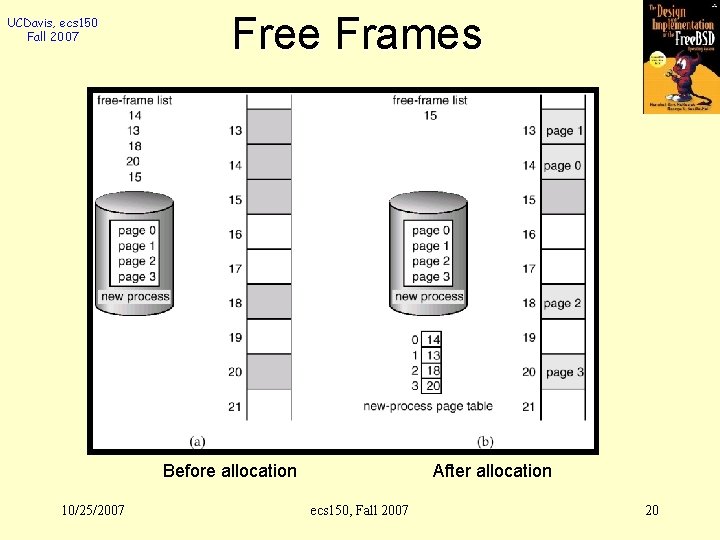

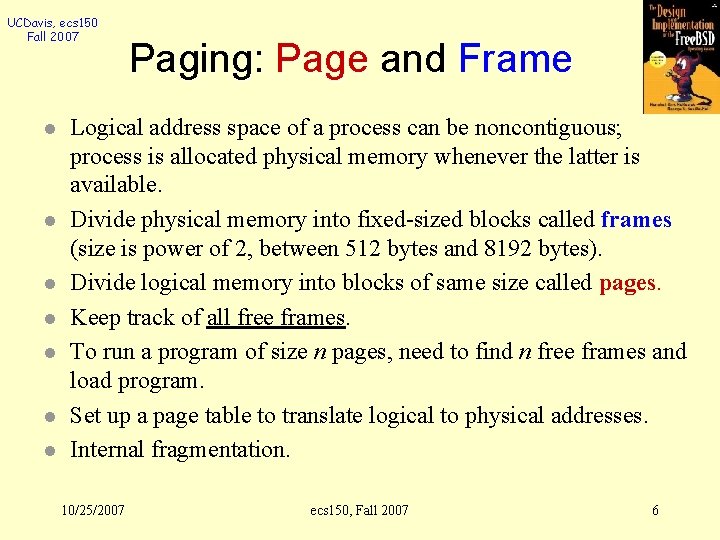

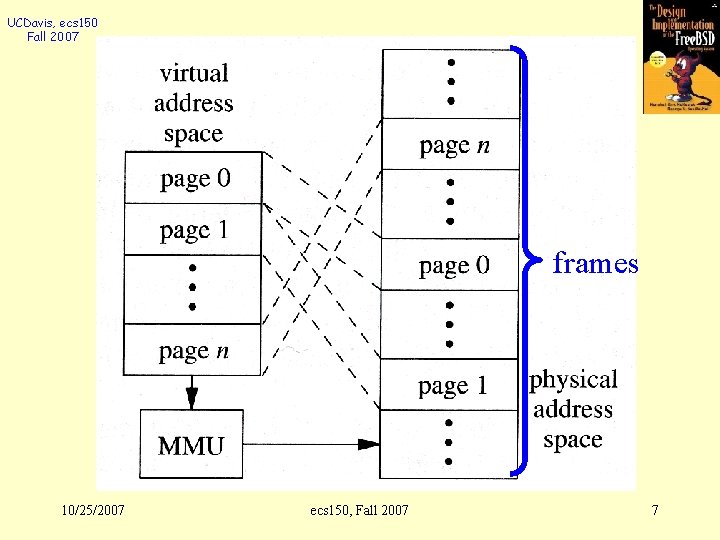

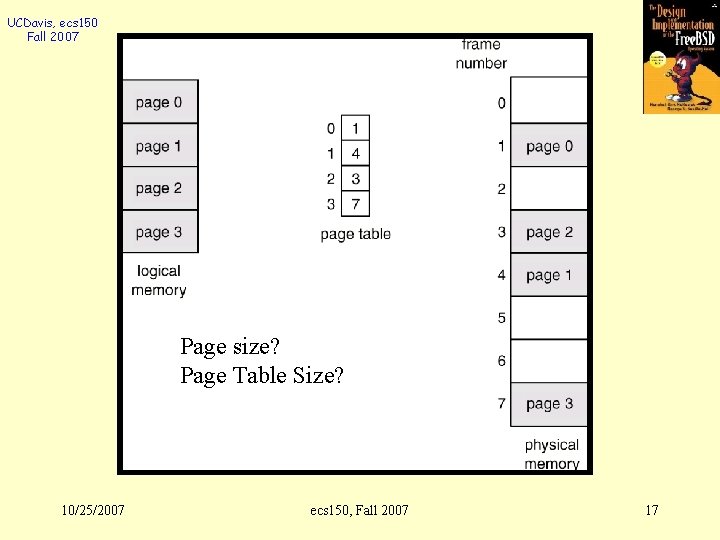

UCDavis, ecs 150 Fall 2007 l l l l Paging: Page and Frame Logical address space of a process can be noncontiguous; process is allocated physical memory whenever the latter is available. Divide physical memory into fixed-sized blocks called frames (size is power of 2, between 512 bytes and 8192 bytes). Divide logical memory into blocks of same size called pages. Keep track of all free frames. To run a program of size n pages, need to find n free frames and load program. Set up a page table to translate logical to physical addresses. Internal fragmentation. 10/25/2007 ecs 150, Fall 2007 6

UCDavis, ecs 150 Fall 2007 frames 10/25/2007 ecs 150, Fall 2007 7

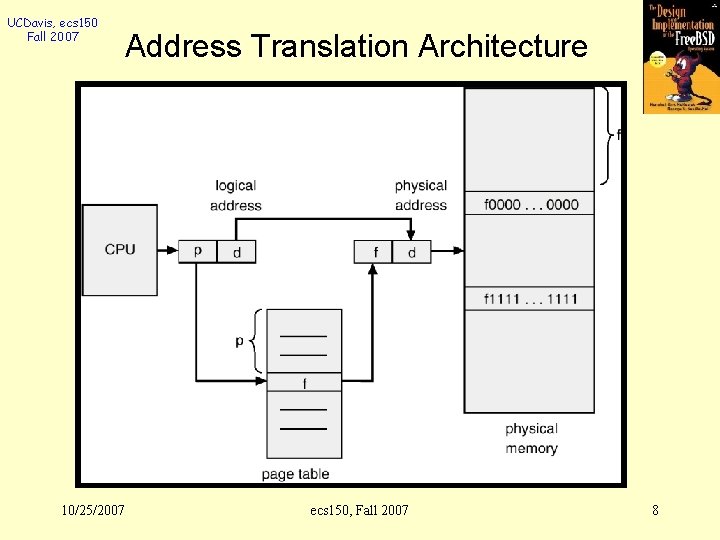

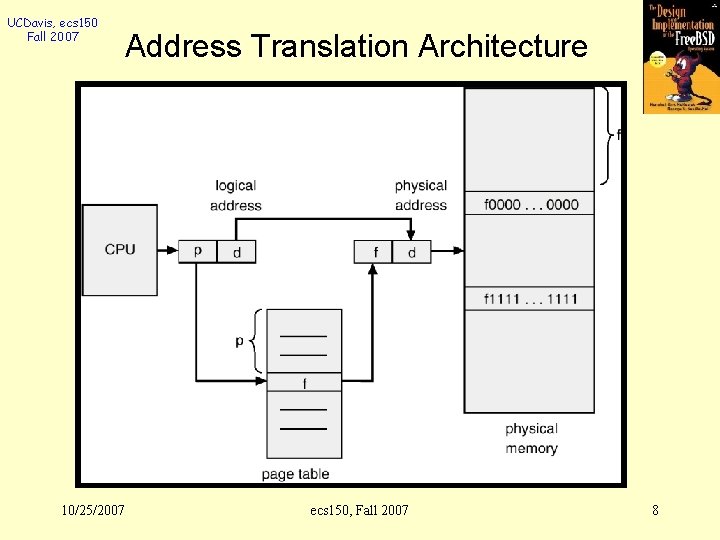

UCDavis, ecs 150 Fall 2007 Address Translation Architecture 10/25/2007 ecs 150, Fall 2007 8

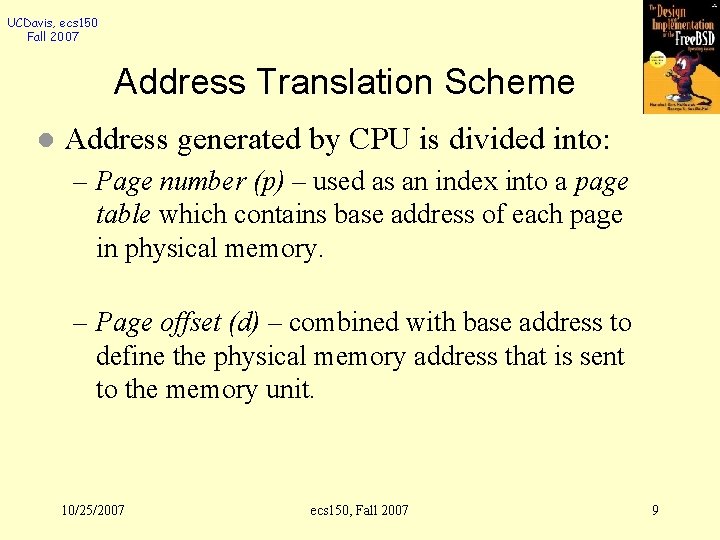

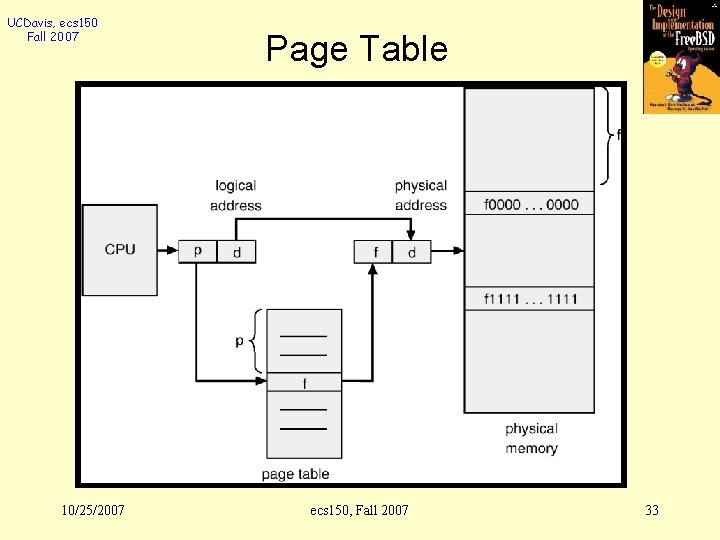

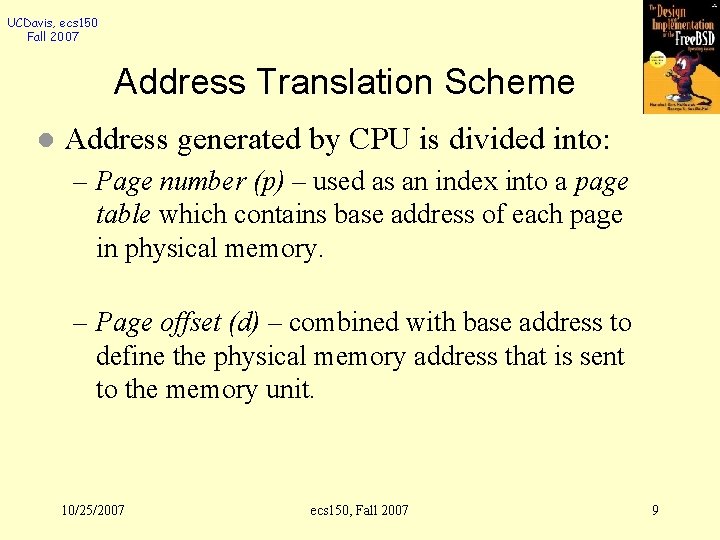

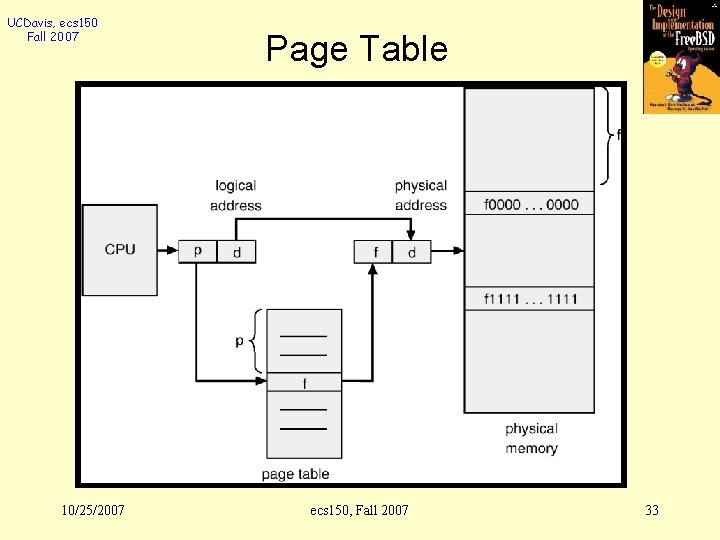

UCDavis, ecs 150 Fall 2007 Address Translation Scheme l Address generated by CPU is divided into: – Page number (p) – used as an index into a page table which contains base address of each page in physical memory. – Page offset (d) – combined with base address to define the physical memory address that is sent to the memory unit. 10/25/2007 ecs 150, Fall 2007 9

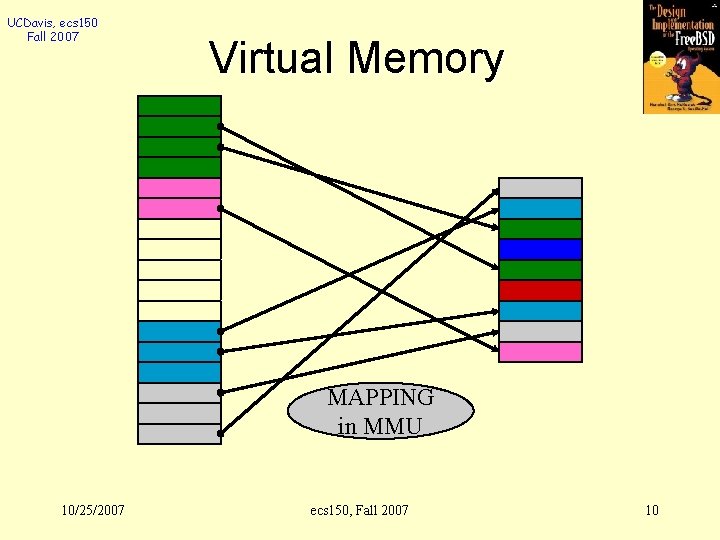

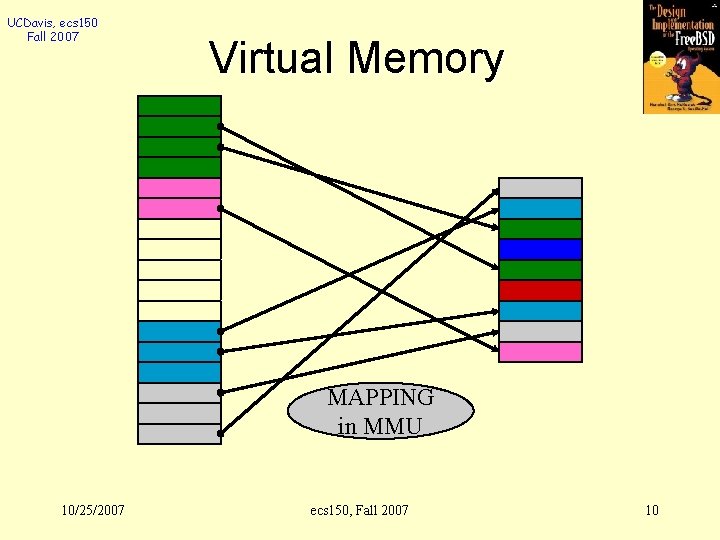

UCDavis, ecs 150 Fall 2007 Virtual Memory MAPPING in MMU 10/25/2007 ecs 150, Fall 2007 10

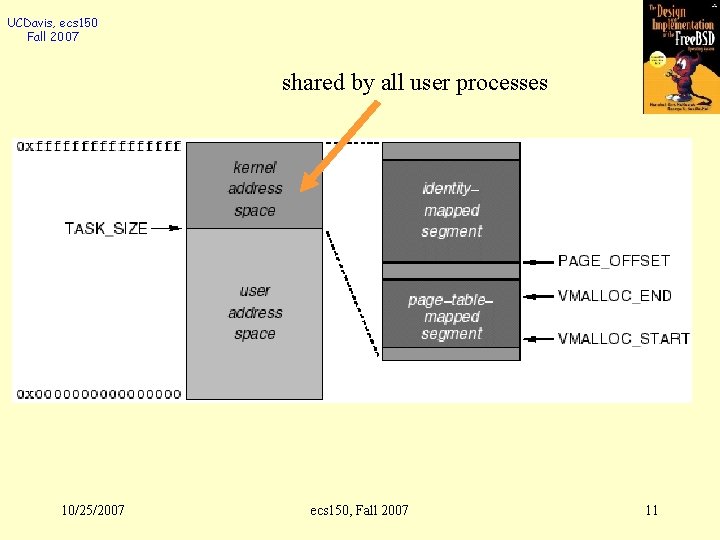

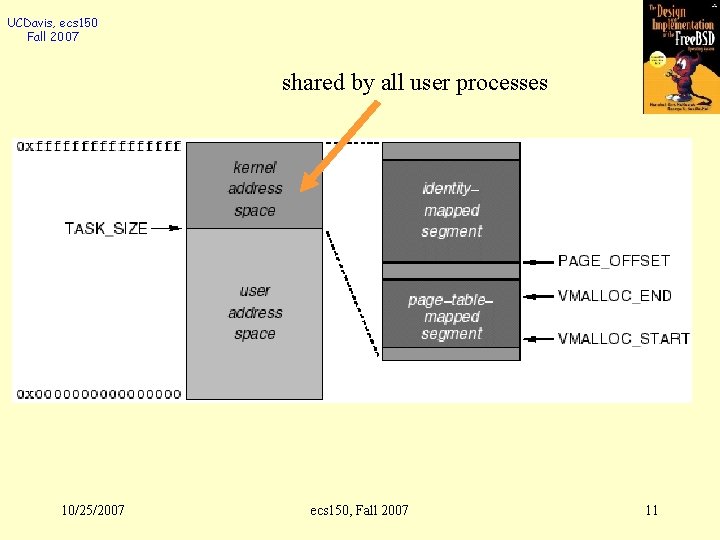

UCDavis, ecs 150 Fall 2007 shared by all user processes 10/25/2007 ecs 150, Fall 2007 11

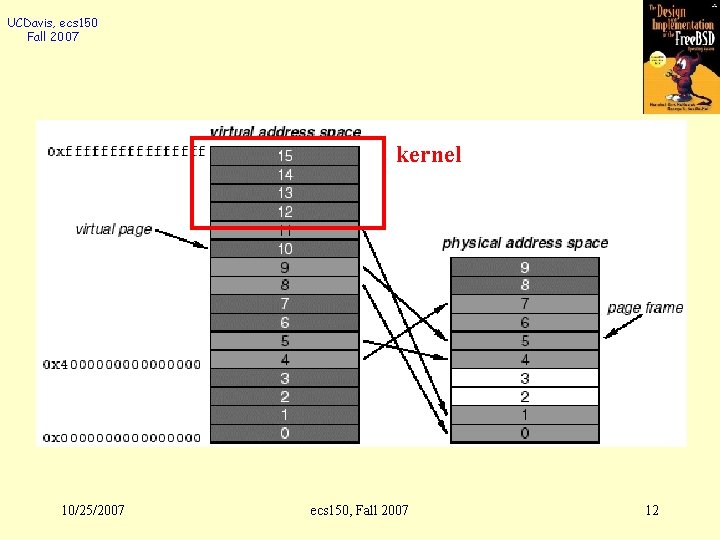

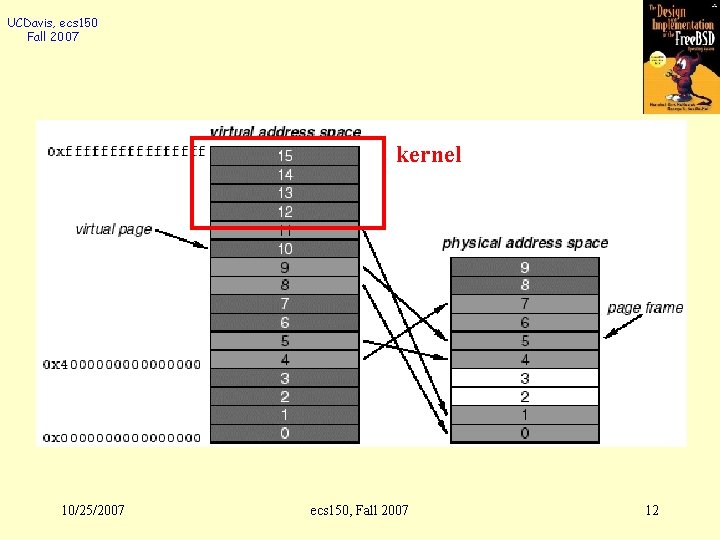

UCDavis, ecs 150 Fall 2007 kernel 10/25/2007 ecs 150, Fall 2007 12

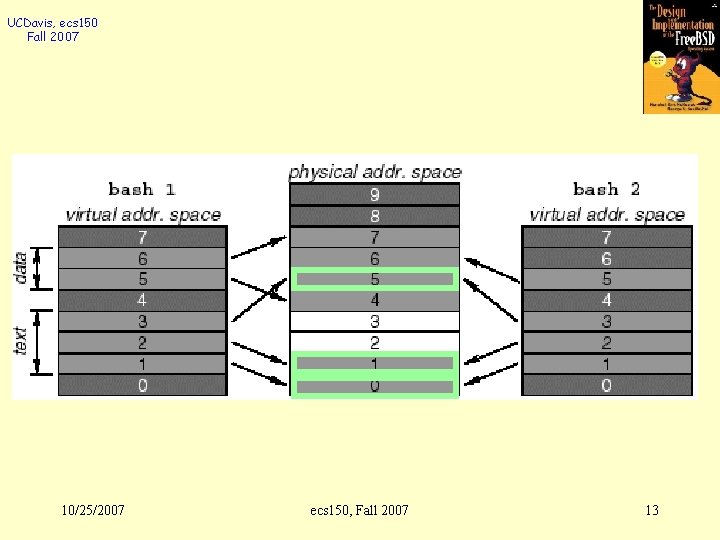

UCDavis, ecs 150 Fall 2007 10/25/2007 ecs 150, Fall 2007 13

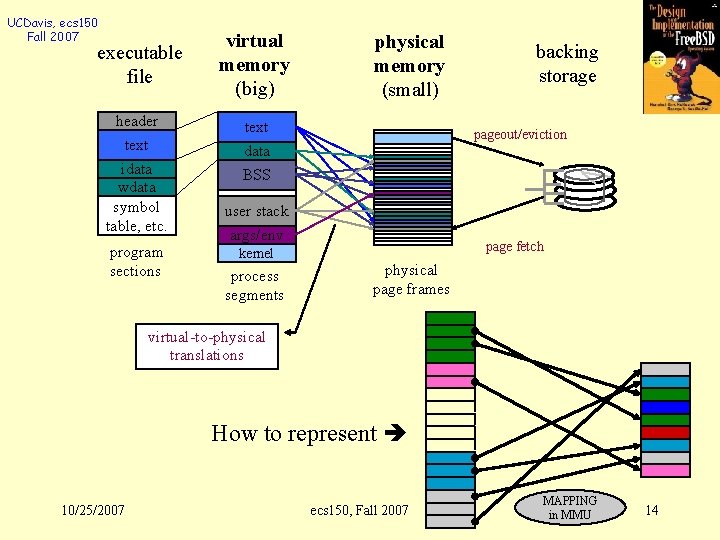

UCDavis, ecs 150 Fall 2007 executable file virtual memory (big) header text idata wdata symbol table, etc. data program sections physical memory (small) backing storage pageout/eviction BSS user stack args/env page fetch kernel process segments physical page frames virtual-to-physical translations How to represent 10/25/2007 ecs 150, Fall 2007 MAPPING in MMU 14

UCDavis, ecs 150 Fall 2007 Paging Advantages? l Disadvantages? l 10/25/2007 ecs 150, Fall 2007 15

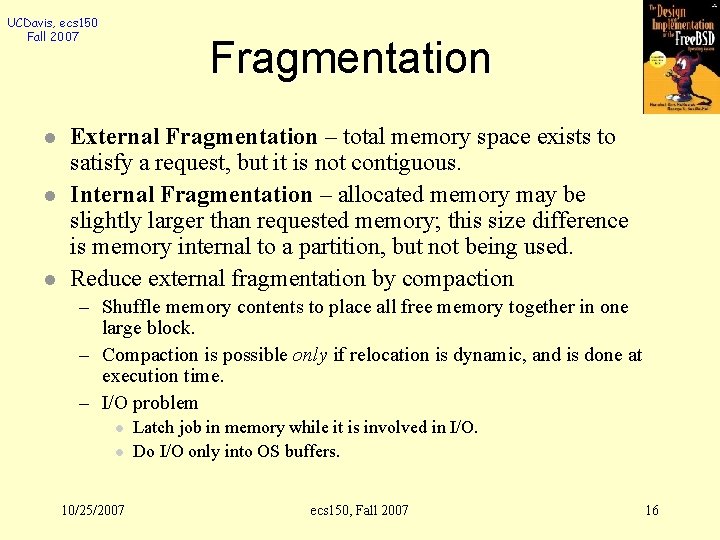

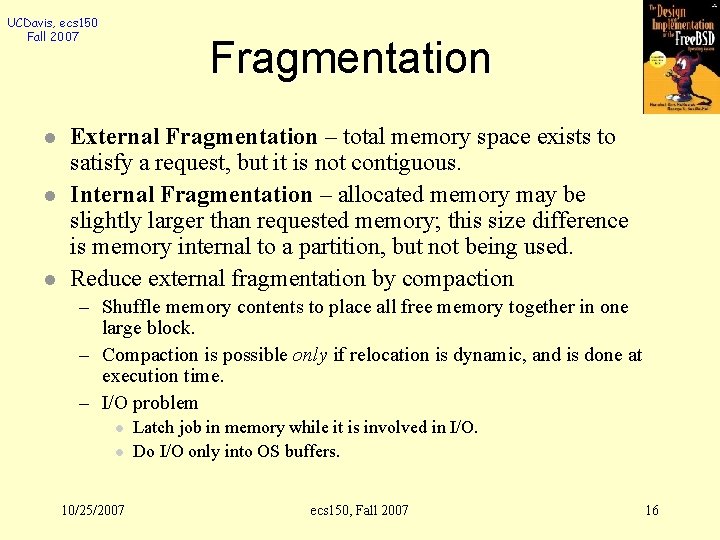

UCDavis, ecs 150 Fall 2007 l l l Fragmentation External Fragmentation – total memory space exists to satisfy a request, but it is not contiguous. Internal Fragmentation – allocated memory may be slightly larger than requested memory; this size difference is memory internal to a partition, but not being used. Reduce external fragmentation by compaction – Shuffle memory contents to place all free memory together in one large block. – Compaction is possible only if relocation is dynamic, and is done at execution time. – I/O problem l l 10/25/2007 Latch job in memory while it is involved in I/O. Do I/O only into OS buffers. ecs 150, Fall 2007 16

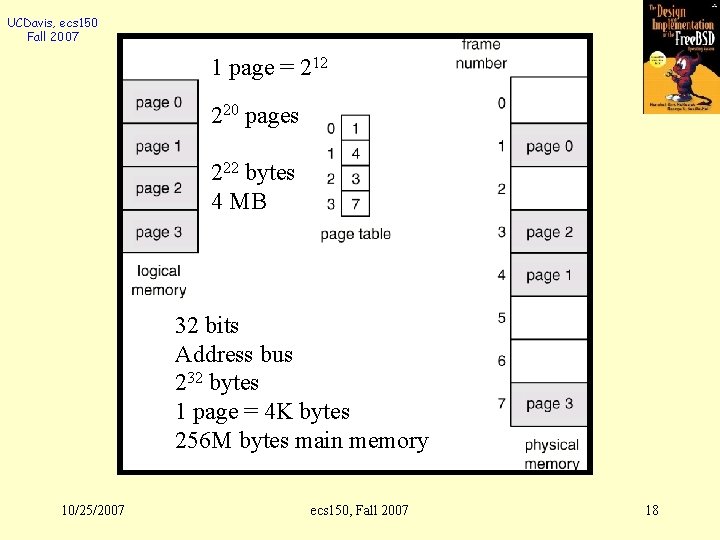

UCDavis, ecs 150 Fall 2007 Page size? Page Table Size? 10/25/2007 ecs 150, Fall 2007 17

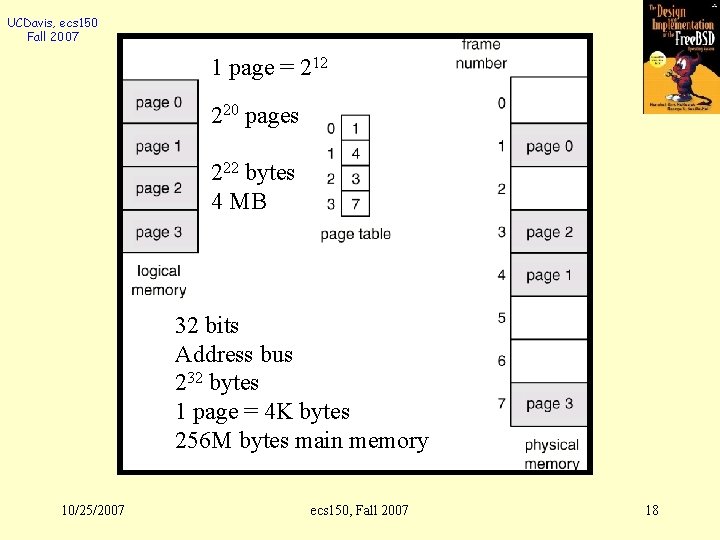

UCDavis, ecs 150 Fall 2007 1 page = 212 220 pages 222 bytes 4 MB 32 bits Address bus 232 bytes 1 page = 4 K bytes 256 M bytes main memory 10/25/2007 ecs 150, Fall 2007 18

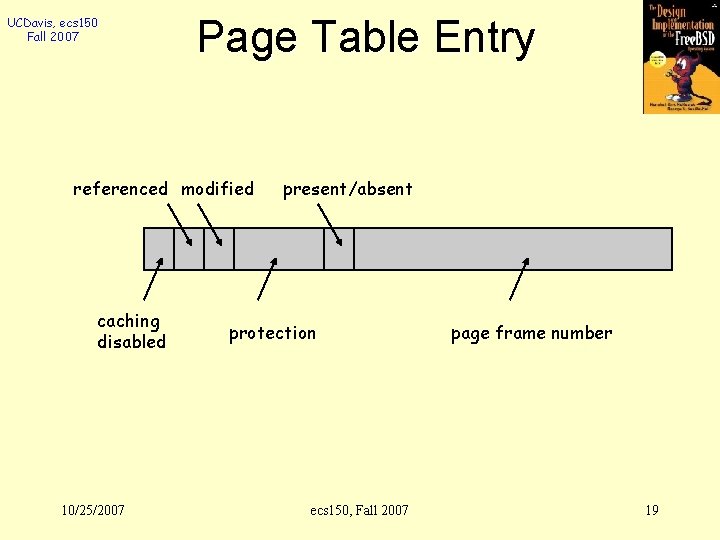

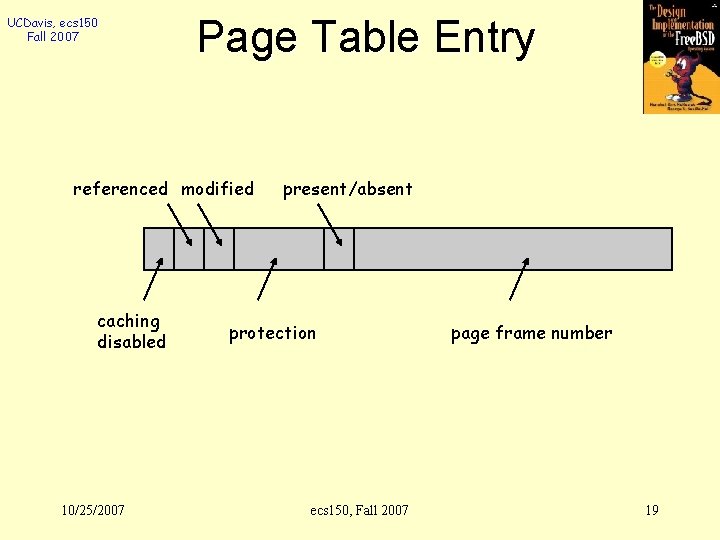

UCDavis, ecs 150 Fall 2007 Page Table Entry referenced modified caching disabled 10/25/2007 present/absent protection ecs 150, Fall 2007 page frame number 19

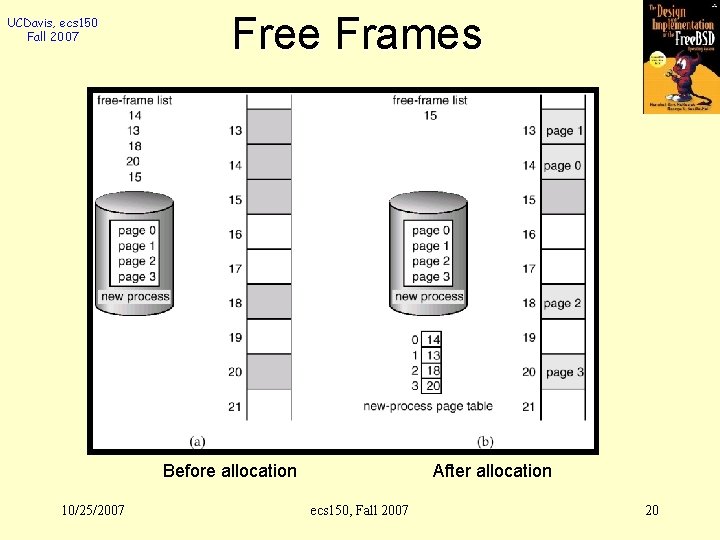

UCDavis, ecs 150 Fall 2007 Free Frames Before allocation 10/25/2007 After allocation ecs 150, Fall 2007 20

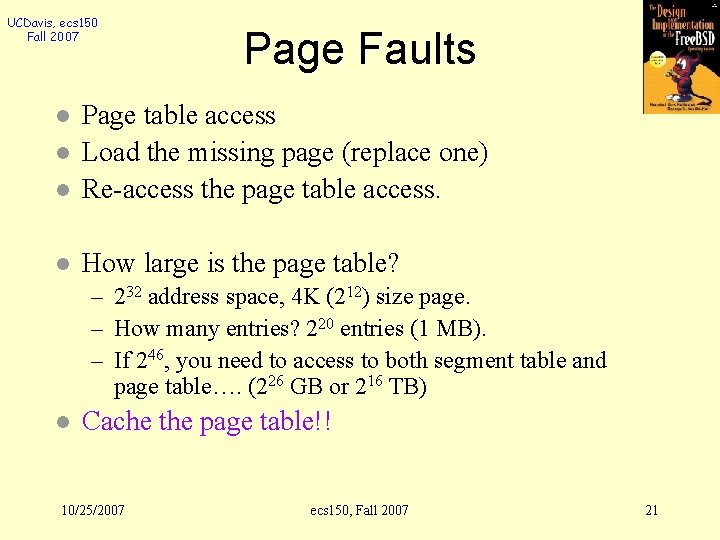

UCDavis, ecs 150 Fall 2007 Page Faults l Page table access Load the missing page (replace one) Re-access the page table access. l How large is the page table? l l – 232 address space, 4 K (212) size page. – How many entries? 220 entries (1 MB). – If 246, you need to access to both segment table and page table…. (226 GB or 216 TB) l Cache the page table!! 10/25/2007 ecs 150, Fall 2007 21

UCDavis, ecs 150 Fall 2007 l Page Faults Hardware Trap – /usr/src/sys/i 386/trap. c l VM page fault handler vm_fault() – /usr/src/sys/vm/vm_fault. c 10/25/2007 ecs 150, Fall 2007 22

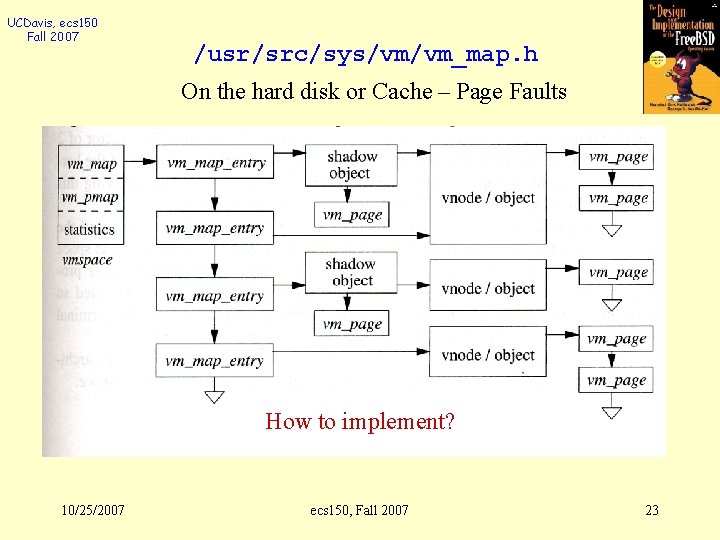

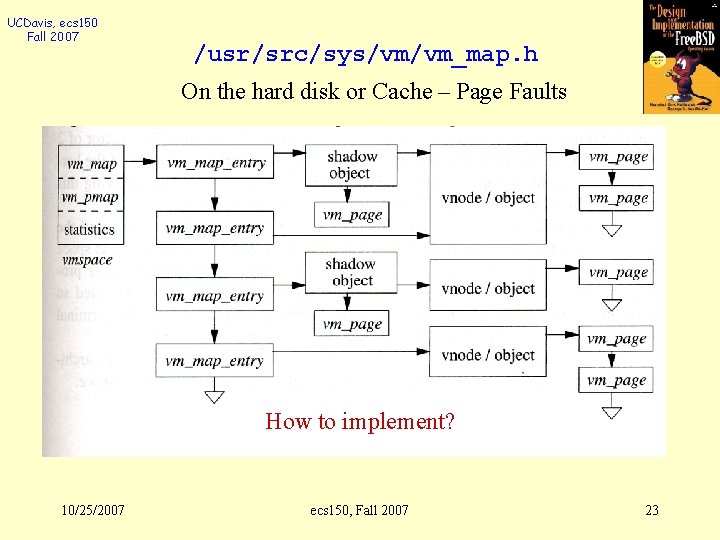

UCDavis, ecs 150 Fall 2007 /usr/src/sys/vm/vm_map. h On the hard disk or Cache – Page Faults How to implement? 10/25/2007 ecs 150, Fall 2007 23

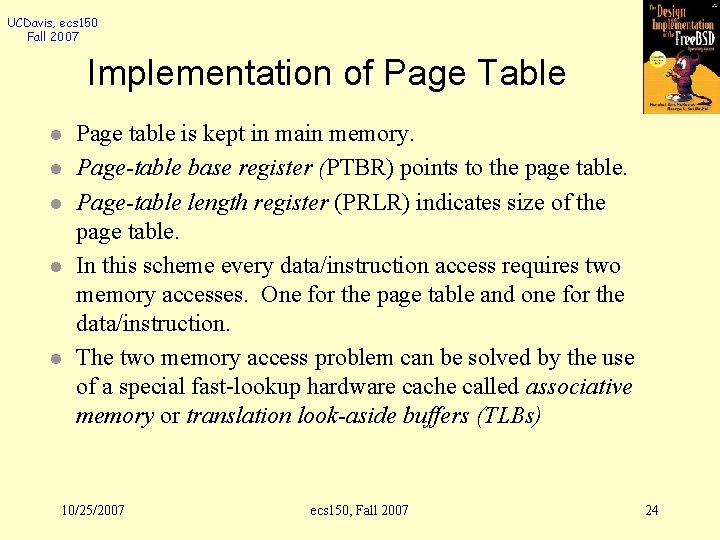

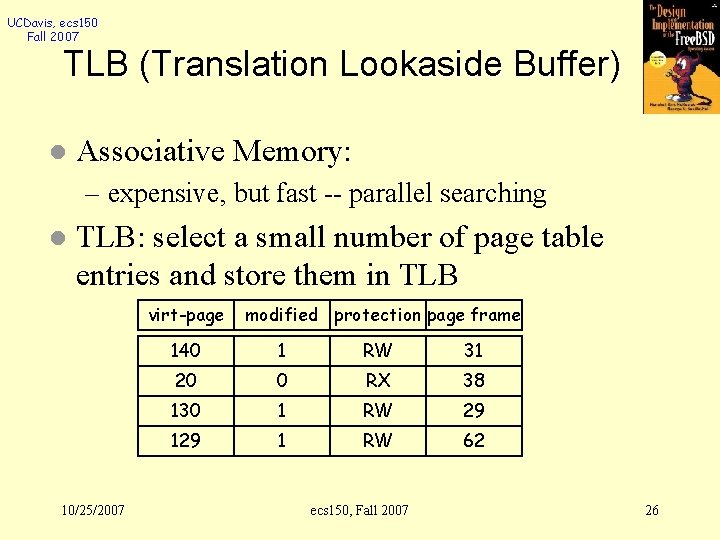

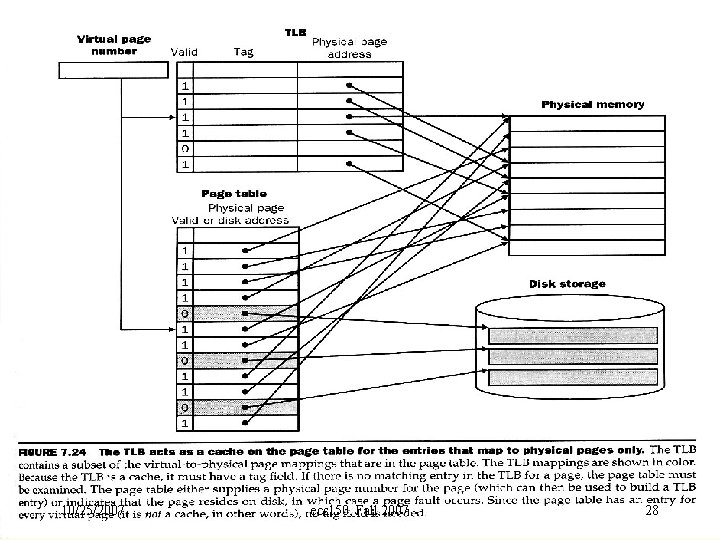

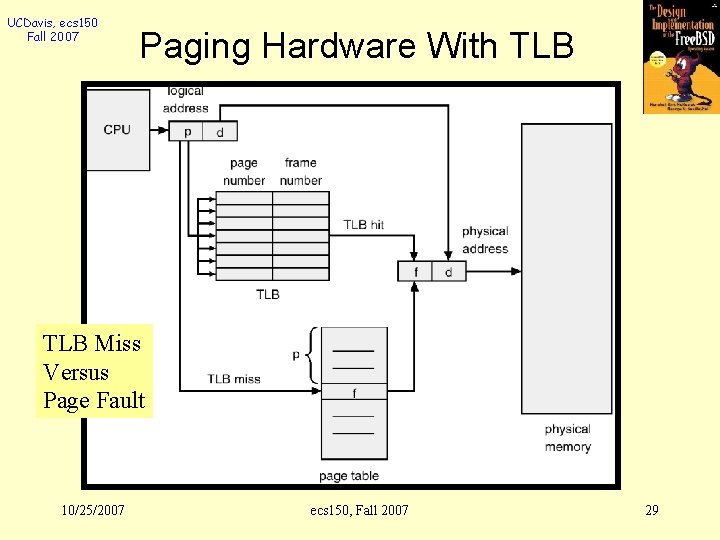

UCDavis, ecs 150 Fall 2007 Implementation of Page Table l l l Page table is kept in main memory. Page-table base register (PTBR) points to the page table. Page-table length register (PRLR) indicates size of the page table. In this scheme every data/instruction access requires two memory accesses. One for the page table and one for the data/instruction. The two memory access problem can be solved by the use of a special fast-lookup hardware cache called associative memory or translation look-aside buffers (TLBs) 10/25/2007 ecs 150, Fall 2007 24

UCDavis, ecs 150 Fall 2007 Two Issues Virtual Address Access Overhead l The size of the page table l 10/25/2007 ecs 150, Fall 2007 25

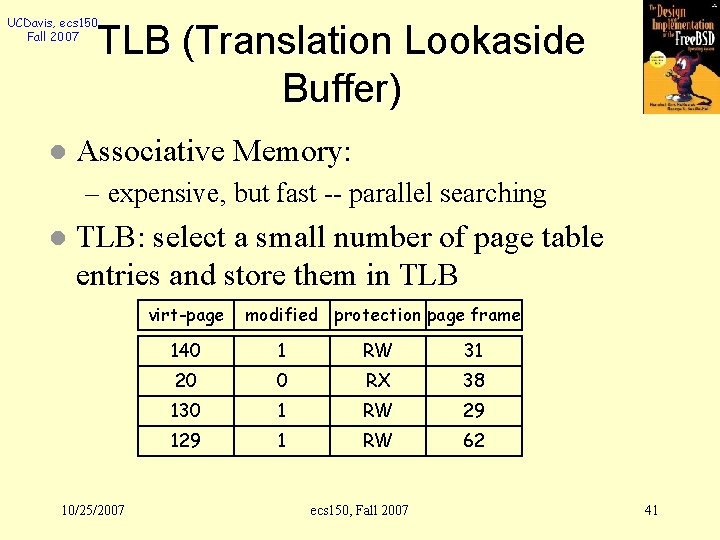

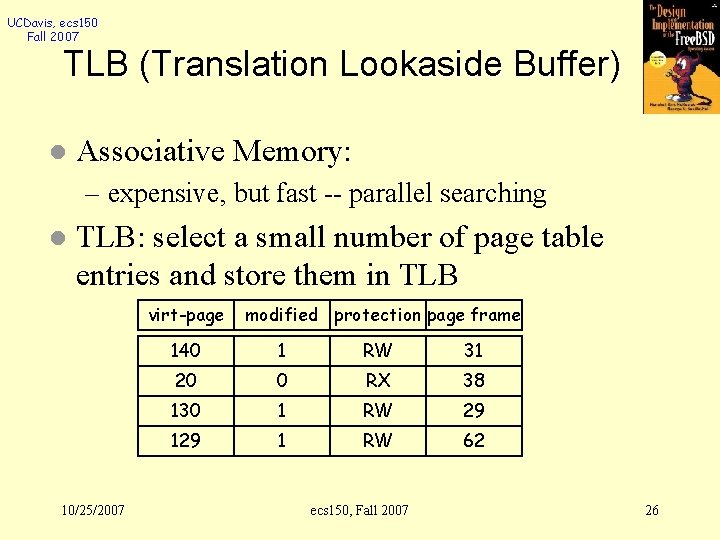

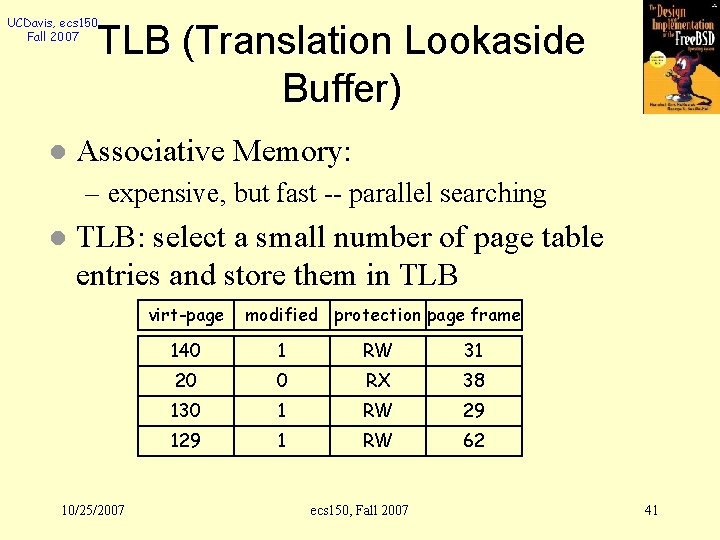

UCDavis, ecs 150 Fall 2007 TLB (Translation Lookaside Buffer) l Associative Memory: – expensive, but fast -- parallel searching l TLB: select a small number of page table entries and store them in TLB virt-page 10/25/2007 modified protection page frame 140 1 RW 31 20 0 RX 38 130 1 RW 29 1 RW 62 ecs 150, Fall 2007 26

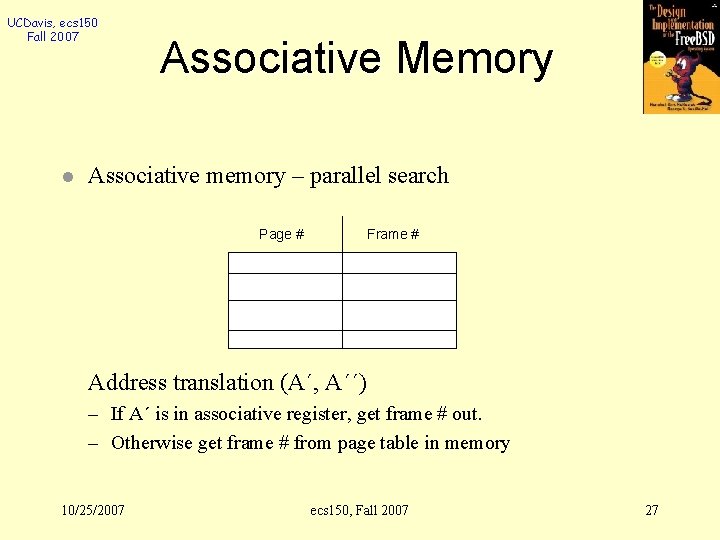

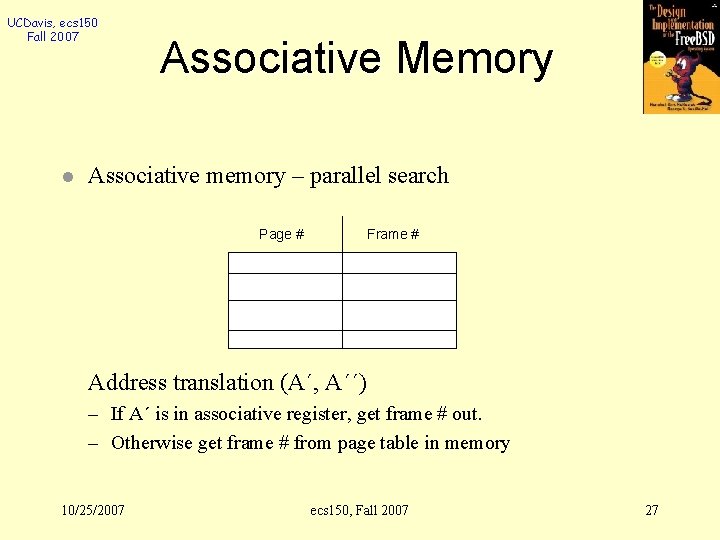

UCDavis, ecs 150 Fall 2007 l Associative Memory Associative memory – parallel search Page # Frame # Address translation (A´, A´´) – If A´ is in associative register, get frame # out. – Otherwise get frame # from page table in memory 10/25/2007 ecs 150, Fall 2007 27

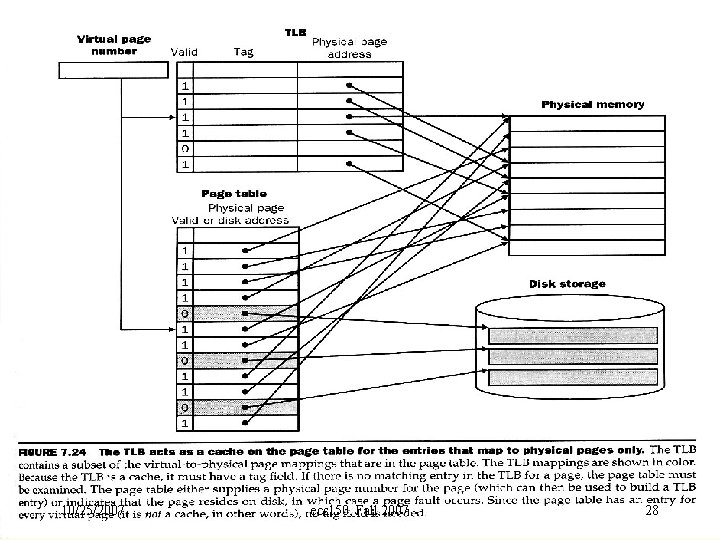

UCDavis, ecs 150 Fall 2007 10/25/2007 ecs 150, Fall 2007 28

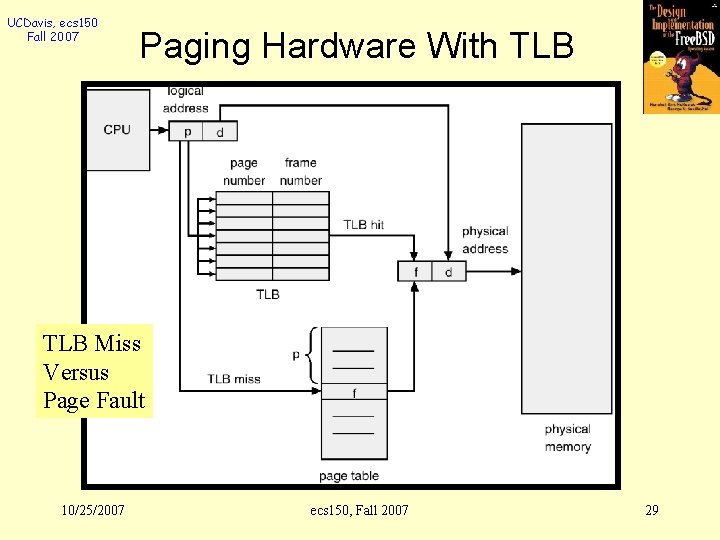

UCDavis, ecs 150 Fall 2007 Paging Hardware With TLB Miss Versus Page Fault 10/25/2007 ecs 150, Fall 2007 29

UCDavis, ecs 150 Fall 2007 Hardware or Software l TLB is part of MMU (hardware): – Automated page table entry (pte) update – OS handling TLB misses l Why software? ? – Reduce HW complexity – Flexibility in Paging/TLB content management for different applications 10/25/2007 ecs 150, Fall 2007 30

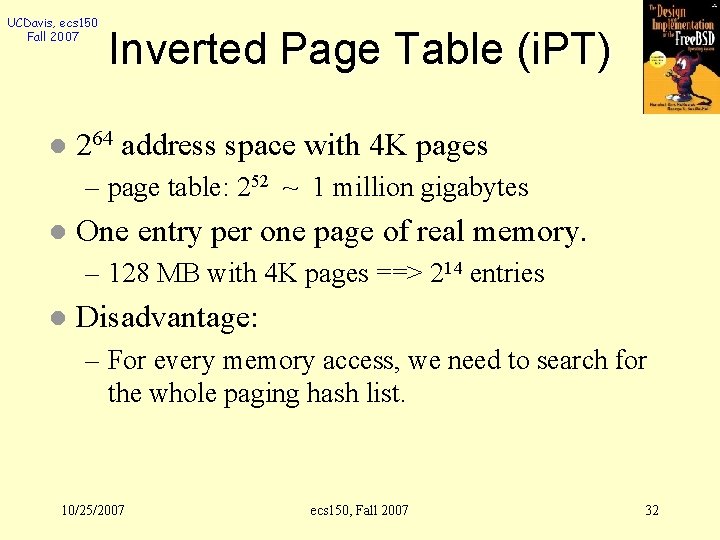

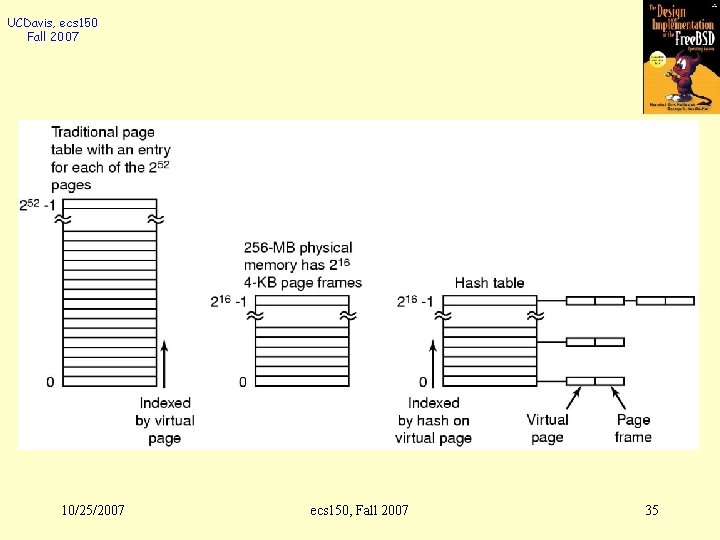

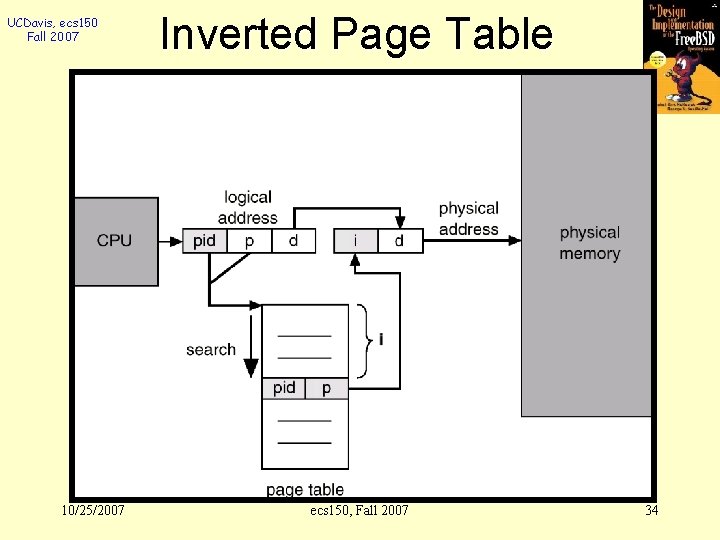

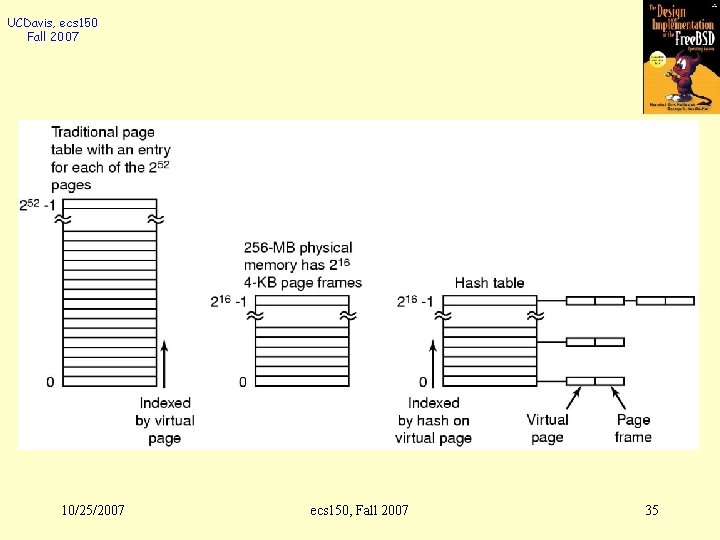

UCDavis, ecs 150 Fall 2007 l Inverted Page Table 264 address space with 4 K pages – page table: 252 ~ 1 million gigabytes 10/25/2007 ecs 150, Fall 2007 31

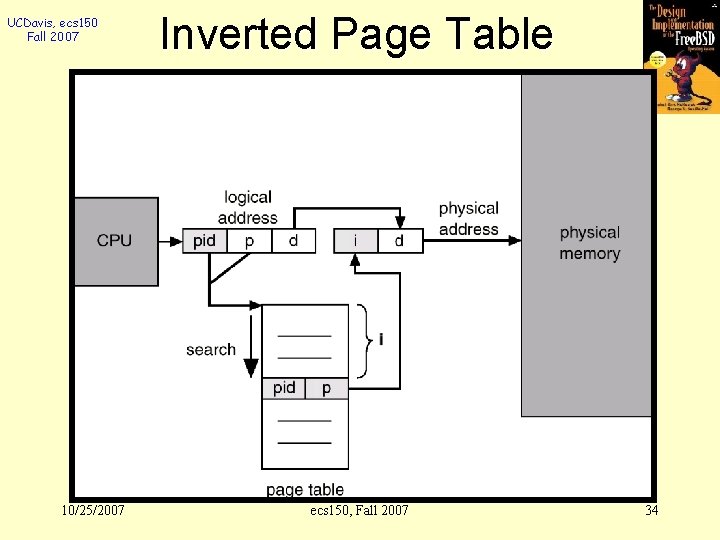

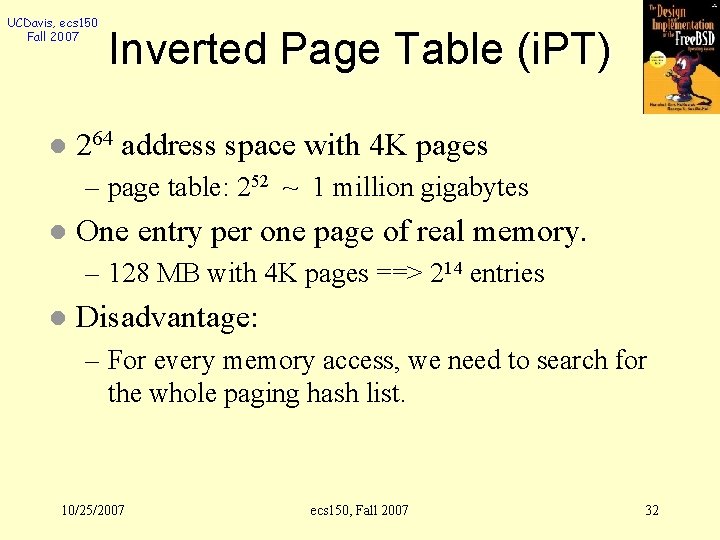

UCDavis, ecs 150 Fall 2007 l Inverted Page Table (i. PT) 264 address space with 4 K pages – page table: 252 ~ 1 million gigabytes l One entry per one page of real memory. – 128 MB with 4 K pages ==> 214 entries l Disadvantage: – For every memory access, we need to search for the whole paging hash list. 10/25/2007 ecs 150, Fall 2007 32

UCDavis, ecs 150 Fall 2007 10/25/2007 Page Table ecs 150, Fall 2007 33

UCDavis, ecs 150 Fall 2007 10/25/2007 Inverted Page Table ecs 150, Fall 2007 34

UCDavis, ecs 150 Fall 2007 10/25/2007 ecs 150, Fall 2007 35

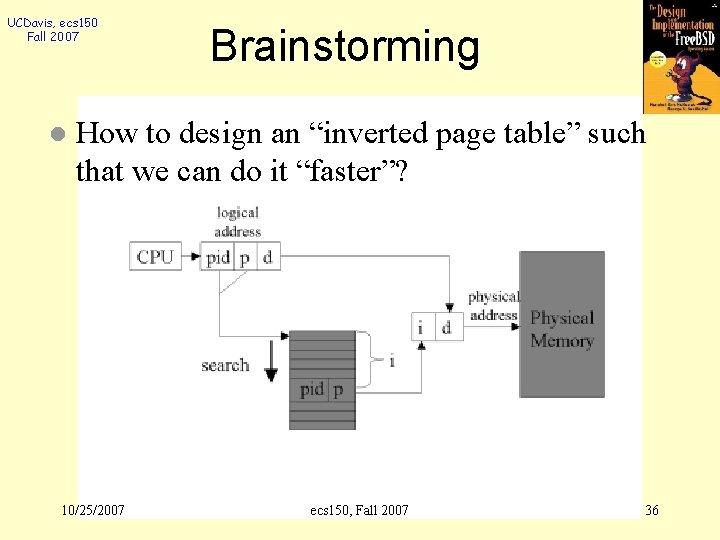

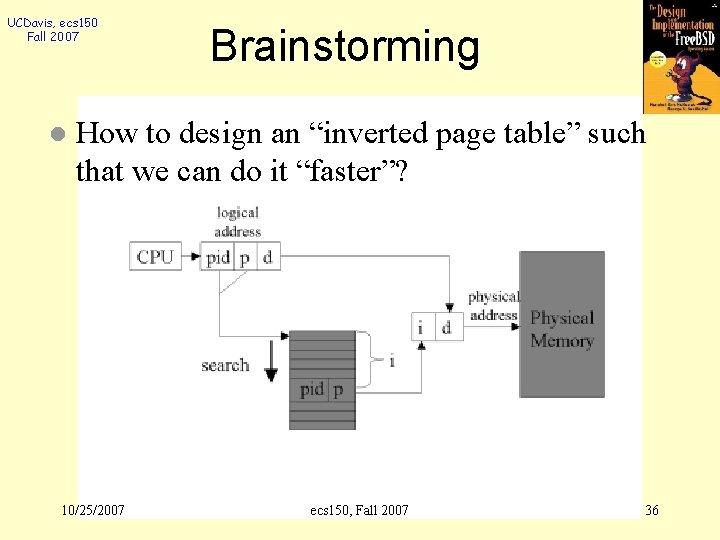

UCDavis, ecs 150 Fall 2007 l Brainstorming How to design an “inverted page table” such that we can do it “faster”? 10/25/2007 ecs 150, Fall 2007 36

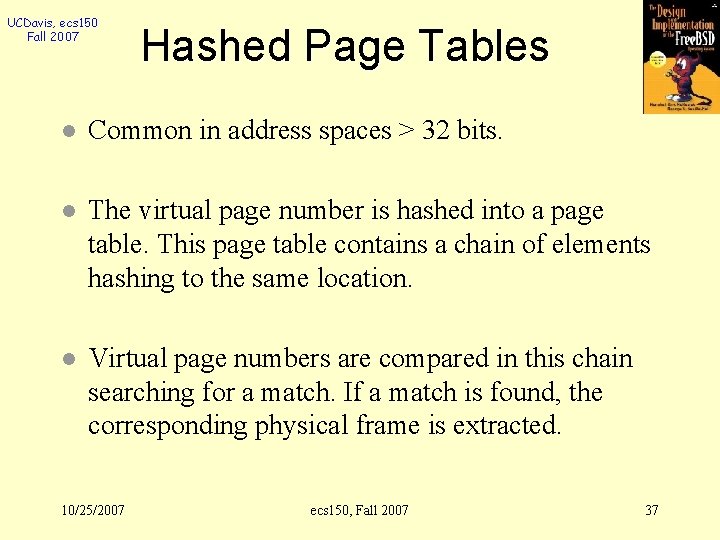

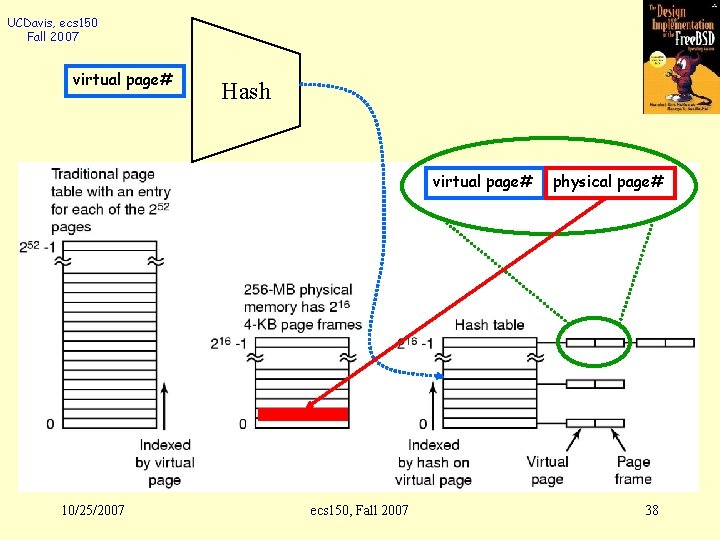

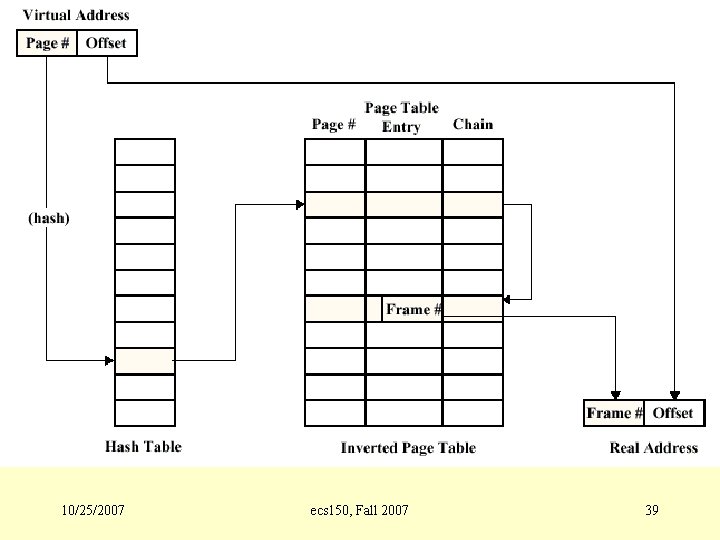

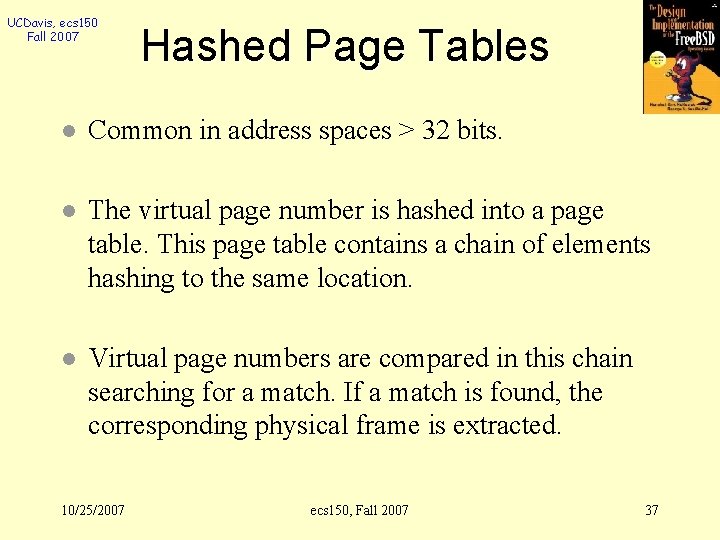

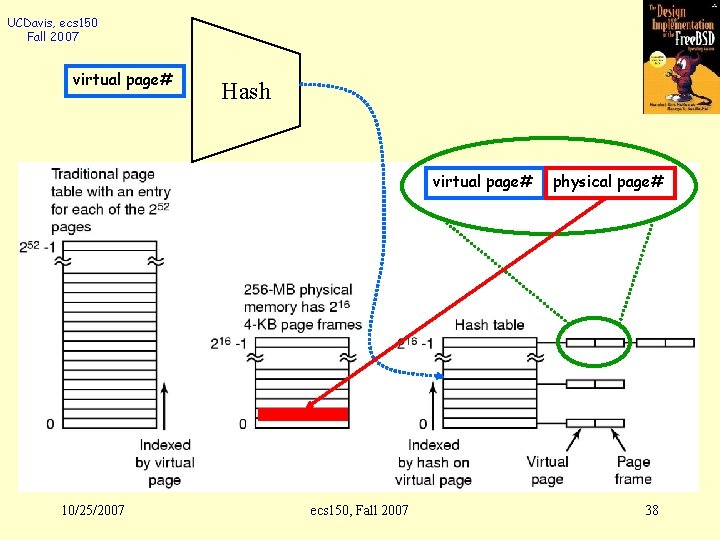

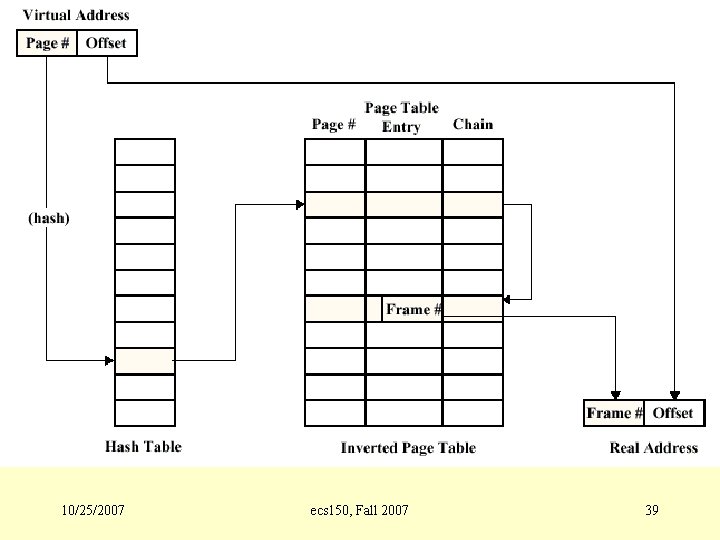

UCDavis, ecs 150 Fall 2007 Hashed Page Tables l Common in address spaces > 32 bits. l The virtual page number is hashed into a page table. This page table contains a chain of elements hashing to the same location. l Virtual page numbers are compared in this chain searching for a match. If a match is found, the corresponding physical frame is extracted. 10/25/2007 ecs 150, Fall 2007 37

UCDavis, ecs 150 Fall 2007 virtual page# Hash virtual page# 10/25/2007 ecs 150, Fall 2007 physical page# 38

UCDavis, ecs 150 Fall 2007 10/25/2007 ecs 150, Fall 2007 39

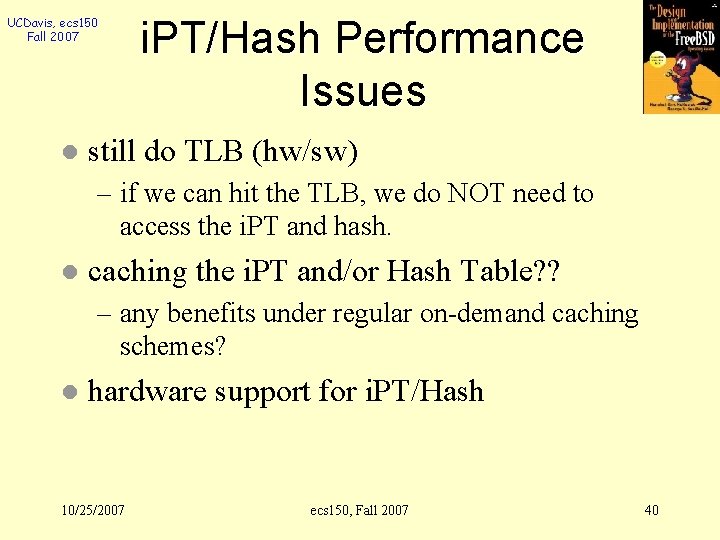

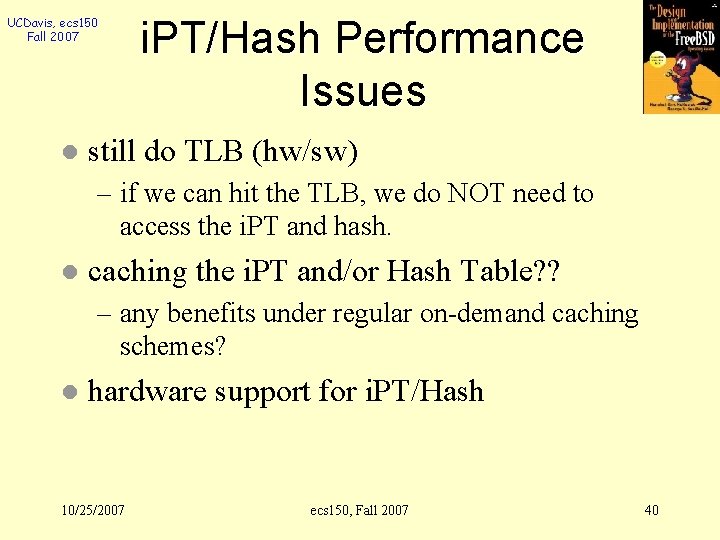

UCDavis, ecs 150 Fall 2007 l i. PT/Hash Performance Issues still do TLB (hw/sw) – if we can hit the TLB, we do NOT need to access the i. PT and hash. l caching the i. PT and/or Hash Table? ? – any benefits under regular on-demand caching schemes? l hardware support for i. PT/Hash 10/25/2007 ecs 150, Fall 2007 40

UCDavis, ecs 150 Fall 2007 TLB (Translation Lookaside Buffer) l Associative Memory: – expensive, but fast -- parallel searching l TLB: select a small number of page table entries and store them in TLB virt-page 10/25/2007 modified protection page frame 140 1 RW 31 20 0 RX 38 130 1 RW 29 1 RW 62 ecs 150, Fall 2007 41

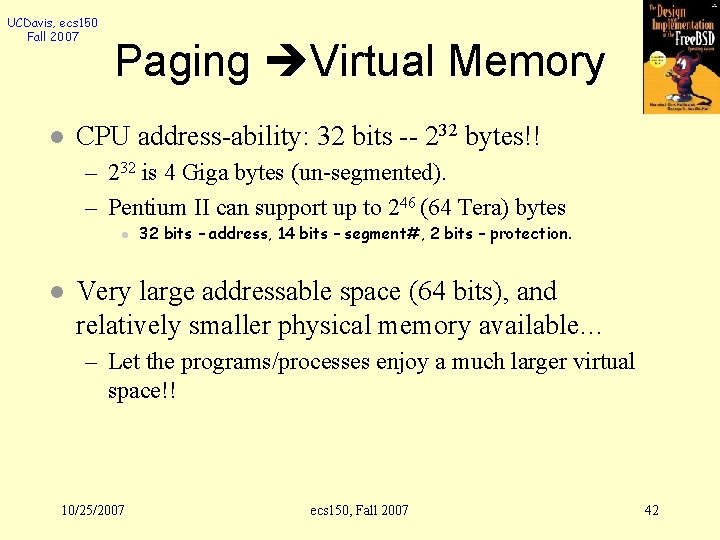

UCDavis, ecs 150 Fall 2007 l Paging Virtual Memory CPU address-ability: 32 bits -- 232 bytes!! – 232 is 4 Giga bytes (un-segmented). – Pentium II can support up to 246 (64 Tera) bytes l l 32 bits – address, 14 bits – segment#, 2 bits – protection. Very large addressable space (64 bits), and relatively smaller physical memory available… – Let the programs/processes enjoy a much larger virtual space!! 10/25/2007 ecs 150, Fall 2007 42

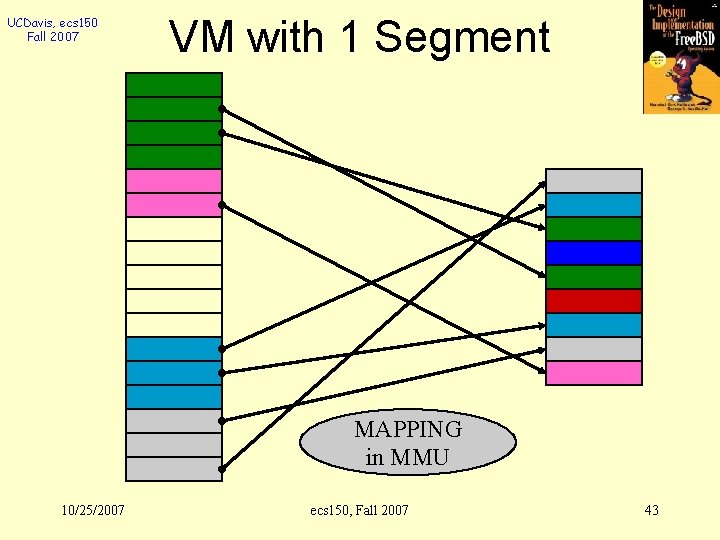

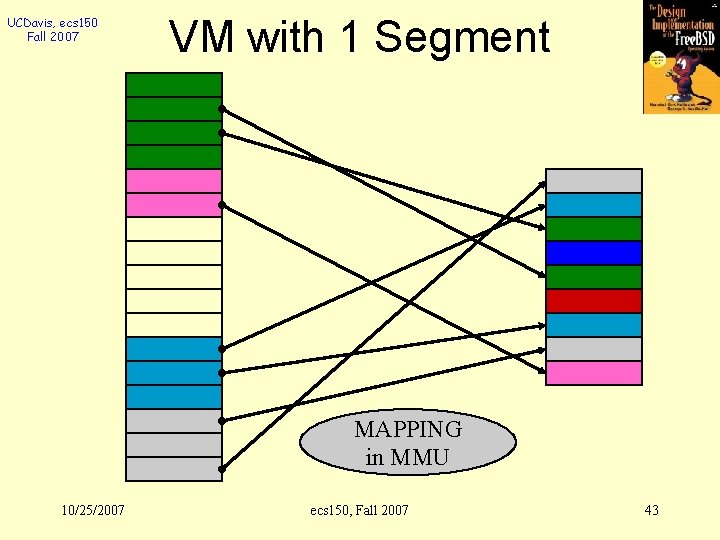

UCDavis, ecs 150 Fall 2007 VM with 1 Segment MAPPING in MMU 10/25/2007 ecs 150, Fall 2007 43

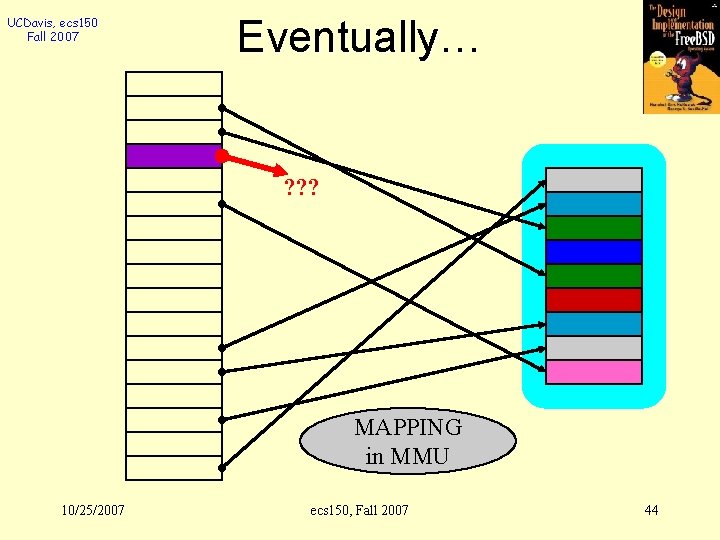

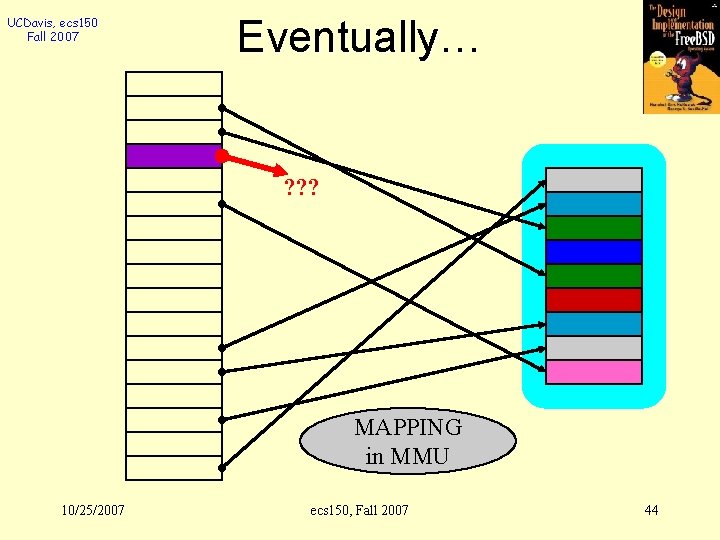

UCDavis, ecs 150 Fall 2007 Eventually… ? ? ? MAPPING in MMU 10/25/2007 ecs 150, Fall 2007 44

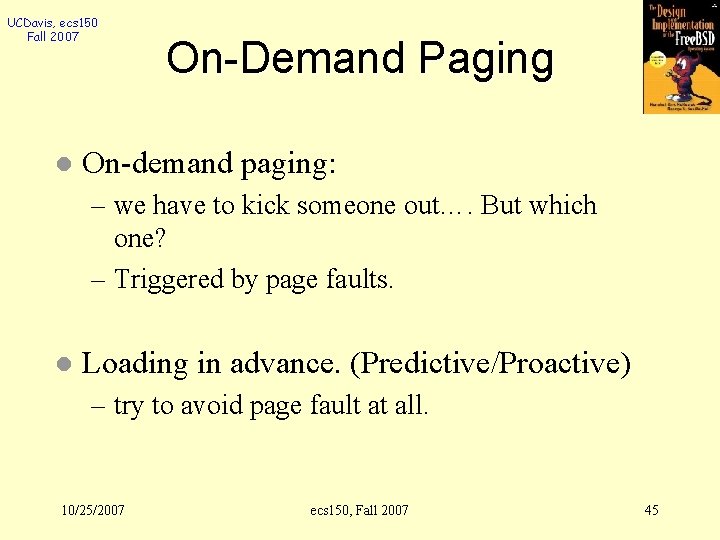

UCDavis, ecs 150 Fall 2007 l On-Demand Paging On-demand paging: – we have to kick someone out…. But which one? – Triggered by page faults. l Loading in advance. (Predictive/Proactive) – try to avoid page fault at all. 10/25/2007 ecs 150, Fall 2007 45

UCDavis, ecs 150 Fall 2007 l Demand Paging On a page fault the OS: – Save user registers and process state. – Determine that exception was page fault. – Find a free page frame. – Issue read from disk to free page frame. – Wait for seek and latency and transfers page into memory. – Restore process state and resume execution. 10/25/2007 ecs 150, Fall 2007 46

UCDavis, ecs 150 Fall 2007 Page Replacement 1. Find the location of the desired page on disk. 2. Find a free frame: - If there is a free frame, use it. - If there is no free frame, use a page replacement algorithm to select a victim frame. 3. Read the desired page into the (newly) free frame. Update the page and frame tables. 4. Restart the process. 10/25/2007 ecs 150, Fall 2007 47

UCDavis, ecs 150 Fall 2007 l Page Replacement Algorithms minimize page-fault rate 10/25/2007 ecs 150, Fall 2007 48

UCDavis, ecs 150 Fall 2007 Page Replacement Optimal l FIFO l Least Recently Used (LRU) l Not Recently Used (NRU) l Second Chance l Clock Paging l 10/25/2007 ecs 150, Fall 2007 49

UCDavis, ecs 150 Fall 2007 Optimal Estimate the next page reference time in the future. l Select the longest one. l 10/25/2007 ecs 150, Fall 2007 50

UCDavis, ecs 150 Fall 2007 LRU l an implementation issue – I need to keep tracking the last modification or access time for each page – timestamp: 32 bits l How to implement LRU efficiently? 10/25/2007 ecs 150, Fall 2007 51

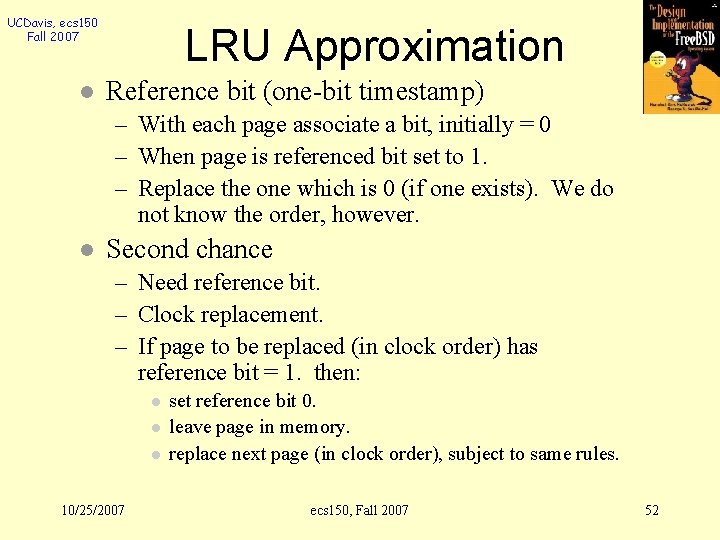

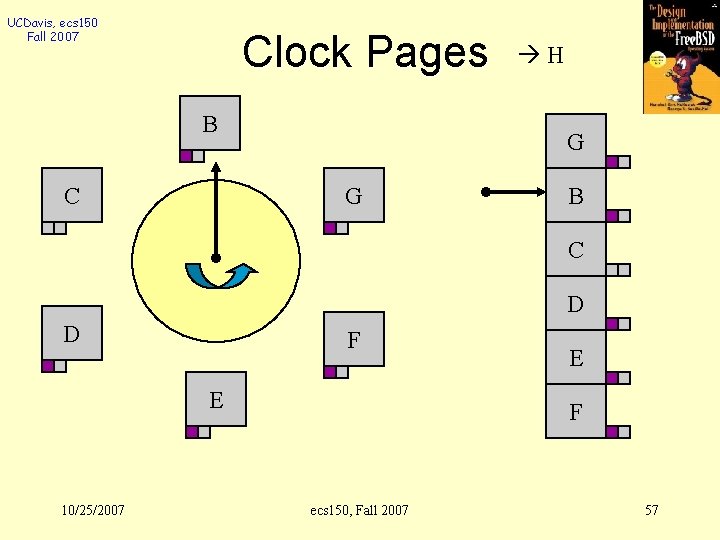

UCDavis, ecs 150 Fall 2007 l LRU Approximation Reference bit (one-bit timestamp) – With each page associate a bit, initially = 0 – When page is referenced bit set to 1. – Replace the one which is 0 (if one exists). We do not know the order, however. l Second chance – Need reference bit. – Clock replacement. – If page to be replaced (in clock order) has reference bit = 1. then: l l l 10/25/2007 set reference bit 0. leave page in memory. replace next page (in clock order), subject to same rules. ecs 150, Fall 2007 52

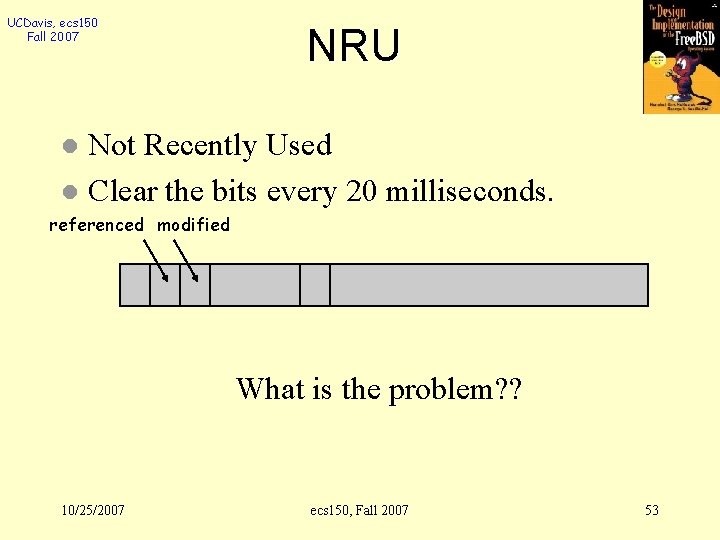

UCDavis, ecs 150 Fall 2007 NRU Not Recently Used l Clear the bits every 20 milliseconds. l referenced modified What is the problem? ? 10/25/2007 ecs 150, Fall 2007 53

UCDavis, ecs 150 Fall 2007 Page Replacement? ? Efficient Approximation of LRU l No periodic refreshing l l How to do that? 10/25/2007 ecs 150, Fall 2007 54

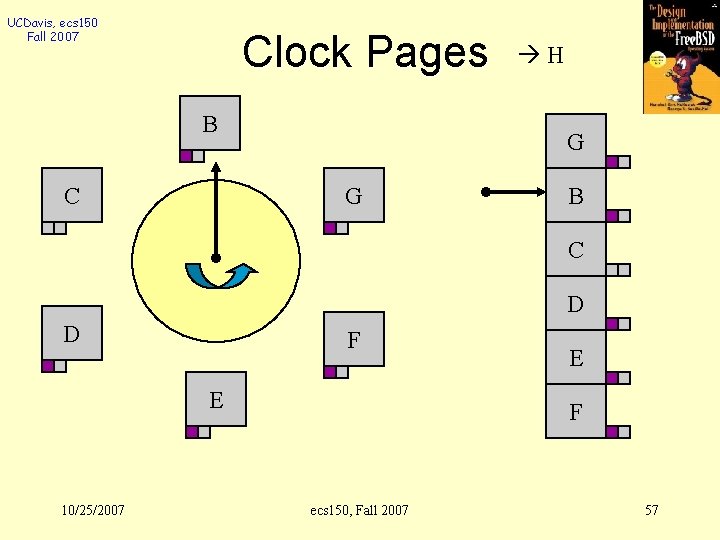

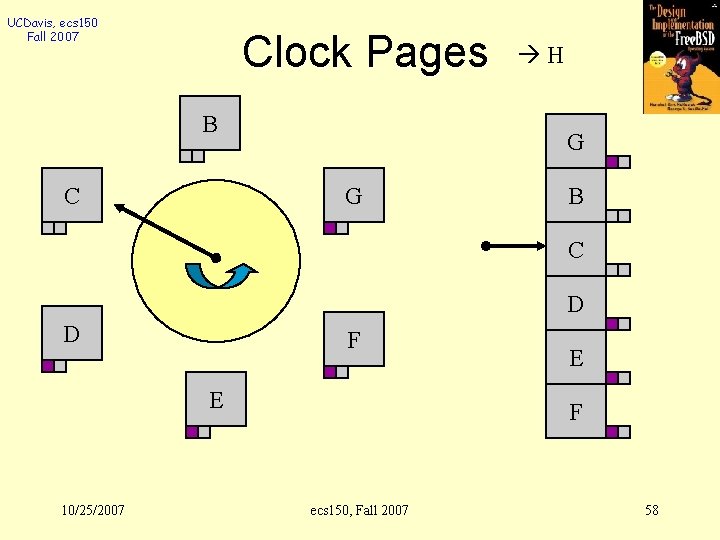

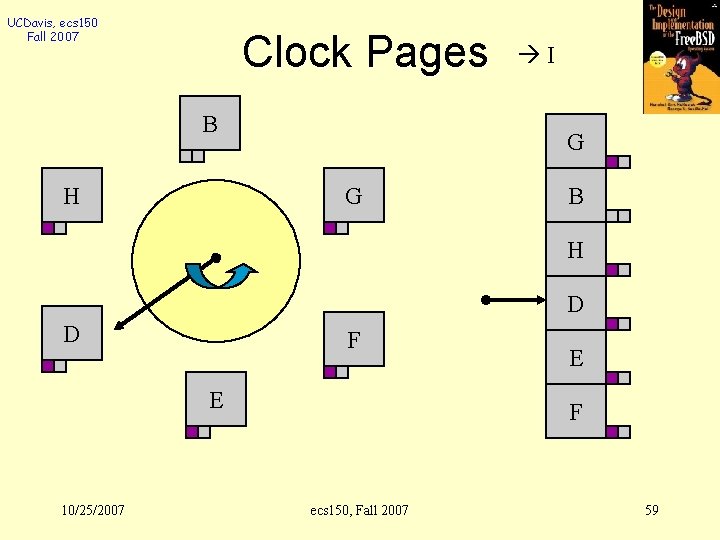

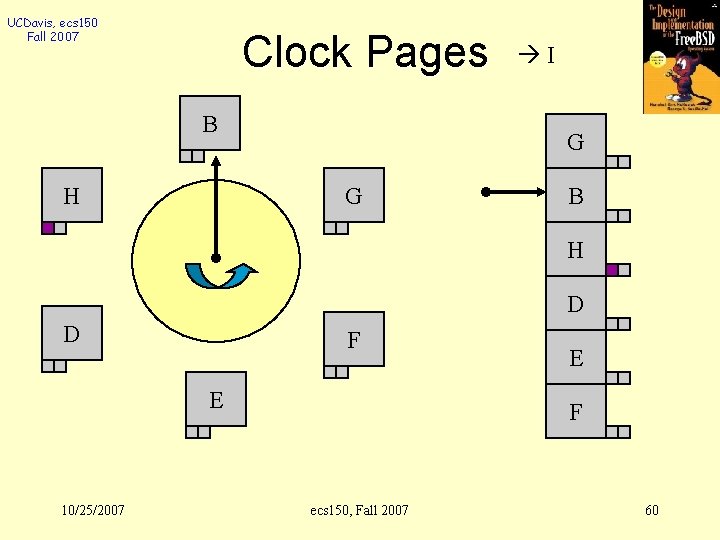

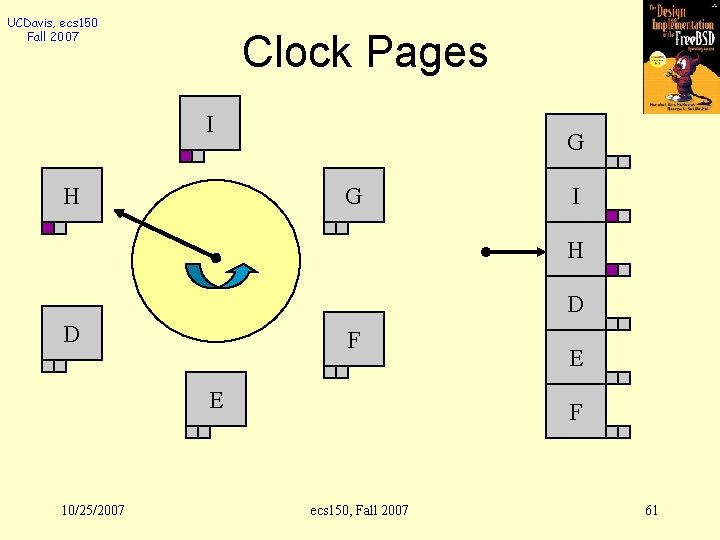

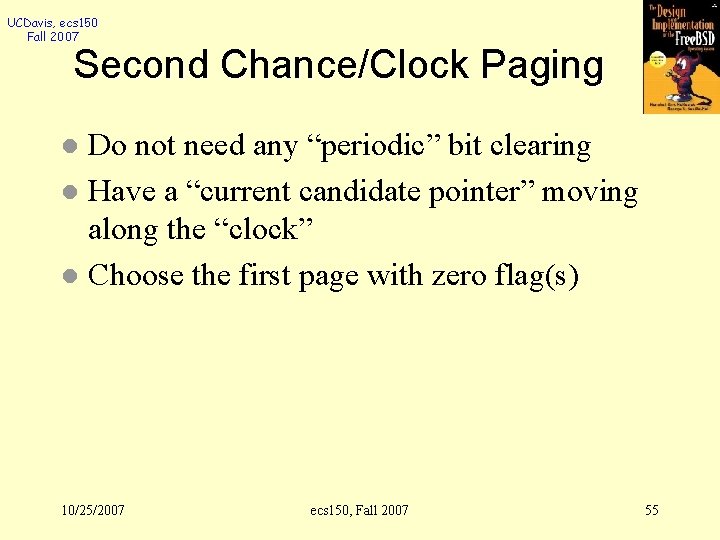

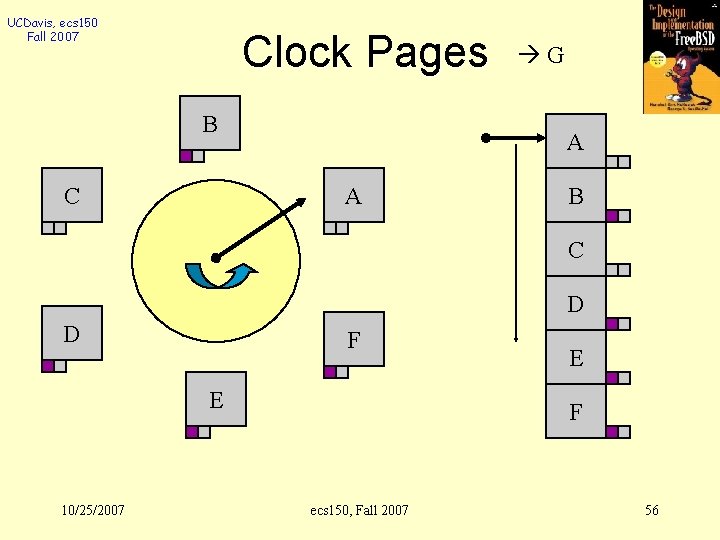

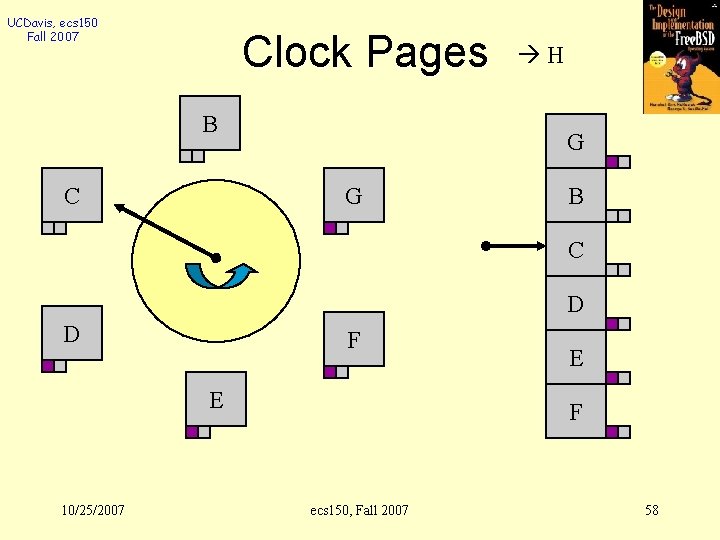

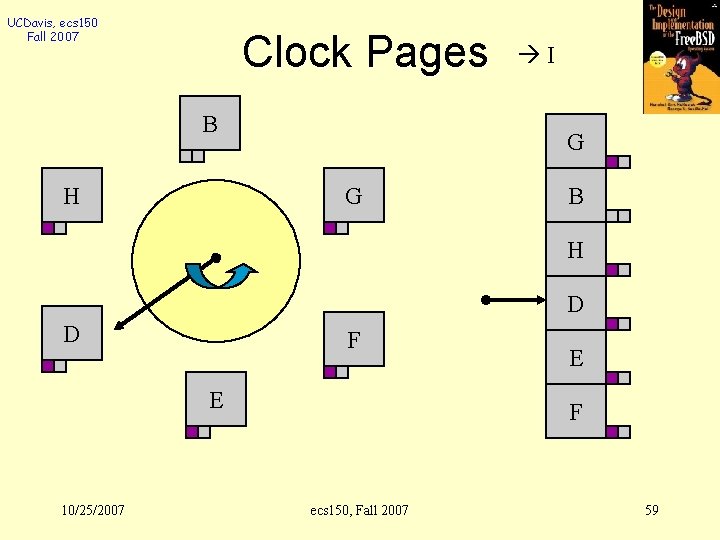

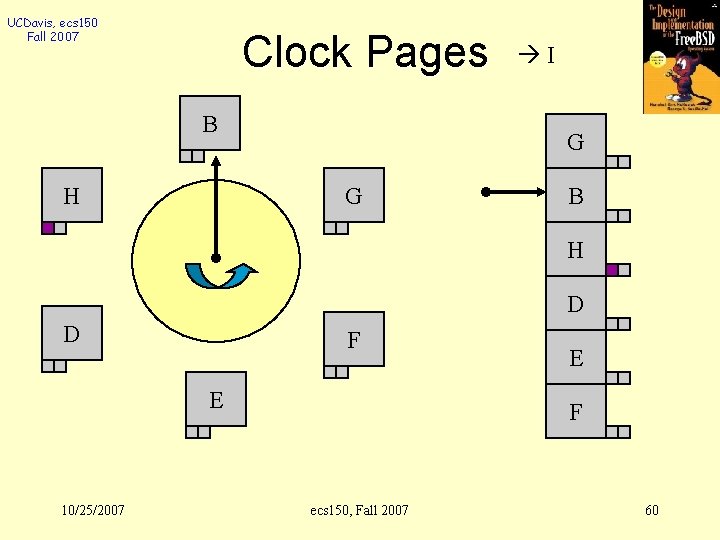

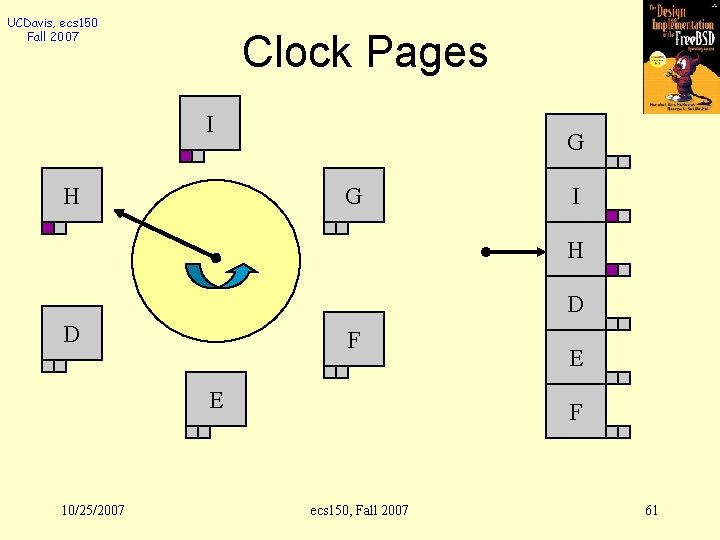

UCDavis, ecs 150 Fall 2007 Second Chance/Clock Paging Do not need any “periodic” bit clearing l Have a “current candidate pointer” moving along the “clock” l Choose the first page with zero flag(s) l 10/25/2007 ecs 150, Fall 2007 55

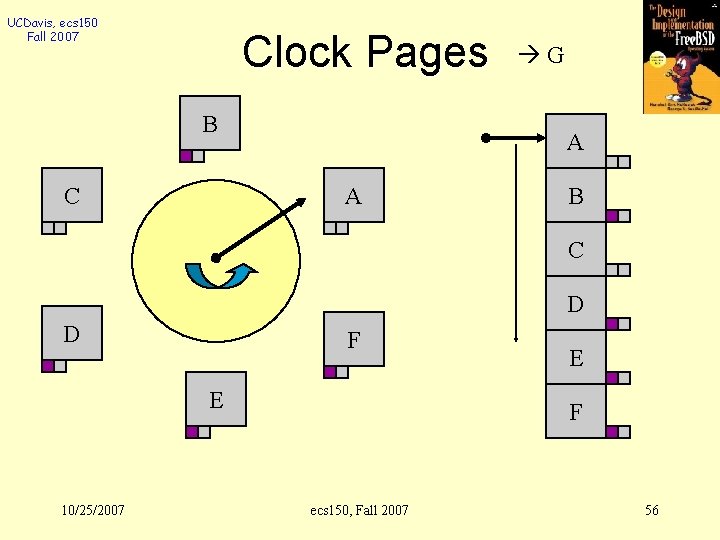

UCDavis, ecs 150 Fall 2007 Clock Pages B C G A A B C D D F E 10/25/2007 E F ecs 150, Fall 2007 56

UCDavis, ecs 150 Fall 2007 Clock Pages B C H G G B C D D F E 10/25/2007 E F ecs 150, Fall 2007 57

UCDavis, ecs 150 Fall 2007 Clock Pages B C H G G B C D D F E 10/25/2007 E F ecs 150, Fall 2007 58

UCDavis, ecs 150 Fall 2007 Clock Pages B H I G G B H D D F E 10/25/2007 E F ecs 150, Fall 2007 59

UCDavis, ecs 150 Fall 2007 Clock Pages B H I G G B H D D F E 10/25/2007 E F ecs 150, Fall 2007 60

UCDavis, ecs 150 Fall 2007 Clock Pages I H G G I H D D F E 10/25/2007 E F ecs 150, Fall 2007 61

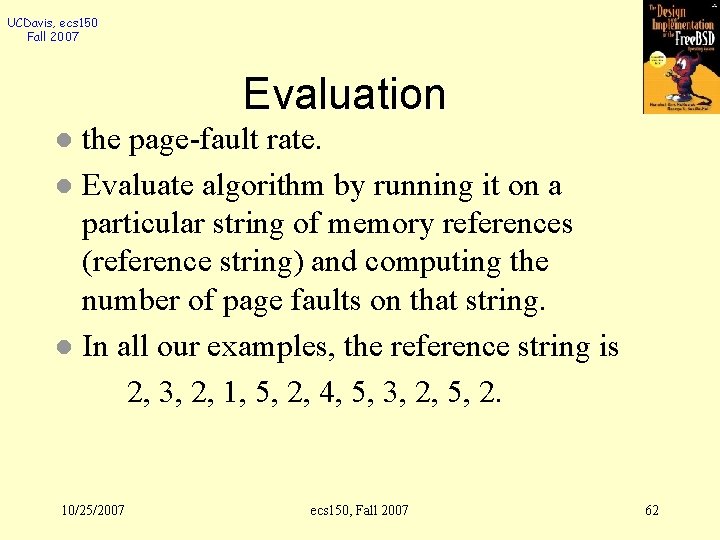

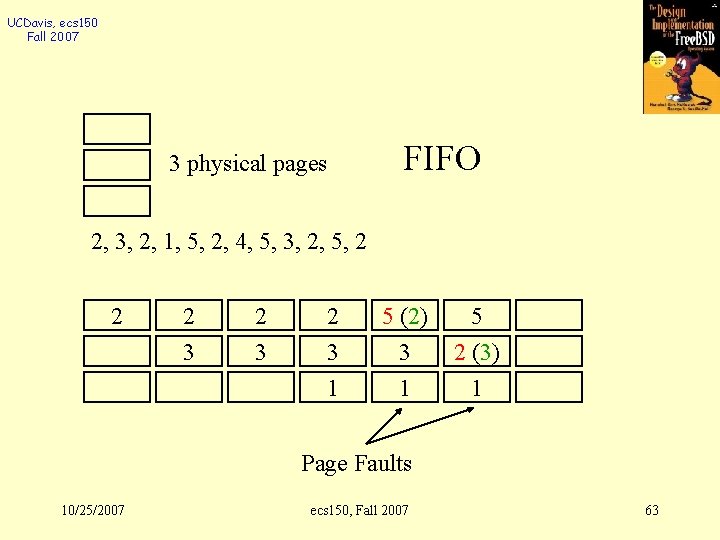

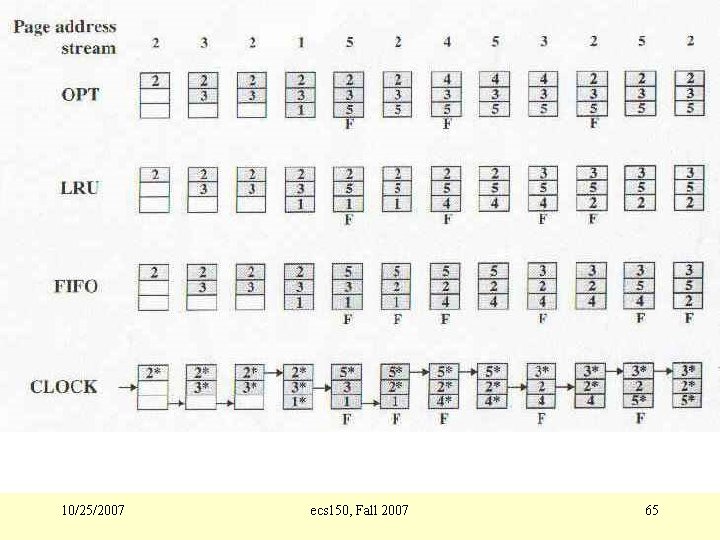

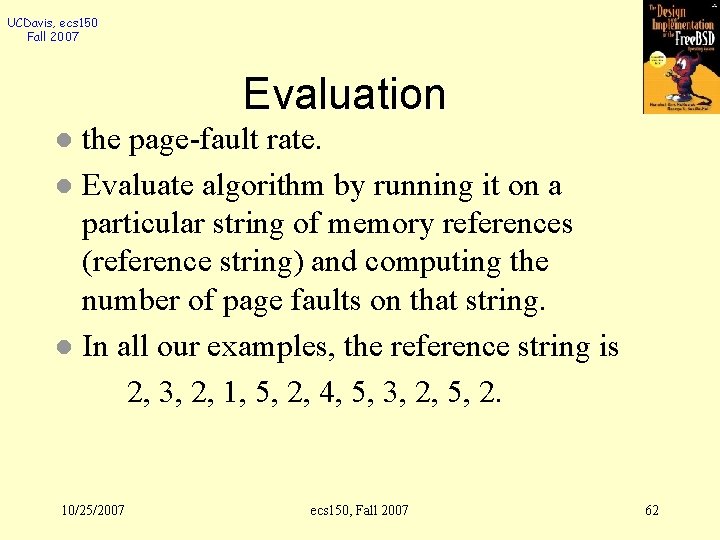

UCDavis, ecs 150 Fall 2007 Evaluation the page-fault rate. l Evaluate algorithm by running it on a particular string of memory references (reference string) and computing the number of page faults on that string. l In all our examples, the reference string is 2, 3, 2, 1, 5, 2, 4, 5, 3, 2, 5, 2. l 10/25/2007 ecs 150, Fall 2007 62

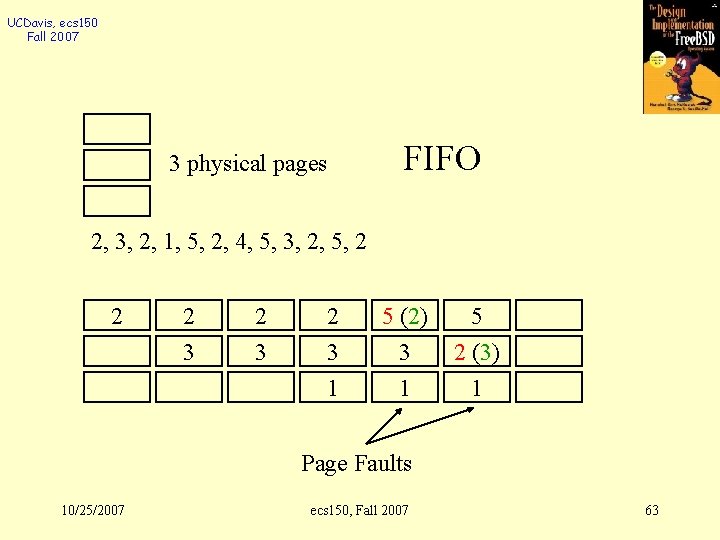

UCDavis, ecs 150 Fall 2007 3 physical pages FIFO 2, 3, 2, 1, 5, 2, 4, 5, 3, 2, 5, 2 2 2 3 2 3 1 5 (2) 3 1 5 2 (3) 1 Page Faults 10/25/2007 ecs 150, Fall 2007 63

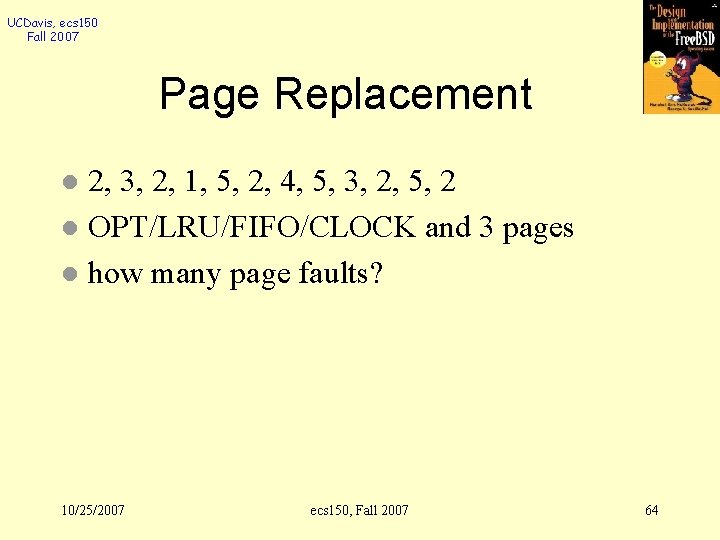

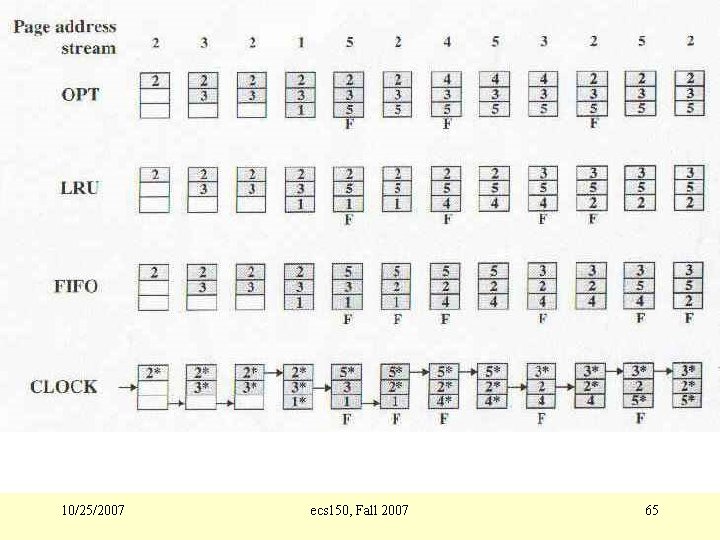

UCDavis, ecs 150 Fall 2007 Page Replacement 2, 3, 2, 1, 5, 2, 4, 5, 3, 2, 5, 2 l OPT/LRU/FIFO/CLOCK and 3 pages l how many page faults? l 10/25/2007 ecs 150, Fall 2007 64

UCDavis, ecs 150 Fall 2007 10/25/2007 ecs 150, Fall 2007 65

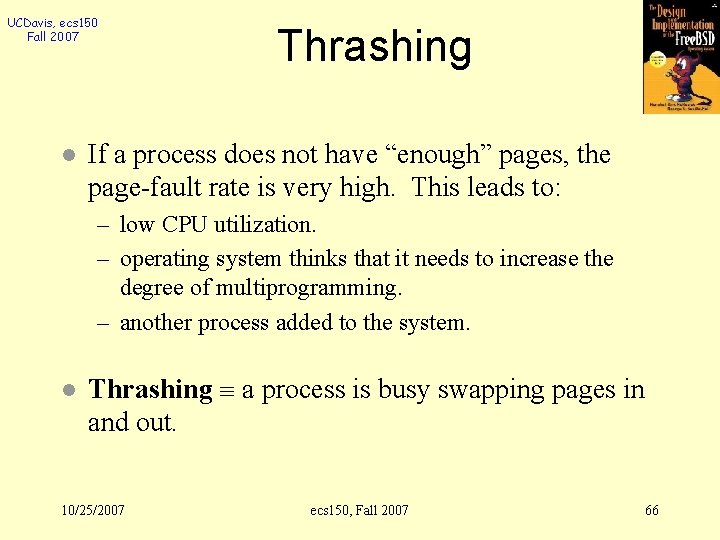

UCDavis, ecs 150 Fall 2007 l Thrashing If a process does not have “enough” pages, the page-fault rate is very high. This leads to: – low CPU utilization. – operating system thinks that it needs to increase the degree of multiprogramming. – another process added to the system. l Thrashing a process is busy swapping pages in and out. 10/25/2007 ecs 150, Fall 2007 66

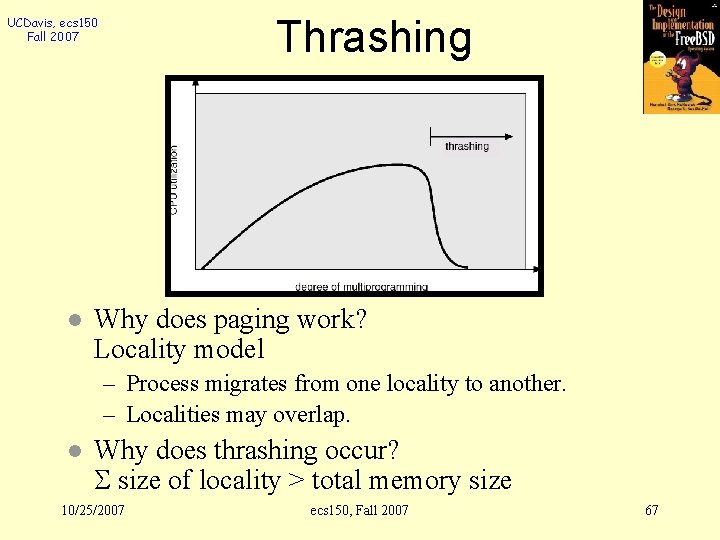

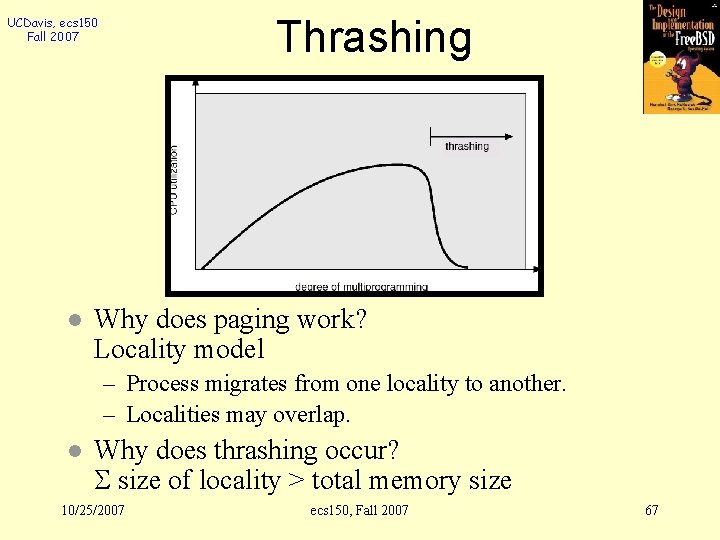

Thrashing UCDavis, ecs 150 Fall 2007 l Why does paging work? Locality model – Process migrates from one locality to another. – Localities may overlap. l Why does thrashing occur? size of locality > total memory size 10/25/2007 ecs 150, Fall 2007 67

UCDavis, ecs 150 Fall 2007 How to Handle Thrashing? l Brainstorming!! 10/25/2007 ecs 150, Fall 2007 68

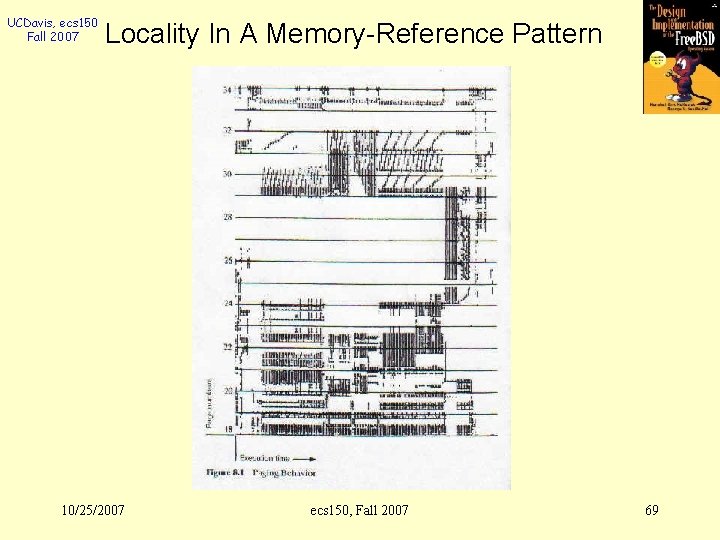

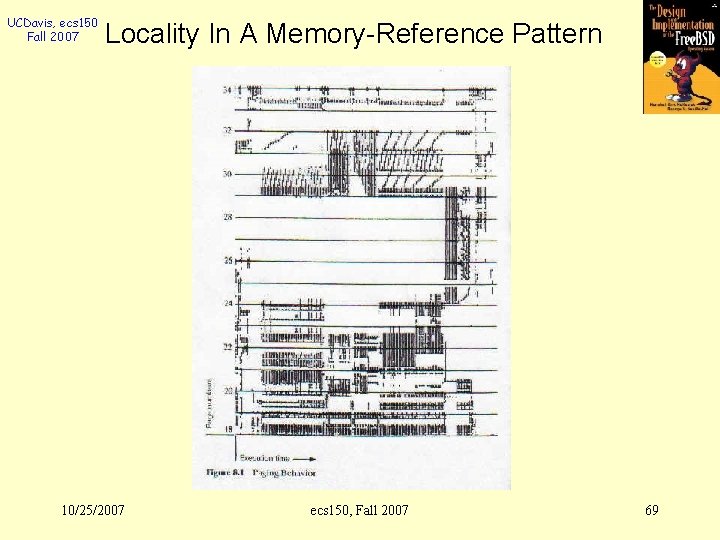

UCDavis, ecs 150 Fall 2007 Locality In A Memory-Reference Pattern 10/25/2007 ecs 150, Fall 2007 69

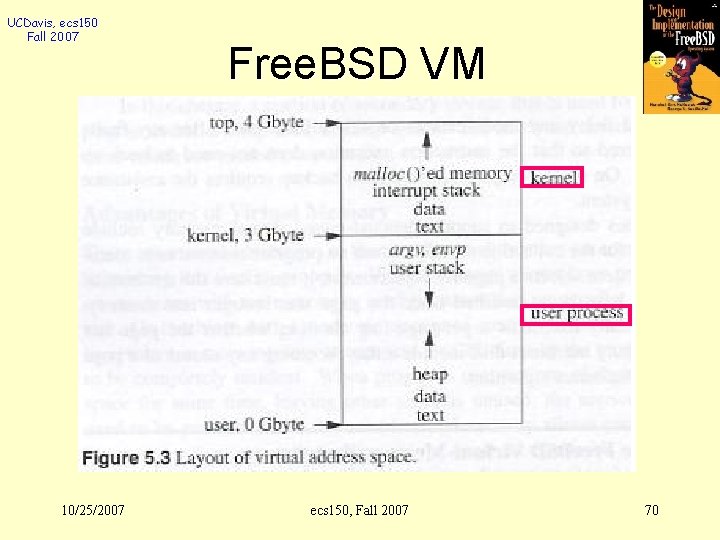

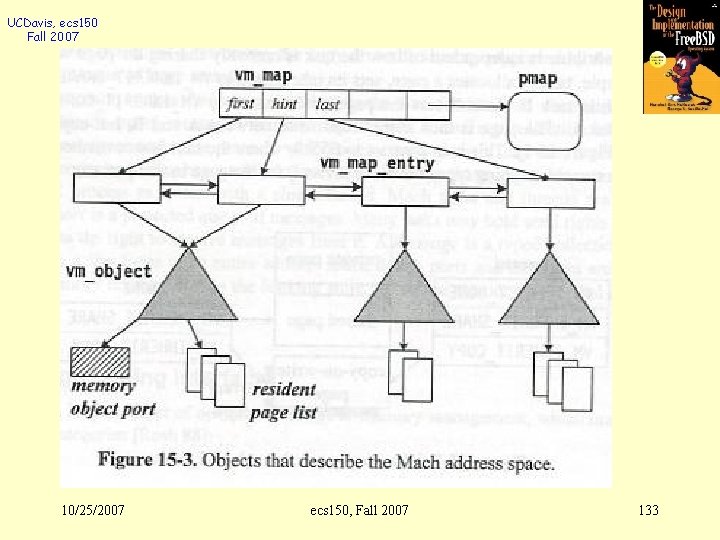

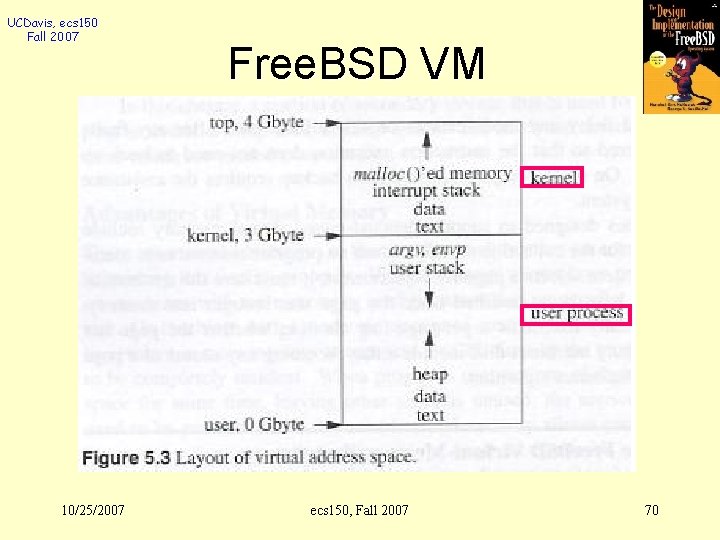

UCDavis, ecs 150 Fall 2007 10/25/2007 Free. BSD VM ecs 150, Fall 2007 70

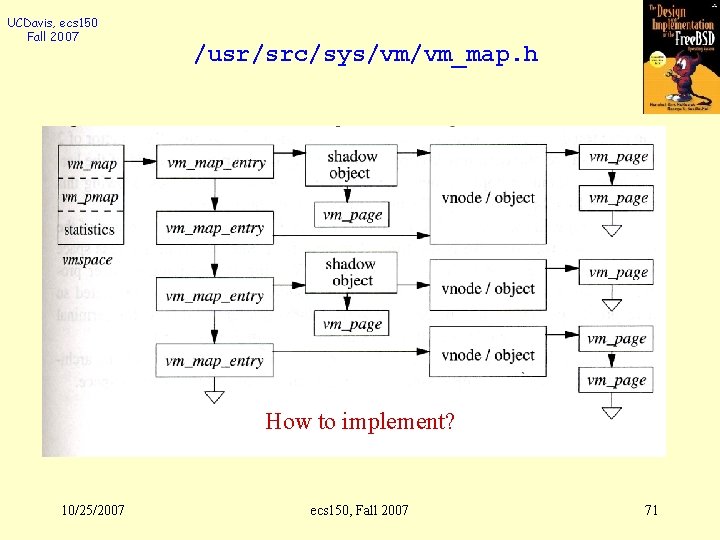

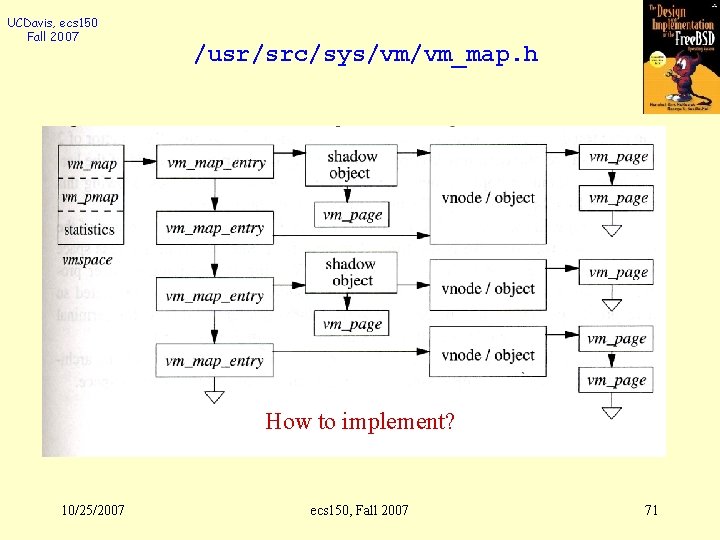

UCDavis, ecs 150 Fall 2007 /usr/src/sys/vm/vm_map. h How to implement? 10/25/2007 ecs 150, Fall 2007 71

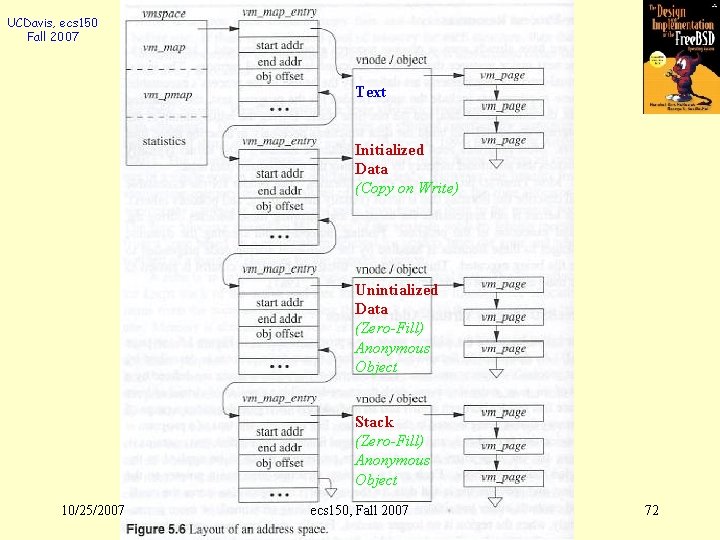

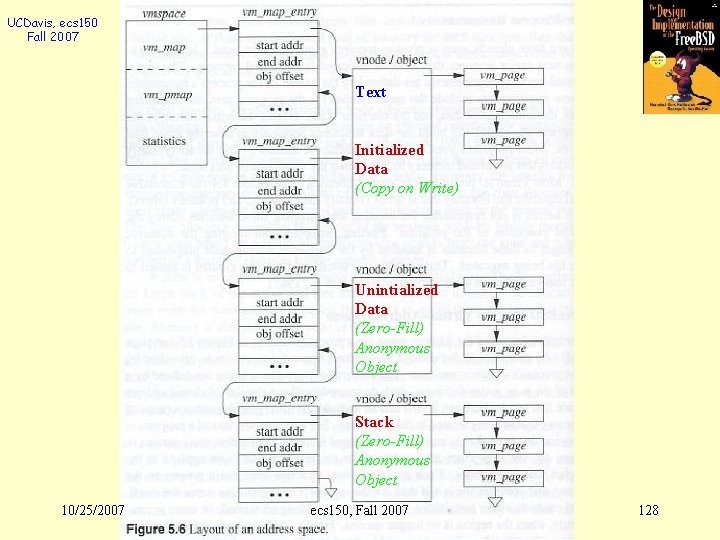

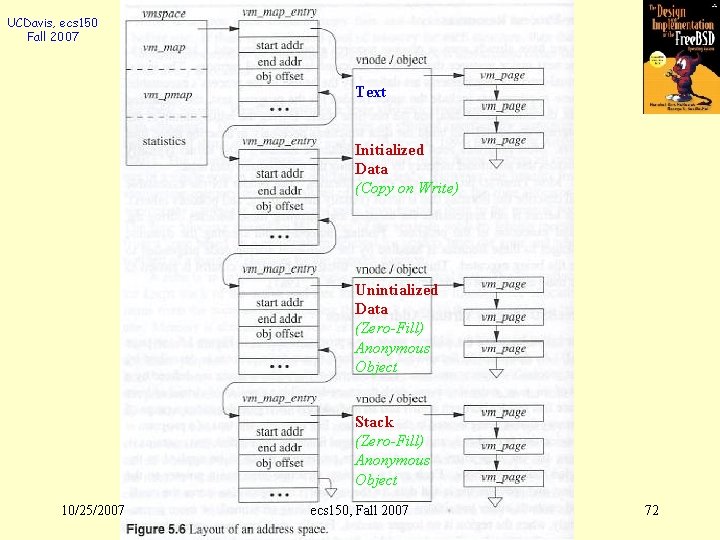

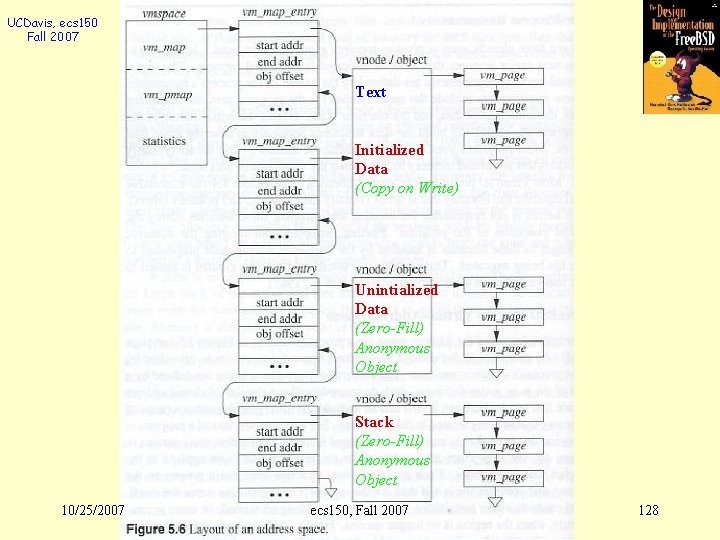

UCDavis, ecs 150 Fall 2007 Text Initialized Data (Copy on Write) Unintialized Data (Zero-Fill) Anonymous Object Stack (Zero-Fill) Anonymous Object 10/25/2007 ecs 150, Fall 2007 72

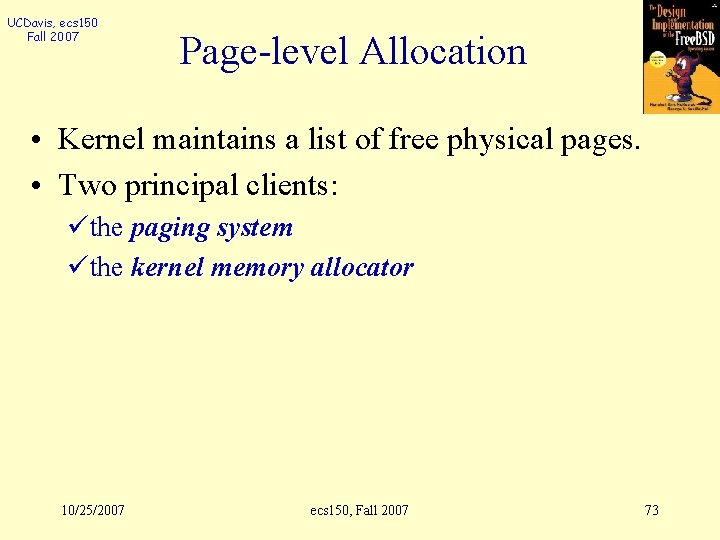

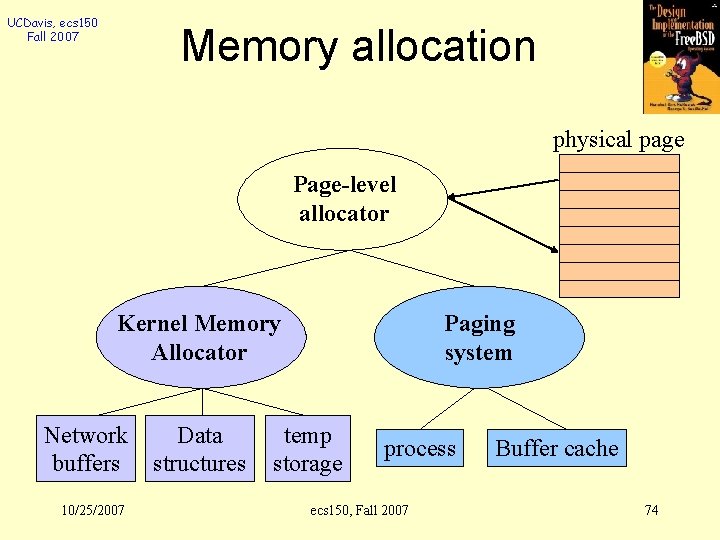

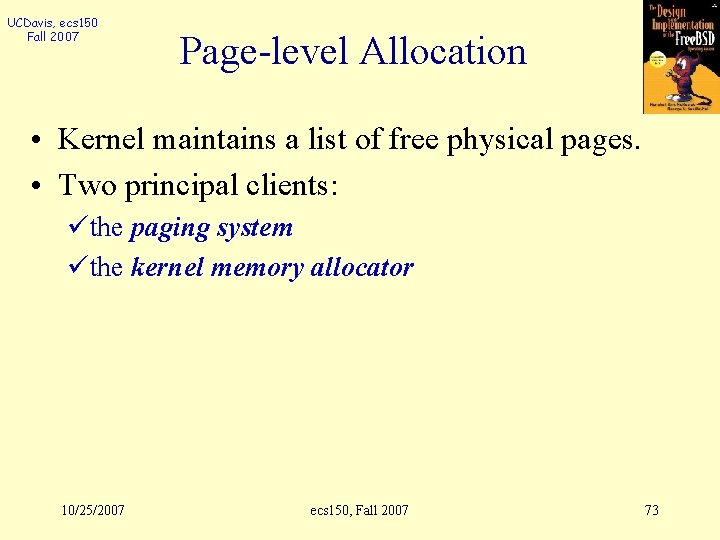

UCDavis, ecs 150 Fall 2007 Page-level Allocation • Kernel maintains a list of free physical pages. • Two principal clients: üthe paging system üthe kernel memory allocator 10/25/2007 ecs 150, Fall 2007 73

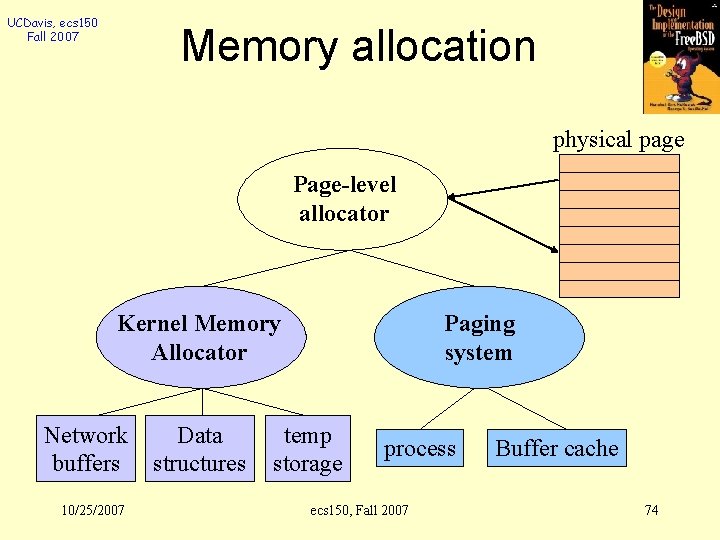

UCDavis, ecs 150 Fall 2007 Memory allocation physical page Page-level allocator Kernel Memory Allocator Network buffers 10/25/2007 Data structures Paging system temp storage process ecs 150, Fall 2007 Buffer cache 74

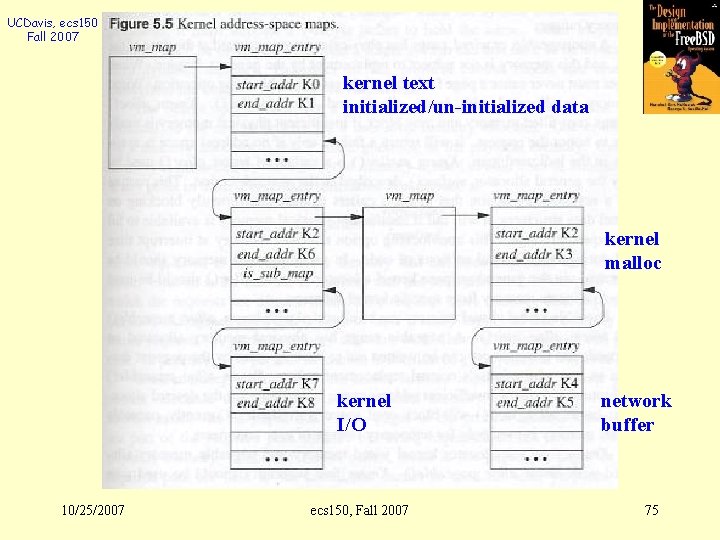

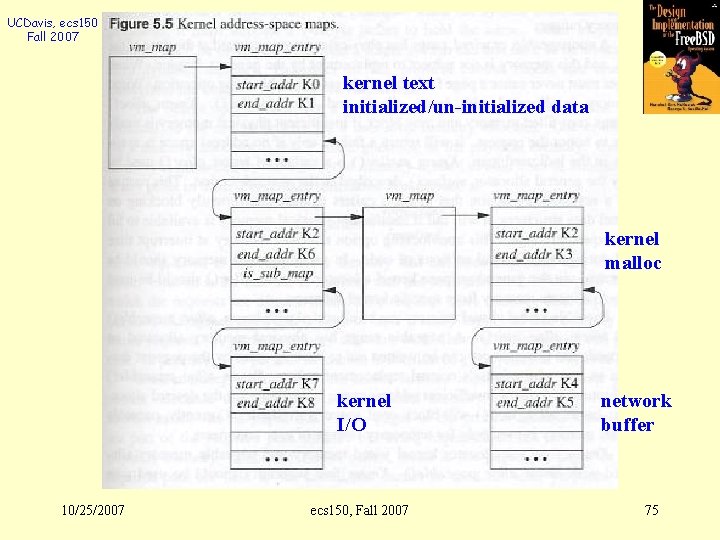

UCDavis, ecs 150 Fall 2007 kernel text initialized/un-initialized data kernel malloc kernel I/O 10/25/2007 ecs 150, Fall 2007 network buffer 75

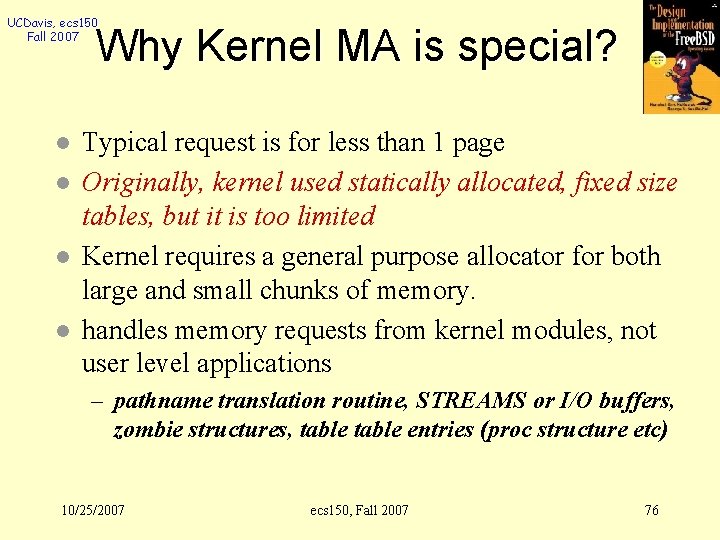

UCDavis, ecs 150 Fall 2007 Why Kernel MA is special? l l Typical request is for less than 1 page Originally, kernel used statically allocated, fixed size tables, but it is too limited Kernel requires a general purpose allocator for both large and small chunks of memory. handles memory requests from kernel modules, not user level applications – pathname translation routine, STREAMS or I/O buffers, zombie structures, table entries (proc structure etc) 10/25/2007 ecs 150, Fall 2007 76

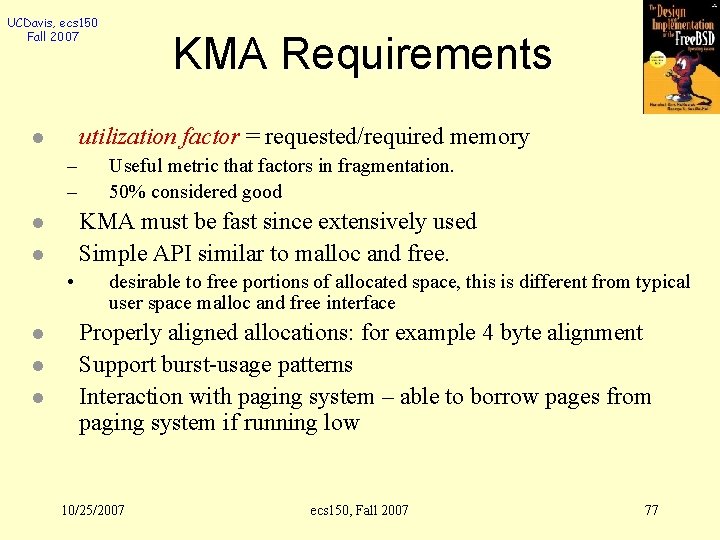

UCDavis, ecs 150 Fall 2007 utilization factor = requested/required memory l – – l • l l Useful metric that factors in fragmentation. 50% considered good KMA must be fast since extensively used Simple API similar to malloc and free. l l KMA Requirements desirable to free portions of allocated space, this is different from typical user space malloc and free interface Properly aligned allocations: for example 4 byte alignment Support burst-usage patterns Interaction with paging system – able to borrow pages from paging system if running low 10/25/2007 ecs 150, Fall 2007 77

UCDavis, ecs 150 Fall 2007 l l l KMA Schemes Resource Map Allocator Simple Power-of-Two Free Lists The Mc. Kusick-Karels Allocator – Freebsd l The Buddy System – Linux l l l SVR 4 Lazy Buddy Allocator Mach-OSF/1 Zone Allocator Solaris Slab Allocator – Freebsd, linux, Solaris, 10/25/2007 ecs 150, Fall 2007 78

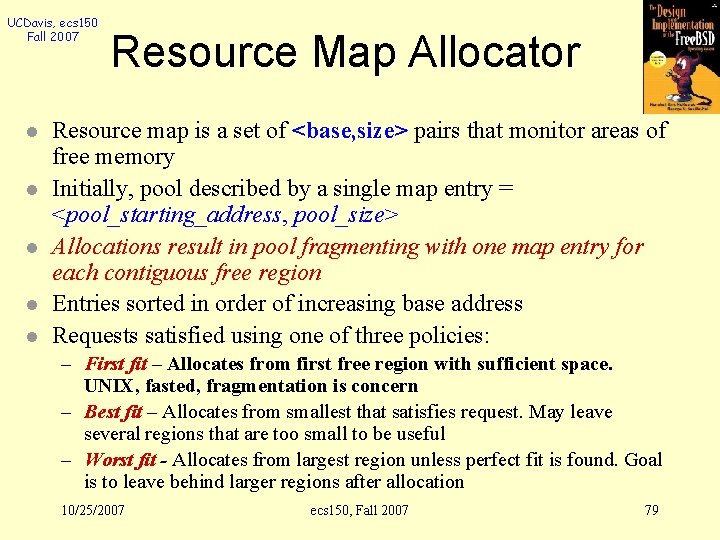

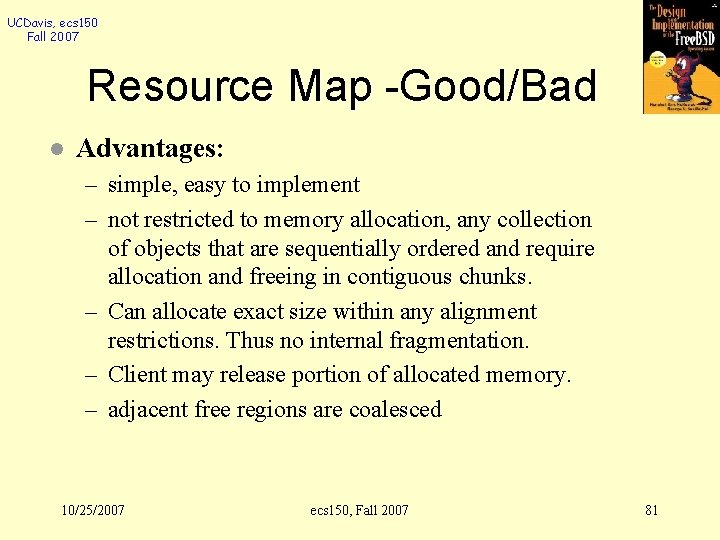

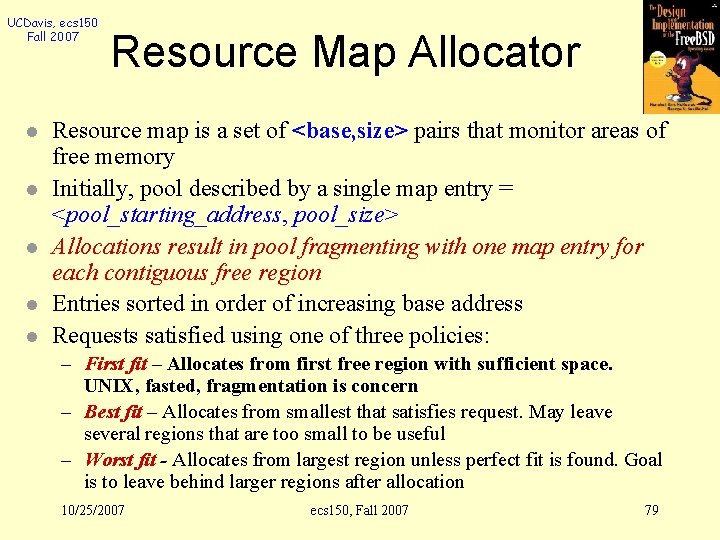

UCDavis, ecs 150 Fall 2007 l l l Resource Map Allocator Resource map is a set of <base, size> pairs that monitor areas of free memory Initially, pool described by a single map entry = <pool_starting_address, pool_size> Allocations result in pool fragmenting with one map entry for each contiguous free region Entries sorted in order of increasing base address Requests satisfied using one of three policies: – First fit – Allocates from first free region with sufficient space. UNIX, fasted, fragmentation is concern – Best fit – Allocates from smallest that satisfies request. May leave several regions that are too small to be useful – Worst fit - Allocates from largest region unless perfect fit is found. Goal is to leave behind larger regions after allocation 10/25/2007 ecs 150, Fall 2007 79

UCDavis, ecs 150 Fall 2007 offset_t rmalloc(size) void rmfree(base, size) <0, 1024> after: rmalloc(256), rmalloc(320), rmfree(256, 128) <256, 128> <576, 448> after: rmfree(128, 128) <128, 256> <128, 32> 10/25/2007 <576, 448> <288, 64> <544, 128> ecs 150, Fall 2007 <832, 32> 80

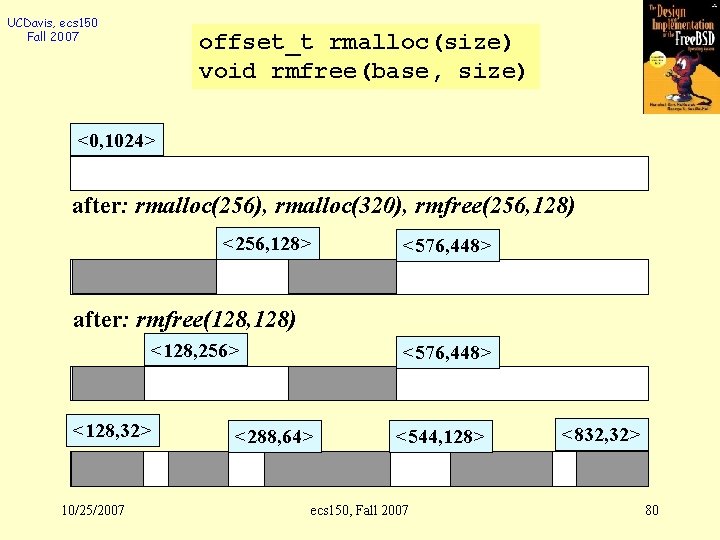

UCDavis, ecs 150 Fall 2007 Resource Map -Good/Bad l Advantages: – simple, easy to implement – not restricted to memory allocation, any collection of objects that are sequentially ordered and require allocation and freeing in contiguous chunks. – Can allocate exact size within any alignment restrictions. Thus no internal fragmentation. – Client may release portion of allocated memory. – adjacent free regions are coalesced 10/25/2007 ecs 150, Fall 2007 81

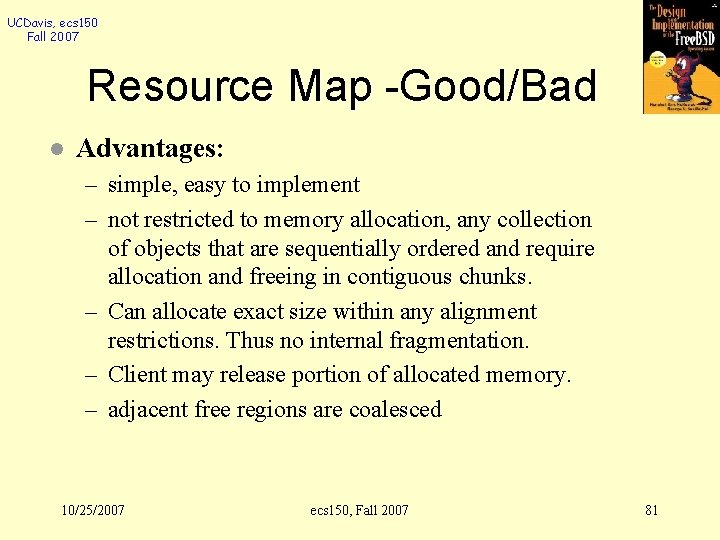

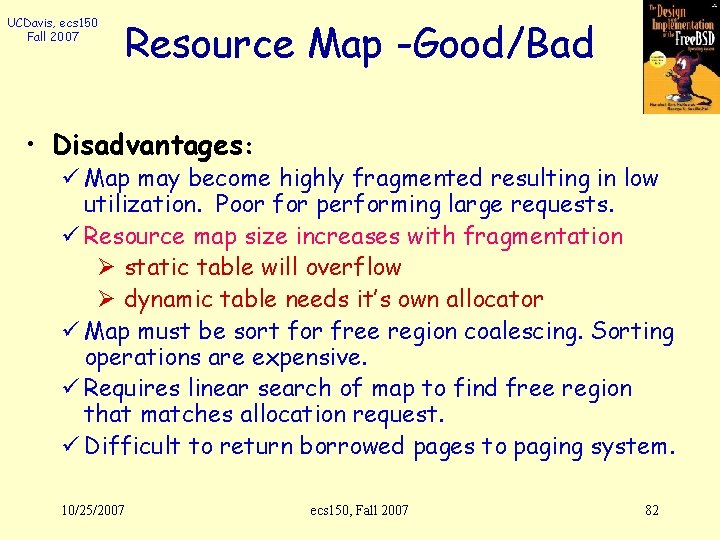

UCDavis, ecs 150 Fall 2007 Resource Map -Good/Bad • Disadvantages: ü Map may become highly fragmented resulting in low utilization. Poor for performing large requests. ü Resource map size increases with fragmentation Ø static table will overflow Ø dynamic table needs it’s own allocator ü Map must be sort for free region coalescing. Sorting operations are expensive. ü Requires linear search of map to find free region that matches allocation request. ü Difficult to return borrowed pages to paging system. 10/25/2007 ecs 150, Fall 2007 82

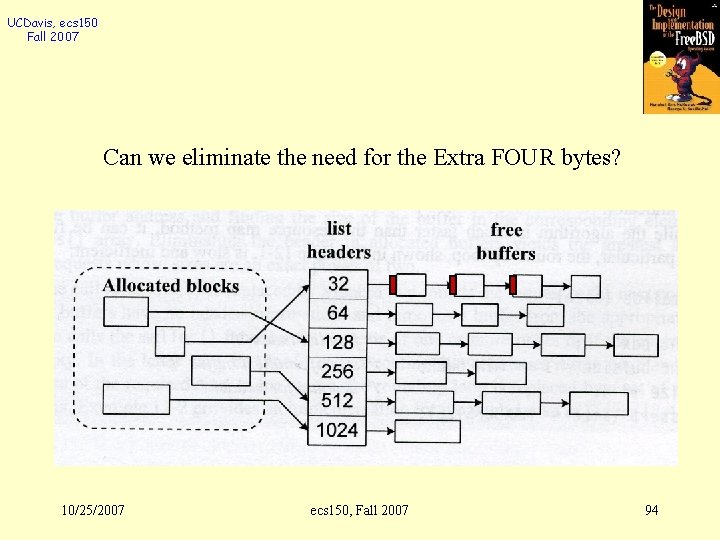

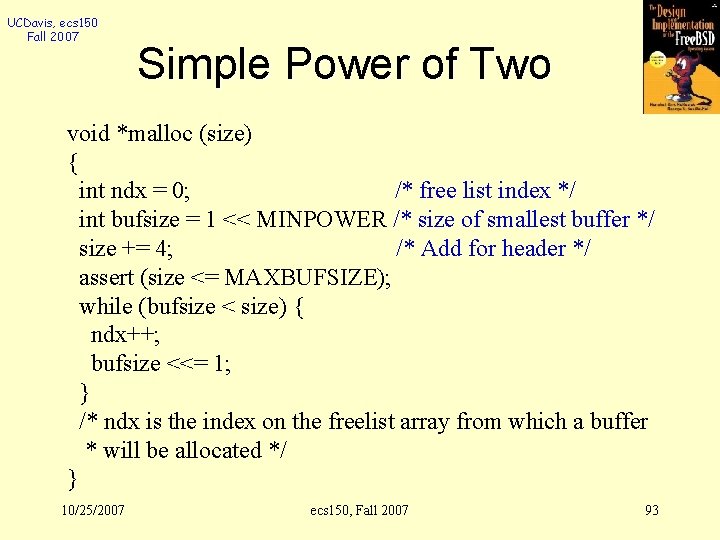

UCDavis, ecs 150 Fall 2007 Simple Power of Twos l has been used to implement malloc() and free() in the user-level C library (libc). l Do you know how it is implemented? 10/25/2007 ecs 150, Fall 2007 83

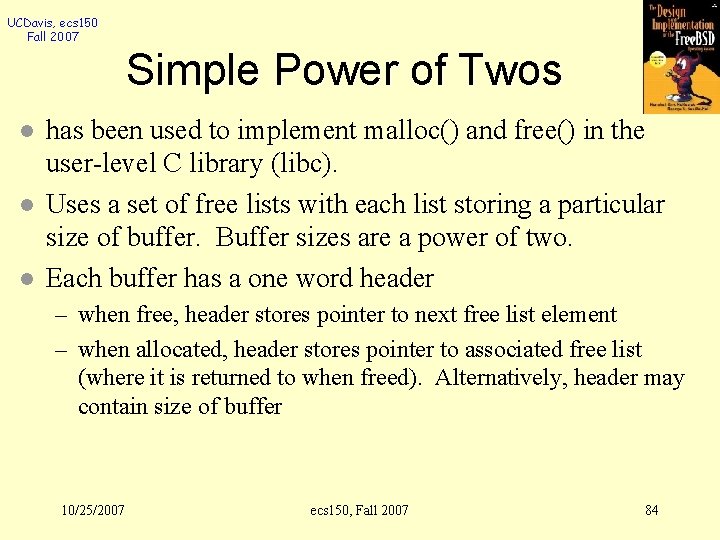

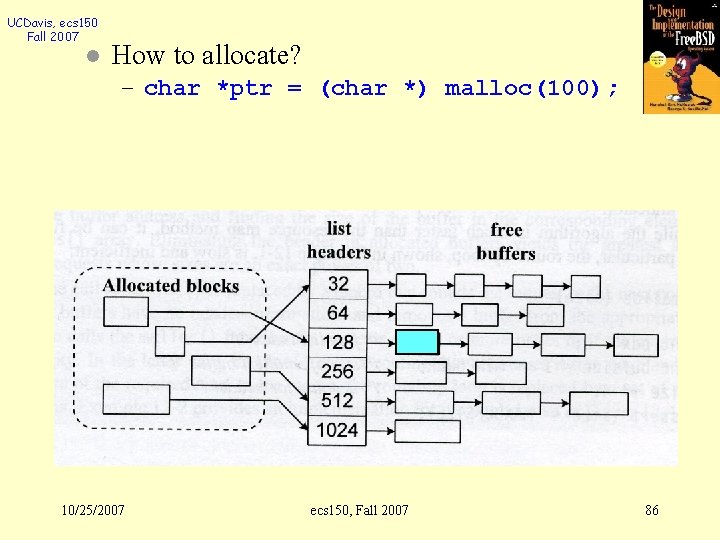

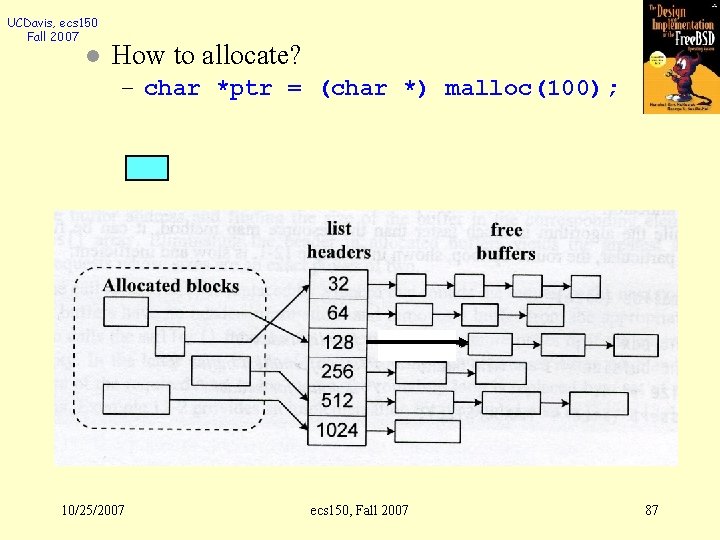

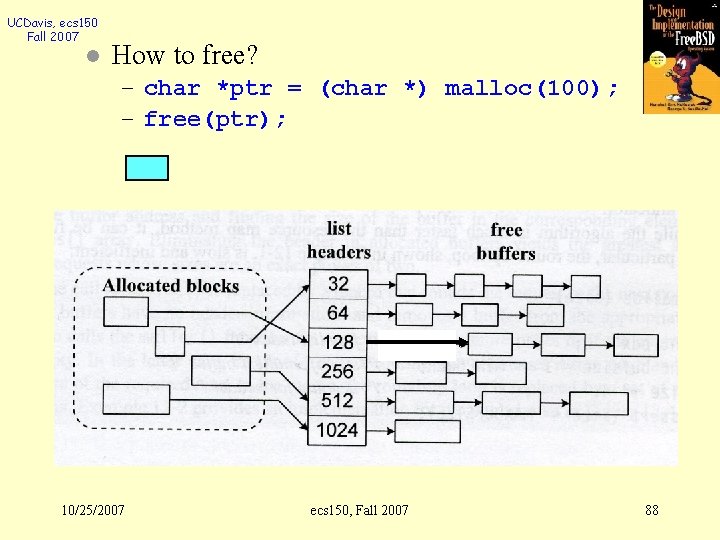

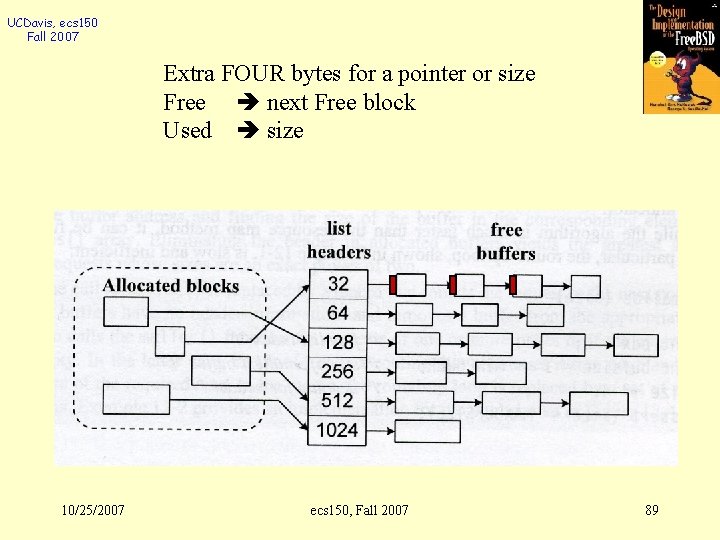

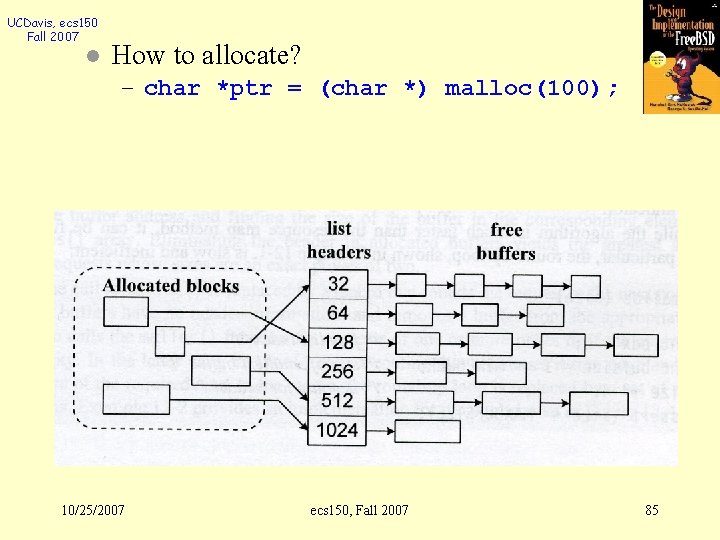

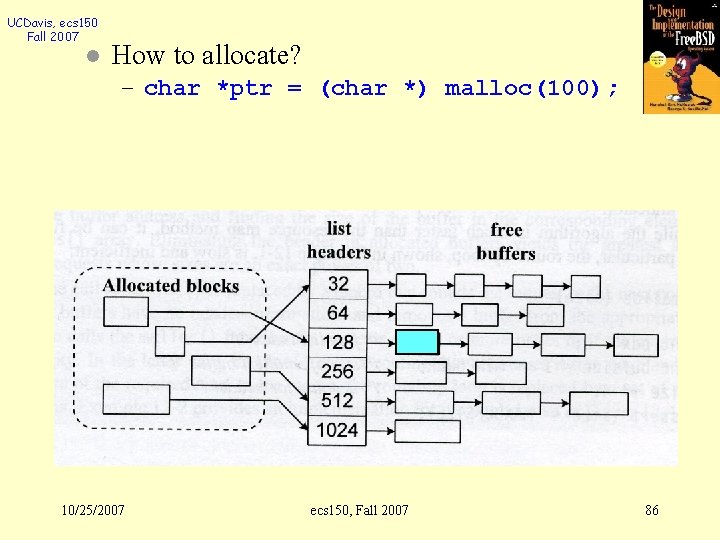

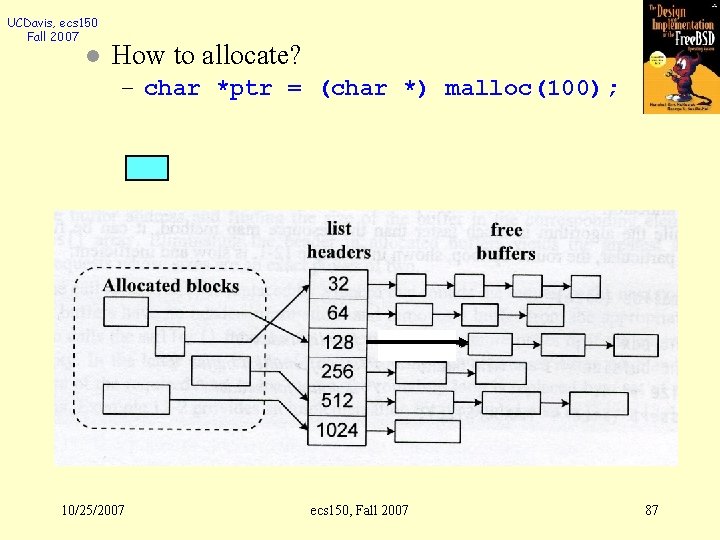

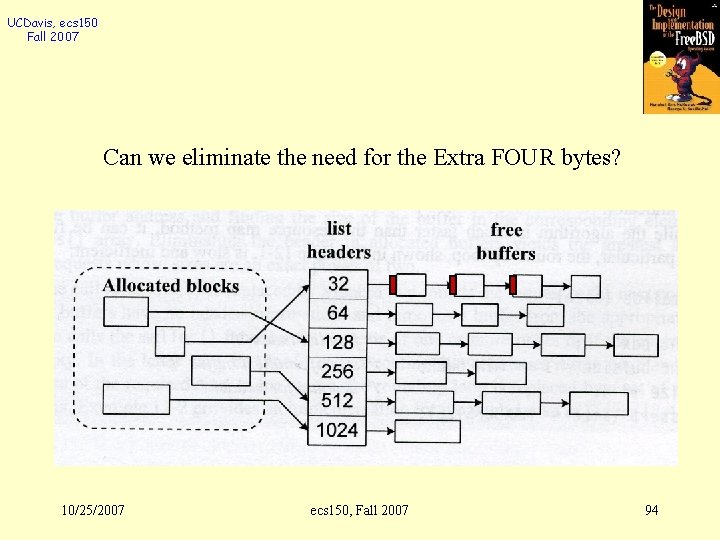

UCDavis, ecs 150 Fall 2007 Simple Power of Twos l l l has been used to implement malloc() and free() in the user-level C library (libc). Uses a set of free lists with each list storing a particular size of buffer. Buffer sizes are a power of two. Each buffer has a one word header – when free, header stores pointer to next free list element – when allocated, header stores pointer to associated free list (where it is returned to when freed). Alternatively, header may contain size of buffer 10/25/2007 ecs 150, Fall 2007 84

UCDavis, ecs 150 Fall 2007 l How to allocate? – char *ptr = (char *) malloc(100); 10/25/2007 ecs 150, Fall 2007 85

UCDavis, ecs 150 Fall 2007 l How to allocate? – char *ptr = (char *) malloc(100); 10/25/2007 ecs 150, Fall 2007 86

UCDavis, ecs 150 Fall 2007 l How to allocate? – char *ptr = (char *) malloc(100); 10/25/2007 ecs 150, Fall 2007 87

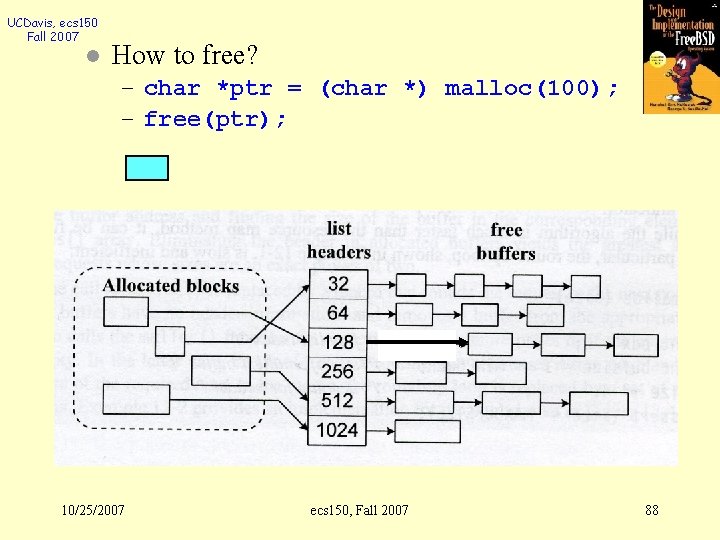

UCDavis, ecs 150 Fall 2007 l How to free? – char *ptr = (char *) malloc(100); – free(ptr); 10/25/2007 ecs 150, Fall 2007 88

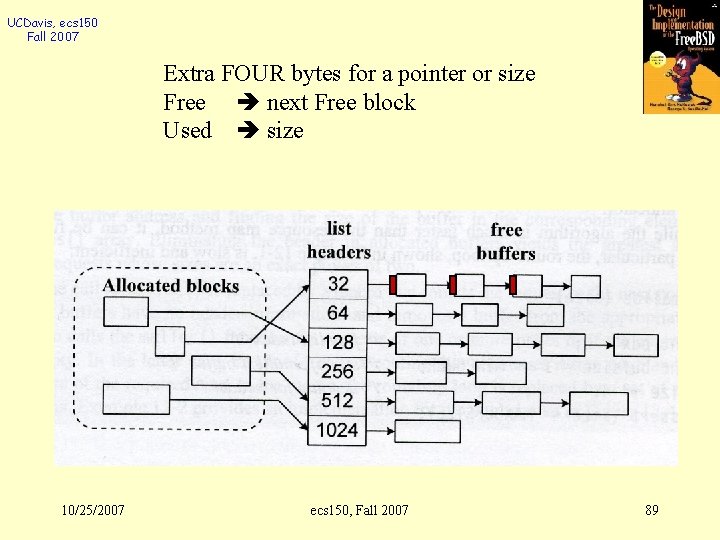

UCDavis, ecs 150 Fall 2007 Extra FOUR bytes for a pointer or size Free next Free block Used size 10/25/2007 ecs 150, Fall 2007 89

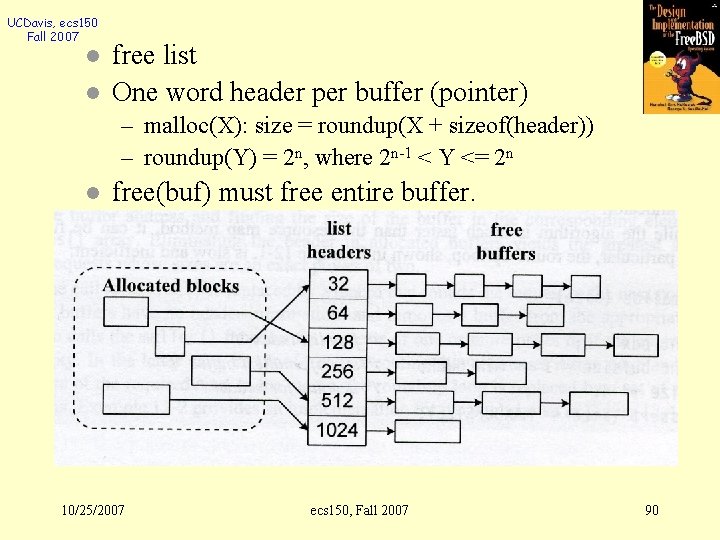

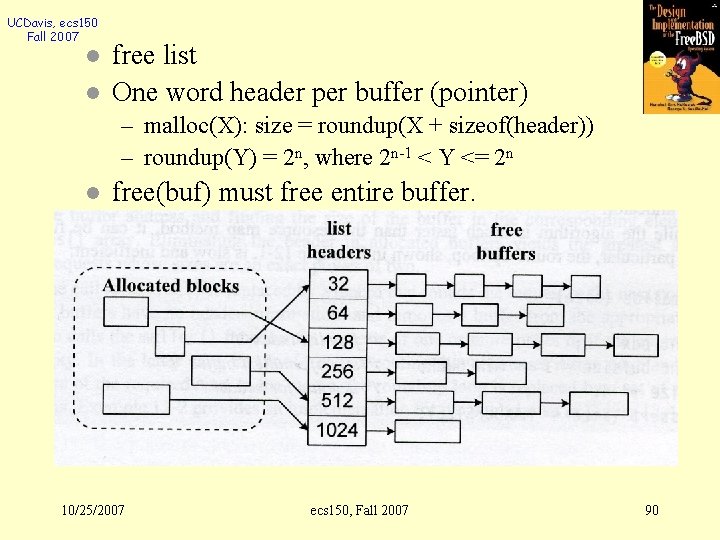

UCDavis, ecs 150 Fall 2007 l l free list One word header per buffer (pointer) – malloc(X): size = roundup(X + sizeof(header)) – roundup(Y) = 2 n, where 2 n-1 < Y <= 2 n l free(buf) must free entire buffer. 10/25/2007 ecs 150, Fall 2007 90

UCDavis, ecs 150 Fall 2007 Simple and reasonably fast l eliminates linear searches and fragmentation. l – Bounded time for allocations when buffers are available familiar API l simple to share buffers between kernel modules since free’ing a buffer does not require knowing its size l 10/25/2007 ecs 150, Fall 2007 91

UCDavis, ecs 150 Fall 2007 l Rounding requests to power of 2 results in wasted memory and poor utilization. – aggravated by requiring buffer headers since it is not unusual for memory requests to already be a power-of-two. l l l no provision for coalescing free buffers since buffer sizes are generally fixed. no provision for borrowing pages from paging system although some implementations do this. no provision for returning unused buffers to page allocator 10/25/2007 ecs 150, Fall 2007 92

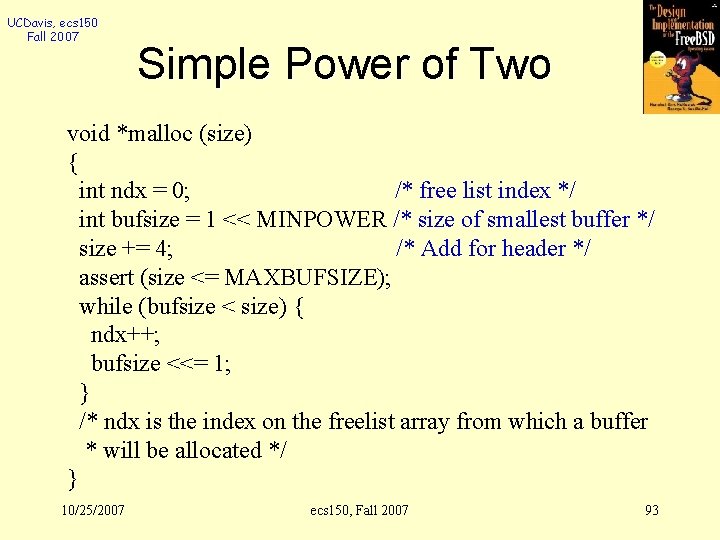

UCDavis, ecs 150 Fall 2007 Simple Power of Two void *malloc (size) { int ndx = 0; /* free list index */ int bufsize = 1 << MINPOWER /* size of smallest buffer */ size += 4; /* Add for header */ assert (size <= MAXBUFSIZE); while (bufsize < size) { ndx++; bufsize <<= 1; } /* ndx is the index on the freelist array from which a buffer * will be allocated */ } 10/25/2007 ecs 150, Fall 2007 93

UCDavis, ecs 150 Fall 2007 Can we eliminate the need for the Extra FOUR bytes? 10/25/2007 ecs 150, Fall 2007 94

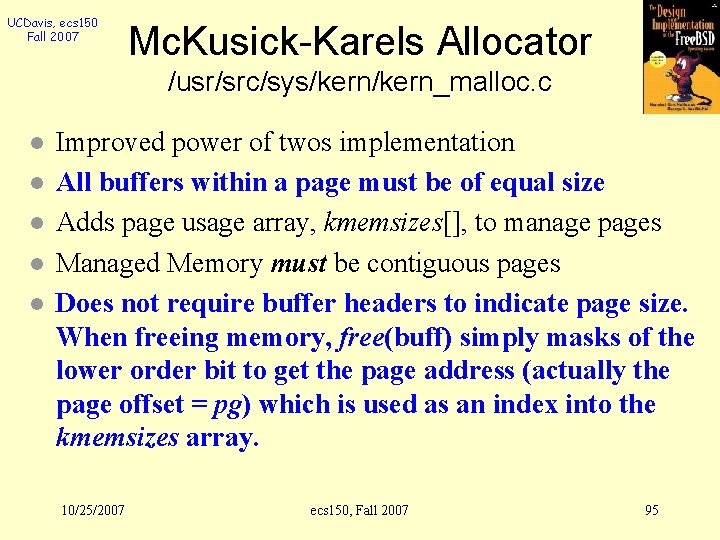

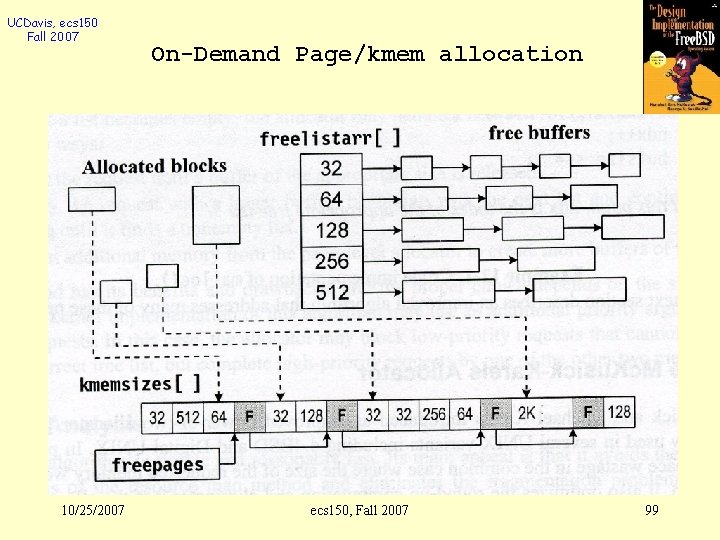

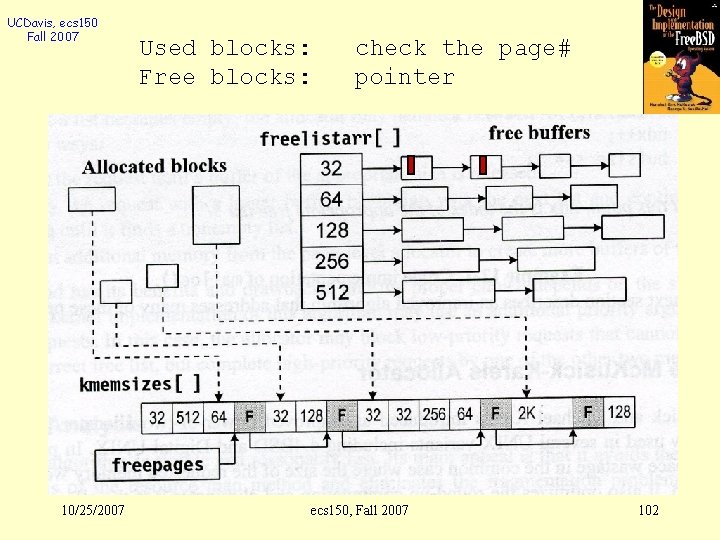

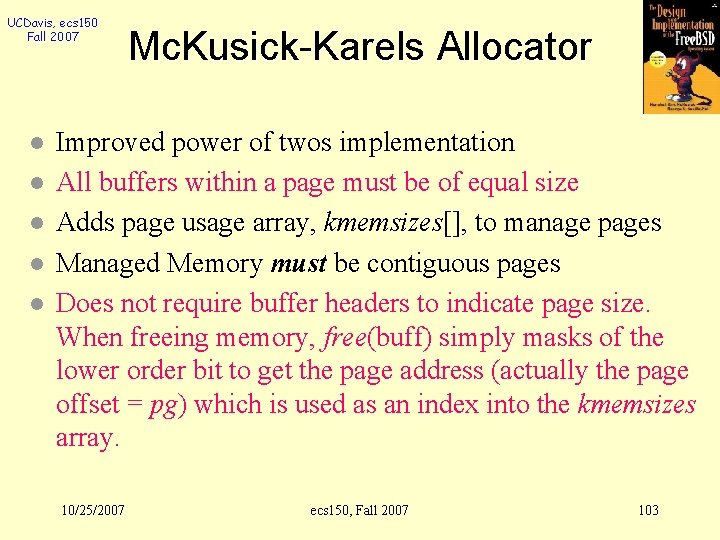

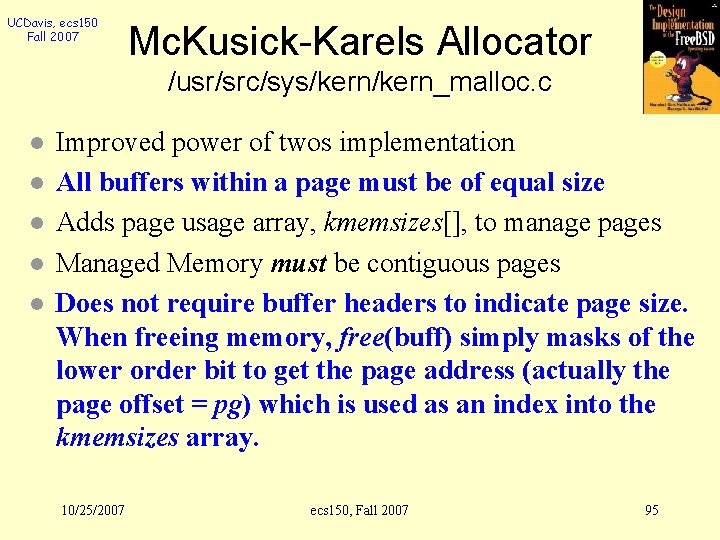

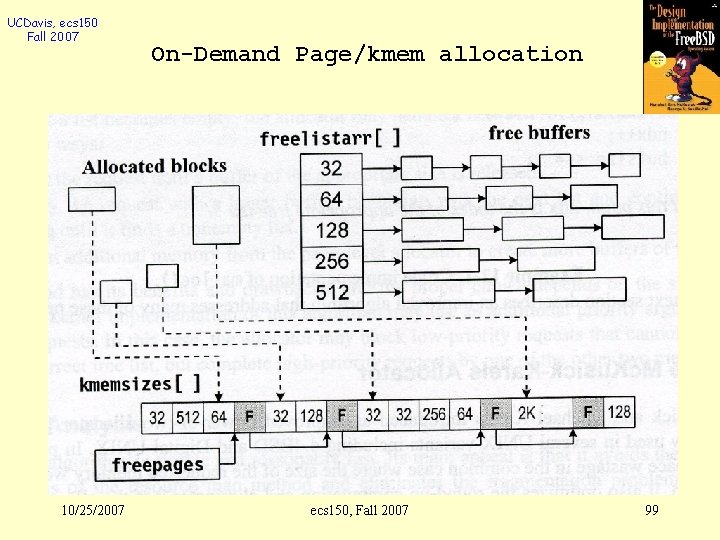

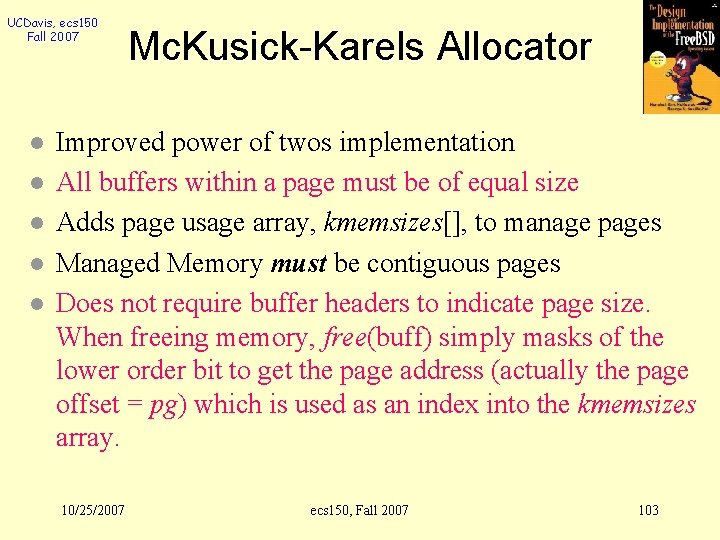

UCDavis, ecs 150 Fall 2007 Mc. Kusick-Karels Allocator /usr/src/sys/kern_malloc. c l l l Improved power of twos implementation All buffers within a page must be of equal size Adds page usage array, kmemsizes[], to manage pages Managed Memory must be contiguous pages Does not require buffer headers to indicate page size. When freeing memory, free(buff) simply masks of the lower order bit to get the page address (actually the page offset = pg) which is used as an index into the kmemsizes array. 10/25/2007 ecs 150, Fall 2007 95

UCDavis, ecs 150 Fall 2007 10/25/2007 ecs 150, Fall 2007 96

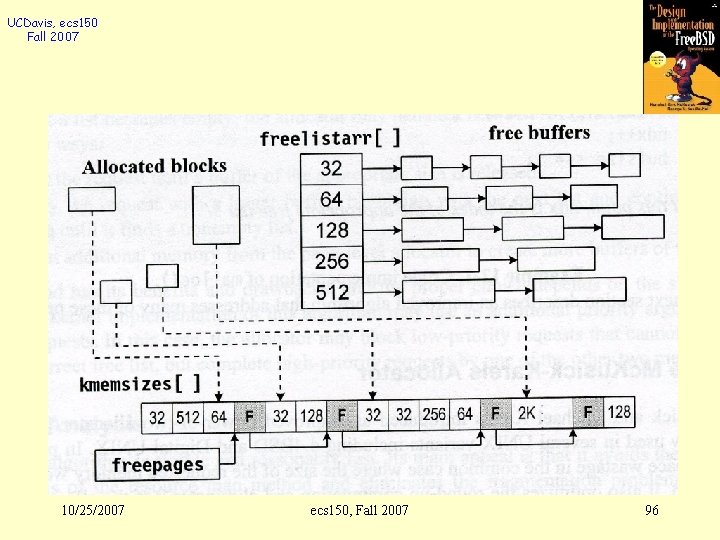

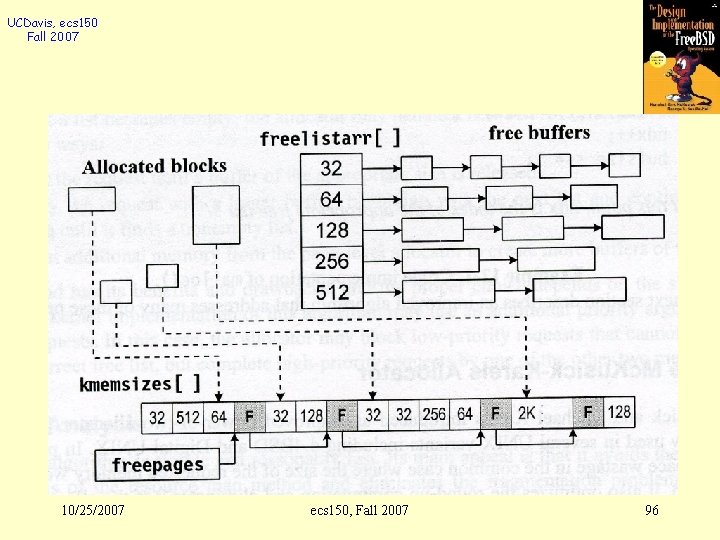

UCDavis, ecs 150 Fall 2007 1 page = 212 (4 K) bytes Separate 16 28 -bytes blocks 28 10/25/2007 ecs 150, Fall 2007 97

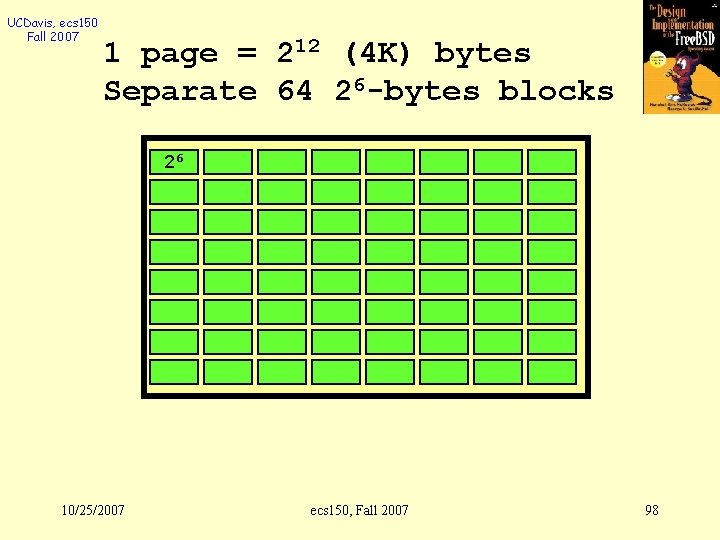

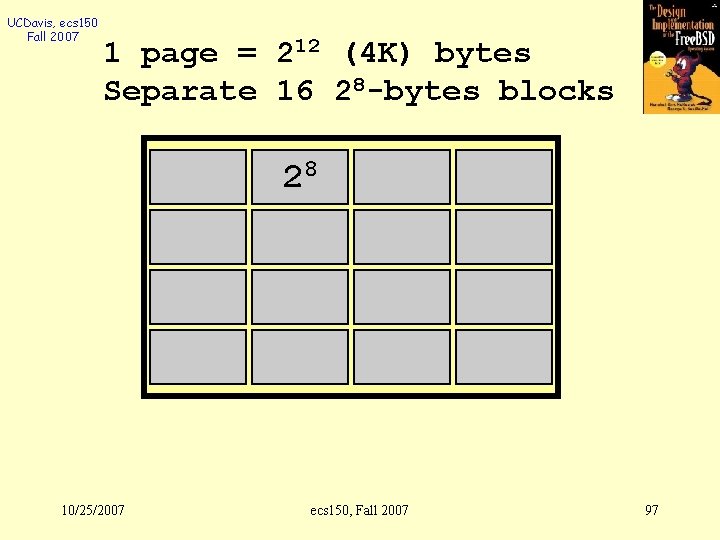

UCDavis, ecs 150 Fall 2007 1 page = 212 (4 K) bytes Separate 64 26 -bytes blocks 26 10/25/2007 ecs 150, Fall 2007 98

UCDavis, ecs 150 Fall 2007 10/25/2007 On-Demand Page/kmem allocation ecs 150, Fall 2007 99

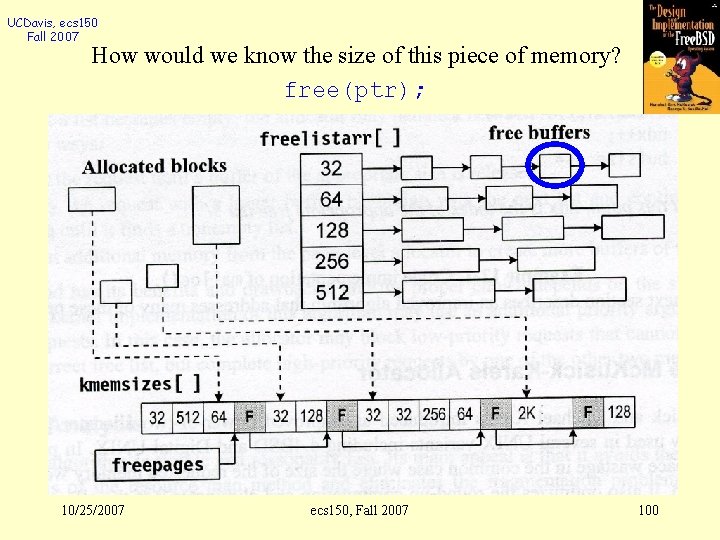

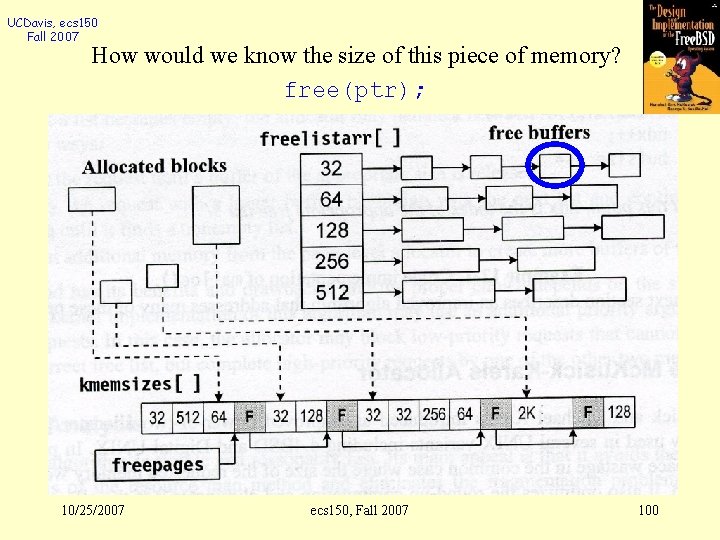

UCDavis, ecs 150 Fall 2007 How would we know the size of this piece of memory? free(ptr); 10/25/2007 ecs 150, Fall 2007 100

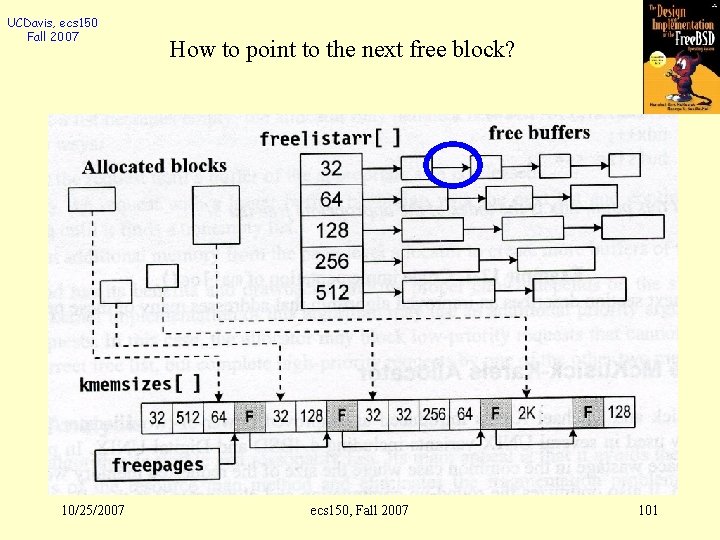

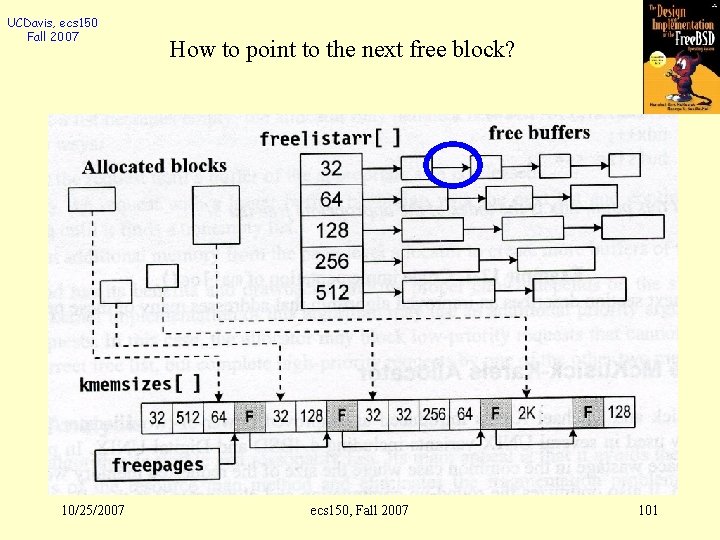

UCDavis, ecs 150 Fall 2007 10/25/2007 How to point to the next free block? ecs 150, Fall 2007 101

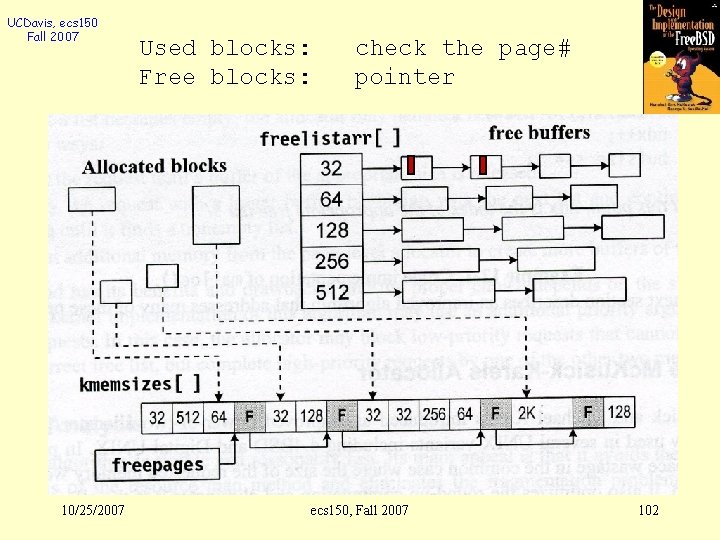

UCDavis, ecs 150 Fall 2007 10/25/2007 Used blocks: Free blocks: check the page# pointer ecs 150, Fall 2007 102

UCDavis, ecs 150 Fall 2007 l l l Mc. Kusick-Karels Allocator Improved power of twos implementation All buffers within a page must be of equal size Adds page usage array, kmemsizes[], to manage pages Managed Memory must be contiguous pages Does not require buffer headers to indicate page size. When freeing memory, free(buff) simply masks of the lower order bit to get the page address (actually the page offset = pg) which is used as an index into the kmemsizes array. 10/25/2007 ecs 150, Fall 2007 103

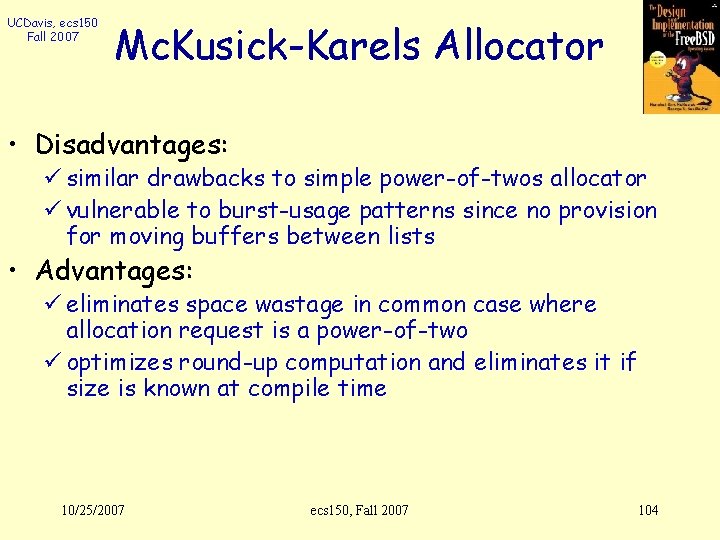

UCDavis, ecs 150 Fall 2007 Mc. Kusick-Karels Allocator • Disadvantages: ü similar drawbacks to simple power-of-twos allocator ü vulnerable to burst-usage patterns since no provision for moving buffers between lists • Advantages: ü eliminates space wastage in common case where allocation request is a power-of-two ü optimizes round-up computation and eliminates it if size is known at compile time 10/25/2007 ecs 150, Fall 2007 104

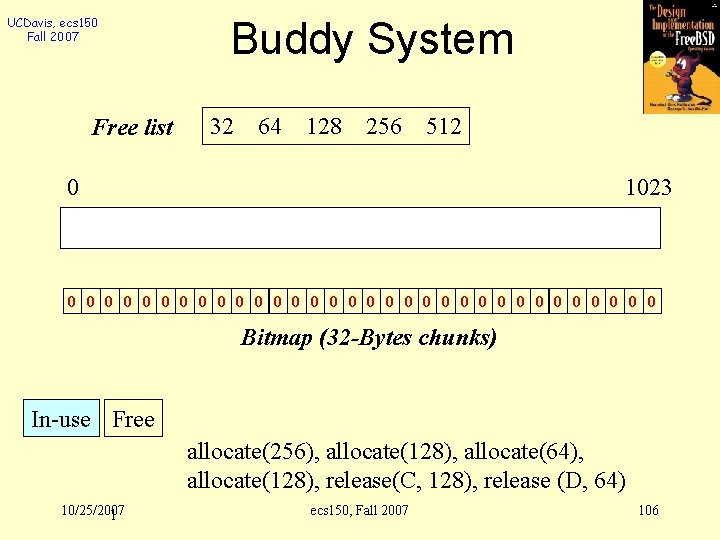

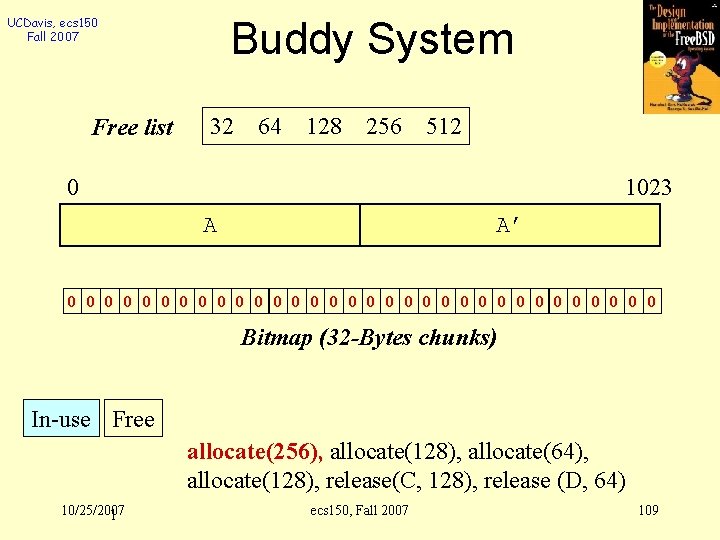

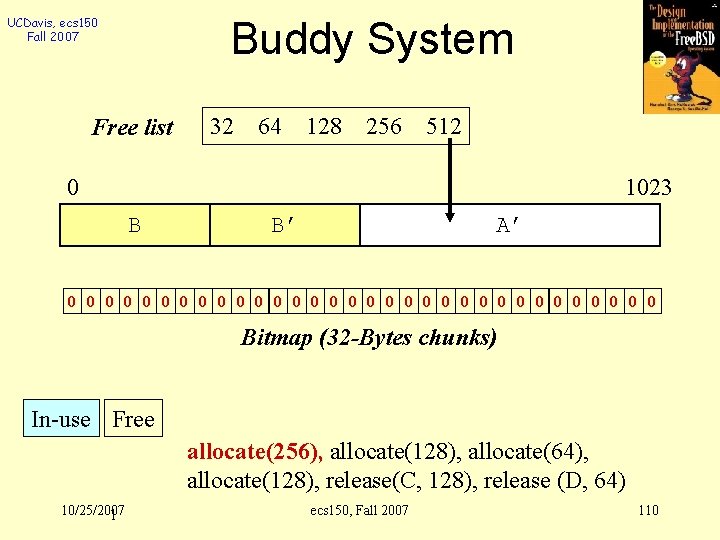

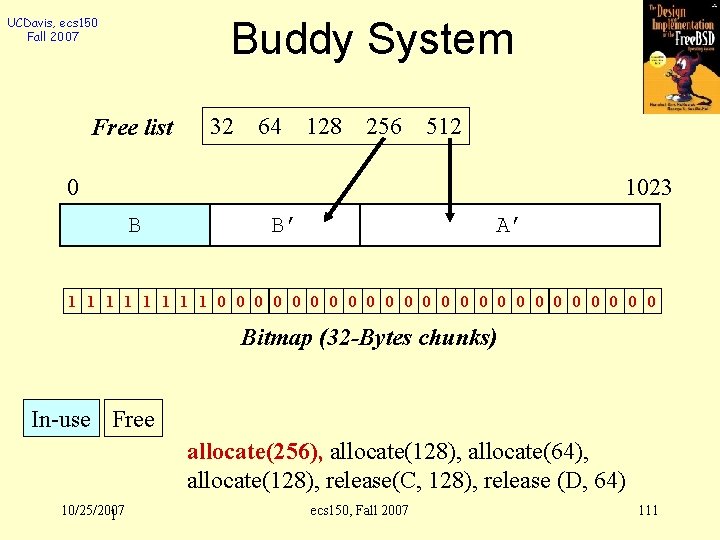

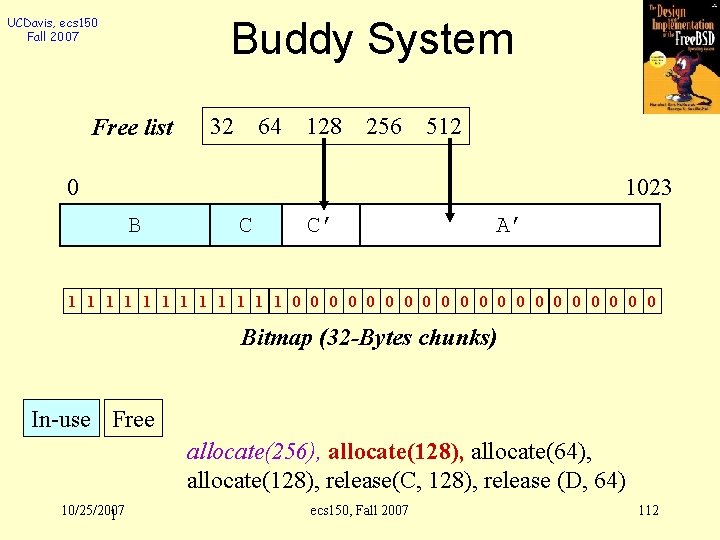

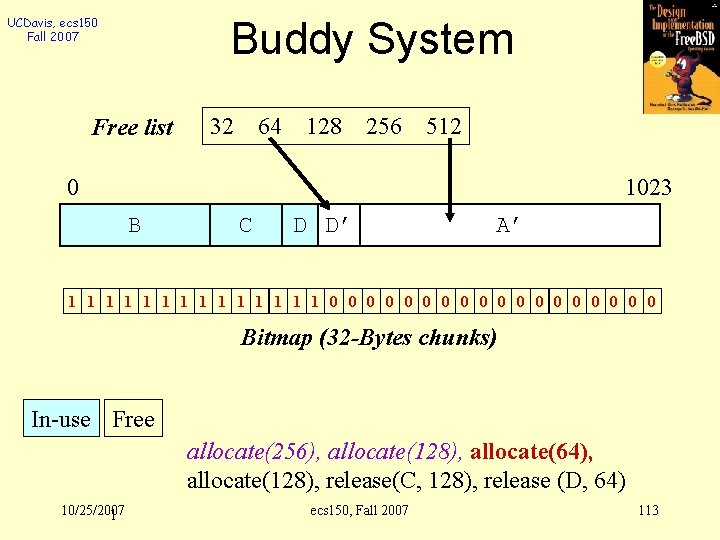

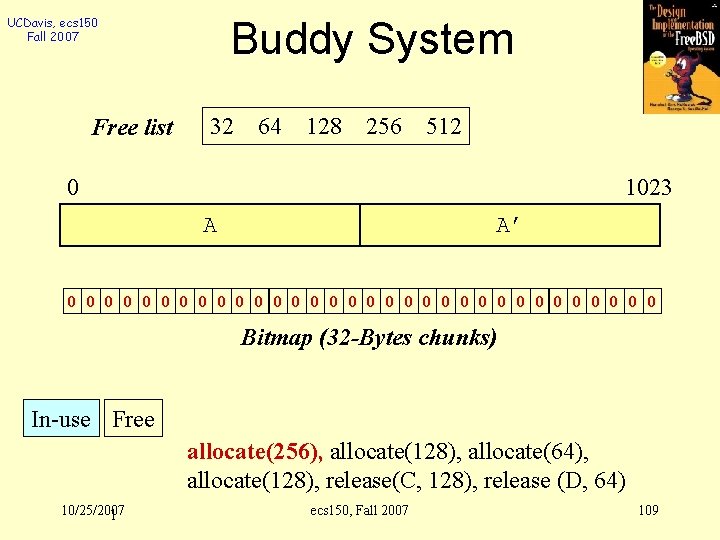

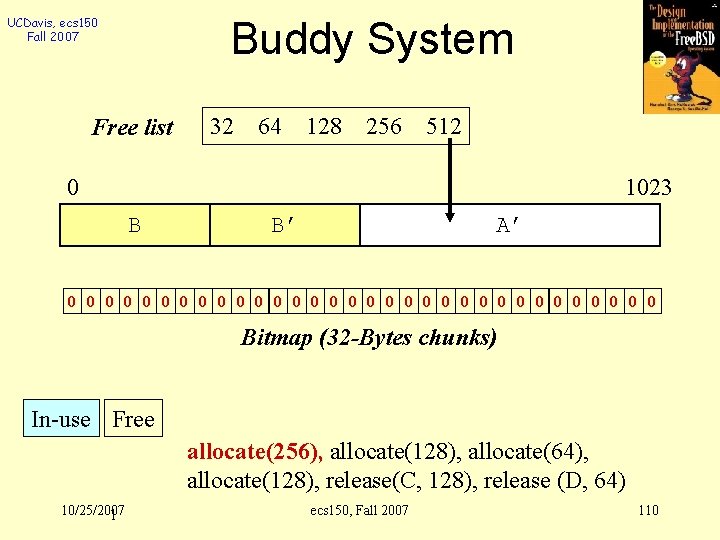

UCDavis, ecs 150 Fall 2007 The Buddy System l Another interesting power-of-2 memory allocation used in Linux Kernel 10/25/2007 ecs 150, Fall 2007 105

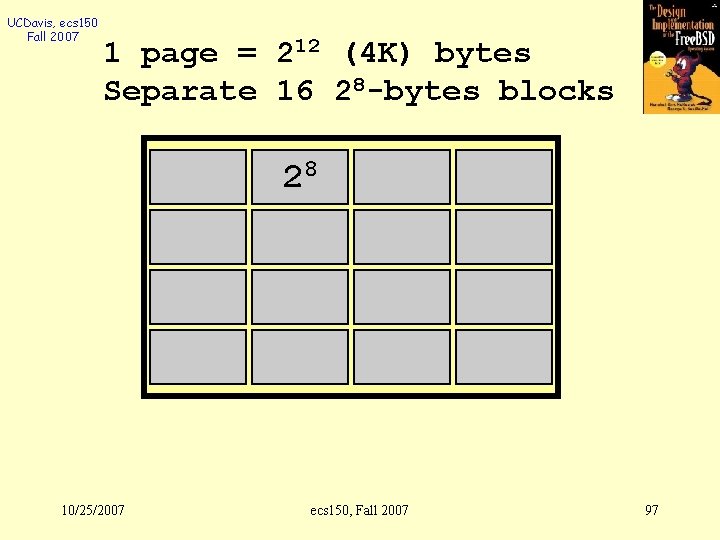

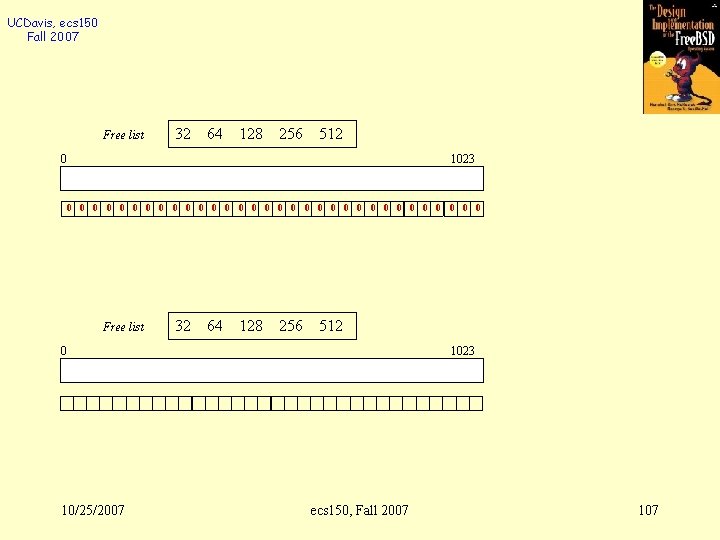

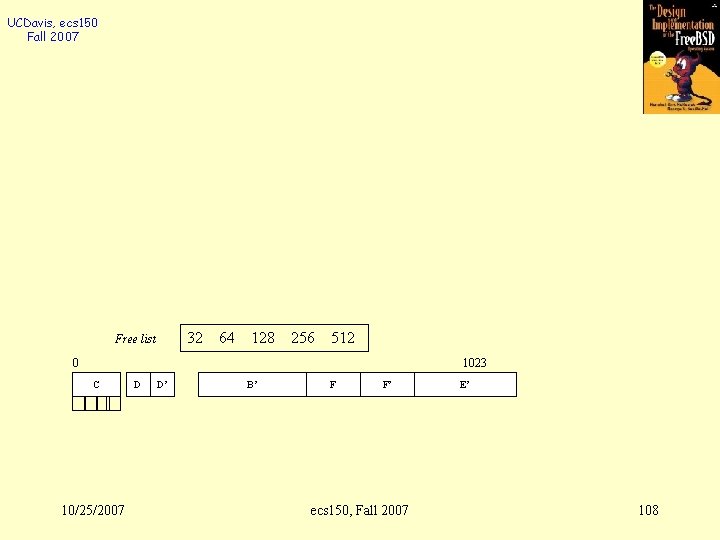

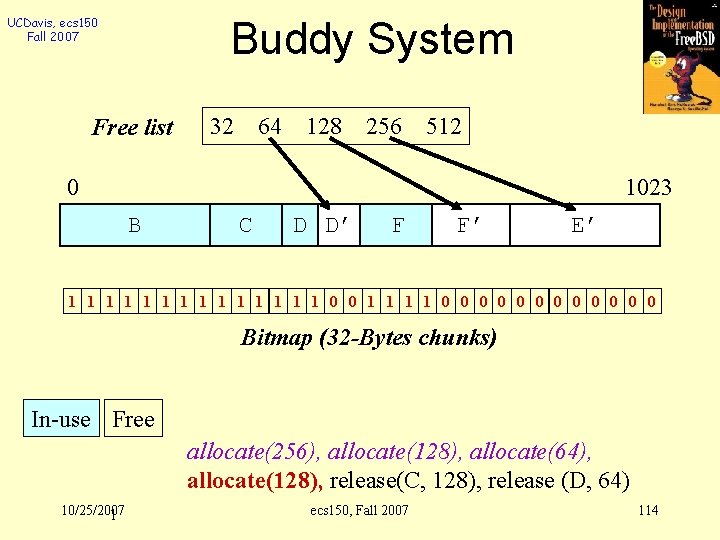

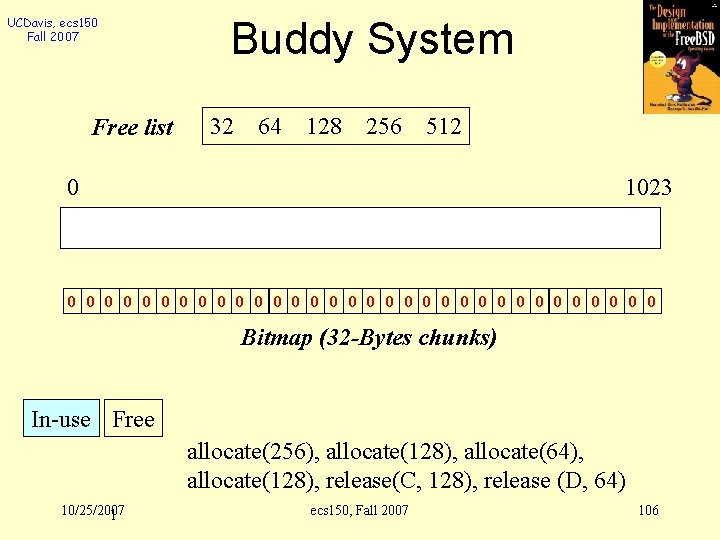

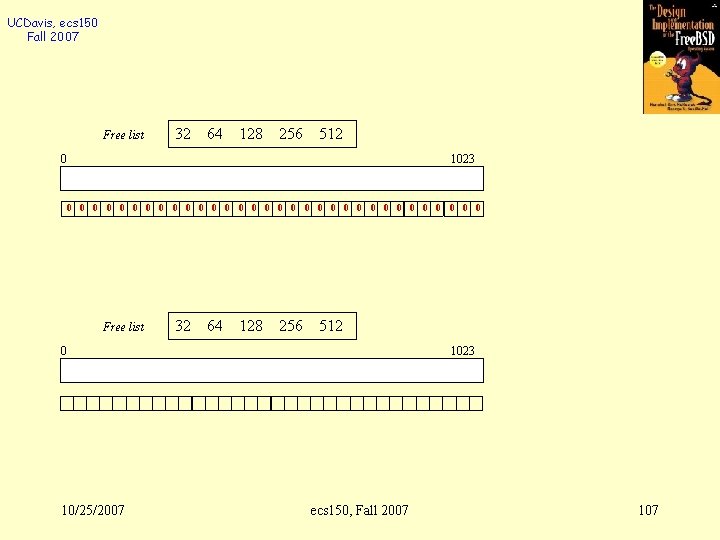

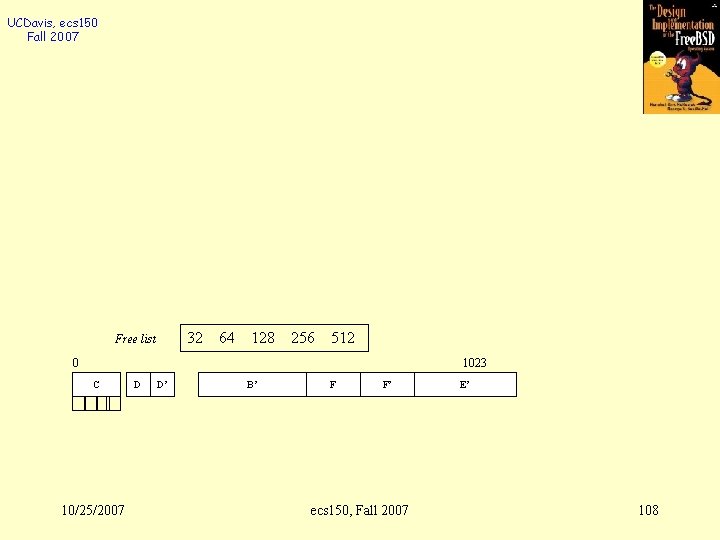

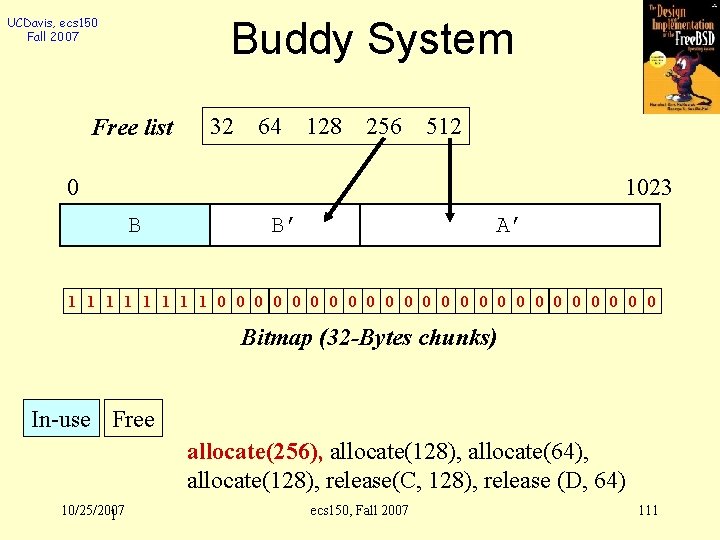

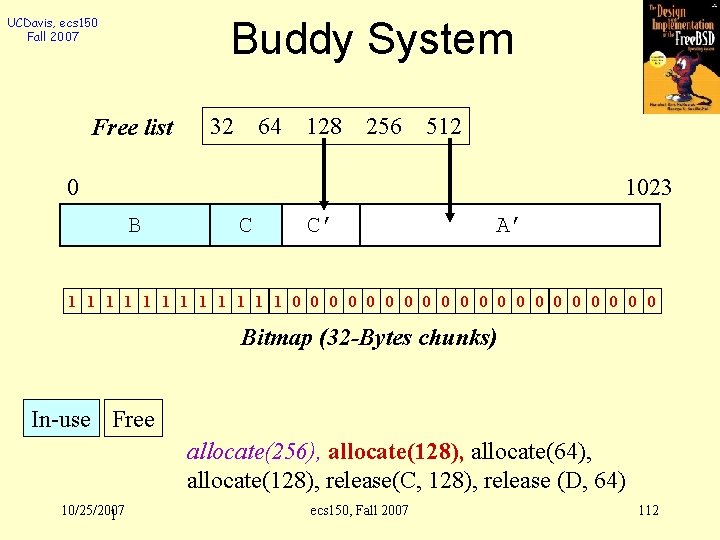

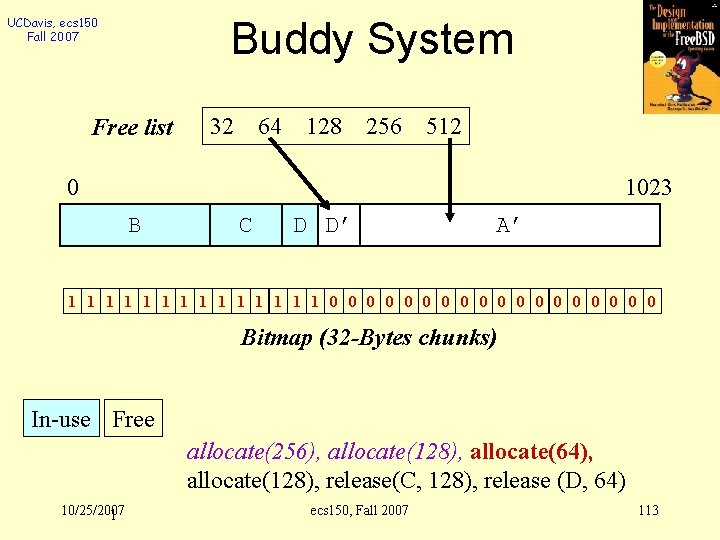

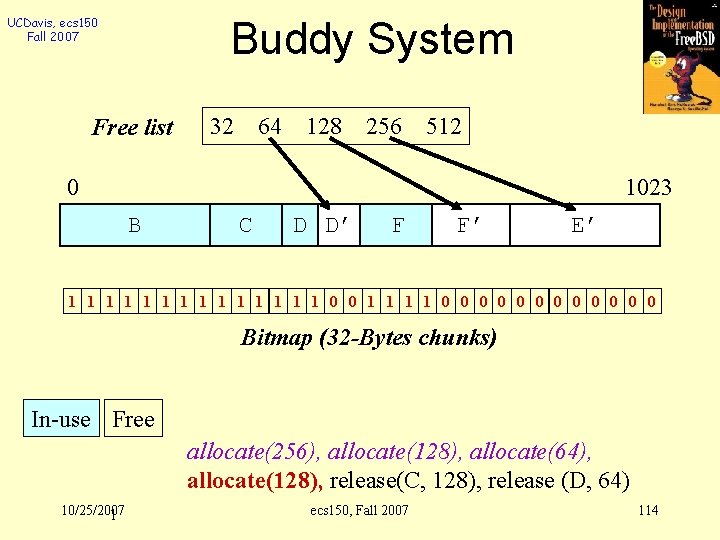

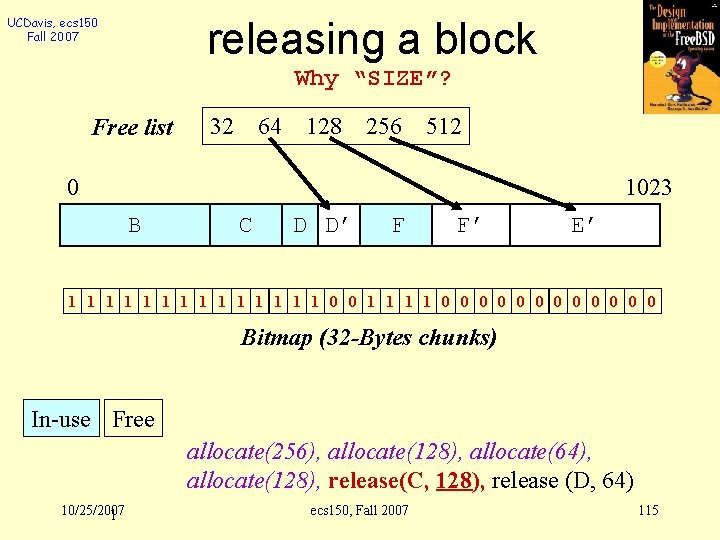

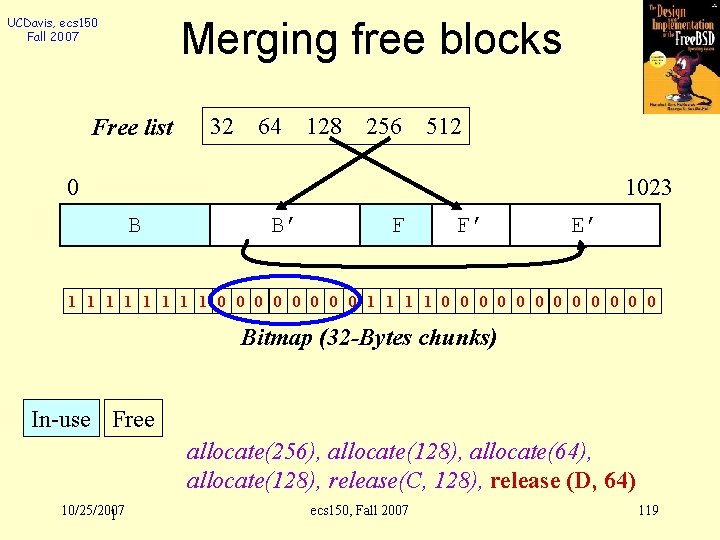

UCDavis, ecs 150 Fall 2007 Free list Buddy System 32 64 128 256 512 0 1023 0 0 0 0 0 0 0 0 Bitmap (32 -Bytes chunks) In-use Free allocate(256), allocate(128), allocate(64), allocate(128), release(C, 128), release (D, 64) 10/25/2007 1 ecs 150, Fall 2007 106

UCDavis, ecs 150 Fall 2007 Free list 32 64 128 256 512 0 1023 0 0 0 0 0 0 0 0 Free list 32 64 128 256 512 0 10/25/2007 1023 ecs 150, Fall 2007 107

UCDavis, ecs 150 Fall 2007 32 Free list 64 128 256 512 0 1023 C 10/25/2007 D D’ B’ F F’ ecs 150, Fall 2007 E’ 108

UCDavis, ecs 150 Fall 2007 Free list Buddy System 32 64 128 256 512 0 1023 A A’ 0 0 0 0 0 0 0 0 Bitmap (32 -Bytes chunks) In-use Free allocate(256), allocate(128), allocate(64), allocate(128), release(C, 128), release (D, 64) 10/25/2007 1 ecs 150, Fall 2007 109

UCDavis, ecs 150 Fall 2007 Buddy System Free list 32 64 128 256 512 0 1023 B B’ A’ 0 0 0 0 0 0 0 0 Bitmap (32 -Bytes chunks) In-use Free allocate(256), allocate(128), allocate(64), allocate(128), release(C, 128), release (D, 64) 10/25/2007 1 ecs 150, Fall 2007 110

UCDavis, ecs 150 Fall 2007 Buddy System Free list 32 64 128 256 512 0 1023 B B’ A’ 1 1 1 1 0 0 0 0 0 0 Bitmap (32 -Bytes chunks) In-use Free allocate(256), allocate(128), allocate(64), allocate(128), release(C, 128), release (D, 64) 10/25/2007 1 ecs 150, Fall 2007 111

UCDavis, ecs 150 Fall 2007 Buddy System Free list 32 64 128 256 512 0 1023 B C C’ A’ 1 1 1 0 0 0 0 0 Bitmap (32 -Bytes chunks) In-use Free allocate(256), allocate(128), allocate(64), allocate(128), release(C, 128), release (D, 64) 10/25/2007 1 ecs 150, Fall 2007 112

UCDavis, ecs 150 Fall 2007 Buddy System Free list 32 64 128 256 512 0 1023 B C D D’ A’ 1 1 1 1 0 0 0 0 0 Bitmap (32 -Bytes chunks) In-use Free allocate(256), allocate(128), allocate(64), allocate(128), release(C, 128), release (D, 64) 10/25/2007 1 ecs 150, Fall 2007 113

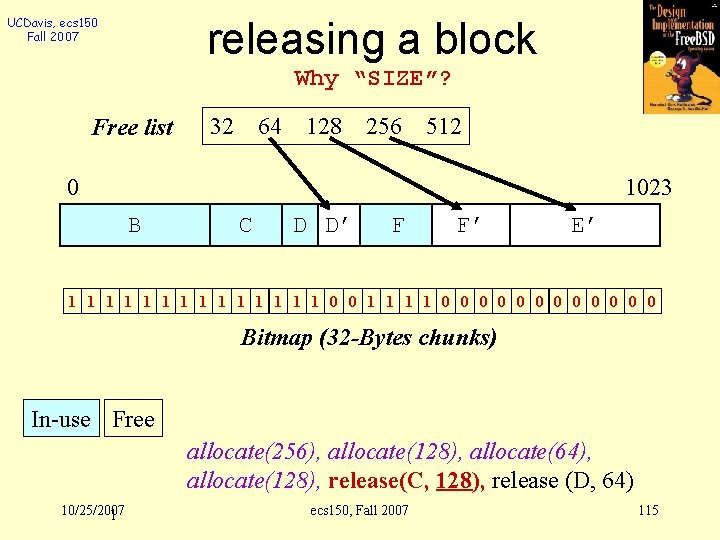

UCDavis, ecs 150 Fall 2007 Buddy System Free list 32 64 128 256 512 0 1023 B C D D’ F F’ E’ 1 1 1 1 0 0 1 1 0 0 0 Bitmap (32 -Bytes chunks) In-use Free allocate(256), allocate(128), allocate(64), allocate(128), release(C, 128), release (D, 64) 10/25/2007 1 ecs 150, Fall 2007 114

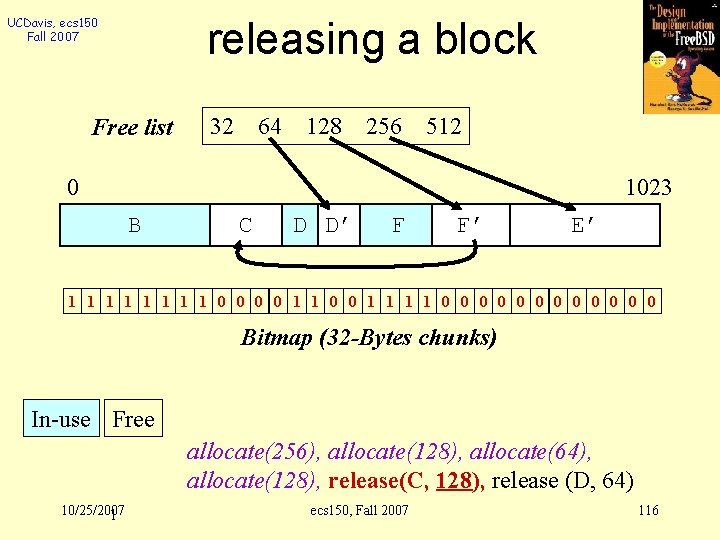

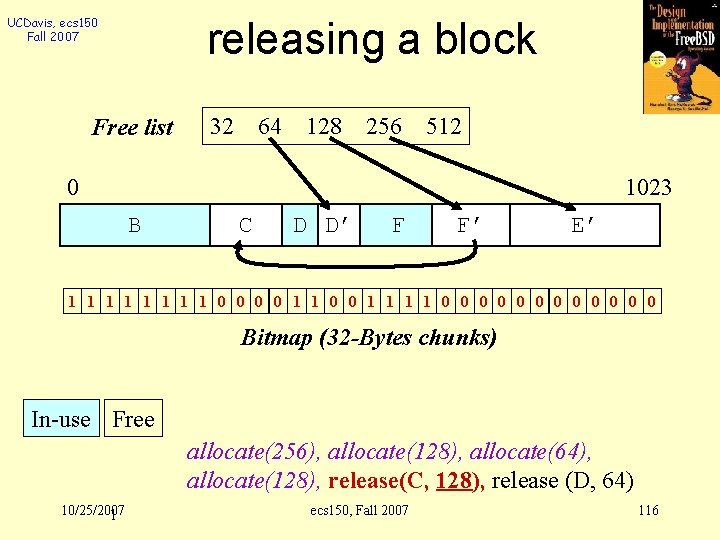

UCDavis, ecs 150 Fall 2007 releasing a block Why “SIZE”? Free list 32 64 128 256 512 0 1023 B C D D’ F F’ E’ 1 1 1 1 0 0 1 1 0 0 0 Bitmap (32 -Bytes chunks) In-use Free allocate(256), allocate(128), allocate(64), allocate(128), release(C, 128), release (D, 64) 10/25/2007 1 ecs 150, Fall 2007 115

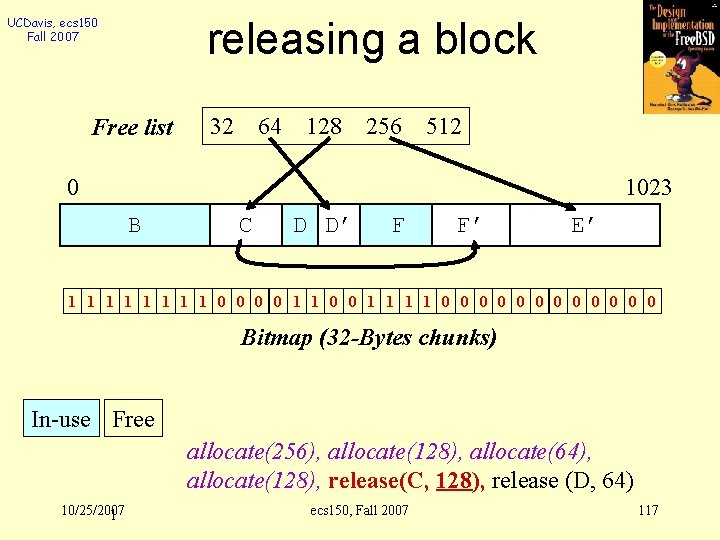

UCDavis, ecs 150 Fall 2007 releasing a block Free list 32 64 128 256 512 0 1023 B C D D’ F F’ E’ 1 1 1 1 0 0 1 1 0 0 0 Bitmap (32 -Bytes chunks) In-use Free allocate(256), allocate(128), allocate(64), allocate(128), release(C, 128), release (D, 64) 10/25/2007 1 ecs 150, Fall 2007 116

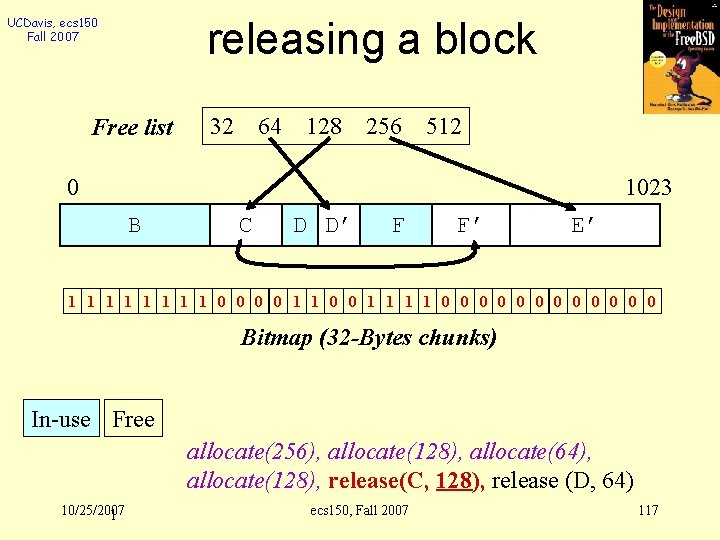

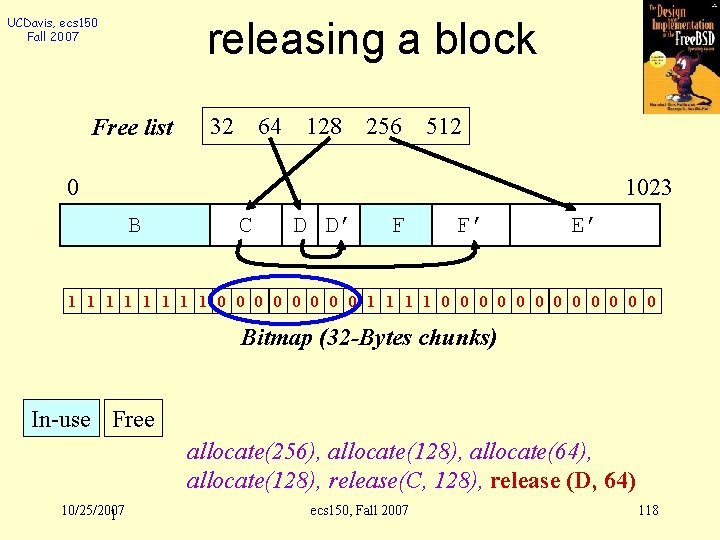

UCDavis, ecs 150 Fall 2007 releasing a block Free list 32 64 128 256 512 0 1023 B C D D’ F F’ E’ 1 1 1 1 0 0 1 1 0 0 0 Bitmap (32 -Bytes chunks) In-use Free allocate(256), allocate(128), allocate(64), allocate(128), release(C, 128), release (D, 64) 10/25/2007 1 ecs 150, Fall 2007 117

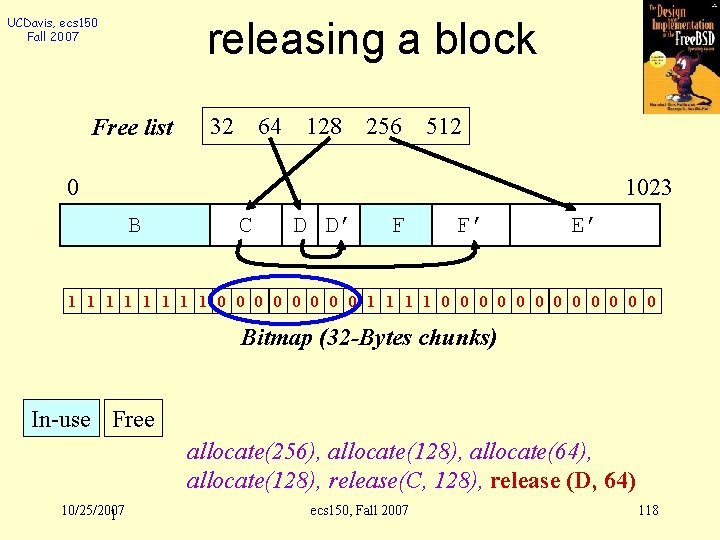

UCDavis, ecs 150 Fall 2007 releasing a block Free list 32 64 128 256 512 0 1023 B C D D’ F F’ E’ 1 1 1 1 0 0 0 0 0 0 Bitmap (32 -Bytes chunks) In-use Free allocate(256), allocate(128), allocate(64), allocate(128), release(C, 128), release (D, 64) 10/25/2007 1 ecs 150, Fall 2007 118

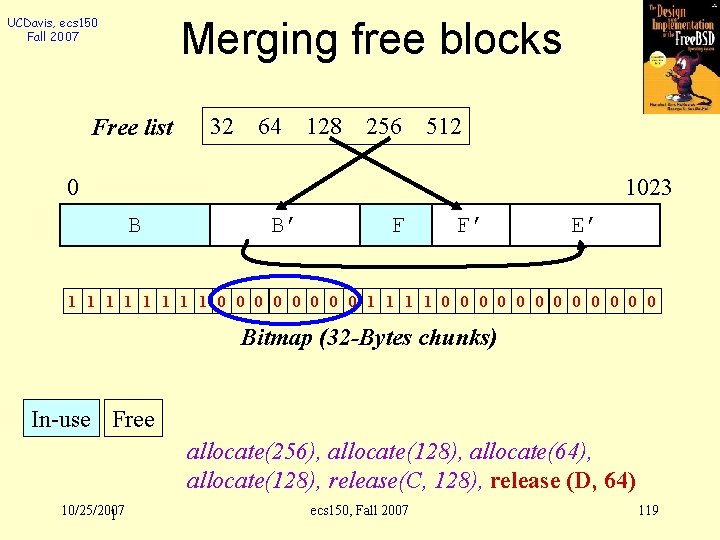

UCDavis, ecs 150 Fall 2007 Merging free blocks Free list 32 64 128 256 512 0 1023 B B’ F F’ E’ 1 1 1 1 0 0 0 0 0 0 Bitmap (32 -Bytes chunks) In-use Free allocate(256), allocate(128), allocate(64), allocate(128), release(C, 128), release (D, 64) 10/25/2007 1 ecs 150, Fall 2007 119

UCDavis, ecs 150 Fall 2007 sizeof(struct proc)? 10/25/2007 ecs 150, Fall 2007 120

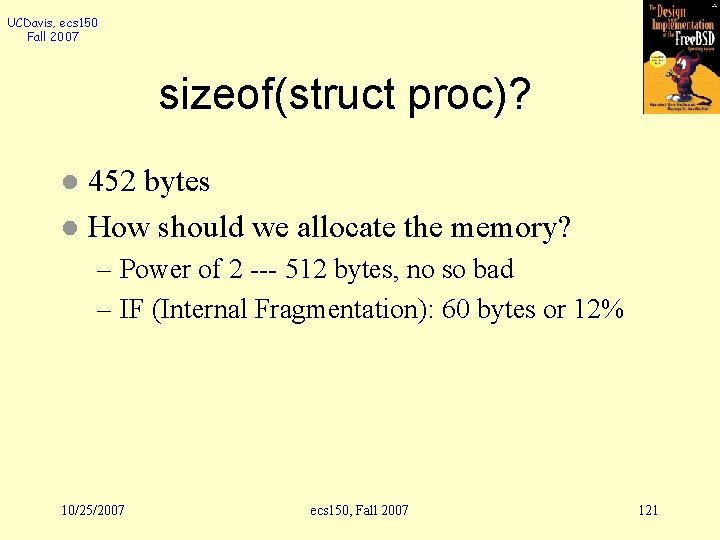

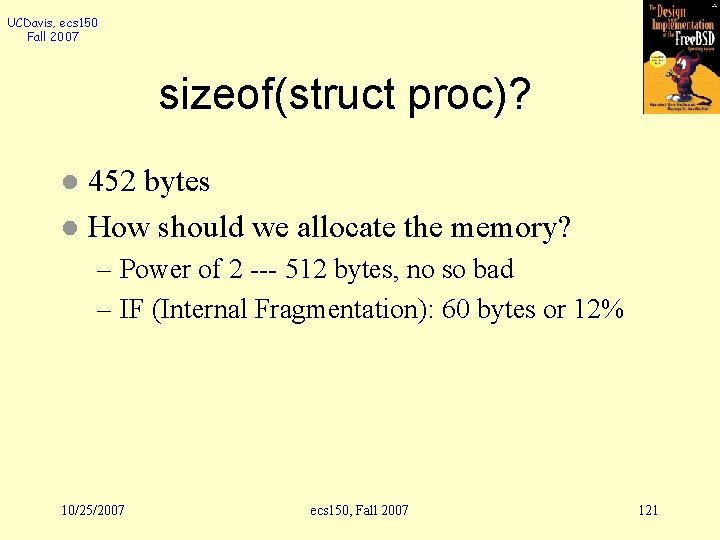

UCDavis, ecs 150 Fall 2007 sizeof(struct proc)? 452 bytes l How should we allocate the memory? l – Power of 2 --- 512 bytes, no so bad – IF (Internal Fragmentation): 60 bytes or 12% 10/25/2007 ecs 150, Fall 2007 121

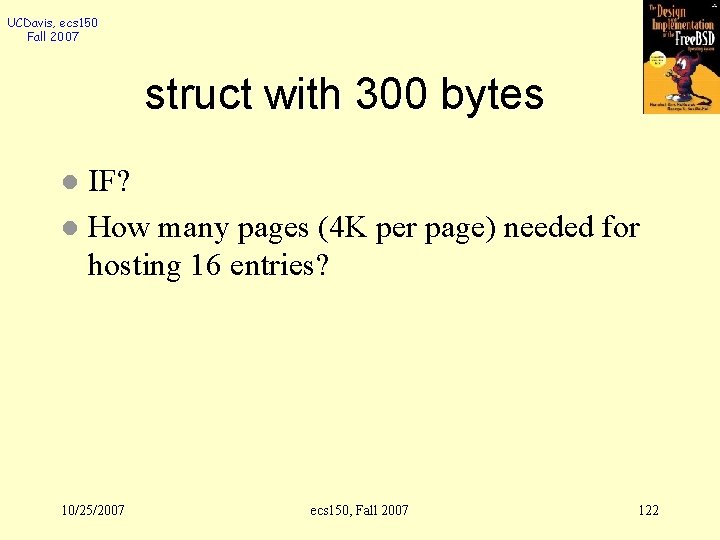

UCDavis, ecs 150 Fall 2007 struct with 300 bytes IF? l How many pages (4 K per page) needed for hosting 16 entries? l 10/25/2007 ecs 150, Fall 2007 122

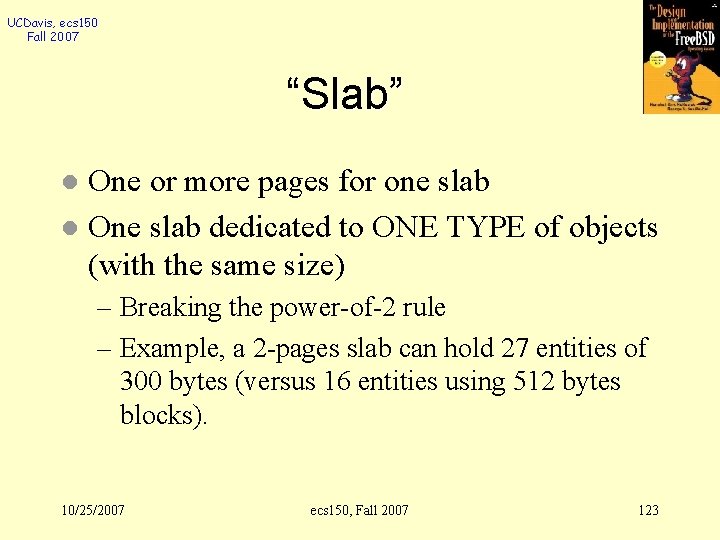

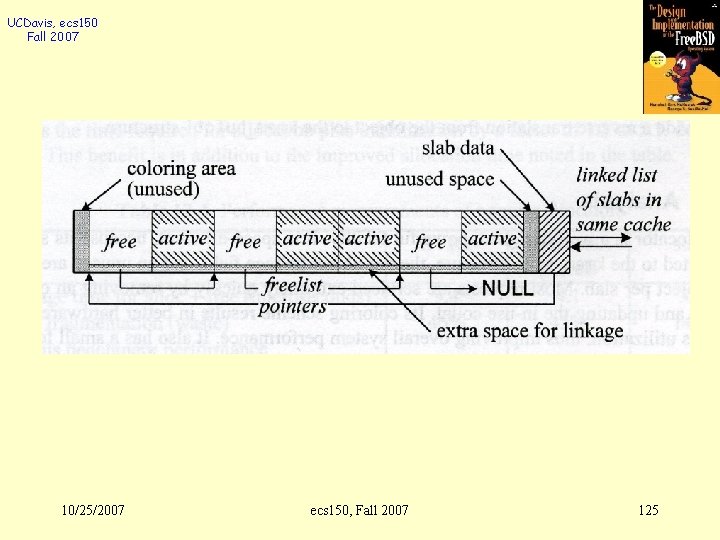

UCDavis, ecs 150 Fall 2007 “Slab” One or more pages for one slab l One slab dedicated to ONE TYPE of objects (with the same size) l – Breaking the power-of-2 rule – Example, a 2 -pages slab can hold 27 entities of 300 bytes (versus 16 entities using 512 bytes blocks). 10/25/2007 ecs 150, Fall 2007 123

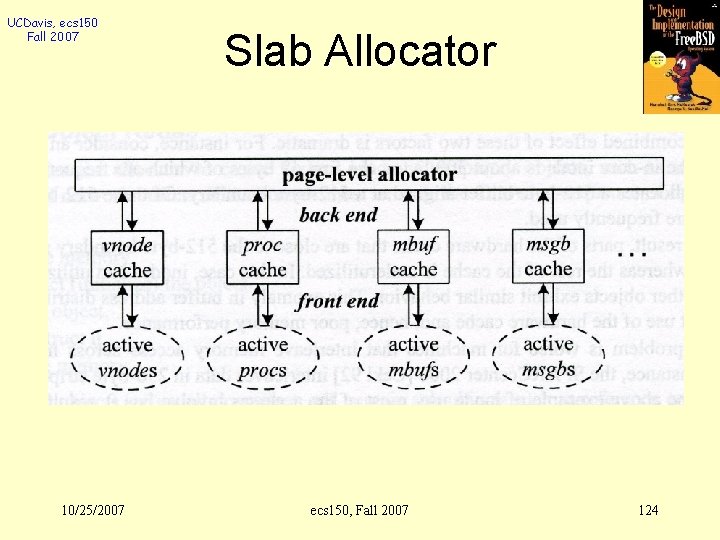

UCDavis, ecs 150 Fall 2007 10/25/2007 Slab Allocator ecs 150, Fall 2007 124

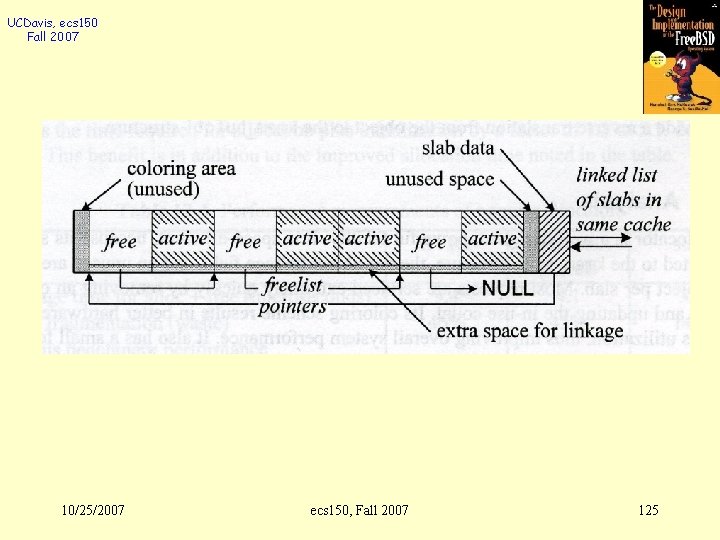

UCDavis, ecs 150 Fall 2007 10/25/2007 ecs 150, Fall 2007 125

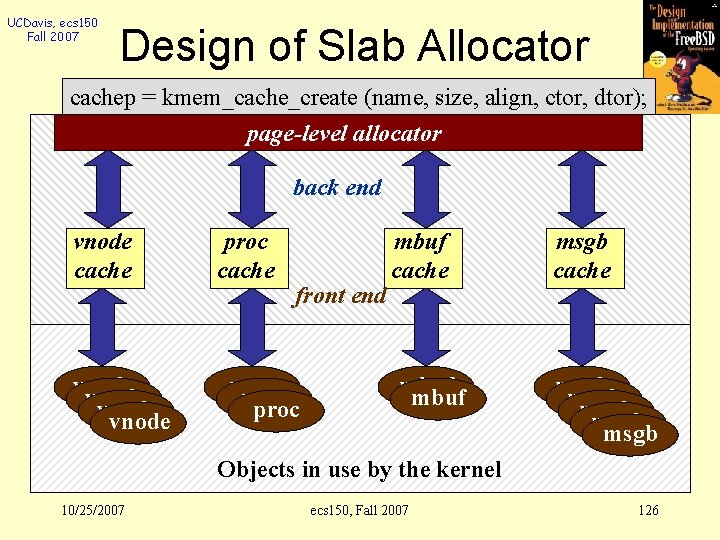

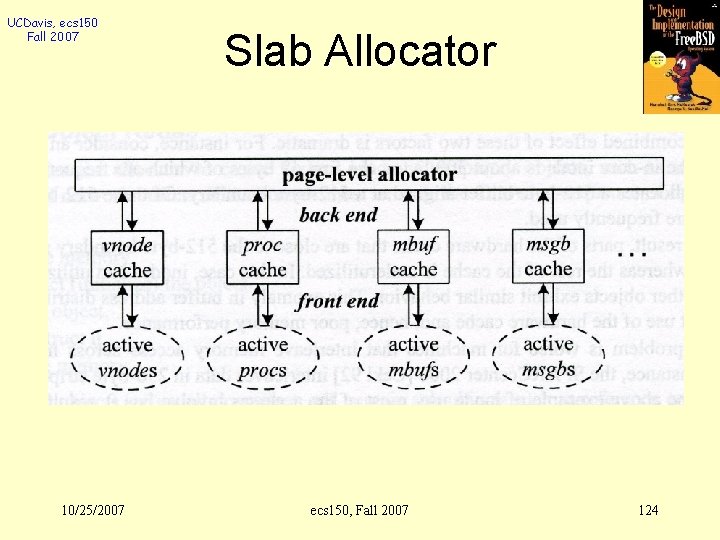

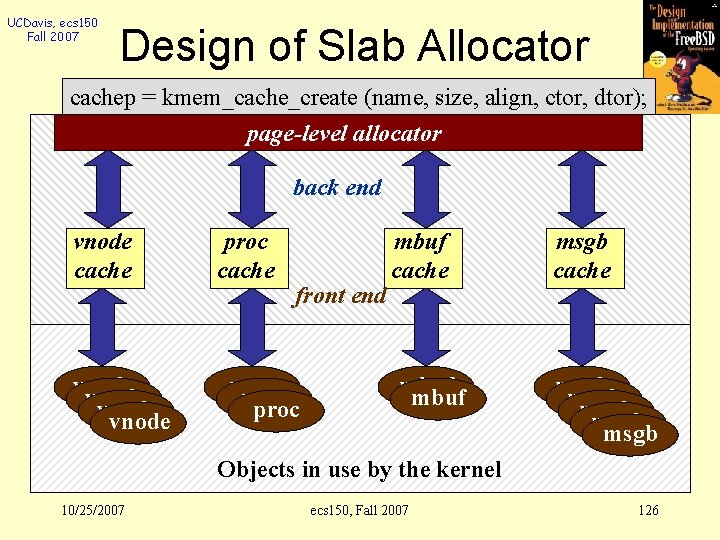

UCDavis, ecs 150 Fall 2007 Design of Slab Allocator cachep = kmem_cache_create (name, size, align, ctor, dtor); page-level allocator back end vnode cache vnode proc cache front end proc mbuf cache mbuf msgb cache msgb msgb Objects in use by the kernel 10/25/2007 ecs 150, Fall 2007 126

UCDavis, ecs 150 Fall 2007 VM file volume with executable programs Modified (dirty) pages are pushed to backing store (swap) on eviction. text data BSS user stack args/env Fetches for clean text or data are typically fill-from-file. kernel Paged-out pages are fetched from backing store when needed. Initial references to user stack and BSS are satisfied by zero-fill on demand. 10/25/2007 ecs 150, Fall 2007 127

UCDavis, ecs 150 Fall 2007 Text Initialized Data (Copy on Write) Unintialized Data (Zero-Fill) Anonymous Object Stack (Zero-Fill) Anonymous Object 10/25/2007 ecs 150, Fall 2007 128

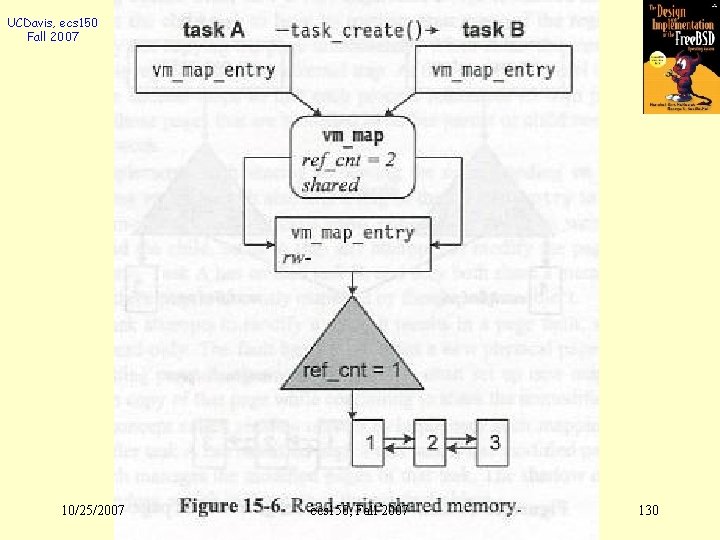

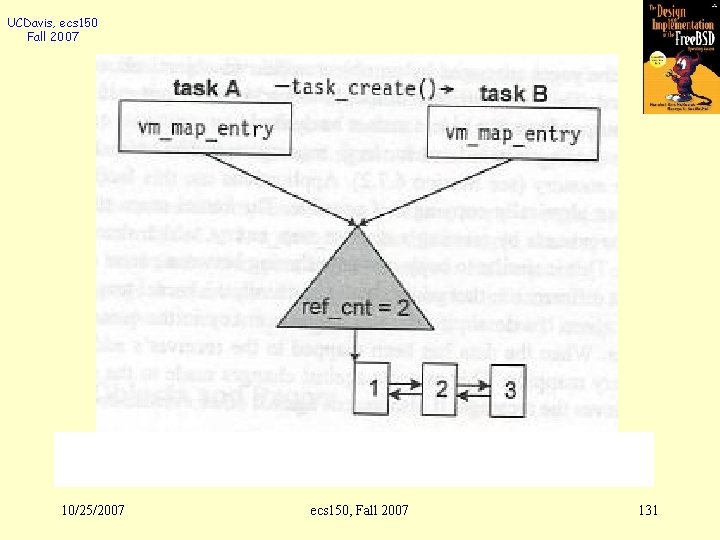

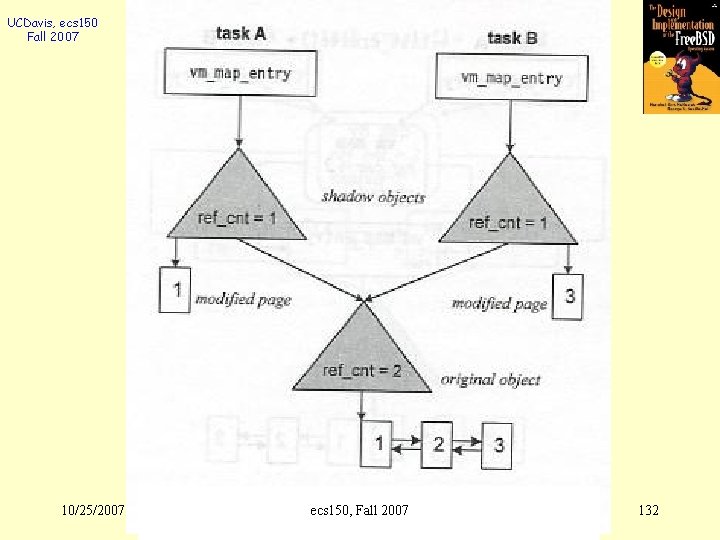

UCDavis, ecs 150 Fall 2007 “mmap” l Memory Mapped File – Read/write versus direct memory access – Sharing a file among multiple processes 10/25/2007 ecs 150, Fall 2007 129

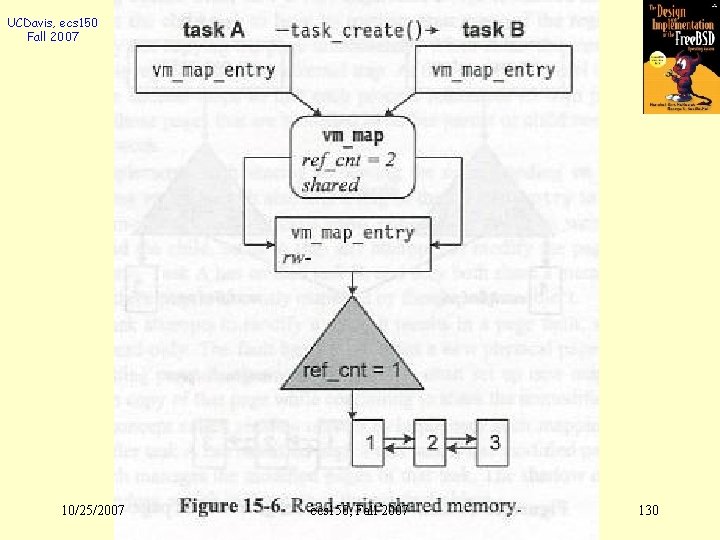

UCDavis, ecs 150 Fall 2007 10/25/2007 ecs 150, Fall 2007 130

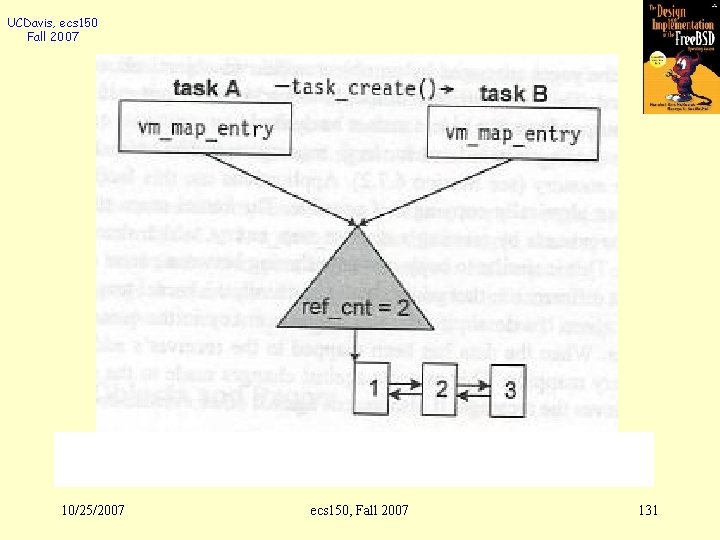

UCDavis, ecs 150 Fall 2007 10/25/2007 ecs 150, Fall 2007 131

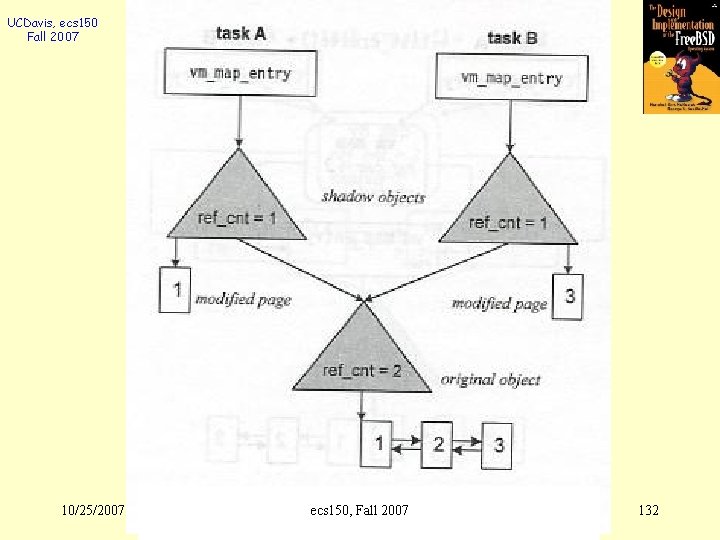

UCDavis, ecs 150 Fall 2007 10/25/2007 ecs 150, Fall 2007 132

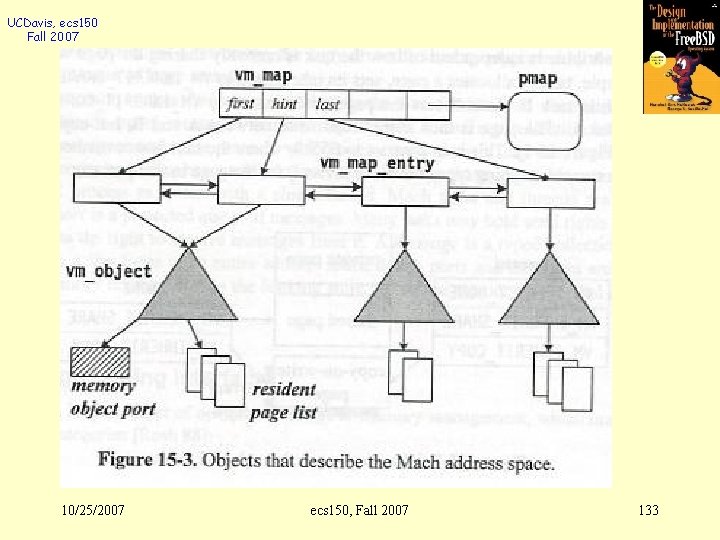

UCDavis, ecs 150 Fall 2007 10/25/2007 ecs 150, Fall 2007 133

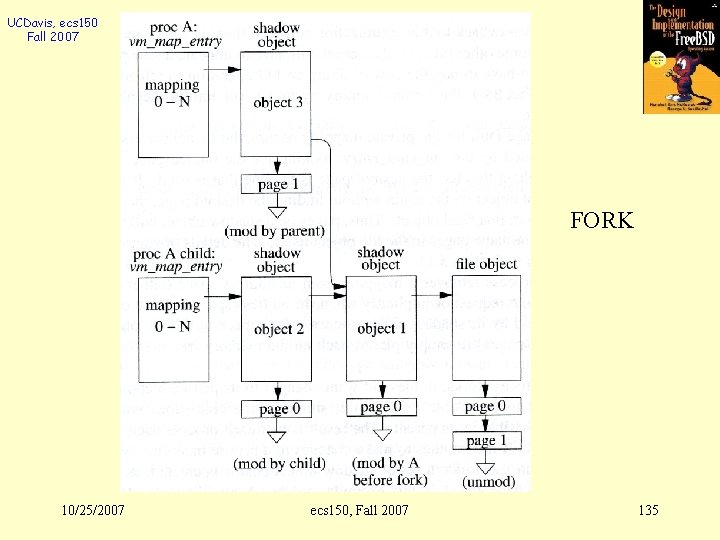

UCDavis, ecs 150 Fall 2007 “mmap” l Memory Mapped File – Read/write versus direct memory access – Sharing a file among multiple processes l Two modes: Shared or Private – Applications? 10/25/2007 ecs 150, Fall 2007 134

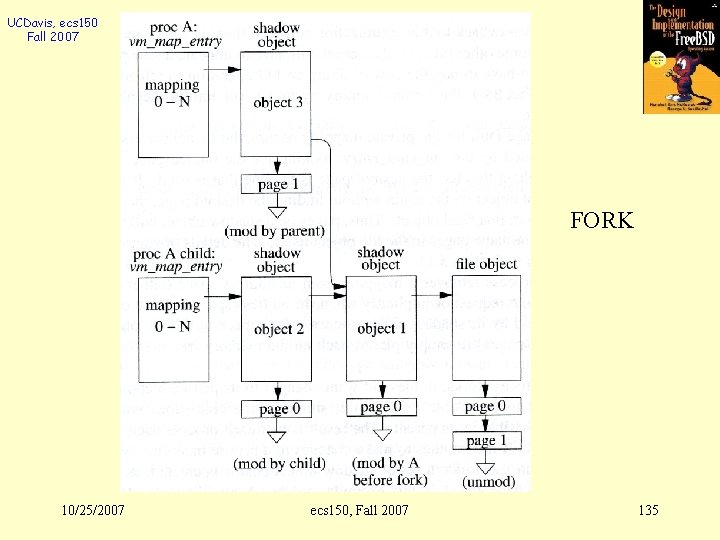

UCDavis, ecs 150 Fall 2007 FORK 10/25/2007 ecs 150, Fall 2007 135

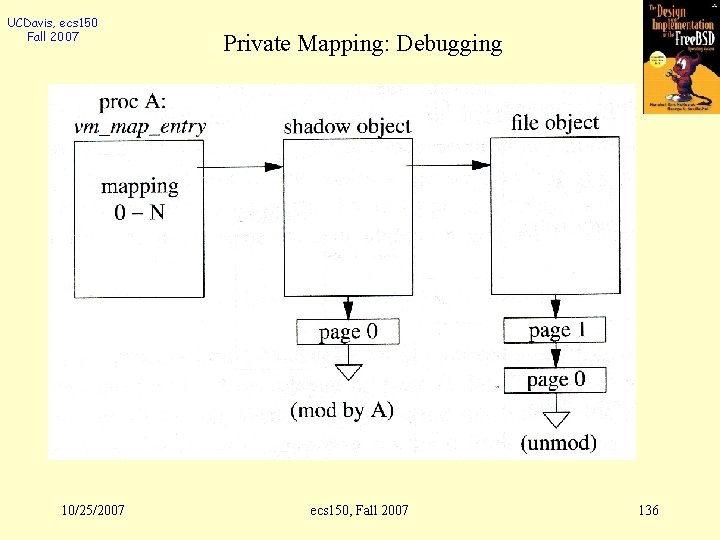

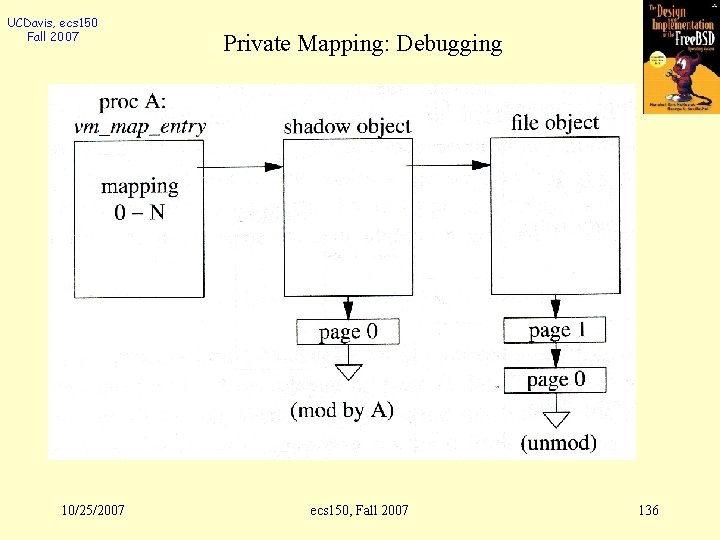

UCDavis, ecs 150 Fall 2007 10/25/2007 Private Mapping: Debugging ecs 150, Fall 2007 136