Multiprocessor Operating Systems Motivation for Multiprocessors Enhanced Performance

- Slides: 30

Multiprocessor Operating Systems

Motivation for Multiprocessors • Enhanced Performance – Concurrent execution of tasks for increased throughput (between processes) – Exploit Concurrency in Tasks (Parallelism within process) • Fault Tolerance – graceful degradation in face of failures

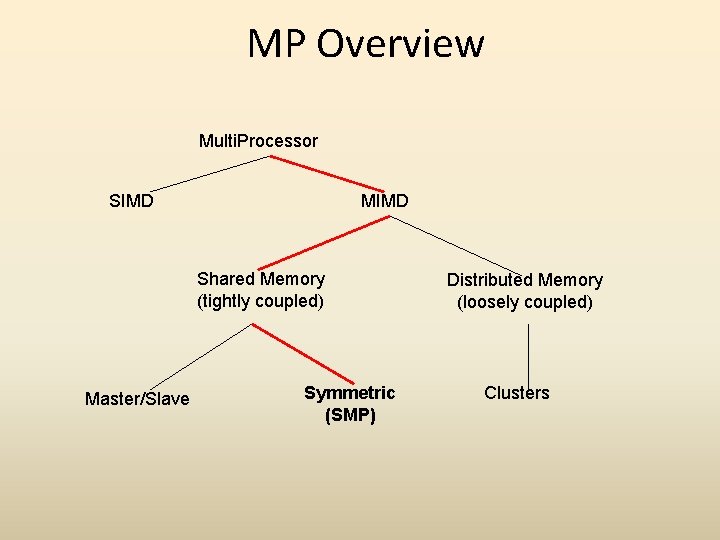

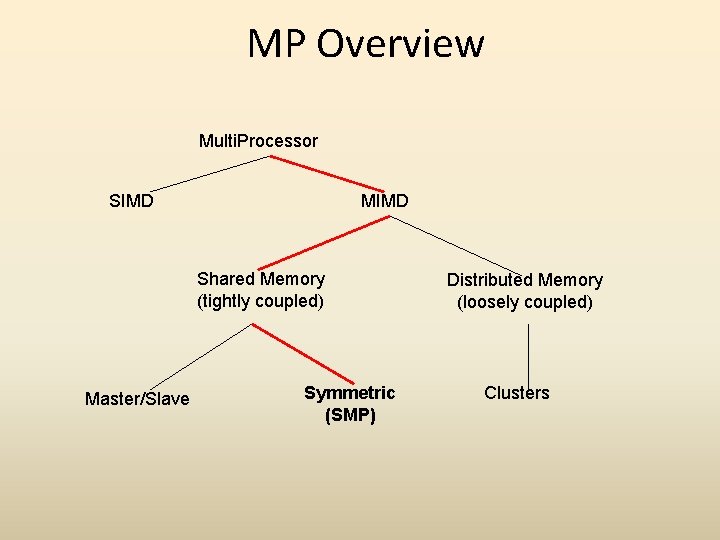

Basic MP Architectures • Single Instruction Single Data (SISD) conventional uniprocessor designs. • Single Instruction Multiple Data (SIMD) Vector and Array Processors • Multiple Instruction Single Data (MISD) Not Implemented. • Multiple Instruction Multiple Data (MIMD) conventional MP designs

MIMD Classifications • Tightly Coupled System - all processors share the same global memory and have the same address spaces (Typical SMP system). – Main memory for IPC and Synchronization. • Loosely Coupled System - memory is partitioned and attached to each processor. Hypercube, Clusters (Multi-Computer). – Message passing for IPC and synchronization.

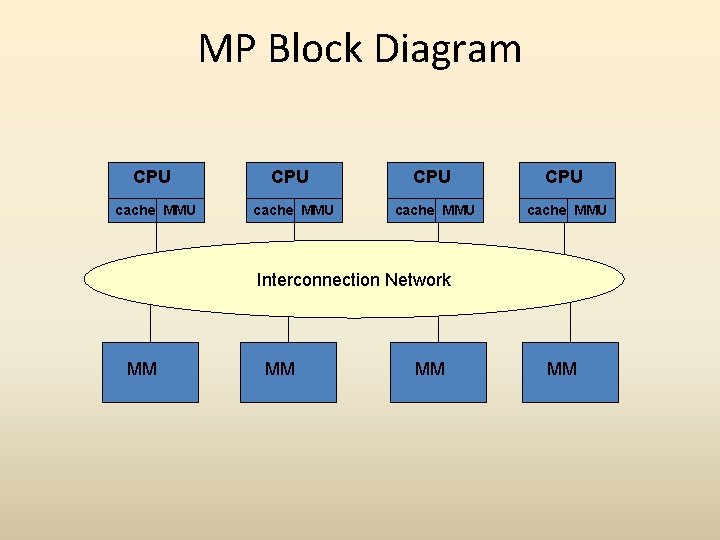

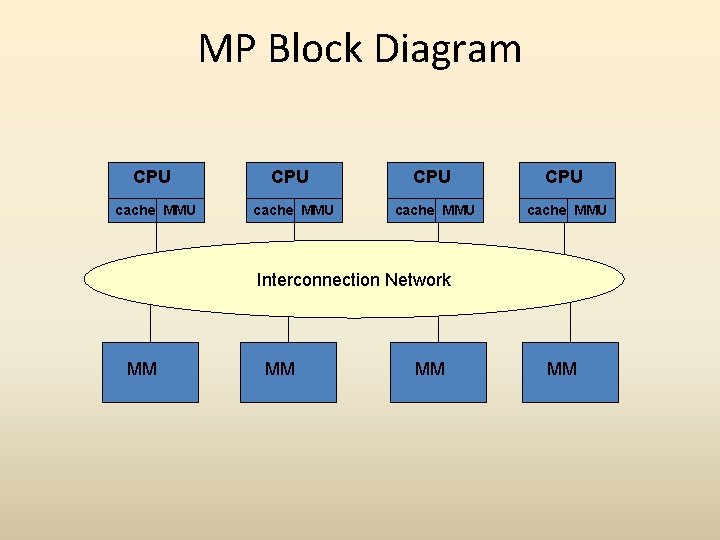

MP Block Diagram CPU CPU cache MMU Interconnection Network MM MM

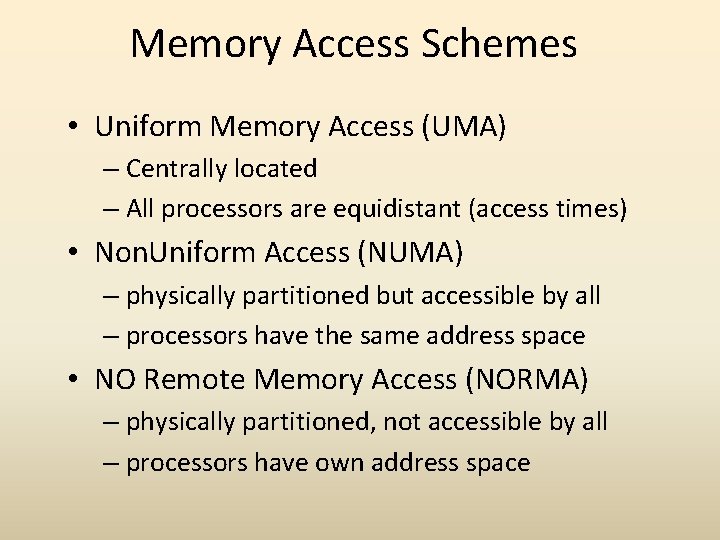

Memory Access Schemes • Uniform Memory Access (UMA) – Centrally located – All processors are equidistant (access times) • Non. Uniform Access (NUMA) – physically partitioned but accessible by all – processors have the same address space • NO Remote Memory Access (NORMA) – physically partitioned, not accessible by all – processors have own address space

Other Details of MP • Interconnection technology – Bus – Cross-Bar switch – Multistage Interconnect Network • Caching - Cache Coherence Problem! – Write-update – Write-invalidate – bus snooping

MP OS Structure - 1 • Separate Supervisor – all processors have their own copy of the kernel. – Some share data for interaction – dedicated I/O devices and file systems – good fault tolerance – bad for concurrency

MP OS Structure - 2 • Master/Slave Configuration – master monitors the status and assigns work to other processors (slaves) – Slaves are a schedulable pool of resources for the master – master can be bottleneck – poor fault tolerance

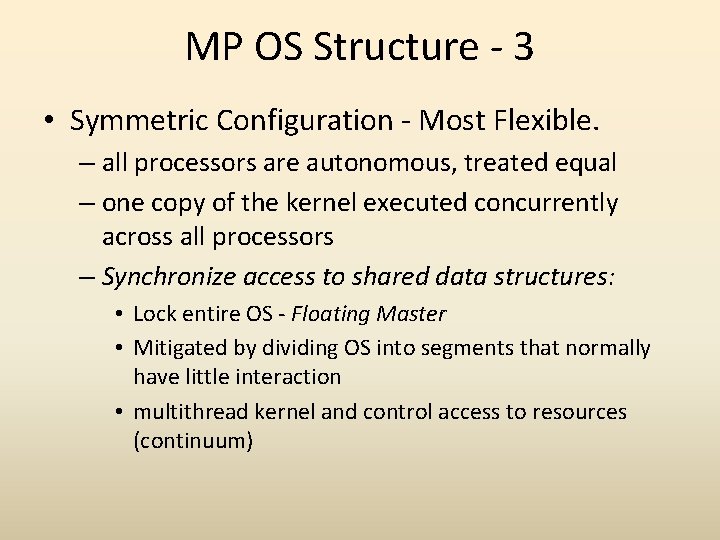

MP OS Structure - 3 • Symmetric Configuration - Most Flexible. – all processors are autonomous, treated equal – one copy of the kernel executed concurrently across all processors – Synchronize access to shared data structures: • Lock entire OS - Floating Master • Mitigated by dividing OS into segments that normally have little interaction • multithread kernel and control access to resources (continuum)

MP Overview Multi. Processor SIMD MIMD Shared Memory (tightly coupled) Master/Slave Symmetric (SMP) Distributed Memory (loosely coupled) Clusters

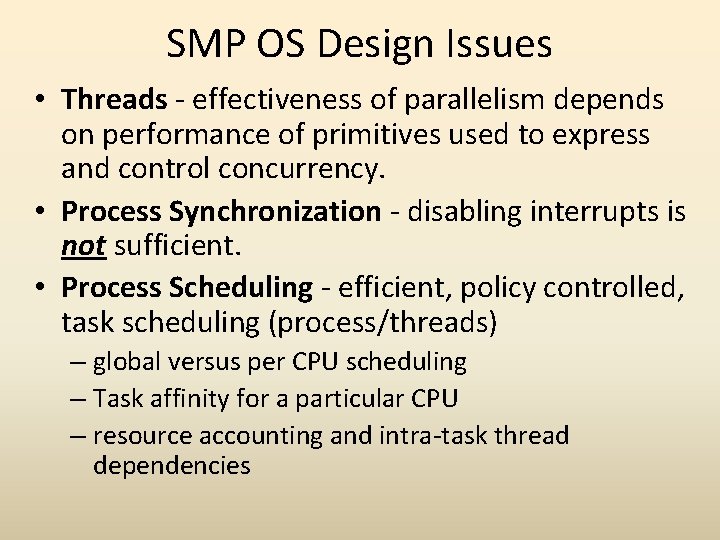

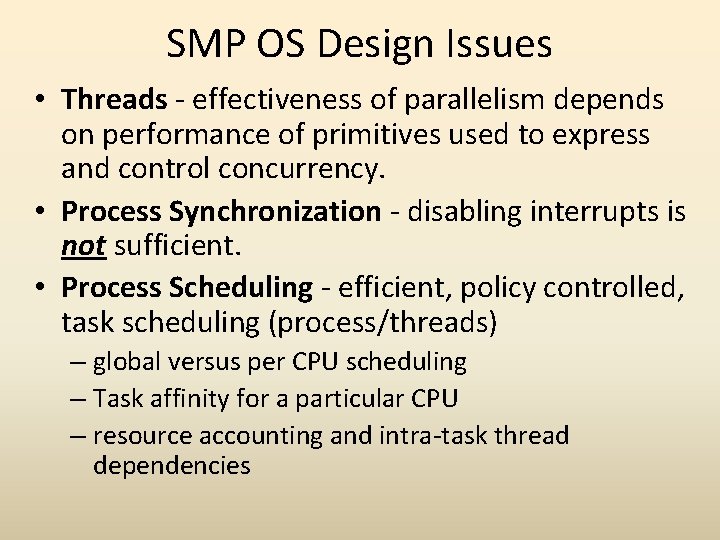

SMP OS Design Issues • Threads - effectiveness of parallelism depends on performance of primitives used to express and control concurrency. • Process Synchronization - disabling interrupts is not sufficient. • Process Scheduling - efficient, policy controlled, task scheduling (process/threads) – global versus per CPU scheduling – Task affinity for a particular CPU – resource accounting and intra-task thread dependencies

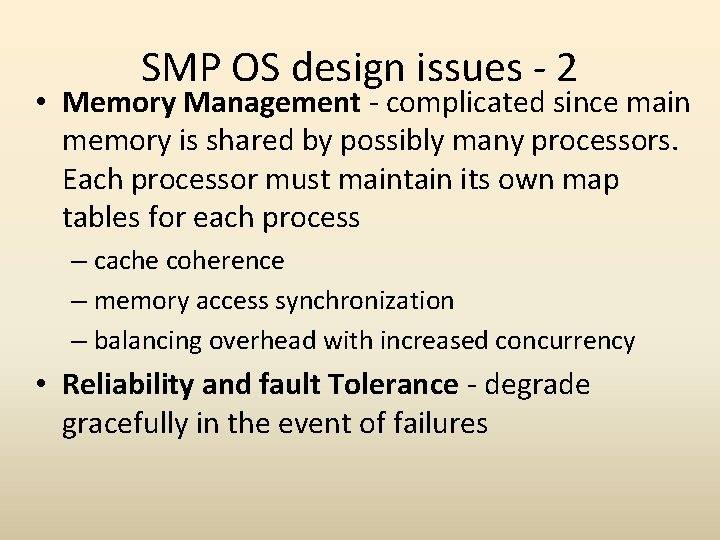

SMP OS design issues - 2 • Memory Management - complicated since main memory is shared by possibly many processors. Each processor must maintain its own map tables for each process – cache coherence – memory access synchronization – balancing overhead with increased concurrency • Reliability and fault Tolerance - degrade gracefully in the event of failures

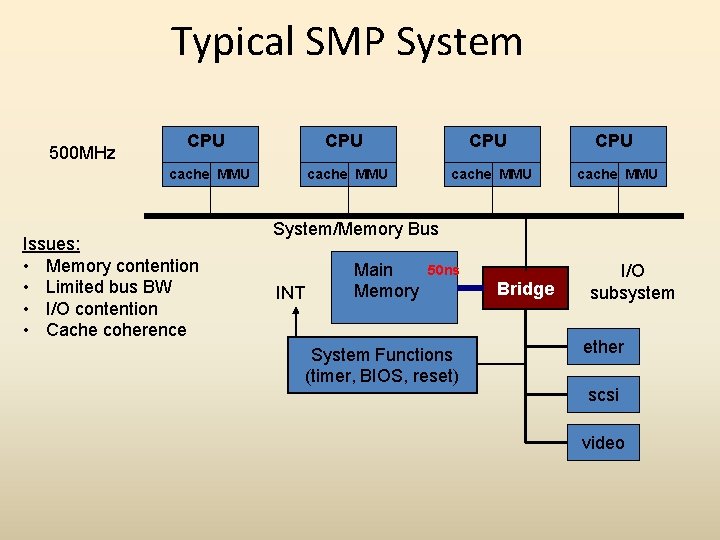

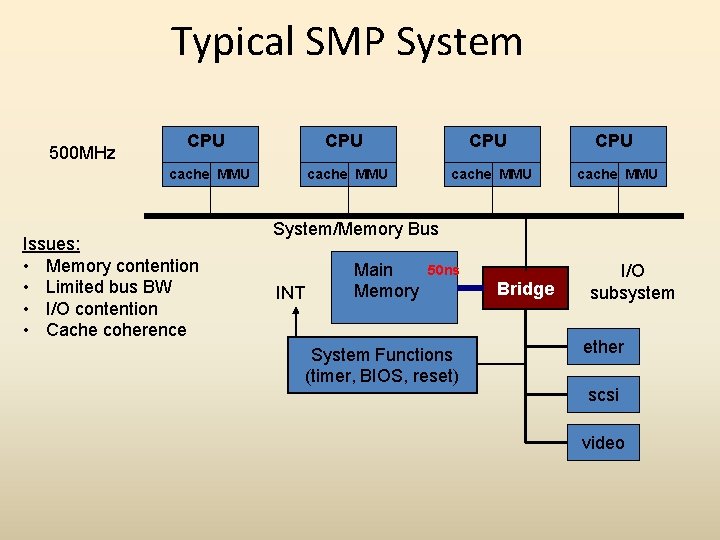

Typical SMP System 500 MHz CPU CPU cache MMU Issues: • Memory contention • Limited bus BW • I/O contention • Cache coherence System/Memory Bus INT Main Memory 50 ns System Functions (timer, BIOS, reset) Bridge I/O subsystem ether scsi video

Some Definitions • Parallelism: degree to which a multiprocessor application achieves parallel execution • Concurrency: Maximum parallelism an application can achieve with unlimited processors • System Concurrency: kernel recognizes multiple threads of control in a program • User Concurrency: User space threads (coroutines) provide a natural programming model for concurrent applications. Concurrency not supported by system.

Process and Threads • Process: encompasses – set of threads (computational entities) – collection of resources • Thread: Dynamic object representing an execution path and computational state. – threads have their own computational state: PC, stack, user registers and private data – Remaining resources are shared amongst threads in a process

Threads • Effectiveness of parallel computing depends on the performance of the primitives used to express and control parallelism • Threads separate the notion of execution from the Process abstraction • Useful for expressing the intrinsic concurrency of a program regardless of resulting performance • Three types: User threads, kernel threads and Light Weight Processes (LWP)

User Level Threads • User level threads - supported by user level (thread) library – Benefits: • no modifications required to kernel • flexible and low cost – Drawbacks: • can not block without blocking entire process • no parallelism (not recognized by kernel)

Kernel Level Threads • Kernel level threads - kernel directly supports multiple threads of control in a process. Thread is the basic scheduling entity – Benefits: • coordination between scheduling and synchronization • less overhead than a process • suitable for parallel application – Drawbacks: • more expensive than user-level threads • generality leads to greater overhead

Light Weight Processes (LWP) • Kernel supported user thread • Each LWP is bound to one kernel thread. – a kernel thread may not be bound to an LWP • LWP is scheduled by kernel • User threads scheduled by library onto LWPs • Multiple LWPs per process

First Class threads (Psyche OS) • Thread operations in user space: – create, destroy, synch, context switch • kernel threads implement a virtual processor • Course grain in kernel - preemptive scheduling • Communication between kernel and threads library – shared data structures. – Software interrupts (user upcalls or signals). Example, for scheduling decisions and preemption warnings. – Kernel scheduler interface - allows dissimilar thread packages to coordinate.

Scheduler Activations • An activation: – serves as execution context for running thread – notifies thread of kernel events (upcall) – space for kernel to save processor context of current user thread when stopped by kernel • kernel is responsible for processor allocation => preemption by kernel. • Thread package responsible for scheduling threads on available processors (activations)

• BSD: Support for Threading – process model only. 4. 4 BSD enhancements. • Solaris: provides – user threads, kernel threads and LWPs • Mach: supports – kernel threads and tasks. Thread libraries provide semantics of user threads, LWPs and kernel threads. • Digital UNIX: extends MACH to provide usual UNIX semantics. – Pthreads library.

Mach • Two abstractions: – Task - static object, address space and system resources called port rights. – Thread - fundamental execution unit and runs in context of a task. • • Zero or more threads per task, kernel schedulable kernel stack computational state • Processor sets - available processors divided into nonintersecting sets. – permits dedicating processor sets to one or more tasks

Mach c-thread Implementations • Coroutine-based - multiples user threads onto a single-threaded task • Thread-based - one-to-one mapping from cthreads to Mach threads. Default. • Task-based - One Mach Task per c-thread.

Digital UNIX • Based on Mach 2. 5 kernel • Provides complete UNIX programmers interface • 4. 3 BSD code and ULTRIX code ported to Mach – u-area replaced by utask and uthread – proc structure retained

Digital UNIX threads • Signals divided into synchronous and asynchronous • global signal mask • each thread can define its own handlers for synchronous signals • global handlers for asynchronous signals

Pthreads library • One Mach thread per pthread • implements asynchronous I/O – separate thread created for synchronous I/O which in turn signals original thread • library includes signal handling, scheduling functions, and synchronization primitives.

Mach Continuations • Address problem of excessive kernel stack memory requirements • process model versus interrupt model – one per process kernel stack versus a per thread kernel stack • Thread is first responsible for saving any required state (the thread structure allows up to 28 bytes) • indicate a function to be invoked when unblocked (the continuation function) • Advantage: stack can be transferred between threads eliminating copy overhead.

• Thank You 30