Chapter 7 Multicores Multiprocessors and Clusters ta tm

- Slides: 75

Chapter 7 Multicores (多核心), Multiprocessors (多處理器 ), and Clusters (叢集)

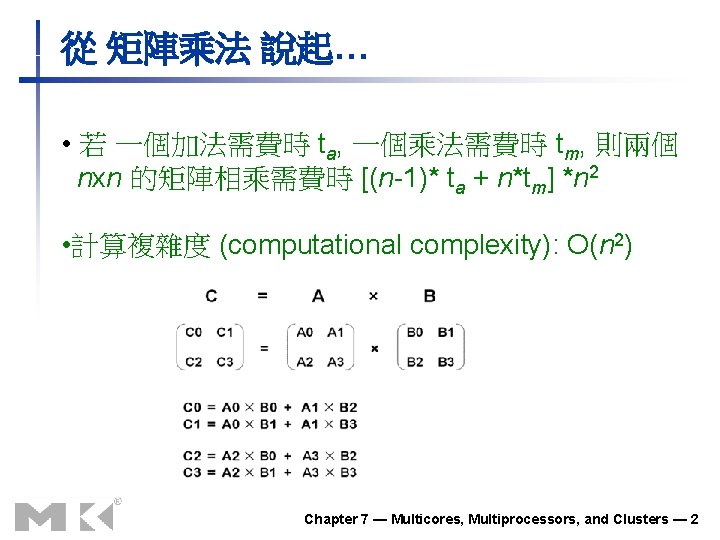

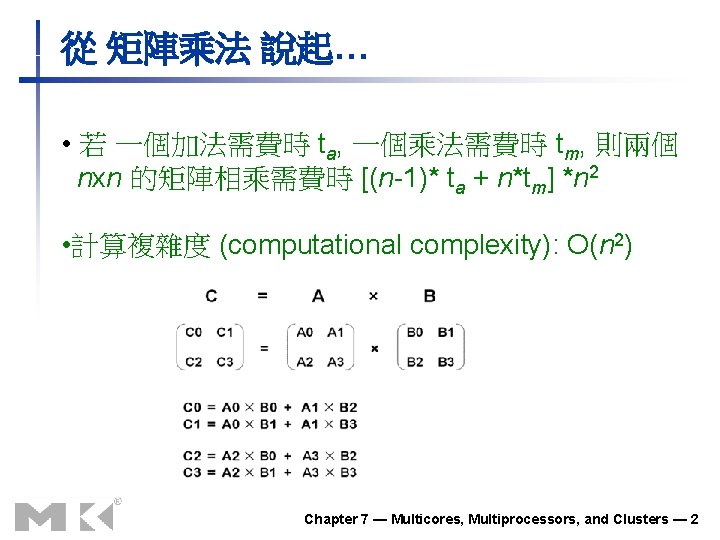

從 矩陣乘法 說起… • 若 一個加法需費時 ta, 一個乘法需費時 tm, 則兩個 nxn 的矩陣相乘需費時 [(n-1)* ta + n*tm] *n 2 • 計算複雜度 (computational complexity): O(n 2) Chapter 7 — Multicores, Multiprocessors, and Clusters — 2

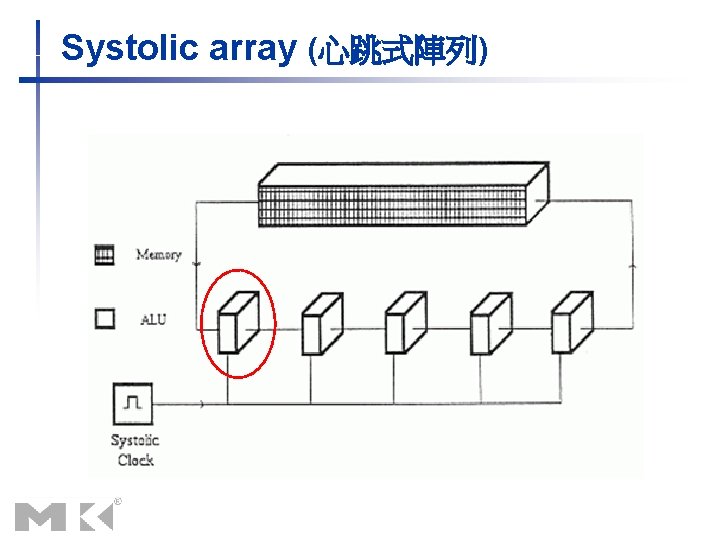

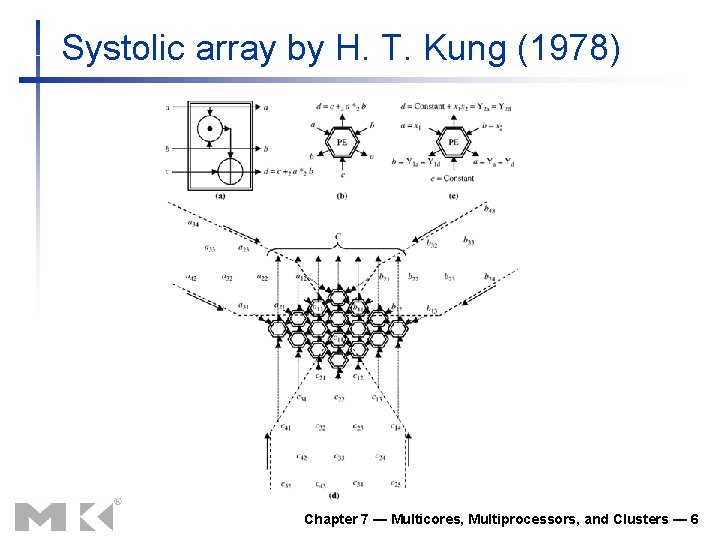

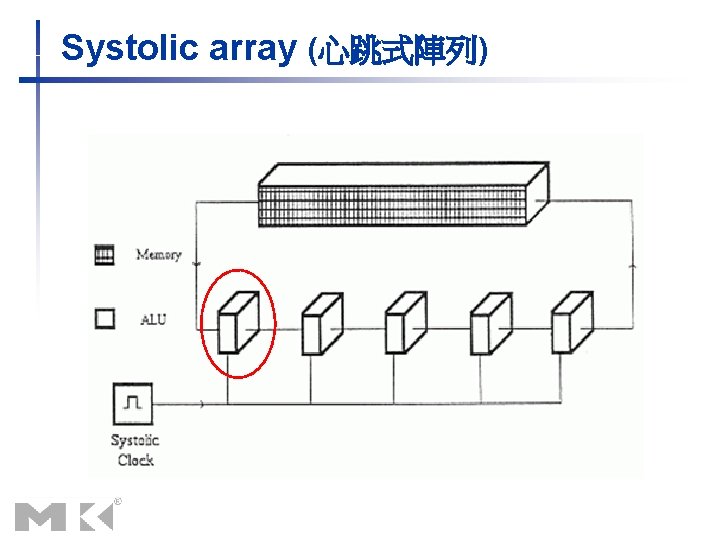

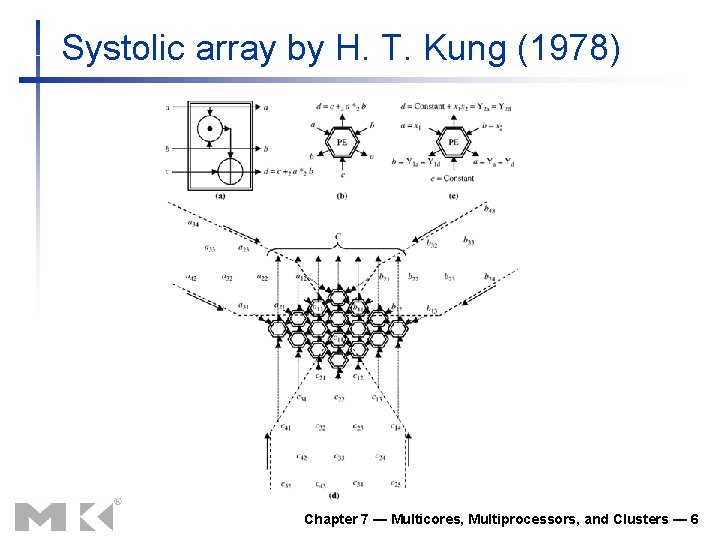

Systolic array (心跳式陣列)

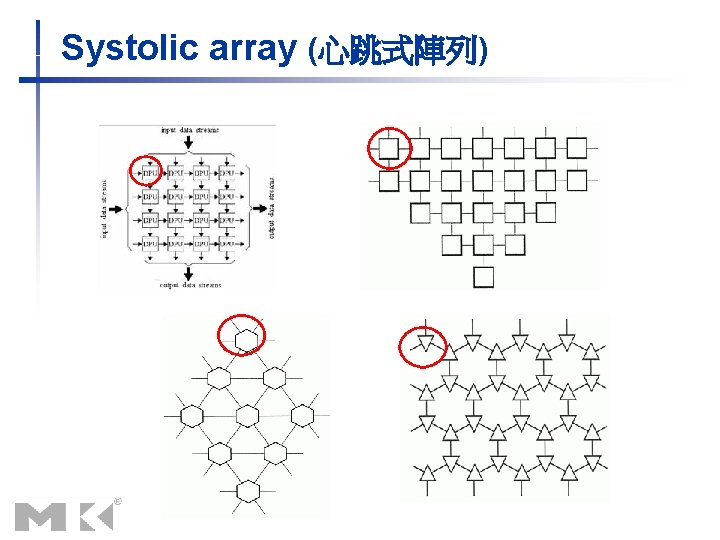

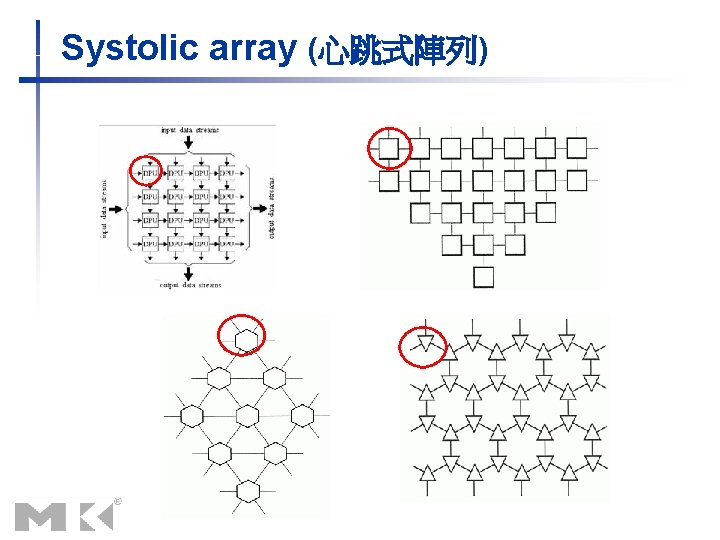

Systolic array (心跳式陣列)

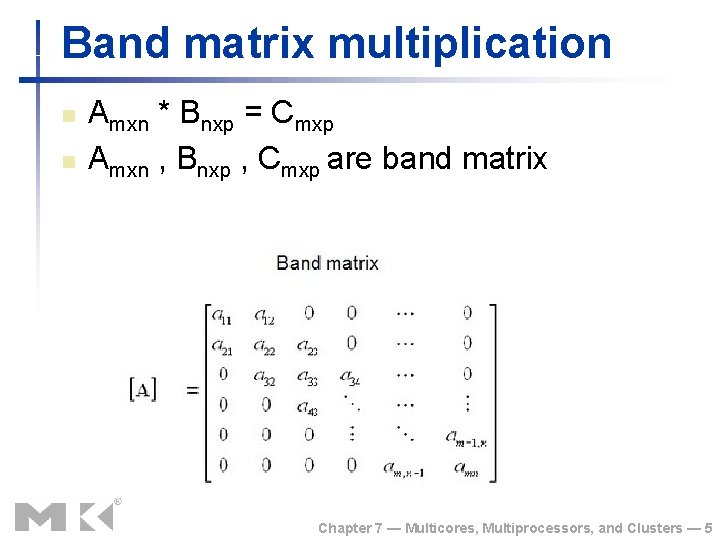

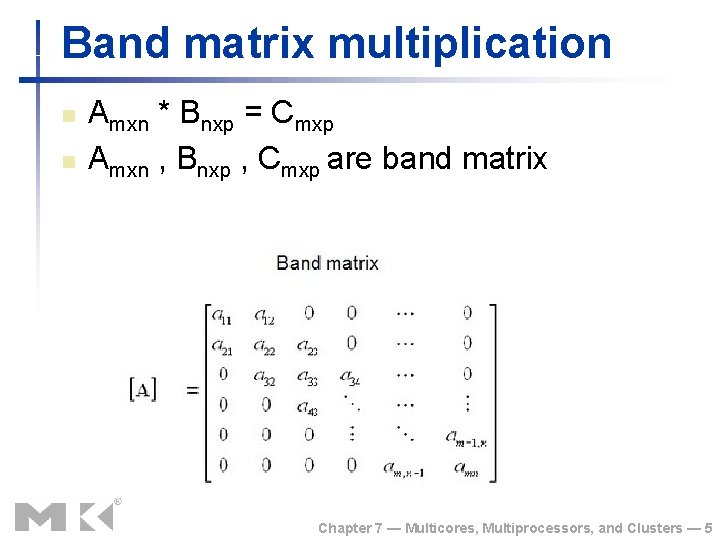

Band matrix multiplication n n Amxn * Bnxp = Cmxp Amxn , Bnxp , Cmxp are band matrix Chapter 7 — Multicores, Multiprocessors, and Clusters — 5

Systolic array by H. T. Kung (1978) Chapter 7 — Multicores, Multiprocessors, and Clusters — 6

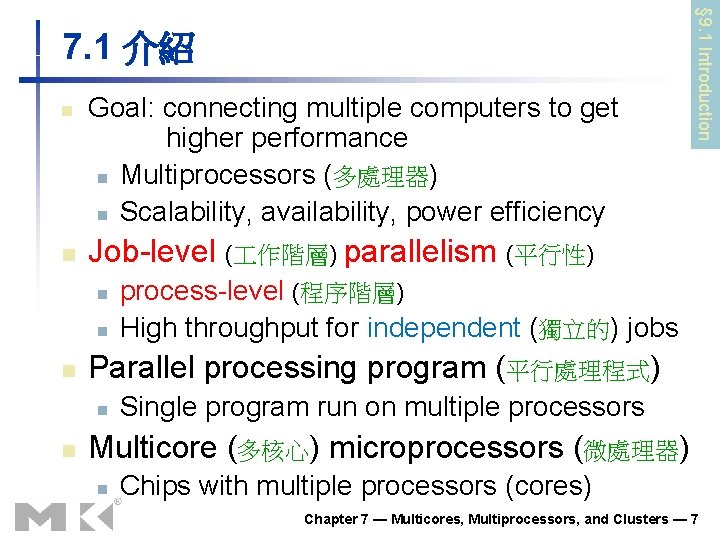

n n Goal: connecting multiple computers to get higher performance n Multiprocessors (多處理器) n Scalability, availability, power efficiency Job-level ( 作階層) parallelism (平行性) n n n process-level (程序階層) High throughput for independent (獨立的) jobs Parallel processing program (平行處理程式) n n § 9. 1 Introduction 7. 1 介紹 Single program run on multiple processors Multicore (多核心) microprocessors (微處理器) n Chips with multiple processors (cores) Chapter 7 — Multicores, Multiprocessors, and Clusters — 7

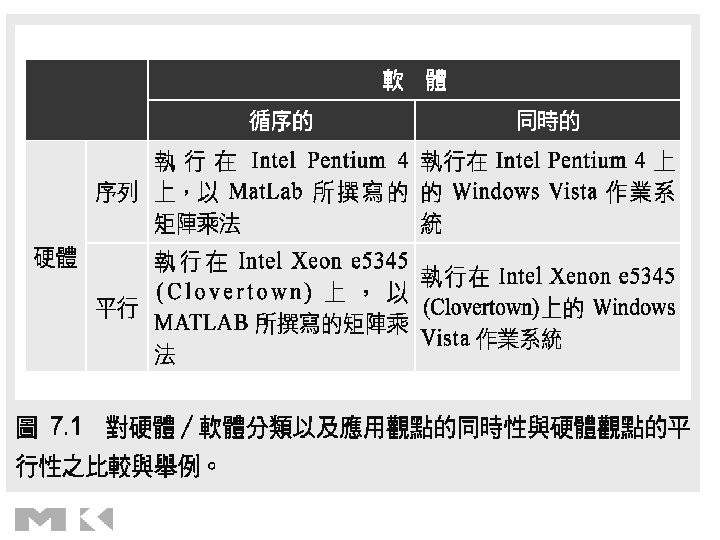

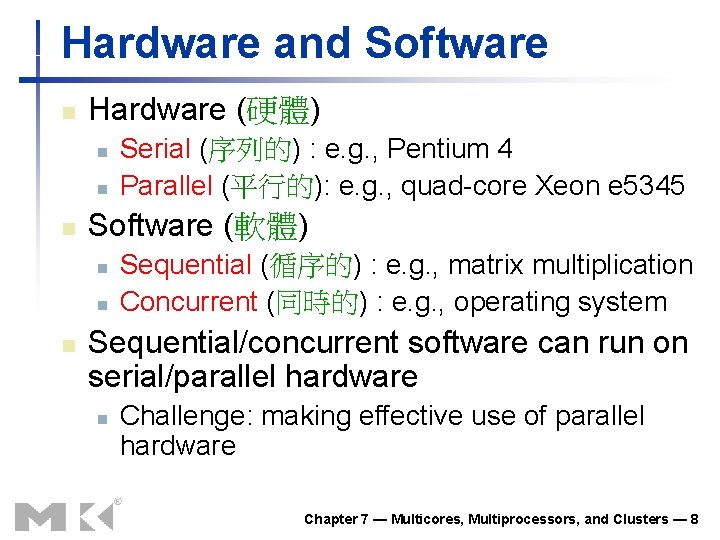

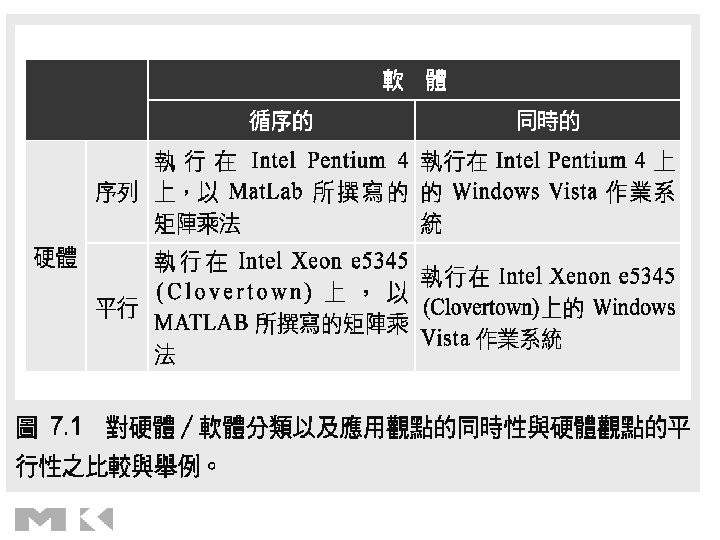

Hardware and Software n Hardware (硬體) n n n Software (軟體) n n n Serial (序列的) : e. g. , Pentium 4 Parallel (平行的): e. g. , quad-core Xeon e 5345 Sequential (循序的) : e. g. , matrix multiplication Concurrent (同時的) : e. g. , operating system Sequential/concurrent software can run on serial/parallel hardware n Challenge: making effective use of parallel hardware Chapter 7 — Multicores, Multiprocessors, and Clusters — 8

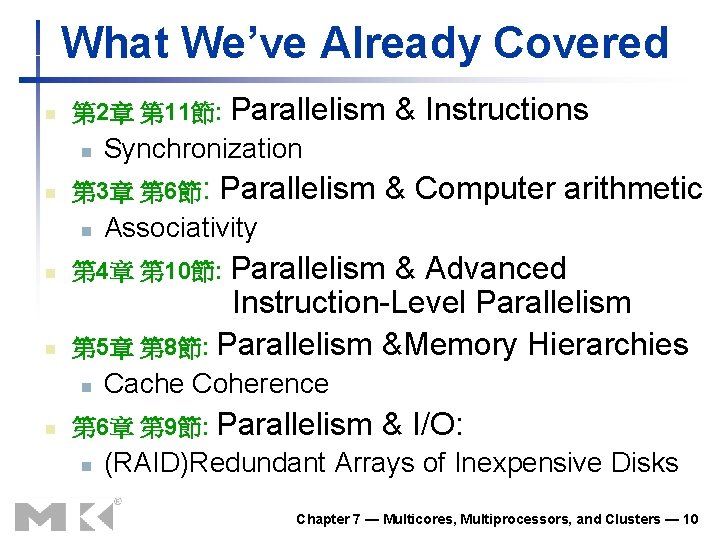

What We’ve Already Covered n 第 2章 第 11節: Parallelism & Instructions n n 第 3章 第 6節: Parallelism & Computer arithmetic n n n Associativity 第 4章 第 10節: Parallelism & Advanced Instruction-Level Parallelism 第 5章 第 8節: Parallelism &Memory Hierarchies n n Synchronization Cache Coherence 第 6章 第 9節: Parallelism & I/O: n (RAID)Redundant Arrays of Inexpensive Disks Chapter 7 — Multicores, Multiprocessors, and Clusters — 10

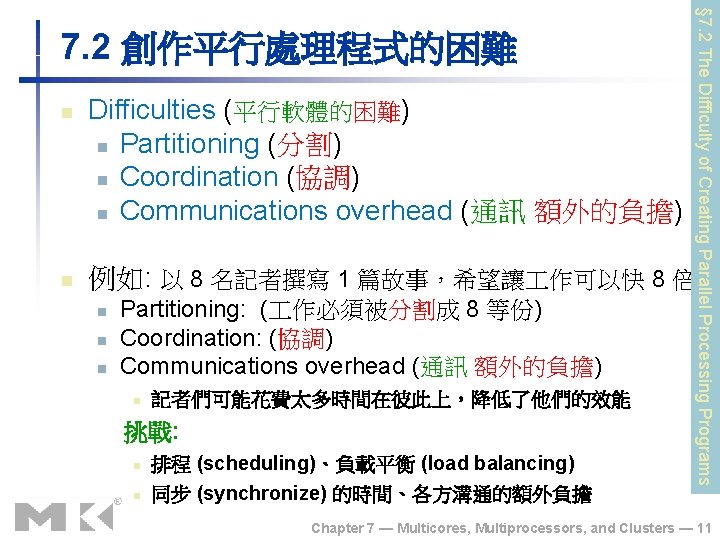

n n Difficulties (平行軟體的困難) n Partitioning (分割) n Coordination (協調) n Communications overhead (通訊 額外的負擔) § 7. 2 The Difficulty of Creating Parallel Processing Programs 7. 2 創作平行處理程式的困難 例如: 以 8 名記者撰寫 1 篇故事,希望讓 作可以快 8 倍 n n n Partitioning: ( 作必須被分割成 8 等份) Coordination: (協調) Communications overhead (通訊 額外的負擔) n 記者們可能花費太多時間在彼此上,降低了他們的效能 挑戰: n n 排程 (scheduling)、負載平衡 (load balancing) 同步 (synchronize) 的時間、各方溝通的額外負擔 Chapter 7 — Multicores, Multiprocessors, and Clusters — 11

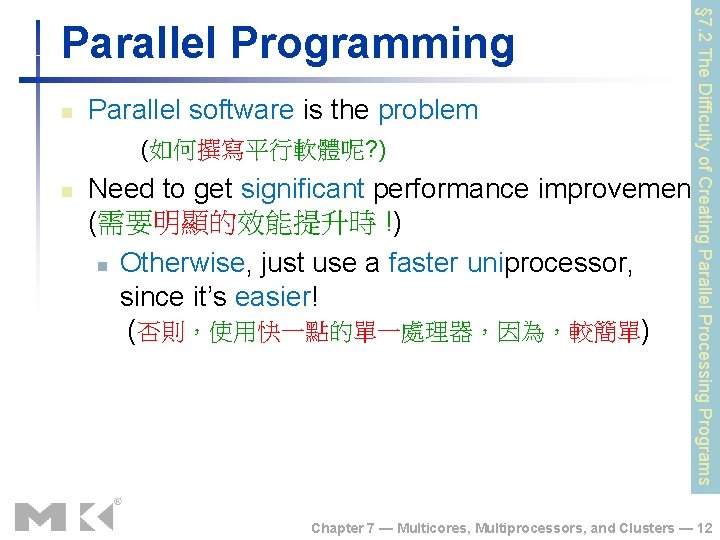

n Parallel software is the problem (如何撰寫平行軟體呢? ) n § 7. 2 The Difficulty of Creating Parallel Processing Programs Parallel Programming Need to get significant performance improvement (需要明顯的效能提升時 !) n Otherwise, just use a faster uniprocessor, since it’s easier! (否則,使用快一點的單一處理器,因為,較簡單) Chapter 7 — Multicores, Multiprocessors, and Clusters — 12

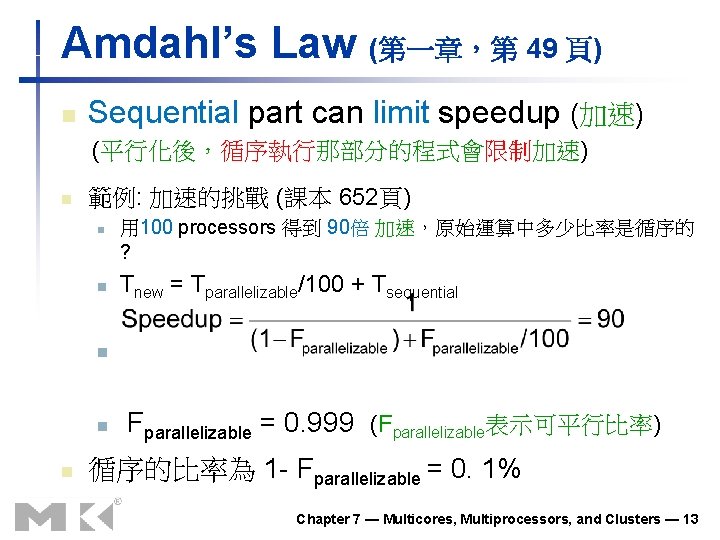

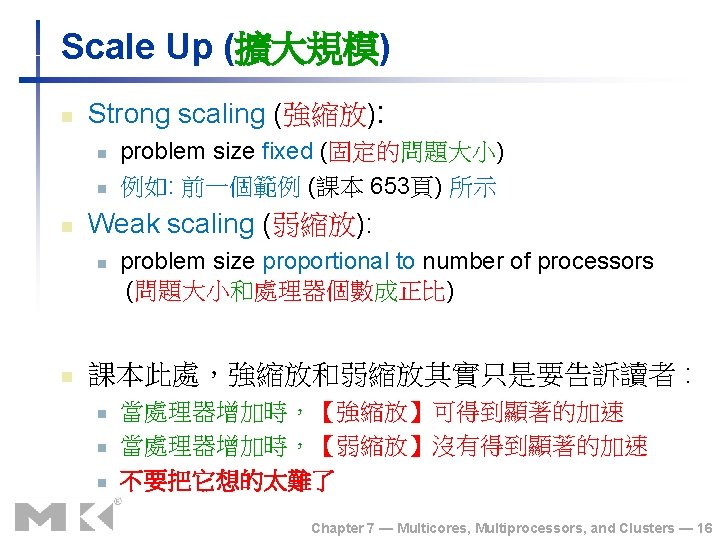

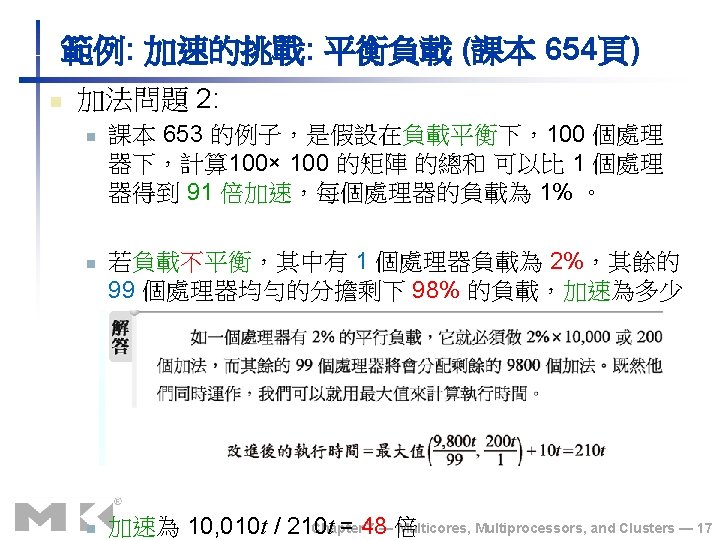

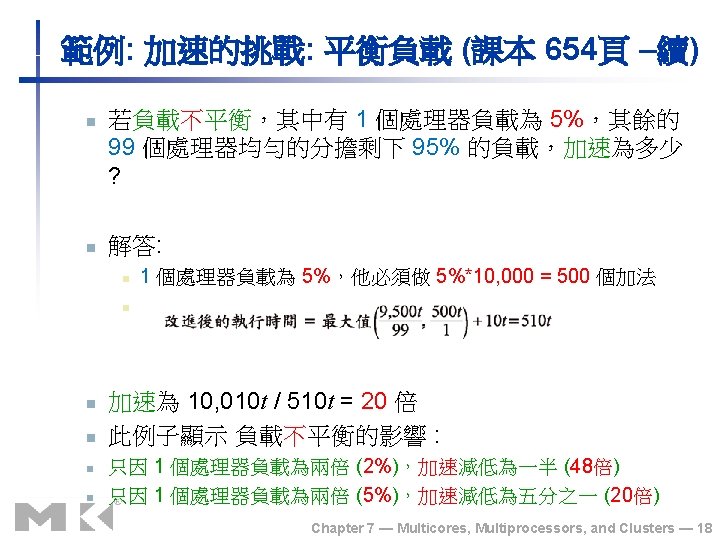

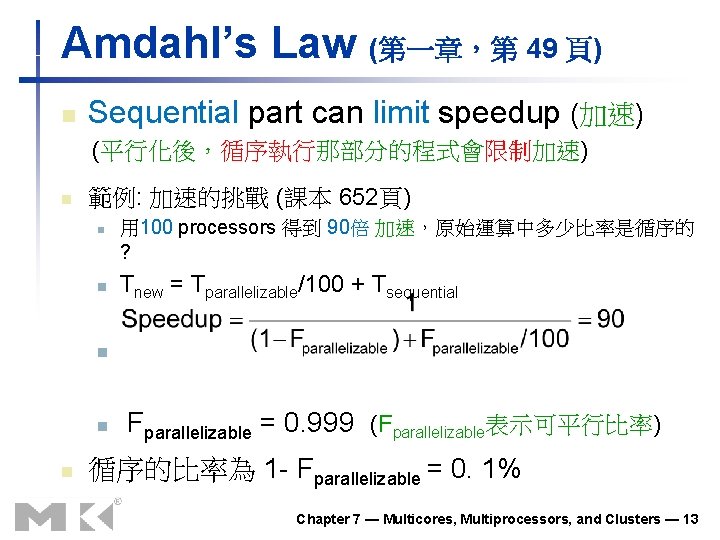

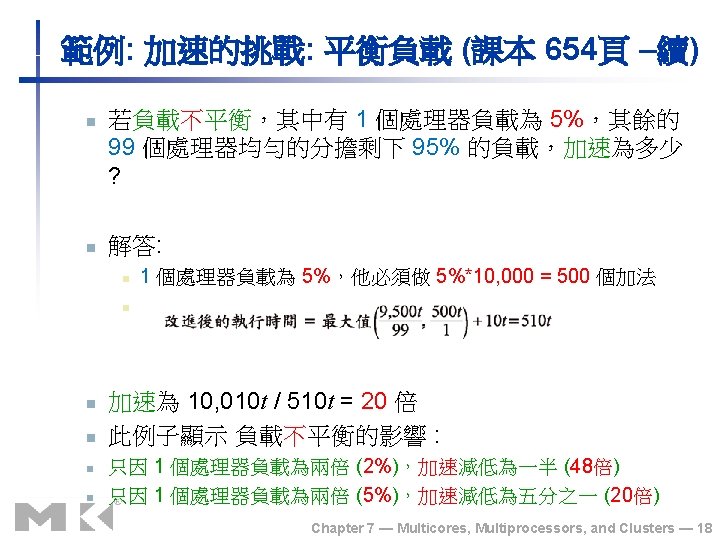

Amdahl’s Law (第一章,第 49 頁) n Sequential part can limit speedup (加速) (平行化後,循序執行那部分的程式會限制加速) n 範例: 加速的挑戰 (課本 652頁) n n 用 100 processors 得到 90倍 加速,原始運算中多少比率是循序的 ? n Tnew = Tparallelizable/100 + Tsequential n n Fparallelizable = 0. 999 (Fparallelizable表示可平行比率) 循序的比率為 1 - Fparallelizable = 0. 1% Chapter 7 — Multicores, Multiprocessors, and Clusters — 13

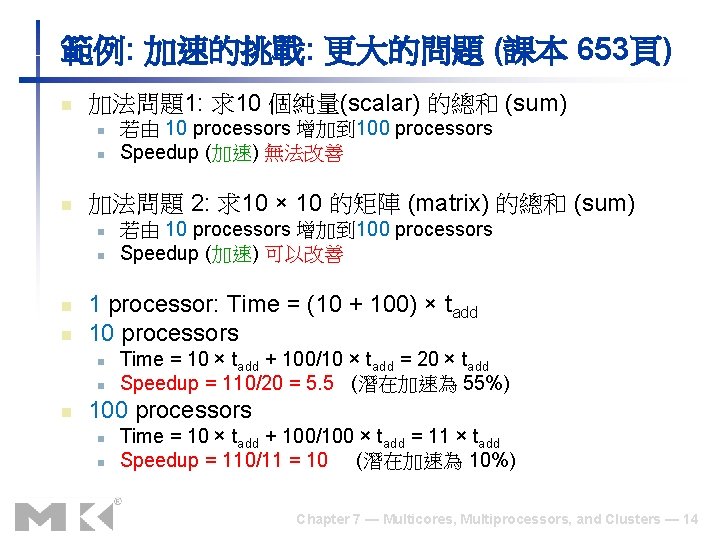

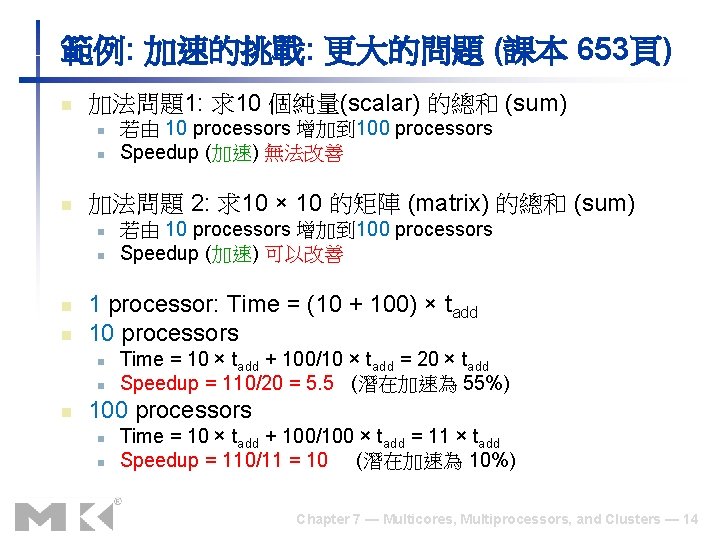

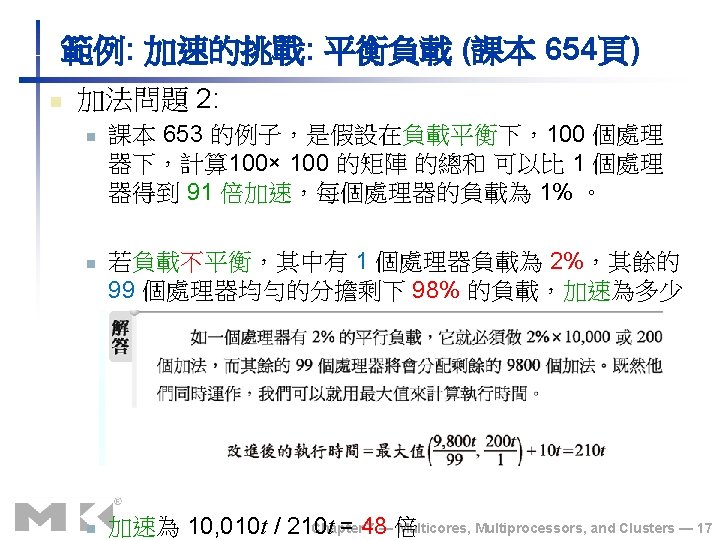

範例: 加速的挑戰: 更大的問題 (課本 653頁) n 加法問題1: 求10 個純量(scalar) 的總和 (sum) n n n 加法問題 2: 求10 × 10 的矩陣 (matrix) 的總和 (sum) n n 若由 10 processors 增加到 100 processors Speedup (加速) 可以改善 1 processor: Time = (10 + 100) × tadd 10 processors n n n 若由 10 processors 增加到 100 processors Speedup (加速) 無法改善 Time = 10 × tadd + 100/10 × tadd = 20 × tadd Speedup = 110/20 = 5. 5 (潛在加速為 55%) 100 processors n n Time = 10 × tadd + 100/100 × tadd = 11 × tadd Speedup = 110/11 = 10 (潛在加速為 10%) Chapter 7 — Multicores, Multiprocessors, and Clusters — 14

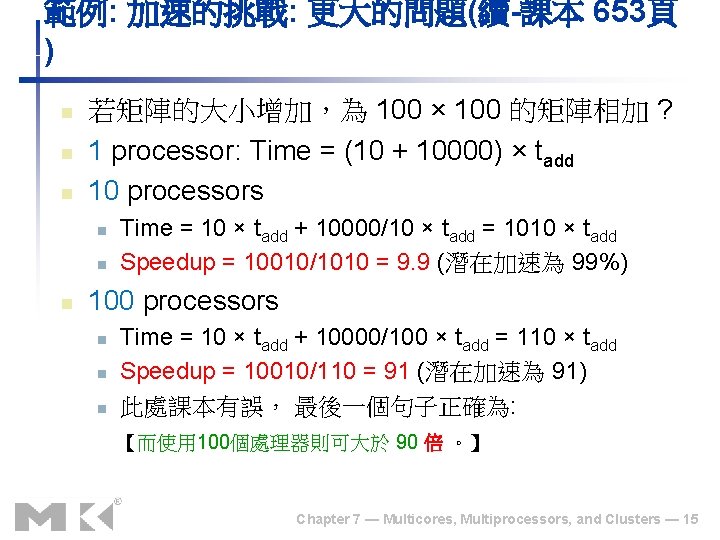

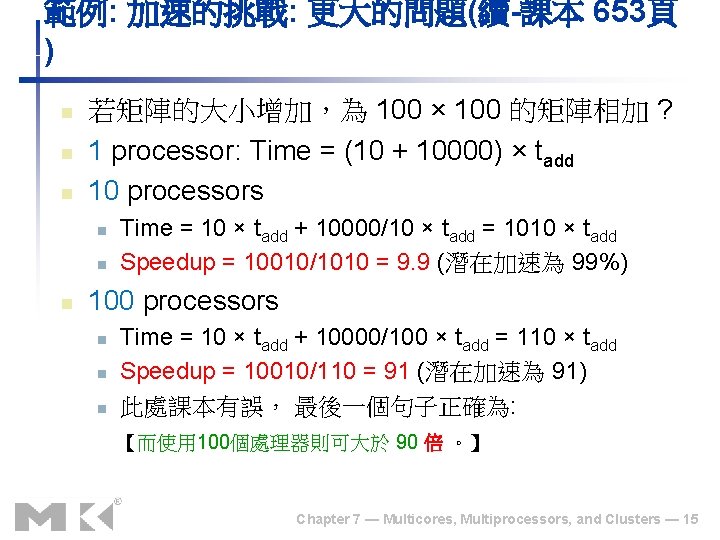

範例: 加速的挑戰: 更大的問題(續-課本 653頁 ) n n n 若矩陣的大小增加,為 100 × 100 的矩陣相加 ? 1 processor: Time = (10 + 10000) × tadd 10 processors n n n Time = 10 × tadd + 10000/10 × tadd = 1010 × tadd Speedup = 10010/1010 = 9. 9 (潛在加速為 99%) 100 processors n n n Time = 10 × tadd + 10000/100 × tadd = 110 × tadd Speedup = 10010/110 = 91 (潛在加速為 91) 此處課本有誤, 最後一個句子正確為: 【而使用 100個處理器則可大於 90 倍 。】 Chapter 7 — Multicores, Multiprocessors, and Clusters — 15

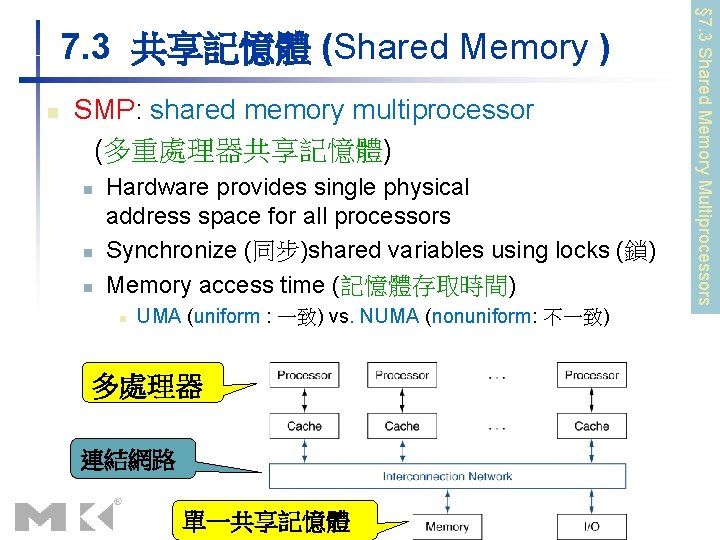

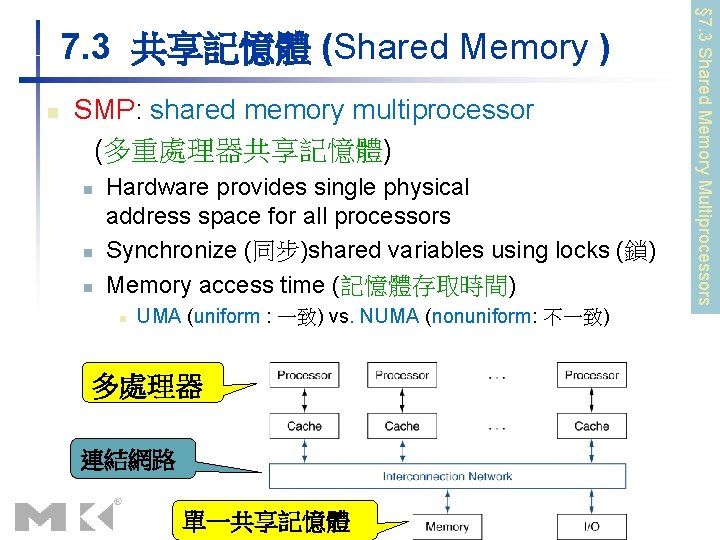

SMP: shared memory multiprocessor (多重處理器共享記憶體) n n Hardware provides single physical address space for all processors Synchronize (同步)shared variables using locks (鎖) Memory access time (記憶體存取時間) n UMA (uniform : 一致) vs. NUMA (nonuniform: 不一致) 多處理器 連結網路 單一共享記憶體 § 7. 3 Shared Memory Multiprocessors 7. 3 共享記憶體 (Shared Memory )

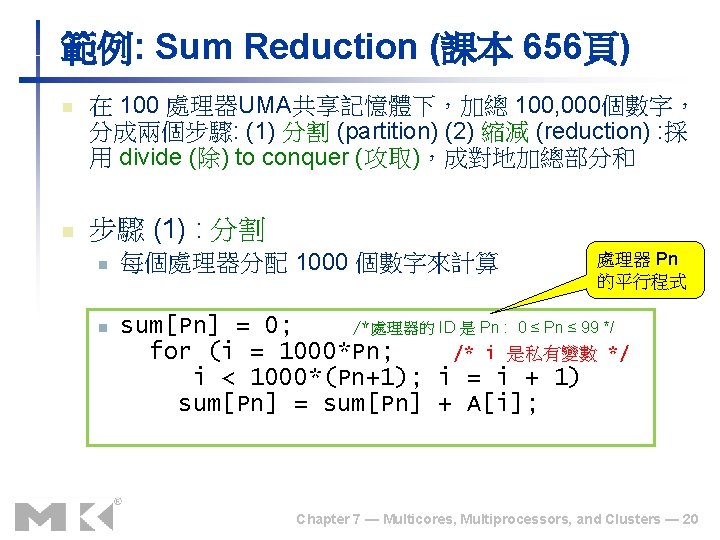

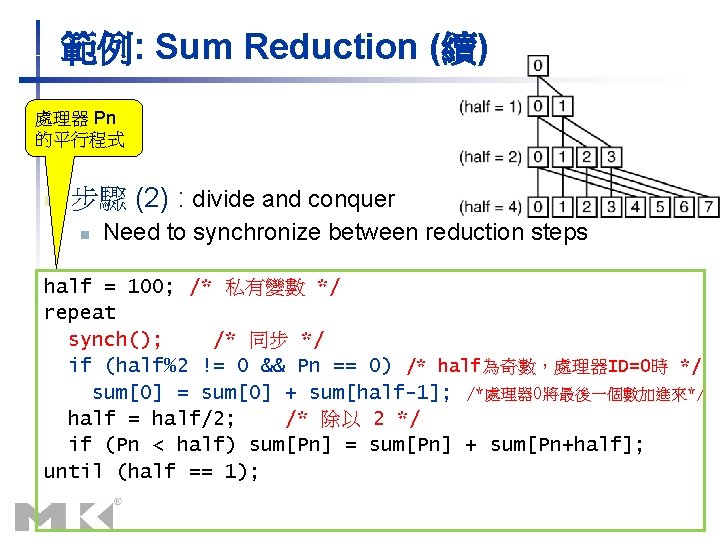

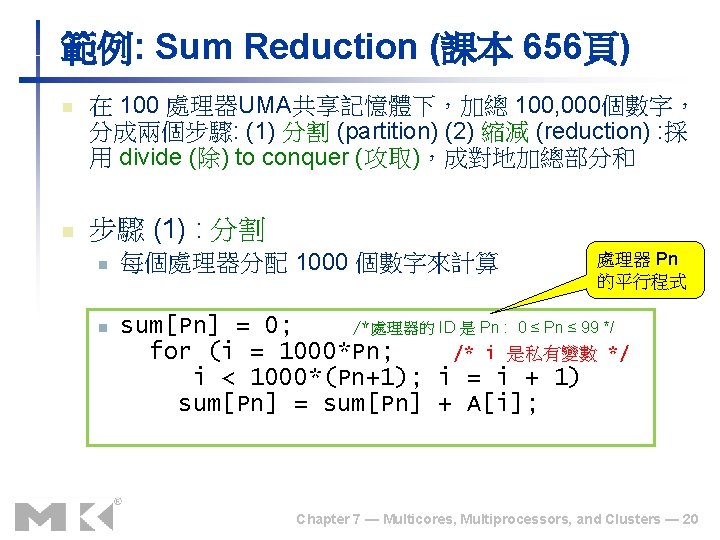

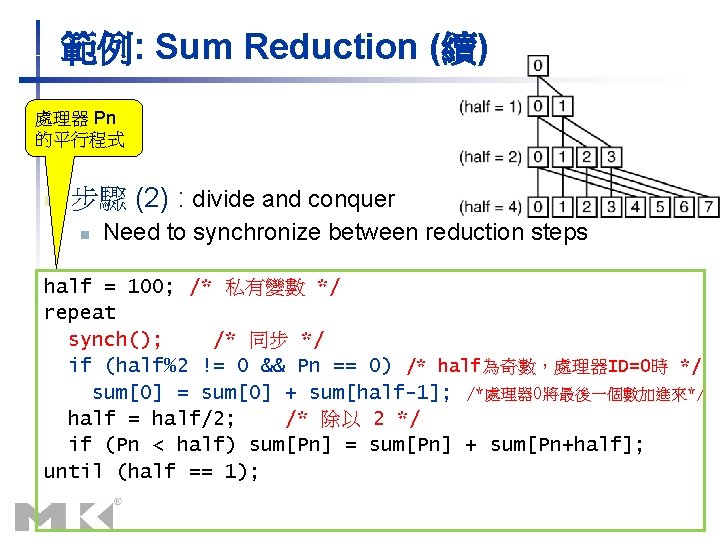

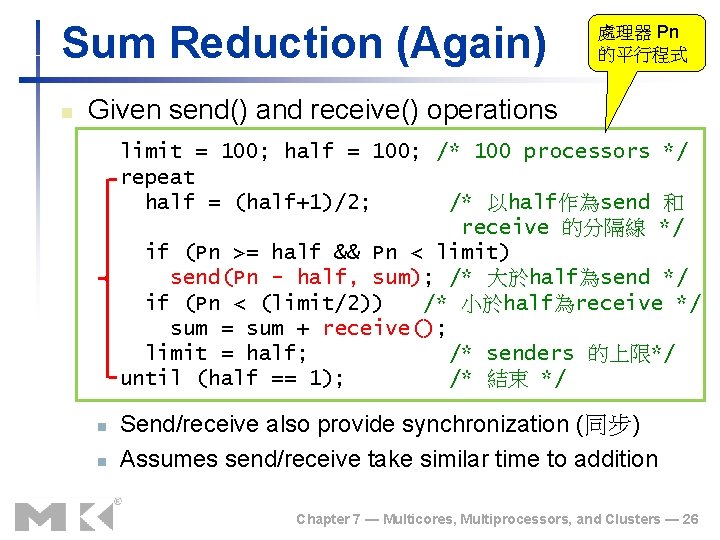

範例: Sum Reduction (續) 處理器 Pn 的平行程式 n 步驟 (2) : divide and conquer n Need to synchronize between reduction steps half = 100; /* 私有變數 */ repeat synch(); /* 同步 */ if (half%2 != 0 && Pn == 0) /* half為奇數,處理器ID=0時 */ sum[0] = sum[0] + sum[half-1]; /*處理器 0將最後一個數加進來*/ half = half/2; /* 除以 2 */ if (Pn < half) sum[Pn] = sum[Pn] + sum[Pn+half]; until (half == 1);

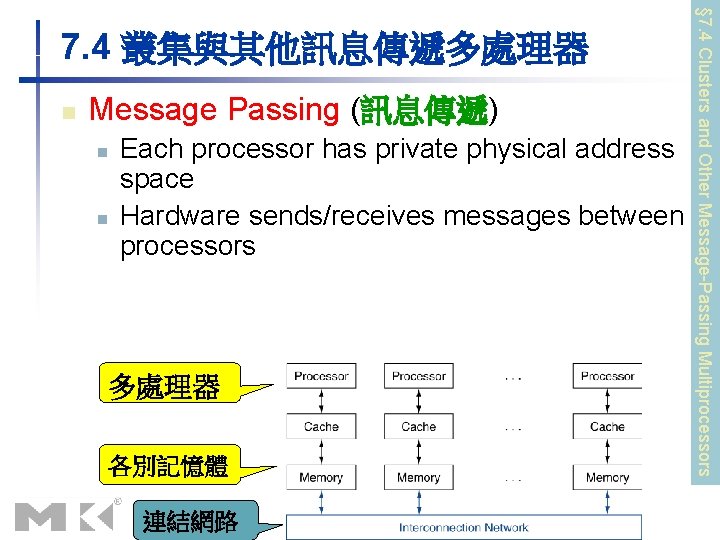

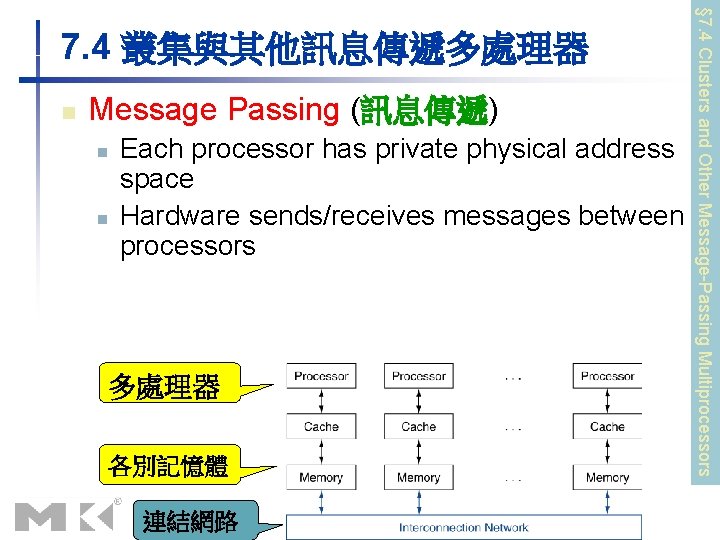

n Message Passing (訊息傳遞) n n Each processor has private physical address space Hardware sends/receives messages between processors 多處理器 各別記憶體 連結網路 § 7. 4 Clusters and Other Message-Passing Multiprocessors 7. 4 叢集與其他訊息傳遞多處理器

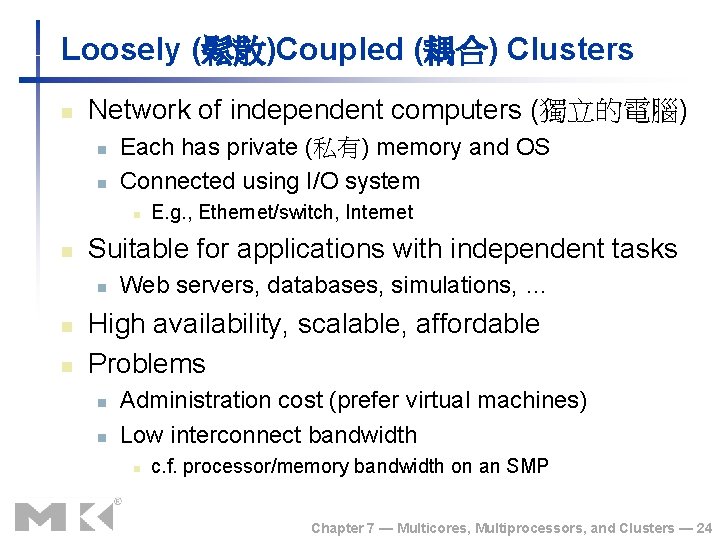

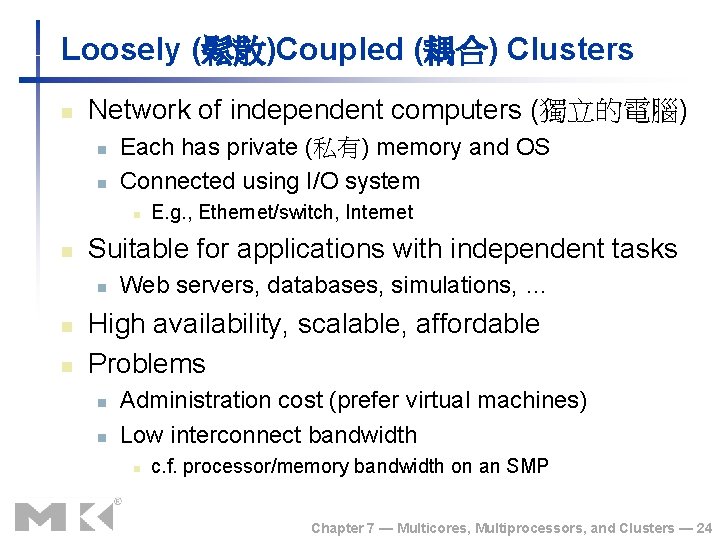

Loosely (鬆散)Coupled (耦合) Clusters n Network of independent computers (獨立的電腦) n n Each has private (私有) memory and OS Connected using I/O system n n Suitable for applications with independent tasks n n n E. g. , Ethernet/switch, Internet Web servers, databases, simulations, … High availability, scalable, affordable Problems n n Administration cost (prefer virtual machines) Low interconnect bandwidth n c. f. processor/memory bandwidth on an SMP Chapter 7 — Multicores, Multiprocessors, and Clusters — 24

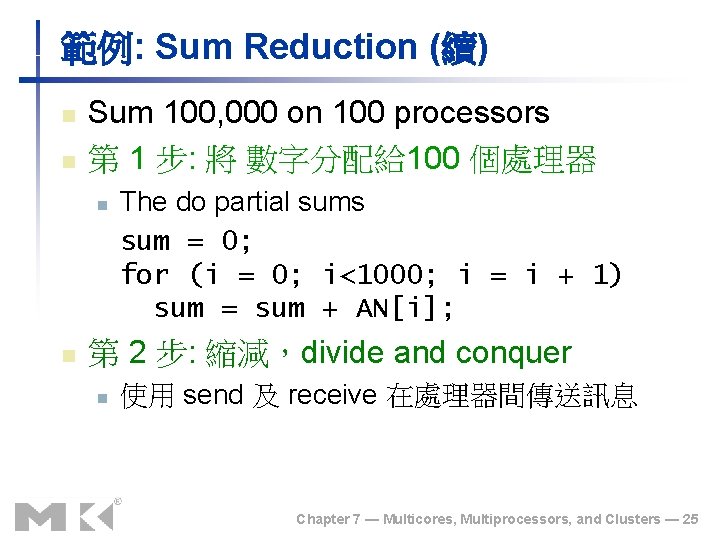

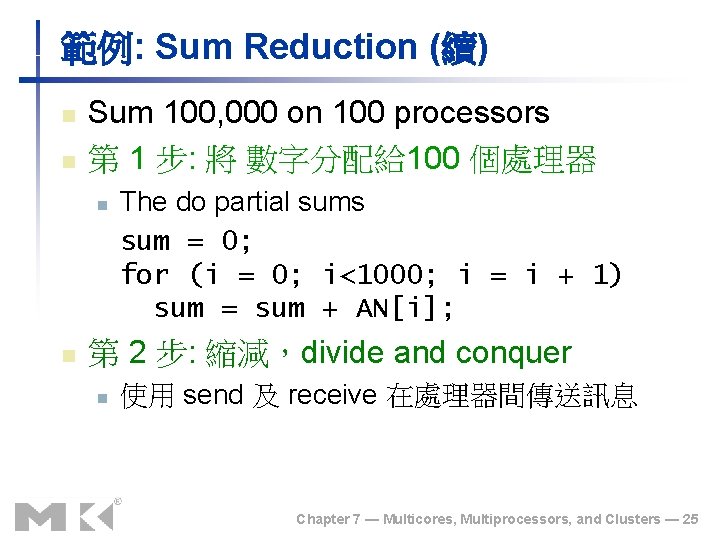

範例: Sum Reduction (續) n n Sum 100, 000 on 100 processors 第 1 步: 將 數字分配給 100 個處理器 n n The do partial sums sum = 0; for (i = 0; i<1000; i = i + 1) sum = sum + AN[i]; 第 2 步: 縮減,divide and conquer n 使用 send 及 receive 在處理器間傳送訊息 Chapter 7 — Multicores, Multiprocessors, and Clusters — 25

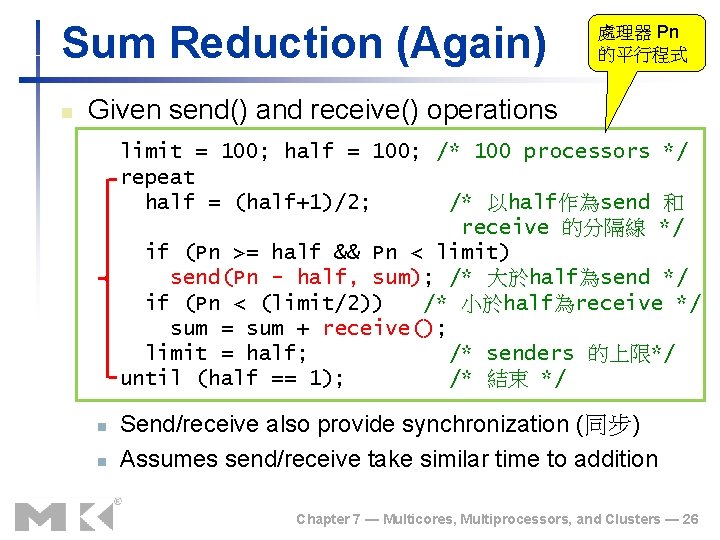

Sum Reduction (Again) n 處理器 Pn 的平行程式 Given send() and receive() operations limit = 100; half = 100; /* 100 processors */ repeat half = (half+1)/2; /* 以half作為send 和 receive 的分隔線 */ if (Pn >= half && Pn < limit) send(Pn - half, sum); /* 大於half為send */ if (Pn < (limit/2)) /* 小於half為receive */ sum = sum + receive(); limit = half; /* senders 的上限*/ until (half == 1); /* 結束 */ n n Send/receive also provide synchronization (同步) Assumes send/receive take similar time to addition Chapter 7 — Multicores, Multiprocessors, and Clusters — 26

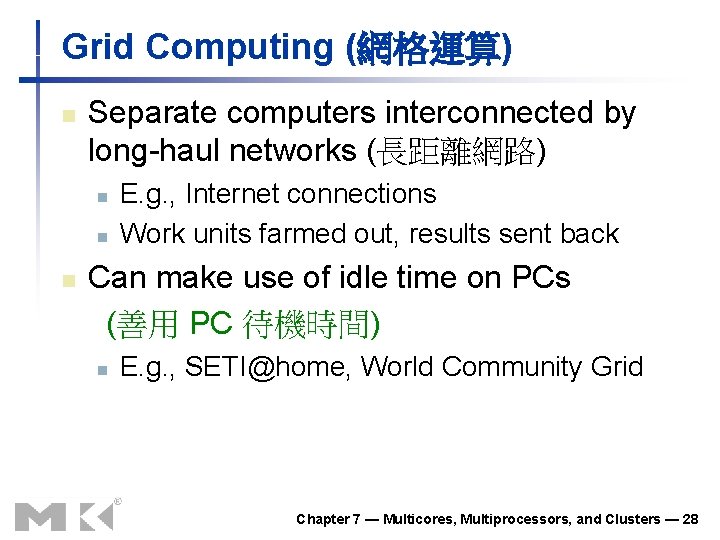

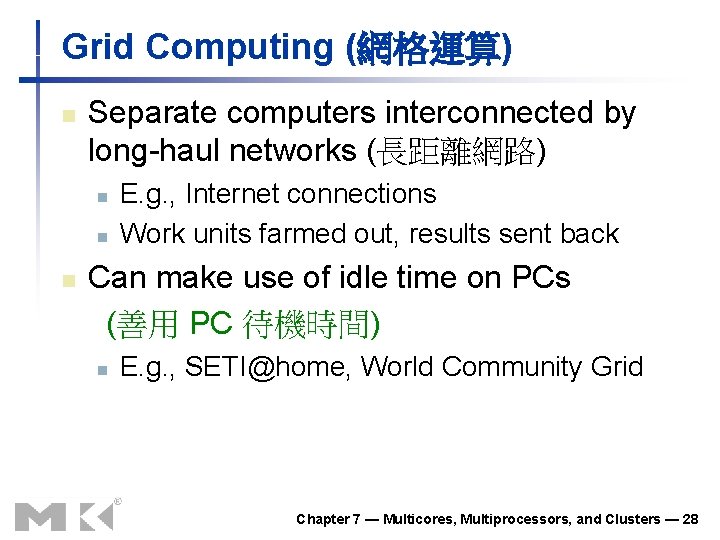

Grid Computing (網格運算) n Separate computers interconnected by long-haul networks (長距離網路) n n E. g. , Internet connections Work units farmed out, results sent back Can make use of idle time on PCs (善用 PC 待機時間) n n E. g. , SETI@home, World Community Grid Chapter 7 — Multicores, Multiprocessors, and Clusters — 28

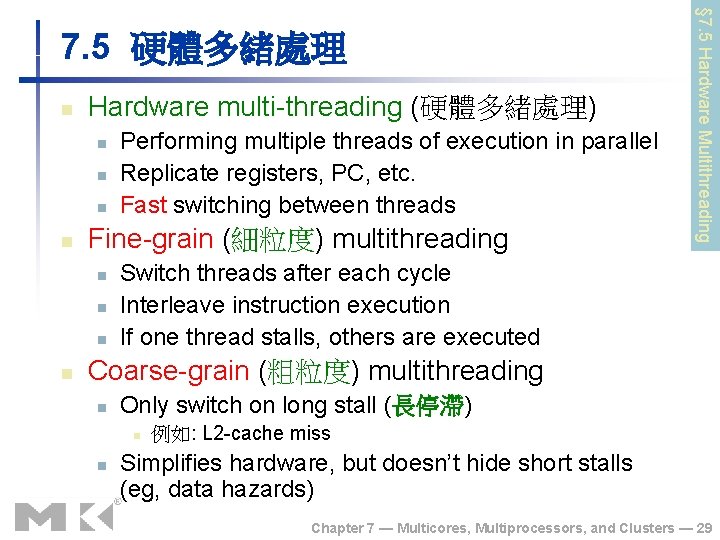

n Hardware multi-threading (硬體多緒處理) n n Fine-grain (細粒度) multithreading n n Performing multiple threads of execution in parallel Replicate registers, PC, etc. Fast switching between threads § 7. 5 Hardware Multithreading 7. 5 硬體多緒處理 Switch threads after each cycle Interleave instruction execution If one thread stalls, others are executed Coarse-grain (粗粒度) multithreading n Only switch on long stall (長停滯) n n 例如: L 2 -cache miss Simplifies hardware, but doesn’t hide short stalls (eg, data hazards) Chapter 7 — Multicores, Multiprocessors, and Clusters — 29

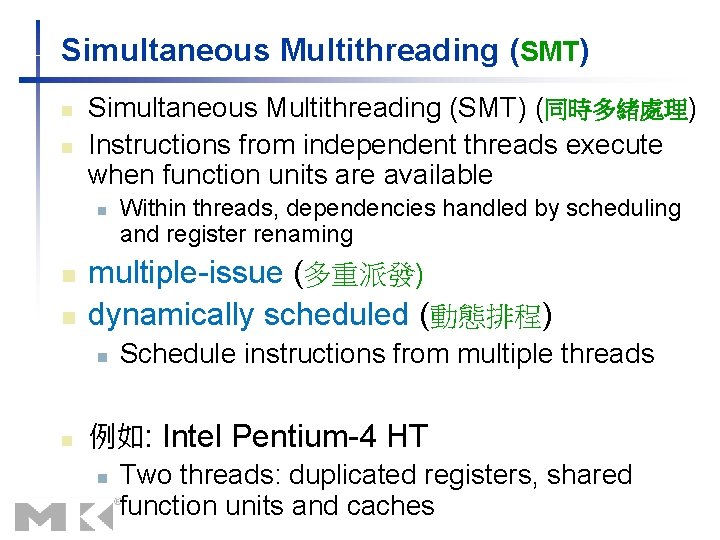

Simultaneous Multithreading (SMT) n n Simultaneous Multithreading (SMT) (同時多緒處理) Instructions from independent threads execute when function units are available n n n multiple-issue (多重派發) dynamically scheduled (動態排程) n n Within threads, dependencies handled by scheduling and register renaming Schedule instructions from multiple threads 例如: Intel Pentium-4 HT n Two threads: duplicated registers, shared function units and caches

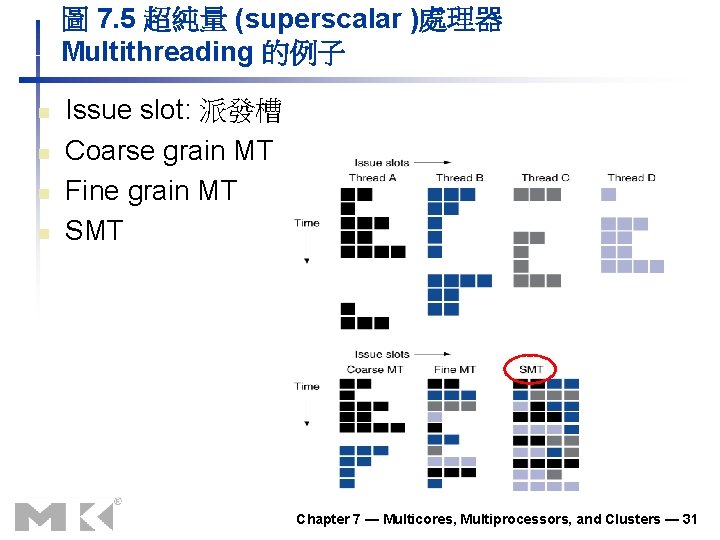

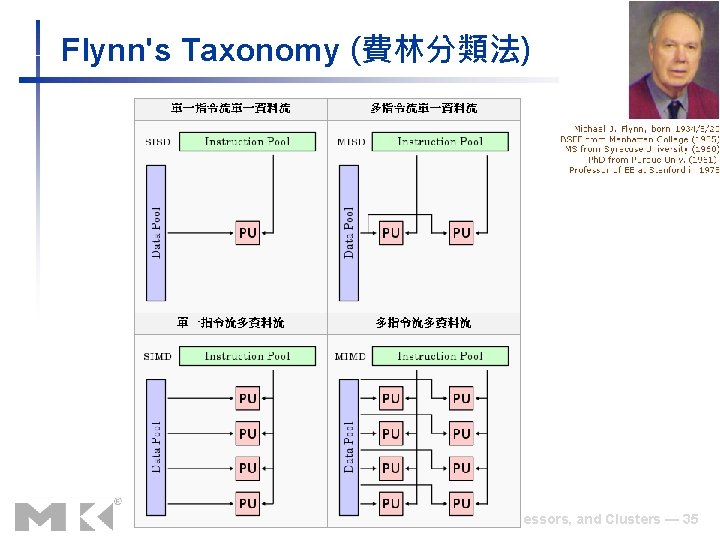

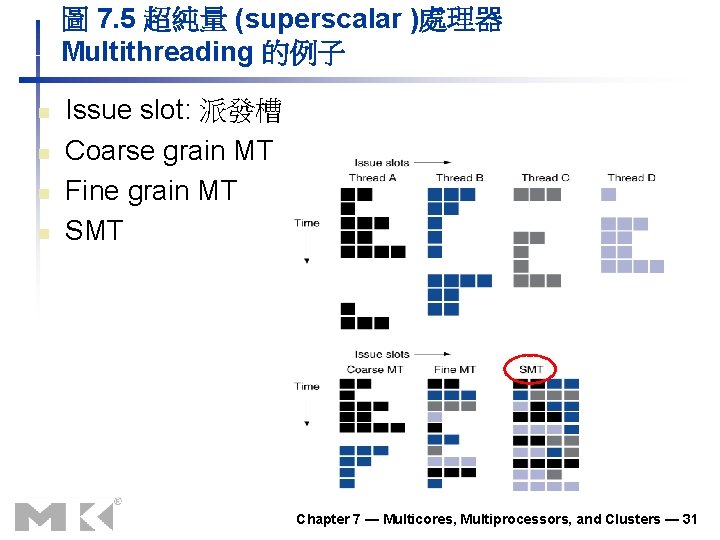

圖 7. 5 超純量 (superscalar )處理器 Multithreading 的例子 n n Issue slot: 派發槽 Coarse grain MT Fine grain MT SMT Chapter 7 — Multicores, Multiprocessors, and Clusters — 31

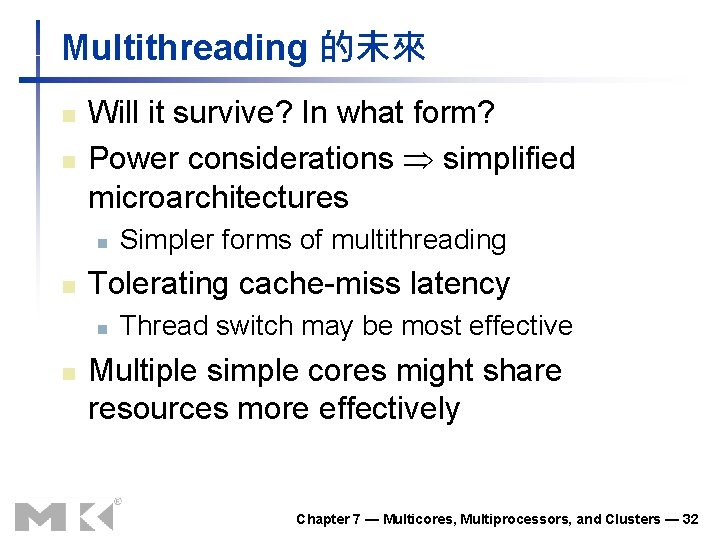

Multithreading 的未來 n n Will it survive? In what form? Power considerations simplified microarchitectures n n Tolerating cache-miss latency n n Simpler forms of multithreading Thread switch may be most effective Multiple simple cores might share resources more effectively Chapter 7 — Multicores, Multiprocessors, and Clusters — 32

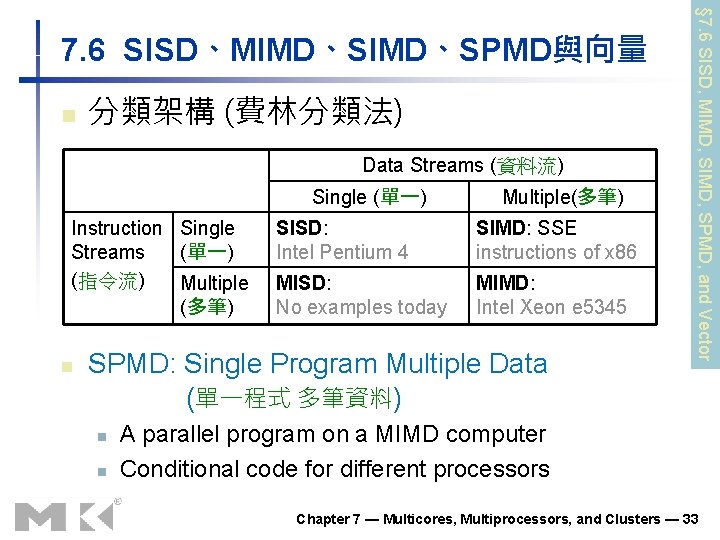

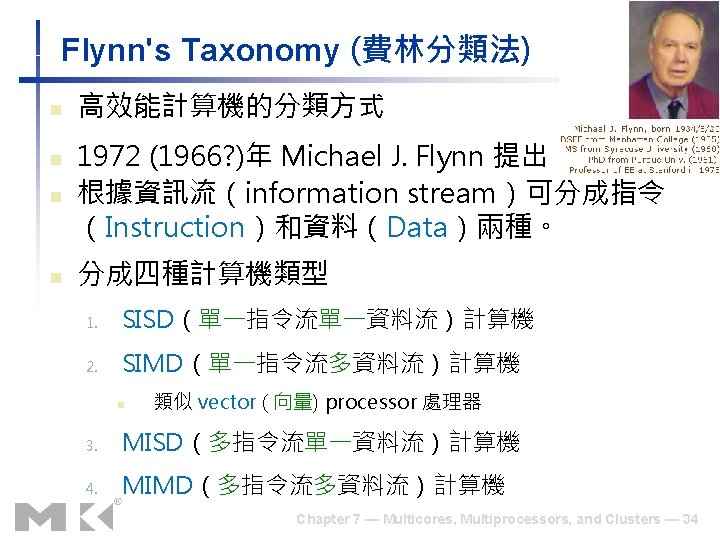

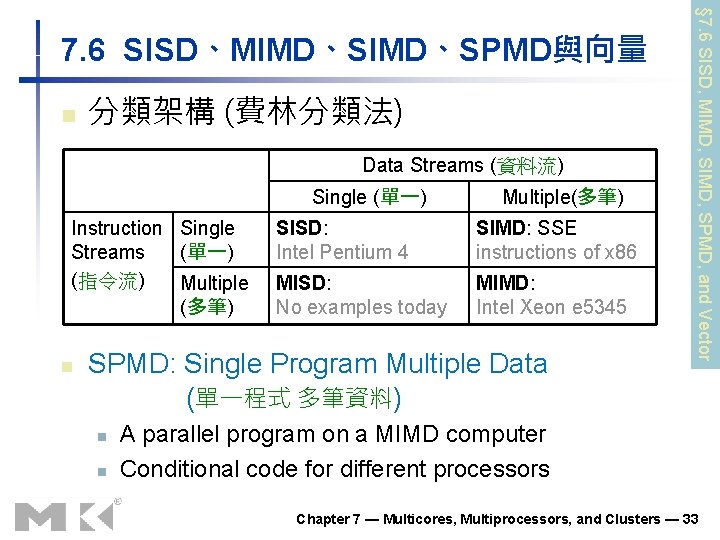

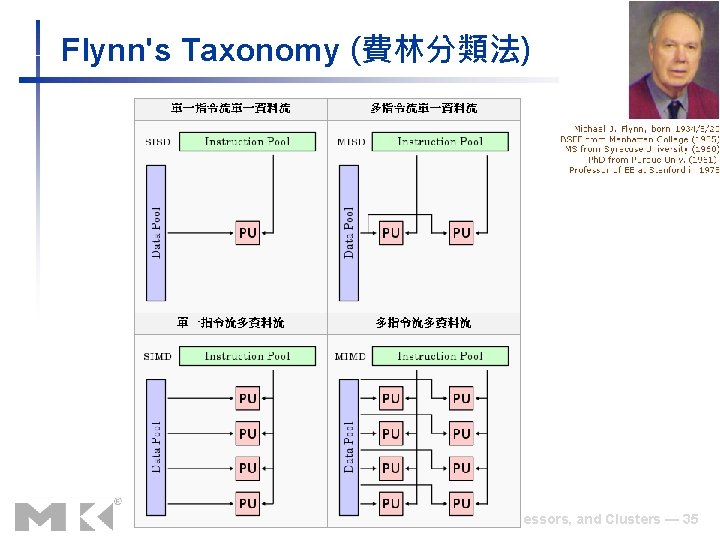

n 分類架構 (費林分類法) Data Streams (資料流) Single (單一) Instruction Single (單一) Streams (指令流) Multiple (多筆) Multiple(多筆) SISD: Intel Pentium 4 SIMD: SSE instructions of x 86 MISD: No examples today MIMD: Intel Xeon e 5345 SPMD: Single Program Multiple Data (單一程式 多筆資料) n n n § 7. 6 SISD, MIMD, SPMD, and Vector 7. 6 SISD、MIMD、SPMD與向量 A parallel program on a MIMD computer Conditional code for different processors Chapter 7 — Multicores, Multiprocessors, and Clusters — 33

Flynn's Taxonomy (費林分類法) Chapter 7 — Multicores, Multiprocessors, and Clusters — 35

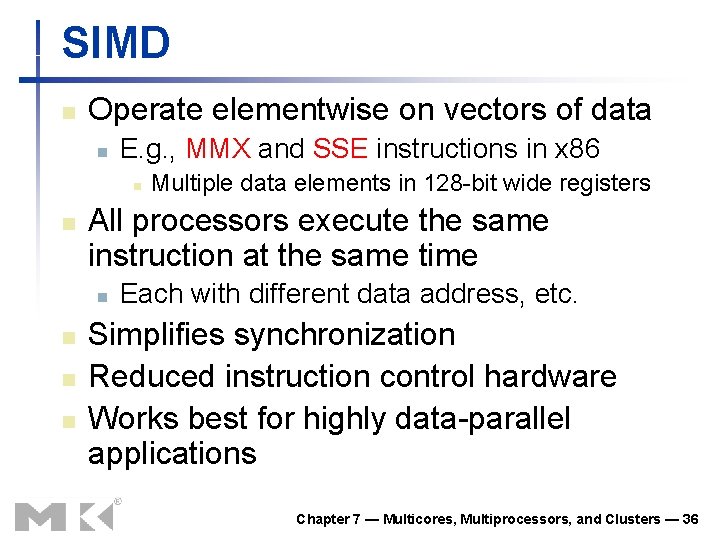

SIMD n Operate elementwise on vectors of data n E. g. , MMX and SSE instructions in x 86 n n All processors execute the same instruction at the same time n n Multiple data elements in 128 -bit wide registers Each with different data address, etc. Simplifies synchronization Reduced instruction control hardware Works best for highly data-parallel applications Chapter 7 — Multicores, Multiprocessors, and Clusters — 36

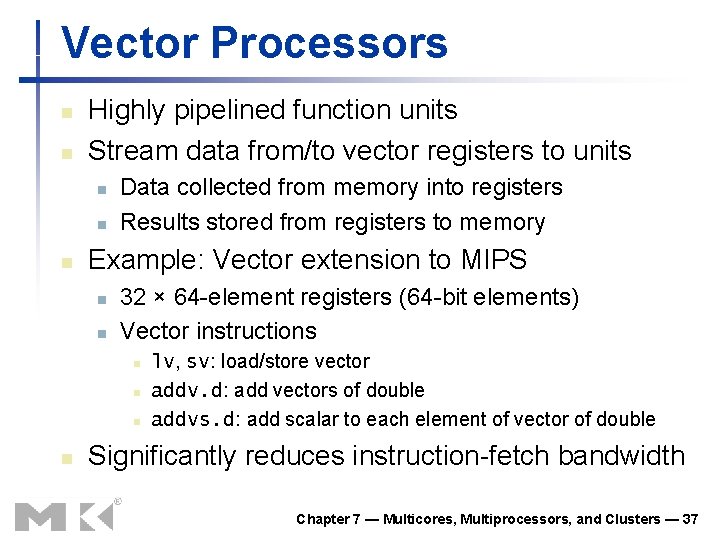

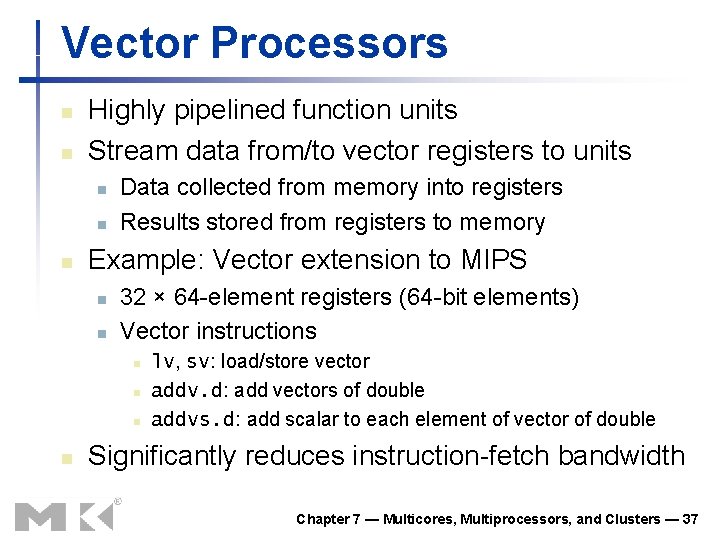

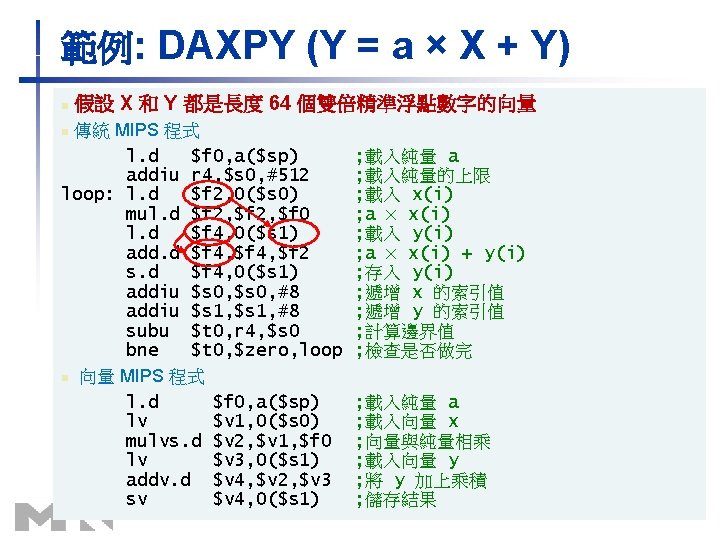

Vector Processors n n Highly pipelined function units Stream data from/to vector registers to units n n n Data collected from memory into registers Results stored from registers to memory Example: Vector extension to MIPS n n 32 × 64 -element registers (64 -bit elements) Vector instructions n n lv, sv: load/store vector addv. d: add vectors of double addvs. d: add scalar to each element of vector of double Significantly reduces instruction-fetch bandwidth Chapter 7 — Multicores, Multiprocessors, and Clusters — 37

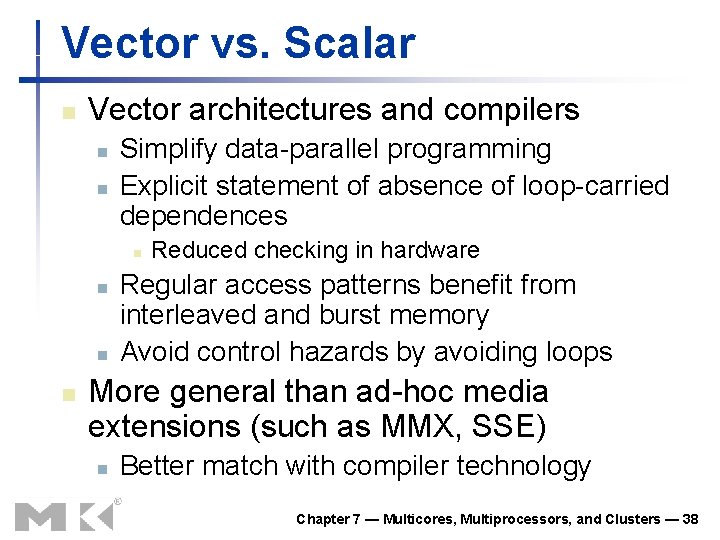

Vector vs. Scalar n Vector architectures and compilers n n Simplify data-parallel programming Explicit statement of absence of loop-carried dependences n n Reduced checking in hardware Regular access patterns benefit from interleaved and burst memory Avoid control hazards by avoiding loops More general than ad-hoc media extensions (such as MMX, SSE) n Better match with compiler technology Chapter 7 — Multicores, Multiprocessors, and Clusters — 38

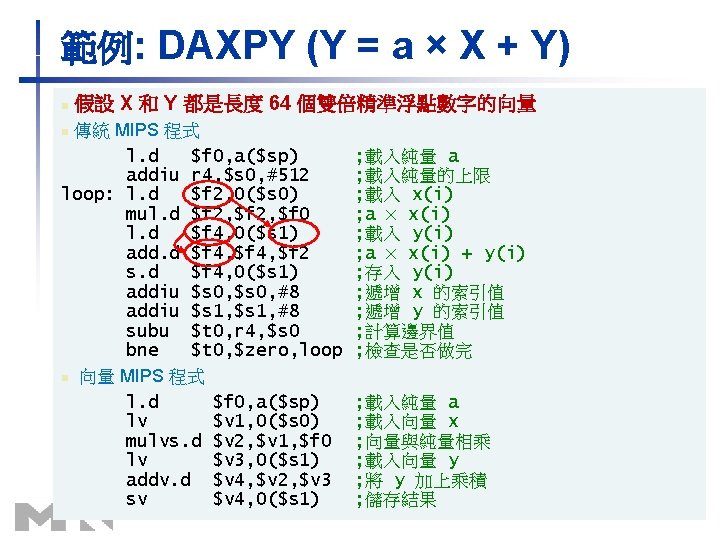

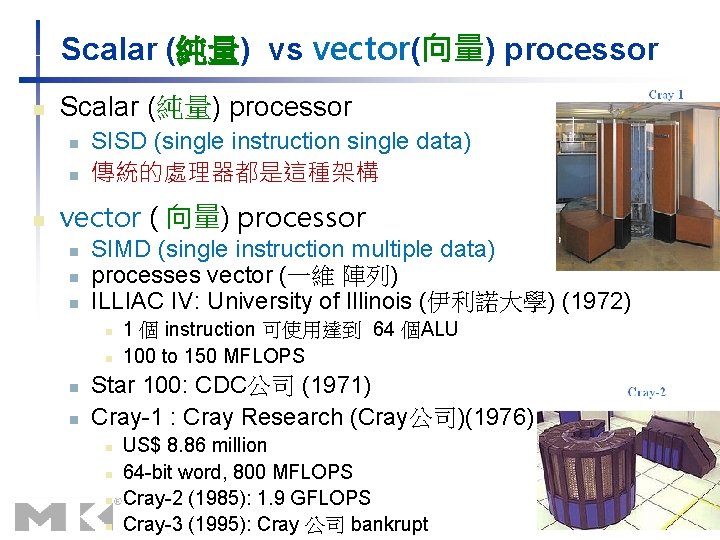

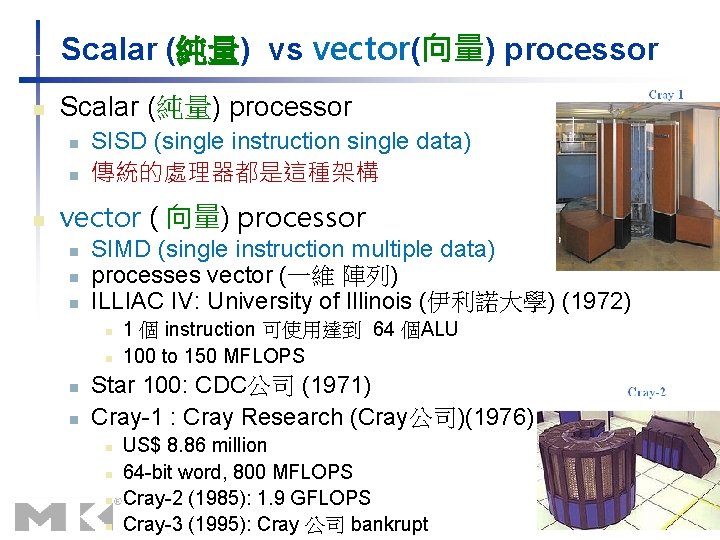

Scalar (純量) vs vector(向量) processor n Scalar (純量) processor n n n SISD (single instruction single data) 傳統的處理器都是這種架構 vector ( 向量) processor n n n SIMD (single instruction multiple data) processes vector (一維 陣列) ILLIAC IV: University of Illinois (伊利諾大學) (1972) n n 1 個 instruction 可使用達到 64 個ALU 100 to 150 MFLOPS Star 100: CDC公司 (1971) Cray-1 : Cray Research (Cray公司)(1976) n n US$ 8. 86 million 64 -bit word, 800 MFLOPS Cray-2 (1985): 1. 9 GFLOPS Cray-3 (1995): Cray 公司 bankrupt

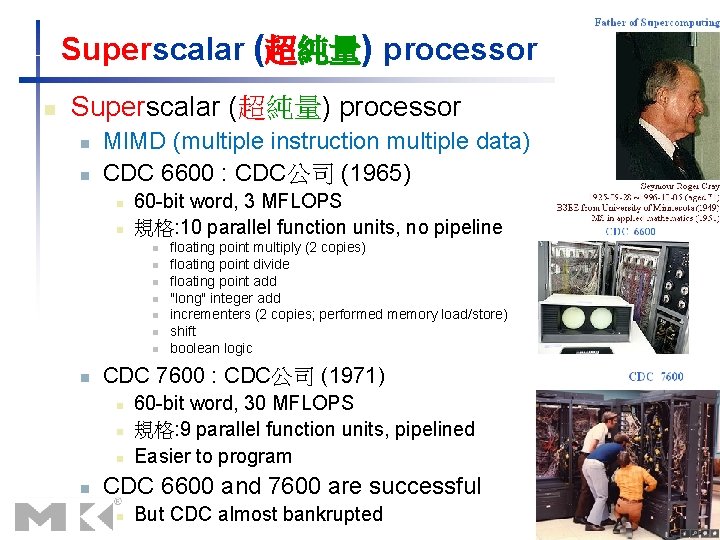

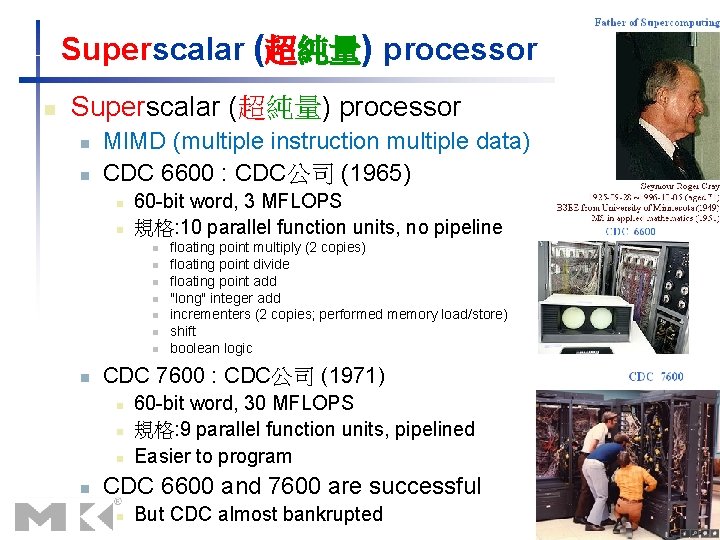

Superscalar (超純量) processor n n MIMD (multiple instruction multiple data) CDC 6600 : CDC公司 (1965) n n 60 -bit word, 3 MFLOPS 規格: 10 parallel function units, no pipeline n n n n CDC 7600 : CDC公司 (1971) n n floating point multiply (2 copies) floating point divide floating point add "long" integer add incrementers (2 copies; performed memory load/store) shift boolean logic 60 -bit word, 30 MFLOPS 規格: 9 parallel function units, pipelined Easier to program CDC 6600 and 7600 are successful n But CDC almost bankrupted

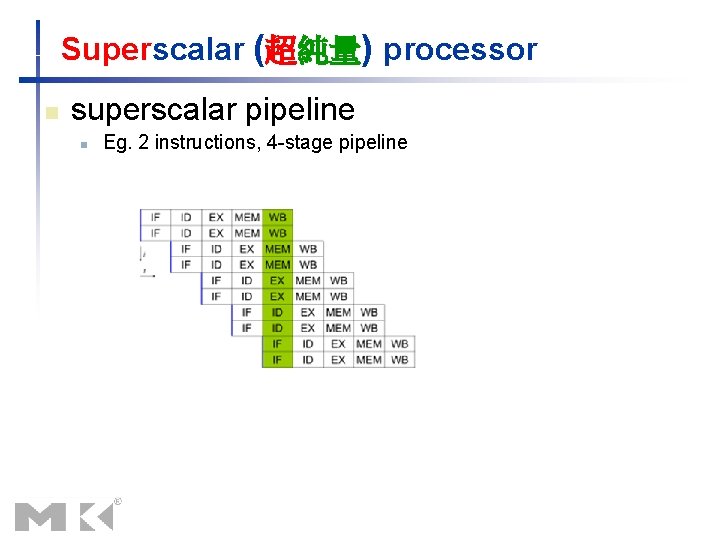

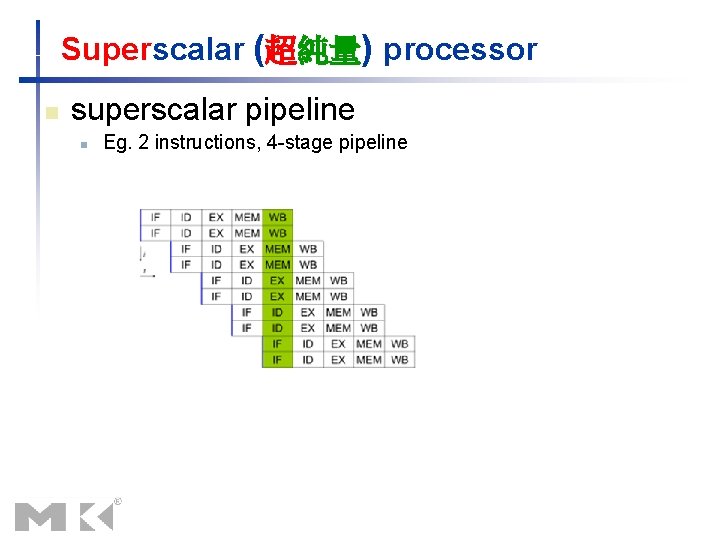

Superscalar (超純量) processor n superscalar pipeline n Eg. 2 instructions, 4 -stage pipeline

Superscalar (超純量) microprocessor n Intel 公司 n n n AMD公司 n n i 960 CA (1988) P 5 Pentium (1993) AMD 29000 -series 29050 (1990) essentially all general-purpose CPUs developed since about 1998 are superscalar Chapter 7 — Multicores, Multiprocessors, and Clusters — 43

RISC: Reduced instruction set computing (精簡指令集運算) n CISC: complex instruction set computing (複雜指令集運算) n n complex instructions with various addressing modes relatively few registers 例如: Intel Pentium RISC: Reduced instruction set computing (精簡指令集運算) n n Uniform instruction format Many identical general purpose registers Instruction is simple to be easily pipelined n high frequencies to achieve clock throughput 例如: MIPS, SPARC, Power. PC, ARM… Chapter 7 — Multicores, Multiprocessors, and Clusters — 44

VLIW: Very Long Instruction Word (超長指令字) n n VLIW is a type of MIMD Josh Fisher at Yale University in 1980 s n VLIW CPUs use software (compiler) to decide which operations can run in parallel n n Superscalar CPUs use hardware to decide which operations can run in parallel VLIW may also refer to Variable Length Instruction Word n 例如: Intel i 860, (64 -bit ) Chapter 7 — Multicores, Multiprocessors, and Clusters — 45

n Early video cards n n Frame buffer memory with address generation for video output 3 D graphics processing n 早期高階顯示卡很昂貴 n n 大多由 Silicon Graphics (SGI) 公司生產 Moore’s Law lower cost, higher density 3 D graphics cards for PCs and game consoles Graphics Processing Units (GPU) 圖形處理晶片) n n § 7. 7 Introduction to Graphics Processing Units 7. 7 圖形處理器介紹 Processors oriented to 3 D graphics tasks Vertex/pixel processing, shading, texture mapping, rasterization Chapter 7 — Multicores, Multiprocessors, and Clusters — 46

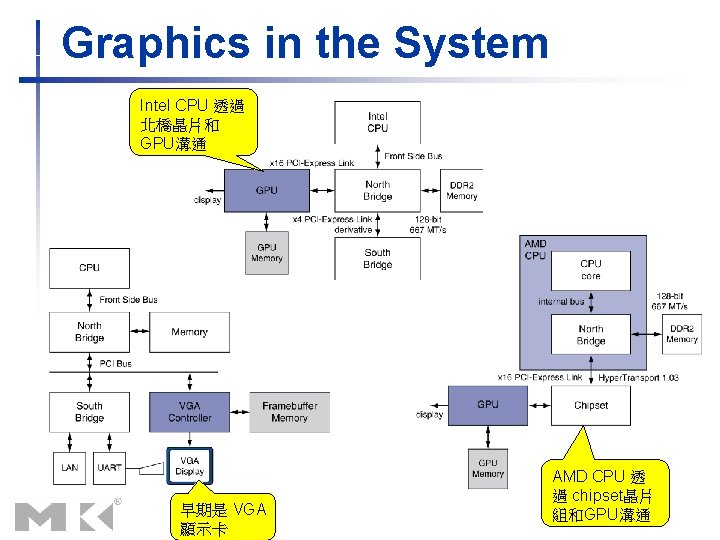

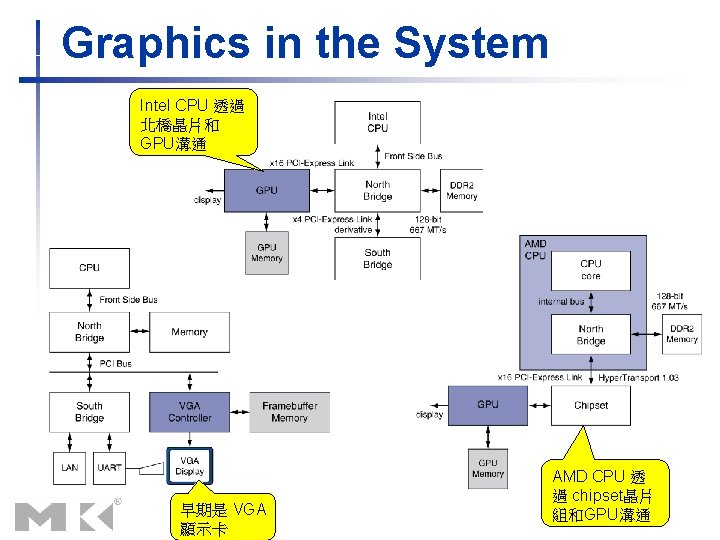

Graphics in the System Intel CPU 透過 北橋晶片和 GPU溝通 早期是 VGA 顯示卡 AMD CPU 透 過 chipset晶片 組和GPU溝通

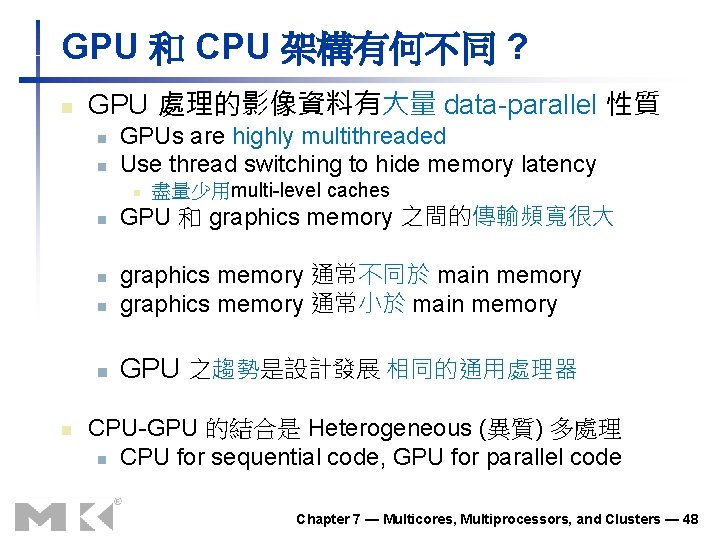

GPU 和 CPU 架構有何不同 ? n GPU 處理的影像資料有大量 data-parallel 性質 n n GPUs are highly multithreaded Use thread switching to hide memory latency n n GPU 和 graphics memory 之間的傳輸頻寬很大 n graphics memory 通常不同於 main memory graphics memory 通常小於 main memory n GPU 之趨勢是設計發展 相同的通用處理器 n n 盡量少用multi-level caches CPU-GPU 的結合是 Heterogeneous (異質) 多處理 n CPU for sequential code, GPU for parallel code Chapter 7 — Multicores, Multiprocessors, and Clusters — 48

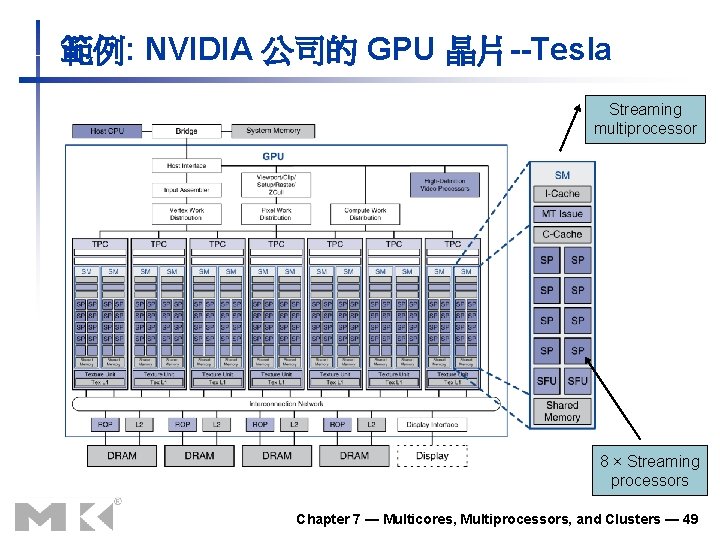

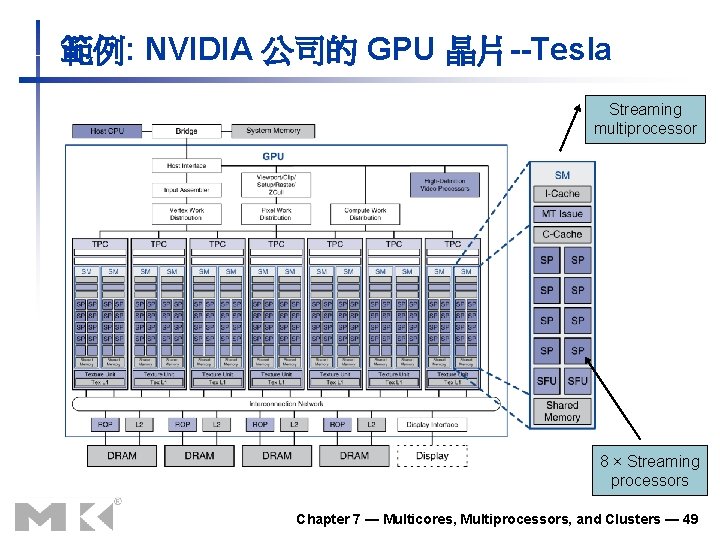

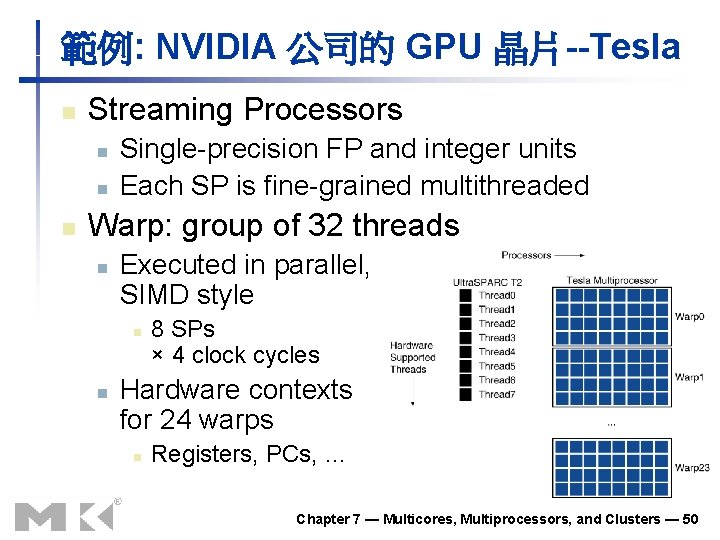

範例: NVIDIA 公司的 GPU 晶片--Tesla Streaming multiprocessor 8 × Streaming processors Chapter 7 — Multicores, Multiprocessors, and Clusters — 49

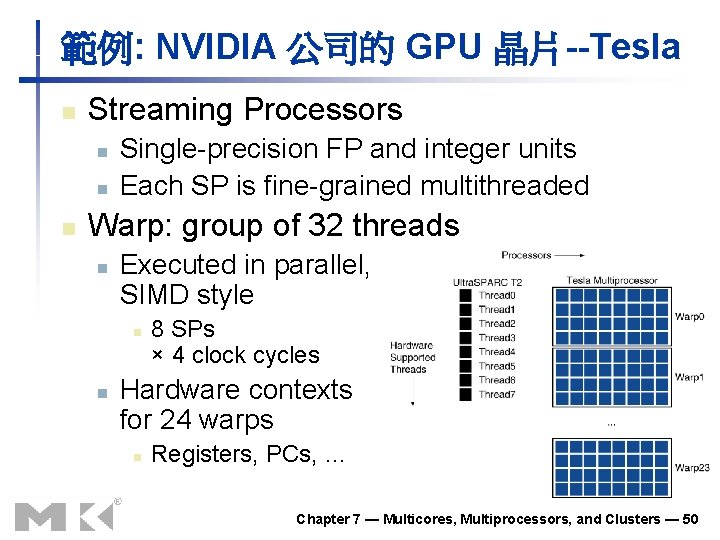

範例: NVIDIA 公司的 GPU 晶片--Tesla n Streaming Processors n n n Single-precision FP and integer units Each SP is fine-grained multithreaded Warp: group of 32 threads n Executed in parallel, SIMD style n n 8 SPs × 4 clock cycles Hardware contexts for 24 warps n Registers, PCs, … Chapter 7 — Multicores, Multiprocessors, and Clusters — 50

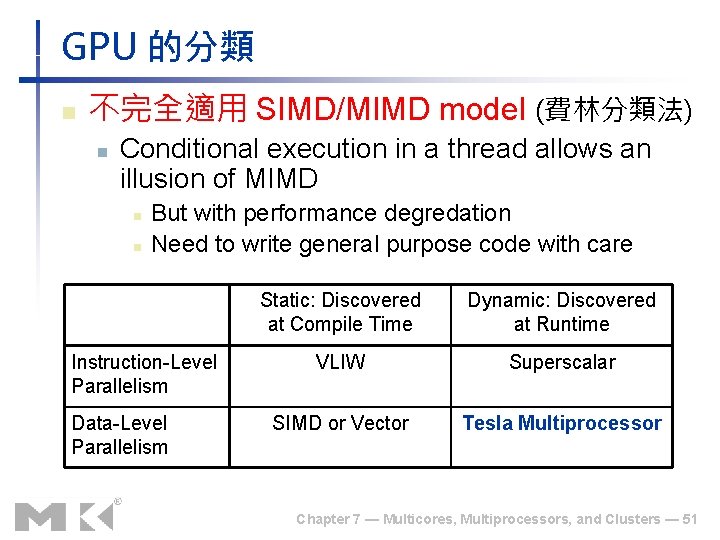

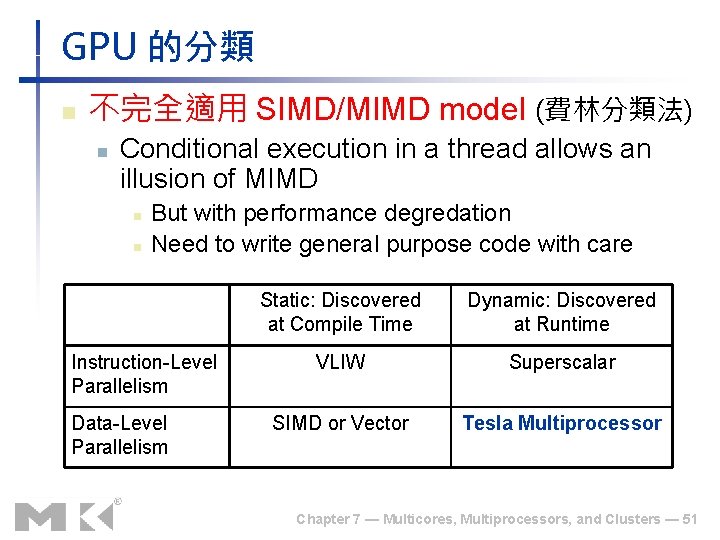

GPU 的分類 n 不完全適用 SIMD/MIMD model (費林分類法) n Conditional execution in a thread allows an illusion of MIMD n n But with performance degredation Need to write general purpose code with care Instruction-Level Parallelism Data-Level Parallelism Static: Discovered at Compile Time Dynamic: Discovered at Runtime VLIW Superscalar SIMD or Vector Tesla Multiprocessor Chapter 7 — Multicores, Multiprocessors, and Clusters — 51

GPU 的編程介面 n Programming languages/APIs n n Direct. X: 微軟 Open. GL C for Graphics (Cg), High Level Shader Language (HLSL) Compute Unified Device Architecture (CUDA) Chapter 7 — Multicores, Multiprocessors, and Clusters — 52

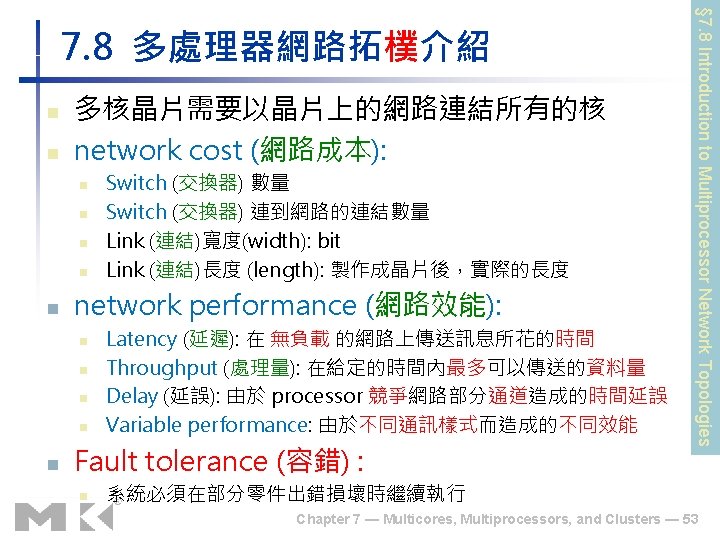

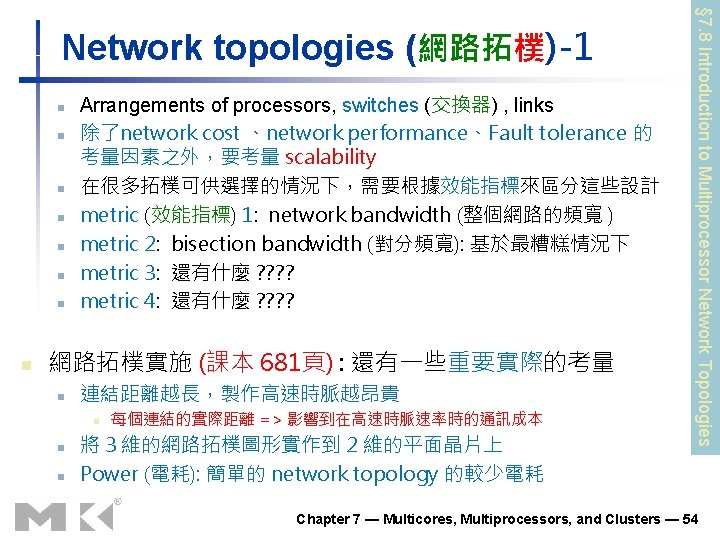

n n n n Arrangements of processors, switches (交換器) , links 除了network cost 、network performance、Fault tolerance 的 考量因素之外,要考量 scalability 在很多拓樸可供選擇的情況下,需要根據效能指標來區分這些設計 metric (效能指標) 1: network bandwidth (整個網路的頻寬 ) metric 2: bisection bandwidth (對分頻寬): 基於最糟糕情況下 metric 3: 還有什麼 ? ? metric 4: 還有什麼 ? ? 網路拓樸實施 (課本 681頁) : 還有一些重要實際的考量 n 連結距離越長,製作高速時脈越昂貴 n n n 每個連結的實際距離 => 影響到在高速時脈速率時的通訊成本 將 3 維的網路拓樸圖形實作到 2 維的平面晶片上 Power (電耗): 簡單的 network topology 的較少電耗 § 7. 8 Introduction to Multiprocessor Network Topologies Network topologies (網路拓樸)-1 Chapter 7 — Multicores, Multiprocessors, and Clusters — 54

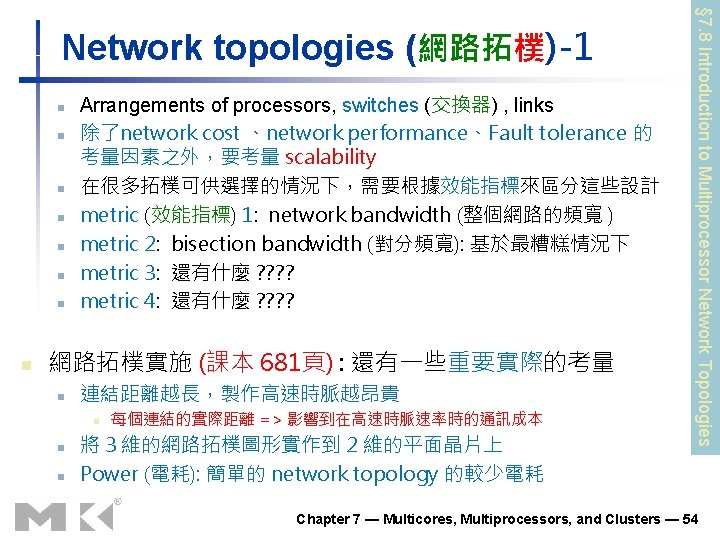

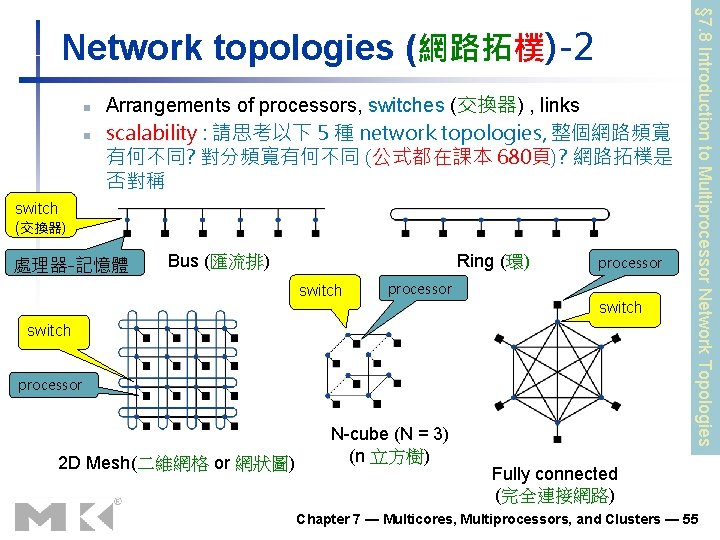

n n switch (交換器) Arrangements of processors, switches (交換器) , links scalability : 請思考以下 5 種 network topologies, 整個網路頻寬 有何不同? 對分頻寬有何不同 (公式都在課本 680頁)? 網路拓樸是 否對稱 處理器-記憶體 Bus (匯流排) Ring (環) switch processor 2 D Mesh(二維網格 or 網狀圖) N-cube (N = 3) (n 立方樹) § 7. 8 Introduction to Multiprocessor Network Topologies Network topologies (網路拓樸)-2 Fully connected (完全連接網路) Chapter 7 — Multicores, Multiprocessors, and Clusters — 55

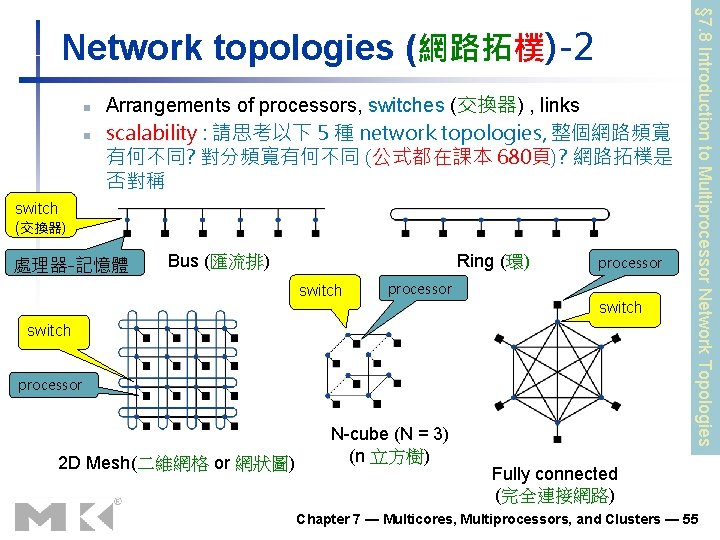

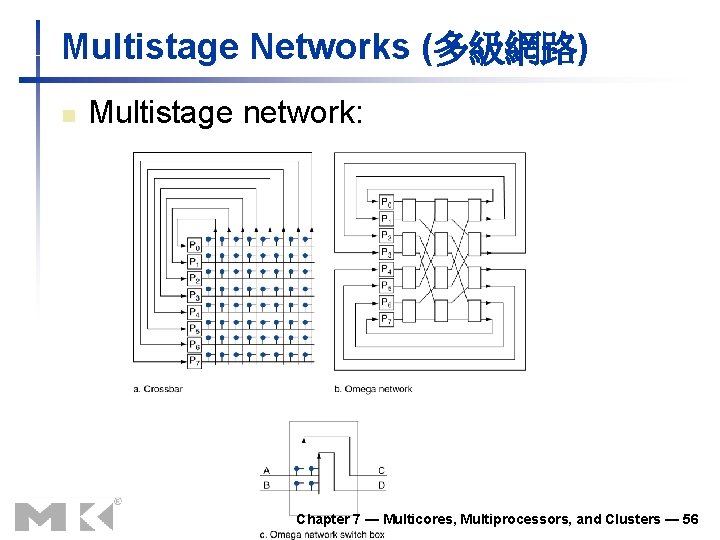

Multistage Networks (多級網路) n Multistage network: Chapter 7 — Multicores, Multiprocessors, and Clusters — 56

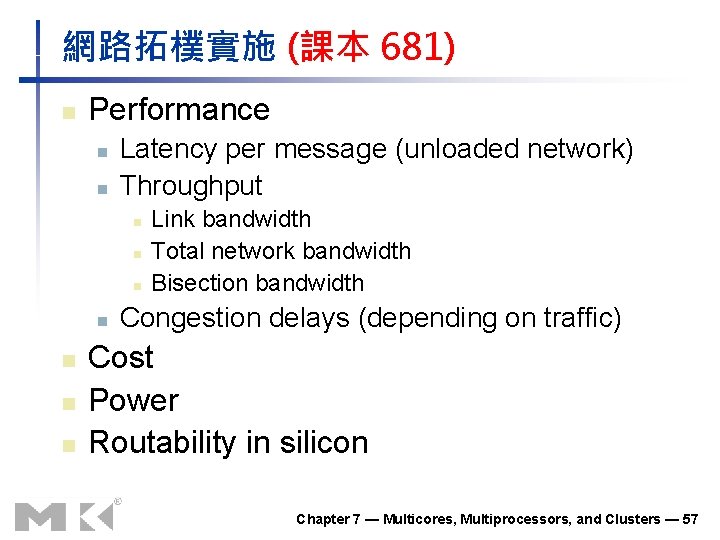

網路拓樸實施 (課本 681) n Performance n n Latency per message (unloaded network) Throughput n n n n Link bandwidth Total network bandwidth Bisection bandwidth Congestion delays (depending on traffic) Cost Power Routability in silicon Chapter 7 — Multicores, Multiprocessors, and Clusters — 57

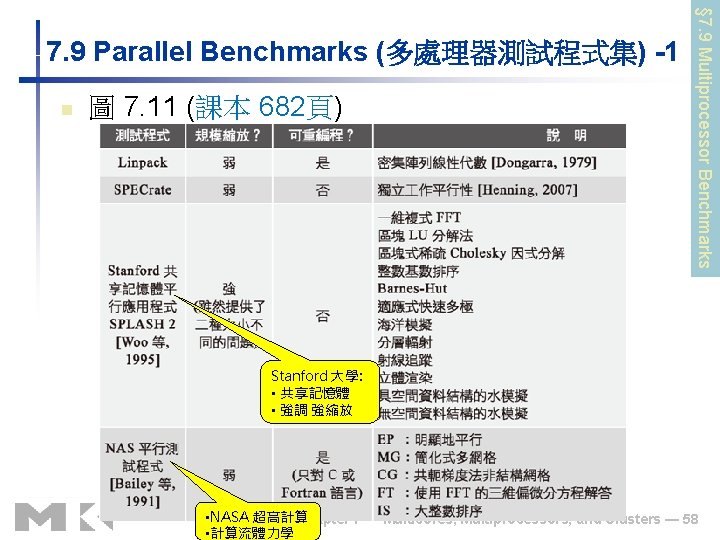

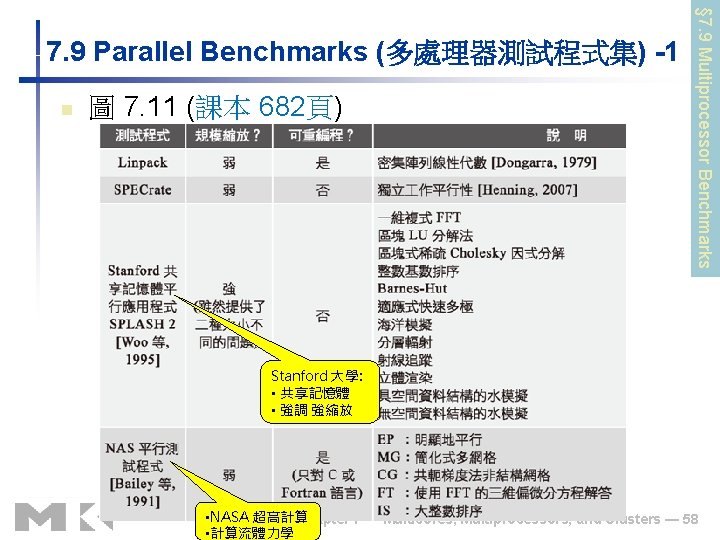

n 圖 7. 11 (課本 682頁) § 7. 9 Multiprocessor Benchmarks 7. 9 Parallel Benchmarks (多處理器測試程式集) -1 Stanford 大學: • 共享記憶體 • 強調 強縮放 • NASA 超高計算 Chapter 7 — Multicores, Multiprocessors, and Clusters — 58 • 計算流體力學

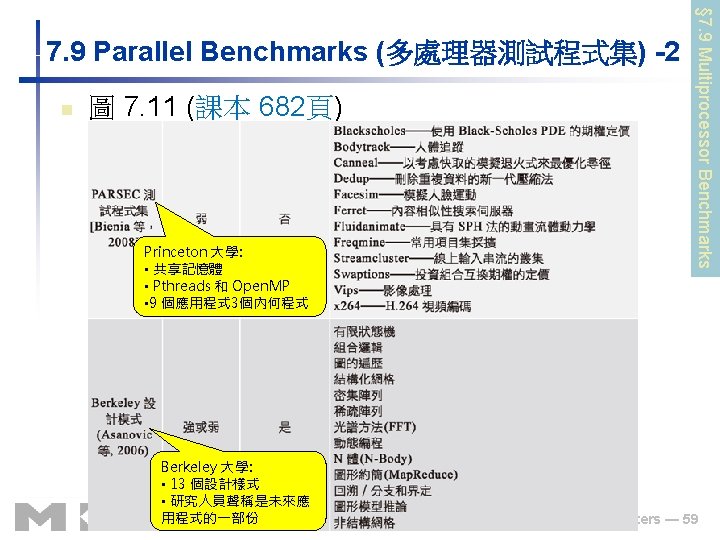

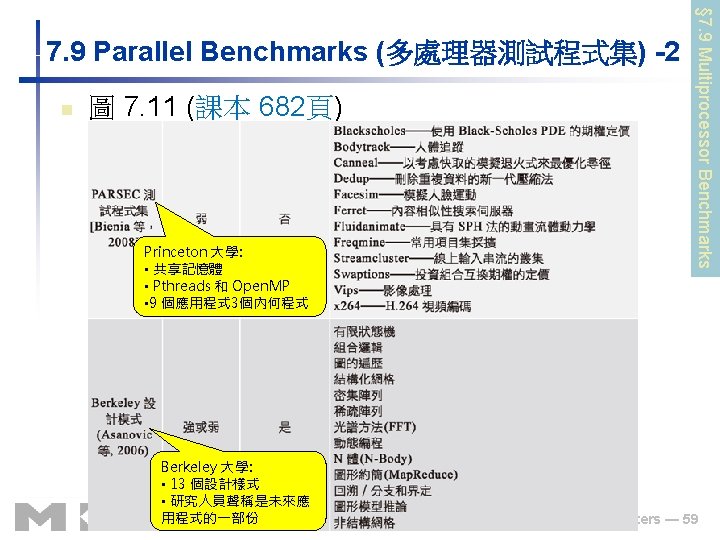

n 圖 7. 11 (課本 682頁) Princeton 大學: • 共享記憶體 • Pthreads 和 Open. MP • 9 個應用程式 3個內何程式 § 7. 9 Multiprocessor Benchmarks 7. 9 Parallel Benchmarks (多處理器測試程式集) -2 Berkeley 大學: • 13 個設計樣式 • 研究人員聲稱是未來應 用程式的一部份 Chapter 7 — Multicores, Multiprocessors, and Clusters — 59

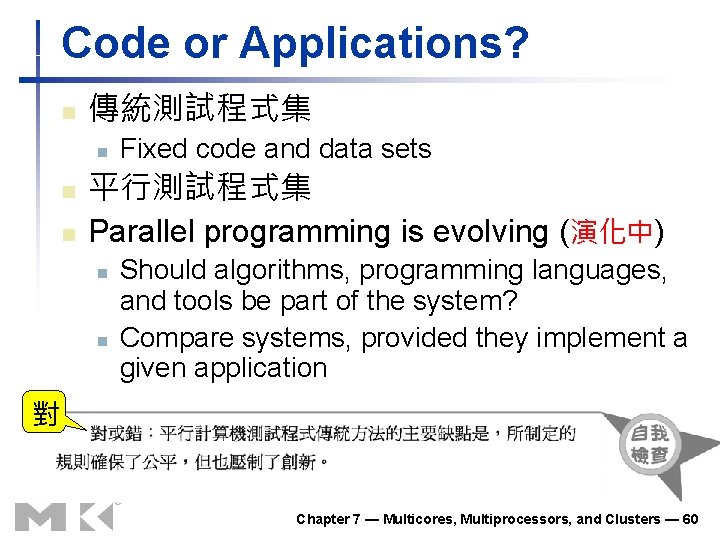

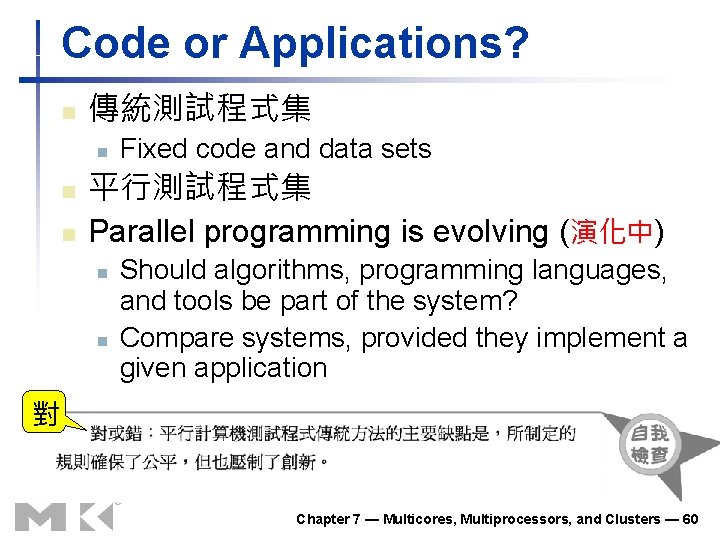

Code or Applications? n 傳統測試程式集 n n n Fixed code and data sets 平行測試程式集 Parallel programming is evolving (演化中) n n Should algorithms, programming languages, and tools be part of the system? Compare systems, provided they implement a given application 對 Chapter 7 — Multicores, Multiprocessors, and Clusters — 60

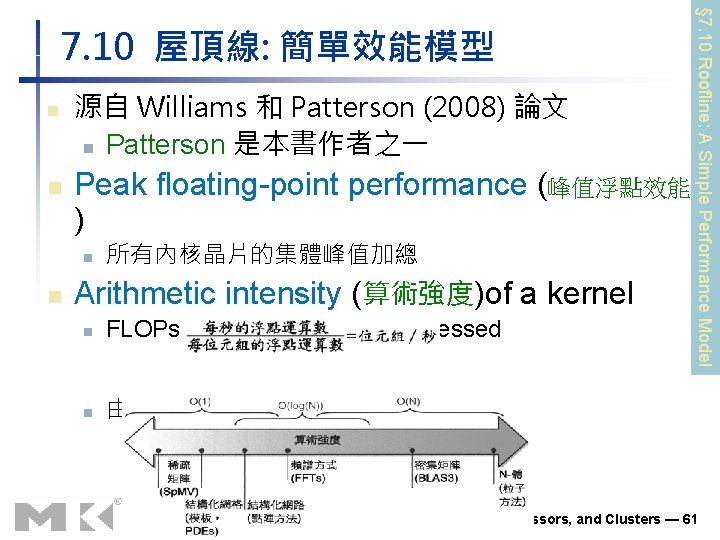

n n 源自 Williams 和 Patterson (2008) 論文 n Patterson 是本書作者之一 Peak floating-point performance (峰值浮點效能 ) n n 所有內核晶片的集體峰值加總 Arithmetic intensity (算術強度)of a kernel n FLOPs per byte of memory accessed n 由Berkeley 設計模式測試 (圖 7. 11)得到 § 7. 10 Roofline: A Simple Performance Model 7. 10 屋頂線: 簡單效能模型 Chapter 7 — Multicores, Multiprocessors, and Clusters — 61

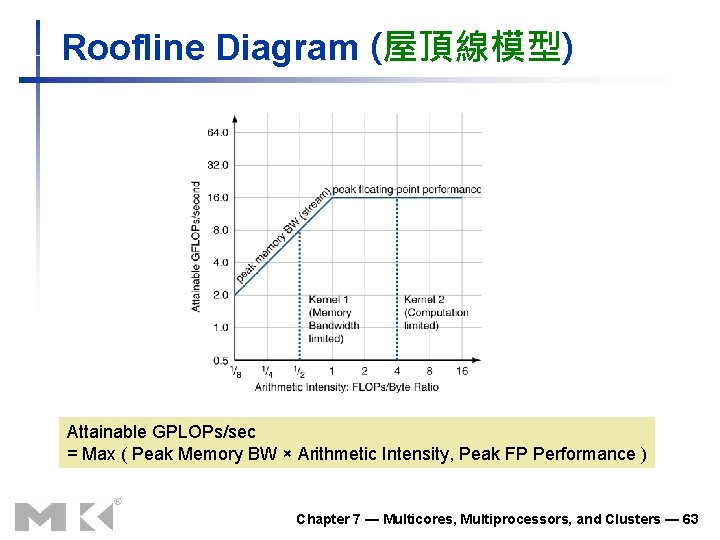

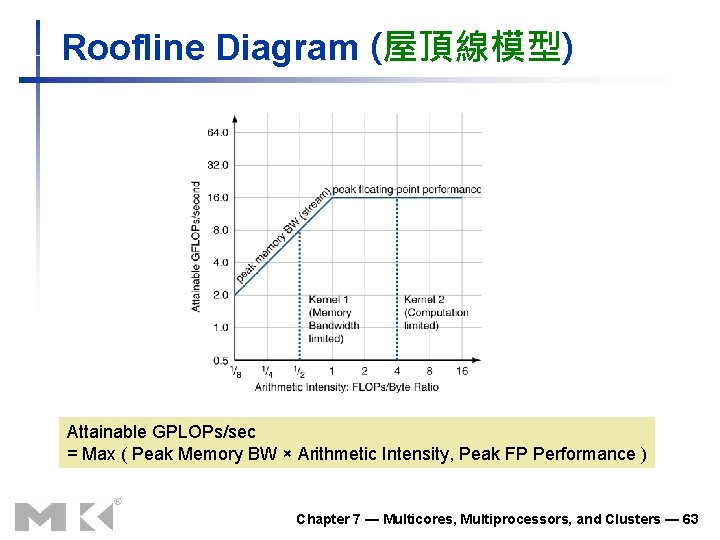

Roofline Diagram (屋頂線模型) Attainable GPLOPs/sec = Max ( Peak Memory BW × Arithmetic Intensity, Peak FP Performance ) Chapter 7 — Multicores, Multiprocessors, and Clusters — 63

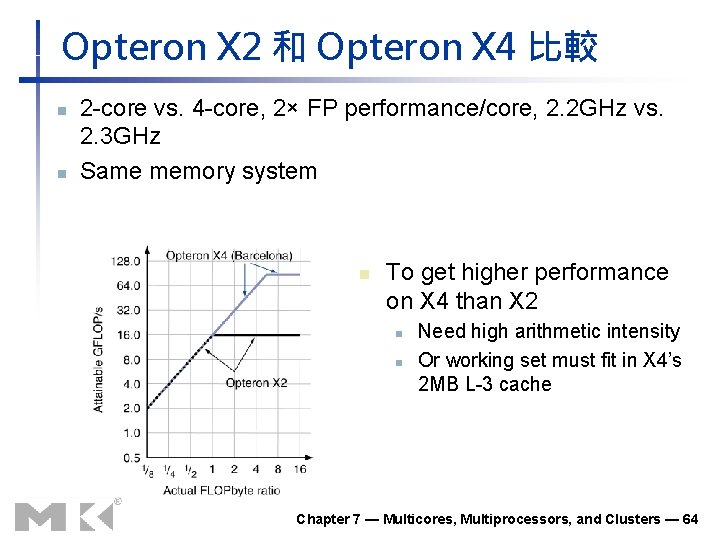

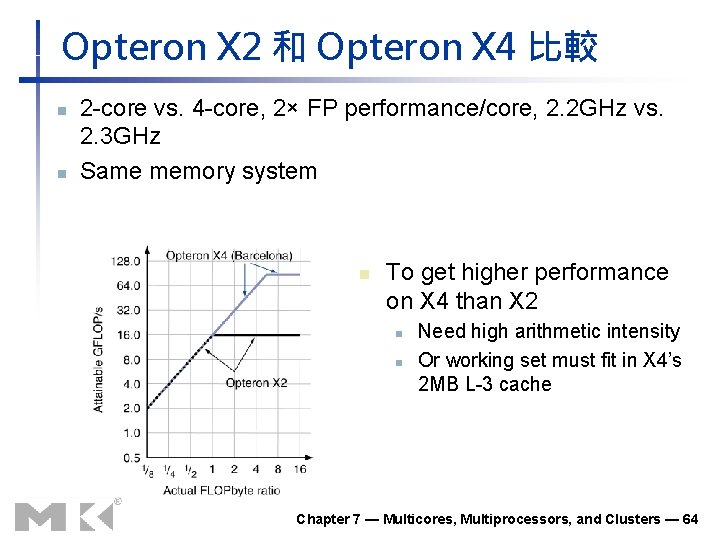

Opteron X 2 和 Opteron X 4 比較 n n 2 -core vs. 4 -core, 2× FP performance/core, 2. 2 GHz vs. 2. 3 GHz Same memory system n To get higher performance on X 4 than X 2 n n Need high arithmetic intensity Or working set must fit in X 4’s 2 MB L-3 cache Chapter 7 — Multicores, Multiprocessors, and Clusters — 64

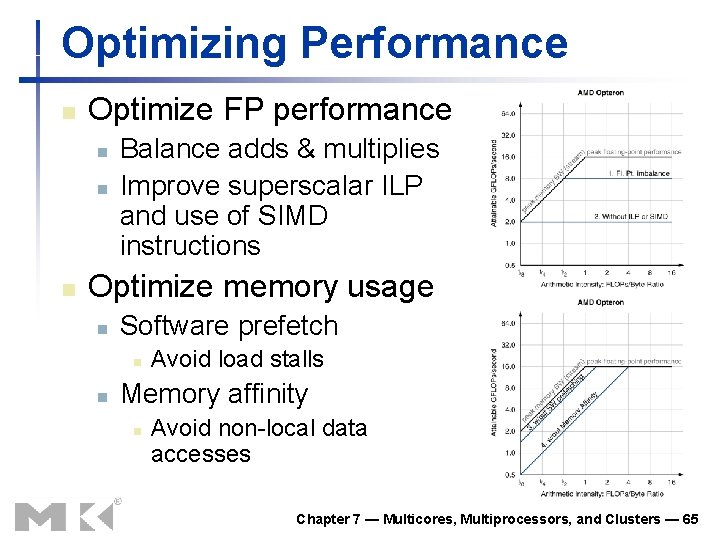

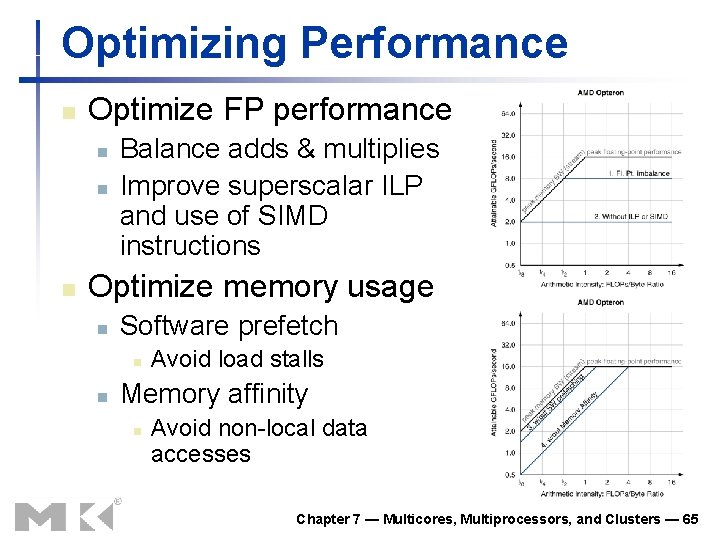

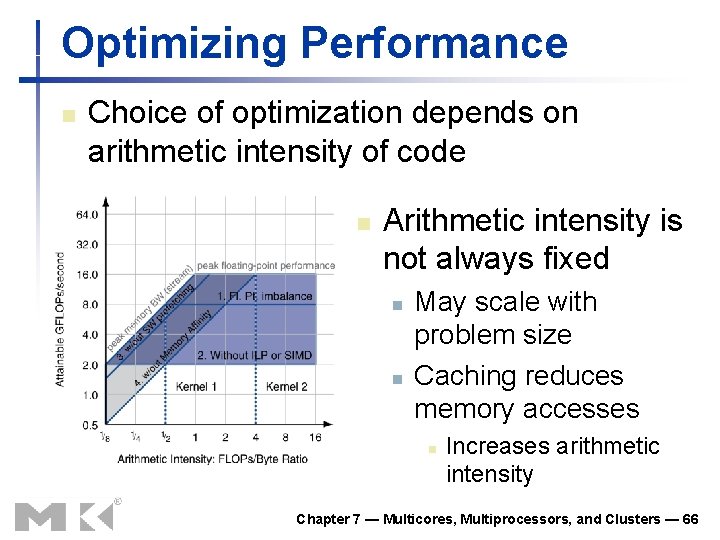

Optimizing Performance n Optimize FP performance n n n Balance adds & multiplies Improve superscalar ILP and use of SIMD instructions Optimize memory usage n Software prefetch n n Avoid load stalls Memory affinity n Avoid non-local data accesses Chapter 7 — Multicores, Multiprocessors, and Clusters — 65

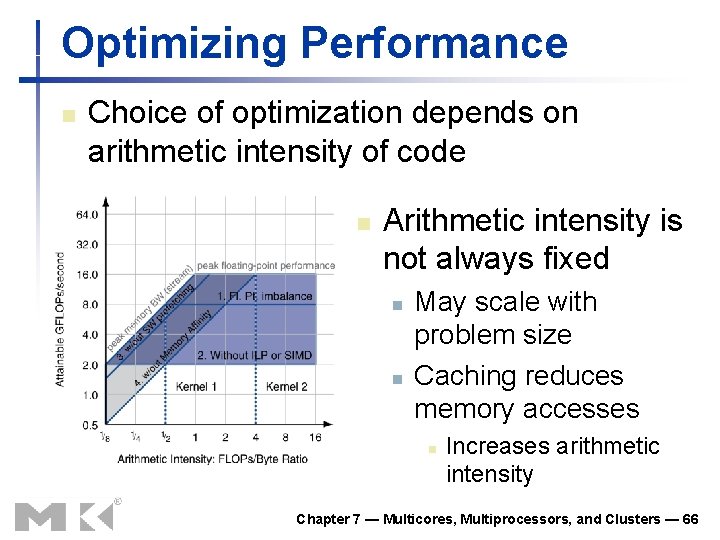

Optimizing Performance n Choice of optimization depends on arithmetic intensity of code n Arithmetic intensity is not always fixed n n May scale with problem size Caching reduces memory accesses n Increases arithmetic intensity Chapter 7 — Multicores, Multiprocessors, and Clusters — 66

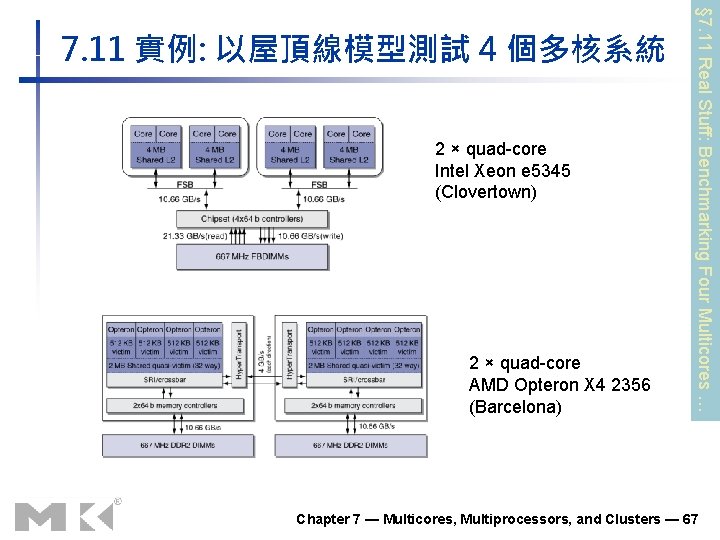

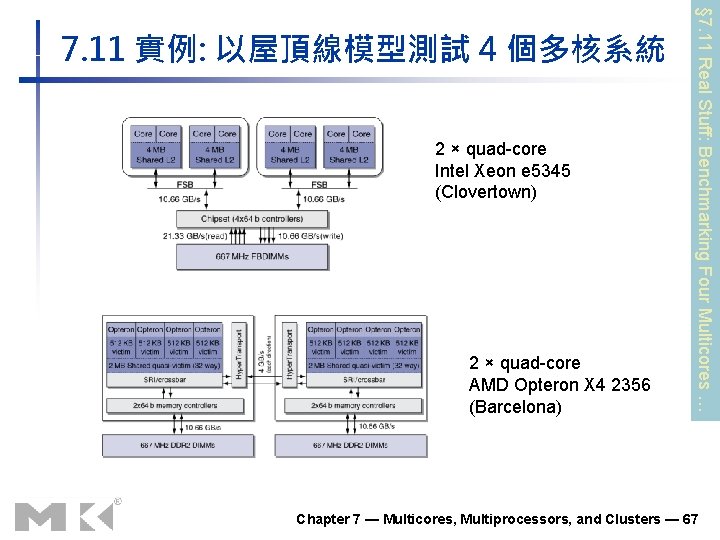

2 × quad-core Intel Xeon e 5345 (Clovertown) 2 × quad-core AMD Opteron X 4 2356 (Barcelona) § 7. 11 Real Stuff: Benchmarking Four Multicores … 7. 11 實例: 以屋頂線模型測試 4 個多核系統 Chapter 7 — Multicores, Multiprocessors, and Clusters — 67

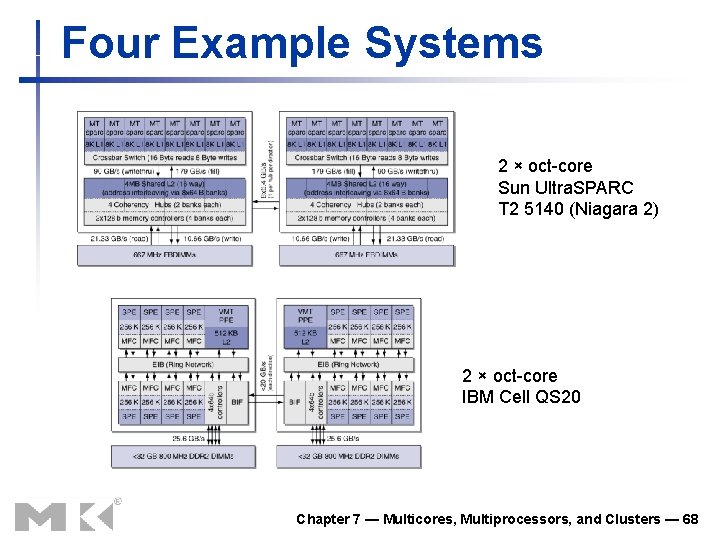

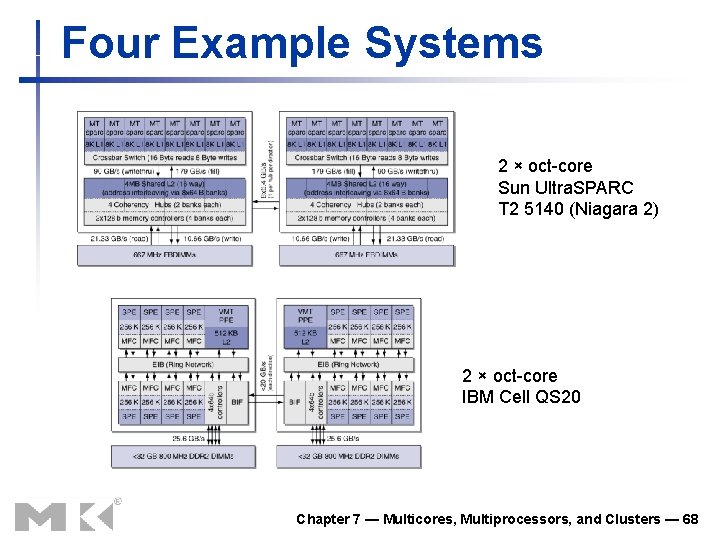

Four Example Systems 2 × oct-core Sun Ultra. SPARC T 2 5140 (Niagara 2) 2 × oct-core IBM Cell QS 20 Chapter 7 — Multicores, Multiprocessors, and Clusters — 68

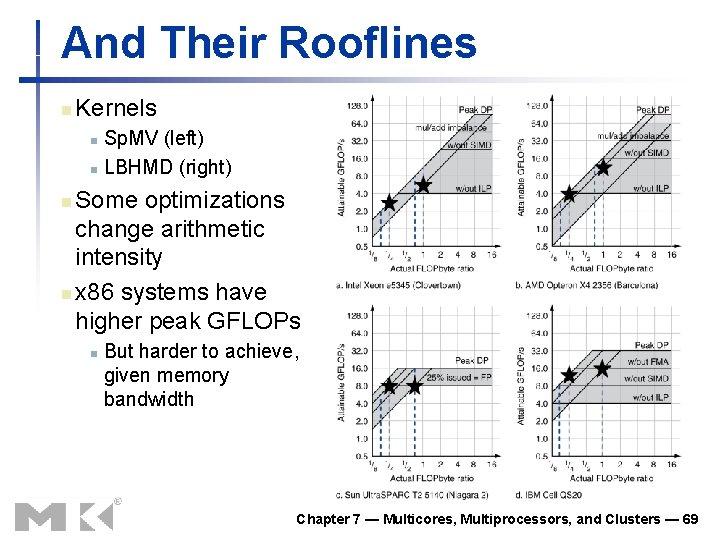

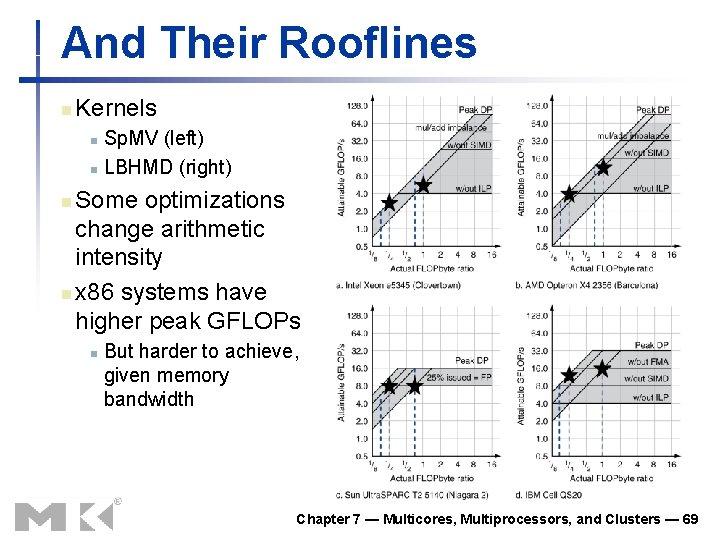

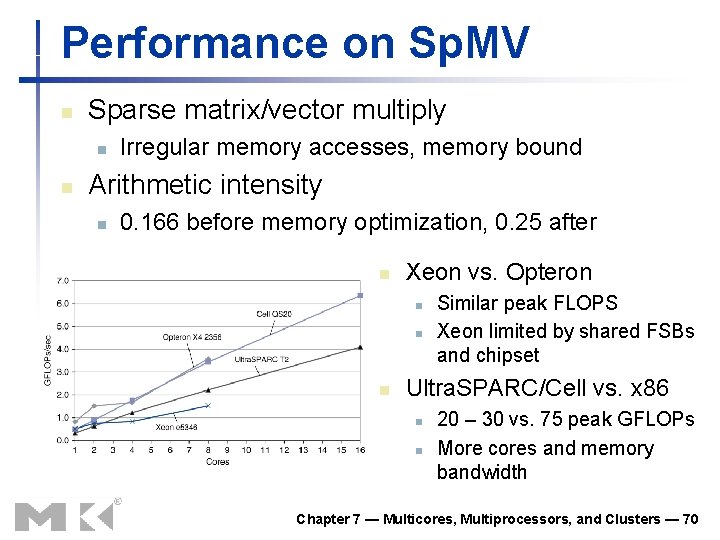

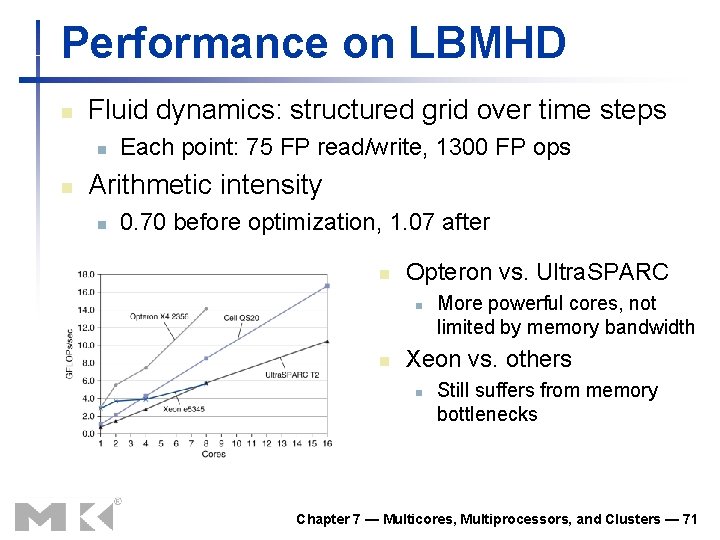

And Their Rooflines n Kernels Sp. MV (left) n LBHMD (right) n Some optimizations change arithmetic intensity n x 86 systems have higher peak GFLOPs n n But harder to achieve, given memory bandwidth Chapter 7 — Multicores, Multiprocessors, and Clusters — 69

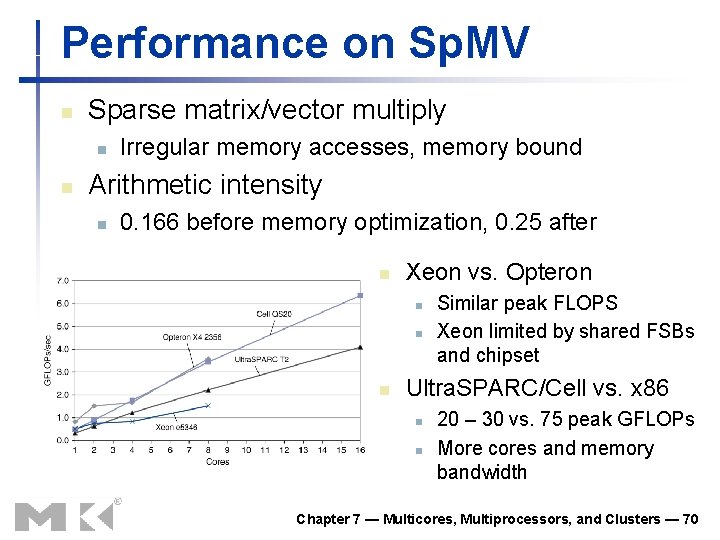

Performance on Sp. MV n Sparse matrix/vector multiply n n Irregular memory accesses, memory bound Arithmetic intensity n 0. 166 before memory optimization, 0. 25 after n Xeon vs. Opteron n Similar peak FLOPS Xeon limited by shared FSBs and chipset Ultra. SPARC/Cell vs. x 86 n n 20 – 30 vs. 75 peak GFLOPs More cores and memory bandwidth Chapter 7 — Multicores, Multiprocessors, and Clusters — 70

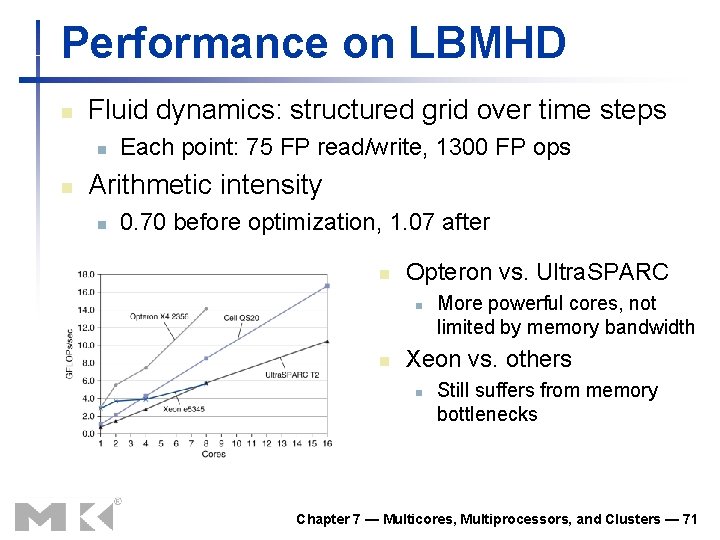

Performance on LBMHD n Fluid dynamics: structured grid over time steps n n Each point: 75 FP read/write, 1300 FP ops Arithmetic intensity n 0. 70 before optimization, 1. 07 after n Opteron vs. Ultra. SPARC n n More powerful cores, not limited by memory bandwidth Xeon vs. others n Still suffers from memory bottlenecks Chapter 7 — Multicores, Multiprocessors, and Clusters — 71

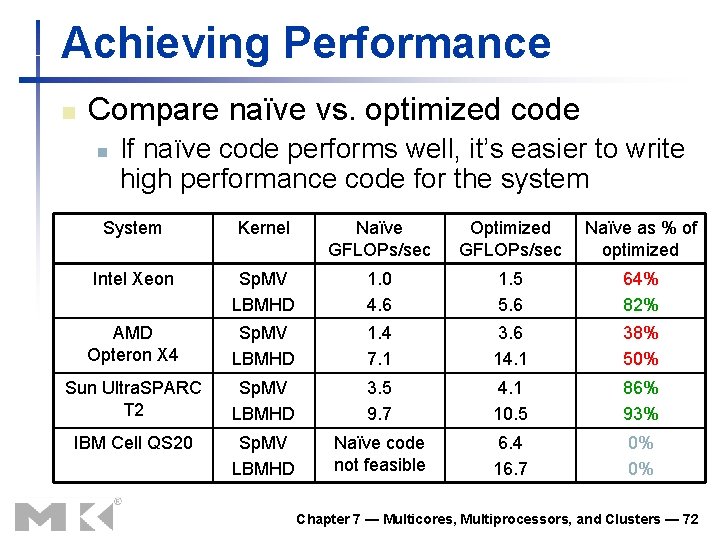

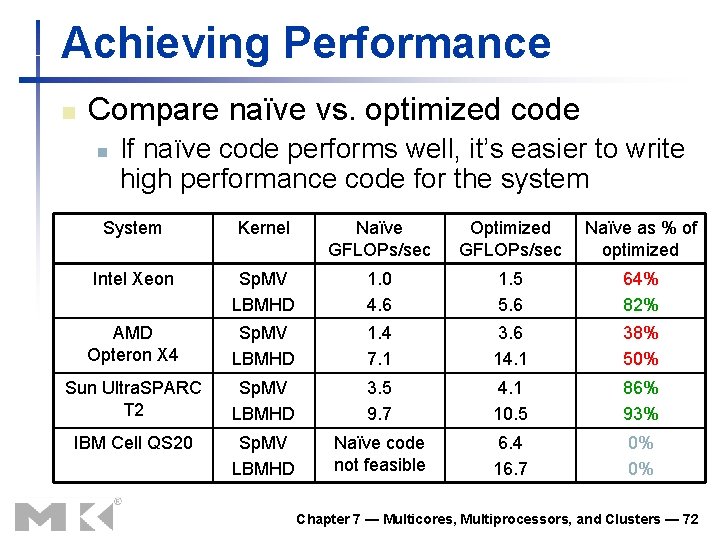

Achieving Performance n Compare naïve vs. optimized code n If naïve code performs well, it’s easier to write high performance code for the system System Kernel Naïve GFLOPs/sec Optimized GFLOPs/sec Naïve as % of optimized Intel Xeon Sp. MV LBMHD 1. 0 4. 6 1. 5 5. 6 64% 82% AMD Opteron X 4 Sp. MV LBMHD 1. 4 7. 1 3. 6 14. 1 38% 50% Sun Ultra. SPARC T 2 Sp. MV LBMHD 3. 5 9. 7 4. 1 10. 5 86% 93% IBM Cell QS 20 Sp. MV LBMHD Naïve code not feasible 6. 4 16. 7 0% 0% Chapter 7 — Multicores, Multiprocessors, and Clusters — 72

n Fallacy (謬誤): 似是而非 的觀念 1. § 7. 12 Fallacies and Pitfalls 7. 12 謬誤與陷阱 Amdahl’s Law doesn’t apply to parallel computers n n Since we can achieve linear speedup But only on applications with weak scaling => 錯 1. Peak performance tracks observed performance n n n Marketers like this approach! But compare Xeon with others in example Need to be aware of bottlenecks => 錯 Chapter 7 — Multicores, Multiprocessors, and Clusters — 73

Pitfalls n Pitfall (陷阱): 容易犯的錯誤 1. n Not developing the software to take account of a multiprocessor architecture 例如: using a single lock for a shared composite resource n n Serializes accesses, even if they could be done in parallel (雖然有些地方可以很容易平行化,卻以循序製作 ) Use finer-granularity locking Chapter 7 — Multicores, Multiprocessors, and Clusters — 74

n n Goal: higher performance by using multiple processors Difficulties n n n Many reasons for optimism n n n Developing parallel software Devising appropriate architectures § 7. 13 Concluding Remarks Changing software and application environment Chip-level multiprocessors with lower latency, higher bandwidth interconnect An ongoing challenge for computer architects! Chapter 7 — Multicores, Multiprocessors, and Clusters — 75