Operating Systems CSE 411 Multiprocessor Operating Systems Dec

- Slides: 16

Operating Systems CSE 411 Multi-processor Operating Systems Dec. 8 2006 - Lecture 30 Instructor: Bhuvan Urgaonkar

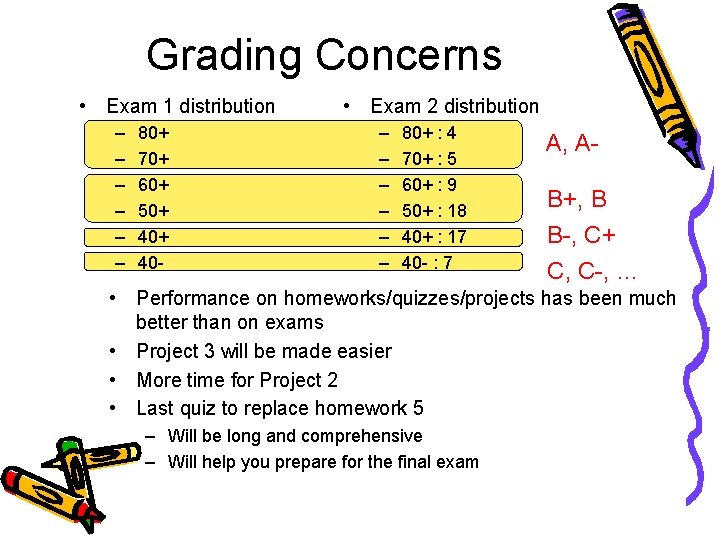

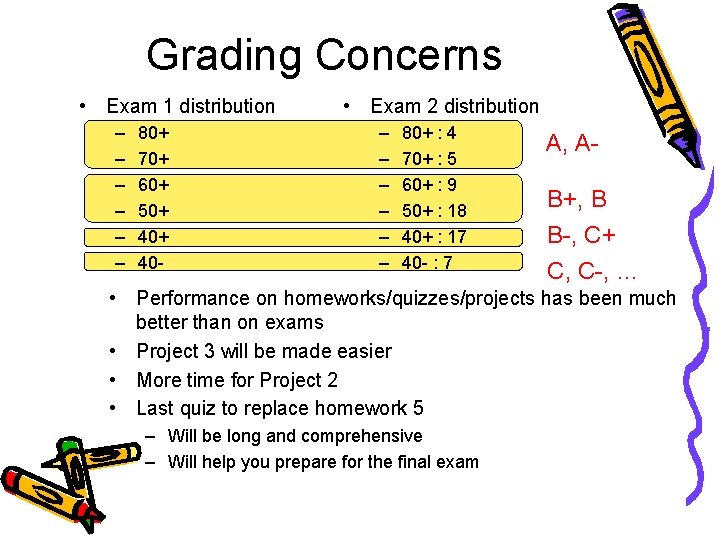

Grading Concerns • Exam 1 distribution – – – 80+ 70+ 60+ 50+ 40 - • Exam 2 distribution – – – 80+ : 4 70+ : 5 60+ : 9 50+ : 18 40+ : 17 40 - : 7 A, AB+, B B-, C+ C, C-, … • Performance on homeworks/quizzes/projects has been much better than on exams • Project 3 will be made easier • More time for Project 2 • Last quiz to replace homework 5 – Will be long and comprehensive – Will help you prepare for the final exam

Multi-processor Computers • Asymmetric – Master processor runs the kernel – Other processors run user code • Symmetric – Each server is self-scheduling • Single run queue or per -processor run queue – Scheduling and synchronization more complex

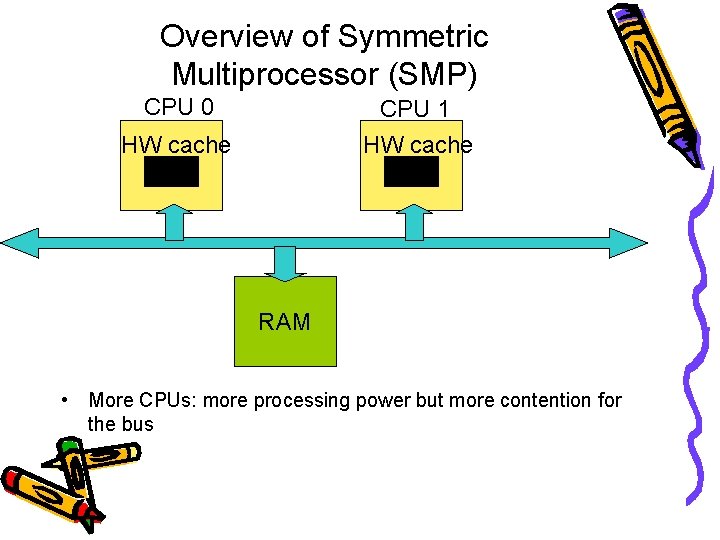

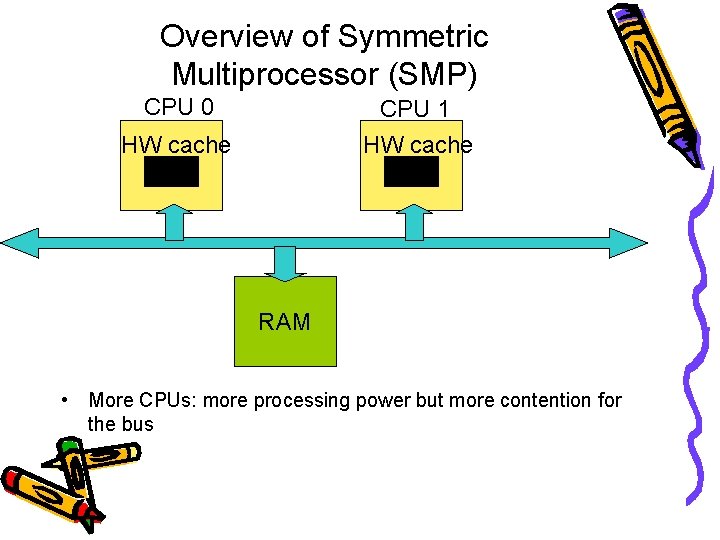

Overview of Symmetric Multiprocessor (SMP) CPU 0 CPU 1 HW cache RAM • More CPUs: more processing power but more contention for the bus

Hardware features of Modern SMPs • Common memory – All CPUs connected to a common bus – Hardware circuit called memory arbiter inserted between the bus and each RAM chip • Serializes accesses to memory • Actually there is an arbiter even in uni-processors – Why: Remember DMA? • Hardware support for cache synchronization – Contents of the cache and memory maintain their consistency at the hardware level just as in a uni-processor – Cache snooping: Whenever a CPU modifies its cache, it must check if the same data is contained in another cache, and if so, notify it of the update • Note: Implemented in hardware, not of concern to the kernel

Hardware features of Modern SMPs (contd. ) • Distributed Interrupt Processing – Being able to deliver interrupt to any CPU crucial to exploit the parallelism of the SMP architecture – Special hardware for routing interrupts to the right processors – Interprocessor interrupts

OS Issues for SMP • How should the CPU scheduler work? • How should synchronization work?

CPU Scheduling in a Multiprocessor • Kernel and user code can now run on N processors – A multi-threaded process may span multiple CPUs • Uni-processor: Scheduler picks a process to run • Multi-processor: Scheduler picks a process and the CPU on which it will run • A process may move from one CPU to another during its lifetime • Two main factors affecting scheduler design – Cache affinity: Would like to run a process on the same CPU – Load balancing: Would like to keep all CPUs equally busy – These are often at odds with each other: Why?

Proportional Fair Scheduling in SMPs • What will happen if we have two threads with weights 1 and 10 and a Lottery scheduler on a dual-processor? • Not all combinations of weights are feasible! • Given k threads with weights w 1, …, wk, and p processors, can you think of a feasibility criterion?

Symmetric Multi-threading • Idea: Create multiple virtual processors on a physical processor – – Illusion provided by hardware Called hyper-threading in Intel machines The OS sees the machine as an SMP Each virtual processor has its own set of registers and interrupt handling, cache is shared • Why: Possibility of better parallelism, better utilization • How does it concern the OS? – From a correctness point of view: it does not – From an efficiency point of view: the scheduler could exploit it to do better load balancing

Synchronization in SMPs

Synchronization building blocks: Atomic Instructions • An atomic instruction for a uni-processor would not be atomic on an SMP unless special care is taken – In particular, any atomic instruction needs to write to a memoy location – Recall Test. And. Set, Swap • Need special support from the hardware – Hardware needs to ensure that when a CPU writes to a memory location as part of an atomic instruction, another CPU can not write the same memory location till the first CPU is finished with its write • Given above hardware support, OS support for synchronization of user processes same as in uniprocessors – Semaphores, monitors • Kernel synchronization raises some special considerations – Both in uni- and multi-processors

Kernel synchronization

A little background (mostly revision) • Recall: A kernel is a “server” that answers requests issued in two possible ways – A process causes an exception (E. g. , page fault, system call) – An external device sends an interrupt • Definition: Kernel Control Path – The set of instructions executed in the kernel mode to handle a kernel request – Similar to a process, except much more rudimentary • No descriptor of any kind – Most modern kernels are “re-entrant” => Multiple KCPs may be executing simultaneously • Synchronization problems can occur if two KCPs update the same data – How to synchronize KCP access to shared data/resources? �

How to synchronize KCP access to shared data? • Can use semaphores or monitors • Not the best solution in multi-processors • Consider two KCPs running on different CPUs – If the time to update a shared data structure is very short, then semaphores may be an overkill • Solution: Spin Locks – Do busy wait instead of getting blocked! – The kernel programmer must decide when to use spin locks versus semaphores – Spin locks are useless in uni-processors: Why?

Two other mechanisms for easy to achieve kernel synchronization • Non-preemptible kernel design – A KCP can not be pre-empted by another one • Useful only certain KCPs, such as those that have no synch. issues with interrupt handlers – Not enough for multi-processors since multiple CPUs can concurrently access the same data structure – Adopted by many Oses including versions of Linux upto 2. 4 – Linux 2. 6 is pre-emptible: faster dispatch times for user processes • Interrupt disabling – Disable all hardware interrupts before entering a critical section and reenable them right after leaving it – Works for uni-processor in certain situations • The critical section should not incur an exception whose handler has synchronization issues with it • The CS should not get blocked – What if the CS incurs a page fault? – Does not work for multi-processors • Interrupts must be