Operating Systems CSE 411 Kernel synchronization deadlocks Dec

- Slides: 30

Operating Systems CSE 411 Kernel synchronization, deadlocks Dec. 11 2006 - Lecture 31 Instructor: Bhuvan Urgaonkar

Symmetric Multi-threading • Idea: Create multiple virtual processors on a physical processor – – Illusion provided by hardware Called hyper-threading in Intel machines The OS sees the machine as an SMP Each virtual processor has its own set of registers and interrupt handling, cache is shared • Why: Possibility of better parallelism, better utilization • How does it concern the OS? – From a correctness point of view: it does not – From an efficiency point of view: the scheduler could exploit it to do better load balancing

Synchronization in SMPs & Kernel Synchronization

Synchronization building blocks: Atomic Instructions • An atomic instruction for a uni-processor would not be atomic on an SMP unless special care is taken – In particular, any atomic instruction needs to write to a memoy location – Recall Test. And. Set, Swap • Need special support from the hardware – Hardware needs to ensure that when a CPU writes to a memory location as part of an atomic instruction, another CPU can not write the same memory location till the first CPU is finished with its write • Given above hardware support, OS support for synchronization of user processes same as in uniprocessors – Semaphores, monitors • Kernel synchronization raises some special considerations – Both in uni- and multi-processors

Kernel Control Path • Recall: A kernel is a “server” that answers requests issued in two possible ways – A process causes an exception (E. g. , page fault, system call) – An external device sends an interrupt • Definition: Kernel Control Path – The set of instructions executed in the kernel mode to handle a kernel request – Similar to a process, except much more rudimentary • No descriptor of any kind – Most modern kernels are “re-entrant” => Multiple KCPs may be executing simultaneously • Synchronization problems can occur if two KCPs update the same data – How to synchronize KCP access to shared data/resources? �

Two mechanisms for easy to achieve kernel synchronization • Non-preemptible kernel design – A KCP can not be pre-empted by another one • Useful only for certain KCPs, such as those that have no synch. issues with interrupt handlers – Not enough for multi-processors since multiple CPUs can concurrently access the same data structure – Adopted by many OSes including versions of Linux upto 2. 4 – Linux 2. 6 is pre-emptible: faster dispatch times for user processes • Interrupt disabling – Disable all hardware interrupts before entering a critical section and reenable them right after leaving it – Works for uni-processor in certain situations • The critical section should not incur an exception whose handler has synchronization issues with it • The CS should not get blocked – What if the CS incurs a page fault? – Does not work for multi-processors

How to synchronize KCP access to shared data? • Can use semaphores or monitors • Not always the best solution in multi-processors • Consider two KCPs running on different CPUs – If the time to update a shared data structure is very short, then semaphores may be an overkill • Solution: Spin Locks – Do busy wait instead of getting blocked! – The kernel designers must decide when to use spin locks versus semaphores – Spin locks are useless in uni-processors: Why?

Deadlocks

The Deadlock Problem • A set of blocked processes each holding a resource and waiting to acquire a resource held by another process in the set. • Example – System has 2 disk drives. – P 1 and P 2 each hold one disk drive and each needs another one. • Example – semaphores A and B, initialized to 1 P 0 wait (A); wait (B); P 1 wait(B) wait(A)

Bridge Crossing Example • Traffic only in one direction. • Each section of a bridge can be viewed as a resource. • If a deadlock occurs, it can be resolved if one car backs up (preempt resources and rollback). • Several cars may have to be backed up if a deadlock occurs. • Starvation is possible.

Deadlock and starvation • Deadlock implies starvation but not the other way around • Recall Dining Philosophers

System Model • Resource types R 1, R 2, . . . , Rm CPU cycles, memory space, I/O devices • Each resource type Ri has Wi instances. • Each process utilizes a resource as follows: – request – use – release

Deadlock Characterization Deadlock can arise if four conditions hold simultaneously: Note: Necessary conditions but not sufficient • Mutual exclusion: only one process at a time can use a resource. • No preemption: a resource can be released only voluntarily by the process holding it, after that process has completed its task. • Circular wait: there exists a set {P 0, P 1, …, Pn} of waiting processes such that P 0 is waiting for a resource that is held by P 1, P 1 is waiting for a resource that is held by P 2, …, Pn– 1 is waiting for a resource that is held by Pn, and Pn is waiting for a resource that is held by P 0 => Hold and wait: a process holding at least one resources held by other is waiting to acquire additional processes.

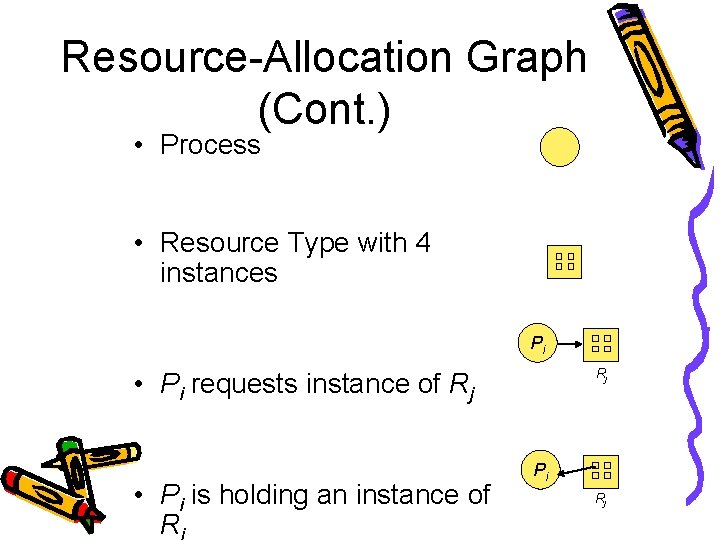

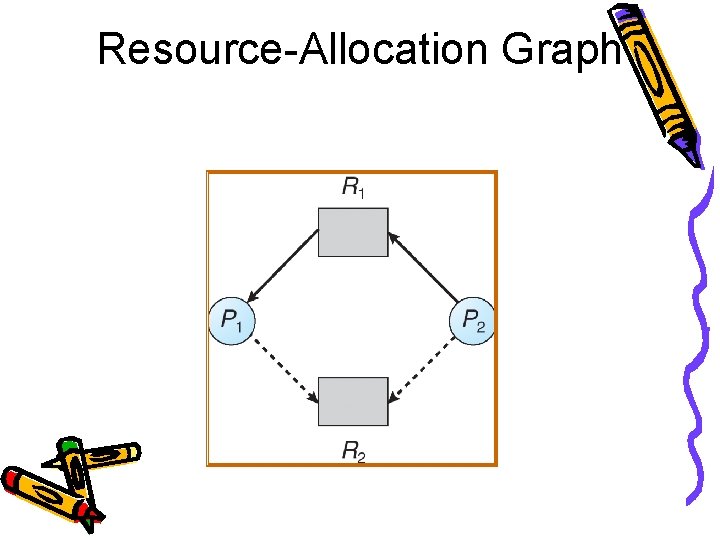

Resource-Allocation Graph A set of vertices V and a set of edges E. • V is partitioned into two types: – P = {P 1, P 2, …, Pn}, the set consisting of all the processes in the system. – R = {R 1, R 2, …, Rm}, the set consisting of all resource types in the system. • request edge – directed edge P 1 �Rj • assignment edge – directed edge Rj �Pi

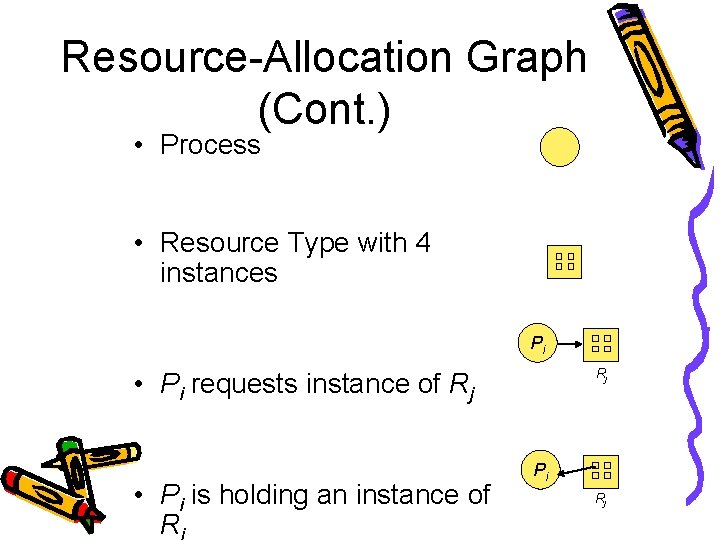

Resource-Allocation Graph (Cont. ) • Process • Resource Type with 4 instances Pi Rj • Pi requests instance of Rj • Pi is holding an instance of R Pi Rj

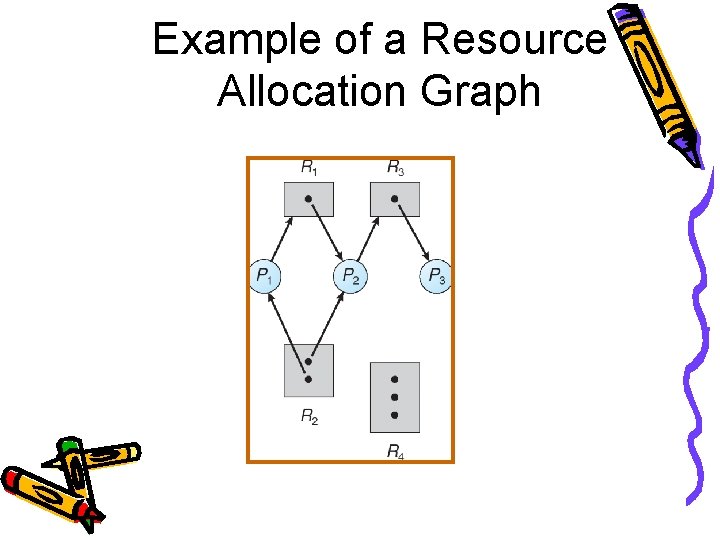

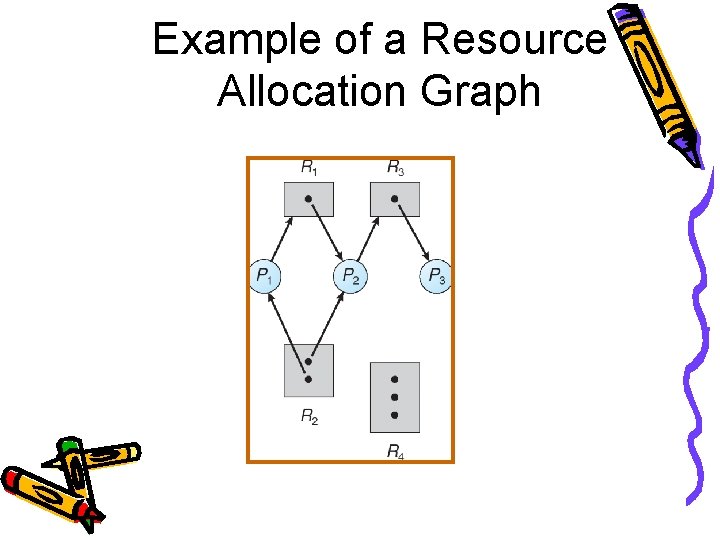

Example of a Resource Allocation Graph

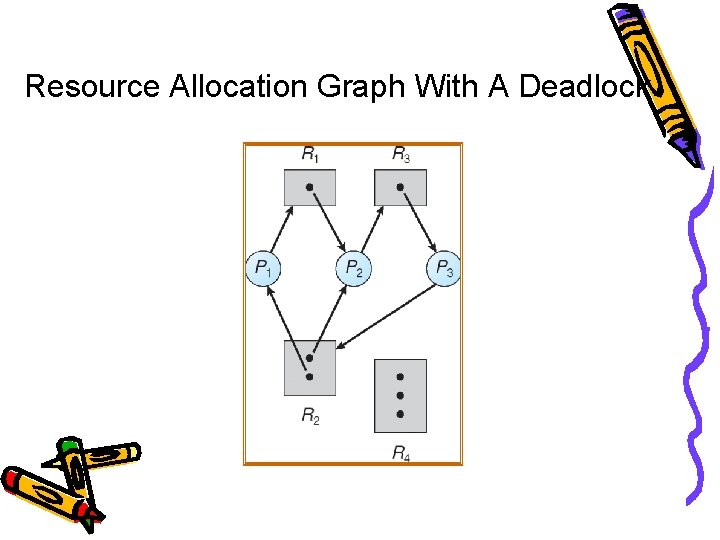

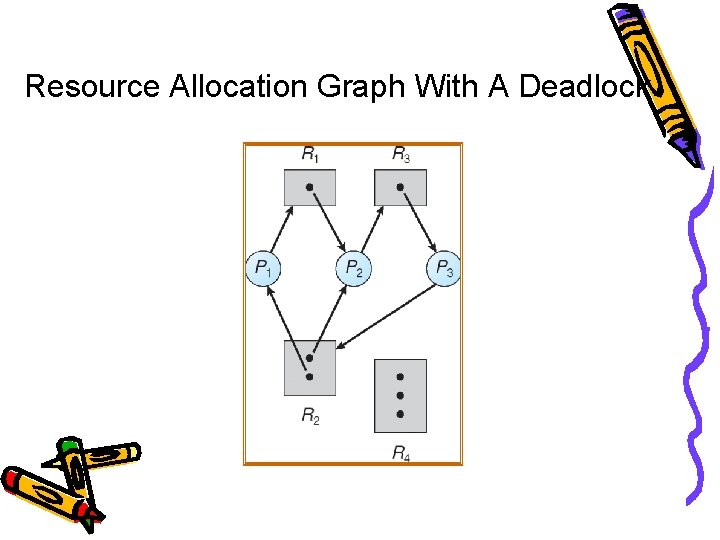

Resource Allocation Graph With A Deadlock

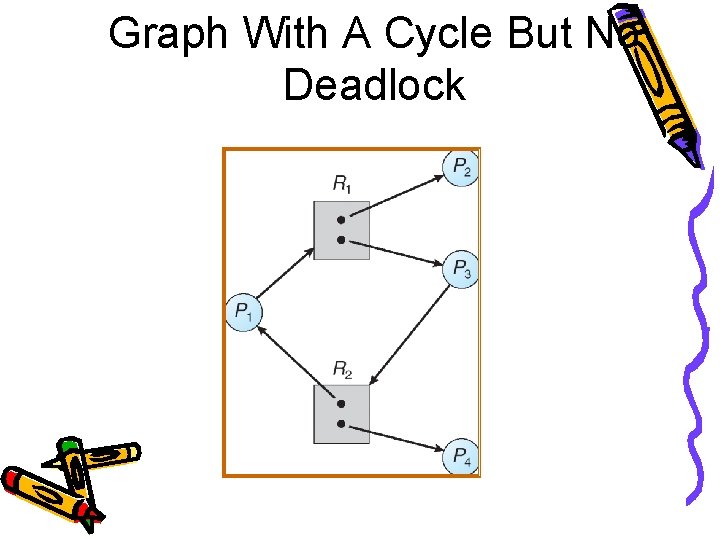

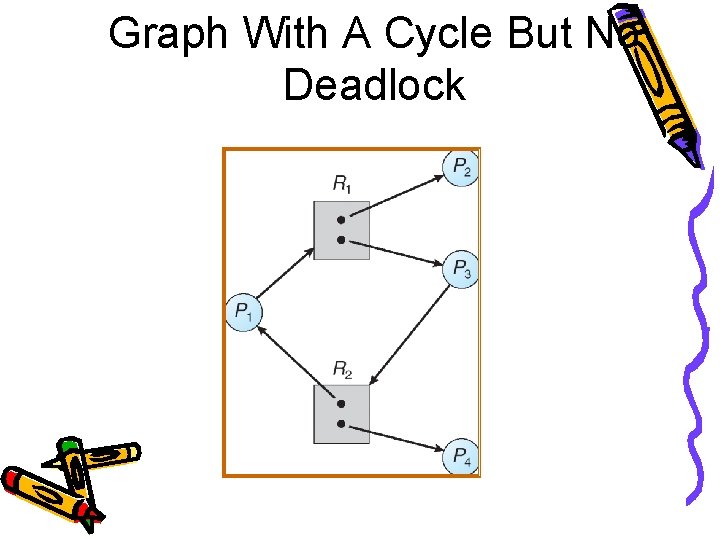

Graph With A Cycle But No Deadlock

Basic Facts • If graph contains no cycles �no deadlock. • If graph contains a cycle � – if only one instance per resource type, then deadlock. – if several instances per resource type, possibility of deadlock.

Methods for Handling Deadlocks • Ensure that the system will never enter a deadlock state – Deadlock prevention and deadlock avoidance • Allow the system to enter a deadlock state and then recover – Deadlock detection and recovery • Ignore the problem and pretend that deadlocks never occur in the system; used by most operating systems, including UNIX – Some call it the “Ostrich” approach - why?

Plan for remaining classes • Penultimate class – A little history of operating systems • Significant events, important contributions, standards – What is happening now and what is likely to happen in the future • What is hot and what is not – Where could you (as a student) go from here? • Related fields • Last class – Revision of entire course – Tips for final • Both classes – Questions about the course – Feedback!

Deadlock Prevention Restrain the ways request can be made. • Mutual Exclusion – not required for sharable resources; must hold for nonsharable resources. • Hold and Wait – must guarantee that whenever a process requests a resource, it does not hold any other resources. – Require process to request and be allocated all its resources before it begins execution, or allow process to request resources only when the process has none. – Low resource utilization; starvation possible.

Deadlock Prevention (Cont. ) • No Preemption – – If a process that is holding some resources requests another resource that cannot be immediately allocated to it, then all resources currently being held are released. – Preempted resources are added to the list of resources for which the process is waiting. – Process will be restarted only when it can regain its old resources, as well as the new ones that it is requesting. • Circular Wait – impose a total ordering of all resource types, and require that each process requests resources in an increasing order of enumeration.

Deadlock Avoidance Requires that the system has some additional a priori information available. • Simplest and most useful model requires that each process declare the maximum number of resources of each type that it may need. • The deadlock-avoidance algorithm dynamically examines the resource-allocation state to ensure that there can never be a circular-wait condition. • Resource-allocation state is defined by the number of available and allocated resources, and the maximum demands of the processes.

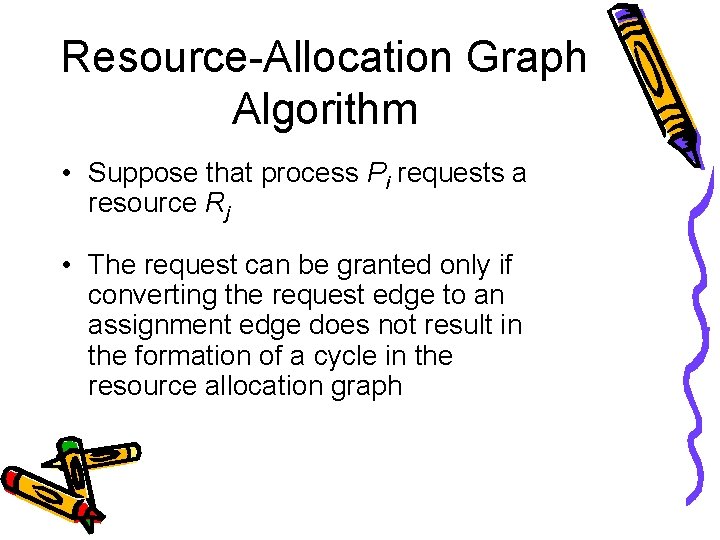

Avoidance algorithms • Single instance of a resource type. Use a resource-allocation graph • Multiple instances of a resource type. Use the banker’s algorithm

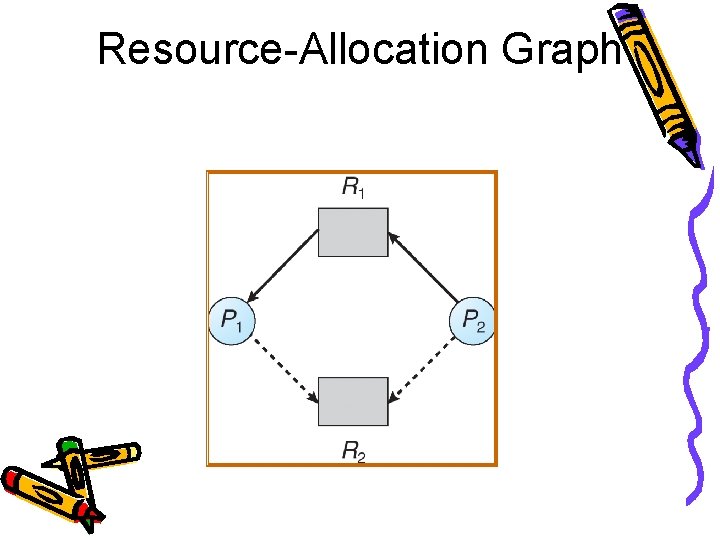

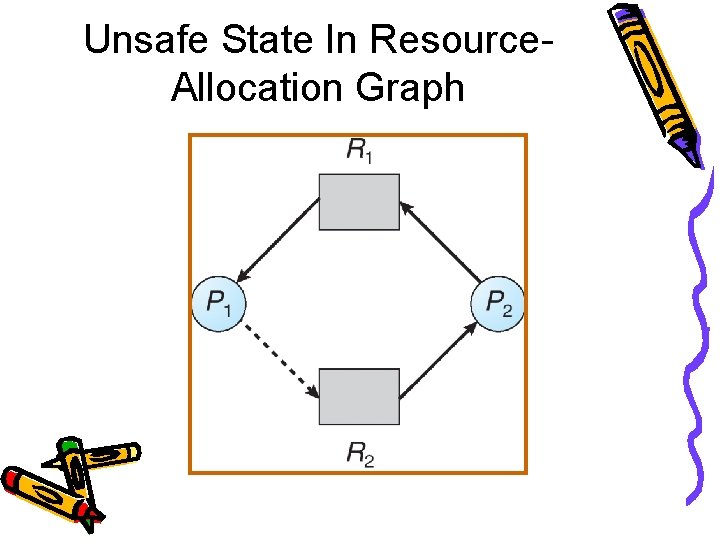

Resource-Allocation Graph Scheme • Claim edge Pi �Rj indicates that process Pi may request resource Rj; represented by a dashed line. • Claim edge converts to request edge when a process requests a resource. • Request edge converted to an assignment edge when the resource is allocated to the process. • When a resource is released by a process, assignment edge reconverts to a claim edge. • Resources must be claimed a priori in the system.

Resource-Allocation Graph

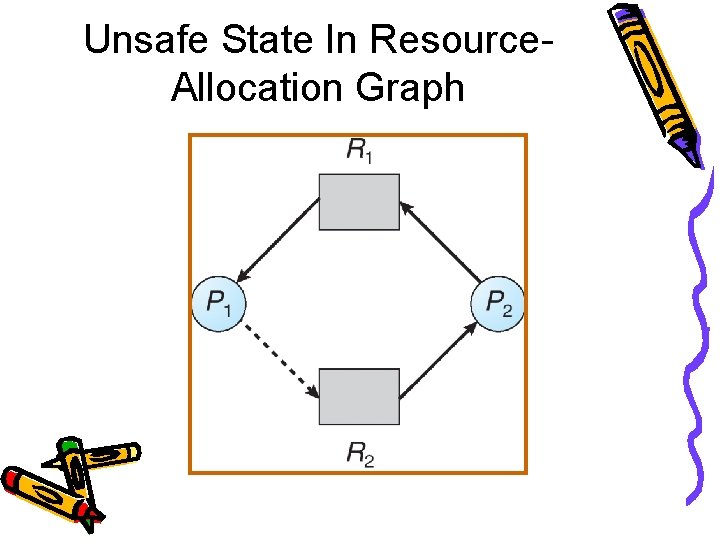

Unsafe State In Resource. Allocation Graph

Resource-Allocation Graph Algorithm • Suppose that process Pi requests a resource Rj • The request can be granted only if converting the request edge to an assignment edge does not result in the formation of a cycle in the resource allocation graph

Deadlock Detection • Allow system to enter deadlock state • Detection algorithm • Recovery scheme – Terminate (deadlocked) process(es) – Preempt resources