Multiprocessors z Why multiprocessors z CPUs and accelerators

![Buffers and latency schedules A[0] A[1] … B[0] B[1] … C[0] C[1] … © Buffers and latency schedules A[0] A[1] … B[0] B[1] … C[0] C[1] … ©](https://slidetodoc.com/presentation_image_h2/035a9708fd474708cf3d83b0489705bc/image-36.jpg)

- Slides: 36

Multiprocessors z. Why multiprocessors? z. CPUs and accelerators. z. Multiprocessor performance analysis. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

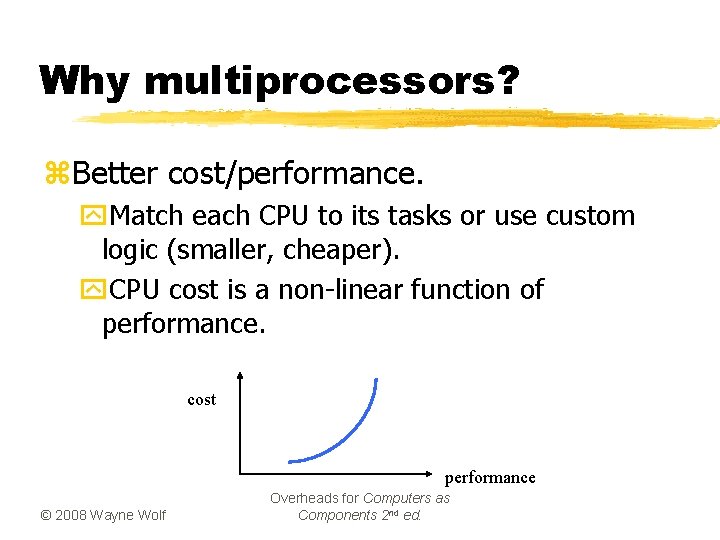

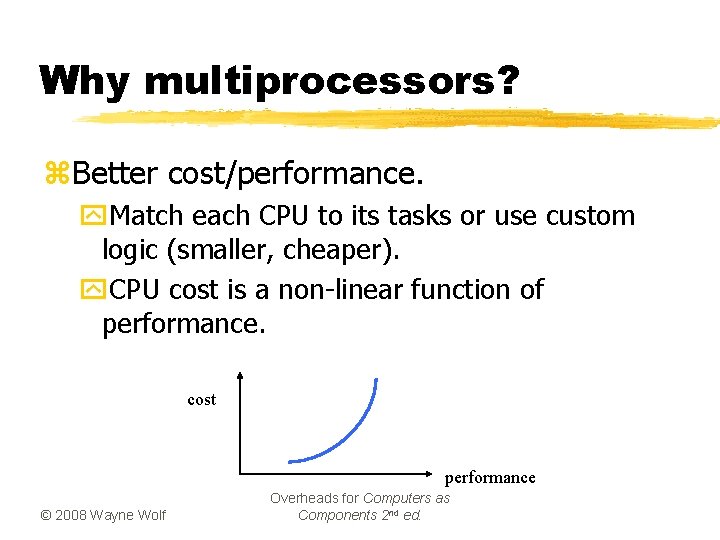

Why multiprocessors? z. Better cost/performance. y. Match each CPU to its tasks or use custom logic (smaller, cheaper). y. CPU cost is a non-linear function of performance. cost performance © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

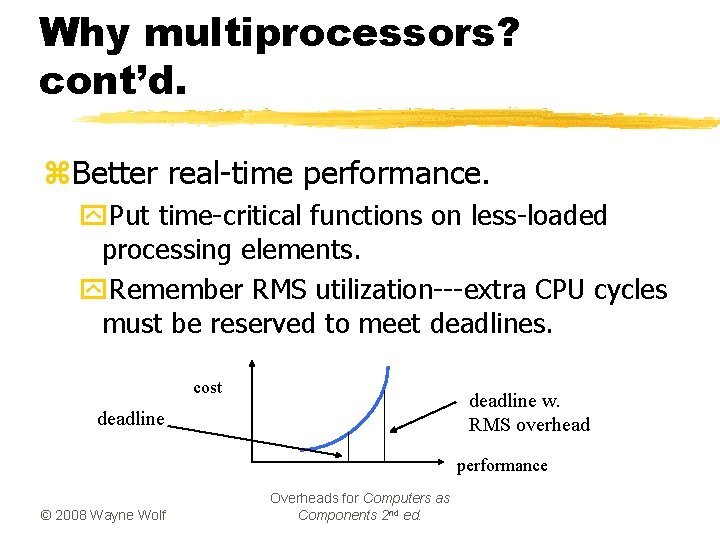

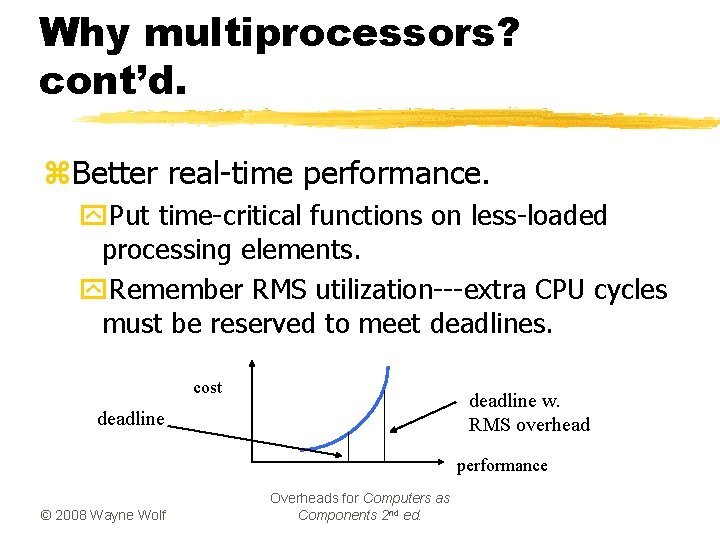

Why multiprocessors? cont’d. z. Better real-time performance. y. Put time-critical functions on less-loaded processing elements. y. Remember RMS utilization---extra CPU cycles must be reserved to meet deadlines. cost deadline w. RMS overhead deadline performance © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

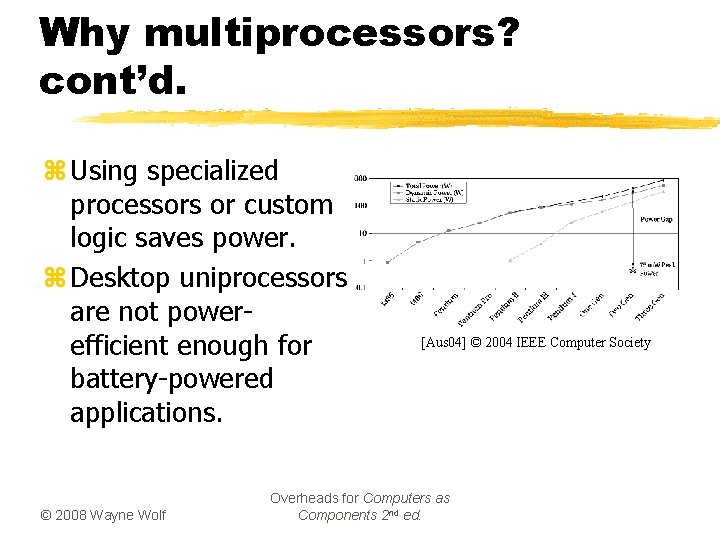

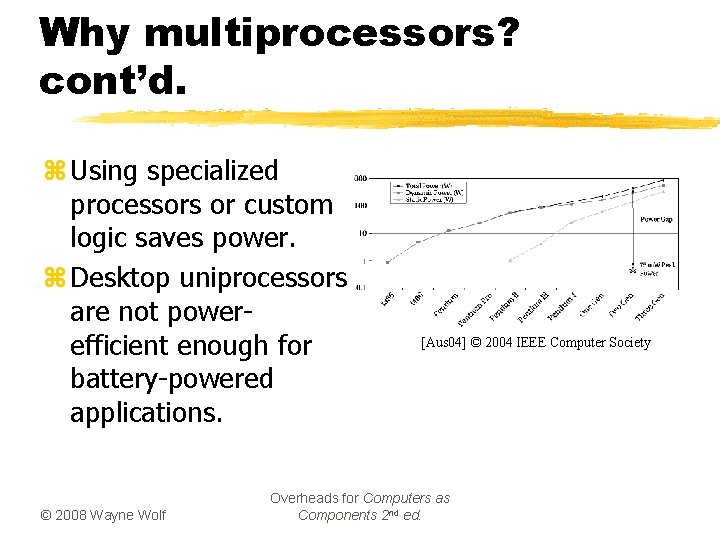

Why multiprocessors? cont’d. z Using specialized processors or custom logic saves power. z Desktop uniprocessors are not powerefficient enough for battery-powered applications. © 2008 Wayne Wolf [Aus 04] © 2004 IEEE Computer Society Overheads for Computers as Components 2 nd ed.

Why multiprocessors? cont’d. z. Good for processing I/O in real-time. z. May consume less energy. z. May be better at streaming data. z. May not be able to do all the work on even the largest single CPU. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

Accelerated systems z. Use additional computational unit dedicated to some functions? y. Hardwired logic. y. Extra CPU. z. Hardware/software co-design: joint design of hardware and software architectures. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

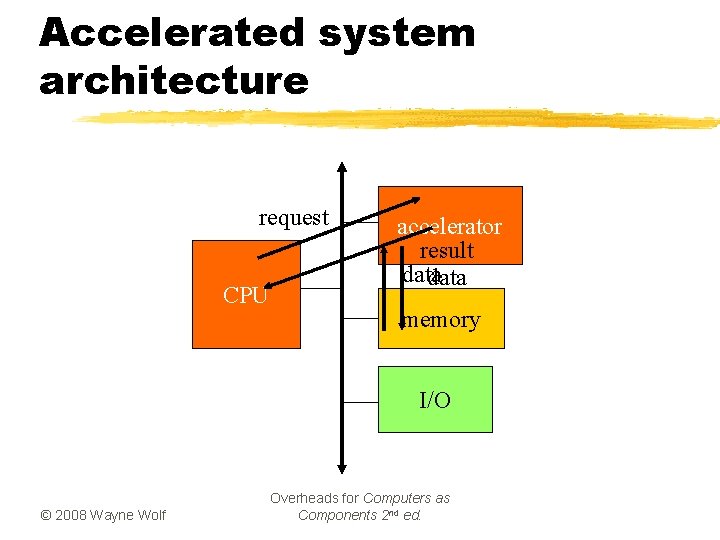

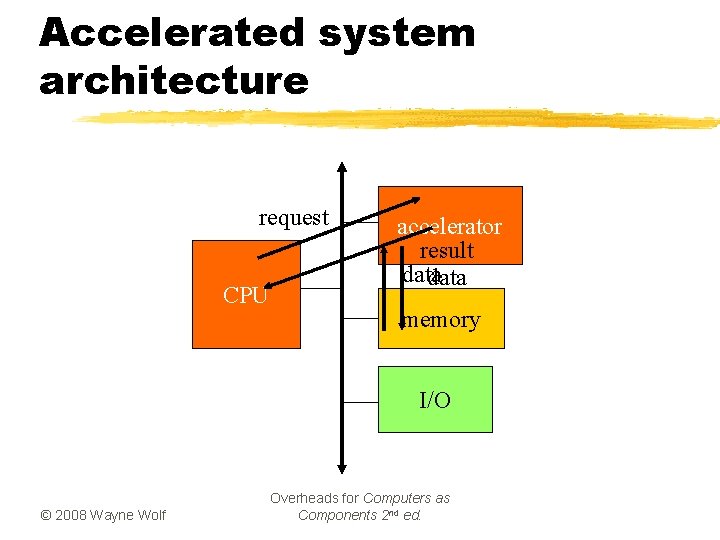

Accelerated system architecture request CPU accelerator result data memory I/O © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

Accelerator vs. coprocessor z. A co-processor executes instructions. y. Instructions are dispatched by the CPU. z. An accelerator appears as a device on the bus. y. The accelerator is controlled by registers. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

Accelerator implementations z. Application-specific integrated circuit. z. Field-programmable gate array (FPGA). z. Standard component. y. Example: graphics processor. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

System design tasks z. Design a heterogeneous multiprocessor architecture. y. Processing element (PE): CPU, accelerator, etc. z. Program the system. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

Accelerated system design z. First, determine that the system really needs to be accelerated. y. How much faster is the accelerator on the core function? y. How much data transfer overhead? z. Design the accelerator itself. z. Design CPU interface to accelerator. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

Accelerated system platforms z. Several off-the-shelf boards are available for acceleration in PCs: y. FPGA-based core; y. PC bus interface. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

Accelerator/CPU interface z. Accelerator registers provide control registers for CPU. z. Data registers can be used for small data objects. z. Accelerator may include special-purpose read/write logic. y. Especially valuable for large data transfers. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

System integration and debugging z. Try to debug the CPU/accelerator interface separately from the accelerator core. z. Build scaffolding to test the accelerator. z. Hardware/software co-simulation can be useful. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

Caching problems z. Main memory provides the primary data transfer mechanism to the accelerator. z. Programs must ensure that caching does not invalidate main memory data. y. CPU reads location S. y. Accelerator writes location S. y. CPU writes location S. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed. BAD

Synchronization z. As with cache, main memory writes to shared memory may cause invalidation: y. CPU reads S. y. Accelerator writes S. y. CPU reads S. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

Multiprocessor performance analysis z. Effects of parallelism (and lack of it): y. Processes. y. CPU and bus. y. Multiple processors. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

Accelerator speedup z. Critical parameter is speedup: how much faster is the system with the accelerator? z. Must take into account: y. Accelerator execution time. y. Data transfer time. y. Synchronization with the master CPU. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

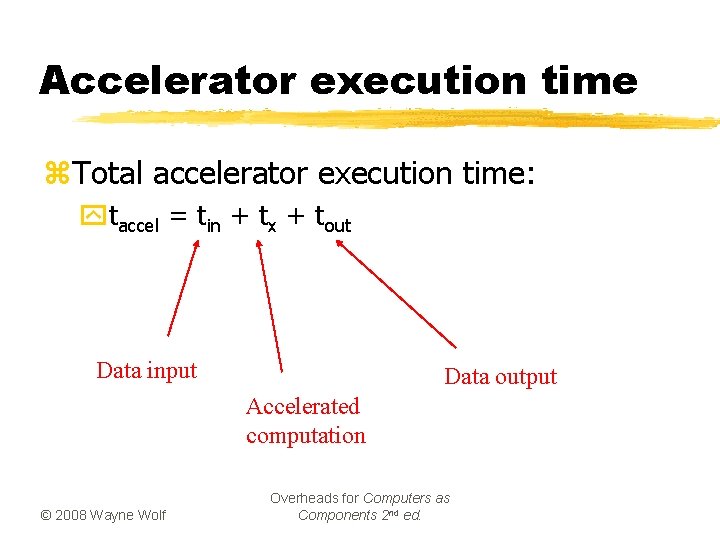

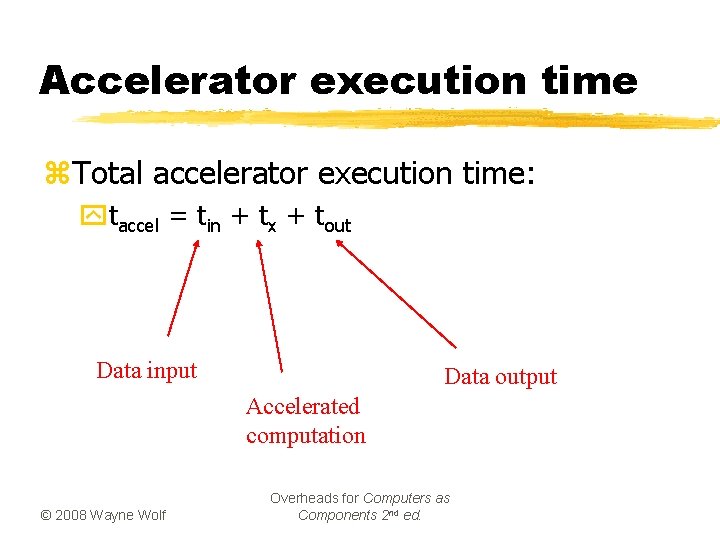

Accelerator execution time z. Total accelerator execution time: ytaccel = tin + tx + tout Data input Data output Accelerated computation © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

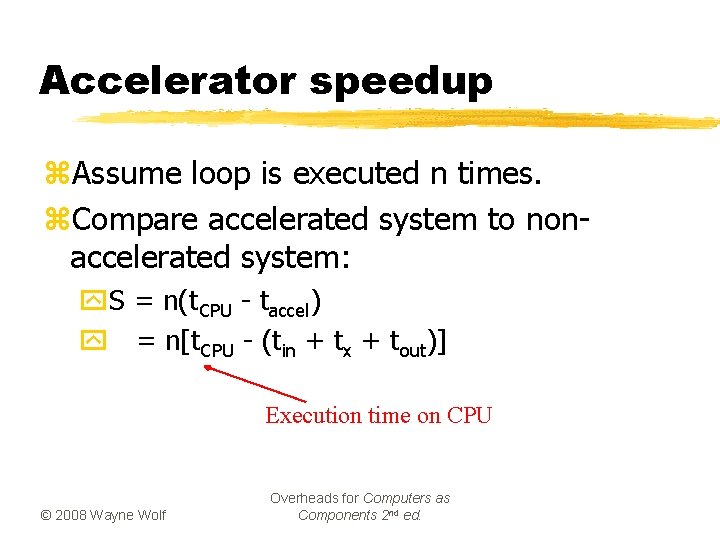

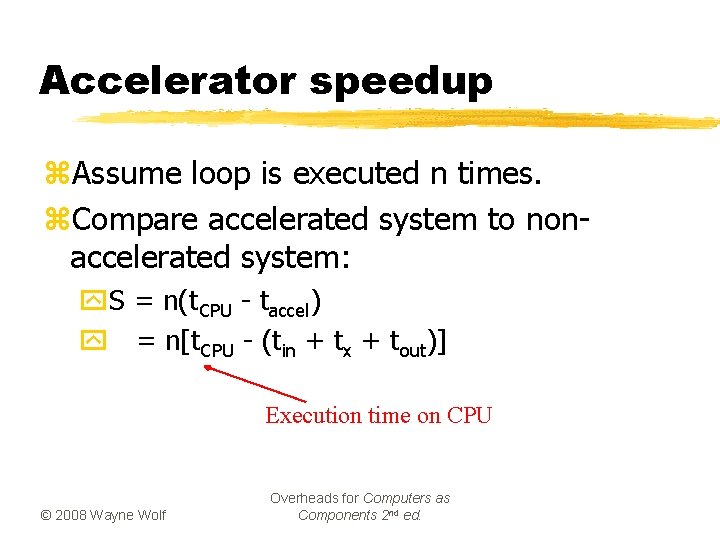

Accelerator speedup z. Assume loop is executed n times. z. Compare accelerated system to nonaccelerated system: y. S = n(t. CPU - taccel) y = n[t. CPU - (tin + tx + tout)] Execution time on CPU © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

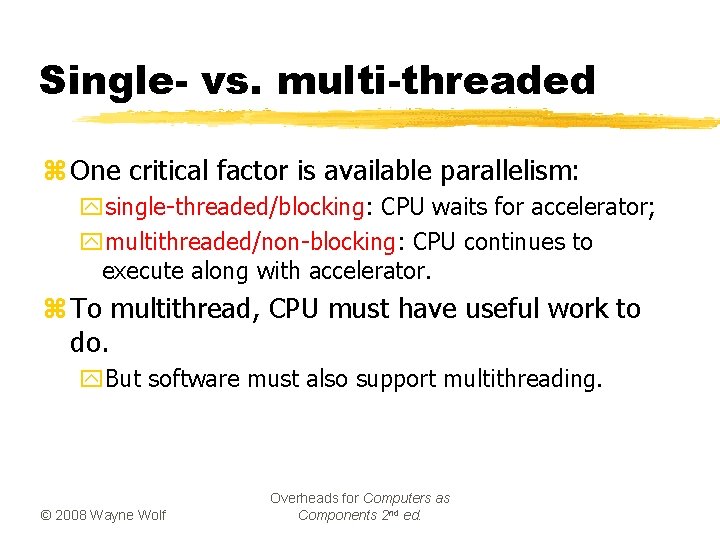

Single- vs. multi-threaded z One critical factor is available parallelism: ysingle-threaded/blocking: CPU waits for accelerator; ymultithreaded/non-blocking: CPU continues to execute along with accelerator. z To multithread, CPU must have useful work to do. y. But software must also support multithreading. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

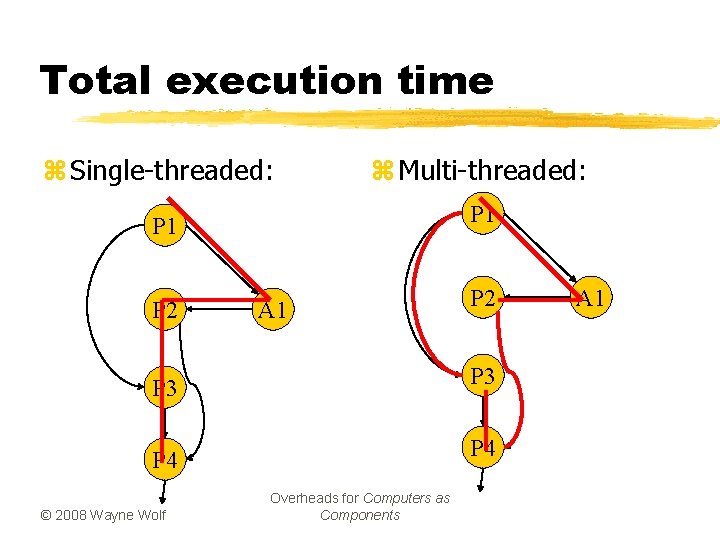

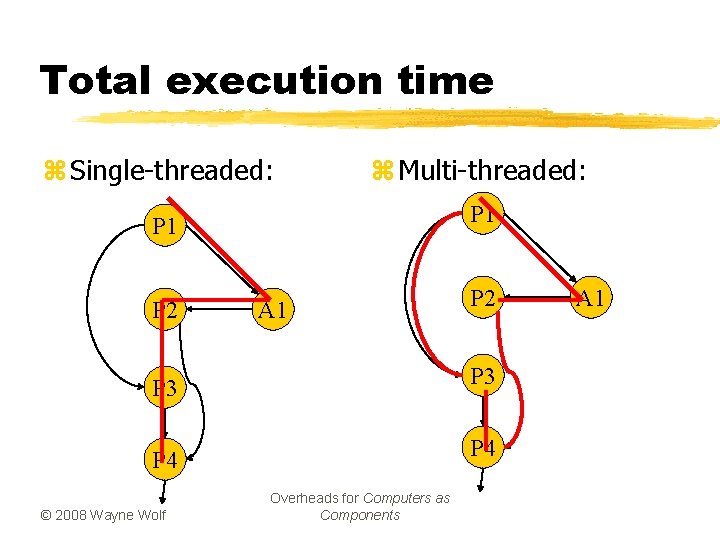

Total execution time z Single-threaded: z Multi-threaded: P 1 P 2 A 1 P 2 P 3 P 4 © 2008 Wayne Wolf Overheads for Computers as Components A 1

Execution time analysis z Single-threaded: y. Count execution time of all component processes. © 2008 Wayne Wolf z Multi-threaded: y. Find longest path through execution. Overheads for Computers as Components 2 nd ed.

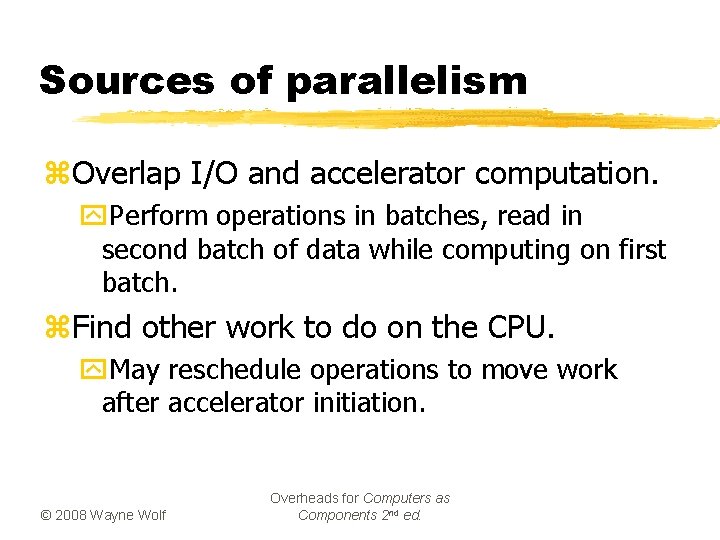

Sources of parallelism z. Overlap I/O and accelerator computation. y. Perform operations in batches, read in second batch of data while computing on first batch. z. Find other work to do on the CPU. y. May reschedule operations to move work after accelerator initiation. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

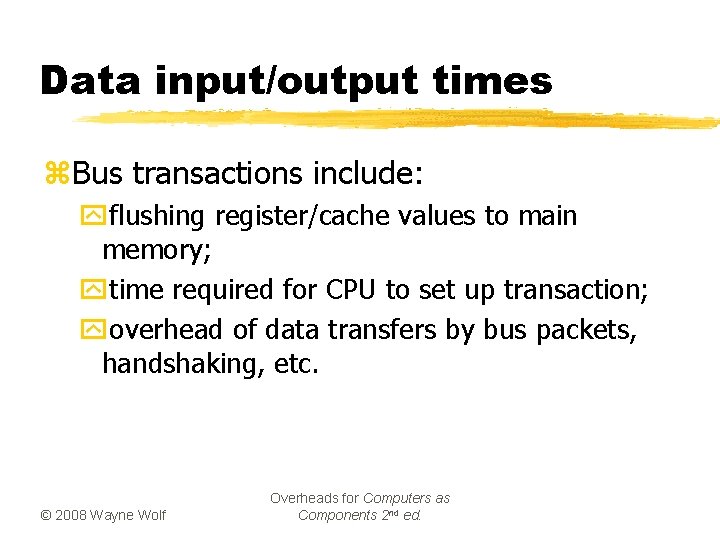

Data input/output times z. Bus transactions include: yflushing register/cache values to main memory; ytime required for CPU to set up transaction; yoverhead of data transfers by bus packets, handshaking, etc. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

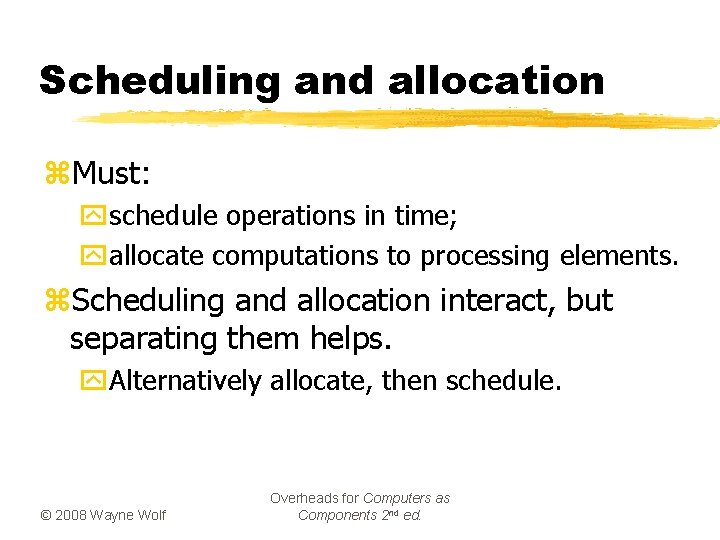

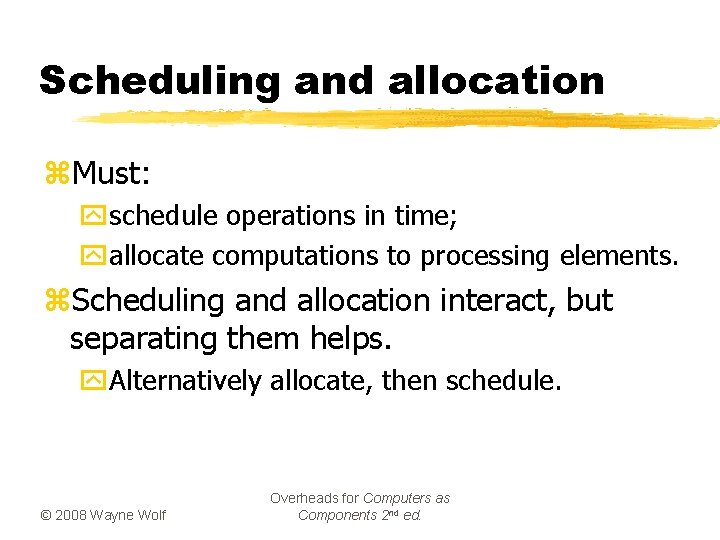

Scheduling and allocation z. Must: yschedule operations in time; yallocate computations to processing elements. z. Scheduling and allocation interact, but separating them helps. y. Alternatively allocate, then schedule. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

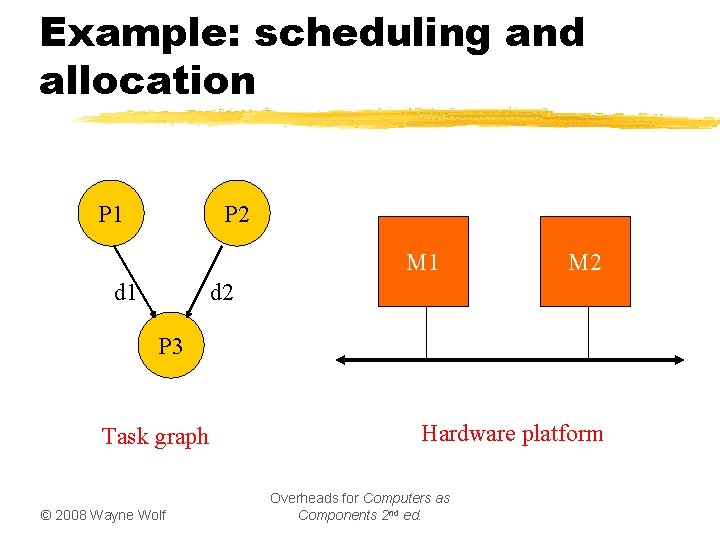

Example: scheduling and allocation P 1 P 2 M 1 d 1 M 2 d 2 P 3 Task graph © 2008 Wayne Wolf Hardware platform Overheads for Computers as Components 2 nd ed.

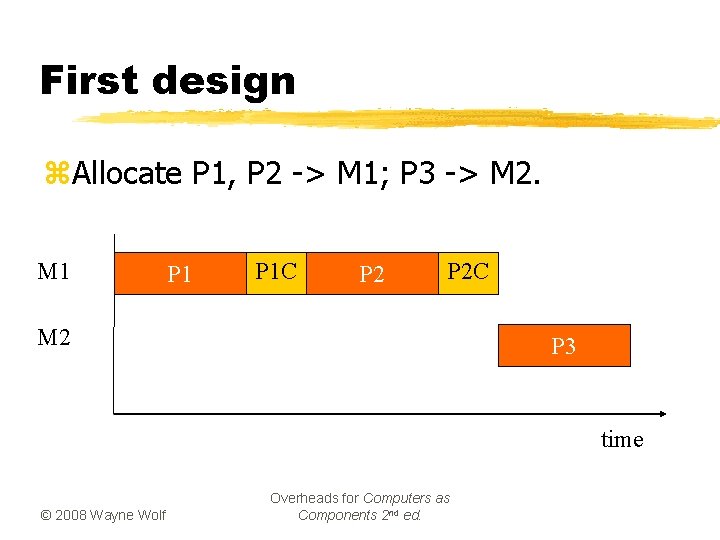

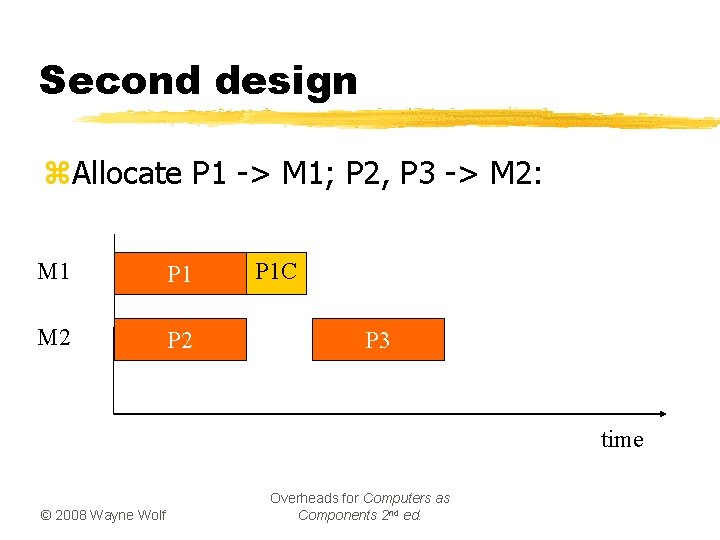

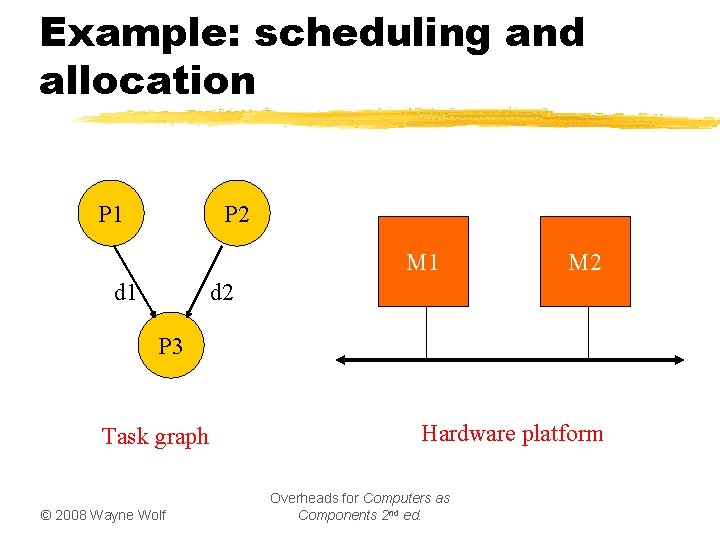

First design z. Allocate P 1, P 2 -> M 1; P 3 -> M 2. M 1 P 1 C P 2 C M 2 P 3 time © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

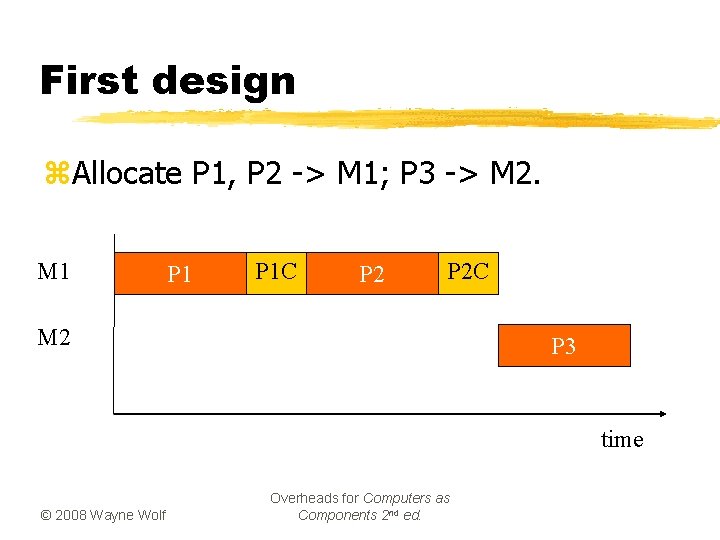

Second design z. Allocate P 1 -> M 1; P 2, P 3 -> M 2: M 1 P 1 M 2 P 1 C P 3 time © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

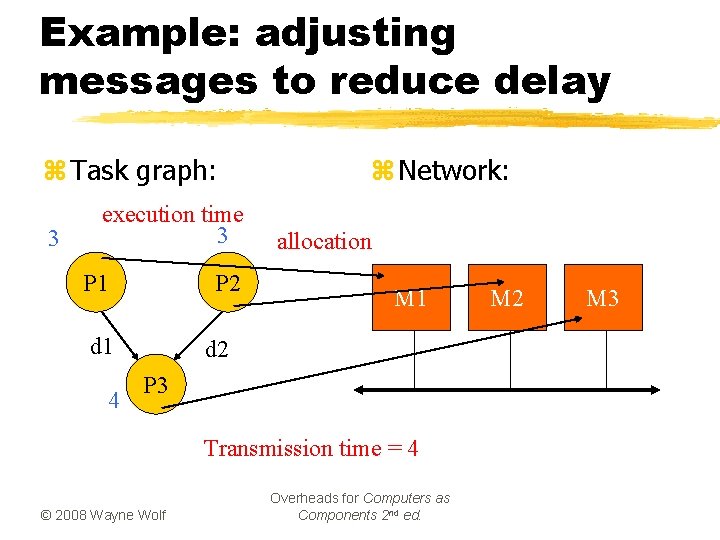

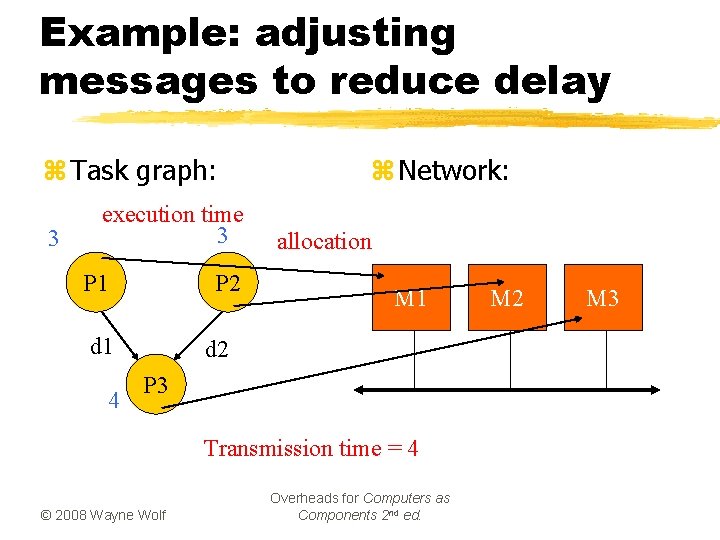

Example: adjusting messages to reduce delay z Task graph: 3 execution time 3 P 1 P 2 d 1 4 z Network: allocation M 1 d 2 P 3 Transmission time = 4 © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed. M 2 M 3

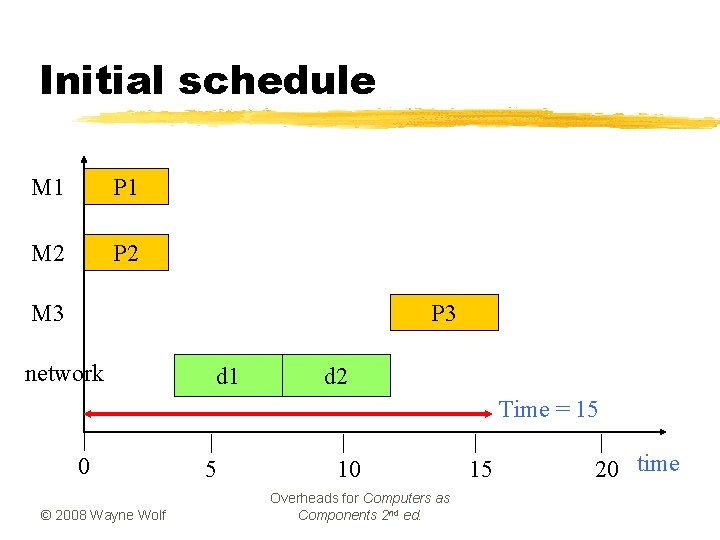

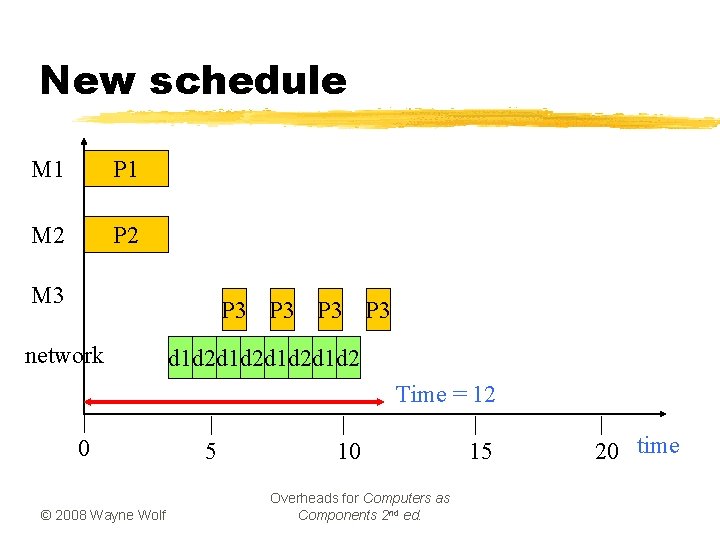

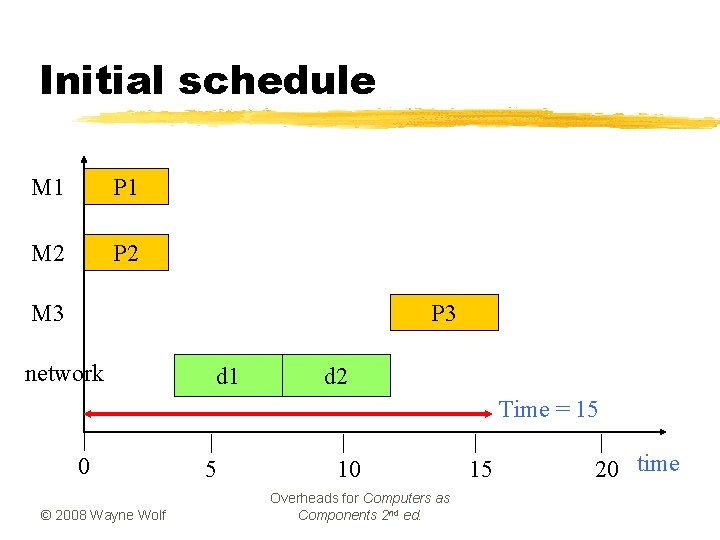

Initial schedule M 1 P 1 M 2 P 3 M 3 network d 1 d 2 Time = 15 0 © 2008 Wayne Wolf 5 10 Overheads for Computers as Components 2 nd ed. 15 20 time

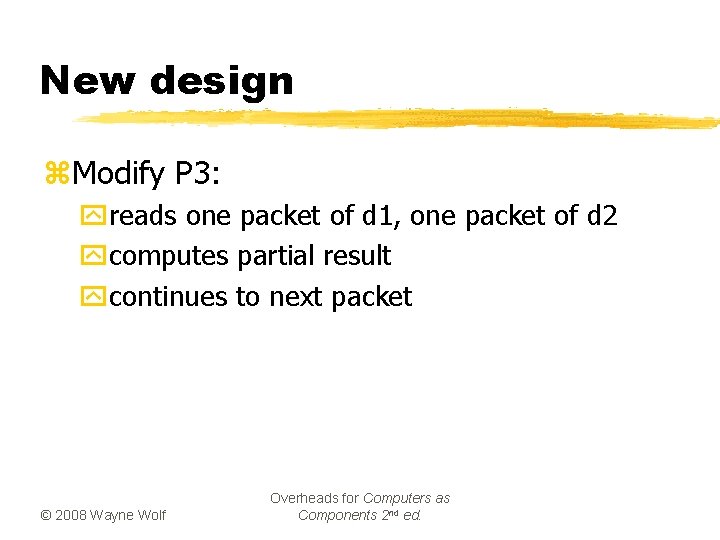

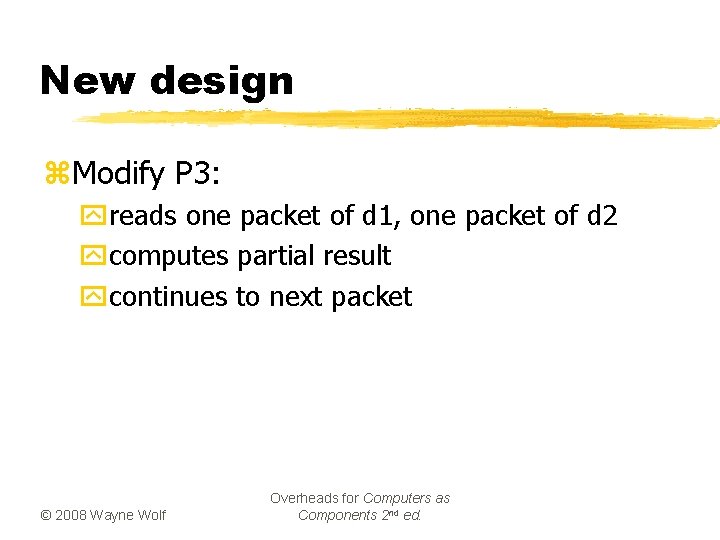

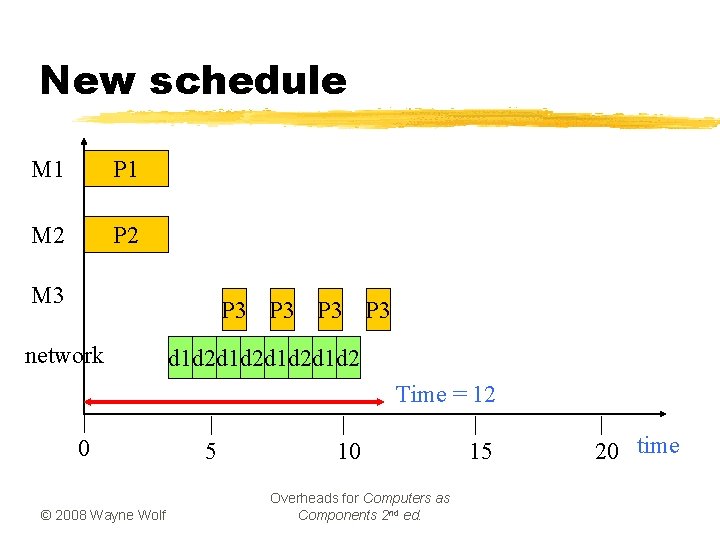

New design z. Modify P 3: yreads one packet of d 1, one packet of d 2 ycomputes partial result ycontinues to next packet © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

New schedule M 1 P 1 M 2 P 2 M 3 P 3 P 3 network d 1 d 2 d 1 d 2 Time = 12 0 © 2008 Wayne Wolf 5 10 Overheads for Computers as Components 2 nd ed. 15 20 time

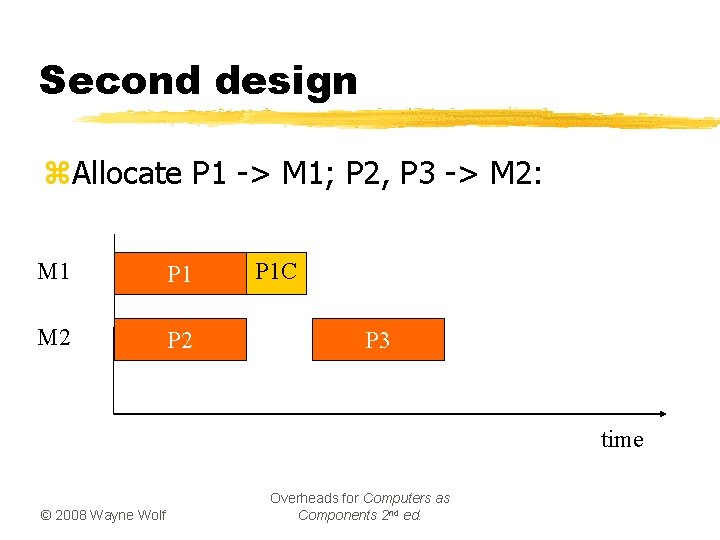

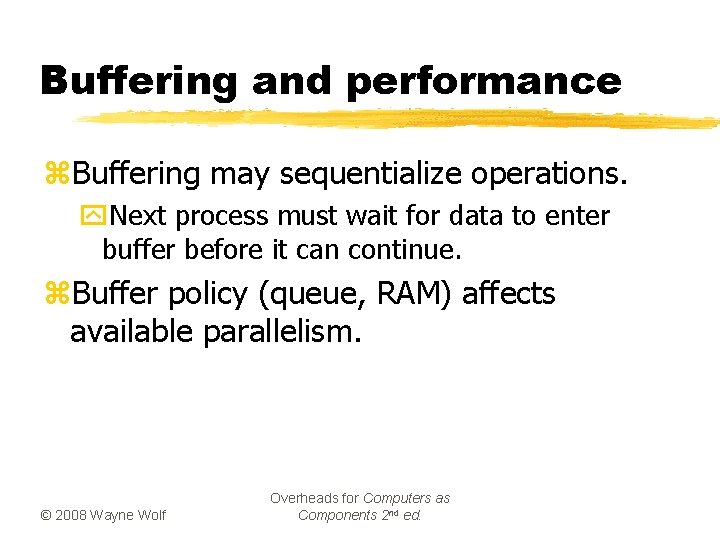

Buffering and performance z. Buffering may sequentialize operations. y. Next process must wait for data to enter buffer before it can continue. z. Buffer policy (queue, RAM) affects available parallelism. © 2008 Wayne Wolf Overheads for Computers as Components 2 nd ed.

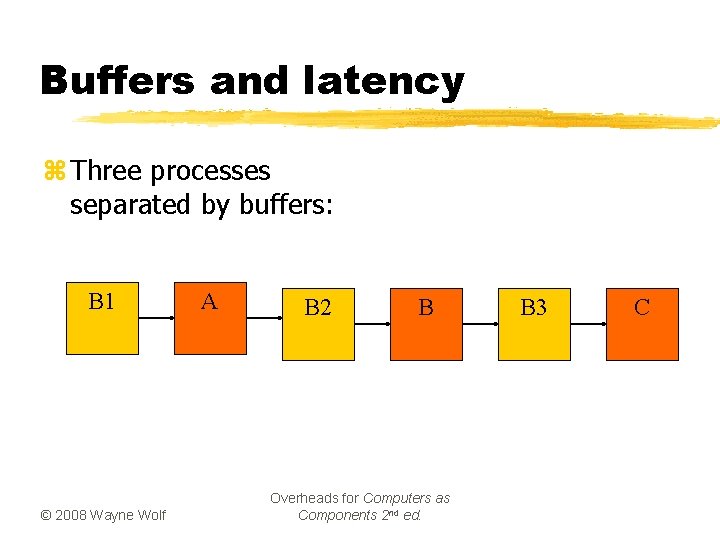

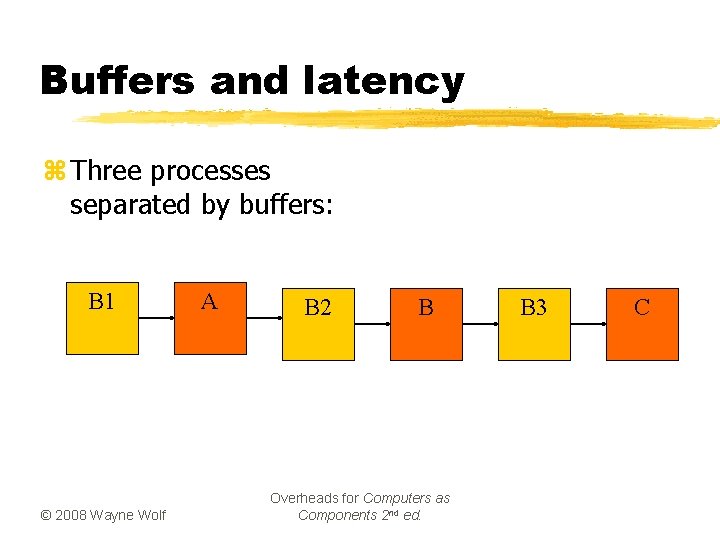

Buffers and latency z Three processes separated by buffers: B 1 © 2008 Wayne Wolf A B 2 B Overheads for Computers as Components 2 nd ed. B 3 C

![Buffers and latency schedules A0 A1 B0 B1 C0 C1 Buffers and latency schedules A[0] A[1] … B[0] B[1] … C[0] C[1] … ©](https://slidetodoc.com/presentation_image_h2/035a9708fd474708cf3d83b0489705bc/image-36.jpg)

Buffers and latency schedules A[0] A[1] … B[0] B[1] … C[0] C[1] … © 2008 Wayne Wolf Must wait for all of A before getting any B A[0] B[0] C[0] A[1] B[1] C[1] … Overheads for Computers as Components 2 nd ed.