MACHINE LEARNING FOR IMPROVED RISK STRATIFICATION OF NCD

- Slides: 77

MACHINE LEARNING FOR IMPROVED RISK STRATIFICATION OF NCD PATIENTS IN ESTONIA Big Data and Machine Learning in Health Care Marvin Ploetz Philip Docena Ojaswi Pandey Aakash Mohpal 23 April, 2019

Objectives • Propose an alternative - machine learning based - approach to patient risk stratification for ECM in Estonia • Illustrate the use and applicability of machine learning to other areas of work relevant to EHIF

Overview • • • Big Data and Machine Learning in Health Care Machine Learning Basics Context of ECM Research Question Data Overview & Sample Construction Feature Engineering Evaluation & Modelling Choices Results Conclusions

Big Data and Machine Learning in Health Care

Big Data and Machine Learning in Health Care • Vital signs • Activity data • Behavioral data • Nutritional data • EMR • Clinical notes • Medical images • Genome data Patients Providers • Take advantage of massive amounts of data and provide the right intervention to the right patient at the right time • Personalized care to the patient Other stakeholders • Public health • Pharma companies and drug discoveries Payers • Claims and billing • Approvals and denials • Population health and risk • Potentially benefit all agents in the health care system: patient, provider, payer, management

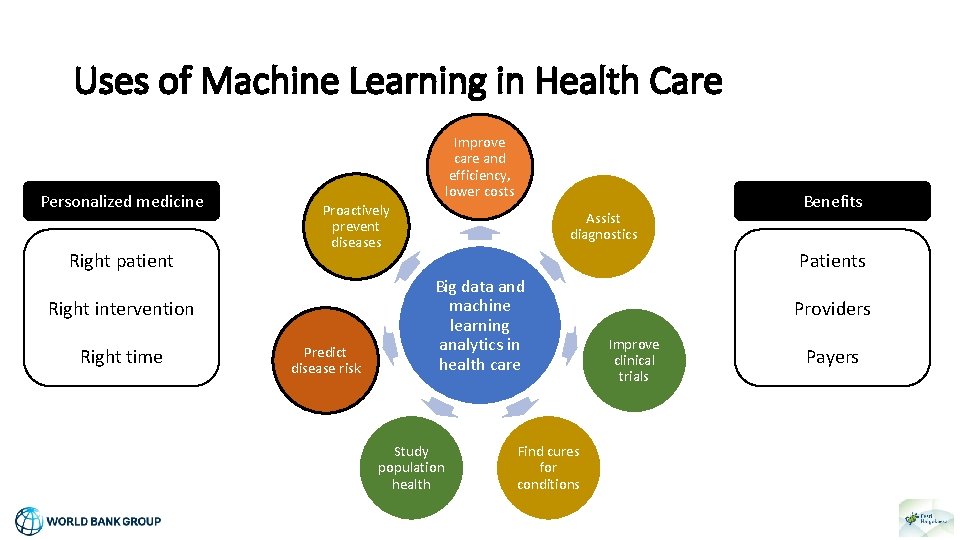

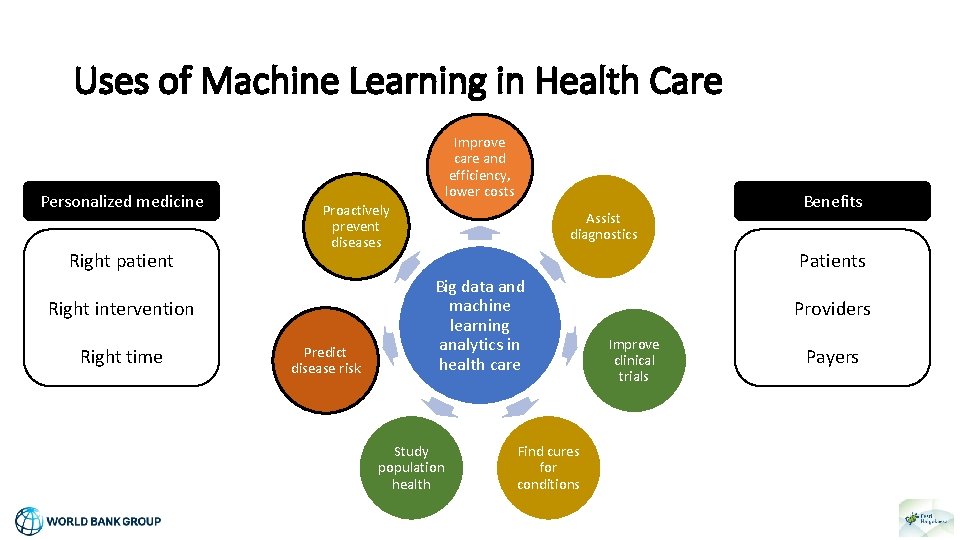

Uses of Machine Learning in Health Care Personalized medicine Right patient Improve care and efficiency, lower costs Proactively prevent diseases Right intervention Right time Predict disease risk Assist diagnostics Benefits Patients Big data and machine learning analytics in health care Study population health Find cures for conditions Providers Improve clinical trials Payers

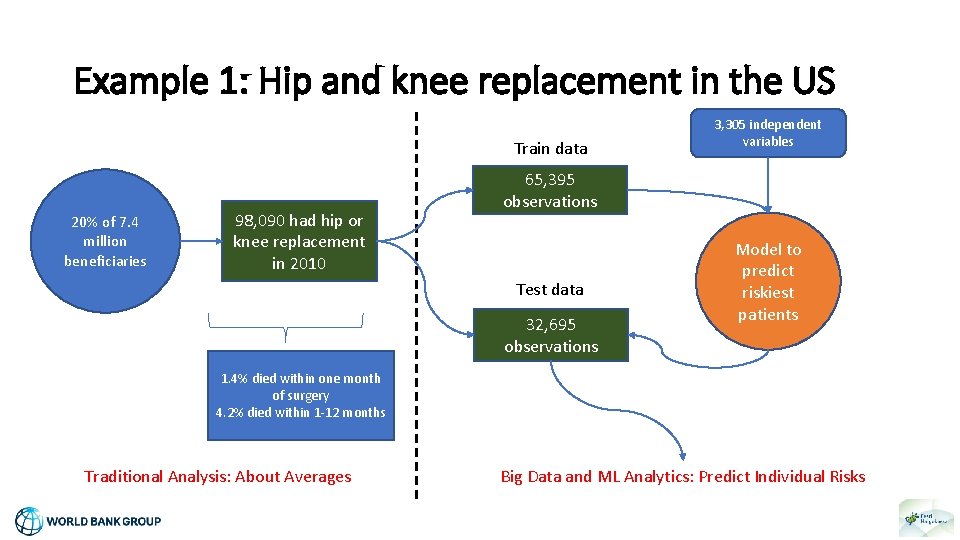

Example 1: Hip and knee replacement in the US • Osteoarthritis: a common and painful chronic condition • Often requires replacement of hip and knees • More than 500, 000 Medicare beneficiaries receive replacements each year • Medical costs: roughly $15, 000 per surgery • Medical benefits: accrue over time, since some months after surgery is painful and spent in disability • Therefore, a joint replacement only makes sense if you will live long enough to enjoy it. If you die soon after, could be futile and painful • Prediction/classification problem: Can we predict which surgeries will be futile using only data available at the time of surgery?

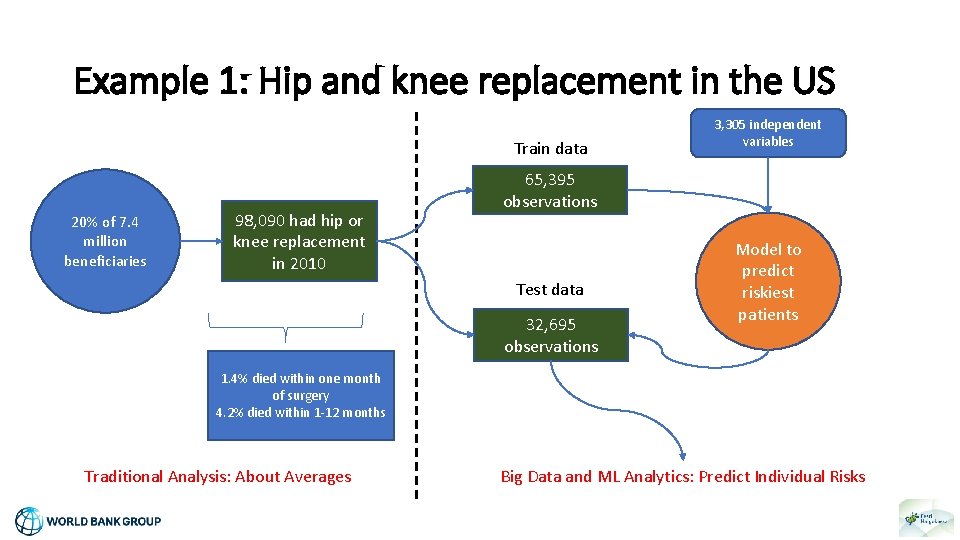

Example 1: Hip and knee replacement in the US Train data 20% of 7. 4 million beneficiaries 98, 090 had hip or knee replacement in 2010 3, 305 independent variables 65, 395 observations Test data 32, 695 observations Model to predict riskiest patients 1. 4% died within one month of surgery 4. 2% died within 1 -12 months Traditional Analysis: About Averages Big Data and ML Analytics: Predict Individual Risks

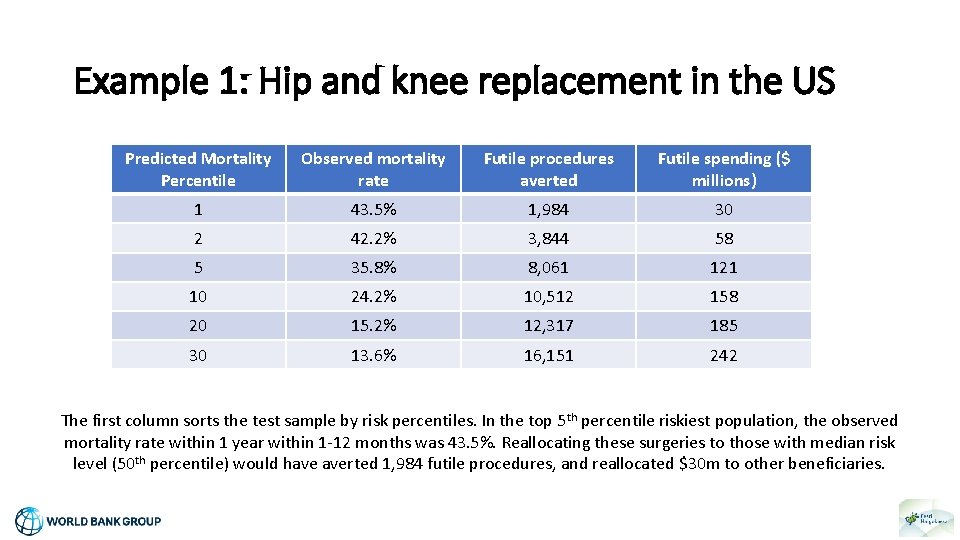

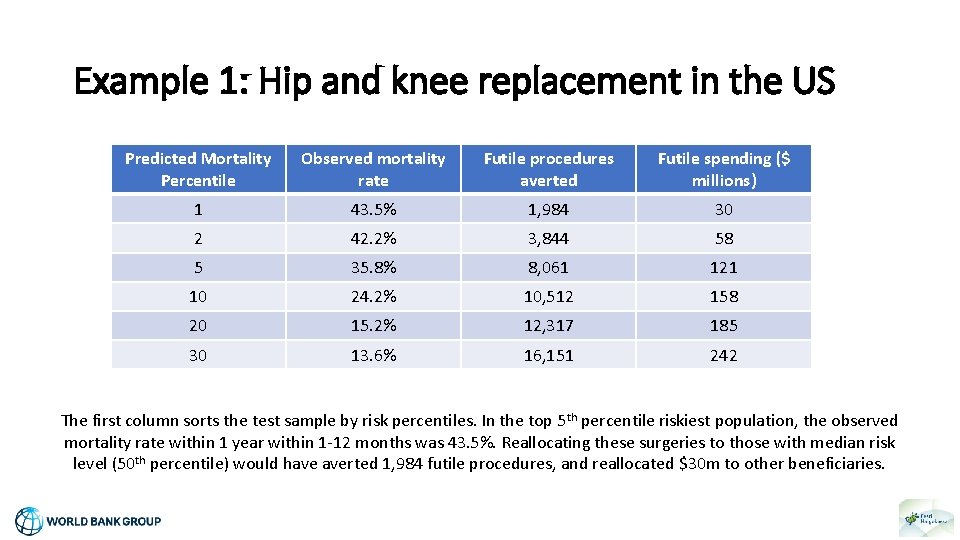

Example 1: Hip and knee replacement in the US Predicted Mortality Percentile Observed mortality rate Futile procedures averted Futile spending ($ millions) 1 43. 5% 1, 984 30 2 42. 2% 3, 844 58 5 35. 8% 8, 061 121 10 24. 2% 10, 512 158 20 15. 2% 12, 317 185 30 13. 6% 16, 151 242 The first column sorts the test sample by risk percentiles. In the top 5 th percentile riskiest population, the observed mortality rate within 1 year within 1 -12 months was 43. 5%. Reallocating these surgeries to those with median risk level (50 th percentile) would have averted 1, 984 futile procedures, and reallocated $30 m to other beneficiaries.

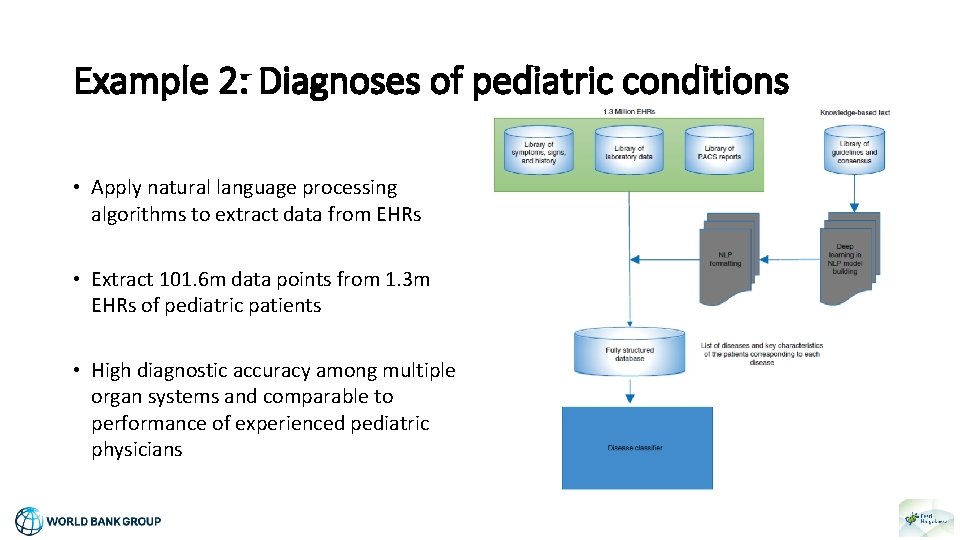

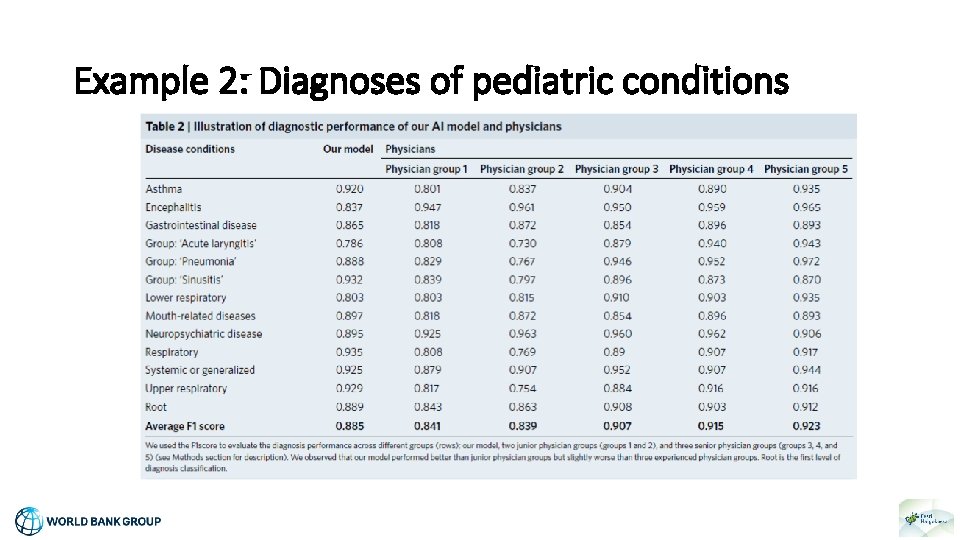

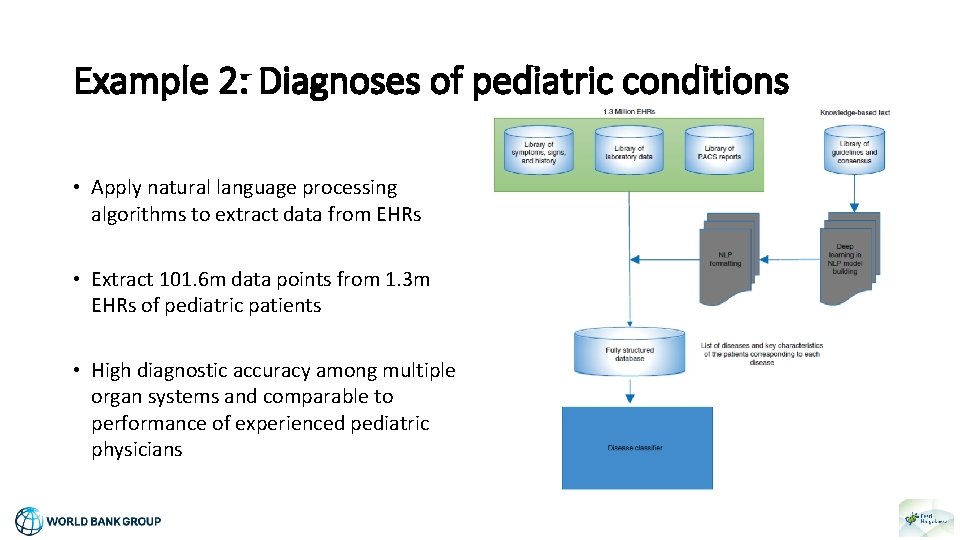

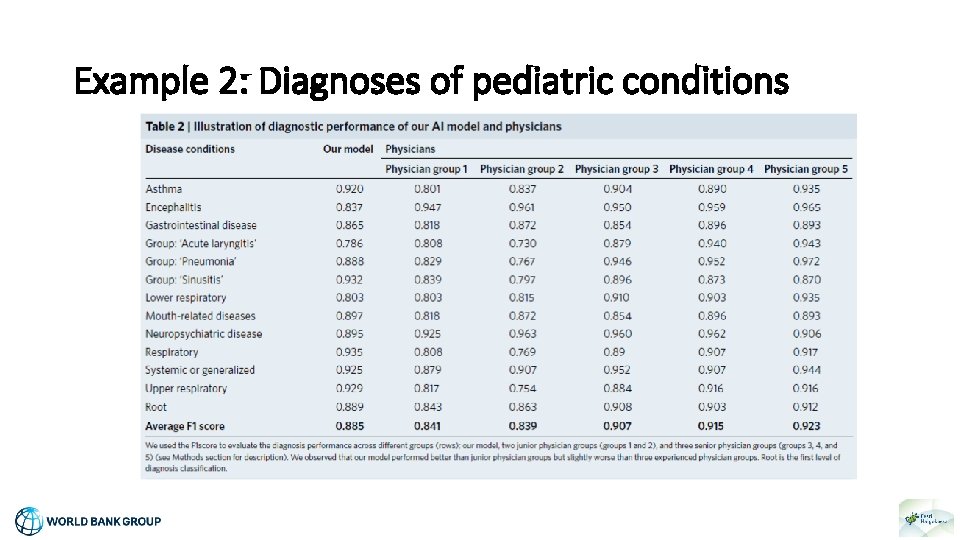

Example 2: Diagnoses of pediatric conditions • Apply natural language processing algorithms to extract data from EHRs • Extract 101. 6 m data points from 1. 3 m EHRs of pediatric patients • High diagnostic accuracy among multiple organ systems and comparable to performance of experienced pediatric physicians

Example 2: Diagnoses of pediatric conditions

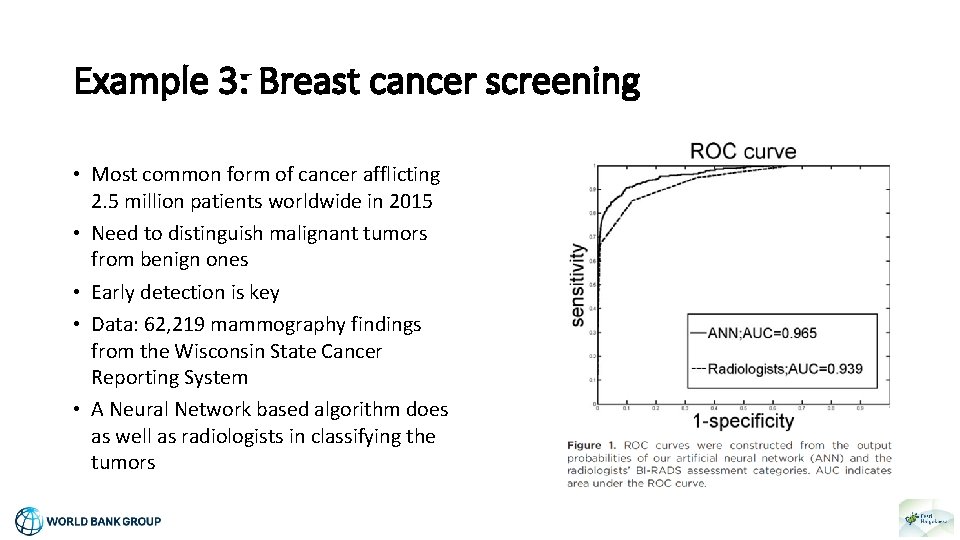

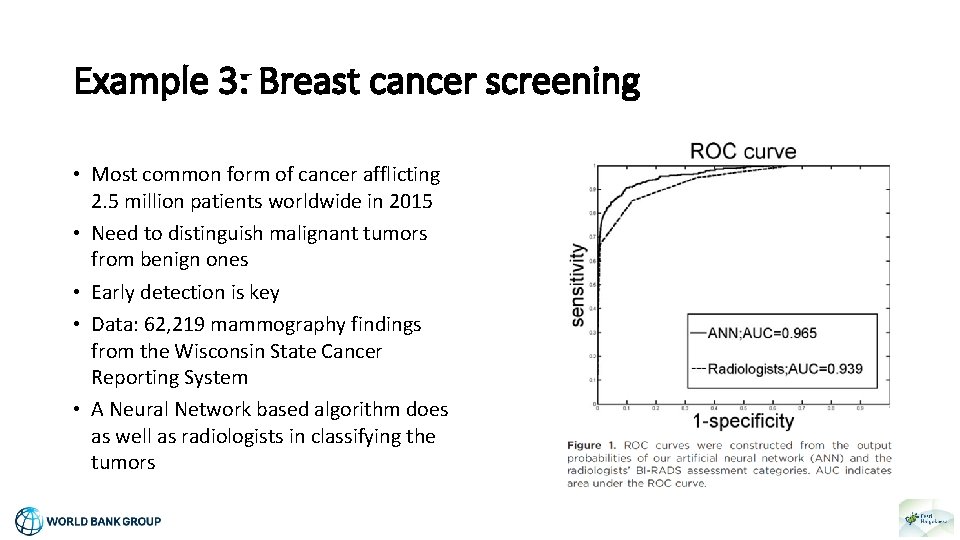

Example 3: Breast cancer screening • Most common form of cancer afflicting 2. 5 million patients worldwide in 2015 • Need to distinguish malignant tumors from benign ones • Early detection is key • Data: 62, 219 mammography findings from the Wisconsin State Cancer Reporting System • A Neural Network based algorithm does as well as radiologists in classifying the tumors

Machine Learning Basics

Definition of Big Data • Collection of large and complex data sets which are difficult to process using common database management tools or traditional data processing applications • Not only about size: finding insights from complex, noisy, heterogeneous, and longitudinal data sets • This includes capturing, storing, searching, sharing and analyzing Volume Big Data Variety Velocity

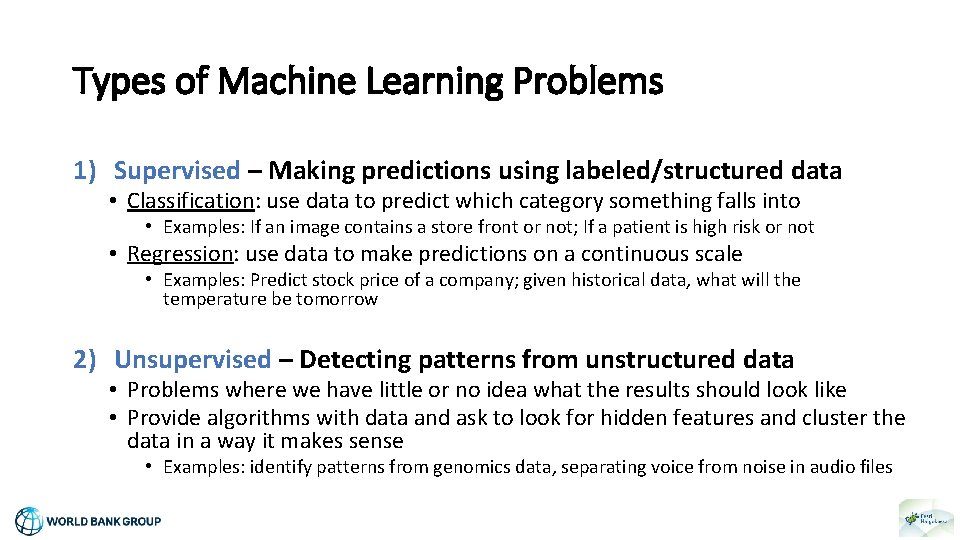

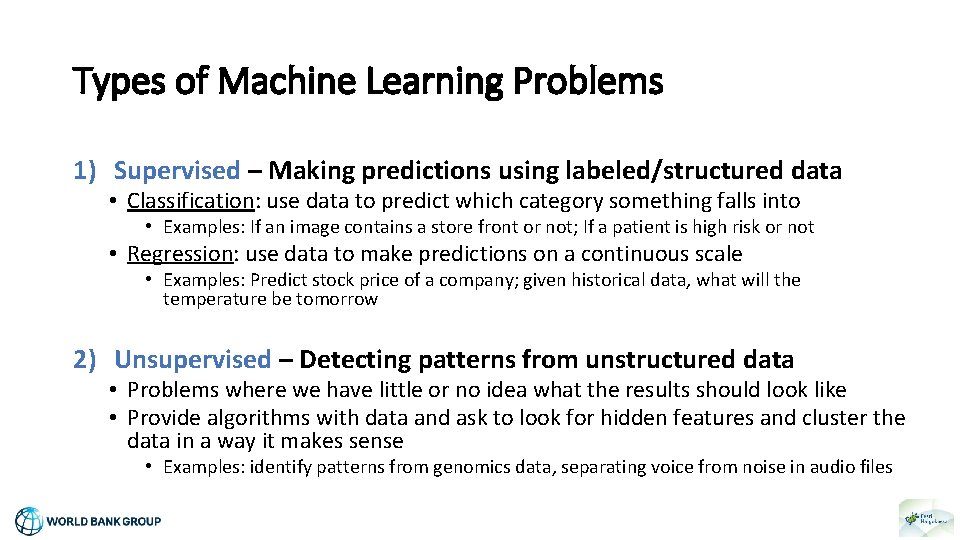

Types of Machine Learning Problems 1) Supervised – Making predictions using labeled/structured data • Classification: use data to predict which category something falls into • Examples: If an image contains a store front or not; If a patient is high risk or not • Regression: use data to make predictions on a continuous scale • Examples: Predict stock price of a company; given historical data, what will the temperature be tomorrow 2) Unsupervised – Detecting patterns from unstructured data • Problems where we have little or no idea what the results should look like • Provide algorithms with data and ask to look for hidden features and cluster the data in a way it makes sense • Examples: identify patterns from genomics data, separating voice from noise in audio files

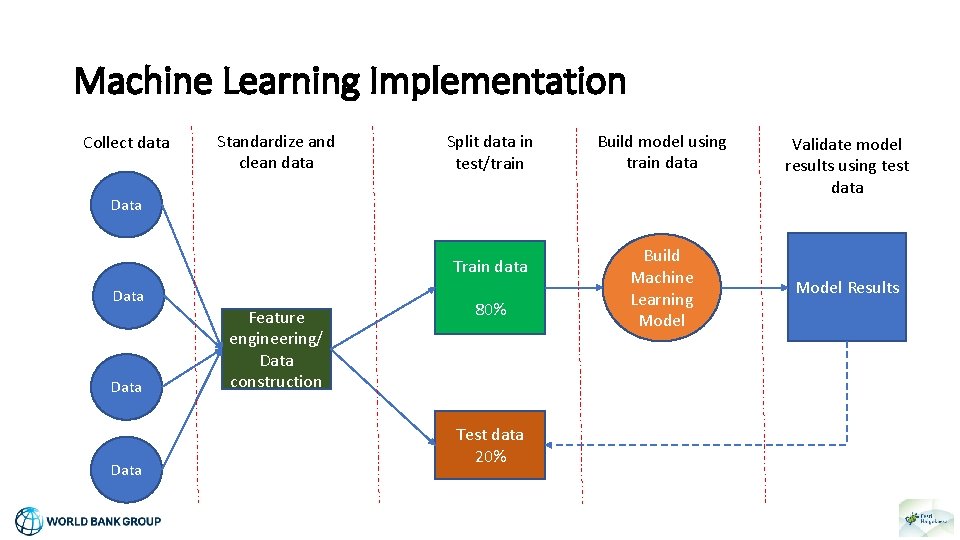

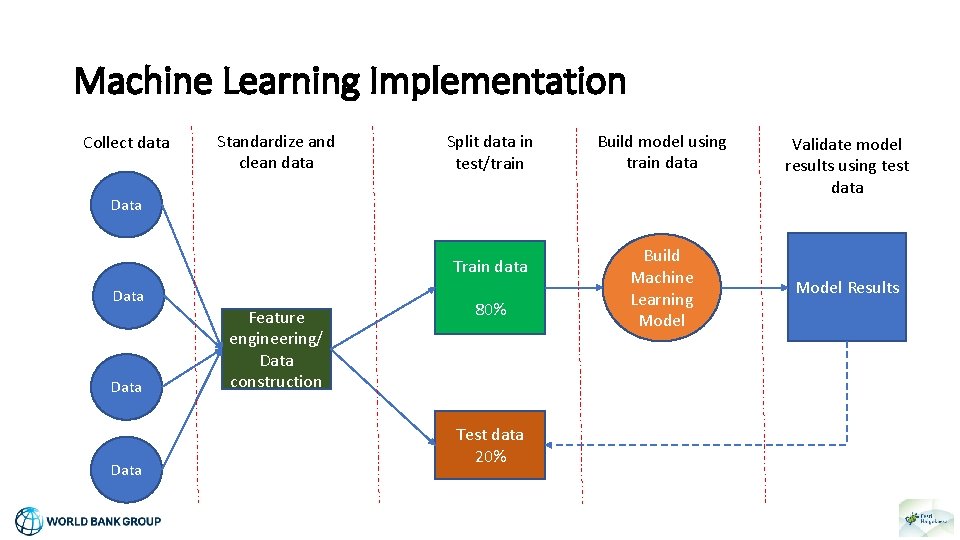

Machine Learning Implementation Collect data Standardize and clean data Split data in test/train Build model using train data Data Train data Data Feature engineering/ Data construction 80% Test data 20% Build Machine Learning Model Validate model results using test data Model Results

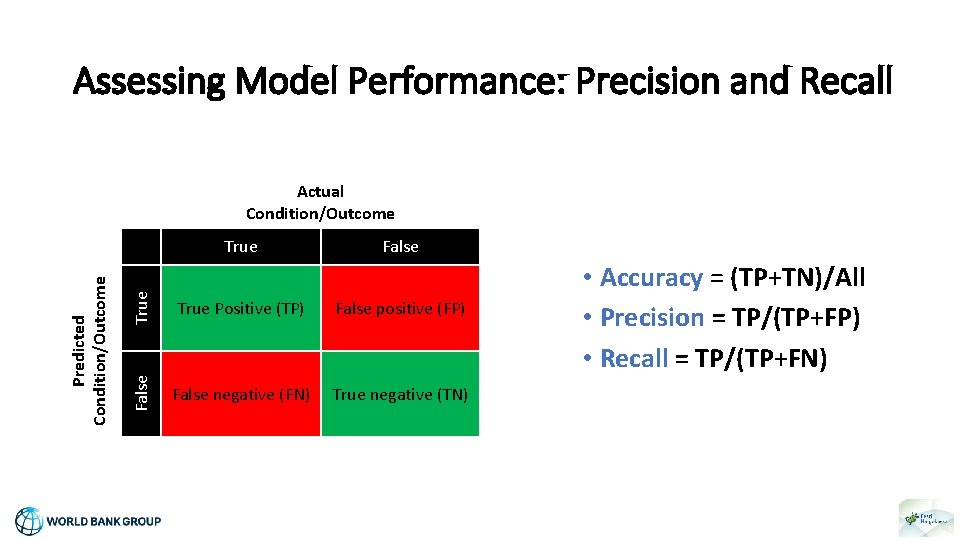

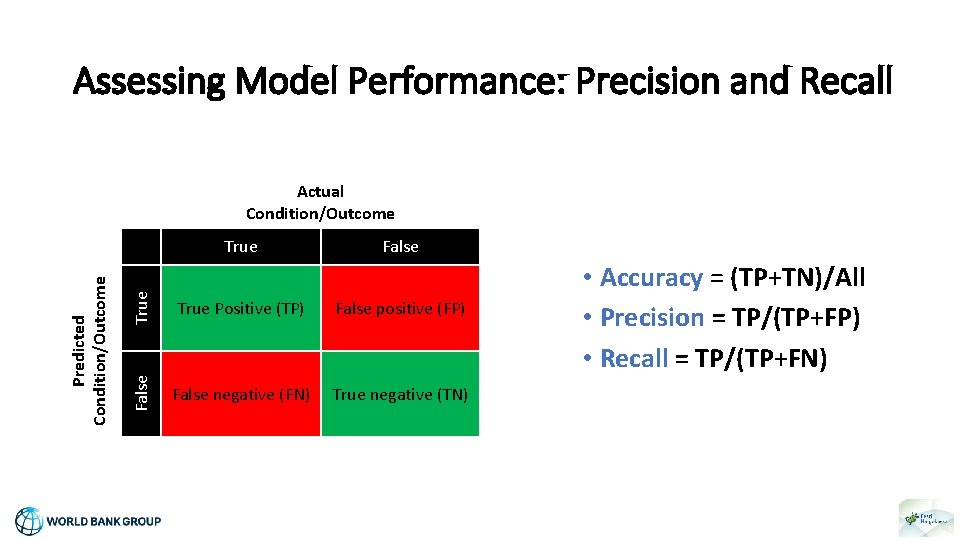

Assessing Model Performance: Precision and Recall Actual Condition/Outcome True False True Positive (TP) False positive (FP) False Predicted Condition/Outcome True False negative (FN) True negative (TN) • Accuracy = (TP+TN)/All • Precision = TP/(TP+FP) • Recall = TP/(TP+FN)

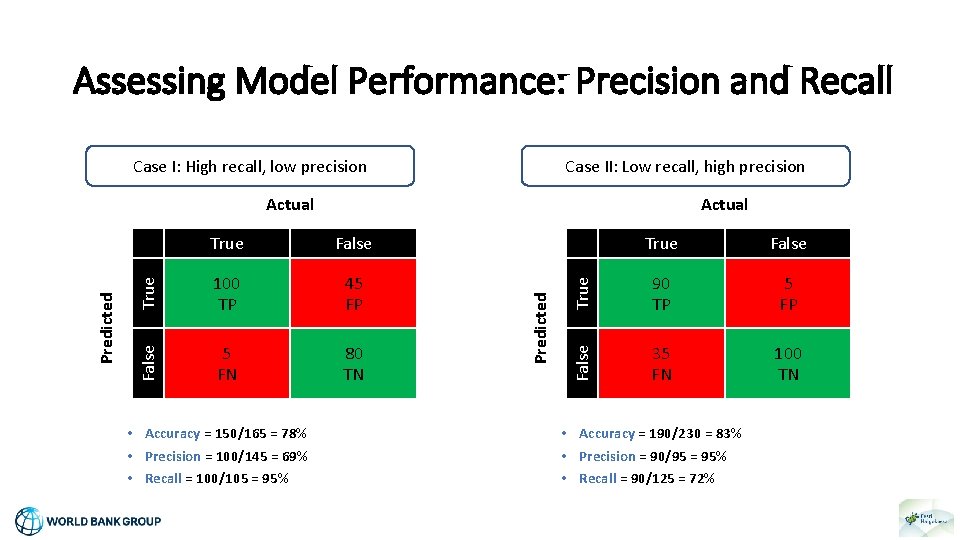

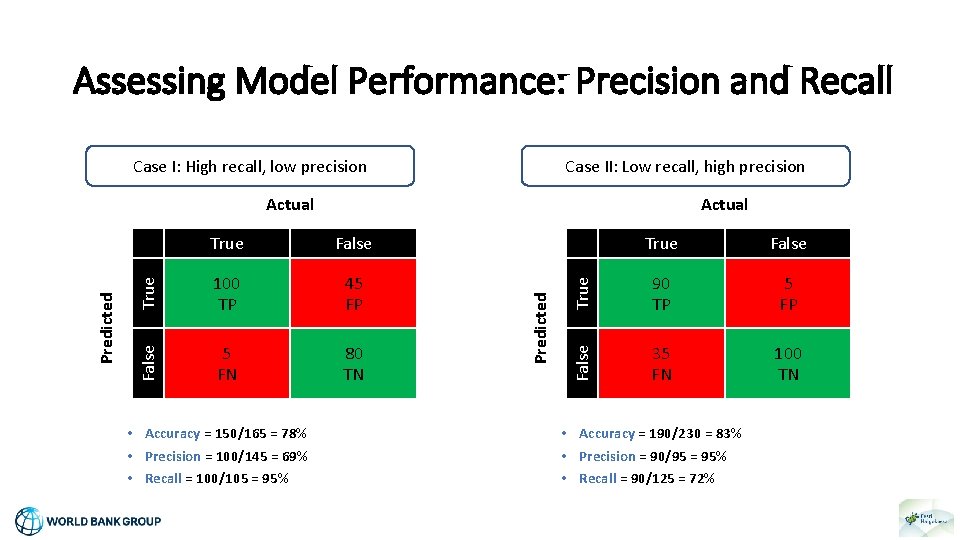

Assessing Model Performance: Precision and Recall Case I: High recall, low precision Case II: Low recall, high precision False True False 100 TP 45 FP True 90 TP 5 FN 80 TN False 35 FN 100 TN • Accuracy = 150/165 = 78% • Precision = 100/145 = 69% • Recall = 100/105 = 95% Predicted True Actual False Predicted Actual • Accuracy = 190/230 = 83% • Precision = 90/95 = 95% • Recall = 90/125 = 72%

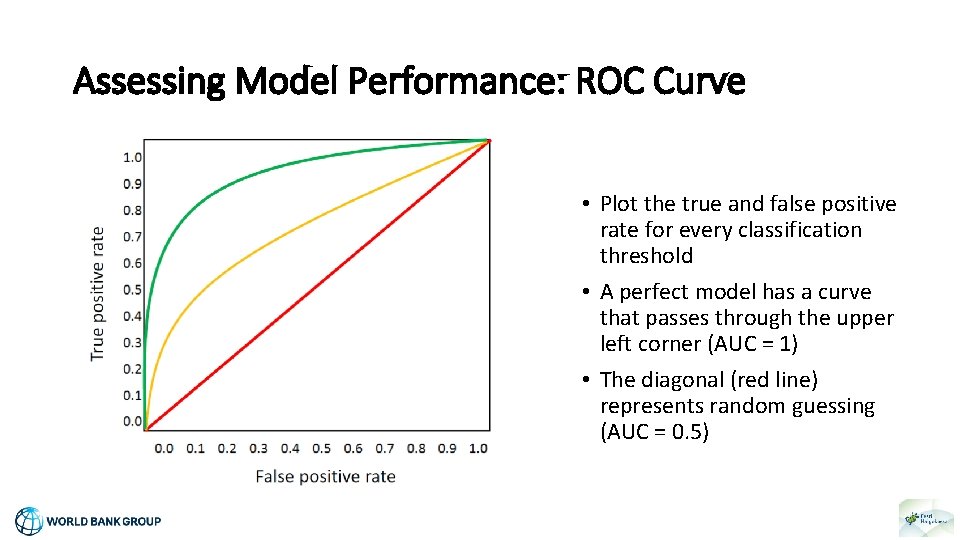

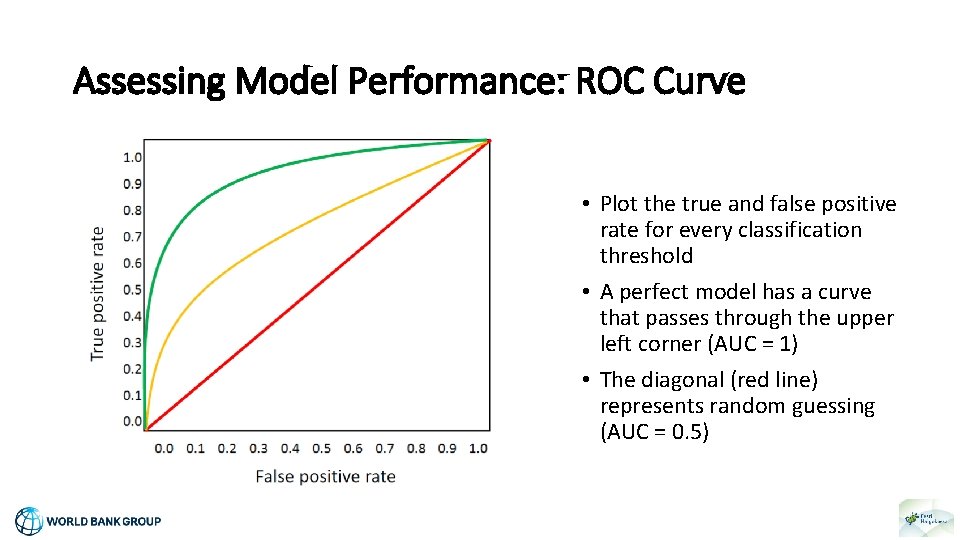

Assessing Model Performance: ROC Curve • Plot the true and false positive rate for every classification threshold • A perfect model has a curve that passes through the upper left corner (AUC = 1) • The diagonal (red line) represents random guessing (AUC = 0. 5)

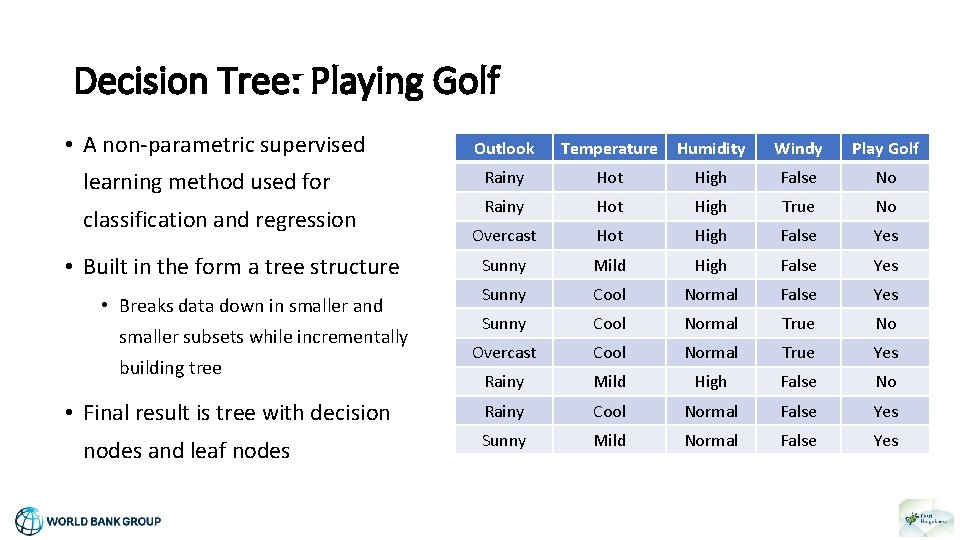

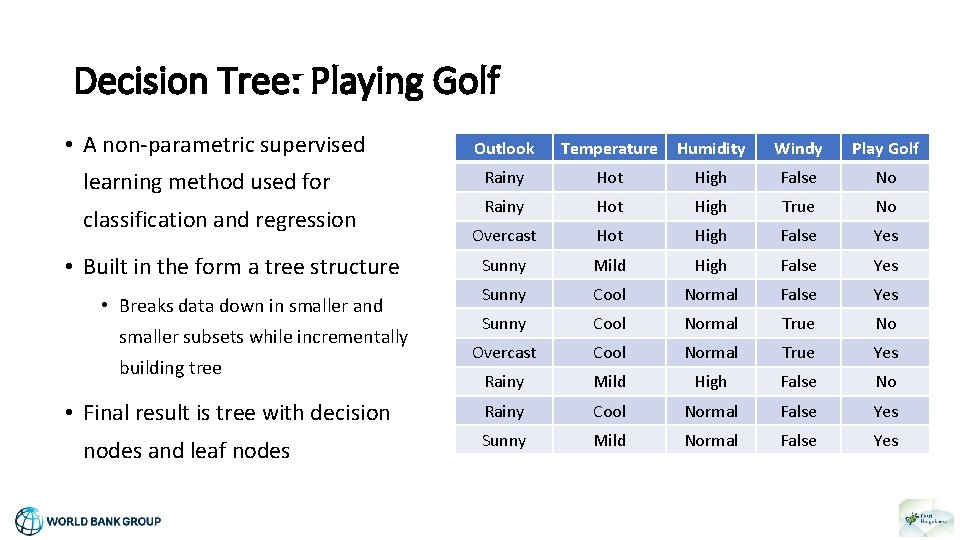

Decision Tree: Playing Golf • A non-parametric supervised learning method used for classification and regression • Built in the form a tree structure • Breaks data down in smaller and smaller subsets while incrementally building tree • Final result is tree with decision nodes and leaf nodes Outlook Temperature Humidity Windy Play Golf Rainy Hot High False No Rainy Hot High True No Overcast Hot High False Yes Sunny Mild High False Yes Sunny Cool Normal True No Overcast Cool Normal True Yes Rainy Mild High False No Rainy Cool Normal False Yes Sunny Mild Normal False Yes

Decision Tree: Playing Golf Outlook Rainy No Golf Overcast Sunny Windy Golf False Play Golf True No Golf

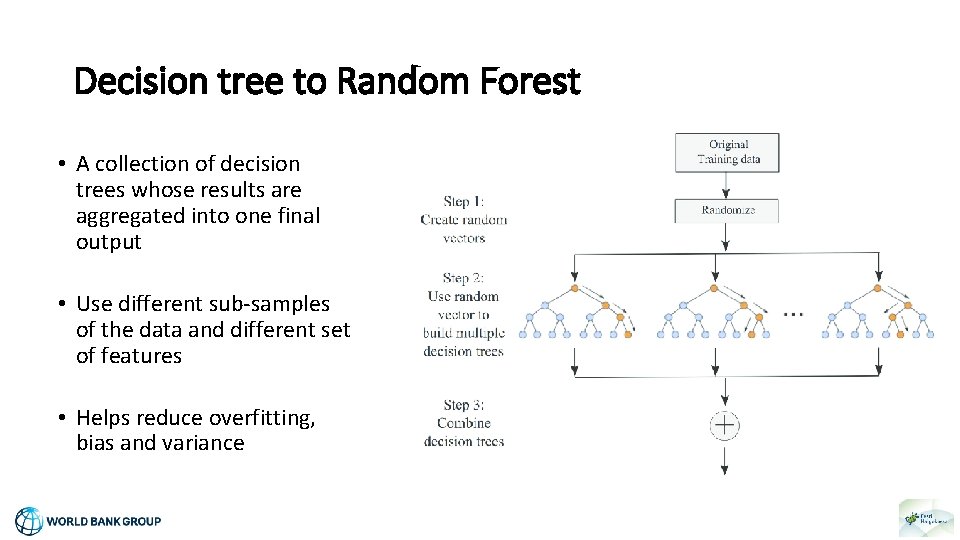

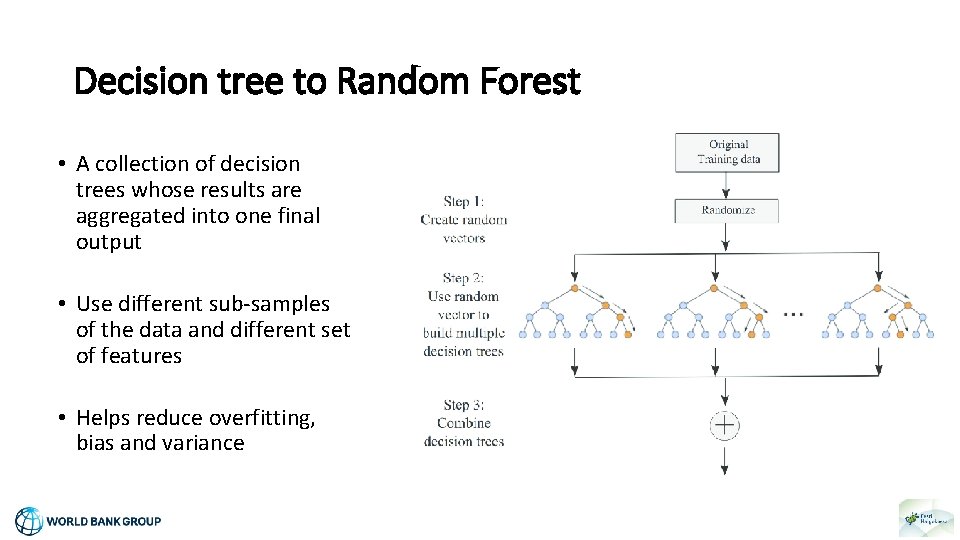

Decision tree to Random Forest • A collection of decision trees whose results are aggregated into one final output • Use different sub-samples of the data and different set of features • Helps reduce overfitting, bias and variance

Context of ECM

A Big Challenge of the Estonian Healthcare System • Changes in the demand for health care due to population ageing and rise of non-communicable diseases • Chronic conditions as the driving force behind needs for better care integration • Low coverage of preventive services and considerable share of avoidable specialist and hospital care • Opportunity to improve management of specific patient groups at the PHC level -> care management for empaneled patients • Prediction for which patients breaches in care coordination will occur -> risk-stratification of patients

DM/ Hypertension/ Hyperlipidemia No Yes Risk Stratification Until Now • No actual prediction analysis done • Involvement of providers to gain trust/understanding • Behavioral and social criteria are key, but sparsely available -> use insider knowledge of doctors Not eligible Min. and Max. Number/Combination of: CVD/ Respiratory/ Mental Health/ Functional Impairment No Yes Not eligible Dominant/complex condition (cancer, schizophrenia, rare disease etc. ) Yes Not eligible No Review by GPs (Behavioral & social factors, information not in data) Yes ECM Candidate No Not eligible

Enhanced Care Management So Far In Estonia • Successful enhanced care management pilot with 15 GPs and < 1, 000 patients to assess the feasibility and acceptability of enhanced care management • Commitment of the Estonian Health Insurance Fund (EHIF) to scale-up the care management pilot • Model for risk stratification: - Clinical algorithm + provider intuition • Need for a better risk-stratification approach!?

Research Question

The Prediction Problem • Target patients - Who benefits from care management? • A combination of disease, social and behavioral factors… • Objective of ECM - Ultimately improve health outcomes for patients with cardio-vascular, respiratory, and mental disease. • What is the right proxy prediction variable in the data? • There is not one single relevant adverse event (e. g. death, hospital admission, health complication, high healthcare spending) • Some discussions on how to choose the dependent variable… -> Unplanned hospital admissions have a large negative impact on patient lives, are costly and relatively frequent. Some are also avoidable…

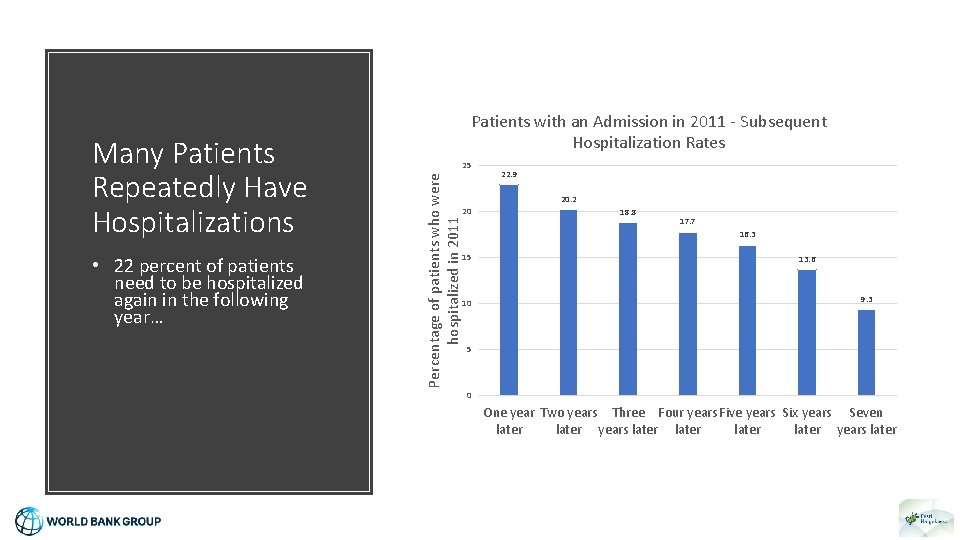

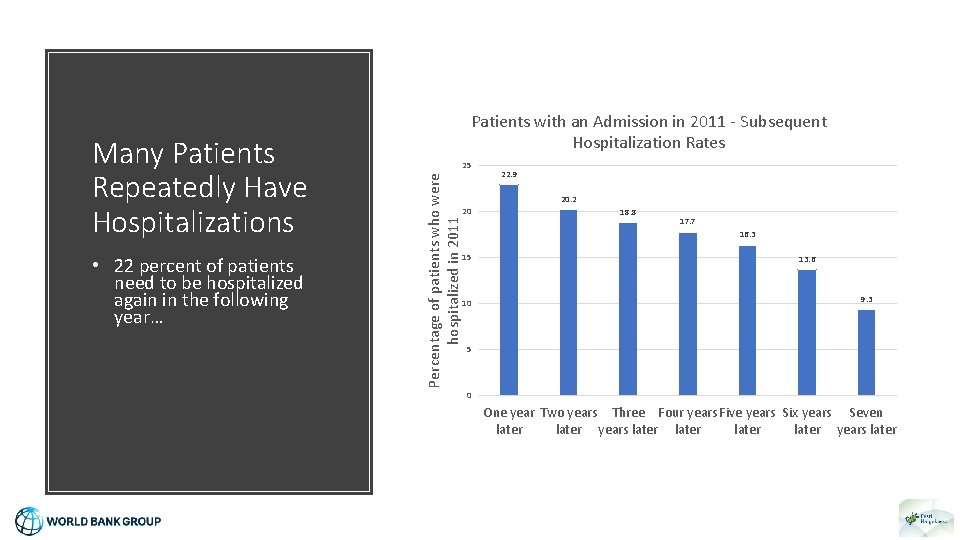

• 22 percent of patients need to be hospitalized again in the following year… Percentage of patients who were hospitalized in 2011 Many Patients Repeatedly Have Hospitalizations Patients with an Admission in 2011 - Subsequent Hospitalization Rates 25 22. 9 20. 2 20 18. 8 17. 7 16. 3 15 10 13. 6 9. 3 5 0 One year Two years Three Four years Five years Six years Seven later years later

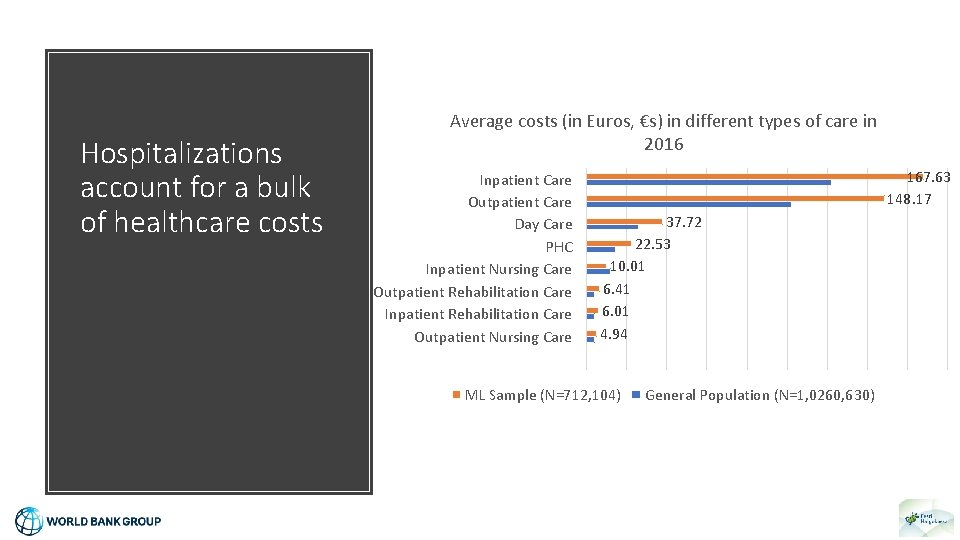

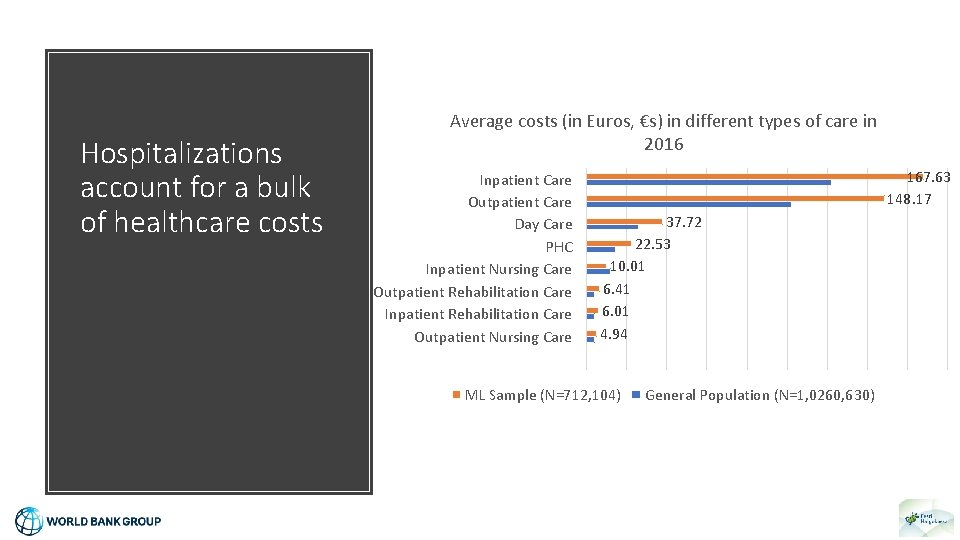

Hospitalizations account for a bulk of healthcare costs Average costs (in Euros, €s) in different types of care in 2016 Inpatient Care Outpatient Care Day Care PHC Inpatient Nursing Care Outpatient Rehabilitation Care Inpatient Rehabilitation Care Outpatient Nursing Care 167. 63 148. 17 37. 72 22. 53 10. 01 6. 41 6. 01 4. 94 ML Sample (N=712, 104) General Population (N=1, 0260, 630)

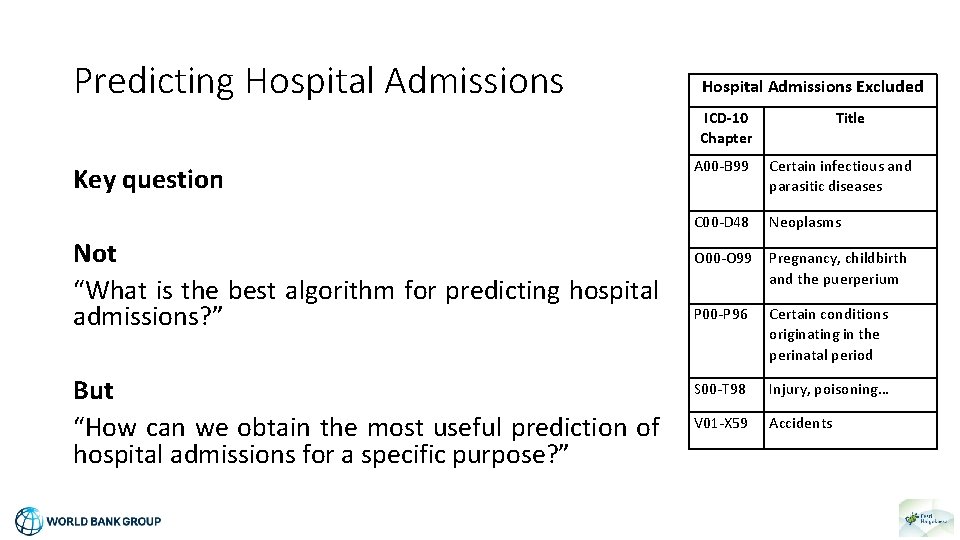

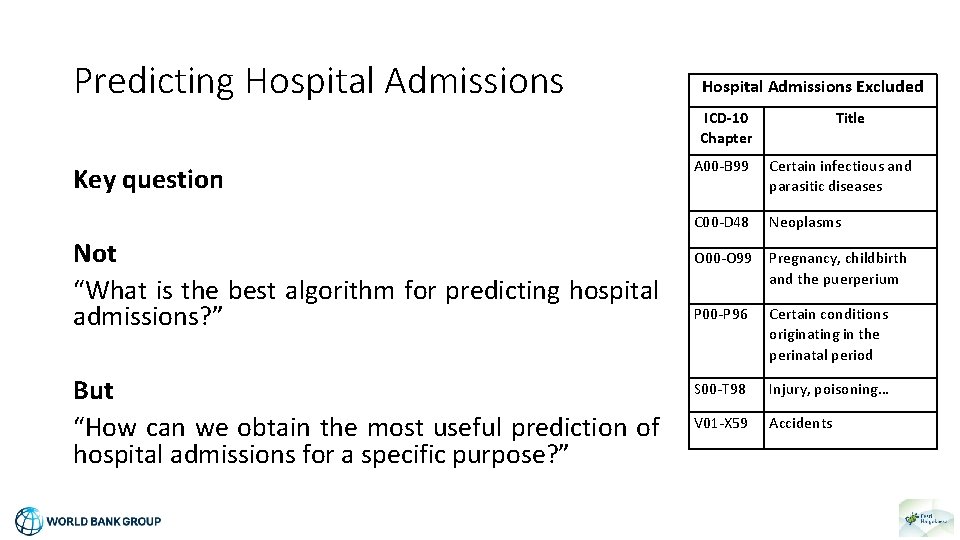

Predicting Hospital Admissions • Hospital admissions are the main (avoidable) adverse health event • But predicting hospitalizations is a hard problem • Social factors matter a lot, patients may have a lot or no contacts with the healthcare systems at all… • Tradeoff to choose which hospitalizations we want to predict • Admissions due to specific conditions vs. hospitalizations in general

Predicting Hospital Admissions Excluded ICD-10 Chapter Key question Not “What is the best algorithm for predicting hospital admissions? ” But “How can we obtain the most useful prediction of hospital admissions for a specific purpose? ” Title A 00 -B 99 Certain infectious and parasitic diseases C 00 -D 48 Neoplasms O 00 -O 99 Pregnancy, childbirth and the puerperium P 00 -P 96 Certain conditions originating in the perinatal period S 00 -T 98 Injury, poisoning… V 01 -X 59 Accidents

Data Overview & Sample Construction

Administrative Claims Data (in Estonia) Very reliable High-quality data availability as of 2007/2008 Comprehensive coding requirements for providers Reporting lag of data is on average 2 weeks No info on clinical outcomes (i. e. test results) Limited information on social conditions and behavioral characteristics • Need for a lot of feature engineering to create “meaningful” variables at the patient level • • •

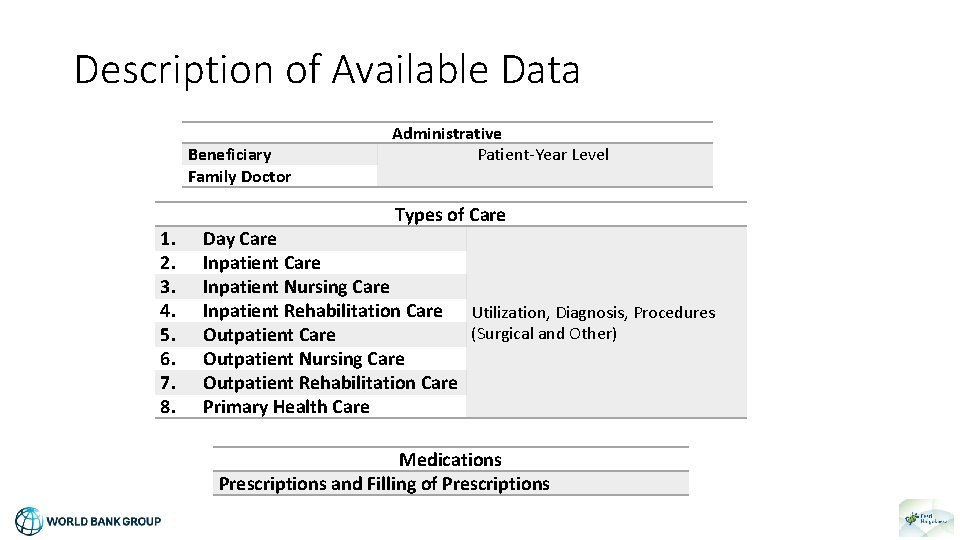

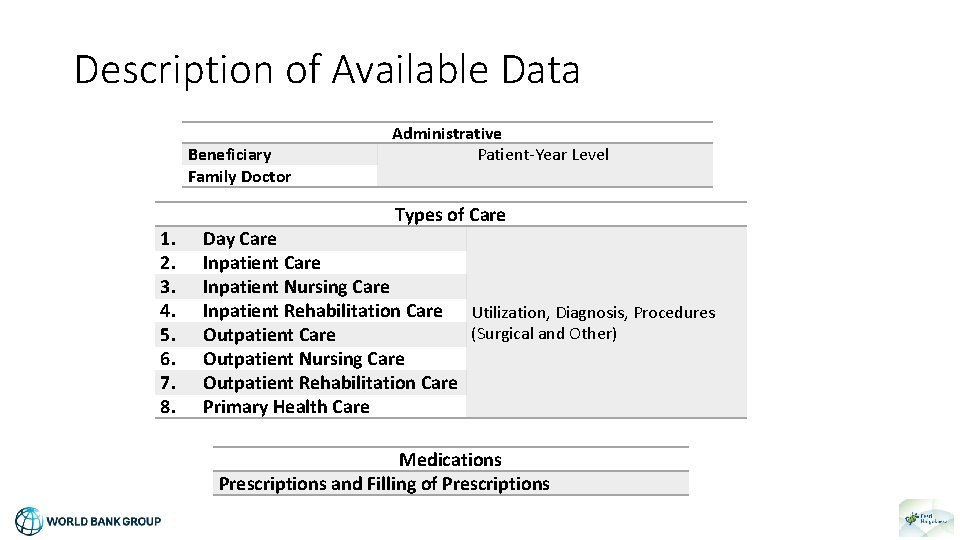

Description of Available Data Beneficiary Family Doctor Administrative Patient-Year Level Types of Care 1. Day Care 2. Inpatient Care 3. Inpatient Nursing Care 4. Inpatient Rehabilitation Care Utilization, Diagnosis, Procedures (Surgical and Other) 5. Outpatient Care 6. Outpatient Nursing Care 7. Outpatient Rehabilitation Care 8. Primary Health Care Medications Prescriptions and Filling of Prescriptions

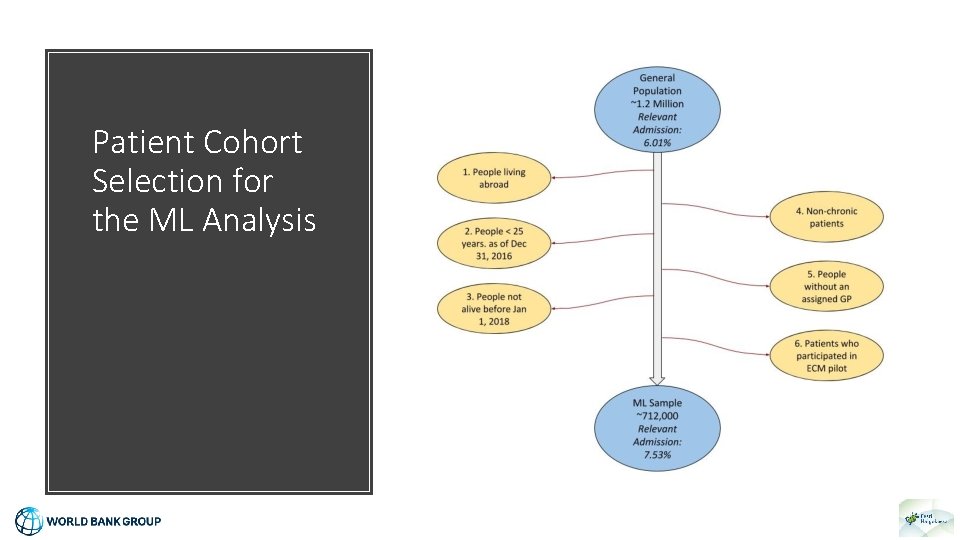

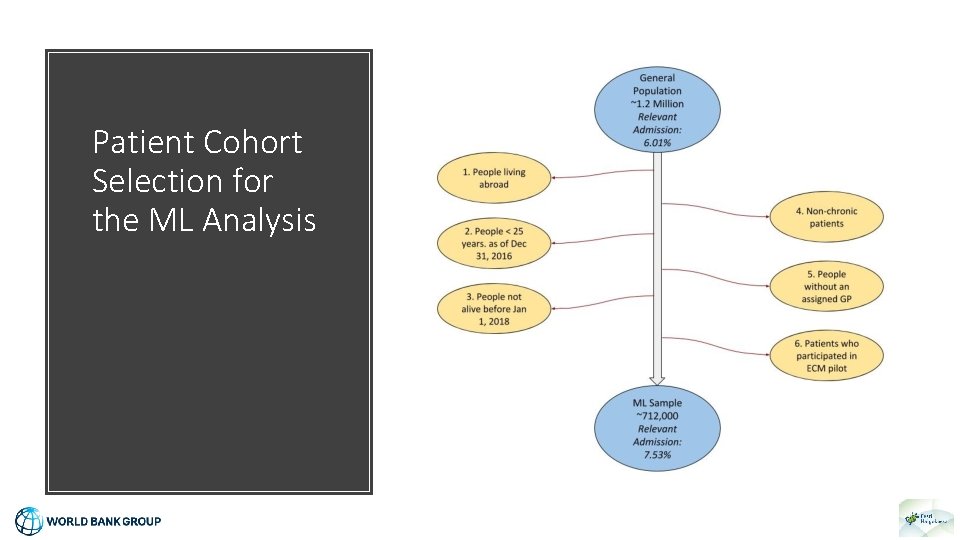

Patient Cohort Selection for the ML Analysis

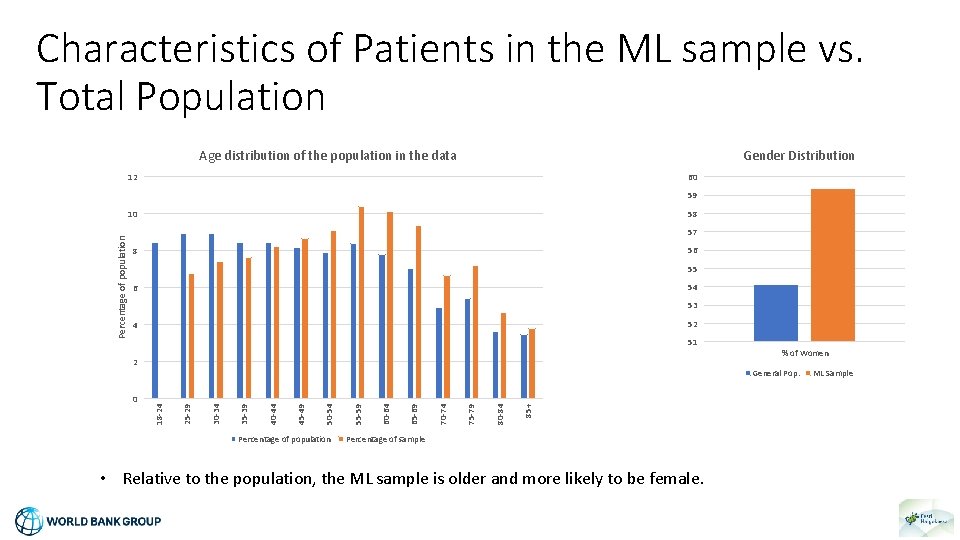

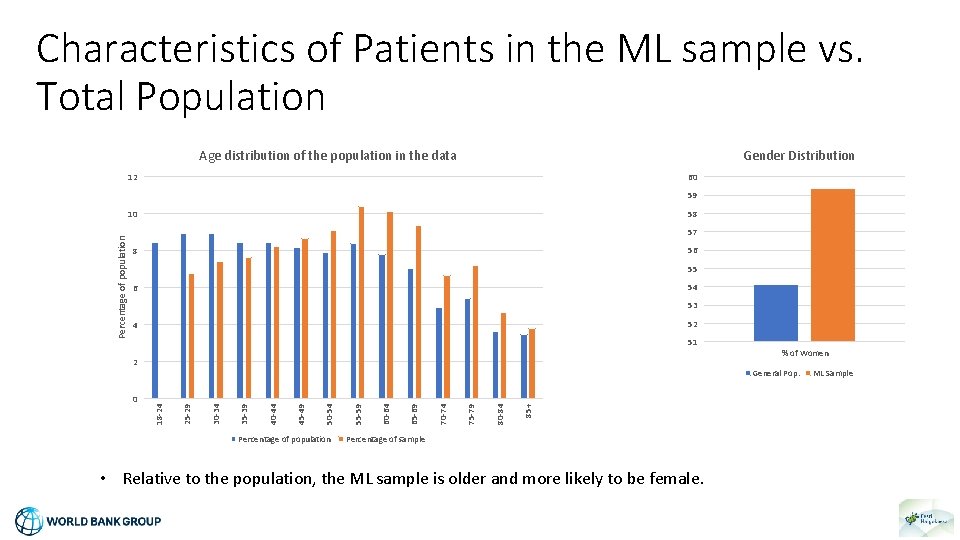

Characteristics of Patients in the ML sample vs. Total Population Gender Distribution Age distribution of the population in the data 12 60 59 58 Percentage of population 10 57 56 8 55 54 6 53 52 4 51 2 % of Women Percentage of population 85+ 80 -84 75 -79 70 -74 65 -69 60 -64 55 -59 50 -54 45 -49 40 -44 35 -39 30 -34 25 -29 0 18 -24 General Pop. Percentage of sample • Relative to the population, the ML sample is older and more likely to be female. ML Sample

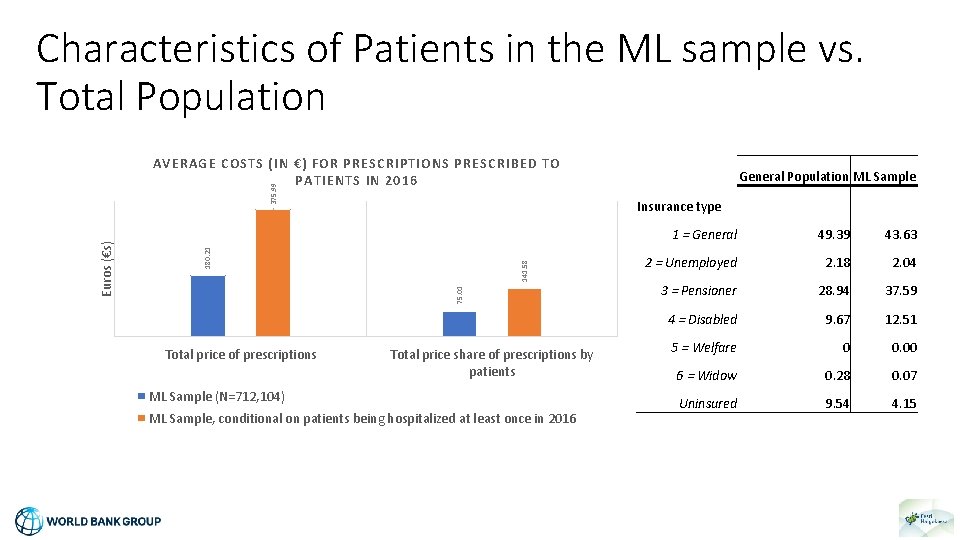

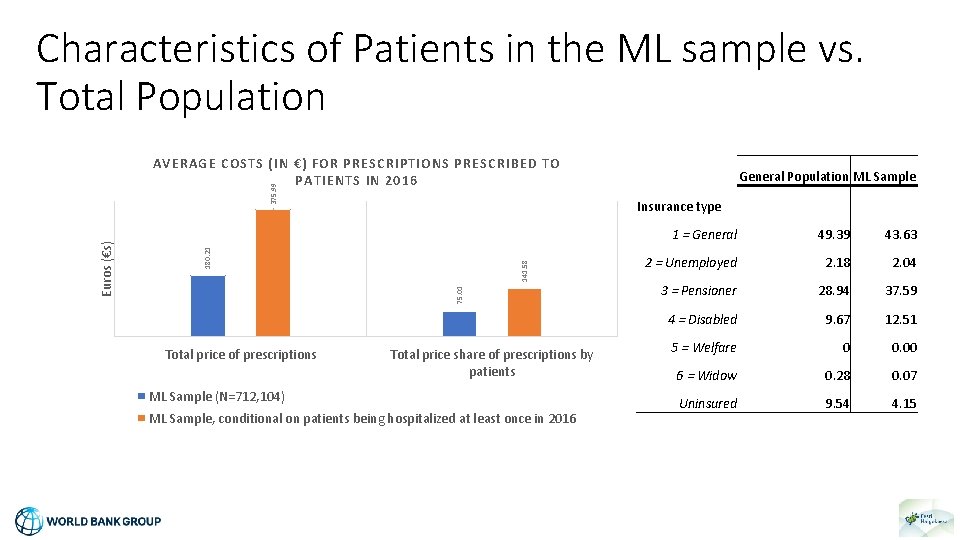

Characteristics of Patients in the ML sample vs. Total Population 141. 58 75. 01 Total price of prescriptions General Population ML Sample Insurance type 180. 21 Euros (€s) 375. 99 AVERAGE COSTS (IN €) FOR PRESCRIPTIONS PRESCRIBED TO PATIENTS IN 2016 Total price share of prescriptions by patients ML Sample (N=712, 104) ML Sample, conditional on patients being hospitalized at least once in 2016 1 = General 49. 39 43. 63 2 = Unemployed 2. 18 2. 04 3 = Pensioner 28. 94 37. 59 4 = Disabled 9. 67 12. 51 5 = Welfare 0 0. 00 6 = Widow 0. 28 0. 07 Uninsured 9. 54 4. 15

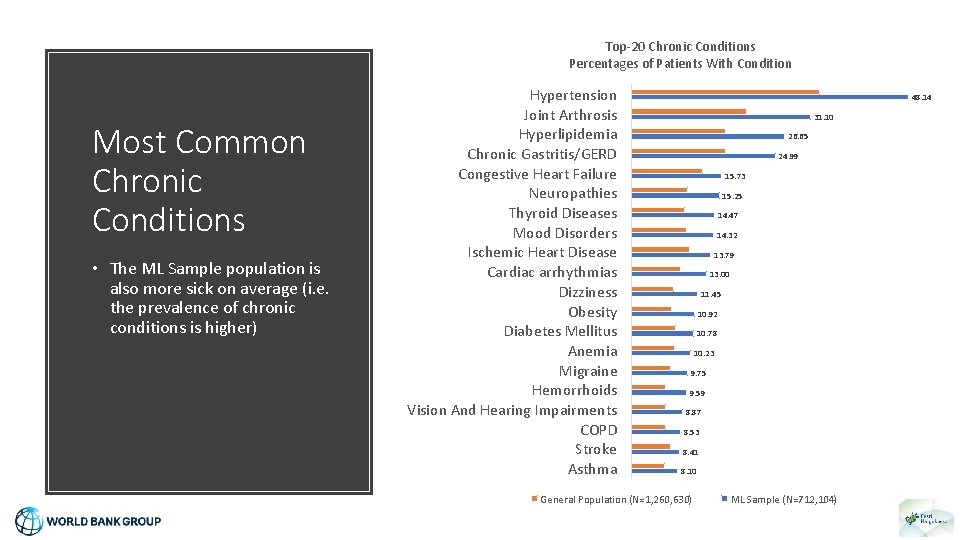

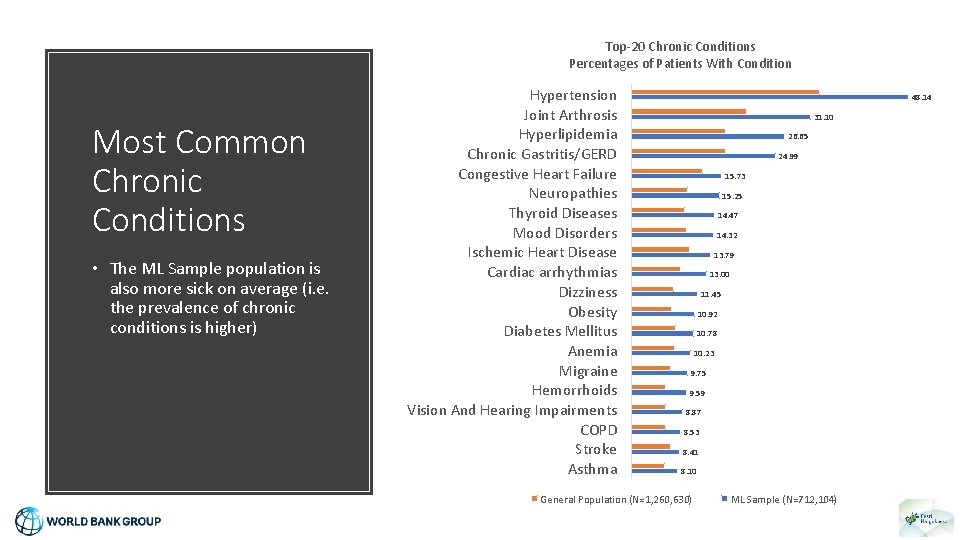

Top-20 Chronic Conditions Percentages of Patients With Condition Most Common Chronic Conditions • The ML Sample population is also more sick on average (i. e. the prevalence of chronic conditions is higher) Hypertension Joint Arthrosis Hyperlipidemia Chronic Gastritis/GERD Congestive Heart Failure Neuropathies Thyroid Diseases Mood Disorders Ischemic Heart Disease Cardiac arrhythmias Dizziness Obesity Diabetes Mellitus Anemia Migraine Hemorrhoids Vision And Hearing Impairments COPD Stroke Asthma 48. 14 31. 10 26. 65 24. 99 15. 73 15. 25 14. 47 14. 32 13. 79 13. 00 11. 45 10. 92 10. 78 10. 23 9. 75 9. 59 8. 87 8. 53 8. 41 8. 10 General Population (N=1, 260, 630) ML Sample (N=712, 104)

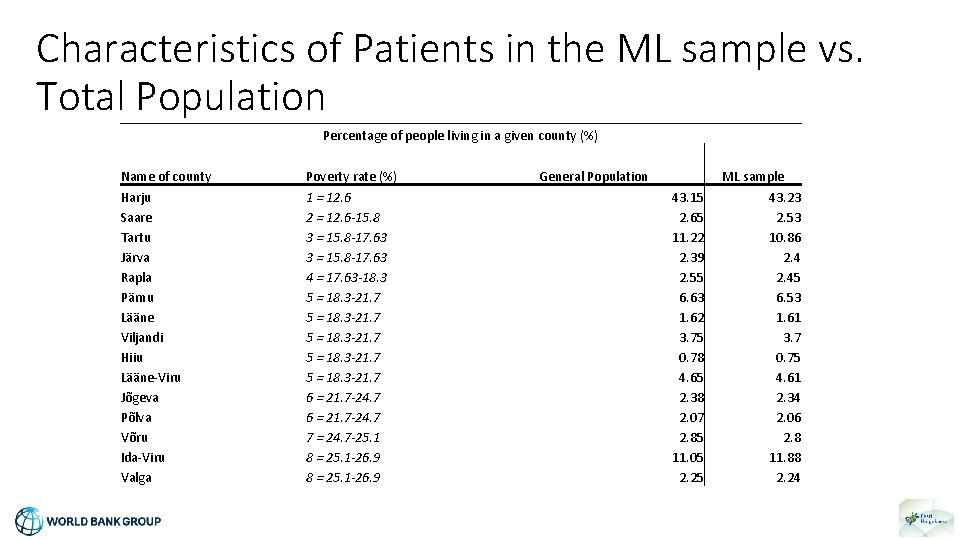

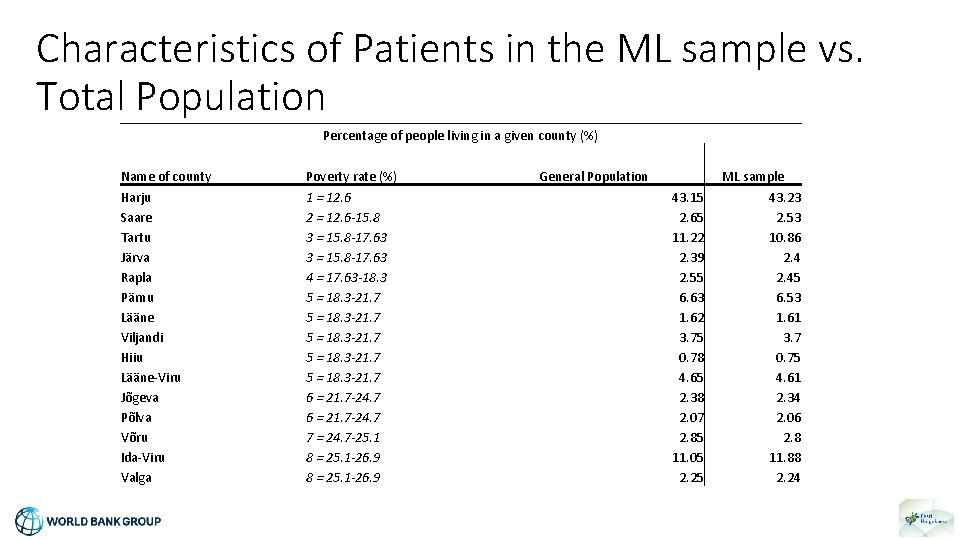

Characteristics of Patients in the ML sample vs. Total Population Percentage of people living in a given county (%) Name of county Harju Saare Tartu Järva Rapla Pärnu Lääne Viljandi Hiiu Lääne-Viru Jõgeva Põlva Võru Ida-Viru Valga Poverty rate (%) 1 = 12. 6 2 = 12. 6 -15. 8 3 = 15. 8 -17. 63 4 = 17. 63 -18. 3 5 = 18. 3 -21. 7 6 = 21. 7 -24. 7 7 = 24. 7 -25. 1 8 = 25. 1 -26. 9 General Population 43. 15 2. 65 11. 22 2. 39 2. 55 6. 63 1. 62 3. 75 0. 78 4. 65 2. 38 2. 07 2. 85 11. 05 2. 25 ML sample 43. 23 2. 53 10. 86 2. 45 6. 53 1. 61 3. 7 0. 75 4. 61 2. 34 2. 06 2. 8 11. 88 2. 24

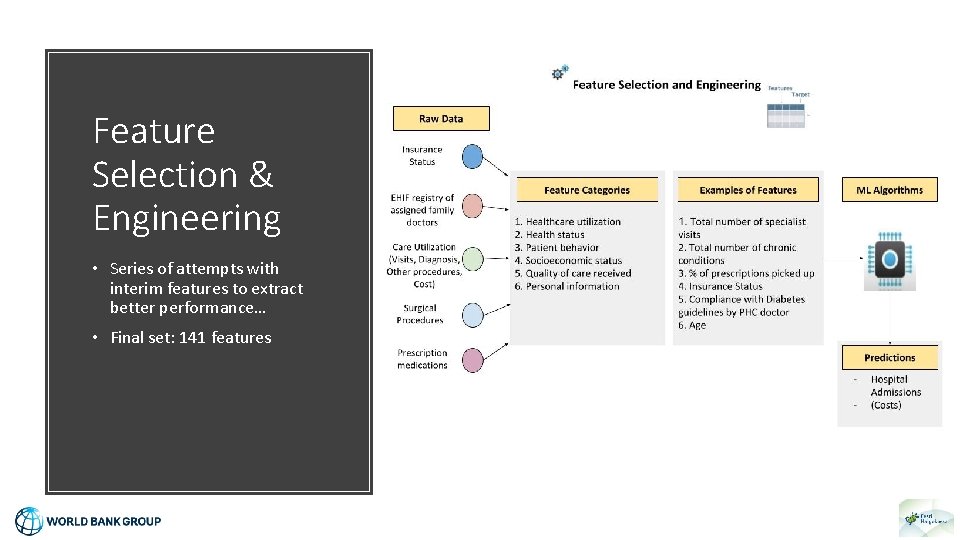

Feature Selection & Engineering

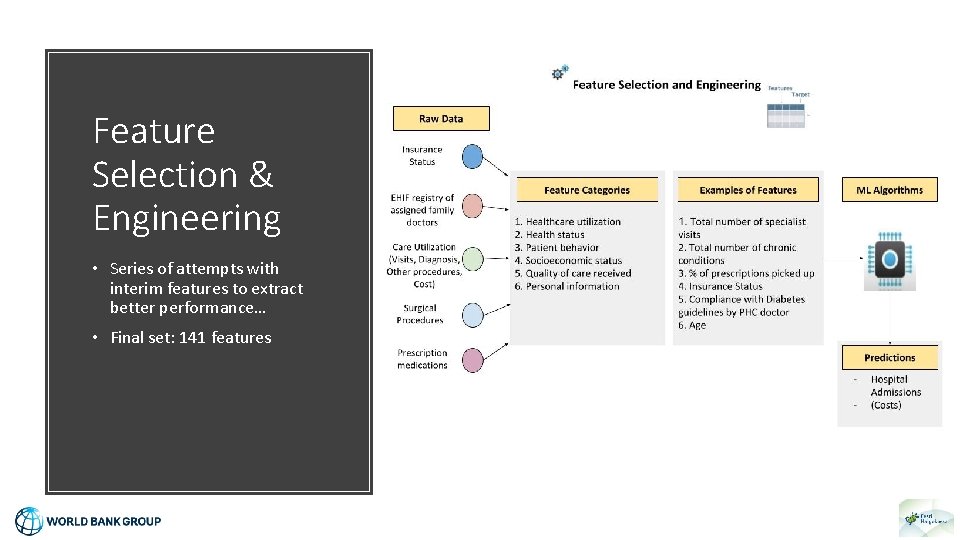

Feature Selection & Engineering • Series of attempts with interim features to extract better performance… • Final set: 141 features

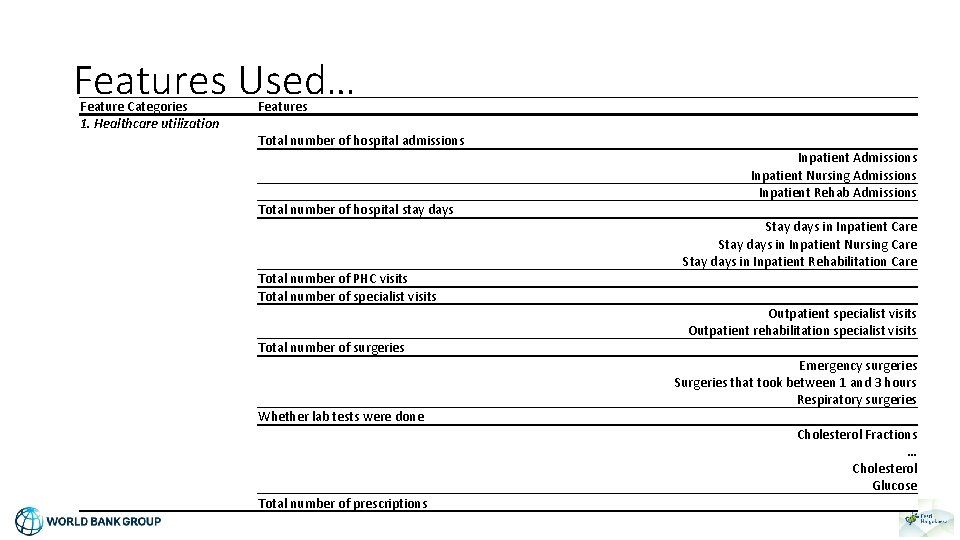

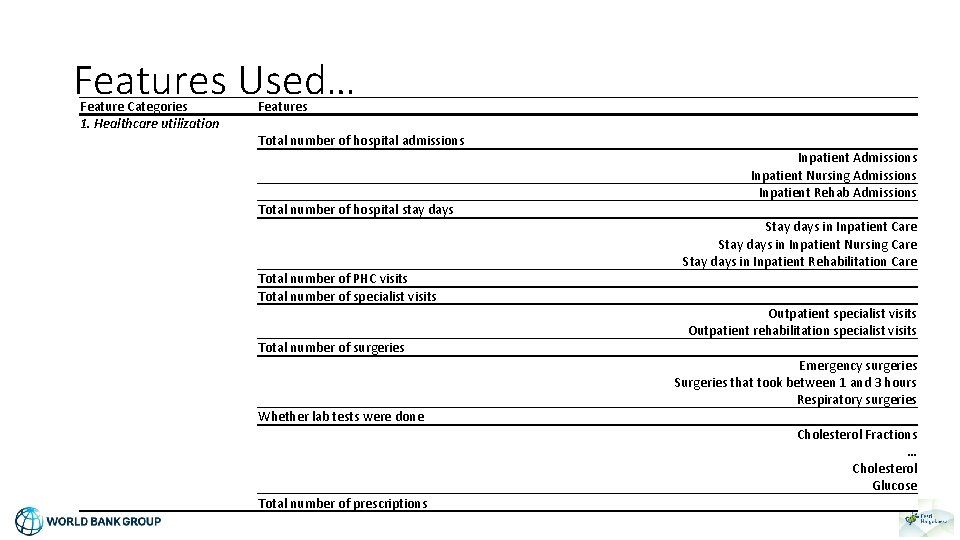

Features Used… Feature Categories 1. Healthcare utilization Features Total number of hospital admissions Inpatient Admissions Inpatient Nursing Admissions Inpatient Rehab Admissions Total number of hospital stay days Stay days in Inpatient Care Stay days in Inpatient Nursing Care Stay days in Inpatient Rehabilitation Care Total number of PHC visits Total number of specialist visits Outpatient rehabilitation specialist visits Total number of surgeries Emergency surgeries Surgeries that took between 1 and 3 hours Respiratory surgeries Whether lab tests were done Cholesterol Fractions … Cholesterol Glucose Total number of prescriptions

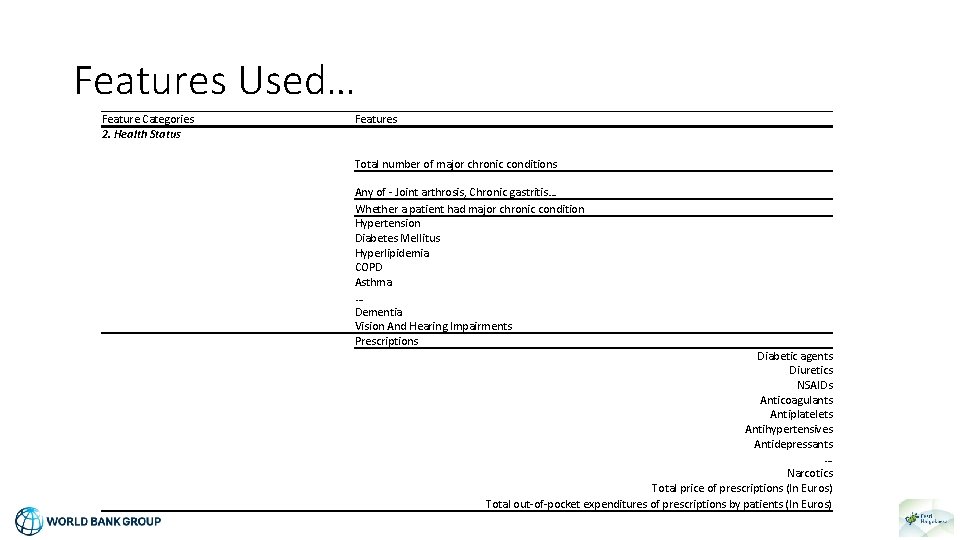

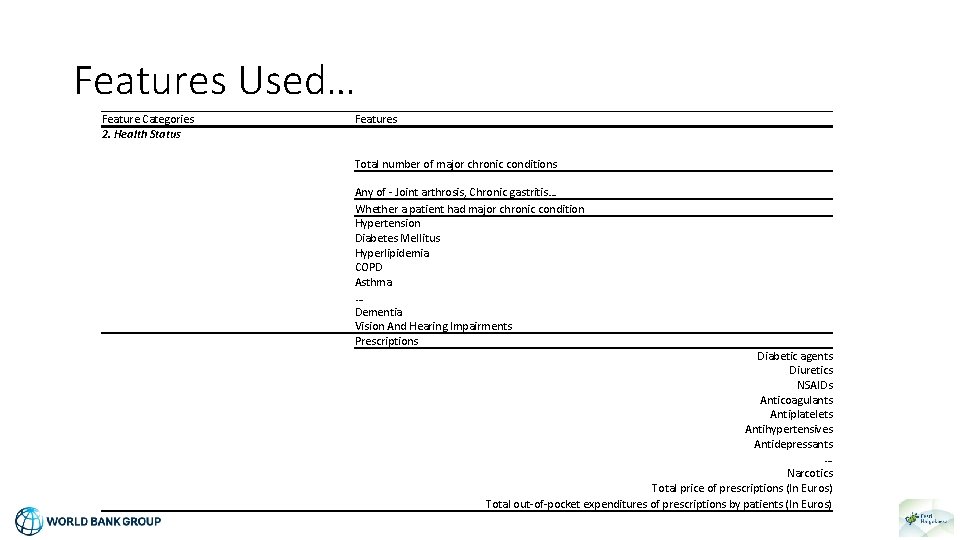

Features Used… Feature Categories 2. Health Status Features Any of - Joint arthrosis, Chronic gastritis… Whether a patient had major chronic condition Hypertension Diabetes Mellitus Hyperlipidemia COPD Asthma … Dementia Vision And Hearing Impairments Prescriptions Total number of major chronic conditions Diabetic agents Diuretics NSAIDs Anticoagulants Antiplatelets Antihypertensives Antidepressants … Narcotics Total price of prescriptions (In Euros) Total out-of-pocket expenditures of prescriptions by patients (In Euros)

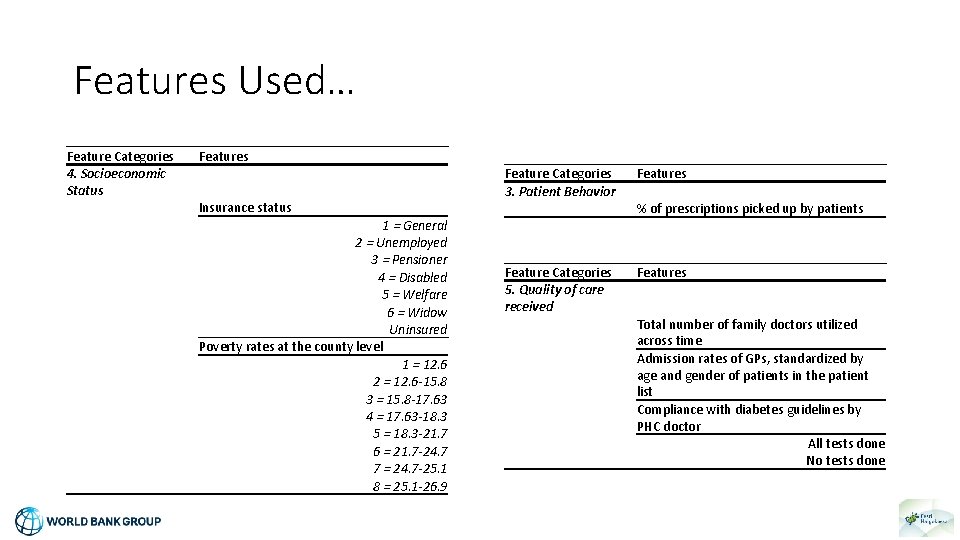

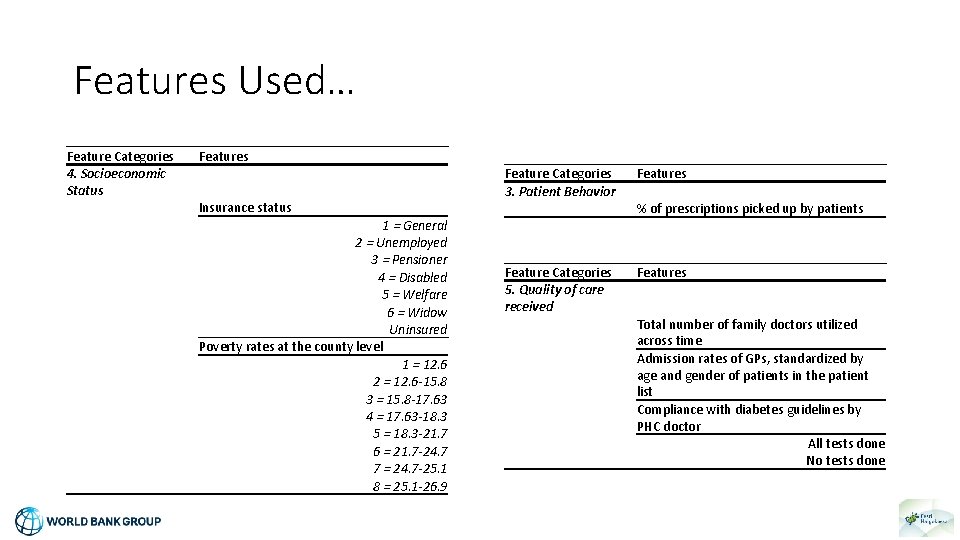

Features Used… Feature Categories 4. Socioeconomic Status Features Insurance status 1 = General 2 = Unemployed 3 = Pensioner 4 = Disabled 5 = Welfare 6 = Widow Uninsured Poverty rates at the county level 1 = 12. 6 2 = 12. 6 -15. 8 3 = 15. 8 -17. 63 4 = 17. 63 -18. 3 5 = 18. 3 -21. 7 6 = 21. 7 -24. 7 7 = 24. 7 -25. 1 8 = 25. 1 -26. 9 Feature Categories 3. Patient Behavior Features % of prescriptions picked up by patients Feature Categories 5. Quality of care received Features Total number of family doctors utilized across time Admission rates of GPs, standardized by age and gender of patients in the patient list Compliance with diabetes guidelines by PHC doctor All tests done No tests done

Getting to Know the Data: Diagnosis and Admissions Single DGN Pairs of DGNs • Afib (Atrial Fibrillation And Flutter), Chf (Congestive Heart Failure), Htn (Hypertension), and Ischemic Htd (Ischemic Heart Disease) are strong indicators of potential admissions in the following year (2017) • Patient groups with these conditions have a non-trivial (~10% likelihood) of hospital admissions • This likelihood increases to ~20%-~30% with one 2016 hospital admission and to >50% with 3 and more admissions in 2016

Evaluation & Modelling Choices

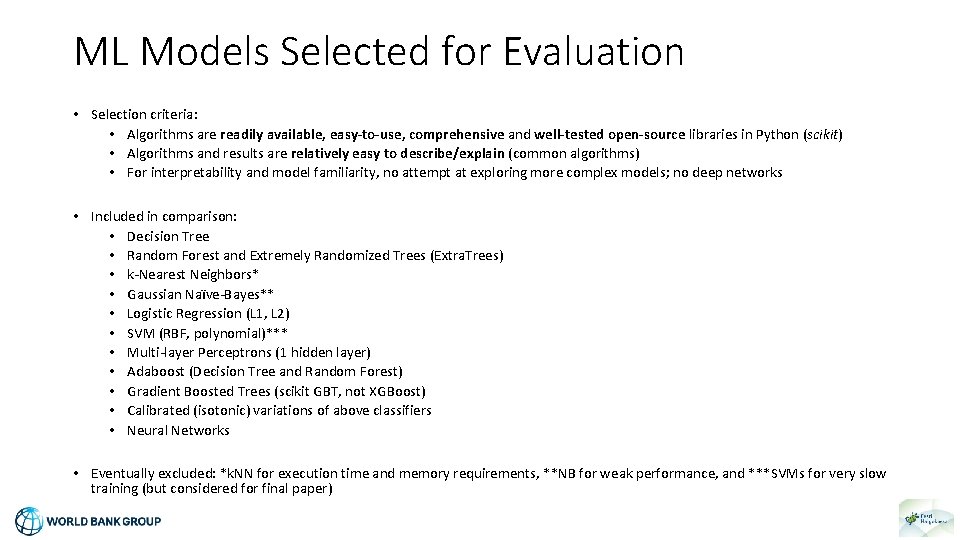

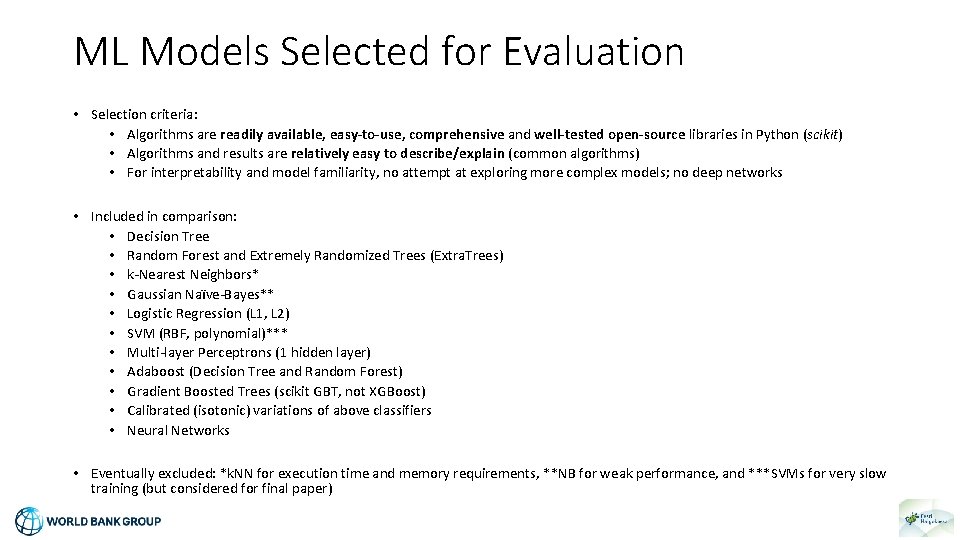

ML Models Selected for Evaluation • Selection criteria: • Algorithms are readily available, easy-to-use, comprehensive and well-tested open-source libraries in Python (scikit) • Algorithms and results are relatively easy to describe/explain (common algorithms) • For interpretability and model familiarity, no attempt at exploring more complex models; no deep networks • Included in comparison: • Decision Tree • Random Forest and Extremely Randomized Trees (Extra. Trees) • k-Nearest Neighbors* • Gaussian Naïve-Bayes** • Logistic Regression (L 1, L 2) • SVM (RBF, polynomial)*** • Multi-layer Perceptrons (1 hidden layer) • Adaboost (Decision Tree and Random Forest) • Gradient Boosted Trees (scikit GBT, not XGBoost) • Calibrated (isotonic) variations of above classifiers • Neural Networks • Eventually excluded: *k. NN for execution time and memory requirements, **NB for weak performance, and ***SVMs for very slow training (but considered for final paper)

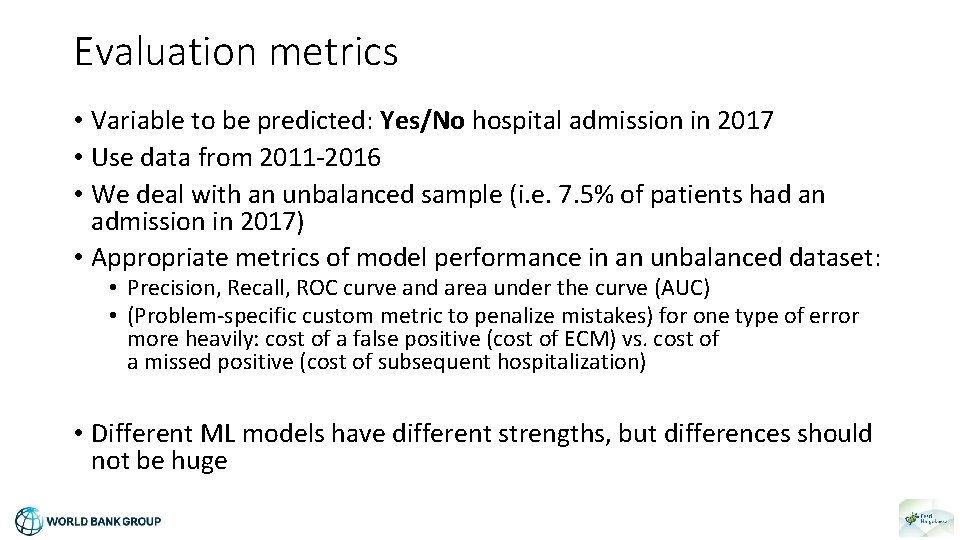

Evaluation metrics • Variable to be predicted: Yes/No hospital admission in 2017 • Use data from 2011 -2016 • We deal with an unbalanced sample (i. e. 7. 5% of patients had an admission in 2017) • Appropriate metrics of model performance in an unbalanced dataset: • Precision, Recall, ROC curve and area under the curve (AUC) • (Problem-specific custom metric to penalize mistakes) for one type of error more heavily: cost of a false positive (cost of ECM) vs. cost of a missed positive (cost of subsequent hospitalization) • Different ML models have different strengths, but differences should not be huge

Intuitive Interpretation of Metrics • Precision is the probability that a patient classified as a patient with a hospital admission by an algorithm is actually going to have a hospital admission. • Recall is the probability that a patient who is going to have a hospital admission is being classified as such by an algorithm. • Which one is more important? • It depends a lot on the application. There is a tradeoff between maximizing either of them…

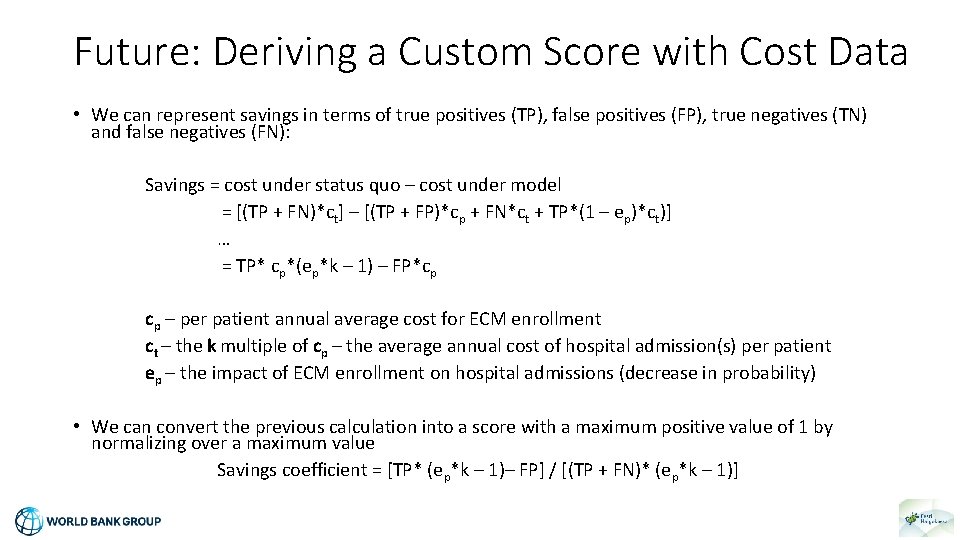

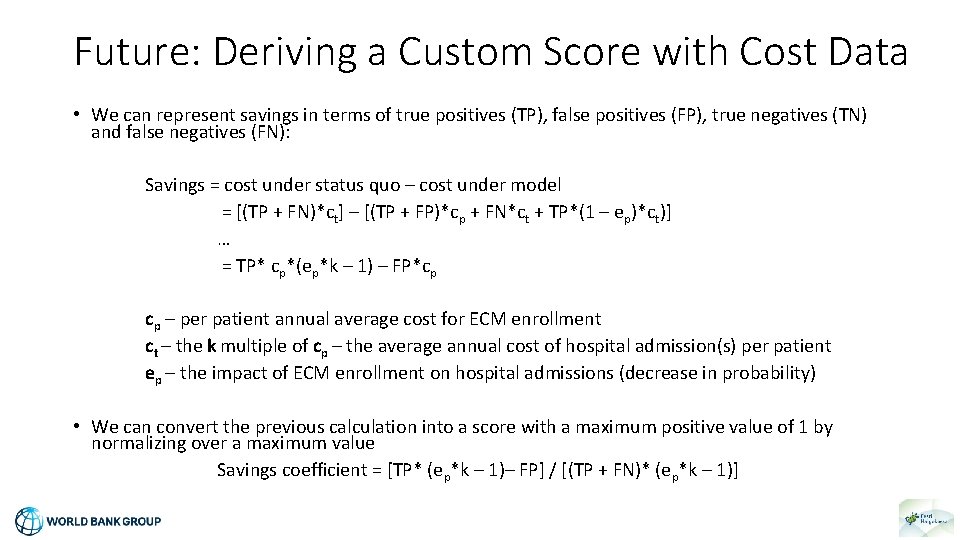

Future: Deriving a Custom Score with Cost Data • We can represent savings in terms of true positives (TP), false positives (FP), true negatives (TN) and false negatives (FN): Savings = cost under status quo – cost under model = [(TP + FN)*ct] – [(TP + FP)*cp + FN*ct + TP*(1 – ep)*ct)] … = TP* cp*(ep*k – 1) – FP*cp cp – per patient annual average cost for ECM enrollment ct – the k multiple of cp – the average annual cost of hospital admission(s) per patient ep – the impact of ECM enrollment on hospital admissions (decrease in probability) • We can convert the previous calculation into a score with a maximum positive value of 1 by normalizing over a maximum value Savings coefficient = [TP* (ep*k – 1)– FP] / [(TP + FN)* (ep*k – 1)]

Future: Custom Evaluation Score Based on Cost Data • A hypothetical exercise (not all the benefits of ECM are being captured) • Hypothetical cost/savings assumptions (based on historical data, references from the literature): • ECM Prevention-to-treatment cost ratio is 1: 30 • Impact of ECM enrollment on hospital admissions (decrease in probability) is 10%, 15%, to 20%

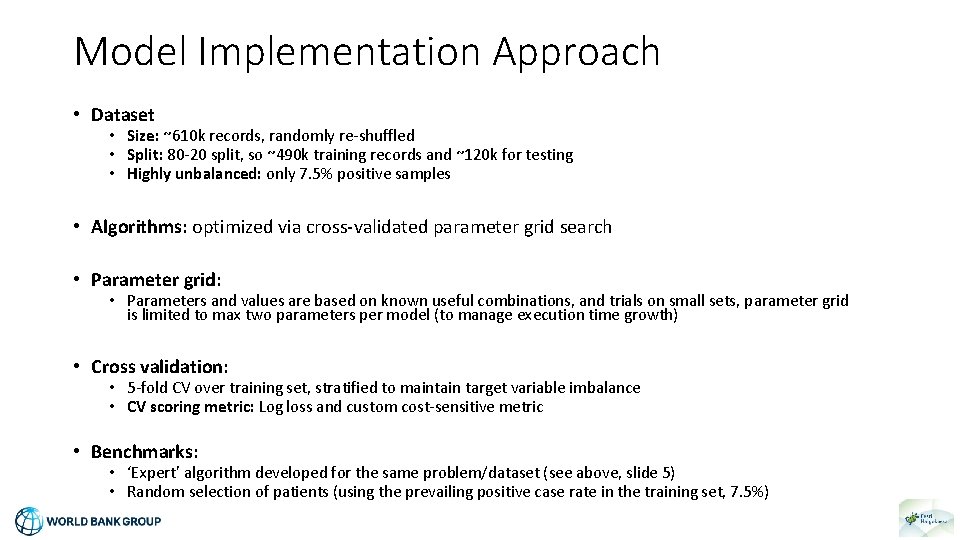

Model Implementation Approach • Dataset • Size: ~610 k records, randomly re-shuffled • Split: 80 -20 split, so ~490 k training records and ~120 k for testing • Highly unbalanced: only 7. 5% positive samples • Algorithms: optimized via cross-validated parameter grid search • Parameter grid: • Parameters and values are based on known useful combinations, and trials on small sets, parameter grid is limited to max two parameters per model (to manage execution time growth) • Cross validation: • 5 -fold CV over training set, stratified to maintain target variable imbalance • CV scoring metric: Log loss and custom cost-sensitive metric • Benchmarks: • ‘Expert’ algorithm developed for the same problem/dataset (see above, slide 5) • Random selection of patients (using the prevailing positive case rate in the training set, 7. 5%)

First Results

Precision and Recall for Log Loss Models ML models outperform the benchmark on precision, but lag behind on recall • ML models have difficulty identifying all positive cases (i. e. patients with an admission). Most positive samples have low probabilities of being a positive case – a typical consequence of highly unbalanced datasets. • But classification above the 50% threshold is highly accurate (few false alarms)

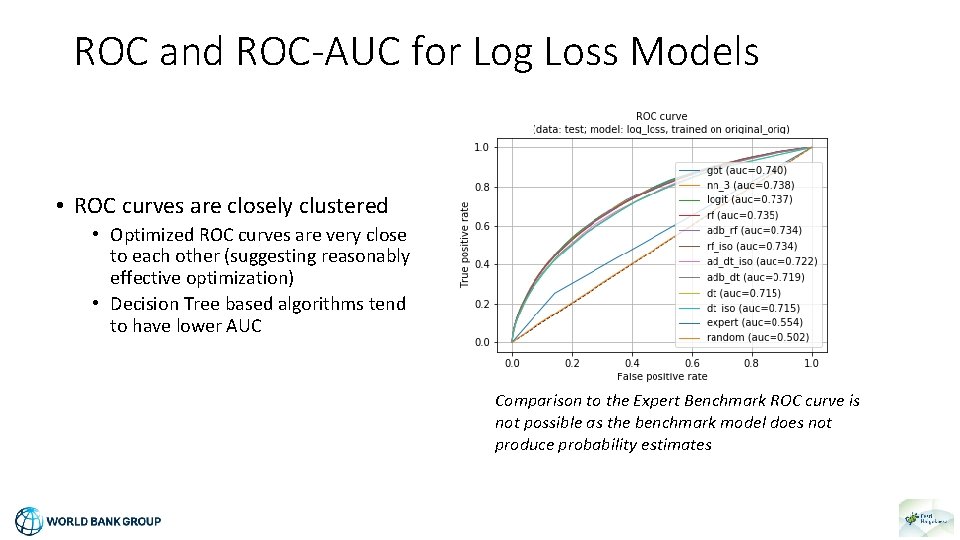

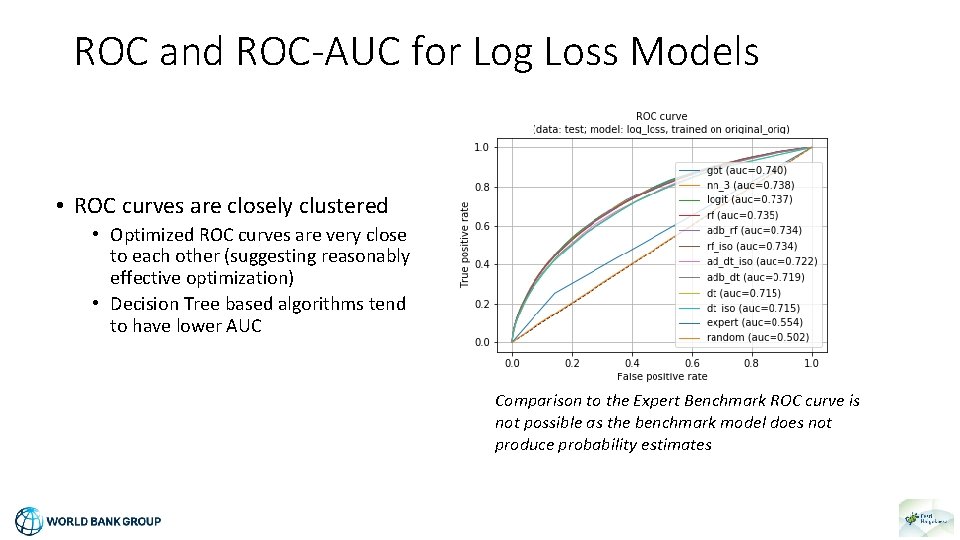

ROC and ROC-AUC for Log Loss Models • ROC curves are closely clustered • Optimized ROC curves are very close to each other (suggesting reasonably effective optimization) • Decision Tree based algorithms tend to have lower AUC Comparison to the Expert Benchmark ROC curve is not possible as the benchmark model does not produce probability estimates

Summary So Far… • Performance of ML models are promising, in line with known expectations (close to 75% AUC) and beats benchmark on precision • But clear weakness on recall (i. e. , Patients with a high chance of a hospital admission are not detected consistently) • Results on original dataset have room for improvement • Why the sub-par classification capacity?

Next Round of Results…

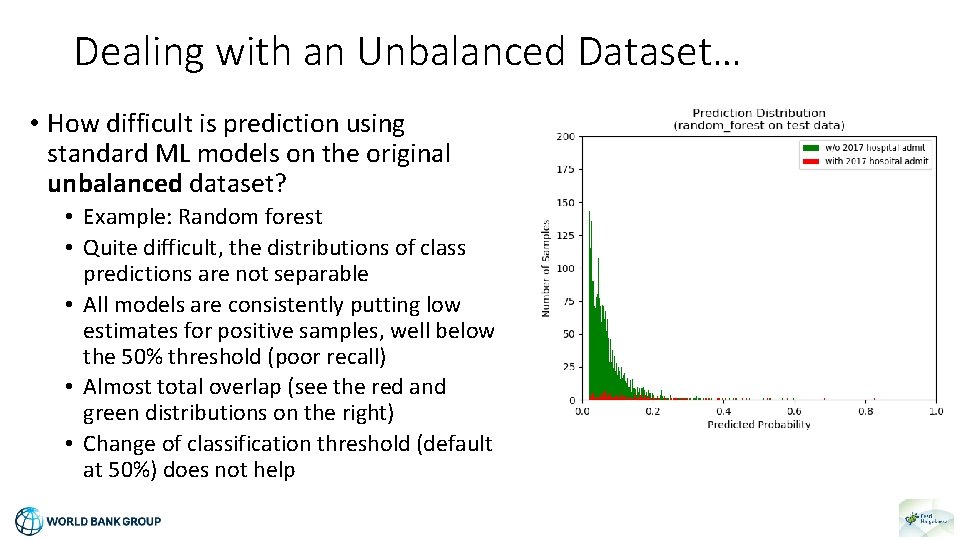

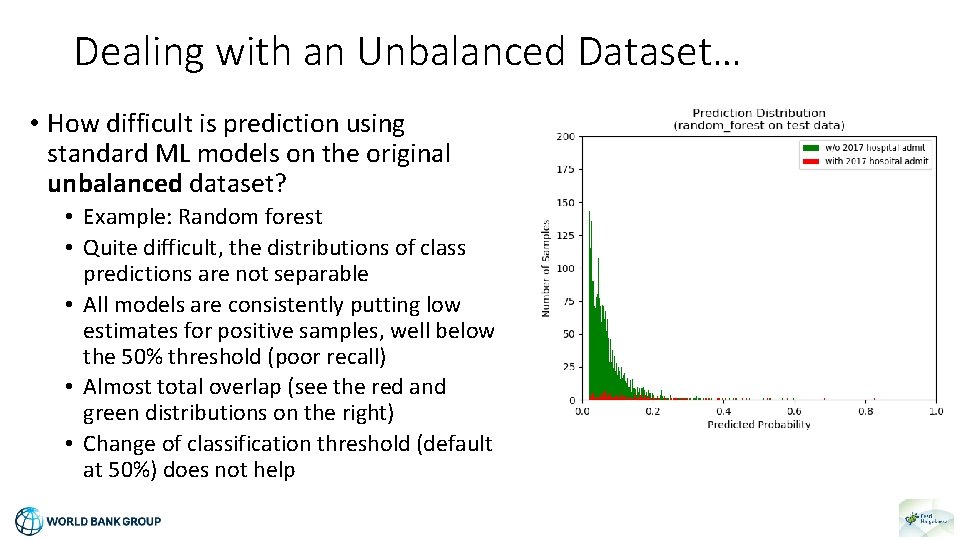

Dealing with an Unbalanced Dataset… • How difficult is prediction using standard ML models on the original unbalanced dataset? • Example: Random forest • Quite difficult, the distributions of class predictions are not separable • All models are consistently putting low estimates for positive samples, well below the 50% threshold (poor recall) • Almost total overlap (see the red and green distributions on the right) • Change of classification threshold (default at 50%) does not help

Dealing with an Unbalanced Dataset… • Improve results via: • better features (possible, as a follow-up phase) • more complex models (possible, as a follow-up phase) • or influence training directly? • Rebalancing techniques (e. g. , under-sampling majority) could be applied during training • Overall goal is to identify more positive cases, at (an acceptable) expense of false positives, subject to tradeoff factors • So the accurate prediction of probabilities is not the main goal • Consider some amount of rebalancing on the training set only • Retain full set of minority class and decrease the majority class to reach ratio • No hard rule on single most effective rebalancing ratio, so several trials

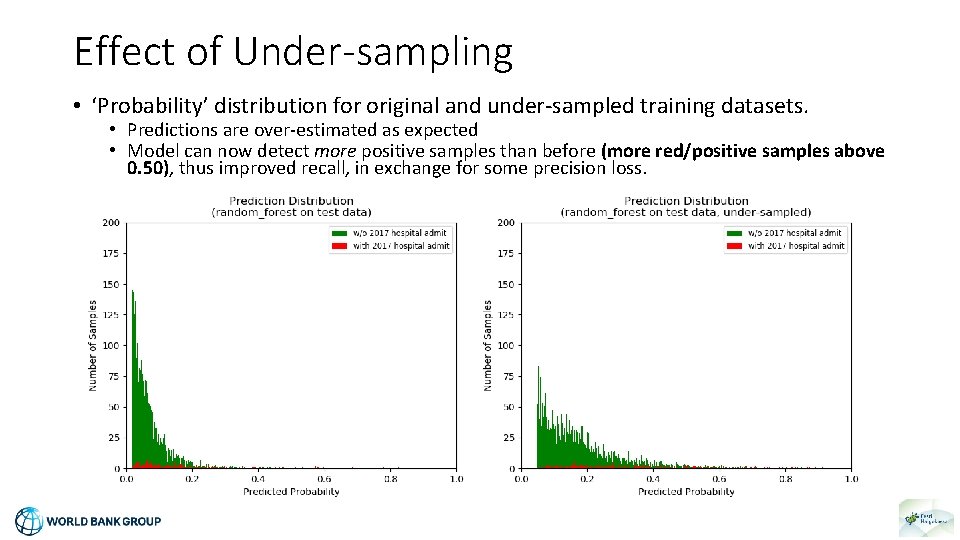

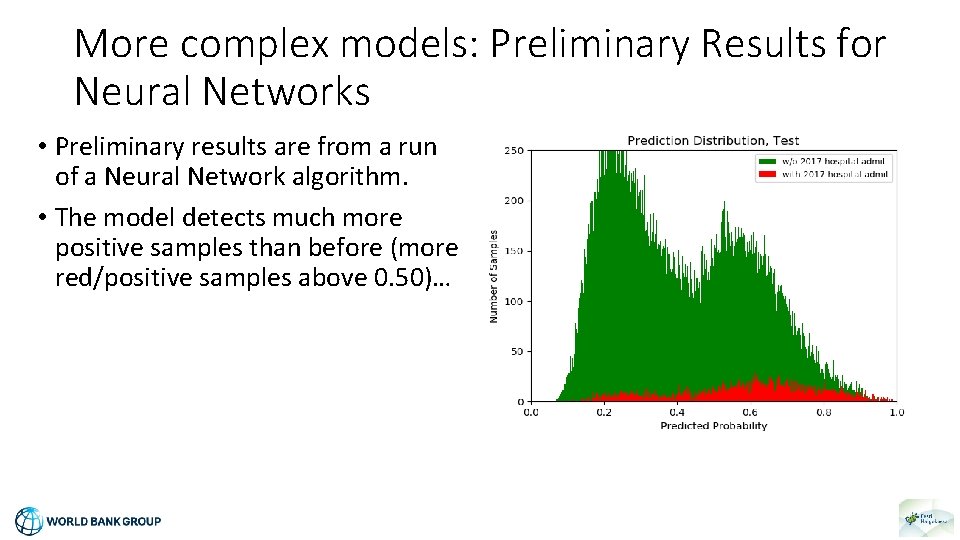

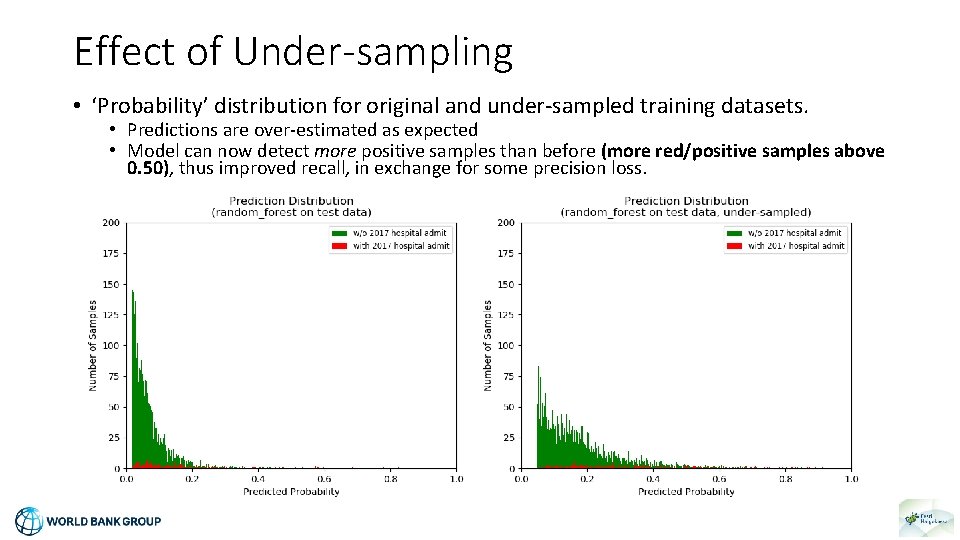

Effect of Under-sampling • ‘Probability’ distribution for original and under-sampled training datasets. • Predictions are over-estimated as expected • Model can now detect more positive samples than before (more red/positive samples above 0. 50), thus improved recall, in exchange for some precision loss.

Results for Models Based on Resampling

Next Round of Results…

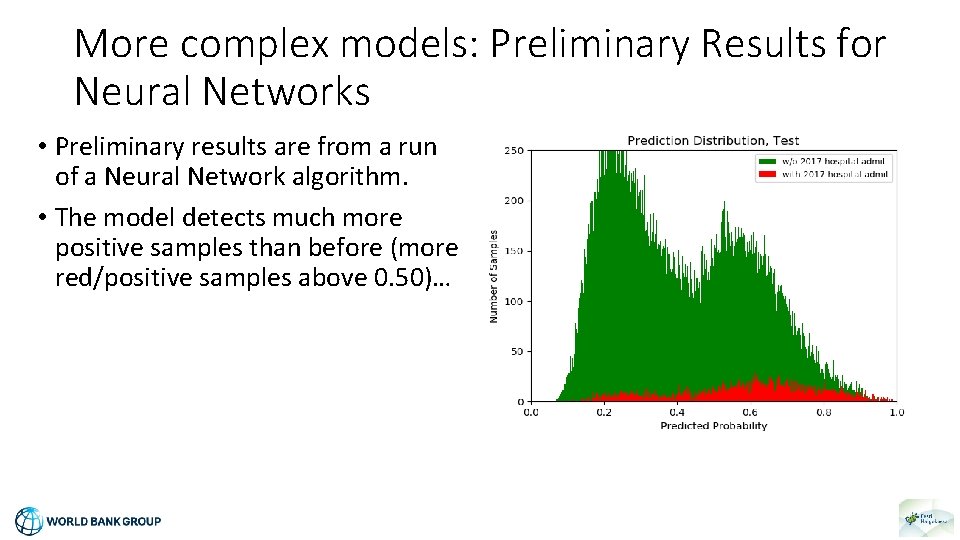

More complex models: Preliminary Results for Neural Networks • Preliminary results are from a run of a Neural Network algorithm. • A neural network is a series of algorithms that endeavors to recognize underlying relationships in a set of data through a process that mimics the way the human brain operates.

More complex models: Preliminary Results for Neural Networks • Preliminary results are from a run of a Neural Network algorithm. • The model detects much more positive samples than before (more red/positive samples above 0. 50)…

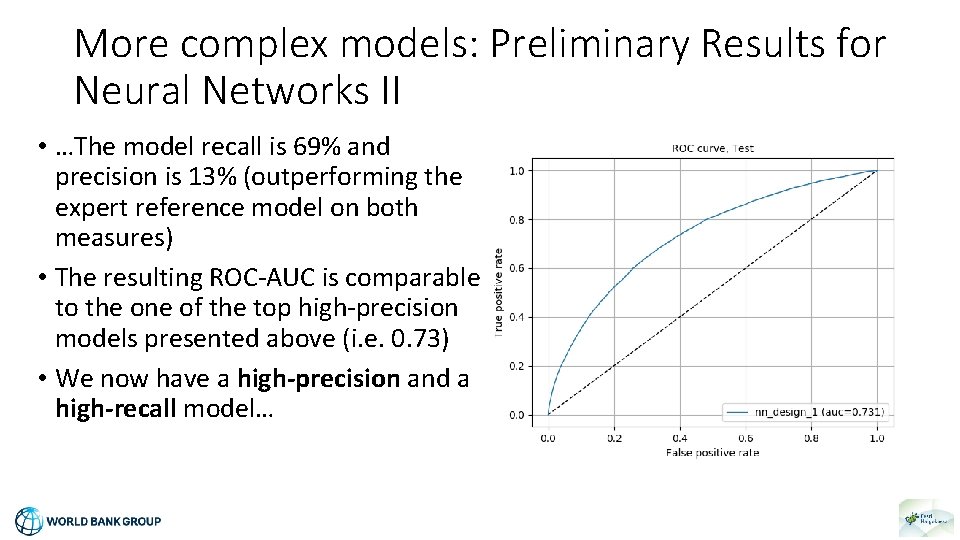

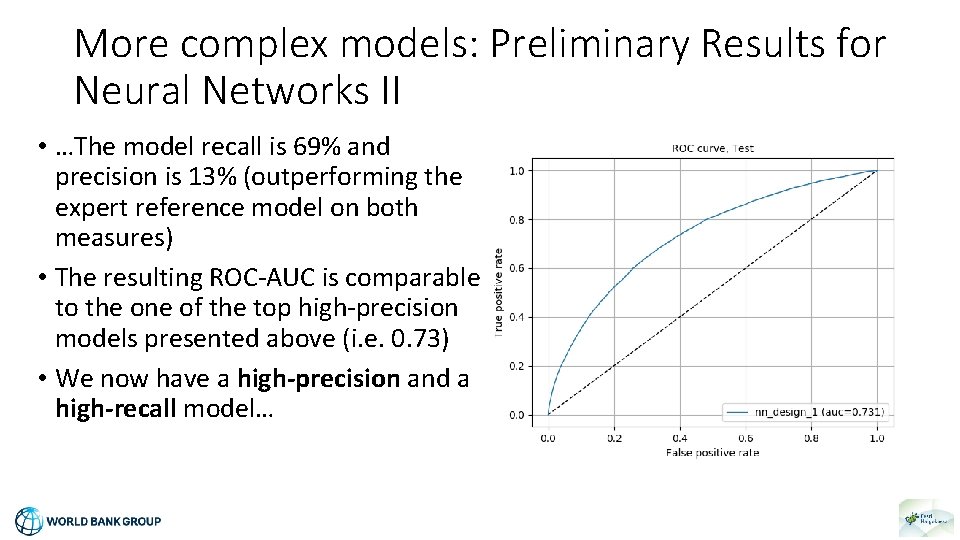

More complex models: Preliminary Results for Neural Networks II • …The model recall is 69% and precision is 13% (outperforming the expert reference model on both measures) • The resulting ROC-AUC is comparable to the one of the top high-precision models presented above (i. e. 0. 73) • We now have a high-precision and a high-recall model…

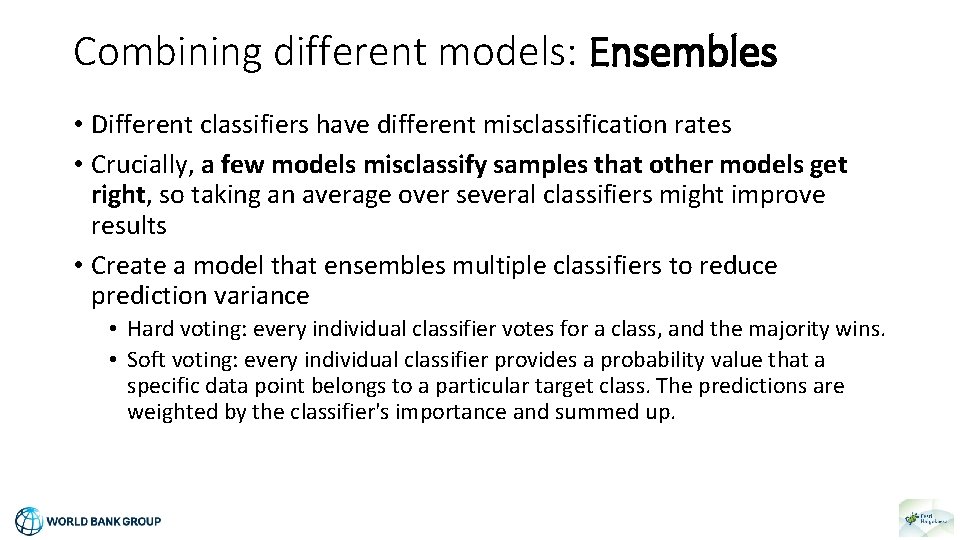

Combining different models: Ensembles • Different classifiers have different misclassification rates • Crucially, a few models misclassify samples that other models get right, so taking an average over several classifiers might improve results • Create a model that ensembles multiple classifiers to reduce prediction variance • Hard voting: every individual classifier votes for a class, and the majority wins. • Soft voting: every individual classifier provides a probability value that a specific data point belongs to a particular target class. The predictions are weighted by the classifier's importance and summed up.

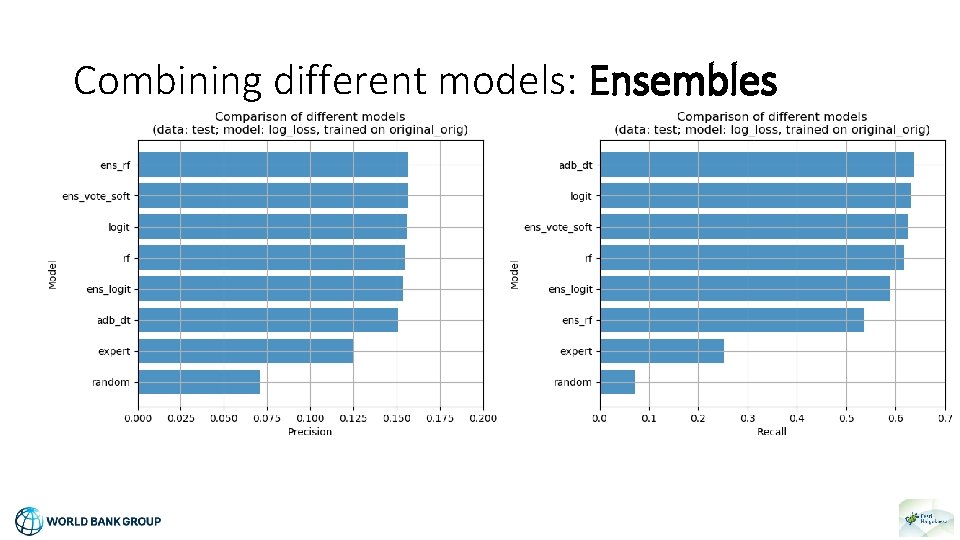

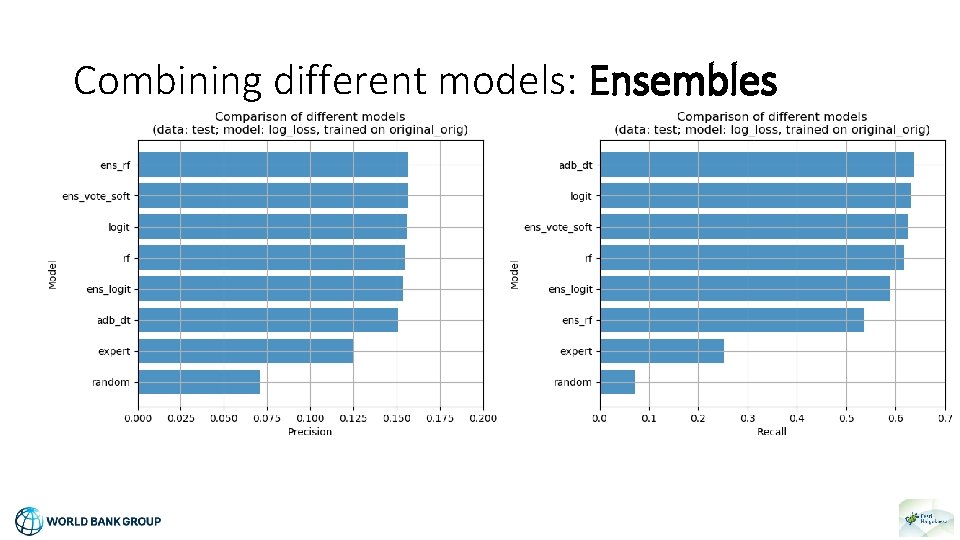

Combining different models: Ensembles

Combining different models: Ensembles • A Soft Voting Ensemble Model • There is no significant advantage to ensembling in this sample, but there is indeed a small gain.

Conclusions ML vs. the Old Approach

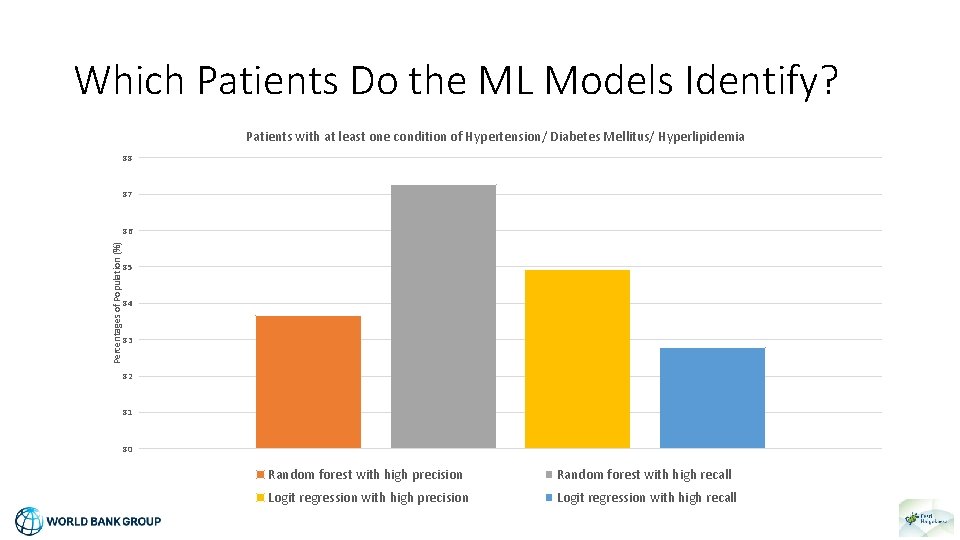

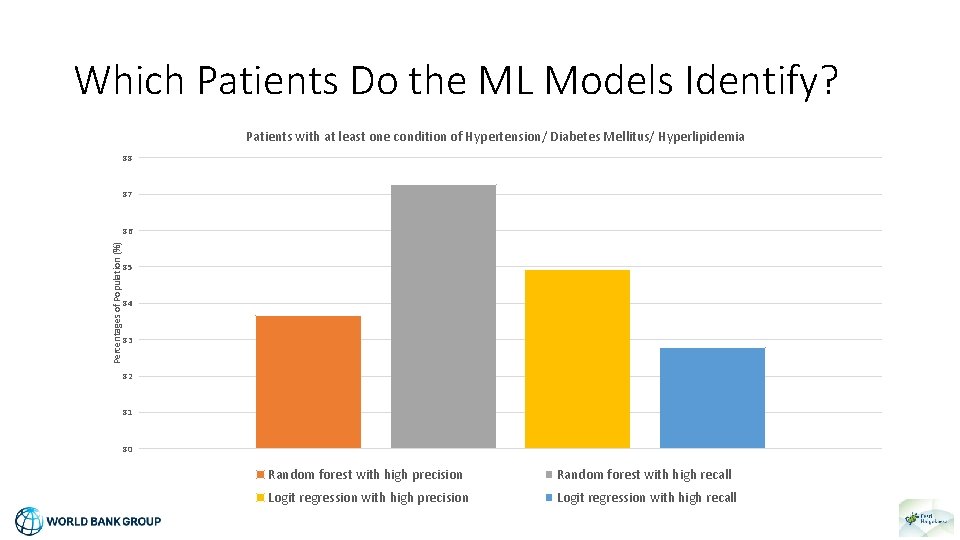

Which Patients Do the ML Models Identify? Patients with at least one condition of Hypertension/ Diabetes Mellitus/ Hyperlipidemia 88 87 Percentages of Population (%) 86 85 84 83 82 81 80 Random forest with high precision Random forest with high recall Logit regression with high precision Logit regression with high recall

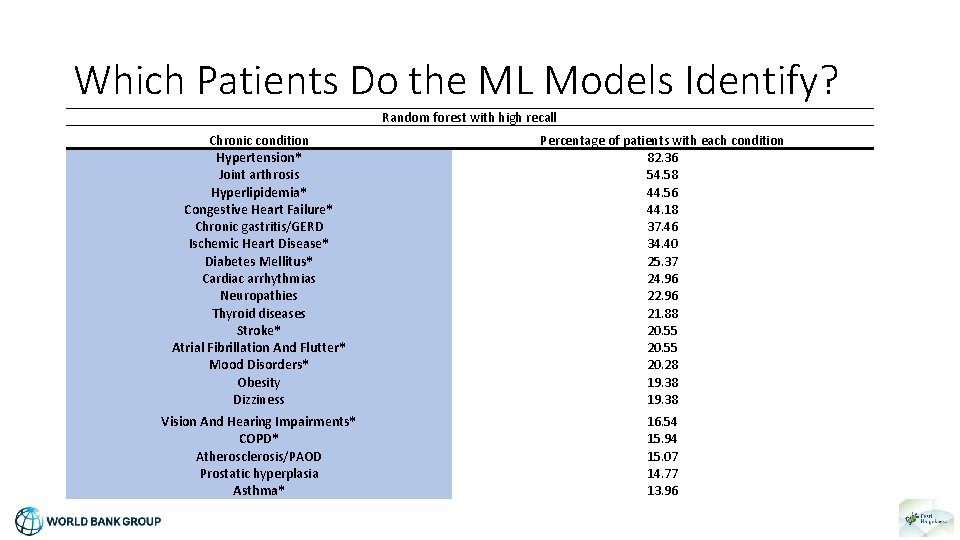

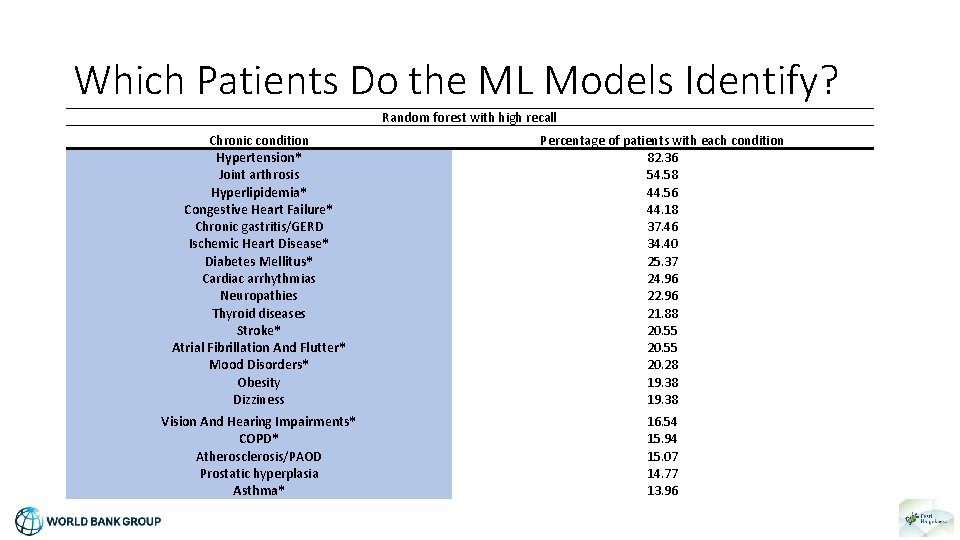

Which Patients Do the ML Models Identify? Random forest with high recall Chronic condition Hypertension* Joint arthrosis Hyperlipidemia* Congestive Heart Failure* Chronic gastritis/GERD Ischemic Heart Disease* Diabetes Mellitus* Cardiac arrhythmias Neuropathies Thyroid diseases Stroke* Atrial Fibrillation And Flutter* Mood Disorders* Obesity Dizziness Percentage of patients with each condition 82. 36 54. 58 44. 56 44. 18 37. 46 34. 40 25. 37 24. 96 22. 96 21. 88 20. 55 20. 28 19. 38 Vision And Hearing Impairments* COPD* Atherosclerosis/PAOD Prostatic hyperplasia Asthma* 16. 54 15. 94 15. 07 14. 77 13. 96

Comparisons – Old Approach and the Literature • The ML models are better than the old approach at predicting hospital admissions (but this is only one aspect/one goal of ECM) • Results are comparable to best performing results from the literature -> John Hopkins Adjusted Clinical Groups (the leading proprietary risk stratification tool) - Haas et al. ; Risk-Stratification Methods for Identifying Patients for Care Coordination; The American Journal of Managed Care (September 2013) • Predicting hospital admissions still remains a hard problem…

How to use ML techniques for ECM? • Use ML instead of the old approach or combine them? • Both approaches have advantages and disadvantages: • Mainly interpretability vs. prediction performance • What is the objective of ECM? • How long is ECM enrolment going to be for a patient? • Use ML in addition to the old algorithm? • Implement ML predictions for other purposes than hospital admissions • Use dashboards as a chance to give more frequent feedback for GPs • Move from retrospective feedback to forward-looking information sharing for better decision making by care teams

Some More Observations • Improving the ML models: additional data on social status and conditions of patients is key • Updating the models based on new available information (every 3, 6, 12 months) can improve performance considerably • More trials and evaluations offer more chances for model improvement • Implementation: Analysis was carried out using Python. All codes will be made available and can be adapted. • Data cleaning and preparation is a lengthy process… • Running the more advanced models takes some time. The availability of multiple/scalable computing resources is key…

Other Potential ML Applications at EHIF • Predicting costs per patient and which patients are going to be the high-cost patients in the next year • Predicting volumes of care services at different providers • Predicting which patients on a waiting list can benefit the most from a given surgery (see above example) • Unsupervised machine learning: Identifying provider fraud and outlier providers (in terms of their performance or their care provision) • …