Regularized risk minimization Usman Roshan Supervised learning for

![Loss function • Loss function: c(x, y, f(x)) • Maps to [0, inf] • Loss function • Loss function: c(x, y, f(x)) • Maps to [0, inf] •](https://slidetodoc.com/presentation_image/3f77c6bdba3c3e42f07af9c4d3c14a0c/image-3.jpg)

- Slides: 15

Regularized risk minimization Usman Roshan

Supervised learning for two classes • We are given n training samples (xi, yi) for i=1. . n drawn i. i. d from a probability distribution P(x, y). • Each xi is a d-dimensional vector (xi in Rd) and yi is +1 or -1 • Our problem is to learn a function f(x) for predicting the labels of test samples xi’ in Rd for i=1. . n’ also drawn i. i. d from P(x, y)

![Loss function Loss function cx y fx Maps to 0 inf Loss function • Loss function: c(x, y, f(x)) • Maps to [0, inf] •](https://slidetodoc.com/presentation_image/3f77c6bdba3c3e42f07af9c4d3c14a0c/image-3.jpg)

Loss function • Loss function: c(x, y, f(x)) • Maps to [0, inf] • Examples:

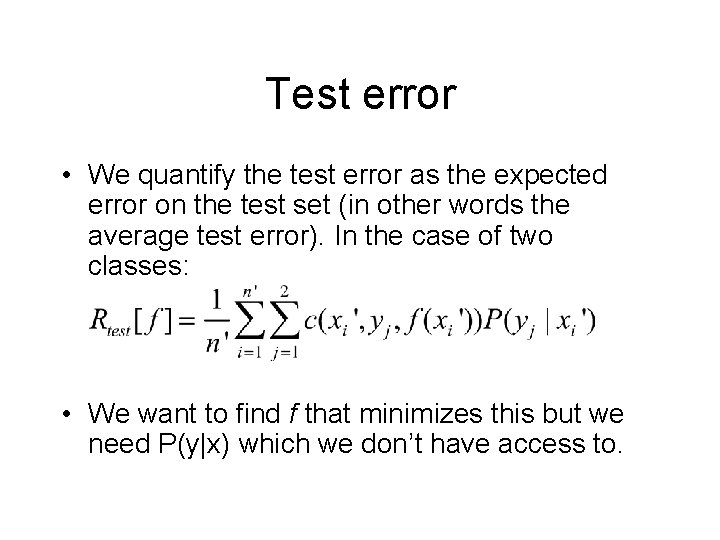

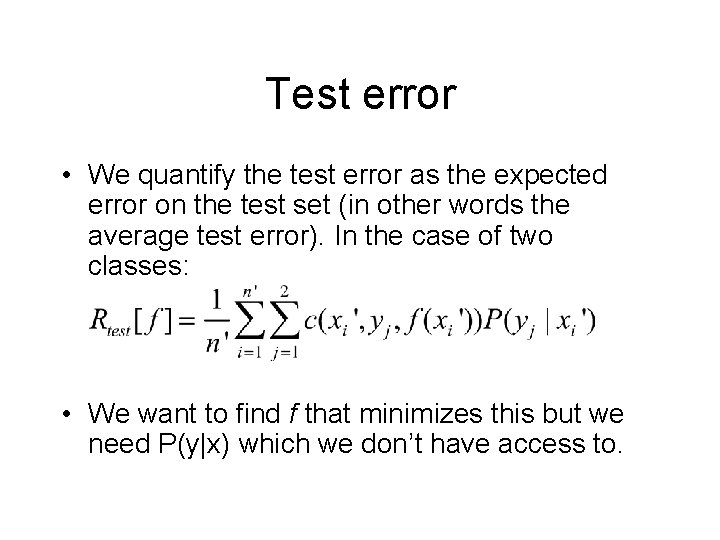

Test error • We quantify the test error as the expected error on the test set (in other words the average test error). In the case of two classes: • We want to find f that minimizes this but we need P(y|x) which we don’t have access to.

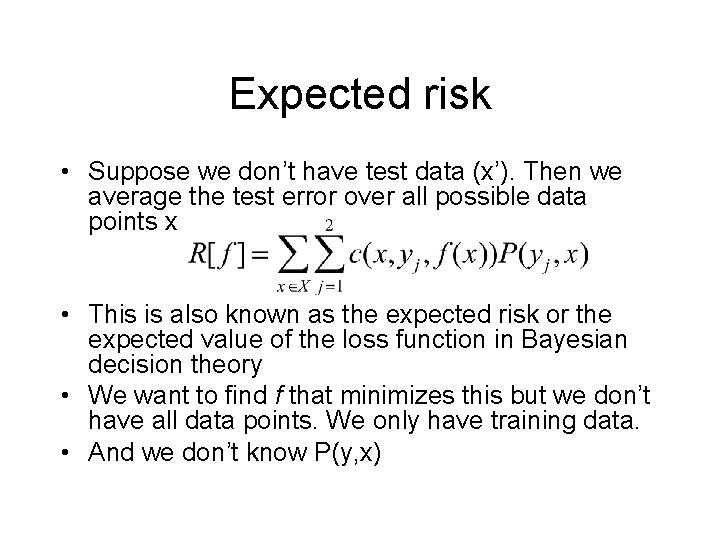

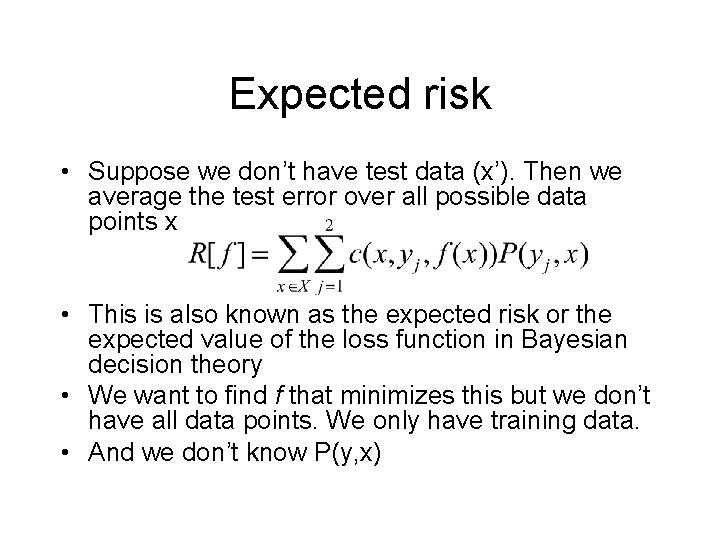

Expected risk • Suppose we don’t have test data (x’). Then we average the test error over all possible data points x • This is also known as the expected risk or the expected value of the loss function in Bayesian decision theory • We want to find f that minimizes this but we don’t have all data points. We only have training data. • And we don’t know P(y, x)

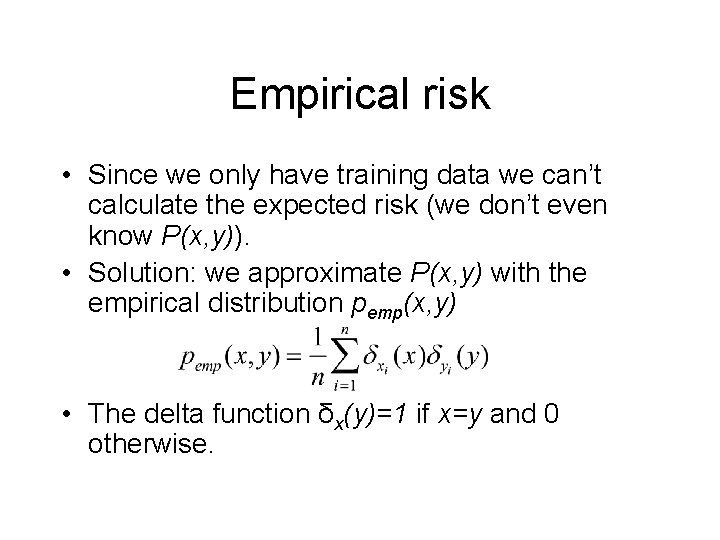

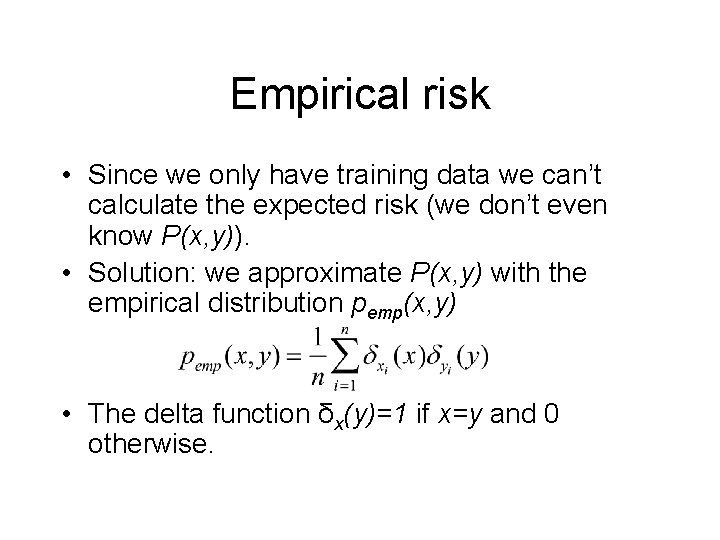

Empirical risk • Since we only have training data we can’t calculate the expected risk (we don’t even know P(x, y)). • Solution: we approximate P(x, y) with the empirical distribution pemp(x, y) • The delta function δx(y)=1 if x=y and 0 otherwise.

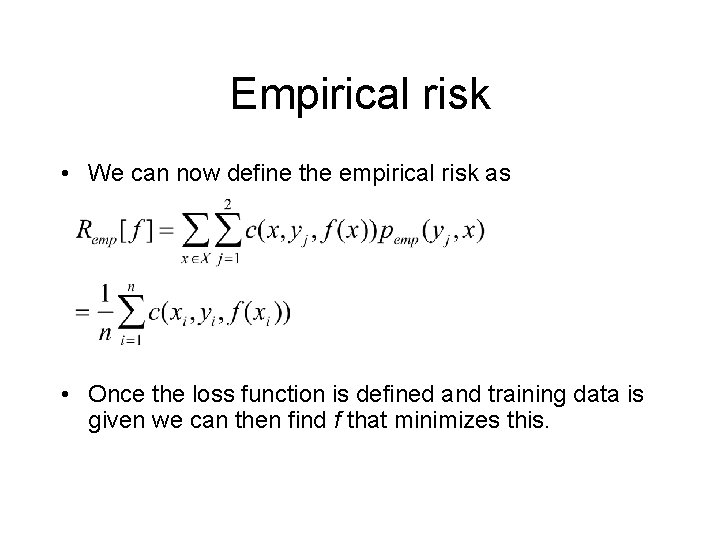

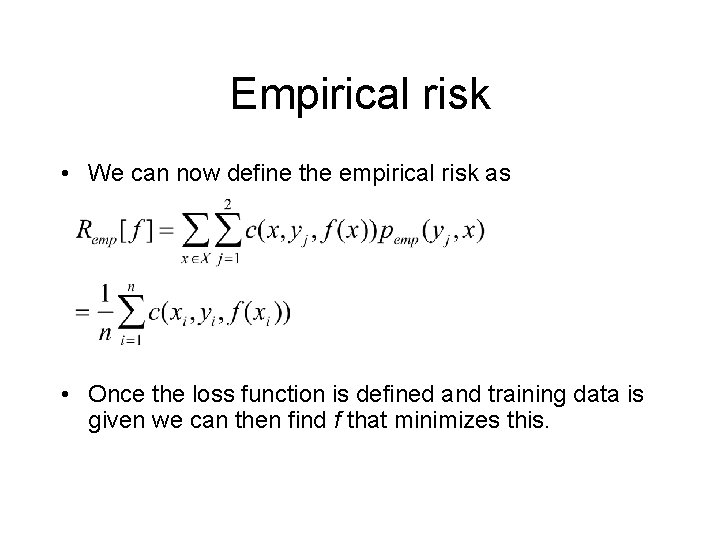

Empirical risk • We can now define the empirical risk as • Once the loss function is defined and training data is given we can then find f that minimizes this.

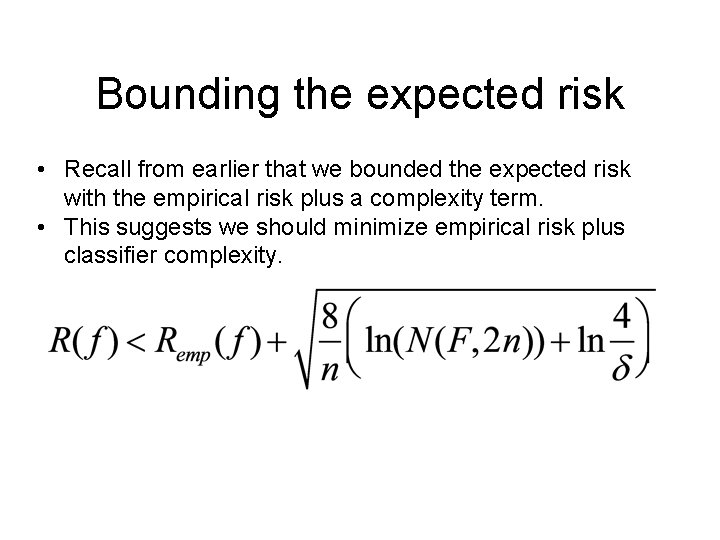

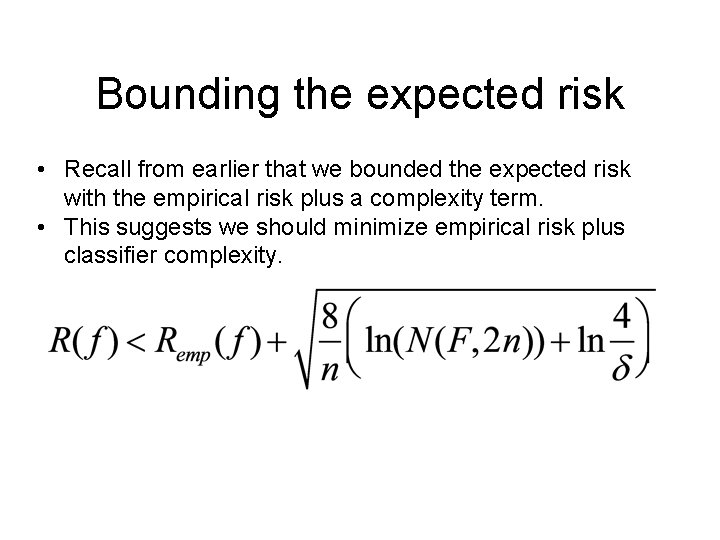

Bounding the expected risk • Recall from earlier that we bounded the expected risk with the empirical risk plus a complexity term. • This suggests we should minimize empirical risk plus classifier complexity.

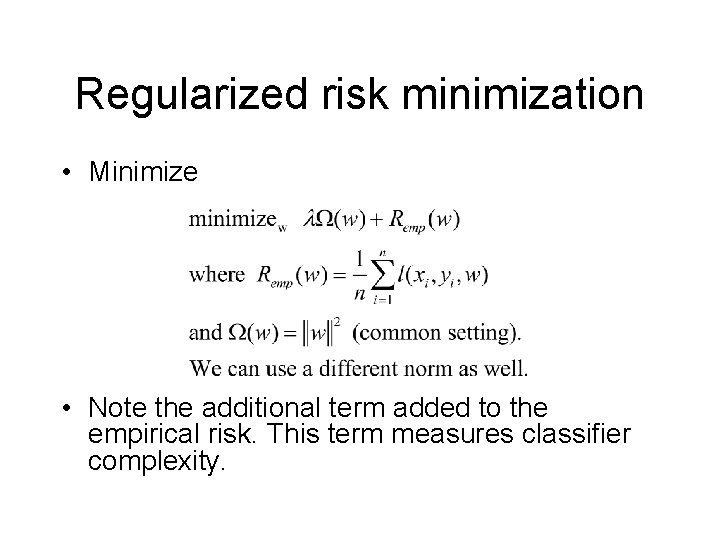

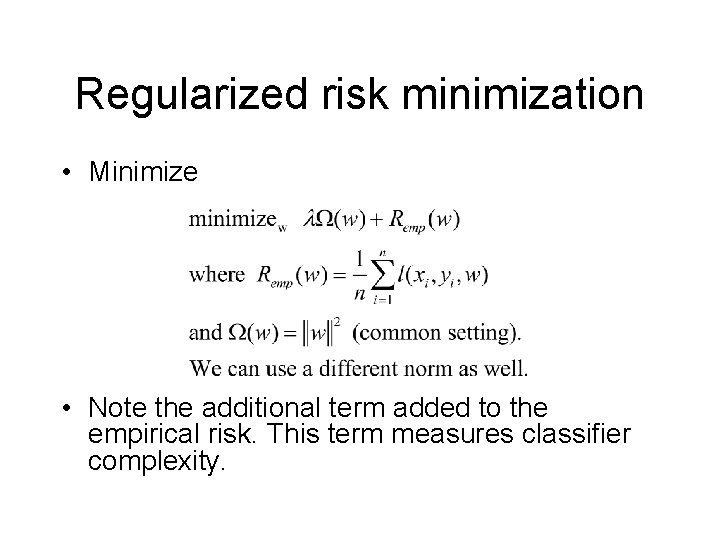

Regularized risk minimization • Minimize • Note the additional term added to the empirical risk. This term measures classifier complexity.

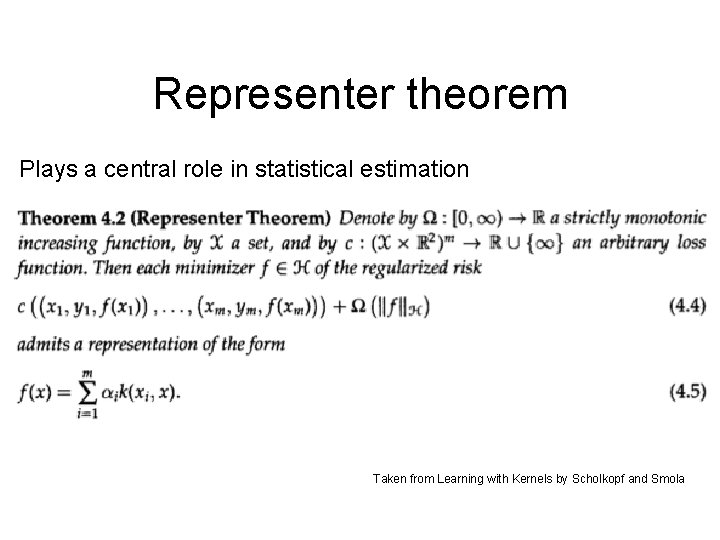

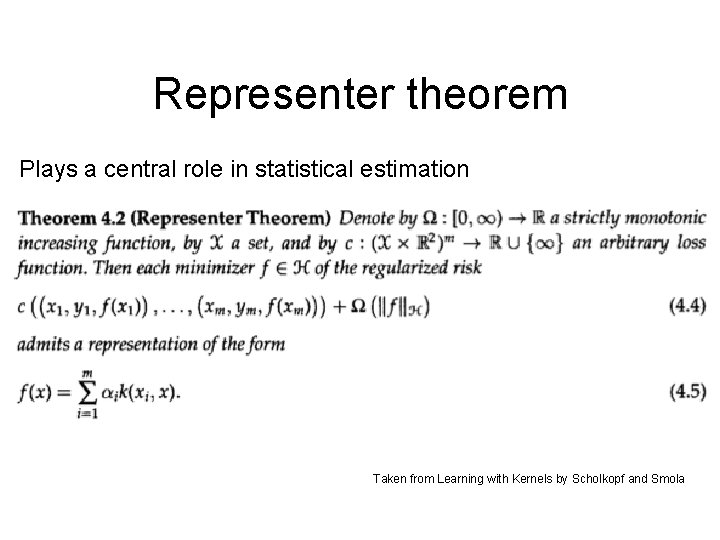

Representer theorem Plays a central role in statistical estimation Taken from Learning with Kernels by Scholkopf and Smola

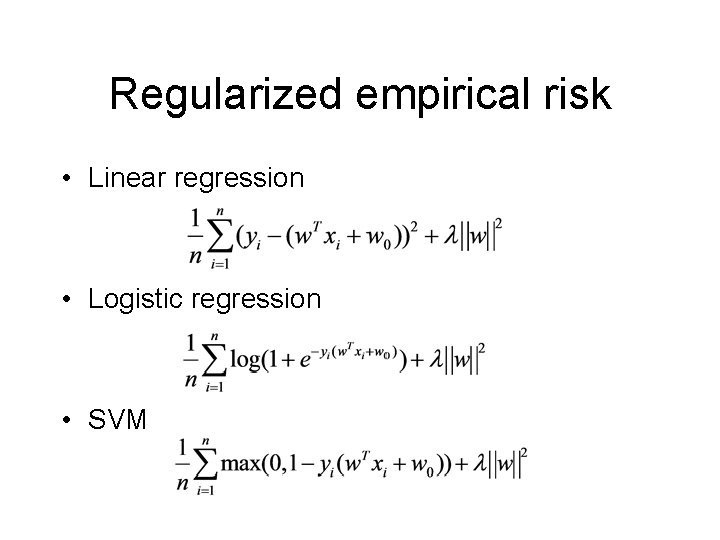

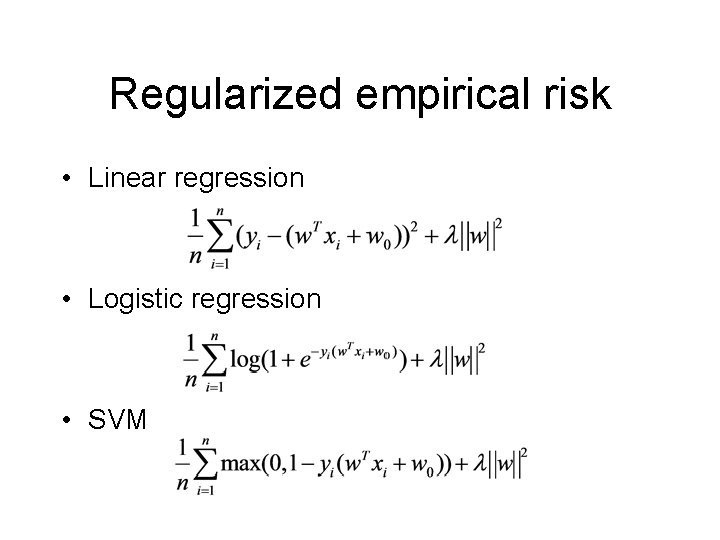

Regularized empirical risk • Linear regression • Logistic regression • SVM

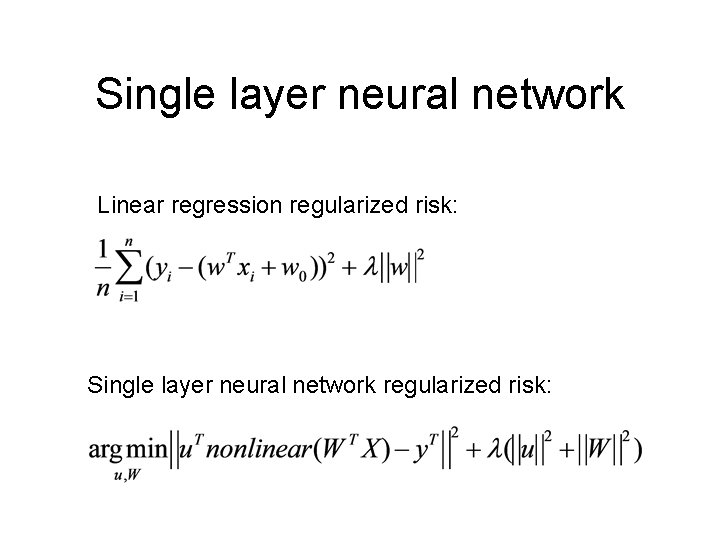

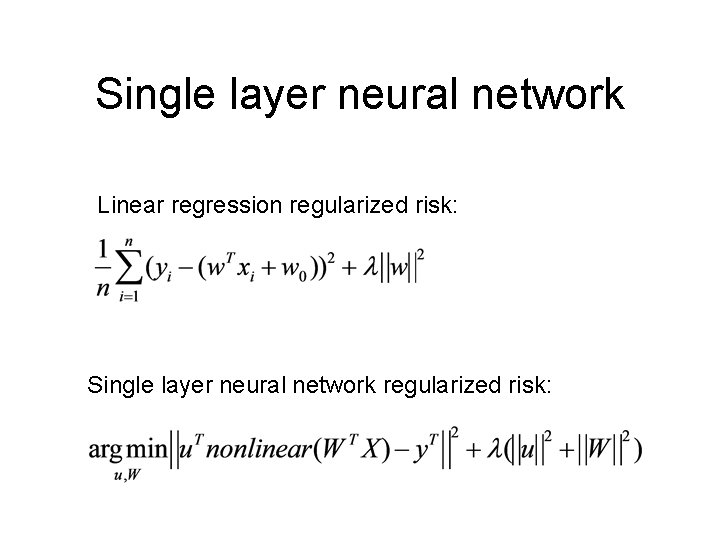

Single layer neural network Linear regression regularized risk: Single layer neural network regularized risk:

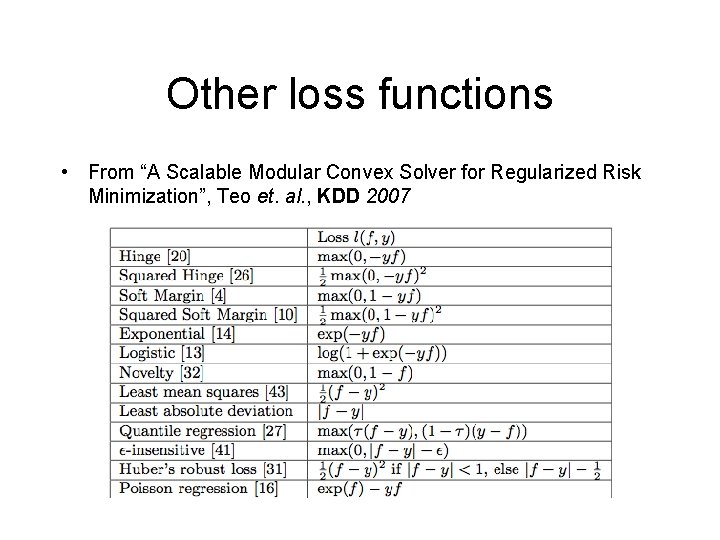

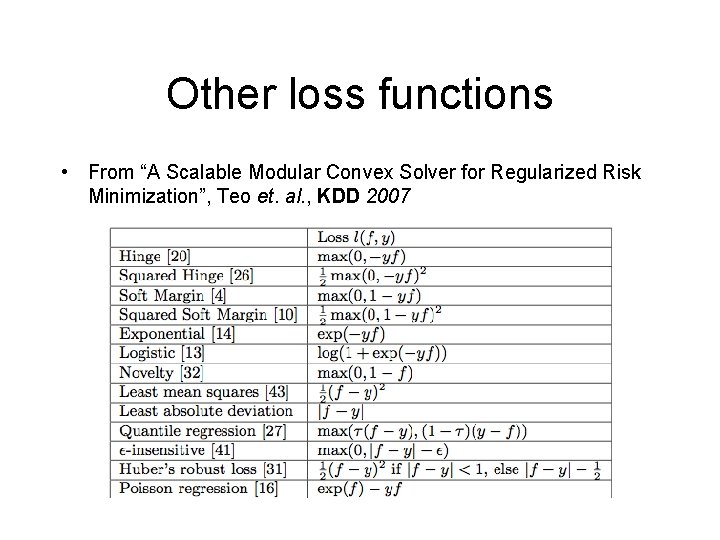

Other loss functions • From “A Scalable Modular Convex Solver for Regularized Risk Minimization”, Teo et. al. , KDD 2007

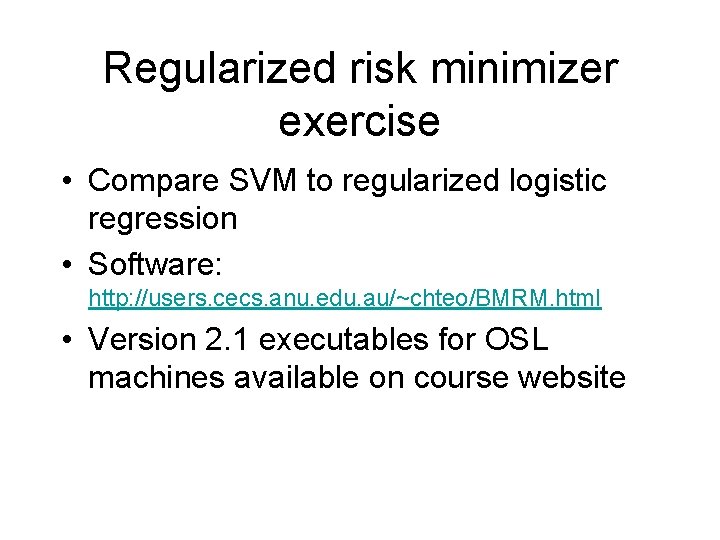

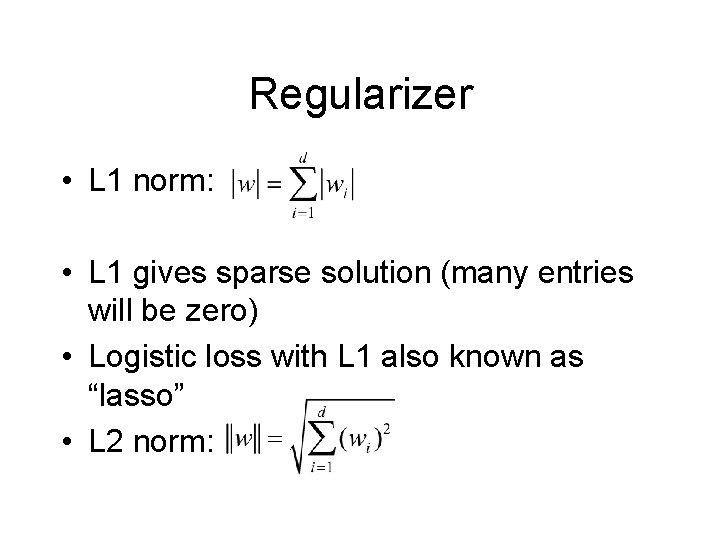

Regularizer • L 1 norm: • L 1 gives sparse solution (many entries will be zero) • Logistic loss with L 1 also known as “lasso” • L 2 norm:

Regularized risk minimizer exercise • Compare SVM to regularized logistic regression • Software: http: //users. cecs. anu. edu. au/~chteo/BMRM. html • Version 2. 1 executables for OSL machines available on course website