Empirical risk minimization Usman Roshan Supervised learning for

![Loss function • Loss function: c(x, y, f(x)) • Maps to [0, inf] • Loss function • Loss function: c(x, y, f(x)) • Maps to [0, inf] •](https://slidetodoc.com/presentation_image_h/26c50169bee605566ca1fd61371b4504/image-3.jpg)

- Slides: 22

Empirical risk minimization Usman Roshan

Supervised learning for two classes • We are given n training samples (xi, yi) for i=1. . n drawn i. i. d from a probability distribution P(x, y). • Each xi is a d-dimensional vector (xi in Rd) and yi is +1 or -1 • Our problem is to learn a function f(x) for predicting the labels of test samples xi’ in Rd for i=1. . n’ also drawn i. i. d from P(x, y)

![Loss function Loss function cx y fx Maps to 0 inf Loss function • Loss function: c(x, y, f(x)) • Maps to [0, inf] •](https://slidetodoc.com/presentation_image_h/26c50169bee605566ca1fd61371b4504/image-3.jpg)

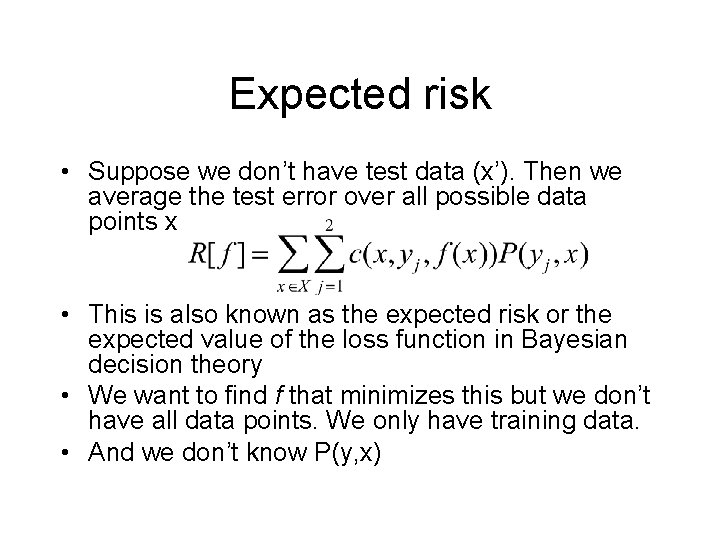

Loss function • Loss function: c(x, y, f(x)) • Maps to [0, inf] • Examples:

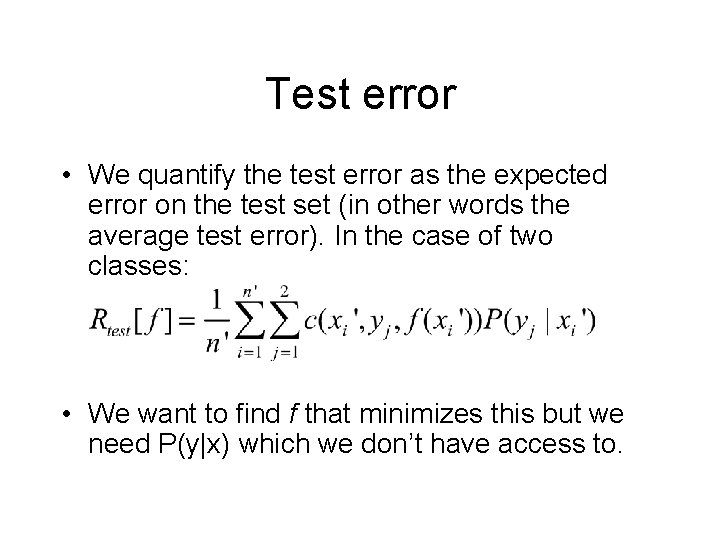

Test error • We quantify the test error as the expected error on the test set (in other words the average test error). In the case of two classes: • We want to find f that minimizes this but we need P(y|x) which we don’t have access to.

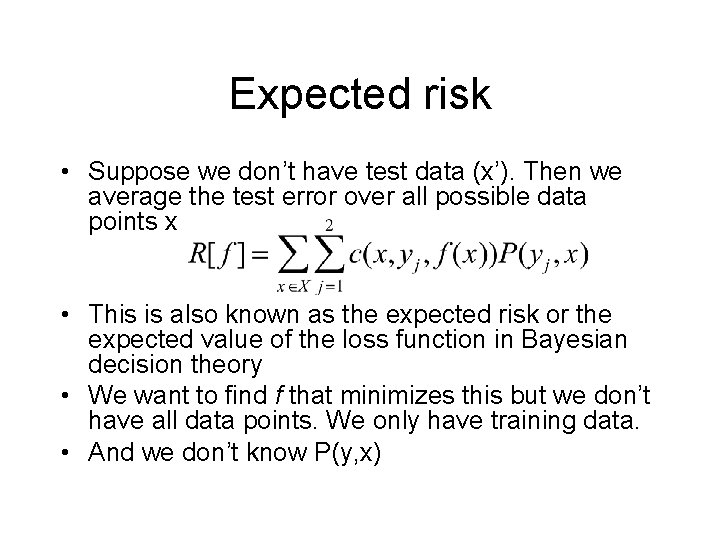

Expected risk • Suppose we don’t have test data (x’). Then we average the test error over all possible data points x • This is also known as the expected risk or the expected value of the loss function in Bayesian decision theory • We want to find f that minimizes this but we don’t have all data points. We only have training data. • And we don’t know P(y, x)

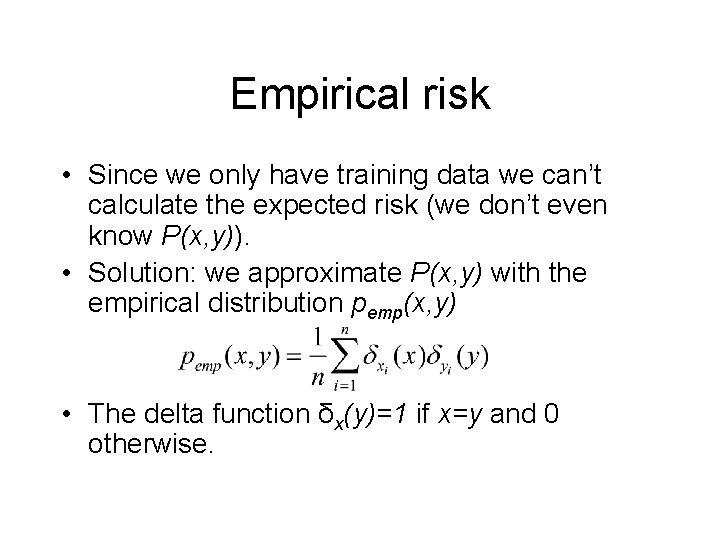

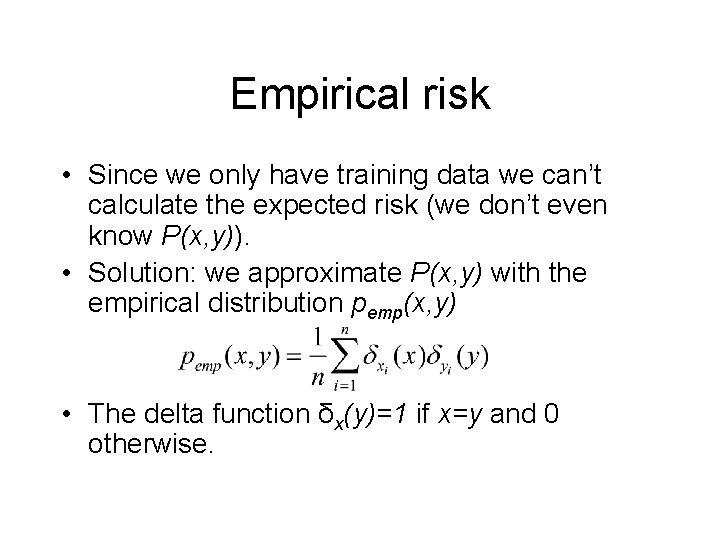

Empirical risk • Since we only have training data we can’t calculate the expected risk (we don’t even know P(x, y)). • Solution: we approximate P(x, y) with the empirical distribution pemp(x, y) • The delta function δx(y)=1 if x=y and 0 otherwise.

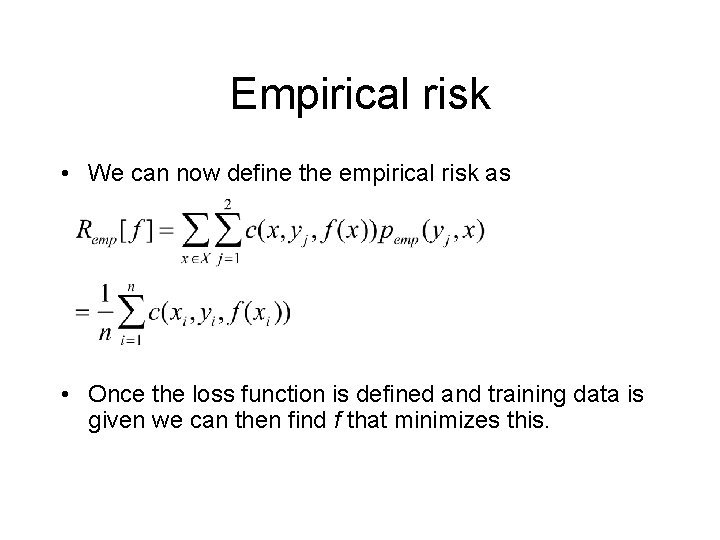

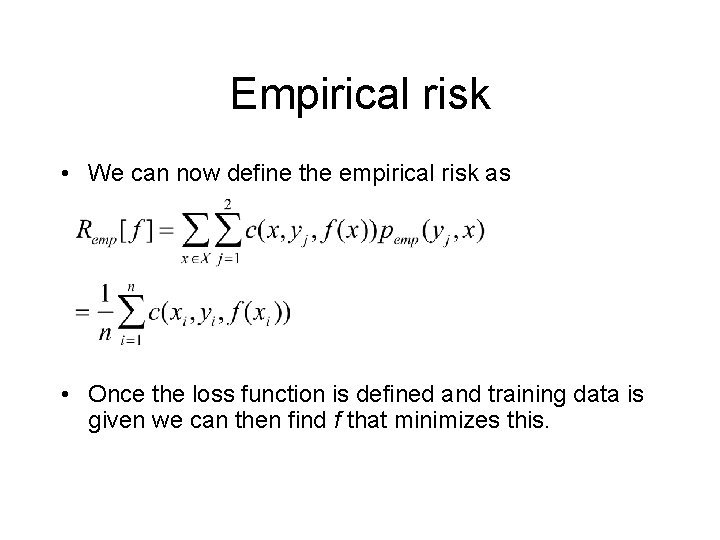

Empirical risk • We can now define the empirical risk as • Once the loss function is defined and training data is given we can then find f that minimizes this.

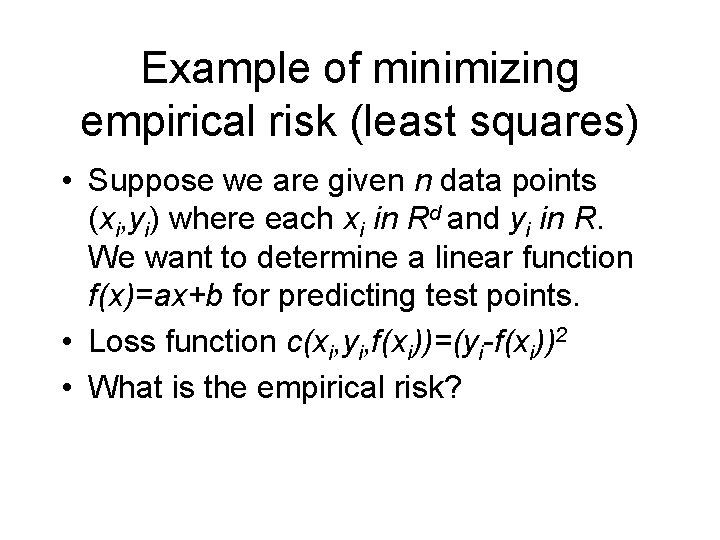

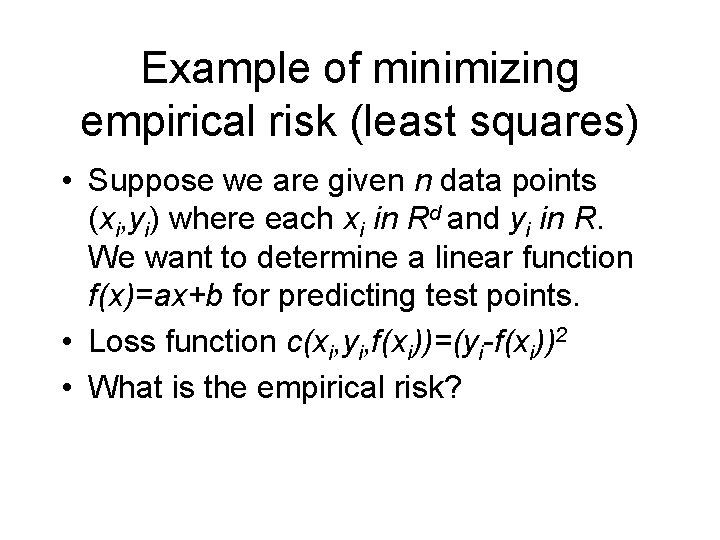

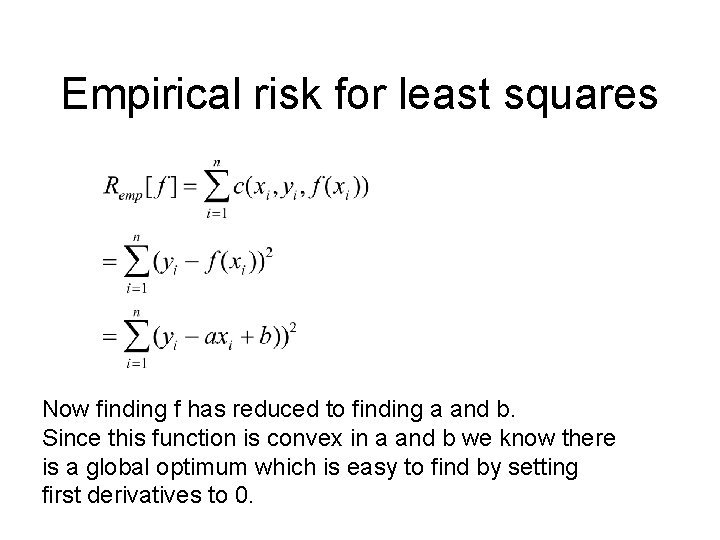

Example of minimizing empirical risk (least squares) • Suppose we are given n data points (xi, yi) where each xi in Rd and yi in R. We want to determine a linear function f(x)=ax+b for predicting test points. • Loss function c(xi, yi, f(xi))=(yi-f(xi))2 • What is the empirical risk?

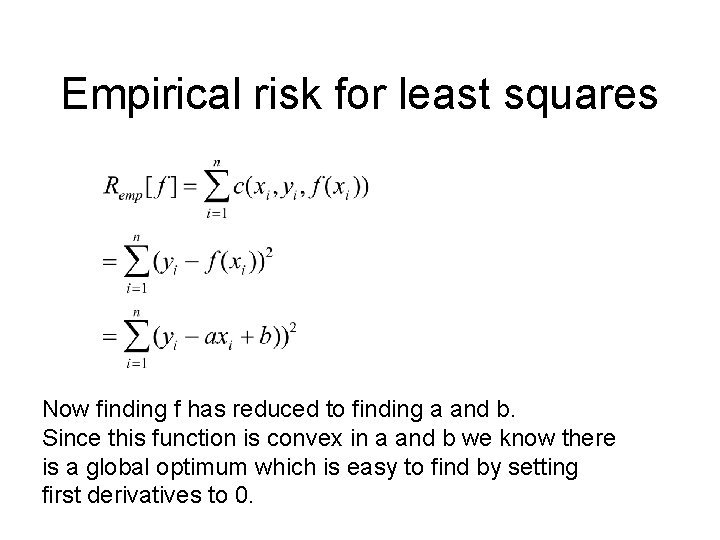

Empirical risk for least squares Now finding f has reduced to finding a and b. Since this function is convex in a and b we know there is a global optimum which is easy to find by setting first derivatives to 0.

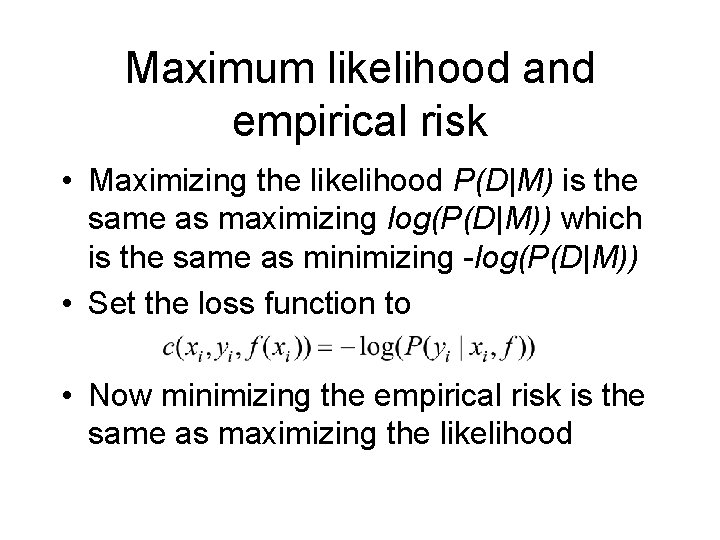

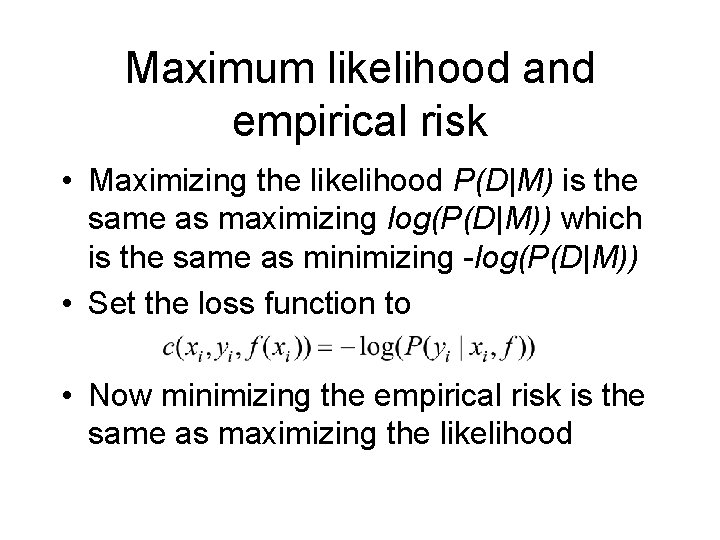

Maximum likelihood and empirical risk • Maximizing the likelihood P(D|M) is the same as maximizing log(P(D|M)) which is the same as minimizing -log(P(D|M)) • Set the loss function to • Now minimizing the empirical risk is the same as maximizing the likelihood

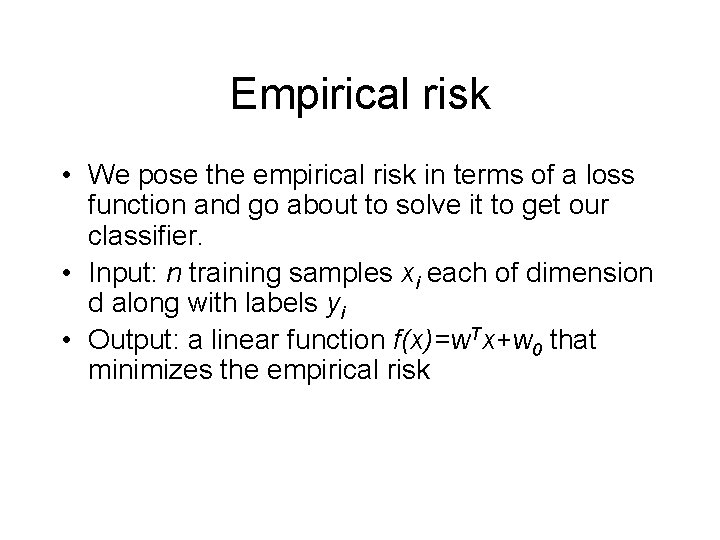

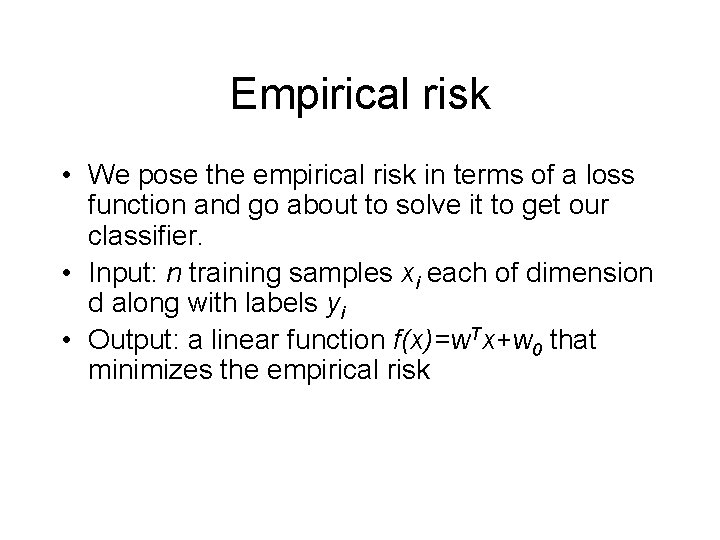

Empirical risk • We pose the empirical risk in terms of a loss function and go about to solve it to get our classifier. • Input: n training samples xi each of dimension d along with labels yi • Output: a linear function f(x)=w. Tx+w 0 that minimizes the empirical risk

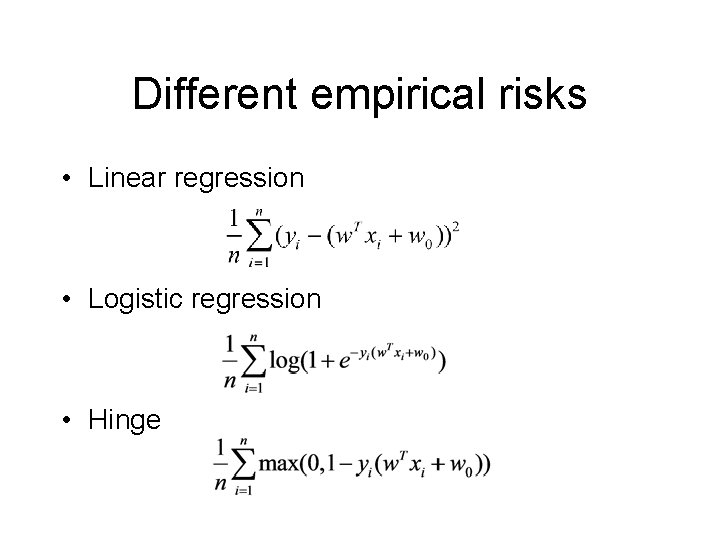

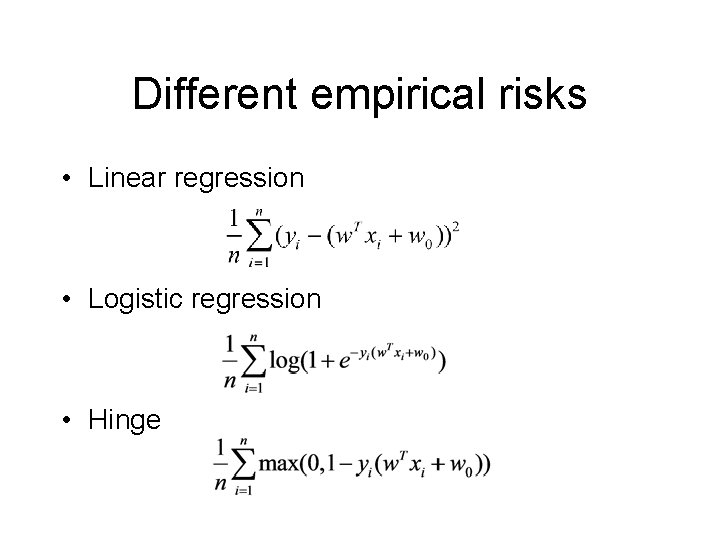

Empirical risk examples • Linear regression • How about logistic regression?

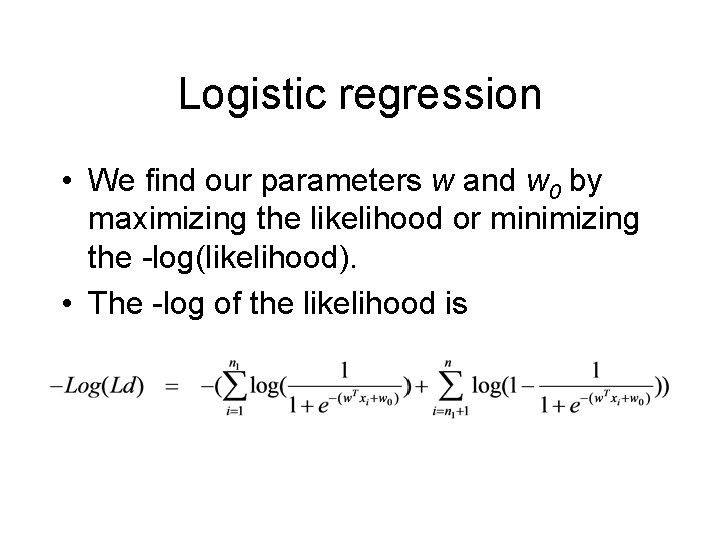

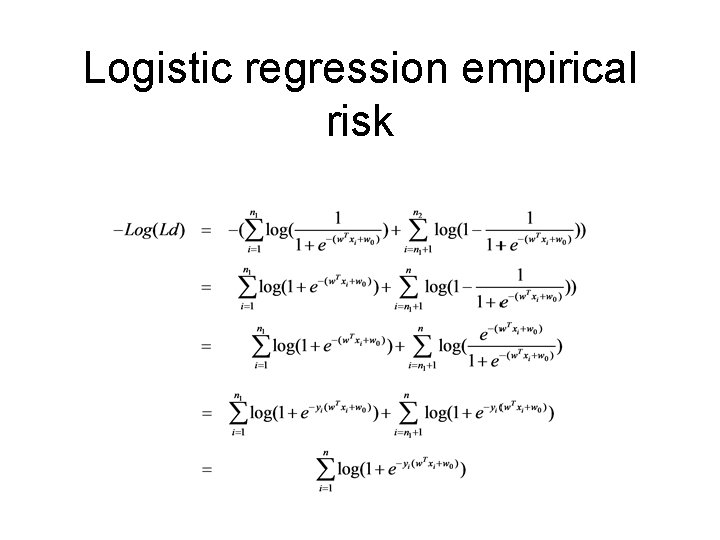

Logistic regression • In the logistic regression model: • Let y=+1 be case and y=-1 be control. • The sample likelihood of the training data is given by

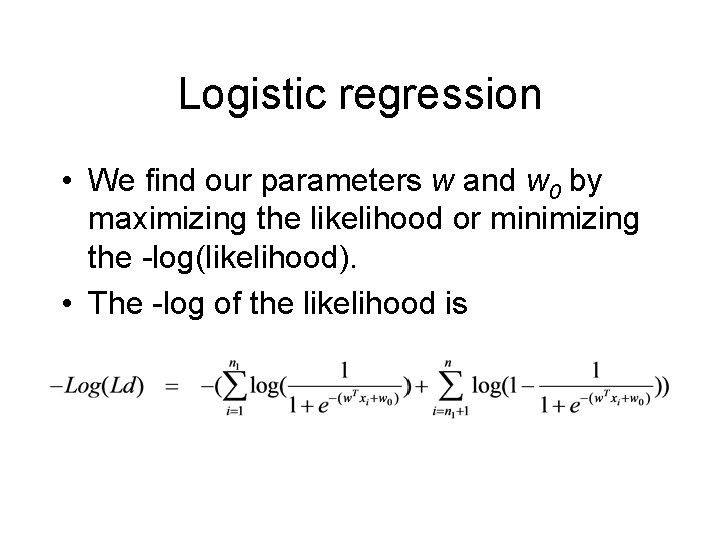

Logistic regression • We find our parameters w and w 0 by maximizing the likelihood or minimizing the -log(likelihood). • The -log of the likelihood is

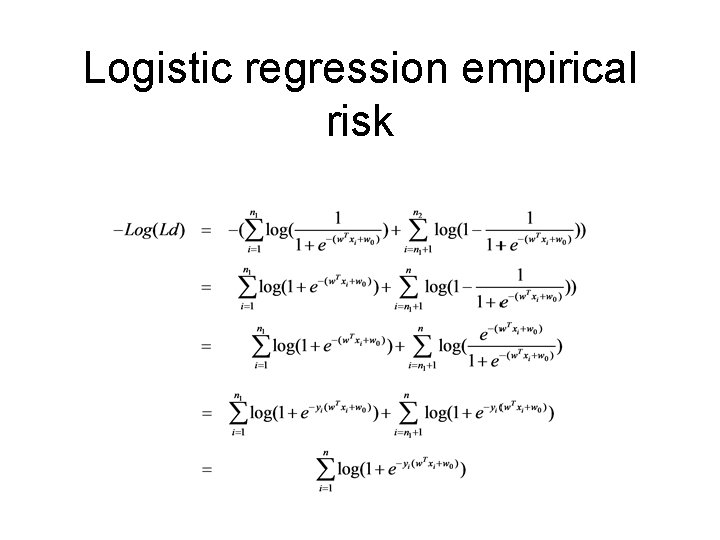

Logistic regression empirical risk

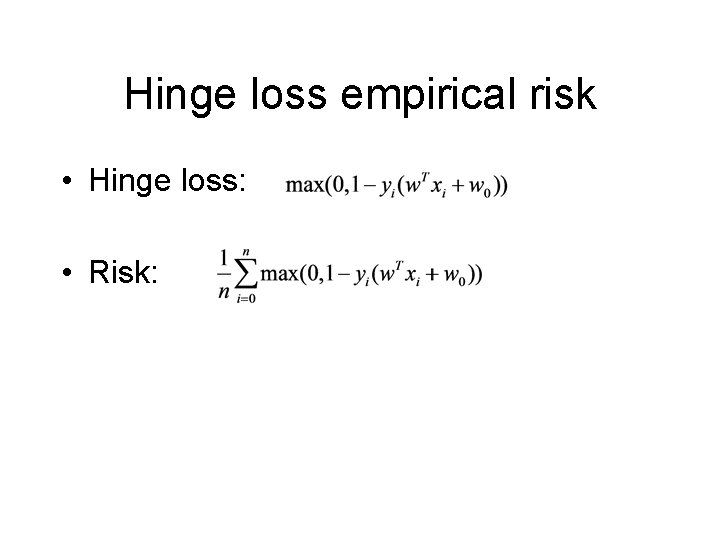

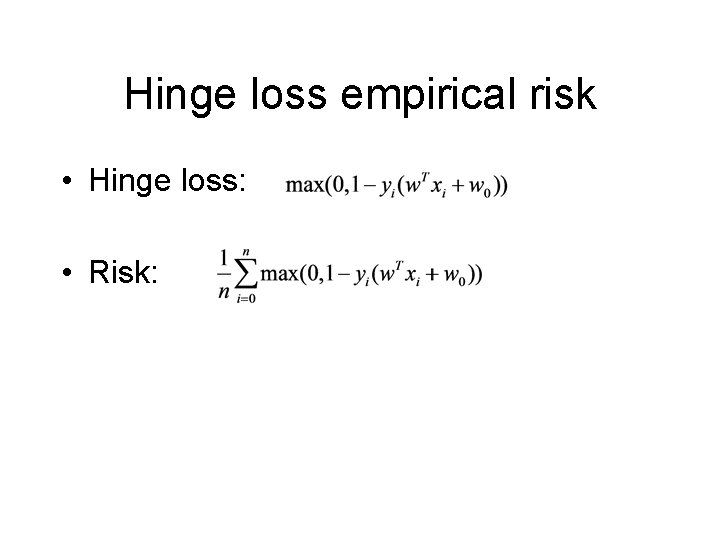

Hinge loss empirical risk • Hinge loss: • Risk:

Different empirical risks • Linear regression • Logistic regression • Hinge

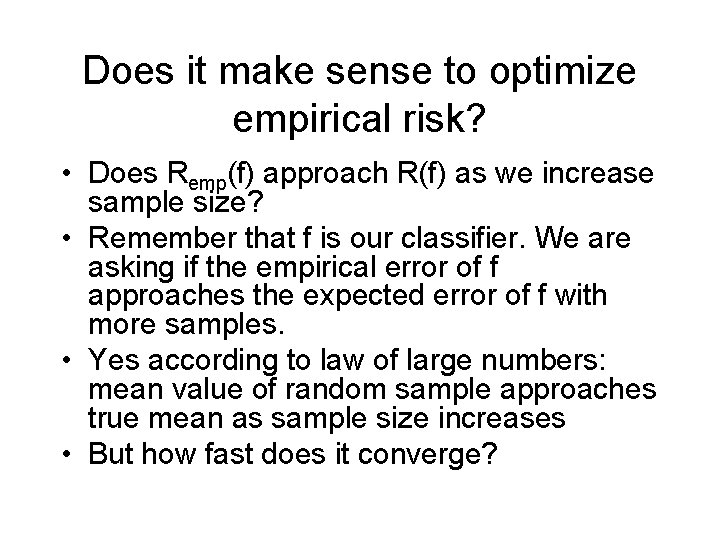

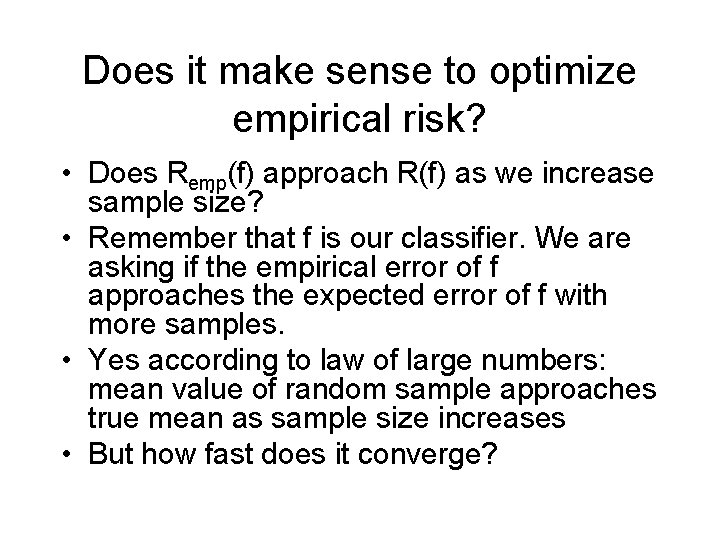

Does it make sense to optimize empirical risk? • Does Remp(f) approach R(f) as we increase sample size? • Remember that f is our classifier. We are asking if the empirical error of f approaches the expected error of f with more samples. • Yes according to law of large numbers: mean value of random sample approaches true mean as sample size increases • But how fast does it converge?

Chernoff bounds • Suppose we have Xi i. i. d. trials where each Xi = |f(xi)-yi| • Let m be the true mean of X • Then

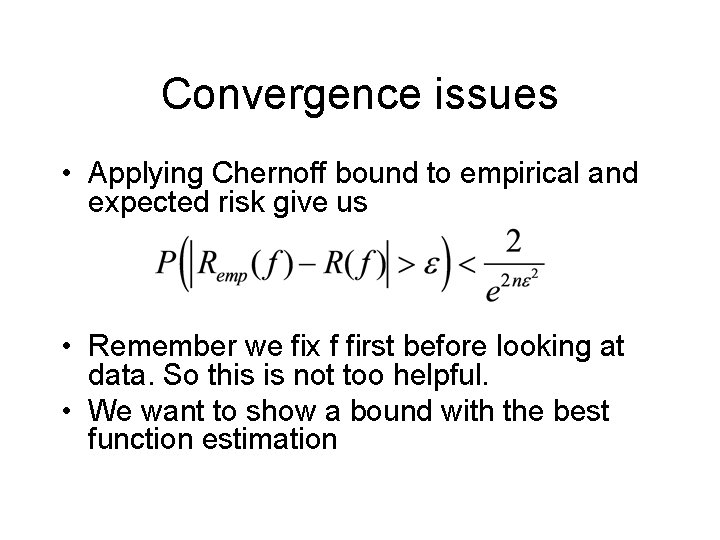

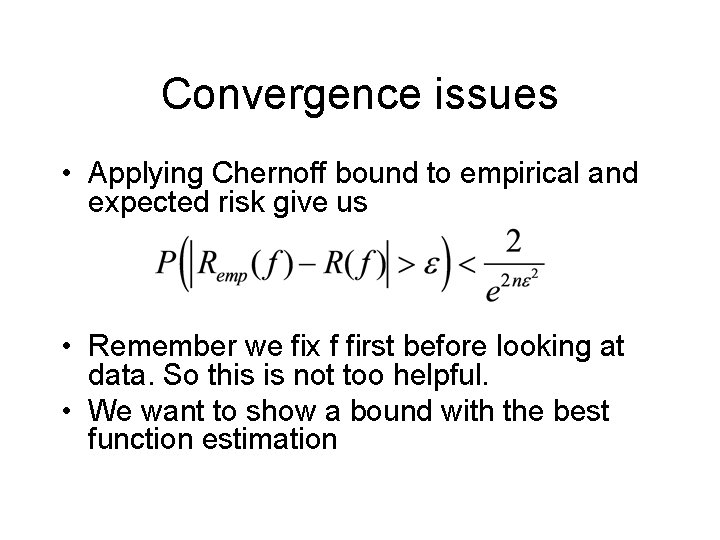

Convergence issues • Applying Chernoff bound to empirical and expected risk give us • Remember we fix f first before looking at data. So this is not too helpful. • We want to show a bound with the best function estimation

Bound on empirical risk minimization • In other words bound: • With some work we can show that where N(F, 2 n) measures the size of function space F. It is the maximum size of F on 2 n datapoints. Since we can have at most 22 n binary classifiers on 2 n points the maximum size of F is 22 n.

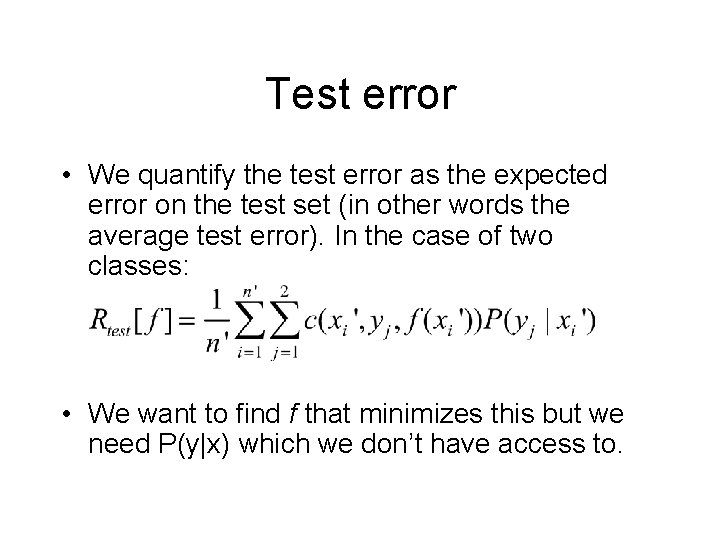

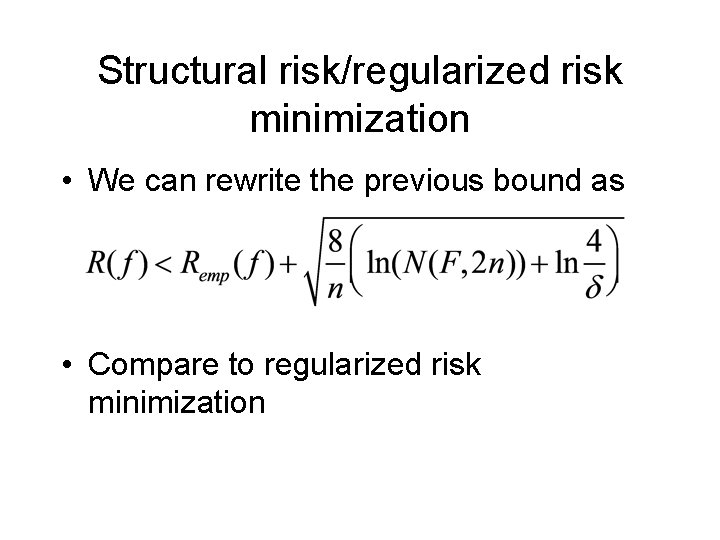

Structural risk/regularized risk minimization • We can rewrite the previous bound as • Compare to regularized risk minimization