Predicting Good Probabilities With Supervised Learning Alexandru NiculescuMizil

Predicting Good Probabilities With Supervised Learning Alexandru Niculescu-Mizil Rich Caruana Cornell University

What are good probabilities? n n Ideally, if the model predicts 0. 75 for an example then the conditional probability, given the available attributes, of that example to be positive is 0. 75. In practice: n n n Good calibration: out of all the cases the model predicts 0. 75 for, 75% are positive. Low Brier score (squared error). Low cross-entropy (log-loss).

Why good probabilities? n Intelligibility n If the classifier is part of a larger system n n n Speech recognition Handwritten recognition If the classifier is used for decision making n n Cost sensitive decisions Medical applications Meteorology Risk analysis

What did we do? n n n We analyzed the predictions made by ten supervised learning algorithms. For the analysis we used eight binary classification problems. Limitations: n n n Only binary problems. No multiclass. No high dimensional problems (only under 200). Only moderately sized training sets.

Questions addressed in this talk n n n Which models are well calibrated and which are not? Can we fix the models that are not well calibrated? Which learning algorithm makes the best probabilistic predictions?

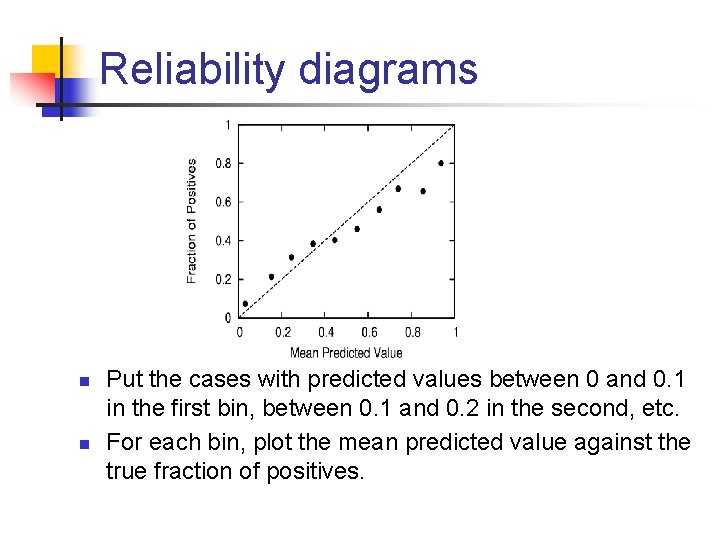

Reliability diagrams n n Put the cases with predicted values between 0 and 0. 1 in the first bin, between 0. 1 and 0. 2 in the second, etc. For each bin, plot the mean predicted value against the true fraction of positives.

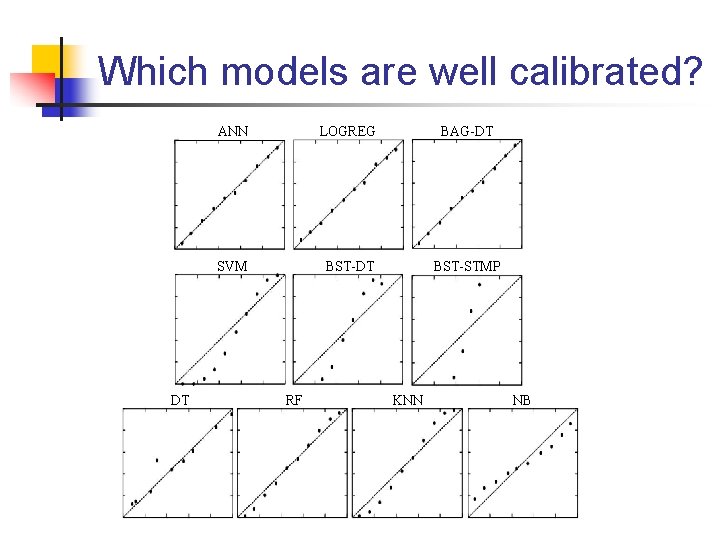

Which models are well calibrated? DT ANN LOGREG BAG-DT SVM BST-DT BST-STMP RF KNN NB

Questions addressed in this talk n n n Which models are well calibrated and which are not? Can we fix the models that are not well calibrated? Which learning algorithm makes the best probabilistic predictions?

Can we fix the models that are not well calibrated? n Platt Scaling n n n Method used by Platt to obtain calibrated probabilities from SVMs. [Platt `99] Converts the outputs by passing them through a sigmoid. The sigmoid is fitted using an independent calibration set.

Can we fix the models that are not well calibrated? n Isotonic Regression n [Robertson et al. `88] More general calibration method used by Zadrozny and Elkan. [Zadrozny & Elkan `01, `02] Converts the outputs by passing them through a general isotonic (monotonically increasing) function. The isotonic function is fitted using an independent calibration set.

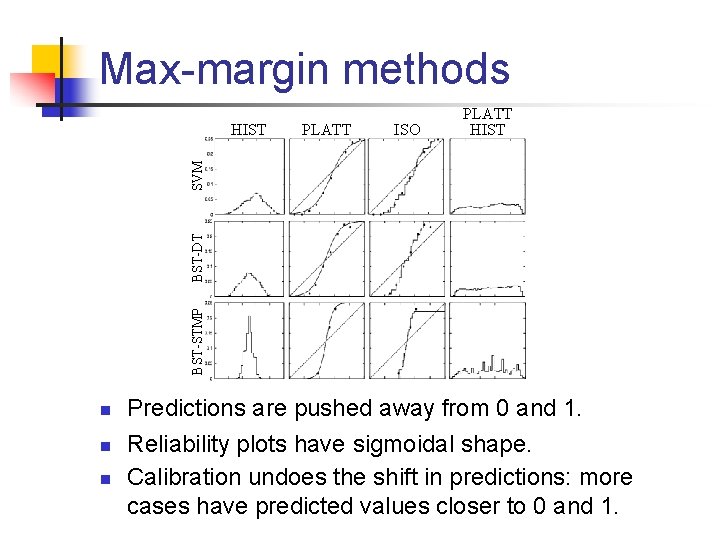

Max-margin methods PLATT ISO BST-STMP BST-DT SVM HIST PLATT HIST n n n Predictions are pushed away from 0 and 1. Reliability plots have sigmoidal shape. Calibration undoes the shift in predictions: more cases have predicted values closer to 0 and 1.

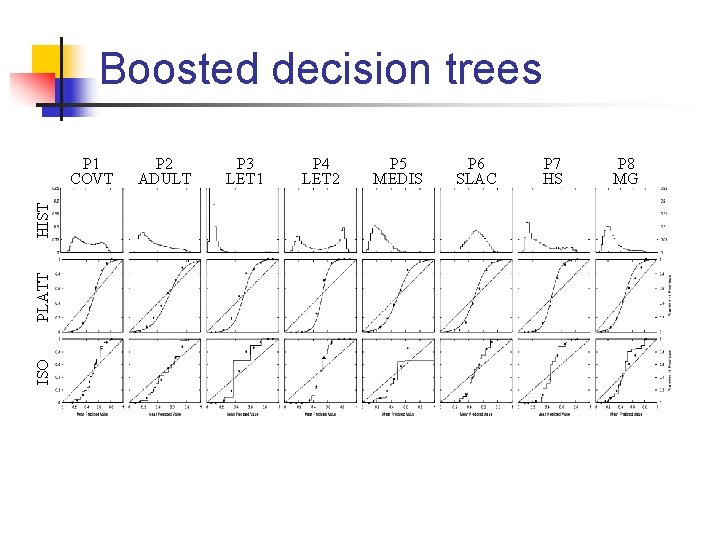

Boosted decision trees ISO PLATT HIST P 1 COVT P 2 ADULT P 3 LET 1 P 4 LET 2 P 5 MEDIS P 6 SLAC P 7 HS P 8 MG

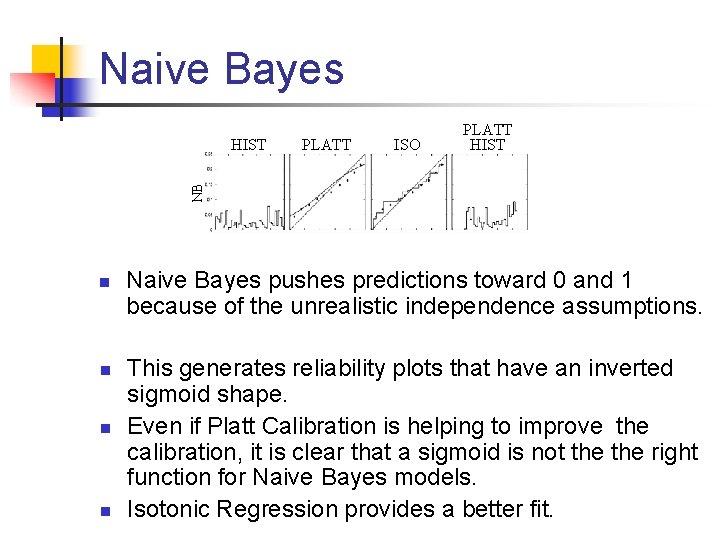

Naive Bayes PLATT ISO NB HIST PLATT HIST n n Naive Bayes pushes predictions toward 0 and 1 because of the unrealistic independence assumptions. This generates reliability plots that have an inverted sigmoid shape. Even if Platt Calibration is helping to improve the calibration, it is clear that a sigmoid is not the right function for Naive Bayes models. Isotonic Regression provides a better fit.

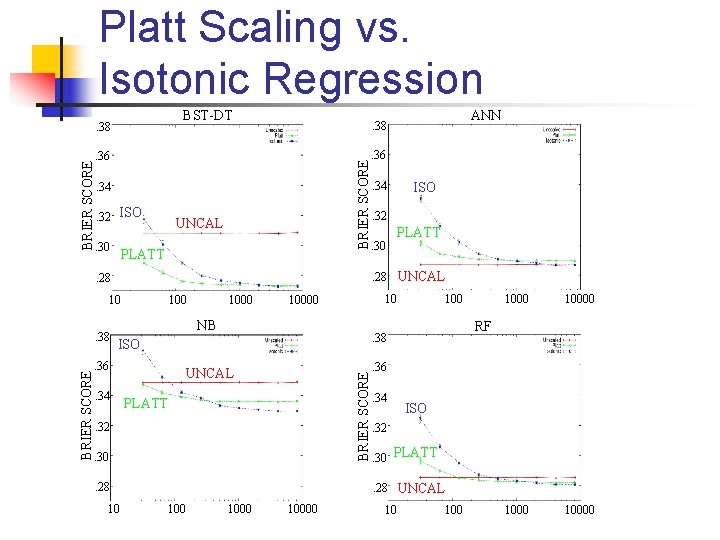

Platt Scaling vs. Isotonic Regression BST-DT . 36. 34. 32 ISO. 30 UNCAL PLATT . 36. 34 . 30 PLATT . 28 UNCAL 10 10000 NB ISO . 36. 34 UNCAL PLATT . 32. 30. 28 10 1000 10000 RF . 38 BRIER SCORE ISO . 32 . 28 . 38 ANN . 38 BRIER SCORE . 38 . 36. 34 ISO . 32. 30 PLATT. 28 UNCAL 10000 10 100

Questions addressed in this talk n n n Which models are well calibrated and which are not? Can we fix the models that are not well calibrated? Which learning algorithm makes the best probabilistic predictions?

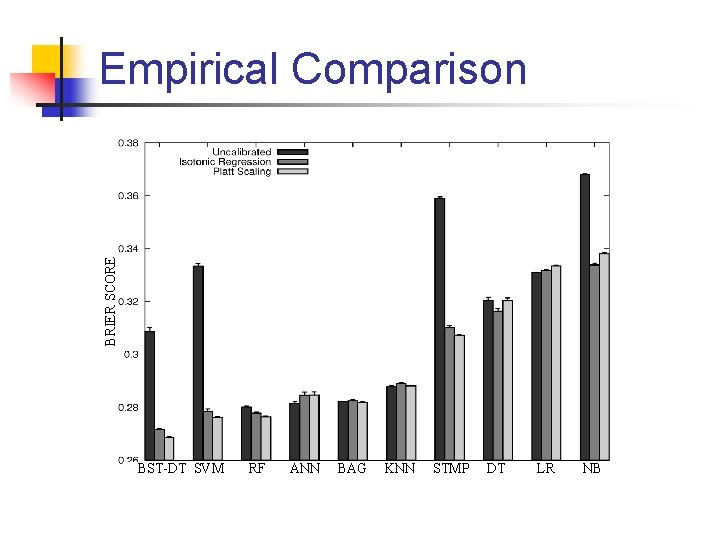

BRIER SCORE Empirical Comparison BST-DT SVM RF ANN BAG KNN STMP DT LR NB

Summary and Conclusions n n We examined the quality of the probabilities predicted by ten supervised learning algorithms. Neural nets, bagged trees and logistic regression have well calibrated predictions. Max-margin methods such as boosting and SVMs push the predicted values away from 0 and 1. This yields a sidmoid-shaped reliability diagram. Learning algorithms such as Naive Bayes distort the probabilities in the opposite way, pushing them closer to 0 and 1.

Summary and Conclusions n n We examined two methods to calibrate the predictions. Max-margin methods and Naive Baies benefit a lot from calibration, while well-calibrated methods do not. Platt Scaling is more effective when the calibration set is small, but Isotonic Regression is more powerful when there is enough data to prevent overfitting. The methods that predict the best probabilities are calibrated boosted trees, calibrated random forests, calibrated SVMs, uncalibrated bagged trees and uncalibrated neural nets.

Thank you! Questions?

- Slides: 19