Representation learning Usman Roshan Representation learning Given input

- Slides: 9

Representation learning Usman Roshan

Representation learning • Given input data X of dimensions n x d we want to learn a new data matrix X’ of dimensions n x d’ • Objective is to achieve better classification • Most methods attempt to find a new representation where the data is linearly separable.

Methods • Dimensionality reduction – Linear discriminant analysis aims to maximize signal to noise ratio • Feature selection – Univariate methods like signal to noise – Multivariate classification based • Kernels – Implicit dot products in a different feature space

Methods • Neural networks – Solving XOR with one hidden layer – In fact a single layer can approximate any function: Universal Approximation theorem by Cybenko (1989) and Hornik (1991) • K-means based feature learning – Applied to image recognition (Coates. et. al. 2011) – Protein sequence classification (Melman and Roshan 2017)

Methods • Deep convolution networks (CNN) – Several layers of convolution, pooling, and dense layers (dense usually at the end) – Image. Net classification with deep CNNs (Krizhevsky et. al. 2012) • Random hyperplanes – Extreme learning machine – Random bit regression – Deep networks with random weights

Theory • What do we know theoretically about creating a linear space from input data? • Thomas Cover (1965): – Every set of n points has a trivial n dimensional linear representation. Simply assign each point to n vertices of an n-1 dimensional simplex • But what about creating a new linear space suitable for classification? (We want generalization to test example not just linearity in training. )

Random hyperplanes - what do we know? • Lindenstrauss-Johnson lemma: – Distances between vectors are preserved upto epsilon • Is the margin preserved? • First, what can we say about the probability that a random hyperplane will place two points on the opposite side of the plane? In other words, their predictions will be opposite.

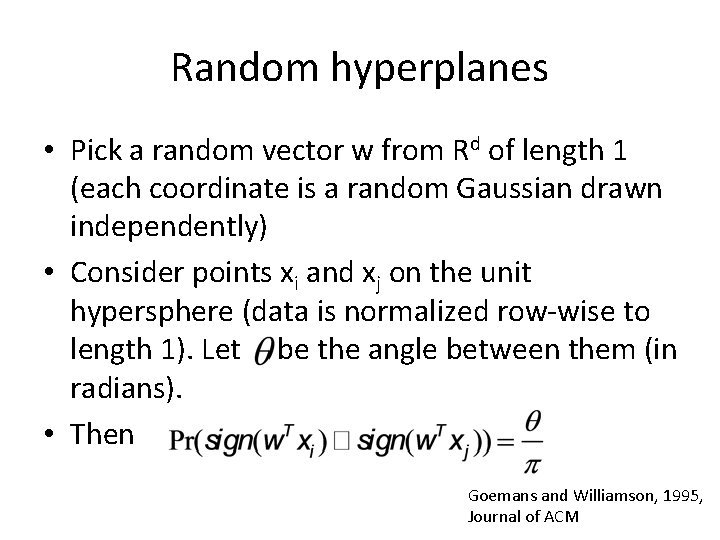

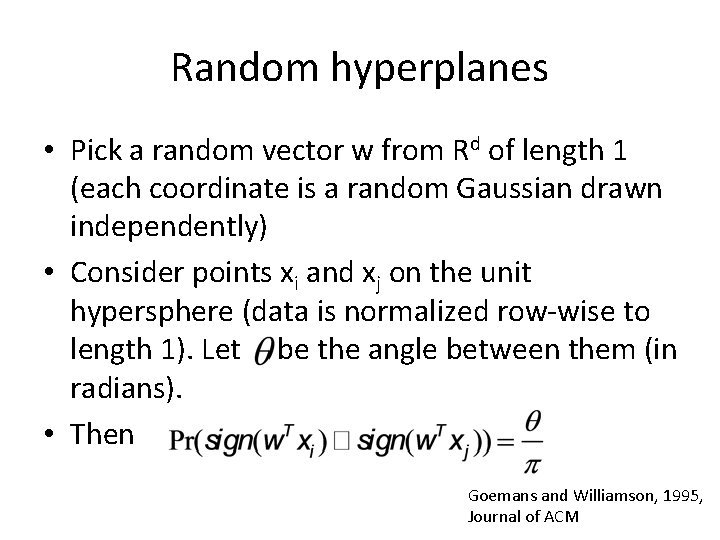

Random hyperplanes • Pick a random vector w from Rd of length 1 (each coordinate is a random Gaussian drawn independently) • Consider points xi and xj on the unit hypersphere (data is normalized row-wise to length 1). Let be the angle between them (in radians). • Then Goemans and Williamson, 1995, Journal of ACM

Random hyperplanes • Based on previous theorem – Normalized vectors with small angle between them will have short Hamming distances • Balcan et. al 2006 and Shi et. al. 2012 show that random projections preserve margin upto a degree