Introduction to formal models of argumentation Henry Prakken

- Slides: 166

Introduction to formal models of argumentation Henry Prakken Dundee (Scotland) September 4 th, 2014

What is argumentation? n n n Giving reasons to support claims that are open to doubt Defending these claims against attack NB: Inference + dialogue

Why study argumentation? n In linguistics: n n Argumentation is a form of language use In Artificial Intelligence: n Our applications have humans in the loop n n We want to model rational reasoning but with standards of rationality that are attainable by humans Argumentation is natural for humans Trade-off between rationality and naturalness In Multi-Agent Systems: n Argumentation is a form of communication

Today: formal models of argumentation n n Abstract argumentation Argumentation as inference n Frameworks for structured argumentation n Deductive vs. defeasible inferences Argument schemes Argumentation as dialogue

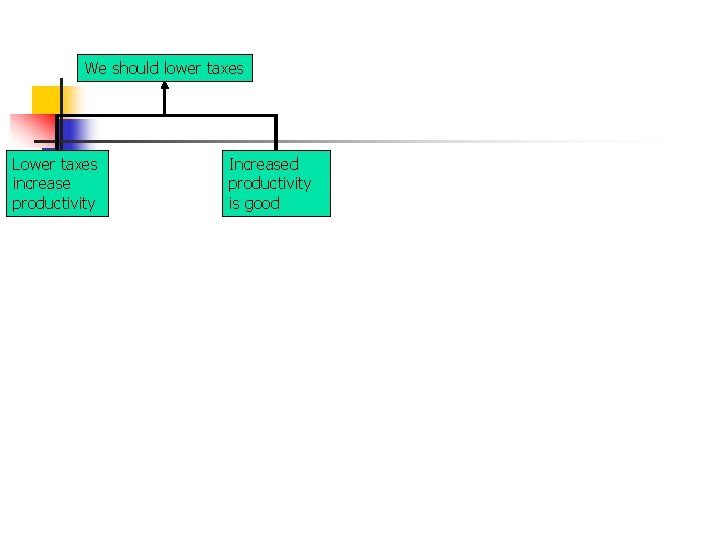

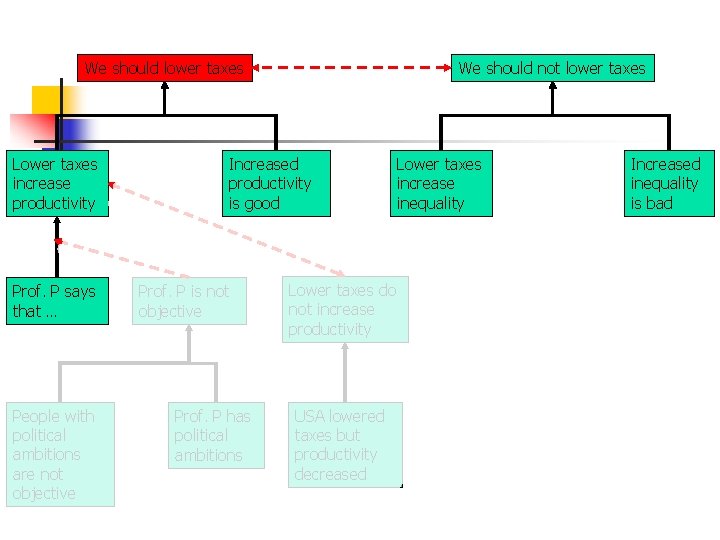

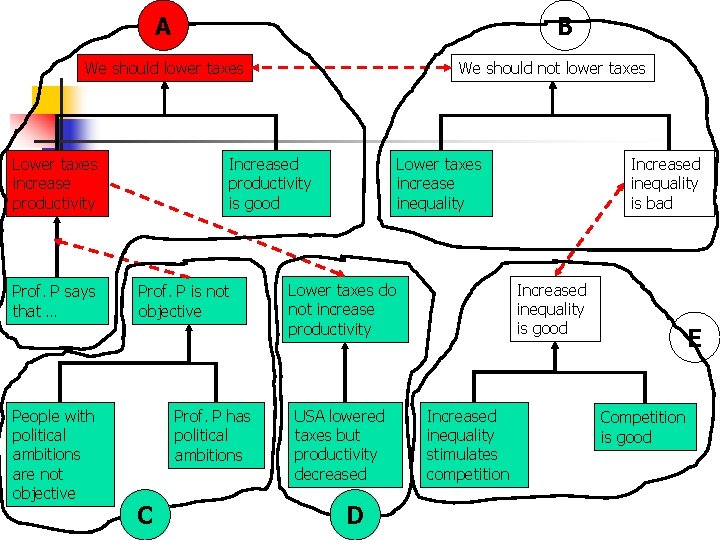

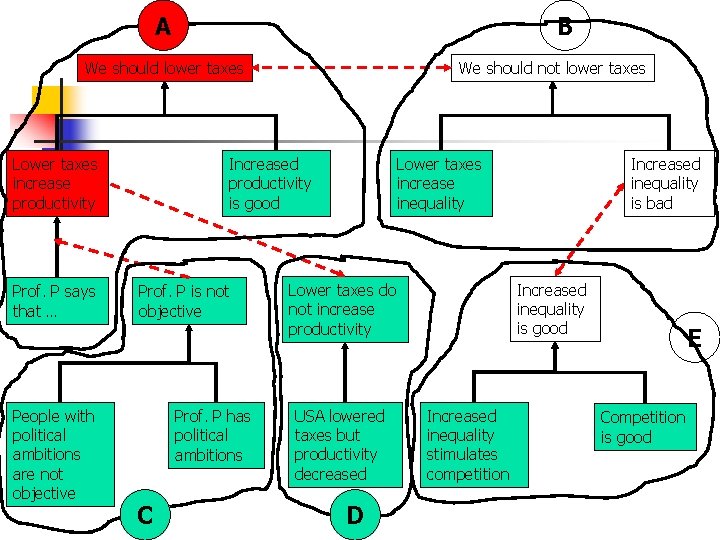

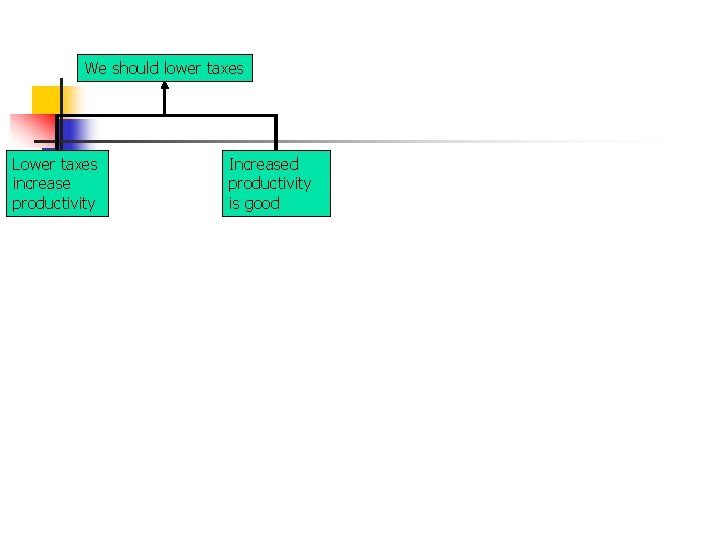

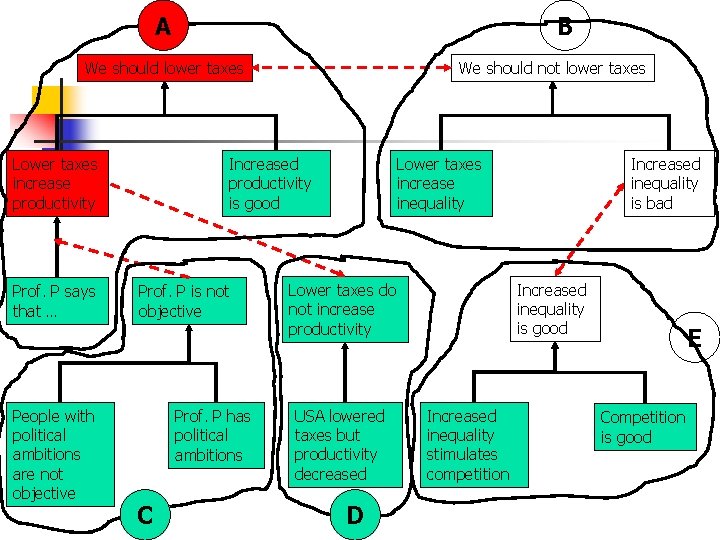

We should lower taxes Lower taxes increase productivity Increased productivity is good

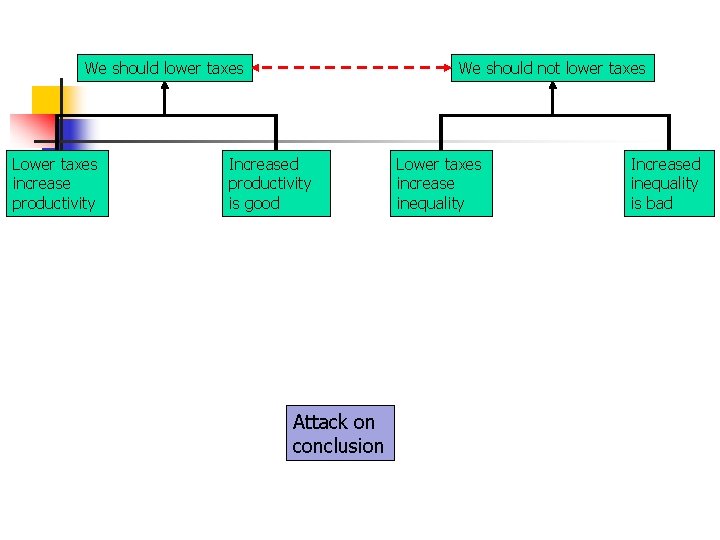

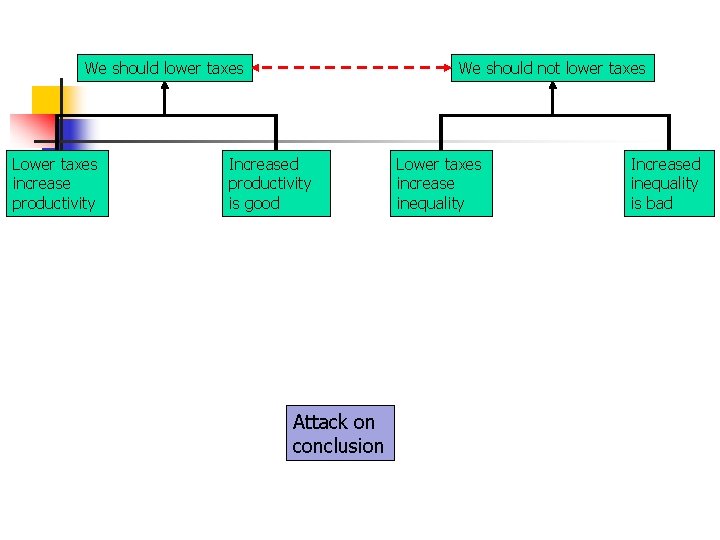

We should lower taxes Lower taxes increase productivity We should not lower taxes Increased productivity is good Attack on conclusion Lower taxes increase inequality Increased inequality is bad

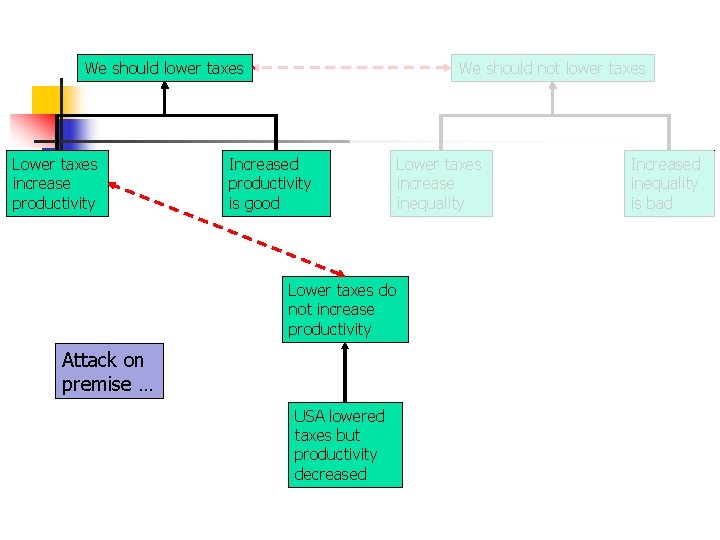

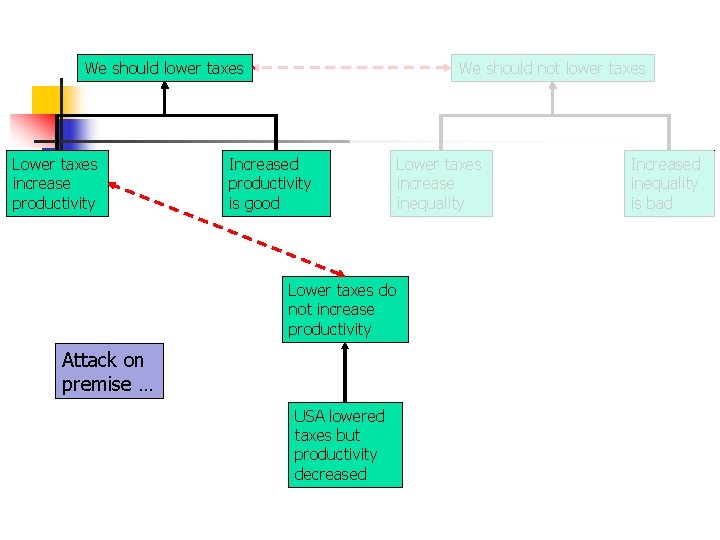

We should lower taxes Lower taxes increase productivity We should not lower taxes Increased productivity is good Lower taxes do not increase productivity Attack on premise … USA lowered taxes but productivity decreased Lower taxes increase inequality Increased inequality is bad

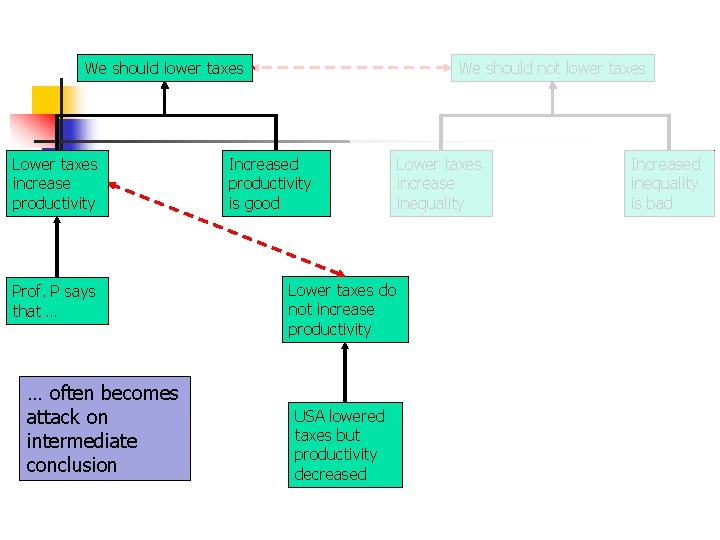

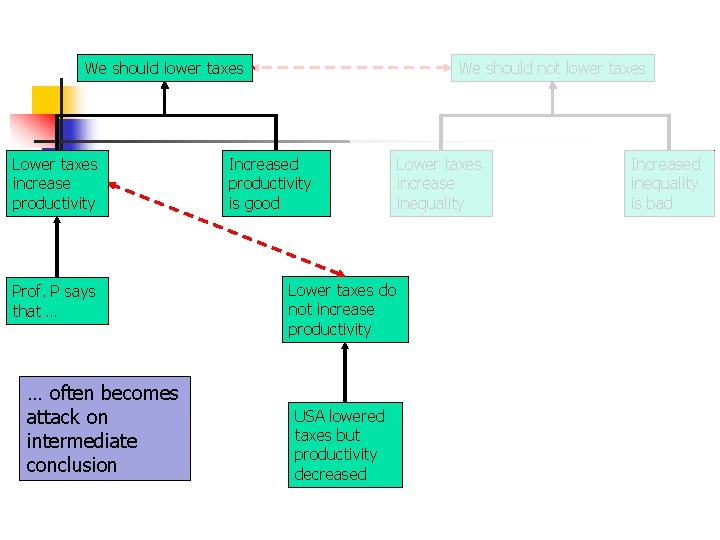

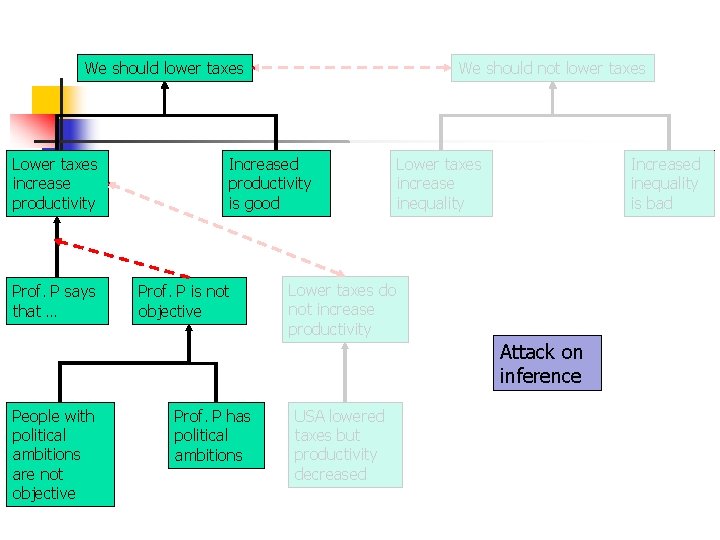

We should lower taxes Lower taxes increase productivity Prof. P says that … … often becomes attack on intermediate conclusion We should not lower taxes Increased productivity is good Lower taxes do not increase productivity USA lowered taxes but productivity decreased Lower taxes increase inequality Increased inequality is bad

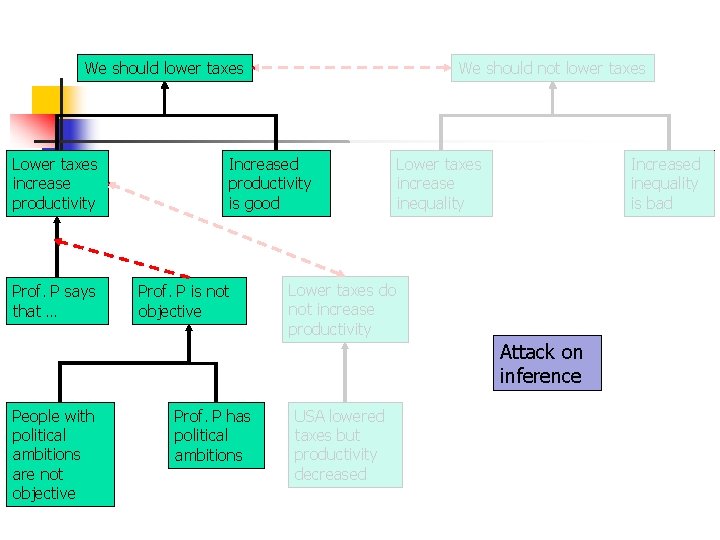

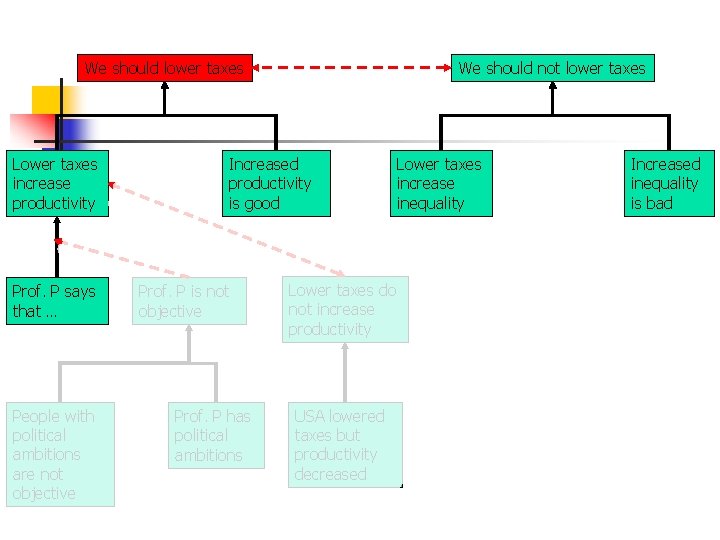

We should lower taxes Lower taxes increase productivity Prof. P says that … We should not lower taxes Increased productivity is good Prof. P is not objective Lower taxes increase inequality Increased inequality is bad Lower taxes do not increase productivity Attack on inference People with political ambitions are not objective Prof. P has political ambitions USA lowered taxes but productivity decreased

We should lower taxes Lower taxes increase productivity Prof. P says that … People with political ambitions are not objective We should not lower taxes Increased productivity is good Prof. P is not objective Prof. P has political ambitions Lower taxes do not increase productivity USA lowered taxes but productivity decreased Lower taxes increase inequality Increased inequality is bad

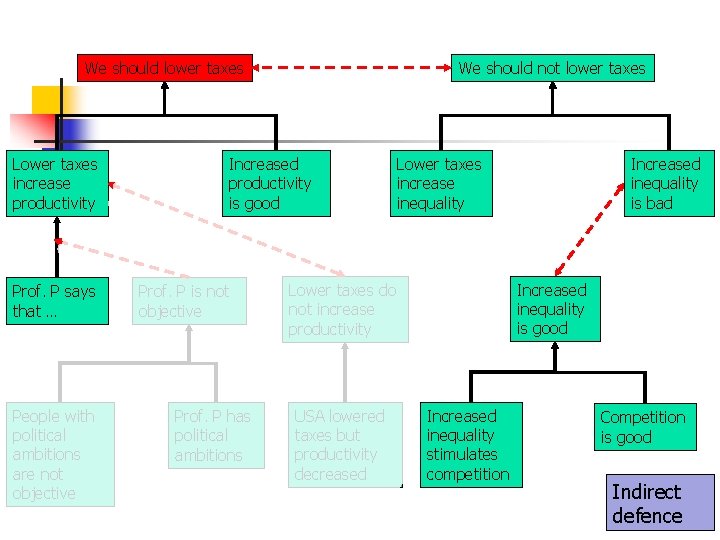

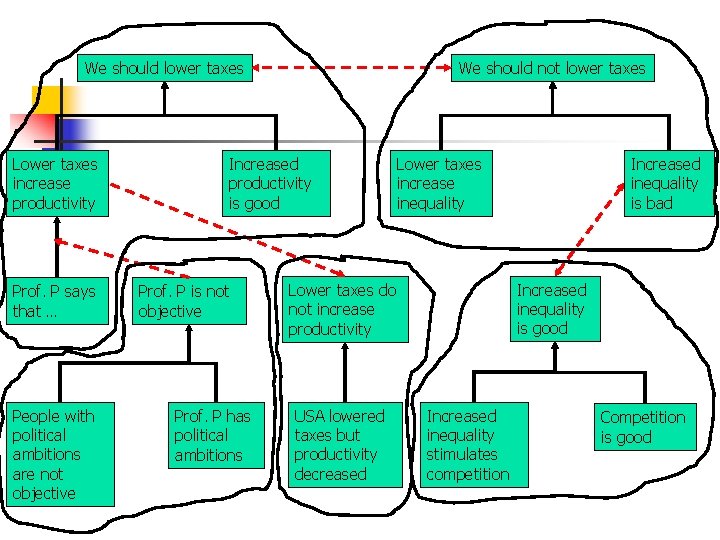

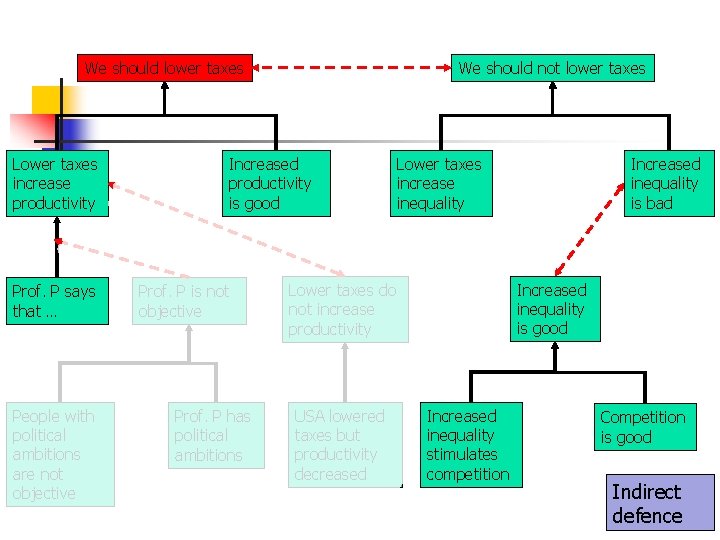

We should lower taxes Lower taxes increase productivity Prof. P says that … People with political ambitions are not objective We should not lower taxes Increased productivity is good Prof. P is not objective Prof. P has political ambitions Lower taxes increase inequality Increased inequality is good Lower taxes do not increase productivity USA lowered taxes but productivity decreased Increased inequality is bad Increased inequality stimulates competition Competition is good Indirect defence

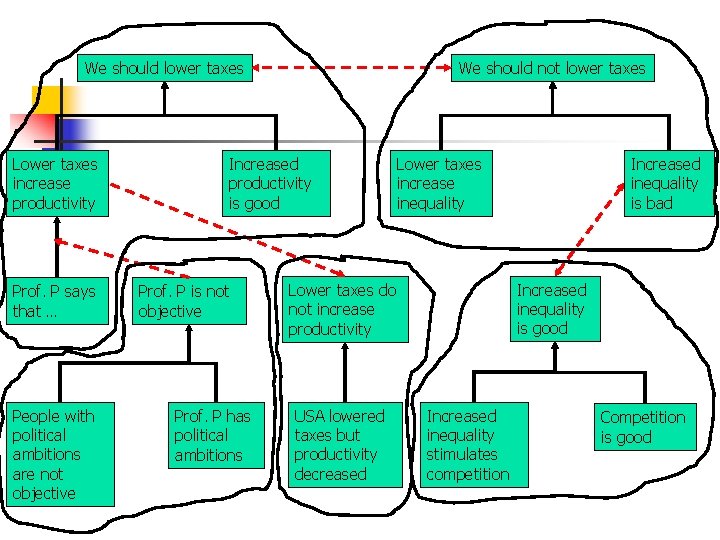

We should lower taxes Lower taxes increase productivity Prof. P says that … People with political ambitions are not objective We should not lower taxes Increased productivity is good Prof. P is not objective Prof. P has political ambitions Lower taxes increase inequality Increased inequality is good Lower taxes do not increase productivity USA lowered taxes but productivity decreased Increased inequality is bad Increased inequality stimulates competition Competition is good

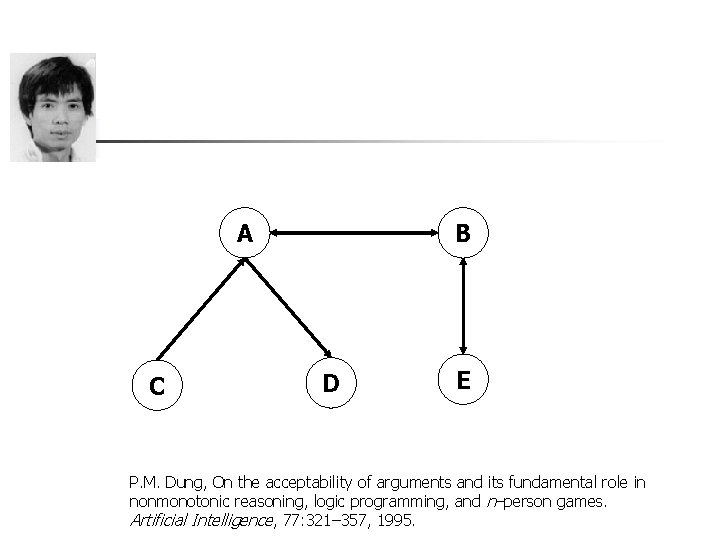

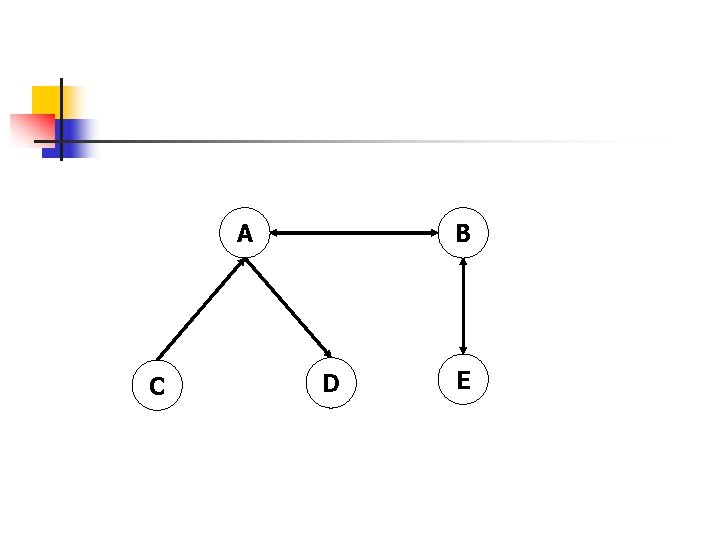

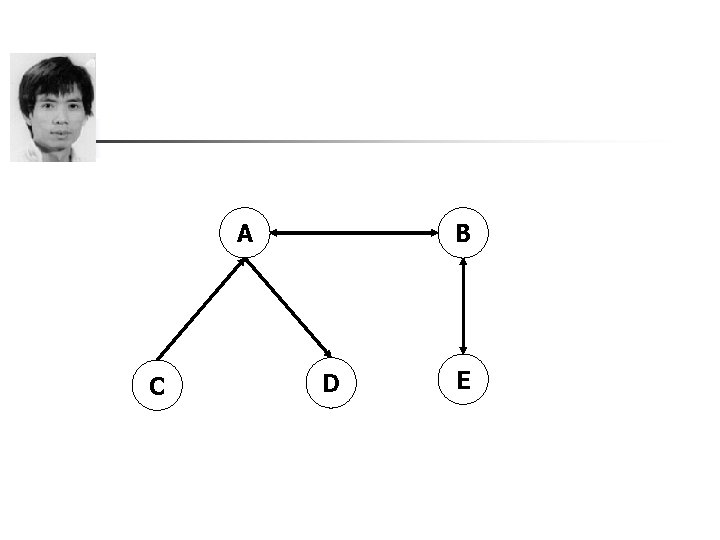

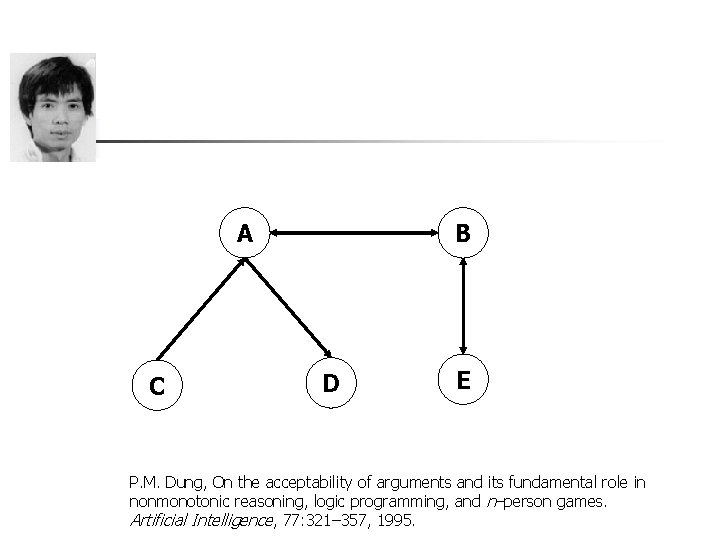

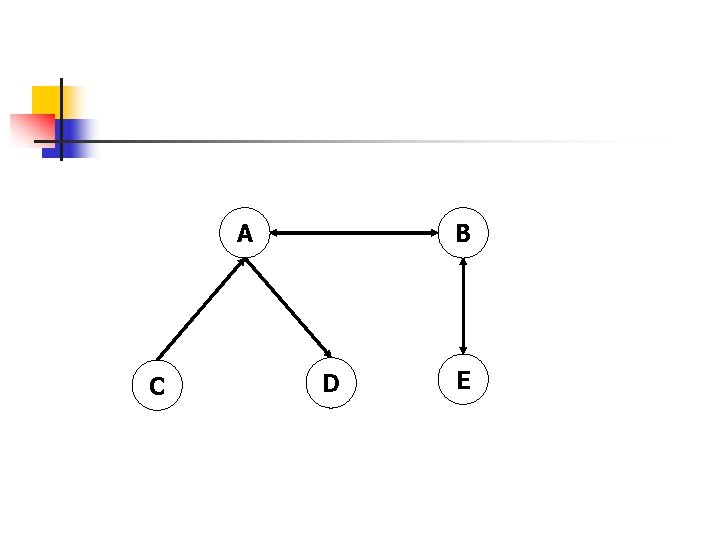

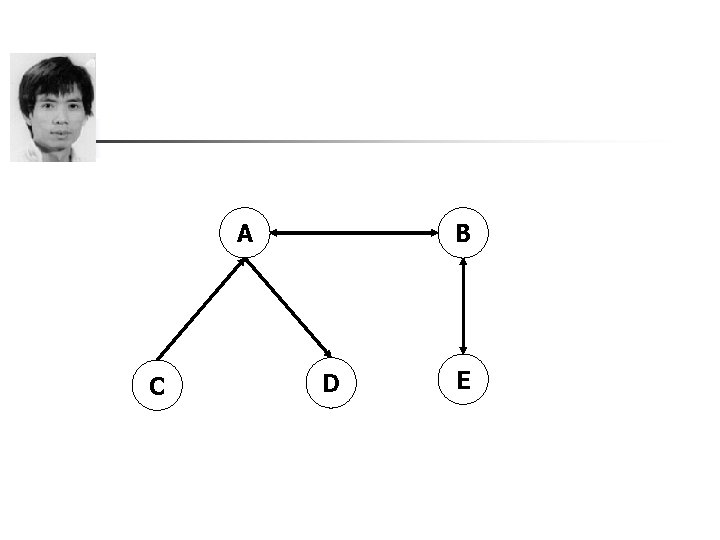

A C B D E P. M. Dung, On the acceptability of arguments and its fundamental role in nonmonotonic reasoning, logic programming, and n–person games. Artificial Intelligence, 77: 321– 357, 1995.

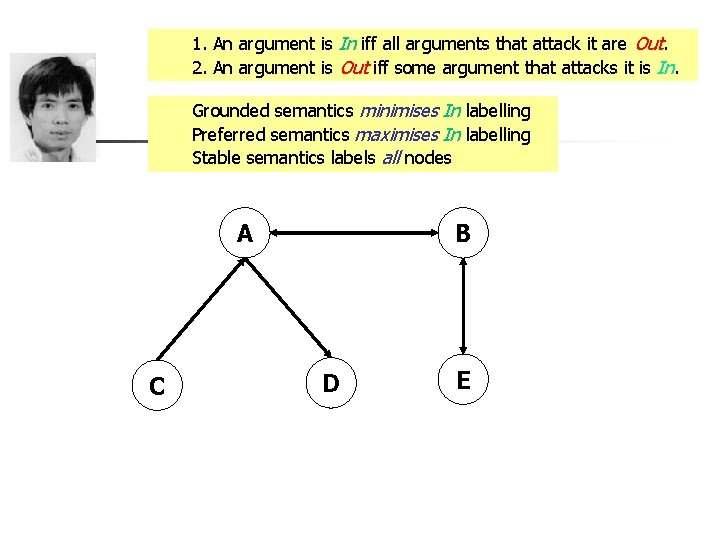

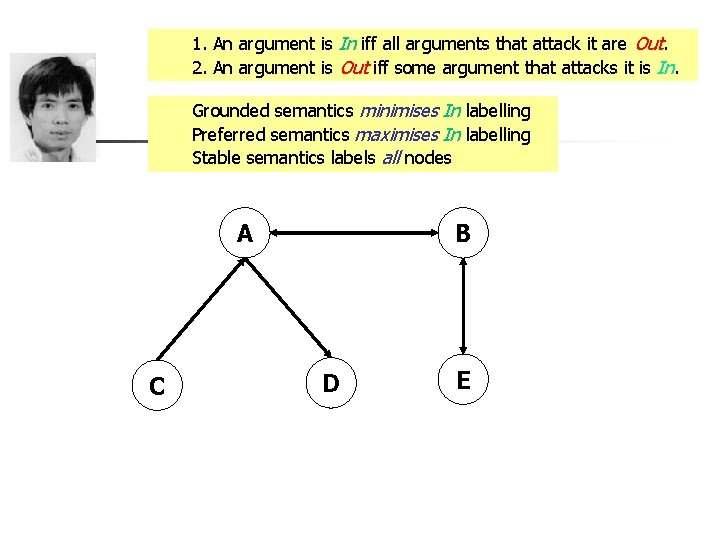

1. An argument is In iff all arguments that attack it are Out. 2. An argument is Out iff some argument that attacks it is In. Grounded semantics minimises In labelling Preferred semantics maximises In labelling Stable semantics labels all nodes A C B D E

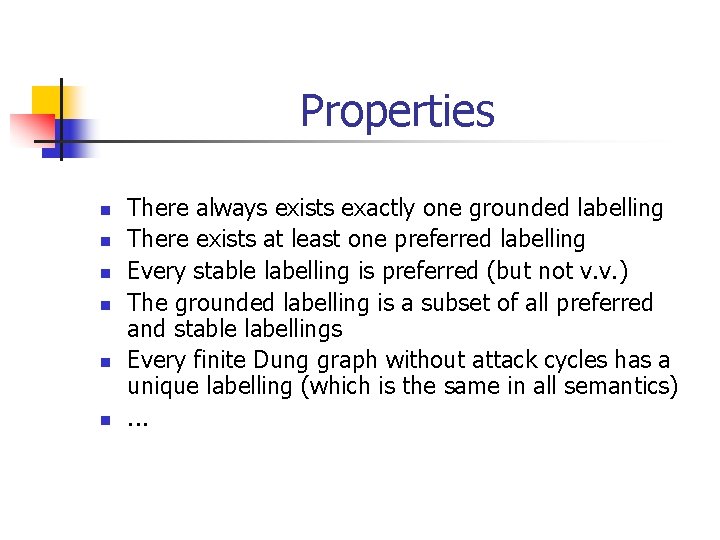

Properties n n n There always exists exactly one grounded labelling There exists at least one preferred labelling Every stable labelling is preferred (but not v. v. ) The grounded labelling is a subset of all preferred and stable labellings Every finite Dung graph without attack cycles has a unique labelling (which is the same in all semantics). . .

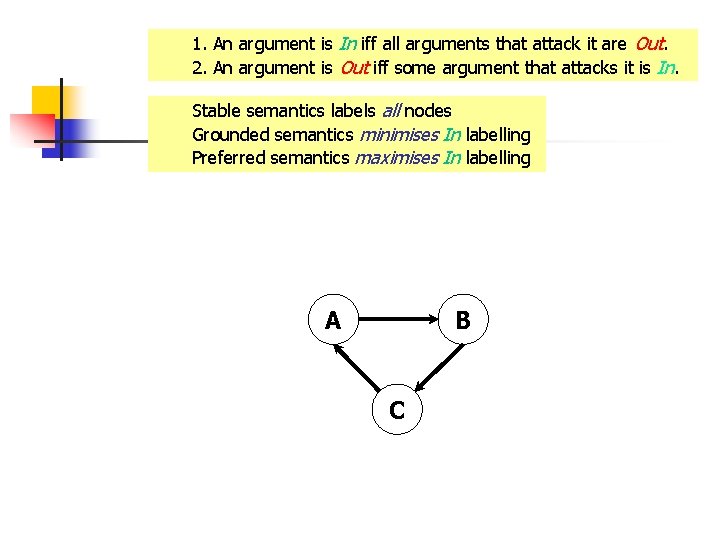

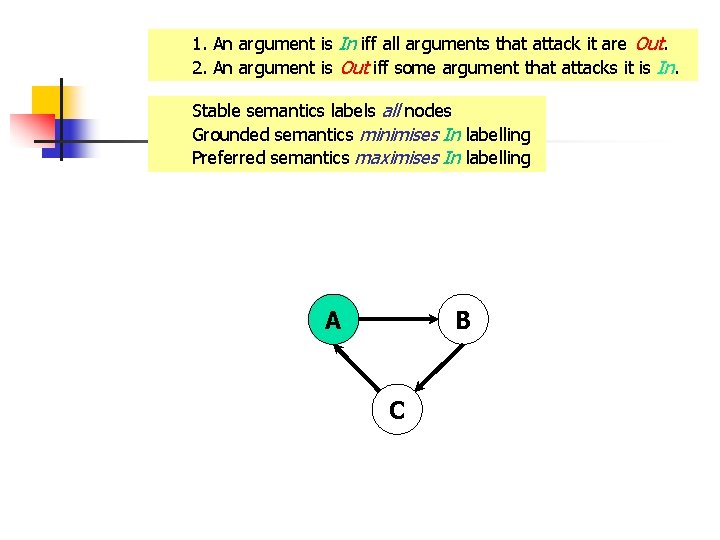

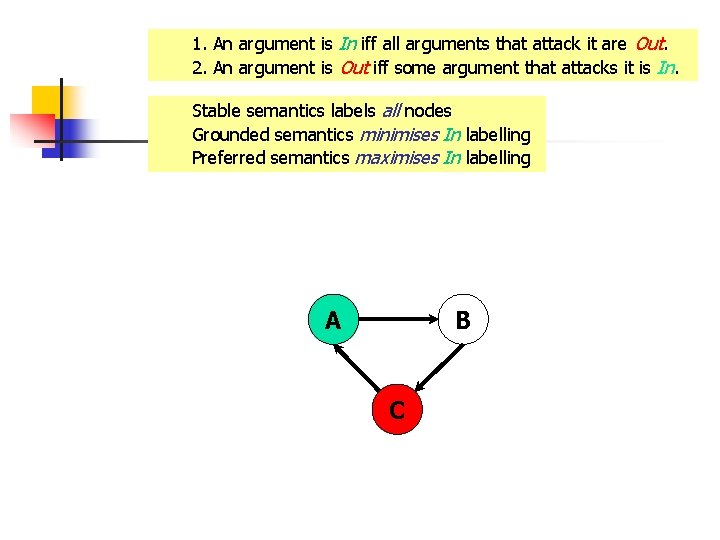

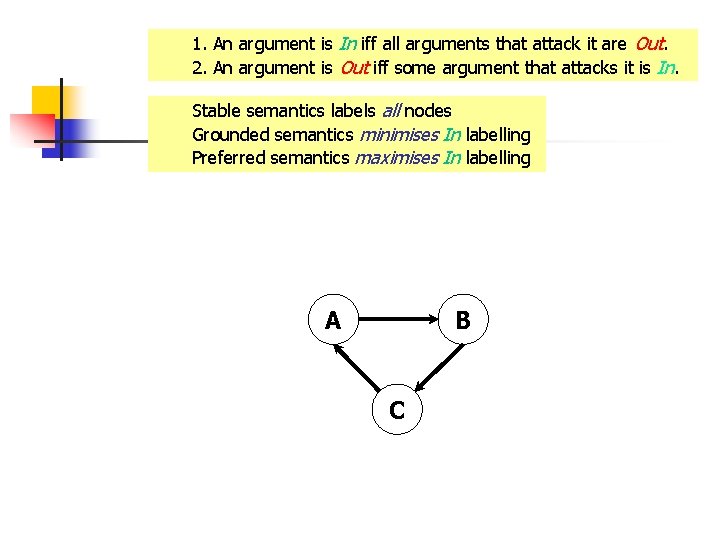

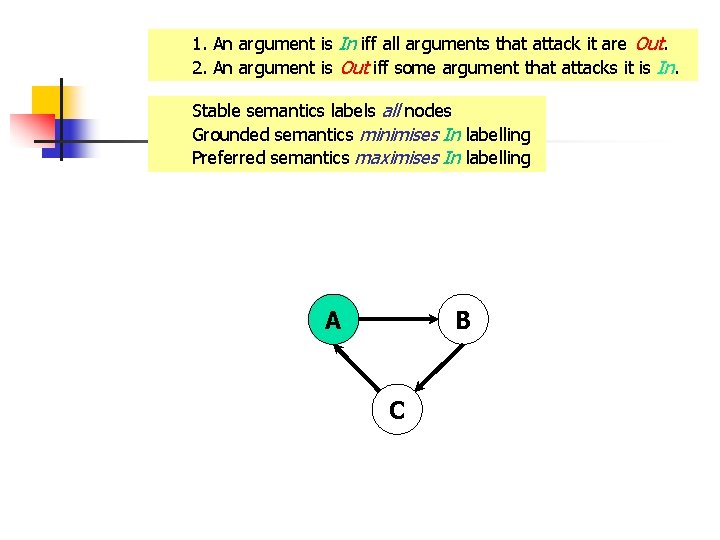

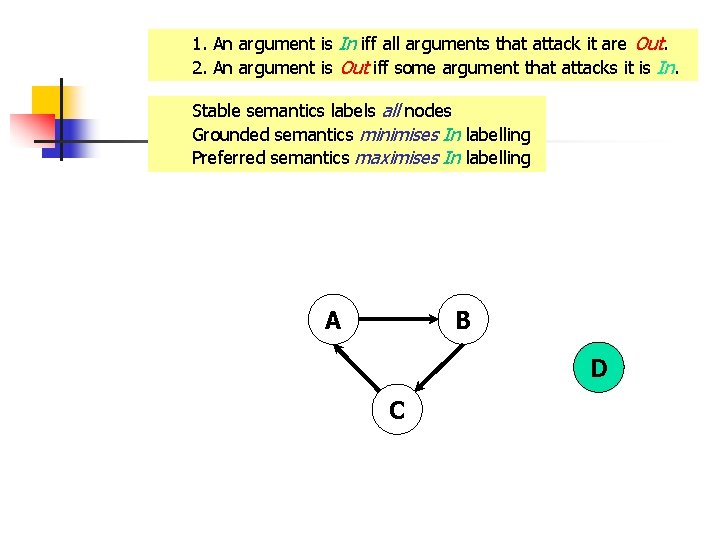

1. An argument is In iff all arguments that attack it are Out. 2. An argument is Out iff some argument that attacks it is In. Stable semantics labels all nodes Grounded semantics minimises In labelling Preferred semantics maximises In labelling A B C

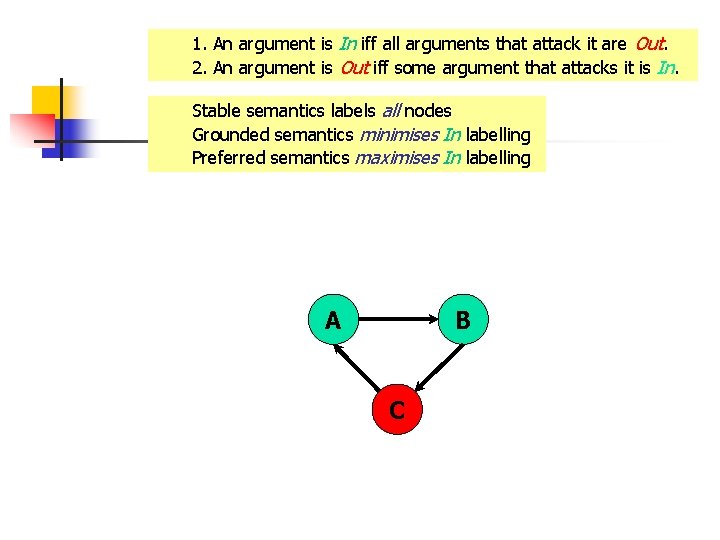

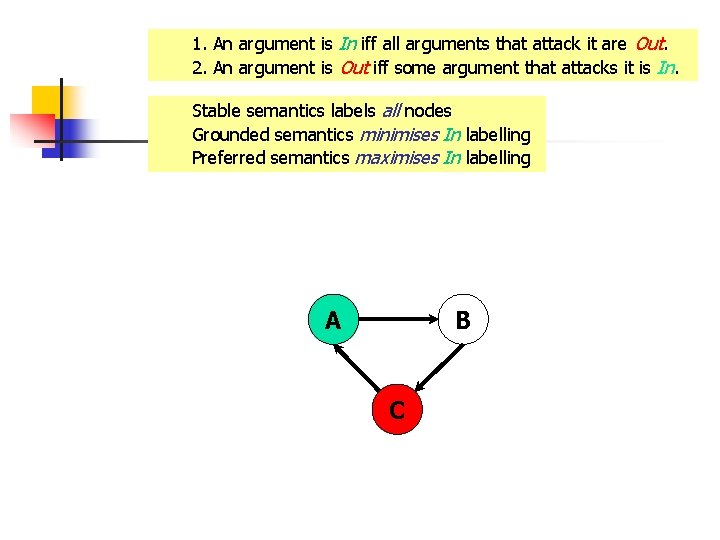

1. An argument is In iff all arguments that attack it are Out. 2. An argument is Out iff some argument that attacks it is In. Stable semantics labels all nodes Grounded semantics minimises In labelling Preferred semantics maximises In labelling A B C

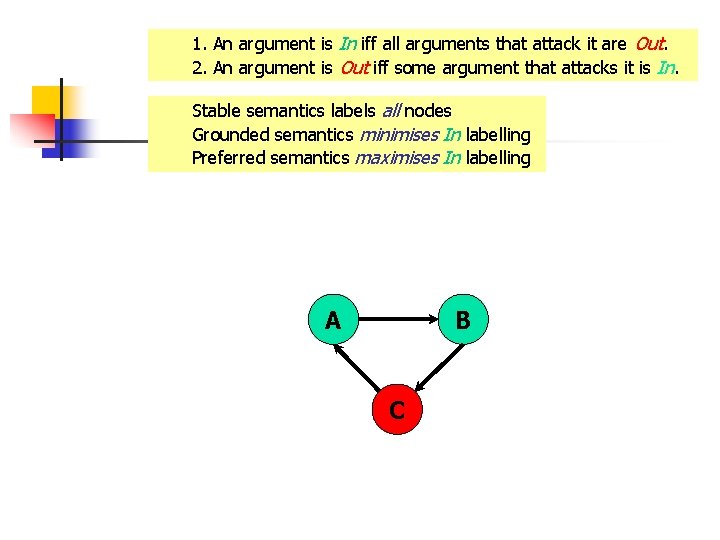

1. An argument is In iff all arguments that attack it are Out. 2. An argument is Out iff some argument that attacks it is In. Stable semantics labels all nodes Grounded semantics minimises In labelling Preferred semantics maximises In labelling A B C

1. An argument is In iff all arguments that attack it are Out. 2. An argument is Out iff some argument that attacks it is In. Stable semantics labels all nodes Grounded semantics minimises In labelling Preferred semantics maximises In labelling A B C

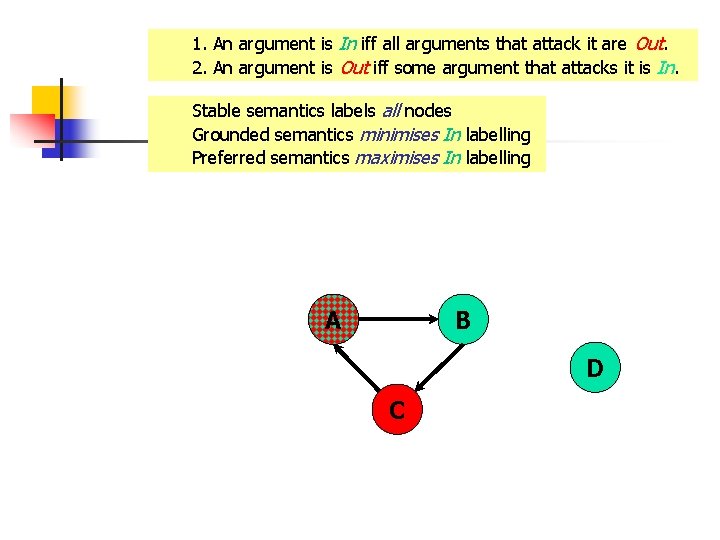

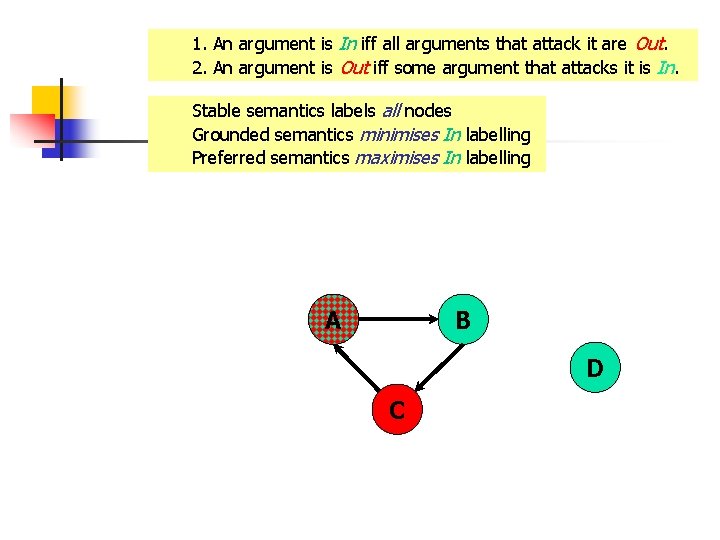

1. An argument is In iff all arguments that attack it are Out. 2. An argument is Out iff some argument that attacks it is In. Stable semantics labels all nodes Grounded semantics minimises In labelling Preferred semantics maximises In labelling A B D C

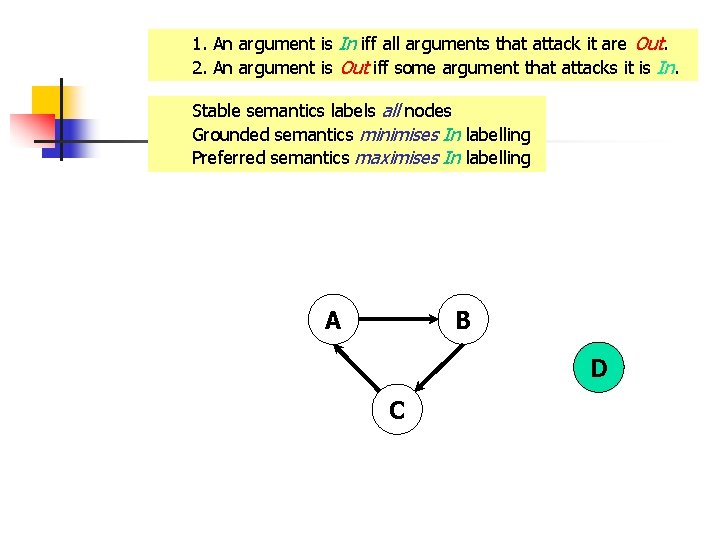

1. An argument is In iff all arguments that attack it are Out. 2. An argument is Out iff some argument that attacks it is In. Stable semantics labels all nodes Grounded semantics minimises In labelling Preferred semantics maximises In labelling A B D C

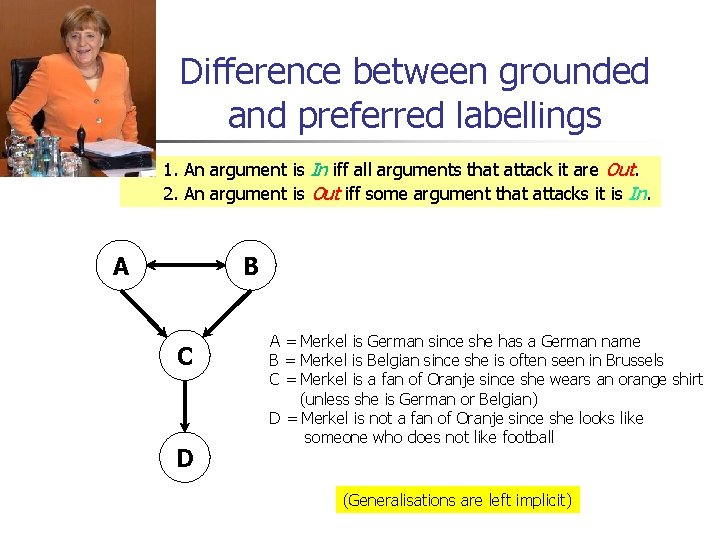

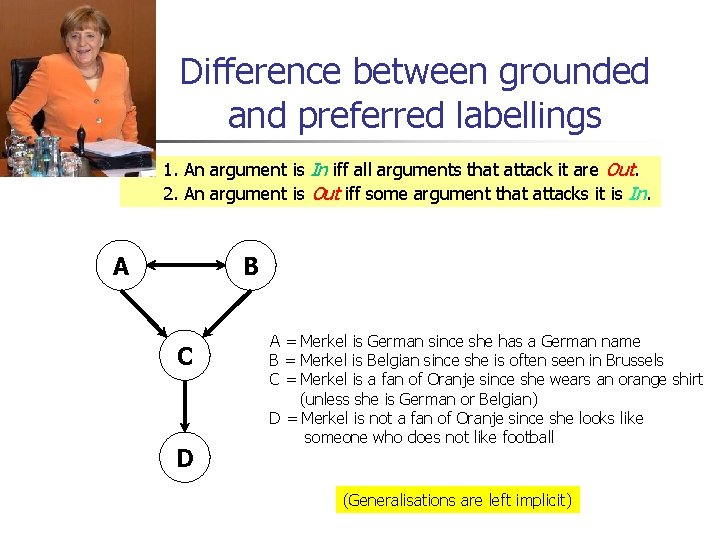

Difference between grounded and preferred labellings 1. An argument is In iff all arguments that attack it are Out. 2. An argument is Out iff some argument that attacks it is In. A B C D A = Merkel is German since she has a German name B = Merkel is Belgian since she is often seen in Brussels C = Merkel is a fan of Oranje since she wears an orange shirt (unless she is German or Belgian) D = Merkel is not a fan of Oranje since she looks like someone who does not like football (Generalisations are left implicit)

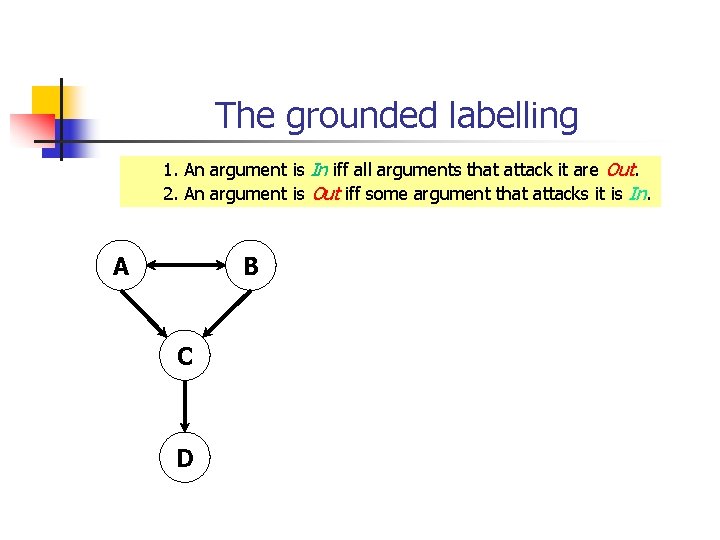

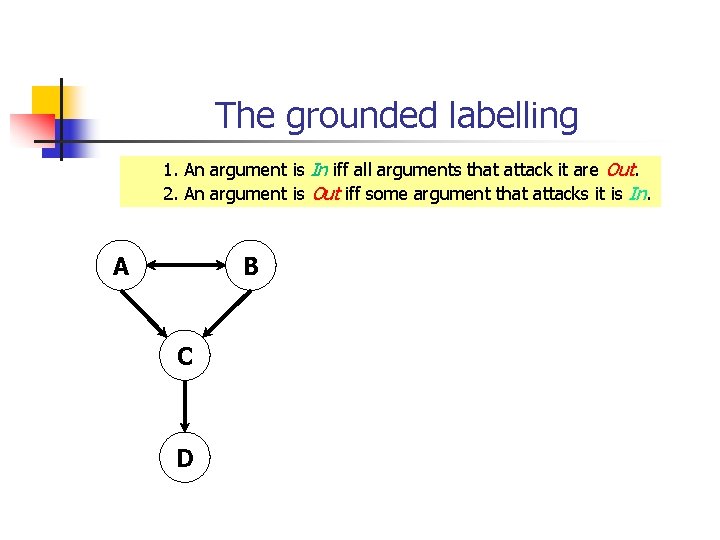

The grounded labelling 1. An argument is In iff all arguments that attack it are Out. 2. An argument is Out iff some argument that attacks it is In. A B C D

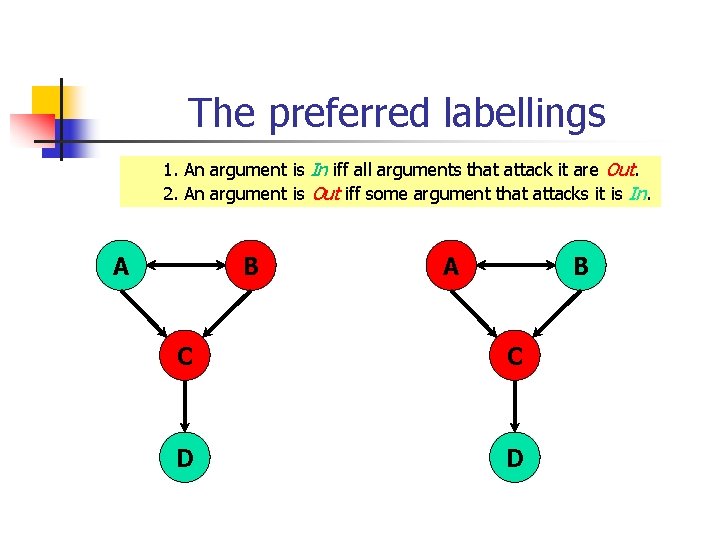

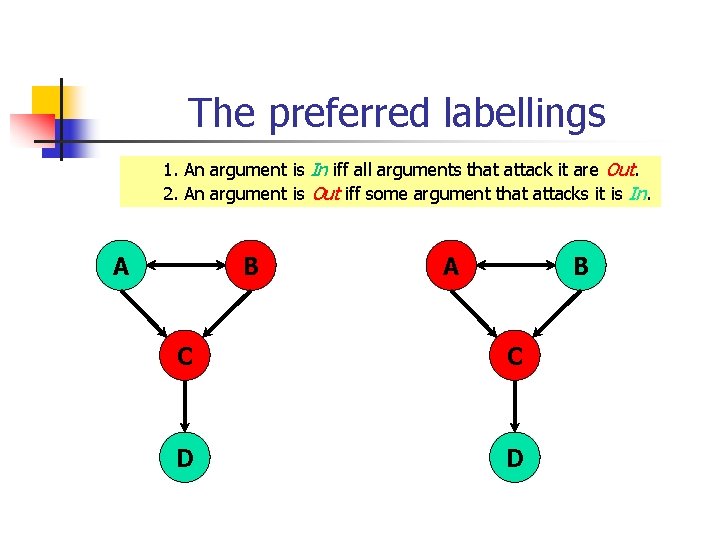

The preferred labellings 1. An argument is In iff all arguments that attack it are Out. 2. An argument is Out iff some argument that attacks it is In. A B C C D D

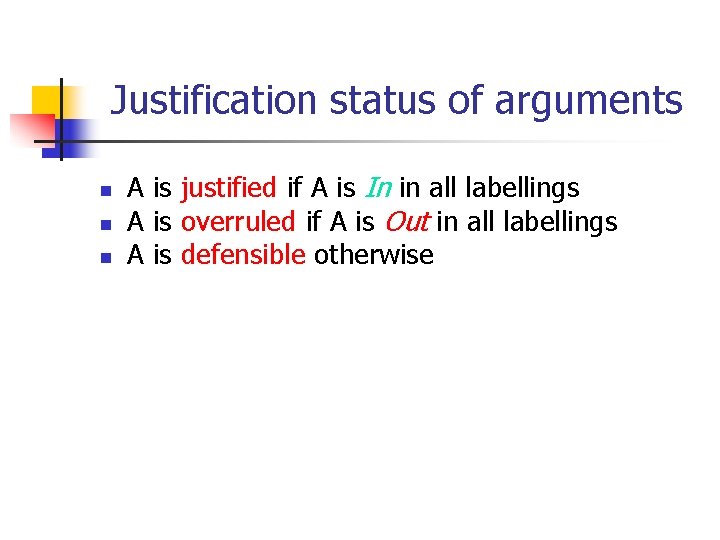

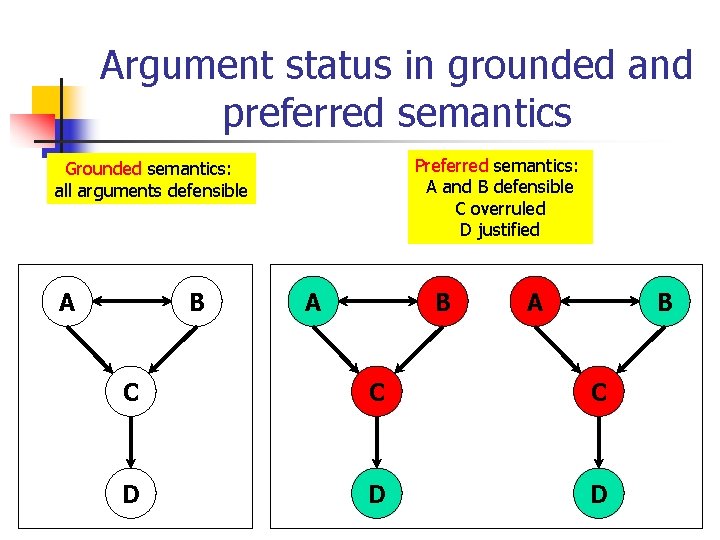

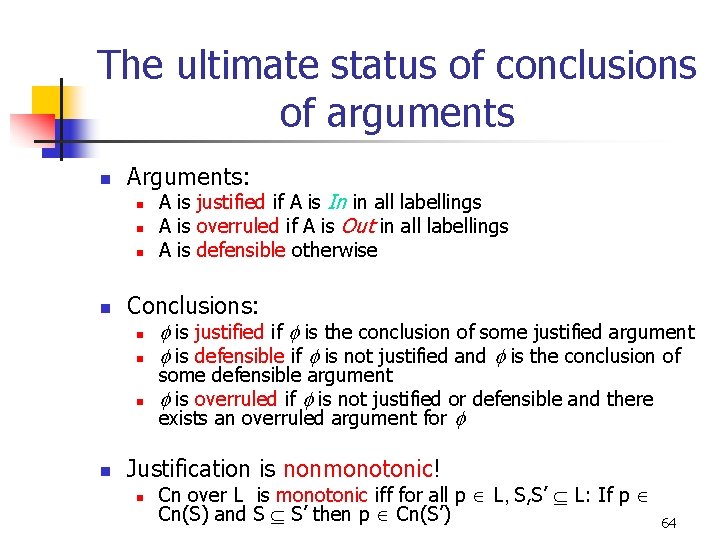

Justification status of arguments n n n A is justified if A is In in all labellings A is overruled if A is Out in all labellings A is defensible otherwise

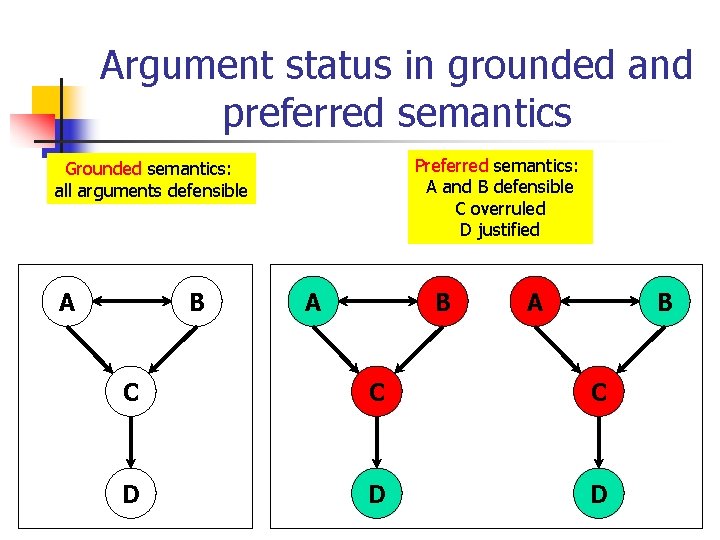

Argument status in grounded and preferred semantics Preferred semantics: A and B defensible C overruled D justified Grounded semantics: all arguments defensible A B A B C C C D D D

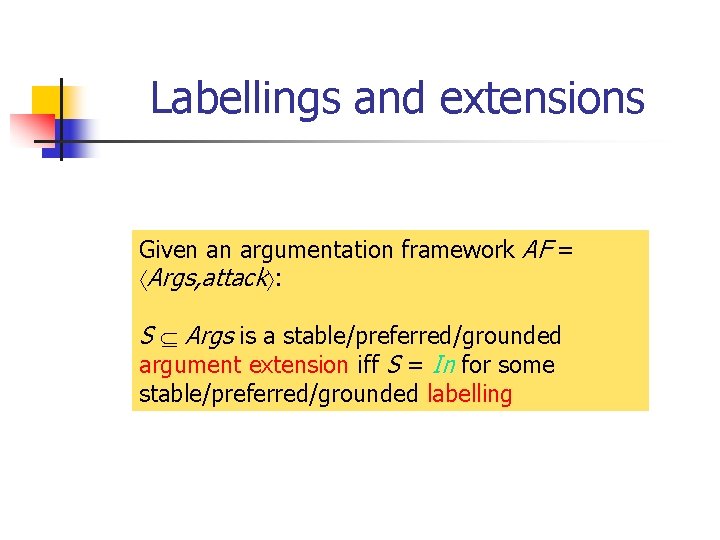

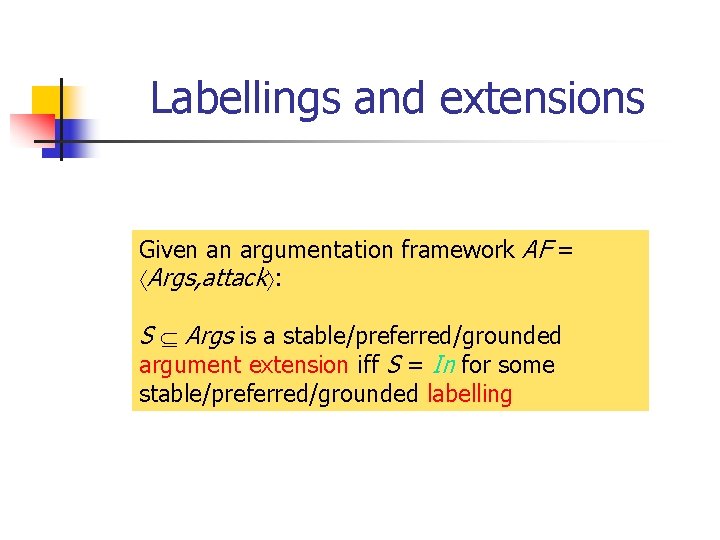

Labellings and extensions Given an argumentation framework AF = Args, attack : S Args is a stable/preferred/grounded argument extension iff S = In for some stable/preferred/grounded labelling

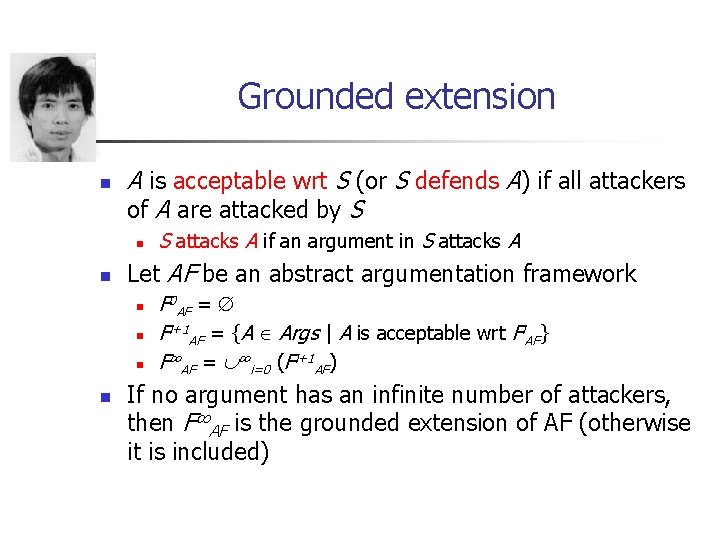

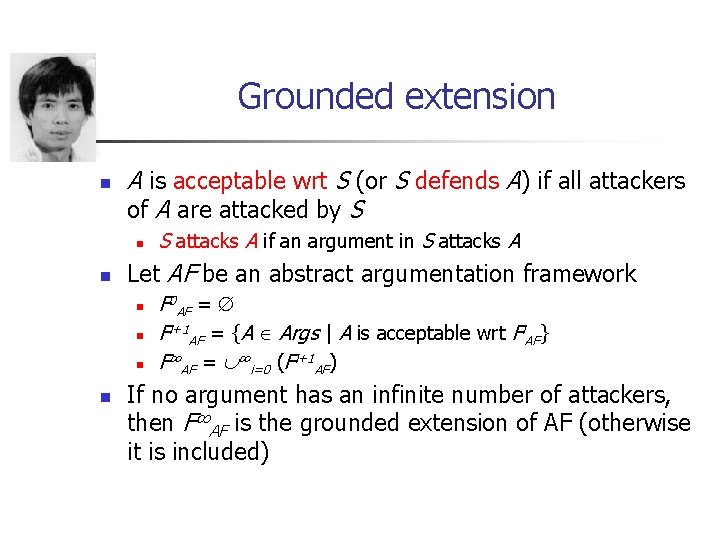

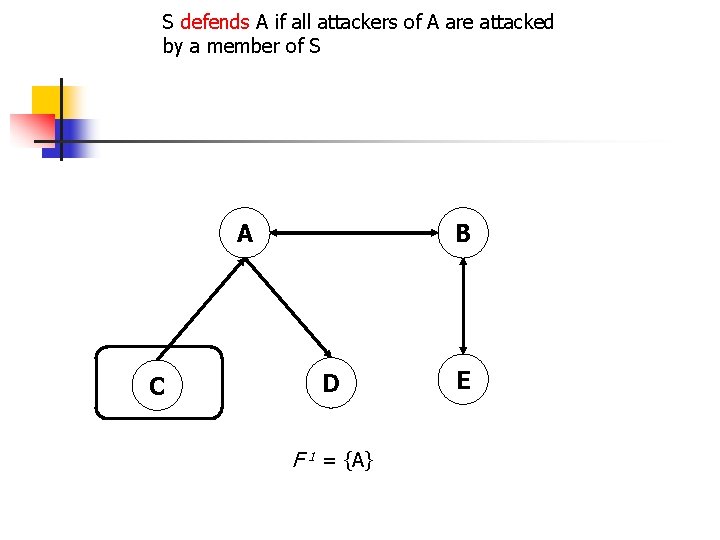

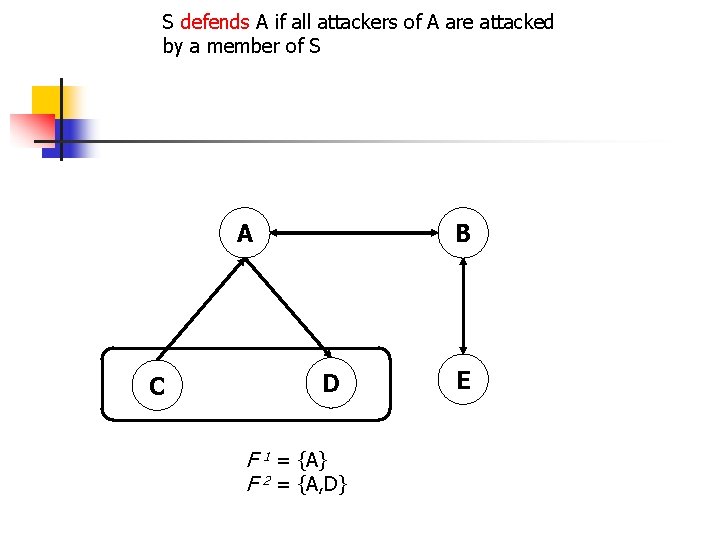

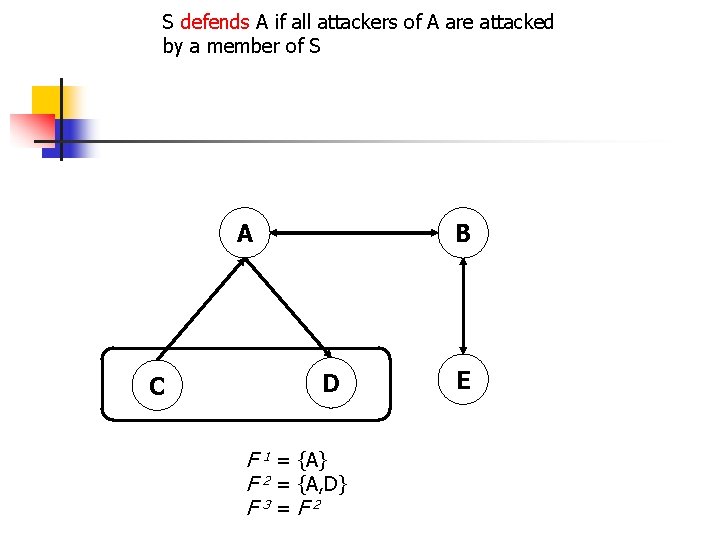

Grounded extension n A is acceptable wrt S (or S defends A) if all attackers of A are attacked by S n n Let AF be an abstract argumentation framework n n S attacks A if an argument in S attacks A F 0 AF = Fi+1 AF = {A Args | A is acceptable wrt Fi. AF} F∞AF = ∞i=0 (Fi+1 AF) If no argument has an infinite number of attackers, then F∞AF is the grounded extension of AF (otherwise it is included)

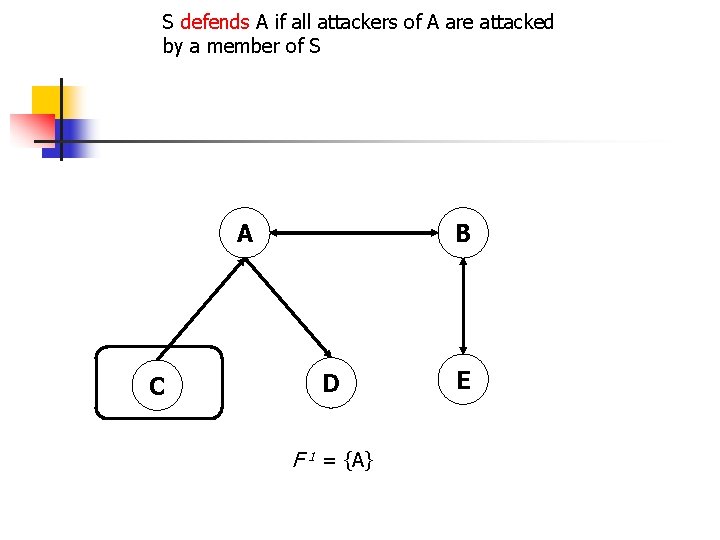

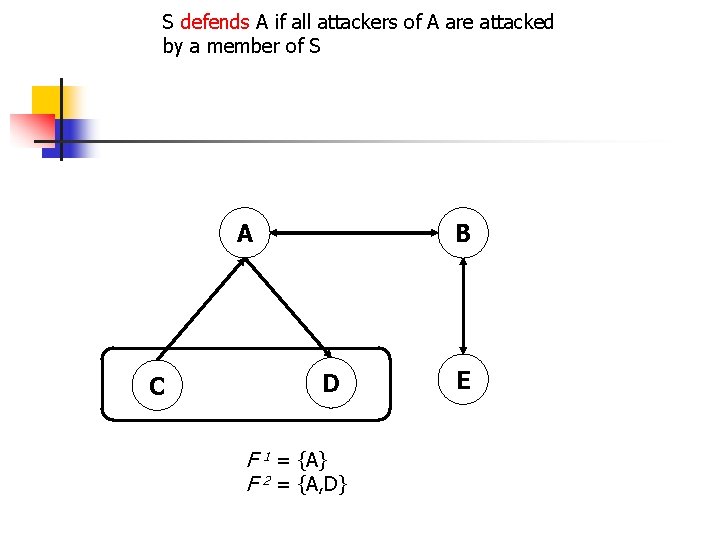

S defends A if all attackers of A are attacked by a member of S A C B D F 1 = {A} E

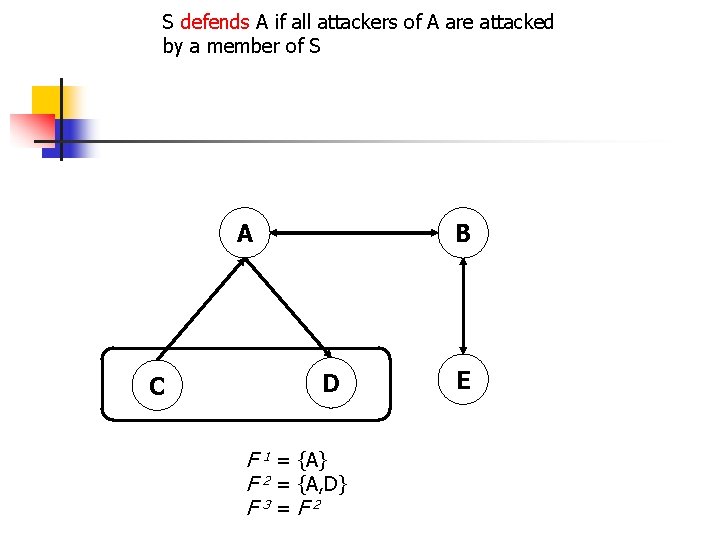

S defends A if all attackers of A are attacked by a member of S A C B D F 1 = {A} F 2 = {A, D} E

S defends A if all attackers of A are attacked by a member of S A C B D F 1 = {A} F 2 = {A, D} F 3=F 2 E

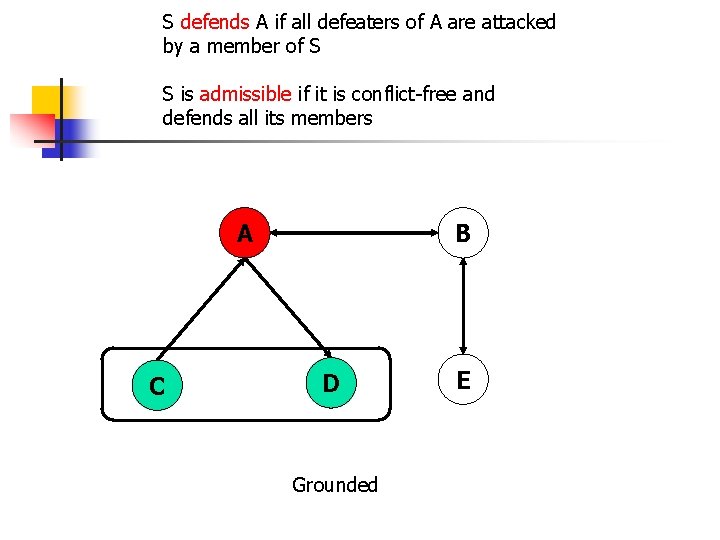

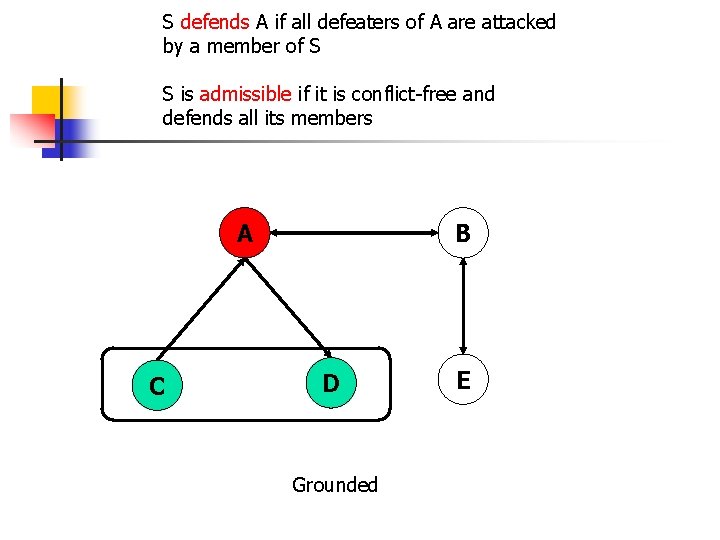

S defends A if all defeaters of A are attacked by a member of S S is admissible if it is conflict-free and defends all its members A C B D Grounded E

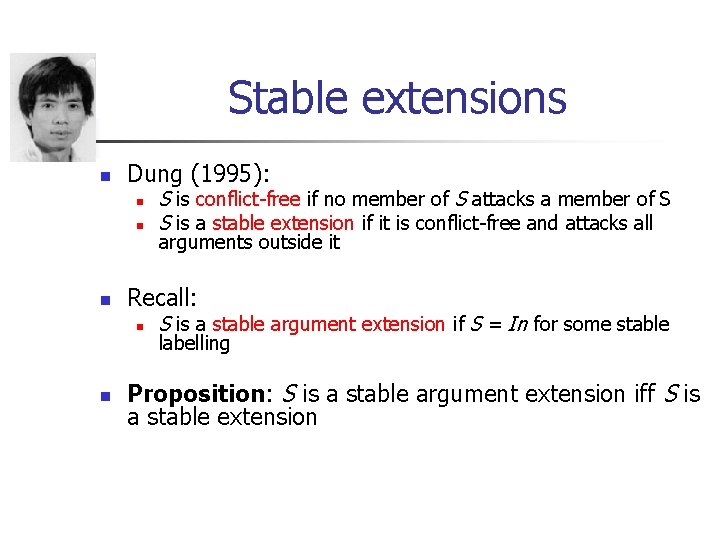

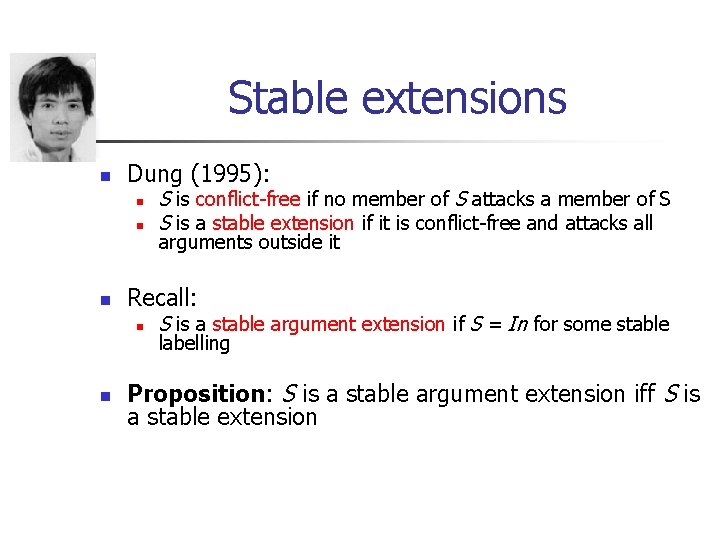

Stable extensions n Dung (1995): n n n arguments outside it Recall: n n S is conflict-free if no member of S attacks a member of S S is a stable extension if it is conflict-free and attacks all S is a stable argument extension if S = In for some stable labelling Proposition: S is a stable argument extension iff S is a stable extension

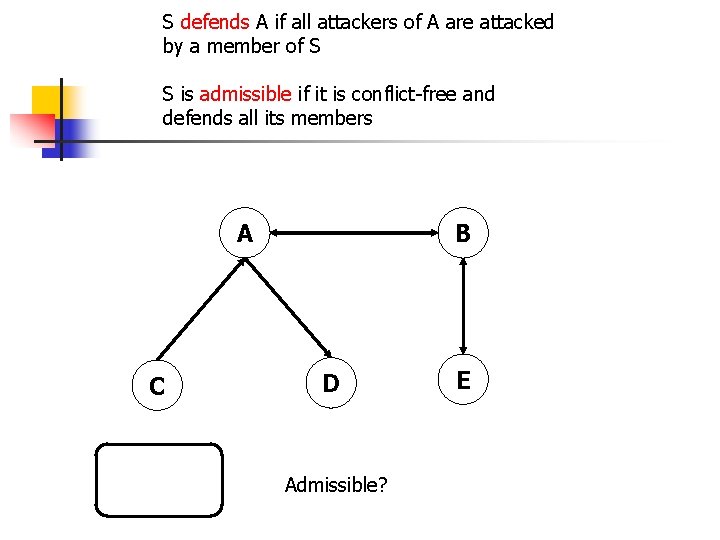

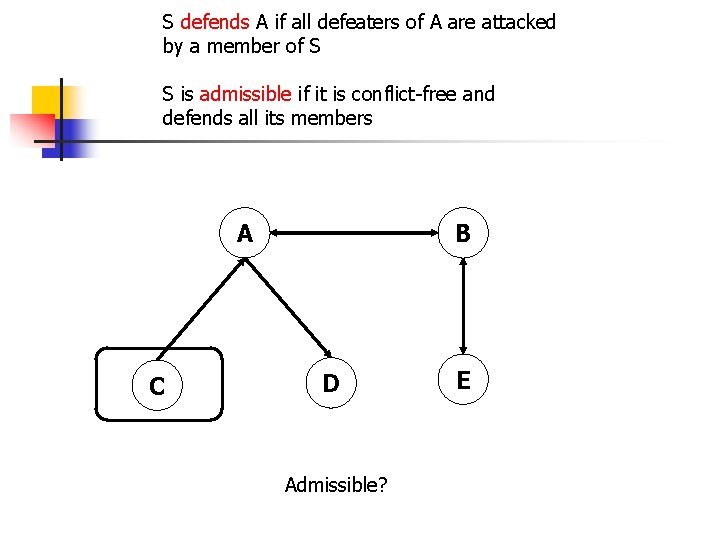

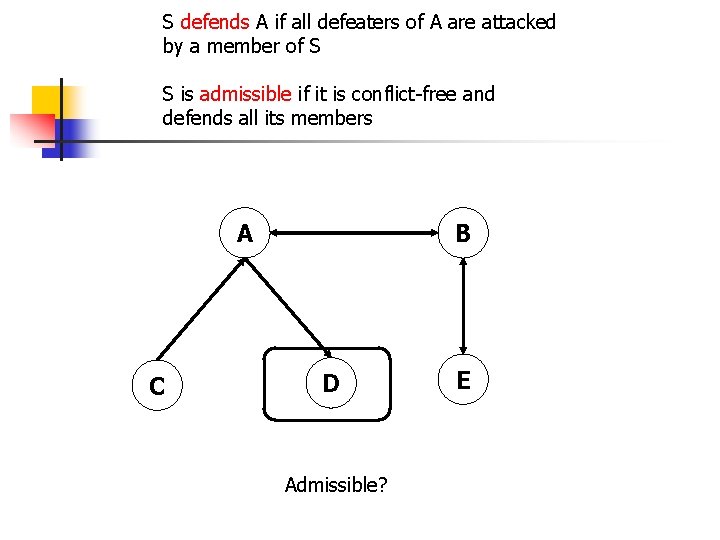

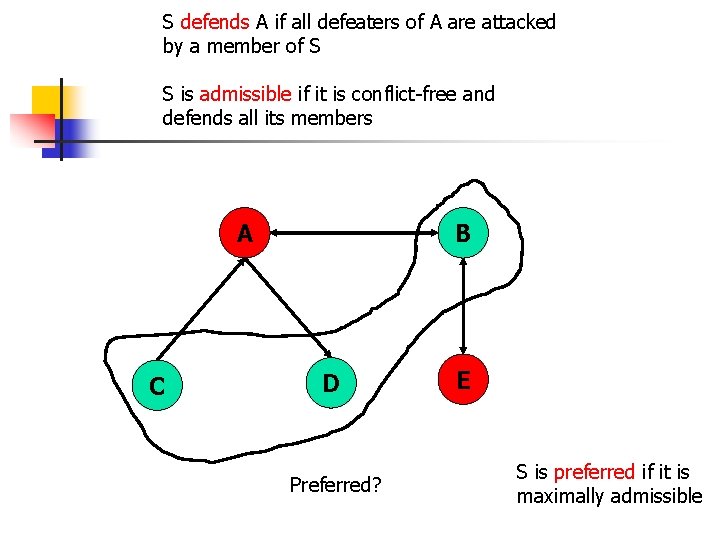

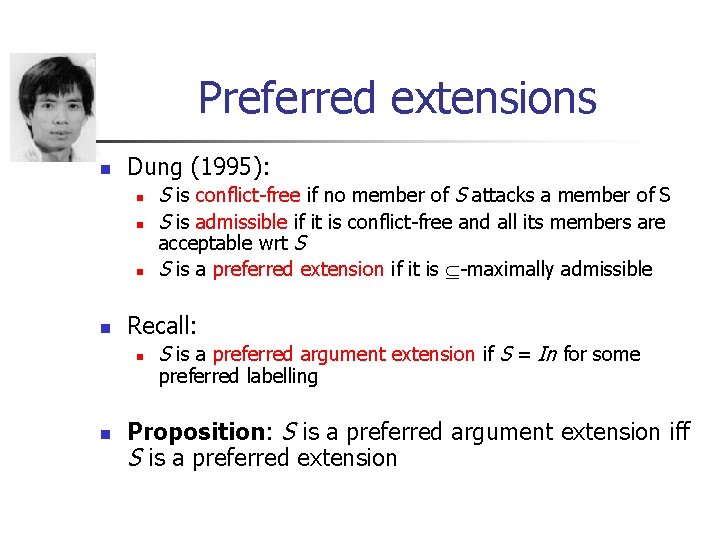

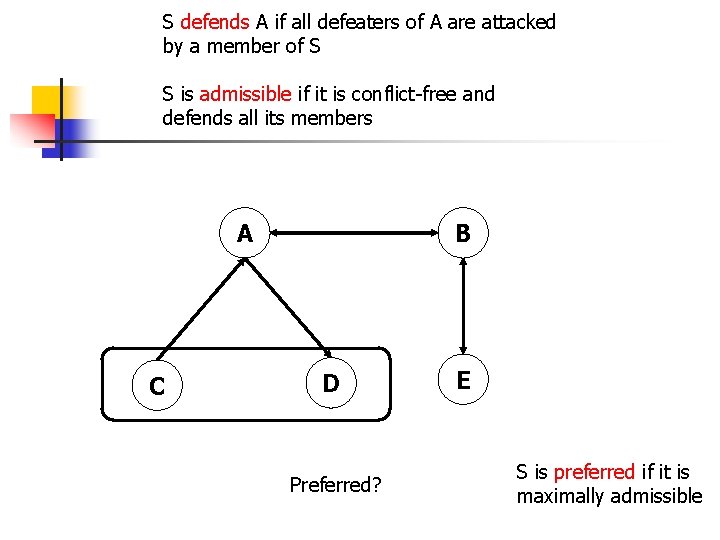

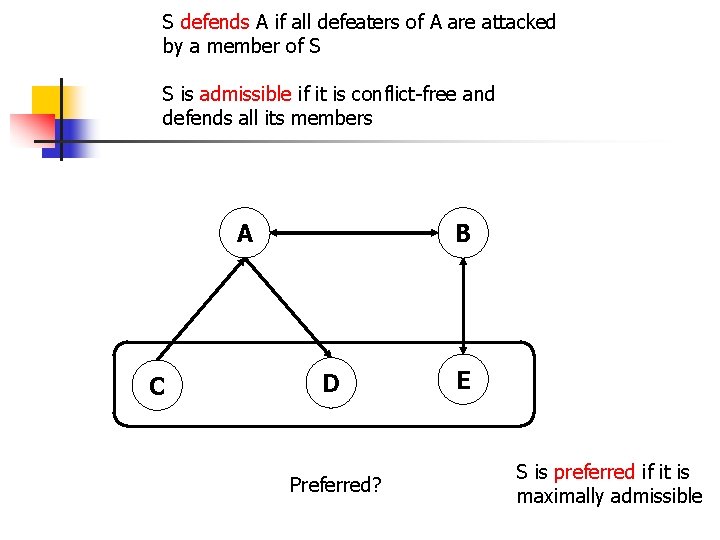

Preferred extensions n Dung (1995): n n Recall: n n S is conflict-free if no member of S attacks a member of S S is admissible if it is conflict-free and all its members are acceptable wrt S S is a preferred extension if it is -maximally admissible S is a preferred argument extension if S = In for some preferred labelling Proposition: S is a preferred argument extension iff S is a preferred extension

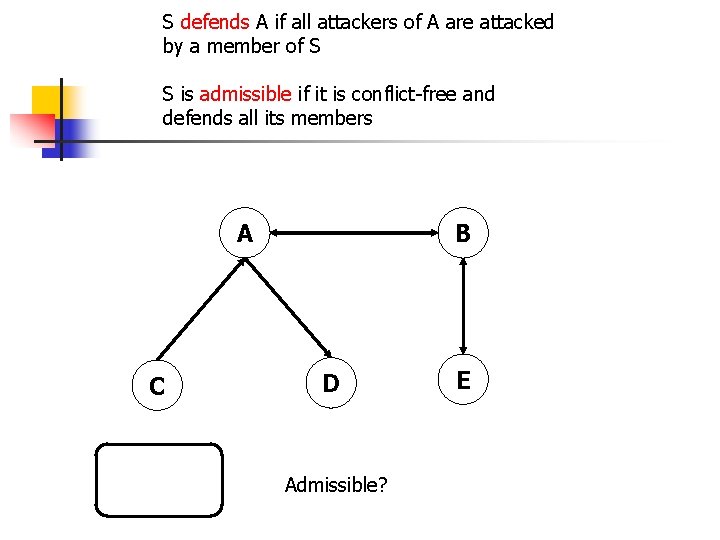

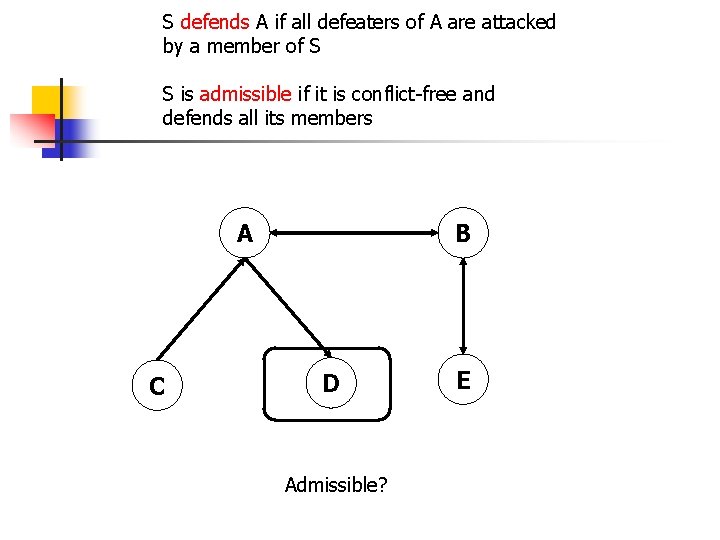

S defends A if all attackers of A are attacked by a member of S S is admissible if it is conflict-free and defends all its members A C B D Admissible? E

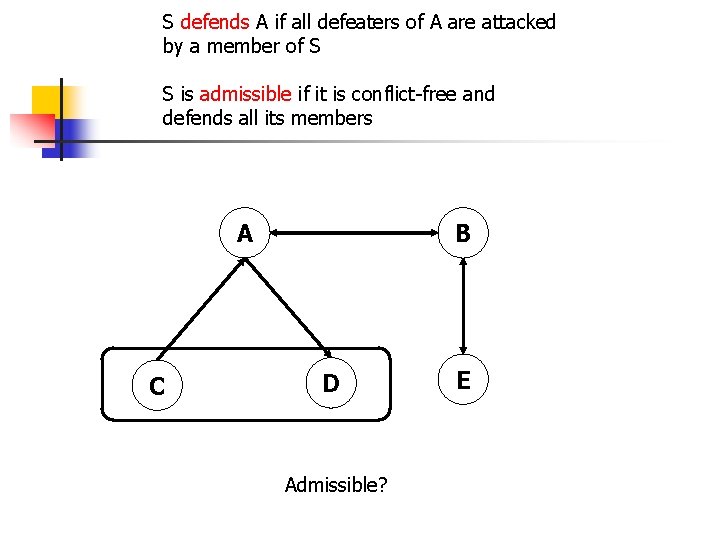

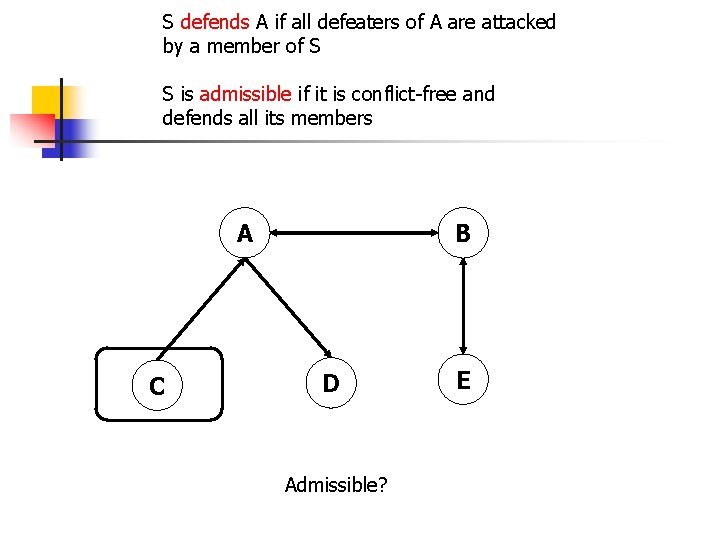

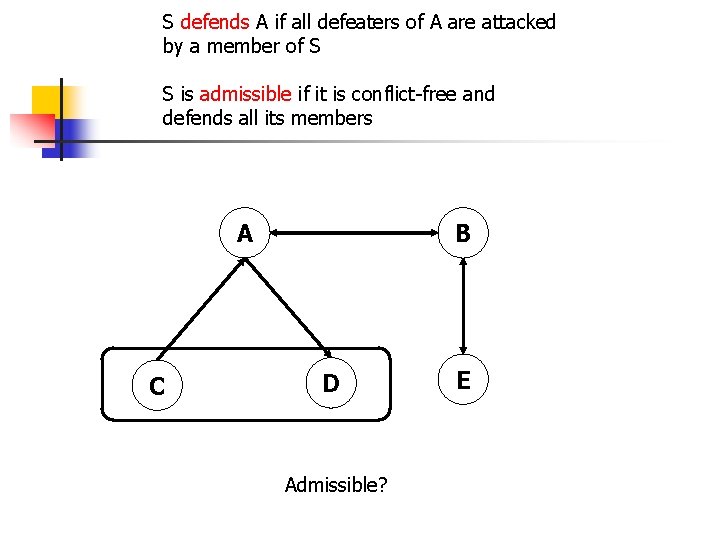

S defends A if all defeaters of A are attacked by a member of S S is admissible if it is conflict-free and defends all its members A C B D Admissible? E

S defends A if all defeaters of A are attacked by a member of S S is admissible if it is conflict-free and defends all its members A C B D Admissible? E

S defends A if all defeaters of A are attacked by a member of S S is admissible if it is conflict-free and defends all its members A C B D Admissible? E

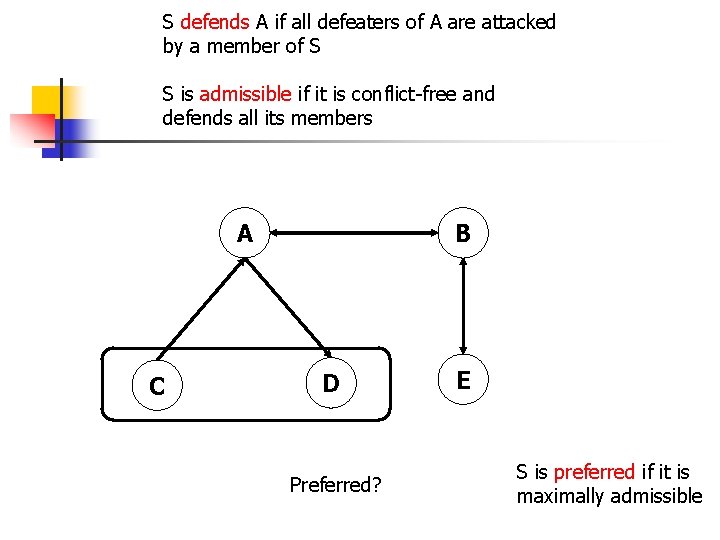

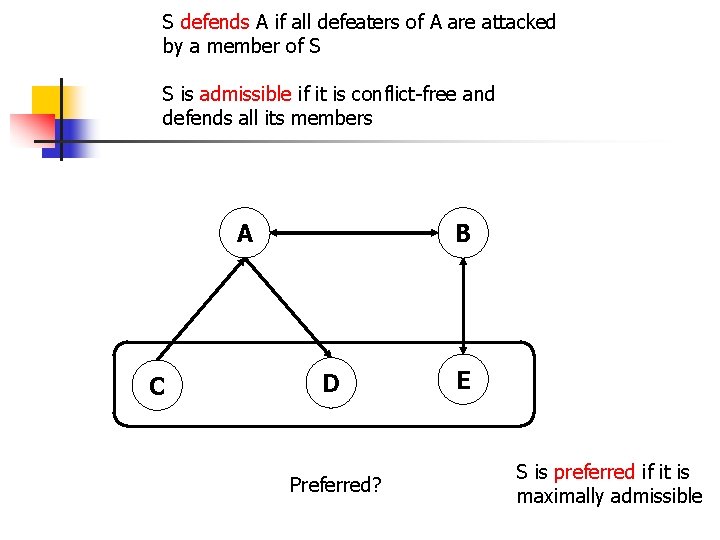

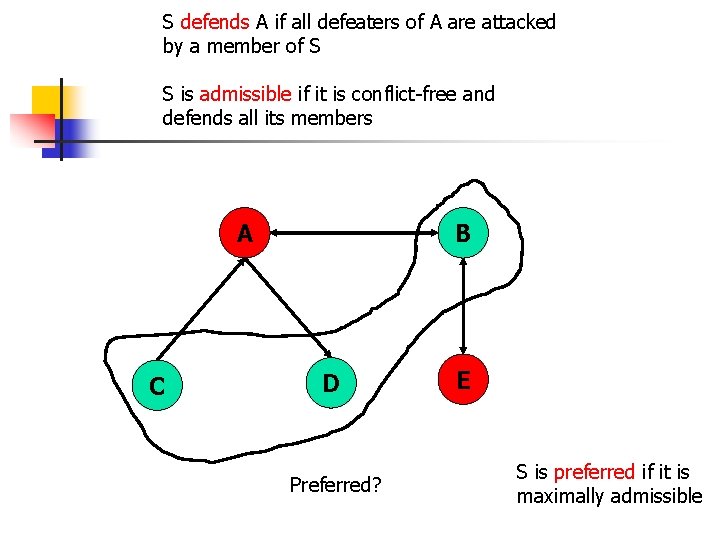

S defends A if all defeaters of A are attacked by a member of S S is admissible if it is conflict-free and defends all its members A C B D Preferred? E S is preferred if it is maximally admissible

S defends A if all defeaters of A are attacked by a member of S S is admissible if it is conflict-free and defends all its members A C B D Preferred? E S is preferred if it is maximally admissible

S defends A if all defeaters of A are attacked by a member of S S is admissible if it is conflict-free and defends all its members A C B D Preferred? E S is preferred if it is maximally admissible

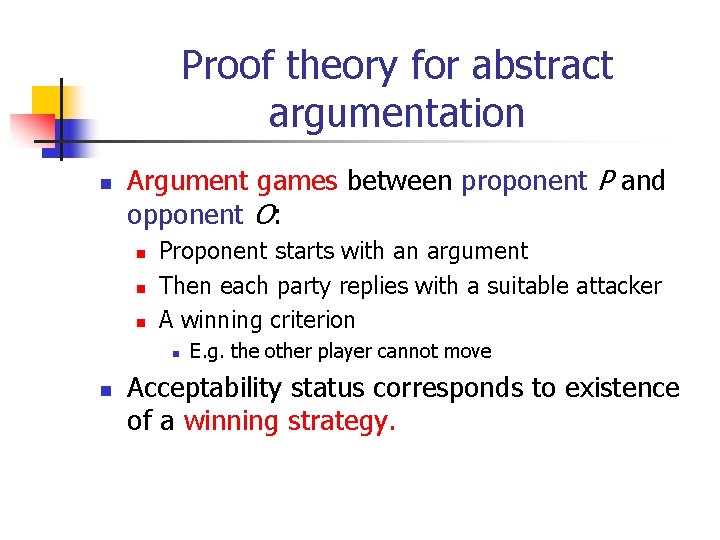

Proof theory for abstract argumentation n Argument games between proponent P and opponent O: n n n Proponent starts with an argument Then each party replies with a suitable attacker A winning criterion n n E. g. the other player cannot move Acceptability status corresponds to existence of a winning strategy.

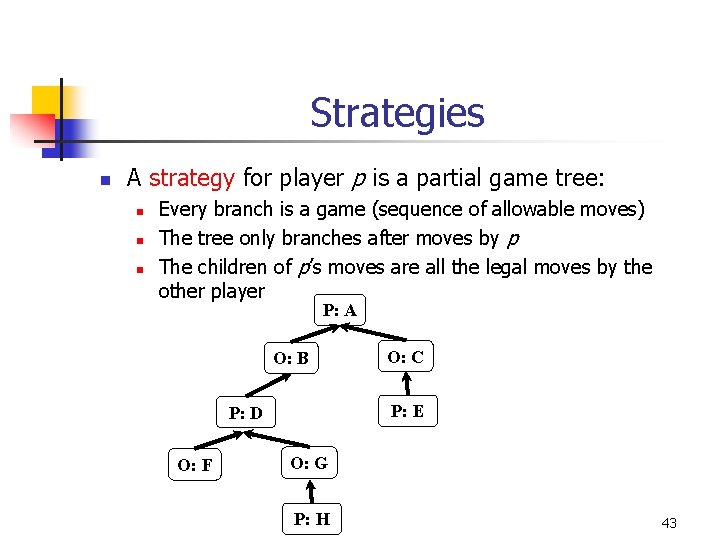

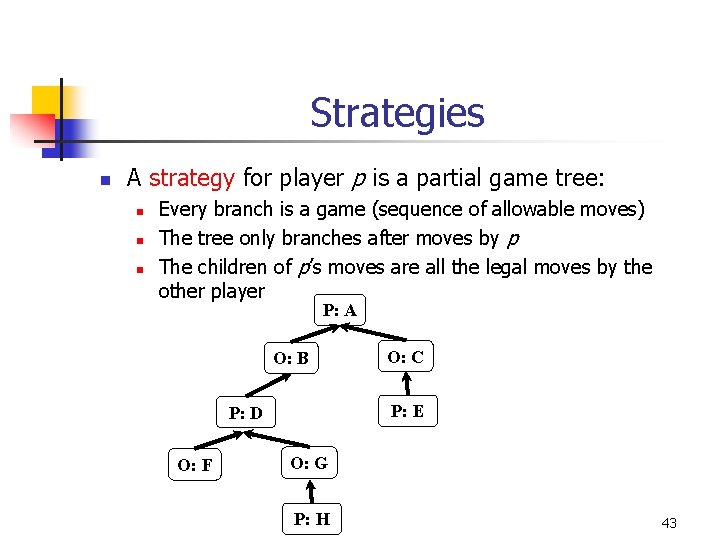

Strategies n A strategy for player p is a partial game tree: n n n Every branch is a game (sequence of allowable moves) The tree only branches after moves by p The children of p’s moves are all the legal moves by the other player P: A O: B P: E P: D O: F O: C O: G P: H 43

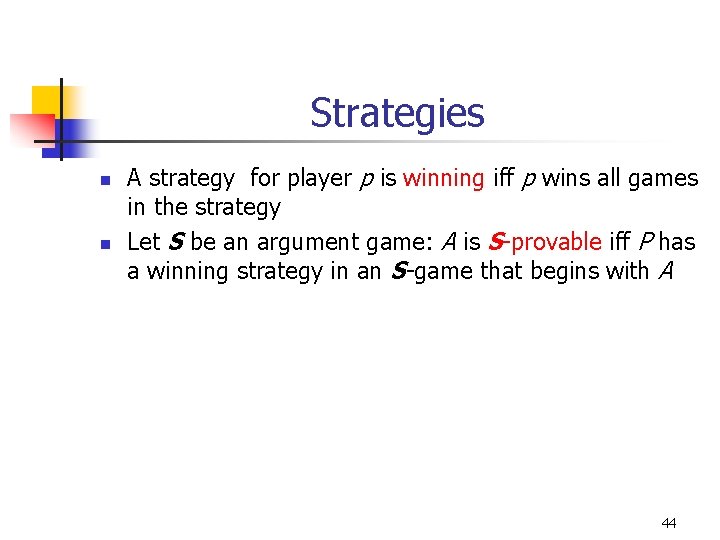

Strategies n n A strategy for player p is winning iff p wins all games in the strategy Let S be an argument game: A is S-provable iff P has a winning strategy in an S-game that begins with A 44

The G-game for grounded semantics: n A sound and complete game: n n n Each move must reply to the previous move Proponent cannot repeat his moves Proponent moves strict attackers, opponent moves attackers A player wins iff the other player cannot move Proposition: A is in the grounded extension iff A is G-provable 45

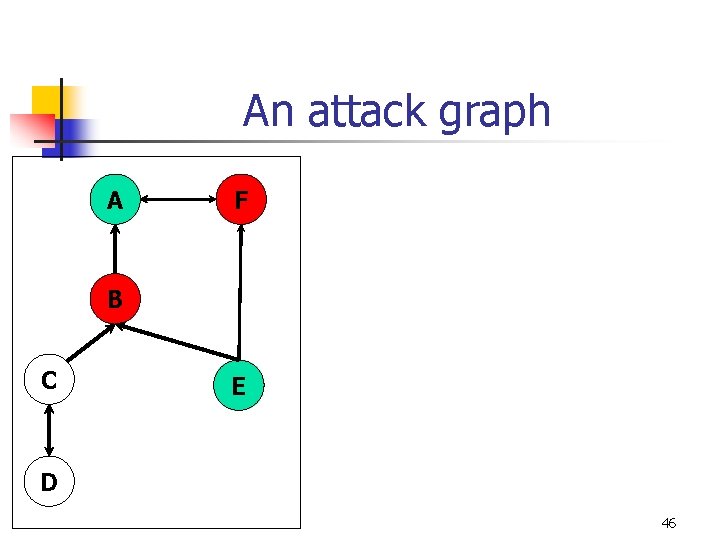

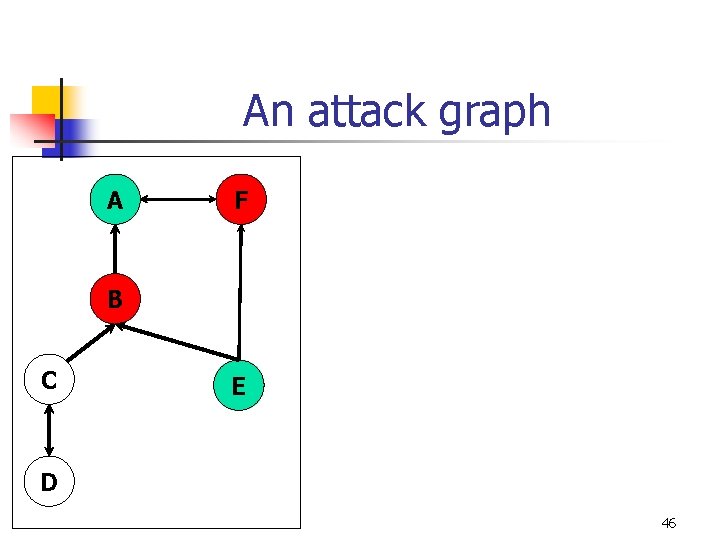

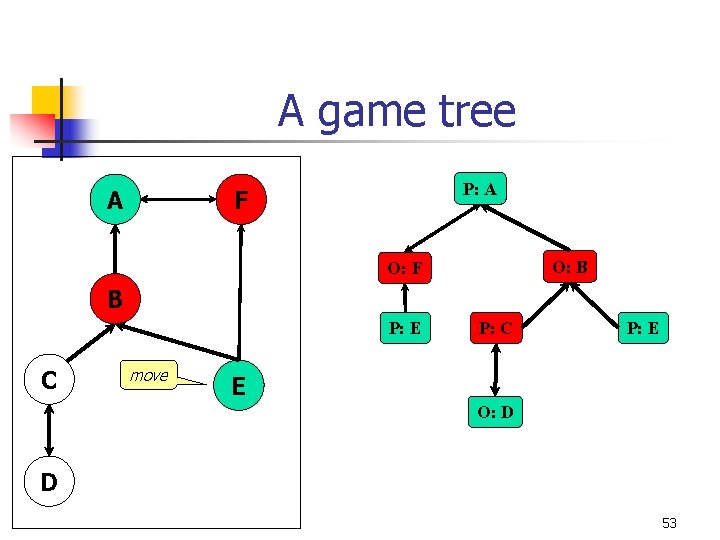

An attack graph A F B C E D 46

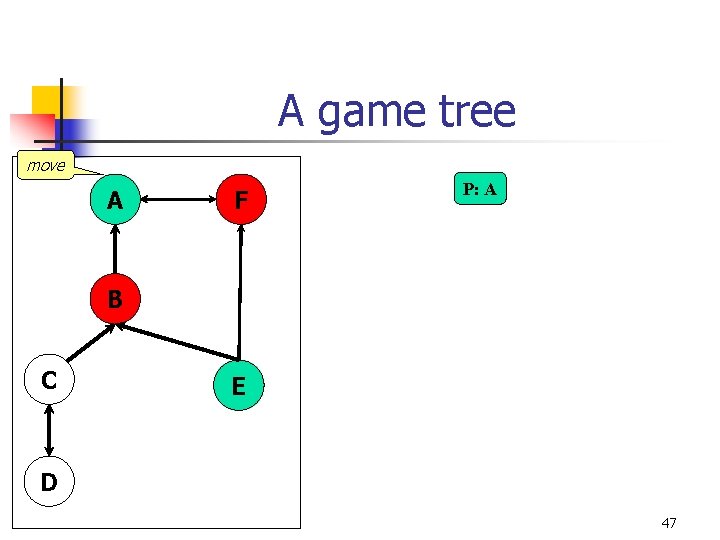

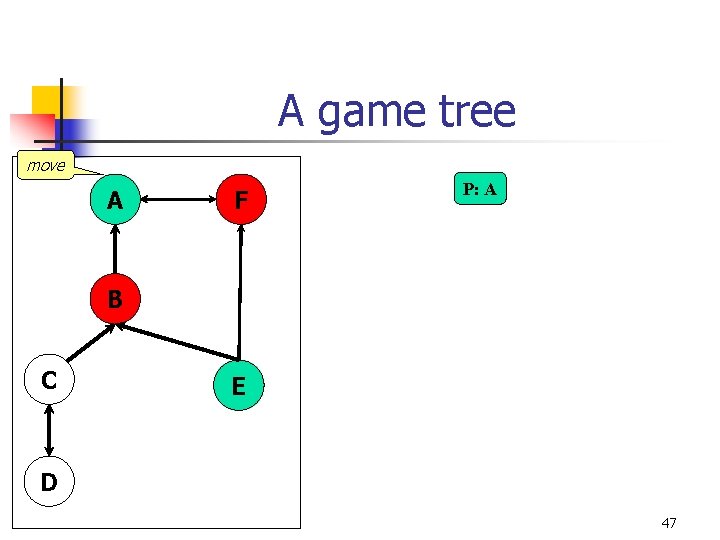

A game tree move A F P: A B C E D 47

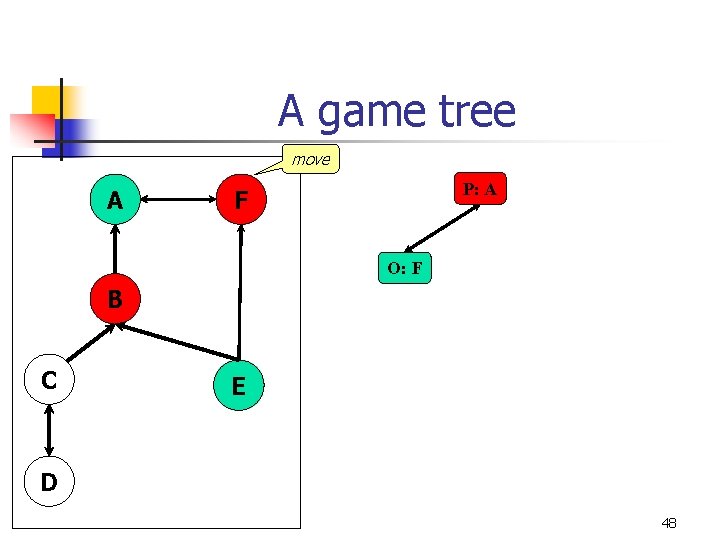

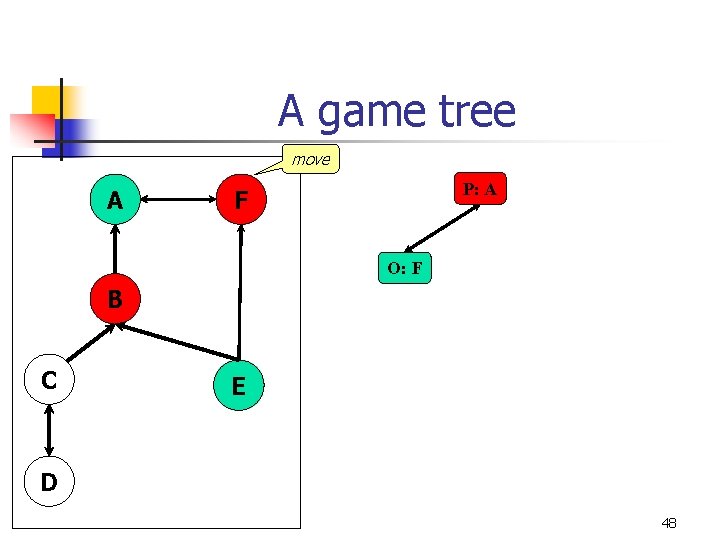

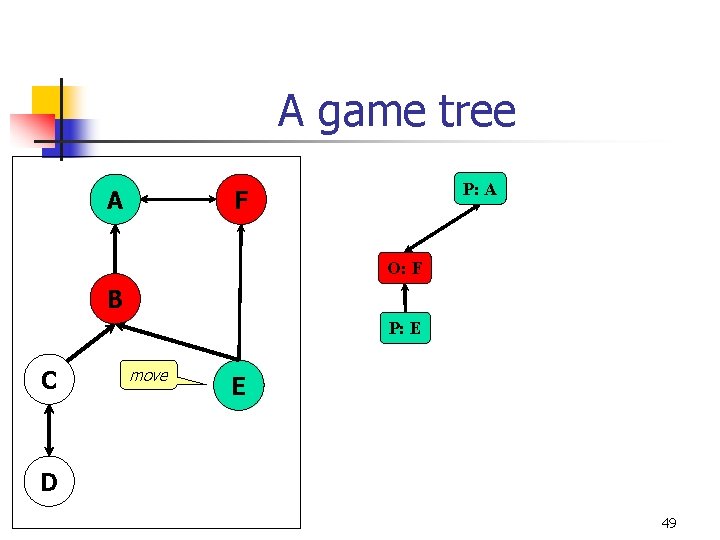

A game tree move A P: A F O: F B C E D 48

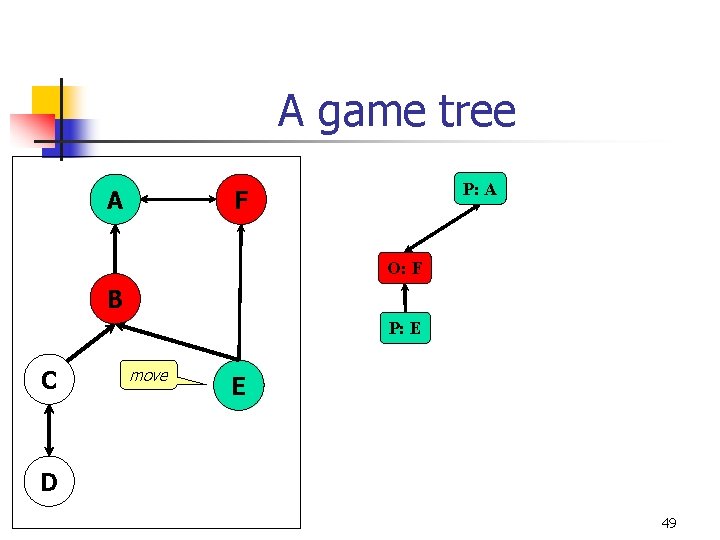

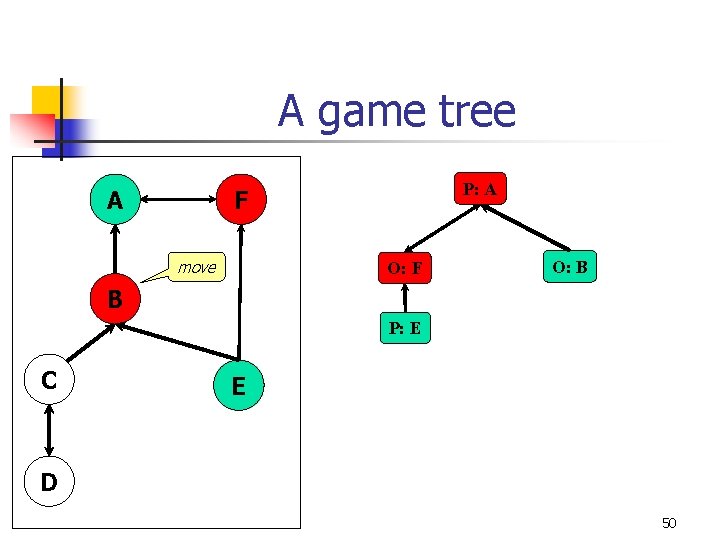

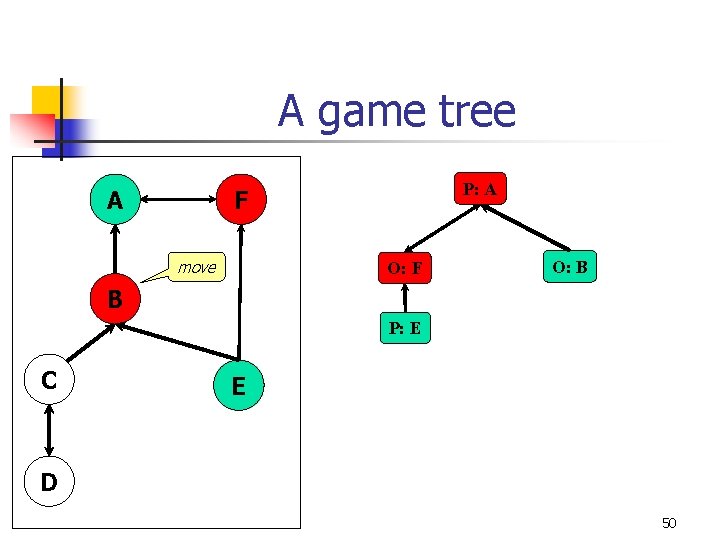

A game tree A P: A F O: F B P: E C move E D 49

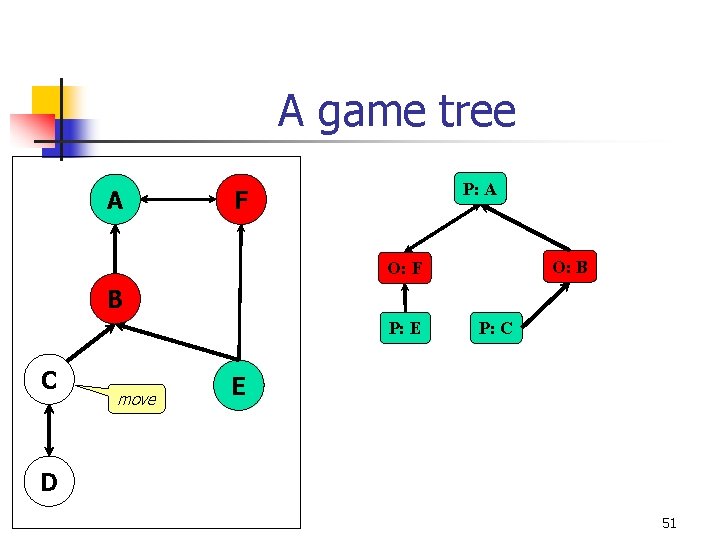

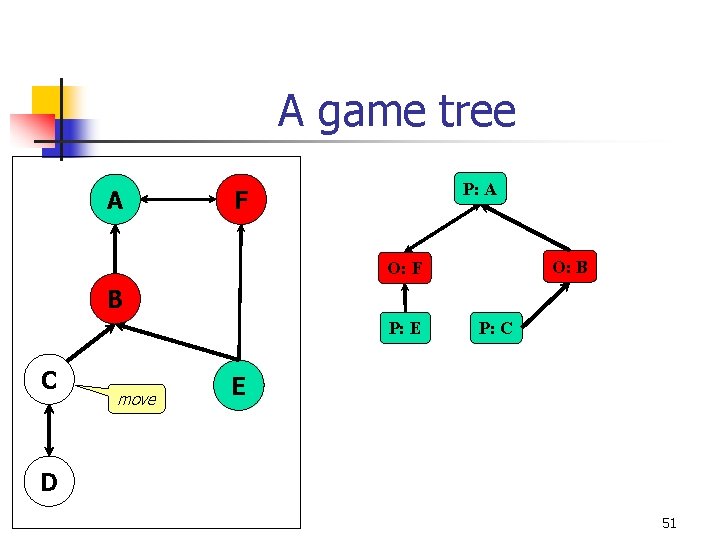

A game tree A P: A F move O: F O: B B P: E C E D 50

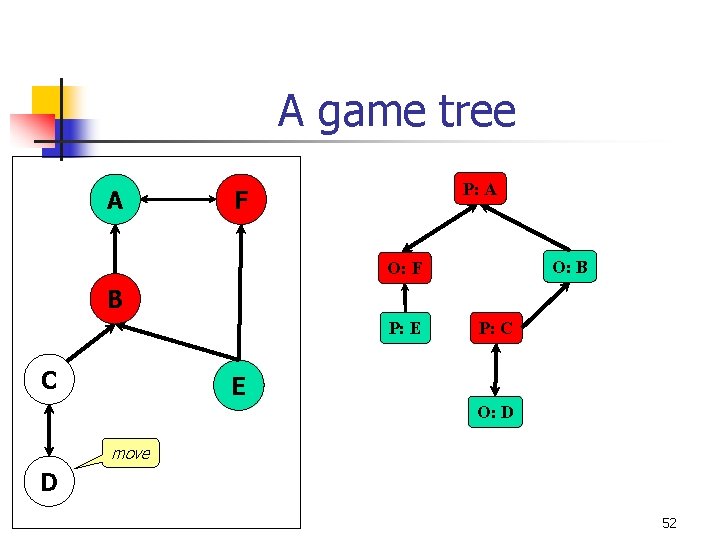

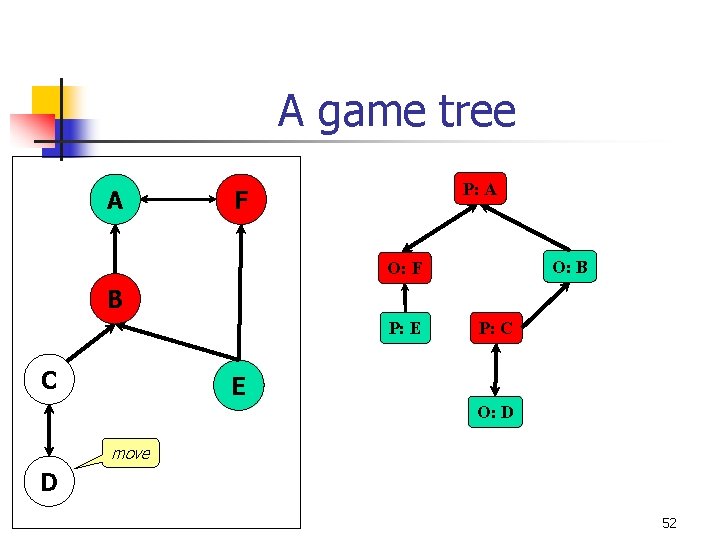

A game tree A P: A F O: B O: F B P: E C move P: C E D 51

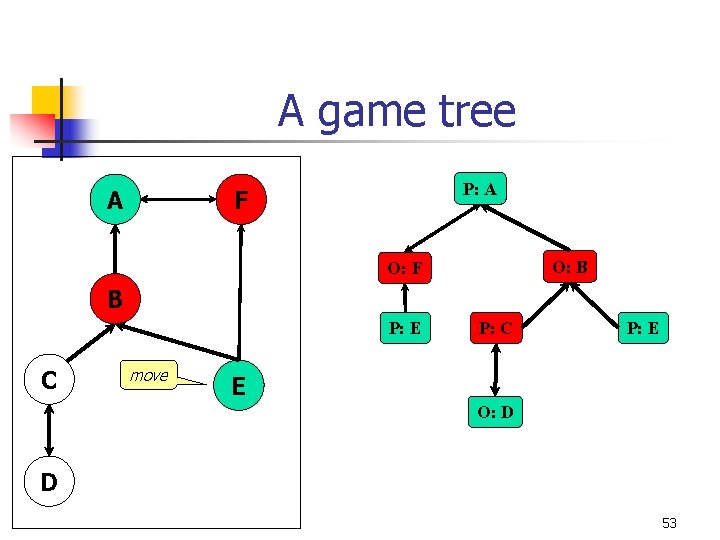

A game tree A P: A F O: B O: F B P: E C P: C E O: D move D 52

A game tree A P: A F O: B O: F B P: E C move P: C P: E E O: D D 53

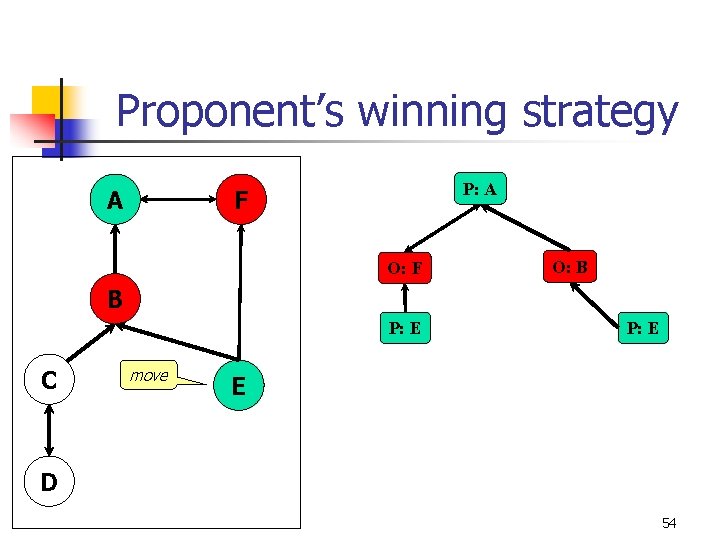

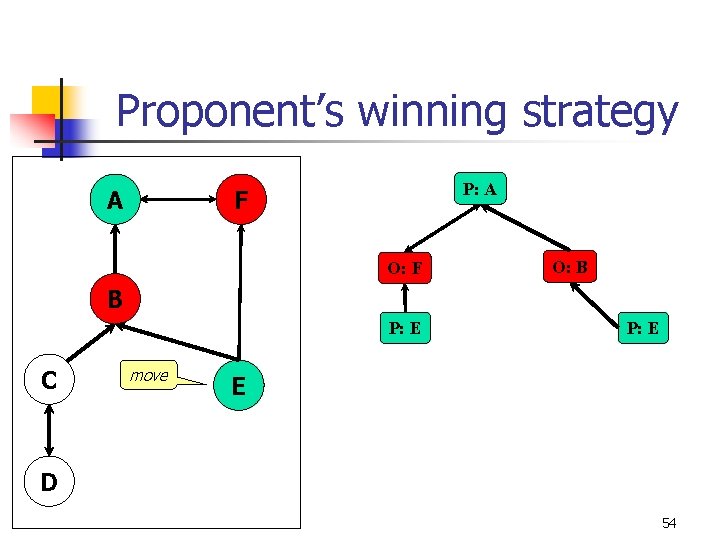

Proponent’s winning strategy A P: A F O: B B P: E C move P: E E D 54

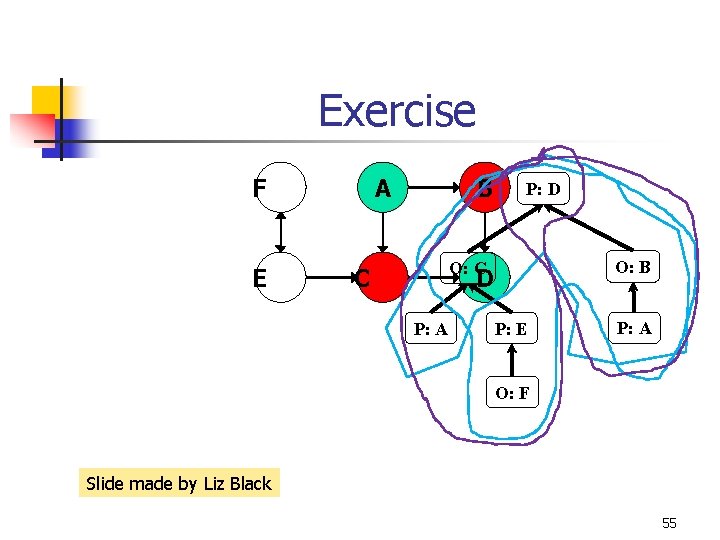

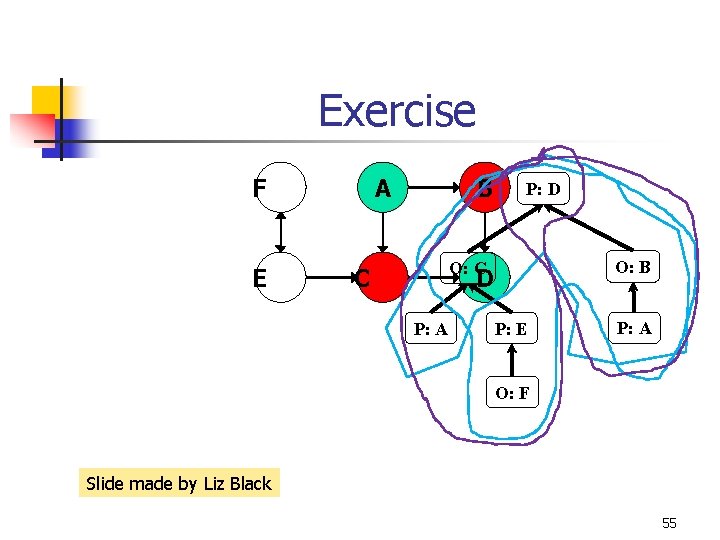

Exercise F E A B P: D O: B O: C C D P: A P: E P: A O: F Slide made by Liz Black 55

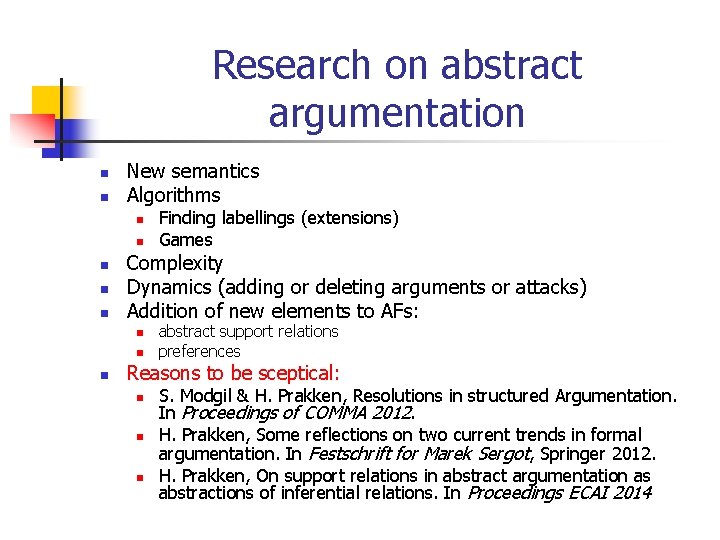

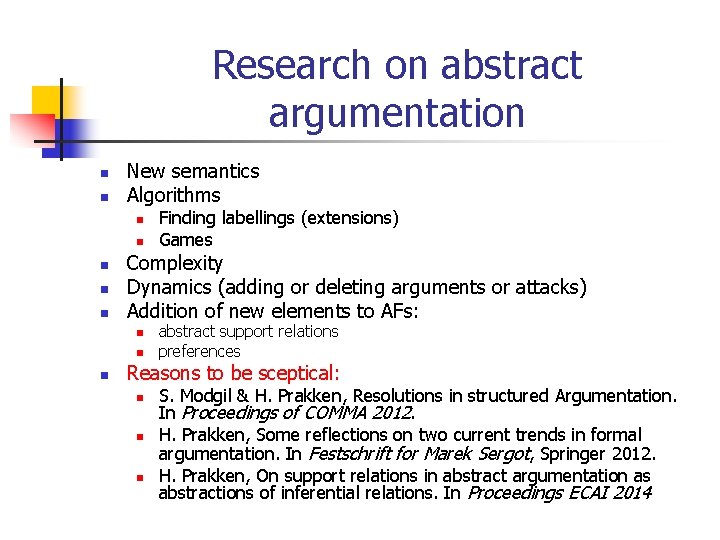

Research on abstract argumentation n n New semantics Algorithms n n n Complexity Dynamics (adding or deleting arguments or attacks) Addition of new elements to AFs: n n n Finding labellings (extensions) Games abstract support relations preferences Reasons to be sceptical: n n n S. Modgil & H. Prakken, Resolutions in structured Argumentation. In Proceedings of COMMA 2012. H. Prakken, Some reflections on two current trends in formal argumentation. In Festschrift for Marek Sergot, Springer 2012. H. Prakken, On support relations in abstract argumentation as abstractions of inferential relations. In Proceedings ECAI 2014

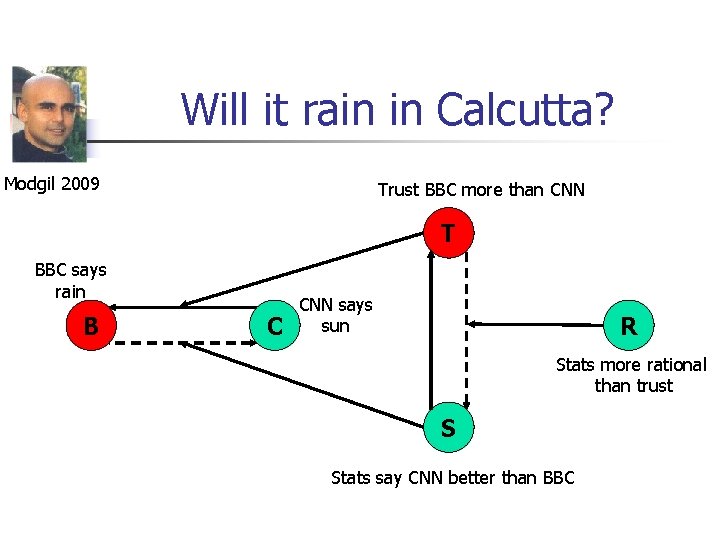

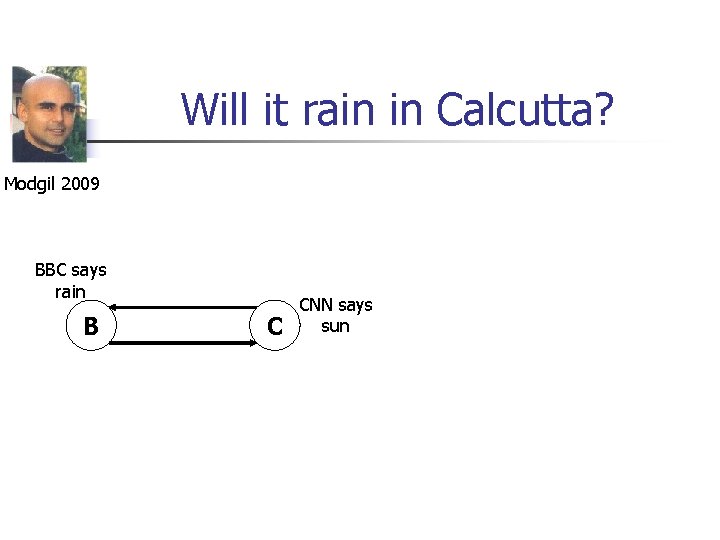

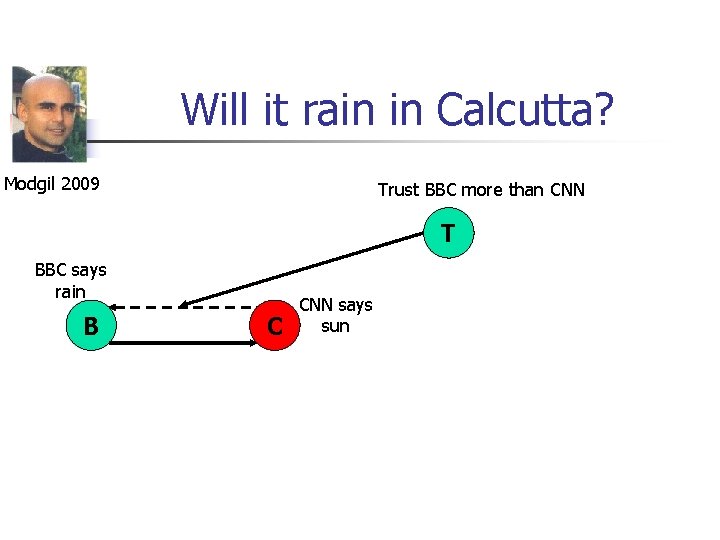

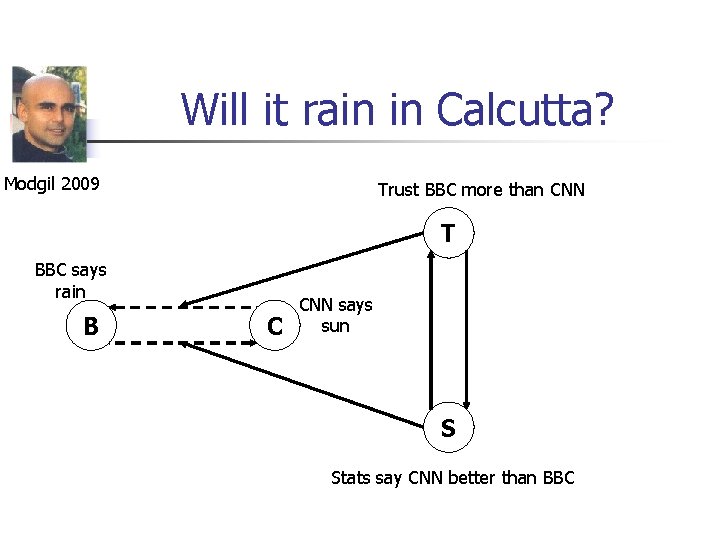

Arguing about attack relations n Standards for determining defeat relations are often: n n Domain-specific Defeasible and conflicting So determining these standards is argumentation! Recently Modgil (AIJ 2009) has extended Dung’s abstract approach n Arguments can also attack relations

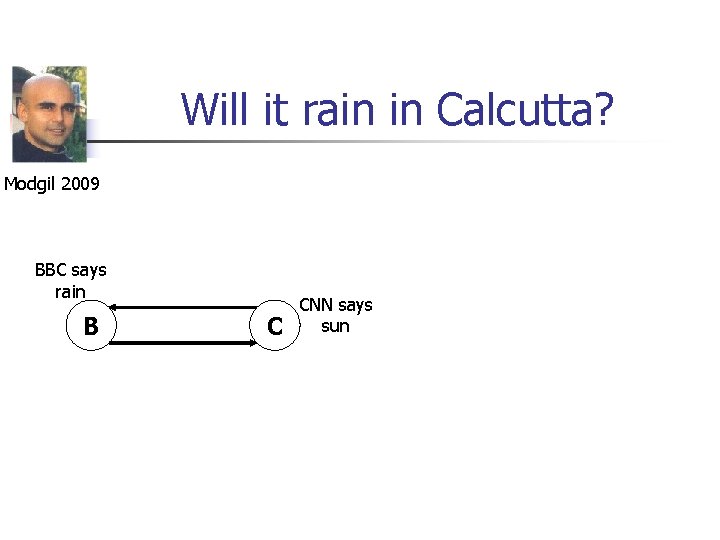

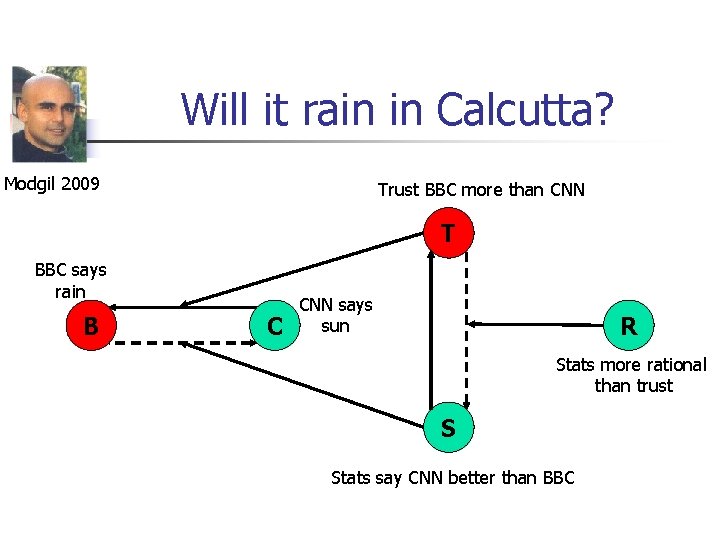

Will it rain in Calcutta? Modgil 2009 BBC says rain B C CNN says sun

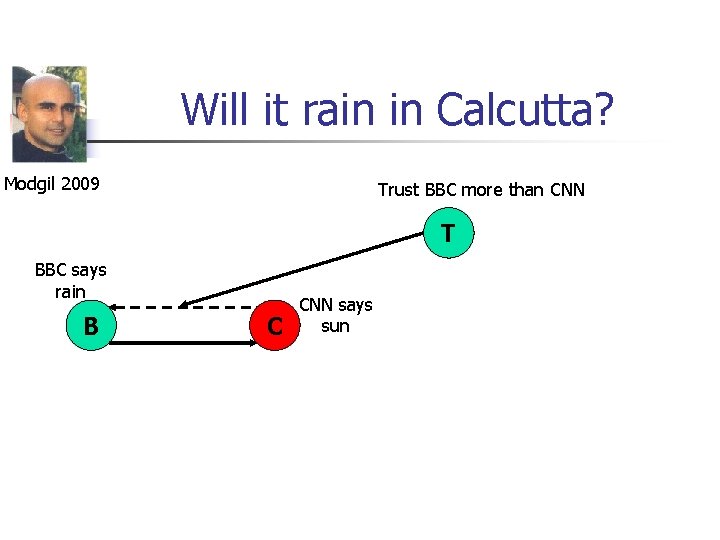

Will it rain in Calcutta? Modgil 2009 Trust BBC more than CNN T BBC says rain B C CNN says sun

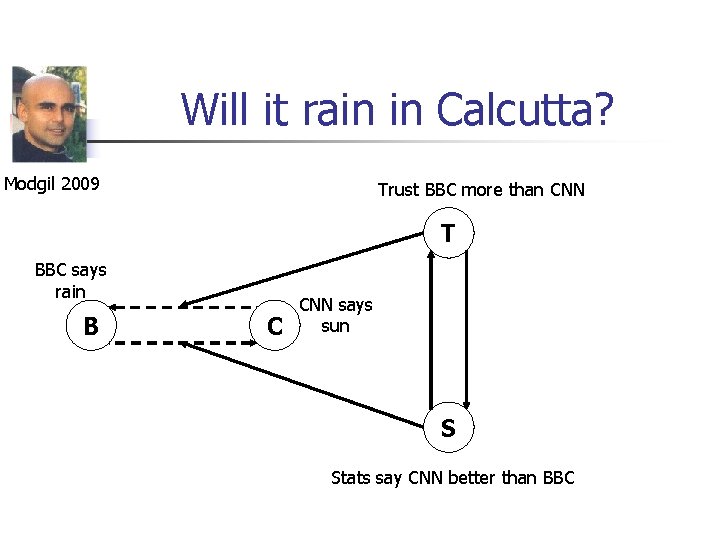

Will it rain in Calcutta? Modgil 2009 Trust BBC more than CNN T BBC says rain B C CNN says sun S Stats say CNN better than BBC

Will it rain in Calcutta? Modgil 2009 Trust BBC more than CNN T BBC says rain B C CNN says sun R Stats more rational than trust S Stats say CNN better than BBC

A C B D E

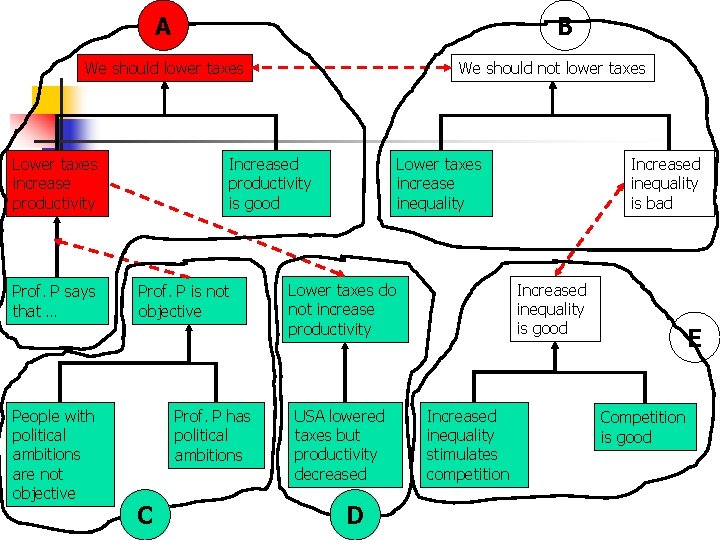

A B We should lower taxes Lower taxes increase productivity Prof. P says that … People with political ambitions are not objective We should not lower taxes Increased productivity is good Prof. P is not objective Prof. P has political ambitions C Lower taxes increase inequality Increased inequality is good Lower taxes do not increase productivity USA lowered taxes but productivity decreased D Increased inequality is bad Increased inequality stimulates competition E Competition is good

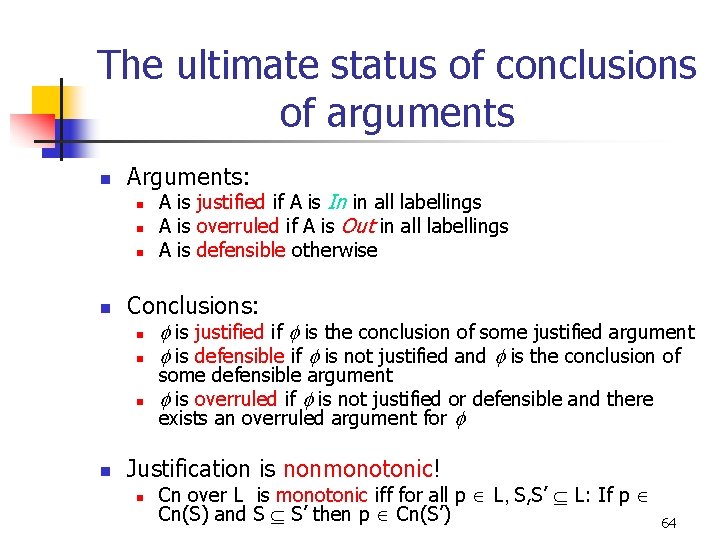

The ultimate status of conclusions of arguments n Arguments: n n Conclusions: n n A is justified if A is In in all labellings A is overruled if A is Out in all labellings A is defensible otherwise is justified if is the conclusion of some justified argument is defensible if is not justified and is the conclusion of some defensible argument is overruled if is not justified or defensible and there exists an overruled argument for Justification is nonmonotonic! n Cn over L is monotonic iff for all p L, S, S’ L: If p Cn(S) and S S’ then p Cn(S’) 64

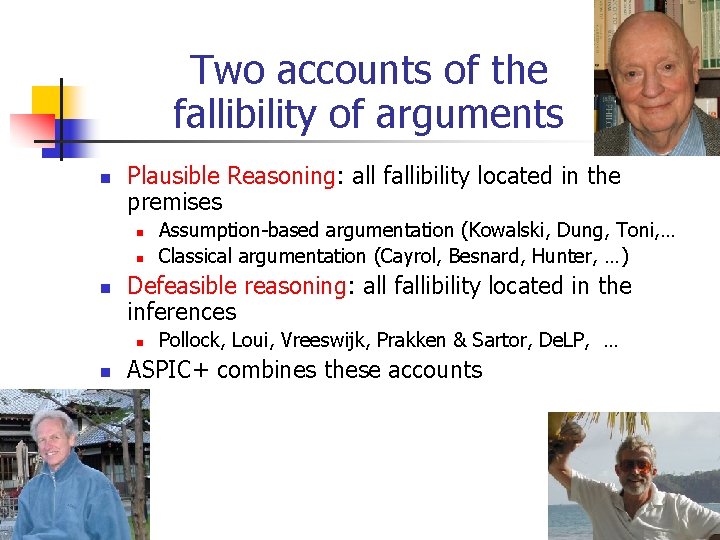

Two accounts of the fallibility of arguments n Plausible Reasoning: all fallibility located in the premises n n n Defeasible reasoning: all fallibility located in the inferences n n Assumption-based argumentation (Kowalski, Dung, Toni, … Classical argumentation (Cayrol, Besnard, Hunter, …) Pollock, Loui, Vreeswijk, Prakken & Sartor, De. LP, … ASPIC+ combines these accounts 65

“Nonmonotonic” v. “Defeasible” n n Nonmonotonicity is a property of consequence notions Defeasibility is a property of inference rules n An inference rule is defeasible if there are situations in which its conclusion does not have to be accepted even though all its premises must be accepted.

Rationality postulates for structured argumentation Extensions should be closed under subarguments Their conclusion sets should be: n n Consistent Closed under deductive inference M. Caminada & L. Amgoud, On the evaluation of argumentation formalisms. Artificial Intelligence 171 (2007): 286 -310

The ‘base logic’ approach (Hunter, COMMA 2010) n n n Adopt a single base logic Define arguments as consequence in the adopted base logic Then the structure of arguments is given by the base logic

Classical argumentation (Besnard, Hunter, …) n n Assume a possibly inconsistent KB in the language of classical logic Arguments are classical proofs from consistent (and subset-minimal) subsets of the KB Various notions of attack Possibly add preferences to determine which attacks result in defeat n n E. g. Modgil & Prakken, AIJ-2013. Approach recently abstracted to Tarskian abstract logics n Amgoud & Besnard (2009 -2013)

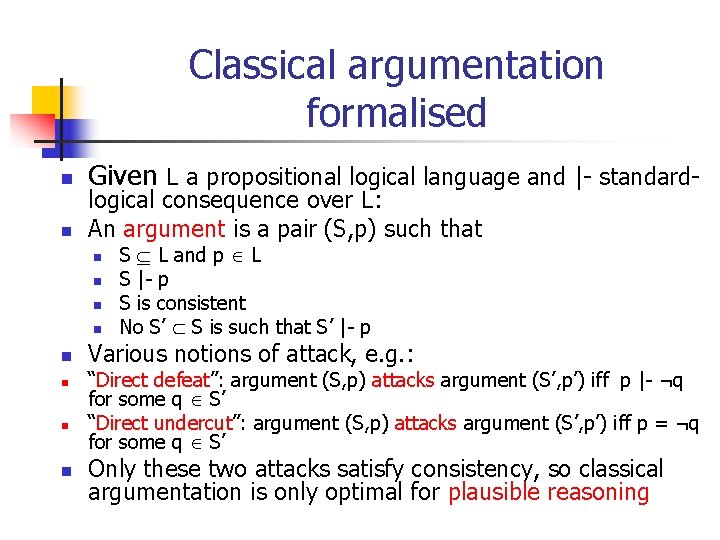

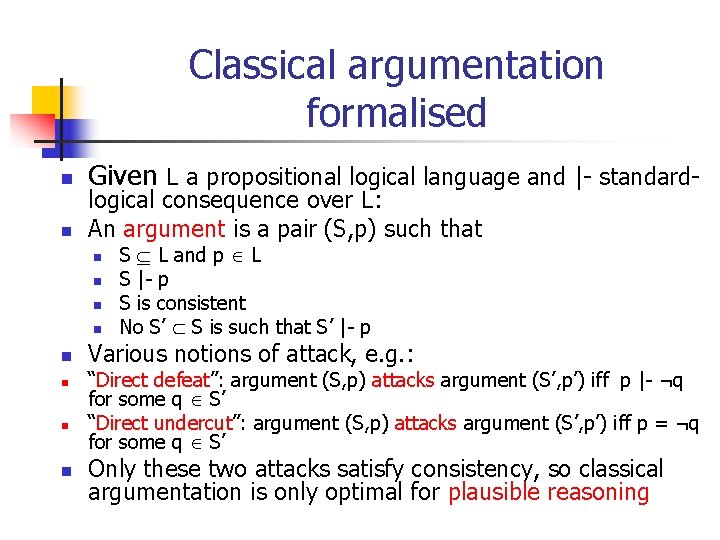

Classical argumentation formalised n n Given L a propositional logical language and |- standardlogical consequence over L: An argument is a pair (S, p) such that n n n n S L and p L S |- p S is consistent No S’ S is such that S’ |- p Various notions of attack, e. g. : “Direct defeat”: argument (S, p) attacks argument (S’, p’) iff p |- ¬q for some q S’ “Direct undercut”: argument (S, p) attacks argument (S’, p’) iff p = ¬q for some q S’ Only these two attacks satisfy consistency, so classical argumentation is only optimal for plausible reasoning

Modelling default reasoning in classical argumentation n Quakers are usually pacifist Republicans are usually not pacifist Nixon was a quaker and a republican 71

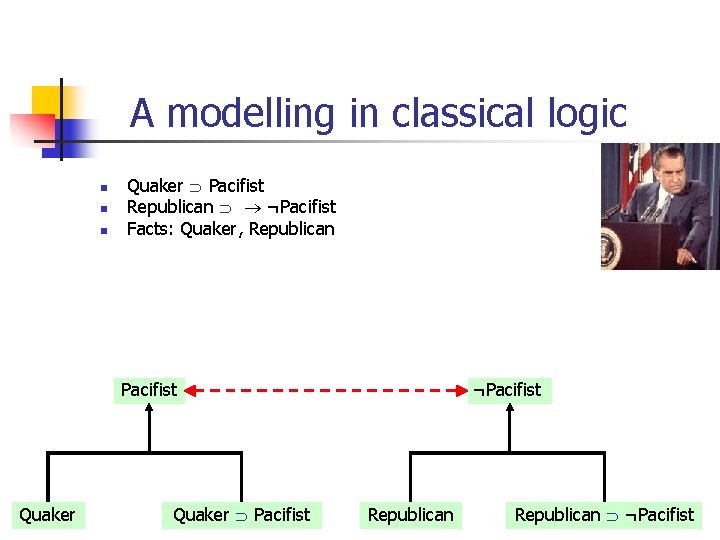

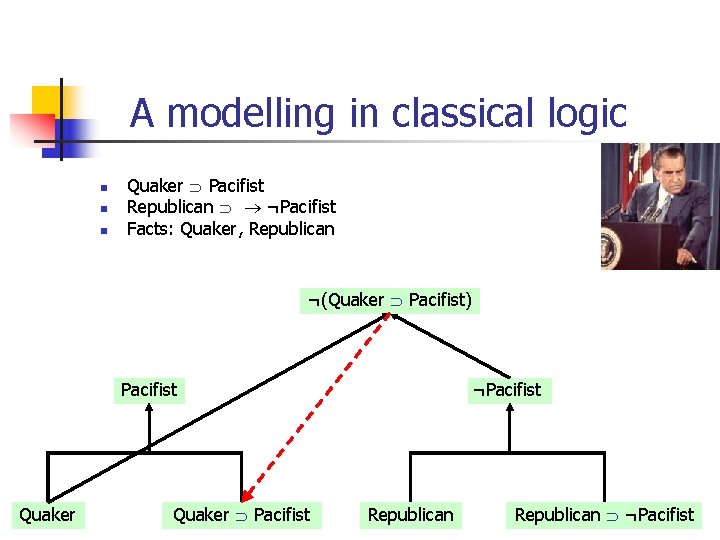

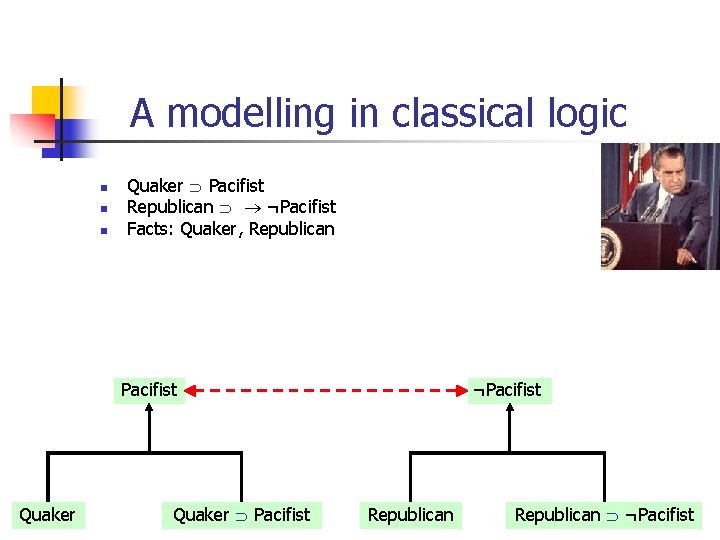

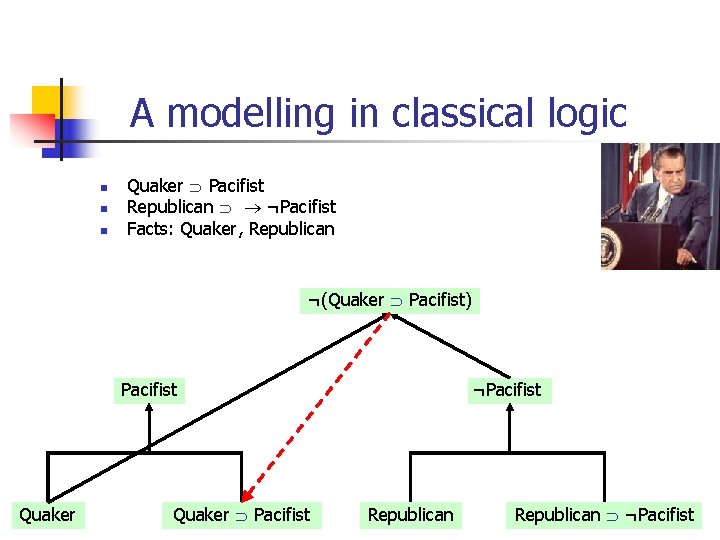

A modelling in classical logic n n n Quaker Pacifist Republican ¬Pacifist Facts: Quaker, Republican Pacifist Quaker Pacifist ¬Pacifist Republican ¬Pacifist 72

A modelling in classical logic n n n Quaker Pacifist Republican ¬Pacifist Facts: Quaker, Republican ¬(Quaker Pacifist) Pacifist Quaker Pacifist ¬Pacifist Republican ¬Pacifist 73

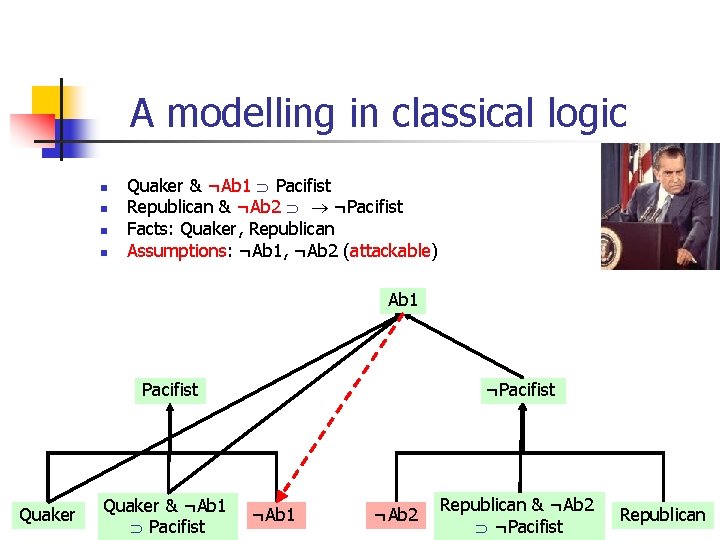

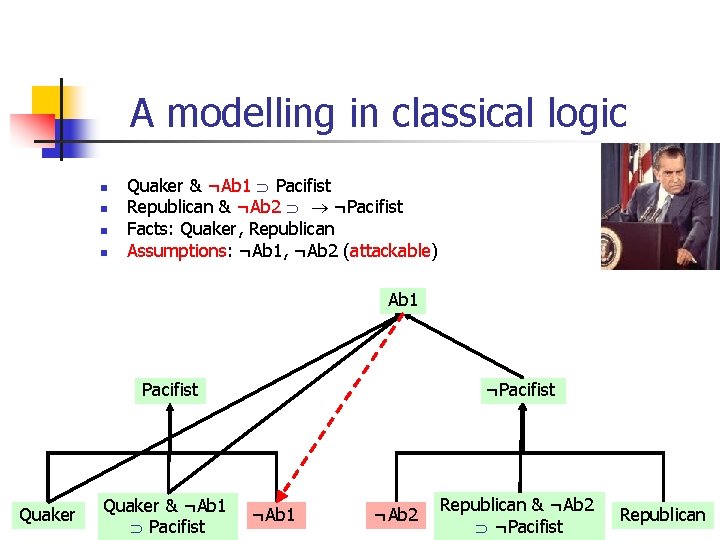

A modelling in classical logic n n Quaker & ¬Ab 1 Pacifist Republican & ¬Ab 2 ¬Pacifist Facts: Quaker, Republican Assumptions: ¬Ab 1, ¬Ab 2 (attackable) Ab 1 Pacifist Quaker & ¬Ab 1 Pacifist ¬Ab 1 ¬Ab 2 Republican & ¬Ab 2 ¬Pacifist Republican 74

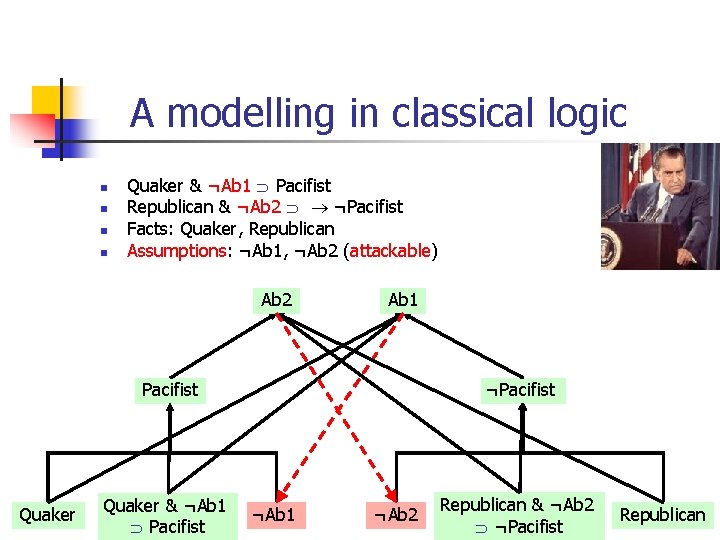

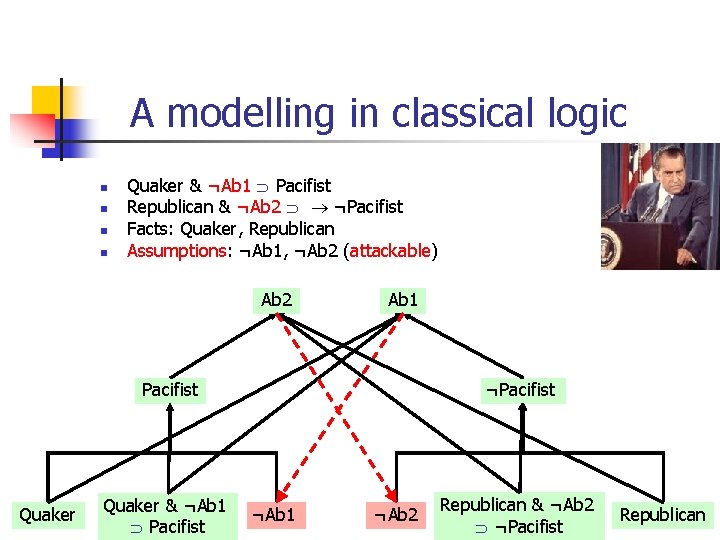

A modelling in classical logic n n Quaker & ¬Ab 1 Pacifist Republican & ¬Ab 2 ¬Pacifist Facts: Quaker, Republican Assumptions: ¬Ab 1, ¬Ab 2 (attackable) Ab 2 Ab 1 Pacifist Quaker & ¬Ab 1 Pacifist ¬Ab 1 ¬Ab 2 Republican & ¬Ab 2 ¬Pacifist Republican 75

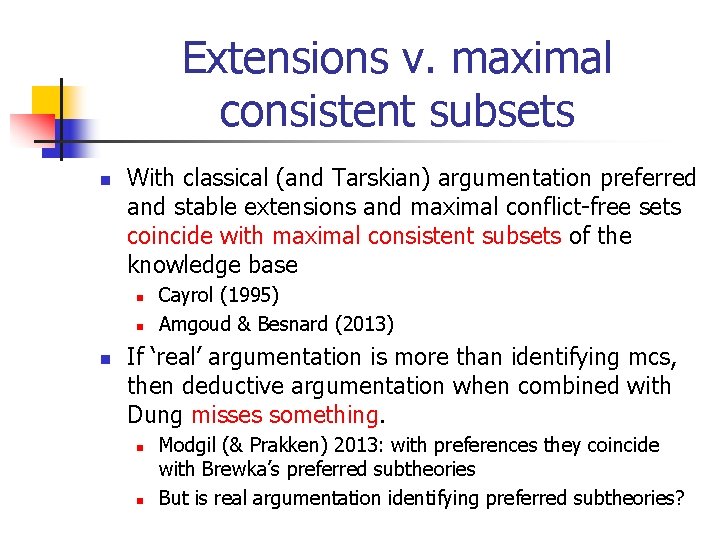

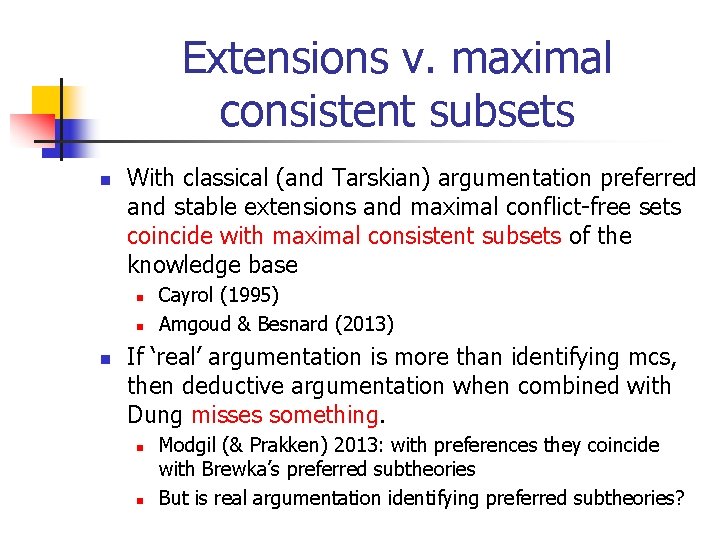

Extensions v. maximal consistent subsets n With classical (and Tarskian) argumentation preferred and stable extensions and maximal conflict-free sets coincide with maximal consistent subsets of the knowledge base n n n Cayrol (1995) Amgoud & Besnard (2013) If ‘real’ argumentation is more than identifying mcs, then deductive argumentation when combined with Dung misses something. n n Modgil (& Prakken) 2013: with preferences they coincide with Brewka’s preferred subtheories But is real argumentation identifying preferred subtheories?

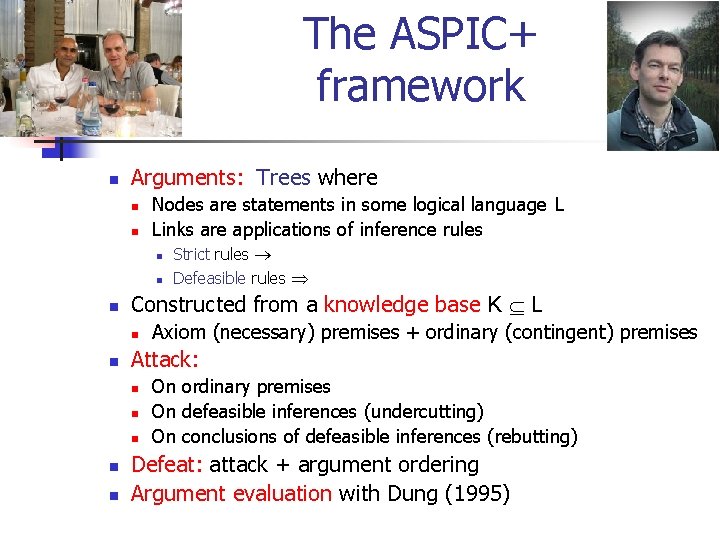

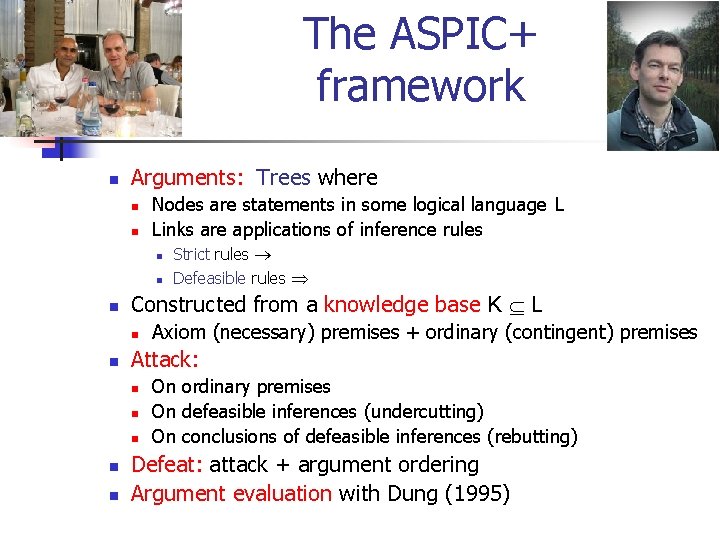

The ASPIC+ framework n Arguments: Trees where n n Nodes are statements in some logical language L Links are applications of inference rules n n n Constructed from a knowledge base K L n n n Axiom (necessary) premises + ordinary (contingent) premises Attack: n n Strict rules Defeasible rules On ordinary premises On defeasible inferences (undercutting) On conclusions of defeasible inferences (rebutting) Defeat: attack + argument ordering Argument evaluation with Dung (1995)

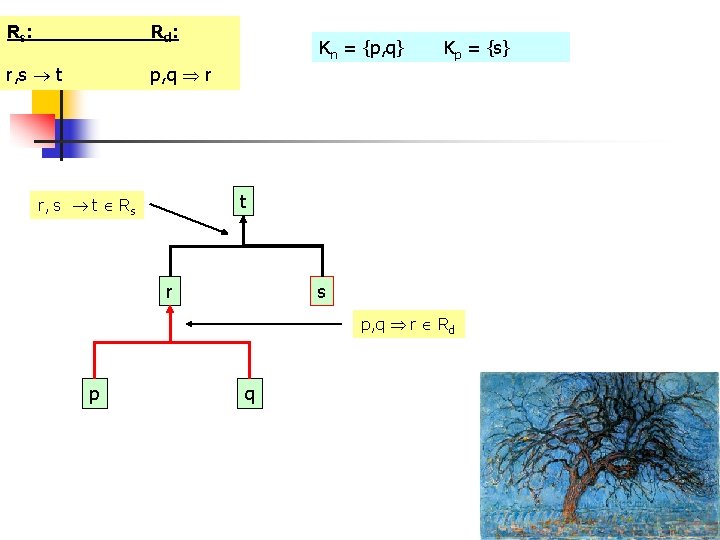

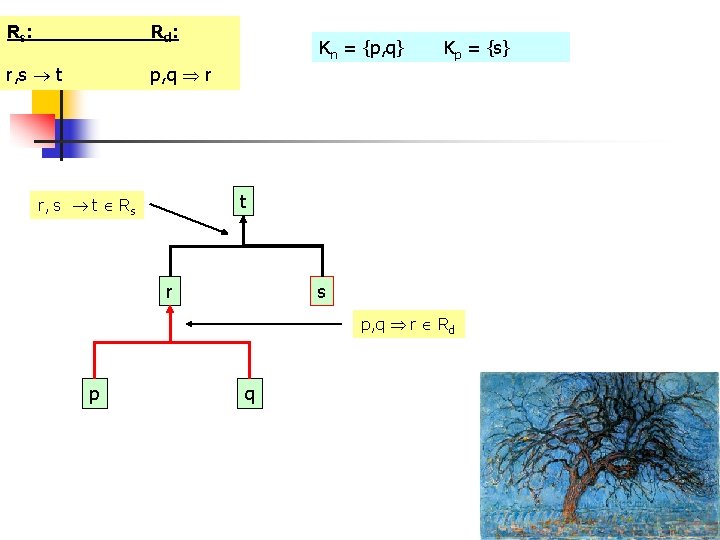

Rs : Rd : r, s t p, q r Kn = {p, q} Kp = {s} t r, s t Rs r s p, q r Rd p q 78

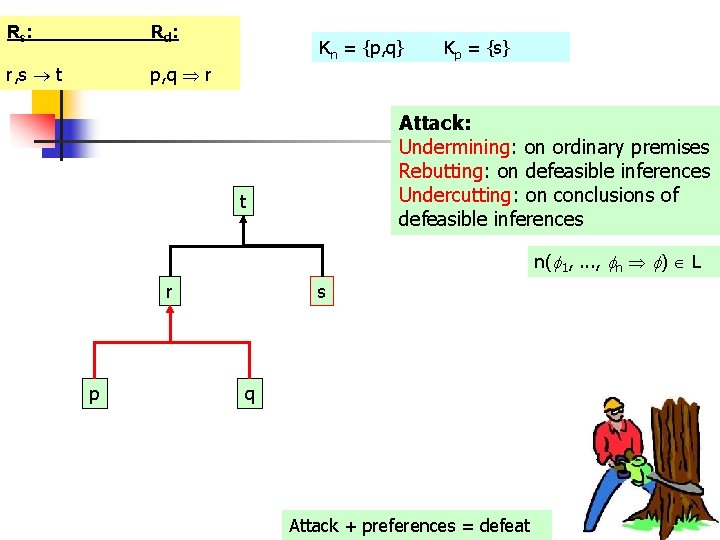

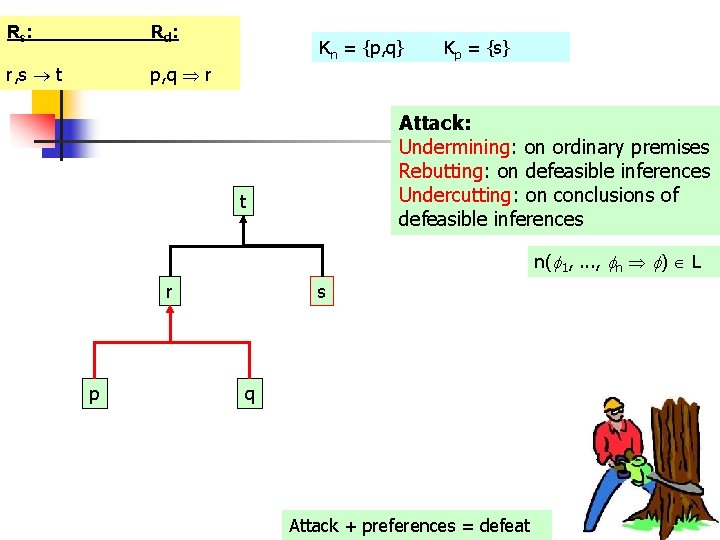

Rs : Rd : r, s t p, q r Kn = {p, q} Kp = {s} Attack: Undermining: on ordinary premises Rebutting: on defeasible inferences Undercutting: on conclusions of defeasible inferences t n( 1, . . . , n ) L r p s q Attack + preferences = defeat 79

Consistency in ASPIC+ (with symmetric negation) For any S L n n n S is (directly) consistent iff S does not contain two formulas and – . … 80

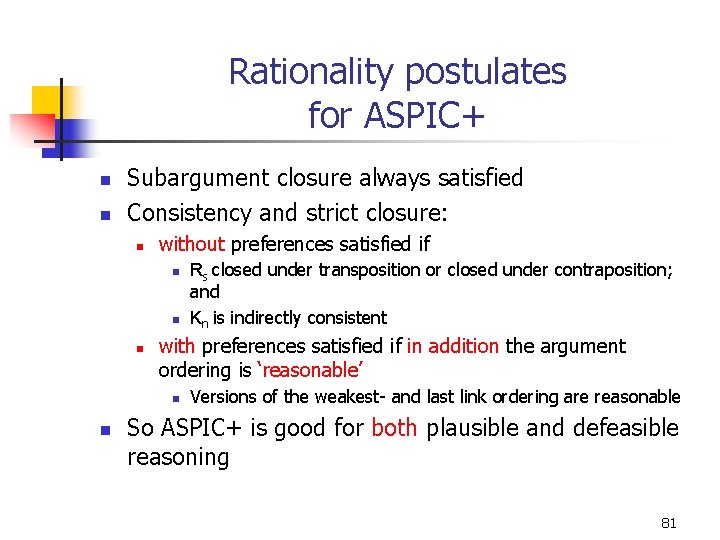

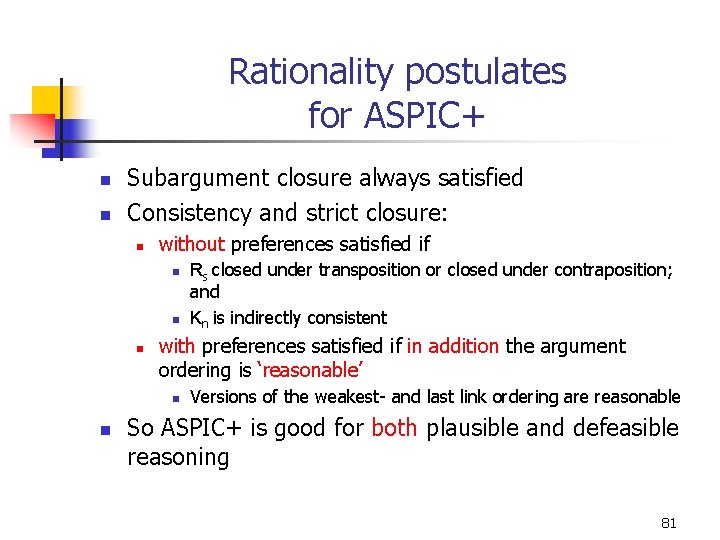

Rationality postulates for ASPIC+ n n Subargument closure always satisfied Consistency and strict closure: n without preferences satisfied if n n n with preferences satisfied if in addition the argument ordering is ‘reasonable’ n n Rs closed under transposition or closed under contraposition; and Kn is indirectly consistent Versions of the weakest- and last link ordering are reasonable So ASPIC+ is good for both plausible and defeasible reasoning 81

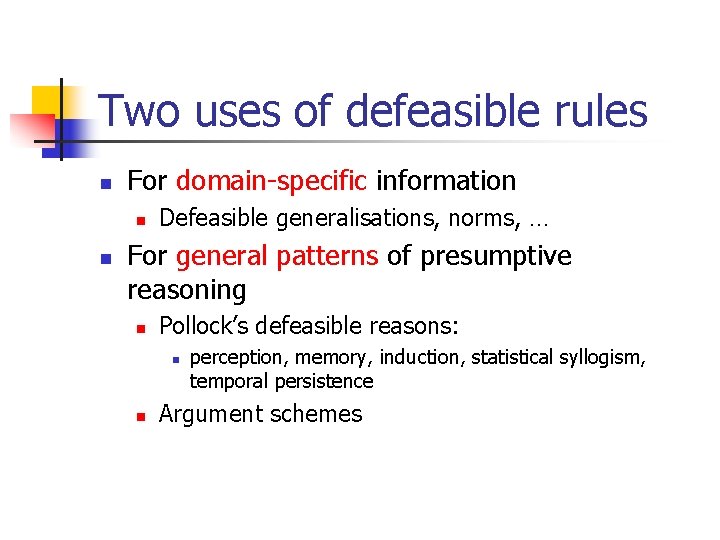

Two uses of defeasible rules n For domain-specific information n n Defeasible generalisations, norms, … For general patterns of presumptive reasoning n Pollock’s defeasible reasons: n n perception, memory, induction, statistical syllogism, temporal persistence Argument schemes

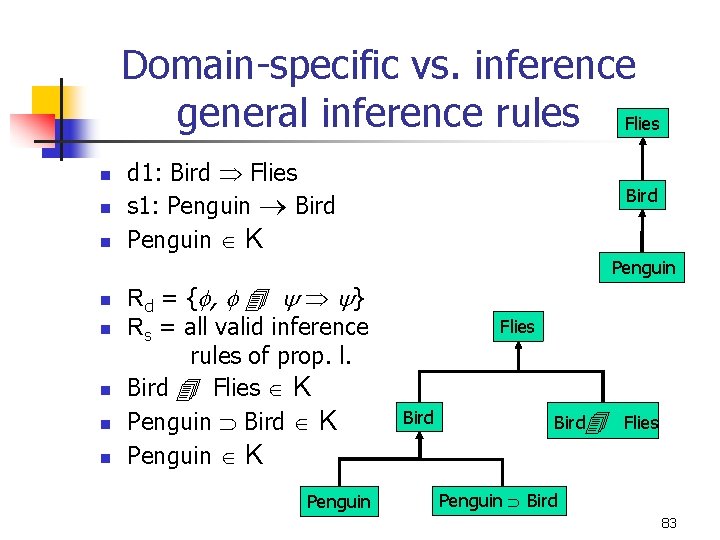

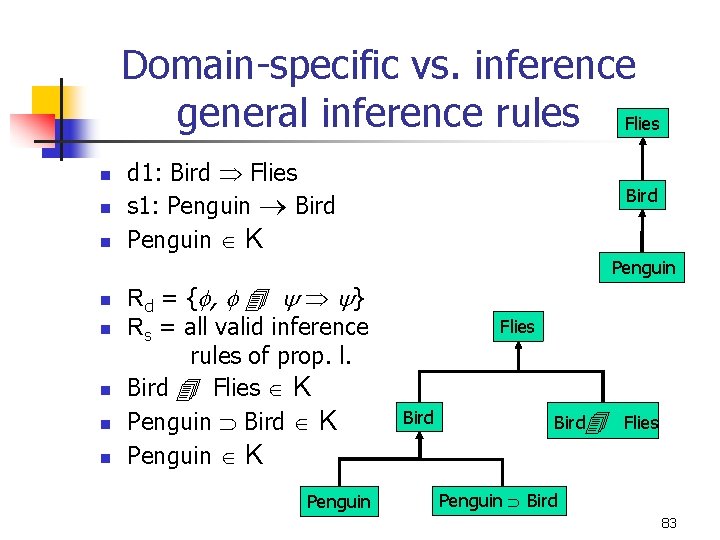

Domain-specific vs. inference general inference rules Flies n n n d 1: Bird Flies s 1: Penguin Bird Penguin K Bird Penguin n n Rd = { , } Rs = all valid inference rules of prop. l. Bird Flies K Penguin Bird K Penguin Flies Bird Flies Penguin Bird 83

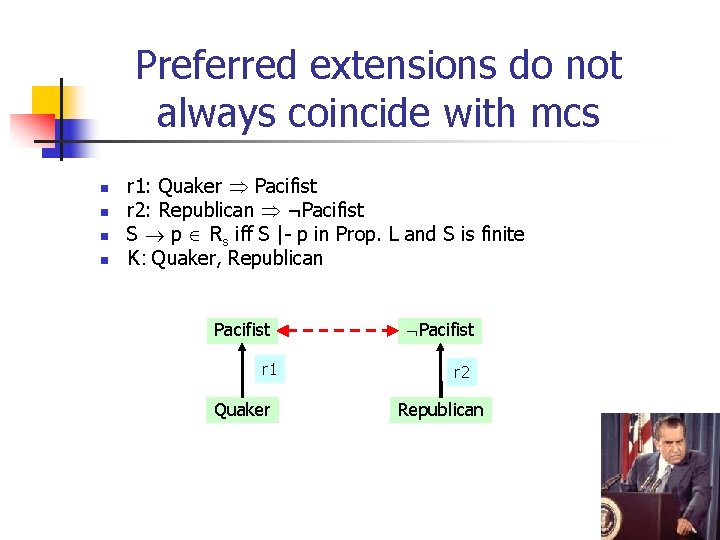

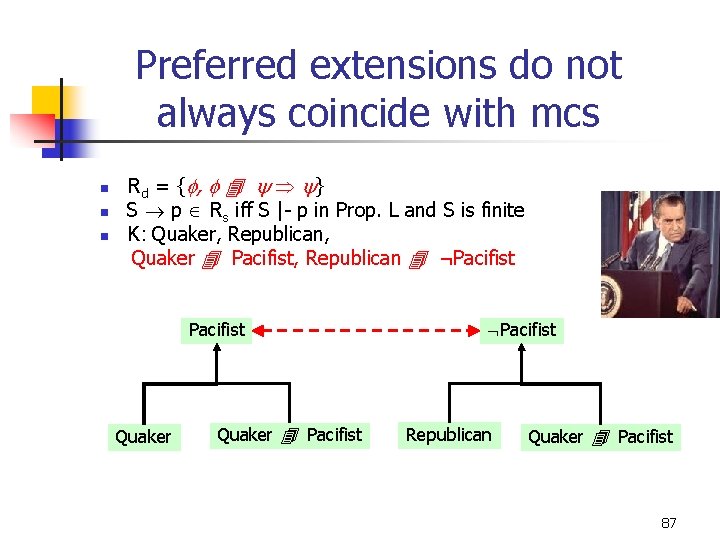

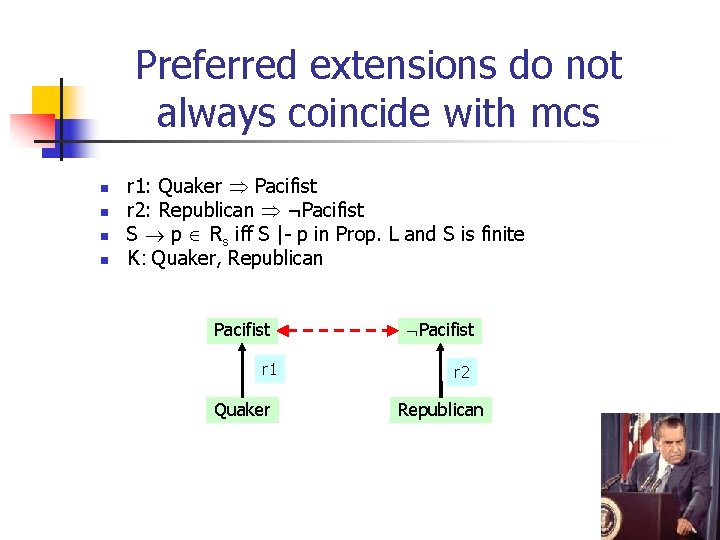

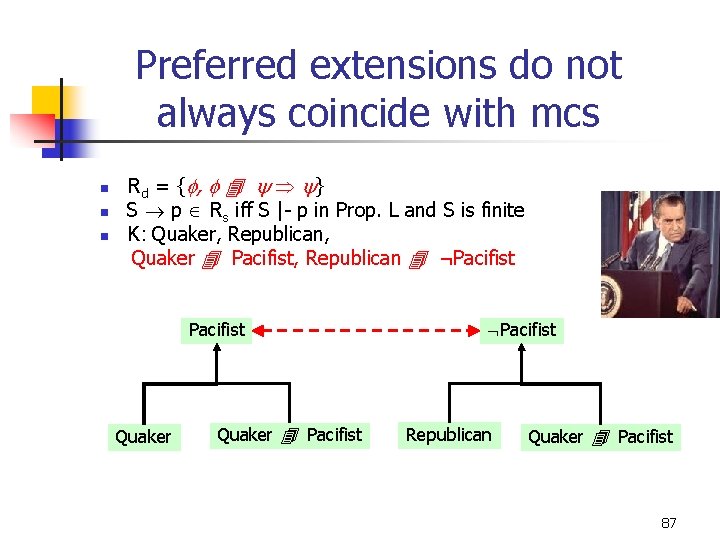

Preferred extensions do not always coincide with mcs n n r 1: Quaker Pacifist r 2: Republican ¬Pacifist S p Rs iff S |- p in Prop. L and S is finite K: Quaker, Republican Pacifist r 1 Quaker Pacifist r 2 Republican 84

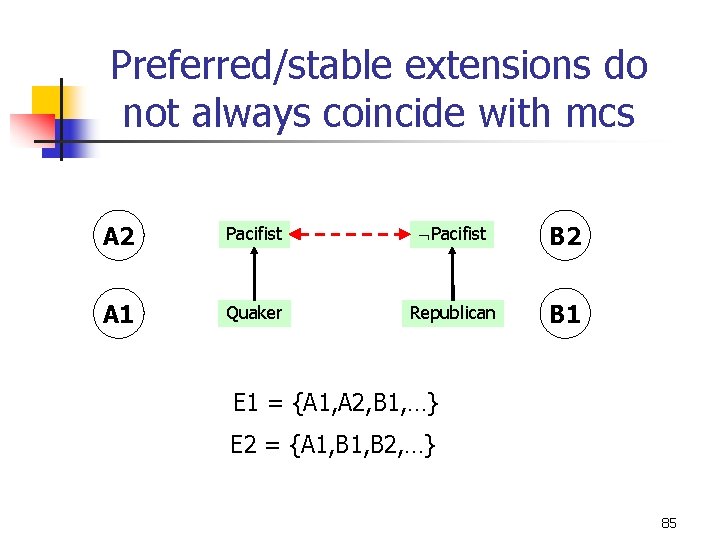

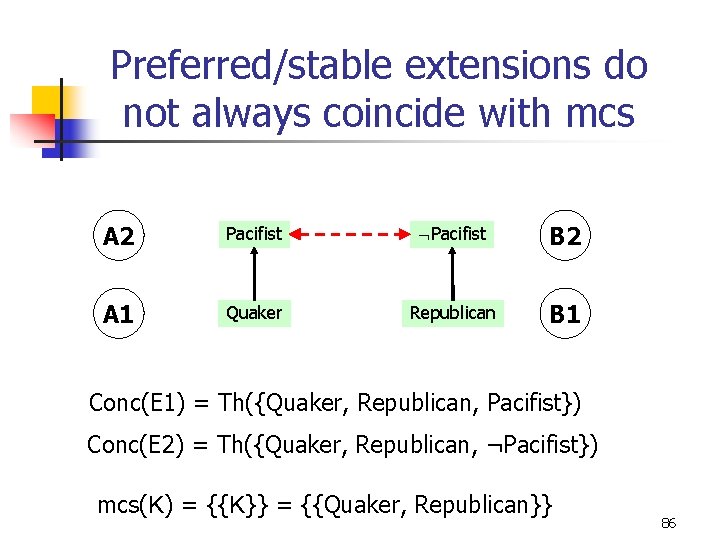

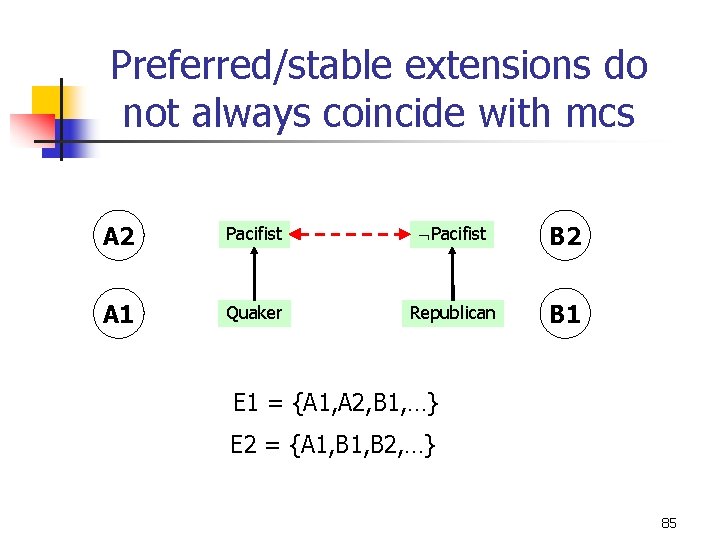

Preferred/stable extensions do not always coincide with mcs A 2 Pacifist B 2 A 1 Quaker Republican B 1 E 1 = {A 1, A 2, B 1, …} E 2 = {A 1, B 2, …} 85

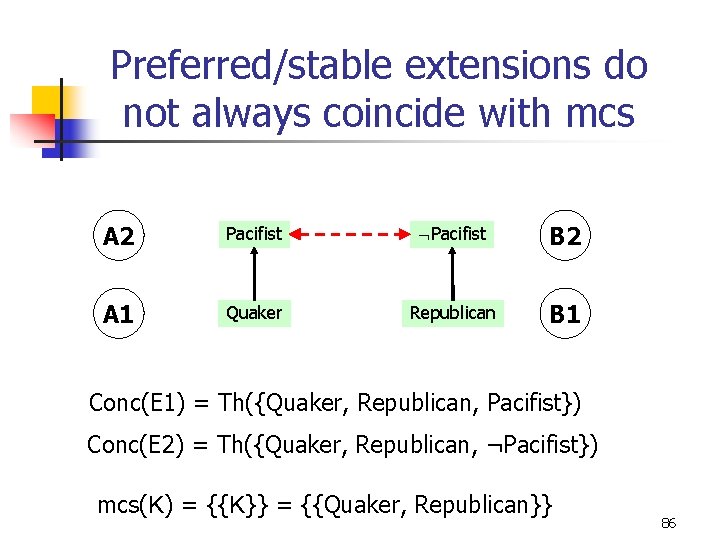

Preferred/stable extensions do not always coincide with mcs A 2 Pacifist B 2 A 1 Quaker Republican B 1 Conc(E 1) = Th({Quaker, Republican, Pacifist}) Conc(E 2) = Th({Quaker, Republican, ¬Pacifist}) mcs(K) = {{K}} = {{Quaker, Republican}} 86

Preferred extensions do not always coincide with mcs n n n Rd = { , } S p Rs iff S |- p in Prop. L and S is finite K: Quaker, Republican, Quaker Pacifist, Republican ¬Pacifist Quaker Pacifist Republican Quaker Pacifist 87

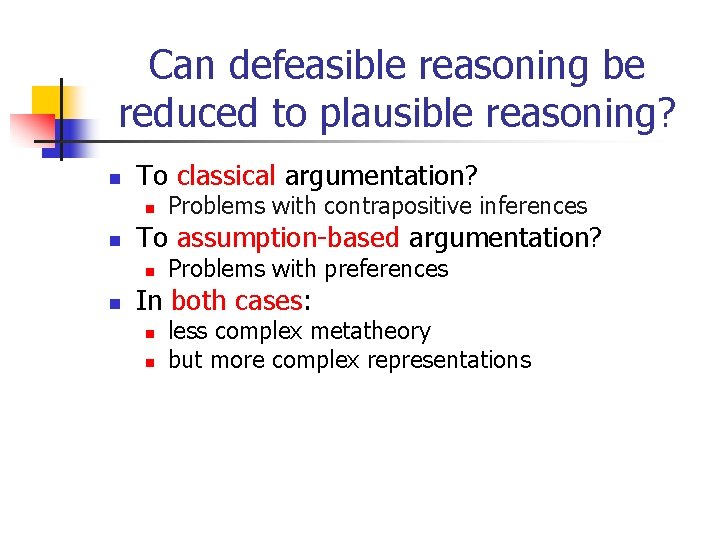

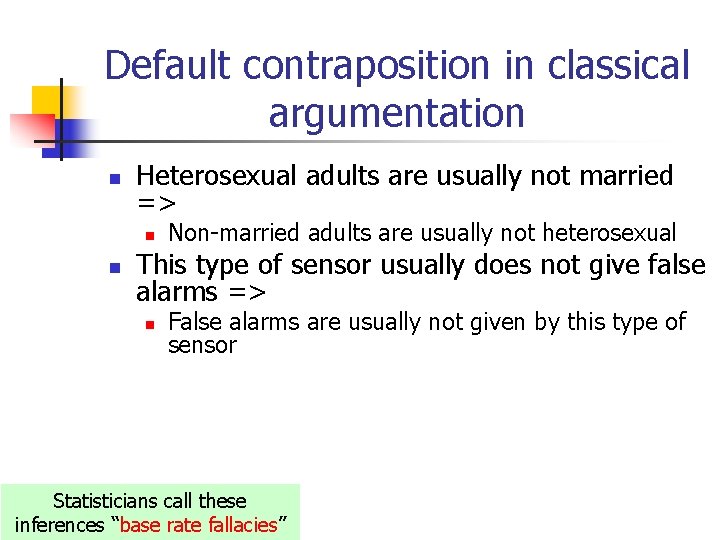

Can defeasible reasoning be reduced to plausible reasoning? n To classical argumentation? n n To assumption-based argumentation? n n Problems with contrapositive inferences Problems with preferences In both cases: n n less complex metatheory but more complex representations

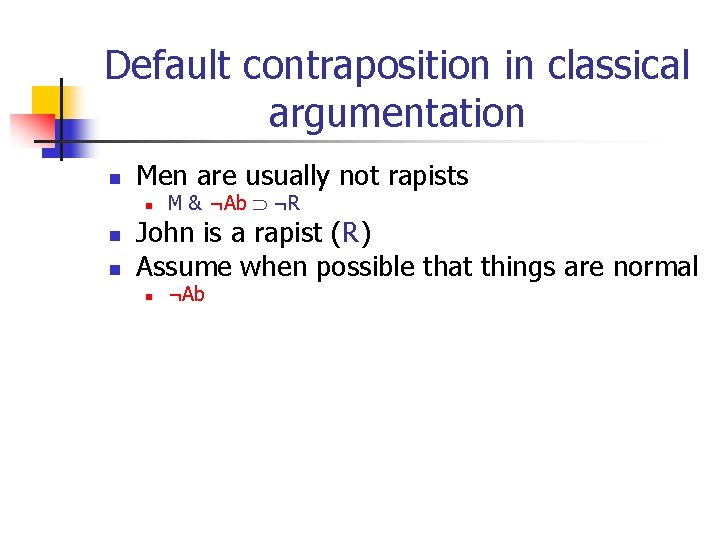

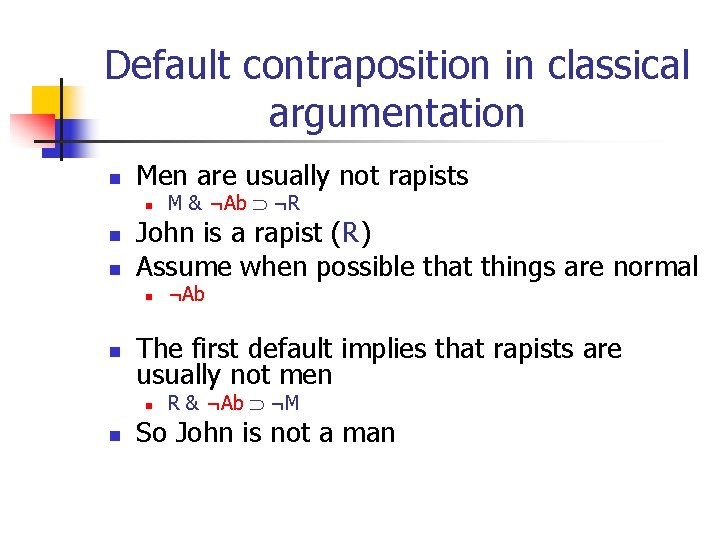

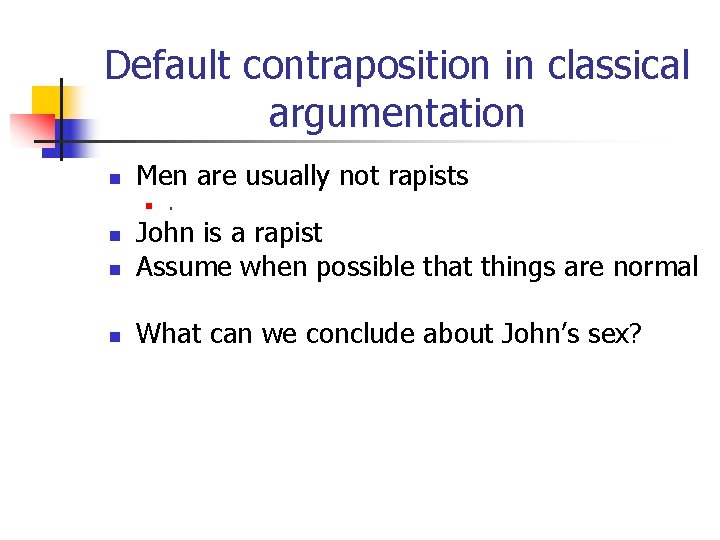

Default contraposition in classical argumentation n Men are usually not rapists n . n John is a rapist Assume when possible that things are normal n What can we conclude about John’s sex? n

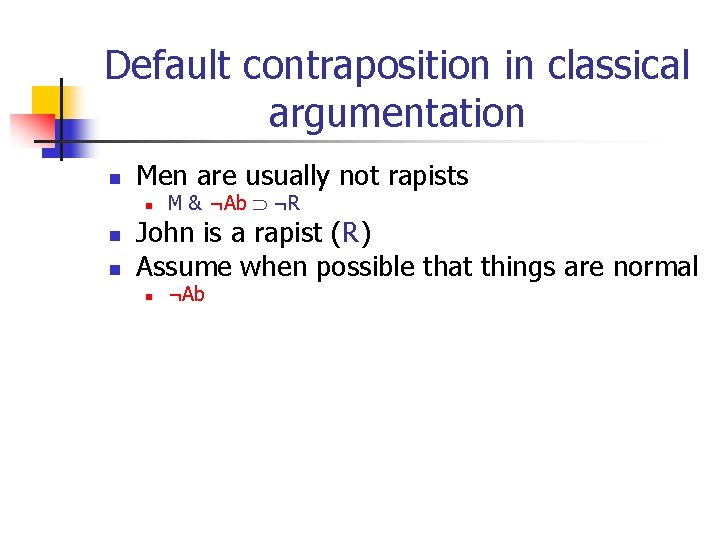

Default contraposition in classical argumentation n Men are usually not rapists n n n M & ¬Ab ¬R John is a rapist (R) Assume when possible that things are normal n ¬Ab

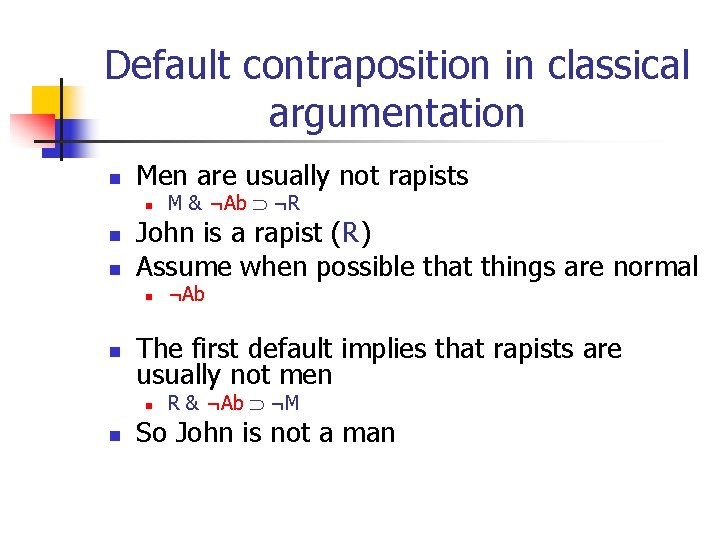

Default contraposition in classical argumentation n Men are usually not rapists n n n John is a rapist (R) Assume when possible that things are normal n n ¬Ab The first default implies that rapists are usually not men n n M & ¬Ab ¬R R & ¬Ab ¬M So John is not a man

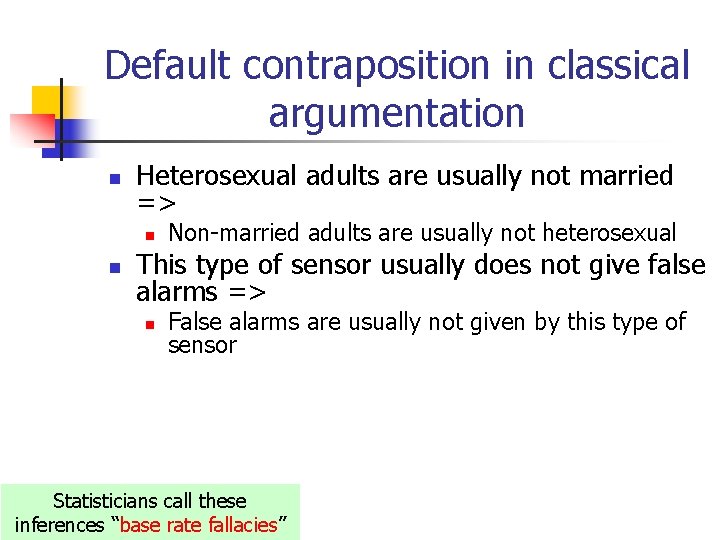

Default contraposition in classical argumentation n Heterosexual adults are usually not married => n n Non-married adults are usually not heterosexual This type of sensor usually does not give false alarms => n False alarms are usually not given by this type of sensor Statisticians call these inferences “base rate fallacies”

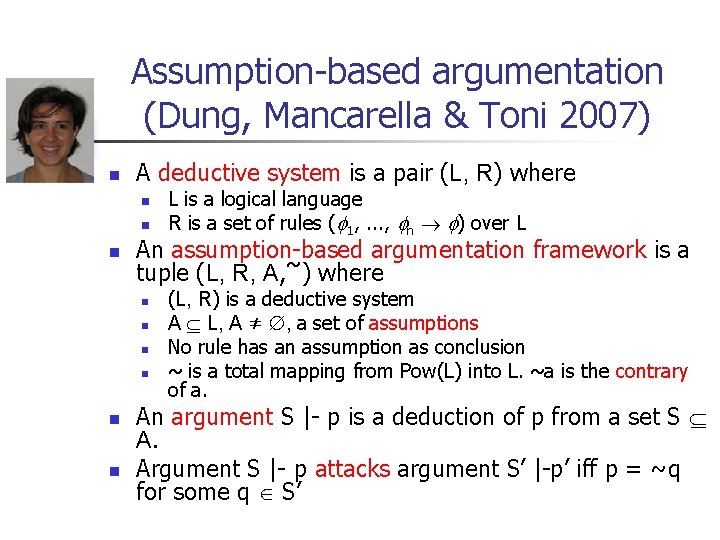

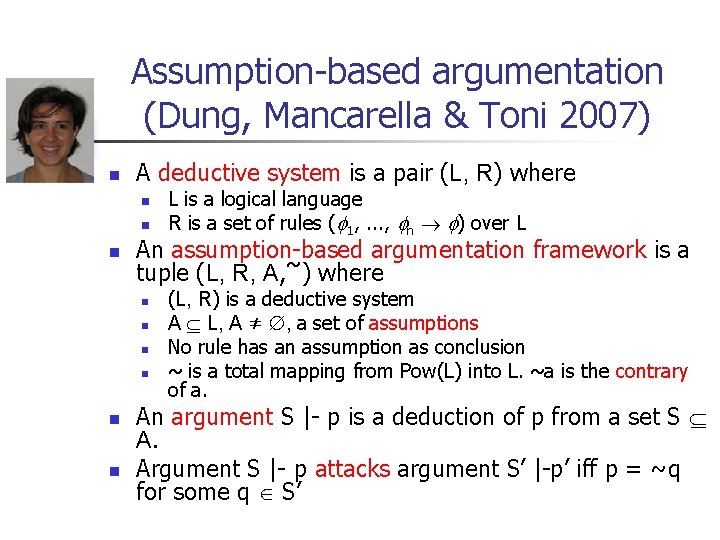

Assumption-based argumentation (Dung, Mancarella & Toni 2007) n A deductive system is a pair (L, R) where n n n An assumption-based argumentation framework is a tuple (L, R, A, ~) where n n n L is a logical language R is a set of rules ( 1, . . . , n ) over L (L, R) is a deductive system A L, A ≠ , a set of assumptions No rule has an assumption as conclusion ~ is a total mapping from Pow(L) into L. ~a is the contrary of a. An argument S |- p is a deduction of p from a set S A. Argument S |- p attacks argument S’ |-p’ iff p = ~q for some q S’

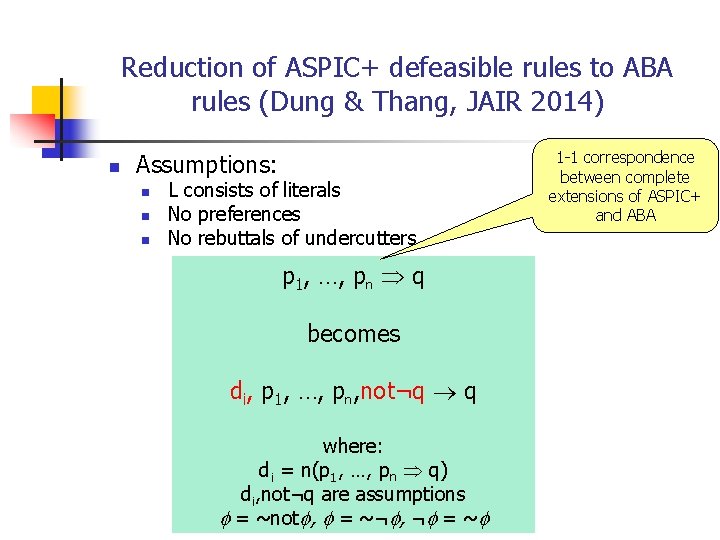

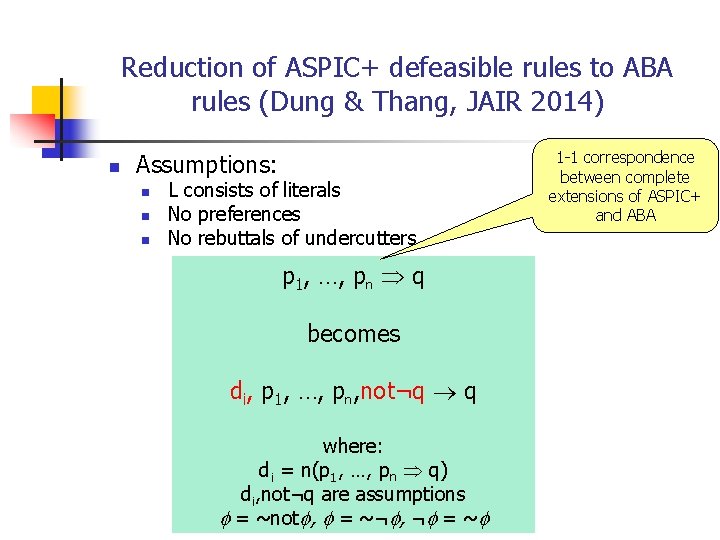

Reduction of ASPIC+ defeasible rules to ABA rules (Dung & Thang, JAIR 2014) n Assumptions: n n n L consists of literals No preferences No rebuttals of undercutters p 1, …, pn q becomes di, p 1, …, pn, not¬q q where: di = n(p 1, …, pn q) di, not¬q are assumptions = ~not , = ~¬ , ¬ = ~ 1 -1 correspondence between complete extensions of ASPIC+ and ABA

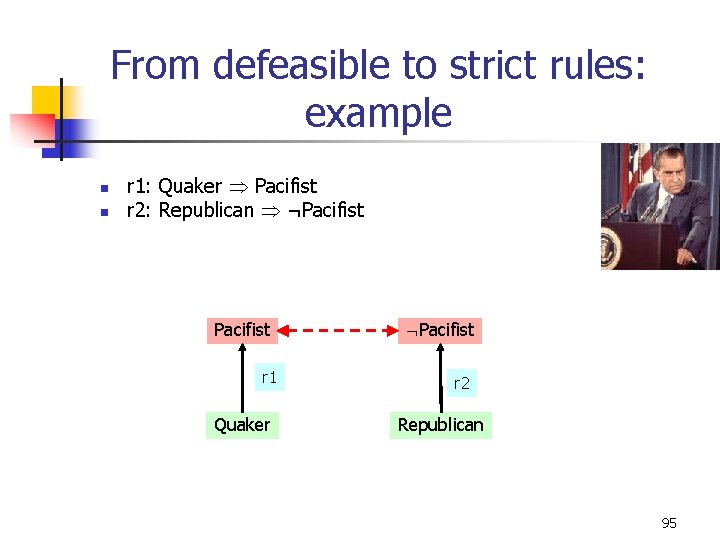

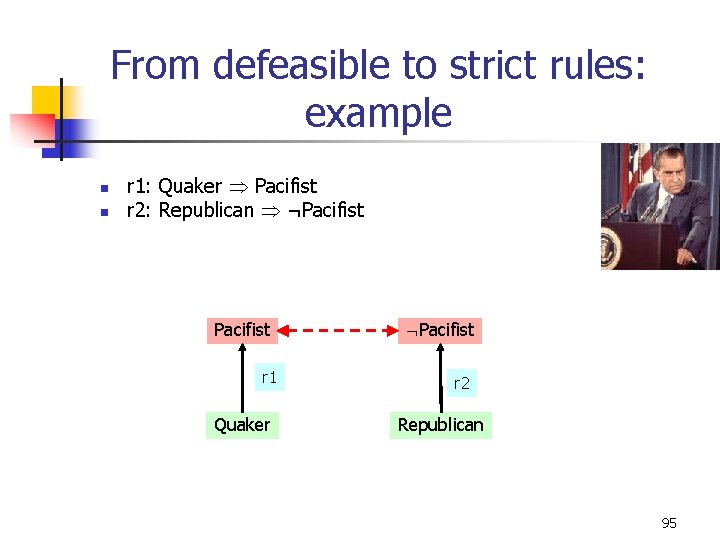

From defeasible to strict rules: example n n r 1: Quaker Pacifist r 2: Republican ¬Pacifist r 1 Quaker Pacifist r 2 Republican 95

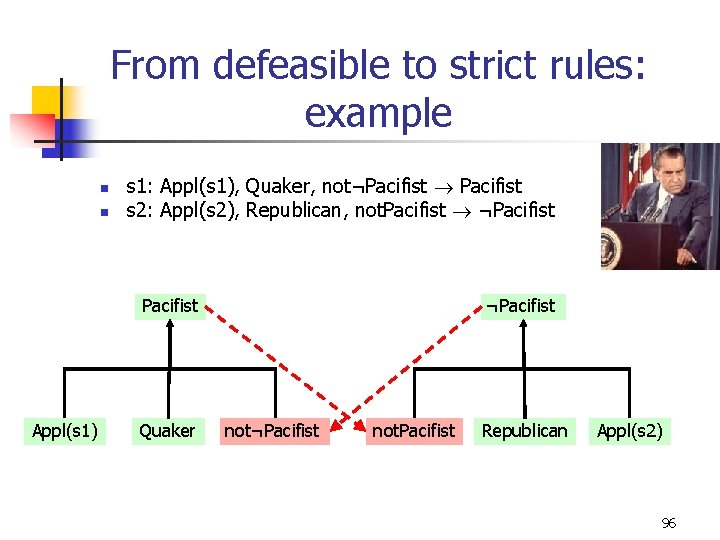

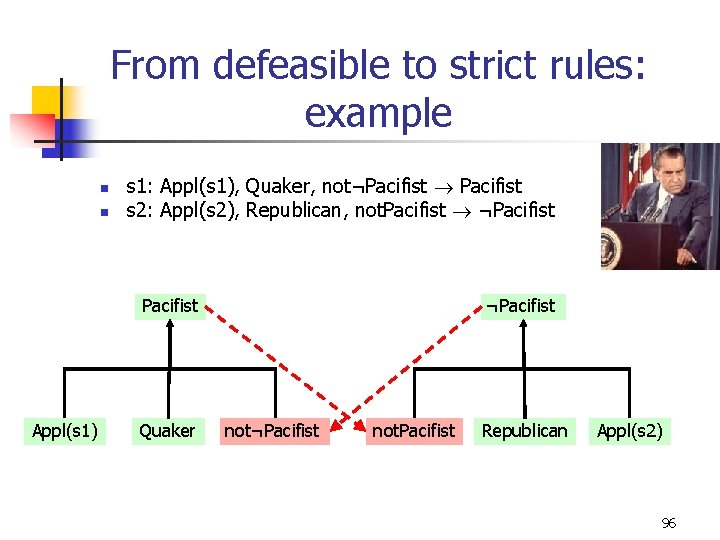

From defeasible to strict rules: example n n s 1: Appl(s 1), Quaker, not¬Pacifist s 2: Appl(s 2), Republican, not. Pacifist ¬Pacifist Appl(s 1) Quaker ¬Pacifist not. Pacifist Republican Appl(s 2) 96

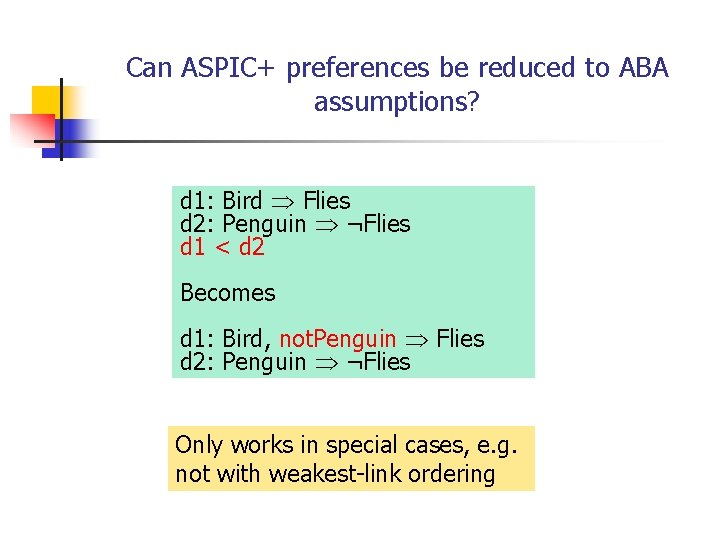

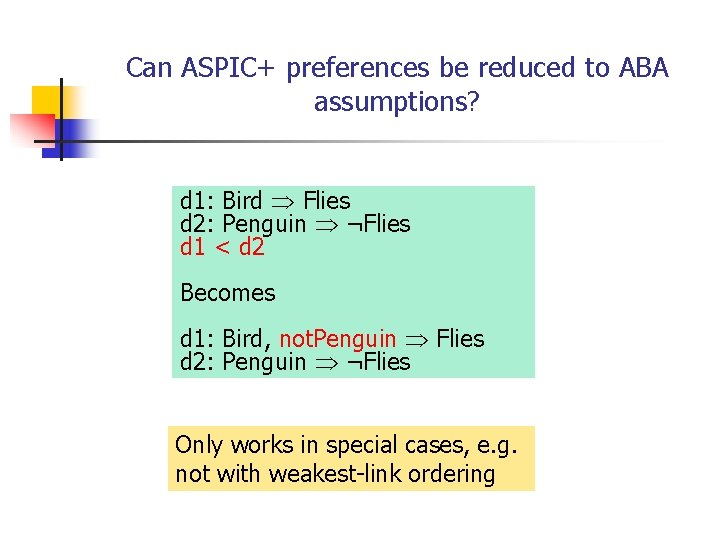

Can ASPIC+ preferences be reduced to ABA assumptions? d 1: Bird Flies d 2: Penguin ¬Flies d 1 < d 2 Becomes d 1: Bird, not. Penguin Flies d 2: Penguin ¬Flies Only works in special cases, e. g. not with weakest-link ordering

A B We should lower taxes Lower taxes increase productivity Prof. P says that … People with political ambitions are not objective We should not lower taxes Increased productivity is good Prof. P is not objective Prof. P has political ambitions C Lower taxes increase inequality Increased inequality is good Lower taxes do not increase productivity USA lowered taxes but productivity decreased D Increased inequality is bad Increased inequality stimulates competition E Competition is good

A C B D E

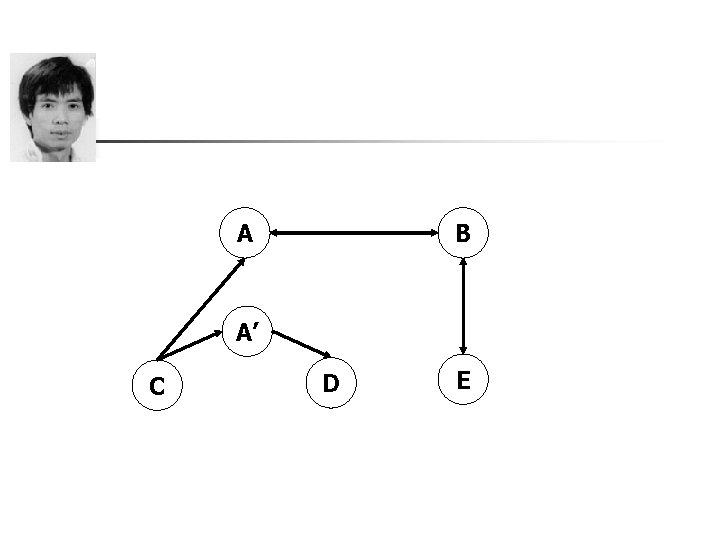

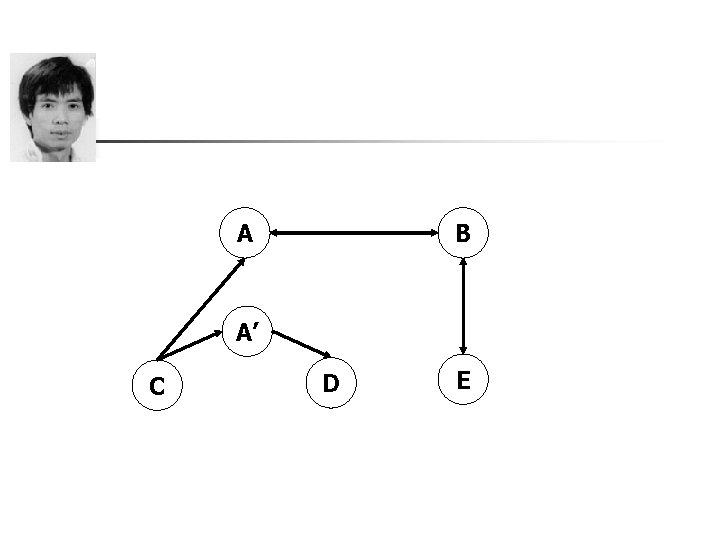

A B A’ C D E

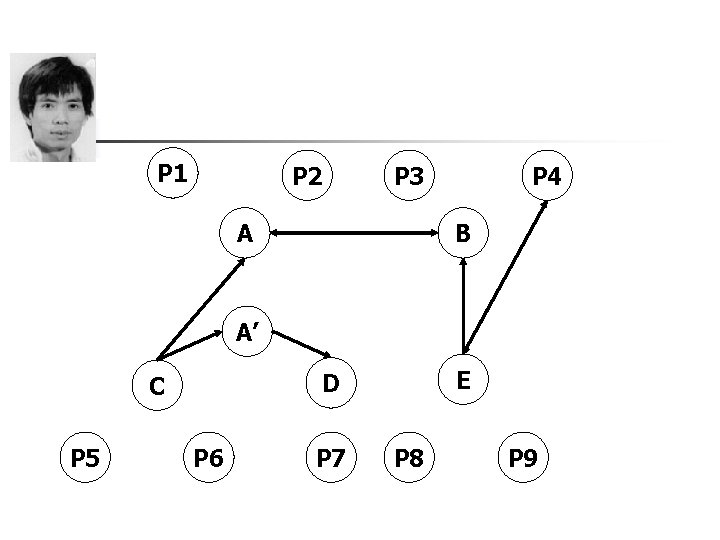

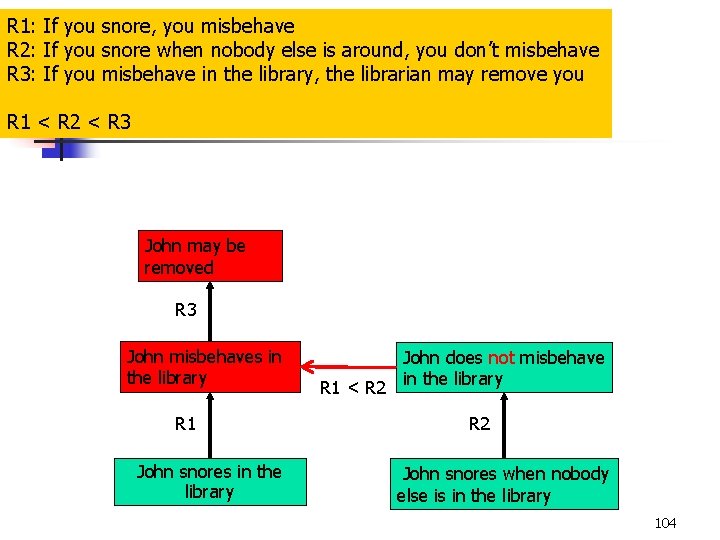

P 1 P 2 P 3 A P 4 B A’ P 5 E D C P 6 P 7 P 8 P 9

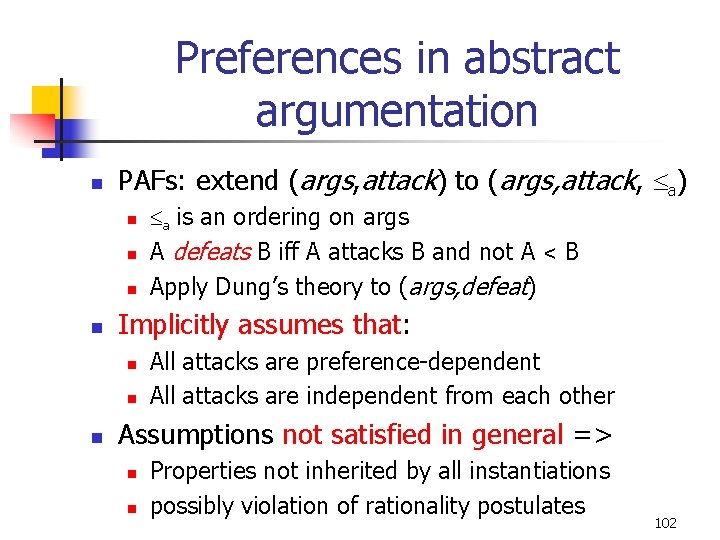

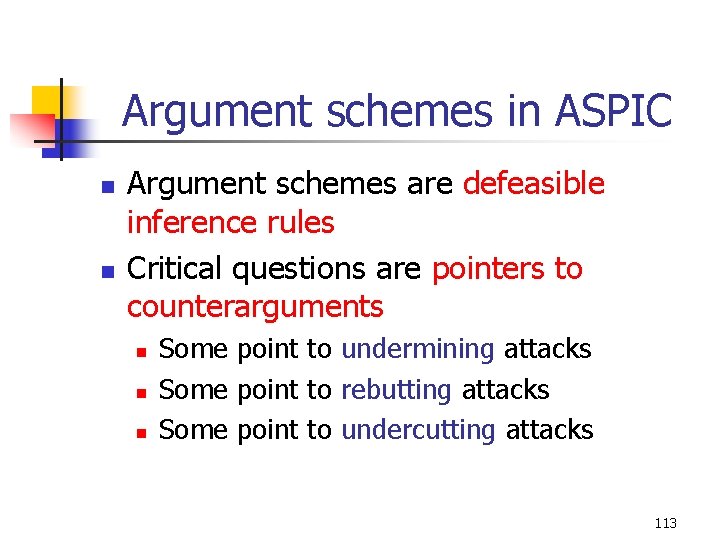

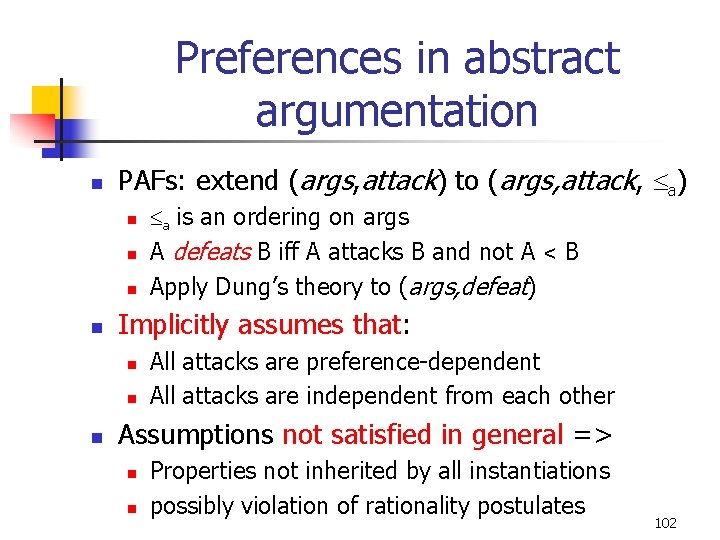

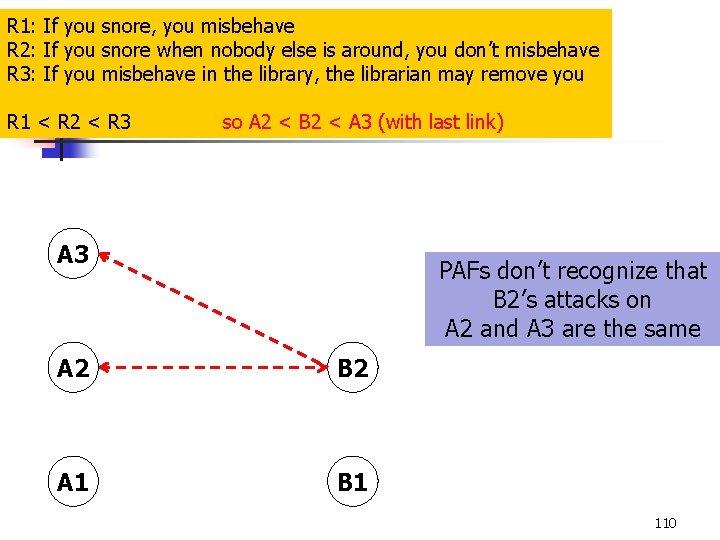

Preferences in abstract argumentation n PAFs: extend (args, attack) to (args, attack, a) n n Implicitly assumes that: n n n a is an ordering on args A defeats B iff A attacks B and not A < B Apply Dung’s theory to (args, defeat) All attacks are preference-dependent All attacks are independent from each other Assumptions not satisfied in general => n n Properties not inherited by all instantiations possibly violation of rationality postulates 102

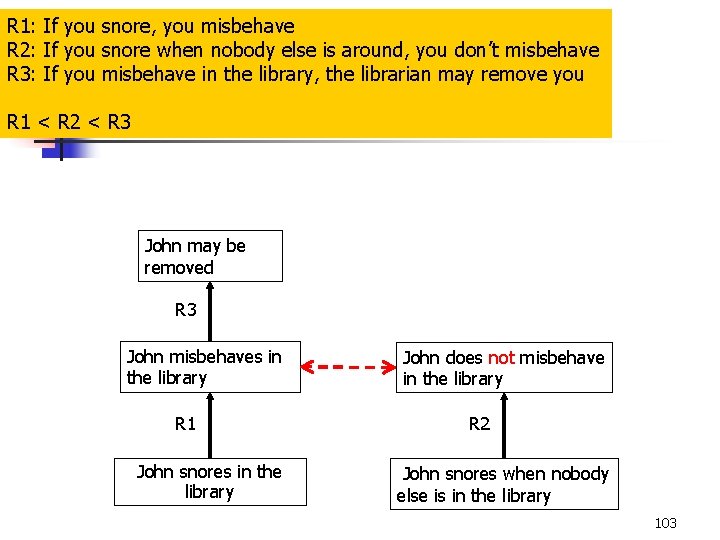

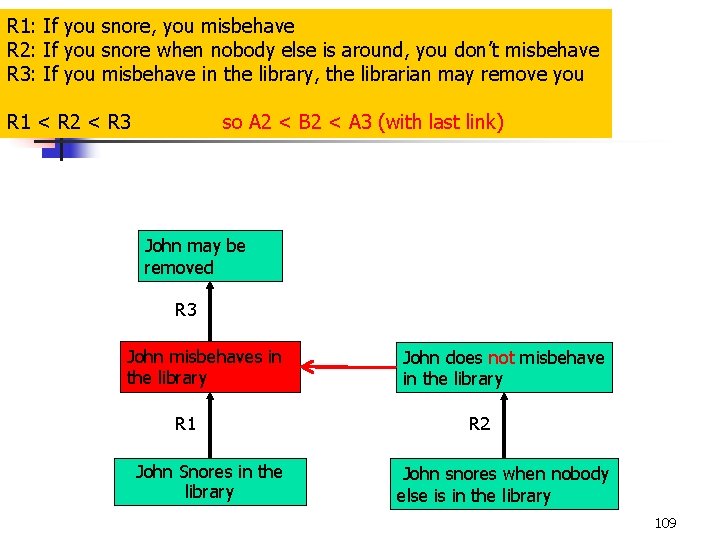

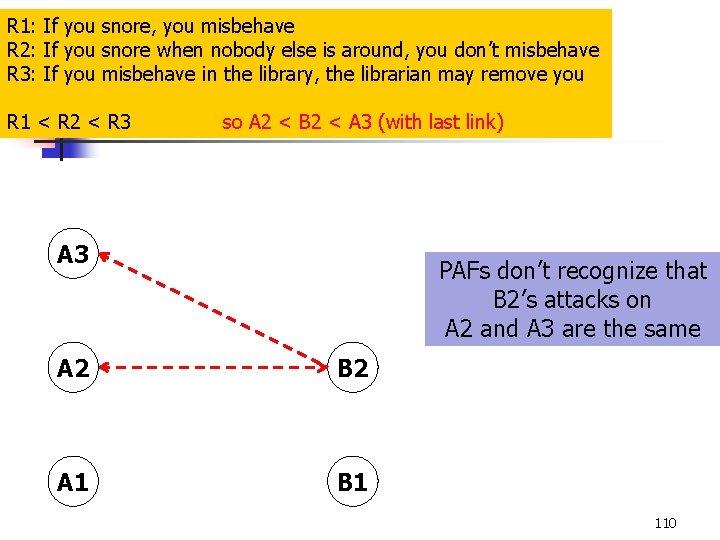

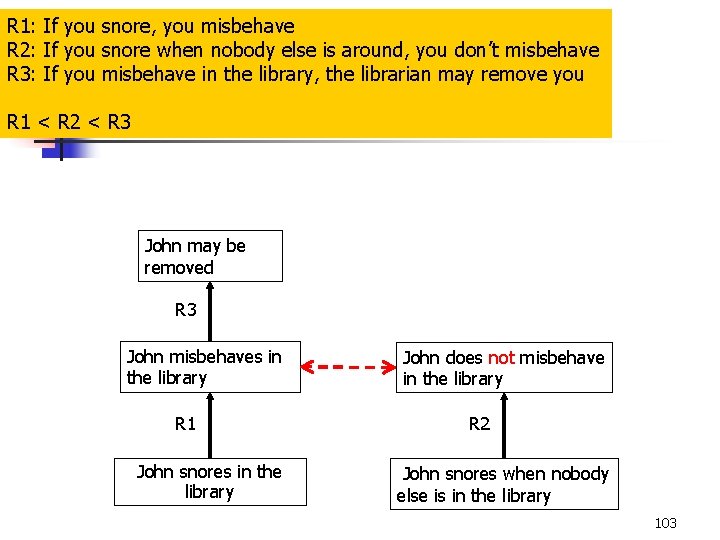

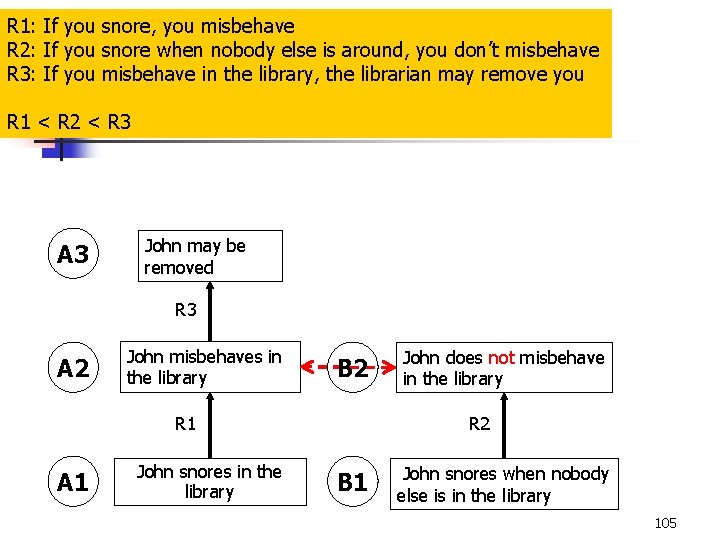

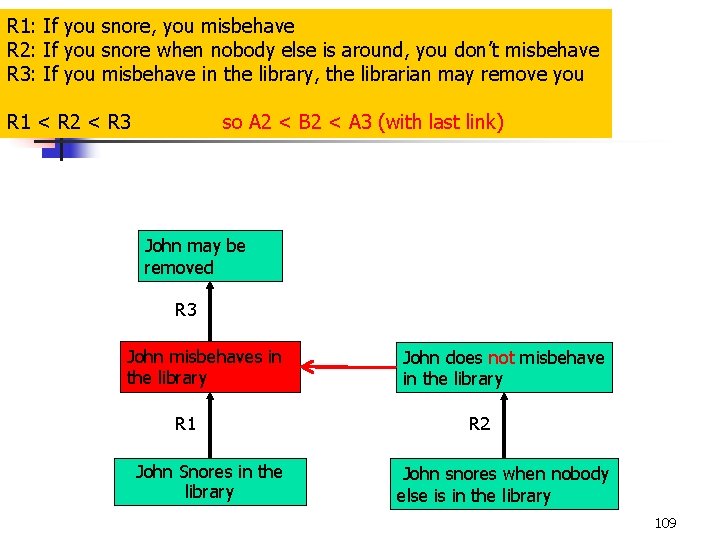

R 1: If you snore, you misbehave R 2: If you snore when nobody else is around, you don’t misbehave R 3: If you misbehave in the library, the librarian may remove you R 1 < R 2 < R 3 John may be removed R 3 John misbehaves in the library R 1 John snores in the library John does not misbehave in the library R 2 John snores when nobody else is in the library 103

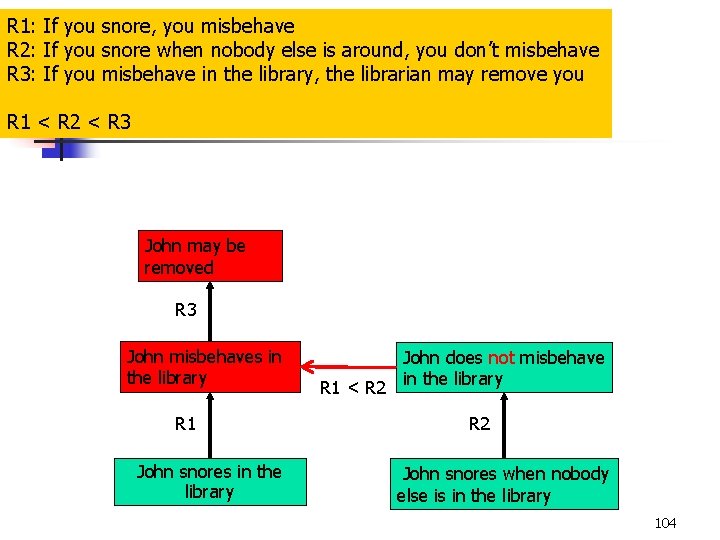

R 1: If you snore, you misbehave R 2: If you snore when nobody else is around, you don’t misbehave R 3: If you misbehave in the library, the librarian may remove you R 1 < R 2 < R 3 John may be removed R 3 John misbehaves in the library R 1 John snores in the library John does not misbehave R 1 < R 2 in the library R 2 John snores when nobody else is in the library 104

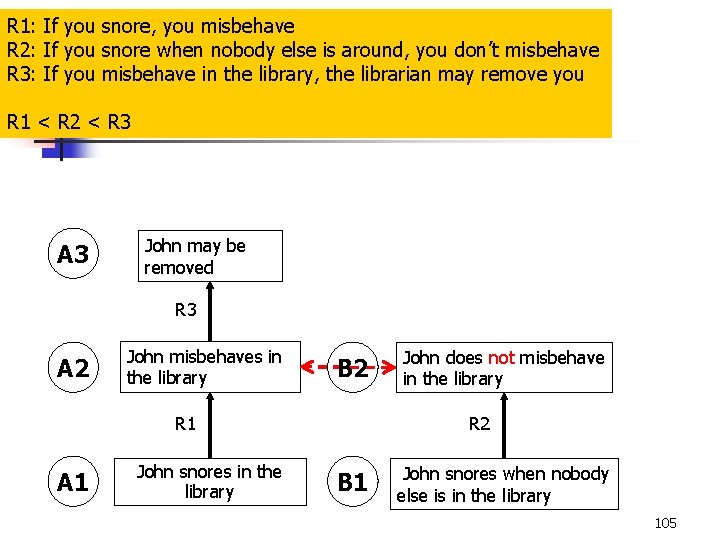

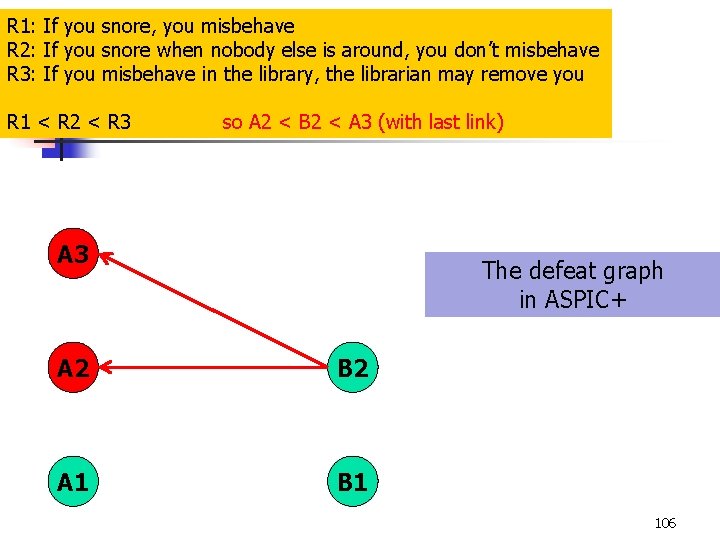

R 1: If you snore, you misbehave R 2: If you snore when nobody else is around, you don’t misbehave R 3: If you misbehave in the library, the librarian may remove you R 1 < R 2 < R 3 A 3 John may be removed R 3 A 2 John misbehaves in the library B 2 R 1 A 1 John snores in the library John does not misbehave in the library R 2 B 1 John snores when nobody else is in the library 105

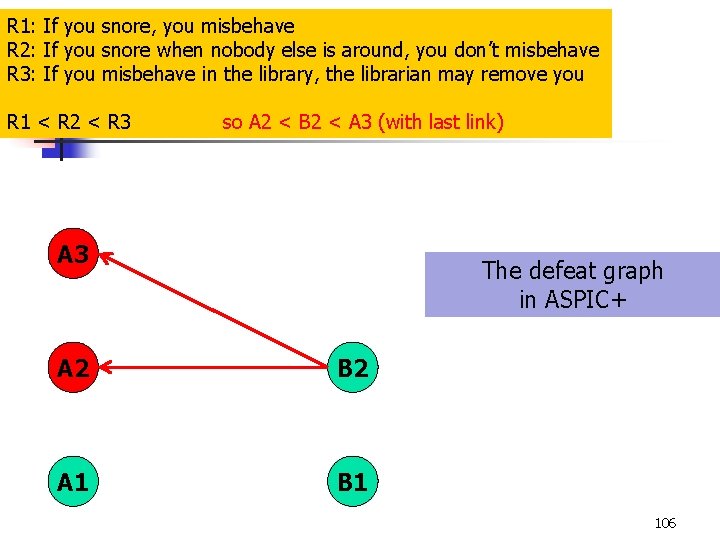

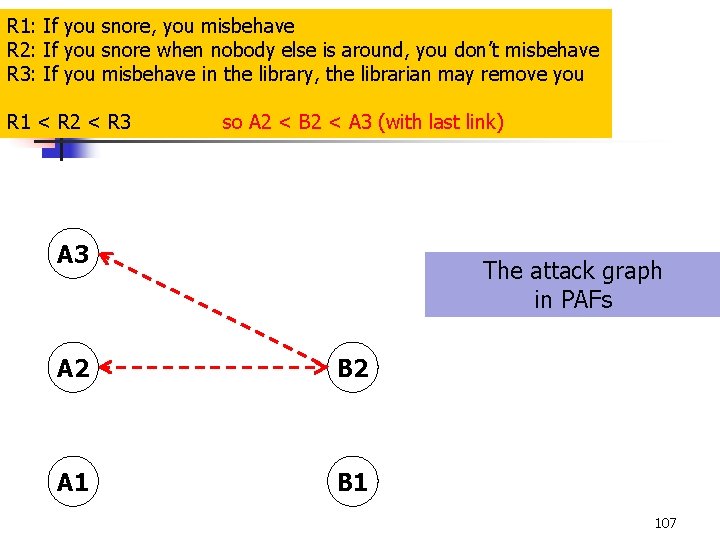

R 1: If you snore, you misbehave R 2: If you snore when nobody else is around, you don’t misbehave R 3: If you misbehave in the library, the librarian may remove you R 1 < R 2 < R 3 so A 2 < B 2 < A 3 (with last link) A 3 The defeat graph in ASPIC+ A 2 B 2 A 1 B 1 106

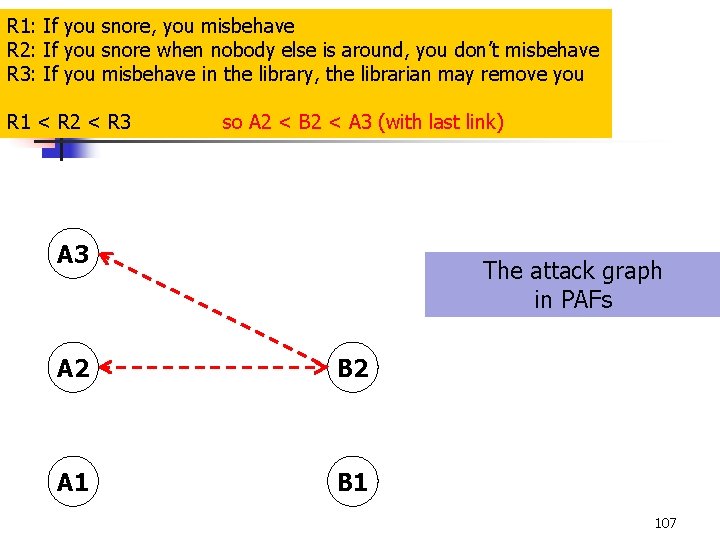

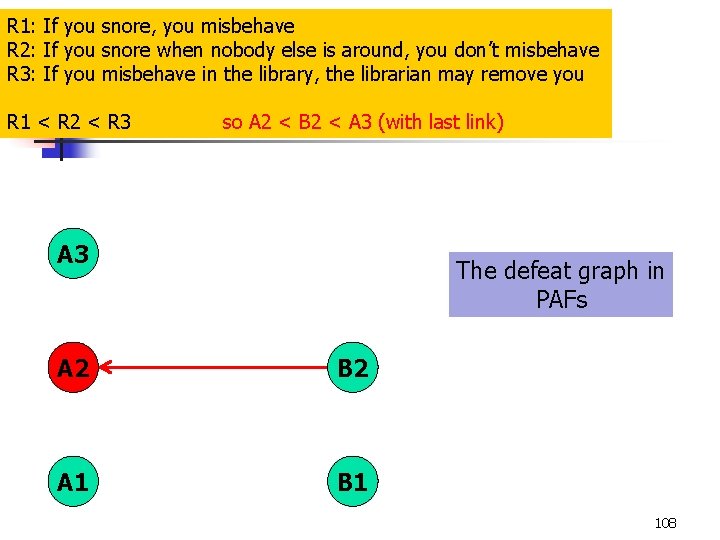

R 1: If you snore, you misbehave R 2: If you snore when nobody else is around, you don’t misbehave R 3: If you misbehave in the library, the librarian may remove you R 1 < R 2 < R 3 so A 2 < B 2 < A 3 (with last link) A 3 The attack graph in PAFs A 2 B 2 A 1 B 1 107

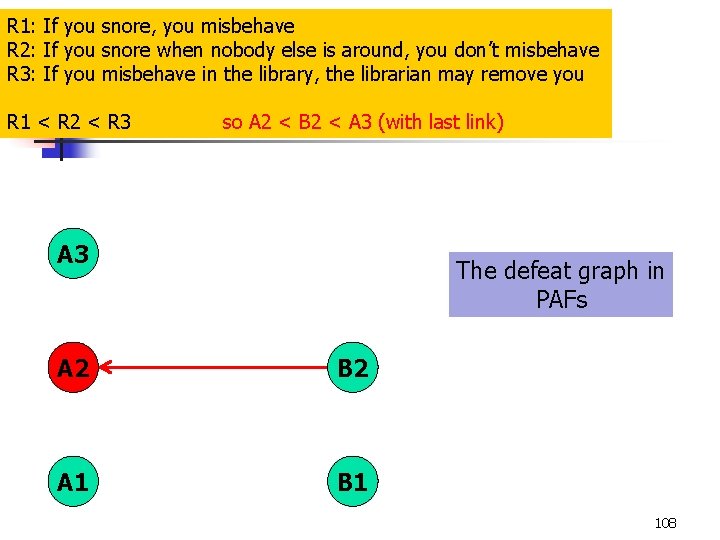

R 1: If you snore, you misbehave R 2: If you snore when nobody else is around, you don’t misbehave R 3: If you misbehave in the library, the librarian may remove you R 1 < R 2 < R 3 so A 2 < B 2 < A 3 (with last link) A 3 The defeat graph in PAFs A 2 B 2 A 1 B 1 108

R 1: If you snore, you misbehave R 2: If you snore when nobody else is around, you don’t misbehave R 3: If you misbehave in the library, the librarian may remove you R 1 < R 2 < R 3 so A 2 < B 2 < A 3 (with last link) John may be removed R 3 John misbehaves in the library R 1 John Snores in the library John does not misbehave in the library R 2 John snores when nobody else is in the library 109

R 1: If you snore, you misbehave R 2: If you snore when nobody else is around, you don’t misbehave R 3: If you misbehave in the library, the librarian may remove you R 1 < R 2 < R 3 so A 2 < B 2 < A 3 (with last link) A 3 PAFs don’t recognize that B 2’s attacks on A 2 and A 3 are the same A 2 B 2 A 1 B 1 110

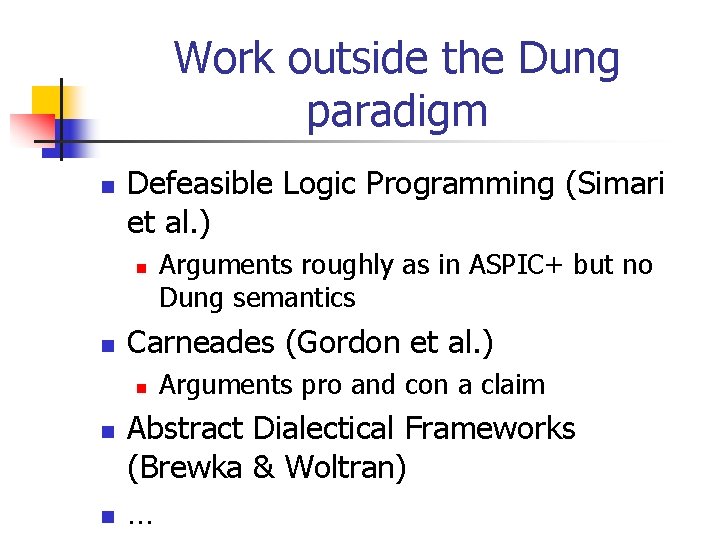

Work outside the Dung paradigm n Defeasible Logic Programming (Simari et al. ) n n Carneades (Gordon et al. ) n n n Arguments roughly as in ASPIC+ but no Dung semantics Arguments pro and con a claim Abstract Dialectical Frameworks (Brewka & Woltran) …

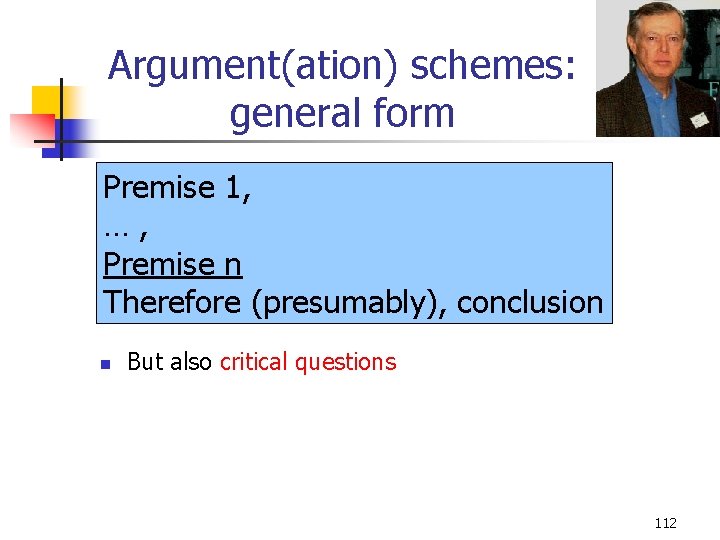

Argument(ation) schemes: general form Premise 1, …, Premise n Therefore (presumably), conclusion n But also critical questions 112

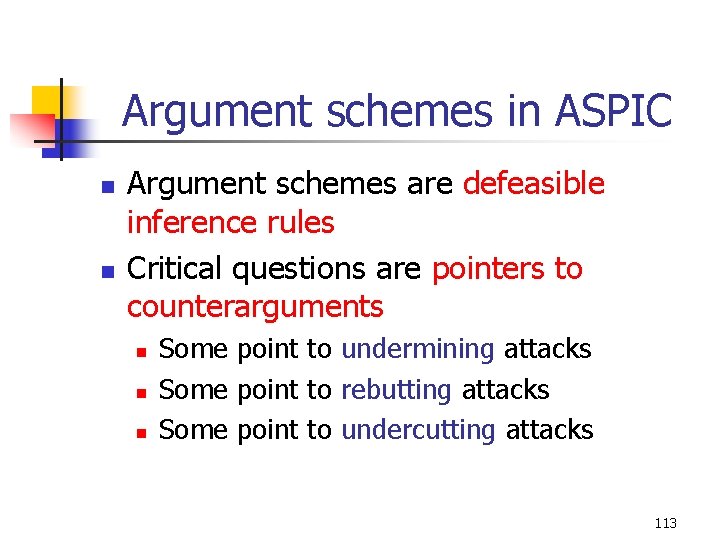

Argument schemes in ASPIC n n Argument schemes are defeasible inference rules Critical questions are pointers to counterarguments n n n Some point to undermining attacks Some point to rebutting attacks Some point to undercutting attacks 113

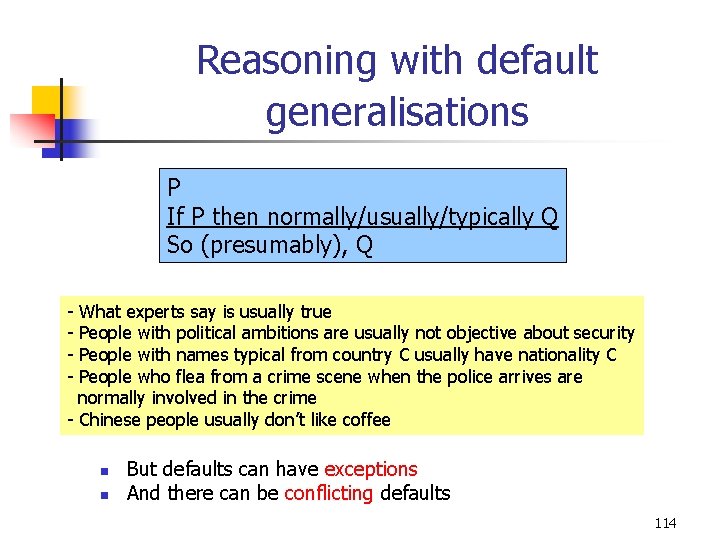

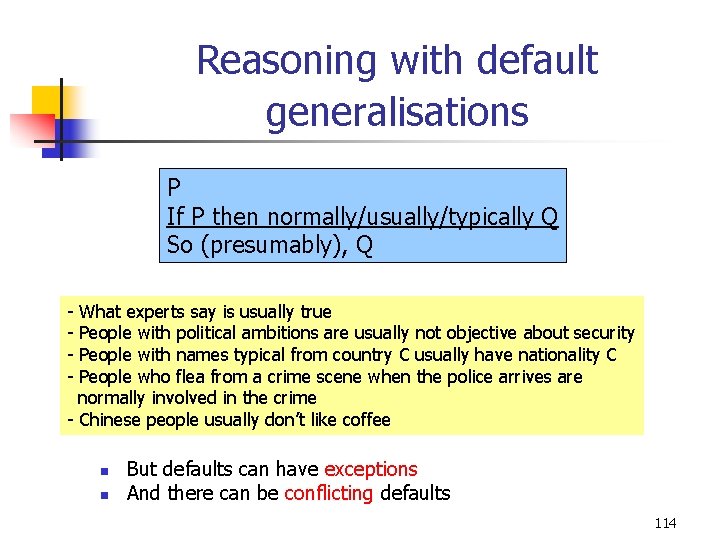

Reasoning with default generalisations P If P then normally/usually/typically Q So (presumably), Q - What experts say is usually true People with political ambitions are usually not objective about security People with names typical from country C usually have nationality C People who flea from a crime scene when the police arrives are normally involved in the crime - Chinese people usually don’t like coffee n n But defaults can have exceptions And there can be conflicting defaults 114

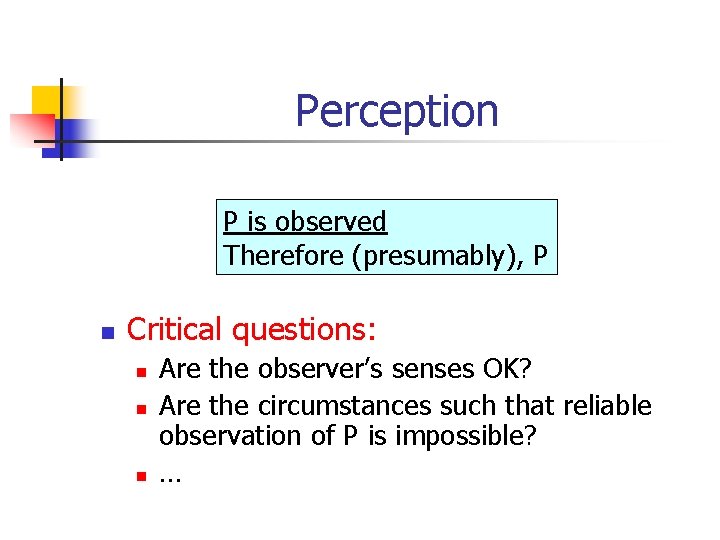

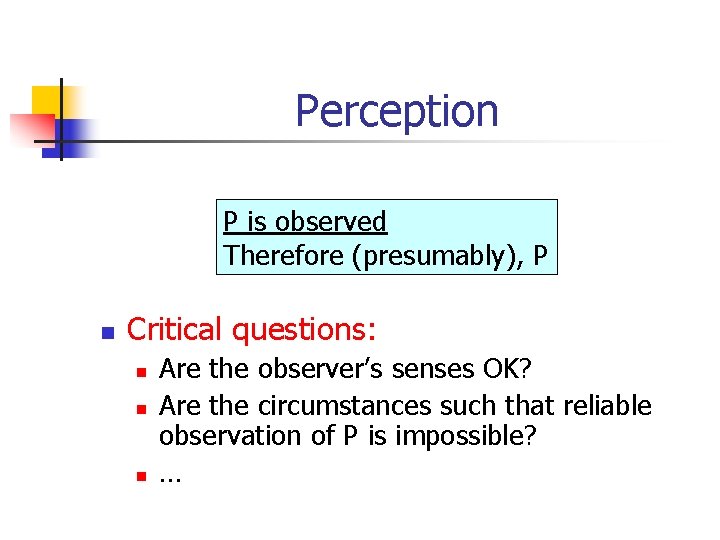

Perception P is observed Therefore (presumably), P n Critical questions: n n n Are the observer’s senses OK? Are the circumstances such that reliable observation of P is impossible? …

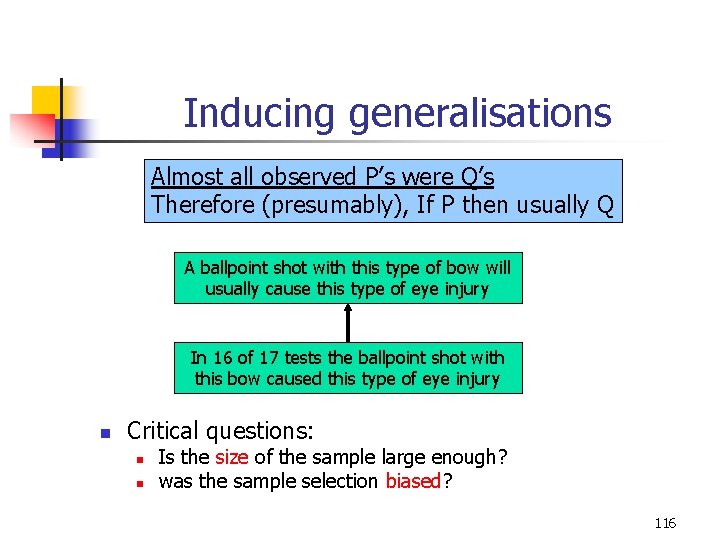

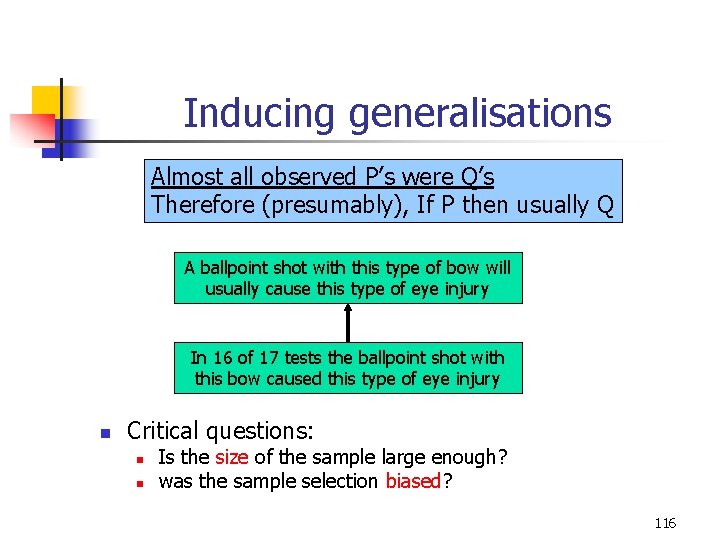

Inducing generalisations Almost all observed P’s were Q’s Therefore (presumably), If P then usually Q A ballpoint shot with this type of bow will usually cause this type of eye injury In 16 of 17 tests the ballpoint shot with this bow caused this type of eye injury n Critical questions: n n Is the size of the sample large enough? was the sample selection biased? 116

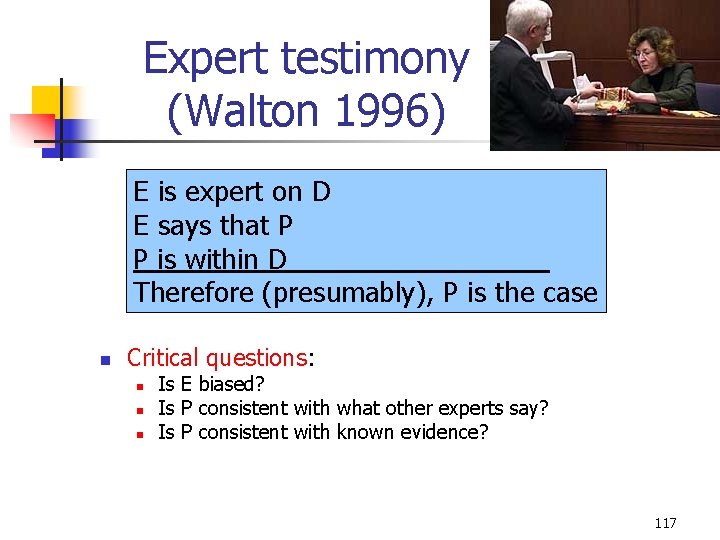

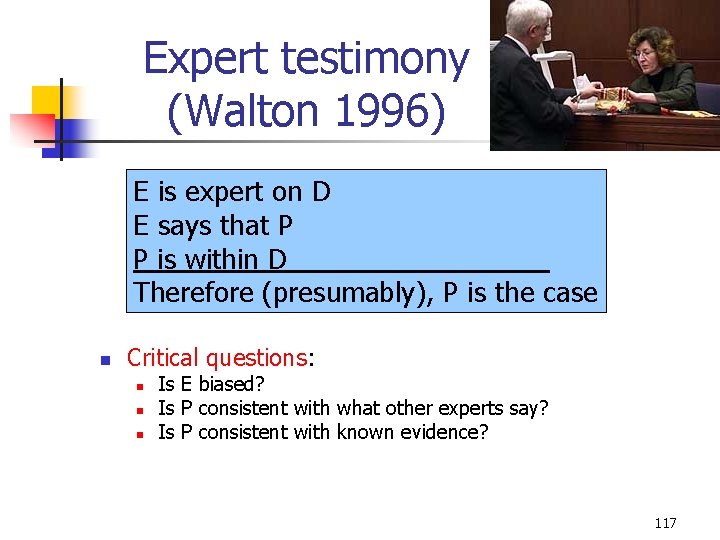

Expert testimony (Walton 1996) E is expert on D E says that P P is within D Therefore (presumably), P is the case n Critical questions: n n n Is E biased? Is P consistent with what other experts say? Is P consistent with known evidence? 117

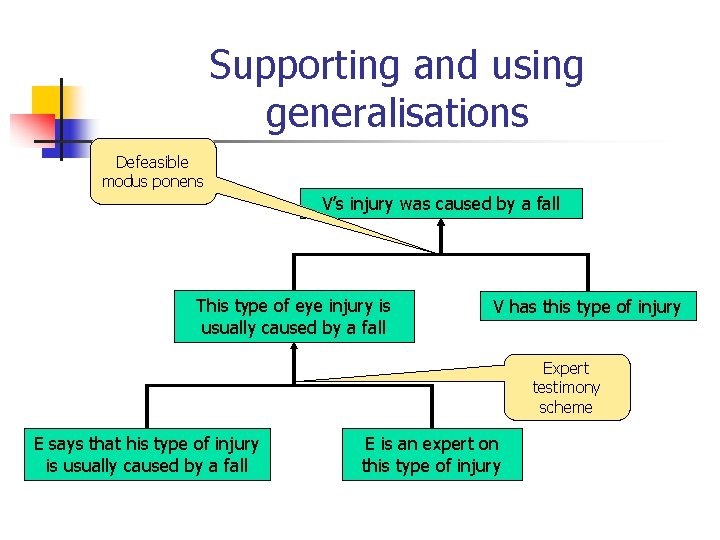

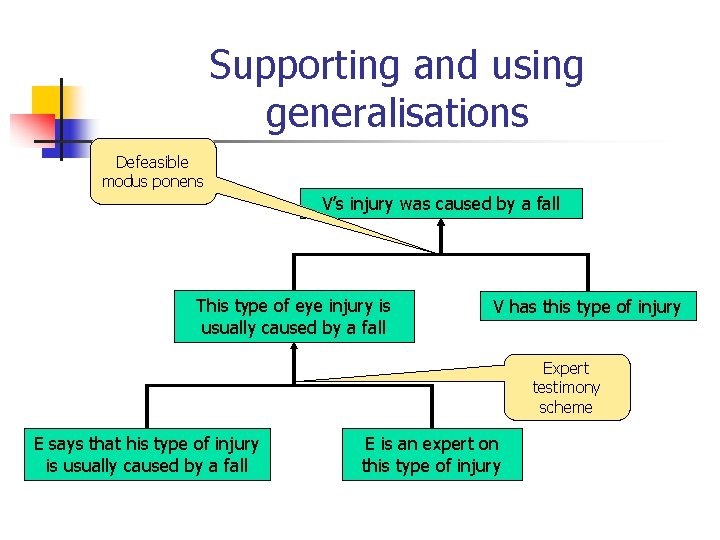

Supporting and using generalisations Defeasible modus ponens V’s injury was caused by a fall This type of eye injury is usually caused by a fall V has this type of injury Expert testimony scheme E says that his type of injury is usually caused by a fall E is an expert on this type of injury

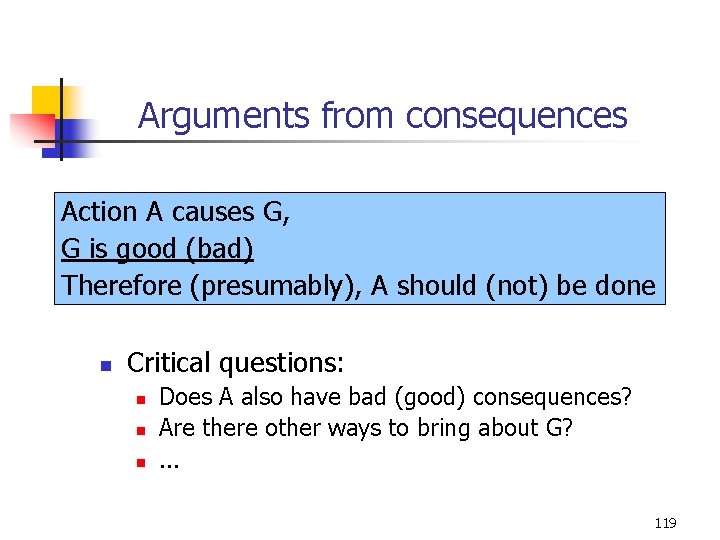

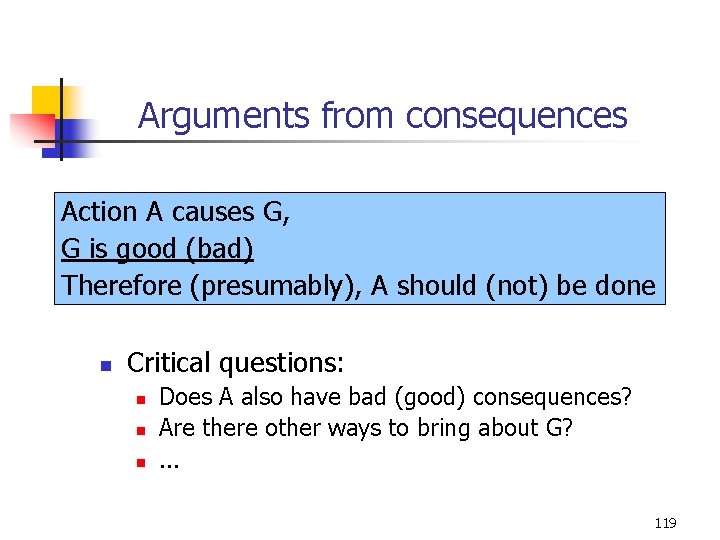

Arguments from consequences Action A causes G, G is good (bad) Therefore (presumably), A should (not) be done n Critical questions: n n n Does A also have bad (good) consequences? Are there other ways to bring about G? . . . 119

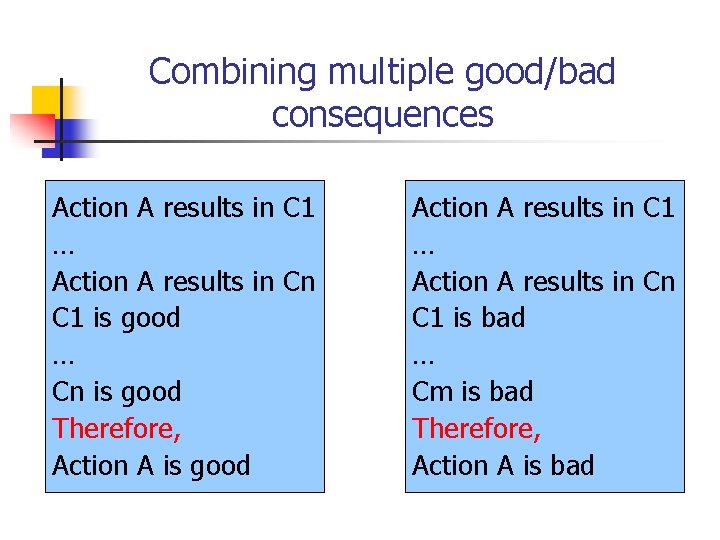

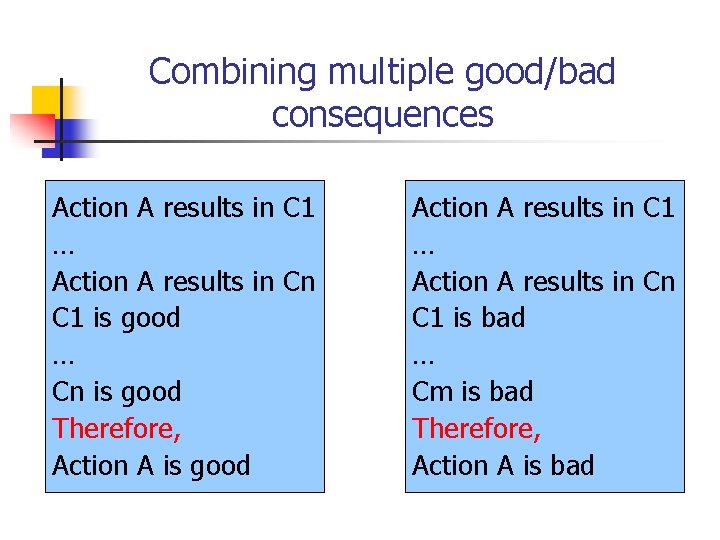

Combining multiple good/bad consequences Action A results in C 1 … Action A results in Cn C 1 is good … Cn is good Therefore, Action A is good Action A results in C 1 … Action A results in Cn C 1 is bad … Cm is bad Therefore, Action A is bad

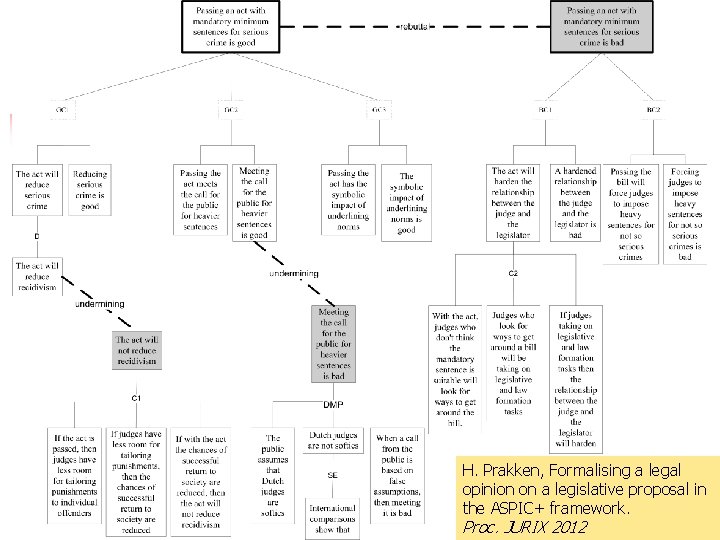

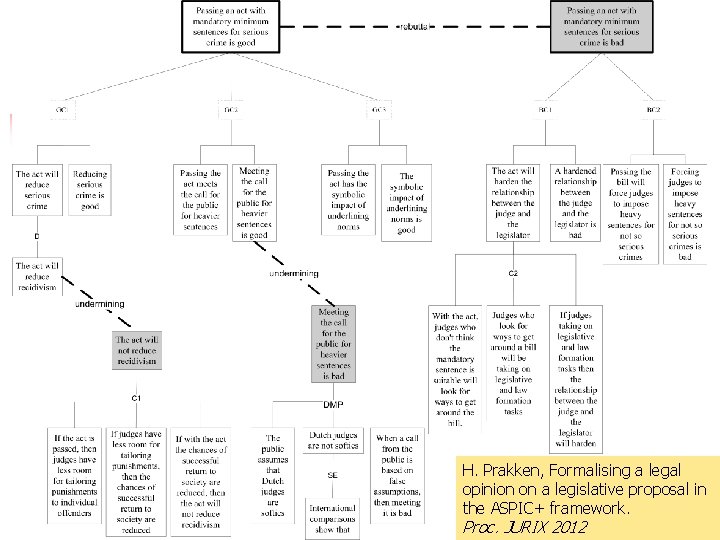

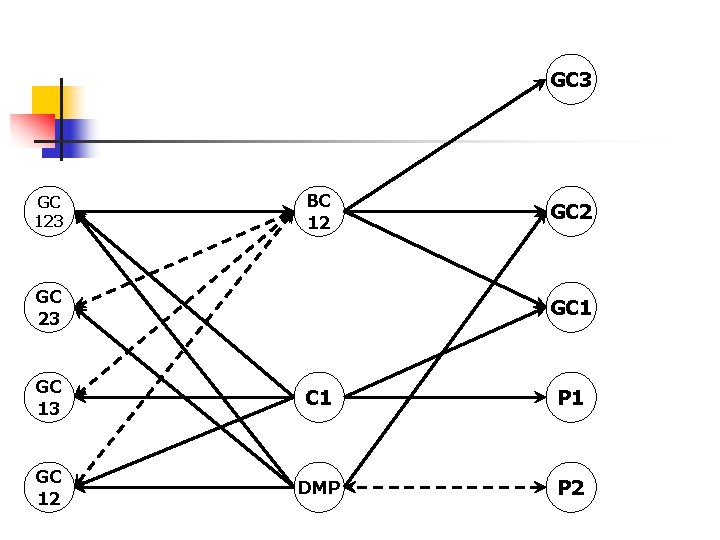

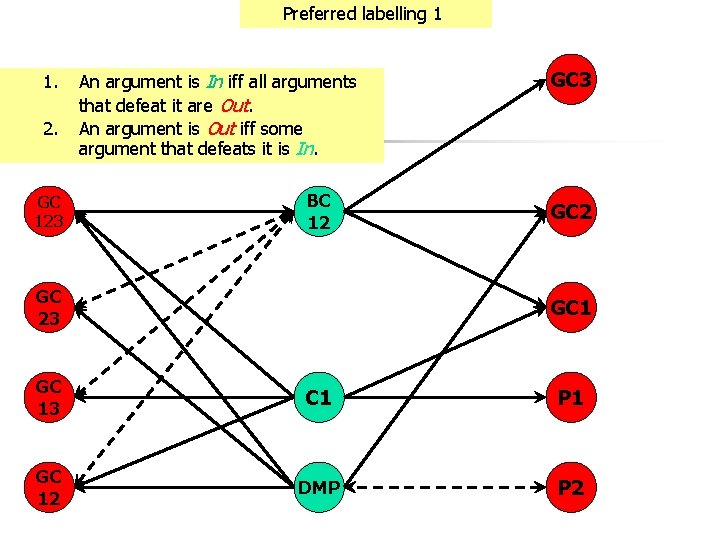

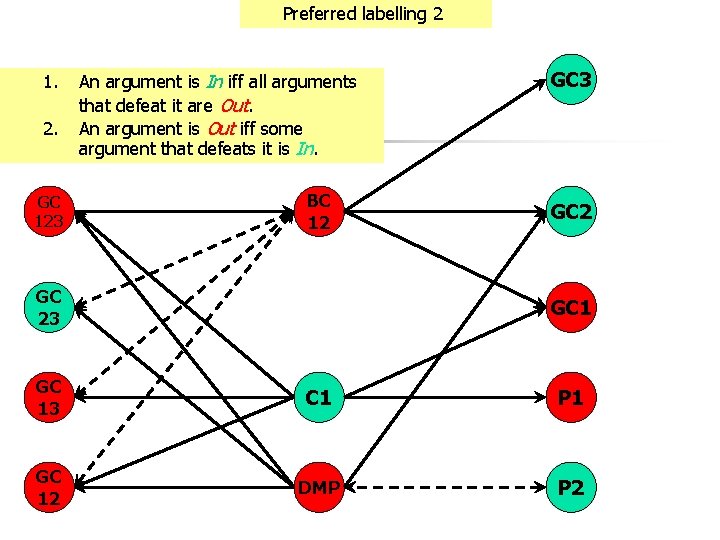

H. Prakken, Formalising a legal opinion on a legislative proposal in the ASPIC+ framework. Proc. JURIX 2012

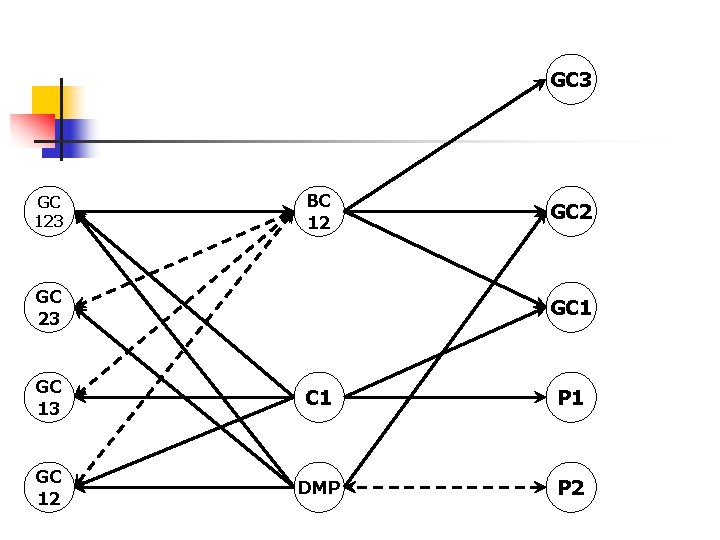

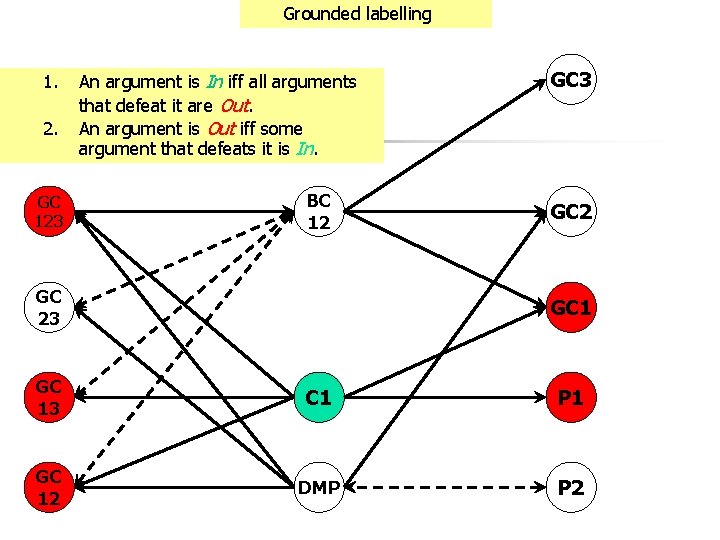

GC 3 GC 123 BC 12 GC 23 GC 2 GC 13 C 1 P 1 GC 12 DMP P 2

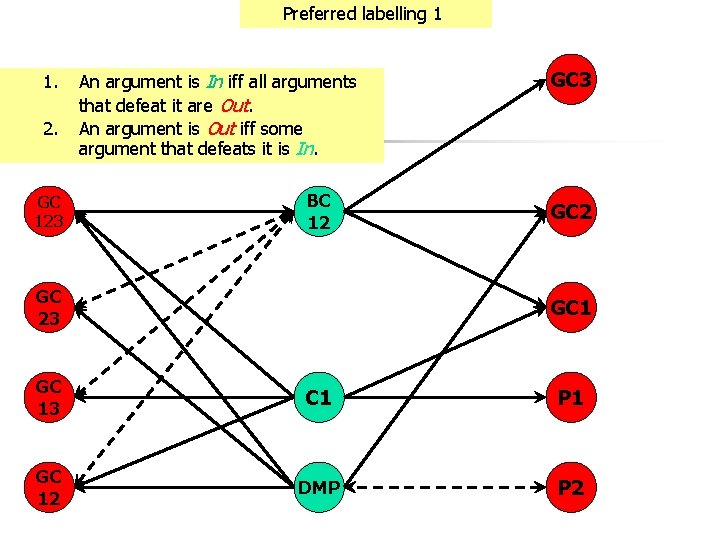

Preferred labelling 1 1. 2. GC 123 An argument is In iff all arguments that defeat it are Out. An argument is Out iff some argument that defeats it is In. BC 12 GC 23 GC 2 GC 13 C 1 P 1 GC 12 DMP P 2

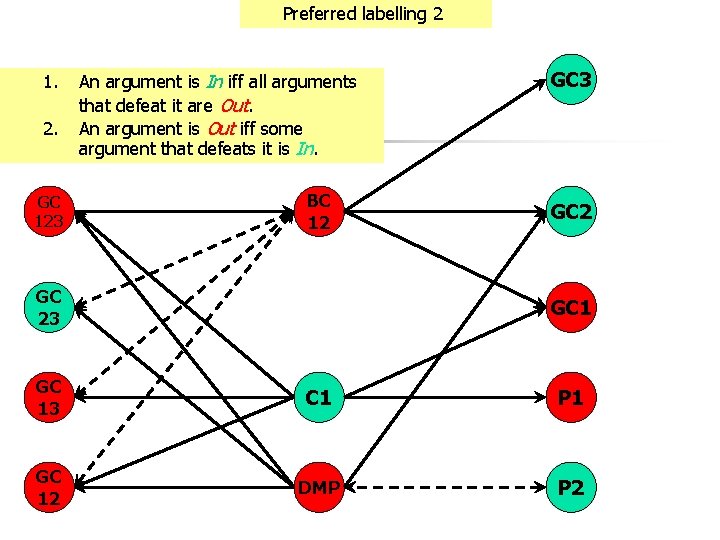

Preferred labelling 2 1. 2. GC 123 An argument is In iff all arguments that defeat it are Out. An argument is Out iff some argument that defeats it is In. BC 12 GC 23 GC 2 GC 13 C 1 P 1 GC 12 DMP P 2

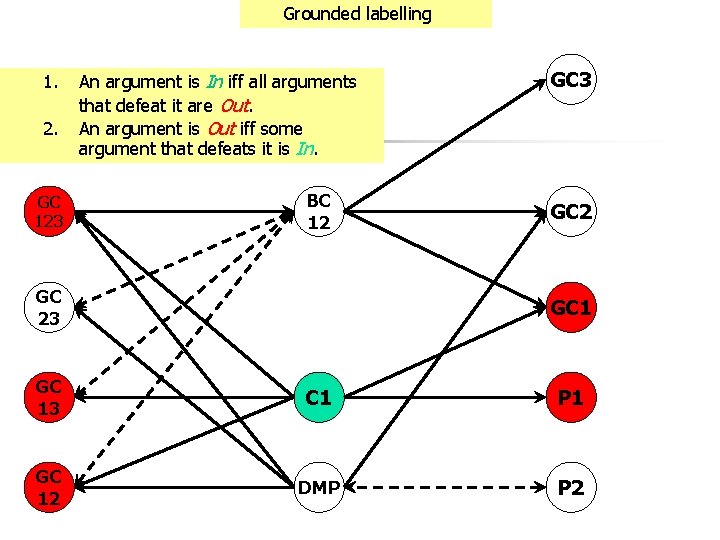

Grounded labelling 1. 2. GC 123 An argument is In iff all arguments that defeat it are Out. An argument is Out iff some argument that defeats it is In. BC 12 GC 23 GC 2 GC 13 C 1 P 1 GC 12 DMP P 2

Summary n n A formal metatheory of structured argumentation is emerging Better understanding needed of philosophical underpinnings and practical applicability n n n Not all argumentation can be naturally reduced to plausible reasoning The ‘one base logic’ approach is only suitable for plausible reasoning Important research issues: n n Aggregation of arguments Relation with probability theory

Interaction n n Argument games verify status of argument (or statement) given a single theory (knowledge base) But real argumentation dialogues have n n Distributed information Dynamics Real players! Richer communication languages

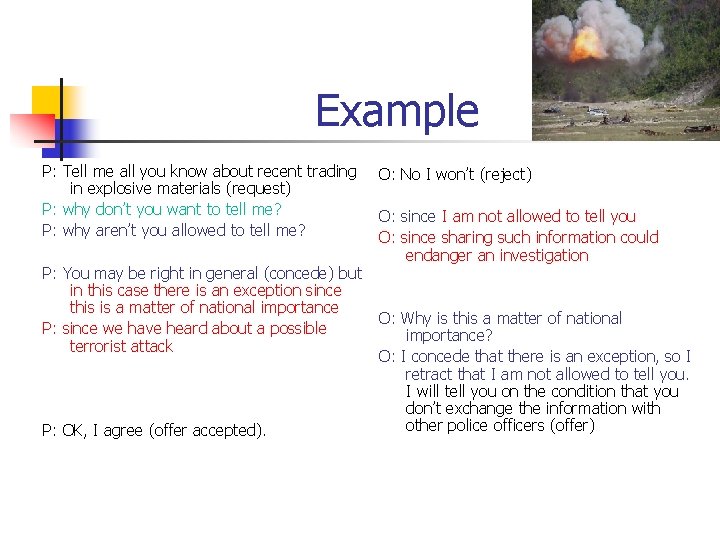

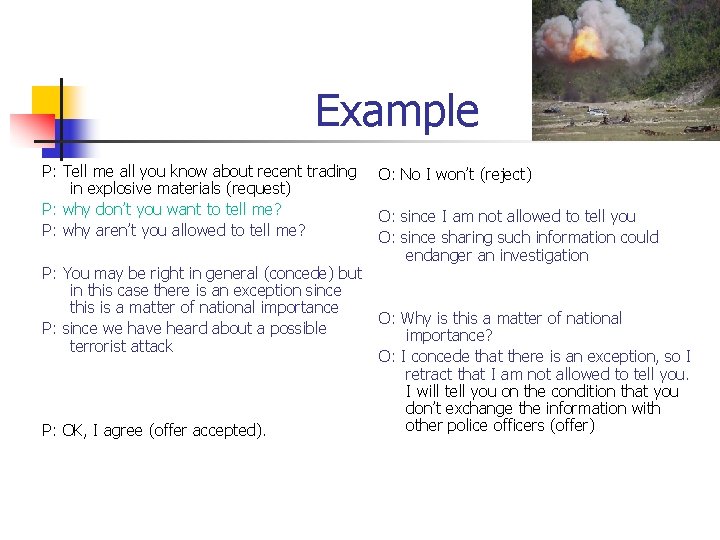

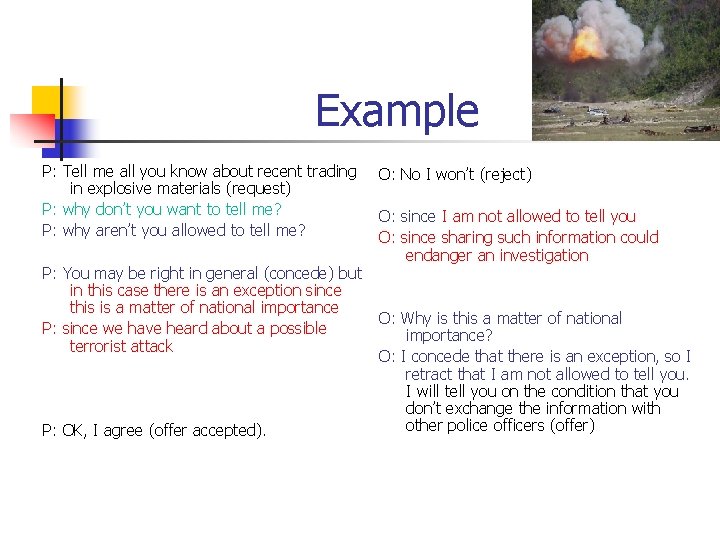

Example P: Tell me all you know about recent trading in explosive materials (request) P: why don’t you want to tell me? P: why aren’t you allowed to tell me? P: You may be right in general (concede) but in this case there is an exception since this is a matter of national importance P: since we have heard about a possible terrorist attack P: OK, I agree (offer accepted). O: No I won’t (reject) O: since I am not allowed to tell you O: since sharing such information could endanger an investigation O: Why is this a matter of national importance? O: I concede that there is an exception, so I retract that I am not allowed to tell you. I will tell you on the condition that you don’t exchange the information with other police officers (offer)

Example P: Tell me all you know about recent trading in explosive materials (request) P: why don’t you want to tell me? P: why aren’t you allowed to tell me? P: You may be right in general (concede) but in this case there is an exception since this is a matter of national importance P: since we have heard about a possible terrorist attack P: OK, I agree (offer accepted). O: No I won’t (reject) O: since I am not allowed to tell you O: since sharing such information could endanger an investigation O: Why is this a matter of national importance? O: I concede that there is an exception, so I retract that I am not allowed to tell you. I will tell you on the condition that you don’t exchange the information with other police officers (offer)

Example P: Tell me all you know about recent trading in explosive materials (request) P: why don’t you want to tell me? P: why aren’t you allowed to tell me? P: You may be right in general (concede) but in this case there is an exception since this is a matter of national importance P: since we have heard about a possible terrorist attack P: OK, I agree (offer accepted). O: No I won’t (reject) O: since I am not allowed to tell you O: since sharing such information could endanger an investigation O: Why is this a matter of national importance? O: I concede that there is an exception, so I retract that I am not allowed to tell you. I will tell you on the condition that you don’t exchange the information with other police officers (offer)

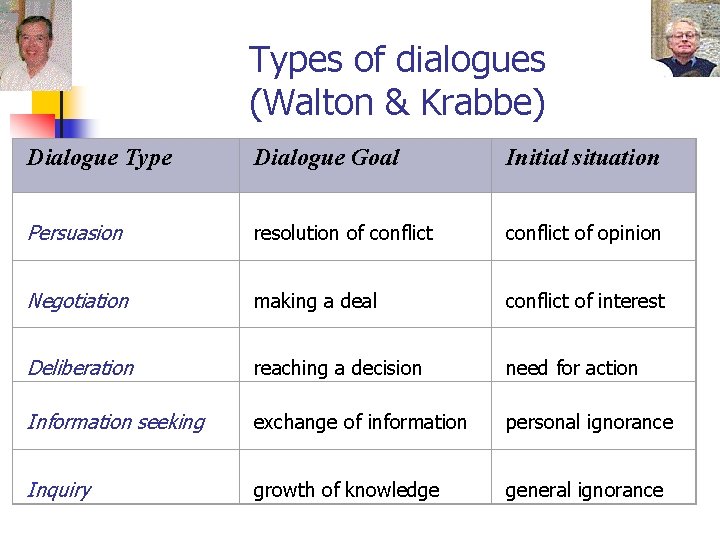

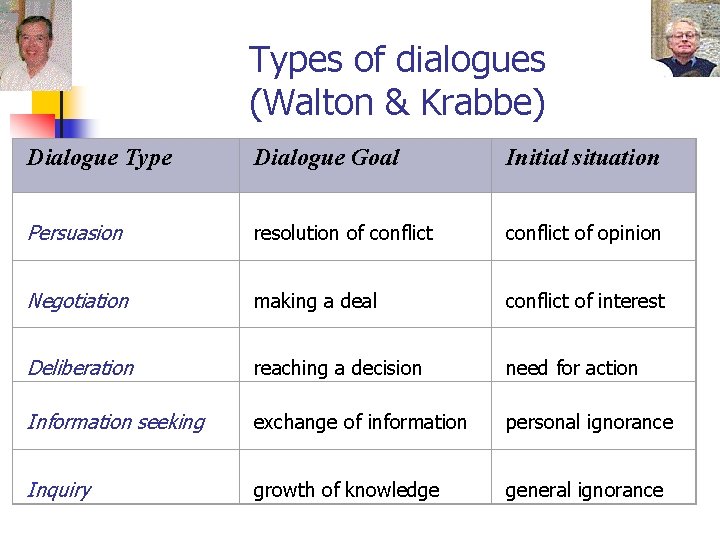

Types of dialogues (Walton & Krabbe) Dialogue Type Dialogue Goal Initial situation Persuasion resolution of conflict of opinion Negotiation making a deal conflict of interest Deliberation reaching a decision need for action Information seeking exchange of information personal ignorance Inquiry growth of knowledge general ignorance

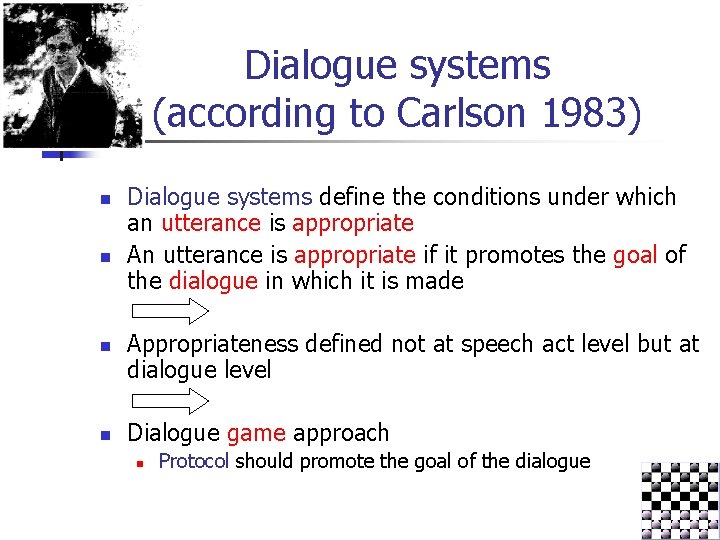

Dialogue systems (according to Carlson 1983) n n Dialogue systems define the conditions under which an utterance is appropriate An utterance is appropriate if it promotes the goal of the dialogue in which it is made Appropriateness defined not at speech act level but at dialogue level Dialogue game approach n Protocol should promote the goal of the dialogue

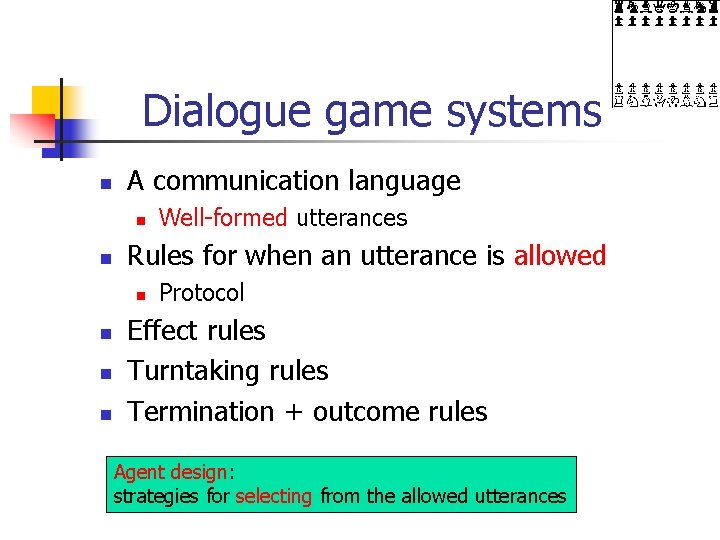

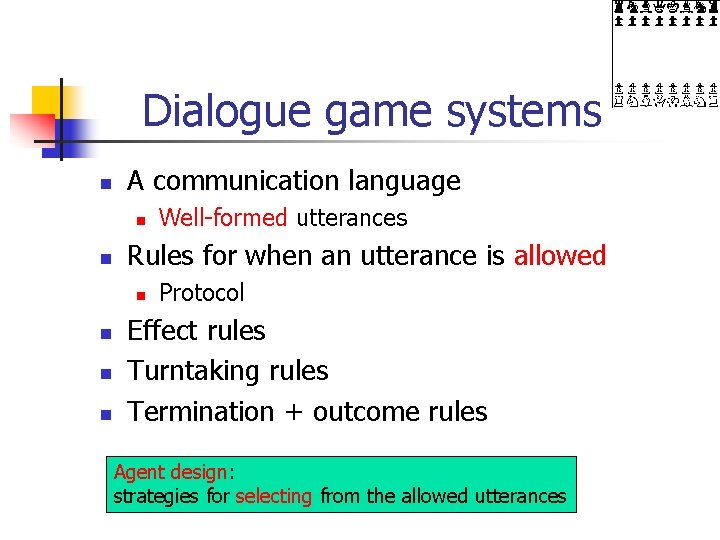

Dialogue game systems n A communication language n n Rules for when an utterance is allowed n n Well-formed utterances Protocol Effect rules Turntaking rules Termination + outcome rules Agent design: strategies for selecting from the allowed utterances

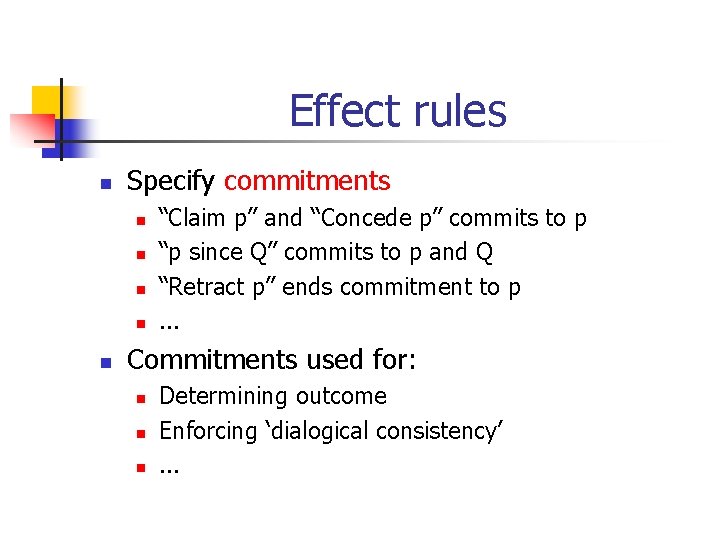

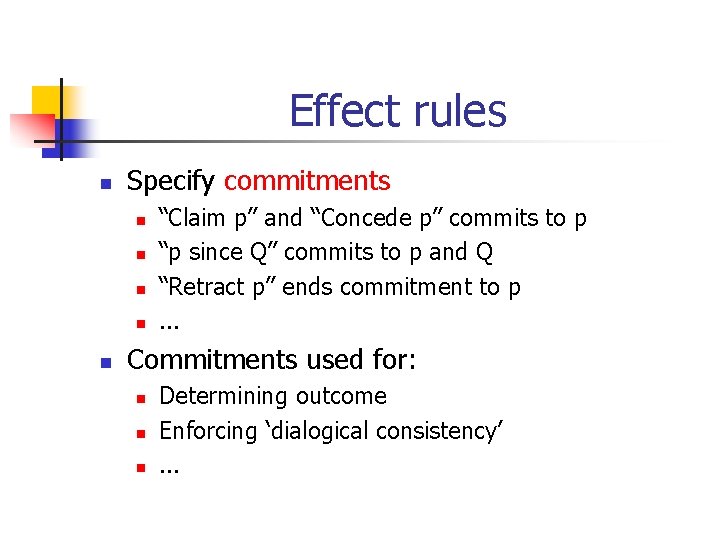

Effect rules n Specify commitments n n n “Claim p” and “Concede p” commits to p “p since Q” commits to p and Q “Retract p” ends commitment to p. . . Commitments used for: n n n Determining outcome Enforcing ‘dialogical consistency’. . .

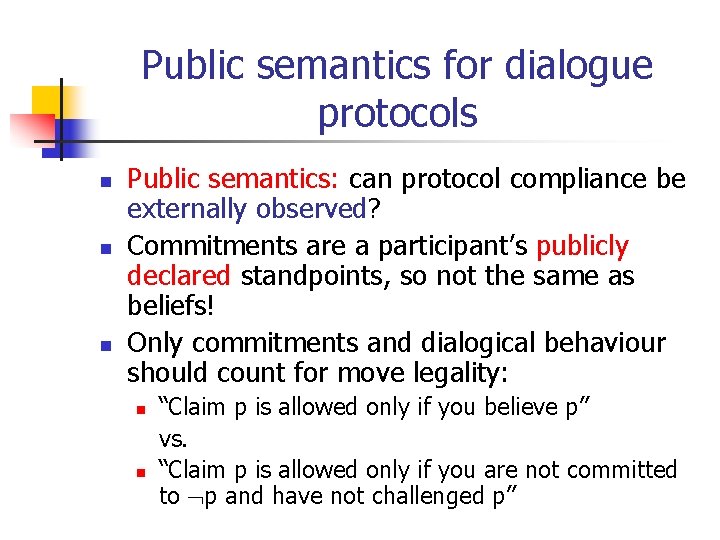

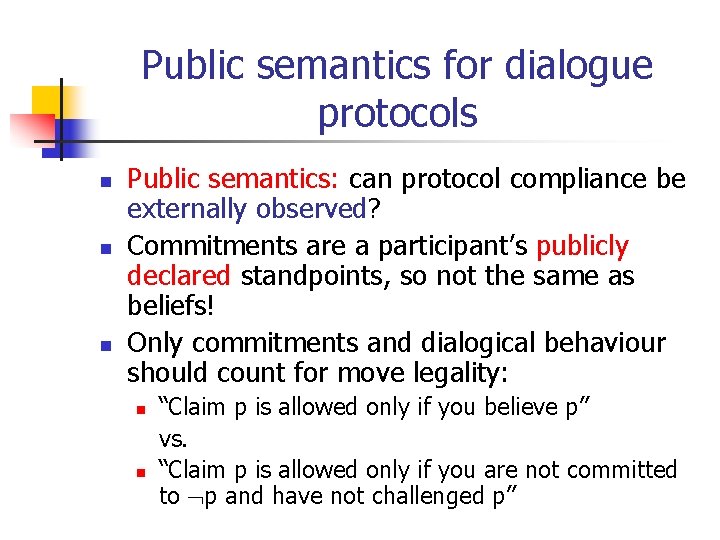

Public semantics for dialogue protocols n n n Public semantics: can protocol compliance be externally observed? Commitments are a participant’s publicly declared standpoints, so not the same as beliefs! Only commitments and dialogical behaviour should count for move legality: n n “Claim p is allowed only if you believe p” vs. “Claim p is allowed only if you are not committed to p and have not challenged p”

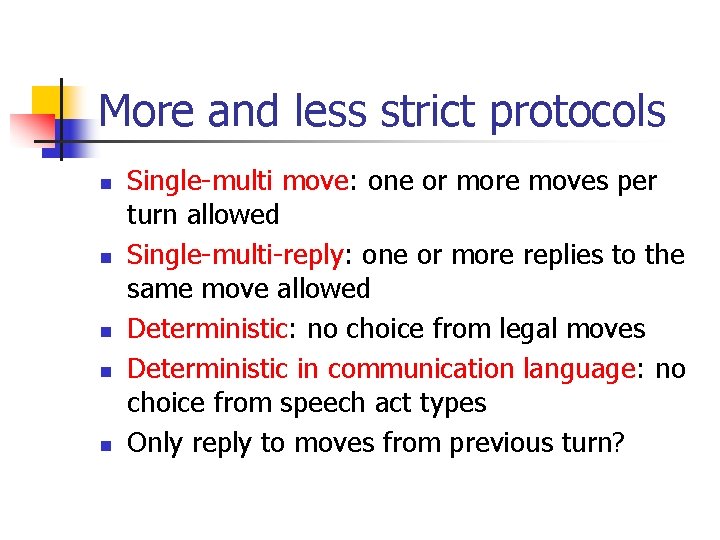

More and less strict protocols n n n Single-multi move: one or more moves per turn allowed Single-multi-reply: one or more replies to the same move allowed Deterministic: no choice from legal moves Deterministic in communication language: no choice from speech act types Only reply to moves from previous turn?

Some properties that can be studied n Correspondence with players’ beliefs n n n If union of beliefs implies p, can/will agreement on p result? If players agree on p, does union of beliefs imply p? Disregarding vs. assuming player strategies

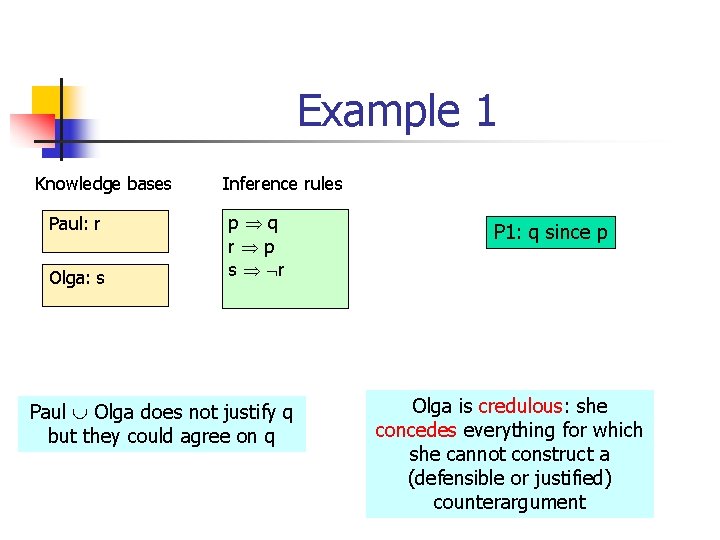

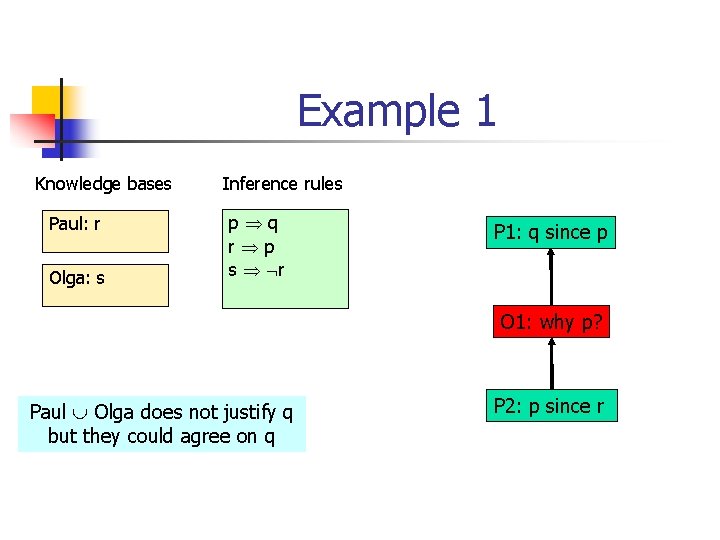

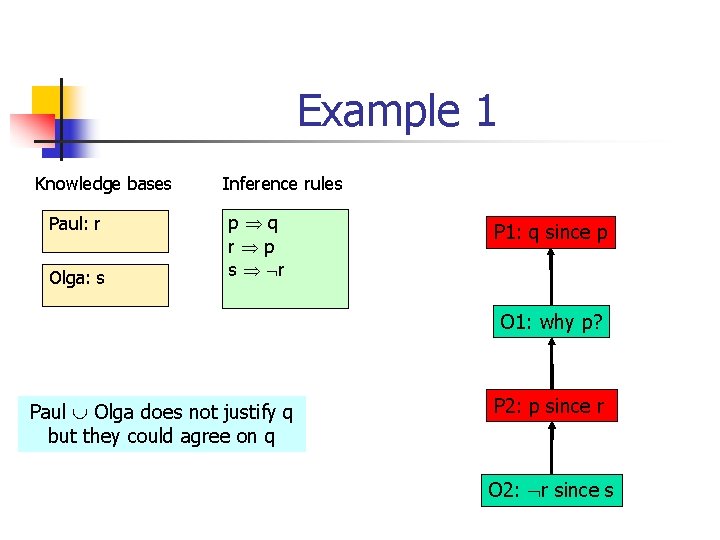

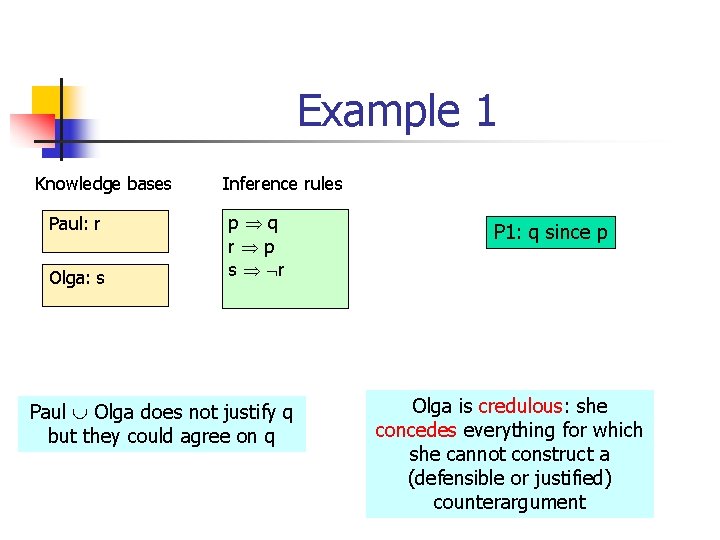

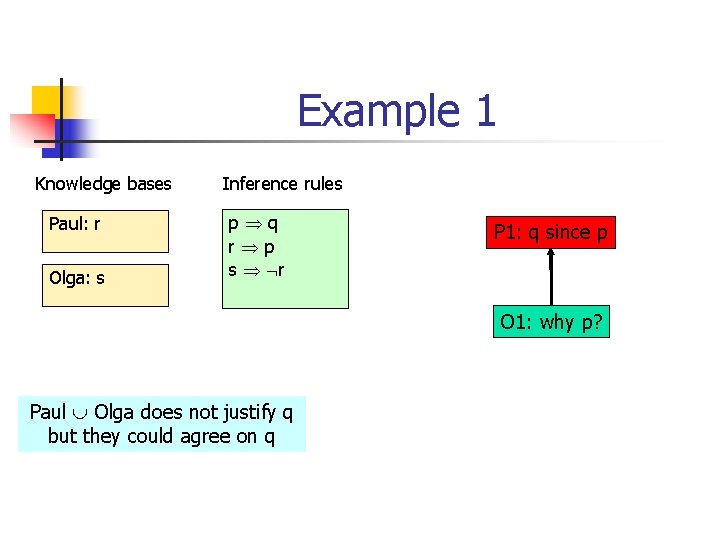

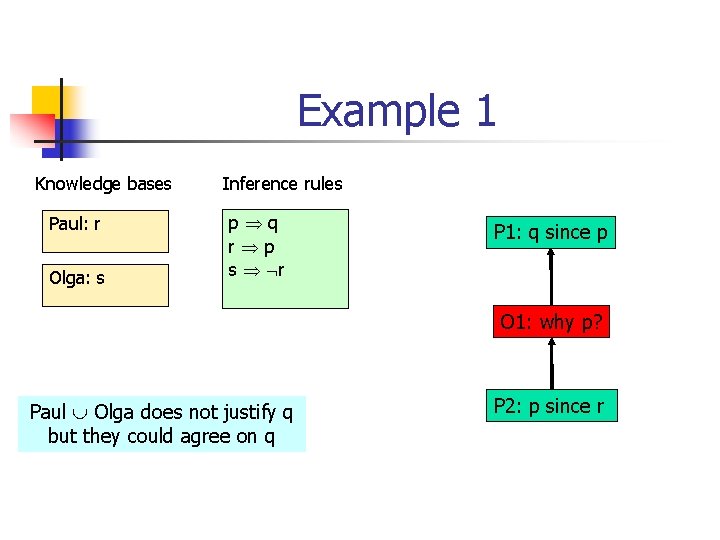

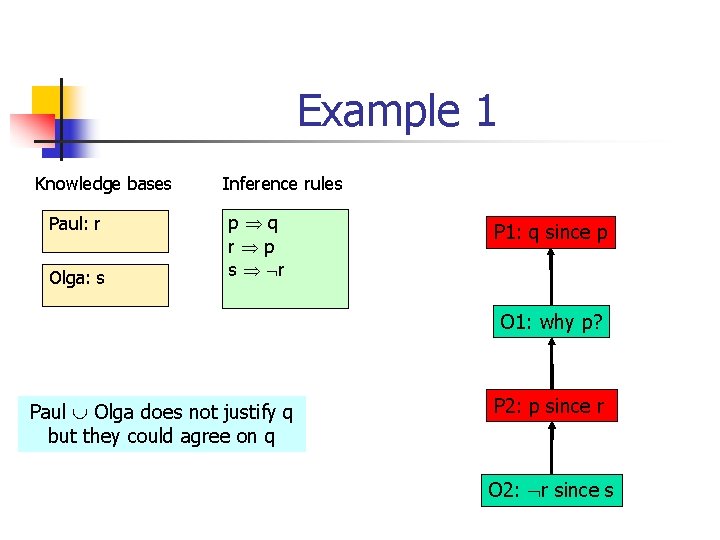

Example 1 Knowledge bases Paul: r Olga: s Inference rules p q r p s r Paul Olga does not justify q but they could agree on q P 1: q since p Olga is credulous: she concedes everything for which she cannot construct a (defensible or justified) counterargument

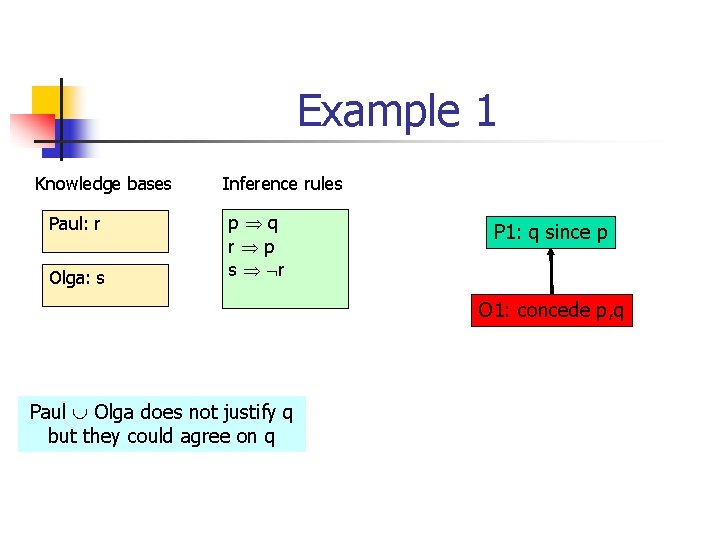

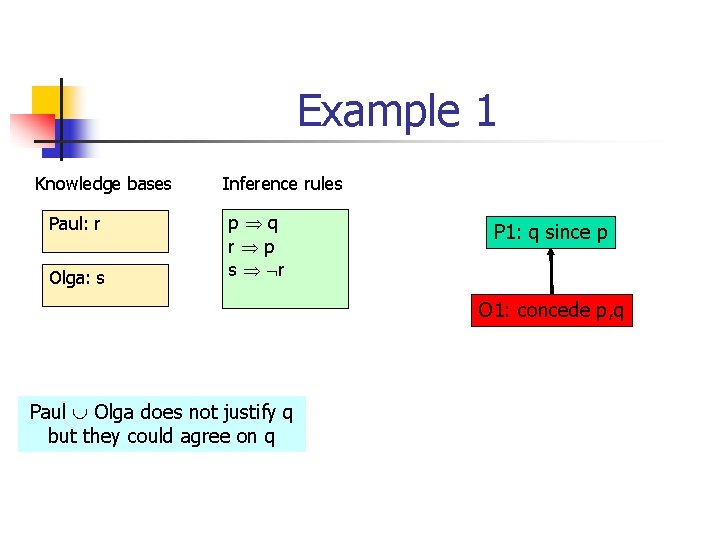

Example 1 Knowledge bases Paul: r Olga: s Inference rules p q r p s r P 1: q since p O 1: concede p, q Paul Olga does not justify q but they could agree on q

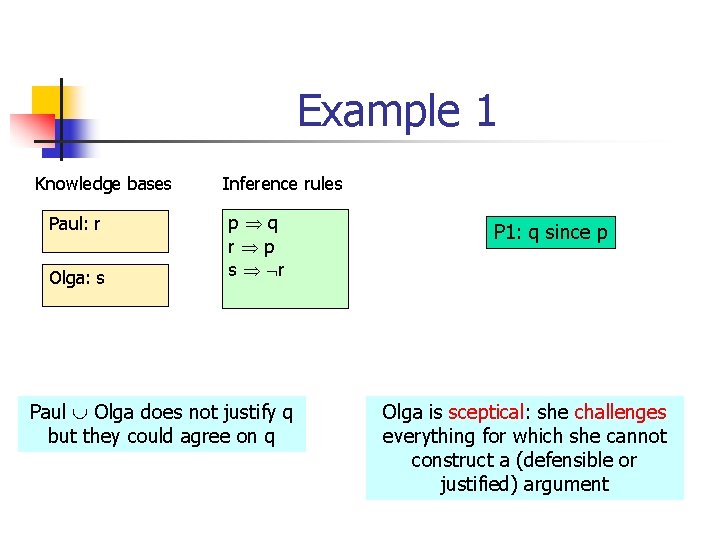

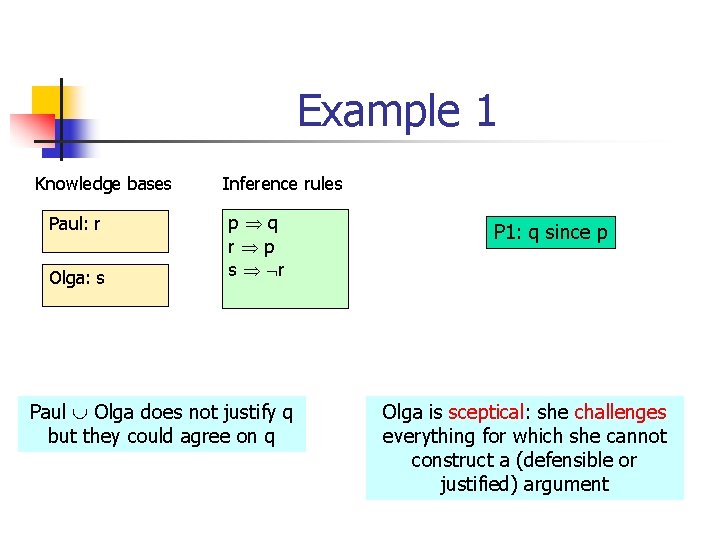

Example 1 Knowledge bases Paul: r Olga: s Inference rules p q r p s r Paul Olga does not justify q but they could agree on q P 1: q since p Olga is sceptical: she challenges everything for which she cannot construct a (defensible or justified) argument

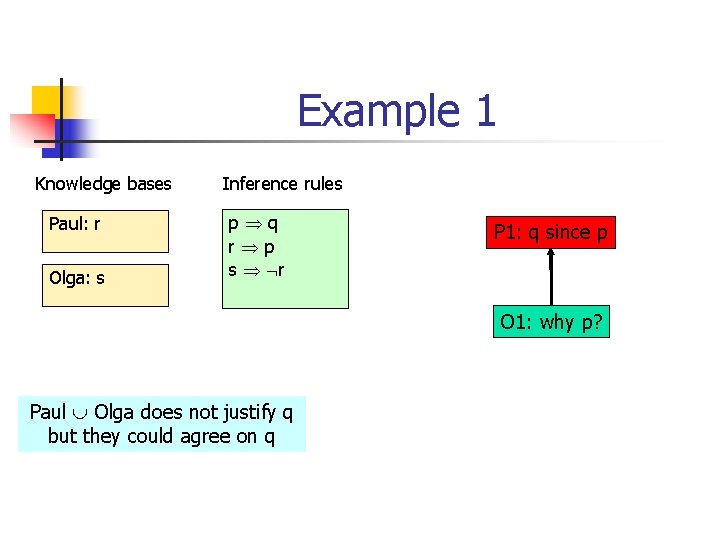

Example 1 Knowledge bases Paul: r Olga: s Inference rules p q r p s r P 1: q since p O 1: why p? Paul Olga does not justify q but they could agree on q

Example 1 Knowledge bases Paul: r Olga: s Inference rules p q r p s r P 1: q since p O 1: why p? Paul Olga does not justify q but they could agree on q P 2: p since r

Example 1 Knowledge bases Paul: r Olga: s Inference rules p q r p s r P 1: q since p O 1: why p? Paul Olga does not justify q but they could agree on q P 2: p since r O 2: r since s

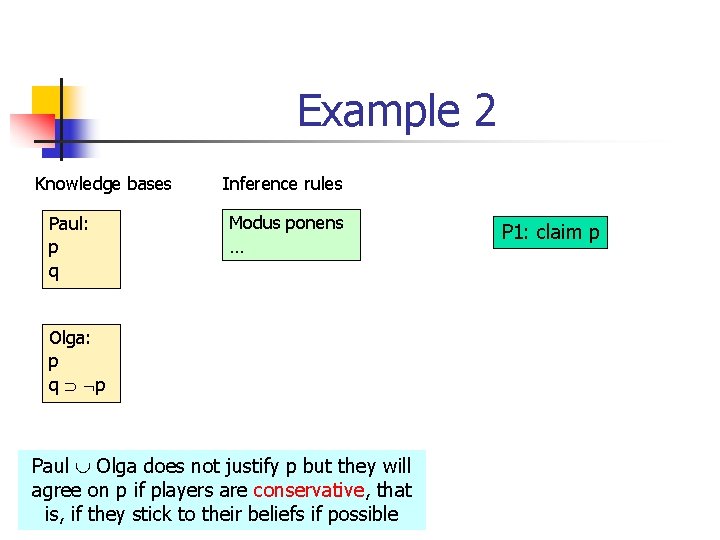

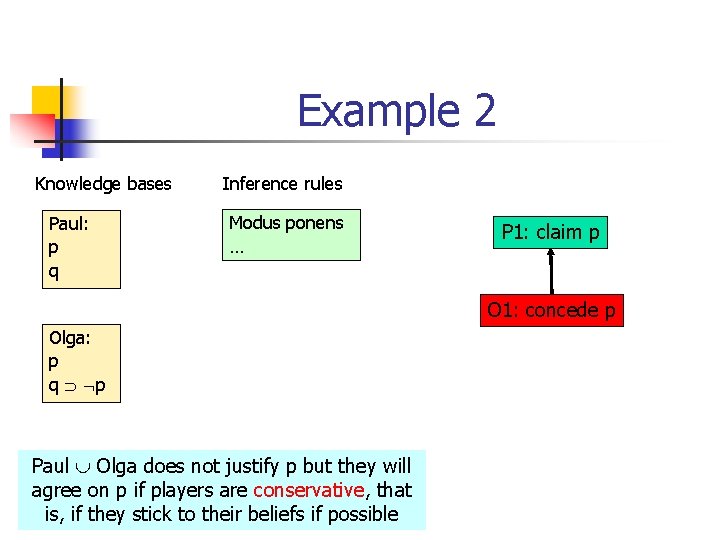

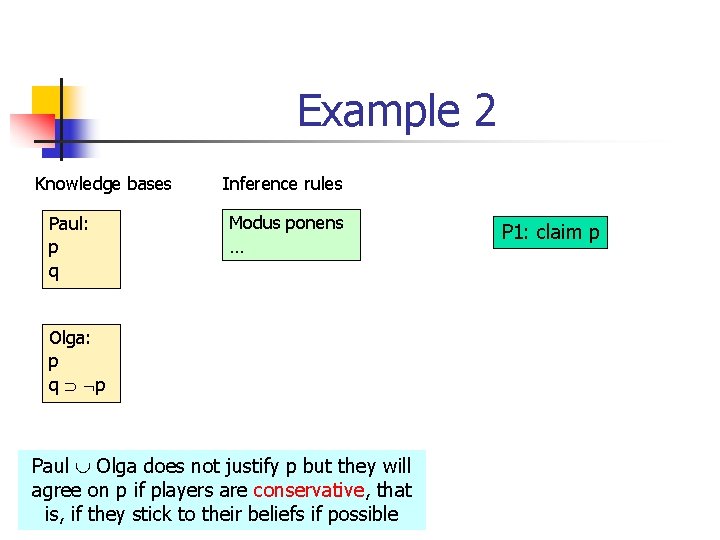

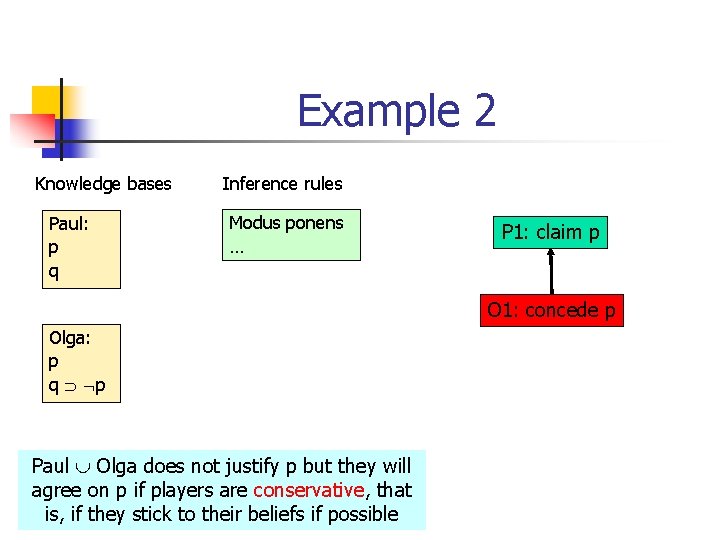

Example 2 Knowledge bases Paul: p q Inference rules Modus ponens … Olga: p q p Paul Olga does not justify p but they will agree on p if players are conservative, that is, if they stick to their beliefs if possible P 1: claim p

Example 2 Knowledge bases Paul: p q Inference rules Modus ponens … P 1: claim p O 1: concede p Olga: p q p Paul Olga does not justify p but they will agree on p if players are conservative, that is, if they stick to their beliefs if possible

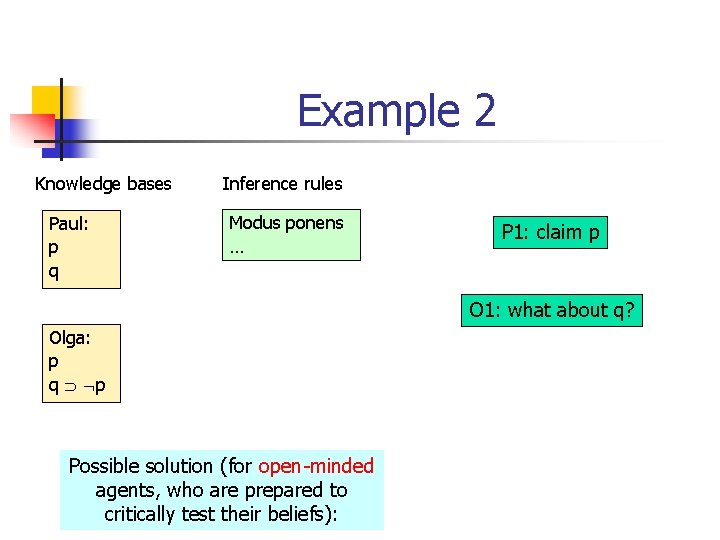

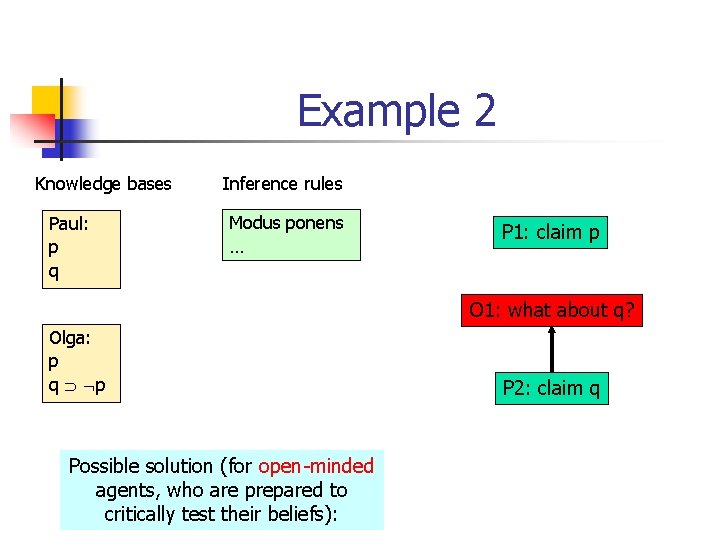

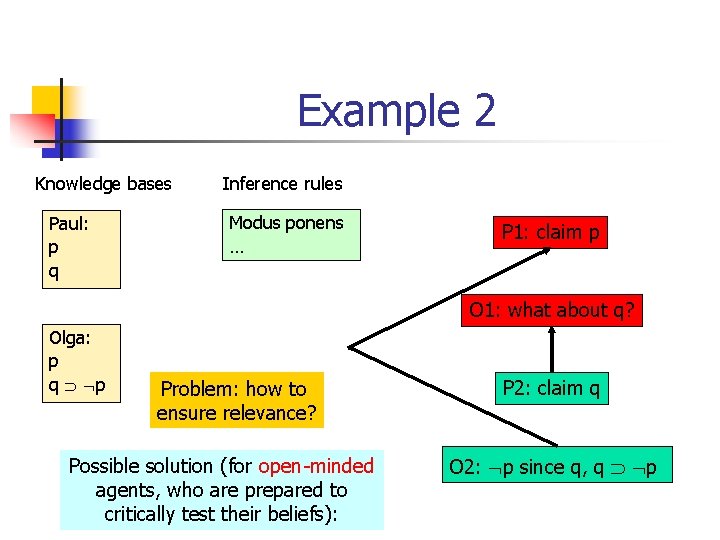

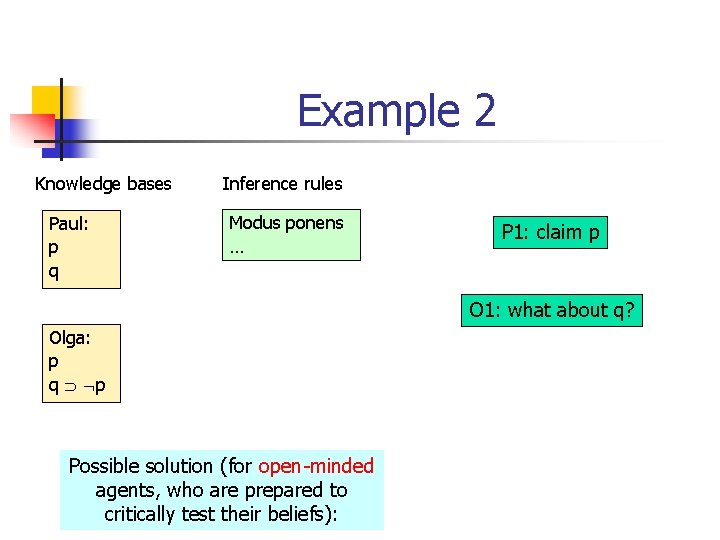

Example 2 Knowledge bases Paul: p q Inference rules Modus ponens … P 1: claim p O 1: what about q? Olga: p q p Possible solution (for open-minded agents, who are prepared to critically test their beliefs):

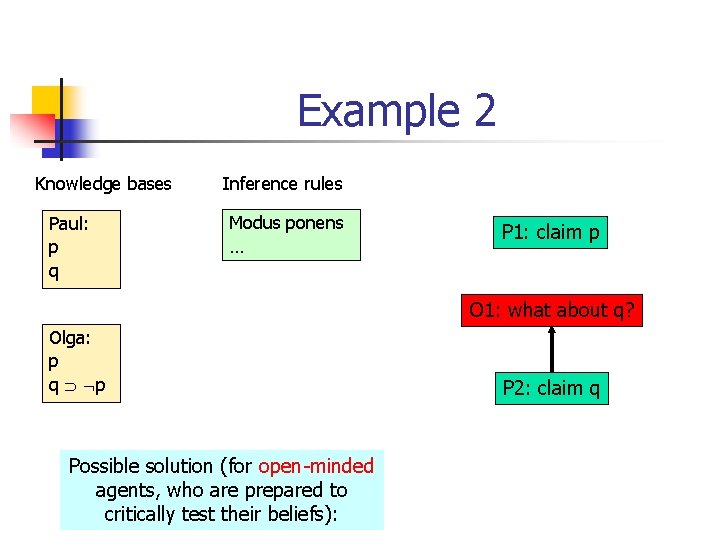

Example 2 Knowledge bases Paul: p q Inference rules Modus ponens … P 1: claim p O 1: what about q? Olga: p q p Possible solution (for open-minded agents, who are prepared to critically test their beliefs): P 2: claim q

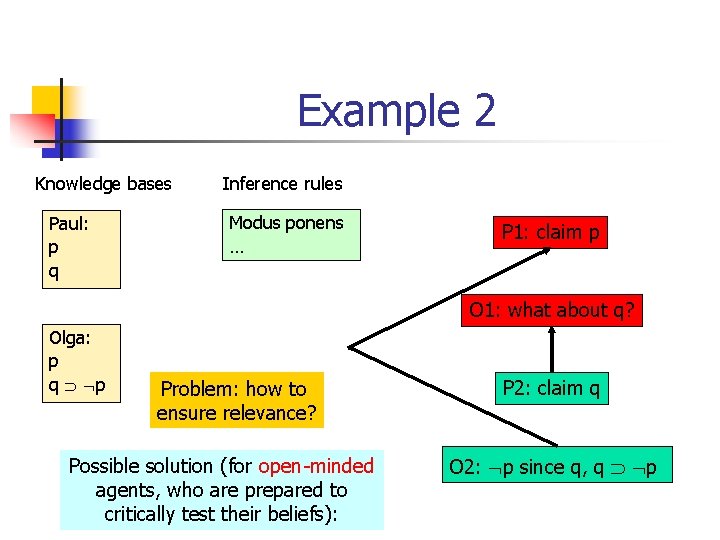

Example 2 Knowledge bases Paul: p q Inference rules Modus ponens … P 1: claim p O 1: what about q? Olga: p q p Problem: how to ensure relevance? Possible solution (for open-minded agents, who are prepared to critically test their beliefs): P 2: claim q O 2: p since q, q p

Automated Support of Regulated Data Exchange. A Multi-Agent Systems Approach Ph. D Thesis Pieter Dijkstra (2012) Faculty of Law University of Groningen

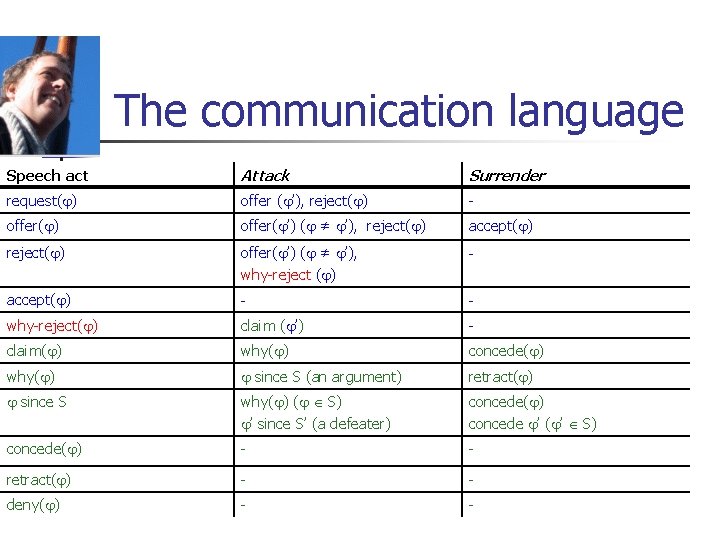

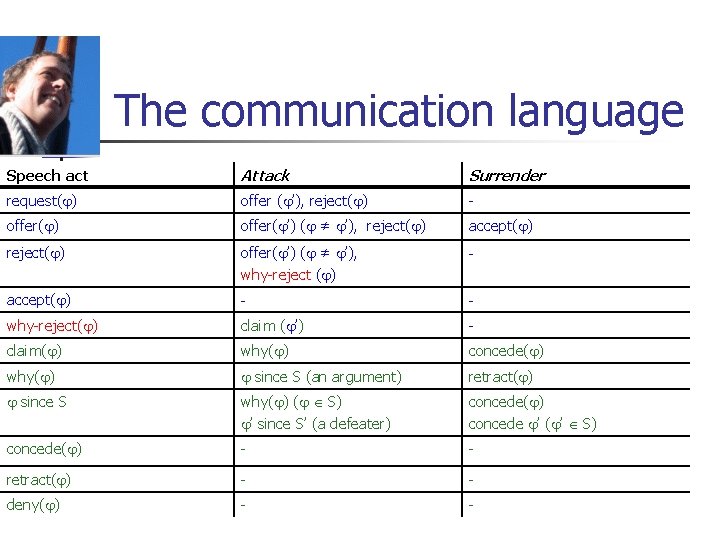

The communication language Speech act Attack Surrender request( ) offer ( ’), reject( ) - offer( ) offer( ’) ( ≠ ’), reject( ) accept( ) reject( ) offer( ’) ( ≠ ’), why-reject ( ) - accept( ) - - why-reject( ) claim ( ’) - claim( ) why( ) concede( ) why( ) since S (an argument) retract( ) since S why( ) ( S) ’ since S’ (a defeater) concede( ) concede ’ ( ’ S) concede( ) - - retract( ) - - deny( ) - -

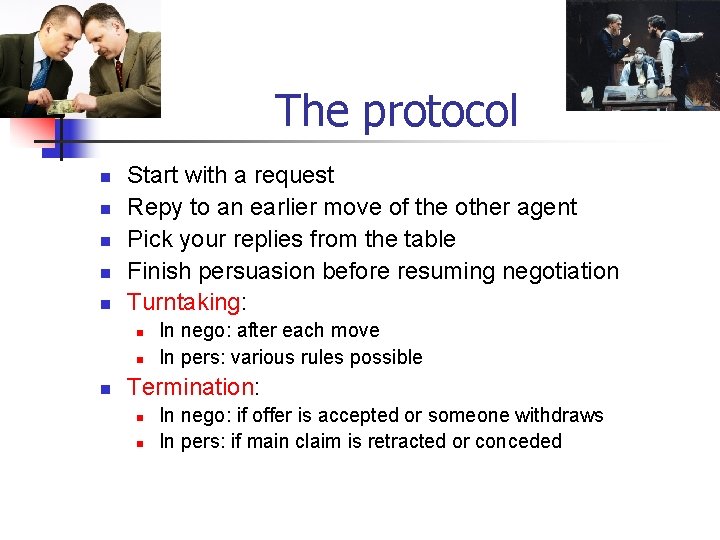

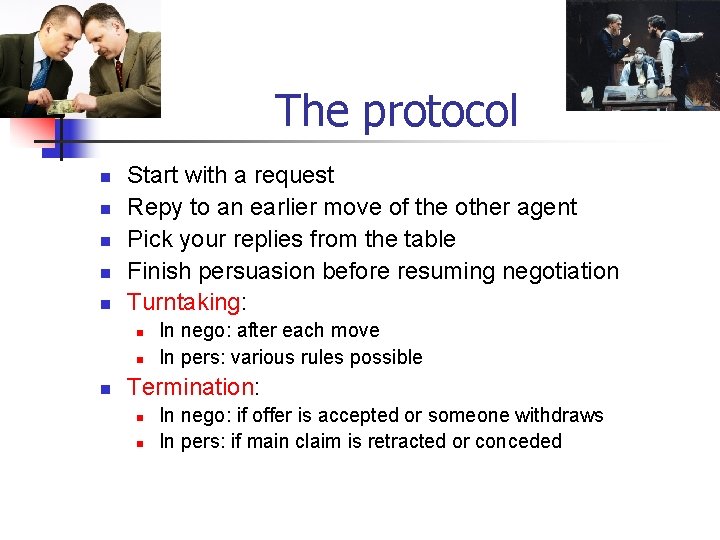

The protocol n n n Start with a request Repy to an earlier move of the other agent Pick your replies from the table Finish persuasion before resuming negotiation Turntaking: n n n In nego: after each move In pers: various rules possible Termination: n n In nego: if offer is accepted or someone withdraws In pers: if main claim is retracted or conceded

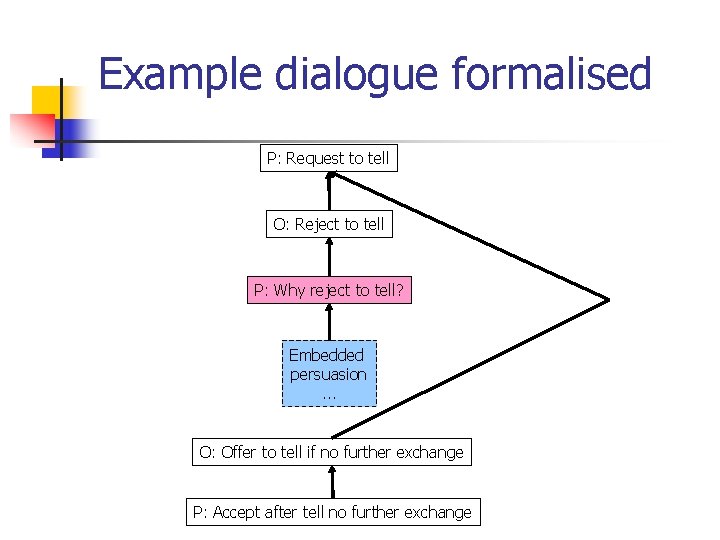

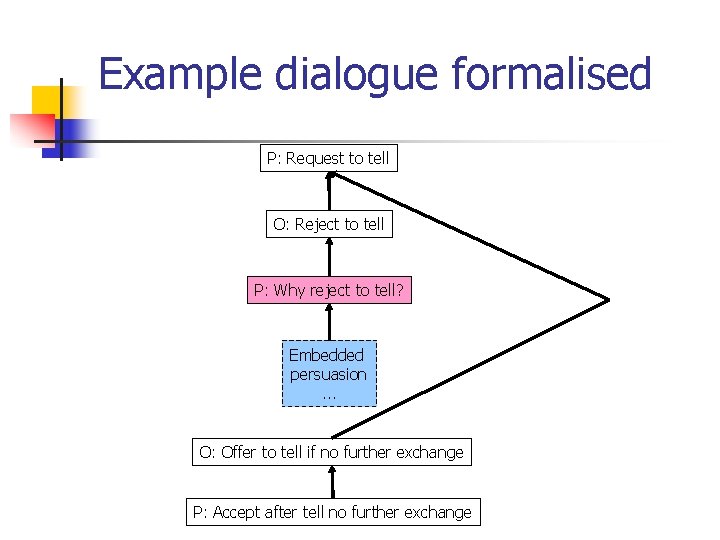

Example dialogue formalised P: Request to tell O: Reject to tell P: Why reject to tell? Embedded persuasion. . . O: Offer to tell if no further exchange P: Accept after tell no further exchange

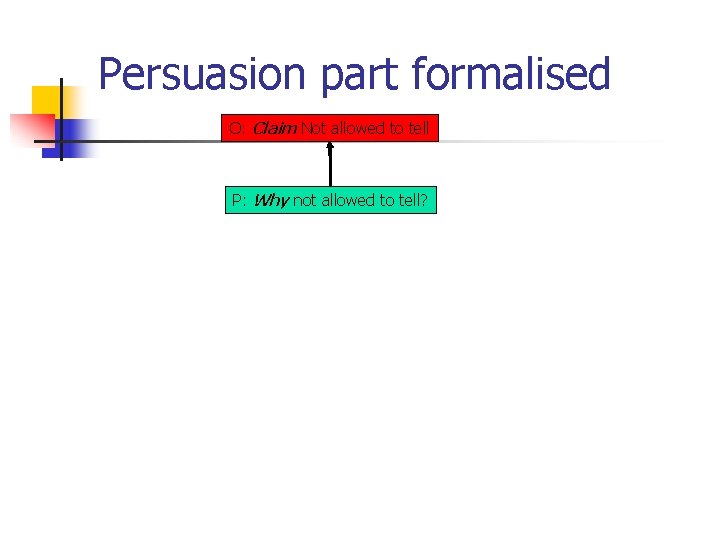

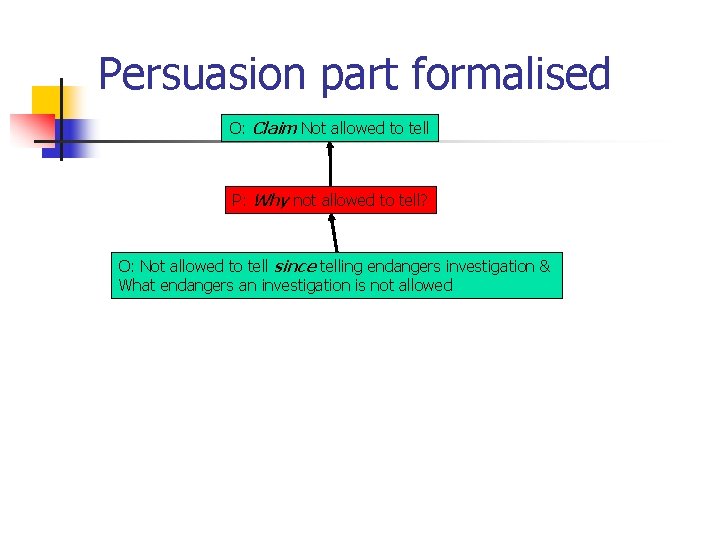

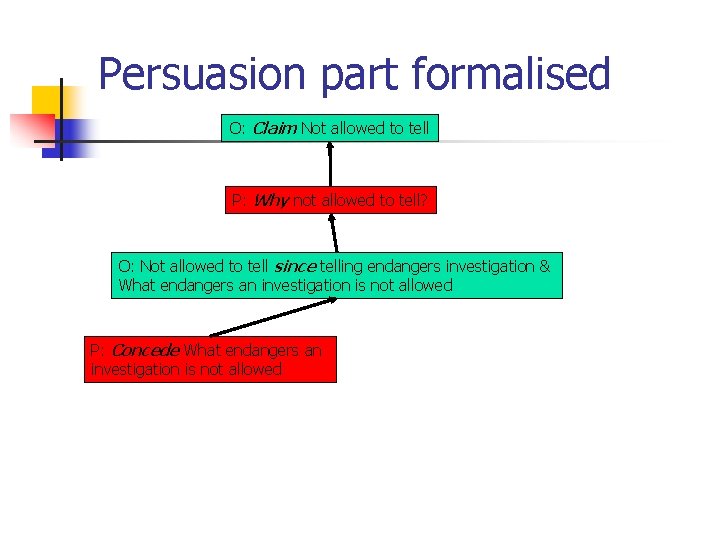

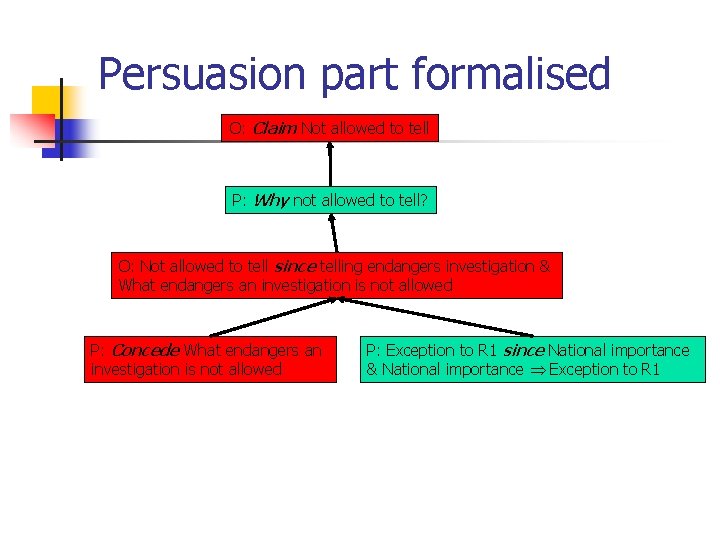

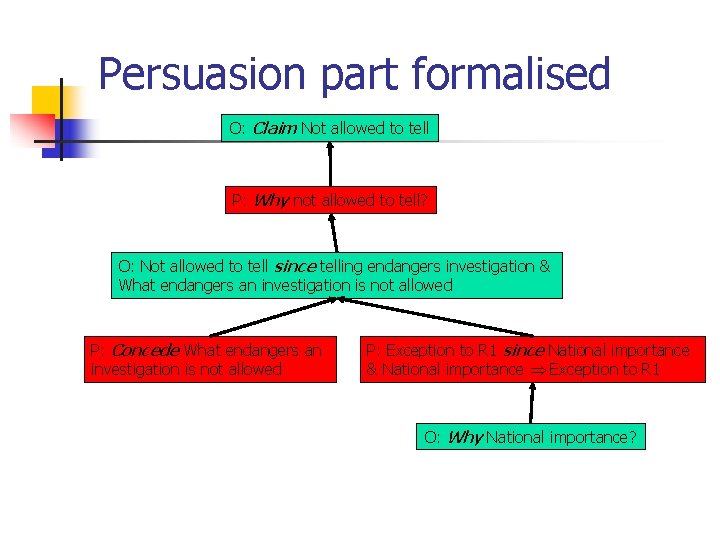

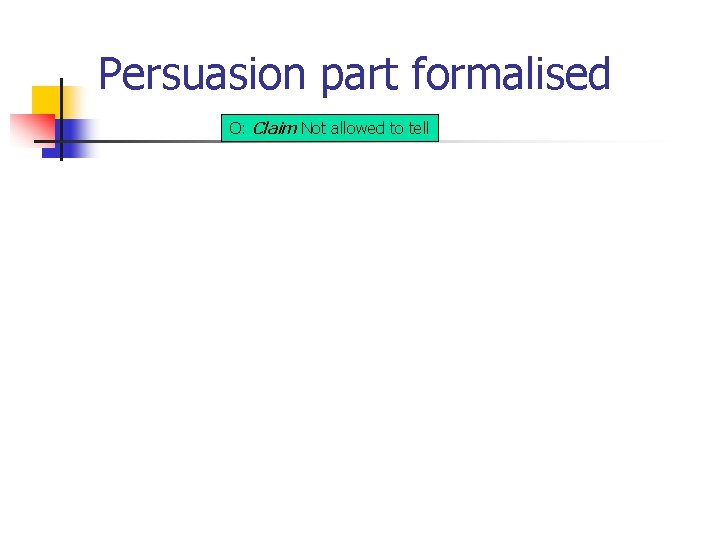

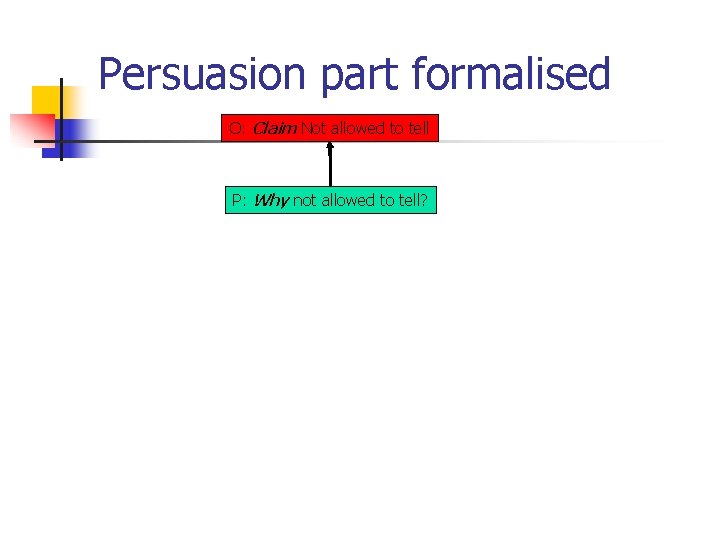

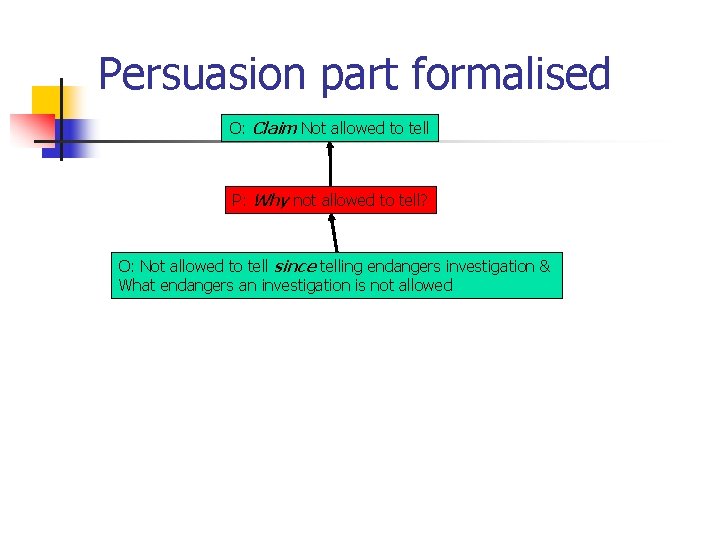

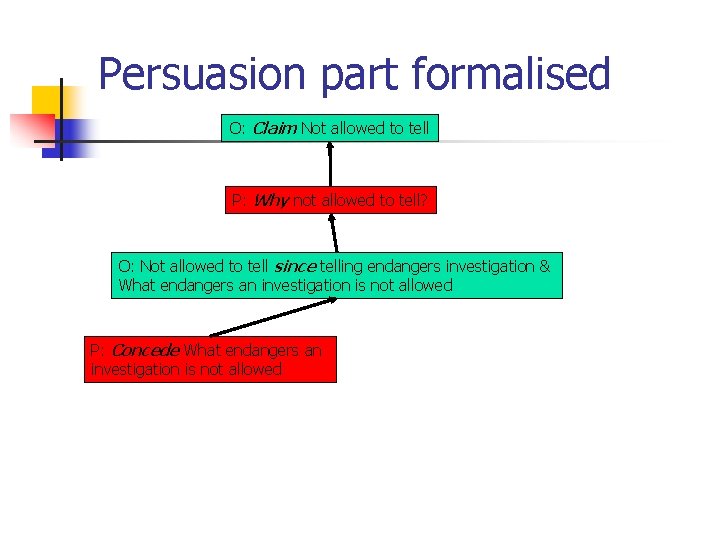

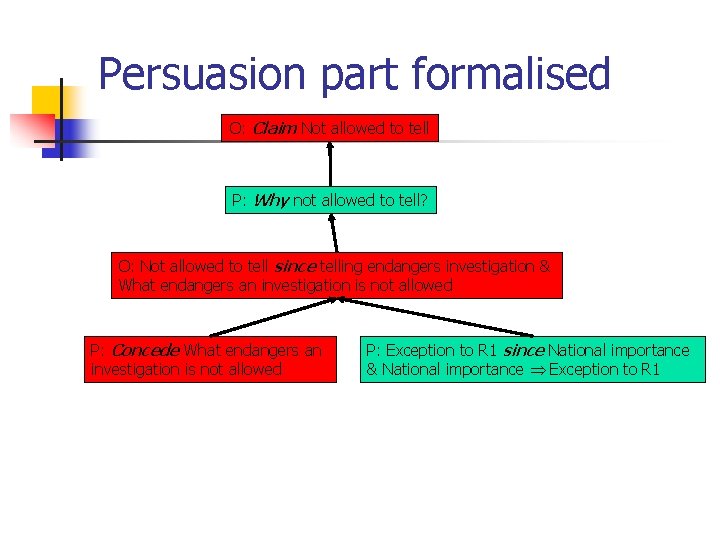

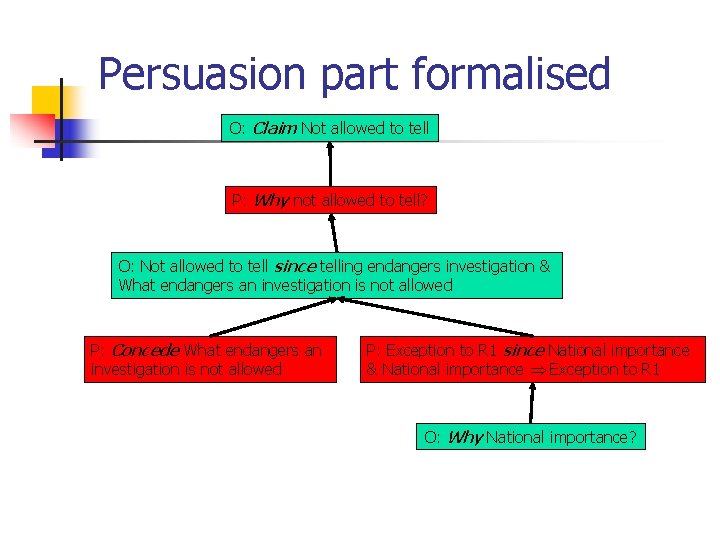

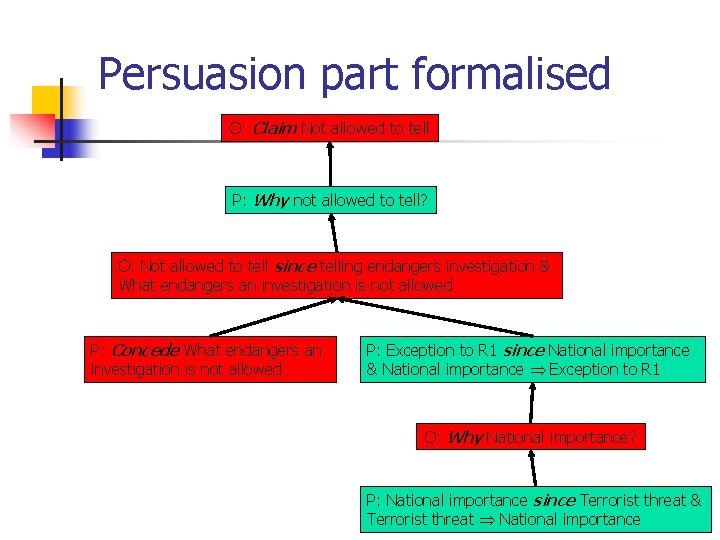

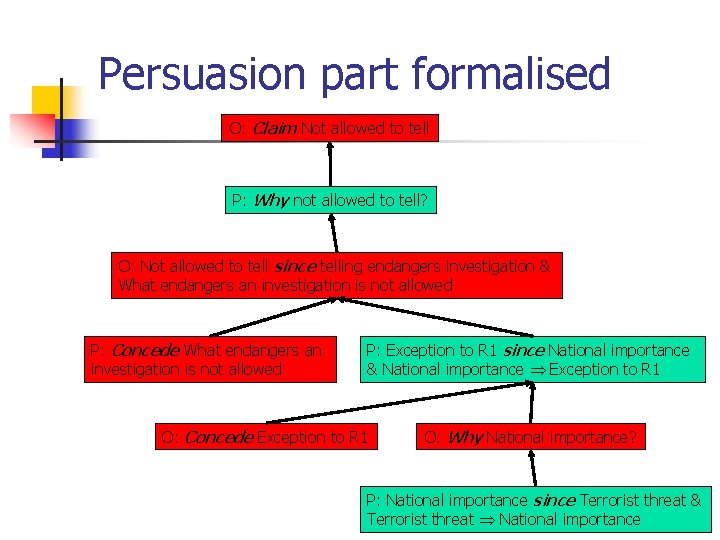

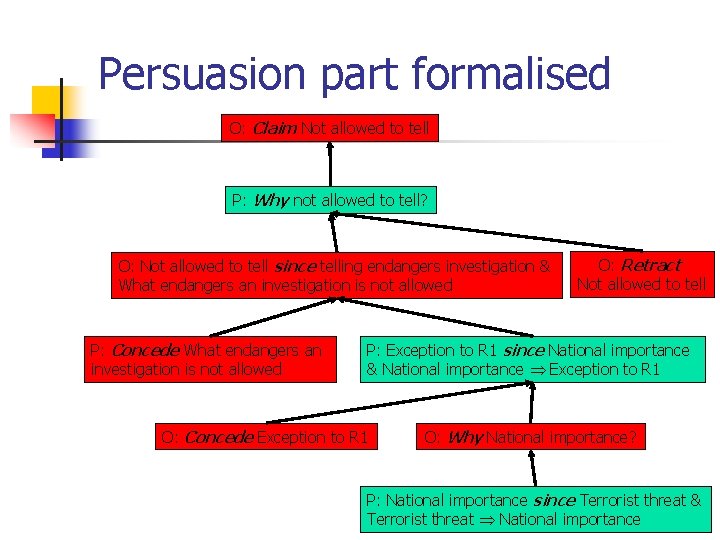

Persuasion part formalised O: Claim Not allowed to tell

Persuasion part formalised O: Claim Not allowed to tell P: Why not allowed to tell?

Persuasion part formalised O: Claim Not allowed to tell P: Why not allowed to tell? O: Not allowed to tell since telling endangers investigation & What endangers an investigation is not allowed

Persuasion part formalised O: Claim Not allowed to tell P: Why not allowed to tell? O: Not allowed to tell since telling endangers investigation & What endangers an investigation is not allowed P: Concede What endangers an investigation is not allowed

Persuasion part formalised O: Claim Not allowed to tell P: Why not allowed to tell? O: Not allowed to tell since telling endangers investigation & What endangers an investigation is not allowed P: Concede What endangers an investigation is not allowed P: Exception to R 1 since National importance & National importance Exception to R 1

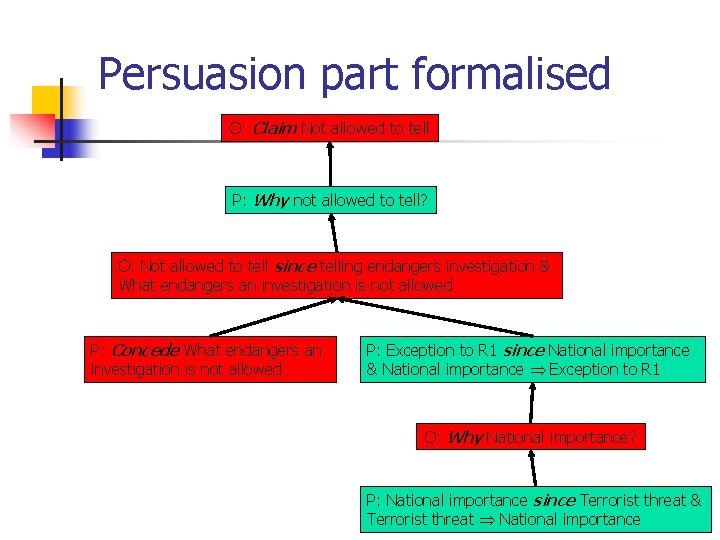

Persuasion part formalised O: Claim Not allowed to tell P: Why not allowed to tell? O: Not allowed to tell since telling endangers investigation & What endangers an investigation is not allowed P: Concede What endangers an investigation is not allowed P: Exception to R 1 since National importance & National importance Exception to R 1 O: Why National importance?

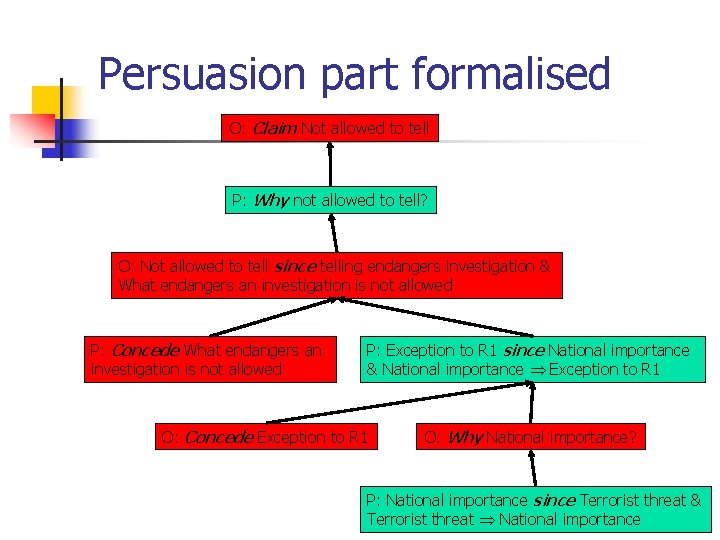

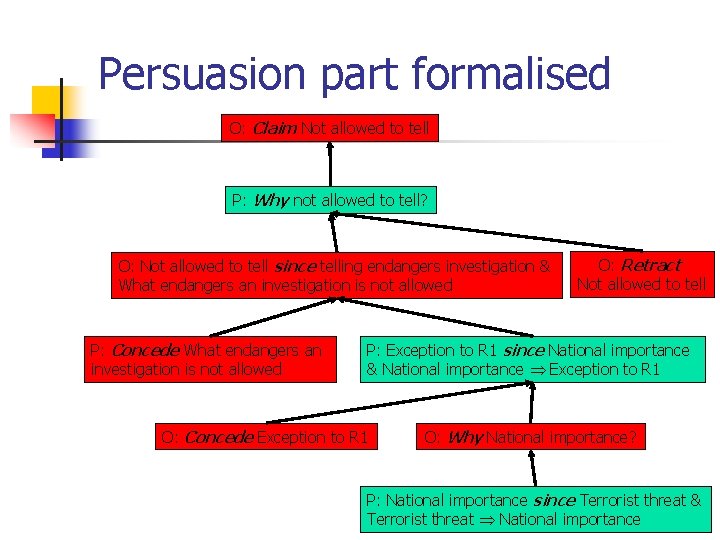

Persuasion part formalised O: Claim Not allowed to tell P: Why not allowed to tell? O: Not allowed to tell since telling endangers investigation & What endangers an investigation is not allowed P: Concede What endangers an investigation is not allowed P: Exception to R 1 since National importance & National importance Exception to R 1 O: Why National importance? P: National importance since Terrorist threat & Terrorist threat National importance

Persuasion part formalised O: Claim Not allowed to tell P: Why not allowed to tell? O: Not allowed to tell since telling endangers investigation & What endangers an investigation is not allowed P: Concede What endangers an investigation is not allowed P: Exception to R 1 since National importance & National importance Exception to R 1 O: Concede Exception to R 1 O: Why National importance? P: National importance since Terrorist threat & Terrorist threat National importance

Persuasion part formalised O: Claim Not allowed to tell P: Why not allowed to tell? O: Not allowed to tell since telling endangers investigation & What endangers an investigation is not allowed P: Concede What endangers an investigation is not allowed O: Retract Not allowed to tell P: Exception to R 1 since National importance & National importance Exception to R 1 O: Concede Exception to R 1 O: Why National importance? P: National importance since Terrorist threat & Terrorist threat National importance

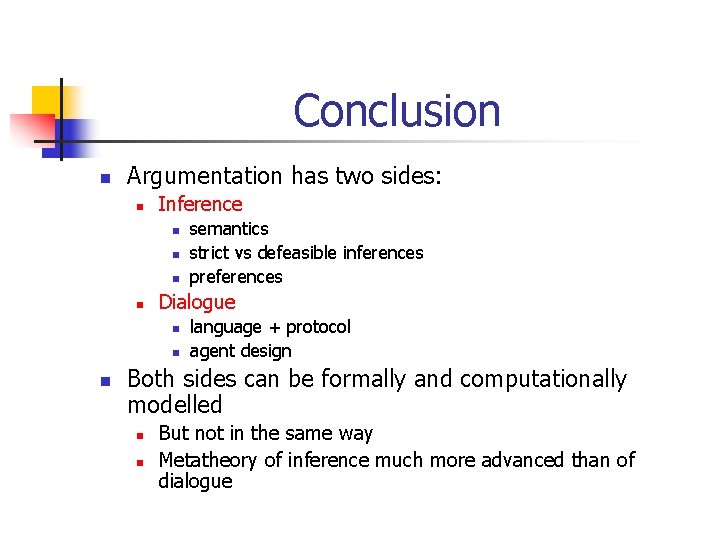

Conclusion n Argumentation has two sides: n Inference n n Dialogue n n n semantics strict vs defeasible inferences preferences language + protocol agent design Both sides can be formally and computationally modelled n n But not in the same way Metatheory of inference much more advanced than of dialogue

Reading (1) n Collections n n T. J. M. Bench-Capon & P. E. Dunne (eds. ), Artificial Intelligence 171 (2007), Special issue on Argumentation in Artificial Intelligence I. Rahwan & G. R. Simari (eds. ), Argumentation in Artificial Intelligence. Berlin: Springer 2009. A. Hunter (ed. ), Argument and Computation 5 (2014), special issue on Tutorials on Structured Argumentation Abstract argumentation n n P. M. Dung, On the acceptability of arguments and its fundamental role in nonmonotonic reasoning, logic programming and n-person games. Artificial Intelligence 77 (1995): 321 -357 P. Baroni, M. W. A. Caminada & M. Giacomin. An introduction to argumentation semantics. The Knowledge Engineering Review 26: 365 -410 (2011)

Reading (2) n Classical and Tarskian argumentation n Ph. Besnard & A. Hunter, Elements of Argumentation. Cambridge, MA: n n n MIT Press, 2008. N Gorogiannis & A Hunter (2011) Instantiating abstract argumentation with classical logic arguments: postulates and properties, Artificial Intelligence 175: 1479 -1497. L. Amgoud & Ph. Besnard, Logical limits of abstract argumentation frameworks. Journal of Applied Non-Classical Logics 23(2013): 229267. ASPIC+ n n H. Prakken, An abstract framework for argumentation with structured arguments. Argument and Computation 1 (2010): 93 -124. S. Modgil & H. Prakken, A general account of argumentation with preferences. Artificial Intelligence 195 (2013): 361 -397

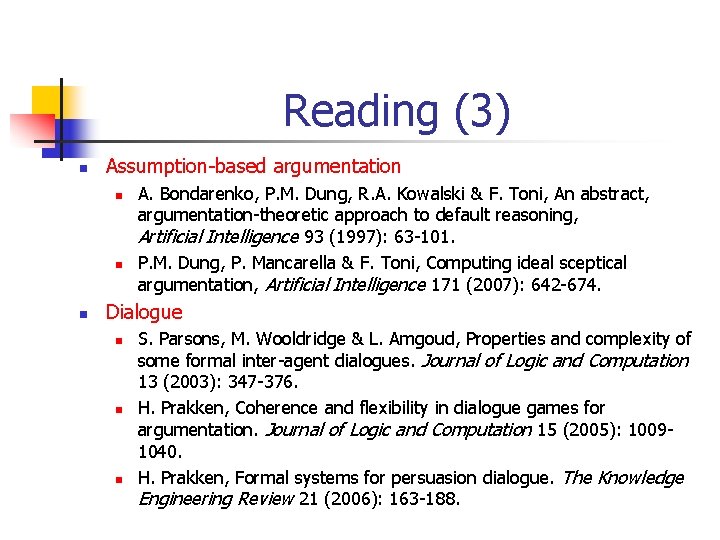

Reading (3) n Assumption-based argumentation n A. Bondarenko, P. M. Dung, R. A. Kowalski & F. Toni, An abstract, argumentation-theoretic approach to default reasoning, Artificial Intelligence 93 (1997): 63 -101. P. M. Dung, P. Mancarella & F. Toni, Computing ideal sceptical argumentation, Artificial Intelligence 171 (2007): 642 -674. Dialogue n n n S. Parsons, M. Wooldridge & L. Amgoud, Properties and complexity of some formal inter-agent dialogues. Journal of Logic and Computation 13 (2003): 347 -376. H. Prakken, Coherence and flexibility in dialogue games for argumentation. Journal of Logic and Computation 15 (2005): 10091040. H. Prakken, Formal systems for persuasion dialogue. The Knowledge Engineering Review 21 (2006): 163 -188.

Introduction to argumentation

Introduction to argumentation Four opinion writers roe j.d.

Four opinion writers roe j.d. Argumentation og retorik

Argumentation og retorik Types of arguments

Types of arguments Mishela ivanova

Mishela ivanova English syntax and argumentation 연습문제 답

English syntax and argumentation 연습문제 답 Forvægt og bagvægt

Forvægt og bagvægt Tierversuche argumentation

Tierversuche argumentation Erlrterung

Erlrterung Argumentation einleitung

Argumentation einleitung Stilistisk term

Stilistisk term Gutachterliche stellungnahme aufbau

Gutachterliche stellungnahme aufbau For and against essay linking words

For and against essay linking words Argumentation

Argumentation Free will defense theodizee

Free will defense theodizee Logos argumentation

Logos argumentation Linking words for arguments

Linking words for arguments These beispiel argument

These beispiel argument Semi modals

Semi modals Lenguaje inculto formal ejemplos

Lenguaje inculto formal ejemplos It is intentional organized and structured

It is intentional organized and structured Unit 3 formal informal and nonformal education

Unit 3 formal informal and nonformal education Contoh falsafah

Contoh falsafah Contoh kerangka karangan formal

Contoh kerangka karangan formal How formal education differs from als

How formal education differs from als Maksud komunikasi sehala

Maksud komunikasi sehala Falasi tidak formal

Falasi tidak formal Pengkayaan kerja

Pengkayaan kerja Aap1 educação formal e não formal

Aap1 educação formal e não formal Fungsi manajemen paud

Fungsi manajemen paud Cloud storage models & communication apis

Cloud storage models & communication apis An introduction to variational methods for graphical models

An introduction to variational methods for graphical models A revealing introduction to hidden markov models

A revealing introduction to hidden markov models What are spreadsheet models

What are spreadsheet models An introduction to probabilistic graphical models

An introduction to probabilistic graphical models A revealing introduction to hidden markov models

A revealing introduction to hidden markov models The basic value proposition of community providers is:

The basic value proposition of community providers is: Introduction in email

Introduction in email An introduction to formal languages and automata

An introduction to formal languages and automata Formal writing introduction

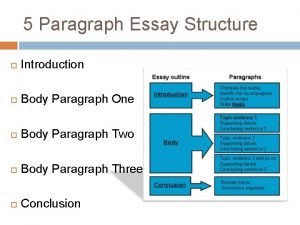

Formal writing introduction Body paragraph

Body paragraph Gauer henry reflex schwimmen

Gauer henry reflex schwimmen Henry harutunian

Henry harutunian King henry doesn't usually drink chocolate milk

King henry doesn't usually drink chocolate milk Energy stored in inductor

Energy stored in inductor Prince henry the navigator ap world history

Prince henry the navigator ap world history Henry weinberg equation

Henry weinberg equation King henry doesn't usually drink chocolate milk

King henry doesn't usually drink chocolate milk Henry jenkins transmedia storytelling

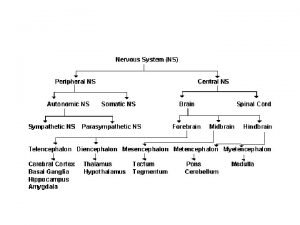

Henry jenkins transmedia storytelling Basal ganglia telencephalon

Basal ganglia telencephalon King henry died unexpectedly drinking chocolate

King henry died unexpectedly drinking chocolate King henry viii family tree

King henry viii family tree Henry, duke of cornwall

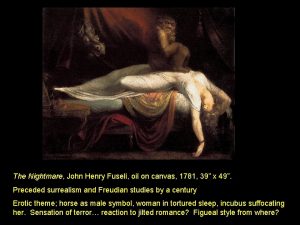

Henry, duke of cornwall The nightmare john henry fuseli

The nightmare john henry fuseli Henry rathbone and clara harris

Henry rathbone and clara harris International measurement system

International measurement system Year of goodbyes chapter 9

Year of goodbyes chapter 9 Henry corrigan-gibbs

Henry corrigan-gibbs Cactus o henry

Cactus o henry What did henry bessemer invent

What did henry bessemer invent Metaphors in patrick henry's speech

Metaphors in patrick henry's speech Alexis brown

Alexis brown Henry mintzberg strategy safari

Henry mintzberg strategy safari Metric system

Metric system Ley de henry solubilidad

Ley de henry solubilidad Ley de henry solubilidad

Ley de henry solubilidad Principle of management

Principle of management Henry the navigator map

Henry the navigator map Winifred ward

Winifred ward Pemikiran pendidikan kritis henry giroux

Pemikiran pendidikan kritis henry giroux Metaphors in speech to the virginia convention

Metaphors in speech to the virginia convention Parallelism in give me liberty speech

Parallelism in give me liberty speech Quando observamos da praia um veleiro

Quando observamos da praia um veleiro Parallelism grammar

Parallelism grammar King henry ate chocolate

King henry ate chocolate King henry chocolate milk story

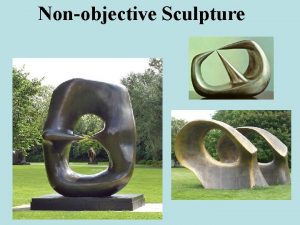

King henry chocolate milk story Non objective sculpture

Non objective sculpture Patrick henry international airport

Patrick henry international airport What were the motives for european exploration

What were the motives for european exploration Henry maslow

Henry maslow Khdudcm

Khdudcm King henry drank chocolate milk

King henry drank chocolate milk Conversions using the ladder method

Conversions using the ladder method King henry died formula

King henry died formula K.h.d d.c.m conversion chart

K.h.d d.c.m conversion chart Henry ford paternalistic leadership

Henry ford paternalistic leadership Angelo buono 2000

Angelo buono 2000 Principios de jenkins

Principios de jenkins Mr. utterson highly disapproves of henry jekyll’s ______.

Mr. utterson highly disapproves of henry jekyll’s ______. Dr henry lindner

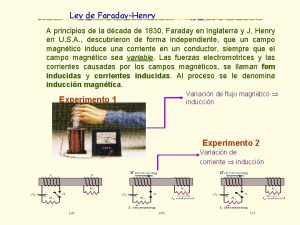

Dr henry lindner Ley de faraday-henry

Ley de faraday-henry Dr jean-yves henry avis

Dr jean-yves henry avis Soal transaksi perusahaan jasa

Soal transaksi perusahaan jasa Teoria de henry murray

Teoria de henry murray Henry ford famous inventions

Henry ford famous inventions Emergent-strategy-definition

Emergent-strategy-definition Why is patrick henry important

Why is patrick henry important Atty. agustin patricio

Atty. agustin patricio Why did henry viii behead his wives

Why did henry viii behead his wives Jackson high school hours

Jackson high school hours Henry iv themes

Henry iv themes Henry hudson lifespan

Henry hudson lifespan What did henry hudson sail for

What did henry hudson sail for Henry hudson 2nd voyage

Henry hudson 2nd voyage When did henry hudson die

When did henry hudson die Henry grady international cotton exposition

Henry grady international cotton exposition Henry giroux biografía

Henry giroux biografía What were henry ford's accomplishments

What were henry ford's accomplishments How did henry ford spend his money

How did henry ford spend his money Henry clay's american system worksheet

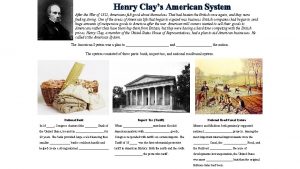

Henry clay's american system worksheet Chandler cowles

Chandler cowles Henry cavendish

Henry cavendish Gamg plank

Gamg plank English language paper 1 rosie answers

English language paper 1 rosie answers K h d u d c m

K h d u d c m French royal family tree

French royal family tree Njera

Njera Jenkins fandom theory

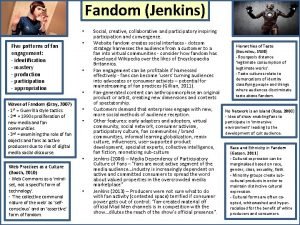

Jenkins fandom theory Fandom jenkins

Fandom jenkins Expository essay example

Expository essay example Magellan achievements

Magellan achievements Why was henry hudson exploring the arctic ocean?

Why was henry hudson exploring the arctic ocean? Examination day author

Examination day author Examination day by henry slesar

Examination day by henry slesar Spain and portugal

Spain and portugal Ellen white biography

Ellen white biography Colonie masters in nursing programs near me

Colonie masters in nursing programs near me Ley de dalton

Ley de dalton Kua tawhiti ke to haerenga mai

Kua tawhiti ke to haerenga mai Henry classification system

Henry classification system Consumer behaviour meaning

Consumer behaviour meaning Mobile wad of henry

Mobile wad of henry Colin henry wilson

Colin henry wilson Henry remak

Henry remak Henry molaison

Henry molaison Aoura lights

Aoura lights Henry lindner

Henry lindner William sydney porter born

William sydney porter born Henry disapproves of stealing jelly beans from his sister's

Henry disapproves of stealing jelly beans from his sister's Henry moseley periodic table contribution

Henry moseley periodic table contribution Samuel de champlain exploration route

Samuel de champlain exploration route O henry twenty years after

O henry twenty years after Henry norwest

Henry norwest A retrieved reformation by o henry

A retrieved reformation by o henry Super bifido plus probiotic benefits

Super bifido plus probiotic benefits O.henry facts

O.henry facts William henry gates iii was born on 28 october 1955

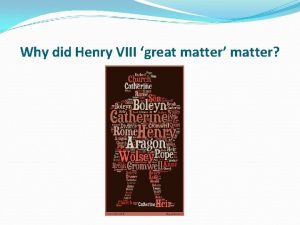

William henry gates iii was born on 28 october 1955 Henry viii great matter

Henry viii great matter Cisco ip phone 7965 voicemail

Cisco ip phone 7965 voicemail Henry hudson birth and death

Henry hudson birth and death Tall tale characters

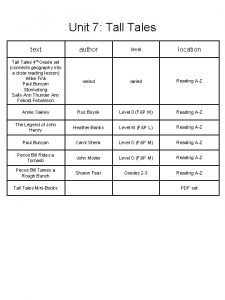

Tall tale characters Tyra henry

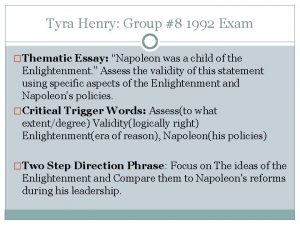

Tyra henry Henry vii

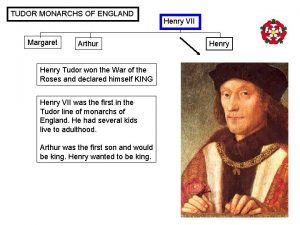

Henry vii Henry legge

Henry legge Discrepancy

Discrepancy The walking cactus

The walking cactus Chapter 9 great gatsby

Chapter 9 great gatsby Symbols in chapter 9 of the great gatsby

Symbols in chapter 9 of the great gatsby Great gatsby chapter 9 summary

Great gatsby chapter 9 summary Henry ford teoria

Henry ford teoria Teori organisasi klasik henry fayol

Teori organisasi klasik henry fayol Henry darcy

Henry darcy Henry gauthier-villars

Henry gauthier-villars Dr henry rosenberg

Dr henry rosenberg Henry mancini quotes

Henry mancini quotes Qumica

Qumica Claustral complex

Claustral complex