System Support for DataIntensive Applications Katherine Yelick U

- Slides: 64

System Support for Data-Intensive Applications Katherine Yelick U. C. Berkeley, EECS Slide 1

The “Post PC” Generation Two technologies will likely dominate: 1) Mobile Consumer Electronic Devices – e. g. , PDA, Cell phone, wearable computers, with cameras, recorders, sensors – make the computing “invisible” through reliability and simple interfaces 2) Infrastructure to Support such Devices – e. g. , successor to Big Fat Web Servers, Database Servers – make these “utilities” with reliability and new economic models Slide 2

Open Research Issues • Human-computer interaction – uniformity across devices • Distributed computing – coordination across independent devices • Power – low power designs and renewable power sources • Information retrieval – finding useful information amidst a flood of data • Scalability – Scaling devices down – Scaling services up • Reliability and maintainability Slide 3

The problem space: big data • Big demand for enormous amounts of data – today: enterprise and internet applications » online applications: e-commerce, mail, web, archives » enterprise decision-support, data mining databases – future: richer data and more of it » computational & storage back-ends for mobile devices » more multimedia content » more use of historical data to provide better services • Two key application domains: – storage: public, private, and institutional data – search: building static indexes, dynamic discovery Slide 4

Reliability/Performance Trade-off • Techniques for reliability: – High level languages with strong types » avoid memory leaks, wild pointers, etc. » C vs. Java – Redundant storage, computation, etc. » adds storage and bandwidth overhead • Techniques for performance: – Optimize for a specific machine » e. g. , cache or memory hierarchy – Minimize redundancy • These two goals work against each other Slide 5

Specific Projects • ISTORE – A reliable, scalable, maintainable storage system • Data-intensive applications for “backend” servers – Modeling the real world – Storing and finding information • Titanium – A high level language (Java) with high performance – A domain-specific language and optimizing compiler • Sparsity – Optimization using partial program input Slide 6

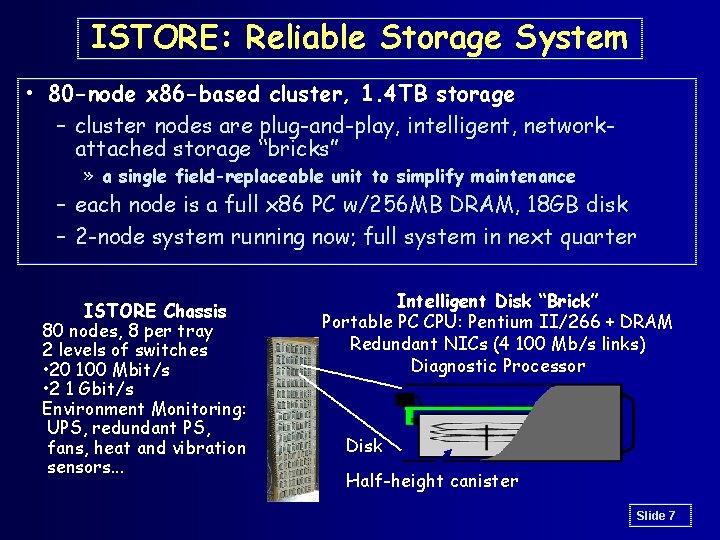

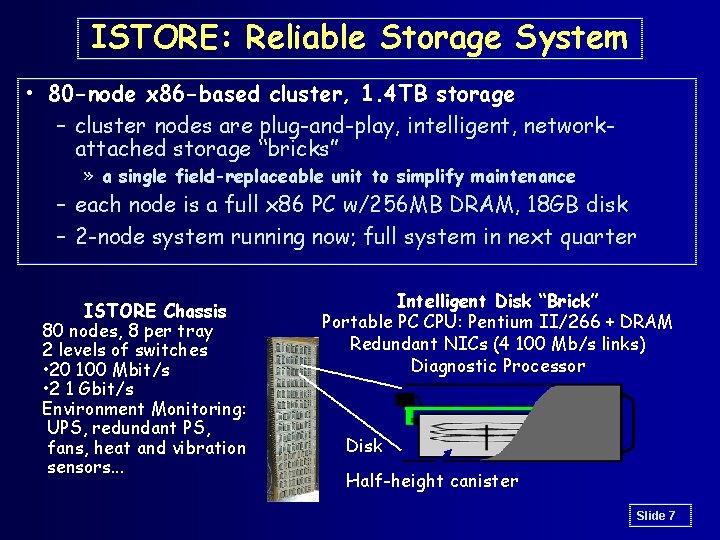

ISTORE: Reliable Storage System • 80 -node x 86 -based cluster, 1. 4 TB storage – cluster nodes are plug-and-play, intelligent, networkattached storage “bricks” » a single field-replaceable unit to simplify maintenance – each node is a full x 86 PC w/256 MB DRAM, 18 GB disk – 2 -node system running now; full system in next quarter ISTORE Chassis 80 nodes, 8 per tray 2 levels of switches • 20 100 Mbit/s • 2 1 Gbit/s Environment Monitoring: UPS, redundant PS, fans, heat and vibration sensors. . . Intelligent Disk “Brick” Portable PC CPU: Pentium II/266 + DRAM Redundant NICs (4 100 Mb/s links) Diagnostic Processor Disk Half-height canister Slide 7

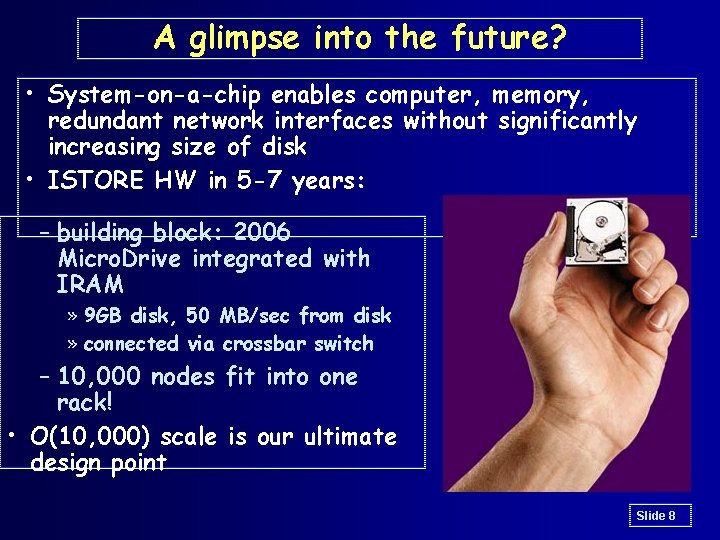

A glimpse into the future? • System-on-a-chip enables computer, memory, redundant network interfaces without significantly increasing size of disk • ISTORE HW in 5 -7 years: – building block: 2006 Micro. Drive integrated with IRAM » 9 GB disk, 50 MB/sec from disk » connected via crossbar switch – 10, 000 nodes fit into one rack! • O(10, 000) scale is our ultimate design point Slide 8

Specific Projects • ISTORE – A reliable, scalable, maintainable storage system • Data-intensive applications for “backend” servers – Modeling the real world – Storing and finding information • Titanium – A high level language (Java) with high performance – A domain-specific language and optimizing compiler • Sparsity – Optimization using partial program input Slide 9

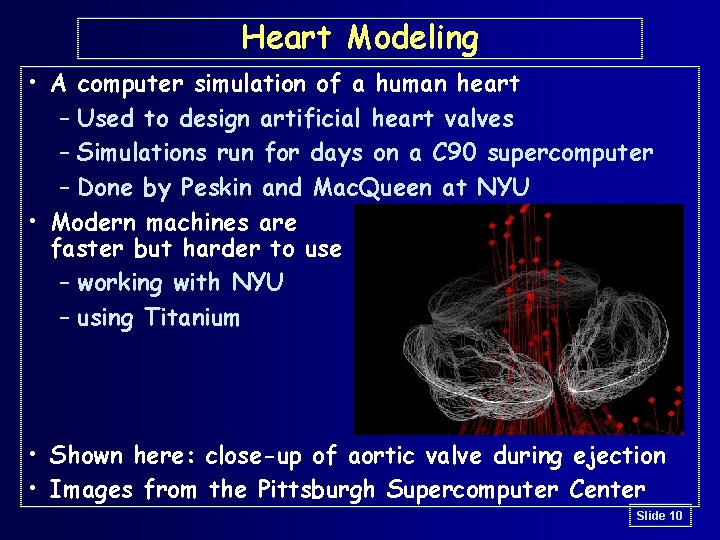

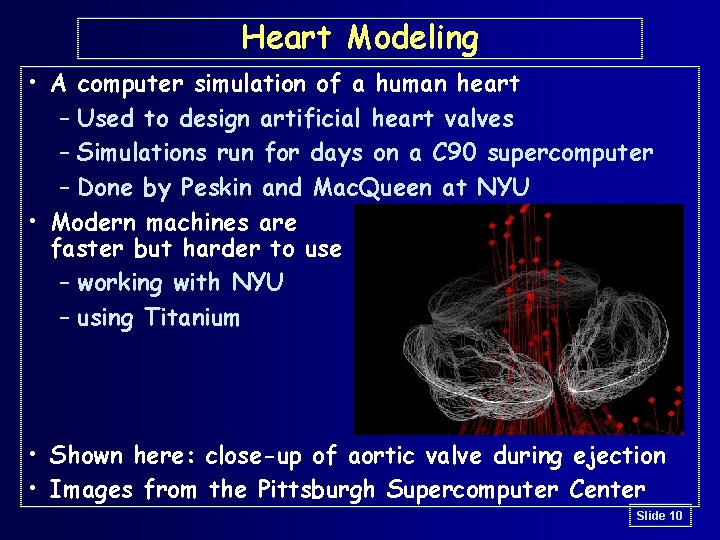

Heart Modeling • A computer simulation of a human heart – Used to design artificial heart valves – Simulations run for days on a C 90 supercomputer – Done by Peskin and Mac. Queen at NYU • Modern machines are faster but harder to use – working with NYU – using Titanium • Shown here: close-up of aortic valve during ejection • Images from the Pittsburgh Supercomputer Center Slide 10

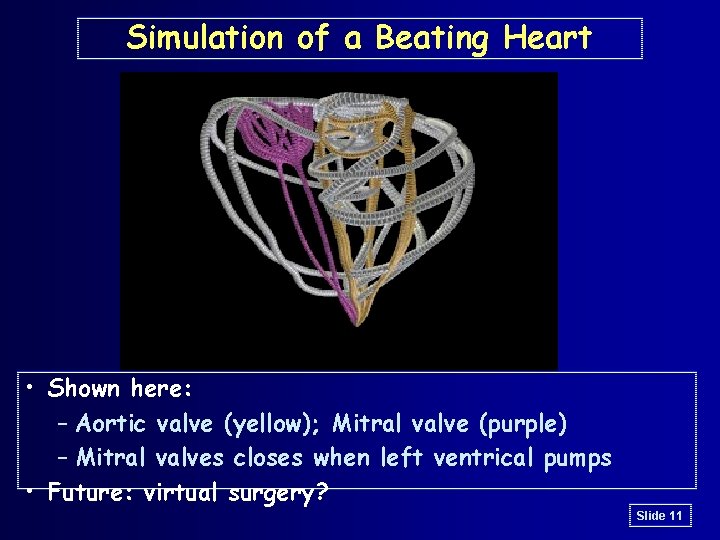

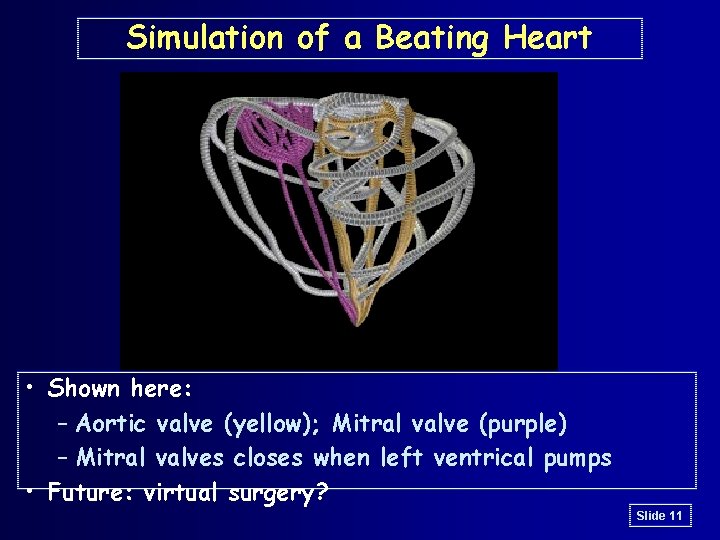

Simulation of a Beating Heart • Shown here: – Aortic valve (yellow); Mitral valve (purple) – Mitral valves closes when left ventrical pumps • Future: virtual surgery? Slide 11

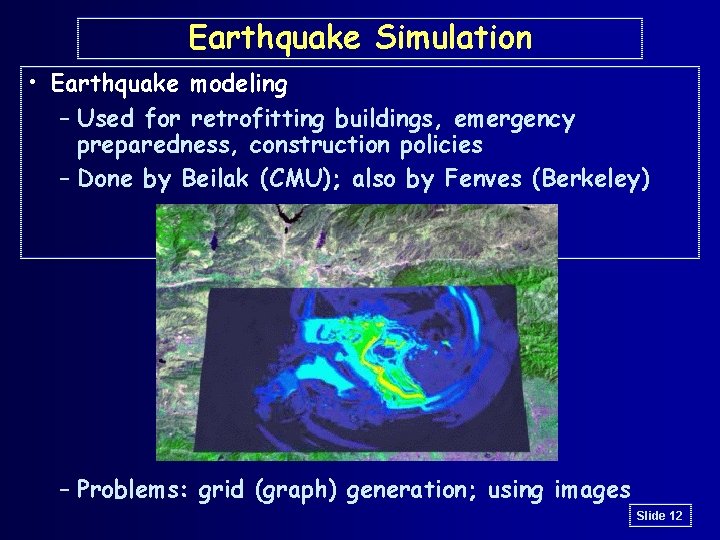

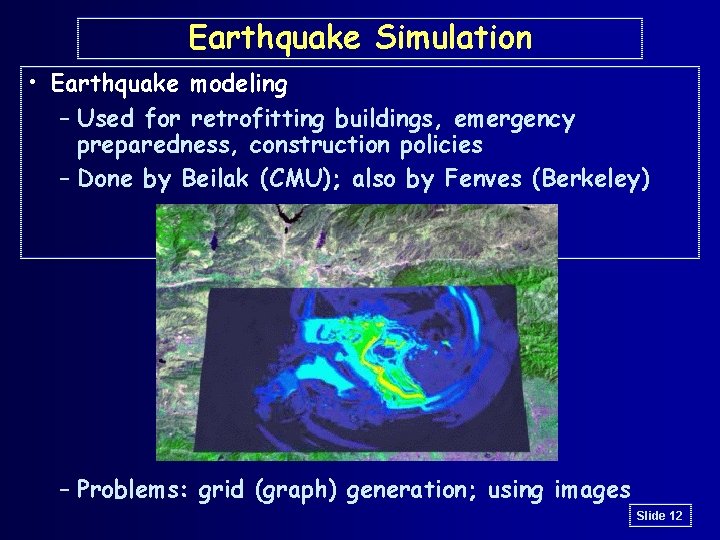

Earthquake Simulation • Earthquake modeling – Used for retrofitting buildings, emergency preparedness, construction policies – Done by Beilak (CMU); also by Fenves (Berkeley) – Problems: grid (graph) generation; using images Slide 12

Earthquake Simuation • Movie shows a simulated aftershock following the 1994 Northridge earthquake in California • Future: sensors everywhere; tied to central system Slide 13

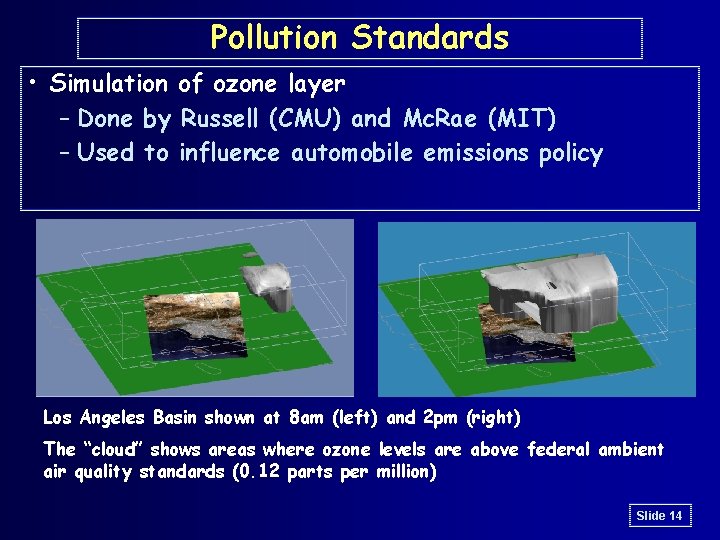

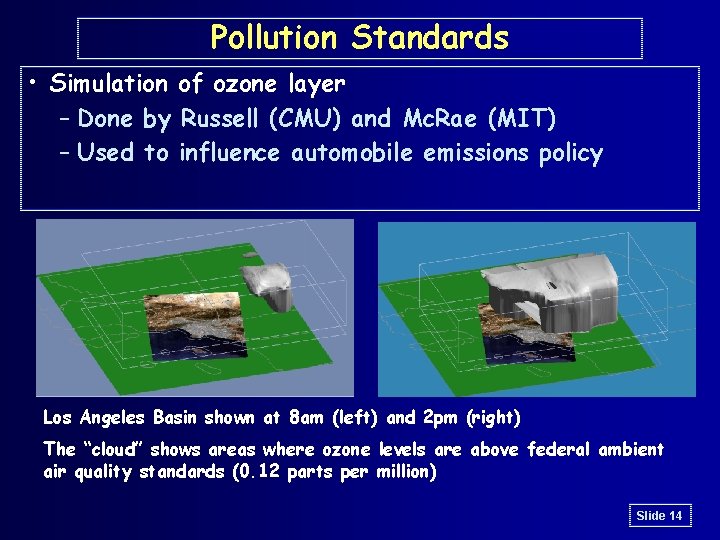

Pollution Standards • Simulation of ozone layer – Done by Russell (CMU) and Mc. Rae (MIT) – Used to influence automobile emissions policy Los Angeles Basin shown at 8 am (left) and 2 pm (right) The “cloud” shows areas where ozone levels are above federal ambient air quality standards (0. 12 parts per million) Slide 14

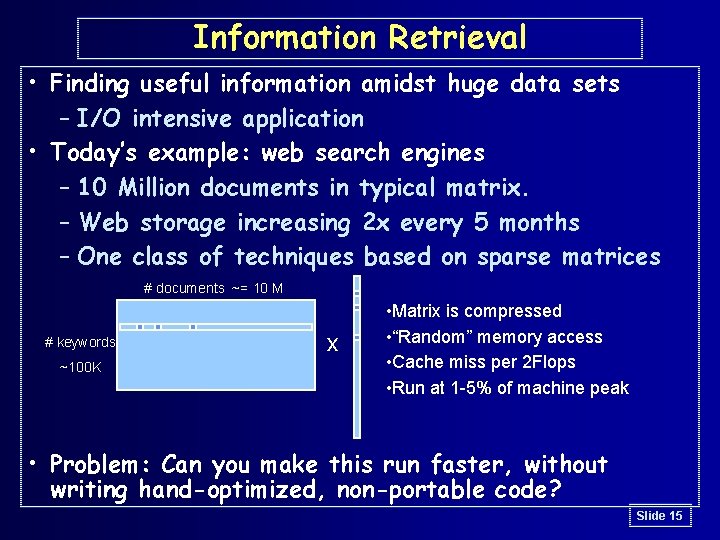

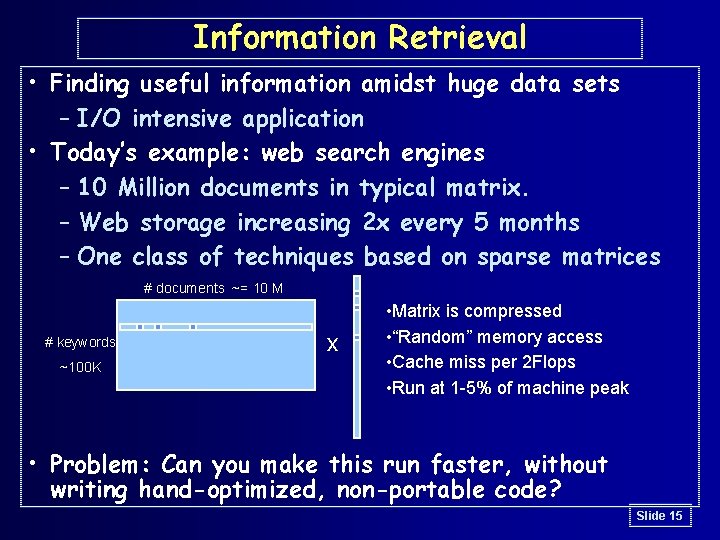

Information Retrieval • Finding useful information amidst huge data sets – I/O intensive application • Today’s example: web search engines – 10 Million documents in typical matrix. – Web storage increasing 2 x every 5 months – One class of techniques based on sparse matrices # documents ~= 10 M # keywords ~100 K x • Matrix is compressed • “Random” memory access • Cache miss per 2 Flops • Run at 1 -5% of machine peak • Problem: Can you make this run faster, without writing hand-optimized, non-portable code? Slide 15

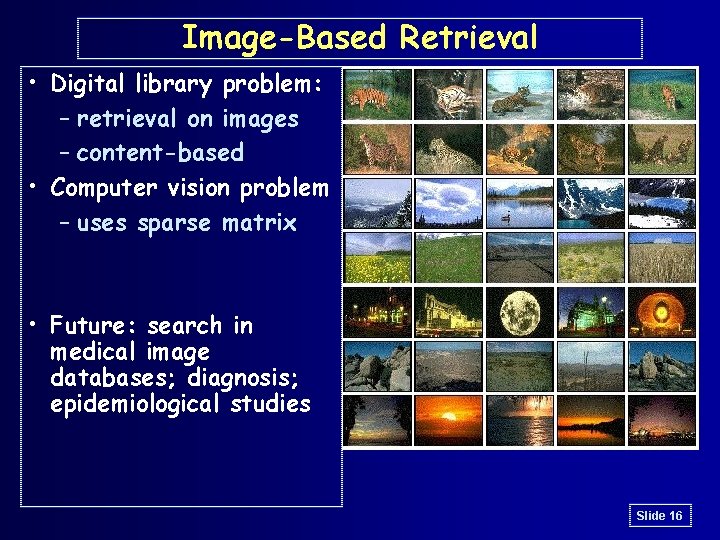

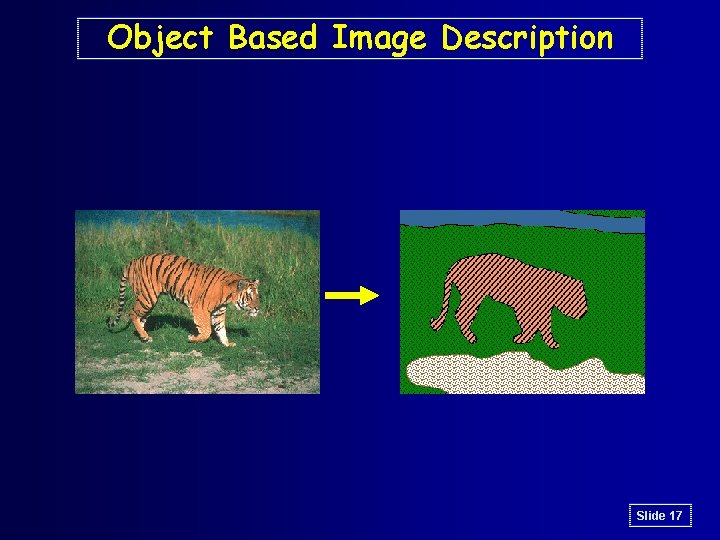

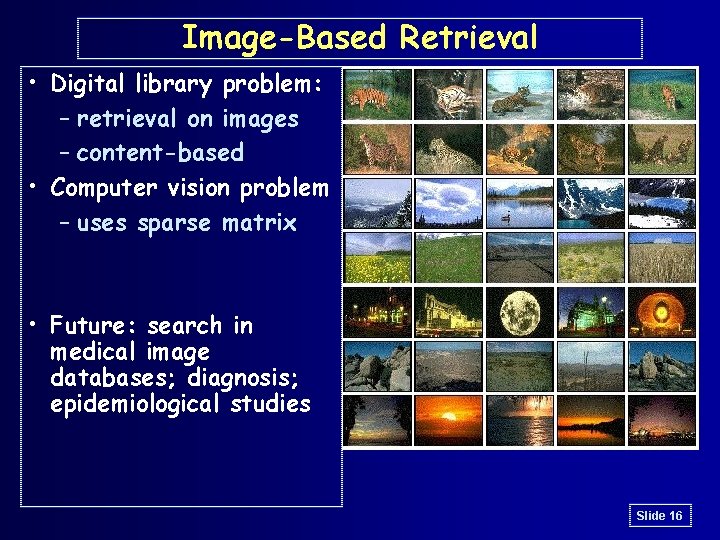

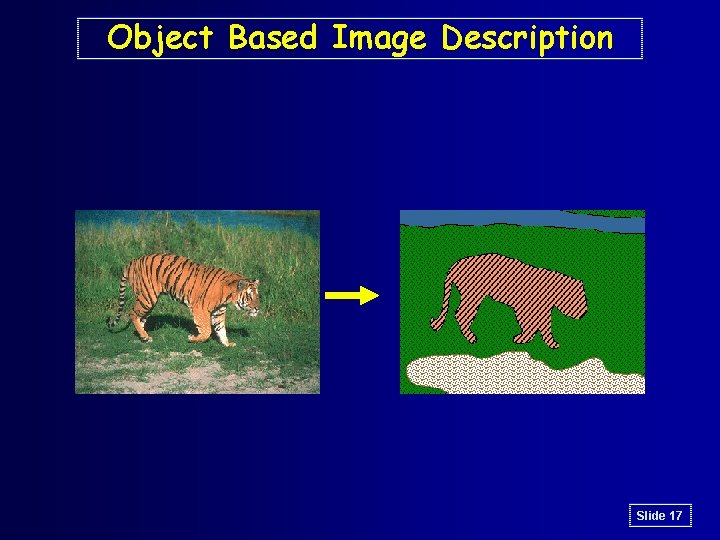

Image-Based Retrieval • Digital library problem: – retrieval on images – content-based • Computer vision problem – uses sparse matrix • Future: search in medical image databases; diagnosis; epidemiological studies Slide 16

Object Based Image Description Slide 17

Specific Projects • ISTORE – A reliable, scalable, maintainable storage system • Data-intensive applications for “backend” servers – Modeling the real world – Storing and finding information • Titanium – A high level language (Java) with high performance – A domain-specific language and optimizing compiler • Sparsity – Optimization using partial program input Slide 18

Titanium Goals • Help programmers write reliable software – Retain safety properties of Java – Extend to parallel programming constructs • Performance – Sequential code comparable to C/C++/Fortran – Parallel performance comparable to MPI • Portability • How? – Domain-specific language and compiler – No JVM – Optimizing compiler – Explicit parallelism and other language constructs for high performance Slide 19

Titanium Overview: Sequential Object-oriented language based on Java with: • Immutable classes – user-definable non-reference types for performance • Unordered loops – compiler is free to run iteration in any order – useful for cache optimizations and others • Operator overloading – by demand from our user community • Multidimensional arrays – points and index sets as first-class values – specific to an application domain: scientific computing with block-structured grids Slide 20

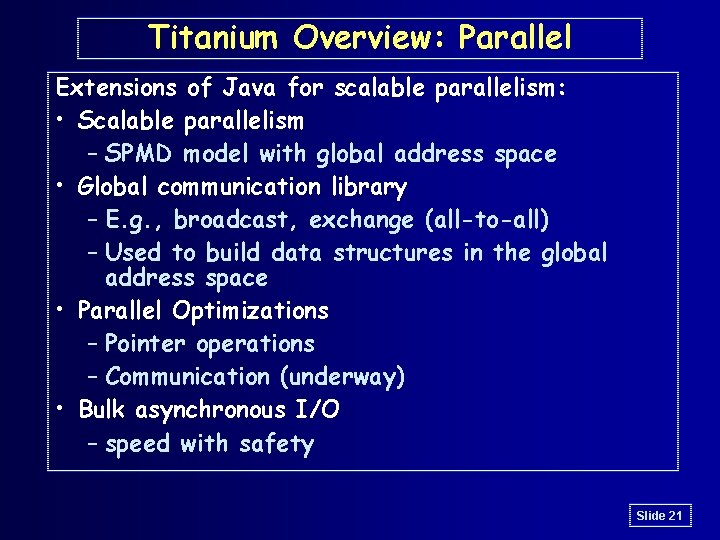

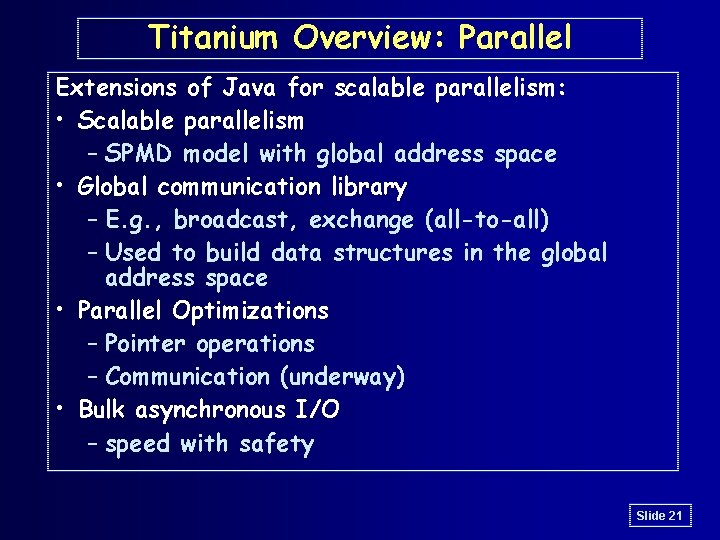

Titanium Overview: Parallel Extensions of Java for scalable parallelism: • Scalable parallelism – SPMD model with global address space • Global communication library – E. g. , broadcast, exchange (all-to-all) – Used to build data structures in the global address space • Parallel Optimizations – Pointer operations – Communication (underway) • Bulk asynchronous I/O – speed with safety Slide 21

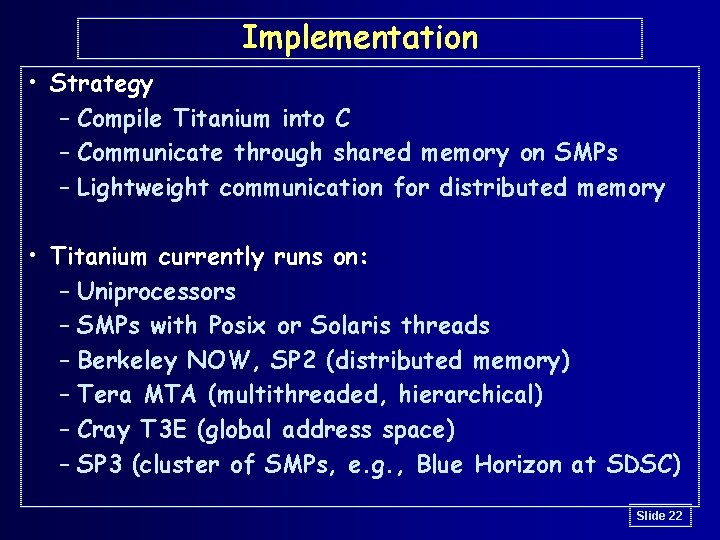

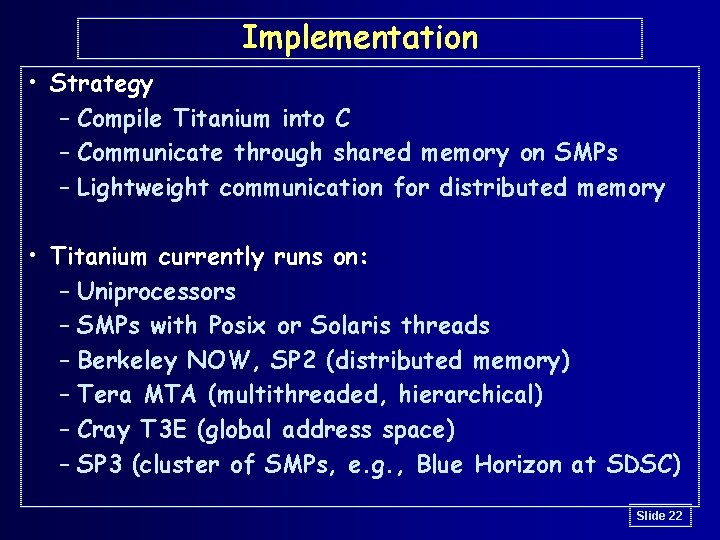

Implementation • Strategy – Compile Titanium into C – Communicate through shared memory on SMPs – Lightweight communication for distributed memory • Titanium currently runs on: – Uniprocessors – SMPs with Posix or Solaris threads – Berkeley NOW, SP 2 (distributed memory) – Tera MTA (multithreaded, hierarchical) – Cray T 3 E (global address space) – SP 3 (cluster of SMPs, e. g. , Blue Horizon at SDSC) Slide 22

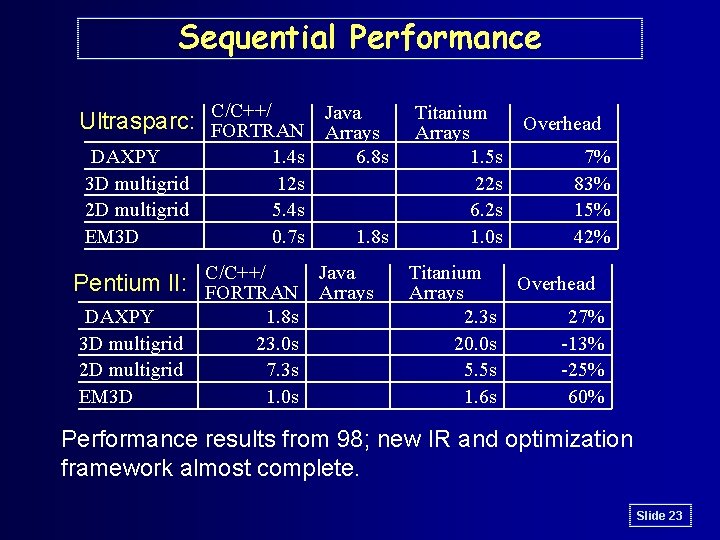

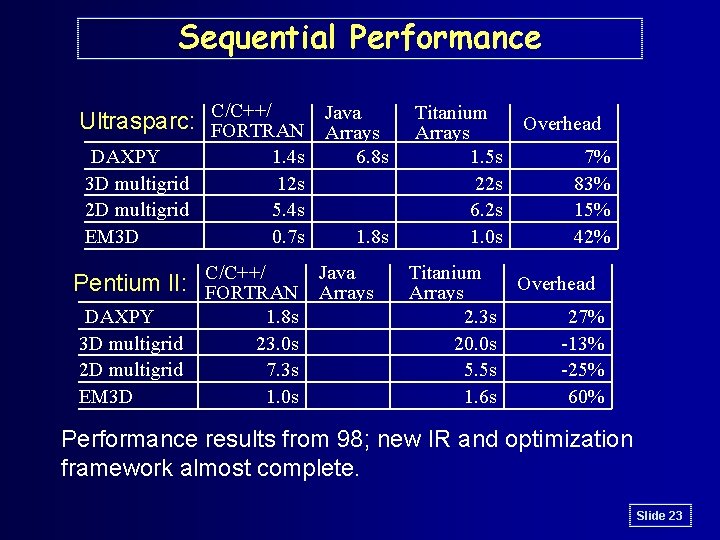

Sequential Performance Java Ultrasparc: C/C++/ FORTRAN Arrays DAXPY 3 D multigrid 2 D multigrid EM 3 D 1. 4 s 12 s 5. 4 s 0. 7 s 6. 8 s 1. 8 s Java Pentium II: C/C++/ FORTRAN Arrays DAXPY 3 D multigrid 2 D multigrid EM 3 D 1. 8 s 23. 0 s 7. 3 s 1. 0 s Titanium Arrays 1. 5 s 22 s 6. 2 s 1. 0 s Titanium Arrays 2. 3 s 20. 0 s 5. 5 s 1. 6 s Overhead 7% 83% 15% 42% Overhead 27% -13% -25% 60% Performance results from 98; new IR and optimization framework almost complete. Slide 23

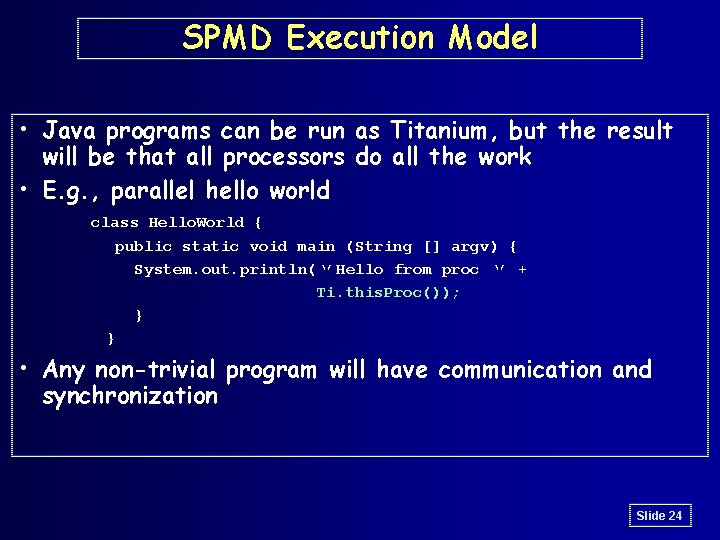

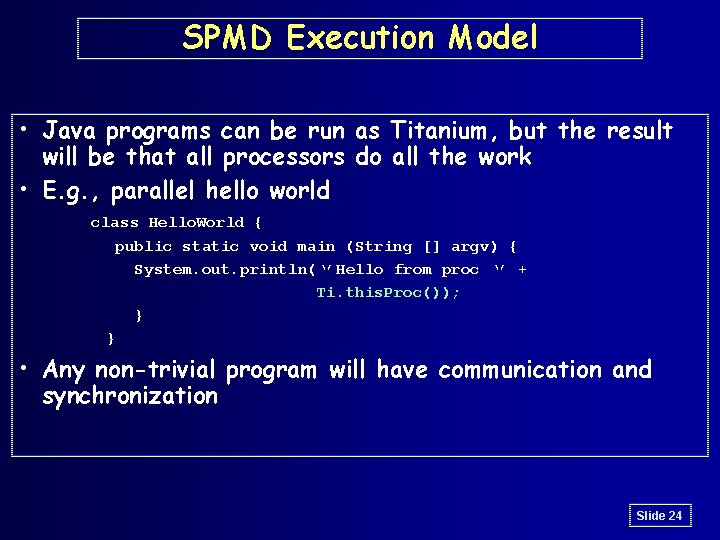

SPMD Execution Model • Java programs can be run as Titanium, but the result will be that all processors do all the work • E. g. , parallel hello world class Hello. World { public static void main (String [] argv) { System. out. println(‘’Hello from proc ‘’ + Ti. this. Proc()); } } • Any non-trivial program will have communication and synchronization Slide 24

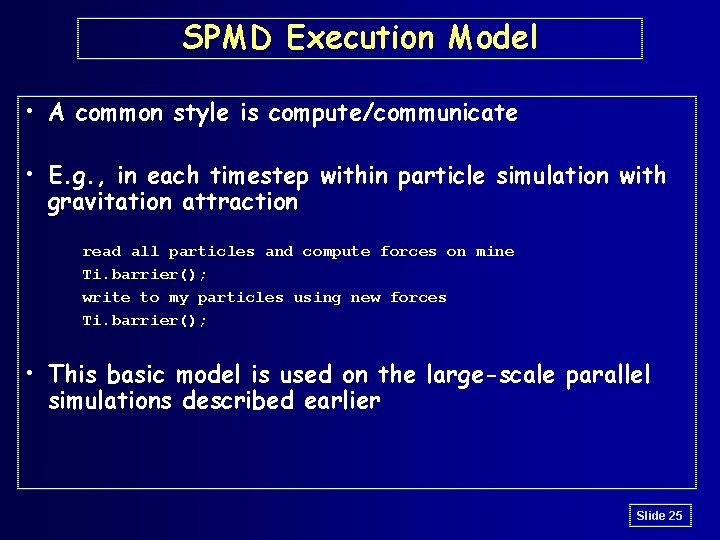

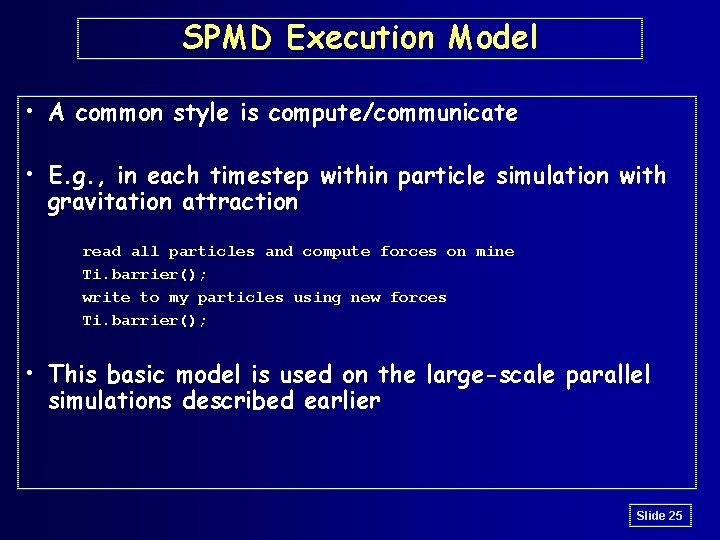

SPMD Execution Model • A common style is compute/communicate • E. g. , in each timestep within particle simulation with gravitation attraction read all particles and compute forces on mine Ti. barrier(); write to my particles using new forces Ti. barrier(); • This basic model is used on the large-scale parallel simulations described earlier Slide 25

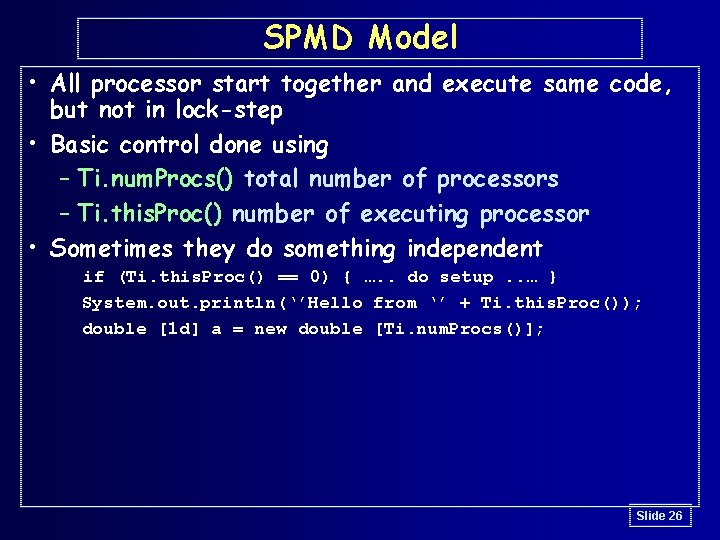

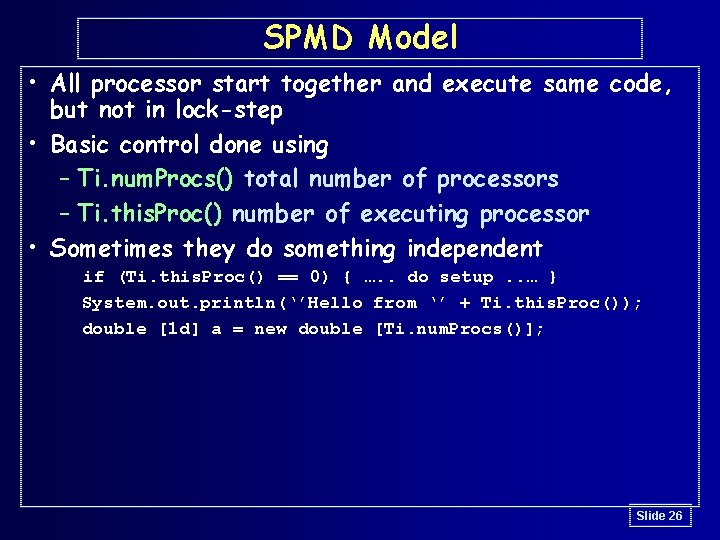

SPMD Model • All processor start together and execute same code, but not in lock-step • Basic control done using – Ti. num. Procs() total number of processors – Ti. this. Proc() number of executing processor • Sometimes they do something independent if (Ti. this. Proc() == 0) { …. . do setup. . … } System. out. println(‘’Hello from ‘’ + Ti. this. Proc()); double [1 d] a = new double [Ti. num. Procs()]; Slide 26

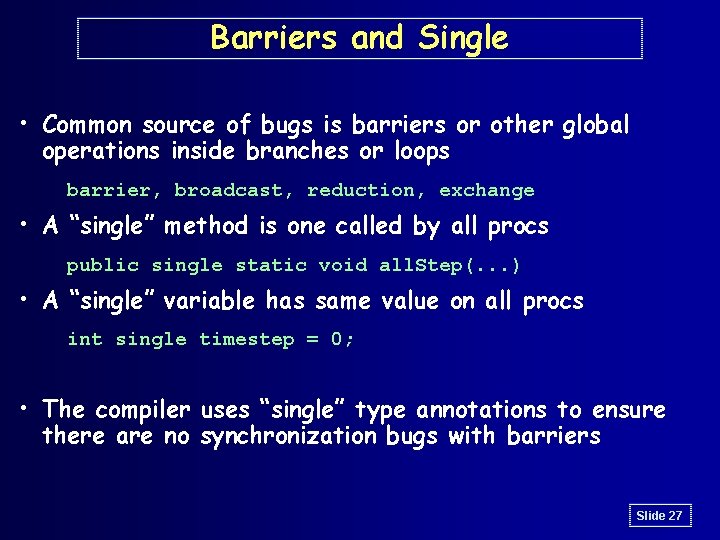

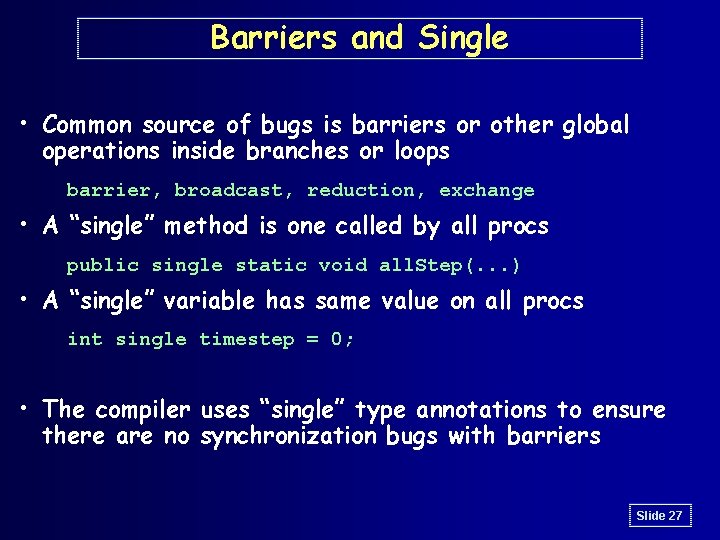

Barriers and Single • Common source of bugs is barriers or other global operations inside branches or loops barrier, broadcast, reduction, exchange • A “single” method is one called by all procs public single static void all. Step(. . . ) • A “single” variable has same value on all procs int single timestep = 0; • The compiler uses “single” type annotations to ensure there are no synchronization bugs with barriers Slide 27

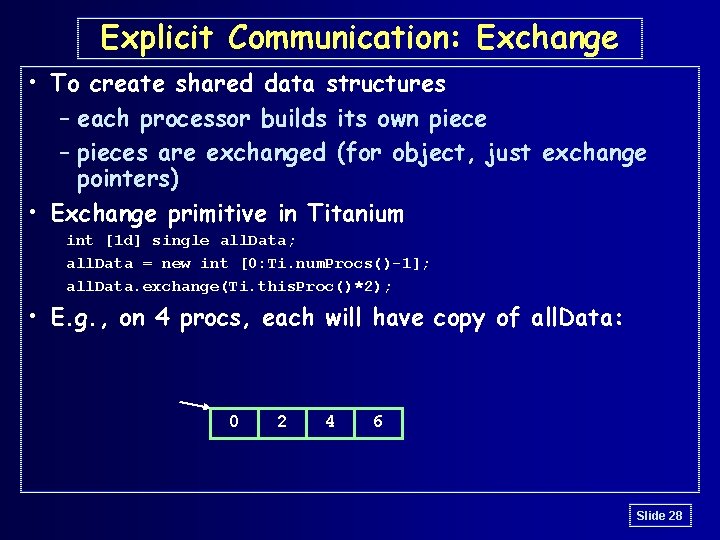

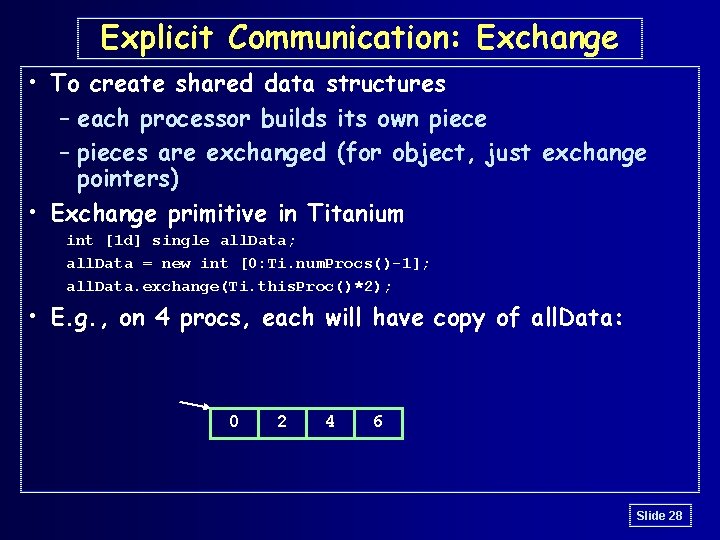

Explicit Communication: Exchange • To create shared data structures – each processor builds its own piece – pieces are exchanged (for object, just exchange pointers) • Exchange primitive in Titanium int [1 d] single all. Data; all. Data = new int [0: Ti. num. Procs()-1]; all. Data. exchange(Ti. this. Proc()*2); • E. g. , on 4 procs, each will have copy of all. Data: 0 2 4 6 Slide 28

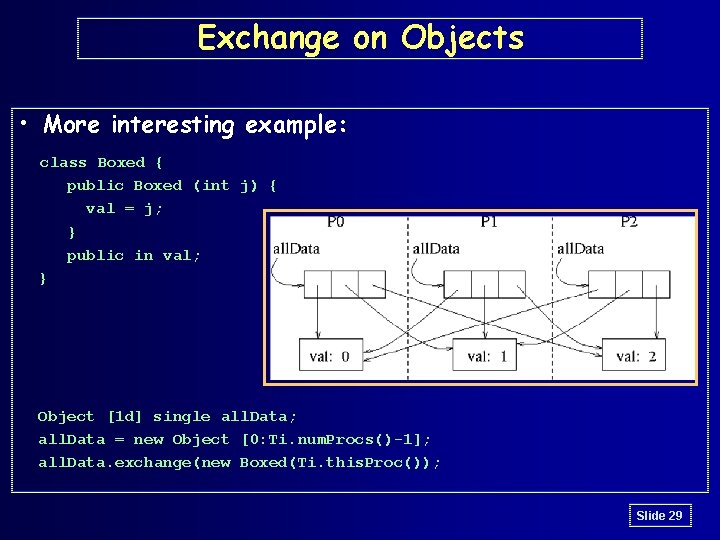

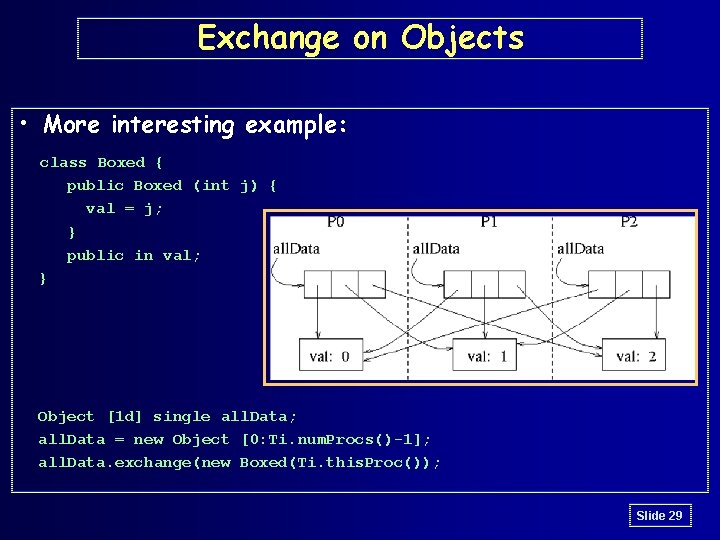

Exchange on Objects • More interesting example: class Boxed { public Boxed (int j) { val = j; } public in val; } Object [1 d] single all. Data; all. Data = new Object [0: Ti. num. Procs()-1]; all. Data. exchange(new Boxed(Ti. this. Proc()); Slide 29

Use of Global / Local • As seen, references (pointers) may be remote – easy to port shared-memory programs • Global pointers are more expensive than local – True even when data is on the same processor – Use local declarations in critical sections • Costs of global: – space (processor number + memory address) – dereference time (check to see if local) • May declare references as local Slide 30

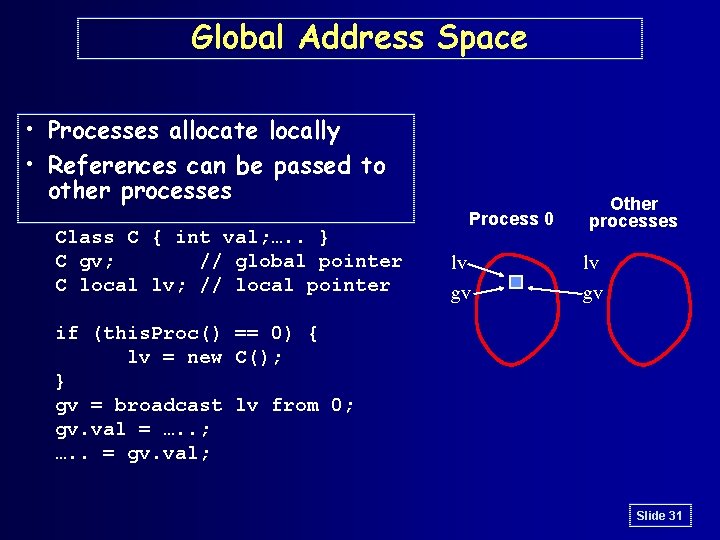

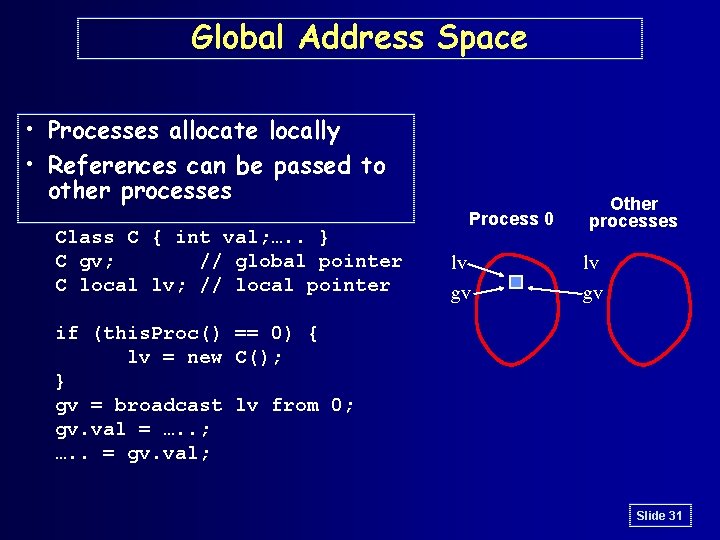

Global Address Space • Processes allocate locally • References can be passed to other processes Class C { int val; …. . } C gv; // global pointer C local lv; // local pointer Process 0 Other processes lv lv gv gv if (this. Proc() == 0) { lv = new C(); } gv = broadcast lv from 0; gv. val = …. . ; …. . = gv. val; Slide 31

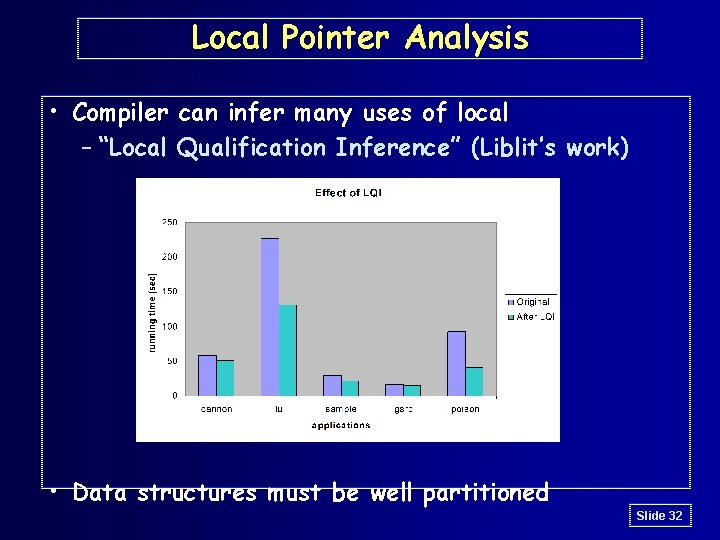

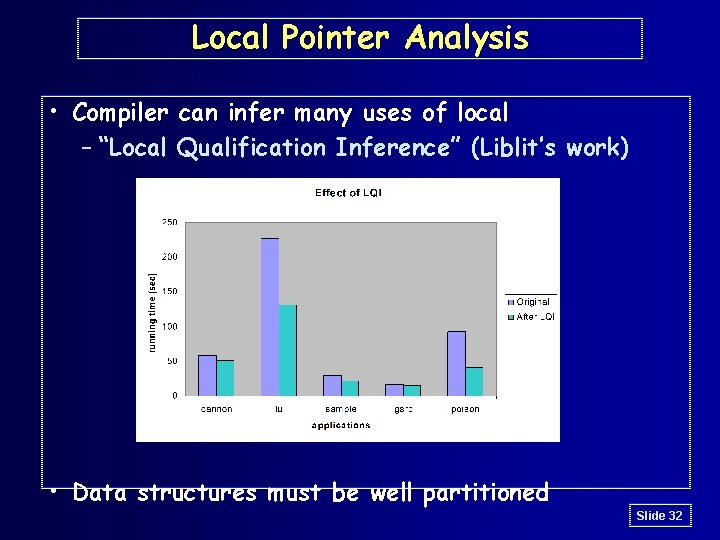

Local Pointer Analysis • Compiler can infer many uses of local – “Local Qualification Inference” (Liblit’s work) • Data structures must be well partitioned Slide 32

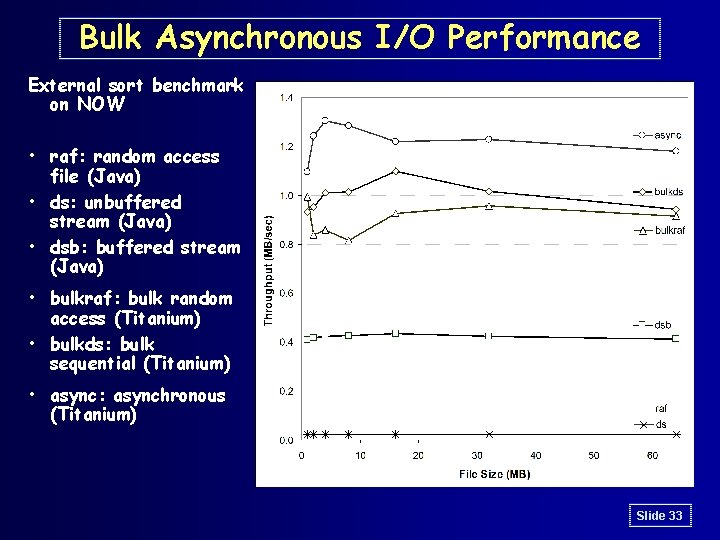

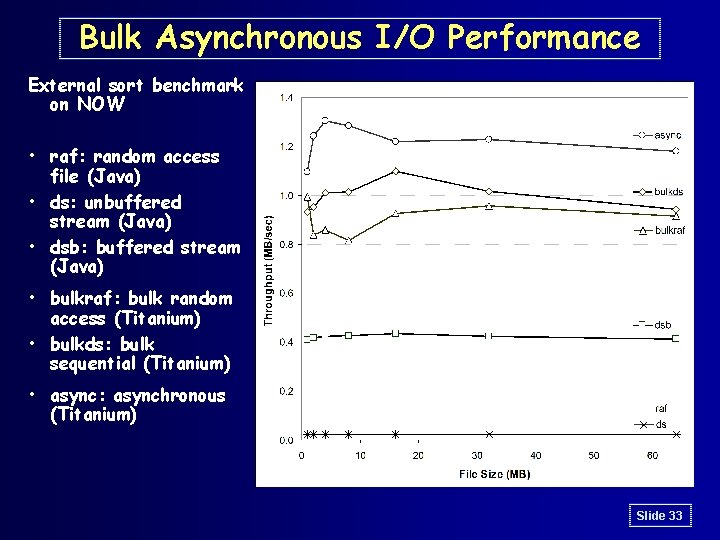

Bulk Asynchronous I/O Performance External sort benchmark on NOW • raf: random access file (Java) • ds: unbuffered stream (Java) • dsb: buffered stream (Java) • bulkraf: bulk random access (Titanium) • bulkds: bulk sequential (Titanium) • async: asynchronous (Titanium) Slide 33

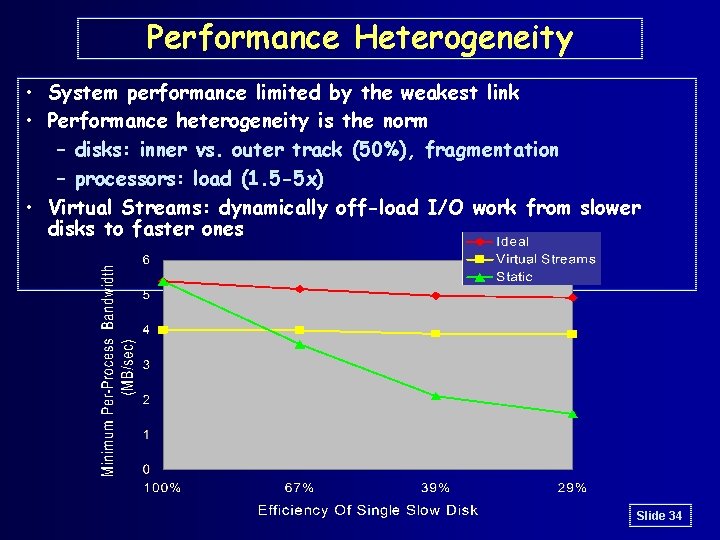

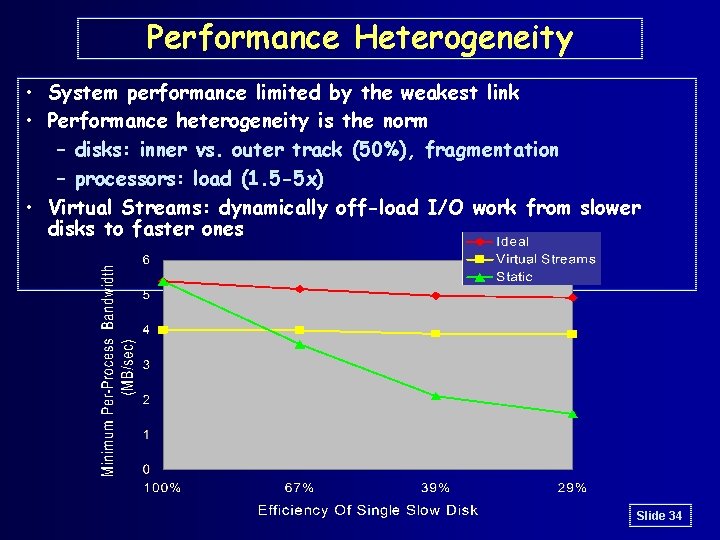

Performance Heterogeneity • System performance limited by the weakest link • Performance heterogeneity is the norm – disks: inner vs. outer track (50%), fragmentation – processors: load (1. 5 -5 x) • Virtual Streams: dynamically off-load I/O work from slower disks to faster ones Slide 34

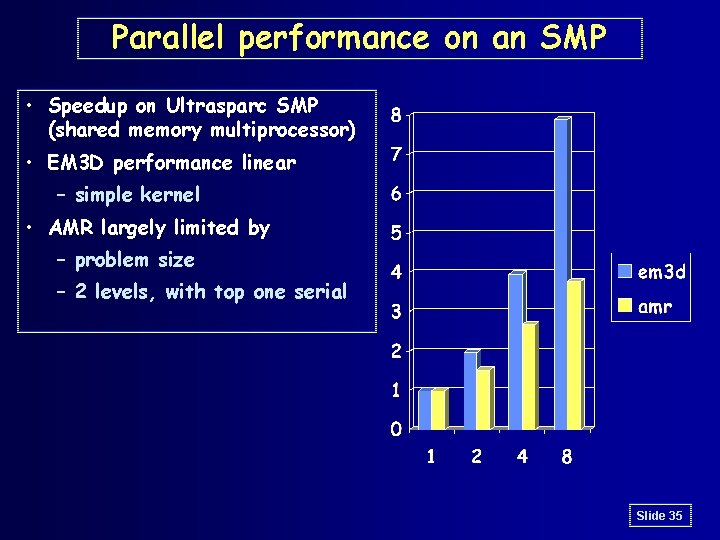

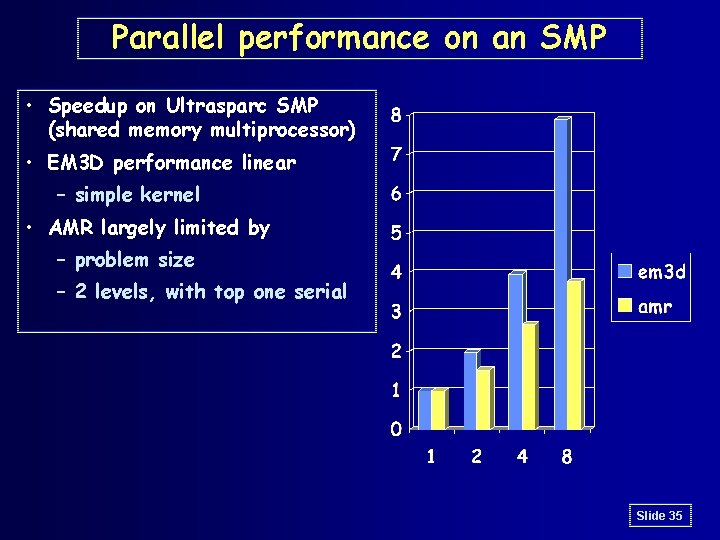

Parallel performance on an SMP • Speedup on Ultrasparc SMP (shared memory multiprocessor) • EM 3 D performance linear – simple kernel • AMR largely limited by – problem size – 2 levels, with top one serial Slide 35

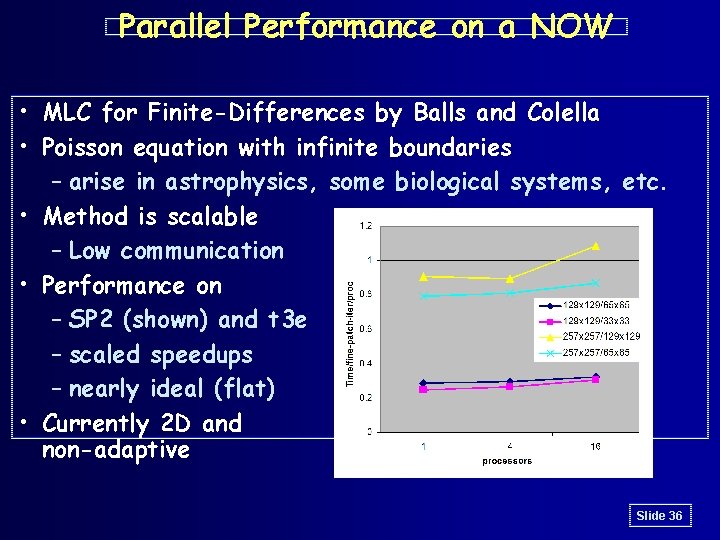

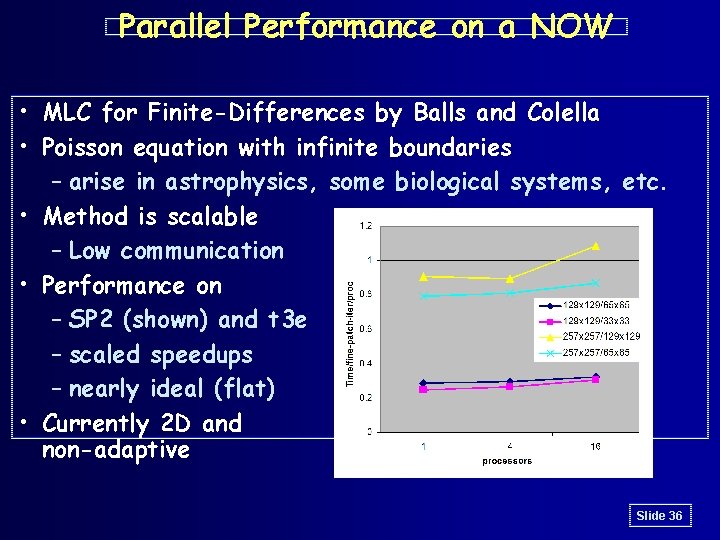

Parallel Performance on a NOW • MLC for Finite-Differences by Balls and Colella • Poisson equation with infinite boundaries – arise in astrophysics, some biological systems, etc. • Method is scalable – Low communication • Performance on – SP 2 (shown) and t 3 e – scaled speedups – nearly ideal (flat) • Currently 2 D and non-adaptive Slide 36

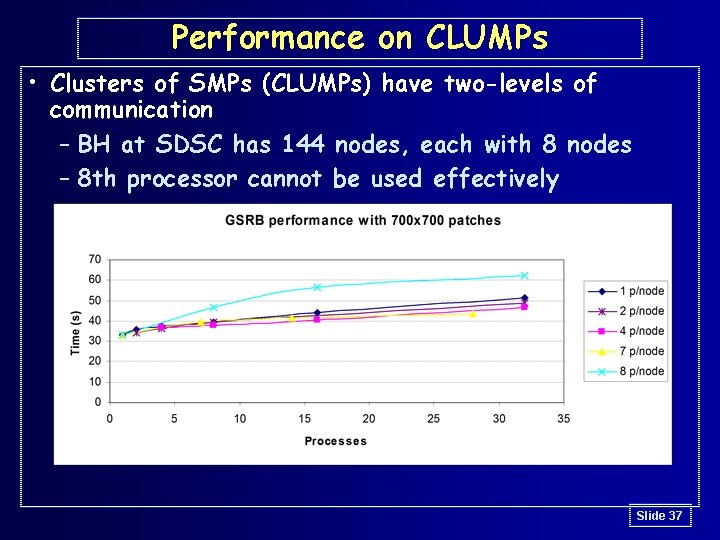

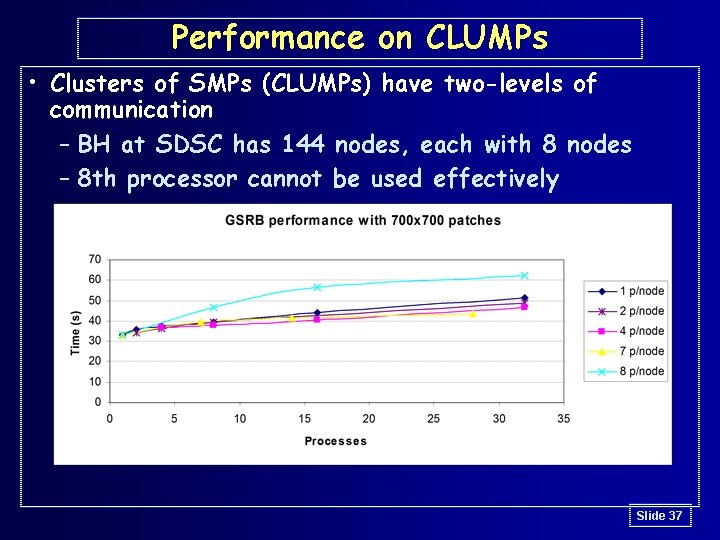

Performance on CLUMPs • Clusters of SMPs (CLUMPs) have two-levels of communication – BH at SDSC has 144 nodes, each with 8 nodes – 8 th processor cannot be used effectively Slide 37

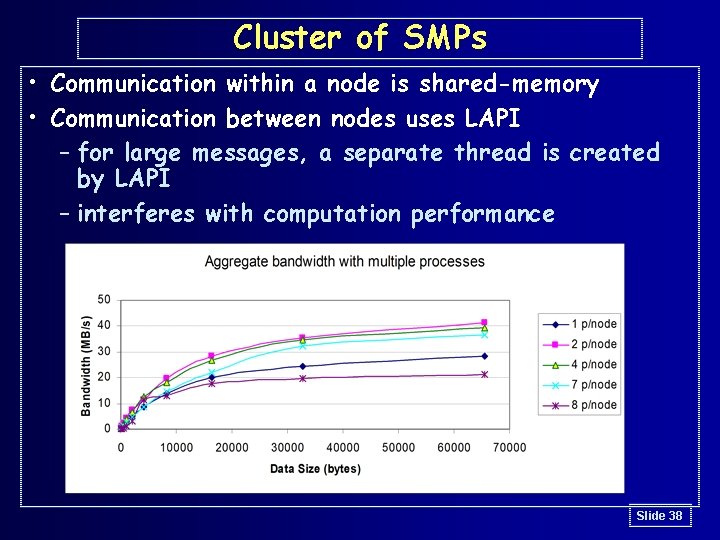

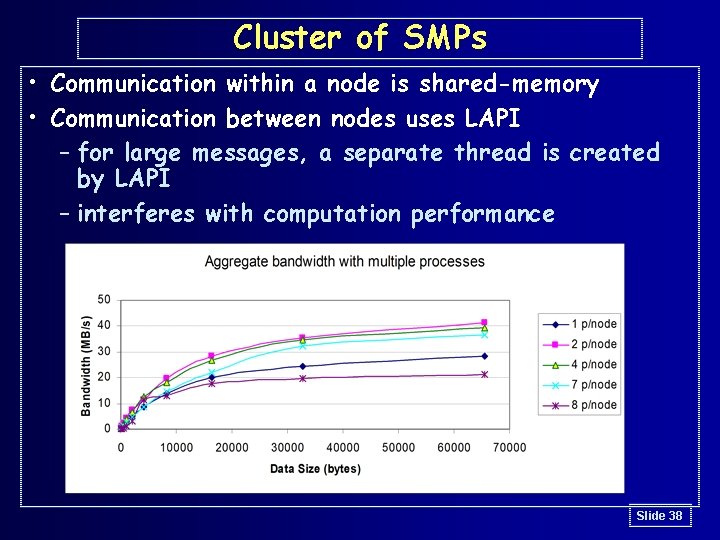

Cluster of SMPs • Communication within a node is shared-memory • Communication between nodes uses LAPI – for large messages, a separate thread is created by LAPI – interferes with computation performance Slide 38

Optimizing Parallel Programs • Would like compiler to introduce asynchronous communication, which is a form of possible reordering • Hardware also reorders – out-of-order execution – write buffered with read by-pass – non-FIFO write buffers • Software already reorders too – register allocation – any code motion • System provides enforcement primitives – volatile: at the language level not well-defined – tend to be heavy weight, unpredictable • Can the compiler hide all this? Slide 39

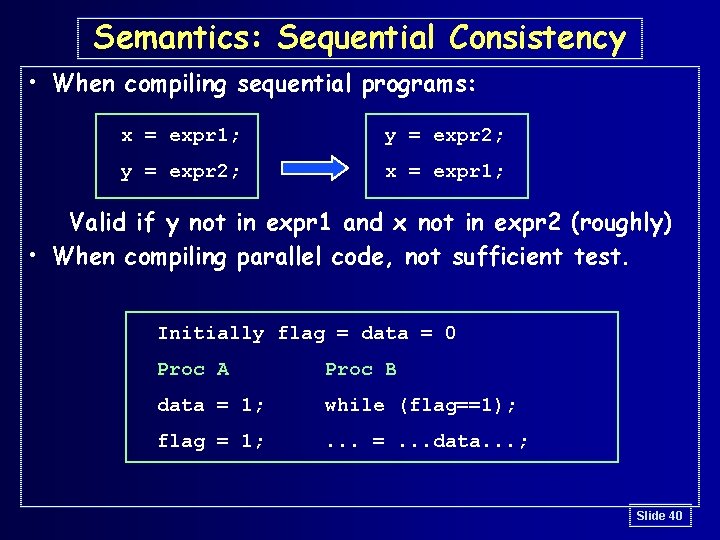

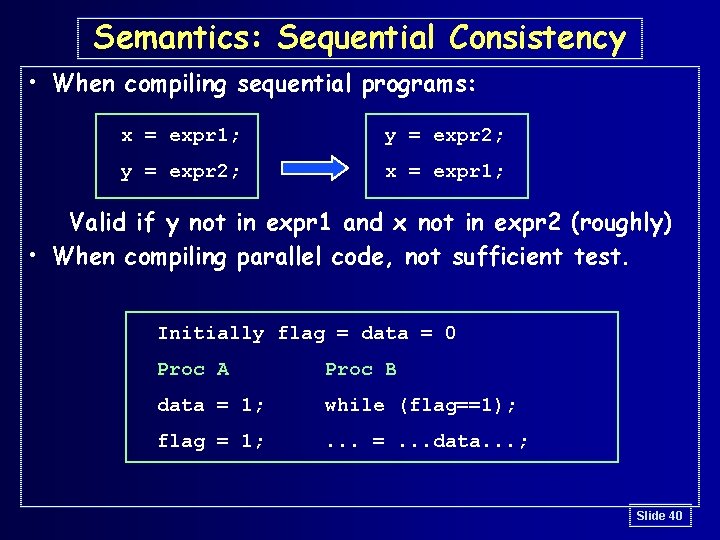

Semantics: Sequential Consistency • When compiling sequential programs: x = expr 1; y = expr 2; x = expr 1; Valid if y not in expr 1 and x not in expr 2 (roughly) • When compiling parallel code, not sufficient test. Initially flag = data = 0 Proc A Proc B data = 1; while (flag==1); flag = 1; . . . =. . . data. . . ; Slide 40

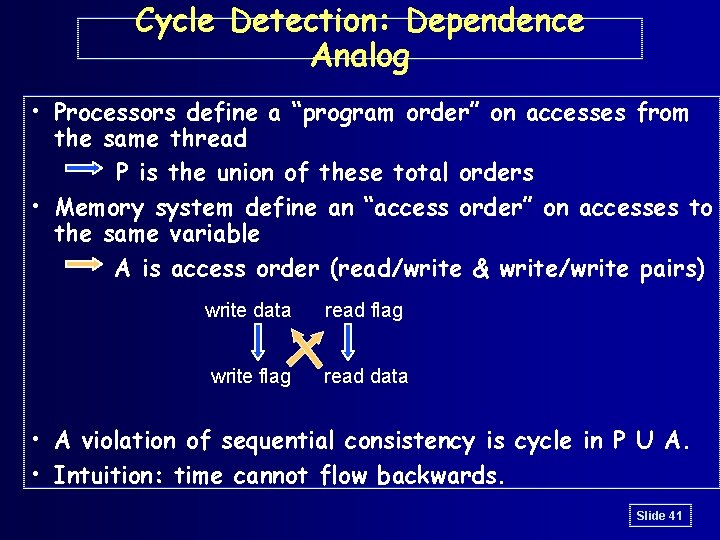

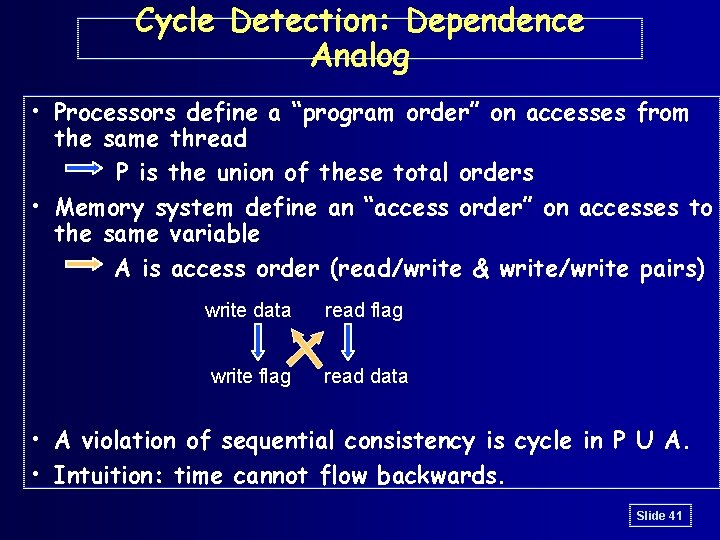

Cycle Detection: Dependence Analog • Processors define a “program order” on accesses from the same thread P is the union of these total orders • Memory system define an “access order” on accesses to the same variable A is access order (read/write & write/write pairs) write data read flag write flag read data • A violation of sequential consistency is cycle in P U A. • Intuition: time cannot flow backwards. Slide 41

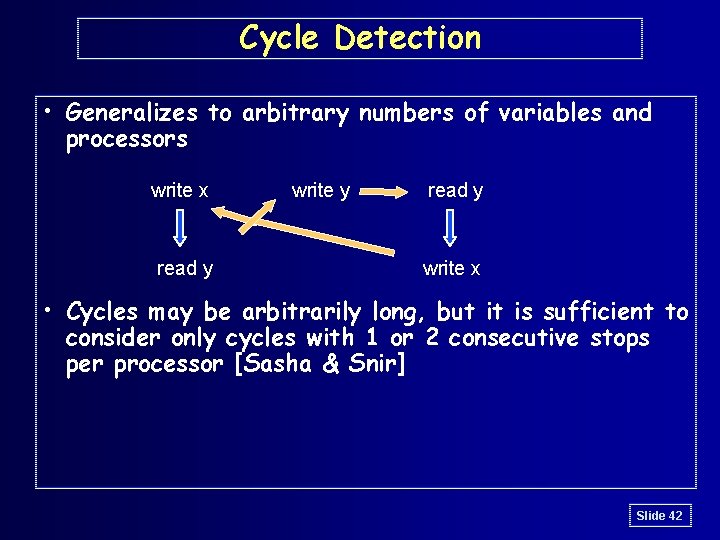

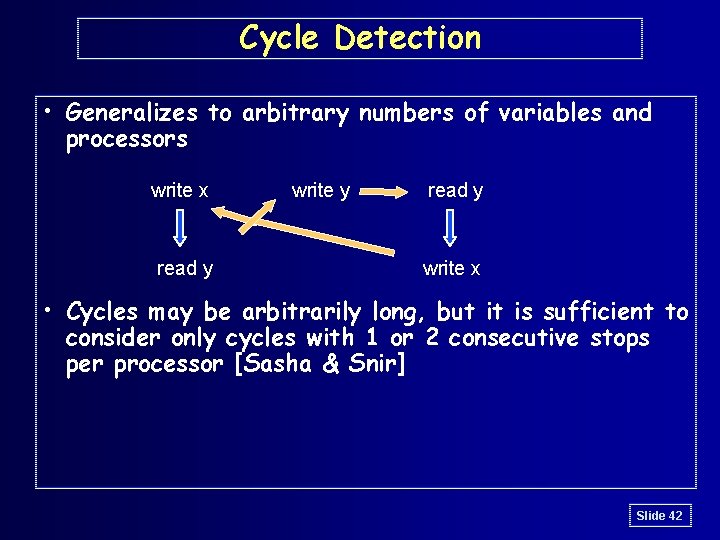

Cycle Detection • Generalizes to arbitrary numbers of variables and processors write x read y write y read y write x • Cycles may be arbitrarily long, but it is sufficient to consider only cycles with 1 or 2 consecutive stops per processor [Sasha & Snir] Slide 42

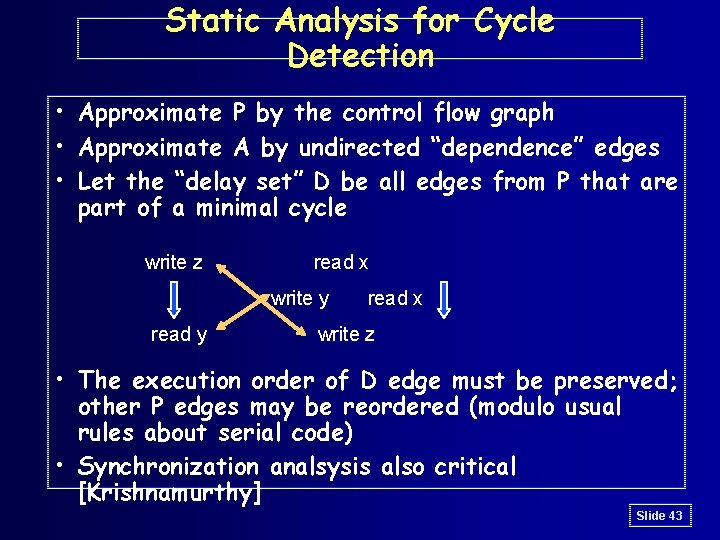

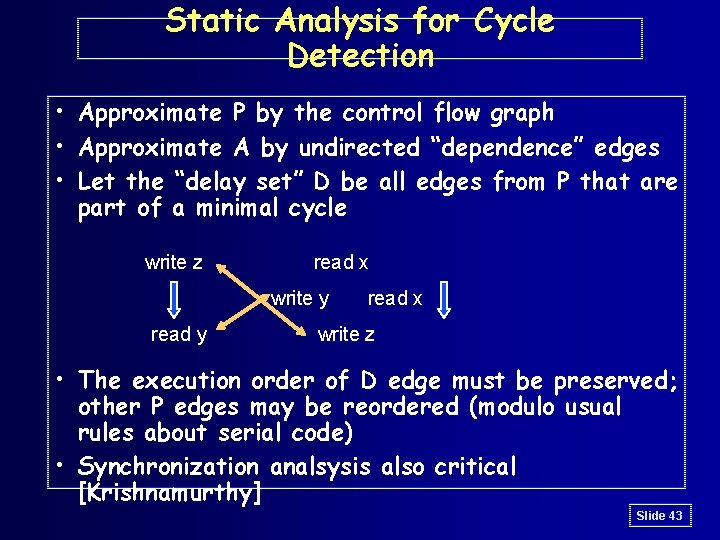

Static Analysis for Cycle Detection • Approximate P by the control flow graph • Approximate A by undirected “dependence” edges • Let the “delay set” D be all edges from P that are part of a minimal cycle write z read x write y read x write z • The execution order of D edge must be preserved; other P edges may be reordered (modulo usual rules about serial code) • Synchronization analsysis also critical [Krishnamurthy] Slide 43

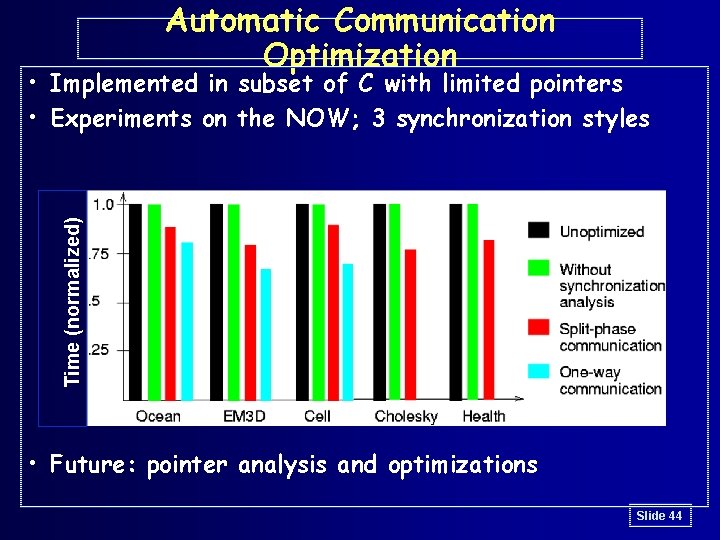

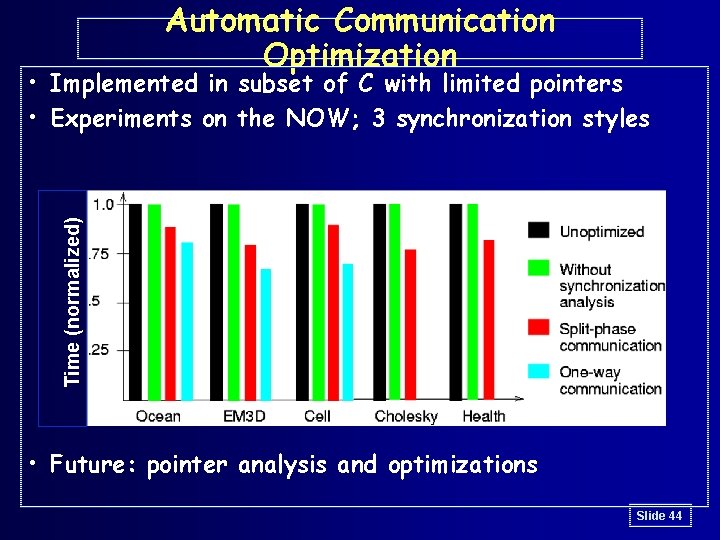

Automatic Communication Optimization Time (normalized) • Implemented in subset of C with limited pointers • Experiments on the NOW; 3 synchronization styles • Future: pointer analysis and optimizations Slide 44

Specific Projects • ISTORE – A reliable, scalable, maintainable storage system • Data-intensive applications for “backend” servers – Modeling the real world – Storing and finding information • Titanium – A high level language (Java) with high performance – A domain-specific language and optimizing compiler • Sparsity – Optimization using partial program input Slide 45

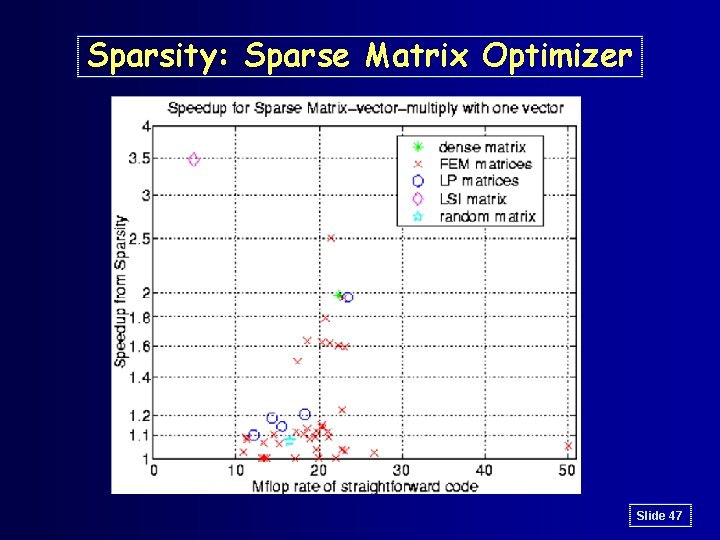

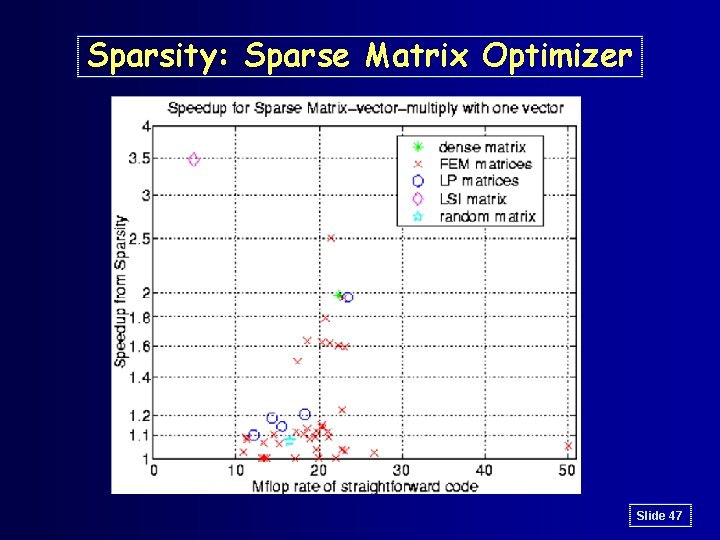

Sparsity: Sparse Matrix Optimizer • Several data mining or web search algorithms use sparse matrix-vector multiplication – use for documents, images, video, etc. – irregular, indirect memory patterns perform poorly on memory hierarchies • Performance improvements possible, but depend on: – sparsity structure, e. g. , keywords within documents – machine parameters without analytical models • Good news: – operation repeated many times on similar matrix – Sparsity: automatic code generator based on matrix structure and machine Slide 46

Sparsity: Sparse Matrix Optimizer Slide 47

Summary • Future – small devices + larger servers – reliability increasingly important • Reliability techniques include – hardware: redundancy, monitoring – software: better languages, many others • Performance trades off against safety in languages – use of domain-specific features (e. g. , Titanium) Slide 48

Backup Slides Slide 49

The Big Motivators for Programming Systems Research • Ease of Programming – Hardware costs -> 0 – Software costs -> infinity • Correctness – Increasing reliance on software increases cost of software errors (medical, financial, etc. ) • Performance – Increasing machine complexity – New languages and applications » Enabling Java; network packet filters Slide 50

The Real Scalability Problems: AME • Availability – systems should continue to meet quality of service goals despite hardware and software failures and extreme load • Maintainability – systems should require only minimal ongoing human administration, regardless of scale or complexity • Evolutionary Growth – systems should evolve gracefully in terms of performance, maintainability, and availability as they are grown/upgraded/expanded • These are problems at today’s scales, and will only get worse as systems grow Slide 51

Research Principles • Redundancy everywhere, no single point of failure • Performance secondary to AME – Performance robustness over peak performance – Dedicate resources to AME » biological systems use > 50% of resources on maintenance – Optimizations viewed as AME-enablers » e. g. , use of (slower) safe languages like Java with static and dynamic optimizations • Introspection – reactive techniques to detect and adapt to failures, workload variations, and system evolution – proactive techniques to anticipate and avert problems before they happen Slide 52

Outline • Motivation • Hardware Techniques – general techniques – ISTORE projects • Software Techniques • Availability Benchmarks • Conclusions Slide 53

Hardware techniques • Fully shared-nothing cluster organization – truly scalable architecture, automatic redundancy – tolerates partial hardware failure • No Central Processor Unit: distribute processing with storage – Most storage servers limited by speed of CPUs; why does this make sense? – Amortize sheet metal, power, cooling infrastructure for disk to add processor, memory, and network • On-demand network partitioning/isolation – Applications must tolerate these anyway – Allows testing, repair of online system Slide 54

Hardware techniques • Heavily instrumented hardware – sensors for temp, vibration, humidity, power • Independent diagnostic processor on each node – remote control of power, console, boot code – collects, stores, processes environmental data – connected via independent network • Built-in fault injection capabilities – Used for proactive hardware introspection » automated detection of flaky components » controlled testing of error-recovery mechanisms – Important for AME benchmarking Slide 55

ISTORE-2 Hardware Proposal • Smaller disks – replace 3. 5” disks with 2. 5” or 1” drives » 340 MB available now in 1”, 1 GB next year (? ) • Smaller, more highly integrated processors – E. g. , Transmeta Crusoe includes processor and Northbridge (interface) functionality in 1 Watt – Xilinx FPGA for Southbridge, diagnostic proc, etc. • Larger scale – Roughly 1000 nodes, depending on support » ISTORE-1 built with donated disks, memory, processors » Paid for network, board design, enclosures (discounted) Slide 56

Outline • Motivation • Hardware Techniques • Software Techniques – general techniques – Titanium: a high performance Java dialect – Sparsity: using dynamic information – Virtual streams: performance robustness • Availability Benchmarks • Conclusions Slide 57

Software techniques • Fault tolerant data structures – Application controls replication, checkpointing, and consistency policy – Self-scrubbing used to identify software errors that have corrupted application state • Encourage use of safe languages – Type safety and automatic memory management avoid a host of application errors – Use of static and dynamic information to meet performance needs • Runtime adaptation to performance heterogeneity – e. g. , outer vs. inner track (1. 5 X), fragmentation – Evolution of systems adds to this problem Slide 58

Software Techniques • Reactive introspection – Use statistical techniques to identify normal behavior and detect deviations from it » e. g. , network activity, response time, program counter (? ) – Semi-automatic response to abnormal behavior » initially, rely on human administrator » eventually, system learns to set response parameters • Proactive introspection – Continuous online self-testing » in deployed systems! » goal is to shake out bugs in failure response code on isolated subset » use of fault-injection and stress testing Slide 59

Techniques for Safe Languages Titanium: A high performance dialect of Java • Scalable parallelism – A global address space, but not shared memory – For tightly-coupled applications, e. g. , mining – Safe, region-based memory management • Scalar performance enhancements, some specific to application domain – immutable classes (avoids indirection) – multidimensional arrays with subarrays • Application domains – scientific computing on grids » typically +/-20% of C++/F in this domain – data mining in progress Slide 60

Use of Static Information • Titanium compiler performs parallel optimizations – communication overlap (40%) and aggregation • Uses two new analyses – synchronization analysis: the parallel analog to control flow analysis » identifies code segments that may execute in parallel – shared variable analysis: the parallel analog to dependence analysis » recognize when reordering can be observed by another processor » necessary for any code motion or use of relaxed memory models in hardware => missed or illegal optimizations Slide 61

Conclusions • Two key applications domains – Storage: loosely coupled – Search: tightly coupled, computation important • Key challenges to future servers are: – Availability, Maintainability, and Evolutionary growth • Use of self-monitoring to satisfy AME goals – Proactive and reactive techniques • Use of static techniques for high performance and reliable software – Titanium extension of Java • Use of dynamic information for performance robustness – Sparsity and Virtual Streams • Availability benchmarks a powerful tool? Slide 62

Projects and Participants ISTORE: iram. cs. berkeley. edu/istore With James Beck, Aaron Brown, Daniel Hettena, David Oppenheimer, Randi Thomas, Noah Treuhaft, David Patterson, John Kubiatowicz Titanium: www. cs. berkeley. edu/projects/titanium With Greg Balls, Dan Bonachea, David Gay, Ben Liblit, Chang-Sun Lin, Peter Mc. Quorquodale, Carleton Miyamoto, Geoff Pike, Alex Aiken, Phil Colella, Susan Graham, Paul Hilfinger Sparsity: www. cs. berkeley. edu/~ejim/sparsity With Eun-Jin Im Slide 63

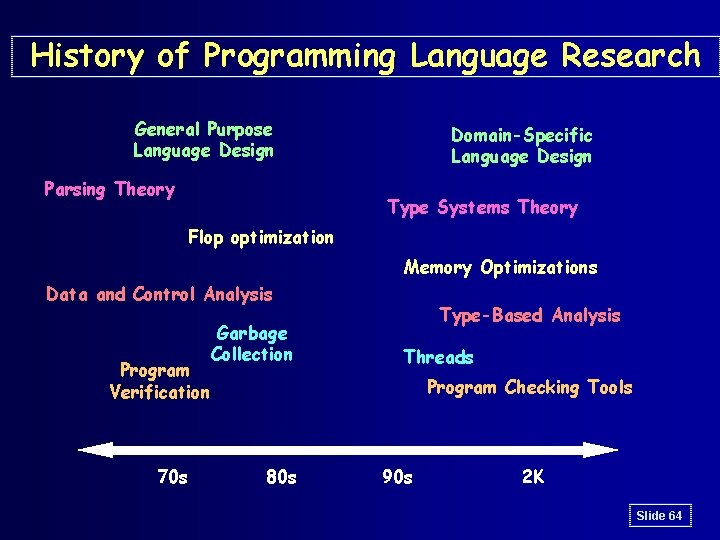

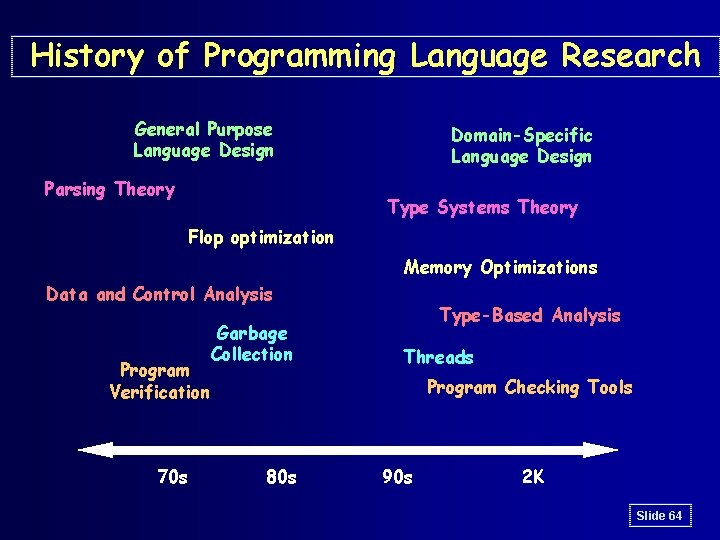

History of Programming Language Research General Purpose Language Design Parsing Theory Domain-Specific Language Design Type Systems Theory Flop optimization Memory Optimizations Data and Control Analysis Program Verification 70 s Garbage Collection Type-Based Analysis Threads Program Checking Tools 80 s 90 s 2 K Slide 64