PGAS Applications What Where and Why Kathy Yelick

- Slides: 40

PGAS Applications What, Where and Why? Kathy Yelick Professor of Electrical Engineering and Computer Sciences University of California at Berkeley Associate Laboratory Director for Computing Sciences Lawrence Berkeley National Laboratory

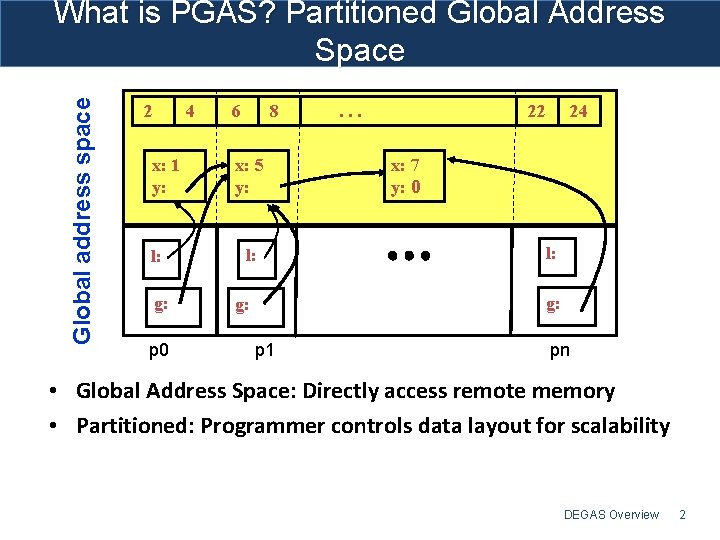

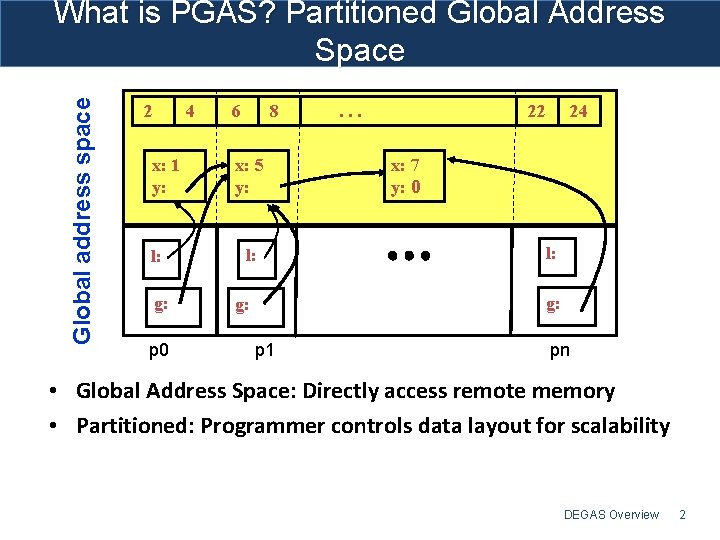

Global address space What is PGAS? Partitioned Global Address Space 2 4 x: 1 y: l: g: p 0 6 8 x: 5 y: l: . . . 22 24 x: 7 y: 0 l: g: p 1 pn • Global Address Space: Directly access remote memory • Partitioned: Programmer controls data layout for scalability DEGAS Overview 2

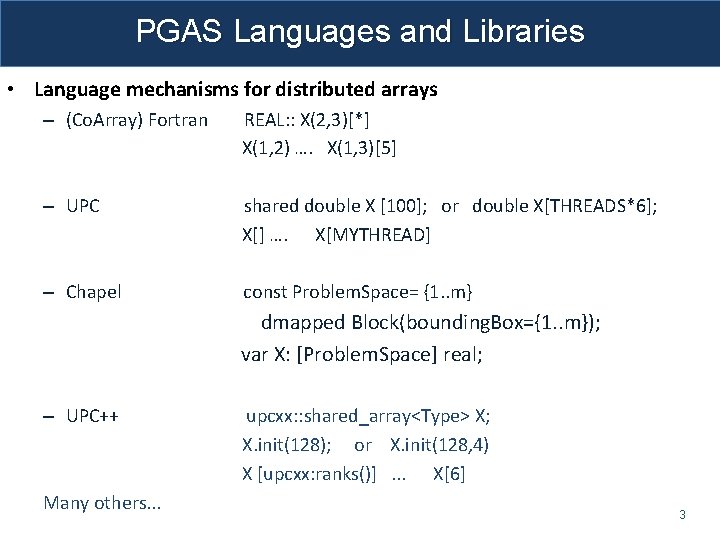

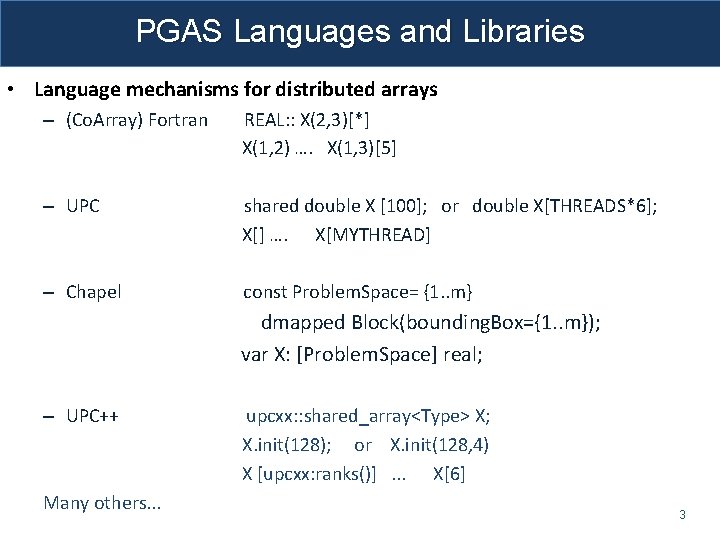

PGAS Languages and Libraries • Language mechanisms for distributed arrays – (Co. Array) Fortran REAL: : X(2, 3)[*] X(1, 2) …. X(1, 3)[5] – UPC shared double X [100]; or double X[THREADS*6]; X[] …. X[MYTHREAD] – Chapel const Problem. Space= {1. . m} dmapped Block(bounding. Box={1. . m}); var X: [Problem. Space] real; – UPC++ Many others. . . upcxx: : shared_array<Type> X; X. init(128); or X. init(128, 4) X [upcxx: ranks()]. . . X[6] 3

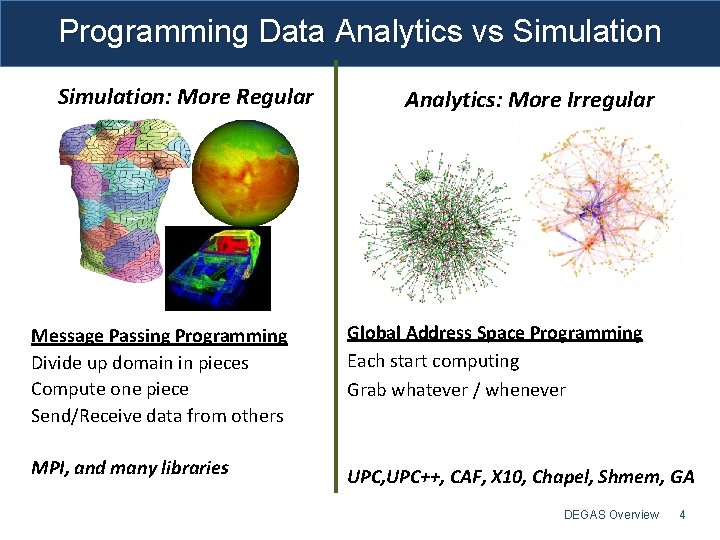

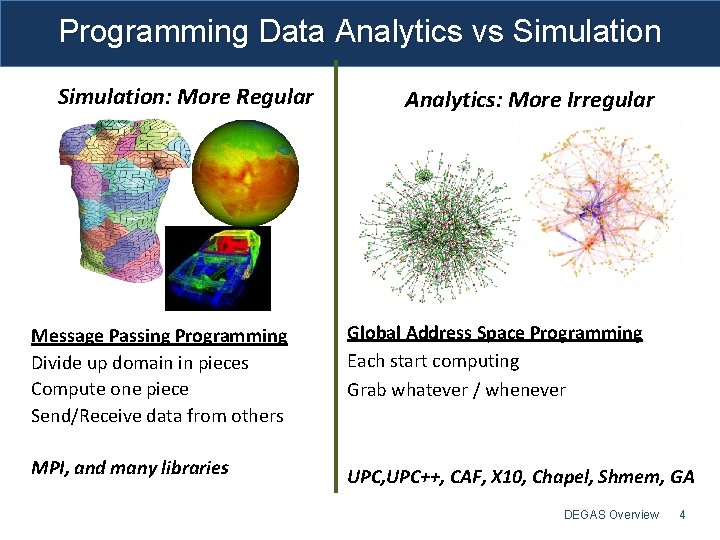

Programming Data Analytics vs Simulation: More Regular Analytics: More Irregular Message Passing Programming Divide up domain in pieces Compute one piece Send/Receive data from others Global Address Space Programming Each start computing Grab whatever / whenever MPI, and many libraries UPC, UPC++, CAF, X 10, Chapel, Shmem, GA DEGAS Overview 4

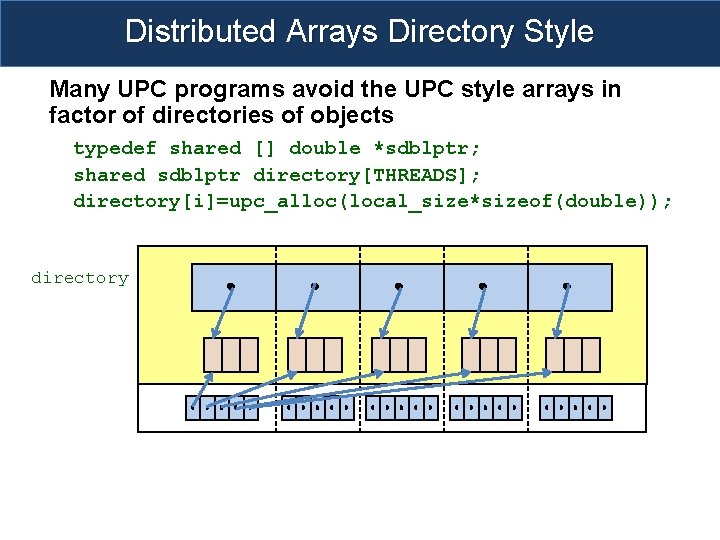

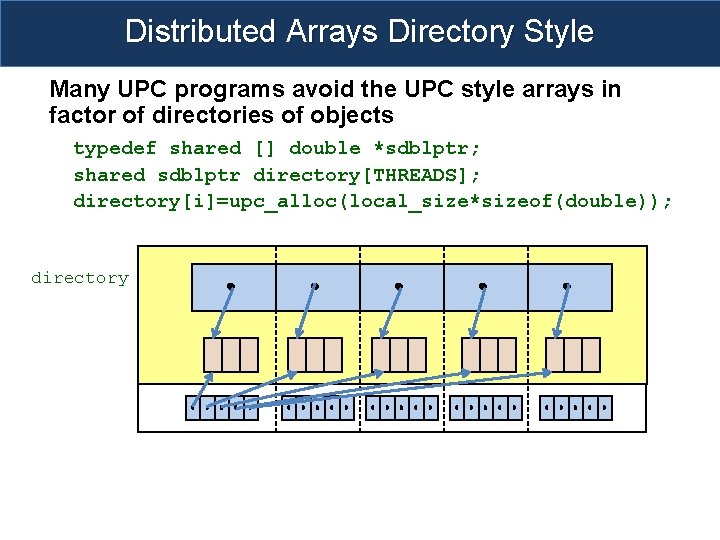

Distributed Arrays Directory Style Many UPC programs avoid the UPC style arrays in factor of directories of objects typedef shared [] double *sdblptr; shared sdblptr directory[THREADS]; directory[i]=upc_alloc(local_size*sizeof(double)); directory

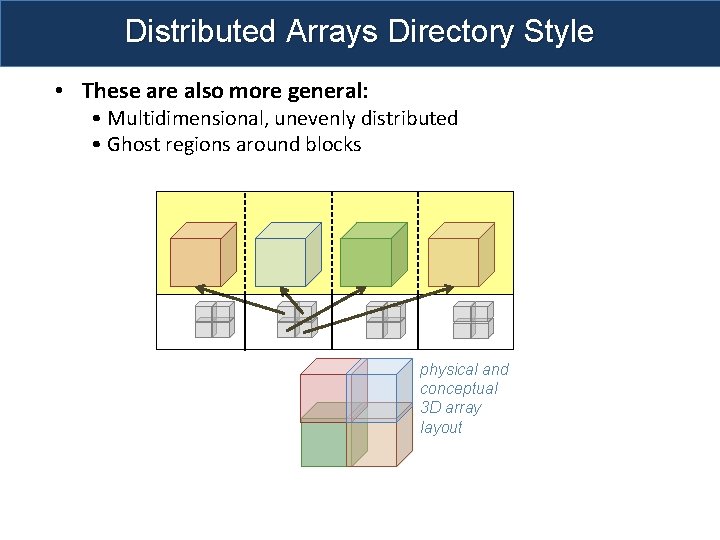

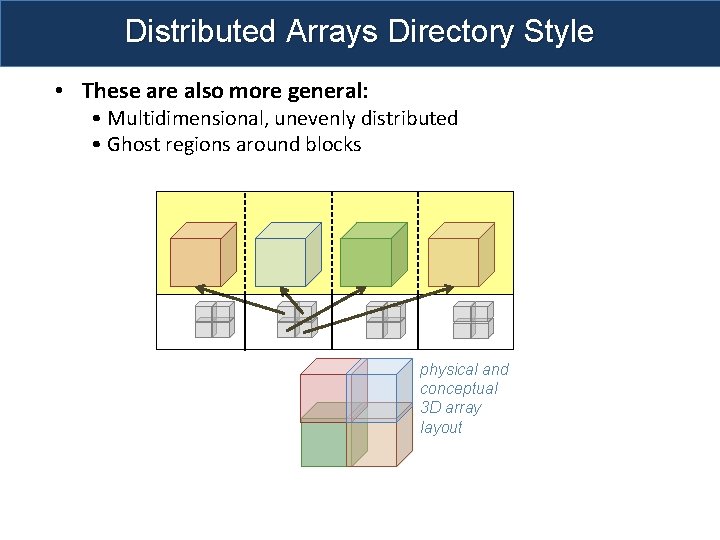

Distributed Arrays Directory Style • These are also more general: • Multidimensional, unevenly distributed • Ghost regions around blocks physical and conceptual 3 D array layout

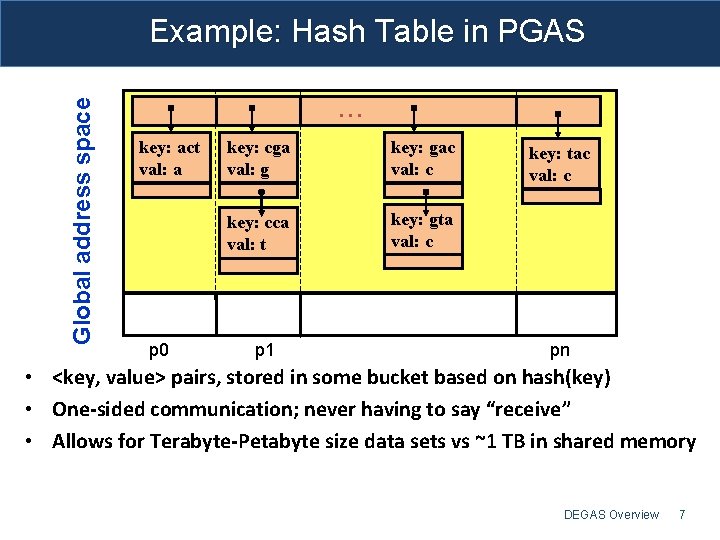

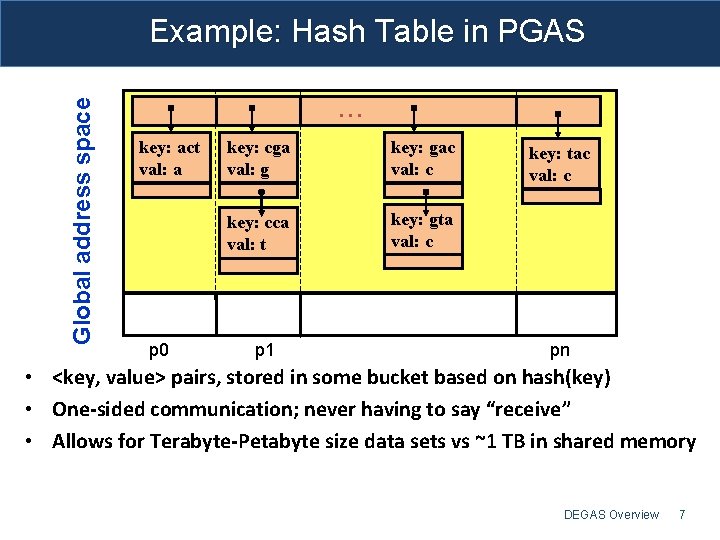

Global address space Example: Hash Table in PGAS. . . key: act val: a p 0 key: cga val: g key: gac val: c key: cca val: t key: gta val: c p 1 key: tac val: c pn • <key, value> pairs, stored in some bucket based on hash(key) • One-sided communication; never having to say “receive” • Allows for Terabyte-Petabyte size data sets vs ~1 TB in shared memory DEGAS Overview 7

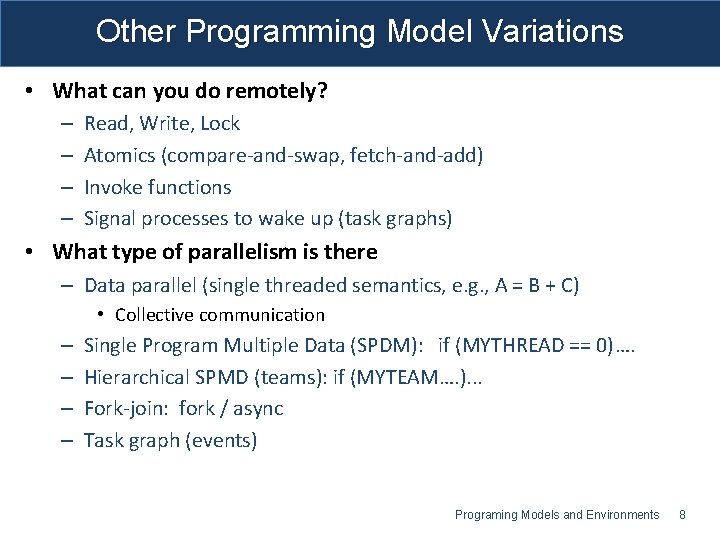

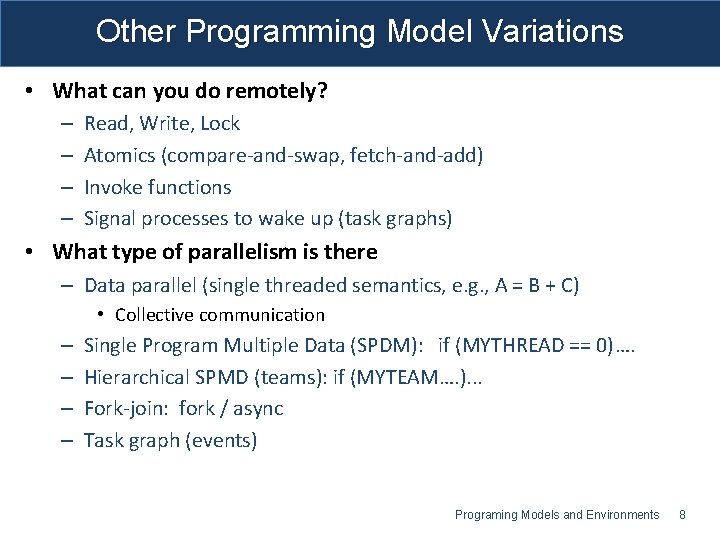

Other Programming Model Variations • What can you do remotely? – – Read, Write, Lock Atomics (compare-and-swap, fetch-and-add) Invoke functions Signal processes to wake up (task graphs) • What type of parallelism is there – Data parallel (single threaded semantics, e. g. , A = B + C) • Collective communication – – Single Program Multiple Data (SPDM): if (MYTHREAD == 0)…. Hierarchical SPMD (teams): if (MYTEAM…. ). . . Fork-join: fork / async Task graph (events) Programing Models and Environments 8

Where is PGAS programming used? 1. Asynchronous fine-grained reads/write/atomics 9

De novo Genome Assembly • DNA sequence consists of 4 bases: A/C/G/T • Read: short fragment of DNA • De novo assembly: Construct a genome (chromosomes) from a collection of reads DEGAS Overview 10

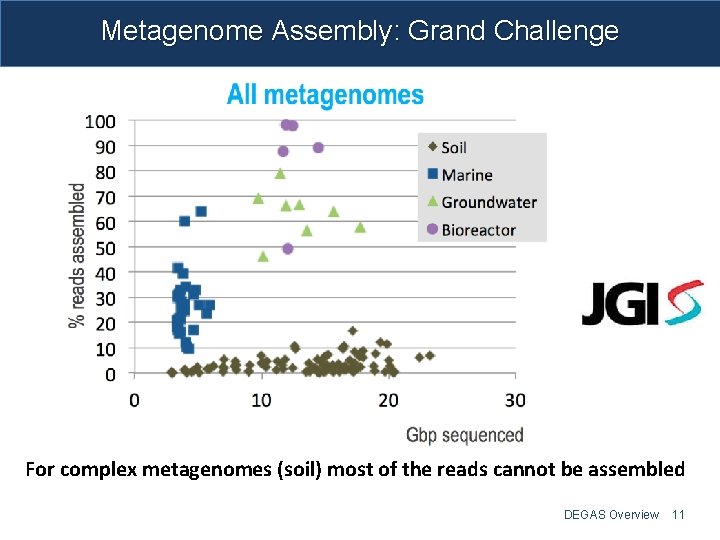

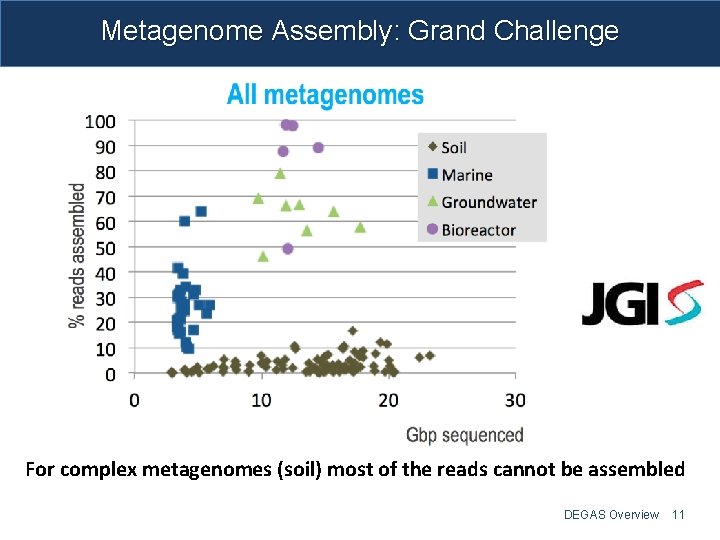

Metagenome Assembly: Grand Challenge For complex metagenomes (soil) most of the reads cannot be assembled DEGAS Overview 11

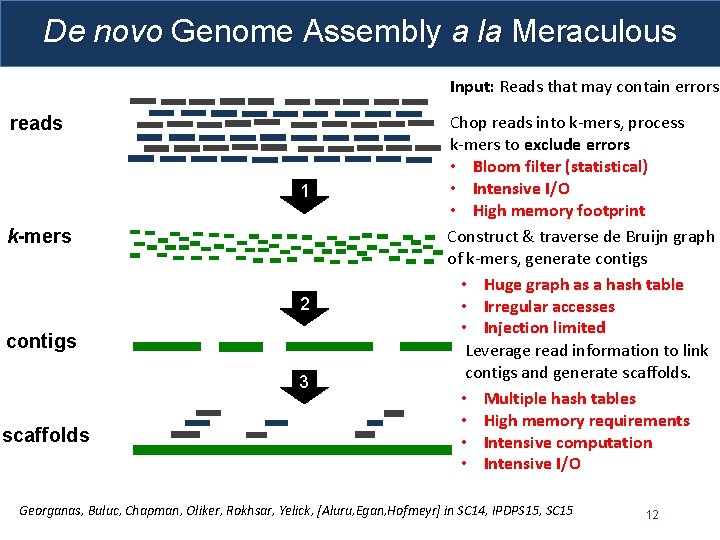

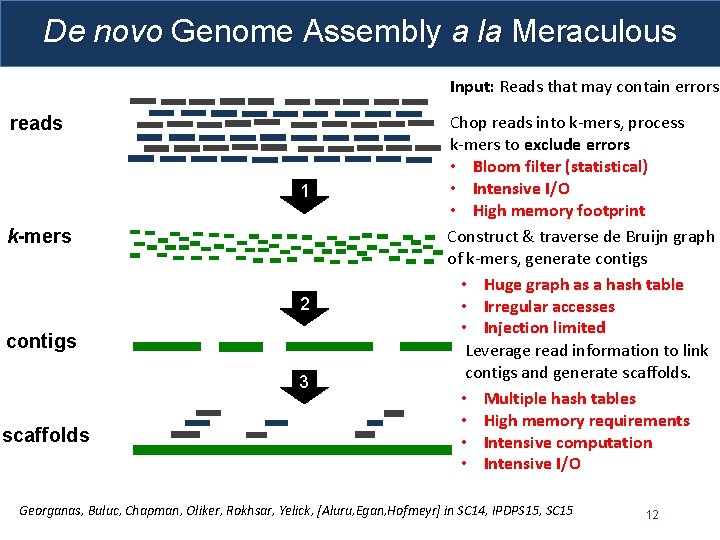

De novo Genome Assembly a la Meraculous Input: Reads that may contain errors reads 1 k-mers 2 contigs 3 scaffolds Chop reads into k-mers, process k-mers to exclude errors • Bloom filter (statistical) • Intensive I/O • High memory footprint Construct & traverse de Bruijn graph of k-mers, generate contigs • Huge graph as a hash table • Irregular accesses • Injection limited Leverage read information to link contigs and generate scaffolds. • Multiple hash tables • High memory requirements • Intensive computation • Intensive I/O Georganas, Buluc, Chapman, Oliker, Rokhsar, Yelick, [Aluru, Egan, Hofmeyr] in SC 14, IPDPS 15, SC 15 12

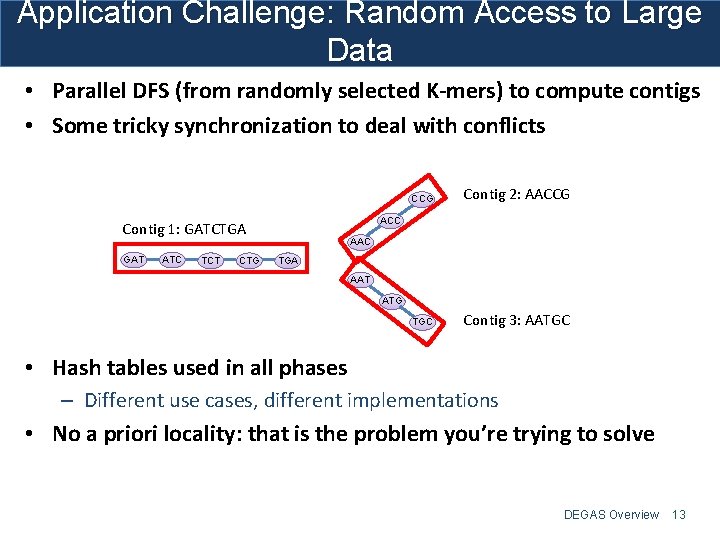

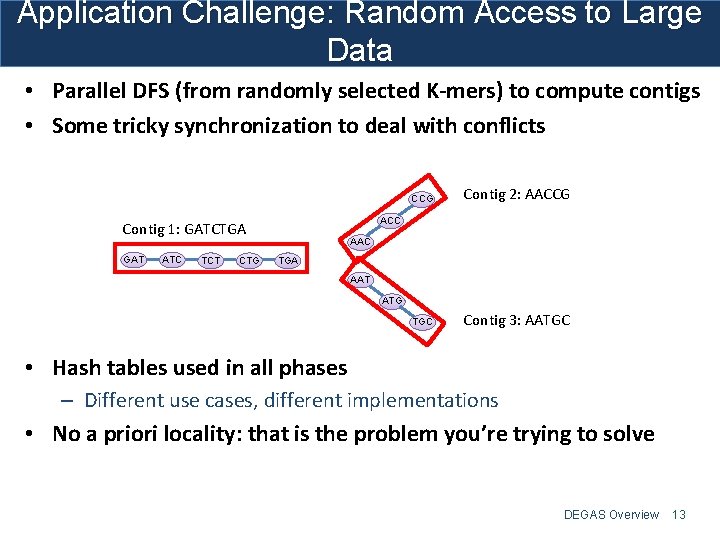

Application Challenge: Random Access to Large Data • Parallel DFS (from randomly selected K-mers) to compute contigs • Some tricky synchronization to deal with conflicts ATC TCT CTG Contig 2: AACCG TGC Contig 3: AATGC ACC Contig 1: GATCTGA GAT CCG AAC TGA AAT ATG • Hash tables used in all phases – Different use cases, different implementations • No a priori locality: that is the problem you’re trying to solve DEGAS Overview 13

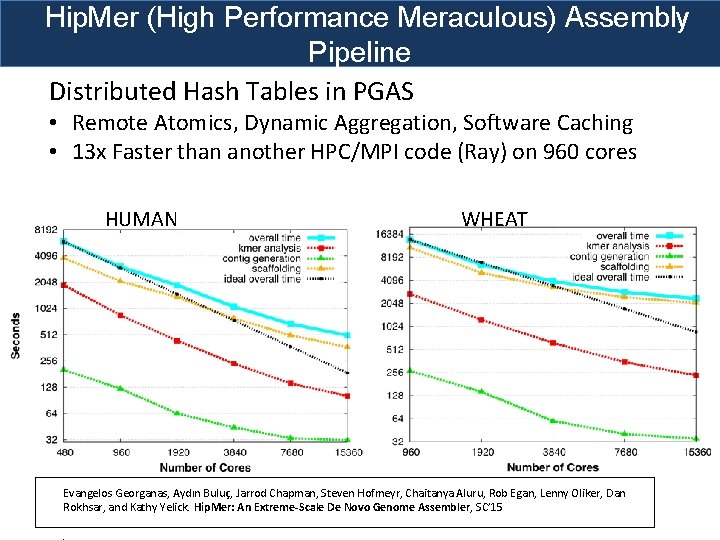

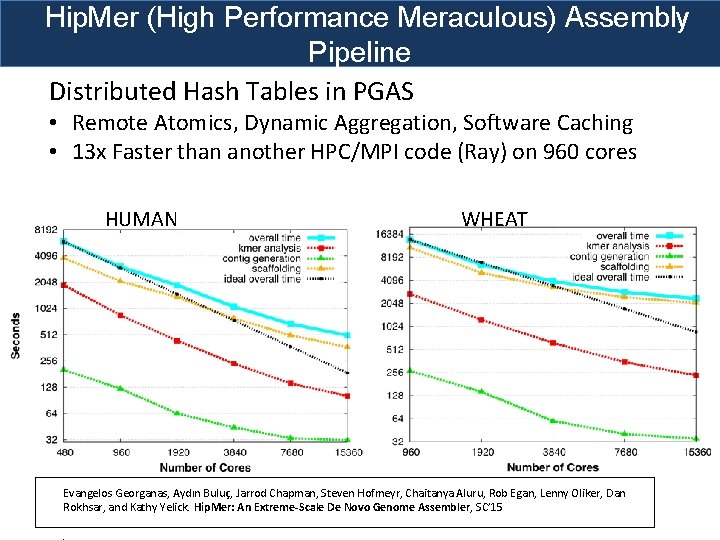

Hip. Mer (High Performance Meraculous) Assembly Pipeline Distributed Hash Tables in PGAS • Remote Atomics, Dynamic Aggregation, Software Caching • 13 x Faster than another HPC/MPI code (Ray) on 960 cores HUMAN WHEAT Evangelos Georganas, Aydın Buluç, Jarrod Chapman, Steven Hofmeyr, Chaitanya Aluru, Rob Egan, Lenny Oliker, Dan Rokhsar, and Kathy Yelick. Hip. Mer: An Extreme-Scale De Novo Genome Assembler, SC’ 15

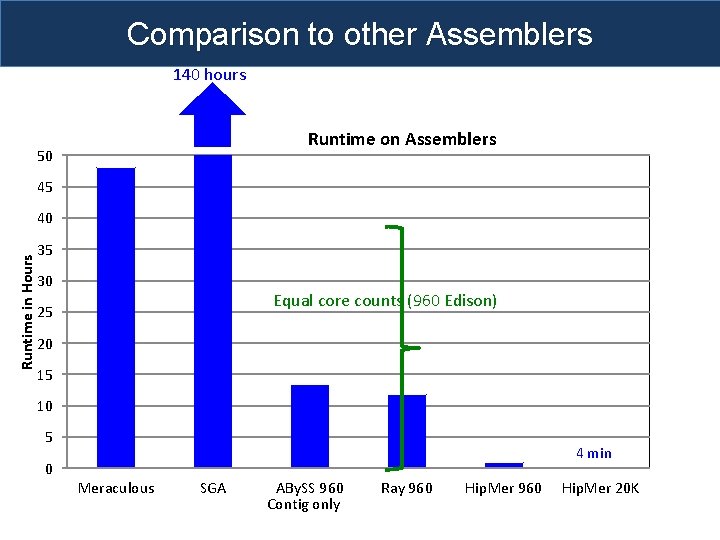

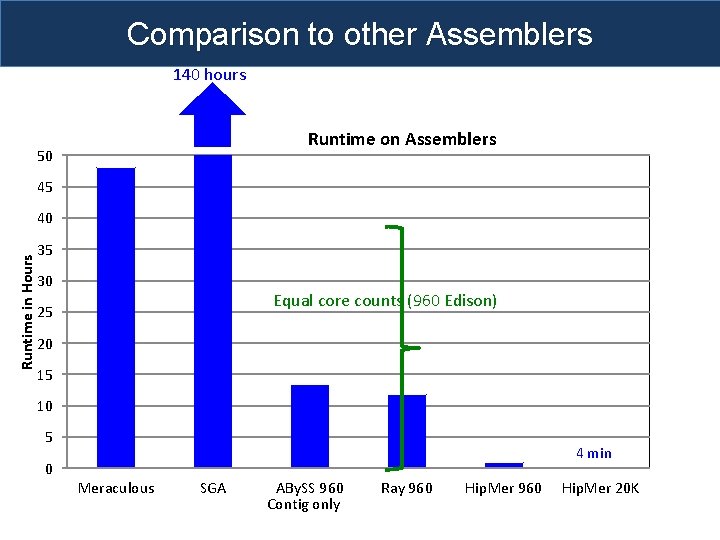

Comparison to other Assemblers 140 hours Runtime on Assemblers 50 45 Runtime in Hours 40 35 30 Equal core counts (960 Edison) 25 20 15 10 5 4 min 0 Meraculous SGA ABy. SS 960 Contig only Ray 960 Hip. Mer 20 K

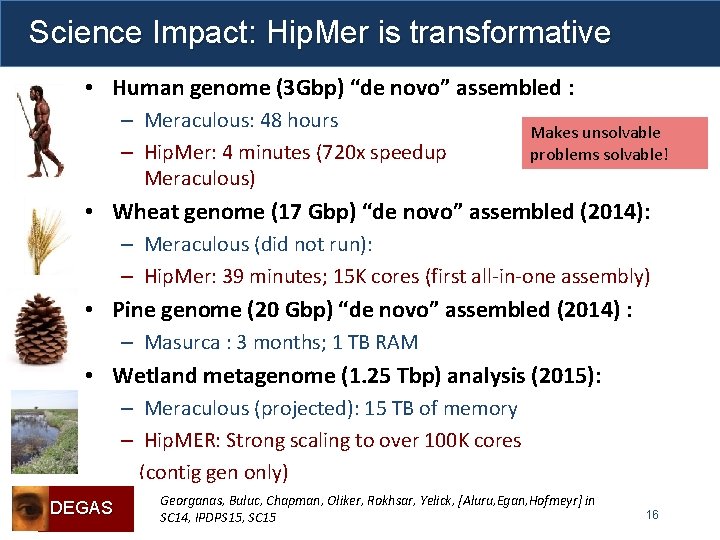

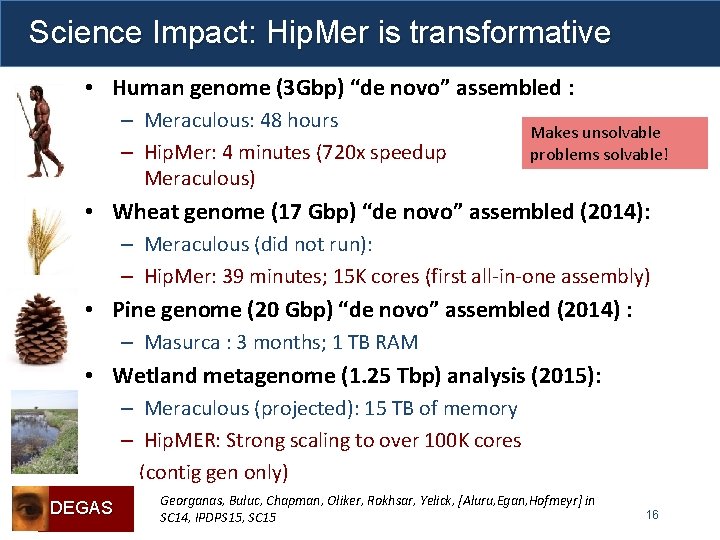

Science Impact: Hip. Mer is transformative • Human genome (3 Gbp) “de novo” assembled : – Meraculous: 48 hours – Hip. Mer: 4 minutes (720 x speedup Meraculous) Makes unsolvable relative to problems solvable! • Wheat genome (17 Gbp) “de novo” assembled (2014): – Meraculous (did not run): – Hip. Mer: 39 minutes; 15 K cores (first all-in-one assembly) • Pine genome (20 Gbp) “de novo” assembled (2014) : – Masurca : 3 months; 1 TB RAM • Wetland metagenome (1. 25 Tbp) analysis (2015): – Meraculous (projected): 15 TB of memory – Hip. MER: Strong scaling to over 100 K cores (contig gen only) DEGAS Georganas, Buluc, Chapman, Oliker, Rokhsar, Yelick, [Aluru, Egan, Hofmeyr] in SC 14, IPDPS 15, SC 15 16

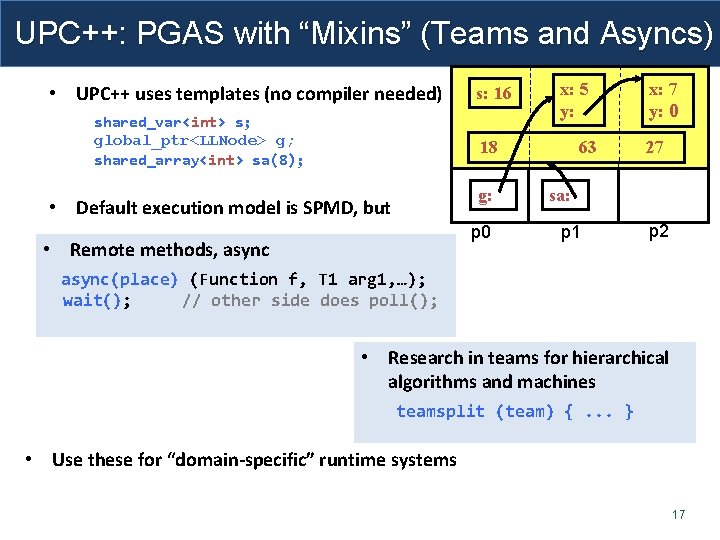

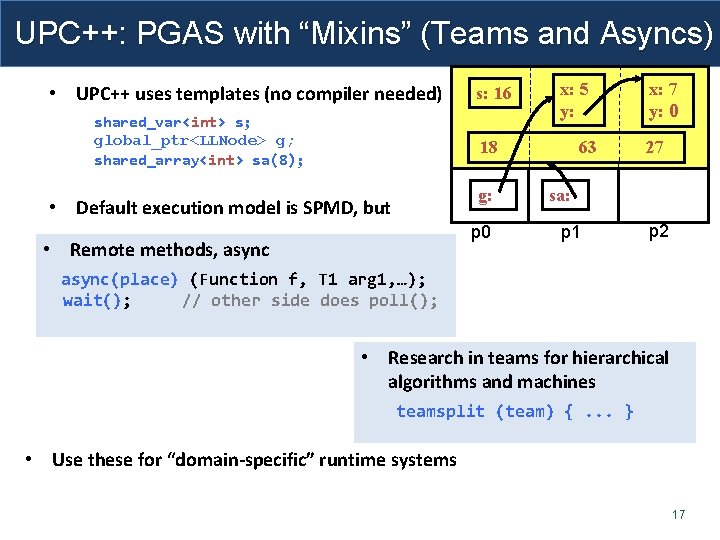

UPC++: PGAS with “Mixins” (Teams and Asyncs) • UPC++ uses templates (no compiler needed) shared_var<int> s; global_ptr<LLNode> g; shared_array<int> sa(8); s: 16 x: 5 y: 18 g: • Default execution model is SPMD, but p 0 • Remote methods, async 63 x: 7 y: 0 27 sa: p 1 p 2 async(place) (Function f, T 1 arg 1, …); wait(); // other side does poll(); • Research in teams for hierarchical algorithms and machines teamsplit (team) {. . . } • Use these for “domain-specific” runtime systems 17

Where is PGAS programming used? 1. Asynchronous fine-grained reads/write/atomics (aggregation and software caching when possible) 2. Strided irregular updates (adds) to distributed matrix 18

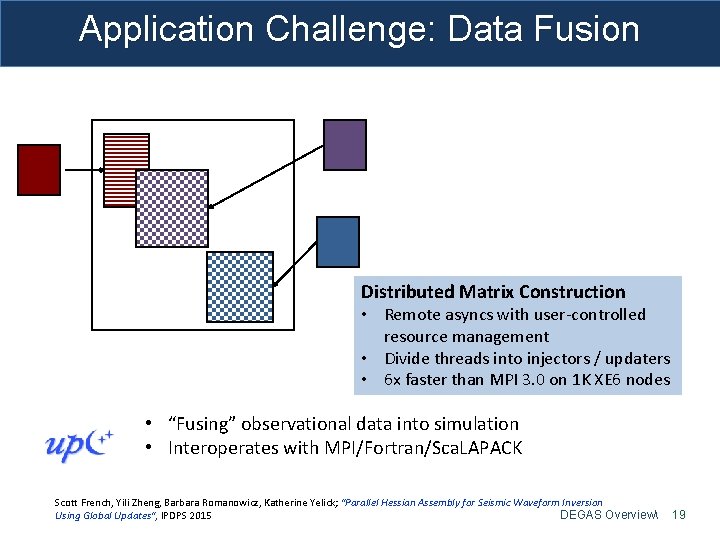

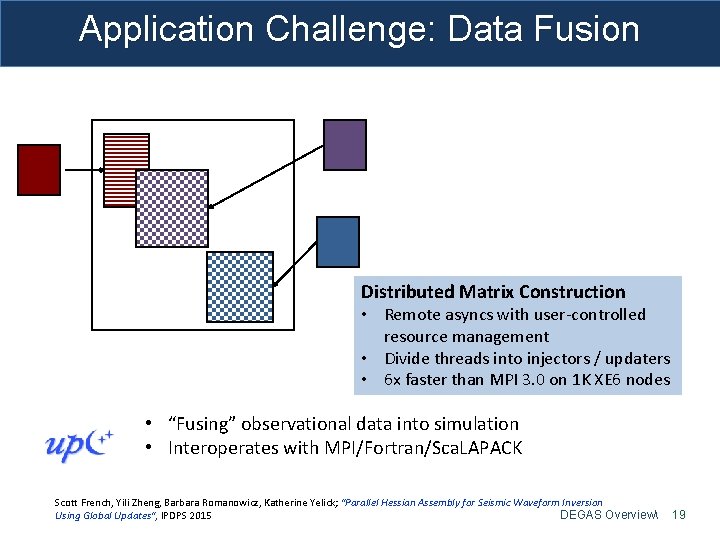

Application Challenge: Data Fusion Distributed Matrix Construction • Remote asyncs with user-controlled resource management • Divide threads into injectors / updaters • 6 x faster than MPI 3. 0 on 1 K XE 6 nodes • “Fusing” observational data into simulation • Interoperates with MPI/Fortran/Sca. LAPACK Scott French, Yili Zheng, Barbara Romanowicz, Katherine Yelick; "Parallel Hessian Assembly for Seismic Waveform Inversion Using Global Updates", IPDPS 2015 DEGAS Overview 19

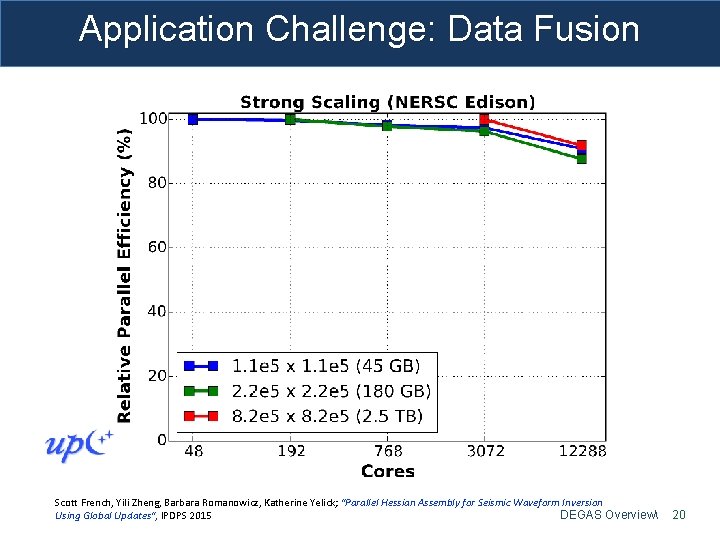

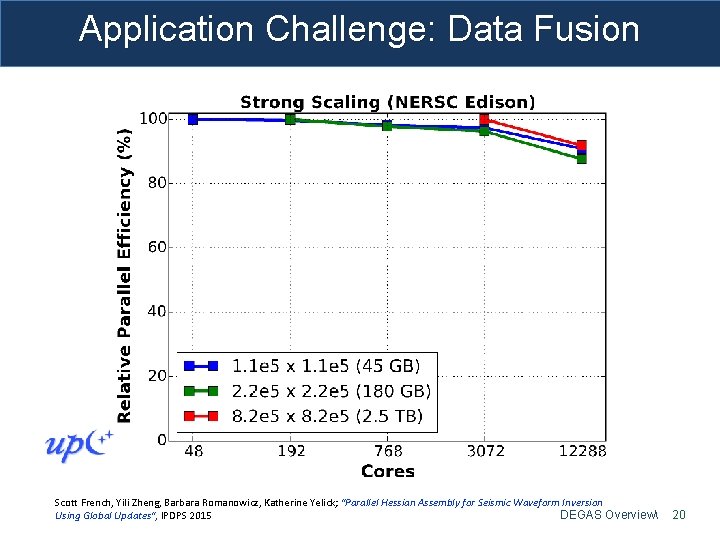

Application Challenge: Data Fusion Scott French, Yili Zheng, Barbara Romanowicz, Katherine Yelick; "Parallel Hessian Assembly for Seismic Waveform Inversion Using Global Updates", IPDPS 2015 DEGAS Overview 20

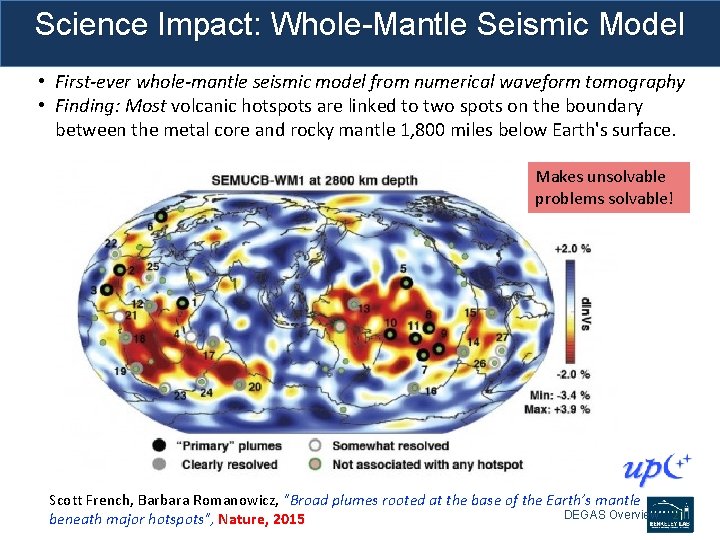

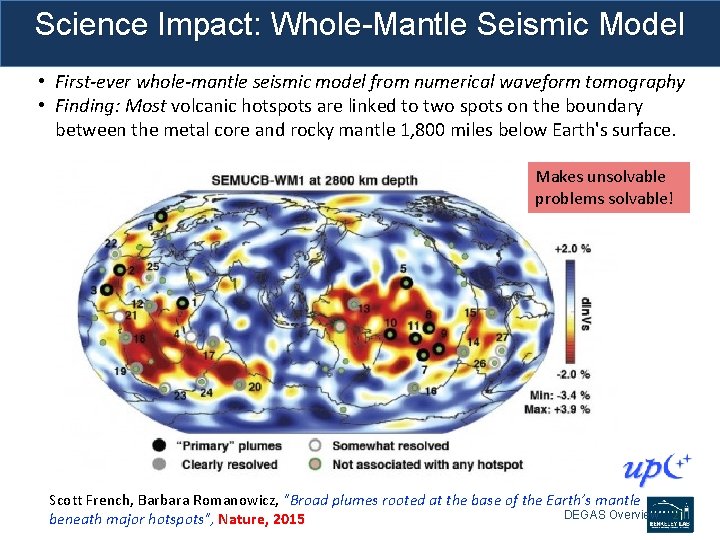

Science Impact: Whole-Mantle Seismic Model • First-ever whole-mantle seismic model from numerical waveform tomography • Finding: Most volcanic hotspots are linked to two spots on the boundary between the metal core and rocky mantle 1, 800 miles below Earth's surface. Makes unsolvable problems solvable! Scott French, Barbara Romanowicz, "Broad plumes rooted at the base of the Earth’s mantle DEGAS Overview beneath major hotspots", Nature, 2015 21

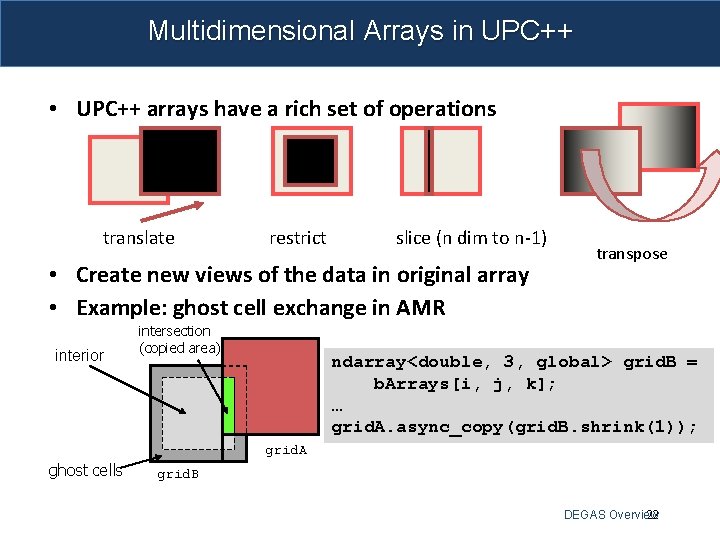

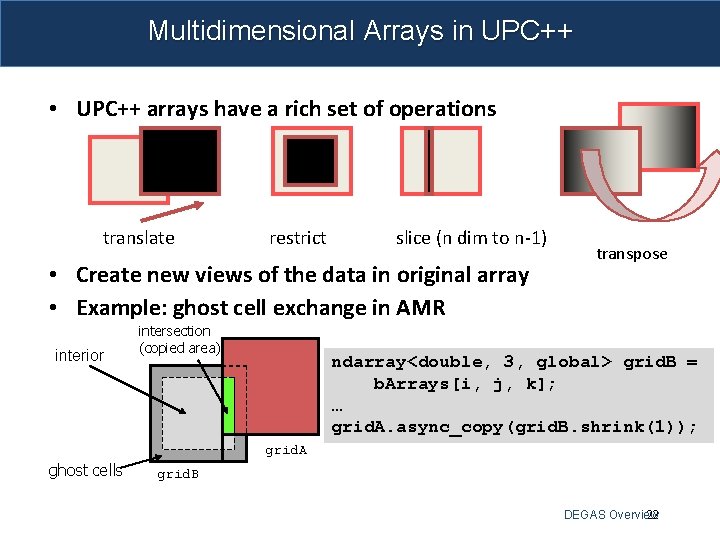

Multidimensional Arrays in UPC++ • UPC++ arrays have a rich set of operations translate restrict slice (n dim to n-1) • Create new views of the data in original array • Example: ghost cell exchange in AMR interior intersection (copied area) transpose ndarray<double, 3, global> grid. B = b. Arrays[i, j, k]; … grid. A. async_copy(grid. B. shrink(1)); grid. A ghost cells grid. B DEGAS Overview 22

Where is PGAS programming used? 1. Asynchronous fine-grained reads/write/atomics (aggregation and software caching when possible) 2. Strided irregular updates (adds) to distributed matrix 3. Dynamic work stealing 23

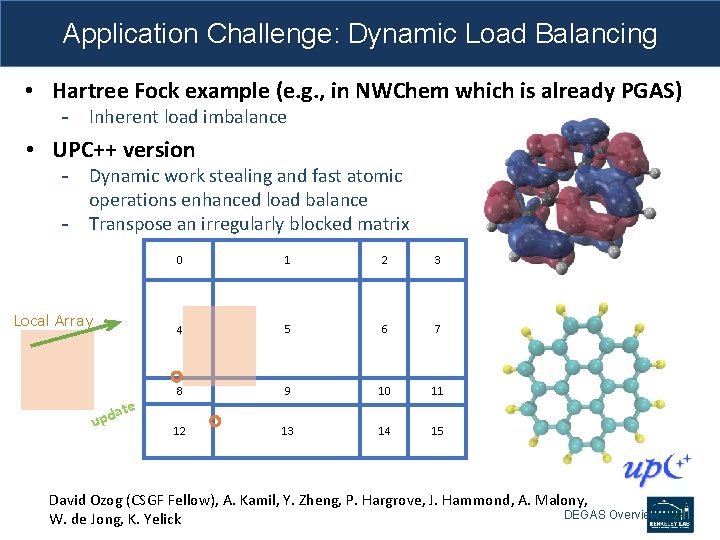

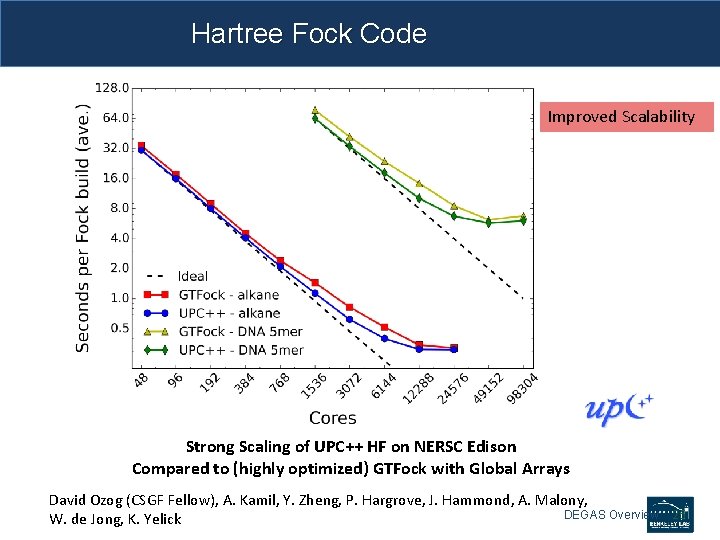

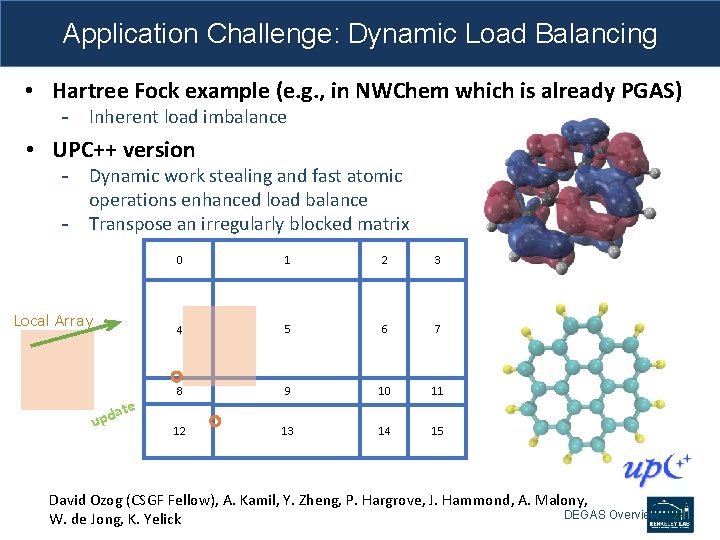

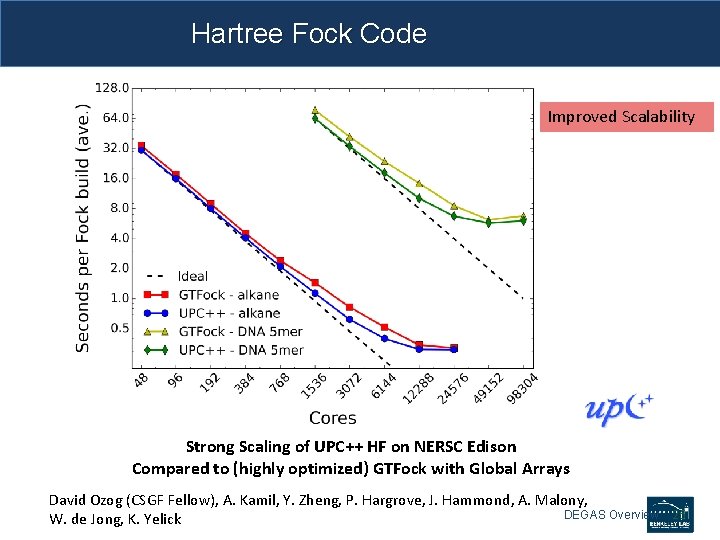

Application Challenge: Dynamic Load Balancing • Hartree Fock example (e. g. , in NWChem which is already PGAS) - Inherent load imbalance • UPC++ version - Dynamic work stealing and fast atomic operations enhanced load balance - Transpose an irregularly blocked matrix Local Array upd ate 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 David Ozog (CSGF Fellow), A. Kamil, Y. Zheng, P. Hargrove, J. Hammond, A. Malony, DEGAS Overview W. de Jong, K. Yelick 24

Hartree Fock Code Improved Scalability Strong Scaling of UPC++ HF on NERSC Edison Compared to (highly optimized) GTFock with Global Arrays David Ozog (CSGF Fellow), A. Kamil, Y. Zheng, P. Hargrove, J. Hammond, A. Malony, DEGAS Overview W. de Jong, K. Yelick 25

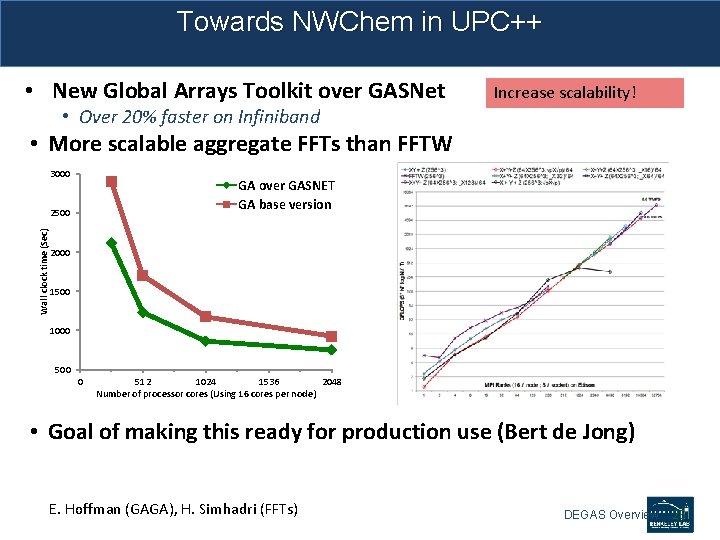

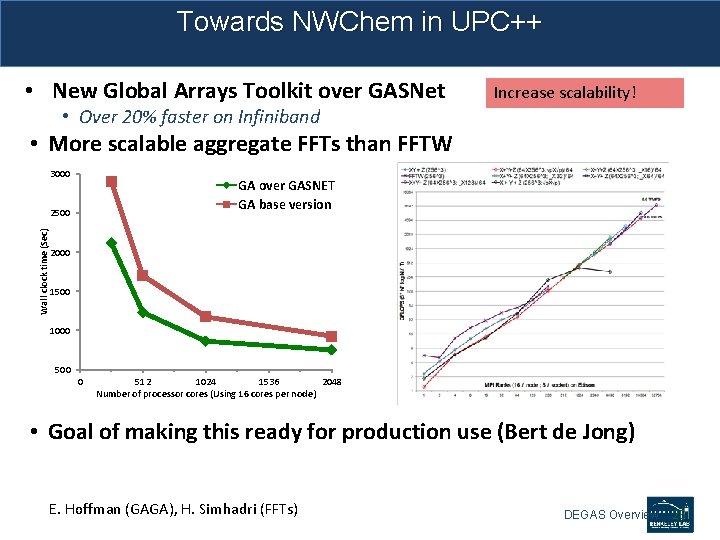

Towards NWChem in UPC++ • New Global Arrays Toolkit over GASNet Increase scalability! • Over 20% faster on Infiniband • More scalable aggregate FFTs than FFTW 3000 GA over GASNET GA base version Wall clock time (Sec) 2500 2000 1500 1000 500 0 512 1024 1536 2048 Number of processor cores (Using 16 cores per node) • Goal of making this ready for production use (Bert de Jong) E. Hoffman (GAGA), H. Simhadri (FFTs) DEGAS Overview 26

Where is PGAS programming used? 1. Asynchronous fine-grained reads/write/atomics (aggregation and software caching when possible) 2. Strided irregular updates (adds) to distributed matrix 3. Dynamic work stealing 4. Hierarchical algorithms / one programming model 27

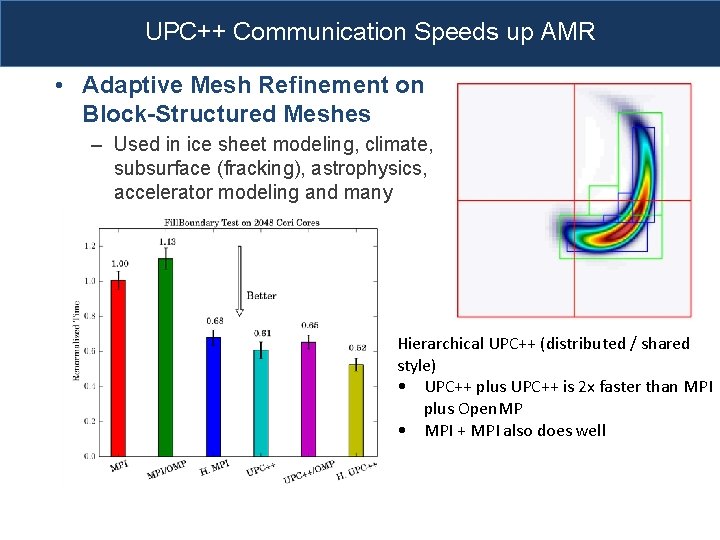

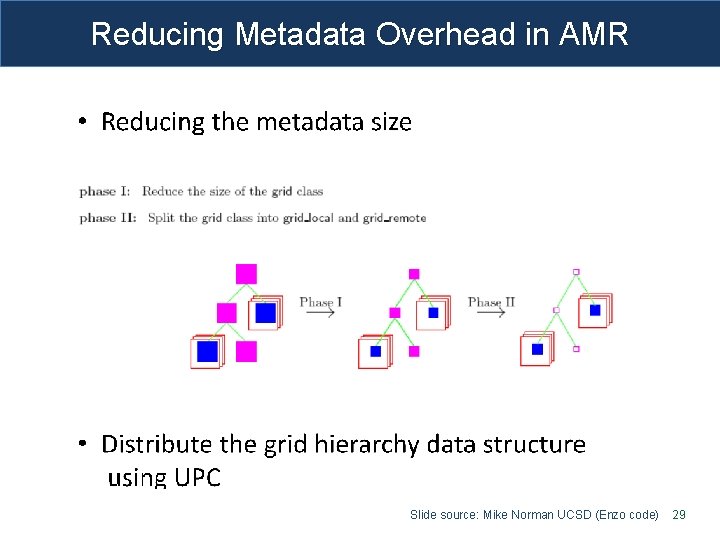

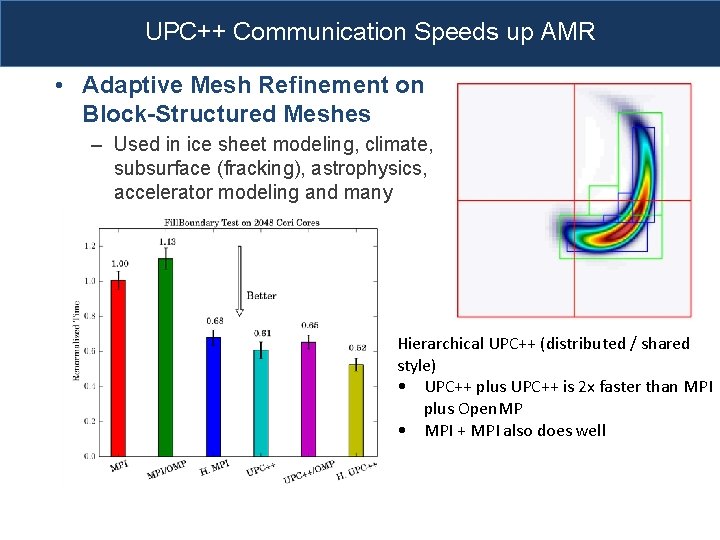

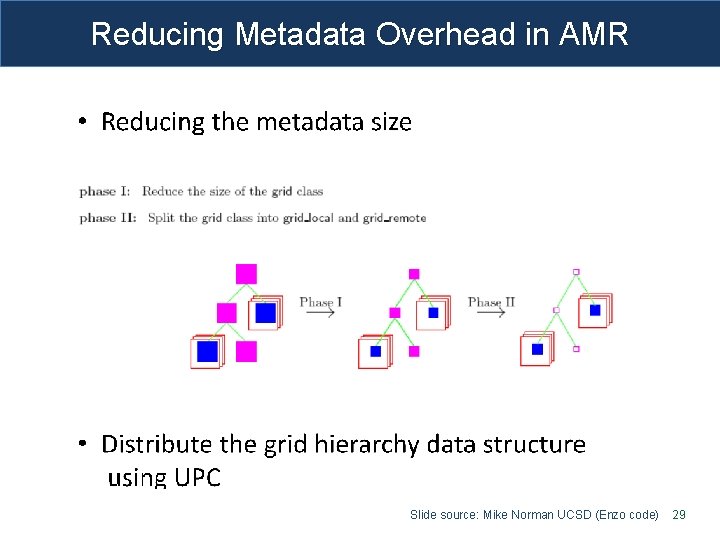

UPC++ Communication Speeds up AMR • Adaptive Mesh Refinement on Block-Structured Meshes – Used in ice sheet modeling, climate, subsurface (fracking), astrophysics, accelerator modeling and many more Hierarchical UPC++ (distributed / shared style) • UPC++ plus UPC++ is 2 x faster than MPI plus Open. MP • MPI + MPI also does well

Reducing Metadata Overhead in AMR Slide source: Mike Norman UCSD (Enzo code) 29

Where is PGAS programming used? 1. Asynchronous fine-grained reads/write/atomics (aggregation and software caching when possible) 2. Strided irregular updates (adds) to distributed matrix 3. Dynamic work stealing 4. Hierarchical algorithms / one programming model 5. Task Graph Scheduling (UPC++) 30

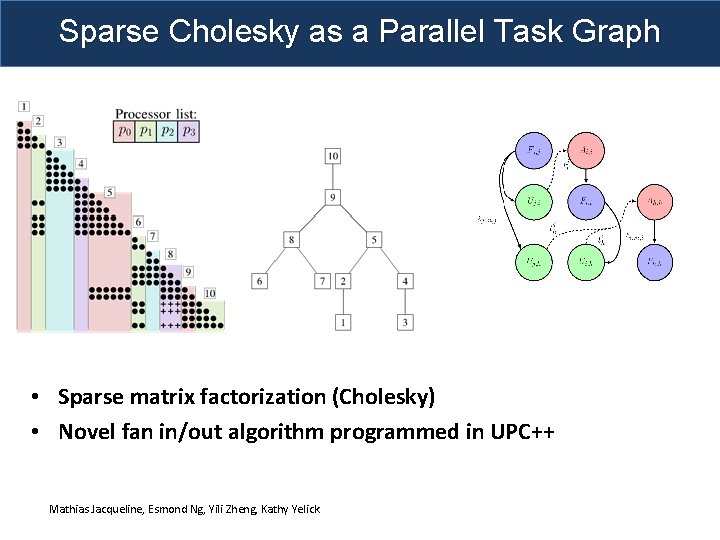

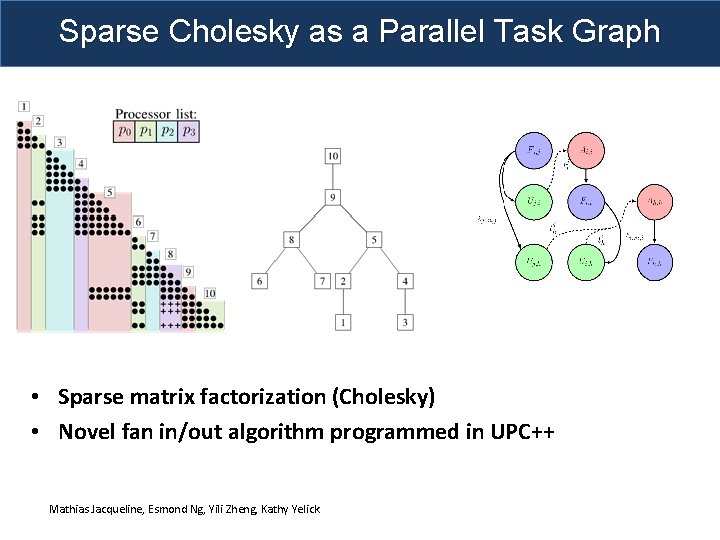

Sparse Cholesky as a Parallel Task Graph • Sparse matrix factorization (Cholesky) • Novel fan in/out algorithm programmed in UPC++ Mathias Jacqueline, Esmond Ng, Yili Zheng, Kathy Yelick

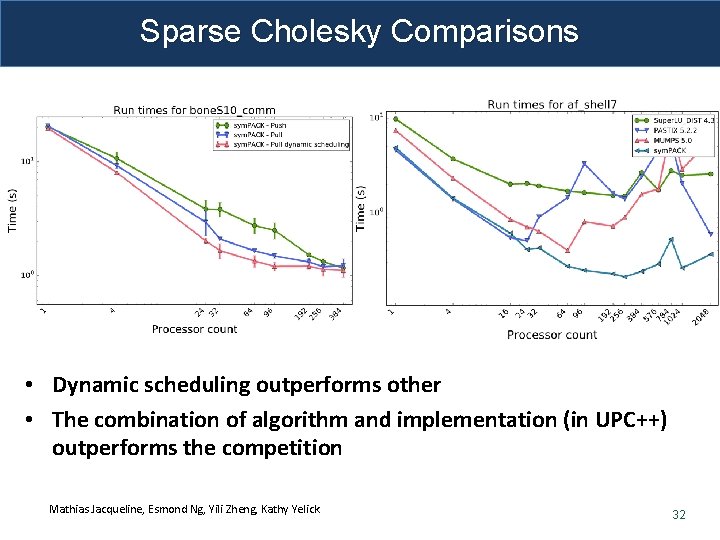

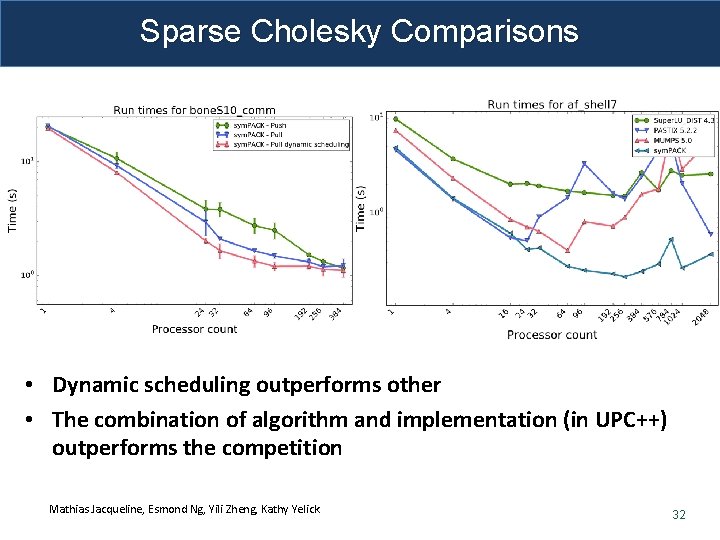

Sparse Cholesky Comparisons • Dynamic scheduling outperforms other • The combination of algorithm and implementation (in UPC++) outperforms the competition Mathias Jacqueline, Esmond Ng, Yili Zheng, Kathy Yelick 32

Where is PGAS programming used? 1. Asynchronous fine-grained reads/write/atomics (aggregation and software caching when possible) 2. Dynamic work stealing 3. Strided irregular updates (adds) to distributed matrix 4. Hierarchical algorithms / one programming model 5. Task Graph Scheduling (UPC++) 6. Dynamic runtimes (CHARM++, Legion, HPX) 33

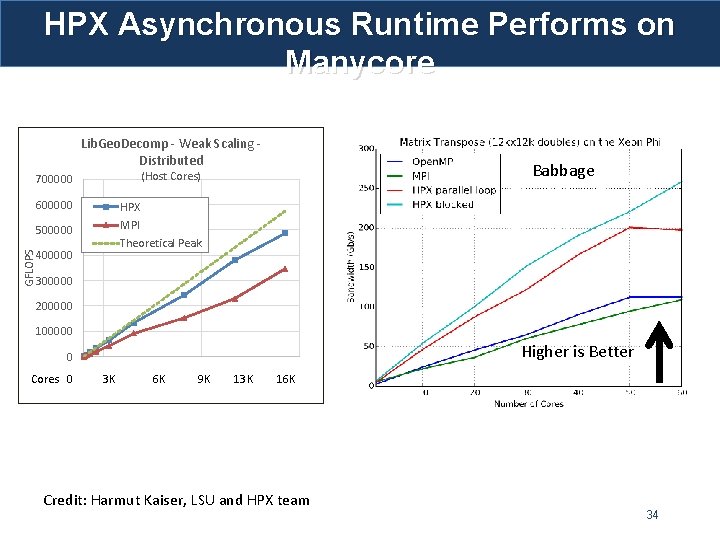

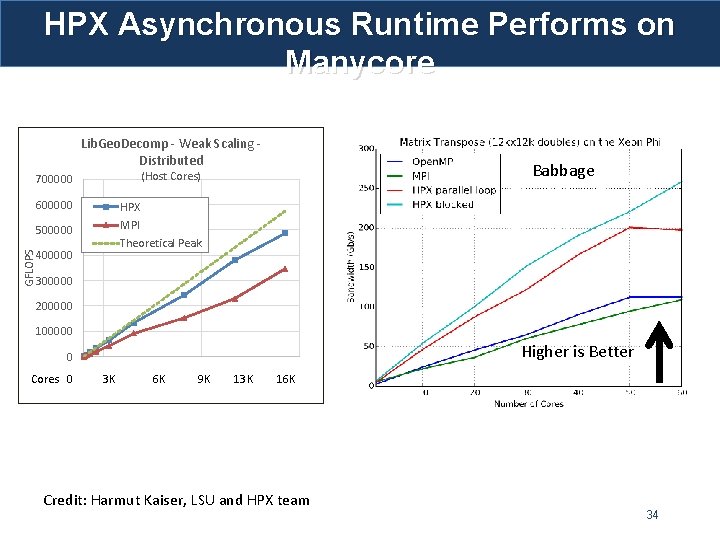

HPX Asynchronous Runtime Performs on Manycore Lib. Geo. Decomp - Weak Scaling Distributed (Host Cores) GFLOPS 700000 600000 HPX 500000 MPI Babbage Theoretical Peak 400000 300000 200000 100000 Higher is Better 0 Cores 0 0 500 1000 3 K 6 K 9 K 13 K 16 K Number of Localities (16 Cores each) Credit: Harmut Kaiser, LSU and HPX team 34

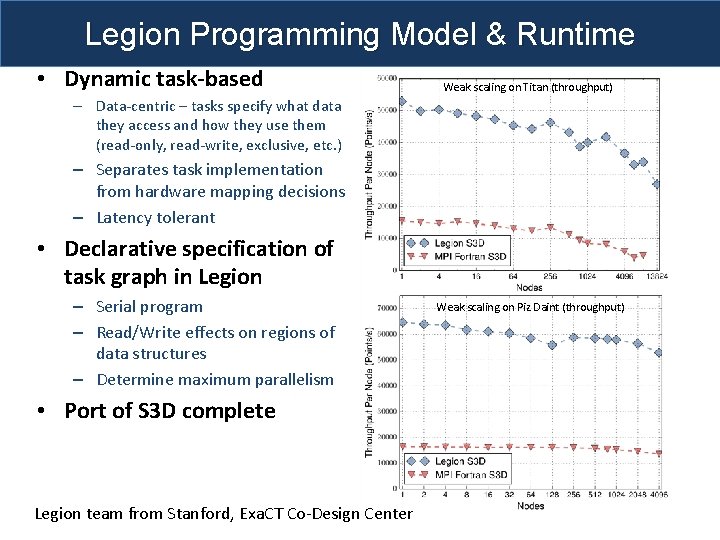

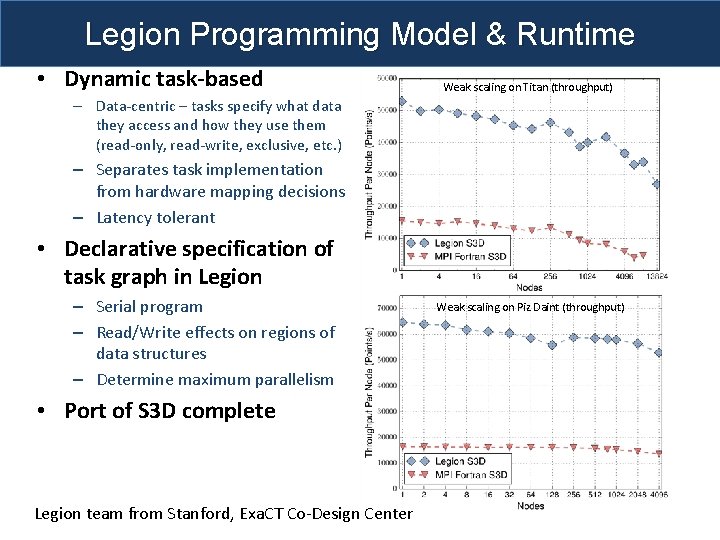

Legion Programming Model & Runtime • Dynamic task-based Weak scaling on Titan (throughput) – Data-centric – tasks specify what data they access and how they use them (read-only, read-write, exclusive, etc. ) – Separates task implementation from hardware mapping decisions – Latency tolerant • Declarative specification of task graph in Legion – Serial program – Read/Write effects on regions of data structures – Determine maximum parallelism • Port of S 3 D complete Legion team from Stanford, Exa. CT Co-Design Center Weak scaling on Piz Daint (throughput)

Why is PGAS used? (Besides Application Characteristics) 36

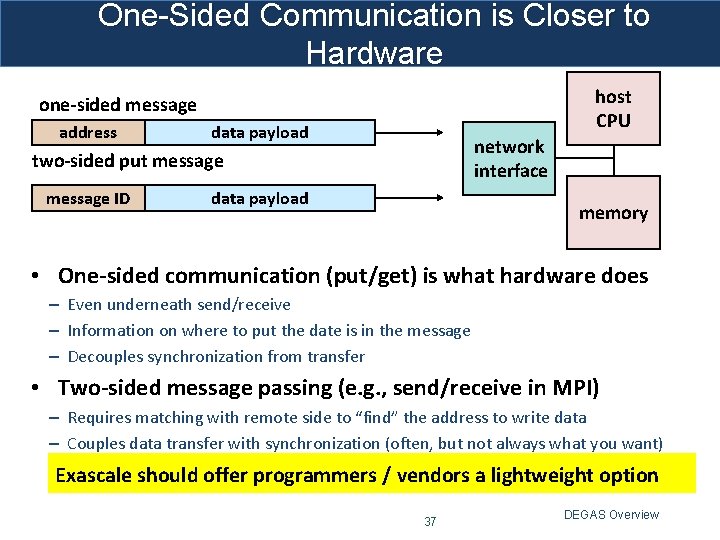

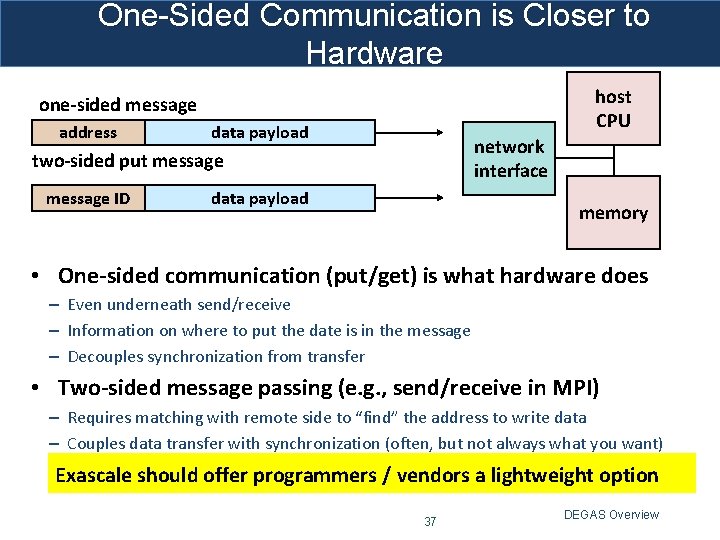

One-Sided Communication is Closer to Hardware host CPU one-sided message address data payload network interface two-sided put message ID data payload memory • One-sided communication (put/get) is what hardware does – Even underneath send/receive – Information on where to put the date is in the message – Decouples synchronization from transfer • Two-sided message passing (e. g. , send/receive in MPI) – Requires matching with remote side to “find” the address to write data – Couples data transfer with synchronization (often, but not always what you want) Exascale should offer programmers / vendors a lightweight option 37 DEGAS Overview

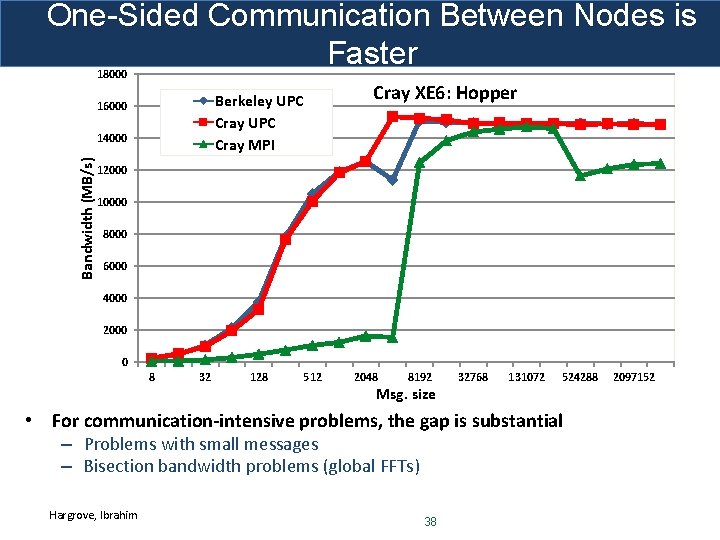

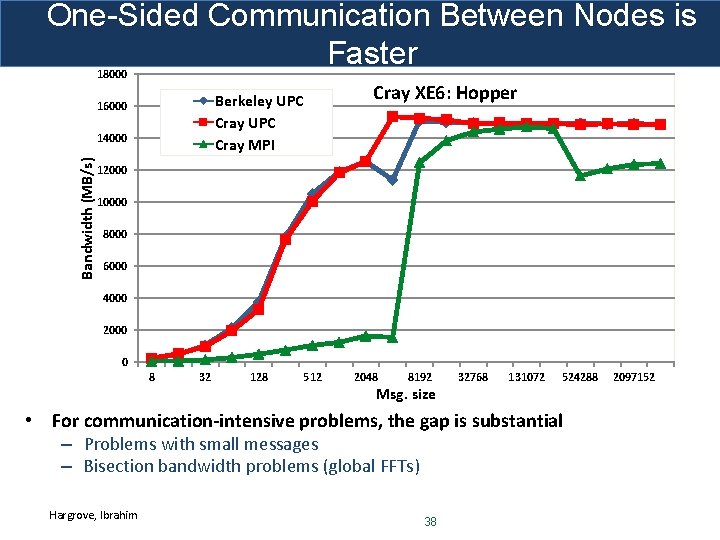

One-Sided Communication Between Nodes is Faster 18000 Berkeley UPC Cray MPI 16000 Bandwidth (MB/s) 14000 Cray XE 6: Hopper 12000 10000 8000 6000 4000 2000 0 8 32 128 512 2048 8192 Msg. size 32768 131072 524288 • For communication-intensive problems, the gap is substantial – Problems with small messages – Bisection bandwidth problems (global FFTs) Hargrove, Ibrahim 38 2097152

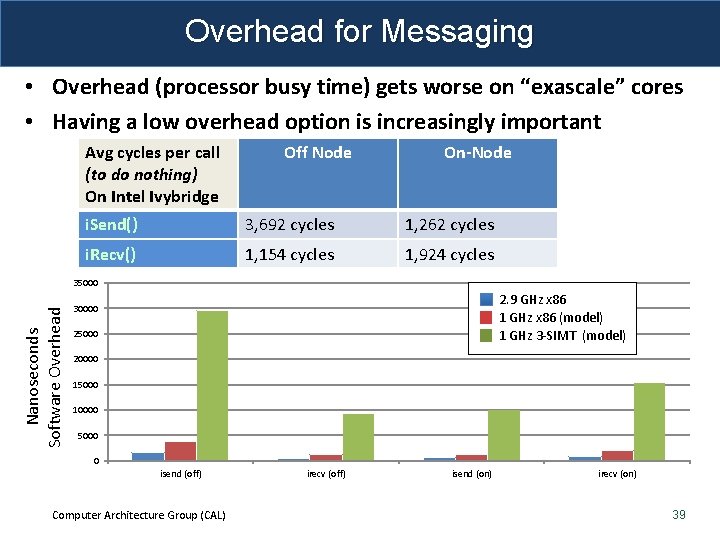

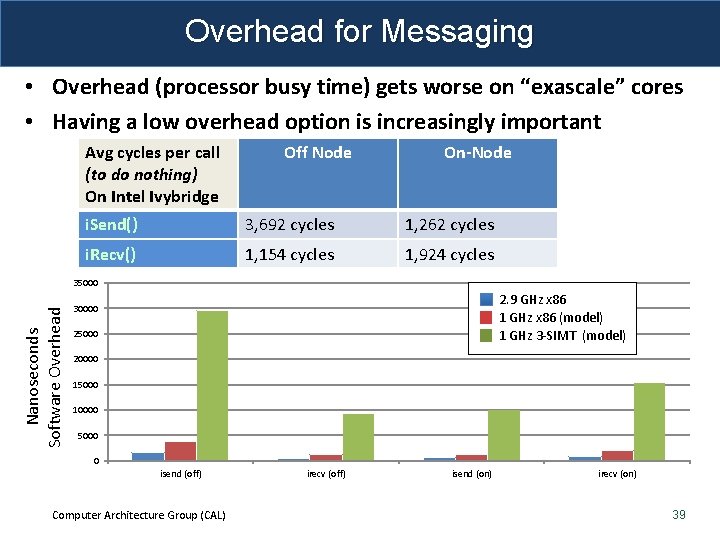

Overhead for Messaging • Overhead (processor busy time) gets worse on “exascale” cores • Having a low overhead option is increasingly important Avg cycles per call (to do nothing) On Intel Ivybridge Off Node On-Node i. Send() 3, 692 cycles 1, 262 cycles i. Recv() 1, 154 cycles 1, 924 cycles Nanoseconds Software Overhead 1800 4000 35000 2. 9 GHz x 86 1 GHz x 86 (model) 1 GHz 3 -SIMT (model) 1600 3500 30000 1400 3000 25000 1200 2500 20000 1000 2000 800 1500 600 1000 400 500 200 0 isend (off) Computer Architecture Group (CAL) irecv (off) isend (on) irecv (on) 39

Summary • Successful PGAS applications are mostly asynchronous 1. Asynchronous fine-grained reads/write/atomics (aggregation and software caching when possible) 2. Dynamic work stealing 3. Strided irregular updates (adds) to distributed matrix 4. Hierarchical algorithms / one programming model 5. Task Graph Scheduling (UPC++) 6. Dynamic runtimes (CHARM++, Legion, HPX) • Exascale architecture trends 40