IS 4800 Empirical Research Methods for Information Science

- Slides: 65

IS 4800 Empirical Research Methods for Information Science Class Notes Feb. 29, 2012 Instructor: Prof. Carole Hafner, 446 WVH hafner@ccs. neu. edu Tel: 617 -373 -5116 Course Web site: www. ccs. neu. edu/course/is 4800 sp 12/

Oral Presentation of Study Results 2

Presenting your research ■ A research project should “tell a story” ■ A brief presentation (20 min or less) is more difficult than a longer one – selectivity is critical ■ The T-shaped talk and the U-shaped talk ■ Rehearse for timing

Oral Presentation ■ Main concepts and ideas ■ Do not go into great detail on experimental methods – BUT enough so people understand what you did ■ Focus on motivation, results, implications ■ If listener wants details they can read the paper or ask questions 4

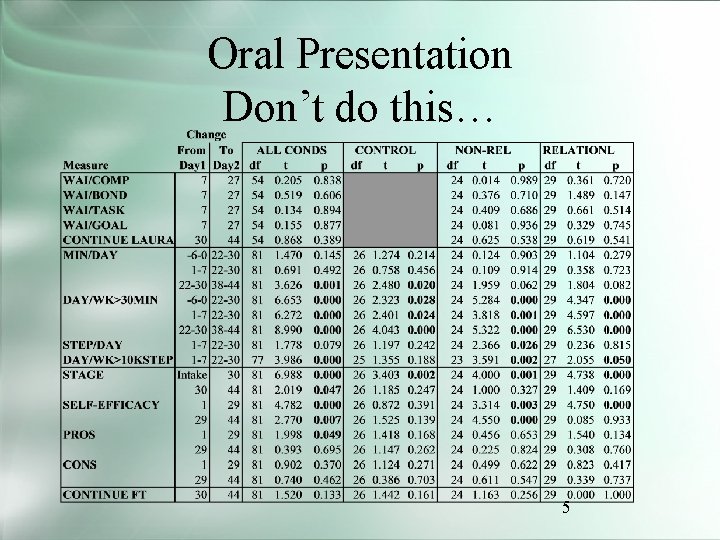

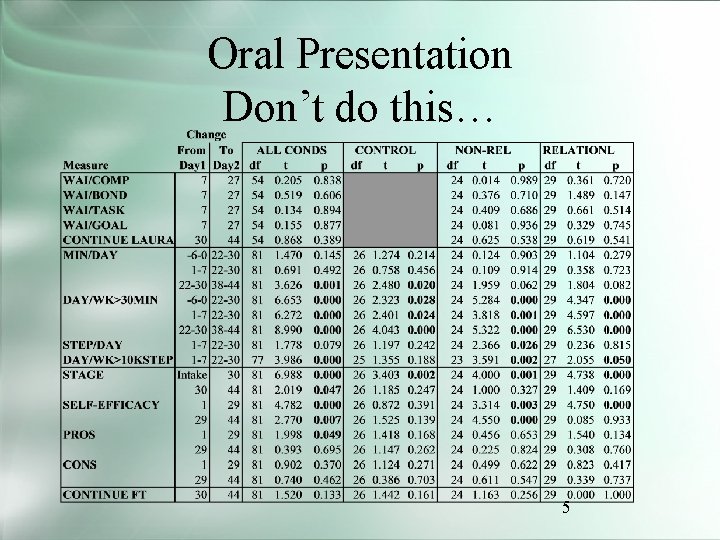

Oral Presentation Don’t do this… 5

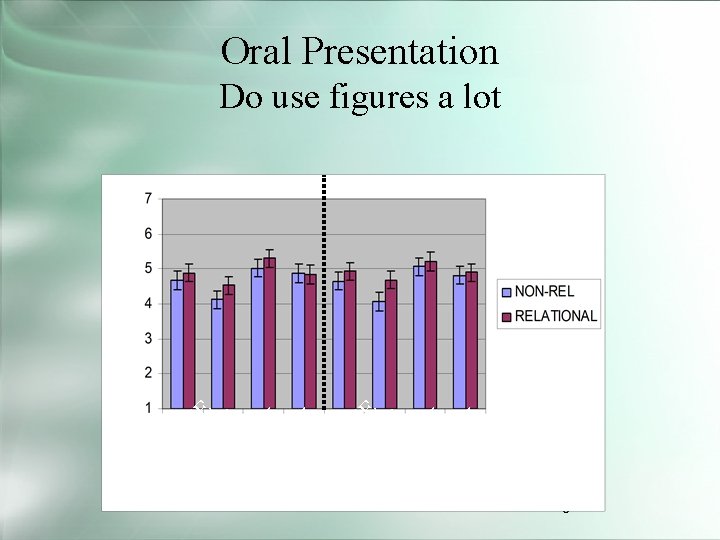

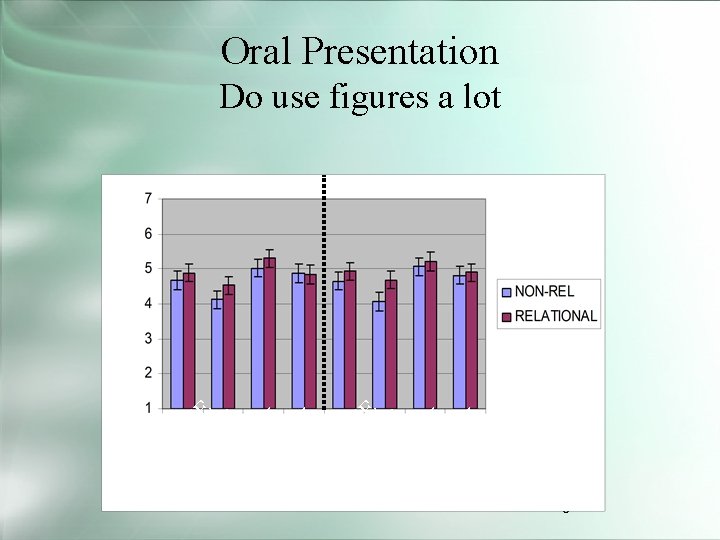

Oral Presentation Do use figures a lot WEEK 4 CO M PO S BO ITE N TA D SK G CO O M AL PO SI BO TE N TA D S G K O A L WEEK 1 6

Oral Presentation Guide for Visuals ■ Visuals should be exhibits that you talk about ■ Do not put lots of text on charts ■ Do not read your charts for your presentation ■ Use interactivity, video, images to keep your audience awake 7

Common Questions ■ How did you evaluate that? ■ How did you measure that? ■ How did you control for extraneous variable X? ■ Why didn’t you use statistic Y? ■ Isn’t that a biased sample? ■ What was your control group? ■ How did you do study procedure Z? 8

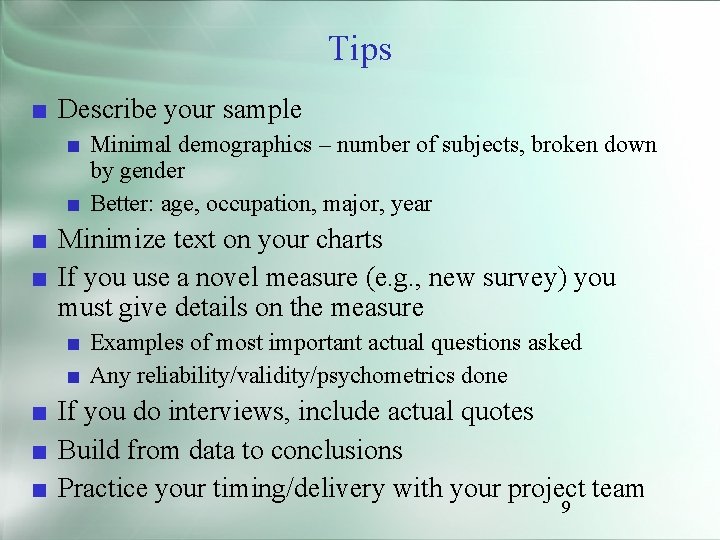

Tips ■ Describe your sample ■ Minimal demographics – number of subjects, broken down by gender ■ Better: age, occupation, major, year ■ Minimize text on your charts ■ If you use a novel measure (e. g. , new survey) you must give details on the measure ■ Examples of most important actual questions asked ■ Any reliability/validity/psychometrics done ■ If you do interviews, include actual quotes ■ Build from data to conclusions ■ Practice your timing/delivery with your project team 9

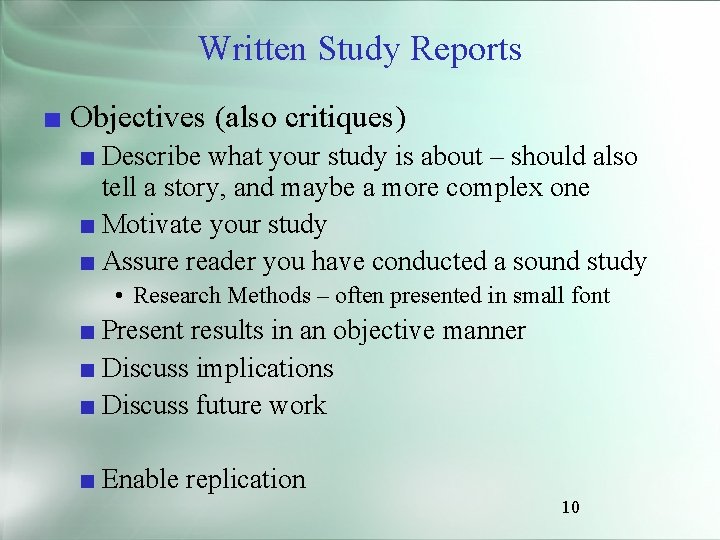

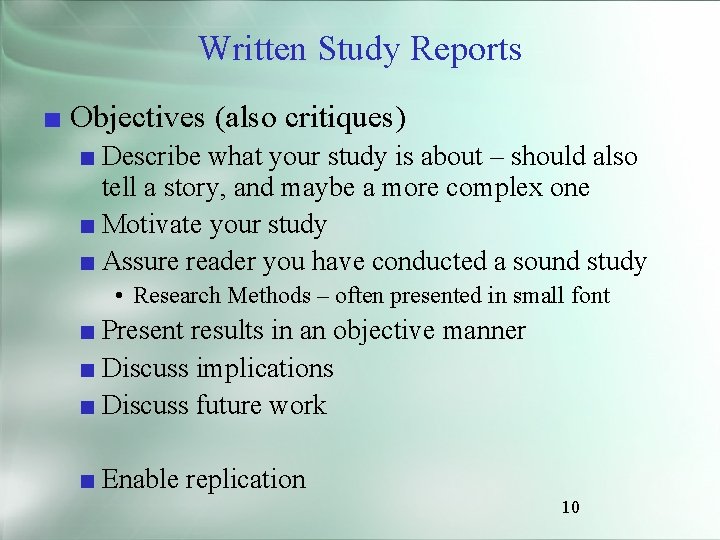

Written Study Reports ■ Objectives (also critiques) ■ Describe what your study is about – should also tell a story, and maybe a more complex one ■ Motivate your study ■ Assure reader you have conducted a sound study • Research Methods – often presented in small font ■ Present results in an objective manner ■ Discuss implications ■ Discuss future work ■ Enable replication 10

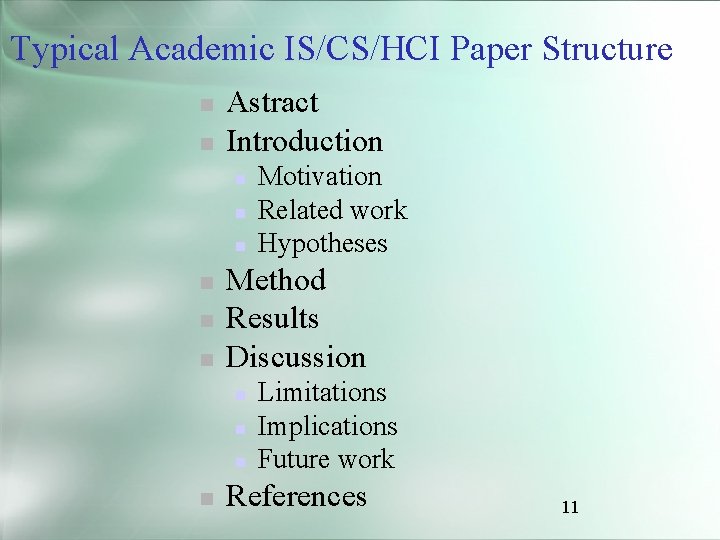

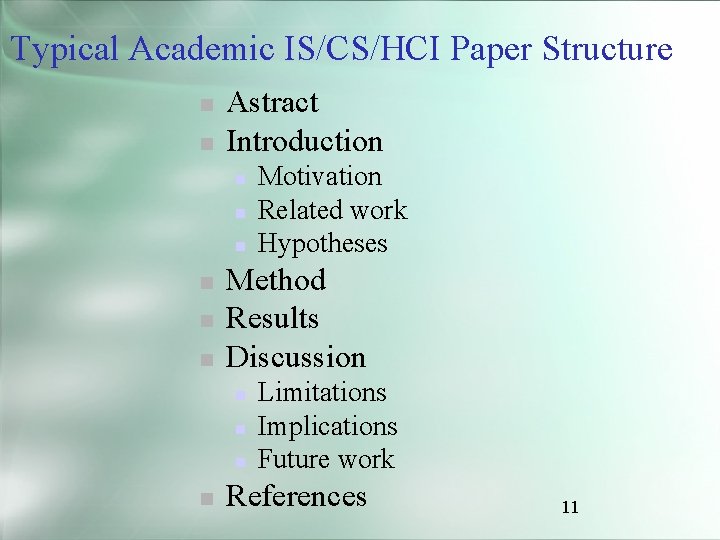

Typical Academic IS/CS/HCI Paper Structure n n Astract Introduction n n n Method Results Discussion n n Motivation Related work Hypotheses Limitations Implications Future work References 11

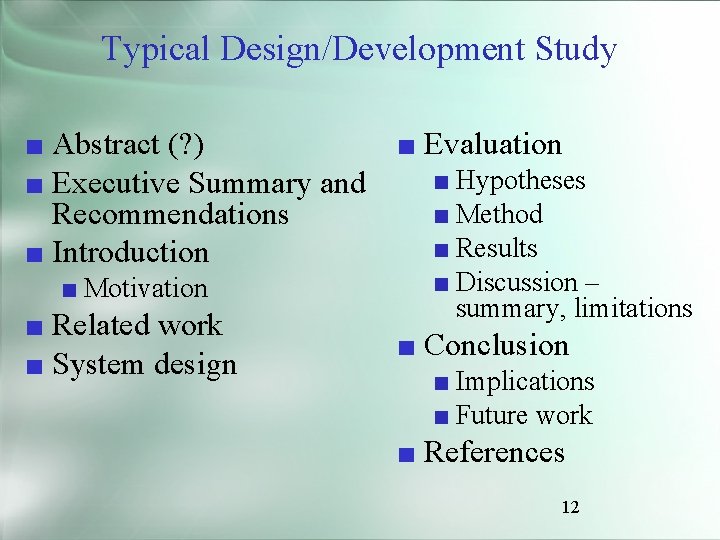

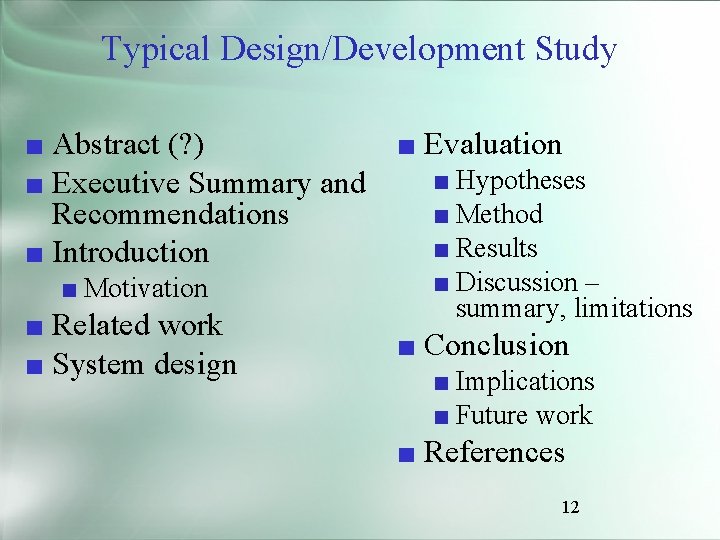

Typical Design/Development Study ■ Abstract (? ) ■ Evaluation ■ Hypotheses ■ Executive Summary and ■ Method Recommendations ■ Results ■ Introduction ■ Motivation ■ Related work ■ System design ■ Discussion – summary, limitations ■ Conclusion ■ Implications ■ Future work ■ References 12

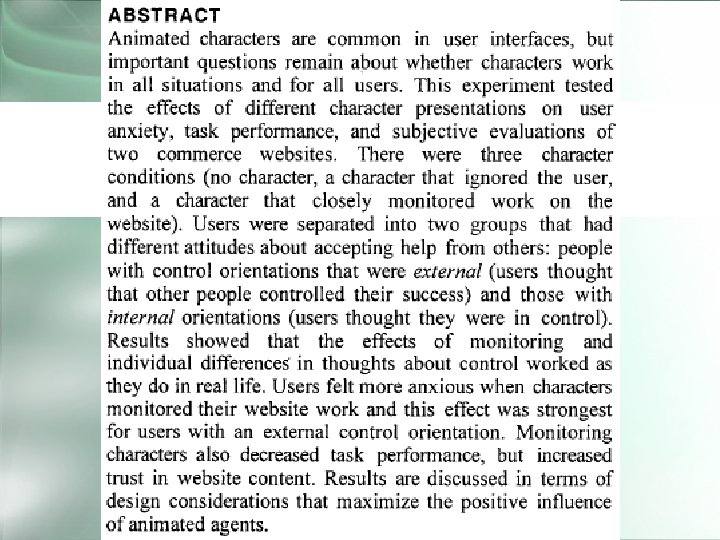

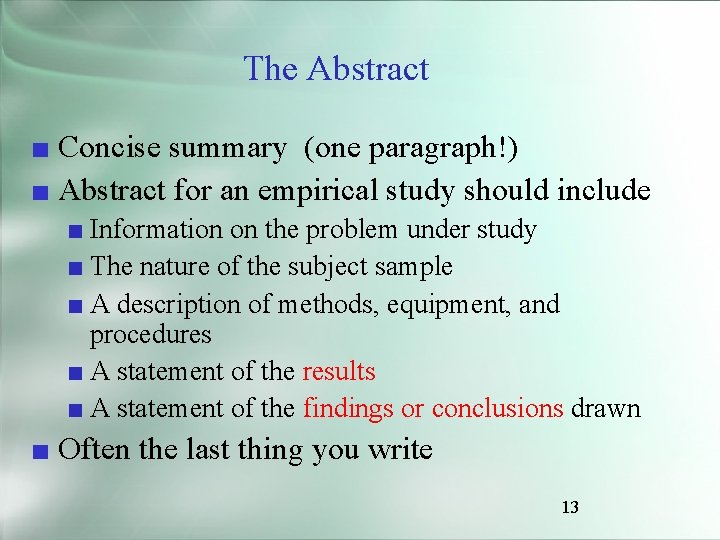

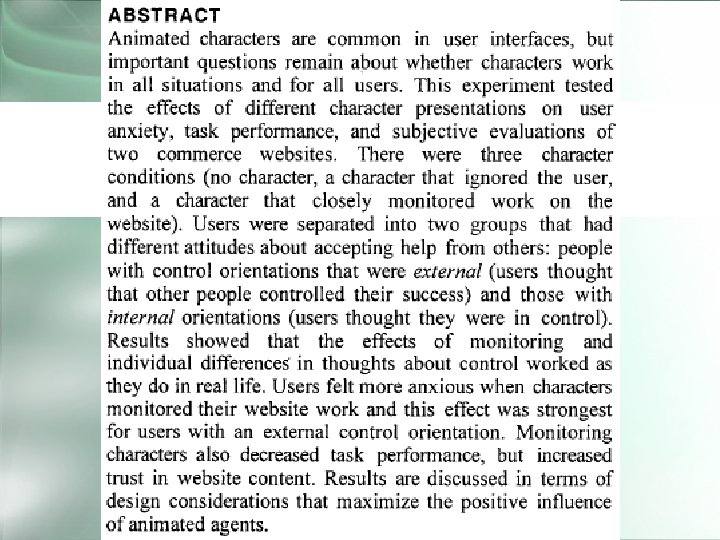

The Abstract ■ Concise summary (one paragraph!) ■ Abstract for an empirical study should include ■ Information on the problem under study ■ The nature of the subject sample ■ A description of methods, equipment, and procedures ■ A statement of the results ■ A statement of the findings or conclusions drawn ■ Often the last thing you write 13

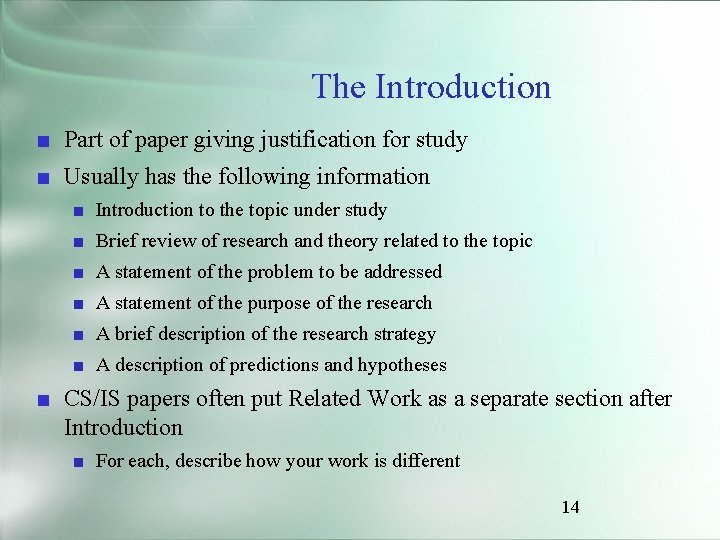

The Introduction ■ Part of paper giving justification for study ■ Usually has the following information ■ Introduction to the topic under study ■ Brief review of research and theory related to the topic ■ A statement of the problem to be addressed ■ A statement of the purpose of the research ■ A brief description of the research strategy ■ A description of predictions and hypotheses ■ CS/IS papers often put Related Work as a separate section after Introduction ■ For each, describe how your work is different 14

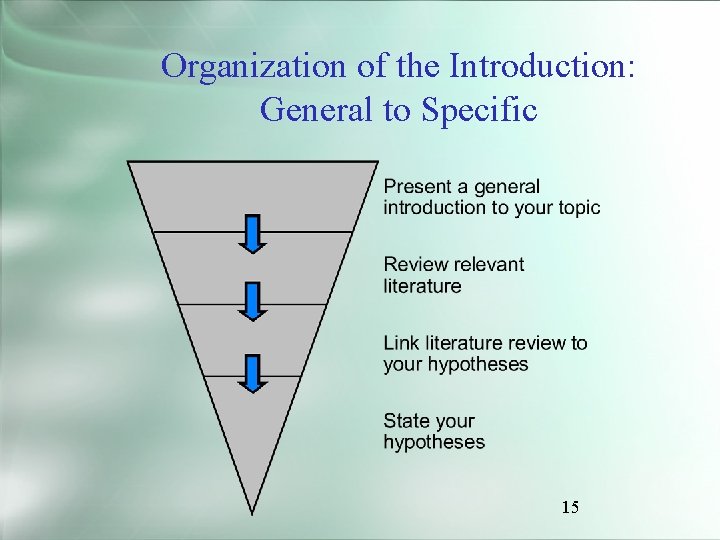

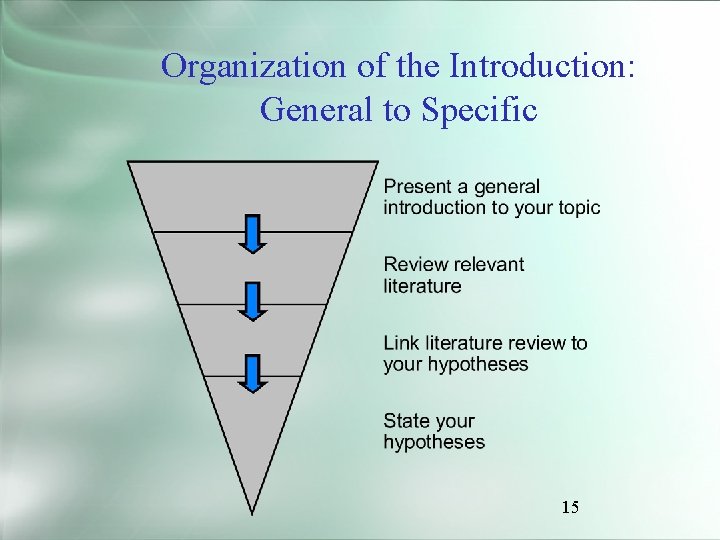

Organization of the Introduction: General to Specific 15

The Method Section ■ Includes information on exactly how a study was carried out ■ Subsections ■ Participants or subjects • Describe in detail the participant or subject sample • Human participants go in a Participants subsection, and animal subjects in a Subjects subsection ■ Apparatus or materials • Describe in detail any equipment or materials used • Equipment is usually described in an Apparatus subsection and written materials in a Materials 16 subsection

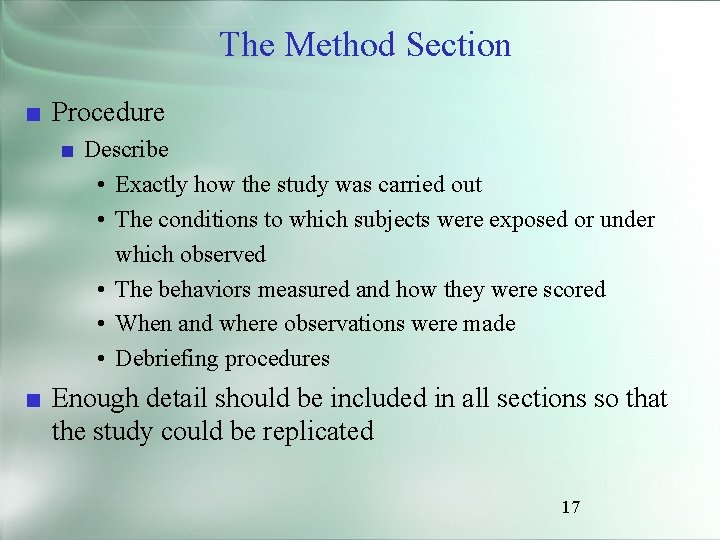

The Method Section ■ Procedure ■ Describe • Exactly how the study was carried out • The conditions to which subjects were exposed or under which observed • The behaviors measured and how they were scored • When and where observations were made • Debriefing procedures ■ Enough detail should be included in all sections so that the study could be replicated 17

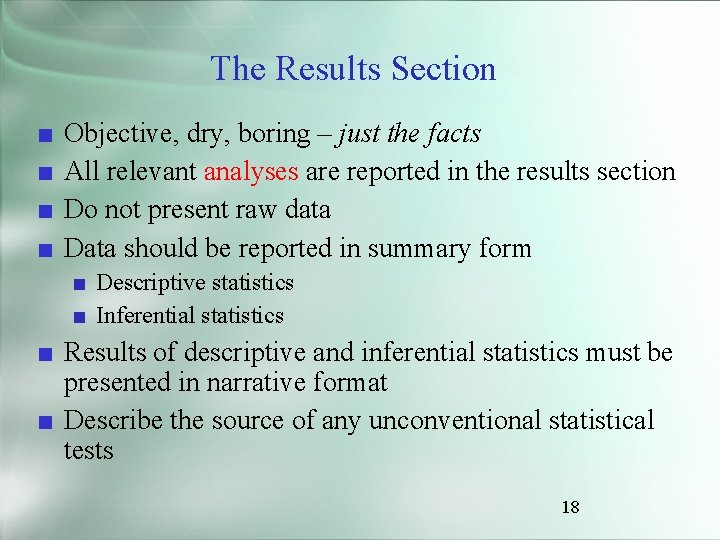

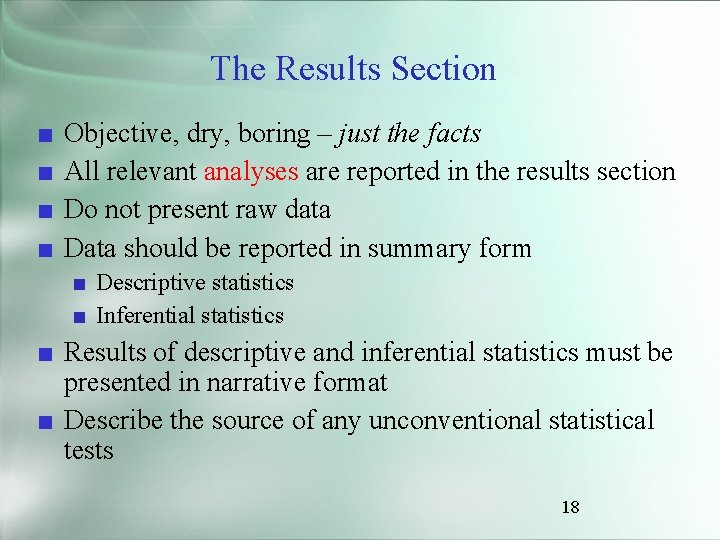

The Results Section ■ ■ Objective, dry, boring – just the facts All relevant analyses are reported in the results section Do not present raw data Data should be reported in summary form ■ Descriptive statistics ■ Inferential statistics ■ Results of descriptive and inferential statistics must be presented in narrative format ■ Describe the source of any unconventional statistical tests 18

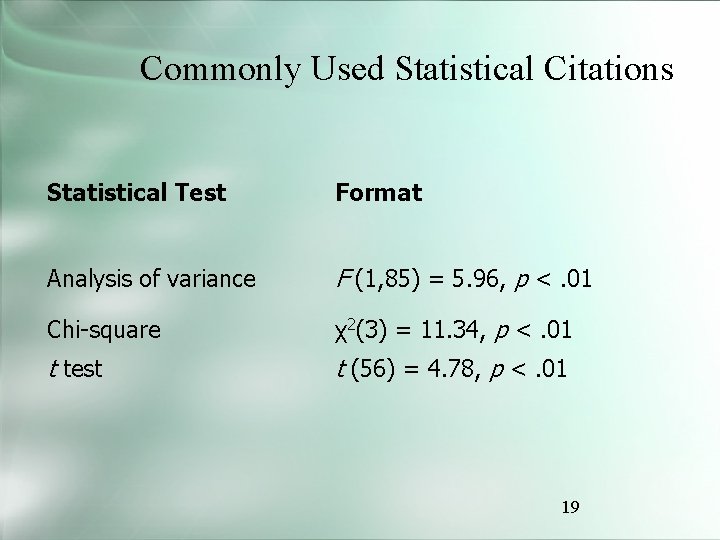

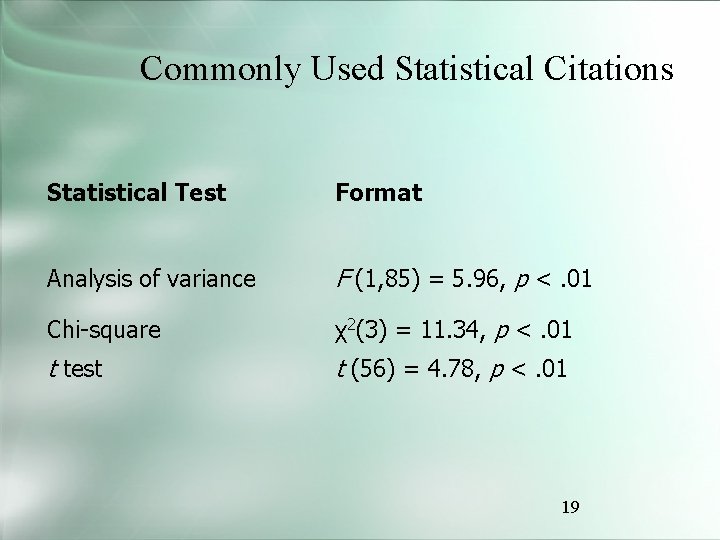

Commonly Used Statistical Citations Statistical Test Format Analysis of variance F (1, 85) = 5. 96, p <. 01 Chi-square χ2(3) = 11. 34, p <. 01 t test t (56) = 4. 78, p <. 01 19

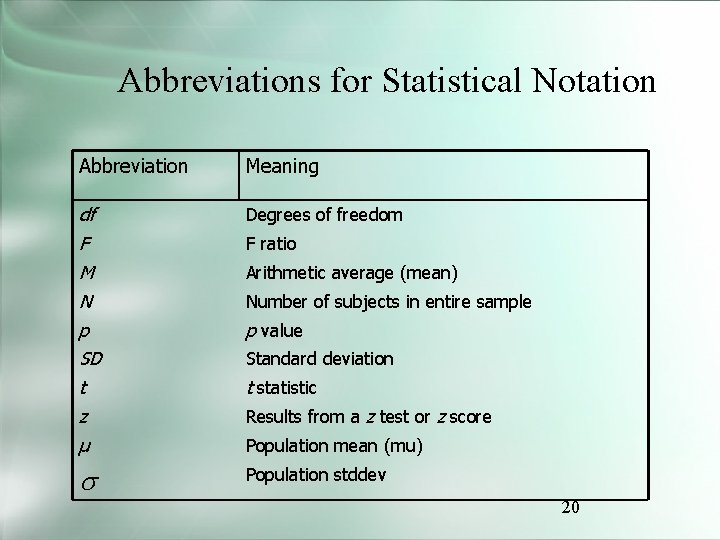

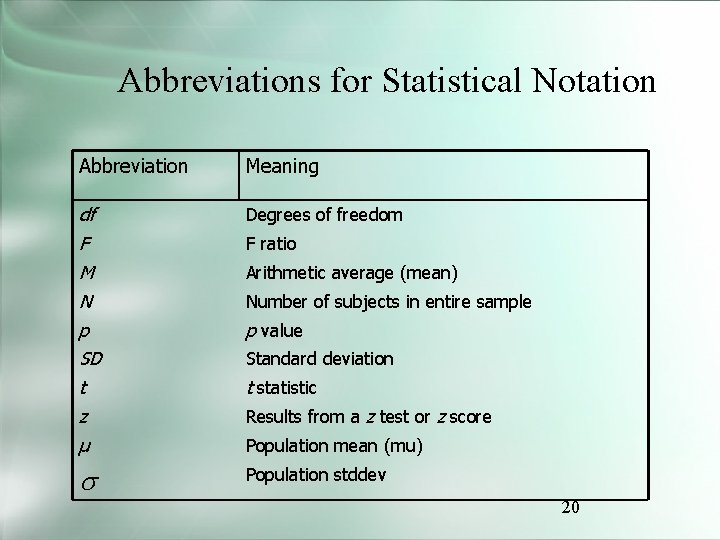

Abbreviations for Statistical Notation Abbreviation Meaning df Degrees of freedom F F ratio M Arithmetic average (mean) N Number of subjects in entire sample p p value SD Standard deviation t t statistic z Results from a z test or z score μ Population mean (mu) s Population stddev 20

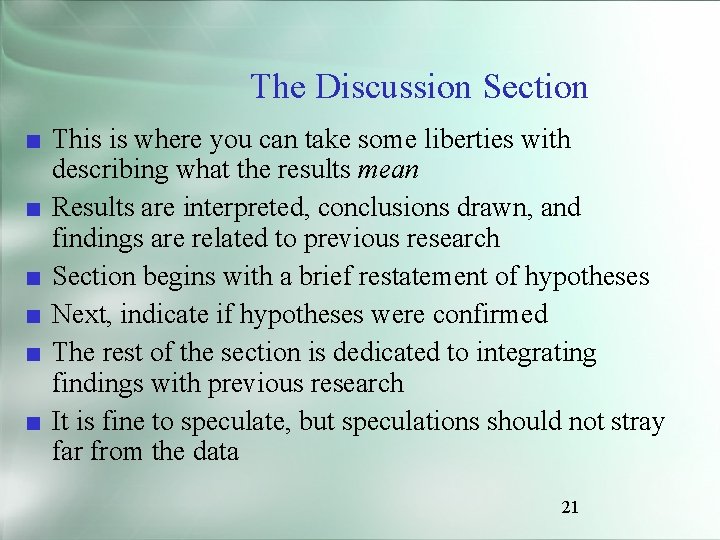

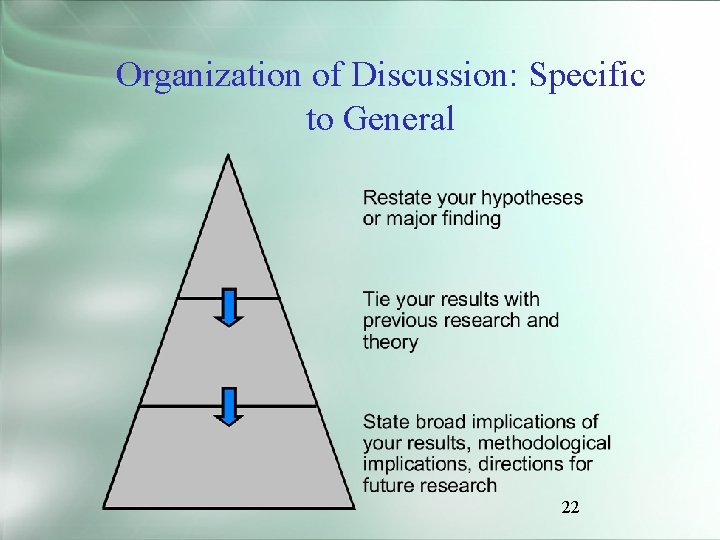

The Discussion Section ■ This is where you can take some liberties with describing what the results mean ■ Results are interpreted, conclusions drawn, and findings are related to previous research ■ Section begins with a brief restatement of hypotheses ■ Next, indicate if hypotheses were confirmed ■ The rest of the section is dedicated to integrating findings with previous research ■ It is fine to speculate, but speculations should not stray far from the data 21

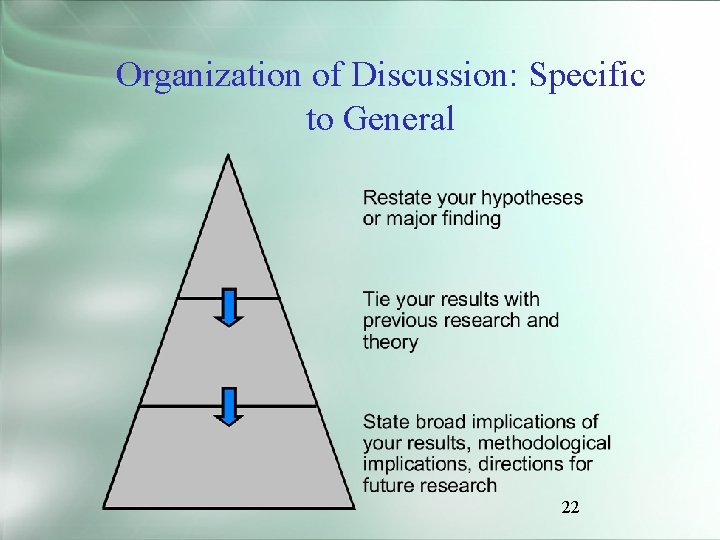

Organization of Discussion: Specific to General 22

Example 23

24

25

26

27

28

29

30

31

32

33

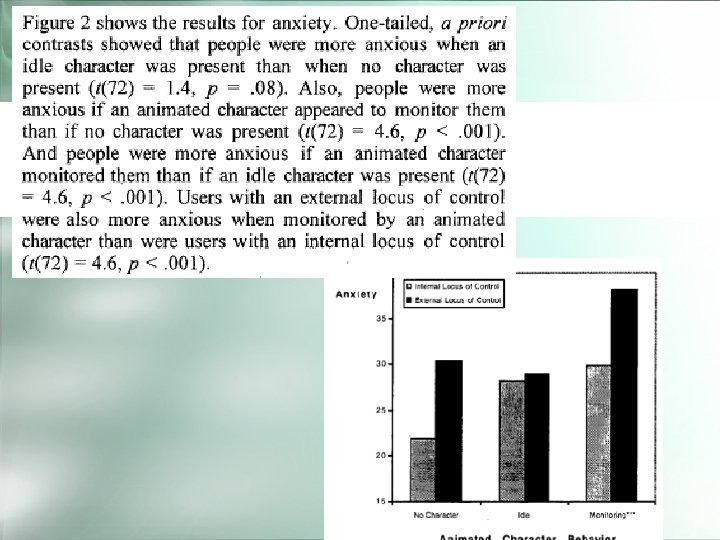

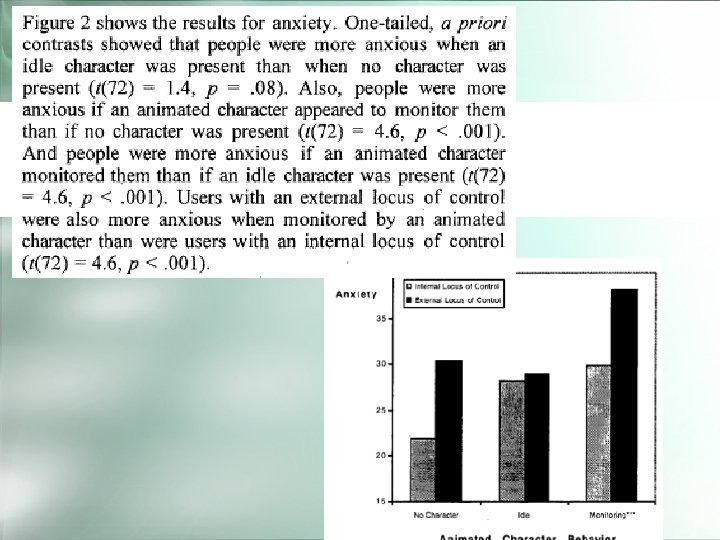

34

35

Citations ■ Liberally cite previous & related work. ■ If you copy passages you must cite and, depending on length, format to indicate it is copied. ■ Suggest using End. Note, Bib. Tex or similar. 36

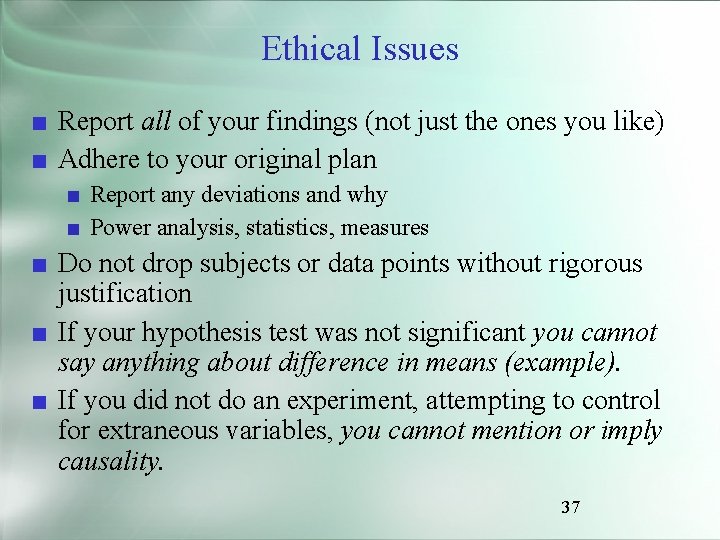

Ethical Issues ■ Report all of your findings (not just the ones you like) ■ Adhere to your original plan ■ Report any deviations and why ■ Power analysis, statistics, measures ■ Do not drop subjects or data points without rigorous justification ■ If your hypothesis test was not significant you cannot say anything about difference in means (example). ■ If you did not do an experiment, attempting to control for extraneous variables, you cannot mention or imply causality. 37

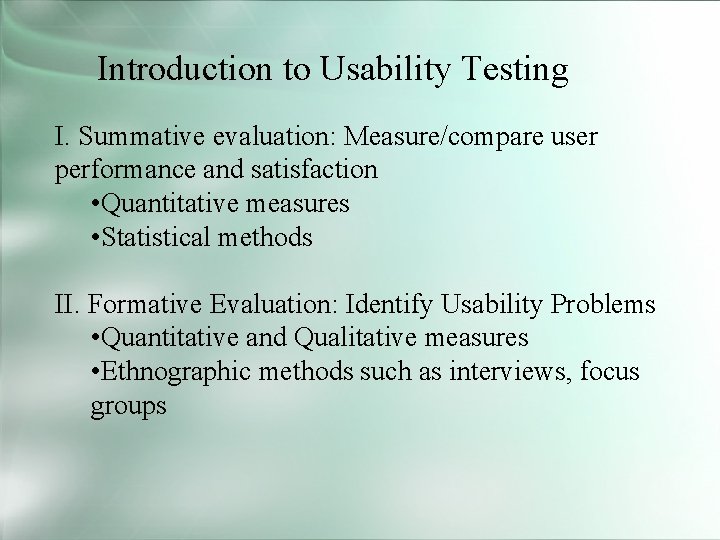

Introduction to Usability Testing I. Summative evaluation: Measure/compare user performance and satisfaction • Quantitative measures • Statistical methods II. Formative Evaluation: Identify Usability Problems • Quantitative and Qualitative measures • Ethnographic methods such as interviews, focus groups

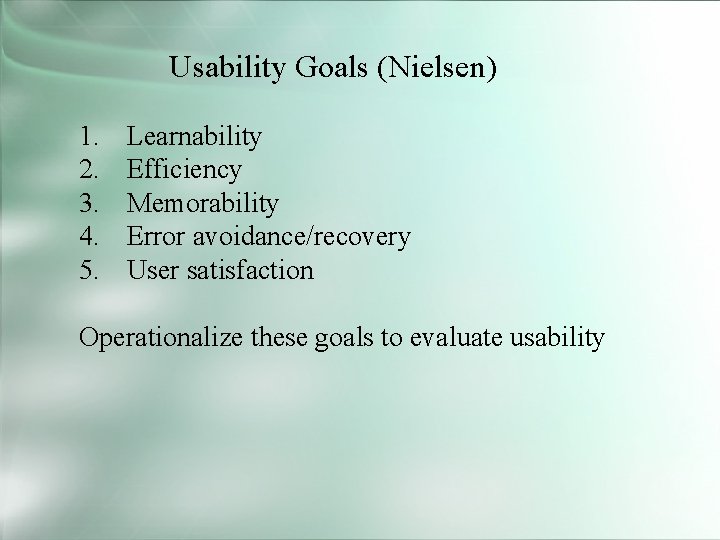

Usability Goals (Nielsen) 1. 2. 3. 4. 5. Learnability Efficiency Memorability Error avoidance/recovery User satisfaction Operationalize these goals to evaluate usability

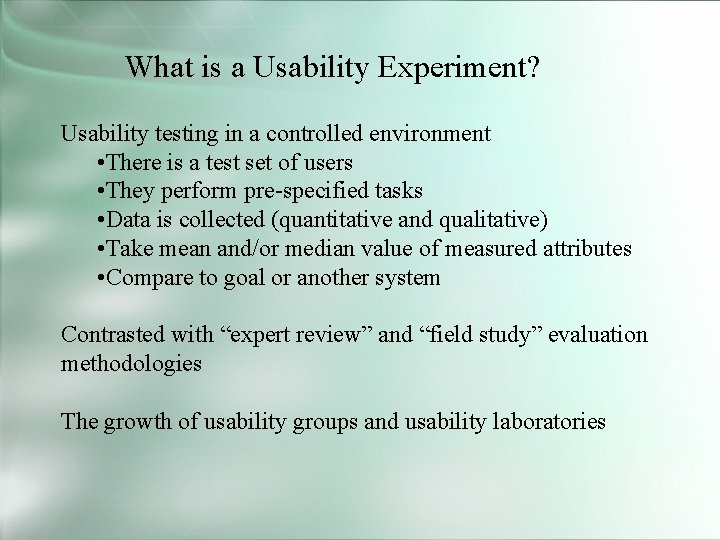

What is a Usability Experiment? Usability testing in a controlled environment • There is a test set of users • They perform pre-specified tasks • Data is collected (quantitative and qualitative) • Take mean and/or median value of measured attributes • Compare to goal or another system Contrasted with “expert review” and “field study” evaluation methodologies The growth of usability groups and usability laboratories

Experimental factors Subjects representative sufficient sample Variables independent variable (IV) characteristic changed to produce different conditions. e. g. interface style, number of menu items. dependent variable (DV) characteristics measured in the experiment e. g. time taken, number of errors.

Experimental factors (cont. ) Hypothesis prediction of outcome framed in terms of IV and DV null hypothesis: states no difference between conditions aim is to disprove this. Experimental design within groups design each subject performs experiment under each condition. transfer of learning possible less costly and less likely to suffer from user variation. between groups design each subject performs under only one condition no transfer of learning more users required variation can bias results.

Summative Analysis What to measure? (and it’s relationship to a usability goal) Total task time User “think time” (dead time? ? ) Time spent not moving toward goal Ratio of successful actions/errors Commands used/not used frequency of user expression of: confusion, frustration, satisfaction frequency of reference to manuals/help system percent of time such reference provided the needed answer

Measuring User Performance Measuring learnability Time to complete a set of tasks Learnability/efficiency trade-off Measuring efficiency Time to complete a set of tasks How to define and locate “experienced” users Measuring memorability The most difficult, since “casual” users are hard to find for experiments Memory quizzes may be misleading

Measuring User Performance (cont. ) Measuring user satisfaction Likert scale (agree or disagree) Semantic differential scale Physiological measure of stress Measuring errors Classification of minor v. serious

Reliability and Validity Reliability means repeatability. Statistical significance is a measure of reliability Validity means will the results transfer into a real-life situation. It depends on matching the users, task, environment Reliability - difficult to achieve because of high variability in individual user performance

Formative Evaluation What is a Usability Problem? ? Unclear - the planned method for using the system is not readily understood or remembered (info. design level) Error-prone - the design leads users to stray from the correct operation of the system (any design level) Mechanism overhead - the mechanism design creates awkward work flow patterns that slow down or distract users. Environment clash - the design of the system does not fit well with the users’ overall work processes. (any design level) Ex: incomplete transaction cannot be saved

Qualitative methods for collecting usability problems Thinking aloud studies Difficult to conduct Experimenter prompting, non-directive Alternatives: constructive interaction, coaching method, retrospective testing Output: notes on what users did and expressed: goals, confusions or misunderstandings, errors, reactions expressed Questionnaires Should be usability-tested beforehand Focus groups, interviews

Observational Methods - Think Aloud user observed performing task user asked to describe what he is doing and why, what he thinks is happening etc. Advantages simplicity - requires little expertise can provide useful insight can show system is actually use Disadvantages subjective selective act of describing may alter task performance

Observational Methods - Cooperative evaluation variation on think aloud user collaborates in evaluation both user and evaluator can ask each other questions throughout Additional advantages less constrained and easier to user is encouraged to criticize system clarification possible

Observational Methods - Protocol analysis paper and pencil cheap, limited to writing speed audio good for think aloud, diffcult to match with other protocols video accurate and realistic, needs special equipment, obtrusive computer logging automatic and unobtrusive, large amounts of data difficult to analyze user notebooks coarse and subjective, useful insights, good for longitudinal studies Mixed use in practice. Transcription of audio and video difficult and requires skill. Some automatic support tools available

Query Techniques - Interviews analyst questions user on one to one basis usually based on prepared questions informal, subjective and relatively cheap Advantages can be varied to suit context issues can be explored more fully can elicit user views and identify unanticipated problems Disadvantages very subjective time consuming

Query Techniques - Questionnaires Set of fixed questions given to users Advantages quick and reaches large user group can be analyzed more rigorously Disadvantages less flexible less probing

Laboratory studies: Pros and Cons Advantages: specialist equipment available uninterrupted environment Disadvantages: lack of context difficult to observe several users cooperating Appropriate if actual system location is dangerous or impractical for to allow controlled manipulation of use.

Steps in a usability experiment 1. The planning phase 1. The execution phase 1. Data collection techniques 1. Data analysis

The planning phase Who, what, where, when and how much? • Who are test users, and how will they be recruited? • Who are the experimenters? • When, where, and how long will the test take? • What equipment/software is needed? • How much will the experiment cost? Prepare detailed test protocol *What test tasks? (written task sheets) *What user aids? (written manual) *What data collected? (include questionnaire) How will results be analyzed/evaluated? Pilot test protocol with a few users

Detailed Test Protocol What tasks? Criteria for completion? User aids What will users be asked to do (thinking aloud studies)? Interaction with experimenter What data will be collected? All materials to be given to users as part of the test, including detailed description of the tasks.

Execution phase Prepare environment, materials, software Introduction should include: purpose (evaluating software) voluntary and confidential explain all procedures recording question-handling invite questions During experiment give user written task description(s), one at a time only one experimenter should talk De-briefing

Execution phase: ethics of human experimentation applied to usability testing Users feel exposed using unfamiliar tools and making erros Guidelines: • Re-assure that individual results not revealed • Re-assure that user can stop any time • Provide comfortable environment • Don’t laugh or refer to users as subjects or guinea pigs • Don’t volunteer help, but don’t allow user to struggle too long • In de-briefing • answer all questions • reveal any deception • thanks for helping

Execution Phase: Designing Test Tasks: Are representative Cover most important parts of UI Don’t take too long to complete Goal or result oriented (possibly with scenario) Not frivolous or humorous (unless part of product goal) First task should build confidence Last task should create a sense of accomplishment

Data collection - usability labs and equipment Pad and paper the only absolutely necessary data collection tool! Observation areas (for other experimenters, developers, customer reps, etc. ) - should be shown to users Videotape (may be overrated) - users must sign a release Video display capture Portable usability labs Usability kiosks

Analysis of data Before you start to do any statistics: look at data save original data Choice of statistical technique depends on type of data information required Type of data discrete - finite number of values continuous - any value

Testing usability in the field 1. Direct observation in actual use discover new uses take notes, don’t help, chat later 2. Logging actual use objective, not intrusive great for identifying errors which features are/are not used privacy concerns

Testing Usability in the Field (cont. ) 3. Questionnaires and interviews with real users ask users to recall critical incidents questionnaires must be short and easy to return 4. Focus groups 6 -9 users skilled moderator with pre-planned script computer conferencing? ? 5 On-line direct feedback mechanisms initiated by users may signal change in user needs trust but verify 6. Bulletin boards and user groups

Field Studies: Pros and Cons Advantages: natural environment context retained (though observation may alter it) longitudinal studies possible Disadvantages: distractions noise Appropriate for “beta testing” where context is crucial for longitudinal studies