IS 4800 Empirical Research Methods for Information Science

- Slides: 53

IS 4800 Empirical Research Methods for Information Science Class Notes Feb 8, 2012 Instructor: Prof. Carole Hafner, 446 WVH hafner@ccs. neu. edu Tel: 617 -373 -5116 Course Web site: www. ccs. neu. edu/course/is 4800 sp 12/

Outline ■ Assignment 2: Relational Agents for Patient Education study ■ Assignment 3: Descriptive Statistics Report ■ Review for test ■ Team project 1 ■ Survey research – (cont. ) ■ Questionnaire construction ■ Composite measures ■ Validity and reliability

Assignment 2 Points to mention ■ Respect for persons ■ Subjects can opt out/verbal and written consent obtained ■ Refusal will not impact medical care -- voluntary ■ Study described in detail in recruitment letter -- informed ■ Gives procedures to ensure confidentiality ■ Participants given number to call if they have concerns ■ Beneficence ■ Little or no risk ■ Potential for significant public benefit – what benefits • Benefit to all diabetes patients • Use of relational agents for educating elderly/minorities/low literacy people ■ Justice ■ Participants may benefit personally (health + $) ■ Minority patients in urban areas have 3 X higher low health literacy therefore represent a class that would benefit the most.

Assignment 2 more points to mention ■ Data safety & monitoring plan ■ Independent oversight ensures plan is followed ■ Provides extra protection for poor/minority patients (justice) ■ Point of Study Subjects section ■ Document inclusion/exclusion criteria ■ Demonstrate there is a sufficient sample size ■ Shows disabled are not over-burdened (justice) ■ HIPPAA issues ■ Use of data to pre-select without consent ■ “Opt-out” initial consent process ■ Use of phone interview to collect more data

Assignment 3 ■ Results were disappointing ■ Frequency tables are only meaningful for categorial measures (gender and job category) unless you create intervals for numeric data. ■ Histograms are meaningful for numeric measures (experience, call time, customer satisfaction) ■ Crosstabs – apparently could not figure out how to get percents ■ Most were able to get the scatter plot ■ About half did the Custom Tables ■ Grade of B for all the requested stats plus a minimal discussion

3. Types of Questionnaire Items • Restricted (close-ended) – Respondents are given a list of alternatives and check the desired alternative • Open-Ended – Respondents are asked to answer a question in their own words • Partially Open-Ended – An “Other” alternative is added to a restricted item, allowing the respondent to write in an alternative 6

Types of Questionnaire Items • Rating Scale – Respondents circle a number on a scale (e. g. , 0 to 10) or check a point on a line that best reflects their opinions – Two factors need to be considered • Number of points on the scale • How to label (“anchor”) the scale (e. g. , endpoints only or each point) • Ranking question 7

Types of Questionnaire Items – A Likert Scale is a scale used to assess attitudes • Respondents indicate the degree of agreement or disagreement to a series of statements • I am happy. Disagree 1 2 3 4 5 6 7 Agree – A Semantic Differential Scale allows participate to provide a rating within a bipolar space • How are you feeling right now? Sad 1 2 3 4 5 6 7 Happy 8

Sample Survey Questions http: //www. custominsight. com/survey-question-types. asp Composite Measures 9

Psychological Concepts aka “Constructs” ■ Constructs are general codifications of experience and observations. ■ Observe differences in social standing -> concept of social status. ■ Observe differences in religious commitment -> concept of religiosity ■ Most psychological constructs have no ultimate definitions ■ Constructs are ad hoc summaries of experience and observations 10

Composite Measures ■ Indexes (aka “scales”) provide an ordinal ranking of respondents with respect to a construct of interest (e. g. , liking of computers) ■ Usually assessed through a series of related questions. 11

Composite measures ■ It is seldom possible to arrive at a single question that adequately represents a complex variable. ■ Any single item is likely to misrepresent some respondents (e. g. , church-going) ■ A single item may not provide enough variation for your purposes. ■ Single items give crude assessments; several items give a more comprehensive and accurate assessment. 12

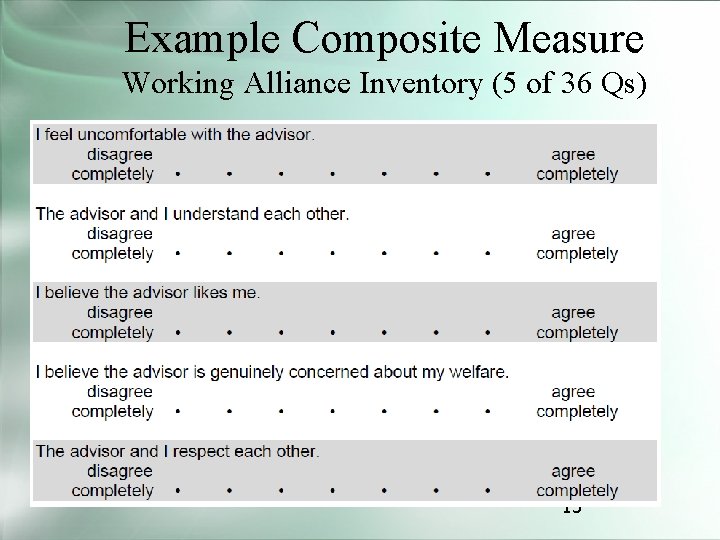

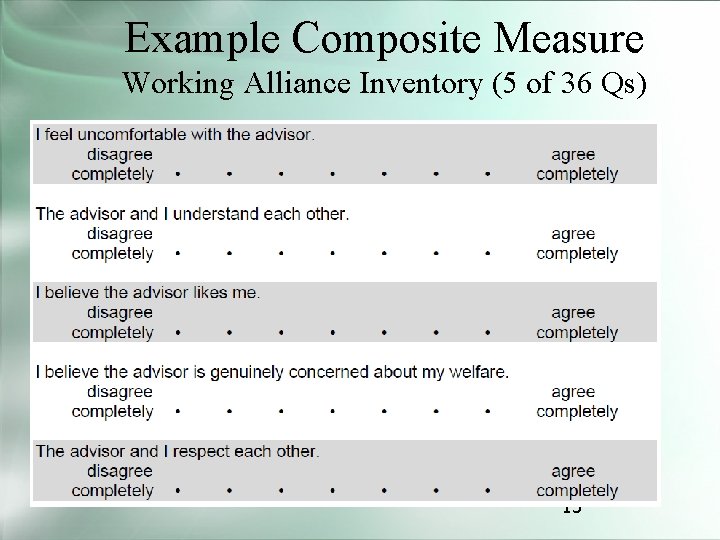

Example Composite Measure Working Alliance Inventory (5 of 36 Qs) 13

Operationalization ■ The process of specifying empirical observations that are indicators of the concept of interest ■ Begin by enumerating all the subdimensions (“factors”) of the concept ■ Review previous research ■ Use commonsense 14

Example: religiosity ■ Subdimensions/indicators/factors ■ Ritual involvement • E. g. , going to church ■ Ideological involvement • Acceptance of religious beliefs ■ Intellectual involvement • Extent of knowledge about religion ■ Experiential involvement • Range of religious experiences ■ Consequential involvement • Extent to which religion guides social decisions ■ (there are many others) 15

Discriminant indicators ■ Also think about related measures which should not be indicators of your construct ■ In particular if you will be measuring another related variable, make sure none of your indicators include any attributes of it. ■ Example ■ Want to study the relationship between religiosity and attitudes towards war => including a question about adherence to “peace on earth” doctrine is not a good idea. 16

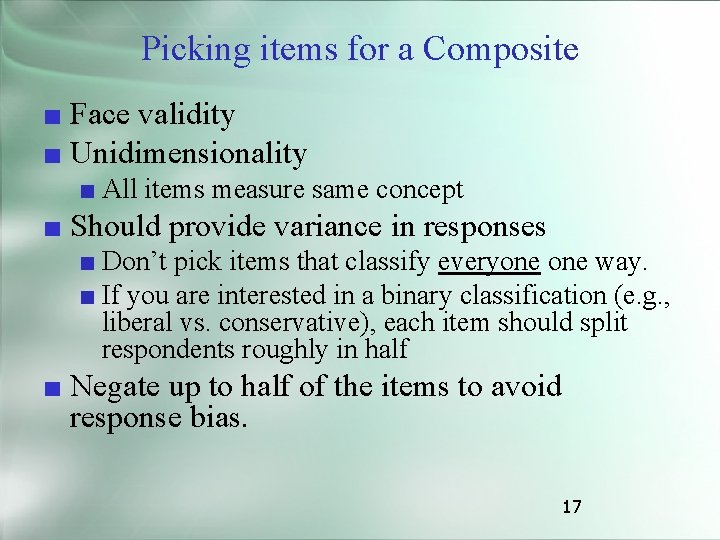

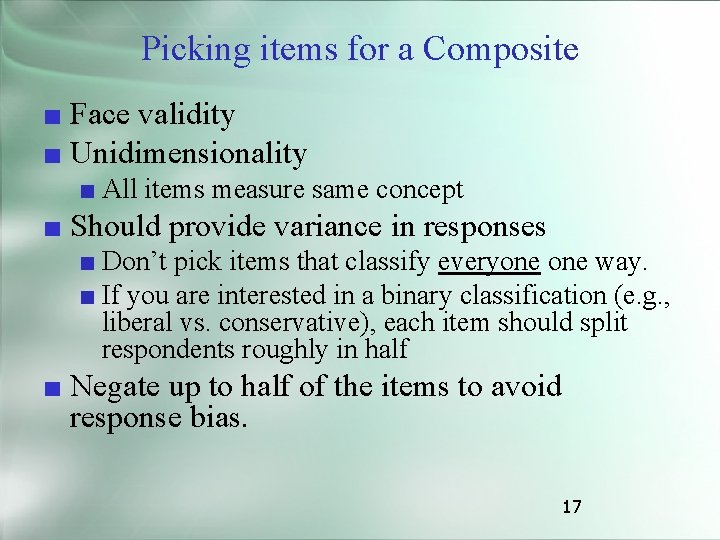

Picking items for a Composite ■ Face validity ■ Unidimensionality ■ All items measure same concept ■ Should provide variance in responses ■ Don’t pick items that classify everyone way. ■ If you are interested in a binary classification (e. g. , liberal vs. conservative), each item should split respondents roughly in half ■ Negate up to half of the items to avoid response bias. 17

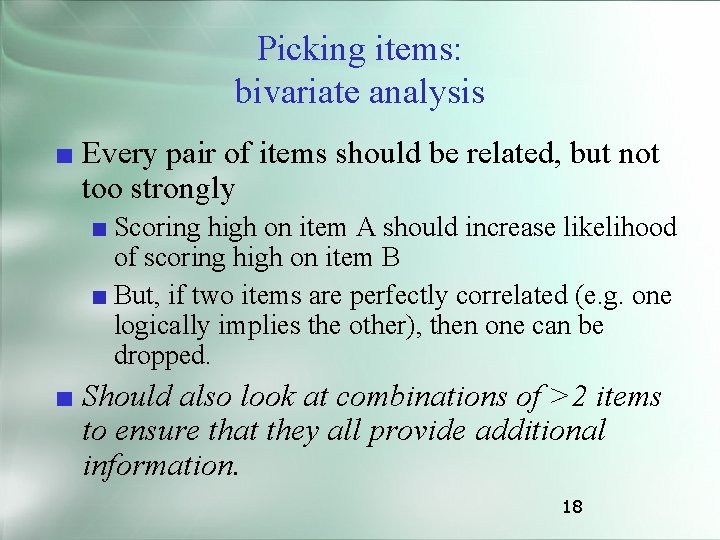

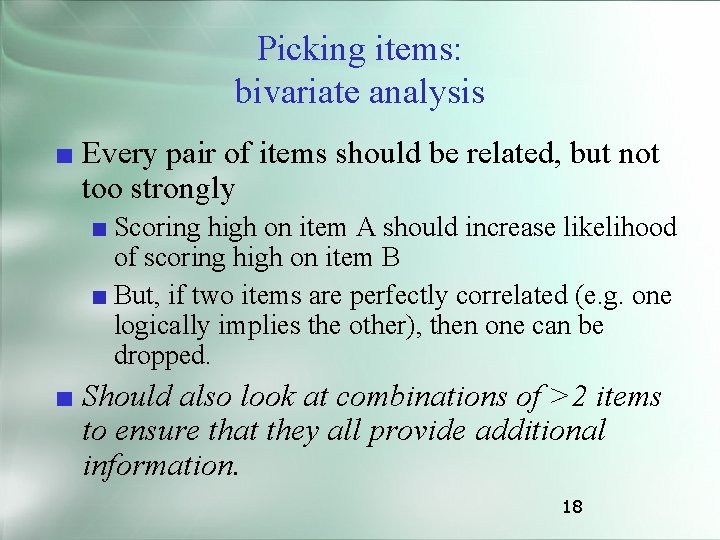

Picking items: bivariate analysis ■ Every pair of items should be related, but not too strongly ■ Scoring high on item A should increase likelihood of scoring high on item B ■ But, if two items are perfectly correlated (e. g. one logically implies the other), then one can be dropped. ■ Should also look at combinations of >2 items to ensure that they all provide additional information. 18

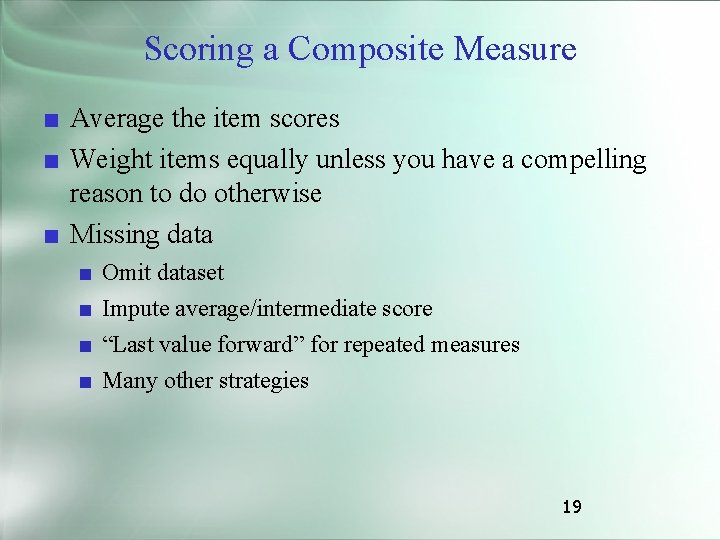

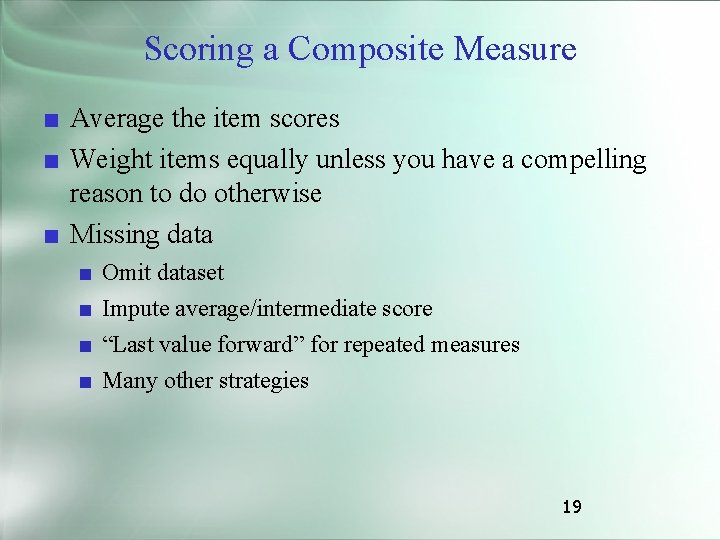

Scoring a Composite Measure ■ Average the item scores ■ Weight items equally unless you have a compelling reason to do otherwise ■ Missing data ■ ■ Omit dataset Impute average/intermediate score “Last value forward” for repeated measures Many other strategies 19

5. Example “NU Husky Fanatic” 1. What are some factors? 2. What are some items per factor? 20

Designing a Composite Measure Literature Review Previous measures, theoretical concepts Brainstorm on Factors Brainstorm on Items Preliminary /Validity Reliability testing Factor analysis Reliability testing Validity testing

Validity and Reliability ■ Reliability of a measure ■ Validity of a measure ■ Especially composite measures of constructs ■ Validity of claims about association of IV and DV ■ Internal ■ External

Internal Validity ■ INTERNAL VALIDITY is the degree to which your design tests what it was intended to test ■ In an experiment, internal validity means showing the observed difference in the dependent variable is truly caused by changes in the independent variable ■ In correlational research, internal validity means that observed difference in the value of the criterion variable are truly related to changes in the predictor variable ■ Internal validity is threatened by Extraneous and Confounding variables ■ Internal validity must be considered during the design phase of research 23

External Validity ■ EXTERNAL VALIDITY is the degree to which results generalize beyond your sample and research setting ■ External validity is threatened by the use of a highly controlled laboratory setting, restricted populations, pretests, demand characteristics, experimenter bias, and subject selection bias (such as volunteer bias) ■ Steps taken to increase internal validity may decrease external validity and vice versa ■ Internal validity may be more important in basic research; external validity, in applied research 24

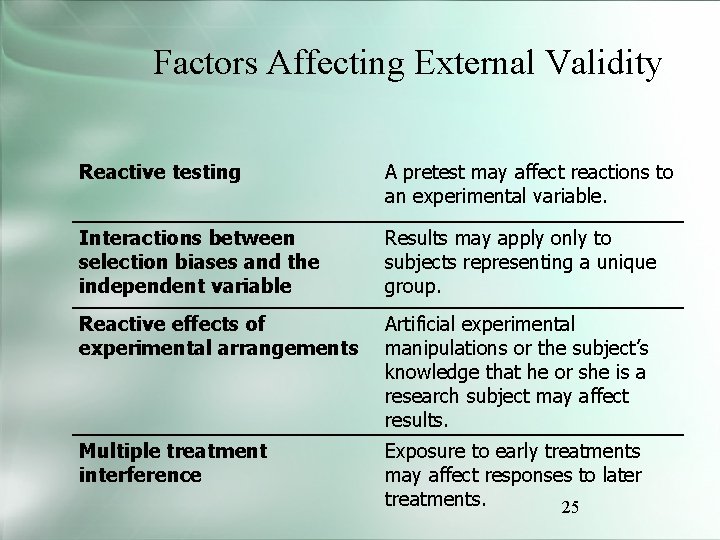

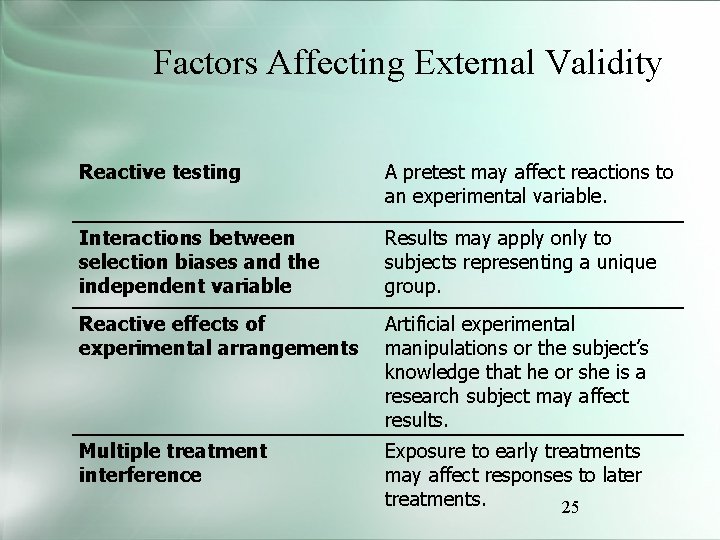

Factors Affecting External Validity Reactive testing A pretest may affect reactions to an experimental variable. Interactions between selection biases and the independent variable Results may apply only to subjects representing a unique group. Reactive effects of experimental arrangements Artificial experimental manipulations or the subject’s knowledge that he or she is a research subject may affect results. Multiple treatment interference Exposure to early treatments may affect responses to later treatments. 25

Internal vs. External Validity of a study. . Internal: appropriate methods (well designed) conducted properly data analyzed correctly correct inference replicability: could someone else conduct your study and get the same result? External: generalize-ability

Extraneous and Confounding Variables (impact on internal validity) ■ Extraneous variable – influences the DV. ■ Confounding variable – influences BOTH the IV and DV. Ice cream and drowning deaths. ■ The most dangerous type of Extraneous variable ■ Must be considered during design of a study

Examples ■ Confounding variable (very difficult to address) ■ A study of the effect of larger vs. smaller monitors on performance. Larger monitors have better speakers. (correlation w/IV). Perhaps the performance difference is due to the speakers. ■ Other extraneous variable (can be addressed by sample restriction, matched group assignment , statistical methods) ■ Task time on 2 word processors: typing skill. Can control by only using subjects with one skill level, matching skills levels among groups, multivariate analysis.

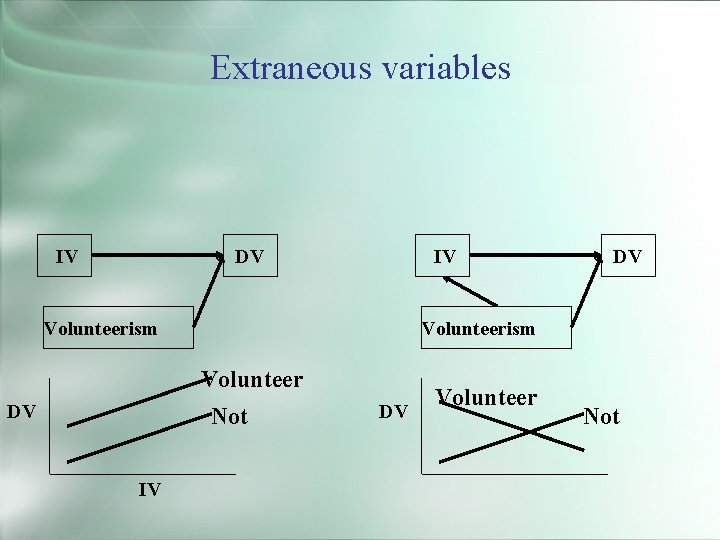

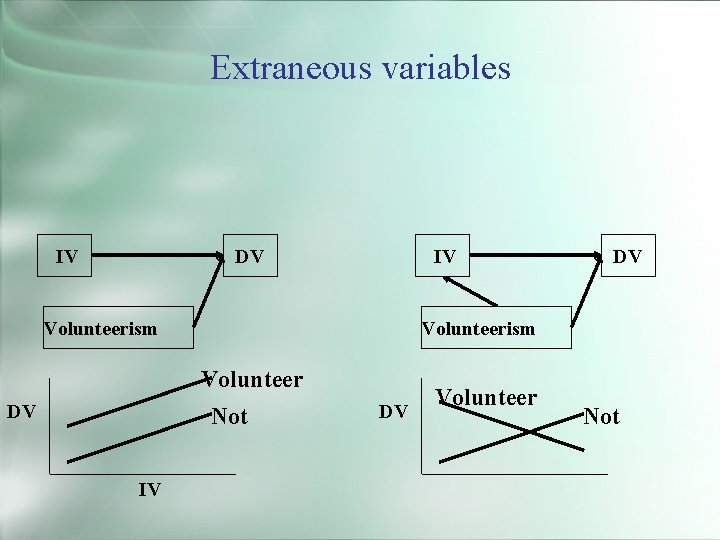

Extraneous variables DV IV IV Volunteerism Volunteer DV Not IV DV DV Volunteer Not

Example: ■ You want to evaluate a new sensor to detect whether people are happy or not. ■ You hire actors and randomly assign them to act happy or sad, and test your sensors on them. ■ What kind of validity (internal/external) might be challenged? 30

Example: ■ You conduct the “Conversational Agents to Promote Health Literacy” study by assigning the first 30 patients who volunteer to the intervention group, and the next 30 to the control group. ■ What kind of validity (internal/external) might be challenged? 31

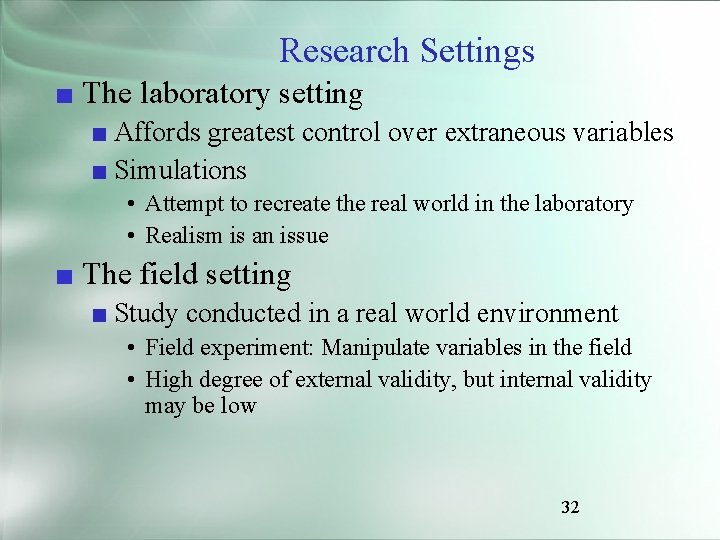

Research Settings ■ The laboratory setting ■ Affords greatest control over extraneous variables ■ Simulations • Attempt to recreate the real world in the laboratory • Realism is an issue ■ The field setting ■ Study conducted in a real world environment • Field experiment: Manipulate variables in the field • High degree of external validity, but internal validity may be low 32

Validating a Composite Measure 33

What is a validated measure? ■ Has reliability ■ Has validity ■ For psychological measures, these are collectively referred to as a measure’s “psychometrics”. 34

Measure Reliability ■ A reliable measure produces similar results when repeated measurements are made under identical conditions ■ Reliability can be established in several ways • Test-retest reliability: Administer the same test twice • Parallel-forms reliability: Alternate forms of the same test used • Split-half reliability: Parallel forms are included on one test and later separated for comparison 35

Reliability ■ For surveys, this also encompasses internal consistency: ■ Do all of the questions address the same underlying construct of interest? ■ That is, do scores covary? ■ A standard measure is Cronbach’s alpha • 0 = no correlation • 1 = scores always covary in the same way • 0. 7 used as conventional threshold 36

Increasing the Reliability of a Questionnaire ■ Check to be sure the items on your questionnaire are clearly written and appropriate for those who will complete your questionnaire ■ Increase the number of items on your questionnaire ■ Standardize the conditions under which the test is administered (e. g. , timing procedures, lighting, ventilation, instructions) ■ Make sure you score your questionnaire carefully, eliminating scoring errors 37

Volunteer Bias How can it affect external validity? Characteristics of volunteers? How do you address volunteer bias?

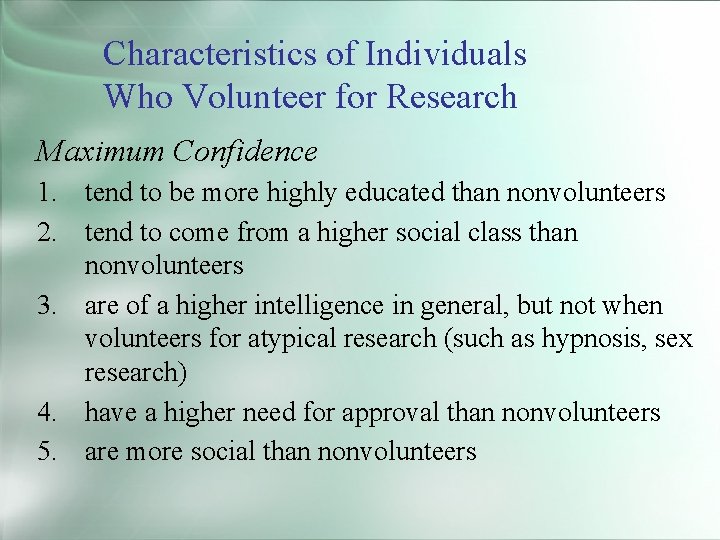

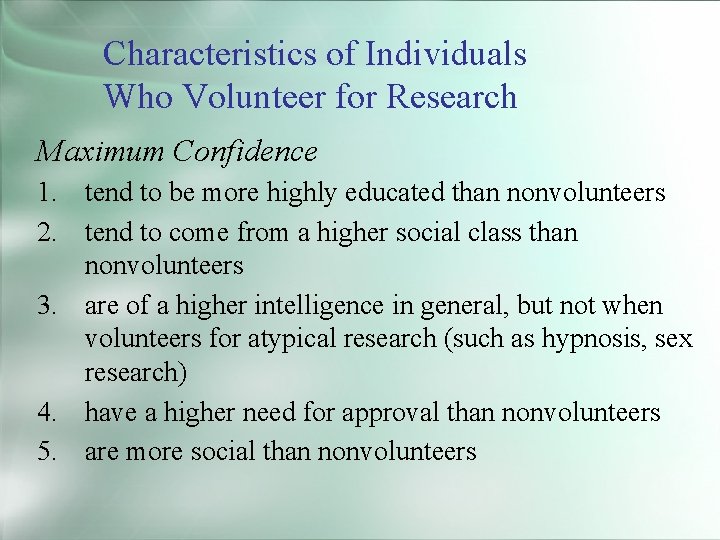

Characteristics of Individuals Who Volunteer for Research Maximum Confidence 1. tend to be more highly educated than nonvolunteers 2. tend to come from a higher social class than nonvolunteers 3. are of a higher intelligence in general, but not when volunteers for atypical research (such as hypnosis, sex research) 4. have a higher need for approval than nonvolunteers 5. are more social than nonvolunteers

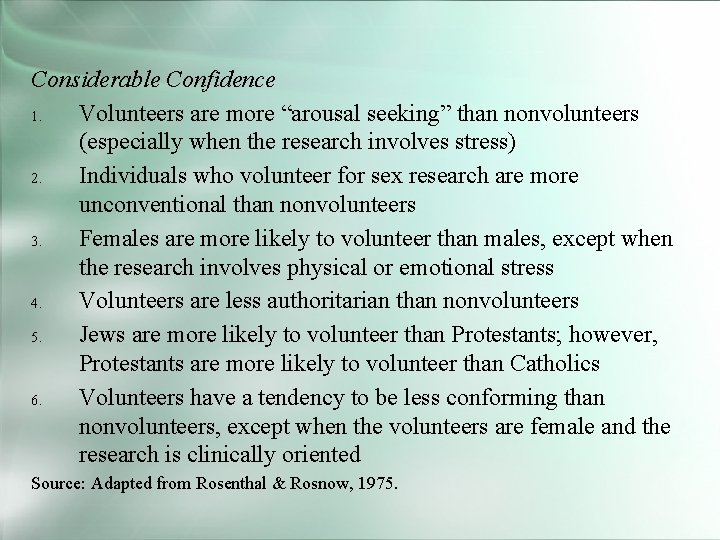

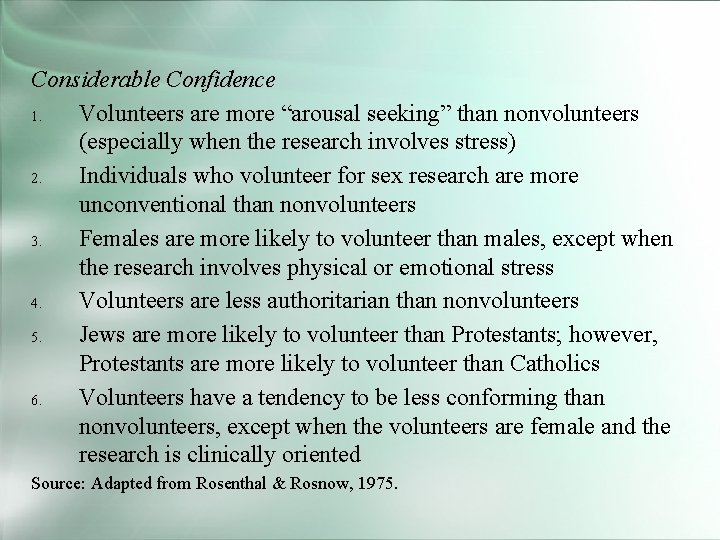

Considerable Confidence 1. Volunteers are more “arousal seeking” than nonvolunteers (especially when the research involves stress) 2. Individuals who volunteer for sex research are more unconventional than nonvolunteers 3. Females are more likely to volunteer than males, except when the research involves physical or emotional stress 4. Volunteers are less authoritarian than nonvolunteers 5. Jews are more likely to volunteer than Protestants; however, Protestants are more likely to volunteer than Catholics 6. Volunteers have a tendency to be less conforming than nonvolunteers, except when the volunteers are female and the research is clinically oriented Source: Adapted from Rosenthal & Rosnow, 1975.

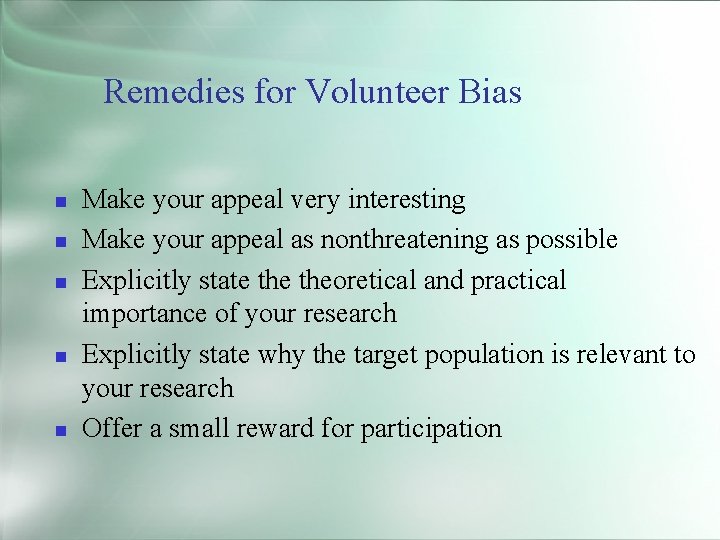

Remedies for Volunteer Bias Make your appeal very interesting Make your appeal as nonthreatening as possible Explicitly state theoretical and practical importance of your research Explicitly state why the target population is relevant to your research Offer a small reward for participation

Have a high-status person make the appeal for participants Avoid research that is physically or psychologically stressful Have someone known to participants make the appeal Use public or private commitment to volunteering when appropriate

Ecological Validity ■ The degree to which a measure corresponds to what happens in the real world. ■ Example: ■ Assessing productivity/day in the lab vs. ■ Assessing productivity/day in the office 43

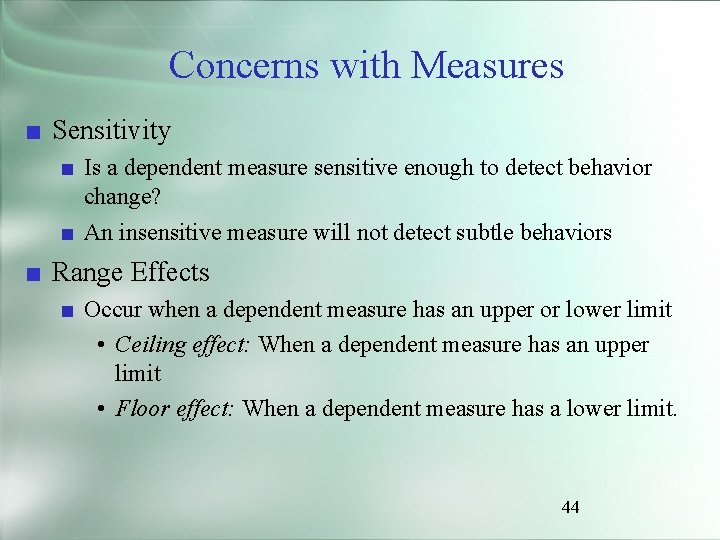

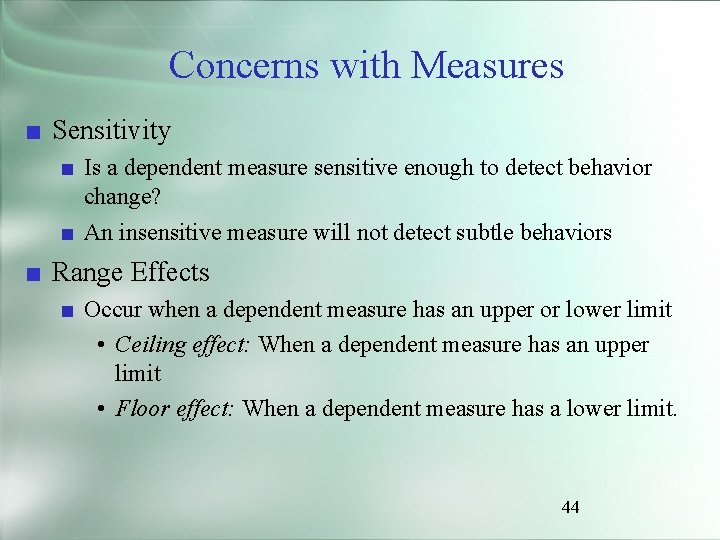

Concerns with Measures ■ Sensitivity ■ Is a dependent measure sensitive enough to detect behavior change? ■ An insensitive measure will not detect subtle behaviors ■ Range Effects ■ Occur when a dependent measure has an upper or lower limit • Ceiling effect: When a dependent measure has an upper limit • Floor effect: When a dependent measure has a lower limit. 44

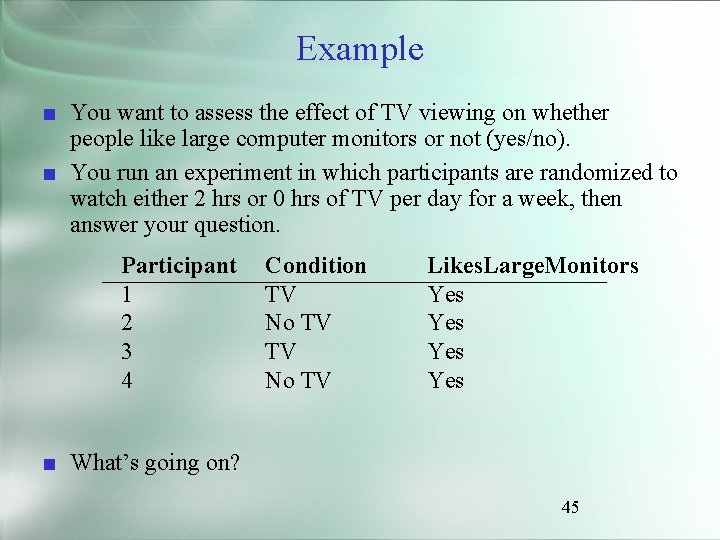

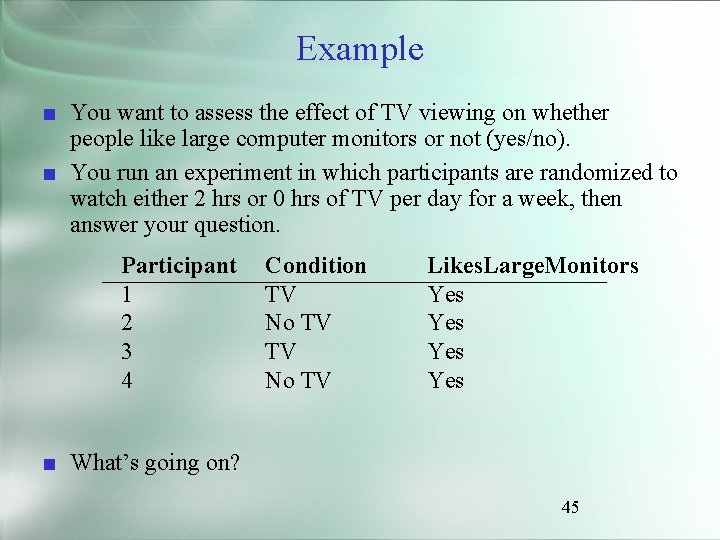

Example ■ You want to assess the effect of TV viewing on whether people like large computer monitors or not (yes/no). ■ You run an experiment in which participants are randomized to watch either 2 hrs or 0 hrs of TV per day for a week, then answer your question. Participant 1 2 3 4 Condition TV No TV Likes. Large. Monitors Yes Yes ■ What’s going on? 45

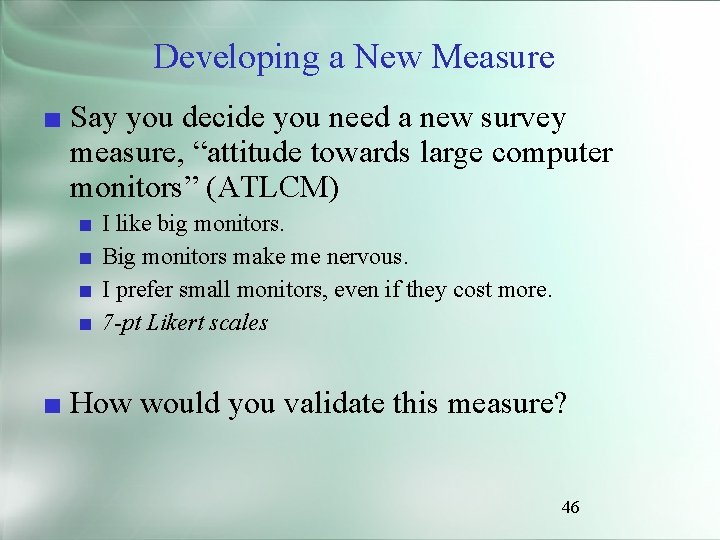

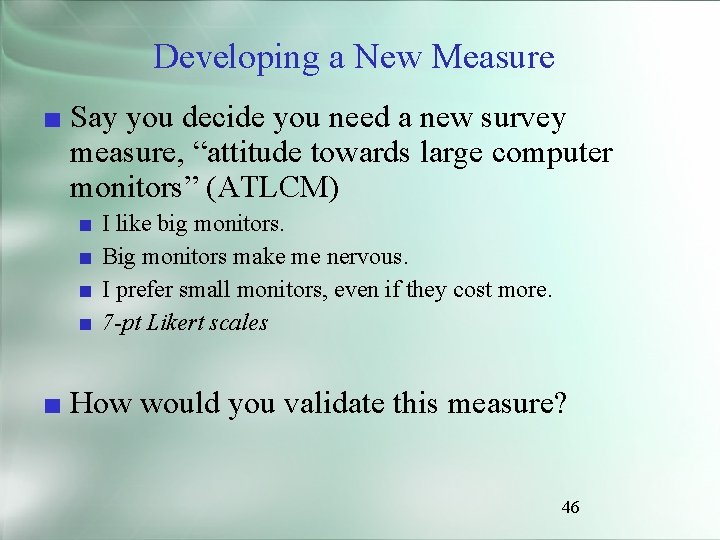

Developing a New Measure ■ Say you decide you need a new survey measure, “attitude towards large computer monitors” (ATLCM) ■ ■ I like big monitors. Big monitors make me nervous. I prefer small monitors, even if they cost more. 7 -pt Likert scales ■ How would you validate this measure? 46

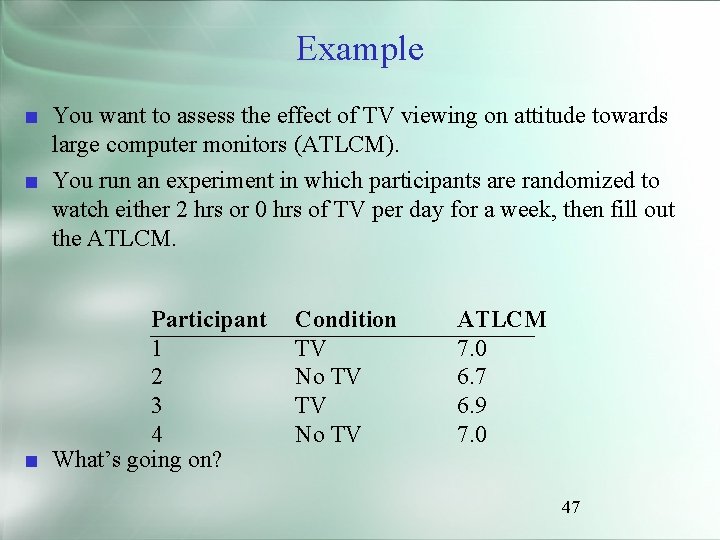

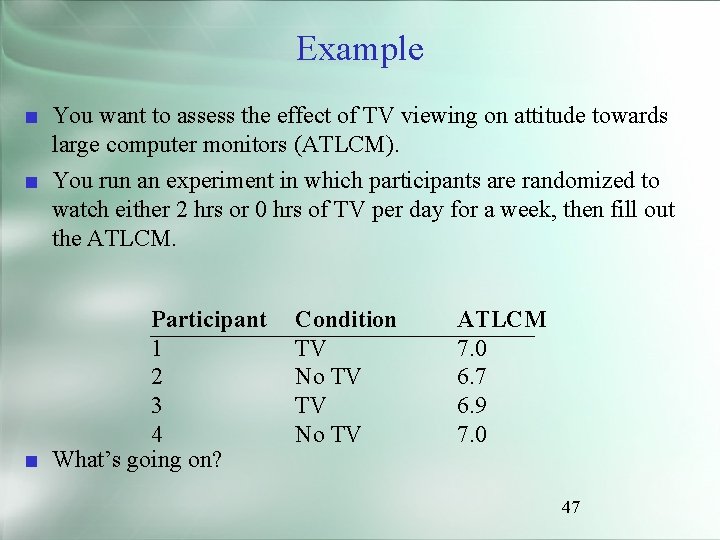

Example ■ You want to assess the effect of TV viewing on attitude towards large computer monitors (ATLCM). ■ You run an experiment in which participants are randomized to watch either 2 hrs or 0 hrs of TV per day for a week, then fill out the ATLCM. Participant 1 2 3 4 ■ What’s going on? Condition TV No TV ATLCM 7. 0 6. 7 6. 9 7. 0 47

Measure Validity – A valid measures what you intend it to measure – Very important when using psychological tests (e. g. , intelligence, aptitude, (un)favorable attitude) – Validity can be established in a variety of ways • Face validity: Assessment of adequacy of content. Least powerful method • Content validity: How adequately does a variable sample the full range of behavior it is intended to measure? 48

Measure Validity • Criterion-related validity: How adequately does a test score match some criterion score? Takes two forms – Concurrent validity: Does test score correlate highly with score from a measure with known validity? – Predictive validity: Does test predict behavior known to be associated with the behavior being measured? 49

Measure Validity • Construct validity: Do the results of a test correlate with what is theoretically known about the construct being evaluated? – Convergent validity (subtype): measures of constructs that should be related to each other are – Discriminant validity (subtype): measures of constructs that should not be related are not 50

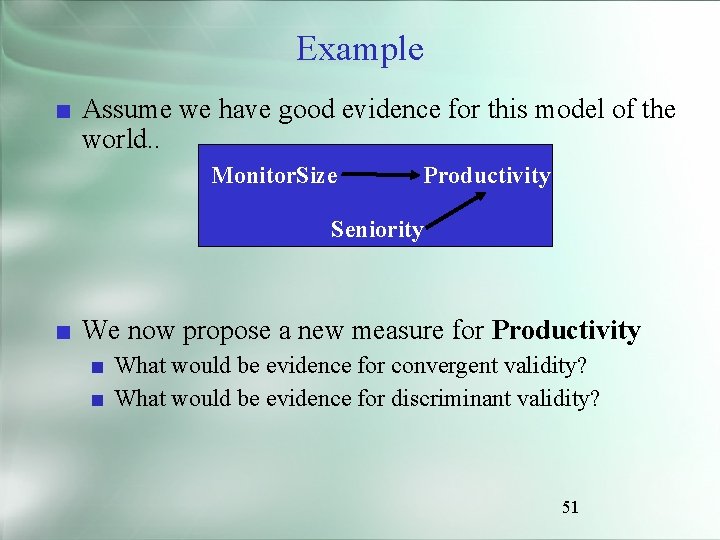

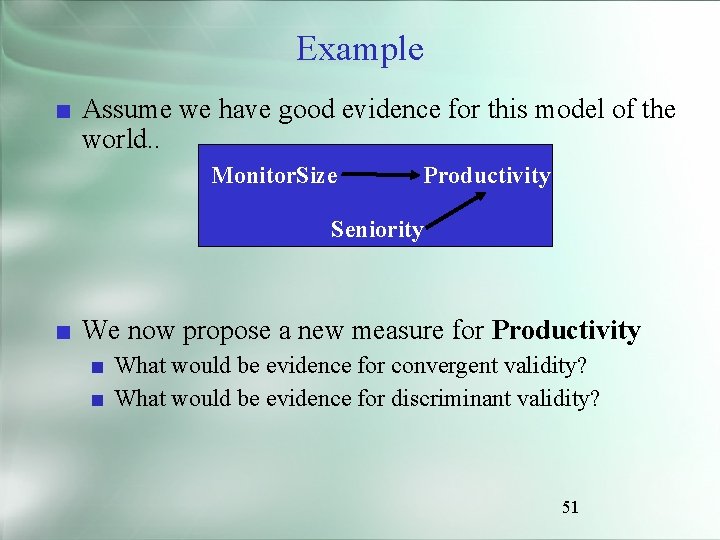

Example ■ Assume we have good evidence for this model of the world. . Monitor. Size Productivity Seniority ■ We now propose a new measure for Productivity ■ What would be evidence for convergent validity? ■ What would be evidence for discriminant validity? 51

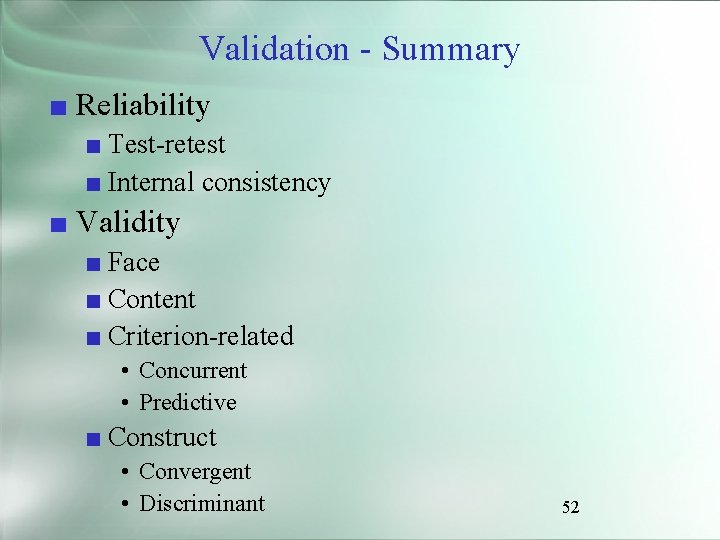

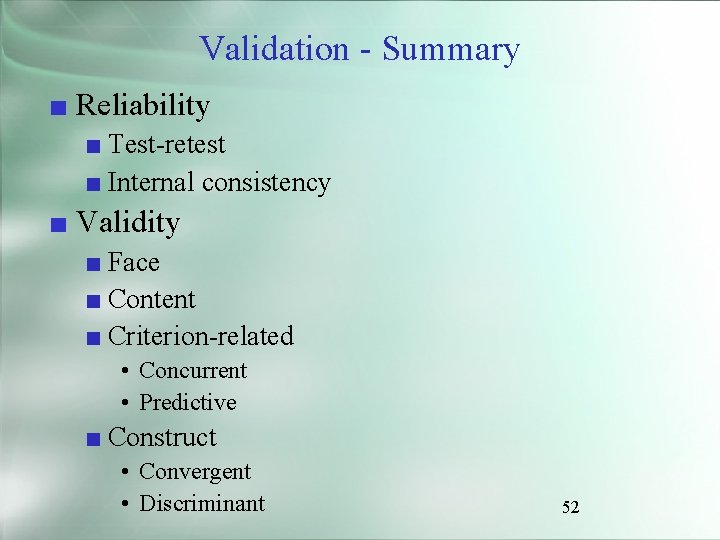

Validation - Summary ■ Reliability ■ Test-retest ■ Internal consistency ■ Validity ■ Face ■ Content ■ Criterion-related • Concurrent • Predictive ■ Construct • Convergent • Discriminant 52

Sampling ■ You should obtain a representative sample ■ The sample closely matches the characteristics of the population ■ A biased sample occurs when your sample characteristics don’t match population characteristics ■ Biased samples often produce misleading or inaccurate results ■ Usually stem from inadequate sampling procedures 53