Contextsensitive Languages Definition 7 1 1 for a

- Slides: 54

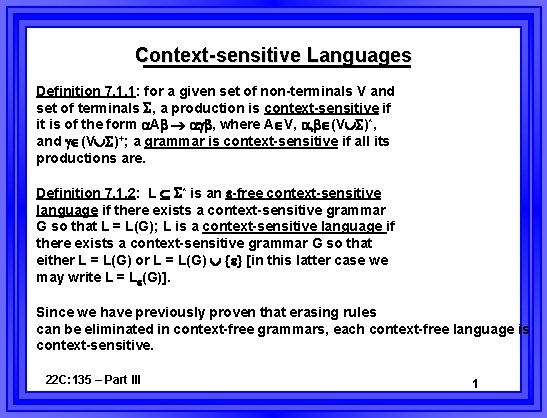

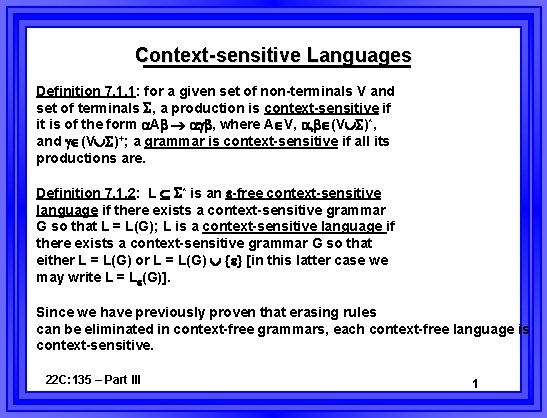

Context-sensitive Languages Definition 7. 1. 1: for a given set of non-terminals V and set of terminals , a production is context-sensitive if it is of the form A , where A V, (V )*, and (V )+; a grammar is context-sensitive if all its productions are. Definition 7. 1. 2: L * is an -free context-sensitive language if there exists a context-sensitive grammar G so that L = L(G); L is a context-sensitive language if there exists a context-sensitive grammar G so that either L = L(G) or L = L(G) { } [in this latter case we may write L = L (G)]. Since we have previously proven that erasing rules can be eliminated in context-free grammars, each context-free language is context-sensitive. 22 C: 135 – Part III 1

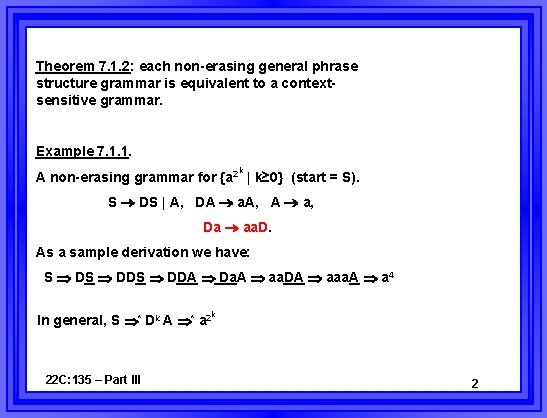

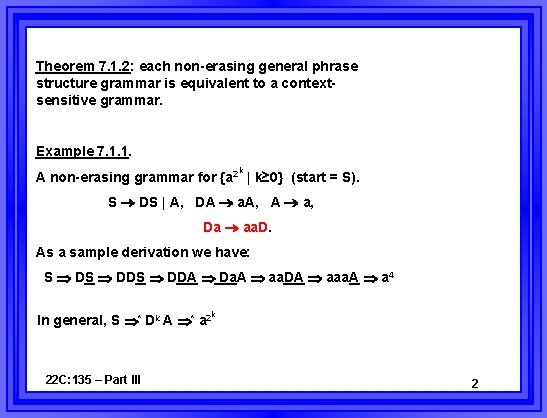

Theorem 7. 1. 2: each non-erasing general phrase structure grammar is equivalent to a contextsensitive grammar. Example 7. 1. 1. k A non-erasing grammar for {a 2 | k≥ 0} (start = S). S DS | A, DA a. A, A a, Da aa. D. As a sample derivation we have: S DDS DDA Da. A aa. DA aaa. A a 4 k In general, S * Dk A * a 2 22 C: 135 – Part III 2

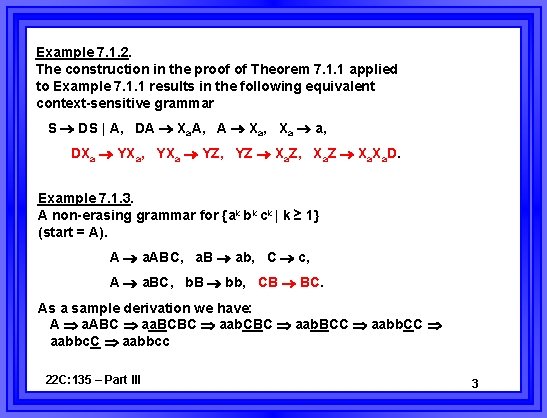

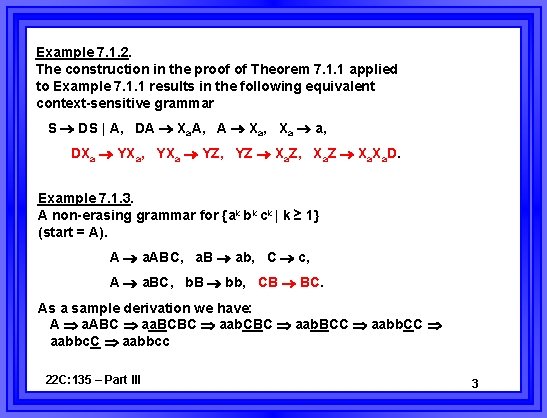

Example 7. 1. 2. The construction in the proof of Theorem 7. 1. 1 applied to Example 7. 1. 1 results in the following equivalent context-sensitive grammar S DS | A, DA Xa. A, A Xa, Xa a, DXa YXa, YXa YZ, YZ Xa. Z, Xa. Z Xa. D. Example 7. 1. 3. A non-erasing grammar for {ak bk ck | k ≥ 1} (start = A). A a. ABC, a. B ab, C c, A a. BC, b. B bb, CB BC. As a sample derivation we have: A a. ABC aa. BCBC aab. BCC aabbc. C aabbcc 22 C: 135 – Part III 3

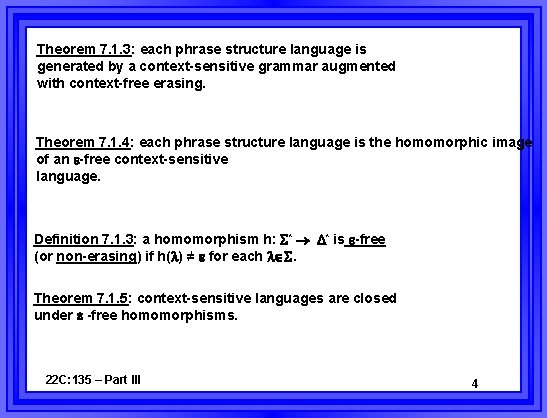

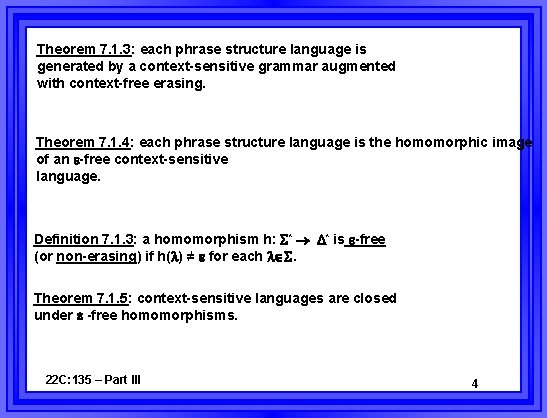

Theorem 7. 1. 3: each phrase structure language is generated by a context-sensitive grammar augmented with context-free erasing. Theorem 7. 1. 4: each phrase structure language is the homomorphic image of an -free context-sensitive language. Definition 7. 1. 3: a homomorphism h: * * is -free (or non-erasing) if h( ) ≠ for each . Theorem 7. 1. 5: context-sensitive languages are closed under -free homomorphisms. 22 C: 135 – Part III 4

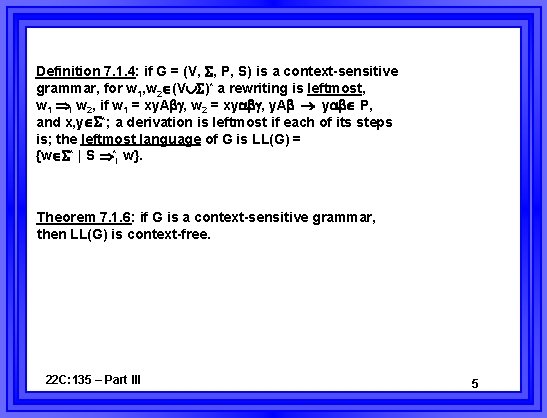

Definition 7. 1. 4: if G = (V, , P, S) is a context-sensitive grammar, for w 1, w 2 (V )* a rewriting is leftmost, w 1 l w 2, if w 1 = xy. A , w 2 = xy , y. A y P, and x, y *; a derivation is leftmost if each of its steps is; the leftmost language of G is LL(G) = {w * | S *l w}. Theorem 7. 1. 6: if G is a context-sensitive grammar, then LL(G) is context-free. 22 C: 135 – Part III 5

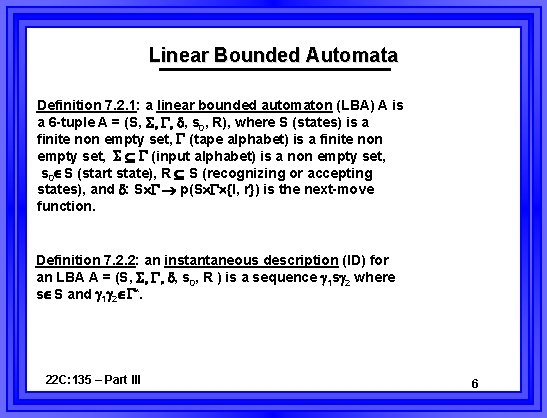

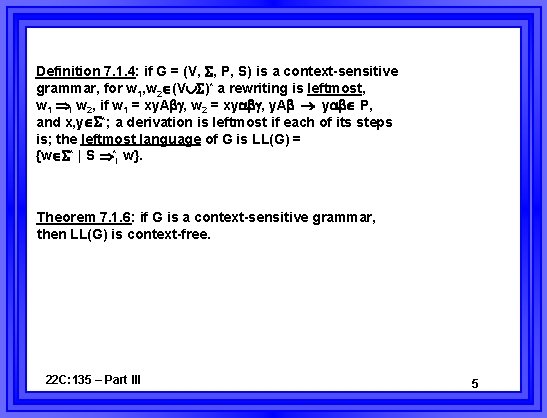

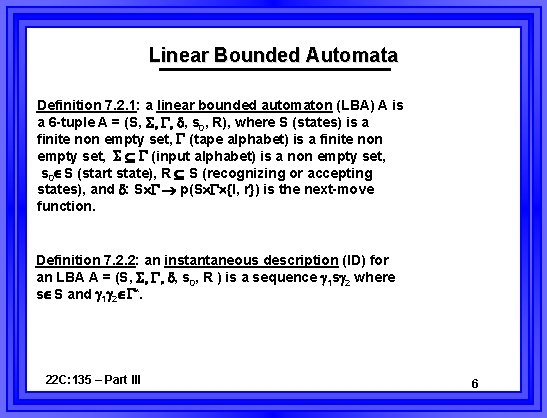

Linear Bounded Automata Definition 7. 2. 1: a linear bounded automaton (LBA) A is a 6 -tuple A = (S, , s 0, R), where S (states) is a finite non empty set, (tape alphabet) is a finite non empty set, (input alphabet) is a non empty set, s 0 S (start state), R S (recognizing or accepting states), and : S p(S {l, r}) is the next-move function. Definition 7. 2. 2: an instantaneous description (ID) for an LBA A = (S, , s 0, R ) is a sequence 1 s 2 where s S and 1 2 *. 22 C: 135 – Part III 6

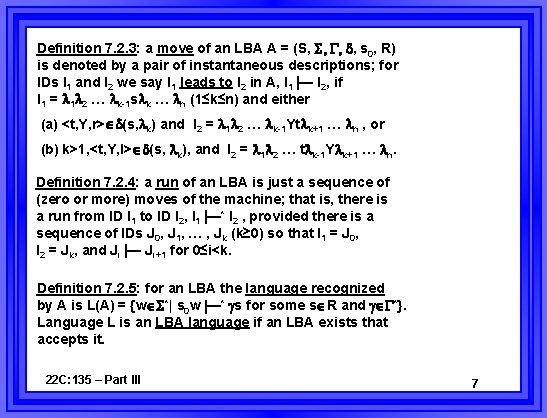

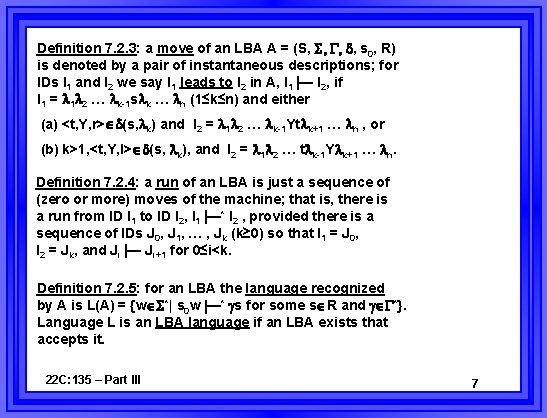

Definition 7. 2. 3: a move of an LBA A = (S, , s 0, R) is denoted by a pair of instantaneous descriptions; for IDs I 1 and I 2 we say I 1 leads to I 2 in A, I 1 — I 2, if I 1 = 1 2 … k-1 s k … n (1≤k≤n) and either (a) <t, Y, r> (s, k) and I 2 = 1 2 … k-1 Yt k+1 … n , or (b) k>1, <t, Y, l> (s, k), and I 2 = 1 2 … t k-1 Y k+1 … n. Definition 7. 2. 4: a run of an LBA is just a sequence of (zero or more) moves of the machine; that is, there is a run from ID I 1 to ID I 2, I 1 —* I 2 , provided there is a sequence of IDs J 0, J 1, … , Jk (k≥ 0) so that I 1 = J 0, I 2 = Jk, and Ji — Ji+1 for 0≤i<k. Definition 7. 2. 5: for an LBA the language recognized by A is L(A) = {w *| s 0 w —* s for some s R and *}. Language L is an LBA language if an LBA exists that accepts it. 22 C: 135 – Part III 7

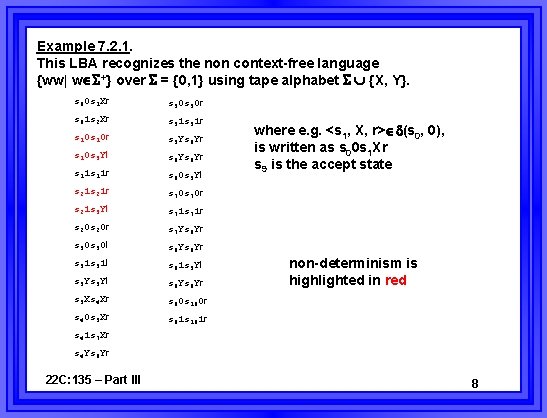

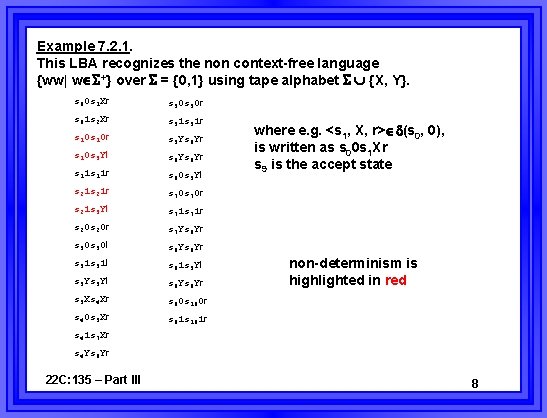

Example 7. 2. 1. This LBA recognizes the non context-free language {ww| w +} over = {0, 1} using tape alphabet {X, Y}. s 00 s 1 Xr s 50 r s 01 s 2 Xr s 51 r s 10 r s 5 Ys 6 Yr s 10 s 3 Yl s 6 Yr s 11 r s 60 s 3 Yl s 21 r s 70 r s 21 s 3 Yl s 71 r s 20 r s 7 Ys 8 Yr s 30 l s 8 Yr s 31 l s 81 s 3 Yl s 9 Yr s 3 Xs 4 Xr s 90 s 100 r s 40 s 5 Xr s 91 s 101 r where e. g. <s 1, X, r> (s 0, 0), is written as s 00 s 1 Xr s 9 is the accept state non-determinism is highlighted in red s 41 s 7 Xr s 4 Ys 9 Yr 22 C: 135 – Part III 8

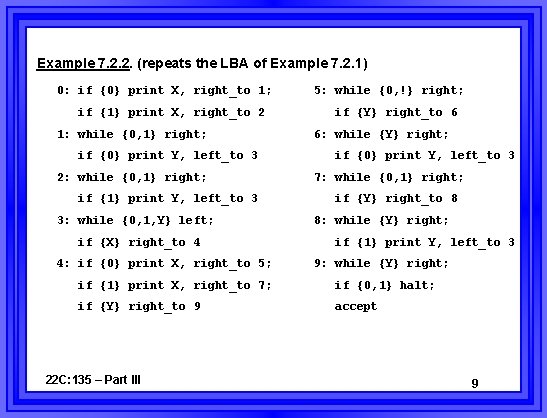

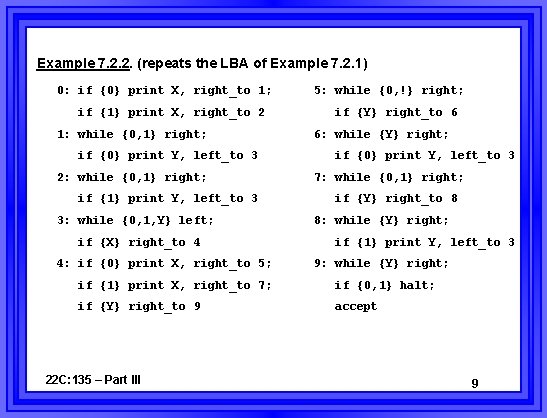

Example 7. 2. 2. (repeats the LBA of Example 7. 2. 1) 0: if {0} print X, right_to 1; if {1} print X, right_to 2 1: while {0, 1} right; if {0} print Y, left_to 3 2: while {0, 1} right; if {1} print Y, left_to 3 3: while {0, 1, Y} left; if {X} right_to 4 4: if {0} print X, right_to 5; 5: while {0, !} right; if {Y} right_to 6 6: while {Y} right; if {0} print Y, left_to 3 7: while {0, 1} right; if {Y} right_to 8 8: while {Y} right; if {1} print Y, left_to 3 9: while {Y} right; if {1} print X, right_to 7; if {0, 1} halt; if {Y} right_to 9 accept 22 C: 135 – Part III 9

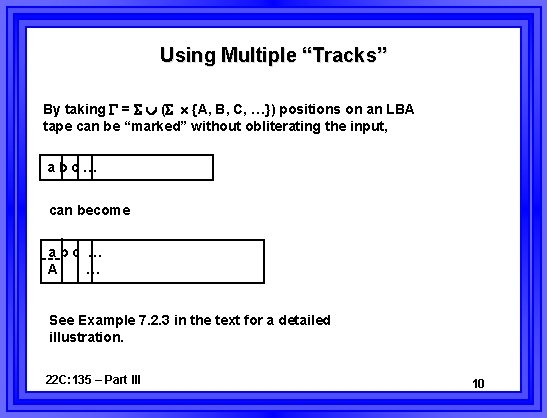

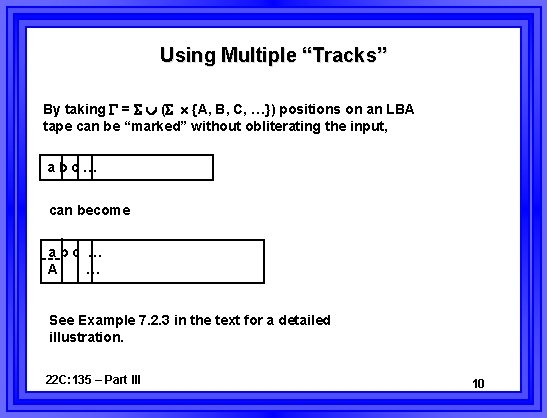

Using Multiple “Tracks” By taking = ( {A, B, C, …}) positions on an LBA tape can be “marked” without obliterating the input, abc… can become abc … A … See Example 7. 2. 3 in the text for a detailed illustration. 22 C: 135 – Part III 10

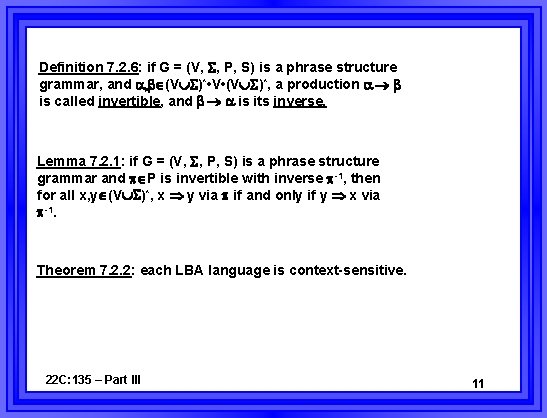

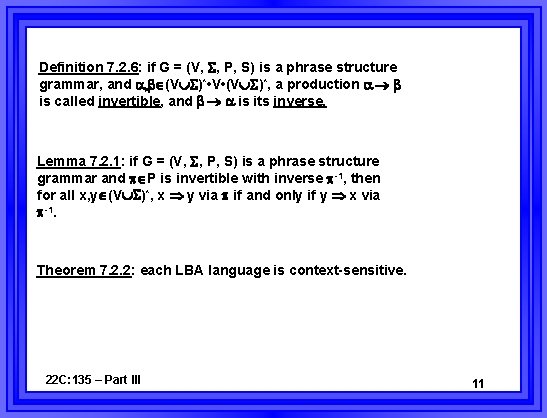

Definition 7. 2. 6: if G = (V, , P, S) is a phrase structure grammar, and (V )* • V • (V )*, a production is called invertible, and is its inverse. Lemma 7. 2. 1: if G = (V, , P, S) is a phrase structure grammar and P is invertible with inverse -1, then for all x, y (V )*, x y via if and only if y x via -1. Theorem 7. 2. 2: each LBA language is context-sensitive. 22 C: 135 – Part III 11

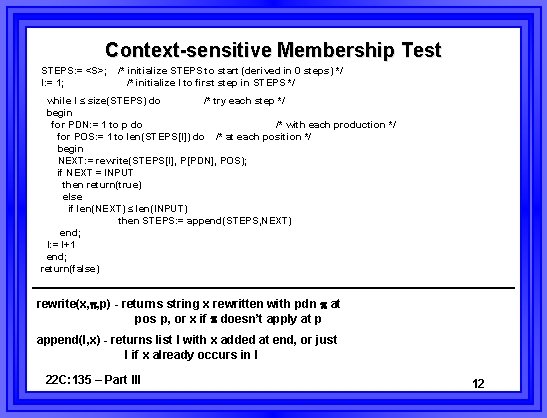

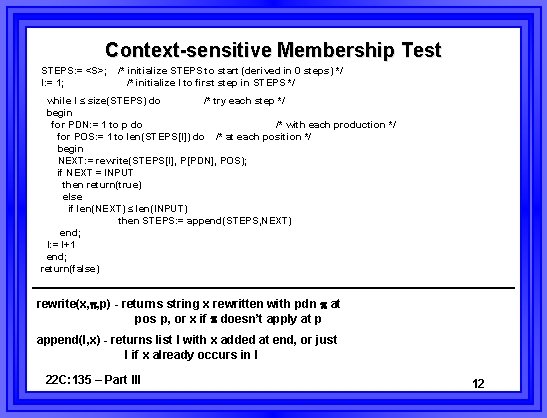

Context-sensitive Membership Test STEPS: = <S>; I: = 1; /* initialize STEPS to start (derived in 0 steps) */ /* initialize I to first step in STEPS */ while I ≤ size(STEPS) do /* try each step */ begin for PDN: = 1 to p do /* with each production */ for POS: = 1 to len(STEPS[I]) do /* at each position */ begin NEXT: = rewrite(STEPS[I], P[PDN], POS); if NEXT = INPUT then return(true) else if len(NEXT) ≤ len(INPUT) then STEPS: = append(STEPS, NEXT) end; I: = I+1 end; return(false) rewrite(x, , p) - returns string x rewritten with pdn at pos p, or x if doesn’t apply at p append(l, x) - returns list l with x added at end, or just l if x already occurs in l 22 C: 135 – Part III 12

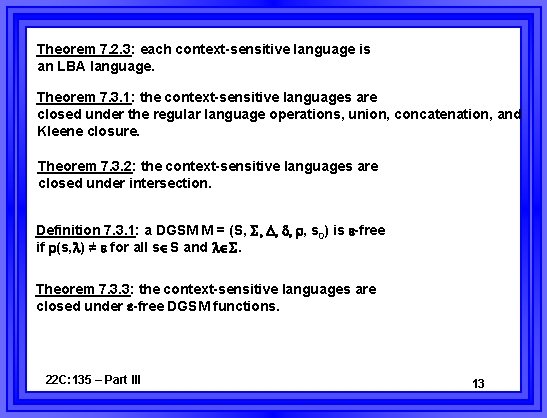

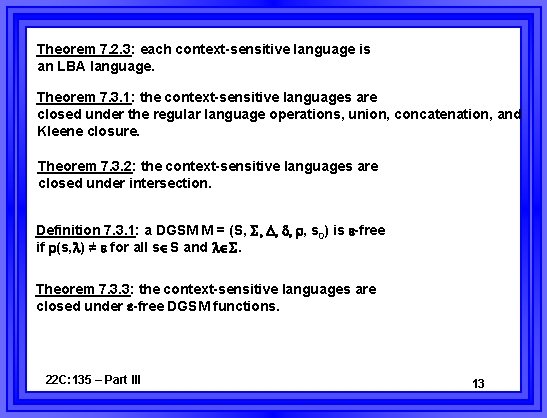

Theorem 7. 2. 3: each context-sensitive language is an LBA language. Theorem 7. 3. 1: the context-sensitive languages are closed under the regular language operations, union, concatenation, and Kleene closure. Theorem 7. 3. 2: the context-sensitive languages are closed under intersection. Definition 7. 3. 1: a DGSM M = (S, , s 0) is -free if (s, ) ≠ for all s S and . Theorem 7. 3. 3: the context-sensitive languages are closed under -free DGSM functions. 22 C: 135 – Part III 13

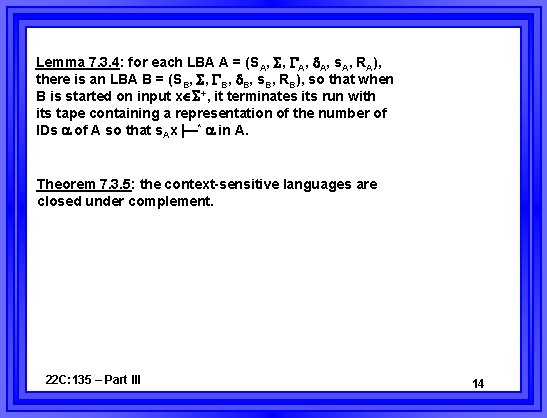

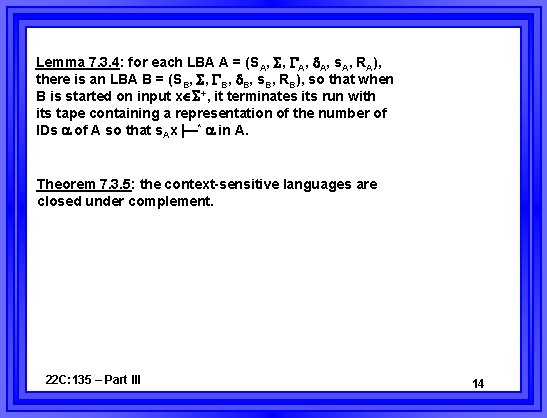

Lemma 7. 3. 4: for each LBA A = (SA, , A, s. A, RA), there is an LBA B = (SB, , B, s. B, RB), so that when B is started on input x +, it terminates its run with its tape containing a representation of the number of IDs of A so that s. Ax —* in A. Theorem 7. 3. 5: the context-sensitive languages are closed under complement. 22 C: 135 – Part III 14

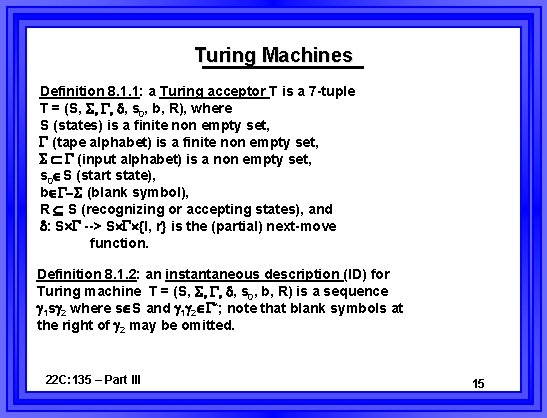

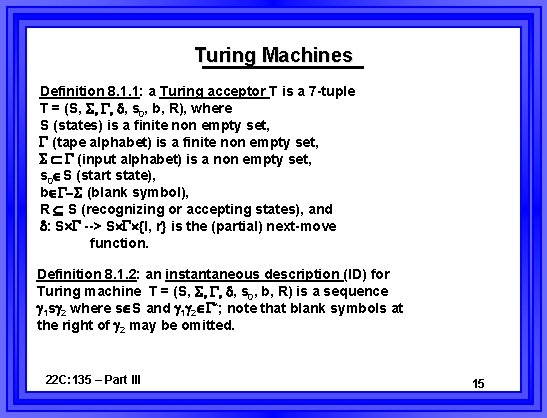

Turing Machines Definition 8. 1. 1: a Turing acceptor T is a 7 -tuple T = (S, , s 0, b, R), where S (states) is a finite non empty set, (tape alphabet) is a finite non empty set, (input alphabet) is a non empty set, s 0 S (start state), b (blank symbol), R S (recognizing or accepting states), and : S --> S {l, r} is the (partial) next-move function. Definition 8. 1. 2: an instantaneous description (ID) for Turing machine T = (S, , s 0, b, R) is a sequence 1 s 2 where s S and 1 2 *; note that blank symbols at the right of 2 may be omitted. 22 C: 135 – Part III 15

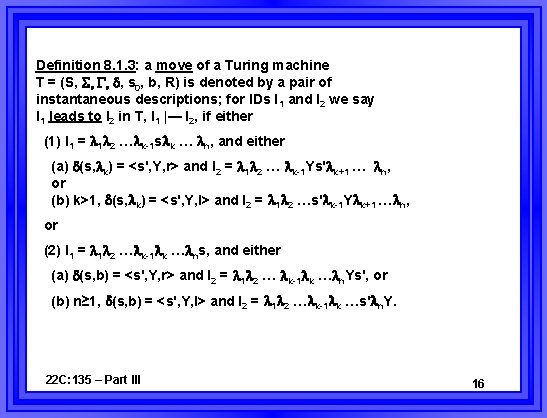

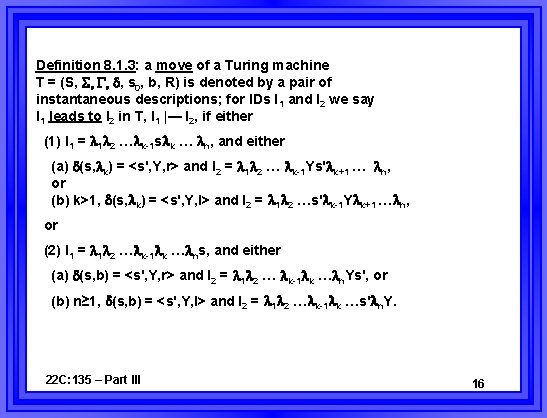

Definition 8. 1. 3: a move of a Turing machine T = (S, , s 0, b, R) is denoted by a pair of instantaneous descriptions; for IDs I 1 and I 2 we say I 1 leads to I 2 in T, I 1 |— I 2, if either (1) I 1 = 1 2 … k-1 s k … n, and either (a) (s, k) = <s', Y, r> and I 2 = 1 2 … k-1 Ys' k+1 … n, or (b) k>1, (s, k) = <s', Y, l> and I 2 = 1 2 …s' k-1 Y k+1 … n, or (2) I 1 = 1 2 … k-1 k … ns, and either (a) (s, b) = <s', Y, r> and I 2 = 1 2 … k-1 k … n. Ys', or (b) n≥ 1, (s, b) = <s', Y, l> and I 2 = 1 2 … k-1 k …s' n. Y. 22 C: 135 – Part III 16

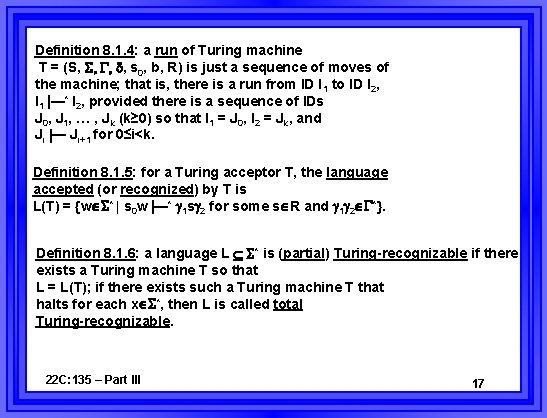

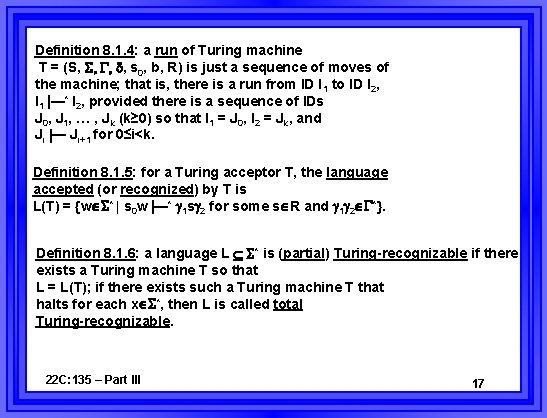

Definition 8. 1. 4: a run of Turing machine T = (S, , s 0, b, R) is just a sequence of moves of the machine; that is, there is a run from ID I 1 to ID I 2, I 1 —* I 2, provided there is a sequence of IDs J 0, J 1, … , Jk (k≥ 0) so that I 1 = J 0, I 2 = Jk, and Ji — Ji+1 for 0≤i<k. Definition 8. 1. 5: for a Turing acceptor T, the language accepted (or recognized) by T is L(T) = {w * | s 0 w —* 1 s 2 for some s R and 1 2 *}. Definition 8. 1. 6: a language L * is (partial) Turing-recognizable if there exists a Turing machine T so that L = L(T); if there exists such a Turing machine T that halts for each x *, then L is called total Turing-recognizable. 22 C: 135 – Part III 17

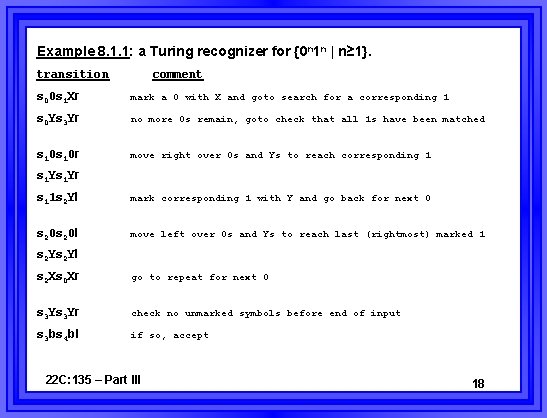

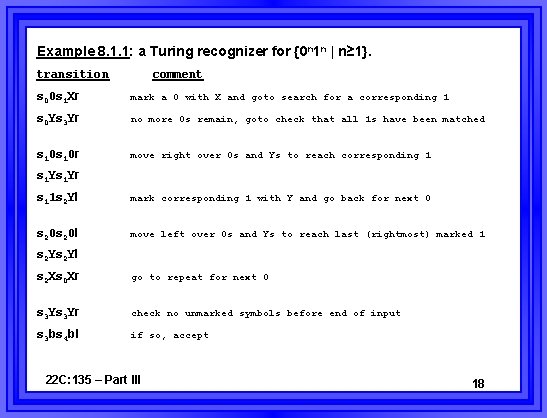

Example 8. 1. 1: a Turing recognizer for {0 n 1 n | n≥ 1}. transition comment s 00 s 1 Xr mark a 0 with X and goto search for a corresponding 1 s 0 Ys 3 Yr no more 0 s remain, goto check that all 1 s have been matched s 10 r move right over 0 s and Ys to reach corresponding 1 s 1 Yr s 11 s 2 Yl mark corresponding 1 with Y and go back for next 0 s 20 l move left over 0 s and Ys to reach last (rightmost) marked 1 s 2 Yl s 2 Xs 0 Xr go to repeat for next 0 s 3 Yr check no unmarked symbols before end of input s 3 bs 4 bl if so, accept 22 C: 135 – Part III 18

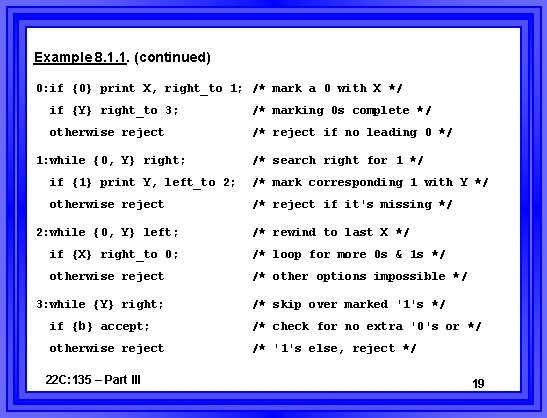

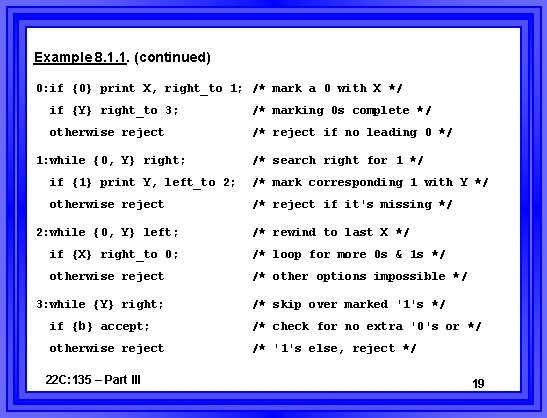

Example 8. 1. 1. (continued) 0: if {0} print X, right_to 1; /* mark a 0 with X */ if {Y} right_to 3; /* marking 0 s complete */ otherwise reject /* reject if no leading 0 */ 1: while {0, Y} right; /* search right for 1 */ if {1} print Y, left_to 2; /* mark corresponding 1 with Y */ otherwise reject /* reject if it's missing */ 2: while {0, Y} left; /* rewind to last X */ if {X} right_to 0; /* loop for more 0 s & 1 s */ otherwise reject /* other options impossible */ 3: while {Y} right; /* skip over marked '1's */ if {b} accept; /* check for no extra '0's or */ otherwise reject /* '1's else, reject */ 22 C: 135 – Part III 19

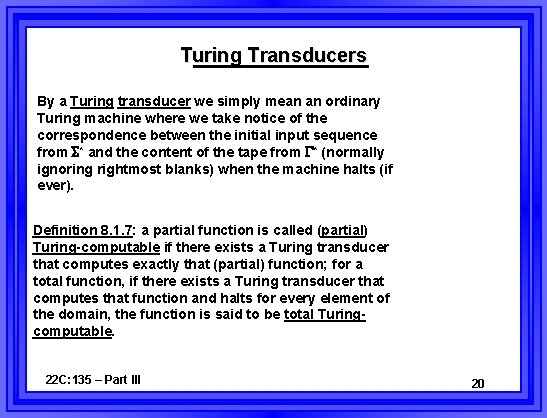

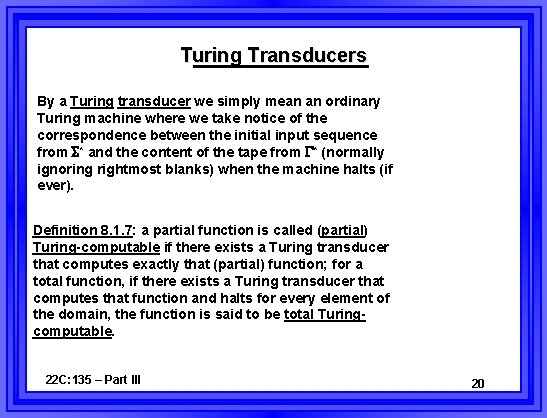

Turing Transducers By a Turing transducer we simply mean an ordinary Turing machine where we take notice of the correspondence between the initial input sequence from * and the content of the tape from * (normally ignoring rightmost blanks) when the machine halts (if ever). Definition 8. 1. 7: a partial function is called (partial) Turing-computable if there exists a Turing transducer that computes exactly that (partial) function; for a total function, if there exists a Turing transducer that computes that function and halts for every element of the domain, the function is said to be total Turingcomputable. 22 C: 135 – Part III 20

Unary Representation of Nat By the literal interpretation of Definition 8. 1. 7, Turing machines can compute only string-to-string functions. For functions on non string domains, Turingcomputability is understood to be with respect to a “simple” representation of the domain in terms of strings. For the natural numbers Nat = {0, 1, 2, … } we use the unary representation, namely, n Nat 0 n 1 {0, 1}*. Since we will consider functions of several arguments, we will also need to encode tuples from Nat by concatenating the representations of the constituents, e. g. , <n, m> 0 n 10 m 1 {0, 1}*. 22 C: 135 – Part III 21

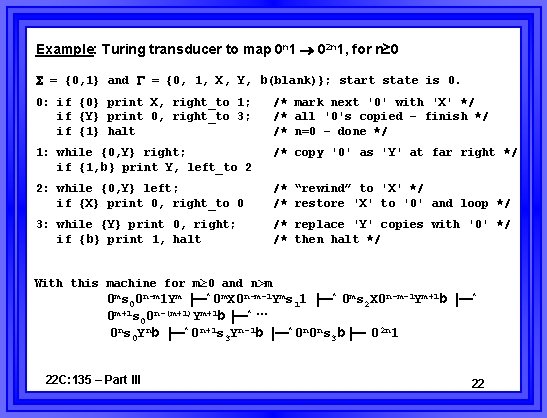

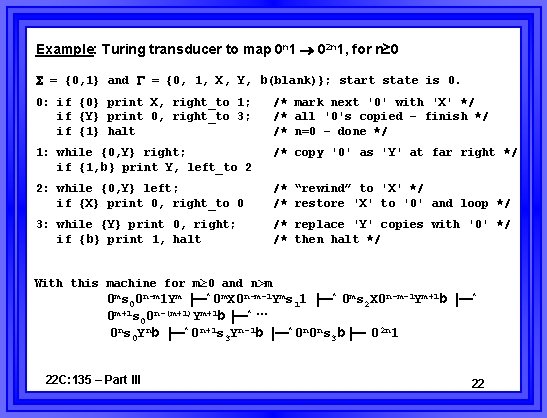

Example: Turing transducer to map 0 n 1 02 n 1, for n≥ 0 = {0, 1} and = {0, 1, X, Y, b(blank)}; start state is 0. 0: if {0} print X, right_to 1; if {Y} print 0, right_to 3; if {1} halt /* mark next '0' with 'X' */ /* all '0's copied – finish */ /* n=0 – done */ 1: while {0, Y} right; if {1, b} print Y, left_to 2 /* copy '0' as 'Y' at far right */ 2: while {0, Y} left; if {X} print 0, right_to 0 /* “rewind” to 'X' */ /* restore 'X' to '0' and loop */ 3: while {Y} print 0, right; if {b} print 1, halt /* replace 'Y' copies with '0' */ /* then halt */ With this machine for m≥ 0 and n>m 0 ms 00 n-m 1 Ym —* 0 m. X 0 n-m-1 Yms 11 —* 0 ms 2 X 0 n-m-1 Ym+1 b —* 0 m+1 s 00 n-(m+1)Ym+1 b —* … 0 ns 0 Ynb —* 0 n+1 s 3 Yn-1 b —* 0 n 0 ns 3 b — 02 n 1 22 C: 135 – Part III 22

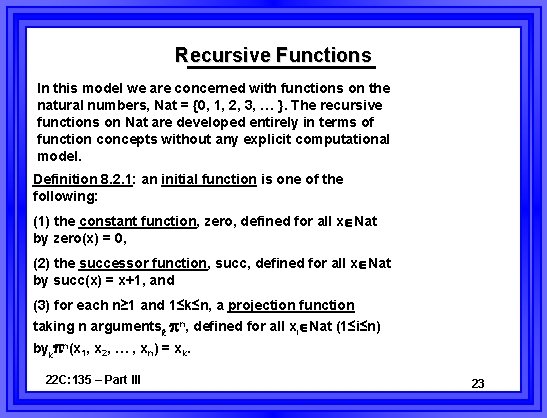

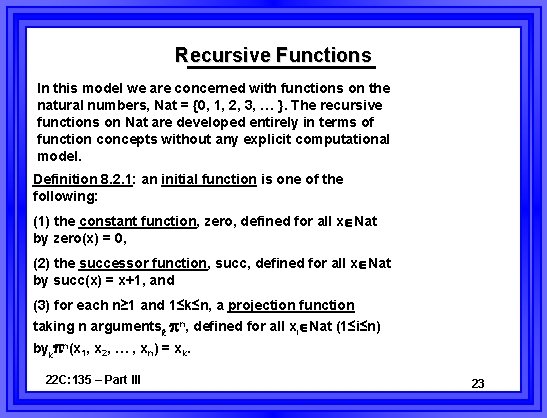

Recursive Functions In this model we are concerned with functions on the natural numbers, Nat = {0, 1, 2, 3, … }. The recursive functions on Nat are developed entirely in terms of function concepts without any explicit computational model. Definition 8. 2. 1: an initial function is one of the following: (1) the constant function, zero, defined for all x Nat by zero(x) = 0, (2) the successor function, succ, defined for all x Nat by succ(x) = x+1, and (3) for each n≥ 1 and 1≤k≤n, a projection function taking n arguments, k n, defined for all xi Nat (1≤i≤n) byk n(x 1, x 2, … , xn) = xk. 22 C: 135 – Part III 23

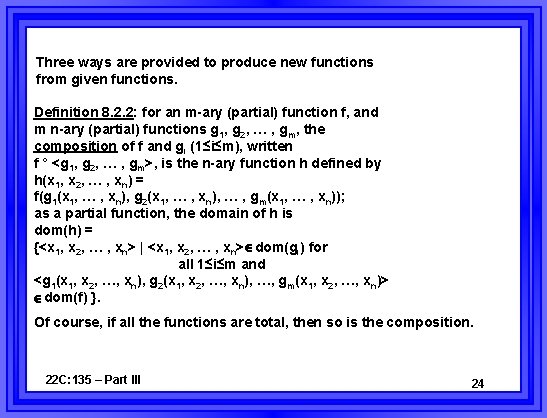

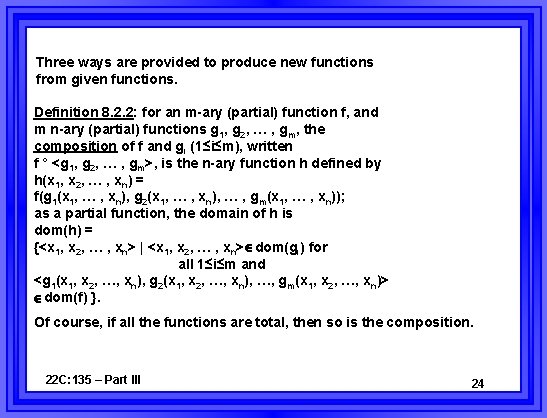

Three ways are provided to produce new functions from given functions. Definition 8. 2. 2: for an m-ary (partial) function f, and m n-ary (partial) functions g 1, g 2, … , gm, the composition of f and gi (1≤i≤m), written f ° <g 1, g 2, … , gm>, is the n-ary function h defined by h(x 1, x 2, … , xn) = f(g 1(x 1, … , xn), g 2(x 1, … , xn), … , gm(x 1, … , xn)); as a partial function, the domain of h is dom(h) = {<x 1, x 2, … , xn> | <x 1, x 2, … , xn> dom(gi) for all 1≤i≤m and <g 1(x 1, x 2, …, xn), g 2(x 1, x 2, …, xn), …, gm(x 1, x 2, …, xn)> dom(f) }. Of course, if all the functions are total, then so is the composition. 22 C: 135 – Part III 24

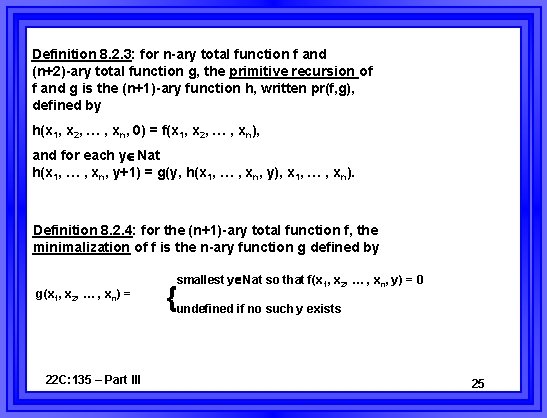

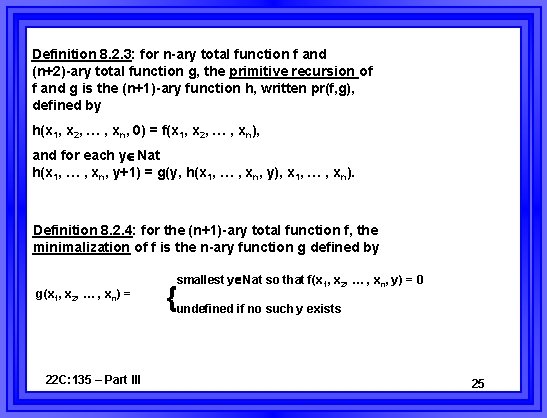

Definition 8. 2. 3: for n-ary total function f and (n+2)-ary total function g, the primitive recursion of f and g is the (n+1)-ary function h, written pr(f, g), defined by h(x 1, x 2, … , xn, 0) = f(x 1, x 2, … , xn), and for each y Nat h(x 1, … , xn, y+1) = g(y, h(x 1, … , xn, y), x 1, … , xn). Definition 8. 2. 4: for the (n+1)-ary total function f, the minimalization of f is the n-ary function g defined by g(x 1, x 2, … , xn) = 22 C: 135 – Part III smallest y Nat so that f(x 1, x 2, … , xn, y) = 0 {undefined if no such y exists 25

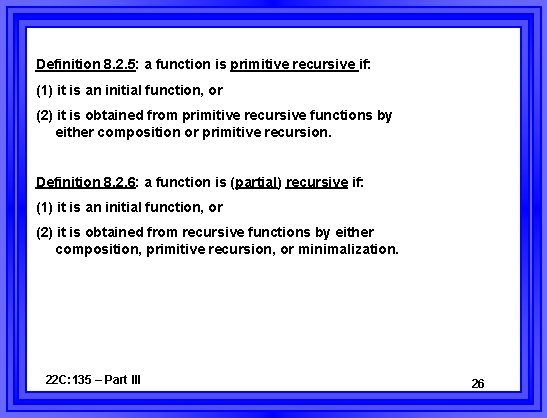

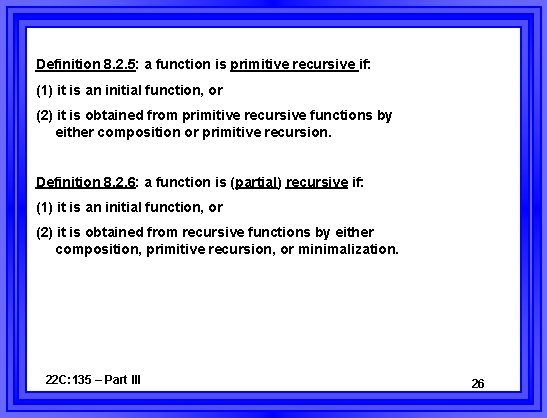

Definition 8. 2. 5: a function is primitive recursive if: (1) it is an initial function, or (2) it is obtained from primitive recursive functions by either composition or primitive recursion. Definition 8. 2. 6: a function is (partial) recursive if: (1) it is an initial function, or (2) it is obtained from recursive functions by either composition, primitive recursion, or minimalization. 22 C: 135 – Part III 26

Example 8. 2. 1. Using informal induction we can define the 'sum' (+) function using only initial functions as for all x, y Nat sum(x, 0) = x, sum(x, y+1) = succ(sum(x, y)). Formally, this is a definition via primitive recursion, namely, sum = pr( 1, succ ° 1 2). 3 Once the sum function is defined we can use it to form other definitions. Informally the 'product' (*) function can be expressed as prod(x, 0) = 0, prod(x, y+1) = sum(prod(x, y), x). Formally this is again defined via primitive recursion, prod = pr(zero, sum ° < 2, 3>). 3 3 22 C: 135 – Part III 27

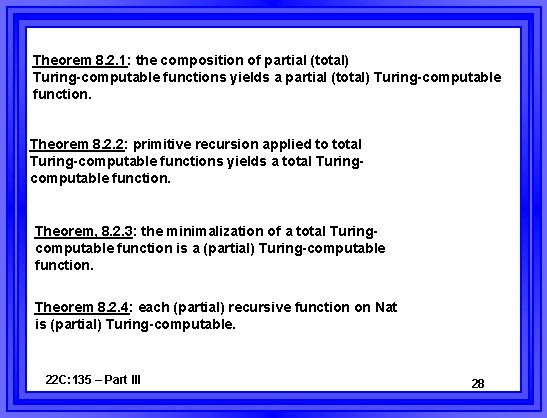

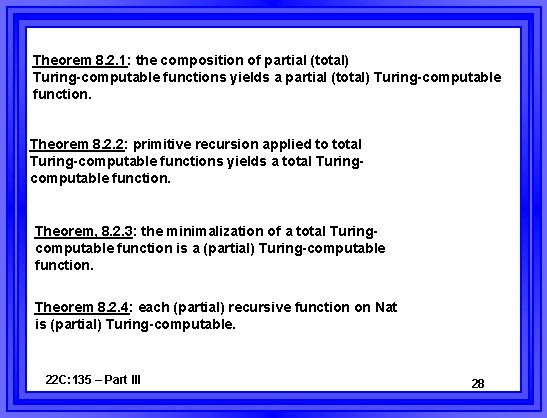

Theorem 8. 2. 1: the composition of partial (total) Turing-computable functions yields a partial (total) Turing-computable function. Theorem 8. 2. 2: primitive recursion applied to total Turing-computable functions yields a total Turingcomputable function. Theorem, 8. 2. 3: the minimalization of a total Turingcomputable function is a (partial) Turing-computable function. Theorem 8. 2. 4: each (partial) recursive function on Nat is (partial) Turing-computable. 22 C: 135 – Part III 28

RASP Model The Random-Access Stored Program (RASP) model is an idealized version of the familiar von Neumann style computer. This model will be shown equivalent to the Turing machine model. We will be concerned here only with arithmetic computations involving Nat = {0, 1, 2, … }. Signed arithmetic and non-integers can be treated by the same means we employ here, but add technical complications that cloud the conceptual clarity of the relationship between models. 22 C: 135 – Part III 29

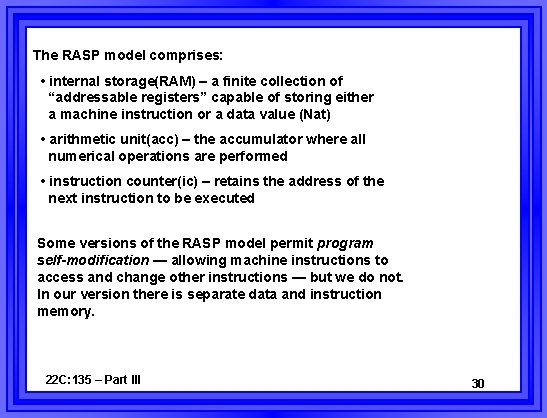

The RASP model comprises: • internal storage(RAM) – a finite collection of “addressable registers” capable of storing either a machine instruction or a data value (Nat) • arithmetic unit(acc) – the accumulator where all numerical operations are performed • instruction counter(ic) – retains the address of the next instruction to be executed Some versions of the RASP model permit program self-modification — allowing machine instructions to access and change other instructions — but we do not. In our version there is separate data and instruction memory. 22 C: 135 – Part III 30

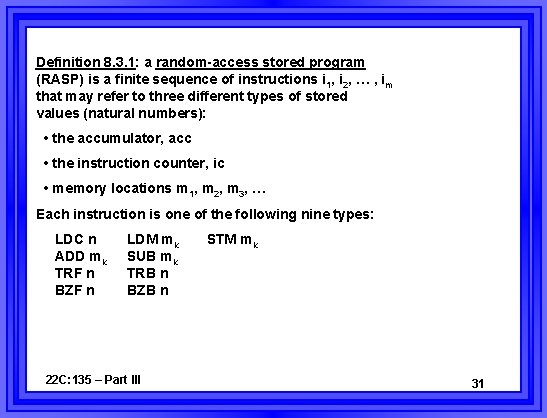

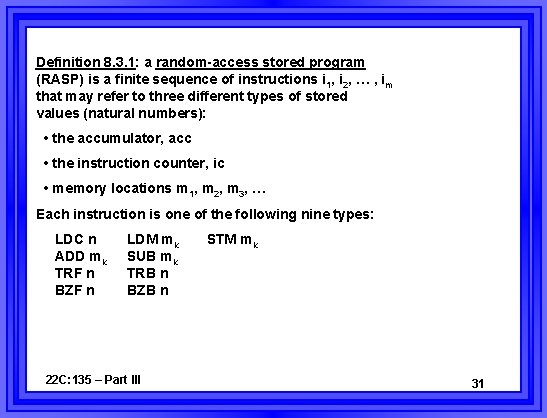

Definition 8. 3. 1: a random-access stored program (RASP) is a finite sequence of instructions i 1, i 2, … , im that may refer to three different types of stored values (natural numbers): • the accumulator, acc • the instruction counter, ic • memory locations m 1, m 2, m 3, … Each instruction is one of the following nine types: LDC n ADD mk TRF n BZF n LDM mk SUB mk TRB n BZB n 22 C: 135 – Part III STM mk 31

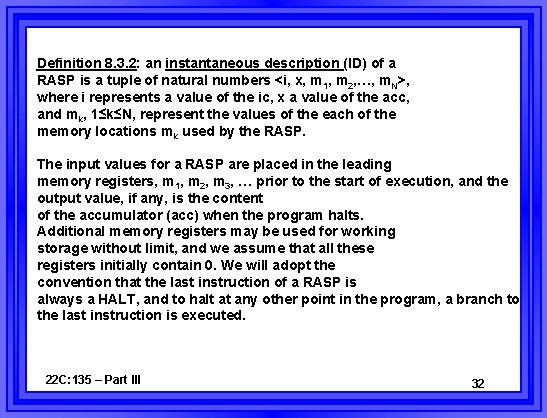

Definition 8. 3. 2: an instantaneous description (ID) of a RASP is a tuple of natural numbers <i, x, m 1, m 2, …, m. N>, where i represents a value of the ic, x a value of the acc, and mk, 1≤k≤N, represent the values of the each of the memory locations mk used by the RASP. The input values for a RASP are placed in the leading memory registers, m 1, m 2, m 3, … prior to the start of execution, and the output value, if any, is the content of the accumulator (acc) when the program halts. Additional memory registers may be used for working storage without limit, and we assume that all these registers initially contain 0. We will adopt the convention that the last instruction of a RASP is always a HALT, and to halt at any other point in the program, a branch to the last instruction is executed. 22 C: 135 – Part III 32

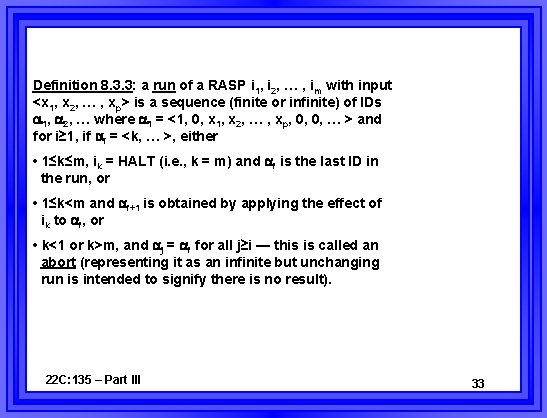

Definition 8. 3. 3: a run of a RASP i 1, i 2, … , im with input <x 1, x 2, … , xp> is a sequence (finite or infinite) of IDs 1, 2, … where 1 = <1, 0, x 1, x 2, … , xp, 0, 0, … > and for i≥ 1, if i = <k, … >, either • 1≤k≤m, ik = HALT (i. e. , k = m) and i is the last ID in the run, or • 1≤k<m and i+1 is obtained by applying the effect of ik to i, or • k<1 or k>m, and j = i for all j≥i — this is called an abort (representing it as an infinite but unchanging run is intended to signify there is no result). 22 C: 135 – Part III 33

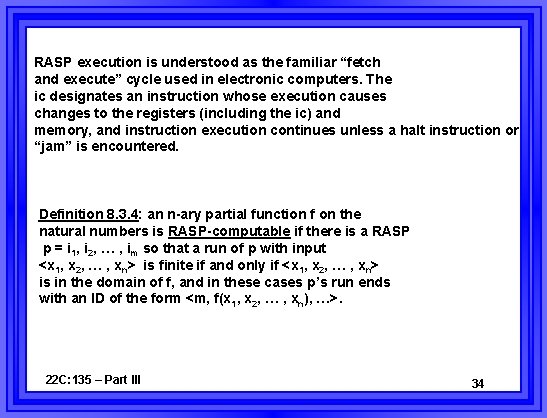

RASP execution is understood as the familiar “fetch and execute” cycle used in electronic computers. The ic designates an instruction whose execution causes changes to the registers (including the ic) and memory, and instruction execution continues unless a halt instruction or “jam” is encountered. Definition 8. 3. 4: an n-ary partial function f on the natural numbers is RASP-computable if there is a RASP p = i 1, i 2, … , im so that a run of p with input <x 1, x 2, … , xn> is finite if and only if <x 1, x 2, … , xn> is in the domain of f, and in these cases p’s run ends with an ID of the form <m, f(x 1, x 2, … , xn), …>. 22 C: 135 – Part III 34

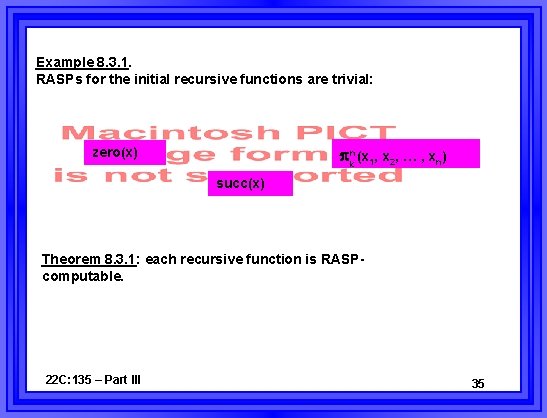

Example 8. 3. 1. RASPs for the initial recursive functions are trivial: kn (x 1, x 2, … , xn) zero(x) succ(x) Theorem 8. 3. 1: each recursive function is RASPcomputable. 22 C: 135 – Part III 35

RASP-program for f ° <g 1, g 2, … , gm> where and 22 C: 135 – Part III is a program for f is a program for gi 36

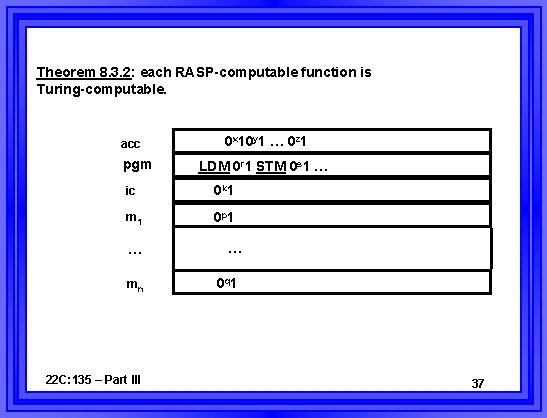

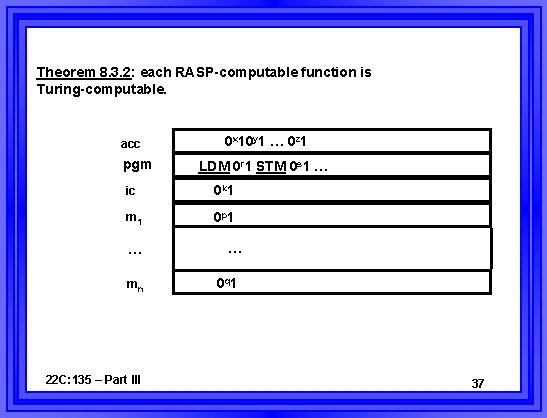

Theorem 8. 3. 2: each RASP-computable function is Turing-computable. acc pgm 0 x 10 y 1 … 0 z 1 LDM 0 r 1 STM 0 s 1 … ic 0 k 1 m 1 0 p 1 … mn 22 C: 135 – Part III … 0 q 1 37

Turing Recognizers & Grammars Theorem 8. 4. 1: for each Turing acceptor T there exists a phrase structure grammar G so that L(T) = L(G). Theorem 8. 4. 2: for each unrestricted phrase structure grammar G there exists a Turing machine T so that L(G) = L(T). Corollary 8. 4. 3: each context-sensitive language is total Turing-recognizable. 22 C: 135 – Part III 38

Theorem 8. 4. 4: each phrase structure language is the homomorphic image of the intersection of (deterministic) context-free languages. Theorem 8. 4. 6: the unrestricted phrase structure languages are closed under union, concatenation, Kleene closure, positive closure, substitution, and intersection. Theorem 8. 4. 7: if language L is total Turingrecognizable, then ¬L is also total Turing-recognizable. Theorem 8. 4. 8: if both language L and its complement ¬L are (partial) Turing-recognizable languages, then L (and hence ¬L) is total Turing-recognizable. 22 C: 135 – Part III 39

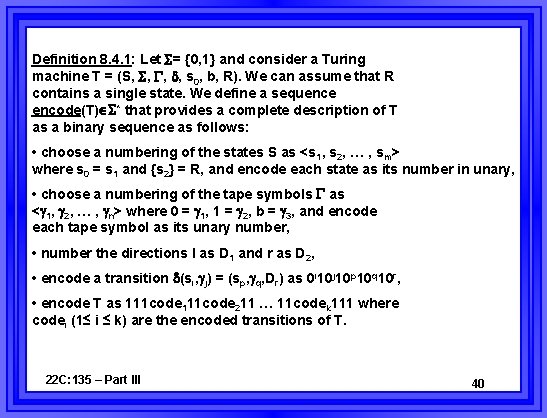

Definition 8. 4. 1: Let = {0, 1} and consider a Turing machine T = (S, , s 0, b, R). We can assume that R contains a single state. We define a sequence encode(T) * that provides a complete description of T as a binary sequence as follows: • choose a numbering of the states S as <s 1, s 2, … , sm> where s 0 = s 1 and {s 2} = R, and encode each state as its number in unary, • choose a numbering of the tape symbols as < 1, 2, … , n> where 0 = 1, 1 = 2, b = 3, and encode each tape symbol as its unary number, • number the directions l as D 1 and r as D 2, • encode a transition (si, j) = (sp, q, Dr) as 0 i 10 j 10 p 10 q 10 r, • encode T as 111 code 211 … 11 codek 111 where codei (1≤ i ≤ k) are the encoded transitions of T. 22 C: 135 – Part III 40

Definition 8. 4. 2: for = {0, 1) the diagonal language Ld * is defined as follows: • let <w 1, w 2, w 3, … > = < , 0, 1, 00, 01, 10, 11, 000, … > be an enumeration of * (i. e. , a 1 -1, onto mapping : {1, 2, 3, … } *) in lexographical order. • regard each positive integer i as a description of Turing recognizer Ti if encode(Ti) = the binary expansion of i; if there is no such Turing recognizer, regard i as a description of the Turing machine with no transitions, • then Ld = {wi | wi L(Ti)}. Theorem 8. 4. 9: the diagonal language Ld is not a partial Turing-recognizable language. 22 C: 135 – Part III 41

A Universal Turing Machine U = ^ {L, R, X, Y, Z, A, B}. States of T are represented as uniform length binary state numbers. Starting configuration of u 22 C: 135 – Part III 42

An outline of the operation of the Universal Turing machine is: • locate a match for the current state/symbol within the description of T • copy the portion in the description immediately following the match into the current state/symbol segment to update it • move the current (overprint) symbol to the marked position of T’s tape, access and mark the next position of T’s tape and move that symbol to the current position • repeat these steps until no match is found 22 C: 135 – Part III 43

Decision Problems A decision problem is a family of yes/no questions p together with a “decision mapping” d: p {yes, no}. Membership problem for Turing machines: given a Turing machine T, and an input w * for T, is w L(T)? The membership problem is a “ 2 parameter” decision since to identify an instance of the problem, there are two independent arguments to be supplied. 22 C: 135 – Part III 44

Definition 9. 2. 1: a decision problem (p, d) is decidable (or solvable) if there is “straightforward” encoding e(p) of the problem instances into sequences in some alphabet so that d: e(p) {yes, no} is a total Turing-computable function; otherwise (p, d) is undecidable (or unsolvable). Since the outcomes are binary, a decision problem (p, d) can also be regarded as a language recognition problem L(p) = {w e(p)| w=e( ) and d( )=yes} *. 22 C: 135 – Part III 45

Theorem 9. 2. 1: the membership problem for Turing machines is undecidable, or alternatively, the language L(u) = {encode(T) w| T is T. M. and w L(T)} is not total Turing-recognizable. Corollary 9. 2. 2: Ld is (partial) Turing-recognizable. 22 C: 135 – Part III 46

The Halting Problem for Turing machines: given a Turing machine T, and an input w * for T, does T halt when started on w? Theorem 9. 2. 3: the halting problem is undecidable for Turing machines. The algorithm test for Turing machines: given a Turing machine T, does T halt for every input w *? Theorem 9. 2. 4: the algorithm test is undecidable. 22 C: 135 – Part III 47

The emptiness problem for Turing machines (also raised for grammars, etc. ): given a Turing machine T, is L(T) = ? The language version of this problem is Le = {encode(T) | L(T) = }, where Turing machine T is encoded by previously discussed means. The non-emptiness problem for Turing machines (also raised for grammars, etc. ): given a Turing machine T, is L(T) ≠ ? The language version of this problem is Lne = {encode(T) | L(T) ≠ }, where Turing machine T is encoded by previously discussed means. 22 C: 135 – Part III 48

Theorem 9. 2. 5: Lne is (partial) Turing-recognizable. Theorem 9. 2. 6: Le is not total Turing-recognizable (i. e. , the emptiness problem is undecidable). Corollary 9. 2. 7: Lne is not total Turing-recognizable (i. e. , the non-emptiness problem is undecidable), and Le is not (partial) Turing-recognizable. Theorem 9. 2. 9: it is undecidable for Turing machine T whether or not L(T) is regular. 22 C: 135 – Part III 49

Post’s Correspondence Problem Definition 9. 3. 1: Post’s Correspondence Problem (PCP) is the decision problem: “given a finite alphabet and two k-tuples (k≥ 1) of sequences A = <a 1, a 2, … , ak> and B = <b 1, b 2, … , bk> where ai, bi *, do there exist positive integers i 1, i 2, … , im (m≥ 1, 1≤ij≤k), called a solution, so that ai 1 ai 2 … aim = bi 1 bi 2 … bim? ” Example 9. 3. 1 a 2 a 3 … = 10101101 … and b 1 b 2 b 3 … = 101011011 … 22 C: 135 – Part III 50

Theorem 9. 3. 1: PCP is undecidable if the alphabet has at least two letters. A Turing machine description can be used to create a PCP whose matching establishes a direct correspondence between a PCP solution and a terminating run of the Turing machine. Hence an algorithm to decide the existence of a PCP solution would also decide Turing machine halting and is therefore impossible. 22 C: 135 – Part III 51

Theorem 9. 3. 2: it is undecidable for homomorphisms h 1, h 2: * * whether or not there exists x + so that h 1(x) = h 2(x). Theorem 9. 3. 3: it is undecidable whether or not an arbitrary context-free grammar is ambiguous. Corollary 9. 3. 4: it is undecidable if the intersection of the languages of two arbitrary context-free grammars is empty. Corollary 9. 3. 5: it is undecidable for an arbitrary context-sensitive grammar G whether or not L(G) = . 22 C: 135 – Part III 52

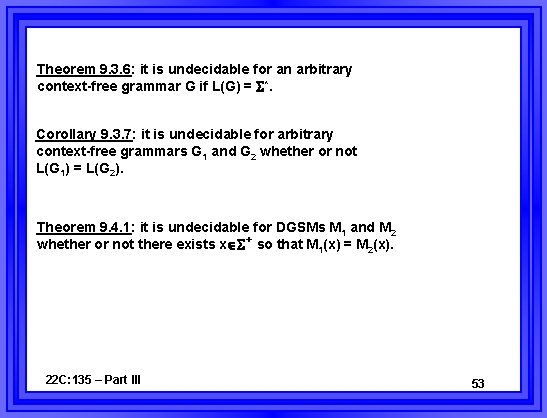

Theorem 9. 3. 6: it is undecidable for an arbitrary context-free grammar G if L(G) = *. Corollary 9. 3. 7: it is undecidable for arbitrary context-free grammars G 1 and G 2 whether or not L(G 1) = L(G 2). Theorem 9. 4. 1: it is undecidable for DGSMs M 1 and M 2 whether or not there exists x + so that M 1(x) = M 2(x). 22 C: 135 – Part III 53

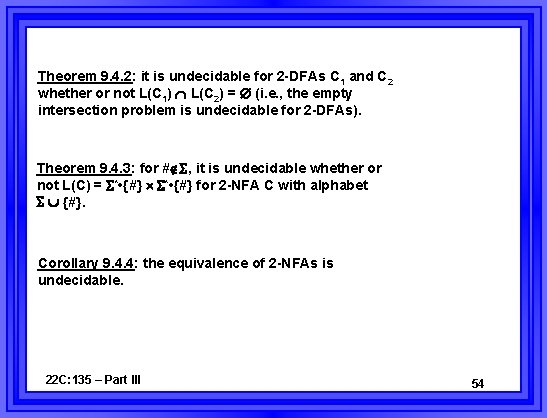

Theorem 9. 4. 2: it is undecidable for 2 -DFAs C 1 and C 2 whether or not L(C 1) L(C 2) = (i. e. , the empty intersection problem is undecidable for 2 -DFAs). Theorem 9. 4. 3: for # , it is undecidable whether or not L(C) = * • {#} for 2 -NFA C with alphabet {#}. Corollary 9. 4. 4: the equivalence of 2 -NFAs is undecidable. 22 C: 135 – Part III 54