Assessment What is Assessment n Assessment is the

- Slides: 55

Assessment

What is Assessment? n Assessment is the systematic or standardized procedures for observing behavior. This means that the procedures are not random, but are followed in a consistent, replicable fashion. The purposes of assessment include:

• Description. Provide basic descriptions of the person, his/ her strengths and weaknesses, or a functional analysis of a problem (as in behavioral assessment). • Classification or diagnosis. Example, mental status exam or use of the Diagnostic and Statistical Manual of the American Psychiatric Association.

• Evaluation. A good example of this is assigning a grade for a class. • Prediction or prognosis. Determining how successful someone would be in school or in employment, guessing the outcome of treatment effects, predicting suicide potential or the course of illness/disorder are examples of this category. • Decision making. Admit to school or program, hospitalize or not, give medications, and so on.

Types of Assessment Behavior Observations n Interviews n Environmental Assessments n Tests n

Behavior Observations are easy to perform, but suffer from their restricted sampling (what I see in a clinical setting may not be representative of what I would see if I could follow a person around all day). n They also have poor consistency (called reliability) among different raters. n

Interviews are actually types of Behavior Observations, but rely on questioning the client. n Interviews also suffer from the same consistency problems. n

Environmental Assessments are rarely used in counseling. n Environmental assessments can be thought of as the “personality” of the setting in which clients inhabit (e. g. their school, their home, their family, their workplace). n

Psychological Tests n A test is an objective and systematic measure of a sample of behavior.

An appropriate test includes: standard conditions to assure that whatever results we find we can state is a reflection of the test taker and not the variations in the testing n directions for administering and scoring n A standardization group so as to know how to compare one person’s performance to a group n

Error n It is essential to realize that all tests include some type of variation due to chance (called error). In other words, a person's "true" test score equals the observed score (the test results obtained) plus error.

The error can result from: the test itself n conditions of the testing n errors in scoring or administration n condition of the subject n sampling error n

n Virtually all of the testing error is due to the fact that all psychological testing involves indirect measurement. In other words, it is based on the behavior of the subject rather than measuring the construct or attribute directly.

Reliability and Validity n Reliability refers to the degree to which instruments are stable and repeatable. There are essentially three types of reliability: • How well the instrument obtains the same score across time, called temporal consistency • How well the test questions correlate with each other within the test, called internal consistency • How well different versions/forms of the test correlate with each other, called alternate forms consistency

Validity n Definition: n Does the test measure what it is designed to measure?

The Concept of Validation n Validation involves the process of gathering and evaluating validity information. n The test developer supplies validity information in the test manual. n A test user can also conduct validation research/studies.

When to perform Validations n During Test construction n When the user is changing the format, instructions, language, or content of the test. n if the population of test takers is significantly different than the original population of test takers.

How is validity is determined? n Validity is determined by: scrutinizing content. ~ relating scores obtained on the test to other test scores or other measures. ~ executing a comprehensive analysis of how scores on the test relate to other scores. ~

Three Categories of Validity Content Validity. n Criterion-related Validity. n Construct Validity. n All three types contribute to the total picture of validity.

Face Validity Face validity relates more to what a test appears to measure than to what the test actually measures n Face validity is important because lack of face validity could contribute to a lack of confidence with respect to perceived effectiveness of the test. n

Content Validity n Definition: content validity is a judgment concerning how adequately a test samples behavior representative of the universe of behavior the test was designed to sample.

Criterion-Related Validity Definition: a judgment regarding how adequately a test score can be used to infer an individual’s most probable standing on some measure of interest. n Two types: n § § concurrent validity. predictive validity.

Concurrent Validity n Concurrent validity refers to the form of criterion-related validity that is an index of the degree to which a test score is related to some criterion measure obtained at the same time.

Predictive Validity Predictive validity = measures of the relationship between test scores and a criterion measure obtained at a future time provide an indication of the predictive validity of the test. n how accurately scores on the test predict some criterion measure. n § example: measures of the relationship between college admissions tests and freshman GPA provide evidence of the predictive validity of the admissions tests.

Incremental Validity Definition: the degree to which an additional predictor explains something about the criterion measure not explained by predictors already in use. n Must be used when predicting something like academic success in college. n

Construct Validity n n Construct validity refers to a judgment about the appropriateness of inferences drawn from test scores regarding individual standings on a variable called a construct Construct: an informed, scientific idea developed, or “constructed” to describe or explain behavior: ¥ unobservable, underlying traits that at test developer may invoke to describe test behavior or criterion performance.

Evidence of Construct Validity n n n The test is homogenous, measuring a single construct. Test scores correlate with scores on other tests in accordance with what would be expected. Test scores increase or decrease as a function of age or passage of time as theoretically predicted. Posttest scores differ from pretest scores as theoretically predicted. Test scores obtained by people from distinct groups vary as predicted by theory.

Validity, Test Bias, and Fairness

Bias n n n a factor inherent within a test that systematically prevents an accurate and impartial measurement. Bias is a statistical characteristic of a test. A test is biased if it makes systematic or consistent errors on prediction or measurement. WHEN NO REAL DIFFERENCES EXIST, then a test is biased.

Types of Bias: Psychological tests are usually used for two general purposes. To measure some attribute or to make a prediction. n Tests can be biased in either of these areas. n Bias in measurement occurs when a test makes systematic errors in measuring some attribute or characteristic. n

n n For example, some people consider that IQ tests are biased against blacks because they show lower scores for this group. Bias in prediction is when tests make systematic errors in predicting outcomes • e. g. , males will do better in math classes than females.

Detecting Bias n test scores are different for different groups; • e. g. , males tend to have higher math SAT scores than women; • Asians score higher on IQ tests than Whites. n n n An item analysis shows that different groups respond differently to the same question. A test systematically under-predicts or over predicts the performance of a particular group. This is called intercept bias. A test produces different slopes for different groups. This is called slope bias.

Fairness n “Fairness” refers to whether a difference in mean predictor scores between two groups represents a useful distinction for society, relative to a decision that must be made, or whether the difference represents a bias that is irrelevant to the objective at hand.

Fairness & Bias Issues in Intelligence Assessment They are culturally biased n National norms are inappropriate for minorities n Minorities are disadvantaged at testtaking skills n Test Results lead to inadequate and inferior education n

Cultural Bias n Mean differences exist between groups (Asian-American perform better than European-American; African-American and American Indians perform less) • this is a doubtful argument if we believe there are disparities among groups, such as the effect of SES upon test scores n Tests perform differently in predicting students’ outcomes (different criterion validity coefficients)

n Items within tests may favor one group over another in being answered correctly • Removal of items do not make enough difference in overall scores or reduce group differences • Items thought to be biased based upon inspection do not empirically separate groups • “What should you do if a child smaller than

Inappropriateness of National Norms n this argument suggests that using different norms for different groups. Opponents state such an approach would result in • undesirable comparisons (conclusions) about different ethnic groups • may lower expectations for ethnic minority groups • may have little relevance for the subject once he/she leaves a specific geographic

Deficiencies in Test-Taking Skills This pertains to factors such as motivation, achievement orientation of tests, or knowing when to choose proper problem-solving strategies n There are numerous studies that support that ethnic minorities lack testtaking skills n Research is still needed to know the extent of this n

Inadequate and Inferior Educational Programs n Because of the concern for the impact of testing decisions, several legal considerations have appeared

1973. Public Law 93 -112. n Outlawed discrimination on the basis of disability.

1974. Family Education Rights and Privacy Act. n School records are open to students and family members and results can be challenged.

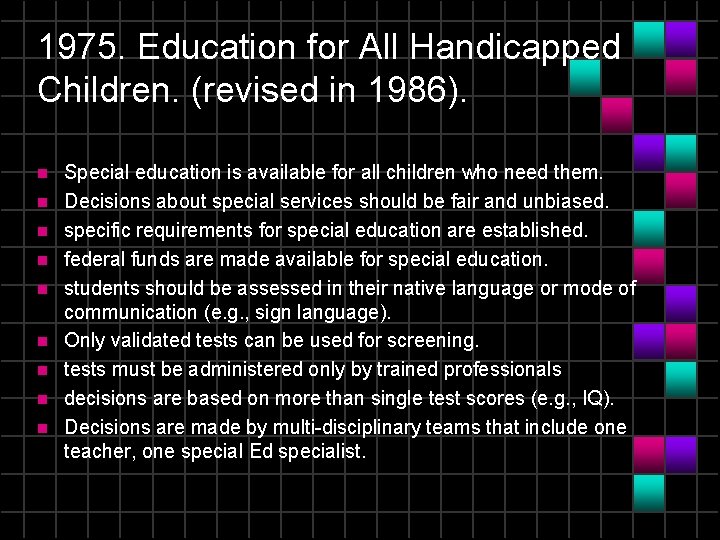

1975. Education for All Handicapped Children. (revised in 1986). n n n n n Special education is available for all children who need them. Decisions about special services should be fair and unbiased. specific requirements for special education are established. federal funds are made available for special education. students should be assessed in their native language or mode of communication (e. g. , sign language). Only validated tests can be used for screening. tests must be administered only by trained professionals decisions are based on more than single test scores (e. g. , IQ). Decisions are made by multi-disciplinary teams that include one teacher, one special Ed specialist.

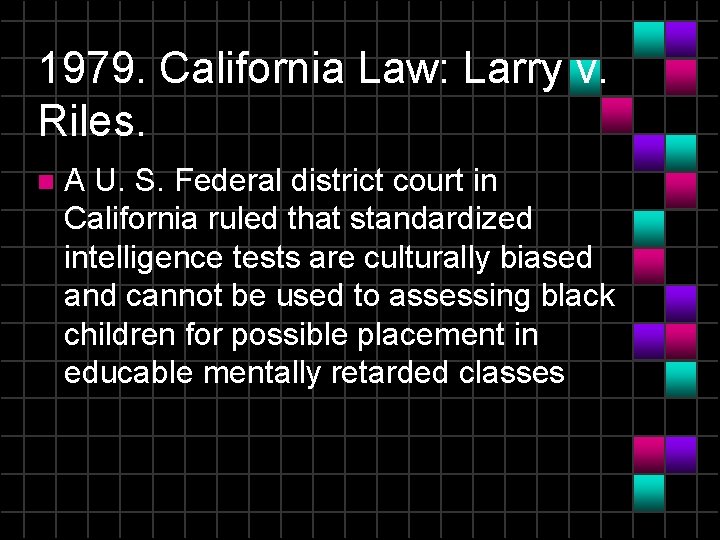

1979. California Law: Larry v. Riles. n A U. S. Federal district court in California ruled that standardized intelligence tests are culturally biased and cannot be used to assessing black children for possible placement in educable mentally retarded classes

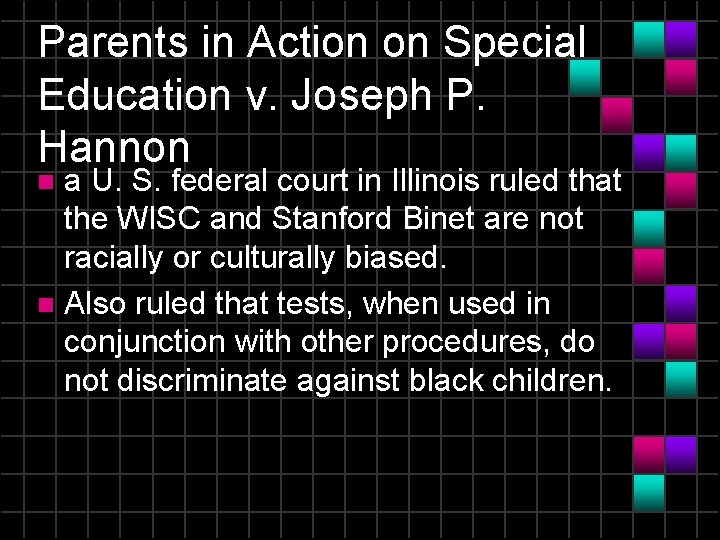

Parents in Action on Special Education v. Joseph P. Hannon a U. S. federal court in Illinois ruled that the WISC and Stanford Binet are not racially or culturally biased. n Also ruled that tests, when used in conjunction with other procedures, do not discriminate against black children. n

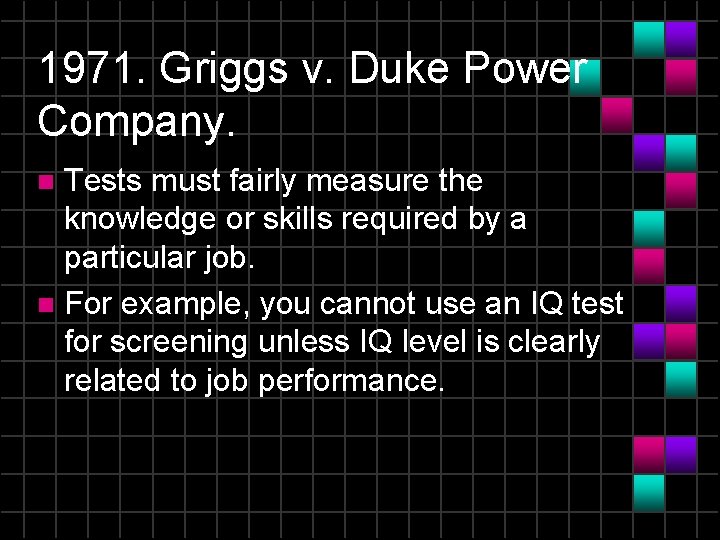

1971. Griggs v. Duke Power Company. Tests must fairly measure the knowledge or skills required by a particular job. n For example, you cannot use an IQ test for screening unless IQ level is clearly related to job performance. n

Gender Bias in interest Measurement (Hood & Johnson) During the decades of the 1960 s and 1970 s, charges of gender bias were leveled against vocational interest inventories and their use in the vocational and educational counseling of women.

Methods reduce gender bias on interest inventories. usingle-sex norms. n For the Strong Interest Inventory and the Kuder Occupational Interest Survey, the occupational scales are based on separate criterion groups for each sex. n All clients receive scores on both the male and female scores. n

The only exceptions are occupations in which it is very difficult to find a norm group of one sex, such as male home economics teachers or female pilots. n Virtually all inventories have eliminated sexist language, for instance, replacing policeman with police officer and mailman with postal worker. n

Second approach: include of only interest items that are equally attractive to both sexes. n For example, on an interest inventory containing items related to the six Holland themes, many more men than women respond to a realistic item such as "repairing an automobile, " and many more women to a social item such as "taking care of very small children. "

the UNIACT (American College Testing Program, 1995) is a sex-balanced inventory, increasing the probability that men will obtain higher scores on the social scale and women on the realistic scale n thus, each sex would be more likely to give consideration to occupations in a full range of fields. n

The use of the inventory affects how one would construe bias. n Holland has resisted constructing sexbalanced scales on his Self-Directed Search, believing that the use of sexbalanced scales destroys much of the predictive validity of the instrument.

n An inventory that predicts that equal numbers of men and women will become automobile mechanics or elementary school teachers is going to have reduced predictive validity given the male and female socialization and occupational patterns found in today's society.

n When such inventories are used primarily for vocational exploration, however, an instrument that channels interests into stereotypical male and female fields can be criticized for containing this gender bias.

Tư thế ngồi viết

Tư thế ngồi viết Công thức tiính động năng

Công thức tiính động năng Thiếu nhi thế giới liên hoan

Thiếu nhi thế giới liên hoan Tỉ lệ cơ thể trẻ em

Tỉ lệ cơ thể trẻ em đặc điểm cơ thể của người tối cổ

đặc điểm cơ thể của người tối cổ Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Phản ứng thế ankan

Phản ứng thế ankan Thẻ vin

Thẻ vin Sơ đồ cơ thể người

Sơ đồ cơ thể người Môn thể thao bắt đầu bằng từ đua

Môn thể thao bắt đầu bằng từ đua Cái miệng xinh xinh thế chỉ nói điều hay thôi

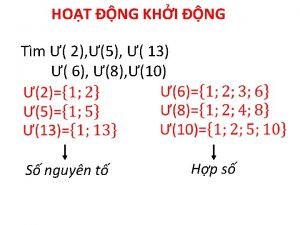

Cái miệng xinh xinh thế chỉ nói điều hay thôi Số.nguyên tố

Số.nguyên tố Hình ảnh bộ gõ cơ thể búng tay

Hình ảnh bộ gõ cơ thể búng tay Trời xanh đây là của chúng ta thể thơ

Trời xanh đây là của chúng ta thể thơ ưu thế lai là gì

ưu thế lai là gì Voi kéo gỗ như thế nào

Voi kéo gỗ như thế nào V cc cc

V cc cc Thơ thất ngôn tứ tuyệt đường luật

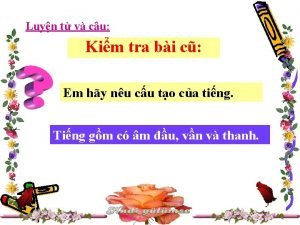

Thơ thất ngôn tứ tuyệt đường luật đại từ thay thế

đại từ thay thế Từ ngữ thể hiện lòng nhân hậu

Từ ngữ thể hiện lòng nhân hậu Thế nào là hệ số cao nhất

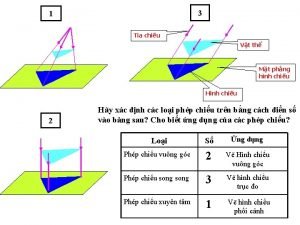

Thế nào là hệ số cao nhất Vẽ hình chiếu vuông góc của vật thể sau

Vẽ hình chiếu vuông góc của vật thể sau Tư thế ngồi viết

Tư thế ngồi viết Mật thư tọa độ 5x5

Mật thư tọa độ 5x5 Vẽ hình chiếu đứng bằng cạnh của vật thể

Vẽ hình chiếu đứng bằng cạnh của vật thể Khi nào hổ mẹ dạy hổ con săn mồi

Khi nào hổ mẹ dạy hổ con săn mồi Tư thế worm breton

Tư thế worm breton Quá trình desamine hóa có thể tạo ra

Quá trình desamine hóa có thể tạo ra Diễn thế sinh thái là

Diễn thế sinh thái là điện thế nghỉ

điện thế nghỉ Thế nào là mạng điện lắp đặt kiểu nổi

Thế nào là mạng điện lắp đặt kiểu nổi Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Thế nào là sự mỏi cơ

Thế nào là sự mỏi cơ Lời thề hippocrates

Lời thề hippocrates Bổ thể

Bổ thể 101012 bằng

101012 bằng Fecboak

Fecboak Khi nào hổ mẹ dạy hổ con săn mồi

Khi nào hổ mẹ dạy hổ con săn mồi Chúa yêu trần thế

Chúa yêu trần thế Một số thể thơ truyền thống

Một số thể thơ truyền thống Các loại đột biến cấu trúc nhiễm sắc thể

Các loại đột biến cấu trúc nhiễm sắc thể Product principle in portfolio assessment

Product principle in portfolio assessment Static assessment vs dynamic assessment

Static assessment vs dynamic assessment Portfolio assessment matches assessment to teaching

Portfolio assessment matches assessment to teaching Pritchett merger integration

Pritchett merger integration Staar assessment management system dashboard

Staar assessment management system dashboard Command terms ib

Command terms ib Integrating communications assessment and tactics

Integrating communications assessment and tactics Fatigue risk management system template

Fatigue risk management system template Nqs assessment

Nqs assessment Nys assessment receivables po box 4128

Nys assessment receivables po box 4128 Task design examples

Task design examples Bsbpmg522 undertake project work

Bsbpmg522 undertake project work Career cruising matchmaker

Career cruising matchmaker Bureau of education assessment

Bureau of education assessment