An Empirical Study on the Correctness of Formally

- Slides: 53

An Empirical Study on the Correctness of Formally Verified Distributed Systems Authors: Pedro Fonseca, Kaiyuan Zhang, Xi Wang, Arvind Krishnamurthy In the proceedings of Euro. Sys’ 17 Presented by: Haobin Ni

The Authors Pedro Fonseca Kaiyuan Zhang Xi Wang Arvind Krishnamurthy University of Washington Euro. Sys 2017 April 23 -26, 2017 Belgrade, Serbia

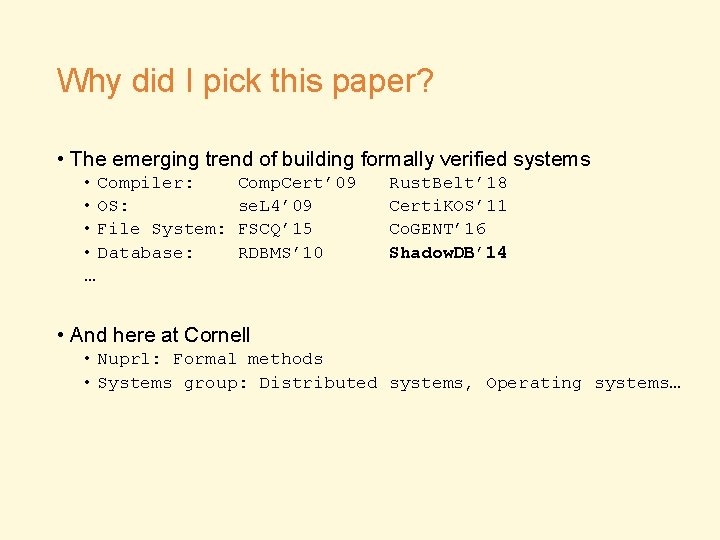

Why did I pick this paper? • The emerging trend of building formally verified systems • Compiler: • OS: • File System: • Database: … Comp. Cert’ 09 se. L 4’ 09 FSCQ’ 15 RDBMS’ 10 Rust. Belt’ 18 Certi. KOS’ 11 Co. GENT’ 16 Shadow. DB’ 14 • And here at Cornell • Nuprl: Formal methods • Systems group: Distributed systems, Operating systems…

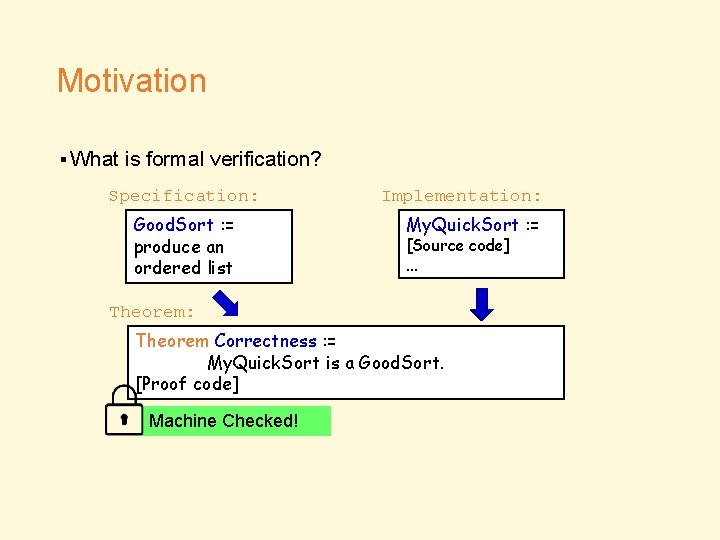

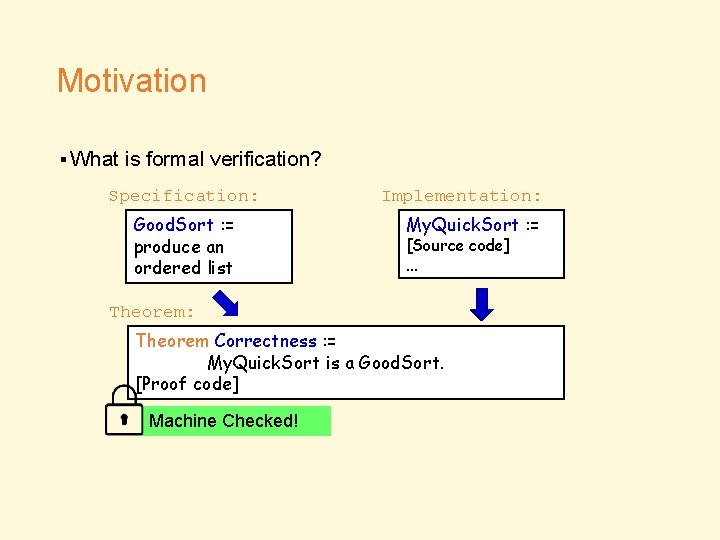

Motivation ▪What is formal verification? Specification: Good. Sort : = produce an ordered list Implementation: My. Quick. Sort : = [Source code] … Theorem: Theorem Correctness : = My. Quick. Sort is a Good. Sort. [Proof code] Machine Checked!

Motivation • Why use formal verification? • Pro: Strong mathematical guarantees • Con: High labor cost • Real-life use cases: • Life/Mission Critical Systems Military Systems Railway Control Web Services

Motivation • To what extent can we trust the formally verified systems? • E. g. Formally verified distributed systems • Why distributed systems? • Critical • Error-prone • Availability of examples • A good test ground for the reliability of formally verified systems!

Motivation • To what extent can we trust the formally verified systems?

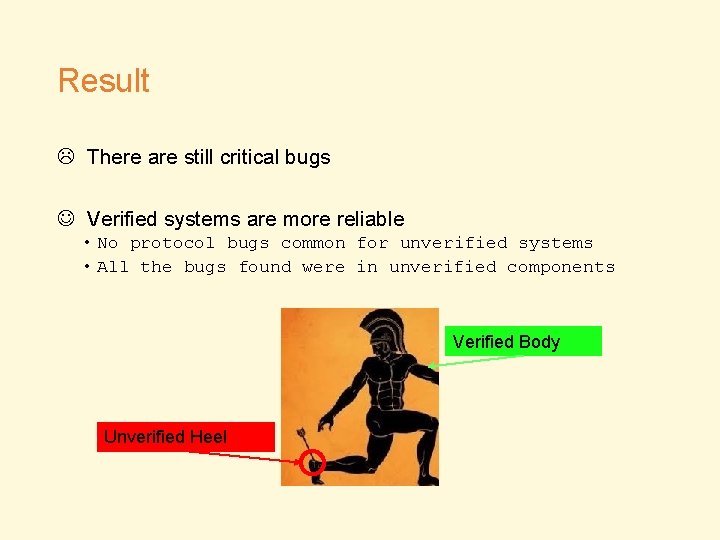

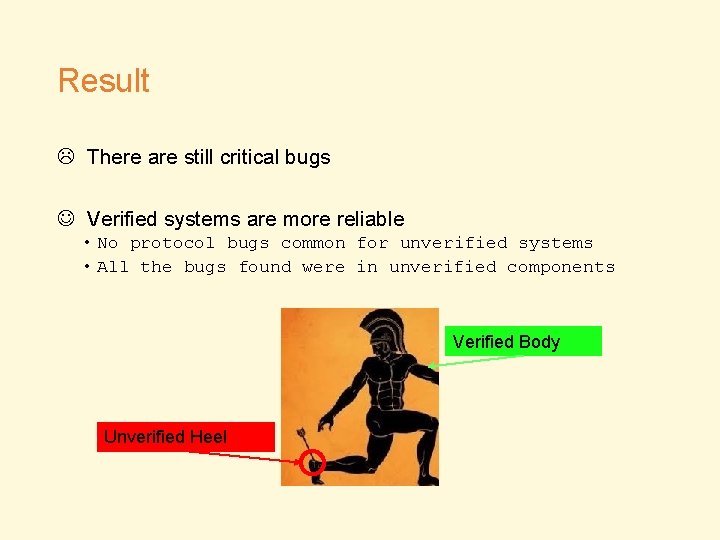

Result There are still critical bugs Verified systems are more reliable • No protocol bugs common for unverified systems • All the bugs found were in unverified components Verified Body Unverified Heel

Outline • Background • Methodology • Bugs • Discussion • Conclusion

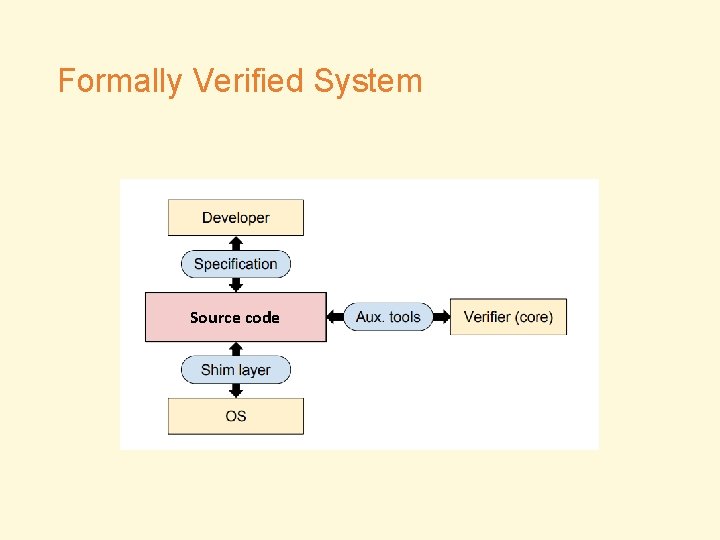

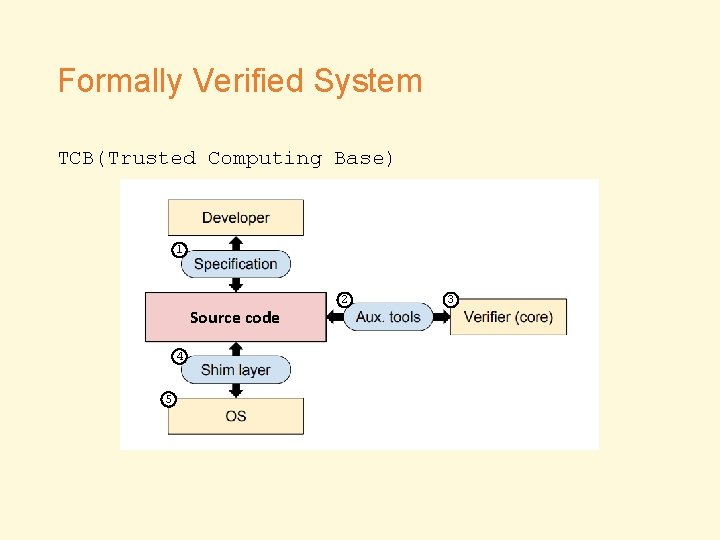

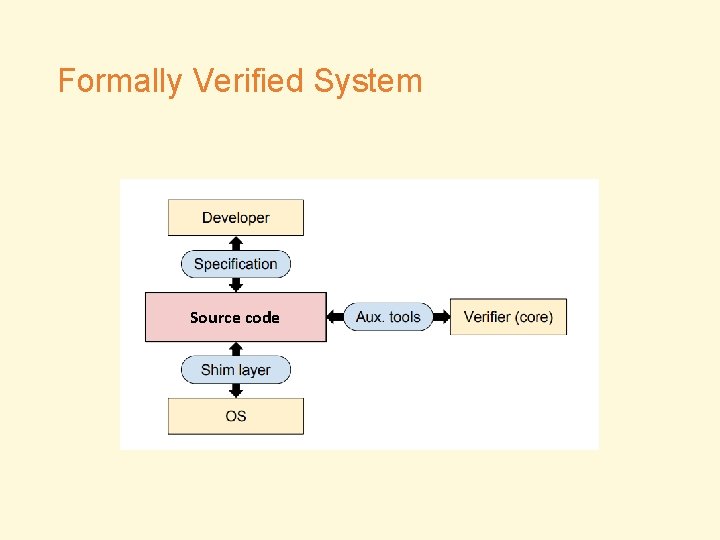

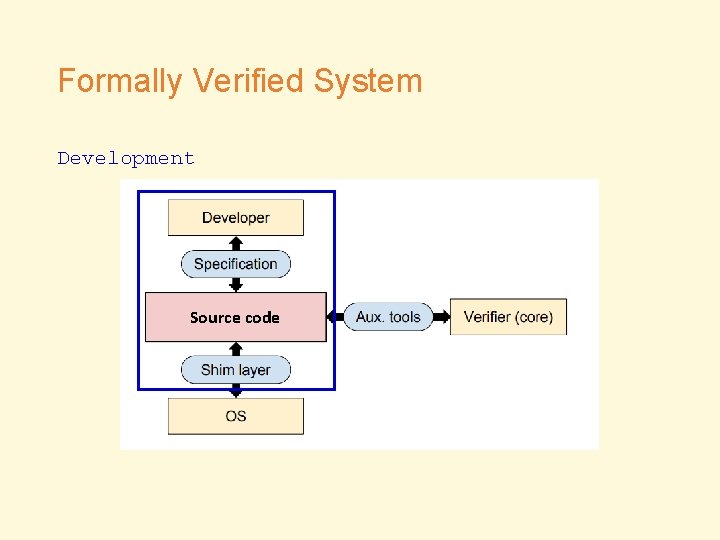

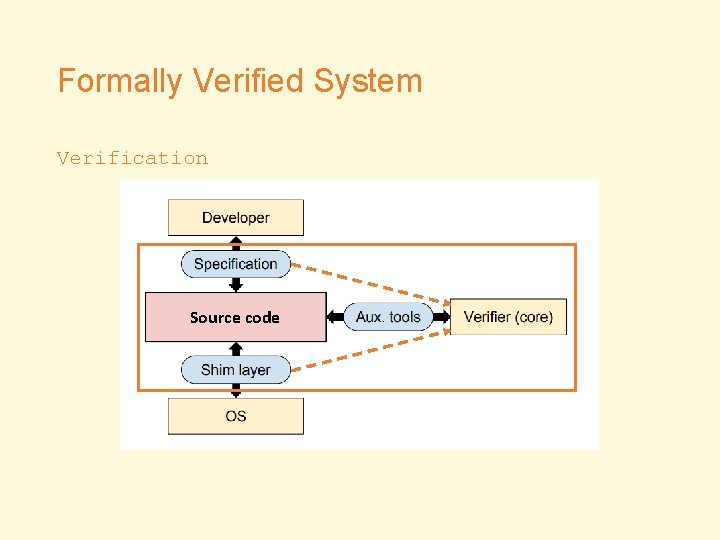

Formally Verified System Source code

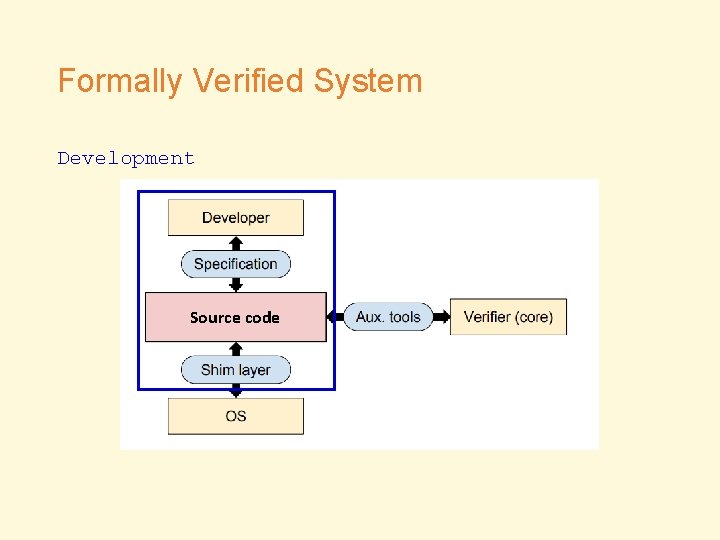

Formally Verified System Development Source code

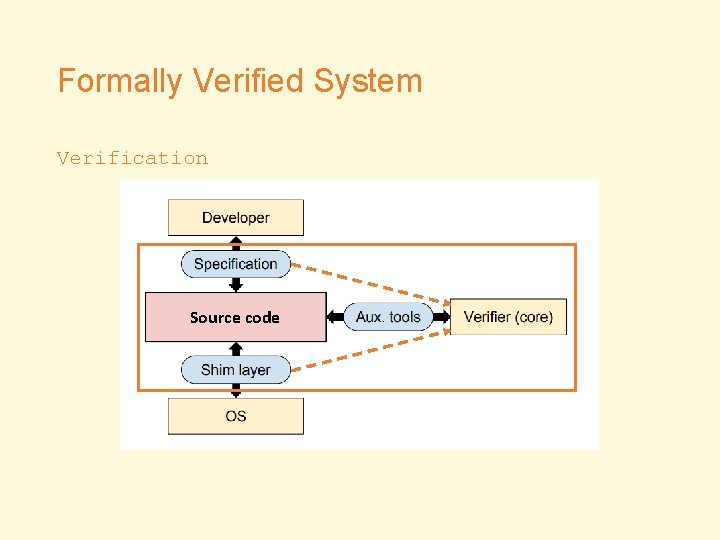

Formally Verified System Verification Source code

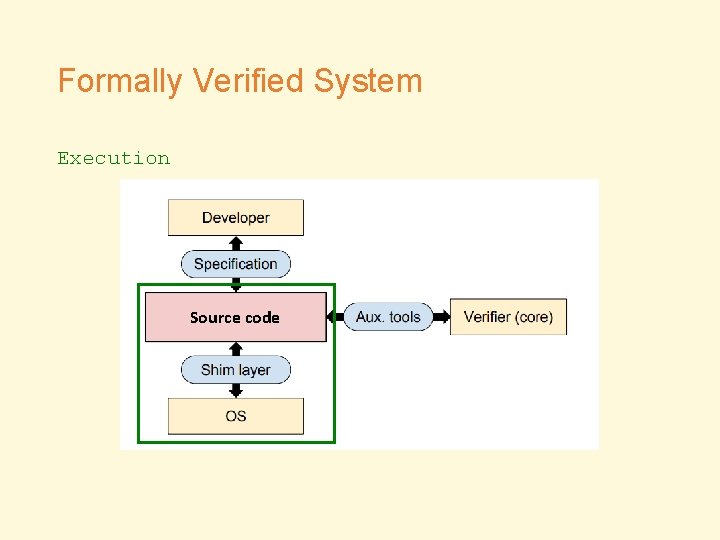

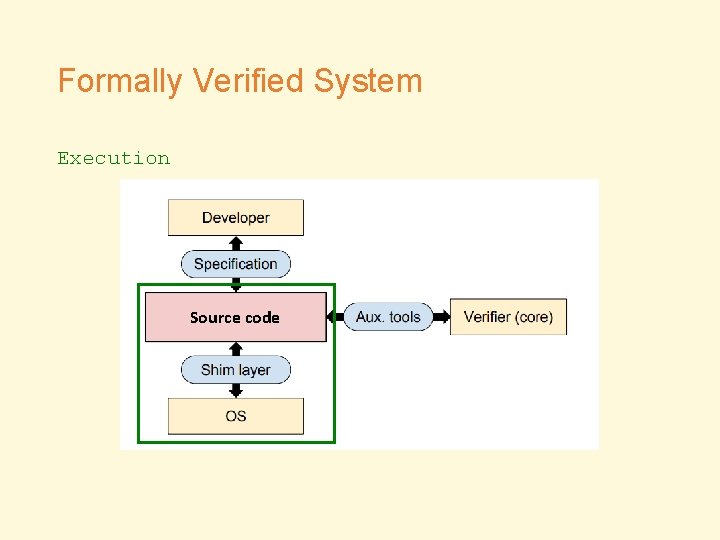

Formally Verified System Execution Source code

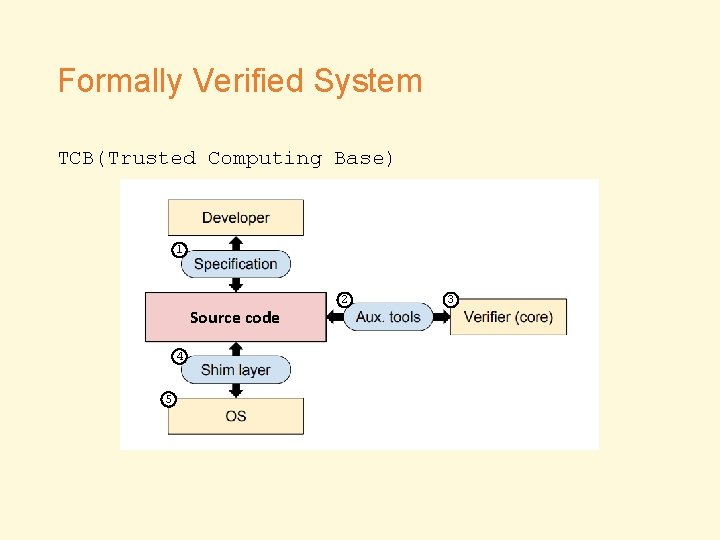

Formally Verified System TCB(Trusted Computing Base) 1 Source code 4 5 2 3

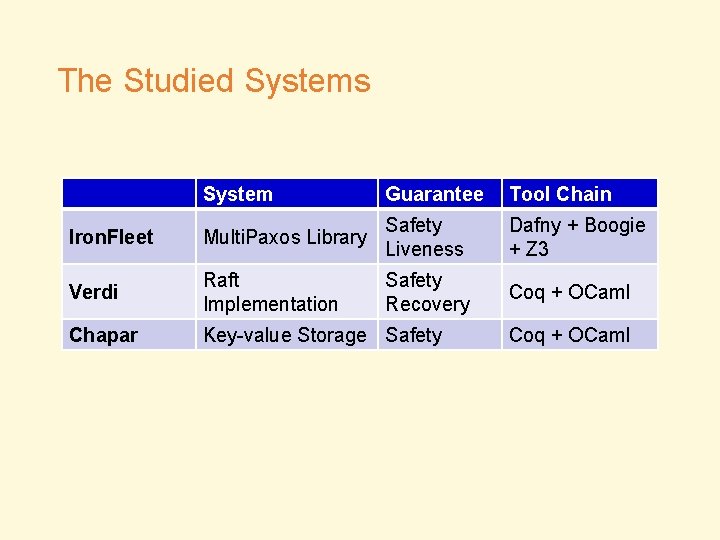

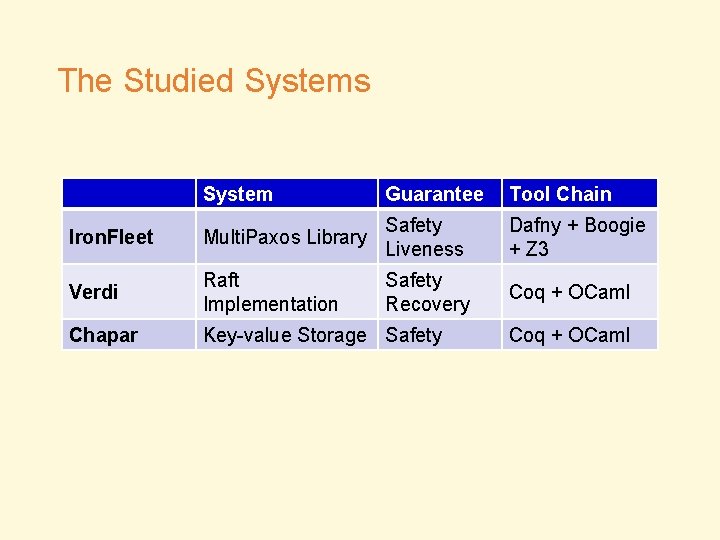

The Studied Systems System Guarantee Tool Chain Iron. Fleet Multi. Paxos Library Safety Liveness Dafny + Boogie + Z 3 Verdi Raft Implementation Safety Recovery Coq + OCaml Chapar Key-value Storage Safety Coq + OCaml

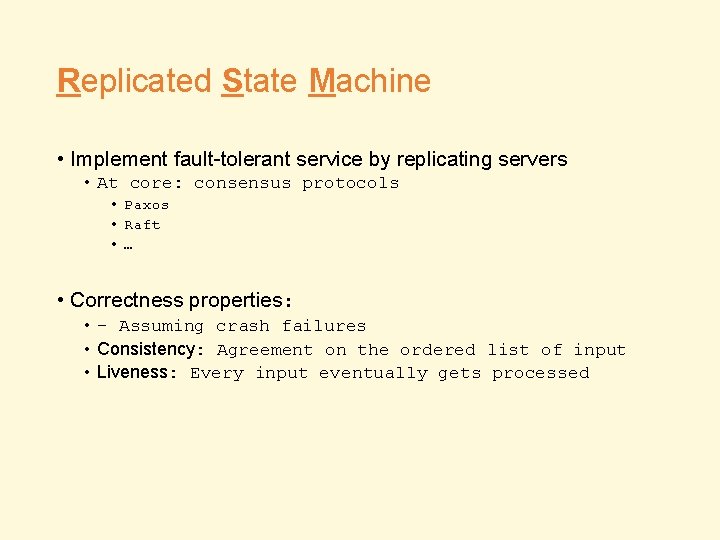

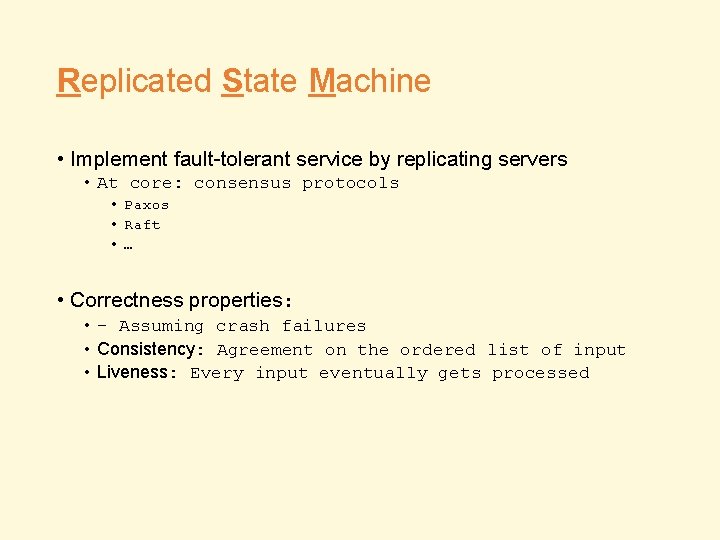

Replicated State Machine • Implement fault-tolerant service by replicating servers • At core: consensus protocols • Paxos • Raft • … • Correctness properties: • - Assuming crash failures • Consistency: Agreement on the ordered list of input • Liveness: Every input eventually gets processed

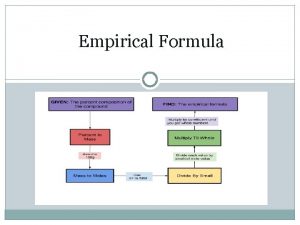

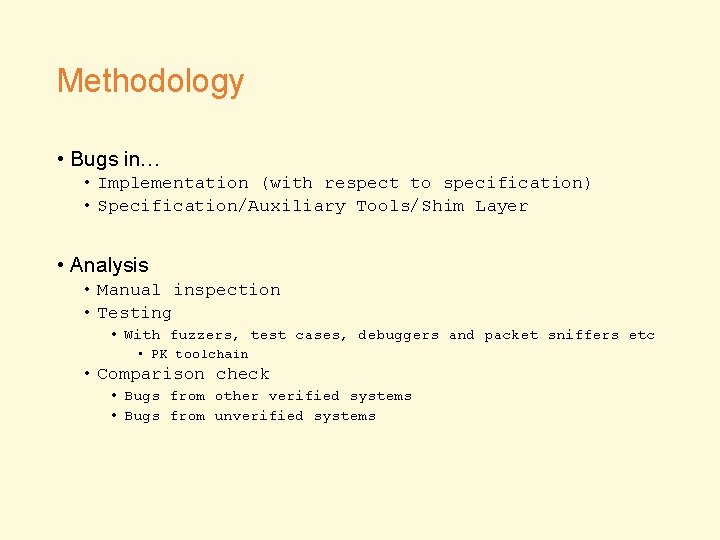

Methodology • Bugs in… • Implementation (with respect to specification) • Specification/Auxiliary Tools/Shim Layer • Analysis • Manual inspection • Testing • With fuzzers, test cases, debuggers and packet sniffers etc • PK toolchain • Comparison check • Bugs from other verified systems • Bugs from unverified systems

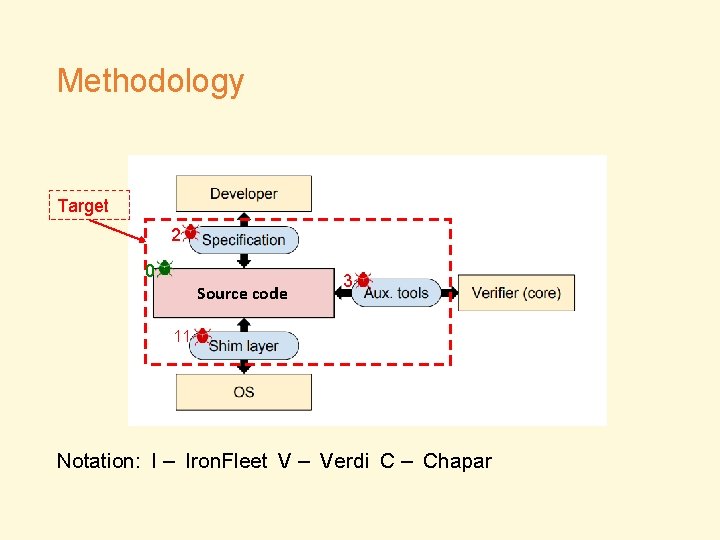

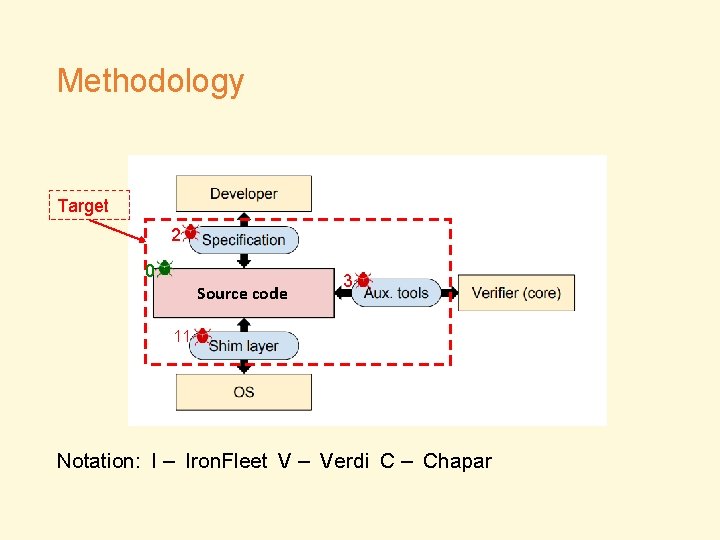

Methodology Target 2 0 Source code 3 11 Notation: I – Iron. Fleet V – Verdi C – Chapar

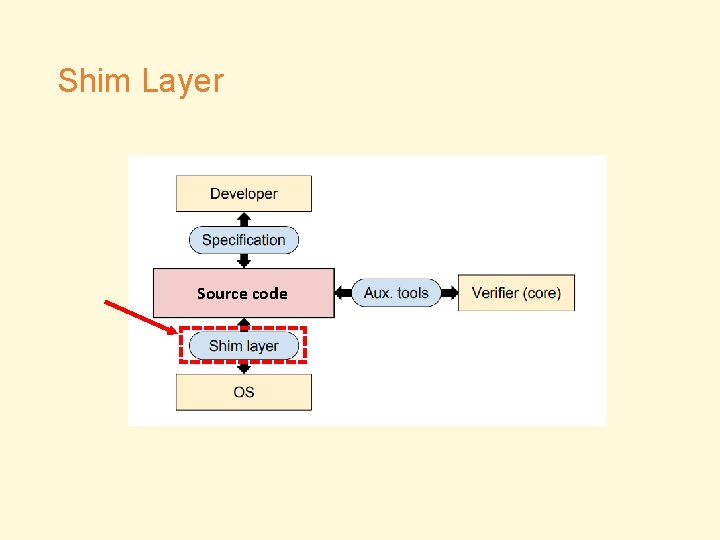

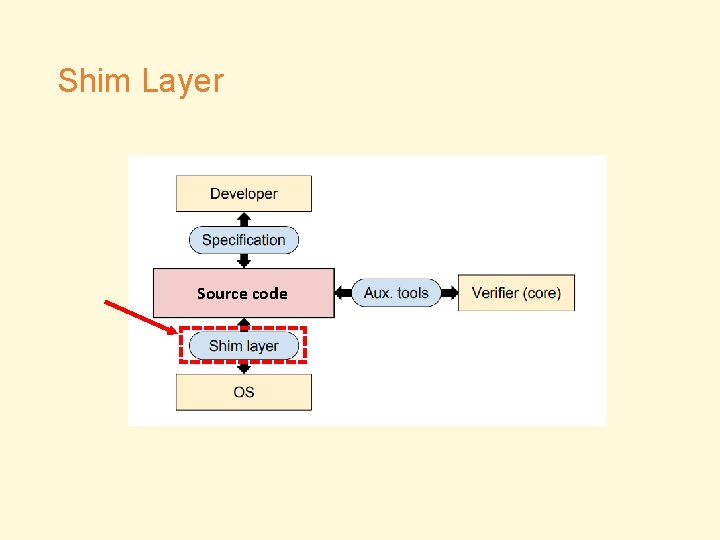

Shim Layer Source code

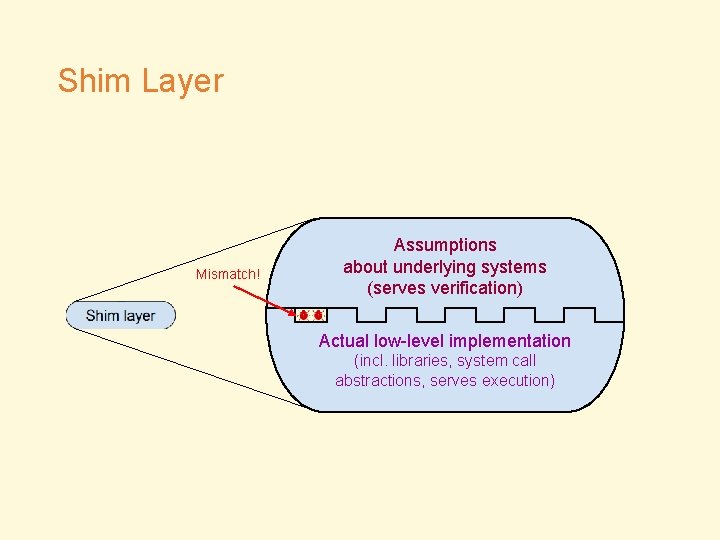

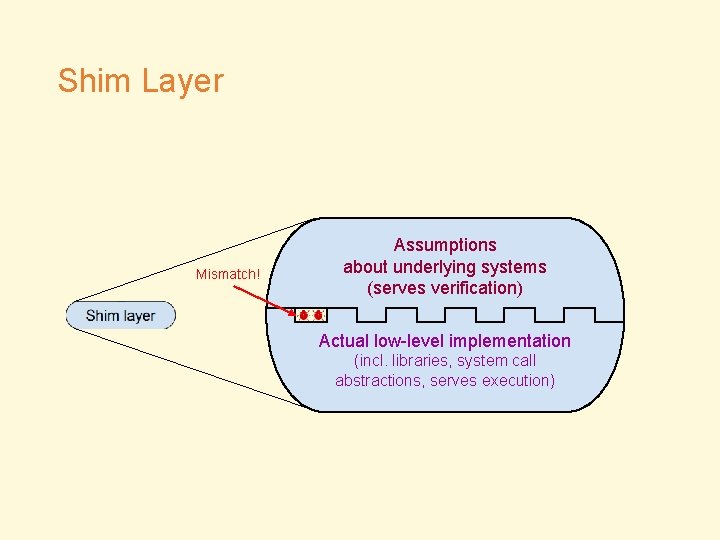

Shim Layer Mismatch! Assumptions about underlying systems (serves verification) Actual low-level implementation (incl. libraries, system call abstractions, serves execution)

Shim Layer Bugs • RPC (Remote Procedure Call) Implementation • Disk Operations • Resource Limits

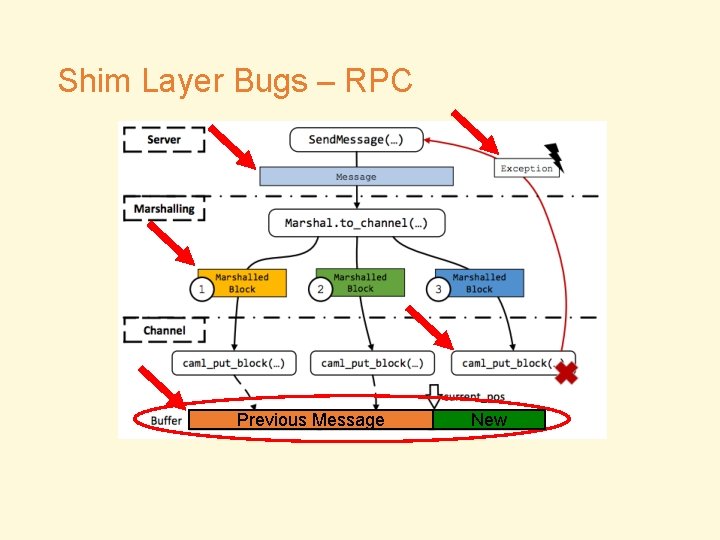

Shim Layer Bugs – RPC • Bug V 1: Incorrect unmarshaling of client requests • Assumption • TCP Recv Sys. Call returns a complete request • Reality • TCP Recv Sys. Call could return a partial request • Result • Exception

Shim Layer Bugs – RPC • Bug C 1: Duplicate packets cause consistency violation • Bug C 2: Dropped packets cause liveness problems • Bug V 2: Incorrect marshaling enables command injection • Cause: Same as SQL injection

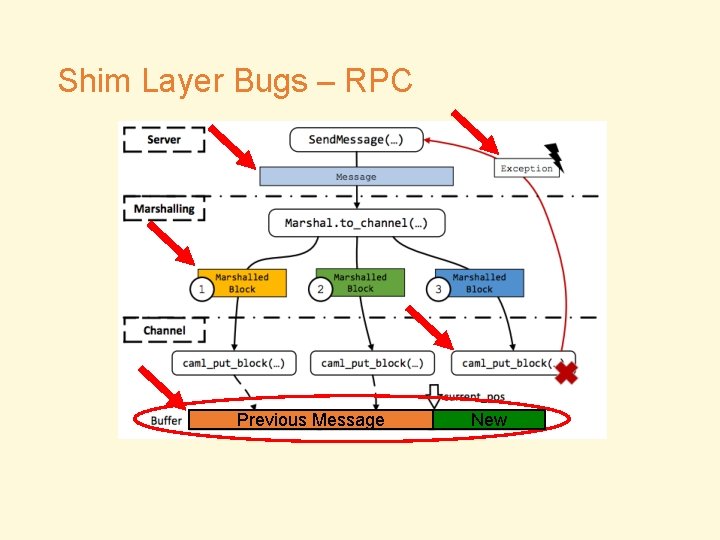

Shim Layer Bugs – RPC • Bug C 3: Library semantics causes safety violations • Assumption (Implicit) • The OCaml Library function Marshal. to_channel() would clear packet buffer if it fails • Reality • No, it won’t • Result • Corrupted packets

Shim Layer Bugs – RPC Previous Message New

Shim Layer Bugs – Disk Ops • Bug V 3: Incomplete log causes crash during recovery • Assumption • Each entry in the log is always complete • Reality • If the system crashes during write sys call, there might be incomplete entries • Result • Crash during recovery

Shim Layer Bugs – Disk Ops • Bug V 4: Crash during snapshot update causes loss of data • Cause: The shim layer could truncate the existing snapshot before safely writing the new one • Bug V 5: System call error causes wrong results and data loss • Cause: open system call could fail with reasons besides file not found

Shim Layer Bugs – Resource Limits • Bug V 6: Large packets cause server crashes • Cause: Overflowing OCaml code buffer size • Bug V 7: Failing to send a packet causes server to stop responding to clients • Cause: Overflowing UDP packet size

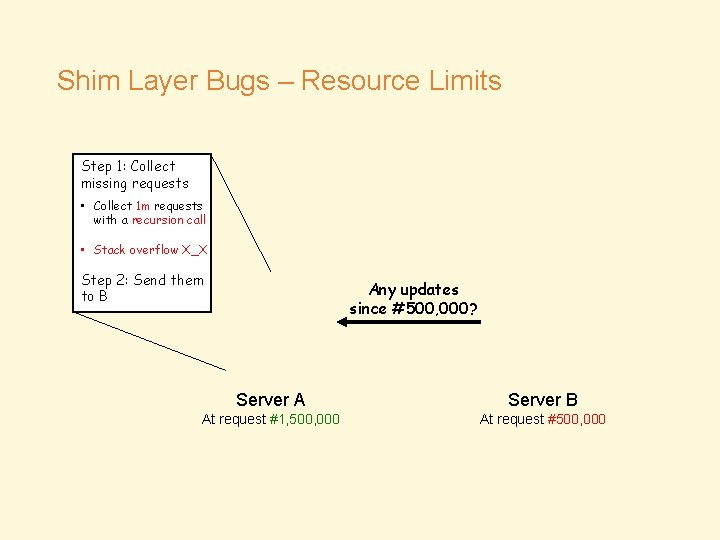

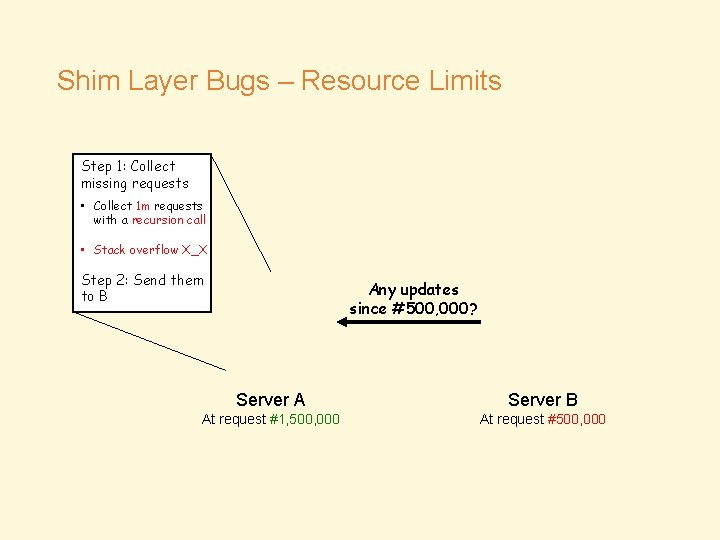

Shim Layer Bugs – Resource Limits • Bug V 8: Lagging follower causes stack overflow on leader • Assumption (Implicit) • Recursions cannot get stack overflow • Reality • It could • But how?

Shim Layer Bugs – Resource Limits Step 1: Collect missing requests • Collect 1 m requests with a recursion call • Stack overflow X_X Step 2: Send them to B Any updates since #500, 000? Server A Server B At request #1, 500, 000 At request #500, 000

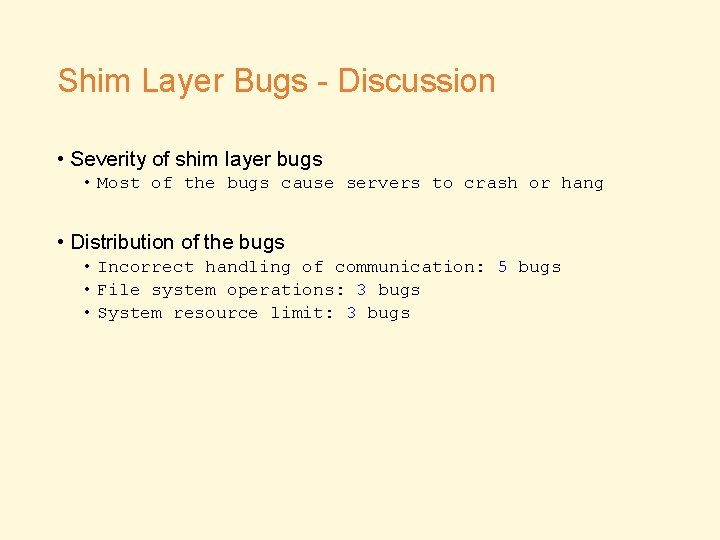

Shim Layer Bugs - Discussion • Severity of shim layer bugs • Most of the bugs cause servers to crash or hang • Distribution of the bugs • Incorrect handling of communication: 5 bugs • File system operations: 3 bugs • System resource limit: 3 bugs

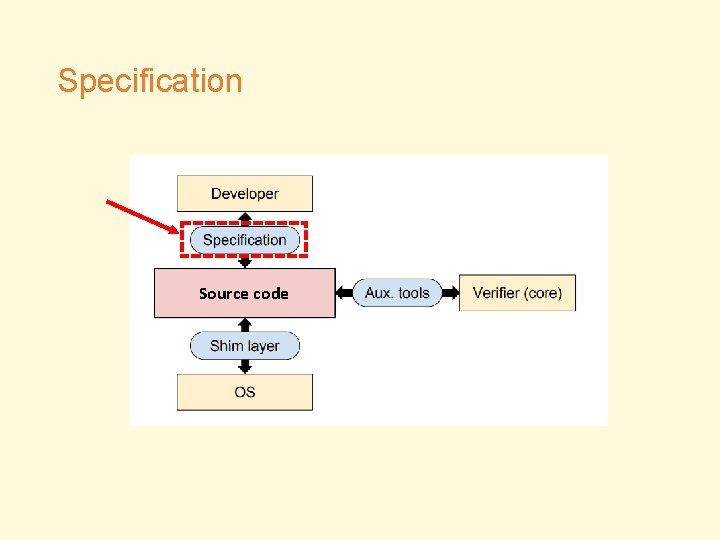

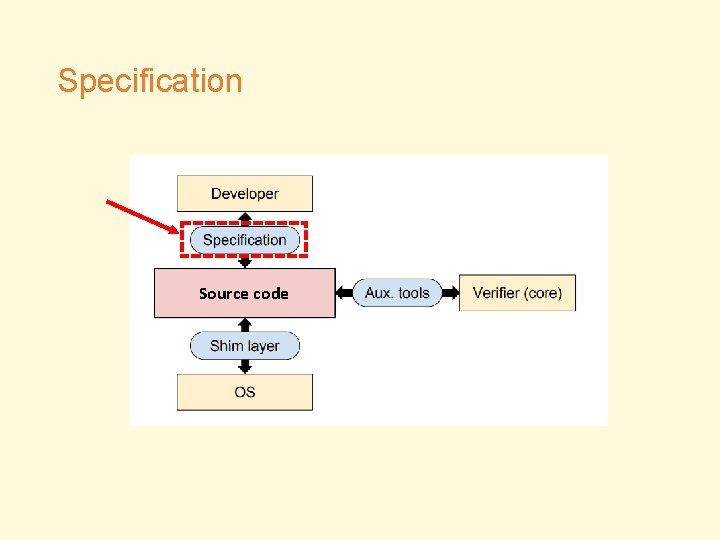

Specification Source code

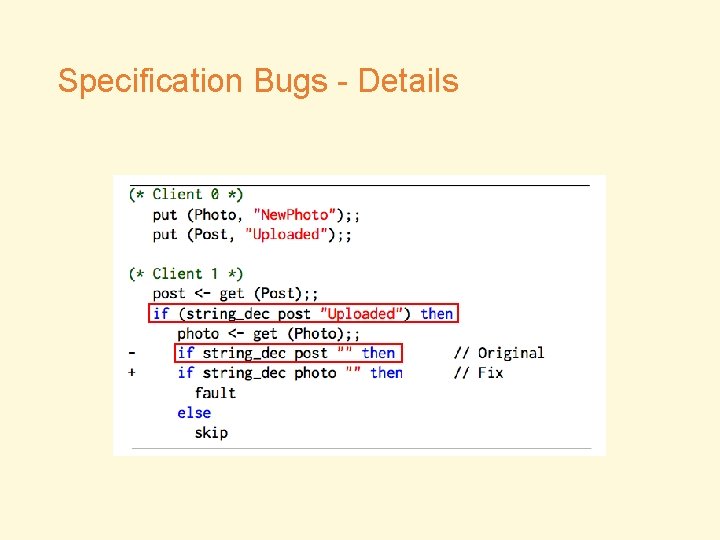

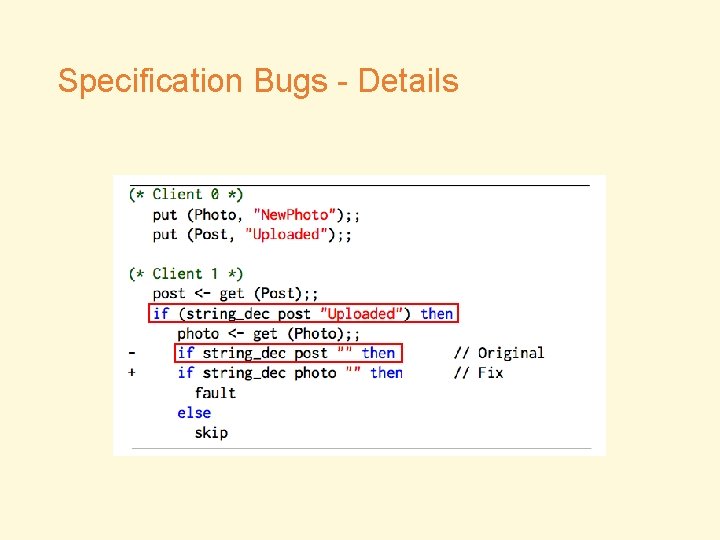

Specification Bugs - Details • Bug I 1: Incomplete high-level specification prevents verification of exactly-once semantics • Bug C 4: Incorrect assertion prevents verification of causal consistency in client

Specification Bugs - Details

Specification Bugs - Discussion • Severity of specification bugs • Cause incorrect systems to pass the verification • How do you say a specification is “wrong”? • Quis custodiet ipsos custodes? • 中文:医者不能自医 • 日本語:誰が見張りを見るのだろうか? • English: Who watches the watchman? • Maybe it’s a feature you don’t understand

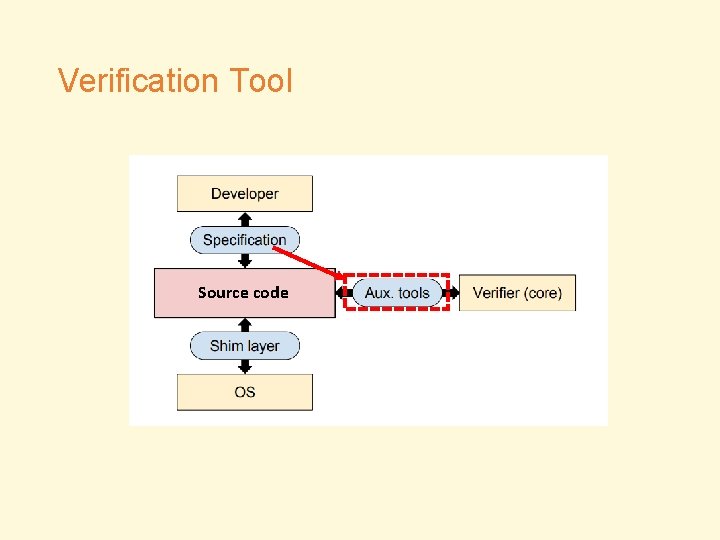

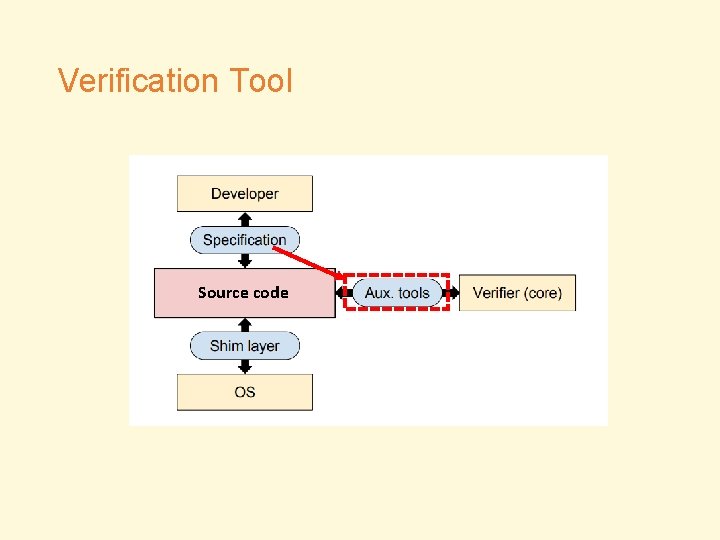

Verification Tool Source code

Verification Tool 1. Call the verifier to verify your project 2. If the verification passes, build the executable More than a compiler since dealing with both verified and unverified components

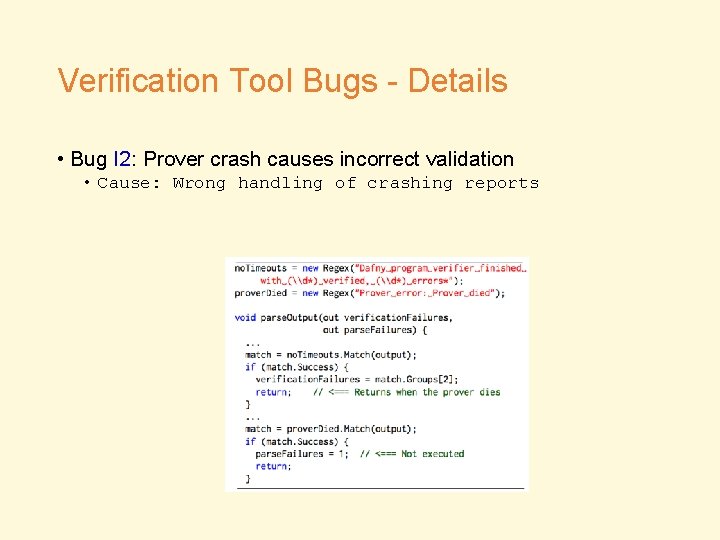

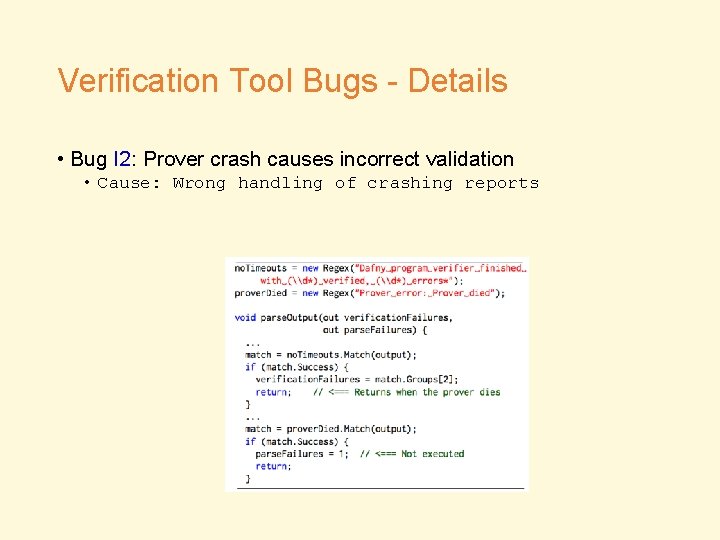

Verification Tool Bugs - Details • Bug I 2: Prover crash causes incorrect validation • Cause: Wrong handling of crashing reports

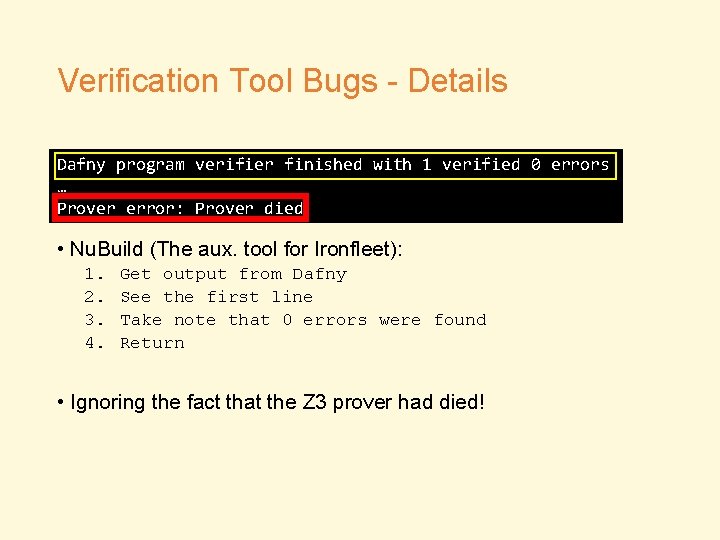

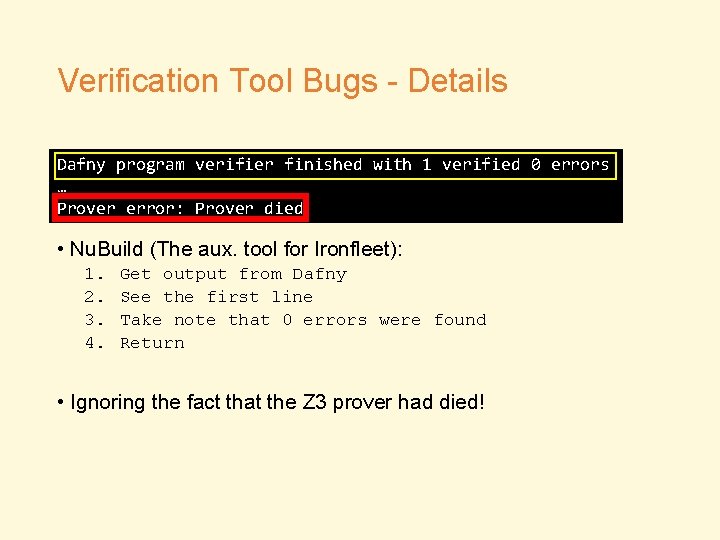

Verification Tool Bugs - Details Dafny program verifier finished with 1 verified 0 errors … Prover error: Prover died • Nu. Build (The aux. tool for Ironfleet): 1. 2. 3. 4. Get output from Dafny See the first line Take note that 0 errors were found Return • Ignoring the fact that the Z 3 prover had died!

Verification Tool Bugs - Details • Bug I 3: Signals cause validation of incorrect programs • Cause: Difference of signals between different OS • Bug I 4: Incompatible libraries cause prover crash • Together with Bug I 2, make any program pass the verification

Verification Tool Bugs - Discussion • Severity of verification tool bugs • Cause incorrect (according to the specification) code to pass • Verification tools can also be buggy • All the bugs found were in non-core components • Not the parts reasoning about proofs

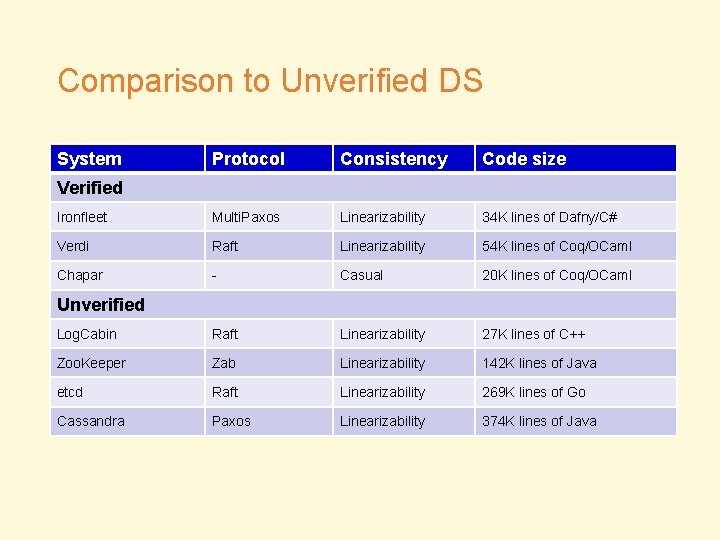

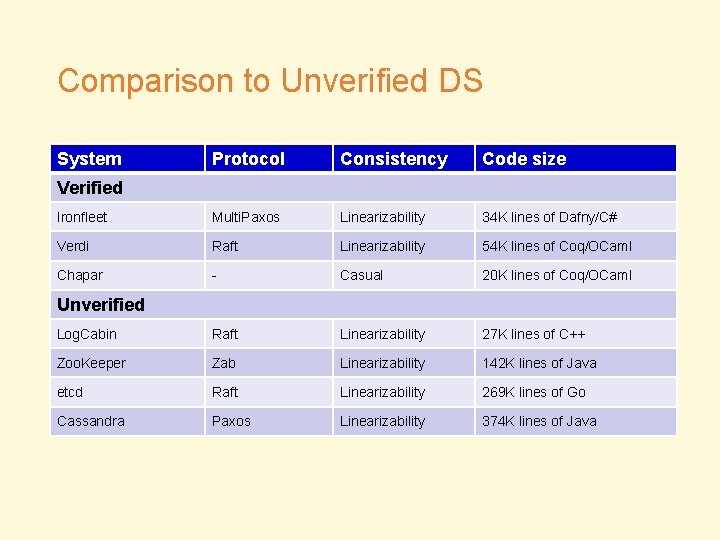

Comparison to Unverified DS System Protocol Consistency Code size Ironfleet Multi. Paxos Linearizability 34 K lines of Dafny/C# Verdi Raft Linearizability 54 K lines of Coq/OCaml Chapar - Casual 20 K lines of Coq/OCaml Log. Cabin Raft Linearizability 27 K lines of C++ Zoo. Keeper Zab Linearizability 142 K lines of Java etcd Raft Linearizability 269 K lines of Go Cassandra Paxos Linearizability 374 K lines of Java Verified Unverified

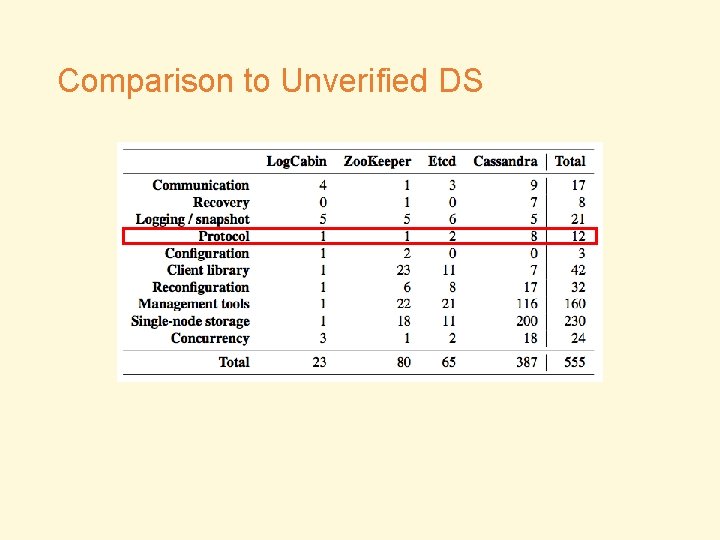

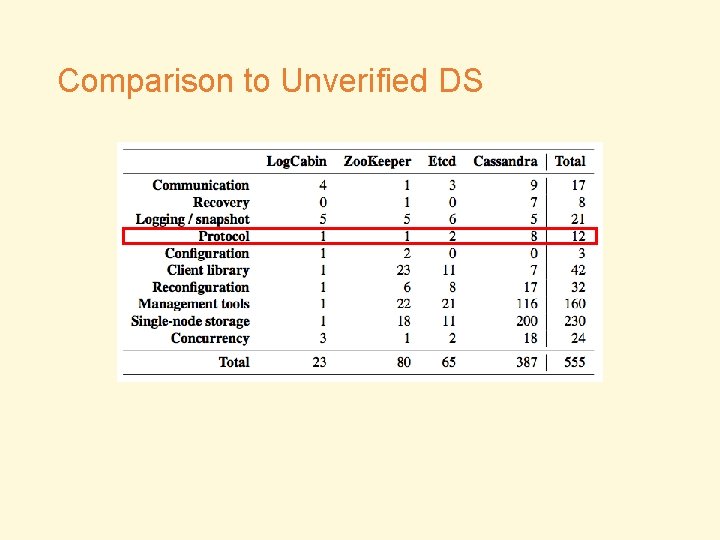

Comparison to Unverified DS

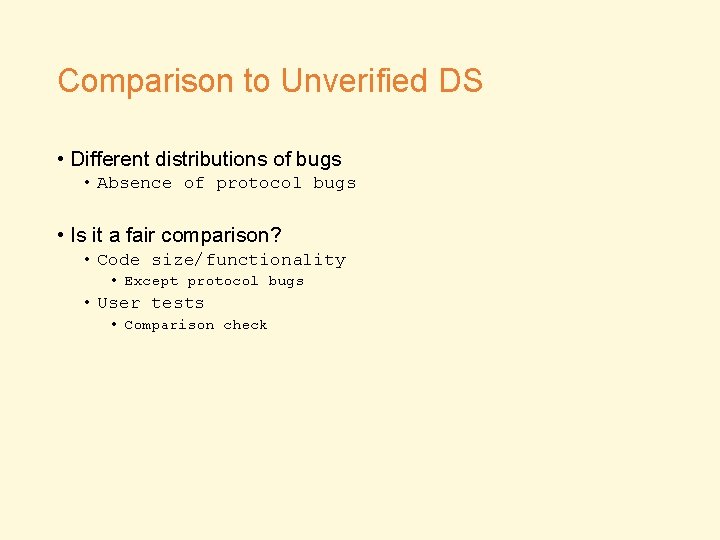

Comparison to Unverified DS • Different distributions of bugs • Absence of protocol bugs • Is it a fair comparison? • Code size/functionality • Except protocol bugs • User tests • Comparison check

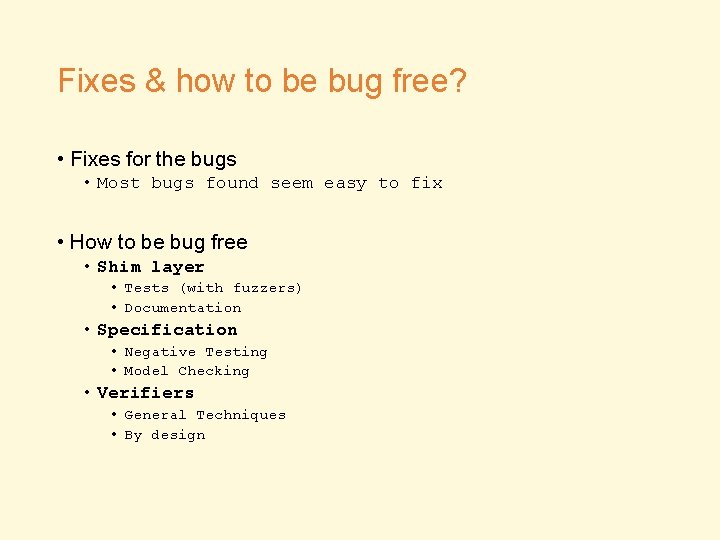

Fixes & how to be bug free? • Fixes for the bugs • Most bugs found seem easy to fix • How to be bug free • Shim layer • Tests (with fuzzers) • Documentation • Specification • Negative Testing • Model Checking • Verifiers • General Techniques • By design

Review • Background • Studied Distributed Systems • Methodology • Bugs • Shim Layer • Specification • Auxiliary Tools • Discussion • Comparing to unverified dist. systems • Towards bug-free • Conclusion

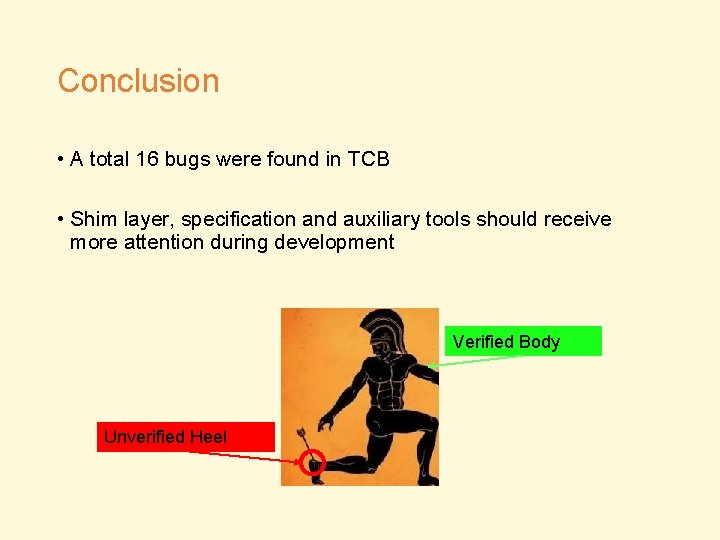

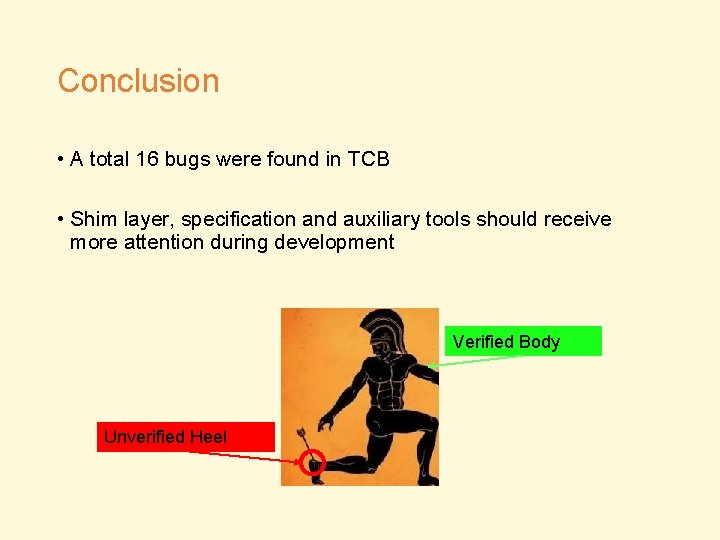

Conclusion • A total 16 bugs were found in TCB • Shim layer, specification and auxiliary tools should receive more attention during development Verified Body Unverified Heel

Conclusion • Protocol bugs which occur in unverified systems were not found in verified systems • Verification is beneficial towards reliability • Given its assumptions are well-tested

My Thoughts • Formal verification shouldn’t be blamed for bugs found in unverified components • These bugs are not generated by adopting formal verification • In system design, formal verification should be considered both as a tool and a perspective • Which components to verify? • How should verified and unverified components be glued together? • More research efforts needed to systematically reduce bugs in verifiers/shim layers • Cross-validation might work since no two inspected systems had the same bugs

Conclusion • Verification is beneficial towards reliability • Given its assumptions are well-tested

Acknowledgment My sincere thanks to Prof. Robbert Van Renesse Prof. Greg Morrisett For your wonderful advice and instructions on the slides and the presentation!

References • Related Works • Finding and Understanding Bugs in C Compilers • Xuejun Yang et al. • Simple Testing Can Prevent Most Critical Failures: An Analysis of Production Failures in Distributed Data-Intensive Systems • Ding Yuan et al. • Minimizing faulty executions of distributed systems • Colin Scott et al.

References • Iron. Fleet: Proving Practical Distributed Systems Correct • Chris Hawblitzel et al. • Verdi: A Framework for Implementing and Formally Verifying Distributed Systems • James R. Wilcox et al. • Chapar: Certified Causally Consistent Distributed Key-Value Stores • Mohsen Lesani et al.

A formal reply is written in-

A formal reply is written in- Section 2 quiz formal amendment

Section 2 quiz formal amendment A trait is formally defined as

A trait is formally defined as Chapter 15 section 4

Chapter 15 section 4 An aggregation of ci's that has been formally reviewed

An aggregation of ci's that has been formally reviewed Correctness of fragmentation

Correctness of fragmentationFunctional correctness

Entity integrity ensures correctness of the data in a table

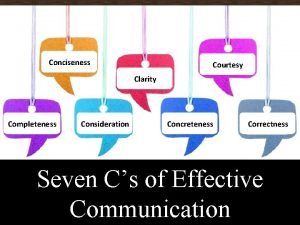

Entity integrity ensures correctness of the data in a table 7cs of effective communication

7cs of effective communication Prove correctness of divide and conquer

Prove correctness of divide and conquer Dynamic connectivity problem

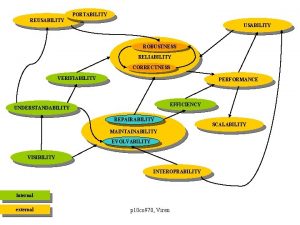

Dynamic connectivity problem Reliability vs correctness

Reliability vs correctness What is the correctness of algorithm

What is the correctness of algorithm Seven c's of communication

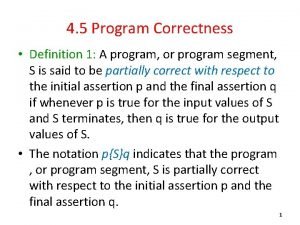

Seven c's of communication What is program correctness

What is program correctness Concreteness and conciseness

Concreteness and conciseness Software design principles correctness and robustness

Software design principles correctness and robustness Four loopy questions

Four loopy questions Emotional correctness definition

Emotional correctness definition What are the principles of business communication

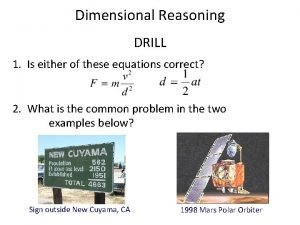

What are the principles of business communication V=u+at is dimensionally correct

V=u+at is dimensionally correct Proof of correctness examples

Proof of correctness examples What is completeness in communication

What is completeness in communication Principles of distributed database systems

Principles of distributed database systems Correctness of bubble sort

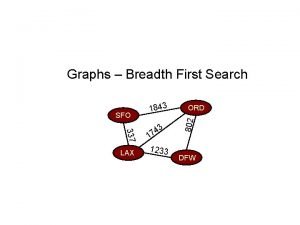

Correctness of bubble sort Bfs proof of correctness

Bfs proof of correctness Notion of correctness

Notion of correctness Correctness of fragmentation

Correctness of fragmentation Alleluia hat len nguoi oi

Alleluia hat len nguoi oi Sự nuôi và dạy con của hươu

Sự nuôi và dạy con của hươu đại từ thay thế

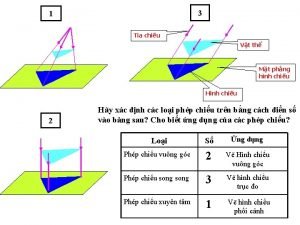

đại từ thay thế Vẽ hình chiếu vuông góc của vật thể sau

Vẽ hình chiếu vuông góc của vật thể sau Công của trọng lực

Công của trọng lực Hát kết hợp bộ gõ cơ thể

Hát kết hợp bộ gõ cơ thể Tỉ lệ cơ thể trẻ em

Tỉ lệ cơ thể trẻ em Thế nào là mạng điện lắp đặt kiểu nổi

Thế nào là mạng điện lắp đặt kiểu nổi Các loại đột biến cấu trúc nhiễm sắc thể

Các loại đột biến cấu trúc nhiễm sắc thể Lời thề hippocrates

Lời thề hippocrates Vẽ hình chiếu đứng bằng cạnh của vật thể

Vẽ hình chiếu đứng bằng cạnh của vật thể Quá trình desamine hóa có thể tạo ra

Quá trình desamine hóa có thể tạo ra độ dài liên kết

độ dài liên kết Môn thể thao bắt đầu bằng từ chạy

Môn thể thao bắt đầu bằng từ chạy Sự nuôi và dạy con của hổ

Sự nuôi và dạy con của hổ điện thế nghỉ

điện thế nghỉ Biện pháp chống mỏi cơ

Biện pháp chống mỏi cơ Trời xanh đây là của chúng ta thể thơ

Trời xanh đây là của chúng ta thể thơ Voi kéo gỗ như thế nào

Voi kéo gỗ như thế nào Thiếu nhi thế giới liên hoan

Thiếu nhi thế giới liên hoan Fecboak

Fecboak Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Một số thể thơ truyền thống

Một số thể thơ truyền thống Thế nào là hệ số cao nhất

Thế nào là hệ số cao nhất Hệ hô hấp

Hệ hô hấp