An Attention Enhanced Graph Convolutional LSTM Network for

- Slides: 70

An Attention Enhanced Graph Convolutional LSTM Network for Skeleton-Based Action Recognition Chenyang Si Wentao Chen Wei Wang Liang Wang Tieniu Tan National Lab of Pattern Recognition (NLPR) Institute of Automation, Chinese Academy of Sciences (CASIA) CVPR 2019 1

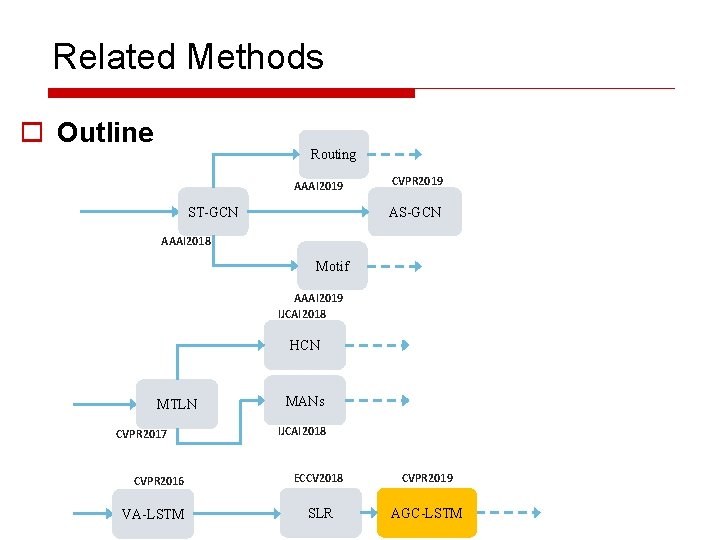

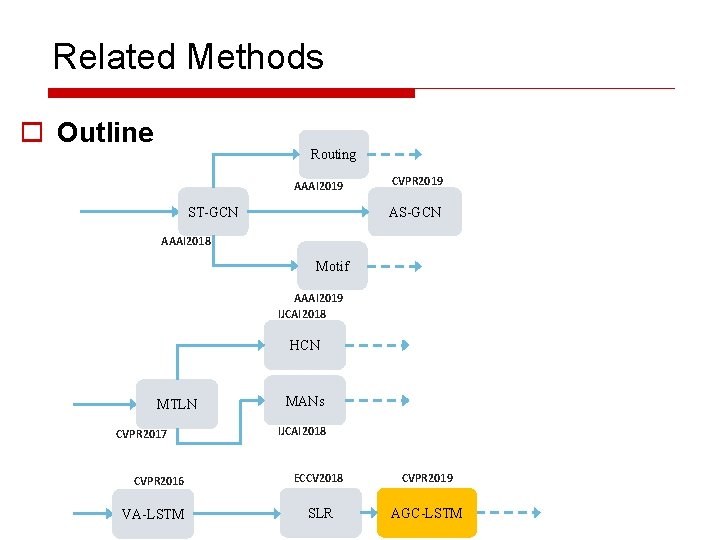

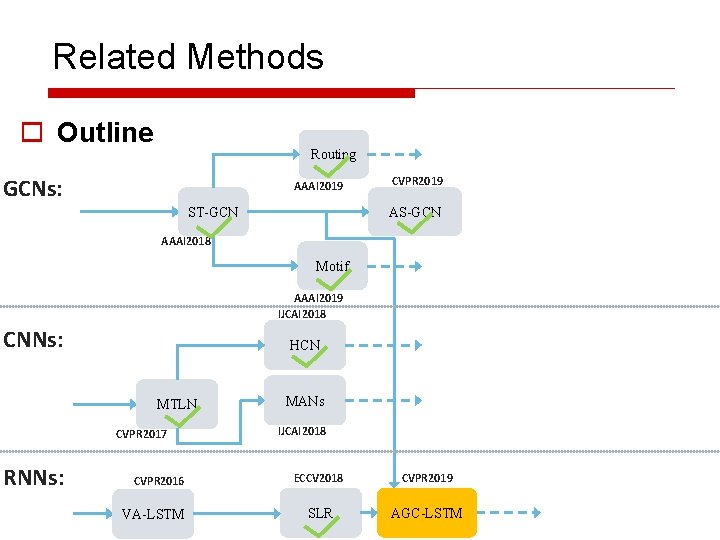

Related Methods o Outline Routing AAAI 2019 CVPR 2019 AS-GCN ST-GCN AAAI 2018 Motif AAAI 2019 IJCAI 2018 HCN MTLN CVPR 2017 CVPR 2016 VA-LSTM MANs IJCAI 2018 ECCV 2018 CVPR 2019 SLR AGC-LSTM

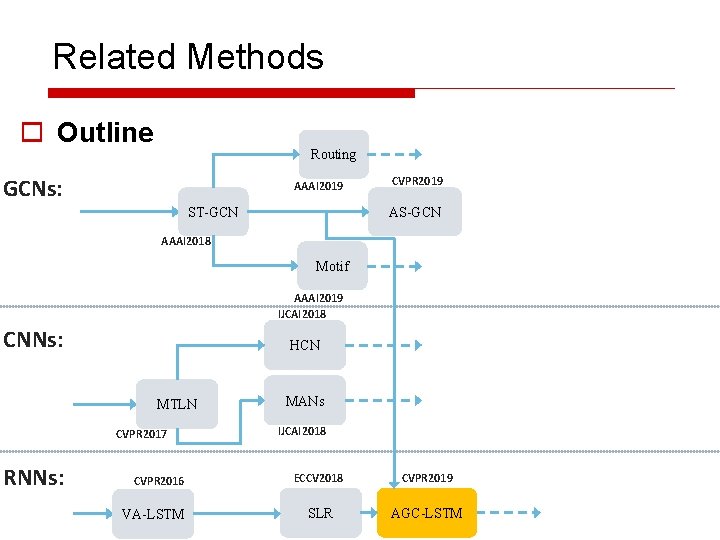

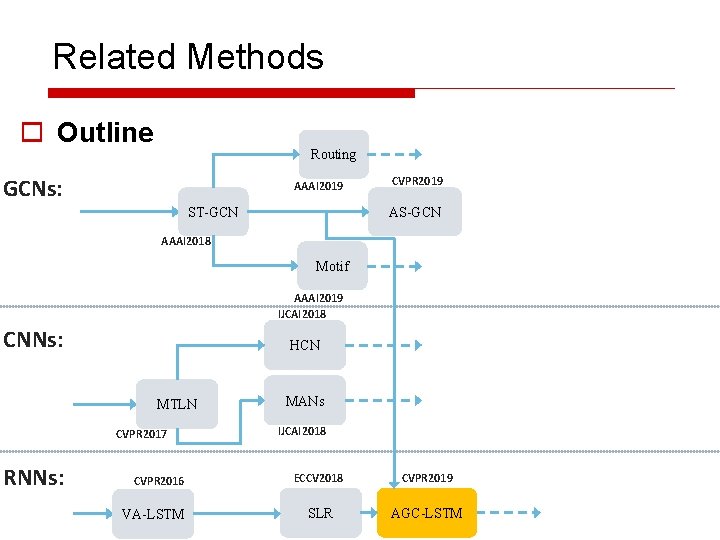

Related Methods o Outline Routing GCNs: AAAI 2019 CVPR 2019 AS-GCN ST-GCN AAAI 2018 Motif AAAI 2019 IJCAI 2018 CNNs: HCN MTLN CVPR 2017 RNNs: CVPR 2016 VA-LSTM MANs IJCAI 2018 ECCV 2018 CVPR 2019 SLR AGC-LSTM

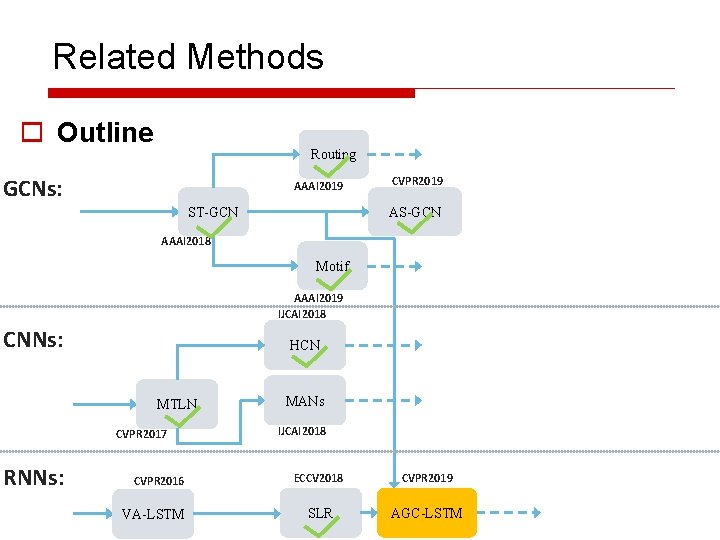

Related Methods o Outline Routing GCNs: AAAI 2019 CVPR 2019 AS-GCN ST-GCN AAAI 2018 Motif AAAI 2019 IJCAI 2018 CNNs: HCN MTLN CVPR 2017 RNNs: CVPR 2016 VA-LSTM MANs IJCAI 2018 ECCV 2018 CVPR 2019 SLR AGC-LSTM

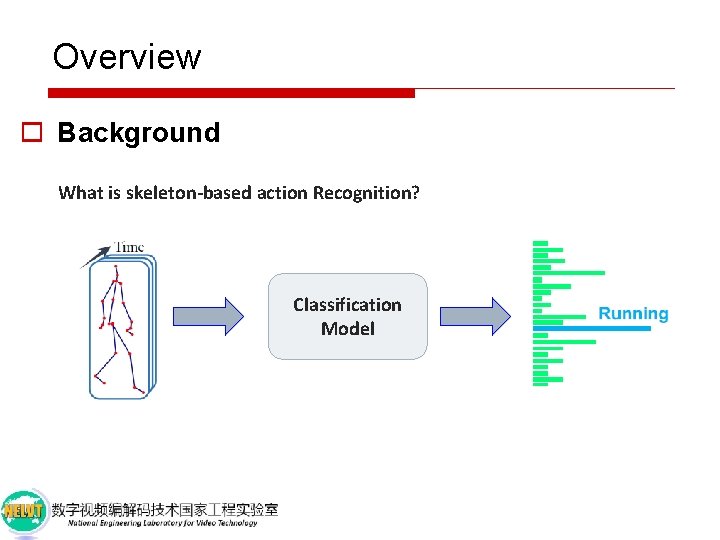

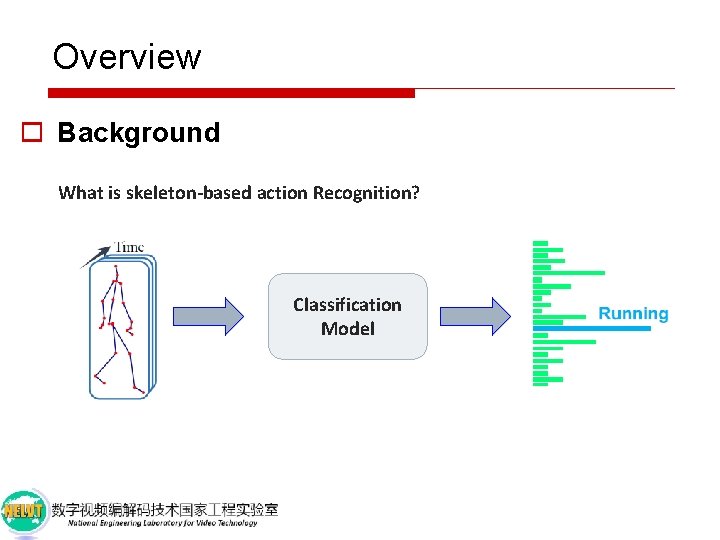

Overview o Background What is skeleton-based action Recognition? Classification Model

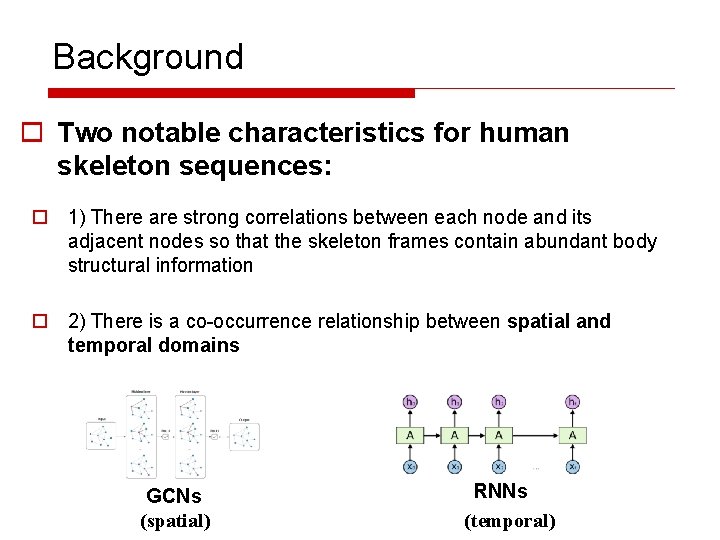

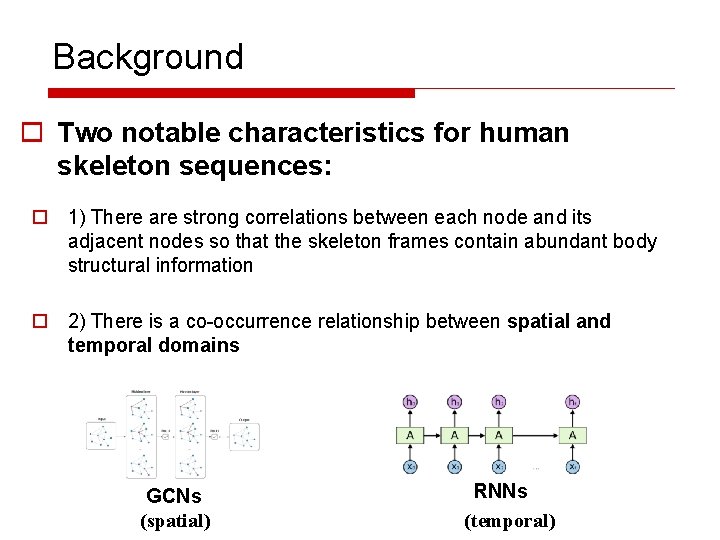

Background o Two notable characteristics for human skeleton sequences: o 1) There are strong correlations between each node and its adjacent nodes so that the skeleton frames contain abundant body structural information o 2) There is a co-occurrence relationship between spatial and temporal domains

Background o Two notable characteristics for human skeleton sequences: o 1) There are strong correlations between each node and its adjacent nodes so that the skeleton frames contain abundant body structural information o 2) There is a co-occurrence relationship between spatial and temporal domains GCNs (spatial) RNNs (temporal)

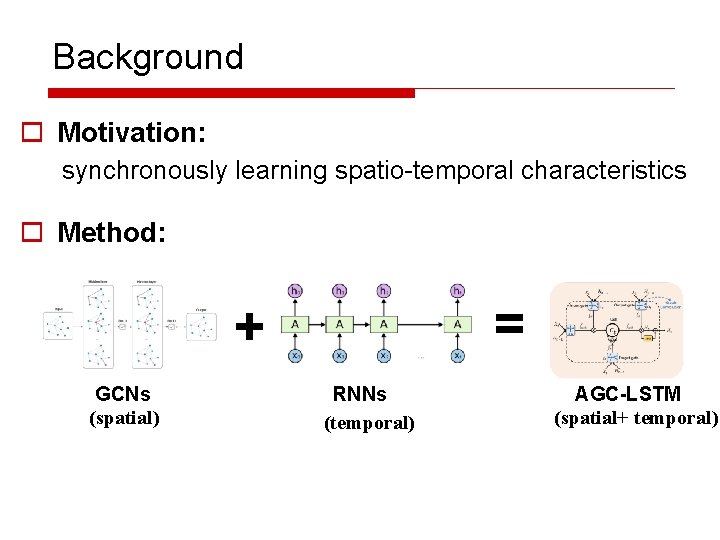

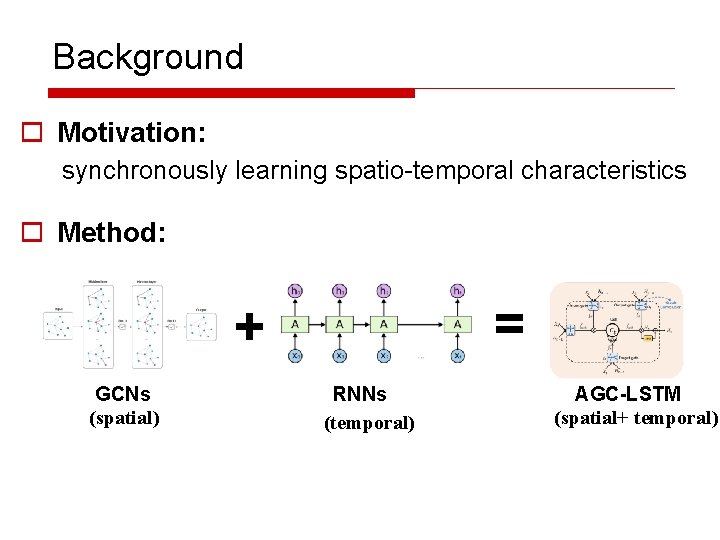

Background o Motivation: synchronously learning spatio-temporal characteristics o Method: = + GCNs (spatial) RNNs (temporal) AGC-LSTM (spatial+ temporal)

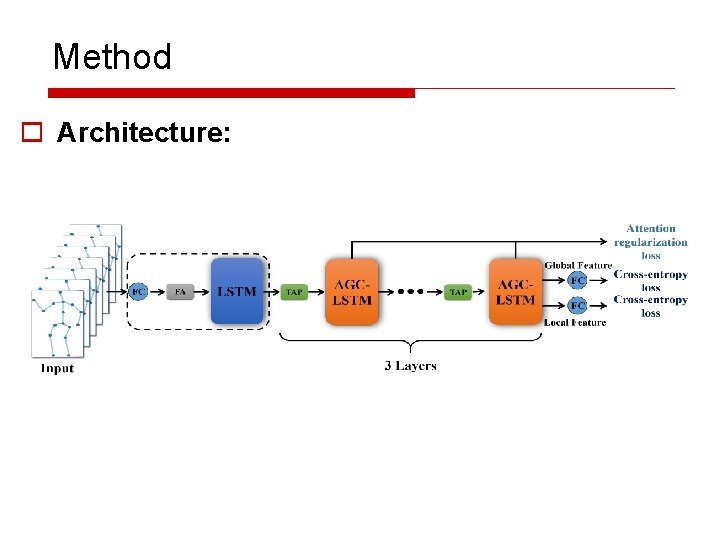

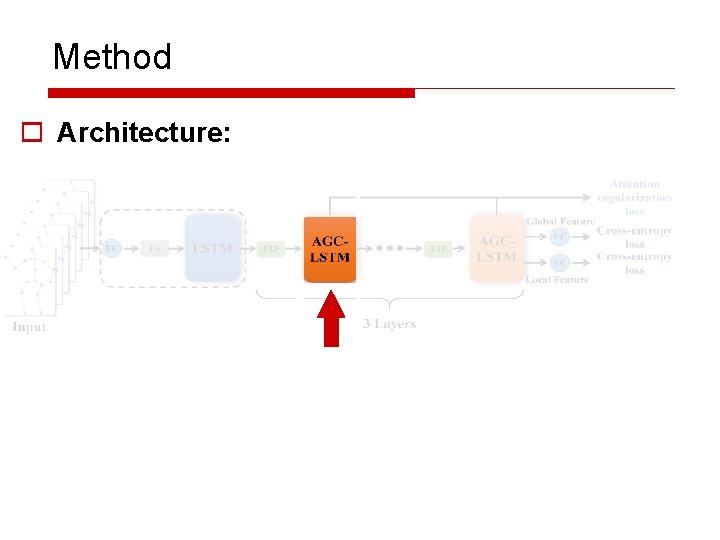

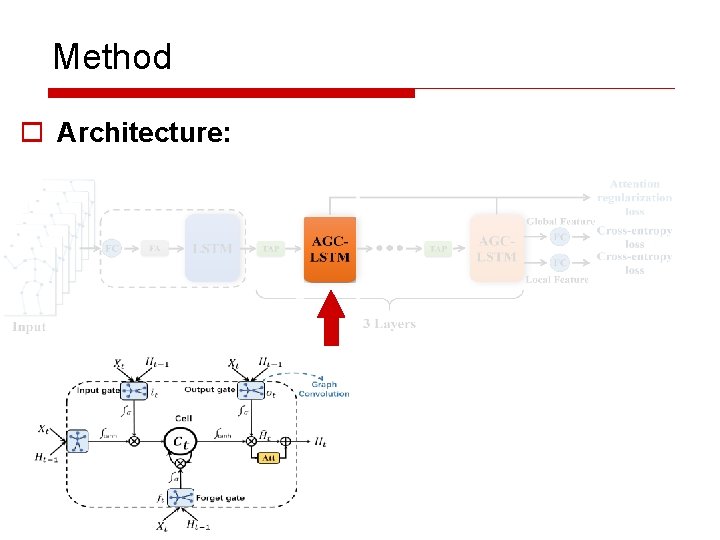

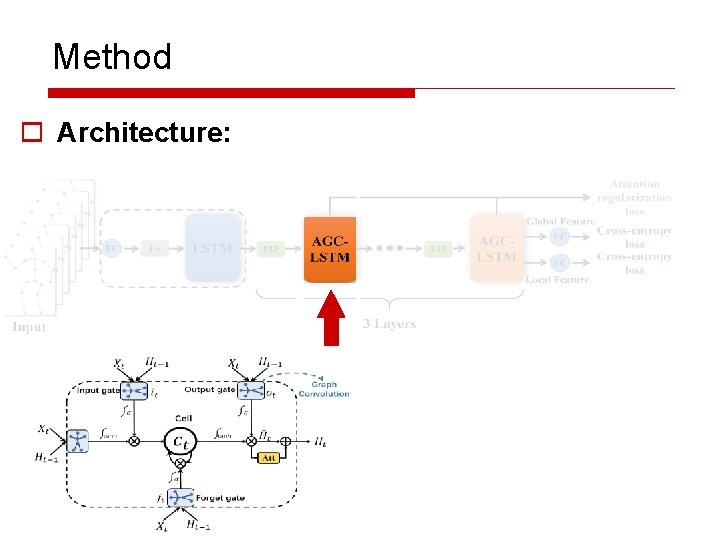

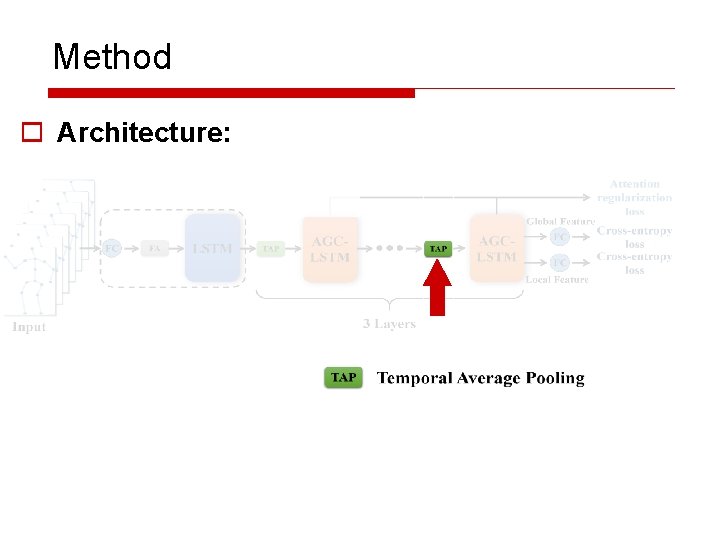

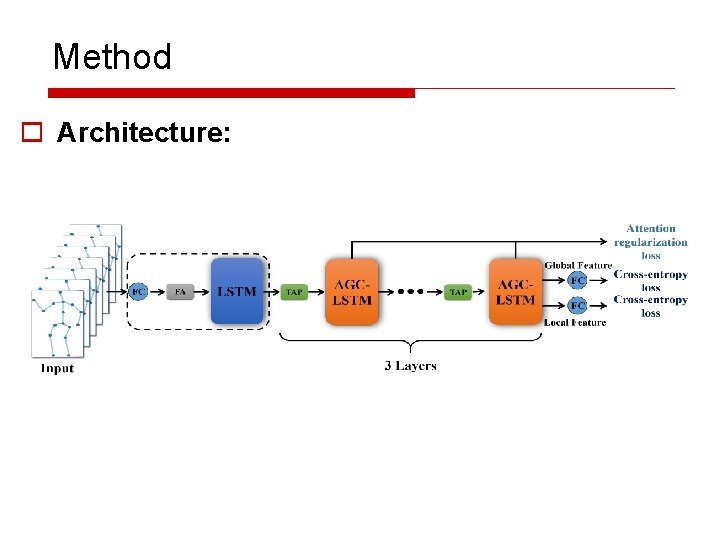

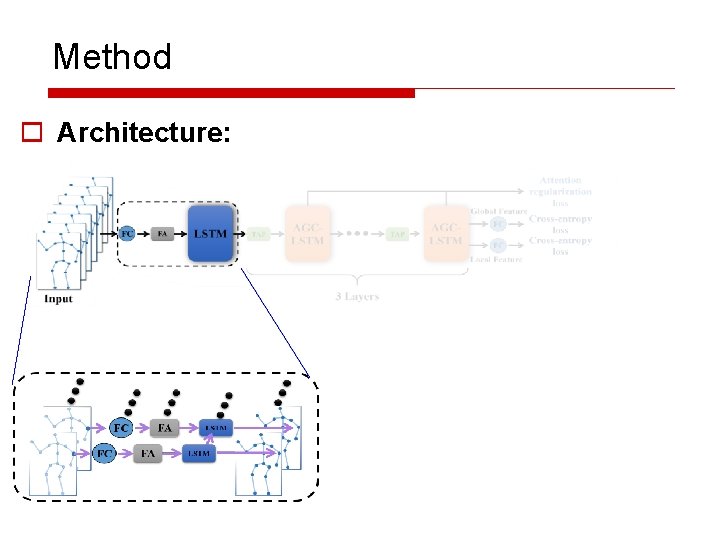

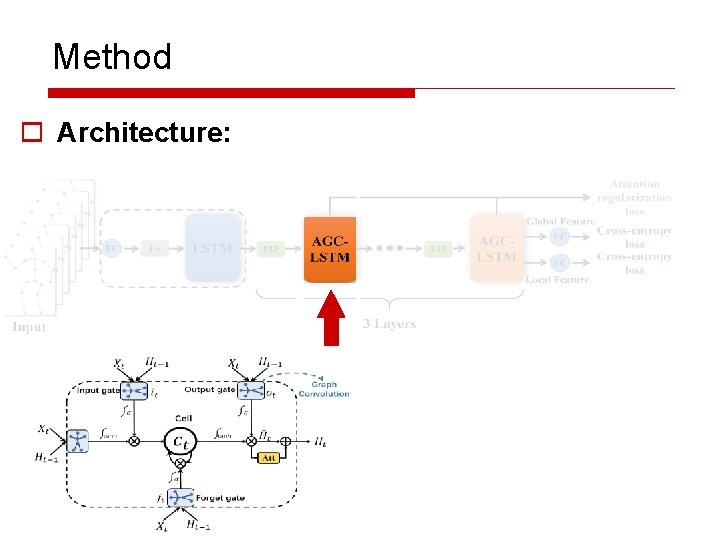

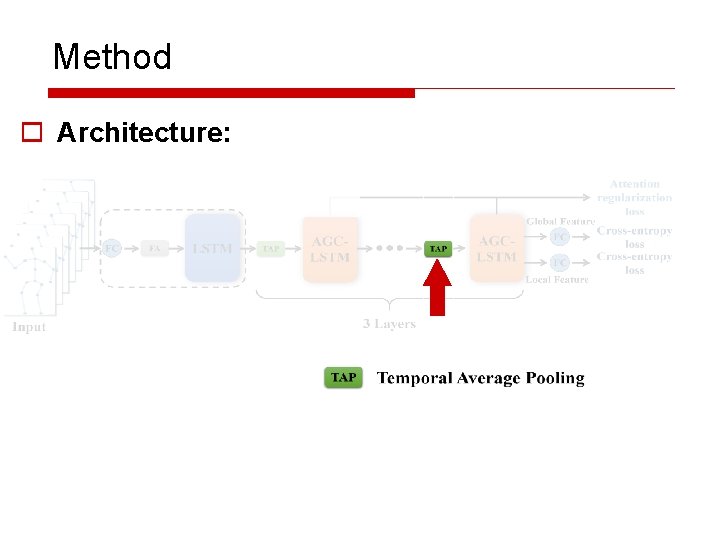

Method o Architecture:

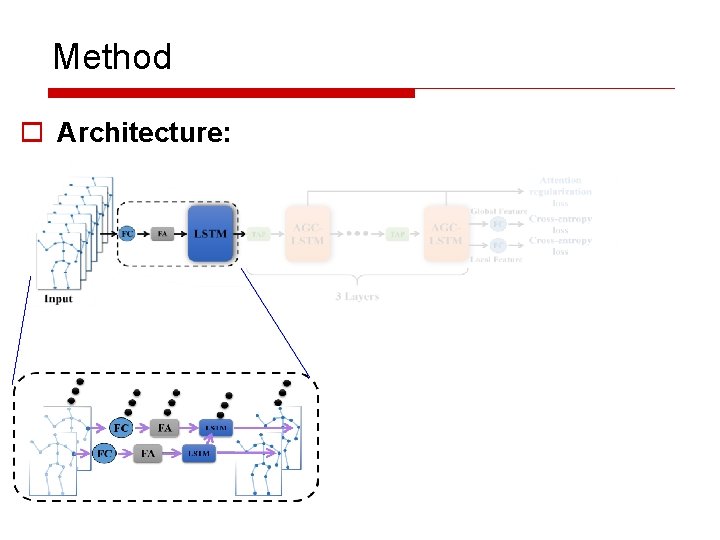

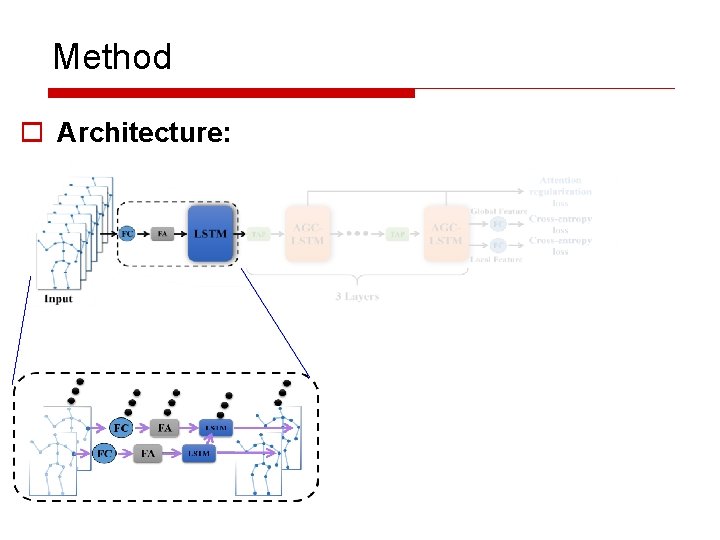

Method o Architecture:

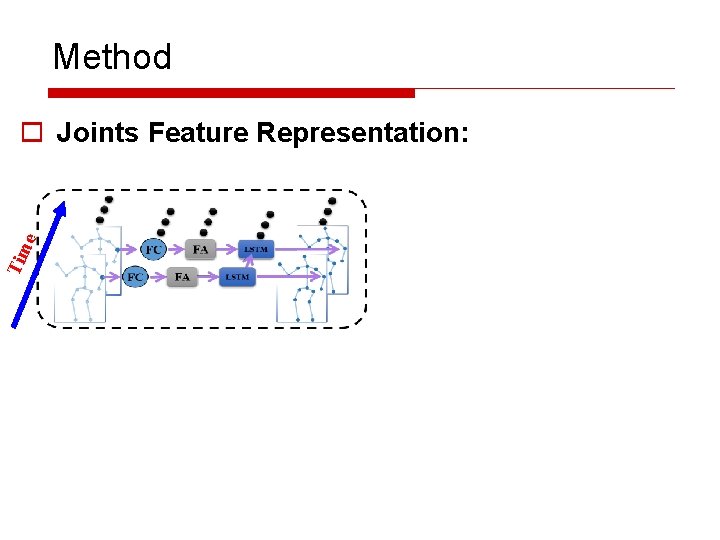

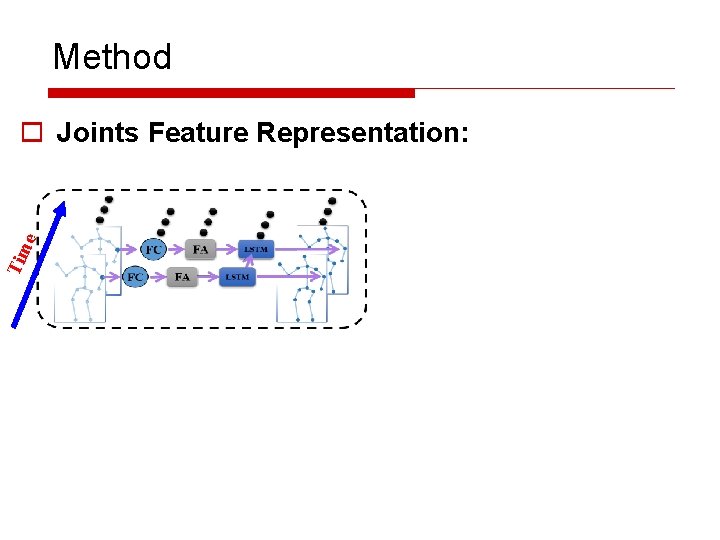

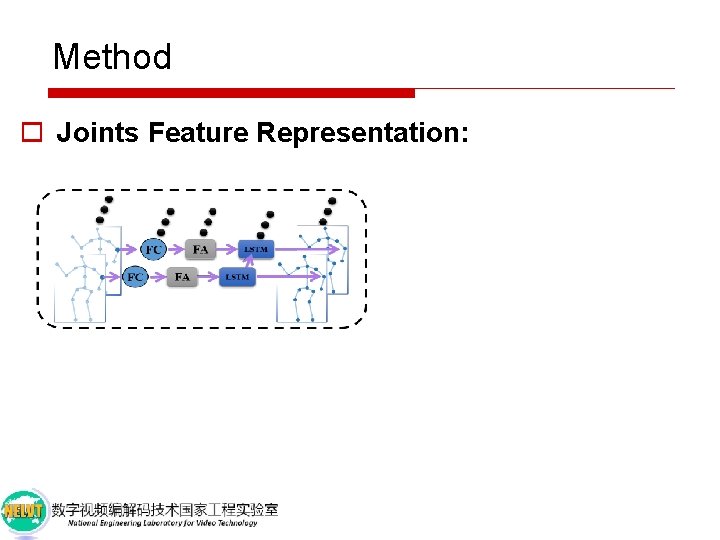

Method Tim e o Joints Feature Representation:

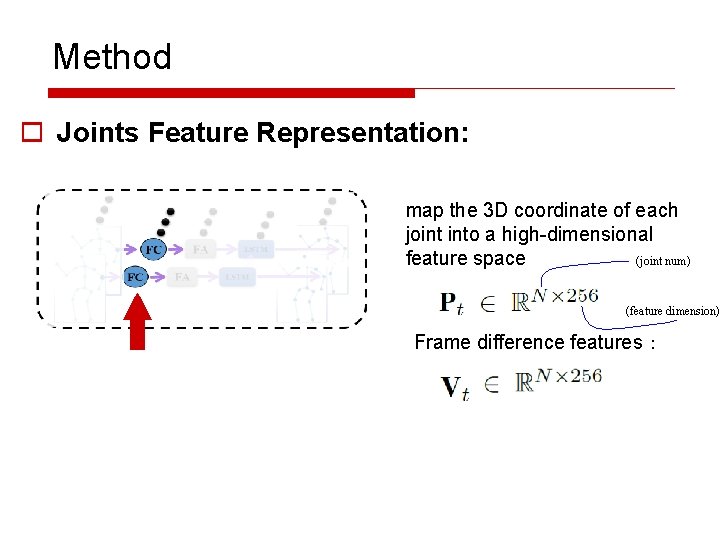

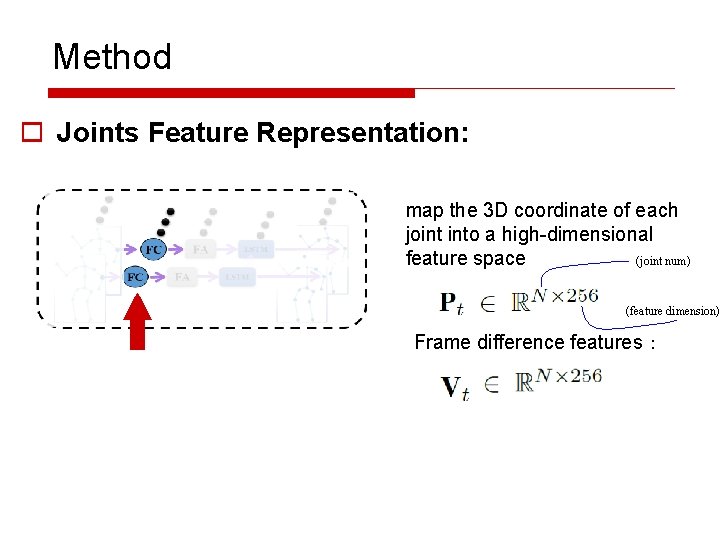

Method o Joints Feature Representation: map the 3 D coordinate of each joint into a high-dimensional feature space (joint num) (feature dimension) Frame difference features:

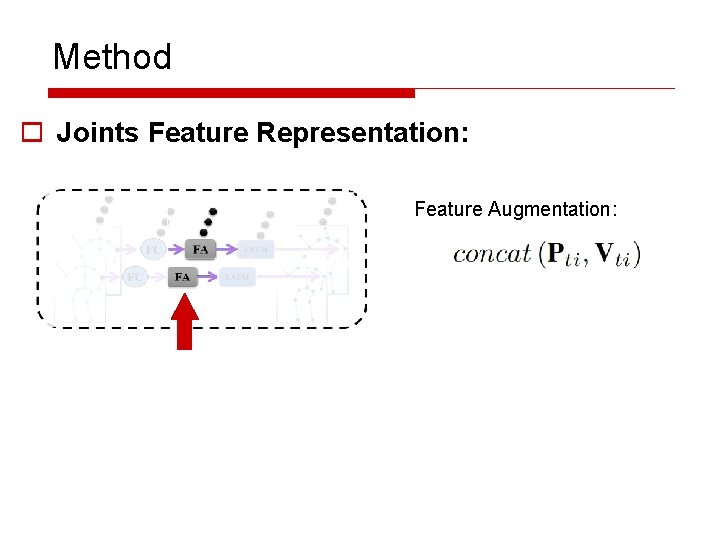

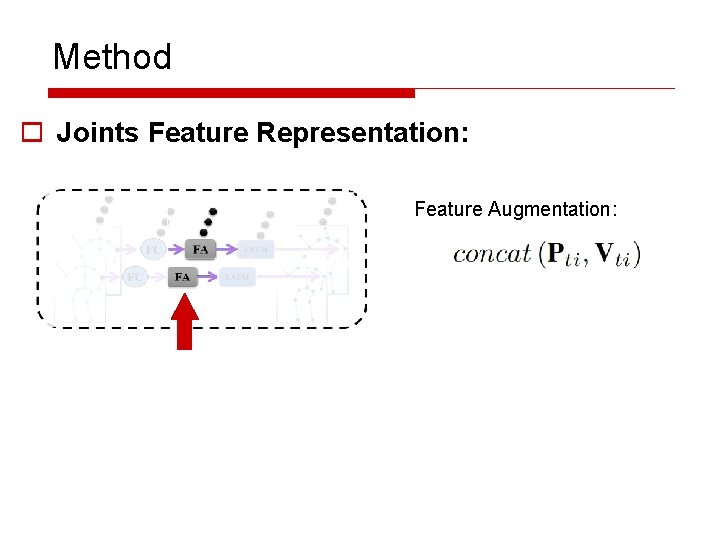

Method o Joints Feature Representation: Feature Augmentation:

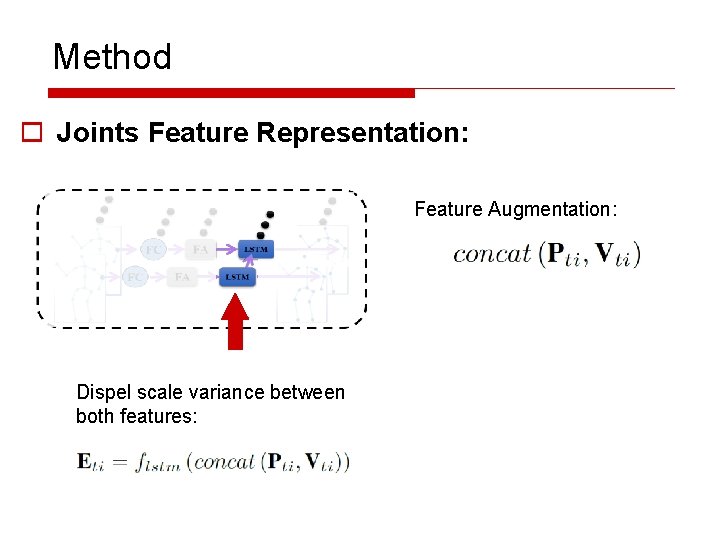

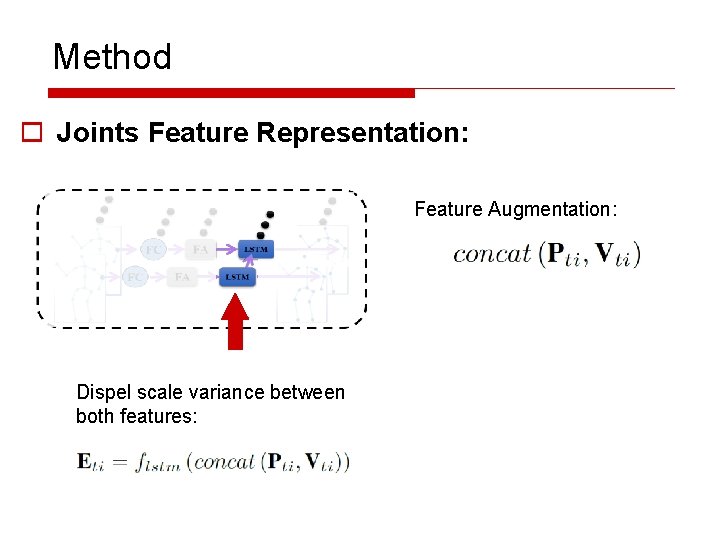

Method o Joints Feature Representation: Feature Augmentation: Dispel scale variance between both features:

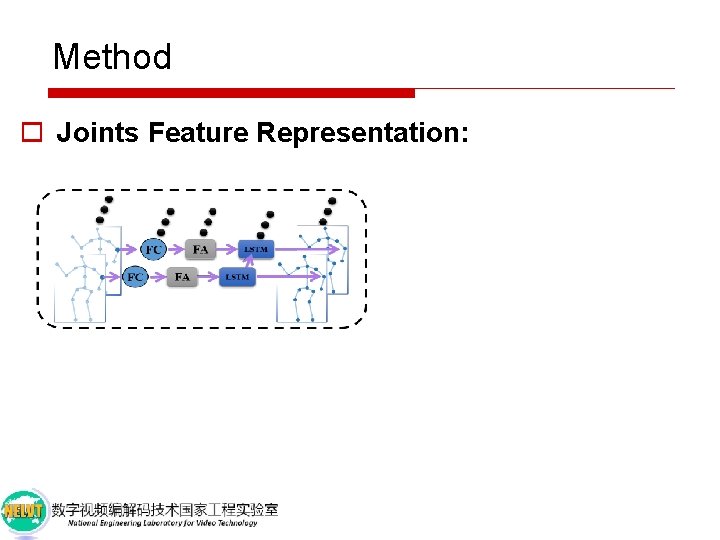

Method o Joints Feature Representation:

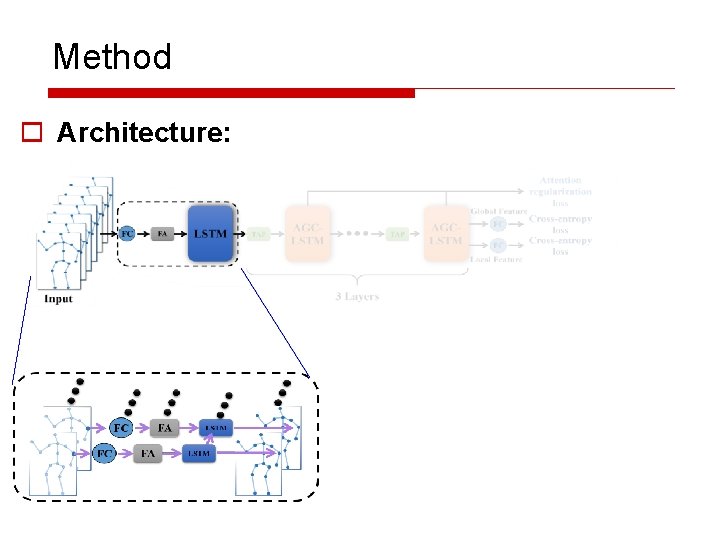

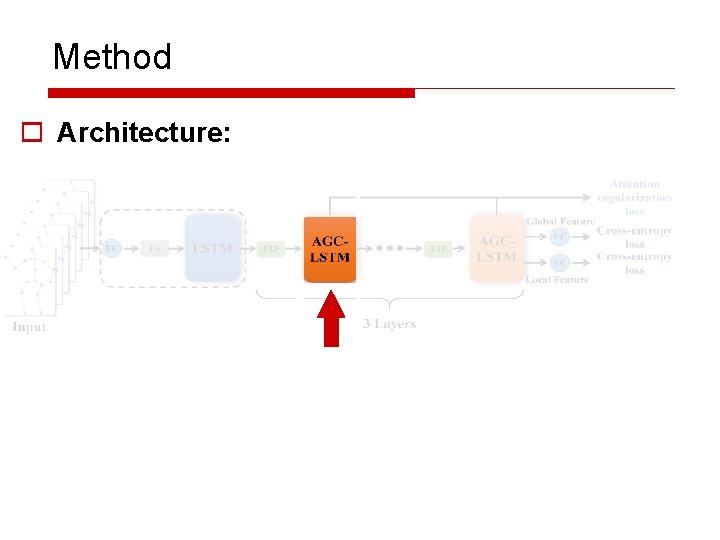

Method o Architecture:

Method o Architecture:

Method o Architecture:

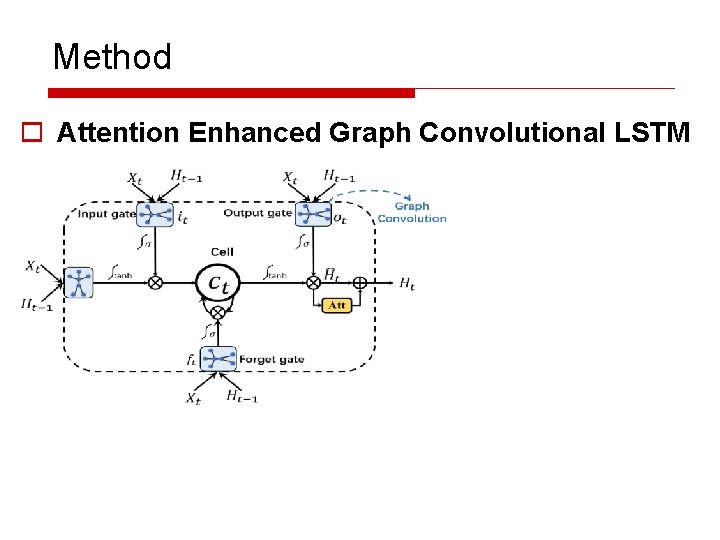

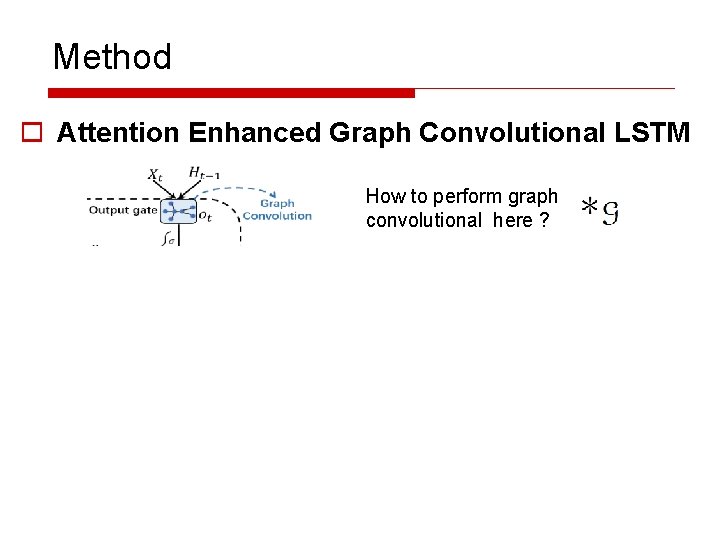

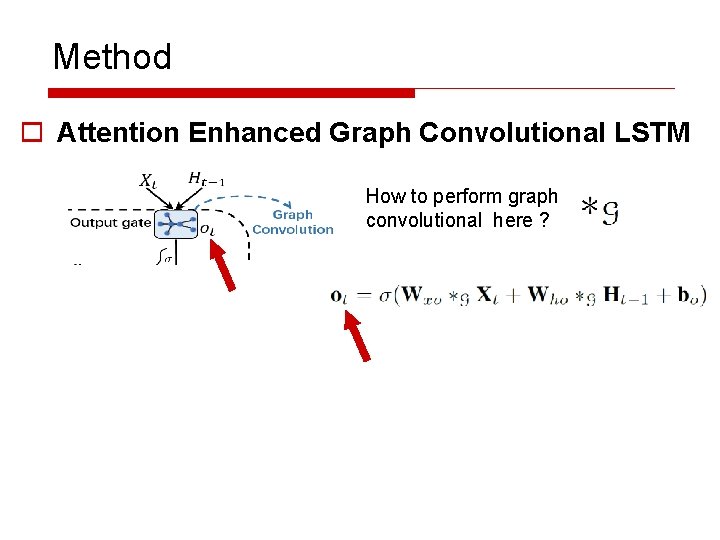

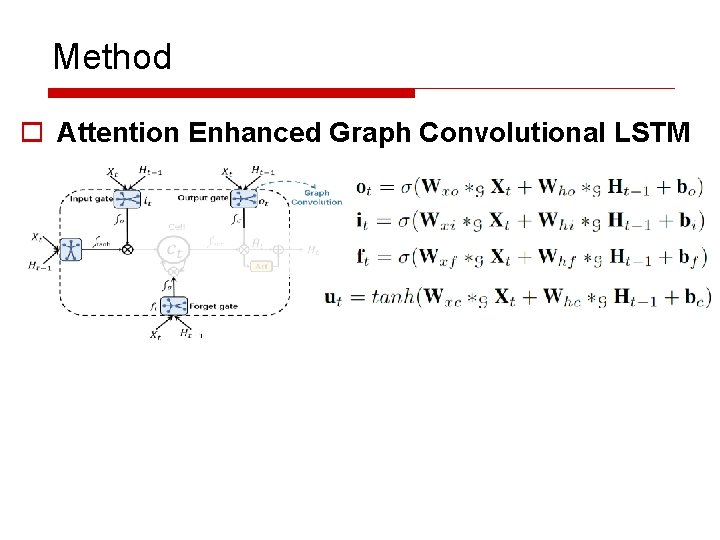

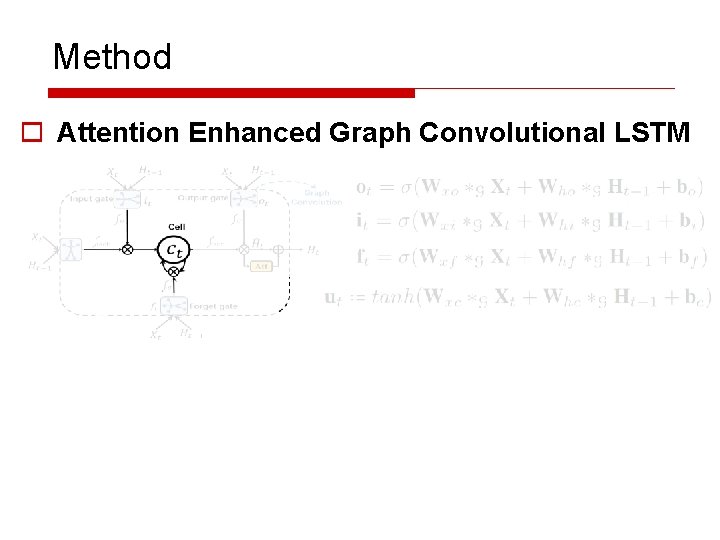

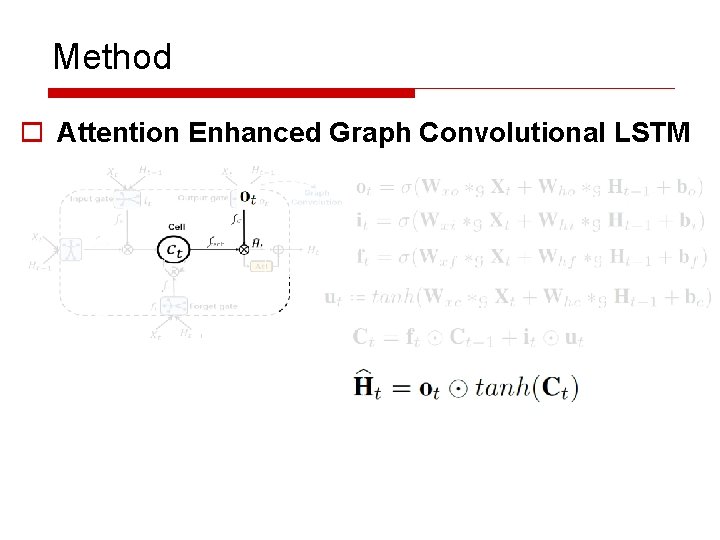

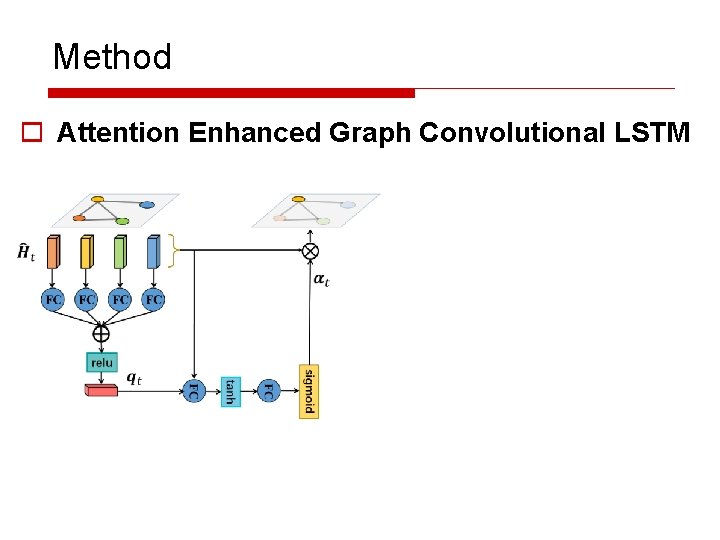

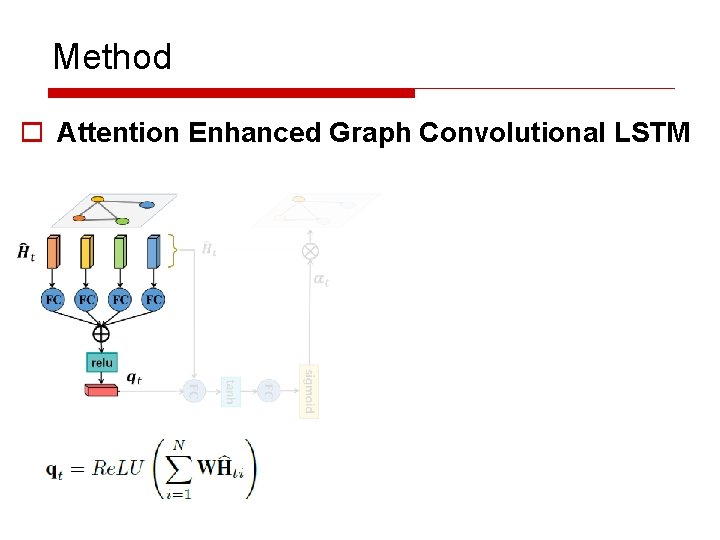

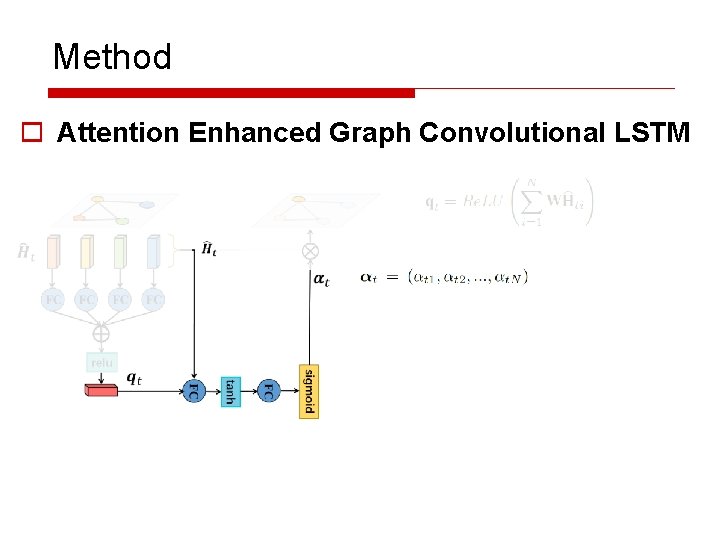

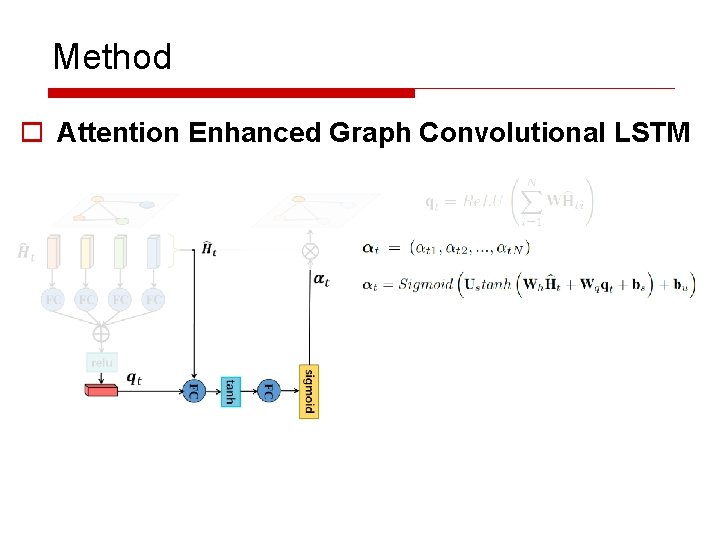

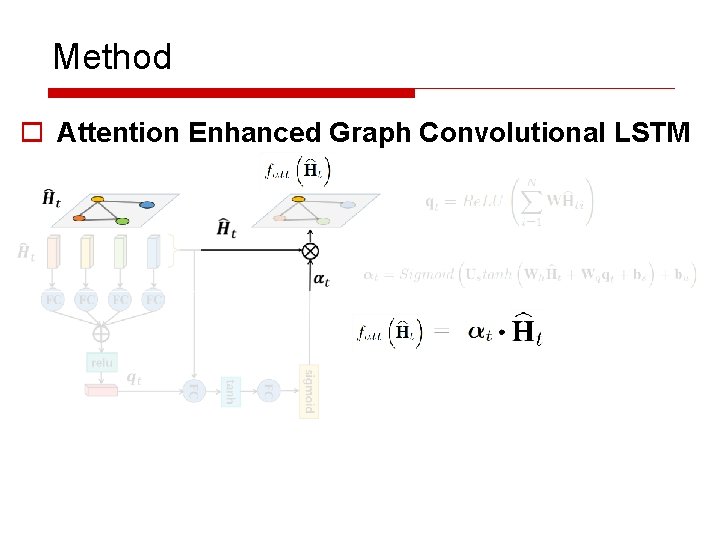

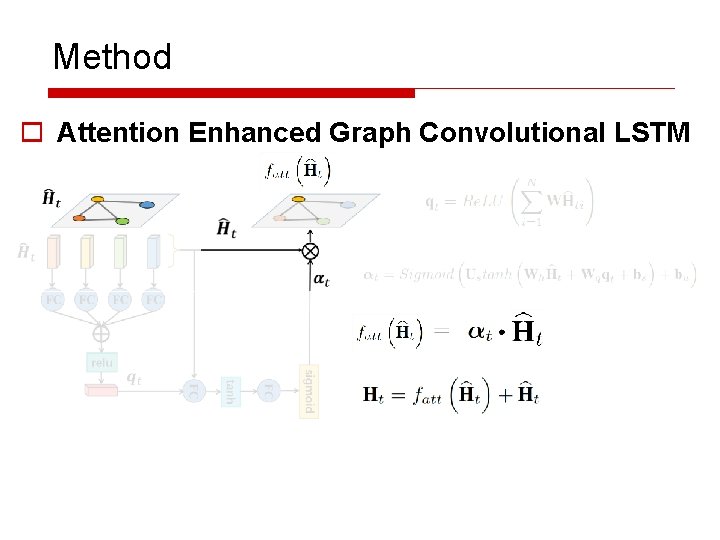

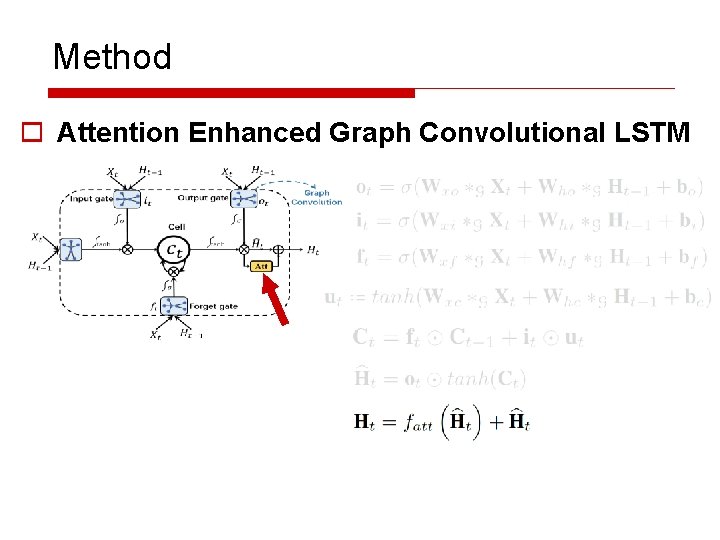

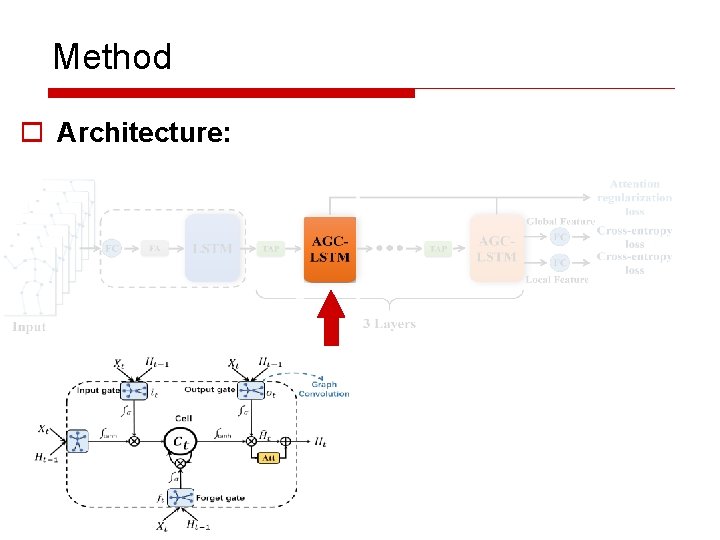

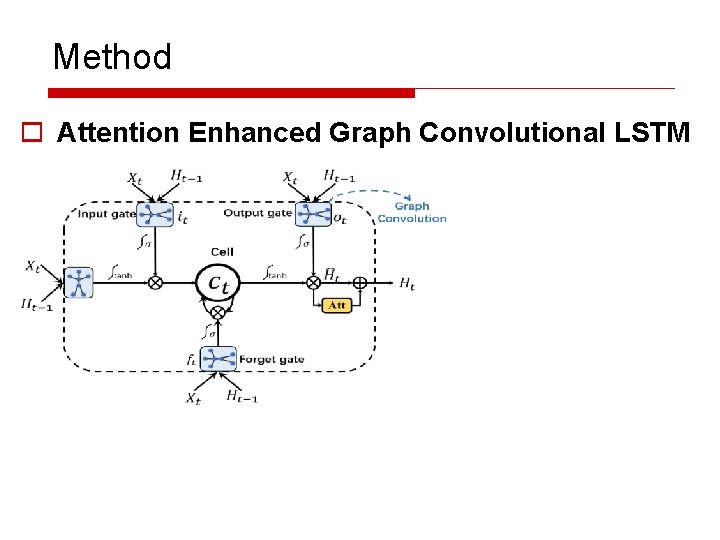

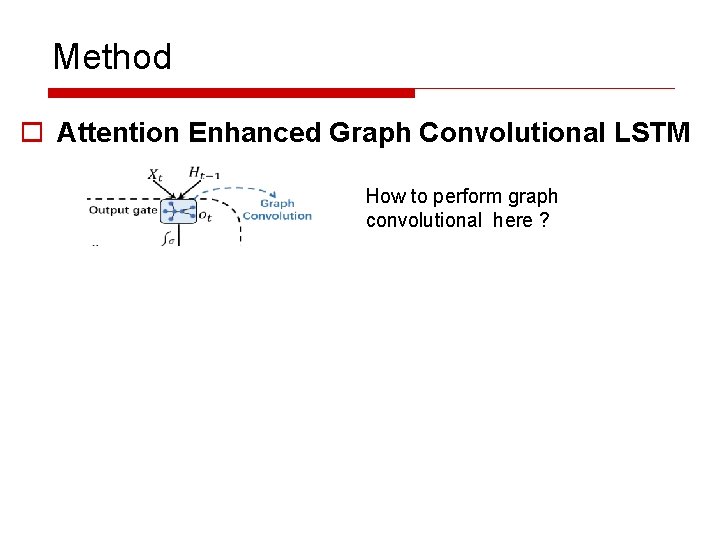

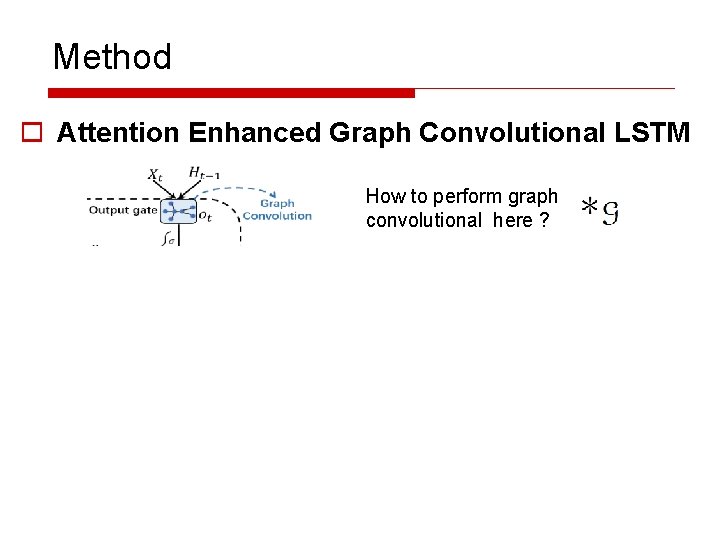

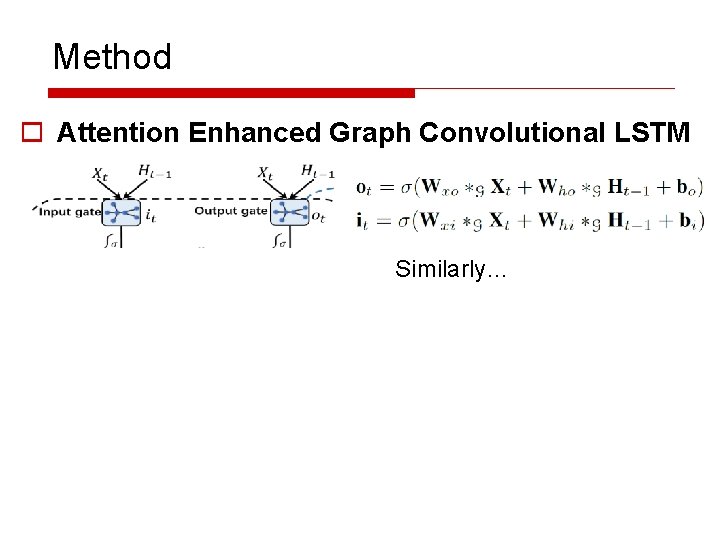

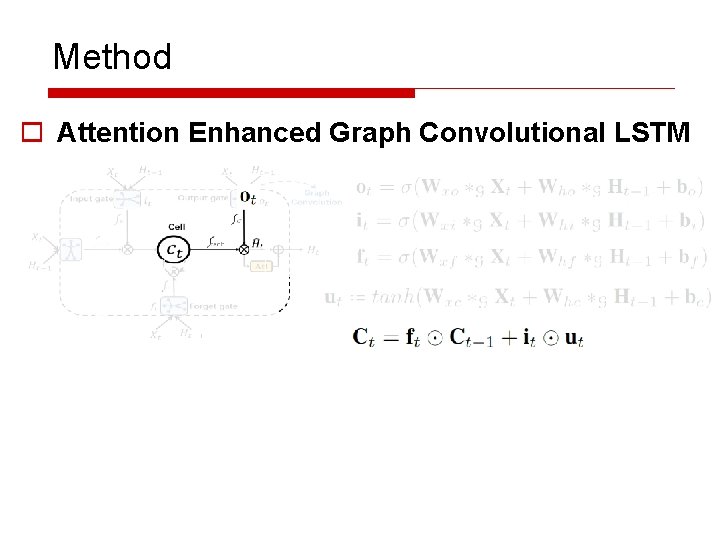

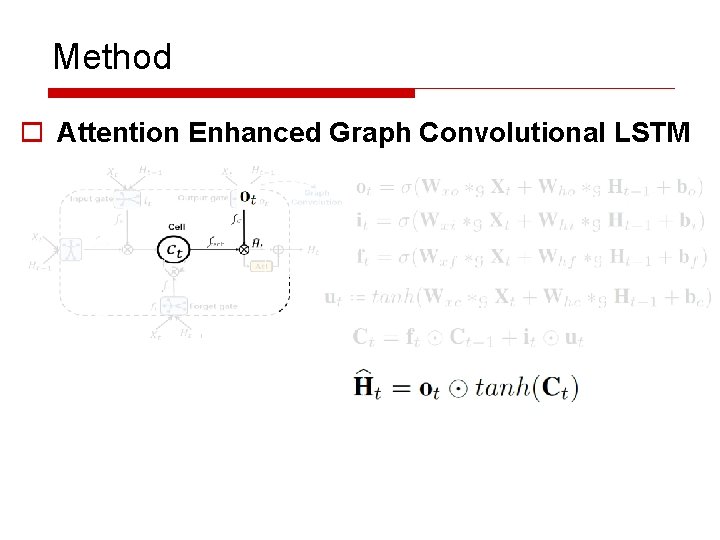

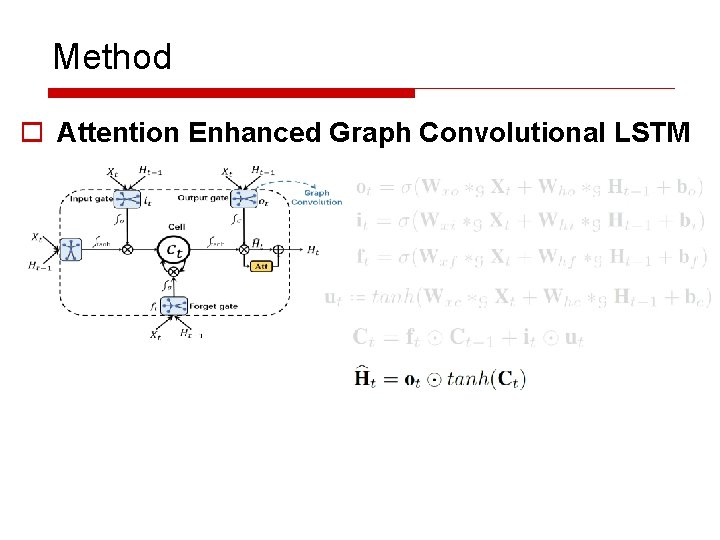

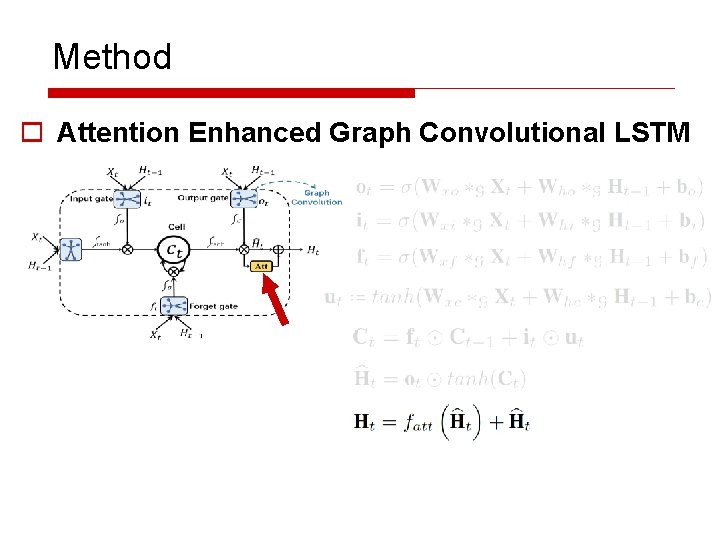

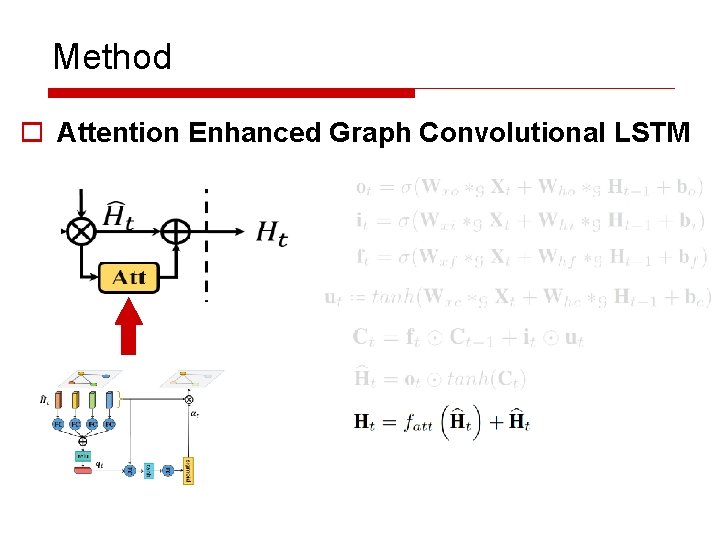

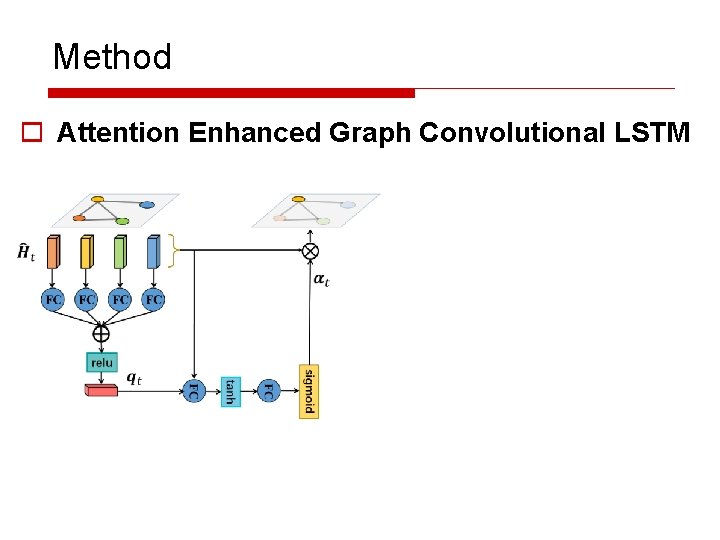

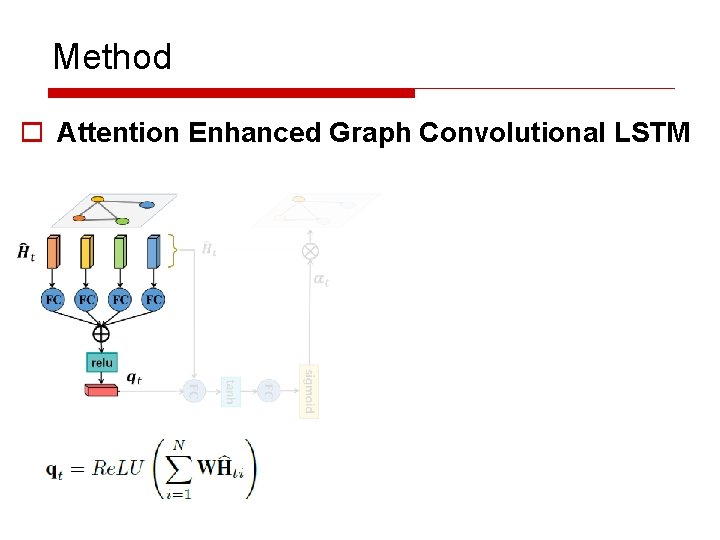

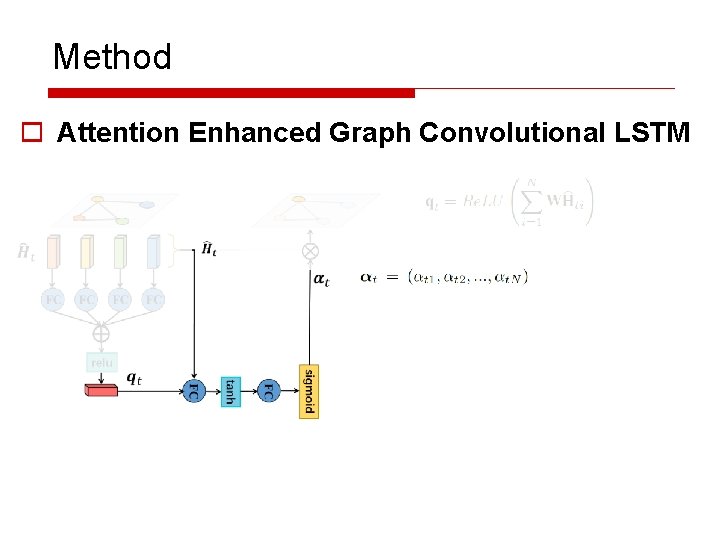

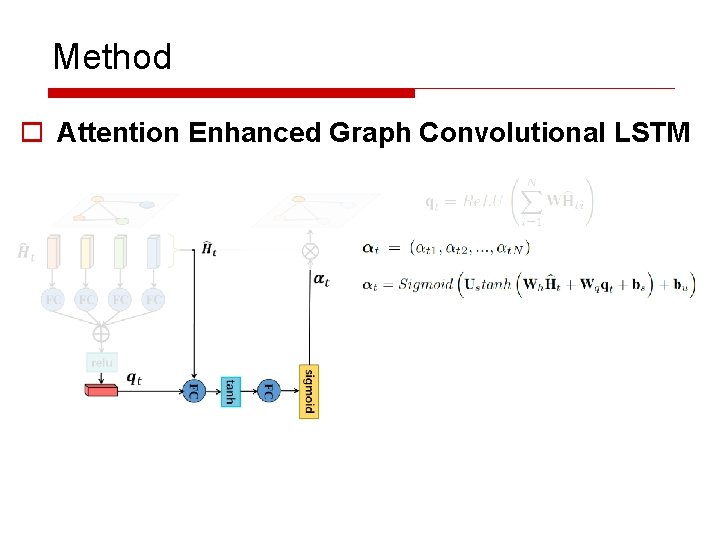

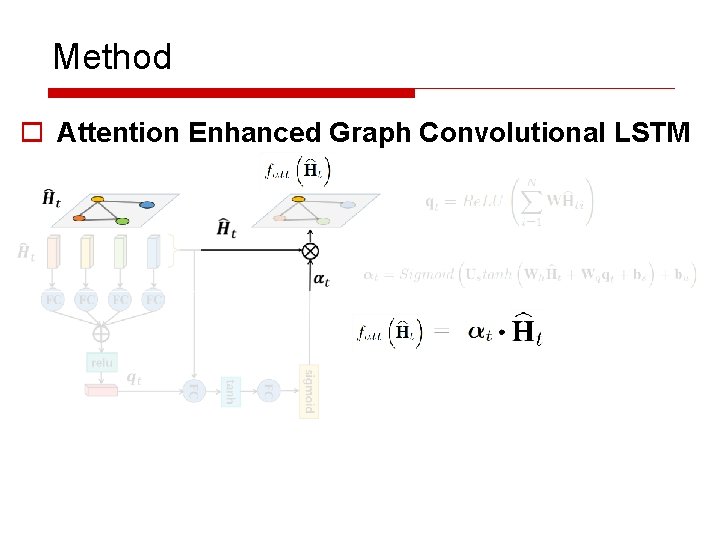

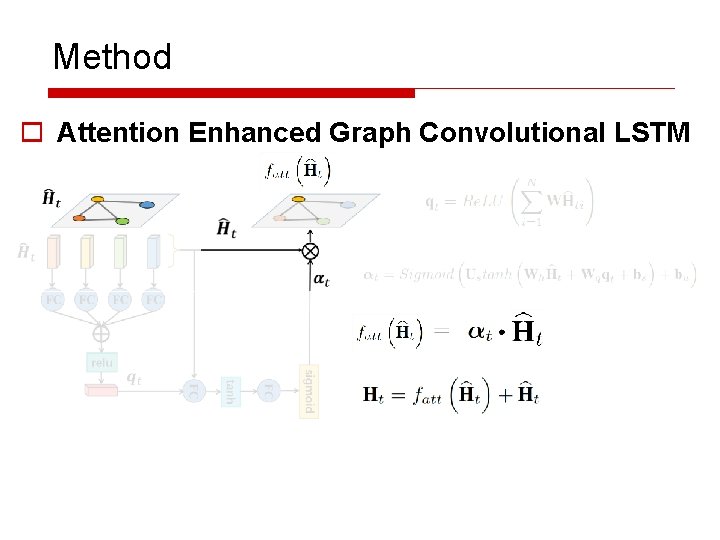

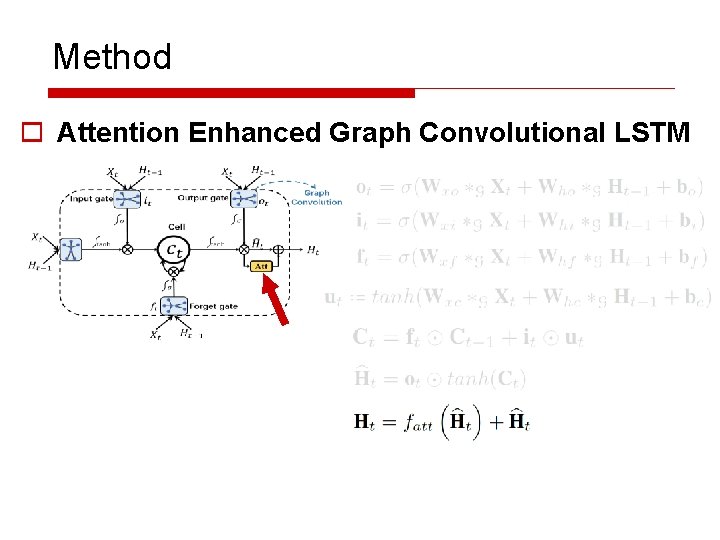

Method o Attention Enhanced Graph Convolutional LSTM

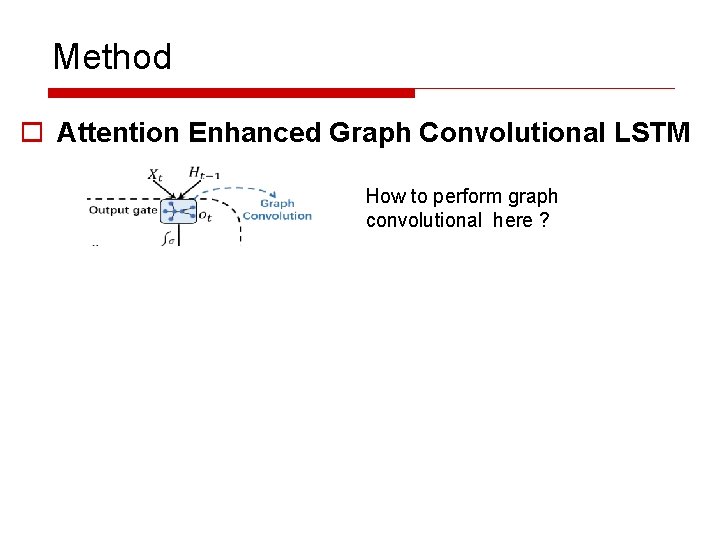

Method o Attention Enhanced Graph Convolutional LSTM How to perform graph convolutional here ?

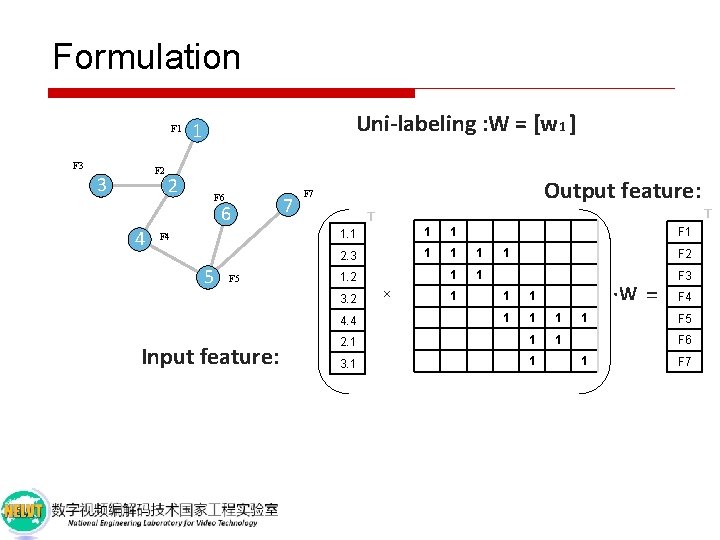

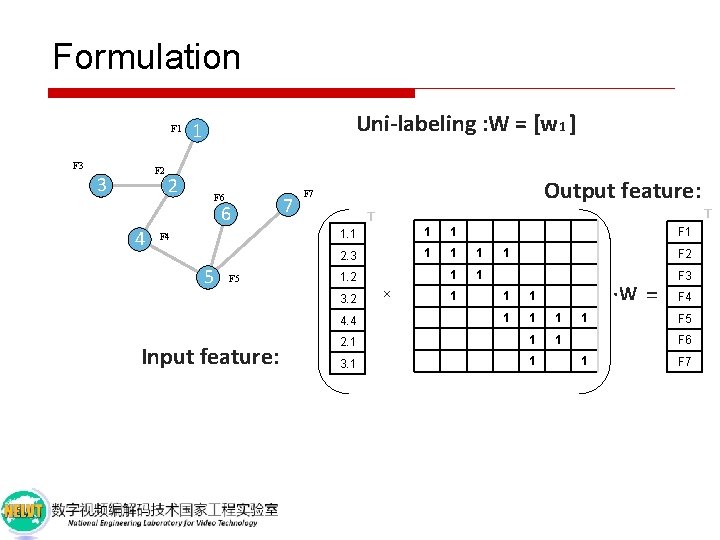

Formulation F 1 F 3 F 2 3 4 Uni-labeling : W = [w 1 ] 1 2 F 6 6 F 4 5 F 5 7 Output feature: F 7 1. 1 1 1 2. 3 1 1 1. 2 × 1 F 2 ·W 1 1 2. 1 1 1 3. 1 1 4. 4 1 F 1 1 3. 2 Input feature: T T 1 = F 3 F 4 F 5 F 6 1 F 7

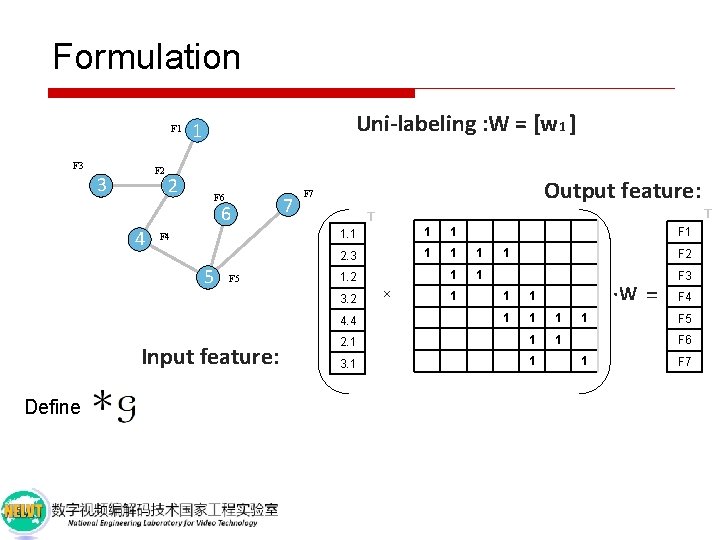

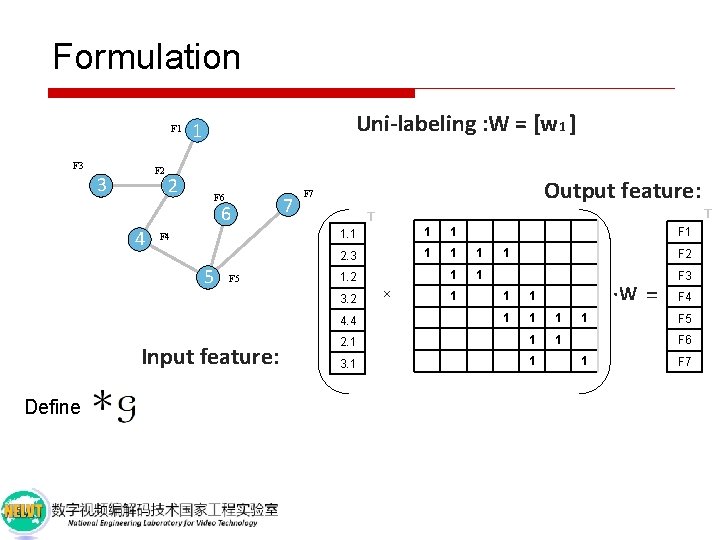

Formulation F 1 F 3 F 2 3 4 Uni-labeling : W = [w 1 ] 1 2 F 6 6 F 4 5 F 5 7 Output feature: F 7 1. 1 1 1 2. 3 1 1 1. 2 1 1 F 2 ·W 1 1 2. 1 1 1 3. 1 1 4. 4 Define × F 1 1 3. 2 Input feature: T T 1 = F 3 F 4 F 5 F 6 1 F 7

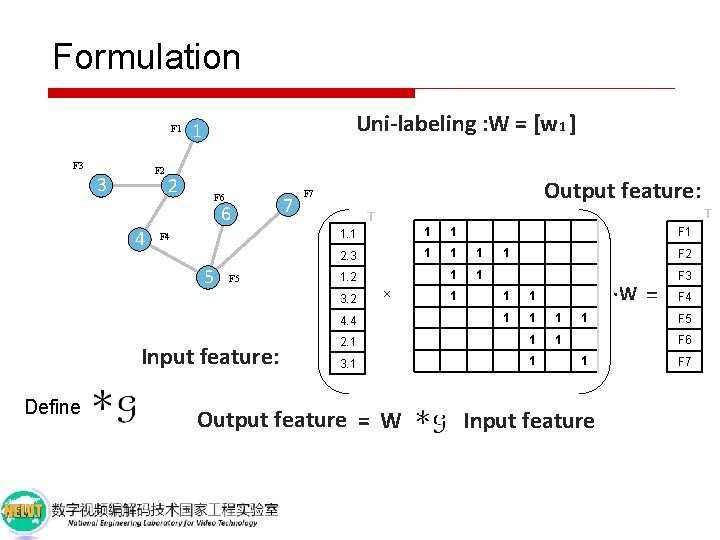

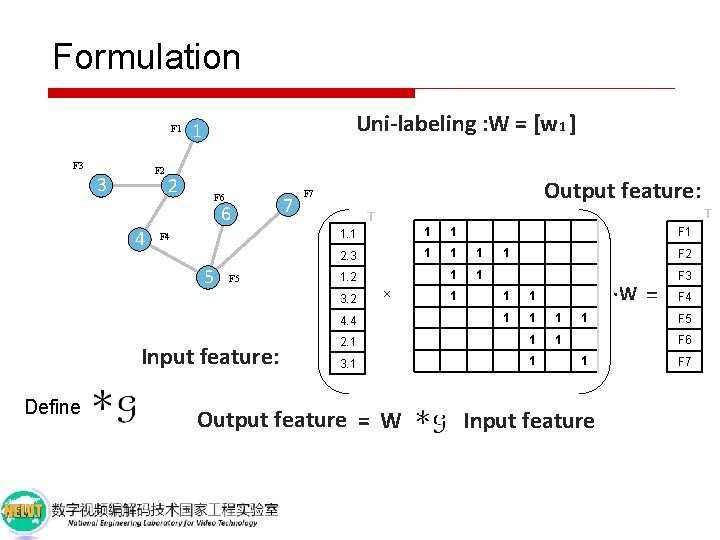

Formulation F 1 F 3 F 2 3 4 Uni-labeling : W = [w 1 ] 1 2 F 6 6 F 4 5 F 5 7 Output feature: F 7 T T 1. 1 1 1 2. 3 1 1 1. 2 × Define F 2 ·W 1 1 2. 1 1 1 3. 1 1 4. 4 Input feature: 1 1 3. 2 Output feature = W 1 F 1 1 = F 3 F 4 F 5 F 6 1 Input feature F 7

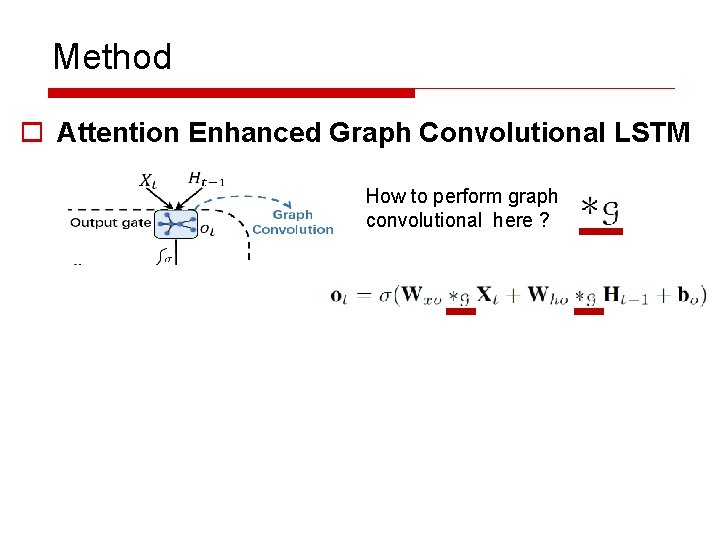

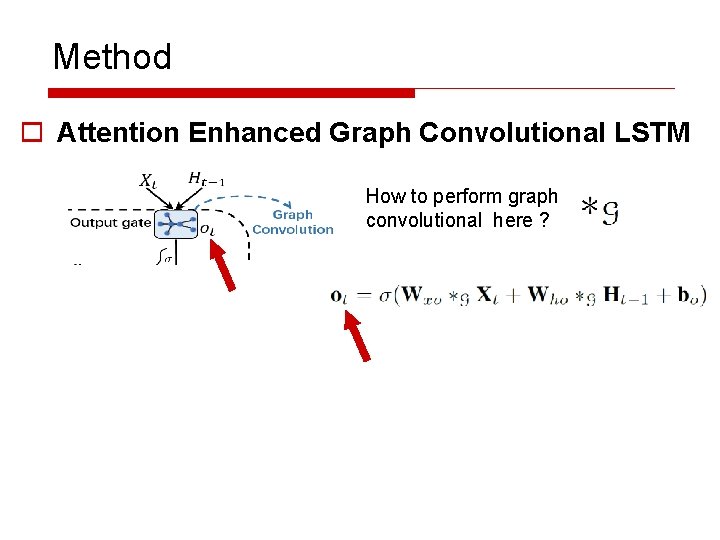

Method o Attention Enhanced Graph Convolutional LSTM How to perform graph convolutional here ?

Method o Attention Enhanced Graph Convolutional LSTM How to perform graph convolutional here ?

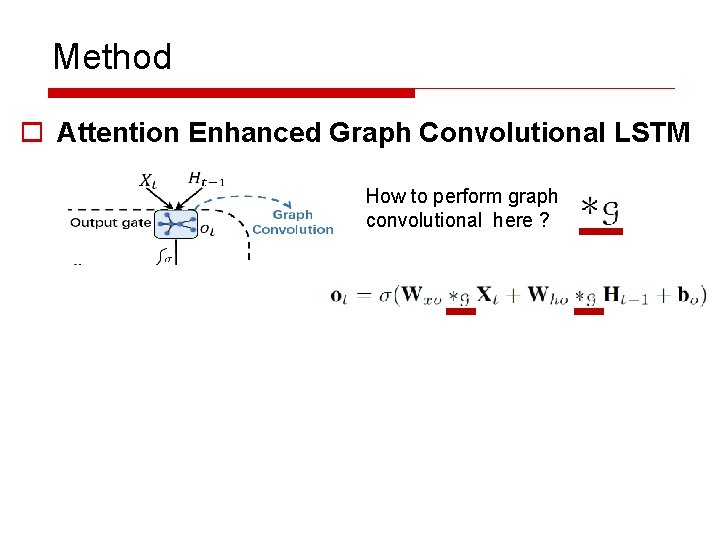

Method o Attention Enhanced Graph Convolutional LSTM How to perform graph convolutional here ?

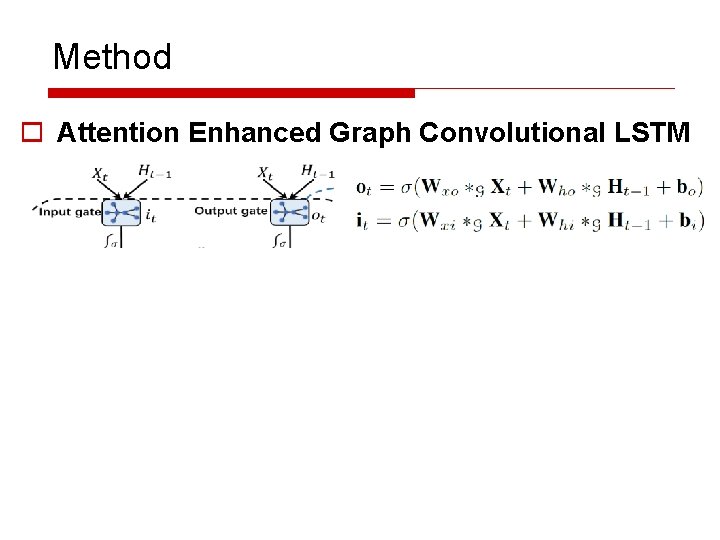

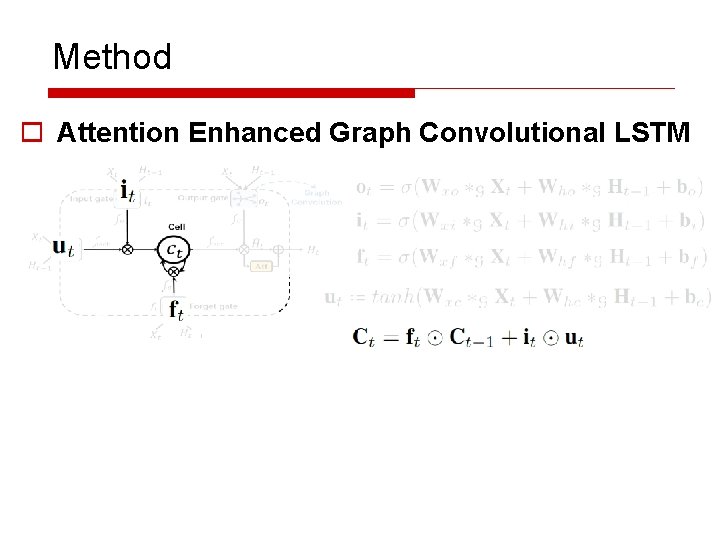

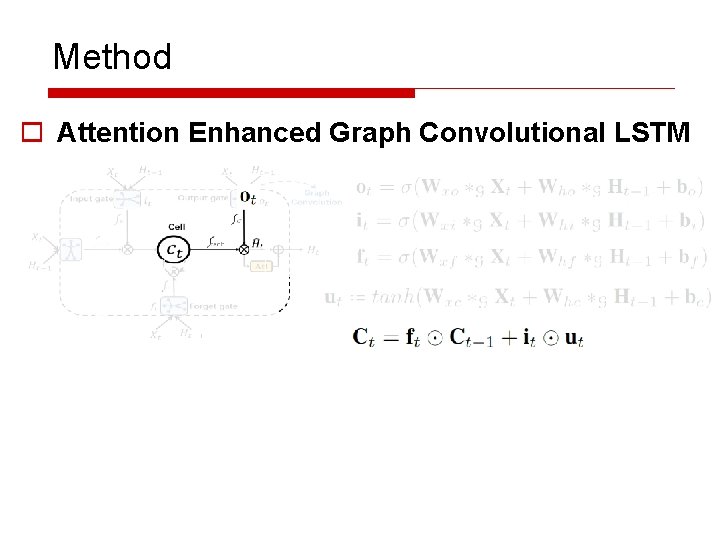

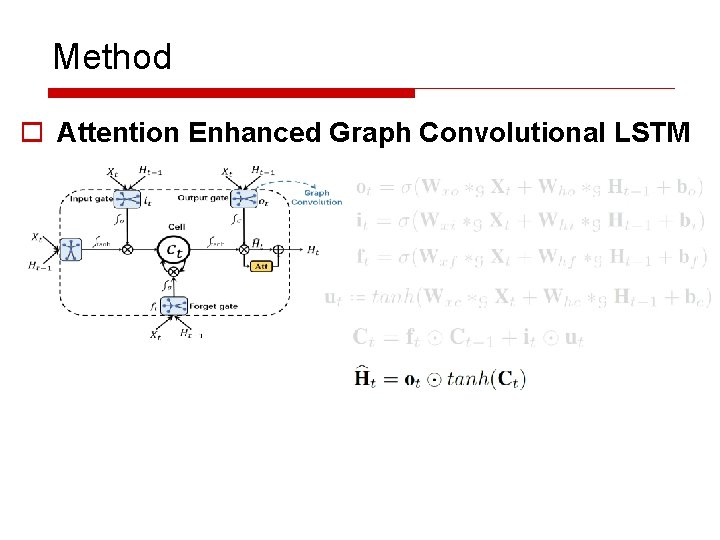

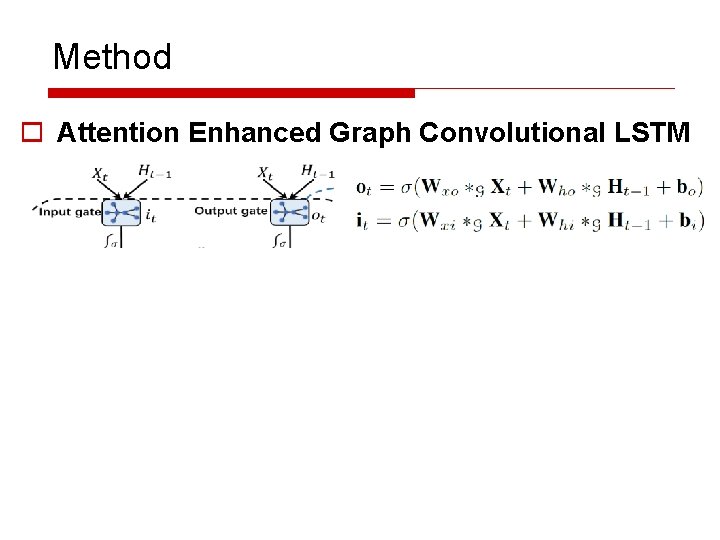

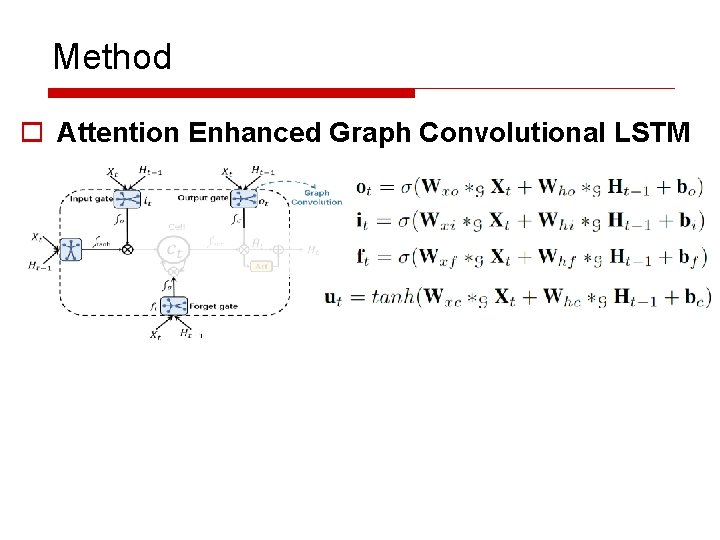

Method o Attention Enhanced Graph Convolutional LSTM

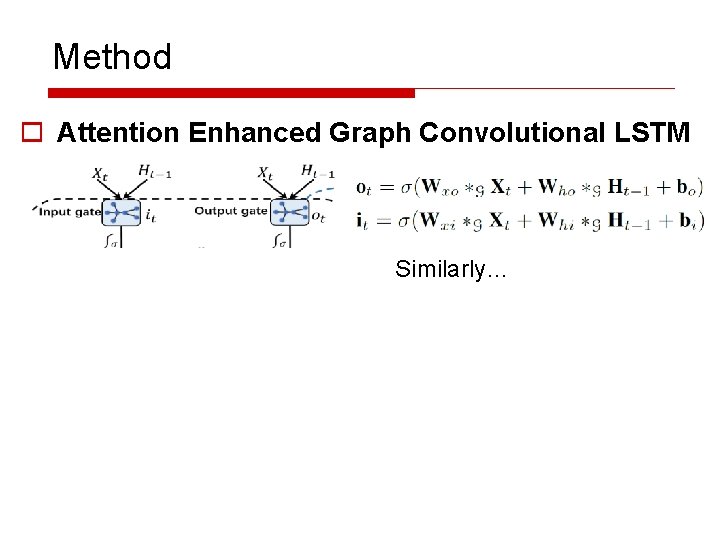

Method o Attention Enhanced Graph Convolutional LSTM Similarly…

Method o Attention Enhanced Graph Convolutional LSTM

Method o Attention Enhanced Graph Convolutional LSTM

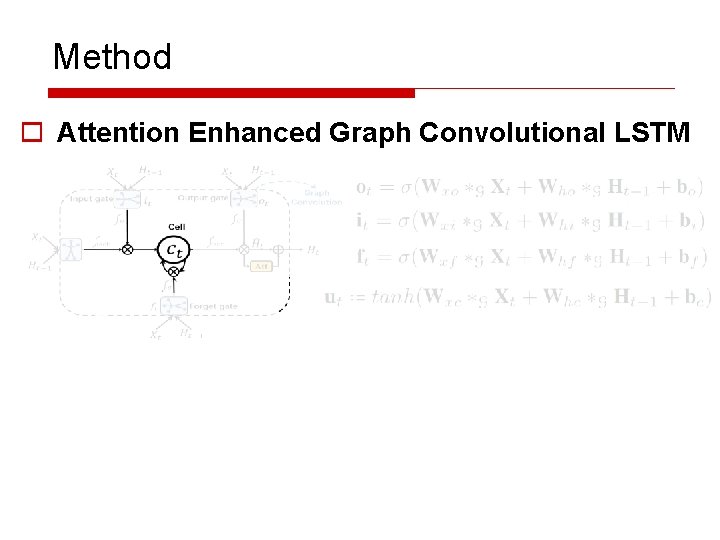

Method o Attention Enhanced Graph Convolutional LSTM

Method o Attention Enhanced Graph Convolutional LSTM

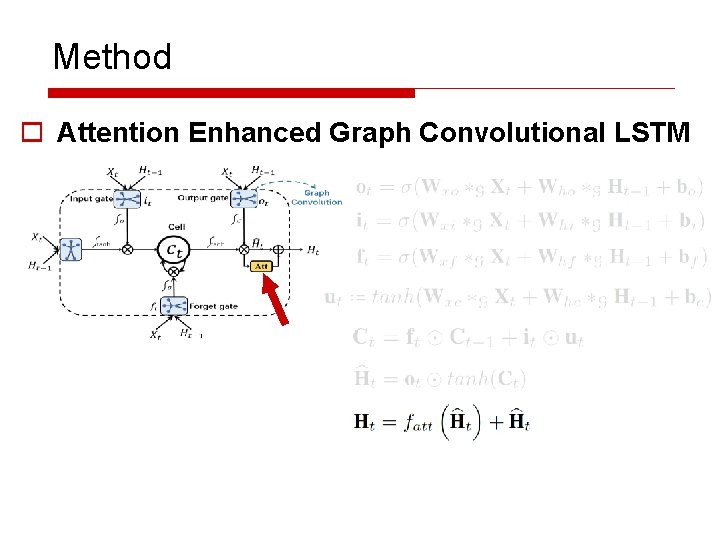

Method o Attention Enhanced Graph Convolutional LSTM

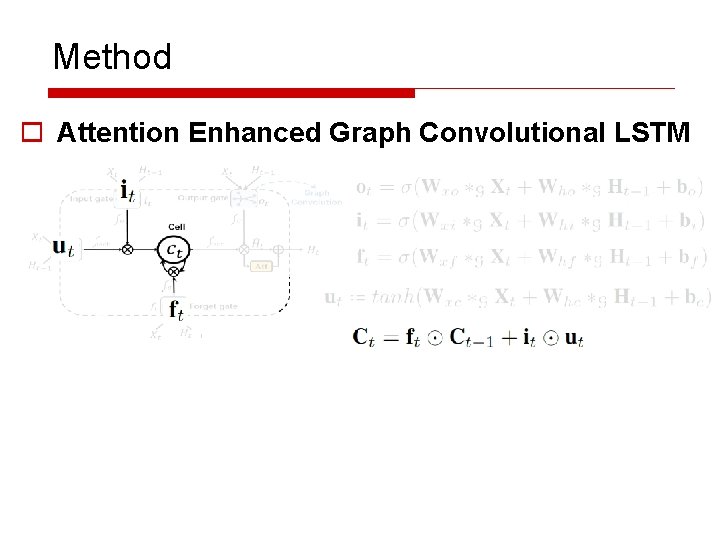

Method o Attention Enhanced Graph Convolutional LSTM

Method o Attention Enhanced Graph Convolutional LSTM

Method o Attention Enhanced Graph Convolutional LSTM

Method o Attention Enhanced Graph Convolutional LSTM

Method o Attention Enhanced Graph Convolutional LSTM

Method o Attention Enhanced Graph Convolutional LSTM

Method o Attention Enhanced Graph Convolutional LSTM

Method o Attention Enhanced Graph Convolutional LSTM

Method o Attention Enhanced Graph Convolutional LSTM

Method o Attention Enhanced Graph Convolutional LSTM

Method o Attention Enhanced Graph Convolutional LSTM

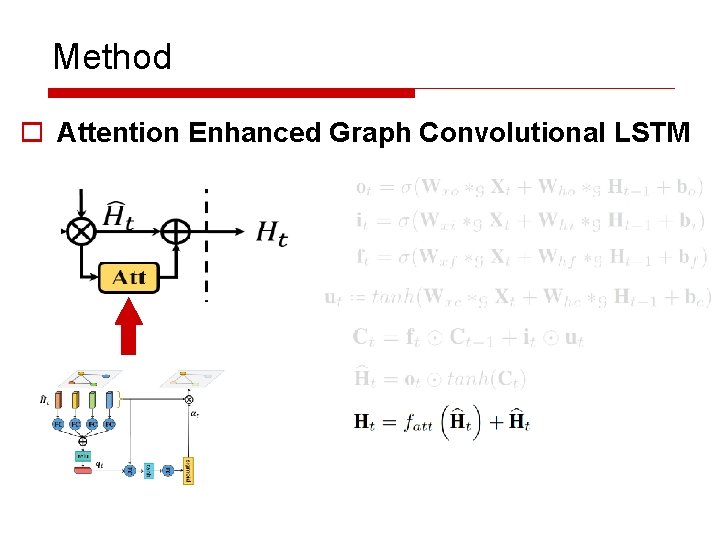

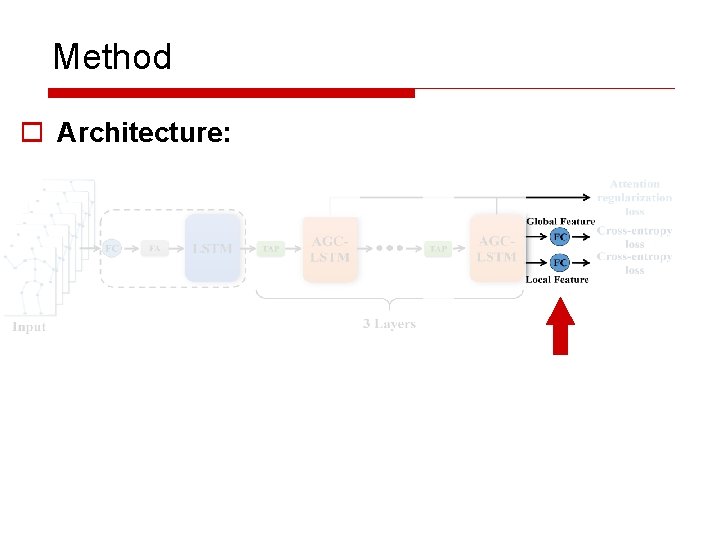

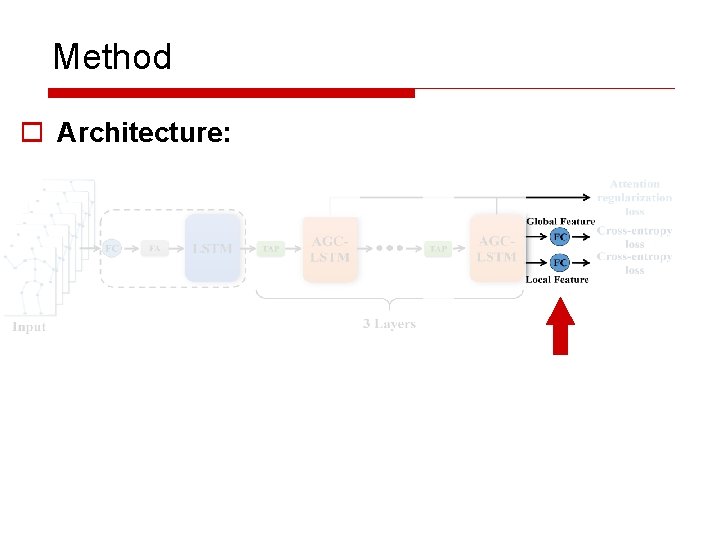

Method o Architecture:

Method o Architecture:

Method o Architecture:

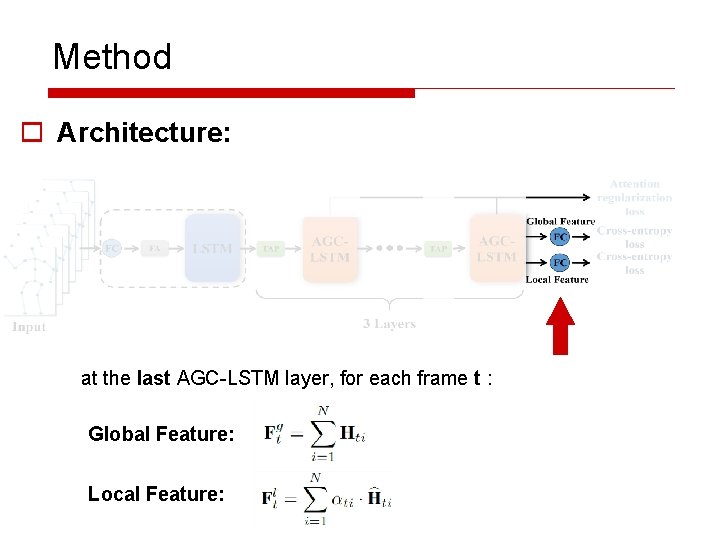

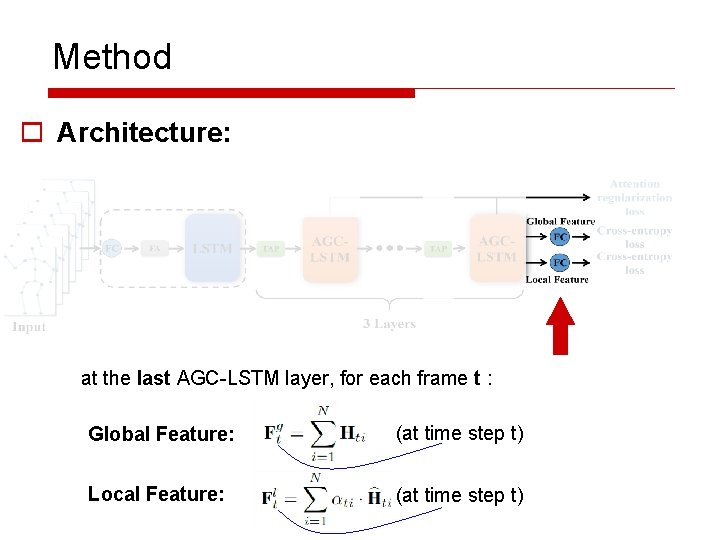

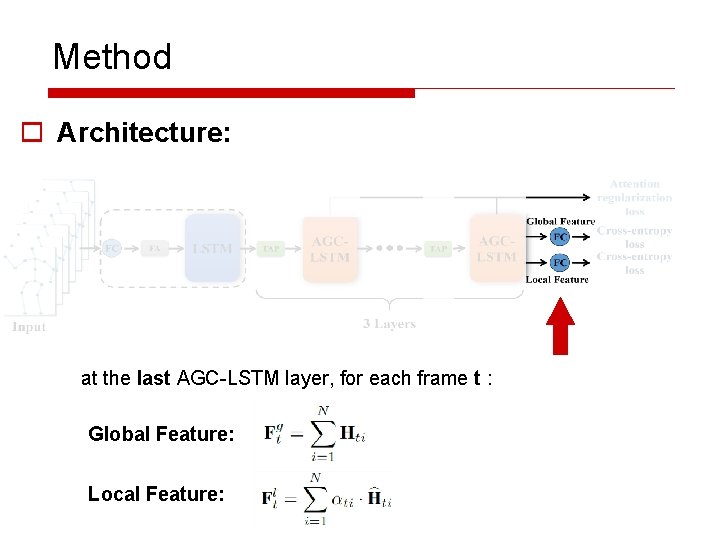

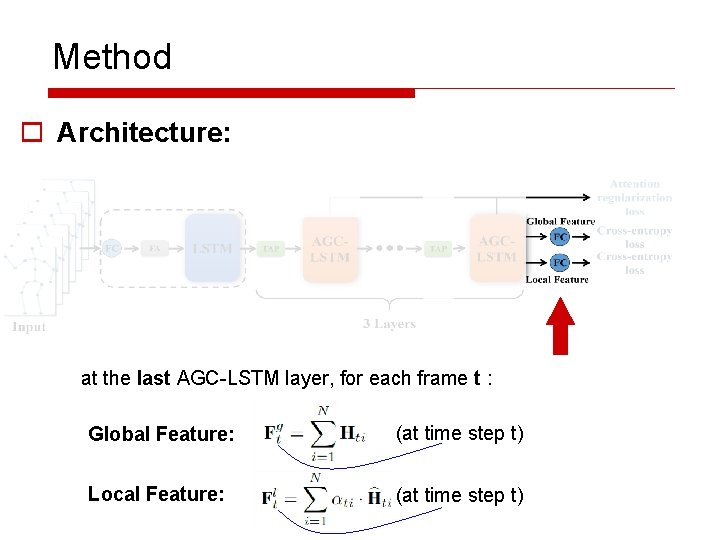

Method o Architecture: at the last AGC-LSTM layer, for each frame t : Global Feature: Local Feature:

Method o Architecture: at the last AGC-LSTM layer, for each frame t : Global Feature: (at time step t) Local Feature: (at time step t)

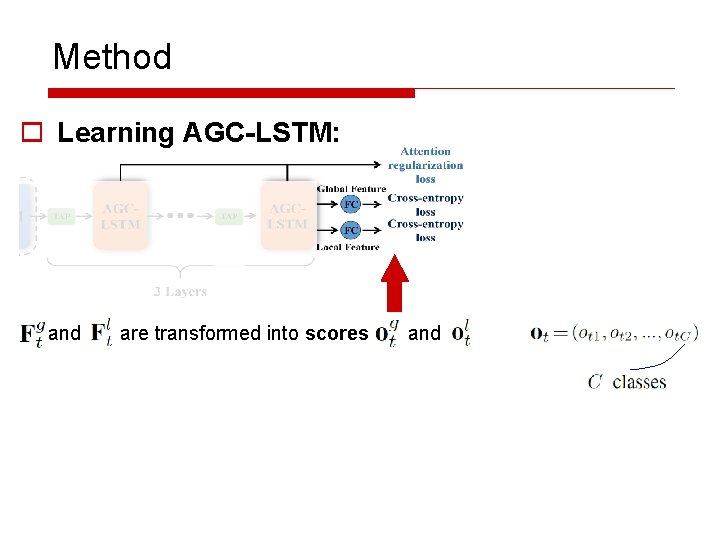

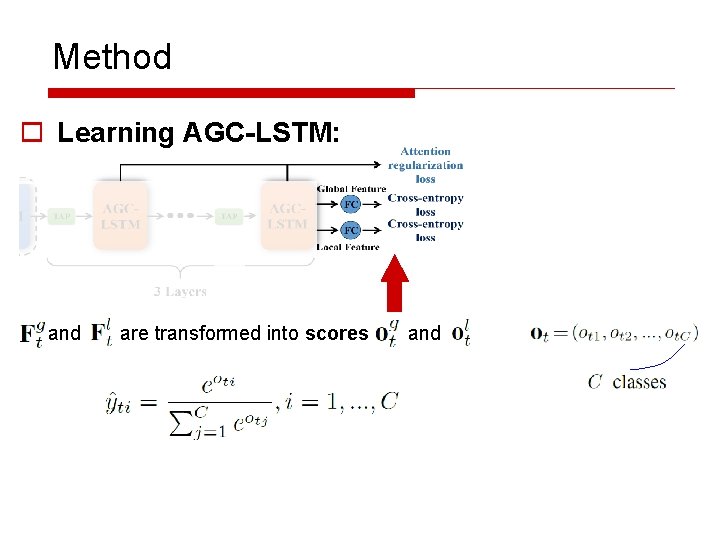

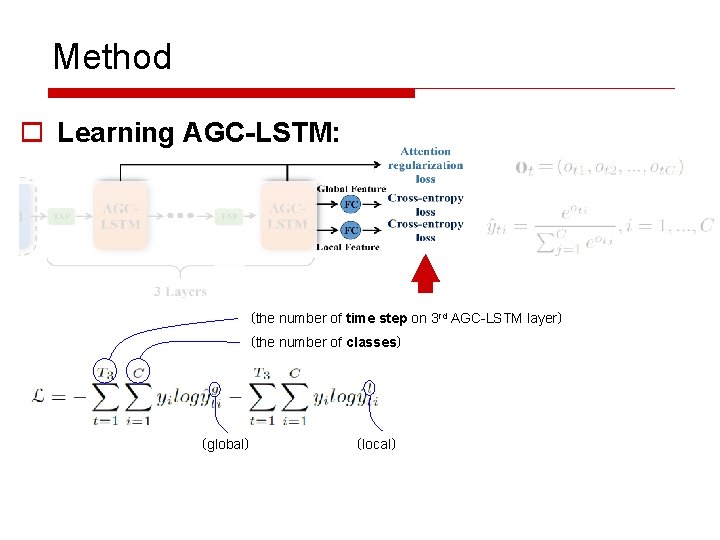

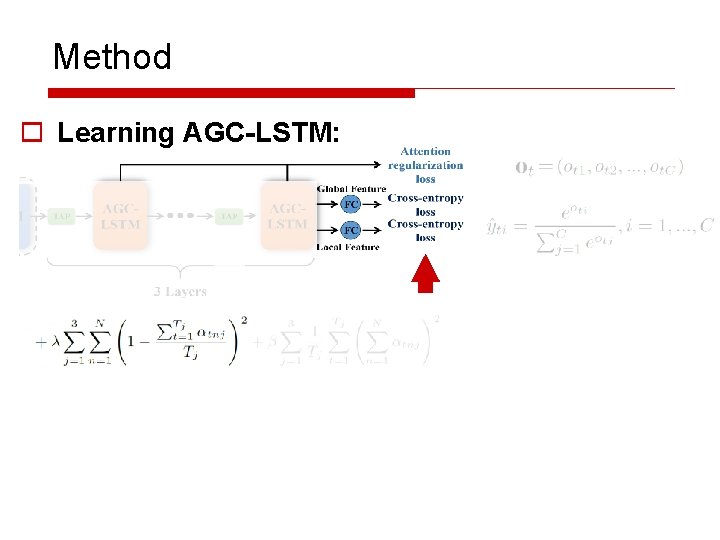

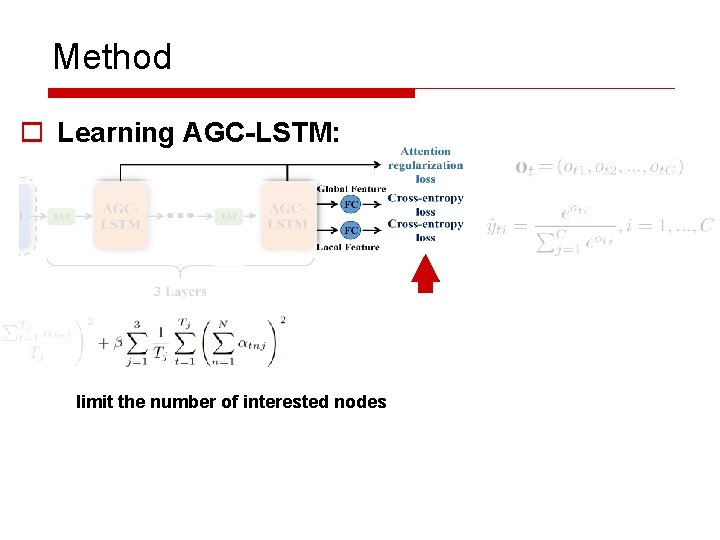

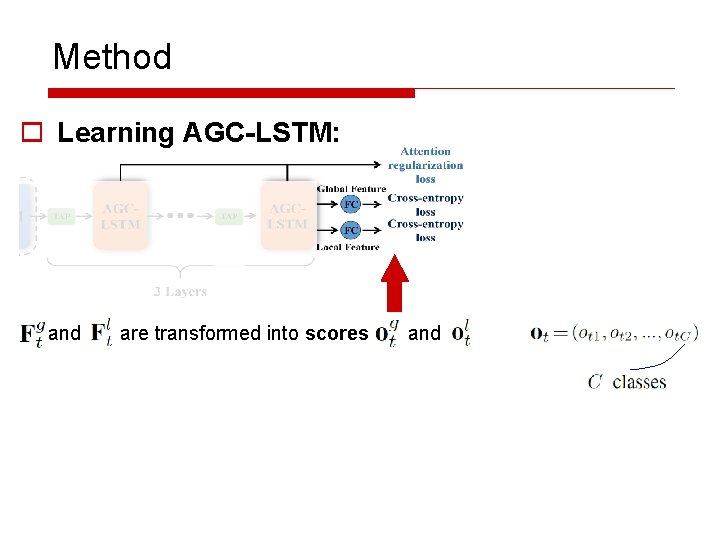

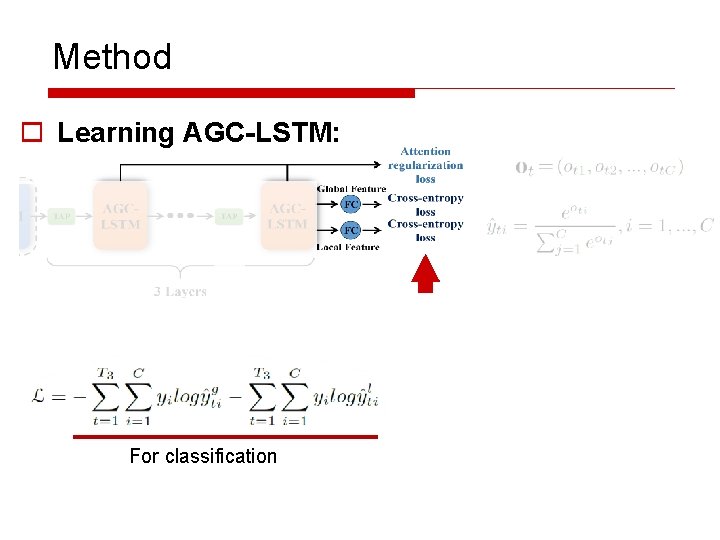

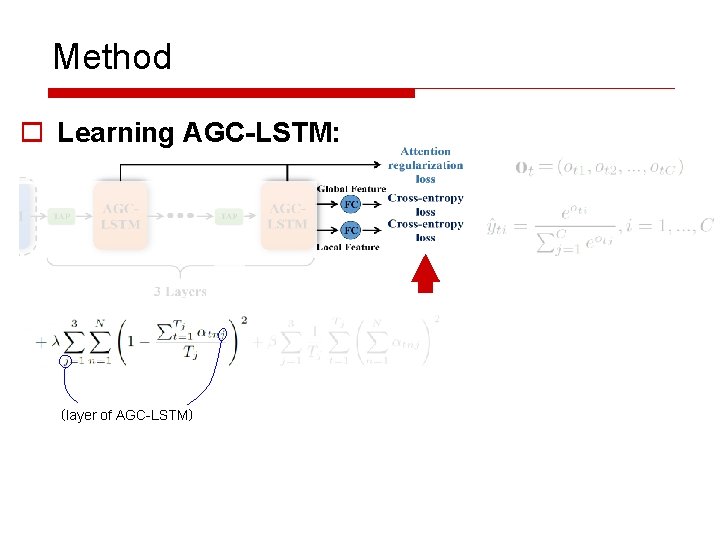

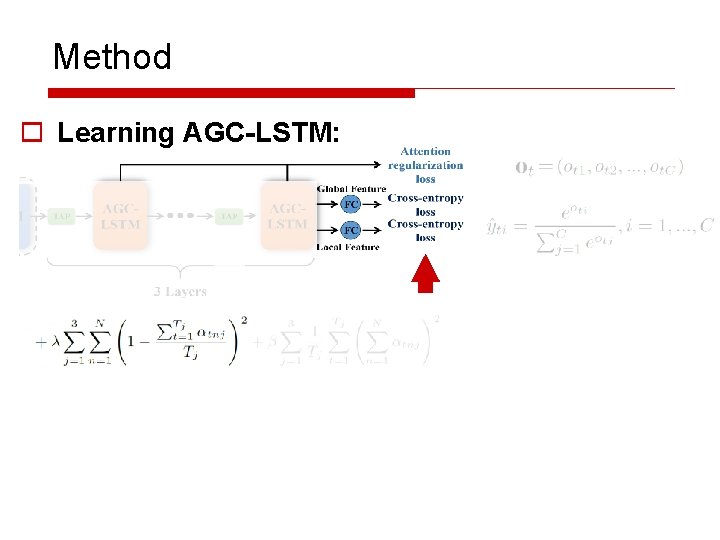

Method o Learning AGC-LSTM: and are transformed into scores and

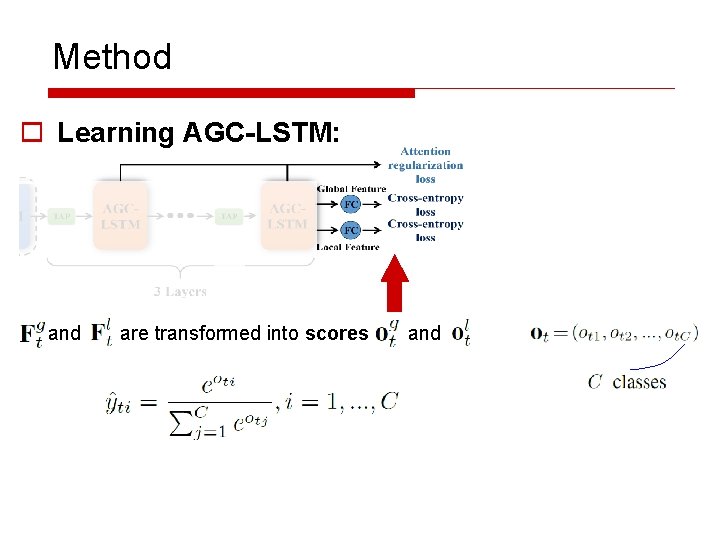

Method o Learning AGC-LSTM: and are transformed into scores and

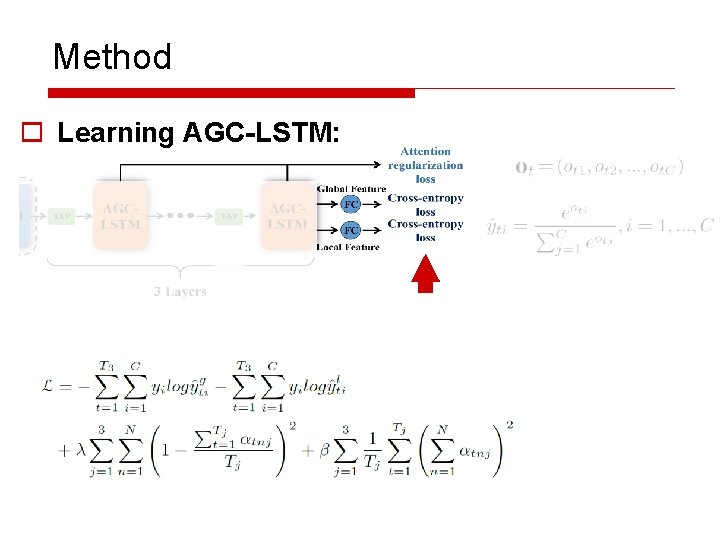

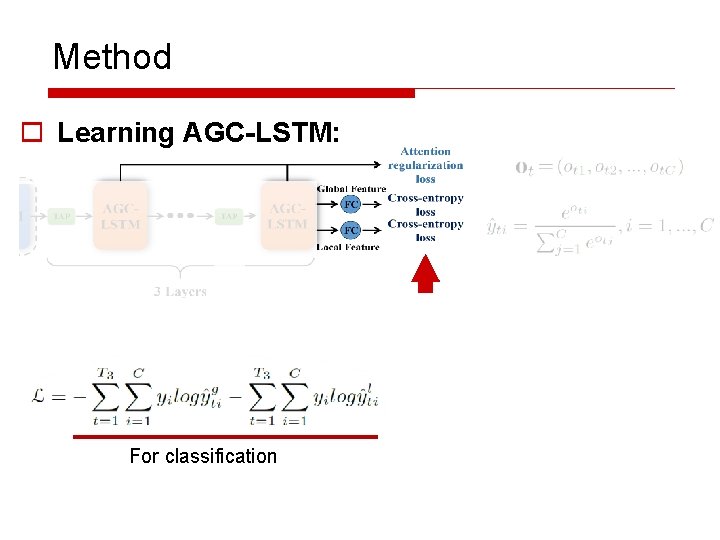

Method o Learning AGC-LSTM:

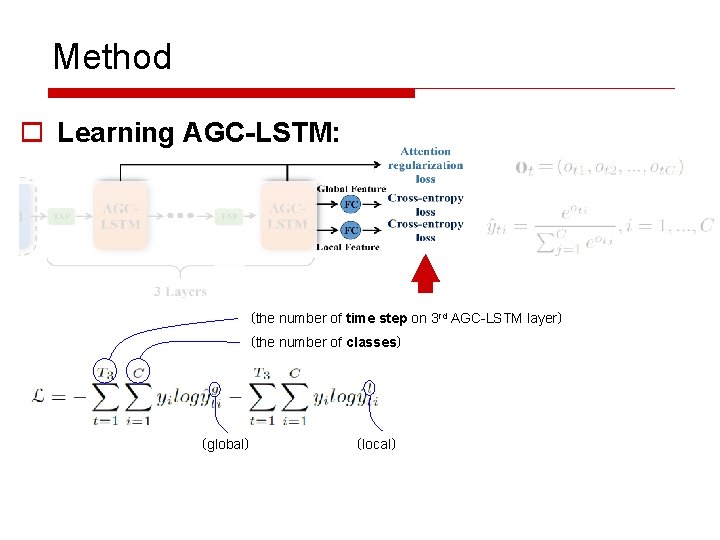

Method o Learning AGC-LSTM: For classification

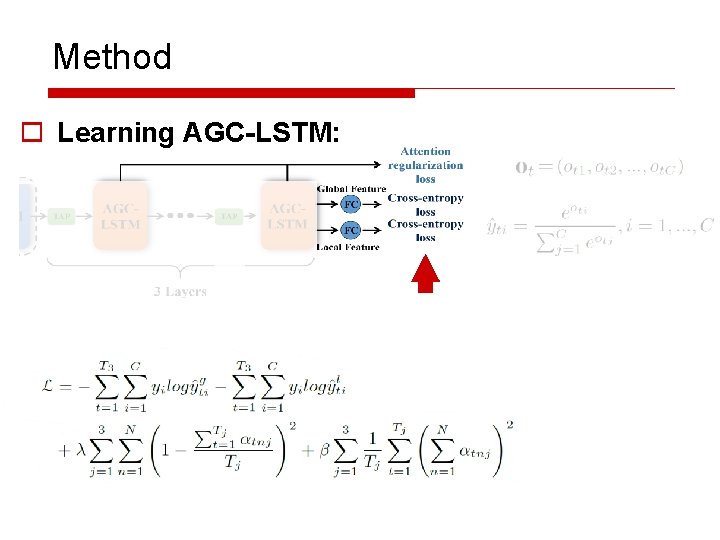

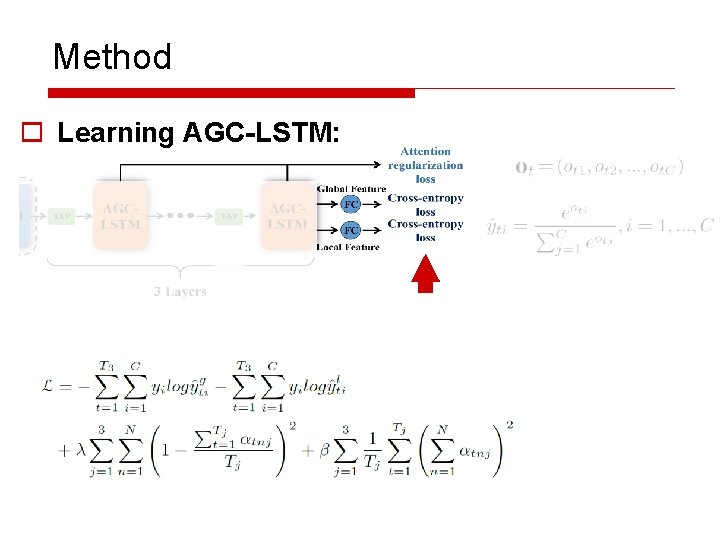

Method o Learning AGC-LSTM: (the number of time step on 3 rd AGC-LSTM layer) (the number of classes) (global) (local)

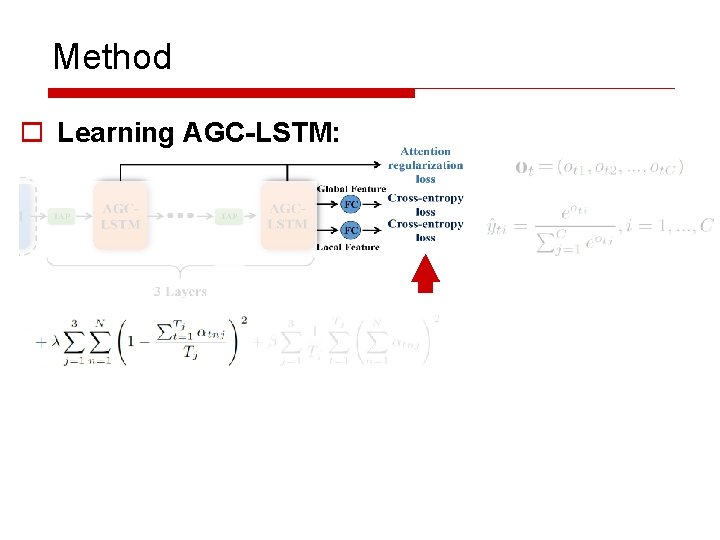

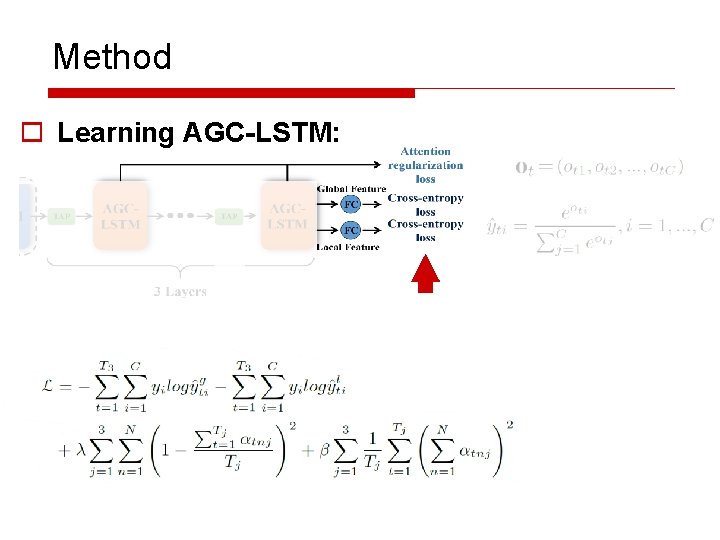

Method o Learning AGC-LSTM:

Method o Learning AGC-LSTM:

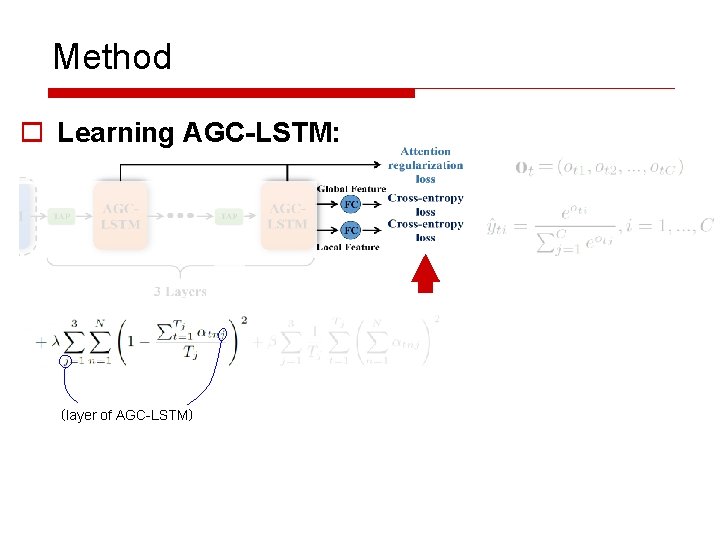

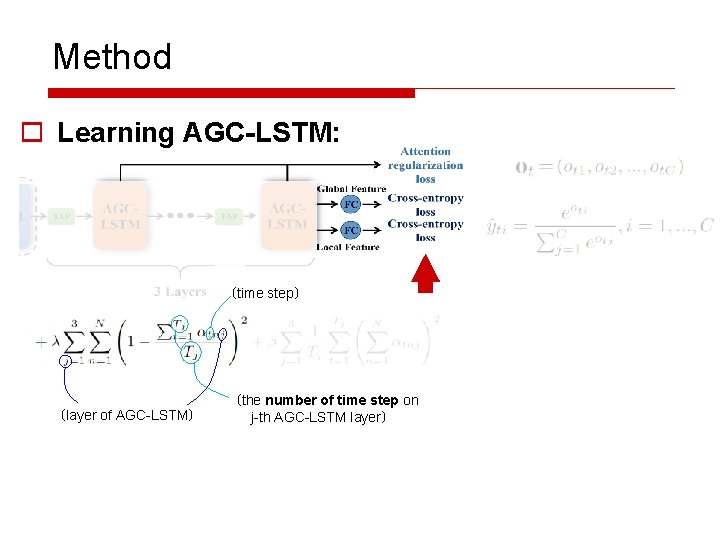

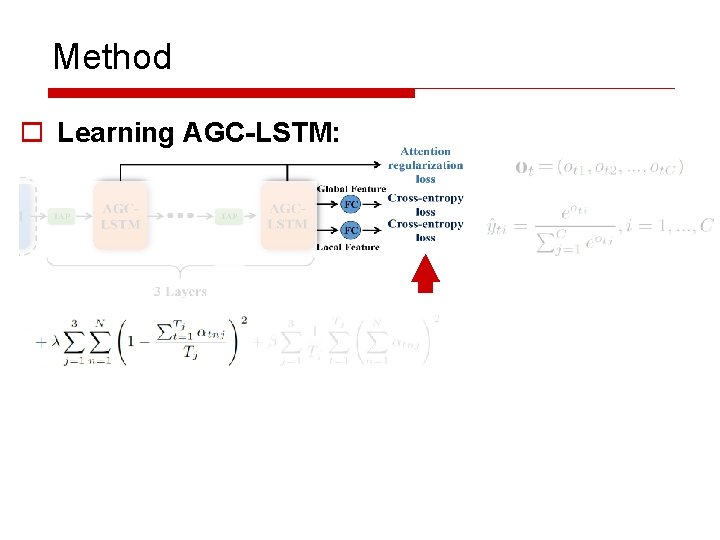

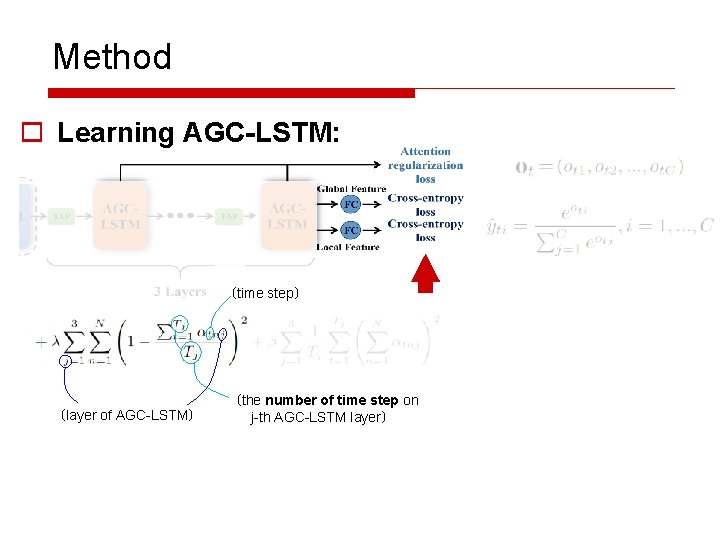

Method o Learning AGC-LSTM: (layer of AGC-LSTM)

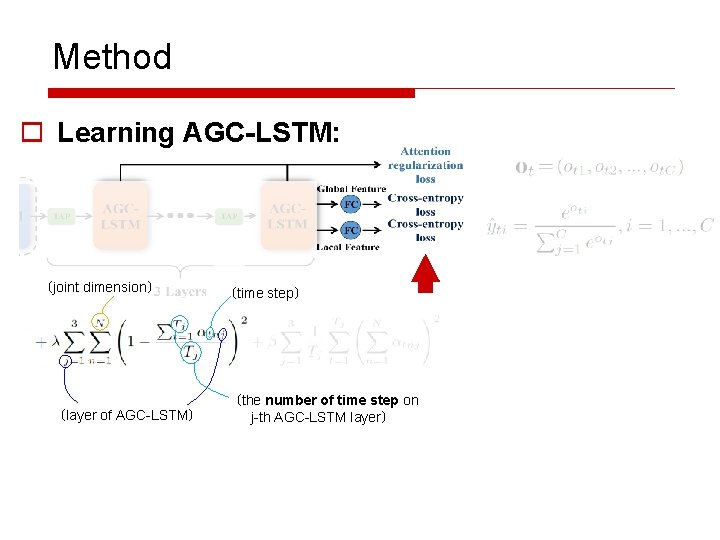

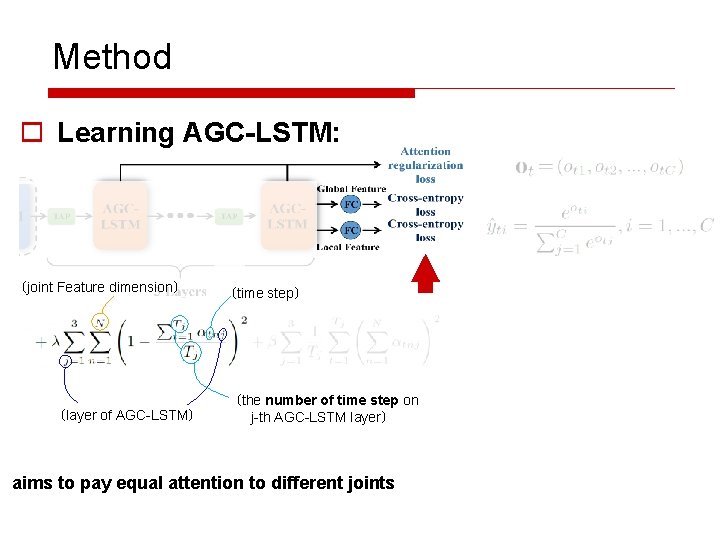

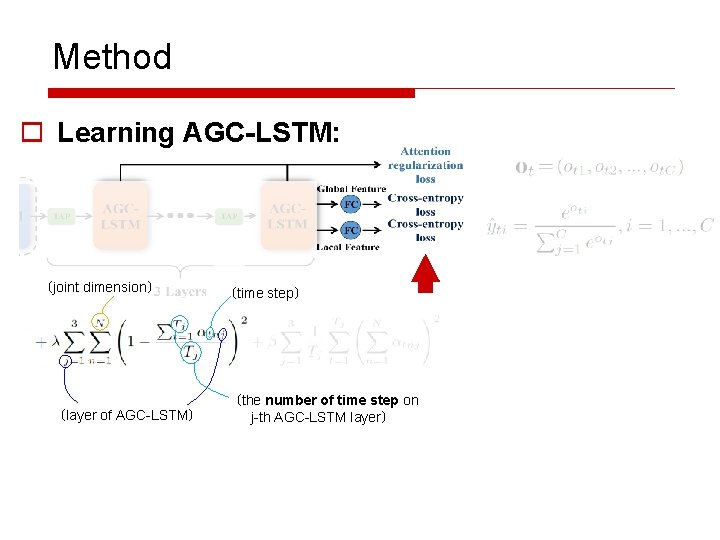

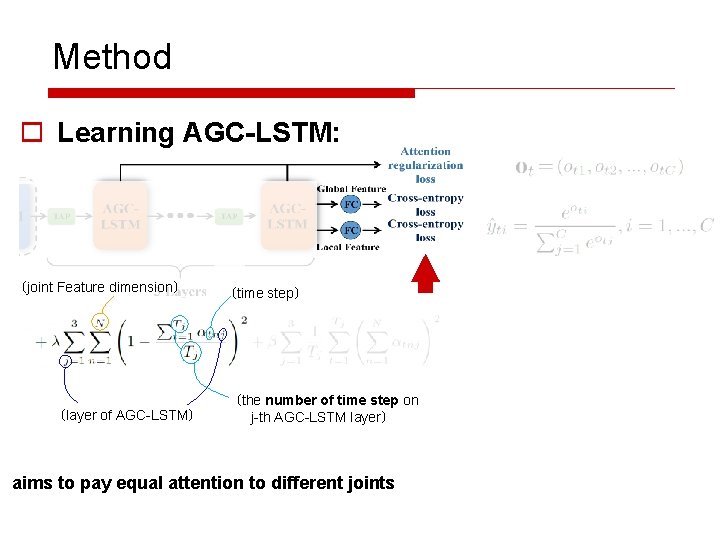

Method o Learning AGC-LSTM: (time step) (layer of AGC-LSTM) (the number of time step on j-th AGC-LSTM layer)

Method o Learning AGC-LSTM: (joint dimension) (layer of AGC-LSTM) (time step) (the number of time step on j-th AGC-LSTM layer)

Method o Learning AGC-LSTM: (joint Feature dimension) (layer of AGC-LSTM) (time step) (the number of time step on j-th AGC-LSTM layer) aims to pay equal attention to different joints

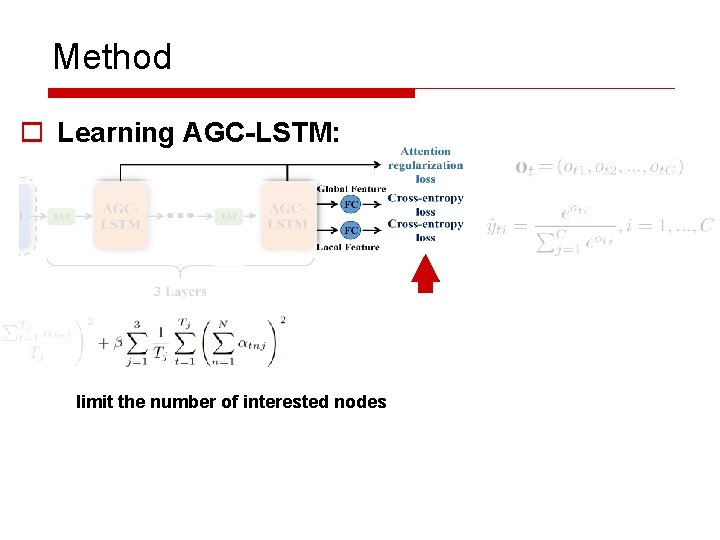

Method o Learning AGC-LSTM:

Method o Learning AGC-LSTM: limit the number of interested nodes

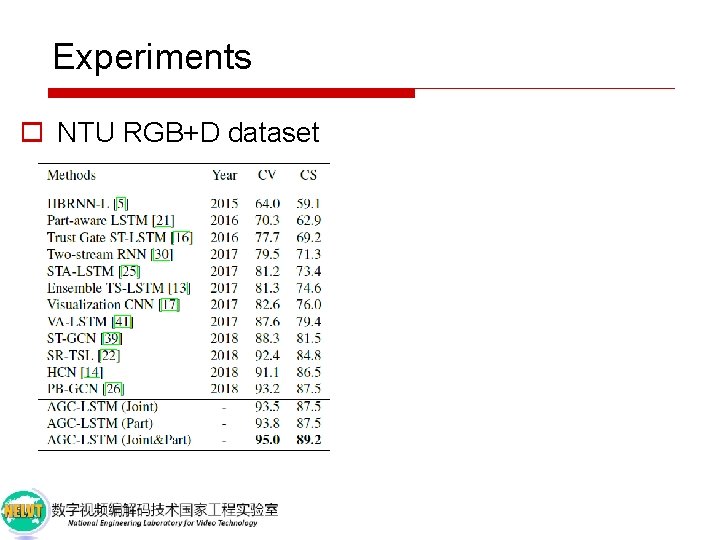

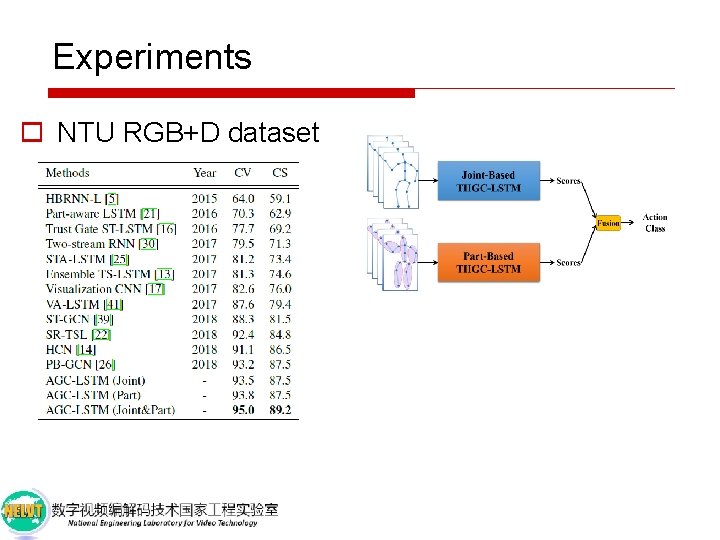

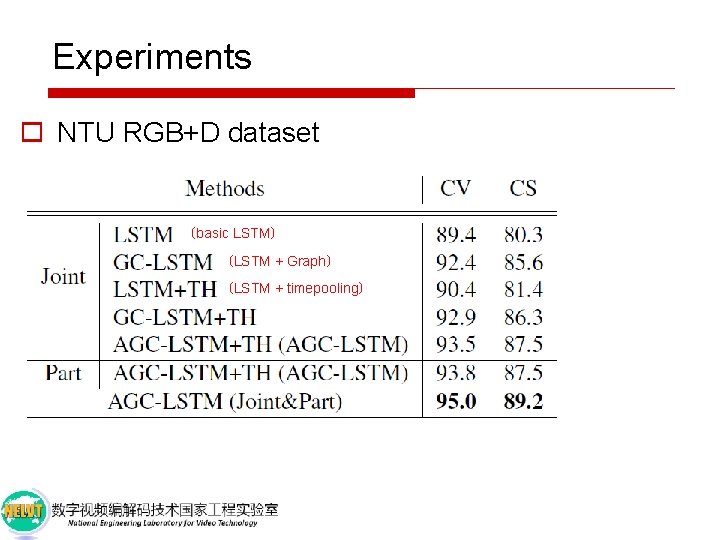

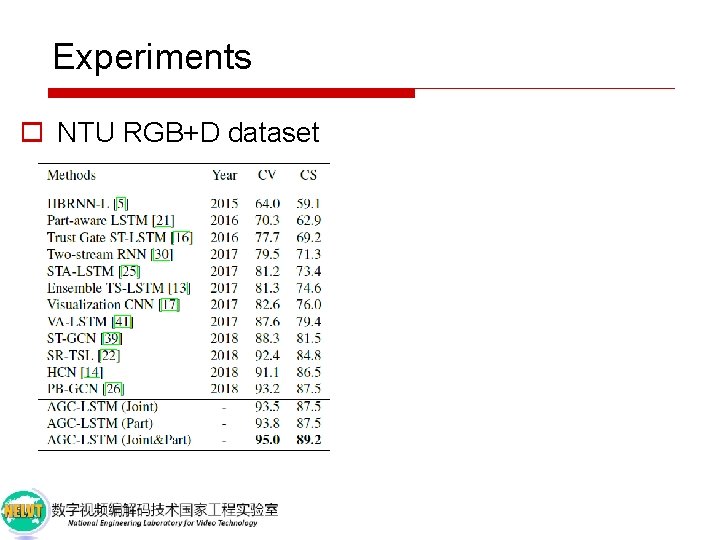

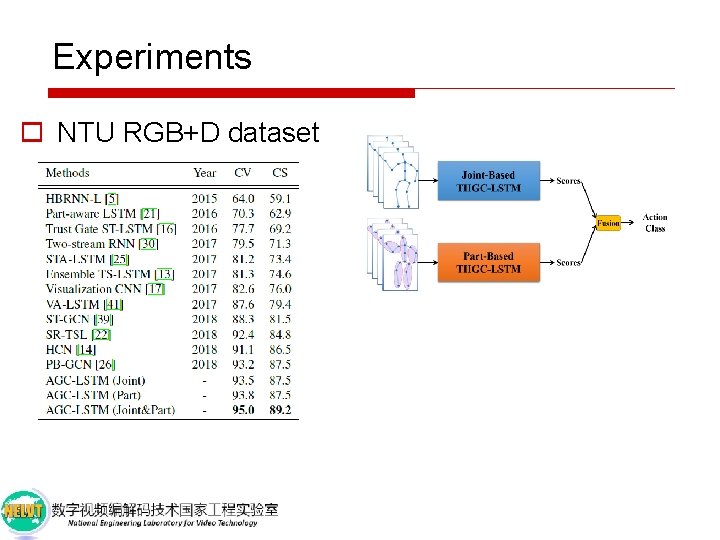

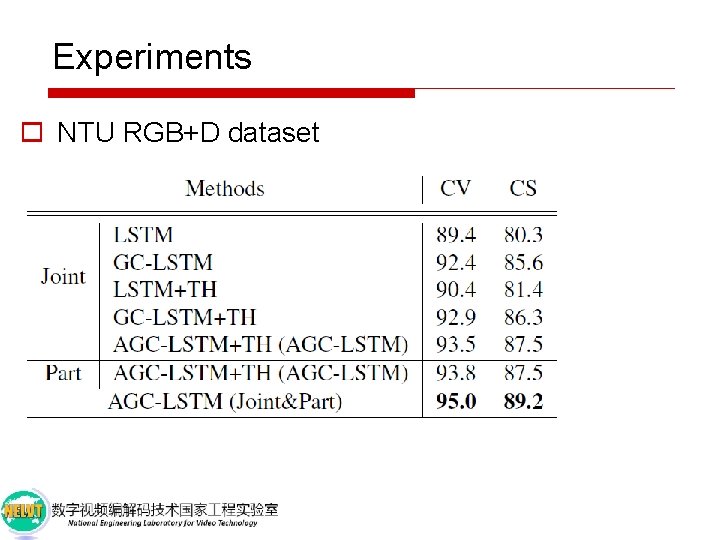

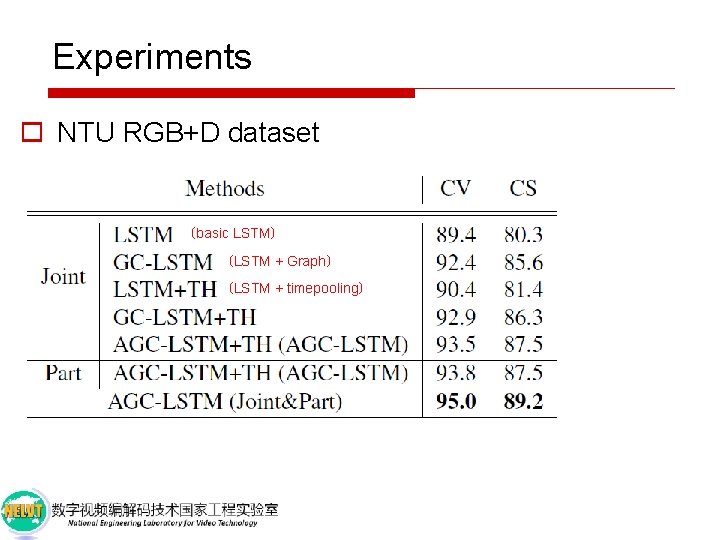

Experiments o NTU RGB+D dataset

Experiments o NTU RGB+D dataset

Experiments o NTU RGB+D dataset

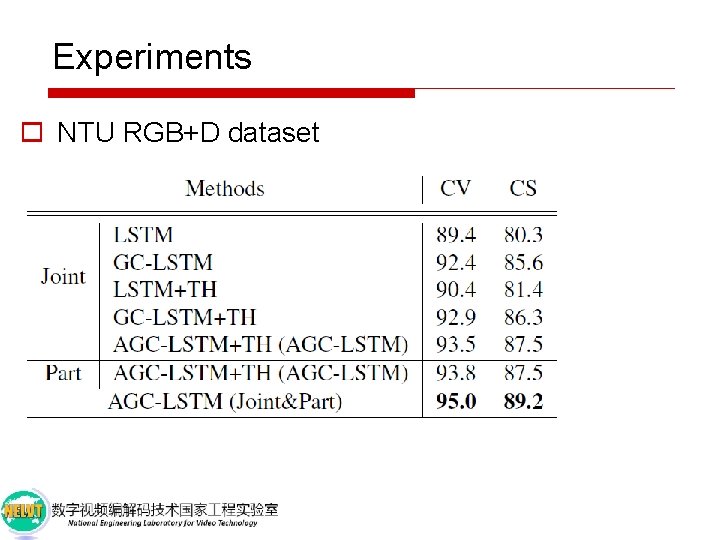

Experiments o NTU RGB+D dataset (basic LSTM) (LSTM + Graph) (LSTM + timepooling)

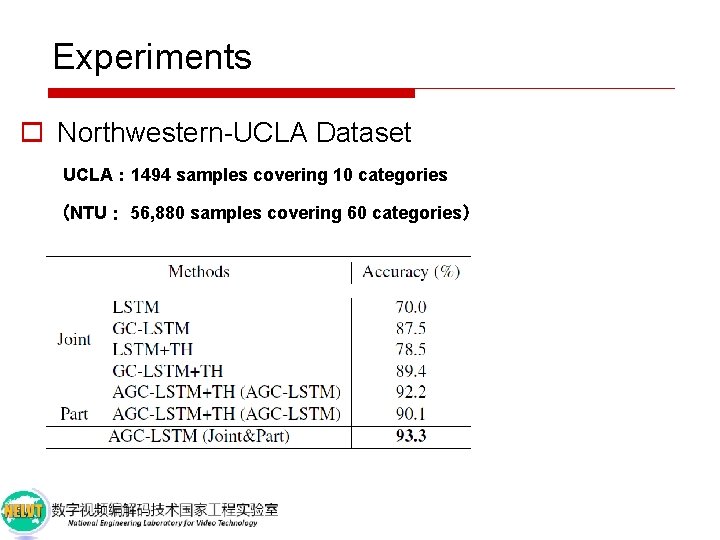

Experiments o Northwestern-UCLA Dataset

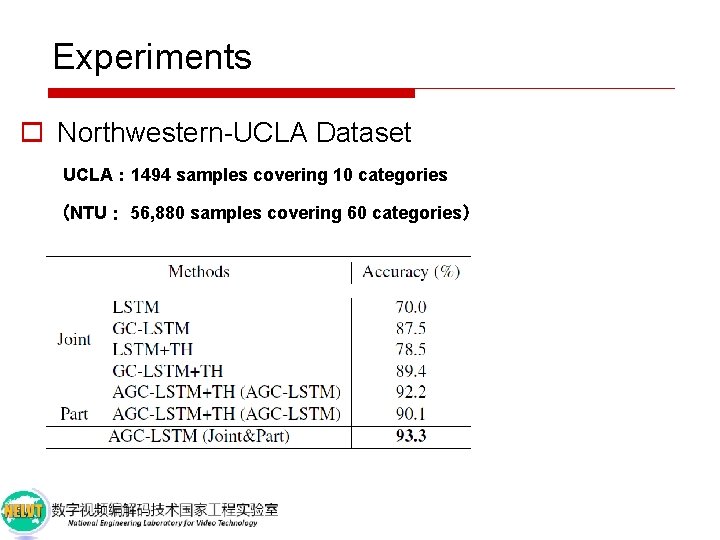

Experiments o Northwestern-UCLA Dataset UCLA: 1494 samples covering 10 categories (NTU: 56, 880 samples covering 60 categories)

Experiments o Northwestern-UCLA Dataset UCLA: 1494 samples covering 10 categories (NTU: 56, 880 samples covering 60 categories)

Thanks!