Recurrent Neural Networks LSTM Advisor S J Wang

- Slides: 23

Recurrent Neural Networks & LSTM Advisor: S. J. Wang, F. T. Chien Student: M. C. Sun 20150226

Outline • Neural Network • Recurrent Neural Network (RNN) • Introduction • Training • Long Short-Term Memory (LSTM) • Evolution • Architecture • Connectionist Temporal Classification (CTC) • Application • Speech recognition • Architecture

Neural Network • Inspired by human’s neural system • A complicated architecture • With some specific limitations • Ex. DBN, CNN…

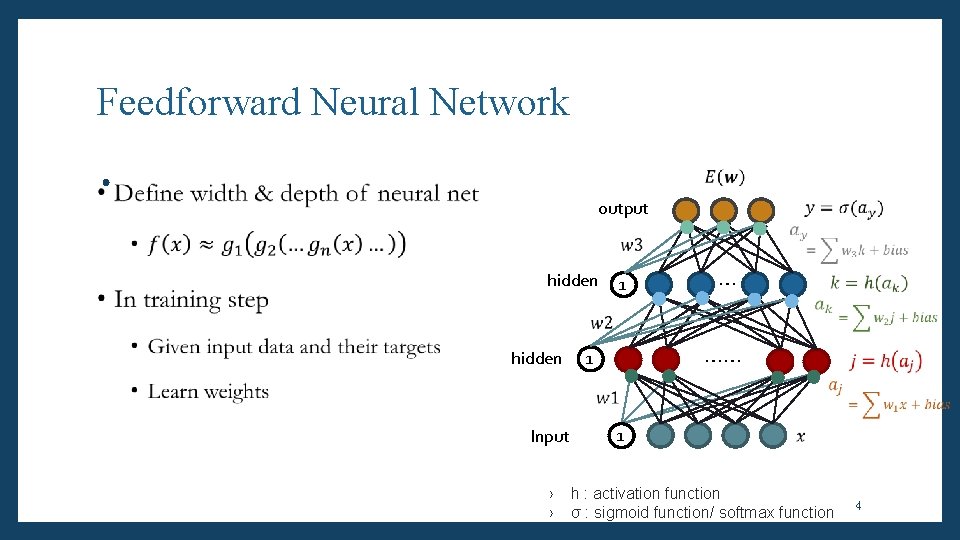

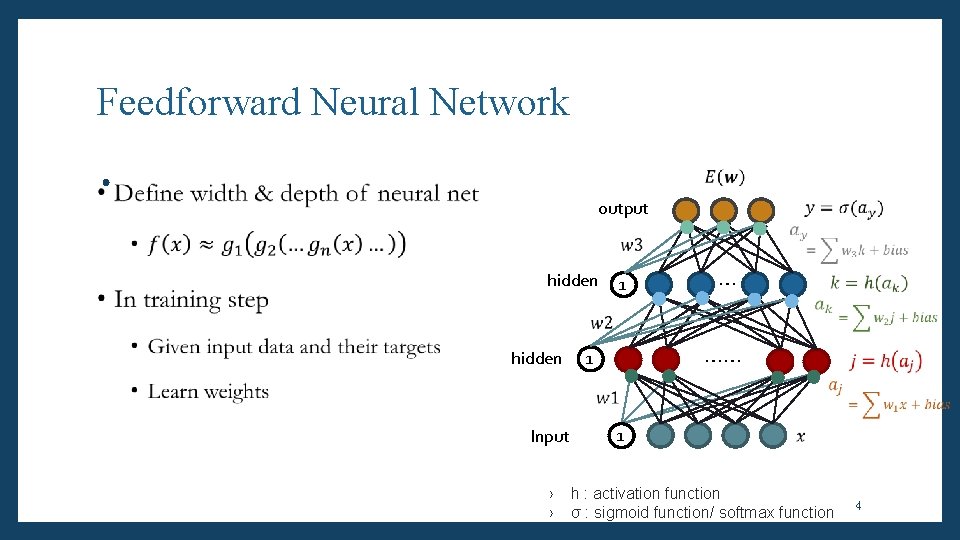

Feedforward Neural Network • output hidden Input › › 1 … …… 1 1 h : activation function σ : sigmoid function/ softmax function 4

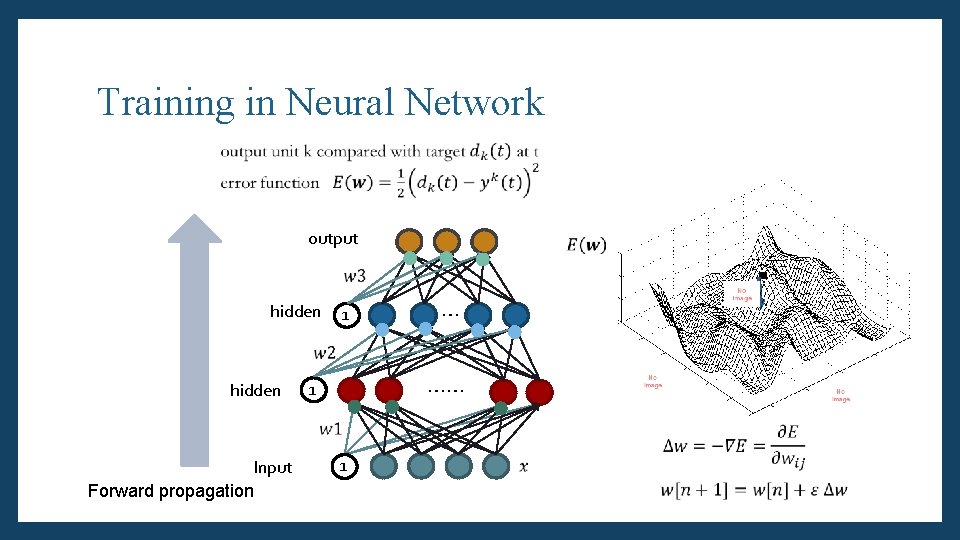

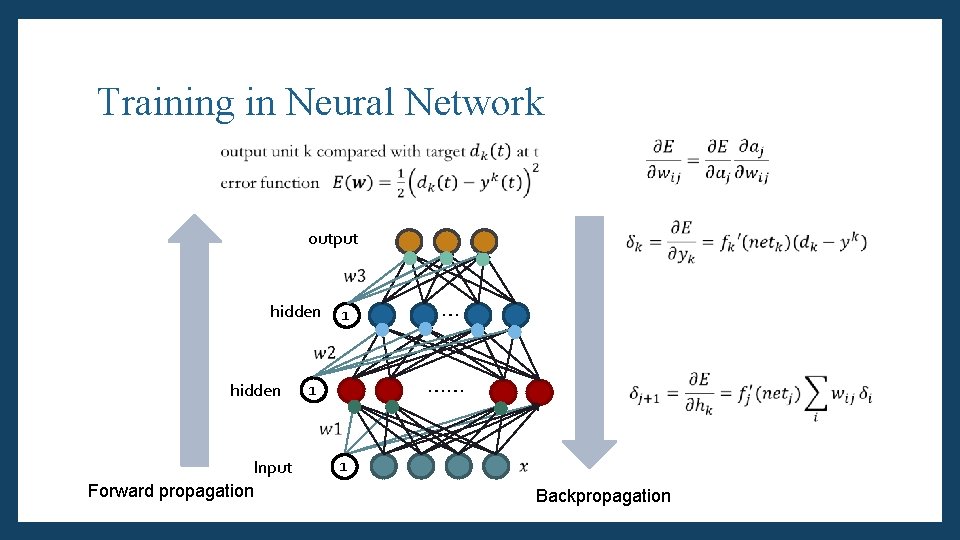

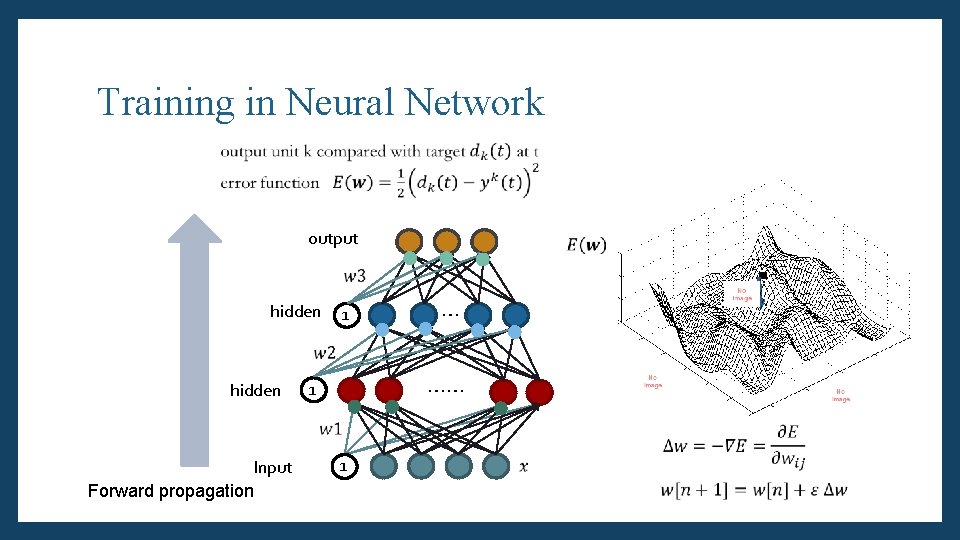

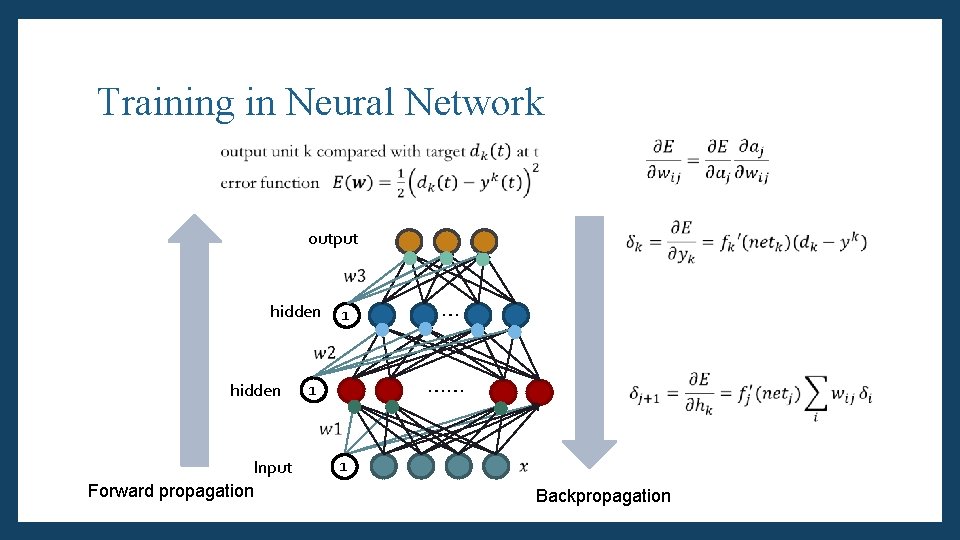

Training in Neural Network output hidden 1 … …… 1 Input Forward propagation 1 ‧

Training in Neural Network output hidden Input Forward propagation 1 … …… 1 1 Backpropagation

RNN A deepest neural network

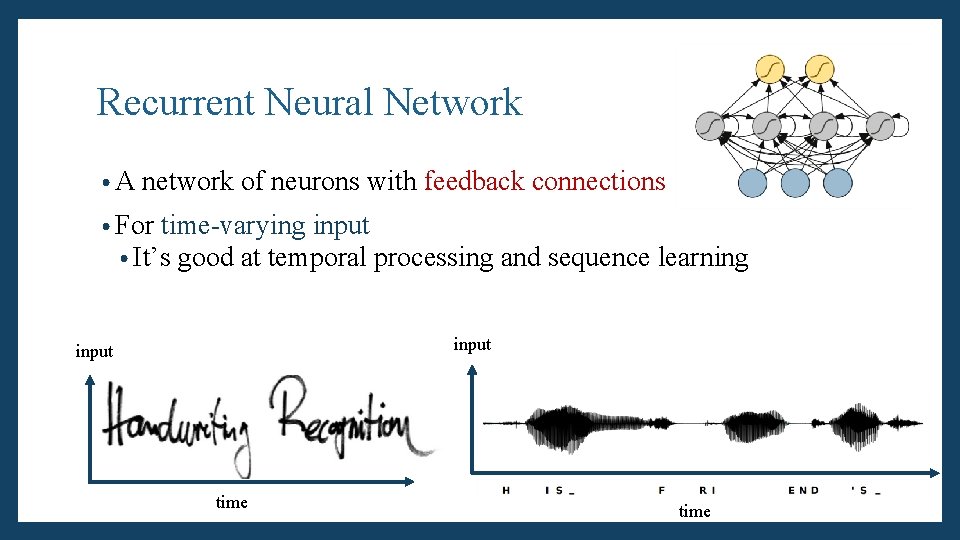

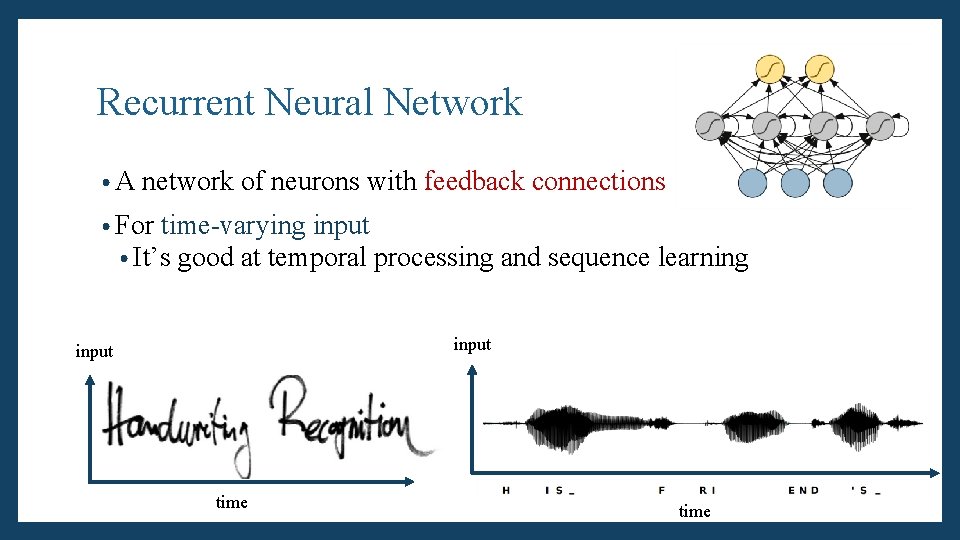

Recurrent Neural Network • A network of neurons with feedback connections • For time-varying input • It’s good at temporal processing and sequence learning input time

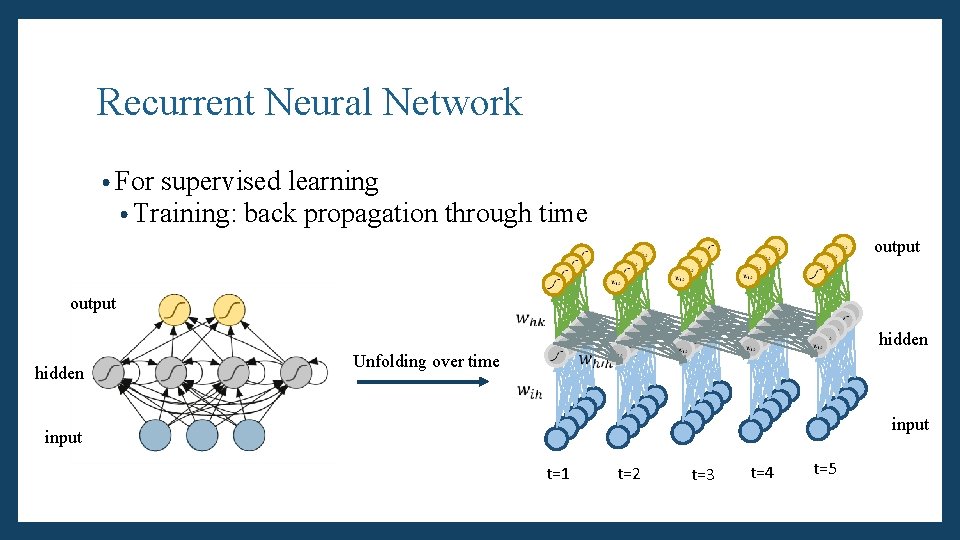

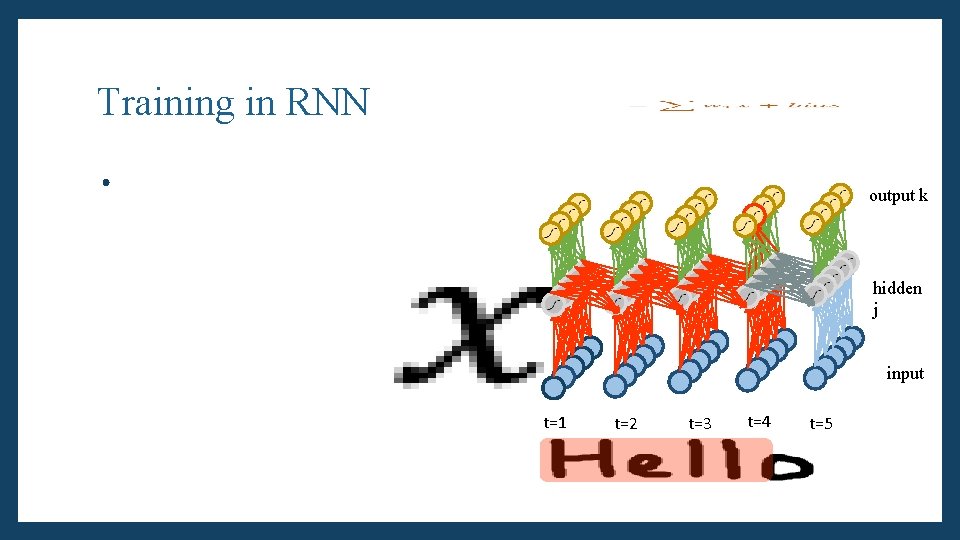

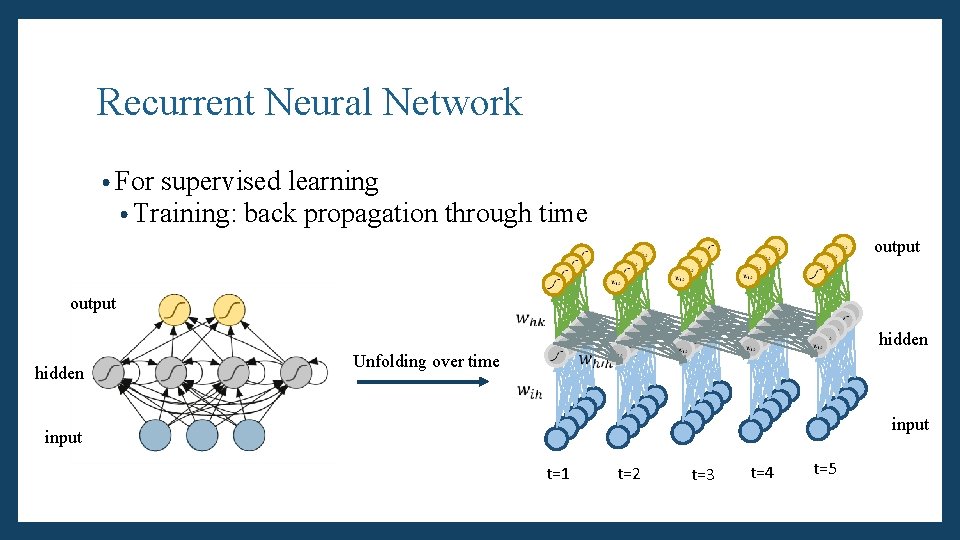

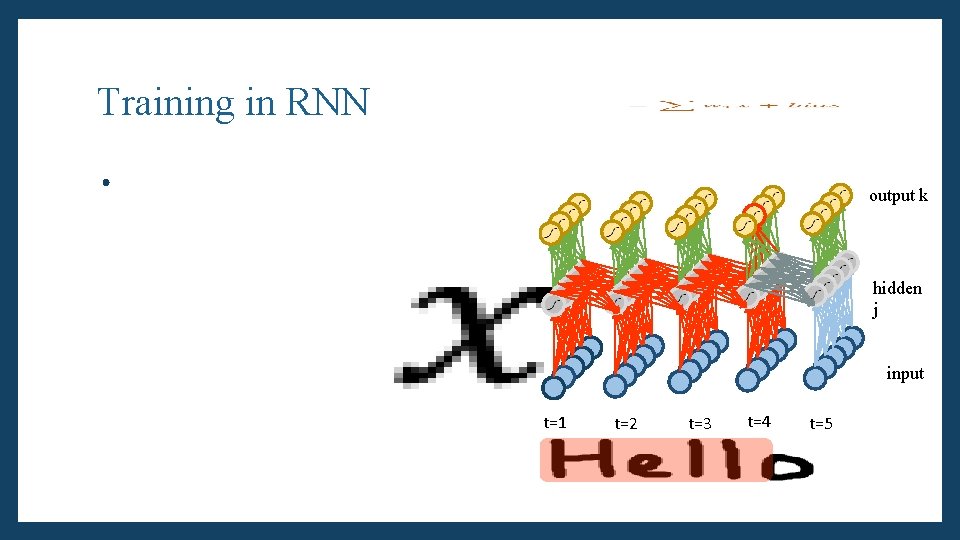

Recurrent Neural Network • For supervised learning • Training: back propagation through time output hidden Unfolding over time input t=1 t=2 t=3 t=4 t=5

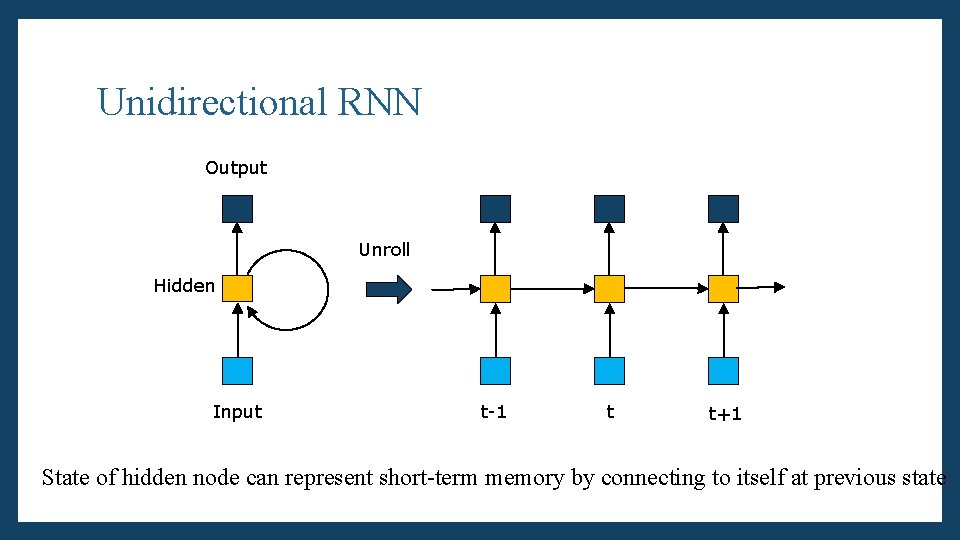

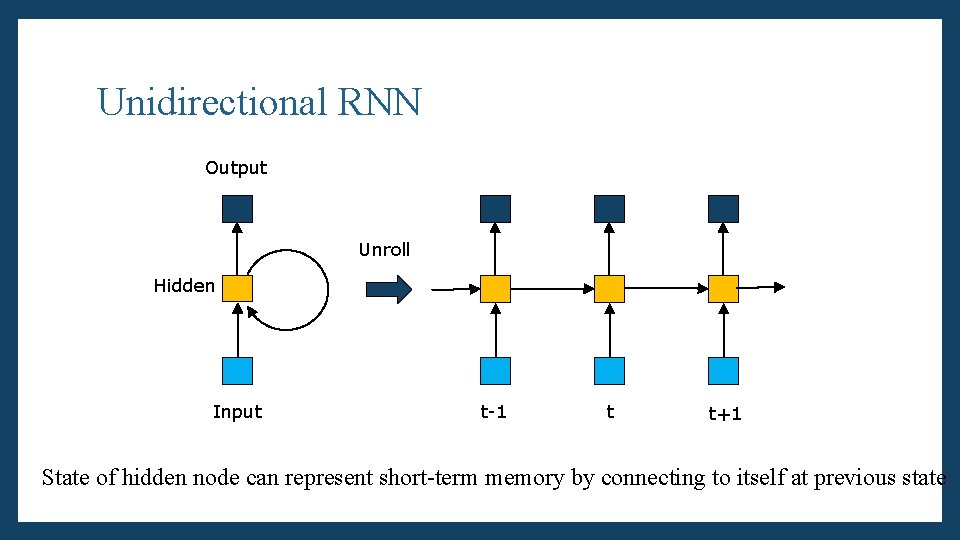

Unidirectional RNN Output Unroll Hidden Input t-1 t t+1 State of hidden node can represent short-term memory by connecting to itself at previous state

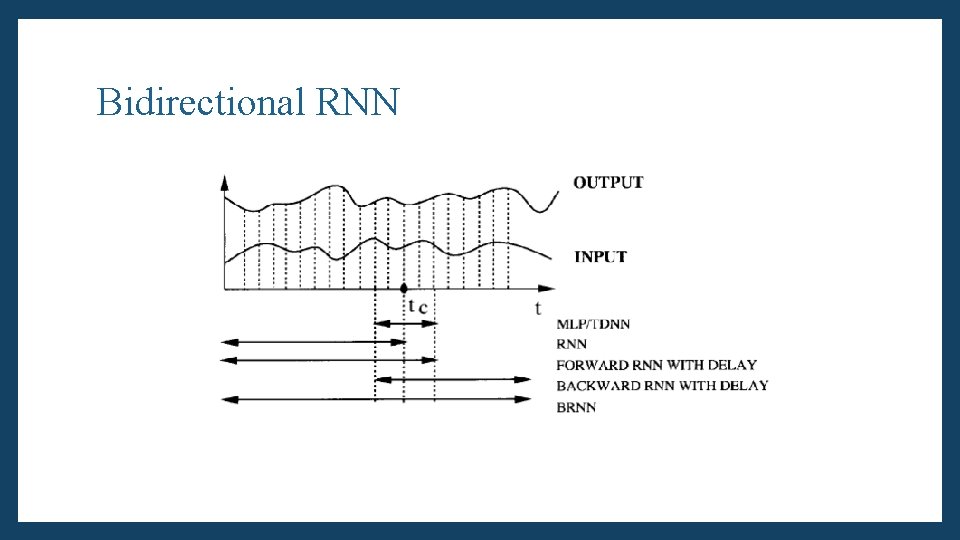

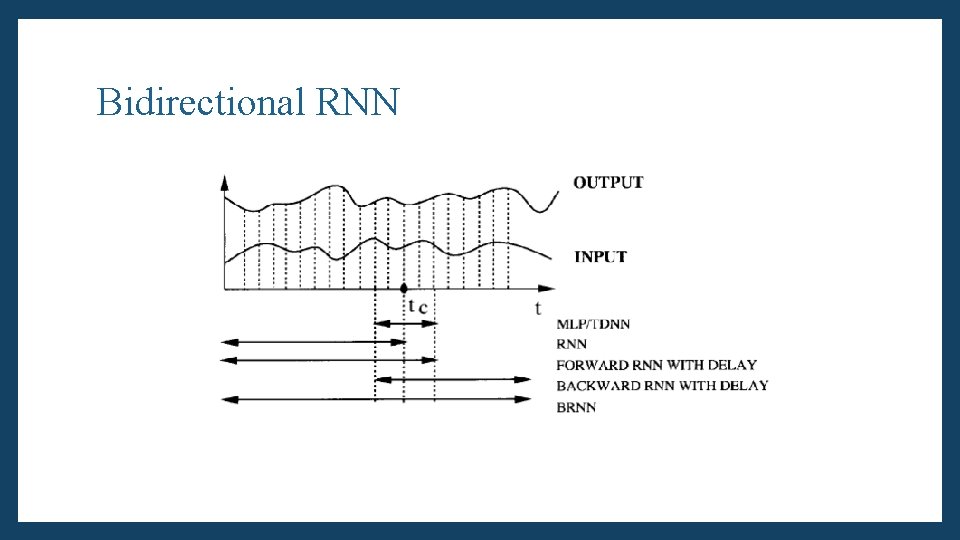

Bidirectional RNN

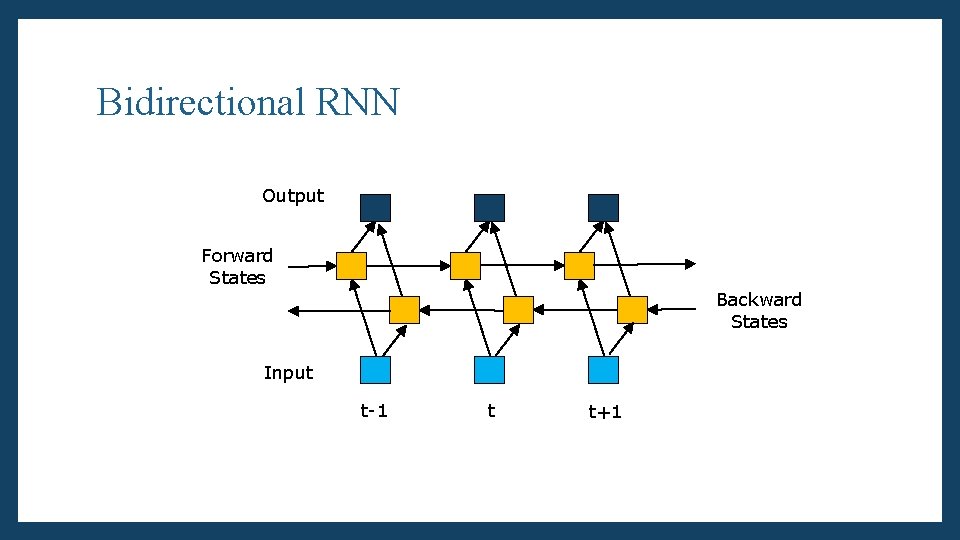

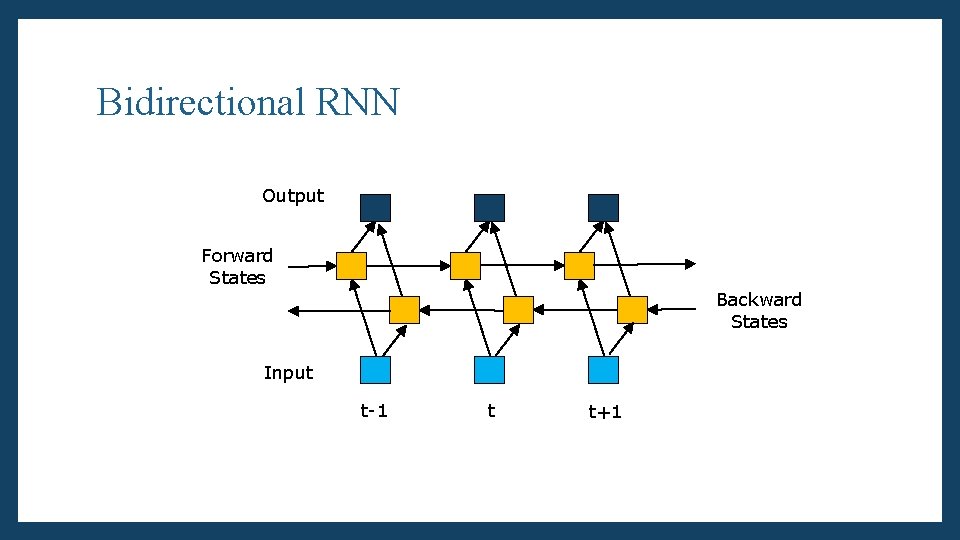

Bidirectional RNN Output Forward States Backward States Input t-1 t t+1

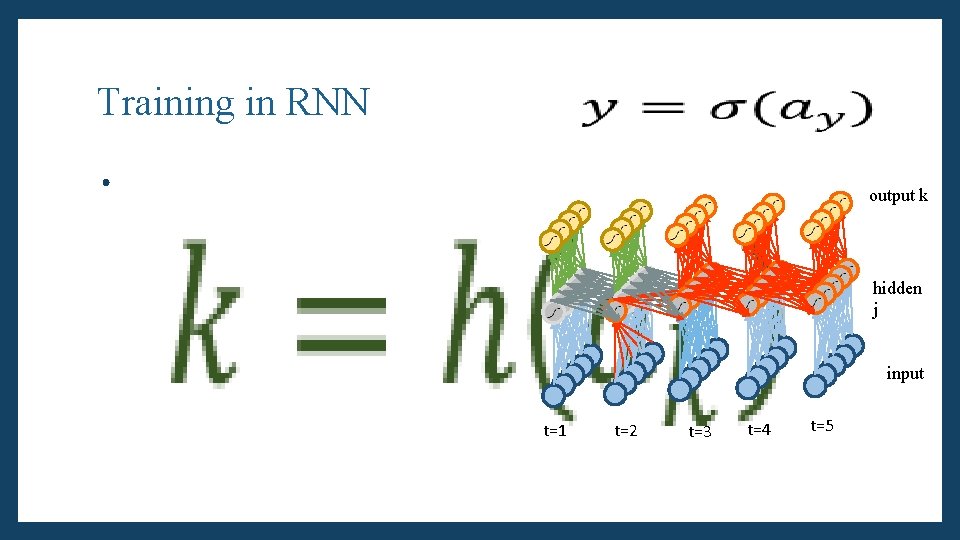

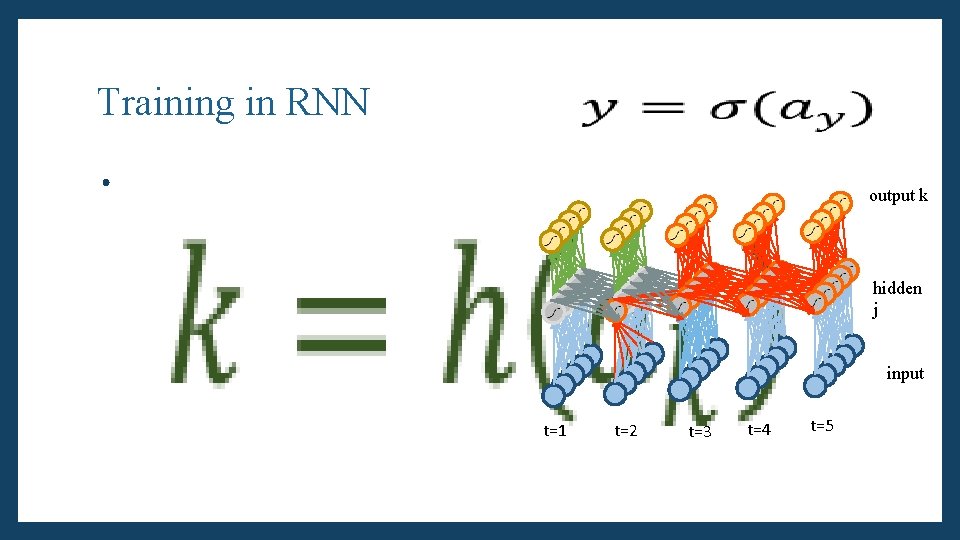

Training in RNN • output k hidden j input t=1 t=2 t=3 t=4 t=5

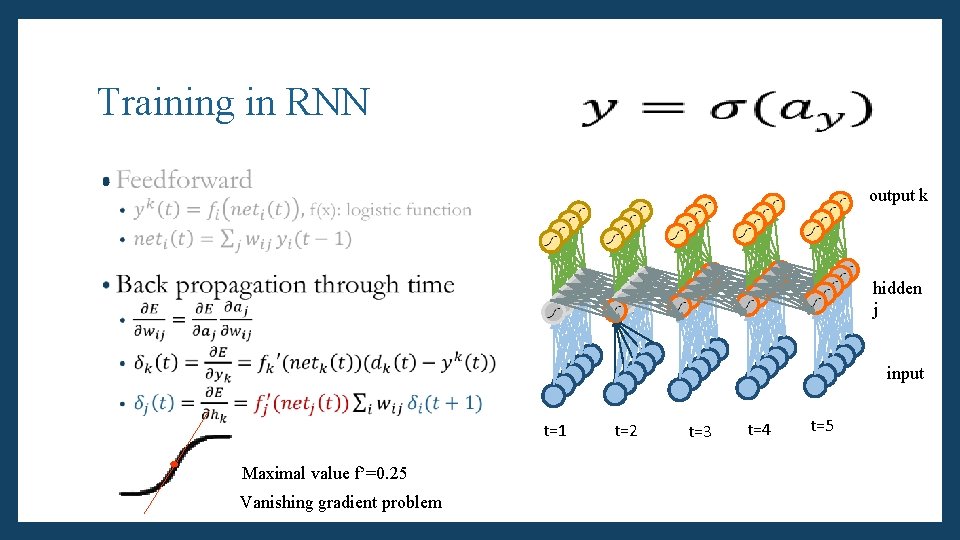

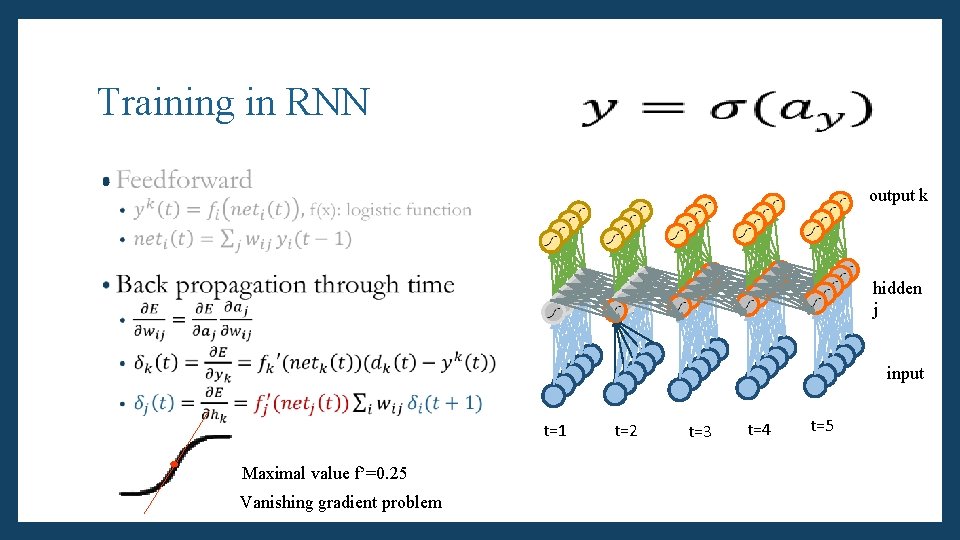

Training in RNN • output k hidden j input t=1 t=2 t=3 t=4 t=5

Training in RNN • output k hidden j input t=1 Maximal value f’=0. 25 Vanishing gradient problem t=2 t=3 t=4 t=5

LSTM One solution to vanishing gradient when training RNN

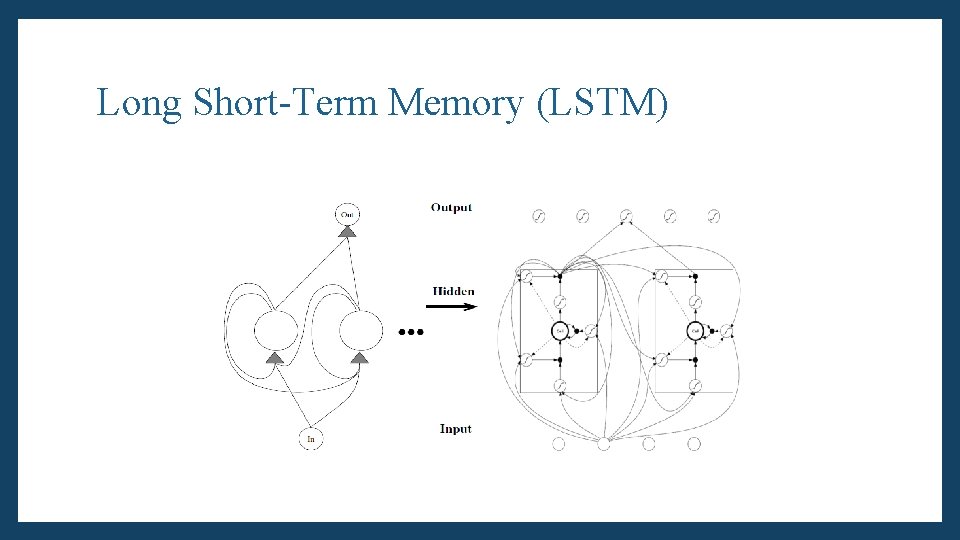

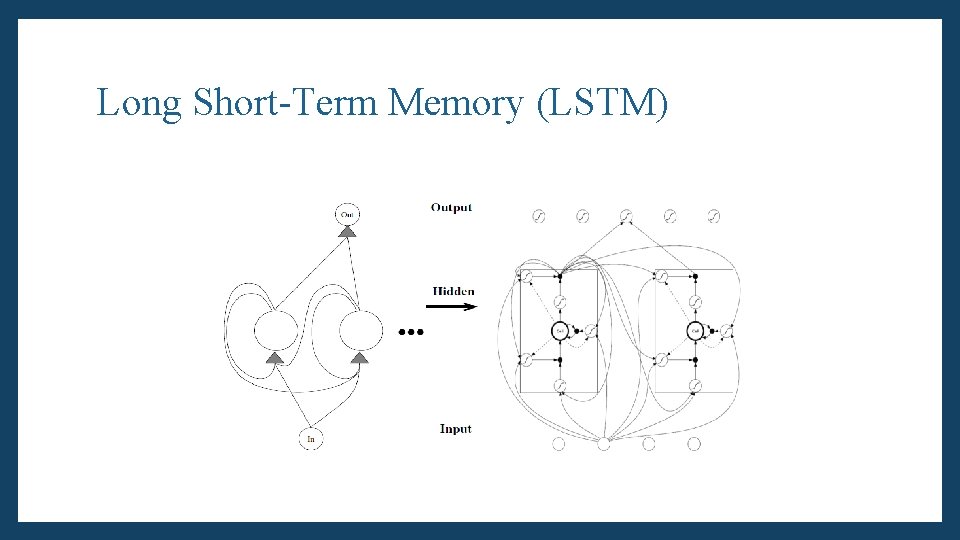

Long Short-Term Memory (LSTM) • For conventional RNN, • Hidden state with self-cycle can only represents short-term memory due to the vanishing gradient problem • Invented by Hochreiter & Schmidhuber (1997) • Long short-term memory is designed to allow hidden state to retain important information over longer period of time • Replace neurons’ structure of hidden layer in RNN Reference: Long Short-Term Memory. Sepp Hochreiter, Juergen Schmidhuber. (1997)

Long Short-Term Memory (LSTM)

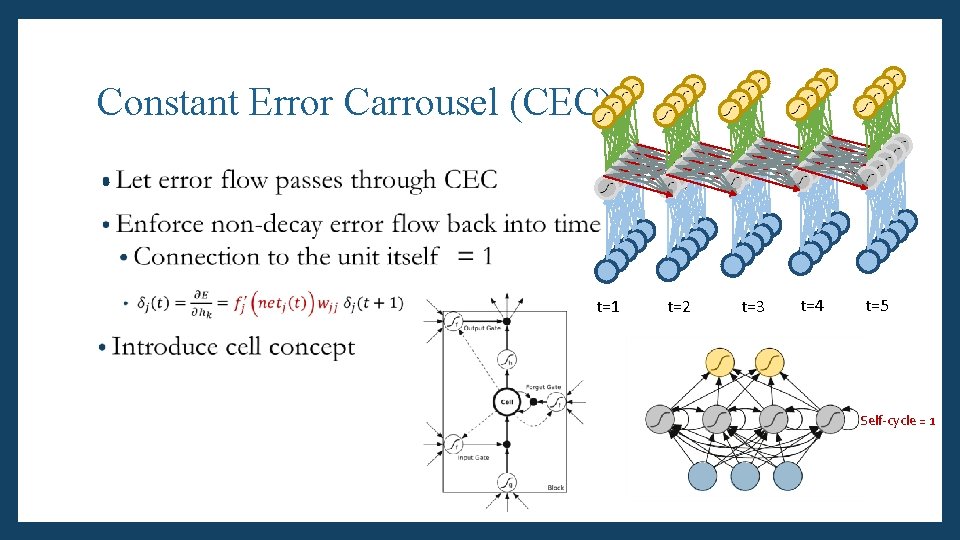

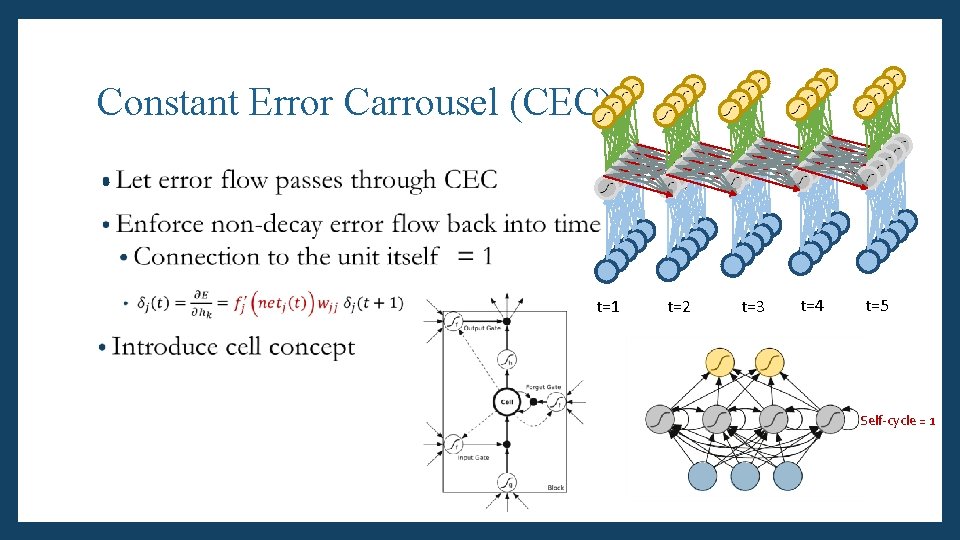

Constant Error Carrousel (CEC) • t=1 t=2 t=3 t=4 t=5 Self-cycle = 1

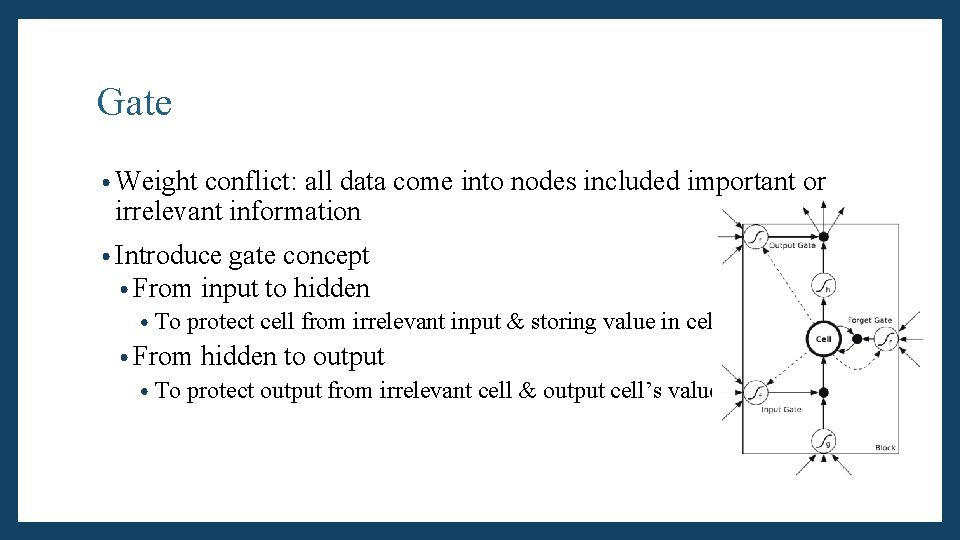

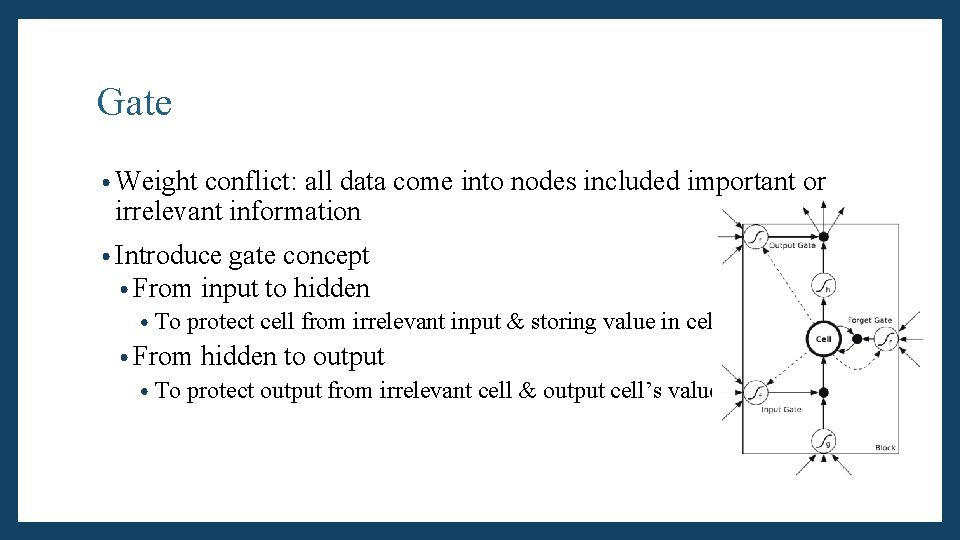

Gate • Weight conflict: all data come into nodes included important or irrelevant information • Introduce gate concept • From input to hidden • To protect cell from irrelevant input & storing value in cell • From hidden to output • To protect output from irrelevant cell & output cell’s value

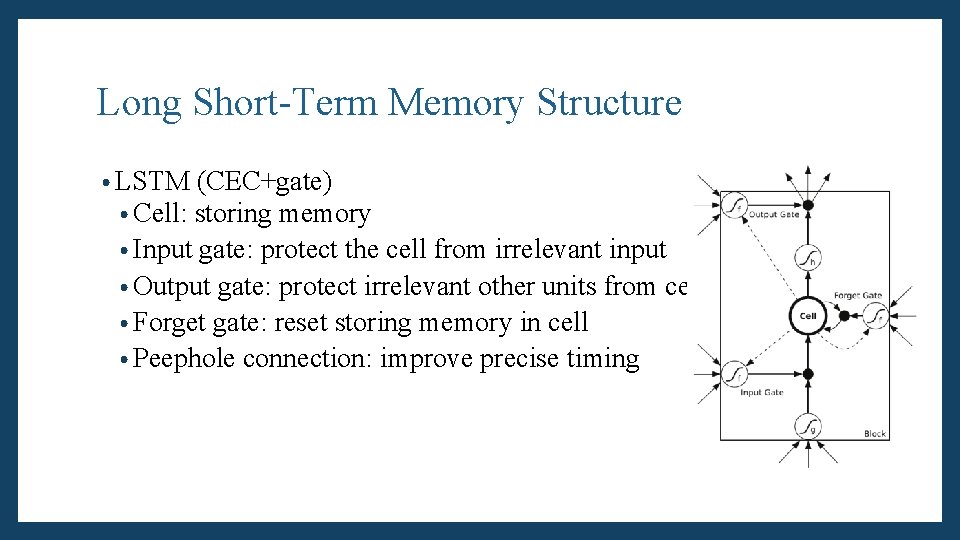

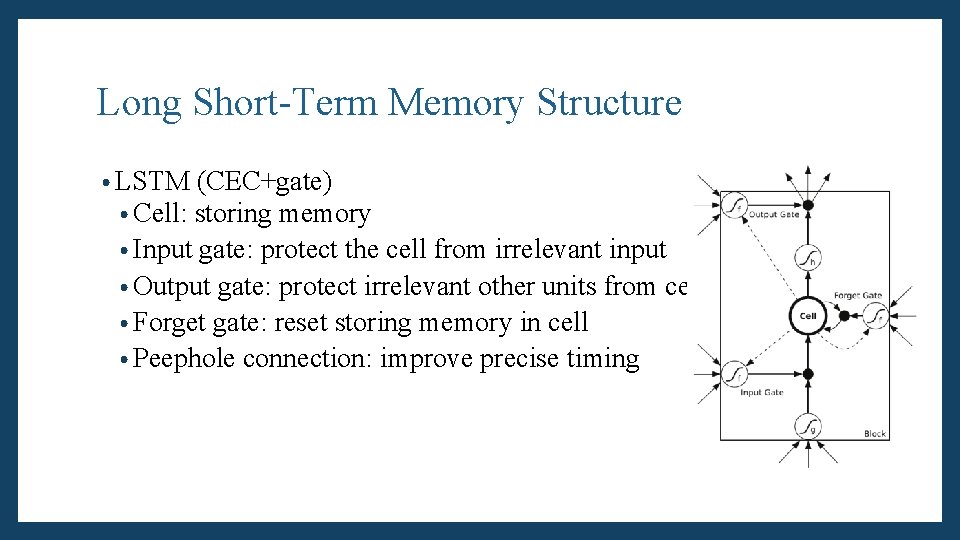

Long Short-Term Memory Structure • LSTM (CEC+gate) • Cell: storing memory • Input gate: protect the cell from irrelevant input • Output gate: protect irrelevant other units from cell • Forget gate: reset storing memory in cell • Peephole connection: improve precise timing

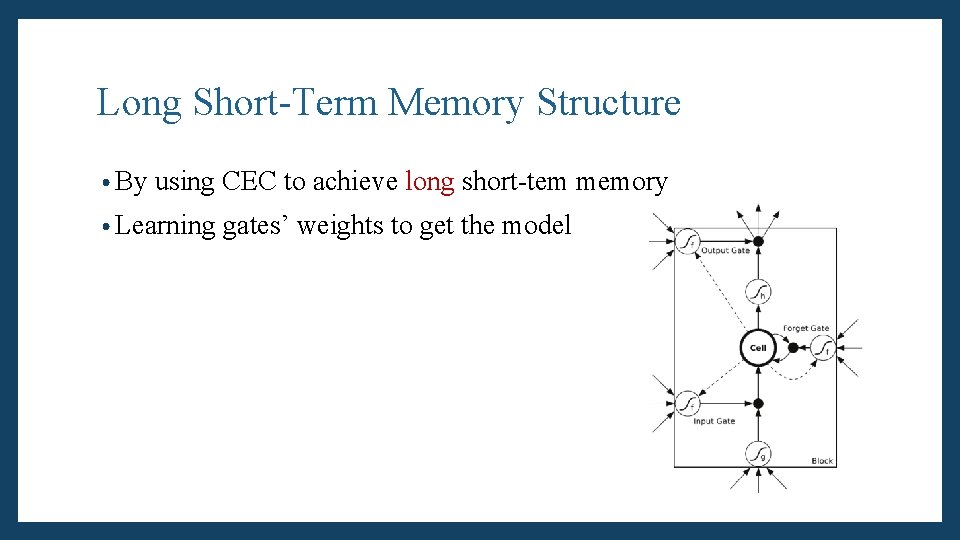

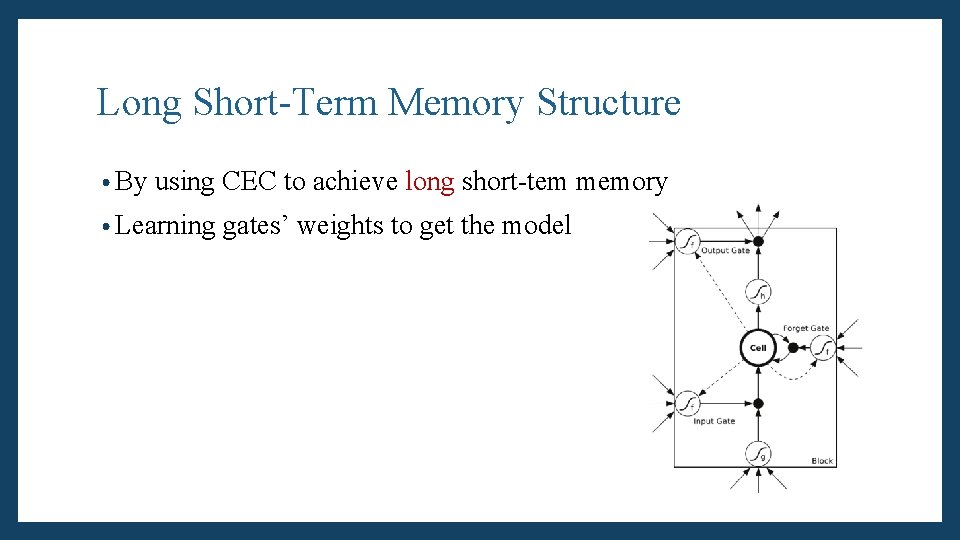

Long Short-Term Memory Structure • By using CEC to achieve long short-tem memory • Learning gates’ weights to get the model