Deep learning Recurrent Neural Networks CV 192 Lecturer

![[http: //norman-ai. mit. edu/] [http: //norman-ai. mit. edu/]](https://slidetodoc.com/presentation_image_h/b16eb6c3a944e909f2dd9aa58b3bb47a/image-44.jpg)

![[http: //norman-ai. mit. edu/] [http: //norman-ai. mit. edu/]](https://slidetodoc.com/presentation_image_h/b16eb6c3a944e909f2dd9aa58b3bb47a/image-45.jpg)

![[http: //norman-ai. mit. edu/] [http: //norman-ai. mit. edu/]](https://slidetodoc.com/presentation_image_h/b16eb6c3a944e909f2dd9aa58b3bb47a/image-46.jpg)

- Slides: 48

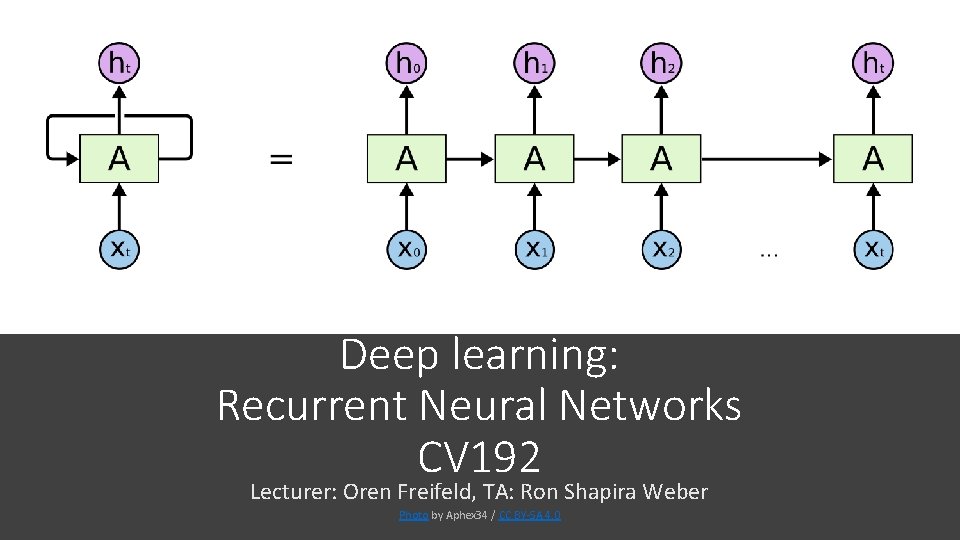

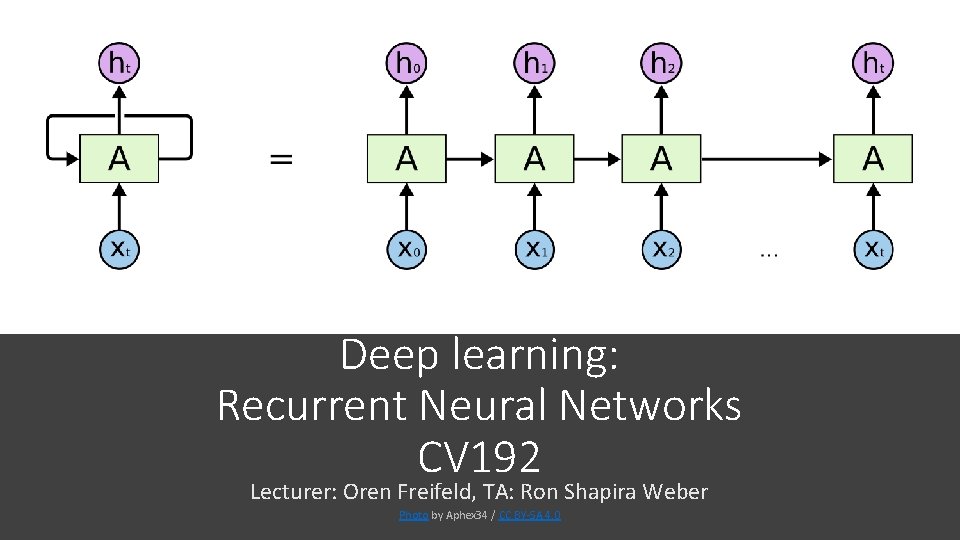

Deep learning: Recurrent Neural Networks CV 192 Lecturer: Oren Freifeld, TA: Ron Shapira Weber Photo by Aphex 34 / CC BY-SA 4. 0

Contents • • Review of previous lecture – CNN Recurrent Neural Networks Back propagation through RNN LSTM

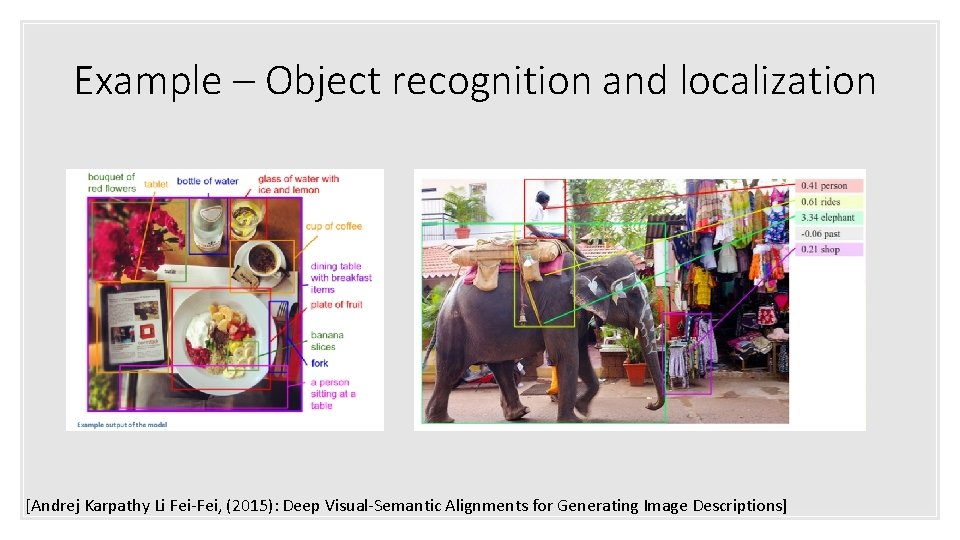

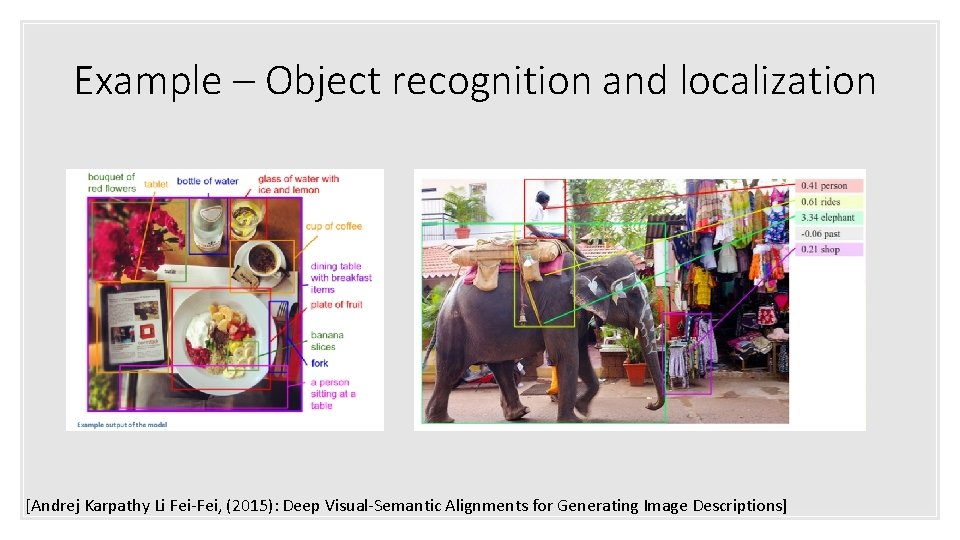

Example – Object recognition and localization [Andrej Karpathy Li Fei-Fei, (2015): Deep Visual-Semantic Alignments for Generating Image Descriptions]

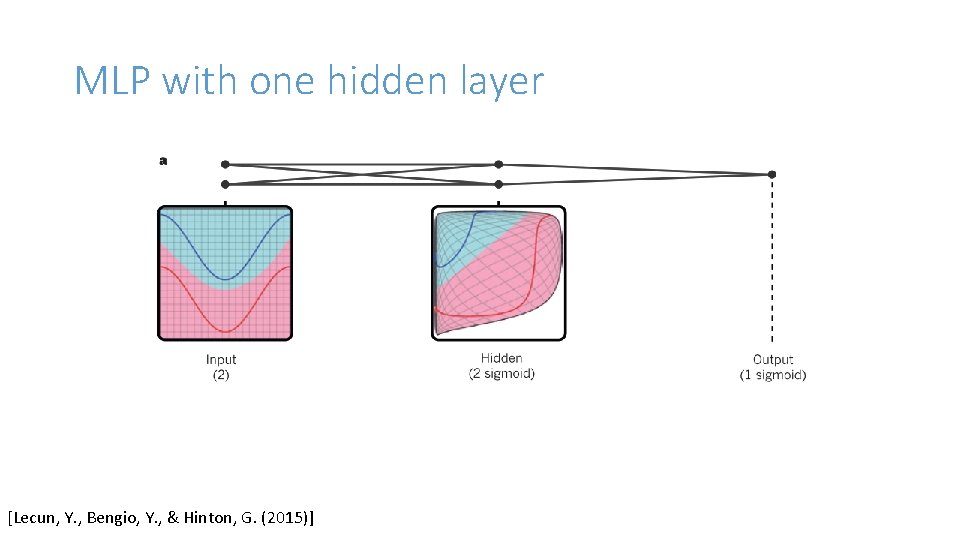

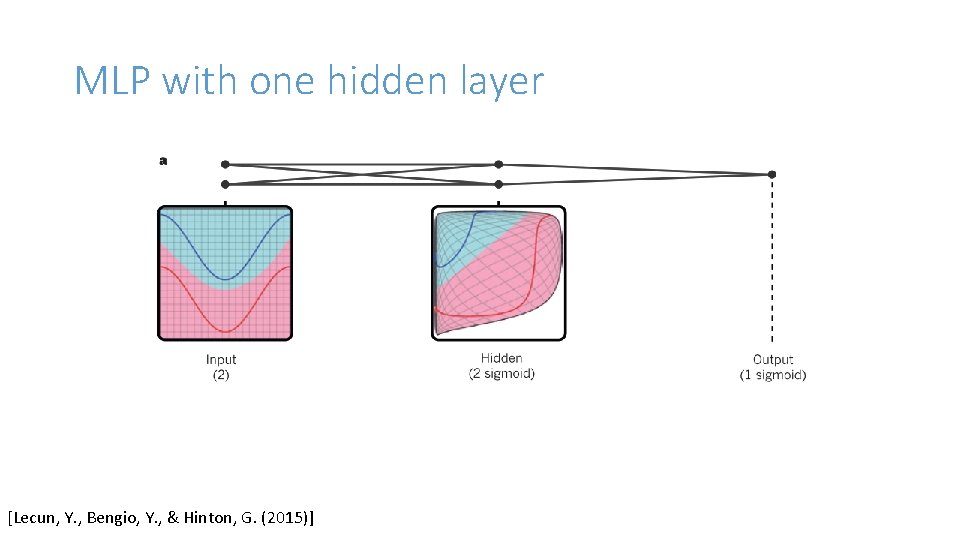

MLP with one hidden layer [Lecun, Y. , Bengio, Y. , & Hinton, G. (2015)]

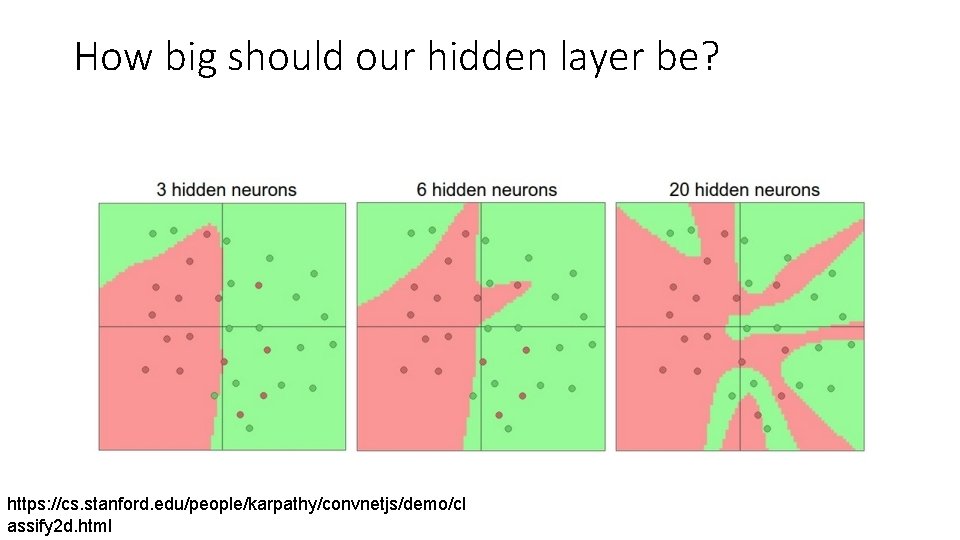

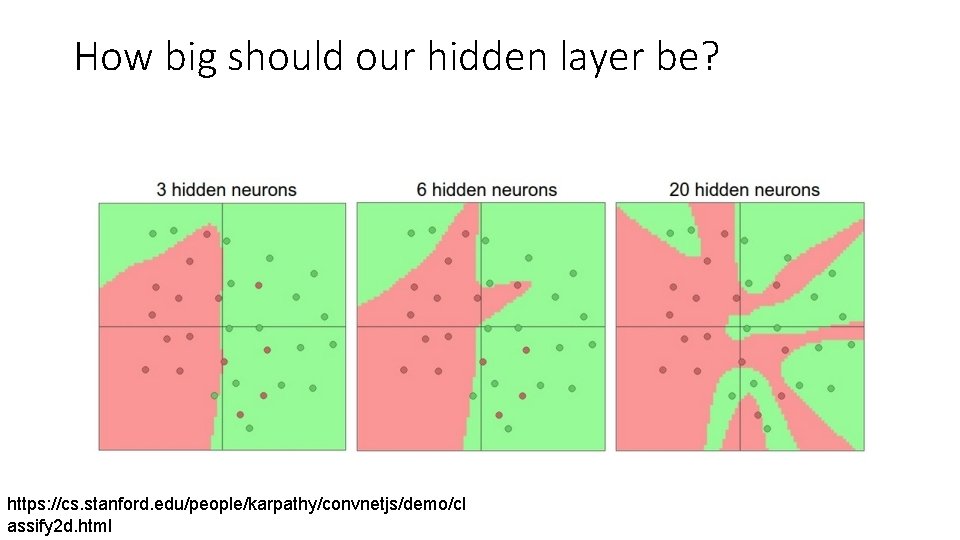

How big should our hidden layer be? https: //cs. stanford. edu/people/karpathy/convnetjs/demo/cl assify 2 d. html

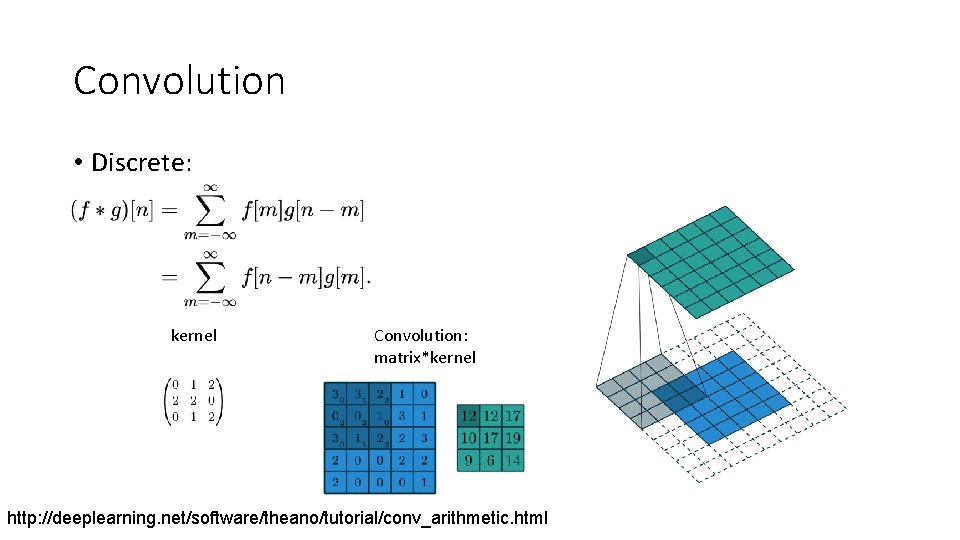

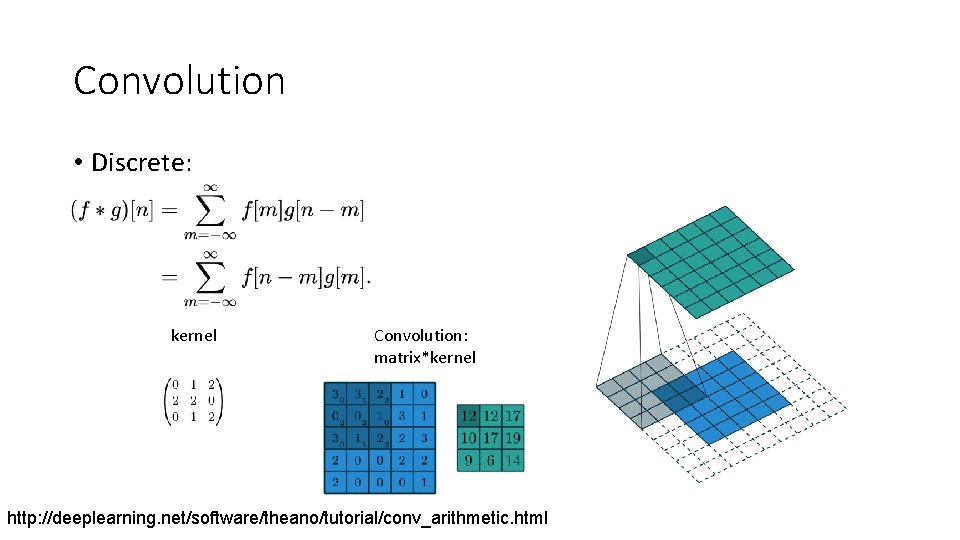

Convolution • Discrete: kernel Convolution: matrix*kernel http: //deeplearning. net/software/theano/tutorial/conv_arithmetic. html

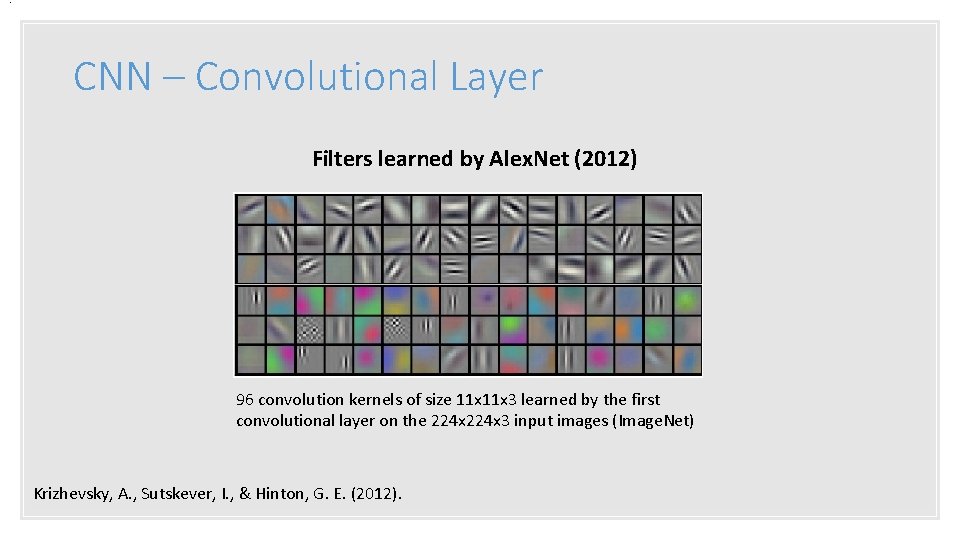

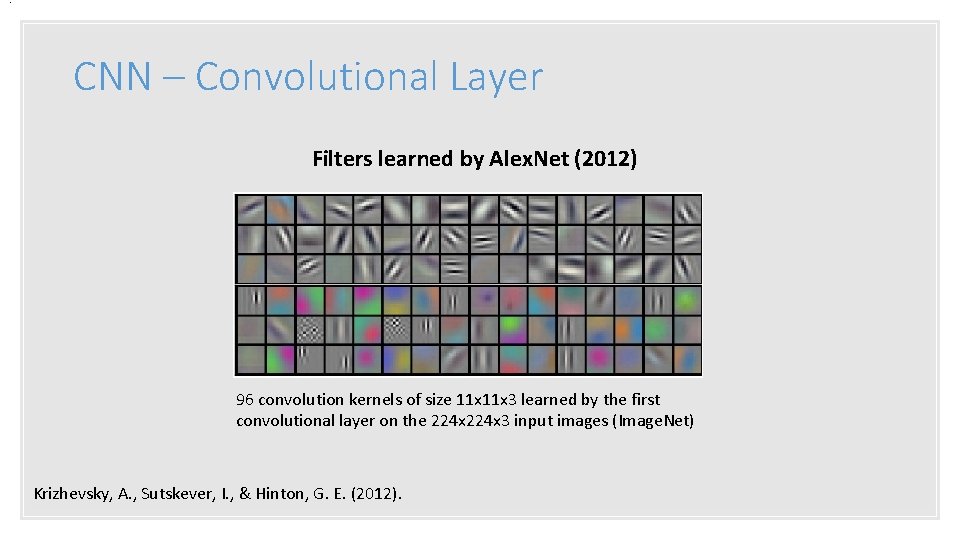

. CNN – Convolutional Layer Filters learned by Alex. Net (2012) 96 convolution kernels of size 11 x 3 learned by the first convolutional layer on the 224 x 3 input images (Image. Net) Krizhevsky, A. , Sutskever, I. , & Hinton, G. E. (2012).

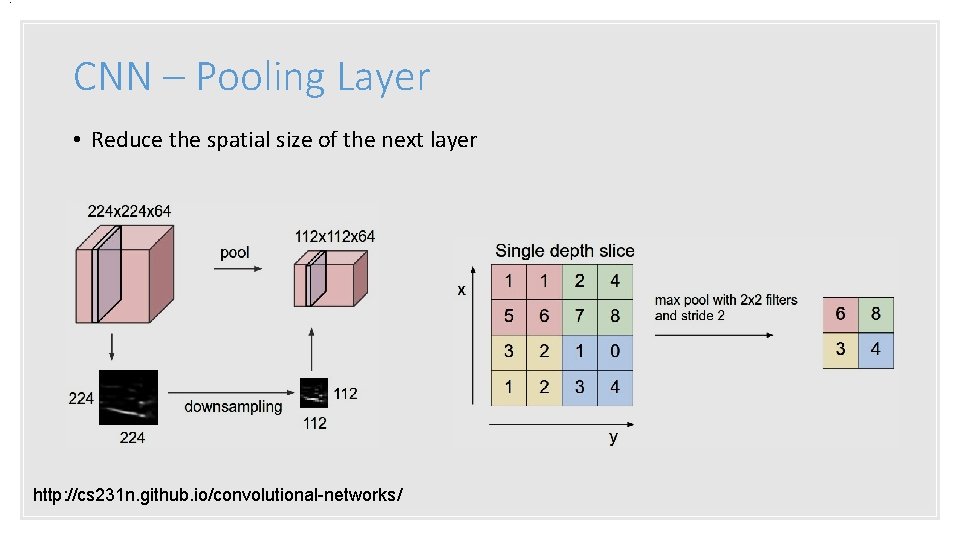

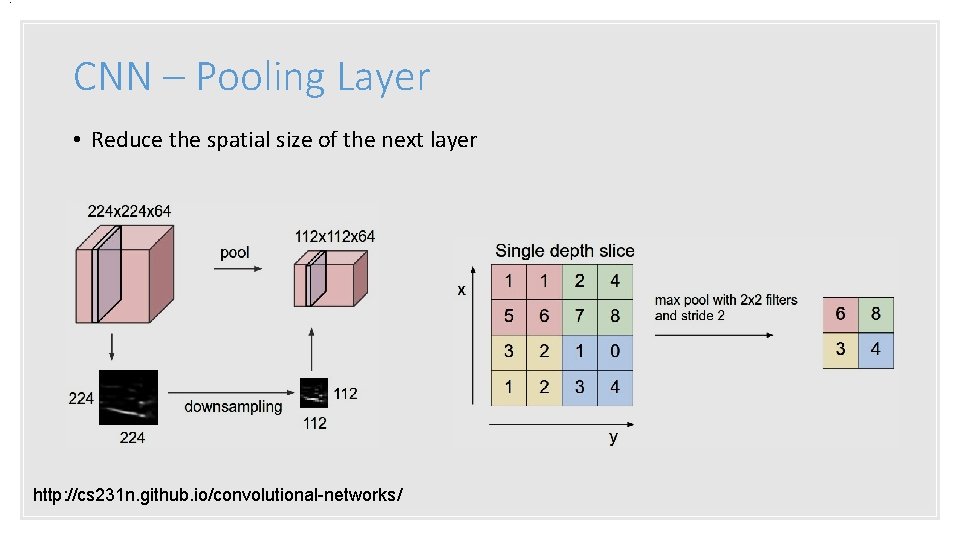

. CNN – Pooling Layer • Reduce the spatial size of the next layer http: //cs 231 n. github. io/convolutional-networks/

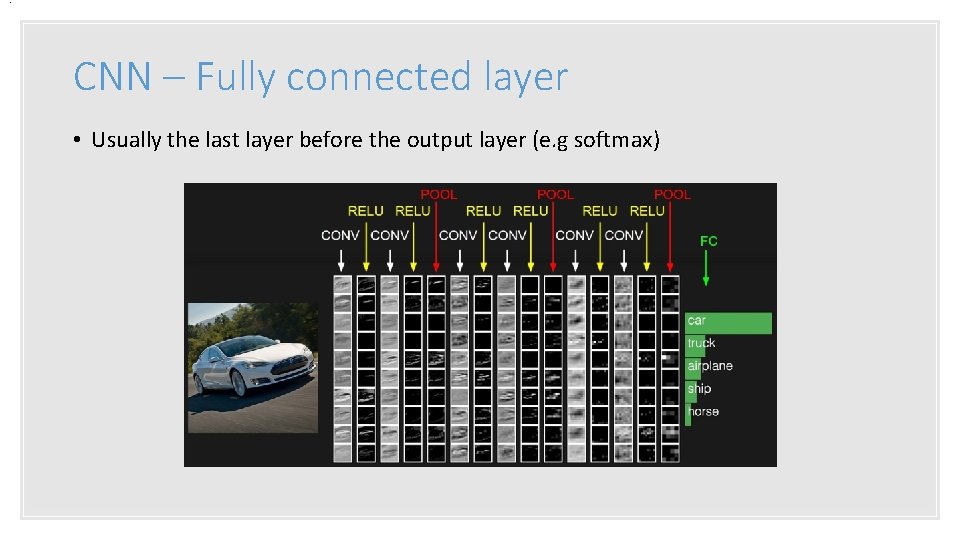

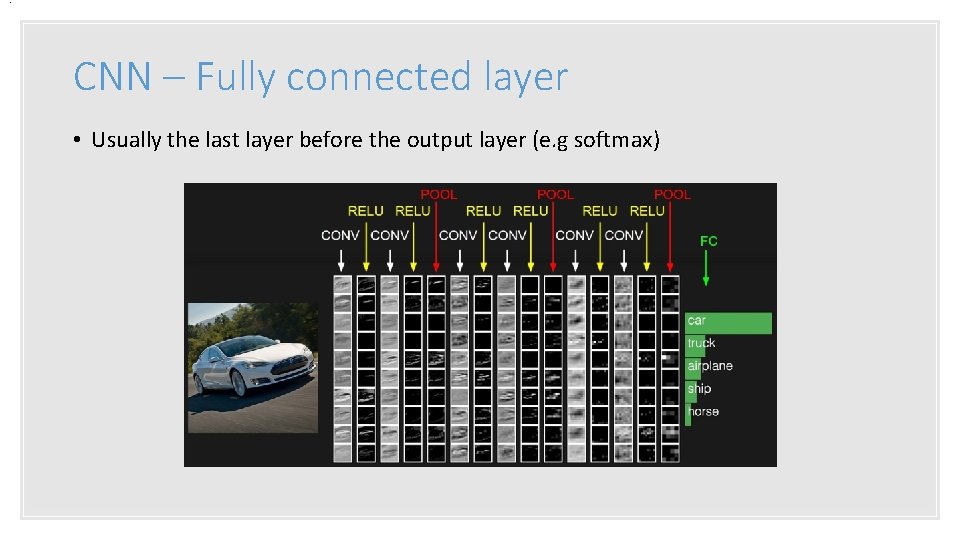

. CNN – Fully connected layer • Usually the last layer before the output layer (e. g softmax)

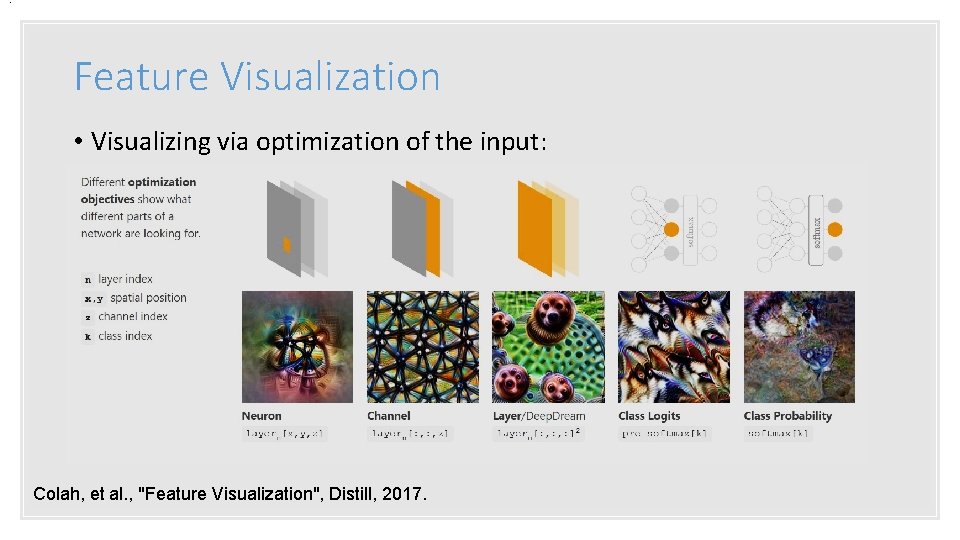

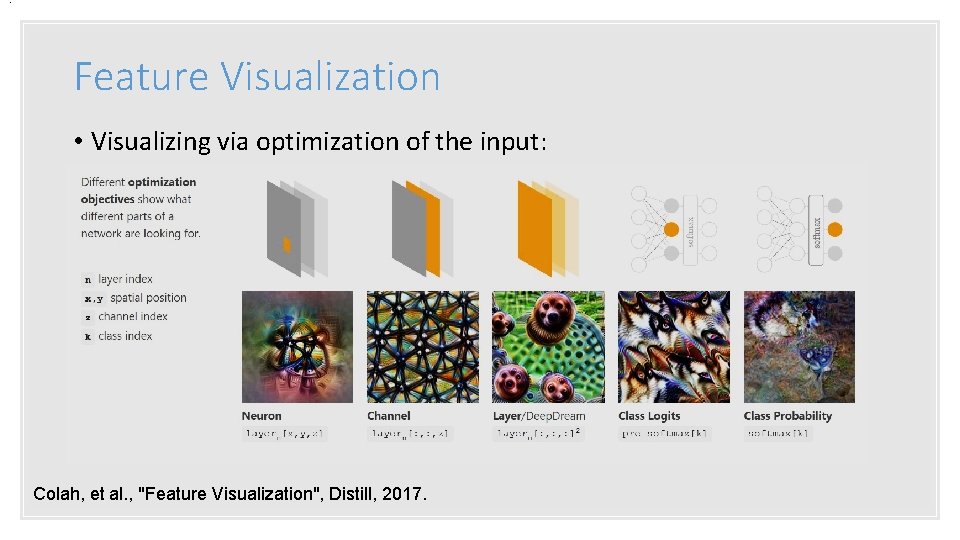

. Feature Visualization • Visualizing via optimization of the input: Colah, et al. , "Feature Visualization", Distill, 2017.

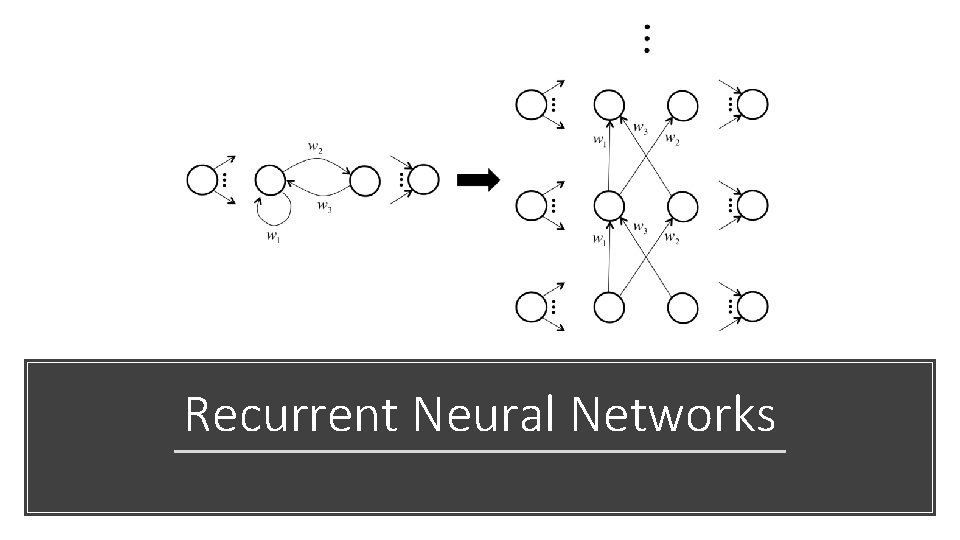

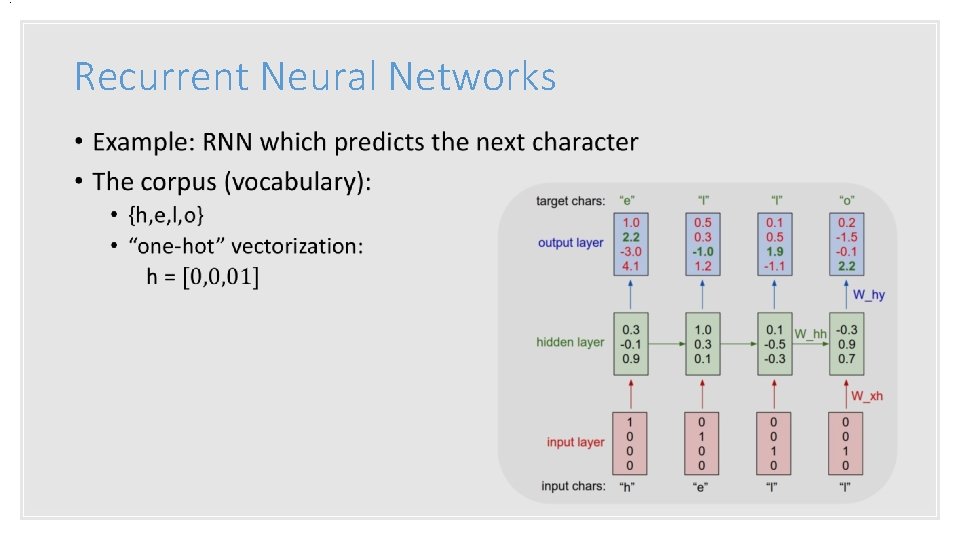

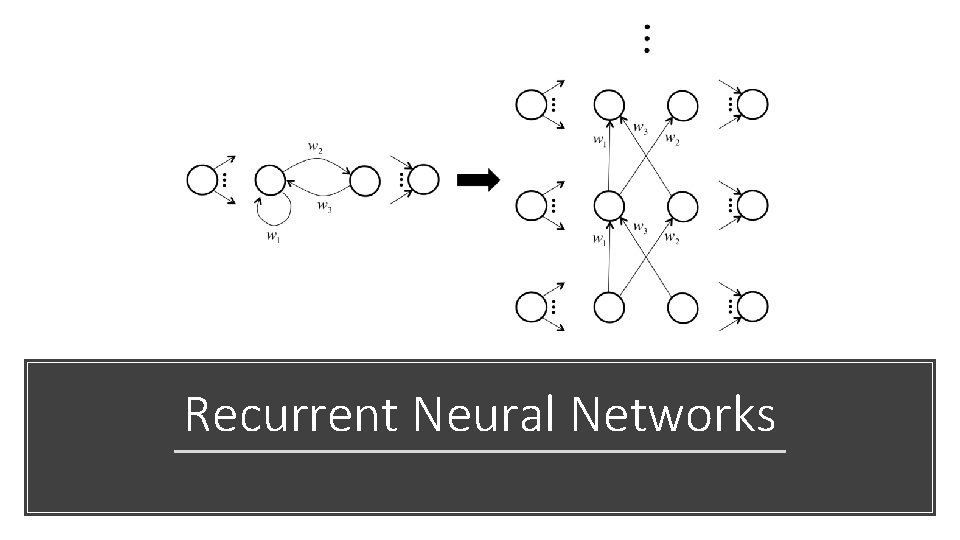

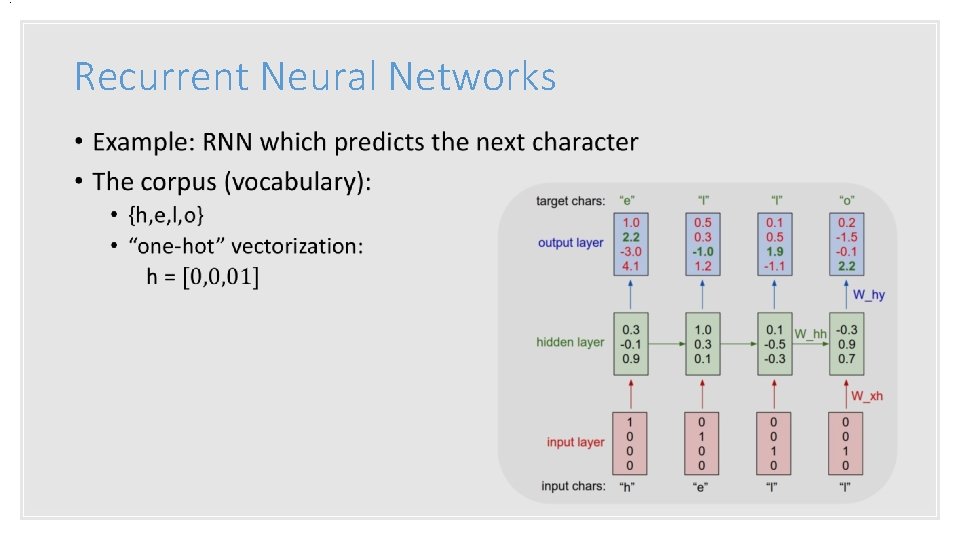

Recurrent Neural Networks

Sequence modeling Examples: Image classification Image captioning Sentiment analysis http: //karpathy. github. io/2015/05/21 /rnn-effectiveness/ Machine translation Video frame-by-frame classification

DRAW: A recurrent neural network for image generation [Gregor, et al. , (2015) DRAW: A recurrent neural network for image generation]

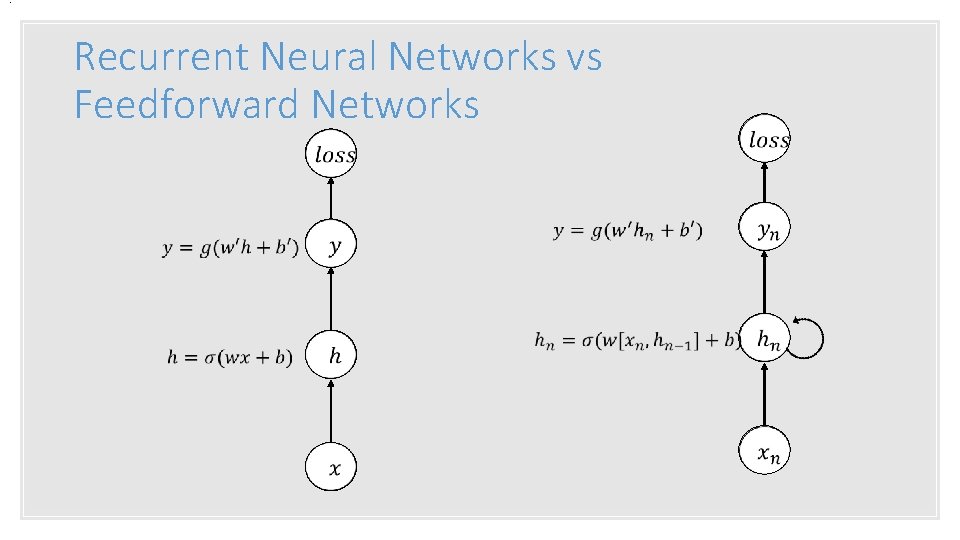

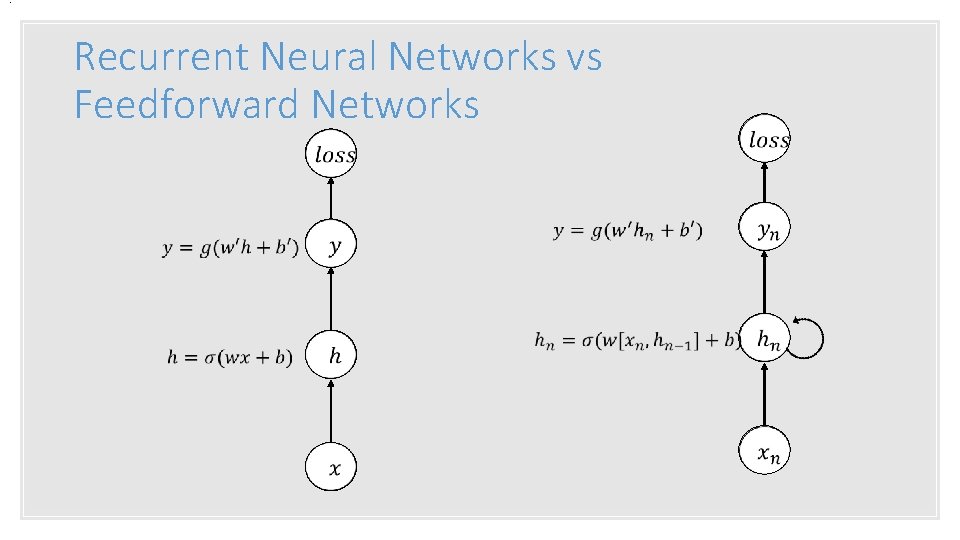

. Recurrent Neural Networks vs Feedforward Networks

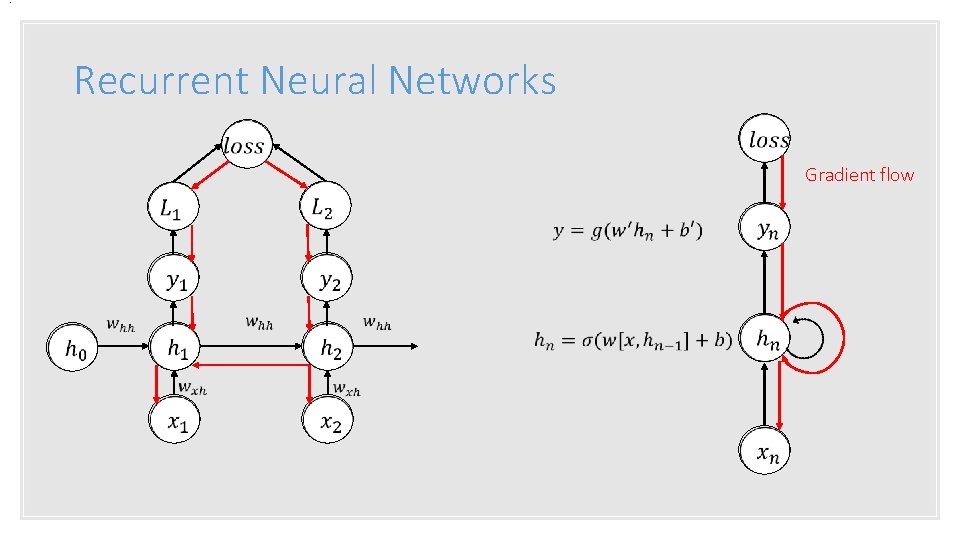

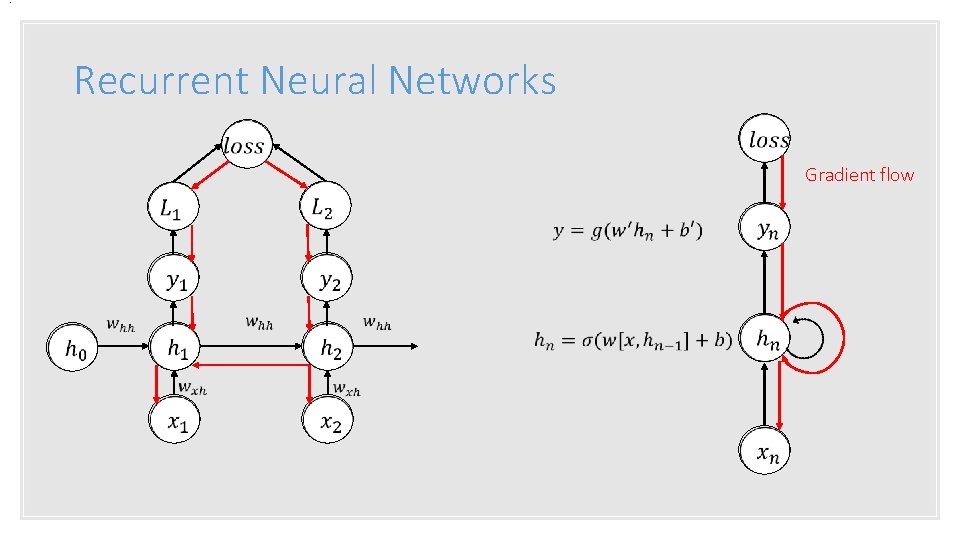

. Recurrent Neural Networks Gradient flow

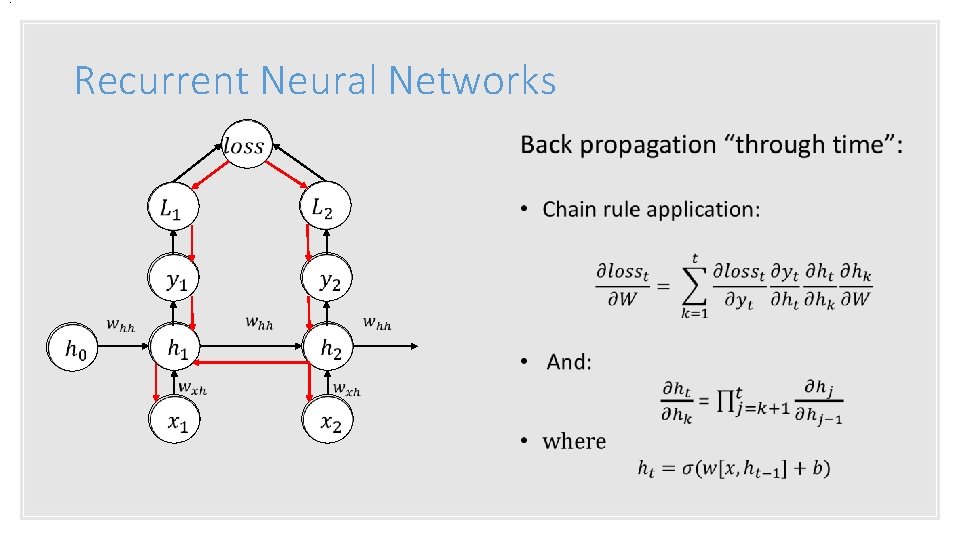

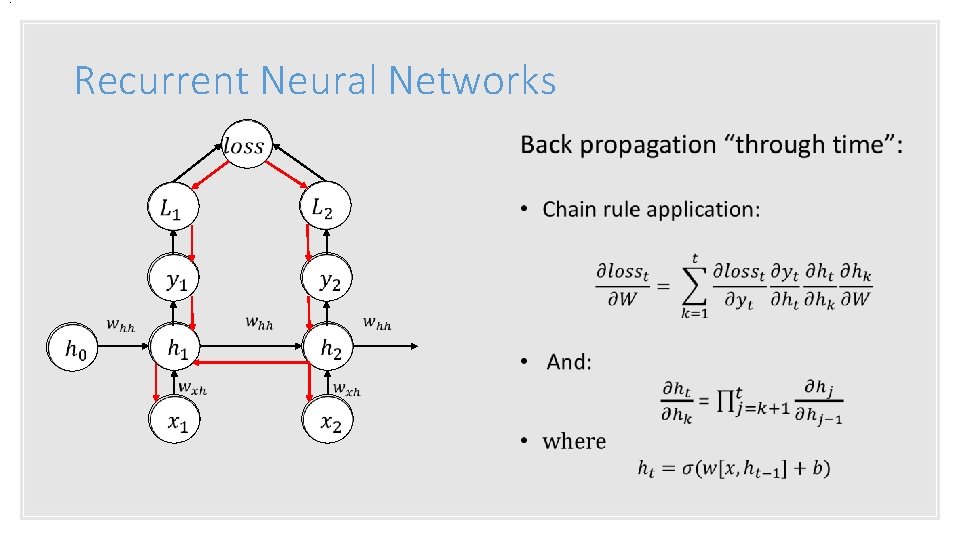

. Recurrent Neural Networks

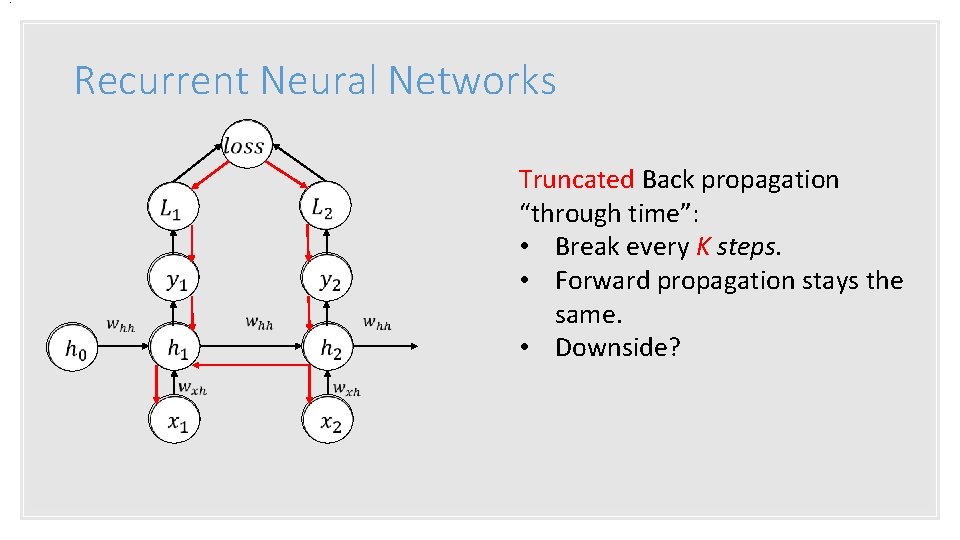

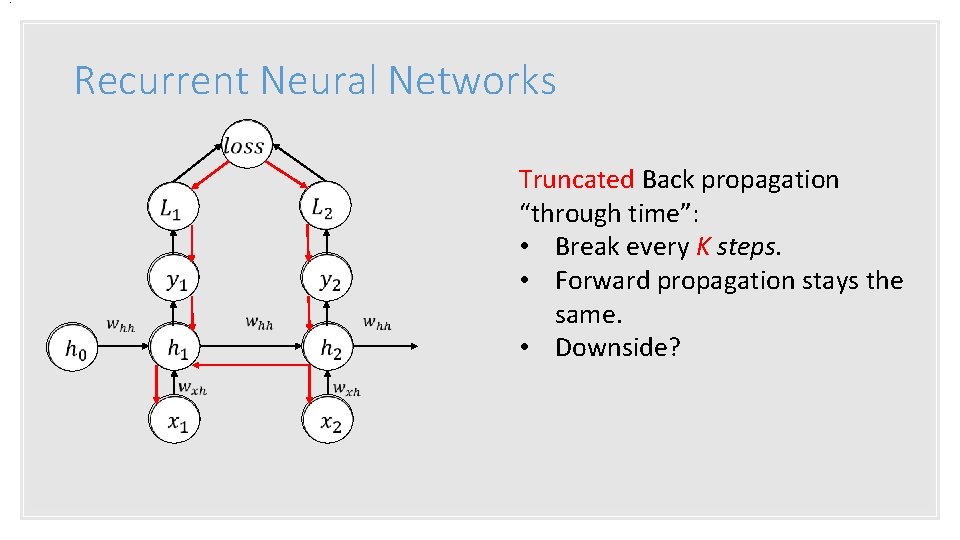

. Recurrent Neural Networks Truncated Back propagation “through time”: • Break every K steps. • Forward propagation stays the same. • Downside?

. Recurrent Neural Networks •

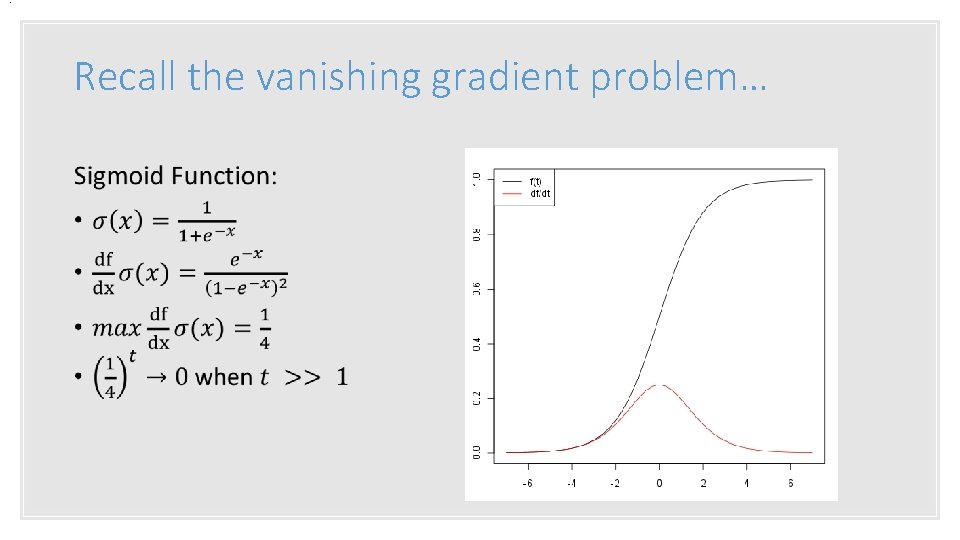

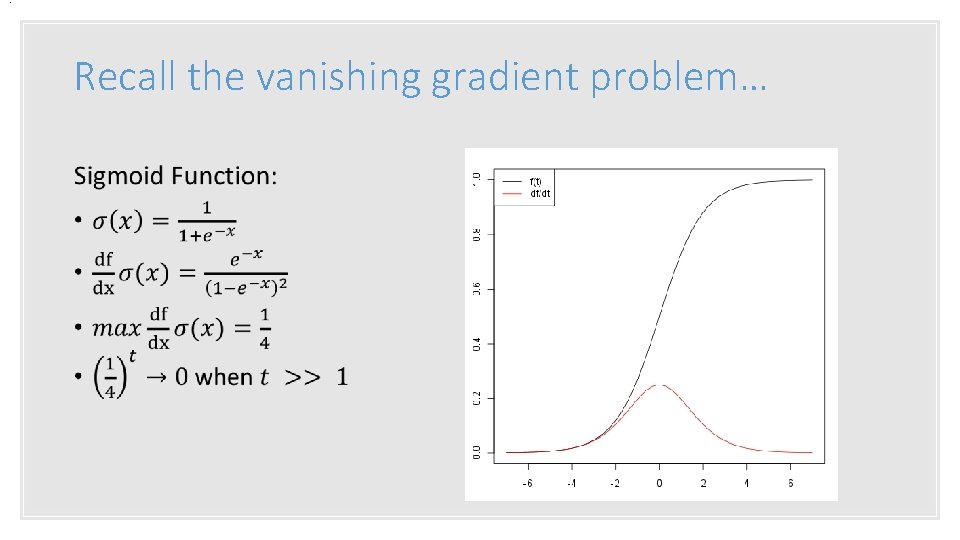

. Recall the vanishing gradient problem…

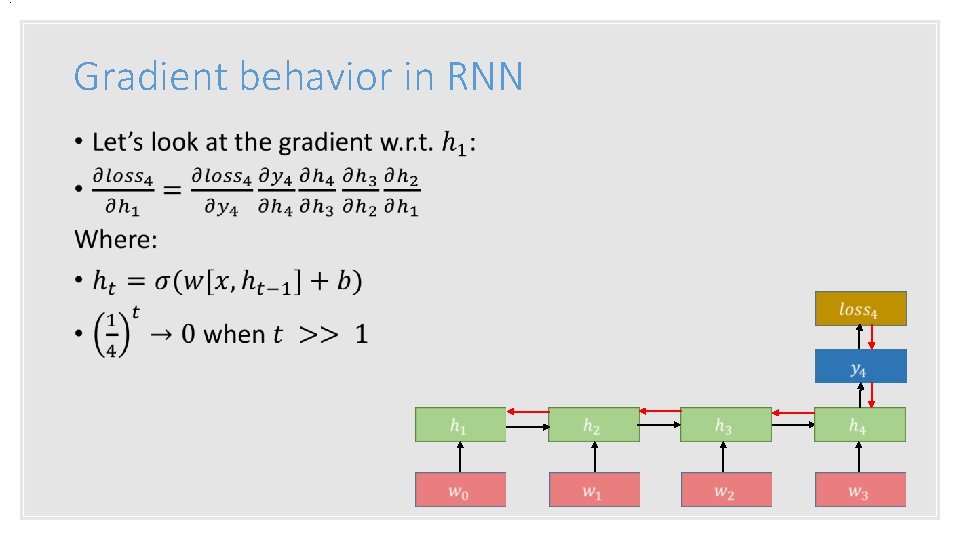

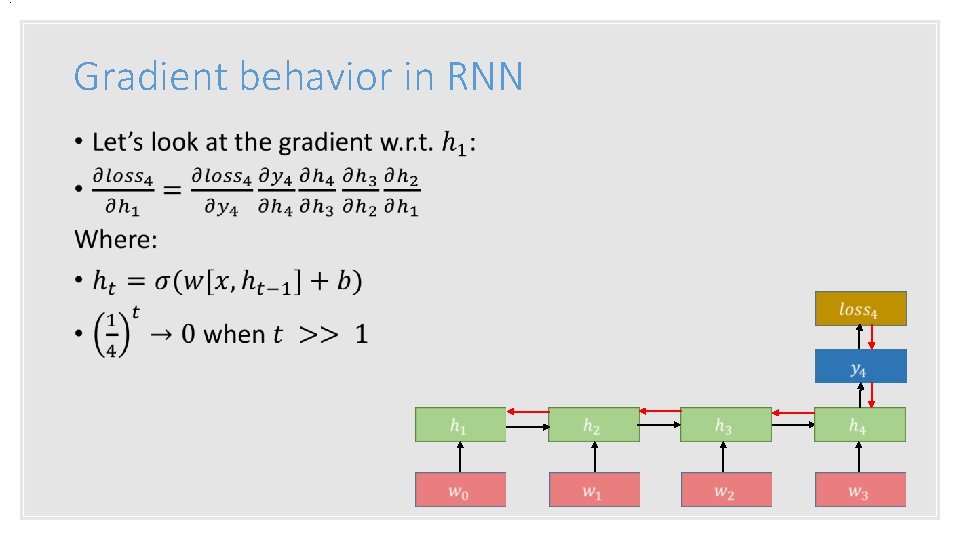

. Gradient behavior in RNN •

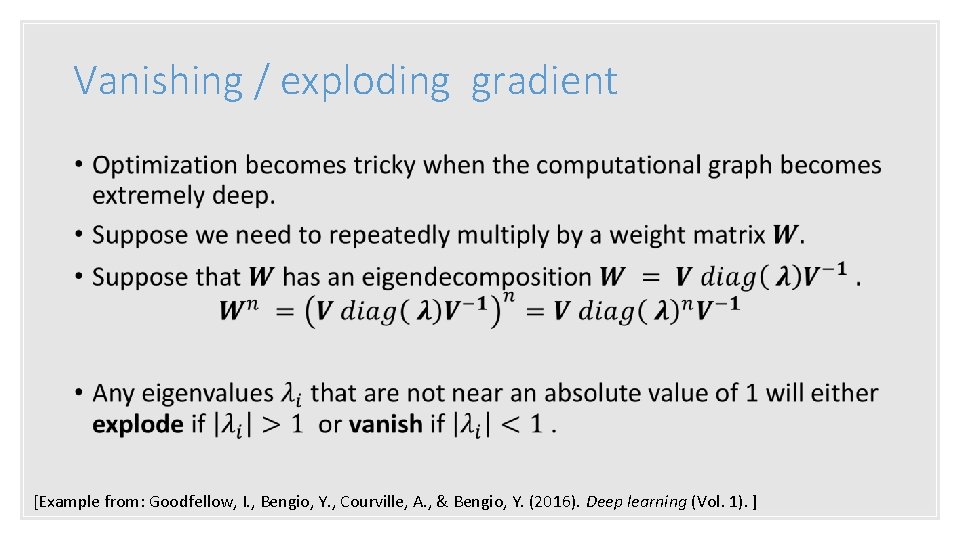

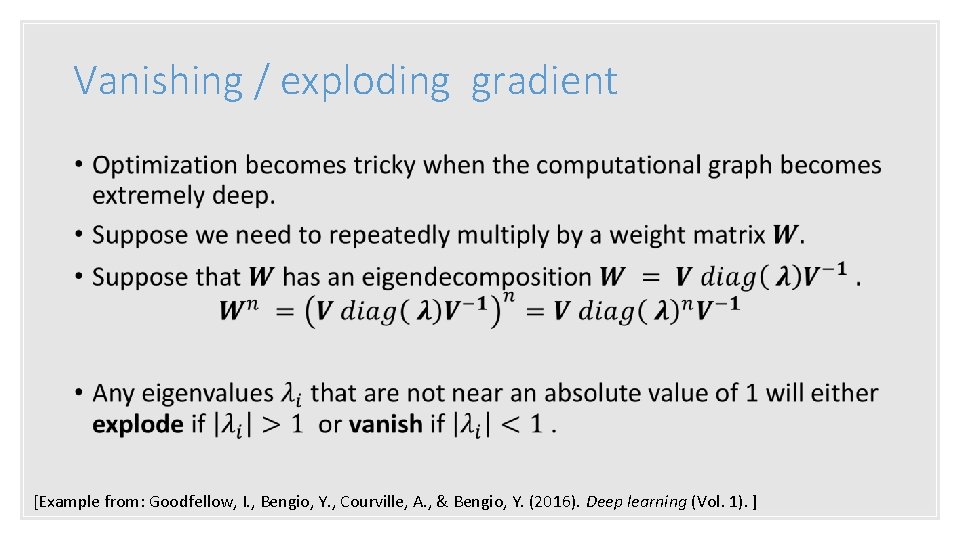

Vanishing / exploding gradient • [Example from: Goodfellow, I. , Bengio, Y. , Courville, A. , & Bengio, Y. (2016). Deep learning (Vol. 1). ]

. Vanishing / exploding gradient •

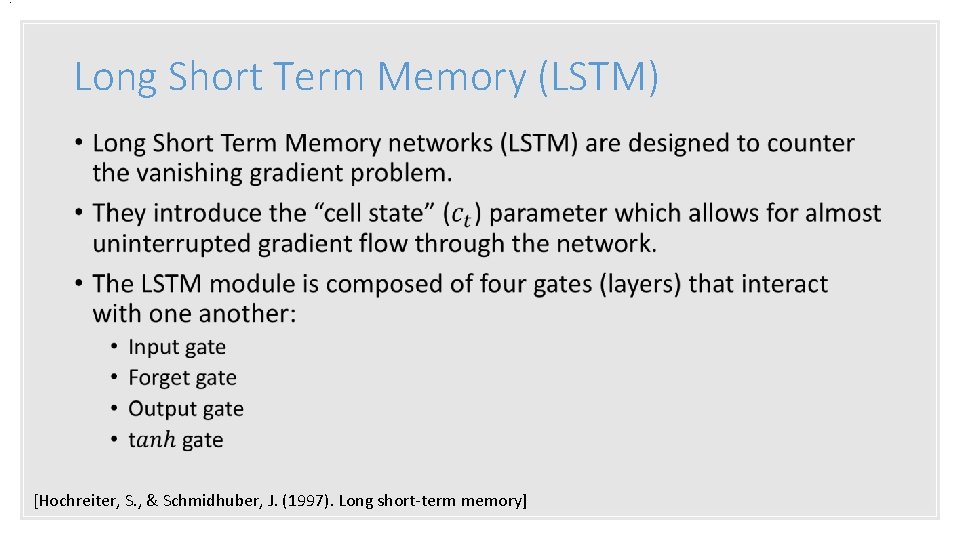

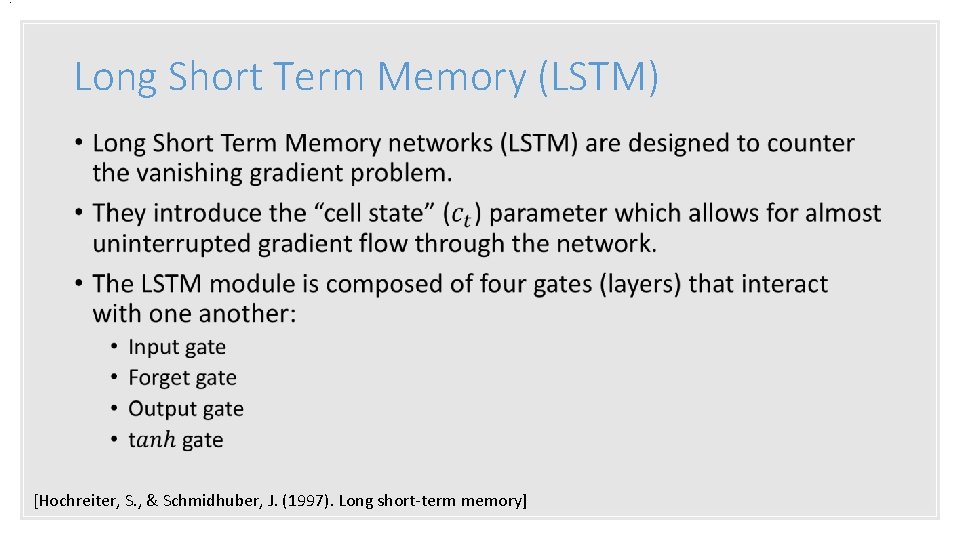

. Long Short Term Memory (LSTM) • [Hochreiter, S. , & Schmidhuber, J. (1997). Long short-term memory]

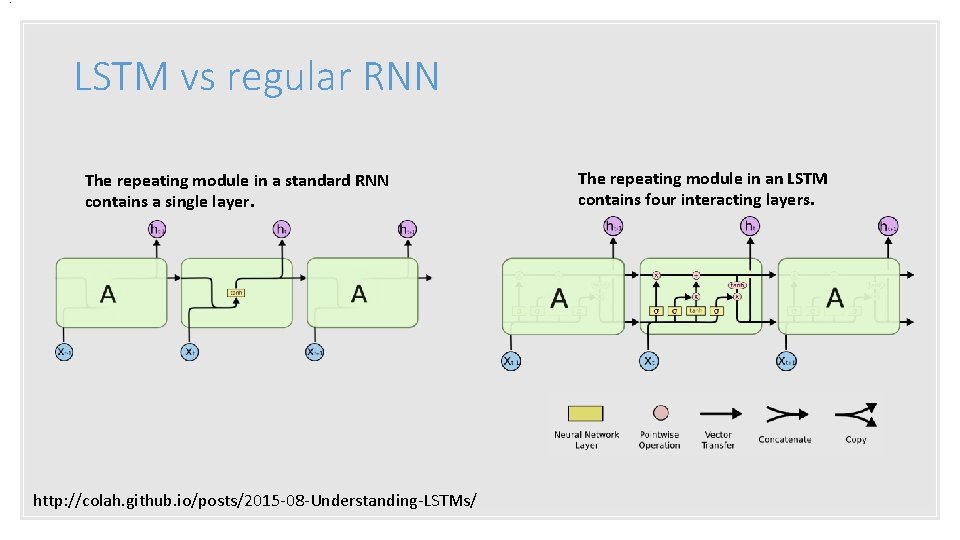

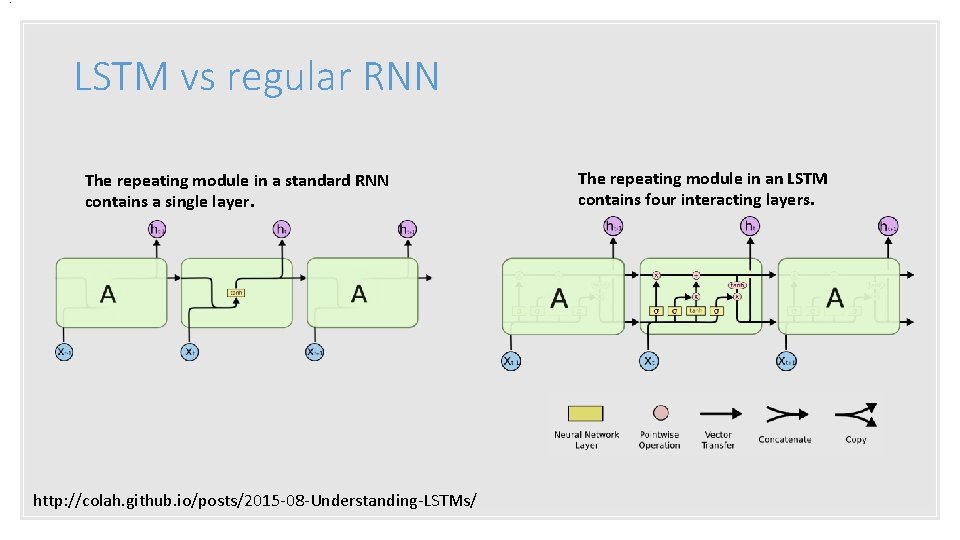

. LSTM vs regular RNN The repeating module in a standard RNN contains a single layer. http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/ The repeating module in an LSTM contains four interacting layers.

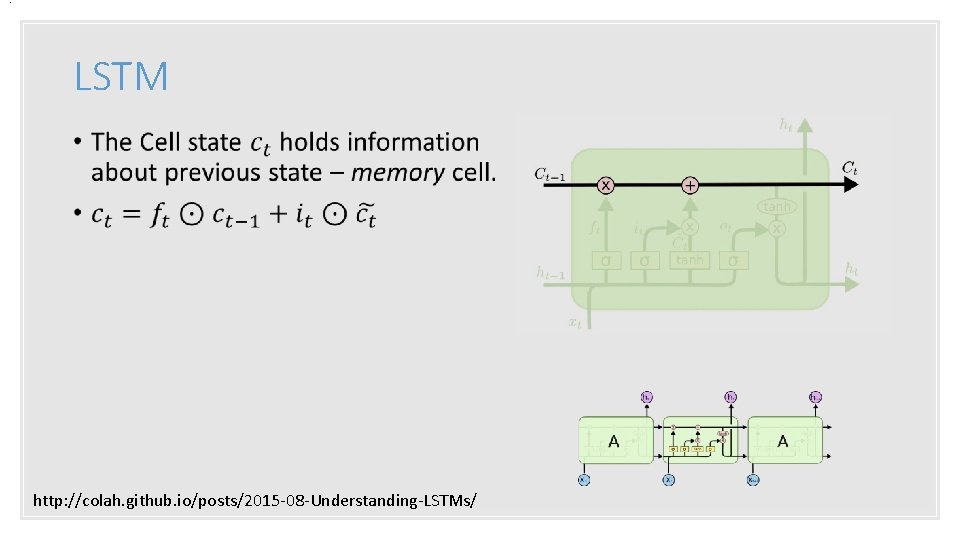

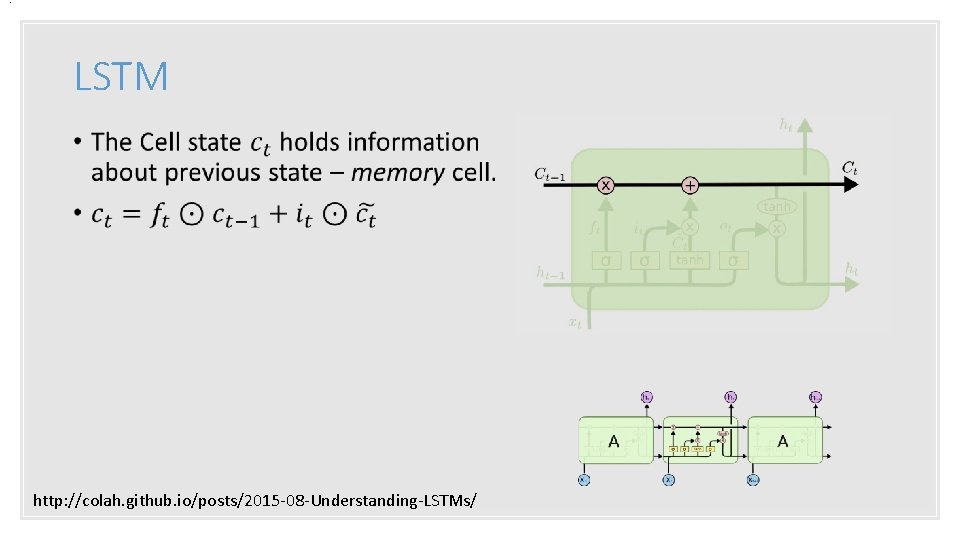

. LSTM • http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/

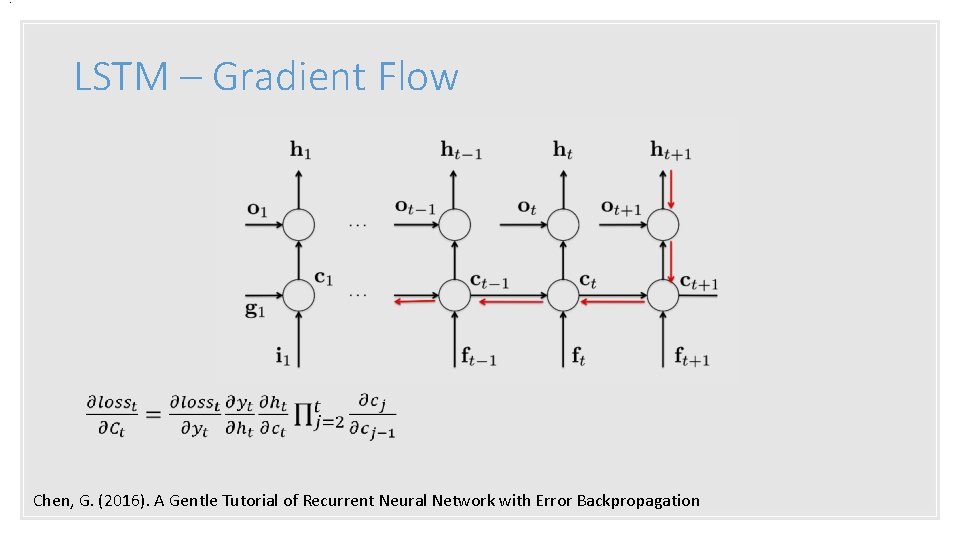

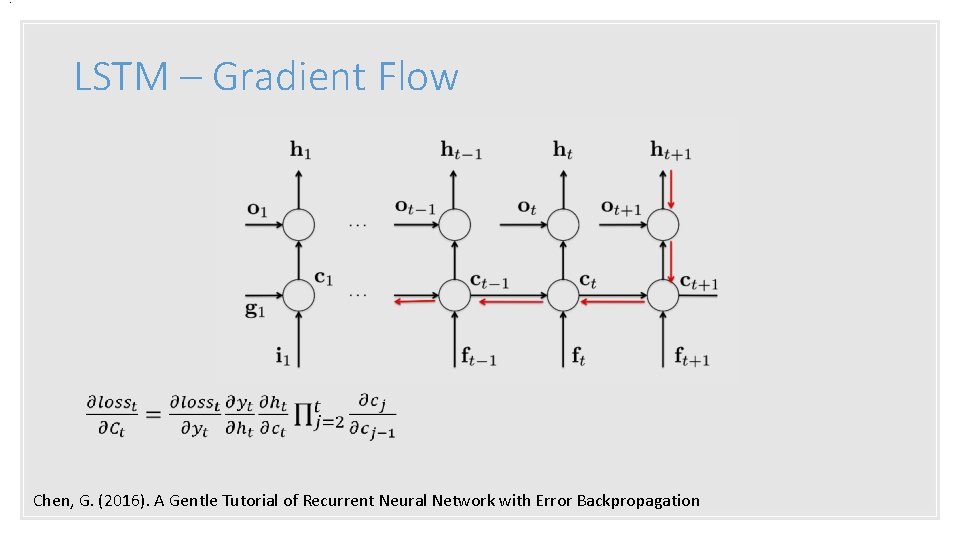

. LSTM – Gradient Flow Chen, G. (2016). A Gentle Tutorial of Recurrent Neural Network with Error Backpropagation

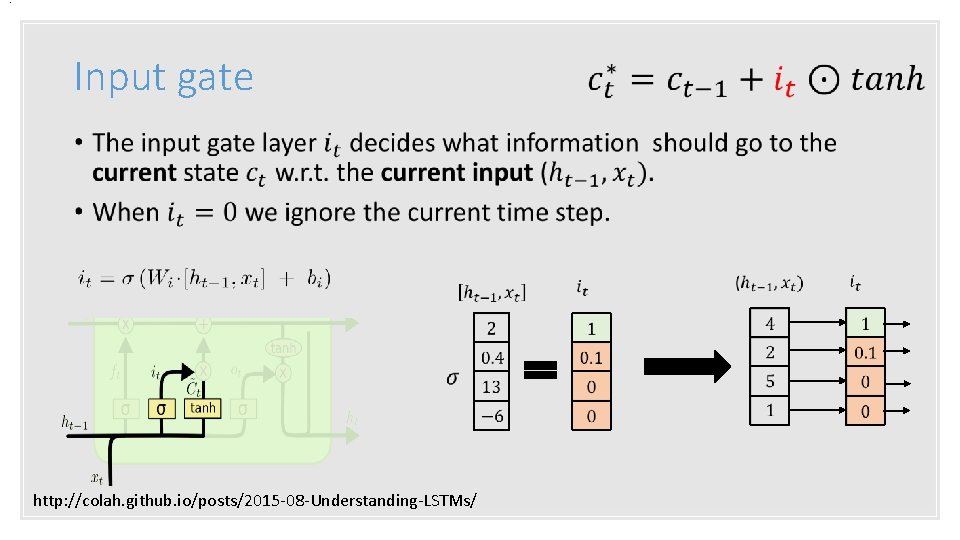

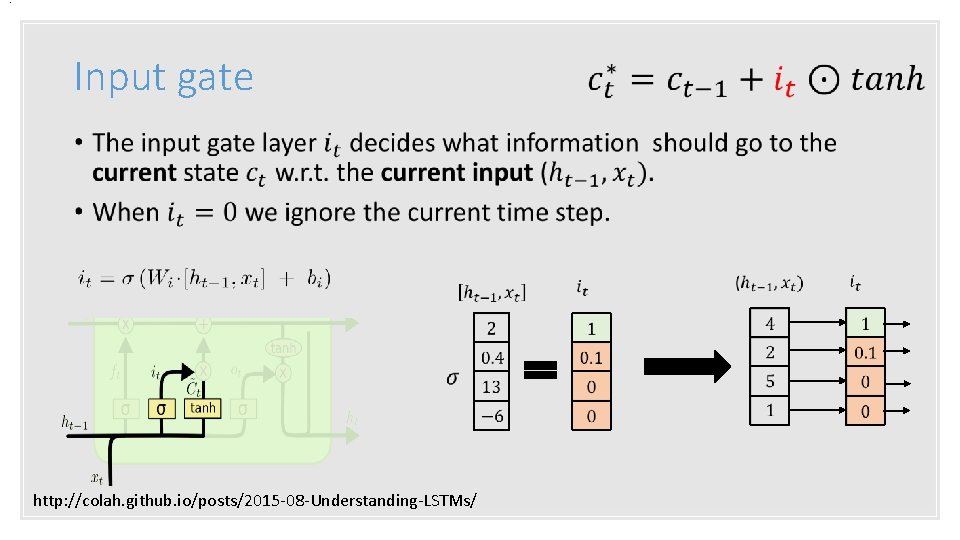

. Input gate • http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/

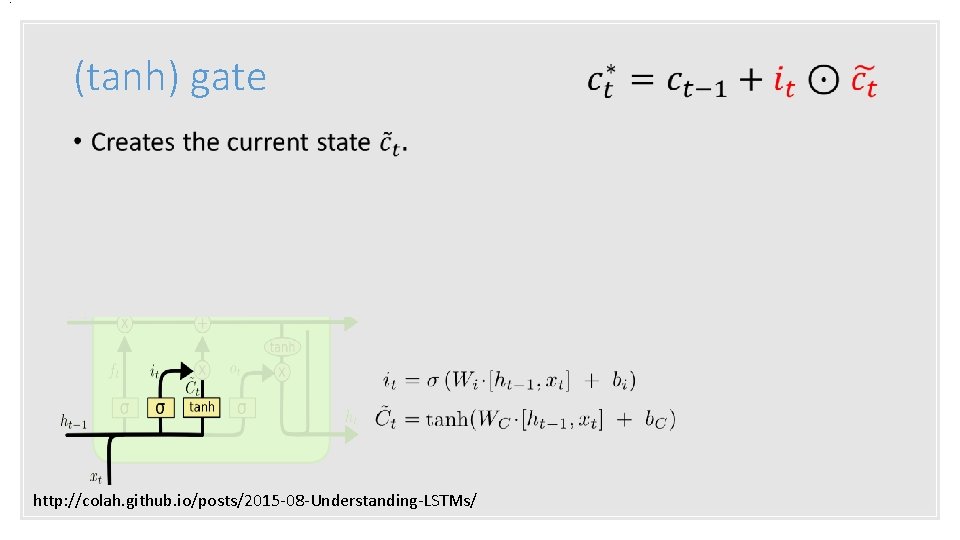

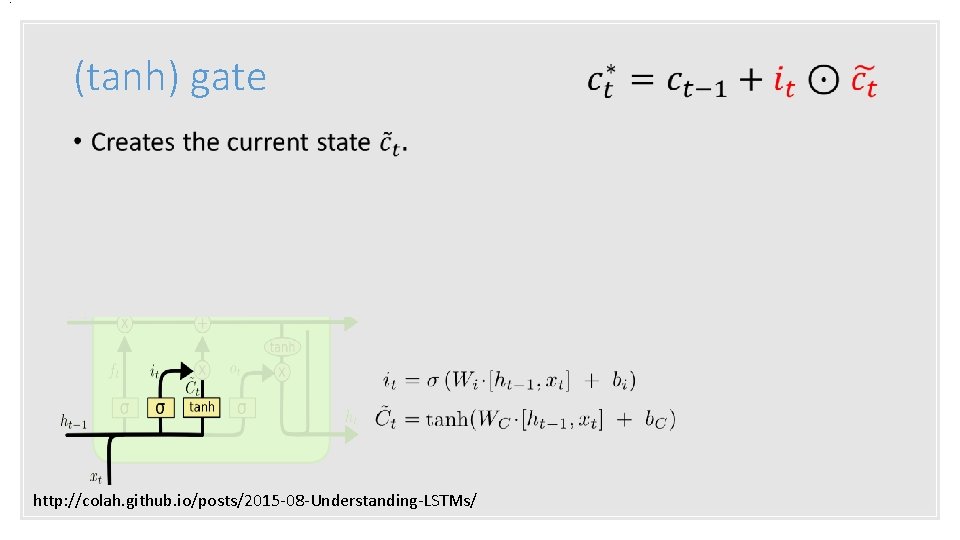

. (tanh) gate • http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/

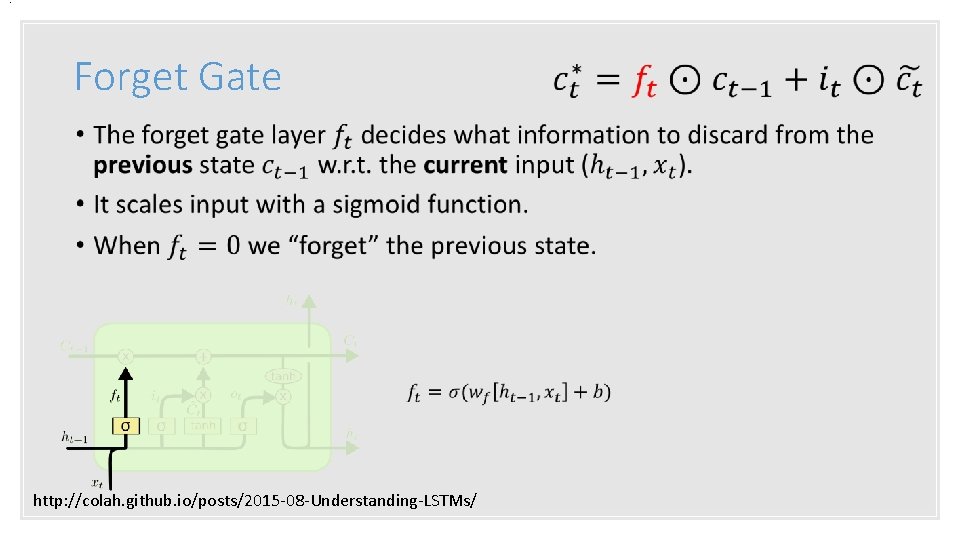

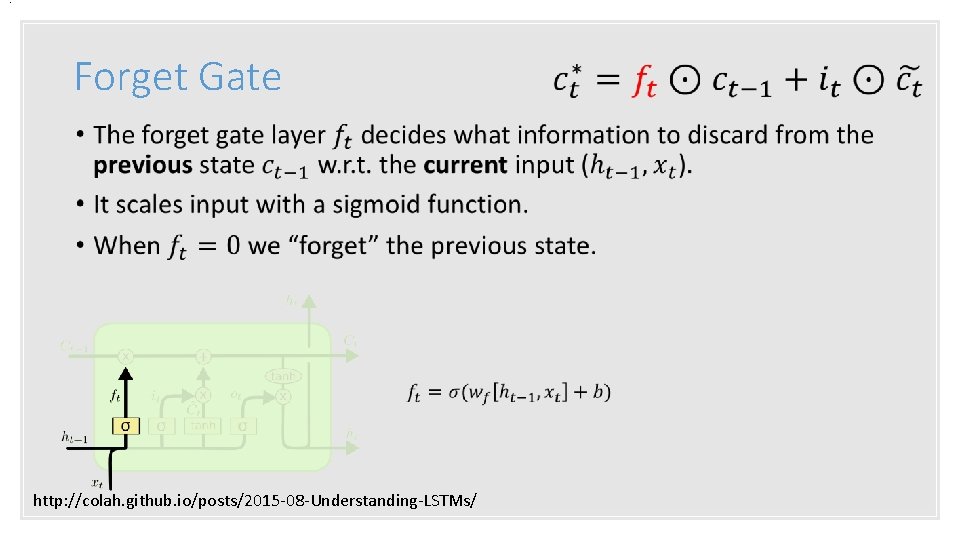

. Forget Gate • http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/

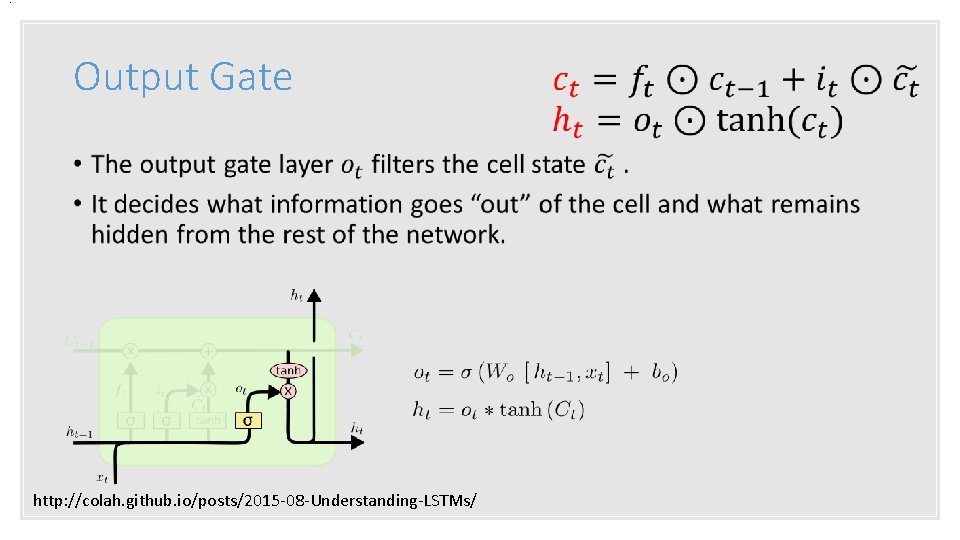

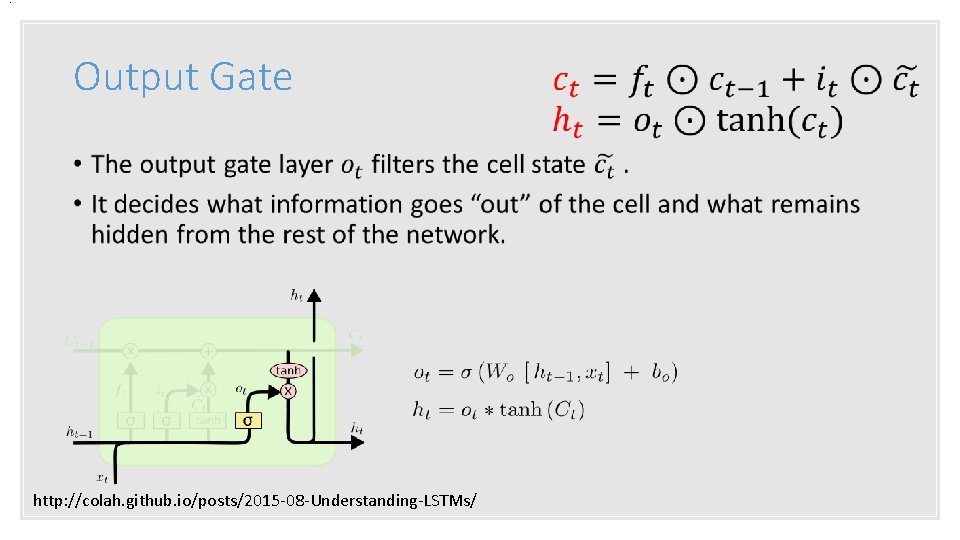

. Output Gate • http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/

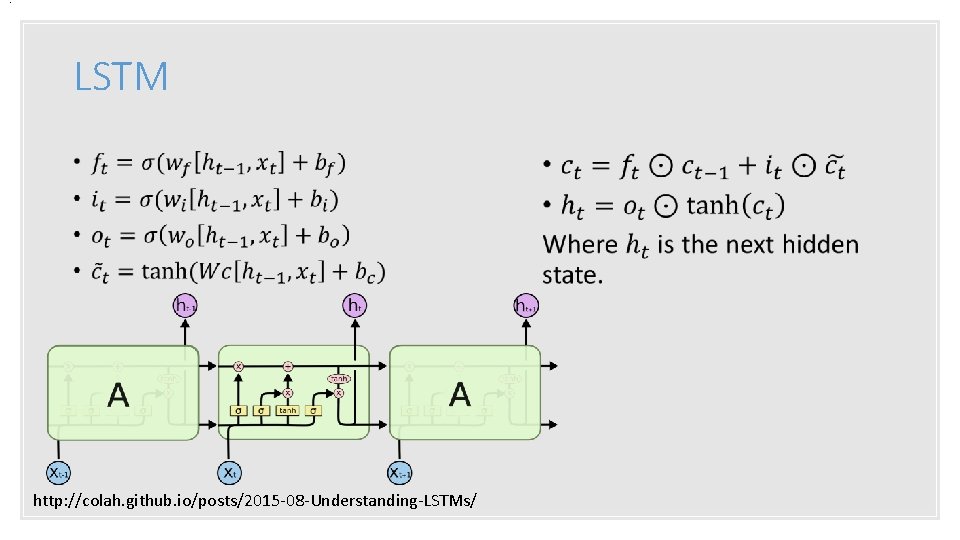

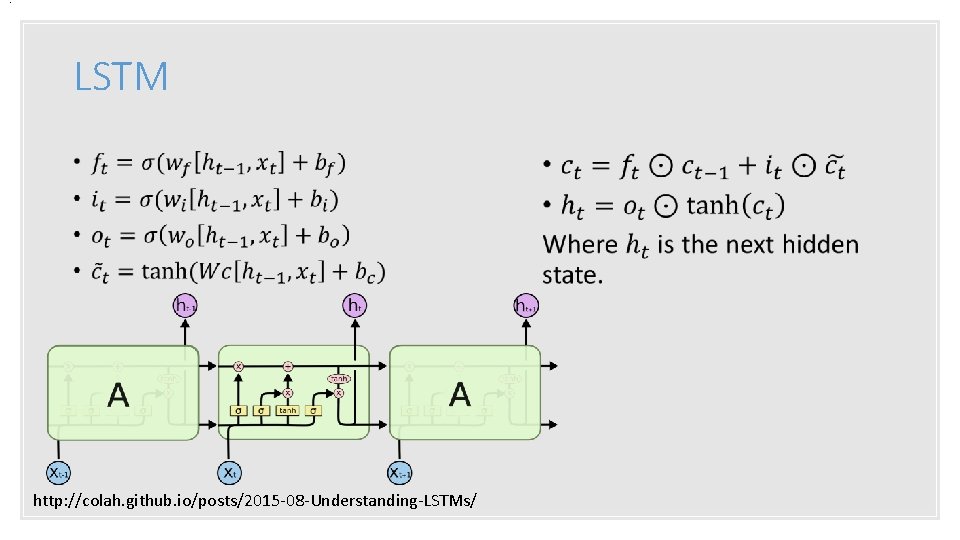

. LSTM • http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/

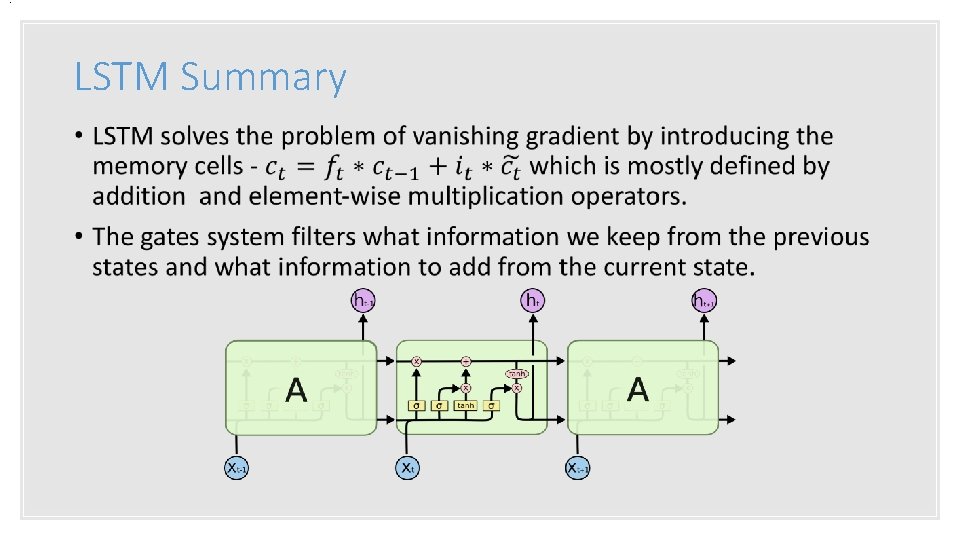

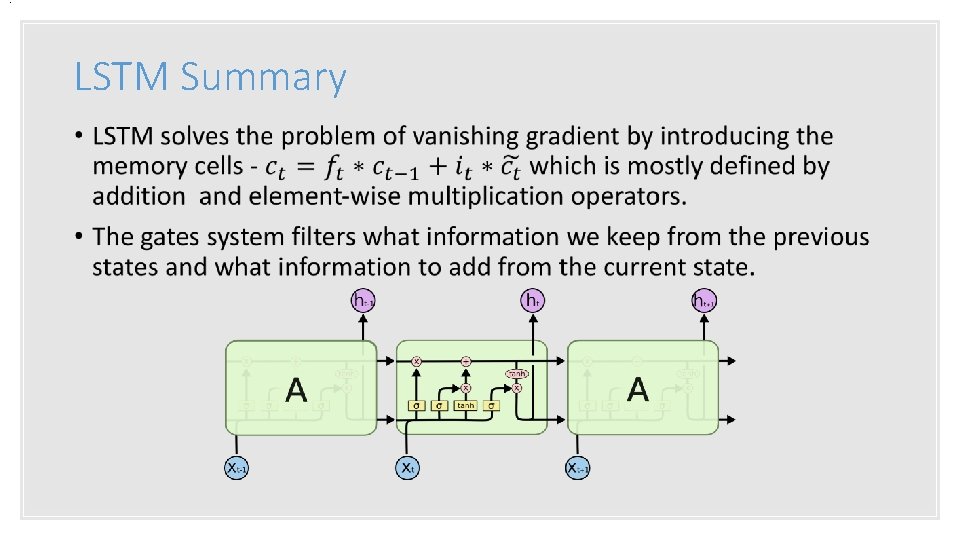

. LSTM Summary •

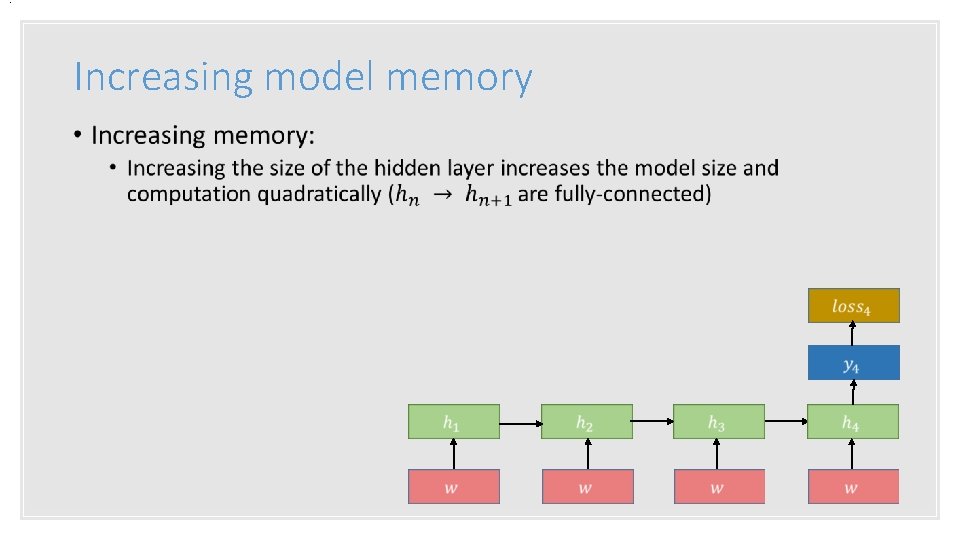

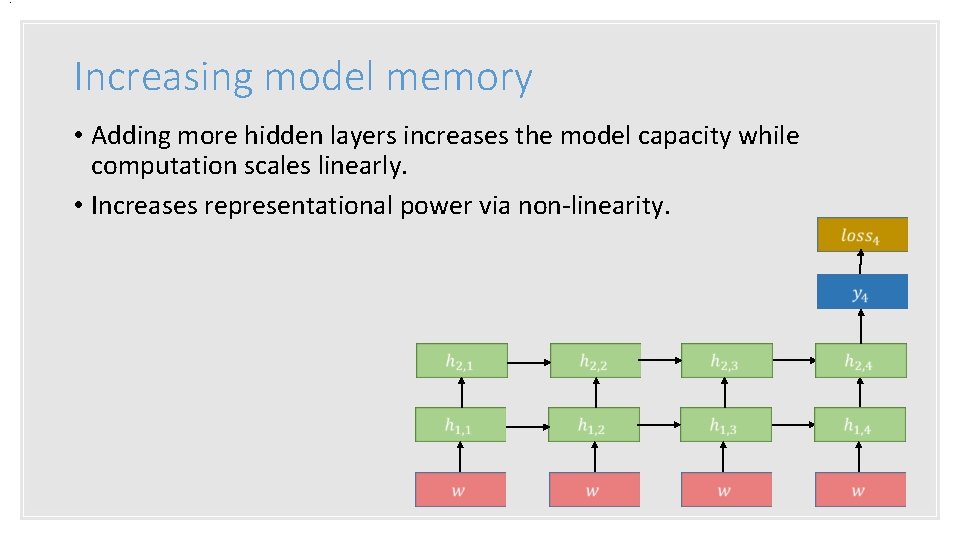

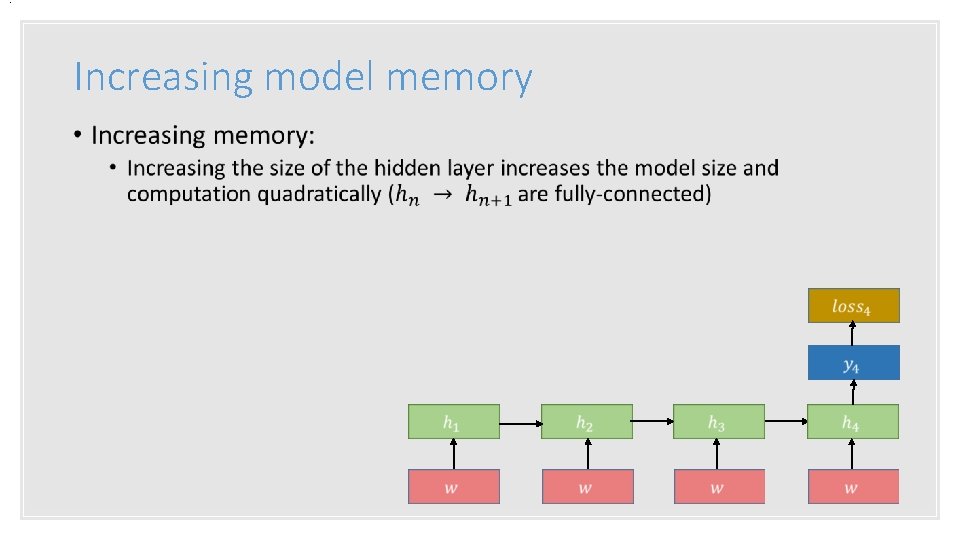

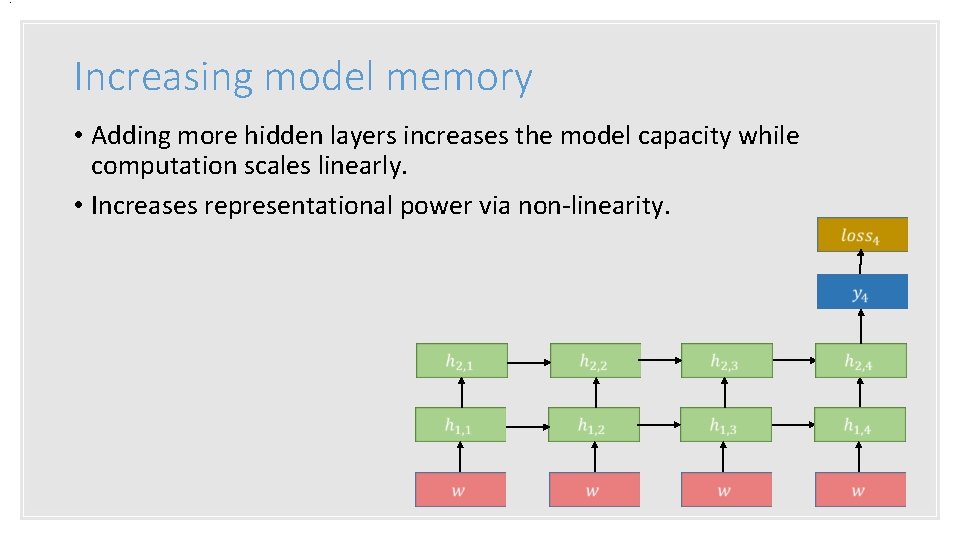

. Increasing model memory •

. Increasing model memory • Adding more hidden layers increases the model capacity while computation scales linearly. • Increases representational power via non-linearity.

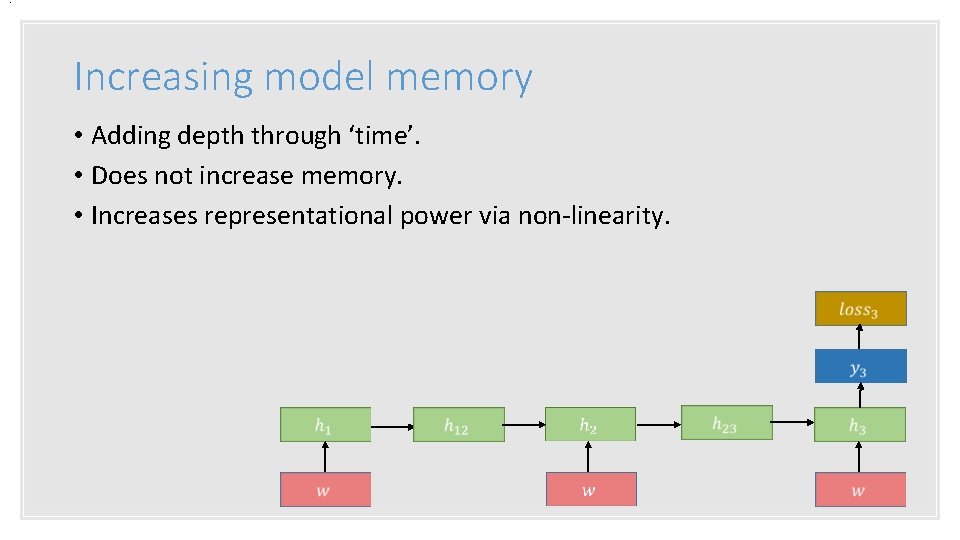

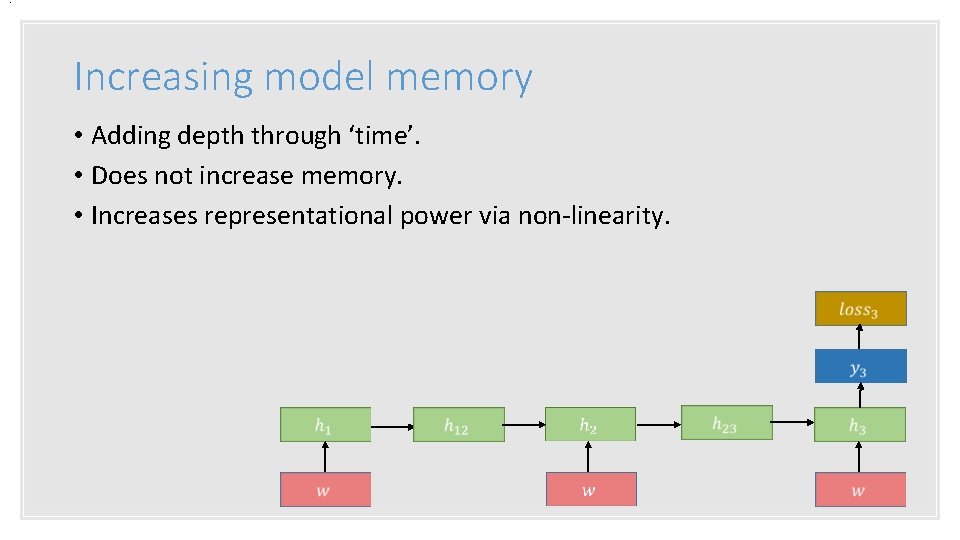

. Increasing model memory • Adding depth through ‘time’. • Does not increase memory. • Increases representational power via non-linearity.

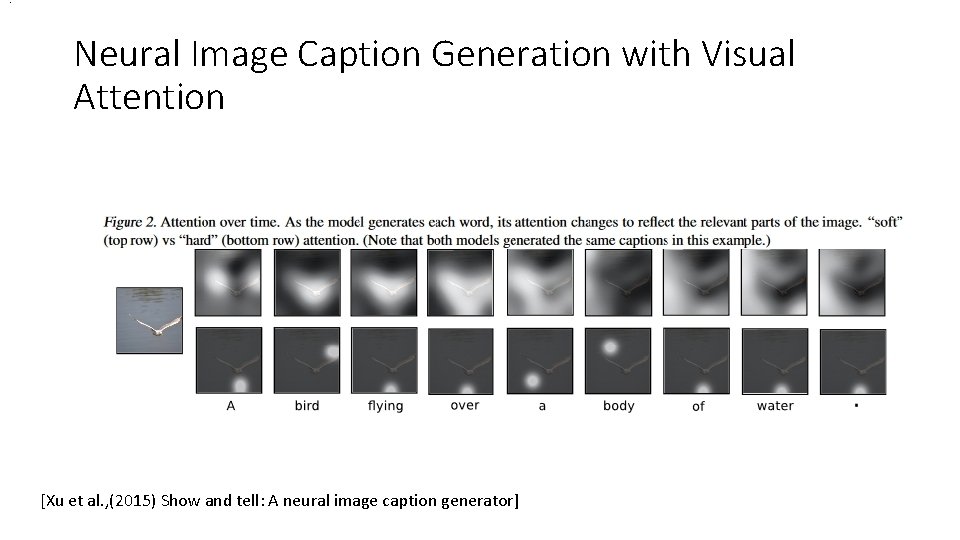

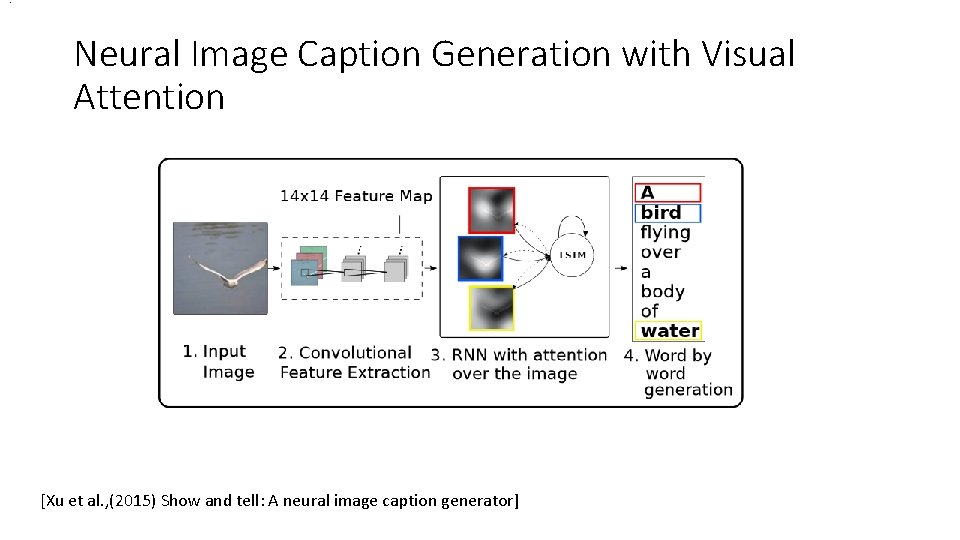

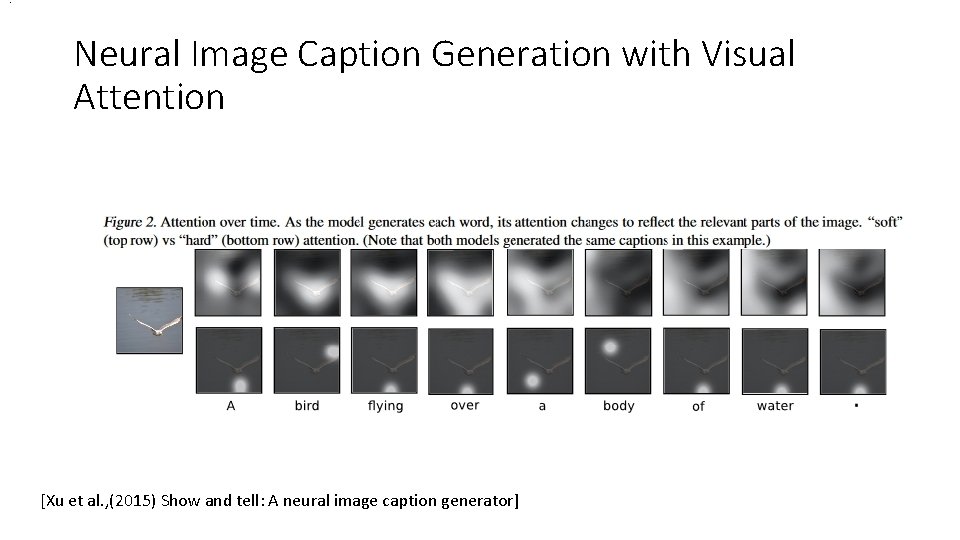

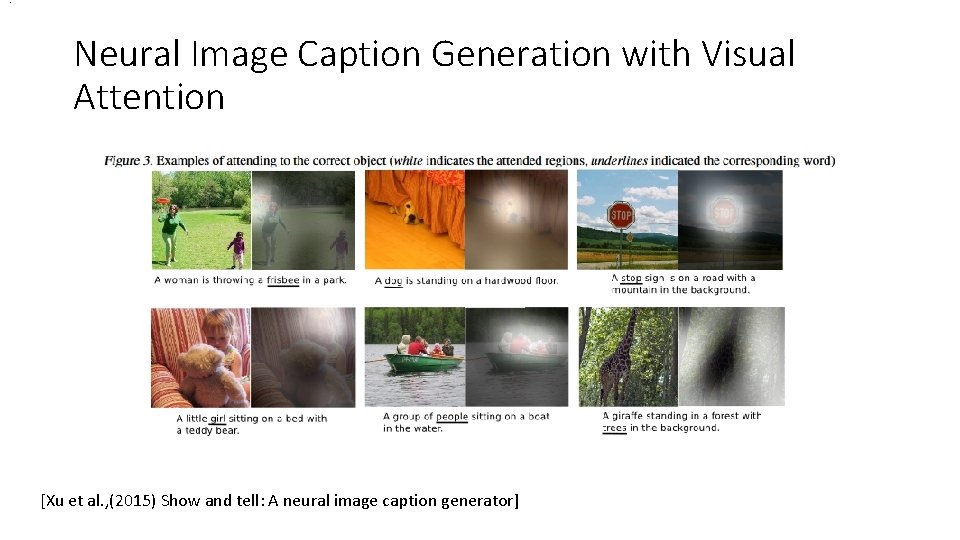

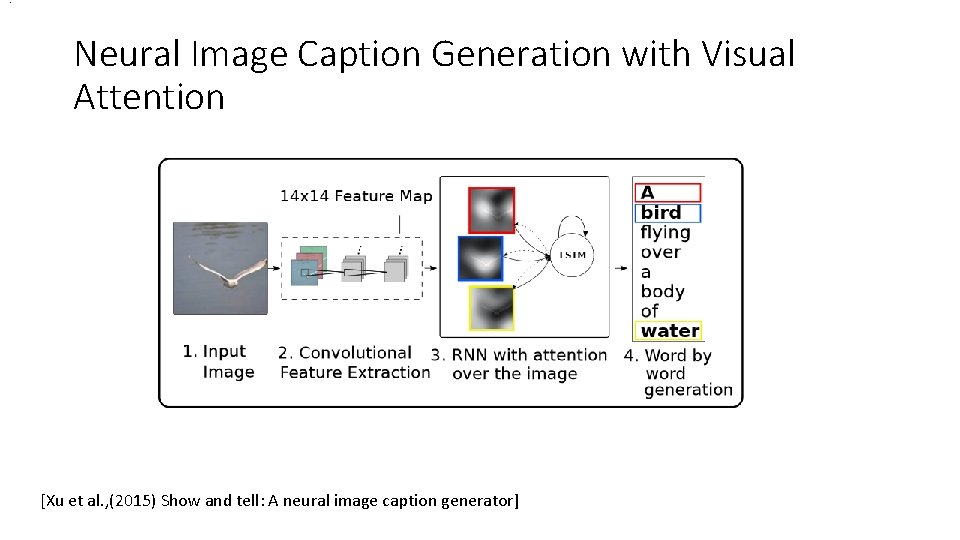

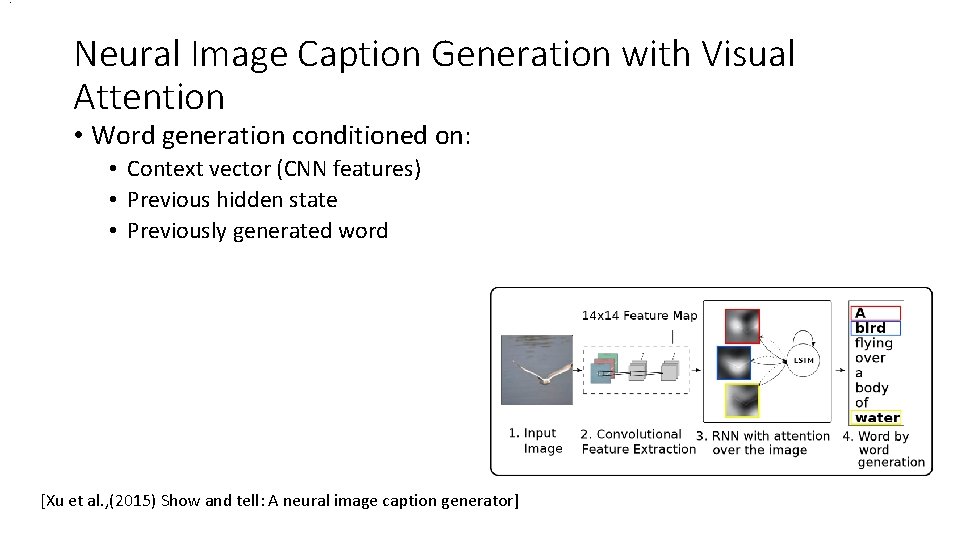

. Neural Image Caption Generation with Visual Attention [Xu et al. , (2015) Show and tell: A neural image caption generator]

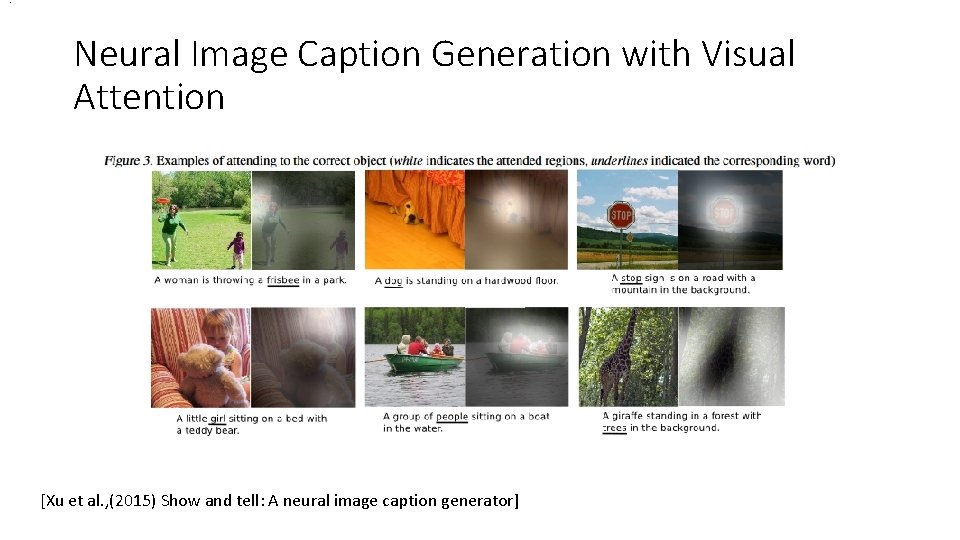

. Neural Image Caption Generation with Visual Attention [Xu et al. , (2015) Show and tell: A neural image caption generator]

. Neural Image Caption Generation with Visual Attention [Xu et al. , (2015) Show and tell: A neural image caption generator]

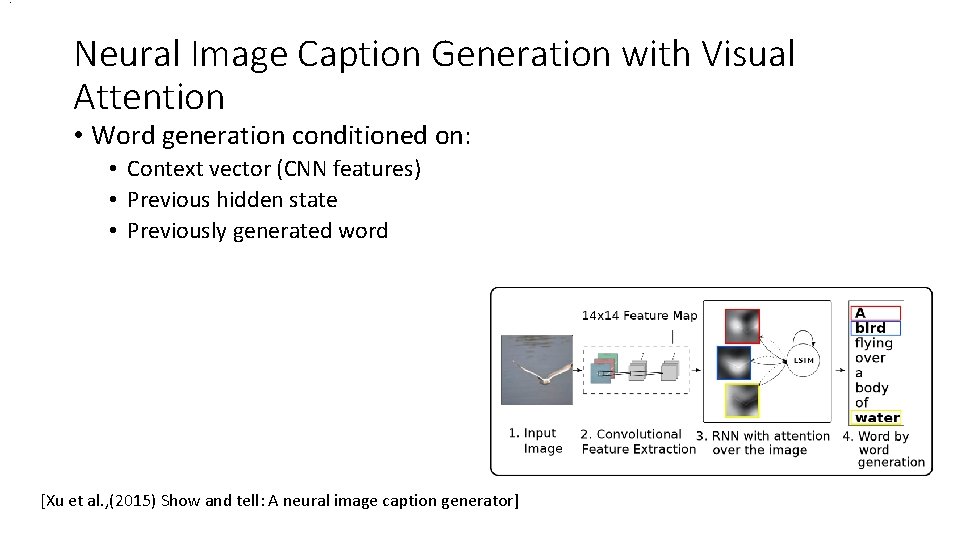

. Neural Image Caption Generation with Visual Attention • Word generation conditioned on: • Context vector (CNN features) • Previous hidden state • Previously generated word [Xu et al. , (2015) Show and tell: A neural image caption generator]

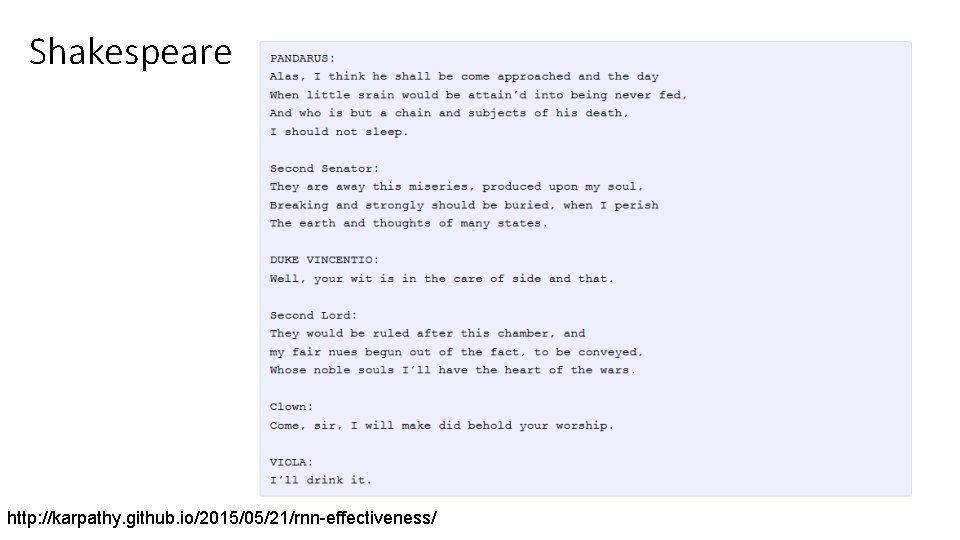

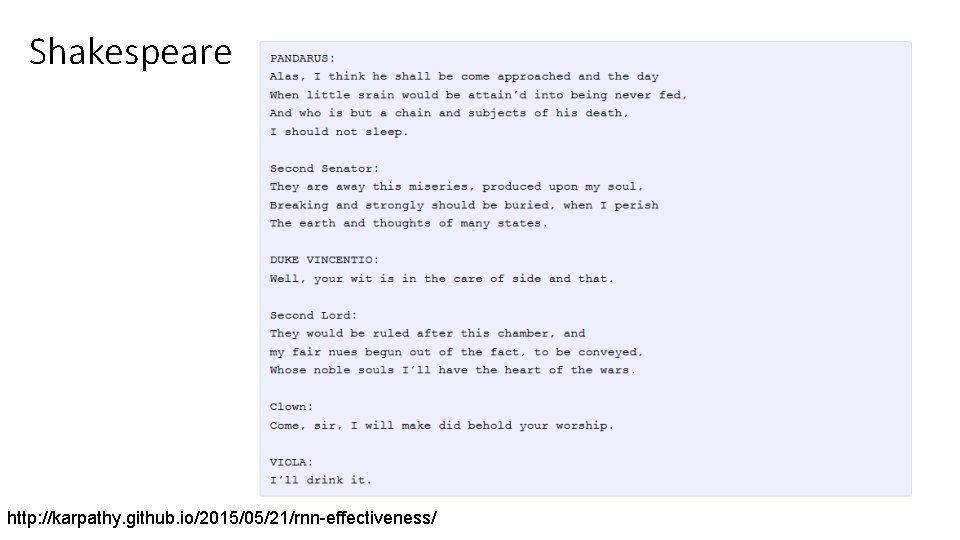

Shakespeare http: //karpathy. github. io/2015/05/21/rnn-effectiveness/

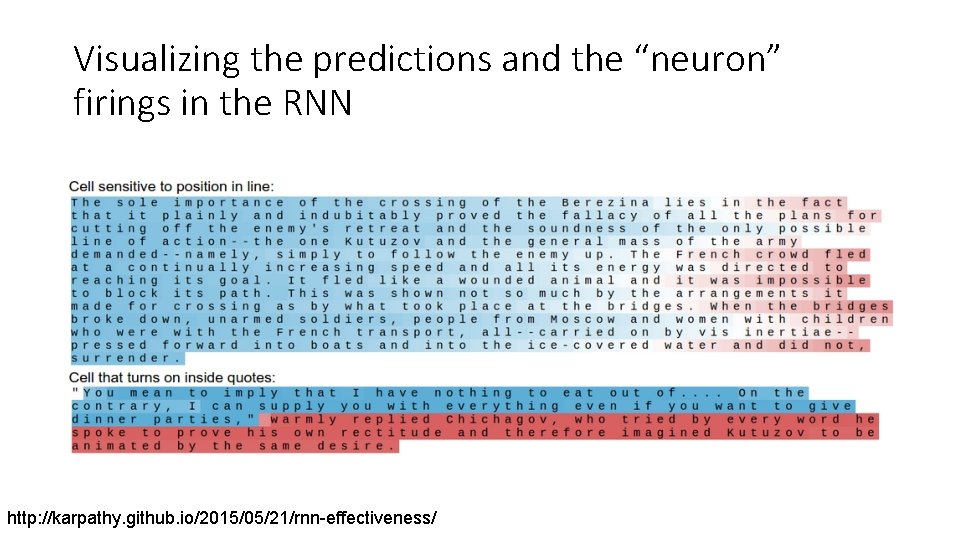

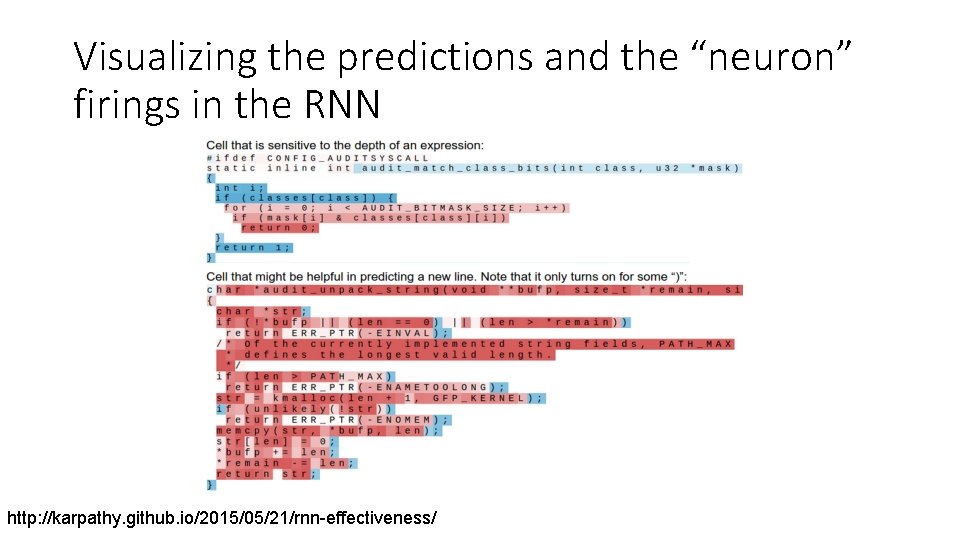

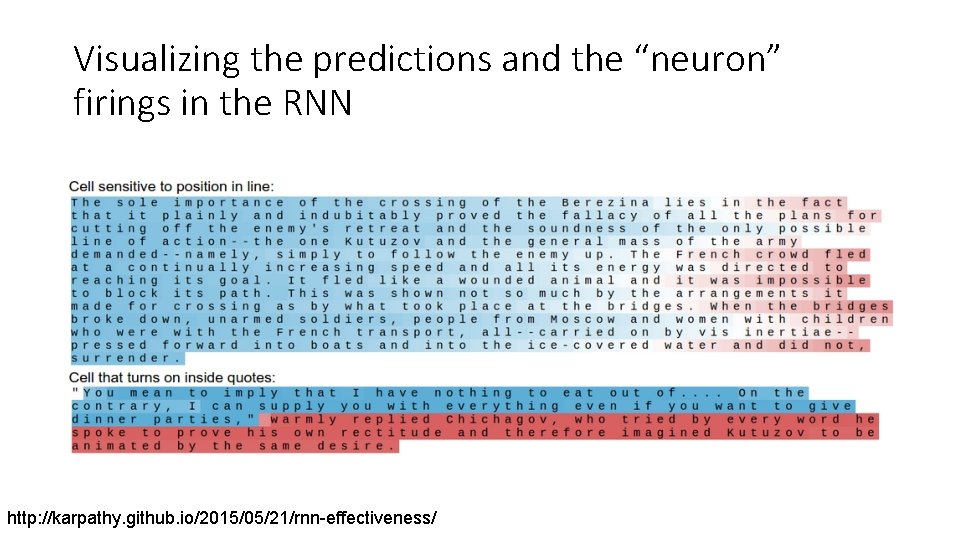

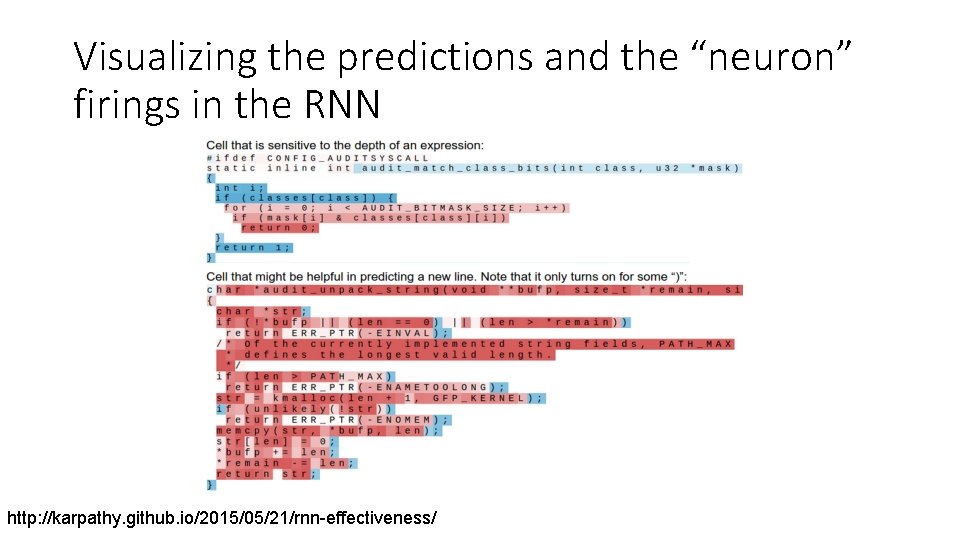

Visualizing the predictions and the “neuron” firings in the RNN http: //karpathy. github. io/2015/05/21/rnn-effectiveness/

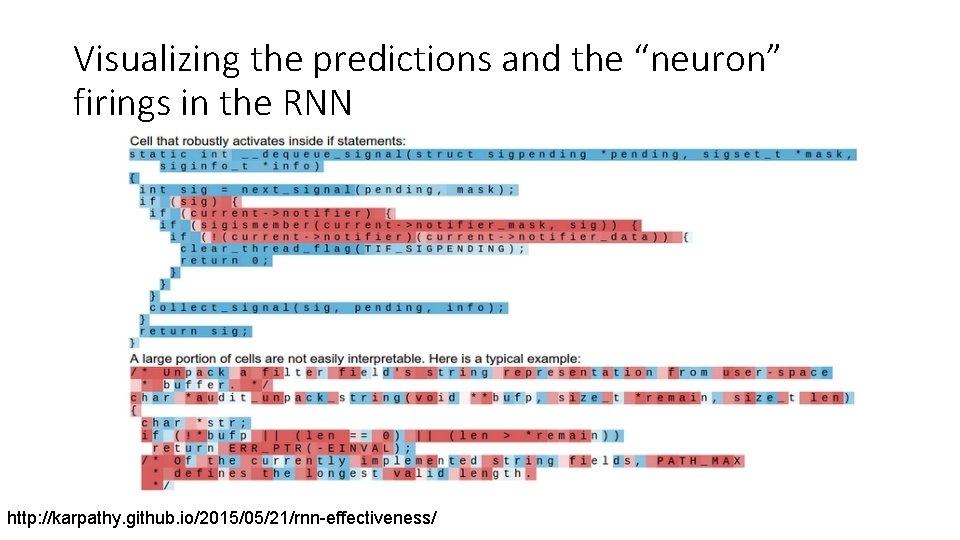

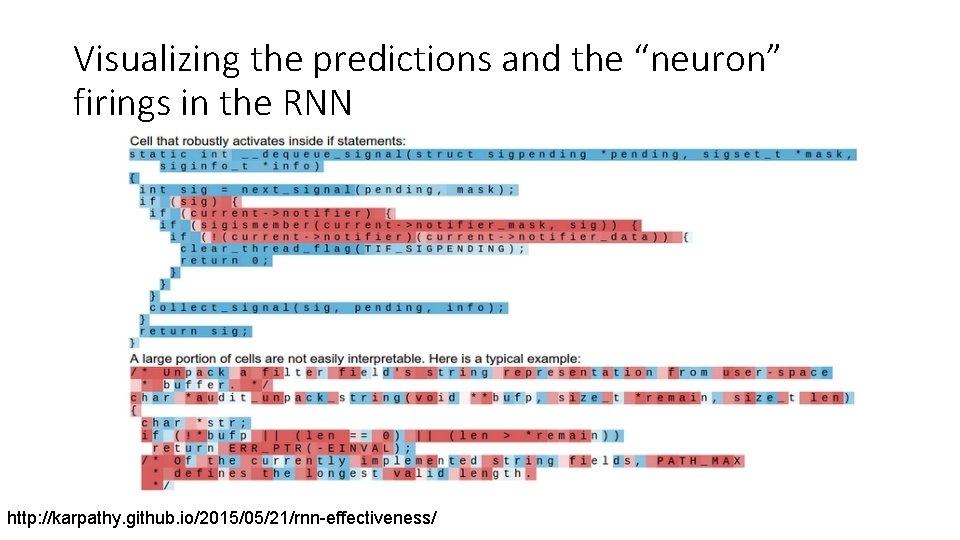

Visualizing the predictions and the “neuron” firings in the RNN http: //karpathy. github. io/2015/05/21/rnn-effectiveness/

Visualizing the predictions and the “neuron” firings in the RNN http: //karpathy. github. io/2015/05/21/rnn-effectiveness/

![http normanai mit edu [http: //norman-ai. mit. edu/]](https://slidetodoc.com/presentation_image_h/b16eb6c3a944e909f2dd9aa58b3bb47a/image-44.jpg)

[http: //norman-ai. mit. edu/]

![http normanai mit edu [http: //norman-ai. mit. edu/]](https://slidetodoc.com/presentation_image_h/b16eb6c3a944e909f2dd9aa58b3bb47a/image-45.jpg)

[http: //norman-ai. mit. edu/]

![http normanai mit edu [http: //norman-ai. mit. edu/]](https://slidetodoc.com/presentation_image_h/b16eb6c3a944e909f2dd9aa58b3bb47a/image-46.jpg)

[http: //norman-ai. mit. edu/]

. Summary • Deep learning is a field of machine learning that uses artificial neural network in order to learn representations in an hierarchical manner. • Convolutional neural achieved state-of-the-art results in the field of computer vision by • Reducing the number of parameters via shard weights and local connectivity. • Using an hierarchal model. • Recurrent neural network are used for sequence modelling. The vanishing gradient problem is address by a model called LSTM.

. Thanks!