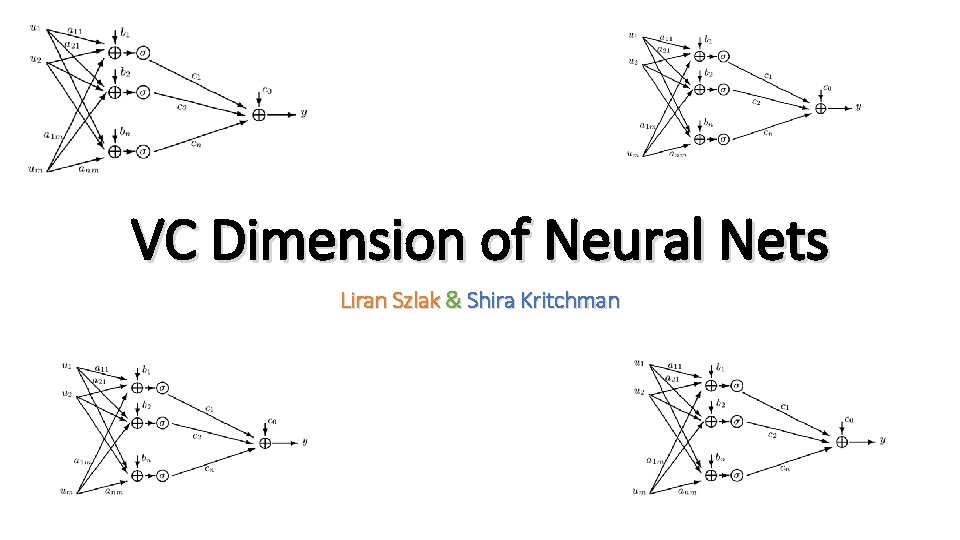

VC Dimension of Neural Nets Liran Szlak Shira

- Slides: 82

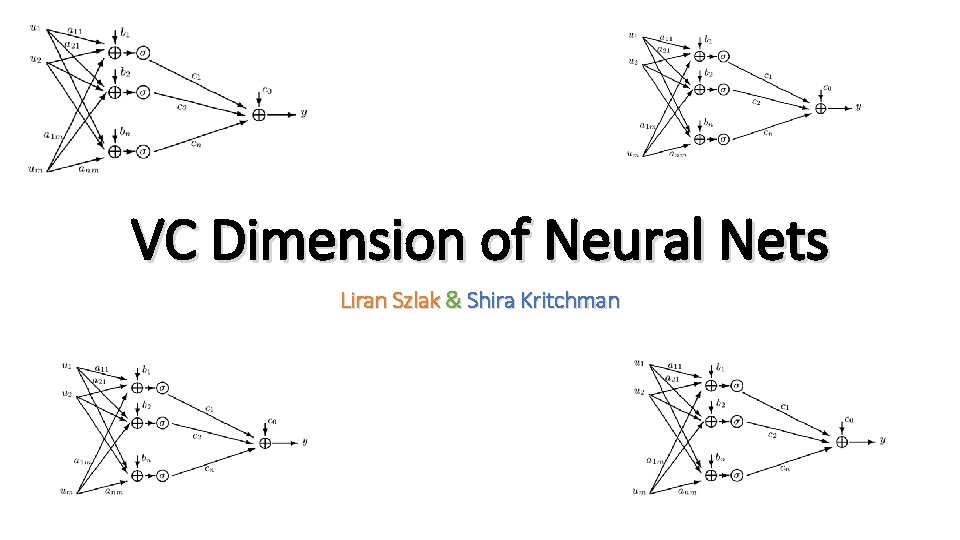

VC Dimension of Neural Nets Liran Szlak & Shira Kritchman

Outline • VC dimension & Sample Complexity • VC dimension & Generalization • VC dimension in neural nets • Fat-shattering – for real valued neural nets • Experiments

Part I: VC Dimension Theory

Motivation •

Motivation • What property makes a learning model good? • Can we learn from a finite sample? • How many examples do we need to be good? • How to choose a good model?

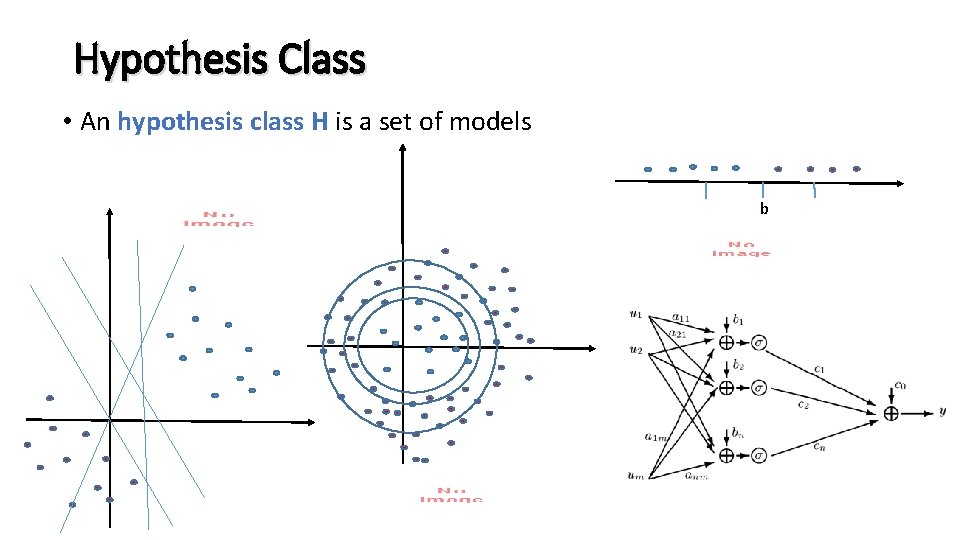

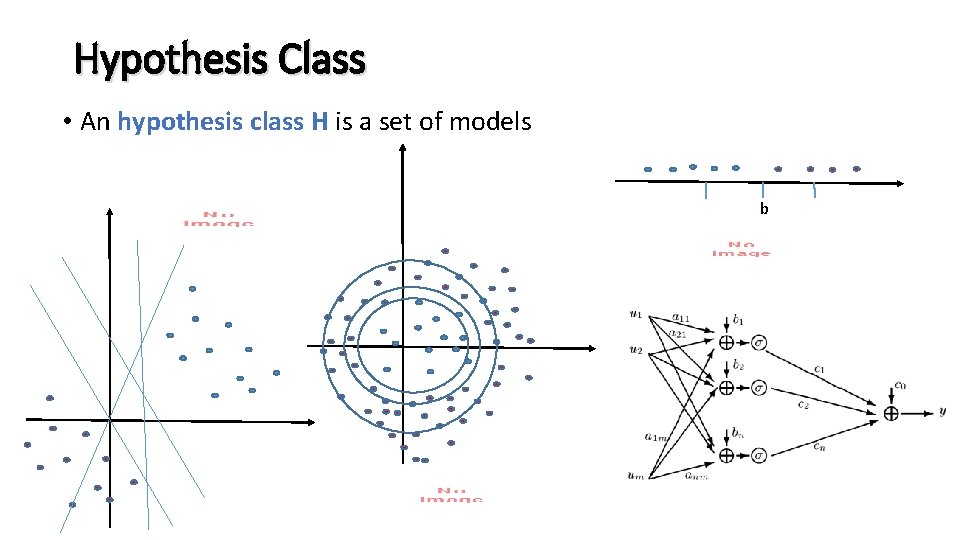

Hypothesis Class • An hypothesis class H is a set of models b

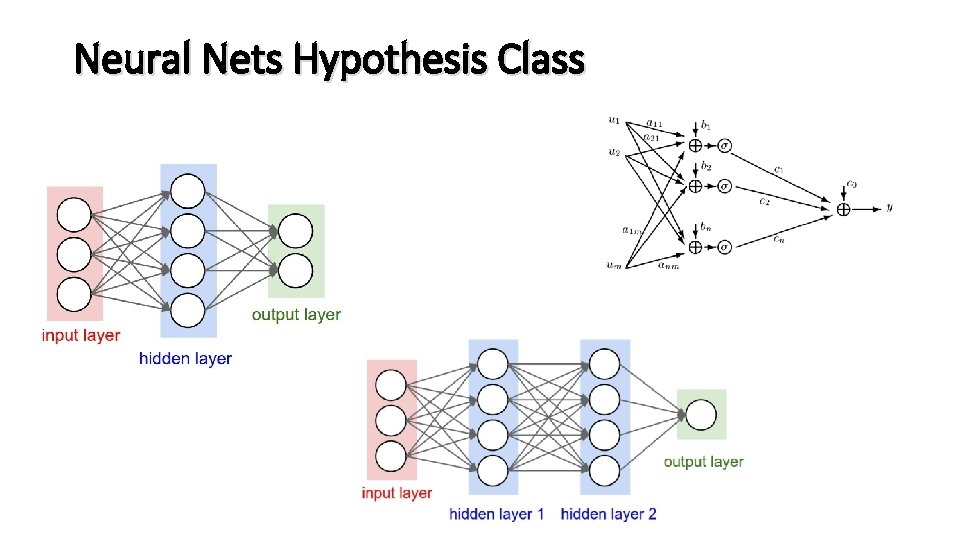

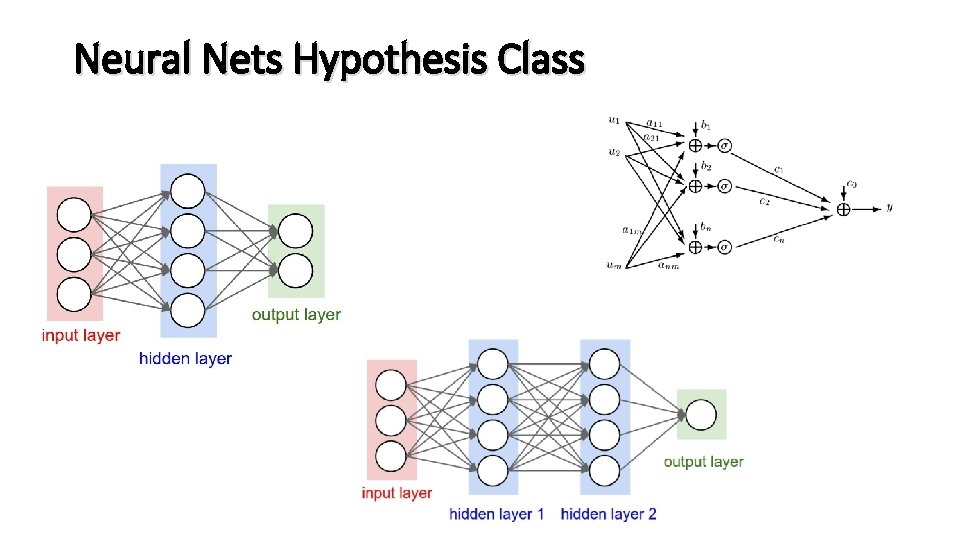

Neural Nets Hypothesis Class

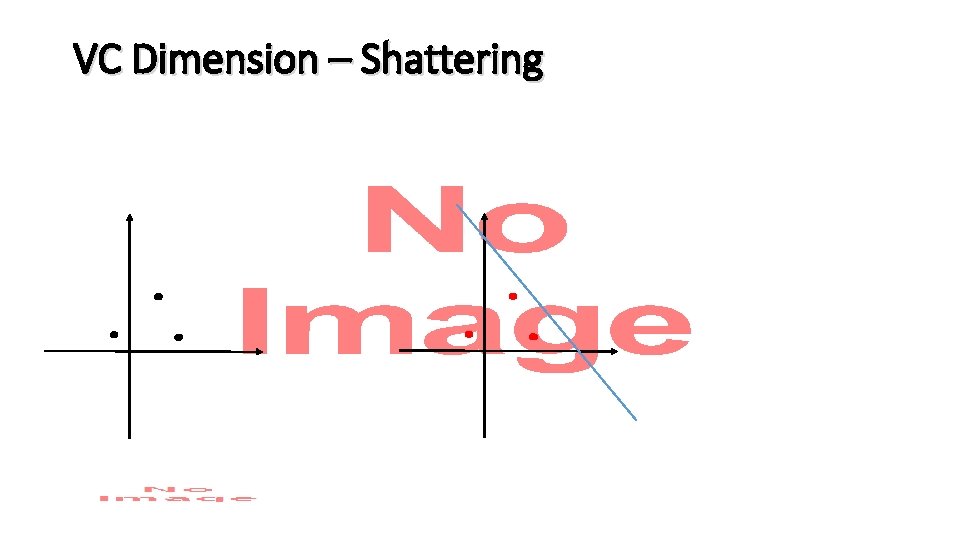

VC Dimension – Shattering •

VC Dimension – Shattering •

VC Dimension – Shattering •

VC Dimension – Shattering •

VC Dimension – Shattering •

VC Dimension – Shattering •

VC Dimension – Shattering •

VC Dimension – Shattering •

VC Dimension – Definition • Vapnik & Chervonenkis, 1971

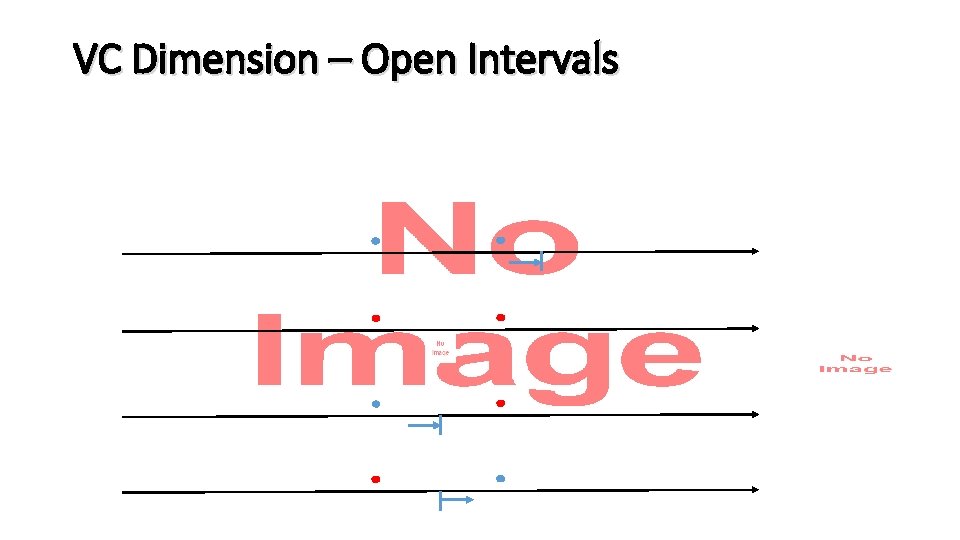

VC Dimension – Open Intervals •

VC Dimension – Open Intervals •

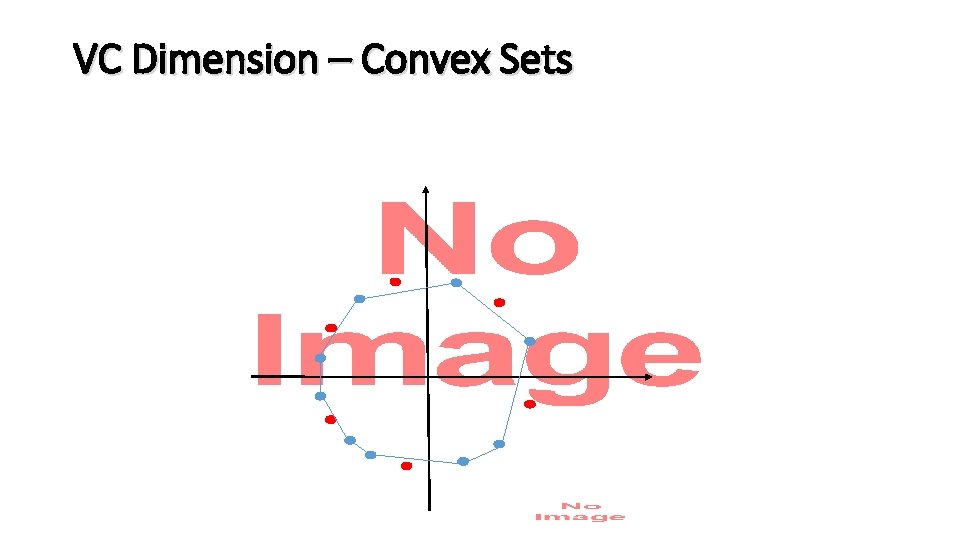

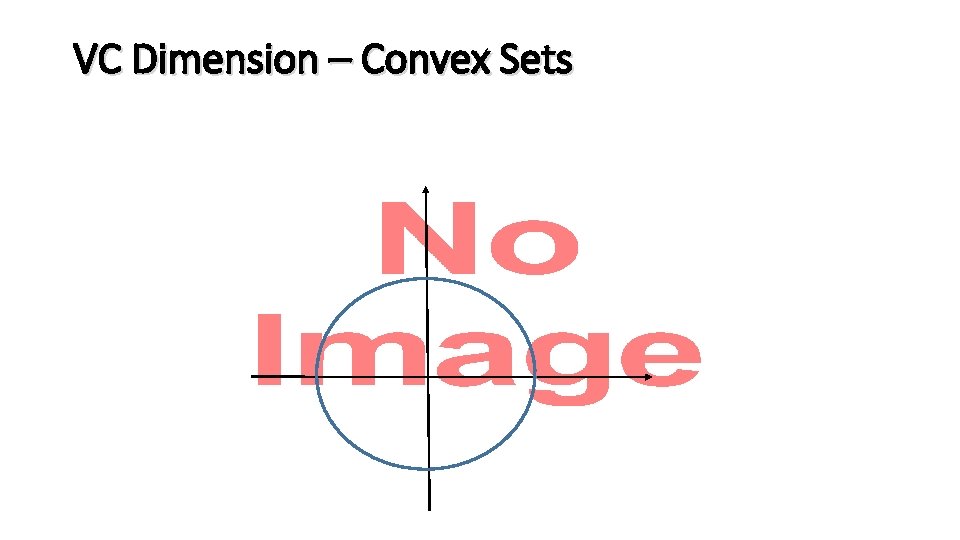

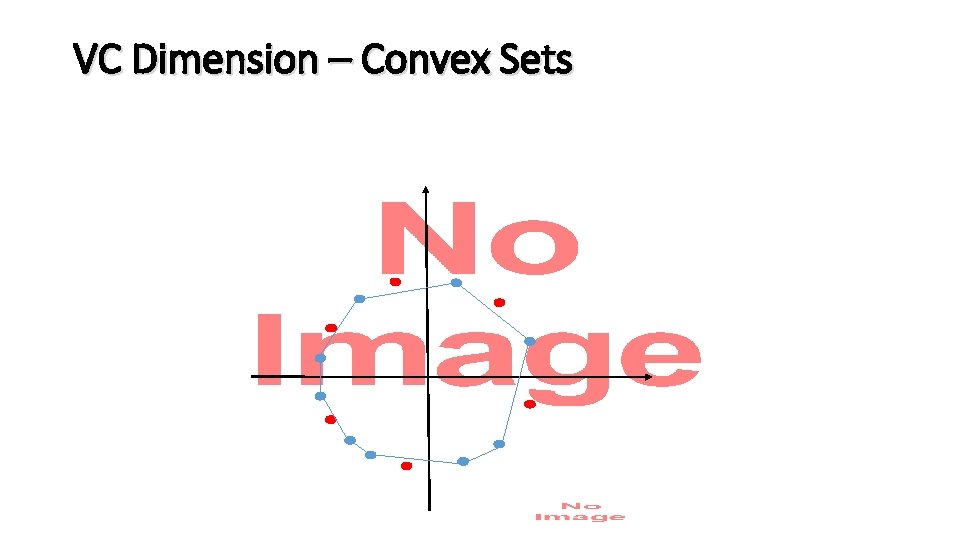

VC Dimension – Convex Sets •

VC Dimension – Convex Sets •

VC Dimension – Convex Sets •

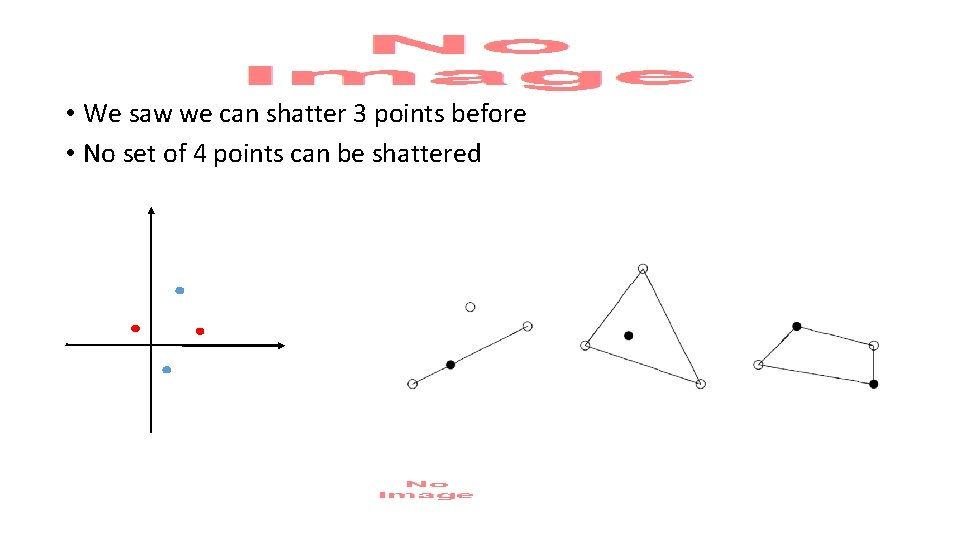

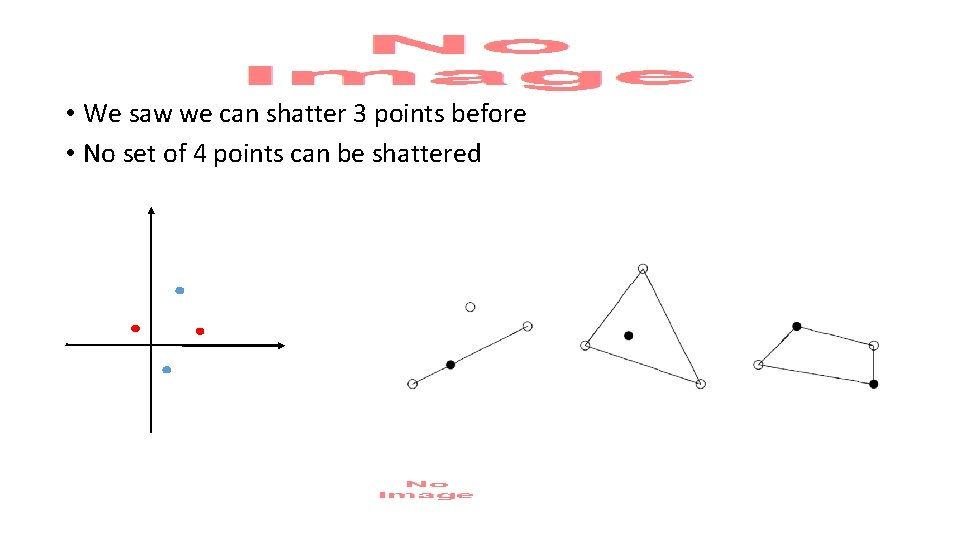

• We saw we can shatter 3 points before

• We saw we can shatter 3 points before • No set of 4 points can be shattered

• We saw we can shatter 3 points before • No set of 4 points can be shattered

Reminder! • What property makes a learning model good? • Can we learn from a finite sample? • How many examples do we need to be good? • How to choose a good model? VC Dimension Sample Complexity Generalization

What Is a Good Model? • x y h i. i. d

What Is a Good Model? PAC Probably Approximately Correct Intuition: We want to be close to the right function with high probability

What Is a Good Model? H

What Is a Good Model? Backpropagation Algorithm A H

What Is a Good Model? Backpropagation Algorithm A H

What Is a Good Model? Backpropagation Algorithm A H

What Is a Good Model? • PAC learnable H • Distribution free guarantee • Overly pessimistic…? . . . • Other measures of “goodness” might be relevant • How does the VC-dimension relate to PAC learnability? How expressive is H? Can we approximate any D with H, using a finite training set?

VC Dimension and PAC Learnability •

VC Dimension & Learning Guarantees Vapnik & Chervonenkis, 1971

VC Dimension & Learning Guarantees

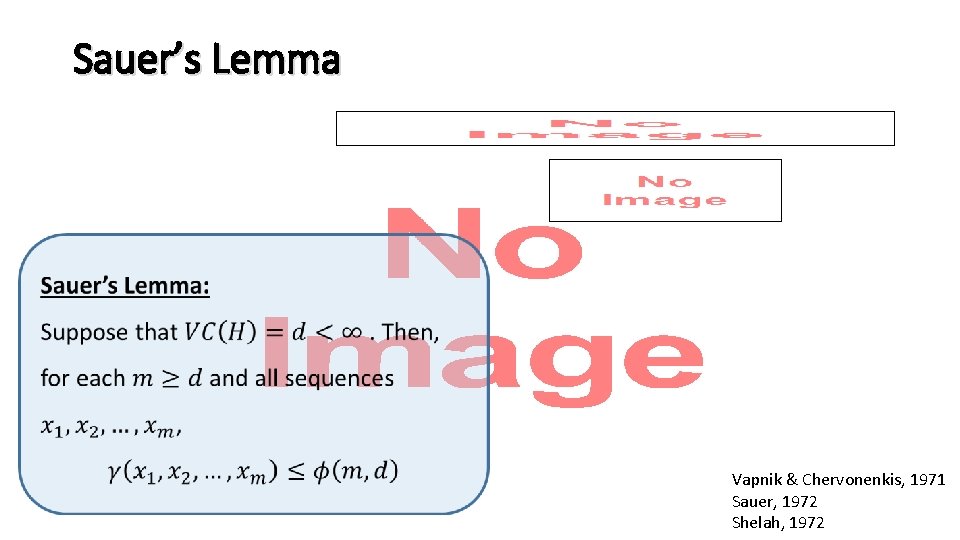

Sauer’s Lemma • H

Sauer’s Lemma • H

Sauer’s Lemma • H

Sauer’s Lemma • The number of different labeling strings that H can achieve on the set H

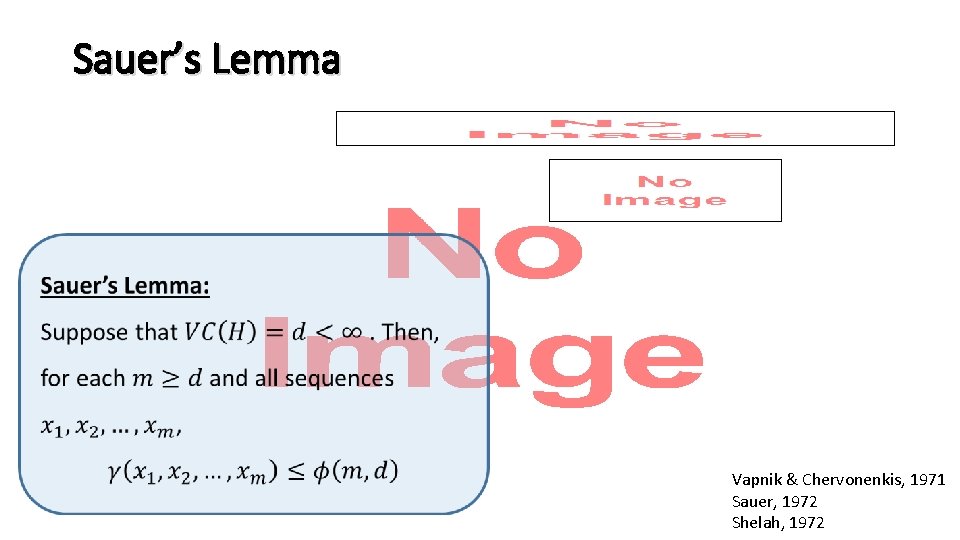

Sauer’s Lemma • Vapnik & Chervonenkis, 1971 Sauer, 1972 Shelah, 1972

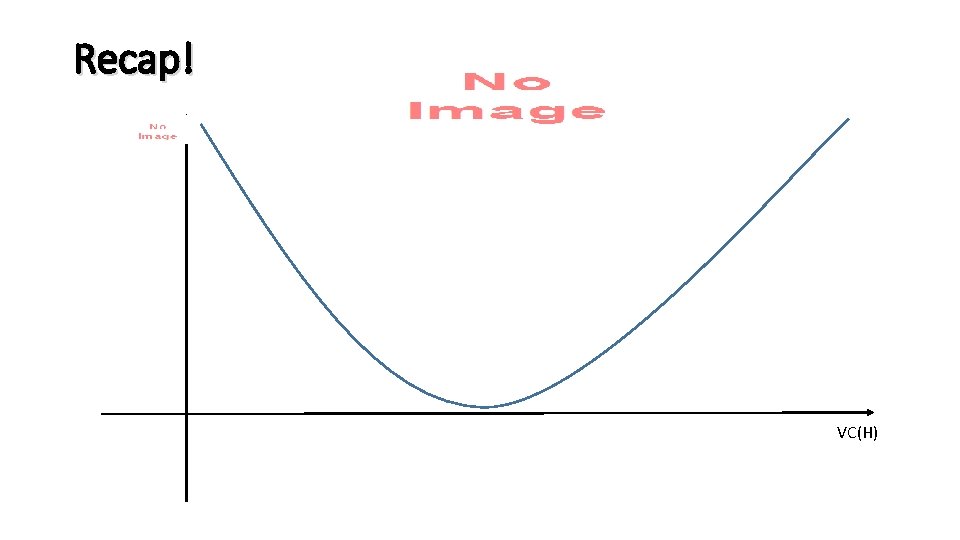

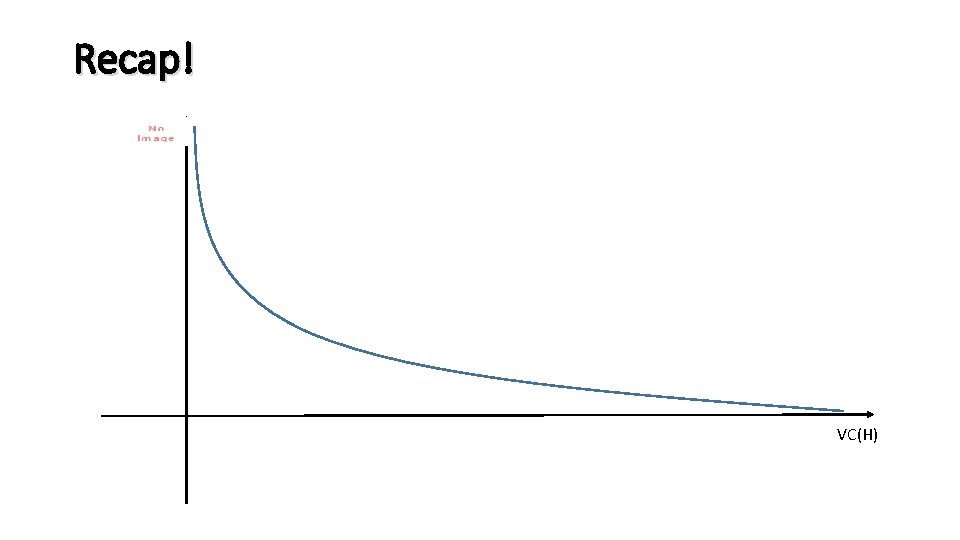

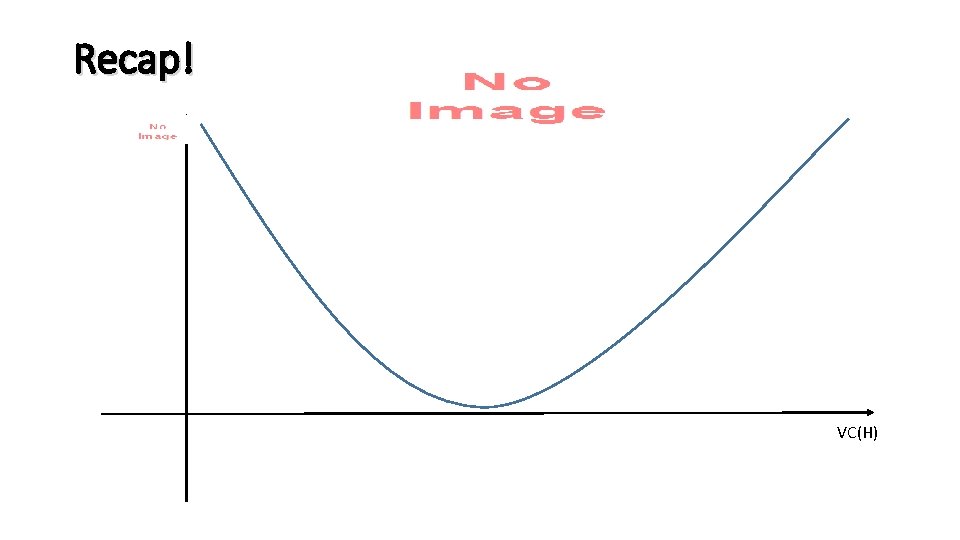

Recap! VC(H)

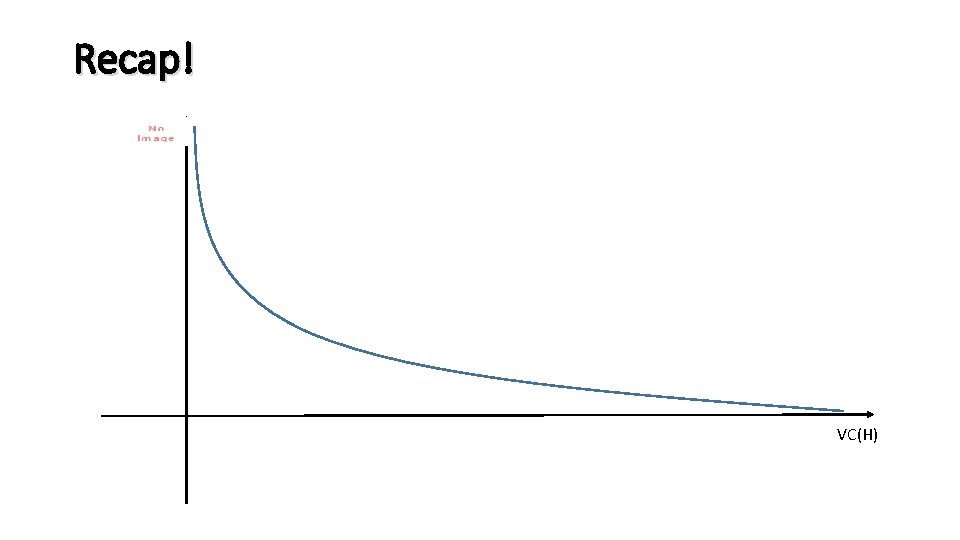

Recap! VC(H)

Recap! VC(H)

Recap! VC(H)

Recap! • What property makes a learning model good? • Can we learn from a finite sample? • How many examples do we need to be good? • How to choose a good model?

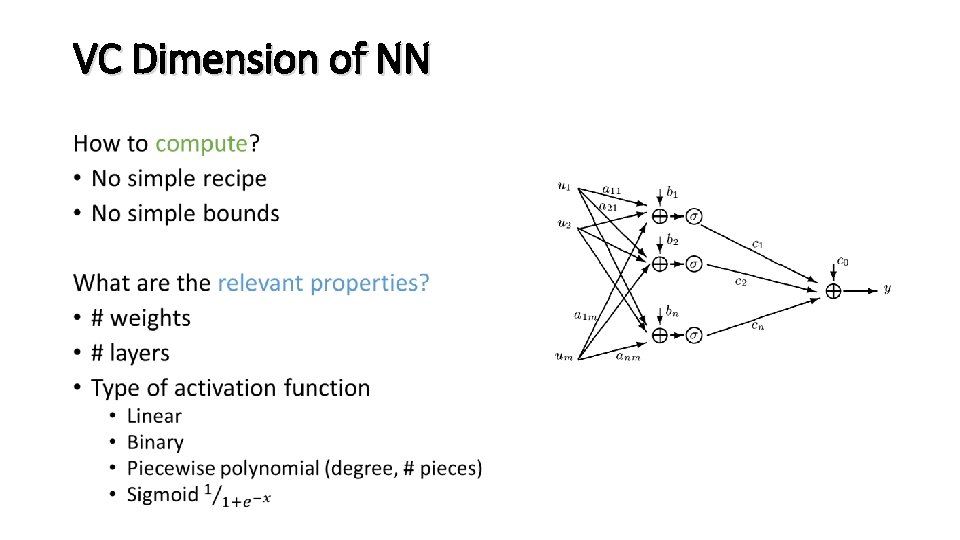

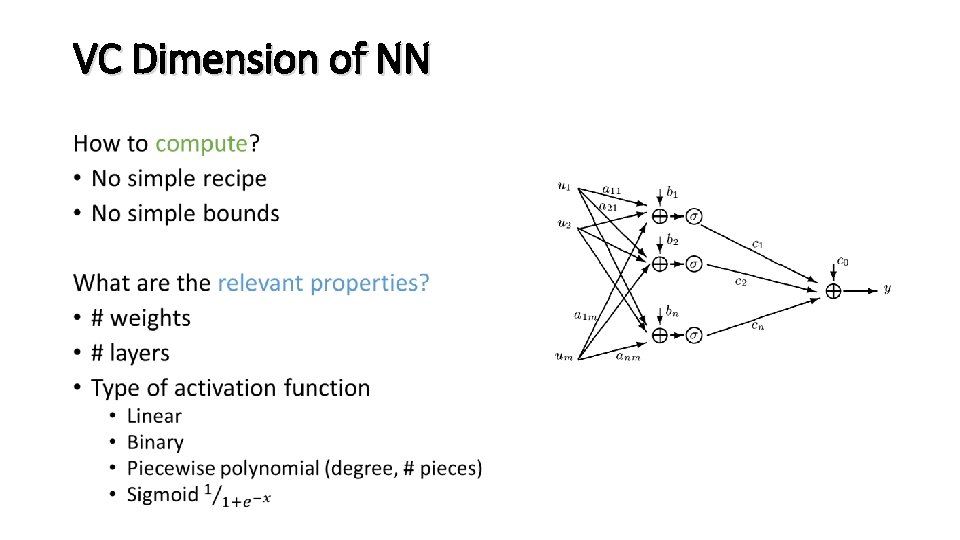

VC Dimension of NN •

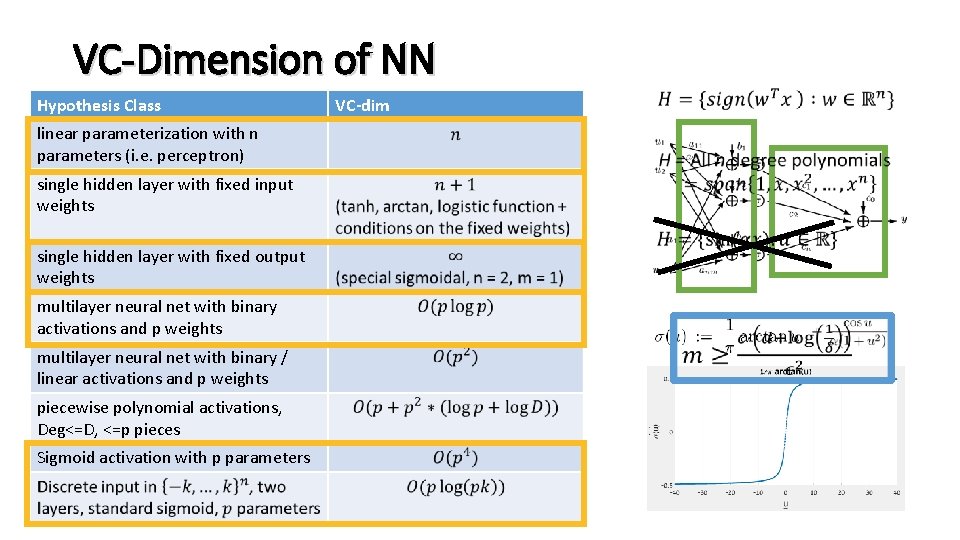

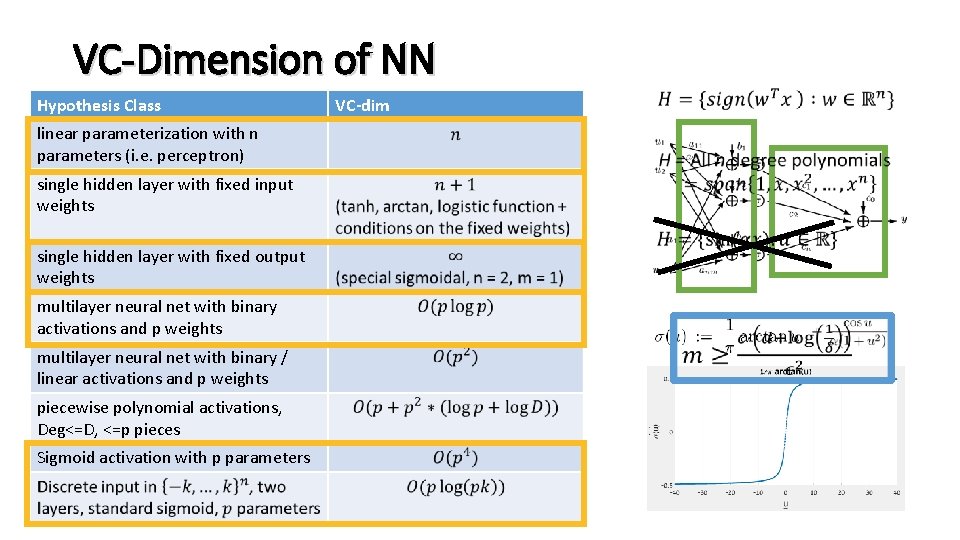

VC-Dimension of NN Hypothesis Class VC-dim linear parameterization with n parameters (i. e. perceptron) single hidden layer with fixed input weights single hidden layer with fixed output weights multilayer neural net with binary activations and p weights multilayer neural net with binary / linear activations and p weights piecewise polynomial activations, Deg<=D, <=p pieces Sigmoid activation with p parameters

Other Dimensions •

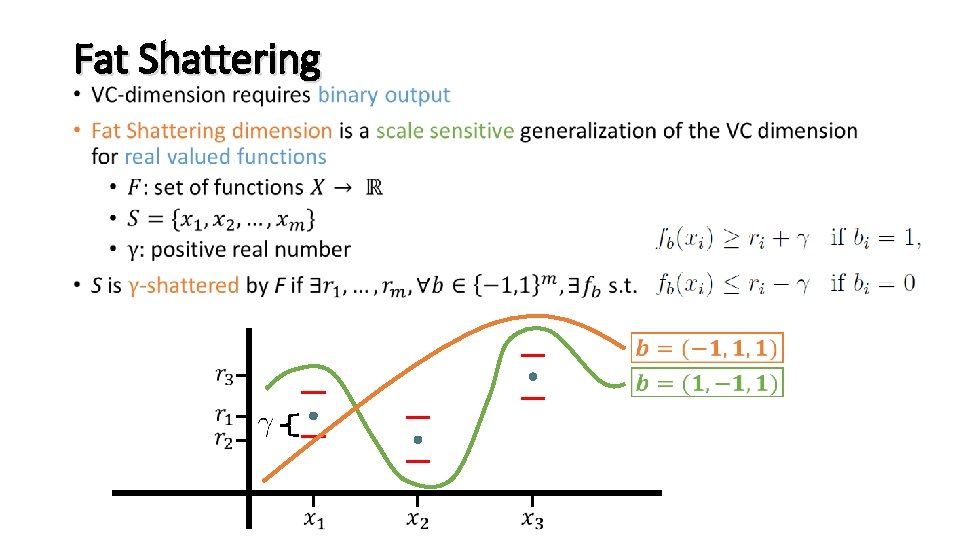

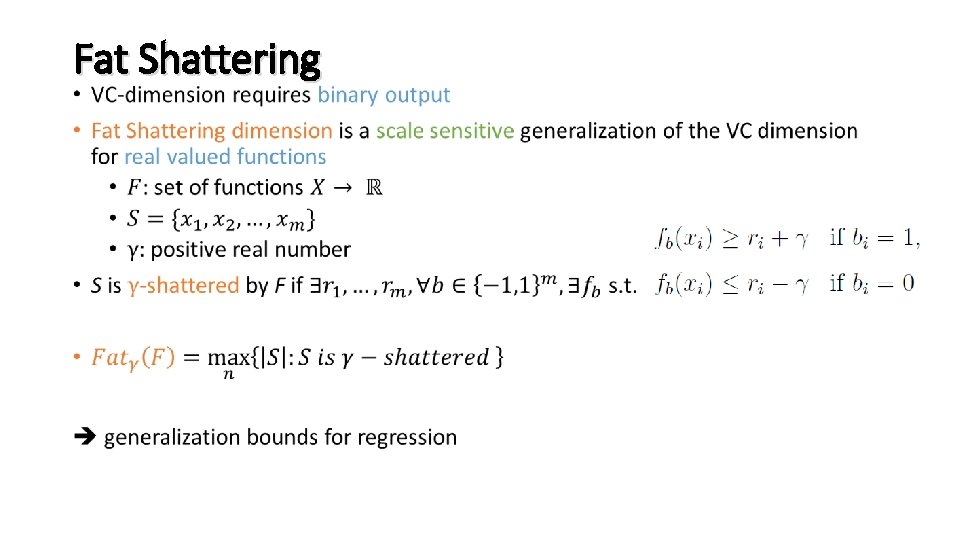

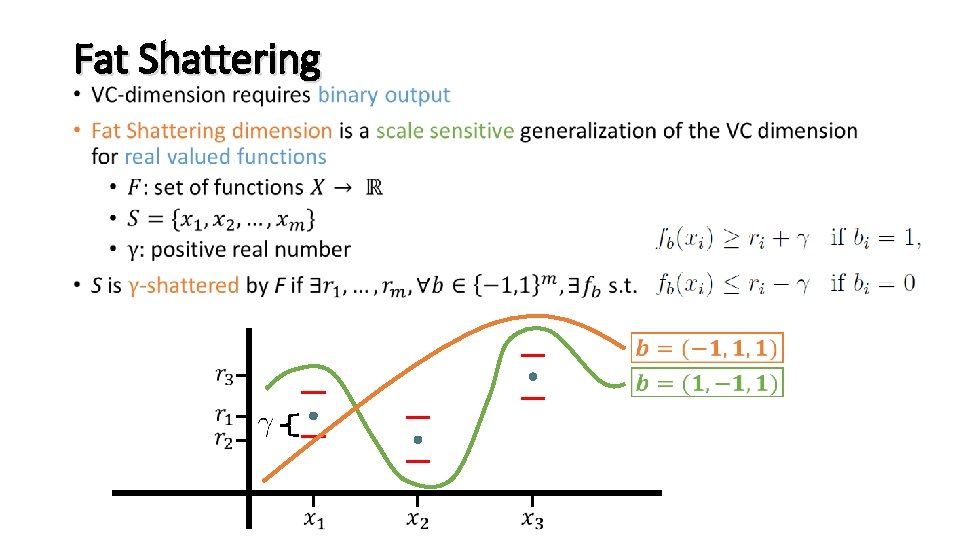

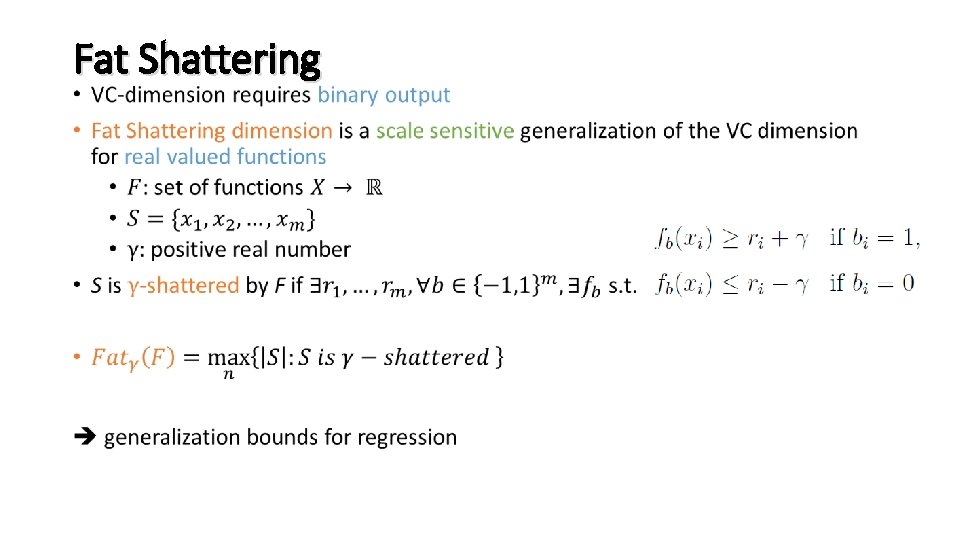

Fat Shattering •

Fat Shattering •

Fat Shattering •

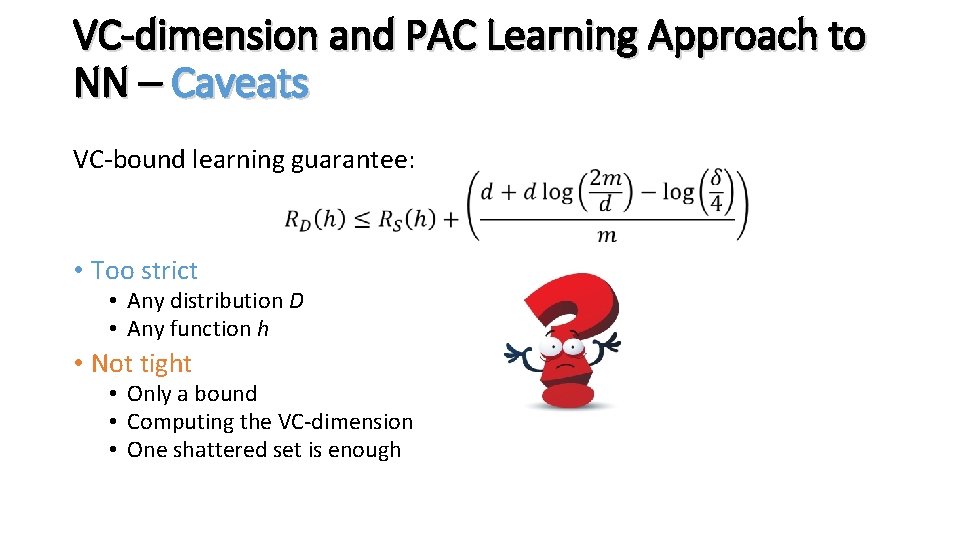

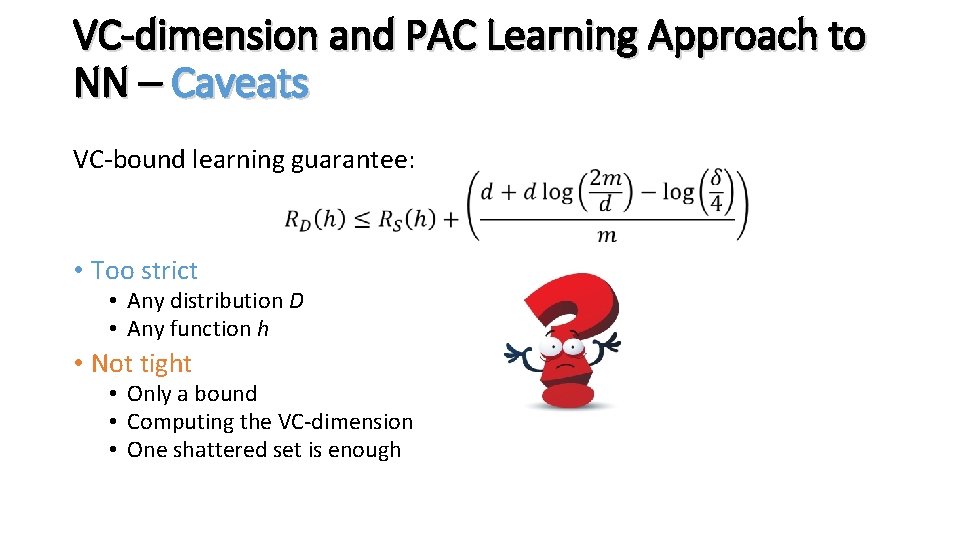

VC-dimension and PAC Learning Approach to NN – Caveats VC-bound learning guarantee: • Too strict • Any distribution D • Any function h • Not tight • Only a bound • Computing the VC-dimension • One shattered set is enough

Part II: Empirical Tests

Experiments’ Motivation •

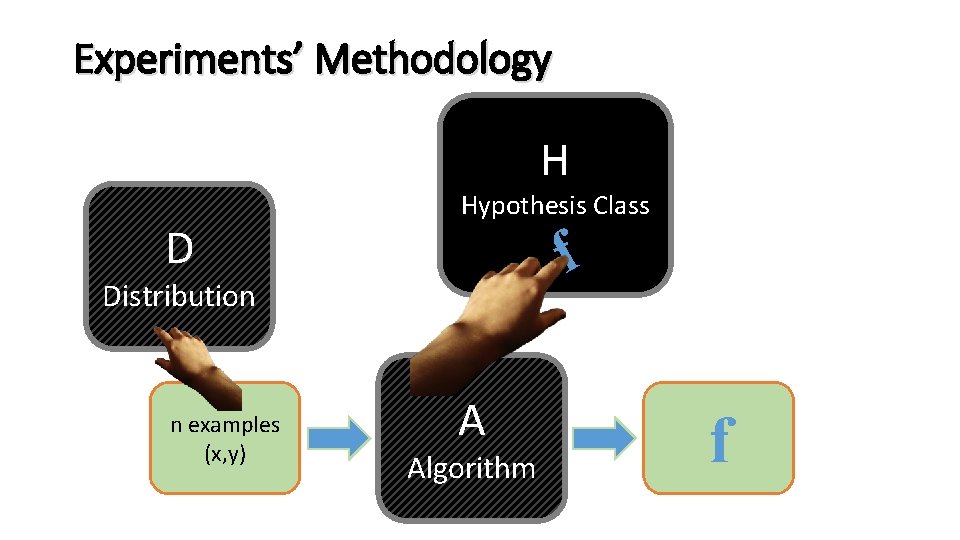

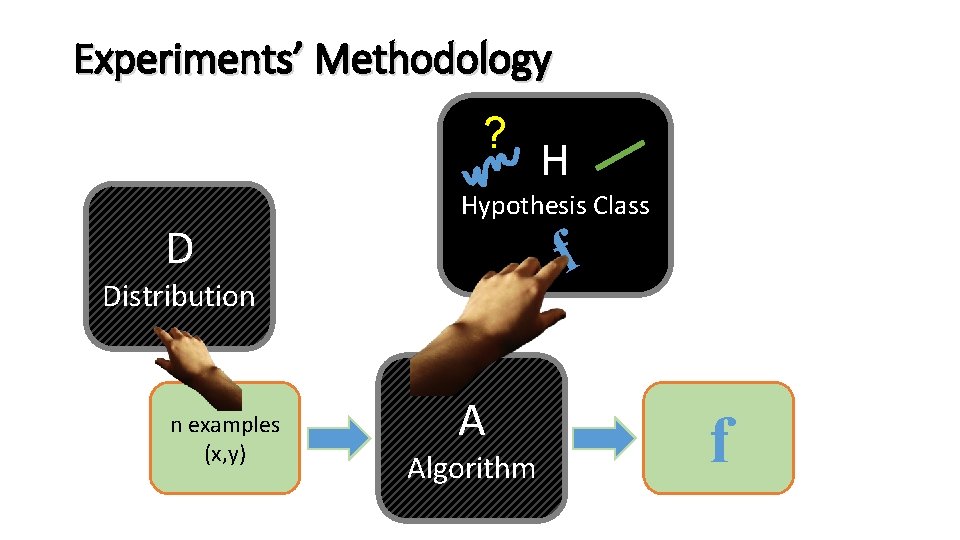

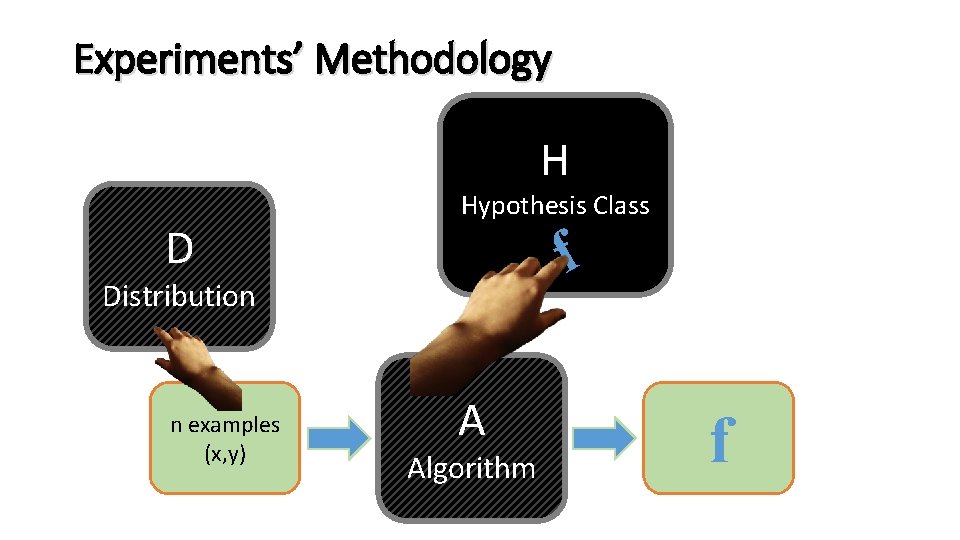

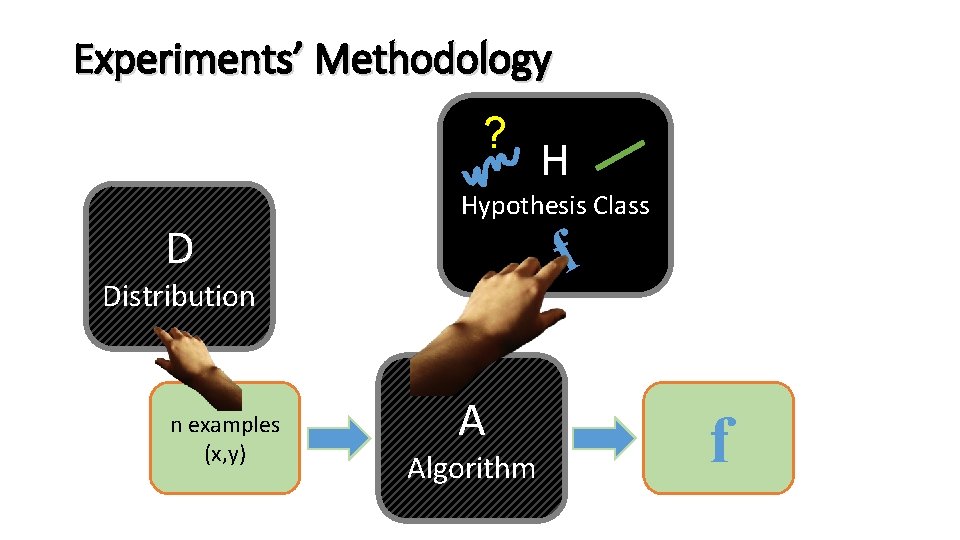

Experiments’ Methodology H D Hypothesis Class f Distribution n examples (x, y) A Algorithm f

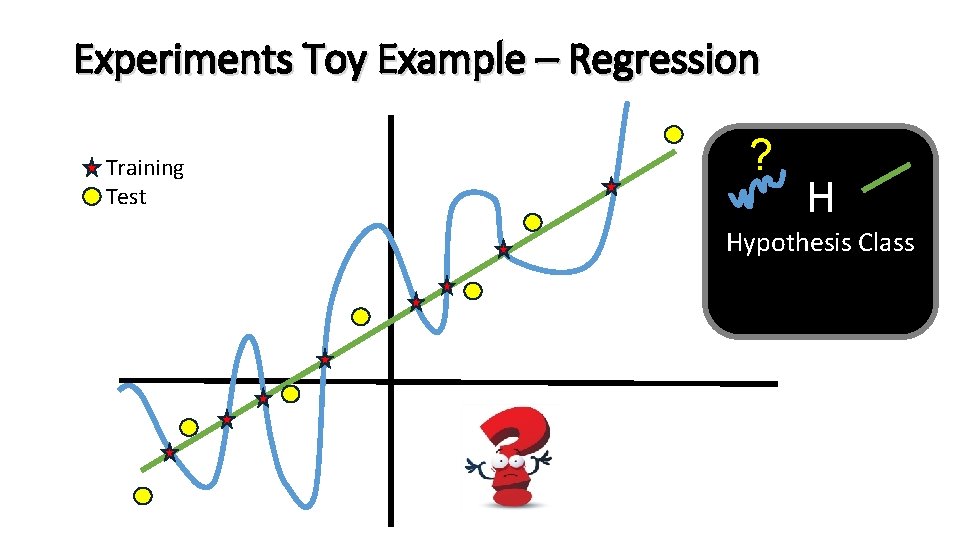

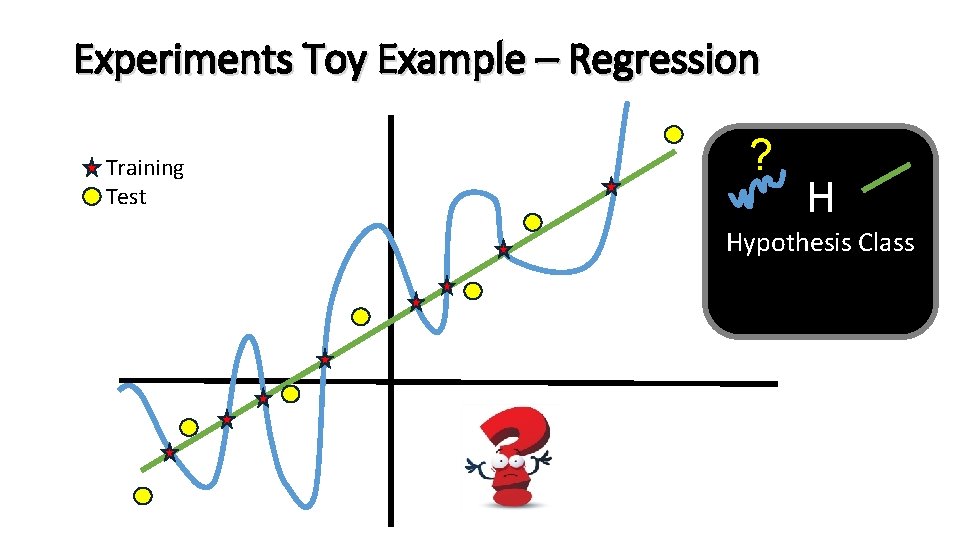

Experiments Toy Example – Regression Training Test ? H Hypothesis Class

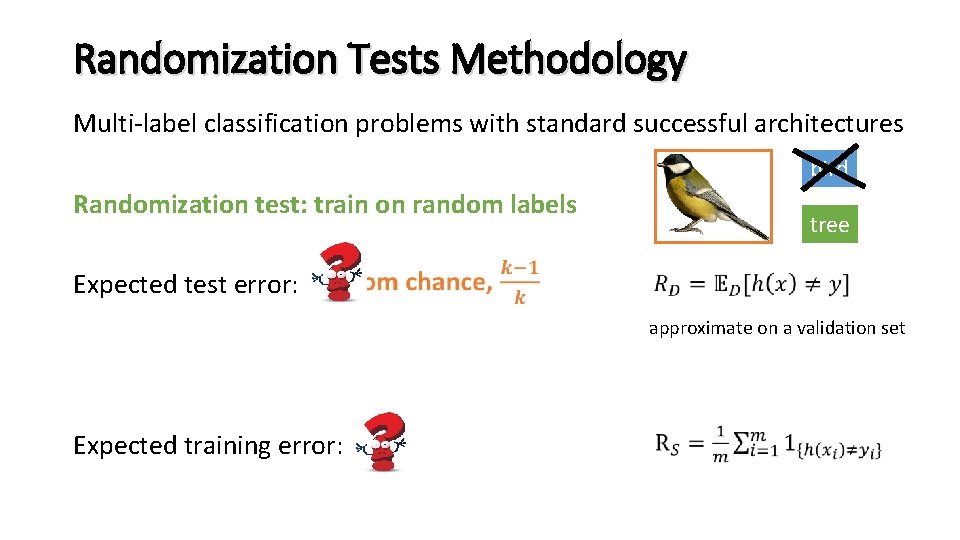

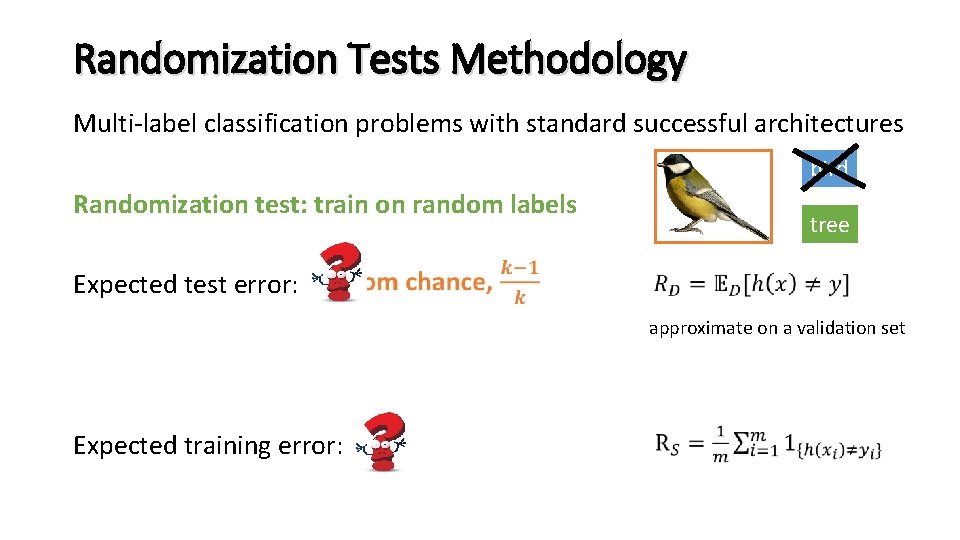

Randomization Tests Methodology Multi-label classification problems with standard successful architectures bird Randomization test: train on random labels Expected test error: tree approximate on a validation set Expected training error:

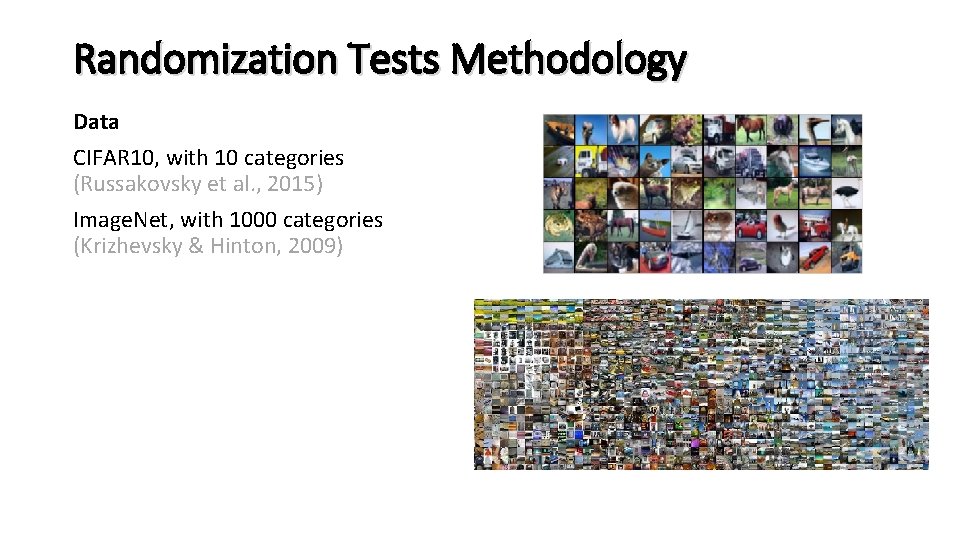

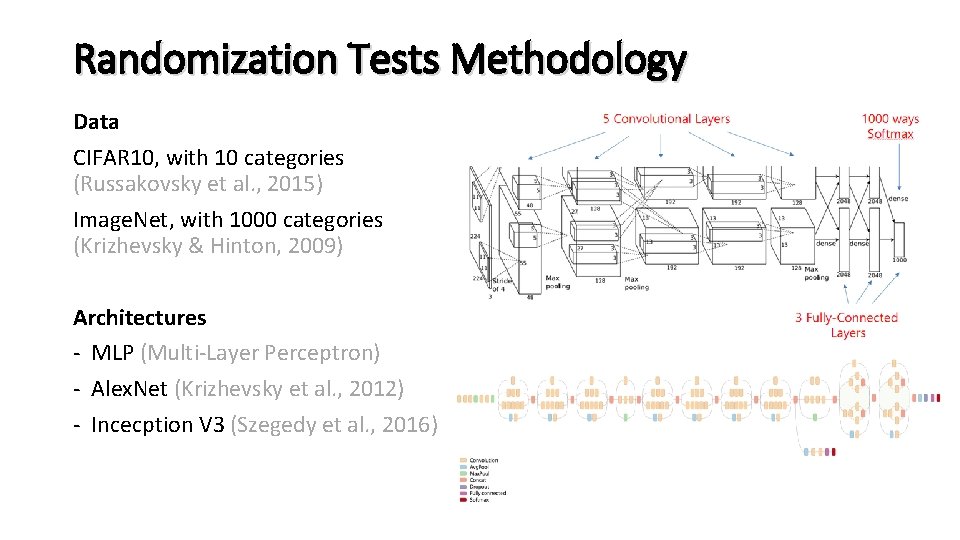

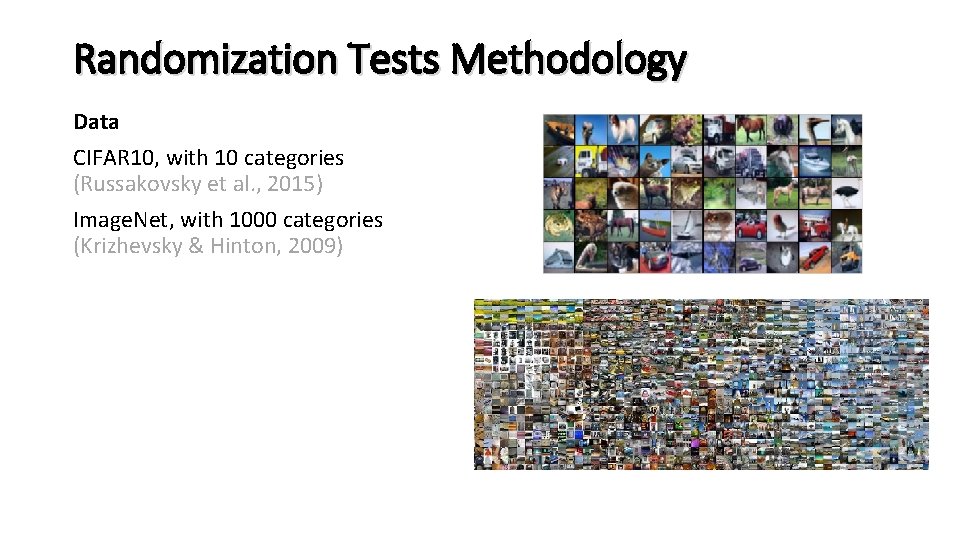

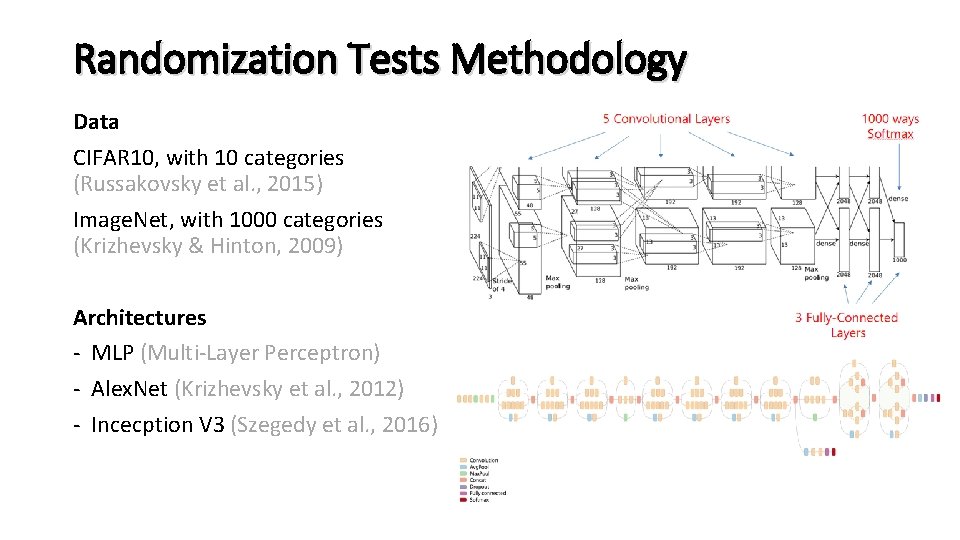

Randomization Tests Methodology Data CIFAR 10, with 10 categories (Russakovsky et al. , 2015) Image. Net, with 1000 categories (Krizhevsky & Hinton, 2009)

Randomization Tests Methodology Data CIFAR 10, with 10 categories (Russakovsky et al. , 2015) Image. Net, with 1000 categories (Krizhevsky & Hinton, 2009) Architectures - MLP (Multi-Layer Perceptron) - Alex. Net (Krizhevsky et al. , 2012) - Incecption V 3 (Szegedy et al. , 2016)

Randomization Tests Methodology Data CIFAR 10, with 10 categories (Russakovsky et al. , 2015) Image. Net, with 1000 categories (Krizhevsky & Hinton, 2009) Architectures - MLP (Multi-Layer Perceptron) - Alex. Net (Krizhevsky et al. , 2012) - Incecption V 3 (Szegedy et al. , 2016) Data manipulation - True labels - Random labels - Shuffled pixels - Random pixels - Gaussian bird tree Algorithm manipulation - Dropout - Data augmentation Generalization techniques: - Weight decay standard tools to confine - Early stopping our learning and encourage - Batch normalization generalization

Randomization Tests Methodology Multi-label classification problems with standard successful architectures Randomization test: train on random labels Expected test error: - CIFAR 10 (10 categories): 90% - Image. Net (1000 categories): 99. 9% Expected training error: approximate on a validation set

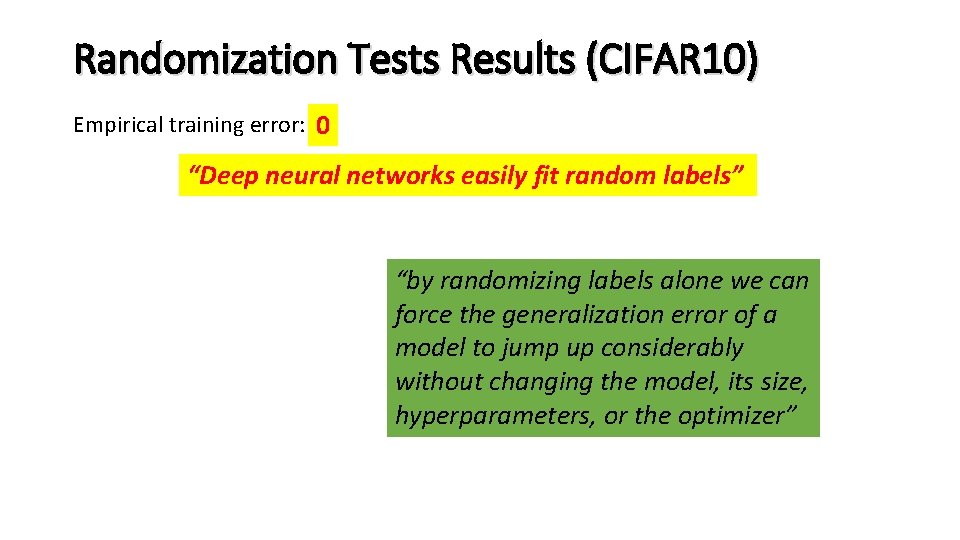

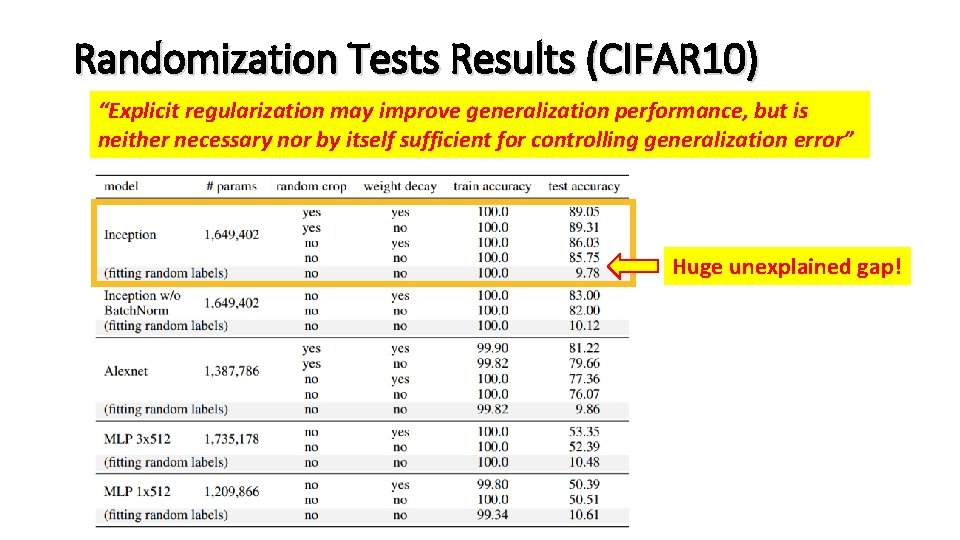

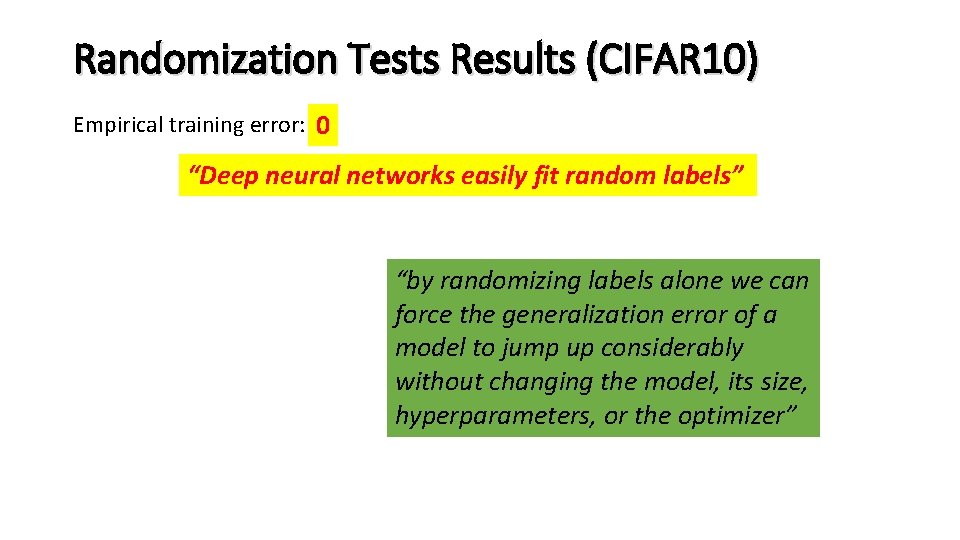

Randomization Tests Results (CIFAR 10) Empirical training error: 0 “Deep neural networks easily fit random labels” “by randomizing labels alone we can force the generalization error of a model to jump up considerably without changing the model, its size, hyperparameters, or the optimizer” (Inception)

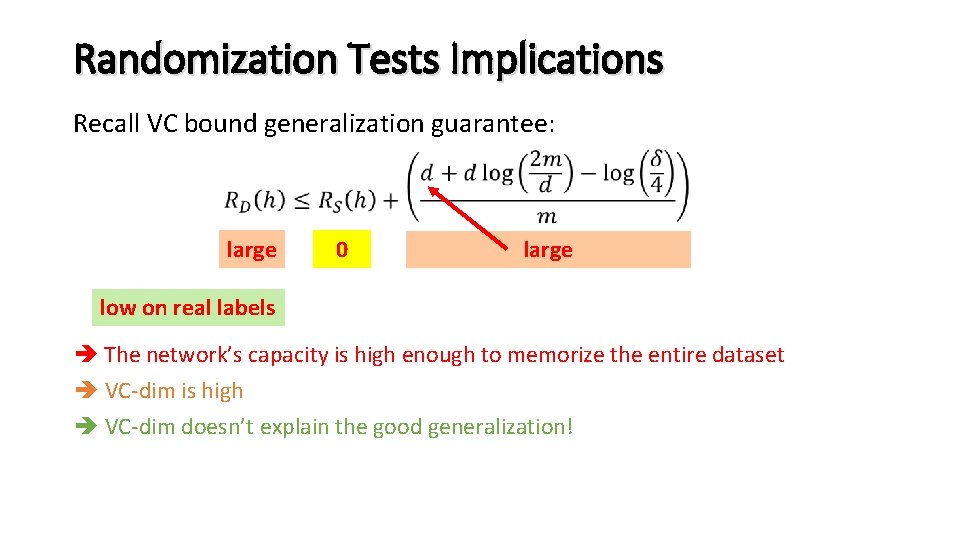

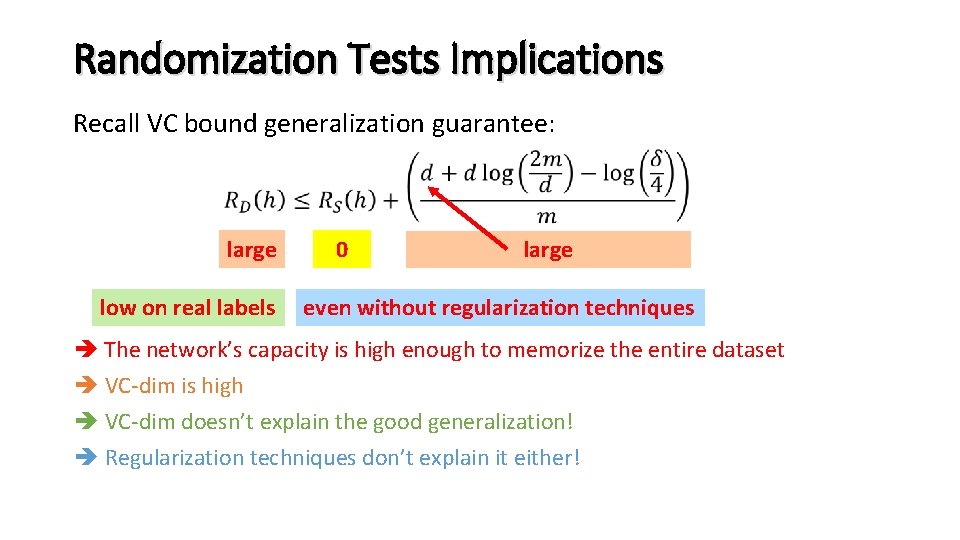

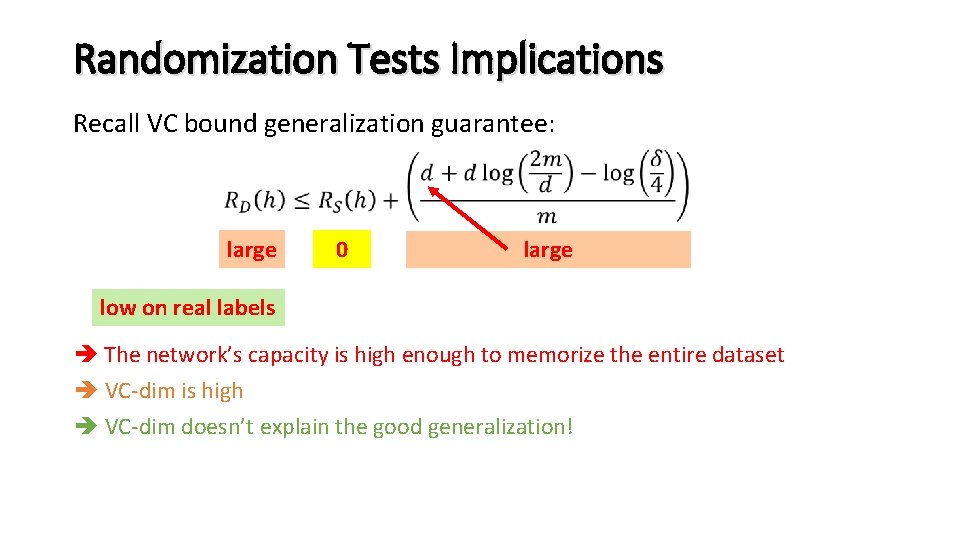

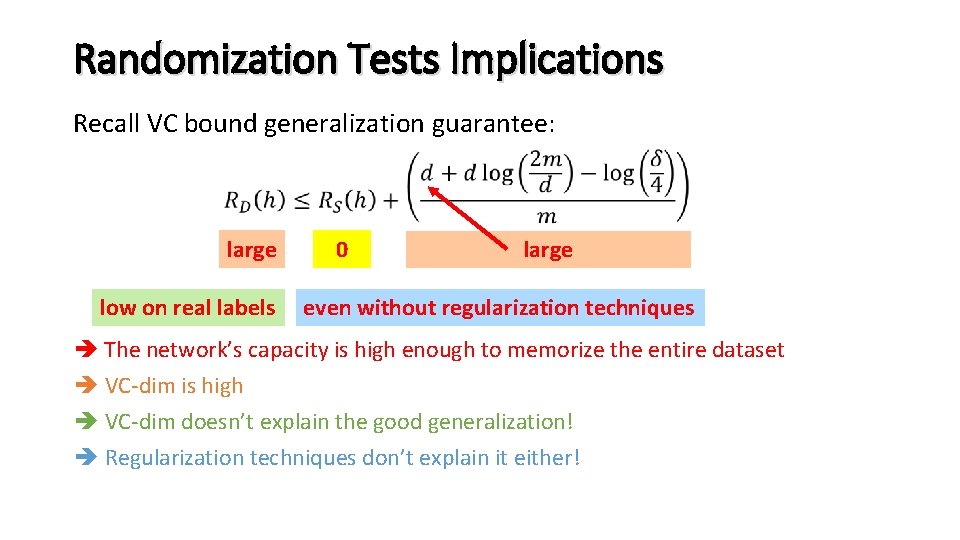

Randomization Tests Implications Recall VC bound generalization guarantee: large 0 large low on real labels The network’s capacity is high enough to memorize the entire dataset VC-dim is high VC-dim doesn’t explain the good generalization!

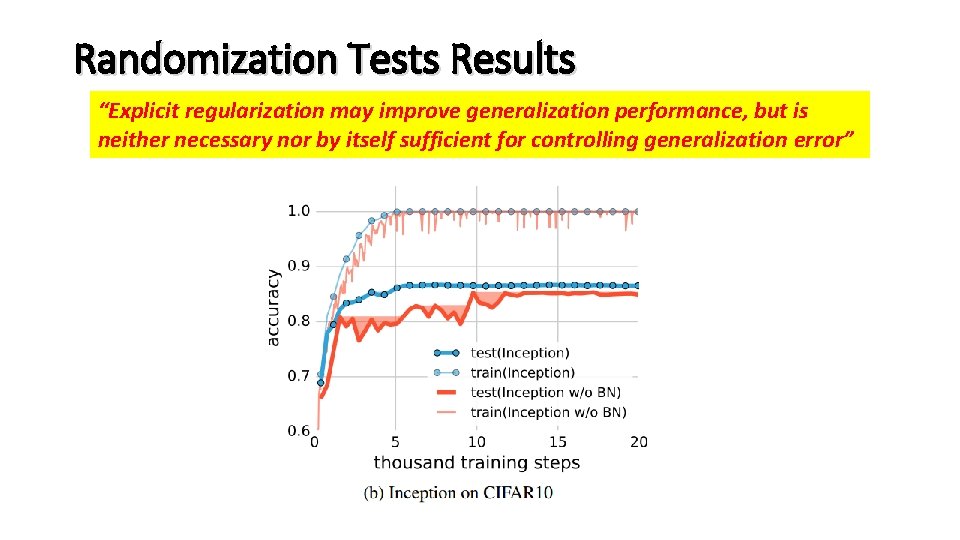

Randomization Tests Results (CIFAR 10) Empirical training error: 0 “Deep neural networks easily fit random labels” (Inception)

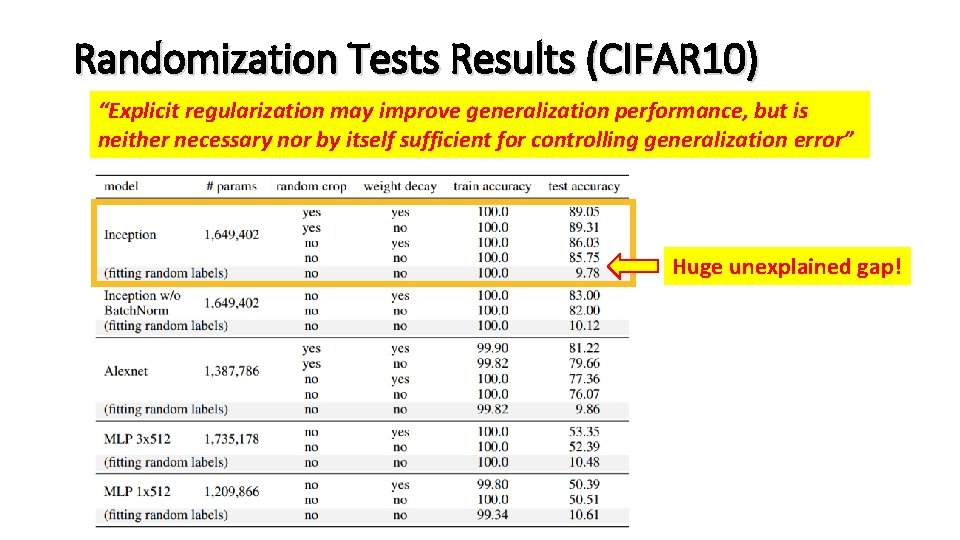

Randomization Tests Results (CIFAR 10) “Explicit regularization may improve generalization performance, but is neither necessary nor by itself sufficient for controlling generalization error” Huge unexplained gap!

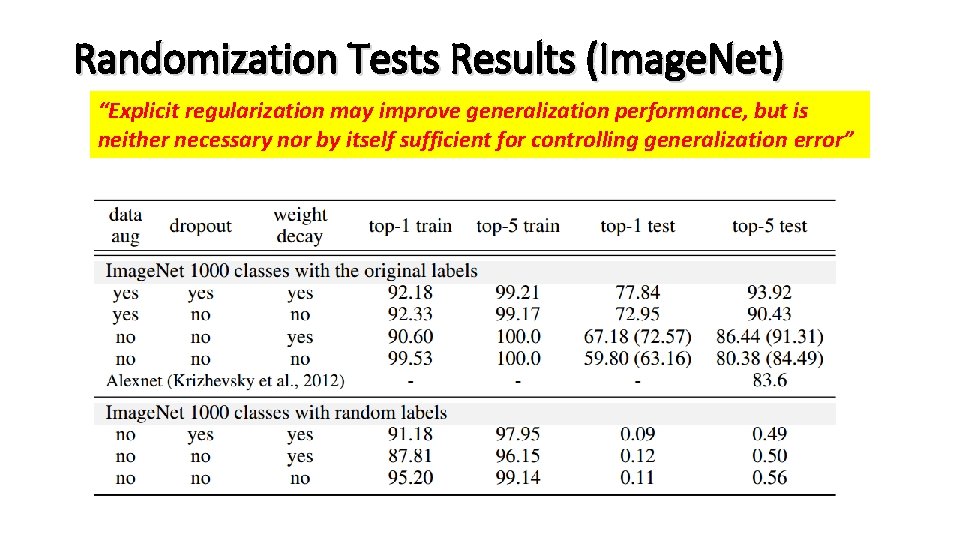

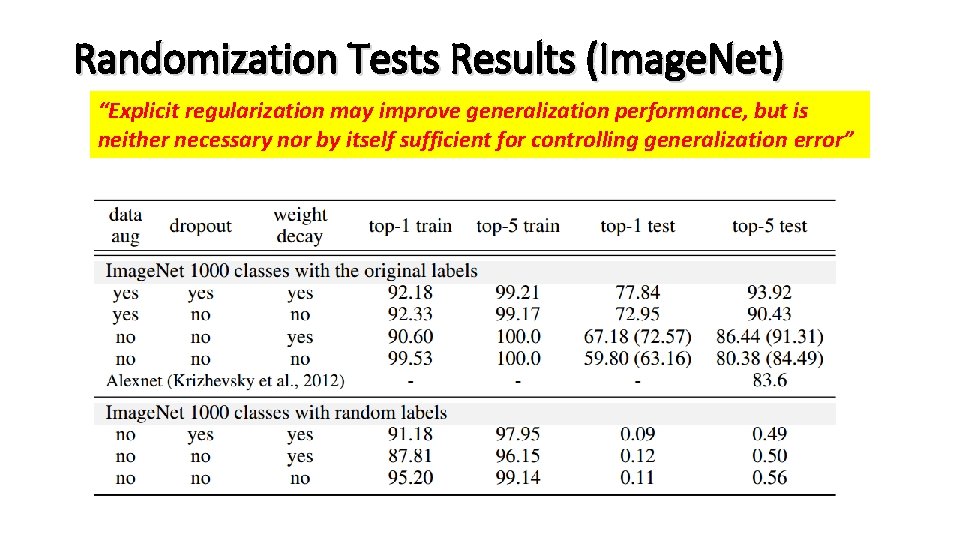

Randomization Tests Results (Image. Net) “Explicit regularization may improve generalization performance, but is neither necessary nor by itself sufficient for controlling generalization error”

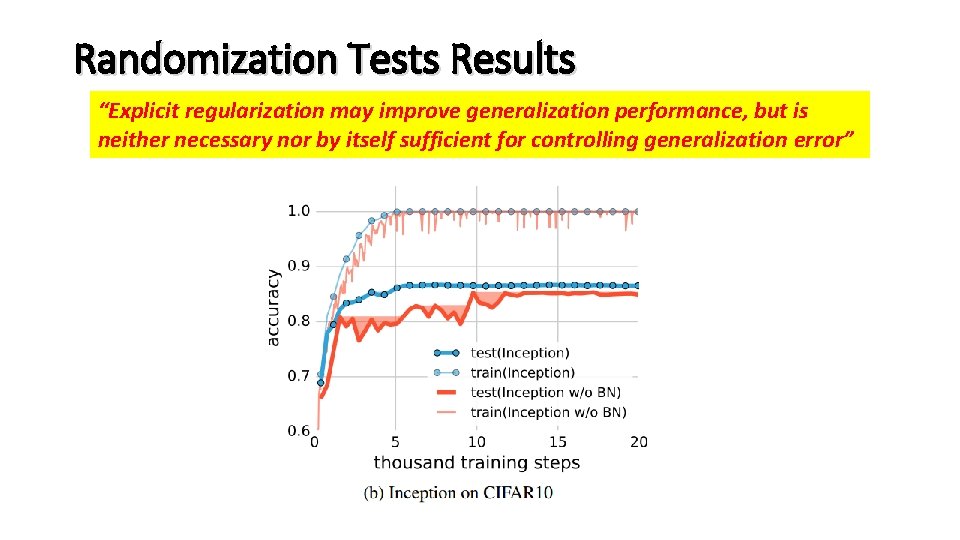

Randomization Tests Results “Explicit regularization may improve generalization performance, but is neither necessary nor by itself sufficient for controlling generalization error”

Randomization Tests Implications Recall VC bound generalization guarantee: large low on real labels 0 large even without regularization techniques The network’s capacity is high enough to memorize the entire dataset VC-dim is high VC-dim doesn’t explain the good generalization! Regularization techniques don’t explain it either!

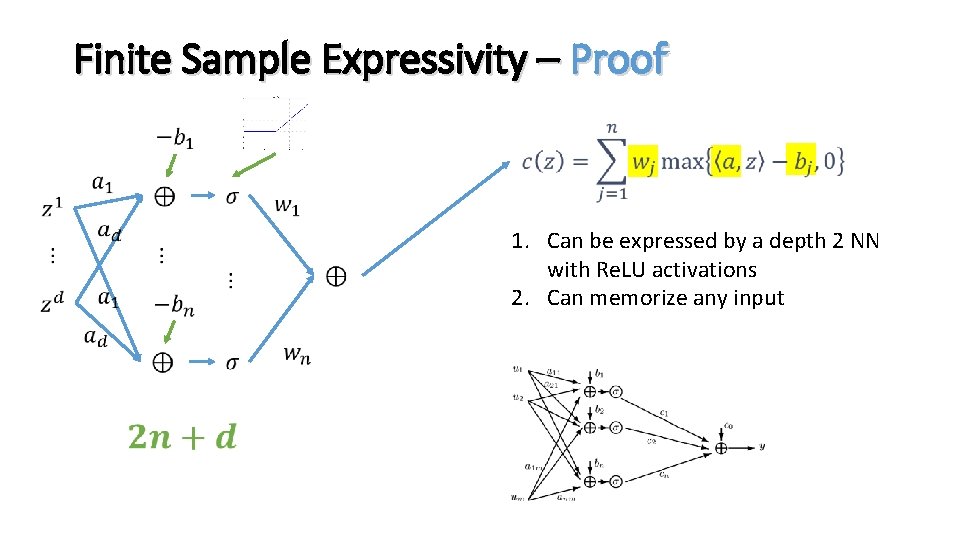

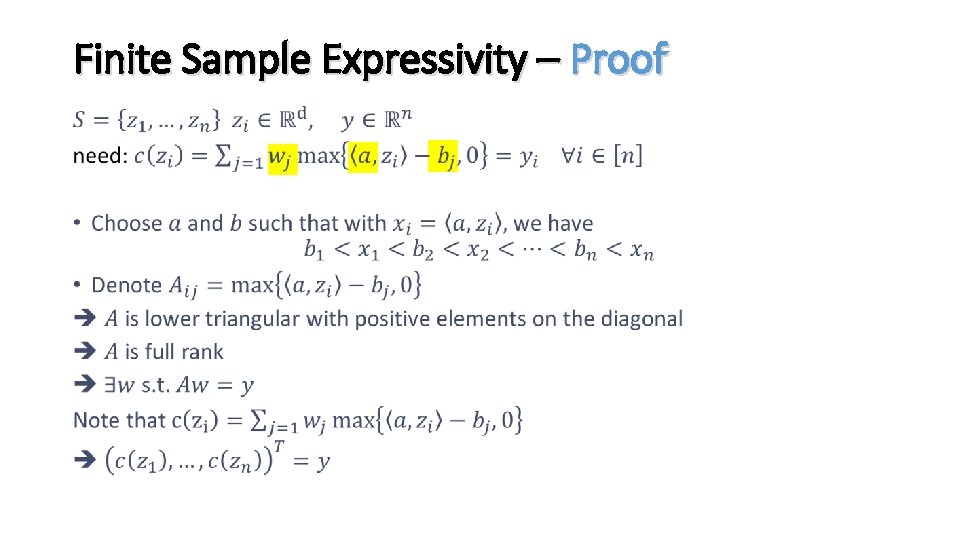

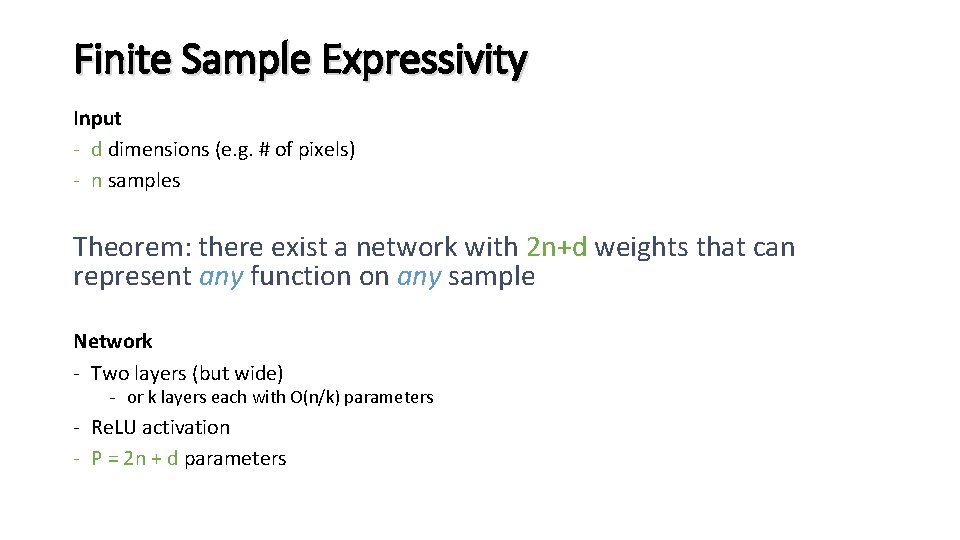

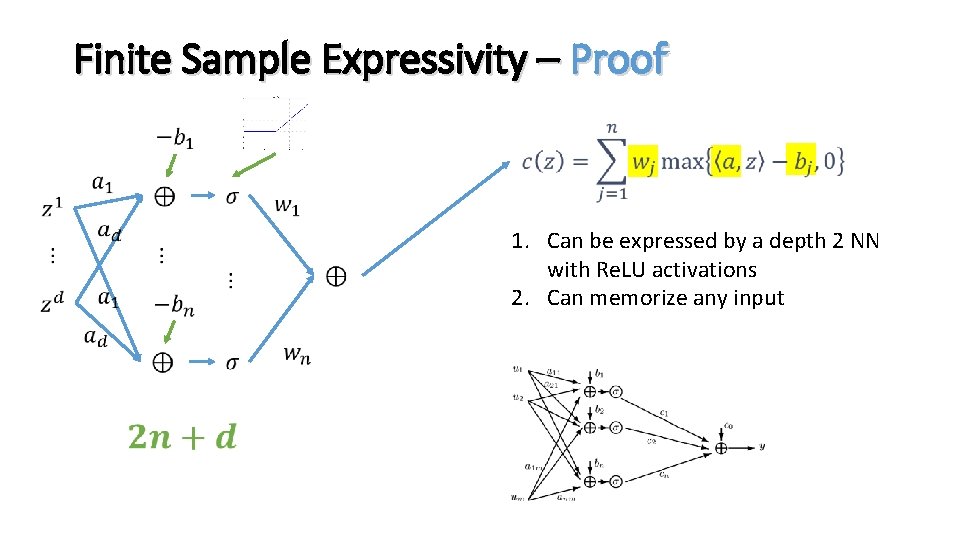

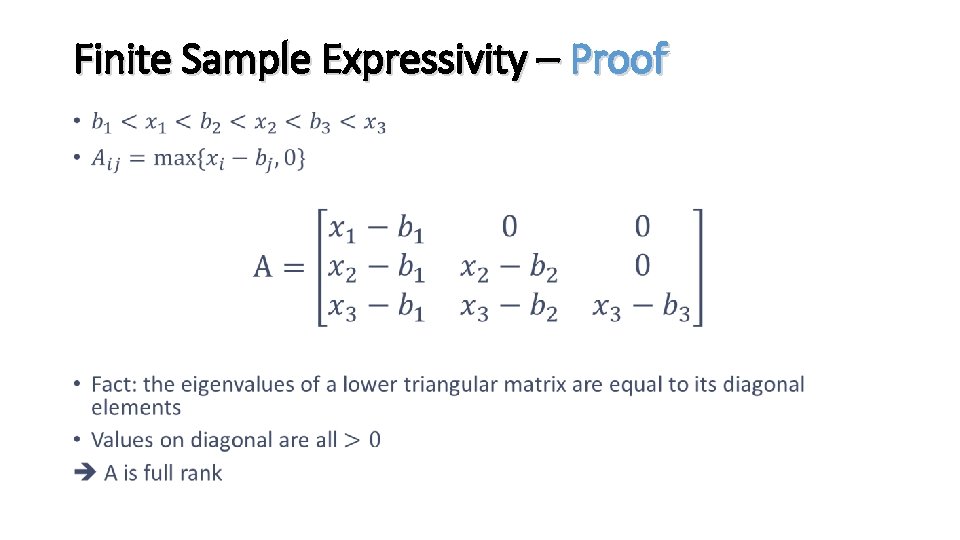

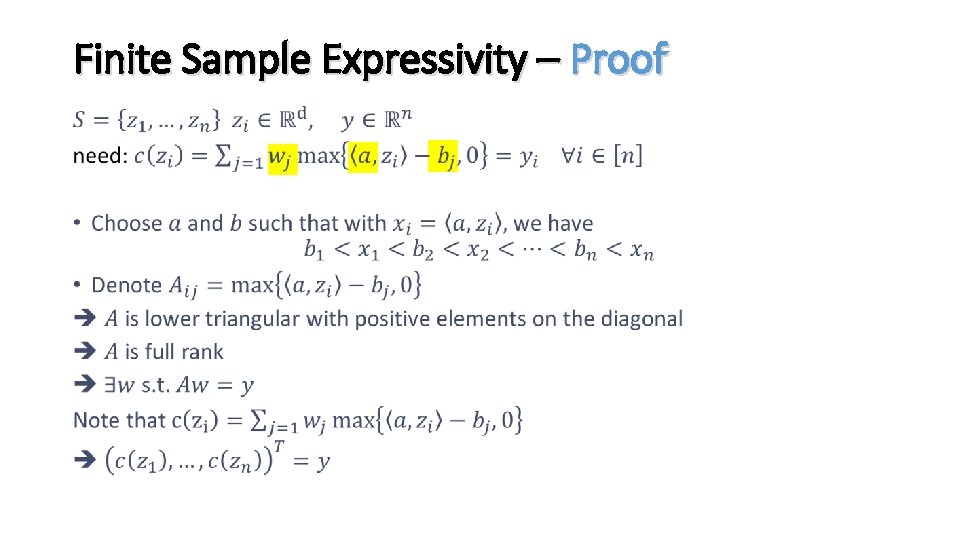

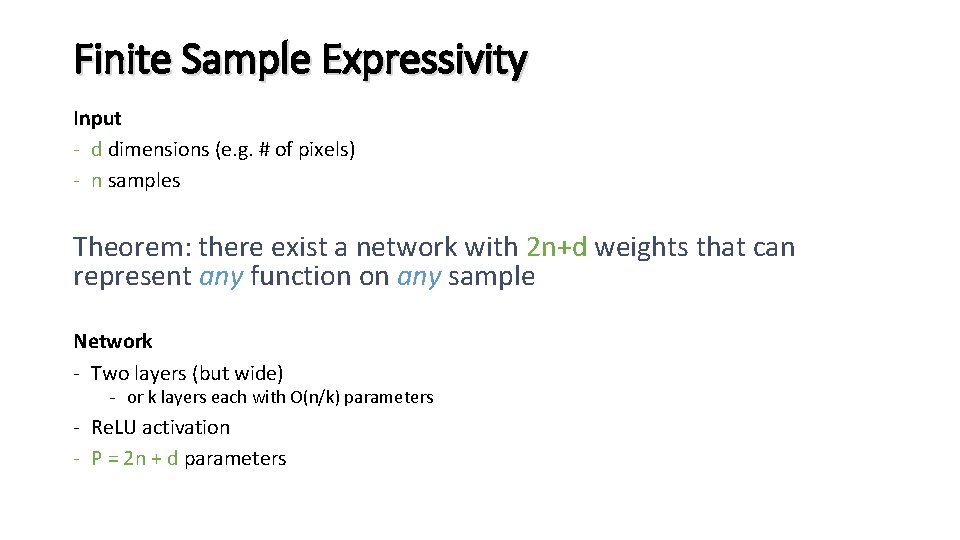

Finite Sample Expressivity Input - d dimensions (e. g. # of pixels) - n samples Theorem: there exist a network with 2 n+d weights that can represent any function on any sample Network - Two layers (but wide) - or k layers each with O(n/k) parameters - Re. LU activation - P = 2 n + d parameters

Finite Sample Expressivity – Proof 1. Can be expressed by a depth 2 NN with Re. LU activations 2. Can memorize any input

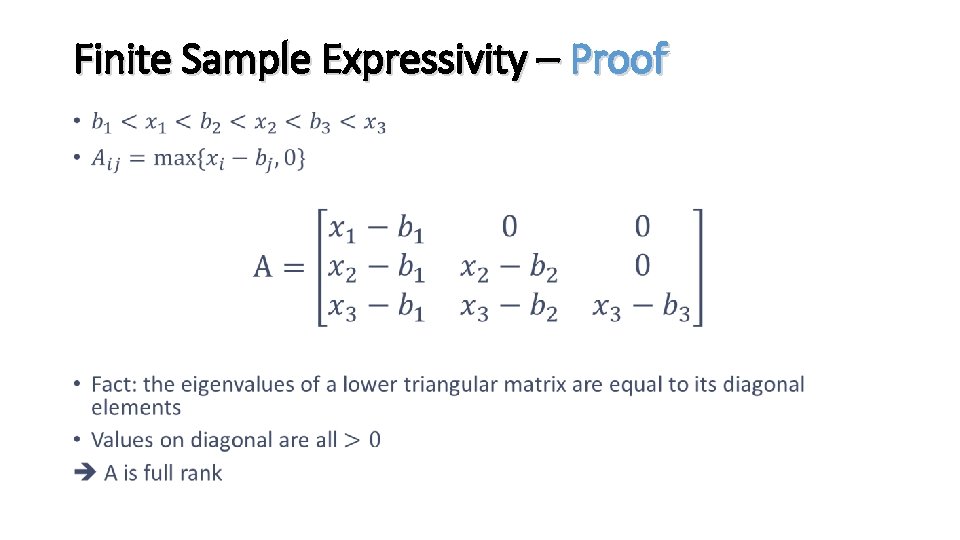

Finite Sample Expressivity – Proof •

Finite Sample Expressivity – Proof •

Finite Sample Expressivity Input - d dimensions (e. g. # of pixels) - n samples Theorem: there exist a network with 2 n+d weights that can represent any function on any sample Network - Two layers (but wide) - or k layers each with O(n/k) parameters - Re. LU activation - P = 2 n + d parameters

Experiments’ Methodology ? D Hypothesis Class f Distribution n examples (x, y) H A Algorithm f

SGD as an Implicit Regularizer •

SGD as an Implicit Regularizer •

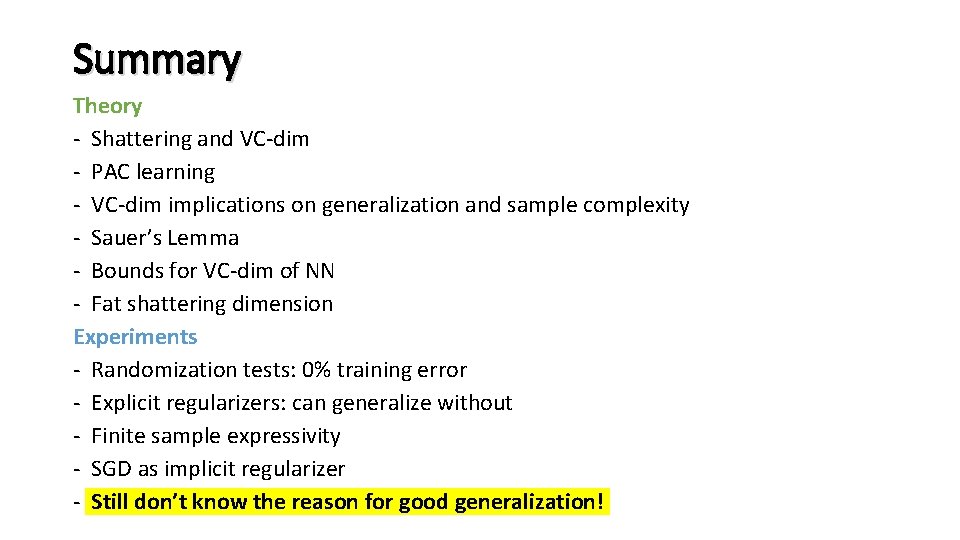

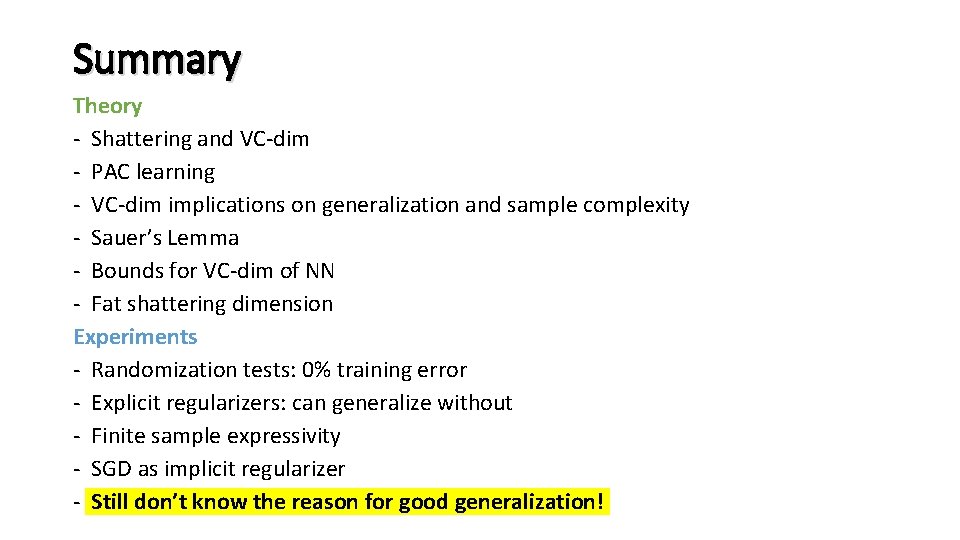

Summary Theory - Shattering and VC-dim - PAC learning - VC-dim implications on generalization and sample complexity - Sauer’s Lemma - Bounds for VC-dim of NN - Fat shattering dimension Experiments - Randomization tests: 0% training error - Explicit regularizers: can generalize without - Finite sample expressivity - SGD as implicit regularizer - Still don’t know the reason for good generalization!

THE END

Vc bound

Vc bound Shira be tsibur

Shira be tsibur Teaching english to arabic speakers

Teaching english to arabic speakers Idolity

Idolity Handel lewantyjski

Handel lewantyjski Strumień brzuszny i grzbietowy

Strumień brzuszny i grzbietowy Szlak poliketydowy

Szlak poliketydowy Compostelka

Compostelka Bobrowy szlak rzepin

Bobrowy szlak rzepin Diauksja

Diauksja Szlak sorbitolowy

Szlak sorbitolowy Szlak polichromii brzeskich

Szlak polichromii brzeskich Szlak brzuszny i grzbietowy

Szlak brzuszny i grzbietowy Lesson 1 nets and drawings for visualizing geometry

Lesson 1 nets and drawings for visualizing geometry Surface area using nets

Surface area using nets Nets blox

Nets blox What is a priori knowledge

What is a priori knowledge Nets church planting

Nets church planting Semantic nets and frames

Semantic nets and frames Nets and drawings for visualizing geometry

Nets and drawings for visualizing geometry Safety nets must be drop tested

Safety nets must be drop tested Apple sweatshop

Apple sweatshop List the 4 nets for better internet searching

List the 4 nets for better internet searching Nets and drawings for visualizing geometry

Nets and drawings for visualizing geometry Petri nets properties analysis and applications

Petri nets properties analysis and applications Bayes nets

Bayes nets Better convenience

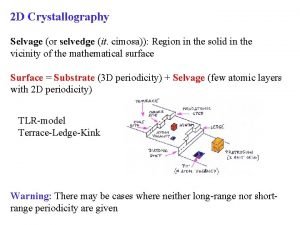

Better convenience Bravais nets

Bravais nets Hlf icao

Hlf icao Partitioned semantic network in artificial intelligence

Partitioned semantic network in artificial intelligence Cylinder net

Cylinder net Nets of square pyramid

Nets of square pyramid Represent solid figures using nets

Represent solid figures using nets Gabriel y petri

Gabriel y petri Ans

Ans The wake-sleep algorithm for unsupervised neural networks

The wake-sleep algorithm for unsupervised neural networks S1iisp36/s344crediamigo/inicial/geral/inicial

S1iisp36/s344crediamigo/inicial/geral/inicial Neural groove

Neural groove Alternatives to convolutional neural networks

Alternatives to convolutional neural networks Edlut

Edlut On the computational efficiency of training neural networks

On the computational efficiency of training neural networks Meshnet: mesh neural network for 3d shape representation

Meshnet: mesh neural network for 3d shape representation Information theory and neural coding

Information theory and neural coding Neural networks and fuzzy logic

Neural networks and fuzzy logic Neural and hormonal communication

Neural and hormonal communication Convolutional neural network

Convolutional neural network Neural circuits the organization of neuronal pools

Neural circuits the organization of neuronal pools Ann unsupervised learning

Ann unsupervised learning Two almond shaped neural clusters

Two almond shaped neural clusters Show and tell a neural image caption generator

Show and tell a neural image caption generator Predicting nba games using neural networks

Predicting nba games using neural networks Thomson learning

Thomson learning Artificial neural network in data mining

Artificial neural network in data mining Xor problem

Xor problem Plexus brachialis

Plexus brachialis Neural control of breathing

Neural control of breathing Large scale distributed deep networks

Large scale distributed deep networks Nerves are neural cables containing many

Nerves are neural cables containing many Intercellular connections

Intercellular connections Xkcd neural network

Xkcd neural network Neural network in data mining

Neural network in data mining Convolutional neural networks for visual recognition

Convolutional neural networks for visual recognition Neural groove

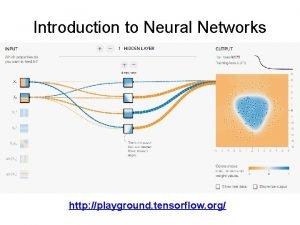

Neural groove Playground tensorflow.org

Playground tensorflow.org Refractory period neuron

Refractory period neuron Backpropagation andrew ng

Backpropagation andrew ng Tacotron 2

Tacotron 2 Neural net

Neural net Audio super resolution using neural networks

Audio super resolution using neural networks Andrew ng rnn

Andrew ng rnn Phases of neural communication like a toilet

Phases of neural communication like a toilet Decision boundary of neural network

Decision boundary of neural network Linear separability in neural network

Linear separability in neural network Neural network data preprocessing

Neural network data preprocessing Visualizing and understanding convolutional networks

Visualizing and understanding convolutional networks Fig 19

Fig 19 Neural crest

Neural crest Nerves are neural cables containing many

Nerves are neural cables containing many Deep forest: towards an alternative to deep neural networks

Deep forest: towards an alternative to deep neural networks Placa neural

Placa neural Neural impulse like a toilet

Neural impulse like a toilet Audio super resolution

Audio super resolution Feedforward neural

Feedforward neural