Artificial Neural Networks for RF and Microwave Design

- Slides: 55

Artificial Neural Networks for RF and Microwave Design: From Theory to Practice Qi-Jun Zhang+ Kuldip C. Gupta* and Vijay K. Devabhaktuni+ +Department of Electronics, Carleton University, Ottawa, ON, Canada *Department of ECE, University of Colorado, Boulder, CO, USA

Outline • • Introduction and overview Neural network structures Neural network model development process RF/Microwave component modeling using neural networks • High-frequency circuit optimization using neural network models • Conclusions

Introduction • Accurate RF/Microwave design is crucial for the current upsurge in VLSI, telecommunication and wireless technologies • Design at microwave frequencies is significantly different from low-frequency and digital designs • Substantial development in RF/microwave CAD techniques have been made during the last decade • Further advances in CAD are needed to address new design challenges, e. g. , 3 D-EM optimization, CPW and multilayered circuits, IC antenna modules, etc • Fast and accurate models are key to efficient CAD • Neural network based modeling and design could significantly impact high-frequency CAD

A Quick Illustration Example: Neural Network Model for Delay Estimation in a High-Speed Interconnect Network

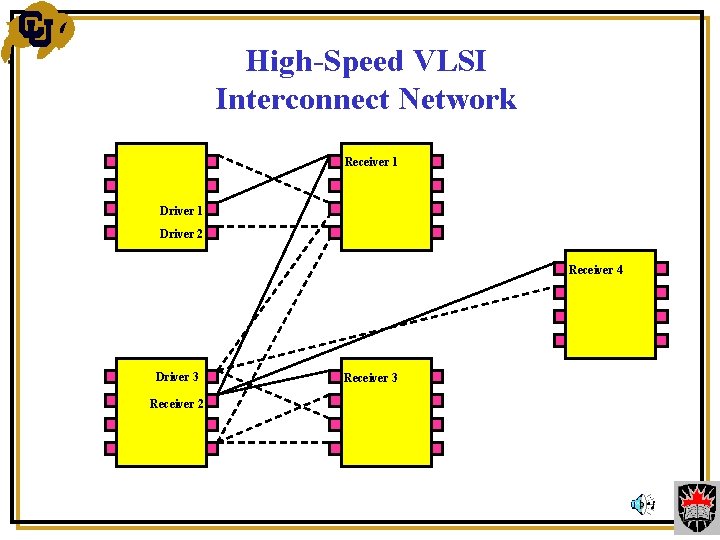

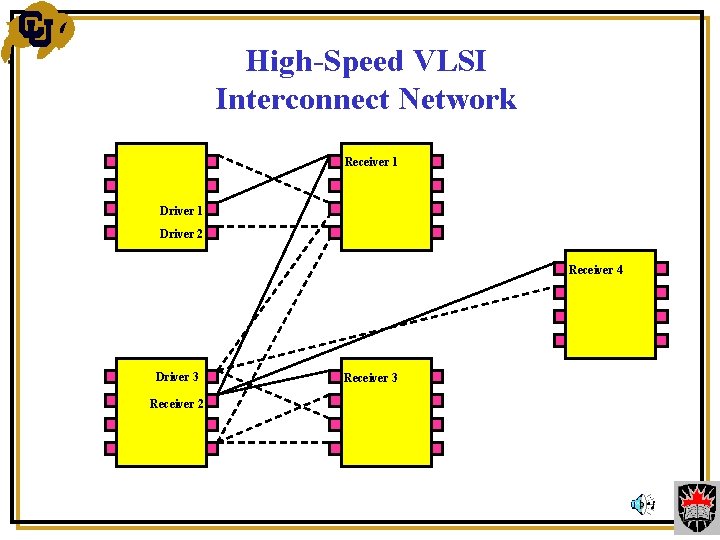

High-Speed VLSI Interconnect Network Receiver 1 Driver 2 Receiver 4 Driver 3 Receiver 2 Receiver 3

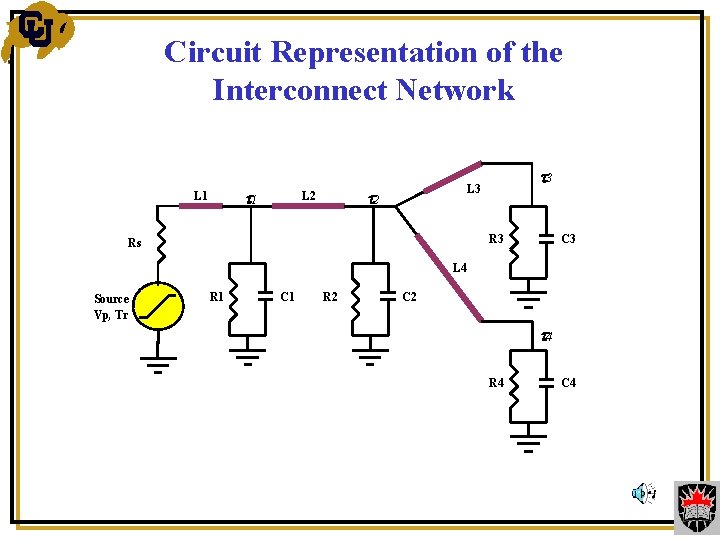

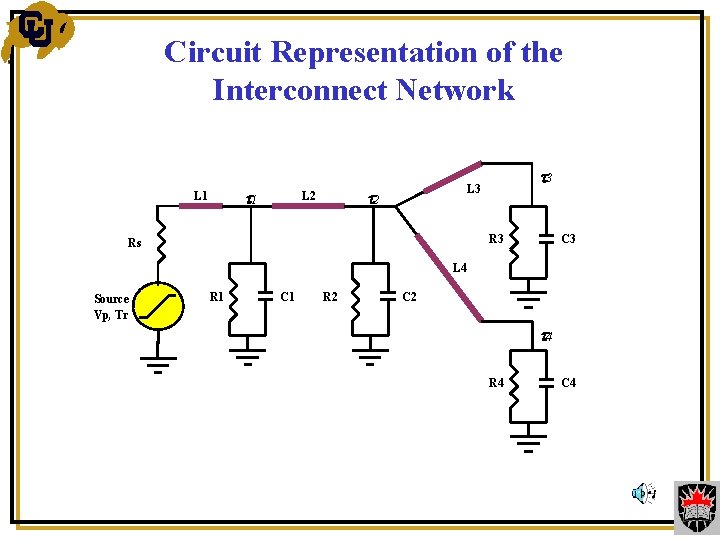

Circuit Representation of the Interconnect Network 1 L 1 2 L 2 3 L 3 Rs C 3 L 4 Source Vp, Tr R 1 C 1 R 2 C 2 4 R 4 C 4

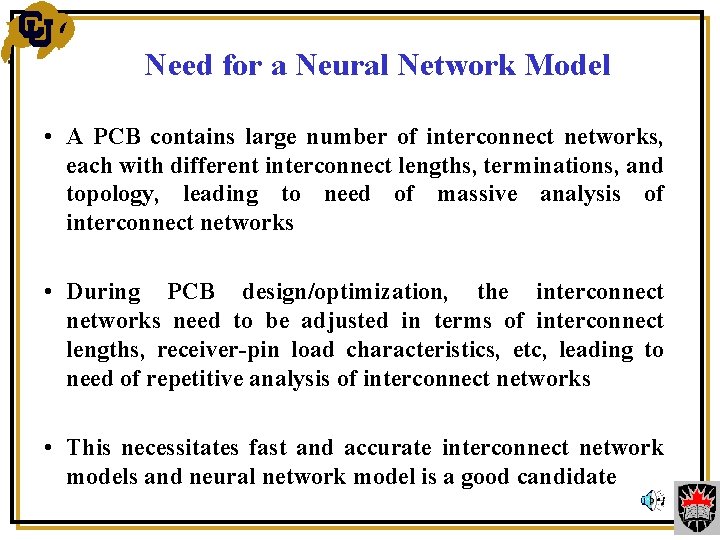

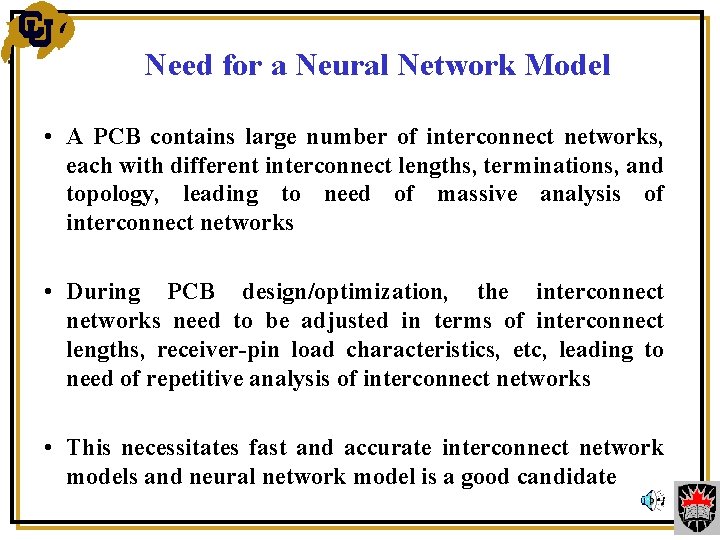

Need for a Neural Network Model • A PCB contains large number of interconnect networks, each with different interconnect lengths, terminations, and topology, leading to need of massive analysis of interconnect networks • During PCB design/optimization, the interconnect networks need to be adjusted in terms of interconnect lengths, receiver-pin load characteristics, etc, leading to need of repetitive analysis of interconnect networks • This necessitates fast and accurate interconnect network models and neural network model is a good candidate

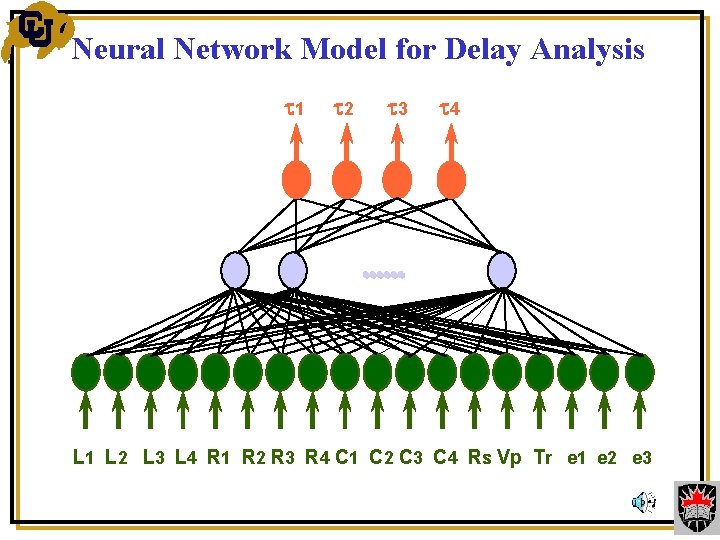

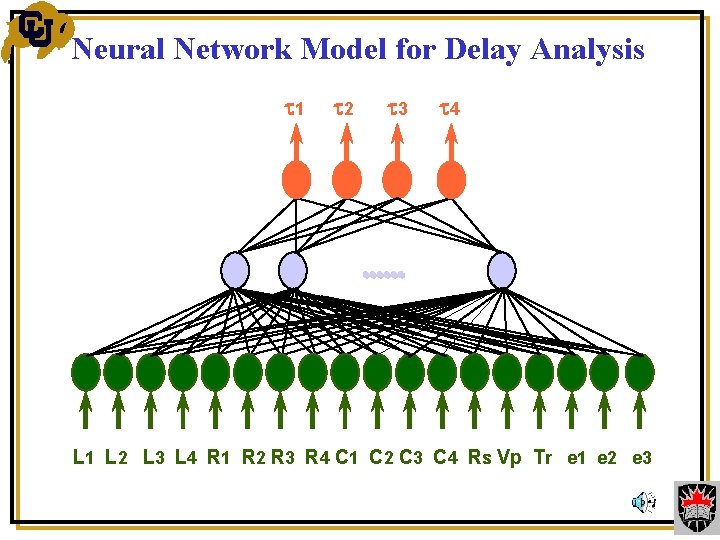

Neural Network Model for Delay Analysis 1 2 3 4 …. . . L 1 L 2 L 3 L 4 R 1 R 2 R 3 R 4 C 1 C 2 C 3 C 4 Rs Vp Tr e 1 e 2 e 3

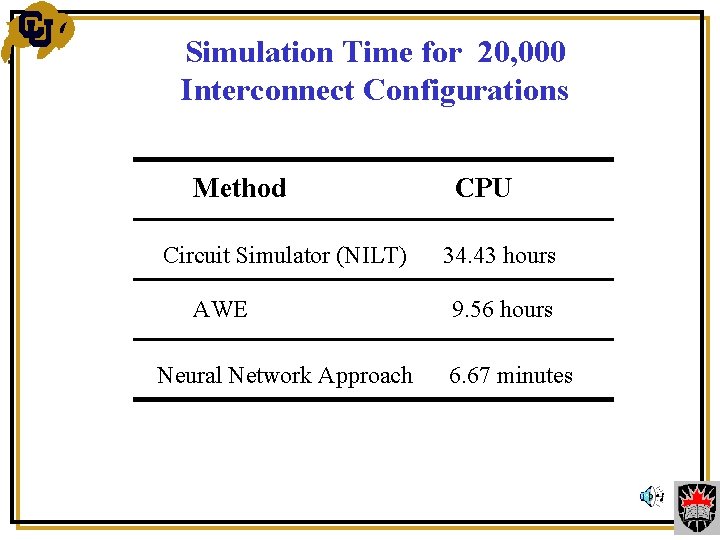

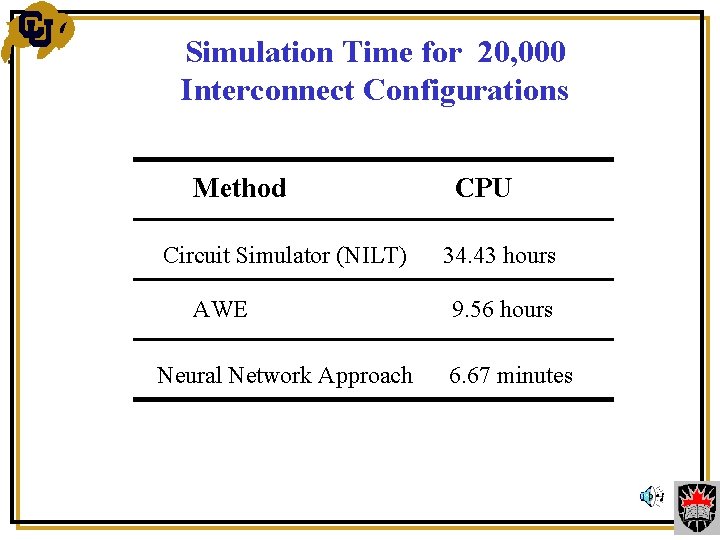

Simulation Time for 20, 000 Interconnect Configurations Method CPU Circuit Simulator (NILT) 34. 43 hours AWE 9. 56 hours Neural Network Approach 6. 67 minutes

Important Features of Neural Networks • Neural networks have the ability to model multi-dimensional nonlinear relationships • Neural models are simple and the model computation is fast • Neural networks can learn and generalize from available data thus making model development possible even when component formulae are unavailable • Neural network approach is generic, i. e. , the same modeling technique can be re-used for passive/active devices/circuits • It is easier to update neural models whenever device or component technology changes

Neural Networks for RF/Microwave Applications: Overview • Neural models are efficient alternatives to closed-form expressions, equivalent circuit models and look-up tables • Neural network models can be developed from measured or simulated data • Neural models can also be used to update or improve the accuracy of already existing models • Neural network models have been developed for active devices, passive components and interconnect networks • These models have been used in circuit simulators for circuitlevel simulation, design and optimization

Neural Network Structures

Neural Network Structures • A neural network contains • neurons (processing elements) • connections (links between neurons) • A neural network structure defines • how information is processed inside a neuron • how the neurons are connected • Examples of neural network structures • multi-layer perceptrons (MLP) • radial basis function (RBF) networks • wavelet networks • recurrent neural networks • knowledge based neural networks • MLP is the basic and most frequently used structure

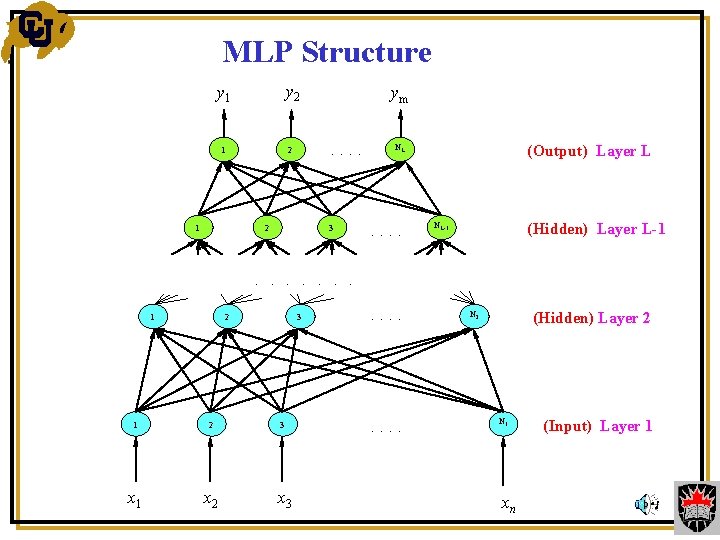

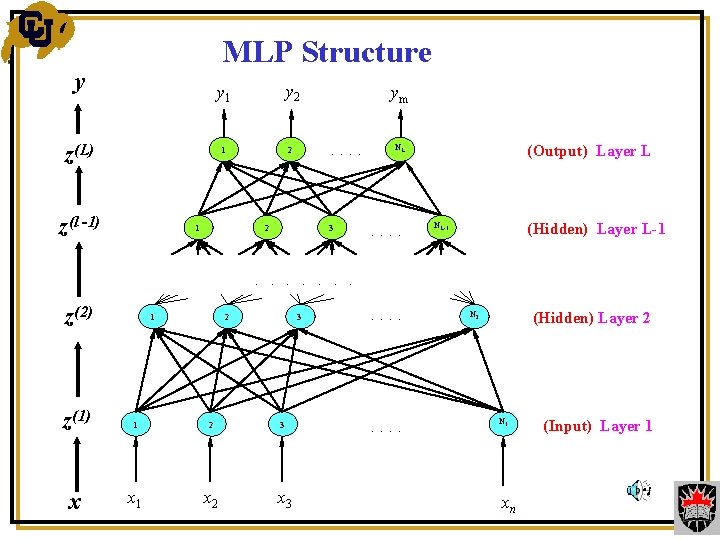

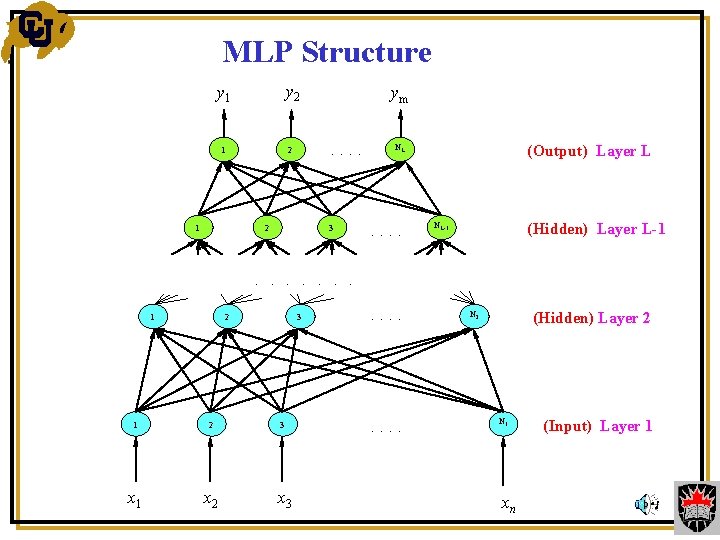

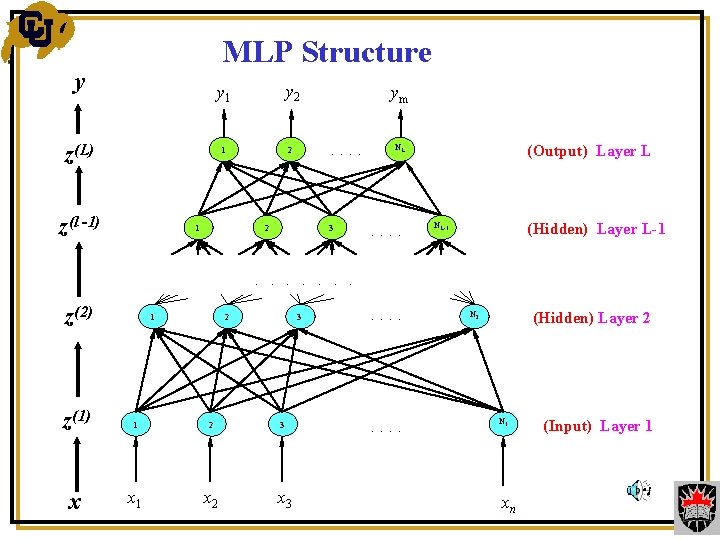

MLP Structure y 2 y 1 1 1 2 ym. . 2 3 NL . . (Output) Layer L NL-1 (Hidden) Layer L-1 . . . . 1 2 x 1 x 2 3 3 x 3 . . N 2 (Hidden) Layer 2 N 1 xn (Input) Layer 1

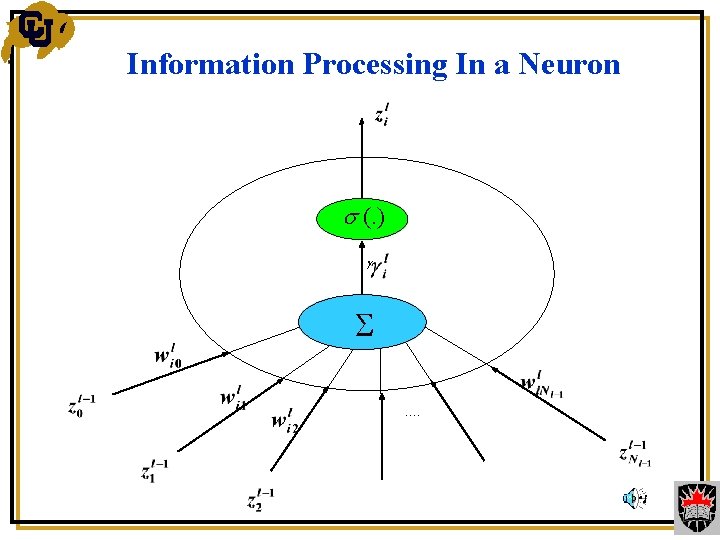

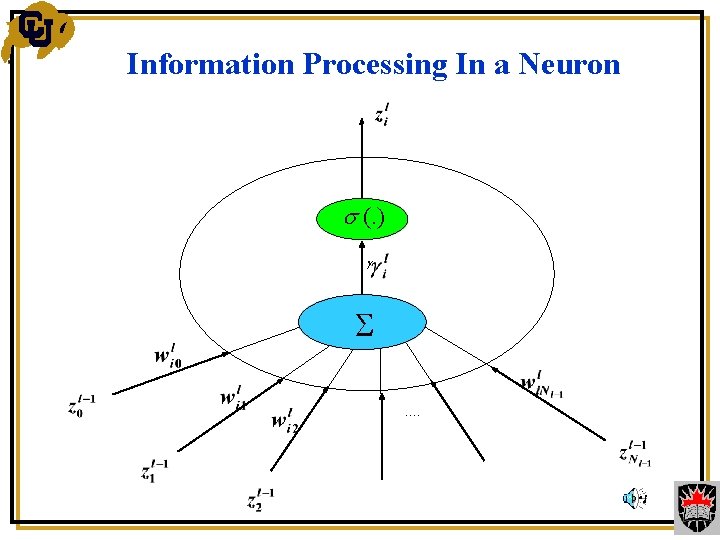

Information Processing In a Neuron (. ) ….

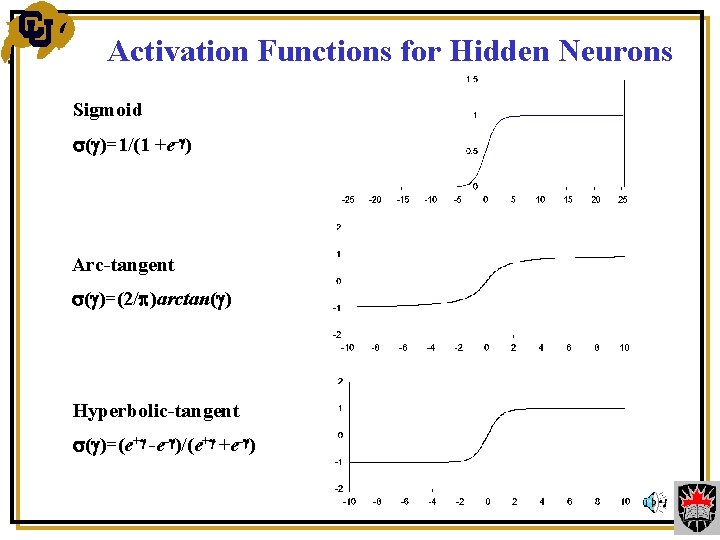

Neuron Activation Functions • Input layer neurons simply relay the external inputs to the neural network • Hidden layer neurons have smooth switch-type activation functions • Output layer neurons can have simple linear activation functions

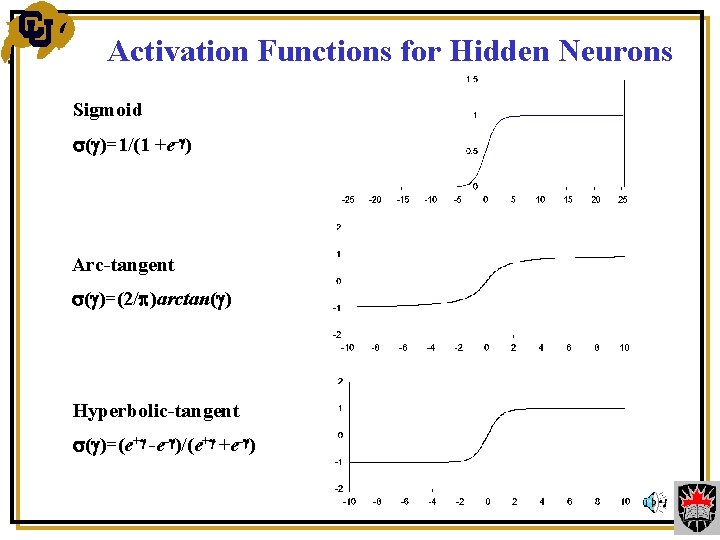

Activation Functions for Hidden Neurons Sigmoid ( )=1/(1 +e- ) Arc-tangent ( )=(2/ )arctan( ) Hyperbolic-tangent ( )=(e+ -e- )/(e+ +e- )

MLP Structure y y 2 y 1 z(L) 1 z(l -1) 1 2 ym. . 2 3 NL . . (Output) Layer L NL-1 (Hidden) Layer L-1 . . . . z(2) 1 2 z(1) 1 2 x x 1 x 2 3 3 x 3 . . N 2 (Hidden) Layer 2 N 1 xn (Input) Layer 1

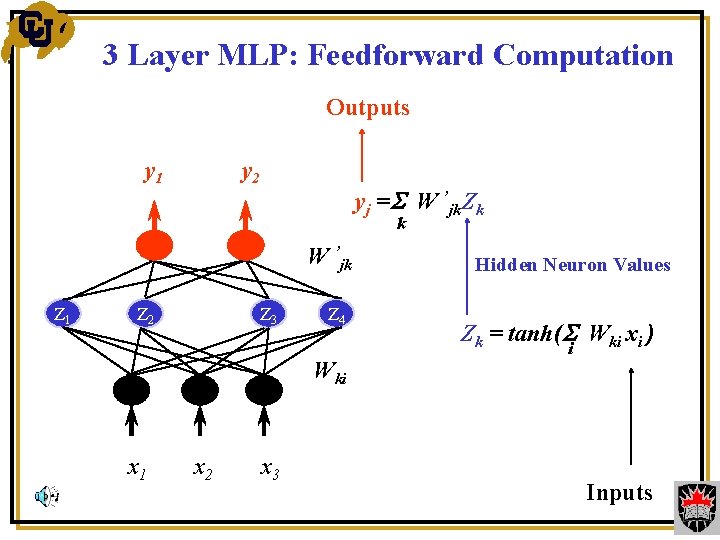

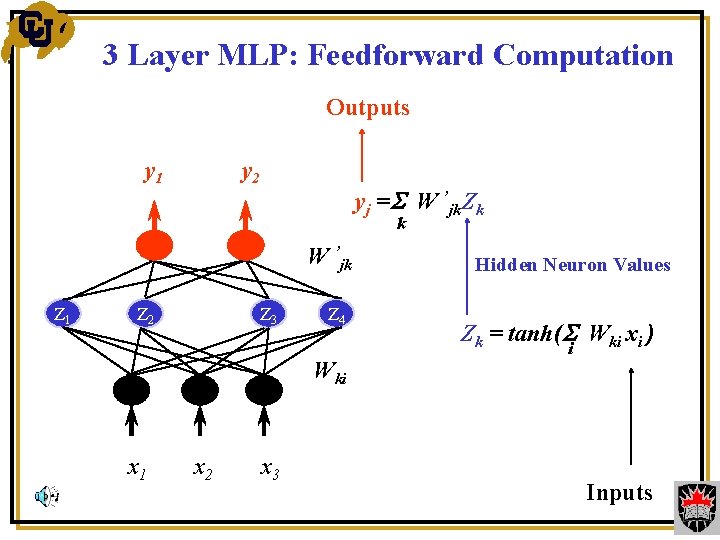

3 Layer MLP: Feedforward Computation Outputs y 1 y 2 yj =S W ’jk. Zk k W ’jk Z 1 Z 2 Z 3 Z 4 Wki x 1 x 2 x 3 Hidden Neuron Values Zk = tanh(S Wki xi ) i Inputs

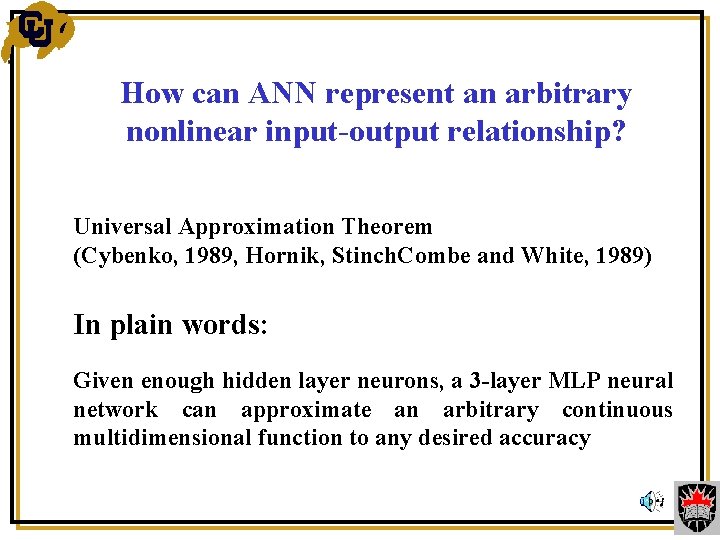

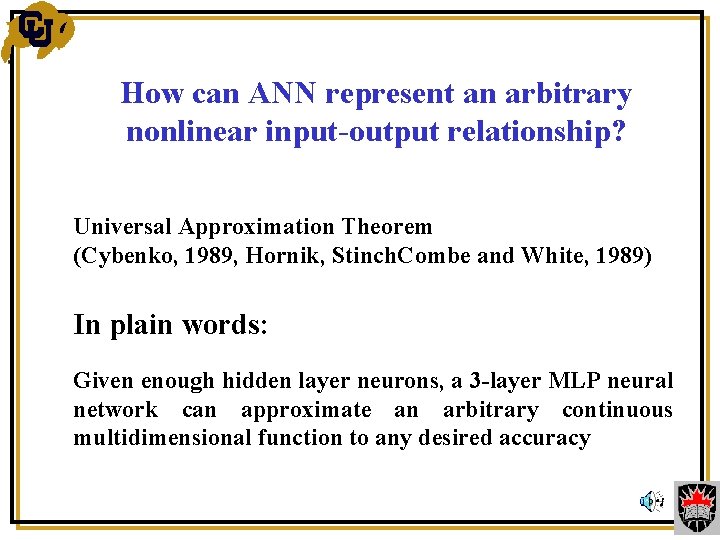

How can ANN represent an arbitrary nonlinear input-output relationship? Universal Approximation Theorem (Cybenko, 1989, Hornik, Stinch. Combe and White, 1989) In plain words: Given enough hidden layer neurons, a 3 -layer MLP neural network can approximate an arbitrary continuous multidimensional function to any desired accuracy

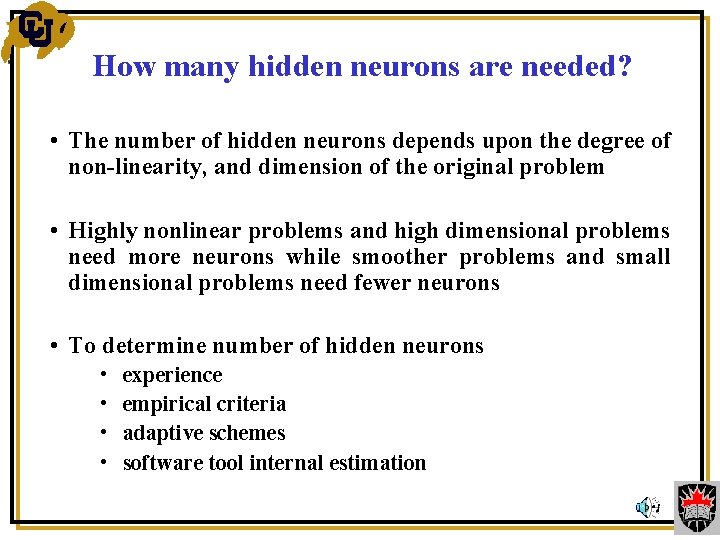

How many hidden neurons are needed? • The number of hidden neurons depends upon the degree of non-linearity, and dimension of the original problem • Highly nonlinear problems and high dimensional problems need more neurons while smoother problems and small dimensional problems need fewer neurons • To determine number of hidden neurons • • experience empirical criteria adaptive schemes software tool internal estimation

Development of Neural Network Models

Notation y = y(x, w): ANN model x: inputs of given modeling problem or ANN y: outputs of given modeling problem or ANN w: weight parameters in ANN d : data of y from simulation or measurement

Define Model Input-Output and Generate Data Define model input-output (x, y), for example, x: physical/geometrical parameters of the component y: S-parameters of the component Generate (x, y) samples (xk, dk) , k = 1, 2, …, P, such that the data set sufficiently represent the behavior of the given x-y problem Types of Data Generator: simulation and measurement

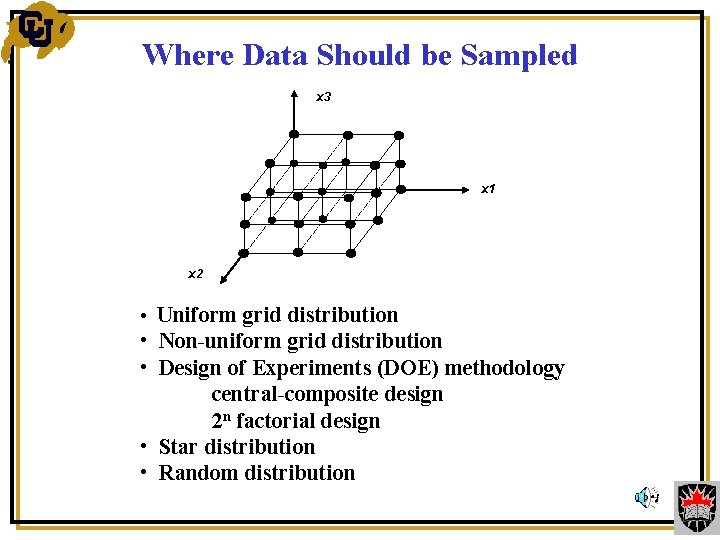

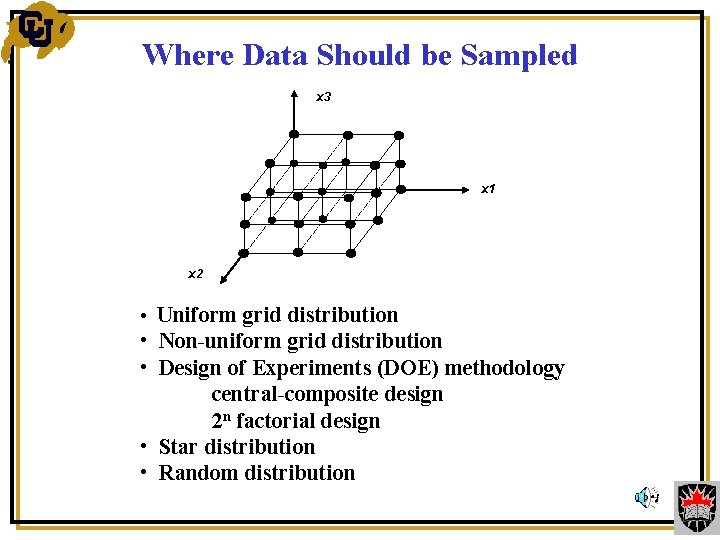

Where Data Should be Sampled x 3 x 1 x 2 • Uniform grid distribution • Non-uniform grid distribution • Design of Experiments (DOE) methodology central-composite design 2 n factorial design • Star distribution • Random distribution

Input / Output Scaling The orders of magnitude of various x and d values in microwave applications can be very different from one another. Scaling of training data is desirable for efficient neural network training The data can be scaled such that various x (or d ) have similar order of magnitude

Training, Validation and Test Data Sets The overall data should be divided into 3 sets, training, validation and test. Notation: Tr V Te - Index set of training data Index set of validation data Index set of test data In RF/microwave cases where overall data is limited, validation and test (or training and validation) data can be shared.

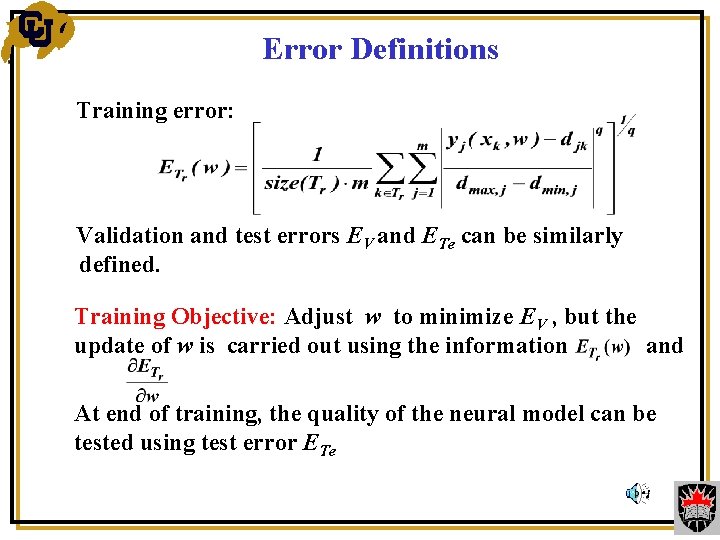

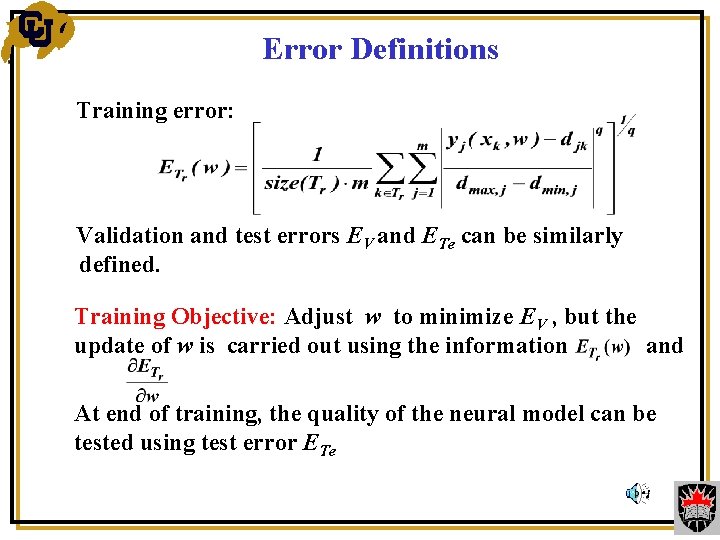

Error Definitions Training error: Validation and test errors EV and ETe can be similarly defined. Training Objective: Adjust w to minimize E V , but the update of w is carried out using the information and At end of training, the quality of the neural model can be tested using test error ETe

Types of Training • Sample-by-sample (or online) training: ANN weights are updated every time a training sample is presented to the network, i. e. , weight update is based on training error from that sample • Batch-mode (or offline) training: ANN weights are updated after each epoch, i. e. , weight update is based on training error from all the samples in training data set • An epoch is defined as a stage of ANN training that involves presentation of all the samples in the training data set to the neural network once for the purpose of learning

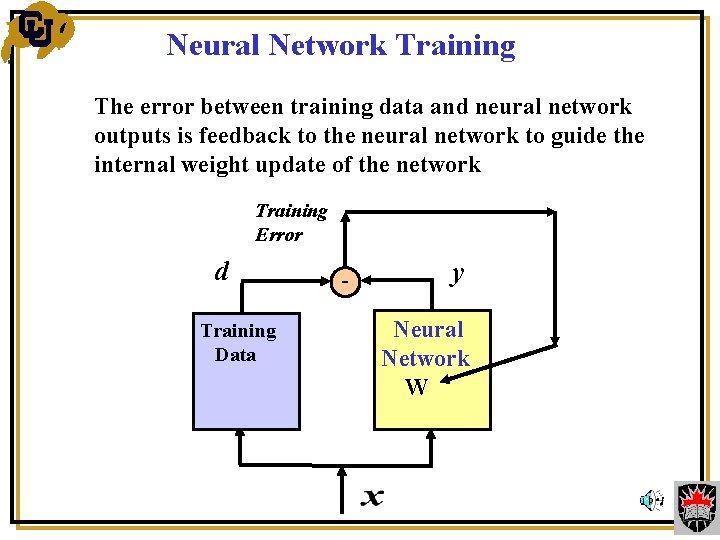

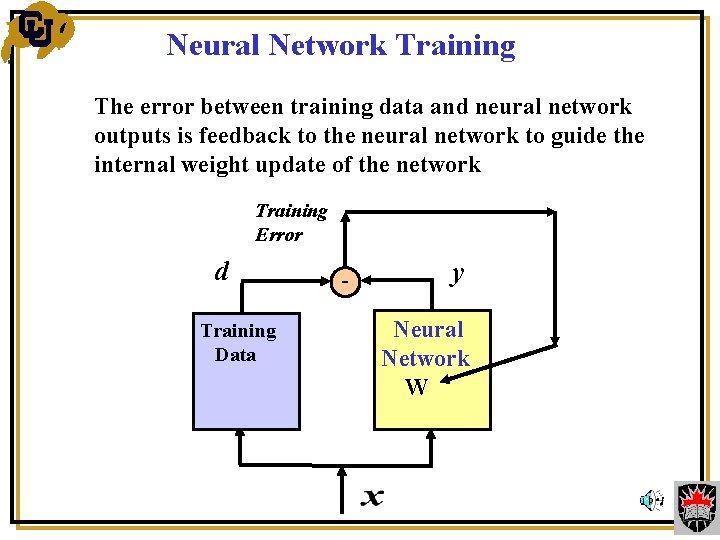

Neural Network Training The error between training data and neural network outputs is feedback to the neural network to guide the internal weight update of the network Training Error d Training Data y - Neural Network W

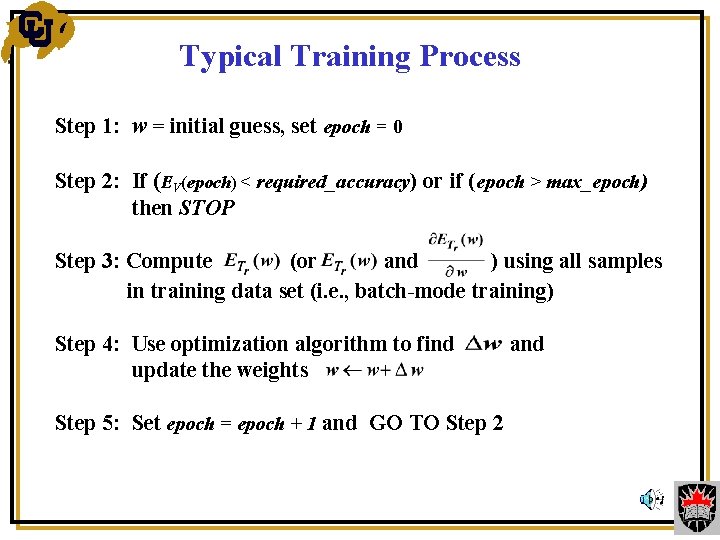

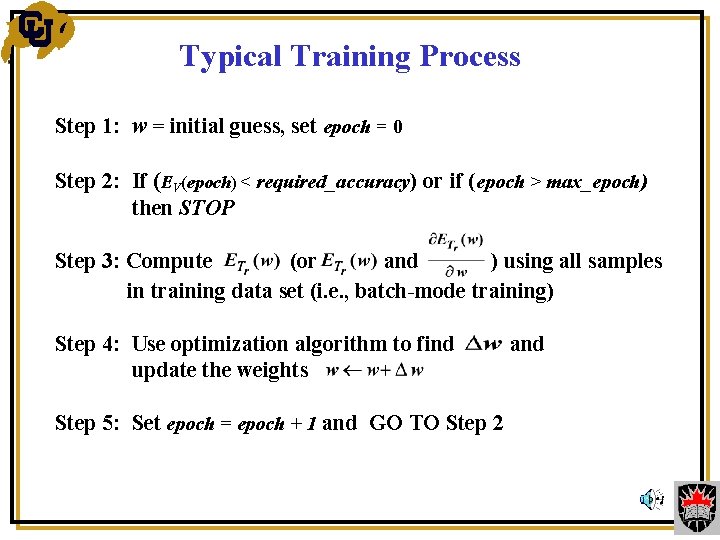

Typical Training Process Step 1: w = initial guess, set epoch = 0 Step 2: If (EV(epoch) < required_accuracy) or if (epoch > max_epoch) then STOP Step 3: Compute (or and ) using all samples in training data set (i. e. , batch-mode training) Step 4: Use optimization algorithm to find and update the weights Step 5: Set epoch = epoch + 1 and GO TO Step 2

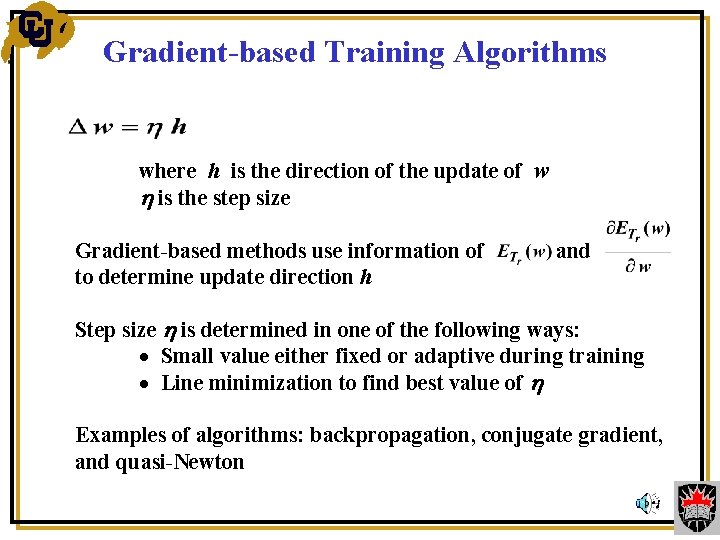

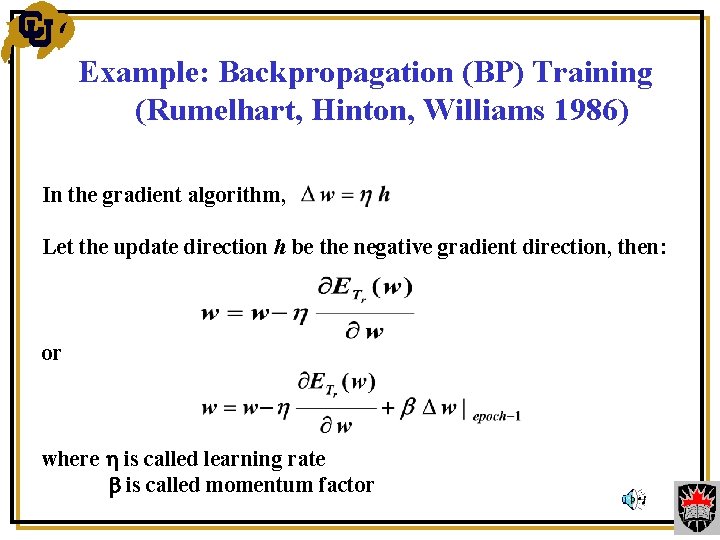

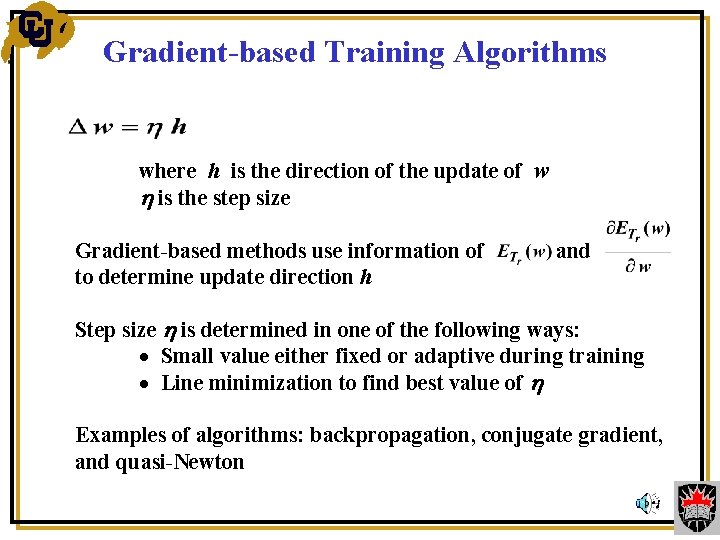

Gradient-based Training Algorithms where h is the direction of the update of w is the step size Gradient-based methods use information of and to determine update direction h Step size is determined in one of the following ways: · Small value either fixed or adaptive during training · Line minimization to find best value of Examples of algorithms: backpropagation, conjugate gradient, and quasi-Newton

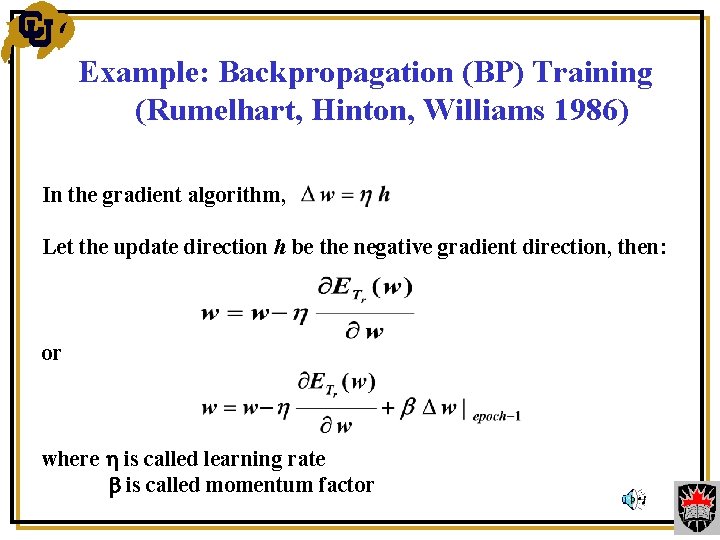

Example: Backpropagation (BP) Training (Rumelhart, Hinton, Williams 1986) In the gradient algorithm, Let the update direction h be the negative gradient direction, then: or where is called learning rate is called momentum factor

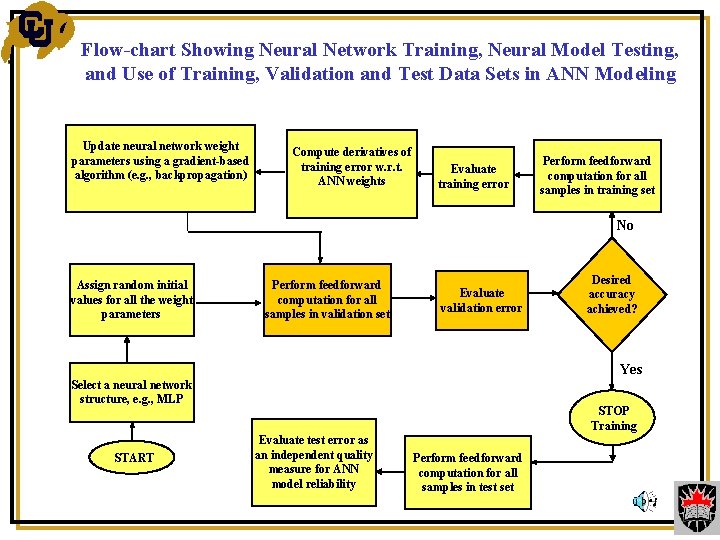

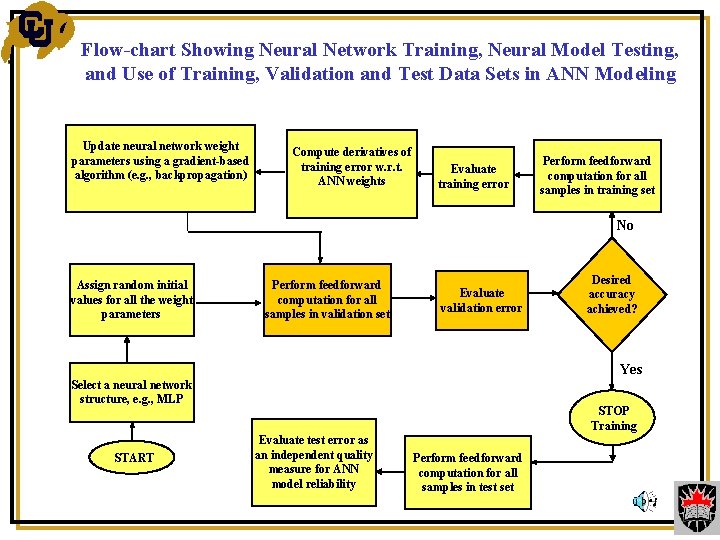

Flow-chart Showing Neural Network Training, Neural Model Testing, and Use of Training, Validation and Test Data Sets in ANN Modeling Update neural network weight parameters using a gradient-based algorithm (e. g. , BP, quasi-Newton) algorithm (e. g. , backpropagation) Compute derivatives of training error w. r. t. ANN weights Evaluate training error Perform feedforward computation for all samples in training set No Assign random initial values for all the weight parameters Perform feedforward computation for all samples in validation set Evaluate validation error Desired accuracy achieved? Yes Select a neural network structure, e. g. , MLP START STOP Training Evaluate test error as an independent quality measure for ANN model reliability Perform feedforward computation for all samples in test set

Example: EM-ANN Models for CPW Circuit Design and Optimization

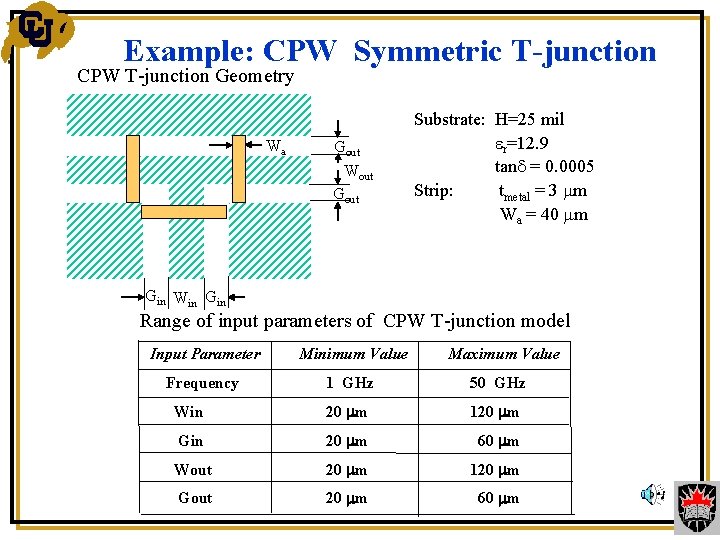

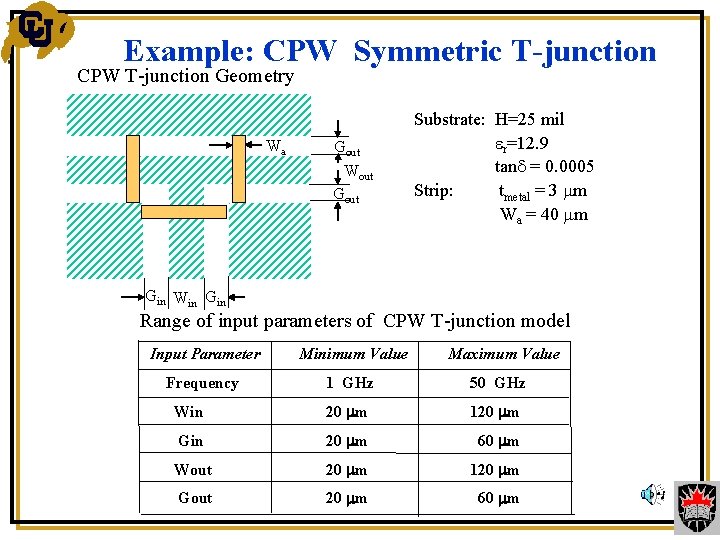

Example: CPW Symmetric T-junction CPW T-junction Geometry Wa Strip Gout Wout Gout Substrate: H=25 mil r=12. 9 tan = 0. 0005 Strip: tmetal = 3 m Wa = 40 m Gin Win Gin Range of input parameters of CPW T-junction model Input Parameter Minimum Value Maximum Value Frequency 1 GHz 50 GHz Win 20 m 120 m Gin 20 m 60 m Wout 20 m 120 m Gout 20 m 60 m

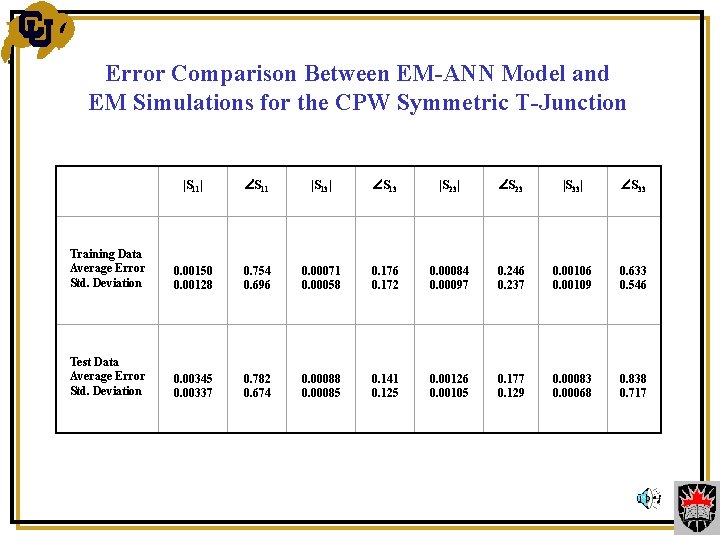

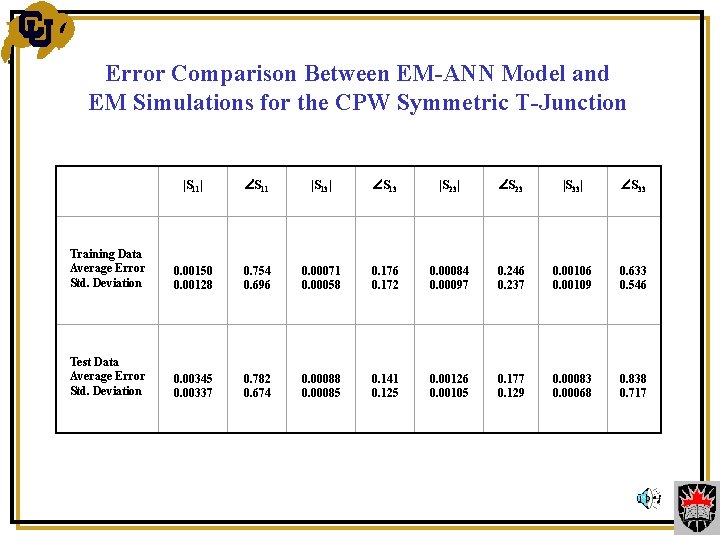

Error Comparison Between EM-ANN Model and EM Simulations for the CPW Symmetric T-Junction |S 11| S 11 |S 13| S 13 |S 23| S 23 |S 33| S 33 Training Data Average Error Std. Deviation 0. 00150 0. 00128 0. 754 0. 696 0. 00071 0. 00058 0. 176 0. 172 0. 00084 0. 00097 0. 246 0. 237 0. 00106 0. 00109 0. 633 0. 546 Test Data Average Error Std. Deviation 0. 00345 0. 00337 0. 782 0. 674 0. 00088 0. 00085 0. 141 0. 125 0. 00126 0. 00105 0. 177 0. 129 0. 00083 0. 00068 0. 838 0. 717

Example: ANN Based Design of a CPW Folded Double-Stub Filter

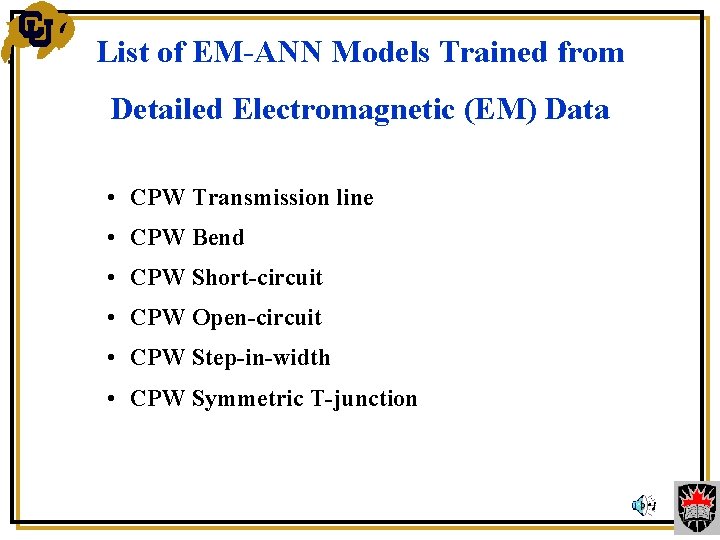

List of EM-ANN Models Trained from Detailed Electromagnetic (EM) Data • CPW Transmission line • CPW Bend • CPW Short-circuit • CPW Open-circuit • CPW Step-in-width • CPW Symmetric T-junction

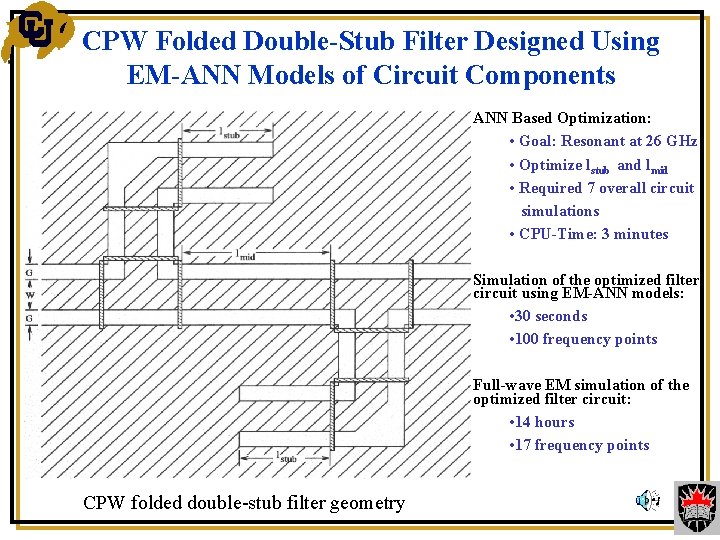

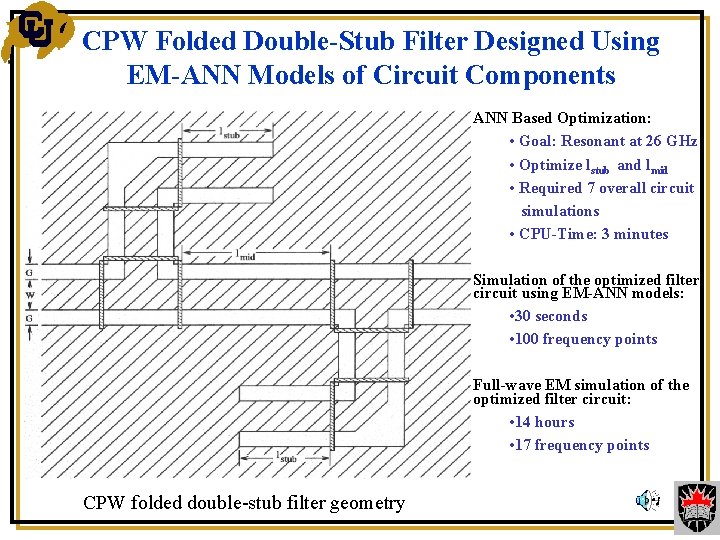

CPW Folded Double-Stub Filter Designed Using EM-ANN Models of Circuit Components ANN Based Optimization: • Goal: Resonant at 26 GHz • Optimize lstub and lmid • Required 7 overall circuit simulations • CPU-Time: 3 minutes Simulation of the optimized filter circuit using EM-ANN models: • 30 seconds • 100 frequency points Full-wave EM simulation of the optimized filter circuit: • 14 hours • 17 frequency points CPW folded double-stub filter geometry

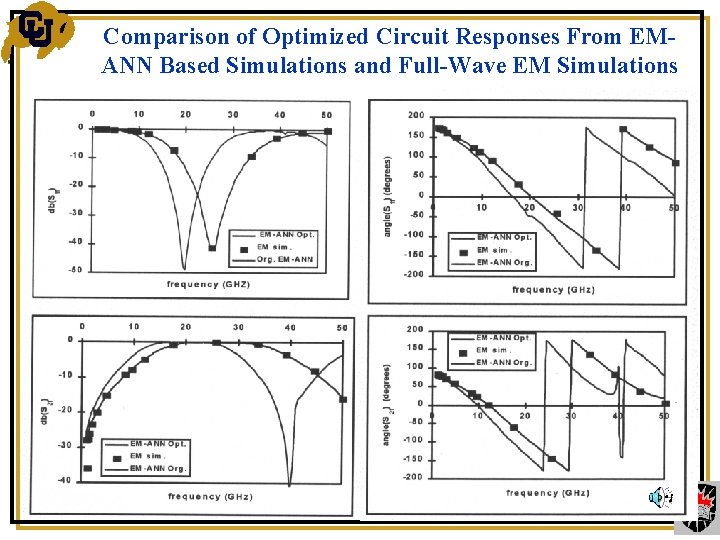

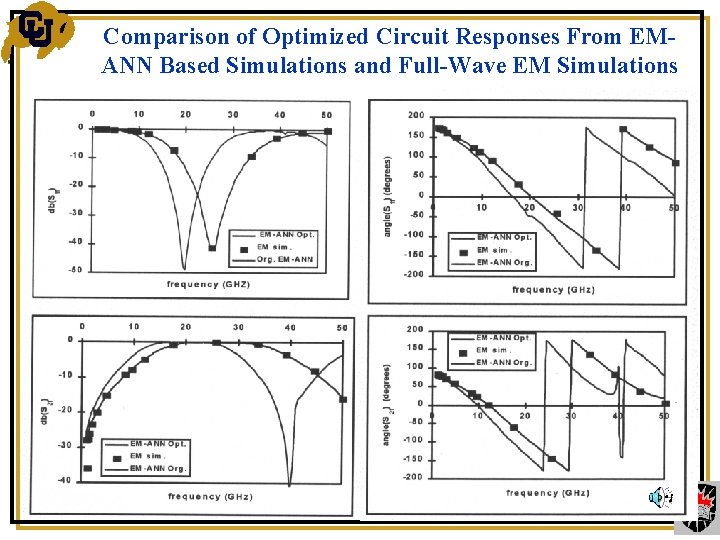

Comparison of Optimized Circuit Responses From EMANN Based Simulations and Full-Wave EM Simulations

Example: FET Modeling Using Neural Networks

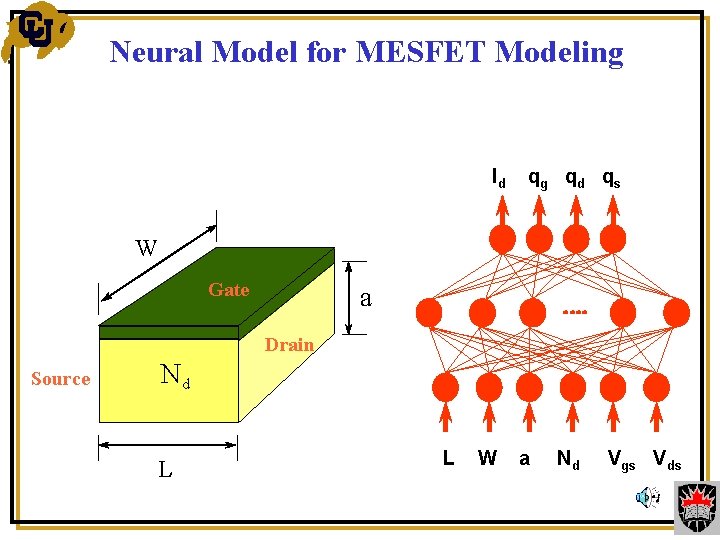

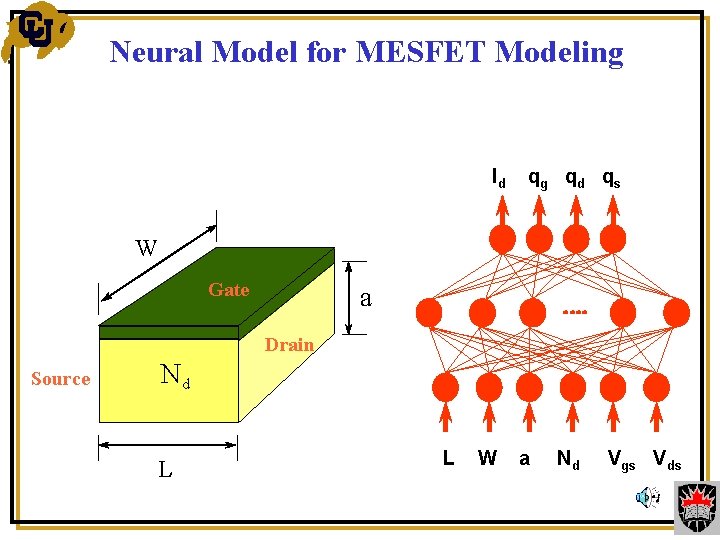

Neural Model for MESFET Modeling Id qg q d q s W Gate a …. Drain Source Nd L L W a Nd Vgs Vds

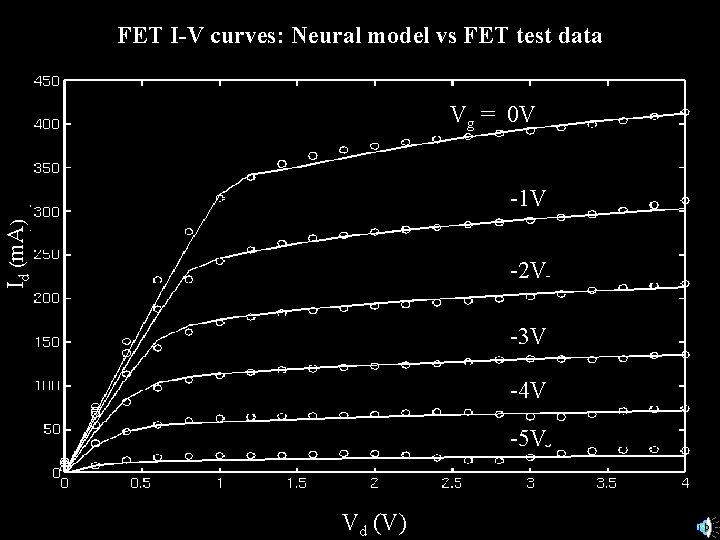

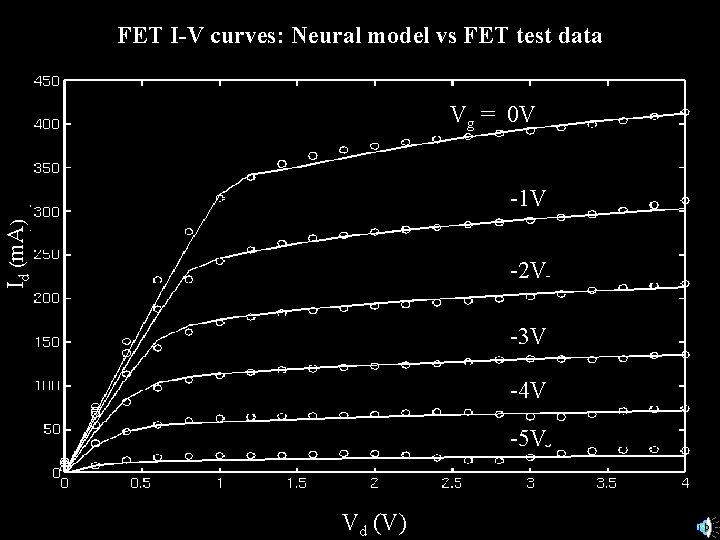

FET I-V curves: Neural model vs FET test data Vg = 0 V Id (m. A) -1 V -2 V -3 V -4 V -5 V Vd (V)

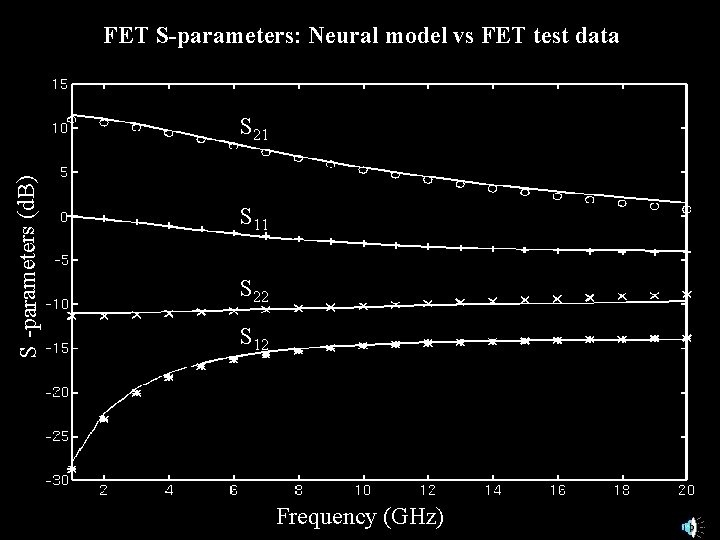

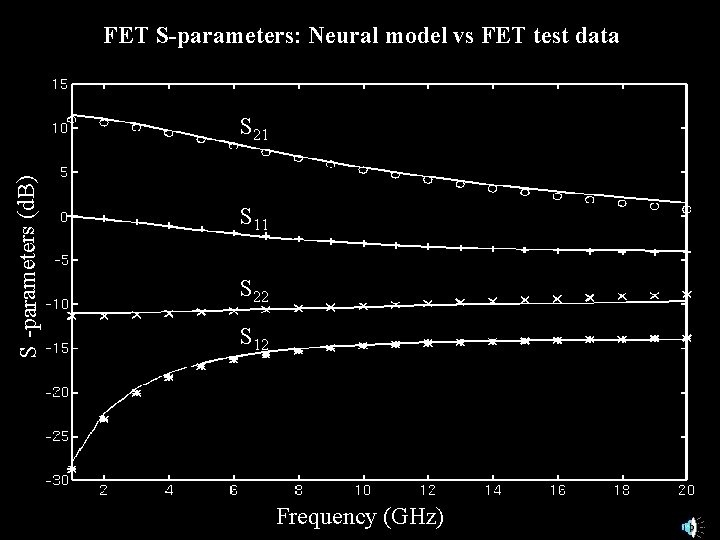

FET S-parameters: Neural model vs FET test data S -parameters (d. B) S 21 S 11 S 22 S 12 Frequency (GHz)

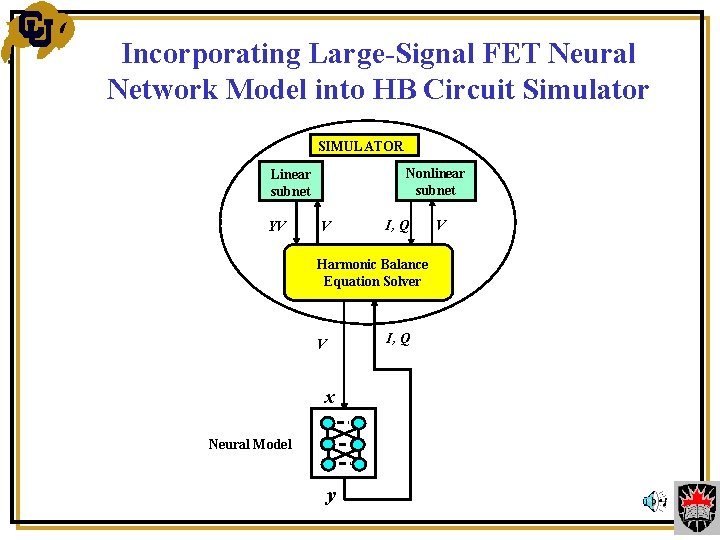

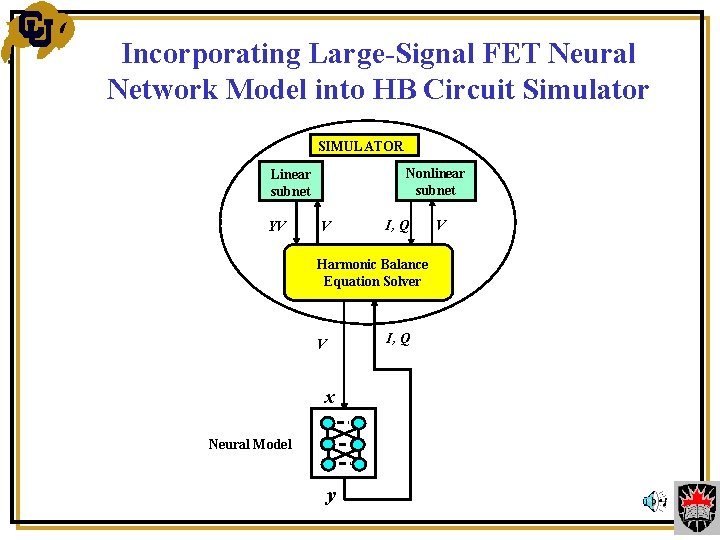

Incorporating Large-Signal FET Neural Network Model into HB Circuit Simulator SIMULATOR Nonlinear subnet Linear subnet YV V I, Q V Harmonic Balance Equation Solver I, Q V x Neural Model y

Example: Yield Optimization of a 3 -Stage MMIC Amplifier Using Neural Network Models

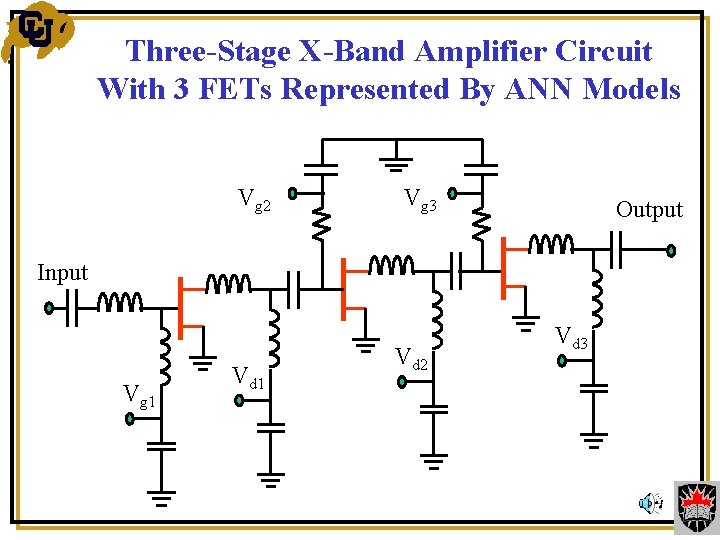

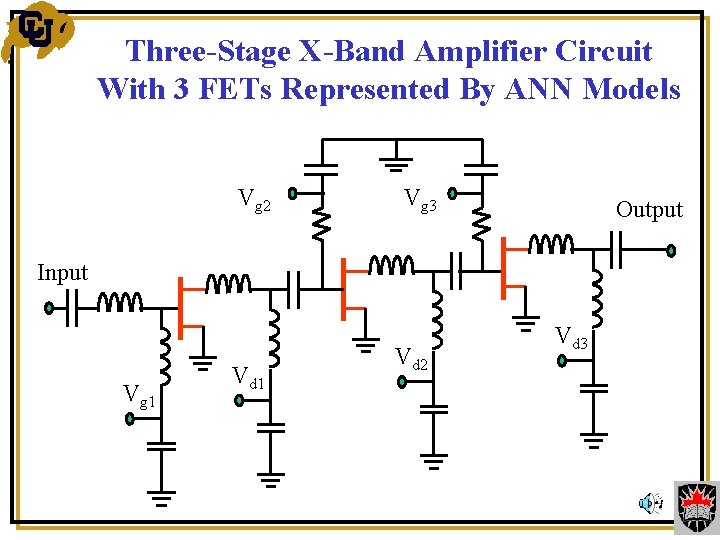

Three-Stage X-Band Amplifier Circuit With 3 FETs Represented By ANN Models Vg 2 Vg 3 Output Input Vg 1 Vd 2 Vd 3

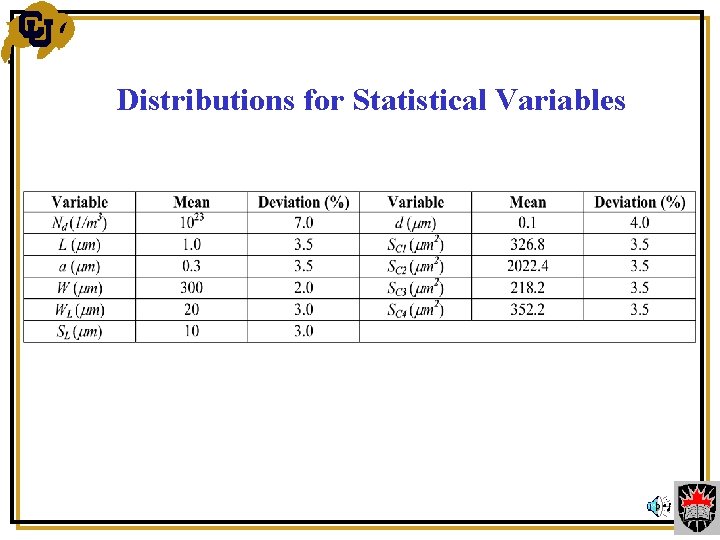

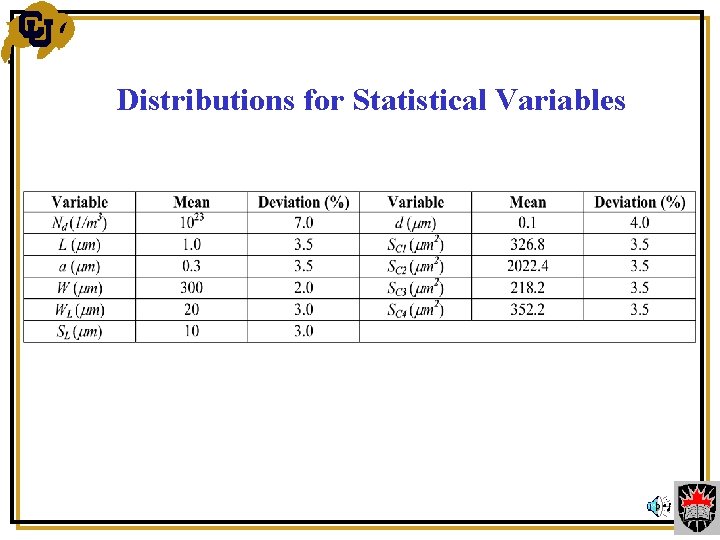

Distributions for Statistical Variables

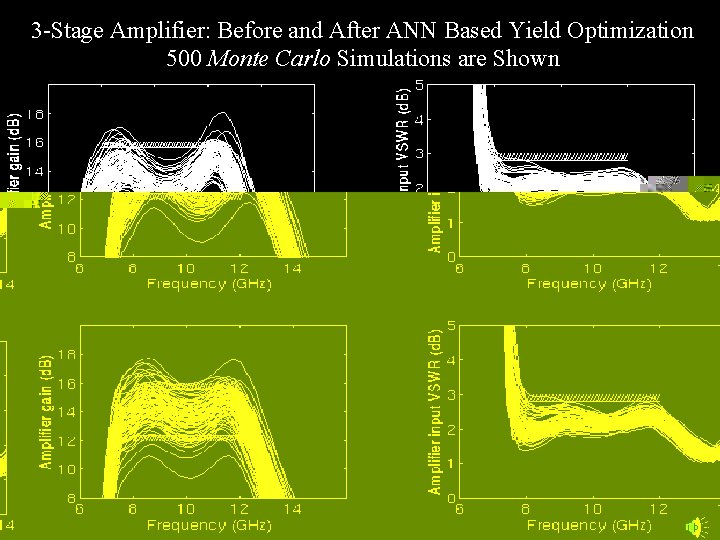

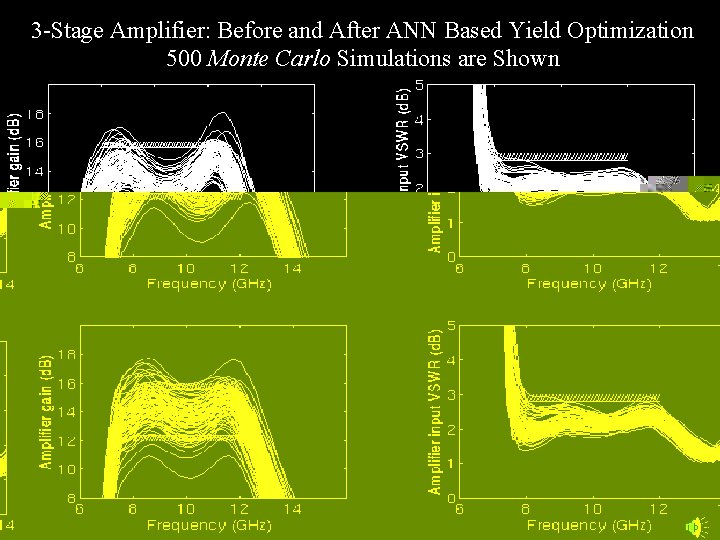

3 -Stage Amplifier: Before and After ANN Based Yield Optimization 500 Monte Carlo Simulations are Shown

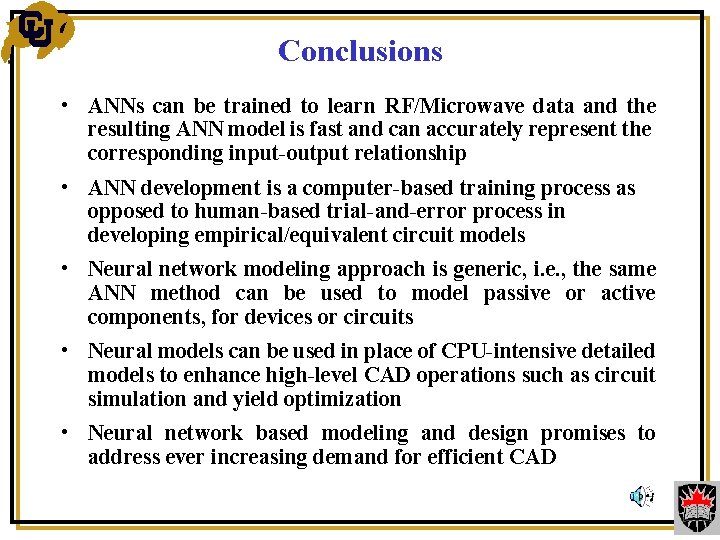

Conclusions • ANNs can be trained to learn RF/Microwave data and the resulting ANN model is fast and can accurately represent the corresponding input-output relationship • ANN development is a computer-based training process as opposed to human-based trial-and-error process in developing empirical/equivalent circuit models • Neural network modeling approach is generic, i. e. , the same ANN method can be used to model passive or active components, for devices or circuits • Neural models can be used in place of CPU-intensive detailed models to enhance high-level CAD operations such as circuit simulation and yield optimization • Neural network based modeling and design promises to address ever increasing demand for efficient CAD

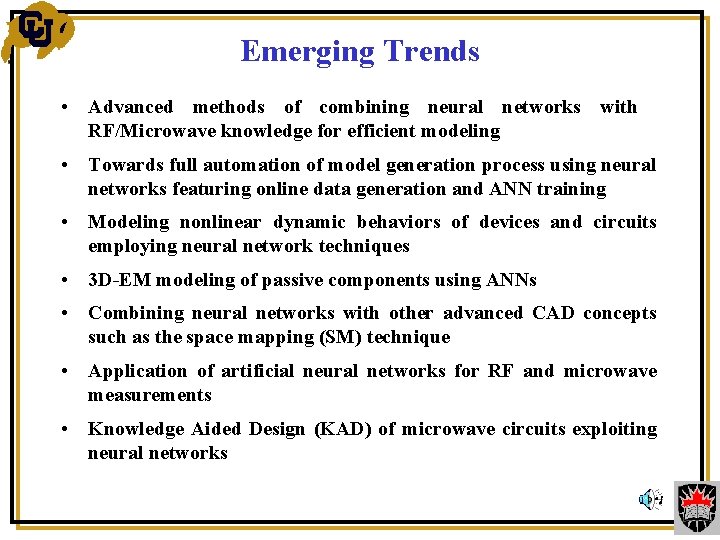

Emerging Trends • Advanced methods of combining neural networks with RF/Microwave knowledge for efficient modeling • Towards full automation of model generation process using neural networks featuring online data generation and ANN training • Modeling nonlinear dynamic behaviors of devices and circuits employing neural network techniques • 3 D-EM modeling of passive components using ANNs • Combining neural networks with other advanced CAD concepts such as the space mapping (SM) technique • Application of artificial neural networks for RF and microwave measurements • Knowledge Aided Design (KAD) of microwave circuits exploiting neural networks

A Hyper. Link to Neuro. Modeler is a software for developing neural network models for passive and active components/circuits for high-frequency circuit design. Here is a Web demonstration version with which you can train a MLP neural model, test it with test data, and see the neural model reproducing the input-output relationship it learnt.

Basic Steps of Running Neuro. Modeler The demonstration version is self-explanatory, where the basic steps are: Press the “New Neural Model” button to define the neural model structure and number of input, output and hidden neurons Press the “Train Neural Model” button to train the neural model Press the “Test Neural Model” button to test the quality of the model Press the “Display Model Input-Output” button to see the input-output relationship reproduced by the neural model

Start Neuro. Modeler Make sure your computer is connected to the Internet. Now you can click Neuro. Modeler here to start the Web demonstration version of the program.