Deep learning Recurrent Neural Networks CV 201 Oren

![[http: //norman-ai. mit. edu/] [http: //norman-ai. mit. edu/]](https://slidetodoc.com/presentation_image_h/fe3db5c16df373b5699568d81755ffa0/image-45.jpg)

![[http: //norman-ai. mit. edu/] [http: //norman-ai. mit. edu/]](https://slidetodoc.com/presentation_image_h/fe3db5c16df373b5699568d81755ffa0/image-46.jpg)

![[http: //norman-ai. mit. edu/] [http: //norman-ai. mit. edu/]](https://slidetodoc.com/presentation_image_h/fe3db5c16df373b5699568d81755ffa0/image-47.jpg)

- Slides: 49

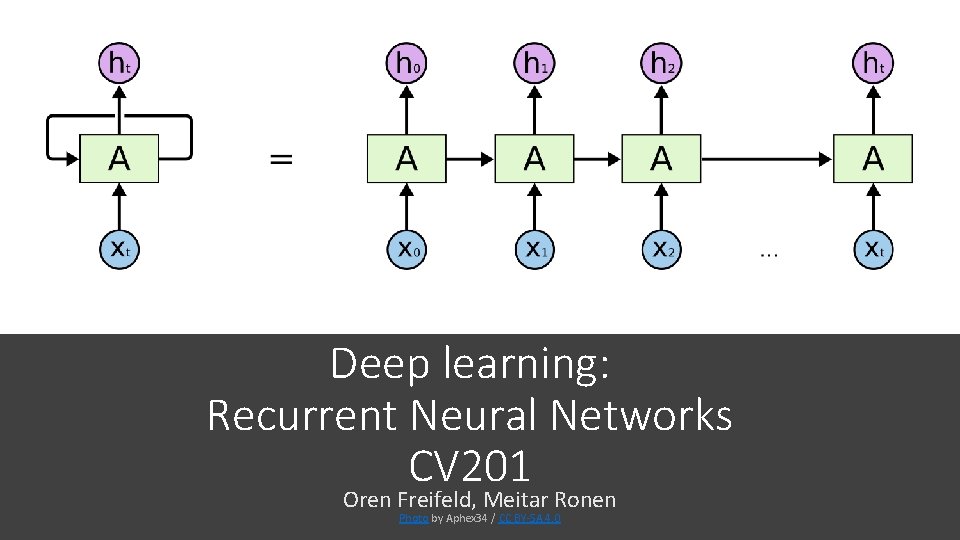

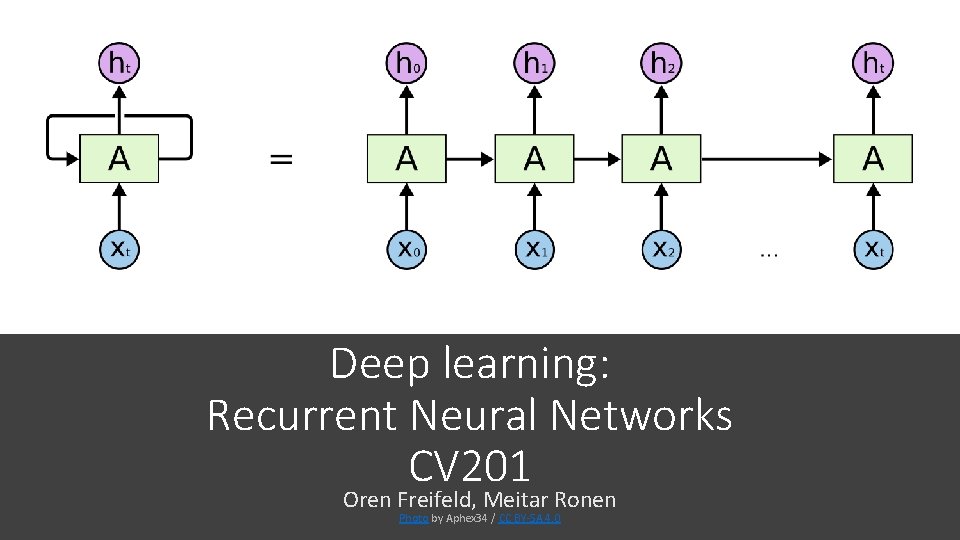

Deep learning: Recurrent Neural Networks CV 201 Oren Freifeld, Meitar Ronen Photo by Aphex 34 / CC BY-SA 4. 0

Contents • • CNN limitations Recurrent Neural Networks Back propagation through RNN LSTM

Sequential data • Text • Video • Audio • Students’ activities • Medical sensors • Financial data • …

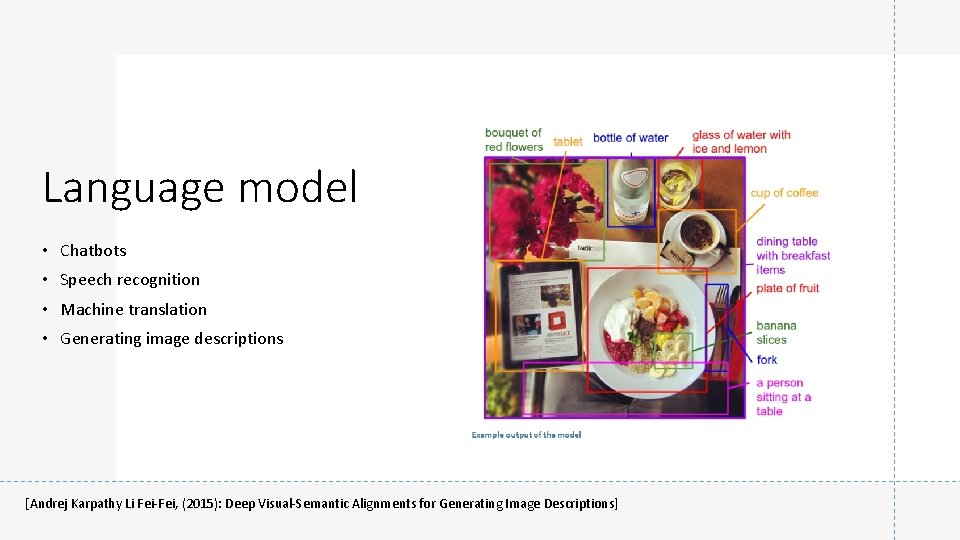

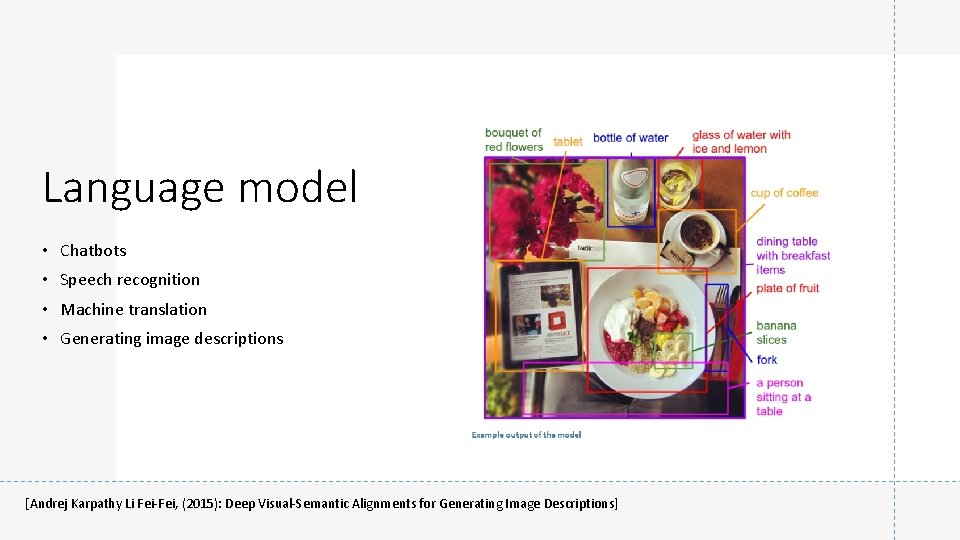

Language model • Chatbots • Speech recognition • Machine translation • Generating image descriptions [Andrej Karpathy Li Fei-Fei, (2015): Deep Visual-Semantic Alignments for Generating Image Descriptions]

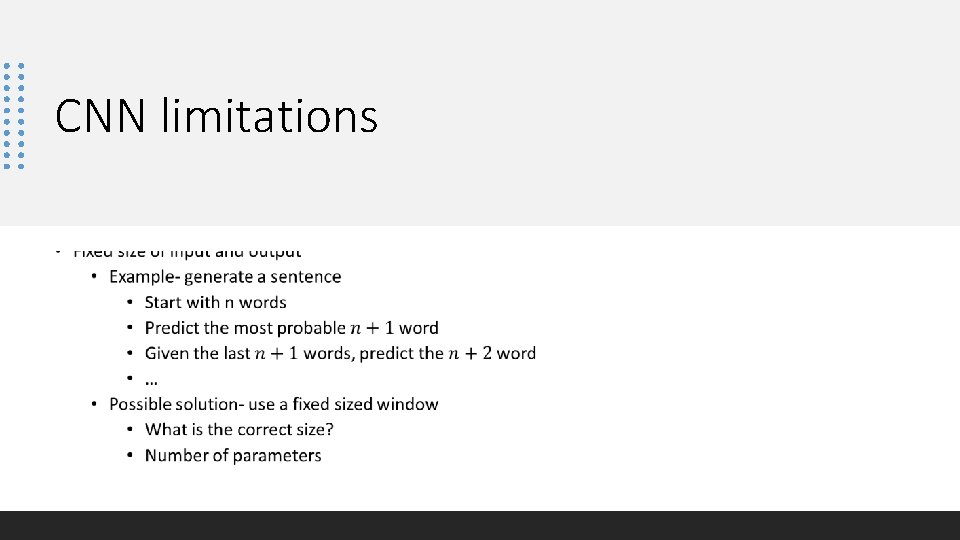

CNN limitations •

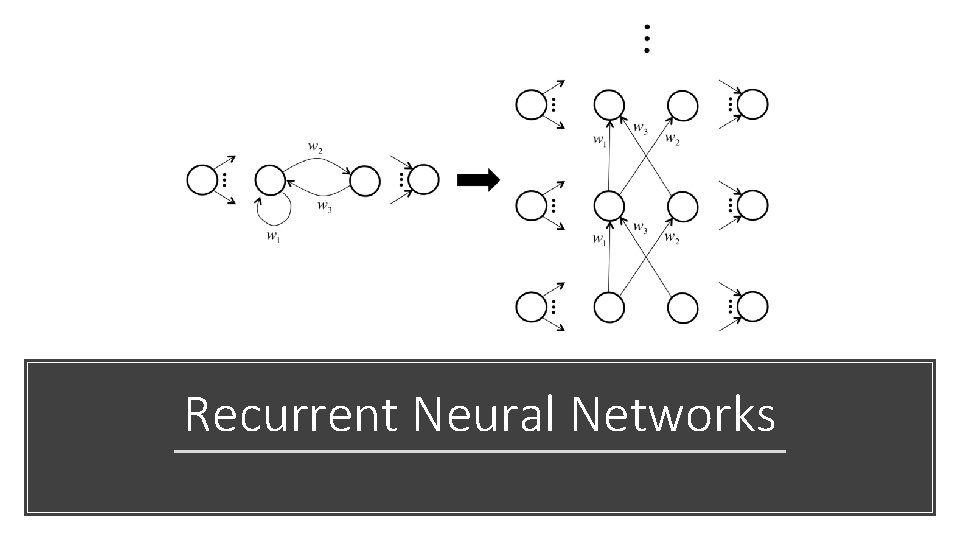

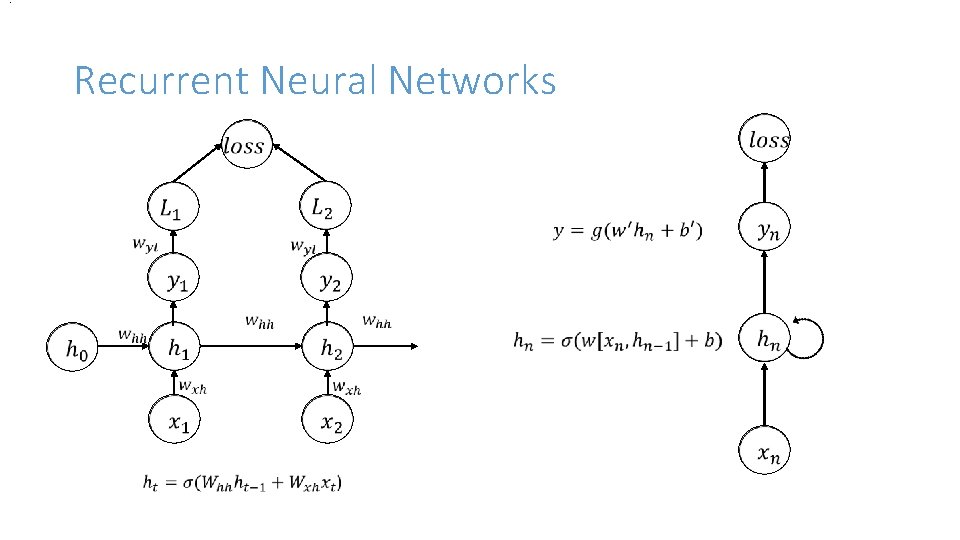

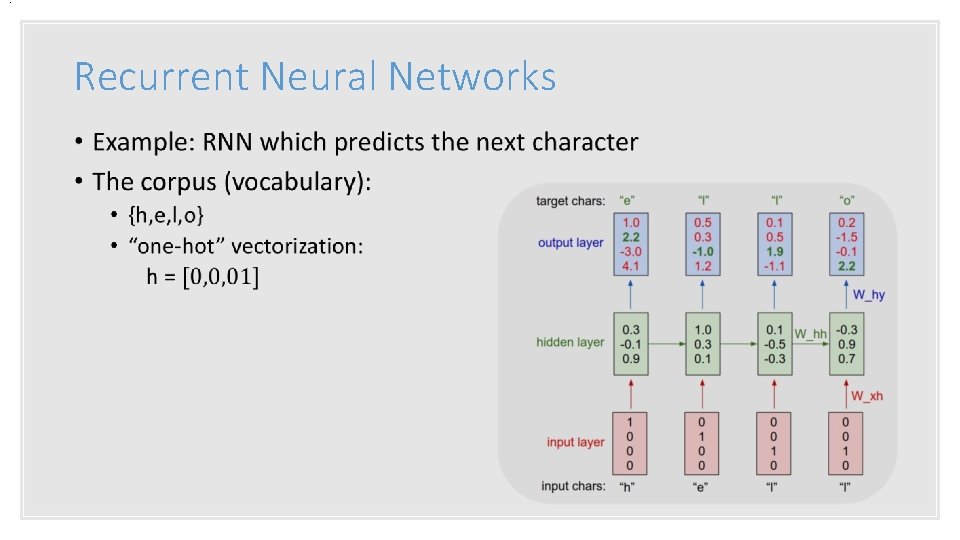

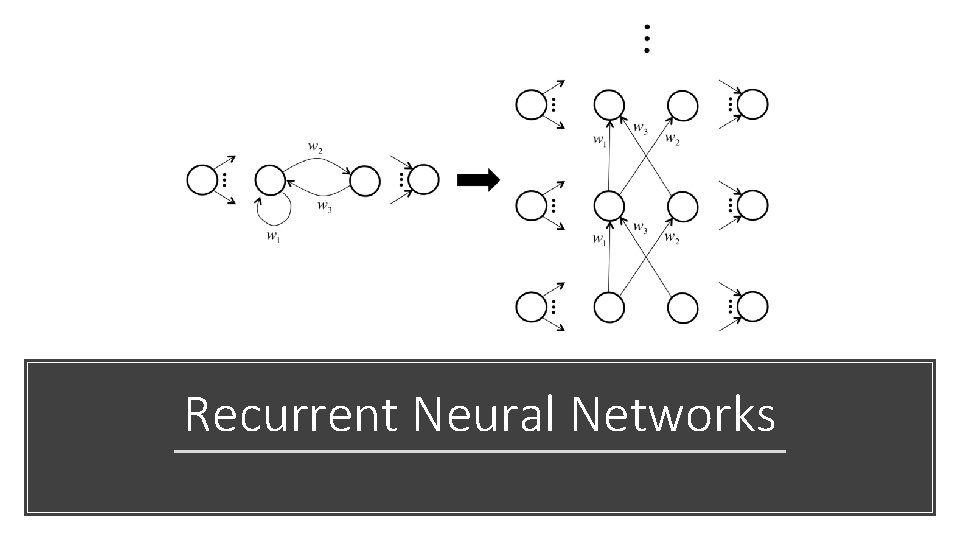

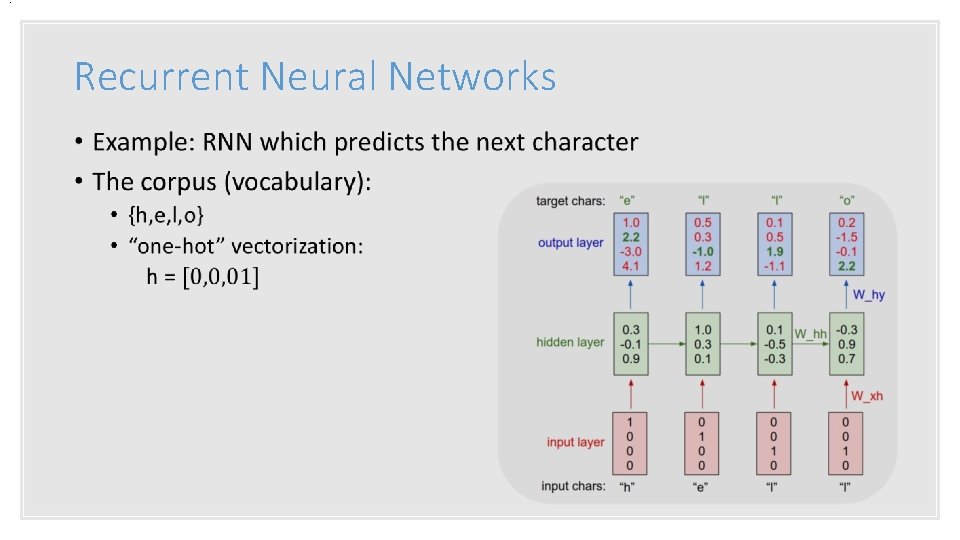

Recurrent Neural Networks

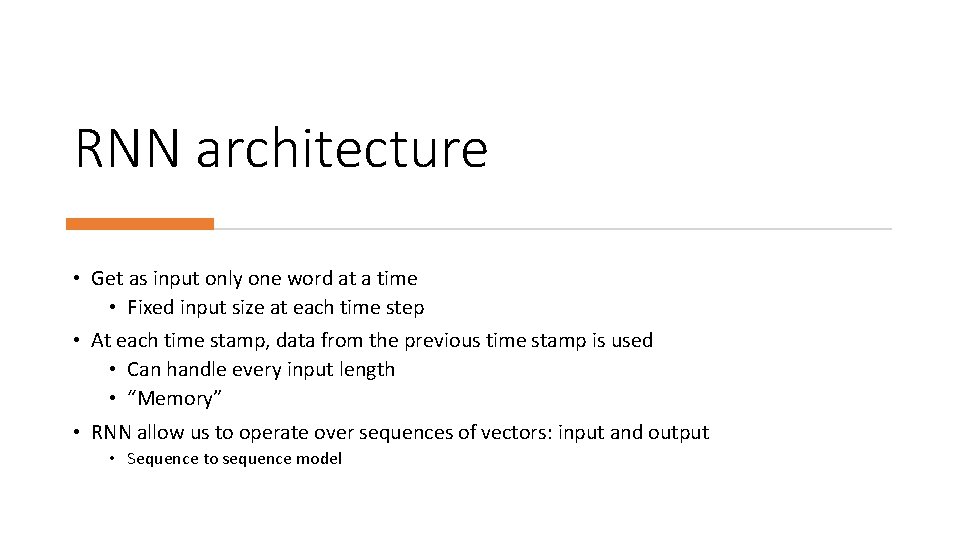

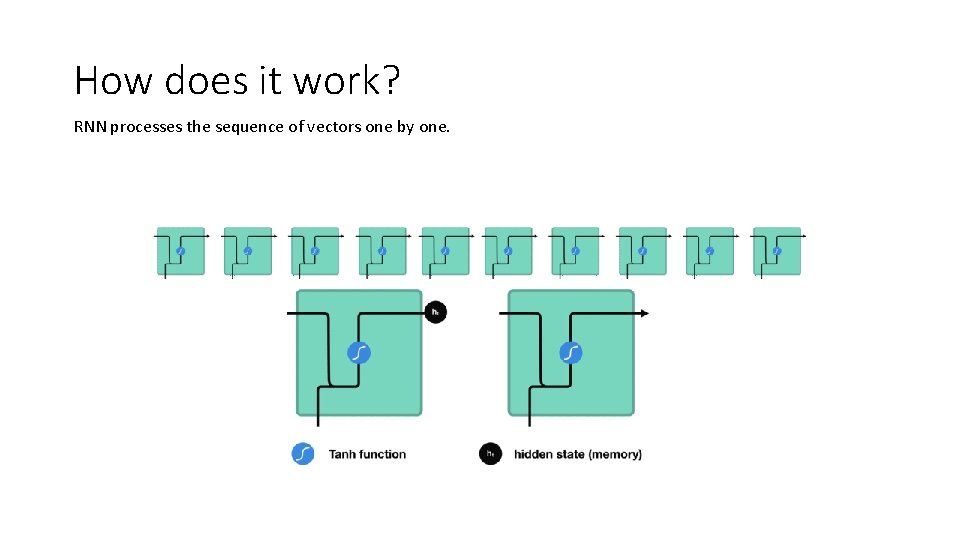

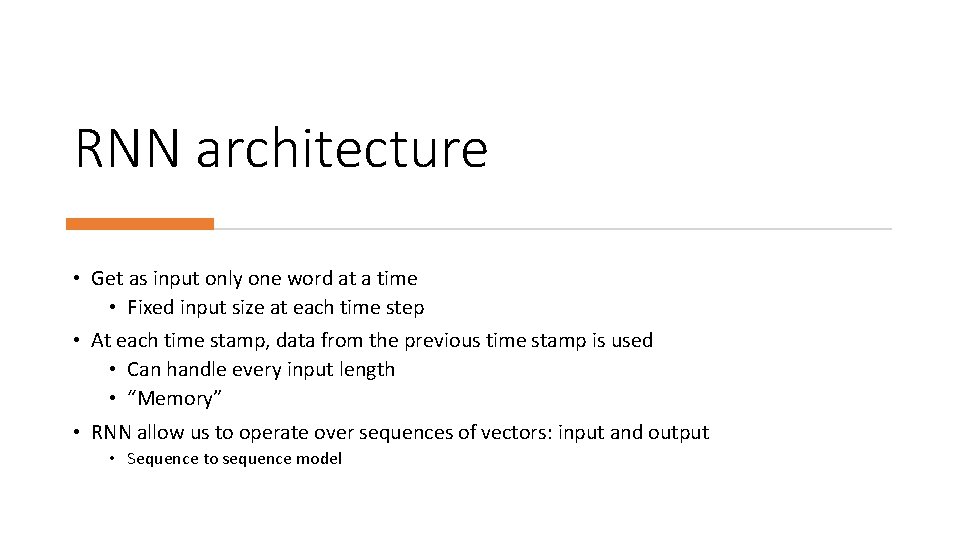

RNN architecture • Get as input only one word at a time • Fixed input size at each time step • At each time stamp, data from the previous time stamp is used • Can handle every input length • “Memory” • RNN allow us to operate over sequences of vectors: input and output • Sequence to sequence model

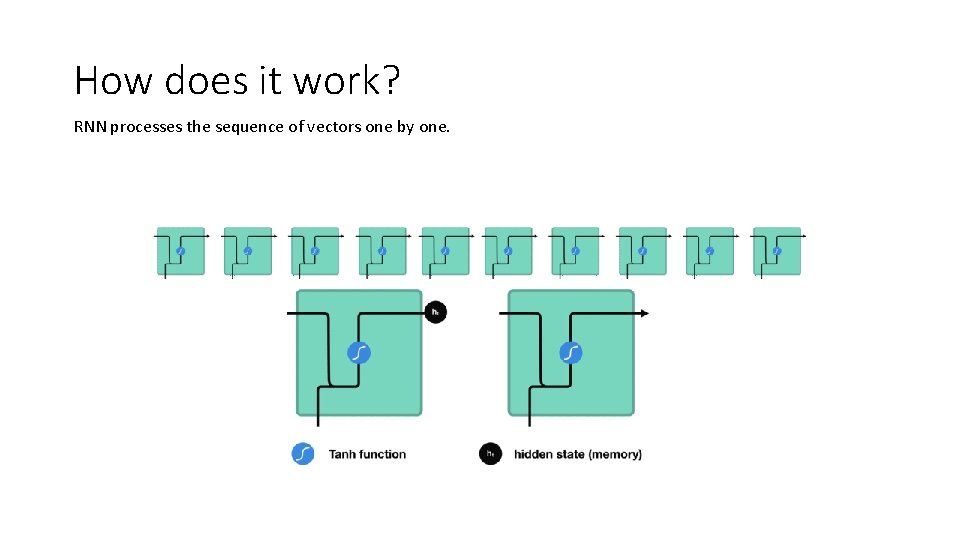

How does it work? RNN processes the sequence of vectors one by one.

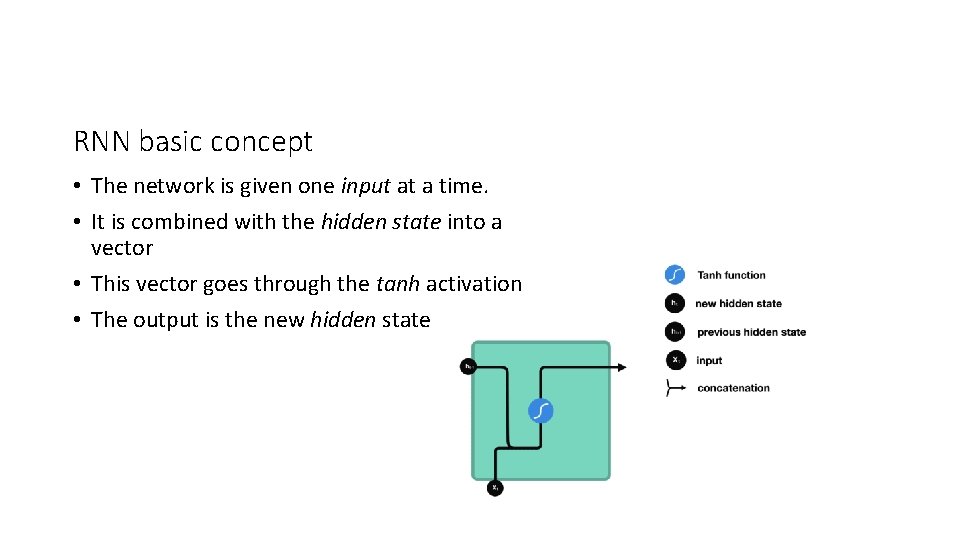

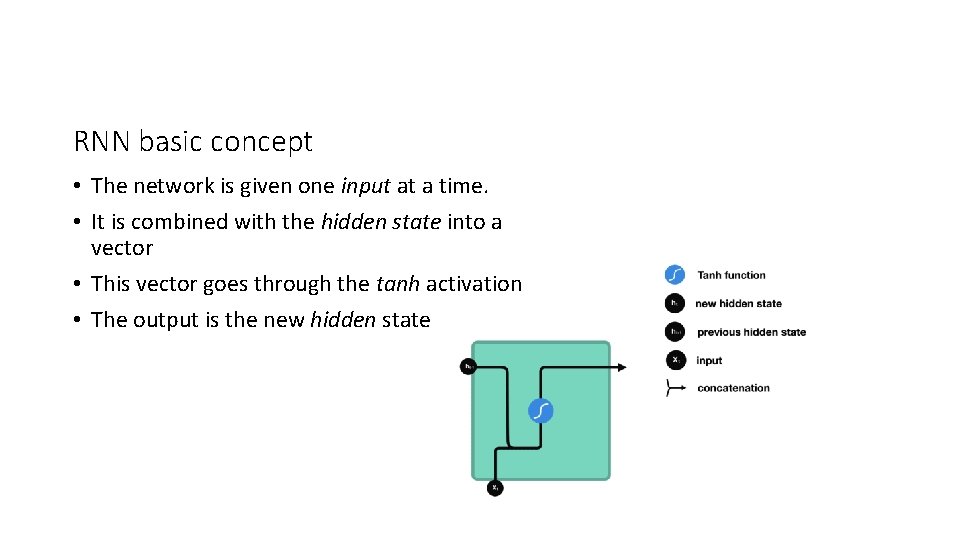

RNN basic concept • The network is given one input at a time. • It is combined with the hidden state into a vector • This vector goes through the tanh activation • The output is the new hidden state

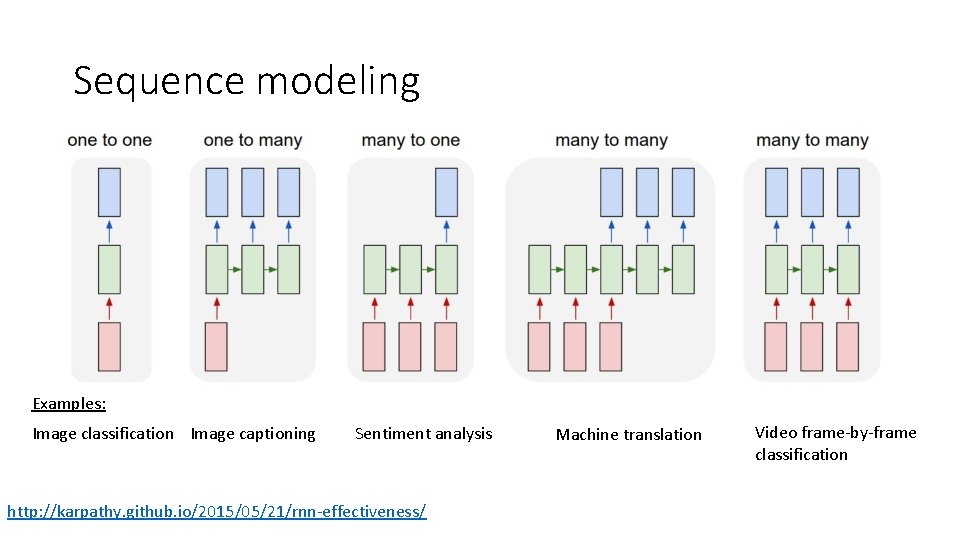

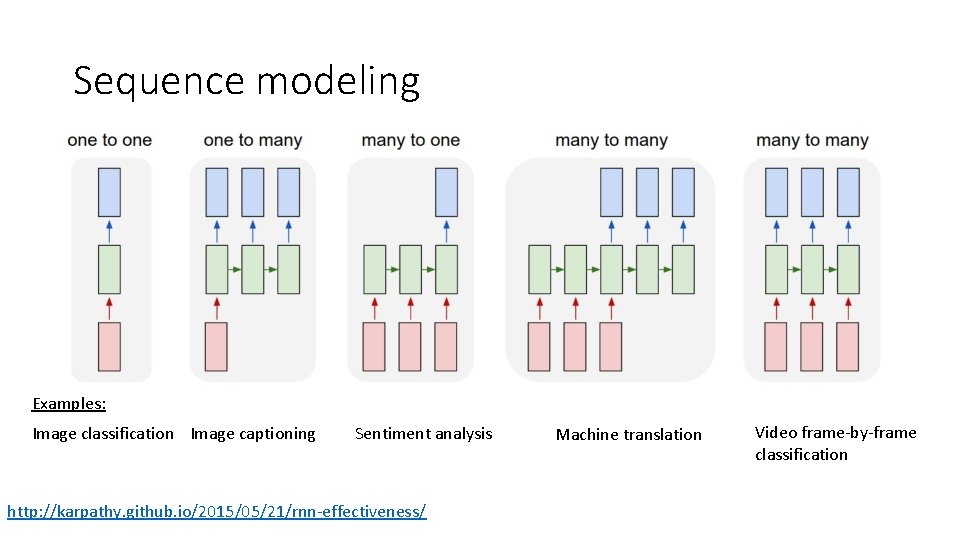

Sequence modeling Examples: Image classification Image captioning Sentiment analysis http: //karpathy. github. io/2015/05/21/rnn-effectiveness/ Machine translation Video frame-by-frame classification

DRAW: A recurrent neural network for image generation [Gregor, et al. , (2015) DRAW: A recurrent neural network for image generation]

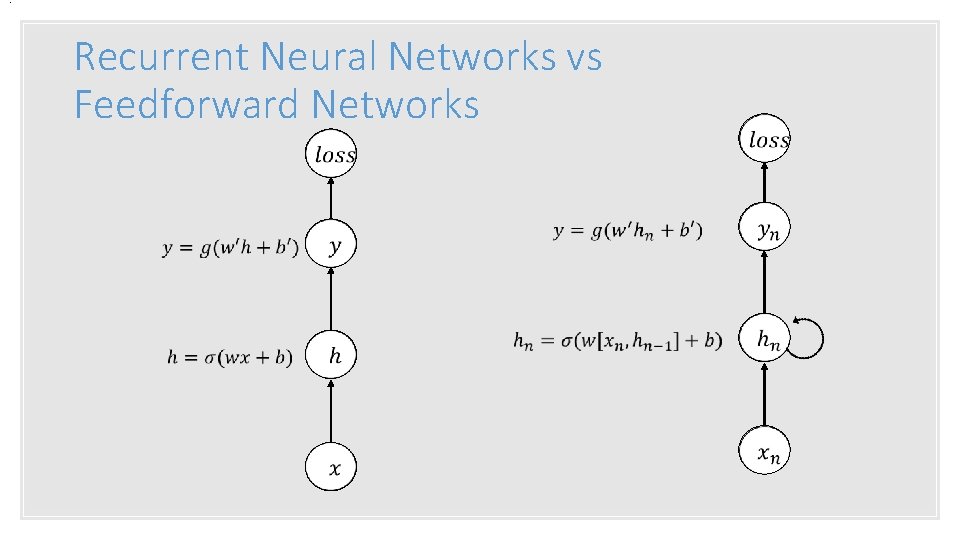

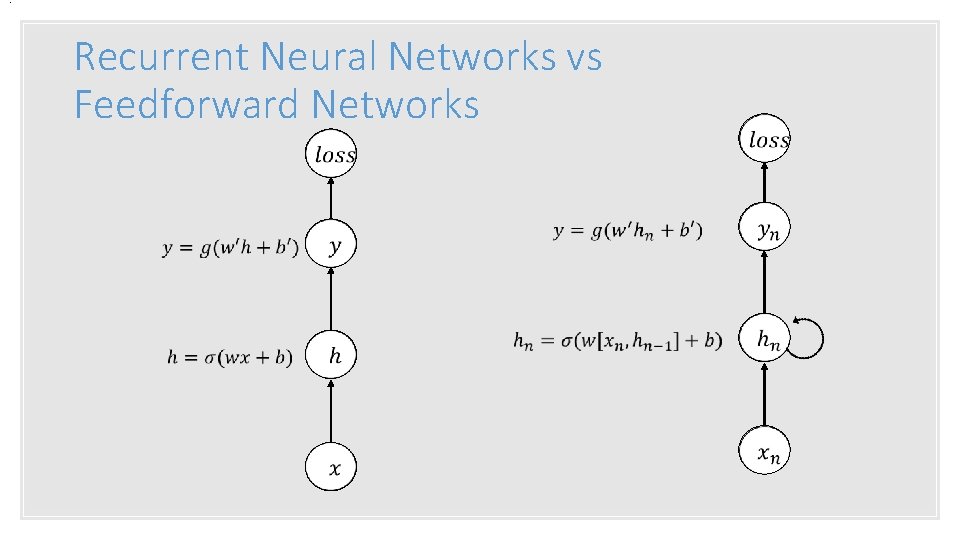

. Recurrent Neural Networks vs Feedforward Networks

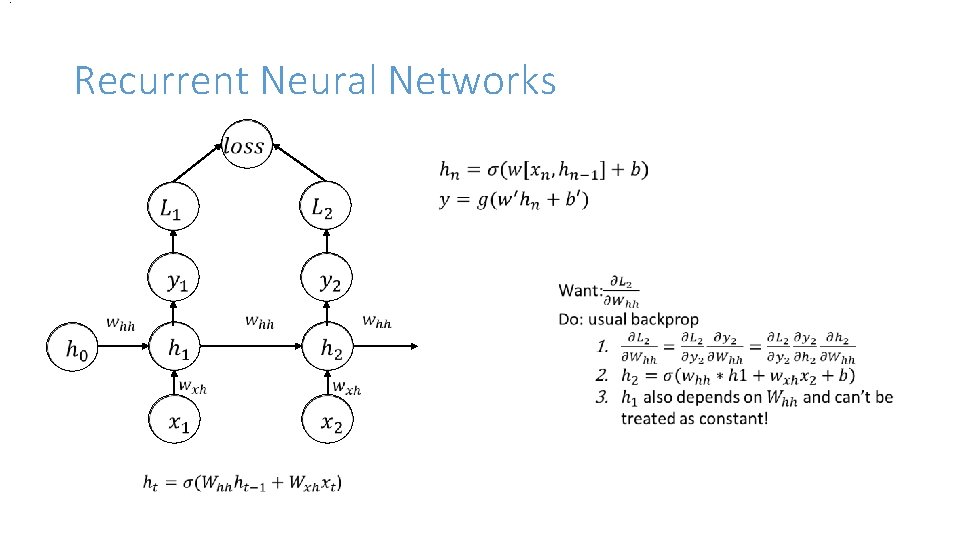

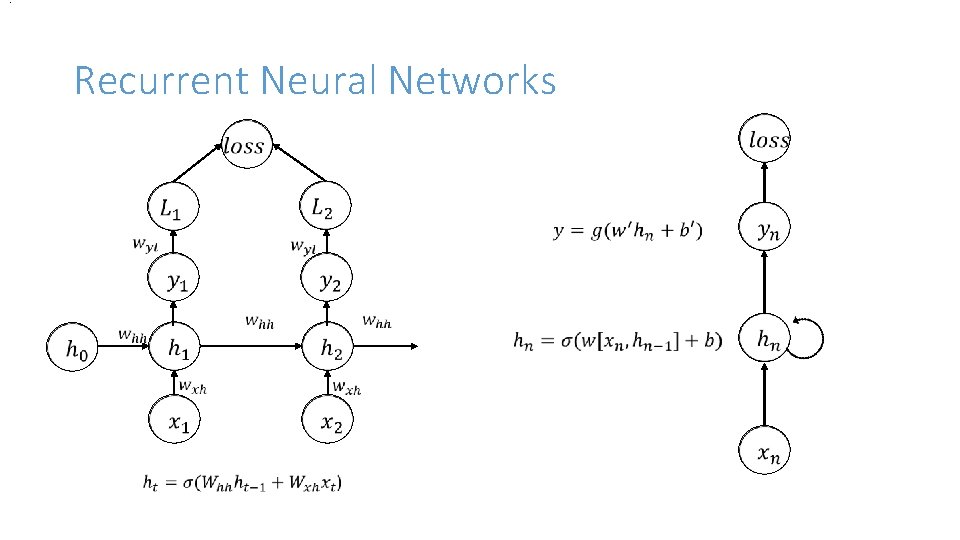

. Recurrent Neural Networks

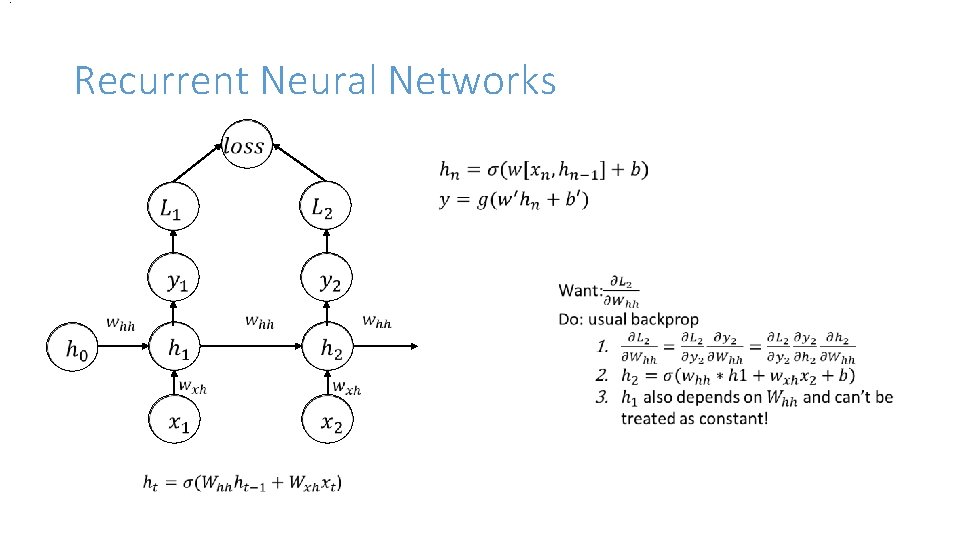

. Recurrent Neural Networks

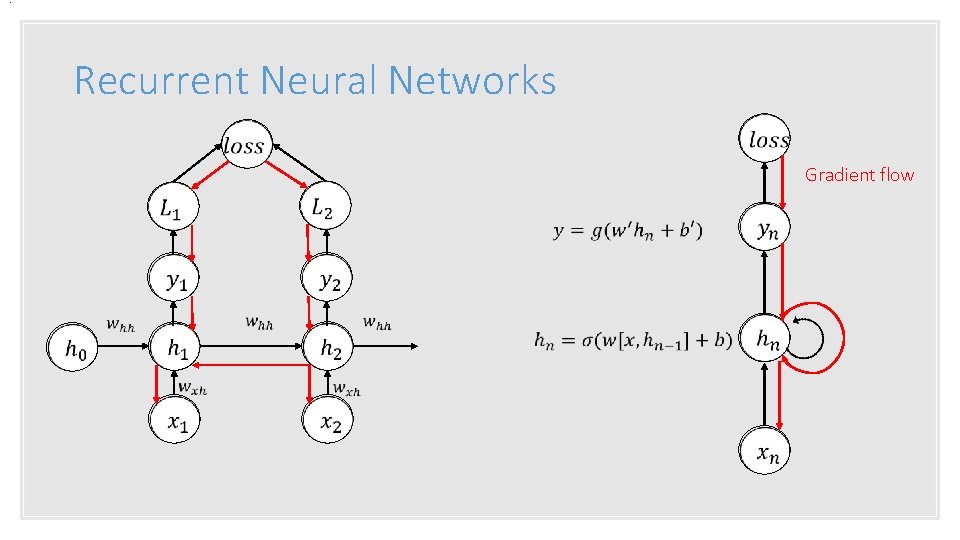

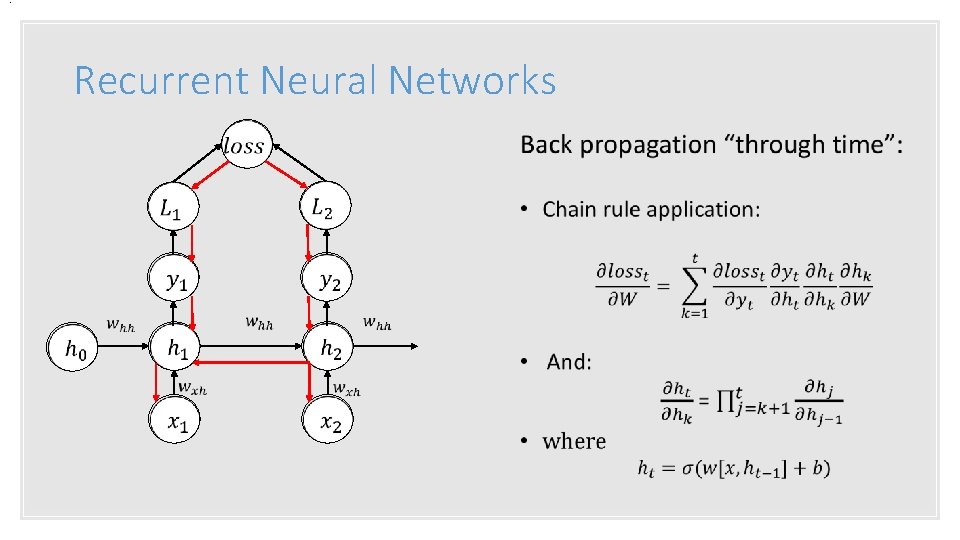

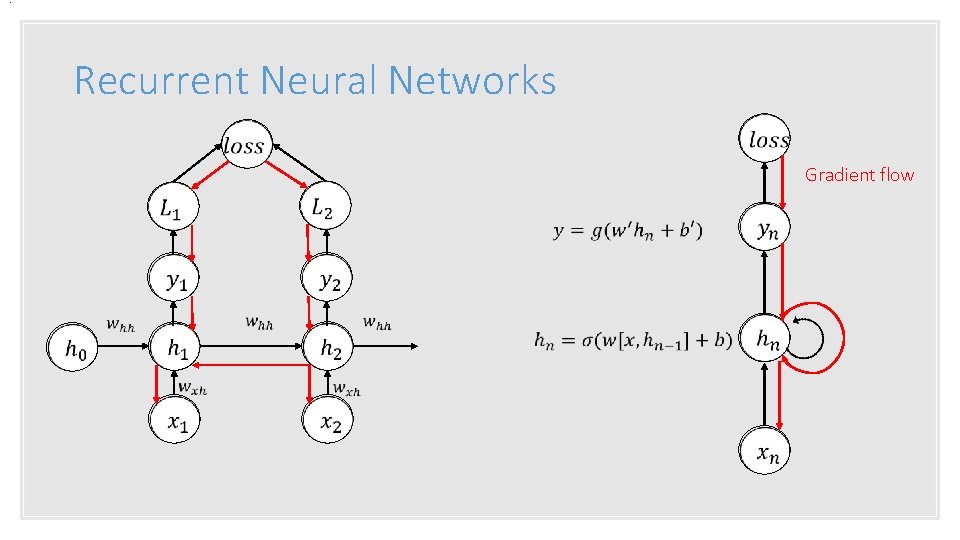

. Recurrent Neural Networks Gradient flow

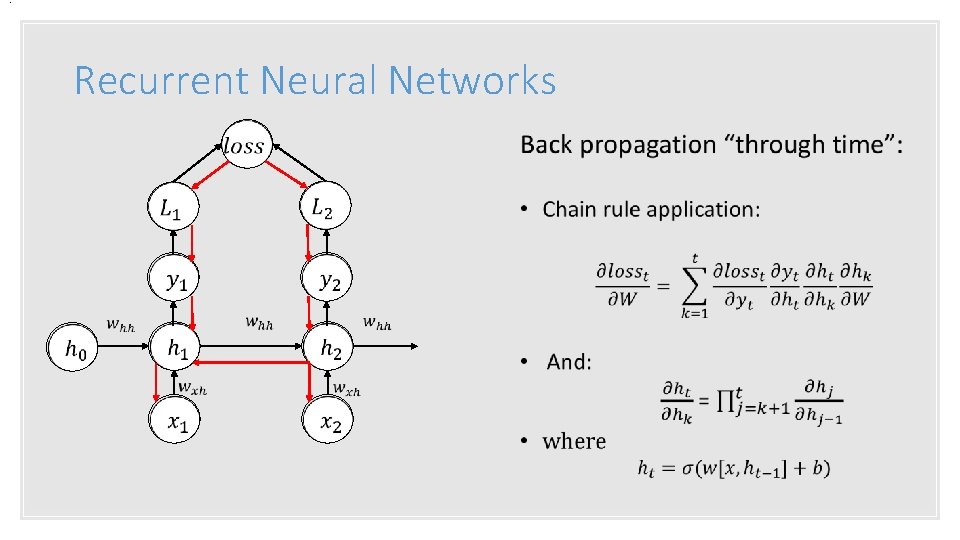

. Recurrent Neural Networks

. Recurrent Neural Networks •

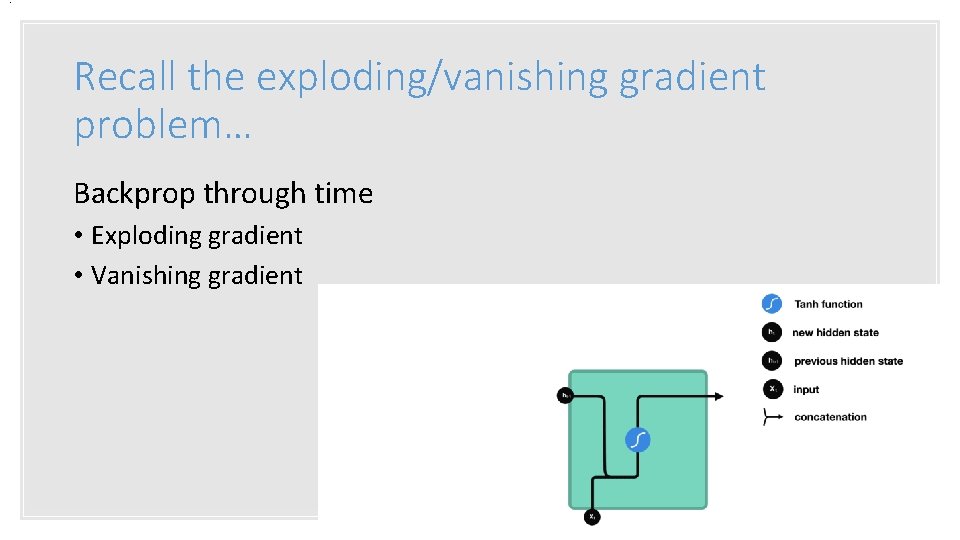

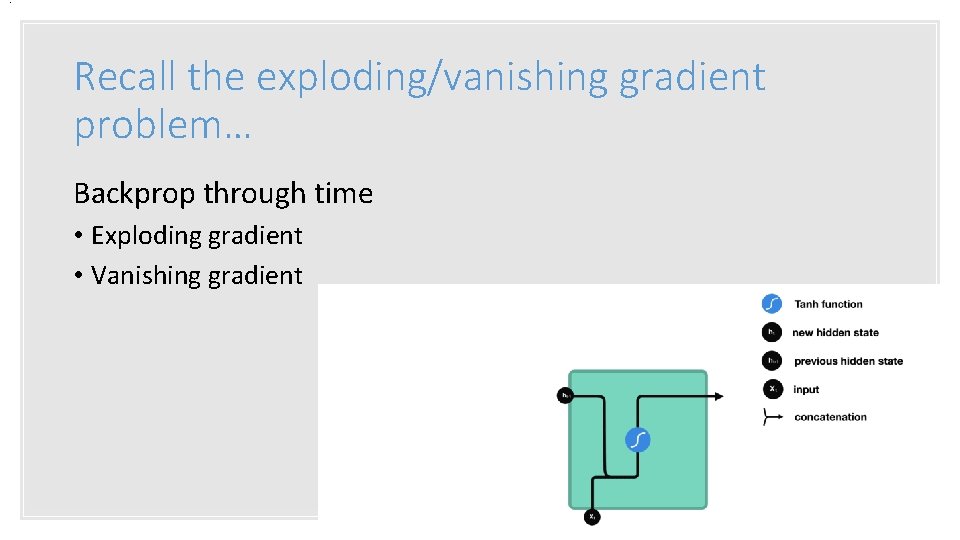

. Recall the exploding/vanishing gradient problem… Backprop through time • Exploding gradient • Vanishing gradient

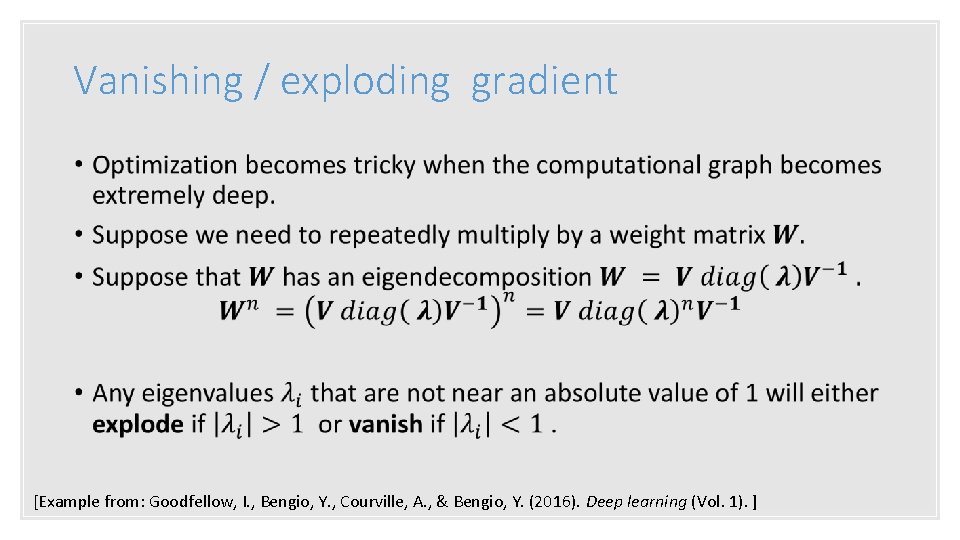

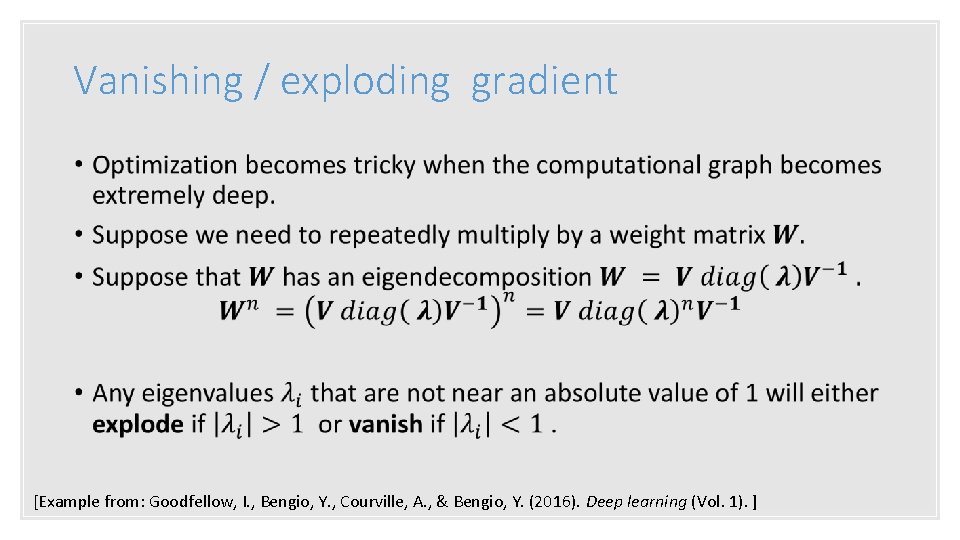

Vanishing / exploding gradient • [Example from: Goodfellow, I. , Bengio, Y. , Courville, A. , & Bengio, Y. (2016). Deep learning (Vol. 1). ]

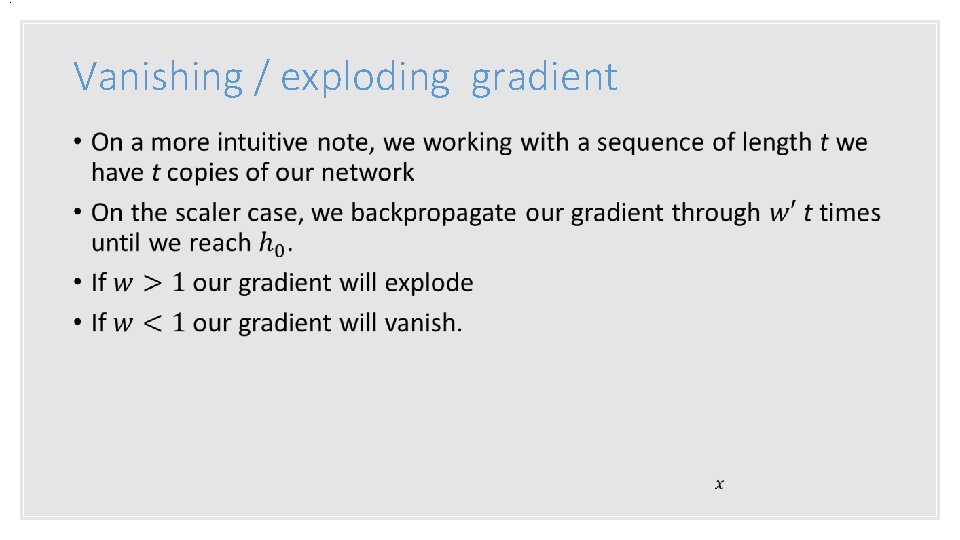

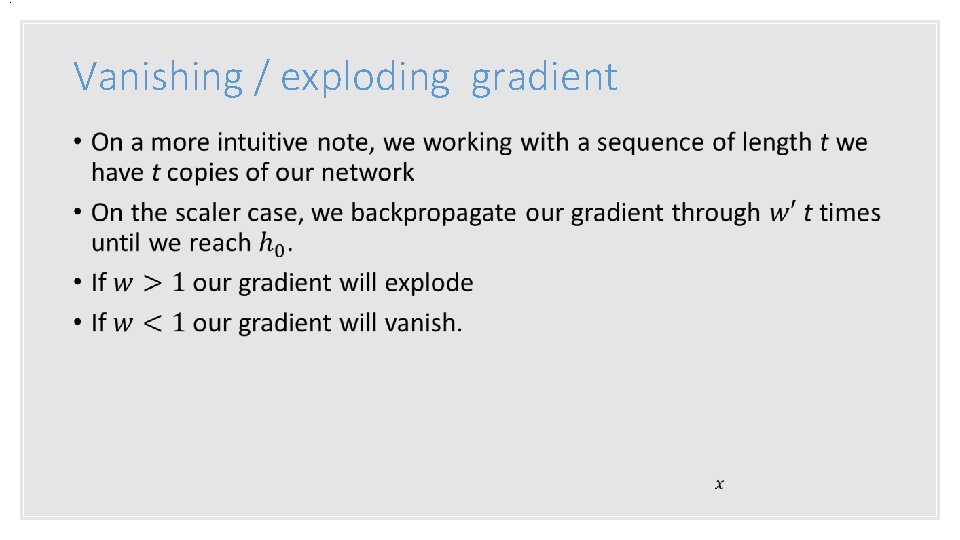

. Vanishing / exploding gradient •

. Recall the exploding/vanishing gradient problem… Backprop through time • Exploding gradient • Vanishing gradient

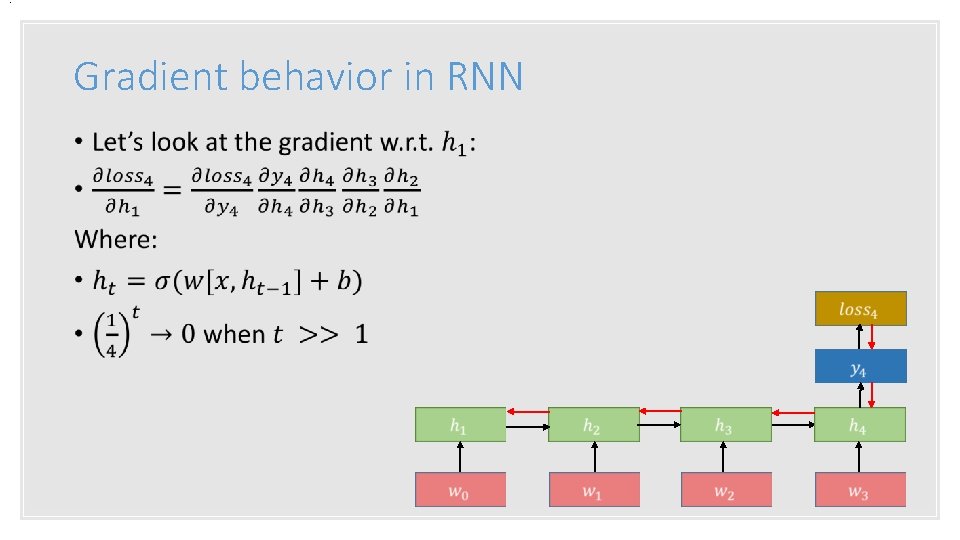

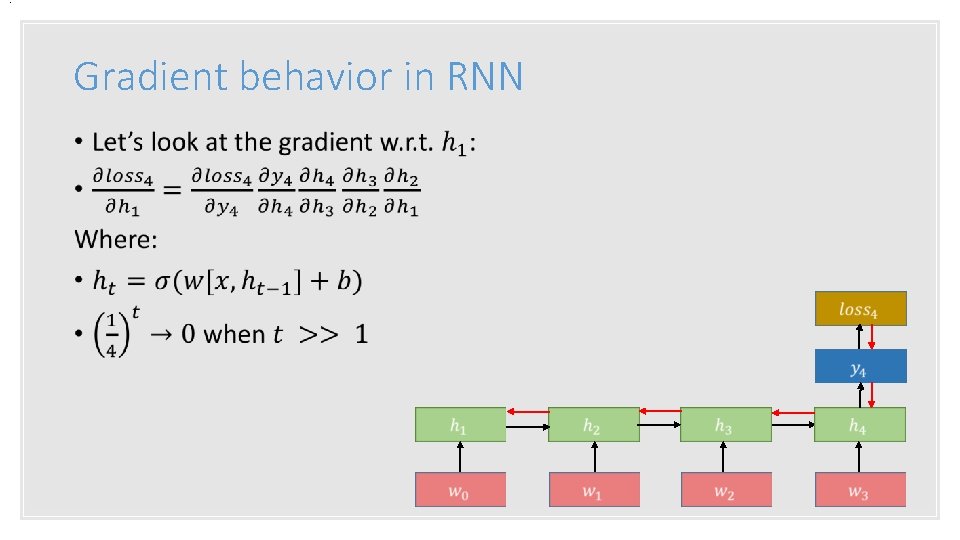

. Gradient behavior in RNN •

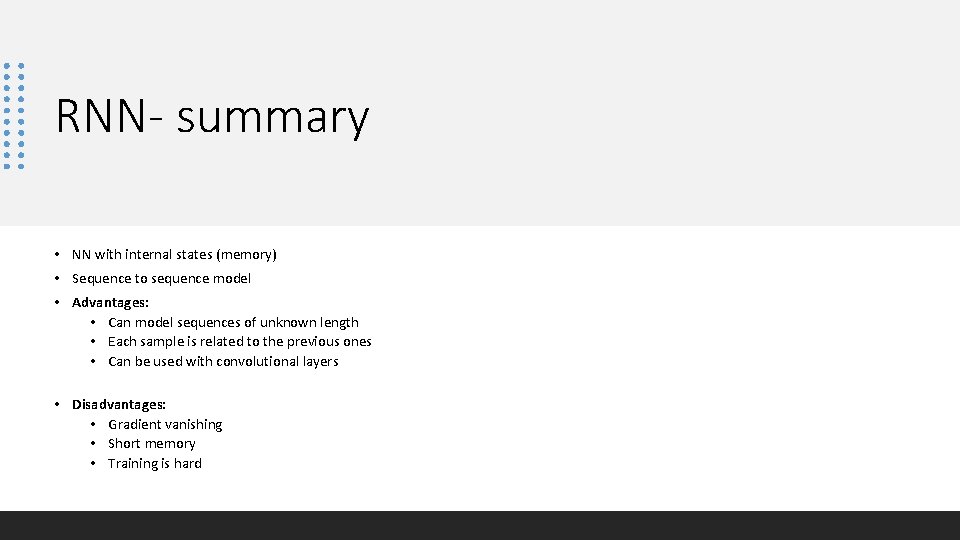

RNN- summary • NN with internal states (memory) • Sequence to sequence model • Advantages: • Can model sequences of unknown length • Each sample is related to the previous ones • Can be used with convolutional layers • Disadvantages: • Gradient vanishing • Short memory • Training is hard

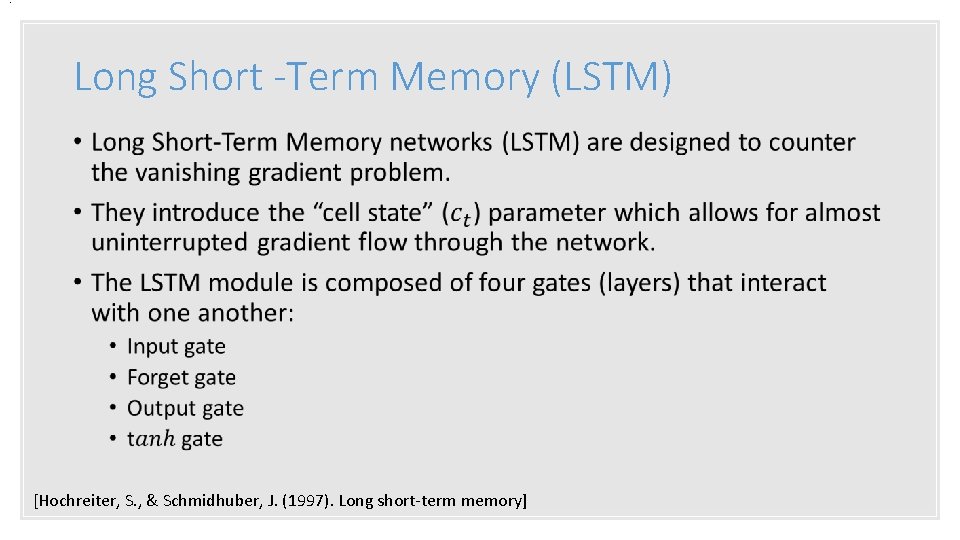

. Long Short -Term Memory (LSTM) • [Hochreiter, S. , & Schmidhuber, J. (1997). Long short-term memory]

Intuition “This is the Star Wars atmosphere and feeling I've been waiting for. No dumb humor, cool characters, and a story I can take seriously. I'm a big fan of what they've done with this series so far and current Star Wars filmmakers need to take notes. The cinematography is amazing. You can tell they use practicality as much as possible and CGI is used only for the obvious like spaceships and creatures etc. It's a truly remarkable balance of old and new. ” IMDB review of “The Mandalorian” by jonahetheredgr https: //www. imdb. com/review/rw 5252671/? ref_=tt_urv

Intuition “This is the Star Wars atmosphere and feeling I've been waiting for. No dumb humor, cool characters, and a story I can take seriously. I'm a big fan of what they've done with this series so far and current Star Wars filmmakers need to take notes. The cinematography is amazing. You can tell they use practicality as much as possible and CGI is used only for the obvious like spaceships and creatures etc. It's a truly remarkable balance of old and new. ” IMDB review of “The Mandalorian” by jonahetheredgr https: //www. imdb. com/review/rw 5252671/? ref_=tt_urv

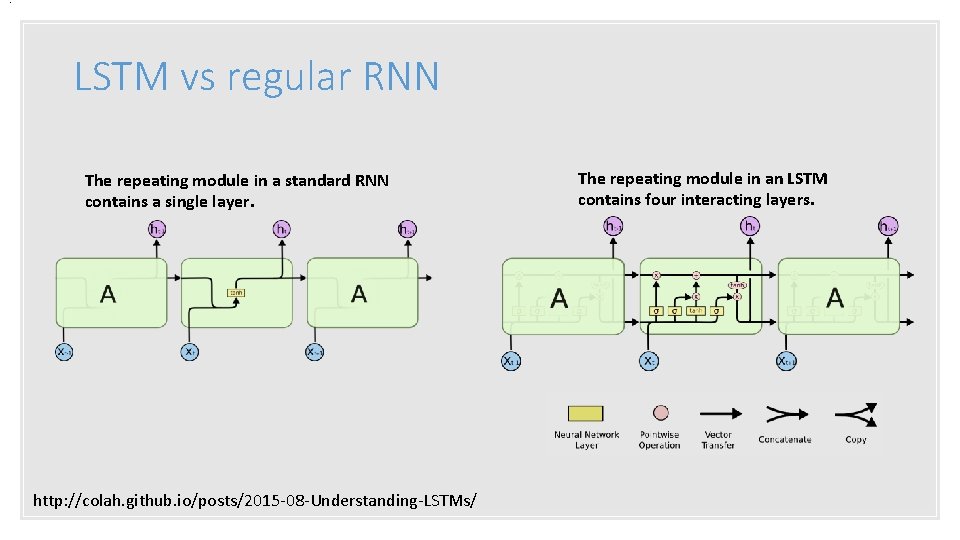

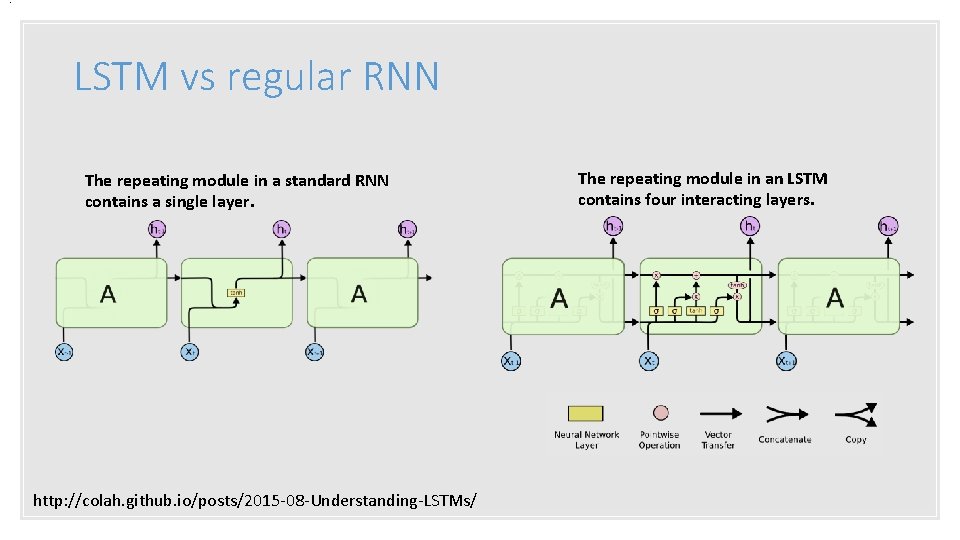

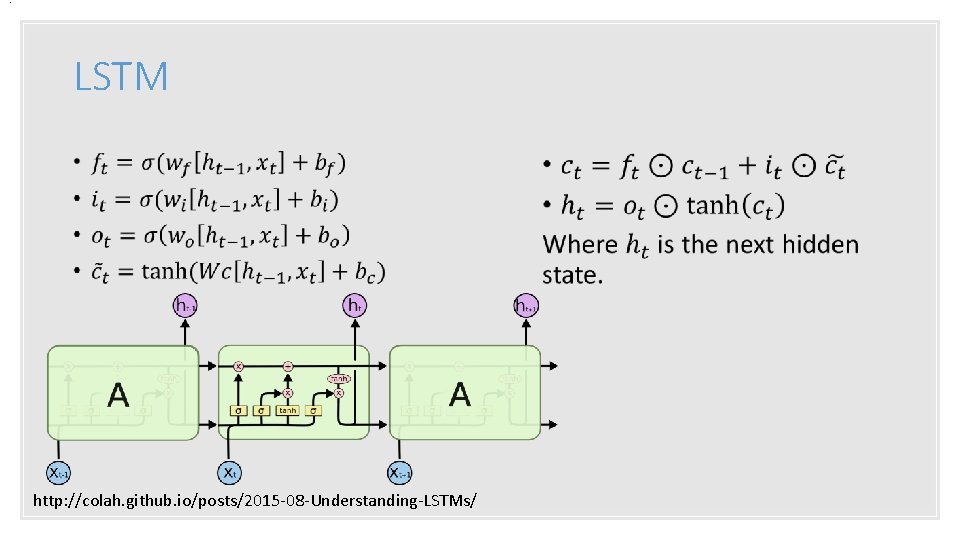

. LSTM vs regular RNN The repeating module in a standard RNN contains a single layer. http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/ The repeating module in an LSTM contains four interacting layers.

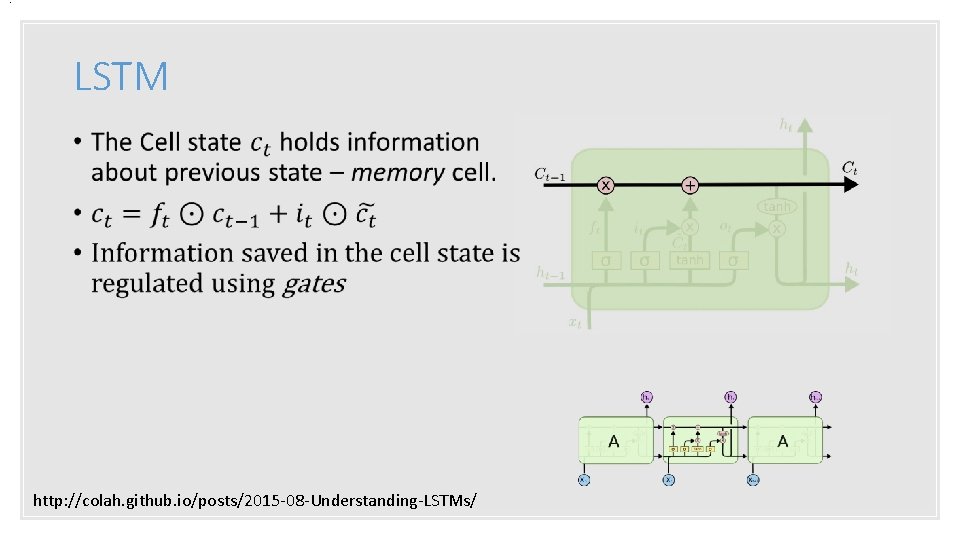

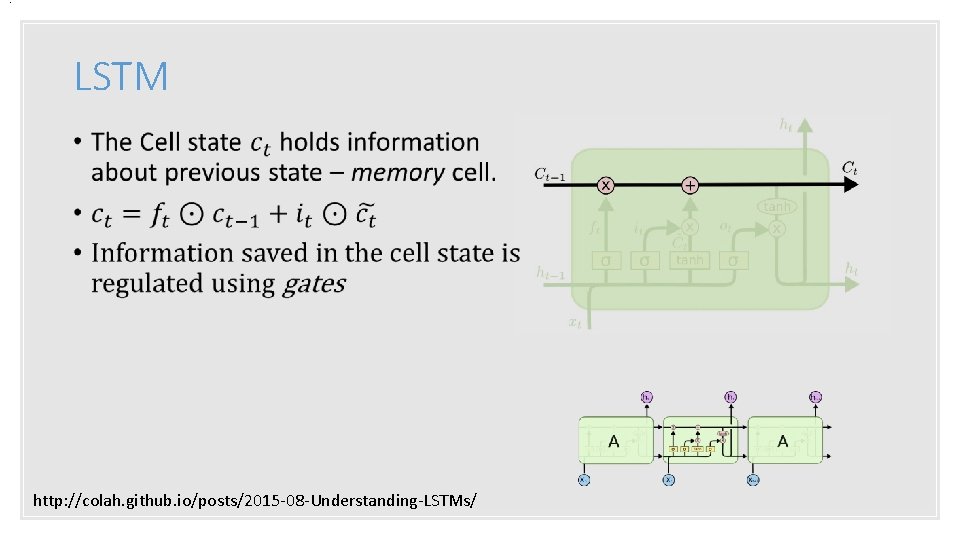

. LSTM • http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/

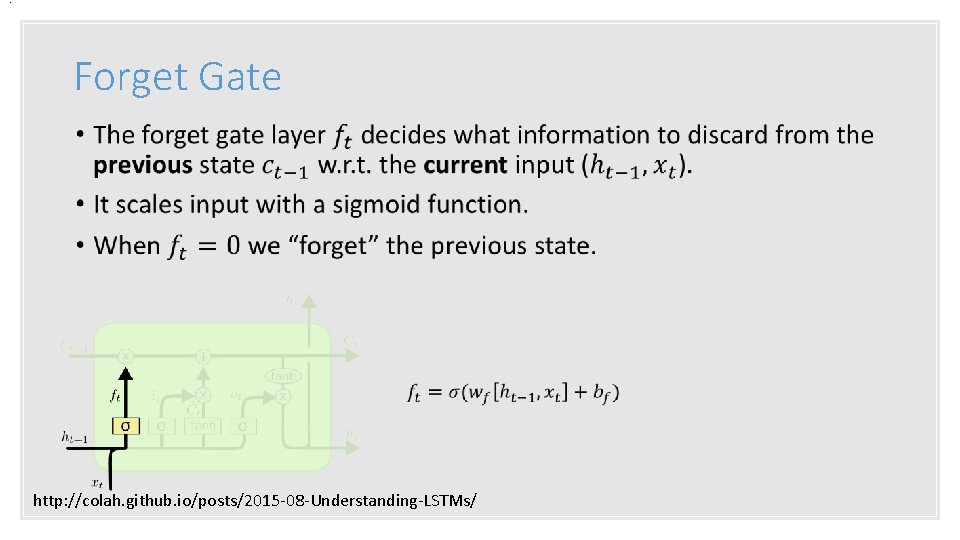

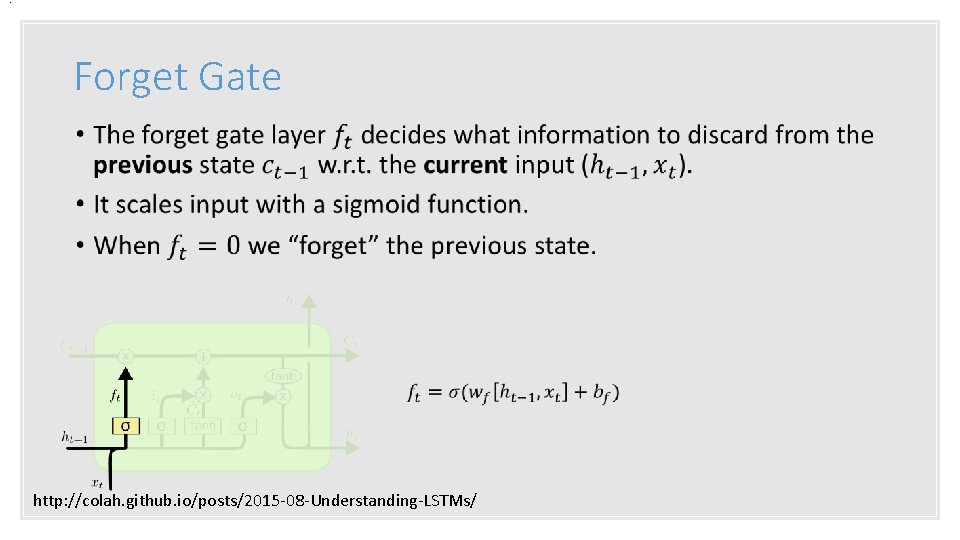

. Forget Gate • http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/

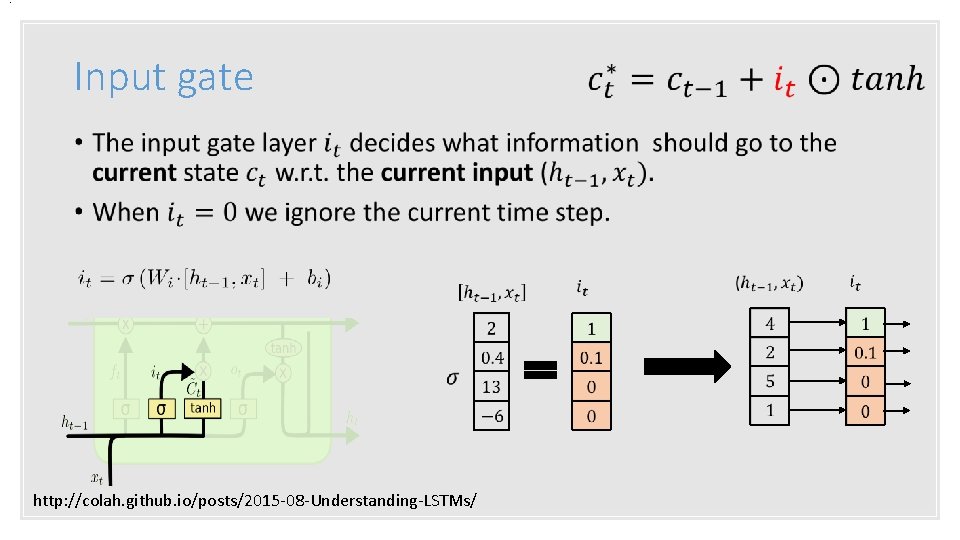

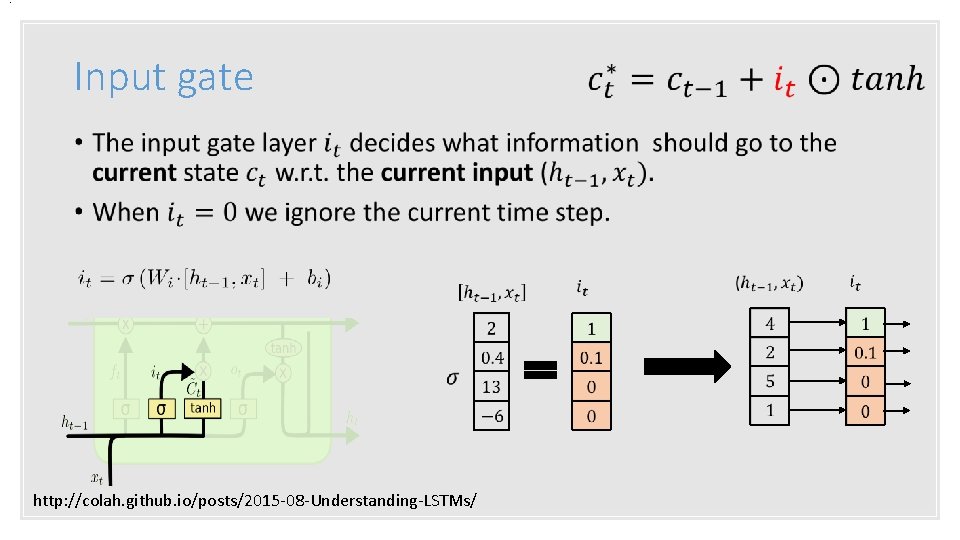

. Input gate • http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/

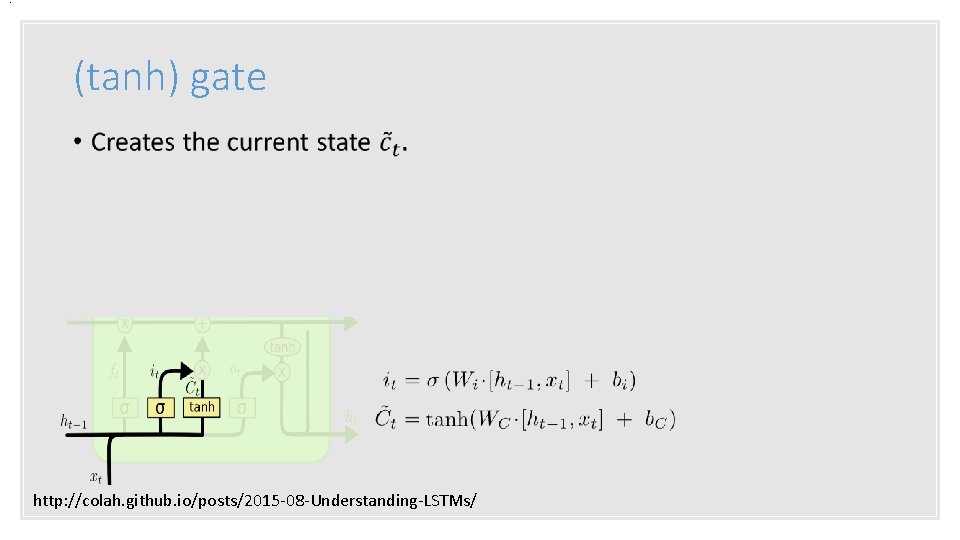

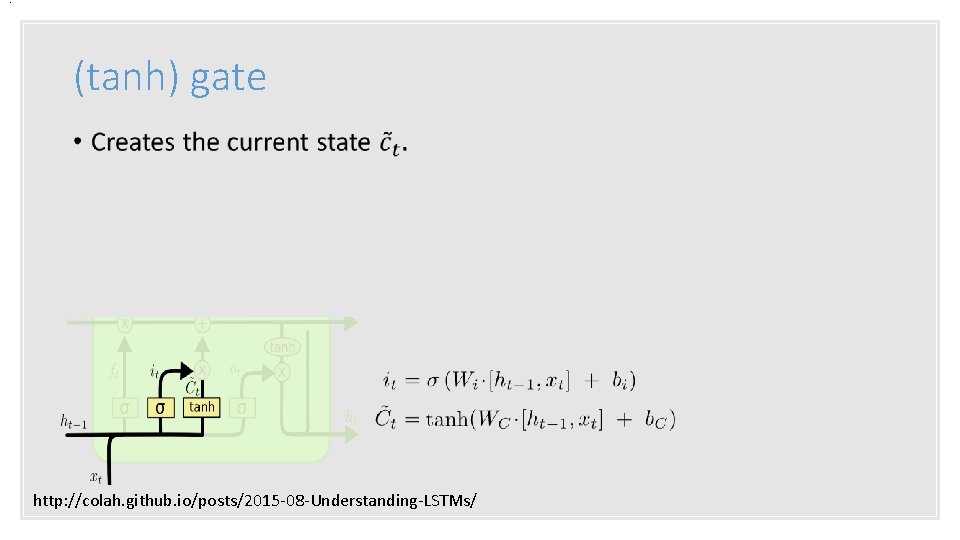

. (tanh) gate • http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/

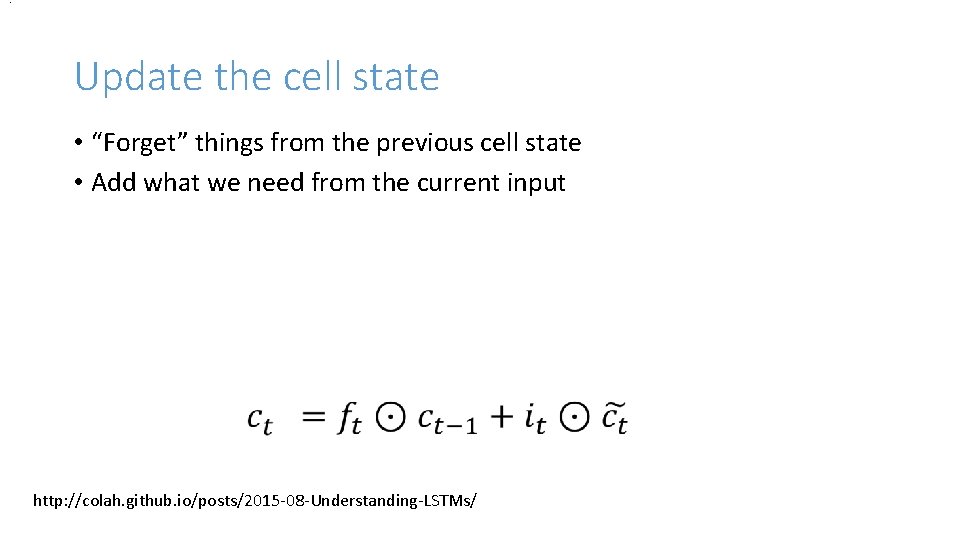

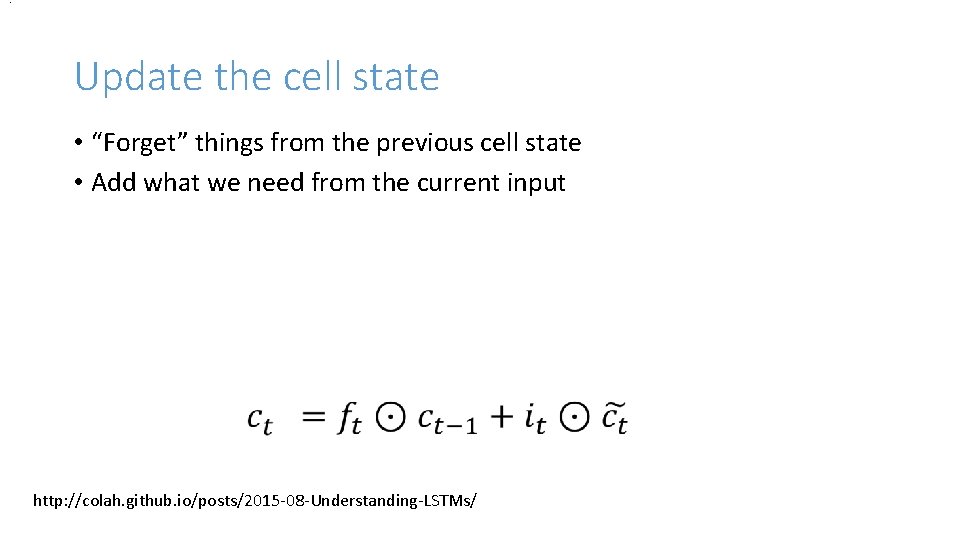

. Update the cell state • “Forget” things from the previous cell state • Add what we need from the current input http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/

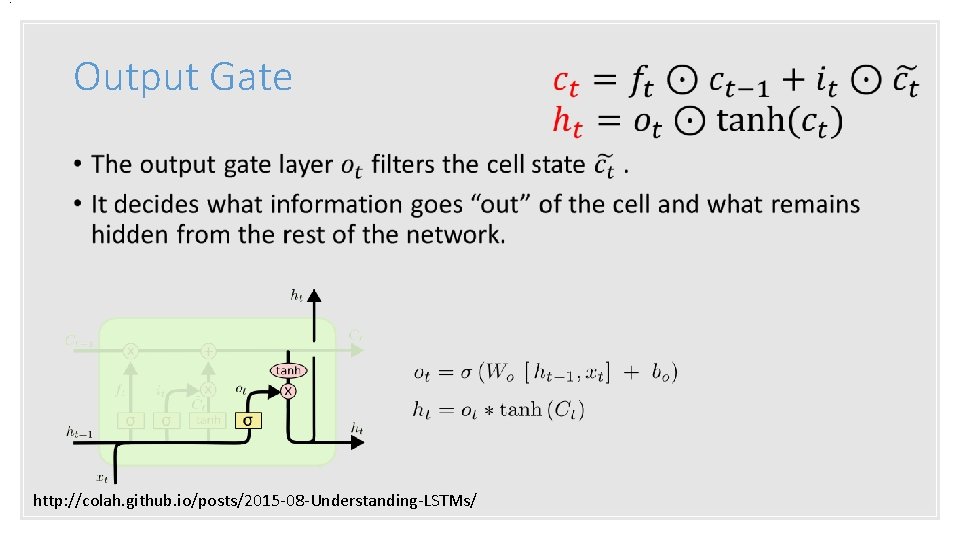

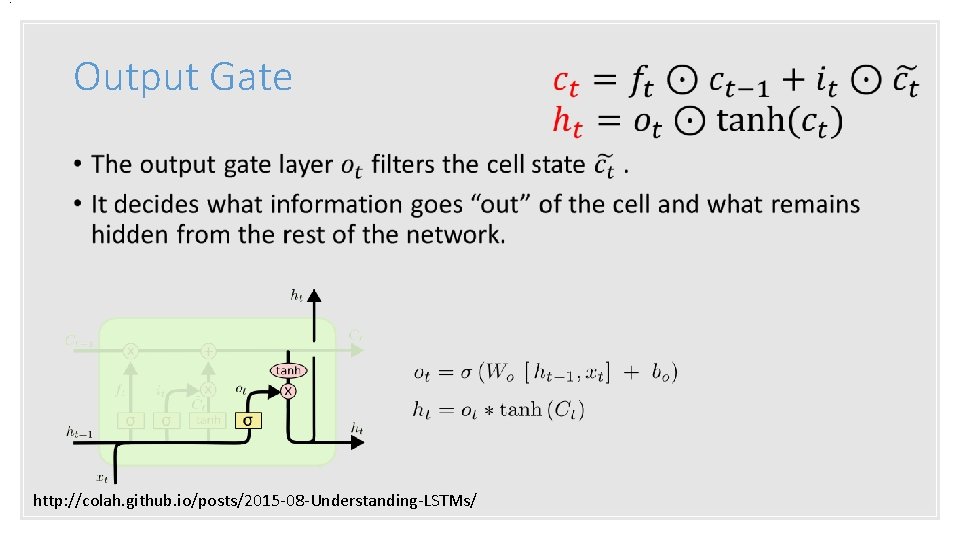

. Output Gate • http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/

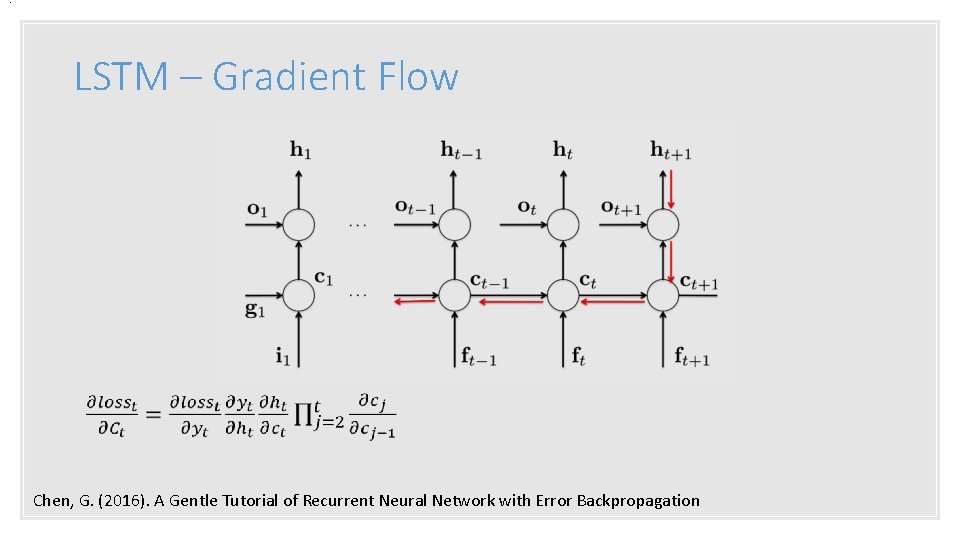

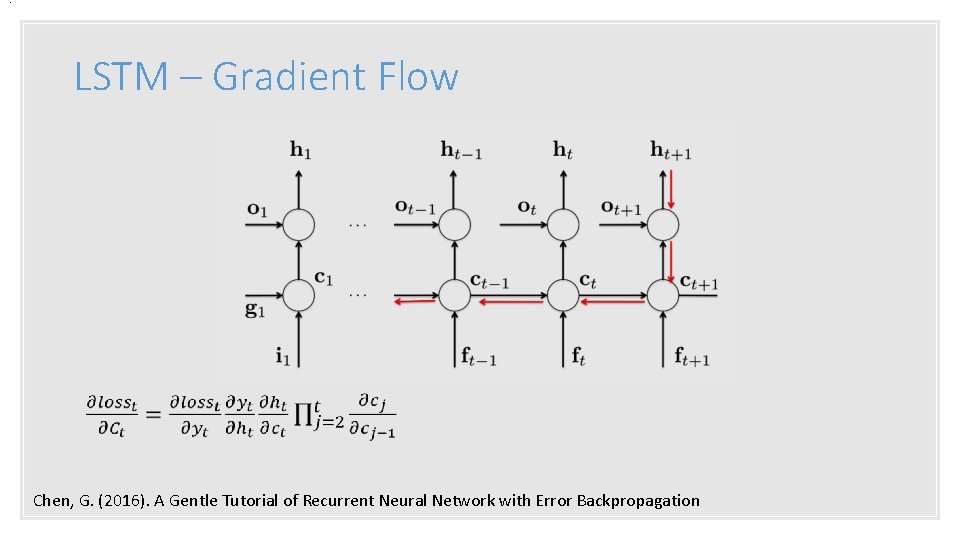

. LSTM – Gradient Flow Chen, G. (2016). A Gentle Tutorial of Recurrent Neural Network with Error Backpropagation

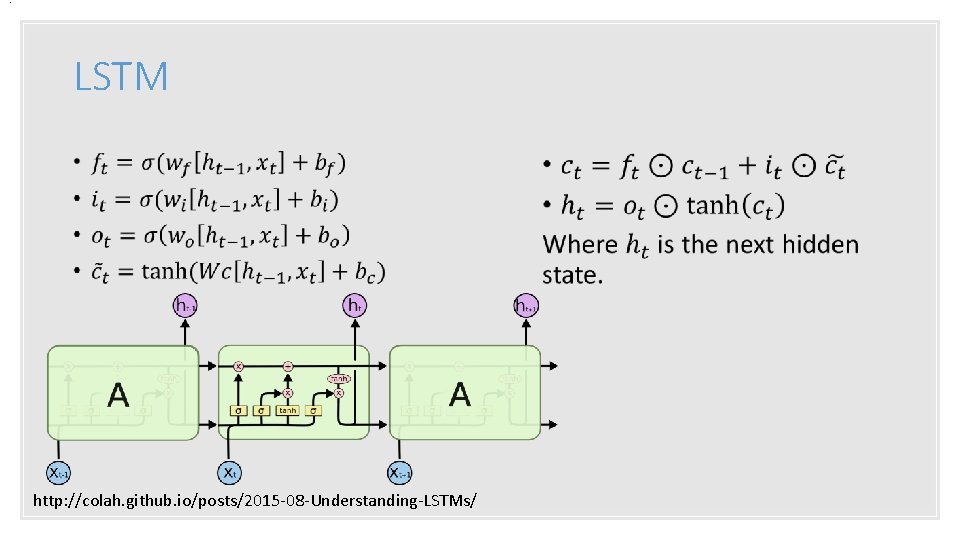

. LSTM • http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/

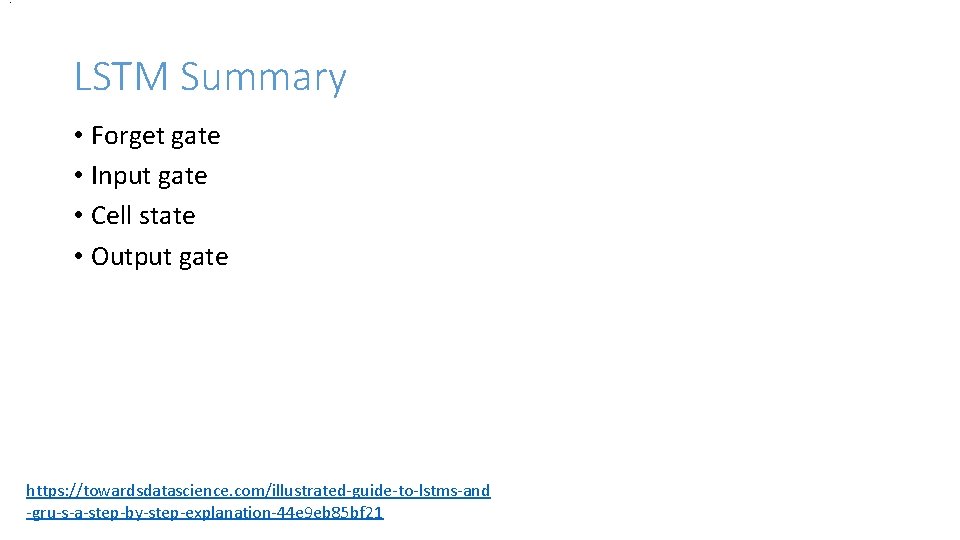

. LSTM Summary • Forget gate • Input gate • Cell state • Output gate https: //towardsdatascience. com/illustrated-guide-to-lstms-and -gru-s-a-step-by-step-explanation-44 e 9 eb 85 bf 21

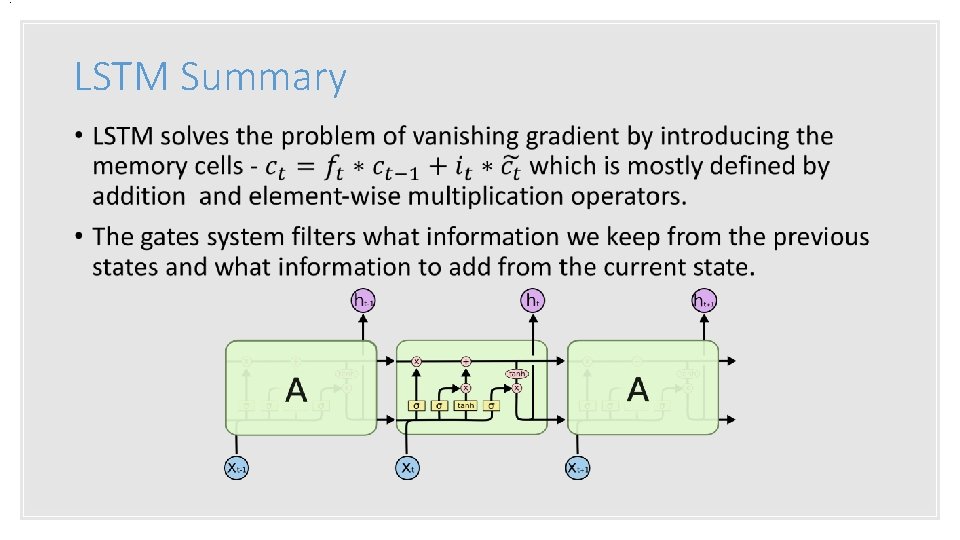

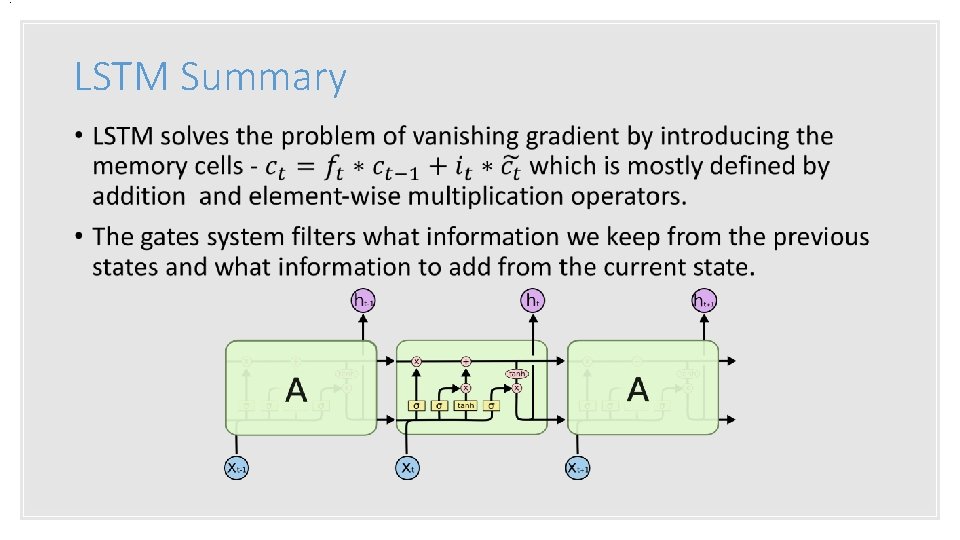

. LSTM Summary •

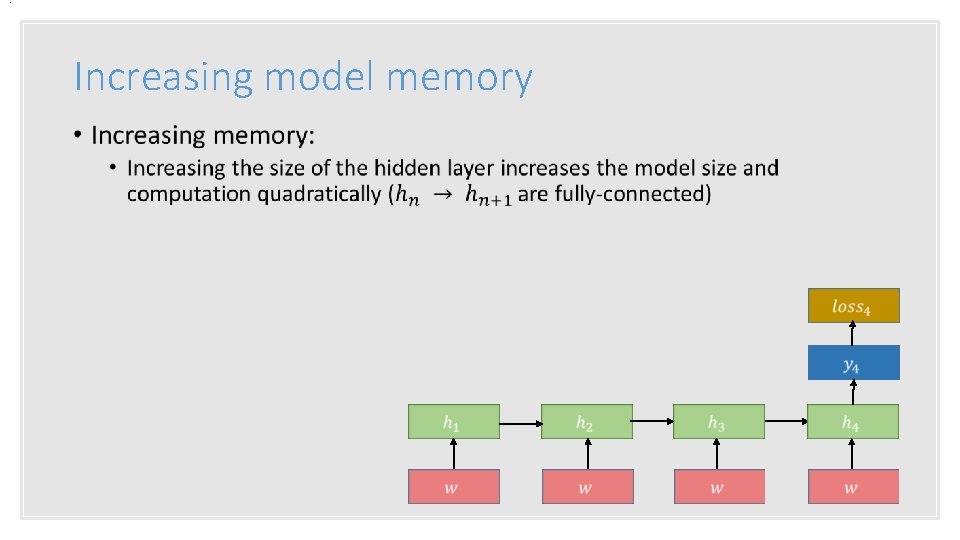

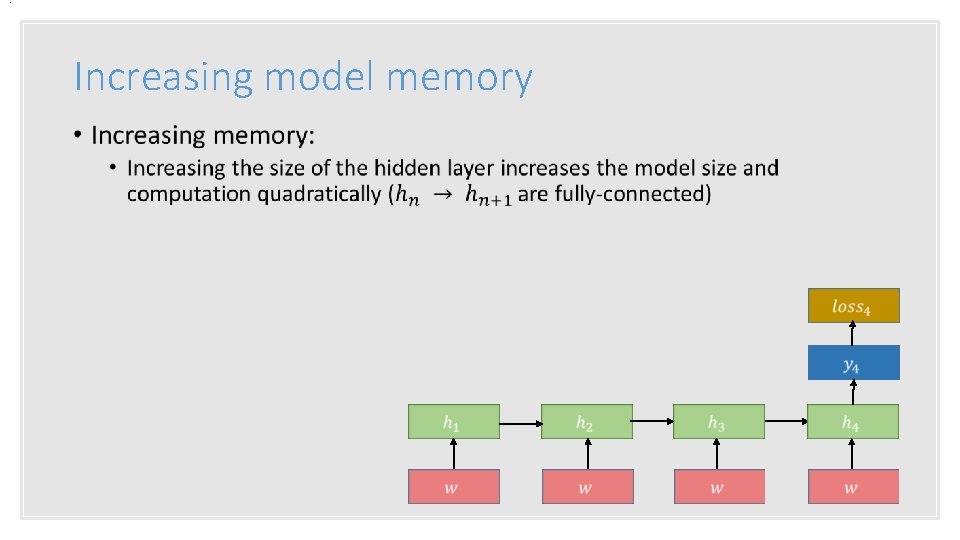

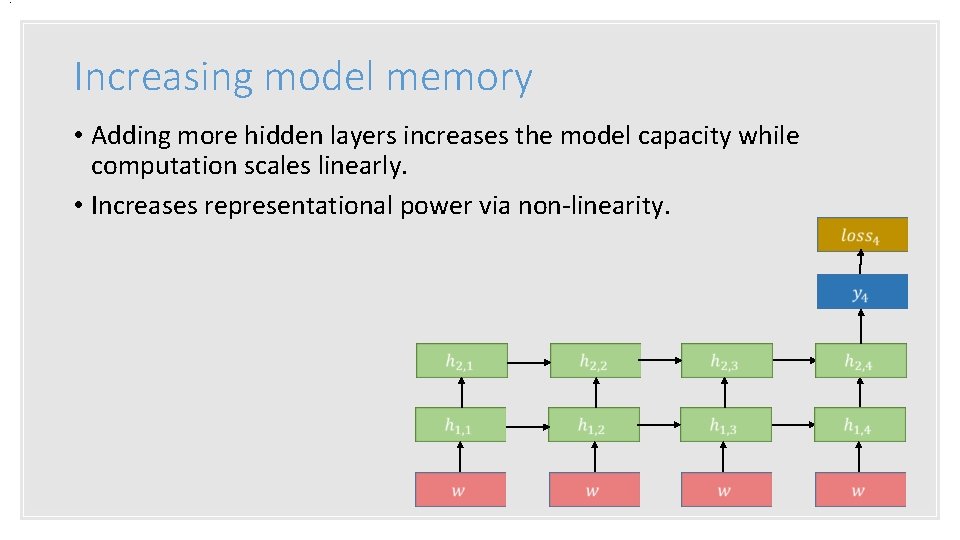

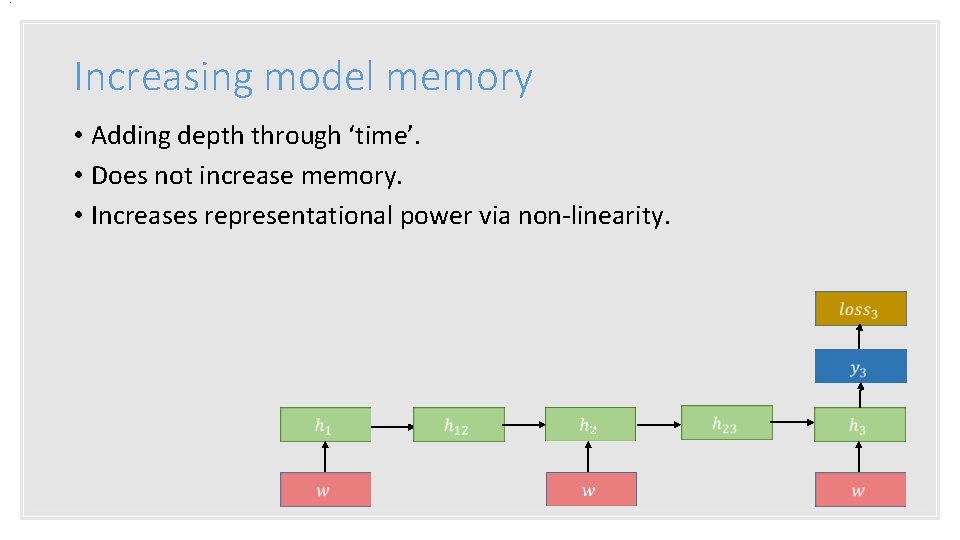

. Increasing model memory •

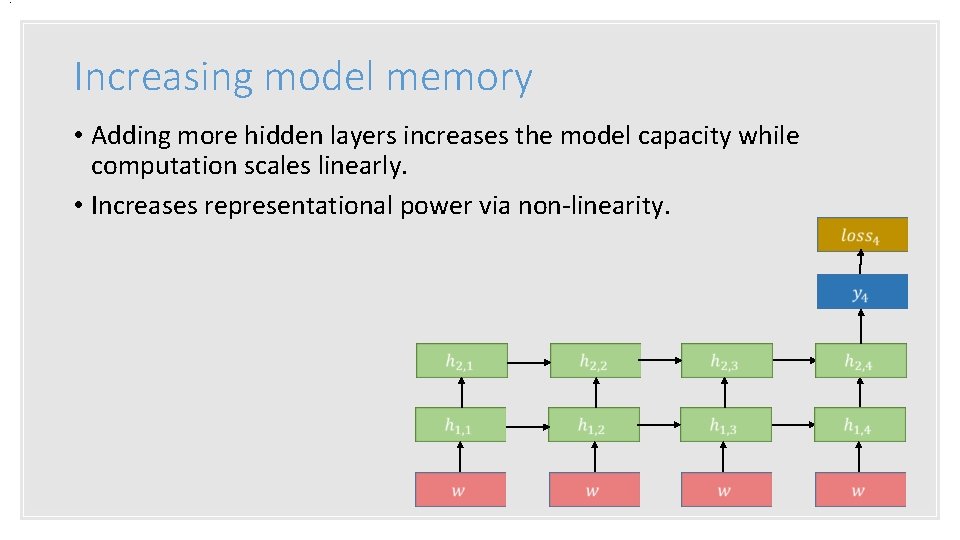

. Increasing model memory • Adding more hidden layers increases the model capacity while computation scales linearly. • Increases representational power via non-linearity.

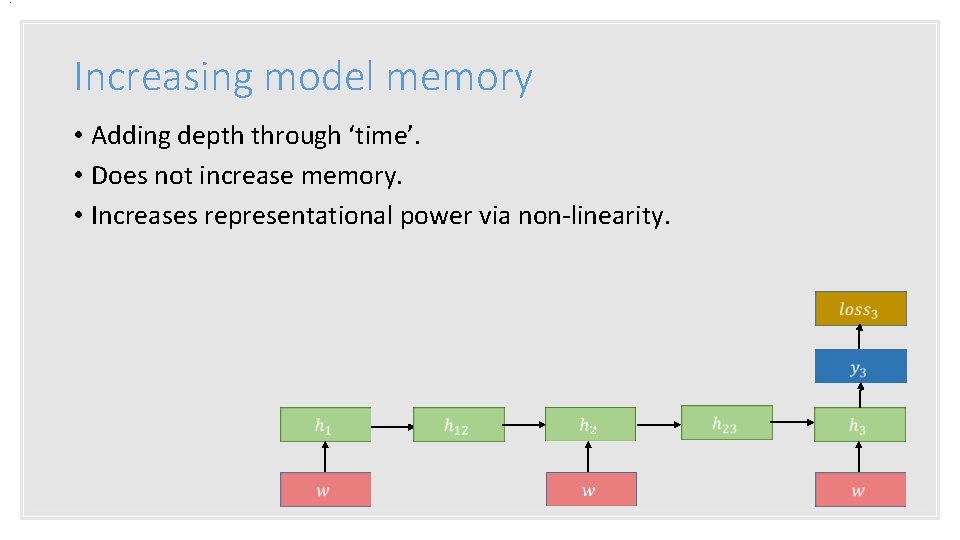

. Increasing model memory • Adding depth through ‘time’. • Does not increase memory. • Increases representational power via non-linearity.

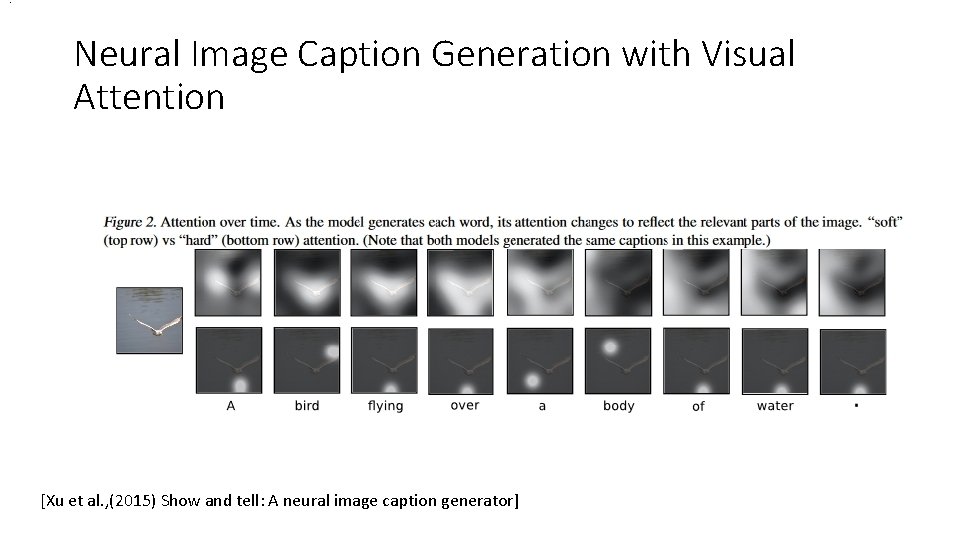

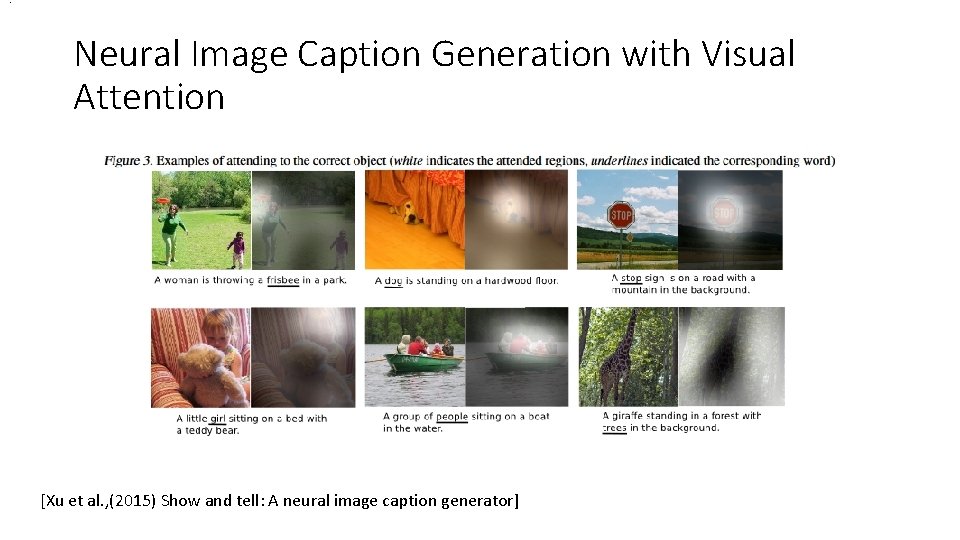

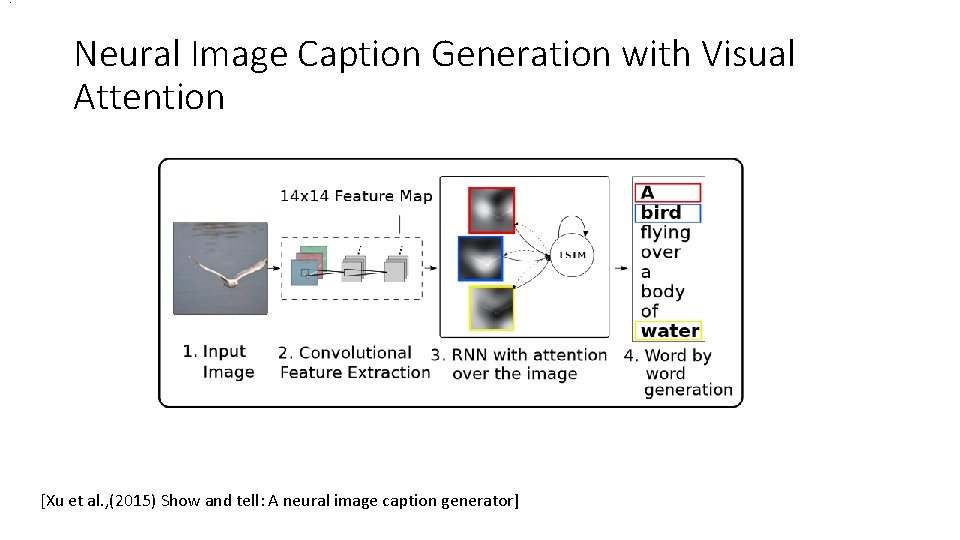

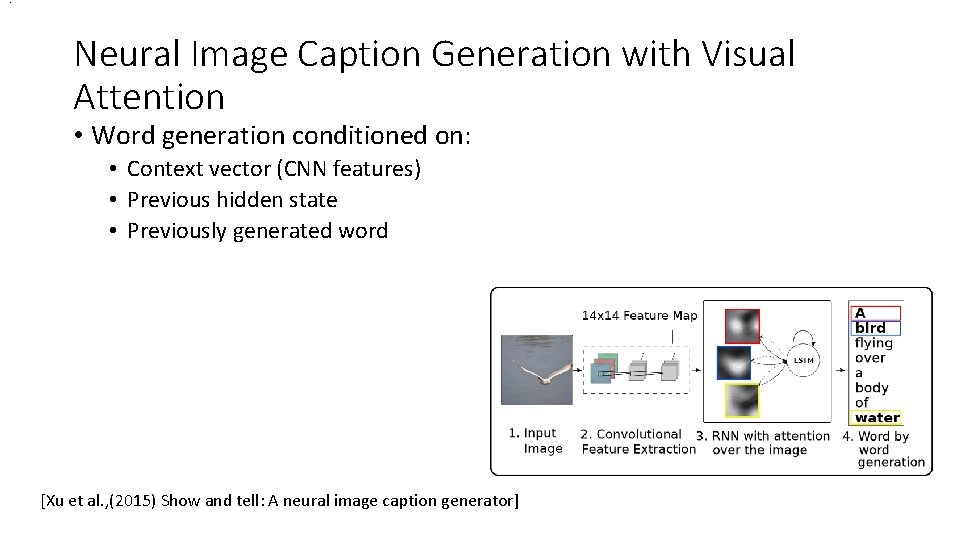

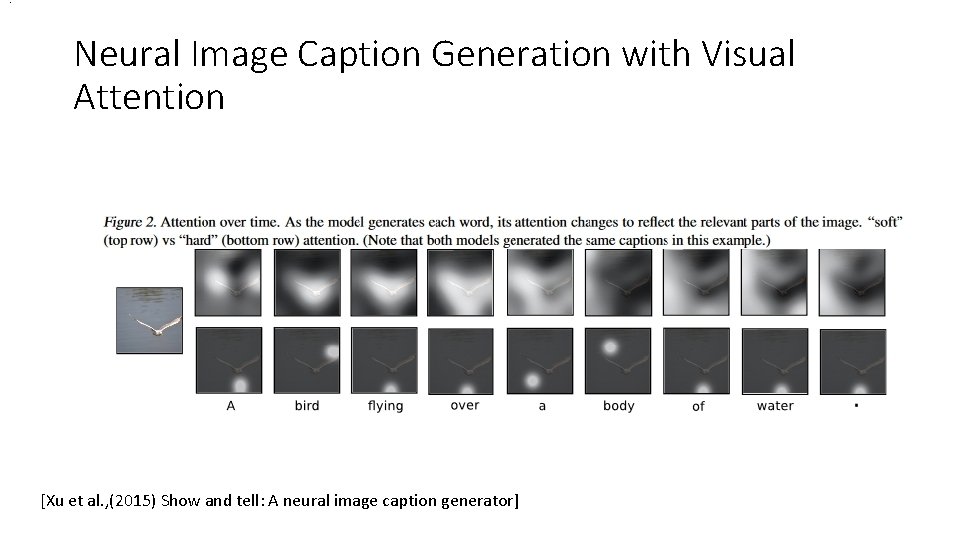

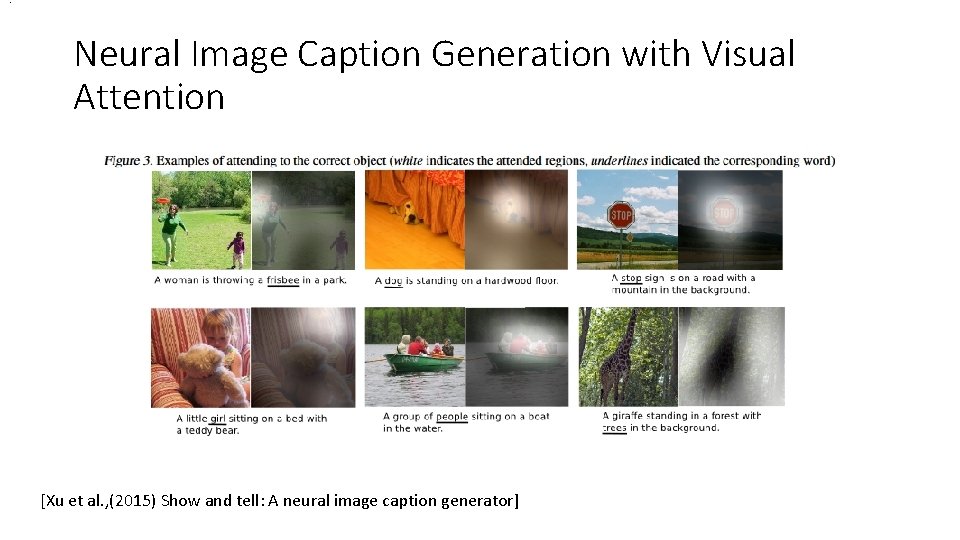

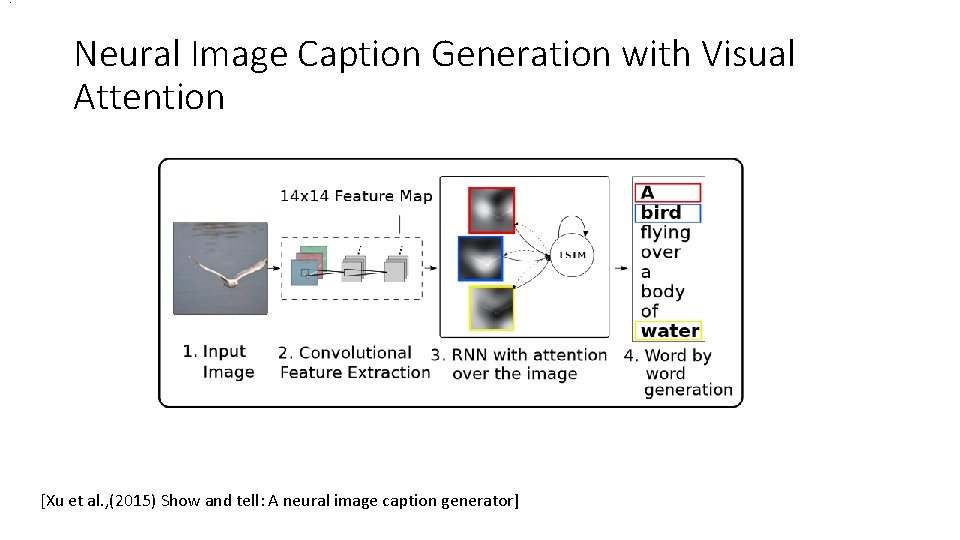

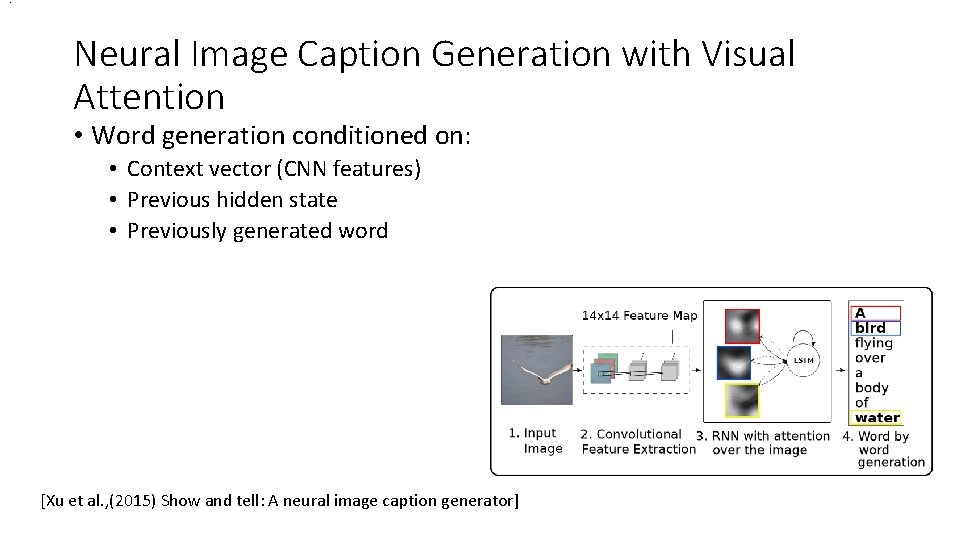

. Neural Image Caption Generation with Visual Attention [Xu et al. , (2015) Show and tell: A neural image caption generator]

. Neural Image Caption Generation with Visual Attention [Xu et al. , (2015) Show and tell: A neural image caption generator]

. Neural Image Caption Generation with Visual Attention [Xu et al. , (2015) Show and tell: A neural image caption generator]

. Neural Image Caption Generation with Visual Attention • Word generation conditioned on: • Context vector (CNN features) • Previous hidden state • Previously generated word [Xu et al. , (2015) Show and tell: A neural image caption generator]

![http normanai mit edu [http: //norman-ai. mit. edu/]](https://slidetodoc.com/presentation_image_h/fe3db5c16df373b5699568d81755ffa0/image-45.jpg)

[http: //norman-ai. mit. edu/]

![http normanai mit edu [http: //norman-ai. mit. edu/]](https://slidetodoc.com/presentation_image_h/fe3db5c16df373b5699568d81755ffa0/image-46.jpg)

[http: //norman-ai. mit. edu/]

![http normanai mit edu [http: //norman-ai. mit. edu/]](https://slidetodoc.com/presentation_image_h/fe3db5c16df373b5699568d81755ffa0/image-47.jpg)

[http: //norman-ai. mit. edu/]

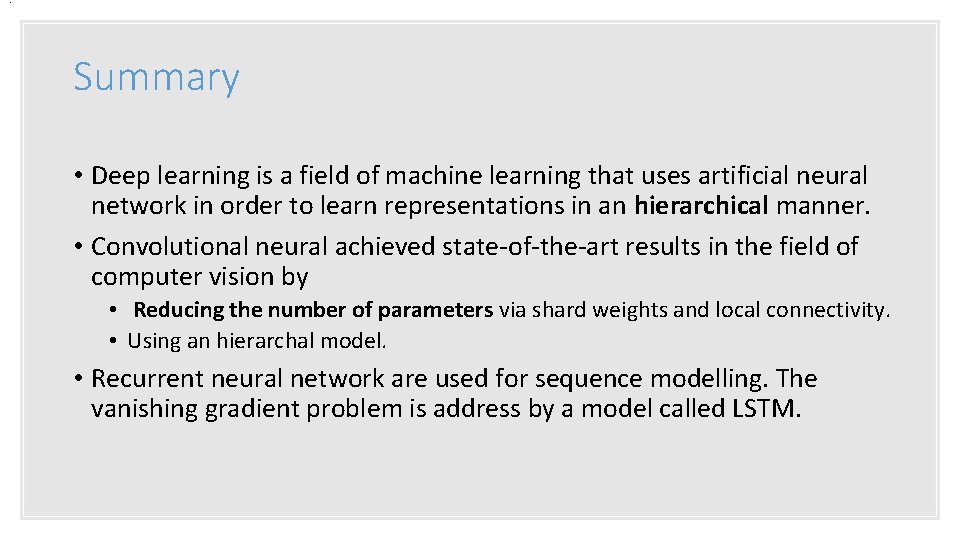

. Summary • Deep learning is a field of machine learning that uses artificial neural network in order to learn representations in an hierarchical manner. • Convolutional neural achieved state-of-the-art results in the field of computer vision by • Reducing the number of parameters via shard weights and local connectivity. • Using an hierarchal model. • Recurrent neural network are used for sequence modelling. The vanishing gradient problem is address by a model called LSTM.

. Thanks!