Modeling Relational Data with Graph Convolutional Networks 2017

![Link Prediction • Dataset [Bordes, NIPS’ 13] Link Prediction • Dataset [Bordes, NIPS’ 13]](https://slidetodoc.com/presentation_image_h2/78ae1fe880849a159bee5f0a8cf34a6b/image-11.jpg)

- Slides: 19

Modeling Relational Data with Graph Convolutional Networks 2017. 5. 5 Zhang Yan Schlichtkrull M, Kipf T N, Bloem P, et al. Modeling Relational Data with Graph Convolutional Networks[J]. ar. Xiv 30 May, 2017. 1

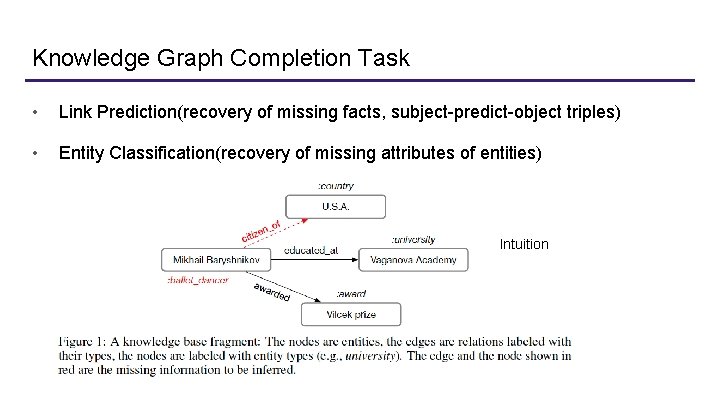

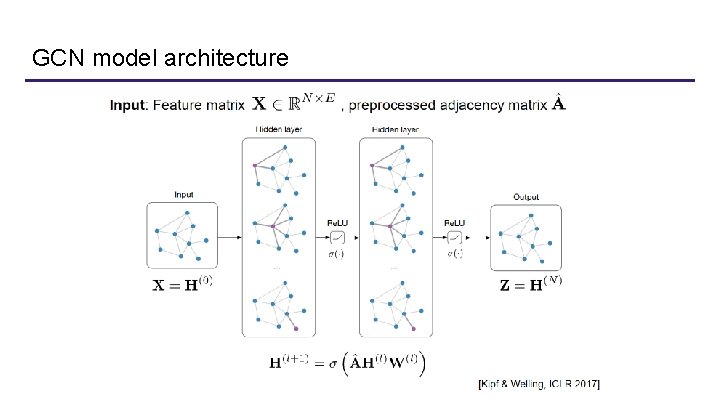

Knowledge Graph Completion Task • Link Prediction(recovery of missing facts, subject-predict-object triples) • Entity Classification(recovery of missing attributes of entities) Intuition

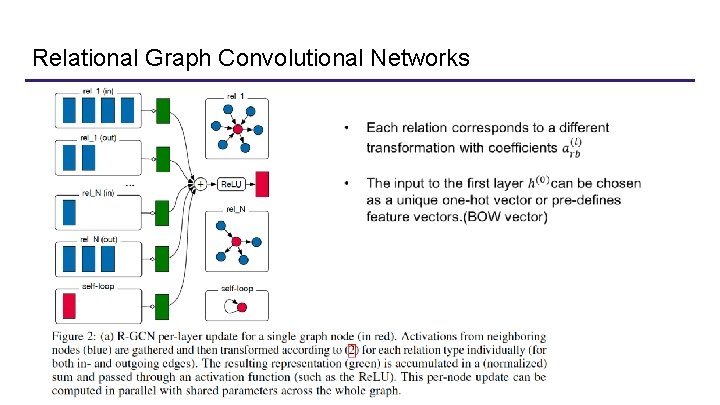

Relational Graph Convolutional Networks

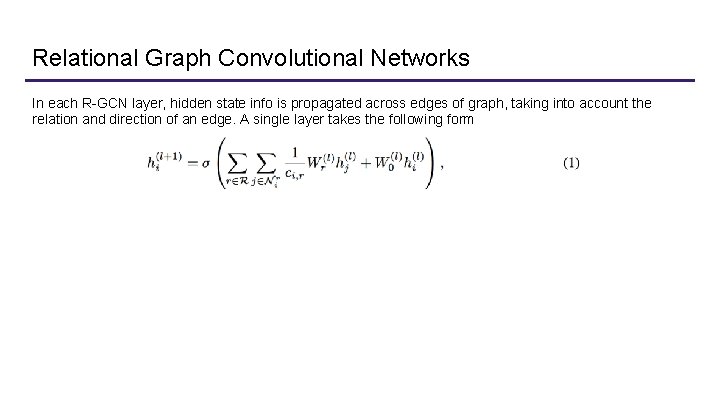

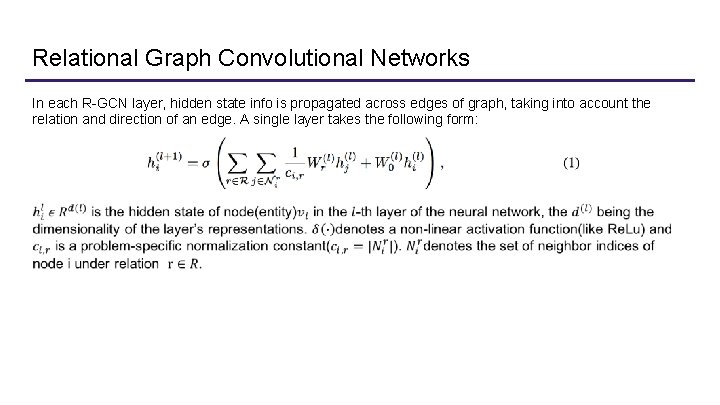

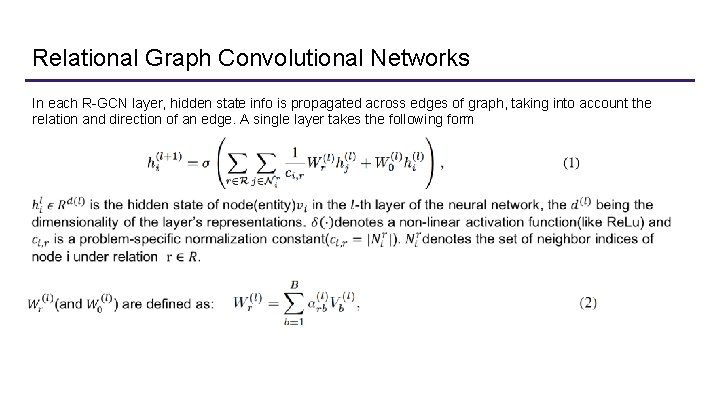

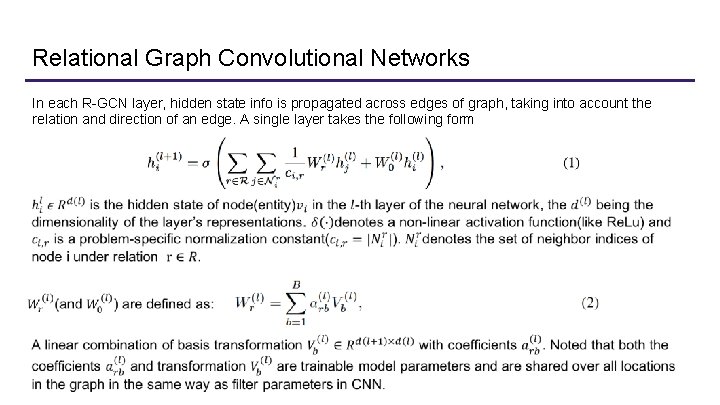

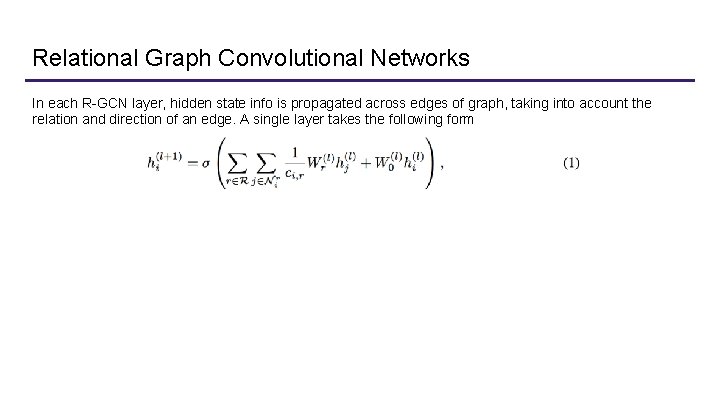

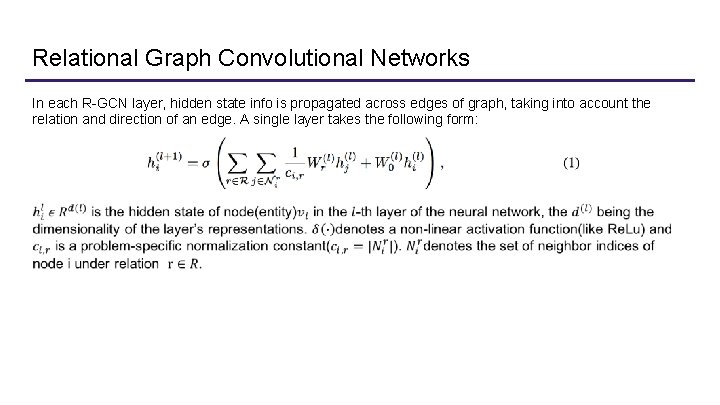

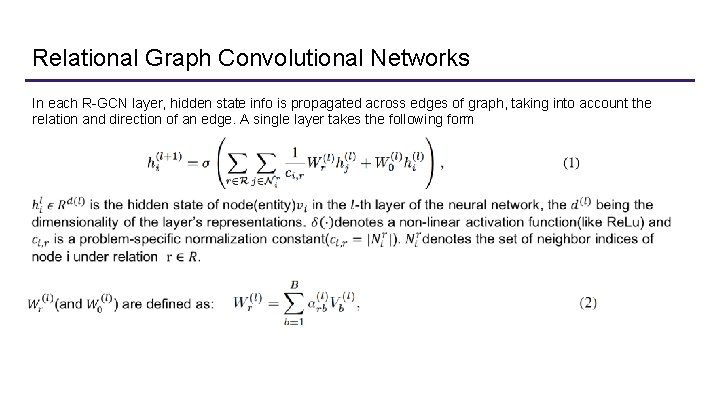

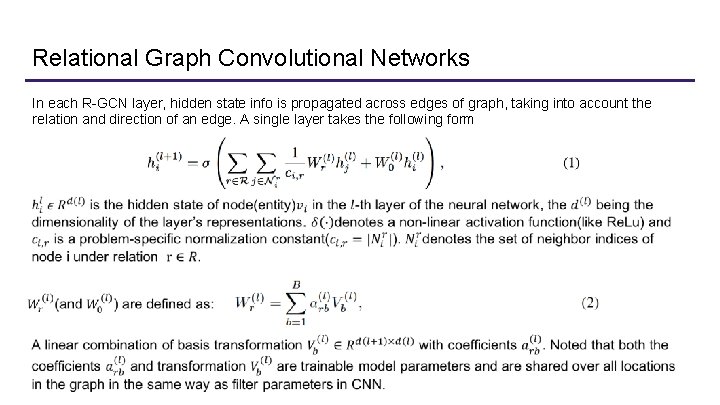

Relational Graph Convolutional Networks In each R-GCN layer, hidden state info is propagated across edges of graph, taking into account the relation and direction of an edge. A single layer takes the following form

Relational Graph Convolutional Networks In each R-GCN layer, hidden state info is propagated across edges of graph, taking into account the relation and direction of an edge. A single layer takes the following form:

Relational Graph Convolutional Networks In each R-GCN layer, hidden state info is propagated across edges of graph, taking into account the relation and direction of an edge. A single layer takes the following form

Relational Graph Convolutional Networks In each R-GCN layer, hidden state info is propagated across edges of graph, taking into account the relation and direction of an edge. A single layer takes the following form

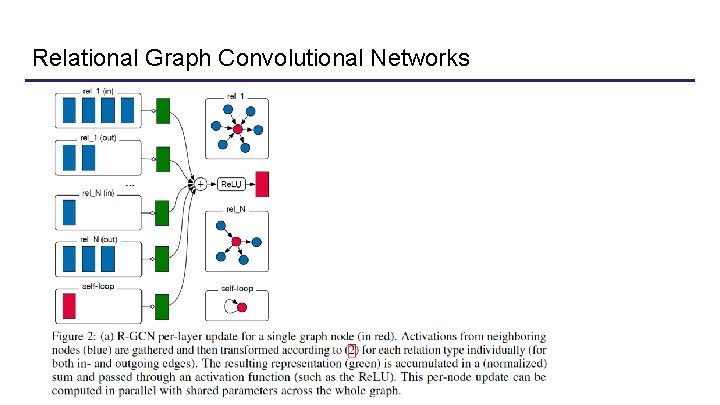

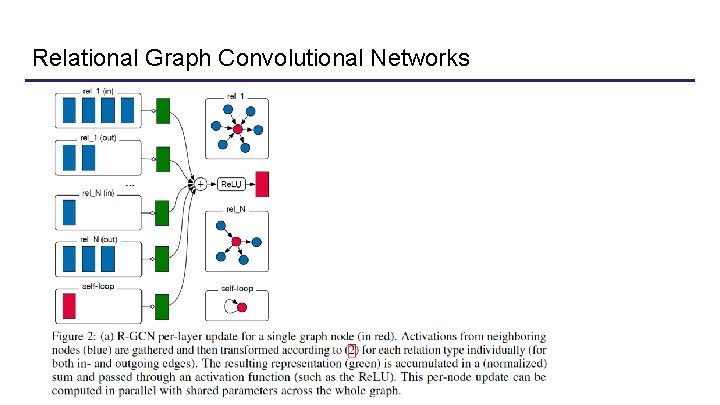

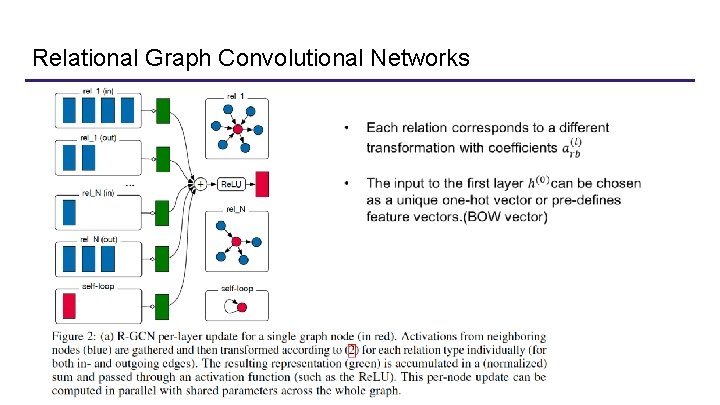

Relational Graph Convolutional Networks

Relational Graph Convolutional Networks

Tasks The model is applied to two tasks: Link Prediction - Generates a response y given dialogue history x. Standard Seq 2 Seq model with Attention Mechanism Entity Classification - - Binary Classifier that takes as input a sequence of dialogue utterances {x, y} and outputs label indicating whether the input is generated by human or machines Hierarchical Encoder + 2 class softmax function -> returns probability of the input dialogue episode being a machine or human generated dialogues.

![Link Prediction Dataset Bordes NIPS 13 Link Prediction • Dataset [Bordes, NIPS’ 13]](https://slidetodoc.com/presentation_image_h2/78ae1fe880849a159bee5f0a8cf34a6b/image-11.jpg)

Link Prediction • Dataset [Bordes, NIPS’ 13]

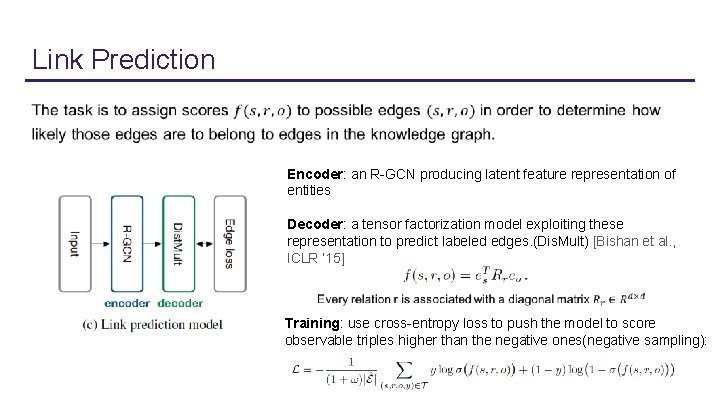

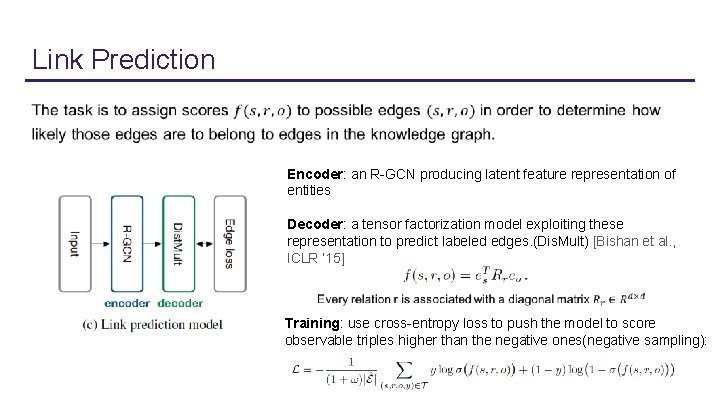

Link Prediction Encoder: an R-GCN producing latent feature representation of entities Decoder: a tensor factorization model exploiting these representation to predict labeled edges. (Dis. Mult) [Bishan et al. , ICLR ‘ 15] Training: use cross-entropy loss to push the model to score observable triples higher than the negative ones(negative sampling):

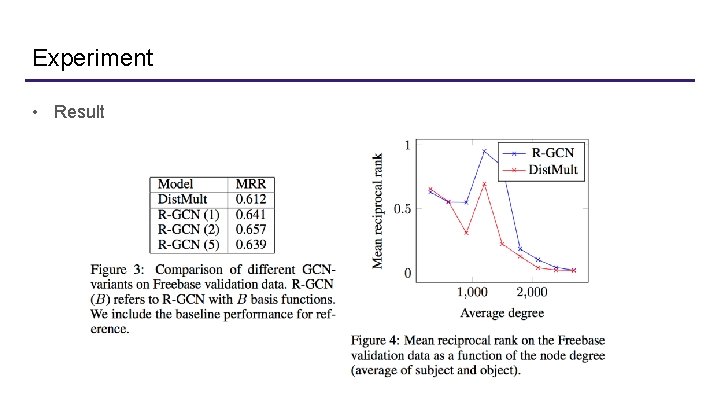

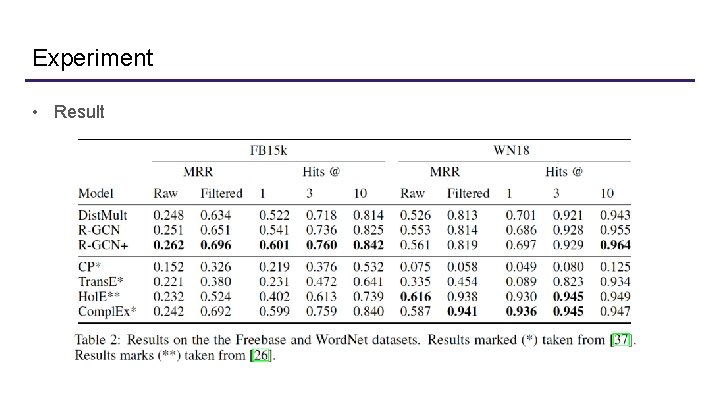

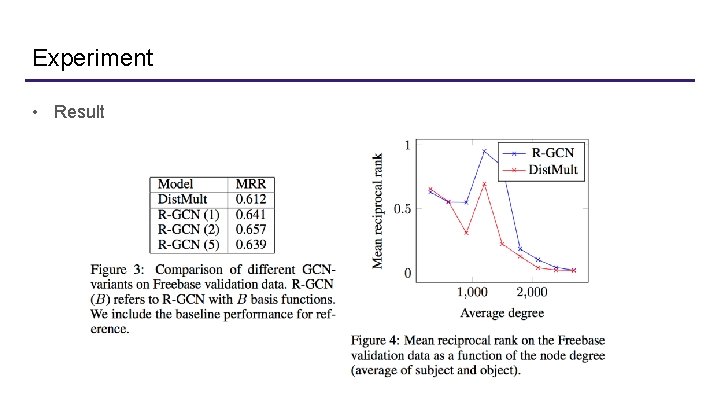

Experiment • Result

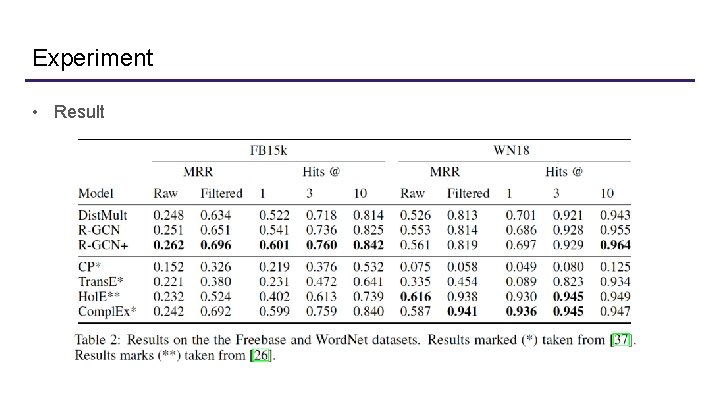

Experiment • Result

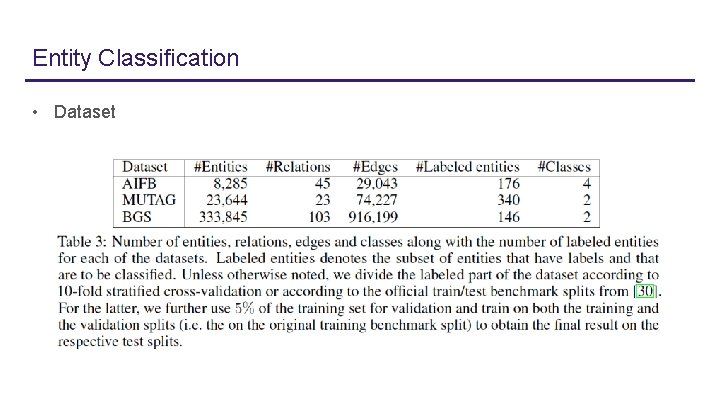

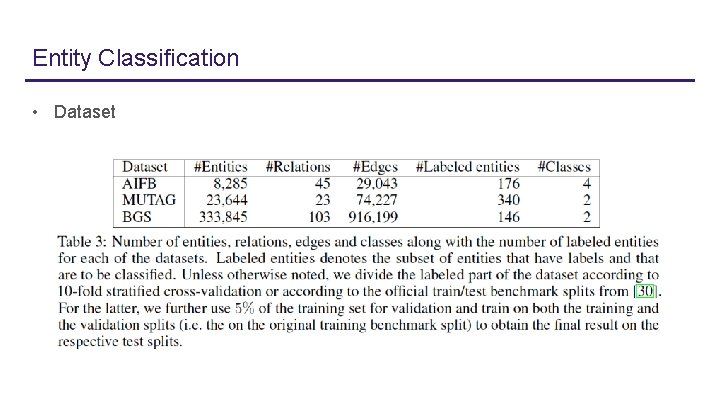

Entity Classification • Dataset

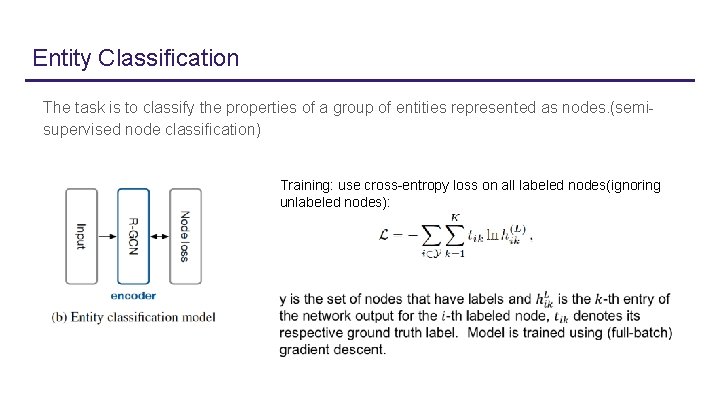

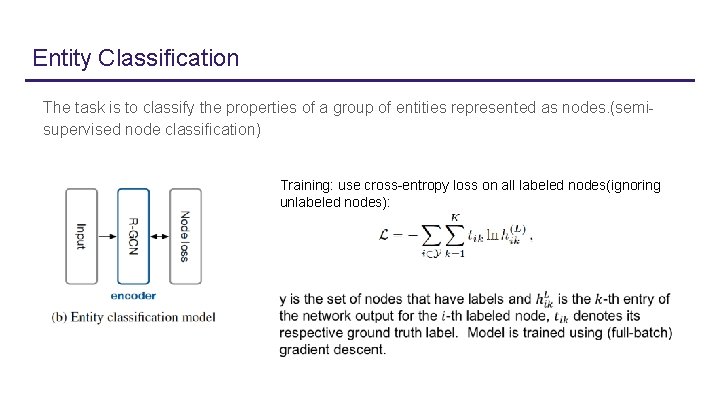

Entity Classification The task is to classify the properties of a group of entities represented as nodes. (semisupervised node classification) Training: use cross-entropy loss on all labeled nodes(ignoring unlabeled nodes):

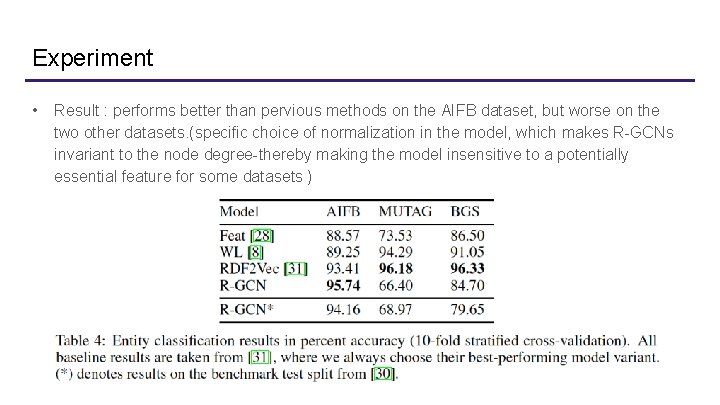

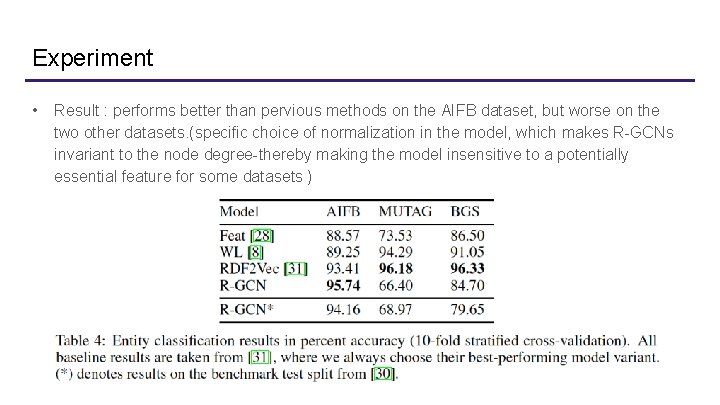

Experiment • Result : performs better than pervious methods on the AIFB dataset, but worse on the two other datasets. (specific choice of normalization in the model, which makes R-GCNs invariant to the node degree-thereby making the model insensitive to a potentially essential feature for some datasets )

Notes • Integrate entity features in R-GCNs, which would be beneficial both for link prediction and entity classification • Path features may be considered in R-GCNs for link prediction task. • Gain a better understanding of how basis transformations are used to represent knowledge base relations, it would be interesting to perform a more thorough analysis of learned relation embeddings.

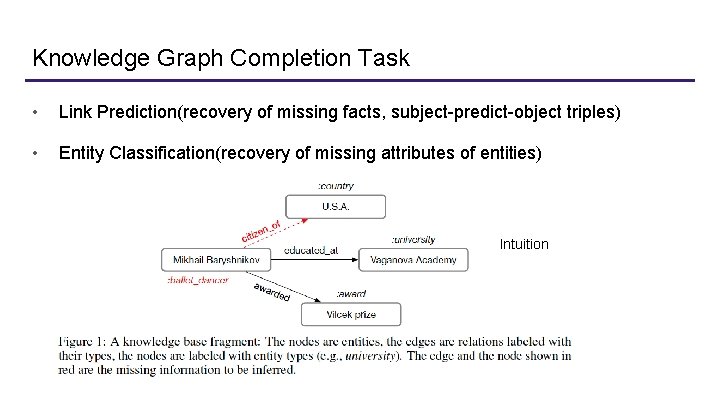

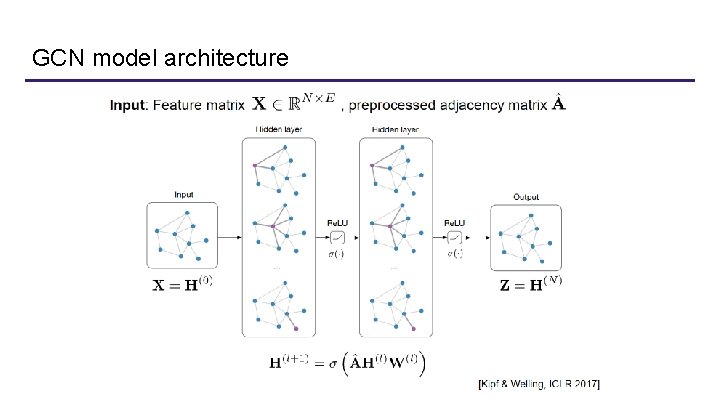

GCN model architecture