Trigger and Data Acquisition Summer Student Lectures 16

- Slides: 93

Trigger and Data Acquisition Summer Student Lectures 16, 17 & 19 July 2001 Clara Gaspar CERN/EP-LBC

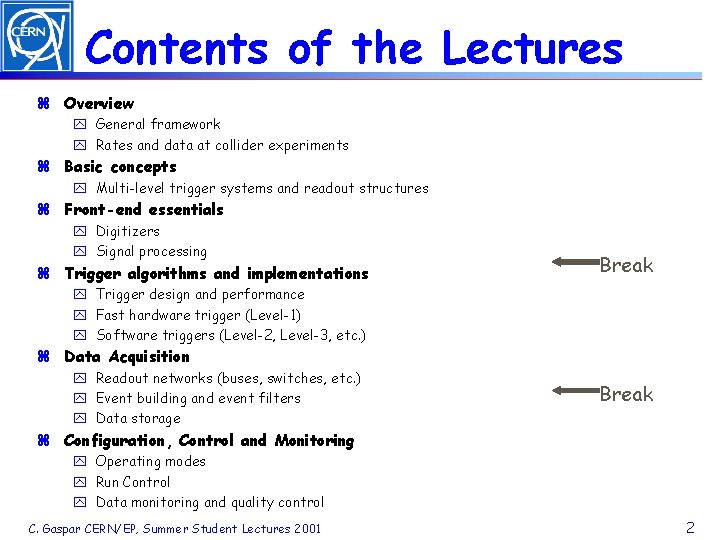

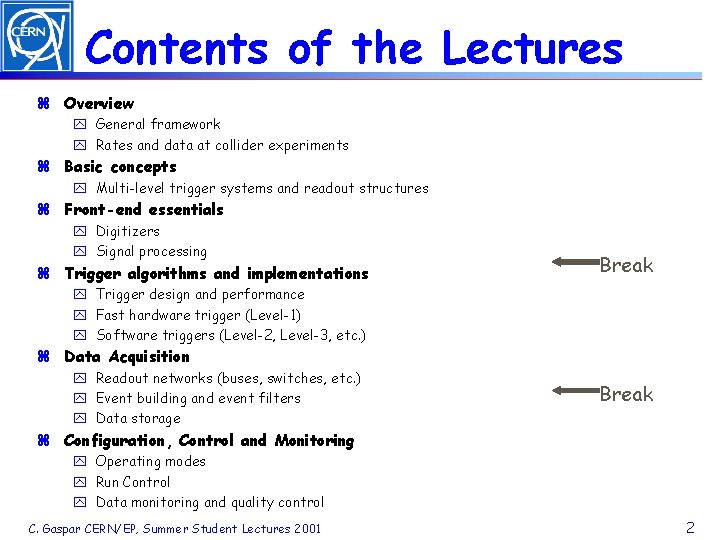

Contents of the Lectures z Overview y General framework y Rates and data at collider experiments z Basic concepts y Multi-level trigger systems and readout structures z Front-end essentials y Digitizers y Signal processing z Trigger algorithms and implementations Break y Trigger design and performance y Fast hardware trigger (Level-1) y Software triggers (Level-2, Level-3, etc. ) z Data Acquisition y Readout networks (buses, switches, etc. ) y Event building and event filters y Data storage Break z Configuration, Control and Monitoring y Operating modes y Run Control y Data monitoring and quality control C. Gaspar CERN/EP, Summer Student Lectures 2001 2

Foreword z This course presents the experience acquired by the work of many people and many teams which have been developing and building trigger and data acquisition systems for high energy physics experiments for many years. z The subject of this course is not an exact science. The trigger and data acquisition system of each experiment is somehow unique (different experiments have different requirements and even similar requirements may be fulfilled with different solutions). z This is not a review of existing systems. The reference to existing or proposed systems are used as examples. z To prepare this course I have taken material and presentation ideas from my predecessors in previous years: Mato and Ph. Charpentier. C. Gaspar CERN/EP, Summer Student Lectures 2001 P. 3

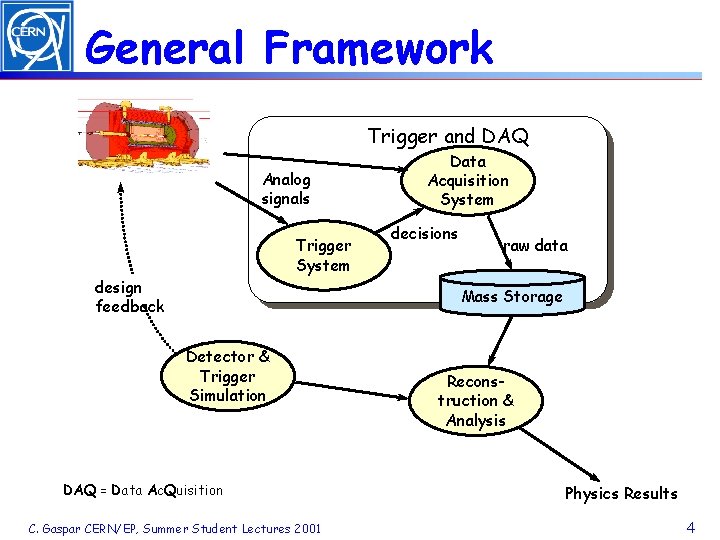

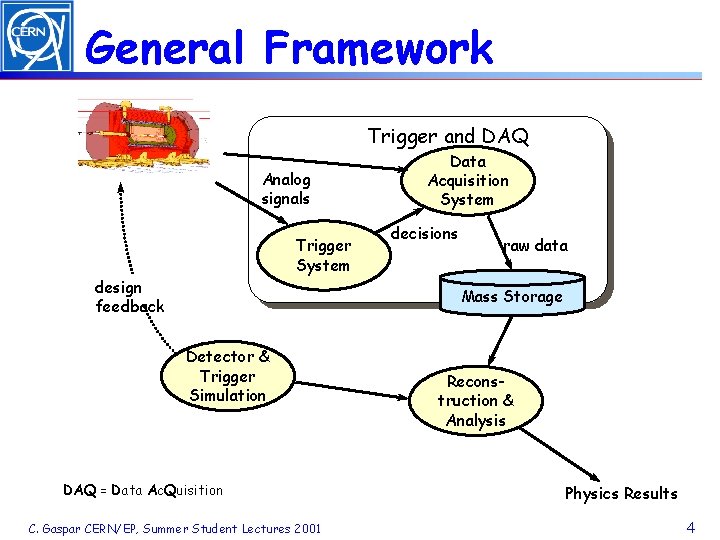

General Framework Trigger and DAQ Analog signals Trigger System design feedback Data Acquisition System decisions raw data Mass Storage Detector & Trigger Simulation DAQ = Data Ac. Quisition C. Gaspar CERN/EP, Summer Student Lectures 2001 Reconstruction & Analysis Physics Results 4

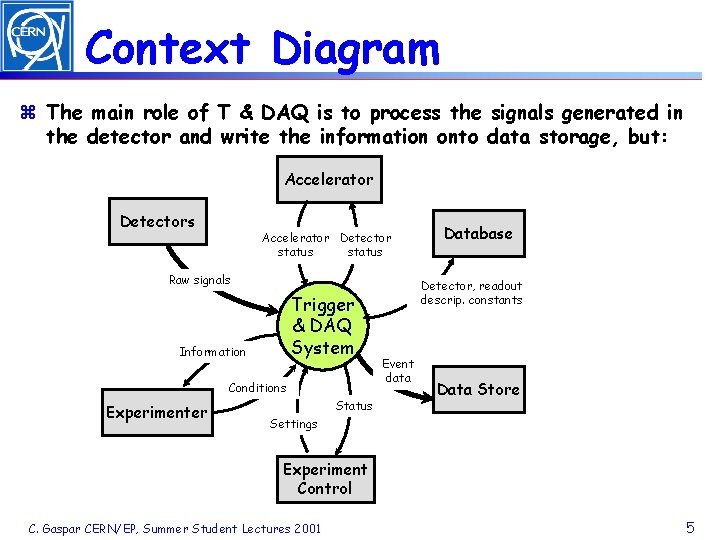

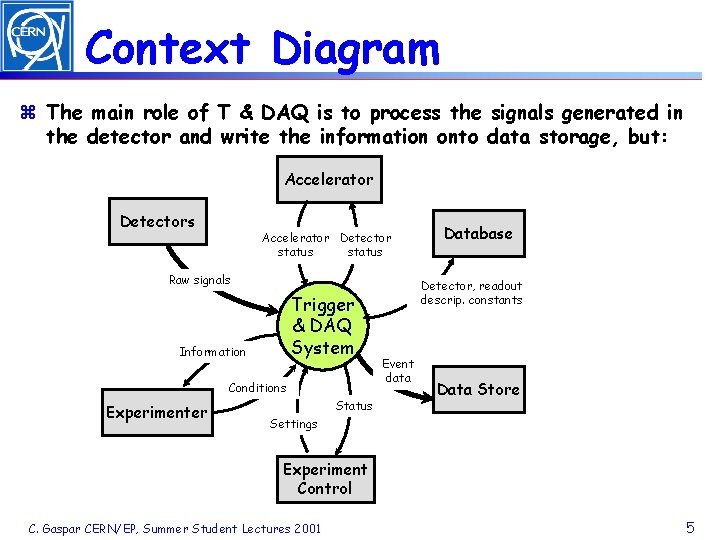

Context Diagram z The main role of T & DAQ is to process the signals generated in the detector and write the information onto data storage, but: Accelerator Detectors Accelerator Detector status Raw signals Trigger & DAQ System Information Conditions Experimenter Status Database Detector, readout descrip. constants Event data Data Store Settings Experiment Control C. Gaspar CERN/EP, Summer Student Lectures 2001 5

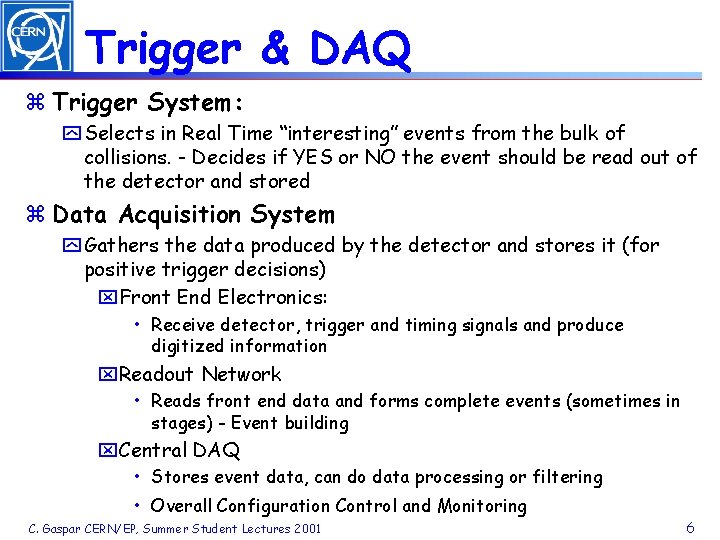

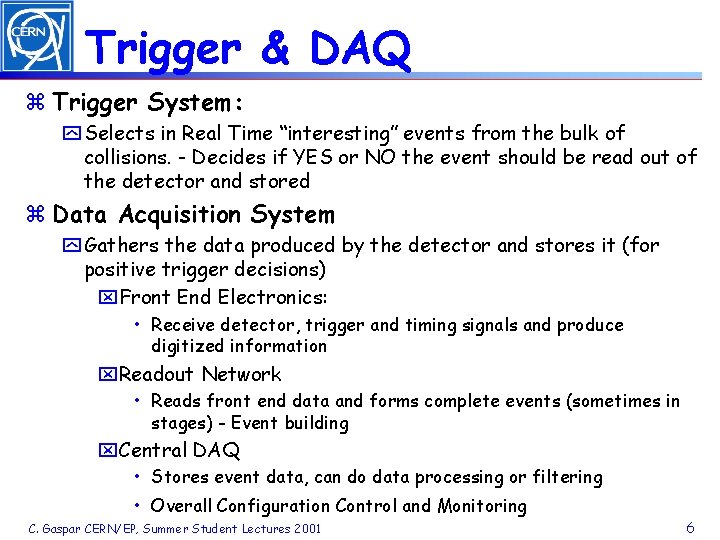

Trigger & DAQ z Trigger System: y Selects in Real Time “interesting” events from the bulk of collisions. - Decides if YES or NO the event should be read out of the detector and stored z Data Acquisition System y Gathers the data produced by the detector and stores it (for positive trigger decisions) x. Front End Electronics: • Receive detector, trigger and timing signals and produce digitized information x. Readout Network • Reads front end data and forms complete events (sometimes in stages) - Event building x. Central DAQ • Stores event data, can do data processing or filtering • Overall Configuration Control and Monitoring C. Gaspar CERN/EP, Summer Student Lectures 2001 6

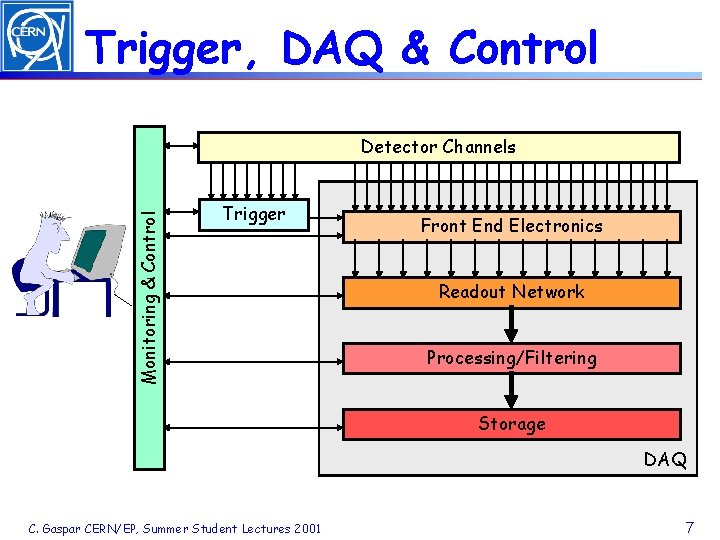

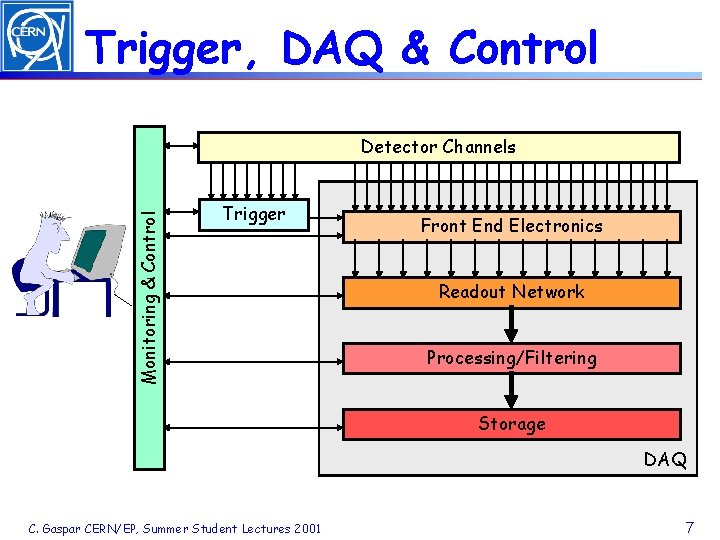

Trigger, DAQ & Control Monitoring & Control Detector Channels Trigger Front End Electronics Readout Network Processing/Filtering Storage DAQ C. Gaspar CERN/EP, Summer Student Lectures 2001 7

LEP & LHC in Numbers C. Gaspar CERN/EP, Summer Student Lectures 2001 8

Basic Concepts Clara Gaspar CERN/EP-LBC

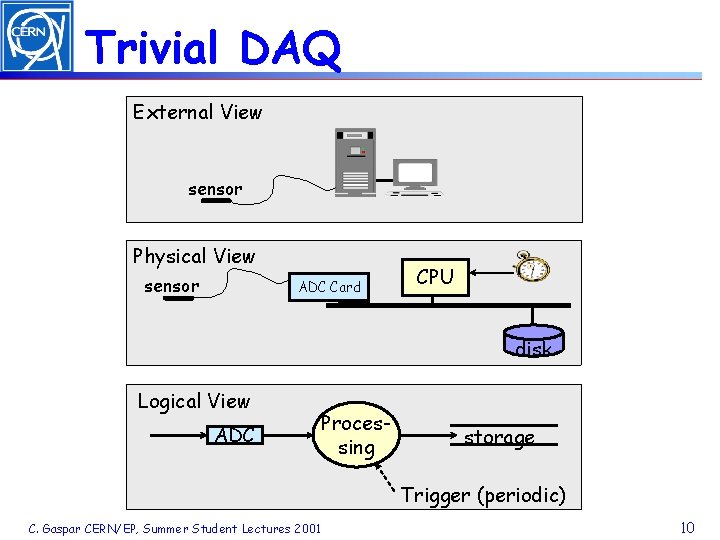

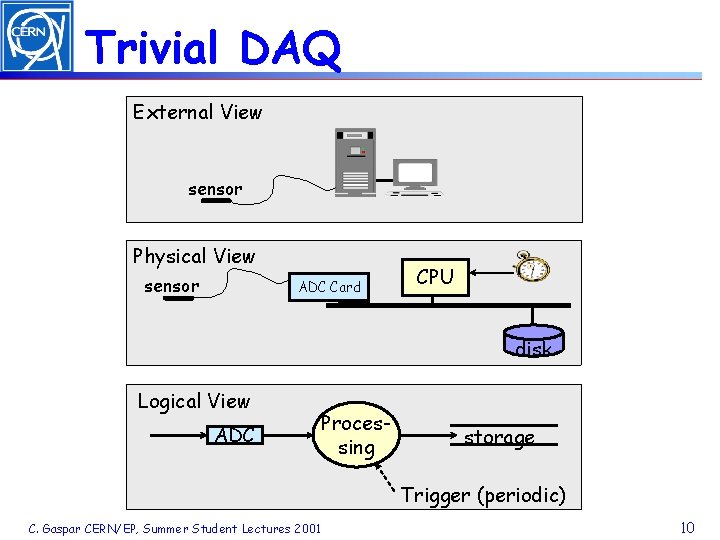

Trivial DAQ External View sensor Physical View sensor ADC Card CPU disk Logical View ADC Processing storage Trigger (periodic) C. Gaspar CERN/EP, Summer Student Lectures 2001 10

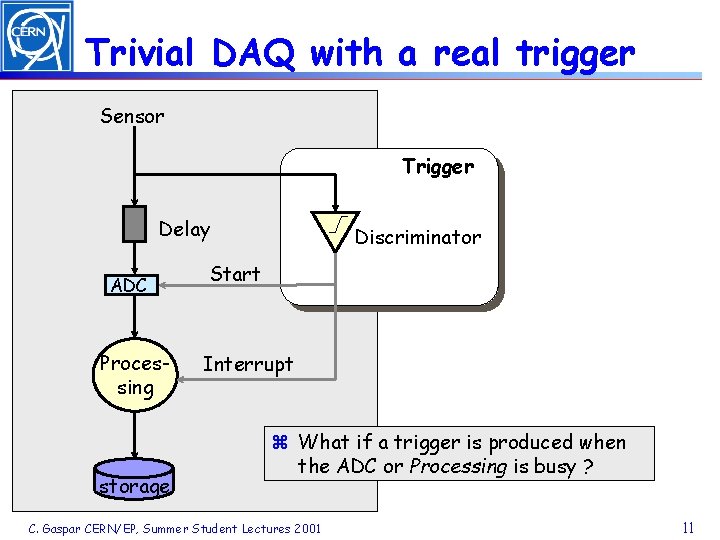

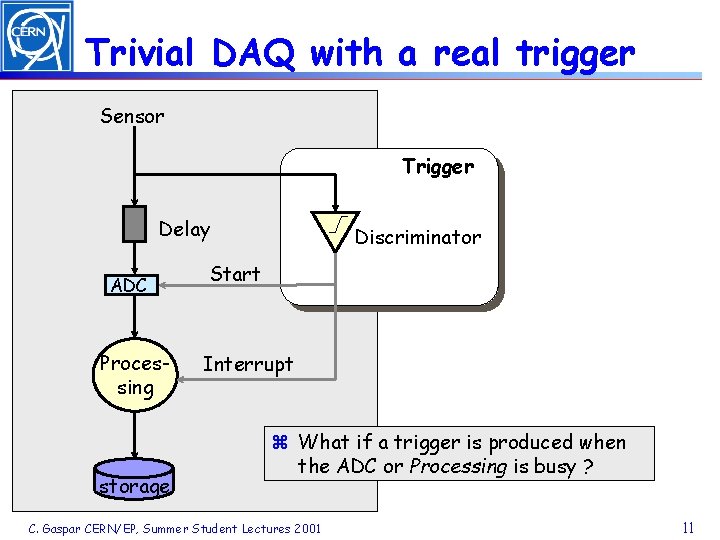

Trivial DAQ with a real trigger Sensor Trigger Delay ADC Processing storage Discriminator Start Interrupt z What if a trigger is produced when the ADC or Processing is busy ? C. Gaspar CERN/EP, Summer Student Lectures 2001 11

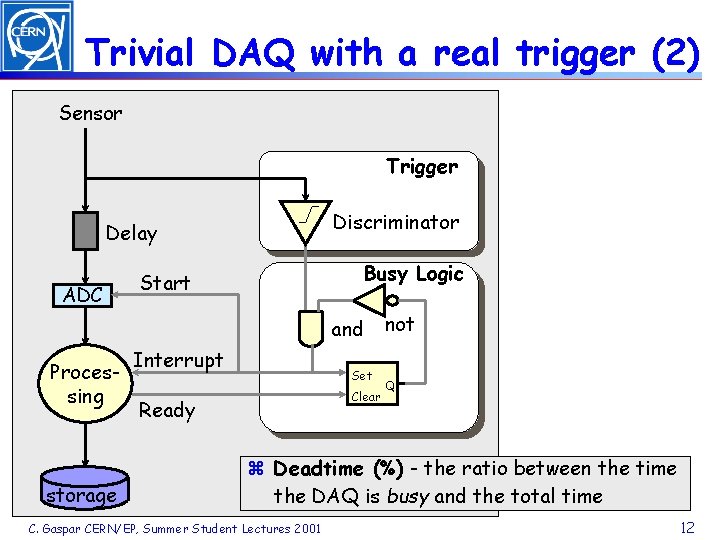

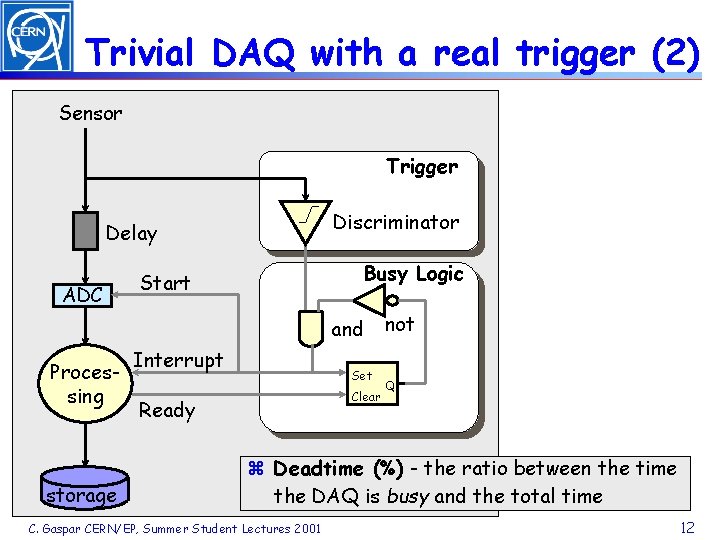

Trivial DAQ with a real trigger (2) Sensor Trigger Discriminator Delay ADC Busy Logic Start and Processing storage Interrupt Set Clear Ready not Q z Deadtime (%) - the ratio between the time the DAQ is busy and the total time C. Gaspar CERN/EP, Summer Student Lectures 2001 12

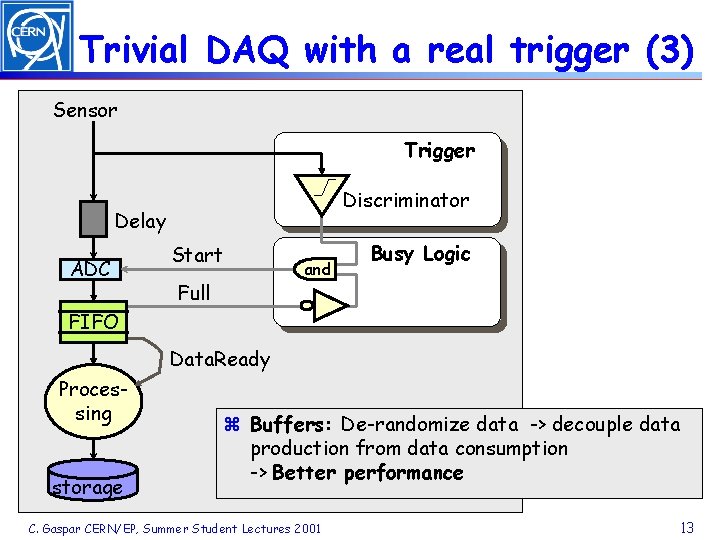

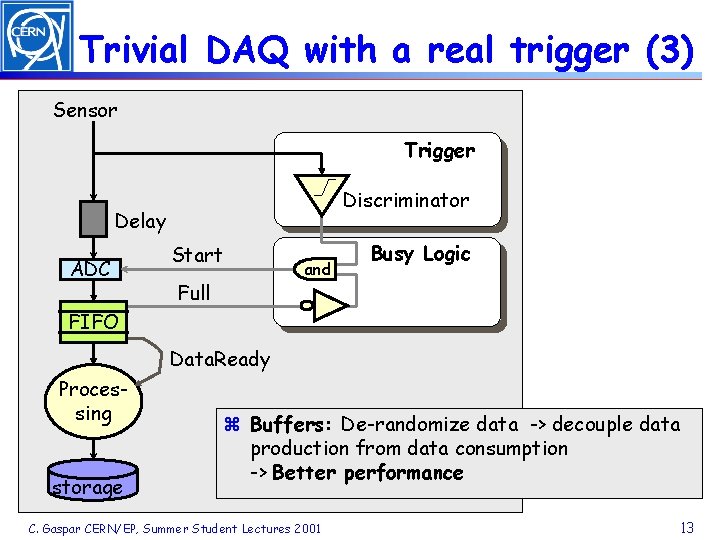

Trivial DAQ with a real trigger (3) Sensor Trigger Discriminator Delay ADC Start and Full Busy Logic FIFO Data. Ready Processing storage z Buffers: De-randomize data -> decouple data production from data consumption -> Better performance C. Gaspar CERN/EP, Summer Student Lectures 2001 13

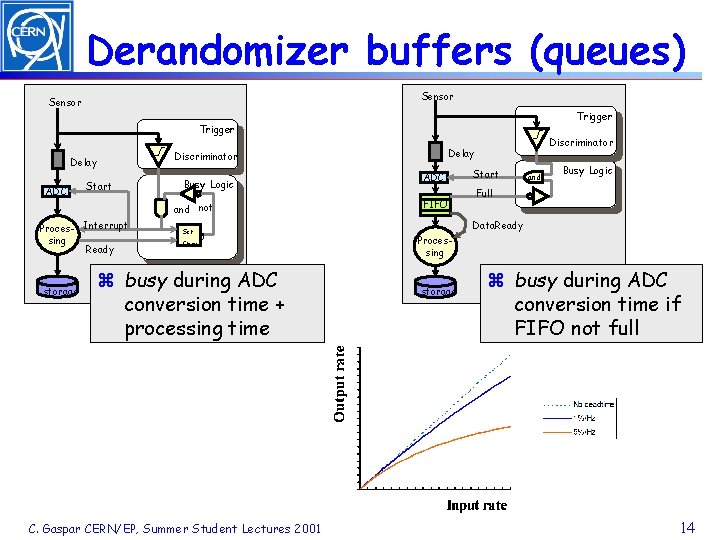

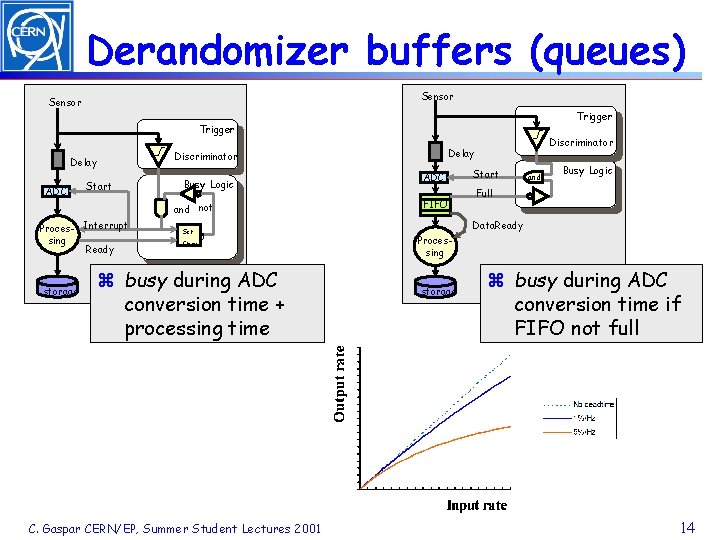

Derandomizer buffers (queues) Sensor Trigger Discriminator Delay ADC Start Busy Logic and not Proces- Interrupt sing Ready storage Set Clear Discriminator Delay ADC FIFO Start and Busy Logic Full Data. Ready Q z busy during ADC conversion time + processing time C. Gaspar CERN/EP, Summer Student Lectures 2001 Processing storage z busy during ADC conversion time if FIFO not full 14

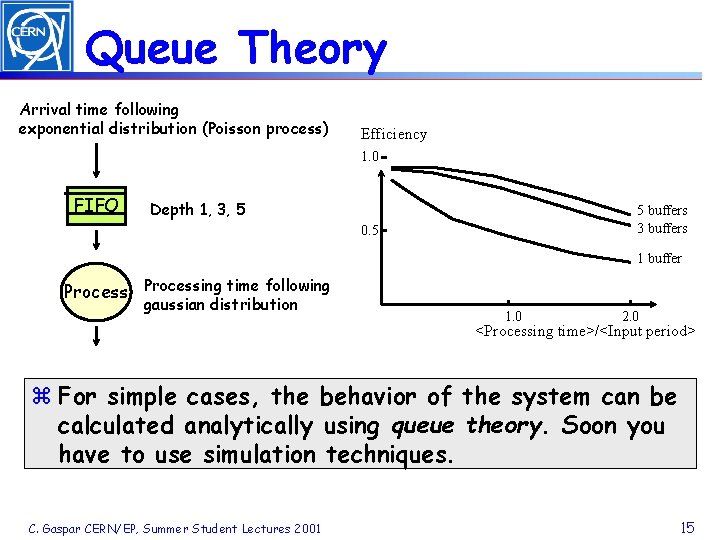

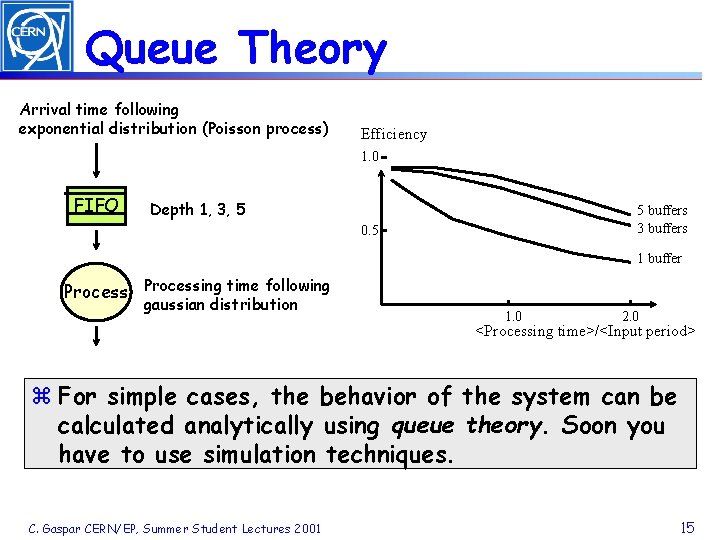

Queue Theory Arrival time following exponential distribution (Poisson process) Efficiency 1. 0 FIFO Depth 1, 3, 5 5 buffers 3 buffers 0. 5 1 buffer Processing time following gaussian distribution 1. 0 2. 0 <Processing time>/<Input period> z For simple cases, the behavior of the system can be calculated analytically using queue theory. Soon you have to use simulation techniques. C. Gaspar CERN/EP, Summer Student Lectures 2001 15

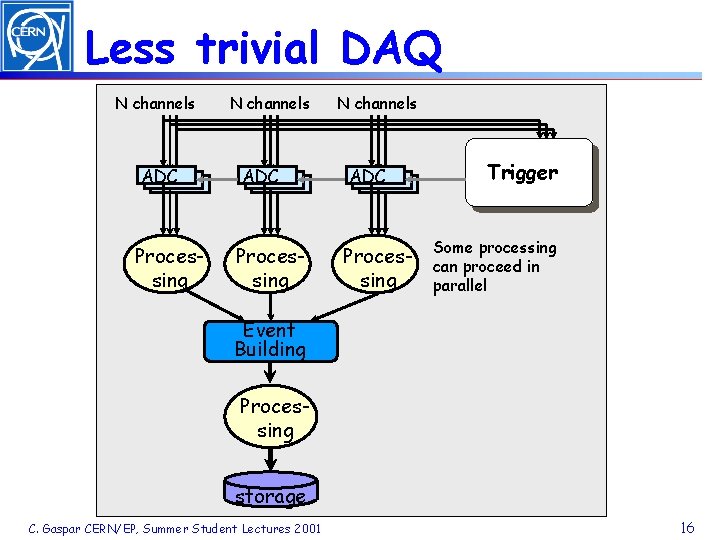

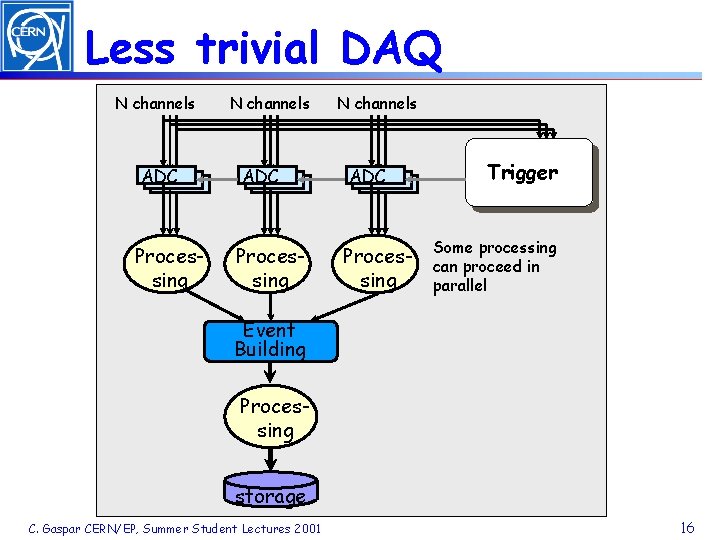

Less trivial DAQ N channels ADC Processing Trigger Some processing can proceed in parallel Event Building Processing storage C. Gaspar CERN/EP, Summer Student Lectures 2001 16

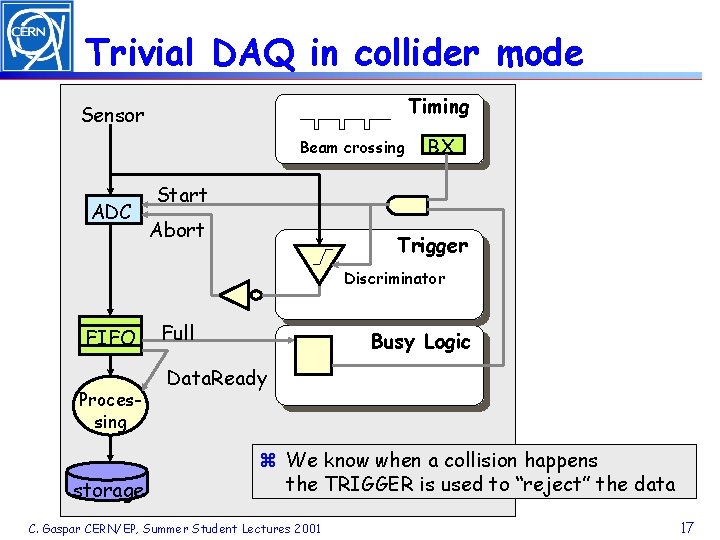

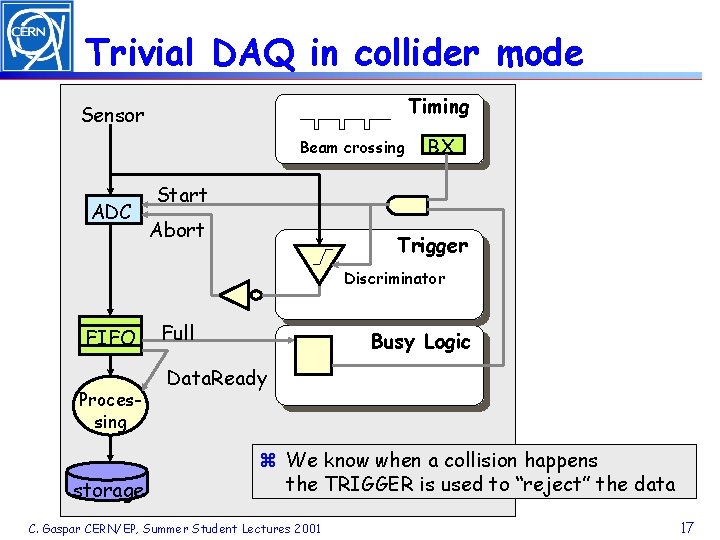

Trivial DAQ in collider mode Timing Sensor Beam crossing ADC BX Start Abort Trigger Discriminator FIFO Processing storage Full Busy Logic Data. Ready z We know when a collision happens the TRIGGER is used to “reject” the data C. Gaspar CERN/EP, Summer Student Lectures 2001 17

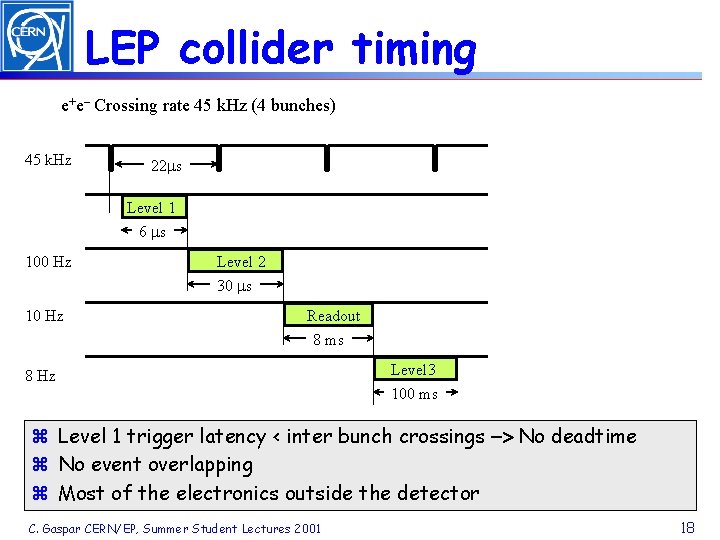

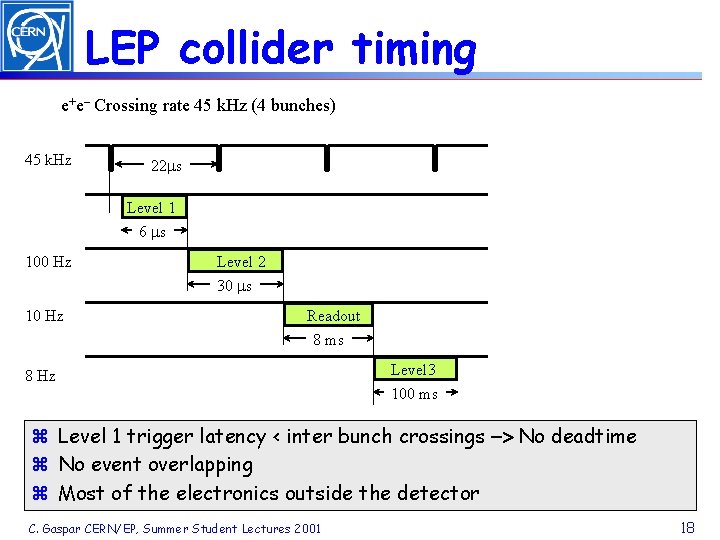

LEP collider timing e+e– Crossing rate 45 k. Hz (4 bunches) 45 k. Hz 22 ms Level 1 6 ms 100 Hz 10 Hz Level 2 30 ms Readout 8 ms 8 Hz Level 3 100 ms z Level 1 trigger latency < inter bunch crossings -> No deadtime z No event overlapping z Most of the electronics outside the detector C. Gaspar CERN/EP, Summer Student Lectures 2001 18

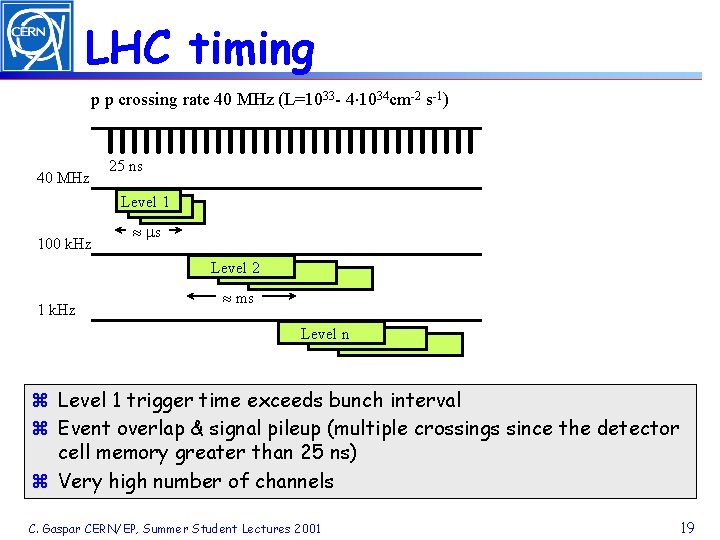

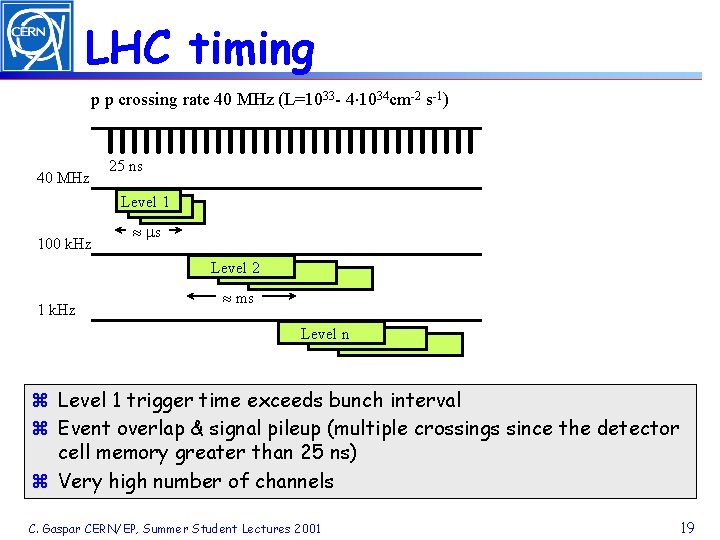

LHC timing p p crossing rate 40 MHz (L=1033 - 4× 1034 cm-2 s-1) 40 MHz 25 ns Level 1 100 k. Hz » ms Level 2 1 k. Hz » ms Level n z Level 1 trigger time exceeds bunch interval z Event overlap & signal pileup (multiple crossings since the detector cell memory greater than 25 ns) z Very high number of channels C. Gaspar CERN/EP, Summer Student Lectures 2001 19

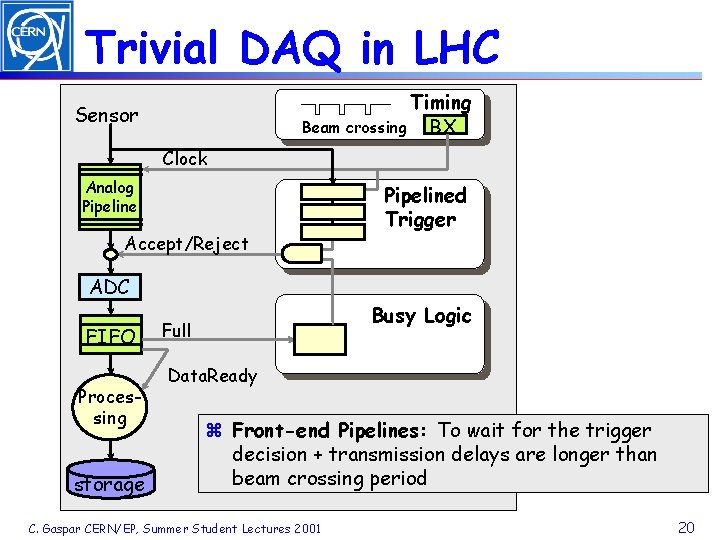

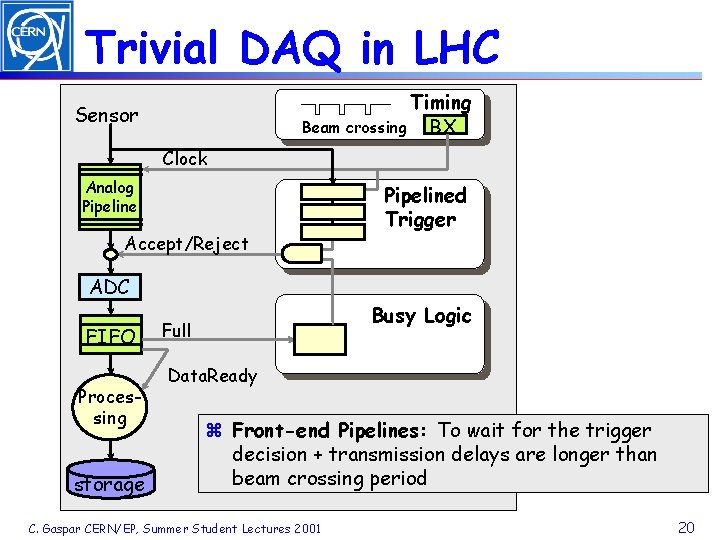

Trivial DAQ in LHC Timing Beam crossing BX Sensor Clock Analog Pipeline Accept/Reject Pipelined Trigger ADC FIFO Processing storage Busy Logic Full Data. Ready z Front-end Pipelines: To wait for the trigger decision + transmission delays are longer than beam crossing period C. Gaspar CERN/EP, Summer Student Lectures 2001 20

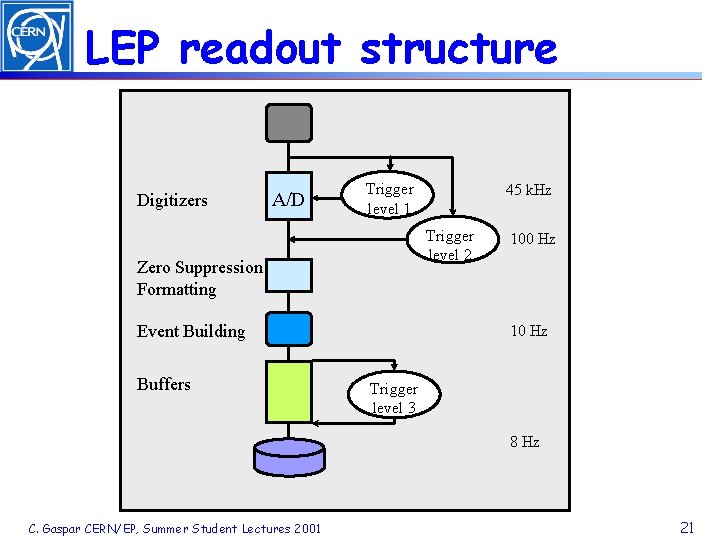

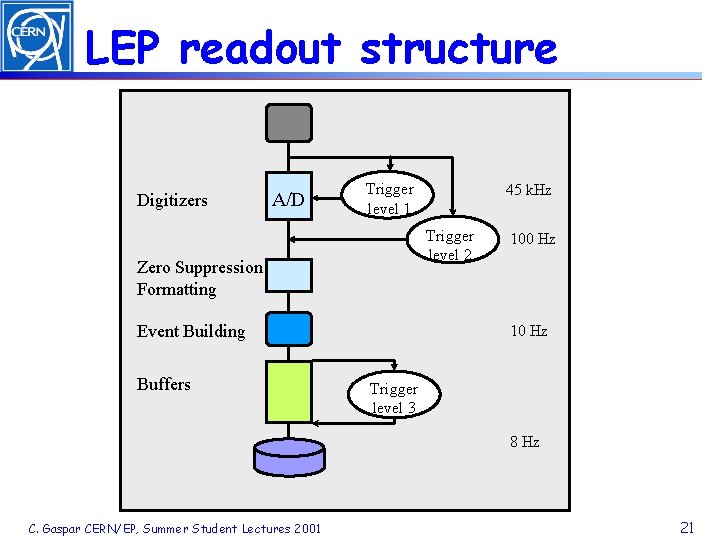

LEP readout structure Digitizers A/D Trigger level 1 Trigger level 2 Zero Suppression Formatting Event Building Buffers 45 k. Hz 100 Hz 10 Hz Trigger level 3 8 Hz C. Gaspar CERN/EP, Summer Student Lectures 2001 21

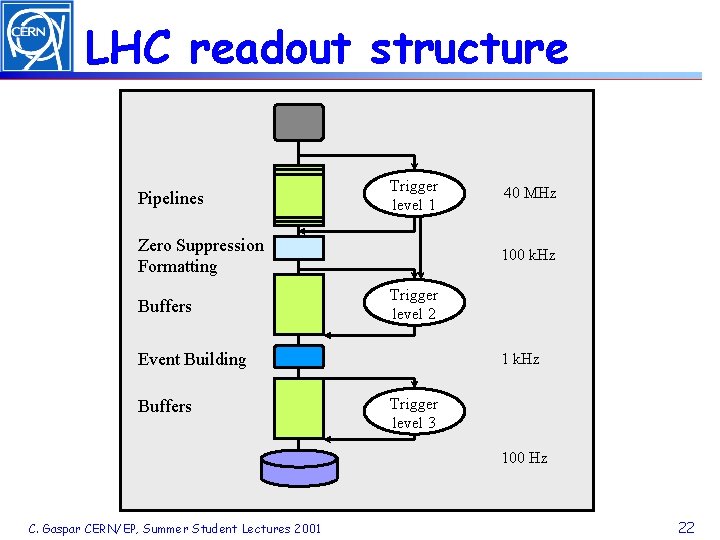

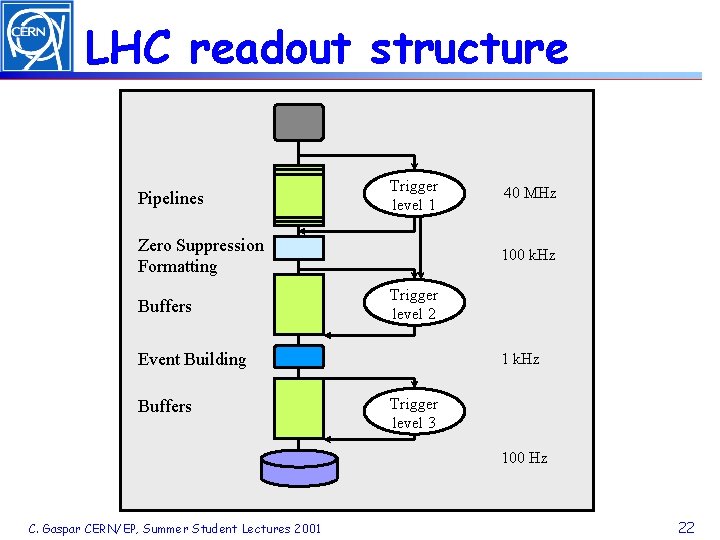

LHC readout structure Pipelines Trigger level 1 Zero Suppression Formatting Buffers 100 k. Hz Trigger level 2 Event Building Buffers 40 MHz 1 k. Hz Trigger level 3 100 Hz C. Gaspar CERN/EP, Summer Student Lectures 2001 22

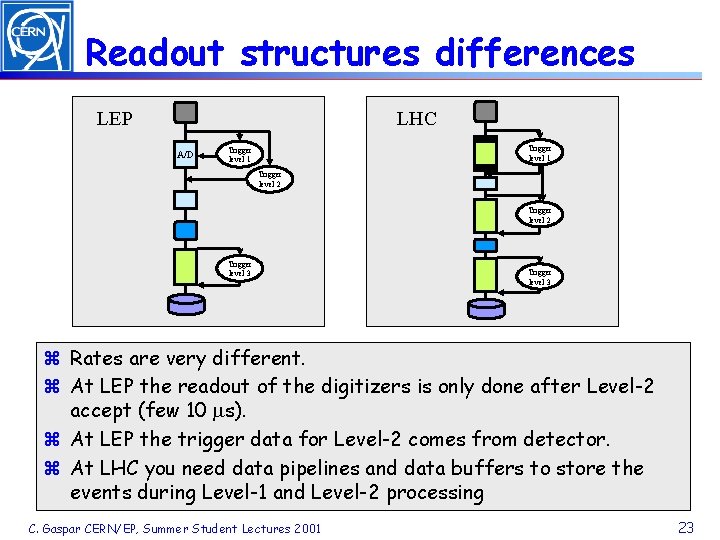

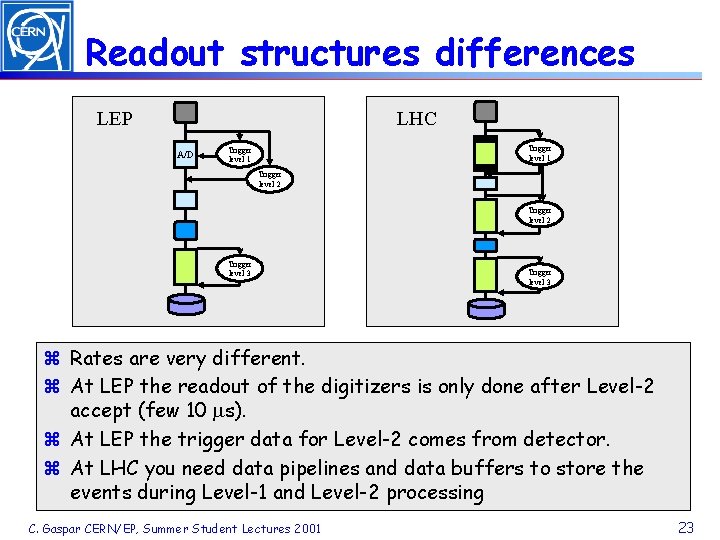

Readout structures differences LEP LHC A/D Trigger level 1 Trigger level 2 Trigger level 3 z Rates are very different. z At LEP the readout of the digitizers is only done after Level-2 accept (few 10 ms). z At LEP the trigger data for Level-2 comes from detector. z At LHC you need data pipelines and data buffers to store the events during Level-1 and Level-2 processing C. Gaspar CERN/EP, Summer Student Lectures 2001 23

Front-end essentials Clara Gaspar CERN/EP-LBC

Front-Ends z. Detector dependent (Home made) y. On Detector x. Pre-amplification, Discrimination, Shaping amplification and Multiplexing of a few channels y. Transmission x. Long Cables (50 -100 m), electrical or fiber-optics y. In Counting Rooms x. Hundreds of FE crates : Reception, A/D conversion and Buffering C. Gaspar CERN/EP, Summer Student Lectures 2001 25

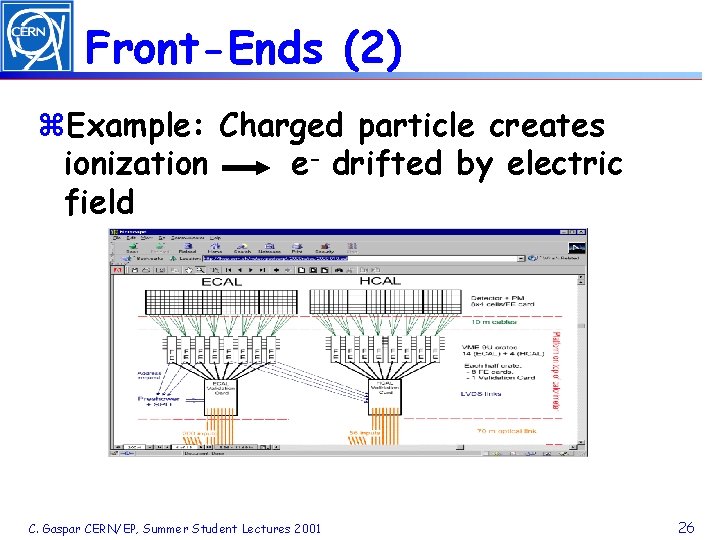

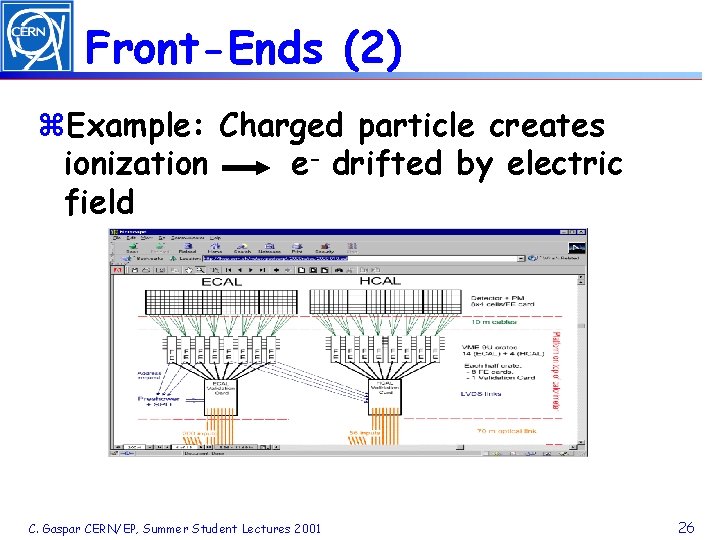

Front-Ends (2) z. Example: Charged particle creates ionization e- drifted by electric field C. Gaspar CERN/EP, Summer Student Lectures 2001 26

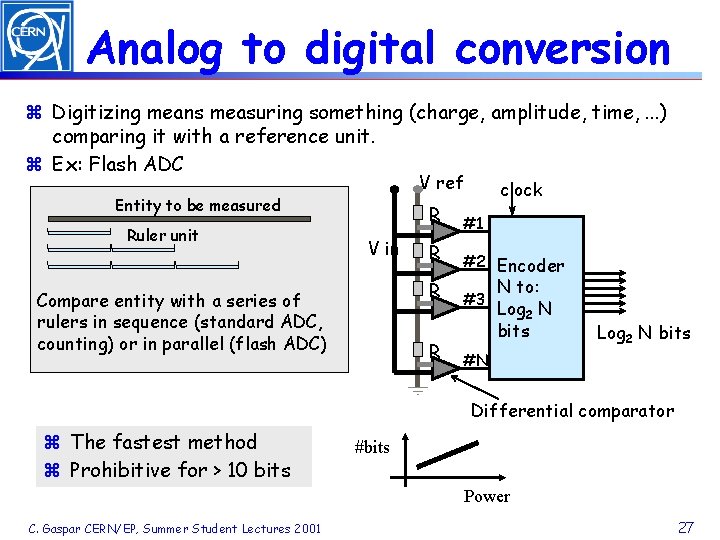

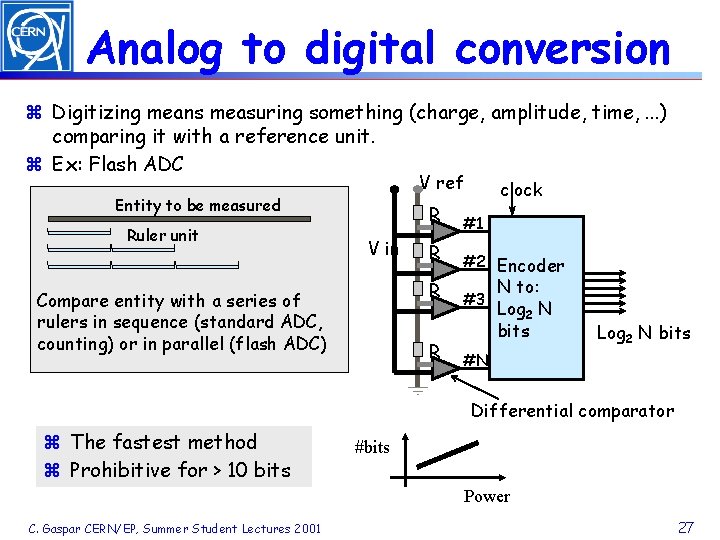

Analog to digital conversion z Digitizing means measuring something (charge, amplitude, time, . . . ) comparing it with a reference unit. z Ex: Flash ADC V ref Entity to be measured Ruler unit R V in R R Compare entity with a series of rulers in sequence (standard ADC, counting) or in parallel (flash ADC) R clock #1 #2 Encoder #3 N to: Log 2 N bits #N Differential comparator z The fastest method z Prohibitive for > 10 bits #bits Power C. Gaspar CERN/EP, Summer Student Lectures 2001 27

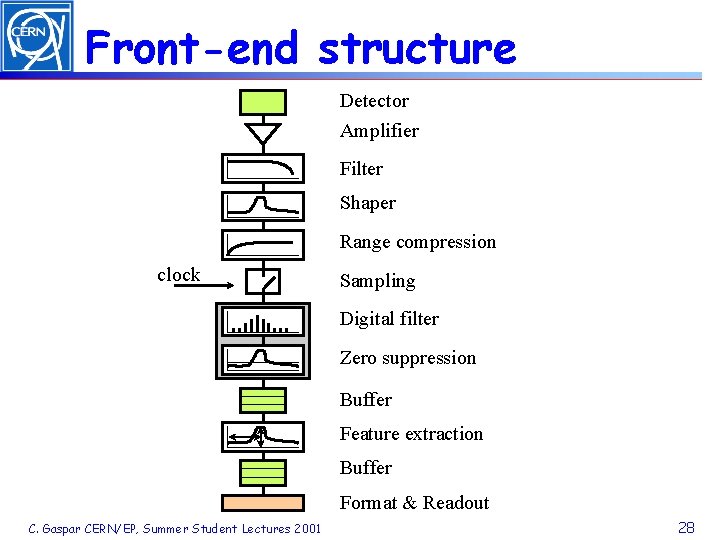

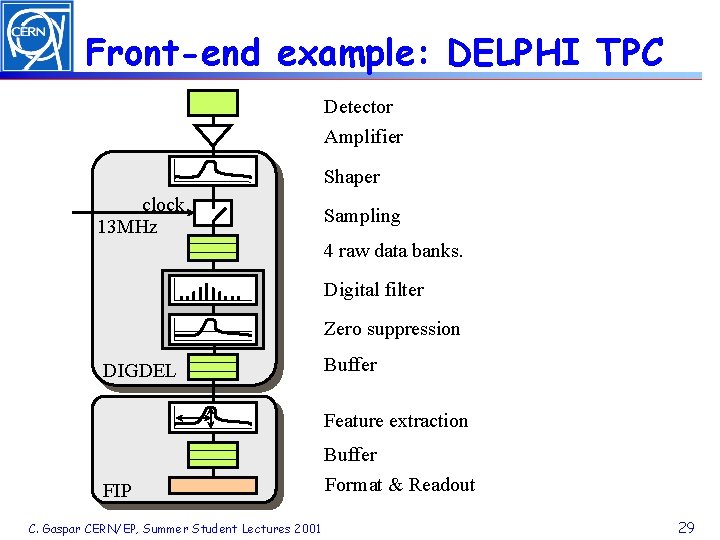

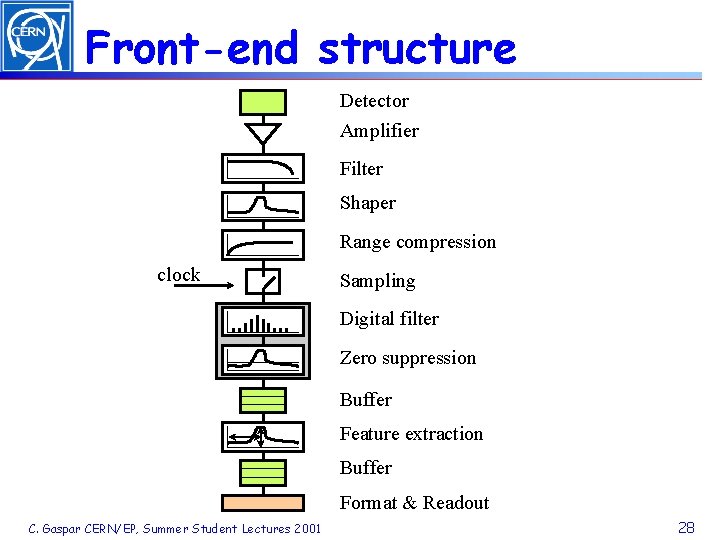

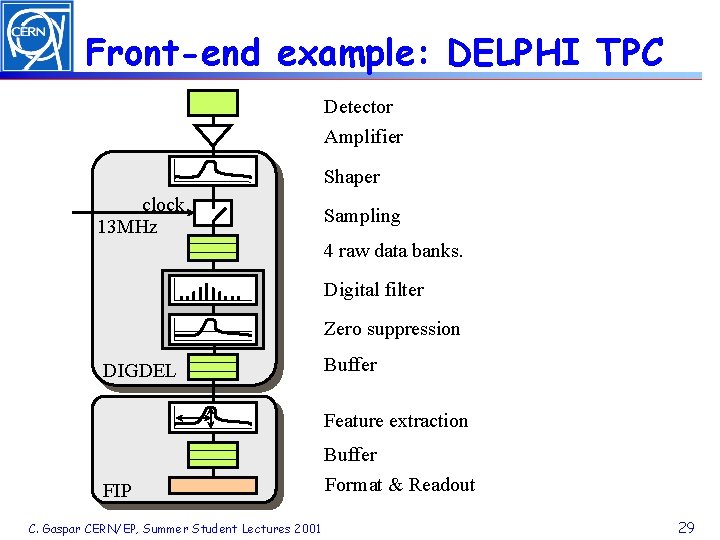

Front-end structure Detector Amplifier Filter Shaper Range compression clock Sampling Digital filter Zero suppression Buffer Feature extraction Buffer Format & Readout C. Gaspar CERN/EP, Summer Student Lectures 2001 28

Front-end example: DELPHI TPC Detector Amplifier Shaper clock 13 MHz Sampling 4 raw data banks. Digital filter Zero suppression DIGDEL Buffer Feature extraction FIP C. Gaspar CERN/EP, Summer Student Lectures 2001 Buffer Format & Readout 29

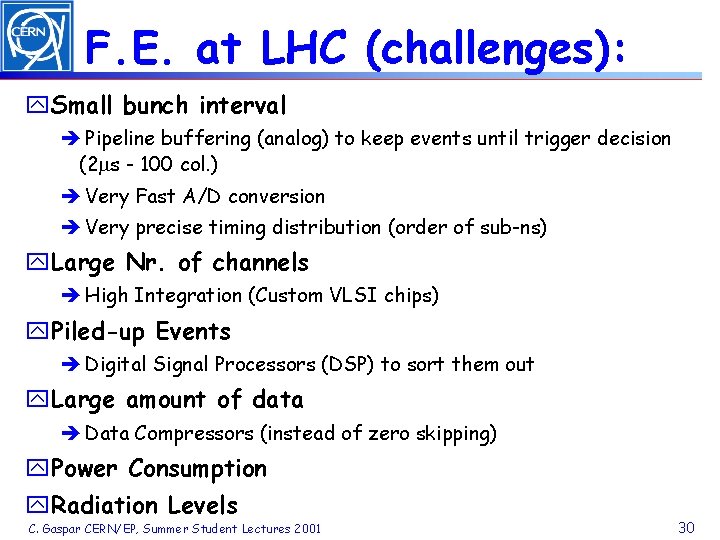

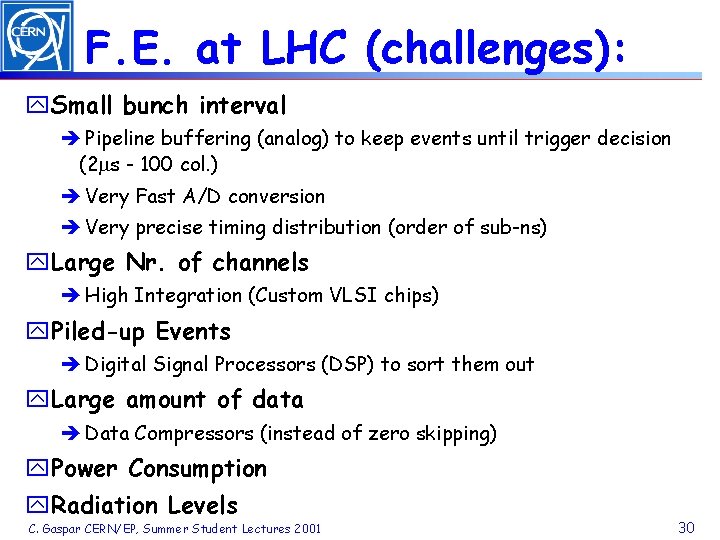

F. E. at LHC (challenges): y. Small bunch interval è Pipeline buffering (analog) to keep events until trigger decision (2 ms - 100 col. ) è Very Fast A/D conversion è Very precise timing distribution (order of sub-ns) y. Large Nr. of channels è High Integration (Custom VLSI chips) y. Piled-up Events è Digital Signal Processors (DSP) to sort them out y. Large amount of data è Data Compressors (instead of zero skipping) y. Power Consumption y. Radiation Levels C. Gaspar CERN/EP, Summer Student Lectures 2001 30

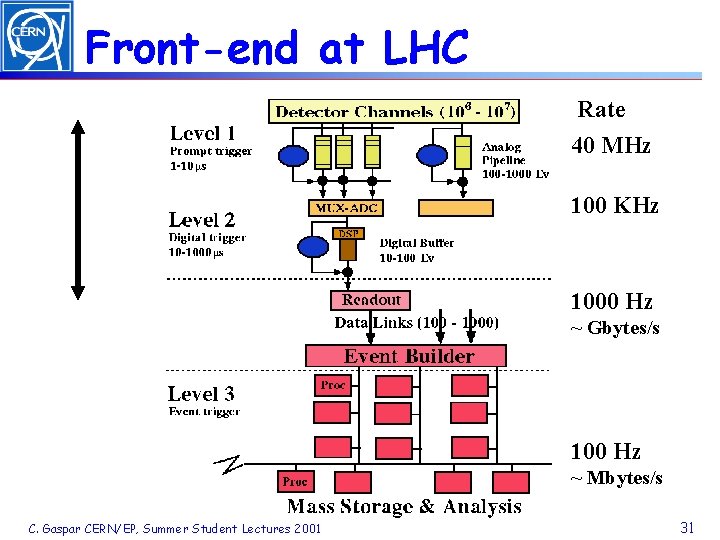

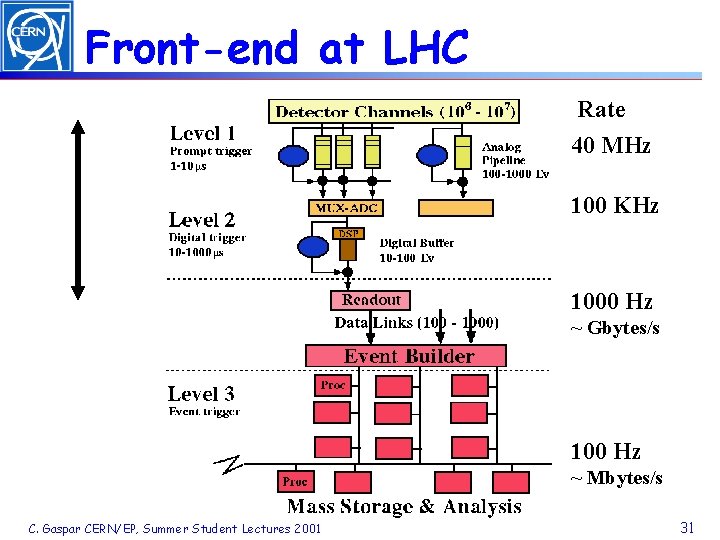

Front-end at LHC Rate 40 MHz 100 KHz 1000 Hz ~ Gbytes/s 100 Hz ~ Mbytes/s C. Gaspar CERN/EP, Summer Student Lectures 2001 31

Trigger Clara Gaspar CERN/EP-LBC

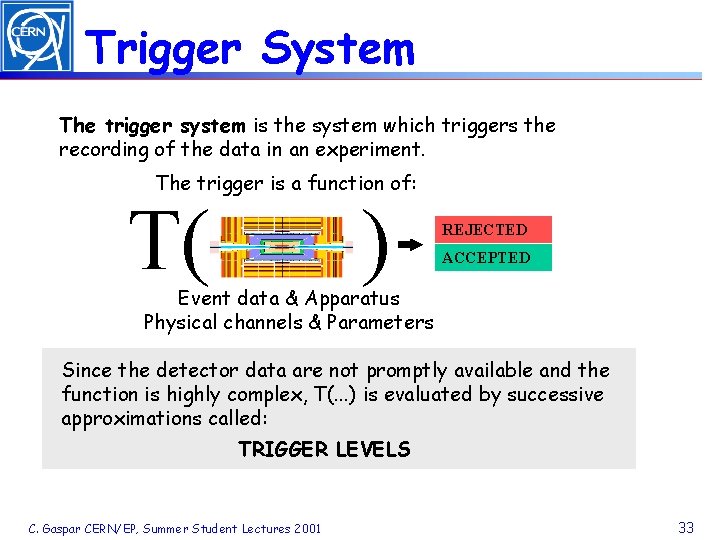

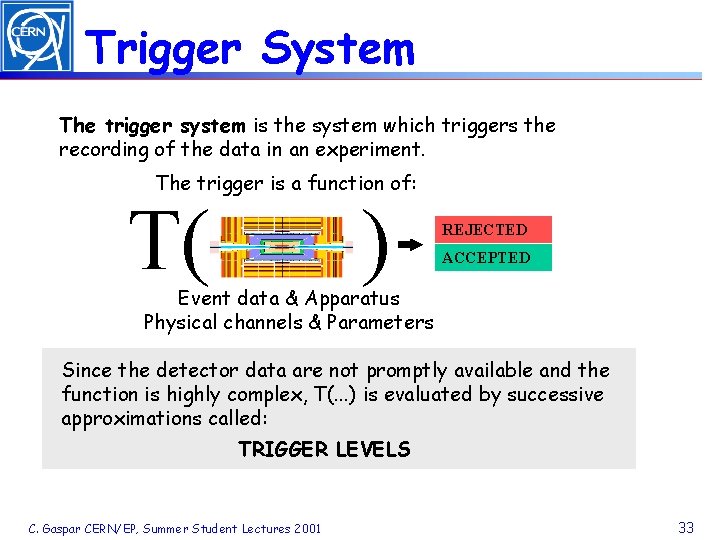

Trigger System The trigger system is the system which triggers the recording of the data in an experiment. The trigger is a function of: T( ) REJECTED ACCEPTED Event data & Apparatus Physical channels & Parameters Since the detector data are not promptly available and the function is highly complex, T(. . . ) is evaluated by successive approximations called: TRIGGER LEVELS C. Gaspar CERN/EP, Summer Student Lectures 2001 33

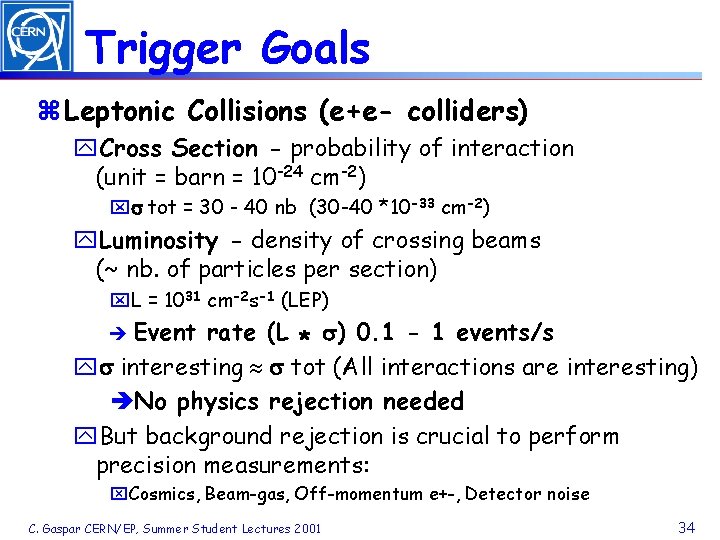

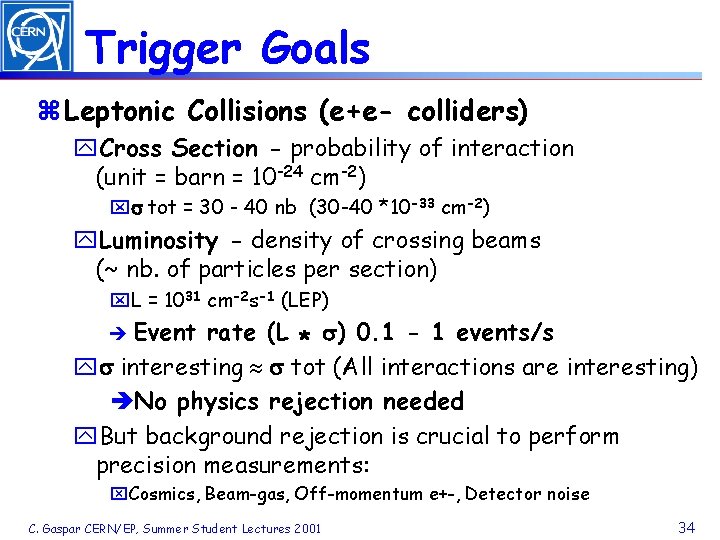

Trigger Goals z Leptonic Collisions (e+e- colliders) y. Cross Section - probability of interaction (unit = barn = 10 -24 cm-2) xs tot = 30 - 40 nb (30 -40 *10 -33 cm-2) y. Luminosity - density of crossing beams (~ nb. of particles per section) x. L = 1031 cm-2 s-1 (LEP) è Event rate (L * s) 0. 1 - 1 events/s ys interesting » s tot (All interactions are interesting) èNo physics rejection needed y. But background rejection is crucial to perform precision measurements: x. Cosmics, Beam-gas, Off-momentum e+-, Detector noise C. Gaspar CERN/EP, Summer Student Lectures 2001 34

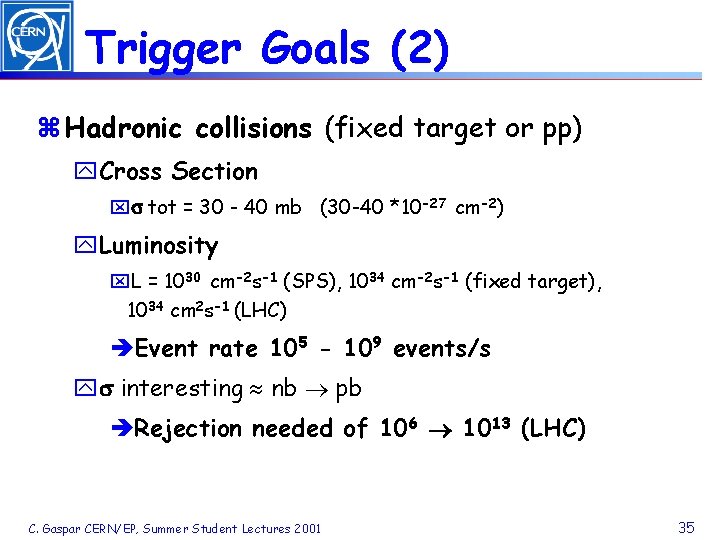

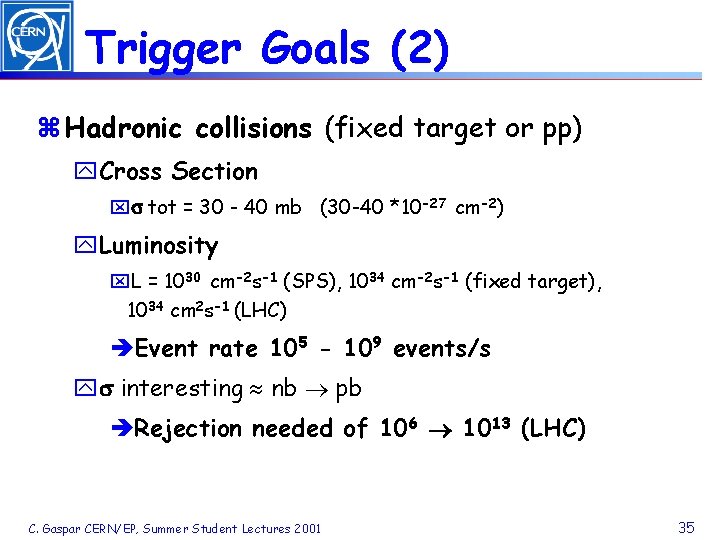

Trigger Goals (2) z Hadronic collisions (fixed target or pp) y. Cross Section xs tot = 30 - 40 mb (30 -40 *10 -27 cm-2) y. Luminosity x. L = 1030 cm-2 s-1 (SPS), 1034 cm-2 s-1 (fixed target), 1034 cm 2 s-1 (LHC) èEvent rate 105 - 109 events/s ys interesting » nb ® pb èRejection needed of 106 ® 1013 (LHC) C. Gaspar CERN/EP, Summer Student Lectures 2001 35

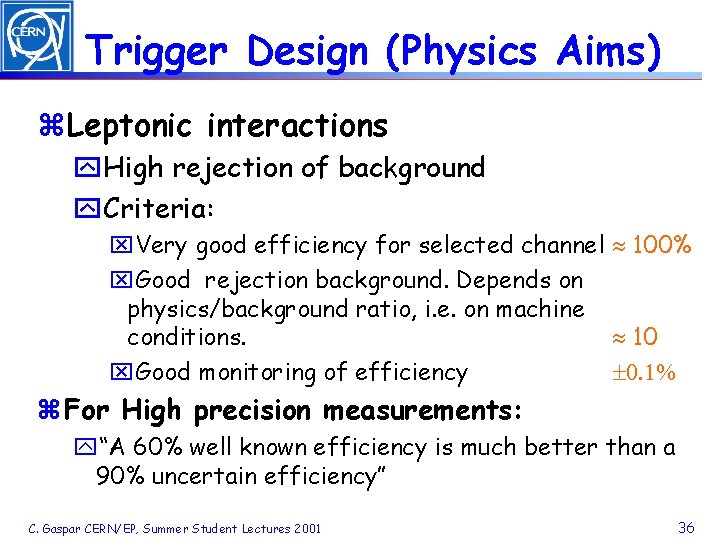

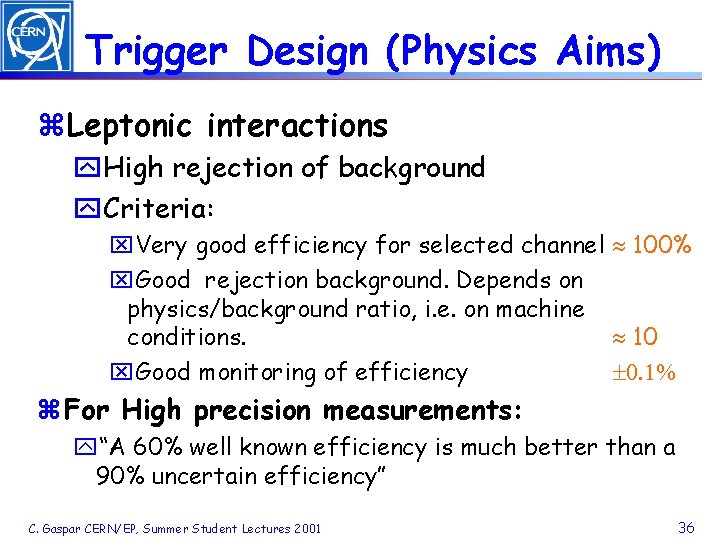

Trigger Design (Physics Aims) z. Leptonic interactions y. High rejection of background y. Criteria: x. Very good efficiency for selected channel » 100% x. Good rejection background. Depends on physics/background ratio, i. e. on machine conditions. » 10 x. Good monitoring of efficiency ± 0. 1% z For High precision measurements: y“A 60% well known efficiency is much better than a 90% uncertain efficiency” C. Gaspar CERN/EP, Summer Student Lectures 2001 36

Trigger Design (Physics Aims) z. Hadronic Interactions y. High rejection of physics events y. Criteria: x. Good efficiency for selected channel 50% x. Good rejection of uninteresting events x. Good monitoring of efficiency ± 0. 1% z Similar criteria, but in totally different ranges of values C. Gaspar CERN/EP, Summer Student Lectures 2001 ³ » 106 37

Trigger Design (2) z. Simulation studies y. It is essential to build into the experiment's simulation the trigger simulation. y. For detector/machine background rejection x. Try to include detector noise simulation x. Include machine background (not easy). x. For example at LEP the trigger rate estimates were about 50 -100 times too high. y. For physics background rejection, the simulation must include the generation of these backgrounds C. Gaspar CERN/EP, Summer Student Lectures 2001 38

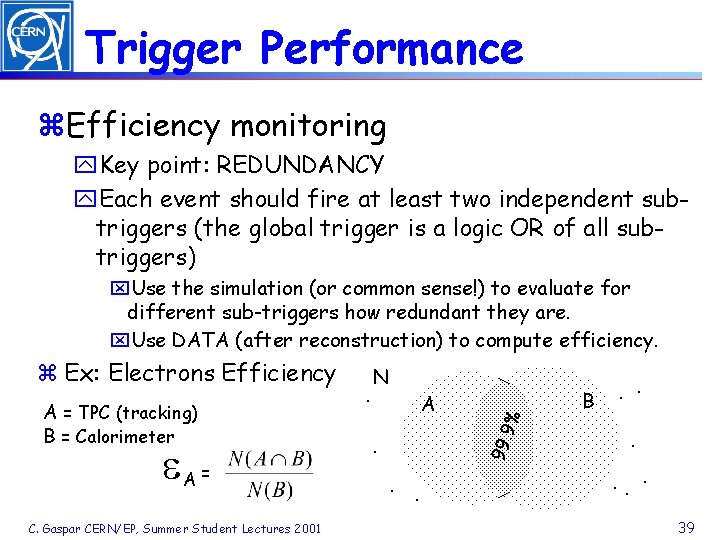

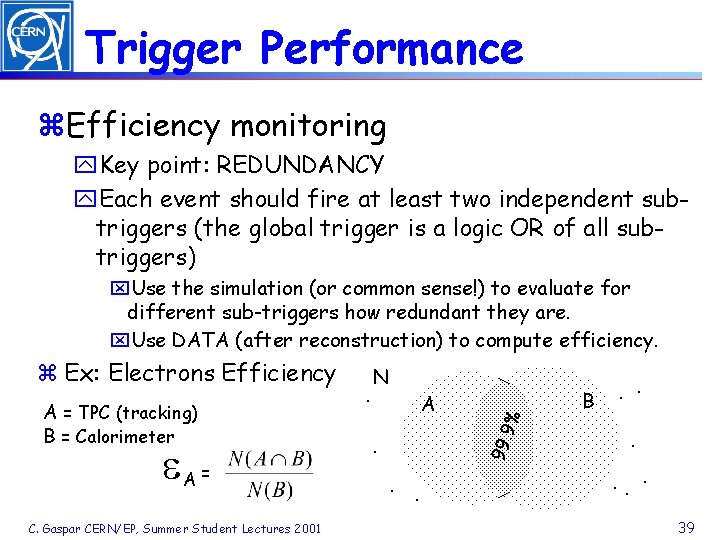

Trigger Performance z. Efficiency monitoring y. Key point: REDUNDANCY y. Each event should fire at least two independent subtriggers (the global trigger is a logic OR of all subtriggers) A = TPC (tracking) B = Calorimeter e. A = C. Gaspar CERN/EP, Summer Student Lectures 2001 . N A 99. 9 z Ex: Electrons Efficiency % x. Use the simulation (or common sense!) to evaluate for different sub-triggers how redundant they are. x. Use DATA (after reconstruction) to compute efficiency. . B . . . 39

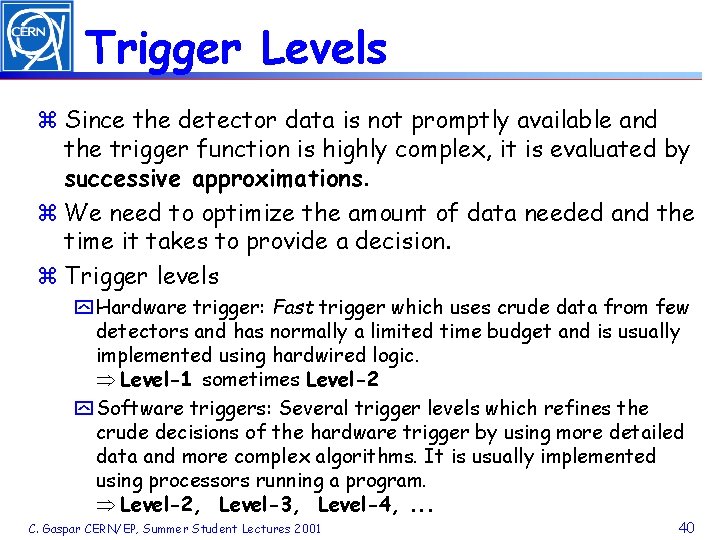

Trigger Levels z Since the detector data is not promptly available and the trigger function is highly complex, it is evaluated by successive approximations. z We need to optimize the amount of data needed and the time it takes to provide a decision. z Trigger levels y Hardware trigger: Fast trigger which uses crude data from few detectors and has normally a limited time budget and is usually implemented using hardwired logic. Þ Level-1 sometimes Level-2 y Software triggers: Several trigger levels which refines the crude decisions of the hardware trigger by using more detailed data and more complex algorithms. It is usually implemented using processors running a program. Þ Level-2, Level-3, Level-4, . . . C. Gaspar CERN/EP, Summer Student Lectures 2001 40

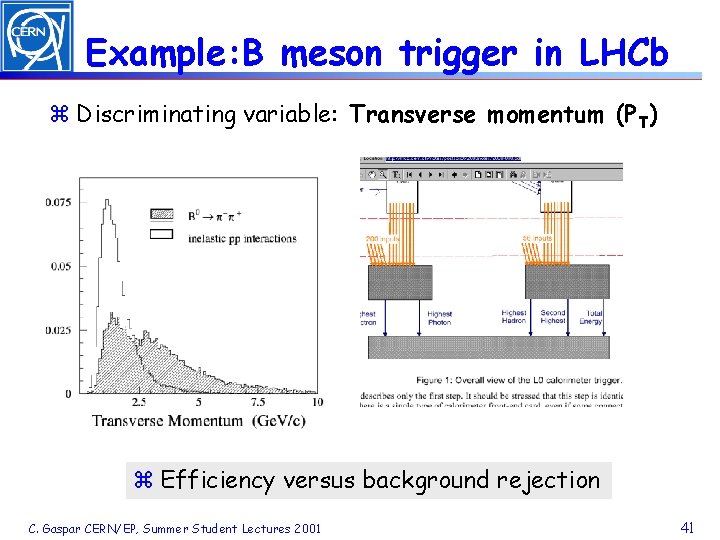

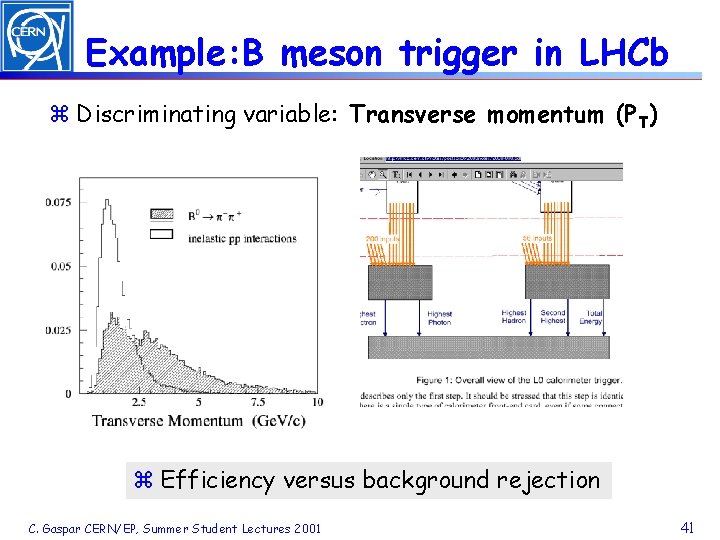

Example: B meson trigger in LHCb z Discriminating variable: Transverse momentum (PT) z Efficiency versus background rejection C. Gaspar CERN/EP, Summer Student Lectures 2001 41

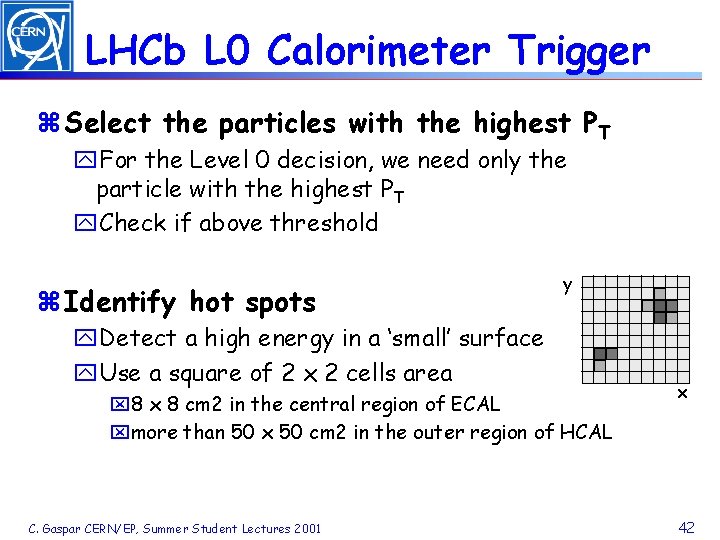

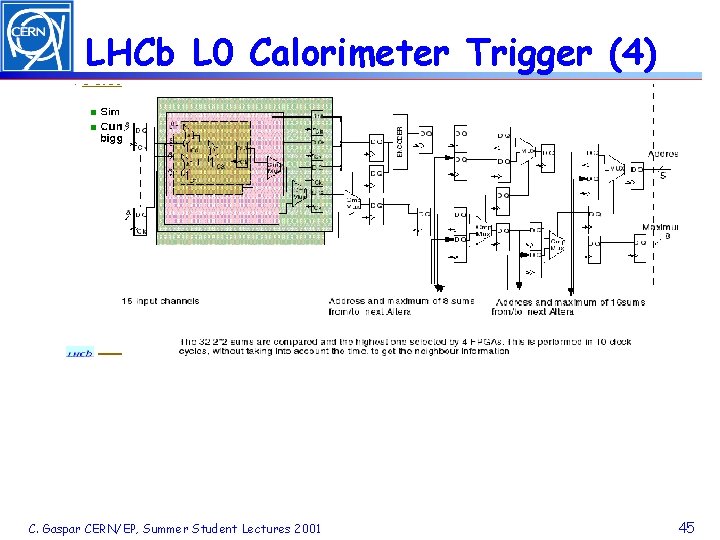

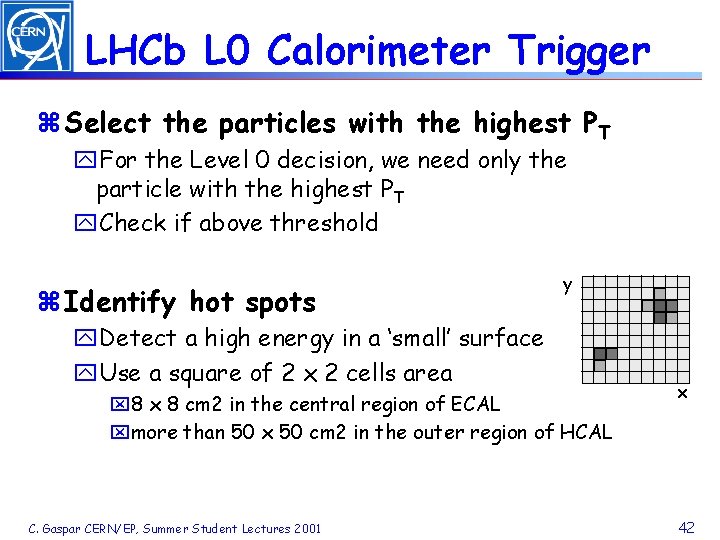

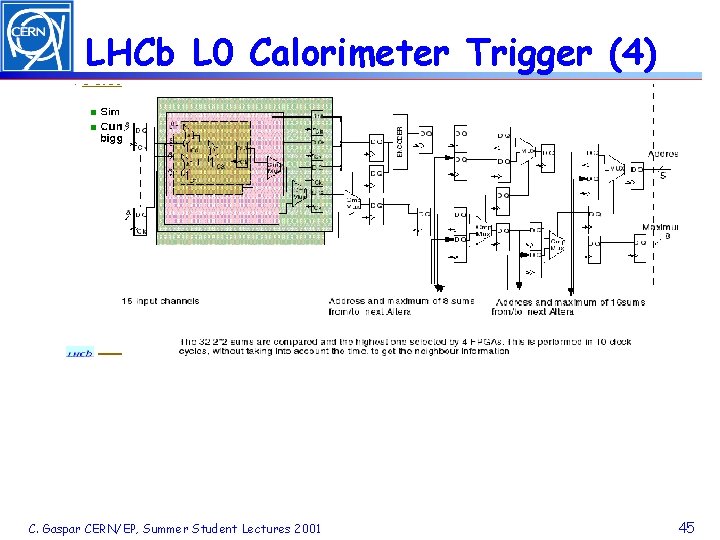

LHCb L 0 Calorimeter Trigger z Select the particles with the highest PT y. For the Level 0 decision, we need only the particle with the highest PT y. Check if above threshold z Identify hot spots y y. Detect a high energy in a ‘small’ surface y. Use a square of 2 x 2 cells area x 8 cm 2 in the central region of ECAL xmore than 50 x 50 cm 2 in the outer region of HCAL C. Gaspar CERN/EP, Summer Student Lectures 2001 x 42

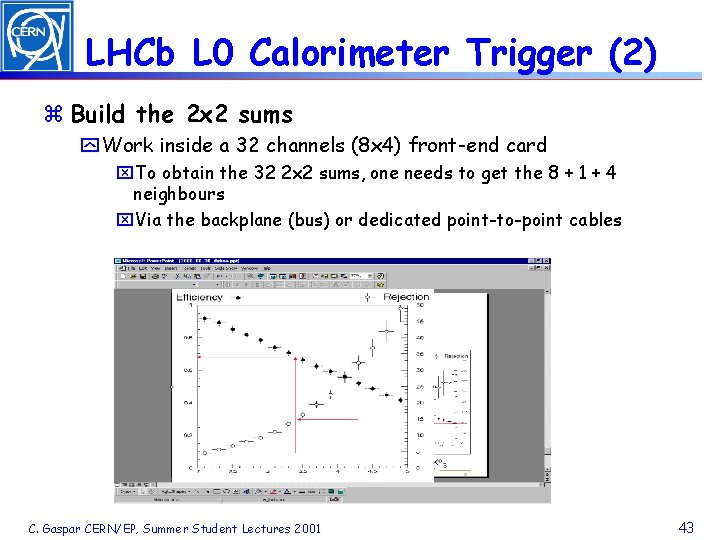

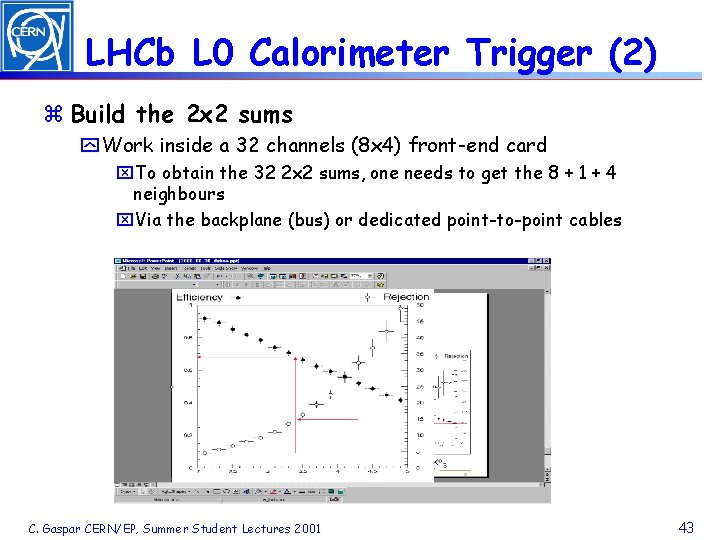

LHCb L 0 Calorimeter Trigger (2) z Build the 2 x 2 sums y Work inside a 32 channels (8 x 4) front-end card x. To obtain the 32 2 x 2 sums, one needs to get the 8 + 1 + 4 neighbours x. Via the backplane (bus) or dedicated point-to-point cables C. Gaspar CERN/EP, Summer Student Lectures 2001 43

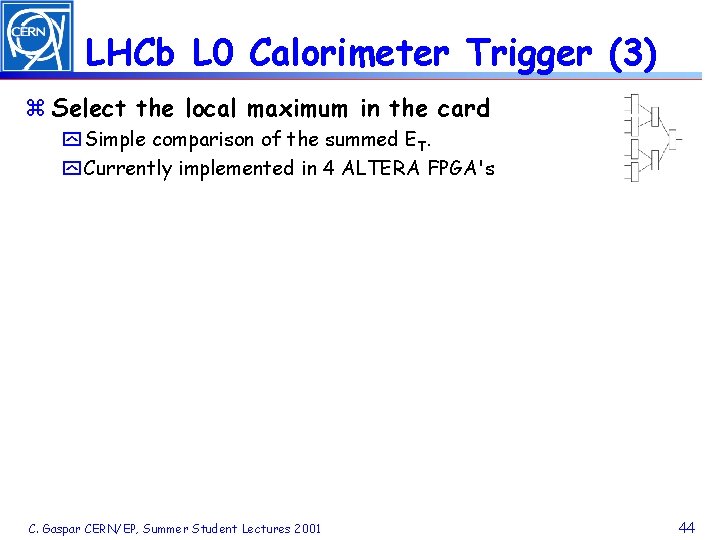

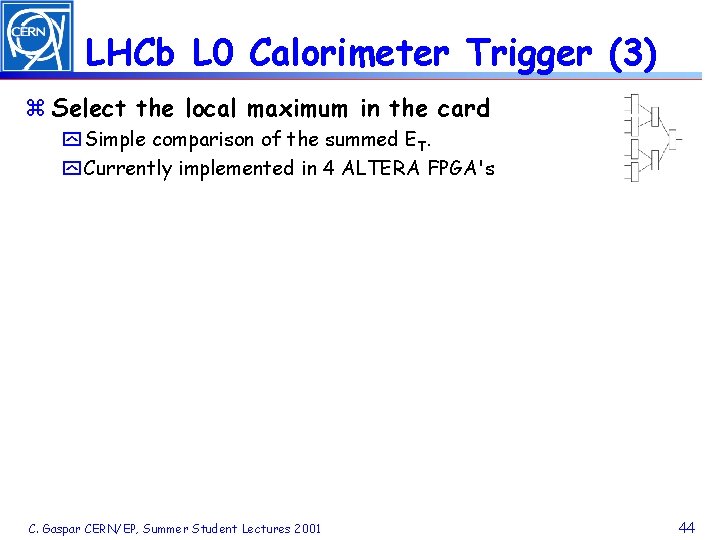

LHCb L 0 Calorimeter Trigger (3) z Select the local maximum in the card y Simple comparison of the summed ET. y Currently implemented in 4 ALTERA FPGA's C. Gaspar CERN/EP, Summer Student Lectures 2001 44

LHCb L 0 Calorimeter Trigger (4) C. Gaspar CERN/EP, Summer Student Lectures 2001 45

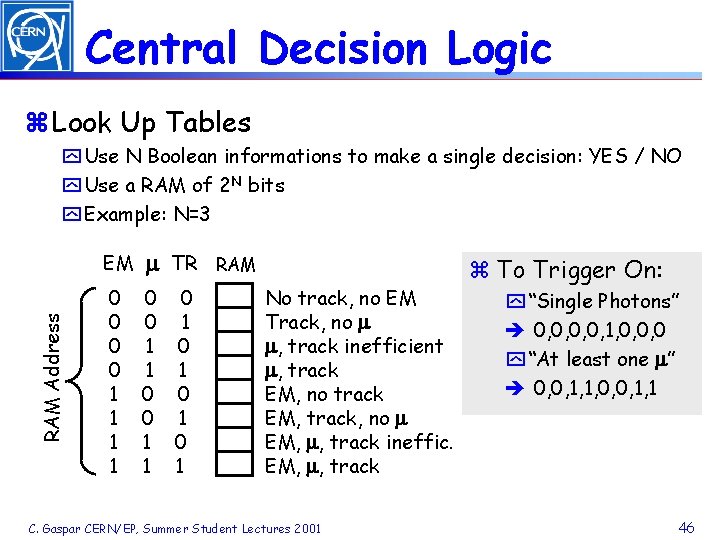

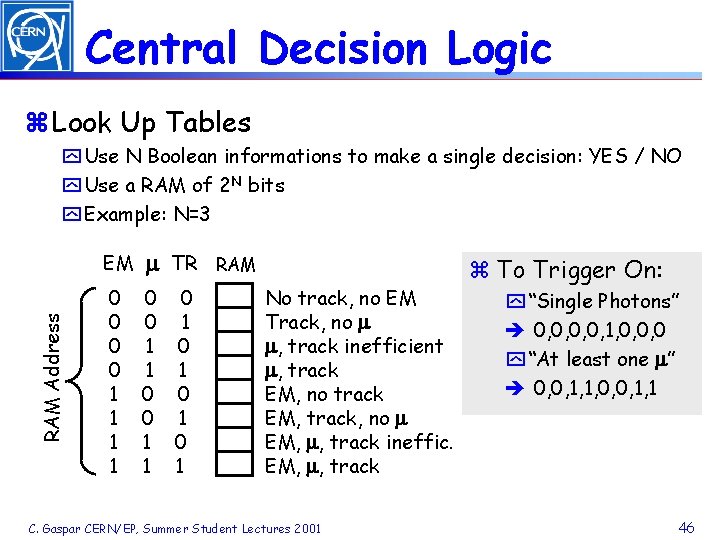

Central Decision Logic z Look Up Tables RAM Address y Use N Boolean informations to make a single decision: YES / NO y Use a RAM of 2 N bits y Example: N=3 EM TR 0 0 1 1 0 1 1 RAM No track, no EM Track, no , track inefficient , track EM, no track EM, track, no EM, , track ineffic. EM, , track C. Gaspar CERN/EP, Summer Student Lectures 2001 z To Trigger On: y “Single Photons” è 0, 0, 1, 0, 0, 0 y “At least one ” è 0, 0, 1, 1, 0, 0, 1, 1 46

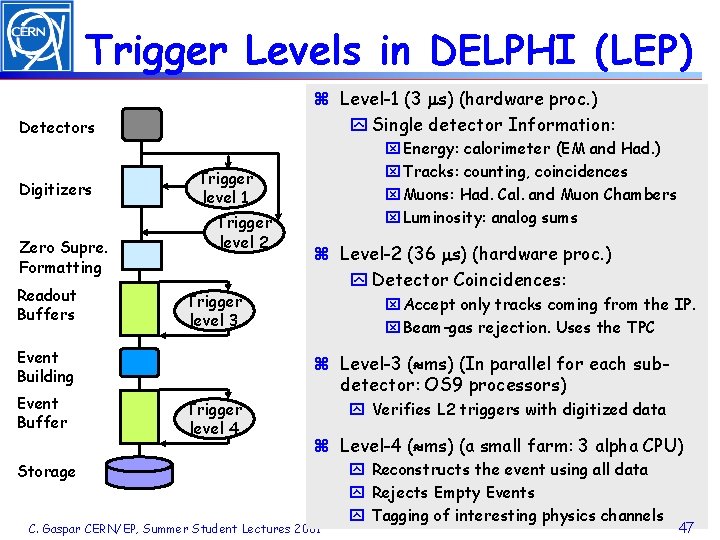

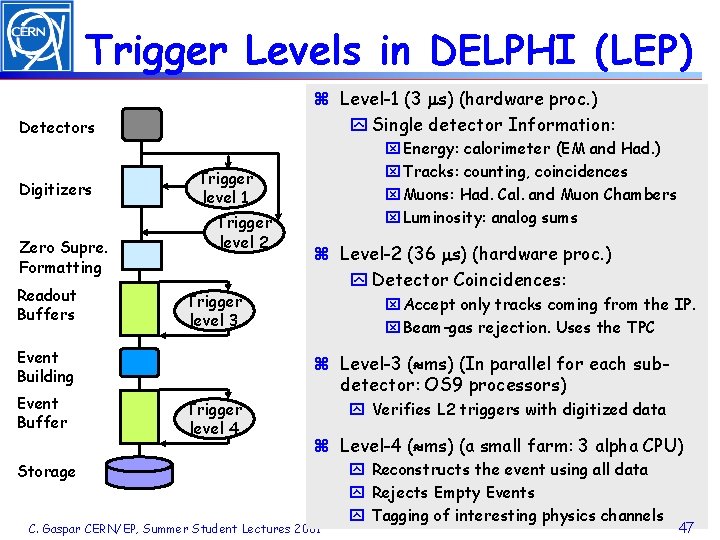

Trigger Levels in DELPHI (LEP) z Level-1 (3 s) (hardware proc. ) y Single detector Information: Detectors Digitizers Zero Supre. Formatting Readout Buffers Trigger level 1 Trigger level 2 Trigger level 3 Event Building Event Buffer x Energy: calorimeter (EM and Had. ) x Tracks: counting, coincidences x Muons: Had. Cal. and Muon Chambers x Luminosity: analog sums z Level-2 (36 s) (hardware proc. ) y Detector Coincidences: x Accept only tracks coming from the IP. x Beam-gas rejection. Uses the TPC z Level-3 ( ms) (In parallel for each subdetector: OS 9 processors) Trigger level 4 y Verifies L 2 triggers with digitized data z Level-4 ( ms) (a small farm: 3 alpha CPU) Storage C. Gaspar CERN/EP, Summer Student Lectures 2001 y Reconstructs the event using all data y Rejects Empty Events y Tagging of interesting physics channels 47

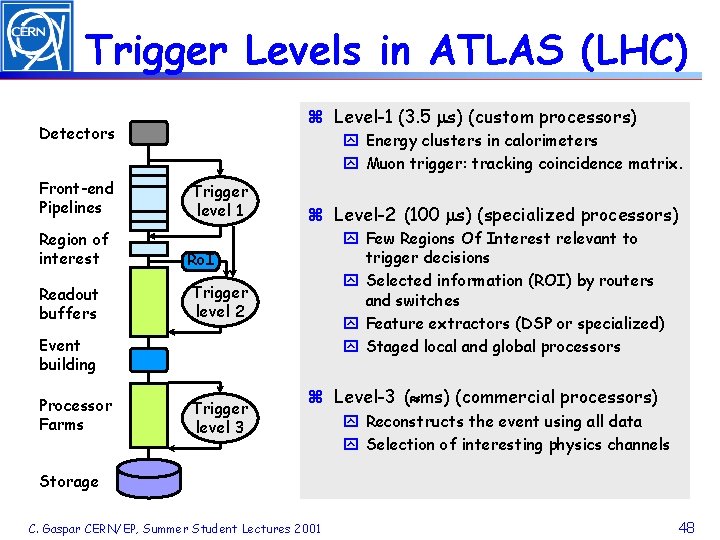

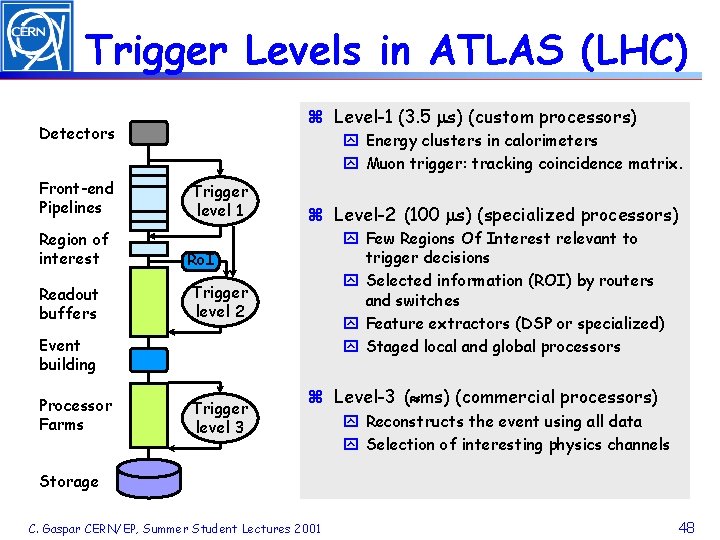

Trigger Levels in ATLAS (LHC) z Level-1 (3. 5 s) (custom processors) Detectors Front-end Pipelines Region of interest Readout buffers y Energy clusters in calorimeters y Muon trigger: tracking coincidence matrix. Trigger level 1 z Level-2 (100 s) (specialized processors) y Few Regions Of Interest relevant to trigger decisions y Selected information (ROI) by routers and switches y Feature extractors (DSP or specialized) y Staged local and global processors Ro. I Trigger level 2 Event building Processor Farms Trigger level 3 z Level-3 ( ms) (commercial processors) y Reconstructs the event using all data y Selection of interesting physics channels Storage C. Gaspar CERN/EP, Summer Student Lectures 2001 48

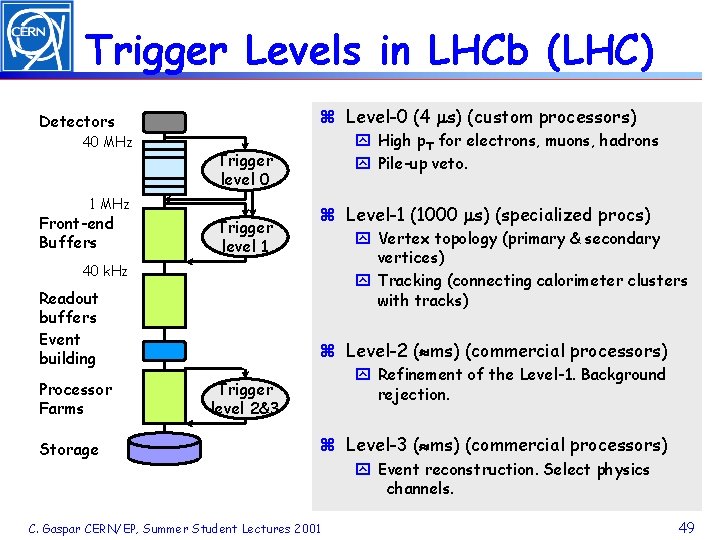

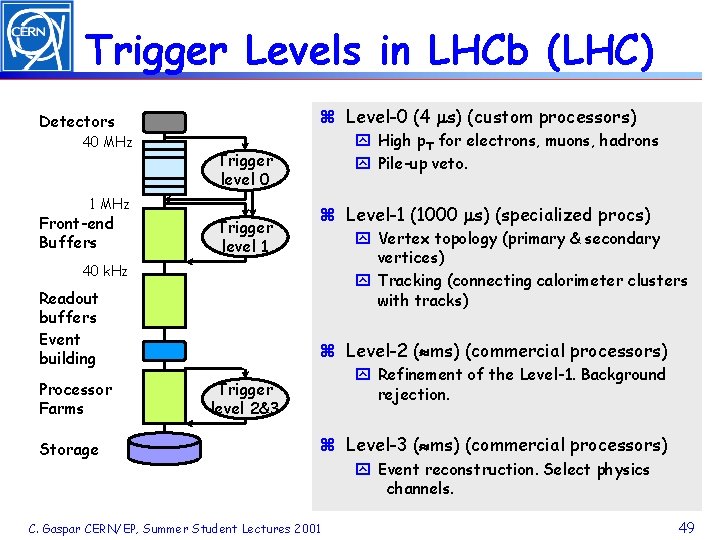

Trigger Levels in LHCb (LHC) z Level-0 (4 s) (custom processors) Detectors 40 MHz Trigger level 0 1 MHz Front-end Buffers y High p. T for electrons, muons, hadrons y Pile-up veto. Trigger level 1 z Level-1 (1000 s) (specialized procs) y Vertex topology (primary & secondary vertices) y Tracking (connecting calorimeter clusters with tracks) 40 k. Hz Readout buffers Event building Processor Farms Storage z Level-2 ( ms) (commercial processors) y Refinement of the Level-1. Background rejection. Trigger level 2&3 z Level-3 ( ms) (commercial processors) C. Gaspar CERN/EP, Summer Student Lectures 2001 y Event reconstruction. Select physics channels. 49

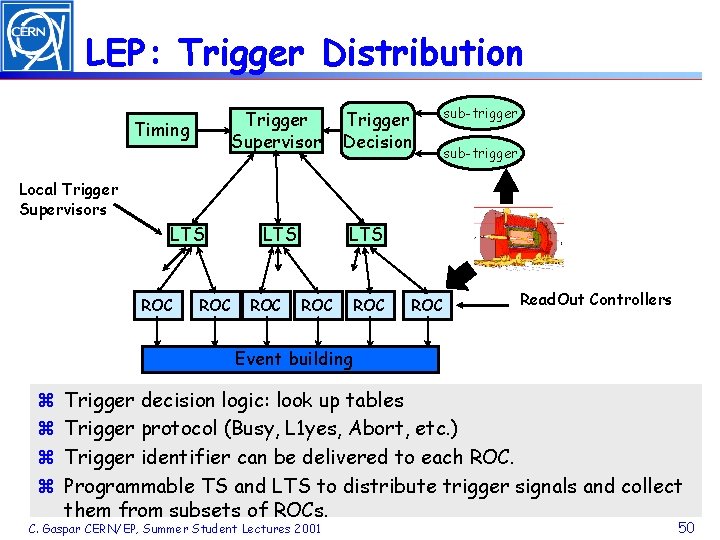

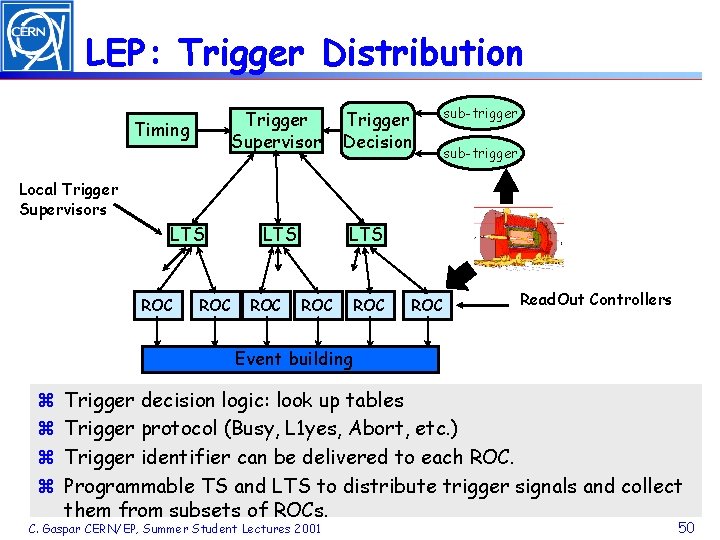

LEP: Trigger Distribution Trigger Supervisor Timing Trigger Decision sub-trigger Local Trigger Supervisors LTS ROC ROC Read. Out Controllers Event building z z Trigger decision logic: look up tables Trigger protocol (Busy, L 1 yes, Abort, etc. ) Trigger identifier can be delivered to each ROC. Programmable TS and LTS to distribute trigger signals and collect them from subsets of ROCs. C. Gaspar CERN/EP, Summer Student Lectures 2001 50

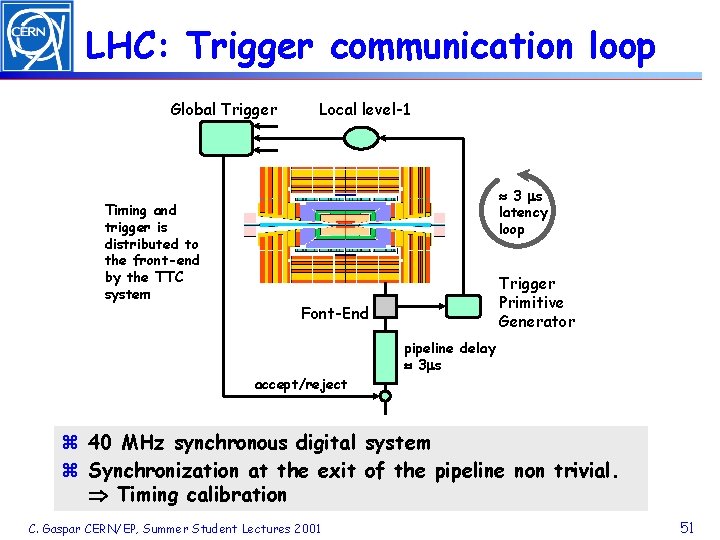

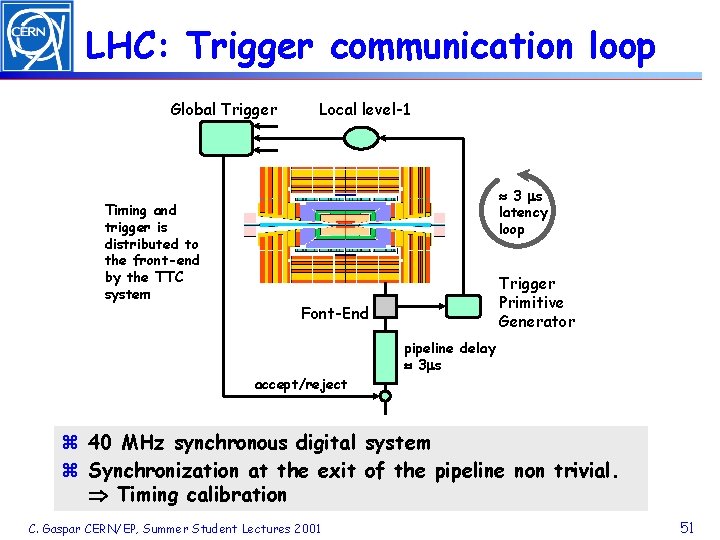

LHC: Trigger communication loop Global Trigger Timing and trigger is distributed to the front-end by the TTC system Local level-1 3 s latency loop Trigger Primitive Generator Font-End accept/reject pipeline delay 3 s z 40 MHz synchronous digital system z Synchronization at the exit of the pipeline non trivial. Timing calibration C. Gaspar CERN/EP, Summer Student Lectures 2001 51

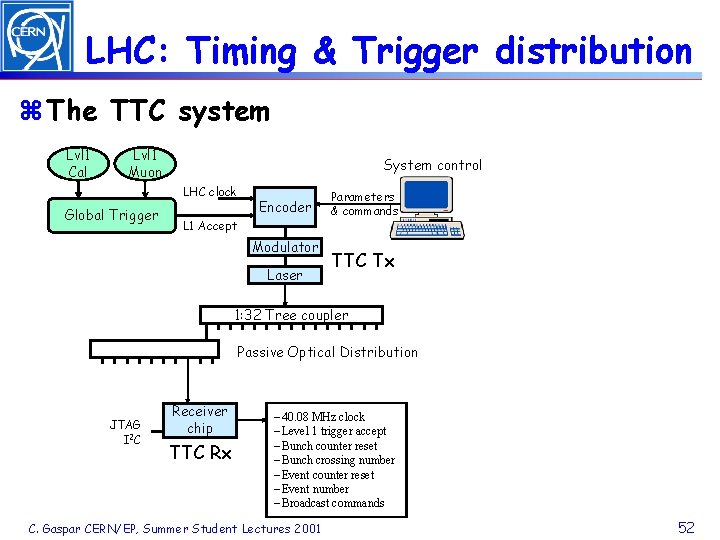

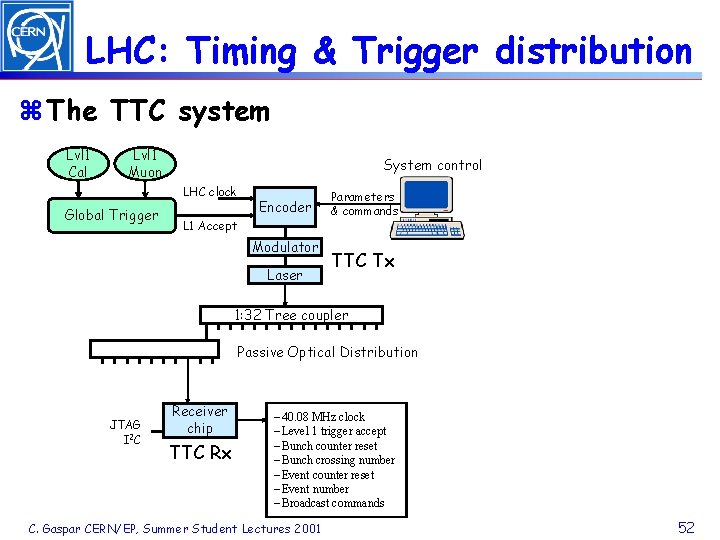

LHC: Timing & Trigger distribution z The TTC system Lvl 1 Cal Lvl 1 Muon System control LHC clock Global Trigger Encoder L 1 Accept Modulator Laser Parameters & commands TTC Tx 1: 32 Tree coupler Passive Optical Distribution JTAG I 2 C Receiver chip TTC Rx – 40. 08 MHz clock – Level 1 trigger accept – Bunch counter reset – Bunch crossing number – Event counter reset – Event number – Broadcast commands C. Gaspar CERN/EP, Summer Student Lectures 2001 52

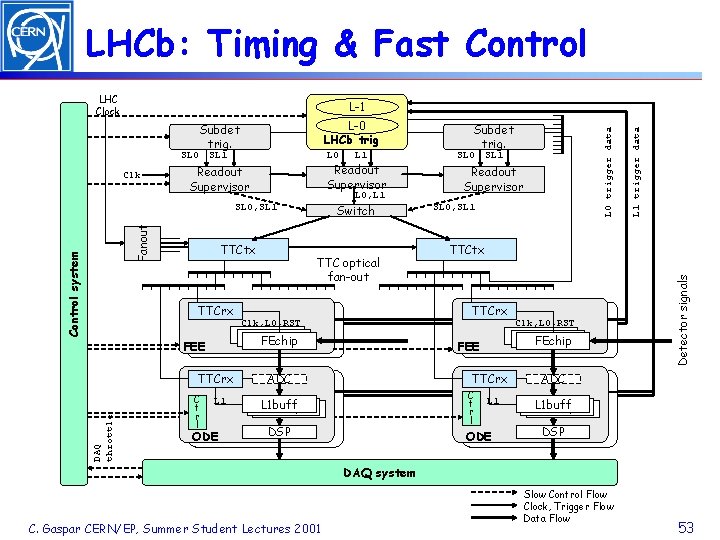

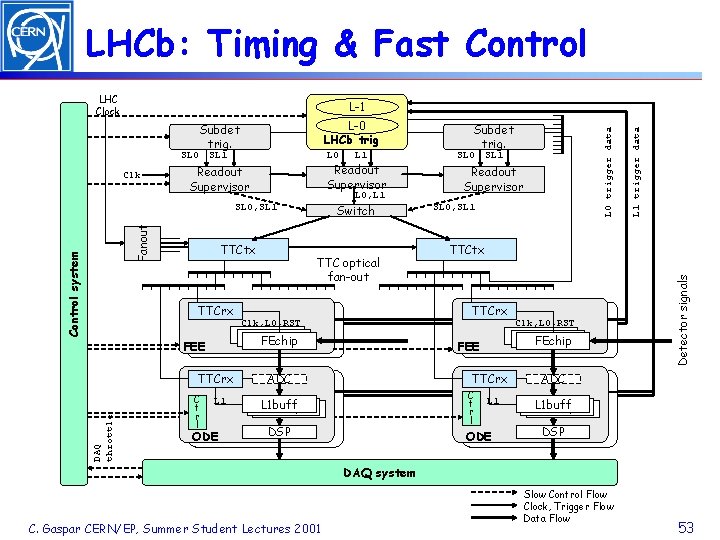

LHCb: Timing & Fast Control LHC Clock Clk Readout Supervisor L 0, L 1 Switch Control system Fanout SL 0, SL 1 TTCtx TTCrx DAQ throttle TTC optical fan-out L 1 ODE SL 0 SL 1 Readout Supervisor SL 0, SL 1 TTCtx TTCrx Clk, L 0, RST FEchip FEE C t r l L 1 FEchip FEE ADC TTCrx C t r l L 1 buff FEchip DSP Clk, L 0, RST L 1 ODE Detector signals L 0 SL 1 Subdet trig. L 0 trigger data L-0 LHCb trig Subdet trig. L 1 trigger data L-1 ADC L 1 buff FEchip DSP DAQ system C. Gaspar CERN/EP, Summer Student Lectures 2001 Slow Control Flow Clock, Trigger Flow Data Flow 53

Data Acquisition Clara Gaspar CERN/EP-LBC

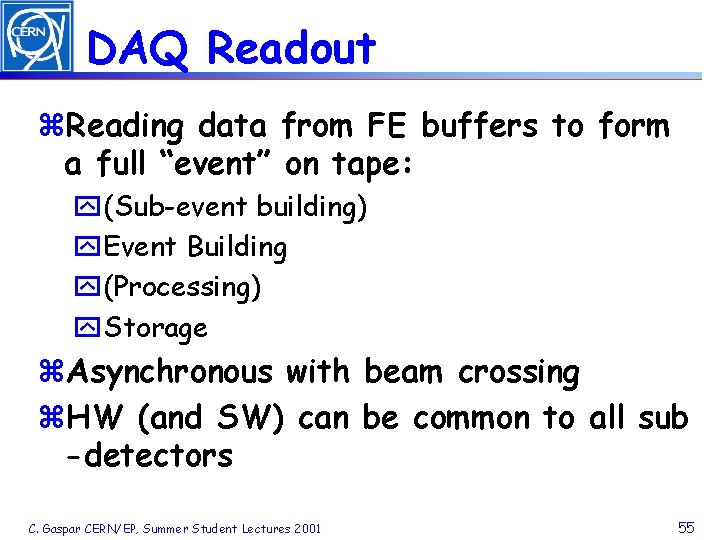

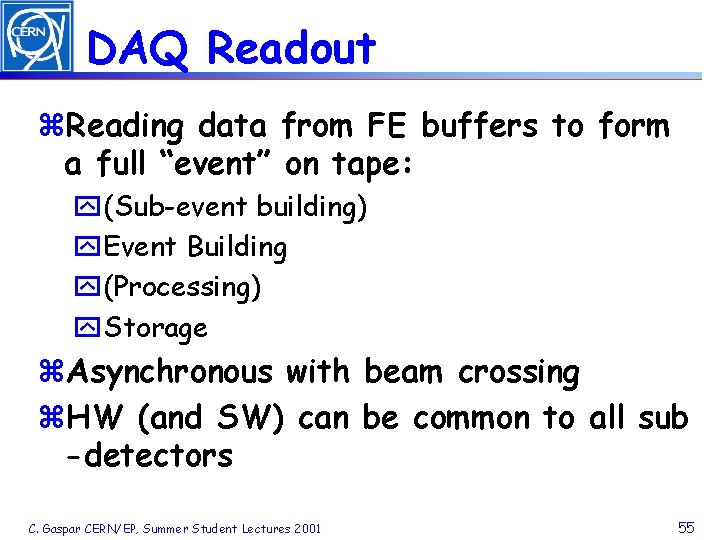

DAQ Readout z. Reading data from FE buffers to form a full “event” on tape: y(Sub-event building) y. Event Building y(Processing) y. Storage z. Asynchronous with beam crossing z. HW (and SW) can be common to all sub -detectors C. Gaspar CERN/EP, Summer Student Lectures 2001 55

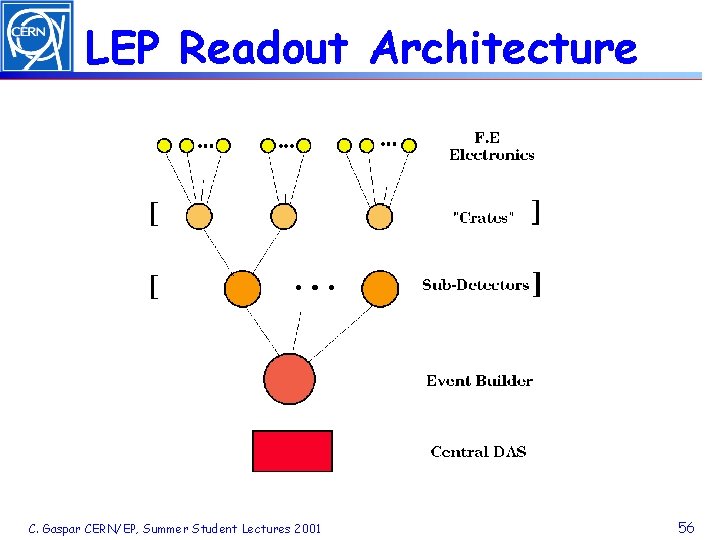

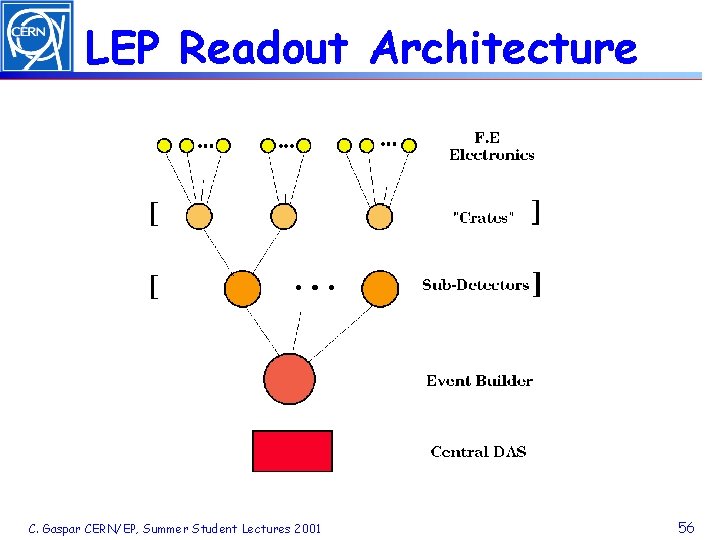

LEP Readout Architecture C. Gaspar CERN/EP, Summer Student Lectures 2001 56

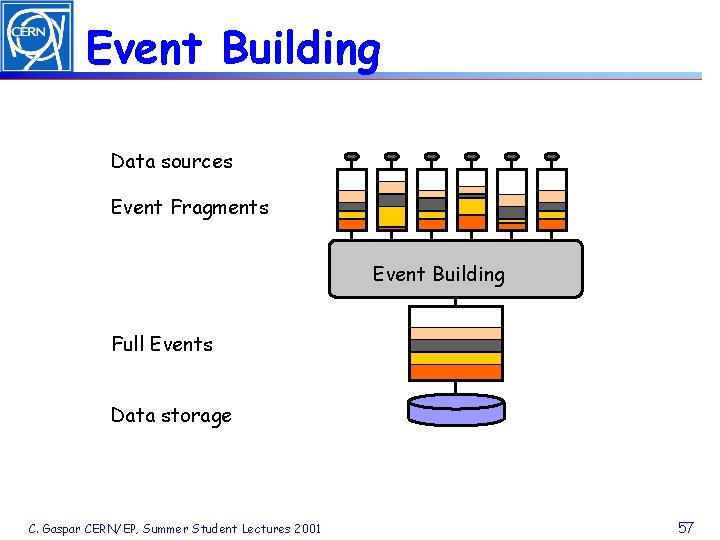

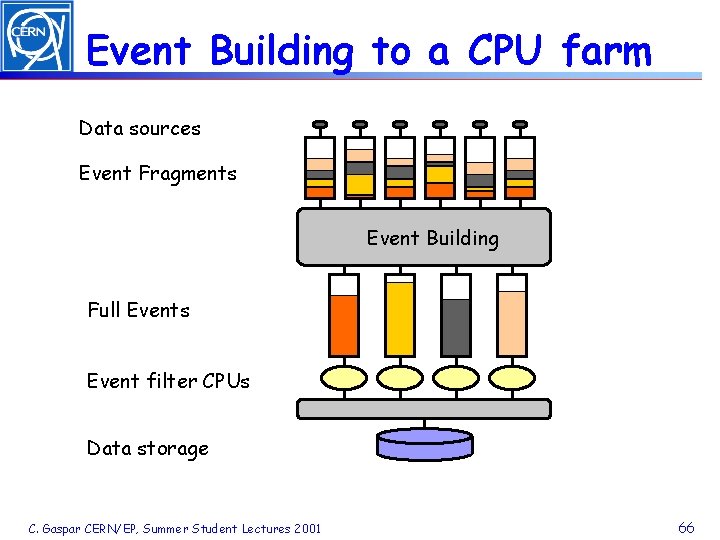

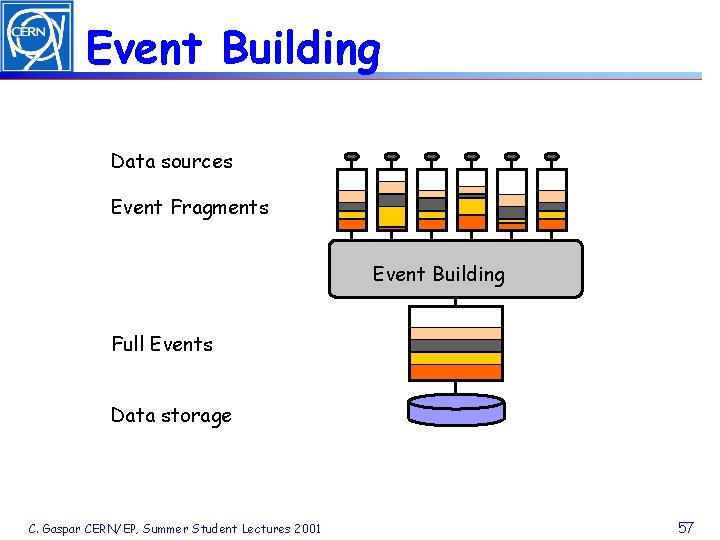

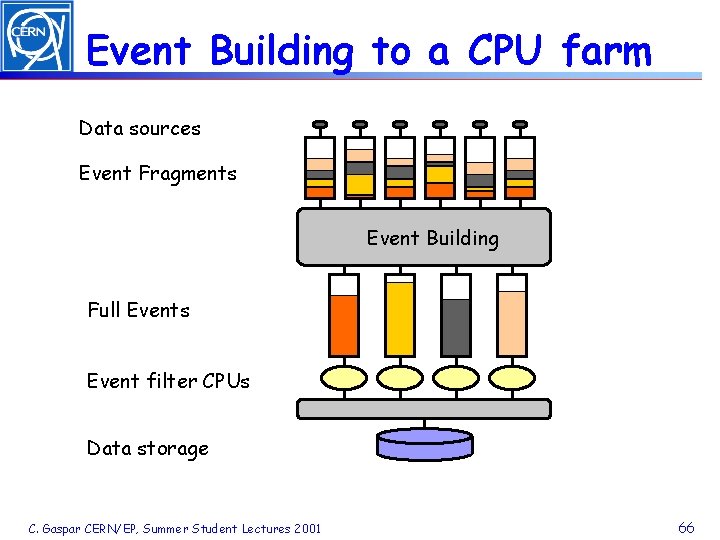

Event Building Data sources Event Fragments Event Building Full Events Data storage C. Gaspar CERN/EP, Summer Student Lectures 2001 57

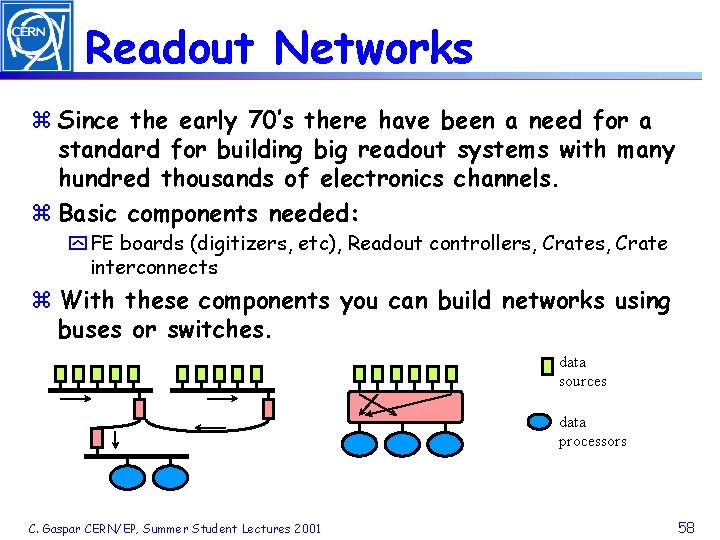

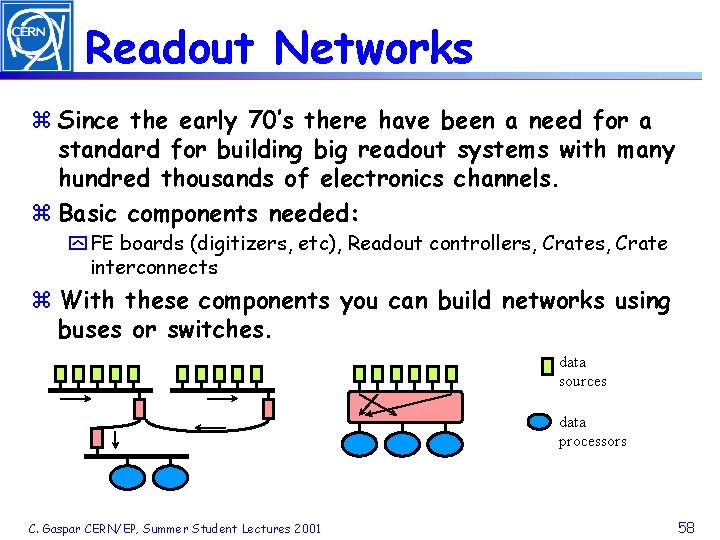

Readout Networks z Since the early 70’s there have been a need for a standard for building big readout systems with many hundred thousands of electronics channels. z Basic components needed: y FE boards (digitizers, etc), Readout controllers, Crate interconnects z With these components you can build networks using buses or switches. data sources data processors C. Gaspar CERN/EP, Summer Student Lectures 2001 58

Buses used at LEP z Camac Ú Very robust system still used by small/medium size experiments Ú Large variety of front-end modules crate Branch Ø Low readout speed (0. 5 -2 Mbytes/s) Crate z Fastbus Controller Ú Fast Data Transfers (Crate: 40 Mbyte/s, cable: 4 Mbyte/s) S Ú Large Board Surface ( 50 -100 electronic channels) Ø Few commercial modules (mostly interconnects) M I z VME Ú Large availability of modules x CPU boards (68 k, Power. PC, Intel), Memories, I/O interfaces (Ethernet, Disk, …), Interfaces to other buses (Camac, Fastbus, …), Front-end boards. Ø Small Board Size and no standard crate interconnection y But VICBus provides Crate Interconnects y 9 U Crates provide large board space VICbus C. Gaspar CERN/EP, Summer Student Lectures 2001 59

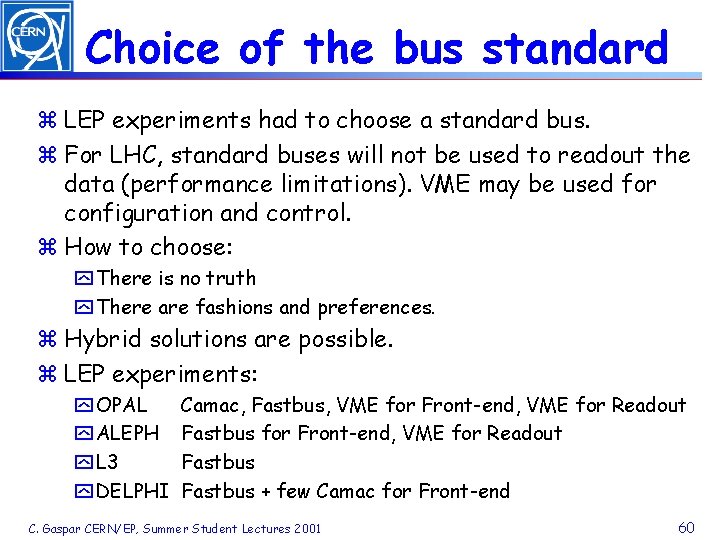

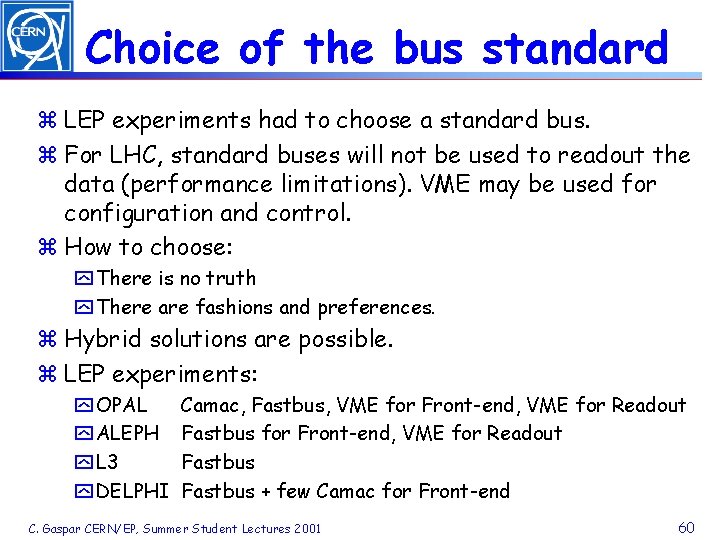

Choice of the bus standard z LEP experiments had to choose a standard bus. z For LHC, standard buses will not be used to readout the data (performance limitations). VME may be used for configuration and control. z How to choose: y There is no truth y There are fashions and preferences. z Hybrid solutions are possible. z LEP experiments: y OPAL y ALEPH y L 3 y DELPHI Camac, Fastbus, VME for Front-end, VME for Readout Fastbus + few Camac for Front-end C. Gaspar CERN/EP, Summer Student Lectures 2001 60

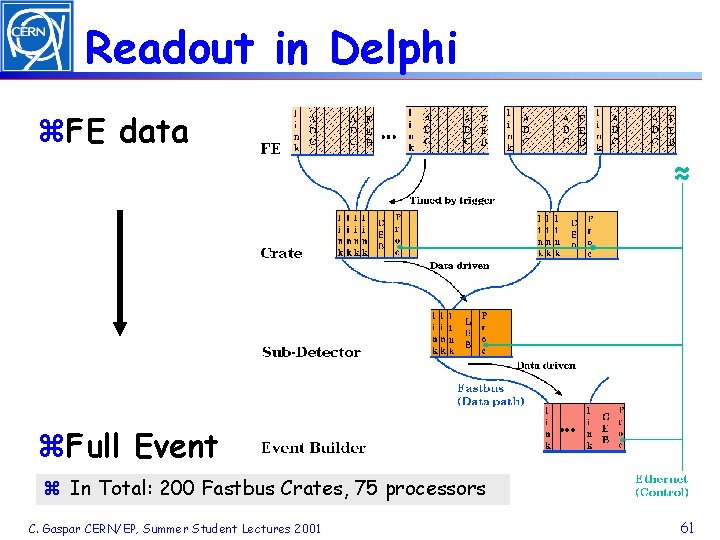

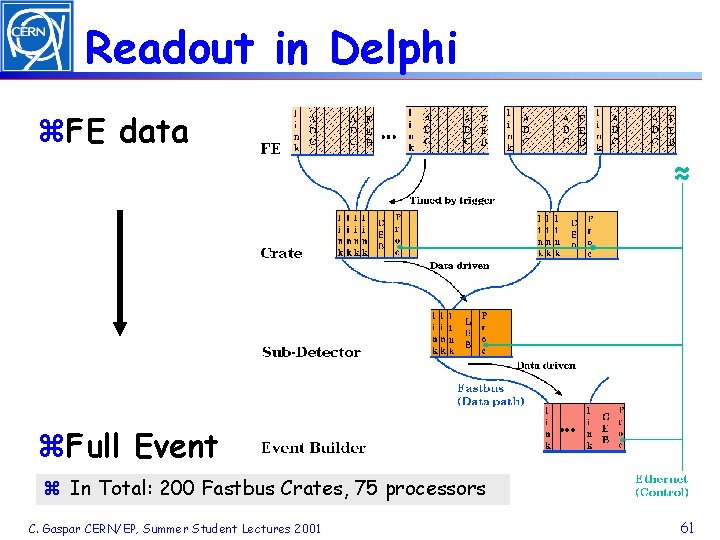

Readout in Delphi z. FE data z. Full Event z In Total: 200 Fastbus Crates, 75 processors C. Gaspar CERN/EP, Summer Student Lectures 2001 61

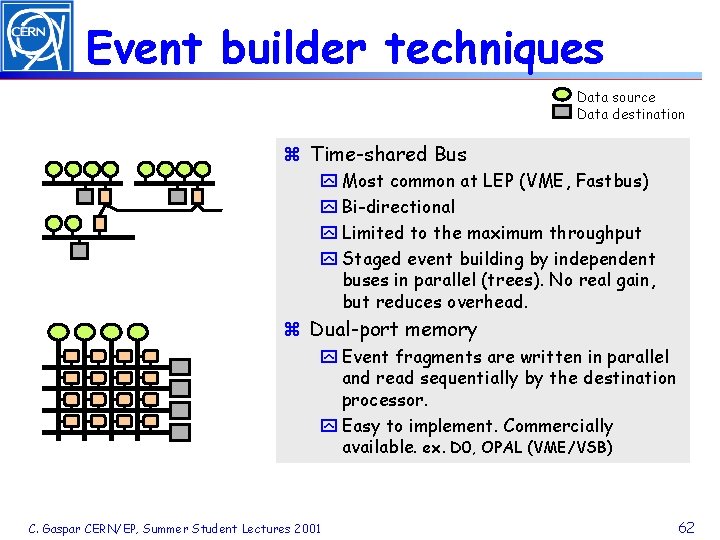

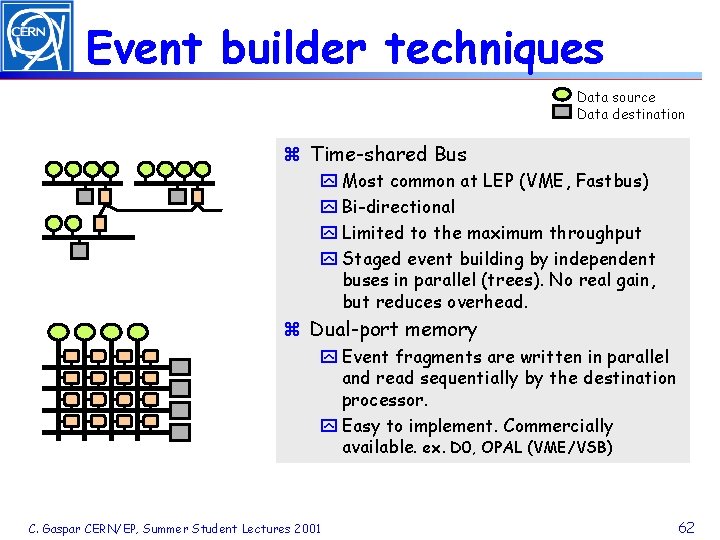

Event builder techniques Data source Data destination z Time-shared Bus y Most common at LEP (VME, Fastbus) y Bi-directional y Limited to the maximum throughput y Staged event building by independent buses in parallel (trees). No real gain, but reduces overhead. z Dual-port memory y Event fragments are written in parallel and read sequentially by the destination processor. y Easy to implement. Commercially available. ex. D 0, OPAL (VME/VSB) C. Gaspar CERN/EP, Summer Student Lectures 2001 62

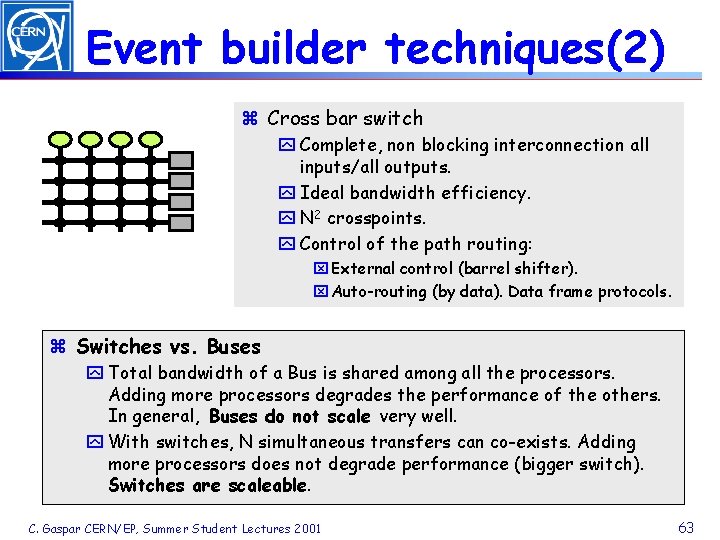

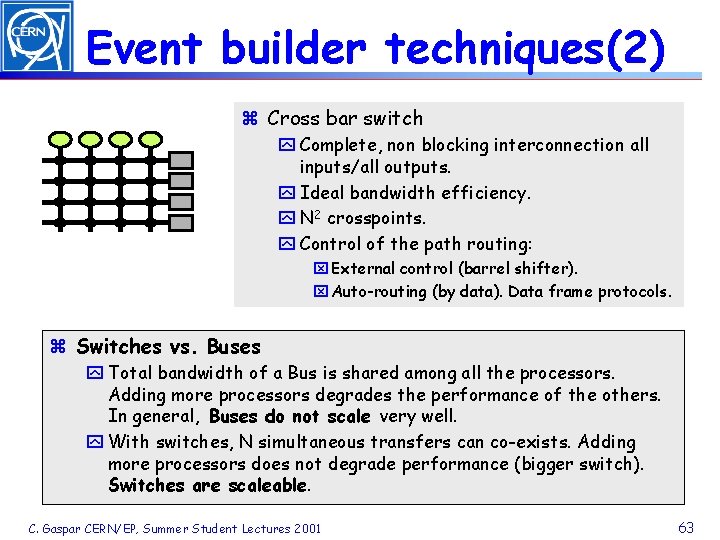

Event builder techniques(2) z Cross bar switch y Complete, non blocking interconnection all inputs/all outputs. y Ideal bandwidth efficiency. y N 2 crosspoints. y Control of the path routing: x External control (barrel shifter). x Auto-routing (by data). Data frame protocols. z Switches vs. Buses y Total bandwidth of a Bus is shared among all the processors. Adding more processors degrades the performance of the others. In general, Buses do not scale very well. y With switches, N simultaneous transfers can co-exists. Adding more processors does not degrade performance (bigger switch). Switches are scaleable. C. Gaspar CERN/EP, Summer Student Lectures 2001 63

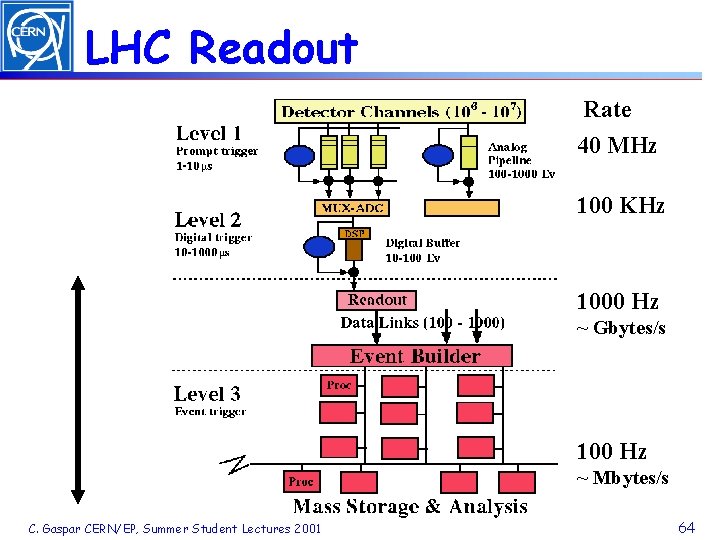

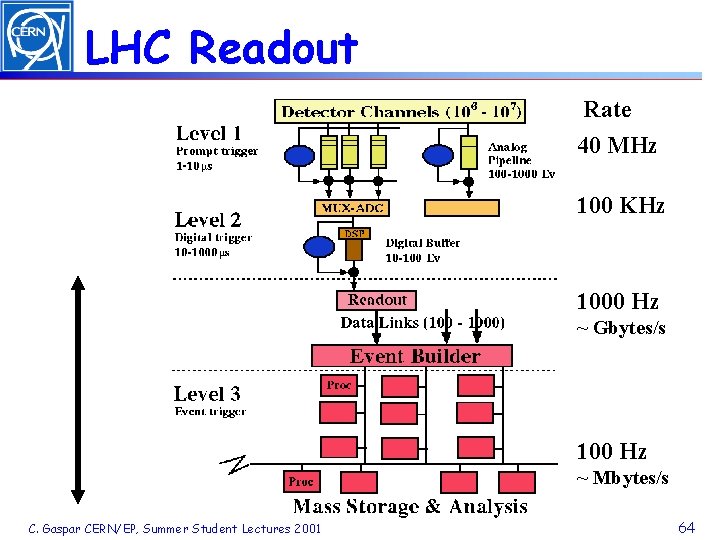

LHC Readout Rate 6 40 x 10 40 MHz 100 KHz 1000 Hz ~ Gbytes/s 100 Hz ~ Mbytes/s C. Gaspar CERN/EP, Summer Student Lectures 2001 64

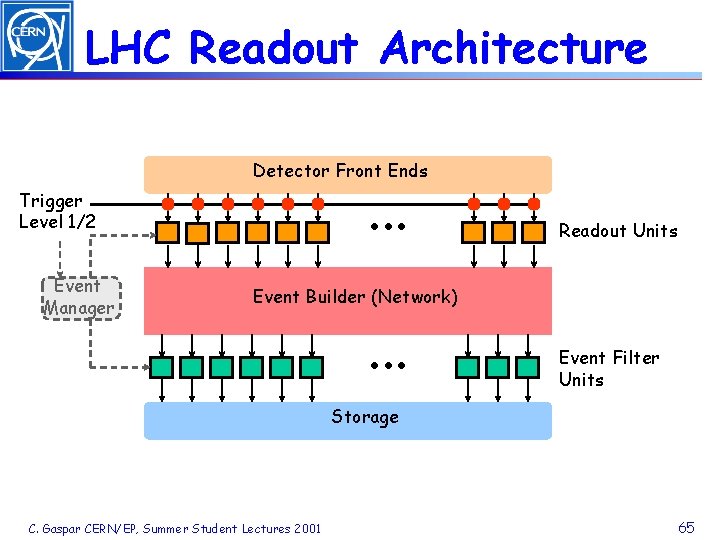

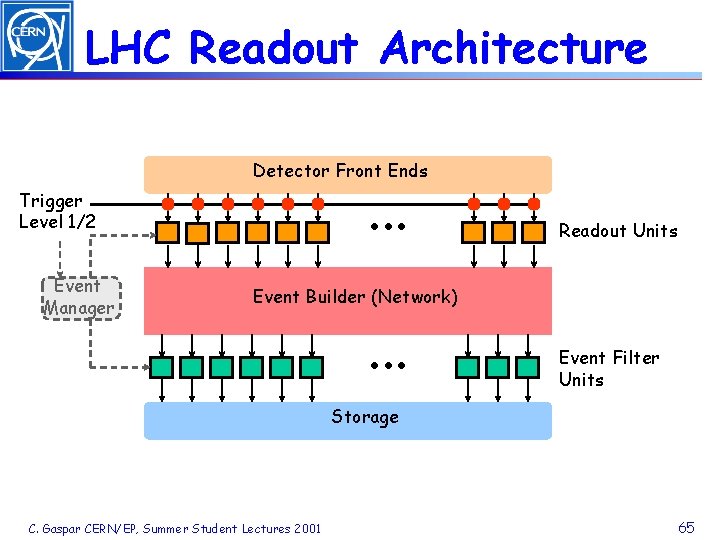

LHC Readout Architecture Detector Front Ends . . . Trigger Level 1/2 Event Manager Readout Units Event Builder (Network) . . . Event Filter Units Storage C. Gaspar CERN/EP, Summer Student Lectures 2001 65

Event Building to a CPU farm Data sources Event Fragments Event Building Full Events Event filter CPUs Data storage C. Gaspar CERN/EP, Summer Student Lectures 2001 66

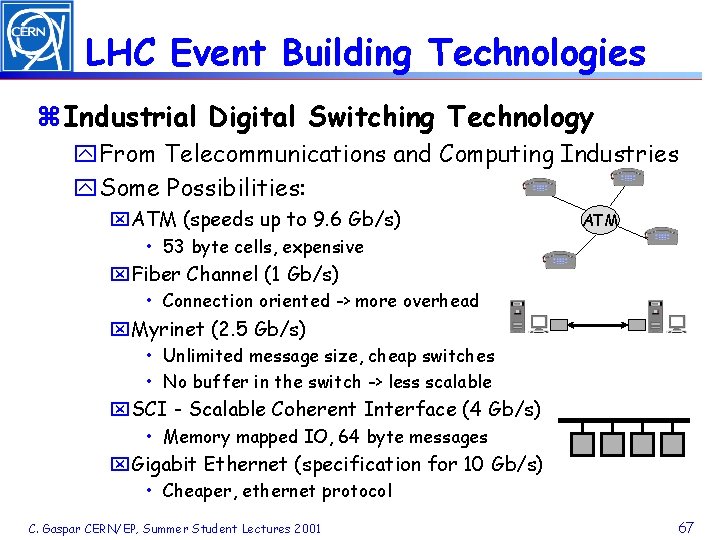

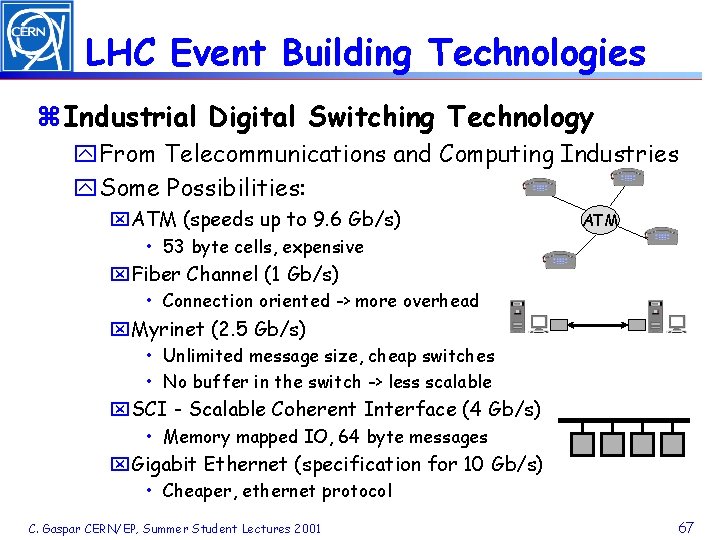

LHC Event Building Technologies z Industrial Digital Switching Technology y. From Telecommunications and Computing Industries y. Some Possibilities: x. ATM (speeds up to 9. 6 Gb/s) ATM • 53 byte cells, expensive x. Fiber Channel (1 Gb/s) • Connection oriented -> more overhead x. Myrinet (2. 5 Gb/s) • Unlimited message size, cheap switches • No buffer in the switch -> less scalable x. SCI - Scalable Coherent Interface (4 Gb/s) • Memory mapped IO, 64 byte messages x. Gigabit Ethernet (specification for 10 Gb/s) • Cheaper, ethernet protocol C. Gaspar CERN/EP, Summer Student Lectures 2001 67

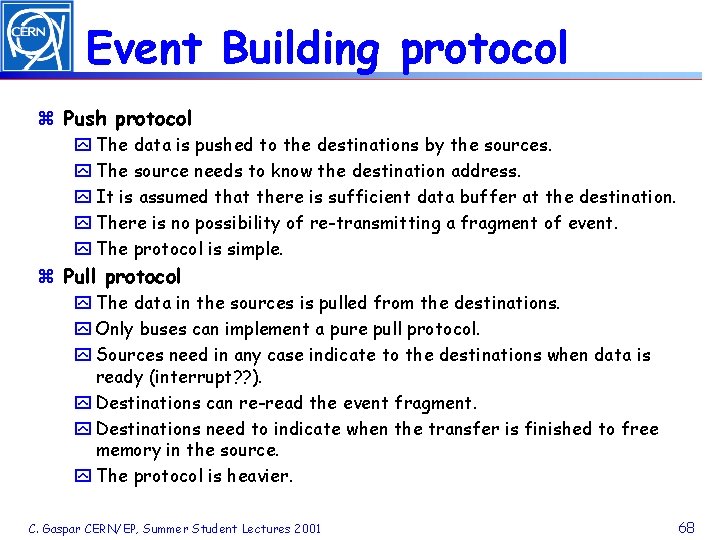

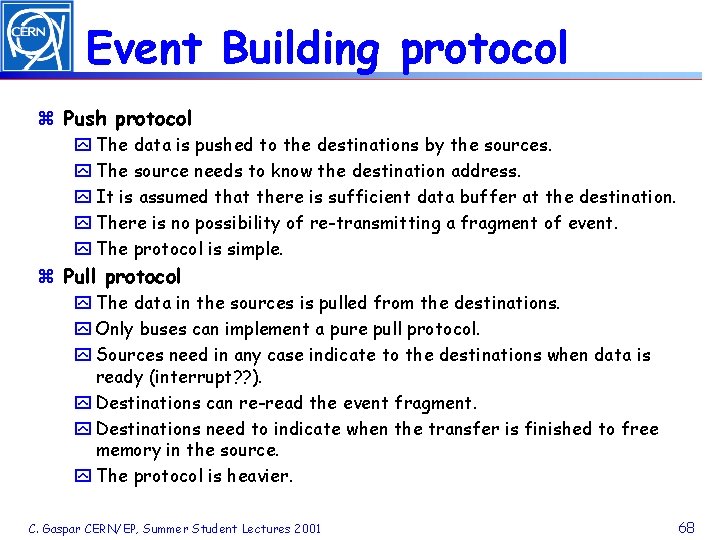

Event Building protocol z Push protocol y The data is pushed to the destinations by the sources. y The source needs to know the destination address. y It is assumed that there is sufficient data buffer at the destination. y There is no possibility of re-transmitting a fragment of event. y The protocol is simple. z Pull protocol y The data in the sources is pulled from the destinations. y Only buses can implement a pure pull protocol. y Sources need in any case indicate to the destinations when data is ready (interrupt? ? ). y Destinations can re-read the event fragment. y Destinations need to indicate when the transfer is finished to free memory in the source. y The protocol is heavier. C. Gaspar CERN/EP, Summer Student Lectures 2001 68

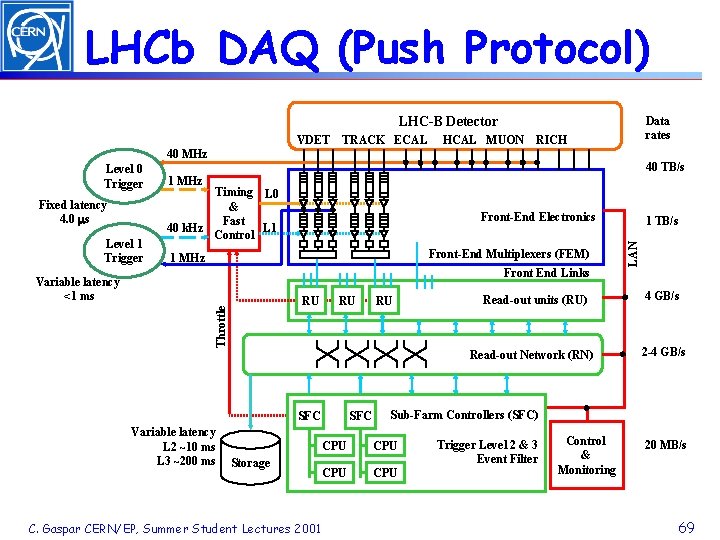

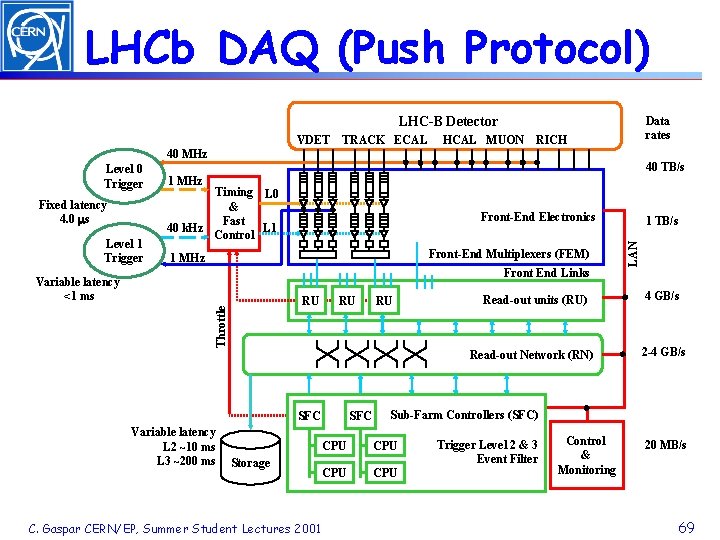

LHCb DAQ (Push Protocol) LHC-B Detector VDET TRACK ECAL HCAL MUON Data rates RICH 40 MHz Fixed latency 4. 0 s Level 1 Trigger 40 TB/s 1 MHz Timing L 0 & Fast 40 k. Hz L 1 Control Front-End Electronics Front-End Multiplexers (FEM) 1 MHz Front End Links Variable latency <1 ms Throttle RU RU RU Read-out units (RU) Read-out Network (RN) SFC Variable latency L 2 ~10 ms L 3 ~200 ms Storage SFC 4 GB/s 2 -4 GB/s Sub-Farm Controllers (SFC) CPU CPU C. Gaspar CERN/EP, Summer Student Lectures 2001 1 TB/s LAN Level 0 Trigger Level 2 & 3 Event Filter Control & Monitoring 20 MB/s 69

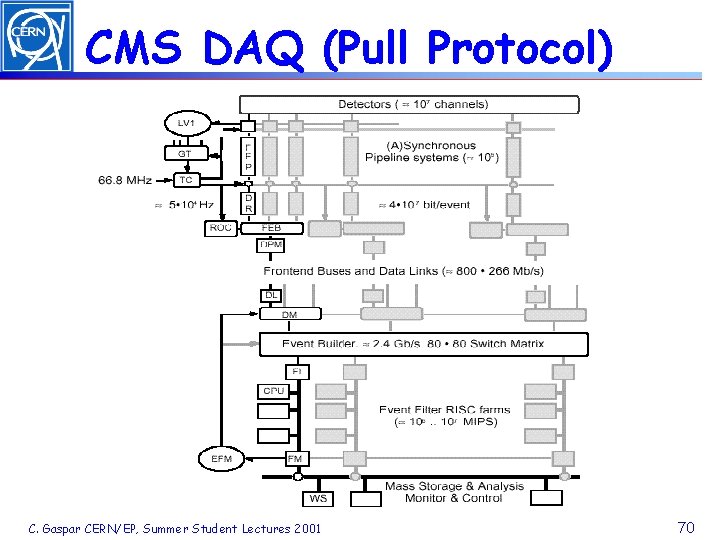

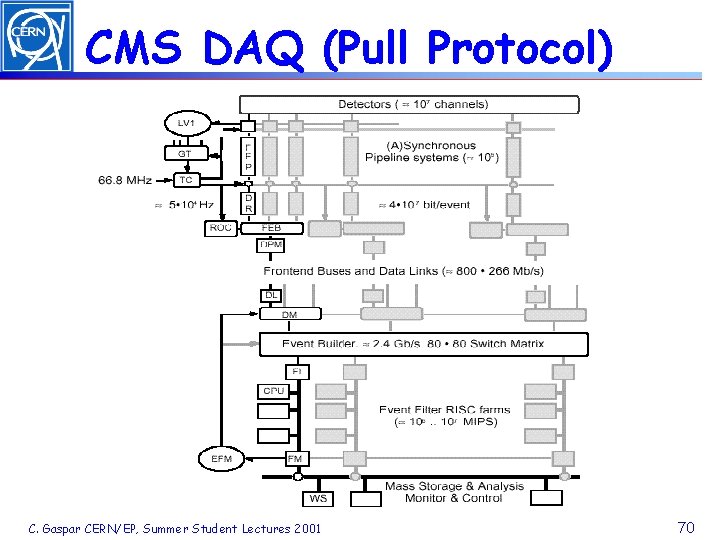

CMS DAQ (Pull Protocol) C. Gaspar CERN/EP, Summer Student Lectures 2001 70

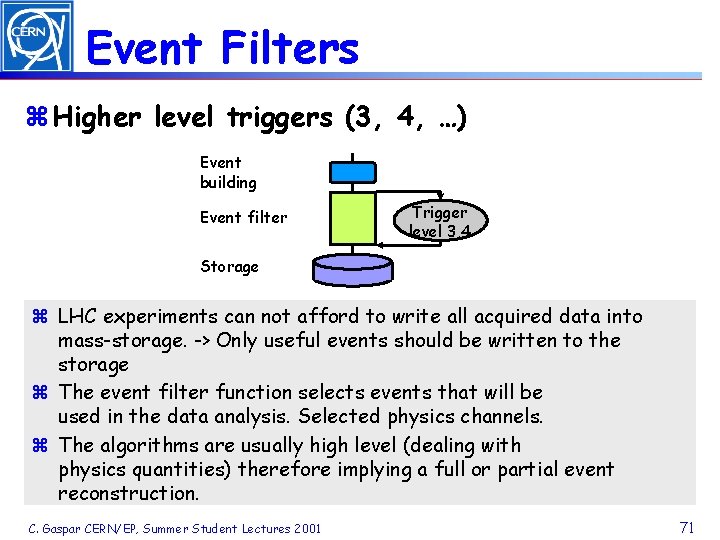

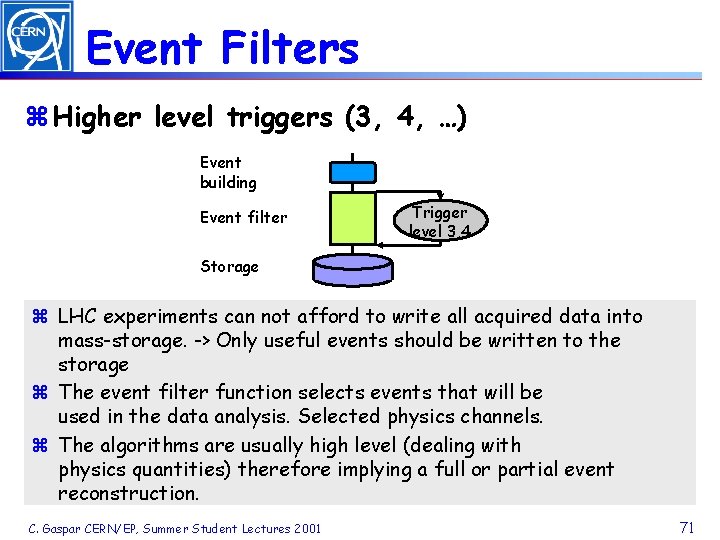

Event Filters z Higher level triggers (3, 4, …) Event building Event filter Trigger level 3, 4 Storage z LHC experiments can not afford to write all acquired data into mass-storage. -> Only useful events should be written to the storage z The event filter function selects events that will be used in the data analysis. Selected physics channels. z The algorithms are usually high level (dealing with physics quantities) therefore implying a full or partial event reconstruction. C. Gaspar CERN/EP, Summer Student Lectures 2001 71

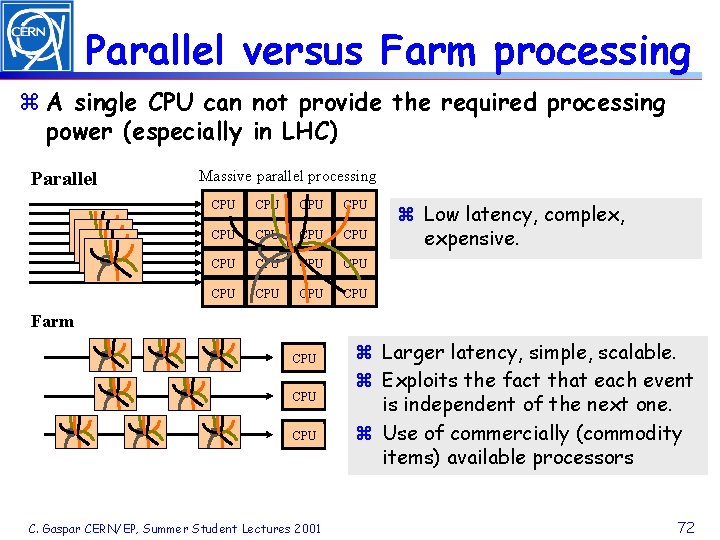

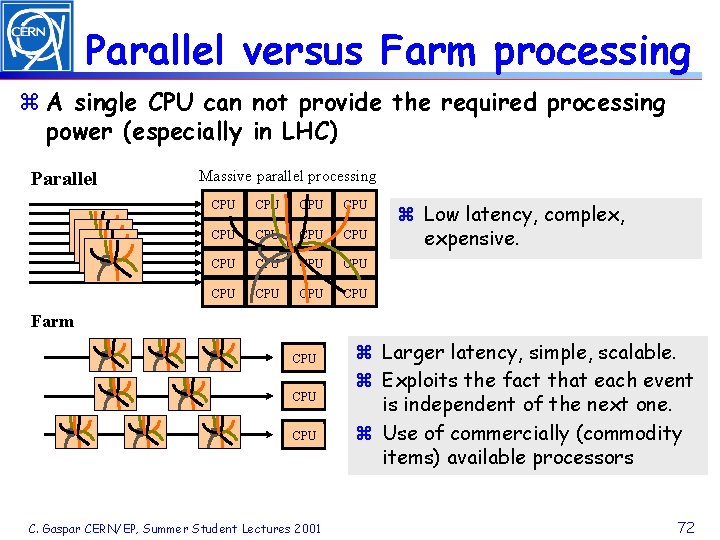

Parallel versus Farm processing z A single CPU can not provide the required processing power (especially in LHC) Parallel Massive parallel processing CPU CPU CPU CPU z Low latency, complex, expensive. Farm CPU CPU C. Gaspar CERN/EP, Summer Student Lectures 2001 z Larger latency, simple, scalable. z Exploits the fact that each event is independent of the next one. z Use of commercially (commodity items) available processors 72

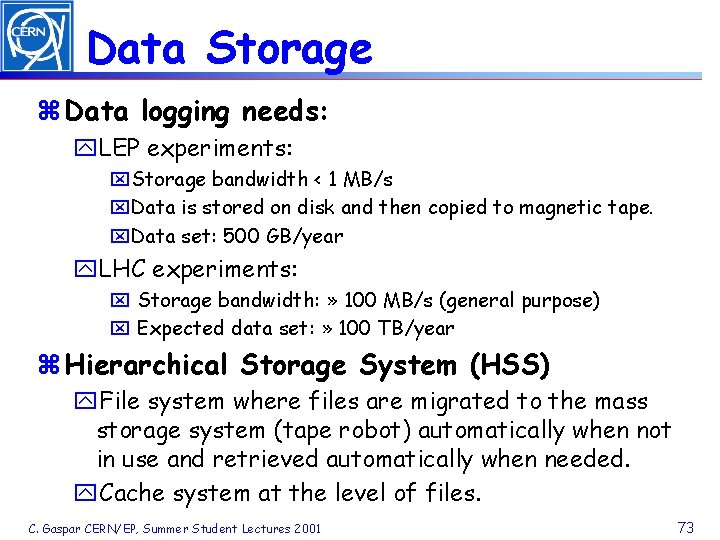

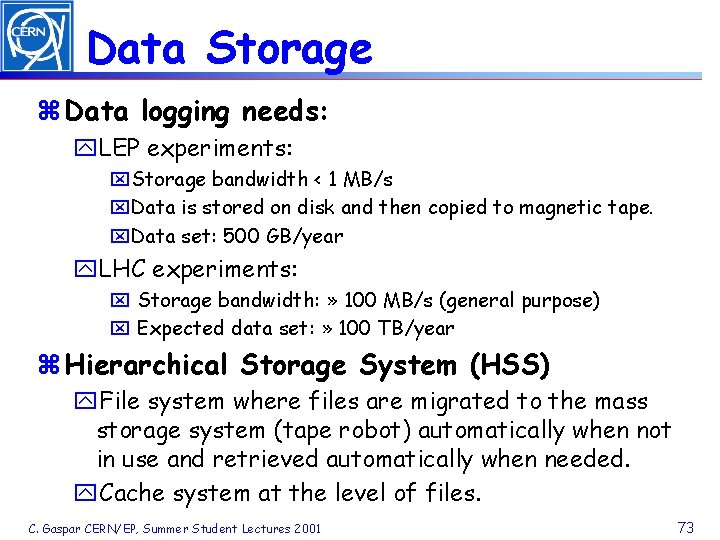

Data Storage z Data logging needs: y. LEP experiments: x. Storage bandwidth < 1 MB/s x. Data is stored on disk and then copied to magnetic tape. x. Data set: 500 GB/year y. LHC experiments: x Storage bandwidth: » 100 MB/s (general purpose) x Expected data set: » 100 TB/year z Hierarchical Storage System (HSS) y. File system where files are migrated to the mass storage system (tape robot) automatically when not in use and retrieved automatically when needed. y. Cache system at the level of files. C. Gaspar CERN/EP, Summer Student Lectures 2001 73

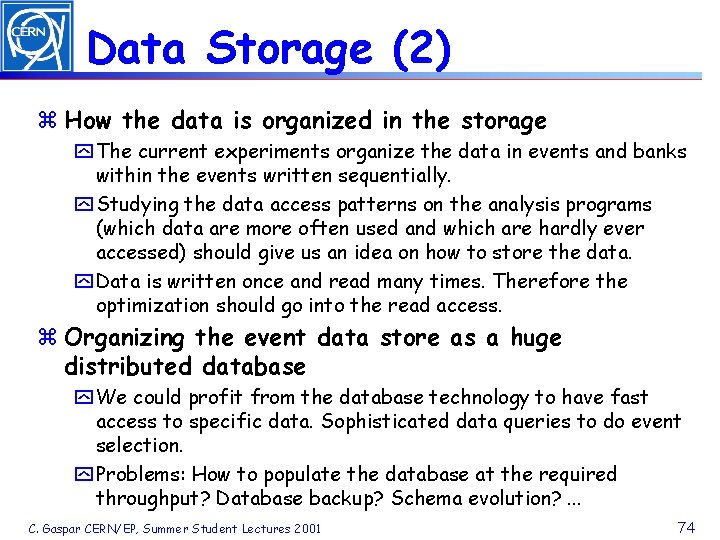

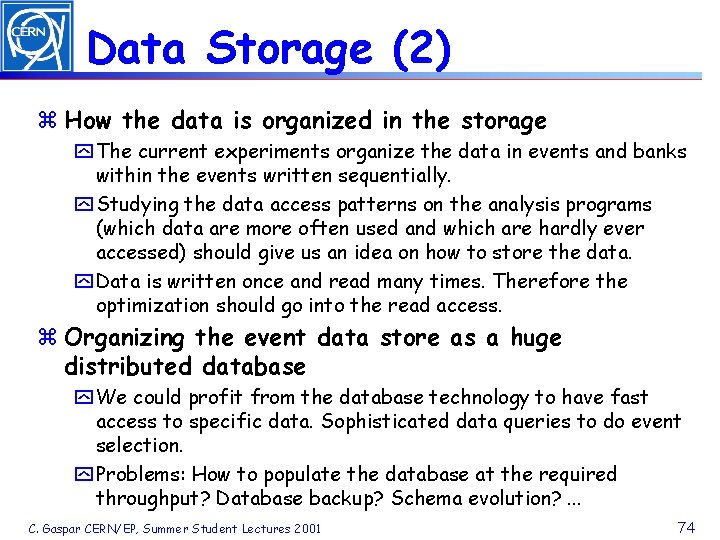

Data Storage (2) z How the data is organized in the storage y The current experiments organize the data in events and banks within the events written sequentially. y Studying the data access patterns on the analysis programs (which data are more often used and which are hardly ever accessed) should give us an idea on how to store the data. y Data is written once and read many times. Therefore the optimization should go into the read access. z Organizing the event data store as a huge distributed database y We could profit from the database technology to have fast access to specific data. Sophisticated data queries to do event selection. y Problems: How to populate the database at the required throughput? Database backup? Schema evolution? . . . C. Gaspar CERN/EP, Summer Student Lectures 2001 74

Configuration, Control and Monitoring Clara Gaspar CERN/EP-LBC

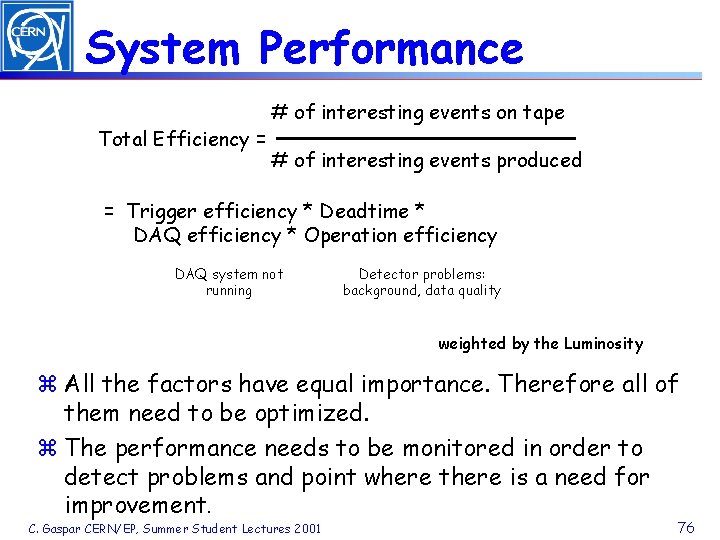

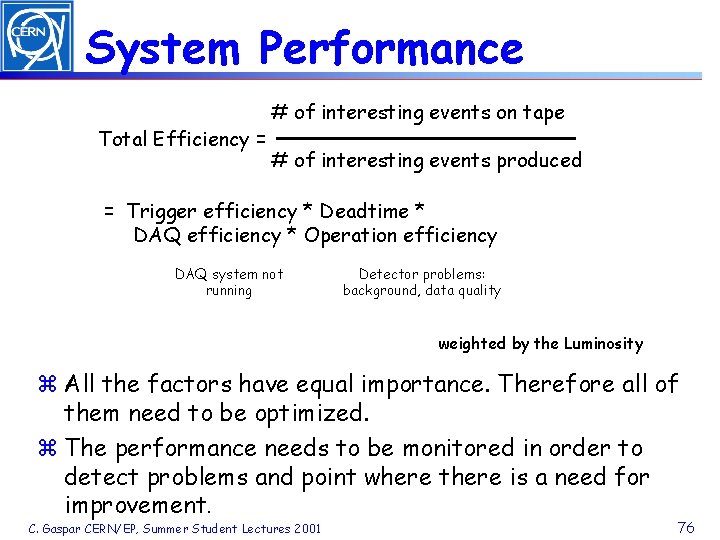

System Performance Total Efficiency = # of interesting events on tape # of interesting events produced = Trigger efficiency * Deadtime * DAQ efficiency * Operation efficiency DAQ system not running Detector problems: background, data quality weighted by the Luminosity z All the factors have equal importance. Therefore all of them need to be optimized. z The performance needs to be monitored in order to detect problems and point where there is a need for improvement. C. Gaspar CERN/EP, Summer Student Lectures 2001 76

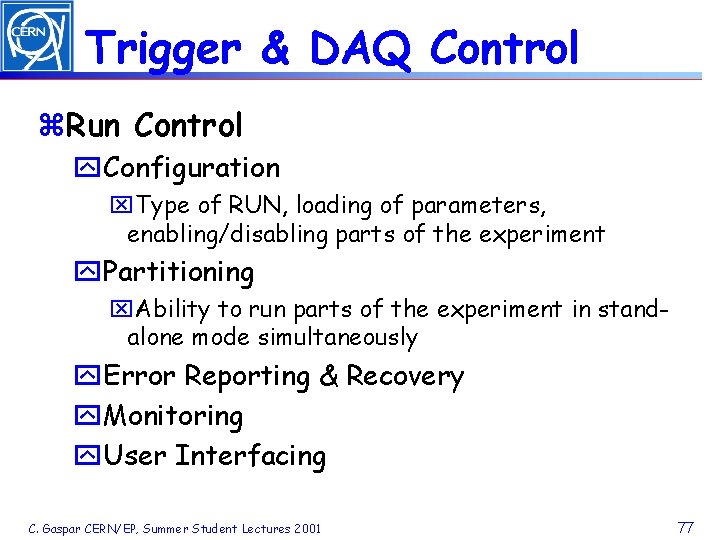

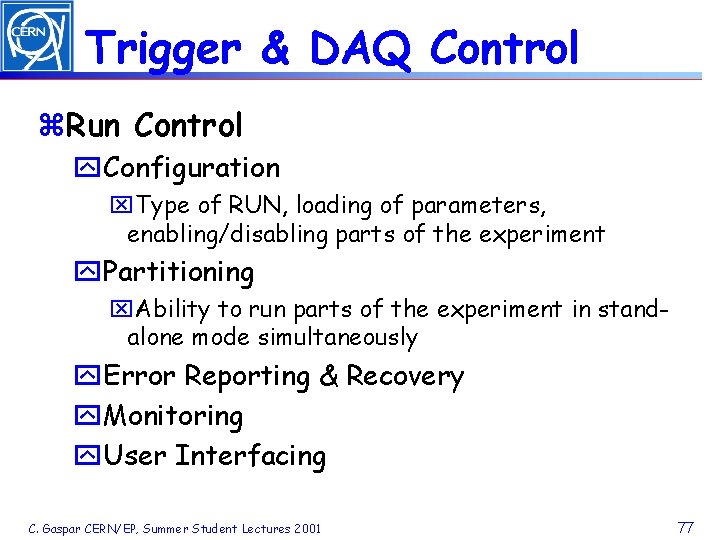

Trigger & DAQ Control z. Run Control y. Configuration x. Type of RUN, loading of parameters, enabling/disabling parts of the experiment y. Partitioning x. Ability to run parts of the experiment in standalone mode simultaneously y. Error Reporting & Recovery y. Monitoring y. User Interfacing C. Gaspar CERN/EP, Summer Student Lectures 2001 77

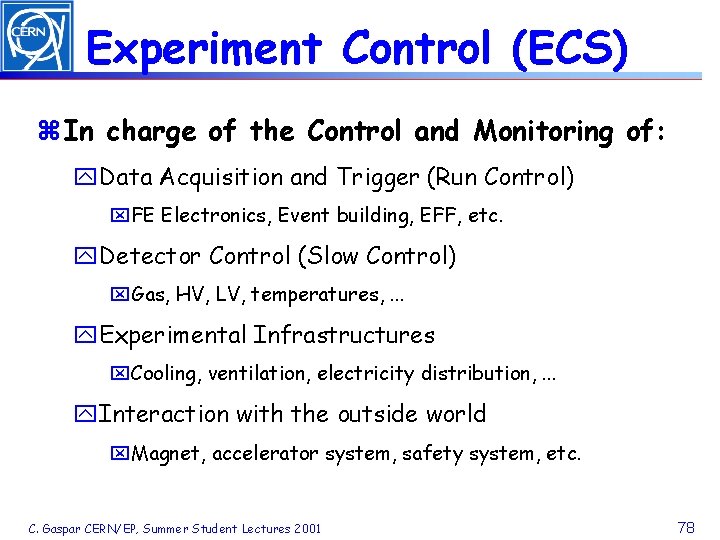

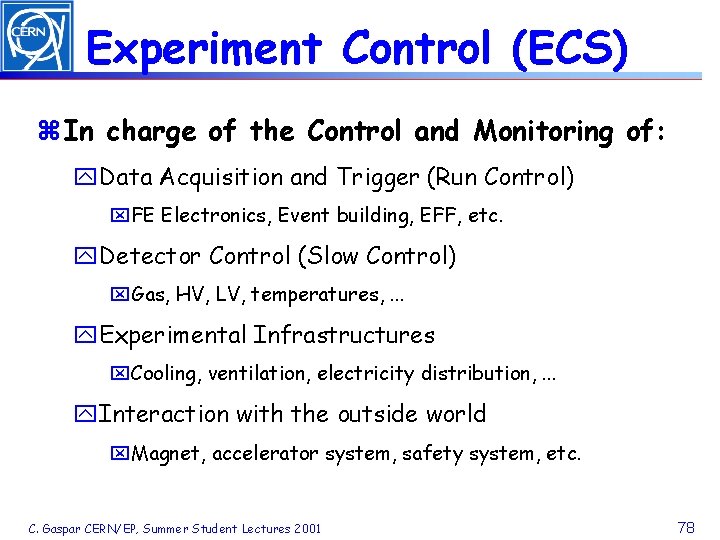

Experiment Control (ECS) z In charge of the Control and Monitoring of: y. Data Acquisition and Trigger (Run Control) x. FE Electronics, Event building, EFF, etc. y. Detector Control (Slow Control) x. Gas, HV, LV, temperatures, . . . y. Experimental Infrastructures x. Cooling, ventilation, electricity distribution, . . . y. Interaction with the outside world x. Magnet, accelerator system, safety system, etc. C. Gaspar CERN/EP, Summer Student Lectures 2001 78

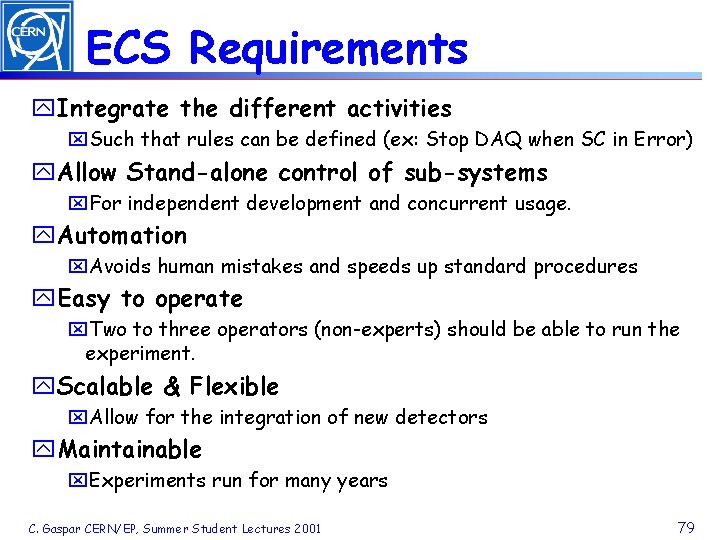

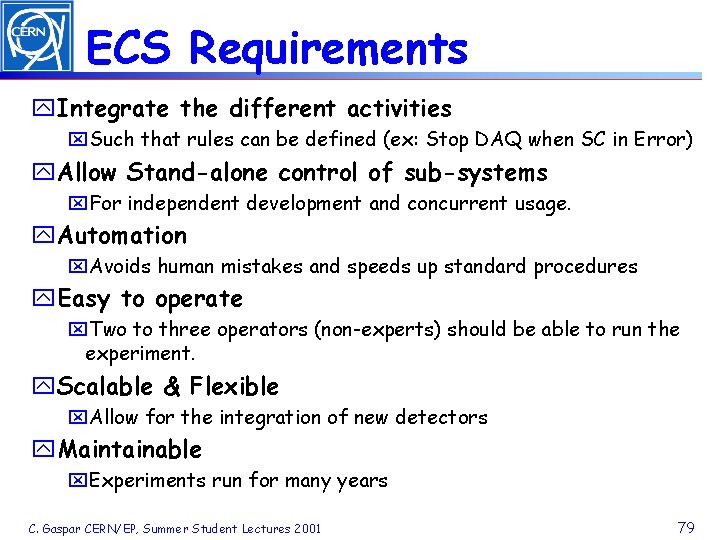

ECS Requirements y. Integrate the different activities x. Such that rules can be defined (ex: Stop DAQ when SC in Error) y. Allow Stand-alone control of sub-systems x. For independent development and concurrent usage. y. Automation x. Avoids human mistakes and speeds up standard procedures y. Easy to operate x. Two to three operators (non-experts) should be able to run the experiment. y. Scalable & Flexible x. Allow for the integration of new detectors y. Maintainable x. Experiments run for many years C. Gaspar CERN/EP, Summer Student Lectures 2001 79

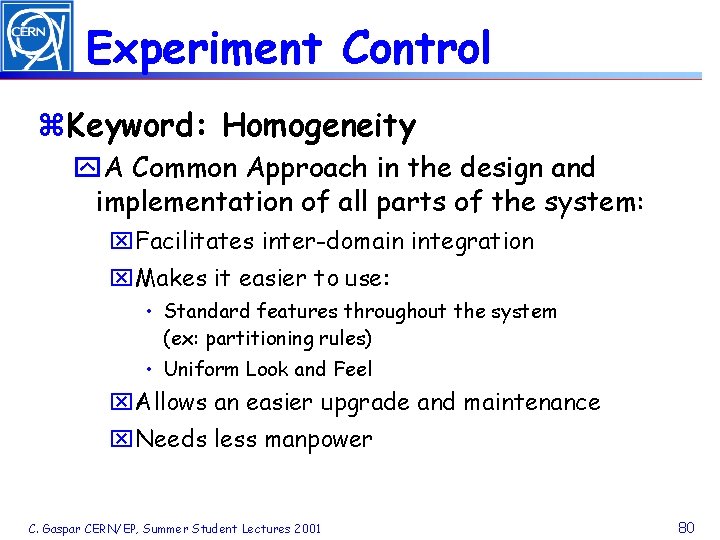

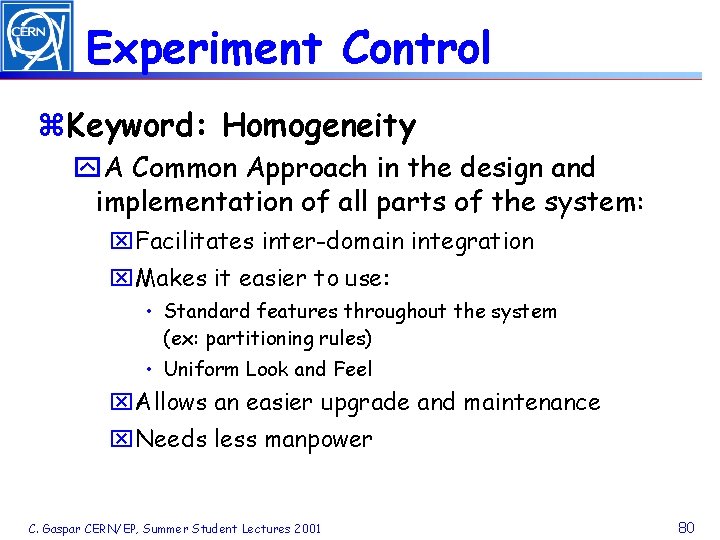

Experiment Control z. Keyword: Homogeneity y. A Common Approach in the design and implementation of all parts of the system: x. Facilitates inter-domain integration x. Makes it easier to use: • Standard features throughout the system (ex: partitioning rules) • Uniform Look and Feel x. Allows an easier upgrade and maintenance x. Needs less manpower C. Gaspar CERN/EP, Summer Student Lectures 2001 80

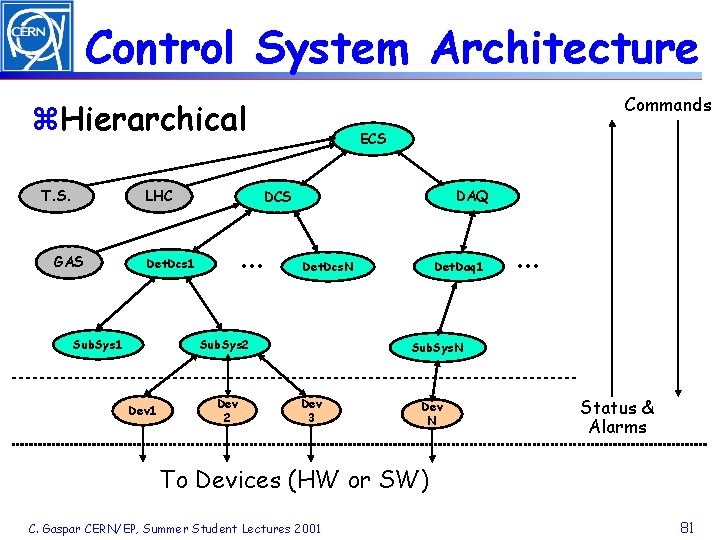

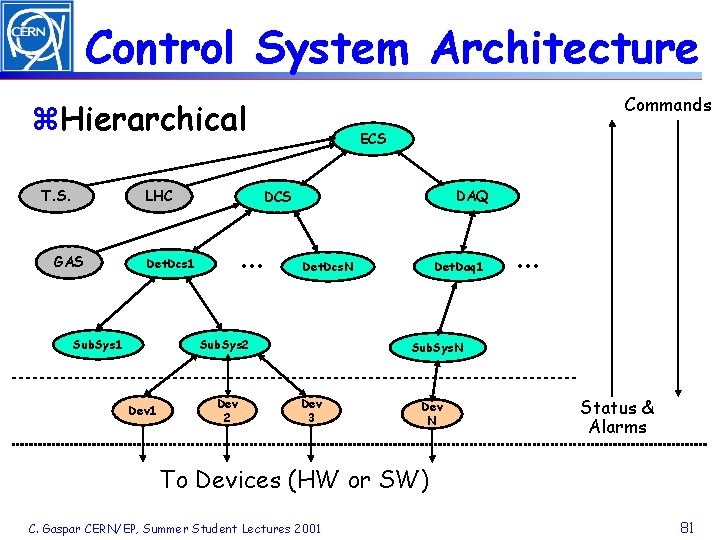

Control System Architecture Commands z. Hierarchical T. S. LHC GAS DAQ DCS . . . Det. Dcs 1 Sub. Sys 1 ECS Det. Dcs. N Sub. Sys 2 Dev 1 Dev 2 Det. Daq 1 . . . Sub. Sys. N Dev 3 Dev N Status & Alarms To Devices (HW or SW) C. Gaspar CERN/EP, Summer Student Lectures 2001 81

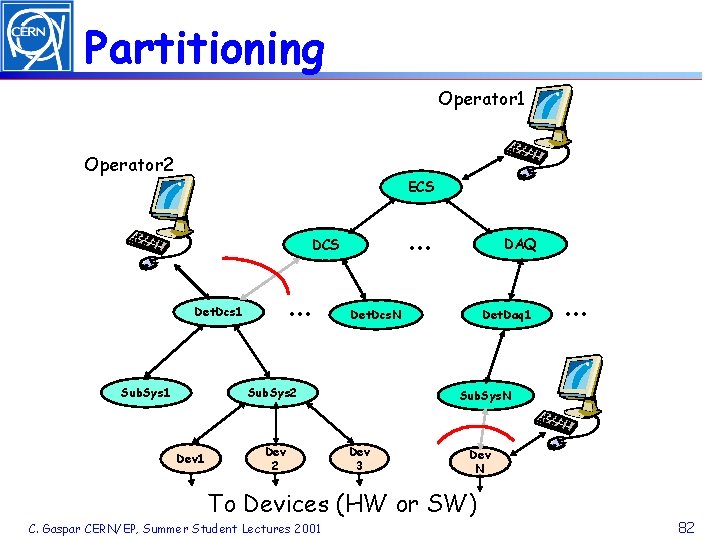

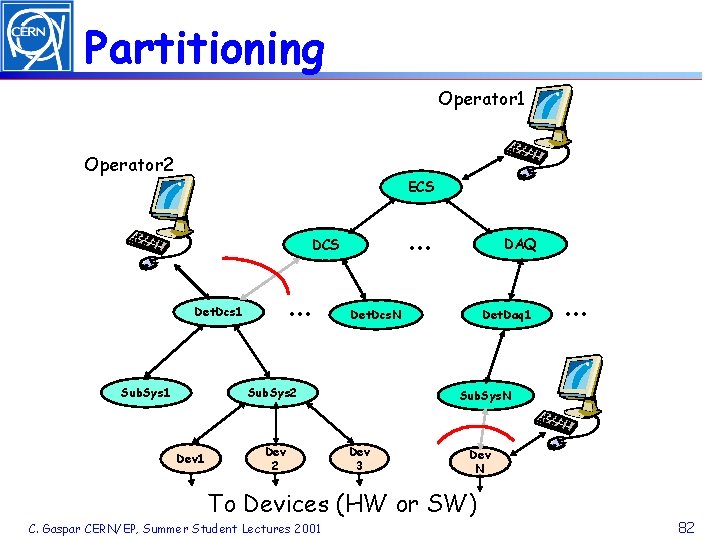

Partitioning Operator 1 Operator 2 ECS . . . Det. Dcs 1 Sub. Sys 1 Det. Dcs. N Sub. Sys 2 Dev 1 Dev 2 DAQ Det. Daq 1 Sub. Sys. N Dev 3 Dev N To Devices (HW or SW) C. Gaspar CERN/EP, Summer Student Lectures 2001 . . . 82

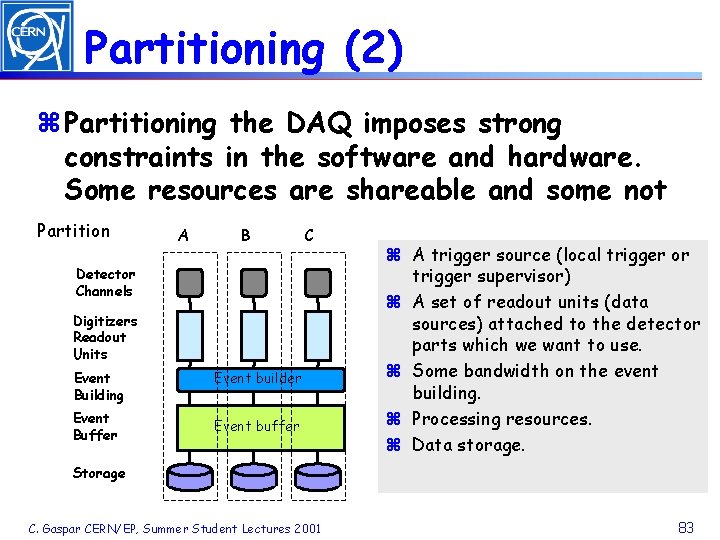

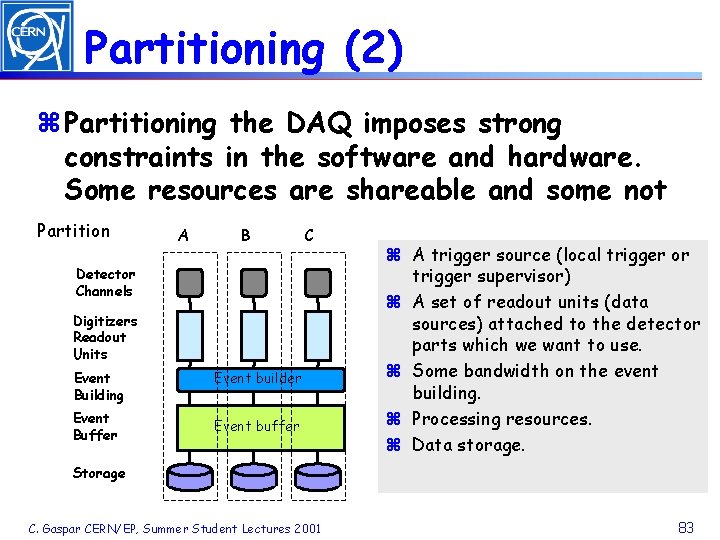

Partitioning (2) z Partitioning the DAQ imposes strong constraints in the software and hardware. Some resources are shareable and some not Partition A B C Detector Channels Digitizers Readout Units Event Building Event Buffer Event builder Event buffer z A trigger source (local trigger or trigger supervisor) z A set of readout units (data sources) attached to the detector parts which we want to use. z Some bandwidth on the event building. z Processing resources. z Data storage. Storage C. Gaspar CERN/EP, Summer Student Lectures 2001 83

System Configuration z All the components of the system need to be configured before they can perform their function. y. Detector channels: thresholds, calibration constants need to be downloaded. y. Processing elements: programs and parameters. y. Readout elements: destination and source addresses. Topology configuration. y. Trigger elements: Programs, thresholds, parameters. z Configuration needs to be performed in a given sequence. C. Gaspar CERN/EP, Summer Student Lectures 2001 84

System Configuration (2) z. Databases y. The data to configure the hardware and software is retrieved from a database system. No data should be hardwired on the code (addresses, names, parameters, etc. ). z. Data Driven Code y. Generic software should be used wherever possible (it is the data that changes) y. In Delphi all sub-detectors run the same DAQ & DAQ control software C. Gaspar CERN/EP, Summer Student Lectures 2001 85

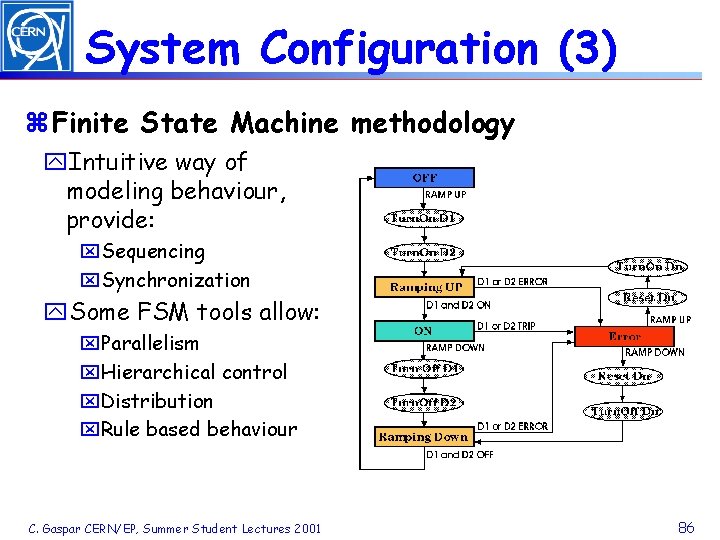

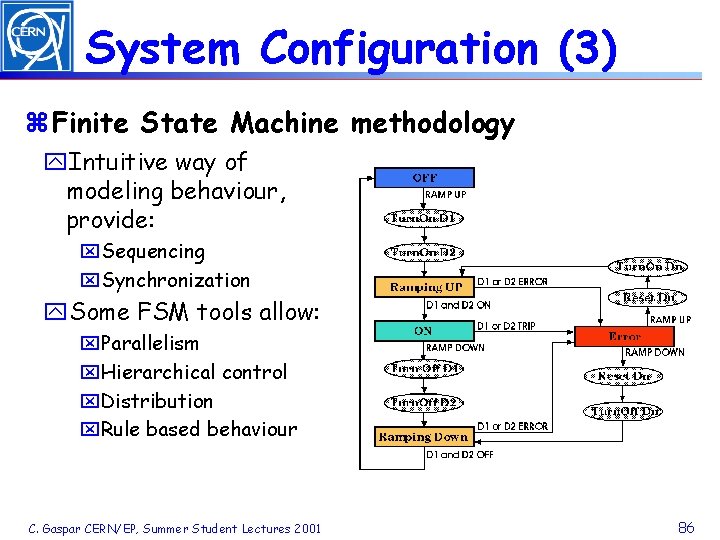

System Configuration (3) z Finite State Machine methodology y. Intuitive way of modeling behaviour, provide: x. Sequencing x. Synchronization y. Some FSM tools allow: x. Parallelism x. Hierarchical control x. Distribution x. Rule based behaviour C. Gaspar CERN/EP, Summer Student Lectures 2001 86

Automation z. What can be automated y. Standard Procedures: x. Start of fill, End of fill y. Detection and Recovery from (known) error situations z. How y. Expert System (Aleph) y. Finite State Machines (Delphi) C. Gaspar CERN/EP, Summer Student Lectures 2001 87

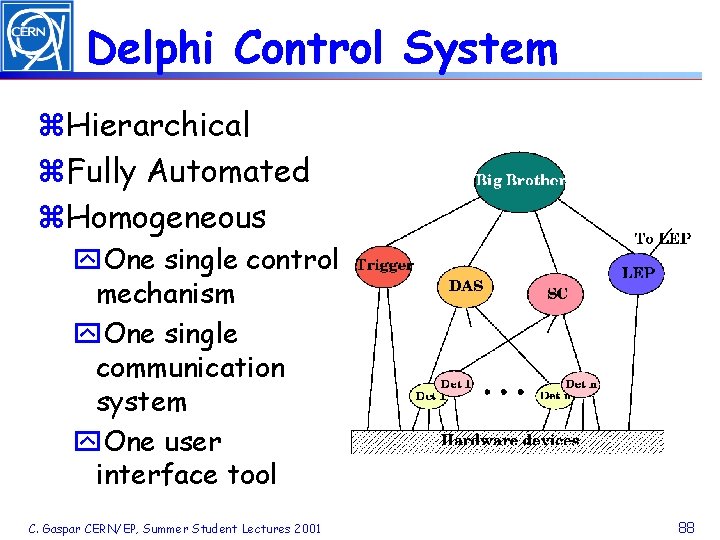

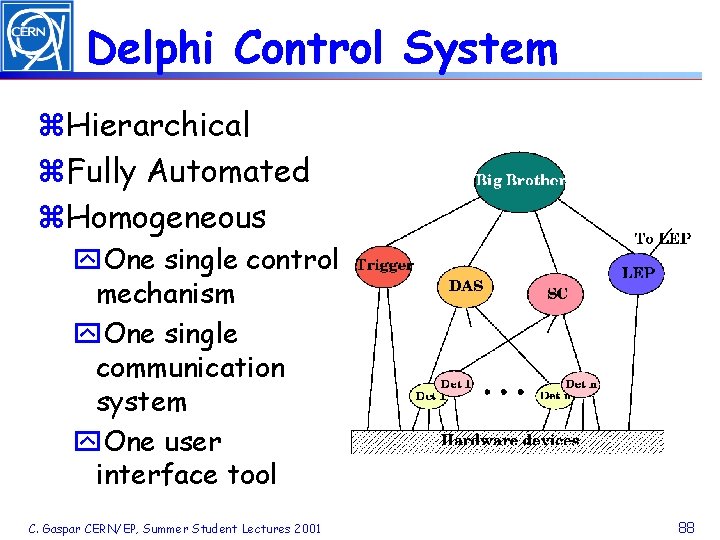

Delphi Control System z. Hierarchical z. Fully Automated z. Homogeneous y. One single control mechanism y. One single communication system y. One user interface tool C. Gaspar CERN/EP, Summer Student Lectures 2001 88

Monitoring z. Two types of Monitoring y. Monitor Experiment’s Behaviour x. Automation tools whenever possible x. Good User Interface y. Monitor the quality of the data x. Automatic histogram production and analysis x. User Interfaced histogram analysis x. Event displays (raw data) C. Gaspar CERN/EP, Summer Student Lectures 2001 89

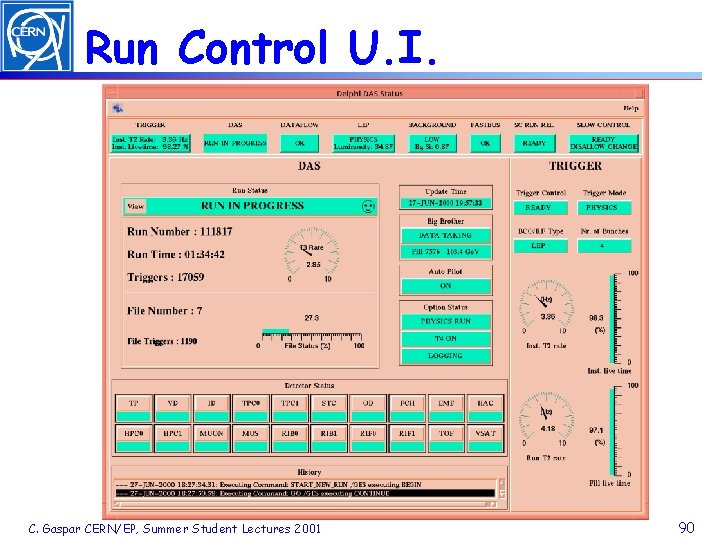

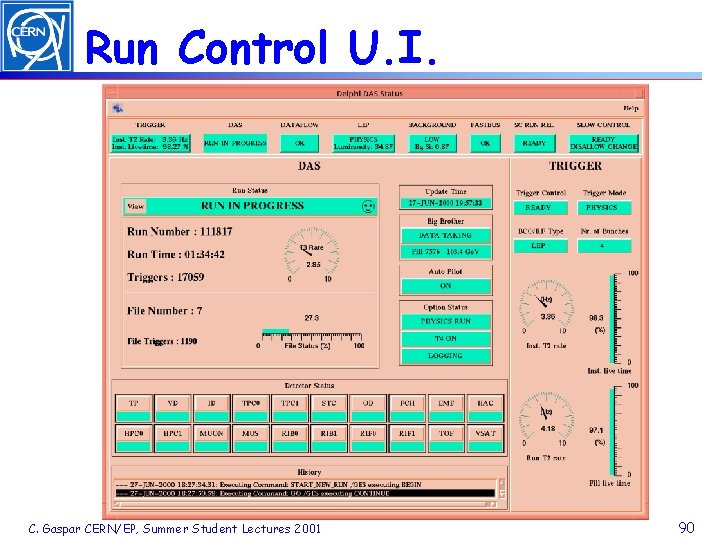

Run Control U. I. C. Gaspar CERN/EP, Summer Student Lectures 2001 90

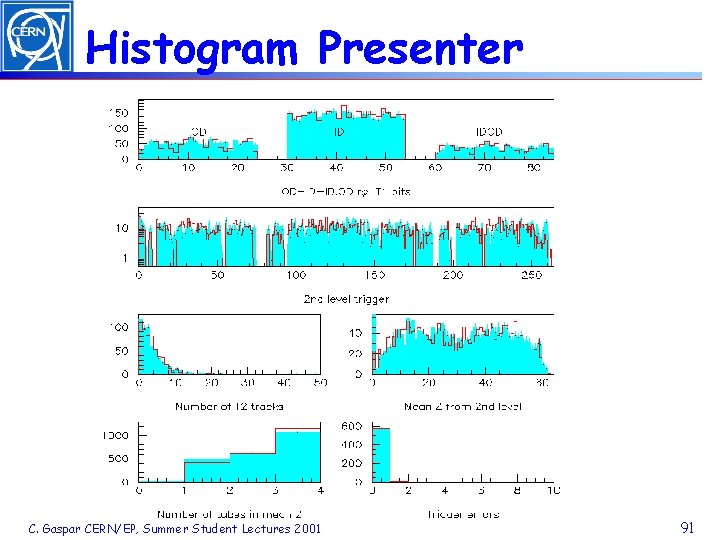

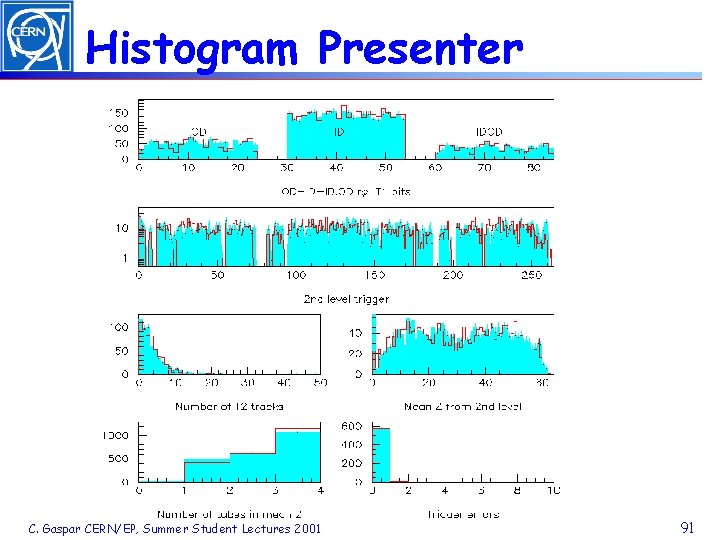

Histogram Presenter C. Gaspar CERN/EP, Summer Student Lectures 2001 91

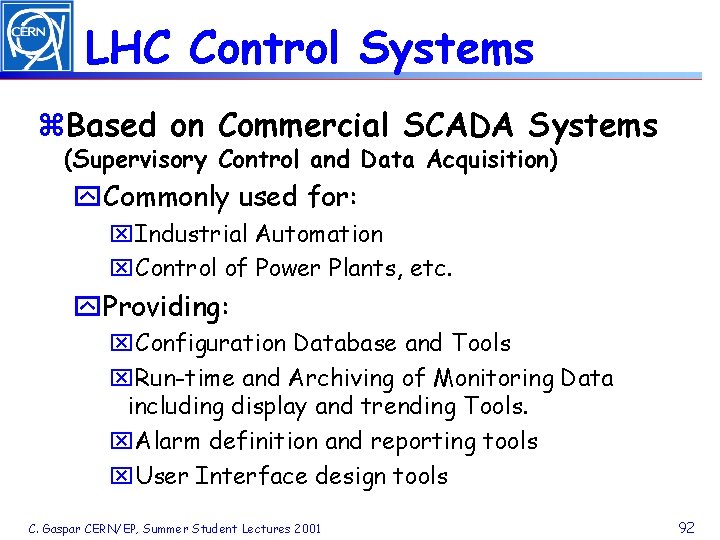

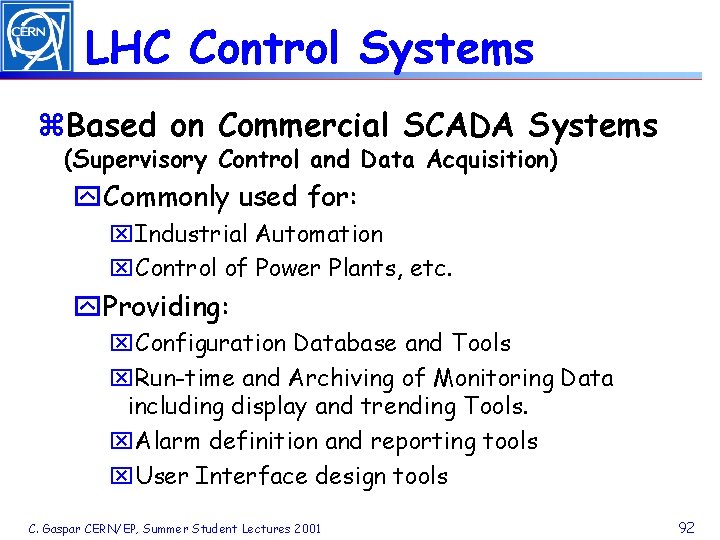

LHC Control Systems z. Based on Commercial SCADA Systems (Supervisory Control and Data Acquisition) y. Commonly used for: x. Industrial Automation x. Control of Power Plants, etc. y. Providing: x. Configuration Database and Tools x. Run-time and Archiving of Monitoring Data including display and trending Tools. x. Alarm definition and reporting tools x. User Interface design tools C. Gaspar CERN/EP, Summer Student Lectures 2001 92

Concluding Remarks z Trigger and Data Acquisition systems are becoming increasingly complex as the scale of the experiments increases. Fortunately the advances being made and expected in the technology are just about sufficient for our requirements. z Requirements of telecommunications and computing in general have strongly contributed to the development of standard technologies and mass production by industry. y Hardware: Flash ADC, Analog memory, PC, Helical scan recording, Data compression, Image processing, Cheap MIPS, . . . y Software: Distributed computing, Integration technology, Software development environment, . . . z With all these off-the-shelf components and technologies we can architect a big fraction of the new DAQ systems for the LHC experiments. Customization will still be needed in the front-end. z It is essential that we keep up-to-date with the progress being made by industry. C. Gaspar CERN/EP, Summer Student Lectures 2001 93