Data Acquisition and Integration in the DGRCs Energy

- Slides: 15

Data Acquisition and Integration in the DGRC’s Energy Data Collection Project Eduard Hovy, Andrew Philpot, Jose Luis Ambite, Yigal Arens USC/ISI Judith Klavans, Walter Bourne, Deniz Saros Columbia University

The EDC Project • EDC members • Information Sciences Institute, USC • Dept of CS, Columbia University • Government partners • Energy Information Admin. (EIA) • Bureau of Labor Statistics (BLS) • Census Bureau • Research challenge • Make accessible in standardized way the contents of thousands of data sets, represented in many different ways (webpages, pdf, MS Access, text…) x x Xxx x x Xx xx Xxx xx X xxx x Xx xxx Xxxx x Xx X Xxx x x xxxxxx xx

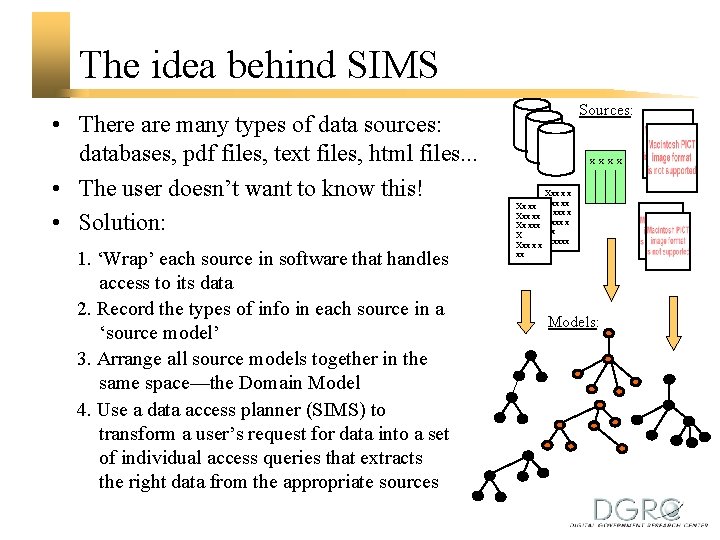

The idea behind SIMS • There are many types of data sources: databases, pdf files, text files, html files. . . • The user doesn’t want to know this! • Solution: 1. ‘Wrap’ each source in software that handles access to its data 2. Record the types of info in each source in a ‘source model’ 3. Arrange all source models together in the same space—the Domain Model 4. Use a data access planner (SIMS) to transform a user’s request for data into a set of individual access queries that extracts the right data from the appropriate sources Sources: x x Xx xxx X Xxx x x xx Xxx xx X xxx x Xx xxxxxx Models:

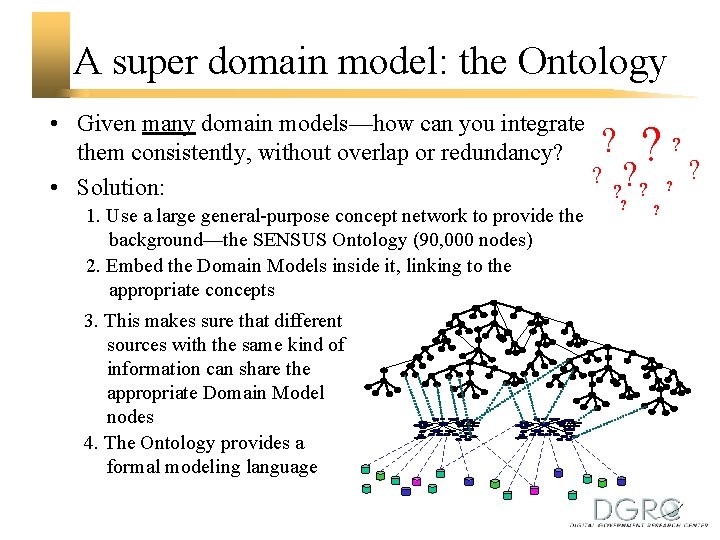

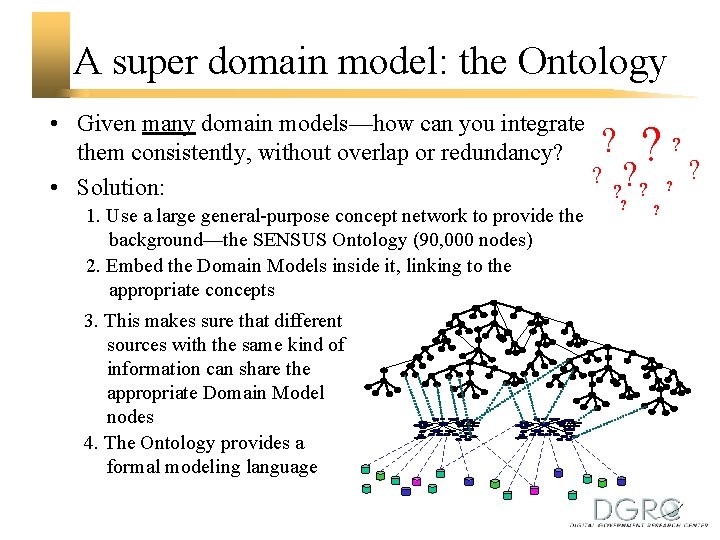

A super domain model: the Ontology • Given many domain models—how can you integrate ? them consistently, without overlap or redundancy? ? ? • Solution: ? ? ? 1. Use a large general-purpose concept network to provide the background—the SENSUS Ontology (90, 000 nodes) 2. Embed the Domain Models inside it, linking to the appropriate concepts 3. This makes sure that different sources with the same kind of information can share the appropriate Domain Model nodes 4. The Ontology provides a formal modeling language ? ? ?

Three challenges 1. Wrapping new databases—lots of ’em 2. Creating domain models for them 3. Linking the domain models into the Ontology

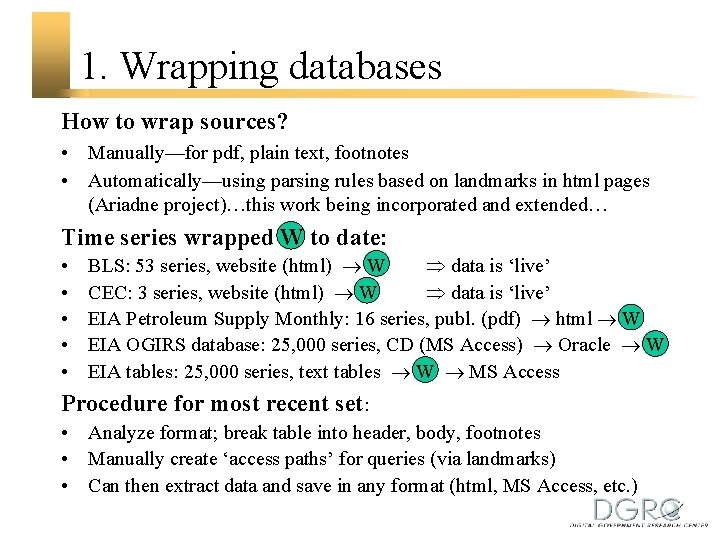

1. Wrapping databases How to wrap sources? • Manually—for pdf, plain text, footnotes • Automatically—using parsing rules based on landmarks in html pages (Ariadne project)…this work being incorporated and extended… Time series wrapped W to date: • • • BLS: 53 series, website (html) W data is ‘live’ CEC: 3 series, website (html) W data is ‘live’ EIA Petroleum Supply Monthly: 16 series, publ. (pdf) html W EIA OGIRS database: 25, 000 series, CD (MS Access) Oracle W EIA tables: 25, 000 series, text tables W MS Access Procedure for most recent set: • Analyze format; break table into header, body, footnotes • Manually create ‘access paths’ for queries (via landmarks) • Can then extract data and save in any format (html, MS Access, etc. )

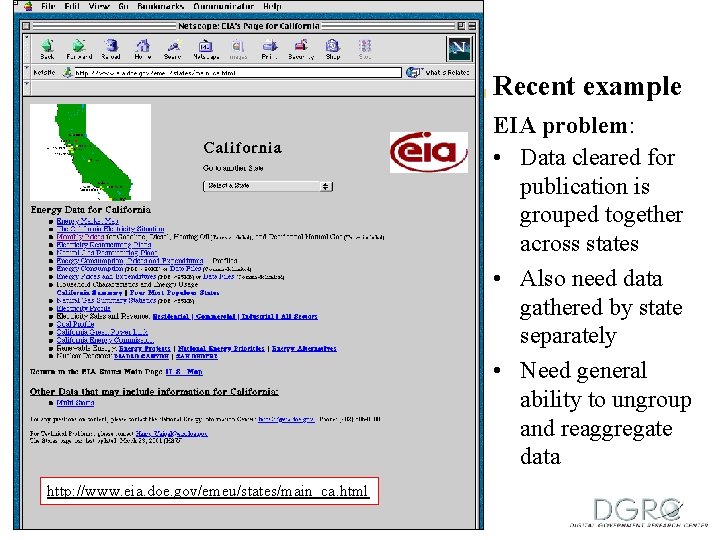

Recent example EIA problem: • Data cleared for publication is grouped together across states • Also need data gathered by state separately • Need general ability to ungroup and reaggregate data http: //www. eia. doe. gov/emeu/states/main_ca. html

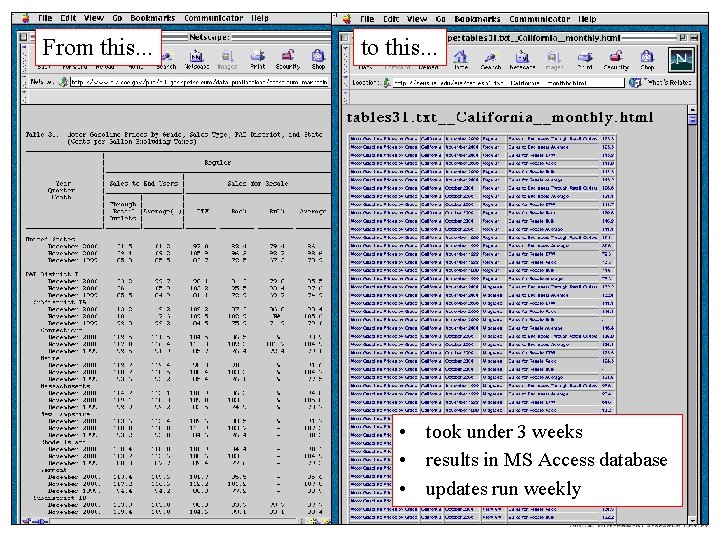

From this. . . to this. . . • took under 3 weeks • results in MS Access database • updates run weekly

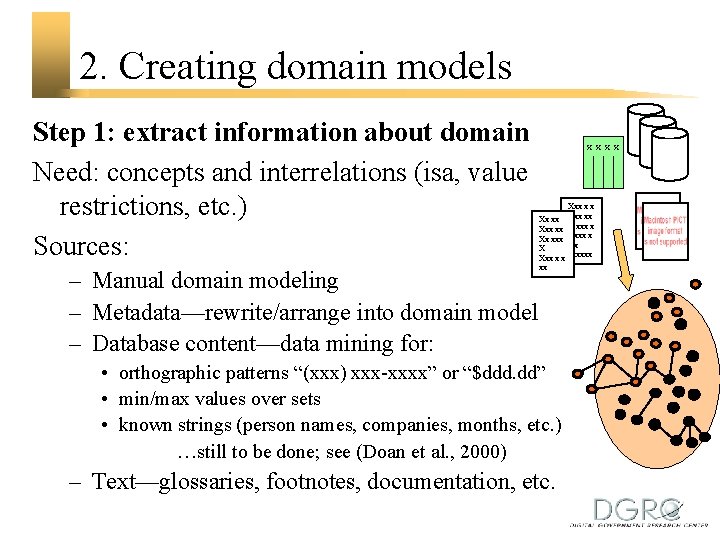

2. Creating domain models Step 1: extract information about domain Need: concepts and interrelations (isa, value restrictions, etc. ) Sources: – Manual domain modeling – Metadata—rewrite/arrange into domain model – Database content—data mining for: x x Xx xxx X Xxx x x xx • orthographic patterns “(xxx) xxx-xxxx” or “$ddd. dd” • min/max values over sets • known strings (person names, companies, months, etc. ) …still to be done; see (Doan et al. , 2000) – Text—glossaries, footnotes, documentation, etc. Xxx x x Xxx xx X xxx x Xx xxxxxx

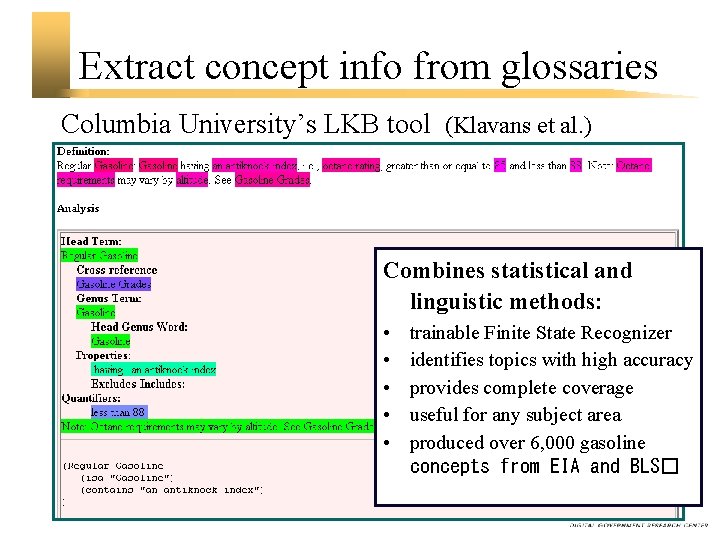

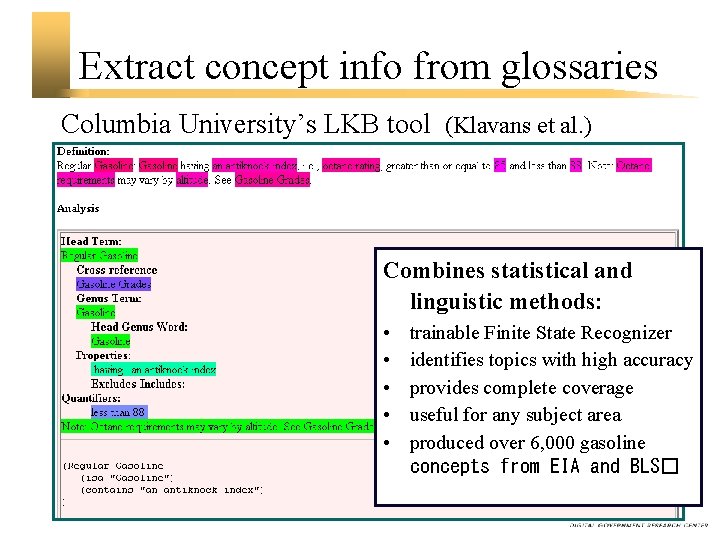

Extract concept info from glossaries Columbia University’s LKB tool (Klavans et al. ) Combines statistical and linguistic methods: • • • trainable Finite State Recognizer identifies topics with high accuracy provides complete coverage useful for any subject area produced over 6, 000 gasoline concepts from EIA and BLS�

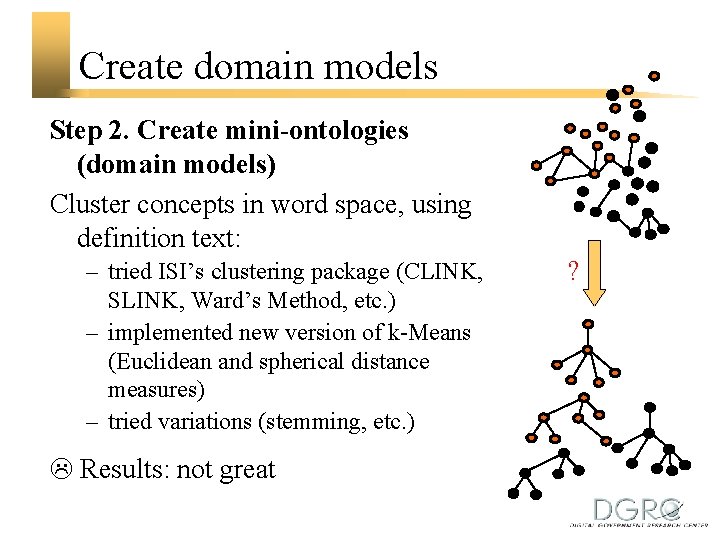

Create domain models Step 2. Create mini-ontologies (domain models) Cluster concepts in word space, using definition text: – tried ISI’s clustering package (CLINK, SLINK, Ward’s Method, etc. ) – implemented new version of k-Means (Euclidean and spherical distance measures) – tried variations (stemming, etc. ) Results: not great ?

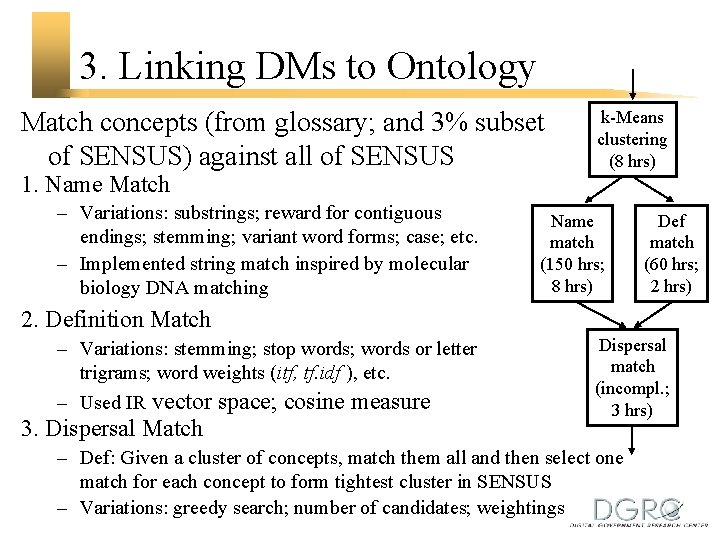

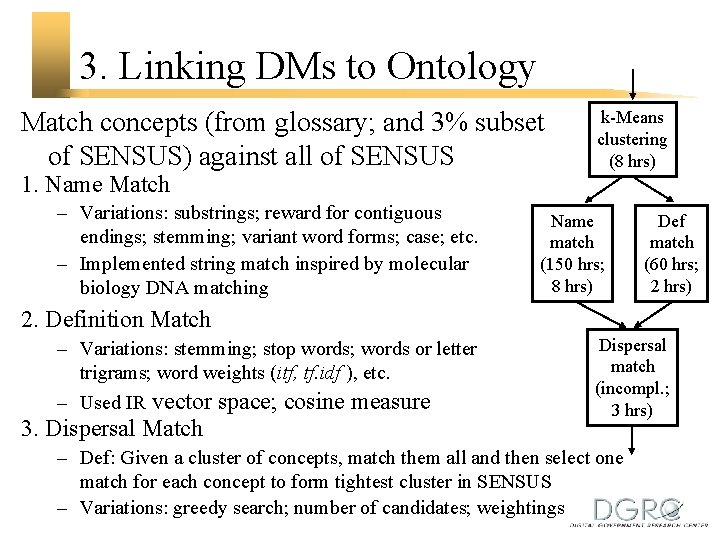

3. Linking DMs to Ontology Match concepts (from glossary; and 3% subset of SENSUS) against all of SENSUS k-Means clustering (8 hrs) 1. Name Match – Variations: substrings; reward for contiguous endings; stemming; variant word forms; case; etc. – Implemented string match inspired by molecular biology DNA matching Name match (150 hrs; 8 hrs) Def match (60 hrs; 2 hrs) 2. Definition Match – Variations: stemming; stop words; words or letter trigrams; word weights (itf, tf. idf ), etc. – Used IR vector space; cosine measure 3. Dispersal Match Dispersal match (incompl. ; 3 hrs) – Def: Given a cluster of concepts, match them all and then select one match for each concept to form tightest cluster in SENSUS – Variations: greedy search; number of candidates; weightings

Dispersal match experiment To get best parameterization, try with ‘perfect’ input: clustering + dispersal match on 3 clusters taken from SENSUS k-Means found 3 clusters. . . …but they are somewhat ‘smeared’. . . though this one is ok Further work in progress

Conclusion Large-scale homogenized access to collections of disparate data is feasible. . . but more work is needed to automate: – data source wrapping – domain modeling • extraction of concept information from text, databases, etc. • clustering, etc. – model-to-ontology integration/matching

Thank you! Any questions?